- Table of Contents

-

- H3C S3610[5510] Series Ethernet Switches Operation Manual-Release 0001-(V1.02)

- 00-1Cover

- 00-2Product Overview

- 01-Login Operation

- 02-VLAN Operation

- 03-IP Address and Performance Operation

- 04-QinQ-BPDU Tunnel Operation

- 05-Port Correlation Configuration Operation

- 06-MAC Address Table Management Operation

- 07-MAC-IP-Port Binding Operation

- 08-MSTP Operation

- 09-Routing Overview Operation

- 10-IPv4 Routing Operation

- 11-IPv6 Routing Operation

- 12-IPv6 Configuration Operation

- 13-Multicast Protocol Operation

- 14-802.1x-HABP-MAC Authentication Operation

- 15-AAA-RADIUS-HWTACACS Operation

- 16-ARP Operation

- 17-DHCP Operation

- 18-ACL Operation

- 19-QoS Operation

- 20-Port Mirroring Operation

- 21-Cluster Management Operation

- 22-UDP Helper Operation

- 23-SNMP-RMON Operation

- 24-NTP Operation

- 25-DNS Operation

- 26-File System Management Operation

- 27-Information Center Operation

- 28-System Maintenance and Debugging Operation

- 29-NQA Operation

- 30-VRRP Operation

- 31-SSH Operation

- 32-Appendix

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 13-Multicast Protocol Operation | 1 MB |

1.1.1 Comparison of Information Transmission Techniques

1.1.3 Advantages and Applications of Multicast

1.4 Multicast Packets Forwarding Mechanism

Chapter 2 IGMP Snooping Configuration

2.1.1 Principle of IGMP Snooping

2.1.2 Basic Concepts in IGMP Snooping

2.1.3 Work Mechanism of IGMP Snooping

2.1.4 Processing of Multicast Protocol Messages

2.2 IGMP Snooping Configuration Tasks

2.3 Configuring Basic Functions of IGMP Snooping

2.3.1 Configuration Prerequisites

2.3.3 Configuring the Version of IGMP Snooping

2.3.4 Configuring Port Aging Timers

2.4 Configuring IGMP Snooping Port Functions

2.4.1 Configuration Prerequisites

2.4.2 Configuring Static Member Ports

2.4.3 Configuring Simulated Joining

2.4.4 Enabling the Fast Leave Feature

2.4.5 Configuring IGMP Report Suppression

2.5 Configuring IGMP-Related Functions

2.5.1 Configuration Prerequisites

2.5.2 Enabling IGMP Snooping Querier

2.5.4 Configuring Source IP Address of IGMP Queries

2.6 Configuring a Multicast Group Policy

2.6.1 Configuration Prerequisites

2.6.2 Configuring a Multicast Group Filter

2.6.3 Configuring Maximum Multicast Groups that Can Pass Ports

2.6.4 Configuring Multicast Group Replacement

2.7 Displaying and Maintaining IGMP Snooping

2.8 IGMP Snooping Configuration Examples

2.8.1 Configuring Simulated Joining

2.8.2 Static Router Port Configuration

2.9 Troubleshooting IGMP Snooping Configuration

2.9.1 Switch Fails in Layer 2 Multicast Forwarding

2.9.2 Configured Multicast Group Policy Fails to Take Effect

Chapter 3 MLD Snooping Configuration

3.1.2 Basic Concepts in MLD Snooping

3.1.3 Work Mechanism of MLD Snooping

3.2 MLD Snooping Configuration Tasks

3.3 Configuring Basic Functions of MLD Snooping

3.3.1 Configuration Prerequisites

3.3.3 Configuring Port Aging Timers

3.4 Configuring MLD Snooping Port Functions

3.4.1 Configuration Prerequisites

3.4.2 Configuring Static Member Ports

3.4.3 Configuring Simulated Joining

3.4.4 Configuring the Fast Leave Feature

3.4.5 Configuring MLD Report Suppression

3.5 Configuring MLD-Related Functions

3.5.1 Configuration Prerequisites

3.5.2 Enabling MLD Snooping Querier

3.5.4 Configuring Source IPv6 Addresses of MLD Queries

3.6 Configuring an IPv6 Multicast Group Policy

3.6.1 Configuration Prerequisites

3.6.2 Configuring an IPv6 Multicast Group Filter

3.6.3 Configuring Maximum Multicast Groups that Can Pass Ports

3.6.4 Configuring IPv6 Multicast Group Replacement

3.7 Displaying and Maintaining MLD Snooping

3.8 MLD Snooping Configuration Examples

3.8.2 Static Router Port Configuration

3.9 Troubleshooting MLD Snooping

3.9.1 Switch Fails in Layer 2 Multicast Forwarding

3.9.2 Configured IPv6 Multicast Group Policy Fails to Take Effect

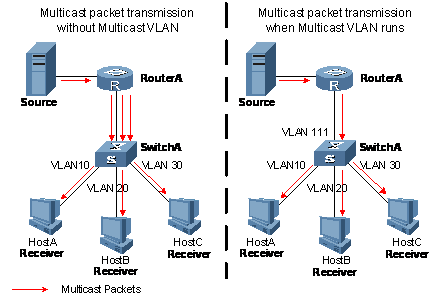

Chapter 4 Multicast VLAN Configuration

4.1 Introduction to Multicast VLAN

4.2 Configuring Multicast VLAN

4.4 Multicast VLAN Configuration Example

5.1.2 Work Mechanism of IGMPv1

5.1.3 Enhancements Provided by IGMPv2

5.1.4 Enhancements Provided by IGMPv3

5.3 Configuring Basic Functions of IGMP

5.3.1 Configuration Prerequisites

5.3.3 Configuring IGMP Versions

5.4 Adjusting IGMP Performance

5.4.1 Configuration Prerequisites

5.4.2 Configuring IGMP Message Options

5.4.4 Configure IGMP Fast Leave

5.5 Displaying and Maintaining IGMP

5.6 IGMP Configuration Example

5.7.1 No Multicast Group Member Information on the Receiver-Side Router

5.7.2 Inconsistent Memberships on Routers on the Same Subnet

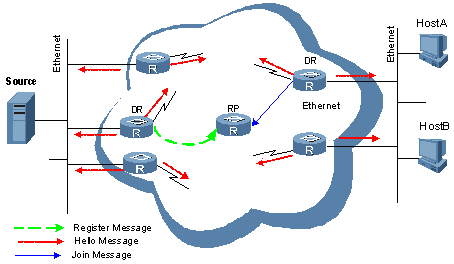

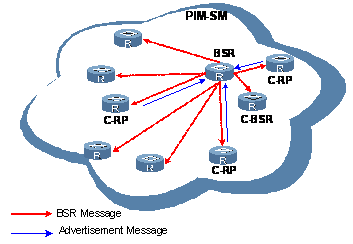

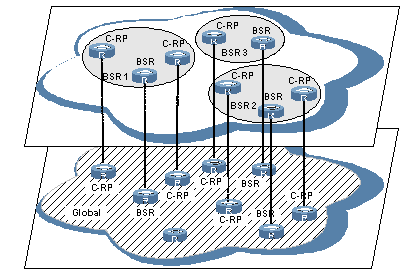

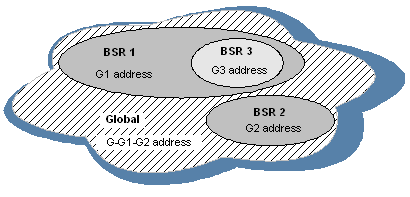

6.1.5 Introduction to BSR Admin-scope Regions in PIM-SM

6.1.6 SSM Model Implementation in PIM

6.2.1 PIM-DM Configuration Tasks

6.2.2 Configuration Prerequisites

6.2.5 Configuring State Refresh Parameters

6.2.6 Configuring PIM-DM Graft Retry Period

6.3.1 PIM-SM Configuration Tasks

6.3.2 Configuration Prerequisites

6.3.6 Configuring PIM-SM Register Messages

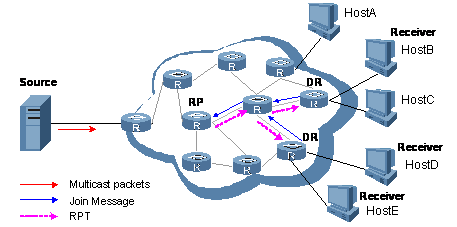

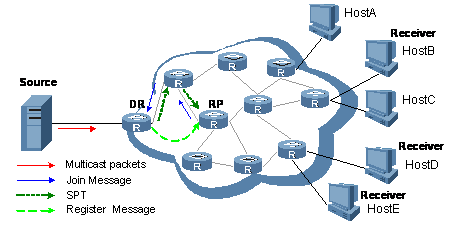

6.3.7 Configuring RPT-to-SPT Switchover

6.4.1 PIM-SSM Configuration Tasks

6.4.2 Configuration Prerequisites

6.4.4 Configuring the Range of PIM-SSM Multicast Groups

6.5 Configuring PIM Common Information

6.5.1 PIM Common Information Configuration Tasks

6.5.2 Configuration Prerequisites

6.5.3 Configuring a PIM Filter

6.5.4 Configuring PIM Hello Options

6.5.5 Configuring PIM Common Timers

6.5.6 Configuring Join/Prune Message Limits

6.6 Displaying and Maintaining PIM

6.7 PIM Configuration Examples

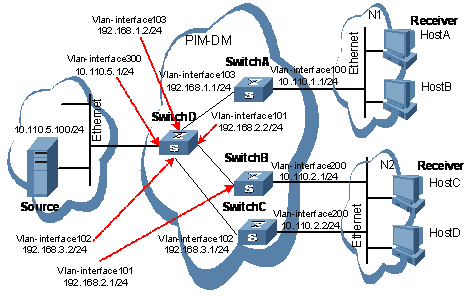

6.7.1 PIM-DM Configuration Example

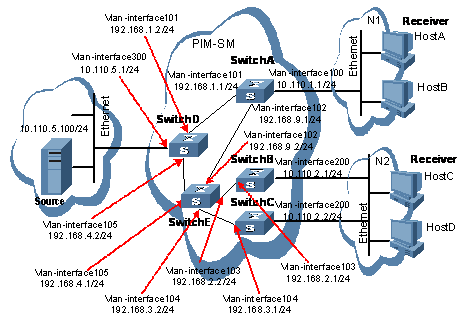

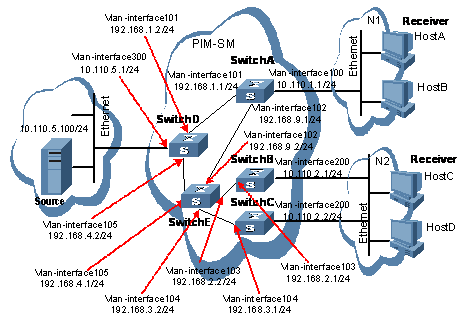

6.7.2 PIM-SM Configuration Example

6.7.3 PIM-SSM Configuration Example

6.8 Troubleshooting PIM Configuration

6.8.1 Failure of Building a Multicast Distribution Tree Correctly

6.8.2 Multicast Data Abnormally Terminated on an Intermediate Router

6.8.3 RPs Unable to Join SPT in PIM-SM

6.8.4 No Unicast Route Between BSR and C-RPs in PIM-SM

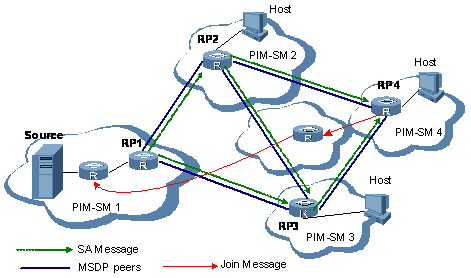

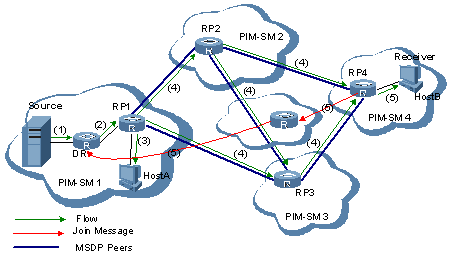

7.1.3 Operation Mechanism of MSDP

7.1.4 MSDP-Related Specifications

7.3 Configuring Basic Functions of MSDP

7.3.1 Configuration Prerequisites

7.3.3 Creating an MSDP Peer Connection

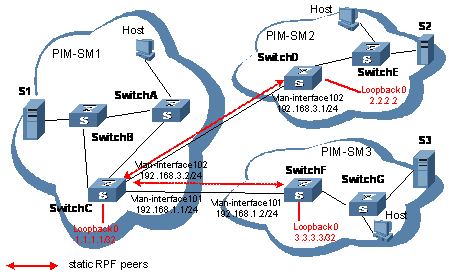

7.3.4 Configuring a Static RPF Peer

7.4 Configuring an MSDP Peer Connection

7.4.1 Configuration Prerequisites

7.4.2 Configuring MSDP Peer Description

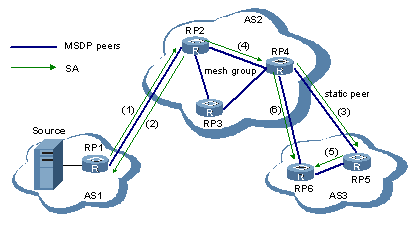

7.4.3 Configuring an MSDP Mesh Group

7.4.4 Configuring MSDP Peer Connection Control

7.5.1 Configuration Prerequisites

7.5.2 Configuring SA Message Content

7.5.3 Configuring SA Request Messages

7.5.4 Configuring an SA Message Filtering Rule

7.5.5 Configuring SA Message Cache

7.6 Displaying and Maintaining MSDP

7.7 MSDP Configuration Examples

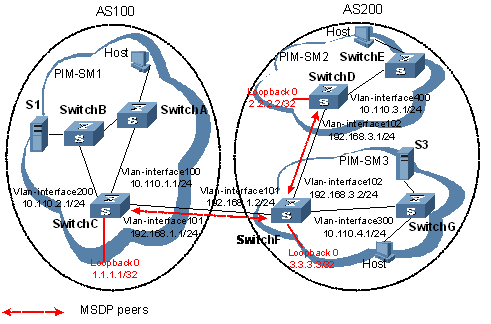

7.7.1 Example of Configuration Leveraging BGP Routes

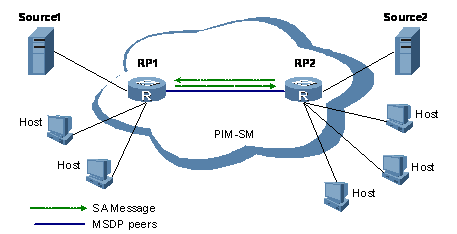

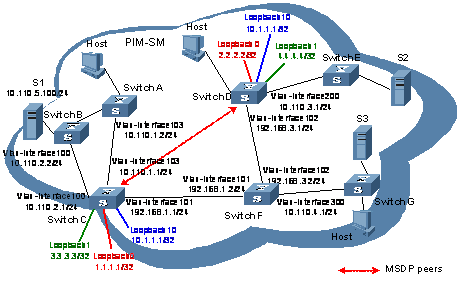

7.7.2 Example of Anycast RP Application Configuration

7.7.3 Static RPF Peer Configuration Example

7.8.1 MSDP Peers Stay in Down State

7.8.2 No SA Entries in the Router’s SA Cache

7.8.3 Inter-RP Communication Faults in Anycast RP Application

Chapter 8 Multicast Policy Configuration

8.1.1 Introduction to Multicast Policy

8.1.2 How a Multicast Policy Works

8.3 Configuring a Multicast Policy

8.3.1 Configuration Prerequisites

8.3.2 Enabling IP Multicast Routing

8.3.3 Configuring a Multicast Static Route

8.3.4 Configuring a Multicast Route Match Policy

8.3.5 Configuring Multicast Load Splitting

8.3.6 Configuring Multicast Forwarding Range

8.3.7 Configuring Multicast Forwarding Table Size

8.4 Displaying and Debugging a Multicast Policy

8.5.1 Multicast Static Route Configuration

8.6 Troubleshooting Multicast Policies

8.6.1 Multicast Static Route Failure

8.6.2 Multicast Data Fails to Reach Receivers

Chapter 1 Multicast Overview

1.1 Introduction to Multicast

As a technique coexisting with unicast and broadcast, the multicast technique effectively addresses the issue of point-to-multipoint data transmission. By allowing high-efficiency point-to-multipoint data transmission over a network, multicast greatly saves network bandwidth and reduces network load.

With the multicast technology, a network operator can easily provide new value-added services, such as live Webcasting, Web TV, distance learning, telemedicine, Web radio, real-time videoconferencing, and other information services that have high demands on the bandwidth and real-time data communication.

1.1.1 Comparison of Information Transmission Techniques

I. Unicast

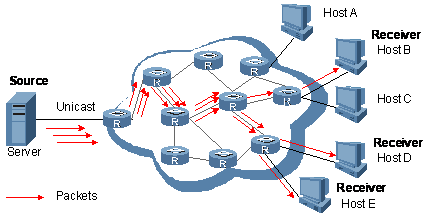

In unicast, the information source sends a separate copy of information to each host that needs the information, as shown in Figure 1-1.

Figure 1-1 Unicast transmission

Assume that Hosts B, D and E need this information. The information source establishes a separate transmission channel for each of these hosts.

In unicast transmission, the traffic over the network is proportional to the number of hosts that need the information, so a tremendous pressure will be imposed on the information source and the network bandwidth if a large number of hosts need the information.

As we can see from the information transmission process, unicast is not suitable for batch transmission of information.

II. Broadcast

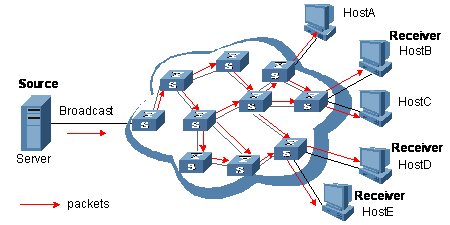

In broadcast, the information source sends information to all hosts on the network, even if some hosts do not need the information, as shown in Figure 1-2.

Figure 1-2 Broadcast transmission

Assume that only Hosts B, D, and E need the information. If the information source broadcasts the information, Hosts A and C also receive it. In addition to information security issues, this also causes traffic flooding on the same network.

Therefore, broadcast is disadvantageous in transmitting data to specific hosts; moreover, broadcast transmission is a significant usage of network resources.

III. Multicast

As discussed above, the unicast and broadcast techniques are unable to provide point-to-multipoint data transmissions with the minimum network consumption.

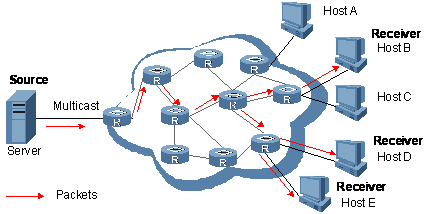

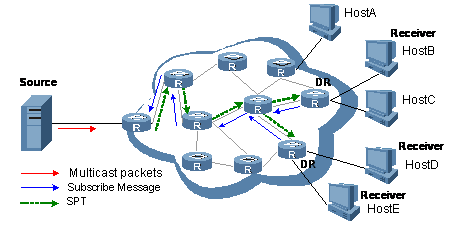

The multicast technique has solved this problem. When some hosts on the network need the information, the multicast source (namely, the information source) sends the information only once. With tree-type routes established for multicast packets through multicast routing protocols, the packets are replicated only where the tree branches, as shown in Figure 1-3:

Figure 1-3 Multicast transmission

Assume that Hosts B, D and E need the information. To transmit the information to the right hosts, you can group Hosts B, D and E into a receiver set, and let the routers on the network duplicate and forward the information based on the distribution of the receivers in this set. Finally, the information is correctly delivered to Hosts B, D, and E.

To sum up, multicast has the following advantages:

l Over unicast: As multicast traffic flows to the node the farthest possible from the source before it is replicated and distributed, an increase of the number of hosts will not remarkably add to the network load.

l Over broadcast: As multicast data is sent only to the receivers that need it, multicast uses the network bandwidth reasonably and brings no waste of network resources, and enhances network security.

1.1.2 Roles in Multicast

The following roles are involved in multicast transmission:

l An information sender is referred to as a Multicast Source (“Source” in Figure 1-3).

l Each receiver is a Multicast Group Member (“Receiver” in Figure 1-3).

l All receivers interested in the same information form a Multicast Group. Multicast groups are not subject to geographic restrictions.

l A router capable of multicast routing is called multicast router. In addition to providing the multicast routing function, a multicast router can also manage multicast group members.

For a better understanding of the multicast concept, you can assimilate multicast transmission to transmission of TV programs.

l The TV station (multicast source) transmits a TV program (multicast data) through a channel (multicast group).

l The host tunes his or her TV set (receiver) to the channel (to join the multicast group).

l Then, the TV set can receive the program provided from the TV station (the receiver can receive the multicast data sent by the multicast source).

& Note:

l A multicast source does not necessarily belong to a multicast group. Namely, a multicast source is not necessarily a multicast data receiver.

l Multiple multicast sources can send data to the same multicast group at the same time.

l If there are routers that do not support multicast on the network, multicast routers can encapsulate multicast packets within unicast IP packets and tunnel them to the neighboring multicast routers, which then remove the IP header and multicast the packets. This avoids significant changes to the network structure.

1.1.3 Advantages and Applications of Multicast

I. Advantages of multicast

Advantages of the multicast technique include:

l Enhanced efficiency: reduces the CPU load of information sources and network devices.

l Optimal performance: reduces redundant traffic.

l Distributive application: Enables multiple-point applications at the price of the minimum network resources.

II. Applications of multicast

Applications of the multicast technique include:

l Multimedia and streaming applications, such as Web TV, Web radio, and real-time video/audio conferencing.

l Communication for training and cooperative operations, such as distance learning and telemedicine.

l Data warehouse and financial applications (stock quotes).

l Any other point-to-multiple-point data distribution application.

1.2 Multicast Models

Based on the multicast source processing modes, there are three multicast models:

l Any-Source Multicast (ASM)

l Source-Filtered Multicast (SFM)

l Source-Specific Multicast (SSM)

I. ASM model

In the ASM model, any sender can become a multicast source and send information to a multicast group; numbers of receivers can join a multicast group identified by a group address and obtain multicast information addressed to that multicast group. In this model, receivers are not ware of the position of a multicast source in advance. However, they can join or leave the multicast group at any time.

II. SFM model

The SFM model is derived from the ASM. From the view of a sender, the two models have the same multicast group membership architecture.

Functionally, the SFM model is an extension of the ASM model. In the SFM model, the upper layer software checks the source address of received multicast packets so as to permit or deny multicast traffic from specific sources. Therefore, receivers can receive the multicast data from only part of the multicast sources. From the view of a receiver, multicast sources are not all valid: they are filtered.

III. SSM model

In the practical life, uses may be interested in the multicast data from only certain multicast sources. The SSM model provides a transmission service that allows users to specify the multicast sources they are interested in at the client side.

The radical difference between the SSM model and the ASM model is that in the SSM model, receivers already know the locations of the multicast sources by some other means. In addition, the SSM model uses a multicast address range that is different from that of the ASM module, and dedicated multicast forwarding paths are established between receivers and the specified multicast sources.

& Note:

For details about the concepts of SPT and RPT, refer to PIM Configuration in the IP Multicast Volume.

1.3 Framework of Multicast

IP multicast involves the following questions:

l Where should the multicast source transmit information to? (multicast addressing)

l What receivers exist on the network? (host registration)

l How should information be transmitted to the receivers? (multicast routing)

IP multicast falls in the scope of end-to-end service. The framework of multicast involves the following four parts:

l Addressing mechanism: Information is sent from a multicast source to a group of receivers through a multicast address.

l Host registration: Receiver hosts are allowed to join and leave multicast groups dynamically. This mechanism is the basis for group membership management.

l Multicast routing: A multicast distribution tree (namely a forwarding path tree for multicast data on the network) is constructed for delivering multicast data from a multicast source to receivers.

l Multicast applications: A software system that supports multicast applications, such as video conferencing, must be installed on multicast sources and receiver hosts, and the TCP/IP stack must support reception and transmission of multicast data.

1.3.1 Multicast Addresses

To allow communication between multicast sources and multicast group members, network-layer multicast addresses, namely, multicast IP addresses must be provided. In addition, a technique must be available to map multicast IP addresses to link-layer multicast MAC addresses.

I. IPv4 multicast addresses

Internet Assigned Numbers Authority (IANA) assigned the Class D address space (224.0.0.0 to 239.255.255.255) for IPv4 multicast, as shown in Table 1-1.

Table 1-1 Class D IP address blocks and description

|

Address block |

Description |

|

224.0.0.0 to 224.0.0.255 |

Reserved multicast addresses (addresses for permanent multicast groups). The IP address 224.0.0.0 is reserved, and other IP addresses can be used by routing protocols and for topology searching and protocol maintenance. |

|

224.0.1.0 to 231.255.255.255 233.0.0.0 to 238.255.255.255 |

ASM/SFM multicast addresses available for users (IP addresses of temporary groups). They are globally scoped multicast addresses. |

|

232.0.0.0 to 232.255.255.255 |

Available SSM multicast addresses (IP addresses of temporary groups). They are valid for the entire network. |

|

239.0.0.0 to 239.255.255.255 |

Administratively scoped multicast addresses. These addresses are constrained to a local group or organization. Use of the administratively scoped addresses allows you to define the range of multicast domains flexibly to isolate addresses between different multicast domains, so that the same multicast address can be used in different multicast domains without causing collisions. |

Note that:

1) The membership of a group is dynamic. Hosts can join or leave multicast groups at any time.

2) A multicast group can be either permanent or temporary.

l Permanent group addresses: Multicast addresses reserved by IANA for routing protocols. Such an address identifies a group of specific network devices (also known as reserved multicast groups). For detail, see Table 1-2. A permanent group address will never change. There can be any number of, or even 0, members in a permanent multicast group.

l Temporary group addresses: Group addresses that are temporarily assigned for user multicast groups. Once the number of members of a group comes to 0, the address is released.

Table 1-2 Reserved IPv4 multicast addresses

|

Address |

Description |

|

224.0.0.1 |

All systems on this subnet, including hosts and routers |

|

224.0.0.2 |

All multicast routers on this subnet |

|

224.0.0.3 |

Unassigned |

|

224.0.0.4 |

DVMRP routers |

|

224.0.0.5 |

OSPF routers |

|

224.0.0.6 |

OSPF designated routers/backup designated routers |

|

224.0.0.7 |

ST routers |

|

224.0.0.8 |

ST hosts |

|

224.0.0.9 |

RIPv2 routers |

|

224.0.0.11 |

Mobile agents |

|

224.0.0.12 |

DHCP server / relay agent |

|

224.0.0.13 |

All PIM routers |

|

224.0.0.14 |

RSVP encapsulation |

|

224.0.0.15 |

All CBT routers |

|

224.0.0.16 |

Designated SBM |

|

224.0.0.17 |

All SBMs |

|

224.0.0.18 |

VRRP |

|

…… |

…… |

II. Multicast MAC addresses

When a unicast IP packet is transmitted over an Ethernet network, the destination MAC address is the MAC address of the receiver. When a multicast packet is transmitted over an Ethernet network, however, a multicast MAC address is used as the destination address because the packet is directed a group with an uncertain number of members, rather than to one specific receiver.

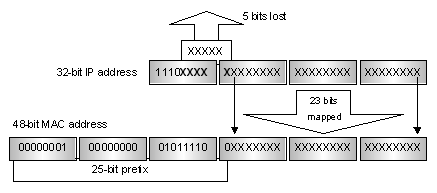

As stipulated by IANA, the upper 24 bits of a multicast MAC address are 0 x 01005e, bit 25 is 0, and the lower 23 bits of the MAC address are the lower 23 bits of the multicast IP address. The mapping relationship between a multicast IP address and the corresponding multicast MAC address is shown in Figure 1-4.

Figure 1-4 Mapping from multicast IP address to multicast MAC address

The upper four bits of a multicast IP address are 1110, representing the multicast flag, and only 23 bits of the remaining 28 bits are mapped to a MAC address, so five bits of the multicast IP address are lost. As a result, 32 multicast IP addresses map to the same MAC address. Therefore, in Layer 2 multicast forwarding, a device may receive some multicast data addressed for other IP multicast groups, and such redundant data needs to be filtered at the upper layer.

III. IPv6 Multicast Addresses

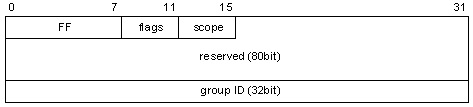

As defined in RFC 2373, the format of an IPv6 multicast is as follows:

Figure 1-5 IPv6 multicast format

l FF: 8 bits, indicating that this address is an IPv6 multicast address.

l Flags: 4 bits, of which the high-order 3 bits are reserved bits set to 0, and the low-order bit is the Transient (T) flag. When set to 0, the T flag indicates that the multicast address is a permanently-assigned (well-known) multicast address. When set to 1, the T flag indicates that the multicast address is a transient (not permanently assigned) multicast address.

l Scope: 4 bits, indicating the scope of the IPv6 internetwork for which the multicast traffic is intended. Possible values of this field are given in Table 1-3.

l Reserved: 80 bits, all set to 0 currently.

l Group ID: 32 bits, identifying the multicast group. The group ID can be used to create a MAC multicast address. The space of IPv6 multicast addresses can be expanded in the future as required.

Table 1-3 Values of the Scope field

|

Meaning |

|

|

0 |

Reserved |

|

1 |

Node-local scope |

|

2 |

Link-local scope |

|

3, 4, 6, 7, 9 through D |

Unassigned |

|

5 |

Site-local scope |

|

8 |

Organization-local scope |

|

E |

Global scope |

|

F |

Reserved |

1.3.2 Multicast Protocols

IP multicast protocols include multicast group management protocols and multicast routing protocols. Figure 1-6 describes the positions of multicast-related protocols in the network.

Figure 1-6 Positions of multicast-related protocols

I. Multicast management protocols

Typically, the internet group management protocol (IGMP) is used between hosts and multicast routers directly connected with the hosts. This protocol defines the mechanism of establishing and maintaining group memberships between hosts and multicast routers.

So far, there three IGMP versions: IGMPv1, IGMPv2, and IGMPv3. Newer versions are fully compatible with older ones.

II. Multicast routing protocols

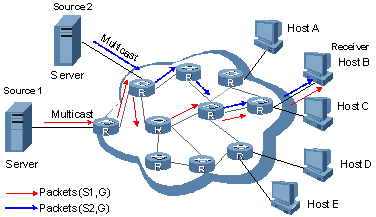

A multicast routing protocol runs between multicast routers to establish and maintain multicast routes and forward multicast packets correctly and efficiently. A multicast route is a loop-free data transmission path from a data source to multiple receivers. Namely, it is a multicast distribution tree.

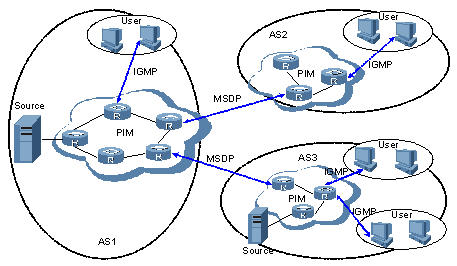

In the ASM model, multicast routes come in intra-domain routes and inter-domain routes.

l Among a variety of mature intra-domain multicast routing protocols, protocol independent multicast (PIM) is the most commonly used protocol currently. It allows delivery of information to receivers by discovering the multicast source and establishing a multicast distribution tree. Based on the forwarding mechanism, PIM comes in two modes – dense mode and sparse mode.

l The principal issue for inter-domain routes is how the routing information is transmitted between autonomous systems (ASs). So far, multicast source discovery protocol (MSDP) is a mature solution.

For the SSM model, multicast routes are not divided into inter-domain routes and intra-domain routes. Since receivers know the position of the multicast source, channels established through PIM-SD are sufficient for multicast information transport.

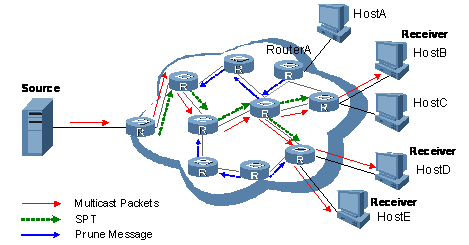

1.4 Multicast Packets Forwarding Mechanism

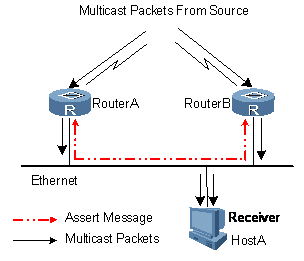

In a multicast model, a multicast source sends information to the host group, which is identified by the multicast group address in the destination address field of the IP packets. Therefore, to deliver multicast packets to receivers located in different parts of the network, multicast routers on the forwarding path usually need to forward multicast packets received on one incoming interface to multiple outgoing interfaces. Compared with a unicast model, a multicast model is more complex in the following aspects.

l To ensure multicast packet transmission in the network, unicast routing tables or multicast routing tables specially provided for multicast must be used as guidance for multicast forwarding.

l To process the same multicast information from different peers received on different interfaces of the same device, every multicast packet is subject to a reverse path forwarding (RPF) check on the incoming interface. The result of the RPF check determines whether the packet will be forwarded or discarded. The RPF check mechanism is the basis for most multicast routing protocols to implement multicast forwarding. For details about RPF, refer to”RPF mechanism”.

Chapter 2 IGMP Snooping Configuration

2.1 IGMP Snooping Overview

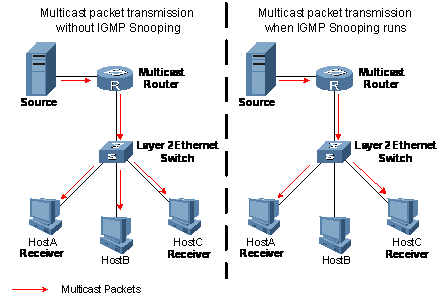

Internet Group Management Protocol Snooping (IGMP Snooping) is a multicast constraining mechanism that runs on Layer 2 devices to manage and control multicast groups.

2.1.1 Principle of IGMP Snooping

By analyzing received IGMP messages, a Layer 2 device running IGMP Snooping establishes mappings between ports and multicast MAC addresses and forwards multicast data based on these mappings.

As shown in Figure 2-1, when IGMP Snooping is not running, multicast packets are broadcast to all devices at Layer 2. When IGMP Snooping runs, multicast packets for known multicast groups are multicast to the receivers at Layer 2.

Figure 2-1 Multicast forwarding before and after IGMP Snooping runs

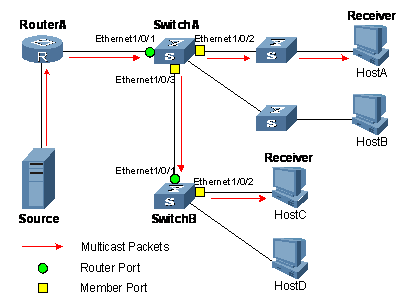

2.1.2 Basic Concepts in IGMP Snooping

I. IGMP Snooping related ports

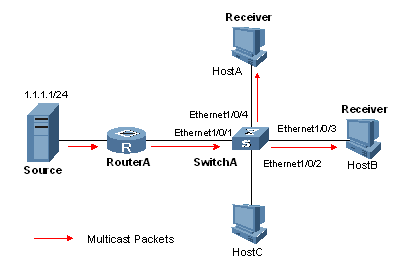

As shown in Figure 2-2, Router A connects to the multicast source, IGMP Snooping runs on Switch A and Switch B, Host A and Host C are receiver hosts (namely, multicast group members).

Figure 2-2 IGMP Snooping related ports

Ports involved in IGMP Snooping, as shown in Figure 2-2, are described as follows:

l Router port: On an Ethernet switch, a router port connects the switch to a multicast router. In the figure, Ethernet 1/0/1 of Switch A and Ethernet 1/0/1 of Switch B are router ports. A switch registers all its local router ports in its router port list.

l Member port: On an Ethernet switch, a member port (also known as multicast group member port) connects the switch to a multicast group member. In the figure, Ethernet 1/0/2 and Ethernet 1/0/3 of Switch A and Ethernet1/0/2 of Switch B are member ports. The switch records all member ports on the local device in the IGMP Snooping forwarding table.

& Note:

Whenever mentioned in this document, a router port is a router-connecting port on a switch, rather than a port on a router.

II. Port aging timers in IGMP Snooping and related messages and actions

Table 2-1 Port aging timers in IGMP Snooping and related messages and actions

|

Timer |

Description |

Message before expiry |

Action after expiry |

|

Router port aging timer |

For each router port, the switch sets a timer initialized to the aging time of the route port |

IGMP general query or PIM hello message of which the source address is not 0.0.0.0 |

The switch removes this port from its router port list |

|

Member port aging timer |

When a port joins an multicast group, the switch sets a timer for the port, which is initialized to the member port aging time |

IGMP report message |

The switch removes this port from the multicast group forwarding table |

2.1.3 Work Mechanism of IGMP Snooping

A switch running IGMP Snooping performs different actions when it receives different IGMP messages, as follows:

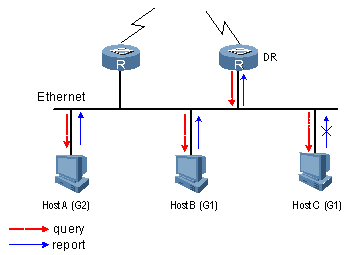

I. General queries

The IGMP querier periodically sends IGMP general queries to all hosts and routers on the local subnet to find out whether multicast group members exist on the subnet.

Upon receiving an IGMP general query, the switch forwards it through all ports in the VLAN except the receiving port and performs the following to the receiving port:

l If the receiving port is a router port existing in its router port list, the switch resets the aging timer of this router port.

l If the receiving port is not a router port existing in its router port list, the switch adds it into its router port list and sets an aging timer for this router port.

II. Membership reports

A host sends an IGMP report to the multicast router in the following circumstances:

l Upon receiving an IGMP query, a multicast group member host responds with an IGMP report.

l When intended to join a multicast group, a host sends an IGMP report to the multicast router to announce that it is interested in the multicast information addressed to that group.

Upon receiving an IGMP report, the switch forwards it through all the router ports in the VLAN, resolves the address of the multicast group the host has joined, and performs the following to the receiving port:

l If the port is already in the forwarding table, the switch resets the member port aging timer of the port.

l If the port is not in the forwarding table, the switch installs an entry for this port in the forwarding table and starts the member port aging timer of this port.

& Note:

A switch will not forward an IGMP report through a non-router port for the following reason: When IGMP report suppression is enabled, if member hosts of that multicast group still exist under non-router ports, the hosts will stop sending reports when they receive the message, and this prevents the switch from knowing if members of that multicast group are still attached to these ports.

For the description of IGMP report suppression mechanism, refer to ”Chapter 5 IGMP Configuration”.

III. Leave messages

When an IGMPv1 host leaves a multicast group, the host does not send an IGMP leave message, so the switch cannot know immediately that the host has left the multicast group. However, as the host stops sending IGMP reports as soon as it leaves a multicast group, the switch deletes the forwarding entry for the member port corresponding to the host from the forwarding table when its aging timer expires.

When an IGMPv2 or IGMPv3 host leaves a multicast group, the host sends an IGMP leave message to the multicast router to announce that it has leaf the multicast group.

Upon receiving an IGMP leave message on the last member port, a switch forwards it out all router ports in the VLAN. Because the switch does not know whether any other member hosts of that multicast group still exists under the port to which the IGMP leave message arrived, the switch does not immediately delete the forwarding entry corresponding to that port from the forwarding table; instead, it resets the aging timer of the member port.

Upon receiving the IGMP leave message from a host, the IGMP querier resolves from the message the address of the multicast group that the host just left and sends an IGMP group-specific query to that multicast group through the port that received the leave message. Upon receiving the IGMP group-specific query, a switch forwards it through all the router ports in the VLAN and all member ports of that multicast group, and performs the following to the receiving port:

l If a response to an IGMP report from that multicast group arrives to the member port before its aging timer expires, this means that some other members of that multicast group still exist under that port: the switch resets the aging timer of the member port.

l If no IGMP report from that multicast group arrives to this member port before its aging timer expires as a response to the IGMP group-specific query, this means that no members of that multicast group still exist under the port: the switch deletes the forwarding entry corresponding to the port from the forwarding table when the aging timer expires.

2.1.4 Processing of Multicast Protocol Messages

Under different conditions, an IGMP Snooping–capable switch processes multicast protocol messages differently, specifically as follows:

1) If only IGMP is enabled, or both IGMP and PIM are enabled on the switch, the switch handles multicast protocol messages in the normal way.

2) In only PIM is enabled on the switch:

l The switch broadcasts IGMP messages as unknown messages.

l Upon receiving a PIM hello message, the switch will maintain the corresponding router port.

3) When IGMP is disabled on the switch, or when IGMP forwarding entries are cleared (by using the reset igmp group command):

l If PIM is disabled, the switch clears all its Layer 2 multicast entries and router ports.

l If PIM is enabled, the switch clears only its Layer 2 multicast entries without deleting its router ports.

4) When PIM is disabled on the switch:

l If IGMP is disabled, the switch clears all its router ports.

l If IGMP is enabled, the switch maintains all its Layer 2 multicast entries and router ports.

2.2 IGMP Snooping Configuration Tasks

Complete these tasks to configure IGMP Snooping:

|

Task |

Remarks |

|

|

Required |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

& Note:

l Configurations performed in IGMP Snooping view are effective for all VLANs, while configurations made in VLAN view are effective only for ports belonging to the current VLAN. Configurations made in VLAN view override the corresponding configurations made in IGMP Snooping view.

l Configurations performed in IGMP Snooping view are globally effective; configurations performed in Ethernet port view are effective only for the current port; configurations performed in port group view are effective only for all the ports in the current port group.

l The configurations made in Ethernet port view/port group view take precedence over those made in IGMP Snooping view. The configurations made in IGMP Snooping view are used only if the corresponding configurations have not been made in Ethernet port view/port group view.

2.3 Configuring Basic Functions of IGMP Snooping

2.3.1 Configuration Prerequisites

Before configuring the basic functions of IGMP Snooping, complete the following tasks:

l Configure the corresponding VLANs

l Configure the corresponding port groups

Before configuring the basic functions of IGMP Snooping, prepare the following data:

l Version of IGMP Snooping

l Aging time of router ports

l Aging timer of member ports

2.3.2 Enabling IGMP Snooping

Follow these steps to enabling IGMP Snooping:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable IGMP Snooping globally and enter IGMP Snooping view |

igmp-snooping |

Required Disabled by default |

|

Return to system view |

quit |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Enable IGMP Snooping in the VLAN |

igmp-snooping enable |

Required Disabled by default |

& Note:

l IGMP Snooping must be enabled globally before it can be enabled in a VLAN.

l If you enable IGMP Snooping in a specified VLAN, this function takes effect for Ethernet ports in this VLAN only.

2.3.3 Configuring the Version of IGMP Snooping

by configuring the IGMP Snooping version, you are actually configuring the version of IGMP messages that can be analyzed and processed by IGMP Snooping.

l In the case of version 2, IGMP Snooping can analyze and process IGMPv1 and IGMPv2 messages, but not IGMPv3 messages, which will be broadcast in the VLAN.

l If the current is 3, IGMP Snooping can analyze and process IGMPv1, IGMPv2 and IGMPv3 messages.

Follow these steps to configure the version of IGMP Snooping:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Configure the version of IGMP Snooping |

igmp-snooping version version-number |

Optional Version 2 by default |

![]() Caution:

Caution:

If you switch IGMP Snooping from version 3 to version 2, the system will clear all IGMP Snooping forwarding entries for dynamic joins, and will:

l Keep forwarding entries for version 3 static (*, G) joins;

l Clear forwarding entries for version 3 static (S, G) joins, which will be restored when IGMP Snooping is switched back to version 3.

For details about static joins, Refer to”Configuring Static Member Ports”.

2.3.4 Configuring Port Aging Timers

If the switch does not receive an IGMP general query or a PIM hello message before the aging timer of a router port expires, the switch deletes this port from the router port list when the aging timer times out.

If the switch does not receive an IGMP report from a multicast group before the aging timer of a member port expires, the switch deletes this port from the forwarding table for that multicast group when the aging timers times out.

If multicast group memberships change frequently, you can set a relatively small value for the member port aging timer, and vice versa.

I. Configuring port aging timers globally

Follow these steps to configure port aging timers globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter IGMP Snooping view |

igmp-snooping |

— |

|

Configure router port aging time |

router-aging-time interval |

Optional 105 seconds by default |

|

Configure member port aging time |

host-aging-time interval |

Optional 260 seconds by default |

II. Configuring port aging timers in a VLAN

Follow these steps to configure port aging timers in a VLAN:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Configure router port aging time |

igmp-snooping router-aging-time interval |

Optional 105 seconds by default |

|

Configure member port aging time |

igmp-snooping host-aging-time interval |

Optional 260 seconds by default |

2.4 Configuring IGMP Snooping Port Functions

2.4.1 Configuration Prerequisites

Before configuring IGMP Snooping port functions, complete the following task:

l Enable IGMP Snooping in the VLAN or enable IGMP on the desired VLAN interface

Before configuring IGMP Snooping port functions, prepare the following data:

l Multicast group and multicast source addresses

2.4.2 Configuring Static Member Ports

If the host attached to a port is interested in the multicast data addressed to a particular multicast group or the multicast data that a particular multicast source sends to a particular group, you can configure this port to be a group-specific or source-and-group-specific static member port (static (*, G) or (S, G) joining).

In a network with a stable topology structure, you can configure router ports of a switch to be static router ports, through which the switch can receive IGMP messages from routers.

Follow these steps to configure static member ports:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Configure a static member port |

igmp-snooping static-group group-address [ source-ip source_address ] vlan vlan-id |

Required Disabled by default |

|

|

Configure a static router port |

igmp-snooping static-router-port vlan vlan-id |

Required Disabled by default |

|

& Note:

l When you enable or disable the static (*, G) or (S, G) joining function on a port, the port will not send an unsolicited IGMP report or an IGMP leave message.

l Static member ports and static router ports never age out. To delete such a port, you need to use the corresponding command.

2.4.3 Configuring Simulated Joining

Generally, a host running IGMP responds to IGMP queries from a multicast router. If a host fails to respond due to some reasons, the multicast router will deem that no member of this multicast group exists on the network segment, and therefore will remove the corresponding forwarding path.

To avoid this situation from happening, you can enable simulated joining on a port of the switch, namely configure the port as a simulated member of the multicast group. When an IGMP query arrives, that member port will give a response. As a result, the switch can continue receiving multicast data.

Through this configuration, the following functions can be implemented:

l When an Ethernet port is configured as a simulated member host, it sends an IGMP report.

l When receiving an IGMP general query, the simulated host responds with an IGMP report just like a real host.

l When the simulated joining function is disabled on an Ethernet port, the simulated host sends an IGMP leave message.

Follow these steps to configure simulated joining:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Configure simulated (*, G) or (S, G) joining |

igmp-snooping host-join group-address [ source-ip source-address ] vlan vlan-id |

Required Disabled by default |

|

& Note:

l Each simulated host is equivalent to an independent host. For example, when receiving an IGMP query, the simulated host corresponding to each configuration responds respectively.

l The IGMP version of a simulated host is the same as the IGMP Snooping version current running on the device.

2.4.4 Enabling the Fast Leave Feature

By default, when receiving a group-specific IGMP leave message on a port, the switch first sends an IGMP group-specific query message that port, rather than directly deleting the port from the multicast forwarding table. If the switch receives no IGMP reports within a certain period of waiting time, it deletes the port from the forwarding table.

I. Configuring the fast leave feature globally

Follow these steps to configure the fast leave feature globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter IGMP Snooping view |

igmp-snooping |

— |

|

Enable the fast leave feature |

fast-leave [ vlan vlan-list ] |

Required Disabled by default |

II. Configuring the fast leave feature on a port or a group ports

Follow these steps to configure the fast leave feature on a port or a group ports:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Enable the fast leave feature |

igmp-snooping fast-leave [ vlan vlan-list ] |

Required Disabled by default |

|

![]() Caution:

Caution:

If the fast leave feature is enabled on a port to which more than one host is connected, when one host leaves a multicast group, the other hosts connected to port and interested in the same multicast group will fail to receive multicast data for that group.

2.4.5 Configuring IGMP Report Suppression

When a Layer 2 device receives an IGMP report from a multicast group member, the device forwards the message to the Layer 3 device directly connected with it. Thus, when multiple members belonging to a multicast group exit on the Layer 2 device, the Layer 3 device directly connected with it will receive duplicate IGMP reports from these members.

With the IGMP report suppression function enabled, within a query interval, the Layer 2 device forwards only the first IGMP report of a multicast group to the Layer 3 device and will not forward the subsequent IGMP reports from the same multicast group to the Layer 3 device. This helps reduce the number of packets being transmitted over the network.

Follow these steps to configure IGMP report suppression:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter IGMP Snooping view |

igmp-snooping |

— |

|

Enable IGMP report suppression |

report-aggregation |

Optional Enabled by default |

2.5 Configuring IGMP-Related Functions

2.5.1 Configuration Prerequisites

Before configuring IGMP-related functions, complete the following task:

l Enable IGMP Snooping in the VLAN

Before configuring IGMP-related functions, prepare the following data:

l IGMP general query interval

l IGMP last-member query interval

l Maximum response time to IGMP general queries

l Source address of IGMP general queries

l Source address of IGMP group-specific queries

2.5.2 Enabling IGMP Snooping Querier

In a network that does not comprise Layer 3 multicast devices, however, it is a problem to implement an IGMP querier, because Layer 2 device do not support IGMP. To solve this problem, you can enable the IGMP Snooping querier function on a Layer 2 device so that it can work as an IGMP querier to create and maintain multicast forwarding entries at the data link layer.

Follow these steps to enable IGMP Snooping querier:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Enable IGMP Snooping querier |

igmp-snooping querier |

Required Disabled by default |

![]() Caution:

Caution:

l An IGMP Snooping querier does not take part in IGMP querier elections.

l It is meaningless to configure an IGMP Snooping querier in a multicast network running IGMP. Furthermore, it may affect IGMP querier elections because it sends IGMP general queries that contain low source IP addresses.

2.5.3 Configuring IGMP Timers

You can tune the IGMP general query interval based on actual condition of the network.

Upon receiving an IGMP query (general query or group-specific query), a host starts a timers for each multicast group it has joined. This timer is initialized to a random value in the range of 0 to the maximum response time (the host obtains the value of the maximum response time from the Max Response Time field in the IGMP query it received). When the timer value comes down to 0, the host sends an IGMP report to the corresponding multicast group.

An appropriate setting of the maximum response time for IGMP queries allows hosts to respond to queries quickly and avoids burst of IGMP traffic on the network caused by reports simultaneously sent by a large number of hosts when corresponding timers expires simultaneously.

l For IGMP general queries, you can configure the maximum response time to fill their Max Response time field.

l For IGMP group-specific queries, you can configure the IGMP last-member query interval to fill their Max Response time field. Namely, for IGMP group-specific queries, the maximum response time equals to the IGMP last-member query interval.

I. Configuring IGMP timers globally

Follow these steps to configure IGMP timers globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter IGMP Snooping view |

igmp-snooping |

— |

|

Configure the maximum response time to IGMP general queries |

max-response-time interval |

Optional 10 seconds by default |

|

Configure the IGMP last-member query interval |

last-member-query-interval interval |

Optional 1 second by default |

II. Configuring IGMP timers in a VLAN

Follow these steps to configure IGMP timers in a VLAN:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Configure IGMP general query interval |

igmp-snooping query-interval interval |

Optional 60 second by default |

|

Configure the maximum response time to IGMP general queries |

igmp-snooping max-response-time interval |

Optional 10 seconds by default |

|

Configure the IGMP last-member query interval |

igmp-snooping last-member-query-interval interval |

Optional 1 second by default |

![]() Caution:

Caution:

In the configuration, make sure that the IGMP general query interval is larger than the maximum response time for IGMP general queries.

2.5.4 Configuring Source IP Address of IGMP Queries

Upon receiving an IGMP query whose source IP address is 0.0.0.0 on a port, the switch will not set that port as a router port. Therefore, we recommend that you configure a valid IP address as the source IP address of IGMP queries.

Follow these steps to configure source IP address of IGMP queries:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Configure the source address of IGMP general queries |

igmp-snooping general-query source-ip { current-interface | ip-address } |

Optional 0.0.0.0 by default |

|

Configure the source IP address of IGMP group-specific queries |

igmp-snooping special-query source-ip { current-interface | ip-address } |

Optional 0.0.0.0 by default |

![]() Caution:

Caution:

The source address of IGMP query messages may affect IGMP querier selection within the segment.

2.6 Configuring a Multicast Group Policy

2.6.1 Configuration Prerequisites

Before configuring a multicast group filtering policy, complete the following task:

l Enable IGMP Snooping in the VLAN or enable IGMP on the desired VLAN interface

Before configuring a multicast group filtering policy, prepare the following data:

l ACL rule for multicast group filtering

l The maximum number of multicast groups that can pass the ports

2.6.2 Configuring a Multicast Group Filter

On an IGMP Snooping–enabled switch, the configuration of a multicast group allows the service provider to define limits of multicast programs available to different users.

In an actual application, when a user requests a multicast program, the user’s host initiates an IGMP report. Upon receiving this report message, the switch checks the report against the ACL rule configured on the receiving port. If the receiving port can join this multicast group, the switch adds this port to the IGMP Snooping multicast group list; otherwise the switch drops this report message. Any multicast data that has failed the ACL check will not be sent to this port. In this way, the service provider can control the VOD programs provided for multicast users.

I. Configuring a multicast group filter globally

Follow these steps to configure a multicast group filter globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter IGMP Snooping view |

igmp-snooping |

— |

|

Configure a multicast group filter |

group-policy acl-number [ vlan vlan-list ] |

Required No filter configured by default |

II. Configuring a multicast group filter on a port or a group ports

Follow these steps to configuring a multicast group filter on a port or a group ports:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Configure a multicast group filter |

igmp-snooping group-policy acl-number [ vlan vlan-list ] |

Required No filter configured by default |

|

2.6.3 Configuring Maximum Multicast Groups that Can Pass Ports

By configuring the maximum number of multicast groups that can pass a port or a group of ports, you can limit the number of number of multicast programs on-demand available to users, thus to control the traffic on the port.

Follow these steps to configure the maximum number of multicast groups that can pass the port(s):

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Configure the maximum number of multicast groups that can pass the port(s) |

igmp-snooping group-limit limit [ vlan vlan-list ] |

Optional 1,000 by default. |

|

& Note:

l When the number of multicast groups a port has joined reaches the maximum number configured, the system deletes this port from all the related IGMP Snooping forwarding entries, and hosts on this port need to join multicast groups again.

l If you have configured a port to be a static member port or a simulated member of a multicast group, the system deletes this port from all the related IGMP Snooping forwarding entries and applies the configurations again, until the number of multicast groups the port has joined reaches the maximum number configured.

2.6.4 Configuring Multicast Group Replacement

For some special reasons, the number of multicast groups passing through a switch or Ethernet port may exceed the number configured for the switch or the port. To address this situation, you can enable the multicast group replacement function on the switch or certain Ethernet ports. When the number of multicast groups an Ethernet port has joined reaches the limit,

l If the multicast group replacement is enabled, the newly joined multicast group automatically replaces an existing multicast group with the lowest address.

l If the multicast group replacement is not enabled, new IGMP reports will be automatically discarded.

I. Configuring multicast group replacement globally

Follow these steps to configure multicast group replacement globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

- |

|

Enter IGMP Snooping view |

igmp-snooping |

- |

|

Configure multicast group replacement |

overflow-replace [ vlan vlan-list ] |

Required Disabled by default |

II. Configuring multicast group replacement on a port or a group port

Follow these steps to configure multicast group replacement on a port or a group ports:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

- |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |

||

|

Configure multicast group replacement |

igmp-snooping overflow-replace [ vlan vlan-list ] |

Required Disabled by default |

|

2.7 Displaying and Maintaining IGMP Snooping

|

To do... |

Use the command... |

Remarks |

|

View the information of multicast groups learned by IGMP Snooping |

display igmp-snooping group [ vlan vlan-id ] [ verbose ] |

Available in any view |

|

View the statistics information of IGMP messages learned by IGMP Snooping |

display igmp-snooping statistics |

Available in any view |

|

Clear IGMP Snooping entries |

reset igmp-snooping group { group-address | all } [ vlan vlan-id ] |

Available in user view |

|

Clear the statistics information of all kinds of IGMP messages learned by IGMP Snooping |

reset igmp-snooping statistics |

Available in user view |

& Note:

l The reset igmp-snooping group command works only on an IGMP Snooping–enabled VLAN, but not on a VLAN with IGMP enabled on its VLAN interface.

l The reset igmp-snooping group command cannot clear IGMP Snooping entries derived from static configuration.

2.8 IGMP Snooping Configuration Examples

2.8.1 Configuring Simulated Joining

I. Network requirements

After the configuration, Host A and Host B, regardless of whether they have joined the multicast group 224.1.1.1, can receive multicast data that the multicast source 1.1.1.1/24 sends to the multicast group 224.1.1.1. Figure 2-3 shows the network connections.

II. Network diagram

Figure 2-3 Network diagram for simulated joining configuration

III. Configuration procedure

# Create VLAN 100.

<SwitchA> system-view

[SwitchA] vlan 100

# Add ports Ethernet 1/0/1 through Ethernet1/0/4 into VLAN 100.

[SwitchA-vlan100] port Ethernet 1/0/1 to Ethernet 1/0/4

[SwitchA-vlan100] quit

2) Configuring simulated (S, G) joining

# Enable IGMP Snooping in VLAN 100, and set its version to 3.

[SwitchA] igmp-snooping

[SwitchA-igmp-snooping] quit

[SwitchA] vlan 100

[SwitchA-vlan100] igmp-snooping enable

[SwitchA-vlan100] igmp-snooping version 3

[SwitchA-vlan100] quit

# Enable simulated (S, G) joining on Ethernet 1/0/3 and Ethernet 1/0/4 respectively.

[SwitchA] interface Ethernet 1/0/3

[SwitchA-Ethernet1/0/3] igmp-snooping host-join 224.1.1.1 source-ip 1.1.1.1 vlan 100

[SwitchA-Ethernet1/0/3] quit

[SwitchA] interface Ethernet 1/0/4

[SwitchA-Ethernet1/0/4] igmp-snooping host-join 224.1.1.1 source-ip 1.1.1.1 vlan 100

[SwitchA-Ethernet1/0/4] quit

3) Verifying the configuration

# View the detailed information of the multicast group in VLAN 100.

[SwitchA] display igmp-snooping group vlan 100 verbose

Total 1 IP Group(s).

Total 1 IP Source(s).

Total 1 MAC Group(s).

Port flags: D-Dynamic port, S-Static port, A-Aggregation port, C-Copy port

Subvlan flags: R-Real VLAN, C-Copy VLAN

Vlan(id):100.

Total 1 IP Group(s).

Total 1 IP Source(s).

Total 1 MAC Group(s).

Router port(s):total 1 port.

Ethernet1/0/1 (D) ( 00:01:30 )

IP group(s):the following ip group(s) match to one mac group.

IP group address:224.1.1.1

(1.1.1.1, 224.1.1.1):

Attribute: Host Port

Host port(s):total 2 port.

Ethernet1/0/3 (D) ( 00:03:23 )

Ethernet1/0/4 (D) ( 00:03:23 )

MAC group(s):

MAC group address:0100-5e01-0101

Host port(s):total 2 port.

Ethernet1/0/3

Ethernet1/0/4

As shown above, Ethernet 1/0/3 and Ethernet 1/0/4 of Switch A have joined the specified (S, G) entry (1.1.1.1, 224.1.1.1).

2.8.2 Static Router Port Configuration

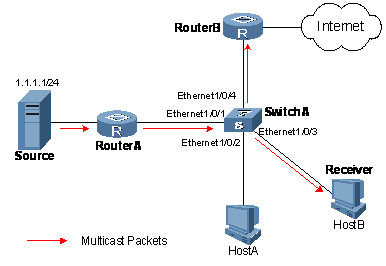

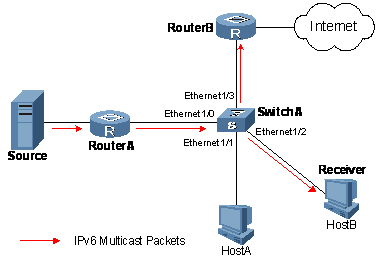

I. Network requirements

No multicast protocol is running on Router B. After the configuration, Switch A should be able to forward multicast data to Router B. Figure 2-4 shows the network connections.

II. Network diagram

Figure 2-4 Network diagram for static router port configuration

III. Configuration procedure

1) Configuring a VLAN

# Create VLAN 100.

<SwitchA> system-view

[SwitchA] vlan 100

# Add ports Ethernet 1/0/1 through Ethernet1/0/4 into VLAN 100.

[SwitchA-vlan100] port Ethernet 1/0/1 to Ethernet 1/0/4

[SwitchA-vlan100] quit

2) Configuring a static router port

# Enable IGMP Snooping in VLAN 100.

[SwitchA] igmp-snooping

[SwitchA-igmp-snooping] quit

[SwitchA] vlan 100

[SwitchA-vlan100] igmp-snooping enable

[SwitchA-vlan100] quit

# Configure Ethernet 1/0/4 to be a static router port.

[SwitchA] interface Ethernet 1/0/4

[SwitchA-Ethernet1/0/4] igmp-snooping static-router-port vlan 100

[SwitchA-Ethernet1/0/4] quit

3) Verifying the configuration

# View the detailed information of the multicast group in VLAN 100.

[SwitchA] display igmp-snooping group vlan 100 verbose

Total 1 IP Group(s).

Total 1 IP Source(s).

Total 1 MAC Group(s).

Port flags: D-Dynamic port, S-Static port, A-Aggregation port, C-Copy port

Subvlan flags: R-Real VLAN, C-Copy VLAN

Vlan(id):100.

Total 1 IP Group(s).

Total 1 IP Source(s).

Total 1 MAC Group(s).

Router port(s):total 2 port.

Ethernet1/0/1 (D) ( 00:01:30 )

Ethernet1/0/4 (S) ( 00:01:30 )

IP group(s):the following ip group(s) match to one mac group.

IP group address:224.1.1.1

(1.1.1.1, 224.1.1.1):

Attribute: Host Port

Host port(s):total 1 port.

Ethernet1/0/3 (D) ( 00:03:23 )

MAC group(s):

MAC group address:0100-5e01-0101

Host port(s):total 1 port.

Ethernet1/0/3

As shown above, Ethernet 1/0/4 of Switch A has become a static router port.

2.9 Troubleshooting IGMP Snooping Configuration

2.9.1 Switch Fails in Layer 2 Multicast Forwarding

I. Symptom

A switch fails to implement Layer 2 multicast forwarding.

II. Analysis

IGMP Snooping is not enabled.

III. Solution

1) Enter the display current-configuration command to view the running status of IGMP Snooping.

2) If IGMP Snooping is not enabled, use the igmp-snooping command to enable IGMP Snooping globally and then use igmp-snooping enable command to enable IGMP Snooping in VLAN view.

3) If IGMP Snooping is disabled only for the corresponding VLAN, just use the igmp-snooping enable command in VLAN view to enable IGMP Snooping in the corresponding VLAN.

2.9.2 Configured Multicast Group Policy Fails to Take Effect

I. Symptom

Although a multicast group policy has been configured to allow hosts to join specific multicast groups, the hosts can still receive multicast data addressed to other multicast groups.

II. Analysis

l The ACL rule is incorrectly configured.

l The multicast group policy is not correctly applied.

l If a non-existing ACL or null ACL is used as a multicast policy, all multicast groups will be filtered out.

l Certain ports have been configured as static member ports of multicasts groups, and this configuration conflicts with the configured multicast group policy.

III. Solution

1) Use the display acl command to check the configured ACL rule. Make sure that the ACL rule conforms to the multicast group policy to be implemented.

2) Use the display this command in IGMP Snooping view or in the corresponding interface view to check whether the correct multicast group policy has been applied. If not, use the group-policy or igmp-snooping group-policy command to apply the correct multicast group policy.

3) Use the display igmp-snooping group command to check whether any port has been configured as a static member port of any multicast group. If so, check whether this configuration conflicts with the configured multicast group policy. If any conflict exists, remove the port as a static member of the multicast group.

Chapter 3 MLD Snooping Configuration

3.1 MLD Snooping Overview

3.1.1 How MLD Snooping Works

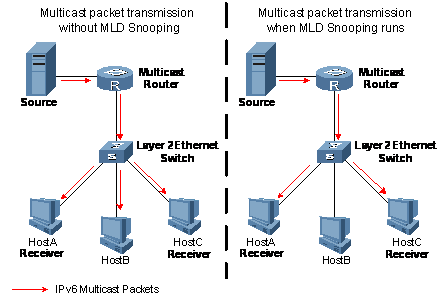

By analyzing received MLD messages, a Layer 2 device running MLD Snooping establishes mappings between ports and multicast MAC addresses and forwards IPv6 multicast data based on these mappings.

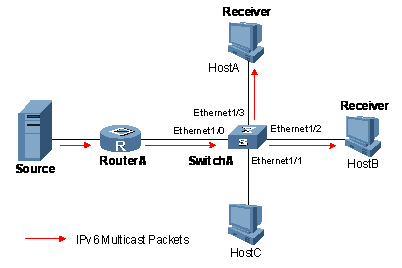

As shown in Figure 3-1, when MLD Snooping is not running, IPv6 multicast packets are broadcast to all devices at Layer 2. When MLD Snooping runs, multicast packets for known IPv6 multicast groups are multicast to the receivers at Layer 2.

Figure 3-1 IPv6 multicast before and after MLD Snooping runs

3.1.2 Basic Concepts in MLD Snooping

I. MLD Snooping related ports

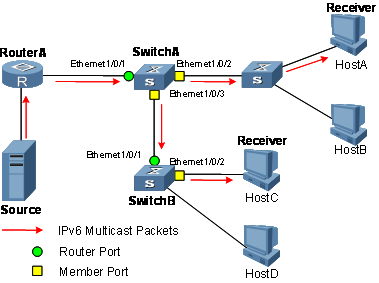

As shown in Figure 3-2, Router A connects to the multicast source, MLD Snooping runs on Switch A and Switch B, Host A and Host C are receiver hosts (namely, IPv6 multicast group members).

Figure 3-2 MLD Snooping related ports

Ports involved in MLD Snooping, as shown in Figure 3-2, are described as follows:

l Router port: On an Ethernet switch, a router port connects the switch to a multicast router. In the figure, Ethernet 1/0/1 of Switch A and Ethernet 1/0/1 of Switch B are router ports. A switch registers all its local router ports in its router port list.

l Member port: On an Ethernet switch, a member port (also known as IPv6 multicast group member port) connects the switch to an IPv6 multicast group member. In the figure, Ethernet 1/0/2 and Ethernet 1/0/3 of Switch A and Ethernet1/0/2 of Switch B are member ports. The switch records all member ports on the local device in the MLD Snooping forwarding table.

& Note:

Whenever mentioned in this document, a router port is a router-connecting port on a switch, rather than a port on a router.

II. Port aging timers in MLD Snooping

Table 3-1 Port aging timers in MLD Snooping and related messages and actions

|

Timer |

Description |

Message before expiry |

Action after expiry |

|

Router port aging timer |

For each router port, the switch sets a timer initialized to the aging time of the route port |

MLD general query or IPv6 PIM hello message of which the source address is not 0::0 |

The switch removes this port from its router port list |

|

Member port aging timer |

When a port joins an IPv6 multicast group, the switch sets a timer for the port, which is initialized to the member port aging time |

MLD report message |

The switch removes this port from the IPv6 multicast group forwarding table |

3.1.3 Work Mechanism of MLD Snooping

A switch running MLD Snooping performs different actions when it receives different MLD messages, as follows:

I. General queries

Upon receiving an MLD general query, the switch forwards it through all ports in the VLAN except the receiving port and performs the following to the receiving port:

l If the receiving port is a router port existing in its router port list, the switch resets the aging timer of this router port.

l If the receiving port is not a router port existing in its router port list, the switch adds it into its router port list and sets an aging timer for this router port.

II. Membership reports

A host sends an MLD report to the multicast router in the following circumstances:

l Upon receiving an MLD query, an IPv6 multicast group member host responds with an MLD report.

l When intended to join an IPv6 multicast group, a host sends an MLD report to the multicast router to announce that it is interested in the multicast information addressed to that IPv6 multicast group.

Upon receiving an MLD report, the switch forwards it through all the router ports in the VLAN, resolves the address of the IPv6 multicast group the host is has joined, and performs the following to the receiving port:

l If the port is already in the IPv6 forwarding table, the switch resets the member port aging timer of the port.

l If the port is not in the IPv6 forwarding table, the switch installs an entry for this port in the IPv6 forwarding table and starts the member port aging timer of this port.

& Note:

A switch will not forward an MLD report through a non-router port for the following reason: When MLD report suppression is enabled, if member hosts of that IPv6 multicast group still exist under other non-router ports, these hosts will stop sending MLD reports when they receive the message. This prevents the switch from knowing if members of that IPv6 multicast group are still attached to these ports.

III. Done messages

When a host leaves an IPv6 multicast group, the host sends an MLD done message to the multicast router to announce that it is to leave the IPv6 multicast group.

Upon receiving an MLD done message, a switch forwards it through all router ports in the VLAN. Because the switch does not know whether any other member hosts of that IPv6 multicast group still exists under the port to which the MLD done message arrived, the switch does not immediately delete the forwarding entry corresponding to that port from the forwarding table; instead, it resets the aging timer of the member port.

Upon receiving an MLD done message from a host, the MLD querier resolves from the message the address of the IPv6 multicast group that the host just left and sends an MLD group-specific query to that IPv6 multicast group through the port that received the done message. Upon receiving the MLD group-specific query, a switch forwards it through all the router ports in the VLAN and all member ports of that IPv6 multicast group, and performs the following to the receiving port:

l If no MLD report from that IPv6 multicast group arrives to this member port before its aging timer expires as a response to the MLD group-specific query, this means that no members of that IPv6 multicast group still exist under the member port: the switch deletes the forwarding entry for the member port from the forwarding table when its aging timer expires.

3.2 MLD Snooping Configuration Tasks

Complete these tasks to configure MLD Snooping:

|

Task |

Remarks |

|

|

Required |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

|

Optional |

||

& Note:

l Configurations performed in MLD Snooping view are effective for all VLANs, while configurations made in VLAN view are effective only for ports belonging to the current VLAN. Configurations made in VLAN view override the corresponding configurations made in MLD Snooping view.

l Configurations performed in MLD Snooping view are globally effective; configurations performed in Ethernet port view are effective only for the current port; configurations performed in port group view are effective only for all the ports in the current port group.

l The system gives priority to configurations made in Ethernet port view or port group view. Configurations made in MLD Snooping view are used only if the corresponding configurations have not been carried out in Ethernet port view or port group view.

3.3 Configuring Basic Functions of MLD Snooping

3.3.1 Configuration Prerequisites

Before configuring the basic functions of MLD Snooping, complete the following tasks:

l Configure the corresponding VLANs

l Configure the corresponding port groups

Before configuring the basic functions of MLD Snooping, prepare the following data:

l Aging timer of member ports

3.3.2 Enabling MLD Snooping

Follow these steps to enable MLD Snooping:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable MLD Snooping globally and enter MLD Snooping view |

mld-snooping |

Required Disabled by default |

|

Return to system view |

quit |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Enable MLD Snooping in the VLAN |

mld-snooping enable |

Required Disabled by default |

& Note:

l MLD Snooping must be enabled globally before it can be enabled in a VLAN.

l If you enable MLD Snooping in a specified VLAN, this function takes effect for Ethernet ports in this VLAN only.

3.3.3 Configuring Port Aging Timers

If the switch does not receive an MLD general query or an IPv6 PIM hello message before the aging timer of a router port expires, the switch deletes this port from the router port list when the aging timer times out.

If the switch does not receive an MLD report from an IPv6 multicast group before the aging timer of a member port expires, the switch deletes this port from the forwarding table for that IPv6 multicast group when the aging timers times out.

If IPv6 multicast group memberships change frequently, you can set a relatively small value for the member port aging timer, and vice versa.

I. Configuring port aging timers globally

Follow these steps to configure port aging timers globally:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MLD Snooping view |

mld-snooping |

— |

|

Configure router port aging time |

router-aging-time interval |

Optional 260 seconds by default |

|

Configure member port aging time |

host-aging-time interval |

Optional 260 seconds by default |

II. Configuring port aging timers in a VLAN

Follow these steps to configure port aging timers in a VLAN:

|

To do... |

Use the command... |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter VLAN view |

vlan vlan-id |

— |

|

Configure router port aging time |

mld-snooping router-aging-time interval |

Optional 260 seconds by default |

|

Configure member port aging time |

mld-snooping host-aging-time interval |

Optional 260 seconds by default |

3.4 Configuring MLD Snooping Port Functions

3.4.1 Configuration Prerequisites

Before configuring MLD Snooping port functions, complete the following task:

l Enable MLD Snooping in the VLAN

Before configuring MLD Snooping port functions, prepare the following data:

l Address of IPv6 multicast group

3.4.2 Configuring Static Member Ports

In a network with a stable topology structure, you can configure router ports of a switch to be static router ports, through which the switch can receive MLD messages from routers or Layer 3 switches.

Follow these steps to configure static member ports:

|

To do... |

Use the command... |

Remarks |

|

|

Enter system view |

system-view |

— |

|

|

Enter the corresponding view |

Enter Ethernet port view |

interface interface-type interface-number |

Use either command |

|

Enter port group view |

port-group { manual port-group-name | aggregation agg-id } |