- Table of Contents

-

- 10-Segment Routing Configuration Guide

- 00-Preface

- 01-SR-MPLS configuration

- 02-SR-MPLS TE policy configuration

- 03-SRv6 configuration

- 04-SRv6 TE policy configuration

- 05-SRv6 VPN overview

- 06-IP L3VPN over SRv6 configuration

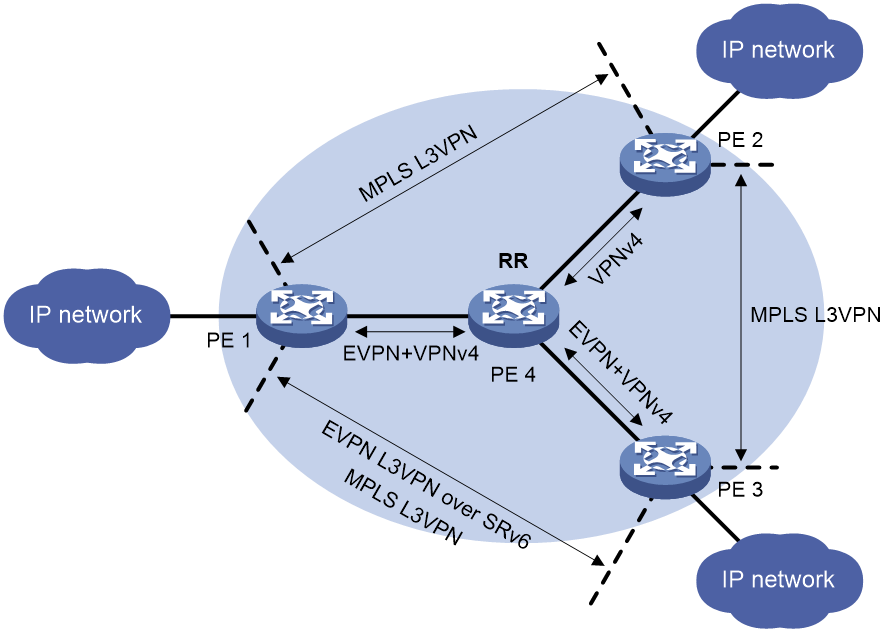

- 07-EVPN L3VPN over SRv6 configuration

- 08-EVPN VPWS over SRv6 configuration

- 09-EVPN VPLS over SRv6 configuration

- 10-Public network IP over SRv6 configuration

- 11-SRv6 OAM configuration

- 12-SRv6 network slicing configuration

- 13-SRv6 service chain configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 04-SRv6 TE policy configuration | 2.65 MB |

Basic concepts in SRv6 TE Policy

SID list creation through dynamic path calculation

SID list computation using PCE

Traffic steering to an SRv6 TE policy

Service class-based traffic steering

TE class ID-based traffic steering

Other traffic steering methods

SRv6 TE policy-based traffic forwarding

Data encapsulation and forwarding through SRv6 TE policies

SRv6 TE policy transit node protection

SRv6 TE policy application scenarios

SRv6 TE policy application in the APN6 network

SRv6 TE policy application in the ARN network

Restrictions and guidelines: SRv6 TE policy configuration

SRv6 TE policy tasks at a glance

Manually creating an SRv6 TE policy and configuring its attributes

Automatically creating SRv6 TE policies by using ODN

Enabling the SRv6 capability for a PCC

Configuring PCEP session parameters

Configuring a candidate path and the SID lists of the path

Configuring a candidate path to use manually configured SID lists

Configuring a candidate path to create an SID list through affinity attribute-based path calculation

Configuring a candidate path to create an SID list through Flex-Algo-based path calculation

Configuring a candidate path to use PCE-computed SID lists

Configuring an ODN-created candidate path to use PCE-computed SID lists

Configuring PCE delegation to create candidate paths and SID lists

Enabling strict SID encapsulation for SID lists

Configuring dynamic path calculation timers

Enabling the device to distribute SRv6 TE policy candidate path information to BGP-LS

Shutting down an SRv6 TE policy

Configuring BGP to advertise BGP IPv6 SR policy routes

Restrictions and guidelines for BGP IPv6 SR policy routes advertisement

Enabling BGP to advertise BGP IPv6 SR policy routes

Configuring BGP to redistribute BGP IPv6 SR policy routes

Enabling advertising BGP IPv6 SR policy routes to EBGP peers

Enabling validity check for BGP IPv6 SR policy routes

Configuring BGP to control BGP IPv6 SR policy route selection and advertisement

Configuring SRv6 TE policy traffic steering

Configuring the SRv6 TE policy traffic steering mode

Configuring color-based traffic steering

Configuring tunnel policy-based traffic steering

Configuring DSCP-based traffic steering

Configuring 802.1p-based traffic steering

Configuring service class-based traffic steering

Configuring APN ID-based traffic steering

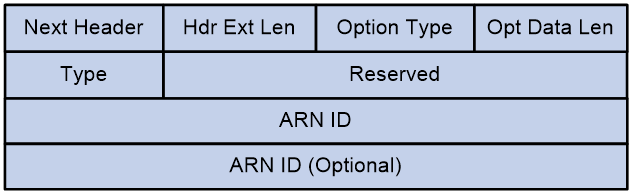

Configuring ARN ID-based traffic steering

Configuring TE class ID-based traffic steering

Configuring static route-based traffic steering

Configuring QoS policy-based traffic steering

Configuring Flowspec-based traffic steering

Enabling automatic route advertisement

Configuring the SRv6 TE policy encapsulation mode

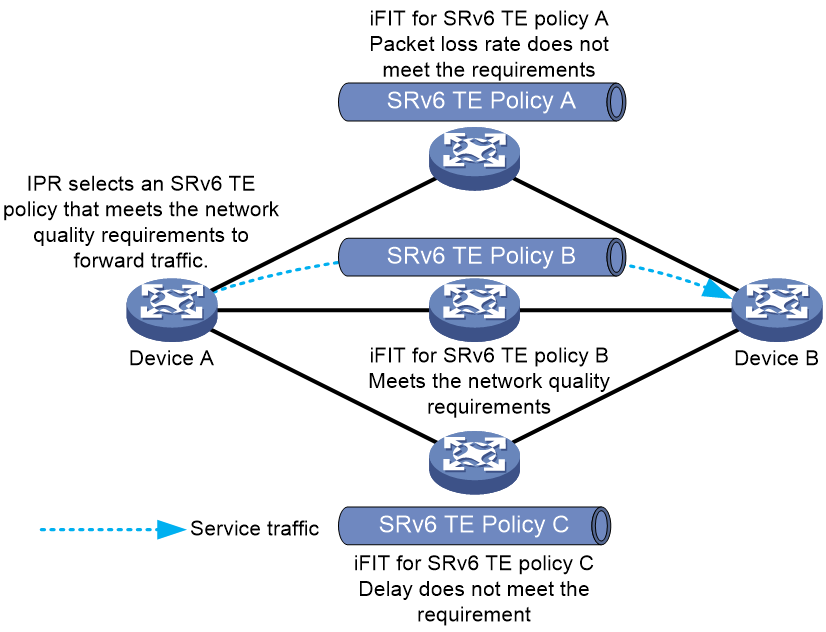

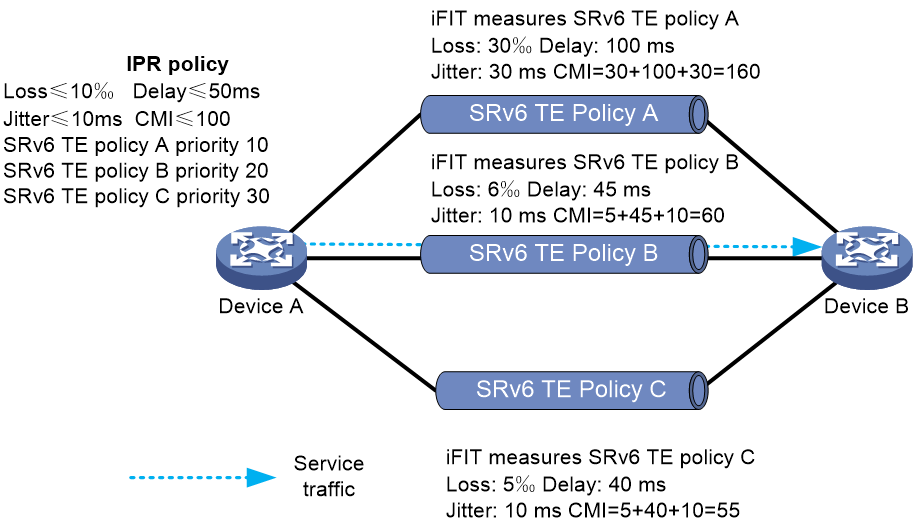

Configuring IPR for SRv6 TE policies

Restrictions and guidelines for IPR configuration

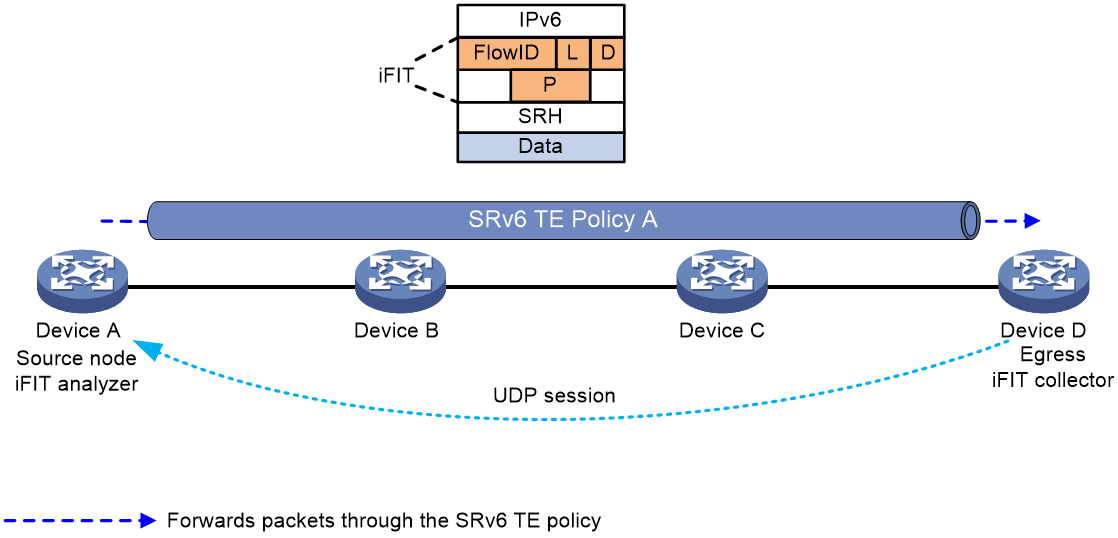

Configuring iFIT measurement for SRv6 TE policies

Configuring IPR path calculation for SRv6 TE policies

Enabling SBFD for SRv6 TE policies

Enabling echo BFD for SRv6 TE policies

Enabling the No-Bypass feature for SRv6 TE policies

Enabling BFD No-Bypass for SRv6 TE policies

Enabling hot standby for SRv6 TE policies

Configuring path switchover and deletion delays for SRv6 TE policies

Setting the delay time for bringing up SRv6 TE policies

Configuring path connectivity verification for SRv6 TE policies

Configuring SRv6 TE policy transit node protection

Configuring SRv6 TE policy egress protection

Restrictions and guidelines for SRv6 TE policy egress protection configuration

Configuring candidate path reoptimization for SRv6 TE policies

Configuring flapping suppression for SRv6 TE policies

Configuring the TTL processing mode of SRv6 TE policies

Configuring SRv6 TE policy CBTS

Configuring a rate limit for an SRv6 TE policy

Enabling the device to drop traffic when an SRv6 TE policy becomes invalid

Specifying the packet encapsulation type preferred in optimal route selection

Configuring SRv6 TE policy resource usage alarm thresholds

Enabling SRv6 TE policy logging

Enabling SNMP notifications for SRv6 TE policies

Configuring traffic forwarding statistics for SRv6 TE policies

Display and maintenance commands for SRv6 TE policies

SRv6 TE policy configuration examples

Example: Configuring SRv6 TE policy-based forwarding

Example: Configuring SRv6 TE policy egress protection

Example: Configuring SRv6 TE policy through ODN

Example: Configuring SRv6 TE policy-based forwarding with IPR

Example: Configuring color-based traffic steering for EVPN L3VPN over SRv6 TE Policy

Example: Configuring CBTS-based traffic steering for EVPN L3VPN over SRv6 TE Policy

Example: Configuring DSCP-based traffic steering for EVPN L3VPN over SRv6 TE Policy

Example: Configuring Flowspec-based traffic steering for EVPN L3VPN over SRv6 TE Policy

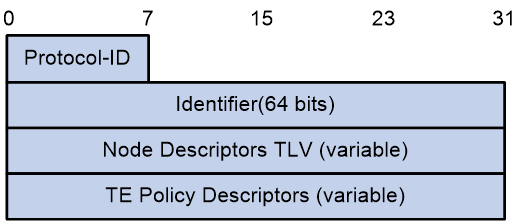

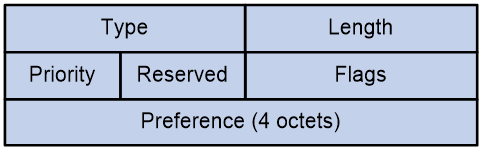

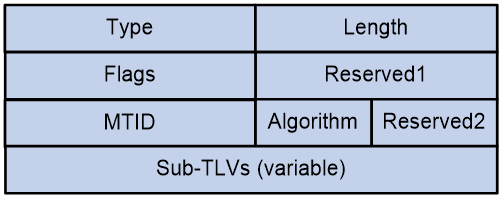

TE Policy NLRI in BGP-LS routes

SRv6 TE policy introduction

About SRv6 TE policies

IPv6 Segment Routing Traffic Engineering (SRv6 TE) policies apply to scenarios where multiple paths exist between a source node and a destination node on an SRv6 network. The device can use an SRv6 TE policy to flexibly steer traffic to a proper forwarding path.

Basic concepts in SRv6 TE Policy

SRv6 TE policy identification

An SRv6 TE policy is uniquely identified by the following triplet:

· Headend—Ingress node (source node).

· Color—Color attribute, which provides a mechanism for associating services with SRv6 TE policies. It is used to distinguish different SRv6 TE policies with the same source and destination nodes. SRv6 TE policies are colored by network administrators. You can use this attribute to implement service-specific traffic steering to SRv6 TE policies. For example, you can use an SRv6 TE policy with a color attribute of 10 to forward the traffic of a service that requires a link delay smaller than 10 ms.

· Endpoint—IPv6 address of the egress node (destination node).

On an ingress node, you can uniquely identify an SRv6 TE policy by its color and egress node.

SRv6 TE policy contents

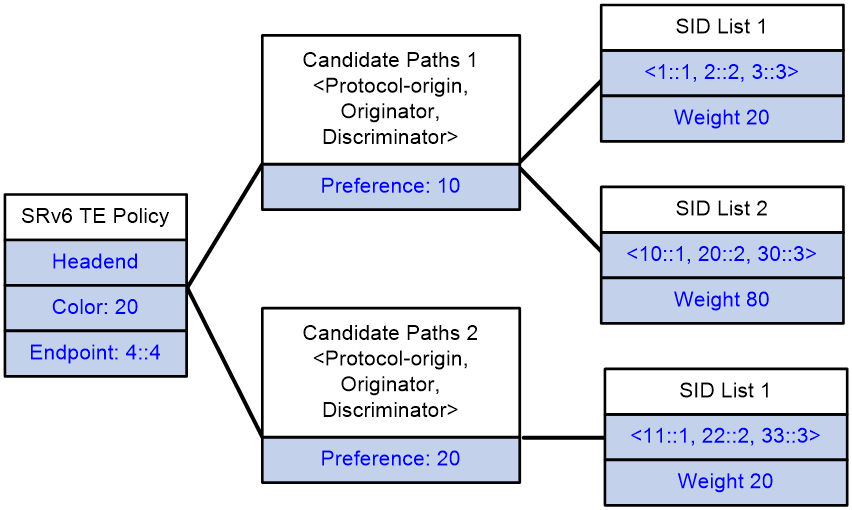

As show in Figure 1, an SRv6 TE policy consists of candidate paths with different preferences. Each candidate path can have one or multiple subpaths identified by segment lists (also called SID lists).

· Candidate path

An SRv6 TE policy can have multiple candidate paths. The device selects the candidate path with the greatest preference value as the primary path in that SRv6 TE policy. A candidate path is uniquely identified by the <Protocol-origin,Originator,Discriminator> triplet:

¡ Protocol-origin—Protocol or method through which the candidate path was generated.

¡ Originator—Node that generated the candidate path. This field always consists of two portions, AS number and node IP address.

¡ Discriminator—Candiate path ID, which is used to distinguish candidate paths with the same Protocol-origin and Originator values. For example, the controller deploys three candiate paths to the ingress node of an SRv6 TE policy through BGP. In this situation, the Protocol-origin and Originator values of those candiate paths are BGP and controller, respectively, but their Discriminator values are different.

Two SRv6 TE policies cannot share the same candidate path.

· SID list

A SID list is a list of SIDs that indicates a packet forwarding path. Each SID is the IPv6 address of a node on the forwarding path.

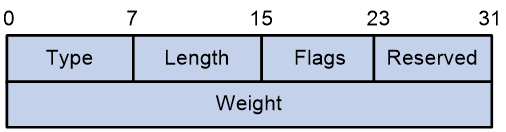

A candidate path can have a single SID list or multiple SID lists that use different weight values. After an SRv6 TE policy chooses a candidate path with multiple SID lists, the traffic will be load shared among the SID lists based on weight values.

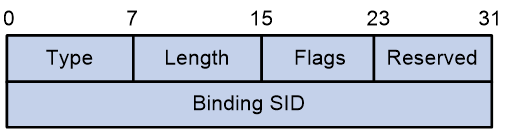

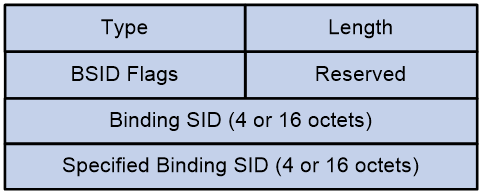

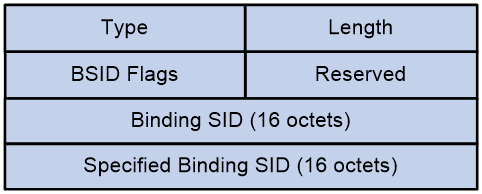

· Binding SID

SRv6 TE policies also support Binding SIDs (BSID). A BSID is typically an SRv6 SID, which represents a candidate path. If the destination address of a packet is a BSID, the packet will be steered to the related candidate path for further forwarding. Assume that an SRv6 TE policy is a network service and you want to forward traffic along a specific candidate path of that SRv6 TE policy. From a programming perspective, you can use the BSID of that candidate path as an interface to call that network service.

You can manually configure a BSID for an SRv6 TE policy or leave the SRv6 TE policy to automatically obtain a BSID from the specified locator. The SRv6 endpoint behavior for BSID is Endpoint Bound to an SRv6 TE Policy (End.B6), because they are SRv6 SIDs indeed. End.B6 behaviors include the End.B6.Insert and End.B6.Encaps behaviors. For more information about End.B6 SRv6 SIDs, see SRv6 configuration in Segment Routing Configuration Guide.

Figure 1 SRv6 TE policy contents

SRv6 TE policy creation

SRv6 TE policy creation modes

An SRv6 TE policy can be created in the following modes:

· CLI- or NETCONF-based configuration

In this method, you need to manually configure the candidate settings for the SRv6 TE policy, such as candidate path preferences, SID lists and weights.

· Learning from a BGP IPv6 SR policy route

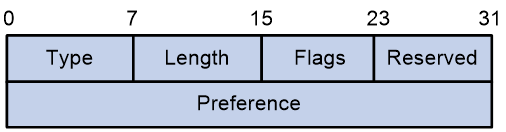

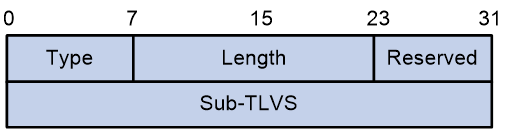

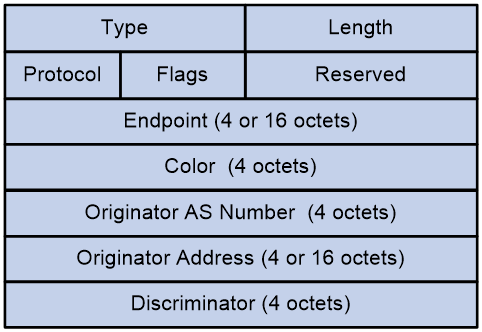

To support SRv6 TE policy, MP-BGP defines the BGP IPv6 SR policy address family and the SRv6 TE policy Network Layer Reachability Information (NLRI). The SRv6 TE policy NLRI is called the BGP IPv6 SR policy route. A BGP IPv6 SR policy route contains SRv6 TE policy settings, including the BSID, color, endpoint, candidate preferences, SID lists, and SID list weights.

The device can advertise its local SRv6 TE policy settings to its BGP IPv6 SR policy peer through a BGP IPv6 SR policy route. The peer device can create an SRv6 TE policy according to the received SRv6 TE policy settings.

· Automatic creation by ODN

When the device receives a BGP route, it compares the color extended attribute value of the BGP route with the color value of the ODN template. If the color values match, the device automatically generates an SRv6 TE policy and two candidate paths for the policy.

¡ The policy uses the BGP route's next hop address as the endpoint address and the ODN template's color value as the color attribute value of the policy.

¡ The candidate paths use preferences 100 and 200. You need to configure the SID lists for the candidate path with preference 200 through dynamic calculation based on affinity attribute or Flex-Algo, and use PCE to compute the SID lists for the candidate path with preference 100. For more information about SID list computation using PCE, see "SID list computation using PCE."

You can also manually create candidate paths for an ODN-created SRv6 TE policy.

SID list creation through dynamic path calculation

SID list creation through dynamic path calculation is supported on the source node of manually created SRv6 TE policies and automatically generated SRv6 TE policies through ODN.

The dynamic path calculation is performed based on affinity attribute or Flex-Algo.

Dynamic path calculation based on affinity attribute

An SRv6 TE policy performs dynamic path calculation based on affinity attribute as follows:

1. Select the links based on the affinity attribute rule.

The SRv6 TE policy selects links containing the bit values associated with the specified affinity attribute as required by the affinity attribute rule.

¡ Link attribute—A 32-bit binary number. Each bit represents an attribute with a value of 0 or 1.

¡ Affinity attribute bit position—The value range is 0 to 32. When the affinity attribute value is N, it is compared with the N+1 bit of the link attribute (from right to left). The affinity attribute applies to the link only if the N+1 bit value of the link attribute is 1.

For example, for affinity attribute name blue associated with bit 1 and affinity attribute name red associated with bit 5, the link selection varies by affinity attribute rule type:

¡ For the include-any affinity attribute rule, a link is available for use if the link attribute has the second bit (associated with affinity attribute blue) or sixth bit (associated with affinity attribute red) set to 1.

¡ For the include-all affinity attribute rule, a link is available for use if the link attribute has both the second bit (associated with affinity attribute blue) and sixth bit (associated with affinity attribute red) set to 1.

¡ For the exclude-any affinity attribute rule, a link is not available for use if the link attribute has the second bit (associated with affinity attribute blue) or sixth bit (associated with affinity attribute red) set to 1.

2. Select the links based on the specified metric.

The SRv6 TE policy supports the following metrics:

¡ Hop count—Selects the link with minimum hops.

¡ IGP link cost—Selects the link with minimum IGP link cost.

¡ Interface latency—Selects the link with the minimum interface latency.

¡ TE cost—Selects the link with minimum TE cost.

After path calculation, the device sorts all link- or node-associated SIDs on the path in an ascending order of distance, and creates an SID list for the SRv6 TE policy. During SID selection, End SIDs take precedence over End.X SIDs.

Dynamic path calculation based on Flex-Algo

The SRv6 TE policy uses the specified Flex-Algo to perform dynamic path calculation. After path calculation, the device sorts all link- or node-associated SIDs on the path in an ascending order of distance, and creates an SID list for the SRv6 TE policy. During SID selection, End SIDs take precedence over End.X SIDs.

For more information about path calculation based on Flex-Algo, see IS-IS configuration in Layer 3—IP Routing Configuration Guide.

SID list computation using PCE

On an SRv6 TE policy network, an SRv6 node can act as a Path Computation Client (PCC) to use the paths computed by the Path Computation Element (PCE) to create SID lists for a candidate path.

Basic concepts

· PCE—An entity that provides path computation for network devices. It can provide intra-area path computation as well as complete SID list computation on a complicated network. A PCE can be stateless or stateful.

¡ Stateless PCE—Provides only path computation.

¡ Stateful PCE—Knows all path information maintained by a PCC, and performs intra-area path recomputation and optimization. A stateful PCE can be active or passive.

- Active stateful PCE—Accepts path delegation requests sent by a PCC and optimizes the paths.

- Passive stateful PCE—Only maintains path information for SID lists of a PCC. A passive stateful PCE does not accept path delegation requests sent by a PCC or optimize the paths.

· PCC—A PCC sends a request to a PCE for path computation and uses the path information returned by the PCE to establish forwarding paths. For a PCC to establish a PCEP session with a PCE, the PCC and PCE must be of the same type.

¡ Stateless PCC—Sends path computation requests to a PCE.

¡ Stateful PCC—Delegates SID list information to a stateful PCE. A stateful PCE can be active or passive.

- Active stateful PCC—Reports its SID list information to a PCE, and uses the paths computed by the PCE to create and update the SID lists.

- Passive stateful PCC—Only reports its SID list information to a PCE but does not use the PCE to compute or update path information for the SID lists.

· PCEP—Path Computation Element Protocol. PCEP runs between a PCC and a PCE, or between PCEs. It is used to establish PCEP sessions to exchange PCEP messages over TCP connections.

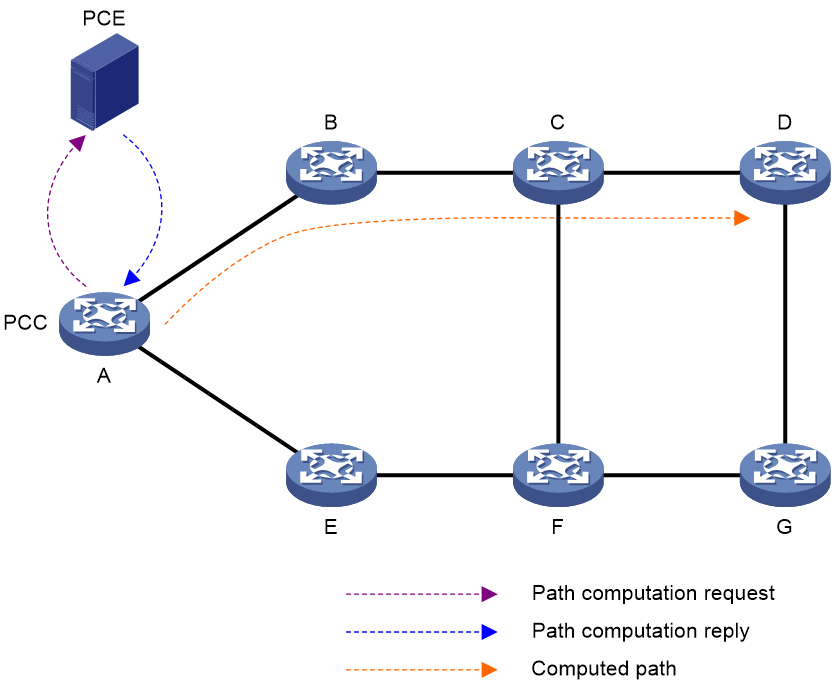

PCE path computation

As shown in Figure 2, the PCE path computation procedure is as follows:

1. The PCC sends a path computation request to the PCE.

2. The PCE computes paths after it receives the request.

3. The PCE replies the PCC with the computed path information.

4. The PCC creates SID lists for the SRv6 TE policy candidate path according to the path information computed by the PCE.

Figure 2 Path computation using PCE

SRv6 TE policy validity

An SRv6 TE policy can be used for traffic forwarding only if it is valid. The device marks an SRv6 TE policy as valid only if that policy contains a minimum of one candidate path with valid SID lists. If all SID lists associated with candidate paths within an SRv6 TE policy are invalid, the device marks the SRv6 TE policy as invalid. An SID list is invalid in one of the following situations:

· The SID list is empty

· The weight of the SID list is 0.

· The SRv6 source node cannot communicate with the IPv6 address of the first hop in the SID list.

· Path connectivity verification is enabled on the ingress node of the SRv6 TE policy, and an SID in the SID list is found unreachable.

· BFD or SBFD is enabled for the SRv6 TE policy and the related BFD or SBFD session goes down. BFD session down events are enabled to trigger SRv6 TE policy path reselection.

SRv6 TE policy group

An SRv6 TE policy group is a group of SRv6 TE policies that have the same endpoint address. Upon receiving a packet destined for that endpoint address, the device searches for the SRv6 TE policy containing the color value mapped to the DSCP or 802.1p value of the packet. The device will use the SRv6 TE policy to forward the packet.

On the same SRv6 source node, you can create an SRv6 TE policy group and multiple SRv6 TE policies. Those SRv6 TE policies can be added into that SRv6 TE policy group only if the following conditions exist:

· The SRv6 TE policies have the same destination node.

· Traffic identifier-to-SRv6 TE policy mappings are configured for the SRv6 TE policy group.

An SRv6 TE policy group can participate in traffic forwarding only if it contains valid SRv6 TE policies.

An SRv6 TE policy group is identified by its group ID. It also has the following attributes:

· Color—Color attribute of the SRv6 TE policy group. BGP routes that carry the same color value as the SRv6 TE policy group are recursed to the SRv6 TE policy group.

· Endpoint—IPv6 address of the egress node (destination node). If an SRv6 TE policy and an SRv6 TE policy group have the same value for the endpoint attribute, the SRv6 TE policy belongs to the SRv6 TE policy group.

You can create an SRv6 TE policy group by using the following methods:

· Manual creation at the CLI

This method requires manually configuring the destination node address of the SRv6 TE policy group.

· Automatic creation by ODN

When the device receives a BGP route, it compares the color extended attribute value of the BGP route with the color value of the ODN template. If the color values match, the device automatically generates an SRv6 TE policy group.

¡ The policy group uses the BGP route's next hop address as the endpoint address and the ODN template's color value as the color attribute value.

¡ The device will assign the smallest ID that is not in use to the SRv6 TE policy group.

Traffic steering to an SRv6 TE policy

About this feature

During traffic steering to SRv6 TE policies, the device performs the following operations in sequence:

1. Finds recursive SRv6 TE policy tunnels for the route that directs traffic from the ingress node to the egress node.

2. Selects an SRv6 TE policy for traffic forwarding from the the ingress node to the egress node.

Therefore, traffic steering to SRv6 TE policies involves two phases, route recursion and path selection. This section focuses on the path selection process. For more information about route recursion to SRv6 TE policies, see SRv6 VPN configuration in Segment Routing Configuration Guide.

Path selection in SRv6 TE policy-oriented traffic steering can be divided into two modes: direct steering and indirect steering. The methods for direct traffic steering include:

· Color-based traffic steering

· Automatic route advertisement

· Other traffic steering methods

During indirect traffic steering, the device performs the following operations in sequence:

1. Steers traffic to the matching SRv6 TE policy group.

2. Based on the forward type and forwarding policy mappings of that SRv6 TE policy group, selects an SRv6 TE policy group for further forwarding.

The methods for indirect traffic steering include:

· 802.1p-based traffic steering

· Service class-based traffic steering

· TE class ID-based traffic steering

· APN ID-based traffic steering

· ARN ID-based traffic steering

BSID-based traffic steering

If the destination IPv6 address of a received packet is the BSID of an SRv6 TE policy, the device uses the SRv6 TE policy to forward the packet.

This traffic steering method is used in SID stitching scenarios. The BSID of an SRv6 TE policy is inserted into the segment list of another SRv6 TE policy, and the inserted BSID represents the segment lists of the optimal candidate path in the SRv6 TE policy. With this method, the SRH is shortened and different SRv6 TE policies can stitched together.

Color-based traffic steering

Traffic steering mechanism

In color-based traffic steering, the device searches for an SRv6 TE policy that has the same color and endpoint address as the color and next hop address of a BGP route. If a matching SRv6 TE policy exists, the device recurses the BGP route to that SRv6 TE policy. When the device receives packets that match the BGP route, it forwards those packets through the SRv6 TE policy.

Traffic steering workflow

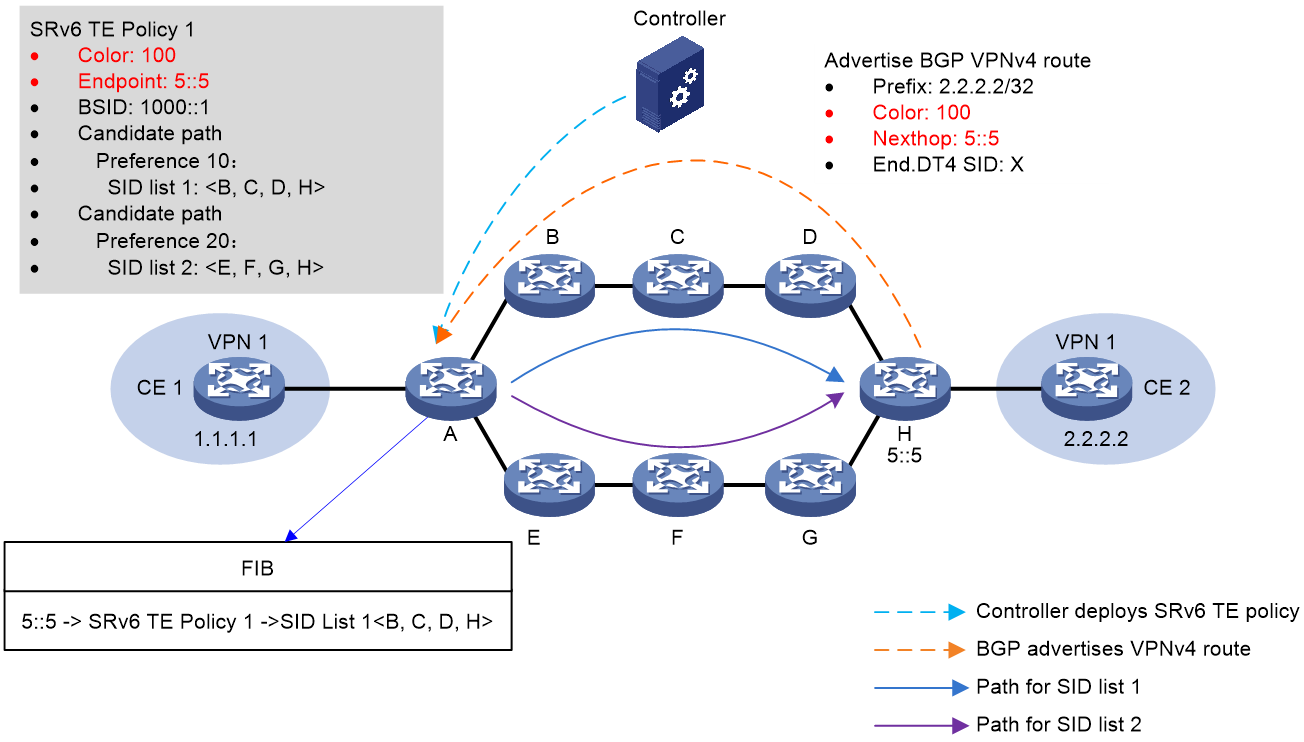

Figure 3 shows the process of color-based traffic steering:

1. The controller issues SRv6 TE policy 1 to Device A (source node). The color value and endpoint address of the SRv6 TE policy is 100 and 5::5 (IP address of Device H), respectively.

2. Device H advertises BGP VPNv4 route 2.2.2.2/32 to Device A. The color value and next hop address of the route is 100 and 5::5, respectively.

3. When Device A receives BGP VPNv4 route 2.2.2.2/32, it recurses this route to SRv6 TE policy 1 based on the route's color value and next hop address. Packets matching the BGP route will be forwarded through SRv6 TE policy 1.

Figure 3 Color-based traffic steering

DSCP-based traffic steering

Traffic steering mechanism

DSCP-based traffic steering is available only after SRv6 TE policy groups are deployed on the device. Each SRv6 TE policy group consists of multiple SRv6 TE policies with different colors but the same endpoint address.

To achieve DSCP-based traffic steering, you can perform the following operations:

1. Add multiple SRv6 TE policies with different colors to the same SRv6 TE policy group, and configure color-to-DSCP mappings for that SRv6 TE policy group.

2. Use one of the following methods to steer traffic to the SRv6 TE policy group:

¡ Bind the desired destination address to the SRv6 TE policy group in a tunnel policy. Traffic destined for the destination address will be steered to the SRv6 TE policy group for further forwarding.

¡ Set SRv6 TE policy group as the preferred tunnel type in a tunnel policy. When the next hop address of a route is the endpoint address of the SRv6 TE policy group, the device preferentially steers traffic to the SRv6 TE policy group.

¡ Recurse BGP routes that can match the SRv6 TE policy group to the SRv6 TE policy group. A BGP route can match an SRv6 TE policy group only if its color value and next hop address can match the color value and endpoint address of that SRv6 TE policy group.

3. Look up for the color value mapped to the DSCP value of a packet, and then use the color value to find the associated SRv6 TE policy in the SRv6 TE policy group.

The above task creates a DSCP > color > SRv6 TE policy mapping, enabling DSCP-based traffic steering to the desired SRv6 TE policy.

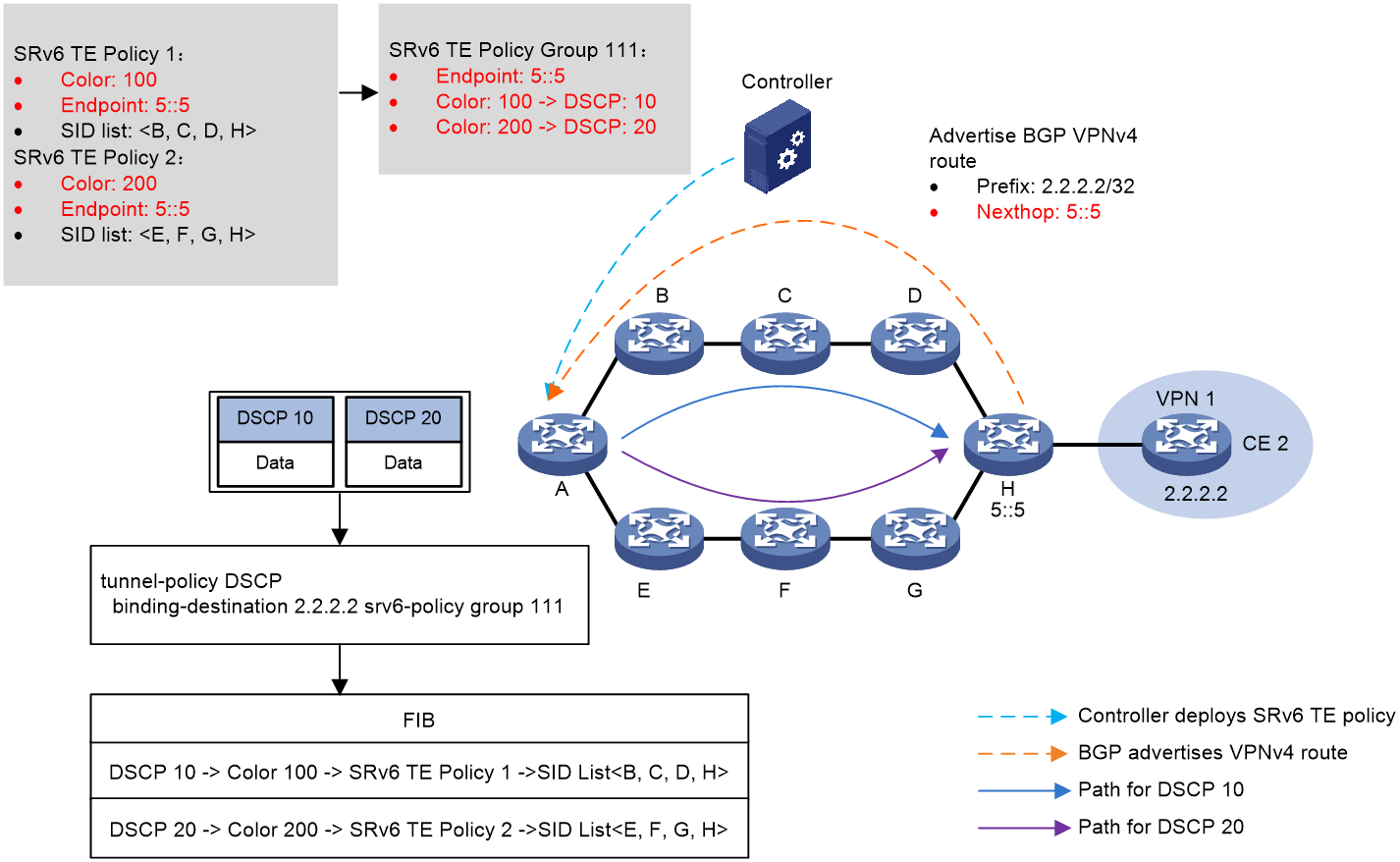

Traffic steering workflow

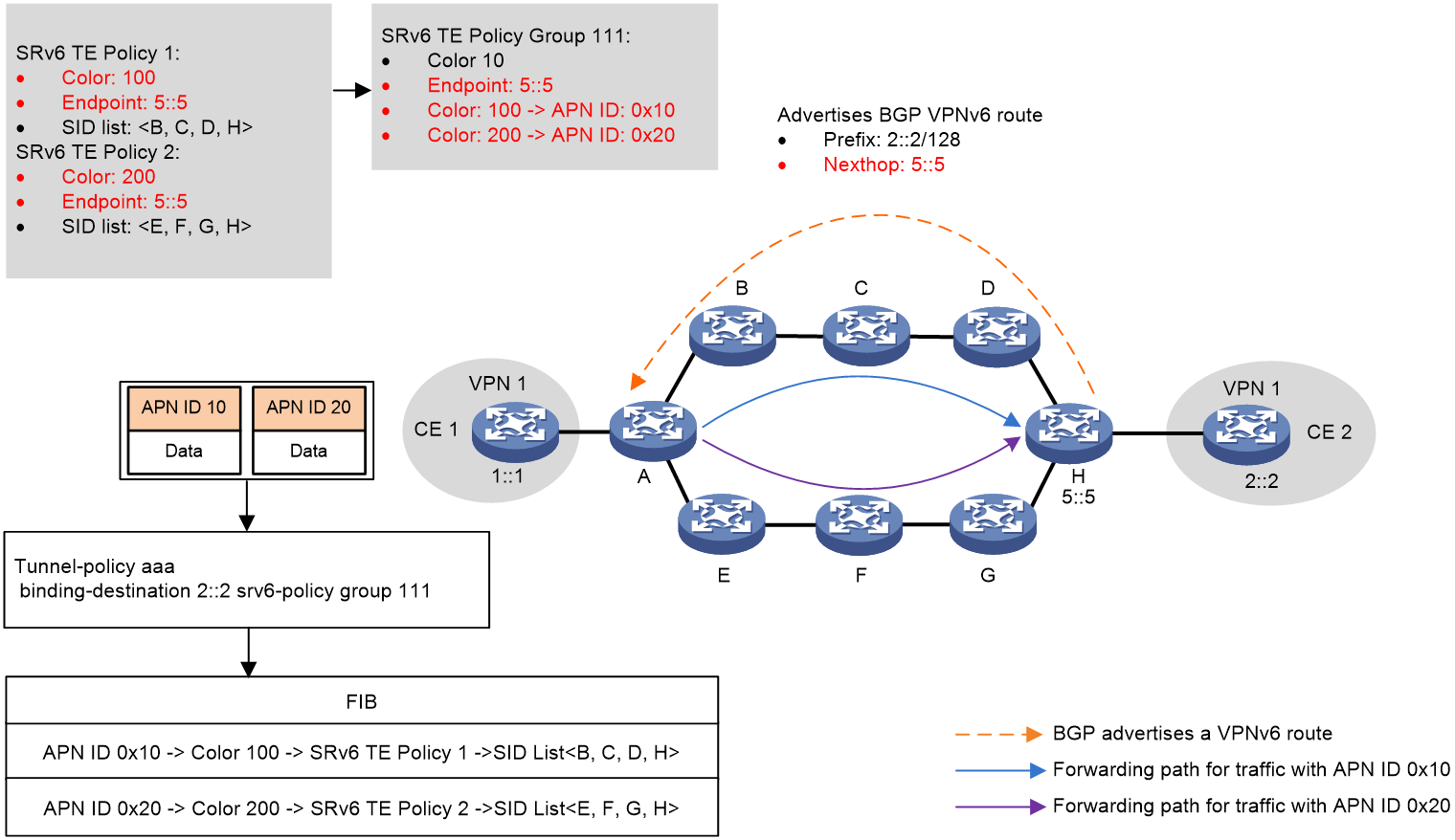

Figure 4 shows the process of DSCP-based traffic steering:

1. The controller issues SRv6 TE policy 1 and SRv6 TE policy 2 to Device A (source node). SRv6 TE policy 1 to Device A (source node). The color values of SRv6 TE policy 1 and SRv6 TE policy 2 are 100 and 200, respectively. The two SRv6 TE policies both use 5::5 as endpoint address, which is the IP address of Device H.

2. Device H advertises BGP VPNv4 route 2.2.2.2/32 to Device A. The next hop address of the route is 5::5.

3. SRv6 TE policy group 111 is created on Device A with its endpoint address as 5::5. Within the SRv6 TE policy group, color value 100 is mapped to DSCP value 10, and color value 200 is mapped to DSCP value 20. A tunnel policy is configured on Device A to bind the SRv6 TE policy group to destination address 2.2.2.2.

4. Device A performs DSCP-based traffic steering for a received packet as follows:

a. Finds the matching tunnel binding policy based on the packet's destination address, and then finds the related SRv6 TE policy group.

b. Uses the packet's DSCP value (10 in this example) to find the mapped color value, and then matches an SRv6 TE policy inside the SRv6 TE policy group based on the color value.

c. Uses the optimal candidate path in this SRv6 TE policy for packet forwarding. In this example, the packet is forwarded along the Device B > Device C > Device D > Device H path, which is in accordance with the SID list in the candidate path.

Figure 4 DSCP-based traffic steering

802.1p-based traffic steering

Traffic steering mechanism

802.1p-based traffic steering is available only after SRv6 TE policy groups are deployed on the device. Each SRv6 TE policy group consists of multiple SRv6 TE policies with different colors but the same endpoint address.

To achieve 802.1p-based traffic steering, you can perform the following operations:

1. Add multiple SRv6 TE policies with different colors to the same SRv6 TE policy group, and configure color-to-802.1p mappings for that SRv6 TE policy group.

2. Use one of the following methods to steer traffic to the SRv6 TE policy group:

¡ Bind the desired destination address to the SRv6 TE policy group in a tunnel policy. Traffic destined for the destination address will be steered to the SRv6 TE policy group for further forwarding.

¡ Set SRv6 TE policy group as the preferred tunnel type in a tunnel policy. When the next hop address of a route is the endpoint address of the SRv6 TE policy group, the device preferentially steers traffic to the SRv6 TE policy group.

¡ Recurse BGP routes that can match the SRv6 TE policy group to the SRv6 TE policy group. A BGP route can match an SRv6 TE policy group only if its color value and next hop address can match the color value and endpoint address of that SRv6 TE policy group.

3. Look up for the color value mapped to the 802.1p value of a packet, and then use the color value to find the associated SRv6 TE policy in the SRv6 TE policy group.

The above task creates a 802.1p > color > SRv6 TE policy mapping, enabling 802.1p-based traffic steering to the desired SRv6 TE policy.

Traffic steering workflow

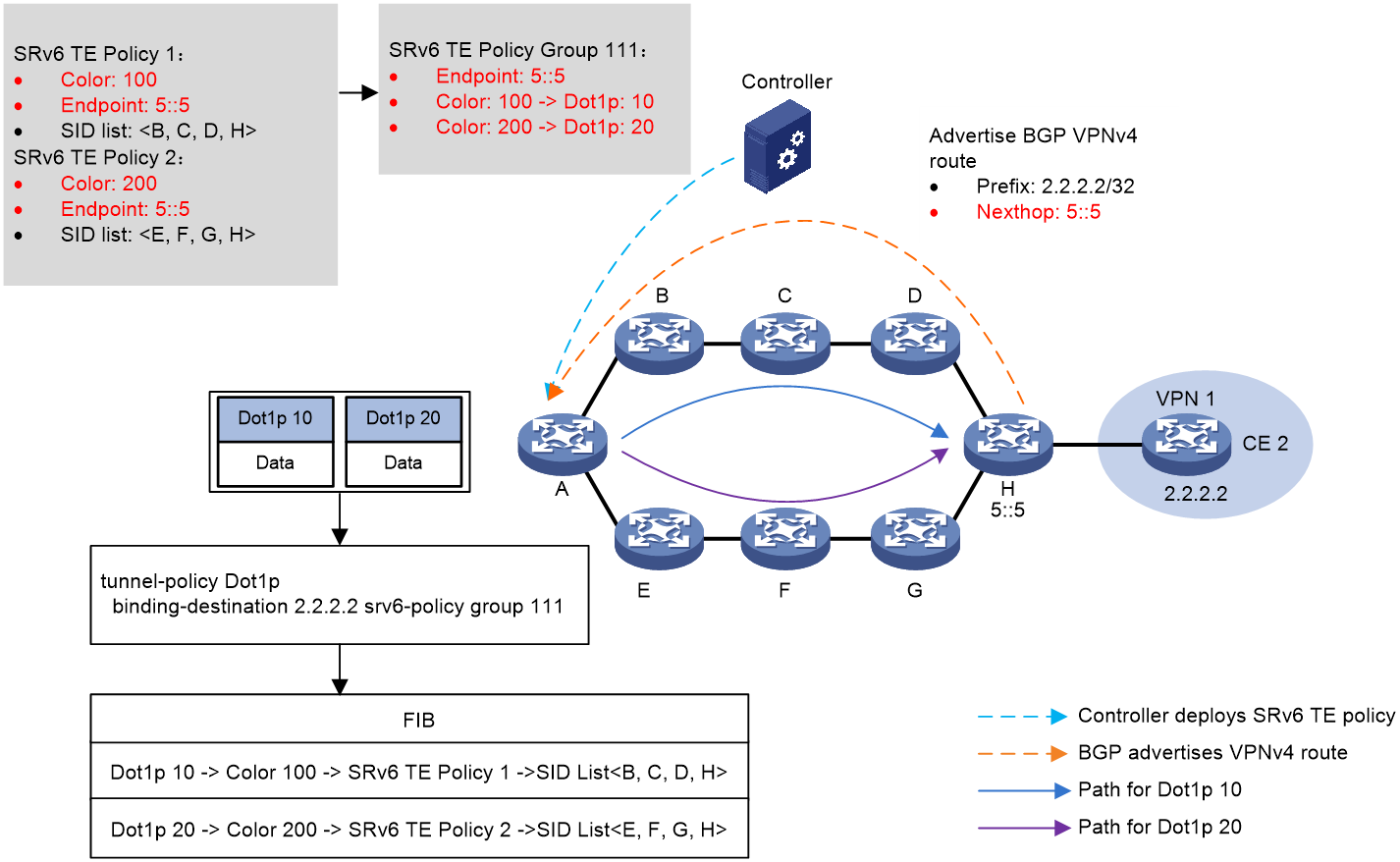

Figure 5 shows the process of 802.1p-based traffic steering:

1. The controller issues SRv6 TE policy 1 and SRv6 TE policy 2 to Device A (source node). SRv6 TE policy 1 to Device A (source node). The color values of SRv6 TE policy 1 and SRv6 TE policy 2 are 100 and 200, respectively. The two SRv6 TE policies both use 5::5 as endpoint address, which is the IP address of Device H.

2. Device H advertises BGP VPNv4 route 2.2.2.2/32 to Device A. The next hop address of the route is 5::5.

3. SRv6 TE policy group 111 is created on Device A with its endpoint address as 5::5. Within the SRv6 TE policy group, color value 100 is mapped to 802.1p value 10, and color value 200 is mapped to 802.1p value 20. A tunnel policy is configured on Device A to bind the SRv6 TE policy group to destination address 2.2.2.2.

4. Device A performs 802.1p-based traffic steering for a received packet as follows:

a. Finds the matching tunnel binding policy based on the packet's destination address, and then finds the related SRv6 TE policy group.

b. Uses the packet's 802.1p value (10 in this example) to find the mapped color value, and then matches an SRv6 TE policy inside the SRv6 TE policy group based on the color value.

c. Uses the optimal candidate path in this SRv6 TE policy for packet forwarding. In this example, the packet is forwarded along the Device B > Device C > Device D > Device H path, which is in accordance with the SID list in the candidate path.

Figure 5 802.1p-based traffic steering

Service class-based traffic steering

Traffic steering mechanism

A service class is a local traffic identifier for devices, which can identify the service class of traffic by QoS policy. You can use the remark service-class command to assign a service class to traffic. For more information about this command, see QoS commands in ACL and QoS Command Reference. Service class-based traffic steering is available only after SRv6 TE policy groups are deployed on the device. Each SRv6 TE policy group consists of multiple SRv6 TE policies with different colors but the same endpoint address.

To achieve service class-based traffic steering, you can perform the following operations:

1. Add multiple SRv6 TE policies with different colors to the same SRv6 TE policy group, and configure color-to-service class mappings for that SRv6 TE policy group.

2. Use one of the following methods to steer traffic to the SRv6 TE policy group:

¡ Bind the desired destination address to the SRv6 TE policy group in a tunnel policy. Traffic destined for the destination address will be steered to the SRv6 TE policy group for further forwarding.

¡ Set SRv6 TE policy group as the preferred tunnel type in a tunnel policy. When the next hop address of a route is the endpoint address of the SRv6 TE policy group, the device preferentially steers traffic to the SRv6 TE policy group.

¡ Recurse BGP routes that can match the SRv6 TE policy group to the SRv6 TE policy group. A BGP route can match an SRv6 TE policy group only if its color value and next hop address can match the color value and endpoint address of that SRv6 TE policy group.

3. Look up for the color value mapped to the service class of a packet, and then use the color value to find the associated SRv6 TE policy in the SRv6 TE policy group.

The above task creates a service class > color > SRv6 TE policy mapping, enabling service class-based traffic steering to the desired SRv6 TE policy.

Traffic steering workflow

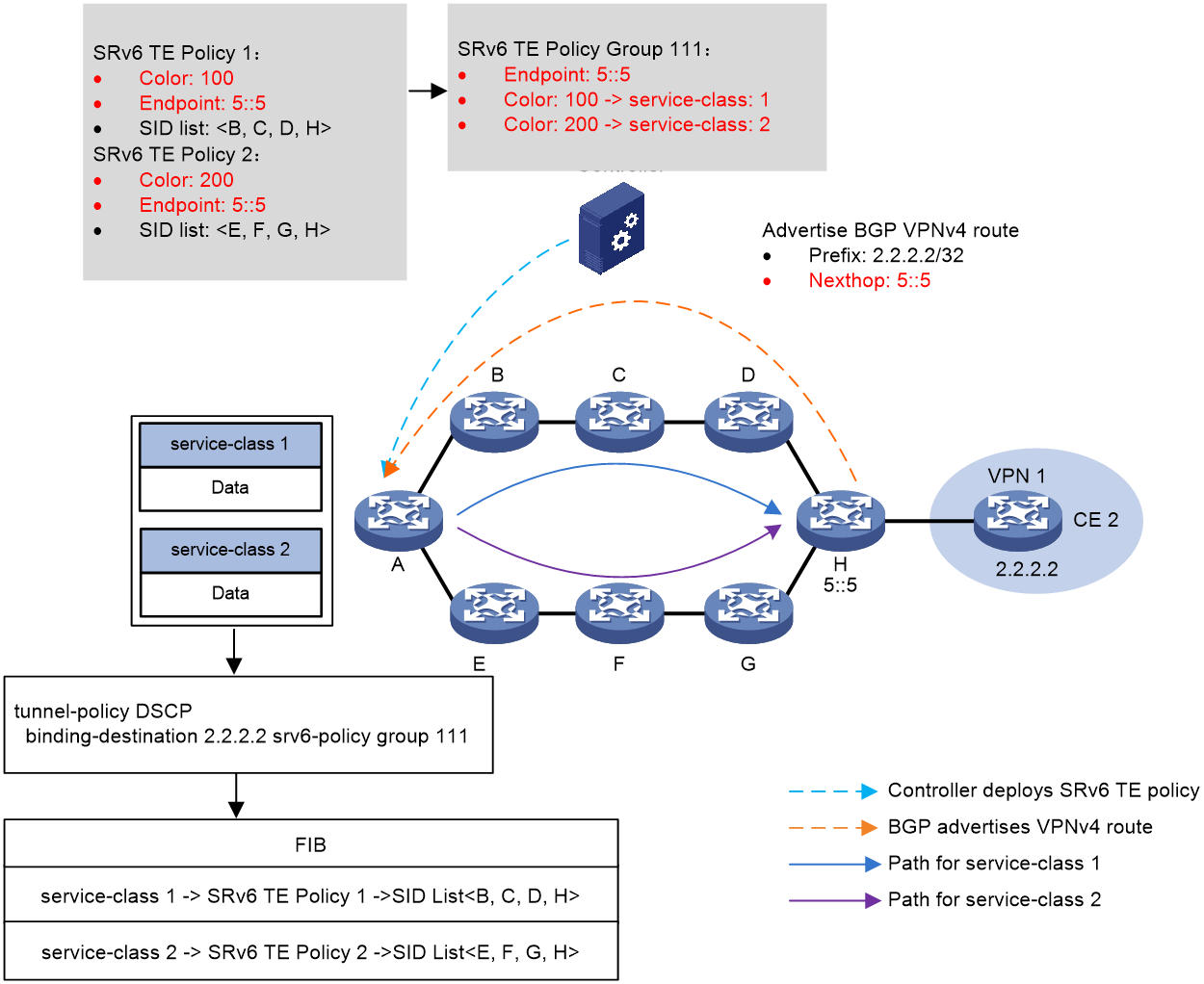

Figure 6 shows the process of service class-based traffic steering:

1. The controller issues SRv6 TE policy 1 and SRv6 TE policy 2 to Device A (source node). SRv6 TE policy 1 to Device A (source node). The color values of SRv6 TE policy 1 and SRv6 TE policy 2 are 100 and 200, respectively. The two SRv6 TE policies both use 5::5 as endpoint address, which is the IP address of Device H.

2. Device H advertises BGP VPNv4 route 2.2.2.2/32 to Device A. The next hop address of the route is 5::5.

3. SRv6 TE policy group 111 is created on Device A with its endpoint address as 5::5. Within the SRv6 TE policy group, color value 100 is mapped to service class 1, and color value 200 is mapped to service class 2. A tunnel policy is configured on Device A to bind the SRv6 TE policy group to destination address 2.2.2.2.

4. Device A performs service class-based traffic steering for a received packet as follows:

a. Finds the matching tunnel binding policy based on the packet's destination address, and then finds the related SRv6 TE policy group.

b. Uses the packet's service class (1 in this example) to find the mapped color value, and then matches an SRv6 TE policy inside the SRv6 TE policy group based on the color value.

c. Uses the optimal candidate path in this SRv6 TE policy for packet forwarding. In this example, the packet is forwarded along the Device B > Device C > Device D > Device H path, which is in accordance with the SID list in the candidate path.

Figure 6 Service class-based traffic steering

CBTS-based traffic steering

About SRv6 TE policy CBTS

SRv6 TE policy Class Based Tunnel Selection (CBTS) enables dynamic routing and forwarding of traffic with service class values over different SRv6 TE policy tunnels between the same tunnel headend and tailend. CBTS uses a dedicated tunnel for a certain class of service to implement differentiated forwarding for services.

How SRv6 TE policy CBTS works

SRv6 TE policy CBTS processes traffic mapped to a priority as follows:

1. Uses a traffic behavior to set a service class value for the traffic. For more information about setting a service class value in traffic behavior view, see the remark service-class command in ACL and QoS Command Reference.

2. Compares the service class value of the traffic with the service class values of the SRv6 TE policy tunnels and forwards the traffic to a matching tunnel.

SRv6 TE policy selection rules

SRv6 TE policy CBTS uses the following rules to select an SRv6 TE policy for the traffic to be forwarded:

· If an SRv6 TE policy has the same service class value as the traffic, CBTS uses this SRv6 TE policy.

· If multiple SRv6 TE policies have the same service class value as the traffic, CBTS selects an SRv6 TE policy based on the flow identification and load sharing mode:

¡ If only one flow exists and flow-based load sharing is used, CBTS randomly selects a matching SRv6 TE policy for packets of the flow.

¡ If multiple flows exist or if only one flow exists but packet-based load sharing is used, CBTS uses all matching SRv6 TE policies to load share the packets.

For more information about the flow identification and load sharing mode, see the ip load-sharing mode command in Layer 3—IP Services Command Reference.

· If the traffic does not match any SRv6 TE policy by service class value, CBTS randomly selects an SRv6 TE policy from all SRv6 TE policies with the lowest forwarding priority. An SRv6 TE policy that has a smaller service class value has a lower forwarding priority. An SRv6 TE policy that is not configured with a service class value has the lowest priority.

SRv6 TE policy CBTS application scenario

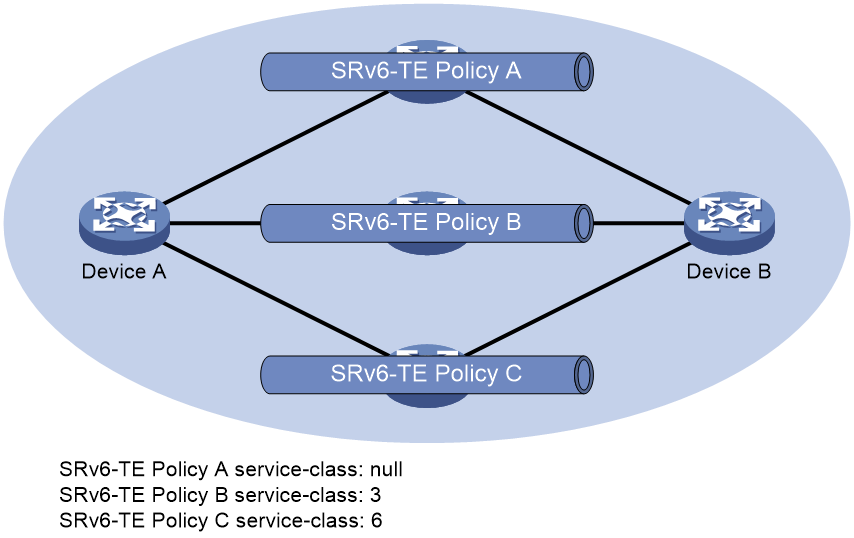

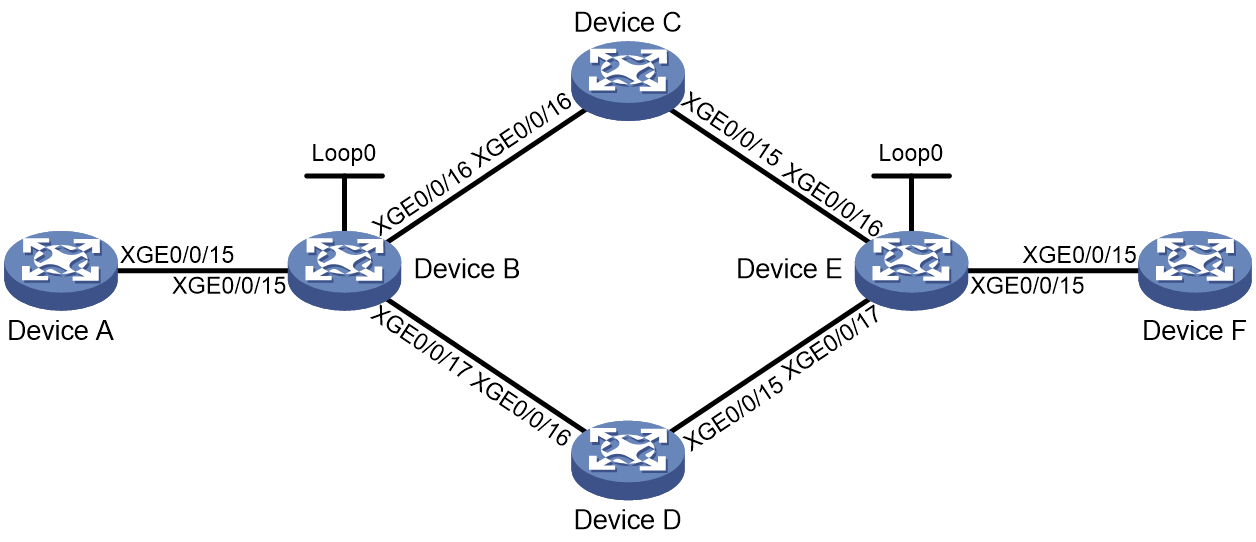

As shown in Figure 7, CBTS selects SRv6 TE policies for traffic from Device A to Device B as follows:

· Uses SRv6 TE policy B to forward traffic with service class value 3.

· Uses SRv6 TE policy C to forward traffic with service class value 6.

· Uses SRv6 TE policy A to forward traffic with service class value 4.

· Uses SRv6 TE policy A to forward traffic with no service class value.

Figure 7 Uses SRv6 TE policy CBTS application scenario

TE class ID-based traffic steering

Traffic steering mechanism

A TE class ID is a local traffic identifier for devices, which can identify the TE class ID of traffic by QoS policy. TE class ID-based traffic steering is more suitable than service class-based traffic steering, because this method can provide support for Intelligent Policy Route (IPR) and TE class IDs outnumber service classes. You can use the remark te-class command to assign a TE class ID to traffic. For more information about this command, see QoS commands in ACL and QoS Command Reference. TE class ID-based traffic steering is available only after SRv6 TE policy groups are deployed on the device. Each SRv6 TE policy group consists of multiple SRv6 TE policies with different colors but the same endpoint address.

To achieve TE class ID-based traffic steering, you can perform the following operations:

1. Add multiple SRv6 TE policies with different colors to the same SRv6 TE policy group, and configure color-to-TE class ID mappings for that SRv6 TE policy group.

2. Use one of the following methods to steer traffic to the SRv6 TE policy group:

¡ Bind the desired destination address to the SRv6 TE policy group in a tunnel policy. Traffic destined for the destination address will be steered to the SRv6 TE policy group for further forwarding.

¡ Set SRv6 TE policy group as the preferred tunnel type in a tunnel policy. When the next hop address of a route is the endpoint address of the SRv6 TE policy group, the device preferentially steers traffic to the SRv6 TE policy group.

¡ Recurse BGP routes that can match the SRv6 TE policy group to the SRv6 TE policy group. A BGP route can match an SRv6 TE policy group only if its color value and next hop address can match the color value and endpoint address of that SRv6 TE policy group.

3. Look up for the color value mapped to the TE class ID of a packet, and then use the color value to find the associated SRv6 TE policy in the SRv6 TE policy group.

The above task creates a TE class ID > color > SRv6 TE policy mapping, enabling TE class ID-based traffic steering to the desired SRv6 TE policy.

Traffic steering workflow

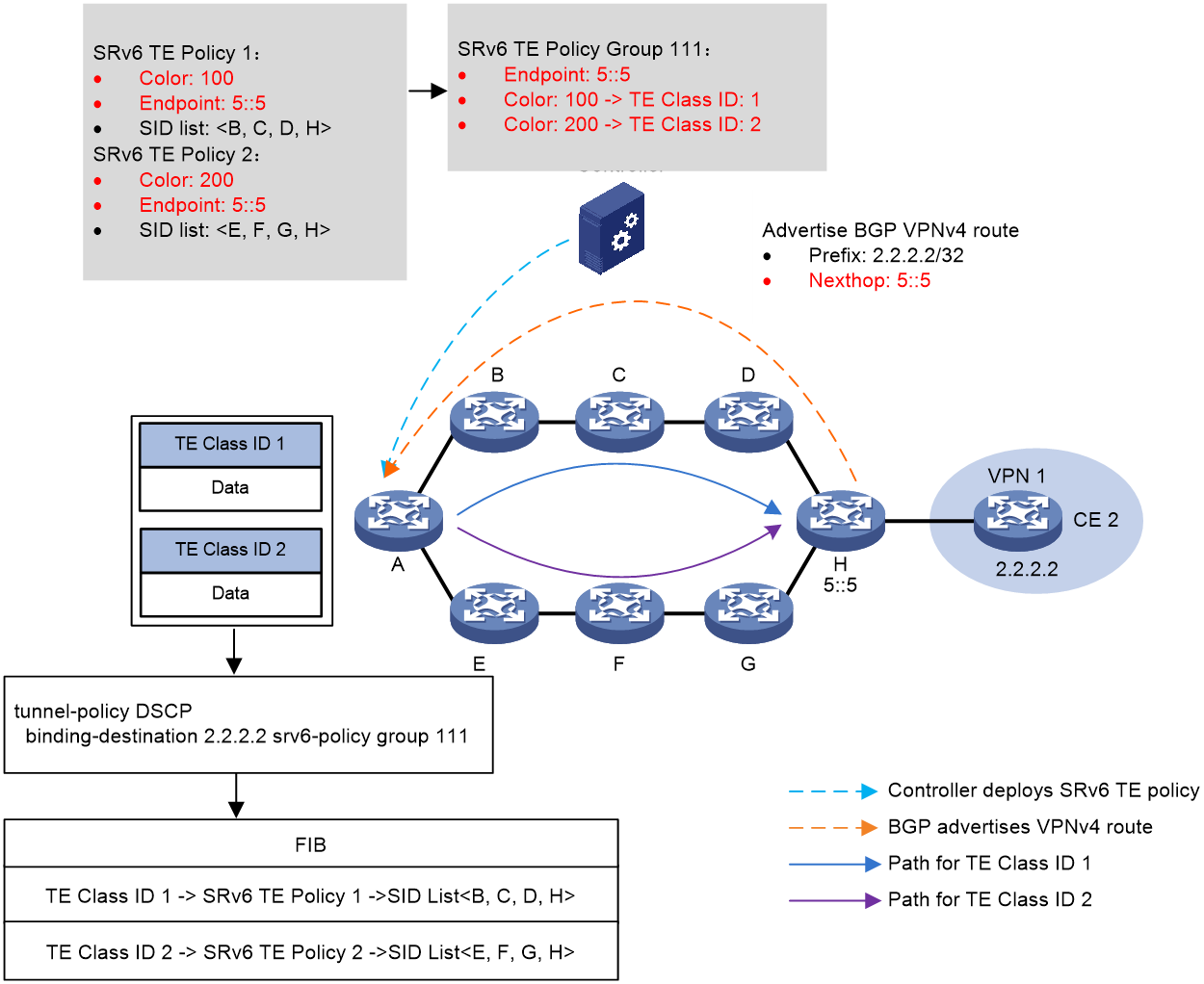

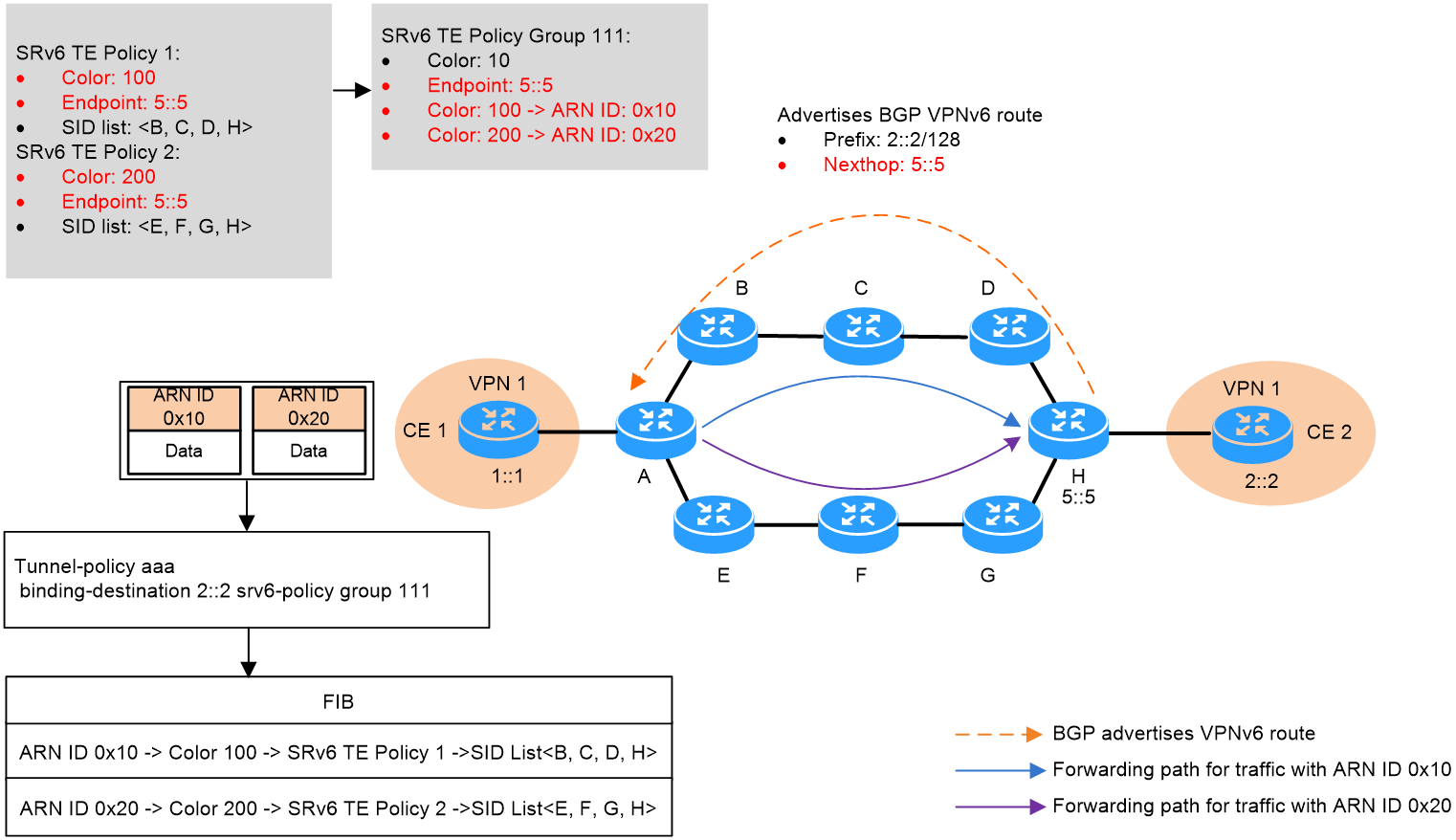

Figure 6 shows the process of TE class ID-based traffic steering:

1. The controller issues SRv6 TE policy 1 and SRv6 TE policy 2 to Device A (source node). SRv6 TE policy 1 to Device A (source node). The color values of SRv6 TE policy 1 and SRv6 TE policy 2 are 100 and 200, respectively. The two SRv6 TE policies both use 5::5 as endpoint address, which is the IP address of Device H.

2. Device H advertises BGP VPNv4 route 2.2.2.2/32 to Device A. The next hop address of the route is 5::5.

3. SRv6 TE policy group 111 is created on Device A with its endpoint address as 5::5. Within the SRv6 TE policy group, color value 100 is mapped to TE class ID 1, and color value 200 is mapped to TE class ID 2. A tunnel policy is configured on Device A to bind the SRv6 TE policy group to destination address 2.2.2.2.

4. Device A performs TE class ID-based traffic steering for a received packet as follows:

a. Finds the matching tunnel binding policy based on the packet's destination address, and then finds the related SRv6 TE policy group.

b. Uses the packet's TE class ID (1 in this example) to find the mapped color value, and then matches an SRv6 TE policy inside the SRv6 TE policy group based on the color value.

c. Uses the optimal candidate path in this SRv6 TE policy for packet forwarding. In this example, the packet is forwarded along the Device B > Device C > Device D > Device H path, which is in accordance with the SID list in the candidate path.

Figure 8 TE class ID-based traffic steering

APN ID-based traffic steering

Once service traffic is steered to an SRv6 TE policy group for forwarding, the device matches the APN ID of the traffic with the color-to-APN ID mappings in the SRv6 TE policy group. If a match is found, the device forwards the traffic through the SRv6 TE policy associated with the color value in the matching mapping.

For more information about APN ID-based traffic steering, see "SRv6 TE policy application in the APN6 network."

ARN ID-based traffic steering

Once service traffic is steered to an SRv6 TE policy group for forwarding, the device matches the ARN ID of the traffic with the color-to-ARN ID mappings in the SRv6 TE policy group. If a match is found, the device forwards the traffic through the SRv6 TE policy associated with the color value in the matching mapping.

For more information about ARN ID-based traffic steering, see "SRv6 TE policy application in the ARN network."

Automatic route advertisement

This feature advertises an SRv6 TE policy or an SRv6 TE policy group (a group of SRv6 TE policies) to IGP (IPv6 IS-IS or OSPFv3) for route computation. The device can then forward the matching traffic through the SRv6 TE policy or SRv6 TE policy group.

An SRv6 TE policy or SRv6 TE policy group supports only automatic route advertisement (also called autoroute announce) in IGP shortcut mode. With automatic route advertisement enabled, the device determines the SRv6 TE policy or SRv6 TE policy group as a link that connects the tunnel ingress and egress. The tunnel ingress includes the SRv6 TE policy or SRv6 TE policy group in IGP route computation.

|

|

NOTE: After traffic is steered to an SRv6 TE policy group through automatic route advertisement, the device looks up for the matching SRv6 TE policy in the SRv6 TE policy group based on DSCP or 802.1p value. Then, the device forwards the traffic through the matching SRv6 TE policy. |

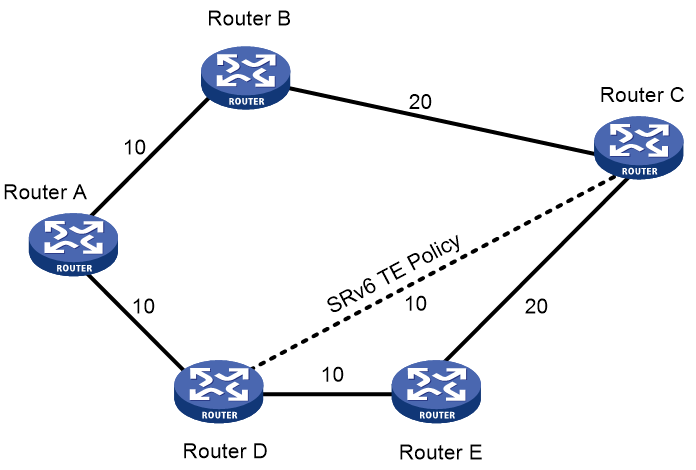

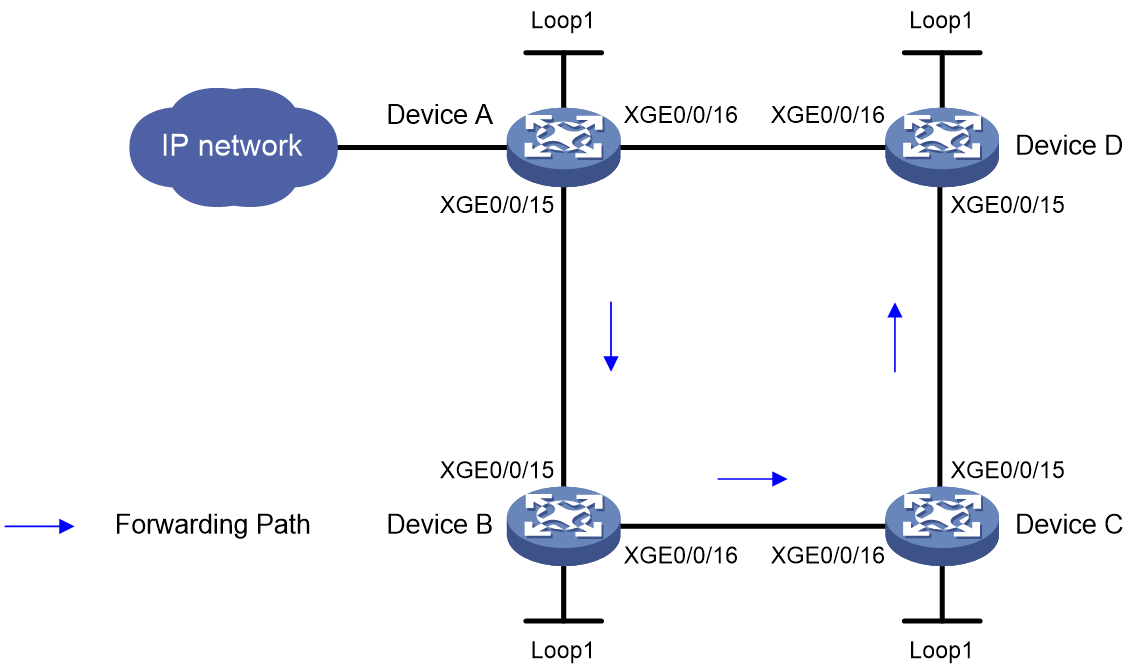

As shown in Figure 9, an SRv6 TE policy tunnel is deployed between Device D and Device C. IGP Shortcut enables the source node, Device D, to utilize this tunnel during IGP route computation. Consequently, Device D can steer incoming packets to the SRv6 TE policy tunnel between Device D and Device C.

Figure 9 Automatic route advertisement

Other traffic steering methods

· Tunnel policy-based traffic steering—By deploying a tunnel policy in an IP L3VPN, EVPN L3VPN, EVPN VPLS, or EVPN VPWS network, you can use an SRv6 TE policy as the public tunnel to forward VPN traffic. For more information about tunnel policies, see tunnel policy configuration in MPLS Configuration Guide.

· Static routing-based traffic steering—This traffic steering method requires recursing a static route to an SRv6 TE policy. The device can then use the SRv6 TE policy to forward packets that match the static route.

· PBR-based traffic steering—This traffic steering method directs traffic to an SRv6 TE policy through PBR. The device uses the SRv6 TE policy to forward packets that match the PBR policy. For more information about PBR, see PBR configuration in Layer 3—IP Routing Configuration Guide.

· QoS policy-based traffic steering—This traffic steering method redirects traffic to an SRv6 TE policy through a QoS policy. The device can then use the SRv6 TE policy to forward packets that match the traffic classes of the QoS policy. For more information about QoS policies, see QoS configuration in ACL and QoS Configuration Guide.

· Flowspec-based traffic steering—This traffic steering method redirects traffic to an SRv6 TE policy through a Flowspec rule. The device can then use the SRv6 TE policy to forward packets that match the Flowspec rule. For more information about Flowspec, see Flowspec configuration in ACL and QoS Configuration Guide.

SRv6 TE policy-based traffic forwarding

SRv6 TE policy path selection

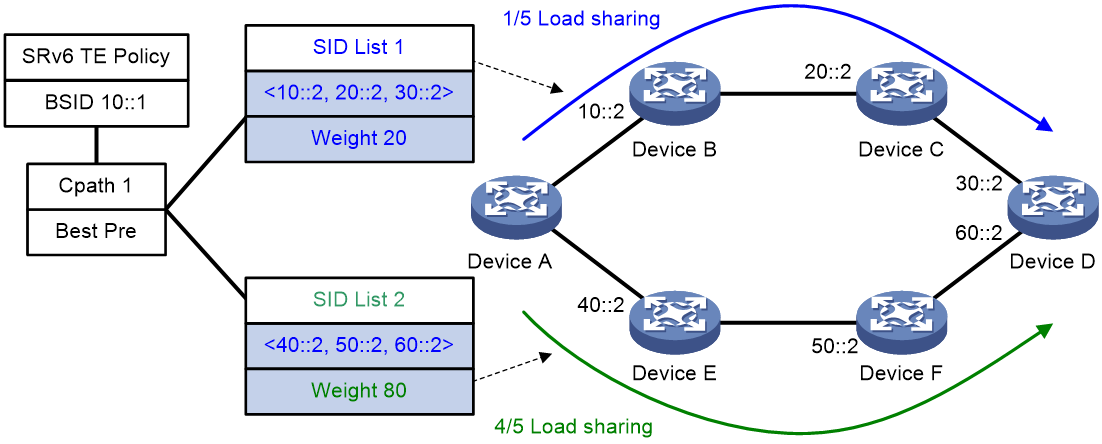

After traffic is steered in to an SRv6 TE policy, the device selects a traffic forwarding path in that SRv6 TE policy as follows:

1. Selects the valid candidate path that has the highest preference.

2. Performs Weighted ECMP (WECMP) load sharing among the SID lists of the selected candidate path. The ratio of load on SID list x is equal to Weight x/(Weight 1 + Weight 2 + … + Weight n). The n argument represents the number of SID lists in the selected candidate path.

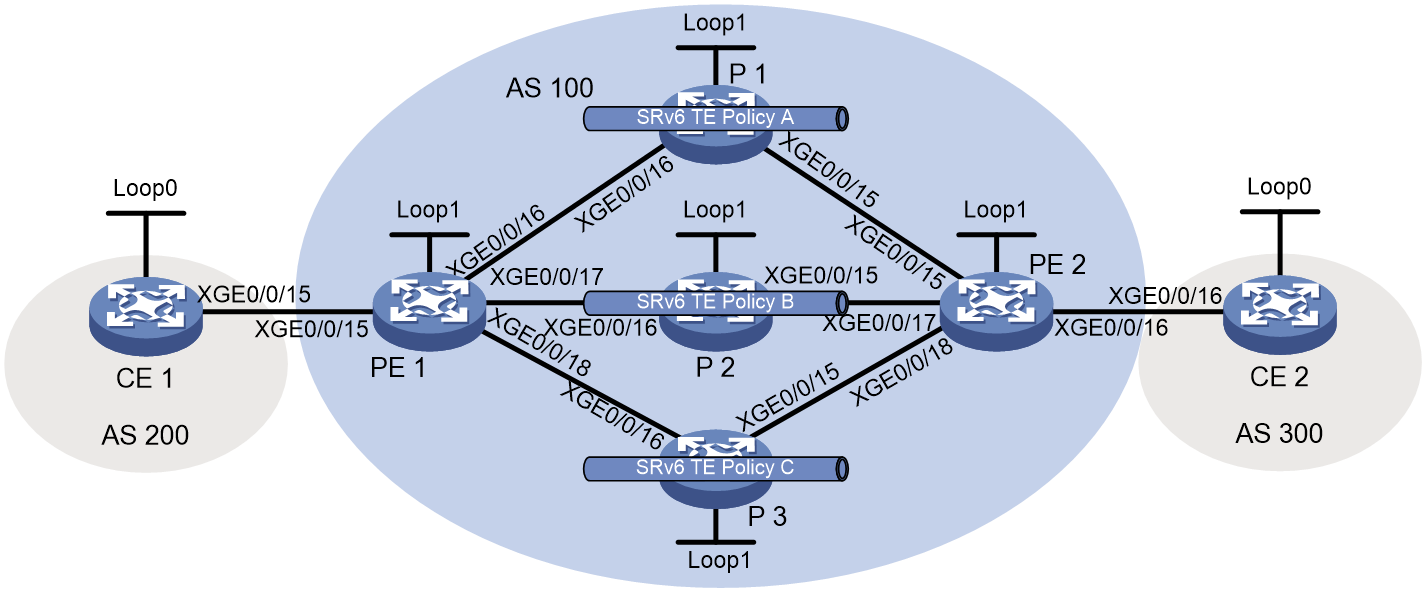

As shown in Figure 10, Device A first selects a valid SRv6 TE policy by BSID. Then, the device selects a candidate path by preference. The candidate path has two valid SID lists: SID list 1 and SID list 2. The weight value of SID list 1 is 20 and the weight value of SID list 2 is 80. One fifth of the traffic will be forwarded through the subpath identified by SID list 1. Four fifth of the traffic will be forwarded through the subpath identified by SID list 2.

Figure 10 SRv6 TE policy path selection

Data encapsulation and forwarding through SRv6 TE policies

For SRv6 TE policies, supported packet encapsulation methods include normal encapsulation and insert encapsulation. In normal encapsulation, the device adds a new IPv6 header and an SRH to each packet. In insert encapsulation, the device inserts an SRH extension header after the original IPv6 header.

The packet forwarding process varies by packet encapsulation method.

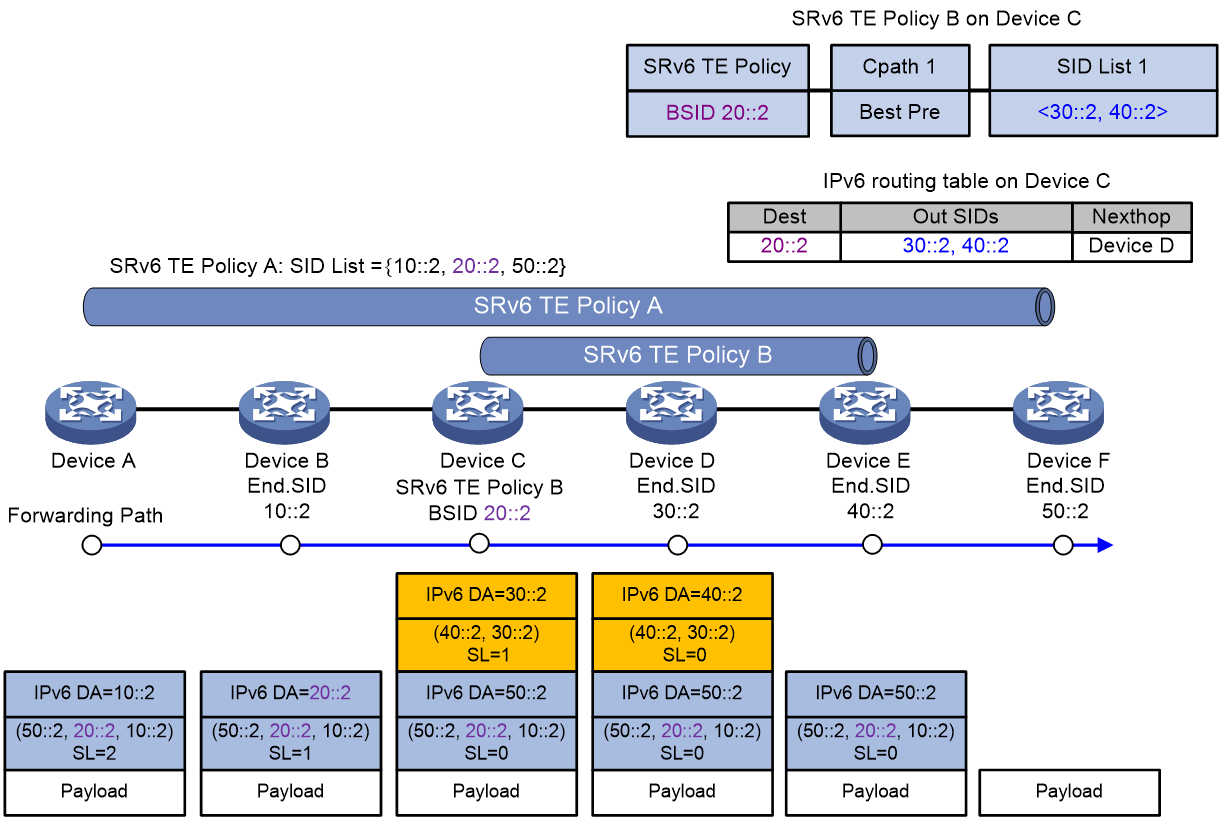

SRv6 TE policy-based packet forwarding with the normal encapsulation method

As shown in Figure 11, BSID-based traffic steering is used in the SID stitching scenario. The packet forwarding process is as follows:

1. Device A steers traffic to SRv6 TE policy A for further forwarding. The SIDs of SRv6 TE policy A are stitched with the BSID of SRv6 TE policy B, 20::2. According to SRv6 TE policy A, the device encapsulates the packet with an SRH header that carries an SID list of {10::2, 20::2, 50::2}. 10::2 represents the End SID of Device B, and 50::2 represents the End SID of Device F.

2. Device A transmits the encapsulated packet to the next hop, Device B.

3. Device B obtains next hop information (Device C) from the SRH of the encapsulated packet, and transmits the packet to Device C.

4. Device C finds that the encapsulated packet is heading for 20::2, which is the BSID of SRv6 TE policy B. The encapsulation method for SRv6 TE policy B is normal encapsulation. Therefore, Device C encapsulates the packet with an outer IPv6 header and an SRH according to SRv6 TE policy B. The SRH carries a SID list of {30::2, 40::2}, where 30::2 is the End SID for Device D, and 40::2 is the End SID for Device E. The destination address in the outer IPv6 header is updated to 30::2, with the next hop set to Device D. After packet encapsulation, Device C transmits the packet to Device D.

5. Device D finds that the next hop pointed by the outer SRH of the encapsulated packet is Device E, and then transmits the packet to Device E.

6. Device E finds that the SL value in the outer SRH of the encapsulated packet is 0, and thus perform the following operations:

a. Decapsulates the packet by removing its outer IPv6 header and SRH.

b. Transmits the packet to Device F, which is the destination address of the packet.

7. Device F finds that the SL value in the outer SRH of the encapsulated packet is 0. As the egress node of SRv6 TE policy A, Device F decapsulates the packet by removing its outer IPv6 header and SRH.

Figure 11 SRv6 TE policy-based packet forwarding with the normal encapsulation method

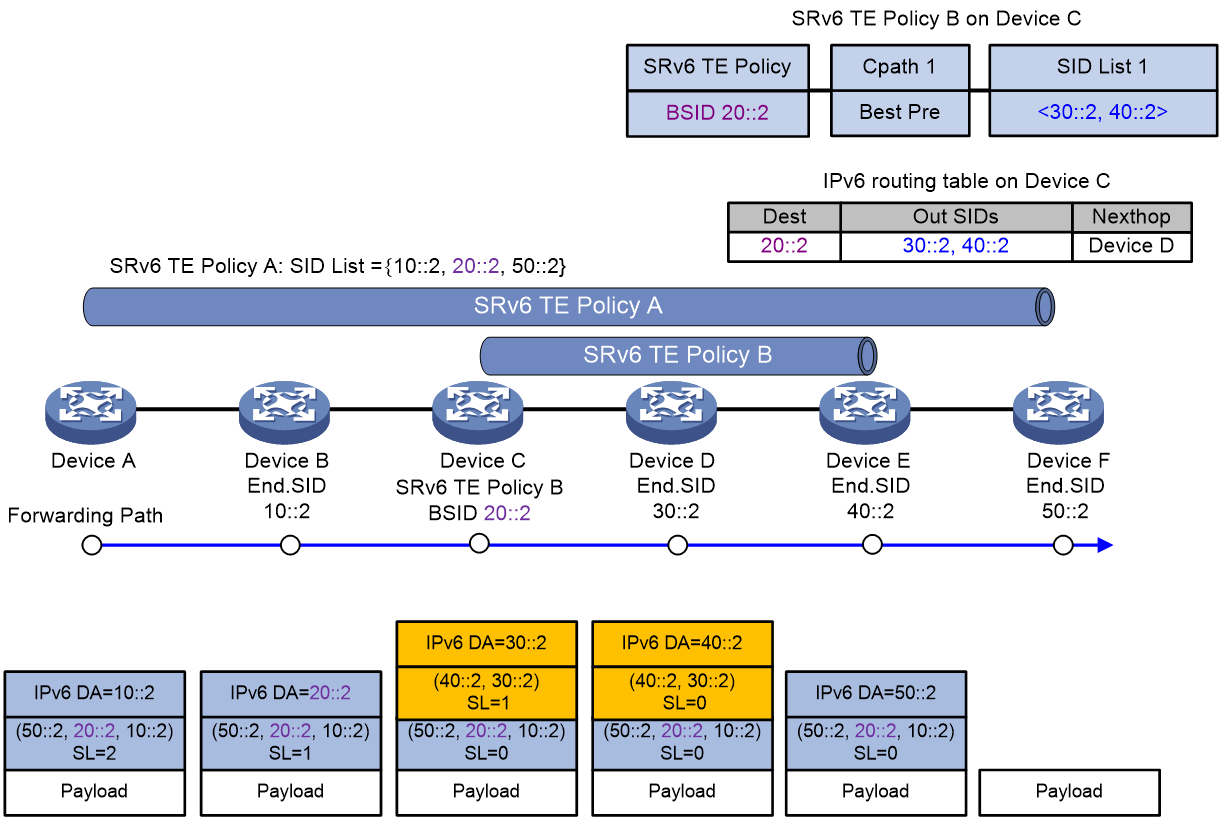

SRv6 TE policy-based packet forwarding with the insert encapsulation method

As shown in Figure 12, BSID-based traffic steering is used in the SID stitching scenario. The packet forwarding process is as follows:

1. Device A steers traffic to SRv6 TE policy A for further forwarding. The SIDs of SRv6 TE policy A are stitched with the BSID of SRv6 TE policy B, 20::2. According to SRv6 TE policy A, the device encapsulates the packet with an SRH that carries an SID list of {10::2, 20::2, 50::2}. 10::2 represents the End SID of Device B, and 50::2 represents the End SID of Device F.

2. Device A transmits the encapsulated packet to the next hop, Device B.

3. Device B obtains next hop information (Device C) from the SRH of the encapsulated packet, and transmits the packet to Device C.

4. Device C finds that the encapsulated packet is heading for 20::2, which is the BSID of SRv6 TE policy B. The encapsulation method for SRv6 TE policy B is insert encapsulation. Therefore, Device C inserts an SRH after the original IPv6 header of the packet according to SRv6 TE policy B. The SRH carries a SID list of {30::2, 40::2}, where 30::2 is the End SID for Device D, and 40::2 is the End SID for Device E. The destination address in the outer IPv6 header is updated to 30::2, with the next hop set to Device D. After packet encapsulation, Device C transmits the packet to Device D.

5. Device D finds that the next hop pointed by the outer SRH of the encapsulated packet is Device E, and then transmits the packet to Device E.

6. Device E finds that the SL value in the outer SRH of the encapsulated packet is 0, and thus perform the following operations for the packet:

a. Removes the outer SRH and updates the destination address in the outer IPv6 header to 50::2.

b. Transmits the packet to Device F, which is the destination address of the packet.

7. Device F finds that the SL value in the outer SRH of the encapsulated packet is 0. As the egress node of SRv6 TE policy A, Device F decapsulates the packet by removing its outer IPv6 header and SRH.

Figure 12 SRv6 TE policy-based packet forwarding with the insert encapsulation method

SRv6 TE policy reliability

SRv6 TE policy hot standby

If an SRv6 TE policy has multiple valid candidate paths, the device chooses the candidate path with the greatest preference value. If the chosen path fails, the SRv6 TE policy must select another candidate path. During path reselection, packet loss might occur and thus affect service continuity.

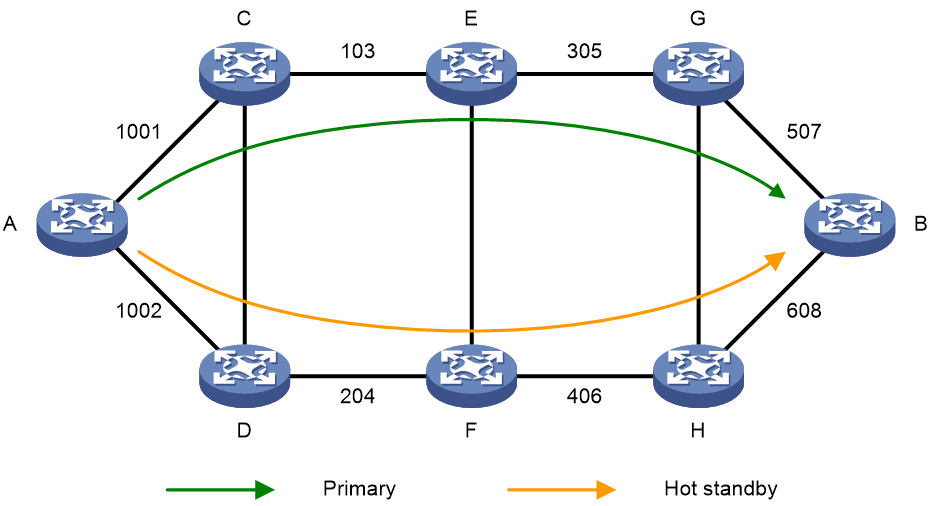

The SRv6 TE hot standby feature can address this issue. This feature takes the candidate path with the greatest preference value as the primary path and that with the second greatest preference value as the backup path in hot standby state. As shown in Figure 13, when the forwarding paths corresponding to all SID lists of the primary path fails, the standby path immediately takes over to minimize service interruption.

Figure 13 SRv6 TE policy hot standby

You can configure both the hot standby and SBFD features for an SRv6 TE policy. Use SBFD to detect the availability of the primary and standby paths specified for hot standby. If all SID lists of the primary path become unavailable, the standby path takes over and a path recalculation is performed. The standby path becomes the new primary path, and a new standby path is selected. If both the primary and standby paths fail, the SRv6 TE policy will calculate new primary and standby paths.

BFD for SRv6 TE policy

Echo BFD for SRv6 TE policy

Echo BFD (BFD in echo packet mode) does not require the initiator and the reflector use the same discriminator for testing the connectivity of an SRv6 TE policy. As such, you do not need to plan local and remote discriminators. This makes echo BFD easier to configure than SBFD for SRv6 TE policy.

Echo BFD tests the connectivity of an SRv6 TE policy as follows:

1. The source node sends BFD echo packets that each encapsulate a SID list of the SRv6 TE policy.

2. After the endpoint node receives an BFD echo packet, it sends the BFD echo packet back to the source node along the shortest path selected through IPv6 routing table lookup.

3. If the source node receives the BFD echo packet within the detection timeout time, it determines that the SID list (forwarding path) under test is available. If no BFD echo packet is received, the device determines that the SID list is faulty. If all the SID lists for the primary path are faulty, BFD triggers a primary-to-backup path switchover.

If multiple SID lists are present in the selected candidate path, the SRv6 TE policy establishes separate BFD sessions to monitor the forwarding path corresponding to each SID list.

When echo BFD is enabled for an SRv6 TE policy, BFD packets can be encapsulated in Insert or Encaps mode. By default, BFD packets use the Insert encapsulation mode.

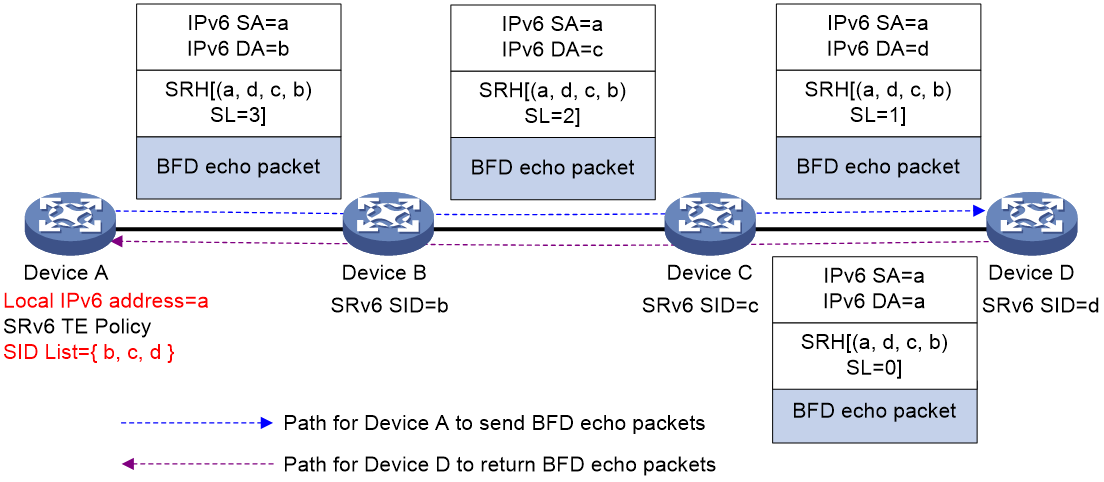

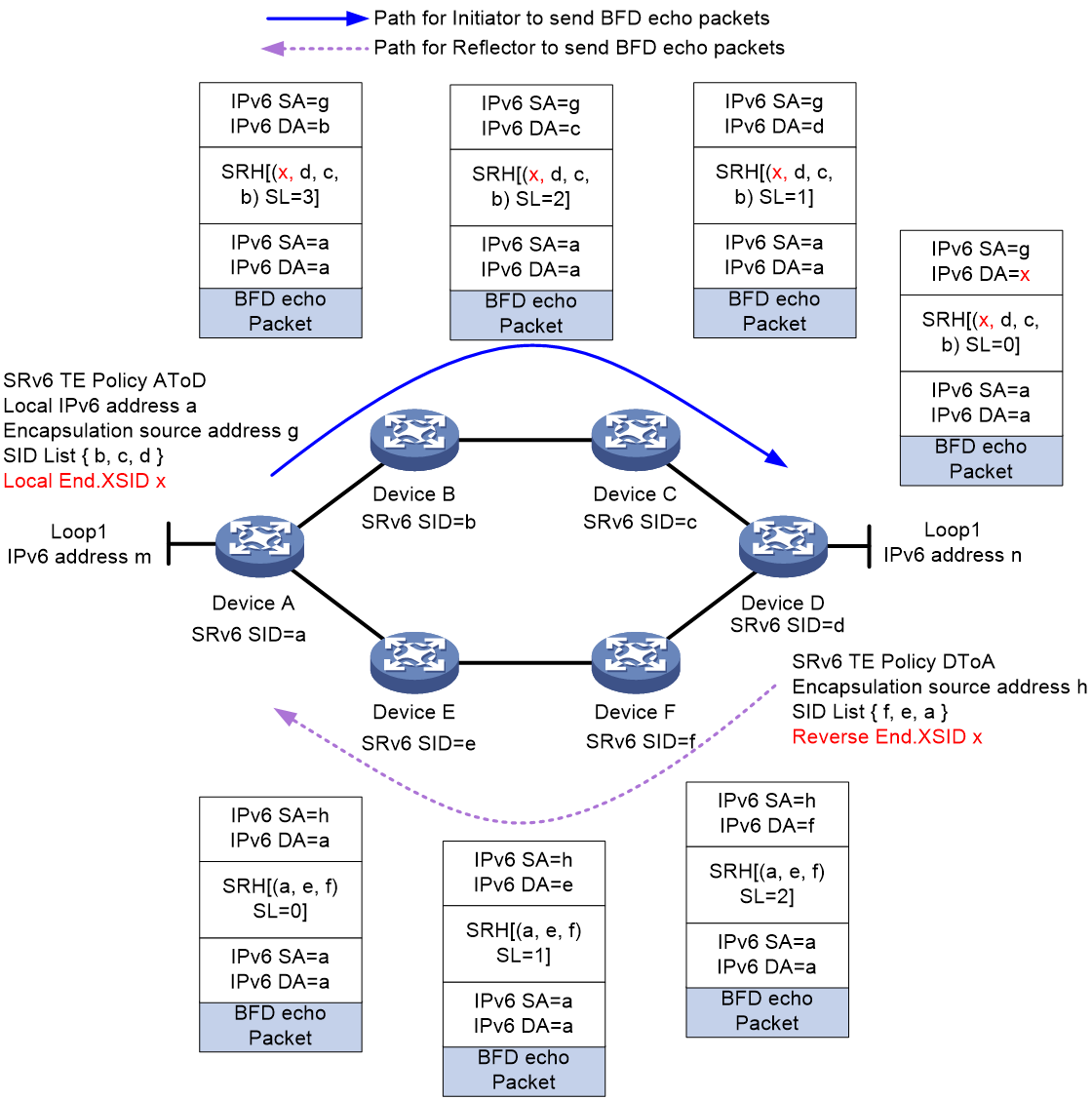

As shown in Figure 14, configure an SRv6 TE policy on Device A and use echo BFD to detect the policy connectivity. If Insert encapsulation is used for BFD packets, Device A constructs a special BFD packet with its local IPv6 address (a) as the source address and inserts IPv6 address a to the SL=0 position in the SID list. When Device D receives the BFD packet, it updates the destination address in the IPv6 header to a, and uses IPv6 address a to look up the routing table to send the packet back to Device A.

|

|

NOTE: For more information about selecting the source address for BFD packets, see “Enabling echo BFD for SRv6 TE policies.” |

Figure 14 Echo BFD for SRv6 TE policy with Insert encapsulation

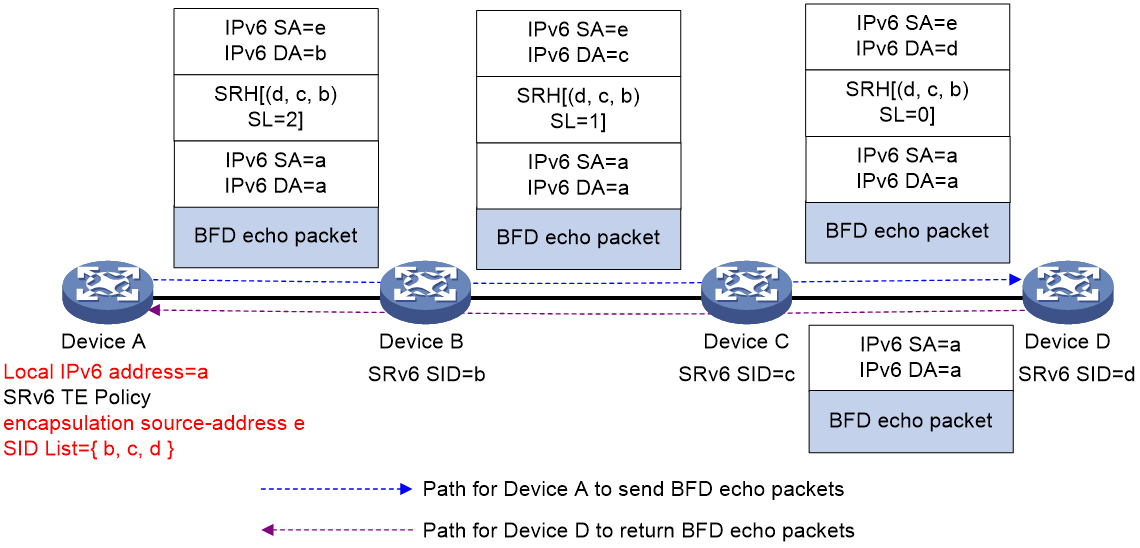

As shown in Figure 15, Device A configures an SRv6 TE policy and uses echo BFD to detect connectivity for this policy. If Encaps encapsulation is used for BFD packets, Device A first constructs a BFD packet with both source and destination addresses set to its local IPv6 address a. Then, Device A encapsulates the BFD packet with an additional IPv6 header and an SRH header. The outer IPv6 header's source address is specified by the encapsulation source-address command, and the SRH header contains the SID list of the SRv6 TE policy. When Device D receives the BFD packet, it performs decapsulation on the outer IPv6 and SRH headers, and then uses IPv6 address a to look up the IPv6 routing table to send the packet back to Device A.

|

|

NOTE: For more information about selecting the source address for BFD packets, see "Enabling echo BFD for SRv6 TE policies." |

Figure 15 Echo BFD for SRv6 TE policy with Encaps encapsulation

SBFD for SRv6 TE policy

SRv6 use seamless BFD (SBFD) to detect path connectivity of SRv6 TE policies, instead of sending messages between nodes. SBFD enables an SRv6 TE policy to detect path failures in milliseconds for fast path switchover.

SBFD detects the connectivity of an SRv6 TE policy as follows:

1. The source node (the initiator) sends SBFD packets that encapsulate the SID lists of the primary and backup candidate paths of the SRv6 TE policy.

2. After the endpoint node (the reflector) receives an SBFD packet, it checks whether the remote discriminator carried the packet is the same as the local discriminator. If yes, the reflector sends the SBFD response packet to the initiator by using the IPv6 routing table. If not, the reflector drops the SBFD packet.

3. If the source node can receive the SBFD response within the detection timeout time, it determines the corresponding SID list (forwarding path) of the SRv6 TE policy is available. If no response is received, the source node determines that the SID list is faulty.

If multiple SID lists are present in the selected candidate path, the SRv6 TE policy establishes separate SBFD sessions to monitor the forwarding path corresponding to each SID list.

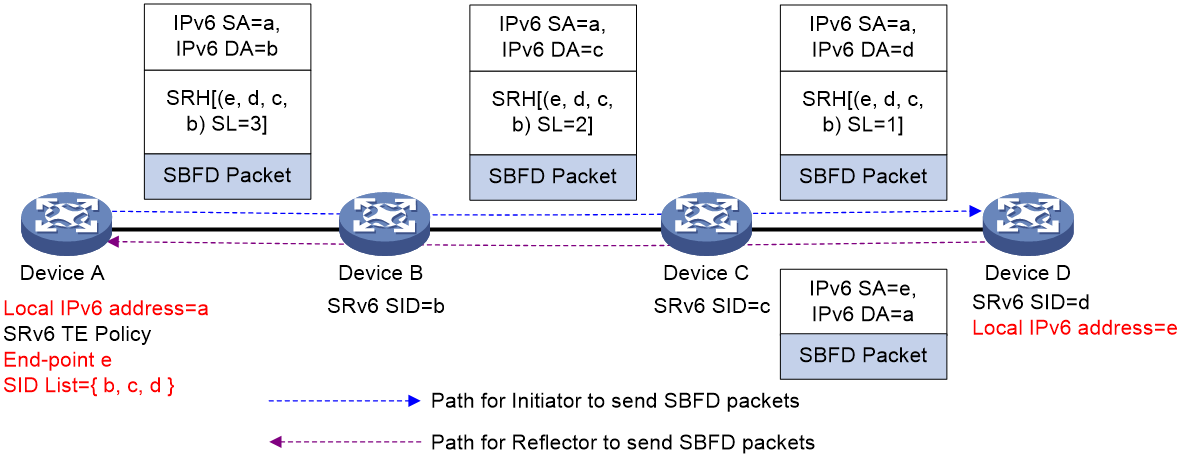

When SBFD is enabled for an SRv6 TE policy, SBFD packets can be encapsulated in Insert or Encaps mode. By default, SBFD packets use the Insert encapsulation mode.

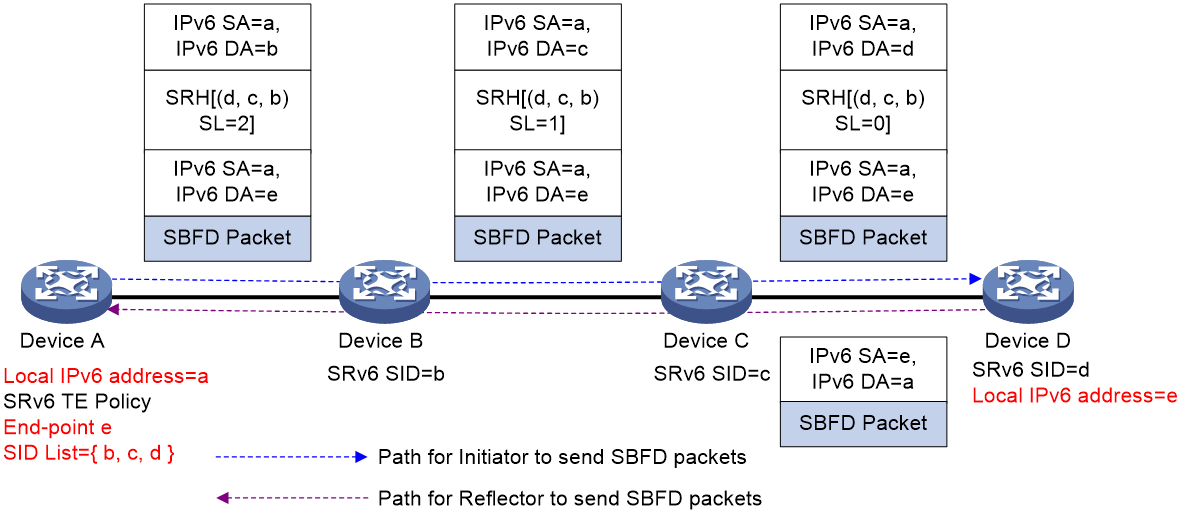

As shown in Figure 16, Device A configures an SRv6 TE policy and uses SBFD to detect connectivity for this policy. When using Insert encapsulation for SBFD packets, Device A constructs an SBFD packet with its local IPv6 address a as the source address and inserts the endpoint address e of the SRv6 TE policy to the SL=0 position in the SID list. When Device D receives the SBFD packet, it performs decapsulation on the IPv6 and SRH headers. Then, it uses IPv6 address a to look up the IPv6 routing table, reconstructs the SBFD packet, and returns the SBFD packet to Device A.

Figure 16 SBFD for SRv6 TE policy with Insert encapsulation

As shown in Figure 17, Device A configures an SRv6 TE policy and uses SBFD to detect connectivity for this policy. When using Encaps encapsulation for SBFD packets, Device A constructs an SBFD packet with its local IPv6 address a as the source address and the endpoint address e of the SRv6 TE policy as the destination address. Additionally, it encapsulates the SBFD packet with an outer IPv6 header and an SRH header, using IPv6 address a as the IPv6 header's source address. When Device D receives the SBFD packet, it performs decapsulation on the outer IPv6 and SRH headers. Then, it uses IPv6 address a to look up the IPv6 routing table, reconstructs the SBFD packet, and returns the SBFD packet to Device A.

Figure 17 SBFD for SRv6 TE policy with Encaps encapsulation

|

|

NOTE: Because SBFD responses are forwarded according to the IPv6 routing table lookup, all SBFD sessions for the SRv6 TE policies that have the same source and destination nodes use the same path to send responses. A failure of the SBFD response path will cause all the SBFD sessions to be down and packets cannot be forwarded through the SRv6 TE policies. |

BFD/SBFD No-Bypass

When you use BFD or SBFD to detect the connectivity of an SRv6 TE policy, the following conditions might exist:

· All SID lists for the primary candidate path fail.

· A local protection path (for example, a backup path calculated with TI-LFA) is available.

In this situation, all the BFD/SBFD packets will be forwarded through the local protection path. The BFD/SBFD session and primary candidate path will remain in up status, and traffic will be forwarded through the local protection path.

In certain scenarios, the local protection path might have unstable bandwidth and delay issues and fail to meet specific service requirements. In this case, the local protection path can only be used to protect traffic temporarily. When you enable the BFD No-Bypass feature, if all SID lists for the primary candidate path fail, the local protection path does not forward BFD/SBFD packets. The associated BFD/SBFD session then goes down, and the primary candidate path goes down as a result. Traffic will switch over to the backup candidate path or another SRv6 TE policy for forwarding. The BFD No-Bypass feature prevents traffic from being forwarded through the local protection path for a long time.

SBFD for SRv6 TE policy by using specific reverse path

By default, the SBFD return packets used for SRv6 TE policy connectivity detection are forwarded based on the IP forwarding path. If a transit node fails, all the return packets will be discarded, and the SBFD sessions will go down as a result. If multiple SRv6 TE policies exist between the source and endpoint nodes, SBFD will mistakenly determine that the SID lists of all SRv6 TE policies are faulty.

To resolve this issue, you can enable SBFD return packets to be forwarded based on the specified SID list. Generally for SRv6 TE policies, the path specified for SBFD return packets (reverse path) is consistent with the path for forwarding SBFD packets (forward path). This scenario is known as SBFD forward and reverse path consistency.

You can specify the SBFD reverse path by specifying a reverse BSID or a path segment (End.PSID).

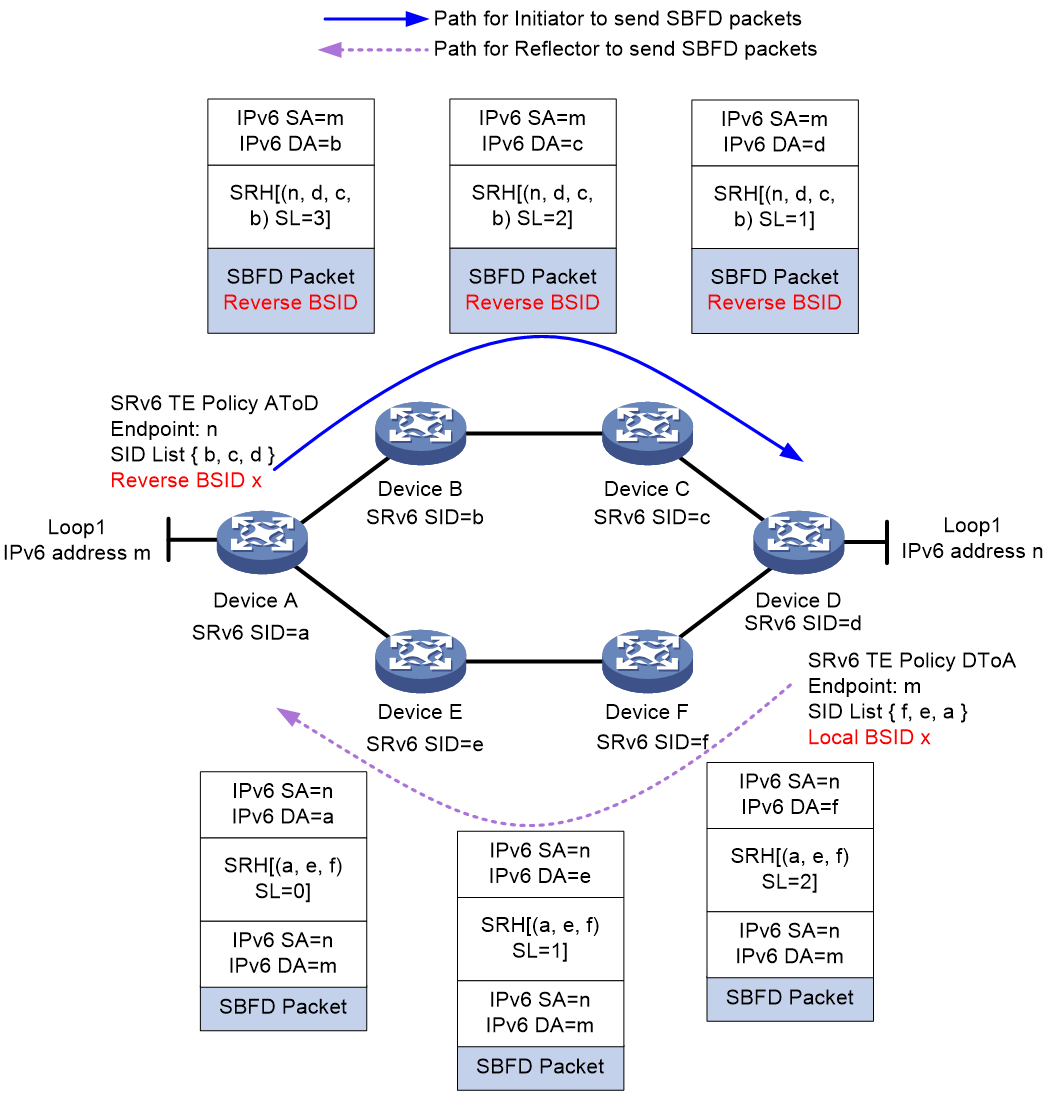

As shown in Figure 18, the reverse BSID method is implemented as follows (using the Insert encapsulation mode):

1. Create an SRv6 TE policy on both Device A and Device D, named AtoD and DtoA, respectively. The forwarding path for SRv6 TE policy AtoD is A > B > C > D and that for SRv6 TE policy DtoA is D > F > E > A. On Device D, assign local BSID x to SID list D > F > E > A.

2. Enable SBFD for SRv6 TE policy AtoD on Device A, and set the reverse BSID for SBFD return packets to x, which is the same as the local BSID specified on Device D. When Device A sends an SBFD packet, it encapsulates an Aux Path TLV (TLV for the backup path) in the packet, which includes the reverse BSID.

3. When Device D receives the SBFD packet, it compares the reverse BSID in the packet with the local BSID. If they are the same, Device D re-encapsulates the IPv6 header and SRH for the return SBFD packet, where the SRH contains the SID list associated with the local BSID.

4. The return packet will then follow path D > F > E > A in the SID list back to Device A.

Figure 18 SBFD for SRv6 TE policy by using specific reverse path (reverse BSID)

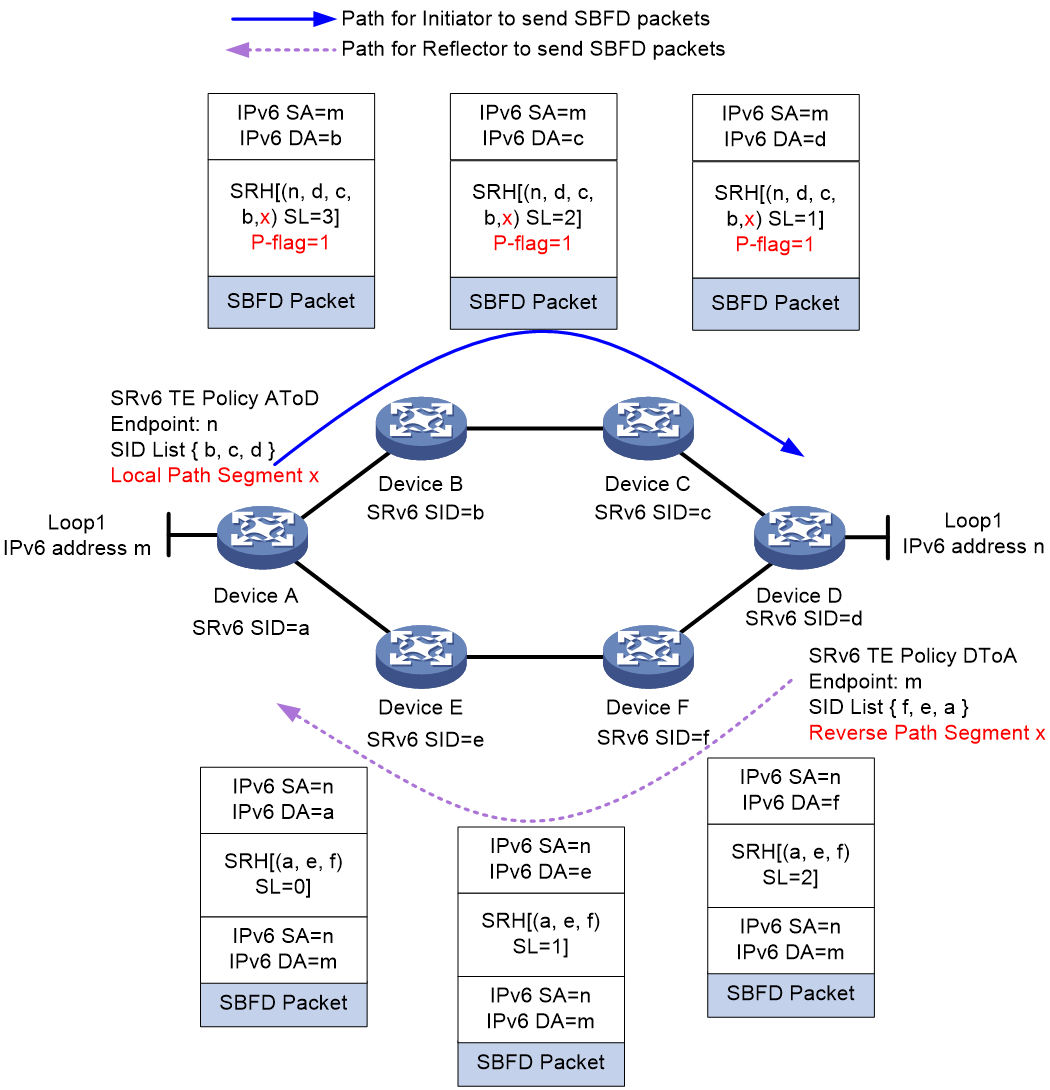

As shown in Figure 19, the path segment (End.PSID) method is implemented as follows (using the Insert encapsulation mode):

Create an SRv6 TE policy on both Device A and Device D, named AtoD and DtoA, respectively. The forwarding path for SRv6 TE policy AtoD is A > B > C > D and that for SRv6 TE policy DtoA is D > F > E > A. On Device D, assign reverse path segment x to SID list D > F > E > A.

On Device A, enable SBFD for SRv6 TE policy AtoD, and set the local path segment for SBFD return packets to x, which is the same as the reverse path segment specified on Device D. When Device A sends an SBFD packet, it sets the fifth bit of the Flags field in the SRH header, known as the P-flag, to indicate that the SRH header carries a path segment. Then, it encapsulates the local path segment (End.PSID) into the SRH header, at the SRH[SL +1] position in the SID list.

After Device D receives the SBFD packet and finds that the P-flag is set, it retrieves the path segment information from the packet. On Device D, the reverse path segment value specified for local SID list D > F > E > A is the same as the path segment value in the SRH header of the received packet. Therefore, Device D decapsulates the packet and re-encapsulates an IPv6 header and SRH for the return SBFD packet, where the SRH contains the SID list associated with the reverse path segment.

The return packet will then follow path D > F > E > A in the SID list back to Device A.

Figure 19 SBFD for SRv6 TE policy by using specific reverse path (path segment)

BFD for SRv6 TE policy by using specific reverse path

By default, the BFD return packets used for SRv6 TE policy connectivity detection are forwarded based on the IP forwarding path. If a transit node fails, all the return packets will be discarded, and the BFD sessions will go down as a result. If multiple SRv6 TE policies exist between the source and endpoint nodes, BFD will mistakenly determine that the SID lists of all SRv6 TE policies are faulty.

To resolve this issue, you can enable the return packets for BFD echo packets to be forwarded based on the specified SID list. Generally for SRv6 TE policies, the path specified for BFD echo return packets (reverse path) is consistent with the path for forwarding BFD echo packets (forward path). This scenario is known as BFD forward and reverse path consistency.

You can specify the BFD reverse path by using the following methods:

· Specifying a reverse BSID

· Specifying an End.XSID.

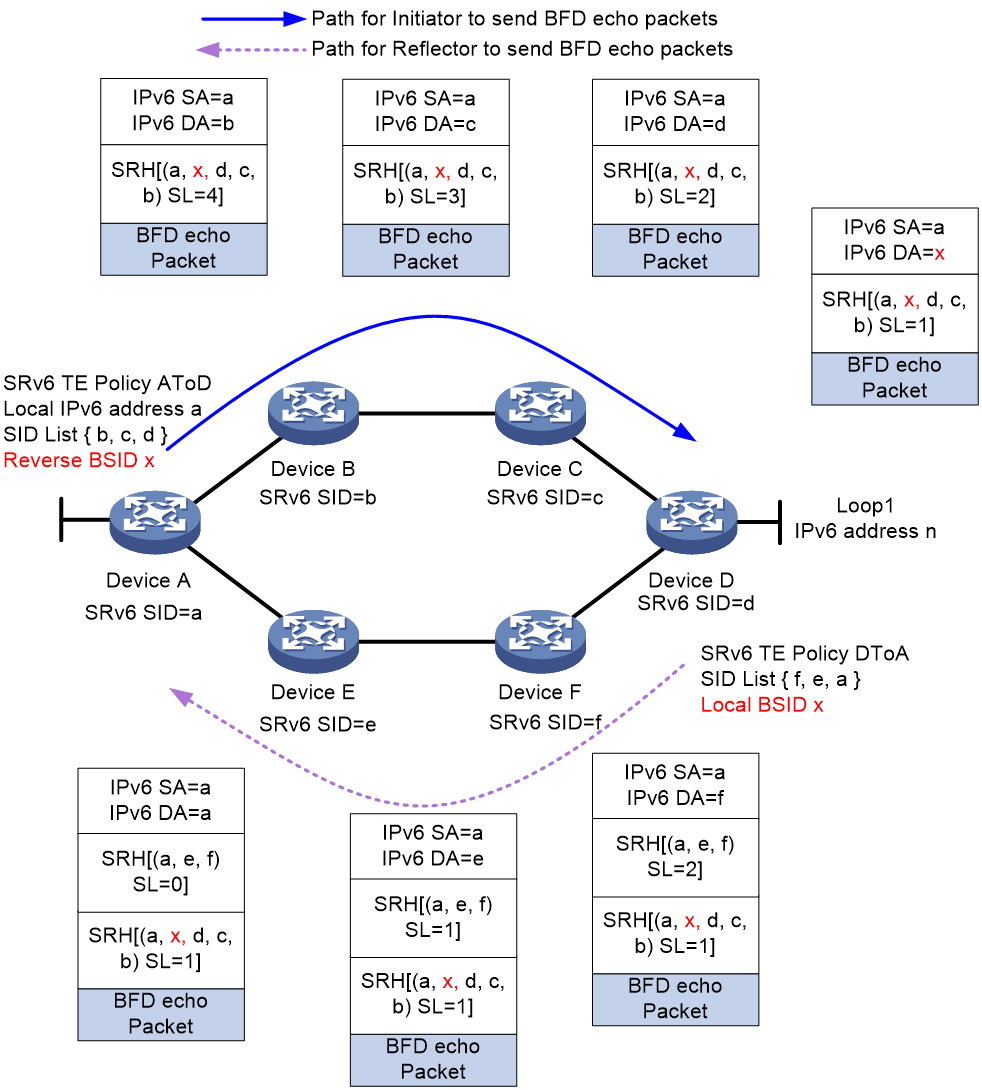

As shown in Figure 20, the reverse BSID method is implemented as follows (using the Insert encapsulation mode):

1. Create an SRv6 TE policy on both Device A and Device D, named AtoD and DtoA, respectively. The forwarding path for SRv6 TE policy AtoD is A > B > C > D and that for SRv6 TE policy DtoA is D > F > E > A. On Device D, assign local BSID x to SID list D > F > E > A.

2. Enable echo BFD for SRv6 TE policy AtoD on Device A, and set the reverse BSID for BFD echo return packets to x, which is the same as the local BSID specified on Device D. When Device A sends a BFD echo packet, it encapsulates the reverse BSID into the SRH header, at the SRH[1] position in the SID list.

3. When Device D receives the BFD echo packet, it finds that SL is 1 and the destination address is x in the packet. The destination address x is the same as the local BSID specified for the local SID list D > F > E > A. As a result, Device D inserts a new SRH into the BFD echo return packet, carrying the SID list associated with the local BSID.

4. The return packet will then follow path D > F > E > A in the SID list back to Device A.

Figure 20 BFD for SRv6 TE policy by using specific reverse path (reverse BSID)

As shown in Figure 21, the End.XSID method is implemented as follows (using the Encaps encapsulation mode):

1. Create an SRv6 TE policy on both Device A and Device D, named AtoD and DtoA, respectively. The forwarding path for SRv6 TE policy AtoD is A > B > C > D and that for SRv6 TE policy DtoA is D > F > E > A. On Device D, assign reverse End.XSID x for SID list D > F > E > A.

2. Enable echo BFD for SRv6 TE policy AtoD on Device A, and set the local End.XSID for BFD echo return packets to x, which is the same as the reverse BSID specified on Device D. When Device A sends a BFD echo packet, it encapsulates a new IPv6 header and SRH header outside the BFD echo packet header, where the SRH contains the local End.XSID at the SRH[0] position in the SID list.

3. When Device D receives the BFD echo packet, it finds that SL is 0 and the destination address is x in the packet. The destination address x is the same as the reverse End.XSID specified for the local SID list D > F > E > A. As a result, Device D executes the forwarding behavior of the reverse End.XSID: decapsulates the IPv6 and SRH headers of the BFD echo packet, and then encapsulates the packet with a new IPv6 header and SRH extension header, which carries the SID list associated with reverse End.XSID.

4. The return packet will then follow path D > F > E > A in the SID list back to Device A.

Figure 21 BFD for SRv6 TE policy by using specific reverse path (End.XSID).

BFD down triggering candidate path reselection for an SRv6 TE policy

This feature enables collaboration between the BFD scheme and hot standby scheme for an SRv6 TE policy. It allows BFD session down events to trigger candidate path reselection for SRv6 TE policies.

By default, when the SRv6 TE policy has multiple valid candidate paths, the following conditions exist:

· If the hot standby feature is disabled, BFD or SBFD detects all SID lists for only the optimal valid candidate path of the SRv6 TE policy. The device establishes a separate BFD or SBFD session for each SID list. When all BFD or SBFD sessions go down, the SRv6 TE policy will not select other valid candidate paths, and the device will not forward packets through the SRv6 TE policy.

· If the hot standby feature is enabled, BFD or SBFD detects all SID lists for the primary and backup paths of the SRv6 TE policy. The device establishes a separate BFD or SBFD session for each SID list.

¡ If all BFD or SBFD sessions for the primary path go down, the SRv6 TE policy will use the backup path to forward packets without reselecting other valid candidate paths.

¡ If all BFD or SBFD sessions for the primary and backup paths go down, the SRv6 TE policy will not select other valid candidate paths, and the device will not forward packets through the SRv6 TE policy.

If you enable BFD session down events to trigger SRv6 TE policy path reselection, the following conditions exist when the SRv6 TE policy has multiple valid candidate paths:

· If the hot standby feature is disabled, BFD or SBFD detects all SID lists for only the optimal valid candidate path of the SRv6 TE policy. The device establishes a separate BFD or SBFD session for each SID list. When all BFD or SBFD sessions go down, the SRv6 TE policy will reselect another valid candidate path for packet forwarding. If no valid candidate paths are available for the SRv6 TE policy, the device cannot forward packets through the SRv6 TE policy.

· If the hot standby feature is enabled, BFD or SBFD detects all SID lists for the primary and backup paths of the SRv6 TE policy. The device establishes a separate BFD or SBFD session for each SID list.

¡ If all BFD or SBFD sessions for the primary path go down, the SRv6 TE policy will use the backup path to forward packets, and reselect the primary and backup paths.

¡ If all BFD or SBFD sessions for the primary and backup paths go down, the SRv6 TE policy will reselect other valid candidate paths as the primary and backup paths. The device will forward packets through the new primary path of the SRv6 TE policy.

¡ During optimal path reselection, if no valid candidate paths are available for the SRv6 TE policy, the device cannot forward packets through the SRv6 TE policy.

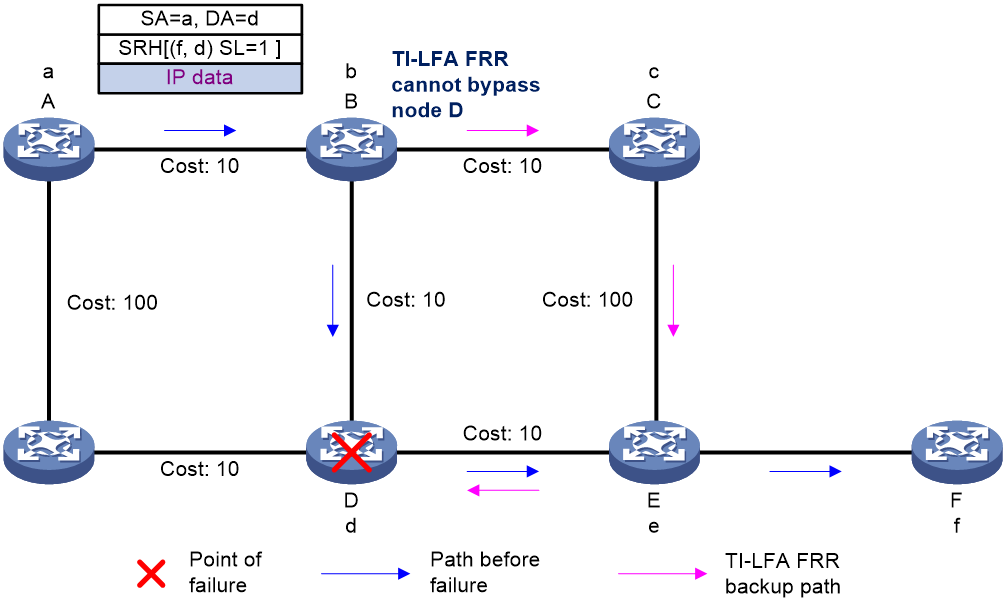

SRv6 TE policy transit node protection

Protection path failure

The SID list of an SRv6 TE policy specifies the nodes or links that packets must traverse. As shown in Figure 22, node A forwards packets to node F through an SRv6 TE policy. The SID list of the optimal candidate path contains the End.SIDs of node D and egress node F. Therefore, the packet arrives at node D before reaching egress node F. If TI-LFA FRR is enabled on node B and node D fails, the backup path calculated by TI-LFA FRR will still use the End.SID of node D as destination. As a result, the backup path cannot bypass node D and thus cannot provide service protection.

Figure 22 Protection path failure

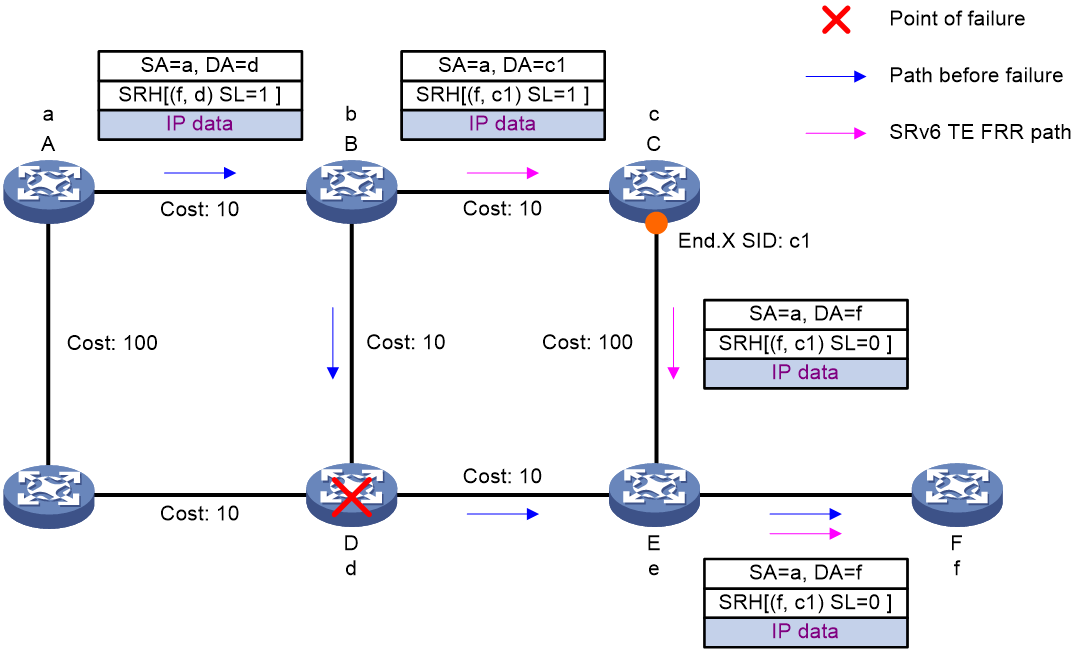

Transit node protection (SRv6 TE FRR)

To resolve the protection path failure caused by strict node constraints in an SRv6 TE policy, SRv6 TE FRR is introduced.

After SRv6 TE FRR is enabled, when a transit node of an SRv6 TE policy fails, the upstream node (enabled with SRv6 TE FRR) of the faulty node can take over to forward packets. The upstream node is called a proxy forwarding node. During proxy forwarding, the faulty node is bypassed by traffic.

After SRv6 TE FRR is enabled on a node, upon receiving a packet that contains an SRH and the SL in the SRH is greater than 0 (SL>0), the node will act as a proxy forwarding node in any of the following scenarios:

· The node does not find a matching forwarding entry in the IPv6 FIB.

· The next hop address of the packet is the destination address of the packet, and the outgoing interface for the destination address is in DOWN state.

· The matching local SRv6 SID is an END.X SID, and the outgoing interface for the END.X SID is in DOWN state.

· The outgoing interface in the matching route is NULL0.

A proxy forwarding node forwards packets as follows:

· Decrements the SL value in the SRH of a packet by 1.

· Copies the next SID to the DA field in the outer IPv6 header, so as to use the SID as the destination address of the packet.

· Looks up the forwarding table by the destination address and then forwards the packet.

In this way, the proxy forwarding node bypasses the fault node. This transit node failure protection technology is referred to as SRv6 TE FRR.

Figure 23 SRv6 TE FRR for transit node failure protection

As shown in Figure 23, after a packet is steered into an SRv6 TE policy, the packet is forwarded as instructed by the SID list {d, f}.

When node D fails, the node failure protection process is as follows:

1. Upstream node B detects that the next hop of the packet is faulty. In this situation, the output interface for the destination address of the packet is in DOWN state, and the SL value is greater than 0. Therefore, node B performs the proxy forwarding behavior: Decrements the SL value by 1, copies the next SID to the DA field in the outer IPv6 header, and then forwards the packet according to the SID (destination address of the packet). Because the SL value is now 0, node B can remove the SRH and then search the corresponding table to forward the packet based on the destination address (f).

2. Node B forwards the packet as follows:

¡ If the route to node F is converged (the next hop in the route is node C), node B uses the converged shortest path to forward the packet to node C.

¡ If the route to node F is not converged (the primary next hop in the route is node D), node B uses the TI-LFA computed backup path to forward packet. The backup path's repair list is <c1>. Therefore, node B encapsulates an SRH to the packet to add backup path Segment List c1, and then forwards the packet over the backup path to node F.

Special processing on the source node

If source node A (ingress node) of the SRv6 TE policy detects the failure of node D (the first SID in the SID list is unreachable), node A then places the SRv6 TE policy to the down state. The device cannot forward packets through the SRv6 TE policy or trigger SRv6 TE FRR.

To resolve this issue, enable the bypass feature for the SRv6 TE policy on the source node. This feature enables the source node to generate a route that uses the first SID as the destination address and the NULL0 interface as the outgoing interface. The route ensures that the SRv6 TE policy is in up state when the first SID is unreachable, so as to trigger SRv6 TE FRR.

After node A triggers SRv6 TE FRR, node A decrements the SL value by 1, copies the next SID (f) to the DA field in the outer IPv6 header, and then forwards the packet to node B according to the SID (destination address of the packet).

When node B receives the packet, it processes the packet as follows:

· If the route to node F is converged (the next hop in the route is node C), node B uses the converged shortest path to forward the packet to node C.

· If the route to node F is not converged (the primary next hop in the route is node D), node B uses the TI-LFA computed backup path to forward the packet to node F.

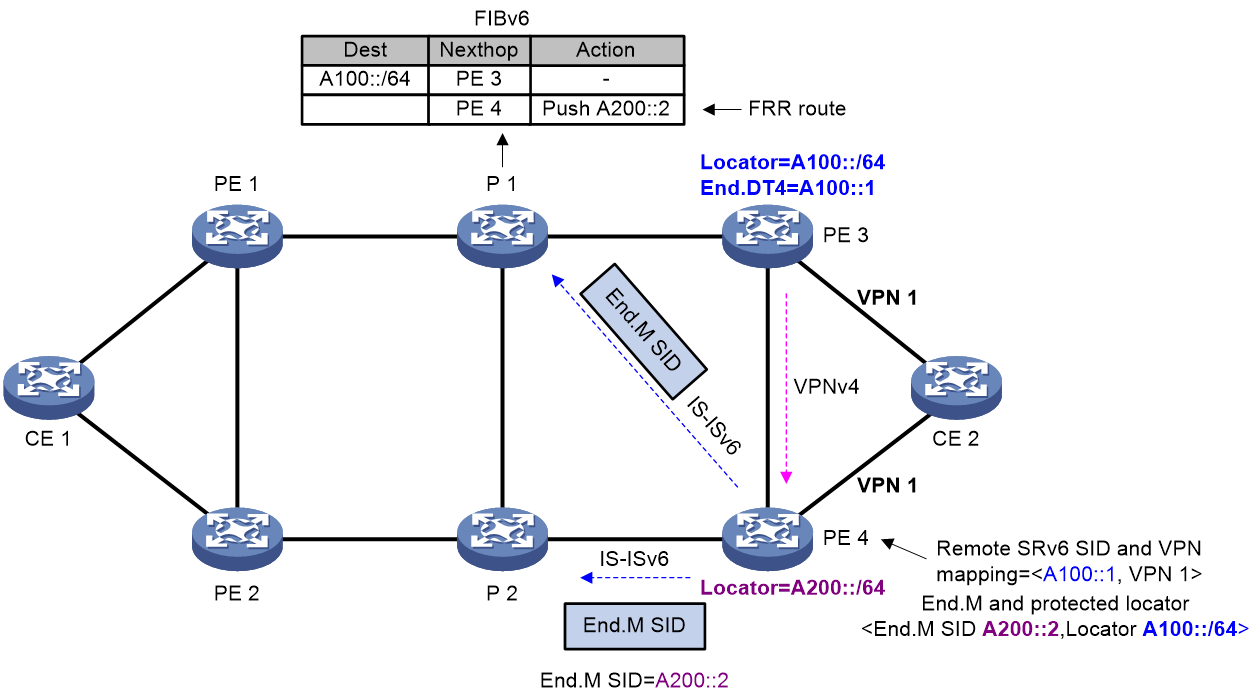

SRv6 egress protection

This feature provides egress node protection in IP L3VPN over SRv6, EVPN L3VPN over SRv6, EVPN VPLS over SRv6, EVPN VPWS over SRv6, or IP public network over SRv6 networks where the public tunnel is an SRv6 TE policy tunnel.

SRv6 TE policy egress protection applies only to the dual homing scenario where a CE is dual-homed to PEs. To implement SRv6 TE policy egress protection, the egress node and the protected egress node must have the same forwarding entries.

|

IMPORTANT: · SRv6 TE policy egress protection is not supported in EVPN VPLS over SRv6 and EVPN VPWS over SRv6 networks if dual homing is configured in the networks and the redundancy mode is single-active. · In addition to scenarios where SRv6 TE policies act as tunnels, SRv6 TE policy egress protection also takes effect on scenarios where SRv6 BE-based forwarding is used. |

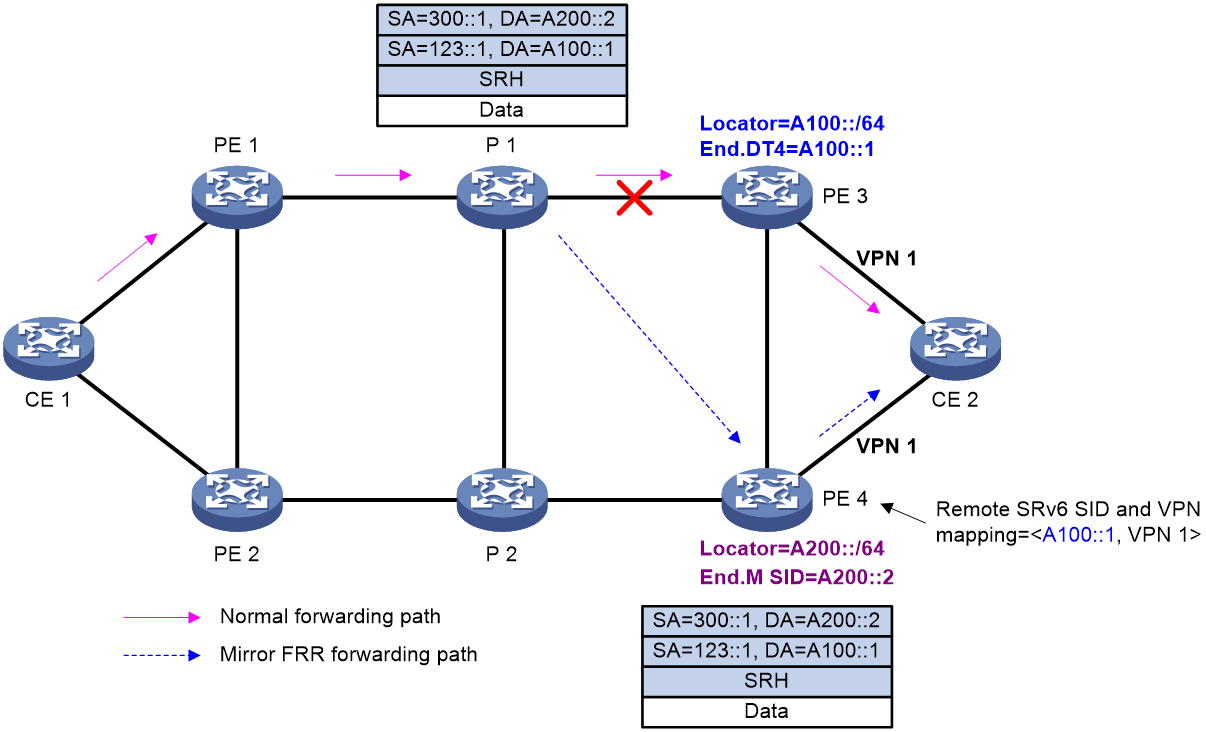

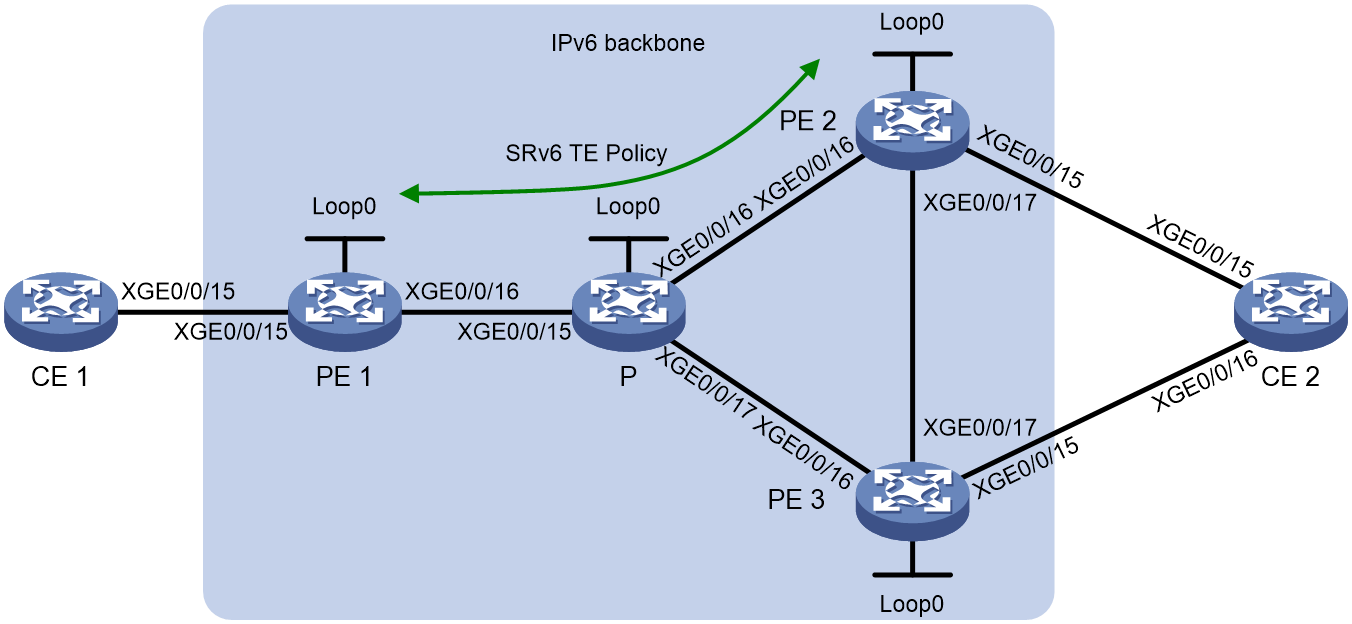

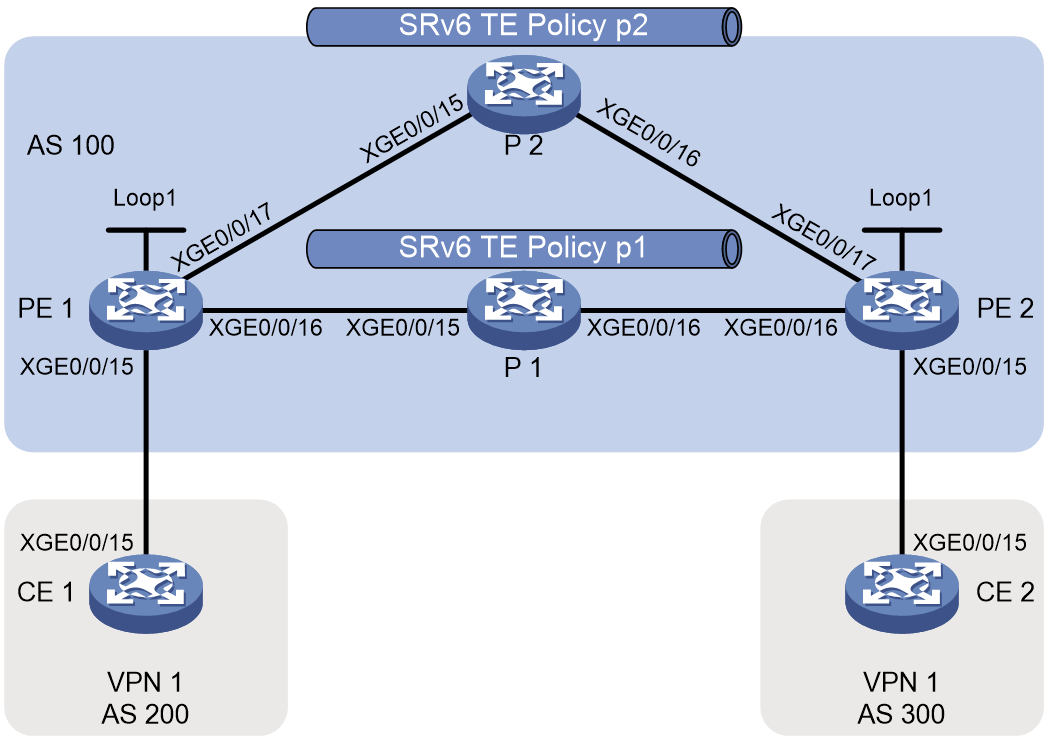

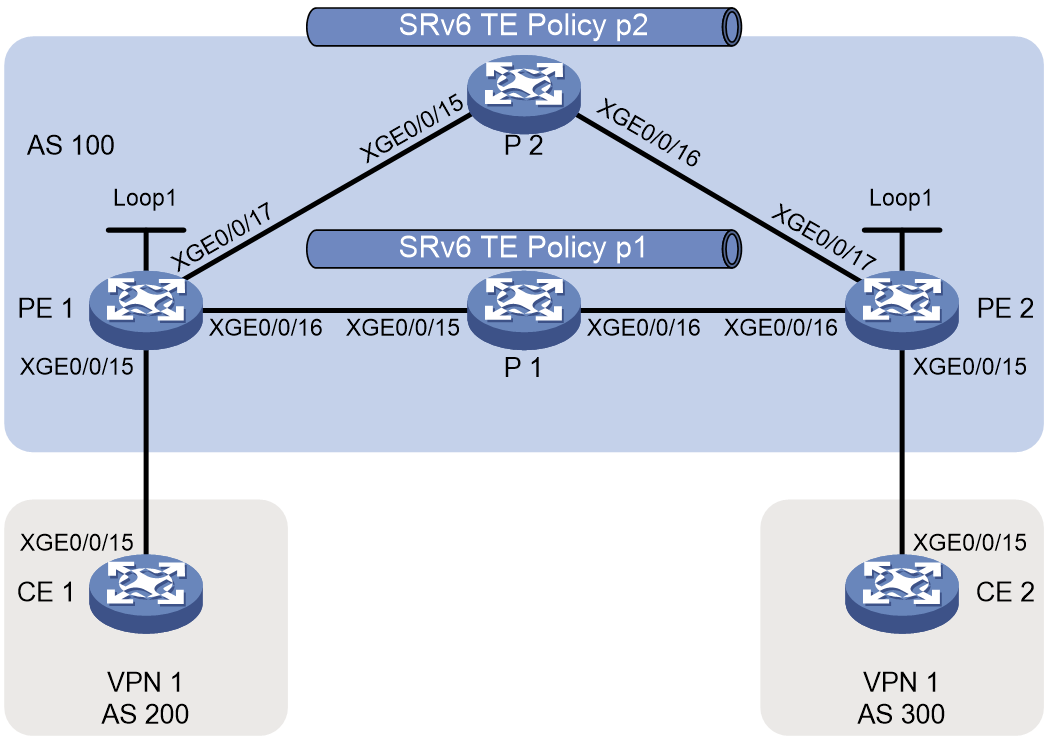

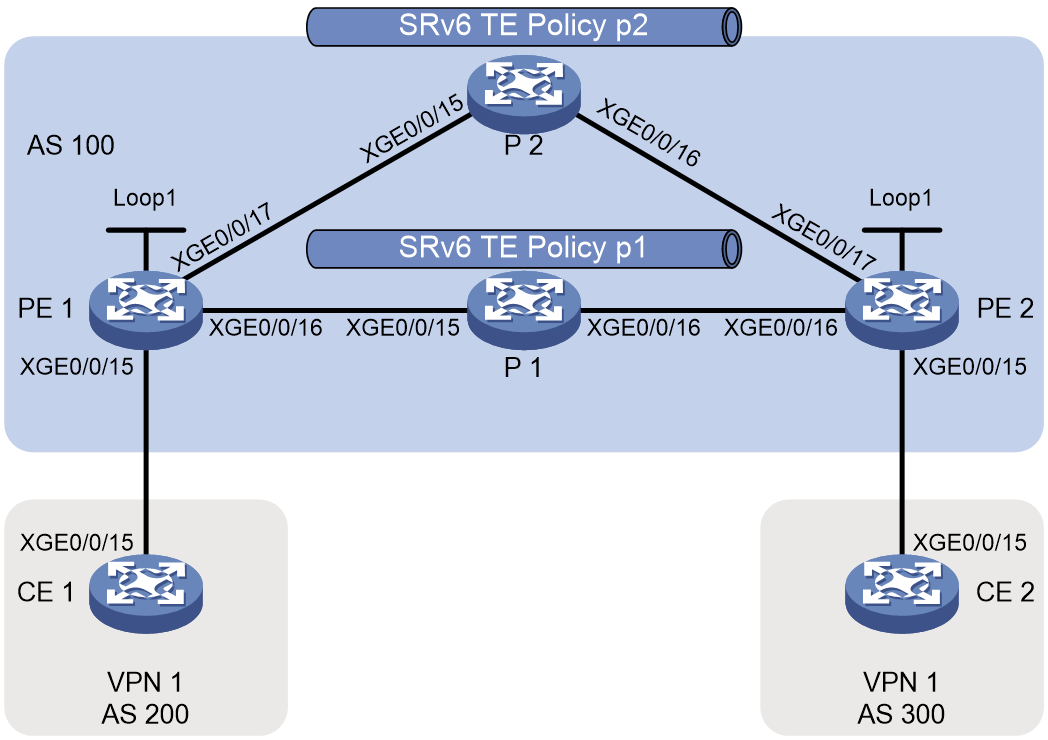

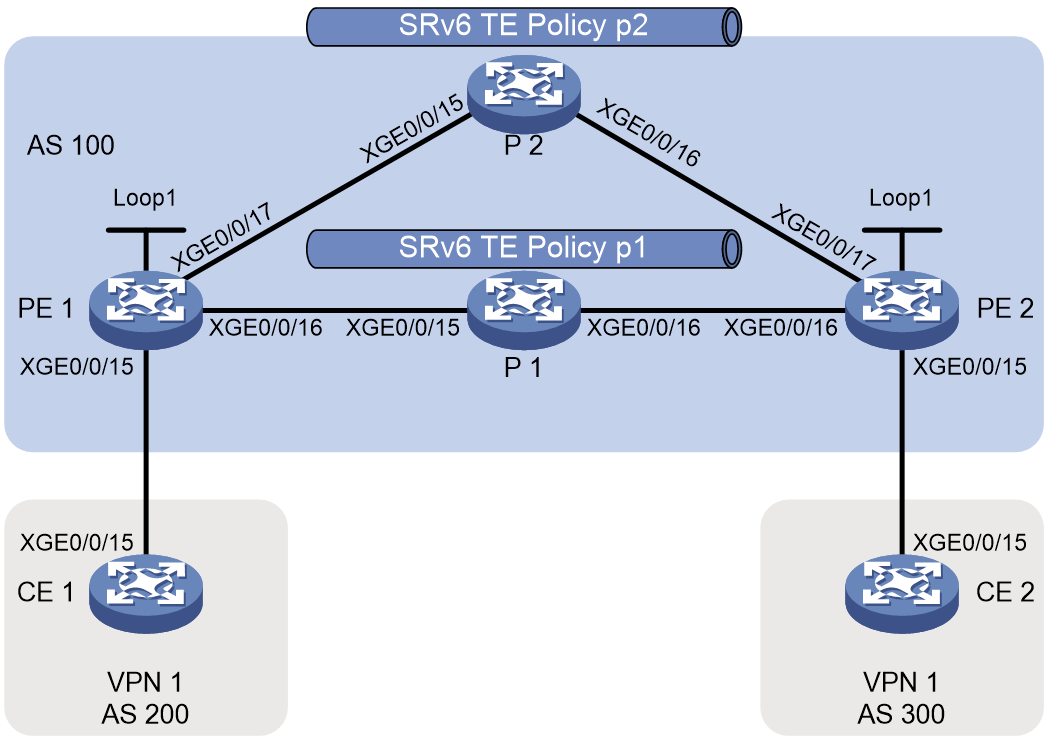

As shown in Figure 24, an SRv6 TE policy is deployed between PE 1 and PE 3. PE 3 is the egress node (endpoint node) of the SRv6 TE policy. To improve forwarding reliability, CE 2 is dual-homed to PE 3 and PE 4, and PE 4 is enabled to protect PE 3.

Figure 24 SRv6 TE policy egress protection

End.M SID

In SRv6 TE policy egress protection, an End.M SID is used to protect the SRv6 SIDs in a specific locator. If an SRv6 SID advertised by the remote device (PE 3 in this example) is within the locator, the protection node (PE 4) uses the End.M SID to protect that SRv6 SID (remote SRv6 SID).

PE 4 takes different actions as instructed by an End.M SID in different network scenarios:

· IP/EVPN L3VPN/IP public network over SRv6 TE policy scenario

a. Removes the outer IPv6 header to obtain the remote SRv6 SID from the inner packet.

b. Searches the remote SRv6 SID and VPN/public instance mapping table to find the VPN/public instance mapped to the remote SRv6 SID.

c. Forwards the packet by looking up the routing table of the VPN/public instance.

· EVPN VPWS over SRv6 TE policy scenario

a. Removes the outer IPv6 header to obtain the remote SRv6 SID from the inner packet.

b. Searches the remote SRv6 SID and cross-connect mapping table to find the cross-connect mapped to the remote SRv6 SID.

c. Forwards the packet to the AC associated with the cross-connect.

In this scenario, the remote SRv6 SID must be an End.DX2 SID.

· EVPN VPLS over SRv6 TE policy scenario

a. Removes the outer IPv6 header to obtain the remote SRv6 SID from the inner packet.

b. Searches the remote SRv6 SID and VSI mapping table to find the VSI mapped to the remote SRv6 SID.

c. Forwards the packet according to the MAC address forwarding table of the VSI.

In this scenario, the remote SRv6 SID must be an End.DT2U SID.

Remote SRv6 SID

As shown in Figure 24, PE 4 receives a BGP route from PE 3. If the SRv6 SID in the BGP route is within the locator protected by the End.M SID on PE 4, PE 4 regards the SRv6 SID as a remote SRv6 SID and generates a mapping between the remote SRv6 SID and the VPN instance, public instance, cross-connect, or VSI.

When the adjacency between PE 4 and PE 3 breaks, PE 4 deletes the BGP route received from PE 3. As a result, the remote SRv6 SID and VPN instance/public instance/cross-connect/VSI mapping will be deleted, resulting in packet loss. To avoid this issue, you can configure the mappings deletion delay time on PE 4 to ensure that traffic is forwarded through PE 4 before PE 1 knows the PE 3 failure and computes a new forwarding path.

Route advertisement

The route advertisement procedure is similar in IP L3VPN, EVPN L3VPN, EVPN VPWS, EVPN VPLS, or IP public network over SRv6 TE policy egress protection scenarios. The following example describes the route advertisement in an IP L3VPN over SRv6 TE policy egress protection scenario.

As shown in Figure 24, the FRR path is generated on P 1 as follows:

1. PE 4 advertises the End.M SID and the protected locator to P 1 through an IPv6 IS-IS route. Meanwhile, PE 4 generates a local SID entry for the End.M SID.

2. Upon receiving the route that carries the End.M SID and the protected locator, P 1 installs a Mirror FRR backup route into its routing table for the protected locator. The next hop of the route is PE 4. To ensure that traffic can bypass PE 3 and arrive at PE 4 without causing loops, the device must calculate a TI-LFA backup path and push the End.M SID to the end of the TI-LFA backup path's SID list.

On PE 4, a <remote SRv6 SID, VPN instance > mapping entry is generated as follows:

1. Upon receiving the private route from CE 2, PE 3 encapsulates the route as a VPNv4 route and sends it to PE 4. The VPNv4 route carries the SRv6 SID, RT, and RD information.

2. After PE 4 receives the VPNv4 route, it obtains the SRv6 SID and the VPN instance. Then, PE 4 performs longest matching of the SRv6 SID against the locators protected by End.M SIDs. If a match is found, PE 4 uses the SRv6 SID as a remote SRv6 SID and generates a <remote SRv6 SID, VPN instance> mapping entry.

Packet forwarding

The packet forwarding procedure is similar in IP L3VPN, EVPN L3VPN, EVPN VPWS, EVPN VPLS, or IP public network over SRv6 TE policy egress protection scenarios. The following example describes the packet forwarding in an IP L3VPN over SRv6 TE policy egress protection scenario.

Figure 25 Data forwarding in SRv6 TE policy egress protection

As shown in Figure 25, typically traffic is forwarded along the CE 1-PE 1-P 1-PE 3-CE 2 path. When the egress node PE 3 fails, a packet is forwarded as follows: