- Table of Contents

-

- 10-Segment Routing Configuration Guide

- 00-Preface

- 01-SR-MPLS configuration

- 02-SR-MPLS TE policy configuration

- 03-SRv6 configuration

- 04-SRv6 TE policy configuration

- 05-SRv6 VPN overview

- 06-IP L3VPN over SRv6 configuration

- 07-EVPN L3VPN over SRv6 configuration

- 08-EVPN VPWS over SRv6 configuration

- 09-EVPN VPLS over SRv6 configuration

- 10-Public network IP over SRv6 configuration

- 11-SRv6 OAM configuration

- 12-SRv6 network slicing configuration

- 13-SRv6 service chain configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 03-SRv6 configuration | 1.04 MB |

Directing traffic to an SRv6 tunnel

G-SID format in 32-bit G-SRv6 compression

G-SRv6 packet in 32-bit G-SRv6 compression

16-bit G-SRv6 compression classification

16-bit compression with a combination of NEXT and COC flavors

16-bit compression scheme where only the NEXT flavor is supported

16-bit compression scheme where only the COC flavor is supported

BGP-LS advertisement of link attribute information

Topology-Independent Loop-Free Alternate Fast Re-Route (TI-LFA FRR)

Microloop avoidance after a network failure

SR microloop avoidance after a failure recovery

Restrictions and guidelines: SRv6 configuration

Configuring non-compressible SRv6 SIDs

Configuring the local locator and opcode

Configuring the remote locator

Configuring SRv6 SIDs on a COC32 locator

Configuring SRv6 SIDs on a COC-both locator

Configuring SRv6 SIDs on a COC16 locator

Configuring the length of the GIB

Configuring dynamic End.X SID deletion delay

Configuring the delay time to flush static End.X SIDs to the FIB

Using IGP to advertise SRv6 SIDs

Enabling BGP to advertise routes for a locator

Configuring a BGP-EPE SRv6 peer set

Configuring delay advertisement for BGP-EPE

Configuring packet loss rate advertisement for BGP-EPE

Configuring bandwidth advertisement for BGP-EPE

Configuring dynamic SID deletion delay

Configuring the BGP virtual link feature

Restrictions and guidelines for TI-LFA FRR

Specifying a repair list encapsulation mode for TI-LFA FRR

Disabling an interface from participating in TI-LFA calculation

Enabling FRR microloop avoidance

Enabling SR microloop avoidance

Specifying an SID list encapsulation mode for SR microloop avoidance

Configuring SR microloop avoidance to encapsulate only strict SIDs in the SID list

Configuring the SRv6 DiffServ mode

Enabling SNMP notifications for SRv6

Display and maintenance commands for SRv6

Example: Configuring IPv6 IS-IS TI-LFA FRR

Example: Configuring SRv6 BGP-EPE

SRv6 basics

About SRv6

Segment Routing (SR) is a source routing technology. The source node selects a path for packet forwarding, and then encodes the path in the packet header as an ordered list of segments. Each segment is identified by a segment identifier (SID). The SR nodes along the path forward the packets based on the SIDs in the packets. None of the nodes except the source node needs to maintain the path state.

IPv6 SR (SRv6) uses IPv6 addresses as SIDs to forward packets.

Basic concepts

SR node roles

The nodes on an SRv6 network can have one or multiple of the following roles:

· Source node—Responsible for inserting an SRH into the IPv6 header of IPv6 packets, or encapsulating IPv6 packets with an outer IPv6 header and inserting an SRH into the outer IPv6 header. A source node steers traffic to the SRv6 path defined in the segment list in the SRH.

¡ If the segment list contains only one SID and the SRH is not required to include information or TLV, the source node only sets the SID as the destination address in the IPv6 header, without inserting an SRH.

¡ If the segment list contains multiple SIDs, the source node must insert an SRH to the packets.

A source node can be the originator of SRv6 packets or the edge device of an SRv6 domain.

· Transit node—Forwards IPv6 packets along the SRv6 path. A transit node does not participate in SRv6 processing. It can be an SRv6-aware or SRv6-unaware node.

· Endpoint node—Performs an SRv6 function for received SRv6 packets. The IPv6 destination address of the received SRv6 packets must be an SRv6 SID configured on the endpoint node. The endpoint node processes the packets based on the SRv6 SID and updates the SRH.

A node can be the source node in one SRv6 path and a transit node or endpoint node in another SRv6 path.

SID portions

In SRv6, a SID represents a segment that represents a network function or instruction to execute on a packet.

An SRv6 SID is in the format of an IPv6 address, but the IPv6 address does not belong to any interface on any device.

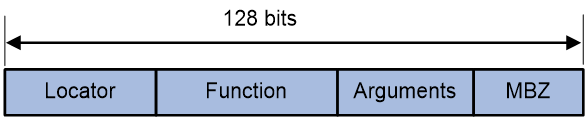

As shown in Figure 1, an SRv6 SID contains the Locator, Function, Arguments, and Must be zero (MBZ) portions.

· Locator—Identifies the network segment of the SID. An SRv6 node advertises IPv6 segments identified by locators to the network through routing protocols such as IGP, to help other devices forward packets to that SRv6 node. Therefore, locators are typically used for SRv6 routing and addressing. The locator of an SRv6 SID must be unique in the SR domain.

· Function—Contains an opcode that identifies the network function (local instruction) bound to a SID. When an SRv6 node receives an SRv6 packet and detects that the IPv6 destination address matches an SRv6 SID in the local SID table, the node analyzes the Function field. Then, it locates and executes the local operation instruction for the function. For example, an SRv6 node is configured with the opcode 101 end-x interface A command for a SID. This command indicates that an opcode value of 101 in the Function field associates with the End.X behavior. If the destination address of an incoming SRv6 packet matches this local SRv6 SID, the node forwards the packet from interface A (the interface identified by End.X) as instructed.

· Arguments—Defines flow and service parameters for SRv6 packets, an optional field in SRv6 SIDs.

· MBZ—When the total number of bits in the Locator, Function, and Arguments portions is less than 128 bits, the lowest bits are padded with 0s.

All SRv6 SIDs are allocated from the locator configured by using the locator command. SRv6 SIDs are divided into different categories depending on the locator type and length. Typically, 128-bit SRv6 SIDs are encapsulated into SRv6 packets. This type of SRv6 SIDs are allocated from common locators.

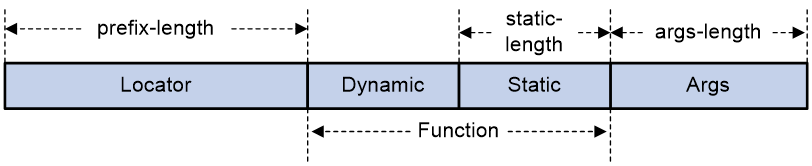

Figure 2 Common locator

Common locators can allocate common SRv6 SIDs, and common SRv6 SIDs include static and dynamic SRv6 SIDs. The formats are as follows:

· A static SRv6 SID is generated based on the following formula: static SRv6 SID = ipv6-prefix + 0 + opcode + 0.

¡ The ipv6-prefix argument represents the IPv6 prefix specified by using the ipv6-address and prefix-length arguments in the locator command. The number of bits occupied by the IPv6 prefix is configured by using the prefix-length argument.

¡ The number of bits occupied by 0s (following the ipv6-prefix portion) is 128 - prefix-length - static-length - args-length.

¡ The opcode argument represents the static portion in the Function field. The number of bits occupied by the opcode is the value of the static-length argument. The number of bits occupied by 0s (following the opcode portion) is the value of the args-length argument.

· A dynamic SRv6 SID is generated based on the following formula: dynamic SRv6 SID = ipv6-prefix + dynamic + static + 0.

¡ The ipv6-prefix argument represents the IPv6 prefix specified by using the ipv6-address and prefix-length arguments in the locator command. The number of bits occupied by the IPv6 prefix is configured by using the prefix-length argument.

¡ The dynamic argument represents the dynamic portion in the Function field. The value for this portion cannot be all zeros. The number of bits occupied by this portion is 128 - prefix-length - static-length - args-length.

¡ The static argument represents the static portion in the Function field. The number of bits occupied by this portion is static-length. This portion can use any value. The number of bits occupied by 0s is the value of the args-length argument.

Assume that you configure the locator test1 ipv6-prefix 100:200:DB8:ABCD:: 64 static 24 args 32 command.

¡ The locator is 100:200:DB8:ABCD::. The length is 64 bits.

¡ The static portion length is 24 bits.

¡ The Args portion length is 32 bits.

¡ The dynamic portion length is 8 bits.

In this example, the following non-compressible static SRv6 SID range and dynamic SRv6 SID range are obtained on the locator:

¡ The start value for static SRv6 SIDs is 100:200:DB8:ABCD:0:1::.

¡ The end value for static SRv6 SIDs is 100:200:DB8:ABCD:FF:FFFF::.

¡ The start value for dynamic SRv6 SIDs is 100:200:DB8:ABCD:100::.

¡ The end value for dynamic SRv6 SIDs is 100:200:DB8:ABCD:FFFF:FFFF::.

SRv6 endpoint behaviors

The local instruction identified by the Function field of an SRv6 SID is a node behavior that guides packet forwarding and processing. This local instruction is called SRv6 endpoint behavior. RFC 8986 defines opcode values for most types of node behaviors. From a network configuration perspective, different node forwarding behaviors are SRv6 SIDs with various functional types. The types of SRv6 SID include, but are not limited to the following:

· End SID—Identifies a node in the network, representing the prefix of a destination address. Upon arrival of packets at the node, if the SL is greater than 0, the node behavior is to decrease the SL by 1, extract the next SID from the SRH to update the destination address field in the IPv6 header, search for the routing table, and then forward the packet. There are two special types of End SIDs, End(COC32) and End(COCNONE). For more information about these types of SIDs, see the G-SRv6 section.

· End.X SID—Identifies a link in the network. Upon arrival of packets at the node that generated the SID, if the SL is greater than 0, the node behavior is to decrease the SL by 1, extract the next SID from the SRH to update the IPv6 header's destination address field, and then forward the packet from the link identified by the End.X SID. There are two special types of End.X SIDs, End.X(COC32) and End.X(COCNONE). For more information about these types of SIDs, see the G-SRv6 section.

· End.DT4 SID—Similar to a private network label in an MPLS L3VPN network, it identifies an IPv4 VPN instance in the network. The function of an End.DT4 SID is decapsulating packets and searching the routing table of the corresponding IPv4 VPN instance to forward the packets. End.DT4 SIDs are applicable to IPv4 private network access scenarios.

· End.DT6 SID—Similar to a private network label in an MPLS L3VPN network, it identifies an IPv6 VPN instance in the network. The function of an End.DT6 SID is decapsulating packets and searching the routing table of the corresponding IPv6 VPN instance to forward the packets. End.DT6 SIDs are applicable to IPv6 private network access scenarios.

· End.DT46 SID—Similar to a private network label in an MPLS L3VPN network, it identifies an IPv4 or IPv6 VPN instance in the network. End.DT46 SIDs are applicable to IPv4 and IPv6 private network concurrent access scenarios.

· End.DX4 SID—Identifies an IPv4 next hop from a PE to a CE in an IPv4 VPN instance in the network. The function of an End.DX4 SID is decapsulating packets and forwarding the decapsulated IPv4 packets out of the Layer 3 interface bound to the SID to a specific next hop. End.DX4 SIDs are applicable to IPv4 private network access scenarios.

· End.DX6 SID—Identifies an IPv6 next hop from a PE to a CE in an IPv6 VPN instance in the network. The function of an End.DX6 SID is decapsulating packets and forwarding the decapsulated IPv6 packets out of the Layer 3 interface bound to the SID to a specific next hop. End.DX6 SIDs are applicable to IPv6 private network access scenarios.

· End.DX2 SID—Identifies one end of a Layer 2 cross-connect in the EVPN VPWS over SRv6 scenario. The function of an End.DX2 SID is decapsulating packets and forwarding the decapsulated packets to the output interface of the SID.

· End.DX2L SID—Identifies packets that come from a bypass SRv6 PW. The packets will not be forwarded back to the bypass SRv6 PW for loop prevention. The function of an End.DX2L SID is removing the outer IPv6 header and SRH of packets and forwarding the decapsulated packets to the output interface of the SID. End.DX2L SIDs are applicable to EVPN VPWS over SRv6 multihomed sites.

· End.DT2M SID—Identifies one end of a Layer 2 cross-connect for EVPN VPLS over SRv6 BUM traffic and floods traffic. The function of an End.DT2M SID is decapsulating packets and flooding the decapsulated packets in the VSI.

· End.DT2U SID—Identifies one end of a Layer 2 cross-connect and performs unicast forwarding. The function of an End.DT2U SID is removing the outer IPv6 header and SRH of packets, looking up the MAC address table for the destination MAC address, and forwarding the packets to the output interface based on the MAC address entry. End.DT2U SIDs are applicable to EVPN VPLS unicast traffic.

· End.DT2UL SID—Identifies packets that come from a bypass SRv6 PW. The packets will not be forwarded back to the bypass SRv6 PW for loop prevention. The function of an End.DT2UL SID is removing the outer IPv6 header and SRH of packets and forwarding the packets to the output interface through destination MAC address lookup. End.DT2UL SIDs are applicable to EVPN VPLS over SRv6 multihomed sites.

· End.OP SID—Applies to the SRv6 OAM scenario. For more information about End.OP SIDs, see "Configuring SRv6 OAM."

· End.M SID—Applies to the SRv6 TE policy tailend protection scenario. For more information about End.M SIDs, see "Configuring SRv6 TE policies."

· End.T SID—Applies to the inter-AS option B solution. For more information about End.T SIDs, see "Configuring IP L3VPN over SRv6" and "Configuring EVPN L3VPN over SRv6."

· End.R SID—Applies to for SRv6 VPN Option B inter-domain communication scenarios. The forwarding action corresponding to the End.R SID is to remove the outer IPv6 header, look up the IPv6 FIB table based on the End.R SID, and re-encapsulate the packet with a new outer IPv6 header for forwarding based on the lookup results. For more information about End.R SIDs, see "Configuring IP L3VPN over SRv6."

· End.AS SID—Applies to the SRv6 service chain static proxy scenario. For more information about End.AS SIDs, see "Configuring SRv6 service chains."

· End.AM SID—Applies to the SRv6 service chain masquerading scenario. For more information about End.AM SIDs, see "Configuring SRv6 service chains."

· End.B6.Encaps—Applies to the scenario where an SRv6 ingress node steers traffic to an SRv6 TE policy or stitches an SRv6 TE policy by using a BSID. The node behavior is to encapsulate a new IPv6 header and SRH onto the received packet.

· End.B6.Encaps.Red—Applies to the scenario where an SRv6 ingress node steers traffic to an SRv6 TE policy or stitches an SRv6 TE policy by using a BSID. The node behavior is to encapsulate the SIDs except for the first SID in the SRv6 TE policy’s SID list when it encapsulates an IPv6 header and SRH onto the received packet to reduce the SRH length.

· End.B6.Insert—Applies to the scenario where an SRv6 ingress node steers traffic to an SRv6 TE policy or stitches an SRv6 TE policy by using a BSID. The node behavior is to encapsulate an SRH header onto the received packet.

· End.B6.Insert.Red—Applies to the scenario where an SRv6 ingress node steers traffic to an SRv6 TE policy or stitches an SRv6 TE policy by using a BSID. The node behavior is to insert an SRH into the received IPv6 packet and to encapsulate the SIDs except for the first SID in the SRv6 TE policy’s SID list to reduce the SRH length.

· End.XSID—Applies to the scenario where BFD detects the specified reverse path in an SRv6 TE policy. The node behavior is to encapsulate new IPv6 header and SRH header onto the BFD echo packet header and encapsulate the local End.XSID onto SRH[0] in the SRH header SID list. For more information about SRv6 TE policy reverse path detection by BFD, see "Configuring SRv6 TE policy."

· Src.DT4 SID—Identifies the source address for a BIERv6 tunnel in an IPv4 multicast VPN. In BIER multicast VPN scenarios, the forwarding action is to decapsulate the packet and look up IPv4 table entries. For more information about BIERv6 tunnel source addresses, see "Configuring multicast VPN" in IP Multicast Configuration Guide.

· Src.DT6 SID—Identifies the source address for a BIERv6 tunnel in an IPv6 multicast VPN. In BIER multicast VPN scenarios, the forwarding action is to decapsulate the packet and look up IPv6 table entries. For more information about BIERv6 tunnel source addresses, see "Configuring multicast VPN" in IP Multicast Configuration Guide.

· End.BIER SID—Applies to BIERv6 scenarios. For more information about End.BIER SIDs, see "Configuring BIER" in BIER Configuration Guide.

· End.RGB SID—Used in MSR6 scenarios. For more information about End.RGB SIDs, see "Configuring BIER" in BIER Configuration Guide.

· End.DX2.AUTO—H3C-proprietary SIDs, which are used only for rapid service deployment scenarios such as PPPoE over IPv6 in access networks. In scenarios such as PPPoE dial-up access or dedicated access, a large number of customer-side CPE devices are widely deployed. To deploy services quickly and avoid on-site configuration, you can deploy a centralized controller to manage CPE devices through TR-069 and assign the SRv6 SID of the service gateway (SGW) to the CPE devices. This SID identifies the traffic from CPEs to the SGW. The SGW acts as the egress for CPE services. The following uses PPPoE authentication and traffic forwarding as an example to describe the communication between a customer CPE and the SGW:

a. After a CPE comes online, it uses PPPoE for authentication on the SGW. It encapsulates the PPPoE packets with an IPv6 header and an Ethernet frame header for PPPoE over IPv6 encapsulation. The destination IP address in the IPv6 header is the SGW's End.DX2.AUTO type SRv6 SID, and the source address is the CPE's service SID.

b. The SGW authenticates the CPE through an AAA server and allocates the address for the PPPoE WAN port to the CPE after the CPE passes authentication. The SGW records the mappings between the PPPoE session ID and the outer source IP address. It then creates an <IP address, PPPoE MAC> entry based on the End.DX2.AUTO type SRv6 SID in the outer IPv6 header of the PPPoE packet.

c. After authentication, the SGW obtains the related authorization information allocated by the AAA server, for example, rate limits.

d. When the CPE receives a service packet from the internal network, it encapsulates the original service packet with an IPv6 header and an Ethernet frame header. The IPv6 packet's destination IP address is the SGW's End.DX2.AUTO type SRv6 SID, and the source address is the CPE's service SID. After the SGW receives the service packet, it decapsulates the outer IPv6 header based on the action associated with the End.DX2.AUTO type SRv6 SID, and then forwards the remaining packet to the L2VE interface associated with the SID, terminating the L2VPN packet. Then, it looks up the routing table in the associated VRF to determine the packet's next destination, and then forwards the packet through the L3VE interface associated with that VRF.

Use IGP to advertise SRv6 SIDs for an SR node. The other SR nodes will generate route entries of that SR node based on the advertised information.

SRv6 SID flavors

SID flavors can be combined with some node behaviors to form new node behaviors. For example, node behavior End.X can be combined flavor PSP to form a new node behavior called End.X with PSP. Use SRv6 SID flavors to change the forwarding behaviors of SRv6 SIDs to meet multiple service requirements. The following SRv6 SID flavors are supported:

· NO-FLAVOR—The SRv6 SID does not carry any flavors.

· Penultimate Segment POP of the SRH (PSP)—The penultimate SRv6 node removes the SRH to reduce the workload of the end SRv6 node and improve the forwarding efficiency. The end SRv6 node does not read the SRH, and it only looks up the local SID table for the destination IPv6 address of packets to forward the packets.

· NO PSP—The penultimate SRv6 node does not remove the SRH. For correct connectivity detection in the SRv6 OAM scenario, make sure the SRH is not removed on the penultimate SRv6 node. The device needs to obtain the SID from the SRH to identify the link connectivity. (This flavor type is not supported in the current software version.)

· Ultimate Segment POP of the SRH (USP)—The ultimate SRv6 node (endpoint node) removes the SRH from the packets. In an SRv6 VPN network, upon obtaining the forwarding action based on the SID, the PE removes the SRH from the packets and forwards the packets to the CE.

· Ultimate Segment Decapsulation (USD)—The ultimate SRv6 node (endpoint node) removes the outer IPv6 header from the packets. In the TI-LFA scenario, the endpoint node in the repair list removes the outer IPv6 header from the packets and forwards the decapsulated packets to the destination node.

· Continue of Compression (COC)—A COC flavor identifies the next SID as a compressed G-SID, either 16-bit or 32-bit, in packet encapsulation. In packet forwarding, SIDs that carry the COC flavor support the replace action. When an SRv6 packet is forwarded to an endpoint node, if the local SID of that node has the COC flavor and the current SID is the last SID or G-SID in the IPv6 destination address, the node extracts a 16-bit or 32-bit G-SID from the 128-bit address space of the encapsulated SID. This G-SID is then replaced in the IPv6 packet's destination address, forming a new SID with a common prefix. This new SID guides G-SRv6 packet forwarding. For more information about the replace action, see "16-bit G-SRv6 compression."

· NEXT—A NEXT flavor identifies a 16-bit compressed G-SID in packet encapsulation. In packet forwarding, SIDs that carry the NEXT flavor support the move action. When an SRv6 packet is forwarded to an endpoint node, if the local SID of that node has the NEXT flavor and the current G-SID is not the last G-SID in the IPv6 destination address, the node moves the next G-SID to the position of the current G-SID to guide G-SRv6 packet forwarding. For more information about the move action, see "16-bit G-SRv6 compression."

The device supports advertising SRv6 SIDs with multiple flavor types through IGP or BGP. Therefore, a SID might carry different flavors, for example, End X with PSP&USD.

Local SID forwarding table

An SRv6-enabled node maintains a local SID forwarding table that records the SRv6 SIDs generated on the local node. The local SID forwarding table has the following functions:

· Stores local generated SRv6 SID forwarding information.

· Stores SRv6 SID operation types.

Segment List

A Segment List is an ordered list of SIDs, which is also referred to as a Segment Identifier (SID) list in this document. The SR nodes forward packets based on the SIDs in the order that they are arranged in the SID list.

SRv6 tunnel

An SRv6 tunnel is a virtual point-to-point connection established between the SRv6 ingress node and egress node. IPv6 packets are encapsulated at the ingress node and de-encapsulated at the egress node.

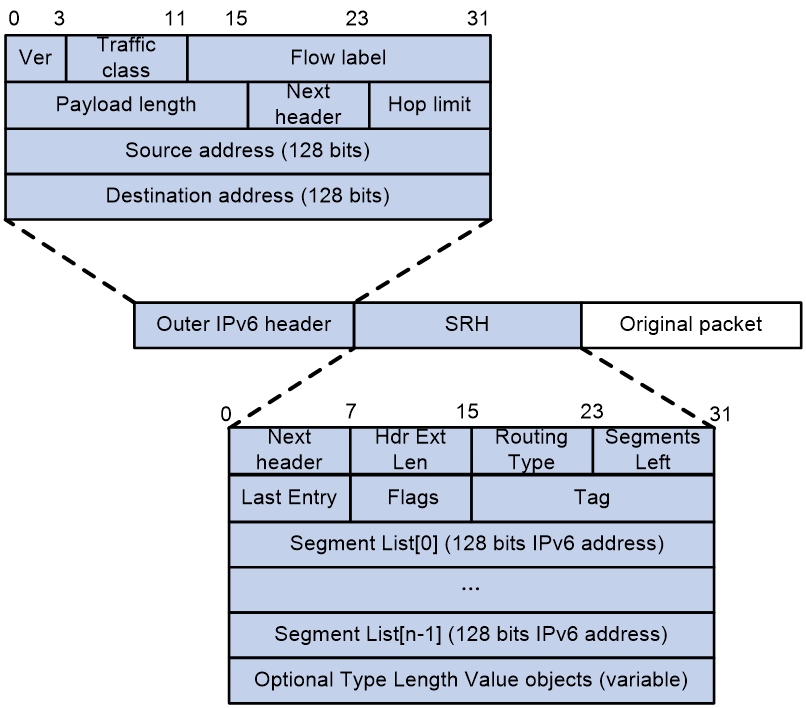

SRv6 packet format

An outer IPv6 header and a Segment Routing Header (SRH) are added to the original Layer 3 data packet to form an SRv6 packet.

As shown in Figure 3, the value for the Next Header field is 43 in the outer IPv6 header, which indicates that the header next to the IPv6 header is a routing extension header. The value for the Routing Type field in the routing extension header is 4, which indicates that the routing extension header is an SRH. The SRH header contains the following fields:

· 8-bit Next Header—Identifies the type of the header next to the SRH.

· 8-bit Hdr Ext Len—Length of the SRH header in 8-octet units, not including the first 8 octets.

· 8-bit Routing Type—The value for this field is 4, which represents SRH.

· 8-bit Segments Left—Contains the index of the next segment to inspect in the Segment List. The Segments Left field is set to n-1 where n is the number of segments in the Segment List. Segments Left is decremented at each segment.

· 8-bit Last Entry—Contains the index of the first SID in the path used to forward the packet.

· 8-bit Flags—Contains flags.

· 16-bit Tag—Tags a packet as part of a class or group of packets, for example, packets sharing the same set of properties.

· Segment List—Contains 128-bit IPv6 addresses representing the ordered segments. The Segment List is encoded starting from the last segment of the path. The first element of the segment list (Segment List [0]) contains the last segment of the path, the second element (Segment List [1]) contains the penultimate segment of the path and so on. The number enclosed in a pair of brackets is the index of a segment.

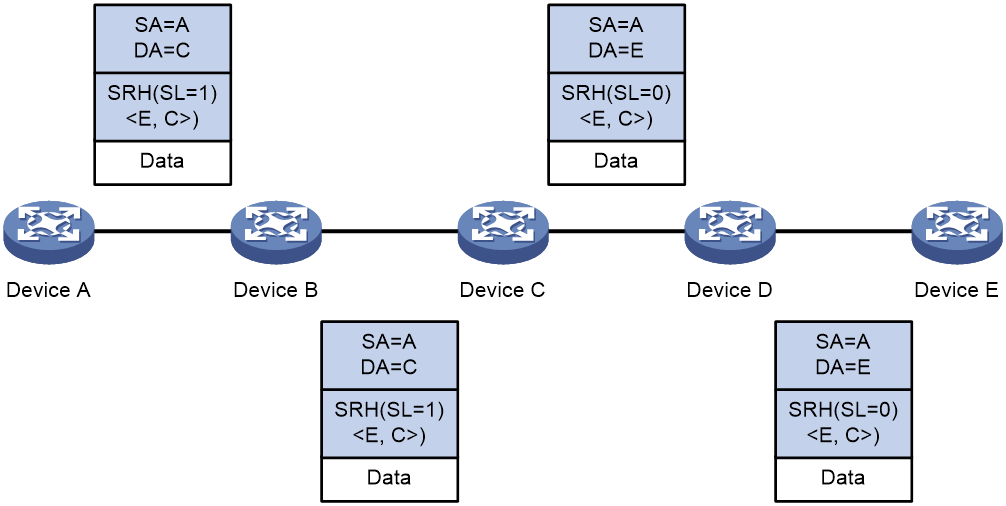

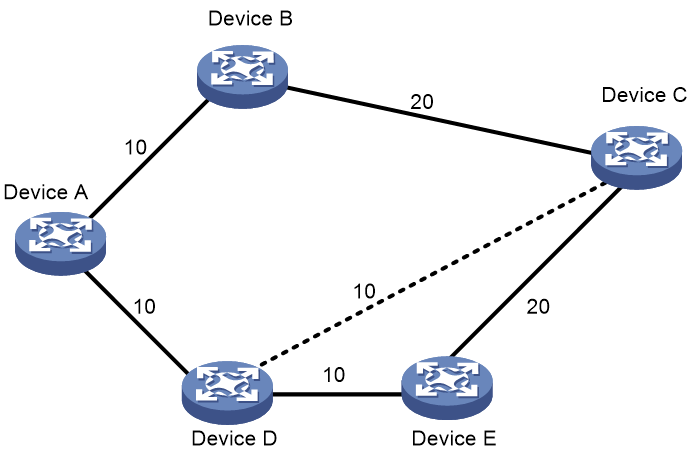

SRv6 packet forwarding

As shown in Figure 4, a source node receives a packet that matches an SRv6 path. Device A is the source node, Device C and Device E are endpoint nodes, and Device B and Device D are transit nodes. The packet is forwarded through the SRv6 path as follows:

1. Upon receiving an IPv6 packet, Device A performs the following operations:

a. Encapsulates an SRH to the packet. The packet must pass two segments to reach Device E, so the Segments Left (SL) in the SRH is set to 1 (the number of segments along the path minus 1). The Segment List contains Segment List [0]=E and Segment List [1]=C.

b. Encapsulates an outer IPv6 header to the packet. The source address of the IPv6 header is an IP address on Device A and the destination address is determined by the SL value. On Device A, the SL value is 1, which points to the SID on Device C, so the destination address is the SID on Device C.

c. Looks up the routing table based on the destination address of the outer IPv6 header and forwards the packet to Device B.

2. Device B looks up the routing table based on the destination address of the outer IPv6 header and forwards the packet to Device C.

3. Device C performs the following operations:

a. Checks the SL value in the SRH and decreases the value by 1 if the SL value is greater than 0.

b. Updates the destination address to the address pointed by the SL. In this example, the SL is 0, which points to the SID on Device E. Device C replaces the destination address in the outer IPv6 header with the SID on Device E.

c. Looks up the routing table based on the destination address of the outer IPv6 header and forwards the packet to Device D.

4. Device D looks up the routing table based on the destination address of the outer IPv6 header and forwards the packet to Device E.

5. Device E checks the SL value in the SRH and finds that the value has decreased to 0. The device performs the following operations:

a. Decapsulates the packet by removing the outer IPv6 header and the SRH.

b. Forwards the original packet to the destination based on the destination address.

Figure 4 SRv6 packet forwarding

Directing traffic to an SRv6 tunnel

After an SRv6 tunnel is established, traffic is not forwarded on the tunnel automatically. You must direct the traffic to the tunnel by configuring a static route or automatic route advertisement.

Static routing

You can direct traffic to an SRv6 tunnel by creating a static route that reaches the destination through the tunnel interface on the source node. This is the easiest way to implement SRv6 tunnel forwarding. When traffic to multiple networks is to be forwarded through the SRv6 tunnel, you must configure multiple static routes, resulting in increased configuration and maintenance workloads.

For more information about static routing, see Layer 3—IP Routing Configuration Guide.

Automatic route advertisement

Automatic route advertisement distributes the SRv6 tunnel to the IGP (OSPF or IS-IS), so the SRv6 tunnel can participate in IGP route calculation. Automatic route advertisement is easy to configure and maintain.

Automatic route advertisement can be implemented by using the following methods:

· IGP shortcut—Also known as AutoRoute Announce. It considers the SRv6 tunnel as a link that directly connects the tunnel ingress node and the egress node. Only the ingress node uses the SRv6 tunnel during IGP route calculation.

· Forwarding adjacency—Considers the SRv6 tunnel as a link that directly connects the tunnel ingress node and the egress node, and advertises the link to the network through an IGP. Every node in the network uses the SRv6 tunnel during IGP route calculation.

|

IMPORTANT: Only IGP shortcut is supported in the current software version. |

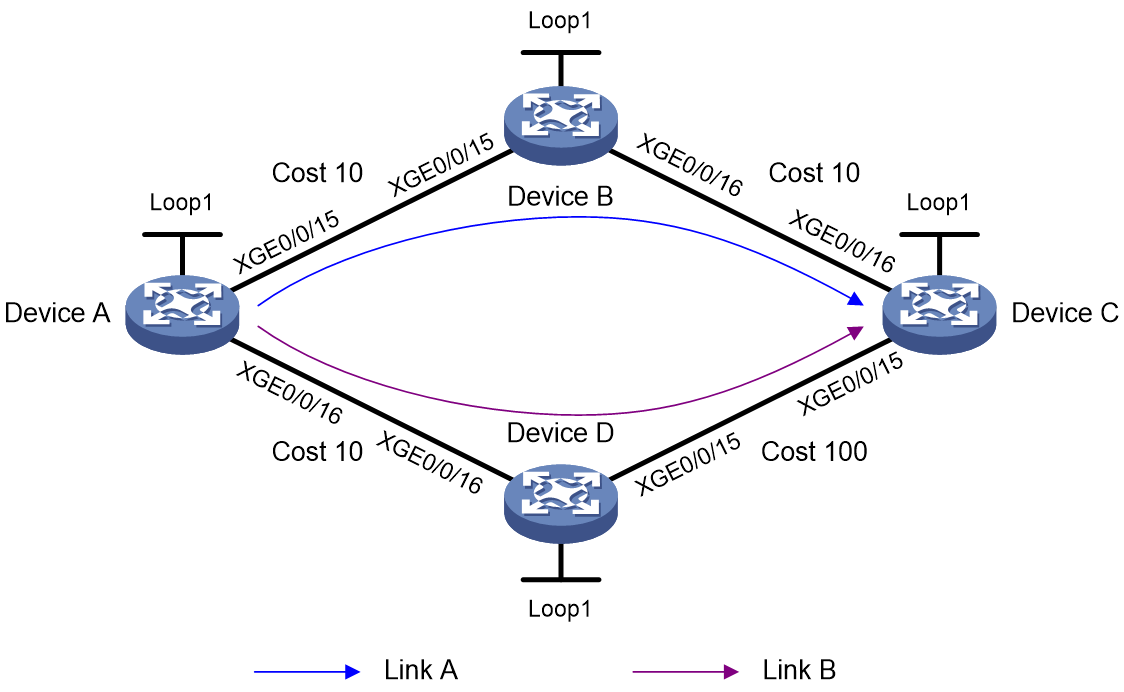

As shown in Figure 5, an SRv6 tunnel exists from Device D to Device C. IGP shortcut enables only the ingress node Device D to use the SRv6 tunnel in IGP route calculation. Device A cannot use this tunnel to reach Device C. With forwarding adjacency enabled, Device A can learn this SRv6 tunnel and transfer traffic to Device C by forwarding the traffic to Device D.

Figure 5 IGP shortcut and forwarding adjacency diagram

G-SRv6

Background

In an SRv6 TE policy scenario, the administrator needs to add the 128-bit SRv6 SIDs of SRv6 nodes on the packet forwarding path into the SID list of the SRv6 TE policy. If the packet forwarding path is long, a large number of SRv6 SIDs will be added to the SID list of the SRv6 TE policy. This greatly increases the size of the SRv6 packet header, resulting in low device forwarding efficiency and reduced chip processing speed. The situation might be worse in a scenario that spans across multiple ASs where a much greater number of end-to-end SRv6 SIDs exist.

Generalized SRv6 (G-SRv6) encapsulates shorter SRv6 SIDs (G-SIDs) in the segment list of SRH by compressing the 128-bit SRv6 SIDs. This reduces the size of the SRv6 packet header and improves the efficiency for forwarding SRv6 packets. In addition, G-SRv6 supports both 128-bit SRv6 SIDs and G-SIDs in a segment list.

About G-SRv6

Typically, an address space is reserved for SRv6 SID allocation in an SRv6 subnet. This address space is called a SID space. In the SRv6 subnet, all SIDs are allocated from the SID space. The SIDs have the same prefix (common prefix). The SID common prefix is redundant information in the SRH.

G-SRv6 removes the common prefix and carries only the variable portion of SRv6 SIDs (G-SIDs) in the segment list, effectively reducing the SRv6 packet header size. To forward a packet according to routing table lookup, SRv6 replaces the destination IP address of the packet with the combination of the G-SID and common prefix in the segment list of the SRH. An SRH encapsulated with a G-SID is called a G-SRH.

The following G-SRv6 compression schemes are available:

· 16-bit G-SRv6 compression—A 128-bit SRv6 SID is compressed into a 16-bit G-SID when encapsulated in an SRH.

· 32-bit G-SRv6 compression—A 128-bit SRv6 SID is compressed into a 32-bit G-SID when encapsulated in an SRH.

32-bit G-SRv6 compression

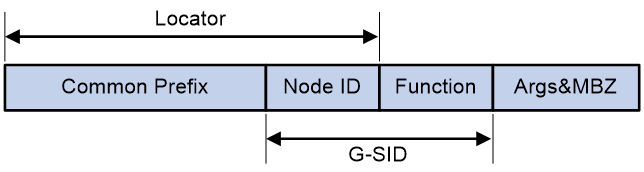

G-SID format in 32-bit G-SRv6 compression

As shown in Figure 6, the locator portion of an SRv6 SID contains the Common Prefix and Node ID portions. The Common Prefix portion represents the address of the common prefix. The Node ID portion identifies a node. G-SRv6 can compress all SIDs with the same common prefix into 32-bit G-SIDs. A G-SID contains the Node ID and Function portions of a 128-bit SRv6 SID. A 128-bit SRv6 SID is formed by the Common Prefix portion, a 32-bit G-SID, and the 0 (Args&MBZ) portion.

Figure 6 Compressible SRv6 SID

In 32-bit compression, G-SIDs can be allocated from COC32 and COC-both locators.

COC32 locators

Figure 7 COC32 locator

A COC32 locator can allocate SRv6 SIDs that carry the COC flavor (End(COC32) SIDs and End.X(COC32) SIDs) and common SRv6 SIDs that do not carry the COC flavor. You can specify these SRv6 SIDs in a static locator or use IGP to automatically allocate compressible SRv6 SIDs in a dynamic locator. Assume that you configure the locator test1 ipv6-prefix 100:200:DB8:ABCD:: 64 common-prefix 48 coc32 static 8 args 16 command. The G-SID contains the node ID, dynamic portion, and static portion, with a fixed length of 32 bits. In this case, the total length of the SRv6 SID is less than 128 bits, and the last 32 bits are MBZ, all set to 0. In this command:

· The locator is 100:200:DB8:ABCD::. The length is 64 bits.

· The common prefix length is 48 bits.

· The static portion length is 8 bits.

· The Args portion length is 16 bits.

· The dynamic portion length is 8 bits.

· The MBZ is 32 bits.

In this example, the following compressible static SRv6 SID range and dynamic SRv6 SID range are obtained on the locator:

· The start value for static SRv6 SIDs is 100:200:DB8:ABCD:1::.

· The end value for static SRv6 SIDs is 100:200:DB8:ABCD:FF::.

· The start value for dynamic SRv6 SIDs is 100:200:DB8:ABCD:100::.

· The end value for dynamic SRv6 SIDs is 100:200:DB8:ABCD:FFFF::.

COC-both locators

Figure 8 COC-both locator

For more flexible allocation of SRv6 SIDs, a new locator type COC-both has been introduced. In a COC-both locator, compressible SID portions and non-compressible SID portions are available. SIDs that carry the COC flavor, for example, End(COC32) and End.X(COC32) SIDs and SIDs that do not carry the COC flavor, for example, End(COCNONE) and End.X(COCNONE) SIDs are dynamically or statically allocated from the compressible SID portions. Only common SIDs, such as End and End.X SIDs are allocated from the non-compressible SID portions. The SRv6 SIDs allocated from different portions are categorized as follows:

· SRv6 SIDs allocated from the static compressible portion.

· SRv6 SIDs allocated from the dynamic compressible portion.

· SRv6 SIDs allocated from the static non-compressible portion.

· SRv6 SIDs allocated from the dynamic non-compressible portion.

Assume that you configure the locator test1 ipv6-prefix 100:200:DB8:ABCD:: 64 common-prefix 48 coc-both non-compress-static 16 static 8 args 16 command.

· The locator is 100:200:DB8:ABCD::. The length is 64 bits.

· The common prefix length is 48 bits. A compressed SRv6 SID does not contain this portion.

· The compressible static portion length is 8 bits.

· The compressible dynamic portion length is 8 bits. This value is calculated by using the following formula: 32 – (prefix-length – common-prefix-length) + compressible-static-length.

· The non-compressible static portion length is 16 bits.

· The non-compressible dynamic portion length is 16 bits. This value is calculated by using the following formula: 128 - common-prefix-length - args-length - 32 - non-compressible-static-length.

· The Args portion length is 16 bits.

In this example, the following static compressible SRv6 SID range and dynamic compressible SRv6 SID range are obtained on the locator:

· The start value for compressible static SRv6 SIDs is 100:200:DB8:ABCD:1::.

· The end value for compressible static SRv6 SIDs is 100:200:DB8:ABCD:FF::.

· The start value for compressible dynamic SRv6 SIDs is 100:200:DB8:ABCD:100::.

· The end value for compressible dynamic SRv6 SIDs is 100:200:DB8:ABCD:FFFF::.

· The following static non-compressible SRv6 SID range and dynamic non-compressible SRv6 SID range are obtained on the locator:

· The start value for static non-compressible SRv6 SIDs is 100:200:DB8:ABCD::1:0.

· The end value for static non-compressible SRv6 SIDs is 100:200:DB8:ABCD::FFFF:0.

· The start value for dynamic non-compressible SRv6 SIDs is 100:200:DB8:ABCD:0:1::.

· The end value for dynamic non-compressible SRv6 SIDs is 100:200:DB8:ABCD:0:FFFF:FFFF:0.

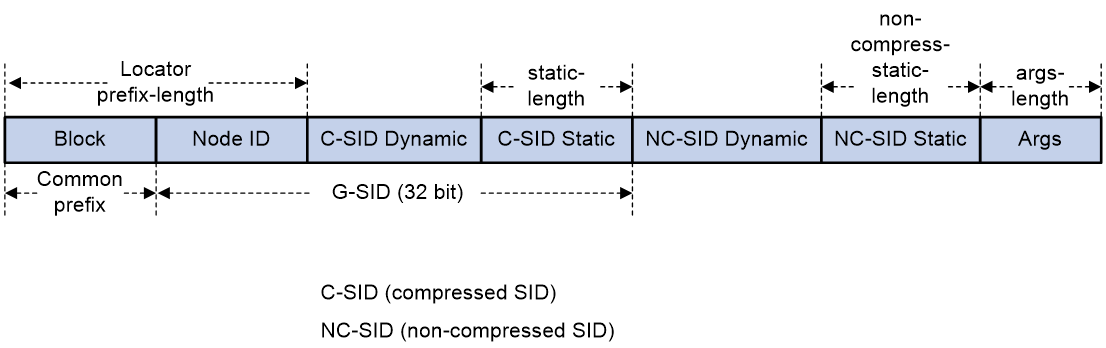

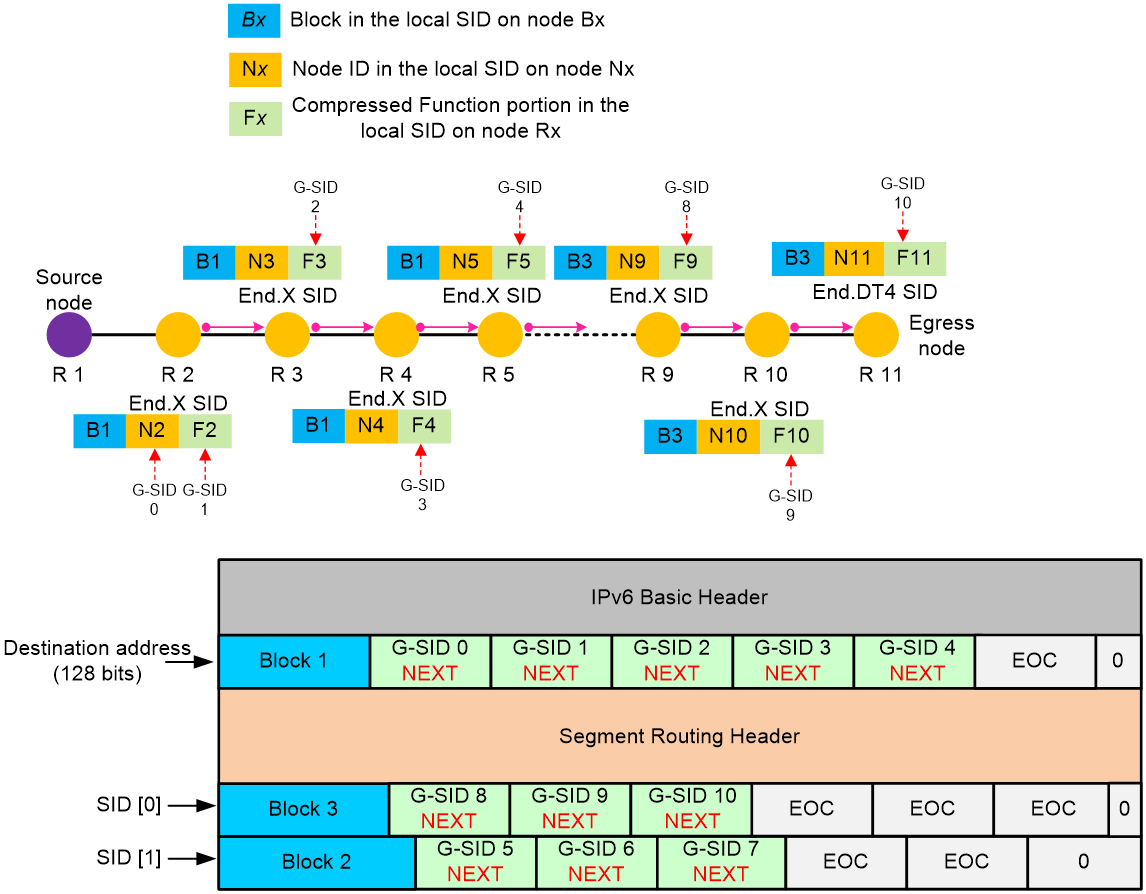

G-SRv6 packet in 32-bit G-SRv6 compression

G-SRv6 packet format

As shown in Figure 9, G-SRv6 can encapsulate both G-SIDs and 128-bit SRv6 SIDs in the segment list of the SRH. It needs to encapsulate four G-SIDs in a group to the original location of a 128-bit SRv6 SID. If the location contains fewer than four G-SIDs (less than 128 bits), G-SRv6 pads the remaining bits with 0s. Multiple consecutive G-SIDs form a compressed path, called a G-SID list. A G-SID list can contain one or more groups of G-SIDs.

|

|

NOTE: If the SRv6 SID of the next node requires compression, the routing protocol adds the Continue of Compression (COC) flag to the advertised SRv6 SID of the local node. The COC flag indicates that the next SRv6 SID is a G-SID. A COC flag only identifies the forwarding behavior of an SRv6 SID, and is not actually carried in the packet. The COC flags in Figure 9 are for illustration purposes only. |

The G-SIDs in the segment list are arranged as follows:

· The SRv6 SID before the G-SID list is a 128-bit SRv6 SID with the COC flag, indicating that the next SID is a 32-bit G-SID.

· Except the last G-SID, all G-SIDs in the G-SID list must carry the COC flag to indicate that the next SID is a 32-bit G-SID.

· The last G-SID in the G-SID list must be a 32-bit G-SID without the COC flag, indicating that the next SID is a 128-bit SRv6 SID.

· The next SRv6 SID after the G-SID list is a 128-bit SRv6 SID that can carry the COC flag or does not carry the COC flag.

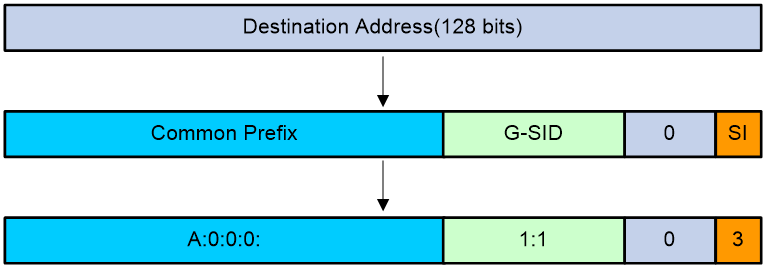

Calculating the destination address with G-SID

As shown in Figure 10, G-SRv6 combines the G-SID and Common Prefix in the segment list to form a new destination address.

· Common Prefix—Common prefix address manually configured by the administrator.

· G-SID—Compressed 32-bit SID obtained from the SRH.

· SID Index (SI)—Index that identifies a G-SID in a group of G-SIDs. This field is the least significant two bits of the destination IPv6 address. The value range is 0 to 3. The SI value decreases by 1 at each node that performs SID compression. If the SI value becomes 0, the SL value decreases by 1. In a group of G-SIDs in the segment list, the G-SIDs are arranged from left to right based on SI values. The SI value is 0 for the leftmost G-SID, and is 3 for the rightmost G-SID.

· 0—If the total length of the Common Prefix, G-SID, and SI portions is less than 128 bits, the deficient bits are padded with 0s before the SI portion.

Figure 10 Destination address calculated with G-SID

Suppose the following conditions exist:

· The Common Prefix deployed on the SRv6 node is A:0:0:0::/64.

· The G-SID in the SRv6 packet is 1:1.

· The SI value associated with the G-SID is 3.

Based on the previous conditions, the device calculates the destination address as A:0:0:0:1:1::3.

Upon receiving the G-SRv6 packet, the SRv6 node calculates the destination address for the packet as follows:

· If the destination address of the packet is a 128-bit SRv6 SID with the COC flag in the segment list, the next SID is a G-SID. The device decreases the SI value by 1 and searches for the G-SID group corresponding to [SI-1]. Then, the device calculates the destination address based on the 32-bit G-SID identified by SI value 3.

· If the destination address of the packet is a 32-bit SRv6 SID with the COC flag in the segment list, the next SID is a G-SID.

¡ If the SI value is larger than 0, the device decreases the SI value by 1 and searches for the G-SID group corresponding to SL value of the packet. Then, the device calculates the destination address based on the 32-bit G-SID identified by [SI-1].

¡ If the SI value is equal to 0, the device decreases the SL value by 1, resets the SI value to 3, and searches for the G-SID group corresponding to the SL value of the packet. Then, the device calculates the destination address based on the 32-bit G-SID identified by SI value 3.

· If the destination address of the packet is a 32-bit SRv6 SID without the COC flag in the segment list, the device decreases the SL value by 1 and searches for the 128-bit SRv6 SID corresponding to [SL-1]. Then, the device replaces the destination address in the IPv6 header with the SRv6 SID.

· If the destination address of the packet is a 128-bit SRv6 SID without the COC flag in the segment list, the device decreases the SL value by 1 and searches for the 128-bit SRv6 SID corresponding to [SL-1]. Then, the device replaces the destination address in the IPv6 header with the SRv6 SID.

16-bit G-SRv6 compression

Basic concepts

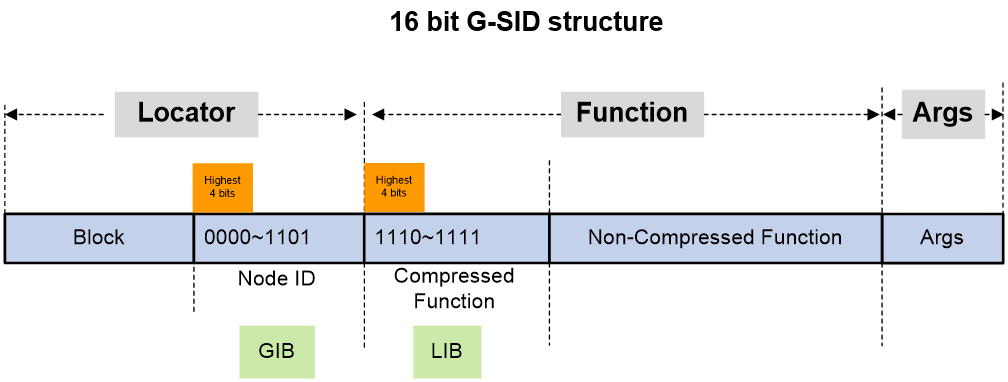

G-SID format

As shown in Figure 11, in 16-bit G-SRv6 compression, an SRv6 SID contains the Locator, Function, and Arguments portions.

The locator portion of an SRv6 SID contains the Block and Node ID portions.

· Block—Common prefix, also known as Locator Block, is redundant information in a G-SRv6 packet. Its length is the total length of the locator portion (prefix-length) minus 16 bits.

· Node ID—Identifies a node, also known as Locator Node. It has a fixed length of 16 bits. The Node ID is advertised to all nodes within the SRv6 domain along with the locator through IGP. After learning the routing prefix that contains the Block and Node ID, other nodes can forward SRv6 packets based on the Block and Node ID.

The Function portion of an SRv6 SID is only used locally to guide packet forwarding and is only locally significant. The Function portion is divided into Compressed Function and Non-Compressed Function portions.

· Compressed Function—This portion has a fixed length of 16 bits. G-SIDs that carry COC and NEXT flavors can be allocated from this portion. This portion contains the dynamic compressed G-SID (dynamic portion) and static compressed G-SID (static portion, where the length of the static portion can be specified in the CLI).

· Non-Compressed Function—Common SRv6 SIDs that do not carry the COC flavor are allocated from this portion.

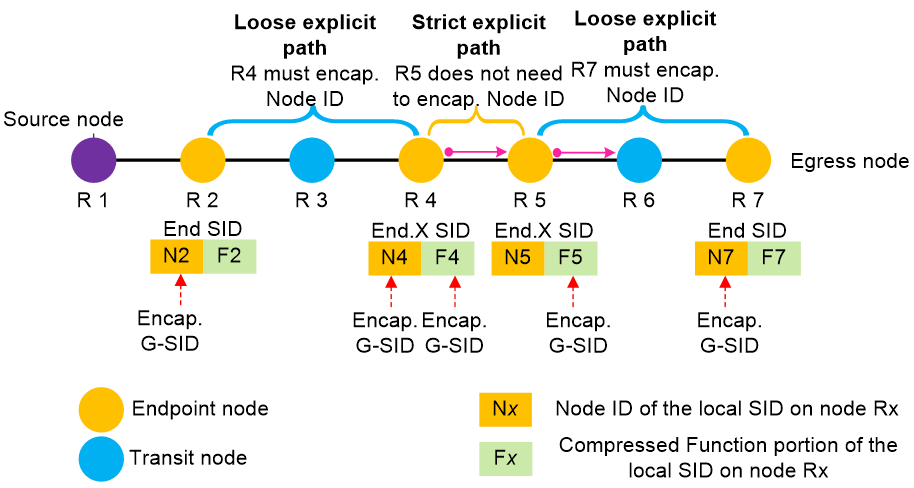

Strict explicit path and loose explicit path

Typically, SRv6 SIDs with the same common prefix can be compressed when an SRv6 endpoint node encapsulates either the 16-bit Node ID or the 16-bit compressed Function portion as G-SIDs into an G-SRv6 packet.

An SRv6 endpoint node can encapsulate the 16-bit Node ID portion of the local SRv6 SID or the 16-bit compressed Function portion as a G-SID into an G-SRv6 packet, or encapsulate both the Node ID and the compressed Function portions into a G-SRv6 packet. As shown in Figure 12, the encapsulation mode depends on the actual scenario:

· Loose explicit path—A scenario where packets cannot be forwarded to the current node based on the SID of the previous hop (for example, R2 to R4 and R5 to R7 shown in Figure 12). Assume that on an SRv6 forwarding path, there are two non-adjacent endpoint nodes, and the previous node of the current node cannot forward packets to the current node even if it uses an End.X G-SID. In this case, you must configure the device to encapsulate the Node ID in the local SID of this node when it encapsulates a G-SID for routing purposes. You can determine whether configure the device to encapsulate the Function portion of the local SID to control the forwarding behavior as needed.

· Strict explicit path scenario—A scenario where packets can be forwarded to a node based on the SID of the previous hop for that node (for example, R4 to R5 shown in Figure 12). Assume that the previous hop for a node uses a local End.X G-SID to indicate the forwarding path, and the packet can be forwarded to this node based on the next hop and outgoing interface of the End.X G-SID. In this case, only the compressed Function portion of the local SID of this node needs to be encapsulated, enabling the packet to be forwarded according to the forwarding behavior bound to the compressed Function portion of the local SID.

Figure 12 Strict explicit path and loose explicit path

Container for G-SIDs

In a G-SRv6 packet, a container is a 128-bit space used to store G-SIDs. The 128-bit destination address in the IPv6 basic header of a G-SRv6 packet can be used as a container to store multiple G-SIDs. Each 128-bit SID in the SRH extension header can also be used as a container.

GIB and LIB

H3C uses the Global Identifiers Block (GIB) and Local Identifiers Block (LIB) to distinguish between a Node ID and compressed Function portion encapsulated as a 16-bit G-SID into a G-SRv6 packet.

As shown in Figure 13, two non-overlapping subspaces GIB (global G-SID space) and LIB (local G-SID space) are scoped based on the 16 different values of the highest 4 bits of a 16-bit G-SID.

· In the GIB, a G-SID is the Node ID in the local SID of an endpoint node. By default, the highest 4 bits of a G-SID in the GIB are set to 0x0 to 0xD (binary 0000 to 1101, 14 in total), indicating that the G-SID is the Node ID in the local SID of an endpoint node. The G-SID is used for IP addressing by the endpoint node.

· In the LIB, a G-SID is the Function portion in the local SID of an endpoint node. By default, the highest 4 bits of a G-SID in the LIB are set to 0xE to 0xF (binary 1110 to 1111, 2 in total), indicating that the G-SID is the compressed Function portion in the local SID of an endpoint node. The G-SID is used to identify different forwarding behaviors of the endpoint node.

By default, the ratio of G-SIDs between the GIB and LIB is 14:2. You can change this ratio in the CLI.

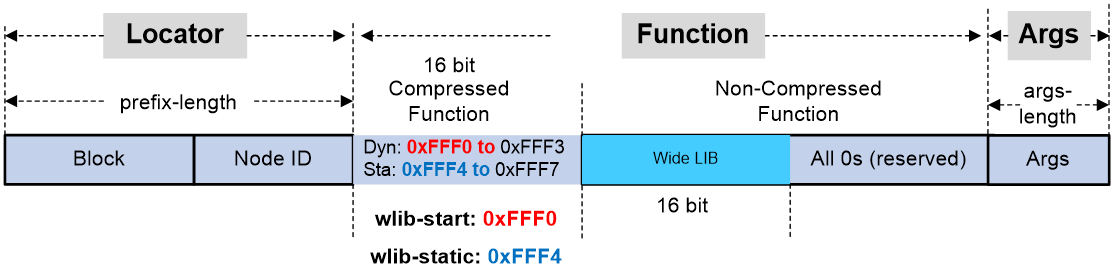

COC16 locators

To allocate 16-bit compressed G-SIDs, H3C has defined a COC16 locator. 16-bit compressed G-SIDs can be allocated from the address space of the COC16 locator. Based on G-SID allocation methods and the flavors carried by G-SIDs, COC16 locators in three different modes have been defined, as shown in Figure 14.

· COC16 locator in default mode—The device can allocate G-SIDs that carry COC, NEXT, and COC and NEXT flavors and SIDs that do not carry COC flavors (COC-none) from the compressible Function portion in a COC16 locator in default mode. Additionally, the device can allocate common SIDs that do not carry COC or NEXT flavors from the non-compressible Function portion. COC16 locators in default mode have the most SID types and flavors that can be allocated.

· COC16 locator in next mode—The only difference between a COC16 locator in default mode and in next mode is that only SIDs that carry the NEXT flavor can be allocated from a COC16 locator in next mode. Only G-SIDs that support the move action can be allocated from a COC16 locator in next mode, and is used only for interoperation with 16-bit G-SRv6 compression from third-parties.

· COC16 locator in W-LIB mode—In the 16-bit compression G-SRv6 scheme, the G-SIDs in the 16-bit Function portion must be bound to different endpoint behaviors and the address space of the Function portion might be insufficient. To address this issue, H3C introduced COC16 locators in W-LIB mode. In this type of locator, the highest 16 bits of the uncompressed Function portion are used to expand the available address space of the compressed Function portion. This expanded 16-bit address space is called the Wide LIB (W-LIB).

The next G-SID will be allocated from the W-LIB of the COC16 locator in W-LIB mode only when the compressed Function portion in a G-SRv6 packet is set to eight specific values (configurable in the CLI). Assume that a compressed Function portion is encapsulated in a G-SRv6 packet, with a value in the range of 0xFFF0 to 0xFFF7, indicating that the next 16-bit G-SID is allocated from the W-LIB. When the packet is forwarded, the system combines the compressed Function portion with the G-SID from the W-LIB to look up in the local SID table and forward the packet.

· For a COC 16 locator in W-LIB mode, G-SIDs that carry the NEXT flavor can be allocated from the W-LIB or from the compressed Function portion. In addition, SIDs that do not carry the COC flavor (COC-NONE) can be allocated from the compressed Function portion. If G-SIDs are allocated from the compressed Function portion, the values for the Function portion will be values other than 0xFFF0 through 0xFFF7.

You can use the locator command to specify the start value for the compressed Function portion or the start value of a static portion. For example, if you set wlib-start to 0xFFF0, you set the start value for the compressed Function portion. If you set wlib-start to 0xFFF4, you set the start value for a static portion in the compressed Function portion. G-SIDs in the W-LIB identified by 0xFFF0 to 0xFFF3 are allocated dynamically, and G-SIDs in the W-LIB identified by 0xFFF4 to 0xFFF7 are statically allocated.

In the current software version, COC16 locators in W-LIB mode are used to allocate VPN SIDs for both L3VPN and L2VPN services.

Figure 14 COC16 locator in W-LIB mode

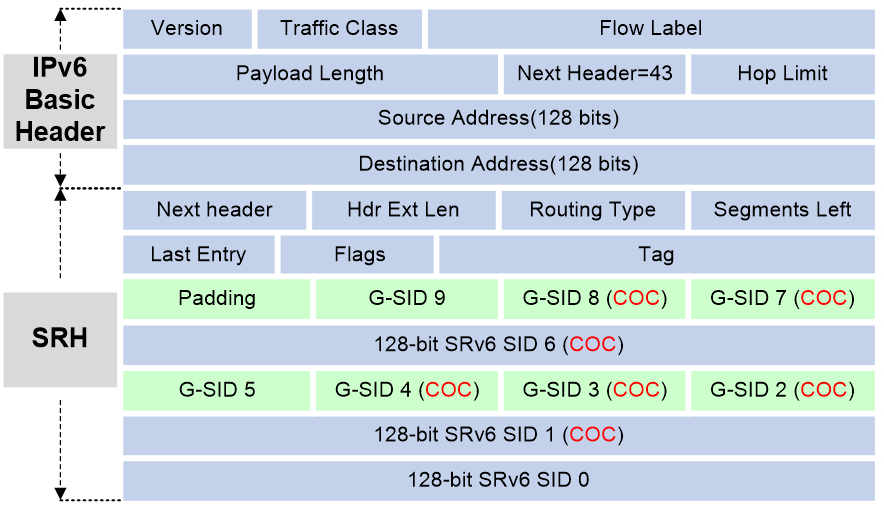

16-bit G-SRv6 compression classification

The following 16-bit compression schemes are available depending on the encapsulation and forwarding modes of SRv6 packets:

· Combination of NEXT and COC flavors—Multiple G-SIDs encapsulated in a G-SRv6 packet carry both COC and NEXT flavors, and the device performs the move or replace actions when it forwards the packet. This scheme offers higher compression efficiency for packet encapsulation. However, all endpoint nodes on the forwarding path must have the same Block (common prefix). If the Block changes, a new container that carries the new Block must be encapsulated, reducing compression efficiency.

· NEXT flavor only—Multiple G-SIDs encapsulated in a G-SRv6 packet carry only the NEXT flavor, and the device performs the move action when it forwards the packet. Although this scheme sacrifices some packet encapsulation compression efficiency, endpoint nodes along the forwarding path can have different Blocks, allowing for more flexible planning of common prefix addresses.

· COC flavor only—Multiple G-SIDs encapsulated in a G-SRv6 packet carry only the COC flavor, and the device performs the replace action when it forwards the packet. The packet encapsulation and forwarding process of this scheme is similar to the 32-bit G-SRv6 compression scheme. G-SID are encapsulated only in the SID list of the SRH header, and the destination IPv6 address is no longer used as the first container for encapsulating the G-SIDs. Therefore, this scheme provides a lower compression efficiency.

You can select the schemes as needed.

16-bit compression with a combination of NEXT and COC flavors

G-SRv6 packet encapsulation in 16-bit compression

Packet encapsulation in the 16-bit compression scheme is controlled by the configuration of the index command. Incorrect planning of G-SIDs or incorrect configuration for the index command can cause discrepancies in G-SRv6 packet encapsulation or even failure of packet encapsulation. The following uses the index command to describe the packet encapsulation procedure.

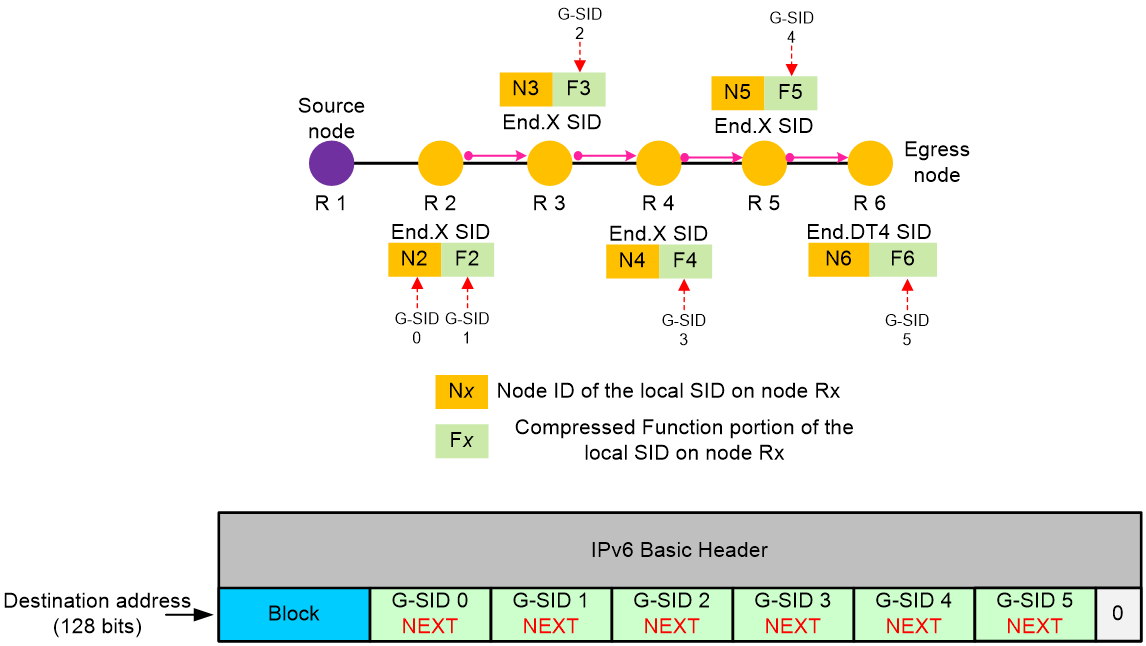

As shown in Figure 15, a packet is forwarded along the path from R1 to R6, with fewer nodes. The total length of the 16-bit G-SIDs and the Block of all nodes does not exceed 128 bits, and the flag field and the SRH TLV are not required. In such cases, the source node can directly encapsulate the Block and the G-SID list into the destination address field of the IPv6 basic header, without encapsulating an SRH extension header. Any space less than 128 bits in the container is padded with zeros. The G-SIDs are encapsulated from left to right in order of proximity to the source node. When you configure the index command, you must specify the coc-next or next keyword for G-SID 0 through G-SID 5, indicating that G-SIDs 0 through 5 are 16-bit compressed G-SIDs.

Figure 15 G-SRv6 packet encapsulation

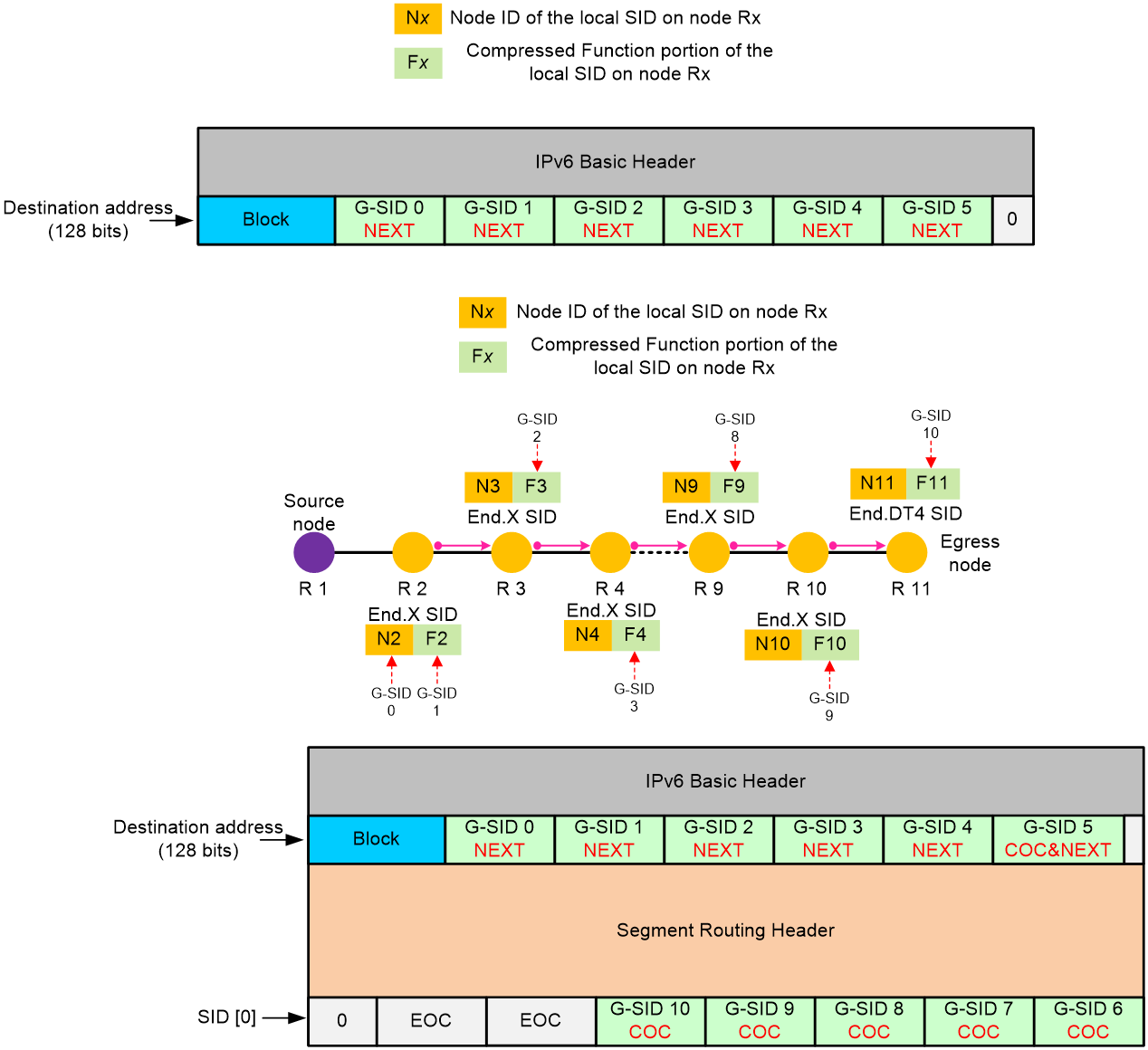

As shown in Figure 16, a packet is forwarded along the path from R1 to R11, with many nodes on the path. The total length of the 16-bit G-SIDs and the Block for all nodes has exceeded 128 bits, and a single container cannot store all G-SIDs. In this case, the source node can encapsulate the Block and the G-SID list in the destination address of the IPv6 basic header. The G-SIDs that exceed 128 bits must be encapsulated in the SID list of the SRH extension header. In the first container of the SID list, the Block does not need to be encapsulated. The G-SIDs are encapsulated from right to left in order of proximity to the source node. When you configure the index command, you must specify the coc-next or next keyword for G-SID 0 to G-SID 4. For the last G-SID G-SID 5 in the container, its next SID is still a 16-bit compressed G-SID. Therefore, this G-SID must carry the COC flavor, and you must specify the coc-next or next keyword in the index command. For G-SID 6 to G-SID 9, the next G-SID for each G-SID is also a 16-bit compressed G-SID. Therefore, you must specify the coc-next or next keyword in the index command. Whether the last G-SID G-SID 10 in the SID list carries the COC flavor depends on whether the next SID is a compressed G-SID. Spaces that are shorter than 128 bits in the container are filled with zeros. A space with consecutive 16-bit zeros is called End of Container (EOC), which indicates that there are no valid G-SIDs in the subsequent space of the current container.

Figure 16 G-SRv6 packet encapsulation

G-SRv6 packet forwarding

The following uses the scenario shown in Figure 17 as an example to describe the package forwarding process. G-SRv6 packets can be forwarded correctly only when local endpoint nodes are configured with appropriate flavors.

G-SRv6 packet forwarding proceeds as follows:

1. On source node R1, after the G-SRv6 packet is encapsulated, R1 searches the routing table based on the destination address of the IPv6 packet. It matches the longest mask and detects that Block+G-SID 0 is the locator network segment for R2. Therefore, the packet is forwarded to node R2 through the output interface and next hop in the routing table.

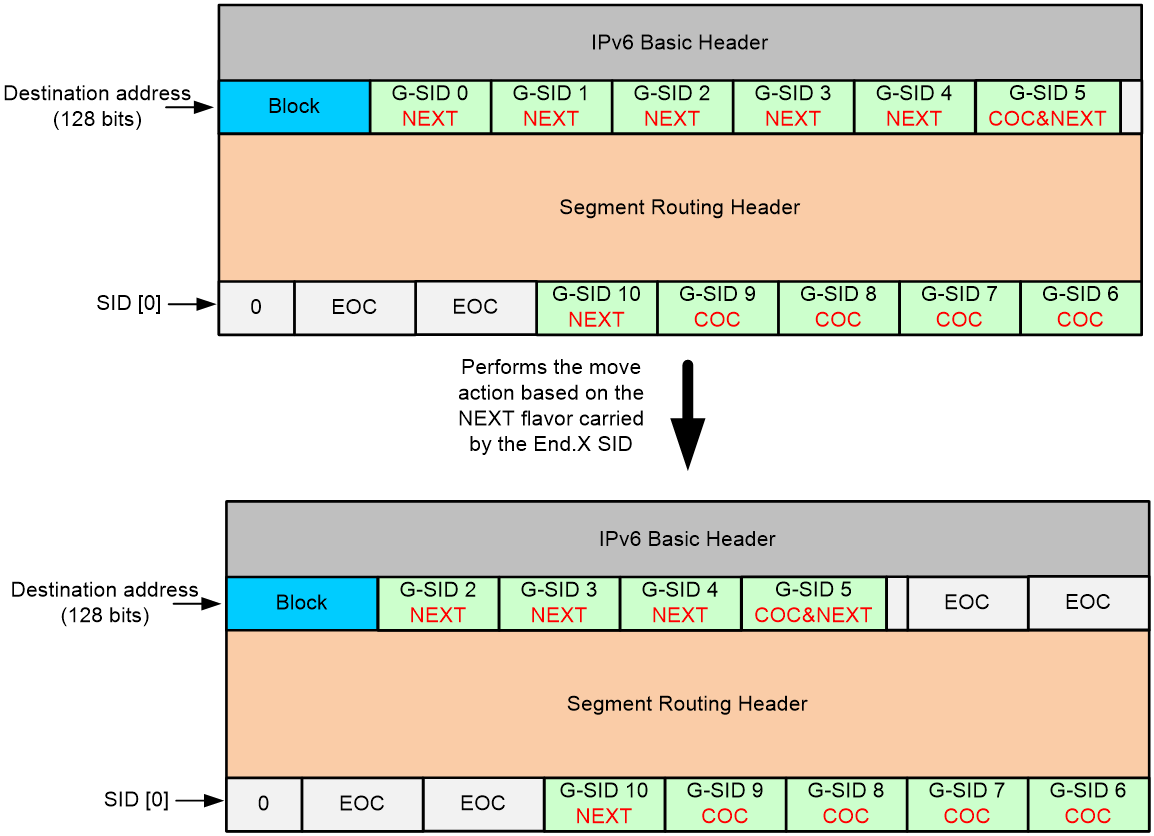

2. Node R2 matches the longest mask and detects that Block+G-SID 0+G-SID 1 is the local End.X SID and the SID carries the NEXT flavor. Therefore, the node performs the move action. As shown in Figure 17, it moves the G-SIDs following G-SID 1 in the container to right after the Block, and fills the last few bits with 0s to generate an EOC. R2 forwards the packet to node R3 through the output interface bound to the End.X SID.

3. For all endpoints on the forwarding path, a special local G-SID entry exists. Different from a common local SID, a G-SID in the local G-SID entry can be generated by the Block + compressed Function portion or the Block + compressed Function portion + W-LIB, without the need for a Node ID. Node R3 matches the longest mask and detects that the destination address Block + G-SID 2 is a local G-SID entry with an End.X bound, and the G-SID carries the NEXT flavor. Therefore, R3 moves all the other G-SID after G-SID 2 in the container to after the Block. The last few bits of the container are filled with 0s to generate an EOC. R3 forwards the packet to node R4 through the outbound interface bound to the End.X SID.

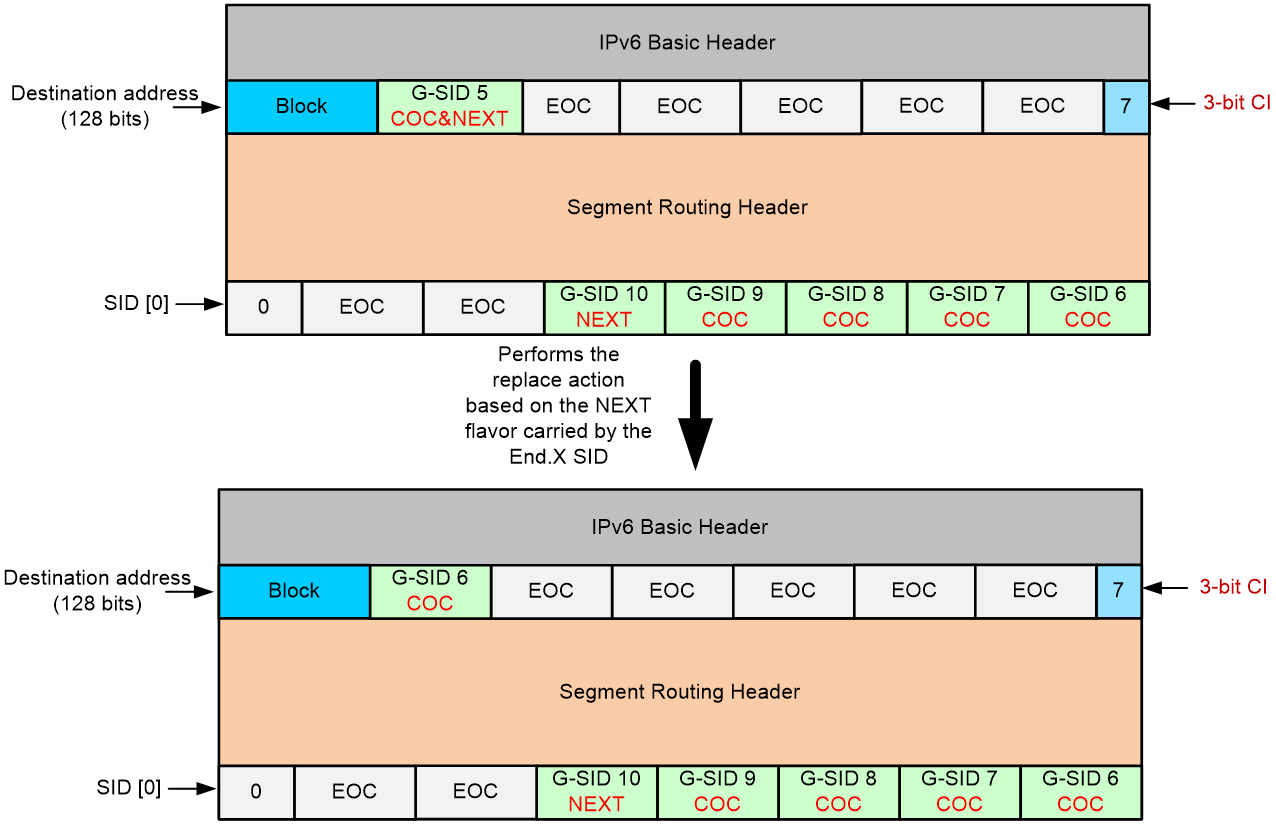

4. Nodes R4 and R5 repeat the previous steps until the G-SRv6 packet is forwarded to node R6. R6 finds that the IPv6 destination address Block + G-SID 5 matches the longest mask rule in the local G-SID table and carries the COC flavor, indicating that the next SID is still a 16-bit compressed G-SID. G-SID 5 is the last G-SID in the current container, followed by an all-zero EOC. As shown in Figure 17, R6 uses the last 3 bits of the destination address as the Compressed-SID Index (CI) flag, setting the value to 7. CI identifies the position of G-SID 6 within the container, with a value in the range of 0 to 7. R6 then performs the replace action according to the COC flavor. It extracts the 16-bit G-SID 6 from the SID[0] container and replaces it in the destination address block. Finally, R6 forwards the packet from the output interface to R7 based on the End.X behavior bound to Block + G-SID 5.

5. Nodes R7 to R10 repeat the steps performed by R6 until the G-SRv6 packet reaches R11. R11 is the egress node, and G-SID 10 is the last SID in the container. The SL is now 0. Therefore, R11 stops processing the SRv6 packet, decapsulates the SRv6 packet based on the forwarding behavior associated with G-SID 10, looks up in the VPN routing table, and forwards the decapsulated packet to the VPN. If the forwarding behavior of G-SID 10 is End or End.X, the SL is greater than 0, and G-SID 10 carries the COC flavor, R11 sets CI to 2. CI identifies the position of an all-zero EOC, indicating the end of the current container. Therefore, the node decreases the SL by 1, sets the CI to 7, and then retrieves the G-SID from the corresponding position in the next container. If G-SID 10 does not carry a COC flavor, the next SID is a common 128-bit SID, and the packet is forwarded through the general SRv6 packet forwarding process.

Figure 17 Execution of the move action based on the NEXT flavor carried by the SID

Figure 18 Execution of the replace action based on the COC flavor carried by the SID

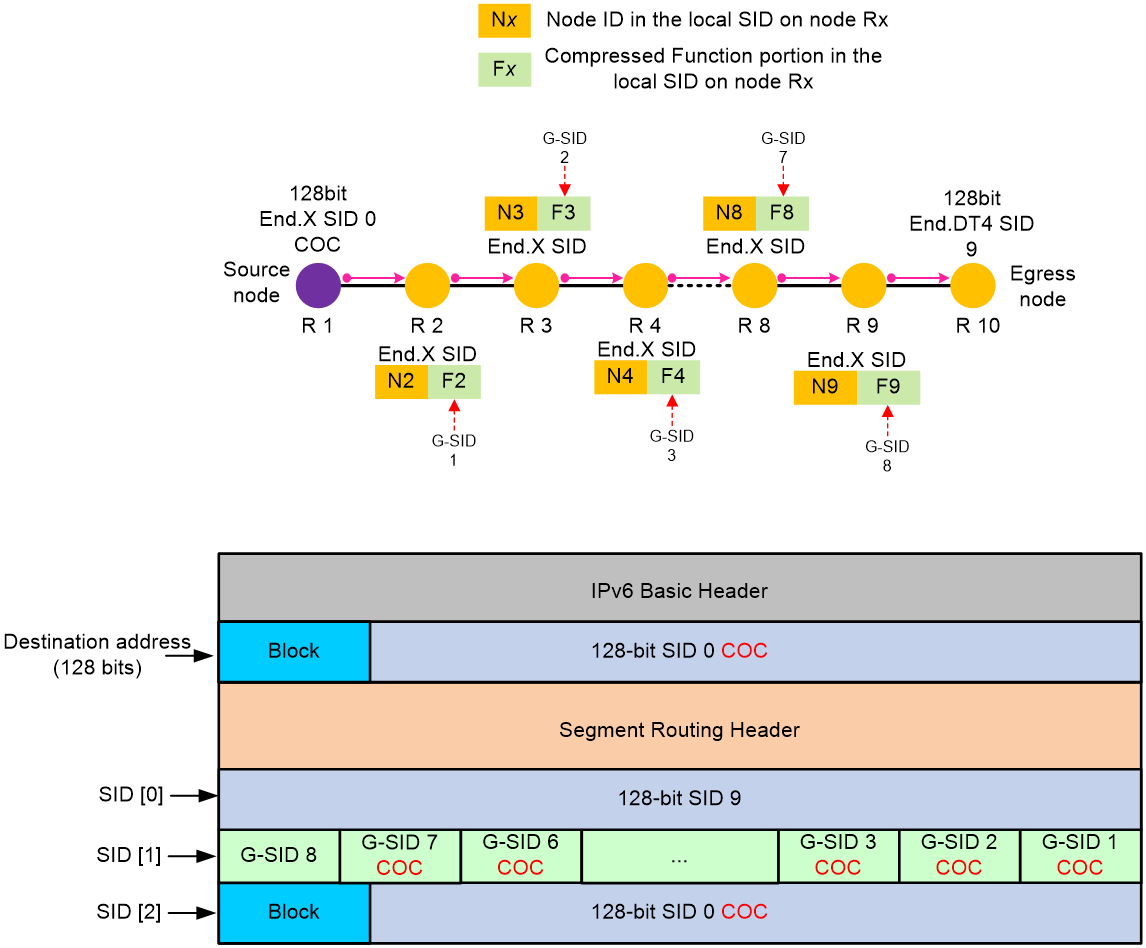

16-bit compression scheme where only the NEXT flavor is supported

G-SRv6 packet encapsulation

This scheme is similar to the 16-bit G-SRv6 compression scheme with a combination of NEXT and COC flavors. The following uses the index command to describe the packet encapsulation procedure.

Similar to scheme 1, if there are fewer forwarding nodes and they share the same Block, the G-SID and Block can be encapsulated in the destination address of the IPv6 header, eliminating the need for SRH encapsulation. However, if there are many nodes on the forwarding path or the nodes have different Blocks, the SRH header must be encapsulated.

As shown in Figure 19, three different Blocks exist on nodes R1 through R11. The Blocks for nodes R2 through R5, R6 through R8, and R9 through R11 are Block 1, Block 2, and Block 3, respectively. When the device encapsulates a G-SRv6 packet, it must encapsulate the three Blocks in different containers. When you configure the index command, you must specify the coc-next or next keyword for G-SIDs 0 through 3, G-SIDs 5 through 6, and G-SIDs 8 through 9 to ensure that the G-SIDs are encapsulated in the containers with Blocks from left to right in order. For the next G-SID to be encapsulated correctly, do not specify the coc or coc-next keyword for the last G-SID in each container. Spaces that are shorter than 128 bits in the container are filled with zeros to generate an EOC.

Figure 19 G-SRv6 packet encapsulation

G-SRv6 packet forwarding

The following uses the scenario shown in Figure 19 as an example to describe the package forwarding process. G-SRv6 packets can be forwarded correctly only when a local endpoint node is configured with SIDs that carry appropriate flavors.

In this scheme, only the move action is taken in G-SRv6 packet forwarding. The packet forwarding process is as follows:

1. On source node R1, after the G-SRv6 packet is encapsulated, R1 searches the routing table based on the destination address of the IPv6 packet. Using the longest mask match rule, R1 detects that Block 1+G-SID 0 is the locator network segment for R2. Therefore, it forwards the packet to node R2 through the output interface and next hop in the routing table.

2. Using the longest mask match rule, node R2 detects that Block+G-SID 0+G-SID 1 is a local End.X SID and the SID carries the NEXT flavor. Therefore, the node performs the move action to move the G-SIDs following G-SID 1 in the container to after Block 1, and fills the last few bits with 0s to generate an EOC. R2 forwards the packet to node R3 through the output interface bound to the End.X SID.

3. For all endpoints on the forwarding path, a special local G-SID entry exists. Different from a common local SID, a G-SID in the local G-SID entry can be generated by the Block + compressed Function portion or the Block + compressed Function portion + W-LIB, without requiring a Node ID. Node R3, according to the longest mask match rule, detects that the destination address Block + G-SID 2 is a local G-SID entry with the End.X behavior bound, and the G-SID carries the NEXT flavor. Therefore, R3 moves all the other G-SIDs after G-SID 2 in the container to after the Block and fills the last few bits of the container with 0s to generate an EOC. R3 forwards the packet to node R4 through the outbound interface bound to the End.X SID.

4. Node R4 repeats the previous steps until the G-SRv6 packet is forwarded to node R5. Using the longest mask match rule, R5 detects that the IPv6 destination address Block + G-SID 4 is a local G-SID entry and the G-SID does not carry a COC flavor, indicating that the next SID is still a 16-bit compressed G-SID. G-SID 4 is the last G-SID in the current container, followed by an all-zero EOC. At this point, the SL is 1. R5 updates SID[1] to the destination address and decreases the SL by 1. Finally, R6 forwards the packet from the output interface to R6 based on the End.X behavior associated with Block + G-SID 4.

5. Nodes R6 to R10 repeat the previous forwarding steps until the G-SRv6 packet reaches R11. R11 is the egress node, and G-SID 10 is the last SID in the container. The SL is now 0. Therefore, R11 stops processing the SRv6 packet, decapsulates the SRv6 packet based on the forwarding behavior associated with G-SID 10, looks up in the VPN routing table, and forwards the decapsulated packet to the VPN.

16-bit compression scheme where only the COC flavor is supported

G-SRv6 packet encapsulation

This scheme is similar to the 16-bit G-SRv6 compression scheme with a combination of NEXT and COC flavors. The following uses the index command to describe the packet encapsulation procedure.

As shown in Figure 20, when you execute the index command for G-SRv6 packet encapsulation on source node R1, you must specify the coc keyword for the first SID SID 0 in the SID list to carry the COC flavor without compression. coc identifies the next SID as 16-bit G-SID 1, which must be compressed and placed in the next container. For G-SIDs 1 through 7, each G-SID's next SID is also a 16-bit compressed G-SID. Therefore, you must specify the coc or coc-next keyword for these G-SIDs. These G-SIDs are encapsulated in the next container from right to left, in order of proximity to the source node. If a container is not fully occupied by G-SIDs, any space less than 128 bits is filled with zeros. A 16-bit block of zeros is called End of Container (EOC), which indicates that there are no valid G-SIDs in the subsequent space of the current container. Whether the last G-SID G-SID 8 in the SID list carries the COC flavor depends on whether the next SID is a 16-bit compressed G-SID. In the figure, the coc keyword is specified for G-SID 8, indicating that the next SID SID 9 is a common 128-bit End.DT4 SID.

Figure 20 G-SRv6 packet encapsulation

G-SRv6 packet forwarding

The following uses the scenario shown in Figure 20 as an example to describe the packet forwarding process. G-SRv6 packets can be forwarded correctly only when local endpoint nodes are configured with appropriate flavors.

In this scheme, only the replace action is taken in G-SRv6 packet forwarding. The packet forwarding process is as follows:

1. After encapsulating the G-SRv6 packet, source node R1 with the SL as 2 replaces the destination address with SID[2]. Using the longest mask match rule, it detects that End.X SID 0 is a local SID that carries the COC flavor, indicating that the next SID is a 16-bit compressed G-SID, and SID 0 is the last SID in the current container. Therefore, R1 uses the last 3 bits of the destination address as the CI, and sets the value to 7. CI identifies the position of G-SID 1 within the container, with a value in the range of 0 to 7. R1 performs the replace action to extract the 16-bit G-SID 1 from SID[1] in the container and place it in the Block after the destination address. Finally, R1 forwards the packet to node R2 through the output interface and next hop bound to End.X SID 0.

2. Using the longest mask match rule, Node R2 detects that Block+G-SID 1 is the local End.X SID. This SID carries the COC flavor, indicating that the next SID is a 16-bit compressed G-SID. At this point, CI=7. R2 decreases the CI value by 1 and performs the replace action to extract G-SID 2 from the position indicated by CI and place it after the Block in the destination address. Then, R2 forwards the packet to node R3 through the output interface bound to the End.X SID.

3. Nodes R3 to R8 repeat the forwarding behavior of node R2 until the packet reaches node R9, where CI=0, indicating that G-SID 8 is the last G-SID in the container. G-SID 8 does not carry a COC flavor, indicating that the next SID 9 is a 128-bit common SID. At this point, SL-1=0. R9 copies SID[0] to the destination address of the IPv6 packet and forwards the packet to node R10 through the output interface bound to G-SID 8.

4. R10 is the endpoint node and the SL=0. Therefore, it stops processing the SRv6 packet, decapsulates the SRv6 packet based on forwarding behavior End.DT4 associated with SID 9, looks up in the VPN routing table, and forwards the decapsulated packet to the VPN.

|

IMPORTANT: The three 16-bit compression G-SRv6 packet encapsulation and forwarding schemes, the 32-bit G-SRv6 compression scheme, and the non-compression SRv6 packet encapsulation scheme can be used together. How SRv6 packets are encapsulated and forwarded depends on actual service requirements, configuration of the index command, and local SID configuration. This section only lists three significantly different and mainstream G-SRv6 packet encapsulation and forwarding schemes in 16-bit G-SRv6 compression. |

BGP-EPE

About BGP-EPE

Advertising SRv6 SIDs through IGP can only implement orchestration of SIDs within an AS for optimal traffic forwarding based on the SID list. However, in large-scale networks across multiple ASs, using IGP for SRv6 cannot orchestrate SIDs to form a complete inter-AS traffic forwarding path across ASs. At this point, an extension of BGP for SRv6 is required for inter-AS SID allocation and advertisement.

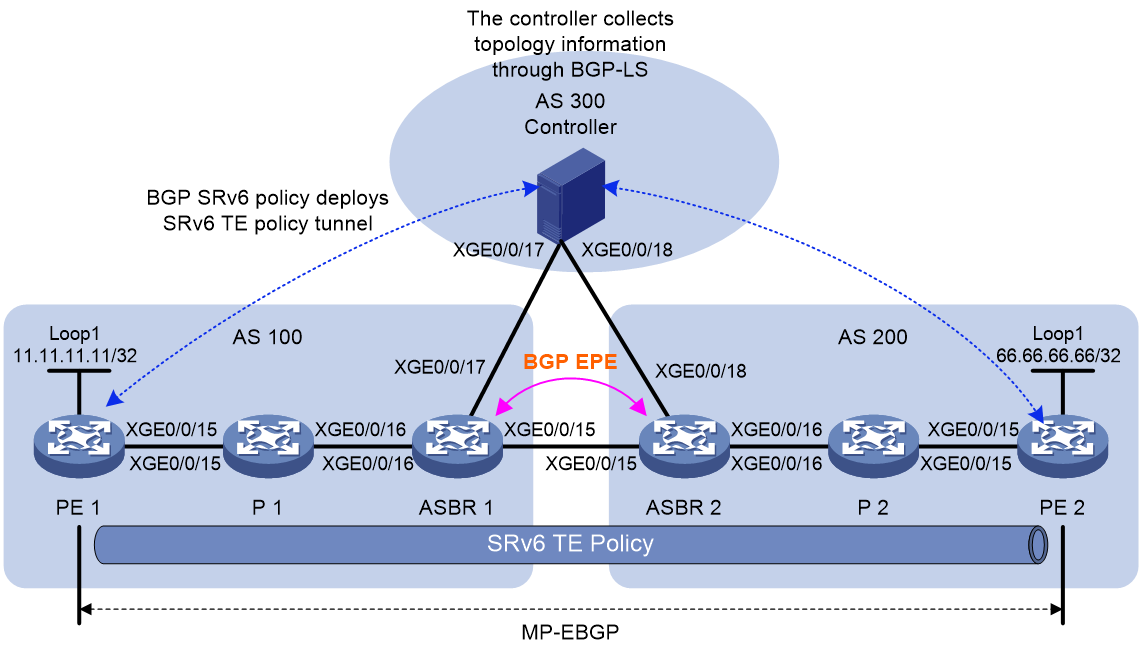

BGP Egress Peer Engineering (BGP-EPE) is an extension of BGP for SRv6. It can allocate BGP peer SIDs to inter-AS segments. Peer SIDs are advertised to the SDN controller through extended BGP LS messages. The SDN controller orchestrates the IGP SIDs and BGP peer SIDs to generate inter-AS packet forwarding paths. Typically, in an inter-AS network, each AS requires at least one but not all forwarding devices to establish a BGP LS peer relationship with the SDN controller. The forwarding devices that have established a BGP LS peer relationship with the SDN controller collect all IGP SIDs and BGP peer SIDs within the AS and advertise them to the SDN controller through BGP LS messages, completing collection of network-wide information.

Operating mechanism

BGP-EPE supports automatic peer SID allocation and static peer SID allocation. As shown in Figure 21, BGP-EPE can allocate the following peer SIDs:

· PeerNode SID—A BGP-EPE peer that identifies a peer node. BGP-EPE allocates a PeerNode SID to each BGP peer. If the device establishes EBGP peer relationship with a peer through a loopback interface, multiple physical links might exist between BGP-EPE peers. In this case, the PeerNode SID for this peer is associated with multiple output interfaces. Traffic destined for this peer based on the PeerNode SID will be distributed among these output interfaces.

· PeerAdj SID—Identifies an adjacency link that can reach a BGP-EPE peer. If the device establishes EBGP peer relationship with a peer through a loopback interface, multiple physical links might exist between BGP-EPE peers. Each link is allocated a PeerAdj SID. When the device forwards traffic based on a PeerAdj SID, the traffic is forwarded out of the interface that is attached to the link identified by the PeerAdj SID.

· PeerNode-Adj SID—Identifies a peer node and identifies one or multiple adjacency links that can reach a peer node.

· PeerSet SID—Identifies a group of peer nodes in a BGP-EPE SRv6 peer set. A PeerSet SID corresponds to multiple PeerNode SIDs and PeerAdj SIDs. When the device forwards traffic based on a PeerSet SID, it distributes the traffic among multiple peers.

Figure 21 BGP-EPE network diagram

As shown in Figure 21, BGP-EPE allocates peer SIDs as follows:

· ASBR 1 and ASBR 3 have two direct physical links. They establish EBGP peer relationship through loopback interfaces. On ASBR 1, BGP-EPE allocates PeerNode SID 100:AB::1 to ASBR 3 and allocates PeerAdj SIDs 100:AB:1::2 and 100:AB:1::3 to the physical links. When ASBR 1 forwards traffic to ASBR 3 based on the PeerNode SID, the two physical links load share the traffic.

· EBGP peer relationship has been established between ASBR 1 and ASBR 5, between ASBR 2 and ASBR 4, and between ASBR 2 and ASBR 5 through directly connected physical interfaces. On ASBR 1, BGP-EPE allocates PeerNode SID 100:AB::2 to ASBR 5. On ASBR 2, BGP-EPE allocates PeerNode SIDs 100:AB::4 and 100:AB::5 to ASBR 4 and ASBR 5, respectively.

· ASBR 4 and ASBR 5 each has established EBGP peer relationship with ASBR 2. On ASBR 2, peers ASBR 4 and ASBR 5 are added to a peer set. BGP-EPE allocates PeerSet SID 100:AB::3 to the peer set. When ASBR 2 forwards traffic based on the PeerSet SID, the traffic is distributed to both ASBR 4 and ASBR 5 for load sharing.

The SIDs allocated to peers by BGP-EPE are not advertised to the peers. Route types used by the peers do not affect BGP-EPE.

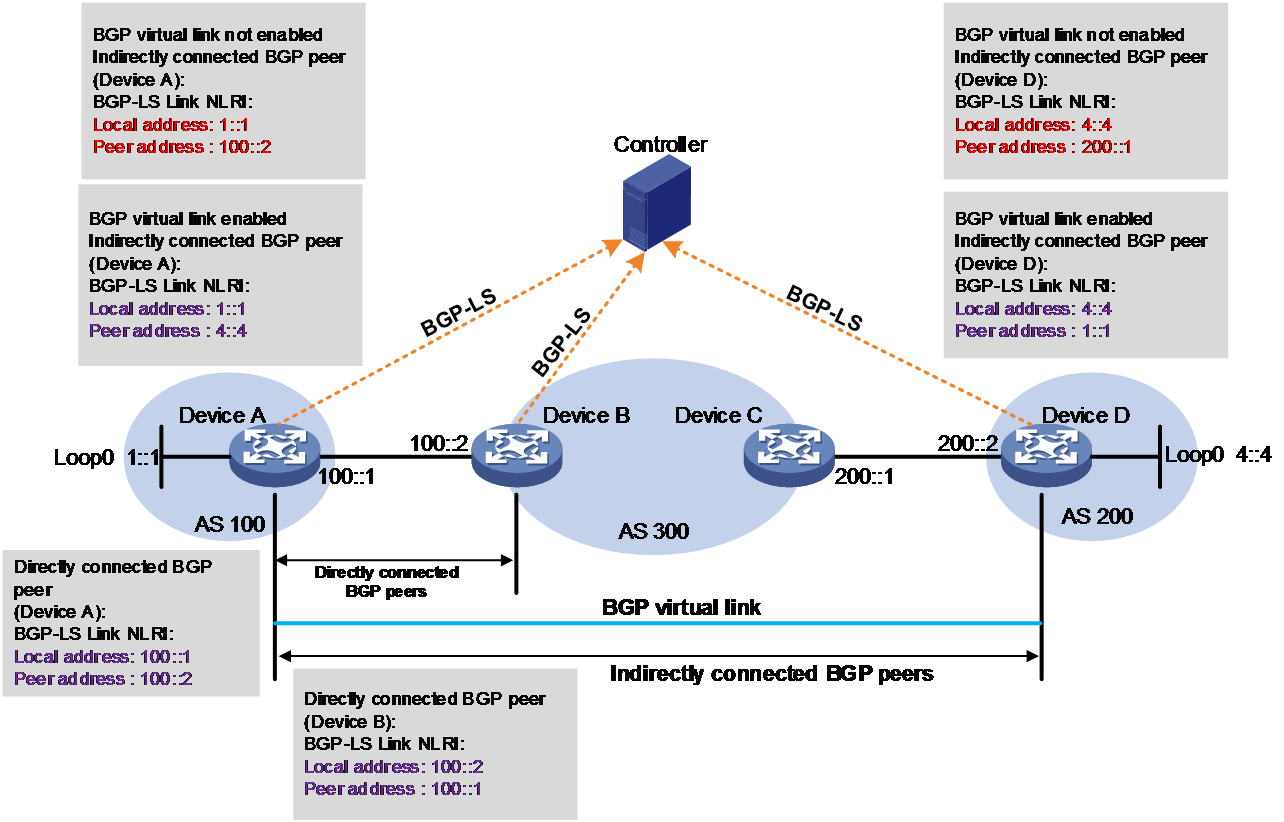

BGP virtual links

As shown in Figure 22, the controller can orchestrate IGP SIDs and BGP peer SIDs assigned by BGP-EPE for inter-AC paths based on the link information reported by BGP-LS to generate inter-AS forwarding paths. Typically, Device A and Device B in different ASs are directly connected and they establish EBGP peer relationships through directly connected interfaces. In this case, the local address in the BGP-LS Link NLRI reported by Device A to the controller is 100::1, and the remote address is the next hop 100::2. Device B's local address is 100::2, and the remote address is the next hop 100::1. The controller detects that the remote addresses advertised by Device A and Device B belong to the same network segment, and Device A's local address matches Device B's remote address. Therefore, the controller creates a direct inter-AS link, and orchestrates SIDs based on this link to form a complete inter-AS traffic forwarding path.

Device A and Device D, which are indirectly connected across different ASs, establish EBGP peer relationships through loopback interfaces. The local address in the BGP-LS Link NLRI reported by Device A to the controller is the loopback interface address 1::1, and the remote address is the next hop 100::2 towards the remote loopback interface. Device D's local address is 4::4, and the remote address is 200::1. In this case, Device A and Device D's remote addresses belong to different network segments, and Device A's local address does not match Device D's remote address. Therefore, the controller cannot create a complete inter-AS link based on the address information in the BGP-LS Link NLRI.

Figure 22 Network diagram for BGP virtual link

For the controller to orchestrate SIDs and create a complete inter-AS link for the two indirectly connected devices Device A and Device D, you can configure a BGP virtual link. When this feature is enabled, the local address in the BGP-LS Link NLRI reported by Device A to the controller is the loopback interface address 1::1, and the remote address is 4::4, address of the loopback interface on Device D. The local address in the BGP-LS Link NLRI reported by Device D to the controller is local address 4::4, and the remote address is 1::1. The controller can create a reachable virtual link.

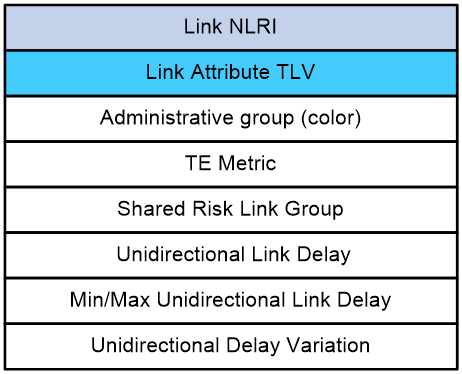

BGP-LS advertisement of link attribute information

In an AS, the extended IGP protocol can carry link attribute information. With the link attribute information, devices running the IGP protocol can use the Constraint-based Shortest Path First (CSPF) algorithm to implement TE capabilities.

In scenarios where the controller calculates optimal inter-AC paths, link attributes of intra-AS and inter-AS links are required to implement TE capabilities. Therefore, BGP-LS uses Link Attribute TLVs to carry various link attribute information. Figure 23 shows some of the link attribute information in an Link Attribute TLV.

Table 1 Link Attribute TLV description

|

Field |

Description |

|

Link NLRI |

Link Network Layer Reachability Information (NLRI). This information can contain Link Attribute TLVs. |

|

Link Attribute TLV |

Link Attribute TLV type. Options include: · Administrative group (color)—Affinity attribute value, which indicates the color of links. The TLV Type code is 1088. · TE Metric—The TLV Type code is 1092. · Shared Risk Link Group—A set of links that share a resource. The TLV Type code is 1096. · Unidirectional Link Delay—The TLV Type code is 1114. · Min/Max Unidirectional Link Delay—The TLV Type code is 1115. · Unidirectional Delay Variation—The TLV Type code is 1116. |

Topology-Independent Loop-Free Alternate Fast Re-Route (TI-LFA FRR)

Topology-Independent Loop-Free Alternate Fast Re-Route (TI-LFA FRR) provides link and node protection for SRv6 tunnels. When a link or node fails, TI-LFA FRR switches the traffic to the backup path to ensure continuous data forwarding.

TI-LFA FRR background

To minimize traffic loss during the route reconvergence process in SR-MPLS, you can enable the FRR feature on the device directly connected to the protected link or node. The device enabled with FRR is called the Point of Local Repair (PLR). The PLR calculates the shortest path to the destination and calculates an FRR backup path at the same time, and then writes the information into the FIB table. When a protected link or node fails, traffic is rerouted through the FRR backup path on the PLR node, without the need for the network topology to reconverge, significantly reducing traffic loss. FRR has the following mechanisms:

· Loop-Free Alternate Fast Reroute (LFA FRR)—To calculate the backup path, LFA FRR identifies a protected neighboring node (LFA node) of the PLR, enabling traffic to be forwarded to the destination node without passing through the protected link or node. In some scenarios, especially in a ring network, LFA FRR cannot calculate a backup path, making it topology dependent. According to RFC 6571, LFA FRR has a topology coverage of 80% to 90%.

· Remote Loop-Free Alternate Fast Reroute (RLFA FRR)—To improve the topology coverage of LFA FRR, RFC 7490 defines RLFA FRR, which enables traffic to be forwarded from the PLR to an RLFA FRR node and reach the destination node without passing through the protected link or node. Compared to LFA FRR, RLFA FRR does not restrict the protective node to being a neighbor of the PLR, providing more protection possibilities and increasing the topology coverage to 95% to 99%.

· TI-LFA FRR suitable for SRv6 and SR-MPLS—Compared to LFA FRR and RLFA FRR, TI-LFA FRR is topology independent, meaning FRR backup path calculation is not restricted by the network topology. PLR can automatically calculate a TI-LFA FRR backup path as long as a bypass forwarding path is available.

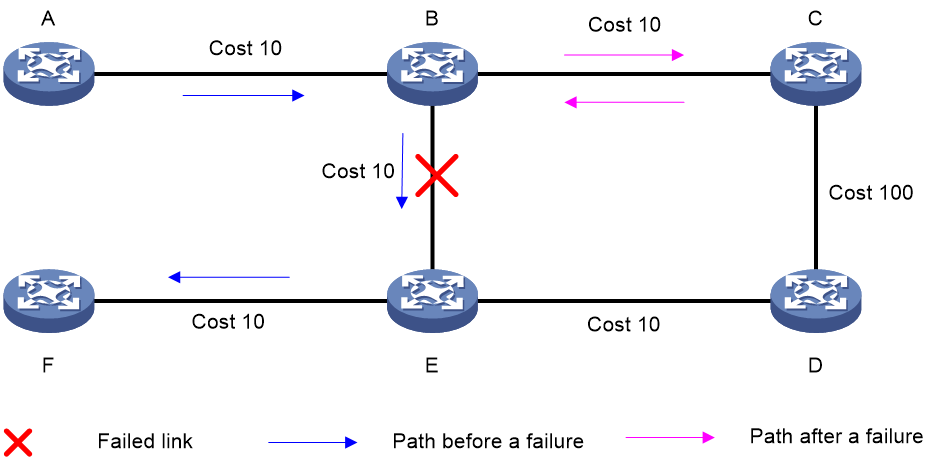

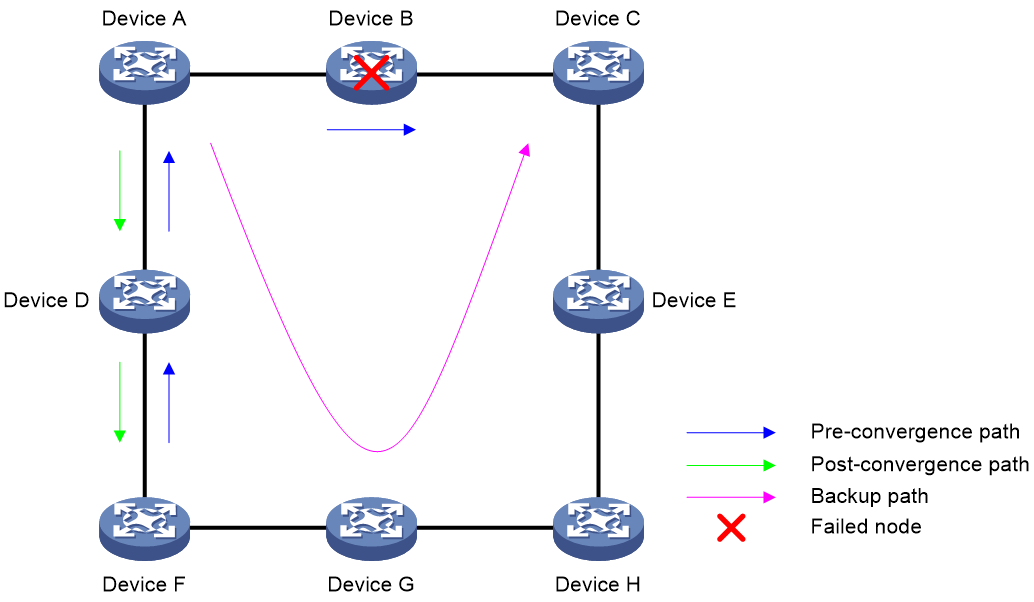

As shown in Figure 24, node A sends data packets to node F. When the link between node B and node E fails, node B forwards the data packets to node C. The cost of the link between node C and node D is 100 (which is greater than the cost of the link between node C and node D) and the routes on node C have not converged. As a result, node C determines that the next hop of the optimal path to reach node F is node B. Then, node C forwards the data packets back to node B, which causes a loop.

Figure 24 TI-LFA application scenario

To resolve this issue, deploy TI-FLA on the SRv6 network. As shown in Figure 25, when the link between node B and node E fails, node B uses the backup path calculated by TI-LFA to forward the data packets along the B->C->D->E path.

Figure 25 TI-LFA forwarding network diagram

TI-LFA FRR concepts

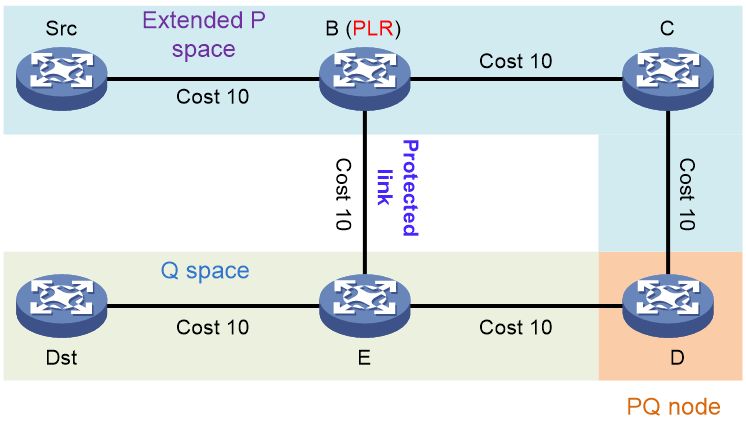

TI-LFA FRR uses the concepts of RLFA FRR defined in RFC 7490:

· P space—A set of nodes reachable (using pre-convergence paths) from the PLR without using the protected link or node (including equal-cost path splits). Nodes in the P space are called P nodes.Calculation of P nodes typically involves building an SPF tree with the PLR as the root node, and then identifying nodes on the SPF tree that meet the loop-free requirement.

· Extended P space—A set of nodes reachable (using pre-convergence paths) from the neighbors of the PLR (except for the protected node) without using the protected link or node (including equal-cost path splits). The P space is a subset of the extended P space. Nodes in the extended P space are also called P nodes. Neighbor nodes of the PLR are N nodes. As shown in Figure 26, the expanded P space contains nodes Src, B, C, and D. The P nodes meet the following loop-free requirement: Distance (N, P) < Distance (N, PLR) + Distance (PLR, P).

· Q space—A set of nodes that can reach (using pre-convergence paths) the destination without using the protected link or node (including equal-cost path splits). Nodes in the Q space are called Q nodes.

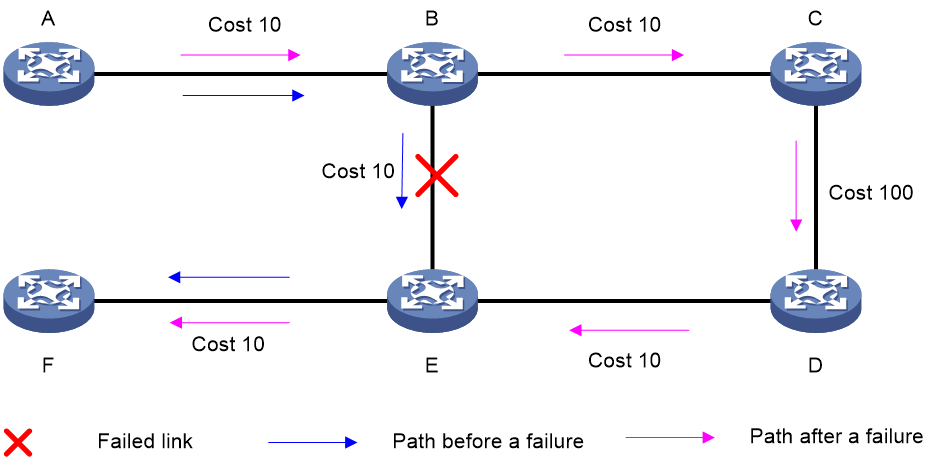

TI-LFA FRR path calculation

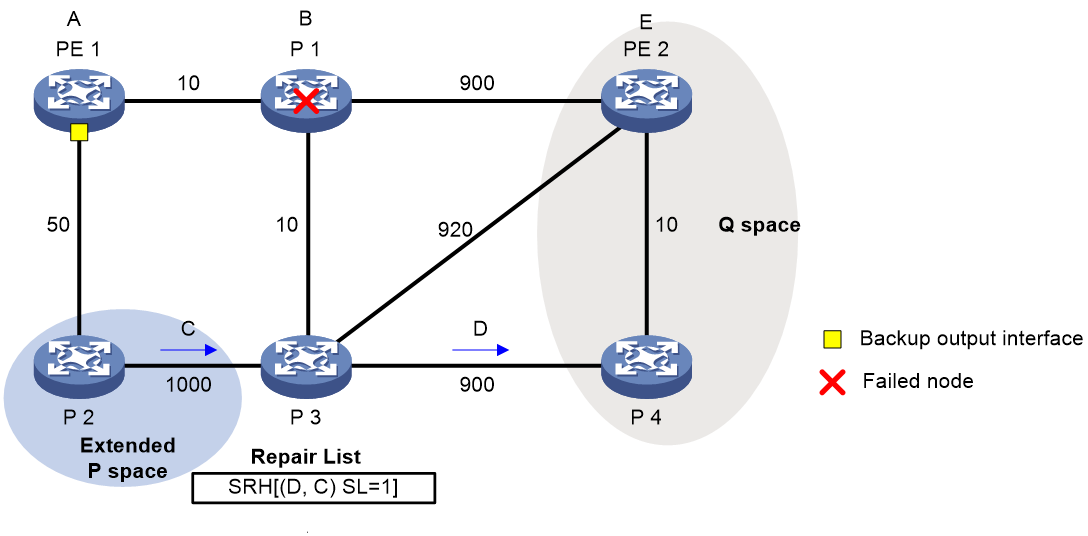

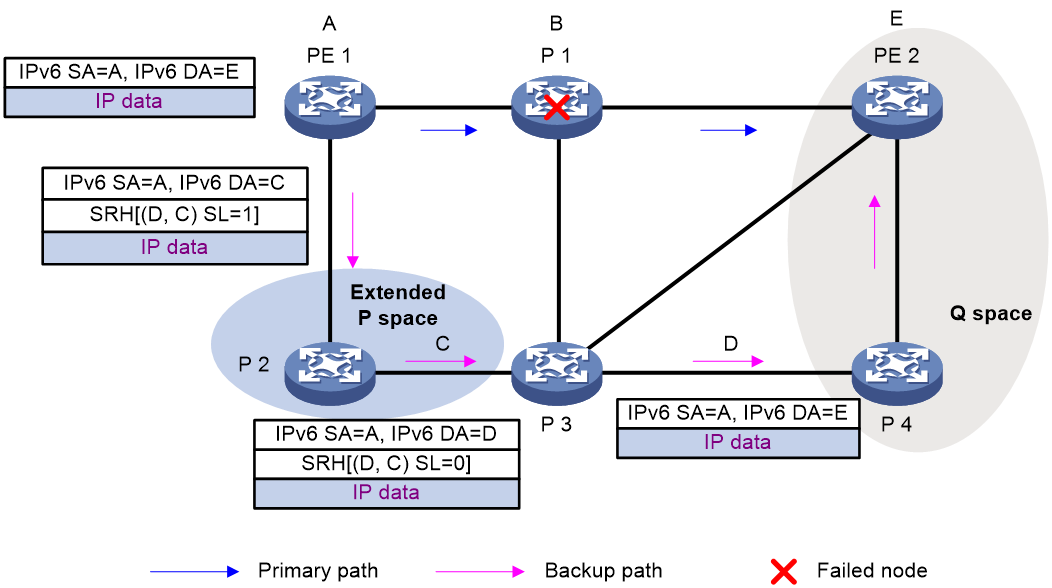

As shown in Figure 27, PE 1 is the source node. P 1 is the faulty node. PE 2 is the destination node. The numbers on links represent the link costs. A data flow traverses PE 1, P 1, and PE 2. To protect data against P 1 failure, TI-LFA FRR calculates the extended P space, Q space, shortest path tree converged after P 1 fails, repair list, and backup output interface, and creates the backup forwarding entry.

TI-LFA FRR calculates the backup path by using the following steps:

1. Calculates the extended P space: P 2.

2. Calculates the Q space: PE 2 and P 4.

3. Calculates the shortest path tree converged after P 1 fails: PE 1 --> P 2 --> P 4 --> PE 2.

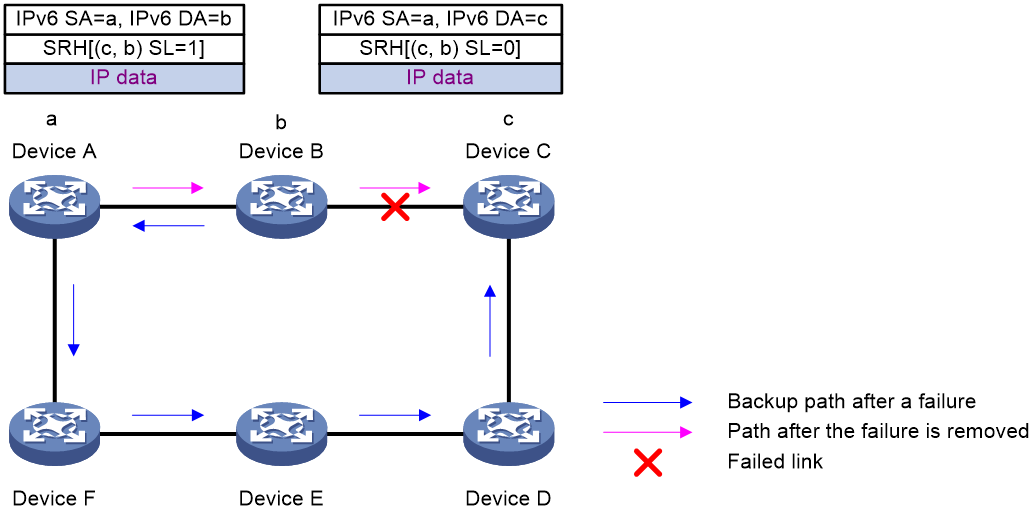

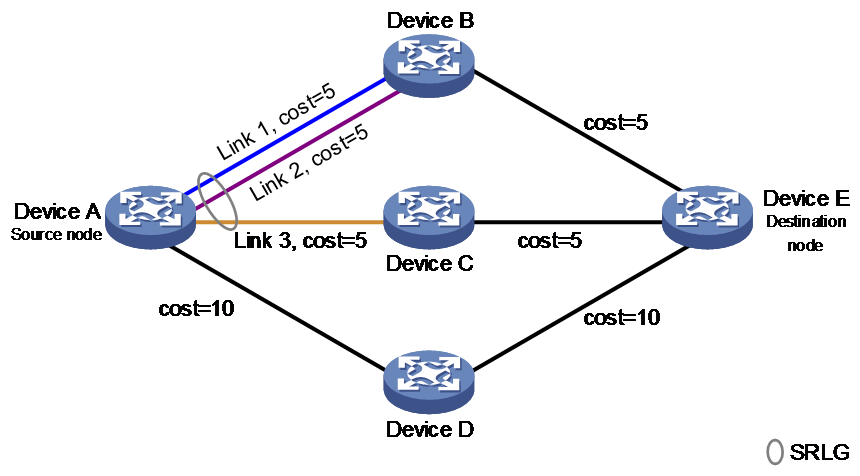

4. Calculates the repair list: End.X SID C of the link between P 2 and P 3 and End.X SID D of the link between P 3 and P 4.