- Table of Contents

-

- 14-Layer 3—IP Routing Configuration Guide

- 00-Preface

- 01-Basic IP routing configuration

- 02-Static routing configuration

- 03-IPv6 static routing configuration

- 04-RIP configuration

- 05-RIPng configuration

- 06-OSPF configuration

- 07-OSPFv3 configuration

- 08-IS-IS configuration

- 09-BGP configuration

- 10-Policy-based routing configuration

- 11-IPv6 policy-based routing configuration

- 12-Routing policy configuration

- 13-Guard route configuration

- 14-RIR configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 14-RIR configuration | 344.41 KB |

Preference-based link selection

Bandwidth-based link selection

About bandwidth-based link selection

Bandwidth-based link selection policy

Per-session weight-based link selection mode

Per-session periodic link adjustment mode

Link selection delay and suppression

Link selection workflow summary

Preference-based primary link selection

Preference-based backup link selection

Quality tolerant link selection

Bandwidth tolerant link selection

Restrictions: Hardware compatibility with RIR

Restrictions and guidelines: RIR configuration

Spoke configuration tasks at a glance

Hub configuration tasks at a glance

Prerequisites for RIR configuration

Specifying an RIR client synchronization port

Configuring NQA link connectivity probe parameters

Configuring an NQA link quality operation

Specifying an RIR server synchronization port

Creating an SLA and an NQA link quality operation

Assigning a link type and index to a VSI interface

Configuring the link bandwidth of a VXLAN tunnel interface

About flow template configuration

Configuring a quality policy for the flow template

Specifying the per-session expected bandwidth

Specifying link preference values for links

Configuring the link load balancing mode

Restrictions and guidelines for link load balancing mode configuration

Setting the per-session periodic link adjustment mode

Setting the per-packet load balancing mode

Configuring flow priority-based traffic scheduling

Setting the link selection delay and link selection suppression period

Configuring a QoS policy to mark matching packets with a flow ID

About configuring a QoS policy to mark matching packets with a flow ID

Creating a traffic class and defining packet match criteria

Creating a traffic behavior and configuring a flow ID marking action

Applying the QoS policy to an interface

Restrictions and guidelines for RIR collaboration configuration

Setting up RIR dedicated links between local and peer devices

Applying QoS policies to interfaces interconnecting local and peer devices

Assigning links to an RIR collaboration link group

Establishing RIR collaboration relationship for each pair of local and peer devices

Configuring RIR packet redirection

Configuring flow ID-based traffic rate statistics for tunnels

Display and maintenance commands for RIR

Configuring RIR

About RIR

Resilient Intelligent Routing (RIR) dynamically selects the most suitable links for traffic forwarding based on service requirements (for example, link quality and link bandwidth). RIR not only can select the optimal link from a specific type of transport network, but also can perform automatic link switchover when the current link becomes unqualified.

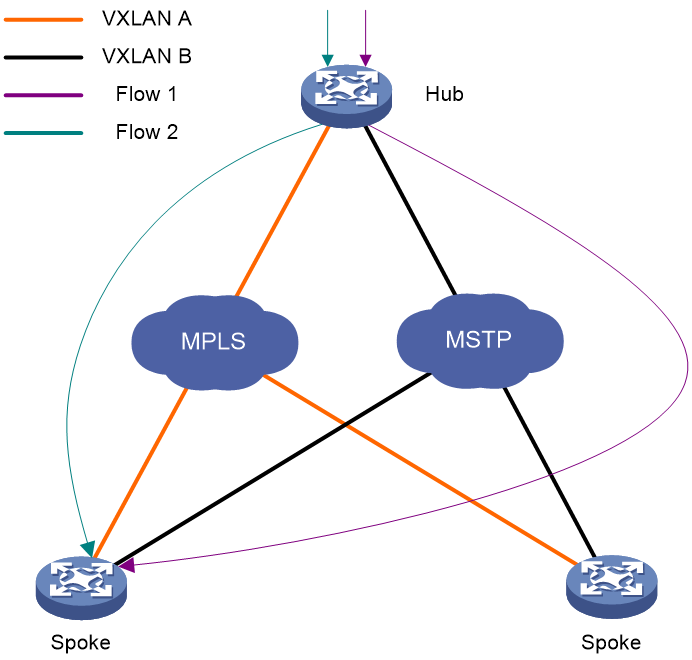

Application scenario

As shown in Figure 1, RIR is used in a VXLAN-based hub-spoke network. The feature can select different VXLAN tunnels to forward traffic for different services, depending on parameters including the link preference, link primary/backup role, link quality, and link bandwidth. RIR can perform link selection not only for traffic from a hub to a spoke, but also for traffic from a spoke to a hub. The feature might select different links for bidirectional traffic between a hub and a spoke.

Figure 1 RIR application scenario

Flow template

A flow template defines link selection policies for a type of service flow. A flow ID uniquely identifies a flow template.

The device applies the link selection policies under a flow template to the service flow marked with the flow ID of the flow template.

The device supports using QoS policies to mark flow IDs for service flows. After QoS identifies the service of a packet based on the quintuple and DSCP of the packet, it assigns a flow ID to the packet. Then, RIR will perform link selection for the packet based on the flow template that uses the flow ID.

The flow ID is marked only in the RIR process, and it will not be added to any outgoing packets.

For more information about QoS marking, see QoS overview, QoS policies, and marking configuration in ACL and QoS Configuration Guide.

Link type

RIR uses the link type to identify the network type of a link and uses the link index to distinguish links of the same network type. RIR supports 4G, Internet, MPLS, and MSTP link types. The link type is used only for identifying links, and it does not affect packet encapsulation.

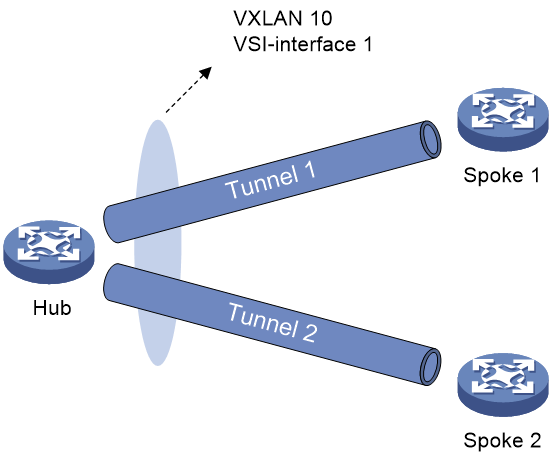

As shown in Figure 2, assign a link type and link index to the VSI interface to identify the VXLAN tunnels between the hub and the spokes in the VXLAN. To uniquely identify a VXLAN tunnel between a hub and a spoke, VXLAN-based RIR allows the hub and spoke to have only one VXLAN tunnel for each VSI interface.

Figure 2 Links in a VXLAN network

Preference-based link selection

Link preference

You can assign a preference to a link based on factors such as the service requirements, the link conditions, and the link cost. RIR preferentially selects links with higher preference.

VXLAN-based RIR supports assigning a link preference to a type of links with a specific link index in flow template view. The link type and link index identify a VSI interface. As VXLAN-based RIR allows a hub and spoke to have only one VXLAN tunnel for a VSI interface, the link preference of the VSI interface is the link preference of the VXLAN tunnel between the hub and spoke.

Link selection rules

You can assign the same preference value to different links in the same flow template.

RIR selects a link for a type of service flows from the links in the flow template in descending order of link preference. If the links with the highest preference cannot meet the service requirements, RIR tries the links with the second highest preference, and so forth to the links with the lowest preference.

If the flow template has two or more links with the same preference, RIR performs link selection based on RIR link load sharing criteria.

Redundant link selection

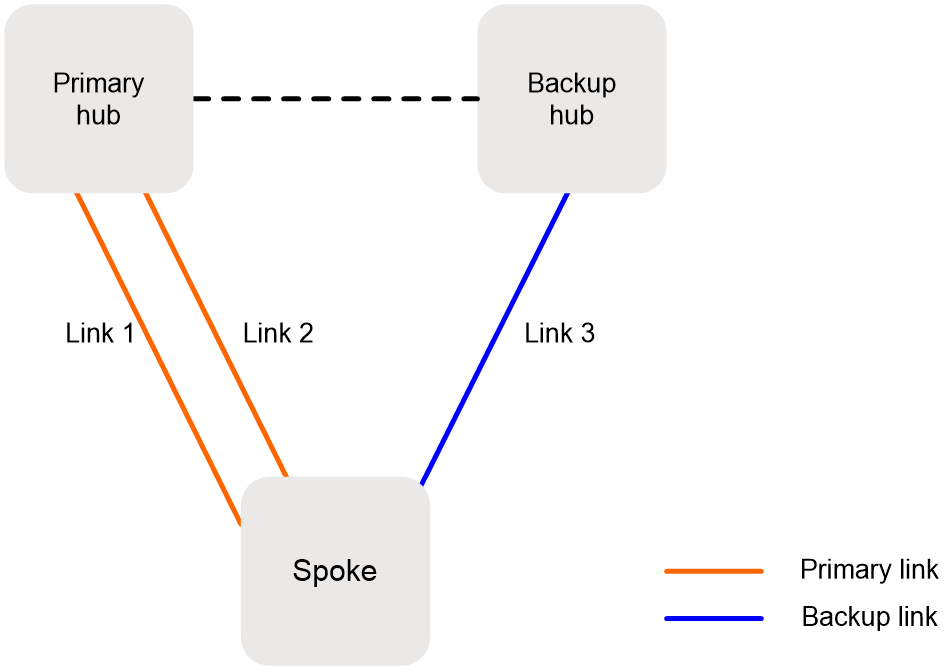

To ensure service high availability, redundant hubs are deployed. Typically, use the links between a spoke and the primary hub as primary links, and use the links between a spoke and the backup hub as backup links. When no link is available to reach the primary hub, the spoke can switch traffic to the backup hub to ensure service continuity.

A VXLAN tunnel is a primary link by default after it is assigned a link preference based on the link type and index on its VSI interface. To specify a VXLAN tunnel associated with a VSI interface as a backup link, specify that VXLAN tunnel as an RIR backup tunnel.

As shown in Figure 3, when primary links 1 and 2 are not available, the spoke uses backup link 3 to forward traffic to the backup hub.

Figure 3 Primary and backup links

Quality-based link selection

RIR server and RIR client

About the RIR server and RIR client

In a hub-spoke network, a hub is typically connected to multiple spokes. To avoid the hub from consuming too many resources on link quality detection by Network Quality Analyzer (NQA) probes, RIR provides the following roles:

· RIR server—An RIR server does not perform NQA link probes. It performs link selection based on the link quality probe results synchronized from RIR clients.

· RIR client—An RIR client performs NQA link probes to detect the link quality and synchronizes the link quality probe results to RIR servers.

Configure a hub as an RIR server and spokes as RIR clients, so the hub can perform link selection based on the link quality probe results synchronized from the RIR clients.

RIR server function

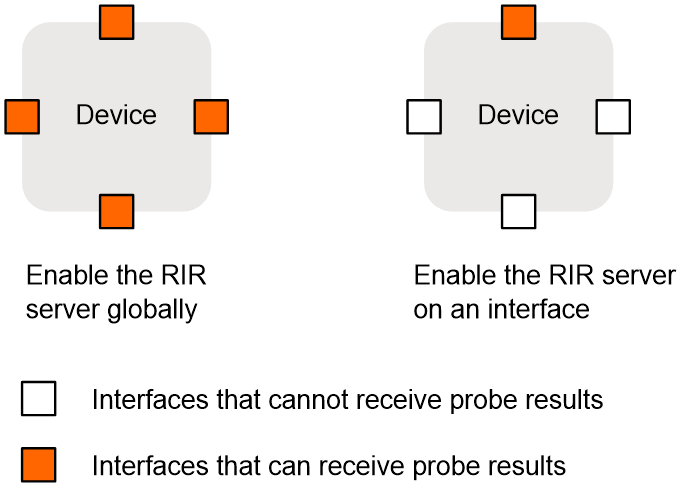

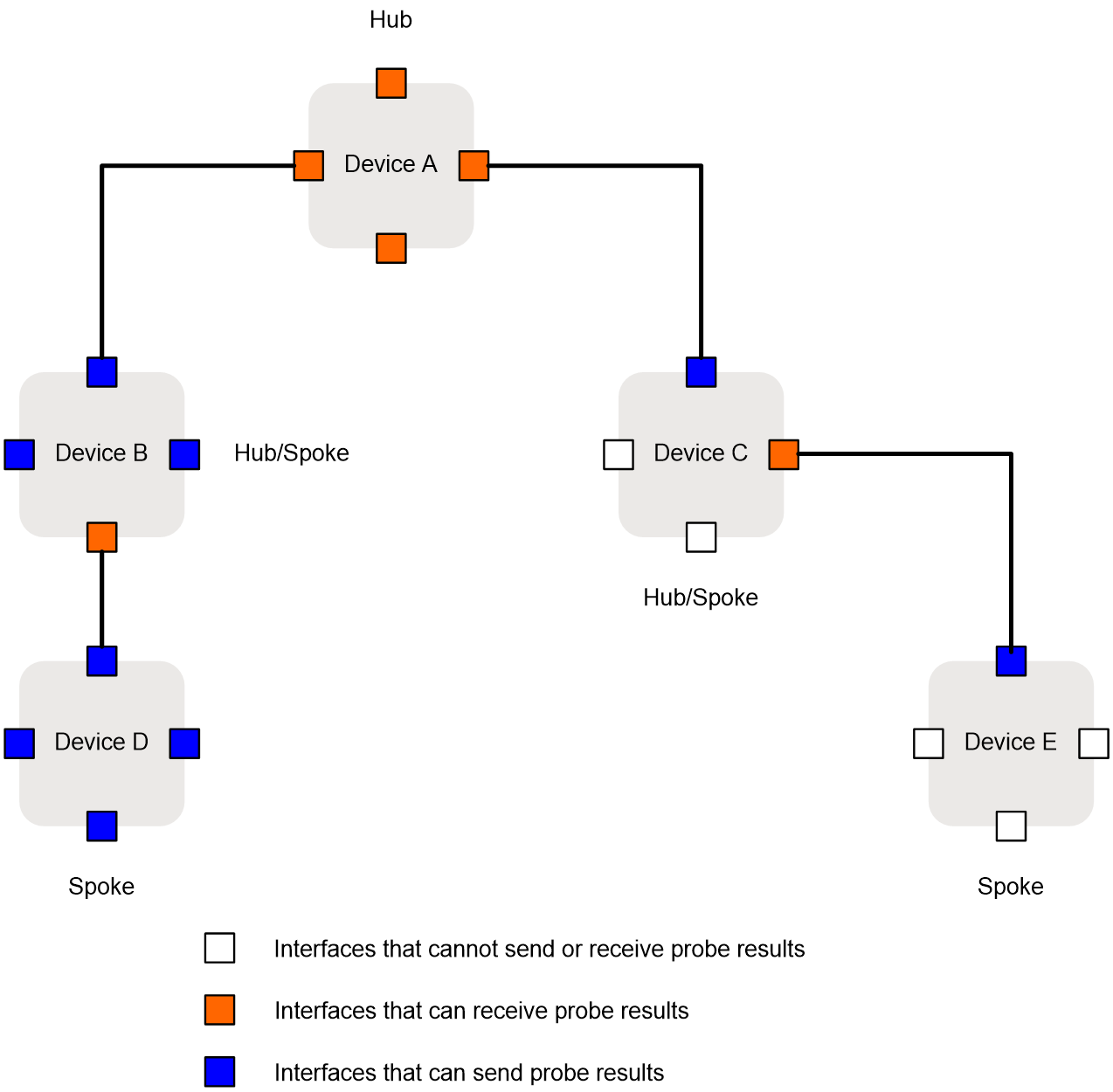

You can enable the RIR server globally or on an interface, as shown in Figure 4.

· If you enable the RIR server globally, the RIR server is also enabled on all interfaces on the device. The interfaces can receive RIR link quality probe results from RIR clients.

· If you enable the RIR server on an interface, only that interface can receive RIR link quality probe results from RIR clients.

|

IMPORTANT: In a VXLAN network, only tunnel interfaces support enabling the RIR server. The RIR server uses the tunnel interfaces to receive RIR link quality probe results from RIR clients. |

Figure 4 Enabling the RIR server

RIR client function

The RIR client synchronizes link quality probe results to RIR servers. Enabling the RIR client is the same as enabling the RIR server.

RIR server and client enabling policy

Enable the RIR server or RIR client, or use them in combination, depending on the role of the device in the network.

· If the device acts only as a hub, you can enable the RIR server globally.

· If the device acts only as a spoke, you can enable the RIR client globally.

· If the device acts as both a hub and a spoke, you can enable the RIR server and RIR client on the corresponding interfaces.

The RIR server and RIR client cannot be both enabled on the same interface. If the enabled role (RIR server or client) on an interface is different from the globally enabled role, the interface-specific role takes effect on that interface.

As shown in Figure 5, enable the global and interface-specific RIR server and RIR client in combination as follows:

· Device A—Enable the RIR server globally.

· Device B—Enable the RIR client globally and enable the RIR server on an interface.

· Device C—Enable the RIR client on an interface and enable the RIR server on an interface.

· Device D—Enable the RIR client globally.

· Device E—Enable the RIR client on an interface.

Figure 5 Enabling the RIR server and client

NQA link probes

RIR uses NQA to detect the status of candidate links and selects the most suitable link based on the NQA link quality probe results. For more information about NQA, see Network Management and Monitoring Configuration Guide.

A hub performs link selection based on the link quality probe results synchronized from spokes. RIR uses spokes as NQA clients and hubs as NQA servers. The following types of NQA link probe operations are defined:

· NQA link connectivity probe operation—Performs ICMP echo probes to check the connectivity of each link. If a link is disconnected, RIR does not perform NQA link quality probes on that link. You can configure only one NQA link connectivity probe operation.

· NQA link quality probe operations—Also referred to as NQA link quality operations, perform UDP jitter probes to detect the link delay, jitter, and packet loss ratio for links that pass NQA link connectivity check. You can configure multiple NQA link quality operations. The operations might offer different link quality probe results for the same link.

The device performs NQA probes only for links that are assigned link types and link indexes.

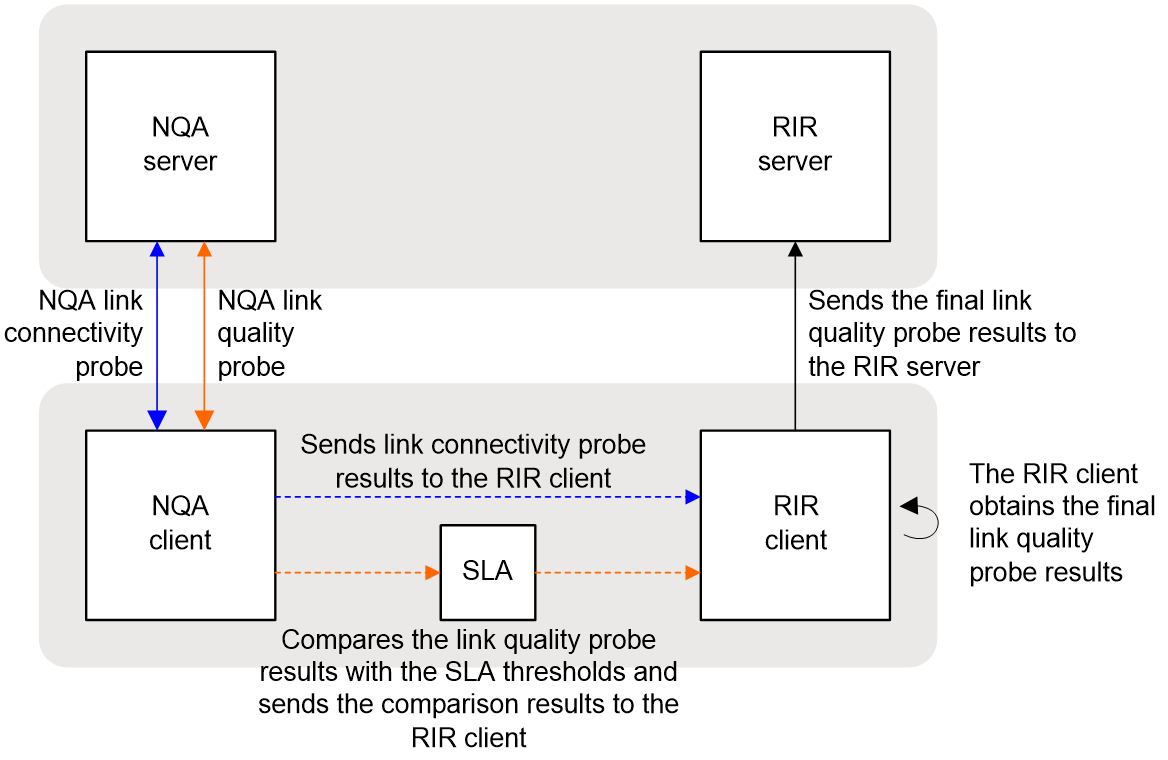

SLA

To meet the differentiated requirements of services on link quality, configure a Service Level Agreement (SLA) for each service. An SLA contains a set of link quality evaluation thresholds, including the link delay threshold, packet loss threshold, and jitter threshold.

The quality policy of a flow template contains an SLA and an NQA link quality operation. By comparing the NQA link quality probe results with the thresholds in the SLA, the device determines whether a link meets the quality requirements of the service. If all parameter values in the probe results of a link are lower than or equal to the thresholds in the SLA, the link is qualified for the service.

Quality policy

Link quality probe results

As shown in Figure 6, if a flow template is configured with a quality policy, the spoke determines whether a link is qualified for that type of service flow based on the NQA link quality probe results. In addition, the spoke synchronizes the link quality probe results to the hub. The hub performs link selection based on the link quality probe results synchronized from the spoke. You only need to configure the quality policy on the spoke.

RIR uses the following rules to determine whether a link is qualified for a type of service flow:

· If the link fails NQA link connectivity check, RIR determines that the link is unqualified.

· If the link passes NQA link connectivity check, RIR checks the NQA link quality probe results for the link.

¡ If all link quality parameter values in the probe results are lower than or equal to the link quality thresholds in the SLA, RIR determines that the link is qualified.

¡ If any link quality parameter value in the probe results is higher than the corresponding link quality threshold in the SLA, RIR determines that the link is unqualified.

Figure 6 Link quality probe result processing network diagram

Quality-based link selection on a hub and a spoke

When a spoke performs quality-based link selection, it considers only the quality policy configured on the spoke. If a quality policy has been configured in a flow template on the spoke, the spoke calculates link quality probe results based on the NQA link quality operation and SLA in the quality policy. Then, the spoke performs link selection for the flow that matches the flow template based on the link quality probe results. If no quality policy is configured in a flow template on the spoke, the spoke does not consider the quality factor when it performs link selection. All links in the flow template meet the service requirements in quality.

When a hub performs quality-based link selection for traffic sent to a spoke, it considers the quality policies both on the hub and spoke. Table 1 shows the link selection rules for a flow that matches a flow template on a hub.

Table 1 Quality-based link selection rules on a hub

|

Whether a quality policy is configured on the hub |

Whether a quality policy is configured on the spoke |

Quality-based link selection rules on the hub |

|

Yes |

Yes |

The hub performs link selection based on the link quality probe results synchronized from the spoke. |

|

Yes |

No |

The spoke does not synchronize link quality probe results to the hub. The hub determines that no link in the flow template meets the quality requirements. |

|

No |

Yes |

The spoke synchronizes link quality probe results to the hub. The hub determines that all links in the flow template are qualified for packets that match the flow template. |

|

No |

No |

The spoke does not synchronize link quality probe results to the hub. The hub determines that all links in the flow template are qualified for packets that match the flow template. |

As a best practice, configure a quality policy both on the hub and spoke for a type of service flow or do not configure any quality policy on the hub or spoke for the type of service flow.

Bandwidth-based link selection

About bandwidth-based link selection

Bandwidth-based link selection not only can select links that meet the service bandwidth requirements, but also can load share service traffic among multiple links. This manner can avoid a link from being overwhelmed or congested.

Bandwidth-based link selection policy

Bandwidth-based link selection enables the device to select a suitable link for service traffic based on the used bandwidth of the link, the total link bandwidth, and the per-session expected bandwidth. The device can automatically obtain the used bandwidth of the link. The total bandwidth of the link and the per-session expected bandwidth are manually configured or calculated based on the user configuration.

The device uses sessions as the minimum granularity and performs bandwidth-based link selection to achieve refined link bandwidth management. A session is uniquely defined by a quintuple including the source IP address, destination IP address, source port, destination port, and transport layer protocol.

When the device selects links for traffic of a session, it first performs bandwidth detection based on the per-session expected bandwidth in the flow template to which the session belongs. If the used bandwidth plus the per-session expected bandwidth of a candidate link is less than 80% of its total bandwidth, the available bandwidth of the link meets the session bandwidth requirements. The link passes the bandwidth detection.

When different sessions of the same service type use the same link selection policy, the link selection results might be different.

Load balancing

Load balancing modes

If multiple links are available for sessions that match a flow template, the device distributes the traffic of the sessions to these links for load balancing based on the link bandwidths. RIR supports the following load balancing modes:

· Per-session weight-based link selection mode—RIR global link load balancing mode that takes effect on all RIR flows. This mode can distribute the sessions that match the same flow template to different links according to the weights of the links. RIR selects only one link to transmit a session.

· Per-session periodic link adjustment mode—RIR global link load balancing mode that takes effect on all RIR flows. This mode not only can distribute the sessions that match the same flow template to different links, but also can periodically adjust links for the sessions. Within one adjustment period, RIR selects only one link to transmit a session.

· Per-packet mode—Flow-specific link load balancing mode that takes effect only on traffic that matches the flow template where this mode is enabled. This mode can distribute the same session to different links for transmission.

The per-packet mode takes precedence over the per-session modes.

Load balancing concepts

Bandwidth weight

The bandwidth of a link is used as the weight of the link. If the total bandwidth of a link is used as the link weight, the weight is called the total bandwidth weight. If the remaining bandwidth of a link is used as the link weight, the weight is called the remaining bandwidth weight.

To select links from multiple links based on their bandwidth weights, the probability that each link is selected equals to the ratio of the bandwidth of a single link to the bandwidth sum of all the links. The bandwidth sum of all the links is the weight sum.

For example, the total bandwidths of links 1, 2, and 3 are 10 Mbps, 10 Mbps, and 20 Mbps, respectively. The remaining bandwidths of links 1, 2, and 3 are 8 Mbps, 4 Mbps, and 8 Mbps, respectively. All the links meet the service requirements. To select links based on the total bandwidth weights, the probabilities that links 1, 2, and 3 are selected are 25%, 25%, and 50%, respectively. To select links based on the remaining bandwidth weights, the probabilities that links 1, 2, and 3 are selected are 40%, 20%, and 40% ,respectively.

Remaining bandwidth ratio

The remaining bandwidth ratio refers to the ratio of the remaining bandwidth of a link compared to the total bandwidth of the link. If the remaining bandwidth ratio of a link is the largest among multiple links, this remaining bandwidth ratio is called the largest remaining bandwidth ratio. If the remaining bandwidth ratio of a link is the smallest among multiple links, this remaining bandwidth ratio is called the smallest remaining bandwidth ratio.

Per-session weight-based link selection mode

The mechanisms of the per-session weight-based link selection mode are as follows:

· For preference-based primary link selection, preference-based backup link selection, and quality tolerant link selection—If multiple links with the same preference meet the requirements of a flow template, RIR selects one optimal link for each session of the flow template from these links. In this mode, RIR performs link selection based on the remaining bandwidth weight of each link. The used bandwidth is the actually used bandwidth plus the per-session expected bandwidth.

· For bandwidth tolerant link selection—If multiple links meet the requirements of a flow template, RIR selects one optimal link for each session of the flow template from these links. In this scenario, RIR performs link selection based on the total bandwidth weight of each link.

For more information about the preference-based primary or backup link selection, quality tolerant link selection, and bandwidth tolerant link selection, see "RIR working mechanisms."

Per-session periodic link adjustment mode

The mechanisms of the per-session periodic link adjustment mode are as follows:

· For preference-based primary link selection, preference-based backup link selection, and quality tolerant link selection—If multiple links with the same preference meet the requirements of a flow template, RIR selects one optimal link for each session of the flow template from these links. RIR preferentially selects the link with the lowest bandwidth usage for a session. The used bandwidth is the actually used bandwidth plus the per-session expected bandwidth.

· For bandwidth tolerant link selection—If multiple links meet the requirements of a flow template, RIR selects one optimal link for each session of the flow template from these links. The link selected the last time for a session takes precedence over the other links for that session. If RIR performs link selection for a session for the first time, it selects a link based on the remaining bandwidth weights of the available links.

In per-session periodic link adjustment mode, the device periodically detects the bandwidth usage of all links that have RIR sessions at the configured adjustment intervals. RIR reselect links for sessions that match a flow template if the links in the flow template meet the following requirements: The difference between the largest remaining bandwidth ratio and the smallest remaining bandwidth ratio becomes larger than or equal to the periodic adjustment upper threshold. The link adjustment might be last for several adjustment intervals. RIR stops link adjustment if one of the following requirements is met:

· The difference between the largest remaining bandwidth ratio and the smallest remaining bandwidth ratio of the links becomes smaller than the periodic adjustment lower threshold.

· The adjustment interval is the 20th interval after link reselection is triggered.

Per-packet mode

The mechanisms of the per-packet mode are as follows:

· For preference-based primary link selection, preference-based backup link selection, and quality tolerant link selection—If multiple links with the same preference meet the requirements of a session, all these links are candidate optimal links for this session. When forwarding traffic for the session, the device distributes the traffic to these links packet by packet according to the remaining bandwidth weight of each link.

For example, the device needs 10 Mbps of bandwidth to transmit traffic for a session with flow ID 1. Links 1 and 2 are available. The remaining bandwidth of link 1 is 20 Mbps and the remaining bandwidth of link 2 is 30 Mbps. Finally, the traffic of this session uses 4 Mbps of bandwidth on link 1 and 6 Mbps of bandwidth on link 2.

· For bandwidth tolerant link selection—If multiple links meet the requirements of a session, all these links are candidate optimal links for this session. When forwarding traffic for the session, the device distributes the traffic to these links packet by packet. Each link has the same probability to be selected.

Link selection delay and suppression

To improve packet forwarding efficiency, the device does not repeatedly perform link selection for traffic of the same session. After the device performs link selection for traffic of a session, it forwards the subsequent traffic of that session according to the previous link selection result. Link reselection is triggered when any link in the session's flow template has one of the following changes:

· The quality of a link becomes qualified from unqualified or the quality of a link becomes unqualified from qualified.

· The bandwidth usage of a link has reached the maximum.

To avoid frequent link selection caused by link flapping, RIR defines a link selection delay and link selection suppression period.

After the device performs link selection, it starts the link selection suppression period if the period has been configured. Within the link selection suppression period, the device does not perform link reselection, but it maintains the link state data. When the link selection suppression period ends, the link selection delay timer starts. If the link state still meets the conditions that can trigger link reselection when the delay timer expires, the device performs link reselection. If the link state changes to not meet the conditions that can trigger link reselection within the delay time, the device does not perform link reselection.

RIR collaboration

About RIR collaboration

RIR collaboration enables multiple RIR-capable devices to share link data, load share traffic, and realize distributed link schedule through establishing RIR dedicated links between each pair of them.

Network structure

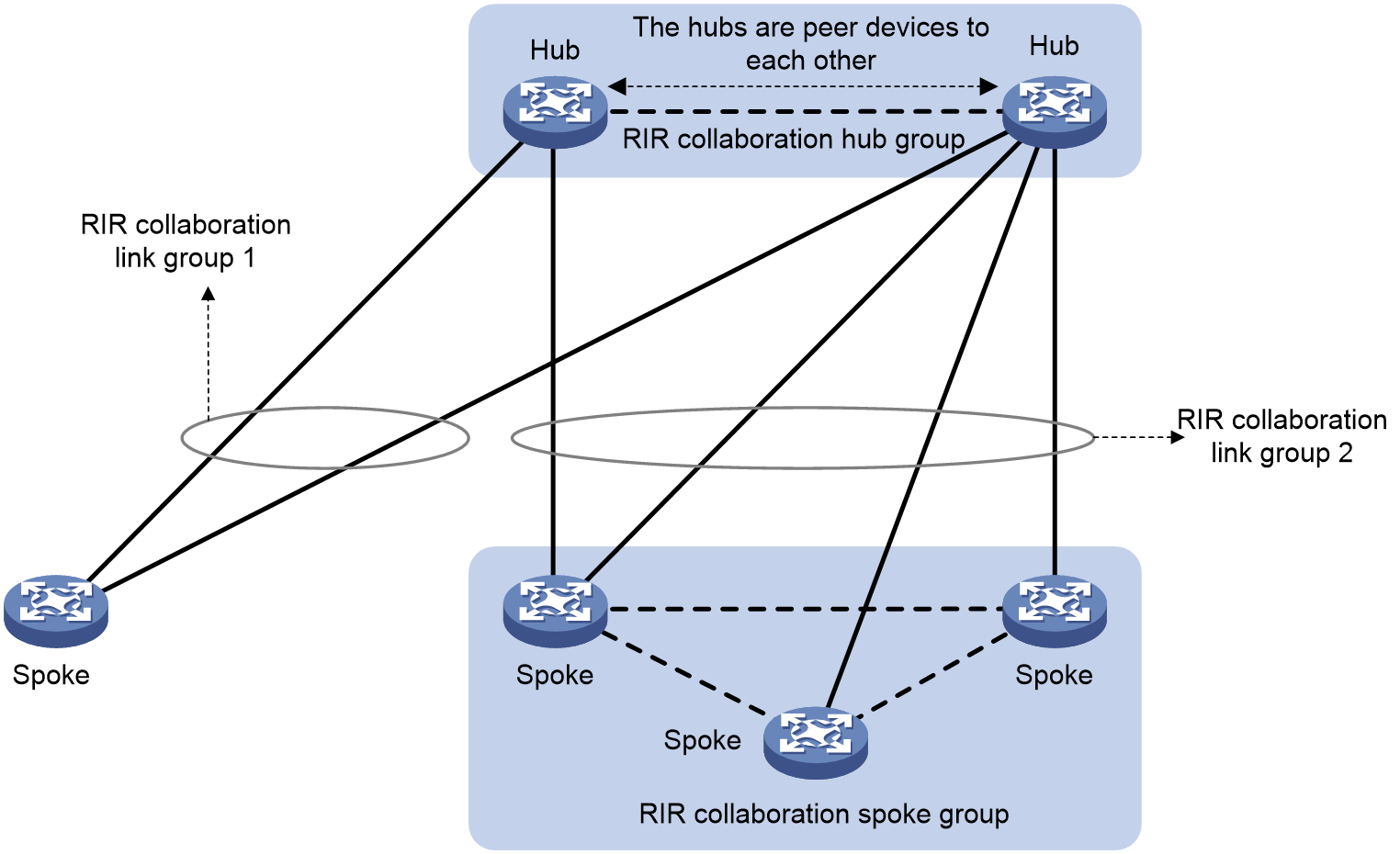

As shown in Figure 7, RIR collaboration has the following concepts:

· RIR collaboration device group—Contains a group of devices that collaboratively select links. Each pair of devices in an RIR collaboration device group are peer devices to each other. They share links for RIR.

Typically, devices in the same RIR collaboration device group are deployed in the same physical area, for example, the same equipment room or campus.

¡ A group of hubs can form an RIR collaboration hub group.

¡ A group of spokes can form an RIR collaboration spoke group.

An RIR collaboration device group acts as a logical device. The logical device can form a hub-spoke network with RIR collaboration device groups or physical devices.

· RIR collaboration link group—Contains a group of links that connect an RIR collaboration device group and another RIR collaboration device group or a physical device (hub or spoke). As shown in Figure 7, RIR collaboration link groups 1 and 2 connect the RIR collaboration hub group to a spoke and an RIR collaboration spoke group, respectively.

· Local packets and peer packets—In RIR collaboration, the packets firstly processed by the local device are called local packets and the packets firstly processed by the peer device are called peer packets. Devices in an RIR collaboration device group use different link selection policies to process local packets and peer packets. If a device does not belong to an RIR collaboration device group, all service packets received by the device are local packets.

Figure 7 RIR collaboration network structure

Working mechanism

The RIR collaboration mechanism is as follows:

1. After each pair of devices in an RIR collaboration device group establish RIR collaboration relationship, the device with a lower IP address acts as the client. The client initiates a TCP connection to the peer device.

2. Through TCP connections, a device synchronizes the configuration and status data of local links that meet the service requirements to its peer devices. The data does not include the link data synchronized from other devices in the same RIR collaboration device group. As a result, the devices in the RIR collaboration device group can obtain link information from one another, and can update link information in real time.

3. When a device in the RIR collaboration device group receives a service packet for a session, it handles the packets as shown in Table 2.

Table 2 Collaborative link selection policies

|

Packet condition |

Are routes available to forward the packet |

Collaborative link selection policies |

|

Local packet and received for the first time |

Yes |

If the optimal link information exists for the session, the local device forwards the packet according to the information. If no optimal link information exists for the session, the local device examines all links in the same flow template on the local device and peer devices. Then, the local device selects the most suitable link for the packet. · If the selected link is a link on the local device, the local device directly forwards the packet. · If the selected link is a link on a peer device, the local device forwards the packet to the peer device. |

|

Local packet and received for the first time |

No |

The local device selects a peer device in the same RIR collaboration device group and forwards the packet to the peer device. |

|

Local packet but not received for the first time |

Yes |

The packet is forwarded to a peer device, and then the peer device returns the packet back to the local device because it does not have a route to forward the packet. After the packet is returned back, the local device only examines the local links in order to find the most suitable link to forward the packet. The local device performs link reselection for the session every 60 seconds. This ensures that the traffic of the session can be switched to the peer device in time after the routes on the peer device recover. |

|

Local packet but not received for the first time |

No |

The local device selects a peer device to which it has never forwarded the traffic of the session and forwards the packet to the peer device. If no such a peer device is available, the local device discards the packet. |

|

Peer packet |

Yes |

If the optimal link information exists for the session, the local device forwards the packet according to the information. If no optimal link information exists for the session, the local device selects the most suitable link from the local links to forward the packet. |

|

Peer packet |

No |

The local device returns the packet back to the original peer device. |

RIR working mechanisms

Preparation

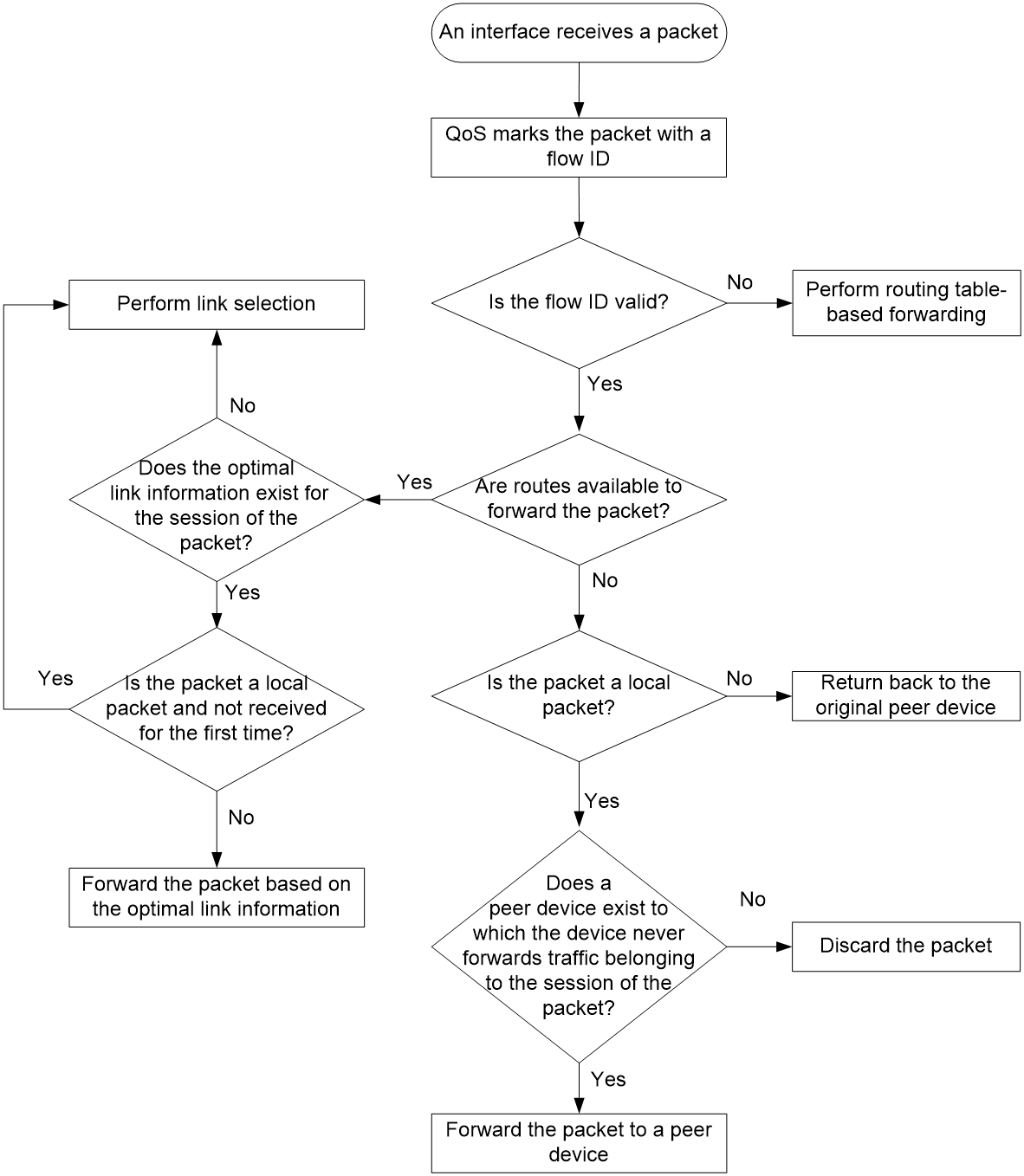

When the device receives a packet on an interface, it handles the packet as follows:

1. Uses QoS to mark the packet with a flow ID based on the quintuple and DSCP of the packet.

2. Identifies whether the flow ID of the packet is valid.

¡ If the flow ID is invalid, the device performs routing table-based forwarding for the packet.

¡ If the flow ID is valid, go to the next step.

3. Performs a routing table lookup to identify whether routes are available to forward the packet.

¡ If no route is available, the device identifies whether the packet is a local packet.

- If the packet is not a local packet, the device returns the packet back to the original peer device.

- If the packet is a local packet, the device selects a peer device to which it has never forwarded traffic for the packet's session and forwards the packet to the peer device. If no such a peer device is available, the device discards the packet.

¡ If routes are available, go to the next step.

4. Examines whether the optimal link information exists for the session of the packet.

¡ If the optimal link information exists, the device forwards the packet based on the information.

¡ If no optimal link information exists, the device performs link selection for the packet.

Figure 8 RIR preparation

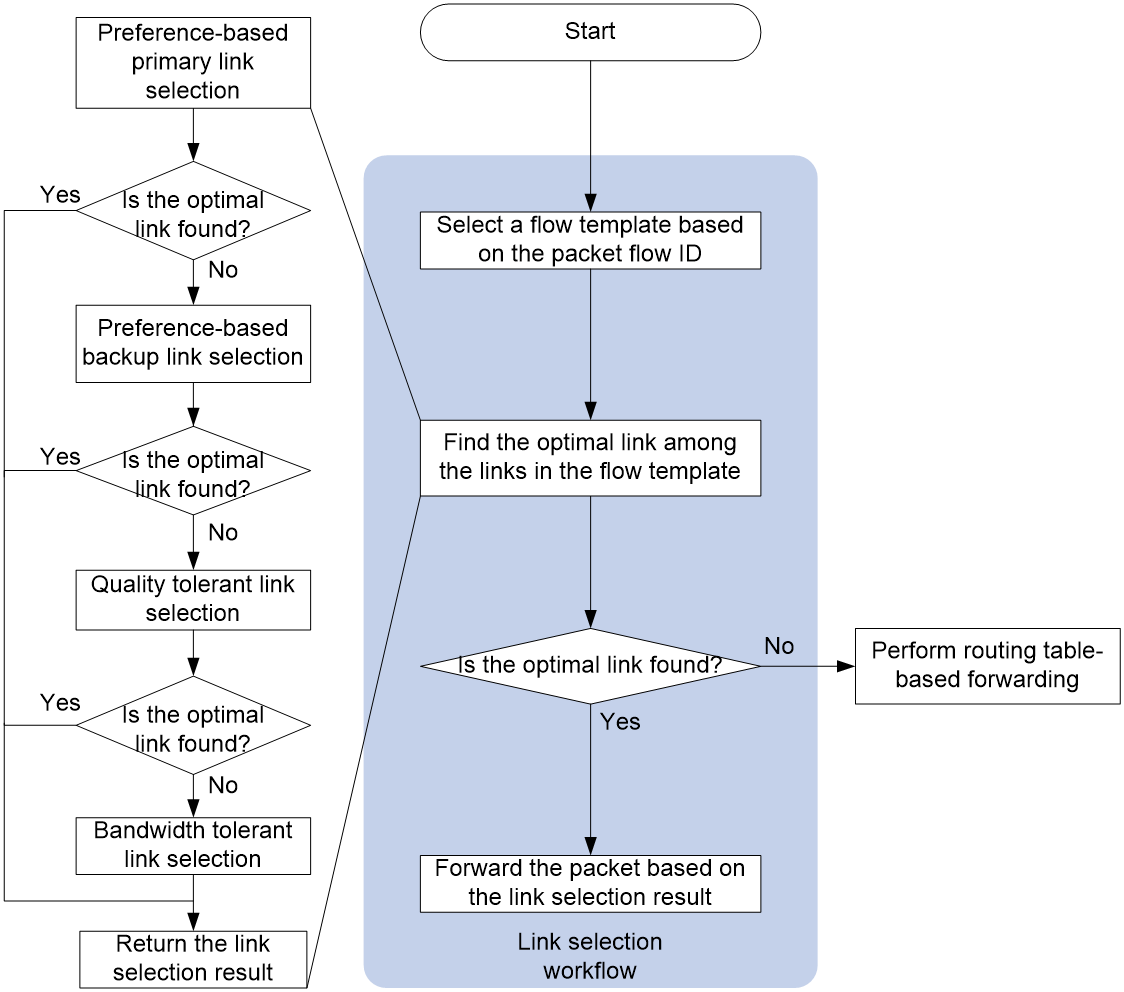

Link selection workflow summary

RIR uses the following workflow to select a link to forward a packet:

1. Selects the flow template that has the same flow ID as the packet.

2. Selects the most suitable link from the links in the flow template by using the following criteria in order:

a. Preference-based primary link selection.

b. Preference-based backup link selection.

c. Quality tolerant link selection.

d. Bandwidth tolerant link selection.

If the packet is a local packet and is received for the first time, the candidate links also include the links synchronized from RIR collaboration peer devices in the same flow template.

If the packet is a peer packet or the packet is a local packet but is not received for the first time, the candidate links only include links on the local device.

3. If a link is found suitable, RIR returns the link selection result and stops searching other links. If no link is found suitable for a criterion, RIR uses the next criterion to select links. If RIR fails to find a suitable link by using all criteria, it determines that no link is suitable and returns the link selection result.

4. If no suitable link is found, the device performs forwarding based on the routing table. If a suitable link is found, RIR forwards the packet based on the link selection result.

¡ If the most suitable link belongs to the local device, the device directly forwards the packet through the link.

¡ If the most suitable link belongs to a peer device in the RIR collaboration device group, the device forwards the packet to the peer device.

After finishing link selection, the device associates the quintuple of the packet with the most suitable link and records the association as the optimal link information for the session. The device forwards the subsequent packets of the same session based on the optimal link information. If no traffic is received for the session for a period of time, the device will delete the optimal link information.

Figure 9 RIR link selection workflow

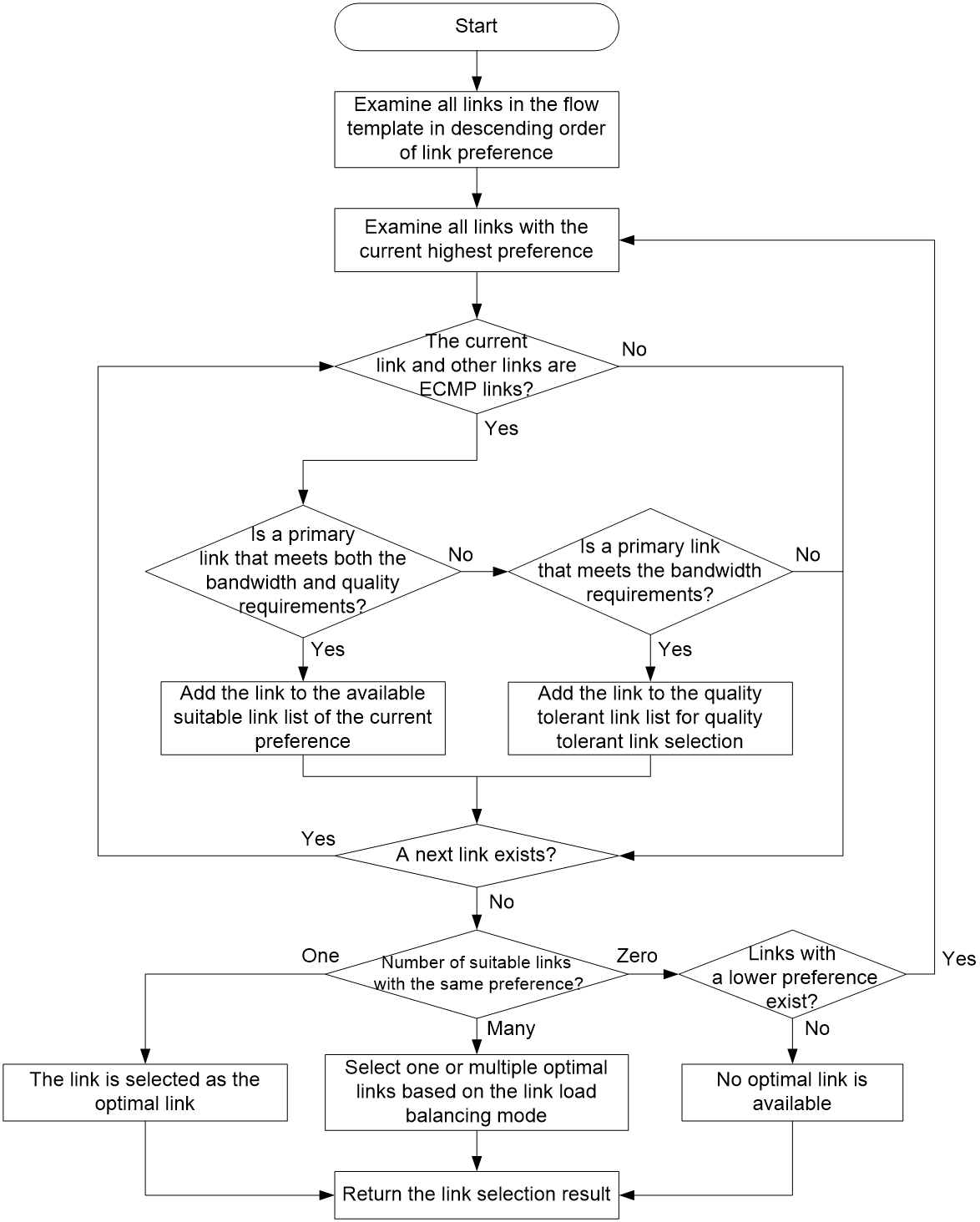

Preference-based primary link selection

RIR preferentially selects primary links that meet both the quality and bandwidth requirements for a service flow that matches a flow template. As shown in Figure 10, the device selects a primary link from the primary links in the flow template by examining the links in descending order of link preference. The device uses the following process to examine links with the same preference:

1. The device examines all links with the preference and identifies whether a link forms ECMP routes with other links. If a link forms ECMP routes with other links, the device further identifies whether the link is a primary link that meets both the quality and bandwidth requirements of the service.

¡ If yes, the device adds the link to the available suitable link list of that preference.

¡ If no, the device further identifies whether the link is a primary link that meets the bandwidth requirements of the service.

- If yes, the device adds the link to the quality tolerant link list for quality tolerant link selection. Then, the device continues to examine other links with the same preference.

- If no, the device continues to examine other links with the same preference.

If a link does not form ECMP routes with other links, the device continues to examine other links with the same preference.

2. When the device finishes examining all links with the preference, it identifies how many suitable links are available for the service flow.

¡ If only one suitable link is available, the device selects that link as the optimal link.

¡ If multiple suitable links are available, the device selects one or multiple optimal links from them based on the link load balancing mode. In a per-session load balancing mode, the device selects only one link as the optimal link of a session. In the per-packet load balancing mode, the device can select multiple links as the optimal links of a session.

¡ If no suitable link is available, the device examines the links that have a preference value lower than the links with the current preference.

If no primary links in the flow template are suitable, the device determines that no optimal primary link is found for the service flow.

For more information about identifying whether a link meets the quality requirements, see "Quality-based link selection." For more information about identifying whether a link meets the bandwidth requirements, see "Bandwidth-based link selection."

Figure 10 Preference-based primary link selection workflow

Preference-based backup link selection

If no primary link is suitable for a service flow, the device tries to find a backup link that meets both the quality and bandwidth requirements for the flow in the flow template. The link selection process is the same as that for selecting a primary link.

Quality tolerant link selection

If preference-based link selection fails to select a suitable link from both primary and backup links, the device performs quality tolerant link selection.

The links that meet the quality tolerant link selection criterion are those added to the quality tolerant link list during preference-based primary and backup link selection. These links do not meet the quality requirements of the service, but they meet the bandwidth requirements of the service. Quality tolerant link selection selects a link from the links that meet only the bandwidth requirements of the service.

If multiple quality tolerant links are available, the device selects one or multiple optimal links from them based on the link load balancing mode.

Bandwidth tolerant link selection

If quality tolerant link selection still cannot find a suitable link for a service flow, the device performs bandwidth tolerant link selection. Bandwidth tolerant link selection selects one link from ECMP routes in the flow template as the optimal link.

If multiple links are available, the device selects one or multiple optimal links from them based on the link load balancing mode.

Restrictions: Hardware compatibility with RIR

|

F1000 series |

Models |

RIR compatibility |

|

F1000-X-G5 series |

F1000-A-G5, F1000-C-G5, F1000-C-G5-LI, F1000-E-G5, F1000-H-G5, F1000-S-G5 |

Yes |

|

F1000-X-G3 series |

F1000-A-G3, F1000-C-G3, F1000-E-G3, F1000-S-G3 |

Yes |

|

F1000-X-G2 series |

F1000-A-G2, F1000-C-G2, F1000-E-G2, F1000-S-G2 |

Yes |

|

F1000-9X0-AI series |

F1000-9390-AI, F1000-9385-AI, F1000-9380-AI, F1000-9370-AI, F1000-9360-AI, F1000-9350-AI, F1000-990-AI, F1000-980-AI, F1000-970-AI, F1000-960-AI, F1000-950-AI, F1000-930-AI, F1000-920-AI, F1000-910-AI, F1000-905-AI |

Yes |

|

F1000-9330-AI, F1000-9320-AI |

No |

|

|

F1000-C83X0 series |

F1000-C8395, F1000-C8390, F1000-C8385, F1000-C8380, F1000-C8370, F1000-C8360, F1000-C8350 |

Yes |

|

F1000-C8330 |

No |

|

|

F1000-C81X0 series |

F1000-C8180, F1000-C8170, F1000-C8160, F1000-C8150, F1000-C8130, F1000-C8120, F1000-C8110 |

Yes |

|

F1000-7X0-HI series |

F1000-770-HI, F1000-750-HI, F1000-740-HI, F1000-720-HI, F1000-710-HI |

Yes |

|

F1000-730-HI |

No |

|

|

F1000-C-X series |

F1000-C-EI, F1000-C-HI, F1000-C-XI, F1000-E-XI |

Yes |

|

F1000-V series |

F1000-E-VG, F1000-S-VG |

Yes |

|

SecBlade IV |

LSPM6FWD8, LSQM2FWDSC8 |

No |

|

F100 series |

Models |

RIR compatibility |

|

F100-X-G5 series |

F100-E-G5, F100-A-G5, F100-C-G5, F100-M-G5, F100-S-G5 |

Yes |

|

F100-X-G3 series |

F100-A-G3, F100-C-G3, F100-E-G3 , F100-M-G3, F100-S-G3 |

Yes |

|

F100-X-G2 series |

F100-A-G2, F100-C-G2, F100-E-G2, F100-M-G2, F100-S-G2 |

Yes |

|

F100-WiNet series |

F100-A80-WiNet, F100-A91-WiNet, F100-C80-WiNet, F100-C60-WiNet, F100-C50-WiNet, F100-S80-WiNet |

Yes |

|

F100-A81-WiNet |

No |

|

|

F100-C-A series |

F100-C-A6, F100-C-A5, F100-C-A3, F100-C-A6-WL, F100-C-A5-W, F100-C-A3-W |

Yes |

|

F100-X-XI series |

F100-A-EI, F100-A-HI, F100-A-SI, F100-C-EI, F100-C-HI, F100-C-XI, F100-E-EI, F100-S-HI, F100-S-XI |

Yes |

Restrictions and guidelines: RIR configuration

When a link has a large amount of traffic, do not change the link selection policy for that link. For example, change the link preference. If you change the link selection policy, the system might fail to perform link selection as expected. As a best practice, change the link selection policy for a link when the link does not have traffic or when the link has a small amount of traffic.

RIR tasks at a glance

Spoke configuration tasks at a glance

To configure RIR on a spoke, perform the following tasks:

¡ Specifying an RIR client synchronization port

¡ (Optional.) Configuring NQA link connectivity probe parameters

¡ Configuring an NQA link quality operation

3. Configuring link attributes

¡ Assigning a link type and index to a VSI interface

¡ Configuring the link bandwidth of a VXLAN tunnel interface

¡ (Optional.) Configuring RIR backup links

4. Configuring a flow template

¡ Configuring a quality policy for the flow template

¡ (Optional.) Specifying the per-session expected bandwidth

¡ Specifying link preference values for links

5. (Optional.) Configuring the link load balancing mode

¡ Setting the per-session periodic link adjustment mode

¡ Setting the per-packet load balancing mode

By default, the per-session weight-based link selection mode is used.

6. (Optional.) Configuring flow priority-based traffic scheduling

7. (Optional.) Setting the link selection delay and link selection suppression period

8. Configuring a QoS policy to mark matching packets with a flow ID

a. Creating a traffic class and defining packet match criteria

b. Creating a traffic behavior and configuring a flow ID marking action

d. Applying the QoS policy to an interface

9. (Optional.) Configuring RIR collaboration

a. Setting up RIR dedicated links between local and peer devices

b. Applying QoS policies to interfaces interconnecting local and peer devices

c. Assigning links to an RIR collaboration link group

d. Establishing RIR collaboration relationship for each pair of local and peer devices

e. Configuring RIR packet redirection

10. (Optional.) Enabling RIR logging

11. (Optional.) Configuring flow ID-based traffic rate statistics for tunnels

Hub configuration tasks at a glance

To configure RIR on a hub, perform the following tasks:

¡ Specifying an RIR server synchronization port

¡ (Optional.) Creating an SLA and an NQA link quality operation

3. Configuring link attributes

¡ Assigning a link type and index to a VSI interface

¡ Configuring the link bandwidth of a VXLAN tunnel interface

¡ (Optional.) Configuring RIR backup links

4. Configuring a flow template

¡ Configuring a quality policy for the flow template

¡ (Optional.) Specifying the per-session expected bandwidth

¡ Specifying link preference values for links

5. (Optional.) Configuring the link load balancing mode

¡ Setting the per-session periodic link adjustment mode

¡ Setting the per-packet load balancing mode

By default, the per-session weight-based link selection mode is used.

6. (Optional.) Configuring flow priority-based traffic scheduling

7. (Optional.) Setting the link selection delay and link selection suppression period

8. Configuring a QoS policy to mark matching packets with a flow ID

a. Creating a traffic class and defining packet match criteria

b. Creating a traffic behavior and configuring a flow ID marking action

d. Applying the QoS policy to an interface

9. (Optional.) Configuring RIR collaboration

a. Setting up RIR dedicated links between local and peer devices

b. Applying QoS policies to interfaces interconnecting local and peer devices

c. Assigning links to an RIR collaboration link group

d. Establishing RIR collaboration relationship for each pair of local and peer devices

e. Configuring RIR packet redirection

10. (Optional.) Enabling RIR logging

11. (Optional.) Configuring flow ID-based traffic rate statistics for tunnels

Prerequisites for RIR configuration

Make sure the hub and each spoke have a minimum of two ECMP routes to reach each other.

Enabling the RIR process

1. Enter system view.

system-view

2. Enable the RIR process and enter RIR view.

rir

By default, the RIR process is disabled.

Configuring a spoke

Enabling the RIR client

About this task

To avoid NQA probes from occupying too many resources on a hub in a hub-spoke network, configure the hub as an RIR server and configure the spokes as RIR clients.

You can enable the RIR client globally or on an interface.

· Enabling the RIR client globally also enables the RIR client for all interfaces on the device. The interfaces can send link quality probe results for the RIR client.

· Enabling the RIR client on an interface allows only that interface to send link quality probe results for the RIR client.

Restrictions and guidelines

When you enable the RIR client, follow these restrictions and guidelines:

· In a VXLAN network, only tunnel interfaces support enabling the RIR client. The RIR client uses the tunnel interfaces to send link quality probe results.

· The RIR server and RIR client cannot be both enabled on the same interface.

· If the enabled role (RIR server or client) on an interface is different from the globally enabled role, the interface-specific role takes effect on that interface.

Procedure

1. Enter system view.

system-view

2. Enable the RIR client. Perform the following tasks as needed:

¡ Execute the following commands in sequence to enable the RIR client globally:

rir

client enable

¡ Execute the following commands in sequence to enable the RIR client on a VXLAN tunnel interface:

interface tunnel tunnel-number

rir role client

By default, the RIR client is disabled globally and on an interface.

Specifying an RIR client synchronization port

About this task

Perform this task to specify a port for an RIR client to synchronize link quality probe results to RIR servers.

Restrictions and guidelines

Specify the same synchronization port on the RIR client and server for successful synchronization of link quality probe results.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Specify a port for the RIR client to synchronize probe information to RIR servers.

probe sync-port port-number

By default, no port is specified for an RIR client to synchronize probe information to RIR servers.

Enabling the NQA client

About this task

An RIR client also acts as an NQA client. You must enable the NQA client on a spoke to ensure that NQA link connectivity probes and link quality probes can be performed correctly.

Procedure

1. Enter system view.

system-view

2. Enable the NQA client.

nqa agent enable

By default, the NQA client is disabled.

For more information about this command, see NQA commands in Network Management and Monitoring Command Reference.

Configuring NQA link connectivity probe parameters

About this task

An NQA client starts NQA link connectivity probes on all links configured on flow templates after RIR is enabled. A spoke (NQA client) performs consecutive probes at intervals as configured and waits for responses for the packets. If the client does not receive any responses when the probe packet timeout timer expires, a link connectivity issue exists.

In a VXLAN network, the NQA link connectivity probe targets are the VXLAN tunnel interfaces enabled with the RIR client.

A link connectivity probe packet uses the source IP address of a VXLAN tunnel as its source IP address and uses the tunnel destination IP address as its destination IP address.

Restrictions and guidelines

Setting a shorter probe interval obtains more precise probe results but requires more system resources.

Set a shorter probe packet timeout time if the requirement for link quality is high.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Configure NQA link connectivity probe parameters.

probe connect interval interval timeout timeout

By default, the NQA probe interval is 100 milliseconds and the NQA packet timeout time is 3000 milliseconds.

Configuring an NQA link quality operation

About this task

An NQA link quality operation allows a flow template to start UDP jitter probes based on the probe parameters in the operation in order to detect the quality of links.

You can configure a quality policy for a flow template to associate the flow template with an SLA and an NQA link quality operation. The device monitors the quality of links in the flow template based on the NQA link quality operation and compares the NQA probe results with the thresholds in the SLA. If all parameter values in the probe results of a link are lower than or equal to the thresholds in the SLA, the link is qualified for the flow.

To differentiate service flows that have different link quality requirements, associate the flow templates with NQA link quality operations that contain different probe parameter values. Two NQA link quality operations with different probe parameter values might offer different probe results for the same link.

In a VXLAN network, the NQA link quality probe targets are the VXLAN tunnel interfaces enabled with the RIR client.

A link quality probe packet uses the source IP address of a VXLAN tunnel as its source IP address and uses the tunnel destination IP address as its destination IP address.

Restrictions and guidelines

NQA link quality probes are used in conjunction with the NQA server and client features. For a spoke (NQA client) to perform NQA link quality probes, make sure UDP listening services have been configured on the NQA server.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Create an NQA link quality operation and enter its view.

nqa nqa-id

4. Configure NQA link quality probe parameters.

¡ Set the intervals at which the NQA client performs consecutive probes.

probe interval interval

By default, the NQA link quality probe interval is 100 milliseconds.

¡ Set the DSCP value of NQA link quality probe packets.

probe packet-dscp dscp-value

By default, the DSCP value of NQA link quality probe packets is 63.

¡ Set the number of NQA link quality probe packets sent per probe.

probe packet-number number

By default, 100 NQA link quality probe packets are sent per probe.

¡ Set the intervals at which NQA link quality probe packets are sent.

probe packet-interval interval

By default, NQA link quality probe packets are sent at intervals of 20 milliseconds.

¡ Set the timeout time for waiting for a response to an NQA link quality probe packet.

probe packet-timeout packet-timeout

By default, the timeout time is 3000 milliseconds.

¡ Specify a destination port for NQA link quality probes.

probe port port-number

By default, no destination port is specified for NQA link quality probes.

To correctly perform NQA link quality probes, the destination port number must be the same as the listening port on the NQA server.

Configuring an SLA

About this task

You can specify an SLA and NQA link quality operation for a flow template. The device monitors the quality of links in the flow template based on the NQA link quality operation and compares the NQA probe results with the thresholds in the SLA. The device selects only links that meet the quality requirements of the SLA for traffic that matches the flow template.

Perform this task to create an SLA and configure its link quality thresholds. Two SLAs might offer different quality results for the same NQA link quality operation.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Create an SLA and enter its view.

sla sla-id

4. Configure NQA link quality thresholds.

¡ Configure the link delay threshold.

delay threshold threshold-value

By default, the link delay threshold is 10 milliseconds.

¡ Configure the link jitter threshold.

jitter threshold threshold-value

By default, the link jitter threshold is 100 milliseconds.

¡ Configure the packet loss threshold.

packet-loss threshold threshold-value

By default, the packet loss threshold is 100‰.

Configuring a hub

Enabling the RIR server

About this task

To avoid NQA probes from occupying too many resources on a hub in a hub-spoke network, configure the hub as an RIR server and configure the spokes as RIR clients.

You can enable the RIR server globally or on an interface.

· Enabling the RIR server globally also enables the RIR server for all interfaces on the device. The interfaces can receive link quality probe results synchronized from RIR clients.

· Enabling the RIR server on an interface allows only that interface to receive link quality probe results synchronized from RIR clients.

Restrictions and guidelines

When you enable the RIR server, follow these restrictions and guidelines:

· In a VXLAN network, only tunnel interfaces support enabling the RIR server. The RIR server uses the tunnel interfaces to receive link quality probe results synchronized from RIR clients.

· The RIR server and RIR client cannot be both enabled on the same interface.

· If the enabled role (RIR server or client) on an interface is different from the globally enabled role, the interface-specific role takes effect on that interface.

Procedure

1. Enter system view.

system-view

2. Enable the RIR server. Perform the following tasks as needed:

¡ Execute the following commands in sequence to enable the RIR server globally:

rir

server enable

¡ Execute the following commands in sequence to enable the RIR server on a VXLAN tunnel interface:

interface tunnel tunnel-number

rir role server

By default, the RIR server is disabled globally and on an interface.

Specifying an RIR server synchronization port

About this task

Perform this task to specify a port for an RIR server to receive link quality probe results synchronized from RIR clients.

Restrictions and guidelines

Specify the same synchronization port on the RIR client and server for successful synchronization of link quality probe results.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Specify a port for receiving probe information synchronized from RIR clients.

probe sync-port port-number

By default, no port is specified for receiving probe information synchronized from RIR clients.

Configuring the NQA server

About this task

A hub that acts as an RIR server also acts as an NQA server. To ensure that NQA link connectivity probes can be performed correctly, you must enable the NQA server on the hub. To ensure that NQA link quality probes can be performed correctly, configure UDP listening services.

Restrictions and guidelines

The listening port specified on the hub (NQA server) must be the same as the destination port number specified in NQA link quality operations on the spokes (NQA clients). In addition, make sure the port is not used by any other services.

Procedure

1. Enter system view.

system-view

2. Enable the NQA server.

nqa server enable

By default, the NQA server is disabled.

For more information about this command, see NQA commands in Network Management and Monitoring Command Reference.

3. Configure a UDP listening service for the NQA server to listen to a port on an IP address.

nqa server udp-echo ip-address port-number [ vpn-instance vpn-instance-name ] [ tos tos ]

For more information about this command, see NQA commands in Network Management and Monitoring Command Reference.

Creating an SLA and an NQA link quality operation

About this task

When you configure the quality policy of a flow template on a hub, you must specify an SLA and an NQA link quality operation. Perform this task to create the SLA and NQA link quality operation specified in the quality policy. On the hub, you do not need to configure the parameters in the SLA and NQA link quality operation. If you configure these parameters, the configuration does not take effect. For more information about quality policy configuration, see "Configuring a quality policy for the flow template."

Restrictions and guidelines

If you do not plan to configure the quality policy of a flow template on a hub, you do not need to create an SLA and NQA link quality operation.

The hub and spoke can have different SLA and NQA link quality operation settings in the quality policy of the same flow template. The difference does not affect the execution and application of the quality policy. As a best practice to identify the quality policy, configure the same SLA and NQA link quality operation on the hub and spoke.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Create an SLA and enter its view.

sla sla-id

4. Return to RIR view.

quit

5. Create an NQA link quality operation and enter its view.

nqa nqa-id

Configuring link attributes

Assigning a link type and index to a VSI interface

About this task

The link type and link index together uniquely identify a link between a hub and a spoke. For a flow template to use a link, you must assign a link type and index to the link. Perform this task to configure the link type as 4G, Internet, MPLS, or MSTP. The link type only marks the network type of the link and it does not affect packet encapsulation.

VXLAN-based RIR allows a hub and a spoke to have only one VXLAN tunnel for a VSI interface (a VXLAN). By assigning a link type and index to the VSI interface, RIR can identify the VXLAN tunnel between the hub and spoke.

A VSI interface on a hub (or spoke) can have a VXLAN tunnel to each spoke (or hub). The VXLAN tunnels of the same VSI interface are assigned the same link type and link index.

Restrictions and guidelines

Only 4G, Internet, MPLS, and MSTP link types are supported.

The link type is used only for identifying links, and it does not affect packet encapsulation.

A VSI interface can be associated only with one link type.

You must assign different link indexes to the same type of links on different VSI interfaces.

Procedure

1. Enter system view.

system-view

2. Enter VSI interface view.

interface vsi-interface vsi-interface-id

3. Assign a link type and index to the VSI interface.

rir link-type { 4g | internet | mpls | mstp } index link-index

By default, no link type or index is assigned to a VSI interface.

Configuring the link bandwidth of a VXLAN tunnel interface

1. Enter system view.

system-view

2. Enter VXLAN tunnel interface view.

interface tunnel tunnel-number

3. Configure the expected link bandwidth of the VXLAN tunnel interface.

bandwidth bandwidth-value

The default expected bandwidth (in kbps) is the interface maximum rate divided by 1000.

The expected bandwidth is an informational parameter used only by higher-layer protocols for calculation. You cannot adjust the actual bandwidth of an interface by using this command.

For more information about this command, see tunneling commands in Layer 3—IP Services Command Reference.

Configuring RIR backup links

About this task

The links between a spoke and a primary hub are typically primary links, and the links between a spoke and a backup hub are typically backup links. RIR selects qualified primary links prior to qualified backup links.

In a VXLAN network, the links (VXLAN tunnels) that are assigned link preference values are primary links by default. If you configure a VXLAN tunnel as an RIR backup tunnel, RIR uses the VXLAN tunnel as an RIR backup link.

Restrictions and guidelines

Configure VXLAN tunnels as backup links depending on the network requirements. You can configure a VXLAN tunnel between a spoke and a primary hub as a backup link.

Procedure

1. Enter system view.

system-view

2. Enter VXLAN tunnel interface view.

interface tunnel number

3. Configure the tunnel as an RIR backup tunnel.

rir backup

By default, a tunnel is an RIR primary tunnel.

Configuring a flow template

About flow template configuration

Configure a flow template to determine the link selection policies for a type of service flow.

Creating a flow template

About this task

To define link selection policies for a type of service flow, you can create a flow template and configure link selection policies in the flow template. By marking the type of service flow with the flow ID of the flow template, the device can use the link selection policies in the flow template to select links for that type of service flow.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Create a flow template and enter its view.

flow flow-id

Configuring a quality policy for the flow template

About this task

If you configure the quality policy of a flow template on a hub, the hub performs link quality detection based on the link quality probe results synchronized from spokes. If you do not configure the quality policy on the spokes for the same flow template, the hub cannot obtain link quality probe results from any spokes. Because the hub does not perform link quality probe on its own, it determines that all links in the flow template fail quality detection.

If you do not configure the quality policy of a flow template on a hub, the hub determines that all links in the flow template meet the service quality requirements.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enter flow template view.

flow flow-id

4. Configure a quality policy for the flow template.

quality-policy sla sla-id nqa nqa-id

By default, no quality policy is configured for a flow template.

The specified SLA and NQA link quality operation must already exist.

Specifying the per-session expected bandwidth

About this task

To select a link for traffic of a session, a device first performs bandwidth detection based on the per-session expected bandwidth in the flow template to which the session belongs. If the used bandwidth plus the per-session expected bandwidth of a candidate link is less than 80% of its total bandwidth, the current available bandwidth of the candidate link meets the session bandwidth requirements. The link passes the bandwidth detection.

The per-session expected bandwidth is not the actual bandwidth of a session. It is only a value estimated based on the user services.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enter flow template view.

flow flow-id

4. Specify the per-session expected bandwidth.

expect-bandwidth bandwidth

By default, the per-session expected bandwidth is 0 kbps.

Specifying link preference values for links

About this task

RIR preferentially selects links with higher preference for a type of service flow.

VXLAN-based RIR supports assigning a link preference to a type of links with a specific link index in flow template view. The link type and link index identify links on a VSI interface. As VXLAN-based RIR allows a hub and spoke to have only one VXLAN tunnel for a VSI interface, the link preference configured on the VSI interface is the link preference of the VXLAN tunnel between the hub and spoke.

Restrictions and guidelines

You can assign the same link preference value to links with different link types and indexes in the same flow template.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enter flow template view.

flow flow-id

4. Configure a link preference for the specified type of links with the specified link index.

path link-type { 4g | internet | mpls | mstp } index link-index preference preference

By default, no link preference is configured for a type of links with a specific link index in a flow template.

Configuring the link load balancing mode

Restrictions and guidelines for link load balancing mode configuration

For a flow template, the per-packet load balancing mode takes precedence over the global per-session periodic link adjustment mode. If the per-packet load balancing mode is not enabled for a flow template, the flow template uses the global link load balancing mode.

Setting the per-session periodic link adjustment mode

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Set the link load balancing mode to the per-session periodic link adjustment mode.

load-balance per-session periodic-adjust enable

By default, the link load balancing mode is per-session weight-based link selection mode.

4. Set the adjustment interval for the per-session periodic link adjustment mode.

load-balance per-session periodic-adjust adjust-interval interval-value

By default, the adjustment interval for the per-session periodic link adjustment mode is 30 seconds.

5. Set the periodic adjustment thresholds in per-session periodic link adjustment mode.

load-balance per-session periodic-adjust threshold upper upper-threshold-value lower lower-threshold-value

By default, the periodic adjustment upper threshold is 50% and the periodic adjustment lower threshold is 20%.

The periodic adjustment upper threshold must be greater than or equal to the periodic adjustment lower threshold.

Setting the per-packet load balancing mode

Restrictions and guidelines

Because packets of the same session are distributed to multiple links, the receiver might receive out-of-order packets. As a best practice, do not enable per-packet load balancing for order-sensitive services (except the services that use protocols to maintain a correct packet order, for example, TCP).

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enter flow template view.

flow flow-id

4. Set the link load balancing mode to the per-packet mode.

load-balance per-packet enable

By default, the RIR global link load balancing mode applies.

Configuring flow priority-based traffic scheduling

About this task

To ensure that services with higher priority preferentially use link resources, enable flow priority-based traffic scheduling.

The priority of a flow that matches a flow template is determined by the ID of the SLA associated with that flow template. The greater the SLA ID is, the higher the flow priority. To specify an SLA for a flow template, use the quality-policy command. If the command is not configured in a flow template, flows that match the flow template have the lowest priority.

If flow priority-based traffic scheduling is enabled, traffic scheduling is triggered when the bandwidth usage of a link exceeds the upper threshold. The scheduling might be last for several scheduling periods. Within each scheduling period, RIR redistributes the current lowest priority flow on this link to other links. The scheduling does not stop until the bandwidth usage of all links for the current lowest priority flow is below the lower threshold or only the highest priority flow is left on this link.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enable flow priority-based traffic scheduling.

flow priority-based-schedule enable

By default, flow priority-based traffic scheduling is disabled.

4. Set the scheduling period for flow priority-based traffic scheduling.

flow priority-based-schedule schedule-period schedule-period-value

By default, the scheduling period for flow priority-based traffic scheduling is 30 seconds.

5. Set the bandwidth usage thresholds for flow priority-based traffic scheduling.

flow priority-based-schedule bandwidth-threshold upper upper-threshold lower lower-threshold

By default, the bandwidth usage upper threshold is 90% and the bandwidth usage lower threshold is 20%.

The upper threshold must be greater than or equal to the lower threshold.

Setting the link selection delay and link selection suppression period

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Set the link selection delay.

link-select delay delay

By default, the link selection delay is 60 seconds.

4. Set the link selection suppression period.

link-select suppress-period period-value

By default, no link selection suppression period is configured. The device does not start the link selection suppression period after a link selection.

As a best practice, set the link selection suppression period to a multiple of the link selection delay time. Make sure the suppression period is at least double of the link selection delay time.

Configuring a QoS policy to mark matching packets with a flow ID

About configuring a QoS policy to mark matching packets with a flow ID

Apply a QoS policy to an interface to mark matching packets on the interface with a flow ID. RIR processes packets marked with a flow ID based on the flow template that uses the flow ID. For more information about QoS marking, see QoS overview, QoS policies, and marking configuration in ACL and QoS Configuration Guide.

Creating a traffic class and defining packet match criteria

1. Enter system view.

system-view

2. Create a traffic class and enter its view.

traffic classifier classifier-name [ operator { and | or } ]

3. Define packet match criteria, including the quintuple and DSCP.

if-match [ not ] match-criteria

By default, no packet match criteria are defined.

Creating a traffic behavior and configuring a flow ID marking action

1. Enter system view.

system-view

2. Create a traffic behavior and enter its view.

traffic behavior behavior-name

3. Configure the traffic behavior to mark matching traffic with the specified flow ID.

remark flow-id flow-id

By default, no flow ID marking action is configured.

Configuring a QoS policy

1. Enter system view.

system-view

2. Create a QoS policy and enter its view.

qos policy policy-name

3. Associate the traffic behavior with the traffic class in the QoS policy.

classifier classifier-name behavior behavior-name

By default, no traffic behavior is associated with a traffic class.

Applying the QoS policy to an interface

1. Enter system view.

system-view

2. Enter interface view.

interface interface-type interface-number

3. Apply the QoS policy to the inbound direction of the interface.

qos apply policy policy-name inbound

By default, no QoS policy is applied to an interface.

Configuring RIR collaboration

Restrictions and guidelines for RIR collaboration configuration

All devices that form an RIR collaboration device group must have independent RIR capabilities. They must mark the same type of service traffic with the same flow ID.

Perform the RIR collaboration tasks in this section on both the local and peer devices in an RIR collaboration device group.

Setting up RIR dedicated links between local and peer devices

Use RIR dedicated links to ensure that packets can be correctly forwarded between local and peer devices. You can configure direct routes or GRE tunnels as dedicated links as follows:

· For directly-connected peer devices, you can configure multiple physical interfaces or subinterfaces on the devices. The interfaces or subinterfaces will generate direct routes for packets in the public network and each VPN instance. For more information, see Ethernet interface configuration in Interface Configuration Guide.

· For indirectly-connected peer devices, manually configure GRE tunnels between them for packets in the public network and each VPN instance. For more information about GRE configuration, see Layer 3—IP Services Configuration Guide.

Applying QoS policies to interfaces interconnecting local and peer devices

Outgoing packets do not carry the flow ID marked in the RIR process. To mark a flow ID for received packets, apply a QoS policy to the interfaces interconnecting the local and peer devices. For more information, see "Configuring a QoS policy to mark matching packets with a flow ID."

Assigning links to an RIR collaboration link group

About this task

The local device can discover and select links synchronized from peer devices only after the links are assigned to an RIR collaboration link group. In the RIR collaboration link group, both local links and links synchronized from peer devices have the same destination.

Restrictions and guidelines

In an RIR collaboration device group, make sure all links to the same device or RIR collaboration device group are assigned to the same RIR collaboration link group.

In an RIR collaboration device group, make sure the links to different devices or RIR collaboration device groups are assigned to different RIR collaboration link groups.

In different RIR collaboration device groups, the links to the same device or RIR collaboration device group can be assigned to the same RIR collaboration link group. As a best practice to identify links, assign the links to different RIR collaboration link groups.

Procedure

1. Enter system view.

system-view

2. Enter VXLAN tunnel interface view.

interface tunnel number

3. Assign the VXLAN tunnel to an RIR collaboration link group.

rir collaboration-link-group group-id

By default, a VXLAN tunnel belongs to RIR collaboration link group 0.

Establishing RIR collaboration relationship for each pair of local and peer devices

About this task

Each pair of devices in an RIR collaboration device group must establish RIR collaboration relationship with each other for link data synchronization. After a pair of devices establish RIR collaboration relationship, the device with a lower IP address acts as the client to initiate a TCP connection to the other device. Through the TCP connection, the local device can synchronize the configuration and status data of links that meet the service requirements to the peer device. The data does not include the link data synchronized from other devices in the same RIR collaboration device group.

Restrictions and guidelines

The local and peer IP addresses used to establish RIR collaboration relationship must belong to the public network or the same VPN instance.

A pair of RIR collaboration devices can establish only one TCP connection to synchronize link data.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Enable the local device to establish RIR collaboration relationship with a peer device.

collaboration peer [ vpn-instance vpn-instance-name ] peer-ipv4-address local local-ipv4-address sync-port port-number

By default, the local device does not establish RIR collaboration relationship with any device.

Configuring RIR packet redirection

About this task

For the local device to select links from a peer device, you must configure RIR packet redirection. Perform this task to specify the redirect IP address for packets redirected to the peer device on the public network or a VPN instance. When the local device selects links from the peer device to forward packets on the public network or a VPN instance, it performs the following operations:

1. Looks up the routing table of the public network or VPN instance based on the redirect IP address.

2. Forwards the packets to the peer device through the RIR dedicated link.

Procedure

1. Enter system view.

system-view

2. Enter RIR view.

rir

3. Specify the IP address to which the local device redirects packets forwarded to an RIR collaboration peer.

collaboration peer [ vpn-instance vpn-instance-name ] peer-ipv4-address redirect [ vpn-instance redirect-vpn-instance-name ] redirect-ipv4-address

By default, no IP address is specified for the local device to redirect packets forwarded to an RIR collaboration peer.

The vpn-instance vpn-instance-name option specifies the VPN instance on which the local and peer devices establish RIR collaboration relationship. The vpn-instance redirect-vpn-instance-name option specifies a redirect VPN instance. When the local device selects a link from a peer device for forwarding traffic in the redirect VPN instance, the local device redirects the packets to the redirect IPv4 address.

Enabling RIR logging

About this task

RIR logs record events occurred during the RIR process, such as link selection and reselection, quality change, bandwidth change, configuration change, and link fault events. The logs help the administrator analyze, maintain, and adjust the RIR network.