- Table of Contents

-

- H3C G3&G5 Servers Storage Controller User Guide-6W109

- 00-Preface

- 01-Storage controller overview

- 02-Storage controller features

- 03-Configuring an embedded RSTe RAID controller

- 04-Configuring an NVMe VROC module

- 05-Configuring a P430 storage controller

- 06-Configuring a 1000 storage controller

- 07-Configuring a 9361, 9440, 9460, L460, P5408, or H5408 storage controller

- 08-Configuring an H460, P460, P2404 or P4408 storage controller

- 09-Configuring a 9300 storage controller

- 10-Configuring a 9311 storage controller

- 11-Configuring an LSI 9400 or 9500 series storage controller

- 12-Configuring a RAID-MARVELL-SANTACRUZ-LP-2i storage controller

- 13-Appendix A Troubleshooting storage controllers

- 14-Appendix B RAID arrays and fault tolerance

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 03-Configuring an embedded RSTe RAID controller | 3.46 MB |

Configuring the embedded RSTe RAID controller or VROC SATA RAID controller

|

|

NOTE: The BIOS screens might vary by the BIOS version. The screenshots in this chapter are for illustration only. |

Rack servers, blade servers, and compute modules may provide embedded RSTe RAID controllers.

About the embedded RSTe RAID controller

The embedded Intel Rapid Storage Technology enterprise (RSTe) RAID controller (referred to as VROC SATA RAID controller for G5 servers) is embedded on the southbridge of the system board. Integrating AHCI and RAID programs, the RAID controller is mainly used to manage Intel chip set-based drives, check the status of the drives, and provide RAID capacity for systems that use SATA drives. If the RAID controller has multiple drives attached, you can configure RAID arrays to protect data and improve read/write performance.

If you use the embedded RSTe RAID controller to configure RAID, you can create a maximum of two logical drives with a physical drive. The second logical drive will use the remaining capacity of the physical drive, and the logical drive size cannot be adjusted.

For the embedded RAID controller, you can configure RAID in both UEFI mode and legacy mode.

Features

Operating modes

The RAID controller supports the following operating modes:

· AHCI mode—This is the default mode. In this mode, all physical drives are exposed to the operating system (OS). The drives can be directly used for data storage. Native Command Queuing (NCQ) is supported, which improves the I/O performance of SATA drives.

· RAID mode—In this mode, RAID functions are enabled and RAID arrays can be created on physical drives to improve I/O performance and data security.

|

|

NOTE: · For the new mode to take effect, restart the server after an operating mode change. · The OS might fail to start up after the operating mode of the storage controller is changed. To resolve this issue, reinstall the OS. If the issue persists, contact Technical Support. |

RAID levels

The supported RAID levels vary by storage controller model. For more information about the supported RAID levels of each storage controller, see H3C Servers Storage Controllers Technical Specifications.

Table 1 shows the minimum number of drives required by each RAID level and the maximum number of failed drives supported by each RAID level. For more information about RAID levels, see "Appendix B RAID arrays and fault tolerance."

Table 1 RAID levels and the numbers of drives for each RAID level

|

RAID level |

Min. drives required |

Max. drives |

Max. failed drives |

|

RAID 0 |

2 |

Maximum number of drives supported by the drive backplane |

0 |

|

RAID 1 |

2 |

2 |

1 |

|

RAID 5 |

3 |

Maximum number of drives supported by the drive backplane |

1 |

|

RAID 10 |

4 |

4 |

n, where n is the number of RAID 1 arrays in the RAID 10 array |

Restrictions and guidelines for RAID configuration

· As a best practice, install drives that do not contain RAID information.

· To avoid degraded RAID performance or RAID creation failures, make sure all drives in the RAID are the same type (HDDs or SSDs) and have the same connector type (SAS or SATA).

· For efficient use of storage, use drives that have the same capacity to build a RAID. If the drives have different capacities, the lowest capacity is used across all drives in the RAID.

· If one drive is used by several logical drives, RAID performance might be affected and maintenance complexities will increase.

Configuring RAID arrays in UEFI mode

This section describes how to configure RAID arrays through an embedded RSTe RAID controller in UEFI mode. For more information about how to enter the BIOS and set the boot mode to UEFI, see the BIOS user guide for the server.

RAID array configuration tasks at a glance

To configure RAID arrays in UEFI mode, perform the following tasks:

· Setting the RSTe operating mode

· Accessing the RSTe configuration screen

· (Optional.) Configuring hot spare drives

· (Optional.) Deleting RAID arrays

Setting the RSTe operating mode

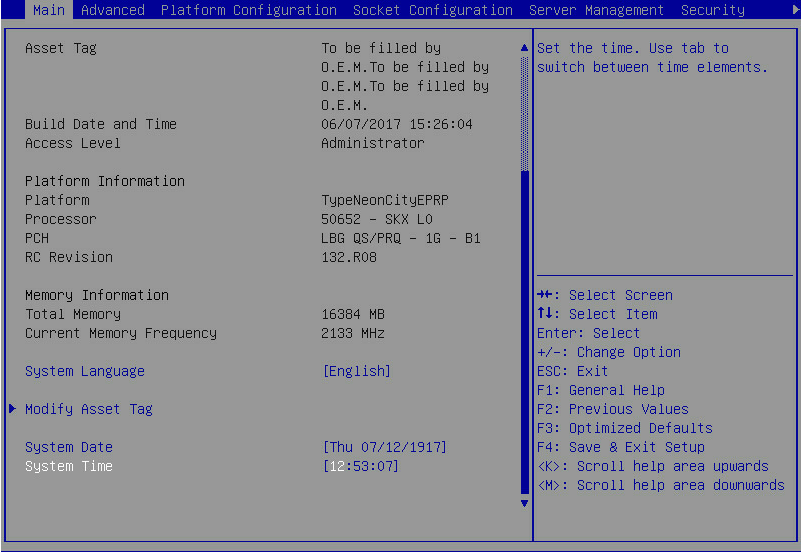

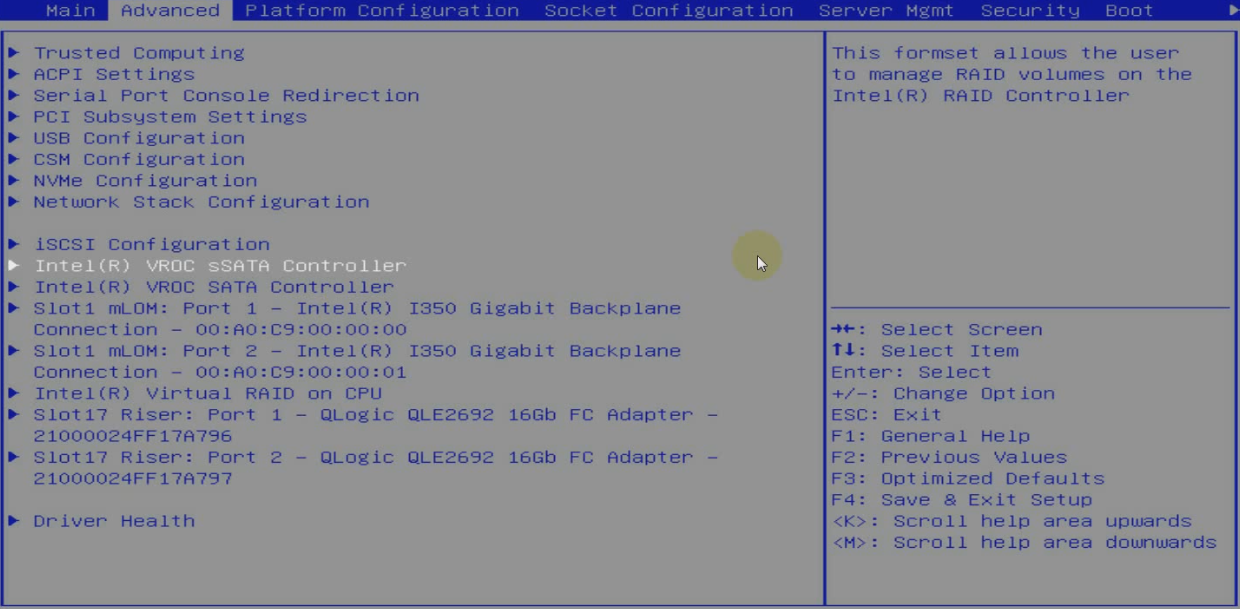

1. During server POST, press Delete, Esc, or F2 as prompted to open the BIOS setup screen as shown in Figure 1.

For how to navigate screens and modify settings, see the operation instructions at the lower right corner.

Figure 1 BIOS setup screen

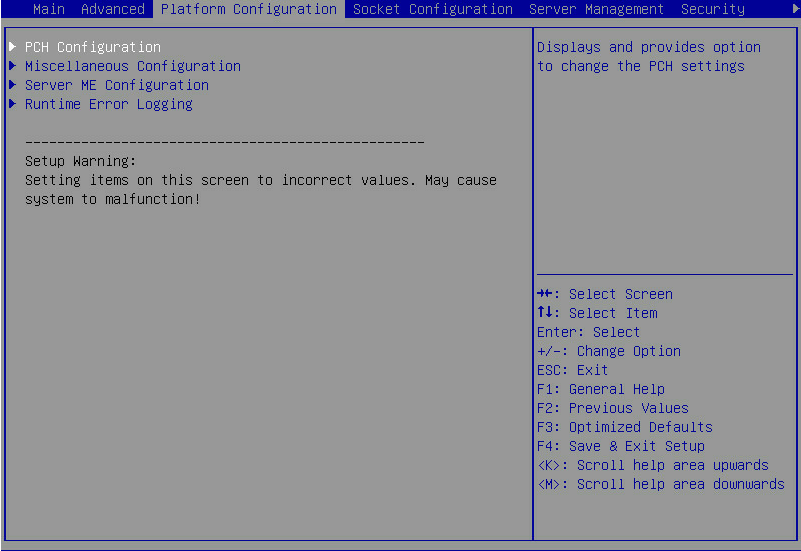

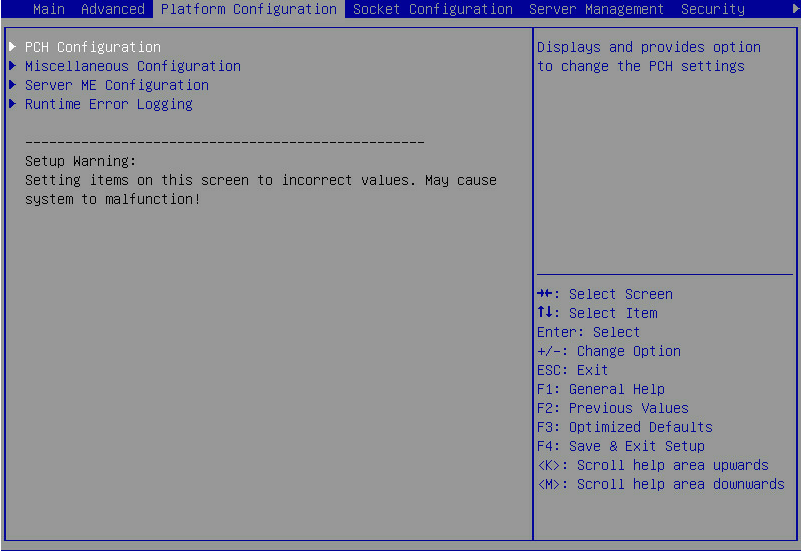

2. On the screen as shown in Figure 2, select Platform Configuration > PCH Configuration, and press Enter.

For G5 servers, the Platform Configuration options are in the Advanced menu.

Figure 2 Platform Configuration screen

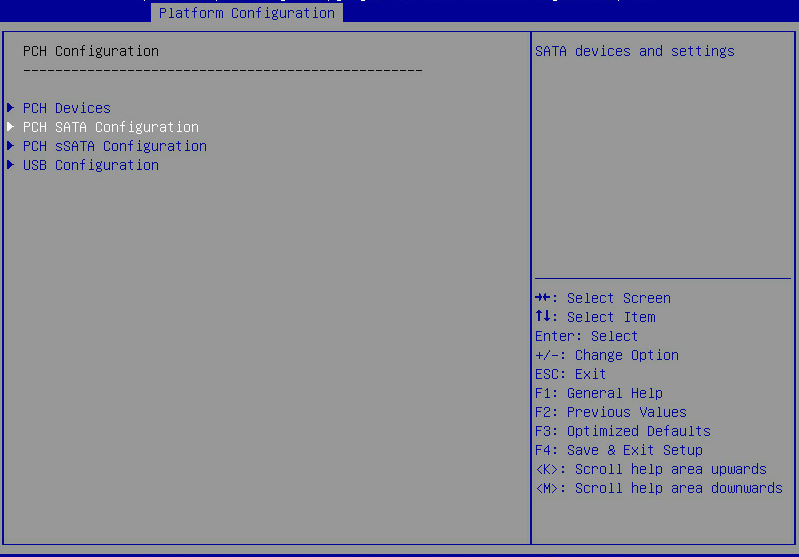

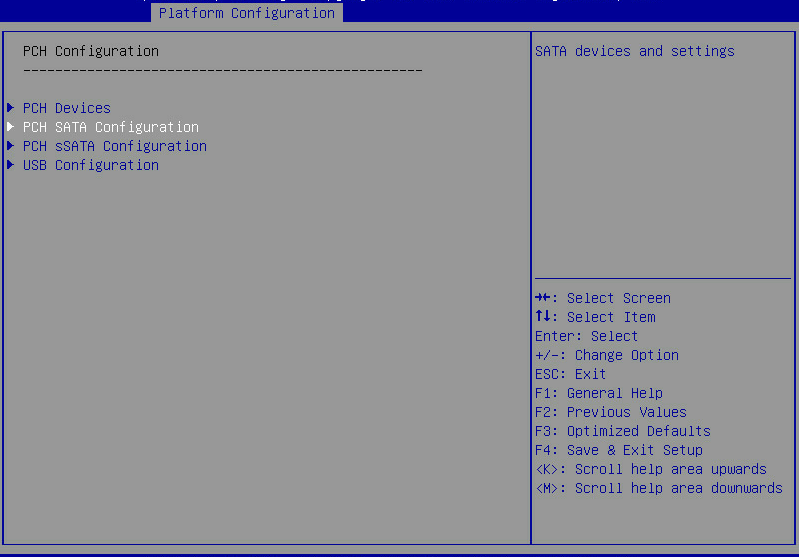

3. On the screen as shown in Figure 3, select the target controller, such as PCH SATA Configuration, and then press Enter.

Figure 3 PCH Configuration screen

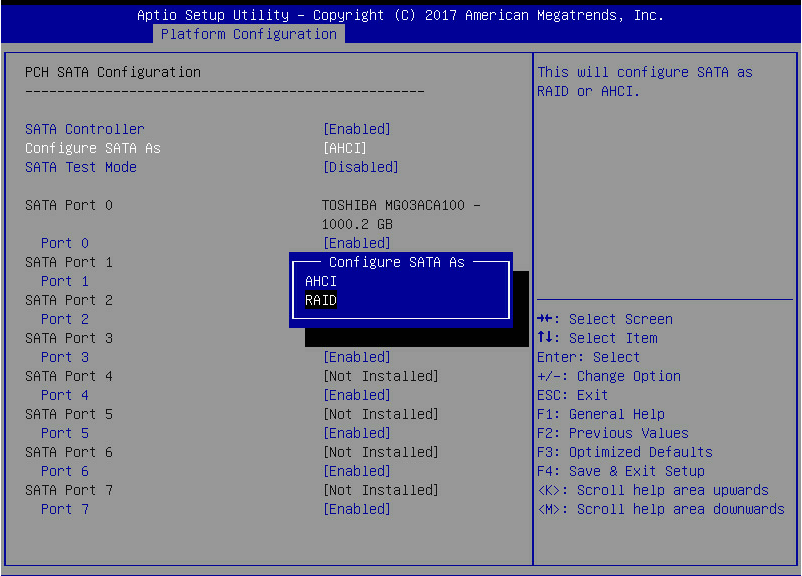

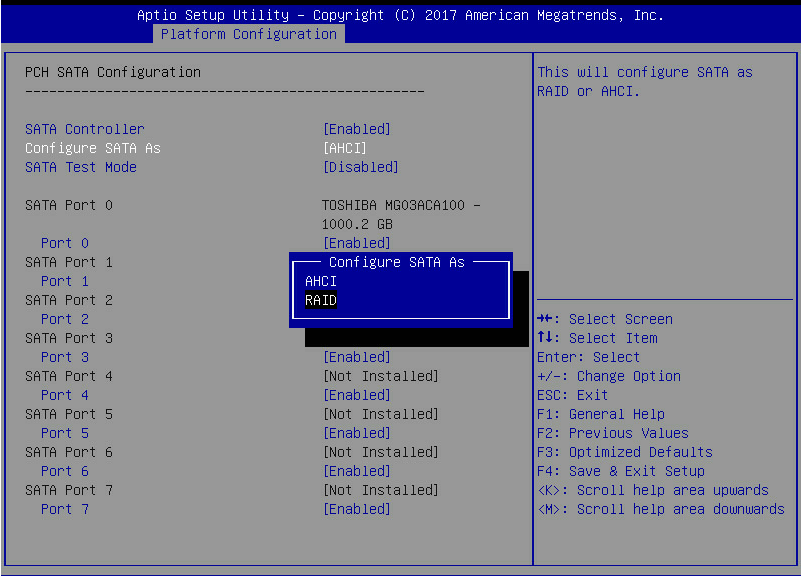

4. On the screen as shown in Figure 4, select Configure SATA As, press Enter, and select an operating mode.

For more information about setting the operating mode for an embedded RSTe RAID controller, see "Features."

Figure 4 Modifying the storage controller operating mode

5. After the configuration is complete, press F4. On the dialog box that opens, select Yes to save the current configuration and reboot the system to complete the storage controller operating mode configuration.

Accessing the RSTe configuration screen

1. Access the BIOS setup screen.

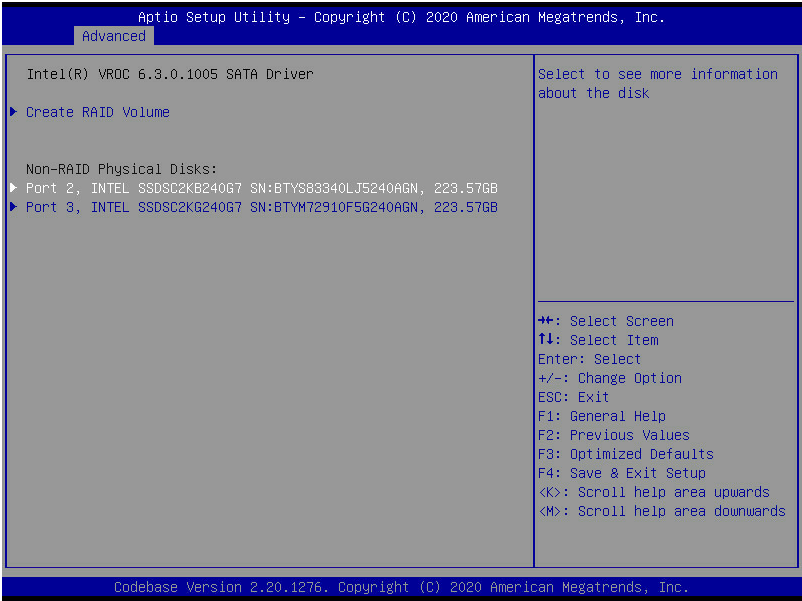

2. On the screen as shown in Figure 5, select Advanced > Dynamic Device Configuration > Target_Controller (such as Intel(R) VROC sSATA Controller) or select Advanced > Target_Controller (such as Intel(R) VROC sSATA Controller), and press Enter. Whether the Dynamic Device Configuration menu is displayed depends on the BIOS version.

|

|

NOTE: A controller option, such as Intel(R) VROC sSATA Controller, is displayed only when the controller has been configured to operate in RAID mode. For how to set the operating mode, see "Setting the RSTe operating mode." |

Figure 5 Advanced screen

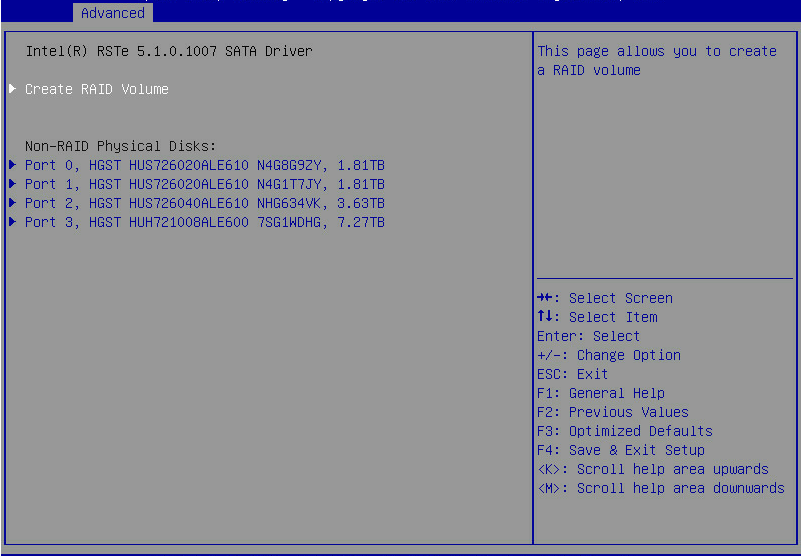

The RSTe configuration screen as shown in Figure 6 opens.

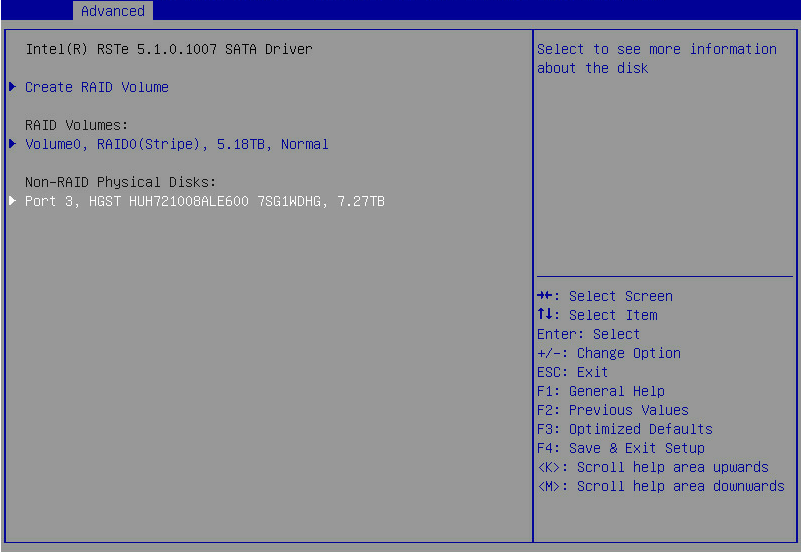

Figure 6 RSTe configuration screen

Configuring RAID arrays

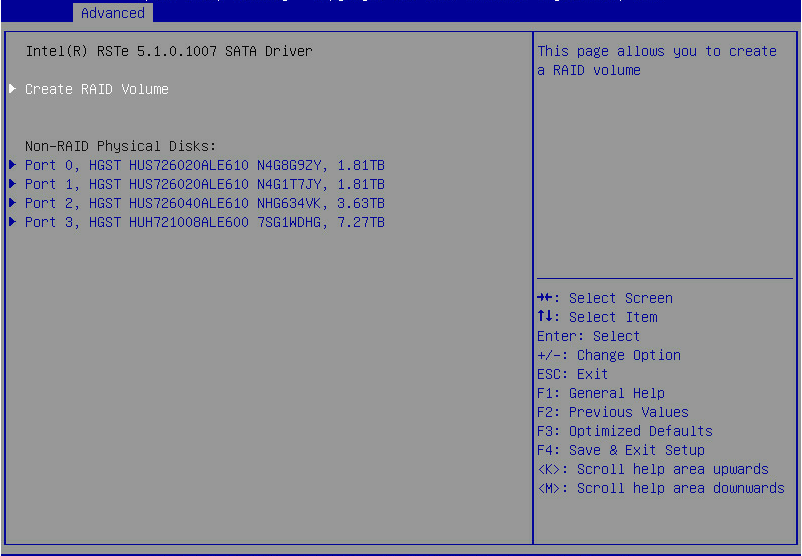

1. On the RSTe configuration screen as shown in Figure 7, select Create RAID Volume, and press Enter.

The Create RAID Volume option is available only when the interface corresponding to the controller connects to two or more drives.

Figure 7 Selecting Create RAID Volume

2. On the screen as shown in Figure 8, set the Name, RAID Level, Select Disks, Strip Size, and Capacity parameters, select Create Volume, and press Enter to create the RAID volume.

Table 2 describes the configuration parameters for creating a RAID volume.

Figure 8 Setting RAID parameters

Table 2 Configuration parameters

|

Parameter |

Description |

|

Name |

RAID array name. |

|

RAID Level |

RAID level, which determines the performance, fault tolerance capability, and capacity for the logical drive. |

|

Select Disks |

Select member drives for the RAID array. Available drives are displayed under Select Disks. Press Enter to select drives. [X] indicates that the corresponding drive has been selected. |

|

Strip Size |

Stripe size, which determines the size of the data block that can be written into a stripe on each drive. |

|

Capacity |

Logical drive capacity. |

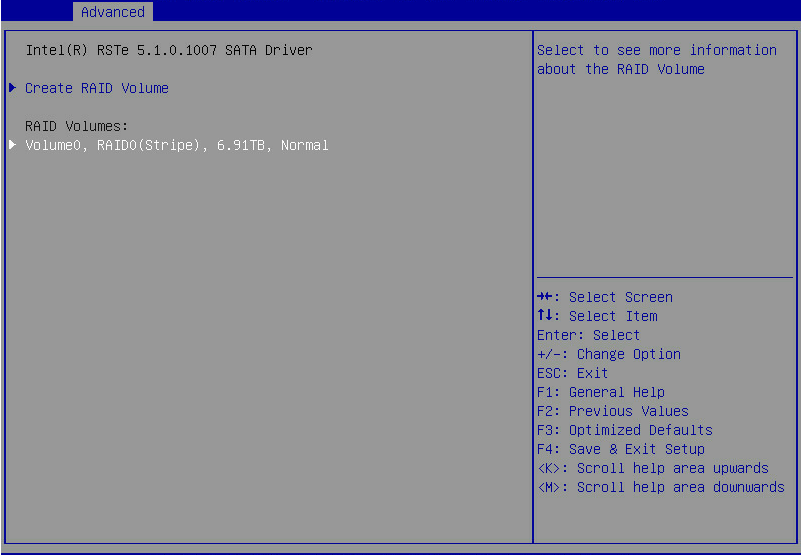

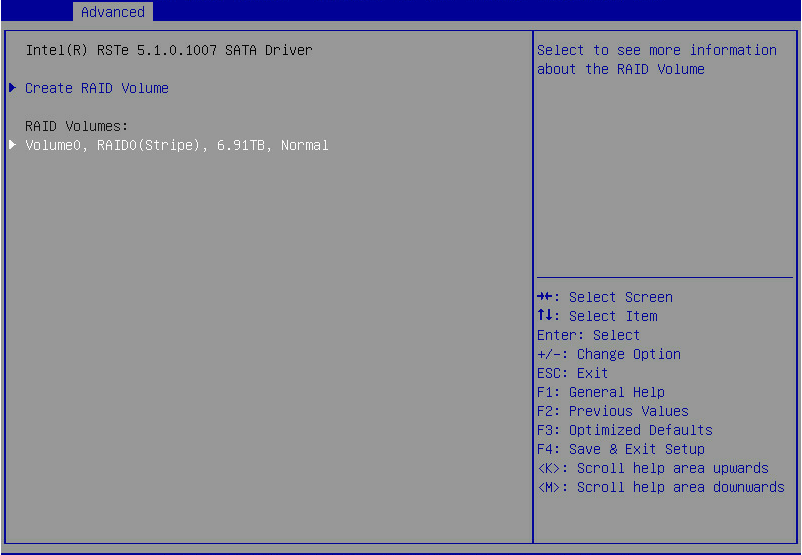

3. The screen as shown in Figure 9 opens.

After the RAID volume is created, the RAID volume is displayed in the RAID Volumes directory. To view details about a RAID volume, select the RAID volume, and press Enter. Details about a RAID volume include the RAID array name, RAID level, and member drives.

Figure 9 Viewing the created RAID volume

Configuring hot spare drives

|

CAUTION: With hot spare drives configured, if a RAID level is degraded due to drive failures, hot spare drives replace the failed drives automatically. The system starts RAID rebuilding automatically after accessing the OS. |

This function configures dedicated hot spare drives. The hot spare drives are only effective for the first RAID volume. The first RAID volume refers to the RAID volume displayed in order from top to bottom on the Intel Virtual RAID on CPU interface.

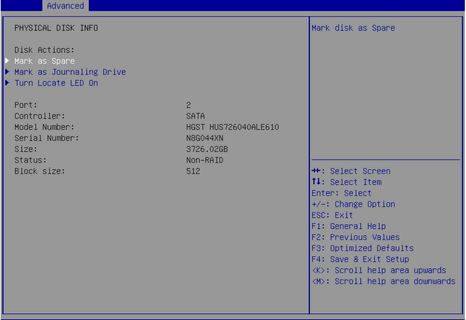

1. On the RSTe configuration screen as shown in Figure 10, select a drive to configure as a hot spare drive, and press Enter.

Figure 10 Selecting a drive for hot spare

2. Select Mark as Spare, and press Enter.

Figure 11 Configuring a spare drive

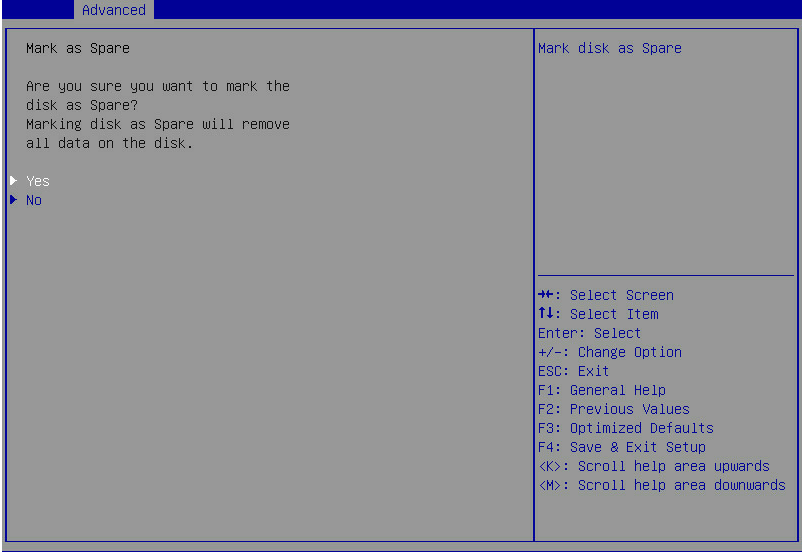

3. On the following screen that opens, select Yes and press Enter.

Figure 12 Confirming the hot spare configuration

Deleting RAID arrays

1. On the RSTe configuration screen as shown in Figure 13, select the RAID volume to be deleted under RAID Volumes, and press Enter.

Figure 13 Selecting the RAID volume to be deleted

2. On the screen as shown in Figure 14, select Delete and press Enter to delete the selected RAID volume.

Figure 14 RAID volume information screen

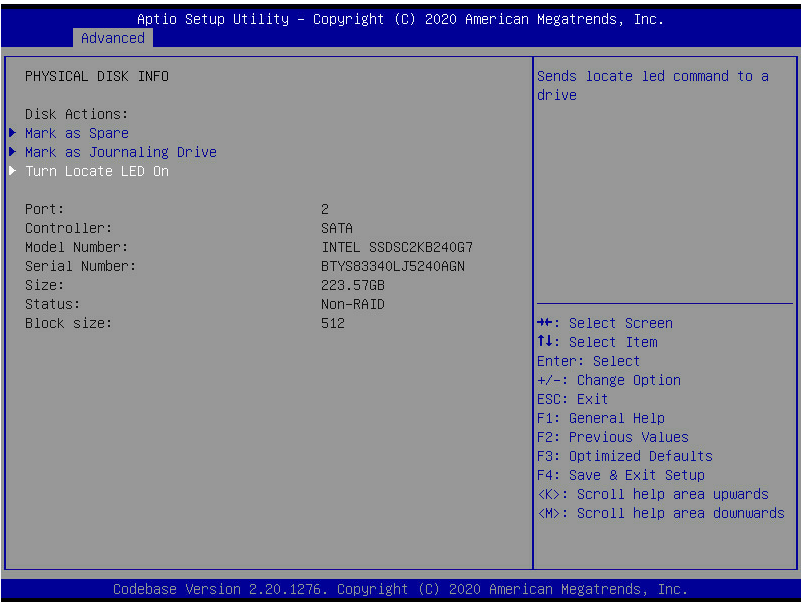

Locating a drive

1. On the RSTe configuration screen as shown in Figure 15, select the RAID volume to locate, and press Enter.

Figure 15 Selecting the RAID volume to delete

2. Select Turn Locate LED On and then press Enter.

For information about drive LEDs, see the user guide for the server.

Figure 16 Locating a logical drive

Initializing a logical drive

The embedded RAID controller automatically initializes the RAID after RAID configuration. After initialization, the logical drive can be used by the OS and the member drives can meet the requirement of the RAID level if the RAID is redundant.

Configuring RAID arrays in legacy mode

This section describes how to configure RAID through an embedded RSTe RAID controller in legacy mode. For more information about how to enter the BIOS and set the boot mode to legacy, see the BIOS user guide for the server.

RAID array configuration tasks at a glance

To configure RAID arrays in legacy mode, perform the following tasks:

· Setting the RSTe operating mode

· Accessing the RSTe configuration screen

· (Optional.) Configuring hot spare drives

· (Optional.) Deleting RAID arrays

Setting the RSTe operating mode

1. Access the BIOS setup screen.

2. On the screen as shown in Figure 17, select Platform Configuration > PCH Configuration, and press Enter.

Figure 17 Platform Configuration screen

3. On the screen as shown in Figure 18, select the target controller, such as PCH SATA Configuration, and then press Enter.

Figure 18 PCH Configuration screen

4. On the screen as shown in Figure 19, select Configure SATA As, press Enter, and select an operating mode.

Figure 19 Modifying the storage controller operating mode

Accessing the RSTe configuration screen

This section describes how to access the legacy BIOS configuration screen. To enter the RSTe configuration screen, make sure a minimum of one embedded RSTe RAID controller is configured to operate in RAID mode and each controller connects to a minimum of two drives. For how to set the operating mode, see "Setting the RSTe operating mode."

To access the RSTe configuration screen:

1. Power on or reboot the server.

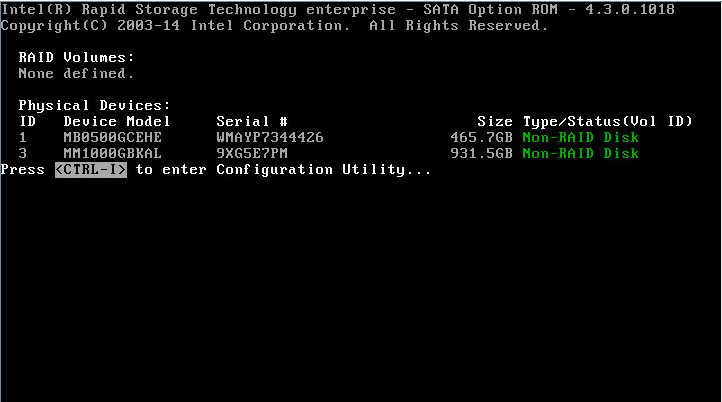

2. When the screen is as shown in Figure 20 during server POST, press Ctrl+I.

|

|

NOTE: If both sSATA and SATA controllers are configured to operate in RAID mode, the prompt Press <CTRL-I> to enter Configuration Utility appears twice during server POST, which corresponds to the sSATA controller and SATA controller. Select a controller according to the drives to be configured for a RAID array. |

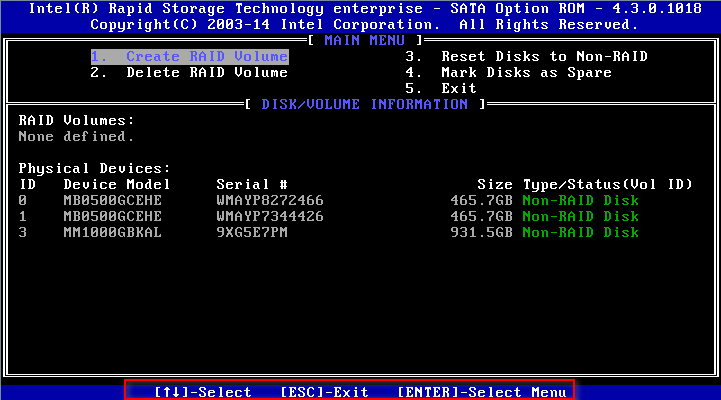

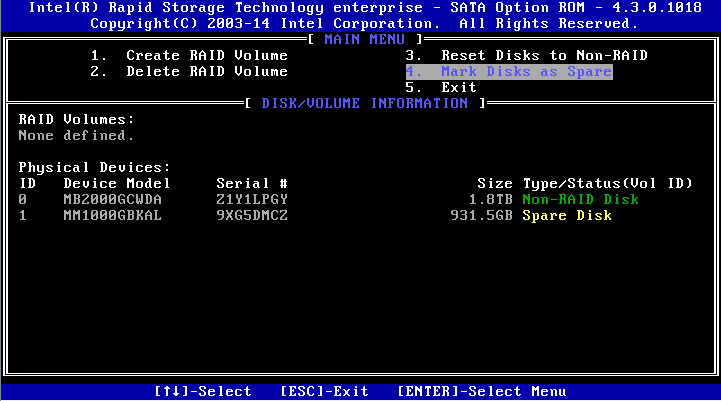

The RSTe configuration screen as shown in Figure 21 opens. Table 3 describes the configuration screen. For how to navigate screens and modify settings, see the operation instructions at the bottom of the screen.

Figure 21 RSTe configuration screen

Table 3 RSTe configuration screen description

|

Section |

Description |

|

MAIN MENU |

In the MAIN MENU section on the upper part of the page, you can perform the following tasks: · Create RAID arrays. · Delete RAID arrays. · Reset drives to non-RAID. · Mark drives as spare. · Exit. |

|

DISK/VOLUME INFORMATION |

In the DRIVE/VOLUME INFORMATION section on the lower part of the page, you can view brief information about existing RAID arrays and physical drives. |

Configuring RAID arrays

1. On the RSTe configuration screen as shown in Figure 22, select Create RAID Volume, and press Enter.

Figure 22 RSTe configuration screen

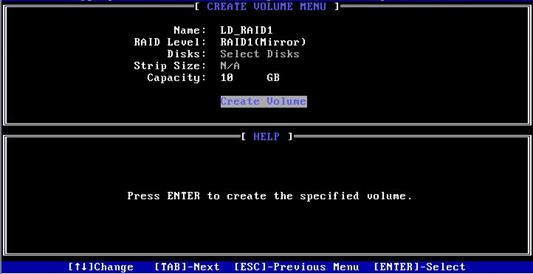

2. On the screen as shown in Figure 23, set the Name, RAID Level, Disks, Strip Size, and Capacity parameters, select Create Volume, and press Enter to create the RAID volume.

Table 4 describes the configuration parameters for creating a RAID volume.

Figure 23 Creating a RAID volume

Table 4 Configuration parameters

|

Configuration |

Description |

|

Name |

RAID array name. |

|

RAID Level |

RAID level, which determines the performance, fault tolerance capability, and capacity for the logical drive. |

|

Disks |

Select member drives for the RAID array. To select a drive, select Disks, press Enter, navigate to the drive, and then press the space bar. |

|

Strip Size |

Stripe size, which determines the size of the data block that can be written into a stripe on each drive. |

|

Capacity |

Logical drive capacity. |

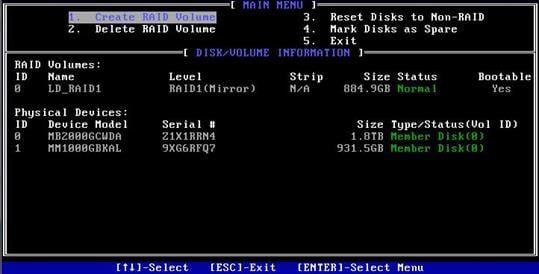

3. The screen as shown in Figure 24 opens.

The screen displays details about the created RAID volume, including the RAID array name, RAID level, and member drives.

Figure 24 RAID volume information screen

Configuring hot spare drives

|

CAUTION: With hot spare drives configured, if a RAID level is degraded due to drive failures, hot spare drives replace the failed drives automatically. The system starts RAID rebuilding automatically after accessing the OS. |

This function configures dedicated hot spare drives. The hot spare drives are only effective for the first RAID volume. The first RAID volume refers to the RAID volume displayed in order from top to bottom on the Intel Virtual RAID on CPU interface.

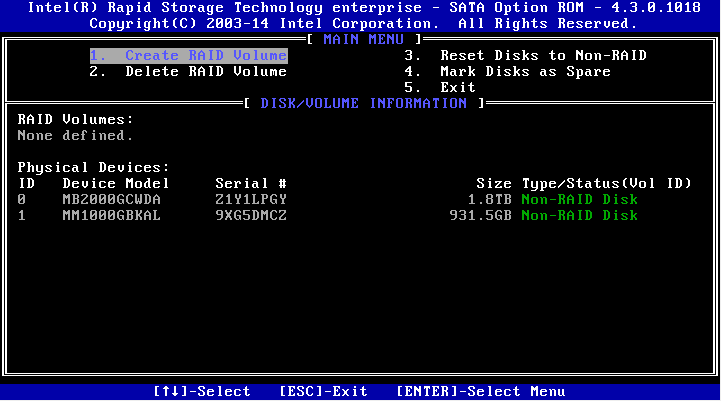

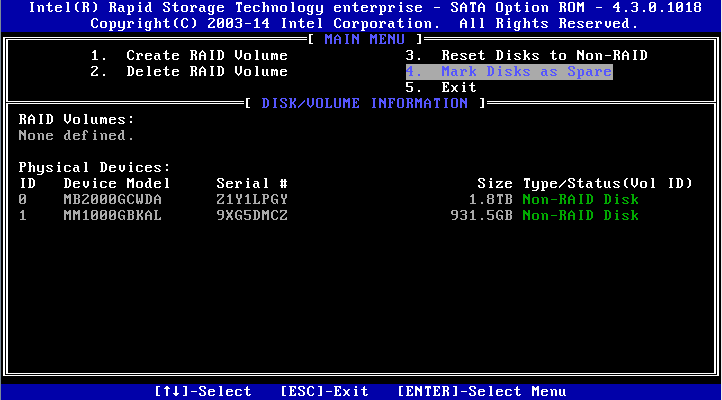

1. On the RSTe configuration screen, select Mark Disks as Spare, and press Enter.

Figure 25 RSTe configuration screen

2. Select a drive for hot spare, press SPACE, and then press Enter. On the prompt that opens, enter y and press Enter.

Figure 26 Selecting a drive for hot spare

3. View the configured hot spare information on the RSTe configuration screen.

Figure 27 Viewing hot spare information

Deleting RAID arrays

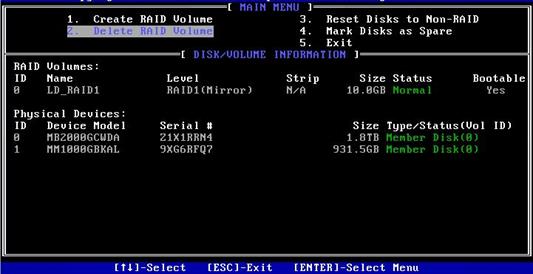

1. On the RSTe configuration screen as shown in Figure 28, select Delete RAID Volume, and press Enter.

Figure 28 RSTe configuration screen

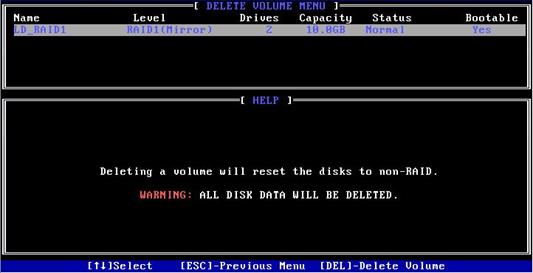

2. On the screen as shown in Figure 29, select the RAID volume to be deleted, and press Delete.

Figure 29 Selecting the RAID volume to be deleted

Configuring RAID in the operating system

Viewing physical drive letters

|

|

NOTE: · To configure the embedded RSTe RAID controller, you must set the controller, such as PCH sSATA Configuration, in PCH Configuration to RAID, and restart the server for the configuration to take effect. · After you switch the working mode of the storage controller, the system disk of the original mode may experience abnormalities, which may prevent the operating system from starting normally. In this case, you can try to reinstall the operating system. If the issue persists, contact Technical Support. |

Perform this task to view physical drive letters used for RAID creation.

Syntax

lsscsi

Examples

# View physical drive letters used for RAID creation.

[root@localhost ~]# lsscsi

[0:0:0:0] disk ATA INTEL SSDSCKKB48 1132 /dev/sda

[1:0:0:0] disk ATA Micron_5300_MTFD U001 /dev/sdb

[N:0:0:1] disk INTEL SSDPE2KX010T8__1 /dev/nvme0n1

In this example, the system disk letter is /dev/nvme0n1, and the drive letters used for RAID creation is /dev/sda and /dev/sdb.

Creating a container

Perform this task to create a container for logical drive creation.

Syntax

mdadm –C container_id phy_id –n num –e imsm

Parameters

container_id: Specifies the container name.

phy_id: Specifies the physical drive letters.

num: Specifies the number of physical drives.

Examples

# Create a container named /dev/md0.

[root@localhost ~]# mdadm -C /dev/md0 /dev/sda /dev/sdb -n 2 -e imsm

mdadm: container /dev/md0 prepared.

Adding RAID arrays to a container

Perform this task to add RAID arrays to a container.

Syntax

mdadm –C RAID_id container_id –n num –l RAID_level

Parameters

RAID_id: Specifies the name of a RAID array.

container_id: Specifies the container name.

num: Specifies the number of physical drives.

RAID_level: Specifies the RAID level.

Examples

# Add RAID 1 array named /dev/md/R1 to container /dev/md0.

[root@localhost ~]# mdadm -C /dev/md/R1 /dev/md0 -n 2 -l 1

mdadm: array /dev/md/R1 started.

# Add RAID 0 array named /dev/md/R1 to container /dev/md0.

[root@localhost ~]# mdadm -C /dev/md/R1 /dev/md0 -n 2 -l 0

mdadm: array /dev/md/R1 started.

# Use two drives to create a RAID 0 array in container /dev/md0.

[root@localhost ~]# mdadm -C /dev/md0 /dev/sdc /dev/sdd -n 2 -e imsm

mdadm: /dev/sdc appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

mdadm: /dev/sdd appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

Continue creating array? y

mdadm: container /dev/md0 prepared.

[root@localhost ~]# mdadm -C /dev/md/R0 /dev/md0 -n 2 -l 0

mdadm: array /dev/md/R0 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0]

md125 : active raid0 sdc[1] sdd[0]

1953513472 blocks super external:/md0/0 128k chunks

md0 : inactive sdd[1](S) sdc[0](S)

2210 blocks super external:imsm

md126 : active raid0 sda[1] sdb[0]

890817536 blocks super external:/md127/0 128k chunks

md127 : inactive sdb[1](S) sda[0](S)

10402 blocks super external:imsm

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md125

/dev/md125:

Container : /dev/md0, member 0

Raid Level : raid0

Array Size : 1953513472 (1863.02 GiB 2000.40 GB)

Raid Devices : 2

Total Devices : 2

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 128K

Consistency Policy : none

UUID : cdba8f2d:aad3144b:8a4c2d03:b16742d0

Number Major Minor RaidDevice State

0 8 48 0 active sync /dev/sdd

1 8 32 1 active sync /dev/sdc

# Use three drives to create a RAID 5 array in container /dev/md0.

[root@localhost ~]# mdadm -C /dev/md0 /dev/sdc /dev/sdd /dev/sde -n 3 -e imsm

mdadm: /dev/sdc appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

mdadm: /dev/sdd appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

mdadm: /dev/sde appears to contain an ext2fs file system

size=468851544K mtime=Wed Dec 31 19:00:00 1969

Continue creating array? y

mdadm: container /dev/md0 prepared.

[root@localhost ~]# mdadm -C /dev/md/R1 /dev/md0 -n 3 -l 5

mdadm: array /dev/md/R1 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid6] [raid5] [raid4]

md125 : active raid5 sde[2] sdd[1] sdc[0]

937691136 blocks super external:/md0/0 level 5, 128k chunk, algorithm 0 [3/3] [UUU]

[>....................] resync = 0.3% (1692948/468845568) finish=59.7min speed=130226K/sec

md0 : inactive sde[2](S) sdd[1](S) sdc[0](S)

3315 blocks super external:imsm

md126 : active raid0 sda[1] sdb[0]

890817536 blocks super external:/md127/0 128k chunks

md127 : inactive sdb[1](S) sda[0](S)

10402 blocks super external:imsm

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md125

/dev/md125:

Container : /dev/md0, member 0

Raid Level : raid5

Array Size : 937691136 (894.25 GiB 960.20 GB)

Used Dev Size : 468845568 (447.13 GiB 480.10 GB)

Raid Devices : 3

Total Devices : 3

State : clean, resyncing

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-asymmetric

Chunk Size : 128K

Consistency Policy : resync

Resync Status : 4% complete

UUID : 62e76373:ba6fb28a:4ae39dba:bdf52d6c

Number Major Minor RaidDevice State

0 8 32 0 active sync /dev/sdc

1 8 48 1 active sync /dev/sdd

2 8 64 2 active sync /dev/sde

# Use four drives to create a RAID 10 array in container /dev/md0.

[root@localhost ~]# mdadm -C /dev/md0 /dev/sdc /dev/sdd /dev/sde /dev/sdf -n 4 -e imsm

mdadm: /dev/sdc appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

mdadm: /dev/sdd appears to contain an ext2fs file system

size=976762584K mtime=Wed Dec 31 19:00:00 1969

mdadm: /dev/sdf appears to contain an ext2fs file system

size=3750738264K mtime=Wed Dec 31 19:00:00 1969

Continue creating array? y

mdadm: container /dev/md0 prepared.

[root@localhost ~]# mdadm -C /dev/md/R1 /dev/md0 -n 4 -l 10

mdadm: array /dev/md/R1 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid6] [raid5] [raid4] [raid10]

md125 : active raid10 sde[3] sdd[2] sdf[1] sdc[0]

937691136 blocks super external:/md0/0 128K chunks 2 near-copies [4/4] [UUUU]

[>....................] resync = 0.1% (1635136/937691136) finish=76.3min speed=204392K/sec

md0 : inactive sdf[3](S) sde[2](S) sdd[1](S) sdc[0](S)

4420 blocks super external:imsm

md126 : active raid0 sda[1] sdb[0]

890817536 blocks super external:/md127/0 128k chunks

md127 : inactive sdb[1](S) sda[0](S)

10402 blocks super external:imsm

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md125

/dev/md125:

Container : /dev/md0, member 0

Raid Level : raid10

Array Size : 937691136 (894.25 GiB 960.20 GB)

Used Dev Size : 468845568 (447.13 GiB 480.10 GB)

Raid Devices : 4

Total Devices : 4

State : clean, resyncing

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 128K

Consistency Policy : resync

Resync Status : 0% complete

UUID : bd08e6b3:90b4c83c:498c7d20:2b33be77

Number Major Minor RaidDevice State

0 8 32 0 active sync set-A /dev/sdc

1 8 80 1 active sync set-B /dev/sdf

2 8 48 2 active sync set-A /dev/sdd

3 8 64 3 active sync set-B /dev/sde

Viewing the states of all RAID arrays

Perform this task to view the state of all RAID arrays.

Syntax

cat /proc/mdstat

Examples

# View the states of all RAID arrays.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md127 : active raid1 sda[1] sdb[0]

468845568 blocks super external:/md0/0 [2/2] [UU]

[>....................] resync = 0.5% (2401792/468845568) finish=35.6min speed=218344K/sec

md0 : inactive sdb[1](S) sda[0](S)

2210 blocks super external:imsm

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md127

/dev/md127:

Container : /dev/md0, member 0

Raid Level : raid1

Array Size : 468845568 (447.13 GiB 480.10 GB)

Used Dev Size : 468845568 (447.13 GiB 480.10 GB)

Raid Devices : 2

Total Devices : 2

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Resync Status : 1% complete

UUID : af26b232:b11886cf:24caa39f:e04f675a

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 0 1 active sync /dev/sda

Wait for the resync process to be complete.

[root@localhost ~]# mdadm -D /dev/md127

/dev/md127:

Container : /dev/md0, member 0

Raid Level : raid1

Array Size : 468845568 (447.13 GiB 480.10 GB)

Used Dev Size : 468845568 (447.13 GiB 480.10 GB)

Raid Devices : 2

Total Devices : 2

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

UUID : af26b232:b11886cf:24caa39f:e04f675a

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 0 1 active sync /dev/sda

Creating RAID arrays in Windows

Installing the RAID controller driver

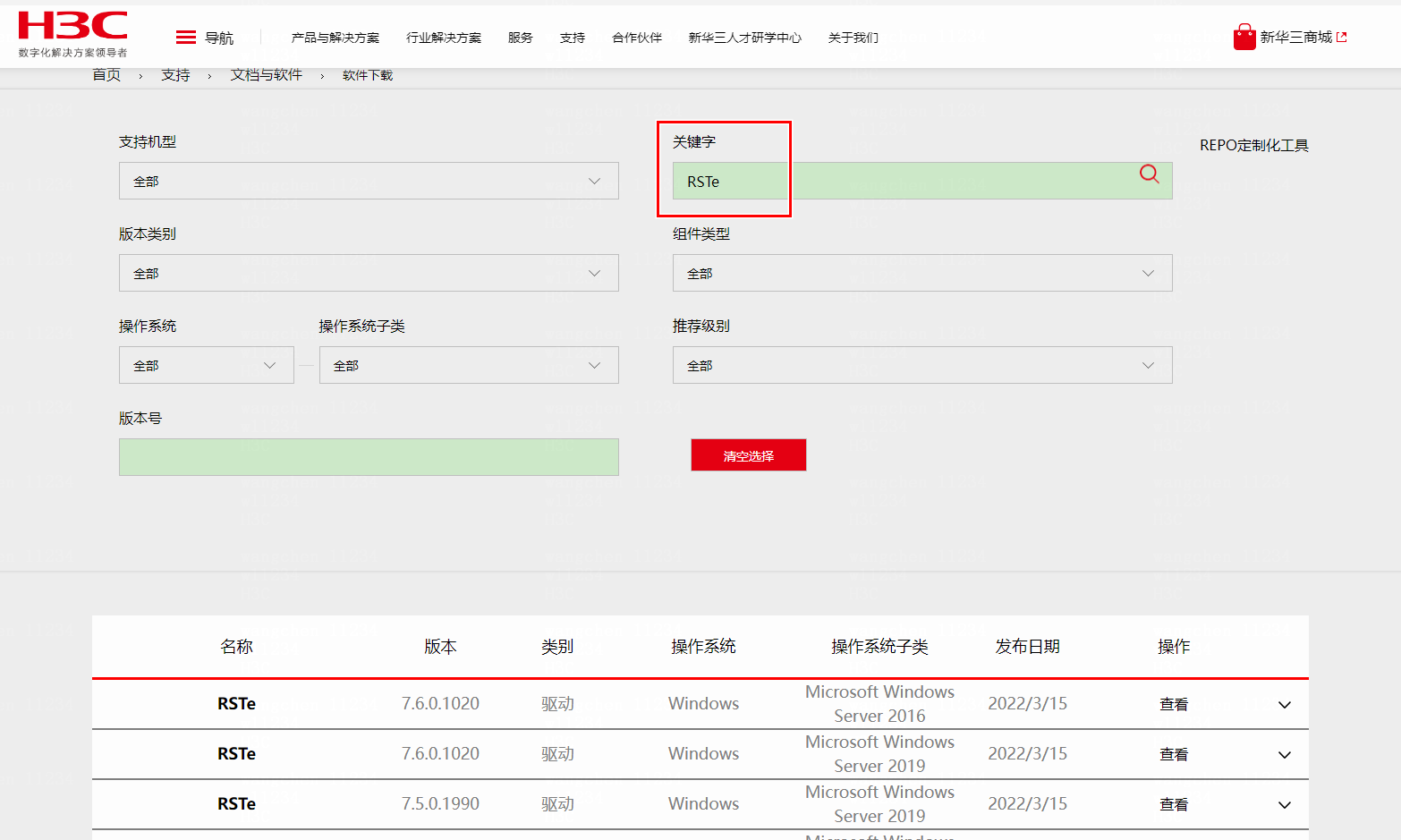

1. Access the H3C official website, access the Support > Software Download > Servers page, and download the RSTe driver.

Figure 30 Downloading the driver

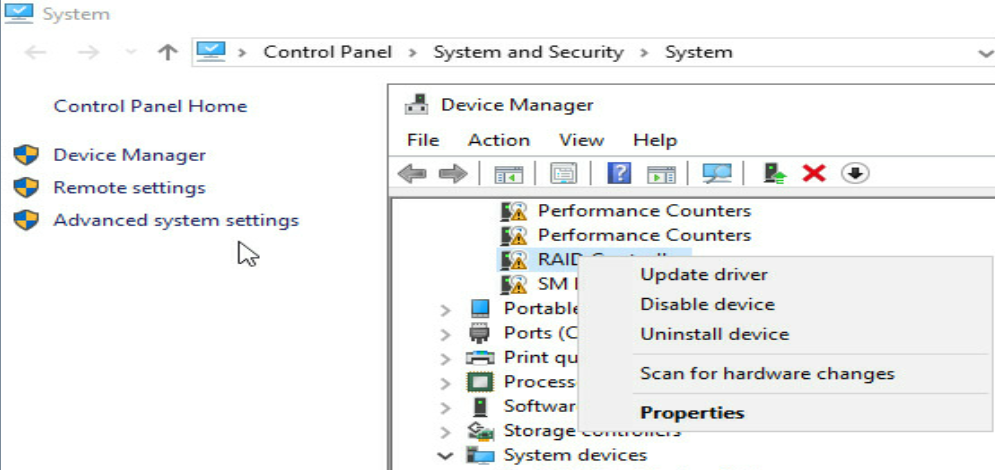

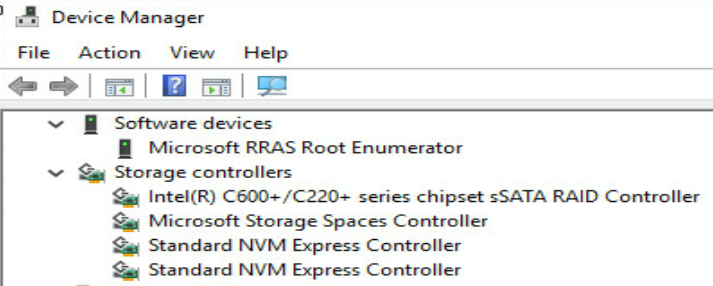

2. Open Device Manager, right click RAID Controllers in the Other devices field, and click Update driver.

Figure 31 Device Manager

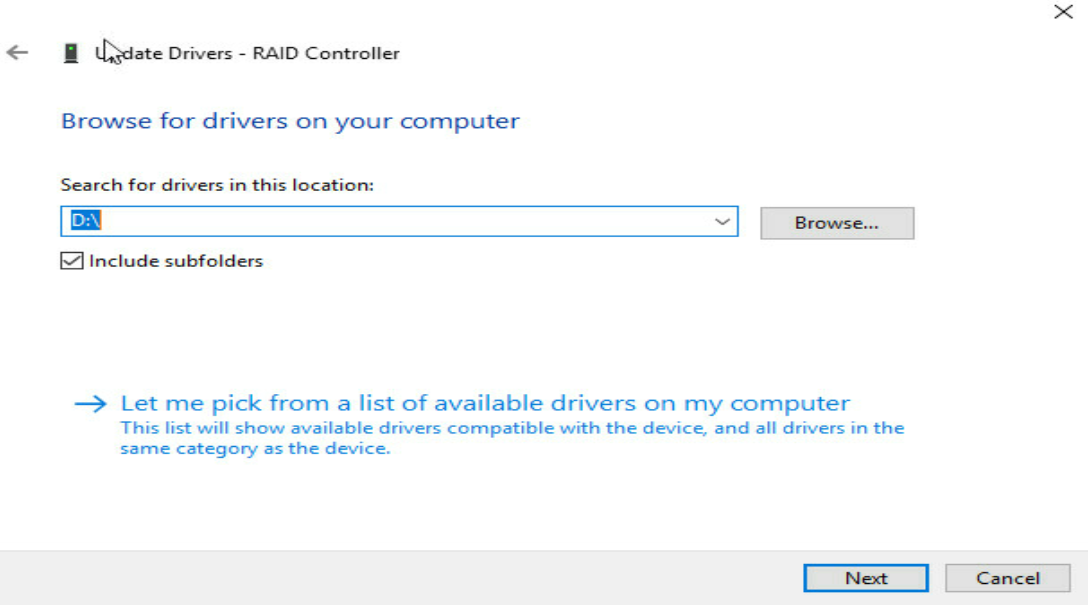

3. Specify the driver location, and install the driver.

Figure 32 Installing the driver

4. Verify that you can view the newly installed driver in the Storage controllers field.

Figure 33 Verifying the installation

Installing the GUI tool for the RSTe RAID controller

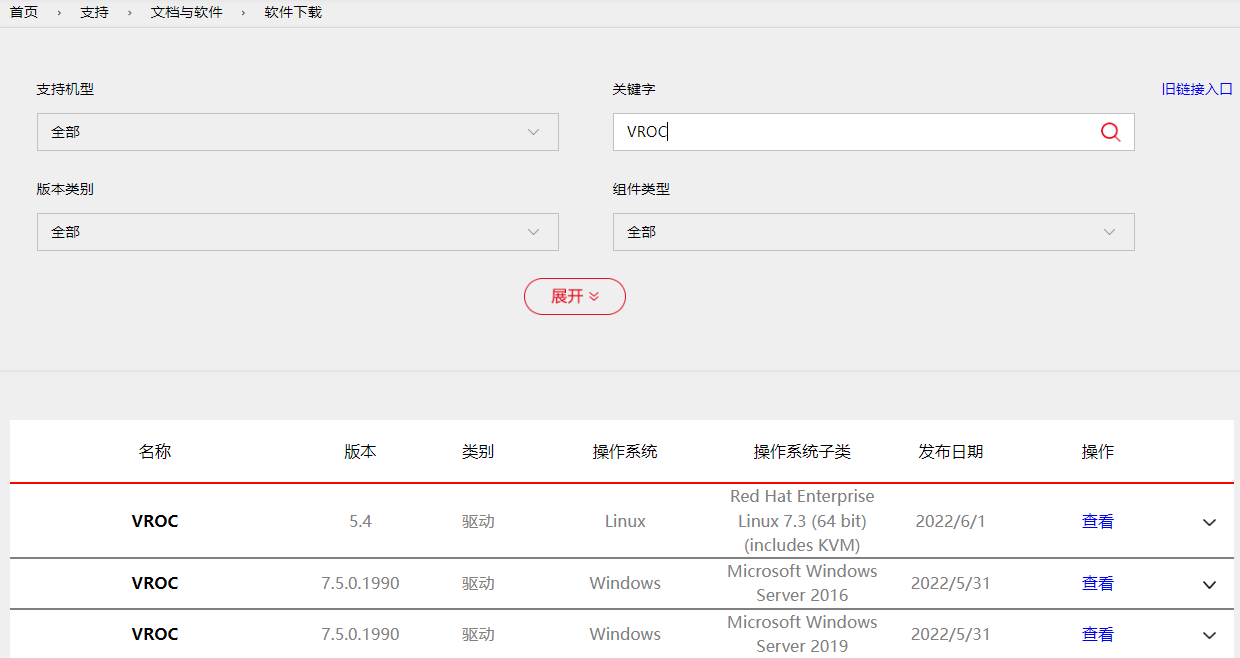

1. Download the VROC driver from the H3C official website.

Figure 34 Downloading the VROC driver

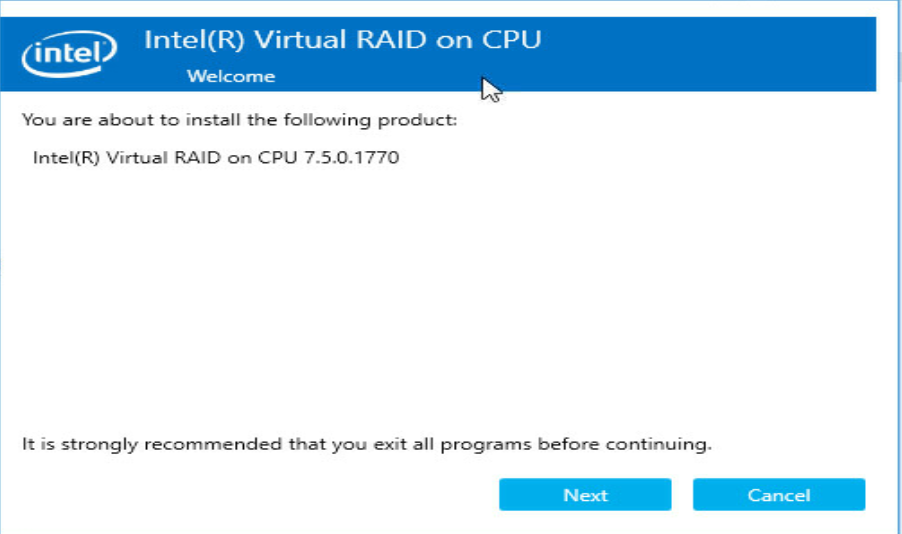

2. Upload the SetupVROC.exe file to the system, and double-click the file to open the installation window.

Figure 35 Installing the VROC tool

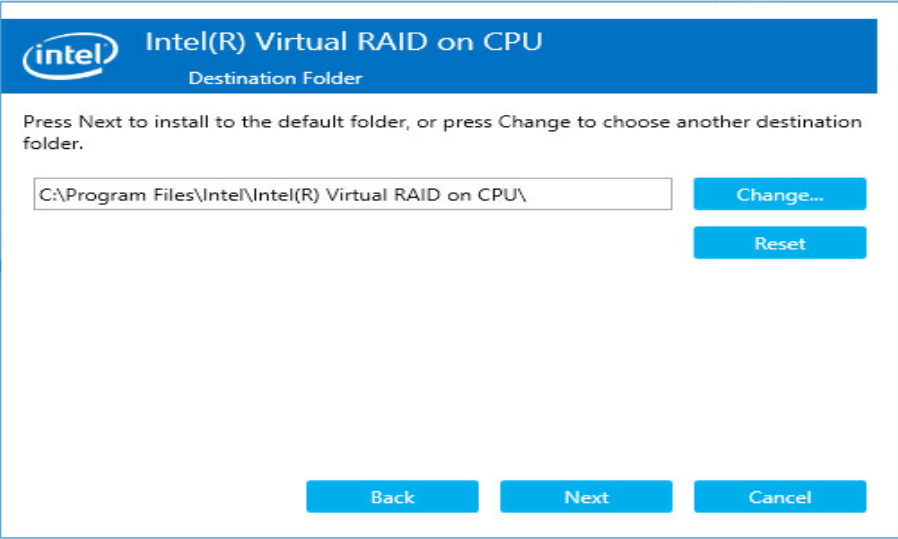

3. Select the installation location.

Figure 36 Selecting the installation location

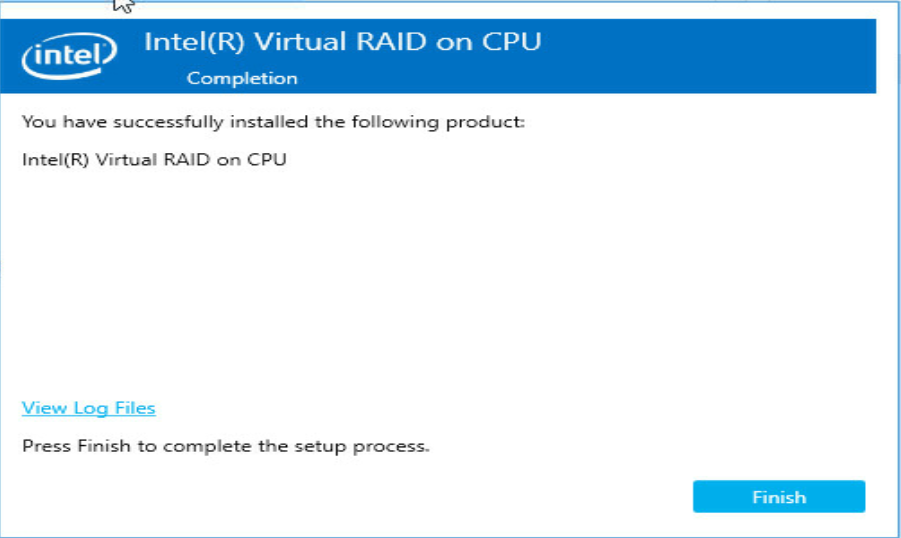

Figure 37 Installation completed

Creating a RAID array

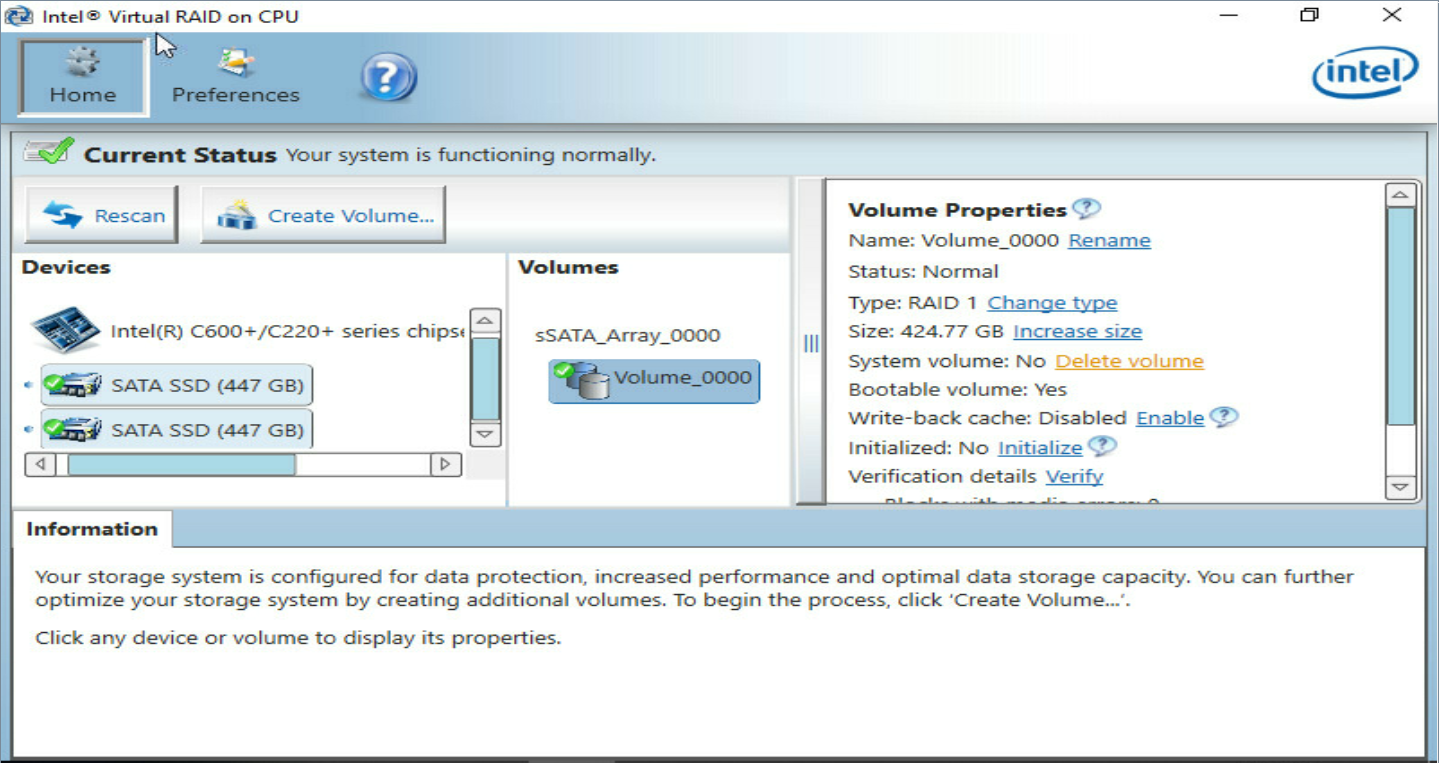

1. Open Intel Virtual RAID on CPU installed together with the RSTe RAID controller GUI tool.

Figure 38 Creating a RAID array

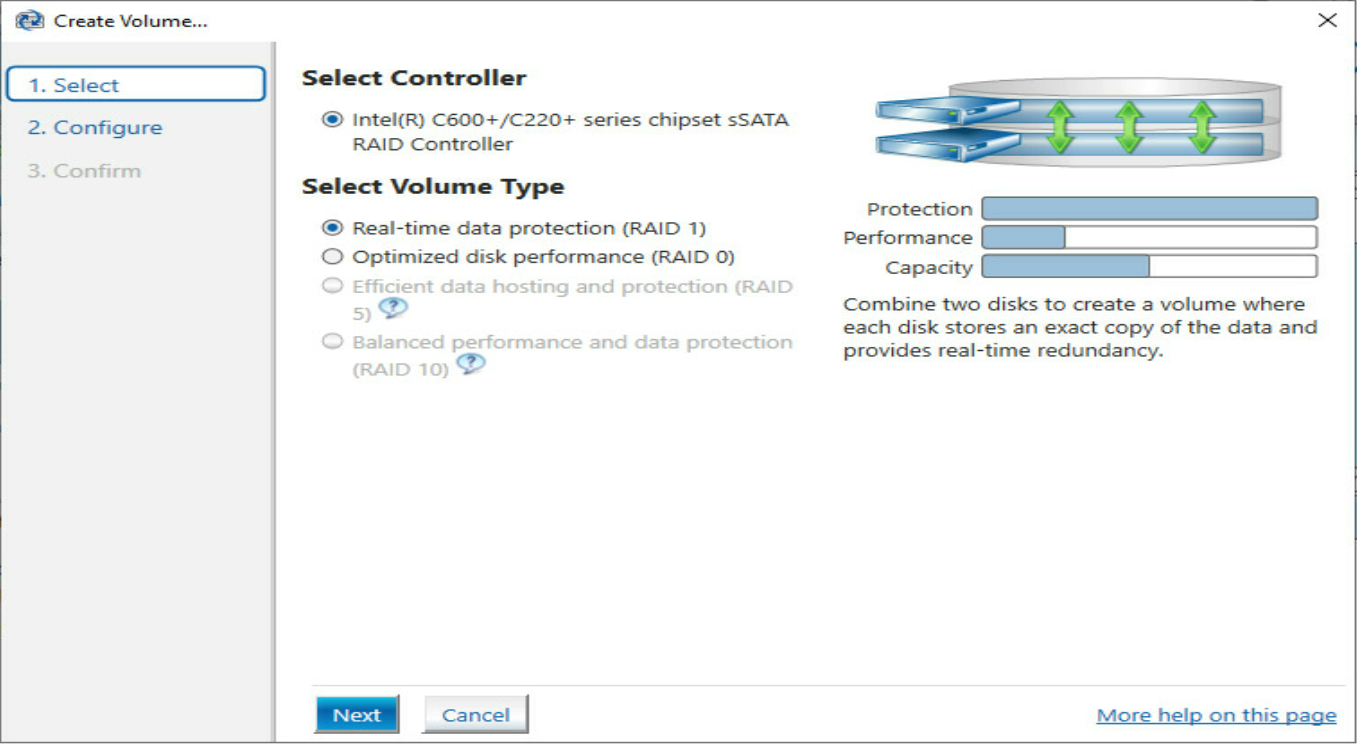

2. Select the RAID level and then click Next. In this example, two M.2 drives are used to create a RAID 1 array.

Figure 39 Selecting the RAID level

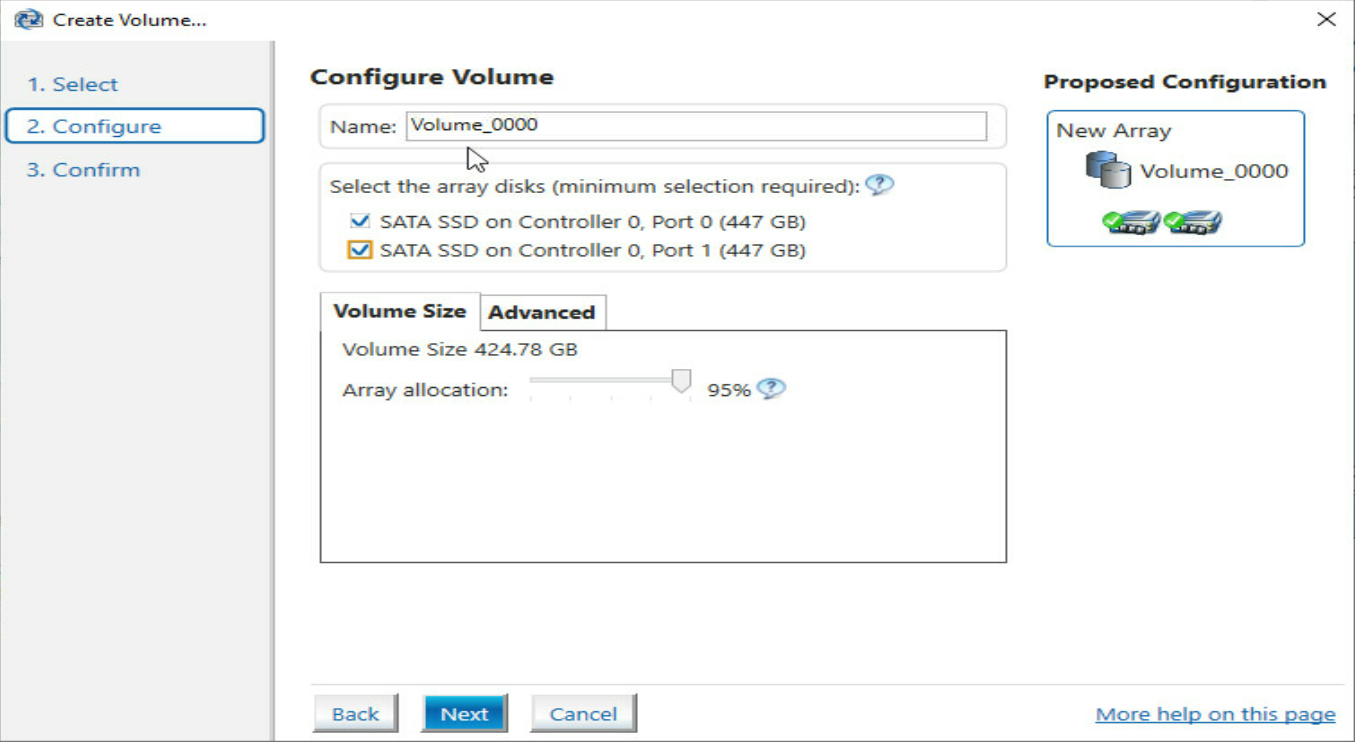

3. Enter the RAID array name, select drives, and then click Next.

Figure 40 Specifying RAID information

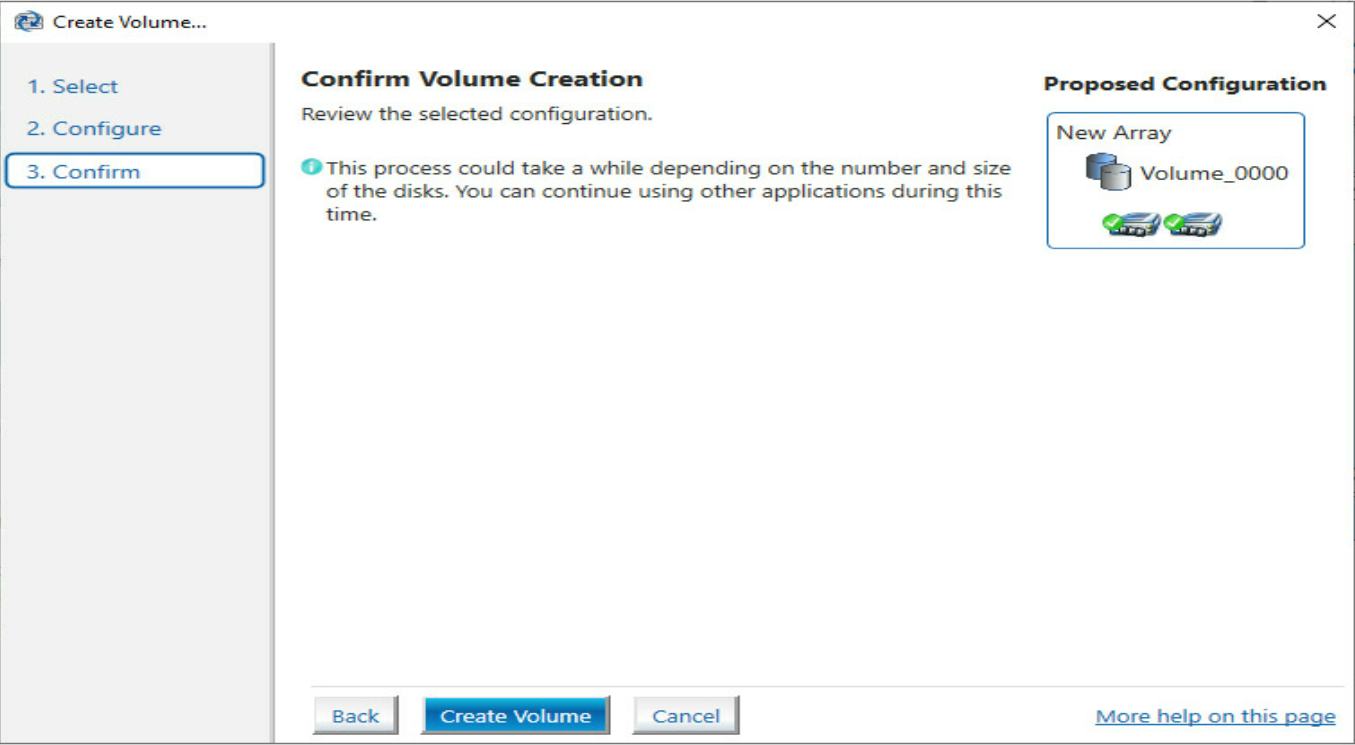

4. Click Create Volume.

Figure 41 Confirming volume creation

Deleting a RAID array

1. Select the RAID array to be deleted, and then click Delete Volume.

Figure 42 Selecting the RAID array to be deleted

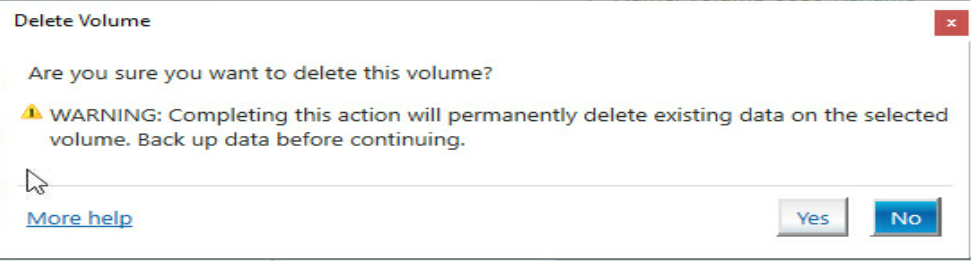

2. In the dialog box that opens, click Yes.

Figure 43 Confirming the deletion

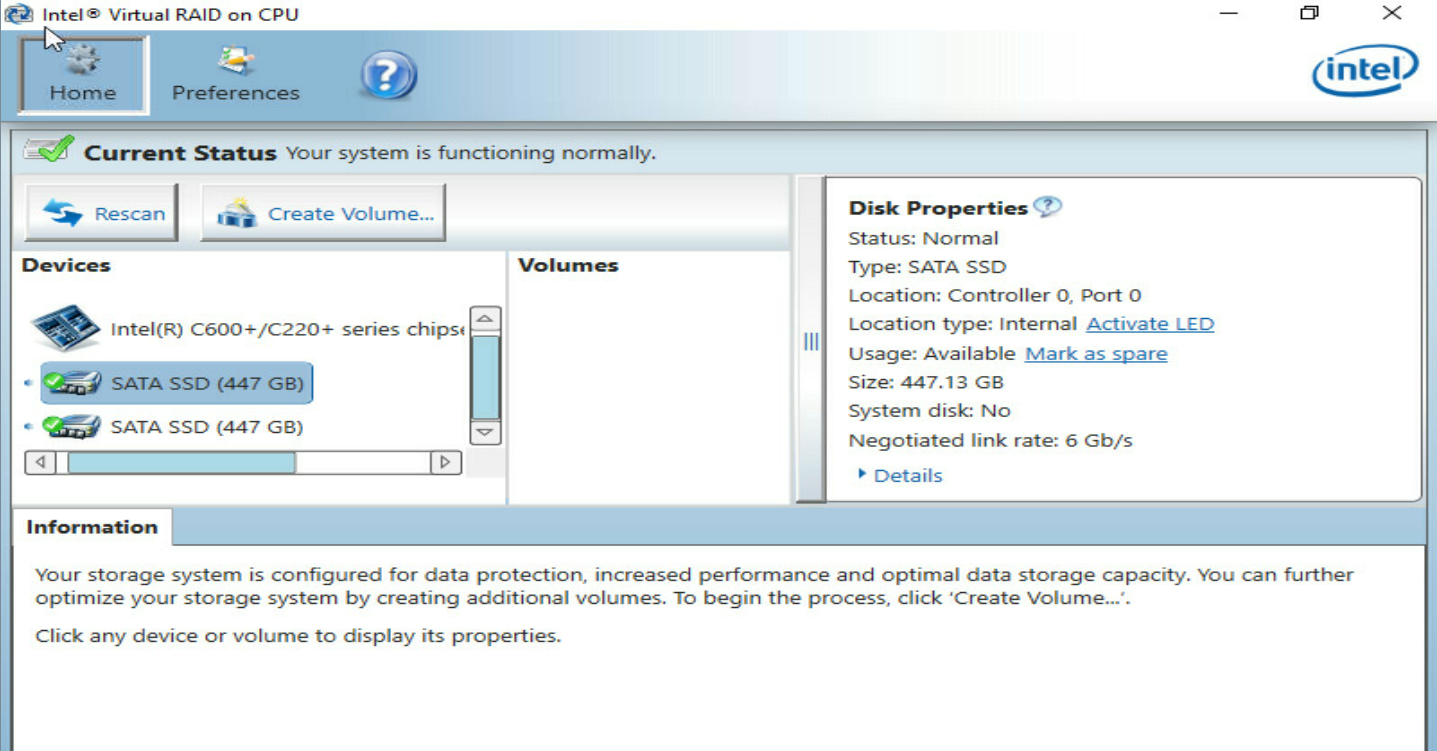

3. Verify that the RAID array has been deleted on the Volumes page.

Figure 44 Verifying the deletion result

Troubleshooting

For detailed information about collecting storage controller fault information, diagnosing and locating faults, and troubleshooting servers, see H3C Servers Troubleshooting Guide.

Compatibility

For information about storage controller and server compatibility, access http://www.h3c.com/en/home/qr/default.htm?id=66.

Downloading and installing drivers

Access the H3C official website to download the storage controller drivers. For more information about installing drivers, see the release notes for the driver program.