- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 02-MPLS Basics Configuration | 487.92 KB |

Label Distribution and Management

Configuring MPLS Basic Capability

MPLS LDP Configuration Task List

Configuring MPLS LDP Capability

Configuring Local LDP Session Parameters

Configuring Remote LDP Session Parameters

Configuring the Policy for Triggering LSP Establishment

Configuring the Label Distribution Control Mode

Configuring LDP Loop Detection

Configuring LDP MD5 Authentication

Restarting MPLS LDP Gracefully

Configuring MPLS IP TTL Processing

Configuring MPLS IP TTL Propagation

Specifying the Type of the Paths for ICMP Responses

Setting the Interval for Reporting Statistics

Displaying and Maintaining MPLS

Example for Configuring LDP Sessions

Example for Configuring LDP to Establish LSPs

When performing MPLS basics configuration, go to these sections for information you are interested in:

l Configuring MPLS Basic Capability

l Configuring MPLS IP TTL Processing

l Displaying and Maintaining MPLS

![]()

l For detailed information about VPN, refer to MPLS L2VPN Configuration and MPLS L3VPN Configuration in the MPLS Volume.

l For detailed information about QoS, refer to the QoS Volume.

l At present, to support MPLS or MPLS-based functions, S7500E series Ethernet switches must use the LSQ1SRP1CB engine or use no other LPUs but the EA series.

MPLS Overview

Multiprotocol Label Switching (MPLS), originating in Internet Protocol version 4 (IPv4), was initially proposed to improve forwarding speed. However, its core technology can be extended to multiple network protocols, such as Internet Protocol version 6 (IPv6), Internet Packet Exchange (IPX), and Connectionless Network Protocol (CLNP). That is what the term multiprotocol means.

MPLS integrates both Layer 2 fast switching and Layer 3 routing and forwarding, satisfying the networking requirements of various new applications.

![]()

For details about the MPLS architecture, refer to RFC 3031 “Multiprotocol Label Switching Architecture”.

Basic Concepts of MPLS

FEC

As a forwarding technology based on classification, MPLS groups packets to be forwarded in the same manner into a class called a forwarding equivalence class (FEC). That is, packets of the same FEC are handled in the same way on an MPLS network.

The classification of FECs is very flexible. It can be based on any combination of source address, destination address, source port, destination port, protocol type and Virtual Private Network (VPN). For example, in traditional IP forwarding using the longest match algorithm, all packets to the same destination belong to the same FEC.

Label

A label is a short, fixed length identifier for identifying a FEC. A FEC may correspond to multiple labels in scenarios where, for example, load sharing is required, while a label can only represent a single FEC.

A label is carried in the header of a packet. It does not contain any topology information and is local significant.

A label is four octets, or 32 bits, in length. Figure 1-1 illustrates its format.

![]()

A label consists of four fields:

l Label: Label value of 20 bits. Used as the pointer for forwarding.

l Exp: For QoS, three bits in length.

l S: Flag for indicating whether the label is at the bottom of the label stack, one bit in length. 1 indicates that the label is at the bottom of the label stack. This field is very useful when there are multiple levels of MPLS labels.

l TTL: Time to live (TTL) for the label. Eight bits in length. This field has the same meaning as that for an IP packet.

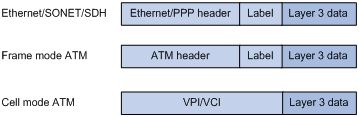

Similar to the VPI/VCI in ATM and the DLCI in frame relay, an MPLS label functions as a connection identifier. If the link layer protocol has a label field like VPI/VCI in ATM or DLCI in frame relay, the MPLS label is encapsulated in that field. Otherwise, it is inserted between the data link layer header and the network layer header as a shim. As such, an MPLS label can be supported by any link layer protocol.

Figure 1-2 shows the place of a label in a packet.

Figure 1-2 Place of a label in a packet

![]()

Currently, the device does not support the cell mode.

LSR

A Label switching router (LSR) is a fundamental component on an MPLS network. All LSRs support MPLS.

LSP

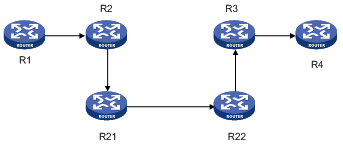

A Label switched path (LSP) is the path along which a FEC travels through an MPLS network. Along an LSP, two neighboring LSRs are called upstream LSR and downstream LSR respectively. In Figure 1-3, R2 is the downstream LSR of R1, while R1 is the upstream LSR of R2.

An LSP is a unidirectional path from the ingress of the MPLS network to the egress. It functions like a virtual circuit in ATM or frame relay. Each node of an LSP is an LSR.

Label distribution protocol

A label distribution protocol is a protocol used by MPLS for control. It has the same functions as a signaling protocol on a traditional network. It classifies FECs, distributes labels, and establishes and maintains LSPs.

MPLS supports multiple label distribution protocols of either of the following two types:

l Those dedicated for label distribution, such as Label Distribution Protocol (LDP).

l Those existing protocols that are extended to support label distribution, such as Border Gateway Protocol (BGP).

In addition, you can configure static LSPs.

![]()

For information about BGP, refer to BGP Configuration in the IP Routing Volume.

LSP tunneling

MPLS supports LSP tunneling.

An LSR and its downstream LSR on an LSP are not necessarily on a path provided by the routing protocol. That is, MPLS supports establishing an LSP along a path different from that established by the routing protocol. Such an LSP is called an LSP tunnel, and the two LSRs are respectively the start point and end point of the LSP tunnel. For example, the LSP <R2→R21→R22→R3> in Figure 1-3 is a tunnel between R2 and R3. This tunneling technology does not use the traditional network layer encapsulation tunneling technology.

If the path that a tunnel traverses is exactly the hop-by-hop route established by the routing protocol, the tunnel is called a hop-by-hop routed tunnel. Otherwise, the tunnel is called an explicitly routed tunnel.

Multi-level label stack

MPLS allows a packet to carry multiple levels of labels organized as a last-in first-out (LIFO) stack, which is called a label stack. A packet with multiple levels of labels can travel along more than one level of LSP tunnel. The ingress and egress of each tunnel perform Push and Pop operations respectively on the top of a stack.

MPLS has no limit to the depth of a label stack. For a label stack with a depth of m, the label at the bottom is of level 1, while the label at the top has a level of m. An unlabeled packet can be considered as a packet with an empty label stack, that is, a label stack whose depth is 0.

Architecture of MPLS

Structure of the MPLS network

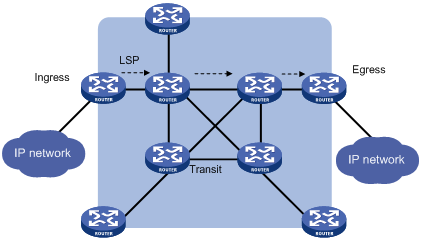

As shown in Figure 1-4, the element of an MPLS network is LSR. LSRs in the same routing or administrative domain form an MPLS domain.

In an MPLS domain, LSRs residing at the domain border and connected with other networks are label edge routers (LERs), while those within the MPLS domain are core LSRs. All core LSRs, which can be routers running MPLS or ATM-LSRs upgraded from ATM switches, use MPLS to communicate, while LERs interact with devices outside the domain that use traditional IP technologies.

Each packet entering an MPLS network is labeled on the ingress LER and then forwarded along an LSP to the egress LER. All the intermediate LSRs are called transit LSRs.

Figure 1-4 Structure of the MPLS network

The following describes how MPLS operates:

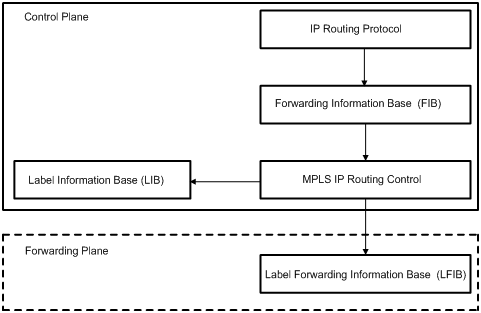

1) First, the LDP protocol and the traditional routing protocol (such as OSPF and ISIS) work together on each LSR to establish the routing table and the label information base (LIB) for intended FECs.

2) Upon receiving a packet, the ingress LER completes the Layer 3 functions, determines the FEC to which the packet belongs, labels the packet, and forwards the labeled packet to the next hop along the LSP.

3) After receiving a packet, each transit LSR looks up its Label Forwarding Information Base (LFIB) for the next hop according to the label of the packet, swaps the label, and then forwards the packet to the next hop. None of the transit LSRs performs Layer 3 processing.

4) When the egress LER receives the packet, it removes the label of the packet and IP forwards the packet.

Obviously, MPLS is not a service or application, but actually a tunneling technology and a routing and switching technology platform that combines label switching with Layer 3 routing. This platform not only supports multiple upper layer protocols and services, but also secures transmission of information to a certain degree.

Structure of an LSR

Figure 1-5 Structure of an LSR

As shown in Figure 1-5, an LSR consists of two planes:

l Control plane: Implements label distribution and routing, establishes the LFIB, and builds and tears LSPs.

l Forwarding plane: Forwards packets according to the LFIB.

An LER forwards both labeled packets and IP packets on the forwarding plane and therefore uses both the LFIB and the FIB. An ordinary LSR only needs to forward labeled packets and therefore uses only the LFIB.

MPLS and Routing Protocols

When establishing an LSP hop by hop, LDP uses the information in the routing tables of the LSRs along the path to determine the next hop. The information in the routing tables is provided by routing protocols such as IGPs and BGP. LDP only uses the routing information indirectly; it has no direct relationship with routing protocols.

On the other hand, existing protocols such as BGP can be extended to support label distribution.

In MPLS applications, it may be necessary to extend some routing protocols. For example, MPLS-based VPN applications requires that BGP be extended to propagate VPN routing information.

Applications of MPLS

By integrating both Layer 2 fast switching and Layer 3 routing technologies, MPLS features improved route lookup speed. However, with the development of the application specific integrated circuit (ASIC) technology, route lookup speed is no longer the bottleneck hindering network development. This makes MPLS not so outstanding in improving forwarding speed.

Nonetheless, MPLS can easily implement the seamless integration between IP networks and Layer 2 networks of ATM, frame relay, and the like, and offer better solutions to Quality of Service (QoS), TE, and VPN applications thanks to the following advantages.

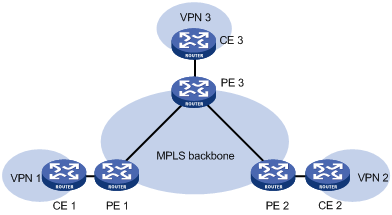

MPLS-based VPN

Traditional VPNs depend on tunneling protocols such as GRE, L2TP, and PPTP to transport data between private networks across public networks, while an LSP itself is a tunnel over public networks. Therefore, implementation of VPN using MPLS holds natural advantages.

An MPLS-based VPN uses LSPs to connect geographically dispersed branches of an organization to form a united network. MPLS-based VPN also supports the interconnection between VPNs.

Figure 1-6 shows the basic structure of an MPLS-based VPN. Two of the fundamental components are customer edge device (CE) and service provider edge router (PE). A CE can be a router, switch, or host. All PEs are on the backbone network.

PEs are responsible for establishing LSPs between them, managing VPN users, and advertising routes among different branches of the same VPN. Route advertisement among PEs is usually implemented by LDP or extended BGP.

MPLS-based VPN supports IP address multiplexing between branches and interconnection between VPNs. Compared with a traditional route, a VPN route requires the branch and VPN identification information. Therefore, it is necessary to extend BGP to carry VPN routing information.

MPLS Configuration Basics

Label Distribution and Management

In MPLS, the label that an LSR uses for an FEC is assigned by the downstream LSR. The downstream LSR then informs the upstream LSR of the assignment. That is, labels are advertised in the upstream direction.

Label advertisement mode

Two label advertisement modes are available:

l Downstream on demand (DoD): In this mode, an LSR distributes a label binding to another LSR only when it receives a label request from the LSR.

l Downstream unsolicited (DU): In this mode, an LSR does not wait for any label request before distributing a label binding.

An upstream LSR and its downstream LSR must use the same label advertisement mode; otherwise, no LSP can be established normally. For more information, refer to LDP Label Distribution.

![]()

Currently, S7500E Series Ethernet Switches supports only the DU mode.

Label distribution control mode

There are two label distribution control modes:

l Independent: In this mode, an LSR can advertise label bindings upstream at anytime. A consequence of this mode is that an LSR may have advertised a label binding to the upstream LSR when it receives a binding from its downstream LSR.

l Ordered: In this mode, an LSR advertises its label binding for a FEC upstream only when it receives a label binding from the next hop for the FEC or it is the egress of the FEC.

Label retention mode

Label retention mode dictates how to process a received label binding that is not useful at the moment.

There are two label retention modes:

l Liberal: In this mode, an LSR keeps any received label binding regardless of whether the binding is from its next hop for the FEC or not.

l Conservative: In this mode, an LSR keeps only label bindings that are from its next hops for the FECs.

In liberal mode, an LSR can adapt to route changes quickly; while in conservative mode, there are less label bindings for an LSR to advertise and keep.

The conservative label retention mode is usually used together with the DoD mode on LSRs with limited label spaces.

![]()

Currently, S7500E Series Ethernet Switches supports only the liberal mode.

Basic concepts for label switching

l Next hop label forwarding entry (NHLFE): Operation to be performed on the label, which can be Push or Swap.

l FEC to NHLFE mapping (FTN): Mapping of a FEC to an NHLFE at the ingress node.

l Incoming label mapping (ILM): Mapping of each incoming label to a set of NHLFEs. The operations performed for each incoming label can be Null or Pop.

Label switching process

Each packet is classified into a certain FEC at the ingress LER. Packets of the same FEC travel along the same path in the MPLS domain, that is, the same LSP. For each incoming packet, an LSR examines the label, uses the ILM to map the label to an NHLFE, replaces the old label with a new label, and then forwards the labeled packet to the next hop.

PHP

As described in Architecture of MPLS, each transit LSR on an MPLS network forwards an incoming packet based on the label of the packet, while the egress removes the label from the packet and forwards the packet based on the network layer destination address.

In fact, on a relatively simple MPLS application network, the label of a packet is useless for the egress, which only needs to forward the packet based on the network layer destination address. In this case, the penultimate hop popping (PHP) feature can pop the label at the penultimate node, relieving the egress of the label operation burden and improving the packet processing capability of the MPLS network.

TTL Processing in MPLS

MPLS TTL processing involves two aspects: IP TTL propagation and ICMP response path.

IP TTL propagation

An MPLS label contains an 8-bit long TTL field, which has the same meaning as that of an IP packet.

According to RFC 3031 “Multiprotocol Label Switching Architecture”, when an LSR labels a packet, it copies the TTL value of the original IP packet or the lower level label to the TTL field of the newly added label. When an LSR forwards a labeled packet, it decrements the TTL value of the label at the stack top by 1. When an LSR pops a label, it copies the TTL value of the label at the stack top back to the TTL field of the IP packet or the lower level label.

TTL can be used not only to prevent routing loops, but to implement the tracert function:

l With IP TTL propagation enabled at ingress, whenever a packet passes a hop along the LSP, its IP TTL gets decremented by 1. Therefore, the result of tracert will reflect the path along which the packet has traveled.

l With IP TTL propagation disabled at ingress, the IP TTL of a packet does not decrement when the packet passes a hop along the LSP, and the result of tracert does not show the hops within the MPLS backbone, as if the ingress and egress were connected directly.

![]()

l Within an MPLS domain, TTL propagation always occurs between the multi-level labels.

l The TTL value of a transmitted local packet is always copied regardless of whether IP TTL propagation is enabled or not. This ensures that the local administrator can tracert for network test.

ICMP response

On an MPLS VPN, P routers cannot route VPN packets carried by MPLS. When the TTL of an MPLS packet expires, an ICMP response will be generated and transported along the LSP until it reaches the destination router of the LSP, where it is forwarded by IP routing. Such processing increases the network traffic and the packet forwarding delay.

![]()

For description and configuration of P routers, refer to MPLS L3VPN Configuration and MPLS L2VPN Configuration in the MPLS Volume.

For an MPLS packet with only one level of label, the ICMP response message travels along the IP route when the TTL expires.

Inspecting an MPLS LSP

In MPLS, the MPLS control plane is responsible for establishing an LSP. However, it cannot detect the error when an LSP fails to forward data. This brings difficulty to network maintenance.

MPLS LSP ping and traceroute can be used to detect errors in LSPs and locate nodes with failures in time. Similar to IP ping and traceroute, MPLS LSP ping and traceroute use MPLS echo requests and MPLS echo replies to check the availability of LSPs. The MPLS echo request message carries the FEC information of the LSP to be detected, and is sent along the LSP like other data packets of the FEC. Thus, the LSP can be checked.

l MPLS LSP ping is a tool for checking the validity and availability of an LSP. It uses messages called MPLS echo requests. In a ping operation, MPLS echo requests are forwarded along an LSP to the egress, where the control plane confirms that the LSR is the egress of the FEC and responds with MPLS echo replies. If the ping initiator receives the replies, the LSP is considered perfect for forwarding data.

l MPLS LSP traceroute is a tool for locating LSP errors. By sending MPLS echo requests to the control plane of each transit LSR, it can determine whether the LSR is really a transit node on the LSP.

![]()

The destination address in the IP header of an MPLS echo request is set to an address on 127.0.0.0/8 (a loopback address of the LSR) and the TTL is set to 1, so as to prevent further forwarding of the request when the request reaches the egress.

LDP Overview

Basic Concepts of LDP

The LDP protocol dictates the messages to be used in label distribution and the related processes.

Using LDP, LSRs can map network layer routing information to data link layer switching paths directly and further establish LSPs. LSPs can be established between both neighboring LSRs and LSRs that are not directly connected, making label switching possible at all transit nodes on the network.

![]()

For detailed description about LDP, refer to RFC 3036 “LDP Specification”.

LDP peer

Two LSRs with an LDP session established between them and using LDP to exchange label bindings are called LDP peers, each of which obtains the label bindings of its peer over the LDP session between them.

LDP session

LDP sessions are used to exchange messages for label binding and releasing.

LDP sessions come in two categories:

l Local LDP session: Established between two directly connected LSRs.

l Remote LDP session: Established between two indirectly connected LSRs.

LDP message type

There are four types of LDP messages:

l Discovery message: Used to declare and maintain the presence of LSRs on a network.

l Session message: Used to establish, maintain, and terminate sessions between LDP peers.

l Advertisement message: Used to create, alter, or remove label bindings.

l Notification message: Used to provide advisory information and to notify errors.

For reliable transport of LDP messages, TCP is used for LDP session messages, advertisement messages, and notification messages, while UDP is used only for discovery messages.

Label space and LDP identifier

A scope of labels that can be assigned to LDP peers is called a label space. A label space can be per interface or per platform. A per interface label space is interface-specific, while a per platform label space is for an entire LSR.

An LDP identifier is used to identify an LSR label space. It is a six-byte numerical value in the format of <LSR ID>:<Label space ID>, where LSR ID is four-byte long. A label space ID of 1 means that the label space is per interface, a label space ID of 0 means that the label space is per platform.

![]()

Currently, only per platform label space is supported by S7500E Series Ethernet Switches..

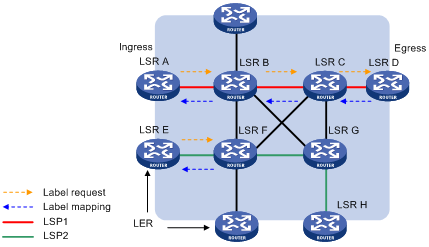

LDP Label Distribution

Figure 1-7 illustrates how LDP distributes labels.

In Figure 1-7, B is the upstream LSR of C along LSP 1.

As described previously, there are two label advertisement modes. The main difference between them is whether the downstream advertises the bindings unsolicitedly or on demand.

The following details the advertisement process in each of the two modes.

DoD mode

In DoD mode, an upstream LSR sends a label request message containing the description of a FEC to its downstream LSR. After receiving the message, the downstream LSR assigns a label to the FEC, encapsulates the binding information in a label mapping message and sends the message to the upstream LSR. However, the time when the downstream LSR sends label binding information depends on the label distribution control mode that it uses:

l In ordered mode, a downstream LSR sends label binding information only after it receives that of its downstream LSR.

l In independent mode, a downstream LSR sends label binding information immediately after it receives a label request message, no matter whether it has received the label binding information of its downstream LSR or not.

Usually, an upstream LSR selects its downstream LSR based on the information in its routing table. In Figure 1-7, all LSRs along LSP 1 work in ordered mode, while LSR F along LSP 2 works in independent mode.

DU mode

In DU mode, an LSR advertises label binding information to all its neighboring LSRs unsolicitedly after the LDP sessions are established. An LSR receiving the label binding information determines how to process the label binding information based on its label retention mode and routing table information.

Fundamental Operation of LDP

LDP goes through four phases in operation: discovery, session establishment and maintenance, LSP establishment and maintenance, and session termination.

Discovery

In this phase, an LSR wanting to establish a session sends Hello messages to its neighboring LSRs periodically, announcing its presence. This way, LSRs can automatically find their peers without manual configuration.

LDP provides two discovery mechanisms:

l Basic discovery mechanism

The basic discovery mechanism is used to discover local LDP peers, that is, LSRs directly connected at link layer, and to further establish local LDP sessions.

Using this mechanism, an LSR periodically sends LDP link Hello messages in the form of UDP packets out its interfaces to the multicast address known as “all routers on this subnet”. An LDP link Hello message carries information about the LDP identifier of a given interface and some other information. Receipt of an LDP link Hello message on an interface indicates that a potential LDP peer is connected to the interface at link layer.

l Extended discovery mechanism

The extended discovery mechanism is used to discover remote LDP peers, that is, LSRs that are not directly connected at link layer, and to further establish remote LDP sessions.

Using this mechanism, an LSR periodically sends LDP targeted Hello messages in the form of UDP packets to a given IP address.

An LDP targeted Hello message carries information about the LDP identifier of a given LSR and some other information. Receipt of an LDP targeted Hello message on an LSR indicates that a potential LDP peer is connected to the LSR at network layer.

At the end of the discovery phase, Hello adjacency is established between LSRs, and LDP is ready to initiate session establishment.

Session establishment and maintenance

In this phase, LSRs pass through two steps to establish sessions between them:

1) Establishing transport layer connections (that is, TCP connections) between them.

2) Initializing sessions and negotiating session parameters such as the LDP version, label distribution mode, timers, and label spaces.

After establishing sessions between them, LSRs send Hello messages and Keepalive messages to maintain those sessions.

LSP establishment and maintenance

Establishing an LSP is to bind FECs with labels and notify adjacent LSRs of the bindings. This is implemented by LDP. The following gives the primary steps when LDP works in DU mode and ordered mode:

1) When the network topology changes and an LER finds in its routing table a new destination address that does not correspond to any existing FEC, the LER creates a new FEC for the destination address.

2) If the LER has upstream LSRs and has at least one free label, it assigns a label to the FEC and sends the label binding information to the upstream LSRs.

3) Upon receiving the label binding information, an upstream LSR records the binding. Then, if the LSR which sent the binding information is the next hop of the FEC, it adds an entry in its LFIB, assigns a label to the FEC, and sends the new label binding information to its own upstream LSRs.

4) When the ingress LER receives the label binding message, it adds an entry in its LFIB. Thus, an LSP is established for the FEC, and packets of the FEC can be label switched along the LSP.

Session termination

LDP checks Hello messages to determine adjacency and checks Keepalive messages to determine the integrity of sessions.

LDP uses different timers for adjacency and session maintenance:

l Hello timer: LDP peers periodically send Hello messages to indicate that they intend to keep the Hello adjacency. If an LSR does not receive any Hello message from its peer in a Hello interval, it removes the Hello adjacency.

l Keepalive timer: LDP peers keep LDP sessions by periodically sending Keepalive messages over LDP session connections. If an LSR does not receive any Keepalive message from its peer during a Keepalive interval, it closes the connection and terminates the LDP session.

LDP Loop Detection

LSPs established in an MPLS domain may be looping. The LDP loop detection mechanism can detect looping LSPs and prevent LDP messages from looping forever.

For the LDP loop detection mechanism to work, all LSRs must have the same LDP loop detection configuration. However, establishing an LDP session does not require that the LDP loop detection configuration on the LDP peers be the same.

LDP loop detection can be in either of the following two modes:

Maximum hop count

A label request message or label mapping message may contain information about its hop count, which increments by 1 for each hop. When this value reaches the specified limit, LDP considers that a loop is present and the attempt to establish an LSP fails.

Path vector

A label request message or label mapping message may contain path information in the form of path vector list. When such a message reaches an LSR, the LSR checks the path vector list of the message to see whether its MPLS LSR ID is in the list. If either of the following cases occurs, the attempt to establish an LSP fails:

l The MPLS LSR ID of the LSR is already in the path vector list.

l The hop count of the path reaches the specified limit.

If the LSR does not find its MPLS LSR ID in the path vector list, it adds the ID into the list.

LDP GR

![]()

For details about Graceful Restart (GR), refer to GR Configuration in the System Volume.

During MPLS LDP session establishment, the LDP peers need to perform Fault Tolerance (FT) and GR capability negotiation. Only when both devices support GR, can the established session be FT/GR capable. To support GR, a GR device must backup the FECs and label information.

When an LDP session is GR capable:

1) Whenever the GR restarter restarts, a GR helper will detect that the related LDP session is down and will keep its neighborship with the GR restarter and retain information about the session until the reconnect timer times out.

2) If the GR helper receives a session request from the GR restarter before the reconnect timer times out, it retains the LSP and label information of the session and restores the session with the GR restarter. Otherwise, it deletes all LSP and label information associated with the session.

3) After the session recovers, the GR restarter and helper activate the neighbor liveness timers and recovery timers, restore all LSP information related to the session, and send to each other label mapping and label request messages.

4) Upon receipt of the mapping messages from each other, the GR restarter and helper delete the LSP stale flag. After the neighbor liveness timer and recovery timer time out, the GR restarter and helper will delete all LSP information of the session.

To summarize, during a GR recover, the LSP information is preserved for the forwarding plane and therefore MPLS packets can be forwarded without interruption.

Configuring MPLS Basic Capability

You need to configure MPLS basic capability on all routers for MPLS forwarding within an MPLS domain, and to configure MPLS basic capability before configuring any other MPLS features.

![]()

Currently, only VLAN-interface supports MPLS capability.

Configuration Prerequisites

Before configuring MPLS basic capability, be sure to complete these tasks:

l Configuring physical parameters on relevant interfaces,

l Configuring link layer attributes on relevant interfaces,

l Assigning IP addresses to relevant interfaces,

l Configuring static routes or an IGP protocol, ensuring that LSRs can reach each other at Layer 3.

![]()

MPLS basic capability can be configured on LSRs even when LSRs cannot reach each other. However, you need to configure the mpls ldp transport-address command in this case.

Configuration Procedure

Follow these steps to configure MPLS basic capability:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Configure the MPLS LSR ID |

mpls lsr-id lsr-id |

Required Not configured by default |

|

Enable MPLS globally and enter MPLS view |

mpls |

Required Not enabled by default |

|

Exit to system view |

quit |

— |

|

Enter interface view |

interface interface-type interface-number |

— |

|

Enable MPLS for the interface |

mpls |

Required Not enabled by default |

![]()

l An MPLS LSR ID is in the format of an IP address and must be unique within an MPLS domain. You are recommended to use the IP address of a loopback interface on an LSR as the MPLS LSR ID.

l At present, the S7500E series switches support enabling MPLS on only VLAN interfaces.

l As MPLS will encapsulate original packets with single layer or multiple layers of labels, after enabling MPLS on the VLAN interface of a VLAN, you are recommended to enable the jumboframe function on the ports of the VLAN and configure a proper jumbo frame length to prevent packets from being dropped due to size limit. For example, if two layers of MPLS labels are required for encapsulating FTP packets, you need to configure the jumbo frame length on related ports to 1544 bytes: 1518 bytes for the FTP packet + 4 bytes × 2 for the MPLS labels + 4 bytes for the VLAN tag + 14 bytes for the Ethernet frame header. For descriptions of the jumboframe function, refer to Ethernet Interface Configuration in the Access Volume.

Configuring PHP

Configure PHP on an egress and select the type of labels for the egress to distribute based on whether the penultimate hop supports PHP.

Configuration Prerequisites

Before configuring PHP, be sure to complete the following task: Configuring MPLS basic capability on all LSRs.

Configuration Procedure

According to RFC 3032 “MPLS Label Stack Encoding”:

l A label value of 0 represents an IPv4 explicit null label and is valid only when it appears at the bottom of a label stack.

l A label value of 3 represents an implicit null label and never appears in the label stack. When an LSR finds that it is assigned an implicit null label, it directly performs a pop operation, rather than substitutes the implicit null label for the original label at the stack top.

Follow these steps to configure PHP:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS view |

mpls |

— |

|

Configure the egress to support PHP and specify the type of the label to be distributed to the penultimate hop |

label advertise { explicit-null | implicit-null | non-null } |

Optional By default, an egress supports PHP and distributes to the penultimate hop an implicit null label. Note that you must reset LDP sessions for the configuration to take effect. |

![]()

For the S7500E series Ethernet switches, a label with a value of 0 can be at the top of a label stack. After receiving a packet with such a label, the switch will pop the label directly and check whether there is any inner layer label. If finding an inner layer label, the switch will forward the packet based on the inner layer label; otherwise, the switch will forward the packet based on the IP address.

Configuring a Static LSP

An LSP can be static or dynamic. A static LSP is manually configured, while a dynamic LSP is established by MPLS LDP.

For a static LSP to work, all LSRs along the LSP must be configured properly.

Static LSPs can be used in MPLS L2VPN.

![]()

For configuration of MPLS L2VPN, refer to MPLS L2VPN Configuration in the MPLS Volume.

Configuration Prerequisites

Before configuring a static LSP, be sure to complete these tasks:

l Determining the ingress, transit LSRs, and egress for the static LSP,

l Configuring MPLS basic capability on all the LSRs.

Configuration Procedure

Follow these steps to configure a static LSP:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Configure a static LSP taking the current LSR as the ingress |

static-lsp ingress lsp-name destination dest-addr { mask | mask-length } nexthop next-hop-addr out-label out-label |

Optional |

|

Configure a static LSP taking the current LSR as a transit LSR |

static-lsp transit lsp-name incoming-interface interface-type interface-number in-label in-label nexthop next-hop-addr out-label out-label |

Optional |

|

Configure a static LSP taking the current LSR as the egress |

static-lsp egress lsp-name incoming-interface interface-type interface-number in-label in-label |

Optional |

![]()

l The value of the next-hop-addr argument cannot be any local public network IP address.

l If you specify the next hop when configuring a static LSP, and the address of the next hop is present in the routing table, you also need to specify the next hop when configuring the static IP route.

l For information about configuring a static IP route, refer to Static Routing Configuration in the IP Routing Volume.

Configuring MPLS LDP

Configuration Prerequisites

Before configuring LDP, be sure to complete the following task:

l Configuring MPLS basic capability.

MPLS LDP Configuration Task List

Complete the following tasks to configure LDP:

|

Task |

Remarks |

|

Required |

|

|

Optional |

|

|

Optional |

|

|

Optional |

|

|

Optional |

|

|

Optional |

|

|

Optional |

Configuring MPLS LDP Capability

Follow these steps to configure MPLS LDP capability:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable LDP capability globally and enter MPLS LDP view |

mpls ldp |

Required Not enabled by default |

|

Configure the LDP LSR ID |

lsr-id lsr-id |

Optional MPLS LSR ID of the LSR by default |

|

Exit to system view |

quit |

— |

|

Enter interface view |

interface interface-type interface-number |

— |

|

Enable LDP capability for the interface |

mpls ldp |

Required Not enabled by default |

![]()

l Currently, only VLAN-interface supports LDP capability.

l Disabling LDP on an interface terminates all LDP sessions on the interface. As a result, all LSPs using the sessions will be deleted.

l Usually, you do not need to configure the LDP LSR ID, which defaults to the MPLS LSR ID. In some VPN applications (for example, MPLS L3VPN applications), however, you need to ensure that different LDP instances have different LDP LSR IDs if the address spaces overlap. Otherwise, the TCP connections cannot be established normally.

Configuring Local LDP Session Parameters

You can configure a local session transport address to be the IP address of an interface or a specified IP address.

Follow these steps to configure local LDP session parameters:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter interface view |

interface interface-type interface-number |

— |

|

Set the link Hello timer |

mpls ldp timer hello-hold value |

Optional 15 seconds by default |

|

Set the link Keepalive timer |

mpls ldp timer keepalive-hold value |

Optional 45 seconds by default |

|

Configure the LDP transport address |

mpls ldp transport-address { ip-address | interface } |

Optional MPLS LSR ID of the LSR by default |

![]()

If you configure an LDP transport address by specifying an IP address, the specified IP address must be the IP address of an interface on the device. Otherwise, the LDP sessions cannot be established.

Configuring Remote LDP Session Parameters

Configure a remote session transport address by specifying an IP address.

Follow these steps to configure remote LDP session parameters:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Create a remote peer entity and enter MPLS LDP remote peer view |

mpls ldp remote-peer remote-peer-name |

Required |

|

Configure the remote peer IP address |

remote-ip ip-address |

Required |

|

Set the targeted Hello timer |

mpls ldp timer hello-hold value |

Optional 45 seconds by default |

|

Set the targeted Keepalive timer |

mpls ldp timer keepalive-hold value |

Optional 45 seconds by default |

|

Configure the transport address |

mpls ldp transport-address ip-address |

Optional MPLS LSR ID of the LSR by default |

![]()

In the current implementation, LDP itself does not send any label information through remote sessions, and remote sessions are used only to transfer messages for L2VPNs. For applications of remote sessions, refer to information about Martini MPLS L2VPN configuration in MPLS L2VPN Configuration of the MPLS Volume.

![]()

l If Hello adjacency exists between two peers, no remote adjacency can be established between them. If remote adjacency exists between two peers, you can configure local adjacency for them. However, the local adjacency can be established only when the transport address and keepalive settings of the two peers match respectively, in which case the remote adjacency will be removed. That is, only one remote session or local session can exist between two LSRs, and the local session takes precedence over the remote session.

l The remote peer IP address to be configured must be different from all existing remote peer IP addresses. Otherwise, the configuration fails.

l The IP address specified as the LDP transport address must be the IP address of an interface on the device.

Configuring the Policy for Triggering LSP Establishment

You can specify the routes that are allowed to trigger the establishment of LSPs:

l All static and IGP routes.

l Static and IGP routes permitted by an IP address prefix list.

Follow these steps to configure the policy for triggering LSP establishment:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS view |

mpls |

— |

|

Configure the LSP establishment triggering policy |

lsp-trigger { all | ip-prefix prefix-name } |

Optional By default, only local loopback addresses with 32-bit masks can trigger LDP to establish LSPs. |

![]()

l For an LSP to be established, an exactly matching routing entry must exist on the LSR. With loopback addresses using 32-bit masks, only exactly matching host routing entries can trigger LDP to establish LSPs.

l For information about IP address prefix list, refer to Routing Policy Configuration in the IP Routing Volume.

Configuring the Label Distribution Control Mode

Follow these steps to configure the LDP label distribution control mode:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable LDP capability globally and enter MPLS LDP view |

mpls ldp |

Required |

|

Specify the label distribution control mode |

label-distribution { independent | ordered } |

Optional Ordered by default Note that you need to reset LDP sessions for this command to take effect. |

|

Enable label readvertisement for DU mode |

du-readvertise |

Optional Enabled by default |

|

Set the interval for label readvertisement in DU mode |

du-readvertise timer value |

Optional 30 seconds by default |

Configuring LDP Loop Detection

Follow these steps to configure LDP loop detection:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable LDP capability globally and enter MPLS LDP view |

mpls ldp |

Required |

|

Enable loop detection |

loop-detect |

Required Disabled by default |

|

Set the maximum hop count |

hops-count hop-number |

Optional 32 by default |

|

Set the maximum path vector length |

path-vectors pv-number |

Optional 32 by default |

![]()

Changing the loop detection configurations does not affect existing LSPs.

![]()

You need to configure loop detection before enabling LDP capability on any interface.

Configuring LDP MD5 Authentication

To improve the security of LDP sessions, you can configure MD5 authentication for the underlying TCP connections.

Follow these steps to configure LDP MD5 authentication:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable LDP capability globally and enter MPLS LDP view |

mpls ldp |

Required |

|

Enable LDP MD5 authentication and set the password |

md5-password { cipher | plain } peer-lsr-id password |

Required Disabled by default |

Configuring LDP Instances

LDP instances are for carrier’s carrier networking applications of MPLS L3VPN. You need to configure LDP capability for existing VPN instances.

Except for the command for the LDP GR feature, all commands available in MPLS LDP view can be configured in MPLS LDP VPN instance view.

Configuration Prerequisites

Before configuring LDP instances, be sure to complete these tasks:

l Configuring VPN instances,

l Configuring MPLS basic capability,

l Configuring MPLS LDP capability.

Configuration Procedure

Usually, you do not need to configure the LDP LSR ID, which defaults to the MPLS LSR ID. In some VPN applications (for example, MPLS L3VPN applications), however, you need to ensure that different LDP instances have different LDP LSR IDs if the address spaces overlap. Otherwise, the TCP connections cannot be established normally.

Follow these steps to configure LDP instances:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable LDP capability for a VPN instance and enter MPLS LDP VPN instance view |

mpls ldp vpn-instance vpn-instance-name |

Required |

|

Configure the LDP LSR ID for the VPN instance |

lsr-id lsr-id |

Optional MPLS LSR ID of the LSR by default |

![]()

l Configurations in MPLS LDP VPN instance view affect only LDP-enabled interfaces bound to the VPN instances, while configurations in MPLS LDP view do not affect interfaces bound to VPN instances. When configuring the transport address of an LDP instance, you need to use the IP address of the interface bound to the VPN instance.

l By default, LDP adjacencies on a private network are established using addresses of the LDP-enabled interfaces, while those on the public network are established using the LDP LSR ID.

Configuring LDP GR

Configuration Prerequisites

Before configuring LDP GR, be sure to complete this task:

l Configuring MPLS LDP capability on each device to be the GR restarter or a GR helper.

Configuration Procedure

![]()

The S7500E Series Ethernet Switches can act as both a GR restarter and a GR helper.

Follow these steps to configure LDP GR:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS LDP view |

mpls ldp |

— |

|

Enable MPLS LDP GR |

graceful-restart |

Required Disabled by default |

|

Set the FT reconnect timer |

graceful-restart timer reconnect timer |

Optional 300 seconds by default |

|

Set the LDP neighbor liveness timer |

graceful-restart timer neighbor-liveness timer |

Optional 120 seconds by default |

|

Set the LDP recovery timer |

graceful-restart timer recovery timer |

Optional 300 seconds by default |

![]()

During MPLS LDP GR, a GR helper takes the lesser one between its LDP neighbor liveness time and the GR restarter’s FT reconnect time as its FT reconnect interval, and takes the lesser one between its LDP recovery time and that of the GR restarter as its LDP recovery interval.

Restarting MPLS LDP Gracefully

To test MPLS LDP GR without main/backup switchover, you can restart MPLS LDP gracefully. You are not recommended to perform this operation in normal cases.

Follow these steps to restart MPLS LDP gracefully:

|

To do… |

Use the command… |

Remarks |

|

Restart MPLS LDP gracefully |

graceful-restart mpls ldp |

Required Available in user view |

Configuring MPLS IP TTL Processing

Configuration Prerequisites

Before configuring MPLS IP TTL propagation, be sure to complete this task:

l Configuring MPLS basic capability.

Configuring MPLS IP TTL Propagation

Follow these steps to configure IP TTL propagation of MPLS:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS view |

mpls |

Required |

|

Enable MPLS IP TTL propagation |

ttl propagate { public | vpn } |

Optional Enabled for only public network packets by default |

![]()

l The ttl propagate command affects only the propagation of the IP TTL to the TTL in an MPLS label. At egress, the system uses the smaller one between the IP TTL and MPLS TTL as the TTL of the IP packet and decrements the value by 1.

l If you enable MPLS IP TTL propagation for VPN packets on one LSR, you are recommended to do so on all related PEs as well, guaranteeing that you can get the same result when tracerting from those PEs.

Specifying the Type of the Paths for ICMP Responses

ICMP responses can use two kinds of paths: IP route and LSP.

For MPLS packets with one-level of labels, you can configure MPLS to send back ICMP responses along IP routes instead of LSPs when the TTL expires.

In MPLS, an IP router generally maintains public network routes only, and MPLS packets with one-level of labels carry public network payload. Therefore, you can configure this function.

In MPLS VPN, for autonomous system border routers (ASBRs), MPLS packets that carry VPN packets may have only one-level of labels. To tracert the VPN packets on public networks in this case, you need to:

l Configure the ttl propagate vpn command on all relevant PEs to allow IP TTL propagation of VPN packets.

l Configure the undo ttl expiration pop command on the ASBRs to assure that ICMP responses can travel along the original LSPs.

Follow these steps to specify the type of the paths for ICMP responses:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS view |

mpls |

— |

|

Specify that ICMP responses travel along the IP route when the TTL of an MPLS packet expires |

ttl expiration pop |

Optional Configure one of them as required. By default, ICMP response messages of an MPLS packet with a one-level label stack travel along the IP route. |

|

Specify that ICMP responses travel along the LSP when the TTL of an MPLS packet expires |

undo ttl expiration pop |

Configuring MPLS Statistics

Setting the Interval for Reporting Statistics

To view LSP statistics, you need to set the interval for collecting statistics at first.

Follow these steps to set the interval for collecting statistics:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enter MPLS view |

mpls |

— |

|

Set the interval for collecting statistics |

statistics interval interval-time |

Required 0 seconds by default, meaning that the system does not collect statistics. |

Inspecting an MPLS LSP

|

To do… |

Use the command… |

Remarks |

|

Check the validity and reachability of an MPLS LSP |

ping lsp [-a source-ip | -c count | -exp exp-value | -h ttl-value | -m wait-time | -r reply-mode | -s packet-size | -t time-out | -v ] * ipv4 dest-addr mask-length [ destination-ip-addr-header ] |

Available in any view |

|

Locate an MPLS LSP error |

tracert lsp [-a source-ip | -exp exp-value | -h ttl-value | -r reply-mode |-t time-out ] * ipv4 dest-addr mask-length [ destination-ip-addr-header ] |

Available in any view |

Enabling MPLS Trap

With the MPLS trap function enabled, trap packets of the notifications level will be generated to report critical MPLS events. Such trap packets will be sent to the information center of the device. Whether and where the packets will then be output depend on the configurations of the information center. For information on how to configure the information center, refer to Information Center Configuration in the System Volume.

Follow these steps to enable the MPLS trap function:

|

To do… |

Use the command… |

Remarks |

|

Enter system view |

system-view |

— |

|

Enable the MPLS trap function |

snmp-agent trap enable mpls |

Required Disabled by default |

![]()

For detailed information about the snmp-agent trap enable mpls command, refer to the snmp-agent trap enable command in SNMP Commands of the System Volume.

Displaying and Maintaining MPLS

Resetting LDP Sessions

If you change any LDP session parameters when the sessions are up, the LDP sessions will not be able to function normally. In this case, you need to reset LDP sessions so that the LDP peers renegotiate parameters and establish new sessions. Use one of the following commands to reset LDP sessions:

|

To do… |

Use the command… |

Remarks |

|

Reset LDP sessions |

reset mpls ldp [ all | [ vpn-instance vpn-instance-name ] [ fec mask | peer peer-id ] ] |

Available in user view |

Displaying MPLS Operation

|

To do… |

Use the command… |

Remarks |

|

Display information about one or all interfaces with MPLS enabled |

display mpls interface [ interface-type interface-number ] [ verbose ] |

Available in any view |

|

Display information about ILM entries |

display mpls ilm [ label ] [ slot slot-number ] [ include text ] |

Available in any view |

|

Display information about specified labels or all labels |

display mpls label { label-value1 [ to label-value2 ] | all } |

Available in any view |

|

Display information about LSPs |

display mpls lsp [ incoming-interface interface-type interface-number ] [ outgoing-interface interface-type interface-number ] [ in-label in-label-value ] [ out-label out-label-value ] [ asbr | [ vpn-instance vpn-instance-name ] [ protocol { bgp | bgp-ipv6 | ldp | static } ] ] [ egress | ingress | transit ] [ { exclude | include } dest-addr mask-length ] [ verbose ] |

Available in any view |

|

Display information about NHLFE entries |

display mpls nhlfe [ token ] [ slot slot-number ] [ include text ] |

Available in any view |

|

Display LSP statistics |

display mpls lsp statistics |

Available in any view |

|

Display information about static LSPs |

display mpls static-lsp [ lsp-name lsp-name ] [ { include | exclude } dest-addr mask-length ] [ verbose ] |

Available in any view |

|

Display LSP-related route information |

display mpls route-state [ vpn-instance vpn-instance-name ] [ dest-addr mask-length ] |

Available in any view |

|

Display statistics for all LSPs or the LSP with a specified index or name |

display mpls statistics lsp { all | index | name lsp-name } |

Available in any view |

|

Display MPLS statistics for one or all interfaces |

display mpls statistics interface { interface-type interface-number | all } |

Available in any view |

Displaying MPLS LDP Operation

|

To do… |

Use the command… |

Remarks |

|

Display information about LDP |

display mpls ldp [ all [ verbose ] [ | { begin | exclude | include } regular-expression ] ] |

Available in any view |

|

Display information about LDP-enabled interfaces |

display mpls ldp interface [ all [ verbose ] | [ vpn-instance vpn-instance-name ] [ interface-type interface-number | verbose ] ] [ | { begin | exclude | include } regular-expression ] |

Available in any view |

|

Display information about LDP session peers |

display mpls ldp peer [ all [ verbose ] | [ vpn-instance vpn-instance-name ] [ peer-id | verbose ] ] [ | { begin | exclude | include } regular-expression ] |

Available in any view |

|

Display information about remote LDP peers |

display mpls ldp remote-peer [ remote-name remote-peer-name ] [ | { begin | exclude | include } regular-expression ] |

Available in any view |

|

Display information about LDP sessions |

display mpls ldp session [ all [ verbose ] | [ vpn-instance vpn-instance-name ] [ peer-id | verbose ] ] [ | { begin | exclude | include } regular-expression ] |

Available in any view |

|

Display information about LSPs established by LDP |

display mpls ldp lsp [ all | [ vpn-instance vpn-instance-name ] [ dest-addr mask-length ] ] [ | { begin | exclude | include } regular-expression ] |

Available in any view |

|

Display information about a specified LDP instance |

display mpls ldp vpn-instance vpn-instance-name [ | { begin | exclude | include } regular-expression ] |

Available in any view |

Clearing MPLS Statistics

|

To do… |

Use the command… |

Remarks |

|

Clear MPLS statistics for one or all MPLS interfaces |

reset mpls statistics interface { interface-type interface-number | all } |

Available in user view |

|

Clear MPLS statistics for all LSPs or the LSP with a specified index or name |

reset mpls statistics lsp { index | all | name lsp-name } |

Available in user view |

MPLS Configuration Examples

Example for Configuring LDP Sessions

Network requirements

l Switch A, Switch B, and Switch C support MPLS and use OSPF as the IGP for the MPLS backbone.

l Local LDP sessions are established between Switch A and Switch B as well as between Switch B and Switch C, while a remote LDP session is required between Switch A and Switch C.

Network diagram

Figure 1-8 Network diagram for configuring LDP sessions

Configuration procedure

1) Configure the IP addresses of the interfaces

Configure the IP addresses and masks of the interfaces including the VLAN interfaces and loopback interfaces as required in Figure 1-8. The detailed configuration procedure is omitted here.

2) Configure the routes for OSPF to advertise

# Configure Switch A.

<Sysname> system-view

[Sysname] sysname SwitchA

[SwitchA] ospf

[SwitchA-ospf-1] area 0

[SwitchA-ospf-1-area-0.0.0.0] network 1.1.1.9 0.0.0.0

[SwitchA-ospf-1-area-0.0.0.0] network 10.1.1.0 0.0.0.255

[SwitchA-ospf-1-area-0.0.0.0] quit

[SwitchA-ospf-1] quit

# Configure Switch B.

<Sysname> system-view

[Sysname] sysname SwitchB

[SwitchB] ospf

[SwitchB-ospf-1] area 0

[SwitchB-ospf-1-area-0.0.0.0] network 2.2.2.9 0.0.0.0

[SwitchB-ospf-1-area-0.0.0.0] network 10.1.1.0 0.0.0.255

[SwitchB-ospf-1-area-0.0.0.0] network 20.1.1.0 0.0.0.255

[SwitchB-ospf-1-area-0.0.0.0] quit

[SwitchB-ospf-1] quit

# Configure Switch C.

<Sysname> system-view

[Sysname] sysname SwitchC

[SwitchC] ospf

[SwitchC-ospf-1] area 0

[SwitchC-ospf-1-area-0.0.0.0] network 3.3.3.9 0.0.0.0

[SwitchC-ospf-1-area-0.0.0.0] network 20.1.1.0 0.0.0.255

[SwitchC-ospf-1-area-0.0.0.0] quit

[SwitchC-ospf-1] quit

After completing the above configurations, you will see that every switch has learned the routes to other switches if you execute the display ip routing-table command. The following takes Switch A as an example:

[SwitchA] display ip routing-table

Routing Tables: Public

Destinations : 9 Routes : 9

Destination/Mask Proto Pre Cost NextHop Interface

1.1.1.9/32 Direct 0 0 127.0.0.1 InLoop0

2.2.2.9/32 OSPF 10 1563 10.1.1.2 Vlan1

3.3.3.9/32 OSPF 10 3125 10.1.1.2 Vlan1

10.1.1.0/24 Direct 0 0 10.1.1.1 Vlan1

10.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

10.1.1.2/32 Direct 0 0 10.1.1.2 Vlan1

20.1.1.0/24 OSPF 10 3124 10.1.1.2 Vlan1

127.0.0.0/8 Direct 0 0 127.0.0.1 InLoop0

127.0.0.1/32 Direct 0 0 127.0.0.1 InLoop0

Now, OSPF adjacency should have been established between Switch A and Switch B and between Switch B and Switch C respectively. If you execute the display ospf peer verbose command, you will find that the neighbors are in the state of Full. The following takes Switch A as an example:

[SwitchA] display ospf peer verbose

OSPF Process 1 with Switch ID 1.1.1.9

Neighbors

Area 0.0.0.0 interface 10.1.1.1(Vlan-interface1)'s neighbors

Router ID: 2.2.2.9 Address: 10.1.1.2 GR State: Normal

State: Full Mode:Nbr is Master Priority: 1

DR: None BDR: None MTU: 1500

Dead timer due in 39 sec

Neighbor is up for 00:02:13

Authentication Sequence: [ 0 ]

3) Configure MPLS basic capability and enable LDP

# Configure Switch A.

[SwitchA] mpls lsr-id 1.1.1.9

[SwitchA] mpls

[SwitchA-mpls] quit

[SwitchA] mpls ldp

[SwitchA-mpls-ldp] quit

[SwitchA] interface vlan-interface 1

[SwitchA-Vlan-interface1] mpls

[SwitchA-Vlan-interface1] mpls ldp

[SwitchA-Vlan-interface1] quit

# Configure Switch B.

[SwitchB] mpls lsr-id 2.2.2.9

[SwitchB] mpls

[SwitchB-mpls] quit

[SwitchB] mpls ldp

[SwitchB-mpls-ldp] quit

[SwitchB] interface vlan-interface 1

[SwitchB-Vlan-interface1] mpls

[SwitchB-Vlan-interface1] mpls ldp

[SwitchB-Vlan-interface1] quit

[SwitchB] interface vlan-interface 2

[SwitchB-Vlan-interface2] mpls

[SwitchB-Vlan-interface2] mpls ldp

[SwitchB-Vlan-interface2] quit

# Configure Switch C.

[SwitchC] mpls lsr-id 1.1.1.9

[SwitchC] mpls

[SwitchC-mpls] quit

[SwitchC] mpls ldp

[SwitchC-mpls-ldp] quit

[SwitchC] interface vlan-interface 1

[SwitchC-Vlan-interface1] mpls

[SwitchC-Vlan-interface1] mpls ldp

[SwitchC-Vlan-interface1] quit

After completing the above configurations, local sessions should have been established between Switch A and Switch B and between Switch B and Switch C. You can execute the display mpls ldp session command to check whether the local sessions have been established, or use the display mpls ldp peer command to check the peers. The following takes Switch A as an example:

[SwitchA] display mpls ldp session

LDP Session(s) in Public Network

Total number of sessions: 1

----------------------------------------------------------------

Peer-ID Status LAM SsnRole FT MD5 KA-Sent/Rcv

----------------------------------------------------------------

2.2.2.9:0 Operational DU Passive Off Off 5/5

----------------------------------------------------------------

LAM : Label Advertisement Mode FT : Fault Tolerance

[SwitchA] display mpls ldp peer

LDP Peer Information in Public network

Total number of peers: 1

-----------------------------------------------------------------

Peer-ID Transport-Address Discovery-Source

----------------------------------------------------------------

2.2.2.9:0 2.2.2.9 Vlan-interface1

----------------------------------------------------------------

4) Configure the remote LDP session

# Configure Switch A.

[SwitchA] mpls ldp remote-peer peerc

[SwitchA-mpls-ldp-remote-peerc] remote-ip 3.3.3.9

[SwitchA-mpls-ldp-remote-peerc] quit

# Configure Switch C.

[SwitchC] mpls ldp remote-peer peera

[SwitchC-mpls-ldp-remote-peera] remote-ip 1.1.1.9

[SwitchC-mpls-ldp-remote-peera] quit

After completing the above configurations, you will find by issuing the following commands on Switch A that the remote LDP session with Switch C is already established:

[SwitchA] display mpls ldp session

LDP Session(s) in Public Network

Total number of sessions: 2

----------------------------------------------------------------

Peer-ID Status LAM SsnRole FT MD5 KA-Sent/Rcv

----------------------------------------------------------------

2.2.2.9:0 Operational DU Passive Off Off 35/35

3.3.3.9:0 Operational DU Passive Off Off 8/8

----------------------------------------------------------------

LAM : Label Advertisement Mode FT : Fault Tolerance

[SwitchA] display mpls ldp peer

LDP Peer Information in Public network

Total number of peers: 2

-----------------------------------------------------------------

Peer-ID Transport-Address Discovery-Source

-----------------------------------------------------------------

2.2.2.9:0 2.2.2.9 Vlan-interface1

3.3.3.9:0 3.3.3.9 Remote Peer : peerc

-----------------------------------------------------------------

Example for Configuring LDP to Establish LSPs

Network requirements

On the network in Figure 1-8, an LSP is required between Switch A and Switch C. Check the validity and reachability of the LSP.

Network diagram

See Figure 1-8.

Configuration procedure

1) Configure LDP sessions. Refer to Example for Configuring LDP Sessions .

2) Configure the LSP establishment triggering policy for LDP to establish LSPs.

![]()

For LDP to establish an LSP, LDP sessions are required between neighboring routers along the LSP. In Figure 1-8, an LDP LSP can be established from Switch A to Switch C provided that local LDP sessions exist between Switch A and Switch B, and between Switch B and Switch C; no remote LDP session is required between Switch A and Switch C.

# Configure Switch A.

[SwitchA] mpls

[SwitchA-mpls] lsp-trigger all

[SwitchA-mpls] return

# Configure Switch B.

[SwitchB] mpls

[SwitchB-mpls] lsp-trigger all

[SwitchB-mpls] quit

# Configure Switch C.

[SwitchC] mpls

[SwitchC-mpls] lsp-trigger all

[SwitchC-mpls] quit

After completing the above configurations, you will see that the LSPs have been established if you execute the display mpls ldp lsp command. The following takes Switch A as an example:

<SwitchA> display mpls ldp lsp

LDP LSP Information

-------------------------------------------------------------------

SN DestAddress/Mask In/OutLabel Next-Hop In/Out-Interface

------------------------------------------------------------------

1 1.1.1.9/32 3/NULL 127.0.0.1 Vlan1/InLoop0

2 2.2.2.9/32 NULL/3 10.1.1.2 ----/Vlan1

3 3.3.3.9/32 NULL/1025 10.1.1.2 ----/Vlan1

4 20.1.1.0/24 NULL/3 10.1.1.2 ----/Vlan1

-------------------------------------------------------------------

A '*' before an LSP means the LSP is not established

A '*' before a Label means the USCB or DSCB is stale

# Check the validity and reachability of the LSP.

<SwitchA> ping lsp ipv4 3.3.3.9 32

LSP PING FEC: LDP IPV4 PREFIX 3.3.3.9/32 : 100 data bytes, press CTRL_C to break

Reply from 20.1.1.2: bytes=100 Sequence=1 time = 1 ms

Reply from 20.1.1.2: bytes=100 Sequence=2 time = 1 ms

Reply from 20.1.1.2: bytes=100 Sequence=3 time = 1 ms

Reply from 20.1.1.2: bytes=100 Sequence=4 time = 1 ms

Reply from 20.1.1.2: bytes=100 Sequence=5 time = 1 ms

--- FEC: LDP IPV4 PREFIX 3.3.3.9/32 ping statistics ---

5 packet(s) transmitted

5 packet(s) received

0.00% packet loss

round-trip min/avg/max = 1/1/1 ms