- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 1013.95 KB |

Contents

SeerEngine-DC Neutron plug-ins

SeerEngine-DC Neutron security plug-ins

Installing OpenStack cloud platforms

Installing SeerEngine-DC Neutron plug-ins and patches on OpenStack

Configuring interoperability in the KVM host-based overlay scenario

Installing and configuring plug-ins on the controller node

Installing and configuring plug-ins on a compute node

Configuring interoperability in the KVM network-based overlay scenario

Installing and configuring plug-ins on the controller node

Installing and configuring plug-ins on a compute node

(Optional.) Installing and configuring plug-ins on the DHCP failover node

CentOS 7.2.1511 operating system

OpenEuler 21.10 ARM operating system

Configuring interoperability in the network-based overlay with SR-IOV enabled scenario

Installing and configuring plug-ins on the controller node

Installing and configuring plug-ins on a compute node

Editing the configuration file

Configuring interoperability with F5 or third-party load balancers

Installing and configuring plug-ins on the controller node

Installing and configuring plug-ins on a compute node

Configuring interoperability with third-party firewalls

Installing and configuring plug-ins on the controller node

Installing and configuring plug-ins on a compute node

Configuring interoperability with Ironic

Installing and configuring plug-ins on the controller node

Installing and configuring OpenStack plug-ins on the controller node

Installing and configuring OpenStack plug-ins on the compute node

Setting up the environment for the traditional VLAN and VXLAN-based Metadata solution

Installing the SeerEngine-DC Neutron security plug-ins on OpenStack

Installing the security plug-ins on the controller node

Obtaining the installation package

Installing the security plug-ins on the OpenStack controller node

Editing the configuration files on the OpenStack controller node

(Optional.) Upgrading the SeerEngine-DC Neutron security plug-ins

Comparing and synchronizing firewall resource information between the cloud platform and controller

Comparing and synchronizing LB resource information between the cloud platform and controller

Upgrading non-converged plug-ins to converged plug-ins

Comparing and synchronizing resource information between the controller and cloud platform

CLI-based management of VPC connections and floating IP addresses

CLI-based management of VPC connections

CLI-based management of floating IP addresses

The Inter X700 Ethernet network adapter series fails to receive LLDP messages. What should I do?

VM instances fail to be created in a normal environment. What should I do?

Overview

This document describes how to install OpenStack plug-ins including SeerEngine-DC Neutron plug-ins, Nova patch, openvswitch-agent patch, and DHCP failover components.

SeerEngine-DC Neutron plug-ins

Neutron is a type of OpenStack services used to manage all virtual networking infrastructures (VNIs) in an OpenStack environment. It provides virtual network services to the devices managed by OpenStack computing services.

SeerEngine-DC Neutron plug-ins are developed for SeerEngine-DC based on the OpenStack framework. SeerEngine-DC Neutron plug-ins can obtain network configuration from OpenStack through REST APIs and synchronize the configuration to SeerEngine-DC. They can obtain settings for the tenants' networks, subnets, routers, or ports.

|

CAUTION: To avoid service interruptions, do not modify the settings issued by the cloud platform on the controller, such as the virtual link layer network, vRouter, and vSubnet settings after the plug-ins connect to the OpenStack cloud platform. |

Nova patch

Nova is an OpenStack computing control software that provides virtual services for users. The virtual services include creating, starting up, shutting down, and migrating virtual machines and setting configuration information for the virtual machines, such as CPU and memory information.

In specific scenarios (such as a host-based overlay or vCenter network-based overlay scenario), you must install the Nova patch to enable virtual machines created by OpenStack to access networks managed by SeerEngine-DC. Kylin V10 operating systems do not support Nova patch installation.

Openvswitch-agent patch

The open source openvswitch-agent process on an OpenStack compute node might fail to deploy VLAN flow tables to open source vSwitches when the following conditions exist:

· The kernel-based virtual machine (KVM) technology is used on the node.

· The hierarchical port binding feature is configured on the node.

To resolve this issue, you must install the openvswitch-agent patch.

Kylin V10 operating systems do not support Openvswitch-agent patch installation.

DHCP failover components

Kylin V10 operating systems do not support installing DHCP failover components.

DHCP component

In the network-based overlay scenario, only a controller is currently allowed to assign addresses to virtual machines or bare metal servers as a DHCP server. When the controller is disconnected from the southbound network, the virtual machines or bare metal servers will not be able to renew and reobtain addresses through DHCP. To resolve the issue, you can install the DHCP component on the DHCP failover node to provide DHCP failover in the network-based overlay scenario. When the controller loses connection to the southbound network, the virtual machines or bare metal servers can renew and reobtain addresses through the independently deployed DHCP server.

Metadata component

In the DHCP failover scenario, you must install a Metadata component on the DHCP failover node to provide the Metadata function for the DHCP component.

SeerEngine-DC Neutron security plug-ins

The SeerEngine-DC Neutron security plug-ins are designed for SeerEngine-DC and meet the OpenStack requirements. They implement all features of security plugins (such as Fwaas, Lbaas, and Vpnaas). They can synchronize the security configurations obtained from OpenStack to SeerEngine-DC through REST API, which allows tenants to schedule security network resources. The synchronized security configurations include tenants' firewalls (FW), load balancers (LB), and VPNs.

Kylin V10 OS does not support installing security plug-ins.

Restrictions and guidelines

This document describes interoperability between SeerEngine-DC with one OpenStack platform that contains one controller node. In other scenarios, follow these restrictions and guidelines:

· SeerEngine-DC interoperates with one OpenStack platform that contains multiple controller nodes.

Configure all controller nodes on the OpenStack platform in the same way a single controller is configured, and make sure the configuration on all controller nodes is the same.

· SeerEngine-DC interoperates with multiple OpenStack platforms. Only OpenStack Queens and Rocky are supported.

¡ Install plug-ins on all controller nodes on each OpenStack platform, and configure interoperability parameters, including the cloud_region_name parameter in ml2_conf.ini of the SeerEngine-DC Neutron.

[SDNCONTROLLER]

cloud_region_name = default

cloud_region_name represents the name of the cloud platform. The default value is default. Make sure the value for this parameter is the same as the cloud platform name added on the Automation > Data Center Networks > Virtual Networking > OpenStack page on the controller. Make sure the cloud platform name and VXLAN VNI for each cloud platform and the host name of each node are unique across the OpenStack platforms.

¡ If each OpenStack platform uses an exclusive keystone service, verify that SeerEngine-DC can interoperate with each OpenStack platform and each platform can deploy services to its tenant.

¡ If multiple OpenStack platforms share the same keystone service, verify that SeerEngine-DC can interoperate with each OpenStack platform and all platforms can deploy services to the same tenant.

· Check the OpenStack version and OSs. Table 1 shows the software requirements for installing the SeerEngine-DC Neutron plug-ins, Nova patch, or openvswitch-agent patch.

|

Item |

Supported versions |

|

OpenStack (deployed on CentOS or Kylin V10 with YUM) |

· OpenStack Kilo 2015.1 on CentOS 7.1.1503 · OpenStack Liberty on CentOS 7.1.1503 · OpenStack Mitaka on CentOS 7.1.1503 · OpenStack Newton on CentOS 7.2.1511 · OpenStack Ocata on CentOS 7.2.1511 · OpenStack Pike on CentOS 7.2.1511 or Kylin V10 · OpenStack Queens on CentOS 7.4.1708 · OpenStack Rocky on CentOS 7.2.1511 · OpenStack Stein on CentOS 7.4.1708 · OpenStack Train on CentOS 7, CentOS 8, or Kylin V10 · OpenStack Ussuri on CentOS 8 or Kylin V10 · OpenStack Victoria on CentOS 8 or Kylin V10 · OpenStack Wallaby on CentOS Stream 8 or Kylin V10 · OpenStack Xena on CentOS Stream 8 · OpenStack Yoga on CentOS Stream 8 |

|

IMPORTANT: · OpenStack security plug-ins cannot be installed on OpenStack Victoria, Wallaby, Xena or Yoga. · To install plug-ins on OpenStack Pike, the dnsmasq version must be 2.76. You can use the dnsmasq –v command to display the dnsmasq version number. · Make sure your system has a reliable Internet connection before you install the OpenStack plug-ins. |

Installing OpenStack cloud platforms

See the installation guide for the specific OpenStack version on the OpenStack official website to install and deploy OpenStack cloud platforms. Verify that the /etc/hosts file on all nodes has the host name-IP address mappings, and the OpenStack Neutron component has been deployed.

Preprovisioning SeerEngine-DC

SeerEngine-DC preprovisioning provides only basic configuration for SeerEngine-DC. For detailed configuration for different scenarios, see the configuration guides.

Table 2 SeerEngine-DC preprovisioning

|

Configuration |

Path |

|

Fabrics |

Automation > Data Center Networks > Fabrics > Fabrics |

|

VDS |

Automation > Data Center Networks > Common Network Settings > Virtual Distributed Switch |

|

Address pools |

Automation > Data Center Networks > Resource Pools > IP Address Pools |

|

VNID pools (VLANs, VXLANs, and VLAN-VXLAN mappings) |

Automation > Data Center Networks > Resource Pools > VNID Pools > VLANs Automation > Data Center Networks > Resource Pools > VNID Pools > VXLANs Automation > Data Center Networks > Resource Pools > VNID Pools > VLAN-VXLAN Mappings |

|

Adding access and border devices to a fabric |

Automation > Data Center Networks > Fabrics > Fabrics |

|

L4-L7 physical devices, resource pools, and profiles |

Automation > Data Center Networks > Resource Pools > Devices > Physical Devices Automation > Data Center Networks > Resource Pools > Devices > L4-L7 Physical Resource Pools |

|

Gateway |

Automation > Data Center Networks > Common Network Settings > Gateways |

|

Domains and hosts |

Automation > Data Center Networks > Fabrics > Domains Automation > Data Center Networks > Fabrics > Domains > Hosts |

|

Interoperability with OpenStack |

Automation > Data Center Networks > Virtual Networking > OpenStack NOTE: · You must specify the cloud platform name. It is case sensitive and must be the same as the value for the cloud_region_name parameter in the ml2_conf.ini file of the Neutron plug-in. · Make the VNI range is the same as the VXLAN VNI range on the cloud platform. |

Installing SeerEngine-DC Neutron plug-ins and patches on OpenStack

The SeerEngine-DC Neutron plug-ins, Nova patch, openvswitch-agent patch, and DHCP failover components can be installed on different OpenStack versions. The installation package varies by OpenStack version. However, you can use the same procedure to install the Neutron plug-ins, Nova patch, or openvswitch-agent patch on different OpenStack versions.

Install the SeerEngine-DC Neutron plug-ins on an OpenStack controller node, the Nova patch and openvswitch-agent patch on an OpenStack compute node, and the DHCP failover components on the DHCP failover node. Before installation, you must install the Python tools on the associated node.

Installing the Python tools

Before installing the plug-ins, first you must download the Python tools online and install them.

To download and install the Python tools:

1. Update the software source list.

[root@localhost ~]# yum clean all

[root@localhost ~]# yum makecache

2. Download and install the Python tools.

¡ CentOS 8, CentOS Stream 8, and Kylin V10 operating systems (OpenStack version: Train, Ussuri, Victoria, or Wallaby):

[root@localhost ~]# yum install –y python3-pip python3-setuptools

¡ Other CentOS operating systems and Kylin V10 operating system (OpenStack version: Pike):

[root@localhost ~]# yum install –y python-pip python-setuptools

3. Log in to the controller node to edit the /etc/hosts file:

a. Add the IP and name mappings for all OpenStack hosts on the Automation > Data Center Networks > Fabrics > Domains > Hosts page on SeerEngine-DC.

b. Add the IP and name mappings of all leaf, spine, and boarder devices on the Automation > Data Center Networks > Resource Pools > Devices > Physical Devices page on SeerEngine-DC.

[root@localhost ~]# vim /etc/hosts

127.0.0.1 localhost

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

99.0.83.75 controller

99.0.83.76 compute1

99.0.83.77 compute2

99.0.83.78 nfs-server

99.0.83.79 compute3

99.0.83.74 compute4

4. Install websocket-client on the controller node.

¡ CentOS 8, CentOS Stream 8, and Kylin V10 operating systems (OpenStack version: Train, Ussuri, Victoria, or Wallaby):

[root@localhost ~]# yum install –y python3-websocket-client

¡ Other CentOS operating systems and Kylin V10 operating system (OpenStack version: Pike):

[root@localhost ~]# yum install –y python-websocket-client

|

IMPORTANT: The version requirement on websocket-client varies by operating system type as follows: · For CentOS operating systems, the version of python-websocket-client and the version of python3-websocket-client must be 0.56. · For Kylin V10 operating systems, the version of python-websocket-client and the version of python3-websocket-client must be 0.47 or 0.56. |

Configuring interoperability in the KVM host-based overlay scenario

Installing and configuring plug-ins on the controller node

Obtaining the SeerEngine-DC Neutron plug-in installation package

The SeerEngine-DC Neutron plug-ins are included in the SeerEngine-DC OpenStack package. Obtain the SeerEngine-DC OpenStack package of the required version and then save the package to the target installation directory on the server or virtual machine.

Alternatively, transfer the installation package to the target installation directory through a file transfer protocol such as FTP, TFTP, or SCP. Use the binary transfer mode to prevent the software package from being corrupted during transit.

Installing the SeerEngine-DC Neutron plug-ins on the controller node

|

CAUTION: The QoS feature will not operate correctly if you configure the database connection in configuration file neutron.conf as follows: This is an open source bug in OpenStack. To prevent this problem, configure the database connection as follows: The three dots (…) in the command line represents the neutron database link information. |

Some parameters must be configured with the required values as described in "Parameters and fields."

To install the SeerEngine-DC Neutron plug-ins:

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg, .whl, or .rpm file) is saved, and install the package on the OpenStack controller node. In the following examples, the SeerEngine-DC OpenStack package is in the /root directory.

The name and the installation method of the SeerEngine-DC OpenStack package vary by operating system type as follows:

¡ The name of an .egg file is SeerEngine_DC_PLUGIN-version-py2.7.egg or SeerEngine_DC_PLUGIN-version-py3.6.egg. The version argument represents the version of the package.

- CentOS operating systems (Python2.7):

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-E3608-py2.7.egg

- Kylin V10 operating systems with OpenStack Pike:

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-E6203-py2.7.egg

¡ The name of an .whl file is SeerEngine_DC_PLUGIN-version-py3-none-any.whl. The version argument represents the version of the package.

- CentOS operating systems (Python3):

[root@localhost ~]# pip3 install SeerEngine_DC_PLUGIN-E6401-py3-none-any.whl

- Kylin V10 operating systems with OpenStack Train, Ussuri, Victoria, or Wallaby:

[root@localhost ~]# pip3 install SeerEngine_DC_PLUGIN-E6401-py3-none-any.whl

¡ The name of an .rpm file is SeerEngine_DC_PLUGIN-version-1-py2.7.noarch.rpm or SeerEngine_DC_PLUGIN-version-1-py3.6.noarch.rpm. The version argument represents the version of the package.

To install an .rpm file on a CentOS operating system (Python2.7):

[root@localhost ~]# rpm -ivh SeerEngine_DC_PLUGIN-E6402-1-py2.7.noarch.rpm

To install an .rpm file on a CentOS operating system (Python3):

[root@localhost ~]# rpm -ivh SeerEngine_DC_PLUGIN-E6402-1-py3.6.noarch.rpm

|

|

NOTE: Kylin V10 operating systems do not support .rpm file installation. |

2. Change the user group and permissions of the plug-in file to be consistent with those of the Neutron file.

¡ CentOS 8 and CentOS Stream 8 operating systems:

[root@localhost ~]# cd /usr/local/lib/python3.6/site-packages

[root@localhost ~]# chown -R --reference=/usr/lib/python3.6/site-packages/neutron *h3c

[root@localhost ~]# chmod -R --reference=/usr/lib/python3.6/site-packages/neutron *h3c

[root@localhost ~]# cd /usr/local/bin

[root@localhost ~]# chown -R --reference=/usr/bin/neutron-server h3c*

[root@localhost ~]# chmod -R --reference=/usr/bin/neutron-server h3c*

¡ Other CentOS operating systems and Kylin V10 operating system (with OpenStack Pike):

[root@localhost ~]# cd /usr/lib/python2.7/site-packages

[root@localhost ~]# chown -R --reference=neutron SeerEngine*

[root@localhost ~]# chmod -R --reference=neutron SeerEngine*

[root@localhost ~]# cd /usr/bin

[root@localhost ~]# chown -R --reference=neutron-server h3c*

[root@localhost ~]# chmod -R --reference=neutron-server h3c*

¡ Kylin V10 operating systems (with OpenStack Train, Ussuri, Victoria, or Wallaby):

[root@localhost ~]# cd /usr/local/lib/python3.7/site-packages/

[root@localhost site-packages]# chown -R --reference=/usr/lib/python3.7/site-packages/neutron *h3c

[root@localhost site-packages]# chmod -R --reference=/usr/lib/python3.7/site-packages/neutron *h3c

[root@localhost site-packages]# cd /usr/local/bin

[root@localhost bin]# chown -R --reference=/usr/bin/neutron-server h3c*

[root@localhost bin]# chmod -R --reference=/usr/bin/neutron-server h3c*

3. Install the SeerEngine-DC Neutron plug-ins.

[root@localhost bin]# cd

[root@localhost ~]# h3c-sdnplugin controller install

¡ If Neutron is developed based on OpenStack Newton, execute the following command to install the SeerEngine-DC Neutron plug-ins:

[root@localhost ~]# h3c-sdnplugin controller install --neutron_version newton

¡ If Neutron is developed based on OpenStack Pike execute the following command to install the SeerEngine-DC Neutron plug-ins:

[root@localhost ~]# h3c-sdnplugin controller install --neutron_version pike

|

IMPORTANT: Before executing the h3c-sdnplugin controller install command, make sure no neutron.conf file exists in the /root directory. If such a file exists, delete it or move it to another location. |

Editing the configuration file

1. Modify the neutron.conf configuration file.

a. Use the vi editor to open the neutron.conf configuration file.

[root@localhost ~]# vi /etc/neutron/neutron.conf

b. Press I to switch to insert mode, and modify the configuration file. For information about the parameters, see "neutron.conf."

If the operating system type is Kylin V10 and the OpenStack version is Train, Ussuri, Victoria, or Wallaby:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos,h3c_port_forwarding

If the operating system type is CentOS and the OpenStack version is Train, Ussuri, Victoria, Wallaby, Xena, or Yoga:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos,h3c_port_forwarding,h3c_bgp_neighbor,h3c_taas,h3c_trunk

[service_providers]

service_provider= BGP_NEIGHBOR:H3C:networking_h3c.l3_router.h3c_bgp_neighbors_driver.H3CBgpNeighborsDriver:default

service_provider= TAAS:H3C:networking_h3c.l3_router.h3c_tap_services.H3CTapServicesDriver:default

service_provider=EXROUTE:H3C:networking_h3c.l3_router.h3c_exroutes_driver.H3CExroutesDriver:default

If the OpenStack version is OpenStack Queens, Rocky, or Stein:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos,h3c_port_forwarding

If the OpenStack version is Pike:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos,h3c_port_forwarding

If the OpenStack version is Mitaka and QoS services are deployed in OpenStack:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos,h3c_bgp_neighbor,h3c_taas,h3c_trunk

[qos]

notification_drivers = message_queue,qos_h3c

[service_providers]

service_provider= BGP_NEIGHBOR:H3C:networking_h3c.l3_router.h3c_bgp_neighbors_driver.H3CBgpNeighborsDriver:default

service_provider= TAAS:H3C:networking_h3c.l3_router.h3c_tap_services.H3CTapServicesDriver:default

service_provider=EXROUTE:H3C:networking_h3c.l3_router.h3c_exroutes_driver.H3CExroutesDriver:default

If the OpenStack version is Liberty, Newton, or Ocata and QoS services are deployed in OpenStack:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router,qos

[qos]

notification_drivers = message_queue,qos_h3c

If the OpenStack version is Kilo 2015.1:

[DEFAULT]

core_plugin = ml2

service_plugins = h3c_l3_router

|

IMPORTANT: · OpenStack Kilo does not support QoS. You do not need to specify QoS in the service_plugins parameter. · The open source port forwarding software has known problems and is not compatible with the Neutron plug-in L3 Plugin. As a best practice, use h3c_port_forwarding Plugin in the Neutron plug-in, and make sure the Neutron community version has resolved the known BUG #1799135. |

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

|

IMPORTANT: If the operating system type is CentOS, OpenStack Mitaka, Pike, Queens, Rocky, Stein, Train, Ussuri, Victoria, Wallaby, Xena, and Yoga can allow inter-VPC traffic to pass through a firewall. To achieve this goal, you need to add configuration as shown in Table 3 to the neutron.conf configuration file. |

|

OpenStack version |

Configuration to add to the neutron.conf configuration file for inter-VPC traffic to pass through the firewall |

|

|

Mitaka |

Network cloud scenario |

|

|

Pike |

Non-network cloud scenario |

If the service_plugins parameter is set to h3c_vpc_connection_pike in the pre-upgrade environment, change the parameter value to h3c_vpc_connection_general as a best practice. The api_extensions_path setting can be obtained as follows: |

|

Queens,Rocky, and Stein |

Non-network cloud scenario |

The api_extensions_path setting can be obtained as follows: |

|

Train, Ussuri, Victoria Wallaby, Xena, and Yoga |

Network cloud scenario |

|

|

Non-network cloud scenario |

The api_extensions_path setting can be obtained as follows: |

|

2. Modify the ml2_conf.ini configuration file.

a. Use the vi editor to open the ml2_conf.ini configuration file.

[root@localhost ~]# vi /etc/neutron/plugins/ml2/ml2_conf.ini

b. Press I to switch to insert mode, and set the parameters in the ml2_conf.ini configuration file. For information about the parameters, see "ml2_conf.ini."

[ml2]

type_drivers = vxlan,vlan

tenant_network_types = vxlan,vlan

mechanism_drivers = ml2_h3c

extension_drivers = ml2_extension_h3c,qos

[ml2_type_vlan]

network_vlan_ranges = physicnet1:1000:2999,port_security

[ml2_type_vxlan]

vni_ranges = 1:500

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the ml2_conf.ini file.

3. Modify the ml2_conf.ini configuration file after the SeerEngine-DC Neutron plug-in is installed.

a. Use the vi editor to open the ml2_conf.ini configuration file.

[root@localhost ~]# vi /etc/neutron/plugins/ml2/ml2_conf.ini

b. Press I to switch to insert mode, and set the following parameters in the ml2_conf.ini configuration file. For information about the parameters, see "ml2_conf_h3c.ini."

[SDNCONTROLLER]

url = http://127.0.0.1:30000

username = admin

password = Pwd@12345

domain = sdn

timeout = 1800

retry = 10

vhostuser_mode = server

white_list = False

use_neutron_credential = False

output_json_log = False

vendor_rpc_topic = VENDOR_PLUGIN

hierarchical_port_binding_physicnets = ANY

hierarchical_port_binding_physicnets_prefix = physicnet

enable_dhcp_hierarchical_port_binding = False

enable_security_group = True

enable_https = False

neutron_plugin_ca_file =

neutron_plugin_cert_file =

neutron_plugin_key_file =

enable_iam_auth = False

sdnc_rpc_url = ws://127.0.0.1:30000

sdnc_rpc_ping_interval = 60

websocket_fragment_size = 102400

enable_l3_router_rpc_notify = False

qos_rx_limit_min = 0

cloud_region_name = default

enable_h3c_l3_exroute = False

neutron_black_list =

black_list_matching =

force_vlan_port_details_qvo = True

neutron_version =

neutron_domain_name =

enable_encrypted_authentication = False

enable_neutron_rpc = True

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the ml2_conf.ini file.

4. If you have set the white_list parameter to True, add an authentication-free user to the controller.

¡ Enter the IP address of the host where the Neutron server resides.

¡ Specify the role as Admin.

5. If you have set the use_neutron_credential parameter to True, perform the following steps:

a. Modify the neutron.conf configuration file.

# Use the vi editor to open the neutron.conf configuration file.

# Press I to switch to insert mode, and add the following configuration. For information about the parameters, see "neutron.conf."

[keystone_authtoken]

admin_user = neutron

admin_password = KEYSTONE_PASS

# Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

b. Add an admin user to the controller.

# Configure the username as neutron.

# Specify the role as Admin.

# Enter the password of the neutron user in OpenStack.

6. Restart the neutron-server service.

[root@localhost ~]# service neutron-server restart

neutron-server stop/waiting

neutron-server start/running, process 4583

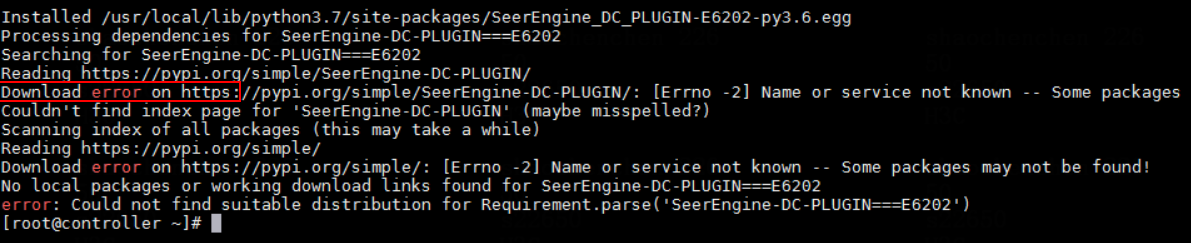

Verifying the installation

# Verify that the SeerEngine-DC OpenStack package is correctly installed. If the correct software and OpenStack versions are displayed, the package is successfully installed.

· .egg file

¡ CentOS 8 and CentOS Stream 8 operating systems:

[root@localhost ~]# pip3 freeze | grep PLUGIN

SeerEngine-DC-PLUGIN===E6102

¡ Other CentOS operating systems and Kylin V10 operating system (with OpenStack Pike):

[root@localhost ~]# pip freeze | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608

¡ Kylin V10 operating systems (with OpenStack Train, Ussuri, Victoria, or Wallaby):

[root@localhost ~]# pip3 freeze | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608

· .rpm file

[root@localhost ~]# rpm -qa | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608.noarch

# Verify that the neutron-server service is enabled. The service is enabled if its state is running.

[root@localhost ~]# service neutron-server status

neutron-server start/running, process 1849

Parameters and fields

This section describes parameters in configuration files and fields included in parameters.

neutron.conf

|

Parameter |

Required value |

Description |

|

core_plugin |

ml2 |

Used for loading the core plug-in ml2 to OpenStack. |

|

service_plugins |

h3c_l3_router,qos,h3c_port_forwarding,h3c_vpc_connection |

Used for loading the extension plug-ins to OpenStack. |

|

service_provider |

N/A |

Directory where the extension plug-ins are saved. |

|

notification_drivers |

message_queue,qos_h3c |

Name of the QoS notification driver. |

|

admin_user |

N/A |

Admin username for Keystone authentication in OpenStack, for example, neutron. |

|

admin_password |

N/A |

Admin password for Keystone authentication in OpenStack, for example, 123456. |

ml2_conf.ini

|

Parameter |

Required value |

Description |

|

type_drivers |

vxlan,vlan |

Driver type. vxlan must be specified as the first driver type. |

|

tenant_network_types |

vxlan,vlan |

Type of the networks to which the tenants belong. For intranet, only vxlan is available. For extranet, only vlan is available. · In the host overlay scenario and network overlay with hierarchical port binding scenario, vxlan must be specified as the first network type. · In the network overlay without hierarchical port binding scenario, vlan must be specified as the first network type. · In the host overlay, network overlay with hierarchical port binding, and network overlay without hierarchical port binding hybrid scenario, vxlan must be specified as the first network type. In this scenario, you can create a VLAN only from the background CLI, REST API, or Web administration interface. |

|

mechanism_drivers |

ml2_h3c |

Name of the ml2 driver. To create SR-IOV instances for VLAN networks, set this parameter to sriovnicswitch, ml2_h3c. To create hierarchy-supported instances, set this parameter to ml2_h3c,openvswitch. |

|

extension_drivers |

ml2_extension_h3c,qos |

Names of the ml2 extension drivers. Available names include ml2_extension_h3c, qos, and port_security. If the QoS feature is not enabled on OpenStack, you do not need to specify the value qos for this parameter. To not enable port security on OpenStack, you do not need to specify the port_security value for this parameter (OpenStack Kilo 2015.1, Liberty 2015.2, and Ocata 2017.1 do not support the port_security value.) OpenStack Kilo 2015.1 do not support the QoS driver. |

|

network_vlan_ranges |

N/A |

Value range for the VLAN ID of the extranet, for example, physicnet1:1000:2999. |

|

vni_ranges |

N/A |

Value range for the VXLAN ID of the intranet, for example, 1:500. |

ml2_conf_h3c.ini

|

Parameter |

Description |

|

url |

URL address for logging in to the Unified Platform, for example, http://ip_address:30000 or https://ip_address:30000. |

|

username |

Username for logging in to the Unified Platform, for example, admin. You do not need to configure a username when the use_neutron_credential parameter is set to True. |

|

password |

Password for logging in to the Unified Platform, for example, Pwd@12345. You do not need to configure a password when the use_neutron_credential parameter is set to True. If the password contains a dollar sign ($), enter a backward slash (\) before the dollar sign. |

|

domain |

Name of the domain where the controller resides, for example, sdn. This parameter has been deprecated. |

|

timeout |

The amount of time that the Neutron server waits for a response from the controller in seconds, for example, 1800 seconds. As a best practice, set the waiting time greater than or equal to 1800 seconds. |

|

retry |

Maximum times for sending connection requests from the Neutron server to the controller, for example, 10. |

|

vif_type |

Default vNIC type: · ovs · vhostuser (applied to the OVS DPDK solution) · None (only supported by Mitaka) You can set the vhostuser_mode parameter when the value of this parameter is vhostuser. For Mitaka plug-ins, if you set the value to None, you must make sure the host name is consistent with the host name of the compute node when you add a compute node on the compute domain page. When the value is None, the vNIC type can be automatically identified in a host-based overlay environment. |

|

vhostuser_mode |

Default DPDK vHost-user mode: · server · client The default value is server. This setting takes effect only when the value of the vif_type parameter is vhostuser. |

|

white_list |

Whether to enable or disable the authentication-free user feature on OpenStack. · True—Enable. · False—Disable. |

|

use_neutron_credential |

Whether to use the OpenStack Neutron username and password to communicate with the controller. · True—Use. · False—Do not use. |

|

output_json_log |

Whether to output REST API messages to the OpenStack operating logs in JSON format for communication between the SeerEngine-DC Neutron plug-ins and the controller. · True—Enable. · False—Disable. |

|

vendor_rpc_topic |

RPC topic of the vendor. This parameter is required when the vendor needs to obtain Neutron data from the SeerEngine-DC Neutron plug-ins. The available values are as follows: · VENDOR_PLUGIN—Default value, which means that the parameter does not take effect. · DP_PLUGIN—RPC topic of DPtech. The value of this parameter must be negotiated by the vendor and H3C. |

|

hierarchical_port_binding_physicnets |

Policy for OpenStack to select a physical VLAN when performing hierarchical port binding. The default value is ANY. · ANY—A VLAN is selected from all physical VLANs for VLAN ID assignment. · PREFIX—A VLAN is selected from all physical VLANs matching the specified prefix for VLAN ID assignment. |

|

hierarchical_port_binding_physicnets_prefix |

Prefix for matching physical VLANs. The default value is physicnet. This parameter is available only when you set the value of the hierarchical_port_binding_physicnets parameter to PREFIX. |

|

enable_dhcp_hierarchical_port_binding |

Whether to enable DHCP hierarchical port binding. The default value is False. · True—Enable. · False—Disable. |

|

enable_security_group |

Whether to deploy OpenStack security group rules to the controller. The default value is False. |

|

enable_https |

Whether to enable HTTPS bidirectional authentication. The default value is False. · True—Enable. · False—Disable. |

|

neutron_plugin_ca_file |

Save location for the CA certificate of the controller, for example, /etc/neutron/ca.crt. As a best practice, save the CA certificate in the /usr/share/neutron directory. |

|

neutron_plugin_cert_file |

Save location for the Cert certificate of the controller, for example, /etc/neutron/sna.pem. As a best practice, save the Cert certificate in the /usr/share/neutron directory. |

|

neutron_plugin_key_file |

Save location for the Key certificate of the controller, for example, /etc/neutron/sna.key. As a best practice, save the Cert certificate in the /usr/share/neutron directory. |

|

enable_iam_auth |

Whether to enable IAM interface authentication. · True—Enable. · False—Disable. When connecting to the Unified Platform, you can set the value to True to use the IAM interface for authentication. The default value is False. This parameter is obsolete. |

|

sdnc_rpc_url |

RPC interface URL of the controller. Only a WebSocket type interface is supported, for example, ws://127.0.0.1:30000. If the Unified Platform uses an HTTPS URL, the controller must uses the WebSocket Secure (wss) URL scheme. Configure this parameter based on the URL of the Unified Platform. For example, if the URL of the Unified Platform is http://127.0.0.1:30000, set this parameter to ws://127.0.0.1:30000. |

|

sdnc_rpc_ping_interval |

Interval at which an RPC ICMP echo request message is sent to the controller, in seconds. Int type. The default value is 60 seconds. |

|

websocket_fragment_size |

Size of a WebSocket fragment sent from the plug-in to the controller in the DHCP failover scenario, in bytes. Int type. The value is an integer equal to or larger than 1024. The default value is 102400. If the value is 1024, the message is not fragmented. |

|

enable_l3_router_rpc_notify |

Whether to enable or disable the feature of sending Layer 3 routing events through RPC. · True—Enable. · False—Disable. |

|

qos_rx_limit_min |

Minimum inbound bandwidth, in kbps. If the QoS minimum inbound bandwidth configured on OpenStack is smaller than this parameter value, this parameter value takes effect. Only OpenStack Kilo 2015.1 supports this parameter. |

|

cloud_region_name |

Name of the cloud platform. String type. The default value is default. Make sure the value of this parameter is the same as the cloud platform name configured on the Automation > Data Center Networks > Virtual Networking > OpenStack page on the controller. |

|

enable_h3c_l3_exroute |

Whether to enable routing extension. The default value is False. When the value is True, configure the api_extensions_path parameter in the neutron.conf file [DEFAULT] as follows: · CentOS 8 and CentOS Stream 8 operating systems: · Other CentOS operating systems: Only OpenStack Mitaka, Train, Ussuri, Victoria, Wallaby, Xena, and Yoga support this parameter. For Kylin V10 operating systems, the value must be False, because they do not support routing extension. |

|

neutron_black_list |

Neutron network denylist function. This parameter must be used together with the black_list_matching parameter. No default value exists. If the value for this parameter is flat and no denylist matching rule is specified, the denylist feature takes effect only on flat-type internal networks. |

|

black_list_matching |

Denylist matching rule. Options are prefix and suffix. When the value is prefix, the network is added to the denylist if the network name prefix is within the value range for the neutron_black_list parameter. When the value is suffix, the network is added to the denylist if the network name suffix is within the value range for the neutron_black_list parameter. After a network resource is added to the denylist, that network resource will not be deployed to the controller after being created on the cloud platform. The default value is not configured, which means the denylist function is disabled. The denylist function only applies to layer 2 resources, such as networks, subnets, and ports. It cannot be used for layer 3 resources, such as binding a subnet interface to a virtual router. |

|

force_vlan_port_details_qvo |

Whether to forcibly create a qvo-type vPort on the OVS bridge after a VM in a VLAN network comes online. If the value is true, the system forcibly creates a qvo-type vPort. If the value is false, the system automatically creates a tap-type or qvo-type vPort as configured. As a best practice, set the value to false for interoperability with the cloud platform for the first time. |

|

neutron_version |

Neutron version. Options include pike and newton. You can also leave the parameter unconfigured. By default, this parameter is unconfigured. If Neutron is developed based on open source OpenStack Pike or Newton, specify the value as pike or newton. |

|

neutron_domain_name |

If the Neutron permission is insufficient for obtaining the tenant or domain names from Keystone, you can use the current domain name to configure this field for Neutron to obtain sufficient permission. By default, this field is not configured. You can obtain the current domain name from the cloud platform. |

|

enable_encrypted_authentication |

Indicates whether to perform base64 decoding for the username and password of Unified Platform. When set to False, the username and password of Unified Platform will not be decrypted. When set to True, the base64 decoding algorithm is used to decrypt the username and password of Unified Platform, and the username and password need to be entered in the encoded base64 value. For example, if the username is admin and the password is Pwd@12345, the encryption command is echo -n 'password' |base64, the encoded username is YWRtaW4=, and the password is UHdkQDEyMzQ1. |

|

enable_neutron_rpc |

Whether to establish a connection to the neutron message queue. The default value is true. |

Upgrading the SeerEngine-DC Neutron plug-ins

|

CAUTION: · Services might be interrupted during the SeerEngine-DC Neutron plug-ins upgrade procedure. · The default parameter settings for SeerEngine-DC Neutron plug-ins might vary by OpenStack version (Kilo 2015.1, Liberty, Mitaka, and Ocata). Modify the default parameter settings for SeerEngine-DC Neutron plug-ins when upgrading the OpenStack version to ensure that the plug-ins have the same configurations before and after the upgrade. |

To upgrade the SeerEngine-DC Neutron plug-ins:

1. Remove the SeerEngine-DC Neutron plug-ins.

[root@localhost ~]# h3c-sdnplugin controller uninstall

Restore config files

Uninstallation complete.

¡ If Neutron is developed based on open source OpenStack Newton, execute the following command to remove the SeerEngine-DC Neutron plug-ins:

[root@localhost ~]# h3c-sdnplugin controller uninstall --neutron_version newton

¡ If Neutron is developed based on open source OpenStack Pike, execute the following command to remove the SeerEngine-DC Neutron plug-ins:

[root@localhost ~]# h3c-sdnplugin controller uninstall --neutron_version pike

2. Remove the SeerEngine-DC OpenStack package.

¡ .egg file or .whl file

- CentOS operating systems (Python3) or Kylin V10 operating systems with OpenStack Train, Ussuri, Victoria, and Wallaby

[root@localhost ~]# pip3 uninstall SeerEngine-DC-PLUGIN

Found existing installation: SeerEngine-DC-PLUGIN E6401

Uninstalling SeerEngine-DC-PLUGIN-E6401:

Would remove:

/usr/local/bin/h3c-sdnplugin

/usr/local/bin/h3c-sdnplugin-extension

/usr/local/lib/python3.6/site-packages/SeerEngine_DC_PLUGIN-E6401.dist-info/*

/usr/local/lib/python3.6/site-packages/networking_h3c/*

/usr/local/lib/python3.6/site-packages/neutron_bgp_h3c/*

/usr/local/lib/python3.6/site-packages/neutron_exroute_h3c/*

/usr/local/lib/python3.6/site-packages/neutron_taas_h3c/*

/usr/local/lib/python3.6/site-packages/neutron_trunk_h3c/*

/usr/local/lib/python3.6/site-packages/neutron_vpc_h3c/*

Proceed (Y/n)? y

Successfully uninstalled SeerEngine-DC-PLUGIN-E6401

- CentOS operating systems (Python2.7) or Kylin V10 operating systems with OpenStack Pike

[root@localhost ~]# pip uninstall SeerEngine-DC-PLUGIN

Uninstalling SeerEngine-DC-PLUGIN-E3608:

/usr/bin/h3c-sdnplugin

/usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-E3608-py2.7.egg

Proceed (y/n)? y

Successfully uninstalled SeerEngine-DC-PLUGIN-E3608

¡ .rpm file

[root@localhost ~]# rpm -e SeerEngine_DC_PLUGIN

3. Install plug-ins of the new version. For more information, see "Installing and configuring plug-ins on the controller node."

Installing and configuring plug-ins on a compute node

You must install the Nova patch only in the following scenarios:

· In KVM host-based overlay or network-based overlay scenario, virtual machines are load balancer members, and the load balancer must be aware of the member status.

· vCenter network-based overlay scenario.

Obtaining the Nova patch installation package

The Nova patch is included in the SeerEngine-DC OpenStack package. Perform the following steps to download the SeerEngine-DC OpenStack package from the H3C website:

1. Obtain the SeerEngine-DC OpenStack package of the required version.

2. Copy the SeerEngine-DC OpenStack package to the installation directory on the server or virtual machine, or upload it to the installation directory through FTP, TFTP, or SCP.

|

|

NOTE: If you decide to upload the SeerEngine-DC OpenStack package through FTP or TFTP, use the binary mode to avoid damage to the package. |

Installing the Nova patch

Based on your network environment, choose one step between step 3 and step 4.

To install the Nova patch on the OpenStack compute node:

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg or .rpm file) is saved, and install the package on the OpenStack compute node. The name of the SeerEngine-DC OpenStack package is SeerEngine_DC_PLUGIN-version1-py2.7.egg or SeerEngine_DC_PLUGIN-version1.noarch.rpm. version1 represents the version of the package.

In this example, the SeerEngine-DC OpenStack package is saved to the /root directory.

¡ .egg file

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-E3608-py2.7.egg

¡ .rpm file

[root@localhost ~]# rpm -ivh SeerEngine_DC_PLUGIN-E3608.noarch.rpm

2. Install the Nova patch.

[root@localhost ~]# h3c-sdnplugin compute install

Install the nova patch

modifying:

/usr/lib/python2.7/site-packages/nova/virt/vmwareapi/vmops.py

modify success, backuped at: /usr/lib/python2.7/site-packages/nova/virt/vmwareapi/vmops.py.h3c_bak

|

|

NOTE: The contents below the modifying: line indicate the modified open source Neutron file and the backup path of the file before modification. |

3. Perform the following steps:

a. Stop the neutron-openvswitch-agent service on the compute node and disable the system from starting the service at startup.

[root@localhost ~]# service neutron-openvswitch-agent stop

[root@localhost ~]# systemctl disable neutron-openvswitch-agent.service

b. Execute the neutron agent-list command on the controller node to identify whether the agent of the compute node exists in the database.

- If the agent of the compute node does not exist in the database, go to the next step.

- If the agent of the compute node exists in the database, execute the neutron agent-delete id command to delete the agent. The id argument represents the agent ID.

[root@localhost ~]# neutron agent-list

| id | agent_type | host |

| 25c3d3ac-5158-4123-b505-ed619b741a52 | Open vSwitch agent | compute3

[root@localhost ~]# neutron agent-delete 25c3d3ac-5158-4123-b505-ed619b741a52

Deleted agent: 25c3d3ac-5158-4123-b505-ed619b741a52

c. Use the vi editor on the compute node to open the nova.conf configuration file.

[root@localhost ~]# vi /etc/nova/nova.conf

d. Press I to switch to insert mode, and set the parameters in the nova.conf configuration file as follows. For descriptions of the parameters, see Table 4.

If the hypervisor type of the compute node is KVM, modify the nova.conf configuration file as follows:

[s1020v]

s1020v = False

member_status = True

[neutron]

ovs_bridge = vds1-br

If the hypervisor type of the compute node is VMware vCenter, modify the nova.conf configuration file as follows:

[DEFAULT]

compute_driver = vmwareapi.VMwareVCDriver

[vmware]

host_ip = 127.0.0.1

host_username = sdn

host_password = skyline123

cluster_name = vcenter

insecure = True

[s1020v]

s1020v = False

vds = VDS2

e. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the nova.conf file.

Table 4 Parameters in the configuration file

|

Parameter |

Description |

|

s1020v |

Whether to use the S1020V vSwitch to forward the traffic between vSwitches and the traffic between the vSwitches and the external network. · True—Use the S1020V vSwitch. · False—Do not use the S1020V vSwitch. This parameter is obsoleted. |

|

member_status |

Whether to enable or disable the feature of modifying the status of members on OpenStack load balancers. · True—Enable. · False—Disable. |

|

vds |

VDS to which the host in the vCenter belongs. In this example, the host belongs to VDS2. In the host-based overlay networking, you can only specify the VDS that the controller synchronizes to the vCenter. In the network-based overlay networking, you can specify an existing VDS on demand. |

|

ovs_bridge |

Name of the bridge for the H3C S1020V vSwitch. Make sure the bridges created on all H3C S1020V vSwitches use the same name. |

|

compute_driver |

Name of the driver used by the compute node for virtualization. |

|

host_ip |

IP address used to log in to the vCenter, for example, 127.0.0.1. |

|

host_username |

Username for logging in to the vCenter, for example, sdn. |

|

host_password |

Password for logging in to the vCenter, for example, skyline123. If the password contains a dollar sign ($), enter a backward slash (\) before the dollar sign. |

|

cluster_name |

Name of the team in the vCenter environment, for example, vcenter. |

|

insecure |

Whether to enable or disable security check. · True—Do not perform security check. · False—Perform security check. This value is not supported in the current software version. |

4. Restart the openstack-nova-compute service.

[root@localhost ~]# service openstack-nova-compute restart

Verifying the installation

# Verify that the SeerEngine-DC OpenStack package is correctly installed. If the correct software and OpenStack versions are displayed, the package is successfully installed.

· .egg file

[root@localhost ~]# pip freeze | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608

· .rpm file

[root@localhost ~]# rpm -qa | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608.noarch

# Verify that the openstack-nova-compute service is enabled. The service is enabled if its state is running.

[root@localhost ~]# service openstack-nova-compute status

nova-compute start/running, process 184

Upgrading the Nova patch

|

CAUTION: Services might be interrupted during the Nova patch upgrade procedure. |

You must remove the Nova patch before upgrading the Nova patch.

To upgrade the Nova patch:

1. Remove the Nova patch.

[root@localhost ~]# h3c-sdnplugin compute uninstall

Uninstall the nova patch

2. Remove the SeerEngine-DC OpenStack package.

¡ .egg file

[root@localhost ~]# pip uninstall SeerEngine-DC-PLUGIN

Uninstalling SeerEngine-DC-PLUGIN-E3608:

/usr/bin/h3c-sdnplugin

/usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-E3608-py2.7.egg

Proceed (y/n)? y

Successfully uninstalled SeerEngine-DC-PLUGIN-E3608

¡ .rpm file

[root@localhost ~]# rpm -e SeerEngine_DC_PLUGIN

3. Install the new-version Nova patch. For more information, see "Installing and configuring plug-ins on a compute node."

Verifying interoperability

1. Create a VXLAN network and a VM on OpenStack.

2. Log in to SeerEngine-DC, and access the Automation > Data Center Networks > All Tenant Networks > vPorts page to identify whether the vPort exists. If the vPort information is correct and the vPort is up, the interoperation has succeeded.

Configuring interoperability in the KVM network-based overlay scenario

Installing and configuring plug-ins on the controller node

Installing the SeerEngine-DC Neutron plug-ins on the controller node

See "Installing the SeerEngine-DC Neutron plug-ins ."

Editing the configuration file

|

IMPORTANT: You must configure a physical network name and VLAN range for all compute nodes in the network_vlan_ranges parameter in the ml2_conf.ini file. Make sure the physical network name in the bridge_mappings parameter in the openvswitch_agent.ini file is unique for a compute node. |

To edit the configuration file:

1. Log in to a controller node as a root user.

2. Edit the network_vlan_ranges parameter in the /etc/neutron/plugins/ml2/openvswitch_agent.ini file. The value to the left of the colon represents the physical network name, and the value to the right of the colon represents the VLAN range.

[ml2]

type_drivers = vxlan,vlan

tenant_network_types = vxlan,vlan

mechanism_drivers = ml2_h3c,openvswitch

[ml2_type_vlan]

network_vlan_ranges = physicnet1:1000:1999,physicnet2:2000:2999

[ml2_type_vxlan]

vni_ranges = 1:500

3. Restart the neutron-server service.

[root@localhost ~]# service neutron-server restart

neutron-server stop/waiting

neutron-server start/running, process 4583

Installing and configuring plug-ins on a compute node

Installing the LLDP service

· CentOS 8 or CentOS Stream 8 operating system

Install and start the lldpad service on the compute node.

[root@localhost ~]# yum install -y lldpd

[root@localhost ~]# systemctl enable lldpd.service

[root@localhost ~]# systemctl start lldpd.service

· Other CentOS operating systems:

a. Install and start the lldpad service on the compute node.

[root@localhost ~]# yum install -y lldpad

[root@localhost ~]# systemctl enable lldpad.service

[root@localhost ~]# systemctl start lldpad.service

b. Enable the uplink interface to send LLDP messages. eno2 is the uplink interface in this example.

[root@localhost ~]# lldptool set-lldp -i eno2 adminStatus=rxtx;

[root@localhost ~]# lldptool -T -i eno2 -V sysName enableTx=yes;

[root@localhost ~]# lldptool -T -i eno2 -V portDesc enableTx=yes;

[root@localhost ~]# lldptool -T -i eno2 -V sysDesc enableTx=yes;

[root@localhost ~]# lldptool -T -i eno2 -V sysCap enableTx=yes;

Installing the Nova patch

You must install the Nova patch only in the following scenarios:

· In KVM host-based overlay or network-based overlay scenario, virtual machines are load balancer members, and the load balancer must be aware of the member status.

· vCenter network-based overlay scenario.

For the installation procedure, see "Installing and configuring plug-ins on a compute node."

Installing the openvswitch-agent patch

|

IMPORTANT: · The openvswitch-agent patch is applicable only to open-source scenarios. If a third-party cloud modifies the openvswitch-agent patch, openvswitch-agent patch installation is not supported. · OpenStack Rocky and later versions do not require installation of the openvswitch-agent patch. · Kylin V10 operating systems do not support openvswitch-agent patch installation. |

To install the openvswitch-agent patch:

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg or .rpm file) is saved, and install the package on the OpenStack compute node. The name of the SeerEngine-DC OpenStack package is SeerEngine_DC_PLUGIN-version -py2.7.egg or SeerEngine_DC_PLUGIN-version.noarch.rpm. version represents the version of the package.

¡ .egg file

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-E3608-py2.7.egg

¡ .rpm file

[root@localhost ~]# rpm -ivh SeerEngine_DC_PLUGIN-E3608-1.noarch.rpm

2. Install the openvswitch-agent patch.

[root@localhost ~]# h3c-sdnplugin openvswitch install

3. Restart the openvswitch-agent service.

[root@localhost ~]# service neutron-openvswitch-agent restart

Verifying the installation

# Verify that the SeerEngine-DC OpenStack package is correctly installed. If the correct software and OpenStack versions are displayed, the package is successfully installed.

· .egg file

[root@localhost ~]# pip freeze | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608

· .rpm file

[root@localhost ~]# rpm -qa | grep PLUGIN

SeerEngine-DC-PLUGIN===E3608

# Verify that the openvswitch-agent service is enabled. The service is enabled if its state is running.

[root@localhost ~]# service neutron-openvswitch-agent status

Redirecting to /bin/systemctl status neutron-openvswitch-agent.service

neutron-openvswitch-agent.service - OpenStack Neutron Open vSwitch Agent

Loaded: loaded (/usr/lib/systemd/system/neutron-openvswitch-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2016-12-05 16:58:18 CST; 18h ago

Main PID: 807 (neutron-openvsw)

Upgrading the openvswitch-agent patch

|

CAUTION: Services might be interrupted during the openvswitch-agent patch upgrade procedure. |

To upgrade the openvswitch-agent patch, you must remove the current version first, and install a new version.

To upgrade the openvswitch-agent patch:

1. Remove the openvswitch-agent patch.

[root@localhost ~]# h3c-sdnplugin openvswitch uninstall

2. Remove the SeerEngine-DC OpenStack package.

¡ .egg file

[root@localhost ~]# pip uninstall SeerEngine-DC-PLUGIN

Uninstalling SeerEngine-DC-PLUGIN-E3608:

/usr/bin/h3c-sdnplugin

/usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-E3608-py2.7.egg

Proceed (y/n)? y

Successfully uninstalled SeerEngine-DC-PLUGIN-E3608

¡ .rpm file

[root@localhost ~]# rpm -e SeerEngine_DC_PLUGIN

3. Install the new patch. For more information, see "Installing the openvswitch-agent patch."

Setting up the configuration environment

|

IMPORTANT: Make sure the physical network name in the bridge_mappings parameter in the openvswitch_agent.ini file is unique for a compute node. |

To set up the configuration environment:

1. Log in to a controller node as a root user.

2. Edit the bridge_mappings parameter in the /etc/neutron/plugins/ml2/openvswitch_agent.ini file. The value to the left of the colon represents the physical network name, and the value to the right of the colon represents the manually created OVS bridge name.

Make sure the physical network name is the same as the physical network name of the bound NIC.

[ovs]

bridge_mappings = physicnet1:br-ens33

3. Create a bridge named br-ens33.

[root@localhost ~]# ovs-vsctl add-br br-ens33

4. Map the bridge to the physical port.

[root@localhost ~]# ovs-vsctl add-port br-ens33 ens33

5. Verify that the bridge was created successfully.

[root@localhost ~]# ovs-vsctl show

6. Delete the default bridge.

[root@localhost ~]# ovs-vsctl del-br br-tun

7. Edit the /etc/neutron/plugins/ml2/openvswitch_agent.ini file to comment out all tunnel-related parameters.

[agent]

# tunnel_types = vxlan

# vxlan_udp_port = 4789

# l2_population = true

[ovs]

# tunnel_bridge = br-tun

# local_ip = 192.168.1.100

8. Restart the openvswitch-agent and neutron-openvswitch-agent services to verify that the br-tun bridge has been deleted successfully.

[root@localhost ~]# systemctl restart neutron-openvswitch-agent.service

[root@localhost ~]# systemctl restart openvswitch-agent.service

[root@localhost ~]# ovs-vsctl show

(Optional.) Installing and configuring plug-ins on the DHCP failover node

To use DHCP failover in a scenario, use a separate node as THE DHCP failover node and install DHCP and Metadata components on the node.

|

IMPORTANT: The DHCP failover components only support the following operating systems: · CentOS 7.2.1511 operating system with kernel version 3.10.0-327.el7.x86_64. If the kernel version does not match the S1020V version, install the kernel patch first. For the specific configuration procedure, see "CentOS 7.2.1511 operating system." · OpenEuler 21.10 ARM operating system. For the specific configuration procedure, see "OpenEuler 21.10 ARM operating system." |

CentOS 7.2.1511 operating system

Installing basic components

1. Install WebSocket Client on the DHCP failover node.

|

IMPORTANT: Make sure WebSocket Client is in version 0.56 or later. |

[root@localhost ~]# yum install –y python-websocket-client

2. Install an S1020V vSwitch on the DHCP failover node and configure bridge and controller settings. For the installation and configuration procedures, see H3C S1020V Installation Guide.

[root@localhost ~]# rpm -ivh --force s1020v-centos71-3.10.0-229.el7.x86_64-x86_64.rpm

Obtaining the installation package of the DHCP failover components

Two SeerEngine-DC OpenStack packages are available: one contains the DHCP failover components package and one does not. The SeerEngine-DC OpenStack package that contains the DHCP failover components package is named in the SeerEngine_DC_PLUGIN-DHCP_version1_version2.egg format. version1 represents the software package version number. version2 represents the OpenStack version number.

Obtain the required version of the SeerEngine-DC OpenStack package and then save the package to the target installation directory on the server or virtual machine. You can also transfer the installation package to the target installation directory through a file transfer protocol such as FTP, TFTP, or SCP. Use the binary transfer mode to prevent the software package from being corrupted during transit.

Installing the DHCP component on the DHCP failover node

Installing the DHCP component

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg file) is saved and then install the package.

In the following example, the SeerEngine-DC OpenStack package is in the /root directory.

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-DHCP_E3608_2017.10-py2.7.egg

2. Install the DHCP component.

[root@localhost ~]# h3c-sdnplugin dhcp install

Install Environment dependent packages

Preparing… ########## [100%]

Updating / installing…

python2-six-1.10.0-9.el7 ########## [ 1%]

………

Install config files

Install services

Installation complete

Please do not remove the *.h3c_bak files.

3. Edit the DHCP component configuration file.

a. Use the vi editor to open the h3c_dhcp_agent.ini file on the DHCP failover node.

[root@localhost ~]# vi /etc/neutron/h3c_dhcp_agent.ini

b. Press I to switch to insert mode and edit the configuration file as follows:

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = networking_h3c.agent.dhcp.driver.dhcp.Dnsmasq

enable_isolated_metadata = true

force_metadata = true

ovs_integration_bridge = br0

[h3c]

transport_url = ws://127.0.0.1:8080

websocket_fragment_size = 102400

[ovs]

ovsdb_interface = vsctl

c. To enable certificate authentication, add the following configurations:

[h3c]

ca_file = /etc/neutron/ca.crt

cert_file = /etc/neutron/sna.pem

key_file = /etc/neutron/sna.key

key_password = KEY_PASSWORD

insecure = true

d. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

4. Start the DHCP component.

[root@localhost ~]# systemctl enable h3c-dhcp-agent.service

[root@localhost ~]# systemctl start h3c-dhcp-agent.service

Installing the Metadata component

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg or .rpm file) is saved and then install the package. The name of the SeerEngine-DC OpenStack package is SeerEngine_DC_PLUGIN-version1_version2-py2.7.egg or SeerEngine_DC_PLUGIN-version1_version2.noarch.rpm. version1 represents the version of the package. version2 represents the version of OpenStack.

In the following example, the SeerEngine-DC OpenStack package is in the /root directory.

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-DHCP_E3608-2017.10-py2.7.egg

2. Install the Metadata component.

[root@localhost ~]# h3c-sdnplugin metadata install

Install config files

Install services

Installation complete

Please do not remove the *.h3c_bak files.

3. Edit the Metadata component configuration file.

a. Use the vi editor to open theh3c_metadata_agent.ini configuration file on the DHCP failover node.

[root@localhost ~]# vi /etc/neutron/h3c_metadata_agent.ini

b. Press I to switch to insert mode and edit the configuration file as follows:

[DEFAULT]

nova_metadata_host = controller

nova_metadata_port = 8775

nova_proxy_shared_secret = METADATA_SECRET

enable_keystone_authtoken = True

[cache]

[keysone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASSWORD

[SDNCONTROLLER]

url = https://127.0.0.1:8443

username = admin

password = Pwd@12345

enable_https = False

neutron_plugin_ca_file =

neutron_plugin_cert_file =

neutron_plugin_key_file =

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

4. Start the Metadata component.

[root@localhost ~]# systemctl enable h3c-metadata-agent.service

[root@localhost ~]# systemctl start h3c-metadata-agent.service

Removing DHCP failover components

Remove the SeerEngine-DC OpenStack package after removing the DHCP and Metadata components.

To remove the DHCP failover components:

1. Remove the DHCP component.

[root@localhost ~]# h3c-sdnplugin dhcp uninstall

Remove services

Removed symlink /etc/system/system/multi-user.target.wants/h3c-dhcp-agent.service.

Backup config files

Uninstallation complete

2. Remove the Metadata component.

[root@localhost ~]# h3c-sdnplugin metadata uninstall

Remove services

Removed symlink /etc/system/system/multi-user.target.wants/h3c-metadata-agent.service.

Backup config files

Uninstallation complete

3. Remove the SeerEngine-DC OpenStack package.

[root@localhost ~]# pip uninstall SeerEngine-DC-PLUGIN

Uninstalling SeerEngine-DC-PLUGIN-DHCP_E3608-2017.10:

/usr/bin/h3c-sdnplugin

/usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-DHCP_E3608-2017.10 -py2.7.egg

Proceed (y/n)? y

Successfully uninstalled SeerEngine-DC-PLUGIN-DHCP_E3608-2017.10

Upgrading DHCP failover components

To upgrade DHCP failover components, first remove the old version and then install the new version.

|

CAUTION: Service might be interrupted during the upgrade. Before performing an upgrade, be sure you fully understand its impact on the services. |

Parameters and fields

This section describes parameters in configuration files and fields included in parameters.

Table 5 DHCP component configuration file

|

Parameter |

Description |

|

ovs_integration_bridge |

vSwitch bridge where the DHCP port resides. |

|

websocket_fragment_size |

Size of a websocket message fragment sent to the controller, in bytes. The value is an integer equal to or larger than 1024. The default value is 102400. When the value is 1024, the websocket messages are not fragmented. |

|

key_password |

Password used for certificate authentication. Replace KEY_PASSWORD with the real password used for certificate authentication. |

|

insecure |

Whether to enable WebSocket certificate authentication. The default value is False. |

Table 6 Metadata component configuration file

|

Parameter |

Description |

|

enable_keystone_authtoken |

Whether to enable Neutron API. When the value is True, you must configure the keystone_authtoken parameter. When the value is False, you must configure the SDNCONTROLLER parameter. |

OpenEuler 21.10 ARM operating system

Installing basic components

1. Install WebSocket Client on the DHCP failover node.

[root@localhost ~]# yum install –y python-websocket-client

|

|

NOTE: Make sure WebSocket Client is in version 0.56 or later. |

2. Install an S1020V vSwitch on the DHCP failover node and configure bridge and controller settings. For the installation and configuration procedures, see H3C S1020V Installation Guide.

Obtaining the installation package of the DHCP failover components

Two SeerEngine-DC OpenStack packages are available: one contains the DHCP failover components package and one does not. The SeerEngine-DC OpenStack package that contains the DHCP failover components package is named in the SeerEngine_DC_PLUGIN-DHCP_version1_version2.egg format. The version1 parameter represents the software package version number. The version2 parameter represents the OpenStack version number.

Obtain the required version of the SeerEngine-DC OpenStack package and then save the package to the target installation directory on the server or virtual machine. You can also transfer the installation package to the target installation directory through a file transfer protocol such as FTP, TFTP, or SCP. Use the binary transfer mode to prevent the software package from being corrupted during transit.

Installing the DHCP component on the DHCP failover node

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg file) is saved and then install the package.

The package name format is SeerEngine_DC_PLUGIN-DHCP_version1_ARM-py2.7.egg, where the version1 parameter represents the software version number.

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-DHCP_E6602_ARM-py2.7.egg

2. Install the DHCP component.

a. Install the required RPM files. Obtain the folder path as follows:

[root@controller ~]# python

>>> import networking_h3c

>>> networking_h3c.__path__

['/usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-DHCP_E6602_ARM-py2.7.egg/networking_h3c'][root@controller ~]# cd /usr/lib/python2.7/site-packages/SeerEngine_DC_PLUGIN-DHCP_E6602_ARM-py2.7.egg/networking_h3c/rpms

[root@controller ~]rpm -ivh * --nodeps --force

warning: c-ares-1.16.1-3.oe1.aarch64.rpm: Header V3 RSA/SHA1 Signature, key ID b25e7f66: NOKEY

Verifying... ################################# [100%]

Preparing... ################################# [100%]

Updating / installing...

1:python2-six-1.15.0-2.oe1 ################################# [ 1%]

2:……

b. Install the DHCP components.

[root@controller ~]h3c-sdnplugin dhcp install

Install config files

Install services

Installation complete.

Please do not remove the *.h3c_bak files.

3. Edit the DHCP component configuration file.

a. Use the vi editor to open the h3c_dhcp_agent.ini file on the DHCP failover node.

[root@localhost ~]# vi /etc/neutron/h3c_dhcp_agent.ini

b. Press I to switch to insert mode and edit the configuration file as follows:

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = networking_h3c.agent.dhcp.driver.dhcp.Dnsmasq

enable_isolated_metadata = true

force_metadata = true

ovs_integration_bridge = br0

[h3c]

transport_url = ws://127.0.0.1:30000

[ovs]

ovsdb_interface = vsctl

[SDNCONTROLLER]

url = http://127.0.0.1:30000

username = admin

password = Pwd@12345

enable_encrypted_authentication = False

timeout = 1800

retry = 10

enable_https = False

neutron_plugin_ca_file =

neutron_plugin_cert_file =

neutron_plugin_key_file =

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

Table 7 Parameters

|

Parameter |

Description |

|

interface_driver |

Driver for managing vPorts. As a best practice, use the default settings. |

|

dhcp_driver |

Driver for managing the DHCP service. As a best practice, use the default settings. |

|

enable_isolated_metadata |

Specify whether the Nova compute service enables a separate metadata service proxy for each instance. As a best practice, use the default settings. |

|

force_metadata |

Specify whether to force an instance to use the metadata service to obtain metadata information. |

|

ovs_integration_bridge |

vSwitch bridge where the DHCP port comes online. |

|

transport_url |

RPC interface URL for the controller. In the current software version, only WebSocket interfaces are supported, for example, ws://127.0.0.1:30000. When Unified Platform is configured to use the HTTPS protocol, you must set this parameter to the WSS protocol. For more information, see the guidelines for filling in URL parameters. For example, if the Unified Platform URL is http://127.0.0.1:30000, then the sdnc_rpc_url parameter must be set to ws://127.0.0.1:30000. |

|

url |

URL for logging in to Unified Platform. The URL for Unified Platform is either http://ip_address:30000 or https://ip_address:30000. For example, use http://127.0.0.1:30000. |

|

username |

Username for logging in to Unified Platform, for example, admin. When the use_neutron_credential parameter is set to True, you do not need to configure this parameter. |

|

password |

Password for logging in to Unified Platform, for example, Pwd@12345. When the use_neutron_credential parameter is set to True, you do not need to configure this parameter. Use the escape character "\" before the character "$" in a password. |

|

timeout |

The amount of time that the Neutron server waits for a response from the controller in seconds, for example, 1800 seconds. As a best practice, set the waiting time greater than or equal to 1800 seconds. |

|

retry |

Times of sending connection requests, for example, 10. |

|

enable_encrypted_authentication |

Indicates whether to perform base64 decoding for the username and password of Unified Platform. When this parameter is set to False, the username and password of Unified Platform will not be decrypted. When this parameter is set to True, the base64 decoding algorithm is used to decrypt the username and password of Unified Platform, and the username and password need to be entered in the encoded base64 value. For example, if the username is admin and the password is Pwd@12345, the encryption command is echo -n 'password' |base64, the encoded username is YWRtaW4=, and the password is UHdkQDEyMzQ1. |

|

enable_https |

Whether to enable HTTPS bidirectional authentication. The default value is False. |

|

neutron_plugin_ca_file |

Save location for the CA certificate of the controller, for example, /etc/neutron/ca.crt. As a best practice, save the CA certificate in the /usr/share/neutron directory. |

|

neutron_plugin_cert_file |

Save location for the Cert certificate of the controller, for example, /etc/neutron/sna.pem. As a best practice, save the Cert certificate in the /usr/share/neutron directory. |

|

neutron_plugin_key_file |

Save location for the Key certificate of the controller, for example, /etc/neutron/sna.key. As a best practice, save the Key certificate in the /usr/share/neutron directory. |

4. Start the DHCP component.

[root@localhost ~]# systemctl enable h3c-dhcp-agent.service

[root@localhost ~]# systemctl start h3c-dhcp-agent.service

Installing the Metadata component on the DHCP failover node

1. Access the directory where the SeerEngine-DC OpenStack package (an .egg file) is saved and then install the package.

The package name format is SeerEngine_DC_PLUGIN-DHCP_version1_ARM-py2.7.egg, where the version1 parameter represents the software version number.

[root@localhost ~]# easy_install SeerEngine_DC_PLUGIN-DHCP_E6602_ARM-py2.7.egg

2. Install the Metadata component for the failover service.

[root@localhost ~]# h3c-sdnplugin metadata install

Install config files

Install services

Installation complete

Please do not remove the *.h3c_bak files.

3. Edit the Metadata component configuration file.

a. Use the vi editor to open the h3c_dhcp_agent.ini file on the DHCP failover node.

[root@localhost ~]# vi /etc/neutron/h3c_dhcp_agent.ini

b. Press I to switch to insert mode and edit the configuration file as follows:

[DEFAULT]

nova_metadata_host = controller

nova_metadata_port = 8775

nova_proxy_shared_secret = METADATA_SECRET

enable_keystone_authtoken = True

[cache]

[keysone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASSWORD

url = http://127.0.0.1:30000

username = admin

password = Pwd@12345

enable_encrypted_authentication = False

timeout = 1800

retry = 10

enable_https = False

neutron_plugin_ca_file =

neutron_plugin_cert_file =

neutron_plugin_key_file =

c. Press Esc to quit insert mode, and enter :wq to exit the vi editor and save the neutron.conf file.

Table 8 Parameters

|

Parameter |

Description |

|