- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 1.22 MB |

Software package naming conventions

Installing the server in the target location

Obtaining the vSCN software package

Installing H3Linux on the server

Logging in to the HDM of UniServer R4900 G3

Editing the power configuration for UniServer R4900 G3

Logging in to the remote console of UniServer R4900 G3

Installing H3Linux on the server

Logging in to H3Linux for the first time

Configuring the server's clock

Configuring an IP address for a network interface on the server

Uploading vSCN image files and the toolkit

Uploading the software package

Adding execute permissions to the script files in the package

Planning service network interfaces

Configuring service network interfaces

Creating the container for the vSCN and running the vSCN

Logging in to the CLI of the vSCN

Configuring the vSCN at the CLI

Logging in to the Web interface

Logging in to the Web interface for the first time

Logging out of the Web interface

Product overview

About the vSCN5100

H3C vSCN5100 is a core network product based on H3C's proprietary network function virtualization (NFV) architecture, aimed at the enterprise market. It addresses the requirement for large-scale 5G mobile communication deployments in industries such as rail transport, electric power, and campuses. The vSCN5100 runs on x86 architecture servers, such as the H3C UniServer R4900 G3, and is deployed as a container. It offers a large user capacity, high availability, high throughput, and a rich set of service features, and supports the IMS voice service. Together with H3C's mobile communication base stations and endpoints, the vSCN5100 can provide a complete 5G network solution. Unless otherwise specified, H3C vSCN5100 is referred to as vSCN in this document.

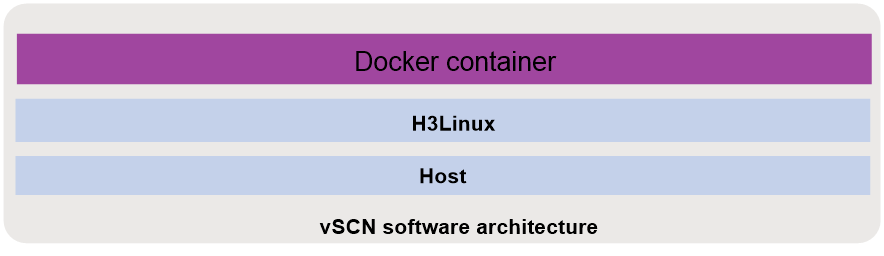

System operating architecture

The vSCN runs in a Docker container on H3Linux. The installation and maintenance engineers must have a basic knowledge of Linux.

Figure 1 shows the software architecture for deploying the vSCN based on Docker containers.

· The host is the underlying device that runs the vSCN, such as H3C UniServer R4900 G3.

· H3Linux is the operating system running on the underlying host.

· The Docker container is the container where the vSCN network element nodes are located.

Figure 1 Software architecture

vSCN software package

Software package type

The vSCN runs in a Docker container based on the H3Linux operating system. To support the installation and operation of the vSCN, H3C provides a software package compatible with the software version of the product. The software package contains the operating system installation package, vSCN installation package, and tool package.

· H3Linux operating system image

The H3Linux operating system image is an H3Linux system image file in .iso format. Install H3Linux on the host before you install the vSCN package.

The vSCN installation package is in .tar.gz format.

This package contains script files for installing, upgrading, or maintaining the vSCN.

Software package naming conventions

H3C provides the operating system installation package, core network installation package, and toolkit when it releases a software version.

· The operating system installation package is named using the H3Linux-H3Linux version number-vSCN format, for example, H3Linux-V202-vSCN.iso.

· The core network package is named using the vSCN5100-CMW910-version number format, for example, vSCN5100-CMW910-A5118.tar.gz.

· The toolkit is named using the vSCN5100_Tools format, for example, vSCN5100_Tools.tar.

|

CAUTION: As a best practice to avoid installation failure, use the vSCN installation package and toolkit with the same software version number when you install the software. |

Preparing for installation

Preparing a server

The vSCN software supports flexible deployment of the 5GC or IMS container. You can deploy both containers on a server. The server hardware requirements of the 5GC container vary by service model. Select a service model based on deployment as shown in Table 1, Table 2, and Table 3.

Table 1 5G service traffic configuration requirements

|

Resource object |

Configuration requirements |

|||||

|

CPU |

Memory (GB) |

10G network interface |

1G copper interface |

Disk (GB) |

CPU main frequency |

|

|

Host |

2 |

4 |

N/A |

1 |

60 |

N/A |

|

5GC |

8 |

14 |

1 |

N/A |

60 |

N/A |

|

IMS |

8 |

14 |

1 |

N/A |

60 |

N/A |

|

Server |

20 |

32 |

1 |

1 |

180 |

≥ 2.1 GHz |

|

|

NOTE: The CPUs in the table refer to logical CPUs. If a server supports hyperthreading, you can virtualize a physical CPU to multiple logical CPUs. If the server is deployed with only the 5GC or IMS container, adjust the specifications based on the actual situation. |

Table 2 10G service traffic configuration requirements

|

Resource object |

Configuration requirements |

|||||

|

CPU core |

Memory (GB) |

10G network interface |

1G copper interface |

Disk (GB) |

CPU main frequency |

|

|

Host |

2 |

4 |

N/A |

1 |

60 |

N/A |

|

5GC |

20 |

32 |

2 |

N/A |

60 |

N/A |

|

IMS |

8 |

14 |

1 |

N/A |

60 |

N/A |

|

Server |

30 |

64 |

3 |

1 |

180 |

≥ 2.1 GHz |

Table 3 20G service traffic configuration requirements

|

Resource object |

Configuration requirements |

|||||

|

CPU core |

Memory (GB) |

10G network interface |

1G copper interface |

Disk (GB) |

CPU main frequency |

|

|

Host |

2 |

4 |

N/A |

1 |

60 |

N/A |

|

5GC |

32 |

56 |

3 |

N/A |

60 |

N/A |

|

IMS |

8 |

14 |

1 |

N/A |

60 |

N/A |

|

Server |

42 |

128 |

4 |

1 |

180 |

≥ 2.3 GHz |

The vSCN can be installed on x86 or ARM servers. This document uses an x86 server as an example.

|

IMPORTANT: · Unless otherwise specified, this guide uses H3C UniServer R4900 G3 as an example to describe the vSCN installation procedure. · If you use a different server model as a vSCN host, see the user guide provided with that server for installation methods of the hardware and operating system. · During server operation, anomalies such as power outages or hot-swapping disks or network adapters can damage the server hardware or vSCN software. For restrictions and guidelines on how to use a server, see the documentation for that server. |

Table 4 shows the physical network adapter models supported by the vSCN. For information about checking the physical network adapter model of a network interface, see "Planning service network interfaces."

Table 4 Physical network adapter models supported by the vSCN

|

Sequence number |

Physical network adapter model |

Network adapter rate |

|

1 |

82599 |

10G |

|

2 |

I350 |

1G |

|

3 |

XL710 |

40G |

|

4 |

X710 |

10G |

|

5 |

X722 |

1G or 10G |

|

6 |

E810 |

25G or 100G |

|

7 |

BCM57412 |

10G |

This document introduces the vSCN installation procedure by using the network interface configuration listed in Table 5.

Table 5 Network interface configuration example

|

Item |

Configuration requirements |

|

Deploy 5GC |

Management interface on the server: enp61s0f0 Service network interfaces: ens1f0 and ens1f1 |

|

Deploy IMS |

Management interface on the server: enp61s0f0 Service network interface: ens2f0 |

Installing the server in the target location

For information about how to install an H3C UniServer R4900 G3 server, see H3C UniServer R4900 G3 Server User Guide.

Obtaining the vSCN software package

Before you install the vSCN, you can obtain the vSCN installation package, toolkit, and the specified version of the H3Linux operating system image from Technical Support.

Installing H3Linux on the server

Multiple methods are available for installing an operating system on the UniServer R4900 G3 server. This document uses HDM as an example. For more information about how to install an operating system on the UniServer R4900 G3 server, see H3C Servers Operating System Installation Guide.

|

|

NOTE: The screenshots in this document are for illustration only and might slightly differ from your HDM webpages. |

Logging in to the HDM of UniServer R4900 G3

About HDM

As shown in Table 6, UniServer R4900 G3 comes with the default HDM login information. You can log in to the HDM of the device by using this default information.

Table 6 Default login information

|

Item |

Default |

|

Dedicated HDM interface IP address. |

192.168.1.2/24 |

|

Username and password |

· Username: admin · Password: Password@_ |

Logging in to the HDM of UniServer R4900 G3

1. Connect the UniServer R4900 G3 dedicated HDM interface to a local PC by using an Ethernet twisted pair.

|

CAUTION: As a best practice, directly connect the UniServer R4900 G3's dedicated HDM interface to a local PC by using an Ethernet twisted pair cable and ensure that the PC will not enter sleep mode. If you fail to do so, the installation might fail because network packet loss occurs or the PC enters sleep mode. |

2. Configure an IP address for the local PC.

Change the IP address of the local PC to any IP address within the 192.168.1.0/24 network segment, except for the default IP address for the dedicated HDM interface, such as 192.168.1.1. This can ensure that the local PC is reachable to the UniServer R4900 G3 dedicated HDM interface.

3. Log in to HDM.

The login procedure for logging in to HDM is similar. The following uses IE 11 as an example.

4. Launch Internet Explorer on your PC, enter the dedicated HDM interface's IP address in the browser, and then press Enter. As shown in Figure 2, a security alarm will pop up in the browser.

5. Click Continue to this website (not recommended).

6. Enter your username and password, and then click Login.

Editing the power configuration for UniServer R4900 G3

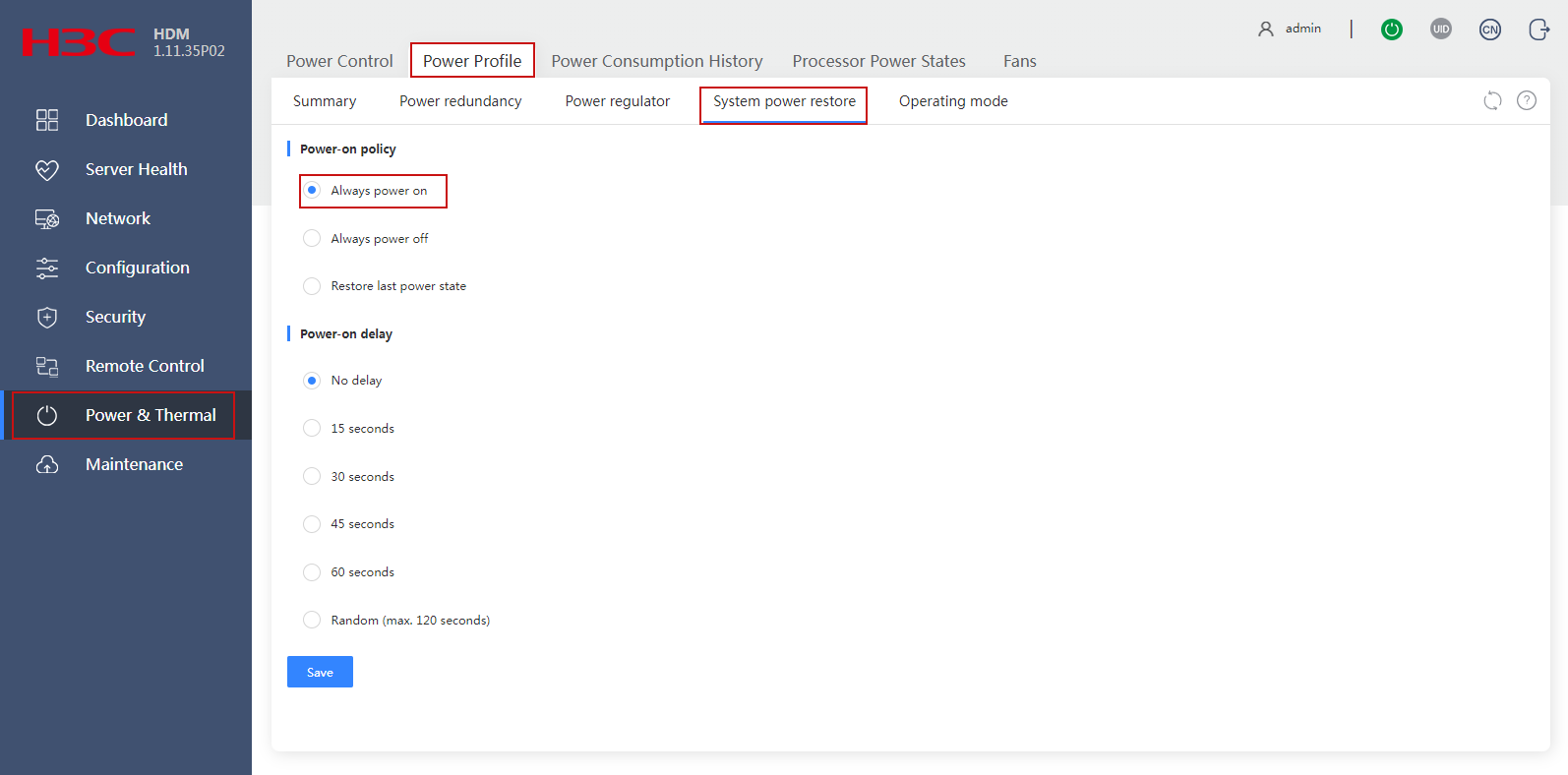

To ensure that the UniServer R4900 G3 server can start up automatically after a power cycle, edit the server power settings as follows:

1. As shown in Figure 3, select Power & Thermal from the left navigation pane. Click the Power Profile tab and System power restore tab in sequence.

2. Select Always power on.

3. Click Save.

Figure 3 Editing the power configuration

Logging in to the remote console of UniServer R4900 G3

About remote console

Remote consoles are available in three types: Kernel-based Virtual Machine (KVM), H5 KVM, and Virtual Network Console (VNC). With a remote console, you can perform remote server management and operating system installation.

|

IMPORTANT: · Due to the firmware version of H5 KVM, you might experience keyboard input errors (such as lowercase letters displayed as uppercase) or abnormal software uploads. As a best practice, use a non-H5 KVM to access the remote console of UniServer R4900 G3. · In some remote console versions, when you use the Backspace key to delete characters, garbled characters will occur. For these consoles, you can press Ctrl+Backspace or Shift+Backspace to delete characters. For other types of remote consoles, if garbled characters occur, see the console's user guide to resolve the issue. · Unless otherwise specified, this document will use the KVM remote console as an example to describe how to log in to the UniServer R4900 G3 remote console. |

Preparing the login environment for the KVM remote console

Before you use the KVM remote console, prepare the login environment on your local PC. As a best practice, install the Zulu OpenJDK and IcedTea-Web software on your local PC. You can download and install the appropriate software for your platform using the following links. For a Windows system, select the Windows version. For a Linux system, select the Linux version.

· Zulu OpenJDK: https://www.azul.com/downloads/zulu/

· IcedTea-Web: http://icedtea.wildebeest.org/download/icedtea-web-binaries/

As a best practice, use the default settings to install Zulu OpenJDK and IcedTea-Web. For more information about preparing the KVM remote console login environment, see H3C Servers HDM User Guide and the HDM online help.

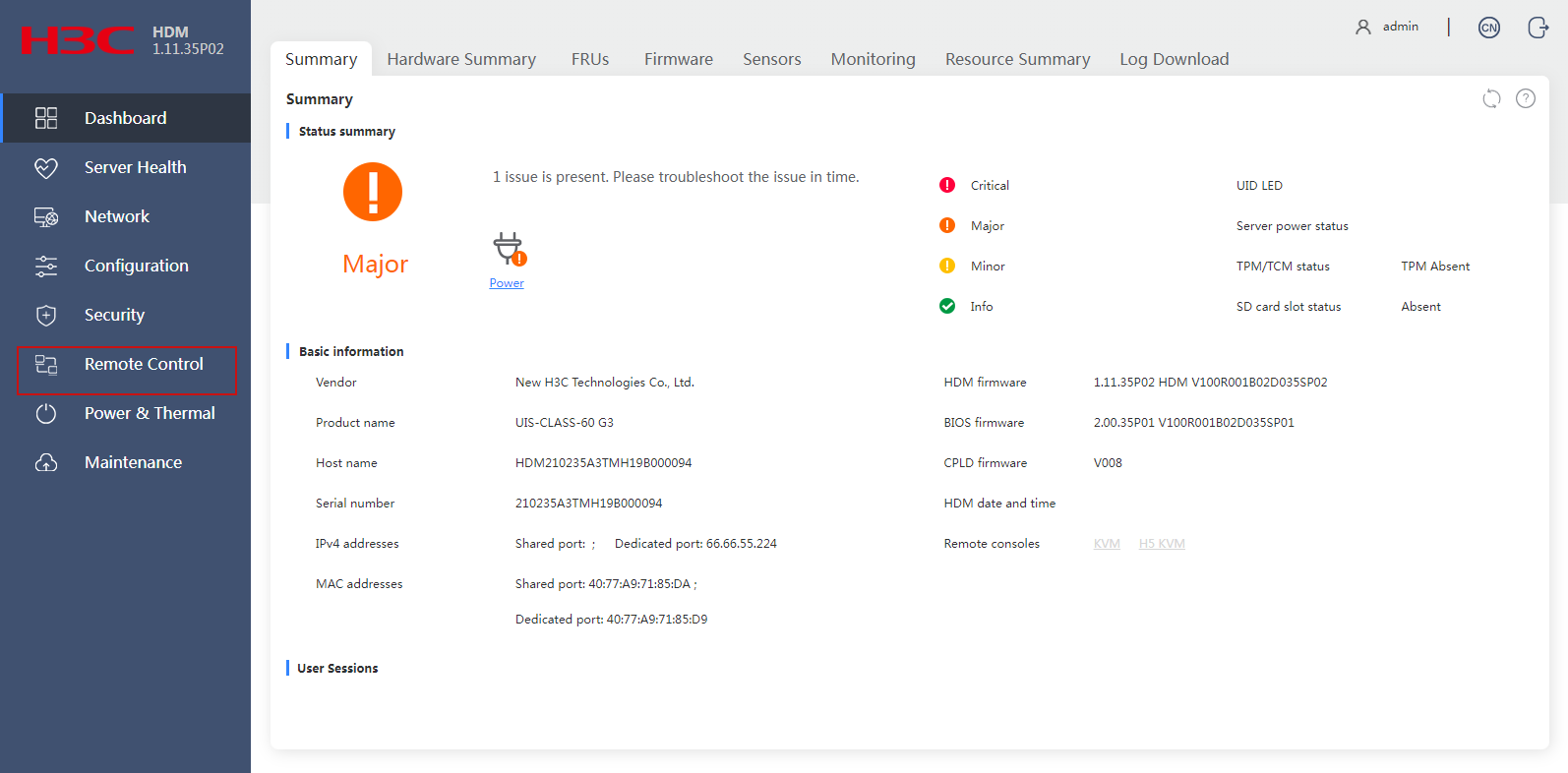

Logging in to the remote console of UniServer R4900 G3

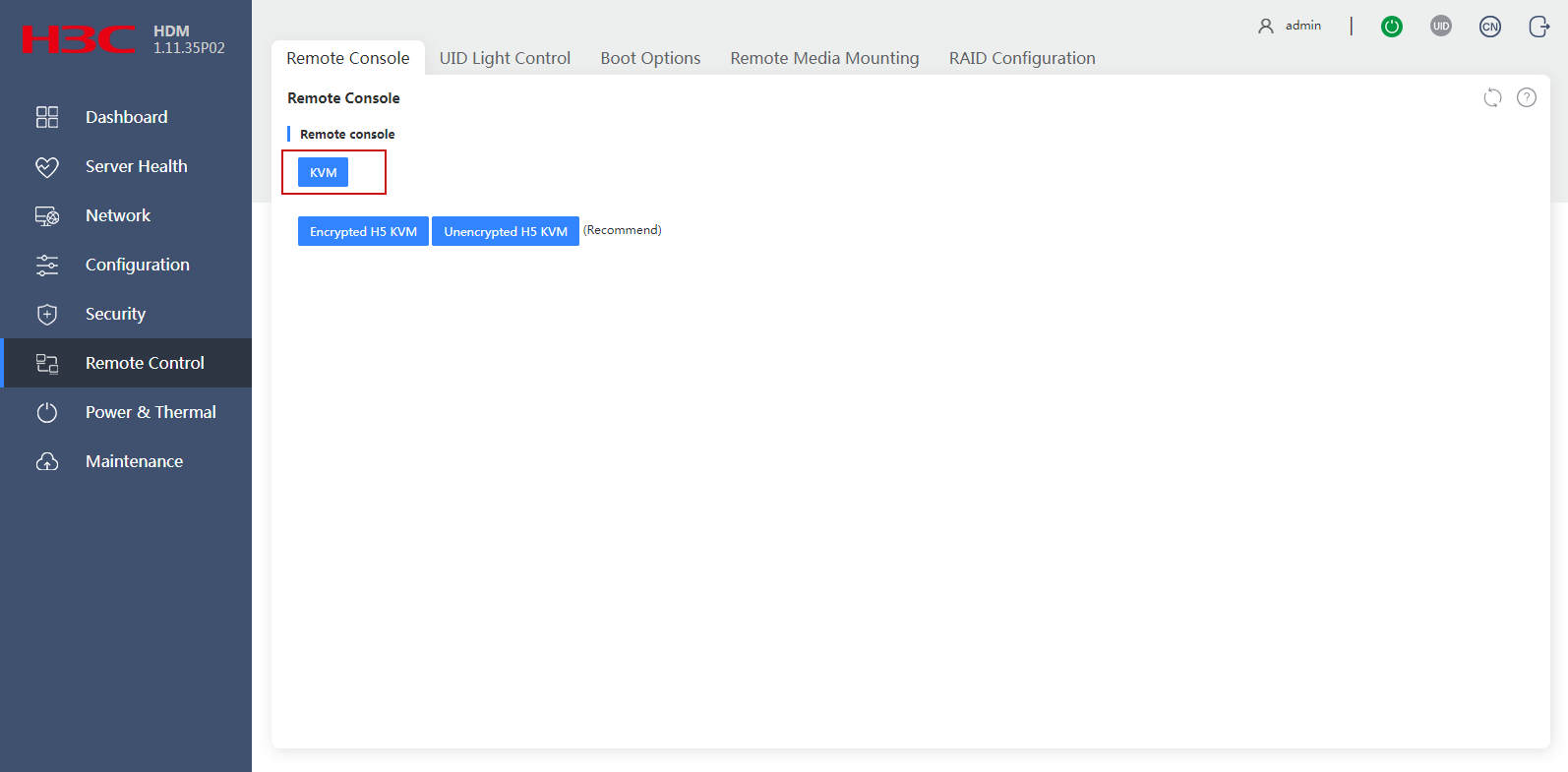

1. Select Remote Control from the left navigation pane.

Figure 4 Accessing the remote control

2. On the Remote Console tab, click KVM.

Figure 5 Logging in to the remote console

3. If the Security Warning dialog box appears, click Continue. Then, in the Do you want to run this application? dialog box, select I accept the risk and want to run this application (I), and then click Run to log in to the remote console of UniServer R4900 G3.

Configuring RAID

For data redundancy protection, configure RAID settings in the server's BIOS via the remote console before you install the operating system on UniServer R4900 G3. Depending on the number and size of disks in UniServer R4900 G3, you can select different RAID configurations. For more information about configuring RAID on the UniServer R4900 G3 server, see H3C G3G5 Servers Storage Controller User Guide.

|

CAUTION: RAID 0 does not have any fault tolerance mechanism, and it cannot prevent data loss in the event of a disk fault. Do not use RAID 0. As a best practice, use RAID 1 or RAID 5. |

Installing H3Linux on the server

|

CAUTION: · During the installation process, record the BIOS boot mode of H3Linux. · For the system to start up successfully, make sure the BIOS boot mode is consistent in installation and startup of H3Linux. For example, if you select UEFI mode when you install H3Linux, you must also boot H3Linux in UEFI mode. For more information about setting the server's BIOS boot mode, see the BIOS user guide or HDM user guide for your server. · If you install the vSCN on a physical server, enable hyperthreading in the BIOS. For more information, see H3C Servers Operating System Installation Guide. |

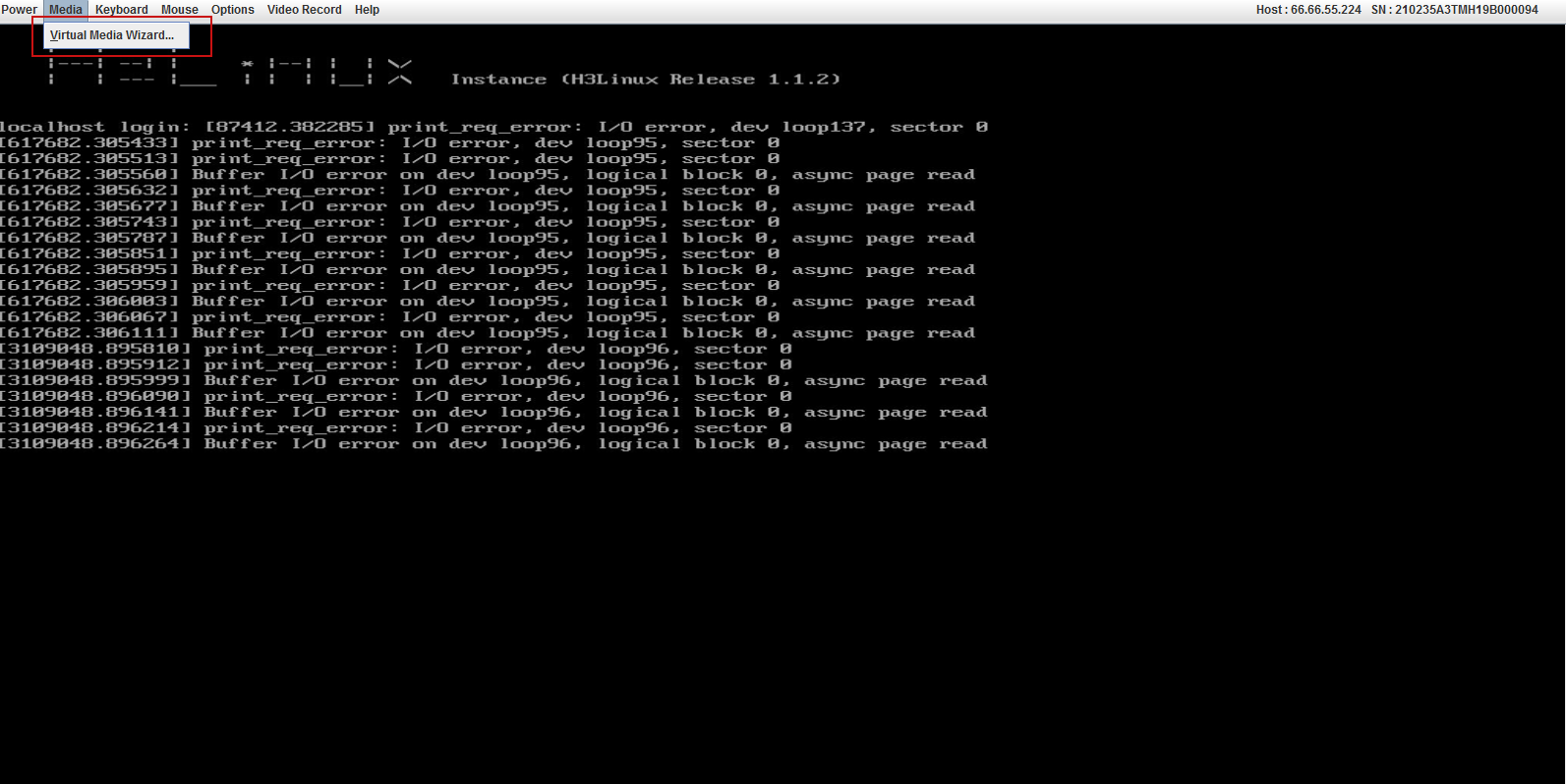

To install H3Linux on the server:

1. Log in to the remote console of the server.

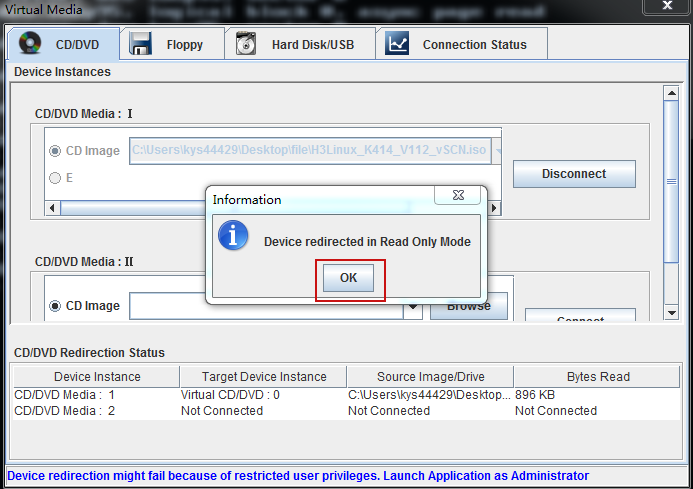

2. Redirect the H3Linux image file to virtual media.

a. Select Media > Virtual Media Wizard in the remote console menu bar.

Figure 6 Opening virtual media

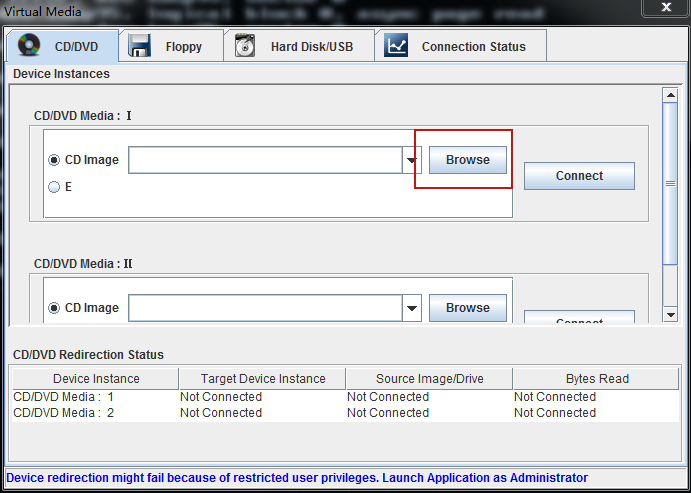

b. Click Browse to select the H3Linux operating system image file from your local PC.

Figure 7 Selecting the H3Linux operating system image

|

IMPORTANT: The image file for the H3Linux operating system must be saved in a local PC path that does not contain Chinese characters. |

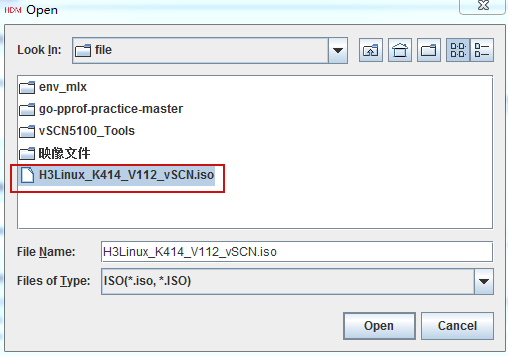

c. Select the H3Linux operating system image file in the local path and click Open to redirect the H3Linux operating system image file to the virtual media.

Figure 8 Redirecting the H3Linux image file to virtual media

d. Click OK to complete redirection of the H3Linux operating system image file to the virtual media in read-only mode.

Figure 9 Redirecting the H3Linux image file to virtual media

3. Install H3Linux on the server.

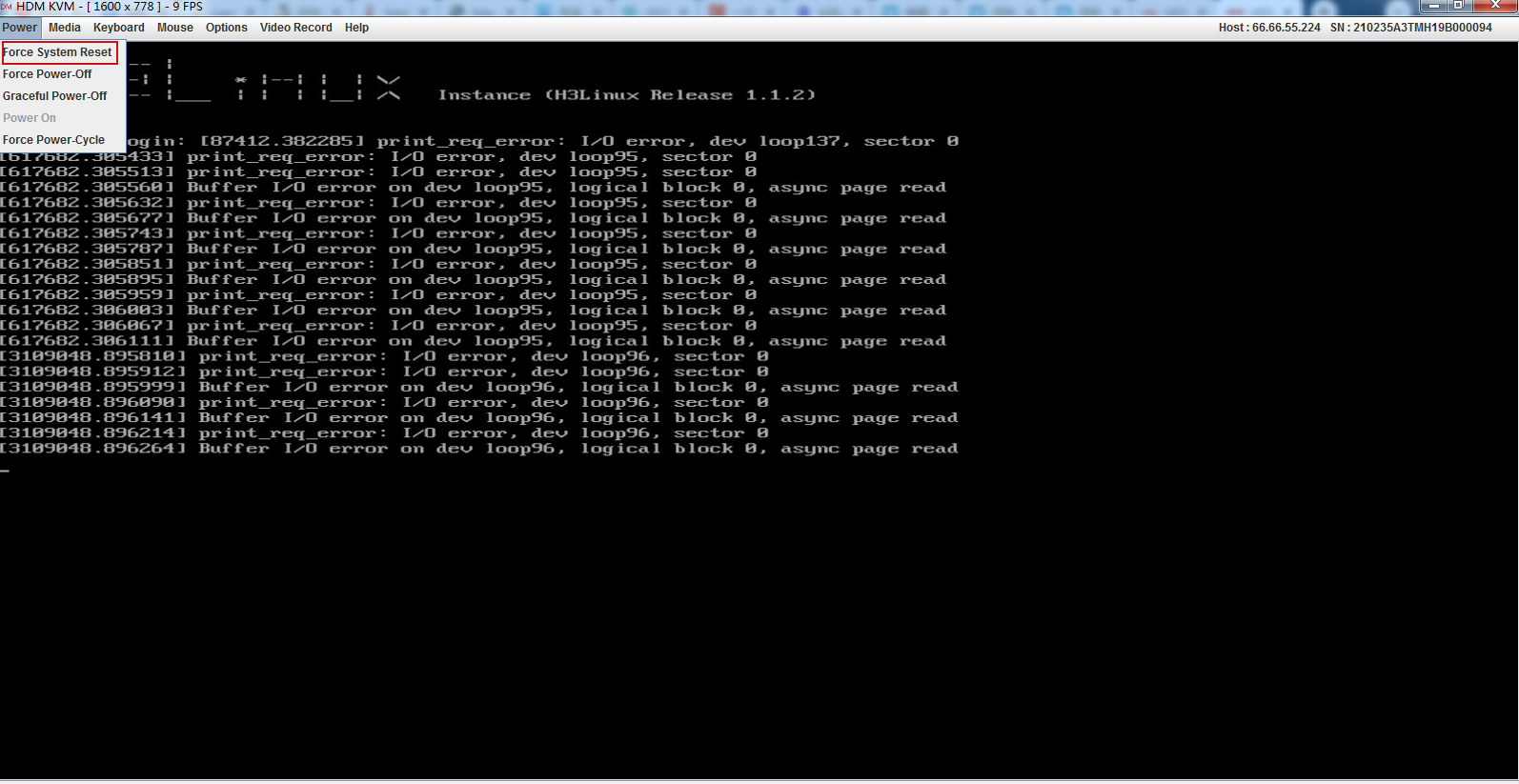

a. Select Power > Force System Reset to boot up the server from the virtual media.

Figure 10 Rebooting UniServer R4900 G3

|

|

NOTE: · When you install H3Linux for the first time, you do not need to configure the server BIOS boot options. · If it is not the first installation, set the server BIOS to boot from the virtual optical drive. For more information about configuring the server BIOS boot options, see H3C Servers Operating System Installation Guide. |

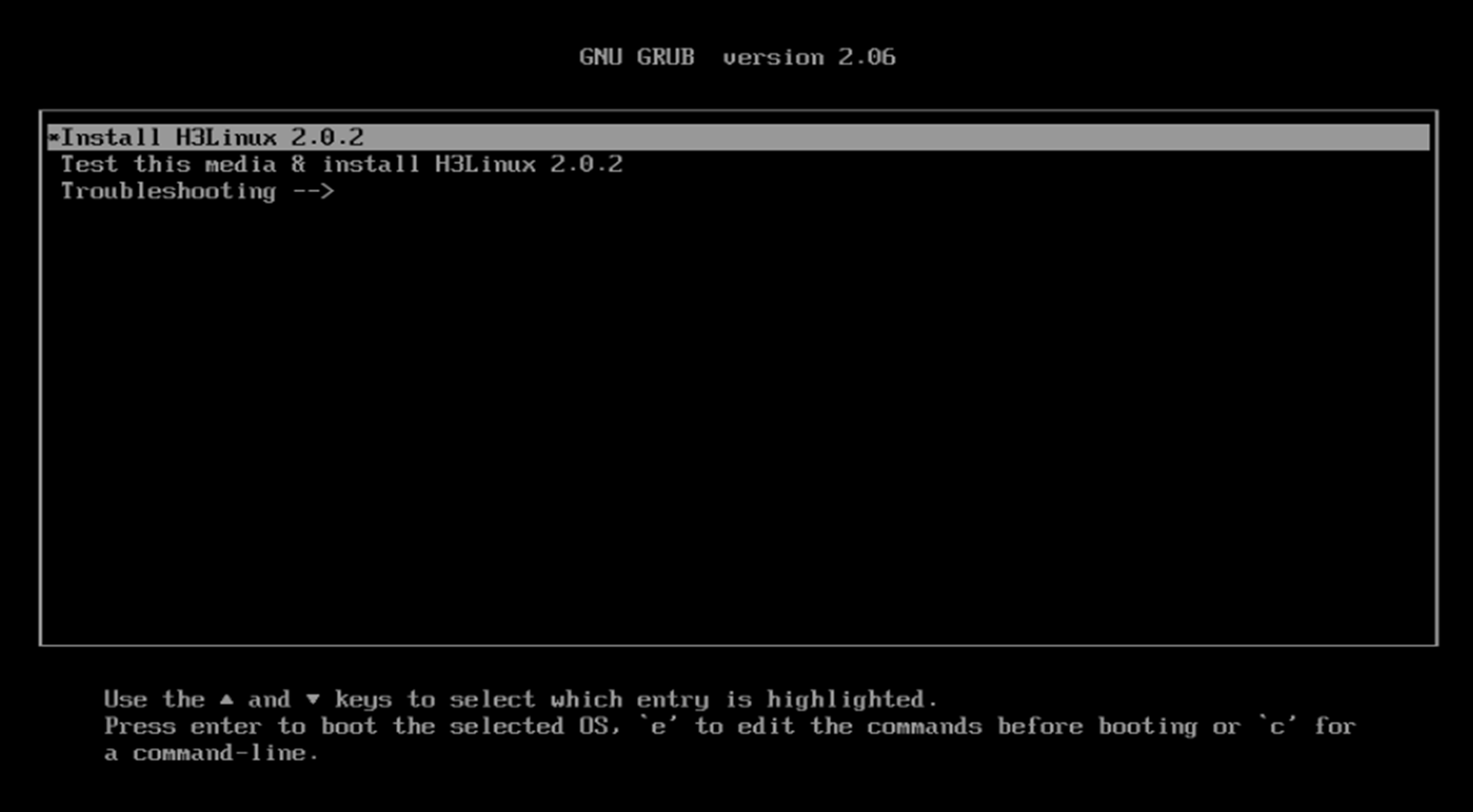

b. Press Enter start H3Linux installation.

|

|

NOTE: · Figure 11 is an installation. The installation process might vary depending on the version of the H3Linux operating system. · H3Linux will be automatically installed on UniServer R4900 G3 without manual intervention. |

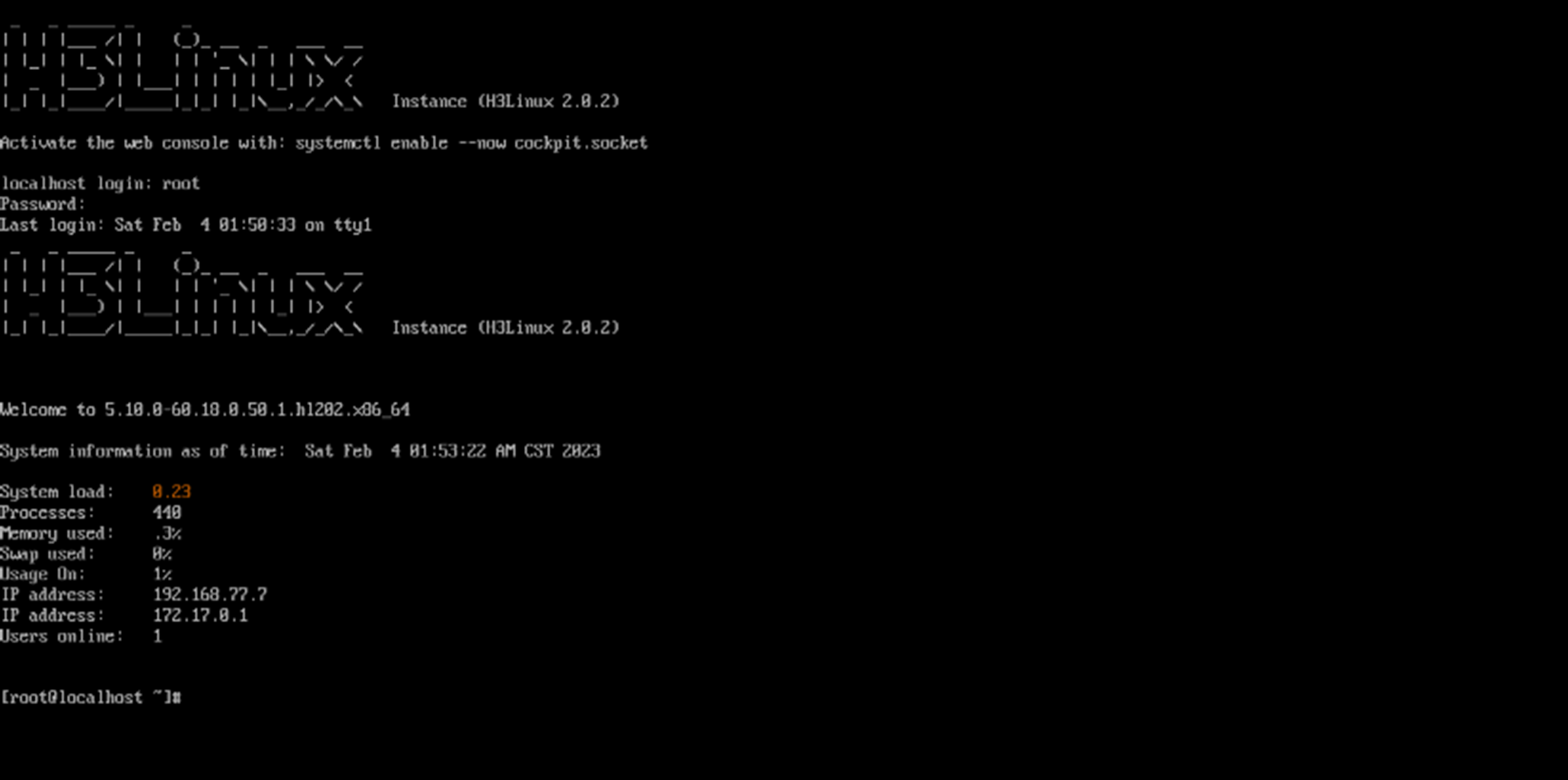

Logging in to H3Linux for the first time

After you install H3Linux, the system will create a root user by default. Use this root user to log in to H3Linux.

Table 7 Default Username and password description

|

User type |

Username |

Password |

|

Root user |

root |

root@vSCN5100 |

|

|

NOTE: · As a best practice to ensure system security, change the default password upon first login to H3Linux. · You can change the password by using the passwd command in H3Linux. For more information about the passwd command, execute the man passwd command in H3Linux. |

Enter the default username and the password, and then press Enter.

Figure 12 Logging in to H3Linux for the first time

Configuring the server's clock

Restrictions and guidelines

You can change the vSCN's system time by using the following methods:

· Change the system time of the Docker container that hosts vSCN.

· Change the system time of the server.

The following uses the second method as an example.

Procedure

1. Log in to H3Linux as a root user via the remote console. Details not shown.

2. Set the time zone.

Set the time zone based on the location of the server.

In this example, the time zone is set to Asia/Shanghai.

[root@localhost ~]# timedatectl set-timezone Asia/Shanghai

3. Use the date -s command to set the system time. For example, set the current time to December 16, 2020, at 20:16:40.

[root@localhost ~]# date -s '2020-12-16 20:16:40'

4. Commit the system time to the device firmware.

[root@localhost ~]# clock –w

|

|

NOTE: · If you do not configure the clock -w command and the server restarts, the server's system time might be reset. · If you have configured the clock -w command and the server restarts, the system time set by the date command is still effective. · As a best practice, execute the reboot command to restart the server and identify whether the system time set by the date command has been committed to the device firmware after you set the system time. |

Configuring an IP address for a network interface on the server

After you configure an IP address for a network interface on the server, you can log in to the server via SSH to manage it. A network interface does not have any requirements on the chip model. The following uses enp61s0f0 as an example.

1. Execute the vim command to open the configuration file for the server's enp61s0f0 network interface.

[root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-enp61s0f0

2. Press Shift+I to enter edit mode, and edit the configuration file as follows:

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME= enp61s0f0

UUID=f4355dc9-db84-40d4-8e9f-c5814e660d21

DEVICE=enp61s0f0

IPADDR=10.20.1.20

NETMASK=255.255.255.0

GATEWAY=10.20.1.1

ONBOOT=yes

3. Press ESC to exit edit mode, then enter :wq to save and exit the VIM editor.

4. Restart the network service for the configuration file to take effect.

[root@localhost ~]# systemctl restart NetworkManager

|

|

NOTE: After you configure an IP address for the network interface, you can use this IP address to log in to H3Linux via SSH. This document uses 10.20.1.20 as an example. |

Uploading vSCN image files and the toolkit

Enabling the SFTP service

By default, the SFTP service on the server is disabled. You must edit the configuration file and restart the related service.

To enable the SFTP service on the server:

1. Execute the vim command to open the configuration file.

[root@localhost ~]# vim /etc/ssh/sshd_config

2. Press Shift+I to enter edit mode and edit the file as follows:

AcceptEnv LANG LC_CTYPE LC_NUMERIC LC_TIME LC_COLLATE LC_MONETARY LC_MESSAGES

AcceptEnv LC_PAPER LC_NAME LC_ADDRESS LC_TELEPHONE LC_MEASUREMENT

AcceptEnv LC_IDENTIFICATION LC_ALL LANGUAGE

AcceptEnv XMODIFIERS

# override default of no subsystems

Subsystem sftp internal-sftp

# Example of overriding settings on a per-user basis

#Match User anoncvs

# X11Forwarding no

# AllowTcpForwarding no

# PermitTTY no

# ForceCommand cvs server

#CheckUserSplash yes

3. Press ESC to exit edit mode, then enter :wq to save and exit the VIM editor.

4. Restart the sshd service for the configuration file to take effect.

[root@localhost ~]# systemctl restart sshd

Uploading the software package

Upload the prepared software package (vSCN image files and toolkit) directly to the server. After the package upload is complete, execute the ls command to identify whether the upload was successful.

As a best practice, copy all prepared software packages to the same directory. The following uses the /root directory as an example.

Adding execute permissions to the script files in the package

If no permissions are set for the script files in the package, use the chmod command to add execute permissions.

1. Execute the tar command in the /root directory to decompress the package.

[root@localhost ~]# tar -xvf vSCN5100_Tools.tar

2. In the /env directory, execute the chmod command to add execute permissions for all script files.

[root@localhost ~]# cd env

[root@localhost env]# chmod +x *

[root@localhost env]# cd

Planning service network interfaces

To check the chip model and name of a network interface

1. Switch to the /root directory.

[root@localhost env]# cd /root/

2. Execute the ./env/dpdk-devbind-py3.py --status command in the /root directory to check the network adapter state.

[root@localhost ~]# ./env/dpdk-devbind-py3.py --status

Network devices using DPDK-compatible driver

============================================

Network devices using kernel driver

===================================

0000:3d:00.0 'Ethernet Connection X722 for 10GbE SFP+ 37d3' if=enp61s0f0 drv=i40e unused=igb_uio

0000:5f:00.1 '82599ES 10-Gigabit SFI/SFP+ Network Connection 10fb' if=ens1f0 drv=ixgbe unused=igb_uio

0000:5f:00.2 '82599ES 10-Gigabit SFI/SFP+ Network Connection 10fb' if=ens1f1 drv=ixgbe unused=igb_uio

Table 8 Output from the ./env/dpdk-devbind-py3.py --status command

|

Field |

Description |

|

Network devices using DPDK-compatible driver |

Network adapters supported by the DPDK driver, including the chip model and interface type. |

|

Network devices using kernel driver |

Network devices that use the kernel driver, including the network adapter's chip model, interface type, and interface name. |

Configuring service network interfaces

The following uses the ens1f0 network interface as an example.

To configure a service network interface:

1. Switch to the /etc/sysconfig/network-scripts directory.

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]#

2. Execute the vim command in the /etc/sysconfig/network-scripts directory to open the configuration file for the ens1f0 network interface.

[root@localhost network-scripts]# vim ifcfg-ens1f0

3. Press Shift+I to enter edit mode, and edit the configuration file as follows:

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens1f0

UUID=84c225c5-b555-41da-af41-796f5f52a0ea

DEVICE=ens1f0

ONBOOT=no

4. Press ESC to exit edit mode, then enter :wq to save and exit the VIM editor.

5. If multiple network interfaces are used, follow the previous steps to edit the configuration files for the other network interfaces.

6. Restart the network service for the configuration file to take effect.

[root@localhost network-scripts]# systemctl restart NetworkManager

Installing the vSCN

Restrictions and guidelines

· Log in to H3Linux multiple times remotely via the console or SSH.

¡ Log in to H3Linux via the remote console. For more information, see "Logging in to H3Linux for the first time."

¡ Log in to H3Linux via SSH: Launch a terminal emulation program on the configuration terminal (such as MobaXterm or PuTTY), select SSH, and enter the IP address of the host management interface, the username and password for logging in to H3Linux. To facilitate user login, H3Linux has SSH service enabled.

· Unless otherwise specified, this document uses the root user to log in to H3Linux, and the default directory after login is /root.

· For the default user and password used to log in to the device, see "Logging in to H3Linux for the first time."

When H3Linux is running correctly and you do not need to use vSCN any more, you can delete the vSCN container by executing ./env/rmdocker.sh remove container name command in the /root directory. Then, delete the vSCN image tag by executing the docker rmi image tag command. To reuse vSCN, you can recreate a Docker container on H3Linux and install the vSCN in the new container.

The output from the commands depends on the server or software version.

Disabling SELinux

|

IMPORTANT: · For the HugePages configuration to take effect, you must restart the server. As a best practice, restart the server after you complete HugePages configuration to save time. To support some vSCN features, you must disable SELinux. |

To disable SELinux:

1. Edit the SELinux configuration file.

a. Open the /etc/selinux/config file by using the VIM editor.

[root@localhost ~]# vim /etc/selinux/config

b. Press Shift+I to enter edit mode, and edit the configuration file as follows:

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# disabled - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

c. Press ESC to exit edit mode, then enter :wq to save and exit the VIM editor.

2. Restart the server for the configuration to take effect.

[root@localhost ~]# reboot

Configuring HugePages

For a successful installation of the vSCN, first complete HugePages configuration.

Table 9 shows the HugePages requirements of different service models.

Table 9 HugePages configuration parameters

|

Service model |

HugePages configuration parameters |

||

|

default_hugepagesz |

hugepagesz |

hugepages |

|

|

5G |

1G |

1G |

4 |

|

10G |

1G |

1G |

8 |

|

20G |

1G |

1G |

16 |

To configure HugePages:

1. Edit the huge page configuration file.

a. Open the /etc/default/grub file by using the VIM editor.

[root@localhost ~]# vim /etc/default/grub

b. Press Shift+I to enter edit mode and edit the file as follows:

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="resume=/dev/mapper/h3linux-swap rd.lvm.lv=h3linux/root rd.lvm.lv=h3linux/swap crashkernel=512M default_hugepagesz=1G hugepagesz=1G hugepages=16"

GRUB_DISABLE_RECOVERY="true"

For information about the configuration parameters, see Table 9.

c. Press ESC to exit edit mode, then enter :wq to save and exit the VIM editor.

2. Select an option based on the server's BIOS boot mode to generate a startup configuration file from the /etc/default/grub file.

¡ If the system boots in UEFI mode, enter the following commands:

[root@localhost ~]# grub2-mkconfig -o /boot/efi/EFI/H3Linux/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.10.0-60.18.0.50.1.hl202.x86_64

Found initrd image: /boot/initramfs-5.10.0-60.18.0.50.1.hl202.x86_64.img

Adding boot menu entry for UEFI Firmware Settings ...

done

¡ If the system boots in Legacy mode, enter the following commands:

[root@node1 ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.10.0-60.18.0.50.3.hl202.x86_64

Found initrd image: /boot/initramfs-5.10.0-60.18.0.50.3.hl202.x86_64.img

Adding boot menu entry for UEFI Firmware Settings ...

done

3. Restart the server for the configuration to take effect.

[root@localhost ~]# reboot

4. Identify whether huge pages are configured successfully.

The output from the cat /proc/cmdline command depends on H3Linux version. You only need to focus on changes to the HugePage configuration.

[root@localhost ~]# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-5.10.0-60.18.0.50.1.hl202.x86_64 root=/dev/mapper/h3linux-root ro resume=/dev/mapper/h3linux-swap rd.lvm.lv=h3linux/root rd.lvm.lv=h3linux/swap crashkernel=512M default_hugepagesz=1G hugepagesz=1G hugepages=16

Creating the container for the vSCN and running the vSCN

Select a container creation procedure based on deployment. To deploy 5GC, follow the procedure in "Deploying 5GC." To deploy IMS, follow the procedure in "Deploying IMS."

To deploy both the 5GC and IMS containers on the server, make sure the CPUs used by both containers are on the same NUMA node and not in use by another container. For more information, see Table 11.

Deploying 5GC

1. Execute the docker import command in the /root directory to import the vSCN software package into the Docker image space.

[root@localhost ~]# docker import vSCN5100-CMW910-A5118.tar.gz vscn:v01

sha256:a579e957acdd46263fa65e986e7dab4c85f926ecddc8ed33650d5b821b73f032

v01 represents the image tag, which can contain only letters (case-sensitive) and digits. vscn represents the name of the image repository, which can contain only lowercase letters and digits.

2. Run the dockerrun.sh script in the /root/env directory to create a vSCN container. As shown in Table 10, the script varies by service model.

|

Service model |

Script |

|

5G |

./dockerrun.sh v01 vscn --cgroup ControlPlane=3 -c 0-7 -m 12288 -h 61440 --ifname ens1f0 |

|

10G |

./dockerrun.sh v01 vscn --cgroup ControlPlane=10 -c 0-9,20-29 -m 28672 -h 61440 --ifname ens1f0,ens1f1 --hugepages 4096 |

|

20G |

./dockerrun.sh v01 vscn --cgroup ControlPlane=16 -c 0-31 -m 49152 -h 61440 --ifname ens2f0,ens2f1,ens3f0 --hugepages 8192 |

The following uses the 10G service model as an example. Table 11 shows the description for dockerrun.sh script parameters.

[root@localhost ~]# cd env

[root@localhost env]# ./dockerrun.sh v01 vscn --cgroup ControlPlane=10 -c 0-9,20-29 -m 28672 -h 61440 --ifname ens1f0,ens1f1 --hugepages 4096

bind vfio-pci to interfaces... ok

kernel.core_pattern = |/root/coredump.sh %p %P %i %I %u %g %d %s %t %h %e %E %c

starting docker container vscn

/dev/tty /dev/ttyS0 /dev/ttyS1 /dev/ttyS2 /dev/ttyS3

harddisk init...

ok

fsck.fat 4.1 (2017-01-24)

/disk/flash.img: 0 files, 1/2080259 clusters

about to fork child process, waiting until server is ready for connections.

forked process: 436

child process started successfully, parent exiting

scm init

Phase 0 finished!

Line con0 is available.

Press ENTER to get started.

Table 11 dockerrun.sh parameters

|

Parameter |

Description |

|

imageTag |

Image tag, v01 in this example. |

|

dockerName |

Container name, vscn in this example. |

|

-c { corelist | coremask } |

Allocates CPU information. Options are corelist and coremask. This example uses the corelist mode to allocate CPUs 0 through 9 and 20 through 29. You must allocate a minimum of two CPU cores. As a best practice, make sure the allocated CPUs are on the same NUMA node as the bound network adapter, and are not in use by another container. To locate CPU NUMA node information, execute the lscpu command. To obtain information about the NUMA node to which the network adapter belongs, execute the cat /sys/class/net/ens1f0/device/numa_node command. |

|

--cgroup ControlPlane |

Specifies the number of CPUs for the control plane. If multiple CPUs are allocated and the number for the control plane is not specified, only one CPU will be used for the control plane by default and the other CPUs are used for the user plane. To improve user login performance, use the --cgroup ControlPlane parameter to allocate multiple CPUs for the control plane. For example, when you run the v01 vscn --cgroup ControlPlane=10 -c 0-9,20-29 -m 28672 -h 61440 --ifname ens1f0,ens1f1 --hugepages 4096 script, the system uses 10 CPUs for the control plane. You can specify the number of CPUs for the control plane only when you create a container. |

|

-m memsize |

Limits the memory size used by the container. If you do not specify this parameter, the default memory size is 2048M. In this example, the memory size is set to 32768M, and you can edit it as needed. To create a vSCN container, set the memory size to be 16384M or more. |

|

-h hdisksize |

Limits the disk size used by the container. If you do not specify this parameter, the default disk size is 2048M. In this example, the disk size is set to 65536M, and you can edit it as needed. To create a vSCN container, set the disk size to be 16384M or more. |

|

--ifname ifname-list |

Network interface names for the container. In this example, ens1f0 and ens1f1 are used. |

|

--hugepages |

HugePages memory size for the container. If you do not specify this parameter, the default memory size is 2048M. In this example, the memory size is set to 4096M, and you can edit it as needed. |

Deploying IMS

1. Execute the docker import command in the /root directory to import the vSCN software package into the Docker image space.

[root@localhost ~]# docker import vSCN5100-CMW910-A5118.tar.gz vims:v01

sha256:a579e957acdd46263fa65e986e7dab4c85f926ecddc8ed33650d5b821b73f032

2. Run the dockerrun.sh script in the /root/env directory to create a vSCN container. For the parameter description, see 0.

[root@localhost env]# ./dockerrun.sh v01 vims -c 10-17 --cgroup ControlPlane=2 -m 12228 -h 65536 --ifname ens2f0

bind vfio-pci to interfaces... ok

kernel.core_pattern = |/root/coredump.sh %p %P %i %I %u %g %d %s %t %h %e %E %c

starting docker container vims

/dev/tty /dev/ttyS0 /dev/ttyS1 /dev/ttyS2 /dev/ttyS3

harddisk init...

ok

fsck.fat 4.1 (2017-01-24)

/disk/flash.img: 0 files, 1/2080259 clusters

about to fork child process, waiting until server is ready for connections.

forked process: 436

child process started successfully, parent exiting

scm init

Phase 0 finished!

Line con0 is available.

Press ENTER to get started.

Table 12 dockerrun.sh parameters

|

Parameter |

Description |

|

imageTag |

Image tag, v01 in this example. |

|

dockerName |

Container name, vims in this example. |

|

-c { corelist | coremask } |

Allocates CPU information. Options are corelist and coremask. This example uses the corelist mode to allocate CPUs 10 through 17. You must allocate a minimum of two CPU cores. As a best practice, make sure the allocated CPUs are on the same NUMA node as the bound network adapter, and are not in use by another container. To locate CPU NUMA node information, execute the lscpu command. To obtain information about the NUMA node to which the network adapter belongs, execute the cat /sys/class/net/ens1f0/device/numa_node command. |

|

--cgroup ControlPlane |

Specifies the number of CPUs for the control plane. If multiple CPUs are allocated and the number for the control plane is not specified, only one CPU will be used for the control plane by default and the other CPUs are used for the user plane. To improve user login performance, use the --cgroup ControlPlane parameter to allocate multiple CPUs for the control plane. For example, when you run the ./dockerrun.sh v01 vims -c 10-17 --cgroup ControlPlane=2 -m 12228 -h 65536 --ifname ens2f0 script, the system uses two CPUs for the control plane. You can specify the number of CPUs for the control plane only when you create a container. |

|

-m memsize |

Limits the memory size used by the container. If you do not specify this parameter, the default memory size is 2048M. In this example, the memory size is set to 32768M, and you can edit it as needed. To create a vSCN container, set the memory size to be 16384M or more. |

|

-h hdisksize |

Limits the disk size used by the container. If you do not specify this parameter, the default disk size is 2048M. In this example, the disk size is set to 65536M, and you can edit it as needed. To create a vSCN container, set the disk size to be 16384M or more. |

|

--ifname ifname-list |

Network interface name for the container. In this example, ens2f0 is used. |

|

--hugepages |

HugePages memory size for the container. If you do not specify this parameter, the default memory size is 2048M. In this example, the default memory size is used, and you can edit it as needed. |

Logging in to the CLI of the vSCN

After the vSCN is installed, you can press Enter to log in to the vSCN CLI. To exit the vSCN CLI, press Ctrl+P and Ctrl+Q in sequence.

If you log in to the vSCN CLI again, you can execute the docker attach container_name command as a root user in any directory and then press Enter.

Configuring the vSCN at the CLI

|

IMPORTANT: The document uses the vSCN interface Ten-GigabitEthernet1/0/0 with IP address 10.20.1.30/24 as an example to describe how to configure the IP address for logging in to the vSCN Web interface. To access the vSCN Web interface, select an appropriate interface based on your actual network setup and assign the planned IP address to it. |

1. Configure secondary IP address 10.20.1.30 on Ten-GigabitEthernet1/0/0 for logging in to the vSCN Web interface.

<H3C> system-view

[H3C] interface Ten- GigabitEthernet 1/ 0/0

[H3C-Ten-GigabitEthernet1/0/0] ip address 10.20.1.30 24 sub

[H3C-Ten-GigabitEthernet1/0/0] quit

2. Execute the save command to save the current configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

flash:/startup.cfg exists, overwrite? [Y/N]:y

Validating file. Please wait...

Saved the current configuration to mainboard device successfully.

Deploying network elements

vSCN supports installing and removing network elements. It contains network elements related to 5GC and IMS, and has the following deployment methods:

· Deploying 5GC—The vSCN container contains only network elements related to 5GC, and can independently provide the data service or work in conjunction with an external IMS to provide the voice service.

· Deploying IMS—The vSCN container contains only network elements related to IMS, and can provide the voice service.

Deploying 5GC

After you install the vSCN, the network elements of 5GC are installed by default. No additional operations are required.

Deploying IMS

To use the vSCN container to deploy IMS independently, first uninstall network elements related to 5GC, and then install network elements related to IMS.

To install network elements related to IMS:

1. Uninstall network elements related to 5GC.

<H3C> system-view

[H3C]undo om install amf smf udm udr ausf nssf nrf iwf pcf upf lmf gmlc

This operate may operate database, Do you want to continue? [Y/N]:y

This operate need reboot, Do you want to reboot force without saving now? [Y/N]:n

2. Install network elements related to IMS.

[H3C]om install ims imshss

This operate may operate database, Do you want to continue? [Y/N]:y

This operate need reboot, Do you want to reboot force without saving now? [Y/N]:y

%May 11 09:11:01:166 2024 H3C DEV/5/SYSTEM_REBOOT: System is rebooting now.

Logging in to the Web interface

Web browser requirements

· As a best practice, use the following Web browsers:

¡ Google Chrome 57.0 or higher.

¡ Mozilla Firefox 35.0 or higher.

· To access the Web interface, you must use the following browser settings:

¡ Accept the first-party cookies (cookies from the site you are accessing).

¡ Enable active scripting or JavaScript, depending on the Web browser.

· After you enable the proxy feature in your browser, you might not be able to access the Web interface correctly. You can disable the browser's proxy feature or set your device's IP address as an exception to the proxy settings, depending on the Web browser you are using.

· To ensure correct display of webpage contents after software upgrade or downgrade, clear data cached by the browser before you log in.

Restrictions and guidelines

· Enter a correct username and password when you log in to the Web interface. If you enter an incorrect username or password, the device will prompt a login failure. If you fail to enter the password in two attempts, you must enter the verification code provided by the device.

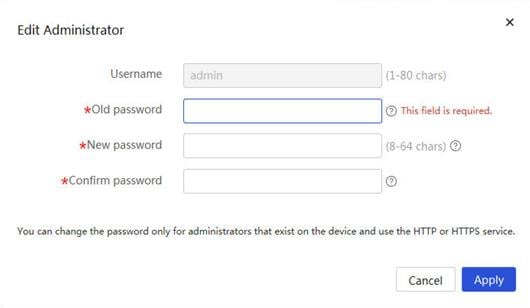

· The device comes with an administrator account named admin with password admin@SCN5100. To enhance security, the system will prompt for a password change at your first login. To log in to the system, you must change the password as prompted.

· The Web interface allows a maximum of 32 concurrent accesses.

Logging in to the Web interface for the first time

Use the following default settings for the first login.

· Username: admin

· Password: admin@SCN5100

To log in to the vSCN Web interface by using the factory default information:

1. Connect the vSCN to the local terminal.

Use an Ethernet cable to connect the configuration terminal to an interface configured with an IP address on the vSCN. The following uses Ten-GigabitEthernet 1/0/0 as an example.

2. Configure an IP address for the configuration terminal.

Make sure the local configuration terminal can communicate with Ten-GigabitEthernet 1/0/0. In this document, the IP address of Ten-GigabitEthernet 1/0/0 is set to 10.20.1.30, and the IP address of the local configuration terminal is set to 10.20.1.2/24.

3. Launch the browser.

a. Open the browser.

b. In the address bar, enter information based on the Web access method.

- HTTP access—Enter http://10.20.1.30:80.

- HTTPS access—Enter https://10.20.1.30:443.

4. Log in to the Web interface of vSCN.

a. On the login page, enter the default username (admin) and the default password (admin), and then click Login.

Figure 13 Entering login information

b. Enter the new password as required, and then click Apply.

Figure 14 Changing the password

|

|

NOTE: As a best practice, set a password that contains the following categories of characters: letters (case insensitive), digits, and special characters ~`!@#$%^&*()_+-={}|[]\:";'<>,./. Spaces are allowed. |

c. Enter the username and new password. Click Login.

Logging out of the Web interface

For security purposes, log out of the Web interface immediately after you finish your tasks.

Use either of the following methods to log out of the Web interface:

· Click admin/Exit in the upper right corner of the Web interface.

· Click ![]() in the upper

right corner of the page.

in the upper

right corner of the page.

The device does not automatically save the configuration when you log out of the Web interface. To prevent the loss of configuration when the device reboots, you must save the configuration. For how to save the configuration, see H3C vSCN5100 Virtual Smart Core Network Web-Based Configuration Guide.