- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 2.26 MB |

3SFF drive backplane (1SAS/SATA+2UniBay)

B/D/F information about the server

Component installation guidelines

Storage controller and its power fail safeguard module

Installing or removing the blade server

Connecting the blade server to a network

Connecting through the management module

Verifying the blade server status

Modifying the default user password of OM

Modifying the default IP address of the OM module

Modifying the default user password of HDM

Modifying the default IP address of HDM

Logging into the blade server operating system

Configuring basic BIOS settings

Installing the operating system and hardware drivers

Replaceable parts and their videos

Replacing the riser card and PCIe card

Removing the riser card and PCIe card

Installing the riser card and PCIe card

Replacing the storage controller and its power fail safeguard module

Removing the storage controller and its power fail safeguard module

Installing a storage controller and a power fail safeguard module

Replacing the straight-through card

Removing the straight-through card

Installing the straight-through card

Replacing the standard PCIe NIC

Removing a mezzanine network adapter

Installing a mezzanine network adapter

Removing a SATA M.2 SSD module

Installing a SATA M.2 SSD module

Replacing a SATA M.2 SSD adapter

Removing a SATA M.2 SSD adapter

Installing a SATA M.2 SSD adapter

Replacing the NVMe VROC module

Installing the NVMe VROC module

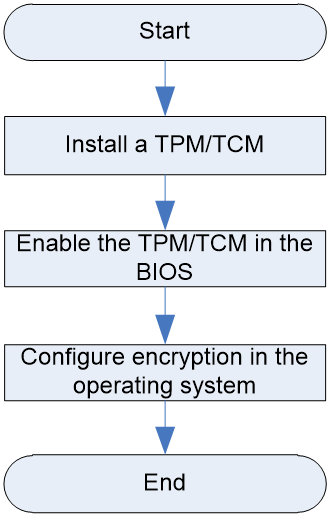

Installing and setting up a TCM or TPM

Installing and setting up a TPM or TCM

Installation and setup flowchart

Enabling the TCM or TPM in the BIOS

Configuring encryption in the operating system

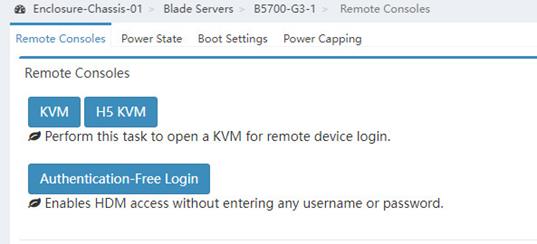

Logging in to the blade server operating system

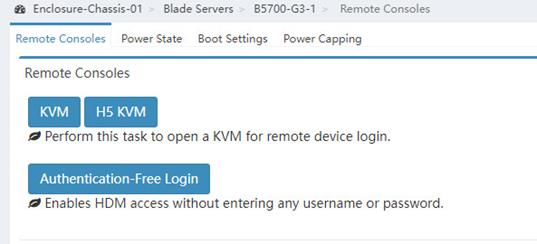

Accessing the blade server HDM interface

Monitoring the temperature and humidity in the equipment room

Updating firmware for the server

Safety information

To avoid bodily injury or damage to the server, read the following information carefully before you operate the server. In practice, the safety information includes but is not limited to what are mentioned in this document.

General operating safety

· Only H3C-authorized personnel or professional engineers can run the device.

· Place the device on a clean, stable workbench or floor for servicing.

· Before running the server, make sure that all cables are correctly connected.

· To cool the server adequately, follow the guidelines below:

¡ Do not block the ventilation holes on the server.

¡ Filler panels must be installed in idle slots of the server, such as drive slots.

¡ Do not run the server if no chassis cover, air duct, or filler panel for idle slots is installed.

· Make sure that you move or place the server evenly and slowly.

· To avoid being burnt, allow the server and its internal modules to cool before touching them.

Electrical safety

To avoid bodily injury or damage to the server, follow these guidelines:

· Verify whether the operation area has potential risks carefully, such as an ungrounded chassis, unreliable grounding, or wet floor.

· Make sure that you disable the power supply before you perform actions in power-off state.

· Power off the server when installing or removing any components that are not hot swappable.

Battery safety

The server's system board contains a system battery, which is designed with a lifespan of 3 to 5 years.

If the BIOS no longer automatically displays the correct date and time, you might need to replace the battery. When you replace the battery, follow these safety guidelines:

· Do not attempt to recharge the battery.

· Do not expose the battery to a temperature higher than 60°C (140°F).

· Do not disassemble, crush, puncture, short external contacts, or dispose of the battery in fire or water.

· Dispose of the battery at a designated facility. Do not throw the battery away together with other wastes

ESD prevention

Preventing electrostatic discharge

To prevent electrostatic damage, follow these guidelines:

· Transport or store the server with the components in antistatic bags.

· Keep the electrostatic-sensitive components in separate antistatic bags until they arrive at an ESD-protected area.

· Place the components on a grounded surface before removing them from their antistatic bags.

· Avoid touching pins, leads, or circuitry.

· You must take ESD preventions before touching the electrostatic-sensitive components.

Grounding methods to prevent electrostatic discharge

The following are grounding methods that you can use to prevent electrostatic discharge:

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Take adequate personal grounding measures, including wearing antistatic clothing and static dissipative shoes.

· Use conductive field service tools.

· Use a portable field service kit with a folding static-dissipating work mat.

About the Blade Server

|

|

NOTE: This manual is a general document for the product. For customized products, the specifications may vary. · In this manual, the model of all components are simplified (for example, the prefix or suffix is deleted). Memory model DDR4-3200-16G-2Rx8-R represents the following models: UN-DDR4-3200-16G-2Rx8-R, UN-DDR4-3200-16G-2Rx8-R-F, and UN-DDR4-3200-16G-2Rx8-R-S. Figures in this document are for illustration only. The actual product may vary. |

Product Overview

H3C UniServer B5700 G5 server (hereinafter referred to as the blade server) is an H3C-proprietary blade server with a maximum of two Intel Whitley Ice Lake series processors or Montage Jintide C3 series processors. The server provides strong computing performance and flexible expansion capability. The blade server can be installed in an H3C UniServer B16000 blade chassis (hereinafter referred to as the chassis) and managed through the OM module in a centralized way.

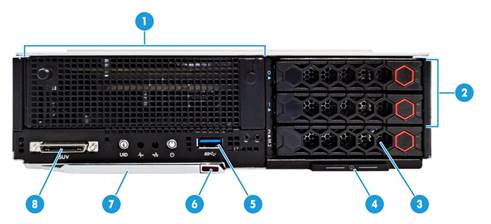

Figure 1 shows the appearance of the blade server.

Figure 1 Appearance of the blade server

Technical parameters

This section introduces the product specifications and technical parameters of the blade server.

Product specifications

Table 1 Product Specifications

|

Feature |

Description |

|

Processor |

Supports 2 Intel Whitley Ice Lake series processors or Montage Jintide C3 series processors l Up to 235 W power consumption per processor l Base frequency up to 3.1 GHz l Up to 48 MB cache per processor |

|

Memory |

Up to 32 memory modules, including DDR4 and PMem 200 memory module |

|

Storage controller |

· Embedded VROC array controller · High-performance storage controller · NVMe VROC module |

|

Chipset |

Intel C621A Lewisburg chipset |

|

Network interface |

2 × embedded 1 Gb/s Ethernet interfaces, connected to chassis backplane for interconnection with the active and standby OM modules |

|

The graphics card chip (model: AST2500) is integrated into the BMC chip. With 64 MB video memory, it supports maximum resolution of 1920 x 1080@60 Hz (32 bpp). Resolution description: · 1920 x 1080: Indicates that there are 1920 pixel columns horizontally and 1080 pixel columns vertically. · 60 Hz: Indicates the refresh rate of 60 times per second. · 32 bpp: Indicates the number of color bits. The larger the number of color bits, more colors will be displayed. |

|

|

I/O port |

· 1 × SUV connector (front panel, for SUV cable connection) · Supports up to 3 USB ports: ¡ 1 × USB 3.0 port (on the front panel) ¡ 2 × USB 2.0 ports (expanded by using the SUV cable) · 1 × VGA port (expanded by using the SUV cable) · 1 × serial port connector (expanded by using the SUV cable) |

|

Expansion slot |

Up to 5 PCIe 3.0 connectors (1 × memory controller connector, 1 × standard connector, 3 × Mezz card connectors) |

|

Certification |

CCC, CE, and FCC |

Technical parameters

Table 2 Technical parameters

|

Category |

Item |

Description |

|

Physical specifications |

Dimensions |

· Height × width: 59.5 × 215 × 613.4 mm · Height × width: Half height and half width · Maximum number of blade servers in a chassis: 16 |

|

Max. weight |

8.8 kg |

|

|

Power consumption |

Max. power |

710 W |

|

Environmental specifications |

Temperature |

Operating temperature: 5°C to 45°C NOTE: · The maximum operating temperature supported by the server may be reduced under some configurations. For details, see the "Operating temperature requirements". |

|

Storage temperature: –40°C to 70°C |

||

|

· Operating humidity: 8% to 90% (non-condensing) · Storage humidity: 5% to 95% (non-condensing) |

||

|

· Operating altitude: –60 m to +5000 m. When the altitude is higher than 900 m, the allowed maximum temperature decreases by 0.33°C for every 100 m increased in altitude (HDDs are not supported if the altitude is higher than 3000 m). · Storage altitude: –60 m to +5000 m |

Components

This sections describes components of the blade server.

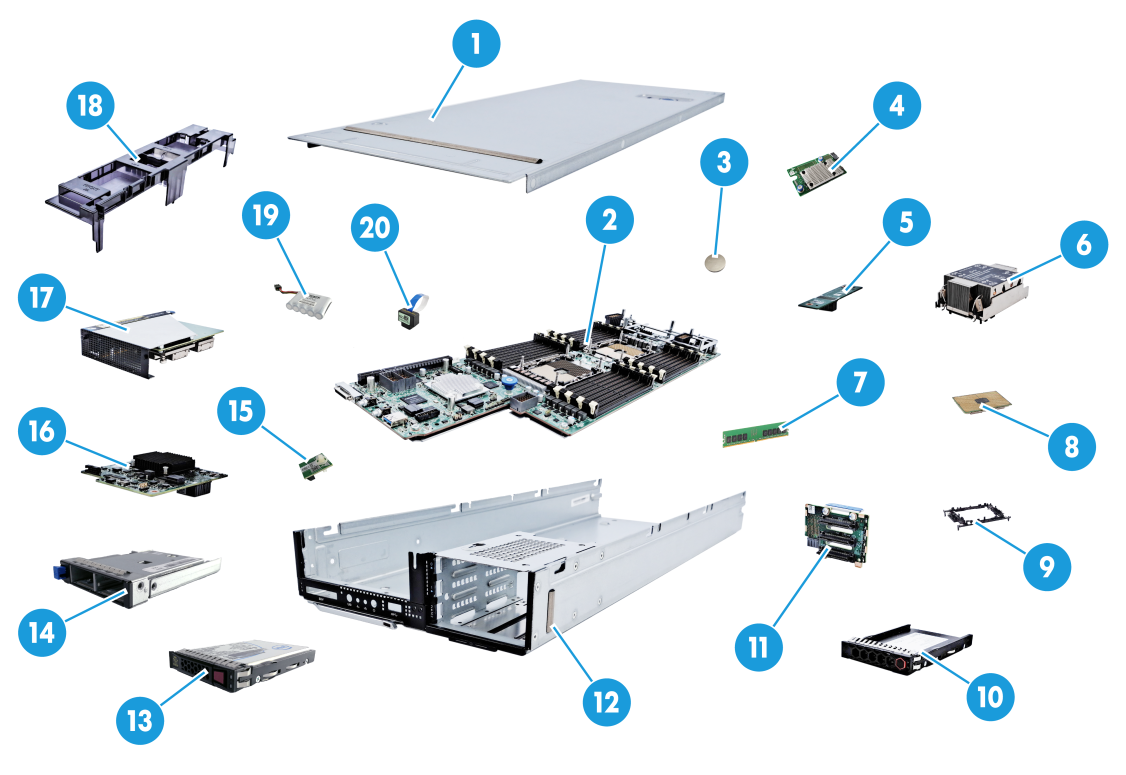

Figure 2 Components of the blade server

Table 3 Description of blade server components

|

Name |

Description |

|

|

1 |

Chassis cover |

- |

|

2 |

System board |

One of the most important parts of a blade server, on which multiple components are installed, such as a CPU, memory, and PCIe module. It is integrated with basic server components, including the BIOS chip, BMC chip, and PCIe connectors. |

|

3 |

System battery |

Powers the system clock to ensure a correct system date and time. |

|

4 |

Mezzanine module |

Refers to modules connected by the mezzanine connectors. Mezzanine modules communicate with the processor via the PCIe protocol. One of the mezzanine modules is the mezzanine NIC, which is used to connect to the interconnect module (ICM) at the rear of the chassis to enable interaction between the blade server and the client. |

|

5 |

Straight-through card |

Connects the embedded VROC array controller soft RAID and SATA drives. |

|

6 |

processor heatsink |

Cools the CPU. |

|

7 |

Memory |

Temporarily stores operational data in the CPU and data exchanged with external storage devices such as drives. |

|

8 |

Processor |

Integrates the memory controller and PCIe controller to provide powerful data processing capabilities for blade servers. |

|

9 |

Processor retaining bracket |

Attaches a CPU to the heatsink. |

|

10 |

Drive filler panel |

Ensures adequate cooling of the blade server. Install a filler panel when no drive is installed on the blade server. |

|

11 |

Drive backplane |

Powers the drive and provides a data transfer channel. |

|

12 |

Blade server chassis |

Centralizes blade server parts and components. |

|

13 |

Drive |

Provides data storage media for blade servers, and supports hot swapping. |

|

14 |

SATA M.2 SSD adapter module |

Expands two SATA M.2 SSD modules, and supports hot swapping. |

|

15 |

Encryption module (TCM/TPM module) |

Provides encryption services for servers to improve server data security. |

|

16 |

Storage controller |

Provides RAID support for SAS/SATA drives, and supports RAID expansion, RAID configuration memory, online upgrade and remote setup. |

|

17 |

Riser card |

Standard PCIe cards are installed to the blade server through the riser card. |

|

18 |

Air duct |

Provides cooling ducts inside the blade server. |

|

19 |

Supercapacitor |

Powers the Flash card integrated or installed on the memory controller in case of unexpected system power failure, to achieve power failure protection for data on the memory controller. |

|

20 |

NVMe VROC module |

Activates the NVMe drive array feature based on the VMD technology. |

Front panel

This section introduces the components, LEDs, and interfaces on the front panel.

Front panel components

Figure 3 Front panel of the blade server

Table 4 Description of front panel components

|

Description |

|

|

1 |

· Optional riser card (for processor 2) |

|

2 |

Optional SAS/SATA drive or NVMe drive |

|

3 |

Optional SAS/SATA drive or SATA M.2 SSD module (via the adapter module) |

|

4 |

Pull-out asset label |

|

5 |

USB 3.0 port |

|

6 |

Buttons for releasing the locking levers |

|

7 |

Locking levers |

|

8 |

SUV connector |

|

· USB 3.0 ports can be used to connect the USB flash drive, USB keyboard or mouse, USB optical drive (for OS installation). |

|

LEDs and buttons

Figure 4 Front panel LEDs and buttons

|

SN |

Description |

Status |

|

1 |

UID button/LED |

· Steady blue—UID LED is activated. The UID LED can be activated by using the following methods: ¡ Press the UID button LED. ¡ Activate the UID LED from HDM or OM. · Flashing blue: ¡ 1 Hz—The upgrade is being upgraded or the system is being managed from HDM. Do not power off the server. ¡ 4 Hz—HDM is restarting. To restart HDM, press the UID button LED for ten seconds. · Off—UID LED is not activated. |

|

2 |

Health LED |

· Steady green—The system is operating correctly. · Flashing green (4 Hz)—HDM is initializing. · Flashing amber (0.5 Hz)—A predictive alarm is present. · Flashing amber (1 Hz)—A minor alarm is present. · Flashing red (1 Hz)—A critical alarm is present. |

|

3 |

Embedded GE network adapter LED |

· Steady green—A link is present on the port. · Flashing green—The port is receiving or sending data. · Off—No link is present on the port. |

|

4 |

Power on/standby button and system power LED |

· Steady green—The system has started. · Flashing green (1 Hz)—The system is starting. · Steady amber—The system is standby. · Off—No power is present. |

|

· When the Health LED shows that the system is faulty, check the system operating state on HDM. ¡ No power supplies are present. ¡ The installed power supplies are faulty. ¡ The chassis is not powered. |

||

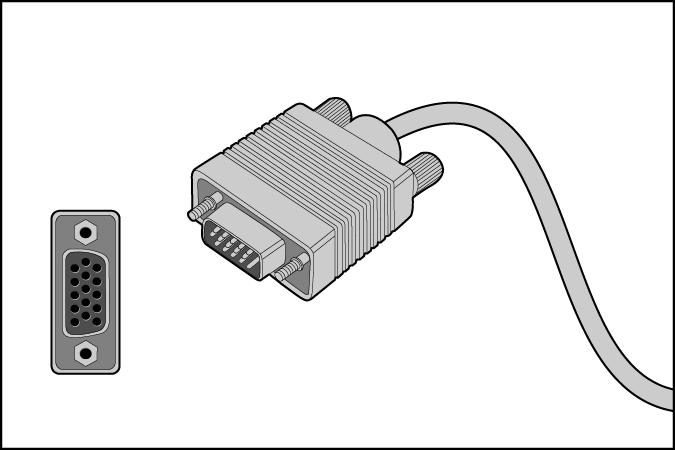

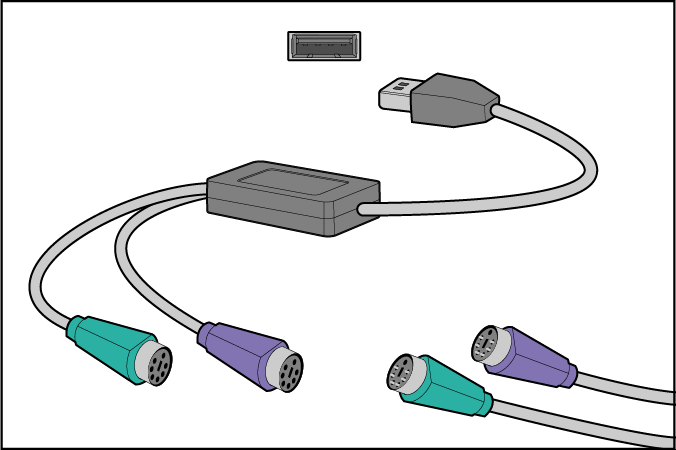

SUV cable

The SUV cable connects to the SUV connector on the front panel of the blade server. The SUV cable allows for the expansion of two USB 2.0 ports, one serial port and one VGA port for the blade server.

Figure 5 SUV cable

Table 6 SUV cable description

|

Item |

Description |

Application |

|

1 |

Serial port |

Diagnoses faults and debugs devices. |

|

2 |

VGA connector |

Connects terminal displays such as monitors or KVM devices |

|

3 |

2 × USB 2.0 connectors |

The extended USB 2.0 connectors and USB 3.0 connector that comes with the default configuration are all available for the following USB devices: · USB drives · USB keyboards or mouses. · USB optical drives for installing operating systems. |

Serial asset label pull tab

The serial label pull tab is on the front panel, as shown in "Front panel components." It provides the following information about the blade server:

· Product QR code. Users can scan the QR code to access the product document center, and view documents for the blade server.

· HDM default information.

· Product serial number.

· Server model.

System board

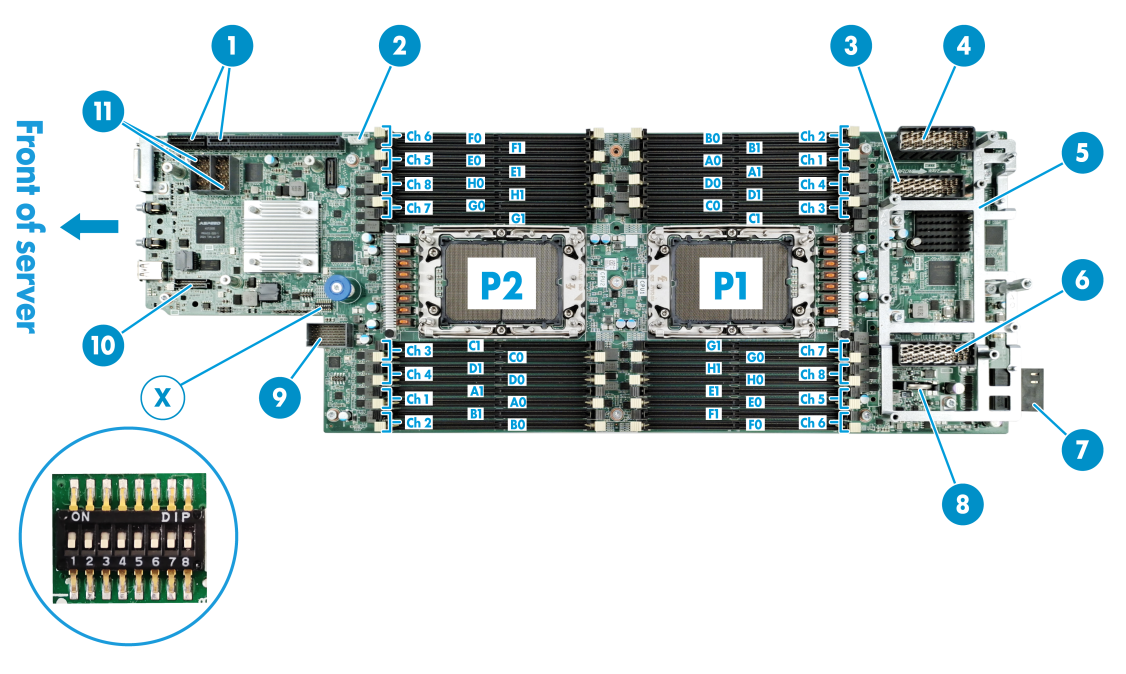

System board layout

Table 7 Layout description

|

Description |

|

|

1 |

PCIe riser card connector (for CPU 2) |

|

2 |

NVMe VROC module connector |

|

3 |

Mezzanine module connector 2 (for processor 2, ICM 2/5) |

|

4 |

Mezzanine module connector 3 (slave processor 1, ICM 2/6) |

|

5 |

Mezzanine module supporting bracket |

|

6 |

Mezzanine module connector 1 (for processor 1, ICM 1/4) |

|

7 |

Backplane connector |

|

8 |

System battery |

|

9 |

Drive backplane connector |

|

10 |

TPM/TCM connector |

|

11 |

Storage controller connector |

|

X |

System maintenance switch |

DIMM slots

The DIMM slot layout is shown in Figure 7. A0, B0 ......H0, H1 indicate the DIMM slot number. See "DIMMs" for specific installation guidelines for DIMMs.

System maintenance switch

The system maintenance switch has 8 pins, as shown in Figure 8.

Figure 8 System maintenance switch

The following problems can be solved with the system maintenance switch. Table 8 describes meanings of the system maintenance switch and "System board layout" shows the layout.

· Users forget the HDM login user name or password and cannot log in to HDM.

· Users forget the BIOS password and cannot enter BIOS.

· Default BIOS settings should be restored.

Table 8 System maintenance switch description

|

Position |

Description (default value: Off) |

Remarks |

|

|

1 |

l Off—HDM login requires the username and password of a valid HDM user account. l On—HDM login requires the default username and password. |

When pin 1 is On, users can permanently log in to HDM with the default username and default password. It is recommended that change pin 1 to Off after completing the operation. |

|

|

5 |

l Off—Normal server startup. l On—Restores the default BIOS settings. |

When the server is powered off, change pin 5 to On, then to Off again, and finally start the server. The default BIOS settings will be restored. When pin 5 is changed to On, the server cannot be started. Therefore, stop the running service in advance and make sure the server is powered off. Otherwise it may cause business data loss. |

|

|

6 |

l Off—Normal server startup. l On—Clears all passwords from the BIOS at server startup. |

When pin 6 is On, all passwords will be cleared from the BIOS every time the servers is started. As a best practice, change pin 6 to Off after setting the BIOS password. |

|

|

2, 3, 4, 7, 8 |

· Reserved |

None |

|

PCIe connector

|

|

NOTE: If a CPU is absent, the corresponding PCIe devices are not available. |

Table 9 CPUs to which the PCIe devices correspond

|

PCIe device |

CPU |

PCIe standard |

PCIe connector physical bandwidth |

PCIe connector bus bandwidth |

PCIe device form factor |

|

NVMe drive 0 |

· CPU 1 |

· PCIe 3.0 |

· x4 |

· x4 |

· 2.5 inches |

|

NVMe drive 1 |

· CPU 2 |

· PCIe 3.0 |

· x4 |

· x4 |

· 2.5 inches |

|

PCIe riser card |

· CPU 2 |

· PCIe 3.0 |

· x16 |

· x16 |

· Supports installation of the LP card |

|

Mezzanine module 1 |

· CPU 1 |

· PCIe 3.0 |

· x16 |

· x16 |

· Non-standard component |

|

Mezzanine module 2 |

· CPU 2 |

· PCIe 3.0 |

· x16 |

· x16 |

· Non-standard component |

|

Mezzanine module 3 |

· CPU 1 |

· PCIe 3.0 |

· x16 |

· x16 |

· Non-standard component |

|

· NVMe drive 0 and NVMe drive 1 represent NVMe drives numbered 0 and 1. For more information about drive numbering, see "Drive numbering." · A PCIe riser card indicates the riser card that is installed into the PCIe riser card connector on the system board. For the location of the PCIe riser card connector, see Figure 6. |

|||||

HDDs and SSDs

Drive numbering

Drive numbering is the physical slot number of the drives. It is used to indicate the location of the drives and is identical to the silkscreen on the front and rear panels of the server.

For the correspondence between the physical number of the drives and the number displayed on the software (HDM, BIOS), see the correspondence table of the slot numbers in Appendix B.

Drive LEDs

The blade server supports SAS, SATA, and NVMe drives, of which SAS and SATA drives support hot swapping. You can use the LEDs on a drive to identify its status. Figure 10 shows the location of the LEDs on a drive.

|

(1) Drive fault/UID LED |

(2) Drive present/active LED |

To identify the status of a SAS or SATA drive, use Table 10. To identify the status of an NVMe drive, use Table 11.

Table 10 SAS/SATA drive LED description

|

Drive fault/UID LED status |

Drive present/active LED status |

Description |

|

Steady/Flashing (4.0 Hz) |

A drive failure is predicted. As a best practice, replace the drive before it fails. |

|

|

Steady amber |

Steady/Flashing (4.0 Hz) |

The drive is faulty. Replace the drive immediately. |

|

Steady blue |

Steady/Flashing (4.0 Hz) |

The drive is operating correctly and is selected by the RAID controller. |

|

Off |

Flashing (4.0 Hz) |

The drive is performing a RAID migration or rebuilding, or the system is reading or writing data to the drive. |

|

Off |

Steady on |

The drive is present but no data is being read or written to the drive. |

|

Off |

Off |

The drive is not securely installed. |

Table 11 NVMe drive LED description

|

Drive fault/UID LED status |

Drive present/active LED status |

Description |

|

Flashing amber (0.5 Hz) |

Off |

The managed hot removal is complete, and you are allowed to remove the drive. |

|

Flashing amber (4 Hz) |

Off |

The drive is in hot insertion. |

|

Steady amber |

Steady/Flashing (4.0 Hz) |

The drive is faulty. Replace the drive immediately. |

|

Steady blue |

Steady/Flashing (4.0 Hz) |

The drive is operating correctly and is selected by the RAID controller. |

|

Off |

Flashing (4.0 Hz) |

The drive is performing a RAID migration or rebuilding, or the system is reading or writing data to the drive. |

|

Off |

Steady on |

The drive is present but no data is being read or written to the drive. |

|

Off |

Off |

The drive is not securely installed. |

SATA M.2 SSD module

With the SATA M.2 SSD adapter module, the blade server supports up to two SATA M.2 SSD modules. Use the LEDs on the front panel of the SATA M.2 SSD module to identify its status. Table 12 describes the meaning of the LEDs.

Figure 11 SATA M.2 SSD module LEDs

|

(1): SATA M.2 SSD module fault/UID LED |

(2): SATA M.2 SSD module present/active LED |

Table 12 SATA M.2 SSD module LED description

|

Fault/UID LED status |

Present/Active LED status |

Description |

|

Flashing amber (0.5 Hz) |

Steady/Flashing (4.0 Hz) |

An M.2 SSD module failure is predicted. As a best practice, replace the drive before it fails. |

|

Steady amber |

Steady/Flashing (4.0 Hz) |

An M.2 SSD module is faulty. Replace the drive immediately. |

|

Steady blue |

Steady/Flashing (4.0 Hz) |

An M.2 SSD module is operating correctly and is selected by the RAID controller. |

|

Off |

Flashing (4.0 Hz) |

An M.2 SSD module is performing a RAID migration or rebuilding, or the system is reading or writing data to the drive. |

|

Off |

Steady on |

An M.2 SSD module drive is present but no data is being read or written to the drive. |

|

Off |

Off |

An M.2 SSD module drive is not securely installed. |

Drive backplane

This section introduces the drive backplanes supported by the server, including components of the backplane, the type and number of drives supported by the backplane.

· Drive backplanes are classified by the type of drives supported, including the SAS/SATA drive backplane, UniBay drive backplane, and drive backplane (X SAS/SATA + Y UniBay).

¡ SAS/SATA drive backplane: All drive slots support SAS/SATA drives only.

¡ UniBay drive backplane: All drive slots support both SAS/SATA drives and NVMe drives.

¡ Drive backplane (X SAS/SATA+Y UniBay): All drive slots support SAS/SATA drives and some drive slots support NVMe drives.

- X: Number of slots that support SAS/SATA drives only.

- Y: Number of slots that support both SAS/SATA drives and NVMe drives.

|

|

NOTE: · The UniBay drive backplane and drive backplane (X SAS/SATA+Y UniBay) can only support both drive types if both the SAS/SATA data cable and NVMe data cable are connected. · The actual number of SAS/SATA drives and NVMe drives supported by the UniBay drive backplane and drive backplane (X SAS/SATA+Y UniBay) is dependent on the cabling scheme. |

3SFF drive backplane (1SAS/SATA+2UniBay)

The 3SFF drive backplane is installed on the front of the chassis and supports up to three 2.5-inch drives, including one SAS/SATA drive and two SAS/SATA/NVMe drives. Drive slot 2 can be replaced with a SATA M.2 adapter module. For the drive slot numbering, see Figure 9.

Figure 12 3SFF drive backplane

Table 13 3SFF drive backplane component

|

SN |

Description |

Silkscreen |

|

1 |

Data, AUX and power 3-in-1 connector |

- |

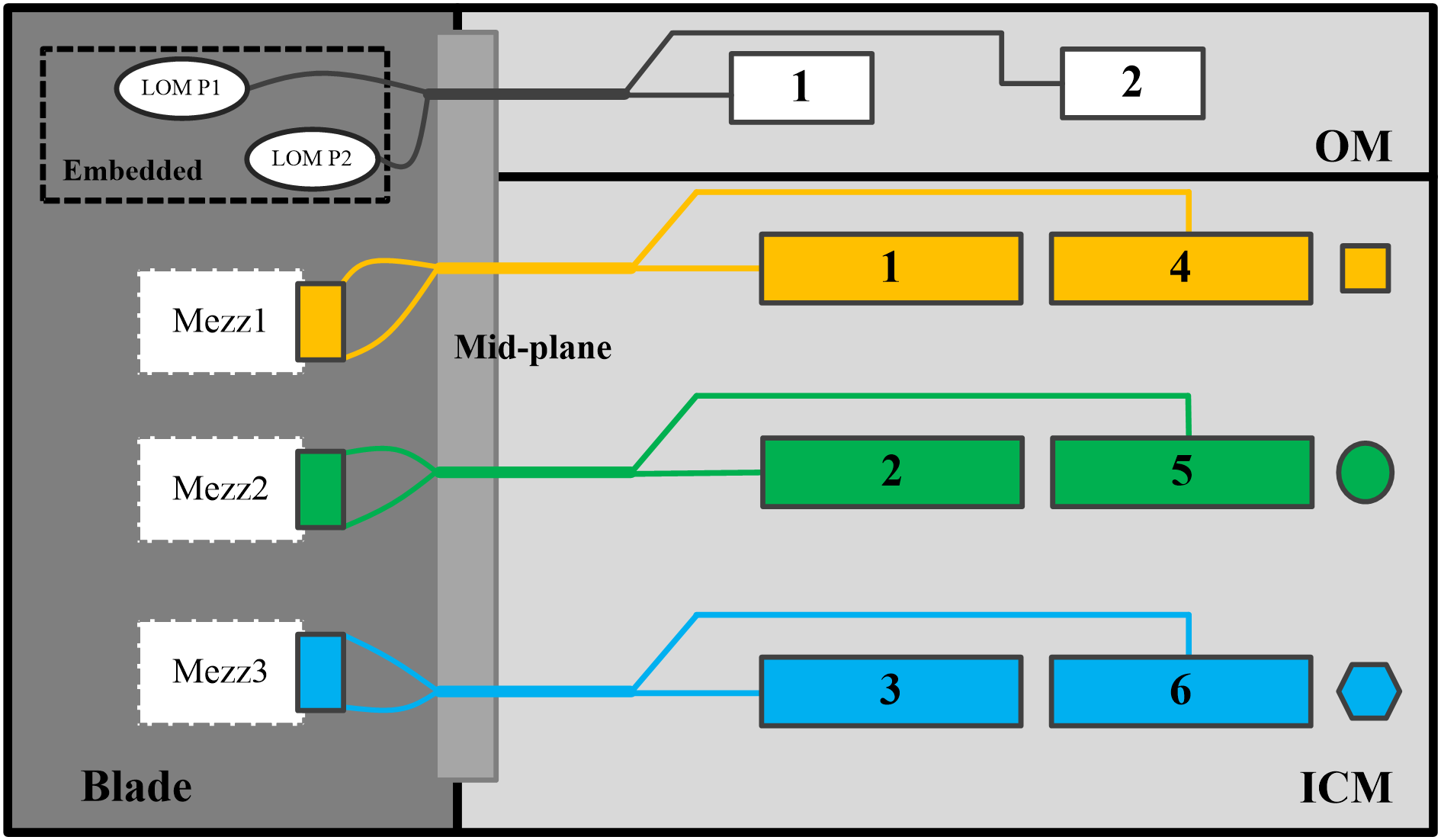

Internal networking

The blade server access the network through an interconnection module. The interconnection module provides the internal interconnection with blade servers through the infix backplane, and provides uplink interconnection interfaces for blade servers through the panel interfaces. The chassis may contain up to 6 interconnection modules. Among them, the interconnection modules in slots 1 and 4, 2 and 5, and 3 and 6 are interconnected through the internal ports of the chassis to form three pairs. Each pair can be used as a switching plane. You may configure them as active or standby based on service needs.

Internal wiring method of the blade server chassis is shown in Figure 13. Where:

· The onboard NIC is connected to the active and standby OM modules.

· Mezzanine module 1 is connected to interconnect modules in slot 1 and slot 4.

· Mezzanine module 2 is connected to interconnect modules in slot 2 and slot 5.

· Mezzanine module 3 is connected to interconnect modules in slot 3 and slot 6.

Figure 13 Internal wiring method of the blade server chassis

B/D/F information about the server

The B/D/F information of the server may change with the PCIe card configuration. Users can obtain the B/D/F information of the server through the following ways:

· BIOS serial port logs: If serial port logs have been collected, users can query the B/D/F information of the server by searching the keyword "dumpiio".

· UEFI Shell: Users can obtain the B/D/F information of the server by using the pci command. For details on how to use the pci command, use the help pci command.

· The way to obtain the B/D/F information varies depending on the operating system. The specific ways are as follows:

¡ Linux OS: You can execute the lspci -vvv command to obtain the B/D/F information of the server.

|

|

NOTE: If the operating system does not support the lspci command by default, you can obtain it through the yum source and install the pci-utils package. |

¡ Windows OS: After installing the pciutils package, execute the lspci command to obtain the B/D/F information of the server.

¡ VMware OS: The VMware OS supports the lspci command by default. You can obtain the information by executing the lspci command.

Component installation guidelines

SAS/SATA drive

· The drives are hot swappable.

· As a best practice, install drives that do not contain RAID information.

· If you are using the drives to create a RAID, follow these restrictions and guidelines:

To avoid degraded RAID performance or RAID creation failures, make sure all drives in the RAID are the same type (HDDs or SSDs) and have the same connector type (SAS or SATA).

· For efficient use of storage, use drives that have the same capacity to build a RAID. If the drives have different capacities, the lowest capacity is used across all drives in the RAID.

· If one drive is used by several logical drives, RAID performance might be affected and maintenance complexities will increase.

· If HDDs are frequently inserted and removed with intervals of less than 30 seconds, the HDDs may fail to be identified by the system.

NVMe drive

· Whether or not NVMe drives support hot swapping and managed hot removal is related to the operating system. You can query the compatibility relationship between the two through the OS compatibility query tool.

· As a best practice, install drives that do not contain RAID information.

· As a best practice, all NVMe drives forming a RAID have the same capacity. When the NVMe drive capacities are different, the system regards the capacity of all NVMe drives as the smallest capacity among them. For NVMe drives with a larger capacity, their excess capacity cannot be used to configure the current RAID or other RAIDs.

· To hot insert NVMe drives, insert the drives steadily without pauses to prevent the operating system from being stuck or restarted.

· Do not hot swap multiple NVMe drives at the same time. As a best practice, hot swap NVMe drives one after another at intervals longer than 30 seconds for the operating system to identify the installed or removed NVMe drive. If you insert multiple NVMe drives in a short period of time, the system might fail to identify the drives

NVMe VROC module

Table 14 describes the NVMe VROC modules supported by the server and their specifications.

Table 14 NVMe VROC module specifications

|

Model |

Description |

Supported RAID level |

|

NVMe-VROC-Key-i |

NVMe VROC module Intel edition, supports Intel NVMe drives only. |

RAID 0/1/5/10 |

|

NVMe-VROC-Key-P |

NVMe VROC module advanced edition, supports any brand of NVMe drives. |

RAID 0/1/5/10 |

|

NVMe-VROC-Key-S |

NVMe VROC module standard edition, supports any brand of NVMe drives. |

RAID 0/1/10 |

Riser card and PCIe card

Compatibility between the riser card and PCIe card

Table 15 PCIe card form factor

|

Short name |

Full name |

|

LP card |

Low Profile card |

|

FHHL card |

Full Height, Half Length card |

|

FHFL card |

Full Height, Full Length card |

|

HHHL card |

Half Height, Half Length card |

|

HHFL card |

Half Height, Full Length card |

Table 16 Shows the compatibility between the riser card and PCIe card.

Table 16 Compatibility between the riser card and PCIe card

|

Riser card model |

Installation location supported by the riser card |

PCIe slot or interface description |

PCIe device supported by the PCIe connector |

CPU |

|

RC-LP |

PCIe riser card connector |

PCIe 3.0 x16 (16, 8, 4, 2, 1) |

LP card |

CPU 2 |

|

· For the specific location of the PCIe riser card connector on the system board, see "System board layout." · The PCIe connector on the riser card is not available when the CPU is not present. · Smaller PCIe cards can be inserted into the PCIe connector corresponding to larger PCIe cards. For example, LP cards can be inserted into the PCIe connector corresponding to FHFL cards. · PCIe 3.0 x16 (16, 8, 4, 2, 1): ¡ PCIe 3.0: Third-generation signal rate. ¡ x16: Connector bandwidth. ¡ (16, 8, 4, 2, 1): Compatible bus bandwidths, including x16, x8, x4, x2 and x1. |

||||

Installation guidelines

When a storage controller is installed onto the storage controller connector on the system board, no riser card and PCIe card can be installed on the blade server. For the specific location of the storage controller connector, see "System board layout."

Storage controller and its power fail safeguard module

Storage controller

Table 17 shows the front mezzanine storage controller and the onboard VROC array controller supported by the blade server.

Table 17 Storage controller description

|

Storage controller model |

Installation position |

Supported drive type |

Power fail safeguard module |

Installation method |

|

|

Embedded VROC array controller |

Embedded on the system board, does not need to be installed by users |

SATA HDD/SSD |

Not supported |

Not involved |

|

|

RAID-P5408-Mf-8i-4GB |

Storage controller connector for system board |

SAS/SATA HDD/SSD |

Supported, SCAP-LSI-G3 supercapacitor required (Flash embedded in the storage controller) |

See "Installing a storage controller and a power fail safeguard moduleInstalling a ." |

|

|

RAID-P2404-Mf-4i-2GB |

Storage controller connector for system board |

SAS/SATA HDD/SSD |

Supported, SCAP-PMC-G3 supercapacitor required (Flash embedded in the storage controller) |

||

|

RAID-P4408-Mf-8i-2GB |

Storage controller connector for system board |

SAS/SATA HDD/SSD |

Supported, SCAP-PMC-G3 supercapacitor required (Flash embedded in the storage controller) |

||

|

HBA-H5408-Mf-8i |

· Storage controller connector for system board |

SAS/SATA HDD/SSD |

Not supported |

NA |

|

Table 18 shows the embedded VROC array controller specifications. For the specifications of other storage controllers, use the server-compatible parts query tool on the official website.

Table 18 Embedded VROC array controller

|

Model Item |

|

|

Type |

8 internal SATA ports |

|

Number of internal ports |

6 Gbps SATA 3.0 Supports drive hot swapping |

|

Drive interface |

PCIe 3.0 x4 bit width |

|

PCIe connector |

RAID 0/1/5 |

|

Location/Size |

Location: Embedded on the PCH of the system board |

|

Built-in cache memory |

N/A |

|

Flash |

N/A |

|

Power fail safeguard module |

Not supported |

|

Battery connector |

N/A |

|

Firmware upgrade |

Upgrade with the BIOS |

|

Note: If you want to use the embedded VROC array controller, you need to choose a straight-through module that supports up to 4 ports (model: PTH-PT104-Mf-4L). |

|

Power fail safeguard module

A power fail safeguard module provides a flash card and a supercapacitor. There are two types of flash cards: One needs to be installed to the storage controller; the other is embedded in the storage controller and does not need to be installed by users.

In the event of an unexpected power failure of the server system, the supercapacitor can power the flash card for more than 20 seconds, during which the cached data is transferred from the DDR memory of the storage controller to the flash card. Since the flash card is a non-volatile storage medium, it enables permanent storage of cached data or until the server system is powered up and the storage controller retrieves such data.

|

|

NOTE: After the supercapacitor is installed, the power may be low. No action is required at this time. The internal circuitry will automatically charge and enable the supercapacitor when the server is powered on. You can query the supercapacitor status through HDM or OM. |

Note on the expiration of the supercapacitor:

· A supercapacitor has a lifespan of 3 to 5 years.

· If the lifespan of a supercapacitor expires, a supercapacitor exception might occur. The system notifies users of supercapacitor exceptions by using the following methods:

¡ For a PMC storage controller, the status of the flash card becomes Abnormal_status code. You can check the status code to identify the exception. For more information, see H3C Servers HDM Online Help.

¡ For an LSI supercapacitor, the status of the flash card displayed by HDM becomes Abnormal.

¡ HDM will generate SDS log records. For details on how to query the SDS log, see H3C Servers HDM Online Help.

· When the supercapacitor expires, it needs to be replaced in time. Otherwise, the power fail data safeguard function of the storage controller will fail.

|

|

NOTE: After replacing the expired supercapacitor, check the logical drive cache status of the storage controller. If the logical drive cache of the storage controller is turned off, re-enable the cache related settings to enable the power fail safeguard function. For details, see H3C Servers HDM Online Help. |

GPU

Due to structural limitations, the GPU and the storage controller cannot be installed at the same time. The GPU is installed on the PCIe riser card connector, and the storage controller is installed on the storage controller connector. For the specific location of both connectors, see "System board layout."

NIC

Standard PCIe NIC installation guidelines

Due to structural limitations, the standard PCIe NIC and the storage controller cannot be installed at the same time. The standard PCIe NIC is connected via the riser card, which is installed on the PCIe riser card connector, and the storage controller is installed on the storage controller connector. For the specific location of both connectors, see "System board layout."

Mezzanine NIC installation guidelines

· The blade server supports installing a maximum of three mezzanine NICs.

· When installing the mezzanine NIC, make sure the corresponding processor is present. See "PCIe connector" for the specific relations.

· The mezzanine NIC and the interconnect module are interconnected through the chassis backplane. There is a slot correspondence between them. When installing the mezzanine NIC, ensure that the interconnect module in the corresponding slot is present. See "Internal networking" for the specific relations.

SATA M.2 SSD module

· A SATA M.2 SSD module must be used together with a SATA M.2 SSD adapter, and the server can install a maximum of two SATA M.2 SSD modules.

· The SATA M.2 SSD modules are hot swappable.

· As a best practice, install SATA M.2 SSD modules that do not contain any RAID information.

· For efficient use of storage, use SATA M.2 SSD modules that have the same capacity to build a RAID. If the SATA M.2 SSD modules have different capacities, the lowest capacity is used across all SATA M.2 SSD modules in the RAID.

SATA M.2 SSD adapter module

The SATA M.2 SSD adapter module supports hot swapping.

DIMMs

This section introduces the basic concept of DIMM, DIMM mode and DIMM installation guidelines.

About DIMMs

DIMMs include DDR4 and PMem 200 DIMMs. DDR4 DIMMs include LRDIMM and RDIMM.

1. DDR4 and PMem 200

· DDR4 is the most common type of DIMM. The data in DDR4 will be lost in the event of an unexpected power failure of the server system.

· PMem 200 has the following two features:

¡ Compared with DDR4, PMem 200 has a larger capacity for a single memory module.

¡ PMem 200 (such as Barlow Pass) has data protection in case of power failure. Data in PMem 200 will not be lost in the event of unexpected power failure of the server system.

2. RDIMM and LRDIMM

· RDIMM provides address parity protection.

· LRDIMM provides a larger capacity and bandwidth for the system.

3. Rank

The number of ranks is usually 1, 2, 4, or 8, generally abbreviated as 1R/SR, 2R, 4R, 8R, or single-rank, dual-rank, quad-rank, or 8-rank.

· A 1R DIMM has a set of DIMM chips that will be accessed when data is written to or read from the DIMM.

· A 2R DIMM is equivalent to a module containing two 1R DIMMs, but only one rank can be accessed at a time.

· A 4R DIMM is equivalent to a module containing two 2R DIMMs, but only one rank can be accessed at a time.

· A 8R DIMM is equivalent to a module containing two 4R DIMMs, but only one rank can be accessed at a time.

When writing or reading data in a DIMM, the server memory control subsystem will select the correct rank from the DIMM.

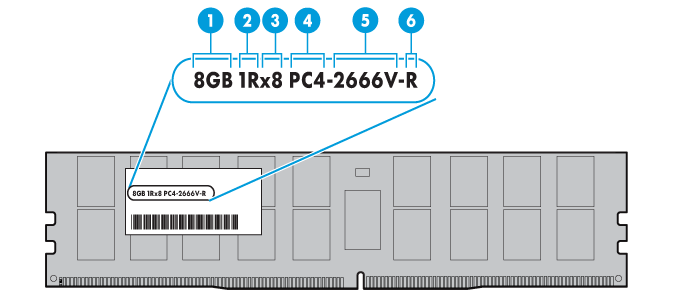

4. DIMM specifications

DIMM specifications can be identified by the label on it.

Figure 14 DIMM identification

Table 19 Description of DIMM identification

|

SN |

Description |

Definition |

|

1 |

Capacity |

· 8 GB · 16 GB · 32 GB |

|

2 |

Number of ranks |

· 1R = The number of ranks is 1. · 2R = The number of ranks is 2. · 4R = The number of ranks is 4. · 8R = The number of ranks is 8. |

|

3 |

Data width |

· x4 = 4-bit · x8 = 8-bit |

|

4 |

DIMM generation |

DDR4 |

|

5 |

DIMM equivalent speed |

· 2133P: 2133 MHz · 2400T: 2400 MHz · 2666V: 2666 MHz · 2933Y:2933 MHz |

|

6 |

DIMM type |

· R = RDIMM · L = LRDIMM |

DIMM mode

The blade server supports the following DIMM modes to protect the data in the DIMM.

|

|

NOTE: Independent Mode is the default mode and is not available on the BIOS interface. |

· Independent Mode (default)

· Mirror Mode

· Memory Rank Sparing

Independent Mode

Standard ECC can correct 1-bit memory errors and detects multi-bit memory errors. When standard ECC detects multi-bit errors, it informs the server and stops the server. Independent mode can prevent multi-bit errors from occurring on the server, and correct 1-bit or 4-bit memory errors (when the errors are located on the same DDR4 on the DIMM). Independent mode can provide more powerful protection and correct memory errors that cannot be corrected by standard ECC and cause the server to shut down.

Mirror Mode

Mirror mode uses a portion of the system memory for mirroring, to improve system stability and prevent uncorrectable memory errors that cause server downtime. When an uncorrectable error is detected in a memory channel, the blade server will fetch data from the mirrored memory. The mirror mode is a channel-level memory mode, for example, CH2 is the mirror for CH1, CH3 for CH2, and CH1 for CH3.

Memory Rank Sparing

Use a portion of the system memory rank as backup rank to improve system stability. When this feature is enabled, if the correctable errors that occur in a non-backup rank exceeds a specific threshold, the blade server enables the backup rank to replace and disable the failed rank.

DIMM installation guidelines

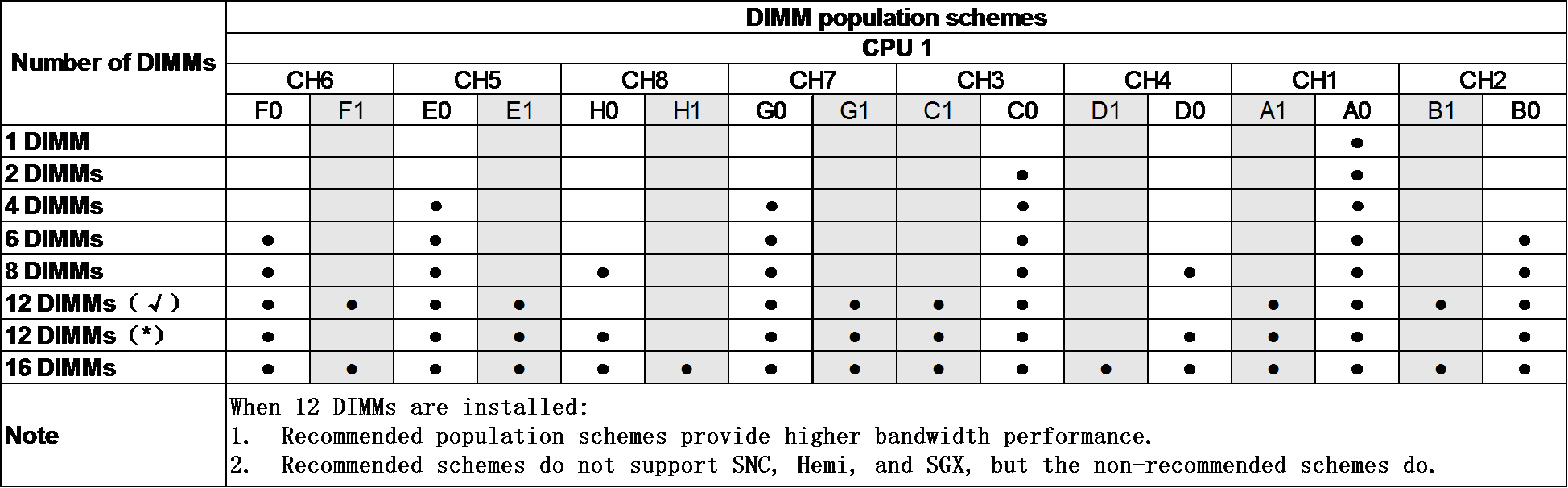

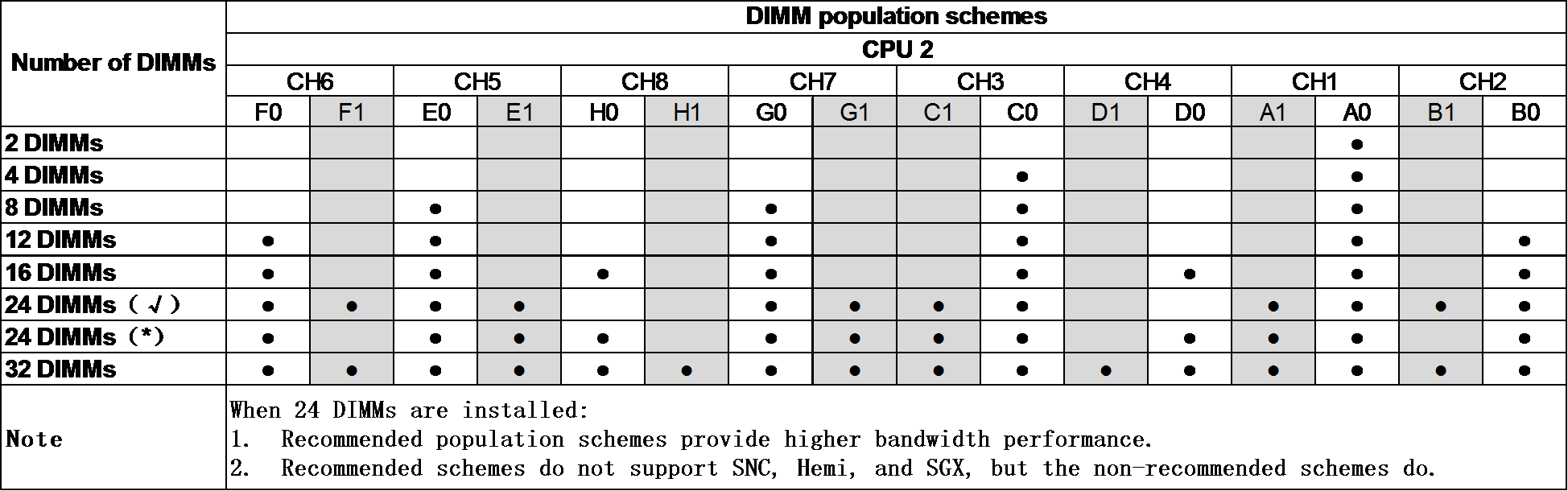

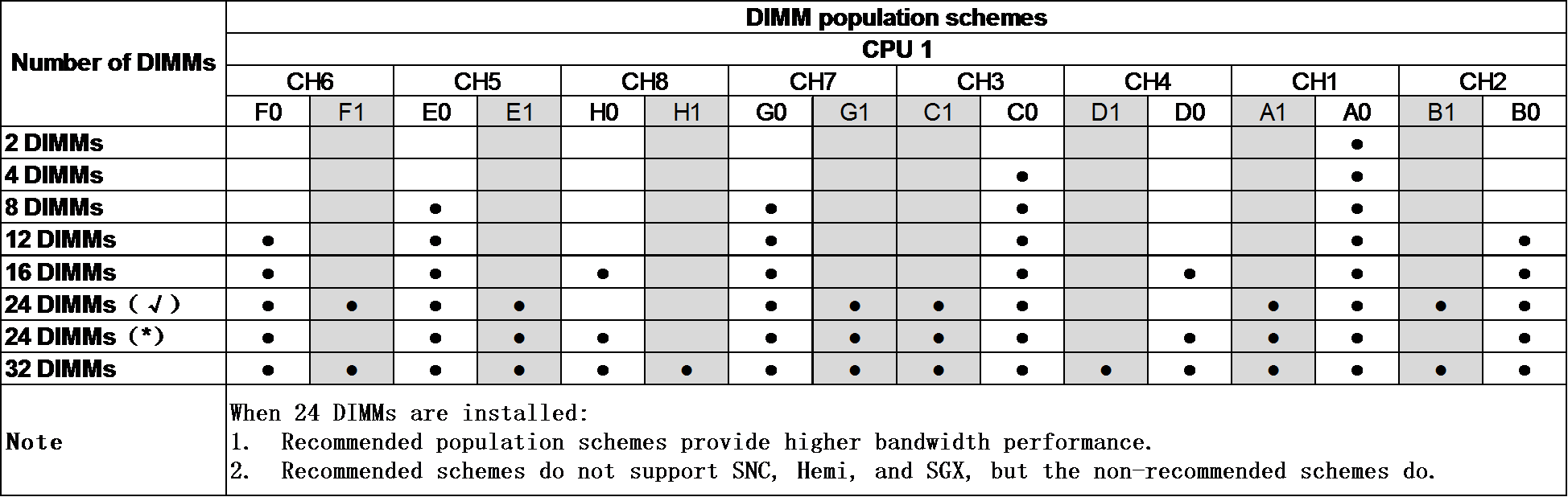

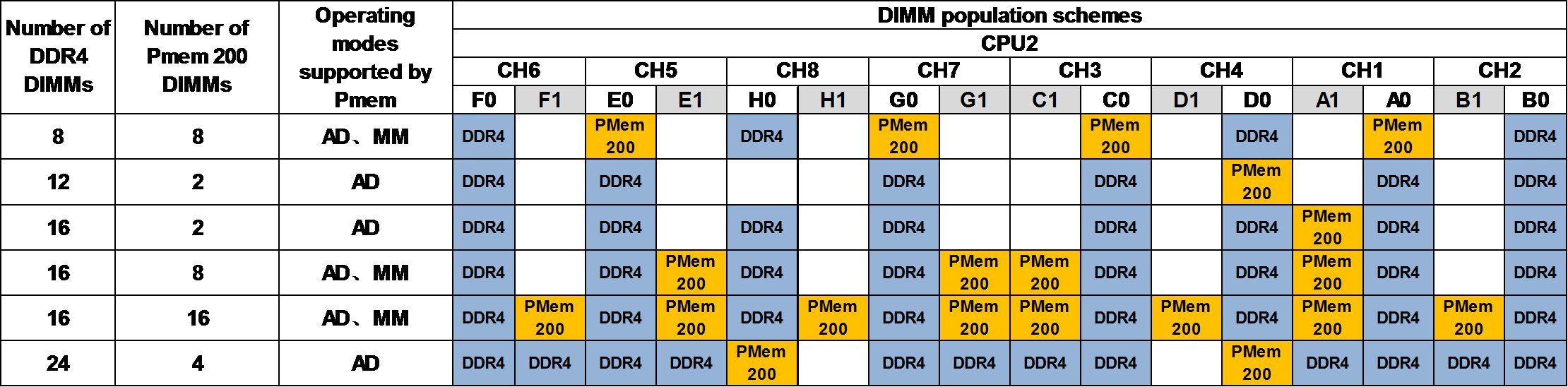

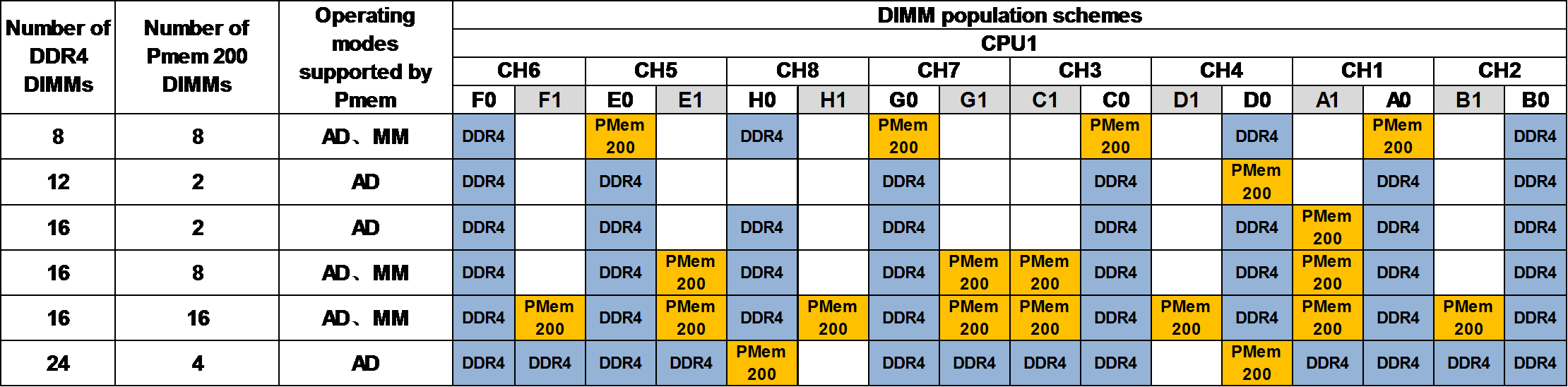

The blade server supports 1 or 2 CPUs, each CPU supports 8 channels, and each channel supports 2 DIMMs, that is, one CPU supports 16 DIMMs and 2 CPUs support 32 DIMMs. The server supports DDR4-only configuration and also a mixture of PMem 200 and DDR4.

|

|

NOTE: The operating frequency of the DIMM can be up to 3200 MHz only when both of the following conditions are met: · Use a CPU with a maximum DIMM frequency of 3200 MHz. · Use a DIMM with a maximum frequency of 3200 MHz. · Only one DIMM is configured in each of the channels where DIMM is configured. |

DIMM and CPU compatibility

Table 20 describes the DIMM and CPU compatibility.

Table 20 DIMM and CPU compatibility

|

CPU type |

CPU-compatible DIMM type @ frequency |

Maximum DIMM capacity supported by a single CPU (DDR4 and PMem included) |

|

· Intel Ice Lake |

· DDR4 @3200 MHz · PMem 200 @2666 MHz |

6 TB |

|

Montage Jintide C3 series |

DDR4 @3200 MHz |

6 TB |

DIMM operating frequency

|

|

NOTE: You can query the DIMM frequency and the maximum DIMM frequency supported by the CPU with the server-compatible part query tool. In the query tool, query the DIMM frequency by the part name of "memory module". Query the maximum DIMM frequency supported by the CPU by the part name of "processor". |

· The operating frequency of DIMM in the server is equal to the lower of the DIMM frequency or the maximum DIMM frequency supported by the CPU. For example, if the DIMM frequency is 2666 MHz and the maximum DIMM frequency supported by the CPU is 3200 MHz, then the DIMM will run at 2666 MHz.

Guidelines for installing only DDR4 DIMMs

· Make sure the corresponding CPU is installed.

· DIMMs of different specifications (type, capacity, rank, data width, rate) cannot be mixed, that is, all DIMMs installed on the server have the same product code. Query the product code information with the server-compatible part query tool.

· In addition to the above guidelines, different DIMM modes have their own specific guidelines, as described in Table 21. Note that when the actual DIMM installation does not meet these specific guidelines, the system will use the default Independent mode regardless of the DIMM mode configured by the user.

Table 21 Specific installation guidelines for different DIMM modes

|

Memory mode |

DIMM population requirements |

|

Independent Mode (default) |

· Strictly follow the DIMM population schemes: · If one processor is present, see Figure 15. · If two processors are present, see Figure 16 and Figure 17. |

|

Mirror Mode |

· A minimum of two DIMMs for a processor. · This mode does not support DIMM population schemes that are not recommended. ¡ If one processor is present, see Figure 15. ¡ If two processors are present, see Figure 16 and Figure 17. |

|

Memory Rank Sparing |

· Make sure no less than two ranks are configured for each channel. · Strictly follow the DIMM population schemes: ¡ If one processor is present, see Figure 15 ¡ If two processors are present, see Figure 16 and Figure 17. |

Figure 15 1-CPU DIMM configuration guidelines

Figure 16 2-CPU DIMM configuration guidelines (1)

Figure 17 2-CPU DIMM configuration guidelines (2)

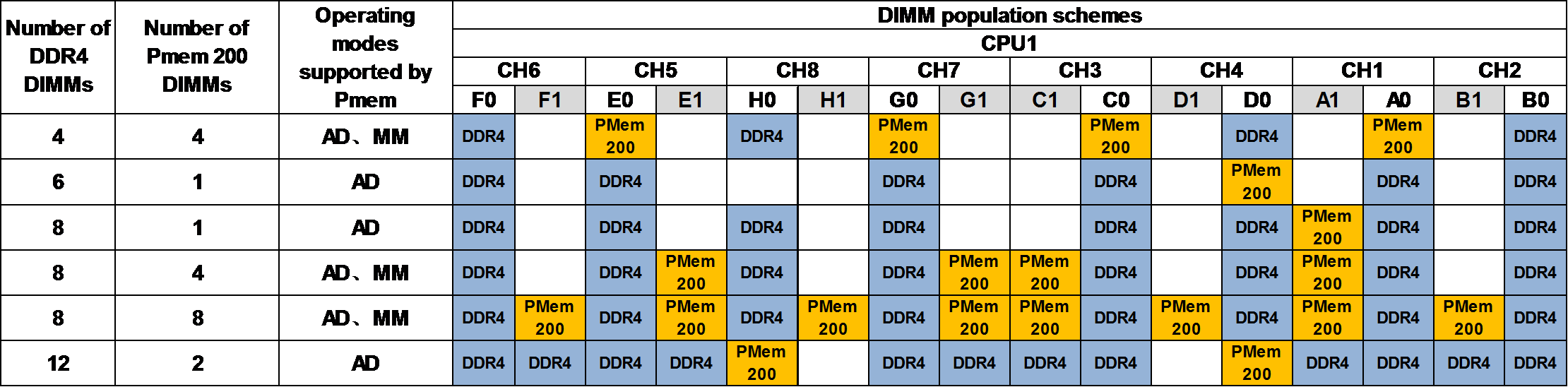

Guidelines for installing a mixture of PMem 200 and DDR4 DIMMs

· Make sure the corresponding CPU is installed.

· Make sure that the installed PMem has not been used in other products. Otherwise it may not work after installation.

¡ DDR4 DIMMs of different specifications (type, capacity, rank, data width, rate) cannot be mixed, that is, all DDR4 DIMMs installed on the server have the same product code. PMem 200 DIMMs of different specifications cannot be mixed, that is, the product codes of all PMem 200 DIMMs installed on the server must be the same. For details on the product code, see the server-compatible part query tool.

¡ The frequency of the DIMM installed for each CPU must not exceed the maximum DIMM frequency supported by the CPU. For the frequency of the DIMM and the maximum DIMM frequency supported by the CPU, see the server-compatible part query tool.

· PMem supports the corresponding operating modes. The corresponding guidelines should be met:

¡ When AD operating mode is supported, these requirements need to be met: DIMM capacity configured under a single CPU (total capacity of DDR4 and PMem) ≤ The maximum DIMM capacity supported by a single CPU (total capacity of DDR4 and PMem); The maximum DIMM capacity supported by a single CPU (total capacity of DDR4 and PMem) is shown in Table 20.

¡ When MM operating mode is supported, these requirements need to be met:

- The DIMM capacity configured under a single CPU (total capacity of DDR4 and PMem) ≤ The maximum DIMM capacity supported by a single CPU (total capacity of DDR4 and PMem).

- The capacity ratio of DDR and PMem configured for each CPU should be limited from 1:4 to 1:16.

¡ For the operating modes supported by different capacity ratios of PMem and DDR and how to configure the operating modes, see PMem 200 User Guide and Appendix.

The DIMM installation guidelines for mixing PMem 200 and DDR4 are shown in Figure 18, Figure 19, and Figure 20.

Figure 18 PMem 200 and DDR4 DIMM configuration guidelines (1-CPU)

Figure 19 PMem 200 and DDR4 DIMM configuration guidelines (2-CPU) (1)

Figure 20 PMem 200 and DDR4 DIMM configuration guidelines (2-CPU) (2)

CPU

Guidelines

You can install one or two processors.

· To avoid damage to a processor or the system board, only H3C authorized or professional server engineers can install, replace, or remove a processor.

· Make sure the processors on the server are the same model.

· A CPU moduel is suffixed with U, indicating the CP supports single-socket operation only. Use "Meaning of CPU product model suffix" to identify processor model suffixes.

· CPUs of the same model support two types of heatsinks: one with sparse fins and one with dense fins. Heatsinks with sparse fins labeled with Front must be installed on CPU 2 and the heatsinks with dense fins labeled with Rear must be installed on CPU 1. For more information about the position of CPUs, see "System board layout."

· For the server to operate correctly, make sure processor 1 is in position. For more information about processor locations, see "System board layout."

· The pins in the processor sockets are very fragile and prone to damage. Install a protective cover if a processor socket is empty.

· To prevent static electricity from damaging the electronic components, wear an ESD wrist strap before operation and ground the other end of the ESD wrist strap.

Meaning of CPU product model suffix

If the CPU model is UN-CPU-INTEL-8360Y-S, then it is suffixed with "Y" (abbreviated as CPU model suffix). You can query the CPU model supported by the server with the server-compatible part query tool.

Table 22 describes meaning of the Intel Ice Lake CPU model suffix.

Table 22 Intel Ice Lake CPU model suffix description

|

CPU model suffix |

Suffix meaning |

Suffix description |

|

N |

NFV Optimized |

Supports NFV scenario optimization. |

|

T |

High Tcase |

Supports high temperature specifications. |

|

U |

Single Socket |

Supports single-socket operation only. |

|

V |

SaaS optimized SKU for orchestration efficiency targeting high density, lower power VM environment (70% CPU utilization) |

SaaS scenario optimized for VM applications with high density and lower power. |

|

P |

laaS optimized SKU for orchestration efficiency targeting higher frequency for VM Markets (70% CPU utilization) |

IaaS scenario optimization for VM applications with higher frequency. |

|

Y |

Speed Select Technology – Performance Profile |

Supports Intel SST technology, with configurable number of cores and core frequency. |

|

S |

Max SGX enclave size SKUs (512 GB) |

Maximum SGX enclave security container (512 GB) |

|

Q |

Liquid cooling (Temperature Inlet to cold plate = 40℃, ICX TTV Ψca (case-to-fluid inlet resistance)=0.06℃/W) |

Liquid cooling dedicated CPU model |

|

M |

Media Processing Optimized |

Media processing scenario optimization. |

|

NOTE: The list is for reference only. For detailed information, see the official website of Intel. |

||

Installing or removing the blade server

This section describes the specific steps for installing or removing the blade server.

|

|

NOTE: For specific steps, see the "parts installation & replacement video." |

Prerequisites

Take the following ESD prevention measures:

· Wear antistatic clothing.

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Do not wear any conductive objects, such as jewelry or watches.

· Before removing the blade server, make sure you back up the data, stop all services, and power off the server.

· When you replace a component, examine the slot and connector for damages. Make sure the pins are not damaged (bent for example) and do not contain any foreign objects.

· You might also remove other components. For the removed components to be reinstalled correctly, record their positions and connections before removal, for example, taking pictures of cable connection and drive installation positions, or labeling cables.

· For more information about installation principles of blade servers, see the user guide for the server.

Installing the blade server

1. Remove the blade server blank. Press the two latches at both sides at the same time and pull the blank out.

2. Take the half-width blade server out of the antistatic bag.

3. Press the buttons to release the locking levers at two sides.

4. Insert the server into the enclosure slowly and horizontally, and then close the locking levers.

Removing the blade server

1. Remove the half-width blade server. Press the buttons to release the locking levers at two sides, and pull the server out of the enclosure slowly and horizontally.

2. Put the server into an antistatic bag. Powering on and powering off the blade server

Powering on the blade server

This section describes how to power on the blade server.

|

|

NOTE: If the server is connected to external storage devices, make sure the server is the first device to power off and then the last device to power on. This restriction prevents the server from mistakenly identifying the external storage devices as faulty devices. |

Supported power-on methods

Table 23 describes power-on methods supported by the blade server.

Table 23 Blade server power-on methods

|

Power-on method |

Application scenario |

|

Powering on the blade server together with the enclosure |

· The blade server is installed and the chassis is not powered on. |

|

Powering on the blade server by pressing the power on/standby button |

The chassis is powered on, the blade server is installed but is in a down state. The blade server system power LED is steady amber. |

|

Powering on the blade server from the OM Web interface |

|

|

Powering on the blade server by using an OM command |

|

|

Powering on the blade server through the blade server HDM Web UI. |

Prerequisites

Before you power on the server, you must complete the following tasks:

· Install the server and internal components correctly.

· Connect the server to a power source.

· As a best practice for the internal components to operate correctly, do not perform the power on action immediately after powering off the server. Wait for over 30 seconds for HDD drives to stop rotation and electronic components to be powered off completely

Guidelines

If the blade server is successfully powered on, the system power LED turns steady green. For more information about the position of the LEDs, see “LEDs and buttons.”

Procedure

Powering on the blade server together with the enclosure

If you want to power on the server together with the enclosure, configure the power-on delay for slots first. After configuration, power on the enclosure, and the blade server is powered on automatically based on previous settings. No extra action is required.

For more information about the power-on delay for slots, see the OM online help.

Powering on the blade server by pressing the power on/standby button

Press the power on/standby button to power on the server. For information about the position of the power on/standby button, see “LEDs and buttons.”

Powering on the blade server from the OM Web interface

Access the server management page from the OM Web interface and power on the server. For more information, see the OM online help.

Powering on the blade server by using an OM command

Execute the psu-blade command to power on the server. For more information, see the OM command reference.

Method 5: Power on the blade server through the blade server HDM Web UI.

The blade server can be powered on through the power management function of the blade server HDM Web UI. For the specific operations, see the H3C Servers HDM Online Help.

Powering off the blade server

This section describes how to power off the blade server.

|

|

NOTE: The blade server will be powered off when the chassis is powered off. |

Supported power-off methods

Table 24 describes power-off methods supported by the blade server.

Table 24 Blade server power-off methods

|

Power-off method |

Application scenario |

|

Powering off the server from its operating system |

Both the chassis and the blade server are powered on and the blade server is working correctly. |

|

Powering off the server from the OM Web interface |

|

|

Powering off the server by using an OM command |

|

|

Power off the blade server through the power management function on the blade server HDM Web UI. |

|

|

Power off the blade server through the power on/standby button on the front panel of the blade server. |

Both the chassis and the blade server are powered on, but the blade server is abnormal. |

Prerequisites

Before powering off the server, you must complete the following tasks:

· Back up all critical data.

· Make sure all services have stopped or have been migrated to other servers.

Guidelines

If the blade server is successfully powered off, the system power LED turns steady amber. For more information about the position of the system power LED, see "LEDs and buttons."

Powering off the server from its operating system

Use the SUV cables to connect a monitor, mouse, and keyboard to the blade server, and then shut down the operating system to power off the server.

Powering off the server from the OM Web interface

Access the server management page from the OM Web interface and power off the server. For more information, see OM online help.

Powering off the server by using an OM command

Execute the psu-blade command to power off the server. For more information, see the OM online help.

Powering off the server from the HDM Web interface

The blade server can be powered off through the power management function of the blade server HDM Web UI. For the specific operations, see the H3C Servers HDM Online Help.

Powering off the server forcedly by pressing the power on/standby button

|

|

NOTE: This method forces the server to enter standby mode without properly exiting applications and the operating system. Use this method only when the server system crashes. For example, a process gets stuck. |

Press and hold the power on/standby button for more than five seconds to power off the server.

Configuring the blade server

The following information describes the procedures to configure the server after the server installation is complete.

Configuration flowchart

Figure 21 Configuration flowchart

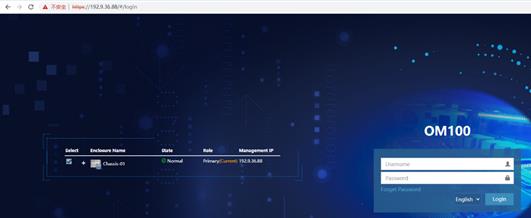

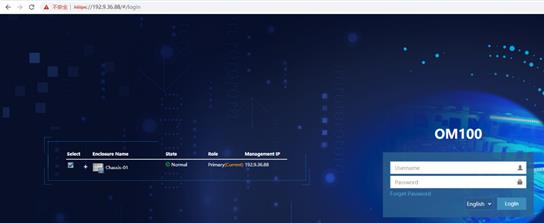

Default login parameters

The default IP address, default username and password for the chassis OM management interface are listed in Table 25.

Table 25 Default OM parameters

|

Item |

Default value |

|

Username |

Admin |

|

Password |

Password@_ |

|

IP address of the management port |

192.168.100.100/24 |

The default IP address, default username and password for the blade server HDM management port are shown in Table 26.

Table 26 Default HDM parameters

|

Item |

Default value |

|

Username |

Admin |

|

Password |

Password@_ |

|

IP address of the management interface |

Obtained from the DHCP server |

Connecting the blade server to a network

Connecting through the management module

Use either of the following methods to connect the blade server to the network through the management module:

· Connect the network cable to one of the four service ports on the management module.

Figure 22 Service ports on the management module

· The management module provides a management port (MGMT) to which a network cable is connected. Thought this port, you can log in to the chassis OM and the HDM of the blade server to monitor the operating status of the chassis and the blade server and set basic information.

Connecting through ICMs

The mezzanine network adapter on each blade server has a corresponding ICM. To connect a blade server to the network through an ICM, make sure the corresponding ICM are present. For more information about mezzanine network adapter and ICM mapping relations, see "Internal networking."

Verifying the blade server status

1. After powering on the server, perform the following tasks to make sure that the server is working correctly. Power on the blade server. For more information, see "Powering on the blade server."

· Verify that the state of the four LEDs on the front panel of the blade server are as expected. For more information about the LEDs, see "LEDs and buttons."

· Log in to OM and verify that the firmware versions are as expected. If not, upgrade the firmware. For more information, see the OM online help.

· Log in to OM and verify that the server is operating correctly. If not, troubleshoot the server.

Modifying default parameters

Modifying the default user password of OM

1. Log in to OM. For more information, see the OM user guide.

2. Modify the OM default user password. For more information, see the OM online help

Modifying the default IP address of the OM module

1. Log in to OM. For more information, see the OM user guide

2. Modify the default IP address of the OM module. For details on how to modify it, see OM Online Help.

Modifying default parameters

Modifying the default user password of HDM

You cannot modify HDM password from OM.

To modify the default password of HDM:

1. Log in to HDM. For more information, see "Accessing the blade server HDM."

2. Modify the HDM default user password. For more information about the password requirements and password setting method, see H3C Servers HDM Online Help.

Modifying the default IP address of HDM

Modifying IP through OM

1. Log in to OM. For more information, see the OM user guide.

2. Modify the HDM default IP address. For more information, see the OM online help.

Modifying IP through HDM

1. Log in to HDM. For more information, see "Accessing the blade server HDM."

2. Modify the HDM default IP address. For more information, see H3C Servers HDM Online Help

Logging into the blade server operating system

For more information, see "Logging in to the blade server operating system."

Configuring basic BIOS settings

Setting the server boot order

The server has a default boot order. Users can modify the boot sequence of the blade server as needed.For the default boot order and the procedure of changing the server boot order, see the BIOS user guide for the server.

Setting the BIOS passwords

BIOS passwords include a boot password as well as an administrator password and a user password for the BIOS setup utility. By default, no passwords are set.

To prevent unauthorized access and changes to the BIOS settings, set both the administrator and user passwords for accessing the BIOS setup utility. Make sure the two passwords are different.

After setting the administrator password and user password for the BIOS setup utility, you must enter the administrator password or user password each time you access the BIOS setup utility.

· To obtain administrator privileges, enter the administrator password.

· To obtain the user privileges, enter the user password.

For the difference between the administrator and user privileges and guidelines for setting the BIOS passwords, see the BIOS user guide for the server.

Configuring RAID

Configure physical and logical drives (RAID arrays) for the server.

The supported RAID levels and RAID configuration methods vary by storage controller model. For more information, see the storage controller user guide for the server.

Installing the operating system and hardware drivers

This section introduces how to install the operating system and drivers.

Installing an OS

The blade server is compatible with many types of operating systems such as Windows and Linux. For details, see the OS compatibility query tool.

For details on how to install the OS, see the OS installation guide.

Installing hardware drivers

For newly installed hardware to operate correctly, the operating system must have the required hardware drivers.

To install a hardware driver, see the operating system installation guide for the server.

|

|

NOTE: To avoid hardware unavailability caused by an update failure, always back up the drivers before you update them. |

Replacing hardware options

This section describes replaceable parts of the blade server and the detailed procedures for part replacement.

|

|

NOTE: If you are replacing multiple hardware options, read their replacement procedures and identify similar steps to streamline the entire replacement procedure. |

Replaceable parts and their videos

Set out below are the specific method of replacing each part and the replaceable parts of the server:

· SAS/SATA drive (Replacing a SAS/SATA drive)

· NVMe drive (Replacing an NVMe drive)

· Riser card and PCIe card (Replacing the riser card and PCIe card)

· Storage controller and its power fail safeguard module (Replacing the riser card and PCIe)

· Straight-through card (Replacing the straight-through card)

· GPU card (Replacing the GPU card)

· NIC (Replacing the straight-through card)

· SATA M.2 SSD module (Replacing a SATA M.2 SSD)

· SATA M.2 SSD adapter module (Replacing a SATA M.2 SSD adapter)

· NVMe VROC module (Replacing the NVMe VROC module)

· DIMMs (Replacing the DIMM)

· CPU (Replacing the GPU card and Expanding the processor)

· TPM/TCM (Installing and setting up a TCM or TPM)

· System battery (Replacing the system battery)

· System board (Replacing the system board)

· Drive backplane (Replacing a drive backplane)

Replacing a SAS/SATA drive

To configure RAID settings after the drive is replaced, see the storage controller user guide for the server.

Operation scenario

· The drive fails.

· Expand the drive.

· Replace the drive with full space.

· Replace the drive with another model.

· The drive hinders maintenance of other parts.

Prerequisites

Take the following ESD prevention measures:

· Wear antistatic clothing.

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Do not wear any conductive objects, such as jewelry or watches.

· Identify the position of the drive to be replaced.

· To replace a drive that is installed with an operating system and is not configured with a RAID or is in a non-redundancy array, back up data, stop all services, and power off the blade server. For more information, see "Powering off the blade server."

· When you replace a component, examine the slot and connector for damages. Make sure the pins are not damaged (bent for example) and do not contain any foreign objects.

· You might also remove other components. For the removed components to be reinstalled correctly, record their positions and connections before removal, for example, taking pictures of cable connection and drive installation positions, or labeling cables.

· Identify the RAID array information of the drive to be replaced. To replace a drive that is not configured with a RAID, back up all data if the old drive is full or the new drive is of a different model

· Understand the guidelines for installing a SAS/SATA drive: SAS/SATA drive

Removing a SAS/SATA drive

1. Remove the drive:

¡ To remove an SSD, press the button on the drive panel to release the locking lever, and then hold the locking lever and pull the drive out of the slot.

¡ To remove an HDD, press the button on the drive panel to release the locking lever. Pull the drive 3 cm (1.18 in) out of the slot. Wait for a minimum of 30 seconds for the drive to stop rotating, and then pull the drive out of the slot.

2. Remove the drive carrier. Remove the screws that secure the drive and then remove the drive from the carrier.

Installing a SAS/SATA drive

1. (Optional) Please confirm in advance whether the newly installed SAS/SATA drive contains RAID information. If yes, please delete the RAID information.

2. (Optional) Attach the drive to the drive carrier. Place the drive in the carrier and then use four screws to secure the drive into place.

3. (Optional) Remove the blank from the drive slot, if any. Pressing the red button on the blank to the right, pull the blank out of the slot.

4. Install the drive. Press the button on the drive panel to release the locking lever, and then insert the drive into the drive slot.

5. After the storage controller detects the new SAS/SATA drive, please confirm whether to configure RAID according to the actual situation. For the method of configuring RAID, refer to the storage controller user guide of the product.

Verifying the replacement

Use one of the following methods to verify that the drive has been replaced correctly:

· Verify the drive properties (including capacity) by using one of the following methods:

¡ Log in to OM. For more information, see the OM online help.

¡ Log in to HDM. For more information, see H3C Servers HDM online help.

¡ Access the BIOS. For more information, see the storage controller user guide for the server.

¡ Access the CLI or GUI of the server.

· Observe the drive LEDs to verify that the drive is operating correctly. For more information about drive LEDs, see "LEDs and buttons."

Replacing an NVMe drive

To configure RAID settings after the drive is replaced, see the storage controller user guide for the server.

Operation scenario

· The drive fails.

· Expand the drive.

· Replace the drive with full space.

· Replace the drive with another model.

· The drive hinders maintenance of other parts.

Prerequisites

Take the following ESD prevention measures:

· Wear antistatic clothing.

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Do not wear any conductive objects, such as jewelry or watches.

· Identify the position of the drive to be replaced.

· To replace a drive that is installed with an operating system and is not configured with a RAID or is in a non-redundancy array, back up data, stop all services, and power off the blade server. For more information, see "Powering off the blade server."

· When you replace a component, examine the slot and connector for damages. Make sure the pins are not damaged (bent for example) and do not contain any foreign objects.

· You might also remove other components. For the removed components to be reinstalled correctly, record their positions and connections before removal, for example, taking pictures of cable connection and drive installation positions, or labeling cables.

· Identify the RAID array information for the drive to be replaced. To replace a drive that is not configured with a RAID, back up all data if the old drive is full or the new drive is of a different model.

· Understand the guidelines for installing the NVMe drive: NVMe drive.

· For the operating systems that support NVMe drive hot swapping and managed hot removal, see "NVMe drive."

· Perform the predictive hot-swap operation. For the specific steps, refer to the online replacement operation guide of NVMe drive.

Removing an NVMe drive

|

|

NOTE: When you remove multiple NVMe drives, remove the drives one after another at intervals of more than five seconds. |

1. Remove the drive. Press the button on the drive panel to release the locking lever, and then pull the drive out of the slot.

2. Remove the drive carrier, if any. Remove all screws that secure the drive, and then remove the drive from the carrier.

Installing an NVMe drive

1. Read the installation guidelines. See "NVMe drive."

2. Attach the drive to the drive carrier. Place the new drive in the carrier and then use screws to secure the drive into place.

3. Remove the drive blank from the drive slot, if any. Pressing the red button to the right on the blank, pull the blank out of the slot.

4. Install the drive. Press the button on the drive panel to release the locking lever, and then insert the drive into the slot.

5. Clear the RAID information in the newly installed NVMe drive, if any.

6. Configure the RAID according to the real situation. For more information, see the BIOS users guidide

Verifying the replacement

Use the following methods to verify that the drive is installed correctly:

· Verify the drive properties (including capacity) by using one of the following methods:

¡ Log in to HDM. For more information, see H3C Servers HDM Online Help.

¡ Log in to OM. For more information, see the OM online help.

¡ Access the BIOS. For more information, see the BIOS user guide for the server.

¡ Access the CLI or GUI of the server.

· Observe the drive LEDs to verify that the drive is operating correctly.

· After entering the operating system, check whether the NVMe drive capacity and other information are correct.

Replacing the riser card and PCIe card

Operation scenario

· The riser card fails.

· The PCIe card fails.

Prerequisites

· Install another model of riser card.

· Install another model of PCIe card.

· Expand the riser card.

· Expand the PCIe card.

· The riser card or PCIe card hinders maintenance of other parts.

Take the following ESD prevention measures:

· Wear antistatic clothing.

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Do not wear any conductive objects, such as jewelry or watches.

· Before replacing, back up data, stop all services, and power off the server. For more information, see "Powering off the blade server."

· Replace the blade server.

· When you replace a component, examine the slot and connector for damages. Make sure the pins are not damaged (bent for example) and do not contain any foreign objects.

· You might also remove other components. For the removed components to be reinstalled correctly, record their positions and connections before removal, for example, taking pictures of cable connection and drive installation positions, or labeling cables.

· For the specific installation guidelines of riser card and PCIe card, see "Riser card and PCIe card."

Removing the riser card and PCIe card

1. Remove the chassis cover. Press the chassis cover unlock button, slide toward the rear of the blade server, and then lift the cover up.

a. Press the chassis cover unlock button and slide toward the rear of the server.

b. Lift the chassis cover up to remove it from the server.

2. Remove the riser card with PCIe card. Lift the riser card up to remove it from the server chassis.

3. Remove the PCIe card from the riser card. Press and open the fixing buckle on the riser card, and then lift the PCIe card up to remove it from the riser card.

Installing the riser card and PCIe card

1. Install the PCIe card to the riser card.

a. Open the fixing buckle on the riser card. Press the locking button on the fixing buckle and open the fixing buckle.

b. (Optional) Remove the PCIe card filler panel. Lift the filler panel up and remove it from the riser card.

c. InstallIng the PCIe card to riser card connector Insert the PCIe card along the PCIe connector on the riser card, and then close the PCIe card fixing buckle.

2. Install the riser card with the PCIe card in the blade server.

a. (Optional) Remove the filler panel. Lift the filler panel up.

b. Install the riser card with the PCIe card in the blade server. Insert the riser card downward as aligning the two mushroom-shaped heads on the riser card with the two notches on the blade server.

3. Install the chassis cover. Place the chassis cover horizontally downward as aligning the mushroom-shaped head on the chassis cover with the groove on the chassis, and slide the chassis cover toward the front of the server until it is locked.

4. Install the blade server.

5. Power on the blade server. For the specific steps, see "Powering on the blade server."

Replacing the storage controller and its power fail safeguard module

This section describes the detailed operating steps for replacing the storage controller and its power fail safeguard module.

Operation scenario

· The storage controller fails.

· Replace the storage controller with another model.