- Table of Contents

- Related Documents

-

02-Appendix

Contents

Appendix A Server specifications

Server models and chassis view

Front panel view of the server

Front panel view of a compute module

Main board of a compute module

Appendix B Component specifications

DRAM DIMM rank classification label

Drive configurations and numbering

Riser card and system board port mapping relationship

800 W high-voltage power supply

850 W high-efficiency Platinum power supply

Appendix C Managed hot removal of NVMe drives

Performing a managed hot removal in Windows

Performing a managed hot removal in Linux

Performing a managed hot removal from the CLI

Performing a managed hot removal from the Intel® ASM Web interface

Appendix D Environment requirements

About environment requirements

General environment requirements

Operating temperature requirements

Appendix A Server specifications

The information in this document might differ from your product if it contains custom configuration options or features.

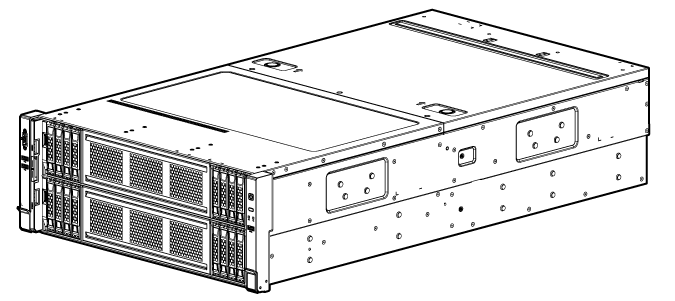

Server models and chassis view

H3C UniServer R6900 G3 servers are 4U rack servers with two dual-processor compute modules communicating through the midplane. The servers are suitable for cloud computing, distributed storage, and video storage, as well as enterprise infrastructure and telecommunications applications. The servers feature high computing performance, large storage capacity, low power consumption, strong expandability, and high availability, allowing for simple deployment and management.

Figure 1 Chassis view

The servers come in the models listed in Table 1. These models support different drive configurations.

Table 1 R6900 G3 server models

|

Model |

Maximum drive configuration |

|

48SFF |

48 SFF SAS/SATA drives. |

|

32SFF |

· 24 SFF SAS/SATA drives and 8 SFF NVMe drives. · 28 SFF SAS/SATA drives and 4 SFF NVMe drives. · 32 SFF SAS/SATA drives. |

|

16SFF |

· 4 SFF SAS/SATA drives and 12 SFF NVMe drives. · 8 SFF SAS/SATA drives and 8 SFF NVMe drives. · 12 SFF SAS/SATA drives and 4 SFF NVMe drives. · 16 SFF SAS/SATA drives. · 16 SFF NVMe drives. |

Technical specifications

|

Item |

48SFF |

32SFF |

16SFF |

|

Dimensions (H × W × D) |

· Without a security bezel and chassis ears: 174.8 × 444 × 807.4 mm (6.88 × 17.48 × 31.79 in) · With a security bezel: 174.8 × 444 × 829.7 mm (6.88 × 17.48 × 32.67 in) |

||

|

Max. weight |

75.77 kg (167.04 lb) |

||

|

Processors |

4 × Intel Purley processors or Jintide-C series processors (Up to 3.8 GHz base frequency, maximum 205 W power consumption, 38.5 MB L3 cache, and a maximum of 28 cores per processor) |

||

|

Memory |

A maximum of 48 DIMMs Supports mixture of DCPMMs and DRAM DIMMs |

||

|

Storage controllers |

· High-performance storage controllers · NVMe VROC module · Dual SD card extended module |

||

|

Chipset |

Intel C622 Lewisburg chipset |

||

|

· 6 × USB connectors: ¡ 4 × USB 3.0 connectors (one on the right chassis ear, two at the server rear, and one in the management module) ¡ 2 × USB 2.0 connectors (provided by the left chassis ear) · 8 × SATA connectors (4 connectors on the main board of each compute module)(The connectors must be used with storage controllers) · 1 × RJ-45 HDM dedicated port at the server rear · 2 × VGA connectors (one at the server rear and one at the server front) · 1 × BIOS serial port at the server rear |

|||

|

Expansion slots |

· 20 × PCIe 3.0 slots (including one mLOM network adapter connector) · 1 × dual SD card extended module connector |

||

|

External USB optical drives |

|||

|

Power supplies |

4 × hot-swappable power supplies, N + N redundancy |

||

|

Standards |

CE EMC CE RoHS CCC FCC ICES-003 VCCI |

||

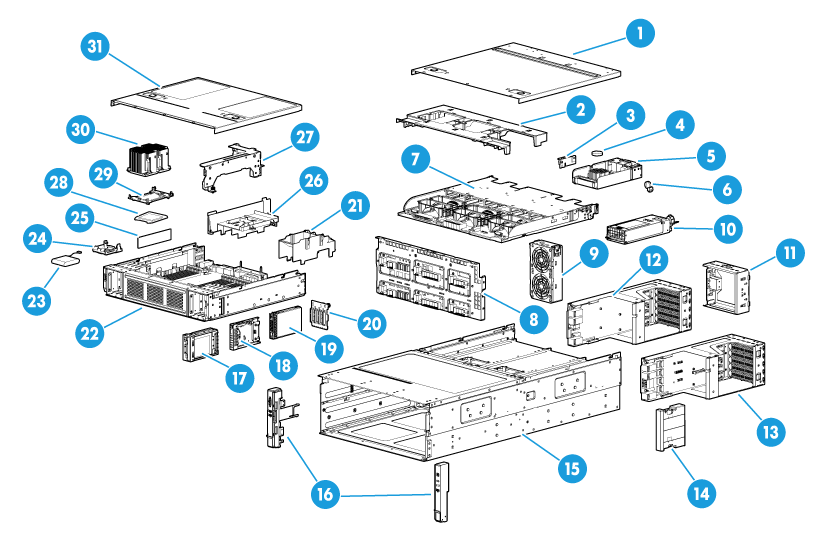

Components

Figure 2 R6900 G3 server components

Table 2 R6900 G3 server components

|

Description |

|

|

(1) Chassis access panel |

N/A |

|

(2) Power supply air baffle |

Provides ventilation aisles for power supplies. |

|

(3) Dual SD card extended module |

Provides two SD card slots. |

|

(4) System battery |

Supplies power to the system clock. |

|

(5) Management module |

Provides system management and monitoring features, and a management network port, a VGA connector, and USB connectors. |

|

(6) NVMe VROC module |

Works with Intel VMD to provide RAID capability for the server to virtualize storage resources of NVMe drives. |

|

(7) PDB |

Used for installing power supplies and the management module, and provides cable connectors for the front I/O component, and VGA and USB 2.0 connectors. |

|

(8) Midplane |

Provides data and power channels in the server. |

|

(9) Fan module |

Supports hot swapping. Fans in the fan modules support N+1 redundancy. |

|

(10) Power supply |

Supplies power to the server and supports hot swapping and N+N redundancy. |

|

(11) Riser card blank |

Installed on an empty PCIe riser bays to ensure good ventilation. |

|

(12) Rear riser card 2 |

Installed on PCIe riser bay 2 at the server rear. |

|

(13) Rear riser cards 1 or 3 |

Installed on PCIe riser bay 1 or 3 at the server rear. |

|

(14) Riser card air baffle |

Provides ventilation aisles for PCIe modules in riser cards at the server rear. |

|

(15) Chassis |

N/A |

|

(16) Chassis ears |

Attach the server to the rack. The right ear is integrated with the front I/O component, and the left ear is integrated with VGA and USB 2.0 connectors. The serial label pull tab on the left ear provides the HDM default login settings and document QR code. |

|

(17) LCD smart management module |

Displays basic server information and operating status, and allows users to perform basic server settings. |

|

(18) Diagnostic panel |

Displays information about faulty components for quick diagnosis. |

|

(19) Drive |

Drive for data storage. |

|

(20) Drive backplane |

Provides power and data channels for drives. |

|

(21) Right air baffle |

Provides ventilation aisles for processor heatsinks and DIMMs, and an installation location for a supercapacitor. |

|

(22) Compute module and its main board |

Integrates all compute module parts and components. |

|

(23) Supercapacitor |

Supplies power to the flash card of the power fail safeguard module, which enables the storage controller to back up data to the flash card for protection when power outage occurs. |

|

(24) Supercapacitor holder |

Secures a supercapacitor in the chassis. |

|

(25) Memory |

Stores computing data and data exchanged with external storage. |

|

(26) Low mid air baffle |

Used in a compute module when the RS-FHHL-G3 riser card is installed to provide ventilation aisles for DIMMs. |

|

(27) Riser card 0 |

Installed on the PCIe riser connector in a compute module. |

|

(28) Processor |

Integrates a memory processing unit and a PCIe controller to provide data processing capabilities for the server. |

|

(29) Processor retaining bracket |

Attaches a processor to the heatsink. |

|

(30) Processor heatsink |

Cools the processor. |

|

(31) Compute module access panel |

N/A |

Front panel

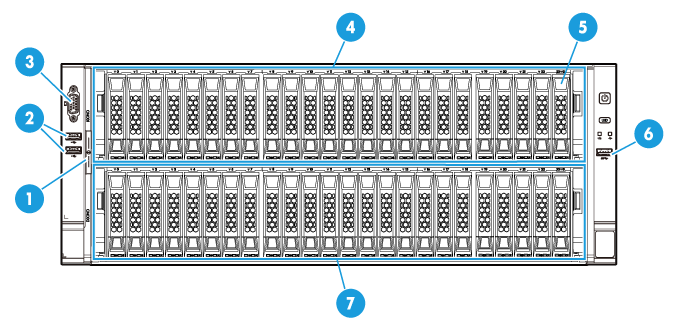

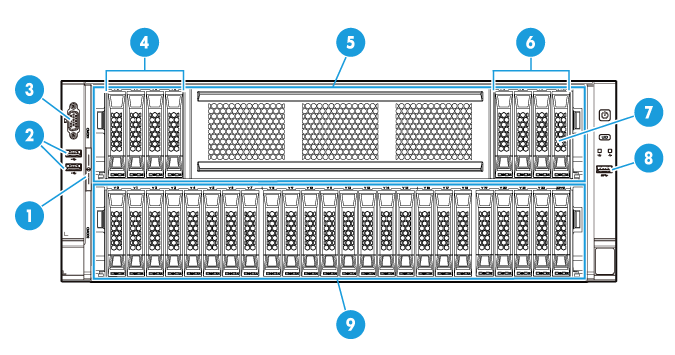

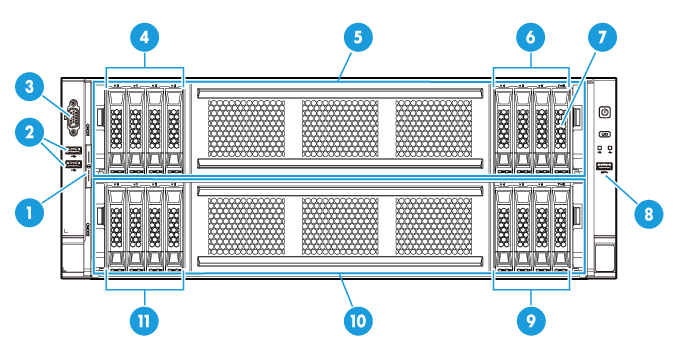

Front panel view of the server

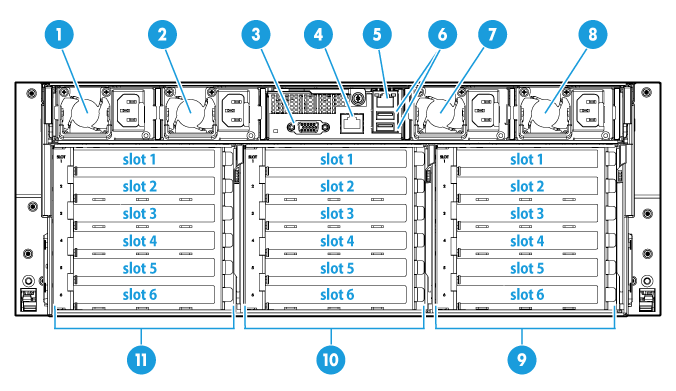

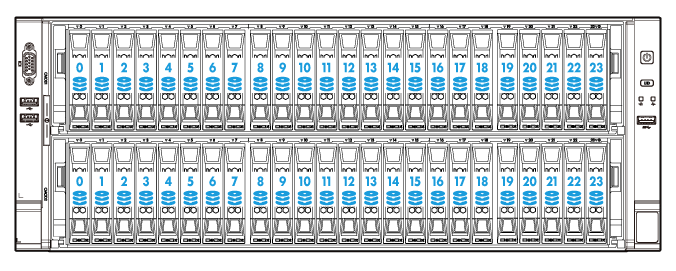

Figure 3, Figure 4, and Figure 5 show the front panel views of 48SFF, 32SFF, and 16SFF servers, respectively.

Figure 3 48SFF front panel

|

(1) Serial label pull tab module |

(2) USB 2.0 connectors |

|

|

(3) VGA connector |

(4) Compute module bay 1 |

|

|

(5) SAS/SATA drive, LCD smart management module, or diagnostic panel (optional) |

||

|

(6) USB 3.0 connector |

(7) Compute module bay 2 |

|

Figure 4 32SFF front panel

|

(1) Serial label pull tab module |

(2) USB 2.0 connectors |

|

(3) VGA connector |

(4) Drive cage bay 1 |

|

(5) Compute module bay 1 |

(6) Drive cage bay 2 |

|

(7) SAS/SATA drive, NVMe drive, LCD smart management module, or diagnostic panel (optional) |

|

|

(8) USB 3.0 connector |

(9) Compute module bay 2 |

|

(1) Serial label pull tab module |

(2) USB 2.0 connectors |

|

(3) VGA connector |

(4) Drive cage bay 1 |

|

(5) Compute module bay 1 |

(6) Drive cage bay 2 |

|

(7) SAS/SATA drive, NVMe drive, LCD smart management module, or diagnostic panel (optional) |

|

|

(8) USB 3.0 connector |

(9) Drive cage bay 4 |

|

(10) Compute module bay 2 |

(11) Drive cage bay 3 |

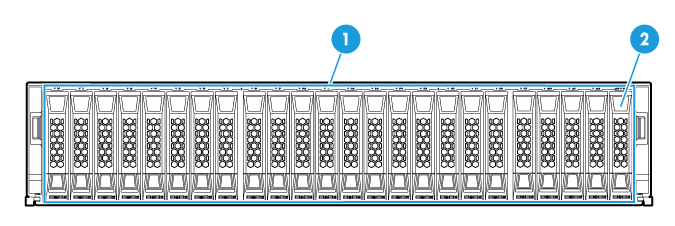

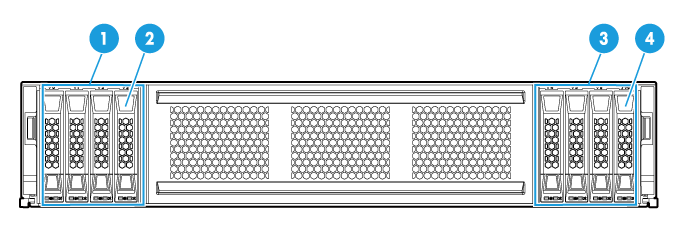

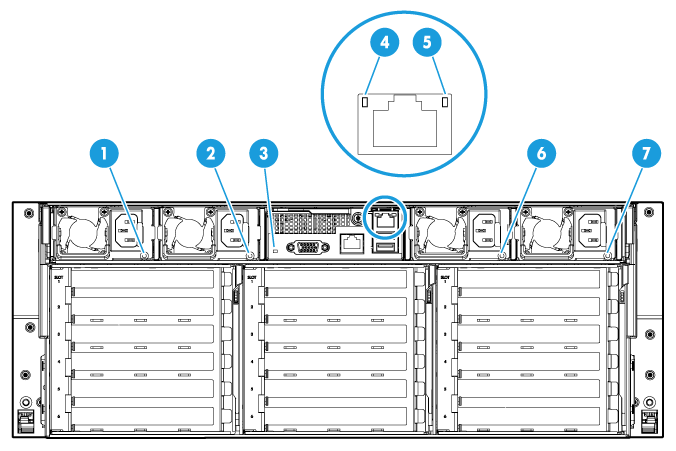

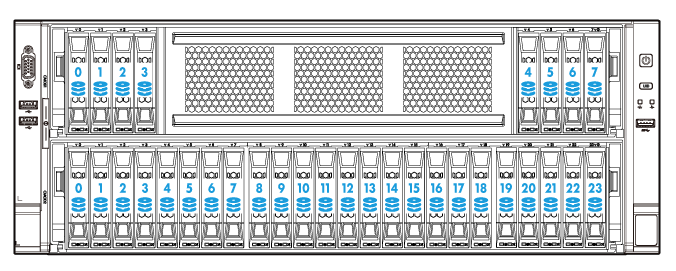

Front panel view of a compute module

Figure 6 and Figure 7 show the front panel views of 24SFF and 8SFF compute modules, respectively. You can install a compute module in compute module bay 1 or 2. Modules installed in compute module bays 1 and 2 are referred to as compute module 1 and compute module 2, respectively. For compute module bay locations, see "Front panel view of the server."

Figure 6 24SFF compute module front panel

|

(1) 24SFF SAS/SATA drives |

|

(2) LCD smart management module or diagnostic panel if installed in compute module bay 1 (optional) |

Figure 7 8SFF compute module front panel

|

(1) Drive cage bay 1/3 for 4SFF SAS/SATA or NVMe drives (optional) |

|

(2) SAS/SATA or NVMe drives (optional) |

|

(3) Drive cage bay 2/4 for 4SFF SAS/SATA or NVMe drives (optional) |

|

(4) LCD smart management module or diagnostic panel if installed in compute module bay 1 (optional) |

|

|

NOTE: Drive cage bays 1 and 2 are for compute module 1, and drive cage bays 3 and 4 are for compute module 2. |

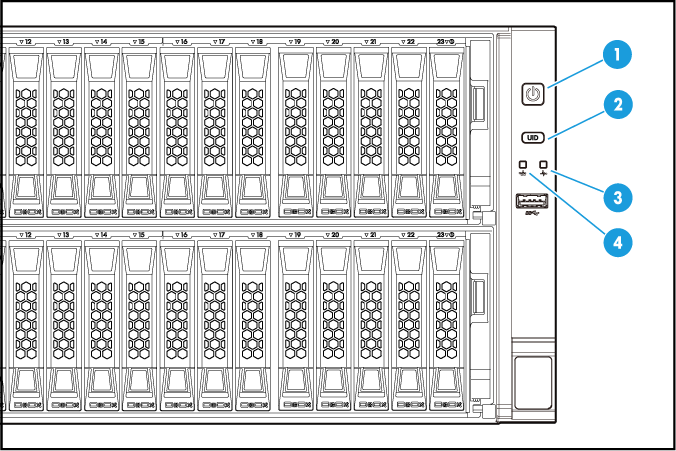

LEDs and buttons

The LED and buttons are the same on all server models. Figure 8 shows the front panel LEDs and buttons. Table 3 describes the status of the front panel LEDs.

Figure 8 Front panel LEDs and buttons

|

(1) Power on/standby button and system power LED |

(2) UID button LED |

|

(3) Health LED |

(4) mLOM network adapter Ethernet port LED |

Table 3 LEDs and buttons on the front panel

|

Button/LED |

Status |

|

Power on/standby button and system power LED |

· Steady green—The system has started. · Flashing green (1 Hz)—The system is starting. · Steady amber—The system is in Standby state. · Off—No power is present. Possible reasons: ¡ No power source is connected. ¡ No power supplies are present. ¡ The installed power supplies are faulty. ¡ The system power LED is not connected correctly. |

|

UID button LED |

· Steady blue—UID LED is activated. The UID LED can be activated by using the following methods: ¡ Press the UID button LED. ¡ Activate the UID LED from HDM. · Flashing blue: ¡ 1 Hz—The firmware is being upgraded or the system is being managed from HDM. ¡ 4 Hz—HDM is restarting. To restart HDM, press the UID button LED for a minimum of eight seconds. · Off—UID LED is not activated. |

|

Health LED |

· Steady green—The system is operating correctly or a minor alarm has occurred. · Flashing green (4 Hz)—HDM is initializing. · Flashing amber (1 Hz)—A major alarm has occurred. · Flashing red (1 Hz)—A critical alarm has occurred. If a system alarm is present, log in to HDM to obtain more information about the system running status. |

|

mLOM network adapter Ethernet port LED |

· Steady green—A link is present on the port. · Flashing green (1 Hz)—The port is receiving or sending data. · Off—No link is present on the port. |

Ports

Table 4 Ports on the front panel

|

Port |

Type |

Description |

|

USB connector |

USB 3.0/2.0 |

Connects the following devices: · USB flash drive. · USB keyboard or mouse. · USB optical drive for operating system installation. For a DVD-RW-Mobile-USB-A optical drive to operate correctly, you must connect it to a USB 3.0 connector. |

|

VGA connector |

DB-15 |

Connects a display terminal, such as a monitor or KVM device. |

Rear panel

Rear panel view

Figure 9 shows the rear panel view.

Figure 9 Rear panel components

|

(1) Power supply 1 |

(2) Power supply 2 |

|

(3) VGA connector |

(4) BIOS serial port |

|

(5) HDM dedicated network port (1 Gbps, RJ-45, default IP address 192.168.1.2/24) |

|

|

(6) USB 3.0 connectors |

(7) Power supply 3 |

|

(8) Power supply 4 |

|

|

(9) PCIe riser bay 3: · PCIe slots 1 through 3 (processor 2 in compute module 1) · PCIe slots 4 through 6 (processor 2 in compute module 2) |

|

|

(10) PCIe riser bay 2: · PCIe slot 1 (processor 2 in compute module 1) · PCIe slot 2 (processor 1 in compute module 1) · PCIe slots 3, 4, and 6 (processor 1 in compute module 2) · PCIe slot 5 (processor 2 in compute module 2) |

|

|

(11) PCIe riser bay 1: · PCIe slots 1 through 3 (processor 1 in compute module 1) · PCIe slots 4 through 6 (processor 1 in compute module 2) NOTE: If you install an RS-4*FHHL-G3 riser card in this bay, you can install a PCIe module or mLOM network adapter in PCIe slot 4. With an mLOM network adapter installed, the PCIe slot number changes to 14. |

|

|

|

NOTE: · If a processor is not present, the corresponding PCIe slots are unavailable. · Some PCIe modules require PCIe I/O resources. Make sure the number of PCIe modules requiring PCIe I/O resources does not exceed eleven. For more information, see "PCIe modules." |

LEDs

Figure 10 shows the rear panel LEDs. Table 5 describes the status of the rear panel LEDs.

|

(1) Power supply LED for power supply 1 |

(2) Power supply LED for power supply 2 |

|

(3) UID LED |

(4) Link LED of the Ethernet port |

|

(5) Activity LED of the Ethernet port |

(6) Power supply LED for power supply 3 |

|

(7) Power supply LED for power supply 4 |

|

Table 5 LEDs on the rear panel

|

LED |

Status |

|

Power supply LED |

· Steady green—The power supply is operating correctly. · Flashing green (1 Hz)—Power is being input correctly but the system is not powered on. · Flashing green (0.33 Hz)—The power supply is in standby state and does not output power. · Flashing green (2 Hz)—The power supply is updating its firmware. · Steady amber—Either of the following conditions exists: ¡ The power supply is faulty. ¡ The power supply does not have power input, but another power supply has correct power input. · Flashing amber (1 Hz)—An alarm has occurred on the power supply. · Off—No power supplies have power input, which can be caused by an incorrect power cord connection or power source shutdown. |

|

UID LED |

· Steady blue—UID LED is activated. The UID LED can be activated by using the following methods: ¡ Press the UID button LED. ¡ Enable UID LED from HDM. · Flashing blue: ¡ 1 Hz—The firmware is being updated or the system is being managed by HDM. ¡ 4 Hz—HDM is restarting. To restart HDM, press the UID button LED for a minimum of eight seconds. · Off—UID LED is not activated. |

|

Link LED of the Ethernet port |

· Steady green—A link is present on the port. · Off—No link is present on the port. |

|

Activity LED of the Ethernet port |

· Flashing green (1 Hz)—The port is receiving or sending data. · Off—The port is not receiving or sending data. |

Ports

Table 6 Ports on the rear panel

|

Port |

Type |

Description |

|

HDM dedicated network port |

RJ-45 |

Establishes a network connection to manage HDM from its Web interface. |

|

USB connector |

USB 3.0 |

Connects the following devices: · USB flash drive. · USB keyboard or mouse. · USB optical drive for operating system installation. |

|

VGA connector |

DB-15 |

Connects a display terminal, such as a monitor or KVM device. |

|

BIOS serial port |

RJ-45 |

The BIOS serial port is used for the following purposes: · Log in to the server when the remote network connection to the server has failed. · Establish a GSM modem or encryption lock connection. |

|

Power receptacle |

Standard single-phase |

Connects the power supply to the power source. |

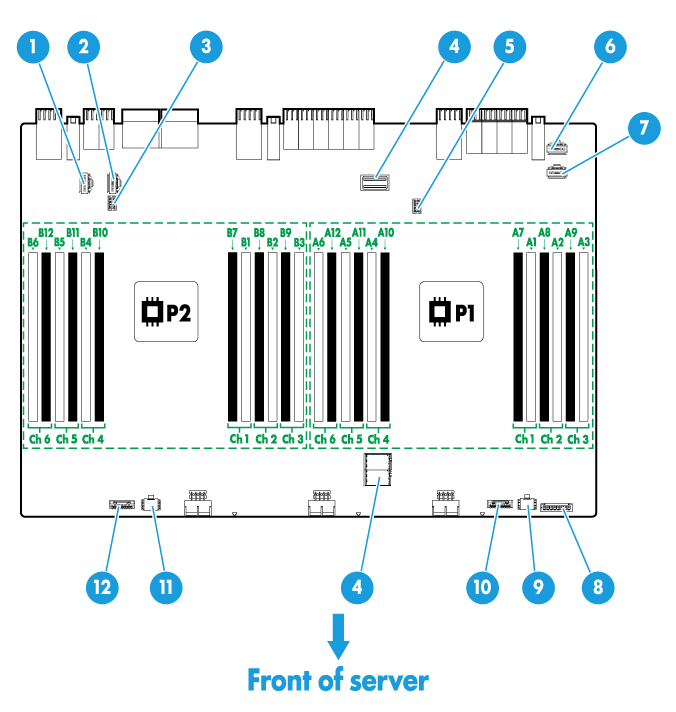

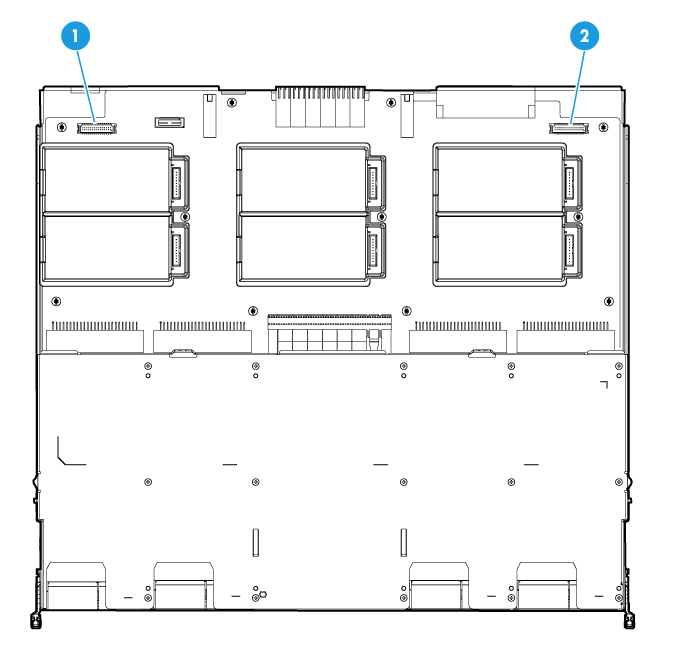

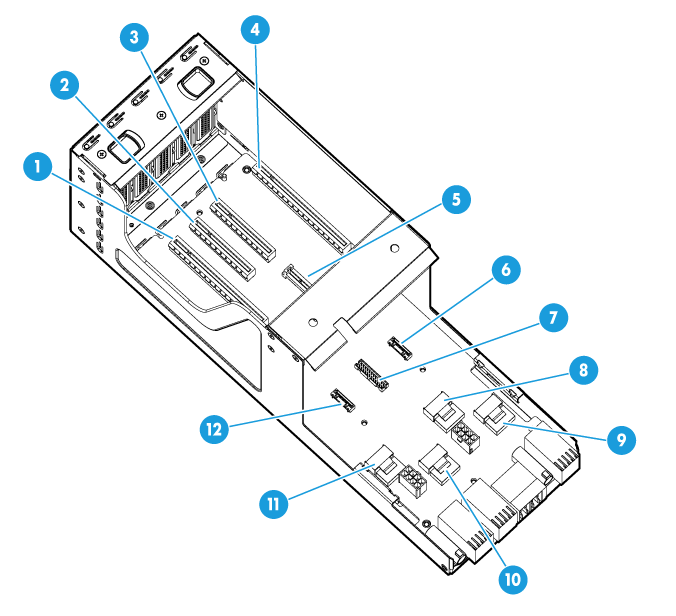

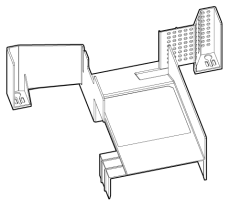

Main board of a compute module

Main board components

8SFF and 24SFF compute modules have the same main board layout.

Figure 11 Main board components

|

(1) SAS port B2 (×4 SAS ports) for PCIe riser bay 3 |

(2) SAS port B1 (×4 SAS ports) for PCIe riser bay 3 |

|

(3) Supercapacitor connector 2 for PCIe riser bay 3 |

(4) PCIe riser connector 0 for processor 2 |

|

(5) Supercapacitor connector 1 for PCIe riser bay 1 |

(6) SAS port A1 (×4 SAS ports) for PCIe riser bay 1 |

|

(7) SAS port A2 (×4 SAS ports) for PCIe riser bay 1 |

(8) LCD smart management module connector |

|

(10) Drive backplane AUX connector 1 |

|

|

(11) Drive backplane power connector 2 |

(12) Drive backplane AUX connector 2 |

For information about the supported PCIe riser cards and their installation locations, see "Riser cards."

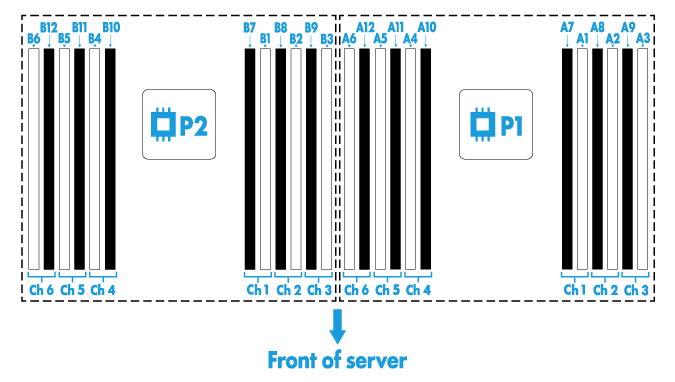

DIMM slots

The server provides six DIMM channels per processor, 12 channels in total. Each channel contains one white-coded slot and one black-coded slot, as shown in Table 7.

Table 7 DIMM slot numbering and color-coding scheme

|

Processor |

DlMM slots |

|

Processor 1 |

A1 through A6 (white coded) A7 through A12 (black coded) |

|

Processor 2 |

B1 through B6 (white coded) B7 through B12 (black coded) |

8SFF and 24SFF compute modules have the same physical layout of the DIMM slots on the main board, as shown in Figure 12. For more information about the DIMM slot population rules, see the guidelines in "Installing DIMMs."

Figure 12 DIMM physical layout

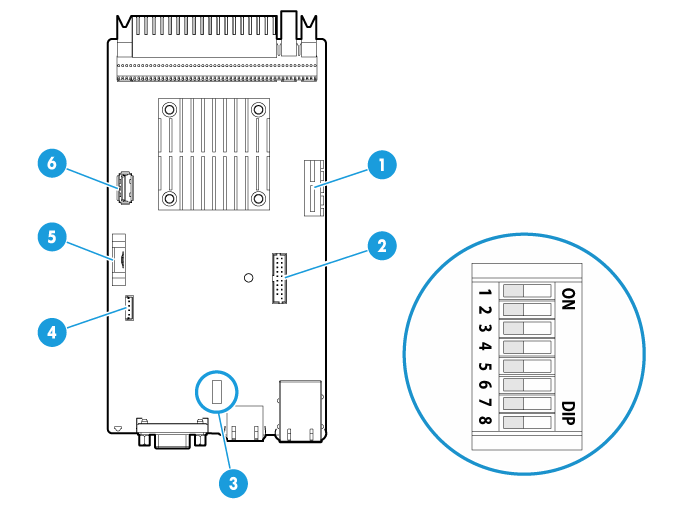

Management module

Management module components

Figure 13 Management module components

|

(1) Dual SD card extended module slot |

(2) TPM/TCM connector |

|

(3) System maintenance switches |

(4) NVMe VROC module connector |

|

(5) System battery |

(6) Internal USB 3.0 connector |

System maintenance switches

Use the system maintenance switch if you forget HDM username, HDM password, or BIOS password, or need to restore default BIOS settings, as described in Table 8. To identify the location of the switch, see Figure 13.

Table 8 System maintenance switch

|

Item |

Description |

Remarks |

|

Switch 1 |

· OFF (default)—HDM login requires the username and password of a valid HDM user account. · ON—HDM login requires the default username and password. |

For security purposes, turn off the switch after you complete tasks with the default username and password as a best practice. |

|

Switch 5 |

· OFF (default)—Normal server startup. · ON—Restores the default BIOS settings. |

To restore the default BIOS settings, turn on the switch and then start the server. The default BIOS settings will be restored. Before the next server startup, power off the server and then turn off the switch to perform a normal server startup. |

|

Switch 6 |

· OFF (default)—Normal server startup. · ON—Clears all passwords from the BIOS at server startup. |

To clear all passwords from the BIOS, turn on the switch and then start the server. All the passwords will be cleared from the BIOS. Before the next server startup, turn off the switch to perform a normal server startup. |

|

Switches 2, 3, 4, 7, and 8 |

Reserved. |

N/A |

PDB

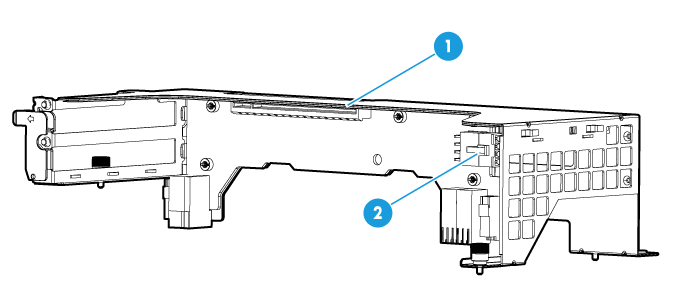

Figure 14 PDB components

|

(1) Front I/O connector |

(2) Front VGA and USB 2.0 connector |

Appendix B Component specifications

For components compatible with the server and detailed component information, visit the query tool at http://www.h3c.com/cn/Service/Document_Software/Document_Center/Server/.

About component model names

The model name of a hardware option in this document might differ slightly from its model name label.

A model name label might add a prefix or suffix to the hardware-coded model name for purposes such as identifying the matching server brand or applicable region. For example, the DDR4-2666-8G-1Rx8-R memory model represents memory module labels including DDR4-2666-8G-1Rx8-R, DDR4-2666-8G-1Rx8-R-F, and DDR4-2666-8G-1Rx8-R-S, which have different suffixes.

DIMMs

The server provides 6 DIMM channels per processor, 24 channels in total. Each DIMM channel has two DIMM slots and supports a maximum of eight ranks. For the physical layout of DIMM slots, see "DIMM slots."

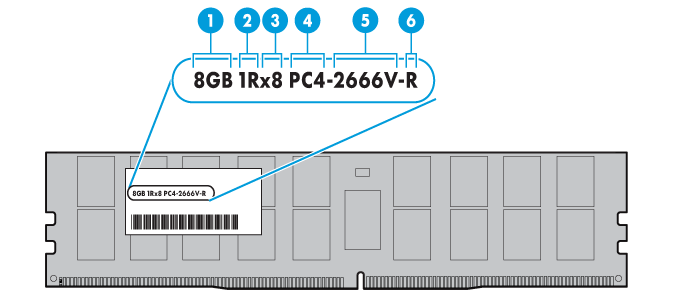

DRAM DIMM rank classification label

A DIMM rank is a set of memory chips that the system accesses while writing or reading from the memory. On a multi-rank DIMM, only one rank is accessible at a time.

To determine the rank classification of a DRAM DIMM, use the label attached to the DIMM, as shown in Figure 15.

Figure 15 DRAM DIMM rank classification label

Table 9 DIMM rank classification label description

|

Callout |

Description |

Remarks |

|

1 |

Capacity |

· 8GB. · 16GB. · 32GB. |

|

2 |

Number of ranks |

· 1R— One rank. · 2R—Two ranks. · 4R—Four ranks. · 8R—Eight ranks. |

|

3 |

Data width |

· ×4—4 bits. · ×8—8 bits. |

|

4 |

DIMM generation |

Only DDR4 is supported. |

|

5 |

Data rate |

· 2133P—2133 MHz. · 2400T—2400 MHz. · 2666V—2666 MHz. · 2933Y—2933 MHz. |

|

6 |

DIMM type |

· L—LRDIMM. · R—RDIMM. |

HDDs and SSDs

Drive LEDs

The server supports SAS, SATA, and NVMe drives, of which SAS and SATA drives support hot swapping. You can use the LEDs on a drive to identify its status after it is connected to a storage controller.

Figure 16 shows the location of the LEDs on a drive.

|

(1) Fault/UID LED |

(2) Present/Active LED |

To identify the status of a SAS or SATA drive, use Table 10. To identify the status of an NVMe drive, use Table 11.

Table 10 SAS/SATA drive LED description

|

Fault/UID LED status |

Present/Active LED status |

Description |

|

Flashing amber (0.5 Hz) |

Steady green/Flashing green (4.0 Hz) |

A drive failure is predicted. As a best practice, replace the drive before it fails. |

|

Steady amber |

Steady green/Flashing green (4.0 Hz) |

The drive is faulty. Replace the drive immediately. |

|

Steady blue |

Steady green/Flashing green (4.0 Hz) |

The drive is operating correctly and is selected by the RAID controller. |

|

Off |

Flashing green (4.0 Hz) |

The drive is performing a RAID migration or rebuilding, or the system is reading or writing data to the drive. |

|

Off |

Steady green |

The drive is present but no data is being read or written to the drive. |

|

Off |

Off |

The drive is not securely installed. |

Table 11 NVMe drive LED description

|

Fault/UID LED status |

Present/Active LED status |

Description |

|

Flashing amber (0.5 Hz) |

Off |

The managed hot removal process is completed and the drive is ready for removal. |

|

Flashing amber (4 Hz) |

Off |

The drive is in hot insertion process. |

|

Steady amber |

Steady green/Flashing green (4.0 Hz) |

The drive is faulty. Replace the drive immediately. |

|

Steady blue |

Steady green/Flashing green (4.0 Hz) |

The drive is operating correctly and selected by the RAID controller. |

|

Off |

Flashing green (4.0 Hz) |

The drive is performing a RAID migration or rebuilding, or the system is reading or writing data to the drive. |

|

Off |

Steady green |

The drive is present but no data is being read or written to the drive. |

|

Off |

Off |

The drive is not securely installed. |

Drive configurations and numbering

The numbers of storage controllers in tables Table 12, Table 14, and Table 16 are applicable to all storage controllers except for the RAID-LSI-9460-16i(4G). If you install the RAID-LSI-9460-16i(4G), only one storage controller is required.

48SFF server

Table 12 presents the drive configurations available for the 48SFF server and their compatible types of storage controllers and NVMe SSD expander modules. Table 13 shows drive population for the 48SFF server.

These drive configurations use the same drive numbering scheme, and drives with the same number are distinguished by the compute module they reside in, as shown in Figure 17.

Table 12 Drive, storage controller, and NVMe SSD expander configurations (48SFF server)

|

Drive configuration |

Storage controller |

NVMe SSD expander |

|

48SFF (48 SFF SAS/SATA drives) |

2 × storage controllers |

N/A |

|

48SFF (24 SFF SAS/SATA drives) |

1 × storage controller |

N/A |

Table 13 Drive population (48SFF server)

|

Drive configuration |

Compute module 1 |

Compute module 2 |

|

48SFF (48 SFF SAS/SATA drives) |

24 SFF SAS/SATA drives |

24 SFF SAS/SATA drives |

|

24SFF (24 SFF SAS/SATA drives) |

24 SFF SAS/SATA drives |

N/A |

|

|

NOTE: "N/A" indicates that the compute module is not required but a compute module blank must be installed. |

Figure 17 Drive numbering for 48SFF drive configurations (48SFF server)

|

|

NOTE: For the location of the compute modules, see "Front panel view of the server." |

32SFF server

Table 14 presents the drive configurations available for the 32SFF server and their compatible types of storage controllers and NVMe SSD expander modules. Table 15 shows drive population for the 32SFF server.

These drive configurations use the same drive numbering scheme, and drives with the same number are distinguished by the compute module they reside in, as shown in Figure 18.

Table 14 Drive, storage controller, and NVMe SSD expander configurations (32SFF server)

|

Drive configuration |

Storage controller |

NVMe SSD expander |

|

32SFF (32 SFF SAS/SATA drives) |

2 × storage controllers |

N/A |

|

32SFF (28 SFF SAS/SATA drives and 4 SFF NVMe drives) |

2 × storage controllers |

1 × 4-port NVMe SSD expander module |

|

32SFF (24 SFF SAS/SATA drives and 8 SFF NVMe drives) |

1 × storage controller |

1 × 8-port NVMe SSD expander module |

|

28SFF (28 SFF SAS/SATA drives) |

2 × storage controllers |

N/A |

|

28SFF (24 SFF SAS/SATA drives and 4 SFF NVMe drives) |

1 × storage controller |

1 × 4-port NVMe SSD expander module |

Table 15 Drive population (32SFF server)

|

Drive configuration |

Drive cage bay 1 in compute module 1 |

Drive cage bay 2 in compute module 1 |

Compute module 2 |

|

32SFF (32 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

24 SFF SAS/SATA drives |

|

32SFF (28 SFF SAS/SATA drives and 4 SFF NVMe drives) |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

|

|

32SFF (24 SFF SAS/SATA drives and 8 SFF NVMe drives) |

4 SFF NVMe drives |

4 SFF NVMe drives |

|

|

28SFF (28 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

/ |

|

|

28SFF (24 SFF SAS/SATA drives and 4 SFF NVMe drives) |

/ |

4 SFF NVMe drives |

|

|

NOTE: · To install 4 SFF SAS/SATA drives, a 4SFF SAS/SATA drive backplane is required. To install 4 SFF NVMe drives, a 4SFF NVMe drive backplane is required. · "/" indicates no drives are required but drive blanks must be installed. |

Figure 18 Drive numbering for the 32SFF configuration (32SFF server)

|

|

NOTE: For the location of the compute modules, see "Front panel view of the server." For the location of the drive cage bays, see "Front panel view of a compute module." |

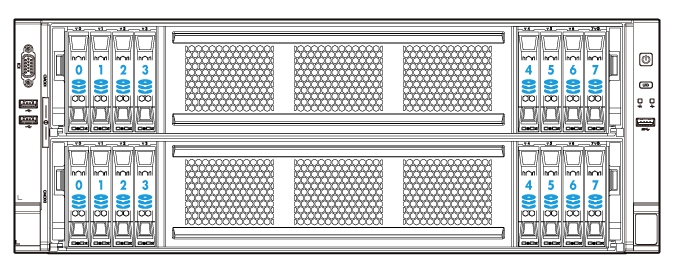

16SFF server

Table 16 presents the drive configurations available for the 16SFF server and their compatible types of storage controllers and NVMe SSD expander modules. Table 17 shows drive population for the 16SFF server.

These drive configurations use the same drive numbering scheme, and drives with the same number are distinguished by the compute module they reside in, as shown in Figure 19.

Table 16 Drive, storage controller, and NVMe SSD expander configurations (16SFF server)

|

Drive configuration |

Storage controller |

NVMe SSD expander |

|

16SFF (16 SFF SAS/SATA drives) |

2 × storage controllers |

N/A |

|

16SFF (12 SFF SAS/SATA drives and 4 SFF NVMe drives) |

2 × storage controllers |

1 × 4-port NVMe SSD expander module |

|

16SFF (8 SFF SAS/SATA drives and 8 SFF NVMe drives) |

1 × storage controller |

1 × 8-port NVMe SSD expander module |

|

16SFF (4 SFF SAS/SATA drives and 12 SFF NVMe drives) |

1 × storage controller |

· 1 × 4-port NVMe SSD expander module · 1 × 8-port NVMe SSD expander module |

|

16SFF (16 SFF NVMe drives) |

N/A |

2 × 8-port NVMe SSD expander modules |

|

12SFF (12 SFF SAS/SATA drives) |

2 × storage controllers |

N/A |

|

12SFF (8 SFF SAS/SATA drives and 4 SFF NVMe drives) |

1 × storage controller |

1 × 4-port NVMe SSD expander module |

|

12SFF (4 SFF SAS/SATA drives and 8 SFF NVMe drives) |

1 × storage controller |

2 × 4-port NVMe SSD expander modules |

|

12SFF (4 SFF SAS/SATA drives and 8 SFF NVMe drives) |

1 × storage controller |

1 × 8-port NVMe SSD expander module |

|

12SFF (12 SFF NVMe drives) |

N/A |

· 1 × 4-port NVMe SSD expander module · 1 × 8-port NVMe SSD expander module |

|

8SFF (8 SFF SAS/SATA drives) |

1 × storage controller |

N/A |

|

8SFF (4 SFF SAS/SATA drives and 4 SFF NVMe drives) |

1 × storage controller |

1 × 4-port NVMe SSD expander module |

|

8SFF (8 SFF NVMe drives) |

N/A |

2 × 4-port NVMe SSD expander modules |

|

8SFF (8 SFF NVMe drives) |

N/A |

1 × 8-port NVMe SSD expander module |

|

4SFF (4 SFF SAS/SATA drives) |

1 × storage controller |

N/A |

|

4SFF (4 SFF NVMe drives) |

N/A |

1 × 4-port NVMe SSD expander module |

Table 17 Drive population (16SFF server)

|

Drive configuration |

Drive cage bay 1 in compute module 1 |

Drive cage bay 2 in compute module 1 |

Drive cage bay 3 in compute module 2 |

Drive cage bay 4 in compute module 2 |

|

16SFF (16 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

|

16SFF (12 SFF SAS/SATA drives and 4 SFF NVMe drives) |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

|

16SFF (8 SFF SAS/SATA drives and 8 SFF NVMe drives) |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

|

16SFF (4 SFF SAS/SATA drives and 12 SFF NVMe drives) |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF NVMe drives |

|

16SFF (16 SFF NVMe drives) |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF NVMe drives |

|

12SFF (12 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

/ |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

|

12SFF (8 SFF SAS/SATA drives and 4 SFF NVMe drives) |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

/ |

|

12SFF (4 SFF SAS/SATA drives and 8 SFF NVMe drives) |

/ |

4 SFF NVMe drives |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

|

12SFF (4 SFF SAS/SATA drives and 8 SFF NVMe drives) |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF SAS/SATA drives |

/ |

|

12SFF (12 SFF NVMe drives) |

/ |

4 SFF NVMe drives |

4 SFF NVMe drives |

4 SFF NVMe drives |

|

8SFF (8 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

4 SFF SAS/SATA drives |

N/A |

|

|

8SFF (4 SFF SAS/SATA drives and 4 SFF NVMe drives) |

4 SFF SAS/SATA drives |

4 SFF NVMe drives |

N/A |

|

|

8SFF (8 SFF NVMe drives) |

/ |

4 SFF NVMe drives |

/ |

4 SFF NVMe drives |

|

8SFF (8 SFF NVMe drives) |

4 SFF NVMe drives |

4 SFF NVMe drives |

N/A |

|

|

4SFF (4 SFF SAS/SATA drives) |

4 SFF SAS/SATA drives |

/ |

N/A |

|

|

4SFF (4 SFF NVMe drives) |

/ |

4 SFF NVMe drives |

N/A |

|

|

|

NOTE: · To install 4 SFF SAS/SATA drives, a 4SFF SAS/SATA drive backplane is required. To install 4 SFF NVMe drives, a 4SFF NVMe drive backplane is required. · "/" indicates no drives are required but drive blanks must be installed. · "N/A" indicates that the compute module is not required but a compute module blank must be installed. |

Figure 19 Drive numbering for the 16SFF drive configuration (16SFF server)

|

|

NOTE: For the location of the compute modules, see "Front panel view of the server." For the location of the drive cage bays, see "Front panel view of a compute module." |

PCIe modules

Typically, the PCIe modules are available in the following standard form factors:

· LP—Low profile.

· FHHL—Full height and half length.

· FHFL—Full height and full length.

· HHHL—Half height and half length.

· HHFL—Half height and full length.

PCIe modules in Figure 20 require PCIe I/O resources. Make sure the number of such installed PCIe modules does not exceed eleven.

Table 18 PCIe modules requiring PCIe I/O resources

|

Type |

Model |

|

Storage controllers |

RAID-LSI-9361-8i(1G)-A1-X |

|

RAID-LSI-9361-8i(2G)-1-X |

|

|

RAID-LSI-9460-8i(2G) |

|

|

RAID-LSI-9460-8i(4G) |

|

|

RAID-P460-B4 |

|

|

HBA-H460-B1 |

|

|

HBA-LSI-9300-8i-A1-X |

|

|

HBA-LSI-9311-8i |

|

|

HBA-LSI-9440-8i |

|

|

RAID-LSI-9460-16i(4G) |

|

|

GPU modules |

GPU-V100 |

|

GPU-V100-32G |

|

|

Network adapters |

NIC-GE-4P-360T-B2-1-X |

|

CNA-10GE-2P-560F-B2-1-X |

|

|

NIC-X540-T2-T-10Gb-2P |

|

|

NIC-XXV710-F-B-25Gb-2P |

|

|

NIC-957454A4540C-B-100G-1P |

|

|

FC HBAs |

FC-HBA-QLE2560-8Gb-1P-1-X |

|

FC-HBA-QLE2562-8Gb-2P-1-X |

|

|

FC-HBA-QLE2690-16Gb-1P-1-X |

|

|

FC-HBA-QLE2692-16Gb-2P-1-X |

Storage controllers

Table 19 lists storage controllers compatible with the server. For detailed storage controller specifications, visit the query tool at http://www.h3c.com/cn/Service/Document_Software/Document_Center/Server/.

Table 19 Storage controllers available for the server

|

Storage controller model |

Supported drives |

Maximum drives |

Compatible supercapacitor |

|

HBA-H460-B1 |

SAS/SATA HDD/SSD |

24 |

Not supported |

|

HBA-LSI-9300-8i-A1-X |

SAS/SATA HDD/SSD |

24 |

Not supported |

|

HBA-LSI-9311-8i |

SAS/SATA HDD/SSD |

24 |

Not supported |

|

HBA-LSI-9440-8i |

SAS/SATA HDD/SSD |

24 |

Not supported |

|

RAID-LSI-9361-8i(1G)-A1-X |

SAS/SATA HDD/SSD |

24 |

Supercapacitor of the BAT-LSI-G2-4U-B-X power fail safeguard module |

|

RAID-LSI-9361-8i(2G)-1-X |

SAS/SATA HDD/SSD |

24 |

|

|

RAID-LSI-9460-8i(2G) |

SAS/SATA HDD/SSD |

24 |

Supercapacitor BAT-LSI-G3-4U-B |

|

RAID-LSI-9460-8i(4G) |

SAS/SATA HDD/SSD |

24 |

|

|

RAID-LSI-9460-16i(4G) |

SAS/SATA HDD/SSD |

48 |

|

|

RAID-P460-B4 |

SAS/SATA HDD/SSD |

24 |

Supercapacitor BAT-PMC-G3-4U-B |

NVMe SSD expander modules

|

Model |

Specifications |

|

EX-4NVMe-B |

4-port NVMe SSD expander module, which supports a maximum of 4 NVMe SSD drives. |

|

EX-8NVMe-B |

8-port NVMe SSD expander module, which supports a maximum of 8 NVMe SSD drives. |

GPU modules

GPU module form factor

|

GPU module model |

Form factor |

|

GPU-P4-X |

LP, single-slot wide |

|

GPU-T4 |

LP, single-slot wide |

|

GPU-P40-X |

FH3/4FL, dual-slot wide |

|

GPU-P100 |

FH3/4FL, dual-slot wide |

|

GPU-V100 |

FH3/4FL, dual-slot wide |

|

GPU-V100-32G |

FH3/4FL, dual-slot wide |

|

GPU-V100S-32G |

FH3/4FL, dual-slot wide |

GPU module and riser card compatibility

|

Riser card |

PCIe riser connector or bay |

PCIe slot |

Available GPU modules |

|

RS-GPU-R6900-G3 |

Connector 0 in a compute module |

Slot 1 |

· GPU-P4-X · GPU-P40-X · GPU-P100 · GPU-T4 · GPU-V100 · GPU-V100-32G · GPU-V100S-32G |

|

RS-4*FHHL-G3 |

Bay 1 or 3 at the server rear |

Slot 2 |

· GPU-P4-X · GPU-T4 |

|

Slot 3 |

Not supported |

||

|

Slot 4 |

Not supported |

||

|

Slot 6 |

· GPU-P4-X · GPU-T4 |

Riser cards

The PCIe slots in a riser card are numbered differently depending on the riser card model and the PCIe riser connector or bay that holds the riser card.

Riser card guidelines

Each PCIe slot in a riser card can supply a maximum of 75 W power to the PCIe module. You must connect a separate power cord to the PCIe module if it requires more than 75 W power.

If a processor is faulty or absent, the corresponding PCIe slots are unavailable.

RS-FHHL-G3

|

Item |

Specifications |

|

PCIe riser connector |

Connector 0 in a compute module |

|

PCIe slots |

Slot 1: PCIe3.0 ×16 (16, 8, 4, 2, 1) for processor 2 of the compute module NOTE: The numbers in parentheses represent link widths. |

|

Form factors of PCIe modules |

FHHL |

|

Maximum power supplied per PCIe slot |

75 W |

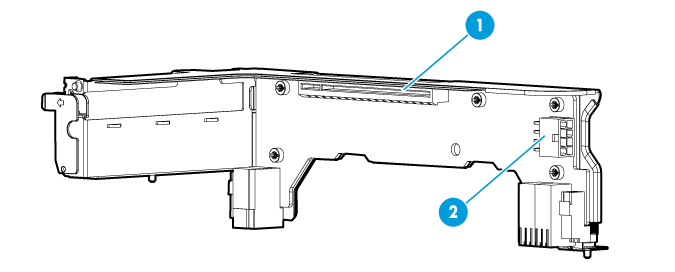

Figure 20 RS-FHHL-G3 riser card

|

(1) PCIe slot 1 |

(2) GPU module power connector |

RS-GPU-R6900-G3

|

Item |

Specifications |

|

PCIe riser connector |

Connector 0 in a compute module |

|

PCIe slots |

Slot 1: PCIe3.0 ×16 (16, 8, 4, 2, 1) for processor 2 of the compute module NOTE: The numbers in parentheses represent link widths. |

|

Form factors of PCIe modules |

FHFL NOTE: The riser card supports double-wide GPU modules. |

|

Maximum power supplied per PCIe slot |

75 W |

Figure 21 RS-GPU-R6900-G3 riser card

|

(1) PCIe slot 1 |

(2) GPU module power connector |

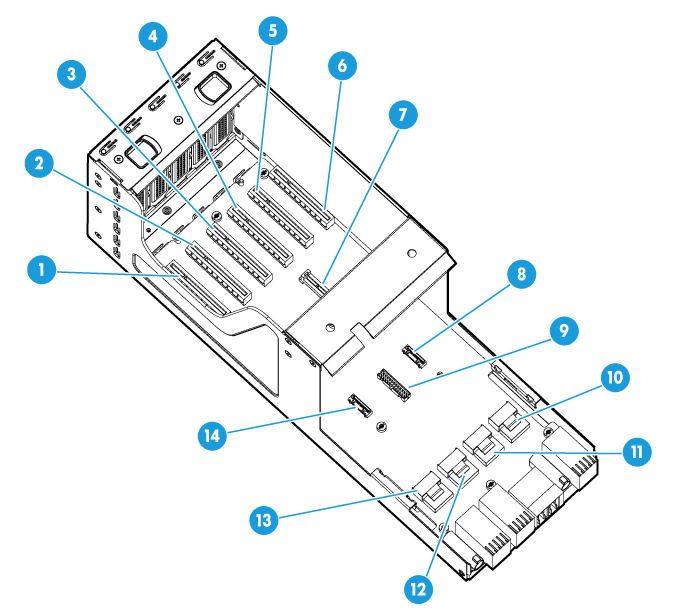

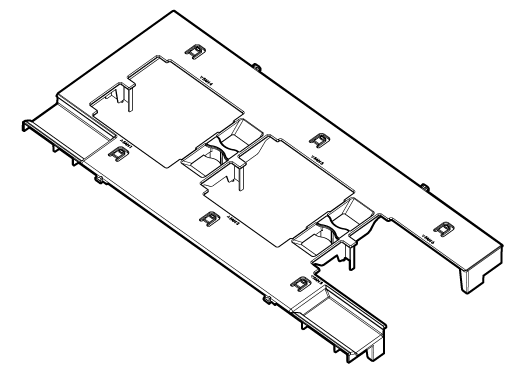

RS-4*FHHL-G3

|

Item |

Specifications |

|

PCIe riser bay |

Bay 1 or 3 at the server rear |

|

PCIe slots |

· PCIe riser bay 1: ¡ Slot 2: PCIe3.0 ×16 (8, 4, 2, 1) for processor 1 in compute module 1 ¡ Slot 3: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 1 ¡ Slot 4: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 2 ¡ Slot 6: PCIe3.0 ×16 (8, 4, 2, 1) for processor 1 in compute module 2 · PCIe riser bay 3: ¡ Slot 2: PCIe3.0 ×16 (8, 4, 2, 1) for processor 2 in compute module 1 ¡ Slot 3: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 1 ¡ Slot 4: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 2 ¡ Slot 6: PCIe3.0 ×16 (8, 4, 2, 1) for processor 2 in compute module 2 NOTE: The numbers in parentheses represent link widths. |

|

Form factors of PCIe modules |

FHHL NOTE: Slots 2 and 6 of the riser card support single-wide GPU modules. All slots of the riser card support mLOM network adapters. |

|

Maximum power supplied per PCIe slot |

75 W |

Figure 22 RS-4*FHHL-G3 riser card

|

(1) PCIe slot 2 |

(2) PCIe slot 3 |

(3) PCIe slot 4 |

|

(4) PCIe slot 6 |

(5) mLOM network adapter connector |

(6) Supercapacitor connector 2 |

|

(7) NCSI connector |

(8) SAS port B2 (×4 SAS ports) |

(9) SAS port B1 (×4 SAS ports) |

|

(10) SAS port A2 (×4 SAS ports) |

(11) SAS port A1 (×4 SAS ports) |

(12) Supercapacitor connector 1 |

|

|

NOTE: · PCIe slot 4 is unavailable if an mLOM network adapter is installed. · If you install the RS-4*FHHL-G3 riser card in riser bay 1 and install an mLOM network adapter in PCIe slot 4, the PCIe slot number changes to 14. |

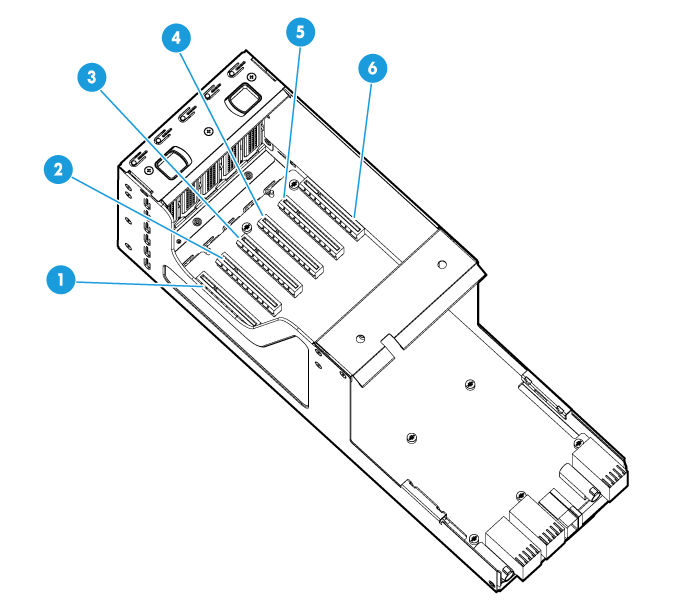

RS-6*FHHL-G3-1

|

Item |

Specifications |

|

PCIe riser bay |

Bay 1 or 3 at the server rear |

|

PCIe slots |

· PCIe riser bay 1: ¡ Slots 1 through 3: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 1 ¡ Slots 4 through 6: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 2 · PCIe riser bay 3: ¡ Slots 1 through 3: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 1 ¡ Slots 4 through 6: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 2 NOTE: The numbers in parentheses represent link widths. |

|

Form factors of PCIe modules |

FHHL NOTE: All slots of the riser card support mLOM network adapters. |

|

Maximum power supplied per PCIe slot |

75 W |

Figure 23 RS-6*FHHL-G3-1 riser card

|

(1) PCIe slot 1 |

(2) PCIe slot 2 |

(3) PCIe slot 3 |

|

(4) PCIe slot 4 |

(5) PCIe slot 5 |

(6) PCIe slot 6 |

|

(7) mLOM network adapter connector |

(8) Supercapacitor connector 2 |

(9) NCSI connector |

|

(10) SAS port B2 (×4 SAS ports) |

(11) SAS port B1 (×4 SAS ports) |

(12) SAS port A2 (×4 SAS ports) |

|

(13) SAS port A1 (×4 SAS ports) |

(14) Supercapacitor connector 1 |

|

|

|

NOTE: PCIe slot 4 is unavailable if an mLOM network adapter is installed. |

RS-6*FHHL-G3-2

|

Item |

Specifications |

|

PCIe riser bay |

Bay 2 at the server rear |

|

PCIe slots |

· Slot 1: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 1 · Slot 2: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 1 · Slots 3, 4, and 6: PCIe3.0 ×8 (8, 4, 2, 1) for processor 1 in compute module 2 · Slot 5: PCIe3.0 ×8 (8, 4, 2, 1) for processor 2 in compute module 2 NOTE: The numbers in parentheses represent link widths. |

|

Form factors of PCIe modules |

FHHL |

|

Maximum power supplied per PCIe slot |

75 W |

Figure 24 RS-6*FHHL-G3-2 riser card

|

(1) PCIe slot 1 |

(2) PCIe slot 2 |

(3) PCIe slot 3 |

|

(4) PCIe slot 4 |

(5) PCIe slot 5 |

(6) PCIe slot 6 |

Riser card and system board port mapping relationship

|

Riser card name |

Ports on the riser card |

Corresponding ports on the system board |

|

|

Riser card 0 |

N/A |

N/A |

|

|

Riser card 1 |

SAS port A1 |

SAS port A1 on compute module 1 |

|

|

SAS port A2 |

SAS port A2 on compute module 1 |

||

|

SAS port B1 |

SAS port A1 on compute module 2 |

||

|

SAS port B2 |

SAS port A2 on compute module 2 |

||

|

Supercapacitor port 1 |

Supercapacitor port 1 on compute module 1 |

||

|

Supercapacitor port 2 |

Supercapacitor port 1 on compute module 2 |

||

|

Riser card 2 |

N/A |

N/A |

|

|

Riser card 3 |

SAS port A1 |

SAS port B1 on compute module 1 |

|

|

SAS port A2 |

SAS port B2 on compute module 1 |

||

|

SAS port B1 |

SAS port B1 on compute module 2 |

||

|

SAS port B2 |

SAS port B2 on compute module 2 |

||

|

Supercapacitor port 1 |

Supercapacitor port 2 on compute module 1 |

||

|

Supercapacitor port 2 |

Supercapacitor port 2 on compute module 2 |

|

|

NOTE: · For more information about the SAS and supercapacitor ports on a riser card, see "Riser cards." · For more information about the SAS and supercapacitor ports on the system board, see "Main board of a compute module." |

B/D/F information

Viewing B/D/F information

Table 20 lists the default Bus/Device/Function numbers (B/D/F) used by the server when the following conditions are all met:

· All compute module bays are installed with compute modules.

· All processor sockets are installed with processors.

· All PCIe riser connectors are installed with riser cards.

· All PCIe slots in riser cards are installed with PCIe modules.

B/D/F information in Table 20 might change if any of the above conditions is not met or a PCIe module with a PCIe bridge is installed.

For more information about riser cards, see "Riser cards." For more information the location of slot 19, see "Rear panel view."

For information about how to obtain B/D/F information, see "Obtaining B/D/F information."

Table 20 PCIe modules and the corresponding Bus/Device/Function numbers

|

Riser card model |

PCIe riser connector or bay |

PCIe slot |

Processor |

Port number |

Root port (B/D/F) |

Endpoint (B/D/F) |

|

· RS-FHHL-G3 · RS-GPU-R6900-G3 |

Connector 0 of compute module 1 |

Slot 111 |

Compute module 1 processor 2 |

Port 2A |

58:00.00 |

59:00.00 |

|

Connector 0 of compute module 2 |

Slot 121 |

Compute module 2 processor 2 |

Port 2A |

d8:00.00 |

d9:00.00 |

|

|

RS-6*FHHL-G3-1 |

Bay 1 |

Slot 11 |

Compute module 1 processor 1 |

Port 2A |

24:00.00 |

25:00.00 |

|

Slot 12 |

Compute module 1 processor 1 |

Port 3C |

32:02.00 |

34:00.00 |

||

|

Slot 13 |

Compute module 1 processor 1 |

Port 2C |

24:02.00 |

26:00.00 |

||

|

Slot 14 |

Compute module 2 processor 1 |

Port 2A |

98:00.00 |

99:00.00 |

||

|

Slot 15 |

Compute module 2 processor 1 |

Port 2C |

98:02.00 |

9a:00.00 |

||

|

Slot 16 |

Compute module 2 processor 1 |

Port 3C |

ac:02.00 |

ae:00.00 |

||

|

RS-4*FHHL-G3 |

Bay 1 |

Slot 12 |

Compute module 1 processor 1 |

Port 2A |

24:00.00 |

25:00.00 |

|

Slot 13 |

Compute module 1 processor 1 |

Port 3C |

32:02.00 |

34:00.00 |

||

|

Slot 14 |

Compute module 2 processor 1 |

Port 3C |

ac:02.00 |

ae:00.00 |

||

|

Slot 16 |

Compute module 2 processor 1 |

Port 2A |

98:00.00 |

99:00.00 |

||

|

RS-6*FHHL-G3-2 |

Bay 2 |

Slot 21 |

Compute module 1 processor 2 |

Port 3A |

6c:00.00 |

6d:00.00 |

|

Slot 22 |

Compute module 1 processor 1 |

Port 3A |

32:00.00 |

33:00.00 |

||

|

Slot 23 |

Compute module 2 processor 1 |

Port 1A |

84:00.00 |

85:00.00 |

||

|

Slot 24 |

Compute module 2 processor 1 |

Port 1C |

84:02.00 |

86:00.00 |

||

|

Slot 25 |

Compute module 2 processor 2 |

Port 3A |

ec:00.00 |

ed:00.00 |

||

|

Slot 26 |

Compute module 2 processor 1 |

Port 3A |

ac:00.00 |

ad:00.00 |

||

|

RS-6*FHHL-G3-1 |

Bay 3 |

Slot 31 |

Compute module 1 processor 2 |

Port 1C |

44:02.00 |

46:00.00 |

|

Slot 32 |

Compute module 1 processor 2 |

Port 3C |

6c:02.00 |

6e:00.00 |

||

|

Slot 33 |

Compute module 1 processor 2 |

Port 1A |

44:00.00 |

45:00.00 |

||

|

Slot 34 |

Compute module 2 processor 2 |

Port 1C |

c4:02.00 |

c6:00.00 |

||

|

Slot 35 |

Compute module 2 processor 2 |

Port 1A |

c4:00.00 |

c5:00.00 |

||

|

Slot 36 |

Compute module 2 processor 2 |

Port 3C |

ec:02.00 |

ee:00.00 |

||

|

RS-4*FHHL-G3 |

Bay 3 |

Slot 32 |

Compute module 1 processor 2 |

Port 1A |

44:00.00 |

45:00.00 |

|

Slot 33 |

Compute module 1 processor 2 |

Port 3C |

6c:02.00 |

6e:00.00 |

||

|

Slot 34 |

Compute module 2 processor 2 |

Port 3C |

ec:02.00 |

ee:00.00 |

||

|

Slot 36 |

Compute module 2 processor 2 |

Port 1A |

c4:00.00 |

c5:00.00 |

|

|

NOTE: · The root port (B/D/F) indicates the bus number of the PCIe root node in the processor. · The endpoint (B/D/F) indicates the bus number of a PCIe module in the operating system. · In this table, PCIe slots provided by a riser card in a compute module are numbered 1xy, where x represents the compute module number and y represents the PCIe slot number. PCIe slots provided by a riser card at the server rear are numbered xy, where x represents the riser connector number and y represents the PCIe slot number. |

Obtaining B/D/F information

You can obtain B/D/F information by using one of the following methods:

· BIOS log—Search the dumpiio keyword in the BIOS log.

· UEFI shell—Execute the pci command. For information about how to execute the command, execute the help pci command.

· Operating system—The obtaining method varies by OS.

¡ For Linux, execute the lspci command.

If Linux does not support the lspci command by default, you must execute the yum command to install the pci-utils package.

¡ For Windows, install the pciutils package, and then execute the lspci command.

¡ For VMware, execute the lspci command.

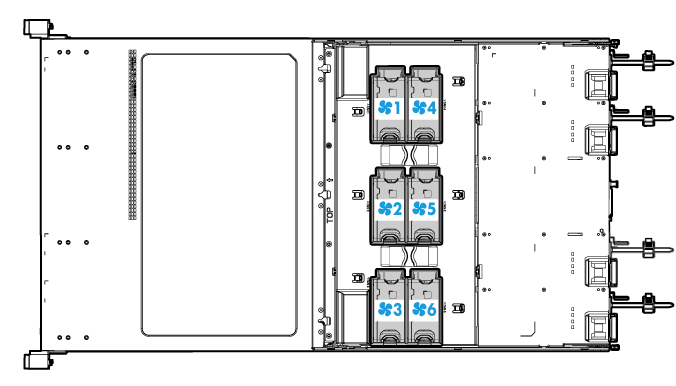

Fan modules

The server must be configured with six hot swappable fan modules, each of which includes two fans. Figure 25 shows the layout of the fan modules in the chassis.

The fans support N+1 redundancy.

During system POST and operation, the server will be powered off through HDM if the temperature detected by any sensor in the server reaches the critical threshold. The server will be powered off directly if any key components such as processors exceed the upper threshold.

Air baffles

Compute module air baffles

Each compute module comes with two bilateral air baffles (a right air baffle and a left air baffle). You must install a low mid air baffle, high mid air baffle, or GPU module air baffle as required.

Table 21 lists air baffles available for a compute module and their installation locations and usage scenarios.

Table 21 Compute module air baffles

|

Name |

Picture |

Installation location |

Usage scenario |

|

High mid air baffle |

Above the DIMMs between the two processors. |

No riser card is installed in the compute module. |

|

|

Low mid air baffle |

Above the DIMMs between the two processors. |

A riser card is installed in the compute module. If the riser card carries a GPU module, install a GPU air baffle instead of a low mid air baffle. |

|

|

Bilateral air baffle |

Above the DIMMs at the right of processor 1, or the DIMMs at the left of processor 2. |

N/A |

|

|

GPU module air baffle |

Above the DIMMs between the two processors. |

A GPU module is installed in the compute module. |

|

|

NOTE: For more information about the air baffle locations, see "Main board components." |

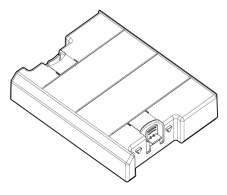

Power supply air baffle

The server comes with one power supply air baffle installed over the fan modules for heat dissipation of the power supplies. For more information about the air baffle location, see "Fan modules."

Figure 26 Power supply air baffle

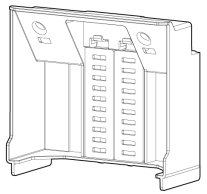

Rear riser card air baffles

Each riser card at the server rear comes with a riser card air baffle installed over the four SAS connectors. An RS-4*FHHL-G3 riser card also comes with a GPU module air baffle. For more information about the air baffle location, see "Riser cards."

Table 22 Rear riser card air baffles

|

Name |

Picture |

Installation location |

Functions |

|

Riser card air baffle |

At the left of the connectors on each server riser card. |

Provides ventilation aisles in rear riser cards. |

|

|

GPU module air baffle |

Between the NCSI connector and the mLOM network adapter connector. |

Provides ventilation aisles in an RS-4*FHHL-G3 riser card. |

Power supplies

The power supplies have an overtemperature protection mechanism. A power supply stops working when an overtemperature occurs and automatically recovers when the overtemperature condition is removed.

800 W power supply

|

Item |

Specifications |

|

Model |

PSR800-12A |

|

Rated input voltage range |

· 100 VAC to 240 VAC @ 50/60 Hz · 192 VDC to 288 VDC (240 HVDC power source) |

|

Maximum rated input current |

· 10.0 A @ 100 VAC to 240 VAC · 4.0 A @ 240 VDC |

|

Maximum rated output power |

800 W |

|

Efficiency at 50 % load |

|

|

Temperature requirements |

· Operating temperature: 0°C to 50°C (32°F to 122°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 90% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

800 W high-voltage power supply

|

Item |

Specifications |

|

Model |

PSR800-12AHD |

|

Rated input voltage range |

· 100 VAC to 240 VAC @ 50/60 Hz · 180 VDC to 400 VDC (240 to 380 HVDC power source) |

|

Maximum rated input current |

· 10.0 A @ 100 VAC to 240 VAC · 3.8 A @ 240 VDC |

|

Maximum rated output power |

800 W |

|

Efficiency at 50 % load |

94%, 80 Plus platinum level |

|

Temperature requirements |

· Operating temperature: 0°C to 50°C (32°F to 122°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 90% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

1200 W power supply

|

Item |

Specifications |

|

Model |

PSR1200-12A |

|

Rated input voltage range |

· 100 VAC to 127 VAC @ 50/60 Hz (1000 W) · 200 VAC to 240 VAC @ 50/60 Hz (1200 W) · 192 VDC to 288 VDC (240 HVDC power source) (1200 W) |

|

Maximum rated input current |

· 12.0 A @ 100 VAC to 240 VAC · 6.0 A @ 240 VDC |

|

Maximum rated output power |

1200 W |

|

Efficiency at 50 % load |

94%, 80 Plus platinum level |

|

Temperature requirements |

· Operating temperature: 0°C to 50°C (32°F to 122°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 90% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

1600 W power supply

|

Item |

Specifications |

|

Model |

PSR1600-12A |

|

Rated input voltage range |

· 200 VAC to 240 VAC @ 50/60 Hz · 192 VDC to 288 VDC (240 HVDC power source) |

|

Maximum rated input current |

· 9.5 A @ 200 VAC to 240 VAC · 8.0 A @ 240 VDC |

|

Maximum rated output power |

1600 W |

|

Efficiency at 50 % load |

94%, 80 Plus platinum level |

|

Temperature requirements |

· Operating temperature: 0°C to 50°C (32°F to 122°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 90% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

850 W high-efficiency Platinum power supply

|

Item |

Specifications |

|

Model |

DPS-850W-12A |

|

Rated input voltage range |

· 100 VAC to 240 VAC @ 50/60 Hz · 192 VDC to 288 VDC (240 HVDC power source) |

|

Maximum rated input current |

· 10.0 A @ 100 VAC to 240 VAC · 4.4 A @ 240 VDC |

|

Maximum rated output power |

850 W |

|

Efficiency at 50 % load |

94%, 80 Plus platinum level |

|

Temperature requirements |

· Operating temperature: 0°C to 55°C (32°F to 131°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 85% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

800 W –48 VDC power supply

|

Item |

Specifications |

|

Model |

DPS-800W-12A-48V |

|

Rated input voltage range |

–48 VDC to –60 VDC |

|

Maximum rated input current |

20.0 A @ –48 VDC to –60 VDC |

|

Maximum rated output power |

800 W |

|

Efficiency at 50 % load |

92% |

|

Temperature requirements |

· Operating temperature: 0°C to 55°C (32°F to 131°F) · Storage temperature: –40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

5% to 90% |

|

Maximum altitude |

5000 m (16404.20 ft) |

|

Redundancy |

N+N redundancy |

|

Hot swappable |

Yes |

|

Cold backup |

Yes |

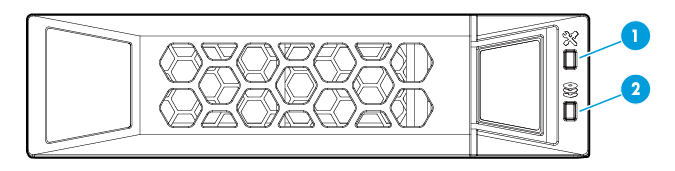

Diagnostic panels

Diagnostic panels provide diagnostics and troubleshooting capabilities. You can locate and troubleshoot component failures by using the diagnostic panels in conjunction with the event log generated in HDM.

|

|

NOTE: A diagnostic panel displays only one component failure at a time. When multiple component failures exist, the diagnostic panel displays all these failures one by one at intervals of 4 seconds. |

Diagnostic panel view

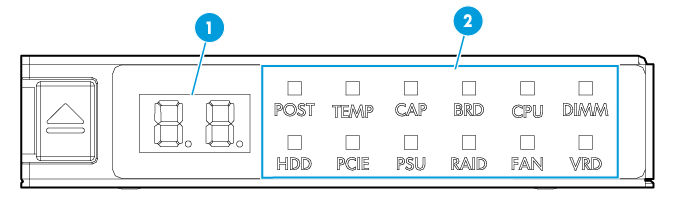

Figure 27 shows the error code and LEDs on a diagnostic panel.

Figure 27 Diagnostic panel view

|

(1) Error code |

(2) LEDs |

For more information about the LEDs and error codes, see "LEDs."

LEDs

The server is operating correctly when the error code is 00 and all LEDs on the diagnostic panel are off.

POST LED

|

LED status |

Error code |

Description |

|

Steady green |

Code for the current POST phase (in the range of 00 to 99) |

The server is performing POST without detecting any error. |

|

Flashing red (1 Hz) |

The POST process encountered an error and stopped in the displayed phase. |

TEMP LED

|

LED status |

Error code |

Description |

|

Flashing amber (1 Hz) |

Temperature sensor ID |

A major temperature warning is present on the component monitored by the sensor. This warning might occur because the temperature of the component has exceeded the upper major threshold or dropped below the lower major threshold. |

|

Flashing red (1 Hz) |

Temperature sensor ID |

A critical temperature warning is present on the component monitored by the sensor. This warning might occur because the temperature of the component has exceeded the upper critical threshold or dropped below the lower critical threshold. |

CAP LED

|

LED status |

Error code |

Description |

|

Flashing amber |

01 |

The system power consumption has exceeded the power cap value. |

Component LEDs

An alarm is present if a component LED has one of the following behaviors:

· Flashing amber (1 Hz)—A major alarm has occurred.

· Flashing red (1 Hz)—A critical alarm has occurred.

Use Table 23 to identify the faulty item if a component LED has one of those behaviors. To obtain records of component status changes, use the event log in HDM. For information about using the event log, see HDM online help.

Table 23 LED, error code and faulty item matrix

|

LED |

Error code |

Faulty item |

|

BRD |

01 |

PDB |

|

02 |

Management module |

|

|

03 |

Midplane |

|

|

11 |

Main board of compute module 1 |

|

|

12 |

Drive backplane for drive cage bay 2 of compute module 1 |

|

|

13 |

Drive backplane for drive cage bay 1 of compute module 1 |

|

|

21 |

Main board of compute module 2 |

|

|

22 |

Drive backplane for drive cage bay 4 of compute module 2 |

|

|

23 |

Drive backplane for drive cage bay 3 of compute module 2 |

|

|

91 |

mLOM network adapter |

|

|

NOTE: If the error code field displays 11 or 21 and any other code alternatively, replace the faulty item other than the main board. If the issue persists, replace the main board. |

||

|

CPU (processor) |

01 |

Processor 1 in compute module 1 |

|

02 |

Processor 2 in compute module 1 |

|

|

03 |

Processor 1 in compute module 2 |

|

|

04 |

Processor 2 in compute module 2 |

|

|

DIMM |

A1 through A9, AA, Ab, or AC |

Compute module 1: · A1 through A9—DIMMs in slots A1 through A9 · AA—DIMM in slot A10 · Ab—DIMM in slot A11 · AC—DIMM in slot A12 |

|

b1 through b9, bA, bb, or bC |

Compute module 1: · b1 through b9—DIMMs in slots B1 through B9 · bA—DIMM in slot B10 · bb—DIMM in slot B11 · bC—DIMM in slot B12 |

|

|

C1 through C9, CA, Cb, or CC |

Compute module 2: · C1 through C9—DIMMs in slots A1 through A9 · CA—DIMM in slot A10 · Cb—DIMM in slot A11 · CC—DIMM in slot A12 |

|

|

d1 through d9, dA, db, or dC |

Compute module 2: · d1 through d9—DIMMs in slots B1 through B9 · dA—DIMM in slot B10 · db—DIMM in slot B11 · dC—DIMM in slot B12 |

|

|

HDD |

00 through 23 |

Relevant drive in compute module 1 (24SFF) |

|

30 through 53 |

Relevant drive in compute module 2 (24SFF) |

|

|

00 through 07 |

Relevant drive in compute module 1 (8SFF) |

|

|

10 through 17 |

Relevant drive in compute module 2 (8SFF) |

|

|

PCIE |

01 |

PCIe module in PCIe slot 1 of riser card 0 in compute module 1 |

|

02 |

PCIe module in PCIe slot 2 of riser card 0 in compute module 1 |

|

|

03 |

PCIe module in PCIe slot 1 of riser card 0 in compute module 2 |

|

|

04 |

PCIe module in PCIe slot 2 of riser card 0 in compute module 2 |

|

|

11 through 16 |

PCIe modules in PCIe slots 1 through 6 of riser card 1 at server rear |

|

|

21 through 26 |

PCIe modules in PCIe slots 1 through 6 of riser card 2 at server rear |

|

|

31 through 36 |

PCIe modules in PCIe slots 1 through 6 of riser card 3 at server rear |

|

|

09 |

PCIe uplink between processors and the PCH for the mLOM network adapter |

|

|

PSU |

01 |

Power supply 1 |

|

02 |

Power supply 2 |

|

|

03 |

Power supply 3 |

|

|

04 |

Power supply 4 |

|

|

FAN |

01 through 06 |

Fan module 1 through fan module 6 |

|

VRD |

01 |

PDB P5V voltage |

|

02 |

PDB P3V3_STBY voltage |

|

|

03 |

Management module P1V05_PCH_STBY voltage |

|

|

04 |

Management module PVNN_PCH_STBY voltage |

|

|

05 |

Management module P1V8_PCH_STBY voltage |

|

|

06 |

PDB overcurrent |

|

|

07 |

PDB P12V primary power supply |

|

|

21 |

Primary power supply of the riser card in riser bay 1 |

|

|

22 |

Secondary power supply of the riser card in riser bay 1 |

|

|

23 |

Primary power supply of the riser card in riser bay 2 |

|

|

25 |

Secondary power supply of the riser card in riser bay 2 |

|

|

26 |

Primary power supply of the riser card in riser bay 3 |

|

|

27 |

Secondary power supply of the riser card in riser bay 3 |

|

|

40 |

Primary power supply of compute module 1 |

|

|

41 |

Secondary power supply of compute module 1 |

|

|

42 |

Compute module 1 overcurrent |

|

|

43 |

Compute module 1 P5V fault |

|

|

44 |

Compute module 1 processor 1 FIVR FAULT |

|

|

45 |

Compute module 1 processor 2 FIVR FAULT |

|

|

46 |

Compute module 1 riser connector 0 power supply |

|

|

47 |

Compute module 1 bay 2 drive backplane power supply |

|

|

48 |

Compute module 1 bay 1 drive backplane power supply |

|

|

50 |

Compute module 2 primary power supply |

|

|

51 |

Compute module 2 secondary power supply |

|

|

52 |

Compute module 2 overcurrent |

|

|

54 |

Compute module 2 processor 1 FIVR FAULT |

|

|

55 |

Compute module 2 processor 2 FIVR FAULT |

|

|

56 |

Compute module 2 riser connector 0 power supply |

|

|

57 |

Compute module 2 bay 4 drive backplane power supply |

|

|

58 |

Compute module 2 bay 3 drive backplane power supply |

|

|

60 |

Compute module 1 HPMOS voltage |

|

|

61 |

Compute module 1 PVCCIO_CPU1 voltage |

|

|

62 |

Compute module 1 PVCCIN_CPU1 voltage |

|

|

63 |

Compute module 1 PVCCSA_CPU1 voltage |

|

|

64 |

Compute module 1 PVCCIO_CPU2 voltage |

|

|

65 |

Compute module 1 PVCCIN_CPU2 voltage |

|

|

66 |

Compute module 1 PVCCSA_CPU2 voltage |

|

|

67 |

Compute module 1 VDDQ/VPP_CPU1_ABC voltage |

|

|

68 |

Compute module 1 VDDQ/VPP_CPU1_DEF voltage |

|

|

69 |

Compute module 1 VTT_CPU1_ABC voltage |

|

|

6A |

Compute module 1 VTT_CPU1_DEF voltage |

|

|

6b |

Compute module 1 VDDQ/VPP_CPU2_ABC voltage |

|

|

6C |

Compute module 1 VDDQ/VPP_CPU2_DEF voltage |

|

|

6d |

Compute module 1 VTT_CPU2_ABC voltage |

|

|

6E |

Compute module 1 VTT_CPU2_DEF voltage |

|

|

70 |

Compute module 2 HPMOS voltage |

|

|

71 |

Compute module 2 PVCCIO_CPU1 voltage |

|

|

72 |

Compute module 2 PVCCIN_CPU1 voltage |

|

|

73 |

Compute module 2 PVCCSA_CPU1 voltage |

|

|

74 |

Compute module 2 PVCCIO_CPU2 voltage |

|

|

75 |

Compute module 2 PVCCIN_CPU2 voltage |

|

|

76 |

Compute module 2 PVCCSA_CPU2 voltage |

|

|

77 |

Compute module 2 VDDQ/VPP_CPU1_ABC voltage |

|

|

78 |

Compute module 2 VDDQ/VPP_CPU1_DEF voltage |

|

|

79 |

Compute module 2 VTT_CPU1_ABC voltage |

|

|

7A |

Compute module 2 VTT_CPU1_DEF voltage |

|

|

7b |

Compute module 2 VDDQ/VPP_CPU2_ABC voltage |

|

|

7C |

Compute module 2 VDDQ/VPP_CPU2_DEF voltage |

|

|

7d |

Compute module 2 VTT_CPU2_ABC voltage |

|

|

7E |

Compute module 2 VTT_CPU2_DEF voltage |

|

|

|

NOTE: · The term "CPU" in this table refers to processors. · For the location of riser cards at the server rear, see "Riser cards." |

NVMe VROC modules

|

Model |

Description |

RAID levels |

Compatible NVMe SSDs |

|

NVMe-VROC-Key-S |

NVMe VROC module standard edition |

0, 1, 10 |

All NVMe drives and PCIe M.2 SSDs |

|

NVMe-VROC-Key-P |

NVMe VROC module premium edition |

0, 1, 5, 10 |

All NVMe drives and PCIe M.2 SSDs |

|

NVMe-VROC-Key-I |

NVMe VROC module Intel edition |

0, 1, 5, 10 |

Intel NVMe drives and Intel PCIe M.2 SSDs |

Appendix C Managed hot removal of NVMe drives

Managed hot removal of NVMe drives enables you to remove NVMe drives safely while the server is operating.

For information about operating systems that support hot swapping and managed hot removal of NVMe drives, visit the query tool at http://www.h3c.com/cn/Service/Document_Software/Document_Center/Server/.

Use Table 24 to determine the managed hot removal method depending on the VMD status and the operating system type. By default, the default VMD status is Auto. For more information about VMD, see the BIOS user guide for the server.

Table 24 Managed hot removal methods

|

VMD status |

Operating system |

Managed hot removal method |

|

Auto/Enabled |

Windows |

|

|

Linux |

||

|

Disabled |

N/A |

Contact the support. |

Performing a managed hot removal in Windows

Prerequisites

Install Intel® Rapid Storage Technology enterprise (Intel® RSTe).

To obtain Intel® RSTe, use one of the following methods:

· Go to https://platformsw.intel.com/KitSearch.aspx to download the software.

· Contact Intel Support.

Procedure

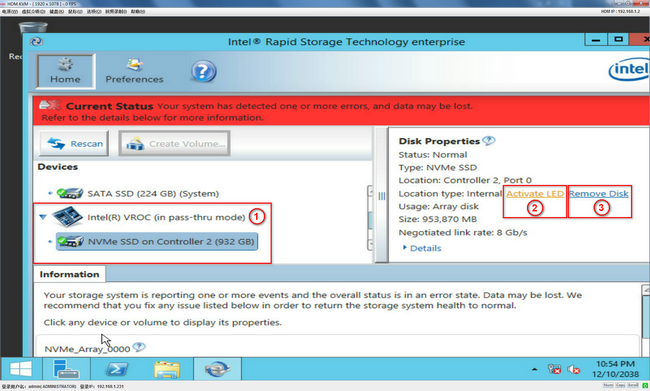

1. Stop reading data from or writing data to the NVMe drive to be removed.

2. Identify the location of the NVMe drive. For more information, see "Drive configurations and numbering."

3. Run Intel® RSTe.

4. Unmount the NVMe drive from the operating system, as shown in Figure 28:

¡ Select the NVMe drive to be removed from the Devices list.

¡ Click Activate LED to turn on the Fault/UID LED on the drive.

¡ Click Remove Disk.

Figure 28 Removing an NVMe drive

5. Observe the Fault/UID LED on the drive. If the Fault/UID LED turns steady blue and the drive is removed from the Devices list, remove the drive from the server.

For more information about the removal procedure, see "Replacing an NVMe drive."

Performing a managed hot removal in Linux

In Linux, you can perform a managed hot removal of NVMe drives from the CLI or by using Intel® Accelerated Storage Manager.

Prerequisites

· Identify that your operating system is a non-SLES Linux operating system. SLES operating systems do not support managed hot removal of NVMe drives.

· To perform a managed hot removal by using Intel® ASM, install Intel® ASM.

To obtain Intel® ASM, use one of the following methods:

¡ Go to https://platformsw.intel.com/KitSearch.aspx to download the software.

¡ Contact Intel Support.

Performing a managed hot removal from the CLI

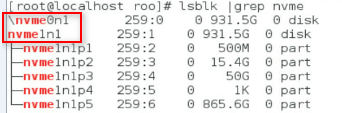

1. Stop reading data from or writing data to the NVMe drive to be removed.

2. Identify the location of the NVMe drive. For more information, see "Drive configurations and numbering."

3. Access the CLI of the server.

4. Execute the lsblk | grep nvme command to identify the drive letter of the NVMe drive, as shown in Figure 29.

Figure 29 Identifying the drive letter of the NVMe drive to be removed

5. Execute the ledctl locate=/dev/drive_letter command to turn on the Fault/UID LED on the drive. The drive_letter argument represents the drive letter, for example, nvme0n1.

6. Execute the echo 1 > /sys/block/drive_letter/device/device/remove command to unmount the drive from the operating system. The drive_letter argument represents the drive letter, for example, nvme0n1.

7. Observe the Fault/UID LED on the drive. If the Fault/UID LED turns steady blue, remove the drive from the server.

For more information about the removal procedure, see "Replacing an NVMe drive."

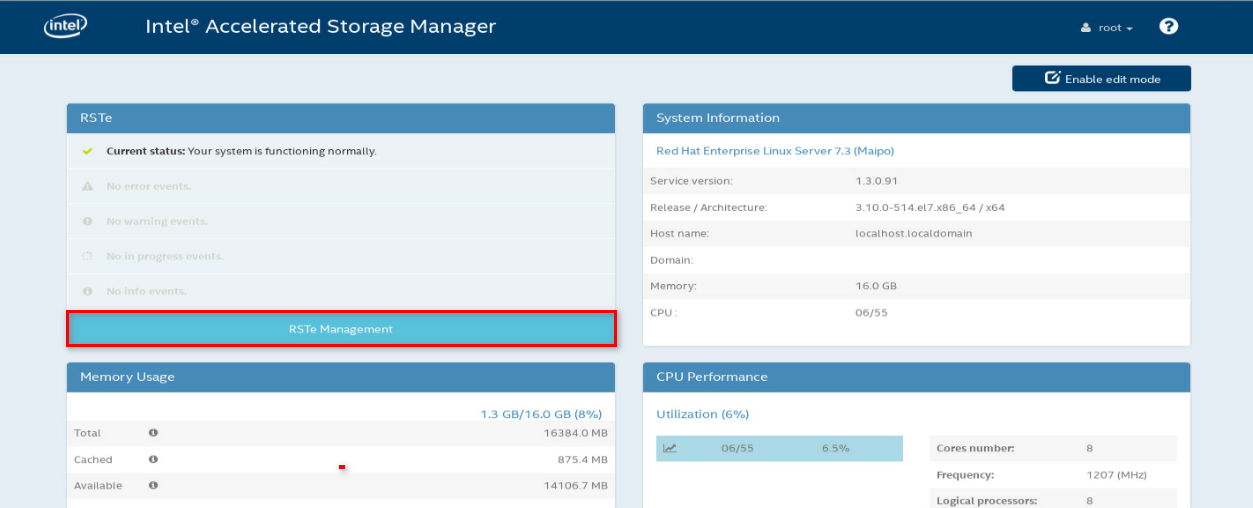

Performing a managed hot removal from the Intel® ASM Web interface

1. Stop reading data from or writing data to the NVMe drive to be removed.

2. Identify the location of the NVMe drive. For more information, see "Drive configurations and numbering."

3. Run Intel® ASM.

4. Click RSTe Management.

Figure 30 Accessing RSTe Management

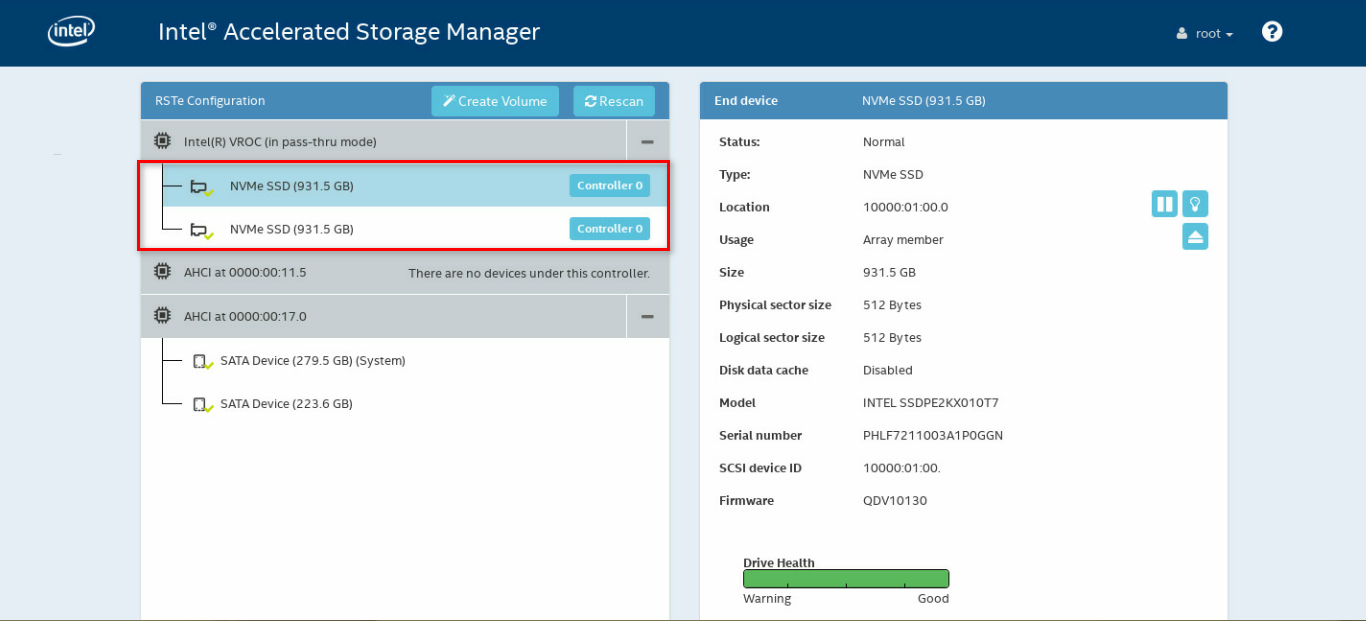

5. Expand the Intel(R) VROC(in pass-thru mode) menu to view operating NVMe drives, as shown in Figure 31.

Figure 31 Viewing operating NVMe drives

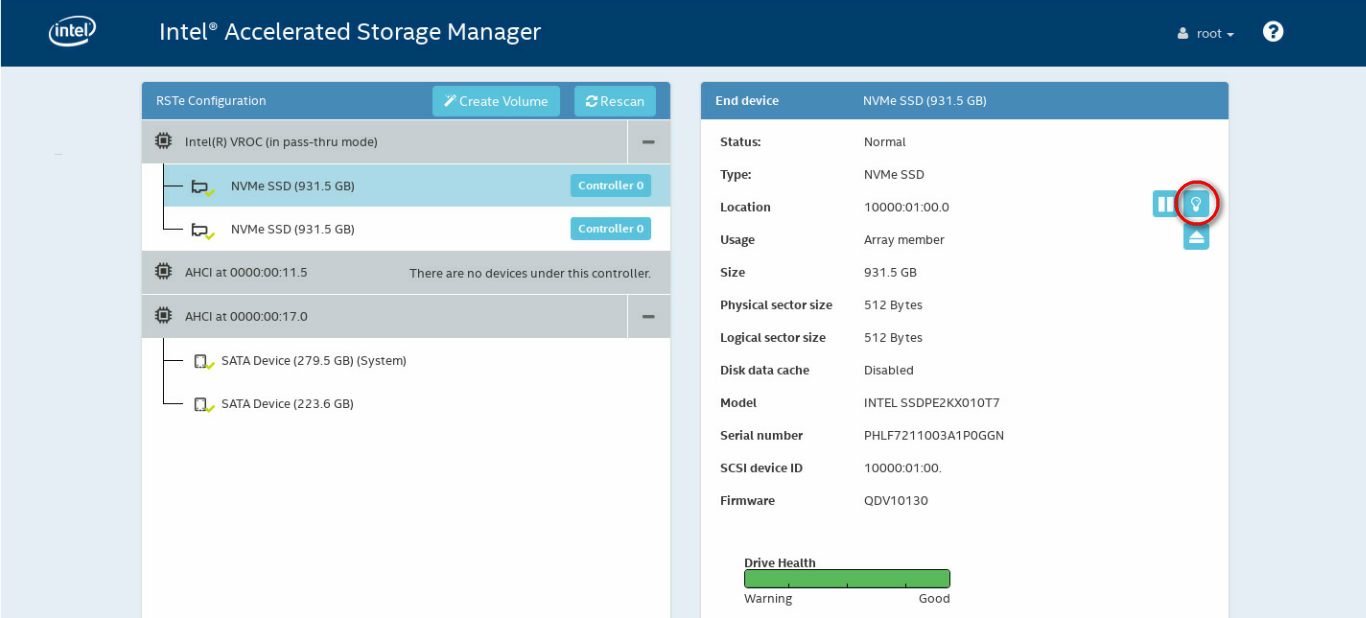

6. Click the light bulb icon to turn on the Fault/UID LED on the drive, as shown in Figure 32.

Figure 32 Turning on the drive Fault/UID LED

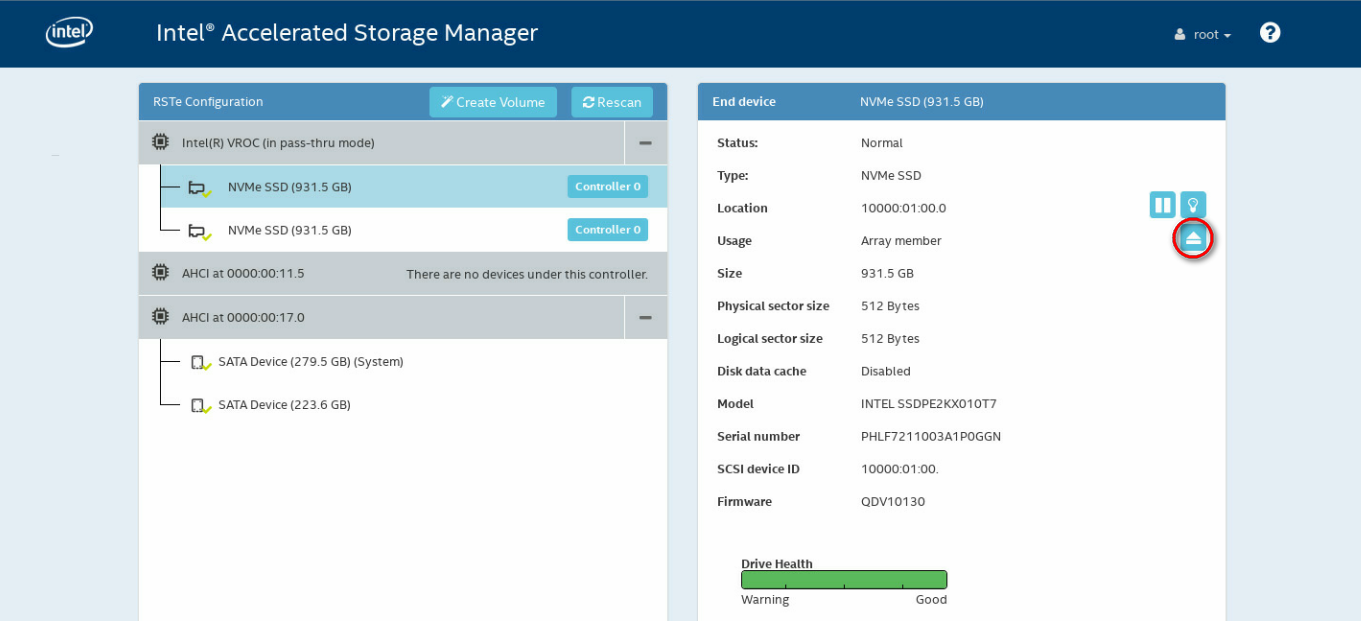

7. Click the removal icon, as shown in Figure 33.

Figure 33 Removing an NVMe drive

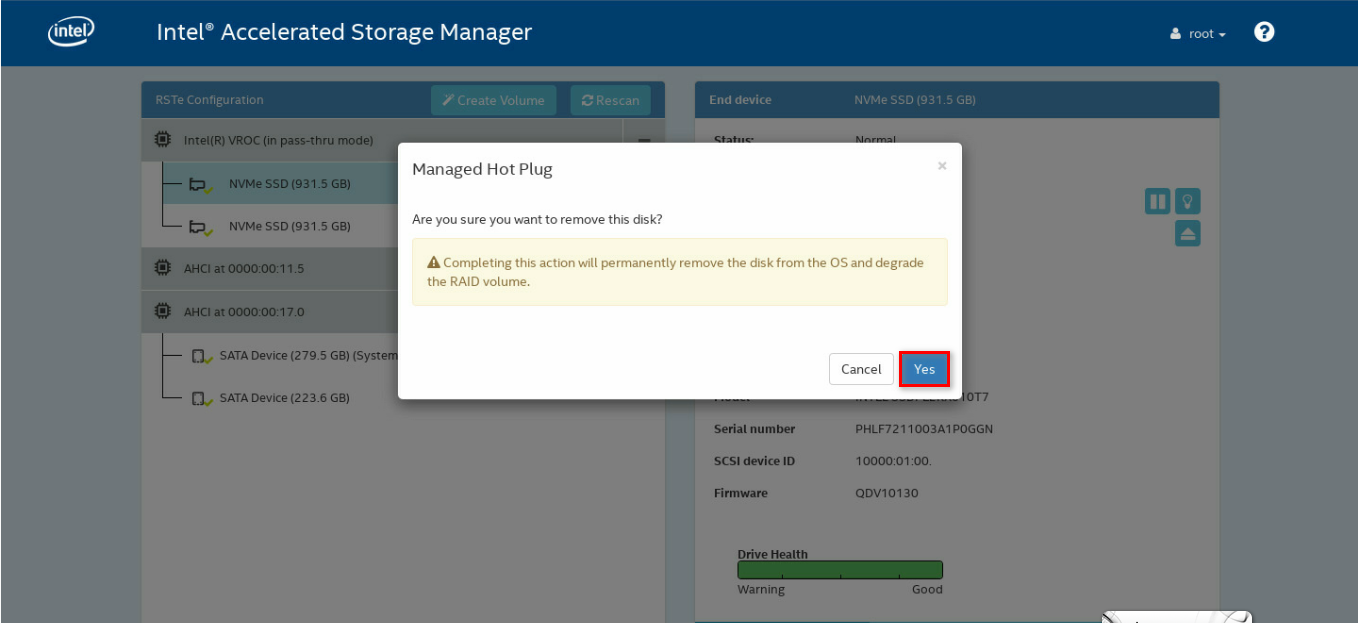

8. In the dialog box that opens, click Yes.

Figure 34 Confirming the removal

9. Remove the drive from the server. For more information about the removal procedure, see "Replacing an NVMe drive."

Appendix D Environment requirements

About environment requirements

The operating temperature requirements for the server vary depending on the server model and hardware configuration. When the general and component-based requirements conflict, use the component-based requirement.

Be aware that the actual maximum operating temperature of the server might be lower than what is stated because of poor site cooling performance. In a real data center, the server cooling performance might decrease because of adverse external factors, including poor cabinet cooling performance, high power density inside the cabinet, or insufficient spacing between devices.

General environment requirements

|

Item |

Specifications |

|

Operating temperature |

Minimum: 5°C (41°F) Maximum: · For 32SFF and 48SFF drive configurations: 40°C (104°F) · For 16SFF drive configuration: 45°C (113°F) The maximum temperature varies by hardware option presence. For more information, see "Operating temperature requirements." |

|

Storage temperature |

–40°C to +70°C (–40°F to +158°F) |

|

Operating humidity |

8% to 90%, noncondensing |

|

Storage humidity |

5% to 95%, noncondensing |

|

Operating altitude |

–60 m to +3000 m (–196.85 ft to +9842.52 ft) The allowed maximum temperature decreases by 0.33 °C (32.59°F) as the altitude increases by 100 m (328.08 ft) from 900 m (2952.76 ft) |

|

Storage altitude |