- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 1.08 MB |

Feature and hardware compatibility

Configuration restrictions and guidelines

Interface backup configuration task list

Configuring strict active/standby interface backup

Explicitly specifying backup interfaces without traffic thresholds

Using interface backup with the Track module

Configuring load-shared interface backup

Displaying and maintaining interface backup

Interface backup configuration examples

Strict active/standby interface backup configuration example

Strict active/standby interface backup with the Track module configuration example

Load-shared interface backup configuration example

Collaboration between the Track module and a detection module

Collaboration between the Track module and an application module

Associating the Track module with a detection module object

Associating Track with interface management

Associating Track with route management

Associating Track with a tracked list

Associating Track with a Boolean list

Associating Track with a percentage threshold list

Associating Track with a weight threshold list

Associating the Track module with an application module

Associating Track with static routing

Associating Track with interface backup

Displaying and maintaining track entries

Static routing-Track-NQA collaboration configuration example

Restrictions: Hardware compatibility with load balancing

Configuring outbound link load balancing

About outbound link load balancing

Outbound link load balancing configuration task list

Link group configuration task list

Setting the availability criteria

Enabling the slow online feature

Specifying a fault processing method

Configuring the proximity feature

Creating a link and specifying a link group

Specifying an outbound next hop for a link

Configuring the bandwidth and connection parameters

Enabling the slow offline feature

Setting the link cost for proximity calculation

Setting the bandwidth ratio and maximum expected bandwidth

Virtual server configuration task list

Specifying the VSIP and port number

Specifying a parameter profile

Configuring the bandwidth and connection parameters

Enabling the link protection feature

Enabling bandwidth statistics collection by interfaces

LB class configuration task list

Creating a match rule that references an LB class

Creating a source IP address match rule

Creating a destination IP address match rule

Creating a domain name match rule

Creating an application group match rule

LB action configuration task list

Configuring a forwarding LB action

Configuring the ToS field in IP packets sent to the server

LB policy configuration task list

Specifying the default LB action

Sticky group configuration task list

Configuring the IP sticky method

Configuring the timeout time for sticky entries

Ignoring the limits for sessions that match sticky entries

Configuring a parameter profile

Configuring the ToS field in IP packets sent to the client

About configuring ISP information

Configuring ISP information manually

Performing a load balancing test

Displaying and maintaining outbound link load balancing

Outbound link load balancing configuration examples

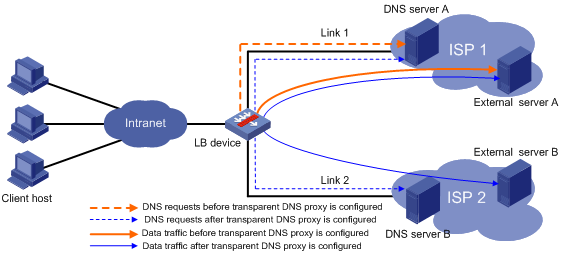

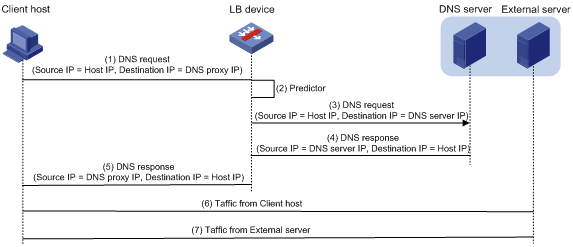

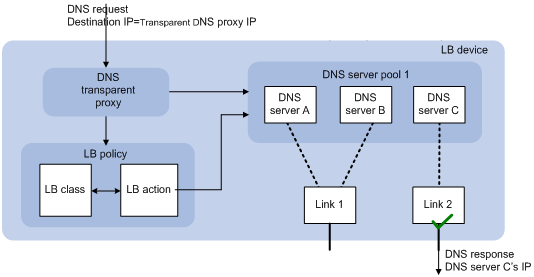

Configuring transparent DNS proxies

Transparent DNS proxy on the LB device

Transparent DNS proxy configuration task list

Configuring a transparent DNS proxy

Creating a transparent DNS proxy

Specifying an IP address and port number

Specifying the default DNS server pool

Enabling the link protection feature

Enabling the transparent DNS proxy

DNS server configuration task list

Creating a DNS server and specifying a DNS server pool

Specifying an IP address and port number

Associating a link with a DNS server

Specifying an outbound next hop for a link

Configuring the maximum bandwidth

Setting the bandwidth ratio and maximum expected bandwidth

LB class configuration task list

Creating a match rule that references an LB class

Creating a source IP address match rule

Creating a destination IP address match rule

Creating a domain name match rule

LB action configuration task list

Configuring a forwarding LB action

Configuring the ToS field in IP packets sent to the DNS server

LB policy configuration task list

Specifying the default LB action

Sticky group configuration task list

Configuring the IP sticky method

Configuring the timeout time for sticky entries

Displaying and maintaining transparent DNS proxy

Transparent DNS proxy configuration examples

Configuring interface backup

Overview

Interface backup enables you to configure multiple backup interfaces for a Layer 3 interface to increase link availability. When the primary interface fails or is overloaded, its backup interfaces can take over or participate in traffic forwarding.

Compatible interfaces

The interface backup feature is configurable for the interfaces in Table 1.

Table 1 Interfaces that support interface backup

|

Category |

Interfaces |

Remarks |

|

Ethernet |

Layer 3 Ethernet interfaces/subinterfaces |

N/A |

|

Others |

Dialer interfaces Tunnel interfaces |

A dialer interface can be used as the primary interface only when it is a PPPoE client in permanent session mode. |

Backup modes

The primary interface and its backup interfaces can operate in strict active/standby mode or load sharing mode.

· Strict active/standby mode—Only one interface transmits traffic. All the other interfaces are in STANDBY state.

· Load sharing mode—Backup interfaces participate in traffic forwarding when the amount of traffic on the primary interface reaches the upper threshold. They are activated and deactivated depending on the amount of traffic.

In strict active/standby mode, traffic loss occurs when the active interface is overloaded. Load sharing mode improves link efficiency and reduces the risk of packet loss.

Strict active/standby mode

In strict active/standby mode, the primary interface always has higher priority than all backup interfaces.

· When the primary interface is operating correctly, all traffic is transmitted through the primary interface.

· When the primary interface fails, the highest-priority backup interface takes over. If the highest-priority backup interface also fails, the second highest-priority backup interface takes over, and so forth.

|

|

NOTE: If two backup interfaces have the same priority, the one configured first has preference. |

An active backup interface is always preempted by the primary interface. However, a higher-priority backup interface cannot preempt a lower-priority backup interface that has taken over the primary interface.

· The primary interface takes over when it recovers from a failure condition.

· The higher-priority backup interface cannot take over when it recovers from a failure condition while the primary interface is still down.

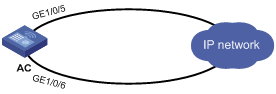

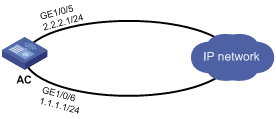

As shown in Figure 1, GigabitEthernet 1/0/5 on AC is the primary interface. GigabitEthernet 1/0/6 is its backup interface.

· When GigabitEthernet 1/0/5 is operating correctly, all traffic is transmitted through GigabitEthernet 1/0/5.

· When GigabitEthernet 1/0/5 fails, GigabitEthernet 1/0/6 takes over.

· When GigabitEthernet 1/0/5 is recovered, it preempts the active backup interface because it is the primary interface.

Figure 1 Strict active/backup mode

Load sharing mode

In load sharing mode, the backup interfaces are activated to transmit traffic depending on the traffic load on the primary interface.

· When the amount of traffic on the primary interface exceeds the upper threshold, the backup interfaces are activated in descending order of priority. This action continues until the traffic drops below the upper threshold.

· When the total amount of traffic on all load-shared interfaces decreases below the lower threshold, the backup interfaces are deactivated in ascending order of priority. This action continues until the total amount of traffic exceeds the lower threshold.

· When the primary interface fails (in DOWN state), the strict active/standby mode applies. Only one backup interface can forward traffic.

The upper and lower thresholds are user configurable.

|

|

NOTE: · "Traffic" on an interface refers to the amount of incoming or outgoing traffic, whichever is higher. · If two backup interfaces have the same priority, the one configured first has preference. |

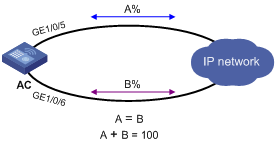

As shown in Figure 2, GigabitEthernet 1/0/5 on AC is the primary interface. GigabitEthernet 1/0/6 is its backup interface.

· When the amount of traffic on GigabitEthernet 1/0/5 exceeds the upper threshold, GigabitEthernet 1/0/6 is activated.

· When the total amount of traffic on all load-shared interfaces decreases below the lower threshold, GigabitEthernet 1/0/6 is deactivated.

Feature and hardware compatibility

|

Hardware series |

Model |

Interface backup compatibility |

|

WX1800H series |

WX1804H WX1810H WX1820H WX1840H |

Yes |

|

WX3800H series |

WX3820H WX3840H |

No |

|

WX5800H series |

WX5860H |

No |

Configuration restrictions and guidelines

When you configure interface backup, follow these restrictions and guidelines:

· An interface can be configured as a backup only for one interface.

· An interface cannot be both a primary and backup interface.

· For correct traffic forwarding, make sure the primary and backup interfaces have routes to the destination network.

Interface backup configuration task list

|

Task |

Remarks |

|

Configuring strict active/standby interface backup: · (Method 1.) Explicitly specifying backup interfaces without traffic thresholds · (Method 2.) Using interface backup with the Track module |

You cannot use these two methods at the same time for a primary interface and its backup interfaces. Use method 1 if you want to monitor the interface state of the primary interface for a switchover to occur. Use method 2 if you want to monitor any other state, such as the link state of the primary interface. |

|

A primary interface and its backup interfaces operate in load sharing mode after you specify the traffic thresholds on the primary interface. This method cannot be used with the other two methods at the same time for an interface. |

Configuring strict active/standby interface backup

You can use one of the following methods to configure strict active/standby interface backup:

· Explicitly specify backup interfaces for a primary interface. If this method is used, interface backup changes the state of the backup interface in response to the interface state change of the primary interface.

· Use interface backup with the Track module. If this method is used, interface backup uses a track entry to monitor the link state of the primary interface. Interface backup changes the state of a backup interface in response to the link state change of the primary interface.

Explicitly specifying backup interfaces without traffic thresholds

For the primary and backup interfaces to operate in strict active/standby mode, do not specify the traffic thresholds on the primary interface. If the traffic thresholds are configured, the interfaces will operate in load sharing mode.

You can assign priority to backup interfaces. When the primary interface fails, the backup interfaces are activated in descending order of priority, with the highest-priority interface activated first. If two backup interfaces have the same priority, the one configured first has preference.

To prevent link flapping from causing frequent interface switchovers, you can configure the following switchover delay timers:

· Up delay timer—Number of seconds that the primary or backup interface must wait before it can come up.

· Down delay timer—Number of seconds that the active primary or backup interface must wait before it is set to down state.

When the link of the active interface fails, the interface state does not change immediately. Instead, a down delay timer starts. If the link recovers before the timer expires, the interface state does not change. If the link is still down when the timer expires, the interface state changes to down.

To configure strict active/standby interface backup for a primary interface:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

This interface must be the primary interface. |

|

3. Specify a backup interface. |

backup interface interface-type interface-number [ priority ] |

By default, an interface does not have any backup interfaces. Repeat this command to specify up to three backup interfaces for the interface. |

|

4. Set the switchover delay timers. |

backup timer delay up-delay down-delay |

By default, the up and down delay timers are both 5 seconds. |

Using interface backup with the Track module

To use interface backup with the Track module to provide strict active/standby backup for a primary interface:

· Configure a track entry to monitor state information of the primary interface. For example, monitor its link state.

· Associate the track entry with a backup interface.

Interface backup changes the state of the backup interface in response to the track entry state, as shown in Table 2.

Table 2 Action on the backup interface in response to the track entry state change

|

Track entry state |

State of the monitored primary link |

Action on the backup interface |

|

Positive |

The primary link is operating correctly. |

Places the backup interface in STANDBY state. |

|

Negative |

The primary link has failed. |

Activates the backup interface to take over. |

|

NotReady |

The primary link is not monitored. This situation occurs when the track module or the monitoring module is not ready, for example, because the Track module is restarting or the monitoring settings are incomplete. In this situation, interface backup cannot obtain information about the primary link from the track module. |

· If the track entry state stays in NotReady state after it is created, interface backup does not change the state of the backup interface. · If the track entry state changes to NotReady from Positive or Negative, the backup interface changes back to the forwarding state before it was used for interface backup. |

For more information about configuring a track entry, see "Configuring Track."

When you associate a backup interface with a track entry, follow these guidelines:

· You can associate an interface with only one track entry.

· You can create the associated track entry before or after the association. The association takes effect after the track entry is created.

To associate Track with an interface:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

This interface must be the interface you are using as a backup. |

|

3. Associate the interface with a track entry. |

backup track track-entry-number |

By default, an interface is not associated with a track entry. |

Configuring load-shared interface backup

To implement load-balanced interface backup, you must configure the traffic thresholds on the primary interface. Interface backup regularly compares the amount of traffic with the thresholds to determine whether to activate or deactivate a backup interface. The traffic polling interval is user configurable.

You can assign priority to backup interfaces.

· When the amount of traffic on the primary interface exceeds the upper threshold, the backup interfaces are activated in descending order of priority.

· When the total amount of traffic on all load-shared interfaces decreases below the lower threshold, the backup interfaces are deactivated in ascending order of priority.

If two backup interfaces have the same priority, the one configured first has preference.

If a traffic flow has a fast forwarding entry, all packets of the flow will be forwarded out of the outgoing interface in the entry. The packets of the flow will not be distributed between interfaces when the upper threshold is reached. For more information about fast forwarding, see Layer 3—IP Services Configuration Guide.

To configure load-shared backup for an interface:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

You must enter the view of the primary interface. |

|

3. Configure a backup interface for the interface. |

backup interface interface-type interface-number [ priority ] |

By default, an interface does not have any backup interfaces. Repeat this command to specify up to three backup interfaces. |

|

4. Set backup load sharing thresholds. |

backup threshold upper-threshold lower-threshold |

By default, no traffic thresholds are configured. |

|

5. Set the traffic polling interval. |

backup timer flow-check interval |

The default interval is 30 seconds. |

Displaying and maintaining interface backup

Execute display commands in any view.

|

Command |

|

|

Display traffic statistics for load-shared interfaces. |

display interface-backup statistics |

|

Display the status of primary and backup interfaces. |

display interface-backup state |

Interface backup configuration examples

Strict active/standby interface backup configuration example

Network requirements

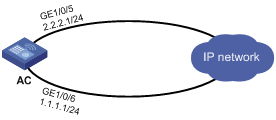

As shown in Figure 3:

· Specify GigabitEthernet 1/0/6 on AC to back up GigabitEthernet 1/0/5.

· Set the up and down delay timers to 10 seconds for the backup interfaces.

Configuration procedure

1. Assign IP addresses to interfaces, as shown in Figure 3. (Details not shown.)

2. On AC, configure backup interfaces and switchover delays:

# Specify GigabitEthernet 1/0/6 to back up GigabitEthernet 1/0/5.

[AC] interface gigabitethernet 1/0/5

[AC-GigabitEthernet1/0/5] backup interface gigabitethernet 1/0/6

# Set both up and down delay timers to 10 seconds.

[AC-GigabitEthernet1/0/5] backup timer delay 10 10

Verifying the configuration

# Display states of the primary and backup interfaces.

[AC-GigabitEthernet1/0/5] display interface-backup state

Interface: GE1/0/5

UpDelay: 10 s

DownDelay: 10 s

State: UP

Backup interfaces:

GE1/0/6 Priority: 0 State: STANDBY

The output shows that GigabitEthernet 1/0/5 is in UP state and the backup interface is in STANDBY state.

# Shut down the primary interface GigabitEthernet 1/0/5.

[AC-GigabitEthernet1/0/5] shutdown

[AC-GigabitEthernet1/0/5] display interface-backup state

Interface: GE1/0/5

UpDelay: 10 s

DownDelay: 10 s

State: DOWN

Backup interfaces:

GE1/0/6 Priority: 0 State: UP

Strict active/standby interface backup with the Track module configuration example

Network requirements

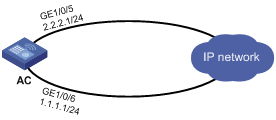

As shown in Figure 4, configure a track entry to monitor the link state of GigabitEthernet 1/0/5. When the link of GigabitEthernet 1/0/5 fails, the backup interface GigabitEthernet 1/0/6 comes up to take over.

Configuration procedure

1. Assign IP addresses to interfaces, as shown in Figure 4. (Details not shown.)

2. On AC, configure track settings:

# Configure track entry 1 to monitor the link state of GigabitEthernet 1/0/5.

[AC] track 1 interface gigabitethernet 1/0/5

# Associate track entry 1 with the backup interface GigabitEthernet 1/0/6.

[AC] interface gigabitethernet 1/0/6

[AC-GigabitEthernet1/0/6] backup track 1

[AC-GigabitEthernet1/0/6] quit

Verifying the configuration

# Verify that the backup interface GigabitEthernet 1/0/6 is in STANDBY state while the primary link is operating correctly.

[AC] display interface-backup state

IB Track Information:

GE1/0/6 Track: 1 State: STANDBY

# Shut down the primary interface GigabitEthernet 1/0/5.

[AC] interface gigabitethernet 1/0/5

[AC-GigabitEthernet1/0/5] shutdown

# Verify that the backup interface GigabitEthernet 1/0/6 comes up after the primary link goes down.

[AC-GigabitEthernet1/0/5] display interface-backup state

IB Track Information:

GE1/0/6 Track: 1 State: UP

Load-shared interface backup configuration example

Network requirements

As shown in Figure 5:

· Configure GigabitEthernet 1/0/6 on AC to back up the primary interface GigabitEthernet 1/0/5.

· On the primary interface:

? Specify the interface bandwidth used for traffic load calculation.

? Set the upper and lower thresholds to 80 and 20, respectively.

Configuration procedure

1. Assign IP addresses to interfaces, as shown in Figure 5. (Details not shown.)

2. On AC, configure backup interfaces and traffic thresholds:

# Specify GigabitEthernet 1/0/6 to back up GigabitEthernet 1/0/5.

[AC] interface gigabitethernet 1/0/5

[AC-GigabitEthernet1/0/5] backup interface gigabitethernet 1/0/6

# Set the expected bandwidth to 10000 kbps on the primary interface.

[AC-GigabitEthernet1/0/5] bandwidth 10000

# Set the upper and lower thresholds to 80 and 20, respectively.

[AC-GigabitEthernet1/0/5] backup threshold 80 20

Verifying the configuration

# Display traffic statistics for load-shared interfaces.

[AC-GigabitEthernet1/0/5] display interface-backup statistics

Interface: GigabitEthernet1/0/5

Statistics interval: 30 s

Bandwidth: 10000000 bps

PrimaryTotalIn: 102 bytes

PrimaryTotalOut: 108 bytes

PrimaryIntervalIn: 102 bytes

PrimaryIntervalOut: 108 bytes

Primary used bandwidth: 28 bps

TotalIn: 102 bytes

TotalOut: 108 bytes

TotalIntervalIn: 102 bytes

TotalIntervalOut: 108 bytes

Total used bandwidth: 28 bps

The output shows that the upper traffic threshold has not been exceeded. All traffic is transmitted through the primary interface GigabitEthernet 1/0/5.

# Verify that the backup interface is in STANDBY state because the upper threshold has not been exceeded.

[AC-GigabitEthernet1/0/5] display interface-backup state

Interface: GE1/0/5

UpDelay: 5 s

DownDelay: 5 s

Upper threshold: 80

Lower threshold: 20

State: UP

Backup interfaces:

GE1/0/6 Priority: 0 State: STANDBY

# Increase the incoming or outgoing traffic rate to be higher than 8000 kbps (80% of the specified bandwidth) on the primary interface. (Details not shown.)

# Verify that the backup interface GigabitEthernet 1/0/6 comes up to participate in traffic forwarding.

[AC-GigabitEthernet1/0/5] display interface-backup state

Interface: GE1/0/5

UpDelay: 5 s

Upper threshold: 80

Lower threshold: 20

State: UP

Backup interfaces:

GE1/0/6 Priority: 0 State: UP

Configuring Track

Overview

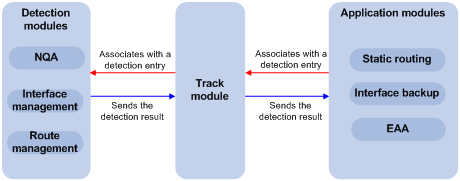

The Track module works between application modules and detection modules, as shown in Figure 6. It shields the differences between various detection modules from application modules.

Collaboration is enabled when you associate the Track module with a detection module and an application module, and it operates as follows:

1. The detection module probes specific objects such as interface status, link status, network reachability, and network performance, and informs the Track module of detection results.

2. The Track module sends the detection results to the application module.

3. When notified of changes for the tracked object, the application modules can react to avoid communication interruption and network performance degradation.

Figure 6 Collaboration through the Track module

Collaboration fundamentals

The Track module collaborates with detection modules and application modules.

Collaboration between the Track module and a detection module

The detection module sends the detection result of the tracked object to the Track module. The Track module changes the status of the track entry as follows:

· If the tracked object operates correctly, the state of the track entry is Positive. For example, the track entry state is Positive in one of the following conditions:

? The target interface is up.

? The target network is reachable.

· If the tracked object does not operate correctly, the state of the track entry is Negative. For example, the track entry state is Negative in one of the following conditions:

? The target interface is down.

? The target network is unreachable.

· If the detection result is invalid, the state of the track entry is NotReady. For example, the track entry state is NotReady if its associated NQA operation does not exist.

The following detection modules can be associated with the Track module:

· NQA.

· Interface management.

· Route management.

You can associate a track entry with an object of a detection module, such as the state of an interface or reachability of an IP route. The state of the track entry is determined by the state of the tracked object.

You can also associate a track entry with a list of objects called a tracked list. The state of a tracked list is determined by the states of all objects in the list. The following types of tracked lists are supported:

· Boolean AND list—The state of a Boolean AND list is determined by the states of the tracked objects using the Boolean AND operation.

· Boolean OR list—The state of a Boolean OR list is determined by the states of the tracked objects using the Boolean OR operation.

· Percentage threshold list—The state of a percentage threshold list is determined by comparing the percentage of positive and negative objects in the list with the percentage thresholds configured for the list.

· Weight threshold list—The state of a weight threshold list is determined by comparing the weight of positive and negative objects in the list with the weight thresholds configured for the list.

Collaboration between the Track module and an application module

The following application modules can be associated with the Track module:

· Static routing.

· EAA.

When configuring a track entry for an application module, you can set a notification delay to avoid immediate notification of status changes.

When the delay is not configured and the route convergence is slower than the link state change notification, communication failures occur.

Track configuration task list

To implement the collaboration function, establish associations between the Track module and detection modules, and between the Track module and application modules.

To configure the Track module, perform the following tasks:

|

Tasks at a glance |

|

|

Associating the Track module with a detection module object |

Perform a minimum of one task. |

|

Associating Track with a tracked list: · Associating Track with a Boolean list |

|

|

(Required.) Associating the Track module with an application module: · Associating Track with static routing |

Perform a minimum of one task. |

Associating the Track module with a detection module object

Associating Track with NQA

NQA supports multiple operation types to analyze network performance and service quality. For example, an NQA operation can periodically detect whether a destination is reachable, or whether a TCP connection can be established.

An NQA operation operates as follows when it is associated with a track entry:

· If the consecutive failures reach the specified threshold, the NQA module notifies the Track module that the tracked object has malfunctioned. The Track module then sets the track entry to Negative state.

· If the specified threshold is not reached, the NQA module notifies the Track module that the tracked object is operating correctly. The Track module then sets the track entry to Positive state.

For more information about NQA, see Network Management and Monitoring Configuration Guide.

To associate Track with NQA:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create a track entry, associate it with an NQA reaction entry, and enter track entry view. |

track track-entry-number nqa entry admin-name operation-tag reaction item-number |

By default, no track entries exist. If the specified NQA operation or the reaction entry in the track entry does not exist, the status of the track entry is NotReady. |

|

3. Set the delay for notifying the application module of track entry state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the track entry state changes. |

Associating Track with interface management

The interface management module monitors the link status or network-layer protocol status of interfaces. The associated Track and interface management operate as follows:

· When the link or network-layer protocol status of the interface changes to up, the interface management module informs the Track module of the change. The Track module sets the track entry to Positive state.

· When the link or network-layer protocol status of the interface changes to down, the interface management module informs the Track module of the change. The Track module sets the track entry to Negative state.

To associate Track with interface management:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create a track entry, associate it with an interface, and enter track entry view. |

· Create a track entry to monitor the link

status of an interface: · Create a track entry to monitor the physical status

of an interface: · Create a track entry to monitor the network-layer protocol status of an

interface: |

By default, no track entries exist. |

|

3. Set the delay for notifying the application module of track entry state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the track entry state changes. |

Associating Track with route management

The route management module monitors changes of route entries in the routing table. The associated Track and route management operate as follows:

· When a monitored route entry is found in the routing table, the route management module informs the Track module. The Track module sets the track entry to Positive state.

· When a monitored route entry is removed from the routing table, the route management module informs the Track module of the change. The Track module sets the track entry to Negative state.

To associate Track with route management:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create a track entry to monitor the reachability of an IP route and enter track entry view. |

track track-entry-number ip route ip-address { mask-length | mask } reachability |

By default, no track entries exist. |

|

3. Set the delay for notifying the application module of track entry state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the track entry state changes. |

Associating Track with a tracked list

Associating Track with a Boolean list

About Boolean list

A Boolean list is a list of tracked objects based on a Boolean logic. It can be further divided into the following types:

· Boolean AND list—A Boolean AND list is set to the positive state only when all objects are in positive state. If one or more objects are in negative state, the list is set to the negative state.

· Boolean OR list—A Boolean or list is set to the positive state if any object is in positive state. If all objects are in negative state, the list is set to the negative state.

Procedure

To associate Track with a Boolean list:

|

Step |

Command |

Remark |

|

4. Enter system view. |

system-view |

N/A |

|

5. Create a track entry. |

See "Associating the Track module with a detection module object." |

Create a track entry before you add it as a tracked object to a tracked list. A minimum of one track entry must be created. |

|

6. Create a Boolean tracked list and enter its view. |

track track-entry-number list boolean { and | or } |

By default, no tracked lists exist. |

|

7. Add the track entry as an object to the tracked list. |

object track-entry-number [ not ] |

By default, a tracked list does not contain any objects. Repeat this step to add all interested objects to the tracked list. |

|

8. (Optional.) Set the delay for notifying the application module of tracked list state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the tracked list state changes. |

Associating Track with a percentage threshold list

About percentage threshold list

A percentage threshold list uses a percentage threshold to determine the state of the list.

· If the percentage of negative objects is equal to or smaller than the negative state threshold, the list is set to the negative state.

· If the percentage of positive objects is equal to or greater than the positive state threshold, the list is set to the positive state.

· The state of the list remains unchanged in the following conditions:

? The percentage of positive objects is smaller than the positive state threshold value.

? The percentage of negative objects is greater than the negative state threshold value.

Procedure

To associate Track with a percentage threshold list:

|

Task |

Command |

Remark |

|

9. Enter system view. |

system-view |

N/A |

|

10. Create a track entry. |

See "Associating the Track module with a detection module object." |

Create a track entry before you add it as an tracked object to a tracked list. A minimum of one track entry must be created. |

|

11. Create a percentage threshold list and enter its view. |

track track-entry-number list threshold percentage |

By default, no tracked lists exist. |

|

12. Add the track entry as an object to the tracked list. |

object track-entry-number [ not ] |

By default, a tracked list does not contain any objects. Repeat this step to add all interested objects to the tracked list. |

|

13. Configure the threshold values used to determine the state of the percentage threshold list. |

threshold percentage { negative negative-threshold | positive positive-threshold } * |

By default, the negative state threshold is 0% and the positive state threshold is 1%. |

|

14. (Optional.) Set the delay for notifying the application module of tracked list state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the tracked list state changes. |

Associating Track with a weight threshold list

About weight threshold list

A weight threshold list uses a weight threshold to determine the state of the list.

· If the total weight of positive objects is equal to or greater than the positive state threshold, the list is set to the positive state.

· If the total weight of negative objects is equal to or smaller than the negative state threshold, the list is set to the negative state.

· The state the list remains unchanged in the following conditions:

? The total weight of positive objects is smaller than the positive state threshold value.

? The total weight of negative objects is greater than the negative state threshold value.

Procedure

To associate Track with a weight threshold list:

|

Task |

Command |

Remark |

|

15. Enter system view. |

system-view |

N/A |

|

16. Create a track entry. |

See "Associating the Track module with a detection module object." |

Create a track entry before you add it as an tracked object to a tracked list. A minimum of one track entry must be created. |

|

17. Create a weight threshold list and enter its view. |

track track-entry-number list threshold weight |

By default, no tracked lists exist. |

|

18. Add the track entry as an object to the tracked list. |

object track-entry-number [ not ] |

By default, a tracked list does not contain any objects. Repeat this step to add all interested objects to the tracked list. |

|

19. Configure the threshold values used to determine the state of the weight threshold list. |

threshold weight { negative negative-threshold | positive positive-threshold } * |

By default, the negative state threshold is 0 and the positive state threshold is 1. |

|

20. (Optional.) Set the delay for notifying the application module of tracked list state changes. |

delay { negative negative-time | positive positive-time } * |

By default, the Track module notifies the application module immediately when the tracked list state changes. |

Associating the Track module with an application module

Before you associate the Track module with an application module, make sure the associated track entry has been created.

Associating Track with static routing

A static route is a manually configured route to route packets. For more information about static route configuration, see Layer 3—IP Routing Configuration Guide.

Static routes cannot adapt to network topology changes. Link failures or network topological changes can make the routes unreachable and cause communication interruption.

To resolve this problem, configure another route to back up the static route. When the static route is reachable, packets are forwarded through the static route. When the static route is unreachable, packets are forwarded through the backup route.

To check the accessibility of a static route in real time, associate the Track module with the static route.

If you specify the next hop but not the output interface when configuring a static route, you can configure the static routing-Track-detection module collaboration. This collaboration enables you to verify the accessibility of the static route based on the track entry state.

· If the track entry is in Positive state, the following conditions exist:

? The next hop of the static route is reachable.

? The configured static route is valid.

· If the track entry is in Negative state, the following conditions exist:

? The next hop of the static route is not reachable.

? The configured static route is invalid.

· If the track entry is in NotReady state, the following conditions exist:

? The accessibility of the next hop of the static route is unknown.

? The static route is valid.

If a static route needs route recursion, the associated track entry must monitor the next hop of the recursive route. The next hop of the static route cannot be monitored. Otherwise, a valid route might be considered invalid.

To associate Track with static routing:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Associate a static route with a track entry to check the accessibility of the next hop. |

ip route-static { dest-address { mask-length | mask } | group group-name } { interface-type interface-number [ next-hop-address ] | next-hop-address } [ permanent | track track-entry-number ] [ preference preference-value ] [ tag tag-value ] [ description description-text ] |

By default, Track is not associated with static routing. |

Associating Track with interface backup

The following matrix shows the feature and hardware compatibility:

|

Hardware series |

Model |

Feature compatibility |

|

WX1800H series |

WX1804H WX1810H WX1820H WX1840H |

Yes |

|

WX3800H series |

WX3820H WX3840H |

No |

|

WX5800H series |

WX5860H |

No |

Interface backup allows interfaces on a device to back up each other, with the active interface transmitting data and the standby interfaces staying in backup state. When the active interface or the link where the active interface resides fails, a standby interface takes over to transmit data. This feature enhances the availability of the network. For more information, see "Configuring interface backup."

To enable a standby interface to detect the status of the active interface, you can associate the standby interface with a track entry.

· If the track entry is in Positive state, the following conditions exist:

? The link where the active interface resides operates correctly.

? The standby interfaces stay in backup state.

· If the track entry is in Negative state, the following conditions exist:

? The link where the active interface resides has failed.

? A standby interface changes to the active interface for data transmission.

· If the track entry is in always NotReady state, the following conditions exist:

? The association does not take effect.

? Each interface keeps its original forwarding state.

When the track entry turns to NotReady from other state, a standby interface becomes the active interface.

To associate Track with interface backup:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

N/A |

|

3. Associate the interface with a track entry. |

backup track track-entry-number |

By default, no track entry is associated with an interface. You can associate an interface with only one track entry. If you use this command multiple times, the most recent configuration takes effect. |

Associating Track with EAA

About Track association with EAA

You can configure EAA track event monitor policies to monitor the positive-to-negative or negative-to-positive state changes of track entries.

· If you specify only one track entry for a policy, EAA triggers the policy when it detects the specified state change on the track entry.

· If you specify multiple track entries for a policy, EAA triggers the policy when it detects the specified state change on the last monitored track entry. For example, if you configure a policy to monitor the positive-to-negative state change of multiple track entries, EAA triggers the policy when the last positive track entry monitored by the policy is changed to the Negative state.

You can set a suppression time for a track event monitor policy. The timer starts when the policy is triggered. The system does not process messages that report the monitored track event until the timer times out.

For more information about EAA, see Network Management and Monitoring Configuration Guide.

Procedure

To associate Track with EAA:

|

Step |

Command |

Remarks |

|

4. Enter system view. |

system-view |

N/A |

|

5. Create a CLI-defined monitor policy and enter its view, or enter the view of an existing CLI-defined monitor policy. |

rtm cli-policy policy-name |

By default, no CLI-defined monitor policies exist. |

|

6. Configure a track event. |

event track track-entry-number-list state { negative | positive } [ suppress-time suppress-time ] |

By default, a monitor policy does not contain any track event. |

Displaying and maintaining track entries

Execute display commands in any view.

|

Task |

Command |

|

Display information about track entries. |

display track { track-entry-number | all [ negative | positive ] } [ brief ] |

Static routing-Track-NQA collaboration configuration example

Network requirements

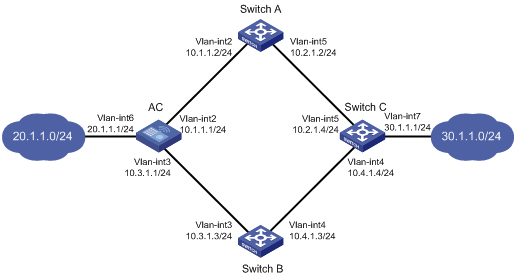

As shown in Figure 7:

· The AC is the default gateway of the hosts in network 20.1.1.0/24.

· Switch C is the default gateway of the hosts in network 30.1.1.0/24.

· Hosts in the two networks communicate with each other through static routes.

To ensure network availability, configure route backup and static routing-Track-NQA collaboration on the AC and Switch C as follows:

· On the AC, assign a higher priority to the static route to 30.1.1.0/24 with the next hop Switch A. This route is the master route. The static route to 30.1.1.0/24 with the next hop Switch B acts as the backup route. When the master route is unavailable, the backup route takes effect. The AC forwards packets to 30.1.1.0/24 through Switch B.

· On Switch C, assign a higher priority to the static route to 20.1.1.0/24 with the next hop Switch A. This route is the master route. The static route to 20.1.1.0/24 with the next hop Switch B acts as the backup route. When the master route is unavailable, the backup route takes effect. Switch C forwards packets to 20.1.1.0/24 through Switch B.

Configuration procedure

1. Create VLANs and assign ports to them. Configure the IP address of each VLAN interface, as shown in Figure 7. (Details not shown.)

2. Configure the AC:

# Configure a static route to 30.1.1.0/24 with the next hop 10.1.1.2 and the default priority 60. Associate this static route with track entry 1.

<AC> system-view

[AC] ip route-static 30.1.1.0 24 10.1.1.2 track 1

# Configure a static route to 30.1.1.0/24 with the next hop 10.3.1.3 and the priority 80.

[AC] ip route-static 30.1.1.0 24 10.3.1.3 preference 80

# Configure a static route to 10.2.1.4 with the next hop 10.1.1.2.

[AC] ip route-static 10.2.1.4 24 10.1.1.2

# Create an NQA operation with the administrator admin and the operation tag test.

[AC] nqa entry admin test

# Configure the operation type as ICMP echo.

[AC-nqa-admin-test] type icmp-echo

# Specify 10.2.1.4 as the destination address of the operation.

[AC-nqa-admin-test-icmp-echo] destination ip 10.2.1.4

# Specify 10.1.1.2 as the next hop of the operation.

[AC-nqa-admin-test-icmp-echo] next-hop ip 10.1.1.2

# Configure the ICMP echo operation to repeat every 100 milliseconds.

[AC-nqa-admin-test-icmp-echo] frequency 100

# Configure reaction entry 1, specifying that five consecutive probe failures trigger the Track module.

[AC-nqa-admin-test-icmp-echo] reaction 1 checked-element probe-fail threshold-type consecutive 5 action-type trigger-only

[AC-nqa-admin-test-icmp-echo] quit

# Start the NQA operation.

[AC] nqa schedule admin test start-time now lifetime forever

# Configure track entry 1, and associate it with reaction entry 1 of the NQA operation.

[AC] track 1 nqa entry admin test reaction 1

3. Configure Switch A:

# Configure a static route to 30.1.1.0/24 with the next hop 10.2.1.4.

<SwitchA> system-view

[SwitchA] ip route-static 30.1.1.0 24 10.2.1.4

# Configure a static route to 20.1.1.0/24 with the next hop 10.1.1.1.

[SwitchA] ip route-static 20.1.1.0 24 10.1.1.1

4. Configure Switch B:

# Configure a static route to 30.1.1.0/24 with the next hop 10.4.1.4.

<SwitchB> system-view

[SwitchB] ip route-static 30.1.1.0 24 10.4.1.4

# Configure a static route to 20.1.1.0/24 with the next hop 10.3.1.1.

[SwitchB] ip route-static 20.1.1.0 24 10.3.1.1

5. Configure Switch C:

# Configure a static route to 20.1.1.0/24 with the next hop 10.2.1.2 and the default priority 60. Associate this static route with track entry 1.

<SwitchC> system-view

[SwitchC] ip route-static 20.1.1.0 24 10.2.1.2 track 1

# Configure a static route to 20.1.1.0/24 with the next hop 10.4.1.3 and the priority 80.

[SwitchC] ip route-static 20.1.1.0 24 10.4.1.3 preference 80

# Configure a static route to 10.1.1.1 with the next hop 10.2.1.2.

[SwitchC] ip route-static 10.1.1.1 24 10.2.1.2

# Create an NQA operation with the administrator admin and the operation tag test.

[SwitchC] nqa entry admin test

# Specify the operation type as ICMP echo.

[SwitchC-nqa-admin-test] type icmp-echo

# Specify 10.1.1.1 as the destination address of the operation.

[SwitchC-nqa-admin-test-icmp-echo] destination ip 10.1.1.1

# Specify 10.2.1.2 as the next hop of the operation.

[SwitchC-nqa-admin-test-icmp-echo] next-hop ip 10.2.1.2

# Configure the ICMP echo operation to repeat every 100 milliseconds.

[SwitchC-nqa-admin-test-icmp-echo] frequency 100

# Configure reaction entry 1, specifying that five consecutive probe failures trigger the Track module.

[SwitchC-nqa-admin-test-icmp-echo] reaction 1 checked-element probe-fail threshold-type consecutive 5 action-type trigger-only

[SwitchC-nqa-admin-test-icmp-echo] quit

# Start the NQA operation.

[SwitchC] nqa schedule admin test start-time now lifetime forever

# Configure track entry 1, and associate it with reaction entry 1 of the NQA operation.

[SwitchC] track 1 nqa entry admin test reaction 1

Verifying the configuration

# Display information about the track entry on the AC.

[AC] display track all

Track ID: 1

State: Positive

Duration: 0 days 0 hours 0 minutes 32 seconds

Notification delay: Positive 0, Negative 0 (in seconds)

Tracked object:

NQA entry: admin test

Reaction: 1

Remote IP/URL:--

Local IP:--

Interface:--

The output shows that the status of the track entry is Positive, indicating that the NQA operation has succeeded and the master route is available.

# Display the routing table of the AC.

[AC] display ip routing-table

Destinations : 10 Routes : 10

Destination/Mask Proto Pre Cost NextHop Interface

10.1.1.0/24 Direct 0 0 10.1.1.1 Vlan2

10.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

10.2.1.0/24 Static 60 0 10.1.1.2 Vlan2

10.3.1.0/24 Direct 0 0 10.3.1.1 Vlan3

10.3.1.1/32 Direct 0 0 127.0.0.1 InLoop0

20.1.1.0/24 Direct 0 0 20.1.1.1 Vlan6

20.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

30.1.1.0/24 Static 60 0 10.1.1.2 Vlan2

127.0.0.0/8 Direct 0 0 127.0.0.1 InLoop0

127.0.0.1/32 Direct 0 0 127.0.0.1 InLoop0

The output shows that the AC forwards packets to 30.1.1.0/24 through Switch A.

# Remove the IP address of interface VLAN-interface 2 on Switch A.

<SwitchA> system-view

[SwitchA] interface vlan-interface 2

[SwitchA-Vlan-interface2] undo ip address

# Display information about the track entry on the AC.

[AC] display track all

Track ID: 1

State: Negative

Duration: 0 days 0 hours 0 minutes 32 seconds

Notification delay: Positive 0, Negative 0 (in seconds)

Tracked object:

NQA entry: admin test

Reaction: 1

Remote IP/URL:--

Local IP:--

Interface:--

The output shows that the status of the track entry is Negative, indicating that the NQA operation has failed and the master route is unavailable.

# Display the routing table of the AC.

[AC] display ip routing-table

Destinations : 10 Routes : 10

Destination/Mask Proto Pre Cost NextHop Interface

10.1.1.0/24 Direct 0 0 10.1.1.1 Vlan2

10.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

10.2.1.0/24 Static 60 0 10.1.1.2 Vlan2

10.3.1.0/24 Direct 0 0 10.3.1.1 Vlan3

10.3.1.1/32 Direct 0 0 127.0.0.1 InLoop0

20.1.1.0/24 Direct 0 0 20.1.1.1 Vlan6

20.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

30.1.1.0/24 Static 80 0 10.3.1.3 Vlan3

127.0.0.0/8 Direct 0 0 127.0.0.1 InLoop0

127.0.0.1/32 Direct 0 0 127.0.0.1 InLoop0

The output shows that the AC forwards packets to 30.1.1.0/24 through Switch B. The backup static route has taken effect.

# Verify that hosts in 20.1.1.0/24 can communicate with the hosts in 30.1.1.0/24 when the master route fails.

[AC] ping -a 20.1.1.1 30.1.1.1

Ping 30.1.1.1: 56 data bytes, press CTRL_C to break

Reply from 30.1.1.1: bytes=56 Sequence=1 ttl=254 time=2 ms

Reply from 30.1.1.1: bytes=56 Sequence=2 ttl=254 time=1 ms

Reply from 30.1.1.1: bytes=56 Sequence=3 ttl=254 time=1 ms

Reply from 30.1.1.1: bytes=56 Sequence=4 ttl=254 time=2 ms

Reply from 30.1.1.1: bytes=56 Sequence=5 ttl=254 time=1 ms

--- Ping statistics for 30.1.1.1 ---

5 packet(s) transmitted, 5 packet(s) received, 0.00% packet loss

round-trip min/avg/max/std-dev = 1/1/2/1 ms

# Verify that the hosts in 30.1.1.0/24 can communicate with the hosts in 20.1.1.0/24 when the master route fails.

[SwitchA] ping -a 30.1.1.1 20.1.1.1

Ping 20.1.1.1: 56 data bytes, press CTRL_C to break

Reply from 20.1.1.1: bytes=56 Sequence=1 ttl=254 time=2 ms

Reply from 20.1.1.1: bytes=56 Sequence=2 ttl=254 time=1 ms

Reply from 20.1.1.1: bytes=56 Sequence=3 ttl=254 time=1 ms

Reply from 20.1.1.1: bytes=56 Sequence=4 ttl=254 time=1 ms

Reply from 20.1.1.1: bytes=56 Sequence=5 ttl=254 time=1 ms

--- Ping statistics for 20.1.1.1 ---

5 packet(s) transmitted, 5 packet(s) received, 0.00% packet loss

round-trip min/avg/max/std-dev = 1/1/2/1 ms

Load balancing overview

Load balancing (LB) is a cluster technology that distributes services among multiple network devices or links.

Restrictions: Hardware compatibility with load balancing

|

Hardware series |

Model |

Load balancing compatibility |

|

WX1800H |

WX1804H WX1810H WX1820H WX1840H |

Yes |

|

WX3800H |

WX3820H WX3840H |

No |

|

WX5800H |

WX5860H |

No |

Advantages of load balancing

Load balancing has the following advantages:

· High performance—Improves overall system performance by distributing services to multiple devices or links.

· Scalability—Meets increasing service requirements without compromising service quality by easily adding devices or links.

· High availability—Improves overall availability by using backup devices or links.

· Manageability—Simplifies configuration and maintenance by centralizing management on the load balancing device.

· Transparency—Preserves the transparency of the network topology for end users. Adding or removing devices or links does not affect services.

Load balancing types

The device supports the link load balancing type. Link load balancing applies to a network environment where there are multiple carrier links to implement dynamic link selection. This enhances link utilization. Link load balancing supports IPv4 and IPv6, but does not support IPv4-to-IPv6 packet translation. Link load balancing is classified into the following types:

· Outbound link load balancing—Load balances traffic among the links from the internal network to the external network.

· Transparent DNS proxy—Load balances DNS requests among the links from the internal network to the external network.

Configuring outbound link load balancing

About outbound link load balancing

Outbound link load balancing load balances traffic among the links from the internal network to the external network.

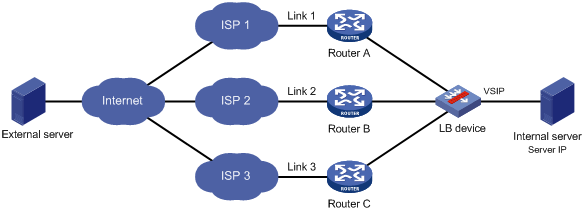

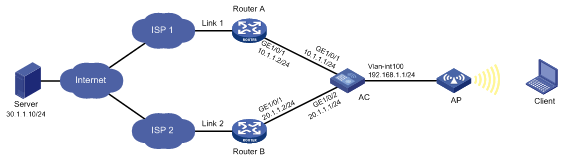

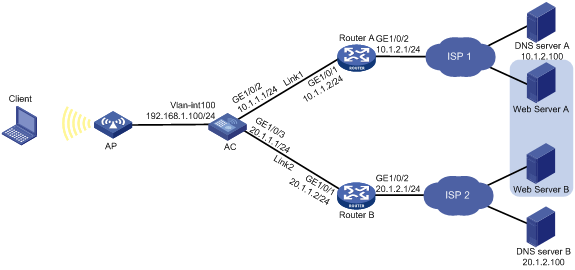

Typical network diagram

As shown in Figure 8, outbound link load balancing contains the following elements:

· LB device—Distributes outbound traffic among multiple links.

· Link—Physical links provided by ISPs.

· VSIP—Virtual service IP address of the cluster, which identifies the destination network for packets from the internal network.

· Server IP—IP address of a server.

Workflow

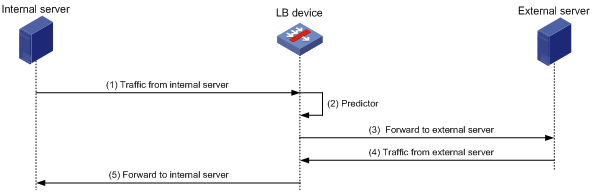

Figure 9 shows the outbound link load balancing workflow.

Figure 9 Outbound link load balancing workflow

The workflow for outbound link load balancing is as follows:

1. The LB device receives traffic from the internal server.

2. The LB device selects the optimal link based on the LB policy, sticky method, proximity algorithm, and scheduling algorithm (typically the bandwidth algorithm or maximum bandwidth algorithm) in turn.

3. The LB device forwards the traffic to the external server through the optimal link.

4. The LB device receives traffic from the external server.

5. The LB device forwards the traffic to the internal server.

Outbound link load balancing configuration task list

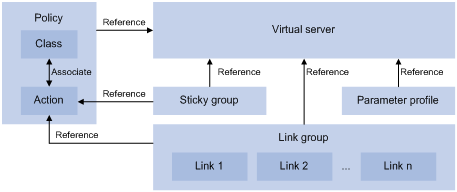

Figure 10 shows the relationship between the following configuration items:

· Link group—A collection of links that contain similar functions. A link group can be referenced by a virtual server or an LB action.

· Link—Physical links provided by ISPs.

· Virtual server—A virtual service provided by the LB device to determine whether to perform load balancing for packets received on the LB device. Only the packets that match a virtual server are load balanced.

· LB class—Classifies packets to implement load balancing based on packet type.

· LB action—Drops, forwards, or modifies packets.

· LB policy—Associates an LB class with an LB action. An LB policy can be referenced by a virtual server.

· Sticky group—Uses a sticky method to distribute similar sessions to the same link. A sticky group can be referenced by a virtual server or an LB action.

· Parameter profile—Defines advanced parameters to process packets. A parameter profile can be referenced by a virtual server.

Figure 10 Relationship between the main configuration items

To configure outbound link load balancing, perform the following tasks:

|

Tasks at a glance |

|

(Required.) Configuring a link group |

|

(Required.) Configuring a link |

|

(Required.) Configuring a virtual server |

|

(Optional.) Configuring an LB class |

|

(Optional.) Configuring an LB class |

|

(Optional.) Configuring an LB policy |

|

(Optional.) Configuring a sticky group |

|

(Optional.) Configuring a parameter profile |

|

(Optional.) Configuring ISP information |

|

(Optional.) Configuring the ALG feature |

|

(Optional.) Performing a load balancing test |

|

(Optional.) Enabling SNMP notifications |

Configuring a link group

You can add links that contain similar functions to a link group to facilitate management.

Link group configuration task list

|

Tasks at a glance |

|

(Required.) Creating a link group |

|

(Required.) Scheduling links |

|

(Required.) Setting the availability criteria |

|

(Required.) Disabling NAT |

|

(Optional.) Configuring SNAT |

|

(Optional.) Enabling the slow online feature |

|

(Optional.) Configuring health monitoring |

|

(Optional.) Specifying a fault processing method |

|

(Optional.) Configuring the proximity feature |

Creating a link group

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create a link group and enter link group view. |

loadbalance link-group link-group-name |

By default, no link groups exist. |

|

3. (Optional.) Set a description for the link group. |

description text |

By default, no description is set for a link group. |

Scheduling links

About scheduling links

Perform this task to specify a scheduling algorithm for a link group and specify the number of links to participate in scheduling. The LB device calculates the links to process user requests based on the specified scheduling algorithm.

The device provides the following scheduling algorithms for a link group:

· Weighted least connection algorithm (least-connection)—Always assigns user requests to the link with the fewest number of weighted active connections (the number of active connections divided by weight).

· Random algorithm (random)—Randomly assigns user requests to links.

· Round robin algorithm (round-robin)—Assigns user requests to links based on the weights of links. A higher weight indicates more user requests will be assigned.

· Bandwidth algorithm (bandwidth)—Distributes user requests to links according to the weights and remaining bandwidth of links.

· Maximum bandwidth algorithm (max-bandwidth)—Distributes user requests always to an idle link that has the largest remaining bandwidth.

· Source IP address hash algorithm (hash address source)—Hashes the source IP address of user requests and distributes user requests to different links according to the hash values.

· Source IP address and port hash algorithm (hash address source-ip-port)—Hashes the source IP address and port number of user requests and distributes user requests to different links according to the hash values.

· Destination IP address hash algorithm (hash address destination)—Hashes the destination IP address of user requests and distributes user requests to different links according to the hash values.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

3. Specify a scheduling algorithm for the link group. |

predictor hash address { destination | source | source-ip-port } [ mask mask-length ] [ prefix prefix-length ] |

By default, the scheduling algorithm for a link group is weighted round robin. |

|

4. Specify the number of links to participate in scheduling. |

selected-link min min-number max max-number |

By default, the links with the highest priority participate in scheduling. |

Setting the availability criteria

About setting the availability criteria

Perform this task to set the criteria (lower percentage and higher percentage) to determine whether a link group is available. This helps implement traffic switchover between the master and backup link groups.

· When the number of available links to the total number of links in the master link group is smaller than the lower percentage, traffic is switched to the backup link group.

· When the number of available links to the total number of links in the master link group is greater than the upper percentage, traffic is switched back to the master link group.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

3. Set the criteria to determine whether the link group is available. |

activate lower lower-percentage upper upper-percentage |

By default, when a minimum of one link is available, the link group is available. |

Disabling NAT

Restrictions and guidelines

Typically, outbound link load balancing networking requires disabling NAT for a link group.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

3. Disable NAT for the link group. |

transparent enable |

By default, NAT is enabled for a link group. |

Configuring SNAT

About SNAT

After a link group references the SNAT address pool, the LB device replaces the source address of the packets it receives with an SNAT address before forwarding the packets.

Restrictions and guidelines

An SNAT address pool can have a maximum of 256 IPv4 addresses and 65536 IPv6 addresses. No overlapping IPv4 or IPv6 addresses are allowed in different SNAT address pools.

As a best practice, do not use SNAT because its application scope is limited for outbound link load balancing.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create an SNAT address pool and enter SNAT address pool view. |

loadbalance snat-pool pool-name |

By default, no SNAT address pools exist. |

|

3. (Optional.) Set a description for the SNAT address pool. |

description text |

By default, no description is set for an SNAT address pool. |

|

4. Specify an address range for the SNAT address pool. |

· Specify an IPv4 address range: · Specify an IPv6 address range: |

By default, no address range is specified for an SNAT address pool. |

|

5. Return to system view. |

quit |

N/A |

|

6. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

7. Specify the SNAT address pool to be referenced by the link group. |

snat-pool pool-name |

By default, no SNAT address pool is referenced by a link group. |

Enabling the slow online feature

About the slow online feature

Links newly added to a link group might be unable to immediately process large numbers of services assigned by the LB device. To resolve this issue, enable the slow online feature for the link group. The feature uses the standby timer and ramp-up timer. When the links are brought online, the LB device does not assign any services to the links until the standby timer expires.

When the standby timer expires, the ramp-up timer starts. During the ramp-up time, the LB device increases the service amount according to the processing capability of the links, until the ramp-up timer expires.

Procedure

|

Step |

Command |

Remarks |

|

|

1. Enter system view. |

system-view |

N/A |

|

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

|

3. Enable the slow online feature for the link group. |

slow-online [ standby-time standby-time ramp-up-time ramp-up-time ] |

By default, the slow online feature is disabled for a link group. |

|

Configuring health monitoring

About configuring health monitoring

Perform this task to enable health monitoring to detect the availability of links.

Restrictions and guidelines

The health monitoring configuration in link view takes precedence over the configuration in link group view.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

3. Specify a health monitoring method for the link group. |

probe template-name |

By default, no health monitoring method is specified for a link group. |

|

4. Specify the health monitoring success criteria for the link group. |

success-criteria { all | at-least min-number } |

By default, health monitoring succeeds only when all the specified health monitoring methods succeed. |

Specifying a fault processing method

About fault processing methods

Perform this task to specify one of the following fault processing methods for a link group:

· Keep—Does not actively terminate the connection with the failed link. Keeping or terminating the connection depends on the timeout mechanism of the protocol.

· Reschedule—Redirects the connection to another available link in the link group.

· Reset—Terminates the connection with the failed link by sending RST packets (for TCP packets) or ICMP unreachable packets (for other types of packets).

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link group view. |

loadbalance link-group link-group-name |

N/A |

|

3. Specify a fault processing method for the link group. |

fail-action { keep | reschedule | reset } |

By default, the fault processing method is keep. All available connections are kept. |

Configuring the proximity feature

About the proximity feature

The proximity feature performs link detection to select the optimal link to a destination. If no proximity information for a destination is available, the load balancing module selects a link based on the scheduling algorithm. It then performs proximity detection to generate proximity entries for forwarding subsequent traffic.

You can specify an NQA template or load-balancing probe template to perform link detection. The device generates proximity entries according to the detection results and proximity parameter settings. For information about NQA templates, see NQA configuration in Network Management and Monitoring Configuration Guide.

Restrictions and guidelines

To configure the proximity feature, first configure proximity parameters in proximity view, and then enable the proximity feature in link group view.

Configuring proximity parameters

|

Step |

Command |

Remarks |

|

|

1. Enter system view. |

system-view |

N/A |

|

|

2. Enter proximity view. |

N/A |

||

|

3. Specify the proximity probe method for packets. |

match [ match-id ] tcp probe nqa-template |

By default, no proximity probe method is specified. |

|

|

4. Specify the default proximity probe method. |

match default probe nqa-template |

By default, the default proximity probe method is not specified. |

|

|

5. Set the mask length for IPv4 proximity entries. |

By default, the mask length for IPv4 proximity entries is 24. |

||

|

6. Set the prefix length for IPv6 proximity entries. |

By default, the prefix length for IPv6 proximity entries is 96. |

||

|

7. Set the network delay weight for proximity calculation. |

By default, the network delay weight for proximity calculation is 100. |

||

|

8. Set the TTL weight for proximity calculation. |

By default, the TTL weight for proximity calculation is 100. |

||

|

9. Set the bandwidth weight for proximity calculation. |

By default, the inbound or outbound bandwidth weight for proximity calculation is 100. |

||

|

10. Set the cost weight for proximity calculation. |

By default, the cost weight for proximity calculation is 100. |

||

|

11. Set the aging timer for proximity entries. |

By default, the aging timer for proximity entries is 60 seconds. |

||

|

12. Set the maximum number of proximity entries. |

By default, the maximum number of proximity entries is not set. |

||

Enabling the proximity feature

|

Step |

Command |

Remarks |

|

|

1. Enter system view. |

system-view |

N/A |

|

|

2. Enter link group view. |

N/A |

||

|

3. Enable the proximity feature. |

By default, the proximity feature is disabled for a link group. |

||

Configuring a link

A link is a physical link provided by an ISP. A link can belong to only one link group. A link group can have multiple links.

Link configuration task list

|

Tasks at a glance |

|

(Required.) Creating a link and specifying a link group |

|

(Required.) Specifying an outbound next hop for a link |

|

(Required.) Setting a weight and priority |

|

(Optional.) Configuring the bandwidth and connection parameters |

|

(Optional.) Configuring health monitoring |

|

(Optional.) Enabling the slow offline feature |

|

(Optional.) Setting the link cost for proximity calculation |

|

(Optional.) Setting the bandwidth ratio and maximum expected bandwidth |

Creating a link and specifying a link group

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Create a link and enter link view. |

loadbalance link link-name |

By default, no links exist. |

|

3. (Optional.) Set a description for the link. |

description text |

By default, no description is set for a link. |

|

4. Specify a link group for the link. |

link-group link-group-name |

By default, a link does not belong to any link group. |

Specifying an outbound next hop for a link

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link view. |

loadbalance link link-name |

N/A |

|

3. Specify an outbound next hop for the link. |

· Specify the IPv4 address of the

outbound next hop: · Specify the IPv6 address of the

outbound next hop: |

By default, no outbound next hop is specified for a link. |

Setting a weight and priority

About setting a weight and priority

Perform this task to configure a weight for the weighted round robin and weighted least connection algorithms of a link, and the scheduling priority in the link group for the server.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link view. |

loadbalance link link-name |

N/A |

|

3. Set a weight for the link. |

weight weight-value |

By default, the weight of a link is 100. |

|

4. Set a priority for the link. |

priority priority |

By default, the priority of a link is 4. |

Configuring the bandwidth and connection parameters

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link view. |

loadbalance link link-name |

N/A |

|

3. Set the maximum bandwidth for the link. |

rate-limit bandwidth [ inbound | outbound ] bandwidth-value |

By default, the maximum bandwidth, inbound bandwidth, and outbound bandwidth are 0 KBps for a link. The bandwidths are not limited. |

|

4. Set the maximum number of connections for the link. |

connection-limit max max-number |

By default, the maximum number of connections is 0 for a link. The number is not limited. |

|

5. Set the maximum number of connections per second for the link. |

rate-limit connection connection-number |

By default, the maximum number of connections per second is 0 for a link. The number is not limited. |

Configuring health monitoring

About configuring health monitoring

Perform this task to enable health monitoring to detect the availability of a link.

Restrictions and guidelines

The health monitoring configuration in link view takes precedence over the configuration in link group view.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link view. |

loadbalance link link-name |

N/A |

|

3. Specify a health monitoring method for the link. |

probe template-name |

By default, no health monitoring method is specified for a link. |

|

4. Specify the health monitoring success criteria for the link. |

success-criteria { all | at-least min-number } |

By default, the health monitoring succeeds only when all the specified health monitoring methods succeed. |

Enabling the slow offline feature

About the slow offline feature

The shutdown command immediately terminates existing connections of a link. The slow offline feature ages out the connections, and does not establish new connections.

Restrictions and guidelines

To enable the slow offline feature for a link, you must execute the slow-shutdown enable command and then the shutdown command. If you execute the shutdown command and then the slow-shutdown enable command, the slow offline feature does not take effect and the link is shut down.

Procedure

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter link view. |