| Title | Size | Downloads |

|---|---|---|

| H3C HDM2 Technology White Paper-6W102-book.pdf | 7.08 MB |

- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| book | 7.08 MB |

|

|

|

|

|

H3C HDM2 |

|

Technology White Paper |

|

|

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice.

Access capability and management interface

Server deployment capabilities

Functions related to device migration and retirement

Server management capabilities

Server monitoring capabilities

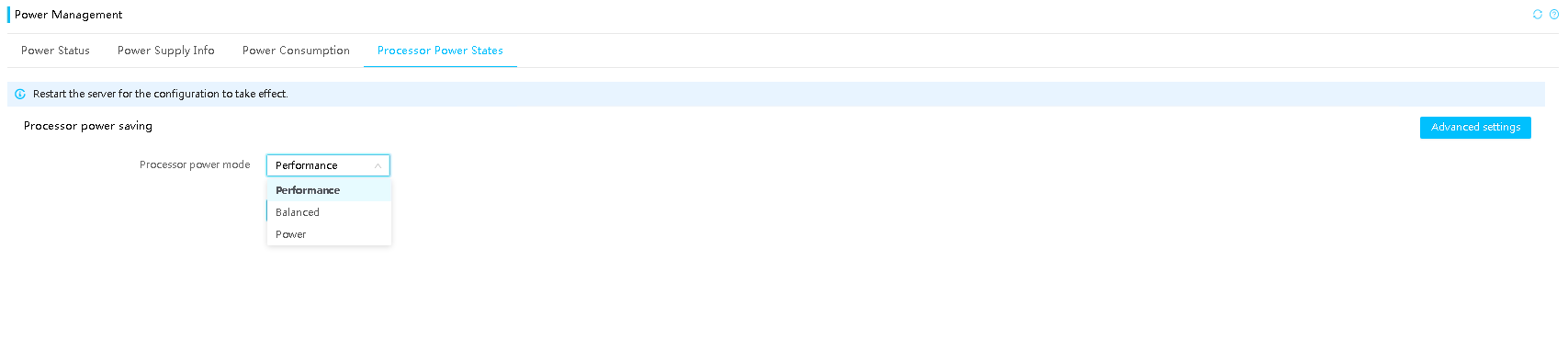

Optimization points of the host's operational capacity

FRU and asset information management

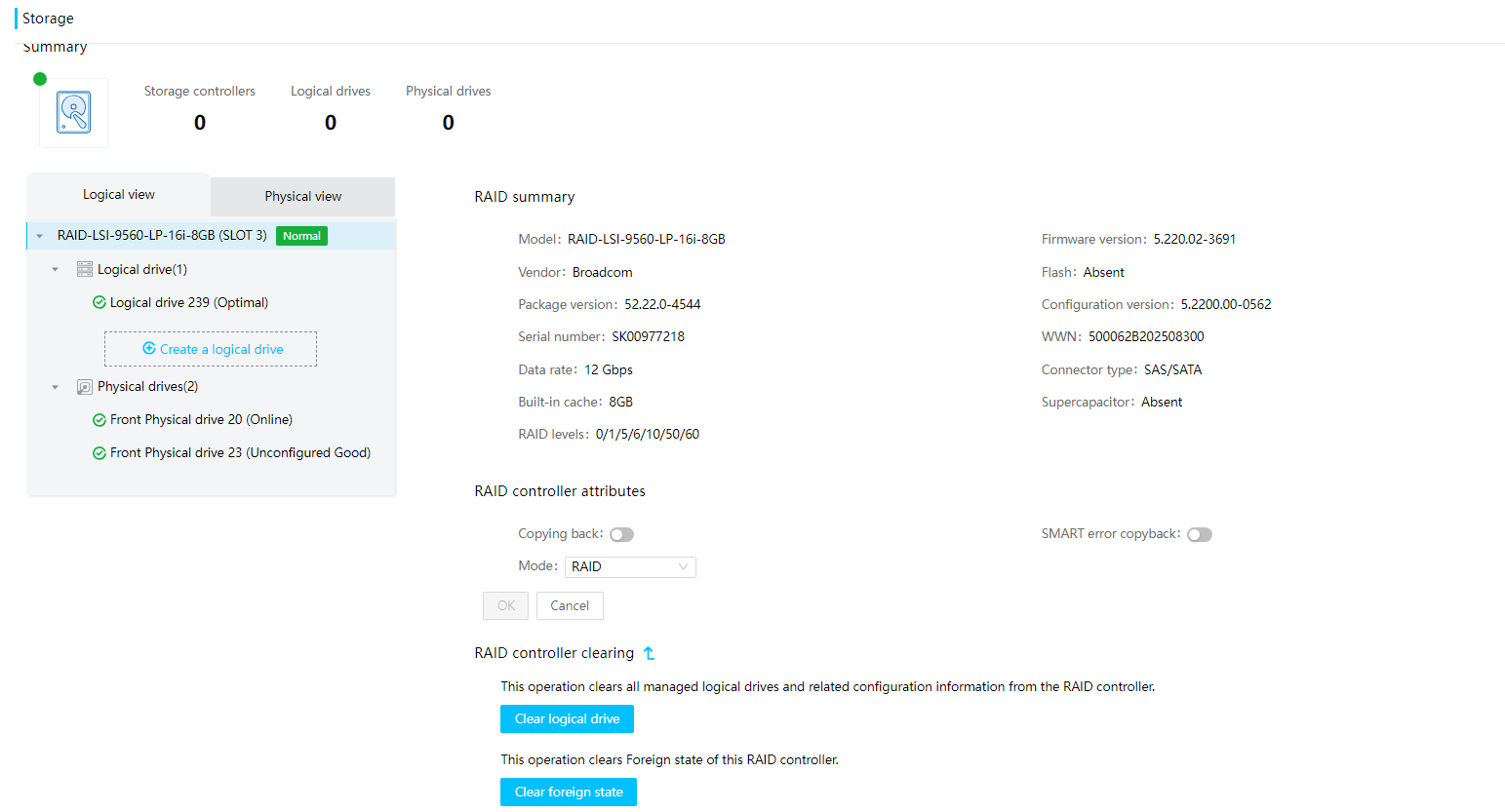

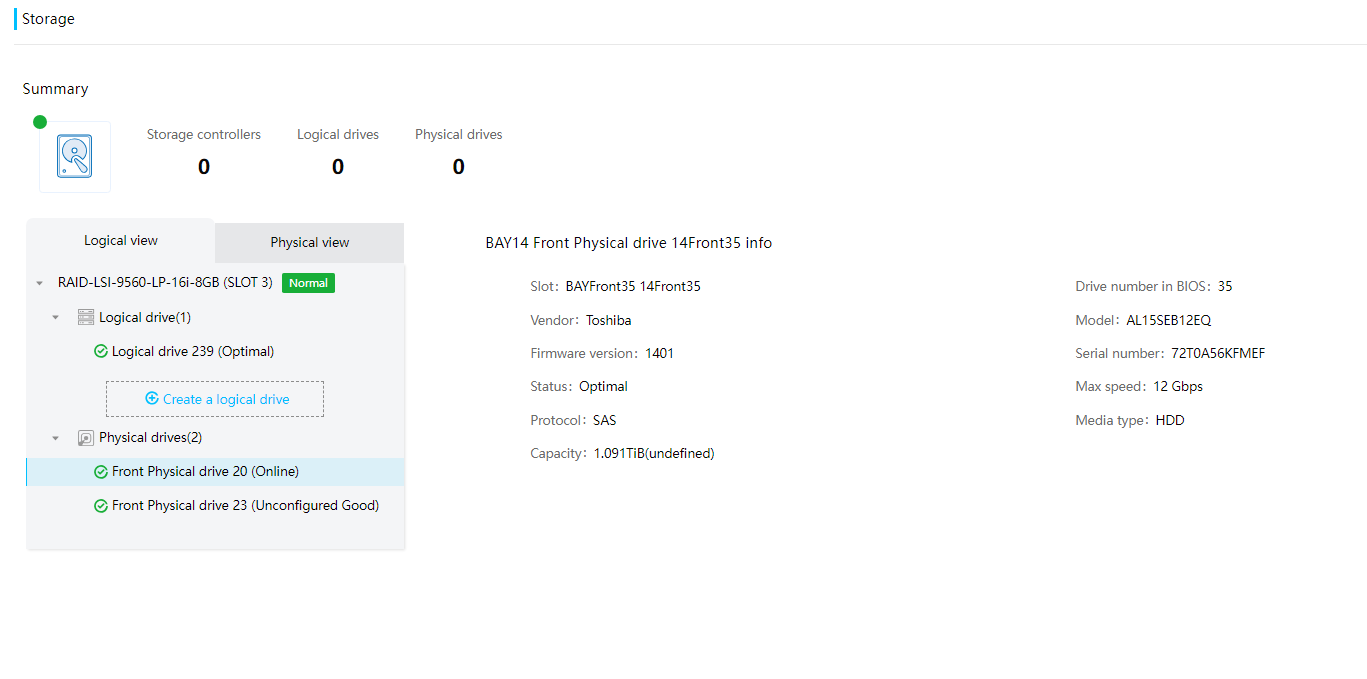

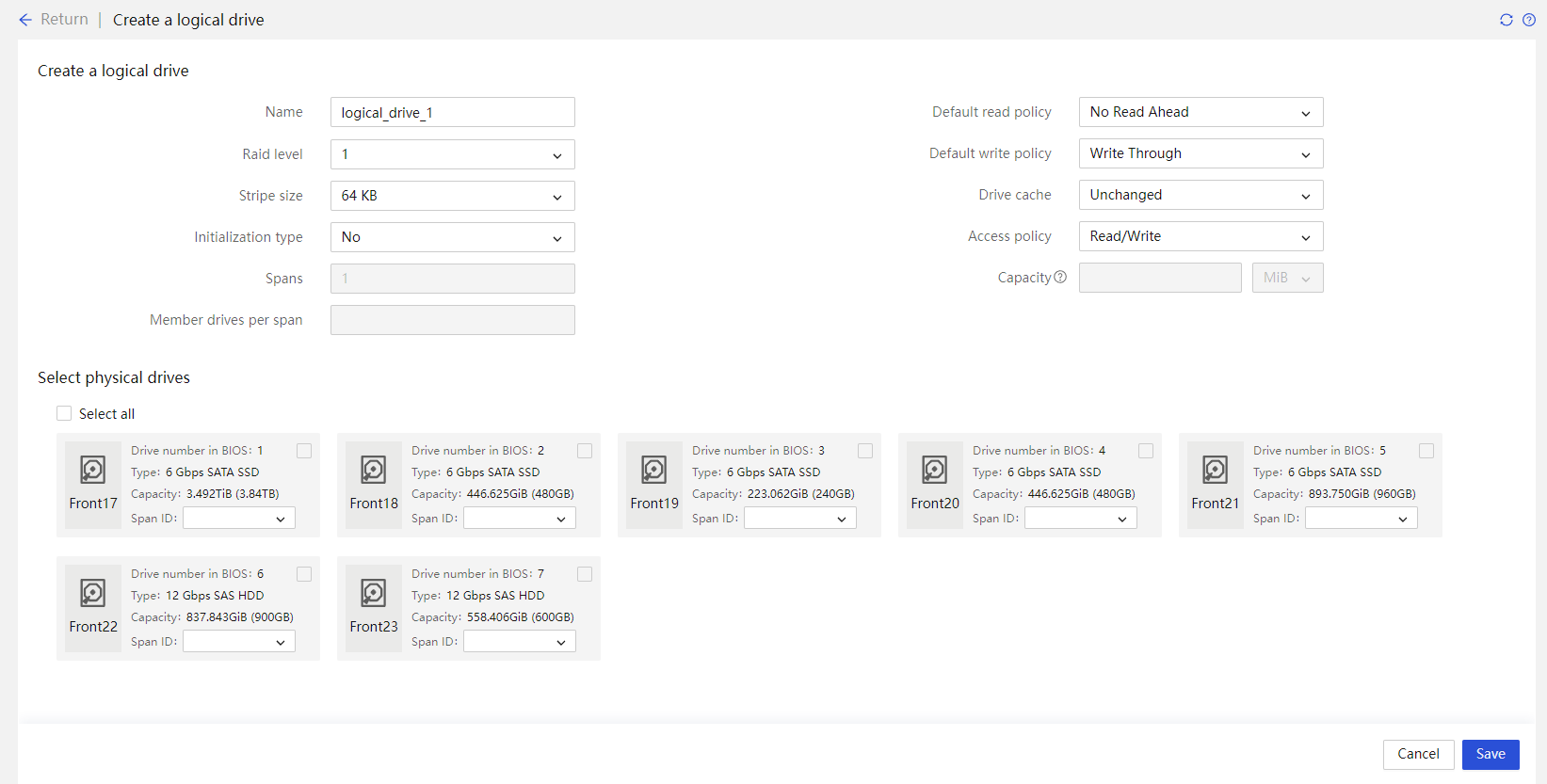

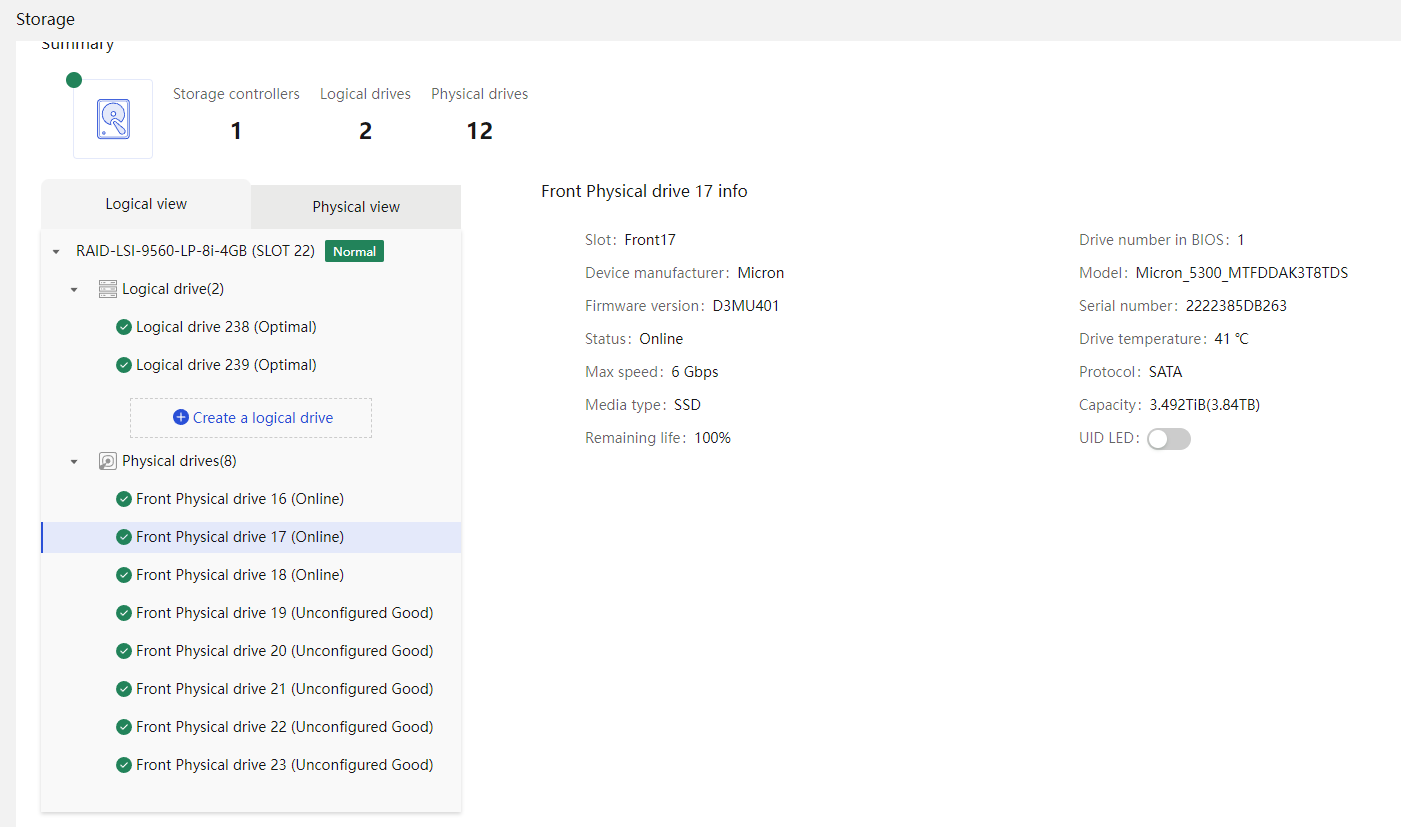

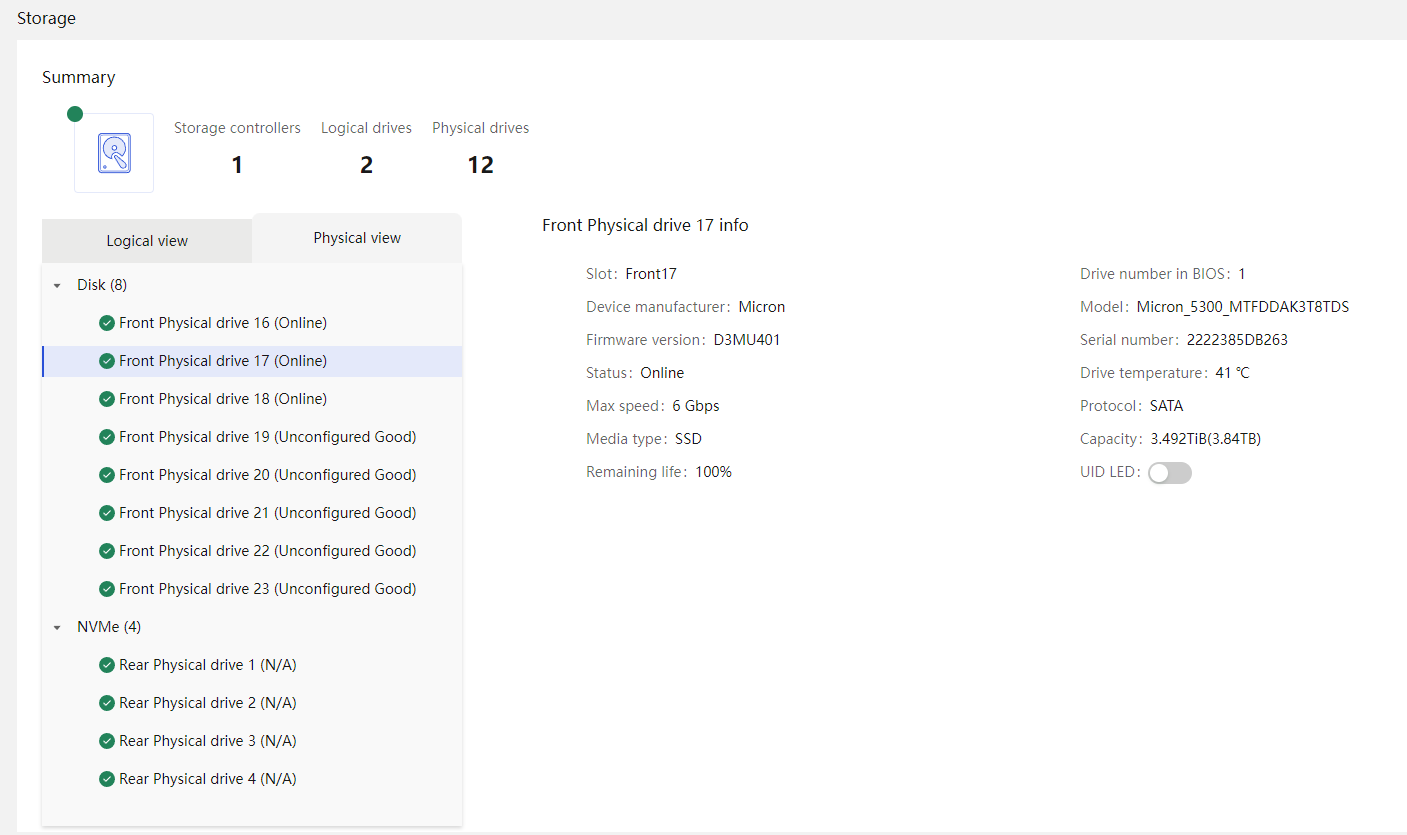

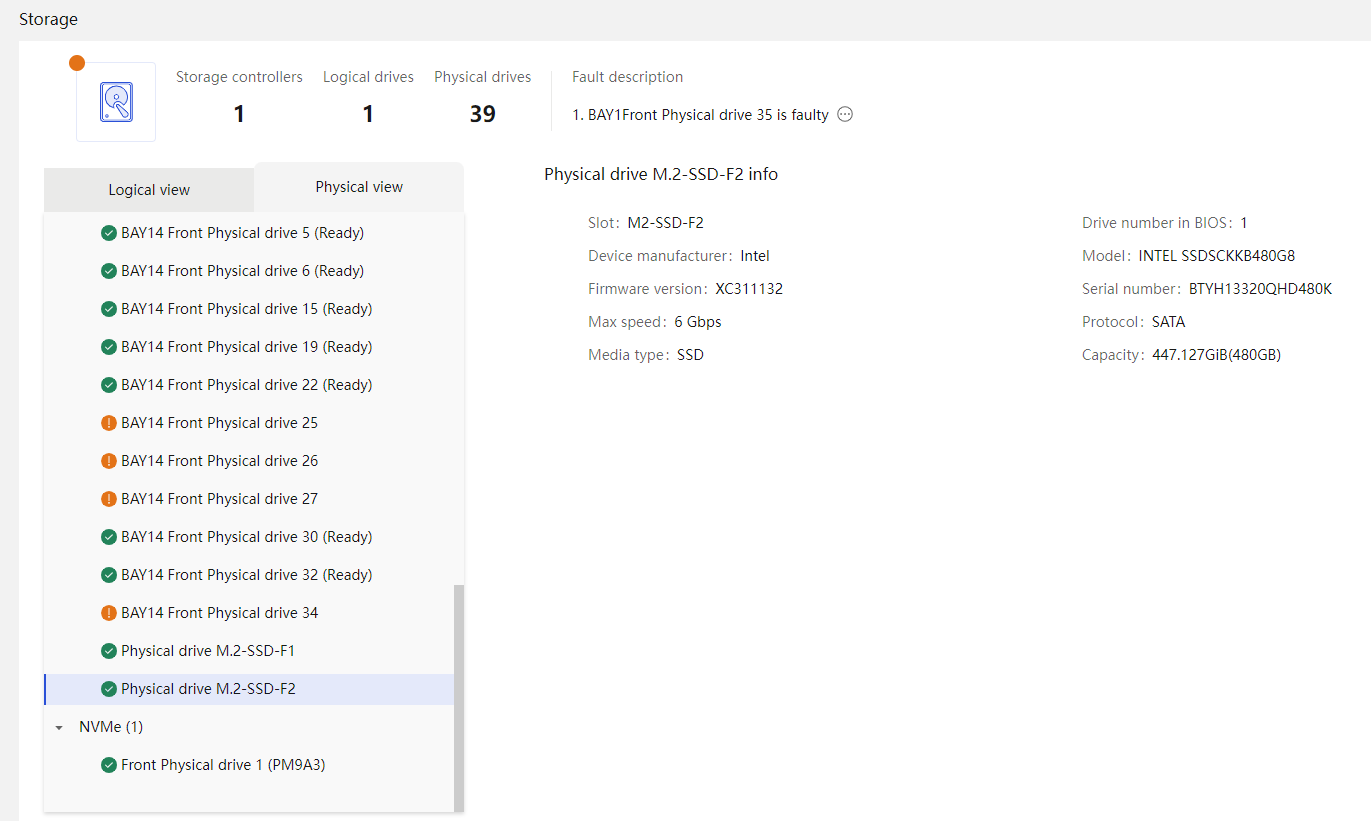

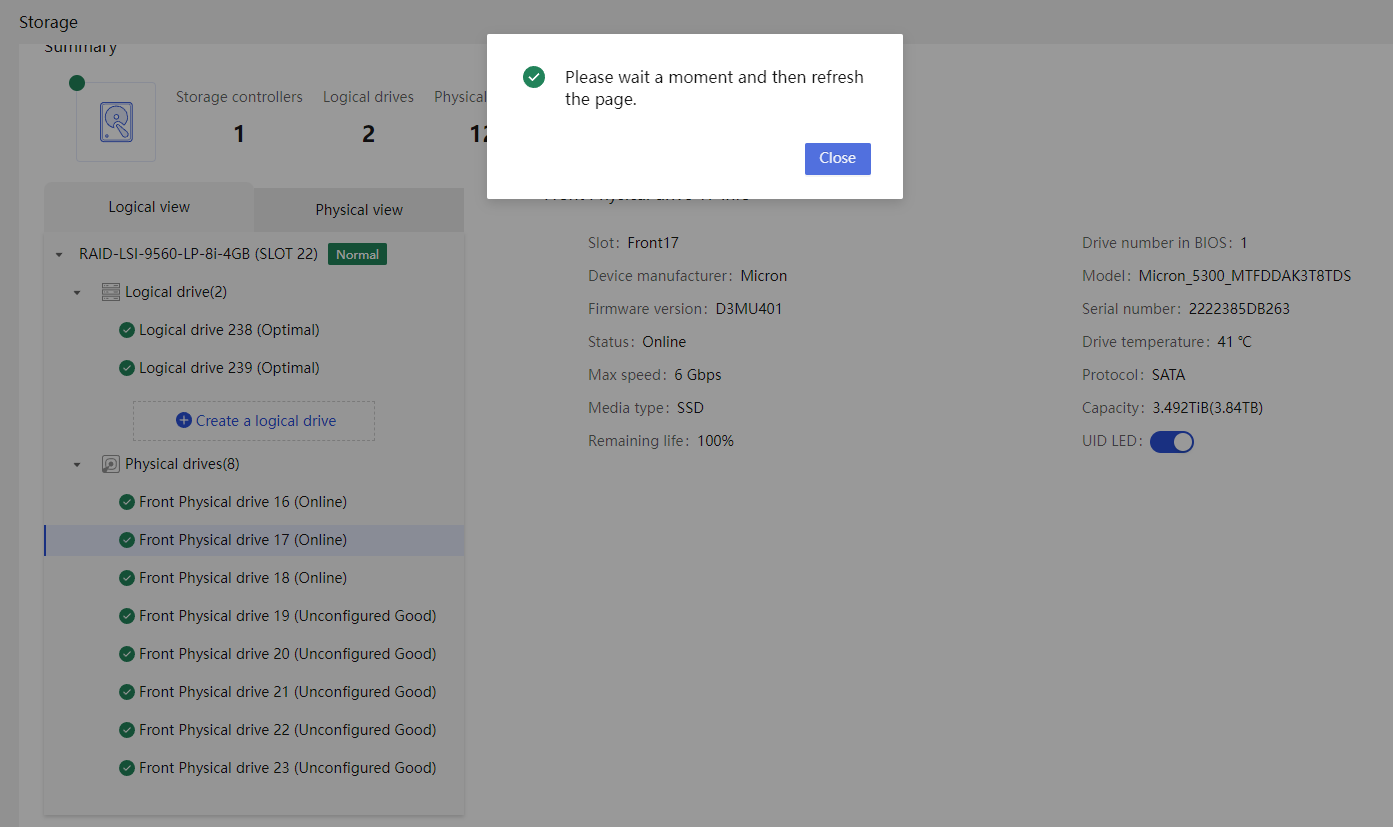

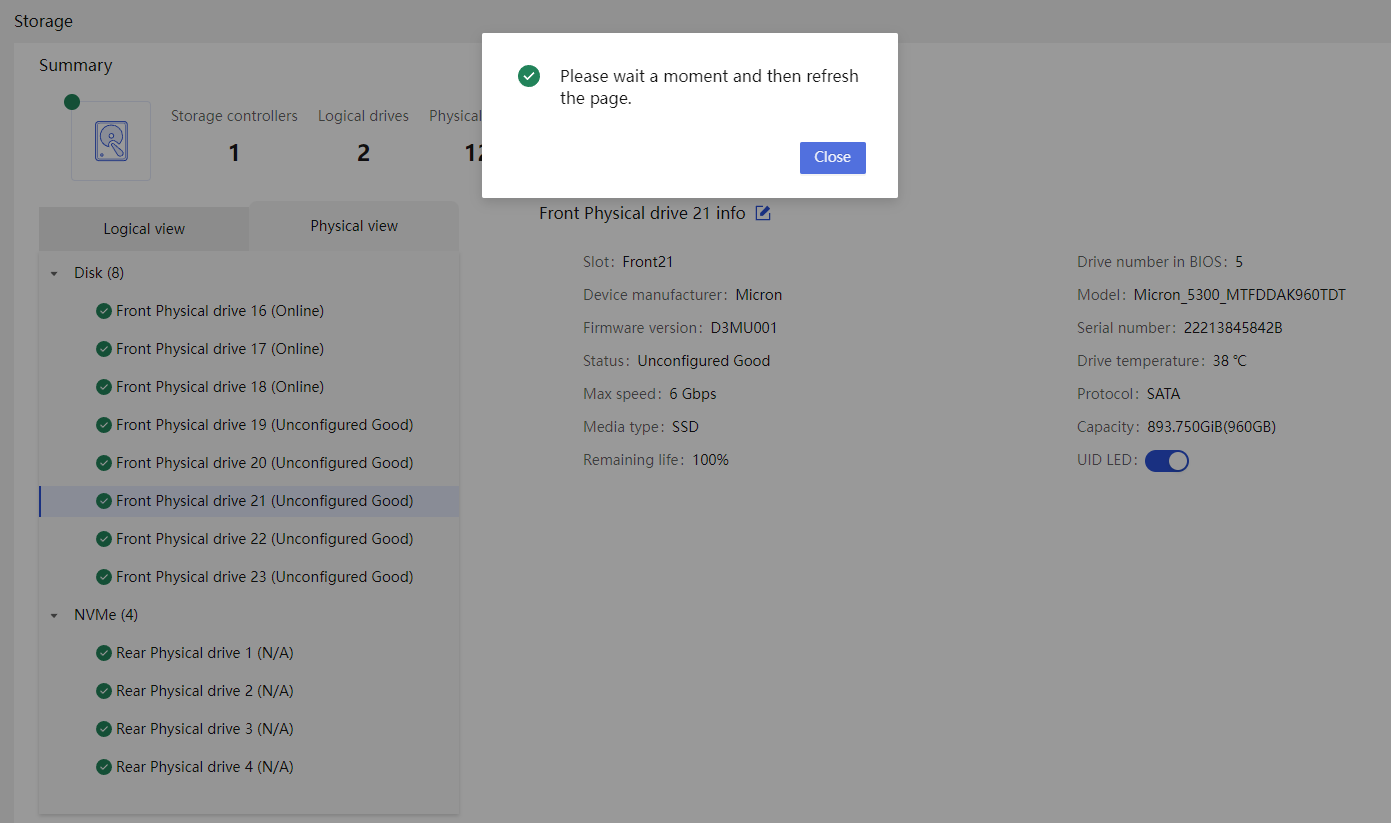

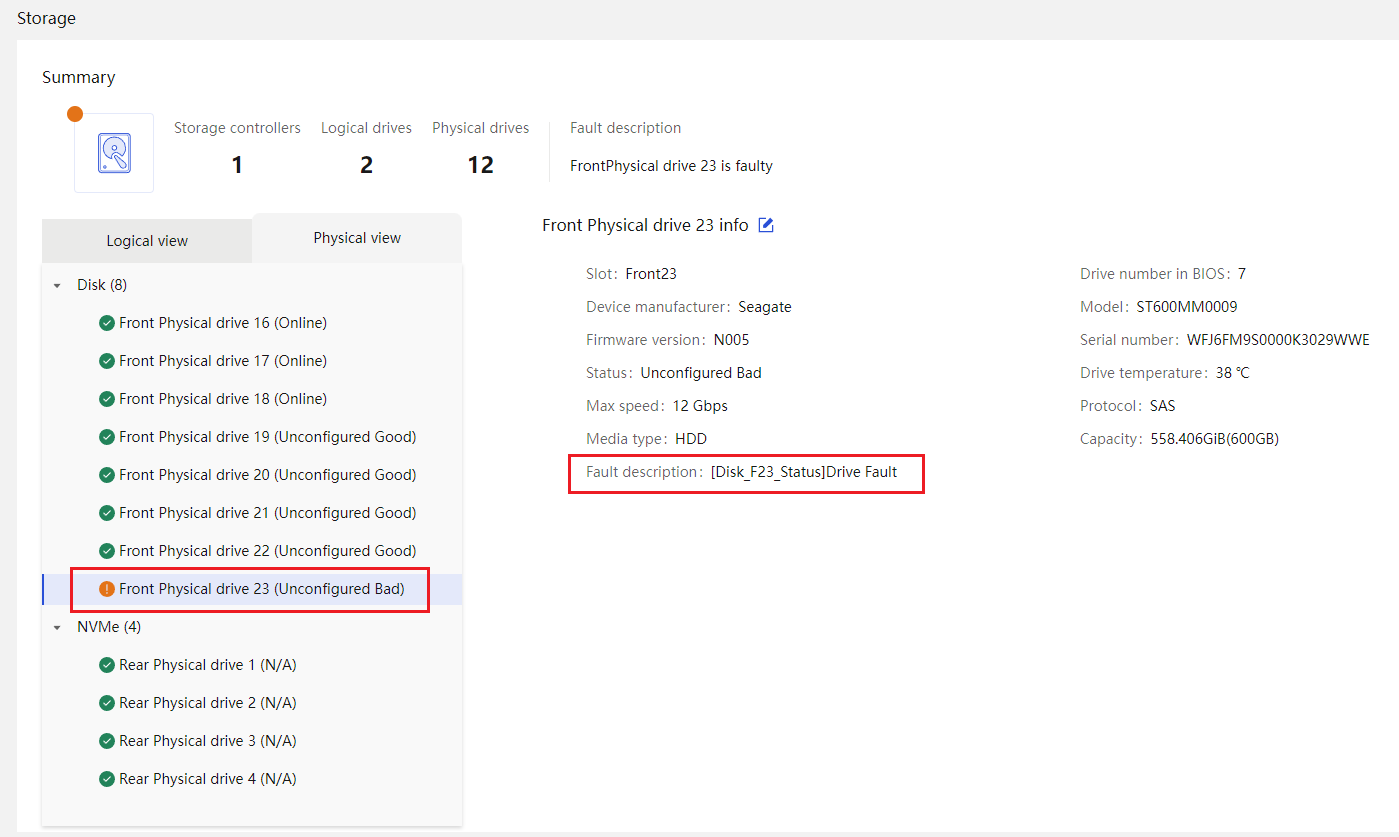

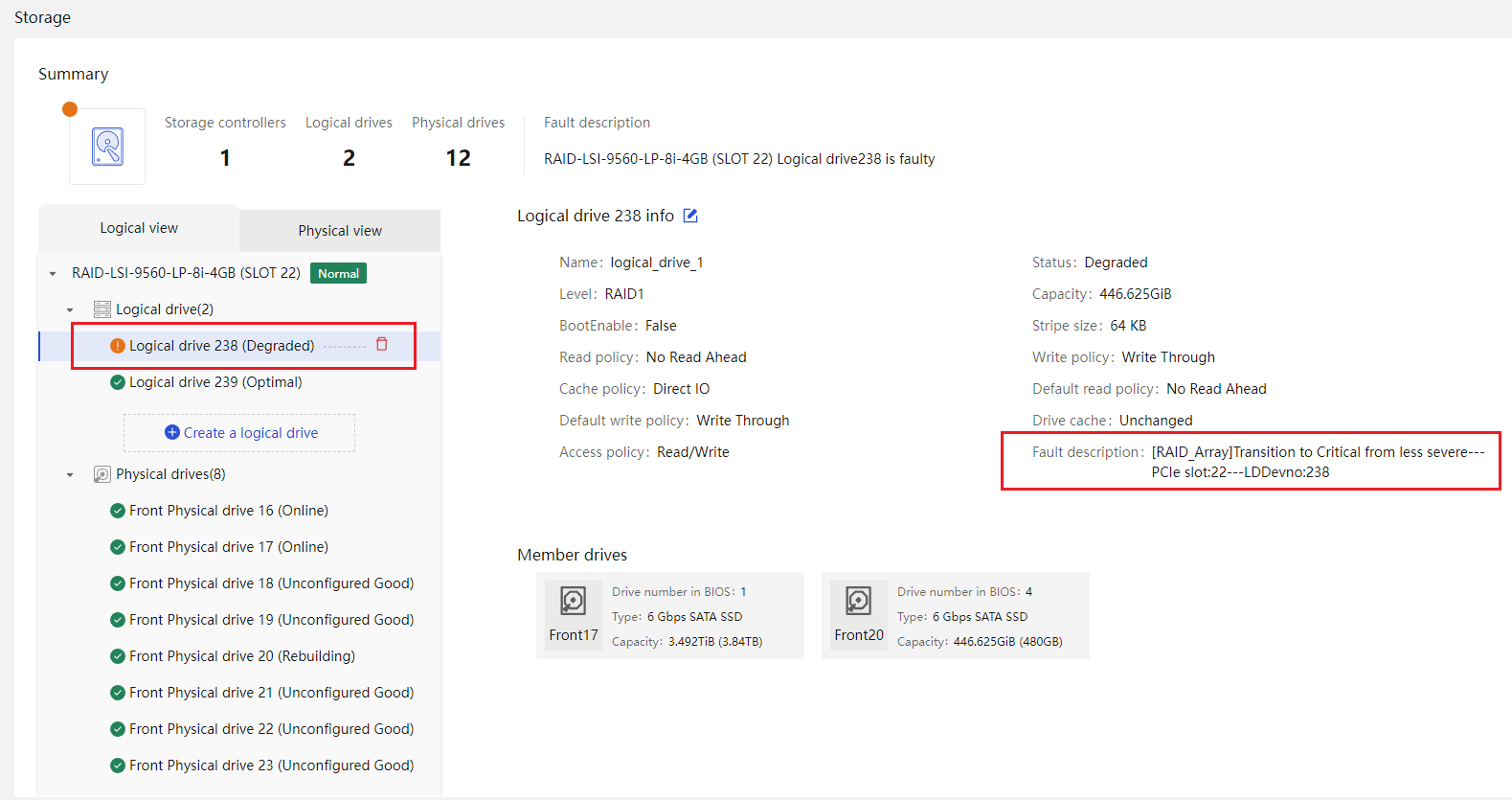

Physical drive management for a storage controller

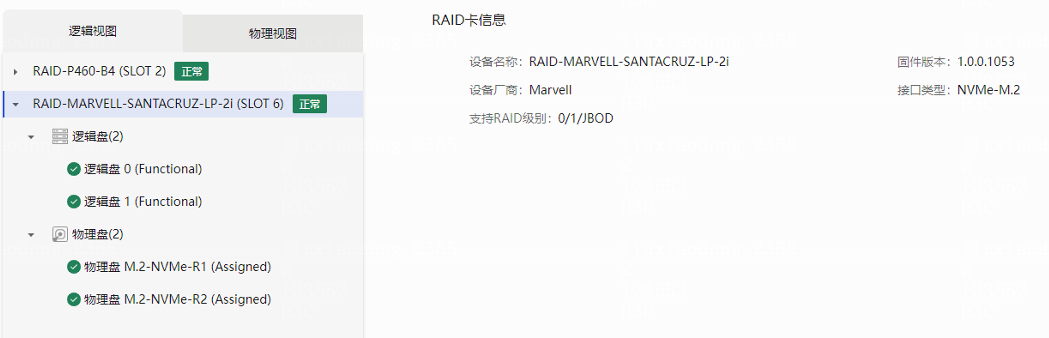

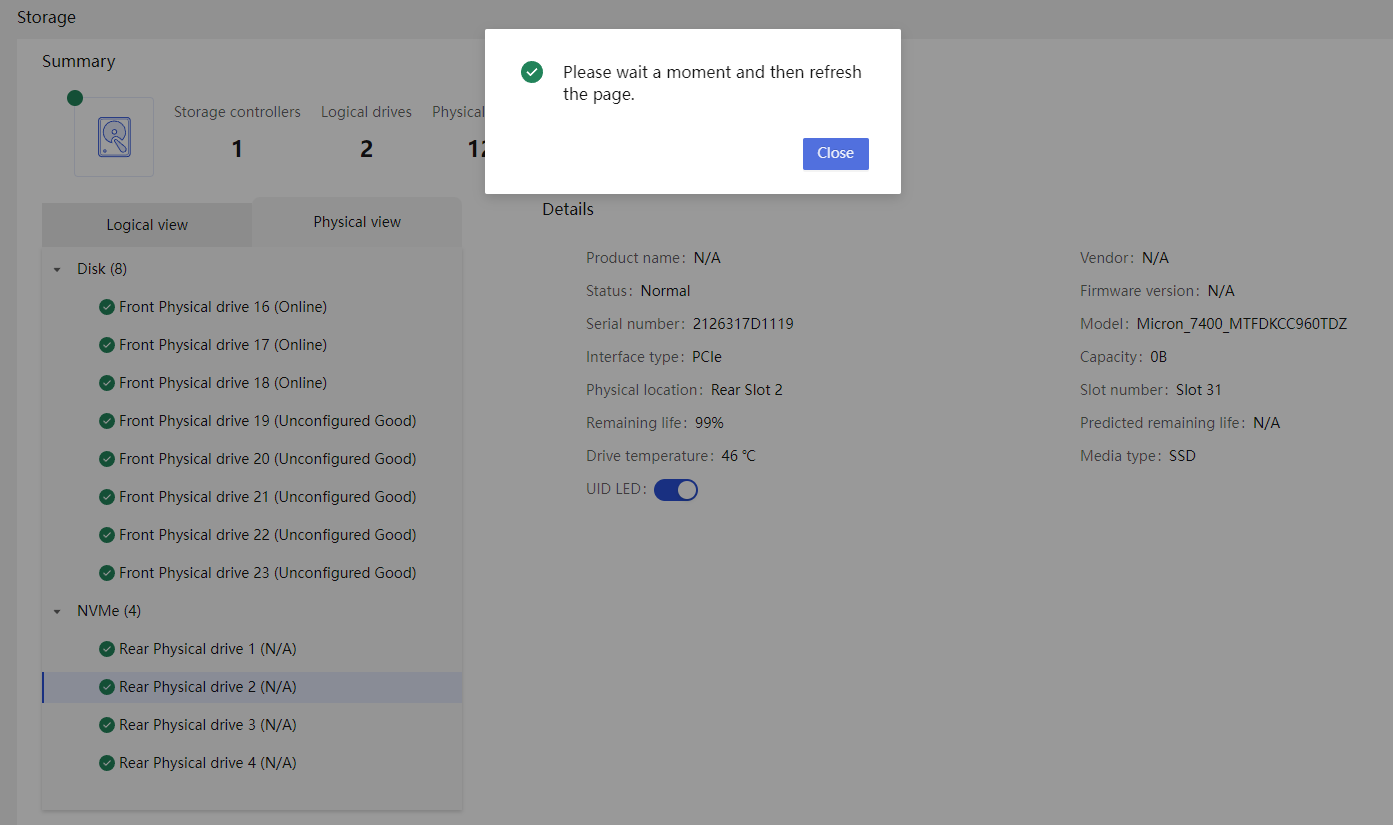

Marvell M.2 storage controller management

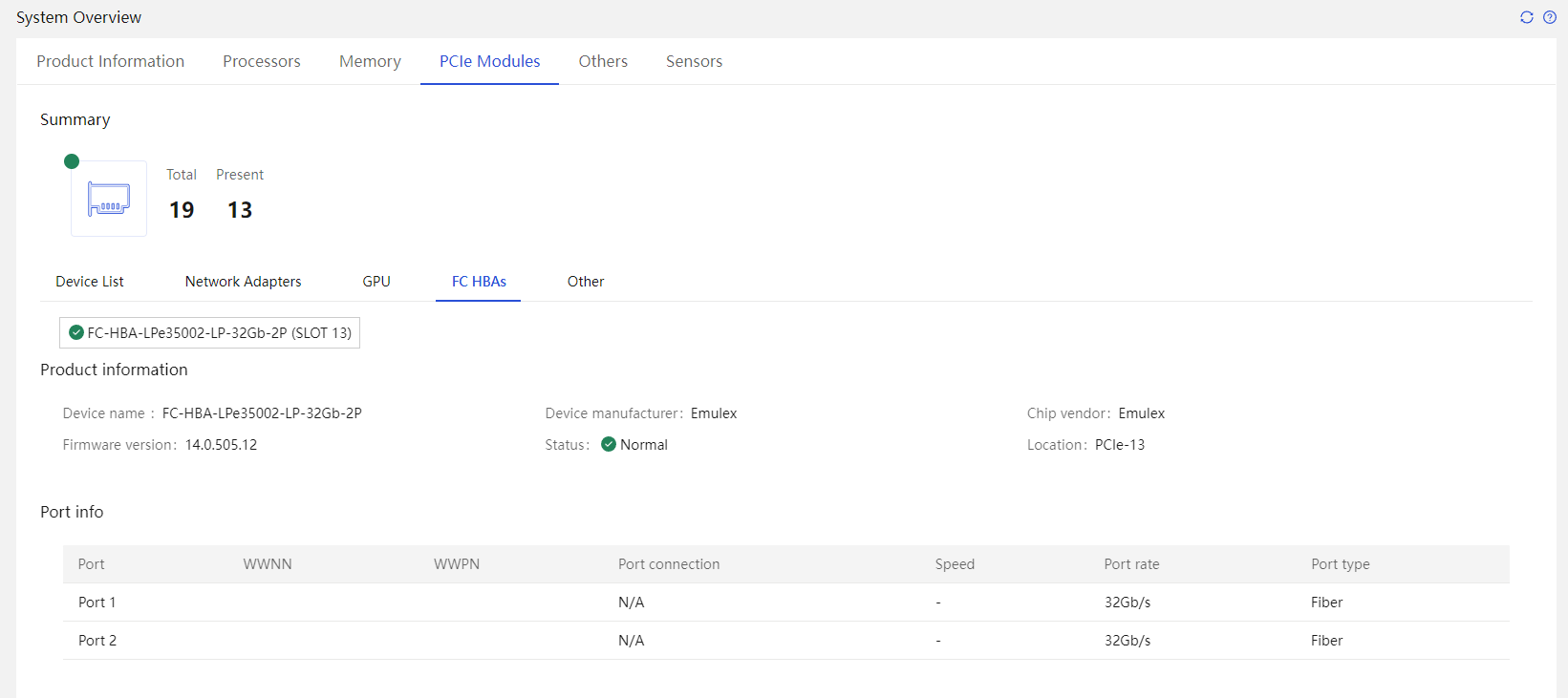

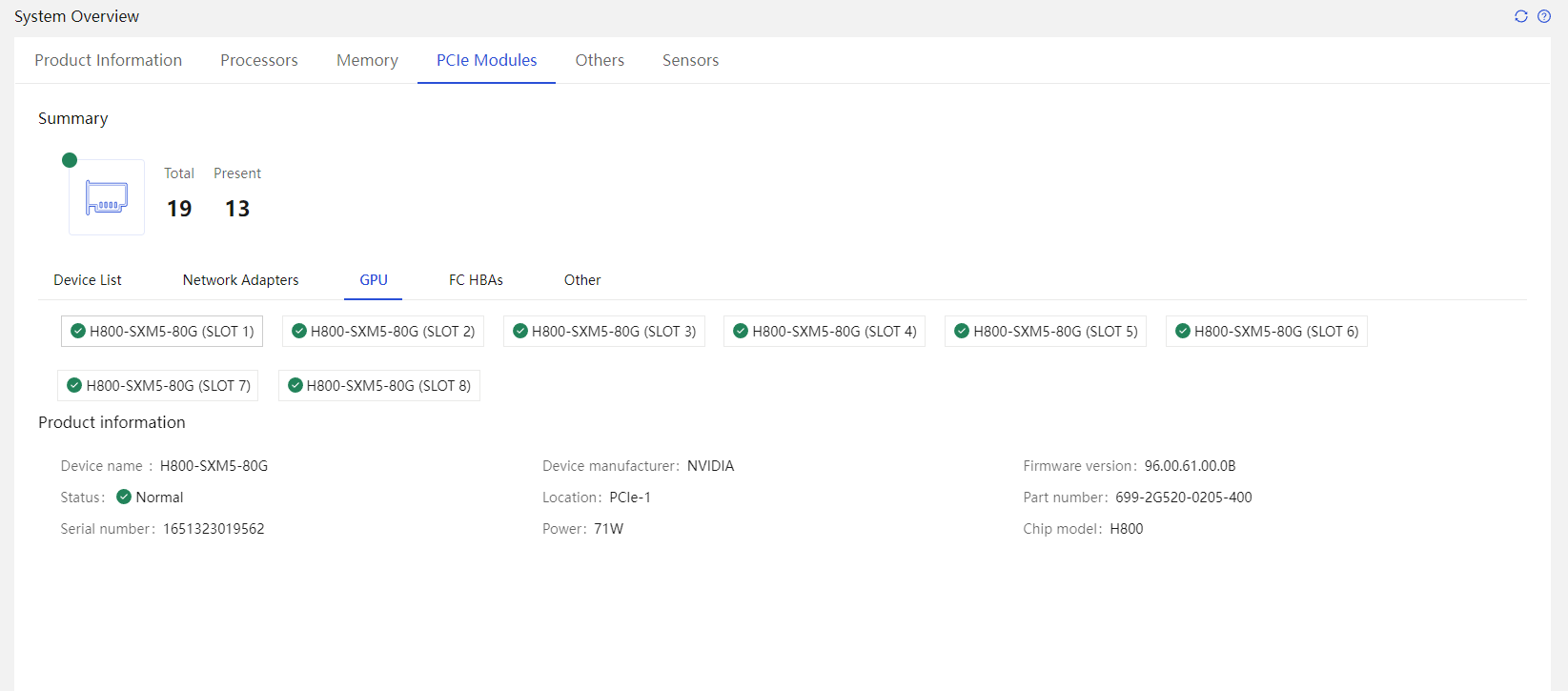

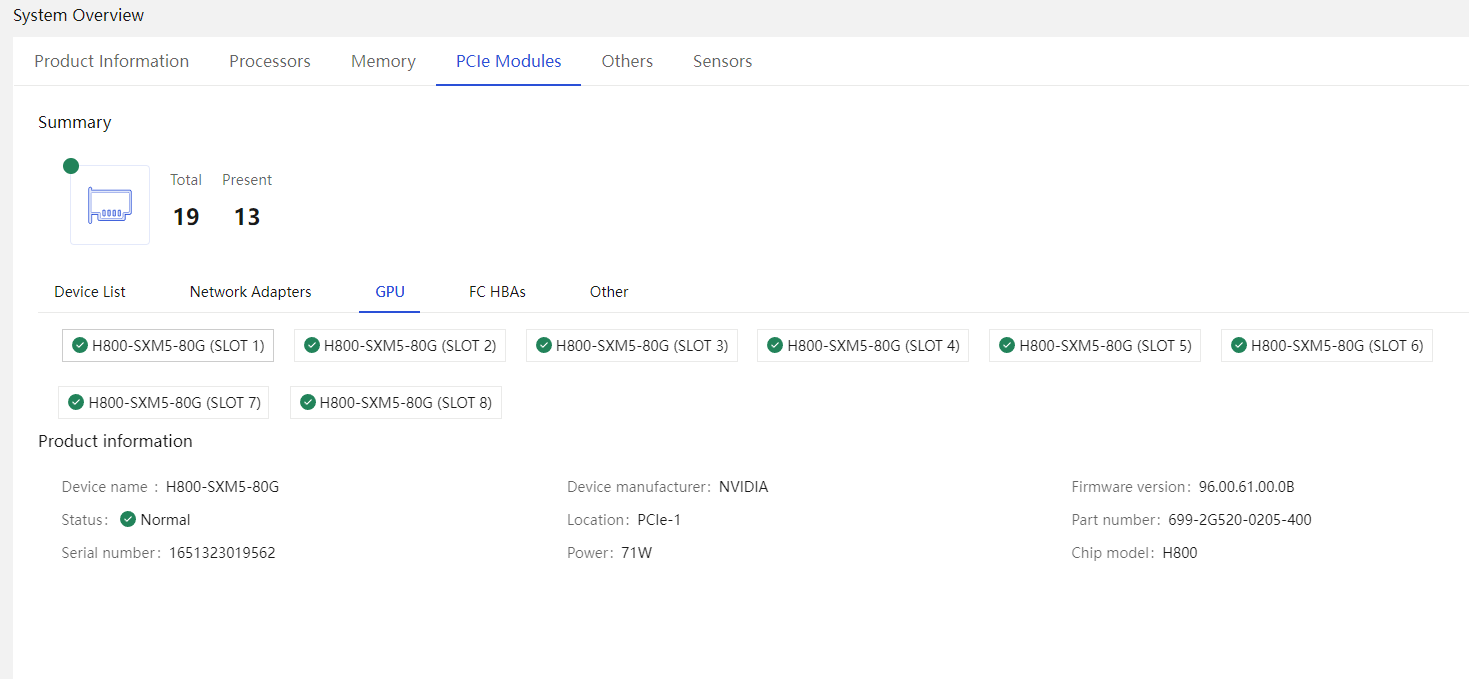

Displaying basic GPU information

GPU module out-of-band upgrade

Powering on or powering off the server

Configuring the server system startup policy after power-on

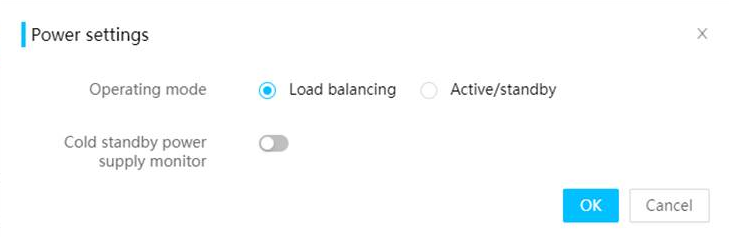

Cold standby power supply monitor

Dynamic active/standby power supply mode

Historical power consumption statistics

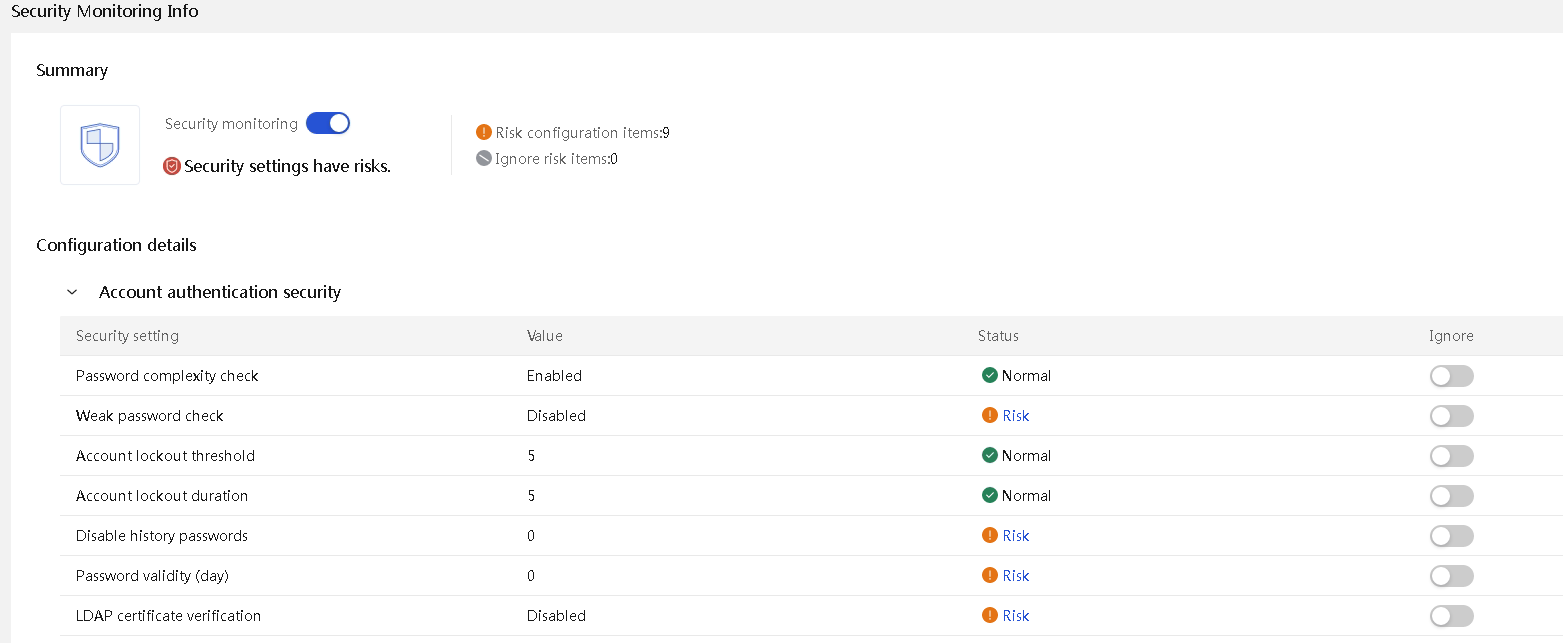

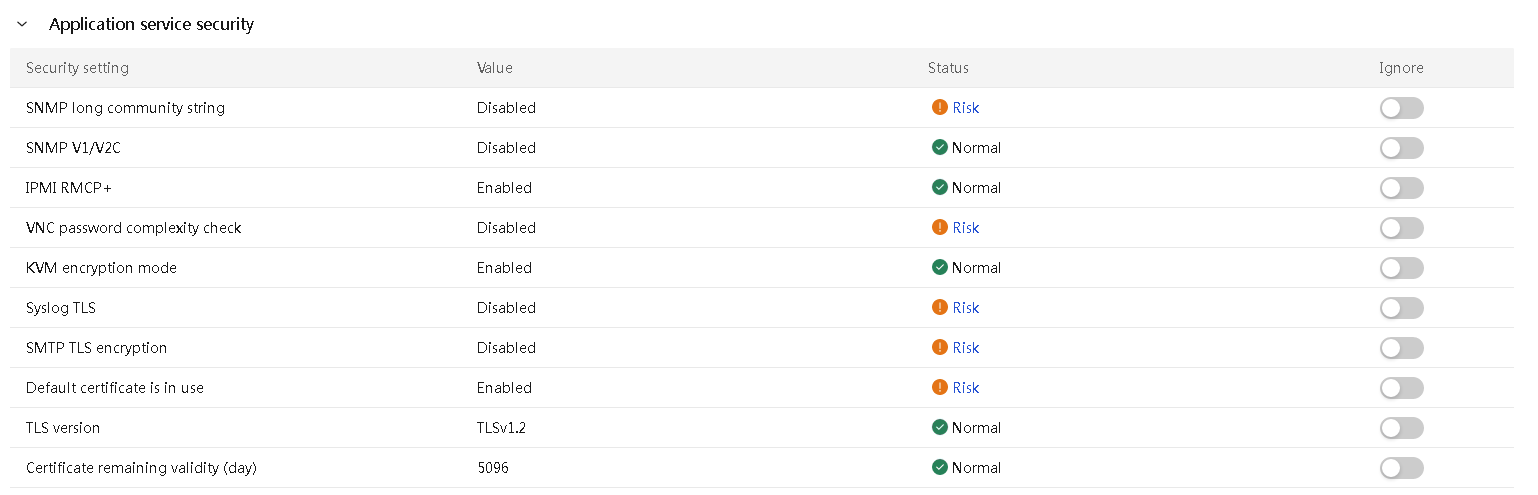

Security monitoring information

Preface

Audience

This document is applicable to the following audience:

· Pre-sales engineers for servers.

· Field technical support and servicing engineers.

· Network administrators.

Revision records

|

Date |

Revised version |

Description |

Revised by |

|

2024-2-22 |

V1.5 |

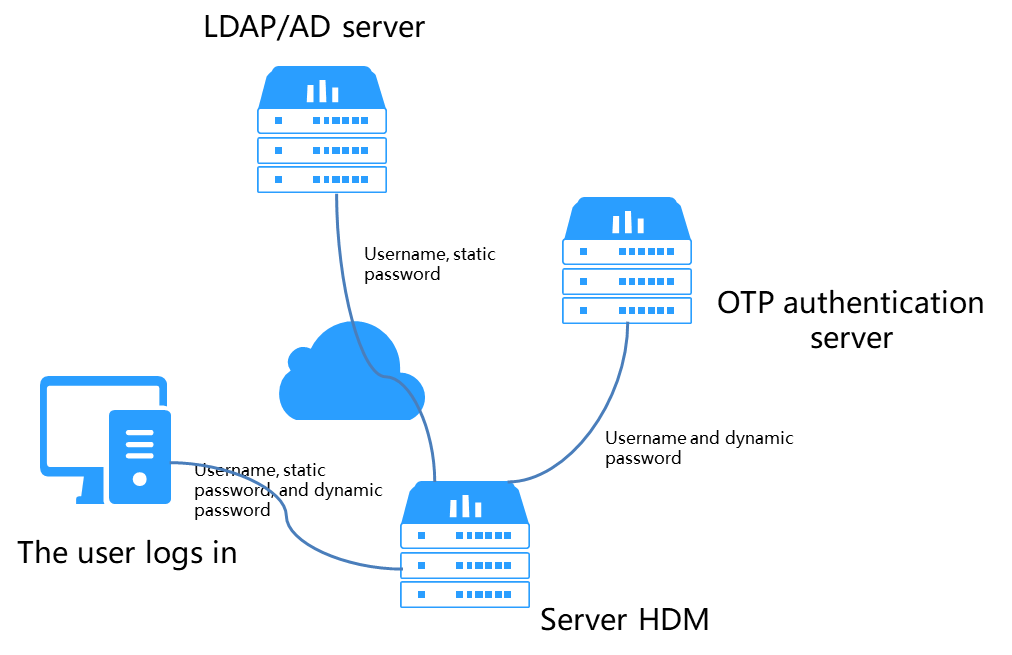

Added "OTP authentication." |

Platform software |

|

2024-1-12 |

V1.4 |

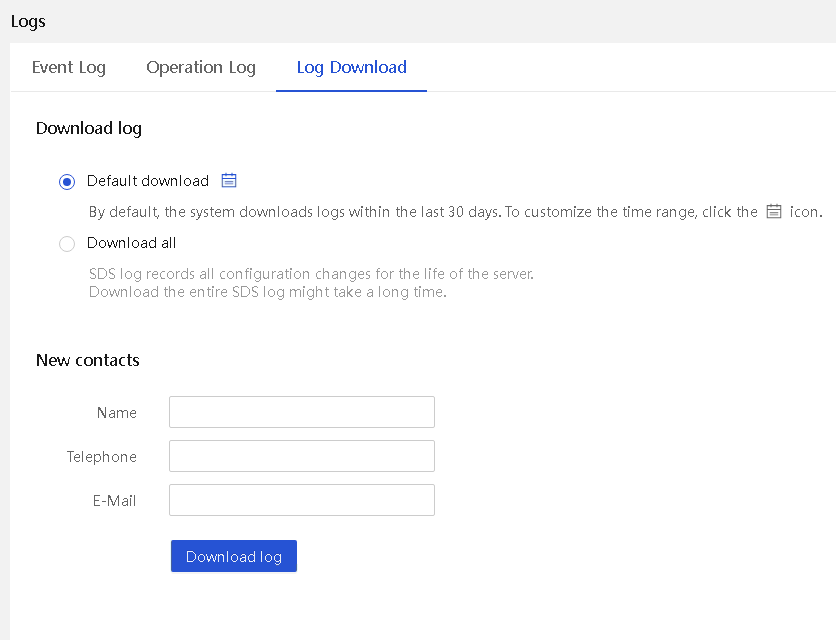

Added "Black box (log download)." |

Platform software |

|

2023-12-29 |

V1.3 |

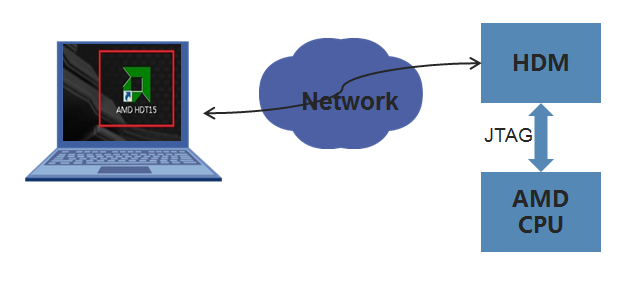

1. Added support for the following features: ¡ Support for HTTP 2.0 ¡ Switchover between two Web themes ¡ IPv4 and IPv6 static route configuration ¡ Setting BIOS boot suboptions ¡ Risk prompts for HDM and BIOS upgrade ¡ Support for Intel On Demand ¡ Power supply black box ¡ HDM system maintainability ¡ Memory anti-counterfeit ¡ LDAP certificate import ¡ Fast OS installation ¡ Added support of AMD for MCTP over PCIe out-of-band management ¡ Out-of-band management and remaining lifespan display of the NVMe-MI controller 2. Updated overall workflow 3. Edited fault diagnosis, MRT, and other features |

Platform software |

|

2023-10-24 |

V1.2 |

Cleared information related with old software versions |

Platform software |

|

2023-10-19 |

V1.1 |

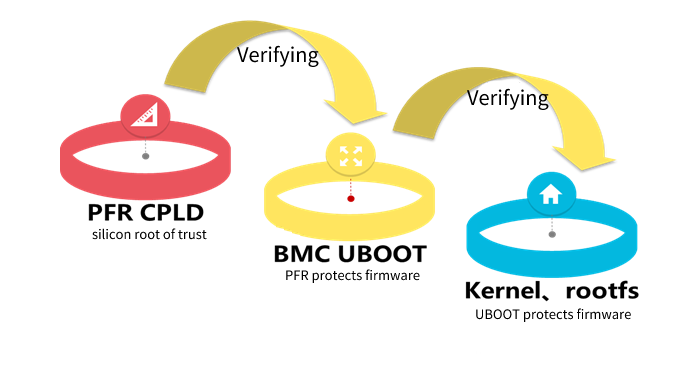

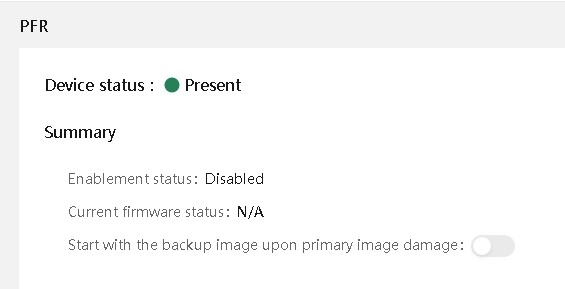

Added parameter information of the BMC chip in "HDM overall architecture" and "Silicon root of trust." |

Platform software |

|

2023-7-25 |

V1.0 |

First release |

Platform software |

Applicable products

This document is applicable to H3C G6 servers, including the following:

· H3C UniServer B5700 G6

· H3C UniServer R3950 G6

· H3C UniServer R4300 G6

· H3C UniServer R4700 G6

· H3C UniServer R4700LE G6

· H3C UniServer R4900 G6

· H3C UniServer R4900 G6 Ultra

· H3C UniServer R4900LE G6 Ultra

· H3C UniServer R4950 G6

· H3C UniServer R5350 G6

· H3C UniServer R5300 G6

· H3C UniServer R5500 G6

· H3C UniServer R6700 G6

· H3C UniServer R6900 G6

Overview

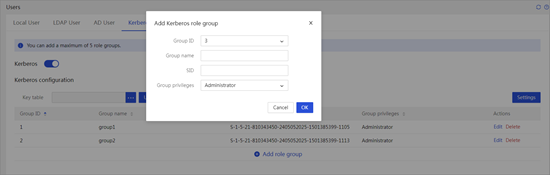

HDM2 is an upgraded version of HDM. In addition to inheriting existing functionalities, HDM2 is optimized for multiple application scenarios and provides corresponding compatible features. It adds intelligent-related attributes to support the strategy of the dual-core integration. It also offers enhanced license services, including memory intelligent repair, expansion of unified management for up to 200 devices, Kerberos authentication and single sign-on, one-click retirement, system configuration locking, intelligent tuning, and security monitoring information.

For the sake of convenience, HDM2 is referred to as HDM.

About HDM

Hardware Device Management (HDM) is a remote server management system. It complies with IPMI, SNMP, and Redfish standards and provides various functions, including keyboard, video, and mouse redirection, text console redirection, SOL connection, remote virtual media, and reliable hardware monitoring and management. HDM supports abundant features as described in Table 1.

|

Description |

|

|

Various management interfaces |

Provides abundant management interfaces, such as IPMI, HTTPS, SNMP, and Redfish, to meet various system integration requirements. |

|

Unified control |

Reduces O&M costs of servers in the small and medium-sized enterprises by implementing the unified control in a small scale. |

|

LCD display |

A touchable 3.5-inch or 2.5-inch LCD display is optional for some servers to facilitate on-site inspection or maintenance. |

|

Fault monitoring and diagnosis |

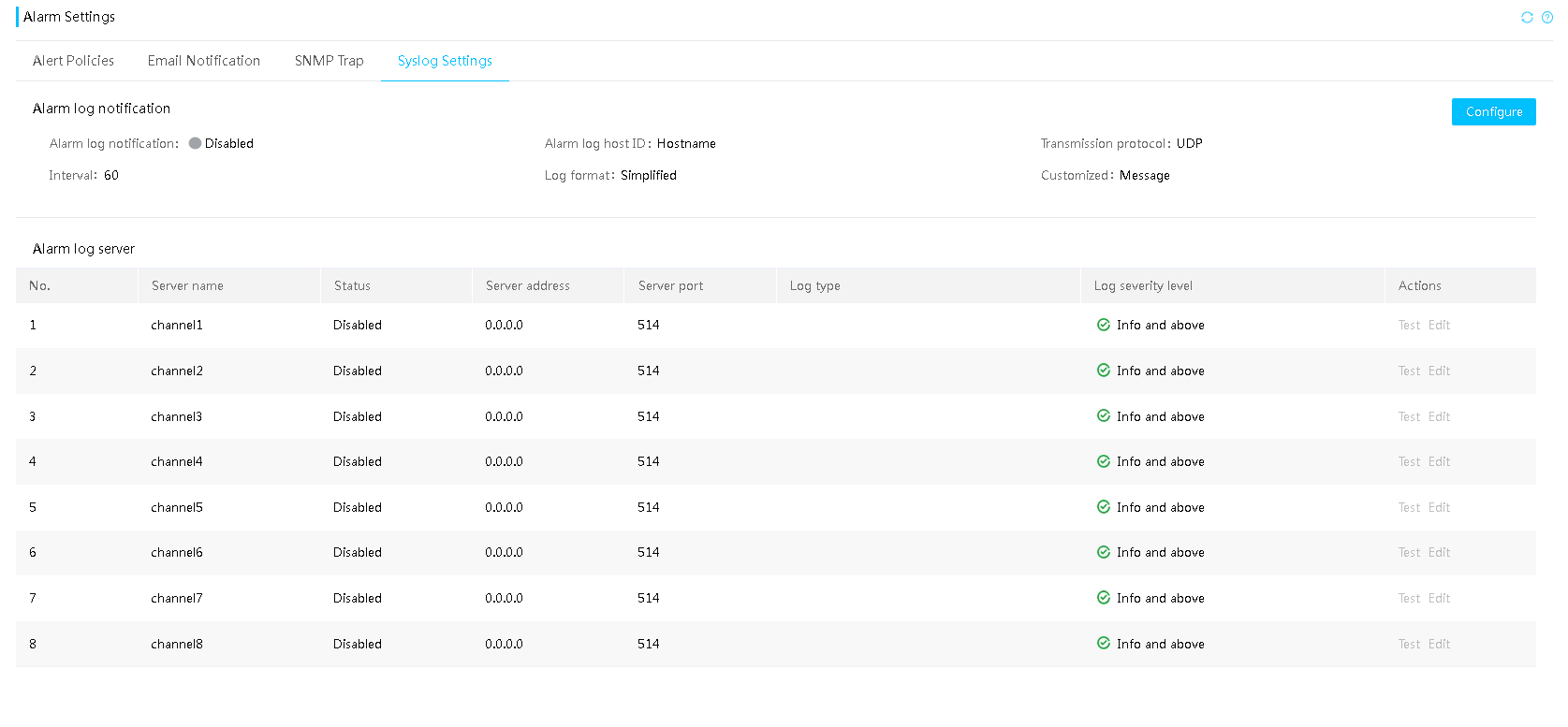

Provides fault location and diagnosis for maintenance to ensure correct 24×7 device operation. The fault logs can be reported proactively through SNMP traps, SMTP, Redfish event subscription, and syslog messages. |

|

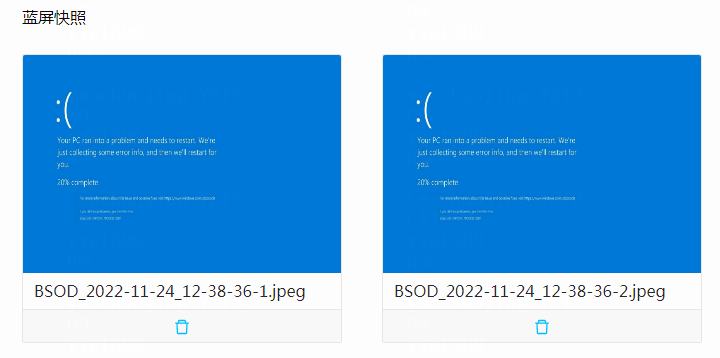

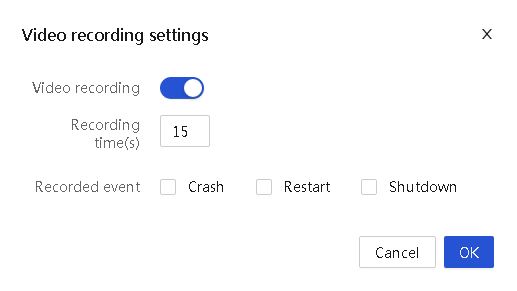

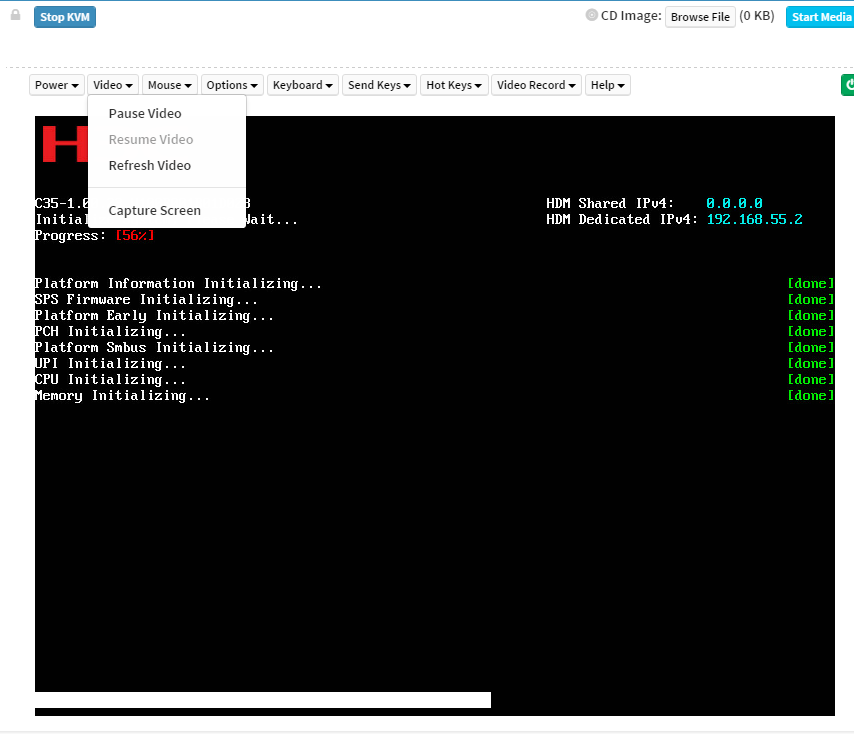

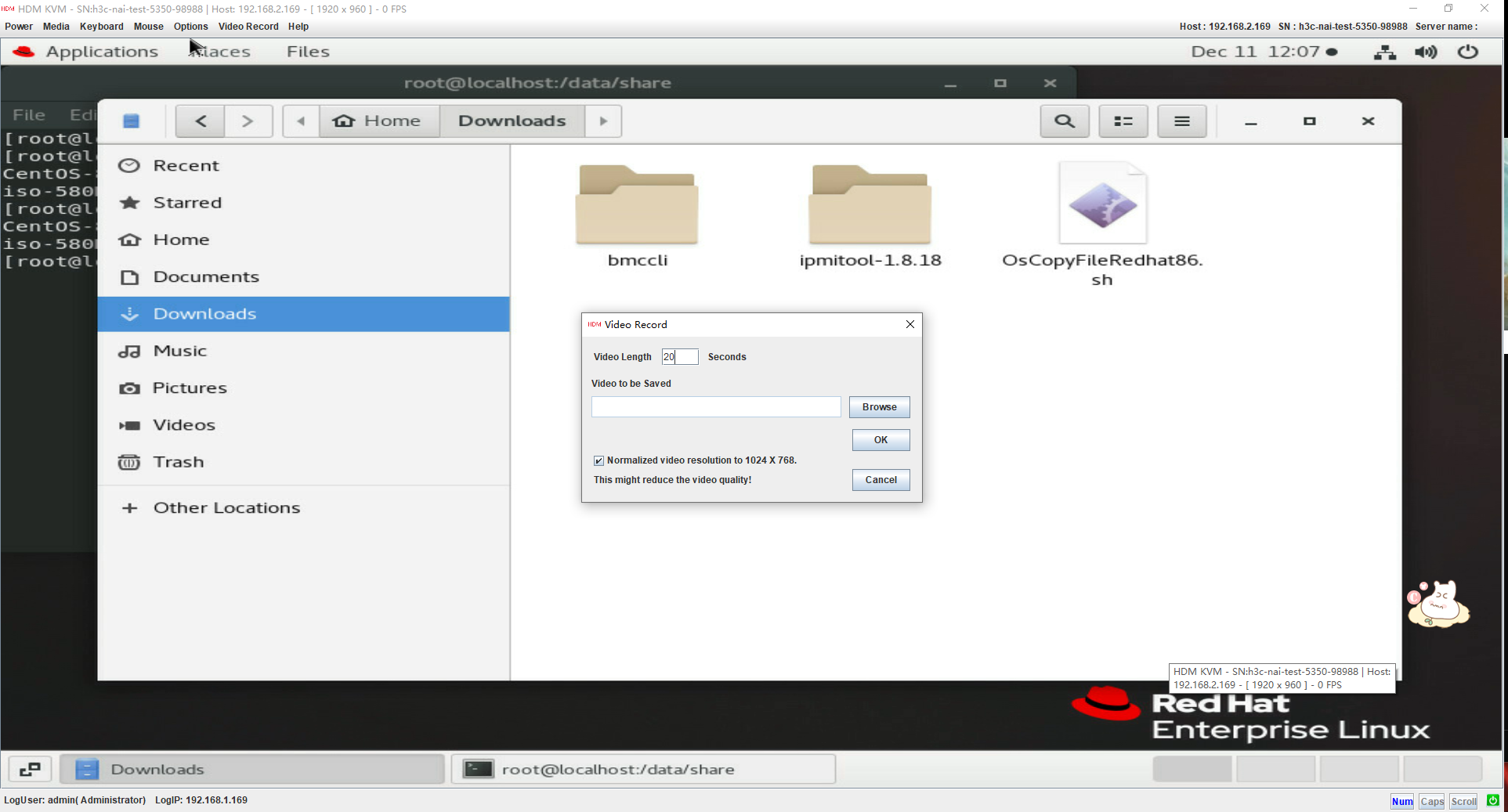

Critical OS event screenshot and video recording |

Takes a screenshot or records a video upon a critical OS event (for example, a crash) for future troubleshooting. |

|

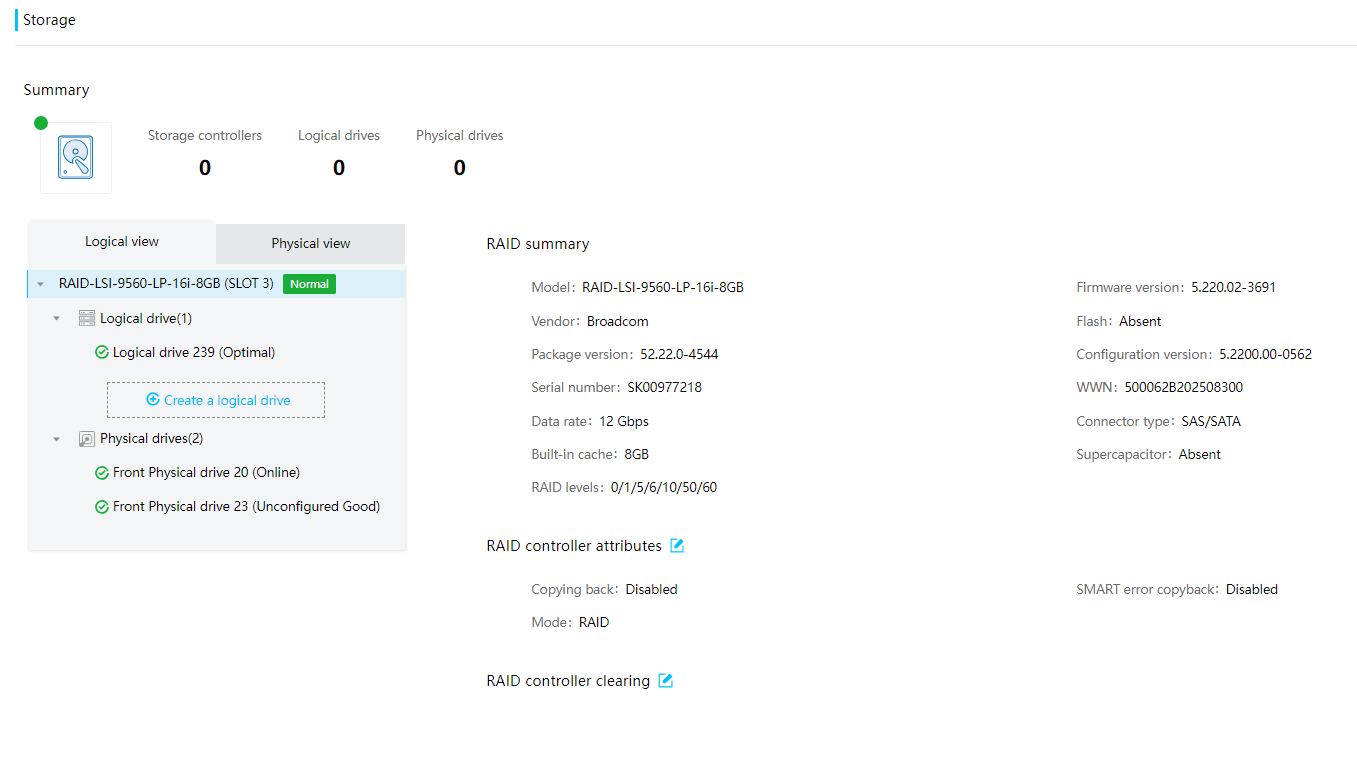

Out-of-band RAID management |

Supports out-of-band RAID monitoring and configuration to improve RAID configuration efficiency and management capability. |

|

Smart power supply management |

Supports power capping to increase deployment density, and provides power management to reduce operation costs. |

|

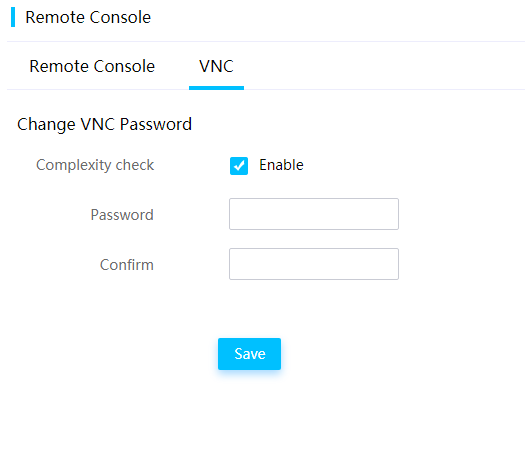

KVM, VNC, and virtual media |

Facilitates remote server maintenance. |

|

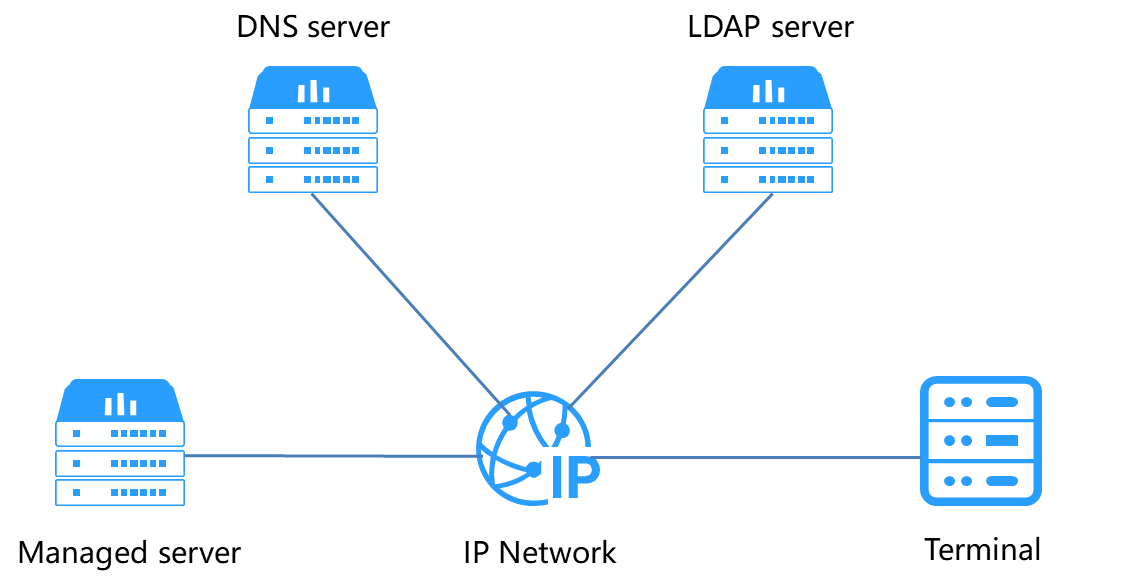

DNS, LDAP, and AD, Kerberos |

Supports domain management and directory services to simplify server network management and user management. Kerberos implements single sign-on to avoid repeated authentication and authorization. |

|

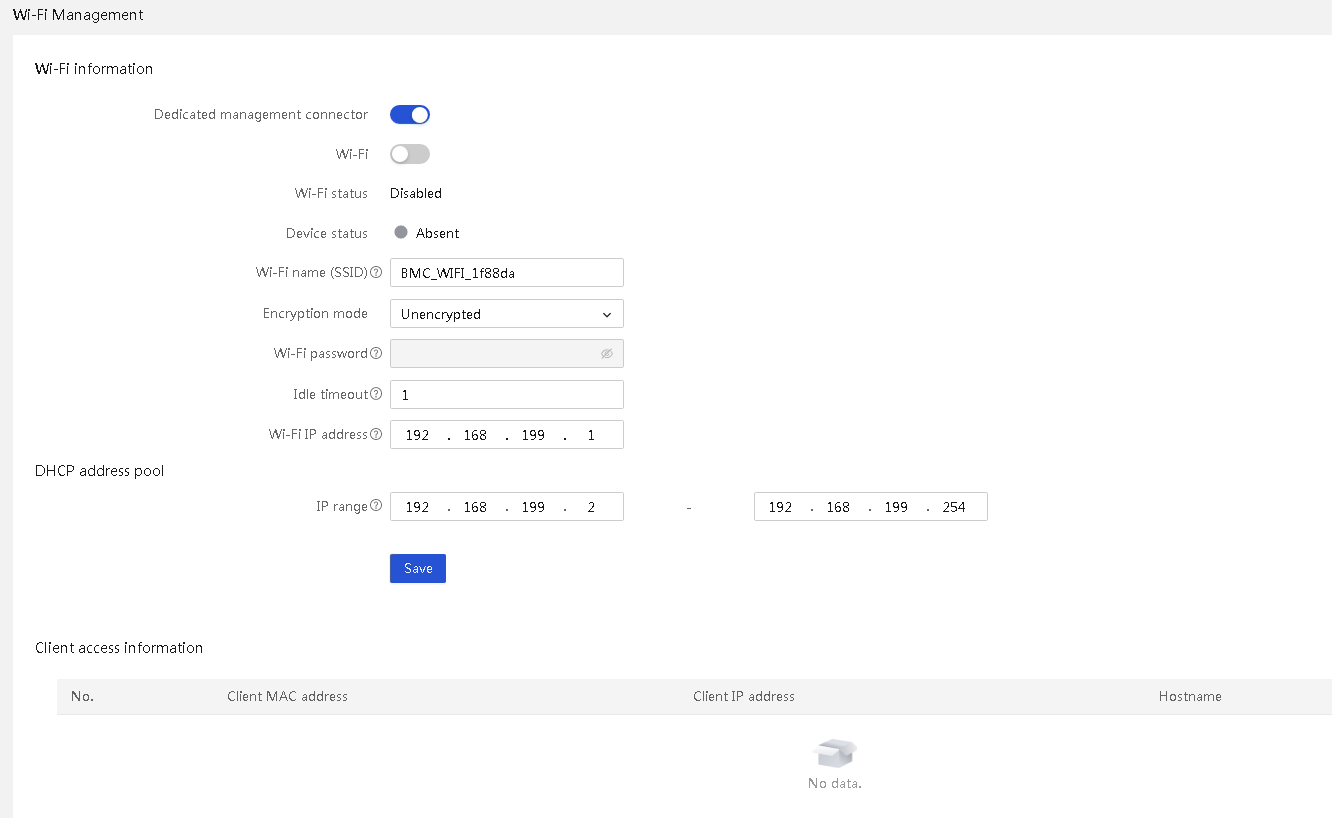

USB Wi-Fi adapter |

Supports an external Xiaomi or 360 portable Wi-Fi adapter to facilitate near-field maintenance and management of the server. |

|

Primary/backup image switchover |

Enables startup by using the backup image if the system crashes, which enhances system availability. |

|

Firmware update |

Supports various out-of-band firmware update for HDM, BIOS, CPLD, LCD (firmware package before 1.05), drives, network adapters, storage controllers, GPU modules, REPO packages, and so on. It also supports cold and hot patch updates for HDM, ensuring uninterrupted fault recovery services. Firmware update supports firmware libraries and queues, allowing operators to schedule firmware updates as needed, minimizing impact on service operations. |

|

Software inventory |

Supports obtaining out-of-band firmware version information and in-band operating system software and driver information, including location, name, version, and update time. |

|

Service USB device |

Supports log downloading to simplify on-site maintenance and management. |

|

Asset management |

Simplifies asset management. |

|

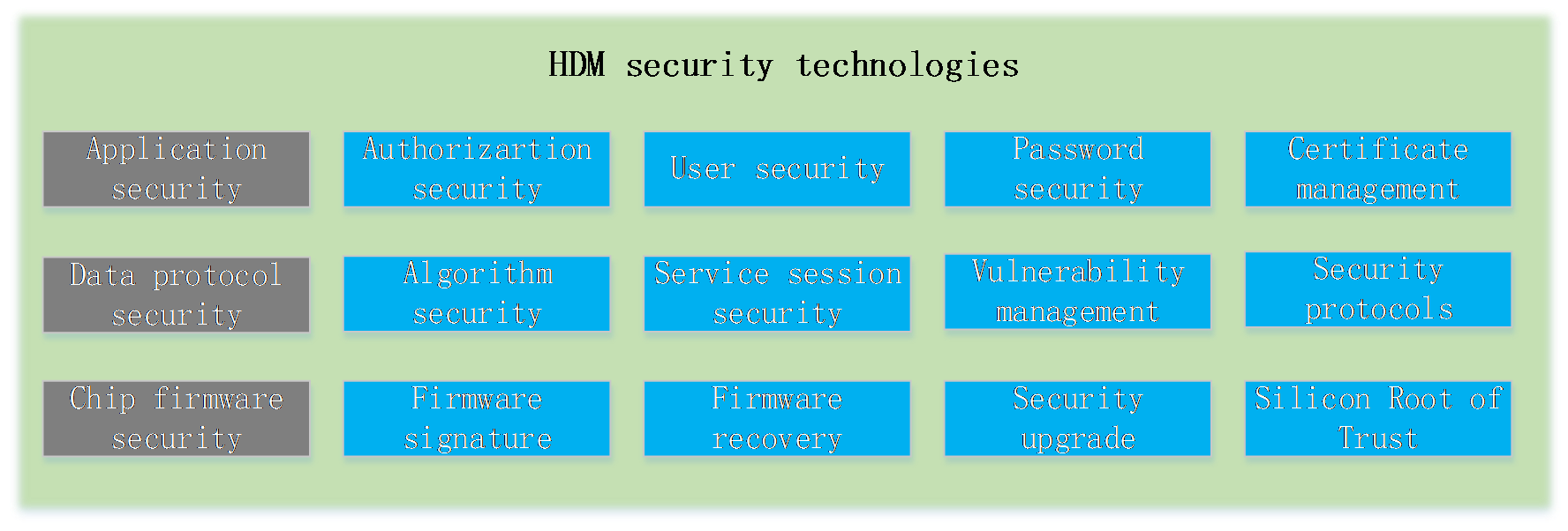

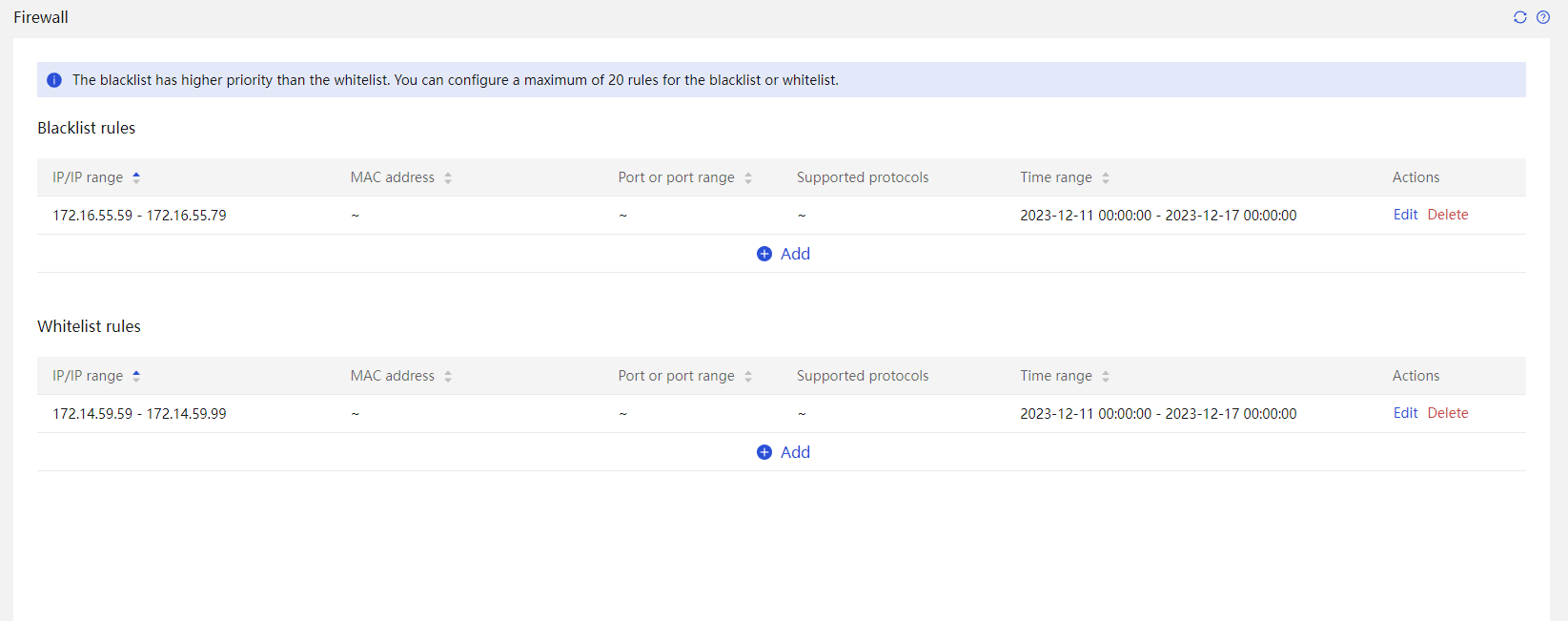

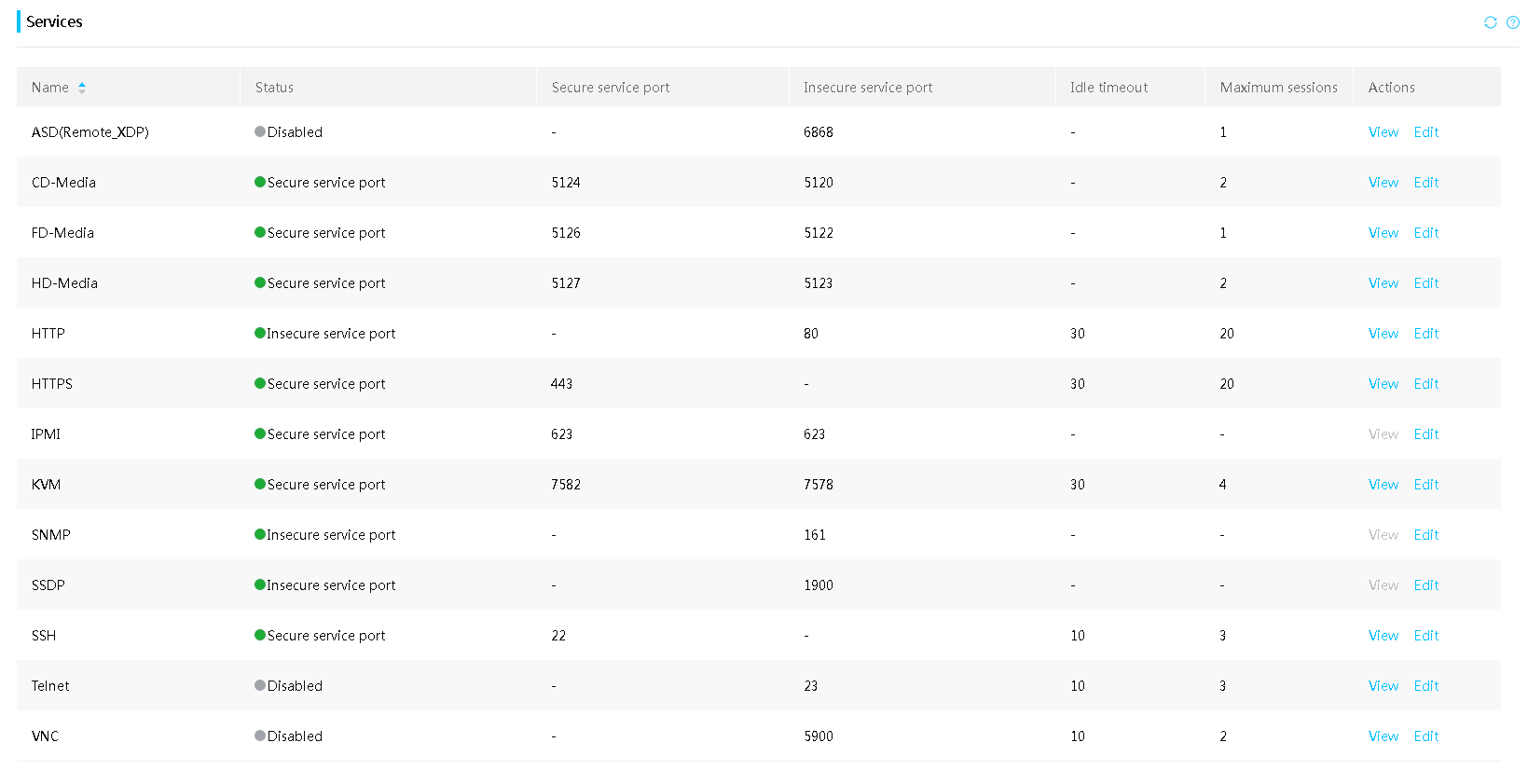

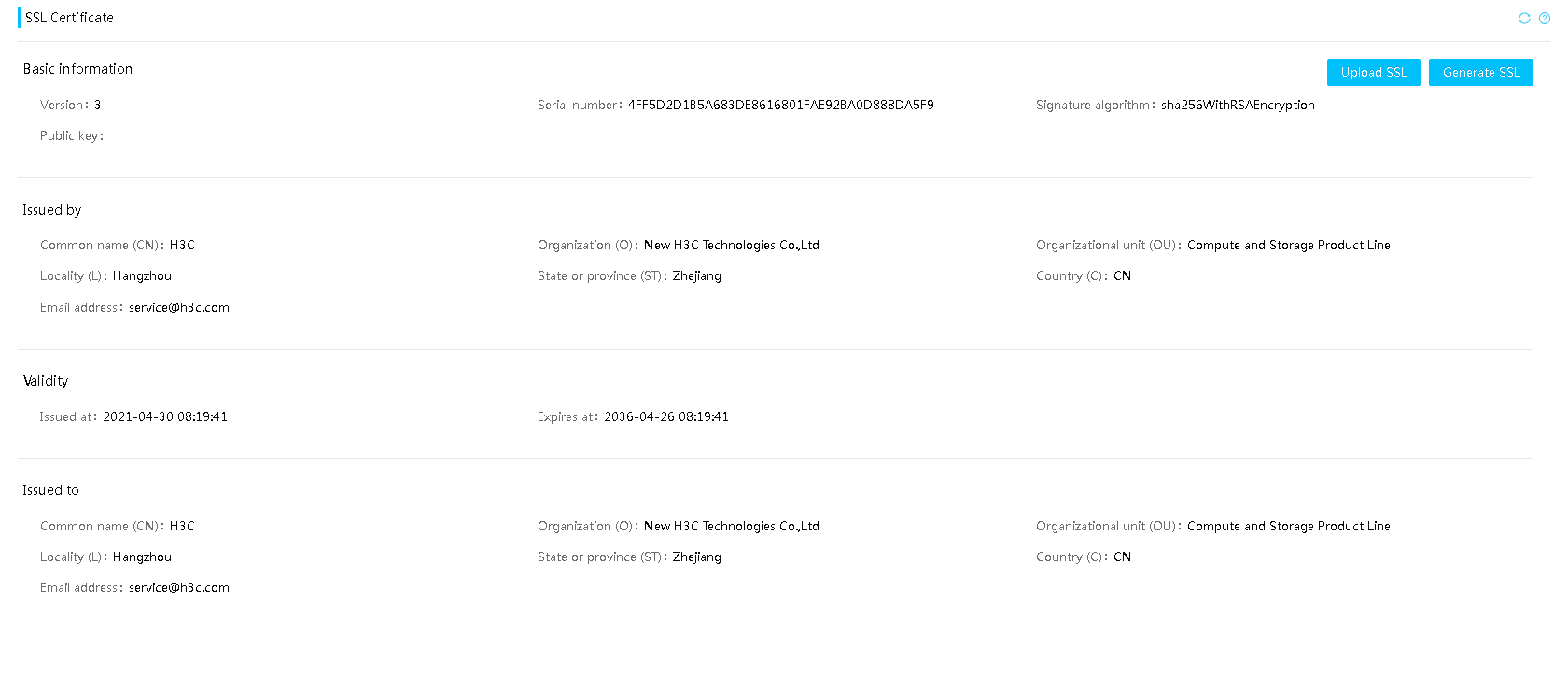

Security management |

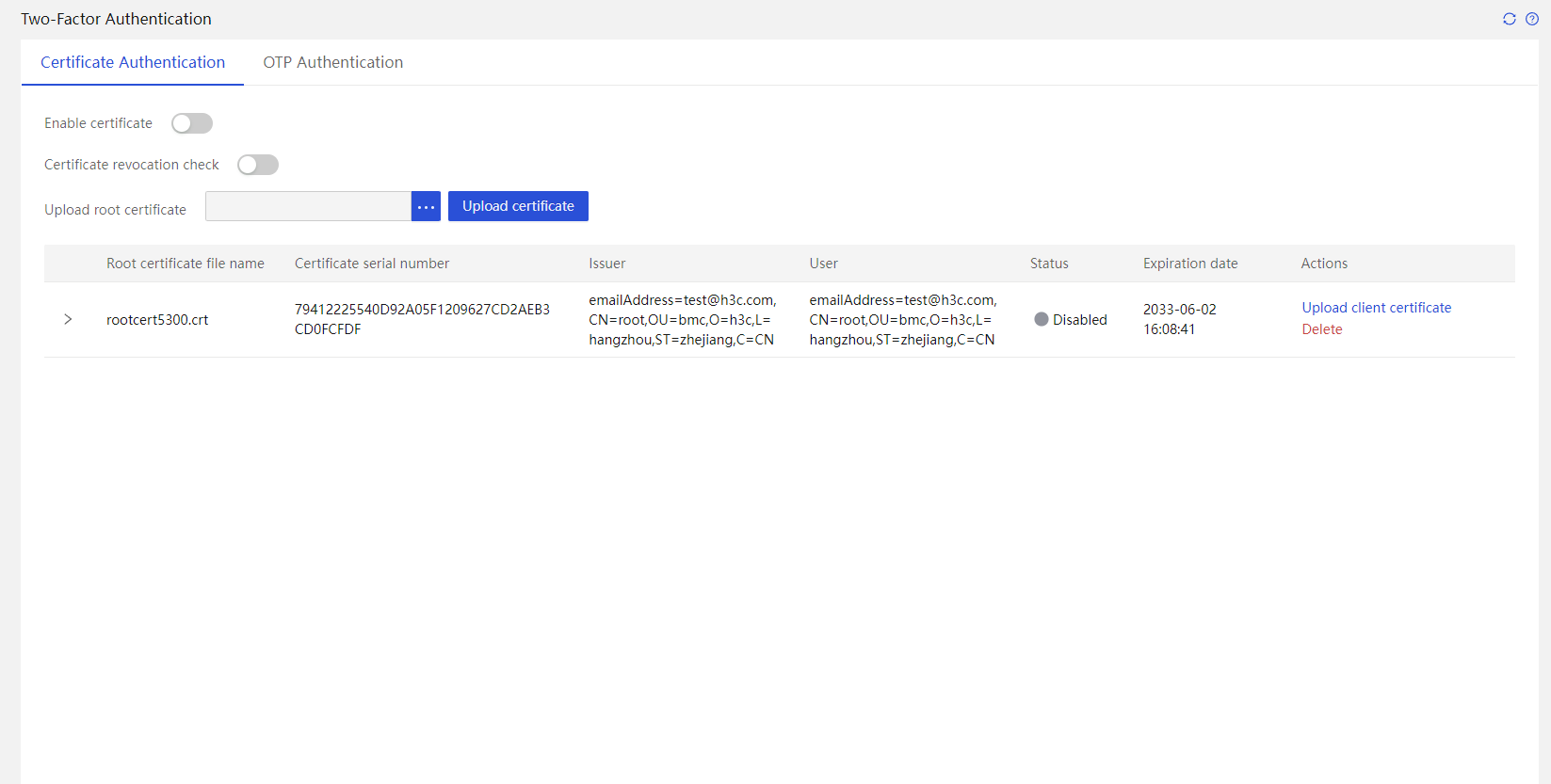

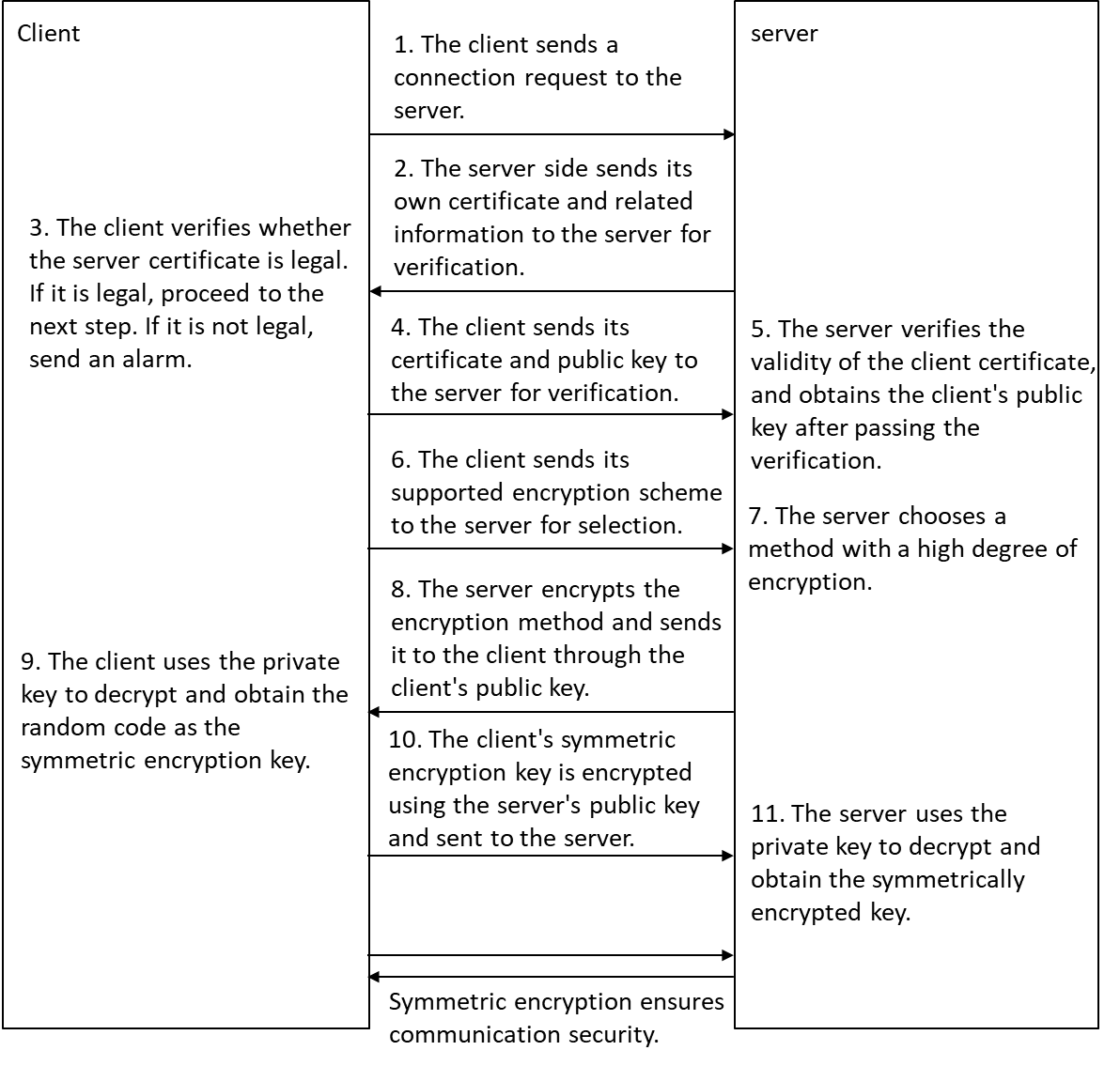

Ensures server security concerning service access, user accounts, data transmission, and storage, and supports two-factor authentication, whitelist and blacklist rules (firewall), management interfaces, SSL, silicon root of trust, and custom user privileges. |

|

One-click retirement |

Restores server components to the default by one click to implement secure retirement. |

HDM overall architecture

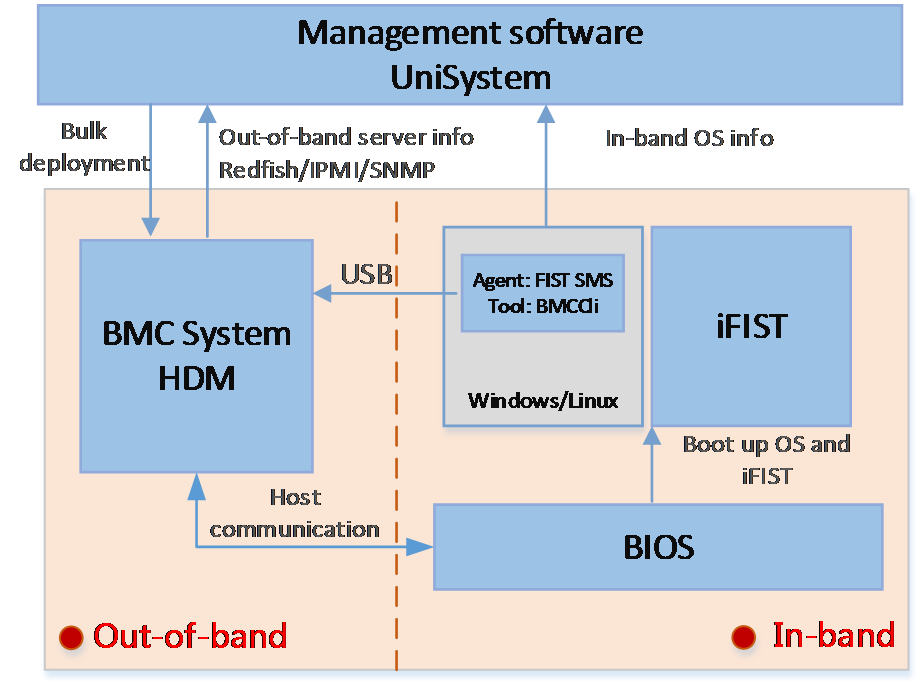

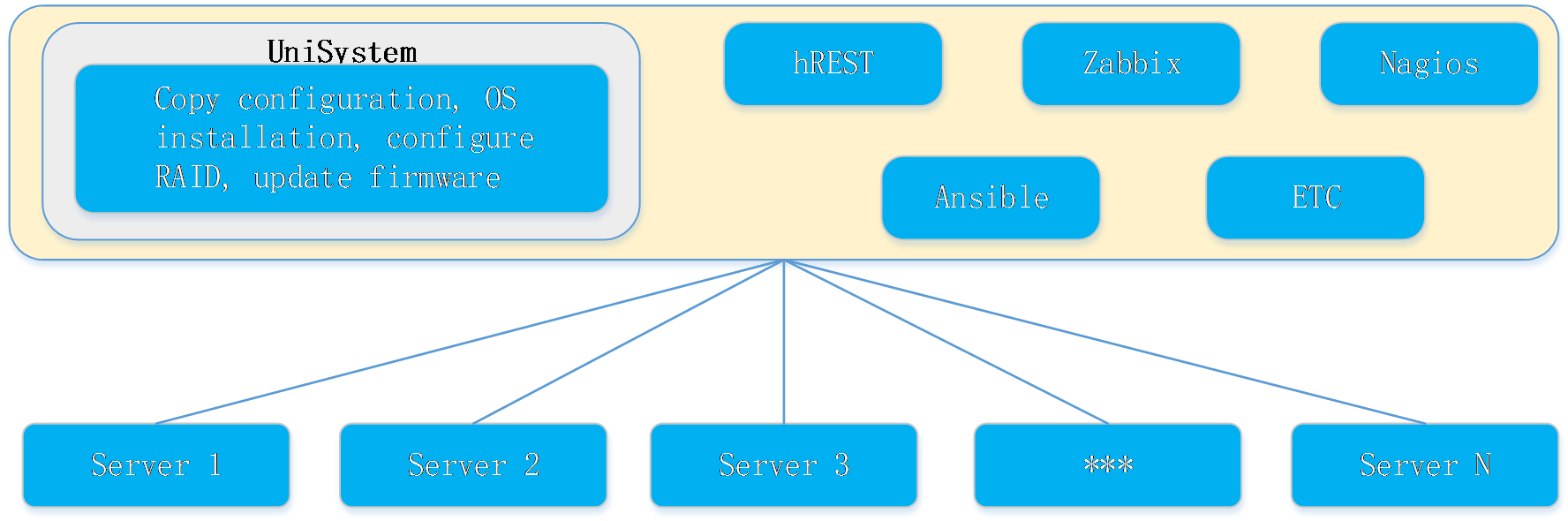

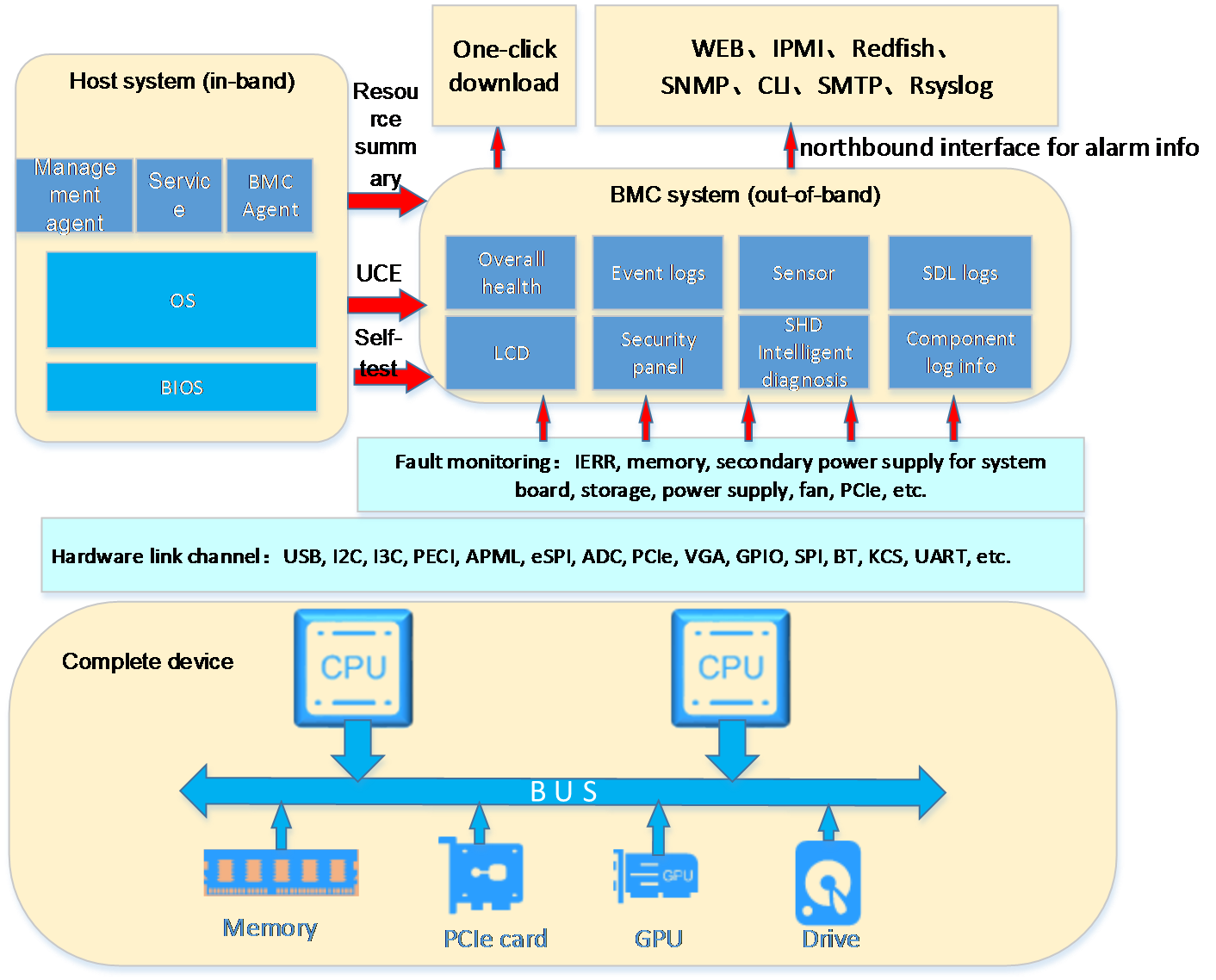

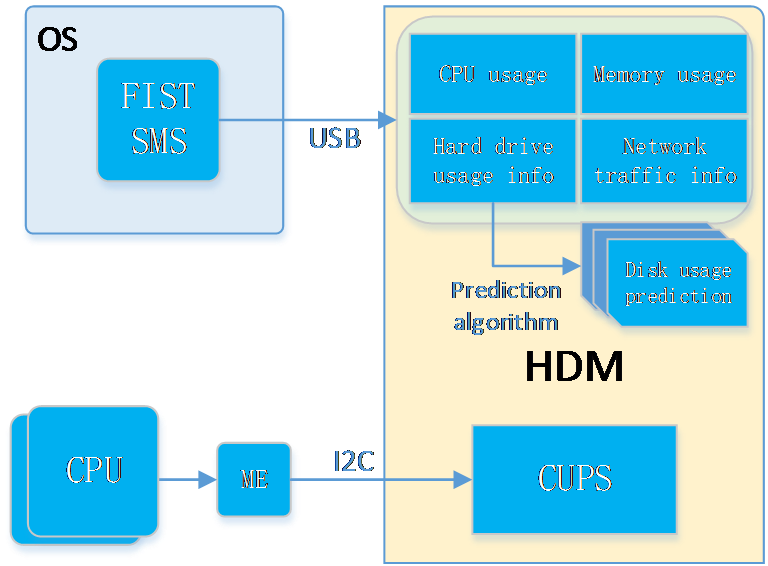

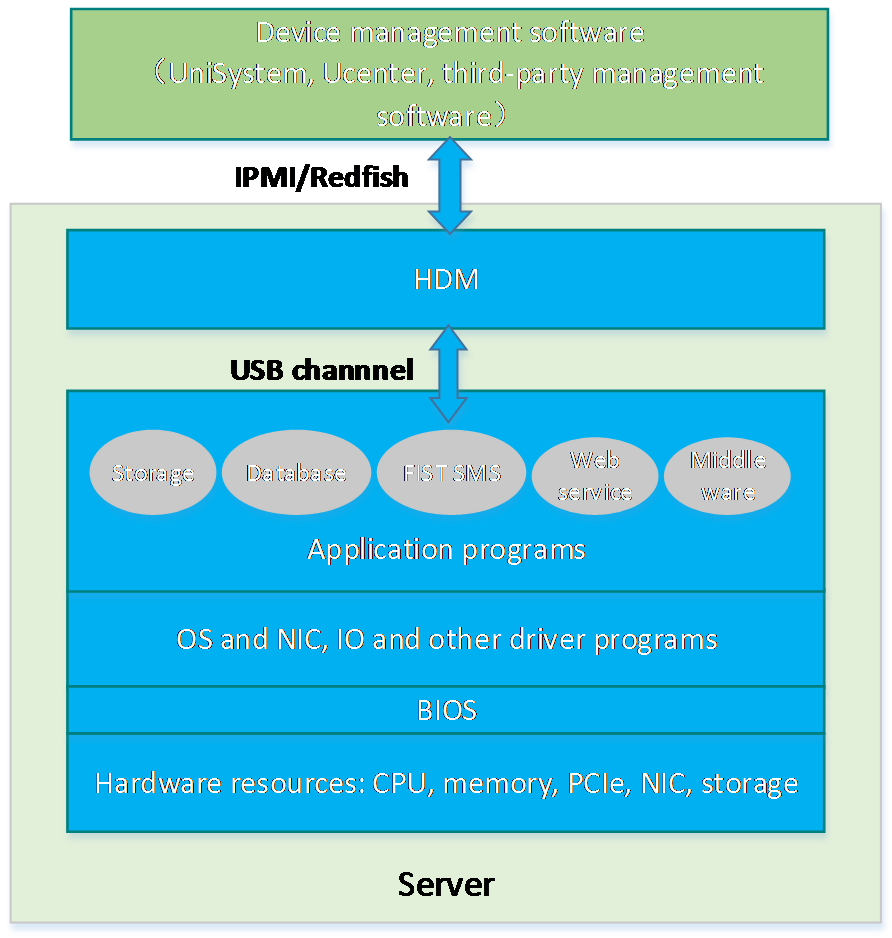

H3C server management software is as shown in Figure 1. HDM is an embedded system that operates independently from the host server. It is used for out-of-band management of the entire server, and cooperates with software such as BIOS, UniSystem, iFIST, FIST SMS, and hREST for server deployment, monitoring, and maintenance. HDM acts as the core and foundation of intelligent server O&M and lifecycle management.

Figure 1 H3C server management software

The system architecture of HDM is as shown in Figure 2. HDM adopts server-specific system on chip (SoC) with processor main frequency 1.2GHz and total memory size 1GB to effectively manage server hardware components. SoC supports KVM, 64MB local VGA display, dedicated and shared network ports, and various board-level management features and peripheral interfaces as follows:

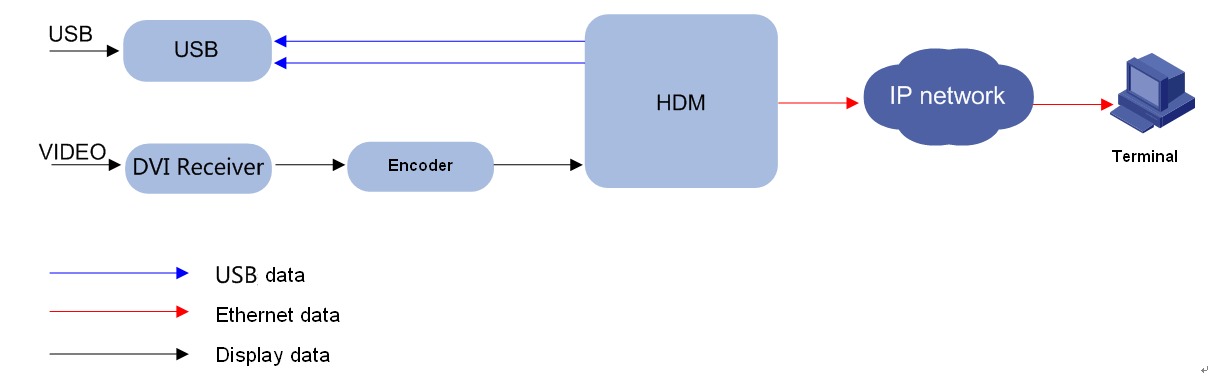

· KVM remote control—Uses the KVM module to process video data and keyboard and mouse data as follows:

a. The KVM module receives video data from the host system through the VGA connector, compresses the video data, and then sends the compressed data to a remote KVM client.

b. The KVM module receives keyboard and mouse data from the remote KVM client, and transmits the data to the host system by using a simulated USB keyboard and mouse device.

· LPC/eSPI communication and IPMI management—Provides the traditional LPC/eSPI system interface for communication with a server and supports the standard IPMI management.

· Remote access through GE interfaces—Provides dedicated GE interfaces through which remote management can be implemented by using IPMI, Redfish, or SNMP over the network.

· NCSI and VLAN support—Supports network controller sideband interface (NCSI) and VLANs, allowing for flexible network management.

· Remote consoles—Supports KVM redirection, text console redirection, remote virtual media (used to map optical drivers, drives, and folders of terminals to the server), and IPMI 2.0-based hardware monitoring and management.

· Sensor-based monitoring and management—Monitors the temperature and voltage in a server through sensors, and also manages fans and power supplies (PSUs) in an intelligent way.

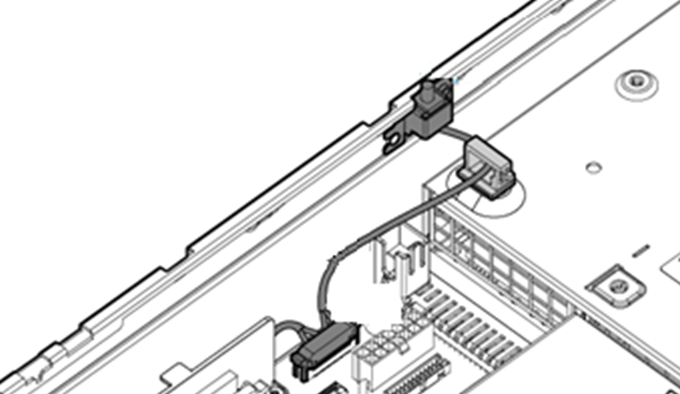

· Two SD cards (Nand flash) attached to HDM—SD card 1 is inserted as a card for saving iFIST images, which is not supported on some servers. SD card 2 is used for recording server operation and diagnosis log, including configuration information, operation log, and event log.

Figure 2 HDM system architecture

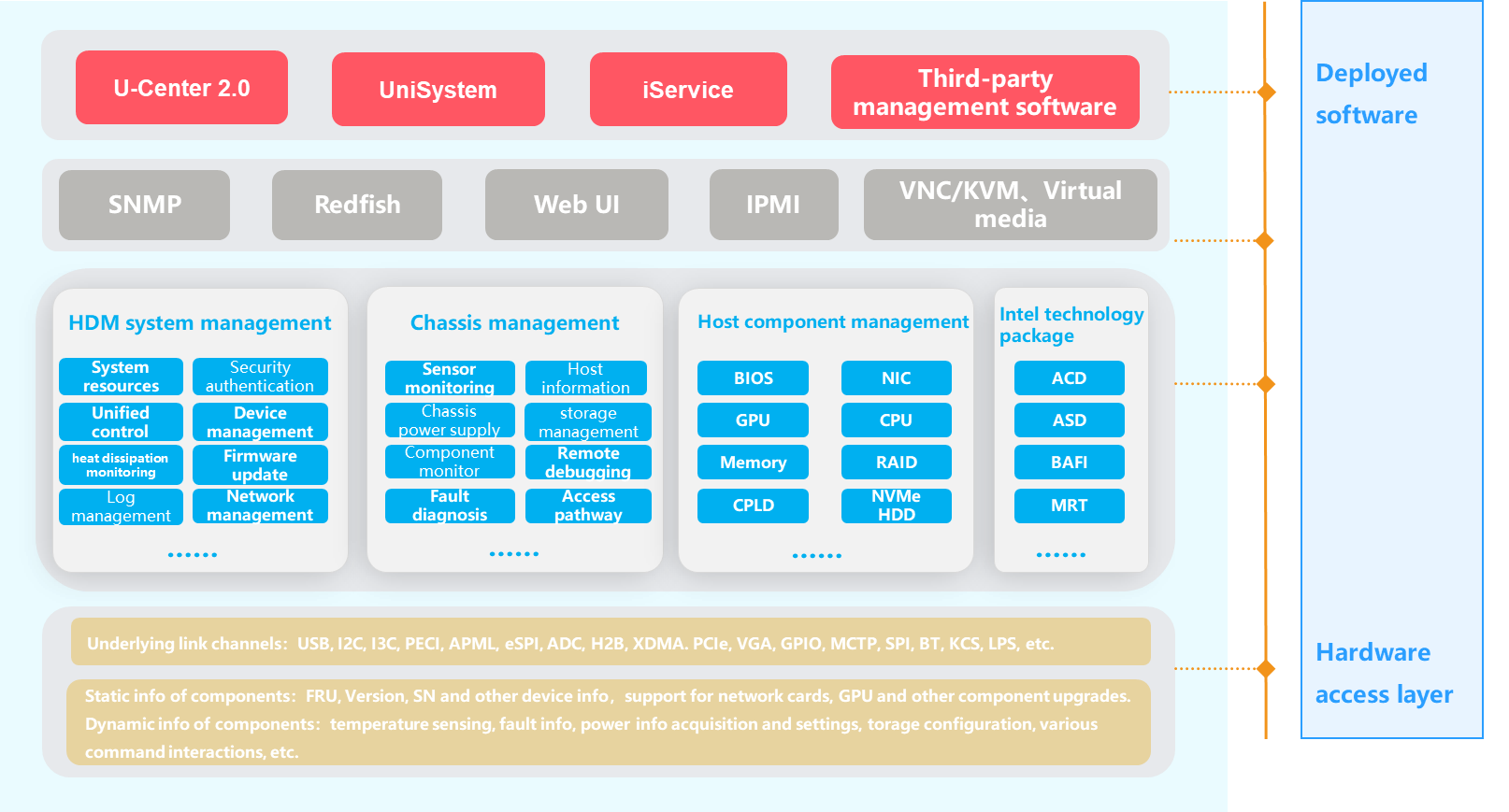

The main software architecture of HDM is as shown in Figure 3. The software capabilities of HDM primarily include the following:

· Chassis management—Monitors and manages sensors, power supplies, and other components.

· BMC system management—Provides security management, time management, and network management.

Figure 3 HDM software architecture

HDM provides abundant user interfaces, such as the Web-based user interface, CLI, IPMI interface, Redfish interface, and SNMP interface. All interfaces adopt authentication mechanisms and highly secure encryption algorithms to ensure access and transmission security.

HDM capabilities

The overall strategy of H3C servers is to implement built-in intelligence for empowering intelligent computing. To implement this strategy, three main underlay platforms are available: AI platform, multivariate computing system platform, and intelligent management platform. On these platforms, servers can sense the load, analyze computing power requirements, efficiently generate and aggregate computing power, and intelligently schedule computing power to fully deploy applications. HDM, from the perspective of servers, supports the implementation of the intelligent management platform by focusing on the various aspects of daily operation and maintenance of servers. It provides intelligent management of data center servers throughout their lifecycle, including intelligent deployment, tuning, energy saving, diagnosis, and retirement. This effectively helps enterprises improve operational efficiency and reduce operational costs. Customers can flexibly configure and deploy servers according to their specific business needs, creating their own dedicated operational environment and providing corresponding firmware support to maximize the capabilities of server hardware. The firmware on servers mainly includes the following types:

· Server host: BIOS on all server models, and OS, iFIST, and FIST SMS for some server models.

· Hardware: Firmware for cards and components.

· Out-of-band management firmware: HDM.

The firmware on the server acts as the management interface for hardware resources and the external access interface for components. From users' perspective, the firmware should possess several capabilities in addition to fulfilling service requirements:

· Access capabilities and management interfaces: Describe how users access servers and the interfaces exported externally by servers.

· Deployment: Covers services in the entire lifecycle of devices, including onboarding, management, migration, and retirement, and supporting features related with parameter configuration and firmware maintenance. The deployment and management functions primarily include device onboarding, configuration, system deployment, firmware update, device migration, and retirement.

· Management: Provides external management capabilities to servers and various components inside the servers, including asset management, inspection, device topology discovery, firmware information, device management, hardware parameter configuration, and BIOS and BMC configuration.

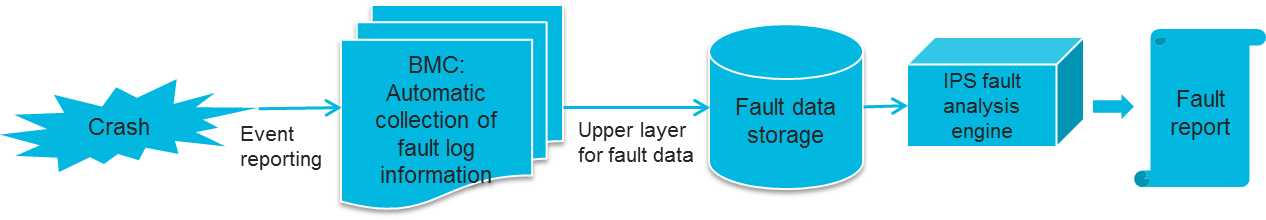

· Monitor: Provides external monitoring of the server operation status, including sensors, log subsystems, reporting channels for types of events especially alarm events, system monitoring status, channels for component alarming and early-warning alarming, and host monitoring capability.

· Diagnosis: Collects relevant contextual information of anomalies, utilizes multiple built-in rules for fault diagnosis, and automatically identifies fault points to locate faulty components in conjunction with server-embedded monitoring capability when anomalies occur. Key features include alarm source monitoring, fault handling mechanism, fault reporting, and maintainability-related features.

· Security: Provides security capabilities for servers.

· Performance: Improves out-of-band response capabilities of servers.

This document focuses on the following aspects:

· Introduces the corresponding solutions provided by H3C servers from the servers' usage scenario and requirements.

· Explains the principles behind each technology from the perspective of the server chassis and key technologies.

· Briefly provides application scenarios for each solution and technology.

Product features

Access capability and management interface

Server access

Considering the different focuses of different users in different scenarios, H3C servers provide a variety of management interfaces and access capabilities. Server access features the following:

· Ease of use: Convenient and easy-to-use configuration capabilities allow users to quickly get started.

· Security: Use various means to ensure the security of each link, which has been verified by multiple security laboratories.

· High performance: Continuously pursuing ultimate performance in terms of both startup and interface access.

· Multiple access methods: Multiple access methods meet the needs of various scenarios.

· Scenario-based optimization: Provides enhanced configurations for applications such as security and reliability, facilitating targeted optimization for users.

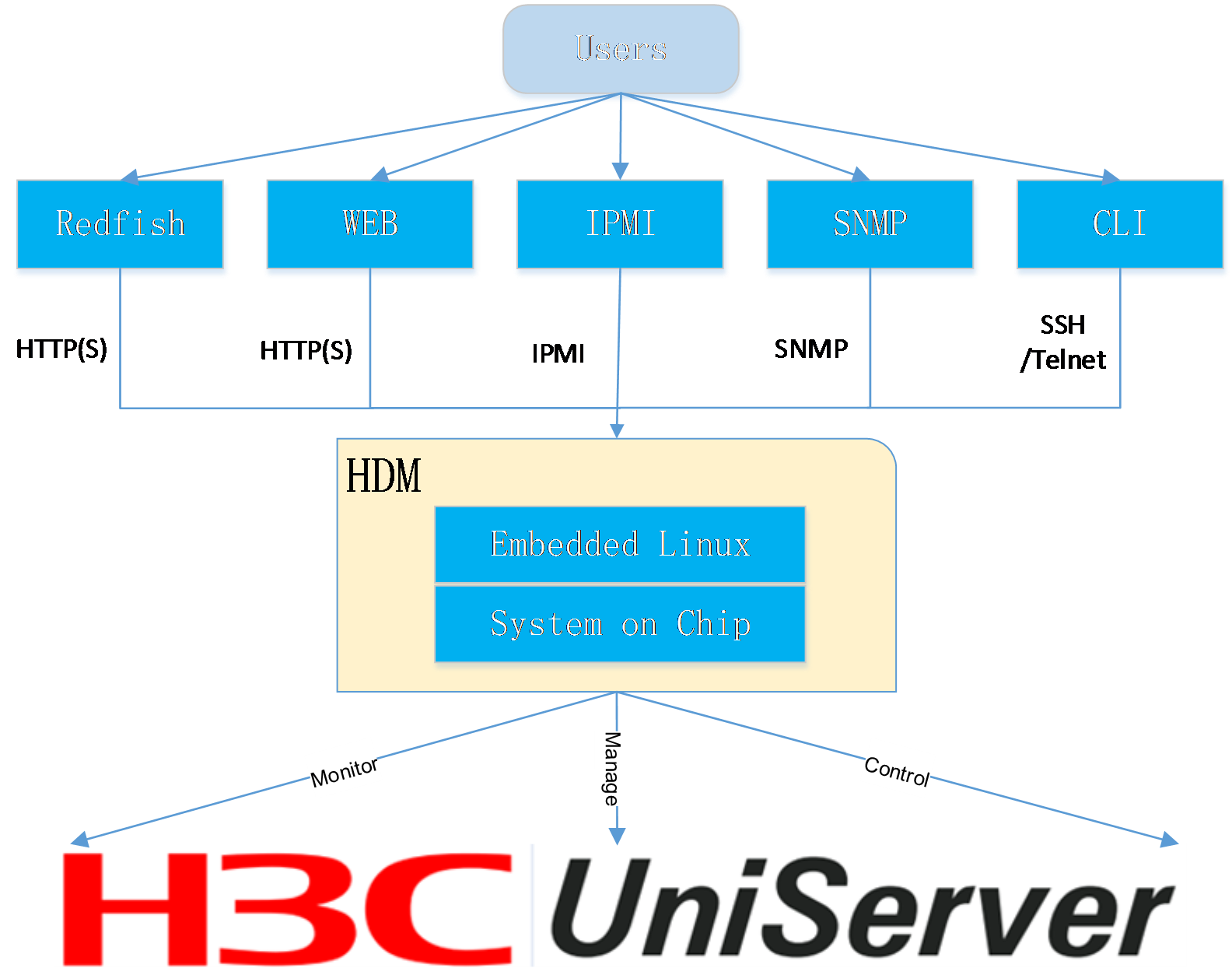

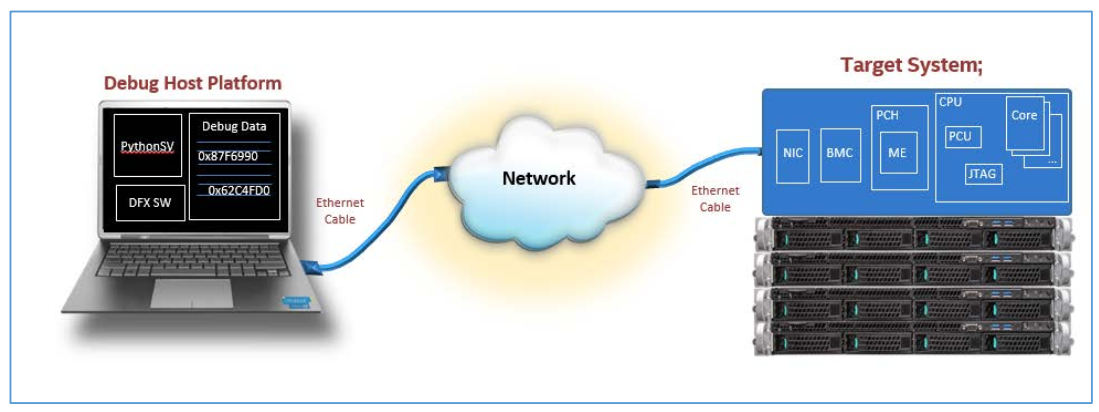

Out-of-band monitoring

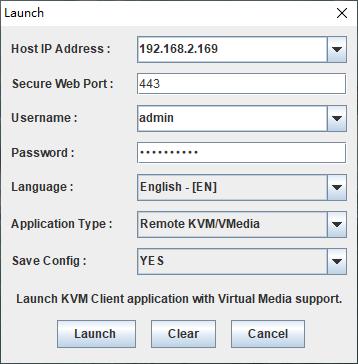

The methods to monitor a server include the following types: in-band and out-of-band. The out-of-band interfaces for accessing HDM mainly include Web-based HTTP/HTTPS, Redfish, IPMI, SNMP, and CLI. The HDM out-of-band interface method is shown in Figure 4.

Figure 4 HDM out-of-band interface

Ease of use

In terms of usability, the main features implemented by HDM are as follows:

· Automatic discovery of connected server devices if used together with UniSystem.

· Access based on the default IP address on the device.

Multiple access methods

In terms of diversity of access, the server provides the ability to directly access devices through manual means. The capabilities provided are as follows:

· Access of the HDM webpage through the shared port, dedicated port, or USB Wi-Fi.

· Access of the BIOS using VGA+keyboard.

· Access of the BIOS port through the device interface (serial port).

· Access of the HDM SHELL via device interface (serial port).

The server also provides the ability to access HDM and the BIOS through a management interface, providing the following capabilities:

· By accessing the BIOS and HDM through the interface of the OS, intelligent in-band deployment of servers can be achieved, including functions such as firmware upgrade, configuration management, and log download.

· Accessing HDM with IPMI tools through an out-of-band network enables integration with external management tools.

· Redfish interface can be used to access HDM through out-of-band network for external management tool integration.

· With the unified control feature, other devices' HDM can be accessed through the out-of-band network.

· The BIOS accesses corresponding resources using network services through the in-band network.

· Resources provided by the corresponding network service can be accessed through the KVM interface.

In addition, the device provides LEDs, an LCD display panel, and a security bezel for users to check the operating status of the equipment on site.

Note that the specific access methods may vary across different products. For more information, see the corresponding product manual.

Management interfaces

HDM further expands its interface on the basis of server hardware interfaces to meet the access needs of various scenarios.

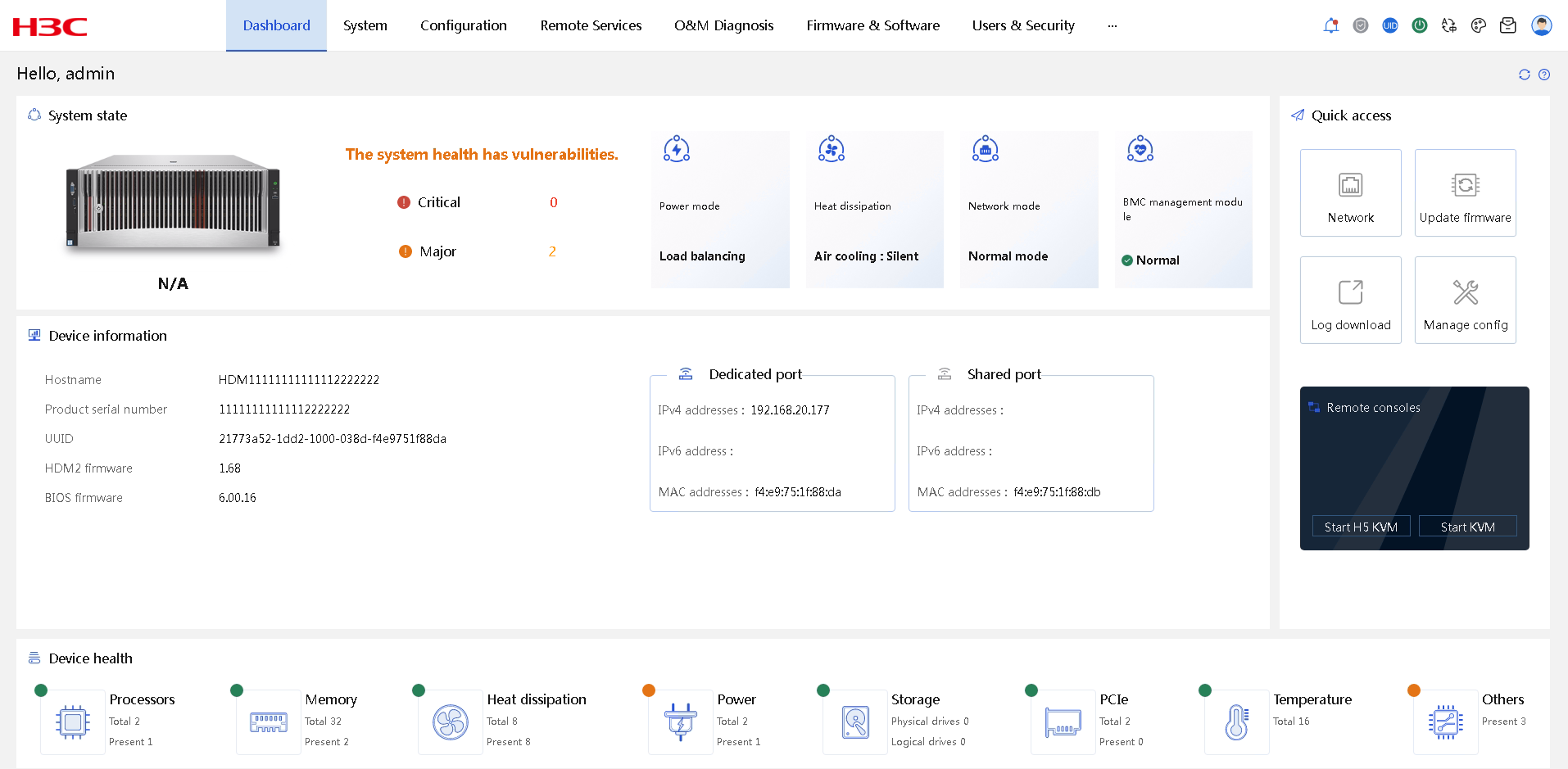

Web management interface

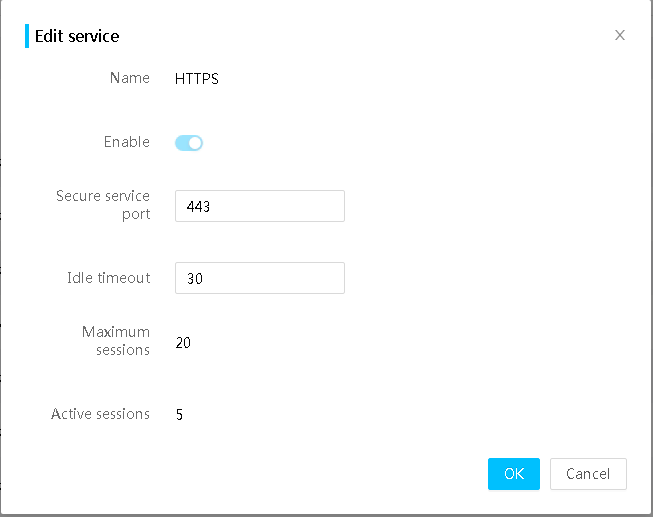

HDM provides a Web-based visual management interface based on HTTPS.

· It enables users to quickly complete setup and query tasks through a simple interface operation.

· Through the remote consoles, you can monitor the entire process of OS startup, perform OS tasks on the server, and map optical drives/floppy drives on the server.

· HDM also supports HTTP 2.0. Compared to HTTP 1.1, HTTP 2.0 significantly improves Web performance and reduces network latency, enhancing transmission reliability and security.

· You can toggle between English and Chinese. HDM supports two skin themes, minimalist white and starry blue.

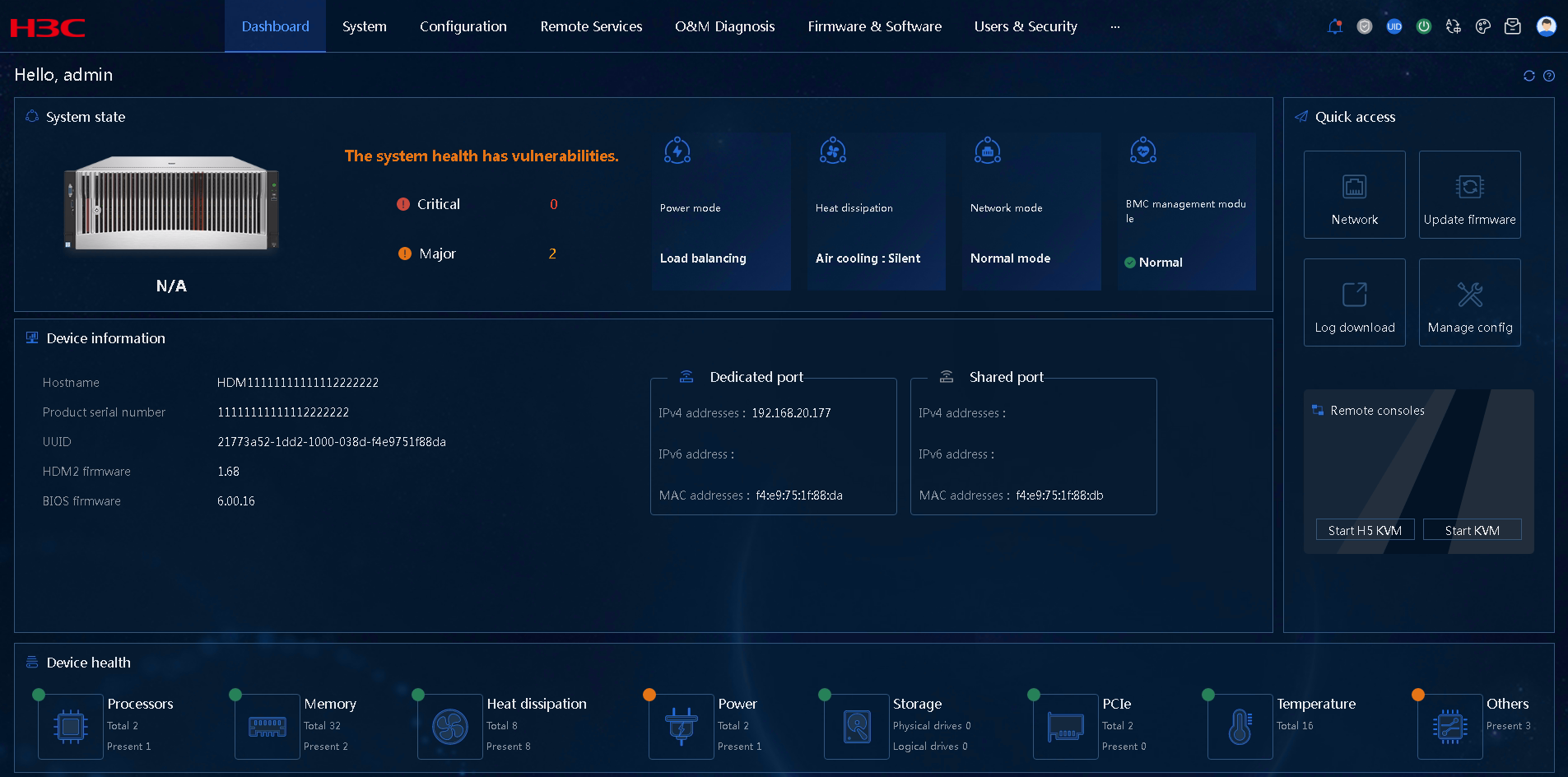

Figure 5 HDM homepage in minimalist white

Figure 6 HDM homepage in starry blue

You can open the login interface of HDM Web by entering the IP address (IPv4 or IPv6) or domain address of the HDM's network port into the browser's address bar, and then log in with your account.

Supported browsers include Firefox 90 and above, Chrome 80 and above, Edge 108 and above, and Safari 14 and above.

IPMI management interface

HDM is compatible with the IPMI 1.5/IPMI 2.0 specifications. The Data Center Management Interface (DCMI, a supported data center management interface) enables effective management of servers through third-party tools (such as ipmitool) based on the LPC/eSPI channel or LAN channel, or through the BMCCLI tool based on the USB channel.

· LPC/eSPI channel: Runs the KCS/BT protocol. The ipmitool and other tools must be run on the server's local operating system.

· LAN channel: Runs UDP/IP protocol. The ipmitool and other tools can remotely manage servers.

· USB channel: Runs BMCCLI protocol. BMCCLI tool must run on the server's local operating system.

BMCCLI tools and third-party tools support Windows and Linux systems.

For more information about supported IPMI functions in HDM2, see H3C HDM2 IPMI Basics Command Reference.

SNMP management interface

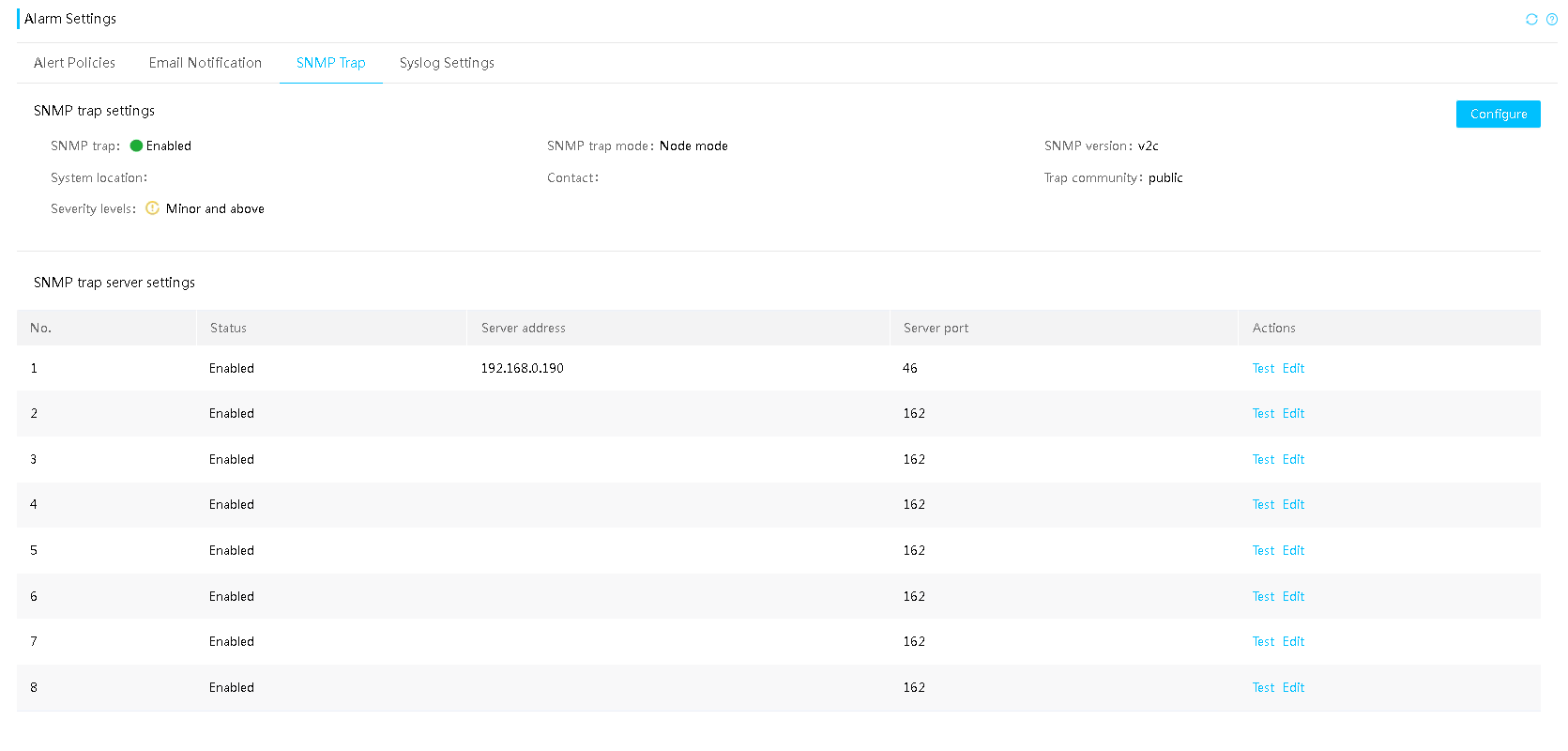

Simple Network Management Protocol (SNMP) is a communication protocol between management process (NMS) and agent process (Agent). It specifies the standardized management framework, common language of communication, corresponding security, and access control mechanisms for monitoring and managing devices in a network environment.

SNMP has the following technical advantages:

· TCP/IP-based standard protocol, with UDP as the transportation layer protocol.

· Automated network management. Allowing network administrators to use the SNMP platform to retrieve information, modify information, troubleshoot, diagnose faults, perform capacity planning, and generate reports on nodes on the network.

· Shield the physical differences of different devices to achieve automated management of products from different vendors. SNMP provides only the basic functionality set, enabling management tasks to be relatively independent of the physical characteristics and actual network types of the managed devices, thereby achieving management of devices from different vendors.

· Simple request-response mode combined with active notification mode, with timeout and retransmission mechanism.

· Few packet types and simple packet format, which facilitates resolution and implementation.

· SNMPv3 version provides authentication and encryption security mechanisms, as well as user-based access control functions, enhancing security.

¡ HDM provides an SNMP-based programming interface that supports SNMP Get/Set/Trap operations. Third-party management software can easily integrate and manage servers by calling the SNMP interface. SNMP agent supports the v1/v2c/v3 versions.

¡ SNMP agent provides interface queries for system health status, system health events, hardware information, alarm reporting configuration, power statistical data, asset information, heat management, firmware version information, and network management.

Redfish management interface

Redfish is a management standard based on HTTPS services that utilizes RESTful interfaces to achieve device management. Each HTTPS operation submits or returns a resource or result in JSON format encoded with UTF-8 (JSON is a data format consisting of key-value pairs). This technology has the advantage of reducing development complexity, being easy and implement and use, and providing scalability, while also allowing flexibility in design.

Redfish uses REST APIs and a software-defined server (data model), and is currently maintained by the standard organization DMTF (www.dmtf.org).

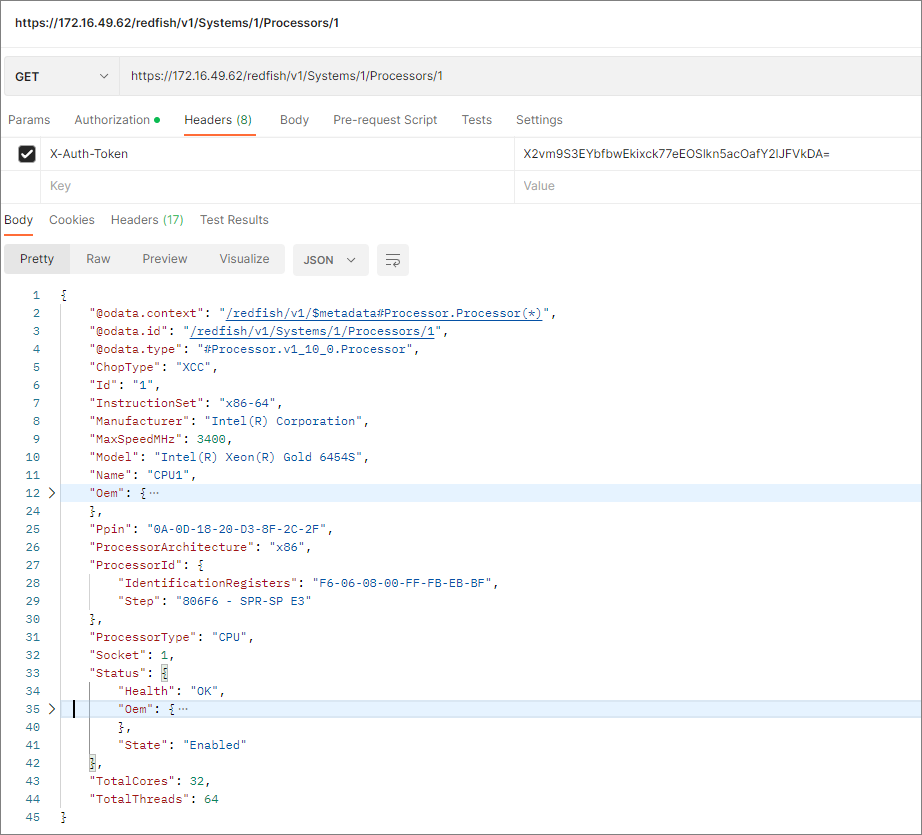

HDM supports Redfish 1.15.1 specifications, which can implement typical HDM and BIOS settings including user management and obtaining server information and management module information. The Redfish client sends HTTP(s) requests, authenticates through Token or Basic, retrieves data from the database, and returns the read results as shown in Figure 7. The database updates its content in real time through SEL and IPMI.

Figure 7 Redfish interface operation example (processor information query)

HDM unified control

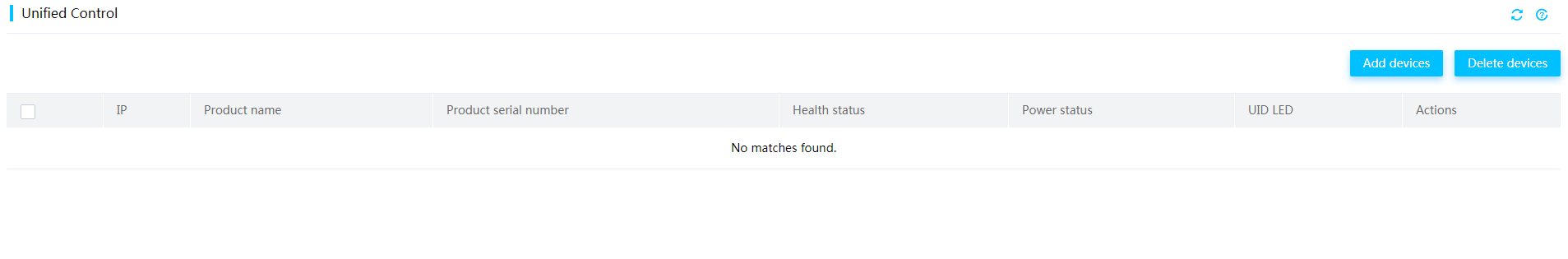

HDM unified control enables centralized management of small-scale servers, simplifying server operation and maintenance management for small and medium-sized enterprises. HDM unified control primarily provides the following functions, as shown in Figure 8:

· Device addition: Support adding single or batch servers, up to 10 devices (IPv6 addresses not supported). After acquiring license authorization, this feature support for up to 200 devices.

· Device deletion: Supports removing devices individually or in batches.

· Status query: Supports viewing basic status information of the device, including product name, product serial number, health status, power status, and UID status.

· Power management: Supports device power operations, including power on, power off, restart, and other operations.

· Remote access: Provides jump links for accessing HDM and H5 KVM consoles.

Figure 8 Unified control interface

LCD display screen

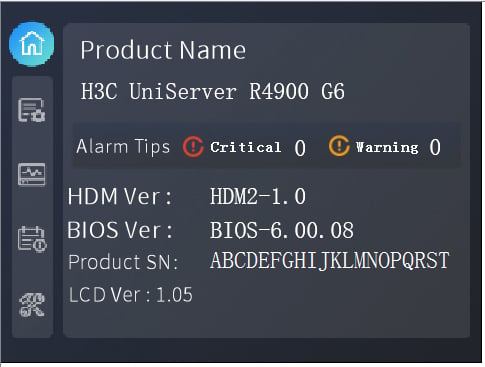

H3C rack servers can be configured with a 3.5-inch or 2.5-inch touchable LCD screen, used for displaying or configuring server information. It improved local maintenance simplicity and accelerated on-site fault location and repair. The LCD screen display supports switching between Chinese and English.

The LCD screen primarily provides the following functions:

· Information page: Displays product name, product serial number, and HDM/BIOS firmware version number, as shown in Figure 9.

· Status page: View the overall health status and log information of the server, as well as the components such as processor, memory, storage, fans, power supply, temperature sensors, and chassis power consumption.

· Monitoring page: Displays inlet temperature and CPU temperature in real time.

· Configuration page: Supports operations such as configuring HDM management interface network and restoring the administrator account.

Each component is displayed in different colors to indicate its status and health, following the same color display rules as the Web:

· Green: The device is normal.

· Orange: Critical alarms are present on the device.

· Red: Emergency alarms are present on the device.

· Gray: The device is not in position.

HDM network configuration

For centralized operation and remote operation of servers, HDM supports multiple network configuration modes, ensuring fast activation of network configurations, including:

· Provide network access through the shared port, dedicated port, and USB Wi-Fi.

· Configure the port active/standby mode and automatic shared port selection.

· Provide support for IPv4, IPv6, and domain names.

· After the device starts up, it automatically sends LLDP, SSDP, and free ARP messages to facilitate external identification and management of the device.

· Support for configuring IPv4 and IPv6 static routes to achieve more flexible network strategies for cross-segment access to meet the requirements in various application scenarios.

HDM provides the following features in terms of security:

· Support for shared port block.

· Support for enabling the firewall mechanism.

· Provide application-level security mechanisms.

Sideband management and NCSI technology

Sideband management (shared network port) is the use of Network Controller Sideband Interface (sideband NCSI) to share the physical NIC of the host system with the management system. This allows simultaneous management operations and service processing through a single NIC, simplifying network configuration and saving switch ports. For security reasons, it is necessary to use VLAN technology to separate management and service into different network segments.

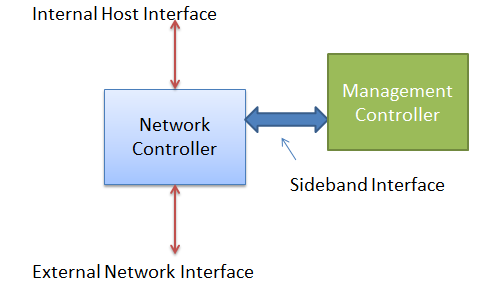

NCSI is an out-of-band management bus protocol based on the physical bus RMII. The NCSI bus is a network communication bus that multiplexes with the RMII bus and defines an NCSI protocol on top of the RMII bus. Network Controller (NC) is divided into internal host interface, external network interface, and sideband interface for external communication. The BMC, as the Management Controller, can both send NCSI control commands to the NC and achieve network data packet communication through the NC and external network interfaces, as shown in Figure 10.

Figure 10 Sideband management diagram

From a data flow perspective, HDM sends a network message to the physical bus RMII via MAC, and the NC receives the network message through RMII and then analyzes it. After analysis, if it is an NCSI message (determined by the ether type being 0x88F8), respond with NCSI. If it is a network message sending data externally (determined by the ether type being not 0x88F8), forward the data to the external interface.

Network port mode

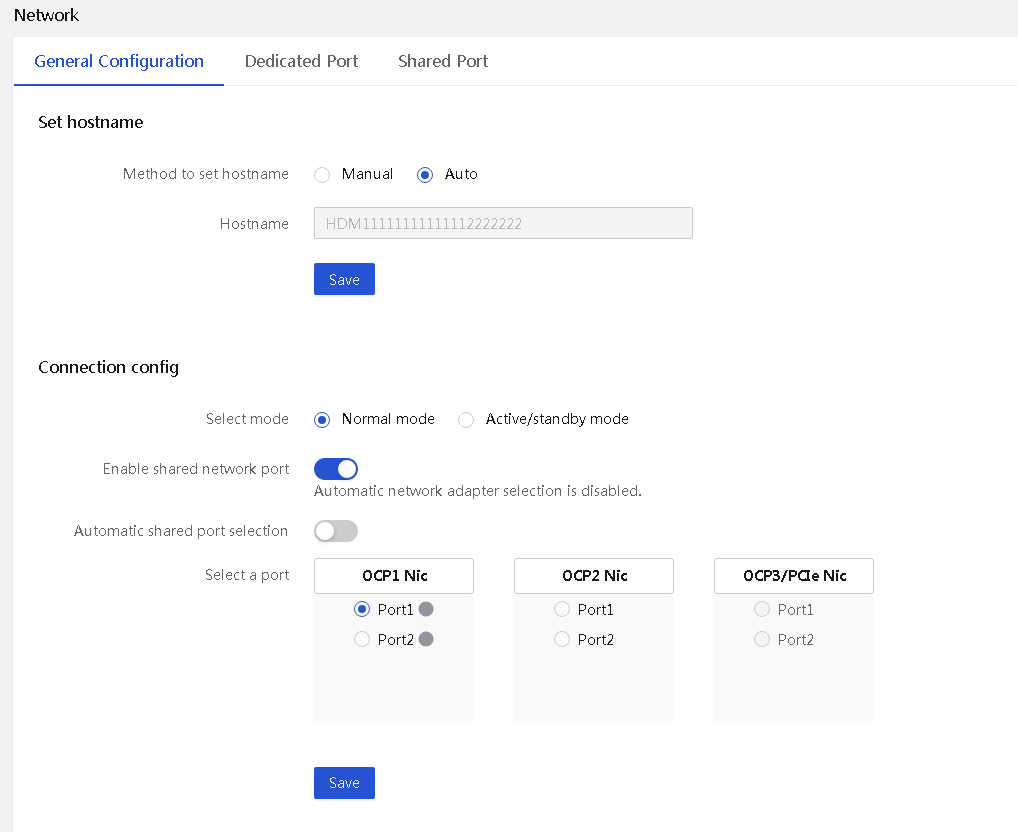

HDM can be configured with normal mode and port active/standby mode for the network interface, as shown in Figure 11.

Figure 11 Network general configuration information

· Normal mode: Users can access HDM through the shared network port or the dedicated network port. The IP addresses of the two ports must belong to different subnets.

· Port active/standby mode: HDM prioritizes the dedicated port as the communication port, and the shared port and dedicated port are in Active/Standby state. Only one type of port can be used to access HDM at a time, with the dedicated port having a higher priority.

¡ When the dedicated network port is connected to a network cable, regardless of whether the shared network port is connected to a network cable, select the dedicated network port as the communication port.

¡ When the shared network port is connected to a network cable and the dedicated port is not connected to a network cable, select the shared port as the communication port.

¡ In active/standby mode, the shared port and dedicated port use the same set of IP address and MAC address (dedicated port MAC).

· Automatic shared port selection: Automatically select a shared port based on the connection status of the network adapter ports. This feature offers the following advantages: HDM management can be done through a shared port as long as at least one network adapter port is connected.

¡ All OCP network adapters and PCIe network adapters that support NCSI support this function.

¡ Switching the shared port allows the administrator to connect to the new shared port and access HDM after the shared port is switched. This feature has the following advantages:

- No need to change the server's network information in the entire network to achieve smooth switching.

- No need to reconfigure the network information for the shared port after switching (including IP address and VLAN) to improve maintenance efficiency.

- Do not enable the active/standby mode and automatic shared port selection at the same time. If you do so, it may cause network failures.

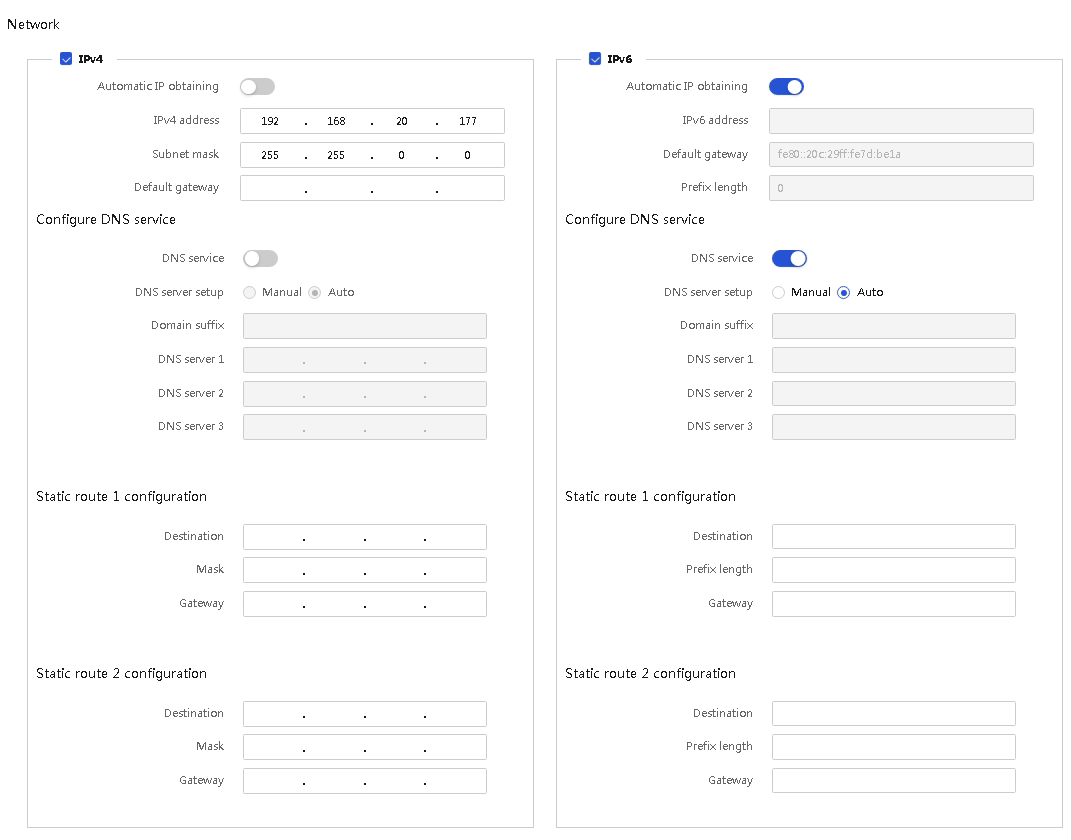

IPv6

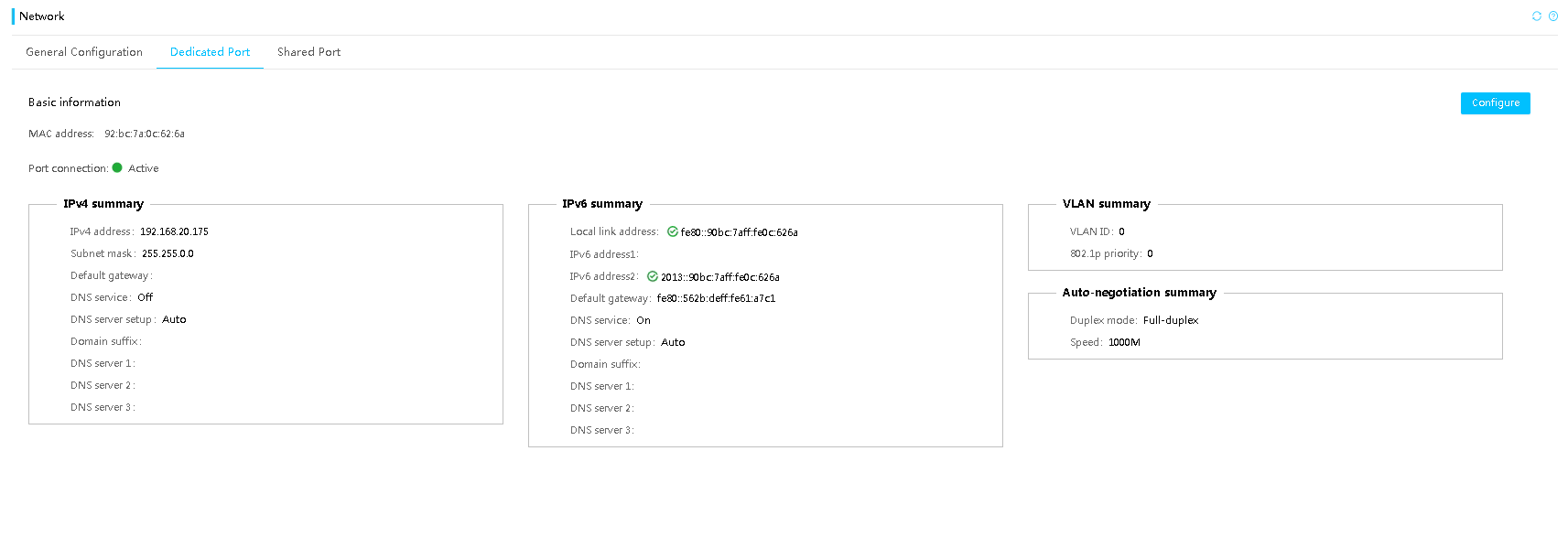

HDM fully supports IPv6. Both the dedicated network port and the shared network port (NCSI) support access through IPv6 addresses on their physical channels, as shown in Figure 12.

Figure 12 Dedicated network port configuration

LLDP

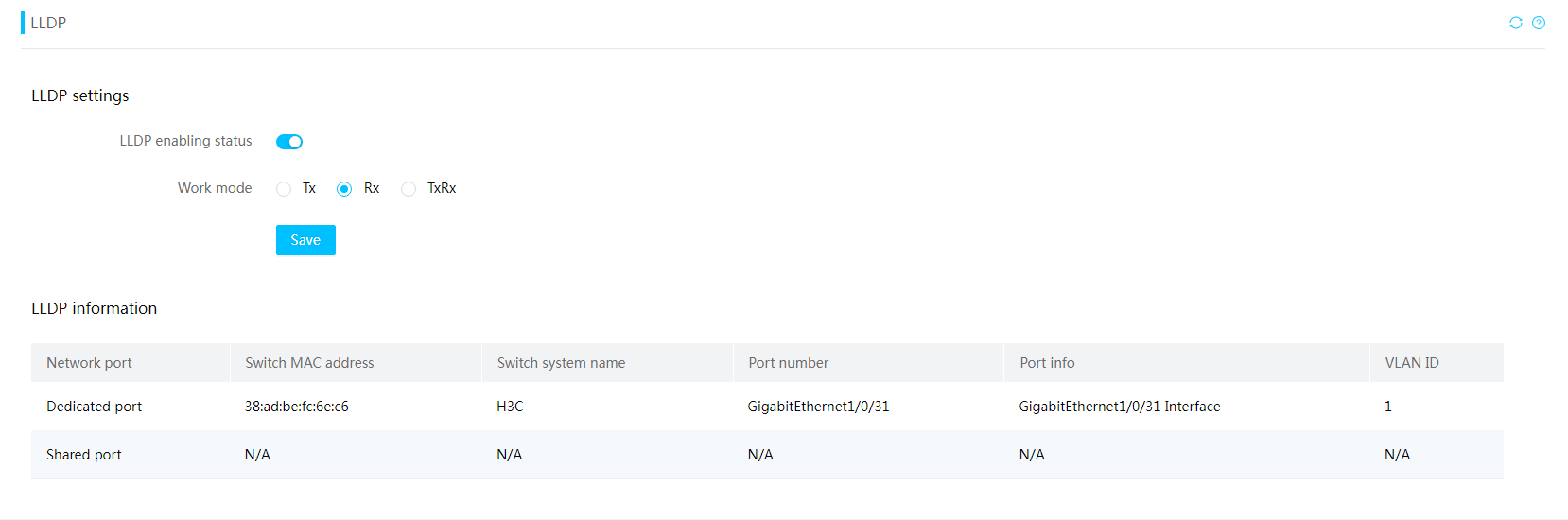

Link Layer Discovery Protocol (LLDP) provides a standardized way of discovering network devices and exchanging system and configuration information among different vendors. It allows network management systems to quickly gather information about the Layer 2 network topology and any changes that occur. The configuration information is as shown in Figure 13.

Figure 13 LLDP configuration information

HDM supports the LLDP protocol and can parse relevant information about the local network's switches.

· Network interface: The network interface through which the server receives LLDP information.

· Switch MAC address: MAC address of the uplink switch port.

· Switch system name: Uplink switch system name.

· Connection port number: Upstream switch port number.

· Port information: Upstream switch port name.

· Network interface speed: Speed of the network interface.

HDM supports actively sending LLDP messages to facilitate the discovery of neighboring devices.

SSDP automatic discovery

Simple Service Discovery Protocol (SSDP) is an application layer protocol and one of the core protocols that make up Universal Plug and Play (UPnP) technology.

HDM supports SSDP and regularly sends NOTIFY messages, enabling upper-level operation and maintenance software (such as UniSystem) to automatically discover all BMC devices, eliminating the pain point of individually configuring BMC devices in the initial setup process.

Static routes

HDM supports setting static routes. You can configure two static routes for dedicated and shared network ports, separately for both IPv4 and IPv6 configurations to control and direct network traffic accurately and implement cross-segment route forwarding.

Figure 14 Configuring static routes

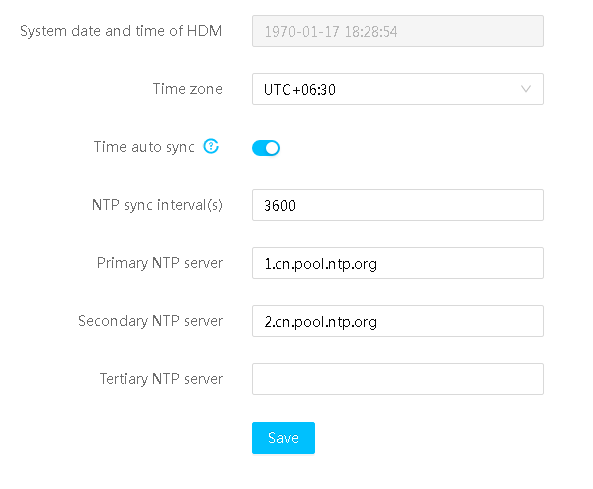

HDM time settings

HDM provides multiple levels of time sources and allows setting the synchronization priorities. The following time synchronization methods are supported and their default priorities are in descending order:

Primary NTP server > Secondary NTP server > Tertiary NTP server > DHCP server (acting as a NTP server) > Host ME (only for Intel products) > RTC on HDM.

Time management

HDM supports obtaining time from various sources to meet different time management scenarios. The following scenarios are available:

· Manually configure NTP synchronization: You can configure the same NTP server between different HDM and hosts to ensure that all systems obtain the correct time from this NTP server and remain consistent at all times.

· Use DHCP server to manage NTP synchronization: Specify the NTP server field on the DHCP server, enabling all systems in the equipment room to automatically obtain the same NTP server and thus have accurate and unified time.

· Synchronize host time: Pass host time to HDM through the BIOS and ME components to maintain accurate time and ensure consistency between host and HDM time.

NTP synchronization

The Network Time Protocol (NTP) is an application layer protocol in the TCP/IP protocol suite. It is used to synchronize clocks between a series of distributed time servers and clients.

HDM supports manual configuration of three NTP servers and also supports automatic retrieval of NTP server configuration from the DHCP server, as shown in Figure 15. All NTP servers are managed based on priority, and if a high priority server is unavailable, a low priority server is automatically used, ensuring periodic automatic synchronization.

The NTP server supports IPv4 addresses, IPv6 addresses, and FQDN (fully qualified domain name) addresses.

Figure 15 NTP configuration information

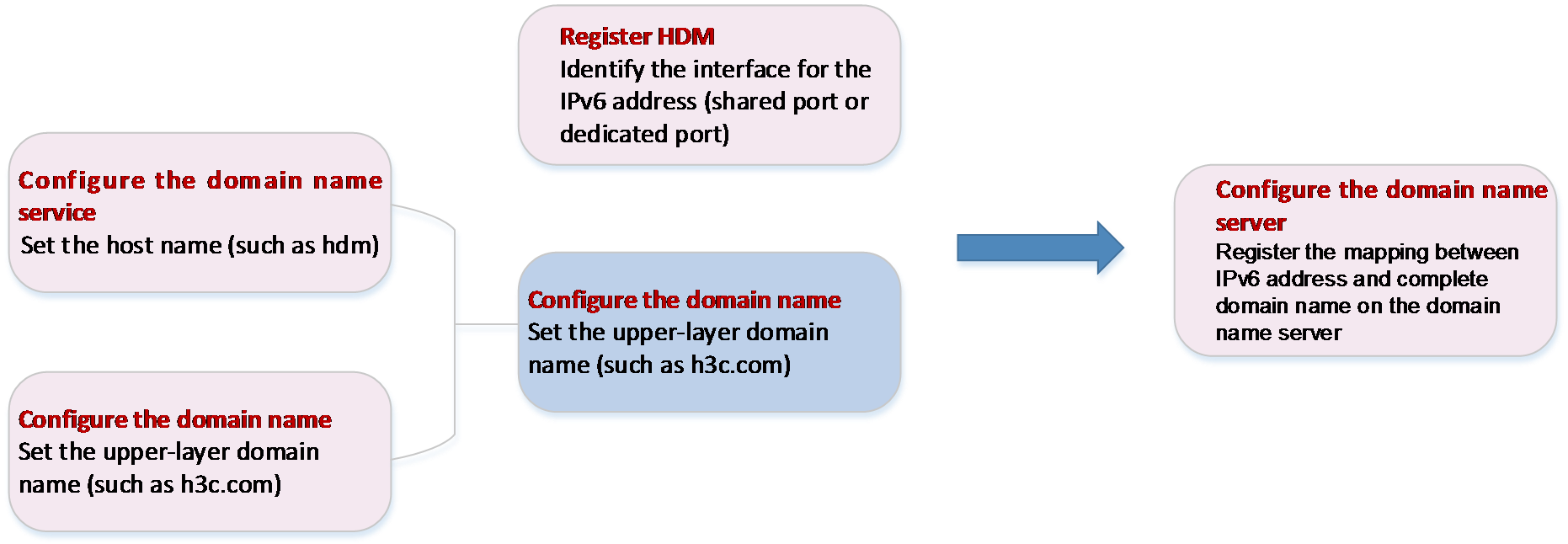

DNS

DNS is a distributed database used by TCP/IP applications to provide translation services between domain names and IP addresses. The complete domain name contains a host name and upper-level domain name. In HDM, the host name can be manually configured or automatically generated based on the server's SN number. The parent domain name can also be manually configured or obtained automatically through DHCP.

HDM supports mapping the IP address of the shared port and dedicated port to a domain name, and registers this mapping with the IP DNS servers in the network.

The registration methods include nsupdate and FQDN/host name: The nsupdate method allows the client to directly update the DNS server zone file through the nsupdate command. The FQDN/host name method allows the DHCP server to dynamically register client information with the DNS server after confirming the client's network address allocation.

The user can add all managed servers to a unified management domain and use a memorable domain name to access the managed servers' HDM.

Figure 16 DNS registration process

HDM supports DNS configuration. DNS information can be independently configured for IPv4 and IPv6 interfaces under dedicated and shared ports, as shown in Figure 17.

Figure 17 DNS configuration information

Server deployment capabilities

Background

H3C servers support general deployment capability, enabling quick access to customers' operational environments, such as PXE servers and DHCP servers. H3C has developed a large number of automation deployment software programs and tools for customers, providing comprehensive and versatile deployment capabilities. Automation software and tools can help customers save a significant amount of manual operation time, provide operational efficiency, and reduce operational costs. H3C can provide the following deployment software and capabilities:

· HDM: Server standalone out-of-band management tool. Customers can achieve some simple server configuration and deployment through HDM, including KVM installation of the operating system, HDM/BIOS/RAID configuration, and firmware updates. For more information, see the other chapters in this document.

· FIST SMS: Server standalone proxy software with in-band deployment capabilities including firmware and driver updates.

· iFIST: Server standalone embedded management software. iFIST is pre-installed on a storage chip in the server to provide in-band standalone deployment capability. Supported deployment functions include automatic installation of operating systems and drivers, HDM/BIOS/RAID configuration, firmware update, pre-mounting device diagnostics, and post-removal data clearance.

· UniSystem: Bulk server management software. If used together with HDM, FIST SMS, and iFIST, UniSystem can provide the ability for bulk deployment of servers, including IP configuration, automatic operating system installation, clone installation, firmware and driver updates, and batch functions such as pushing software installations and configuring HDM/BIOS/RAID. UniSystem supports automatic shelf-up of servers. Customers can plan server software and hardware configuration items in advance on the UniSystem page, and create corresponding server configuration templates and binding rules. When the server accesses the operation and maintenance network, UniSystem will automatically incorporate the server into management and issue corresponding server configuration templates, realizing the automation effect of plug-and-play and worry-free shelf-up.

The deployment of the server mainly involves the following tasks:

· Device rack mounting

Device management: Able to quickly identify new devices joining the network or offline devices.

· Perform batch identification and version management of server equipment and its components.

¡ Check if the firmware and driver versions on the device need updating.

¡ Firmware update: H3C supports multiple methods of firmware update, including in-band single/batch update, out-of-band single/batch update, and offline firmware update.

¡ Driver update: H3C supports multiple driver update methods, including in-band single/batch driver update, and automatic driver installation after operating system installation.

· Configuration operations

Perform centralized HDM configuration, BIOS configuration, batch import and export, and online configuration of components (such as storage controllers).

· Operating system installation

Provide automated batch installation of mainstream operating systems, supporting "split" transmission technology and image cloning technology for image files. Compared to traditional PXE installation methods, UniSystem can greatly improve the speed of operating system installation.

· Support other device operations, such as pre-mounting equipment diagnostics.

· Support security operations after device disassociation, such as data erasure.

Deployment capability

Multiple methods and tools are provided for server configuration and deployment. Along with the support of UniSystem server management center, batch management, deployment, and update capabilities for users are available. For more information, see the H3C UniSystem technical white paper.

Figure 18 Deployment capability diagram

Technical points

Device management capability

HDM supports the following features for easy device incorporation:

· Devices actively send ARP packets to quickly broadcast its MAC address to the network when they connect to the network.

· Devices actively send SSDP and LLDP messages when they connect to the network to facilitate external identification of the devices.

· DHCP supports SN transmission, facilitating unique identification of servers.

· HDM provides rich APIs for external management tools to obtain device information (such as model, version, and asset information).

Rich configuration capabilities

HDM supports the following features to facilitate the configuration of HDM/BIOS/component parameters:

· Support configuration using standard IPMI commands, Redfish commands, and SNMP commands.

· Support one-time effect, permanent effect, immediate effect, and effect upon next startup for the BIOS configuration.

· Support customizing alarm logs and configuring user permissions.

· Support presentation and configuration of BIOS settings through the HDM Web interface.

· Support batch export and import operations of the HDM, BIOS, and storage controller configurations, enabling convenient configuration migration.

· Support factory reset and one-click retirement operations to meet the requirements of equipment migration and retirement.

· Support remote configuration and service switching.

Firmware deployment

HDM supports the following features to facilitate firmware operations:

· Use one image for multiple products without distinguishing versions, making upgrades easier.

· Encapsulate images by firmware type to meet the requirements of security verification and user's unawareness of firmware type.

· Support out-of-band firmware management operations.

· Support immediate upgrade and scheduled asynchronous upgrade.

· Support installing feature packages and patch packages.

· Support REPO upgrade to update multiple types of firmware at the same time.

· Support firmware backup.

· Support automatic recovery of HDM and BIOS firmware anomalies.

· Support resuming upgrade tasks after the host and HDM restart from anomalies.

· Support CPLD restart without power-off.

OS and driver deployment

Except for the traditional method of OS installation and driver deployment through image mounting using KVM or H5 KVM, HDM also supports distributed bulk OS installation and fast OS installation. This can meet fast deployment requirements in various scenarios.

Distributed bulk OS installation: UniSystem, in conjunction with HDM, supports OS installation via distributed image transmission through file sharing, which supports peer-to-peer transmission and avoids bandwidth limitations from software deployment. This improves OS deployment capability by over 10 times compared to traditional installation methods.

Figure 19 Distributed bulk OS installation

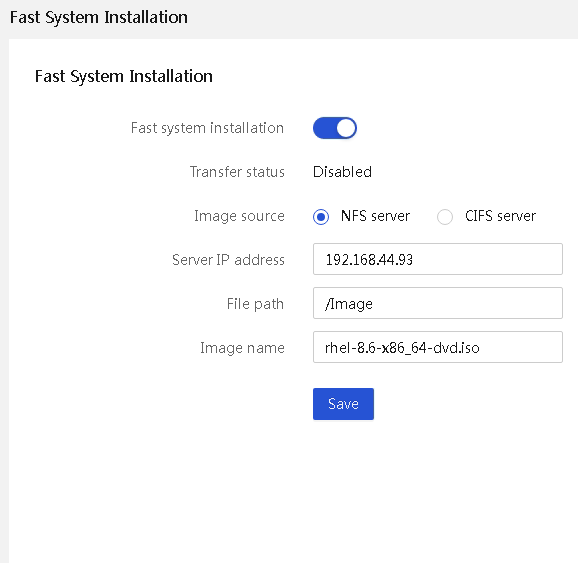

Fast OS installation: HDM supports selecting an image from the NFS server or CIFS (Samba) server for fast OS installation. This feature uses a high-performance, low-latency PCIe hardware link transmission channel to shorten the time for OS installation. This resolves the issue of slow image transmission rates from BMC to the host side during OS installation based on remote virtual media.

Figure 20 Fast OS installation

Peripheral tools

HDM provides a rich ecosystem of cooperative interfaces, constructing a diversified server management ecosystem. It supports Ansible orchestration scripts, Zabbix, and Nagios & Nagios XI for third-party incorporation, monitoring, and O&M management.

Table 2 Third-party platforms supported by HDM

|

Third-party platform |

Description |

|

VMWare vCenter |

Alarm monitoring, information query, OS installation, component update, server configuration |

|

Microsoft System Center Operation Manager (SCOM) |

Alarm monitoring |

|

Microsoft System Center Configuration Manager (SCCM) |

OS installation, OS upgrade, system configuration |

|

Ansible |

Information inquiry, RAID configuration, network configuration, BIOS configuration |

|

Zabbix |

Information inquiry, alarm monitoring |

|

Microsoft Admin Center |

Information query, resource usage monitoring |

|

Nagios & Nagios XI |

Information inquiry, alarm monitoring |

Configuration management

Configuration file import and export

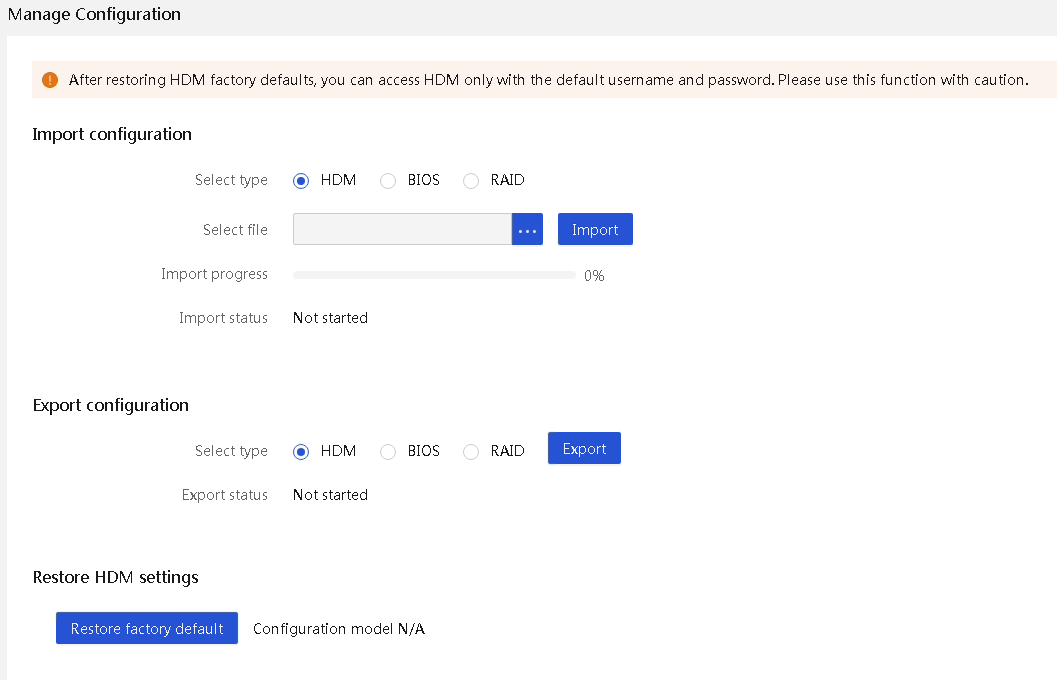

This feature allows exporting or importing the configuration of HDM, BIOS, and RAID as configuration files, allowing administrators to easily configure remote servers, as shown in Figure 21.

This feature has the following technical advantages:

· The configuration options of HDM, BIOS, and RAID modules can be batch configured out of band.

· The configurable parameters are numerous, including 500+ options for HDM and 1100+ options for the BIOS. The feature also supports modifying RAID levels.

· The exported configuration file structure can be read, edited, and saved.

· The exported HDM configuration items supports self-annotation, enhancing users' understanding of the configuration.

· It allows for batch deployment of multiple servers with the same configuration. The feature simplifies operation and greatly improves the O&M efficiency.

· This feature supports password export and import. The exported passwords are displayed in ciphertext format.

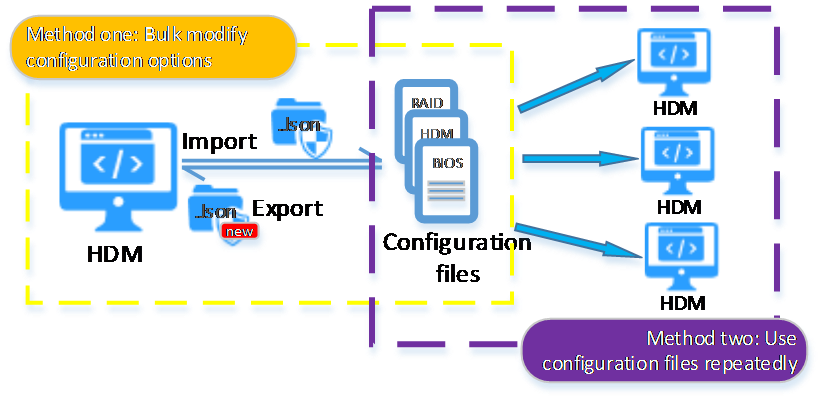

Configuration files support the following use cases, as shown in Figure 22:

· Export configuration file, make modifications, and then import it for bulk setting modification.

· Use the same configuration file for servers of the same model to achieve fast configuration and deployment of large-scale devices.

· After replacing the system board or restoring the factory default settings, you can use the exported configuration file to easily restore the custom settings.

Figure 21 Configuration file import and export

Figure 22 Use cases of configuration file import and export

Restoring HDM factory defaults

Restoring HDM settings can restore HDM to the factory defaults and can be used for HDM reconfiguration.

BIOS option configuration

HDM supports the following functions for convenient out-of-band setting of the BIOS options:

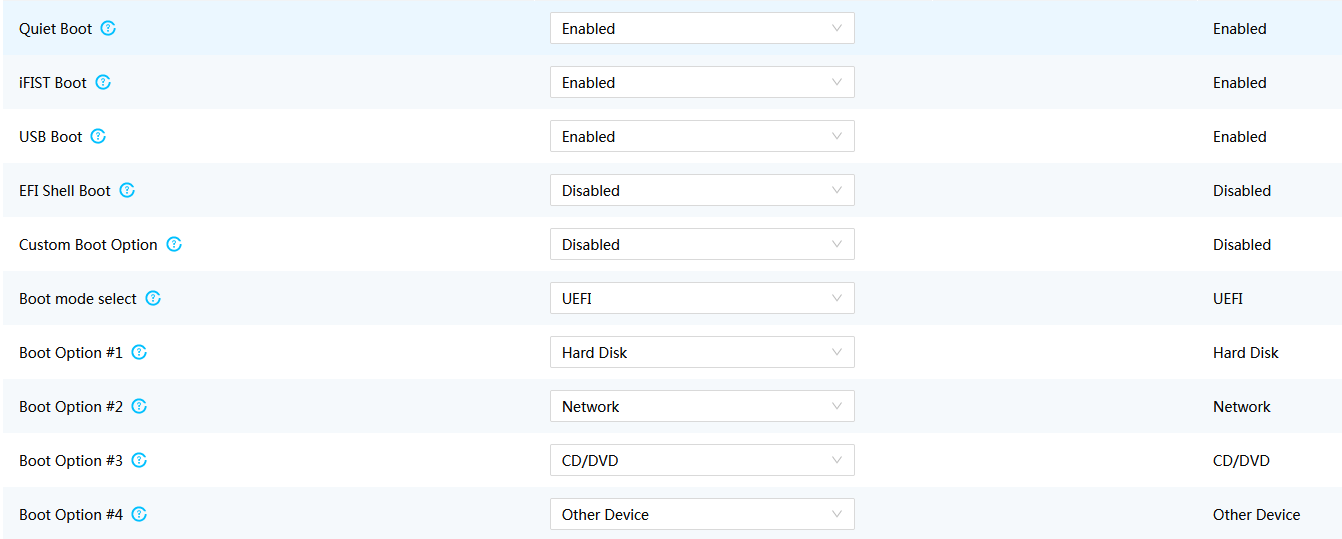

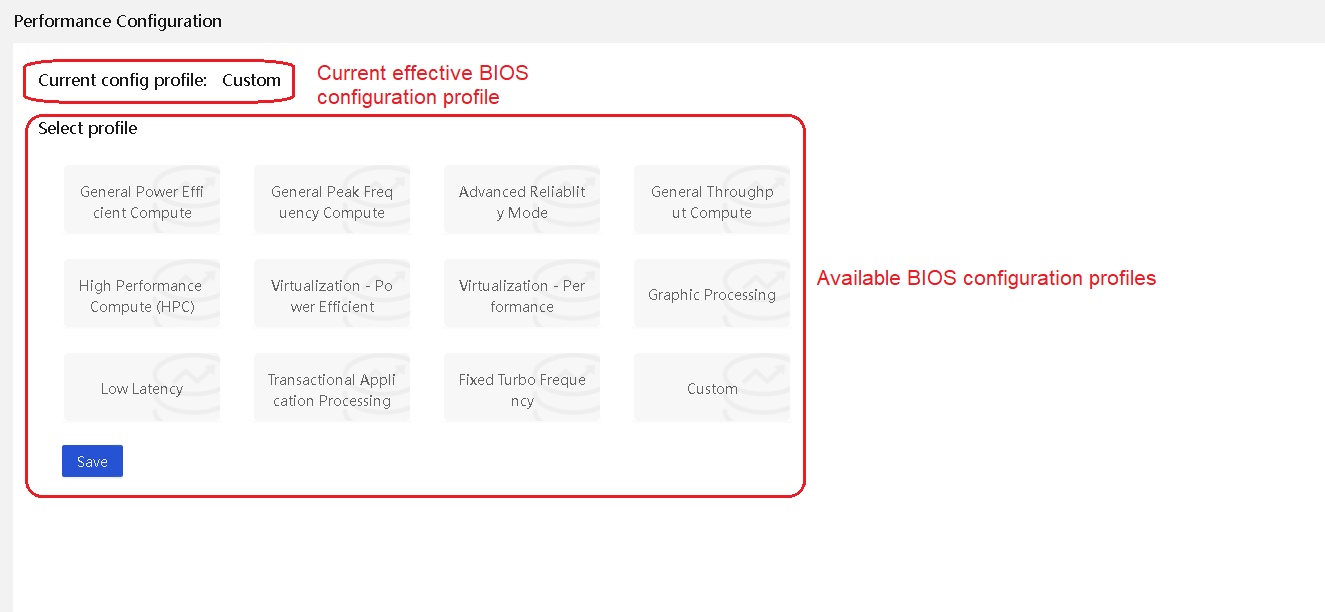

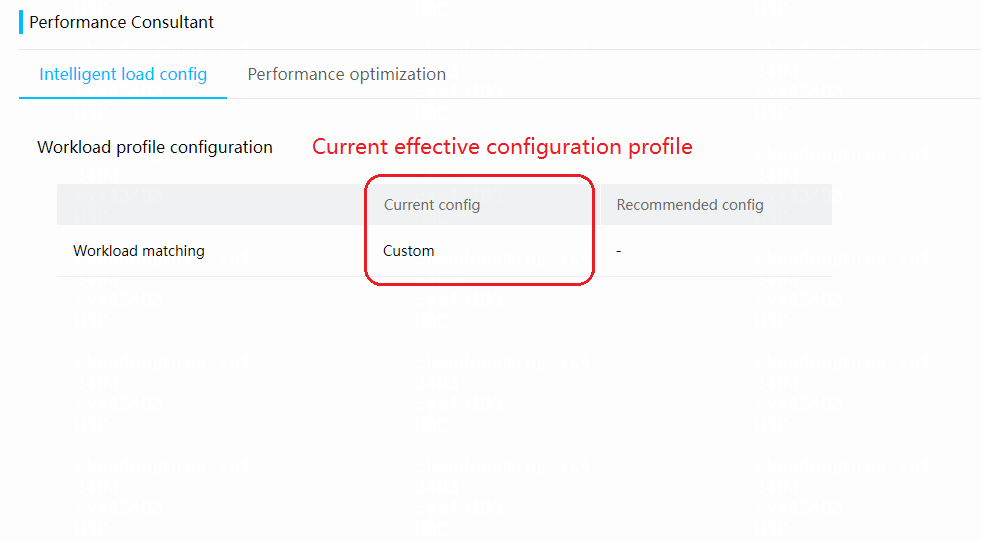

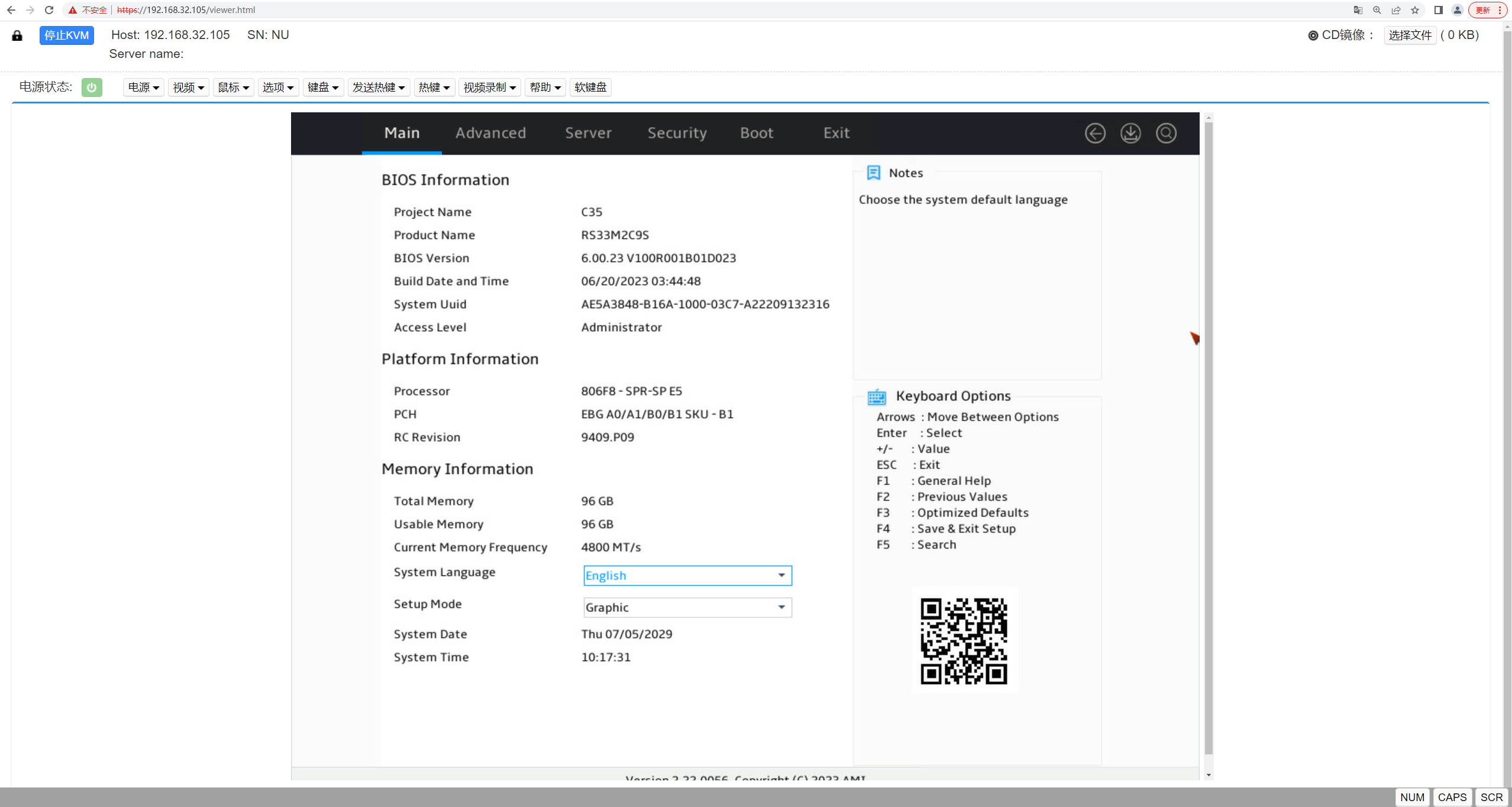

· The Web interface supports displaying BIOS options in a structured way, as shown in Figure 23 and Figure 24. The Web interface is consistent with the BIOS setup utility, which is user-friendly.

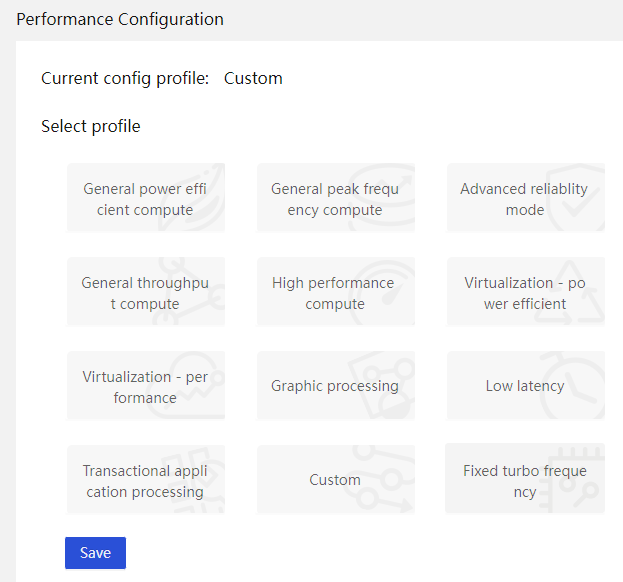

· HDM supports one-key modification of the BIOS configuration template for different server application scenarios, thereby automatically changing all related BIOS configurations. The supported application scenarios are as shown in Figure 25.

HDM supports multiple out-of-band settings methods, with powerful configuration capabilities:

· Support configuration using IPMI commands.

· Support Redfish command to configure BIOS options, and provide parameter descriptions for all supported options in the user manual.

· Support batch configuration import and export from webpage or Redfish interface for 1100+ options.

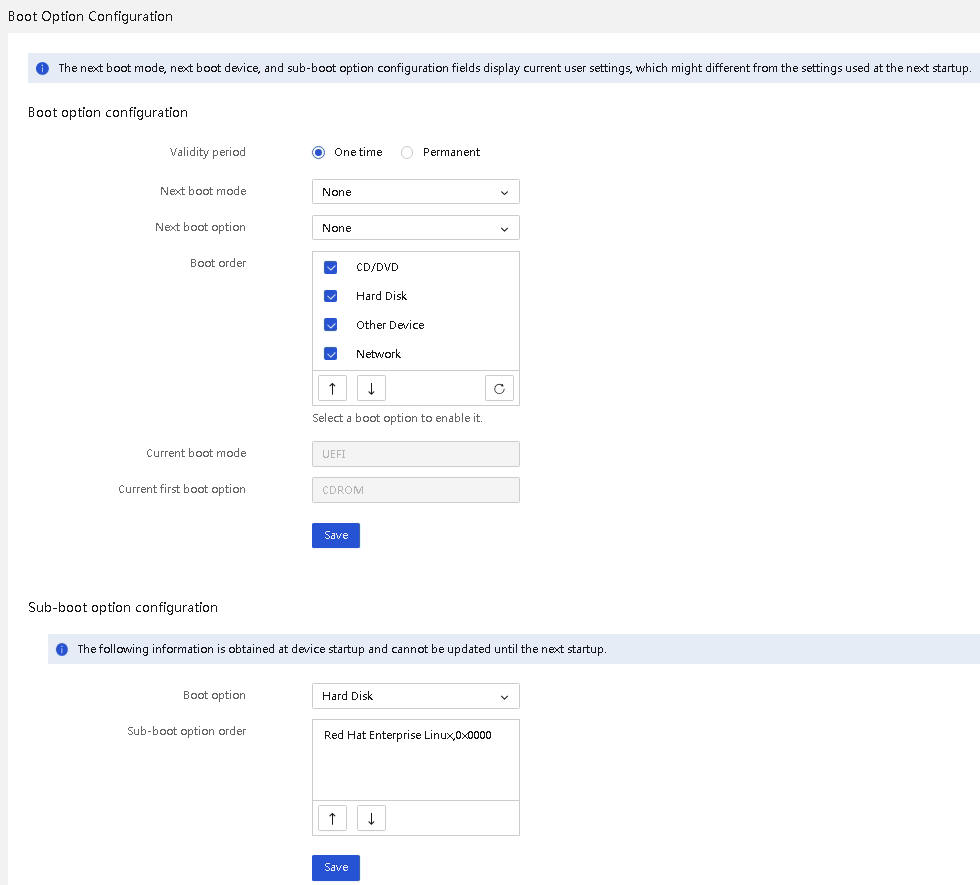

· Support configuring boot option settings, including configuring validity period, next boot mode, next boot device, boot order, and boot suboption order, as shown in Figure 26. This achieves flexible and easy-to-use boot control.

Figure 23 BIOS configuration interface

Figure 24 BIOS setup utility

Figure 25 BIOS configuration template

Figure 26 Configuring boot options

Firmware deployment

Service functions related to HDM upgrade include the following:

· Query firmware versions of HDM, BIOS, CPLD, PSU, LCD, NIC, RAID controllers, and storage drives.

· Upgrade of HDM firmware without service interruption or power-off.

· Same HDM firmware image for multiple products, without the need to differentiate versions, reducing the difficulty of upgrading.

· Signed bin file firmware upgrade to ensure that the image is intact.

· Uninterrupted upgrade by using a REPO package (iso format), realizing firmware upgrade for multiple components at once (PSU, LCD, NIC, RAID controller, storage drive, HDM, BIOS, and CPLD).

· Support feature package upgrade.

· Support patch package upgrade.

· Support scheduled upgrade.

· Support firmware backup to firmware repository.

· Support firmware in the firmware repository to take effect.

· Support abnormal firmware detection.

· Support firmware dump through HDM and using Agent tool to upgrade Option cards.

· Support automatic recovery of HDM and BIOS firmware anomalies.

· Support CPLD restart without power-off.

Golden image

To improve system reliability, HDM uses a Golden Image to implement BMC primary and backup partitions. Each time the HDM firmware is upgraded, only the primary partition image is upgraded, while the Golden Image remains at the factory version. When a flash misoperation or storage block damage occurs during the operation in the primary partition, HDM can automatically switch to the Golden Image to recover the abnormal image of the primary partition and automatically switch back to the primary partition.

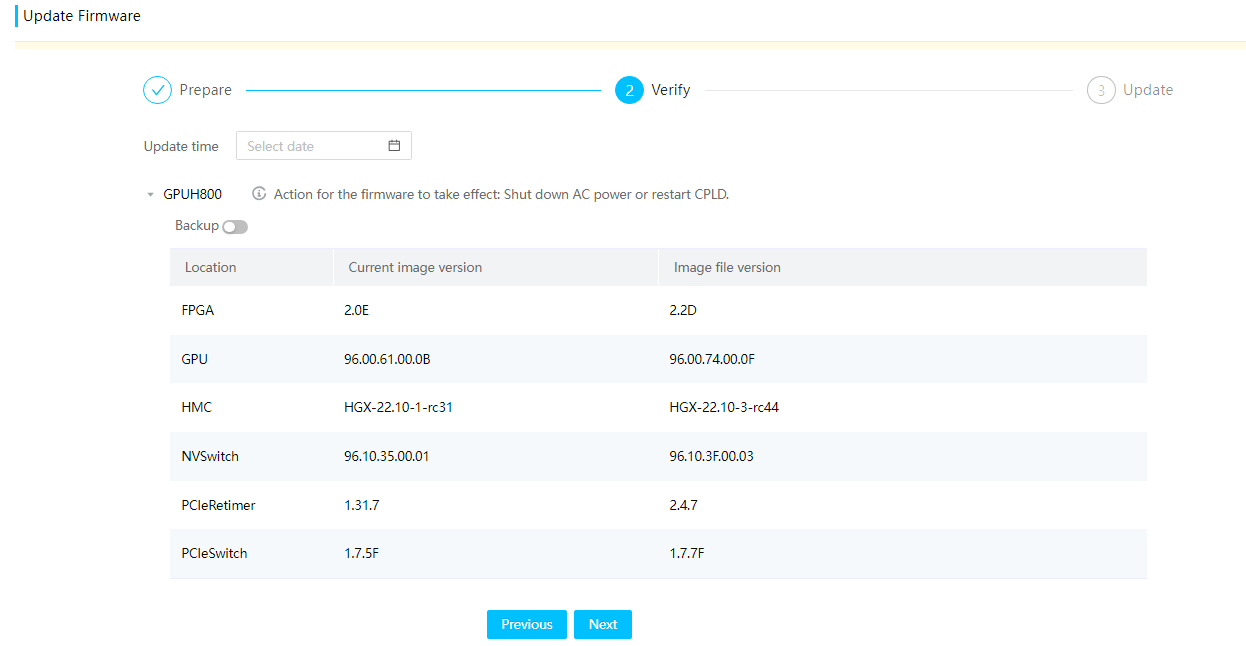

Upgrade firmware

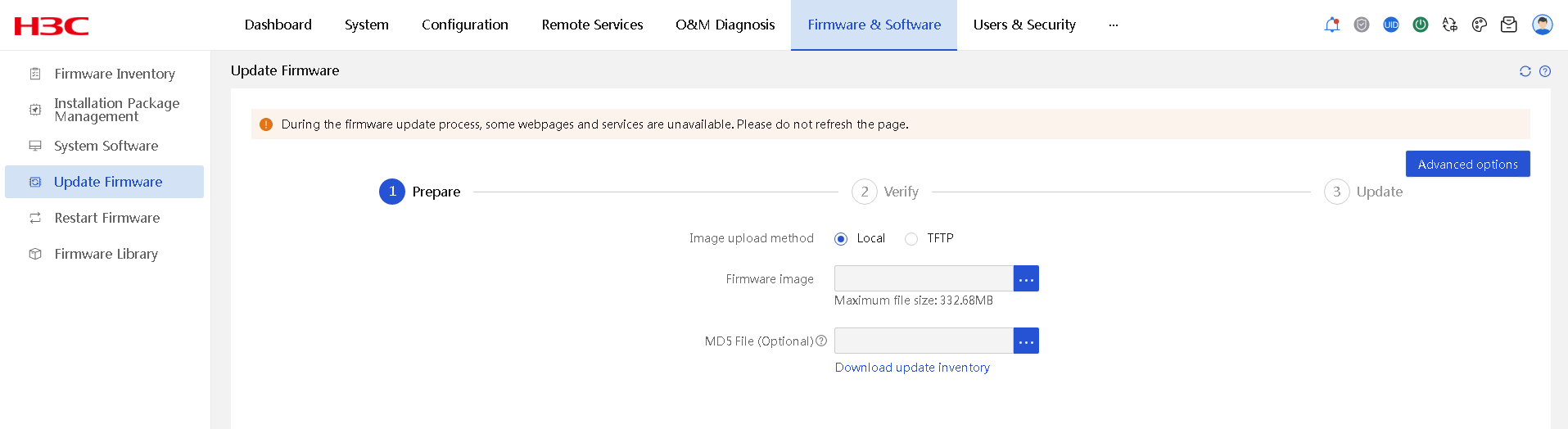

Support firmware upgrades for HDM, BIOS, CPLD, PSU, LCD, RAID controller, drive, network adapter, and GPU. Support feature package upgrades. Support patch package upgrades. Support upgrade by using bin, run, and iso files. Support REPO upgrade, which can simultaneously update firmware of various components such as HDM, BIOS, CPLD, PSU, storage controller, network adapter, and drive on the server. Support upgrading via Redfish URL and various firmware upload methods including HTTP, HTTPS, NFS, TFTP, CIFS, and SFTP.

The firmware information page that opens after verification supports selecting a backup and setting the upgrade execution time. HDM and BIOS firmware upgrades support the manual and auto activation methods and support configuration reservation or overwriting after HDM and BIOS upgrades.

1. Image backup

If you select to enable backup on the firmware information confirmation page, HDM will automatically back up the firmware image file in use to the firmware repository.

2. Scheduled tasks

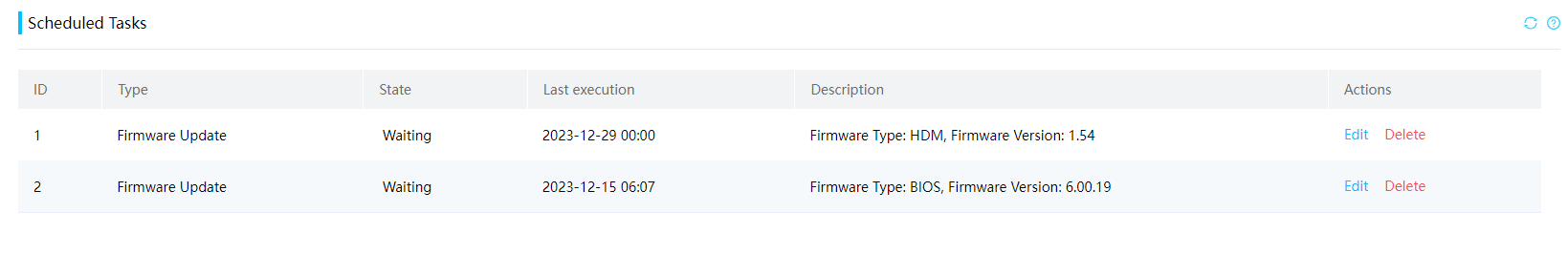

On the firmware information confirmation page, you can choose to set the upgrade time. HDM will save the task in the background and perform the upgrade task at the preset time. Scheduled upgrade tasks support simultaneous issuance of multiple tasks. During the period before the predetermined time, they do not affect the normal issuance of other upgrade tasks. Figure 27 shows successfully issued upgrade tasks.

3. BIOS self-upgrade

When you update the BIOS firmware, you can choose to reserve the user settings or overwrite the user settings. HDM only uploads the firmware file of the BIOS to eMMC. When the host restarts later, the BIOS will retrieve the firmware file from eMMC through HDM and upgrade the firmware itself to implement the relevant configuration strategy.

4. Incremental feature package, cold patches, and hot patches

The installation packages contain the following types: feature packages and patch packages (including cold patches and hot patches), as shown in Table 3.

Table 3 Installation package Introduction

|

Installation package type |

Application scenarios |

Advantages |

Limitations |

|

|

Feature packages |

An incremental service package of the HDM system can add enhanced service functions. |

· Incremental services are automatically enabled without interruption to basic services. · The amount of code modified incrementally can be very large. HDM supports incremental upgrades for large features. |

The release cycle is long, depending on product planning. |

|

|

Patch packages |

Hot patches |

A patch can be used to resolve on-site issues that require urgent troubleshooting. It can provide real-time fault repair without interrupting services. |

· Real-time fault modification without any service interruption. · The patch release cycle is short. It may take 3 to 10 days to deliver a hot patch to the target site. |

· Modification amount is limited, typically requiring no more than 500 lines of code modification. · Certain specific functions of the system do not support hot patching. |

|

Cold patches |

A patch can be used to resolve on-site issues that require urgent troubleshooting. The faulty service is a upper-layer service, and the service process can be restarted. |

· Faulty service recovers within seconds without impacting other services. · The patch release cycle is short. It may take 3 to 10 days to deliver a cold patch to the target site. |

Basic core service processes that do not support restart cannot support cold patches. |

|

Feature packages and patches can provide the following advantages:

¡ HDM firmware diversity. In addition to the basic firmware, delivery of feature packages and patch packages expand the application scenarios.

¡ Timeliness of HDM firmware delivery. The basic firmware delivery cycle is one calendar month at least. Patch packages can respond to on-site fault repairs in a timely manner.

¡ Customized rapid adaptation for customers can be achieved. Customized features can be fast implemented through feature or patch packages.

5. Upgrade risk notification

¡ If HDM has HDM or BIOS upgrade tasks to take effect, the Web interface will display the corresponding notification on these tasks.

¡ If HDM has scheduled tasks, the Web interface will display the corresponding notification on these tasks, including upgrade time, version, and the method of upgrade implementation.

¡ When multiple scheduled tasks exist, HDM will prioritize displaying the content of a task with the closest upgrade time. In the event of multiple tasks scheduled for the same time, priority will be given to the task that was registered earlier.

¡ When both HDM and BIOS upgrade tasks exist simultaneously, HDM will prioritize displaying the BIOS upgrade tasks.

¡ The upgrade risk notification bar is fixed on the webpage, and appears on any tab of the webpage when an upgrade task is to take effect.

Figure 28 Upgrade risk notification

6. Firmware security

¡ Firmware startup security: BMC firmware implements redundant backup mechanism of Golden Image. When a Flash misoperation or storage block damage occurs during operation, you can automatically switch HDM to the backup firmware for operation.

¡ Firmware update security: All externally released firmware versions, including HDM and BIOS, have a signing mechanism. When firmware is packaged, the SHA512 algorithm is used to digest it, and the digest is encrypted using RSA4096. During firmware upgrade, tampering is prevented by verifying the signature. Only firmware with a valid signature is allowed to be upgraded onto the device.

¡ Firmware operation security: During operation, the area where the image is located is write-protected and requires a special method to perform write operations. At the same time, the system verifies the integrity of the image file during each startup and restores the image if necessary.

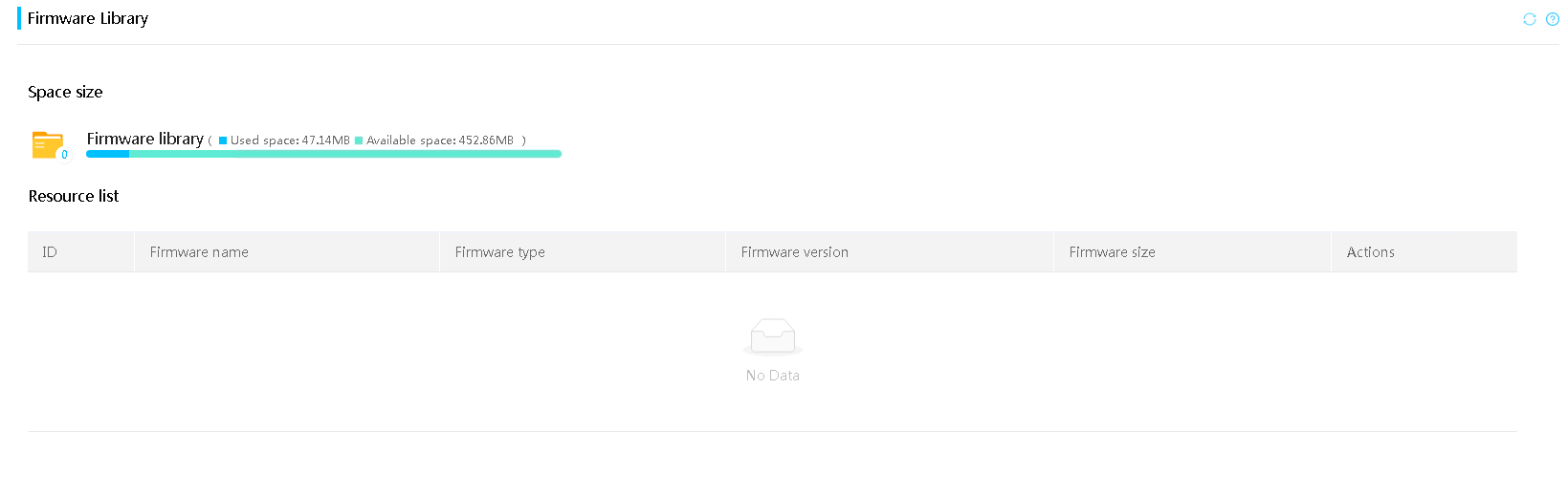

Firmware repository

At firmware upgrade, if the backup function is enabled, HDM will automatically back up the firmware image file to the firmware repository. After a successful backup, users can view information about the backup firmware image files on the firmware repository page. When the available capacity is insufficient, users cannot continue to back up new images. You can manually delete firmware image files to free up more available space. Users can choose to click Apply corresponding to the target image file to enable the image. Then, the system automatically accesses the firmware update page for firmware upgrade.

Figure 29 Firmware repository

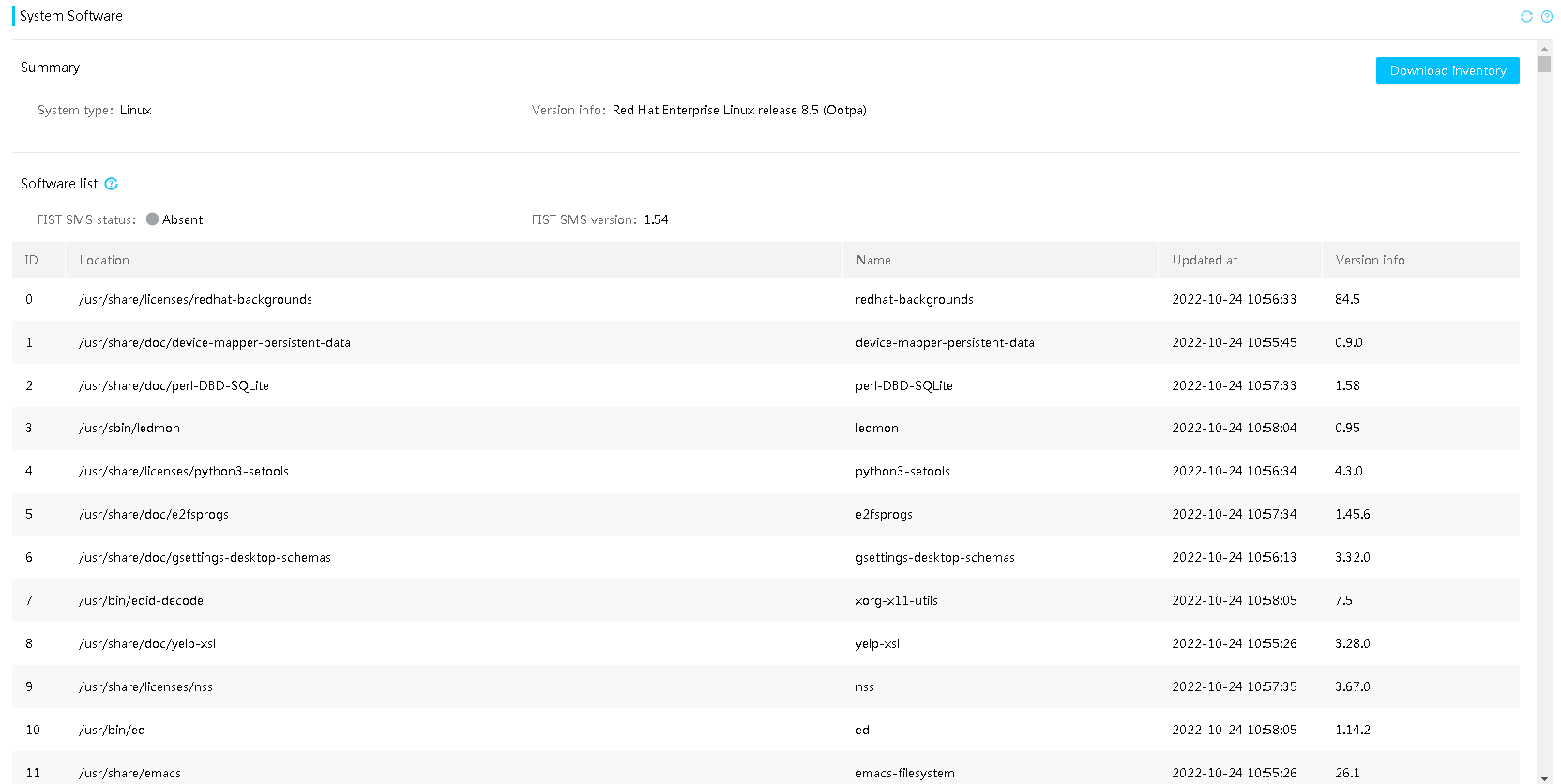

Software inventory

Users can view and download software information on the system software page of the current server operating system, including location, name, version, and update time (dependent on FIST SMS).

Figure 30 Software inventory page

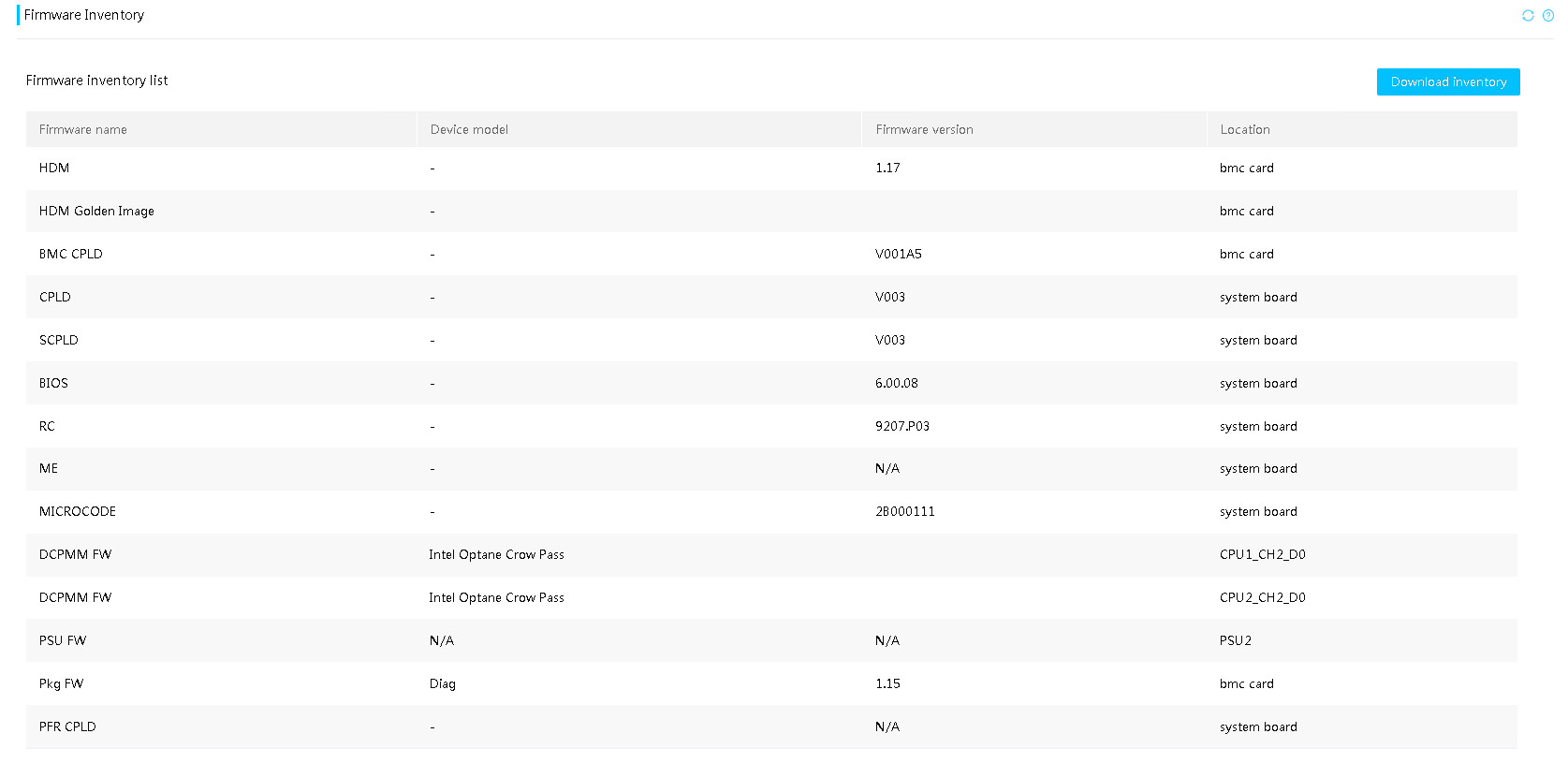

Firmware inventory

Users can view and download information about firmware installed on the current server, including firmware name, firmware version, and location, in the firmware inventory.

Figure 31 Firmware inventory page

Firmware restart

The HDM interface supports restarting BMC and CPLD.

· Users can restart BMC through this function when the BMC firmware requires to be updated or when the BMC firmware malfunctions. All sessions or connections will be interrupted during the restart, and will return to normal after relogin.

· To restart the CPLD due to CPLD firmware update or CPLD firmware exception, you can use this feature to restart the CPLD firmware, so that the new firmware takes effect immediately or the CPLD firmware returns to normal. The CPLD reset service will be affected.

Functions related to device migration and retirement

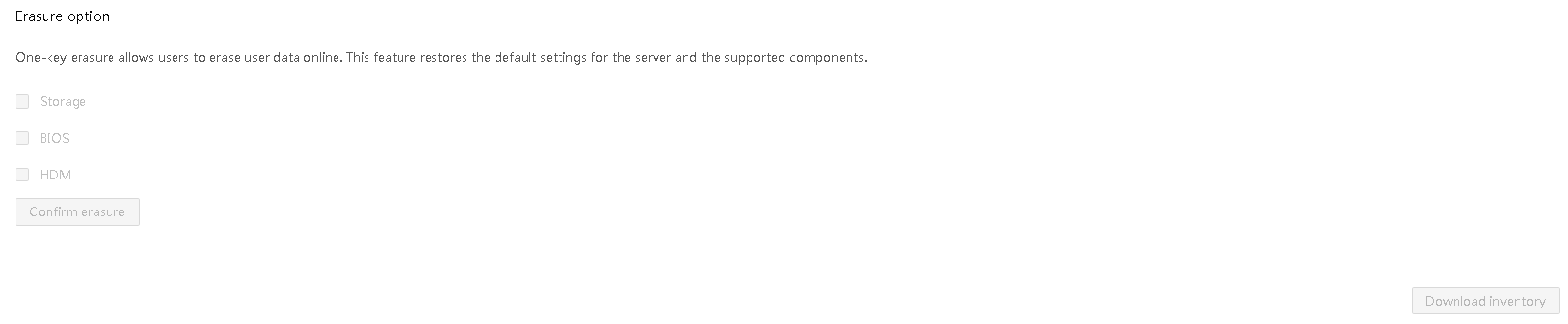

HDM supports one-click, secure, reliable, and convenient restoration of server components to the default state through out-of-band user data clearing. This achieves secure retirement of servers. For more information, see the secure erasure chapter in the security management section.

Application scenarios

Suggestions for first-time device mounting

After the device is mounted, check and confirm the following information as a best practice to see if any adjustments must be made based on the factory default configuration:

· Connection solution for the out-of-band network.

· Configuration of the time synchronization solution.

· Performance template and security consultant configuration according to the focus.

· BMC service, user, and permission configuration.

· Status of the device and whether the specifications meet the requirements after installation.

· Whether firmware upgrade is required.

Batch firmware upgrade with service interruption

At the beginning of the deployment phase, if the factory firmware version does not meet the requirements, a unified redeployment or large-scale upgrade is required. If the services have not been enabled or allow for long interruptions, use the REPO package to automatically upgrade all firmware through one click. Alternatively, use UniSystem to deploy all servers in bulk.

The REPO upgrade process takes a long time and will trigger the restart of components and hosts. However, it can be implemented automatically in the background with a single click and upgrade the firmware of the whole server to the designated compatible state.

Firmware upgrade with service guaranteed

If only certain firmware requires upgrade in on-site maintenance, use the firmware package of the designated component. Supported components include network adapters and RAID controllers. After updating the BMC firmware, restart the corresponding component to complete the process. Components such as CPLD and BIOS require a whole system restart after the firmware update.

In addition, you can use the scheduled upgrade function to set time with a low service volume (such as the early morning) to start the upgrade process.

Firmware upgrade without service interruption

In a critical service environment, the following strategies are offered to meet the expectation of firmware upgrade without affecting on-site operations:

· BMC firmware can be directly updated if BMC out-of-band monitoring can be temporarily interrupted. BMC restart only takes 1 minute to restore BMC services.

· To upgrade the BIOS firmware, if the new firmware is not required immediately, you can delay the time when the new firmware takes effect and allow the firmware to take effect at the next server restart.

Server management capabilities

Background

Most servers need to provide highly reliable services with stable processing capabilities. This indicates enhanced stability, reliability, security, and maintainability, as well as higher management capabilities.

Broadly speaking, deployment, monitoring, and diagnostics are all part of device management. Of course, identifying on-site materials from smart diagnosis system (SDS) logs during maintenance, access control for BMC, configurations for BIOS or BMC, and maintenance for various components also fall under device management.

HDM supports the following management functions for server devices:

· Asset management: Allows users to easily identify and manage their assets.

· Device management:

¡ HDM can manage server hardware, including but not limited to CPUs, memory, hard drives, PCIe modules, power supplies, and fans.

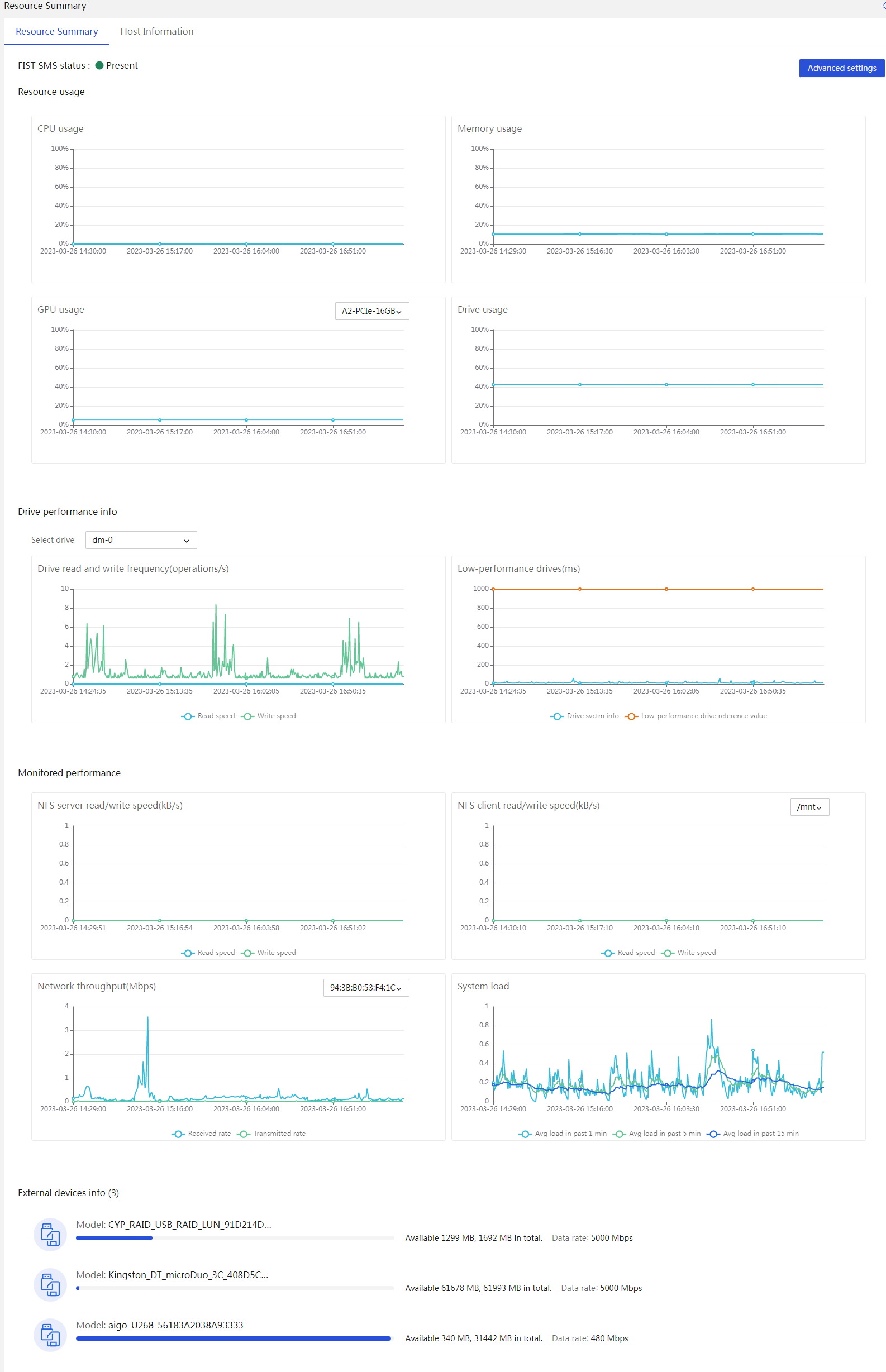

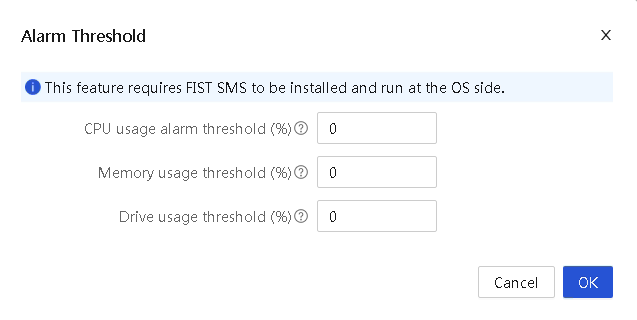

¡ HDM can monitor an operating system's status, for example, detecting the system running status through resource usage rates. To obtain more detailed resource usage rate information, collaboration between HDM and FIST SMS is required.

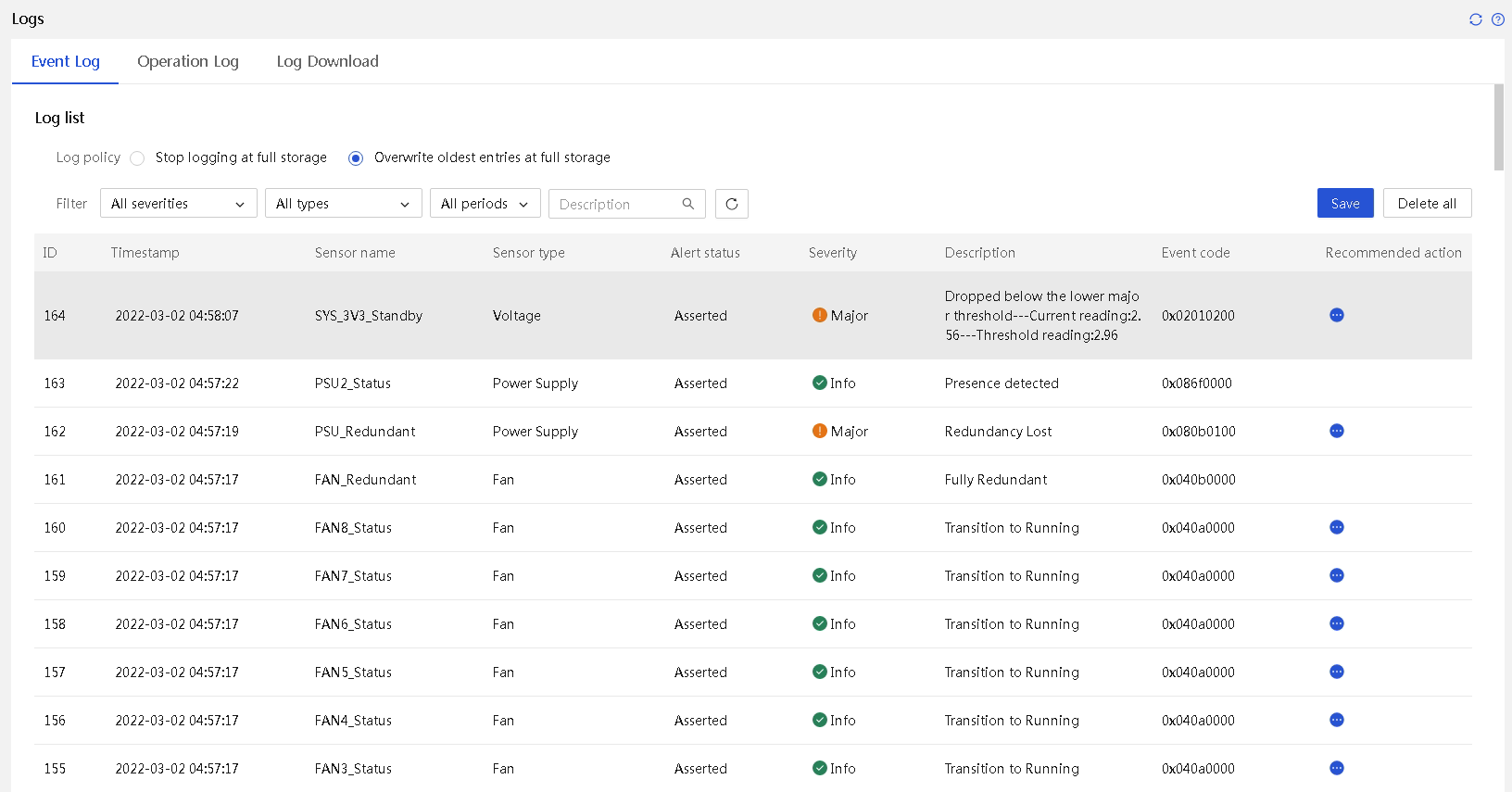

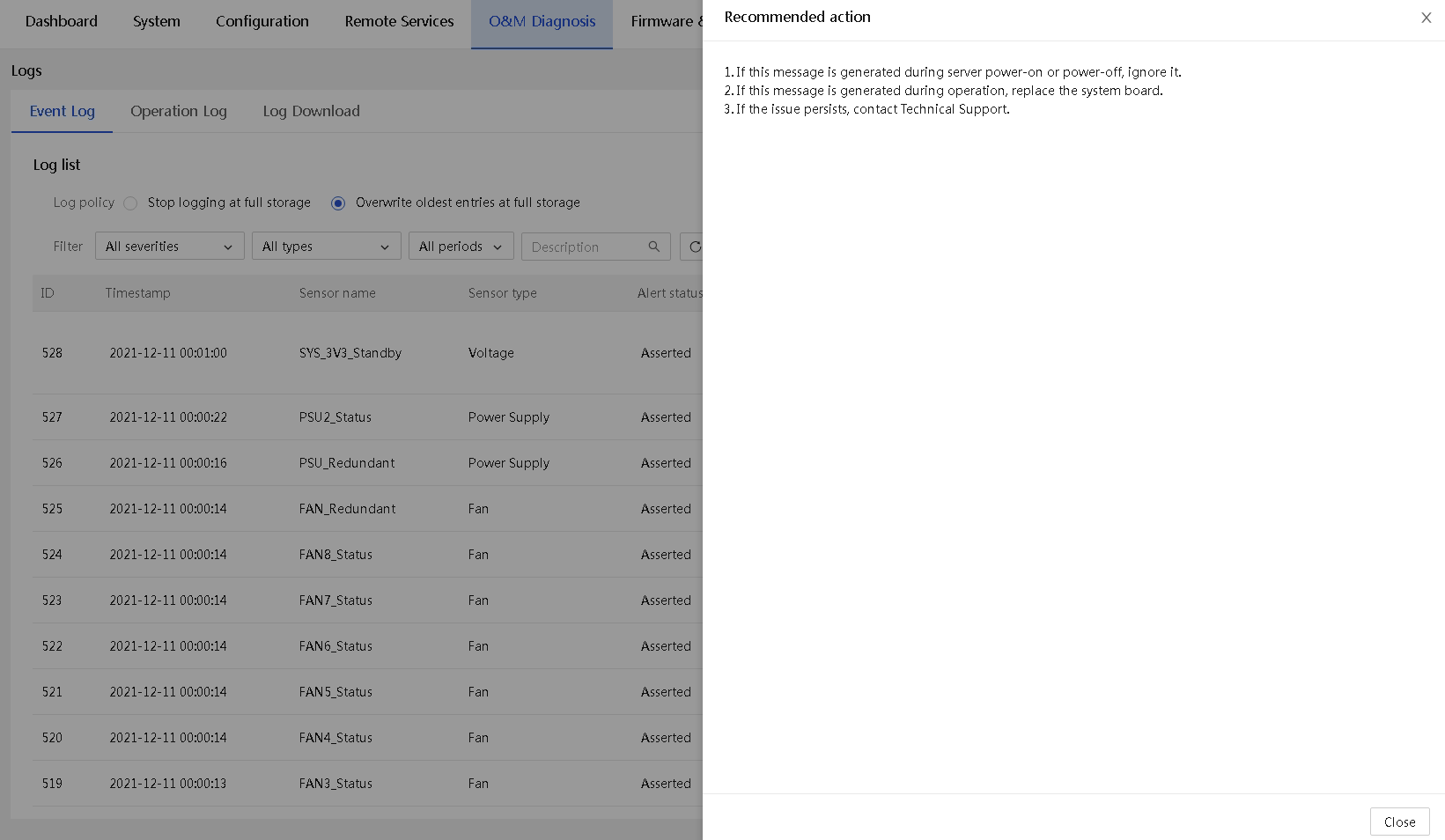

¡ Event log management: A server's operation should be stable. HDM provides event logs and early warnings, which help operation and maintenance personnel know abnormal events, identify issue root causes, and quickly resolve issues. Early warnings can help effectively reduce the probability of system downtime.

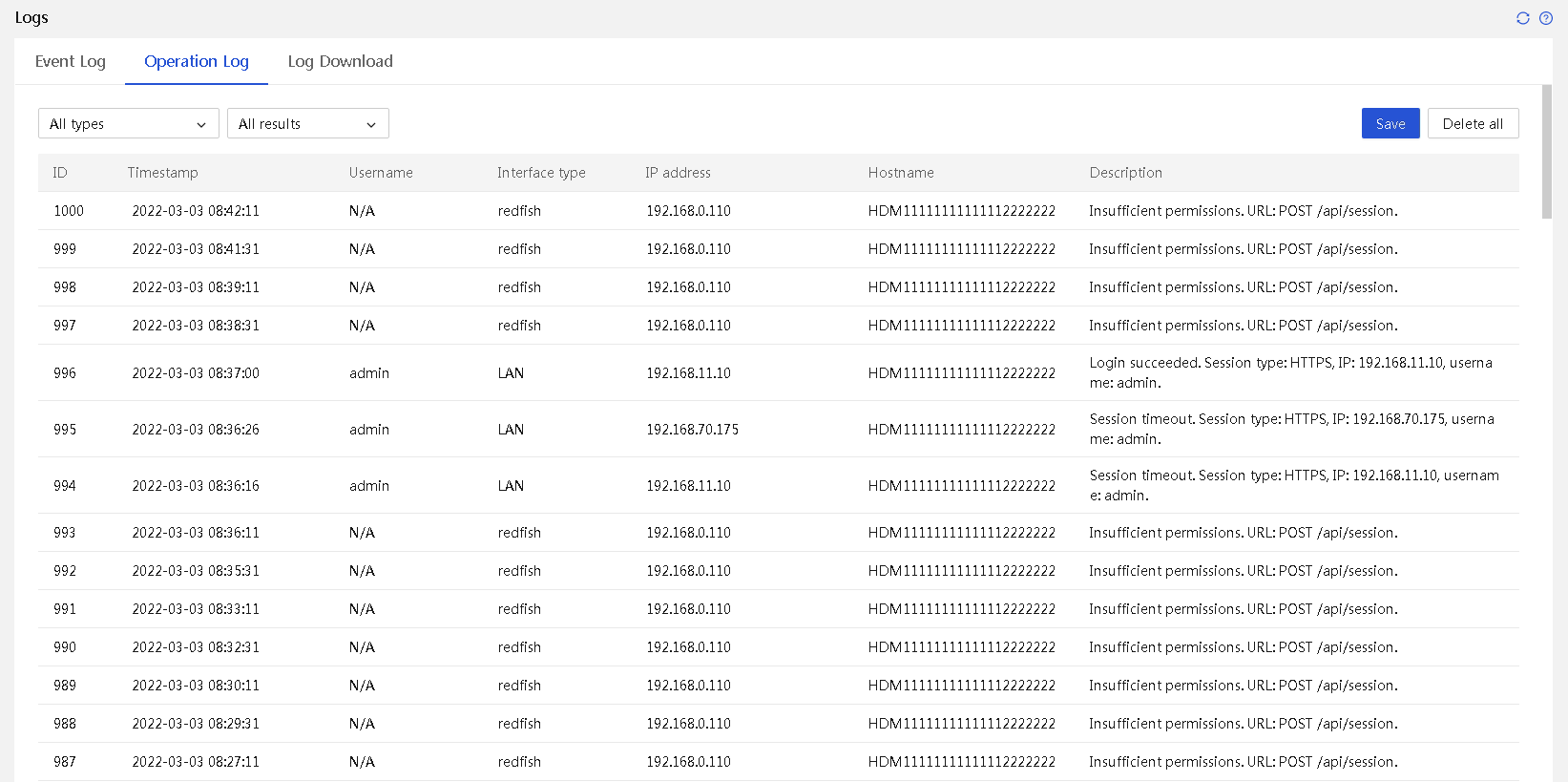

¡ Operation log management: HDM logs various operations, such as logins, logouts, firmware updates, component replacements (such as, CPU, memory, hard drive, and power supply), configuration import/export, and system locks. These logs directly display changes and reasons of changes in configurations, components, and versions during normal server use.

¡ Remote control: HDM provides convenient remote control, allowing users to view and control systems directly from the HDM page.

¡ Firmware inventory management: The firmware inventory summarizes firmware information for various server components. Through the Redfish interface, users can easily obtain firmware version information for all servers managed by HDM in the data center, facilitating unified query and upgrade.

· Component management:

¡ Component information management: HDM can obtain, display, and monitor both static and dynamic information about components. The information is displayed on the HDM page. Users can also actively monitor reporting of information or pushing of content through IPMI/Redfish/SNMP Agent, which includes various event log warnings. During the server's operating cycle, through periodic sampling data, HDM uses sensors as carriers, draws the dynamic change trends for various components. For example, the temperature sensor displays the trends of components in running status.

¡ Power supply and consumption management.

· Host management:

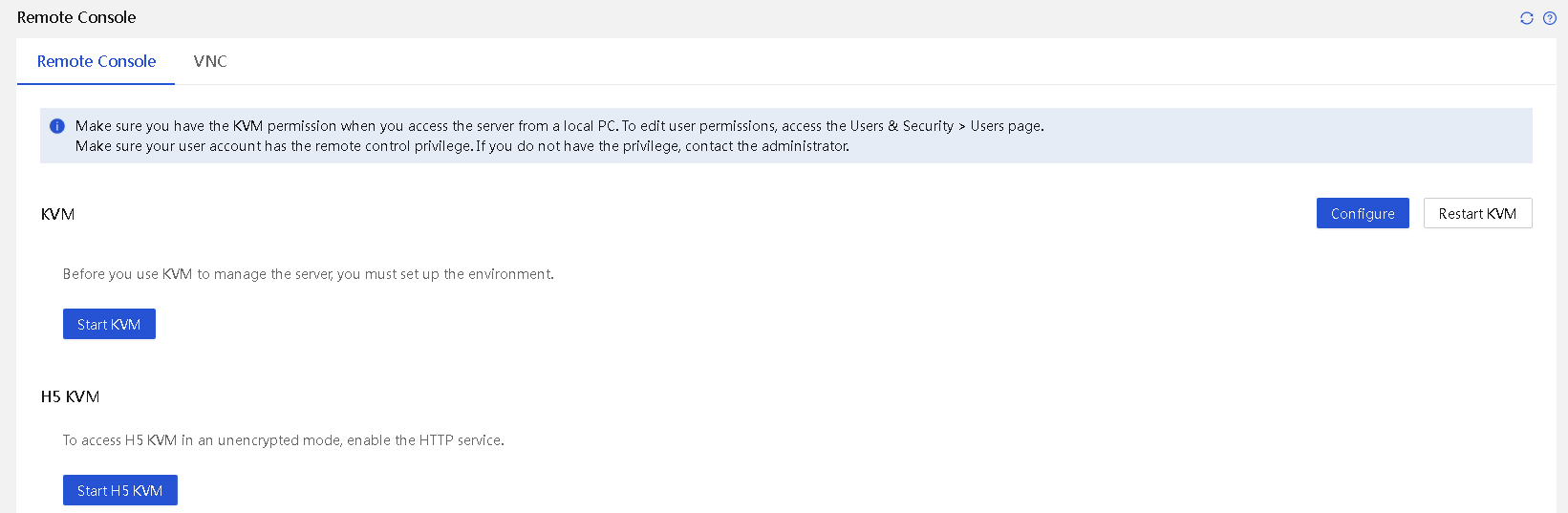

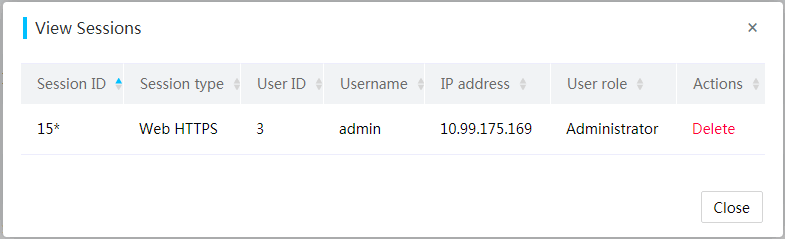

¡ HDM supports the KVM, H5 KVM, VNC, and JViewer remote consoles. It also supports operations such as power control, screen capture, and video recording.

¡ Virtual media: HDM supports mounting for floppy drive, CD/DVD, and hard drive/USB device virtual media, as well as folder mounting.

· Unified control:

¡ HDM supports managing servers in bulk. It supports the operations such as adding devices, deleting devices, viewing device information (IP, product name, serial number, health status, server power status, and UID status), accessing HDM, power operations, and accessing H5 KVM.

Technical key points

Asset management

Each proprietary component of H3C servers is assigned a unique material serial number during design. If a component has an EEPROM, FRU information is written into it at the factory. For a purchased component, we provide a unique material identification solution based on the basic information provided by the component, such as model, serial number, part number, and original vendor information.

We assign each server a device-level serial number (combining serial numbers for three items into one) for distinguishing between servers. Additionally, we provide a solution for writing asset information, facilitating writing user-defined information into asset tags for server asset management.

Device management

HDM's device management mainly covers the following aspects:

· Identification of the connection topology of various components on the device.

· Server power supply and power consumption management.

· Cooling solutions, including liquid cooling and fan control.

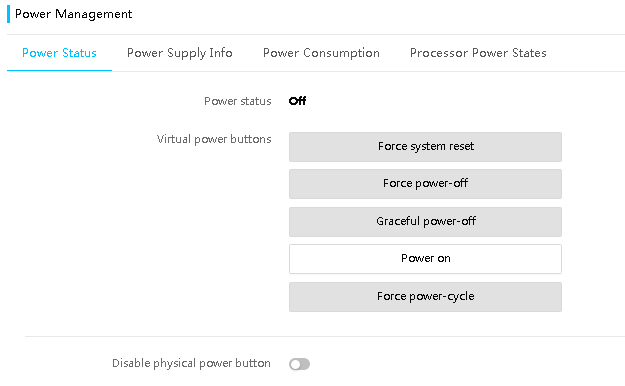

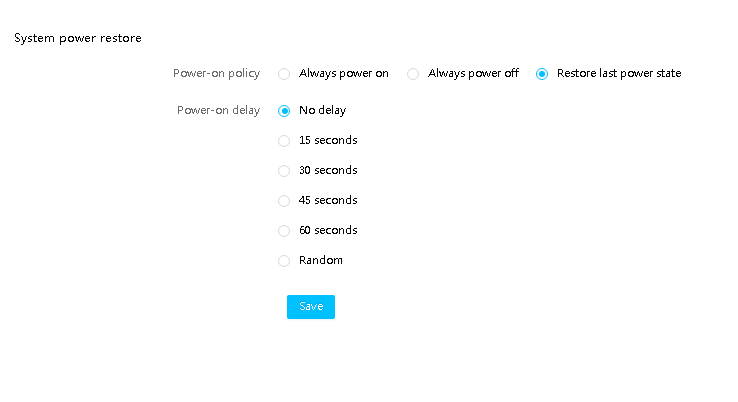

· Power on/off management:

¡ AC power control and customized power-on delay policy.

¡ Support for disabling physical power button.

¡ Based on hardware design, HDM can support a power supply policy for modules in S5 status located at specific PCIe slots. After you enable this policy, the smart Ethernet adapter installed in such a slot can be powered on, and the corresponding fans regulate their speeds to ensure the cooling needs of the smart Ethernet adapter.

¡ Support for power-on sequence control, for example, the power-on sequence control for the smart Ethernet adapter and system host.

Component management

To prevent operational mistakes, HDM supports displaying mark information for various components, including sensors, event logs, and mark information in component location information.

HDM-supported mark and location information meet the following requirements:

· The mark information displayed on HDM is consistent with that on the server.

· The component mark information displayed by various HDM APIs is consistent.

· Provides visual layout consistent with the installation layout of the server's CPUs and memory modules.

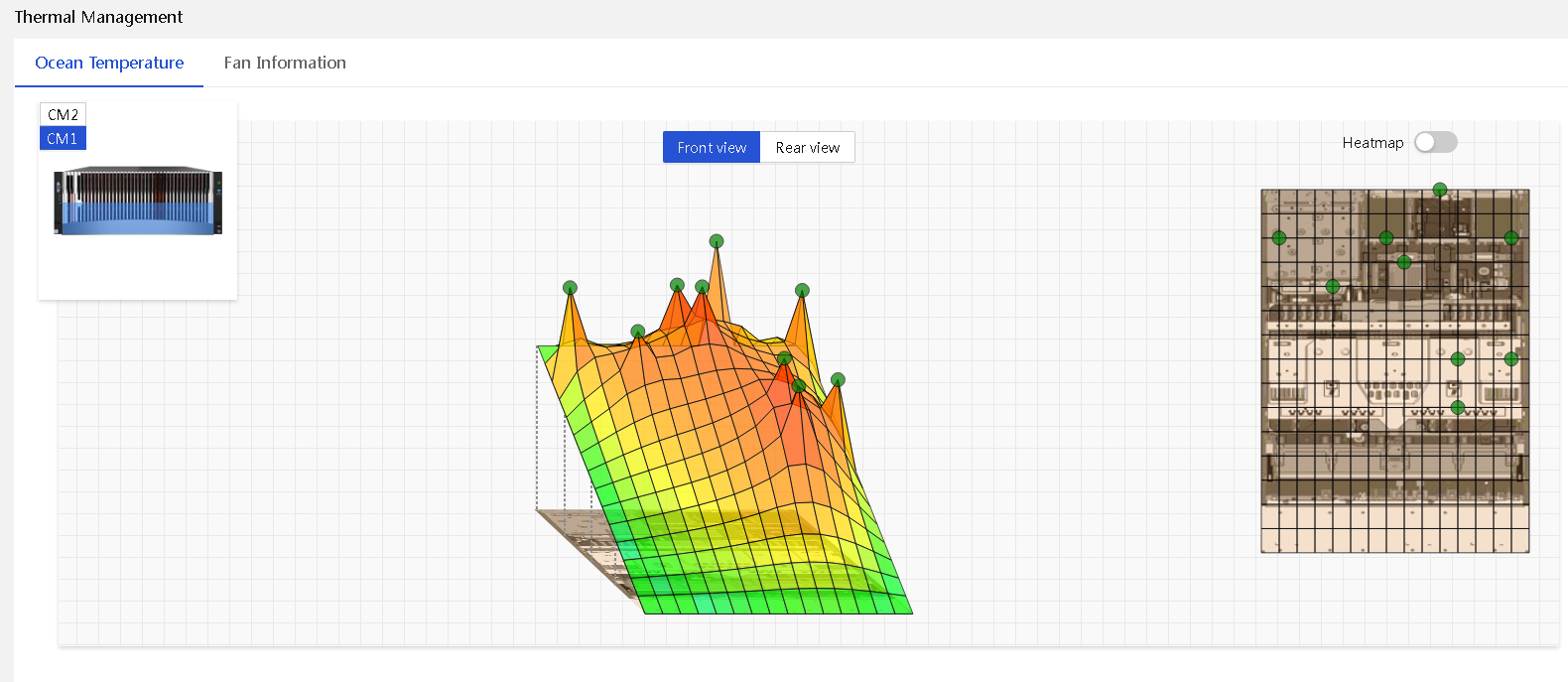

· Provides a visual temperature graph for temperature sensors whose layout locations are consistent with those on the server.

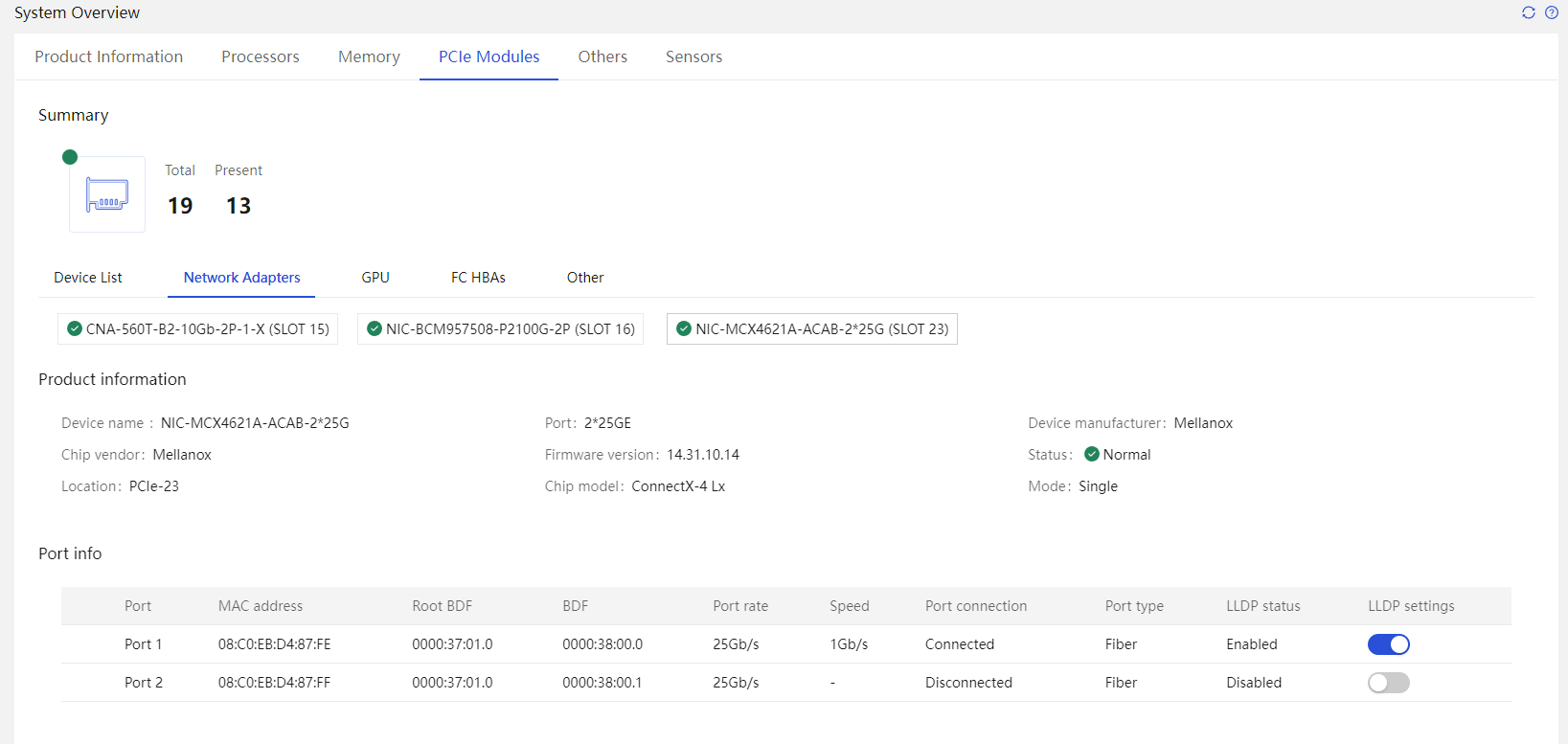

HDM supports management on various components, such as CPU, memory, storage cards, hard drives, power supplies, fans, network adapters, and GPU cards. The supported functions include:

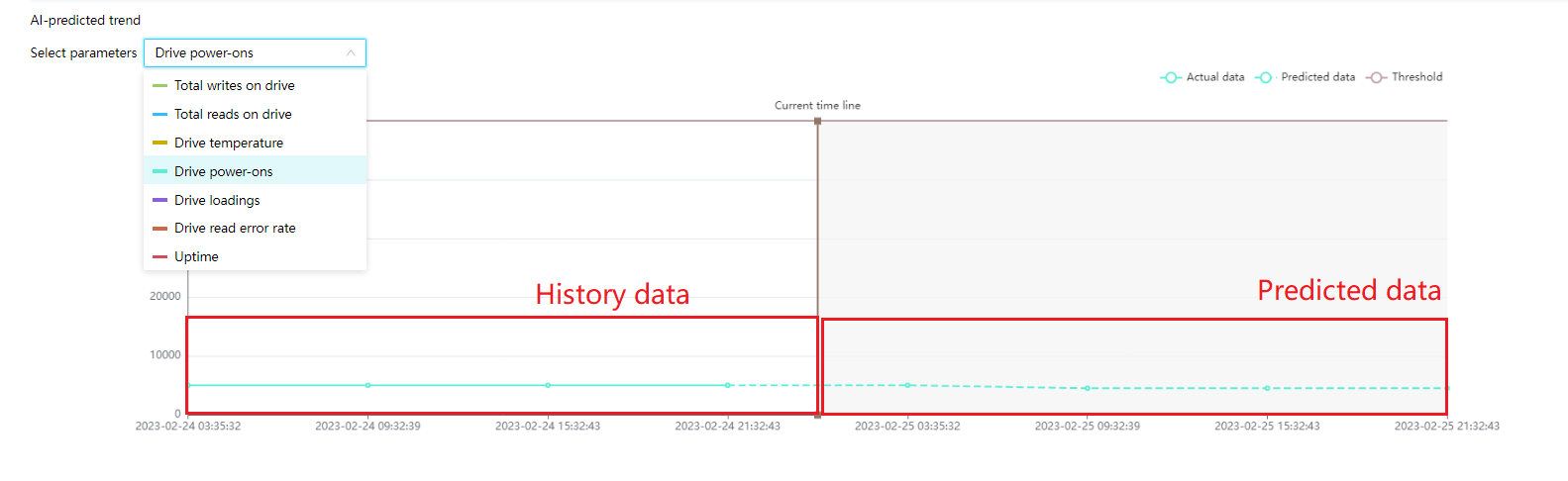

· Out-of-band information reading, historical information storage, and predictions based on historical data in specific scenarios.

· Out-of-band settings, such as out-of-band management of storage cards that support it, and setting the active or standby mode for power supplies.

· Event reporting and handling, especially maintainability events, which involve collecting contextual information.

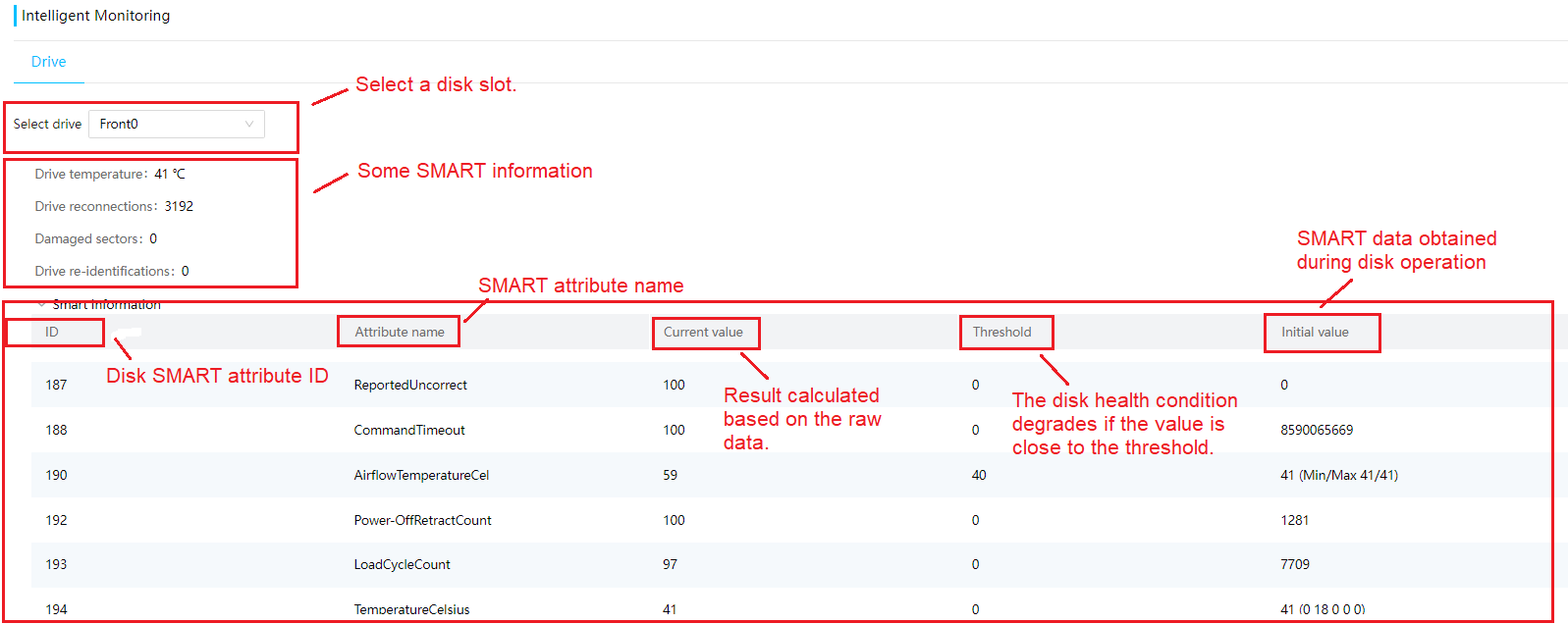

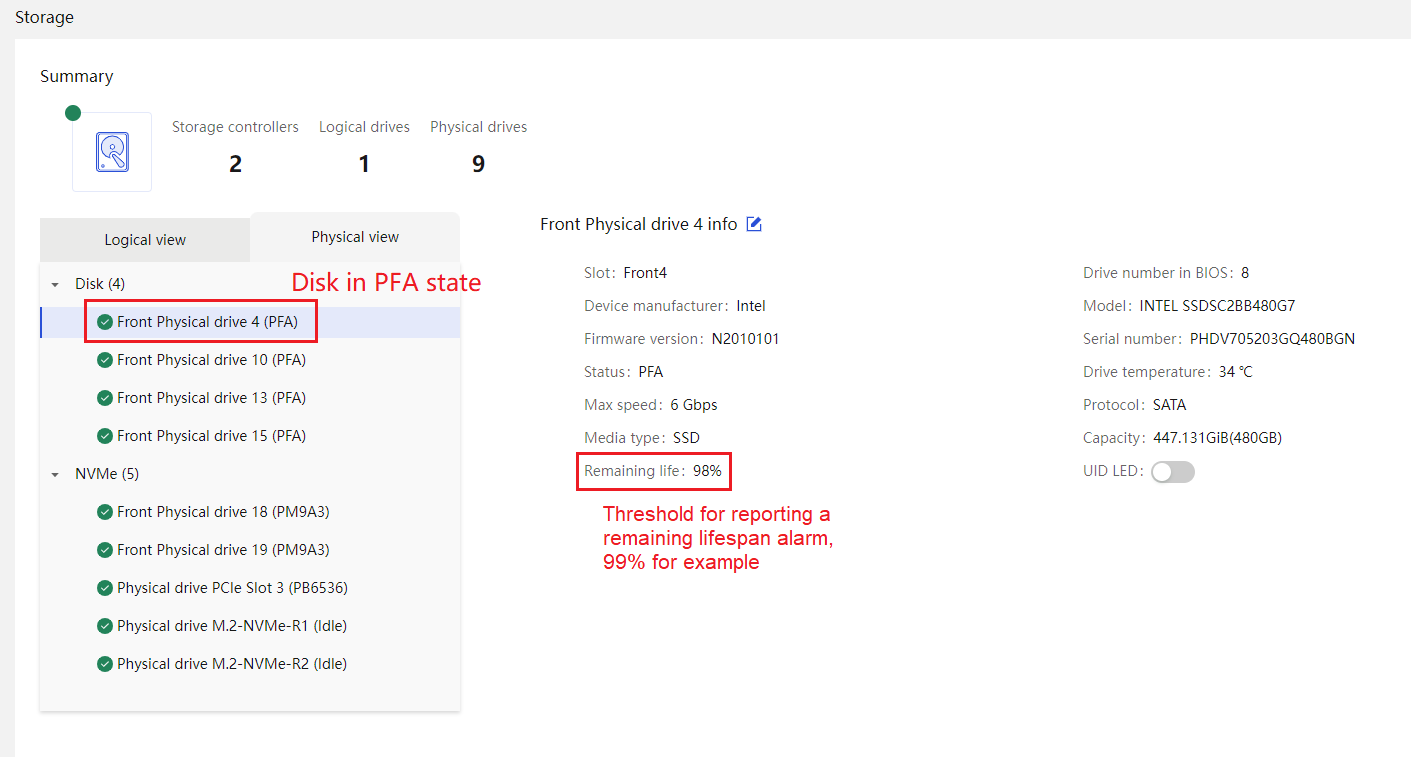

· Life expectancy prediction for some components, such as hard drives that support obtaining SMART information.

The server supports reading the following information about key server components through the out-of-band management interface.

Table 4 Key server component information

|

Component type |

Information provided by the out-of-band management interface |

Remarks |

|

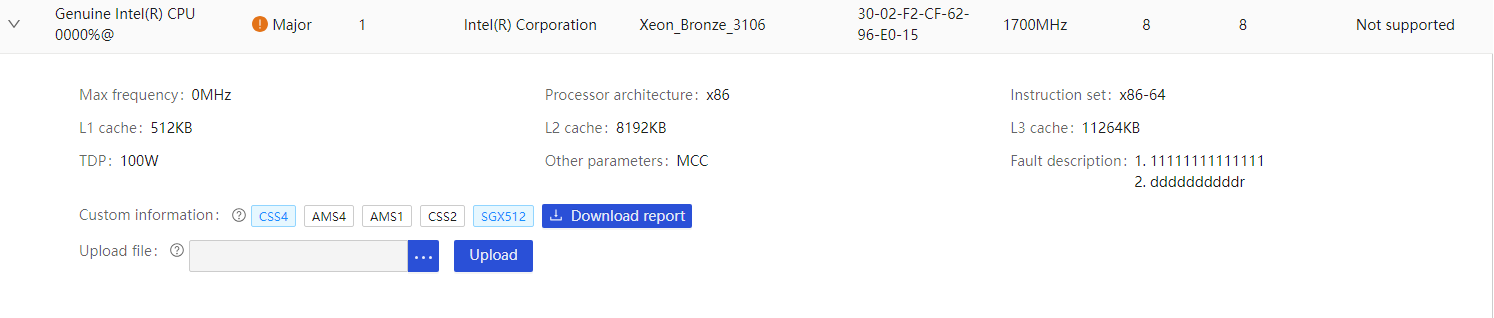

Processor (CPU) |

· Fully configured CPUs and present CPUs · Slot number · Vendor · Model · Clock speed and maximum frequency · Number of cores and number of threads · Health status · Multi-level cache size · Architecture · PPIN (Intel) |

N/A |

|

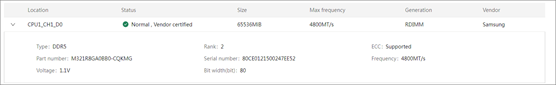

Memory |

· Fully configured memory modules and present memory modules · Location · Working frequency and maximum frequency · Capacity · Memory standard and memory Type · ECC state · Vendor name and part number · Health status |

N/A |

|

Drive |

· Fully configured CPUs and present CPUs · Hard drive location · Vendor (Manufacturer) · Model · Interface type and protocol · Capacity · Health status · Remaining life (for SSD hard drives) · Hard drive SMART information |

N/A |

|

PCIe module |

· Module manufacturer and chip vendor · Firmware version · Resource location (CPU and riser card where the module is located) · Basic information of the network adapter, including port name, port number, MAC address, and port type · Network port information, including MAC address, root BDF, BDF (with port numbers), maximum speed, connection speed, connection status, interface type, LLDP status, and LLDP configuration · Network adapter port traffic (when FIST SMS is installed) · Health status |

Common network adapters include: · Onboard network adapters · Mezz network adapters · PCIe standard form network adapters · OCP form network adapters · Intelligent network adapters The information that can be obtained depends on the actually installed PCIe modules. |

|

Heat dissipation device: fan |

· Fully configured fans and present fans · Fan location · Model · Speed and rate ratio · Health status |

Fan information is not available on the HDM page for immersion liquid cooling models and non-HDM controlled fan models. |

|

Cooling device: liquid cooling module |

· Full configured modules and present modules · Leak detection and disconnection detection |

Supported only for liquid cooling models. |

|

Optical transceiver module |

· Temperature information acquisition · Temperature involvement in speed regulation |

Requires that both network adapters and optical transceiver modules support information acquisition. |

|

Power supply |

· Fully configured power supplies and present power supplies · Power location · Vendor · Model · Rated power · Input voltage · Output voltage · Current power value and health status |

Not available for blade servers and chassis model servers. |

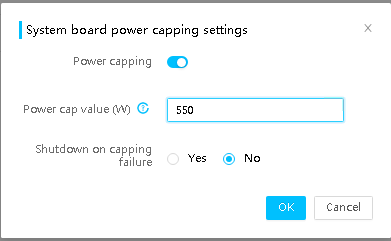

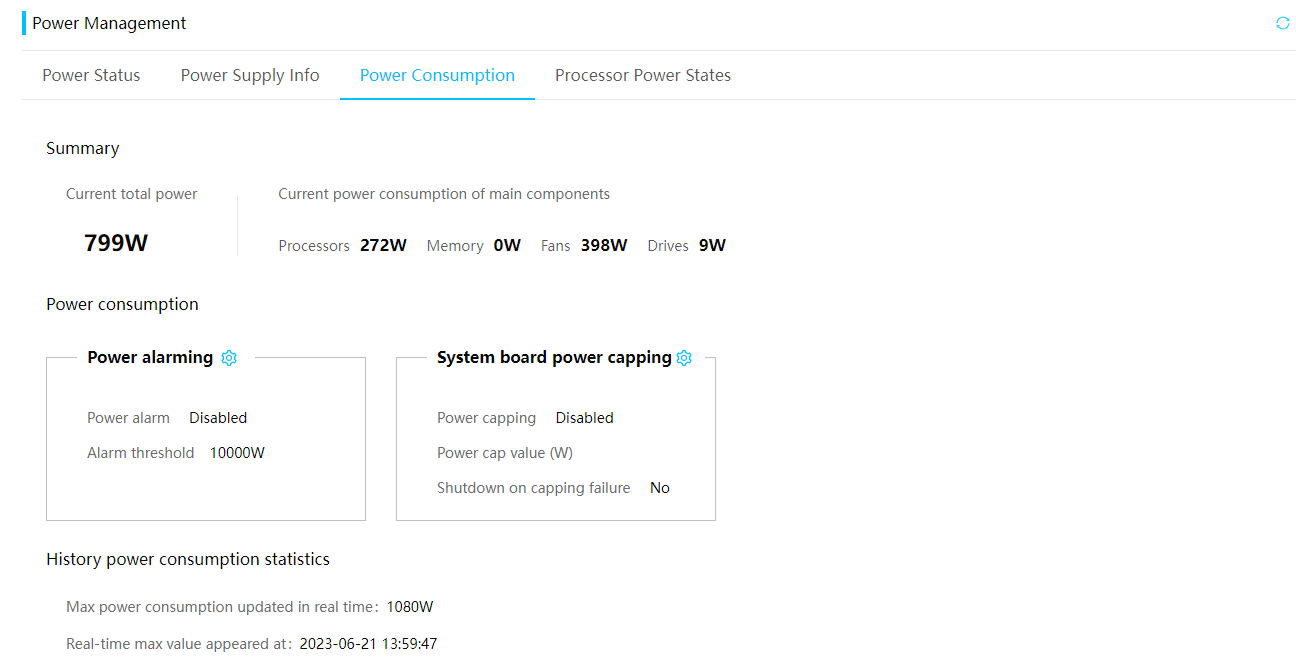

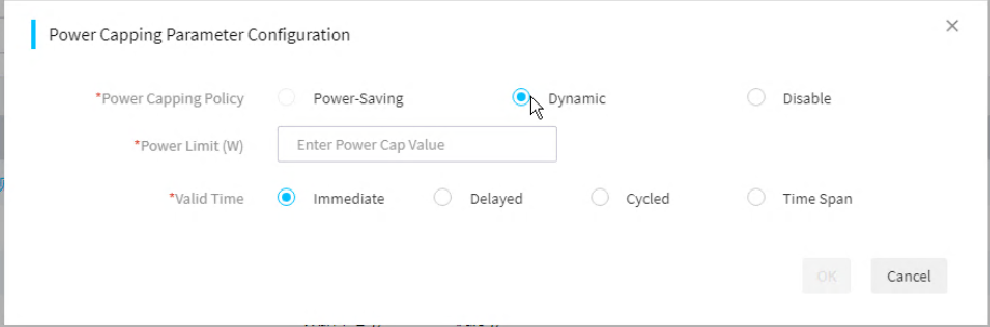

The server supports managing the power control policies through the out-of-band management interface, including:

· Supports obtaining the total power consumption of the server.

· Supports remote power-on, power-off, and reset.

· Supports obtaining the server power on/off status.

· Supports the power capping technology. You can enable and disable power capping, set the power cap value, and specify whether to enable shutdown on capping failure.

Host management

HDM supports the following host management functions:

· Identification of components: Obtaining TPM presence status and presence status of dual SD modules.

· In-band information management: Obtaining BIOS version, ME version, BKC version information, Post codes, host address space, SMBIOS information, operating system version, and host operating status.

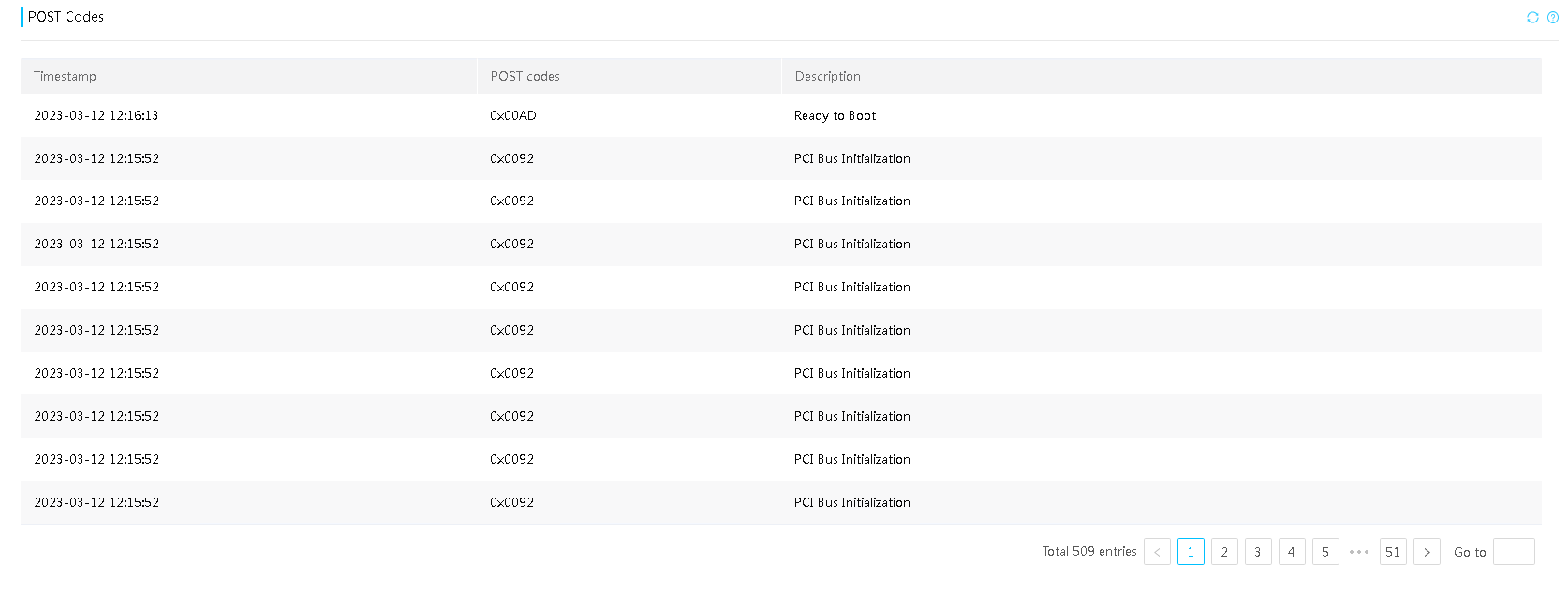

¡ HDM supports displaying POST codes in clear text, as shown in Figure 32. It directly displays the description of each POST code, allowing users to quickly identify the fault location and type. This facilitates targeted troubleshooting, saves time, and provides a user-friendly experience.

Figure 32 BIOS POST code in clear text

For in-band management features that require implementation through the OS Agent, the server need to provide relevant firmware, hardware drivers, and development interfaces to ensure that third-party management software can implement related in-band management features. HDM supports obtaining the following information through in-band management:

· Server CPU usage.

· Total memory capacity, used memory capacity, and memory usage rate of the server.

· Network port transmission rate and reception rate.

· Network adapter firmware version number.

· Optical transceiver module DDM information.

· IPv4 and IPv6 address lists of server network ports.

· Network MAC address and network port name.

Application scenarios

Recommendations for enhancing reliability performance

HDM supports the following configurations to enhance the overall server reliability:

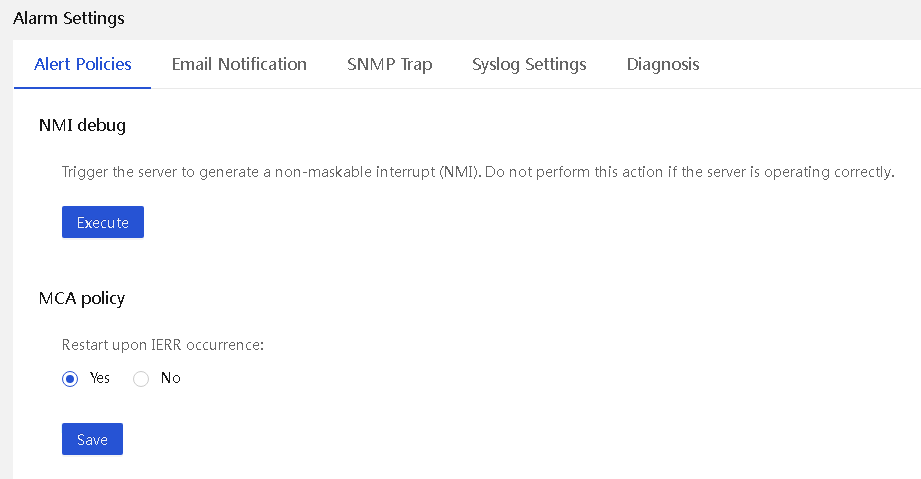

· Start policy upon IERR occurrences.

· Isolation policy upon memory failures.

· Customized power-on delay.

· Security monitoring information.

· Online detection of power supplies, fans, and mode switching.

· Fan cooling policy.

· HDM's reliability.

Server monitoring capabilities

Background

To meet daily operation and maintenance demands, servers need to provide interfaces for continuous monitoring by network management systems to ensure normal operation of devices. When a server device encounters an anomaly, it must actively report the fault to the external maintenance system for quick detection and prompt troubleshooting. The server also needs to monitor some key resources in itself for troubleshooting purposes.

Based on the monitoring requirements in various scenarios, HDM provides the following main features:

· Provides multiple interfaces for continuous monitoring of server hardware by external systems to realize these features:

¡ Allows viewing current and history statuses.

¡ Provides device status and server status.

¡ Supports monitoring of components, and in particular, provides features such as component lifetime prediction and fault warnings.

· Provides a comprehensive logging solution to meet requirements in various scenarios.

¡ Provides users with standardized and component-based event logs, operation logs logging the operations of all parties, audit logs for secure use, and component replacement logs.

¡ Full lifecycle level operation parameter records provided by SDS logs.

¡ Supports offline logging and remote diagnostic analysis.

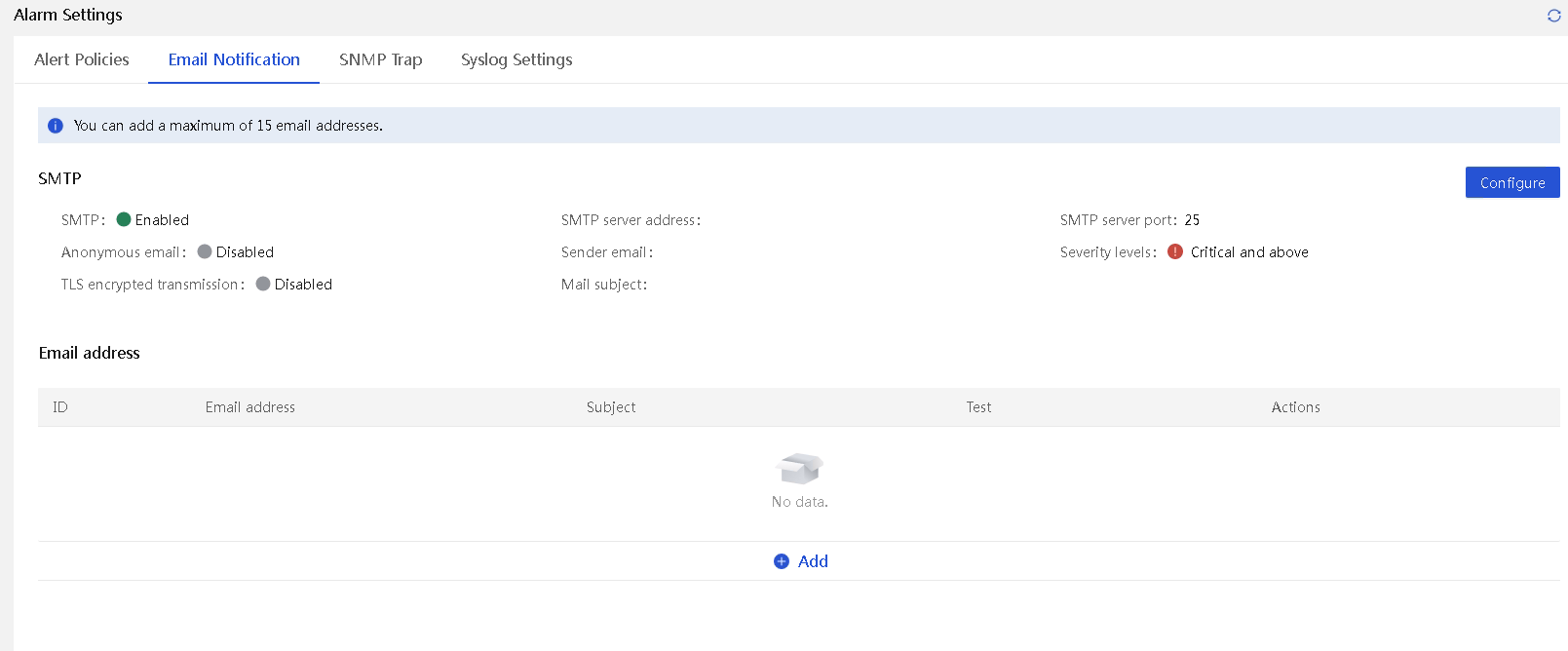

· Uniform, industry-standard, and comprehensive alarm channels across product lines:

¡ Standardized interfaces that meet the management requirements on IPMI, Redfish, and SNMP.

¡ A direct alarm mechanism on the server based on emails and logs.

· Compatible with mainstream network management systems for easy incorporation of server devices.

· Provide comprehensive host monitoring capabilities. For more information, see related descriptions.

Technical key points

System health status

On HDM, you can obtain the overall health status of the system and the health status of each component on the system. Health statuses are displayed through the Web, health LEDs, LCD panels, and the intelligent security bezel.

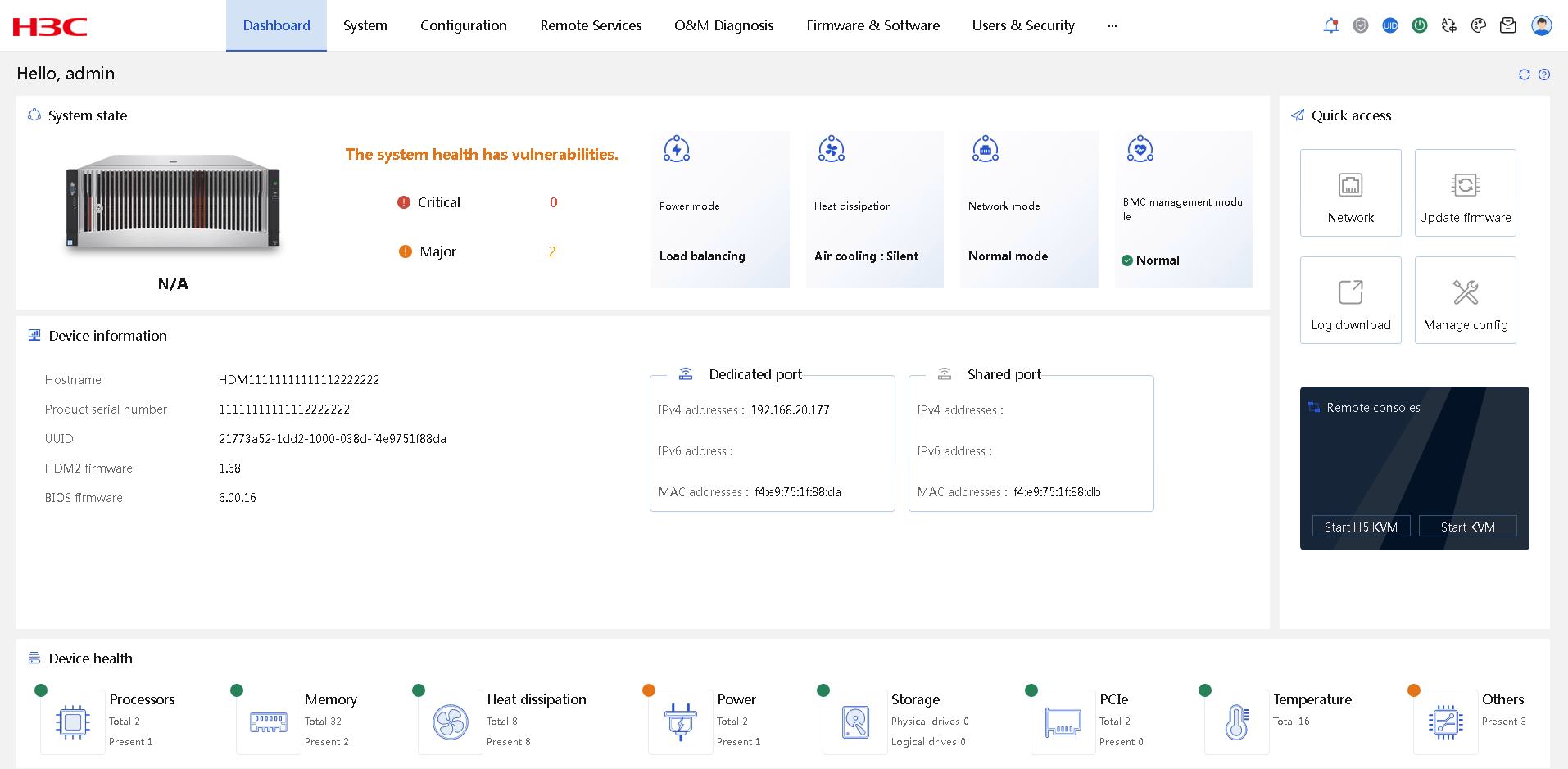

Figure 33 Summary information on the Web interface

On the Dashboard page, you can view the overall health status and summary alarm information of the server.

The overall health status of the server depends on the health status of the relevant components, such as the processor, memory, fan, power supply, storage, PCIe modules, temperature sensors, system board, backplane, and adapter cards.

Component monitoring

· Provides static data query interfaces for each component, continuously monitors dynamic data, senses abnormal events, and triggers corresponding diagnostic analysis based on event types.

· Provides a user-friendly Web page for easily viewing various dimensional information related to components.

· Supports multi-level monitoring of multi-role components (such as PCIe-based network and RAID devices), redundant components (such as power supplies and fans), combined components (such as RAID controllers, supercapacitors, OCP cards, and corresponding fans), multi-unit components (such as memory and hard drives).

· Monitoring information is summarized by level. The final system health status is determined based on the impact on the device.

Sensors

Temperature monitoring displays the distribution maps and values of temperature sensors for various components in the server chassis. Physical structure diagrams are also provided as references, as shown in Figure 34. The temperature graphs use colors between green and red to show the temperature distribution inside the server chassis and use circles to represent sensors. You can hover over a circle to view the target sensor's name, status, temperature reading, and threshold. Green indicates 0°C (32°F). As the temperature gets higher, the color gets warmer until it changes to red. The coordinates' meanings are as follows:

· X: Sensor's position on the X-axis.

· Y: Sensor's position on the Y-axis.

· Z: Node to which the sensor belongs. (Different Z values are displayed in models with multi-node and multi-layer designs.)

Figure 34 Temperature heatmaps

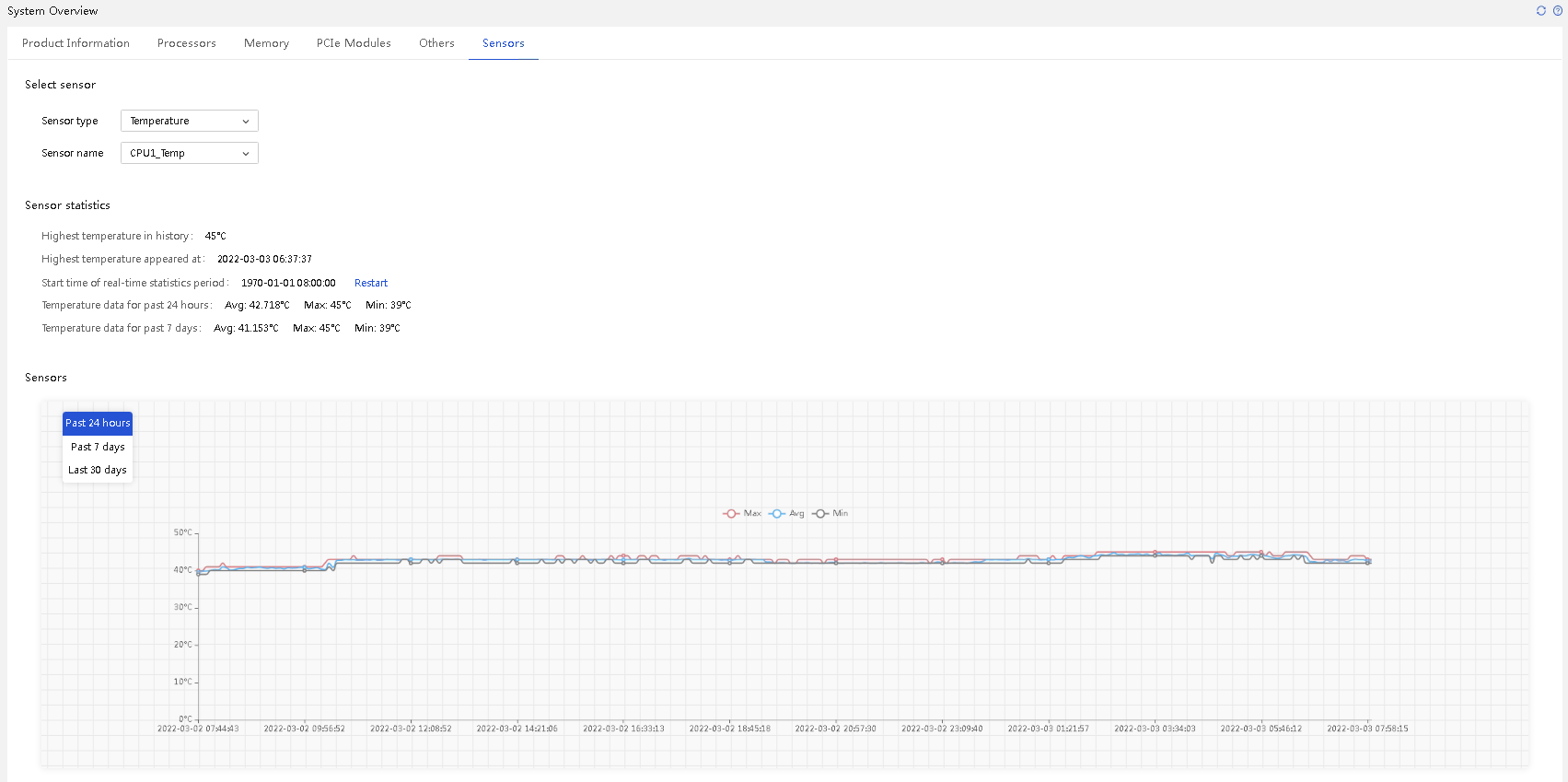

On HDM, you can view the readings of a sensor (temperature, current, voltage, fan speed, or power) in a line chart during the past 24 hours, past 7 days, or past 30 days. You can hover over the lines in the chart to view the maximum, average, and minimum readings during the statistics collection period.

Figure 35 Sensor reading chart

For sensors that have not sampled data or have encountered monitoring anomalies for a long time, the system assigns labels such as not sampled and unavailable. Not sampled is commonly seen in the first sampling point after HDM is started. Unavailable is commonly seen in known (unmonitored scenarios) or abnormal (monitoring failures caused by link anomalies) sensor unavailability situations.

HDM supports identifying the configuration of the server, dynamically generates sensors that need to be monitored based on the identification results, and monitors these sensors. For parts that can be physically installed at the reserved positions but have not been installed, you can view the sensors related to reserved status. For parts that cannot be physically installed (such as uninstalled Riser modules or backplanes), no extra sensors are generated, which saves monitoring resources to some extent.

Standard log output

H3C's event log implementation has the following characteristics:

· Compatibility: Uses standard IPMI logs to ensure that all logs can be detected through IPMI commands.

· Pairs each alarm trigger with the corresponding alarm clear event, facilitating external management operations.

· Clear text: All event logs can be read directly.

· Component-specific: Each alarm log is linked to its corresponding component, which can be used for quick identification and localization.

· Full lifecycle records: Records all event logs to meet the requirements of event log recording during normal warranty period.

· Flexible external interfaces and integration capabilities: Supports multiple interfaces for reporting fault information, facilitating integration with upper-level operation and maintenance systems.

Simultaneous monitoring through multiple channels

· Server alarm channels

Alarms can be reported through the following channels on the server:

¡ Hardware LEDs, mainly displayed on the front and rear panels of the server, including the LEDs on each component, intelligent security bezel LEDs, LCD panel LEDs, and system-level hardware LEDs.

¡ Software device health LEDs, mainly displayed on the Web page and can be queried through IPMI commands.

¡ Event log-based alarm information, including event logs in SEL, SDS logs and event logs in SDS for internal positioning, and information variants displayed through various monitoring channels (mainly including SNMP trap, SMTP, Redfish, and remote rsyslog), and internal log information.

¡ Sensor-based alarm information.

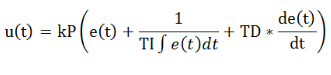

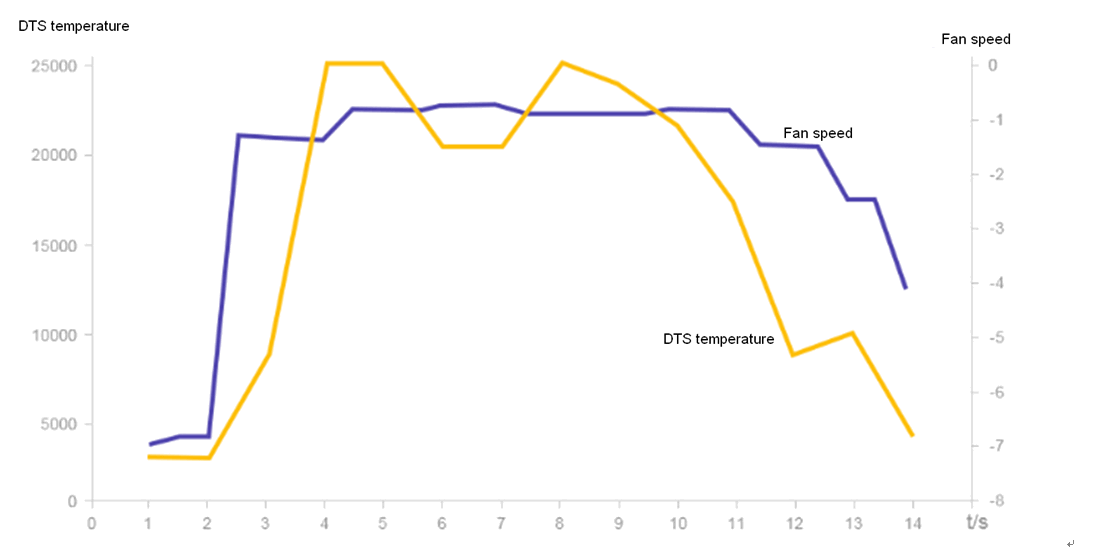

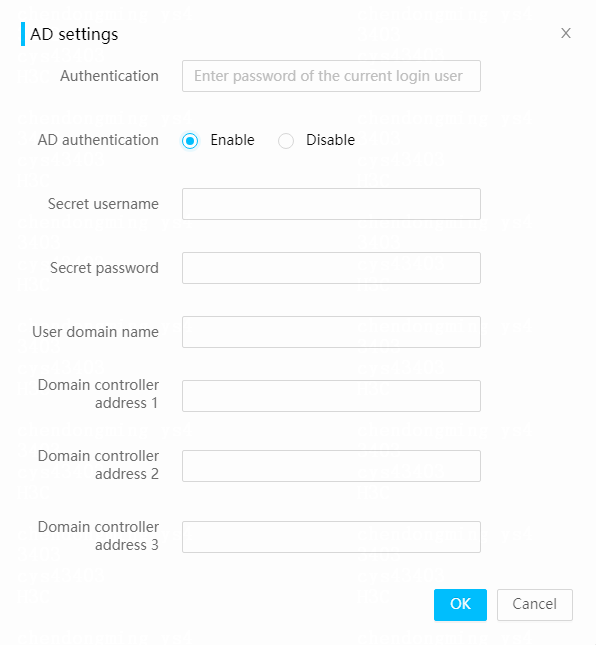

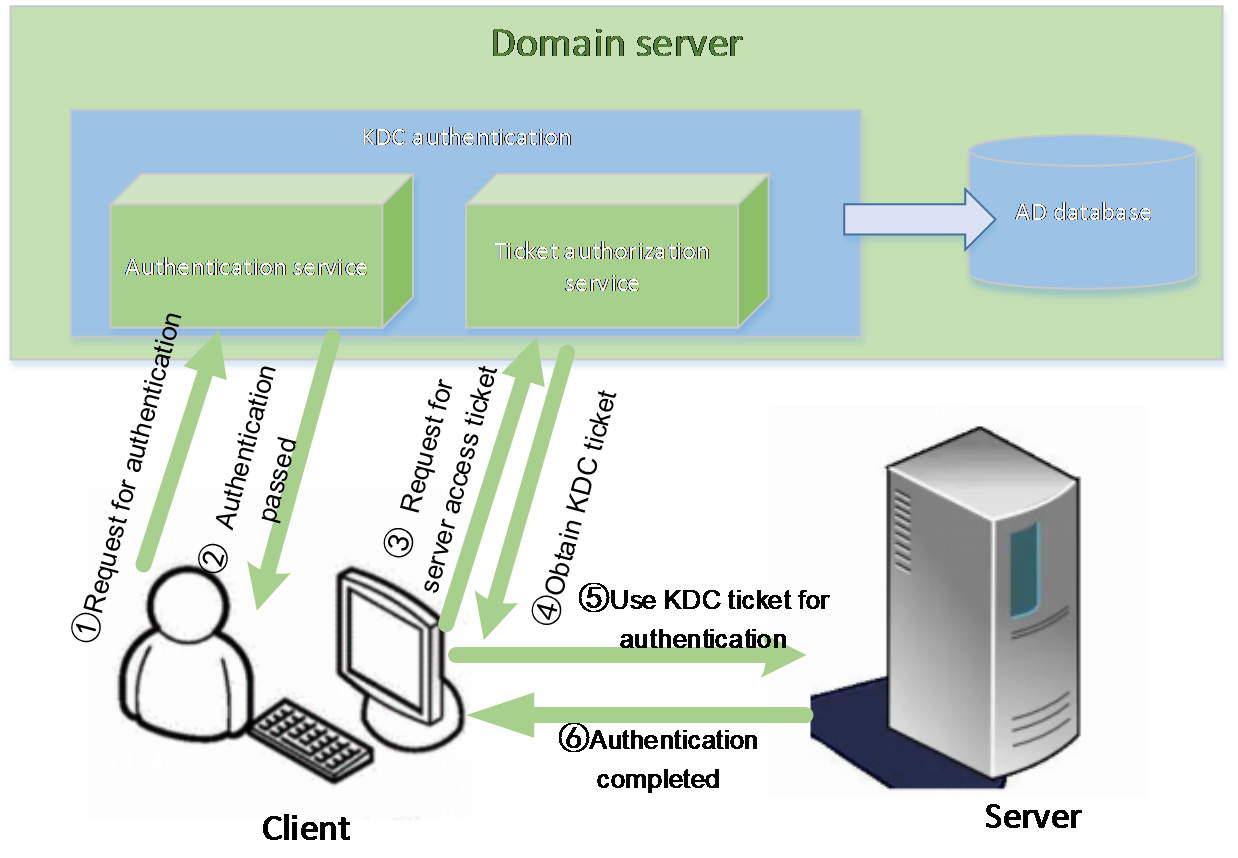

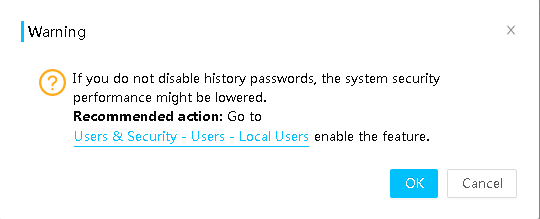

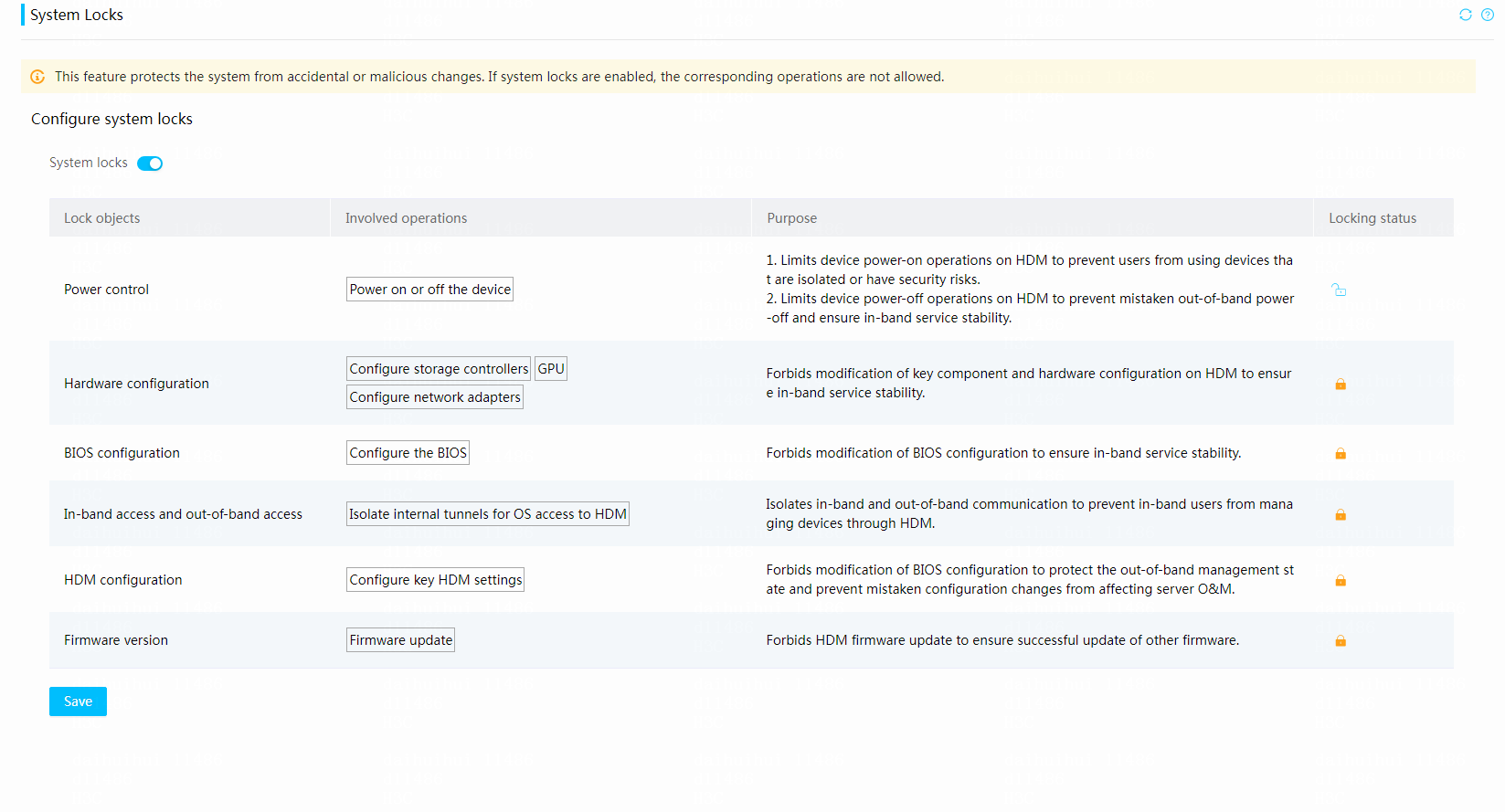

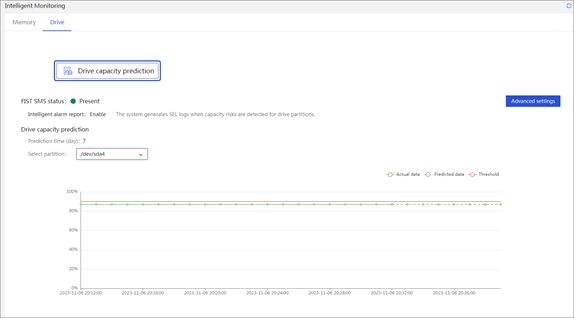

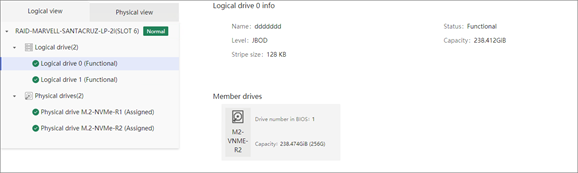

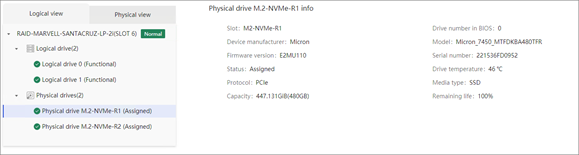

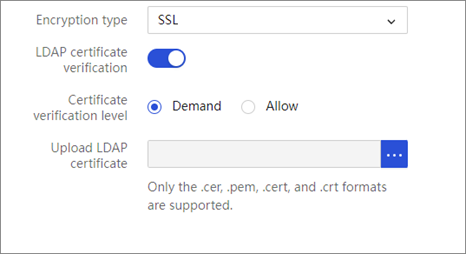

¡ Alarm information in the BIOS and OS.