- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 4.08 MB |

Hardware requirements (physical server)

Server requirements for SeerCollector deployment

Software package authentication

Analyzer deployment tasks at a glance

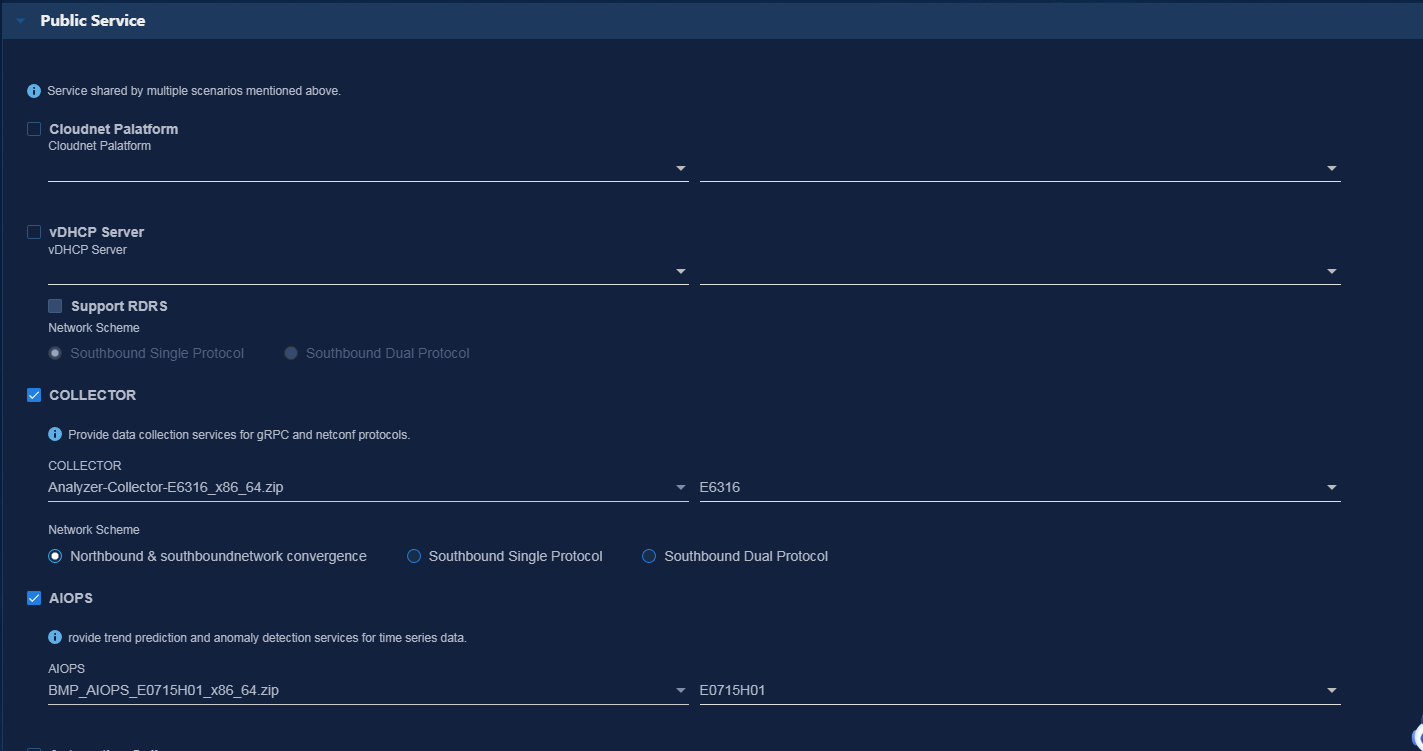

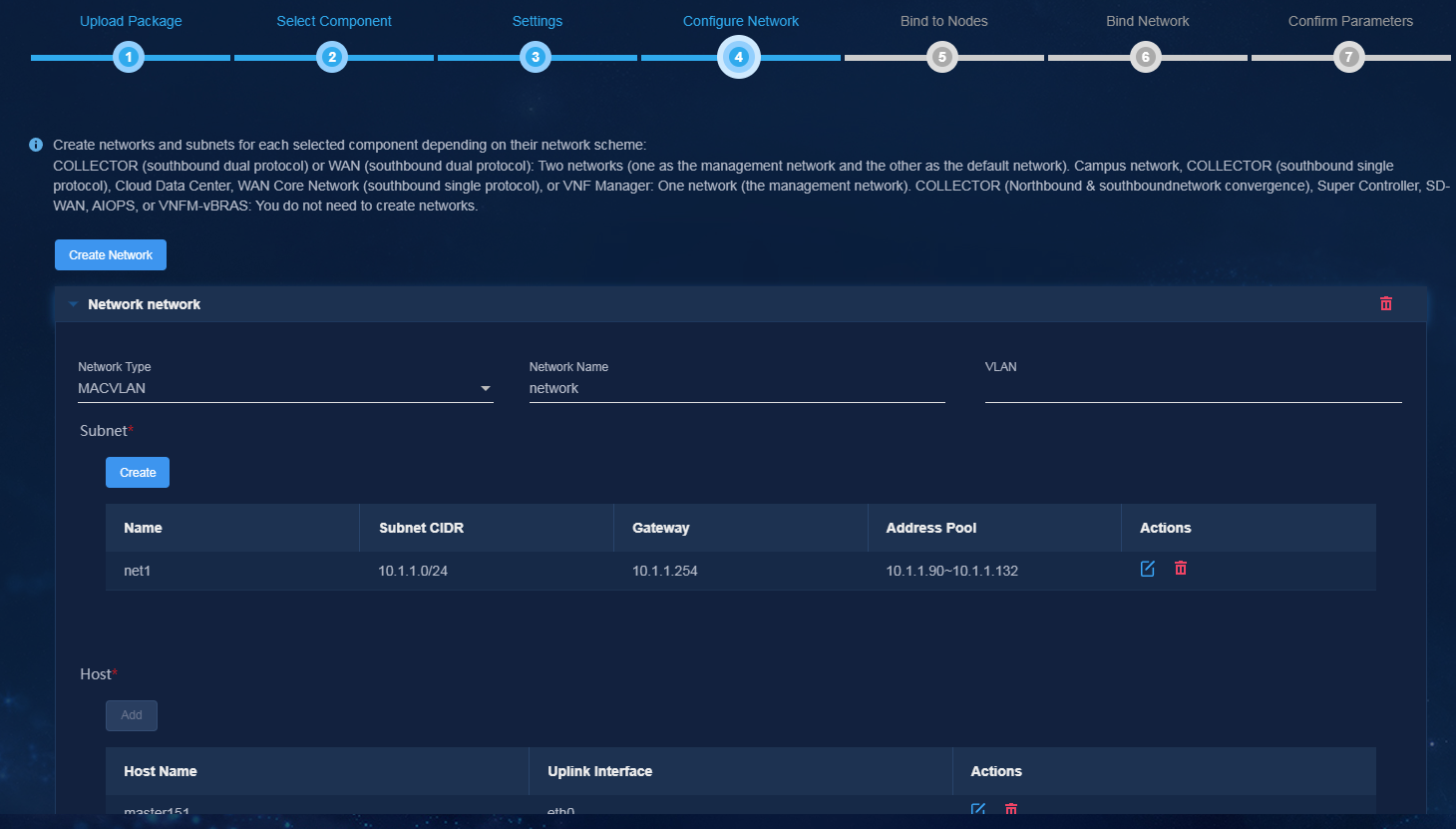

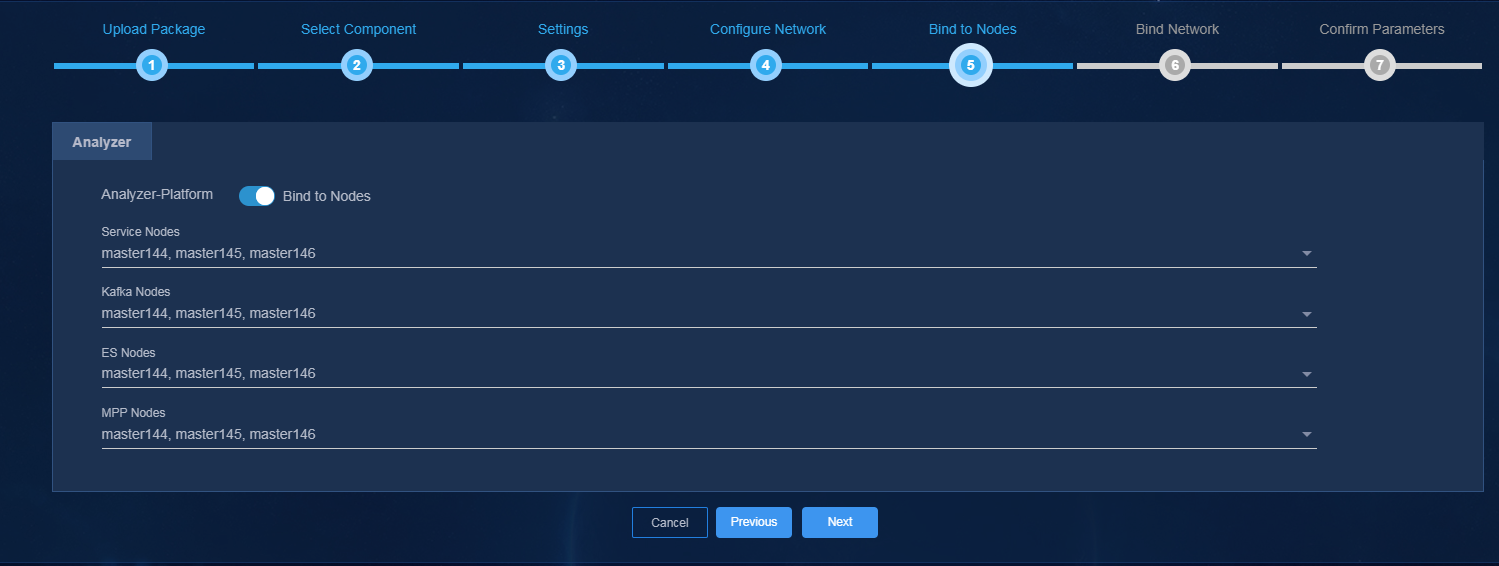

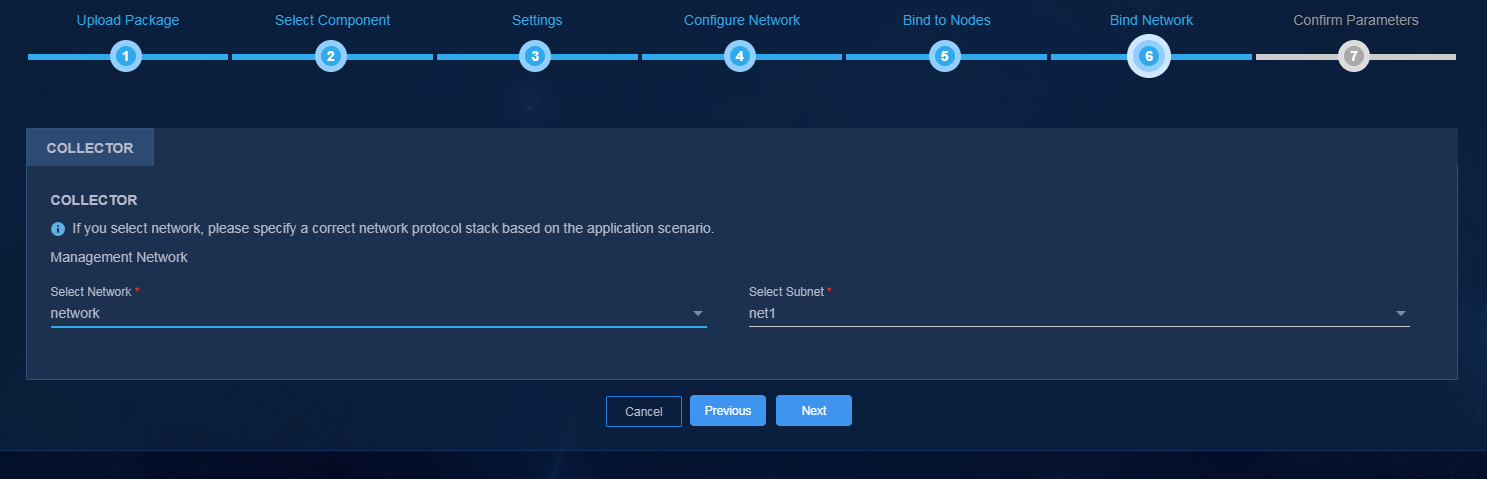

Deploying Unified Platform and optional components

System disk and ETCD disk planning

Manually creating data disk partitions

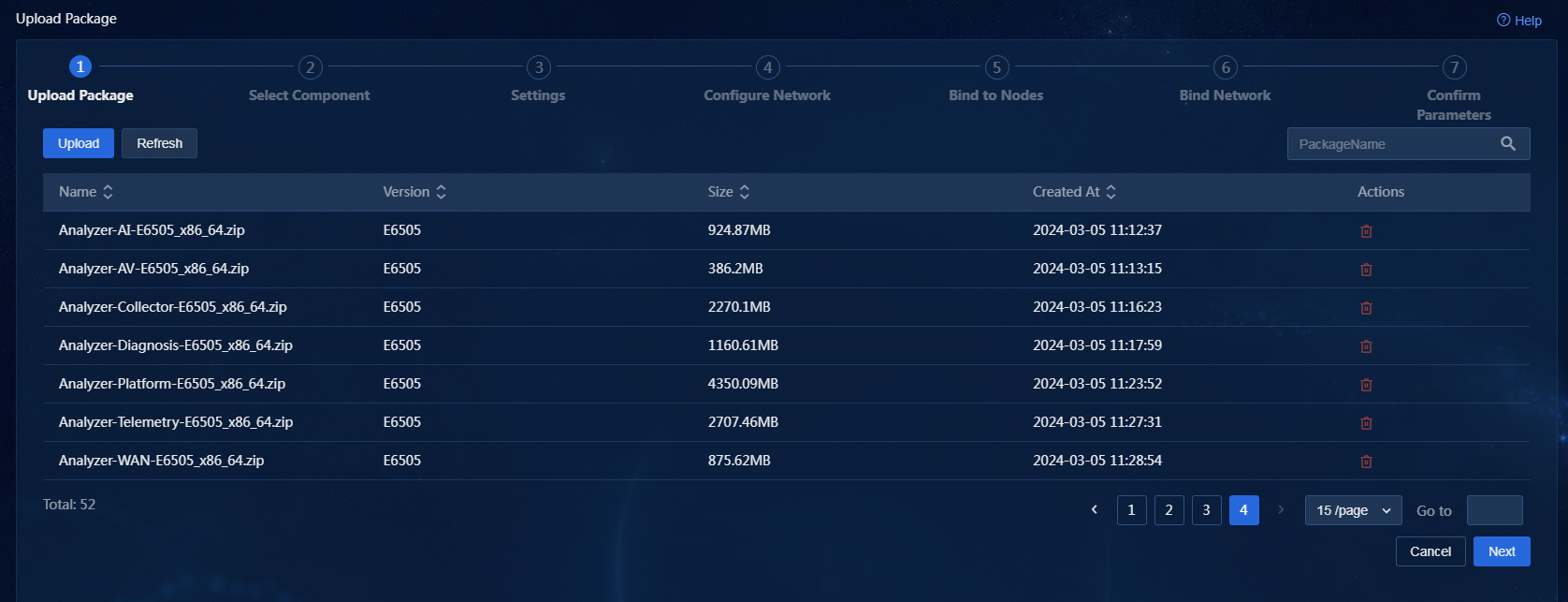

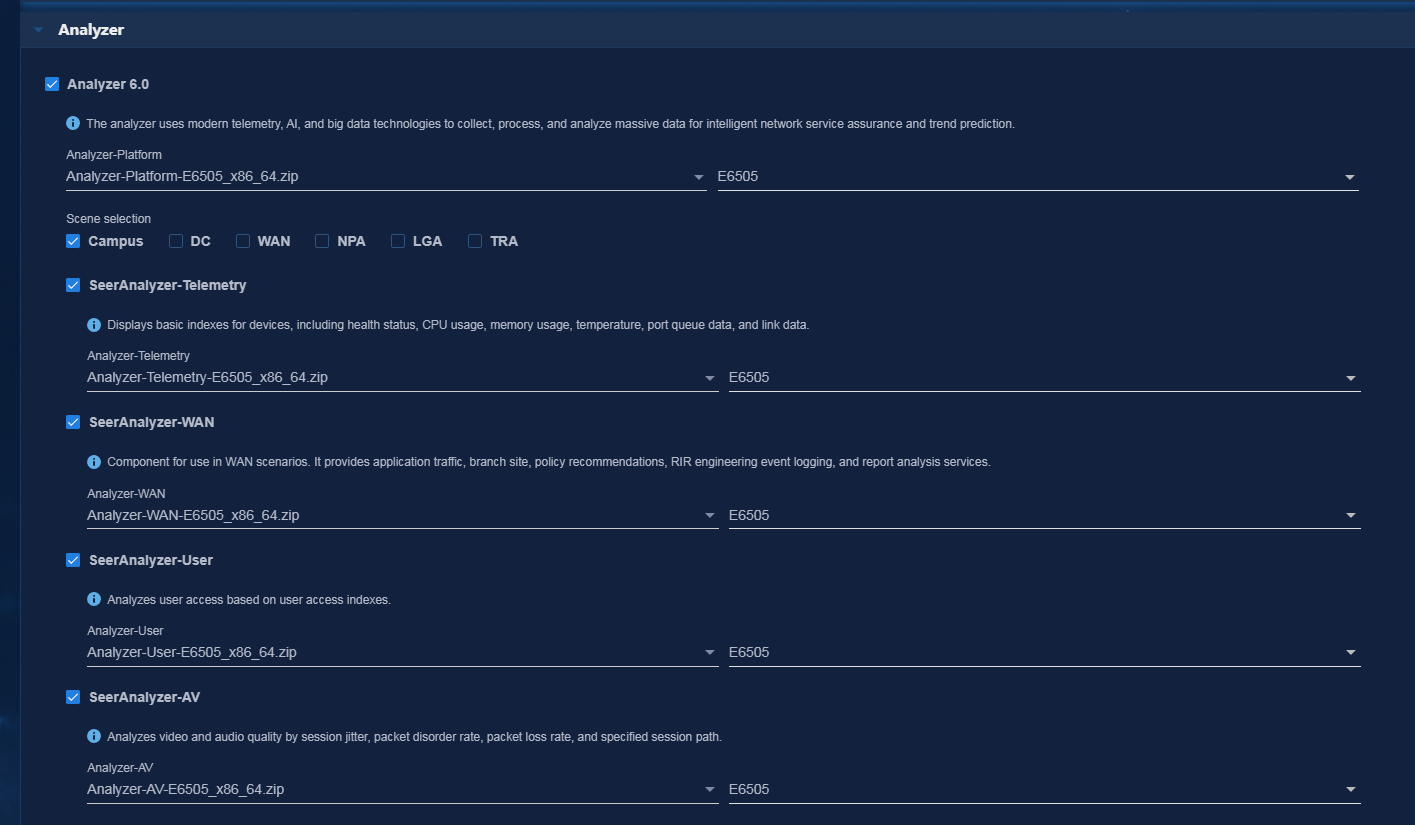

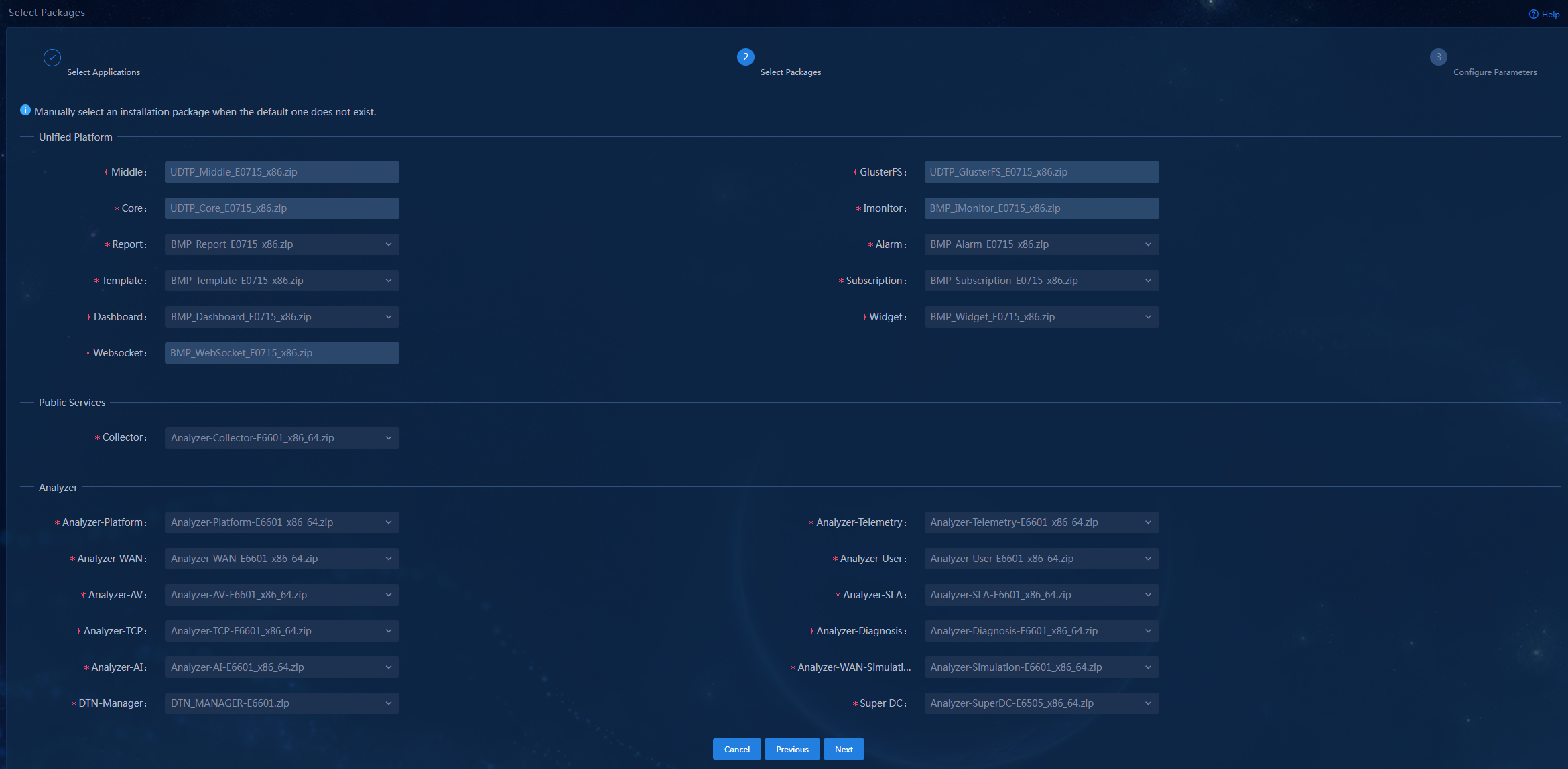

Application installation packages required for deploying Unified Platform

Analyzer application package instructions

(Optional.) Clearing known_hosts information recorded by each node

Deploying Analyzer for Unified Platform of versions earlier than E0713

Deploying Analyzer for Unified Platform of E0713 and later versions

Accessing the Analyzer interface

Installing a license on the license server

Obtaining the license information

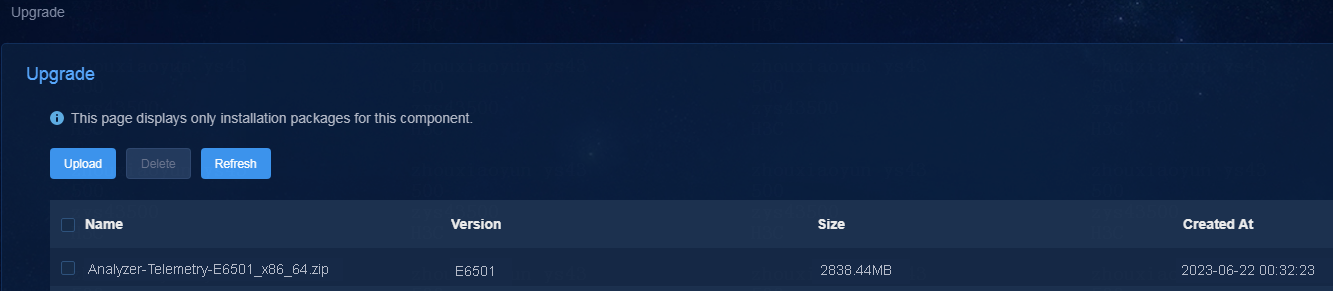

Upgrading Analyzer from E61xx to E65xx

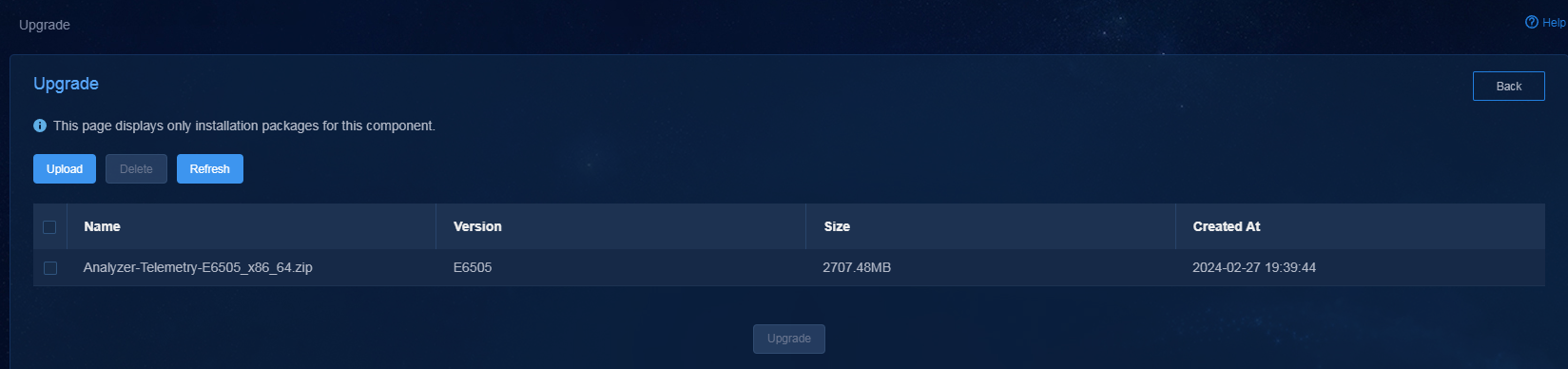

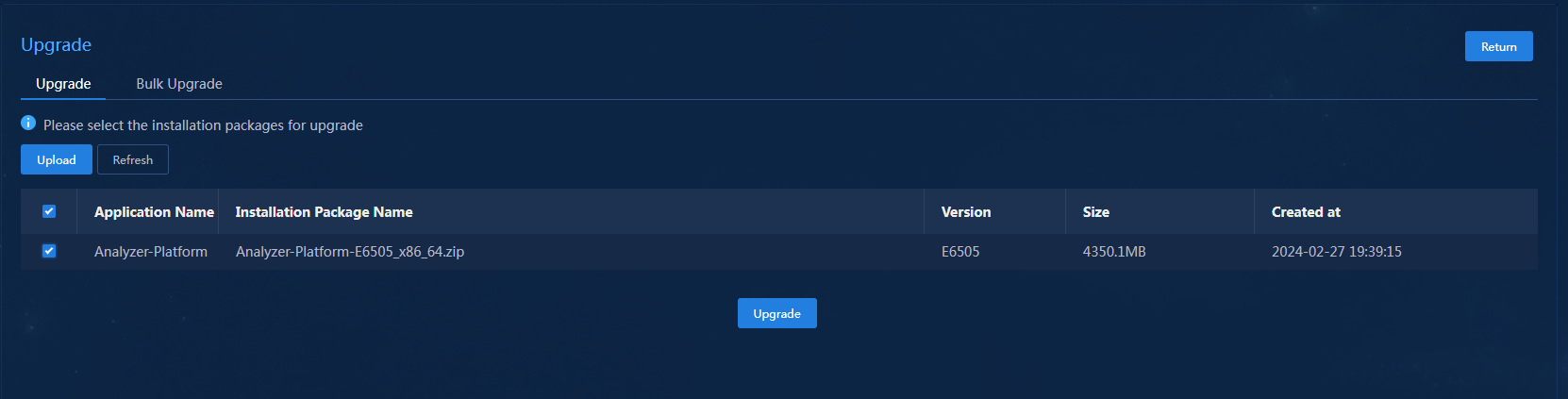

Upgrading Analyzer from E62xx or E63xx to E65xx or from E65xx to a later version

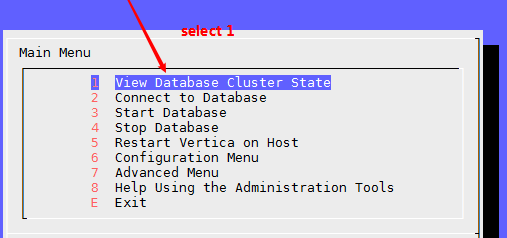

Checking the running status of each server

How can I configure security policies if multiple enabled NICs are configured with IP addresses?

How can I change the SSH port of a cluster node?

What should I do if the analyzer fails to be deployed or upgraded?

Why is Vertica unavailable after the node or cluster IP is changed?

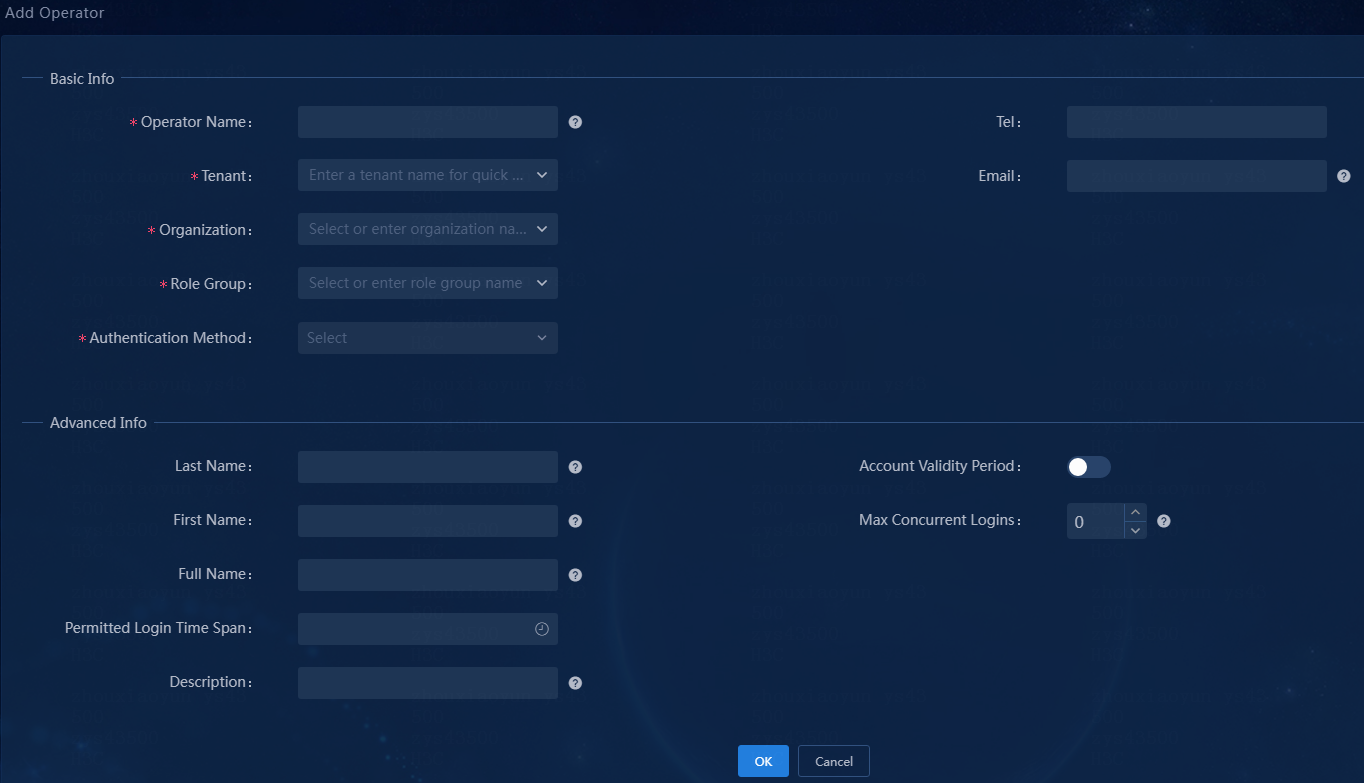

Why the Analyzer menu not visible after an operator logs in?

How to check if NUMA nodes are allocated memory?

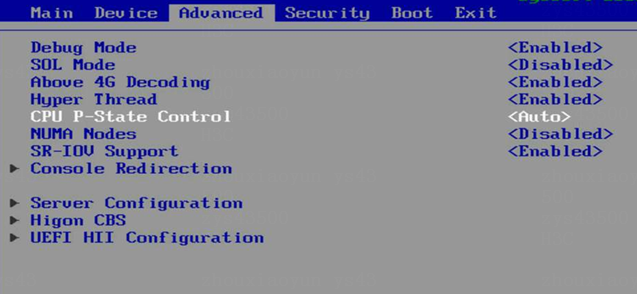

How to check if hyper-threading is enabled?

How to enable hardware virtualization function?

How to change the file system type of a data disk partition to ext4?

What should I do if the deployment of the Analyzer-Platfom component fails?

Introduction

Analyzer focuses on the value mining of machine data. Based on big data technologies, Analyzer finds out valuable information from massive data to help enterprises in networking, service O&M, and business decision making. Analyzer collects device performance, user access, and service traffic data in real time and visualizes network operation through big data analysis and artificial intelligence algorithms. It can predict potential network risks and generate notifications.

· Campus—Based on user access and network usage data collected by Telemetry, the campus analyzer uses Big Data and AI technologies to analyze network health issues, discovers the root causes for degraded experience, and provides optimization suggestions. This improves user experience.

· WAN—Acting as the core engine for smart O&M in a WAN, the WAN analyzer collects network state, log, and traffic data from multiple dimensions, uses Big Data and AI technologies to summarize and analyze the data, and thus provides health evaluation, traffic analysis, capacity forecast, and fault diagnosis functions for the entire network.

· DC—The DC analyzer collects full-time network device operation information and establishes a health evaluation system for the entire DC network. The system brings TCP/UDP session analysis, application visibility and analysis, chip-level cache monitoring, and packet loss analysis in the DC, providing full support for all-round DC O&M, high availability, and low latency.

Concepts

· SeerCollector—Required if you use TCP/UDP flow analysis and INT flow analysis features of Analyzer.

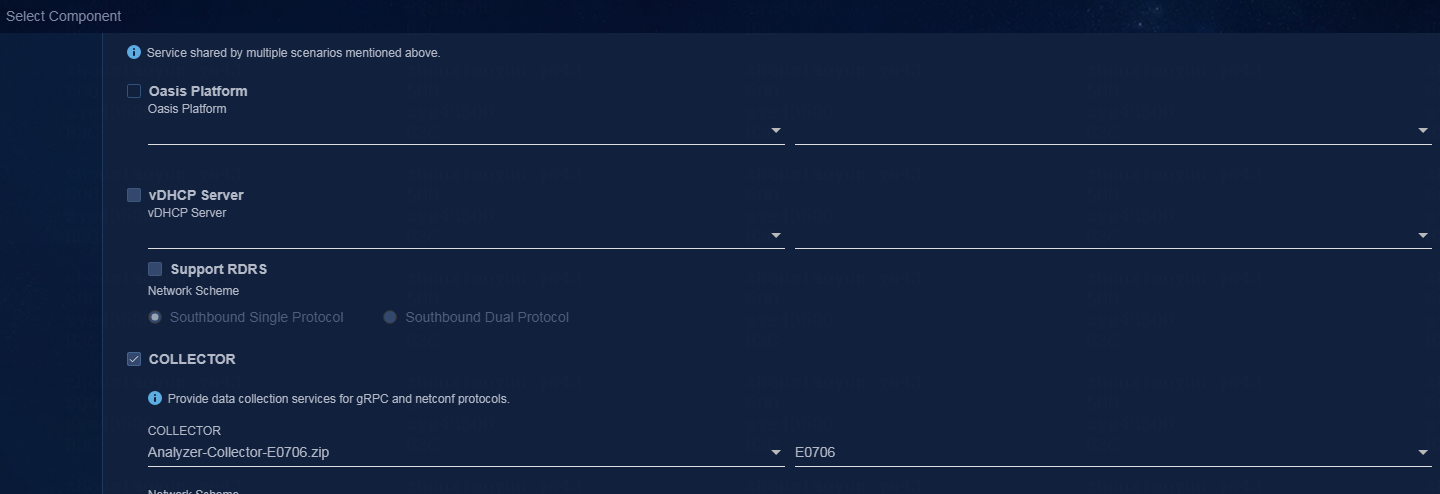

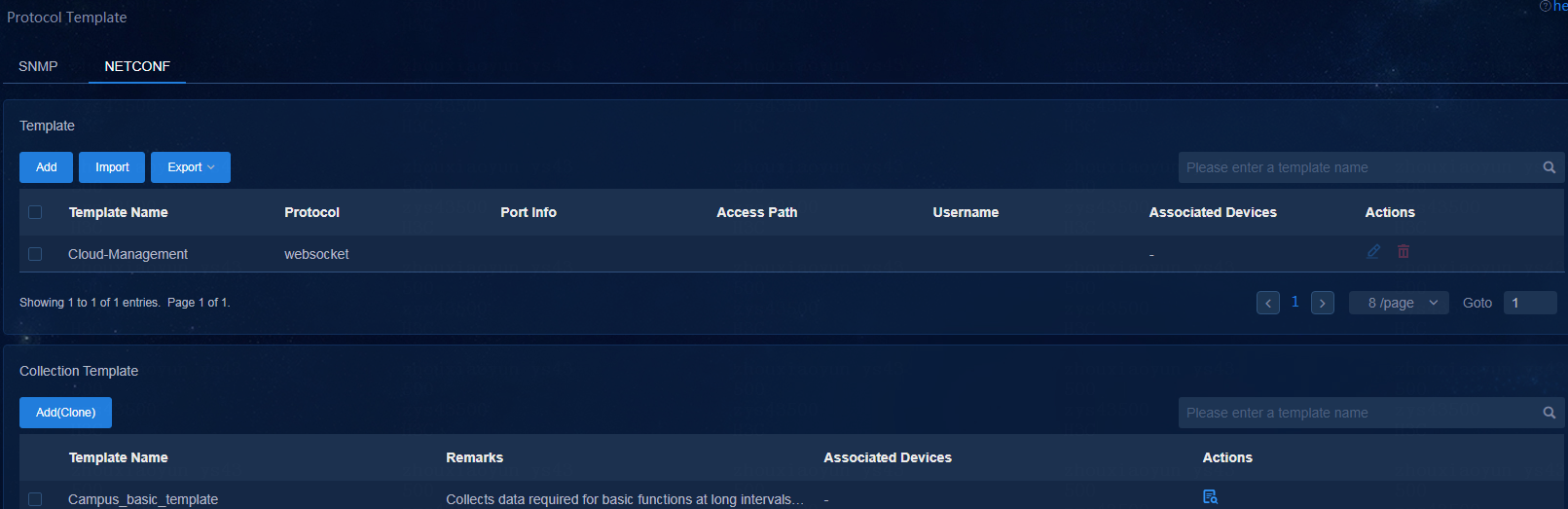

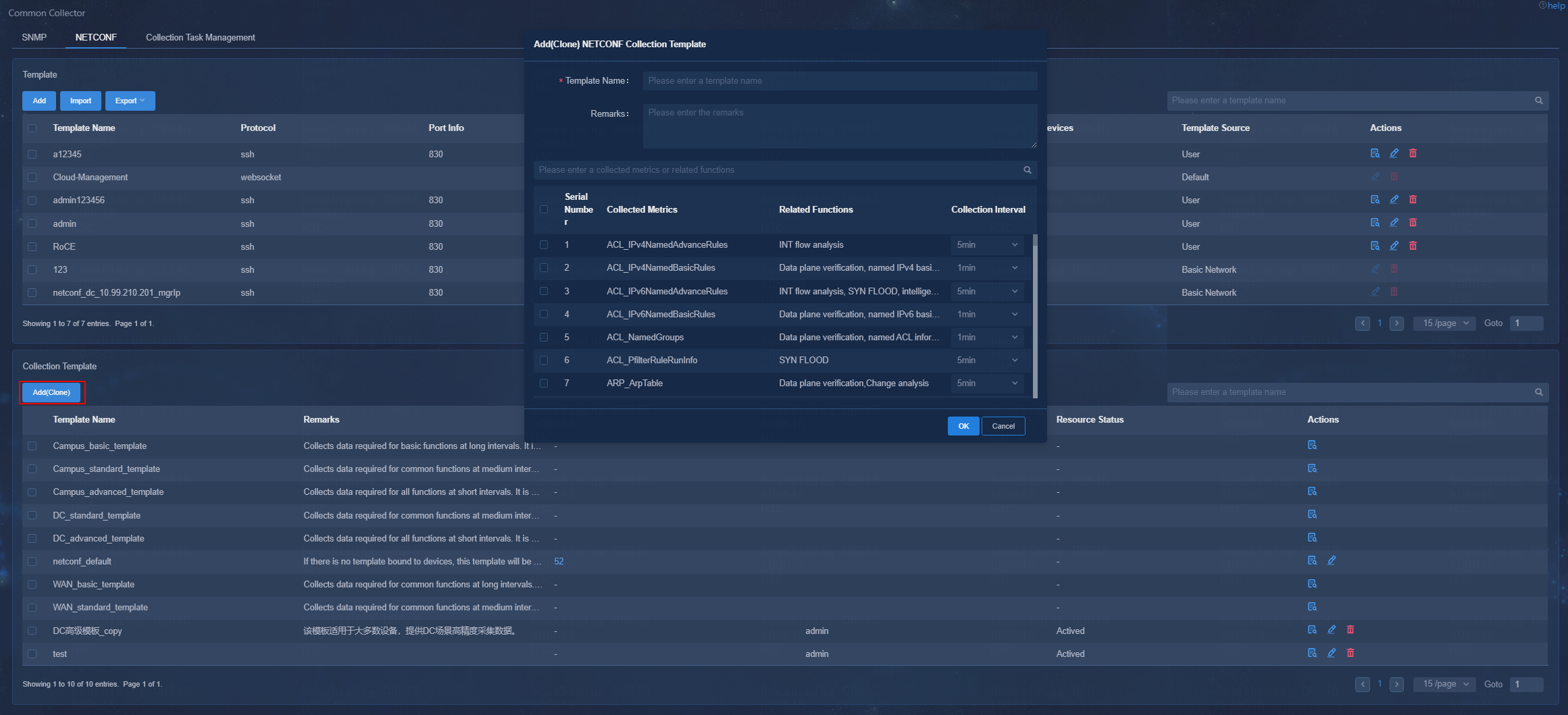

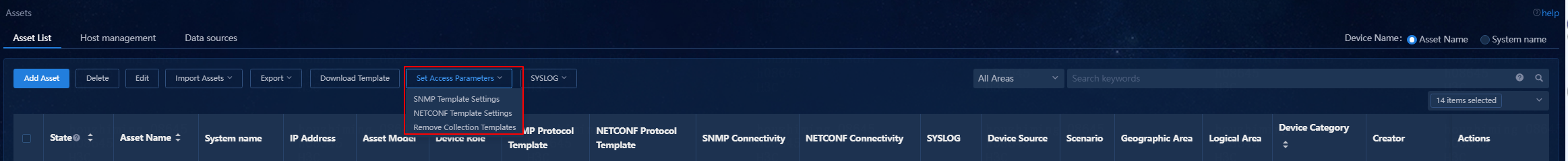

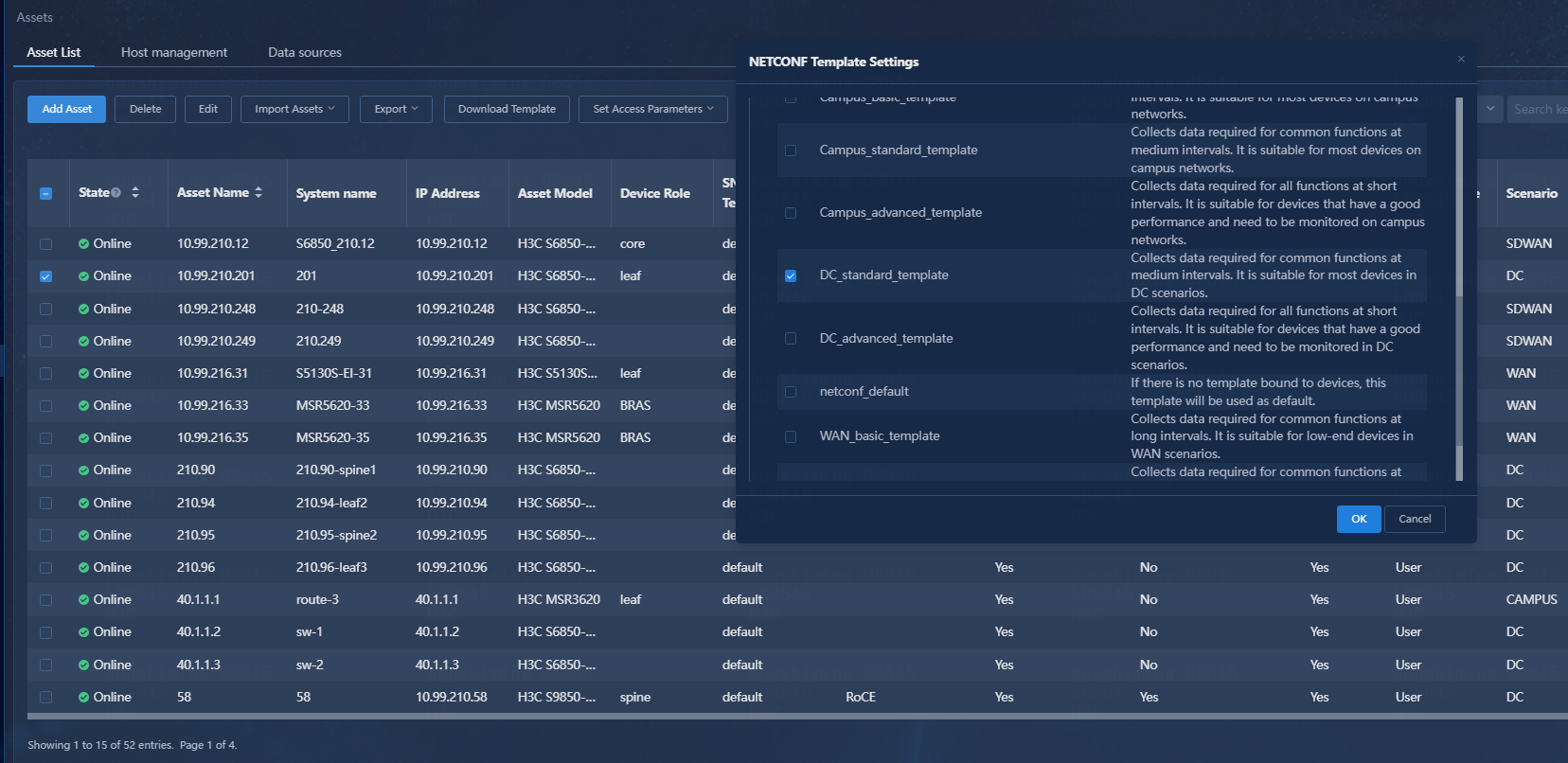

· COLLECTOR—Public collector component that provides collection services through protocols such as SNMP, GRPC, and NETCONF.

Pre-installation preparation

Server requirements

Analyzer is deployed on Unified Platform, which can be deployed on physical servers or VMs. As a best practice, deploy Unified Platform on physical servers. See Table 1 for the deployment modes.

|

Deployment mode |

Required servers |

Description |

|

Single-node deployment |

1 |

Unified Platform is deployed on one node, which is the master node. Analyzer is deployed on Unified Platform. Use single-node deployment only in small networks that do not require high availability. |

|

Three-master cluster deployment |

3+N |

· Unified Platform is deployed on three master nodes. · Analyzer-alone deployment ¡ 3+N (N ≥ 0) mode: Deploy Analyzer alone on one or multiple of the three master nodes and N worker nodes. · Controller+Analyzer converged deployment ¡ 3-master mode—Deploy Controller and Analyzer on the three master nodes of Unified Platform cluster. ¡ 3+1 mode—Deploy Unified Platform and Controller on the three master nodes, and deploy Analyzer on a worker node. ¡ 3+N mode (N ≥ 3)—Deploy Unified Platform and controller on the three master nodes, and deploy Analyzer on N worker nodes. |

To install Unified Platform on a server, make sure the server meets the following requirements:

· Uses the x86-64(Intel64/AMD64) CPU architecture.

· Uses HDDs (SATA/SAS) or SSDs as system and data disks. As a best practice, set up RAID 5 arrays if possible.

· Has a RAID controller with 1 GB or higher write cache and supports power fail protection.

· Supports operating system CentOS 7.6 or later.

Select hardware (physical server or VM) based on the network scale and service load. Application flows bring the most service load in the network.

|

IMPORTANT: · The compatible CPU architecture varies by analyzer version. For more information, see the corresponding release notes. · When the total disk capacity is fixed, the more disks, the better the read/write performance. For example, six 2 TB disks provide better read/write performance than three 4 TB disks. · To use the TCP stream analysis and INT stream analysis features, you must deploy SeerCollector. For more information, see "Server requirements for SeerCollector deployment." |

Hardware requirements (physical server)

Physical server requirements in the campus scenario

Different business scales have similar requirements for network ports in campus scenarios, which are as follows:

· As a best practice, use different network ports for the southbound collection network and the northboud network. If only one network port is available, you can configure the two networks to share the port.

· Normal mode: Use two 1Gbps or above network ports. As a best practice, use a 10Gbps or above northbound network port.

· Redundant mode: Use bonding mode2 or mode4. Use four 1Gbps or above network ports, each two forming a Linux Bonding group. As a best practice, use a 10Gbps or above northbound network port.

Table 2 Physical server requirements for Unified Platform+Analyzer deployment in the campus scenario (single-node deployment)

|

Node settings |

Maximum resources that can be managed |

||

|

Node name |

Node quantity |

Minimum node requirements |

|

|

Analyzer |

1 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 192 GB. · System disk: 2.4 TB (after RAID setup). · Data disk: 2 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 2000 online users. · 400 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 192 GB. · System disk: 2.4 TB (after RAID setup). · Data disk: 2 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 5000 online users. · 1000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 20 cores (total physical cores), 2.0GHz. · Memory: 192 GB. · System disk: 2.4 TB (after RAID setup). · Data disk: 3 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 10000 online users. · 2000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 24 cores (total physical cores), 2.0 GHz. · Memory: 224 GB. · System disk: 3 TB (after RAID setup). · Data disk: 4 TB (after RAID setup). Three drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 20000 online users. · 4000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 28 cores (total physical cores), 2.0 GHz. · Memory: 256 GB. · System disk: 3 TB (after RAID setup). · Data disk: 5 TB (after RAID setup). Four drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 40000 online users. · 8000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 32 cores (total physical cores), 2.0 GHz. · Memory: 288 GB. · System disk: 3 TB (after RAID setup). · Data disk: 7 TB (after RAID setup). Five drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 60000 online users. · 12000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· CPU: 40 cores (total physical cores), 2.0 GHz. · Memory: 384 GB. · System disk: 3 TB (after RAID setup). · Data disk: 11 TB (after RAID setup). Eight drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 100000 online users. · 20000 switches, ACs and APs in total. |

|

Node settings |

Maximum resources that can be managed |

||

|

Node name |

Node quantity |

Minimum requirements per node |

|

|

Analyzer |

3 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 128 GB · System disk: 2.4 TB (after RAID setup) · Data disk: 2 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 2000 online users. · 400 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 128 GB. · System disk: 2.4 TB (after RAID setup). · Data disk: 2 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 5000 online users. · 1000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 128 GB. · System disk: 2.4 TB (after RAID setup). · Data disk: 2 TB (after RAID setup). Two drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 10000 online users. · 2000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 160 GB. · System disk: 3 TB (after RAID setup). · Data disk: 3 TB (after RAID setup). Three drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 20000 online users. · 4000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 24 cores (total physical cores), 2.0 GHz. · Memory: 192 GB. · System disk: 3 TB (after RAID setup). · Data disk: 4 TB (after RAID setup). Three drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 40000 online users. · 8000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 28 cores (total physical cores), 2.0 GHz. · Memory: 224 GB. · System disk: 3 TB (after RAID setup). · Data disk: 5 TB (after RAID setup). Four drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 60000 online users. · 12000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· CPU: 32 cores (total physical cores), 2.0 GHz. · Memory: 256 GB. · System disk: 3 TB (after RAID setup). · Data disk: 8 TB (after RAID setup). Six drives of the same type are required. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · Storage controller: 1 GB cache, powerfail safeguard supported with a supercapacitor installed. |

· 100000 online users. · 20000 switches, ACs and APs in total. |

Physical server requirements in the DC scenario

Table 4 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (single-node deployment) (x86-64(Intel64/AMD64))

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

1 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup).As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 8 TB (after RAID setup). Three drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two forming a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

Table 5 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (single-node deployment) (Hygon x86-64 servers)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

1 |

· CPU: 48 cores (total physical cores), 2 × Hygon C86 7265,24 cores,2.2 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 8 TB (after RAID setup). Three drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two forming a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

|

NOTE: If Kylin system is selected, the IP address must be configured for NIC Bonding, otherwise the NIC Bonding cannot be used. |

Table 6 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (single-node deployment) (Kunpeng ARM server)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

1 |

· CPU: 64 cores (total physical cores), 2 × Kunpeng 920 5232, 32 cores, 2.6 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 8 TB (after RAID setup). Three drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

Table 7 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (single-node deployment) (Phytium ARM server)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

1 |

· CPU: 128 cores (total physical cores), 2 × Phytium S2500, 64 cores, 2.1 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). · Data disk: 8 TB (after RAID setup). Three drives of the same type are required. · ETCD disk: 50 GB SSDs (after RAID setup). · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

3 |

· CPU: 20 cores (total physical cores), 2.0 GHz. · Memory: 192 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 8 TB (after RAID setup). Three drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

3 |

· CPU: 24 cores (total physical cores), 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 12 TB (after RAID setup). Five drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · Storage controller: 1GB cache, powerfail safeguard supported with a supercapacitor installed. · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

100 |

3000 VMs, 6000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

|

3 |

· CPU: 32 cores (total physical cores), 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 24 TB (after RAID setup). Seven drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

200 |

6000 VMs, 12000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

Table 9 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (cluster deployment) (Hygon x86-64 server)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

3 |

· CPU: 48 cores (total physical cores), 2 × Hygon C86 7265,24 cores,2.2 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 24 TB (after RAID setup). Seven drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

200 |

5000 VMs, 10000 TCP streams/sec. |

2 TCP streams/sec per VM. |

Table 10 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario (cluster deployment) (Kunpeng ARM server)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

3 |

· CPU: 64 cores (total physical cores), 2 × Kunpeng 920 5232, 32 cores, 2.6 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · Data disk: 24 TB (after RAID setup). Seven drives of the same type are required. As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. · ETCD disk: 50 GB SSDs (after RAID setup). As a best practice, use HDDs with a rotation speed of 7.2K RPM or above or use SSDs. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

200 |

5000 VMs, 10000 TCP streams/sec. |

2 TCP streams/sec per VM. |

Table 11 Physical server requirements for Unified Platform+Analyzer deployment in the DC scenario cluster deployment (Phytium ARM server)

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

3 |

· CPU: 128 cores (total physical cores), 2 × Phytium S2500, 64 cores, 2.1 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). · Data disk: 12 TB (after RAID setup). Seven drives of the same type are required. · ETCD disk: 50 GB SSDs (after RAID setup). · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

100 |

2000 VMs, 4000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

Analyzer |

3 |

· CPU: 128 cores (total physical cores), 2 × Phytium S2500, 64 cores, 2.1 GHz · Memory: 256 GB. · System disk: 1.92 TB (after RAID setup). · Data disk: 24 TB (after RAID setup). Seven drives of the same type are required. · ETCD disk: 50 GB SSDs (after RAID setup). · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

200 |

5000 VMs, 10000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

|

NOTE: You can calculate the overall TCP streams per second based on the total number of VMs in the DC (2 streams/sec per VM) to determine the required hardware specifications. |

Physical server requirements in the WAN scenario

In the WAN scenario, Analyzer must be deployed together with Controller. You cannot deploy Analyzer alone and must first deploy the security controller. For converged deployment hardware requirements, see the hardware configuration guide for AD-NET Hardware Configuration Guide.

Hardware requirements (VM)

VM requirements in the campus scenario

Different business scales have similar requirements for network ports in campus scenarios, which are as follows:

· As a best practice, use different network ports for the southbound collection network and the northboud network. If only one network port is available, you can configure the two networks to share the port.

· Normal mode: Use two 1Gbps or above network ports. As a best practice, use a 10Gbps or above northbound network port.

· Redundant mode: Use bonding mode2 or mode4. Use four 1Gbps or above network ports, each two forming a Linux Bonding group. As a best practice, use a 10Gbps or above northbound network port.

|

Node settings |

Maximum resources that can be managed |

||

|

Node name |

Node quantity |

Minimum node requirements |

|

|

Analyzer |

1 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 192 GB. · System disk: 2.4 TB. · Data disk: 2 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 2000 online users. · 400 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 192 GB. · System disk: 2.4 TB. · Data disk: 2 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 5000 online users. · 1000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 192 GB. · System disk: 2.4 TB. · Data disk: 3 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 10000 online users. · 2000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 24 × 2 cores, 2.0 GHz. · Memory: 224 GB. · System disk: 3 TB. · Data disk: 4 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 20000 online users. · 4000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 28 × 2 cores, 2.0 GHz. · Memory: 256 GB. · System disk: 3 TB. · Data disk: 5 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 40000 online users. · 8000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 32 × 2 cores, 2.0 GHz. · Memory: 288 GB. · System disk: 3 TB (after RAID setup). · Data disk: 7 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 60000 online users. · 12000 switches, ACs and APs in total. |

|

Analyzer |

1 |

· vCPU: 40 × 2 cores, 2.0 GHz. · Memory: 384 GB. · System disk: 3 TB. · Data disk: 11 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 100000 online users. · 20000 switches, ACs and APs in total. |

|

Node settings |

Maximum resources that can be managed |

||

|

Node name |

Node quantity |

Minimum requirements per node |

|

|

Analyzer |

3 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 128 GB. · System disk: 2.4 TB. · Data disk: 2 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface.. |

· 2000 online users. · 400 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 128 GB. · System disk: 2.4 TB. · Data disk: 2 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 5000 online users. · 1000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 128 GB. · System disk: 2.4 TB. · Data disk: 2 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 10000 online users. · 2000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 160 GB. · System disk: 3 TB. · Data disk: 3 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB (after RAID setup). Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 20000 online users. · 4000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 24 × 2 cores, 2.0 GHz. · Memory: 192 GB. · System disk: 3 TB. · Data disk: 4 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 40000 online users. · 8000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 28 × 2 cores, 2.0 GHz. · Memory: 224 GB. · System disk: 3 TB. · Data disk: 5 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 60000 online users. · 12000 switches, ACs and APs in total. |

|

Analyzer |

3 |

· vCPU: 32 × 2 cores, 2.0 GHz. · Memory: 256 GB. · System disk: 3 TB. · Data disk: 8 TB. The random read/write speed cannot be lower than 100 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

· 100000 online users. · 20000 switches, ACs and APs in total. |

VM requirements in the DC scenario

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum node requirements |

|||

|

Analyzer |

1 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB. · Data disk: 8 TB. The random read/write speed cannot be lower than 200 M/s and shared storage is not supported. · ETCD disk: 50 GB SSDs. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

Node settings |

Maximum number of devices |

Maximum number of TCP connections |

Remarks |

||

|

Node name |

Node quantity |

Minimum single-node requirements |

|||

|

Analyzer |

3 |

· vCPU: 20 × 2 cores, 2.0 GHz. · Memory: 192 GB. · System disk: 1.92 TB. · Data disk: 8 TB. The random read/write speed cannot be lower than 200 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. ¡ 10 GE bandwidth for inter-cluster communication |

50 |

1000 VMs, 2000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

3 |

· vCPU: 24 × 2 cores, 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB. · Data disk: 12 TB. The random read/write speed cannot be lower than 200 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. ¡ 10 GE bandwidth for inter-cluster communication |

100 |

3000 VMs, 6000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

|

3 |

· vCPU: 32 × 2 cores, 2.0 GHz. · Memory: 256 GB. · System disk: 1.92 TB. · Data disk: 12 TB. The random read/write speed cannot be lower than 200 M/s and shared storage is not supported. · ETCD disk: 50 GB. Installation path: /var/lib/etcd · NICs: ¡ Non-bonding mode: 2 × 10 Gbps interfaces. ¡ Bonding mode (recommended mode: mode 2 or mode 4): 4 × 10 Gbps interfaces, each two form a bonding interface. ¡ 10 GE bandwidth for inter-cluster communication |

200 |

6000 VMs, 12000 TCP streams/sec. |

2 TCP streams/sec per VM. |

|

|

|

NOTE: · You can calculate the overall TCP streams per second based on the total number of VMs in the DC (2 streams/sec per VM) to determine the required hardware specifications. · Make sure the CPU, memory, and disk capacity meet the requirements and sufficient physical resources are reserved. Overcommitment is not supported. · Only H3C CAS virtualization is supported and CAS virtualization must use local storage. Make sure the physical drive capacity meet the disk size requirements after RAID setup. A minimum of three drives of the same type is required for RAID setup. · DC collectors do not support deployment on VMs. |

VM requirements in the WAN scenario

In the WAN scenario, Analyzer must be deployed together with Controller. Analyzer cannot be deployed alone and you must deploy the security controller first. For converged deployment hardware requirements, see AD-NET Hardware Configuration Guide.

Operating system requirements

The following operating systems are supported:

· Common_Linux-1.1.2_V9R1B07D014

· H3Linux 2.0.2

· Red Hat Enterprise Linux 8.4

· Red Hat Enterprise Linux 8.6

· Red Hat Enterprise Linux 8.8

· Kylin Linux Advanced Server release V10SP2

|

IMPORTANT: · All nodes in the cluster must be installed with the same operating system version. · Kylin V10SP2, UOS V20, Red Hat Enterprise Linux 8.4, Red Hat Enterprise Linux 8.6 and Red Hat Enterprise Linux 8.8 needs to be prepared by the user. |

Software requirements

Analyzer runs on Unified Platform. Before deploying Analyzer, deploy Unified Platform.

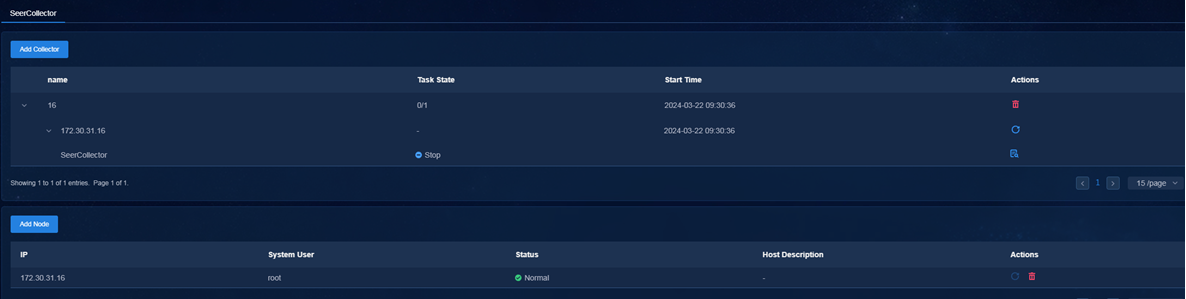

Server requirements for SeerCollector deployment

|

IMPORTANT: · To use the TCP/UDP and INT stream analysis functions provided by Analyzer, you must deploy SeerCollector. · The menu path for managing the SeerCollector collector in the Analyzer on the management page is [Analysis>Analysis Options>Collector>Collector Parameters>SeerCollector]. · If the SeerCollector collector uses an Intel brand CPU, please make sure that the model is E5-2690 v4 or higher (you can execute the lscpu command to check the specific CPU model), otherwise the SeerCollector collector will not function properly. |

Hardware requirements

SeerCollector must be installed on a physical server. Hardware configuration requirements are as follows.

Table 16 SeerCollector server hardware requirements (x86-64(Intel64))

|

Item |

Requirements |

|

CPU |

Intel(R) Xeon(R) CPU (as a best practice, use the Platinum or Gold series), 2.0 GHz, 20+ virtual cores. |

|

Memory |

128 GB. |

|

Disk |

System disk: 2 × 600 GB HDDs (SAS/SATA) or SSDs in RAID 1 mode. |

|

NIC |

1 × 10 Gbps collection interface + 1 × 10 Gbps management interface. · The collection interface must support the DPDK technology, and you cannot configure it in bonding mode. The management network interface can be configured in bonding mode. · As a best practice, use an Intel 82599 NIC as the collection NIC for an x86 server. Plan in advance which NIC is used for collection, record information of the NIC (name, MAC), and plan and set the IP address for it. After the configuration is deployed, the collection NIC is managed by DPDK and will not be displayed in the Linux kernel command output. · You can also use an Mellanox 4[ConnectX-3] NIC as the collection NIC. As a best practice, use one of the two Mellanox 4[ConnectX-3] models: Mellanox technologies MT27710 family,and Mellanox technologies MT27700. If an Mellanox 4[ConnectX-3] NIC is used for the collection NIC, you must use other types of NICs as the management NIC. An ARM server supports only Mellanox NICs currently. · Do not configure DPDK binding for the management network interface. |

|

|

NOTE: · The SeerCollector collector needs to ensure that the NUMA node corresponding to the collected NIC has been allocated memory, otherwise the NIC cannot be recognized, which will cause the SeerCollector to fail to run. Please refer to section How to check if NUMA nodes are allocated memory?. If memory is not allocated, please try to adjust the position of the memory module to ensure that the corresponding NUMA node is allocated memory. · "If SeerCollector collector is used on Hygon servers, hyper-threading cannot be manually turned off. Please refer to section How to check if hyper-threading is enabled?. · If SeerCollector is used on Hygon servers, after the first installation of the operating system, hardware virtualization needs to be enabled. Otherwise, DPDK NIC cannot receive packets normally. Please refer to section How to enable hardware virtualization function?. · For SeerCollector used on Phytium servers, it is necessary to ensure that the first 16 cores correspond to the NUMA nodes that are allocated memory (for example, if a NUMA node has 8 cores, node 1 is cores 0 to 7, and node 2 is cores 8 to 15, then node 1 and node 2 need to be allocated memory). Please refer to section How to check if NUMA nodes are allocated memory?. |

Table 17 SeerCollector server hardware requirements (Hygon x86-64 server)

|

Item |

Requirements |

|

CPU |

CPU: 48 cores (total physical cores), 2 × Hygon C86 7265,24 cores,2.2 GHz |

|

Memory |

The total capacity must be 128 GB or greater, and a minimum of eight memory modules are required (for example, eight 16-GB memory modules are required to provide a capacity of 128 GB). |

|

Disk |

System disk: 2 × 600 GB HDDs (SAS/SATA) or SSDs in RAID 1 mode. |

|

NIC |

1 × 10 Gbps collection interface + 1 × 10 Gbps management interface. · The collection interface must support the DPDK technology, and you cannot configure it in bonding mode. The management network interface can be configured in bonding mode. · As a best practice, use an Intel 82599 NIC as the collection NIC for an x86 server. Plan in advance which NIC is used for collection, record information of the NIC (name, MAC), and plan and set the IP address for it. After the configuration is deployed, the collection NIC is managed by DPDK and will not be displayed in the Linux kernel command output. · You can also use an Mellanox 4[ConnectX-3] NIC as the collection NIC. As a best practice, use one of the two Mellanox 4[ConnectX-3] models: Mellanox technologies MT27710 family,and Mellanox technologies MT27700. If an Mellanox 4[ConnectX-3] NIC is used for the collection NIC, you must use other types of NICs as the management NIC. An ARM server supports only Mellanox NICs currently. · Do not configure DPDK binding for the management network interface. |

Table 18 SeerCollector server hardware requirements(Kunpeng ARM server)

|

Item |

Requirements |

|

CPU |

CPU: 64 cores (total physical cores), 2 × Kunpeng 920 5232, 32 cores, 2.6 GHz |

|

Memory |

128 GB. |

|

Disk |

System disk: 2 × 600 GB HDDs (SAS/SATA) or SSDs in RAID 1 mode. |

|

NIC |

1 × 10 Gbps collection interface + 1 × 10 Gbps management interface. · The collection interface must support the DPDK technology, and you cannot configure it in bonding mode. The management network interface can be configured in bonding mode. · As a best practice, use an Intel 82599 NIC as the collection NIC for an x86 server. Plan in advance which NIC is used for collection, record information of the NIC (name, MAC), and plan and set the IP address for it. After the configuration is deployed, the collection NIC is managed by DPDK and will not be displayed in the Linux kernel command output. · You can also use an Mellanox 4[ConnectX-3] NIC as the collection NIC. As a best practice, use one of the two Mellanox 4[ConnectX-3] models: Mellanox technologies MT27710 family,and Mellanox technologies MT27700. If an Mellanox 4[ConnectX-3] NIC is used for the collection NIC, you must use other types of NICs as the management NIC. An ARM server supports only Mellanox NICs currently. · Do not configure DPDK binding for the management network interface. |

Table 19 SeerCollector server hardware requirements(Phytium ARM server)

|

Item |

Requirements |

|

CPU |

CPU: 128 cores (total physical cores), 2 × Phytium S2500, 64 cores, 2.1 GHz |

|

Memory |

128 GB. |

|

Disk |

System disk: 2 × 600 GB HDDs (SAS/SATA) or SSDs in RAID 1 mode. |

|

NIC |

1 × 10 Gbps collection interface + 1 × 10 Gbps management interface. · The collection interface must support the DPDK technology, and you cannot configure it in bonding mode. The management network interface can be configured in bonding mode. · As a best practice, use an Intel 82599 NIC as the collection NIC for an x86 server. Plan in advance which NIC is used for collection, record information of the NIC (name, MAC), and plan and set the IP address for it. After the configuration is deployed, the collection NIC is managed by DPDK and will not be displayed in the Linux kernel command output. · You can also use an Mellanox 4[ConnectX-3] NIC as the collection NIC. As a best practice, use one of the two Mellanox 4[ConnectX-3] models: Mellanox technologies MT27710 family,and Mellanox technologies MT27700. If an Mellanox 4[ConnectX-3] NIC is used for the collection NIC, you must use other types of NICs as the management NIC. An ARM server supports only Mellanox NICs currently. · Do not configure DPDK binding for the management network interface. |

|

|

NOTE: · The compatible CPU architecture varies by Analyzer version. For the compatible CPU architecture, see the release notes. · A SeerCollector server must provide two interfaces: one data collection interface to receive mirrored packets from the network devices and one management interface to exchange data with Analyzer. · If using the Kylin system, an IP address must be configured for the network card bond, otherwise the bond network card will be unavailable. |

Table 20 NICs available for SeerCollector (x86-64(Intel64))

|

Vendor |

Chip |

Model |

Series |

Applicable version |

|

Intel |

JL82599 |

H3C UIS CNA 1322 FB2-RS3NXP2D, 2-Port 10GE Optical Interface Ethernet Adapter (SFP+) |

CNA-10GE-2P-560F-B2 |

All versions |

|

JL82599 |

H3C UIS CNA 1322 FB2-RS3NXP2DBY, 2-Port 10GE Optical Interface Ethernet Adapter (SFP+) |

CNA-10GE-2P-560F-B2 |

All versions |

|

|

X550 |

H3C UNIC CNA 560T B2-RS33NXT2A, 2-Port 10GE Copper Interface Ethernet Adapter, 1*2 |

N/A |

All versions |

|

|

X540 |

UN-NIC-X540-T2-T-10Gb-2P (copper interface network adapter) |

N/A |

All versions |

|

|

X520 |

UN-NIC-X520DA2-F-B-10Gb-2P |

N/A |

All versions |

|

|

Mellanox |

MT27710 Family [ConnectX-4 Lx] |

NIC-ETH540F-LP-2P |

Mellanox Technologies MT27710 Family |

All versions |

|

MT27712A0-FDCF-AE[ConnectX-4Lx] |

NIC-620F-B2-25Gb-2P |

|

All versions |

|

|

Mellanox Technologies MT28908 Family [ConnectX-6] |

IB-MCX653105A-HDAT-200Gb-1P |

Mellanox Technologies MT28908 Family |

E6508 and later |

|

|

Broadcom |

BCM57414 |

NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller(rev 01) |

|

All versions |

Table 21 System disk partition planning

|

RAID |

Partition name |

Mounting point |

Minimum capacity |

Remarks |

|

2*600GB, RAID1 |

/dev/sda1 |

/boot/efi |

200 MB |

EFI System Partition This partition is required only in UEFI mode. |

|

/dev/sda2 |

/boot |

1024 MB |

N/A |

|

|

/dev/sda3 |

/ |

590 GB |

N/A |

|

|

/dev/sda4 |

swap |

4 GB |

Swap partition |

|

IMPORTANT: · SeerCollector does not require storing data in data disks. · If the system disk is greater than 1.5 TB, you can use automatic partitioning for the disk. If the system disk is smaller than or equal to 1.5 TB, partition the disk manually as described in Table 21. |

Table 22 Operating systems and processors supported by SeerCollector

|

Processor |

Operating system |

Kernel version |

Remarks |

|

Hygon (x86) |

H3Linux 1.3.1 |

5.10.38-21.hl05.el7.x86_64 |

All versions |

|

H3Linux 1.1.2 |

3.10.0-957.27.2.el7.x86_64 |

All versions |

|

|

Kylin V10SP2 |

4.19.90-24.4.v2101.ky10.x86_64 |

All versions |

|

|

H3Linux 2.0.2 |

5.10.0-60.72.0.96.hl202.x86_64 |

E6505 and later |

|

|

5.10.0-136.12.0.86.4.nos1.x86_64 |

E6507 and later |

||

|

Intel (X86) |

Kylin V10SP2 |

4.19.90-24.4.v2101.ky10.x86_64 |

All versions |

|

H3Linux 1.1.2 |

3.10.0-957.27.2.el7.x86_64 |

All versions |

|

|

3.10.0-1160.31.1.hl09.el7.x86_64 |

E6505 and later |

||

|

H3Linux 2.0.2 |

5.10.0-60.18.0.50.1.hl202.x86_64 |

E6310 and later |

|

|

5.10.0-136.12.0.86.4.hl202.x86_64 |

E6505 and later |

||

|

5.10.0-136.12.0.86.4.nos1.x86_64 |

E6507 and later |

||

|

Red Hat Enterprise Linux 8.8 |

4.18.0-477.13.1.el8_8.x86_64 |

E6508 and later |

|

|

Kunpeng (ARM) |

Kylin V10 |

4.19.90-11.ky10.aarch64 |

All versions |

|

Kylin V10SP2 |

4.19.90-24.4.v2101.ky10.aarch64 |

All versions |

|

|

H3Linux 2.0.2 |

5.10.0-60.72.0.96.hl202.aarch64 |

E6310 and later |

|

|

Phytium (ARM) |

Kylin V10SP2 |

4.19.90-24.4.v2101.ky10.aarch64 |

All versions |

|

|

NOTE: To view the kernel version, use compression software (for example, WinRAR) to open the .iso file. Then, access the Packages directory, and identify the file named in the kernel-version.rpm format, where version indicates the kernel version. For example, file path Packages\kernel-3.10.0-957.27.2.el7.x86_64.rpm corresponds to kernel version 3.10.0-957.27.2.el7.x86_64. |

Operating system requirements

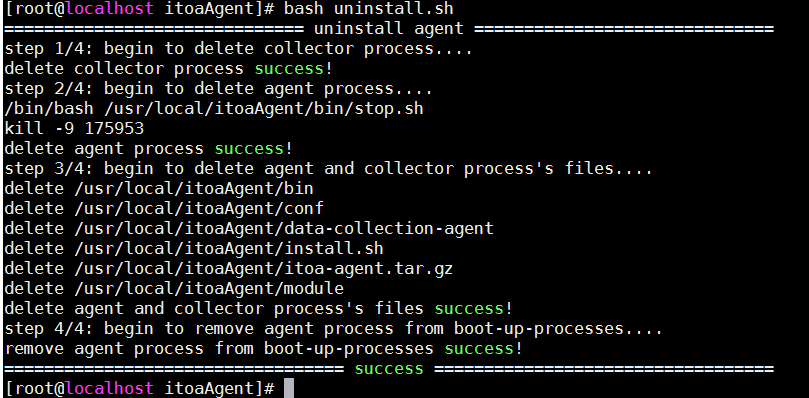

|

IMPORTANT: · To avoid configuration failures, make sure a SeerCollector server uses an H3Linux_K310_V112 operating system or later. · If SeerCollector needs to reinstall or update the operating system, it must be uninstalled first. |

As a best practice, use the operating system coming with Unified Platform.

Installing SeerCollector

Please install SeerCollector according to Hardware requirements and Operating system requirements for SeerCollector.

Other requirements

· Disable the firewall and disable auto firewall startup:

a. Execute the systemctl stop firewalld command to disable the firewall.

b. Execute the systemctl disable firewalld command to disable auto firewall startup.

c. Execute the systemctl status firewalld command to verify that the firewall is in inactive state.

The firewall is in inactive state if the output from the command displays Active: inactive (dead).

[root@localhost ~]# systemctl status firewalld

firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

· To avoid conflicts with the service routes, access the NIC configuration file whose name is prefixed ifcfg in the /etc/sysconfig/network-scripts/ directory, change the value of the DEFROUTE field to no, and then save the file.

Client requirements

You can access Analyzer from a Web browser without installing any client. As a best practice, use a Google Chrome 70 or later Web browser.

Pre-installation checklist

Table 23 Pre-installation checklist

|

Item |

Requirements |

|

|

Server |

Hardware |

· The hardware (including CPUs, memory, disks, and NICs) settings are as required. · The servers for analyzer and SeerCollector deployment support operating system CentOS 7.6 or its higher versions. |

|

Software |

RAID arrays have been set up on the disks of the servers. |

|

|

Client |

The Web browser version is as required. |

|

|

Server and OS compatibility |

To view the compatibility matrix between H3C servers and operating systems, click http://www.h3c.com/en/home/qr/default.htm?id=65 |

|

|

|

NOTE: For general H3Linux configuration, see CentOS 7.6 documents. |

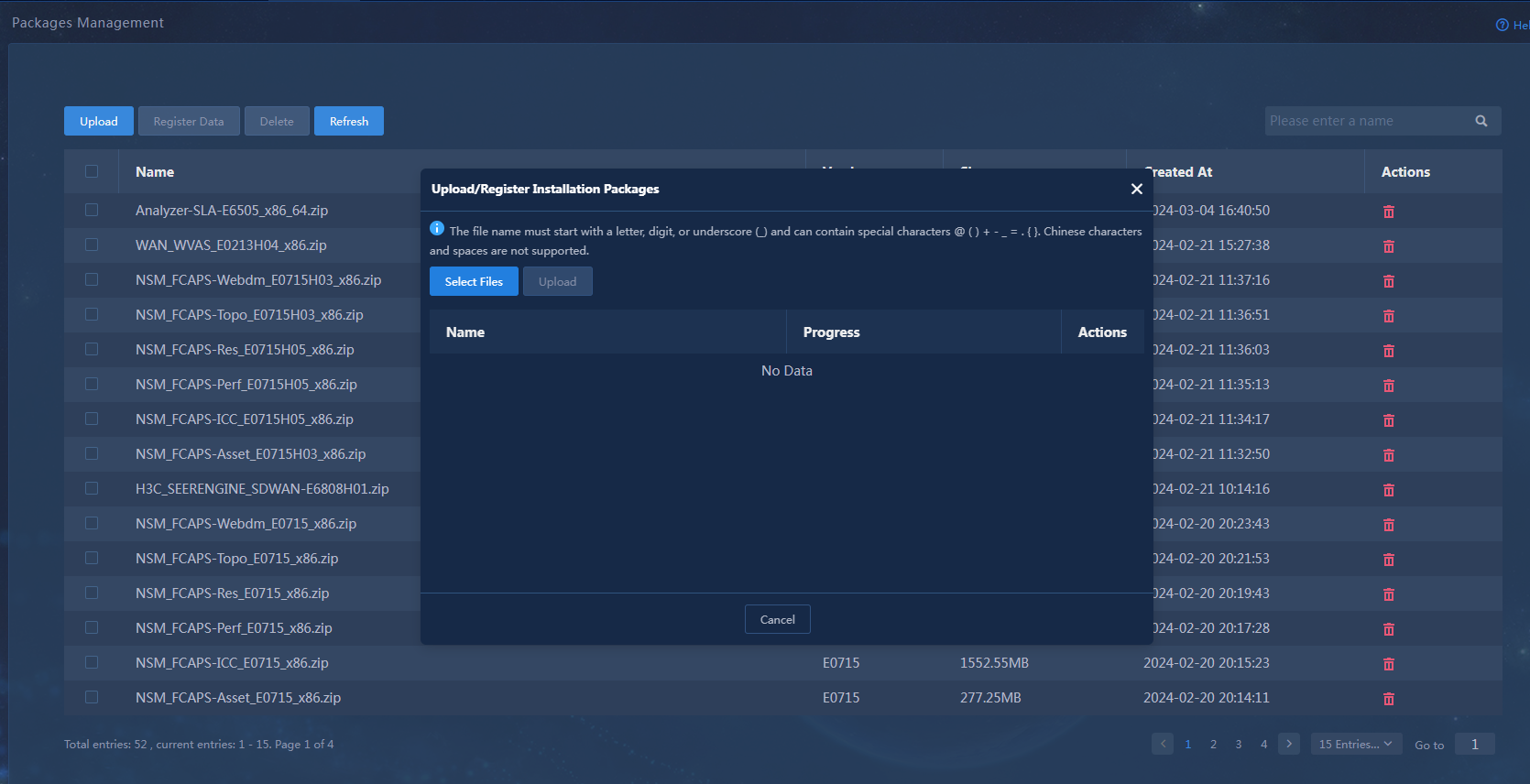

Software package authentication

After uploading installation packages, first perform MD5 verification on each software package to ensure its integrity and correctness.

1. Identify the uploaded installation packages.

[root@node1~]# cd /opt/matrix/app/install/packages/

[root@node1~]# ls

BMP_Report_E0722_x86.zip UDTP_Core_E0722_x86.zip

…

2. Obtain the MD5 value of an installation package, for example, UDTP_Core_E0722_x86.zip.

[root@node1~]# md5sum UDTP_Core_E0722_x86.zip

2b8daa20bfec12b199192e2f6e6fdeac UDTP_Core_E0722_x86.zip

3. Compare the obtained MD5 value with the MD5 value released with the software. If they are the same, the installation package is correct.

Analyzer network planning

Network overview

|

· The solution supports single-stack southbound networking. · Configure the network when you install the Analyzer-Collector component. For more information, see deploying components in "Deploying Analyzer." · To avoid address conflict, make sure the southbound network IP address pool does not contain the VIP address of the northbound service. · To use the device-based simulation feature of the WAN simulation analysis component, configure IP settings for WAN simulation analysis as described in "Network planning." |

· Northbound network—Northbound service VIP of Unified Platform. The cluster uses the network to provide services.

· Southbound network—Network that the COLLECTOR component and SeerCollector use to receive data from devices. Make sure the southbound network and a device from which data is collected are reachable to each other. The following southbound network schemes are available:

¡ Integrated southbound and northbound network—No independent southbound network is configured for analyzers. Cloud deployment supports only this southbound network scheme.

¡ Single-stack southbound network—Create one IPv4 or IPv6 network as the southbound network.

¡ Dual-stack southbound network—Create one IPv4 network and one IPv6 network as the southbound networks to collect information from both IPv4 and IPv6 devices.

· Simulation network—Network used to manage simulated virtual devices and the DTN server when the Analyzer-Simulation and DTN_MANAGER components are deployed in the WAN scenario.

|

|

NOTE: · Northbound network is for users to access the backend through the Web interface. As a network for communication between cluster nodes, it requires high bandwidth and the northbound network should be capable of achieving a bandwidth of 10Gbps. · Southbound network is for service data reporting. It is a service network and generally not exposed to external access. The network has a large amount of traffic and a high bandwidth requirement. The use of a southbound network isolates service data and management data. Subnet isolation is achieved if the northbound and southbound networks use different NICs and subnets. · If the same NIC and different subnets are used, only subnet isolation is achieved. If the same subnet and the same NIC (integrated northbound and southbound) are used, no isolation is provided. You can configure subnets and NICs as needed. For example, in a production environment, management network and service network use different subnets, and the management network use the fortress machine to monitor the service situation. |

|

|

NOTE: You can use the same NIC and same network segment for the southbound network and northbound network. As a best practice, use different NICs and network segments for the southbound network and northbound network when the NICs and network segment resources are sufficient. Use the single-stack southbound network or dual-stack southbound network solution as needed. |

Network planning

Plan the network for different scenarios as follows:

· DC—Deploy one SeerCollector and plan IP settings for SeerCollector.

· Campus—By default, SeerCollector is not required. To use TCP stream analysis, deploy one SeerCollector and plan IP settings for the SeerCollector.

· WAN—No SeerCollector is required.

· WAN—To deploy the simulation component application and use the device-based simulation feature, plan IP settings for the simulation network.

Integrated southbound and northbound network

In the integrated southbound and northbound network scheme, no independent network is created for the analyzer to collect data. The analyzer uses the network of Unified Platform.

In single-node mode, plan network settings for one analyzer and one SeerCollector, as shown in Table 24.

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

One IPv4 address |

IP address of the server where Unified Platform is deployed. |

See "Server requirements." |

|

Northbound service VIP of Unified Platform |

One IPv4 address |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

||

|

Data reporting IP address of SeerCollector |

One IPv4 address |

IP address that the SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IP address of SeerCollector |

Two IPv4 addresses |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

In cluster mode, plan network settings for three analyzers and one SeerCollector, as shown in Table 25.

Table 25 Analyzer network planning in cluster mode (integrated southbound and northbound network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

Three IPv4 addresses |

IP addresses of the servers where Unified Platform is deployed. |

See "Server requirements " |

|

Northbound service VIP of Unified Platform |

One IPv4 address |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

||

|

Data reporting IP address of SeerCollector |

One IPv4 address |

IP address that SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IP address of SeerCollector |

Two IPv4 addresses |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

Single-stack southbound network

In the single-stack southbound network scheme, configure an independent IPv4 or IPv6 network for data collection. The IP version of the southbound collecting IP address must be the same as that of the collector's data collecting IP address.

In single-node deployment mode, plan network settings for one analyzer and one SeerCollector, as shown in Table 26.

Table 26 Analyzer network planning in single-node deployment mode (single-stack southbound network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

One IPv4 address. |

IP address of the server where Unified Platform is deployed. |

See "Server requirements." |

|

Northbound service VIP of Unified Platform |

One IPv4 address. |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

||

|

Data reporting IP address of SeerCollector |

One IPv4 address. |

IP address that SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IP address of SeerCollector |

Two IPv4 addresses. |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

|

Network 3 |

Southbound collecting IP address |

Four IPv4 or IPv6 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). One container address and one cluster VIP for each network. |

See "Server requirements." |

In cluster mode, plan network settings for three analyzers and one SeerCollector, as shown in Table 27.

Table 27 Analyzer network planning in cluster mode (single-stack southbound network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

Three IPv4 addresses. |

IP addresses of the servers where Unified Platform is deployed. |

See "Server requirements." |

|

Northbound service VIP of Unified Platform |

One IPv4 address. |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

See "Server requirements." |

|

|

Data reporting IP address of SeerCollector. |

One IPv4 address. |

IP address that SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IP address of SeerCollector |

Two IPv4 addresses. |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

|

Network 3 |

Southbound collecting IP address |

Eight IPv4 or IPv6 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). Three container addresses and one cluster VIP for each network. |

See "Server requirements." |

|

|

NOTE: If SeerCollector is deployed, make sure the southbound collecting IP address and the data collecting IP address of SeerCollector are of the same IP version. |

Dual-stack southbound network

In the dual-stack southbound network scheme, configure an independent dual-stack network for data collection.

In single-node deployment mode, plan network settings for one analyzer and one SeerCollector, as shown in Table 28.

Table 28 Analyzer network planning in single-node deployment mode (dual-stack southbound network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

One IPv4 address. |

IP address of the server where Unified Platform is deployed. |

See "Server requirements." |

|

Northbound service VIP of Unified Platform |

One IPv4 address. |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

See "Server requirements." |

|

|

Data reporting IP address of SeerCollector. |

One IPv4 address. |

IP address that SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IP address of SeerCollector |

Two IPv4 addresses. |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

|

Network 3 |

Southbound collecting IPv4 address |

Four IPv4 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). One container address and one cluster VIP for each network. |

See "Server requirements." |

|

Network 4 |

Southbound collecting IPv6 address |

Four IPv6 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). One container address and one cluster VIP for each network. |

See "Server requirements." |

In cluster mode, plan network settings for three analyzers and one SeerCollector, as shown in Table 29.

Table 29 Analyzer network planning in cluster mode (dual-stack southbound network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

Unified Platform cluster node IP address |

Three IPv4 addresses. |

IP addresses of the servers where Unified Platform is deployed. |

See "Server requirements." |

|

Northbound service VIP of Unified Platform |

One IPv4 address. |

IP address that Unified Platform uses to provide services. Determined during Unified Platform deployment. |

See "Server requirements." |

|

|

Data reporting IP address of SeerCollector. |

One IPv4 address. |

IP address that SeerCollector uses to report collected data to the analyzer. |

NIC on SeerCollector. |

|

|

Network 2 |

Data collecting IPv4 address of SeerCollector |

Two IPv4 addresses. |

One IP address for receiving mirrored packets from network devices and one floating IP address (used only in cluster mode) of SeerCollector for device discovery. Make sure that you can use the mirrored packet receiving address to reach the device service port. |

Independent DPDK NIC on SeerCollector. |

|

Network 3 |

Southbound collecting IPv4 address |

Eight IPv4 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). Three container addresses and one cluster VIP for each network. |

See "Server requirements." |

|

Network 4 |

Southbound collecting IPv6 address |

Eight IPv6 addresses. |

Addresses of the container additional networks (one active collecting network and one passive collecting network). Three container addresses and one cluster VIP for each network. |

See "Server requirements." |

Single-stack simulation network

In single-node deployment mode, only one simulation analyzer and one DTN_MANAGER node are deployed. Their network settings are planned as shown in Table 30.

Table 30 Analyzer network planning in single-node deployment mode (single-stack silumation network)

|

Network |

IP address type |

IP address quantity |

Description |

NIC requirements |

|

Network 1 |

WAN simulation analysis IP address |

Three IPv4 addresses. |

One IP address for connecting DTN_MANAGER and the DTN server. The other two IP addresses for device-based simulation network connection. |

WAN simulation analysis NICs. To use the device-based simulation feature, use independent DPDK NICs. |

Deployment workflow

Analyzer deployment tasks at a glance

1. (Required.) Prepare servers

Prepare one or three servers for Unified Platform deployment. For server requirements, see "Server requirements."

2. (Required.) Deploy Unified Platform

a. Install Unified Platform Matrix cluster.

For more information, see H3C Unified Platform Deployment Guide. For information about disk planning, see "Data disk planning."

b. Deploy Unified Platform cluster and applications in the following sequence:

- UDTP_Core

- UDTP_GlusterFS

- UDTP_Middle

- BMP_Alarm

- BMP_Dashboard

- BMP_Report

- BMP_Subscription

- BMP_Template

- BMP_Widget

- BMP_IMonitor (optional)

- BMP_WebSocket (optional)

- BMP_Syslog (optional)

- BMP_Region (optional)

3. (Optional.) Prepare configuration

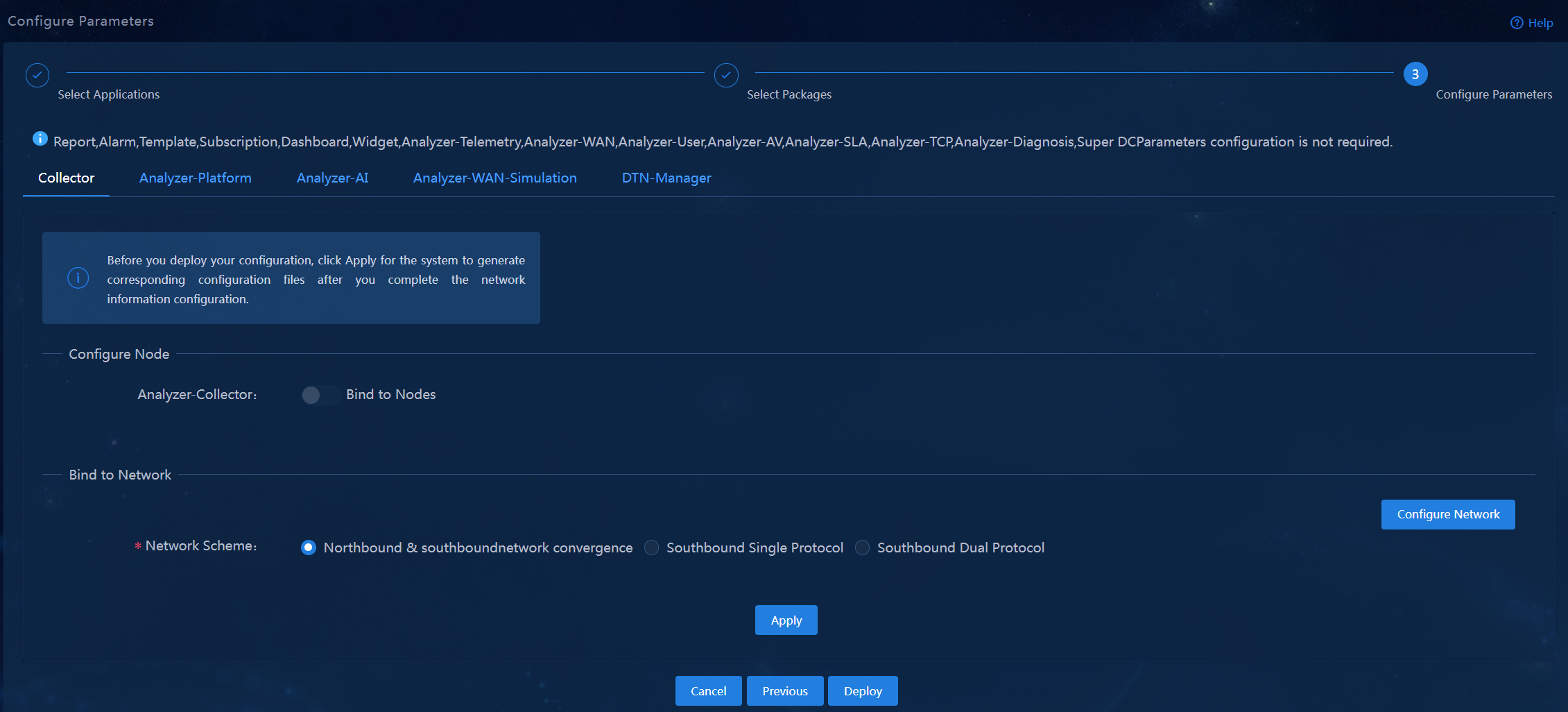

4. (Required.) Deploy Analyzer

Install Analyzer-Collector during Analyzer deployment.

|

IMPORTANT: · In converged deployment where the controller and analyzers are installed in the same cluster, install the controller first. · In campus single-node converged deployment where Unified Platform+vDHCP+SE+EIA+WSM+SA are all deployed, the microservice quantity might exceed the limit. To adjust the maximum number of microservices, see "How do I adjust the maximum microservice quantity in a campus single-node converged deployment scenario?." · In releases earlier than Unified Platform E0711H07, the application package of Analyzer-Collector was placed in Unified Platform release packages. As from SeerAnalyzer E6313, the application package of Analyzer-Collector was placed in SeerAnalyzer release packages. · When you deploy a release earlier than SeerAnalyzer E6313, use the application package of Analyzer-Collector inside Unified Platform release packages earlier than Unified Platform E0711 and E0711H07. In E0711H05, the application package of Analyzer-Collector is placed in package IA-collector-E0711H05_xx. To obtain the Analyzer-Collector application package, decompress package IA-collector-E0711H05_xx. · When you deploy SeerAnalyzer E6313, or later, use the application package of Analyzer-Collector inside the SeerAnalyzer release package. |

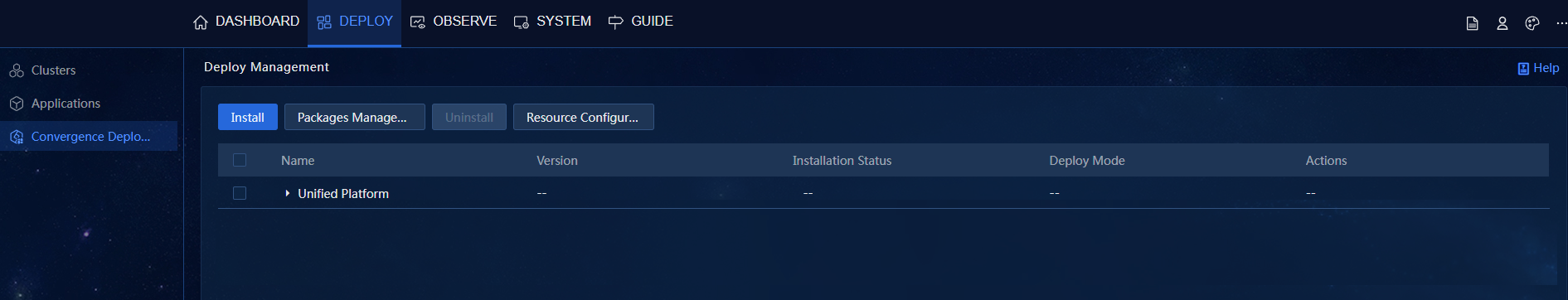

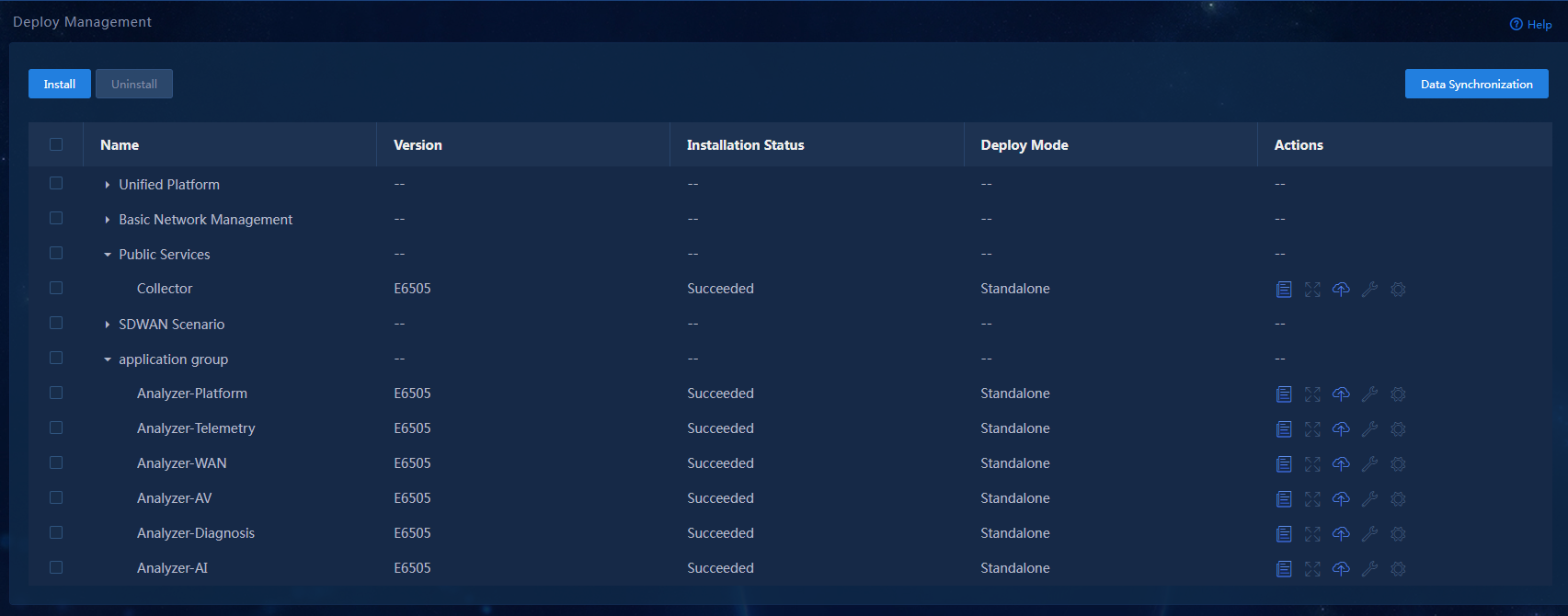

Deploying Unified Platform and optional components

Installation processes may differ when using different operating systems. Please refer to H3C Unified Platform Deployment Guide for details. For information on which Unified Platform version is compatible with the Analyzer please refer to the corresponding version manual.

If you need to run the Analyzer on Unified Platform you must partition the hard drive under the guidance of this chapter and deploy all application installation packages required by the Analyzer.

Restrictions and guidelines

|

IMPORTANT: After reinstalling and rebuilding a master node, run the following two scripts on any other master node that does not need to be reinstalled: · Navigate to /opt/matrix/app/install/metadata/UCENTER/collection/scripts/fault_migration and execute the sh -x faultMigration.sh $IP command. · Navigate to /opt/matrix/app/install/metadata/SA/scripts/fault_migration/ and execute the ./faultMigration.sh $IP command. The $IP argument is the management IP of the newly created node. If you do not run the above scripts, the itoa-collect-multi container will keep restarting endlessly. |

Please note the following considerations during installation:

· When setting the system date and time, please select the appropriate time zone based on your actual location.

· To ensure successful deployment of core components, do not select Beijing when you select a time zone.

· For disk and partition plans, see "Data disk planning."

· In cases where the built-in NTP server is used, it is necessary to ensure that the system time and current time on all nodes are consistent prior to deploying the cluster. For scenarios where an external NTP server is used as the clock synchronization source, there is no need to modify the system time on nodes.

· If the NTP server is not accessible from the southbound address, it can be added later after the cluster is created. When configuring network settings for interfacing cards, it can be added by modifying the cluster parameters.

· After the cluster deployment is completed, please do not modify the system time, as it may cause the cluster to become abnormal.

· The hostname can only consist of lowercase letters, digits, hyphens and dots, and cannot start or end with either a hyphen or a dot.

· During the establishment of the Matrix cluster, it is necessary to ensure that the hostnames of all nodes within the cluster are unique and comply with the naming rules of a hostname. Failure to comply may cause the cluster to fail to establish.

· After the completion of Matrix cluster deployment, please do not modify the hostname of the operating system.

· When multiple networks are displayed in the network list, do not select the network card that is marked with.

· When there are two or more network cards in the installation environment, the subnet used for the northbound business virtual IP must be consistent with the subnet of the first physical network card displayed by the 'ifconfig' command. Otherwise, the cluster deployment may fail or the Pod may not start.

· The network and hostname configuration page can configure network cards. Please make sure to complete the network card binding configuration before creating the cluster.

· When configuring IPv4 and IPv6 addresses, it is necessary to specify the gateway, otherwise there may be problems when creating the cluster.

· After the completion of the operating system installation, it is recommended not to use the 'ifconfig' command to shutdown or start network cards, otherwise it may cause environment exceptions.

· Matrix uses a separate network port and does not allow the configuration of sub-interfaces and sub-IPs on this network port.

· The IP addresses of other network ports on Matrix nodes cannot be in the same network segment as the IP used to establish the cluster.

· The set operating system password cannot contain the following symbols: $ (quotation mark), \ (escape symbol), ' (single quotation mark), " (double quotation mark).

· When working on Matrix, do not perform the following operations on Unified Platform:

¡ Upload or delete component installation packages.

¡ Deploy, upgrade, or scale components.

¡ Add, modify, or delete networks.

· If H3Linux 2.0.x (Euler operating system) is chosen for deployment, the network card for the southbound network needs to be configured in bond mode and its bond configuration needs to be modified. Otherwise, the use of collection components will be affected.

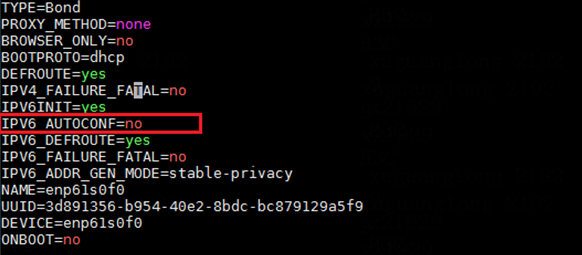

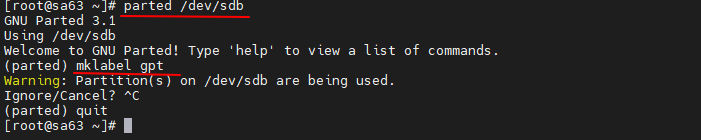

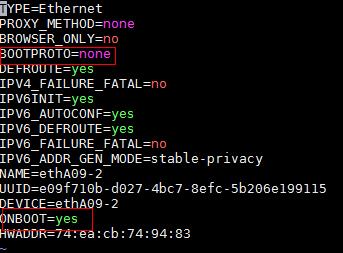

a. After logging in to the server background with administrator privileges, set the IPV6_AUTOCONF configuration to no in configuration file /etc/sysconfig/network-scripts/ifcfg-xxx (xxx is the name of the bond network card):

b. After the modification is complete, execute the command ‘nmcli c reload && nmcli c up xxx (where xxx is the name of the bond network card)’ in the background.

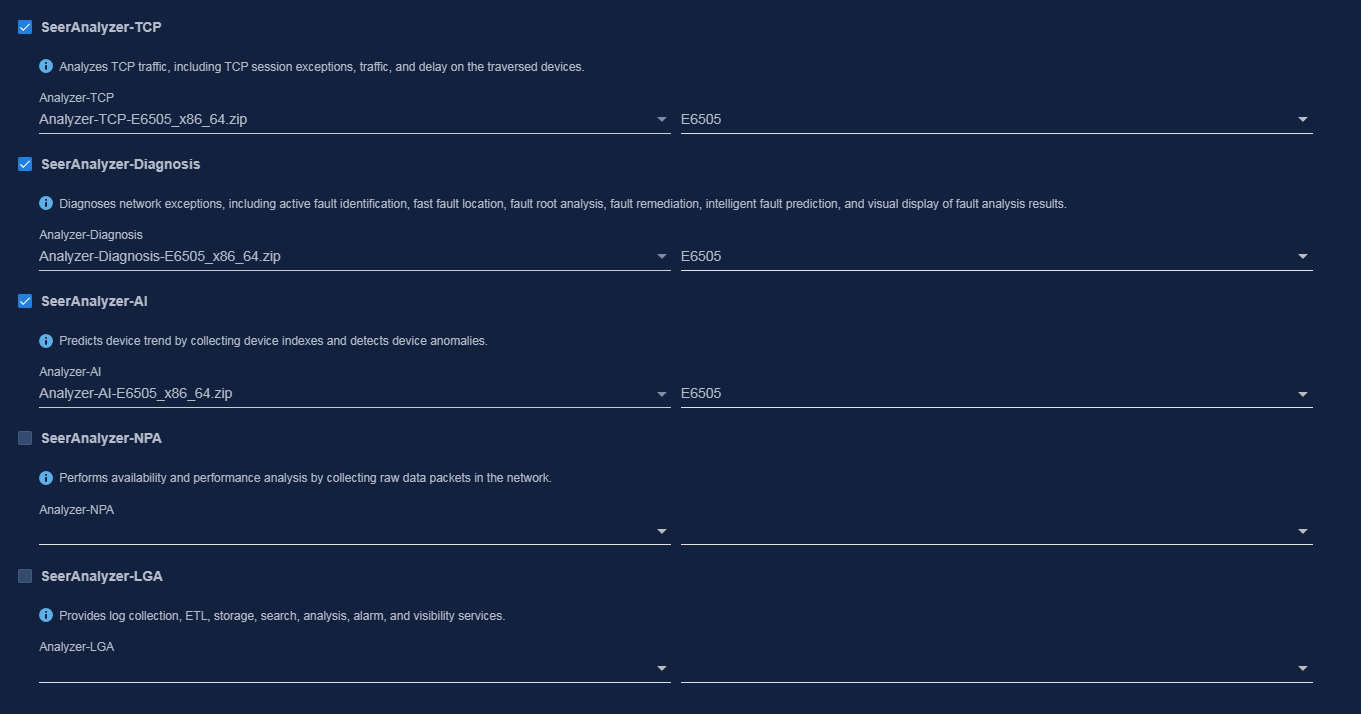

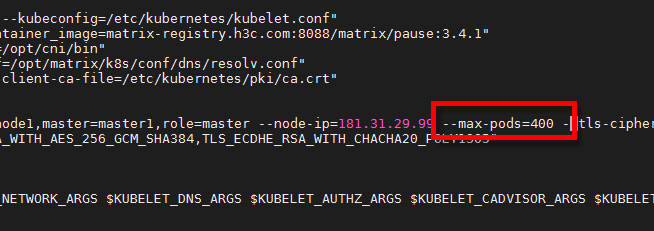

Figure 1 Configuration modification

· The considerations for modifying the node time are as follows:

¡ When modifying the time zone of all devices imported into SeerAnalyzer, it should be consistent with the time zone of the SeerAnalyzer server.

¡ When modifying the time zone of SeerCollector, it should be consistent with the time zone of the SeerAnalyzer server.

· The disk prepared for GlusterFS application cannot be formatted, otherwise the installation will fail. If the disk has been formatted, it can be repaired by using the 'wipefs -a /dev/disk name' command to clear the disk.

· If you need to use the HTTPS protocol, login to Unified Platform after the application and components are installed, click on the 'System > System Settings > Security Settings' menu item to enter the Security Configuration page, and enable the HTTPS protocol.

· Before deploying SeerAnalyzer and SeerCollector, please execute the command cat /proc/sys/vm/nr_hugepages on each node to check if HugePages is enabled. If the returned result is not 0, please record that value and execute the command echo 0 > /proc/sys/vm/nr_hugepages to temporarily disable HugePages. After the deployment of SeerAnalyzer and SeerCollector is completed, change the digit 0 to the recorded value in the command echo 0 > /proc/sys/vm/nr_hugepages, and execute it on each node to recover the HugePages configuration.

Disk partition planning

Make RAID and partition plans based on the service load and server configuration requirements. Edit the partition names as needed in the production environment.

|

|