- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 1.01 MB |

Configuring basic cluster services

Management HA configuration workflow

Creating a management HA group

Logging in to the cluster at management HA VIP

Configuring cluster users and user groups

About cluster users and user groups

Cluster user and user group configuration workflow

(Optional) Editing user permissions

Logging in to the cluster as a common system user

Configuring email notifications

Email notification configuration workflow

Configuring email notification settings

Adding an alarm notification recipient

(Optional) Testing the email address of a recipient

SMS notification configuration workflow

Configuring SMS notification settings

Adding an alarm SMS notification recipient

(Optional) Testing the phone number of a recipient

Configuring SNMP notifications

SNMP notification configuration workflow

Configuring SNMP notification receivers

Adding the storage system to NMS and configuring SNMP parameters

Importing the MIB file of the storage system to NMS

Viewing SNMP notifications on NMS

About multi-cluster management

Multi-cluster management configuration workflow

Configuring a cluster as the primary cluster

Configuring a cluster as a managed cluster

Cluster resource configuration workflow

(Optional) Creating a disk pool

(Optional) Managing disks in a disk pool

(Optional) Creating a protection domain

File storage active-active configuration workflow

Creating an active-active domain

Binding hosts to active-active sites

Enable the active-active mode for a file system

Configuring encryption services

Encryption service configuration workflow

Enabling encryption for the cluster

Enabling encryption for a disk pool

Configuring basic block storage services

About basic block storage services

Basic block storage service configuration workflow

Configuring a block storage service network

Volume clone configuration workflow

Splitting a volume clone from its source volume

Volume copy pair configuration workflow

Starting/Stopping data copy for a copy pair

Configuring volume migration pairs

Volume migration pair configuration workflow

Volume snapshot configuration workflow

Creating a common volume snapshot

(Optional) Restoring a common volume snapshot

(Optional) Creating a writable volume snapshot

(Optional) Mapping writable volume snapshots to a client group

Configuring basic disaster recovery services

About basic disaster recovery services

Basic disaster recovery service configuration workflow

Adding replication nodes to an address pool

Creating an async remote replication pair

Synchronizing/Splitting async remote replication pairs

Configuring disaster recovery primary/secondary switchover

About disaster recovery primary/secondary switchover

Primary/secondary switchover configuration workflow

Splitting async remote replication pairs

Disabling secondary volume protection

Performing a primary/secondary switchover

Enabling secondary volume protection

Synchronizing async remote replication pairs

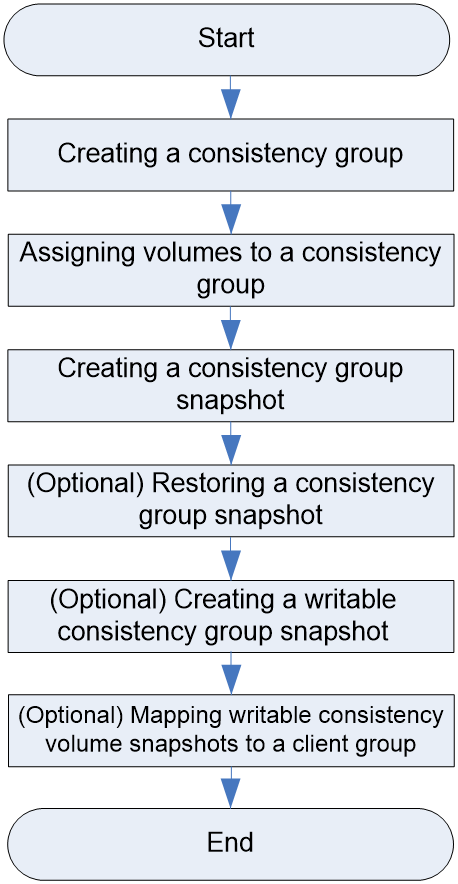

Configuring consistency groups

Consistency group configuration workflow

Assigning volumes to a consistency group

Creating a consistency group snapshot

(Optional) Restoring a consistency group snapshot

(Optional) Creating a writable consistency group snapshot

(Optional) Mapping writable consistency volume snapshots to a client group

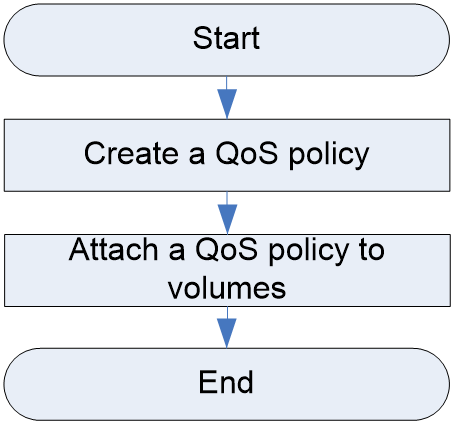

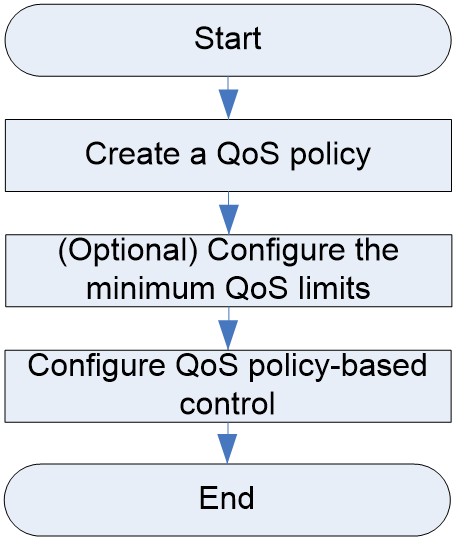

QoS policy configuration workflow

Attaching a QoS policy to volumes

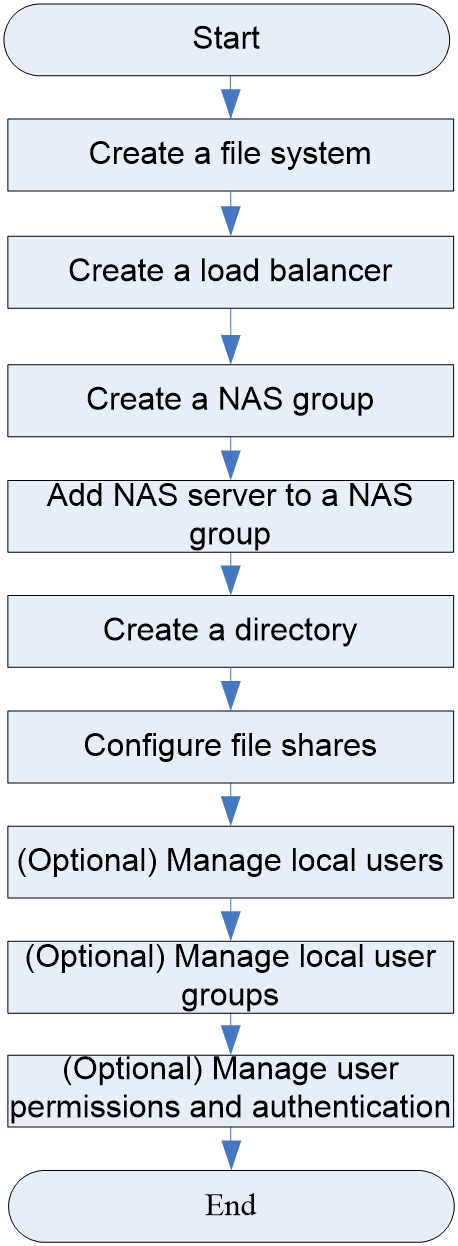

Configuring basic file storage services

About basic file storage services

Basic file storage service configuration workflow

Adding NAS servers to a NAS group

(Optional) Creating local user groups

(Optional) Creating local users

(Optional) Configuring NFS share permissions and authentication

(Optional) Configuring CIFS share permissions and authentication

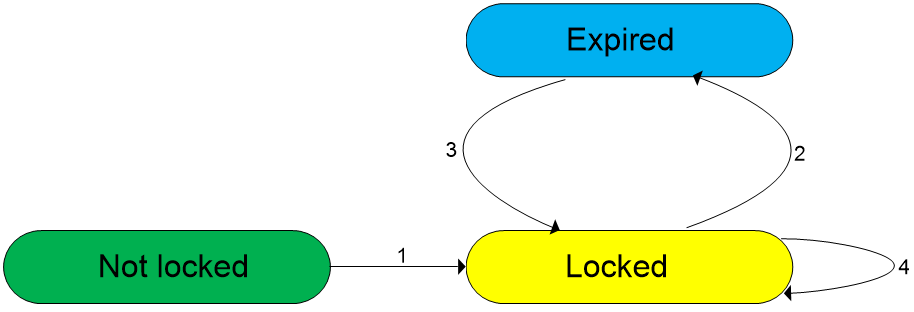

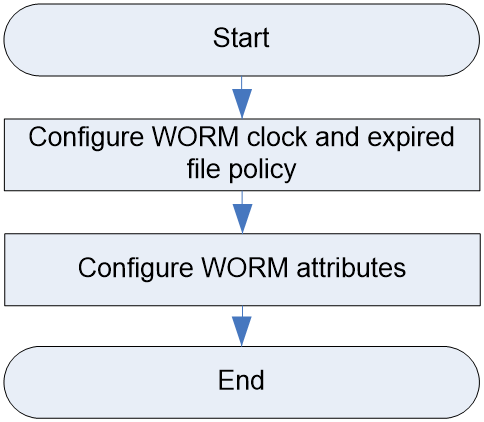

Configuring the WORM clock and expired file policy

Configuring the WORM attribute for a directory

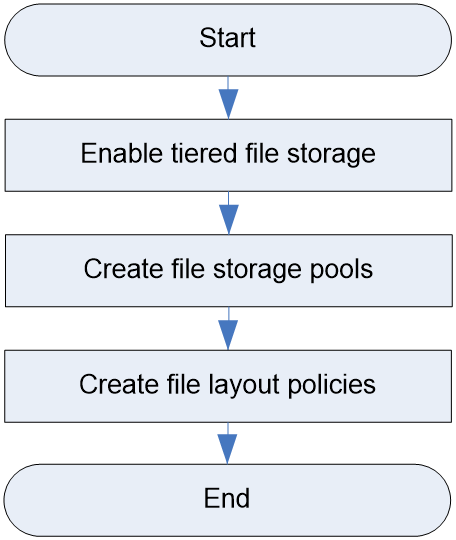

Configuring tiered file storage

File layout policy configuration workflow

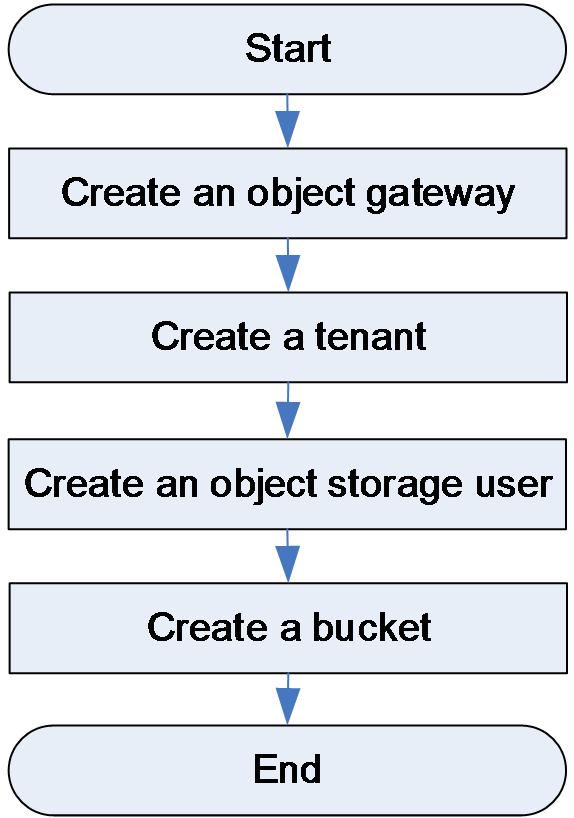

Configuring basic object storage services

About basic object storage services

Basic object storage service configuration workflow

Creating an object gateway HA group

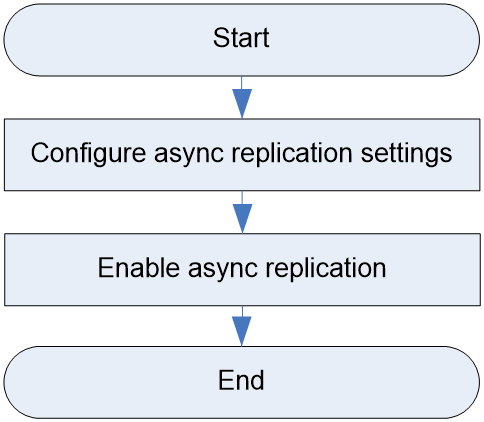

Async replication configuration workflow

Configuring async replication settings

Enabling async replication for a bucket

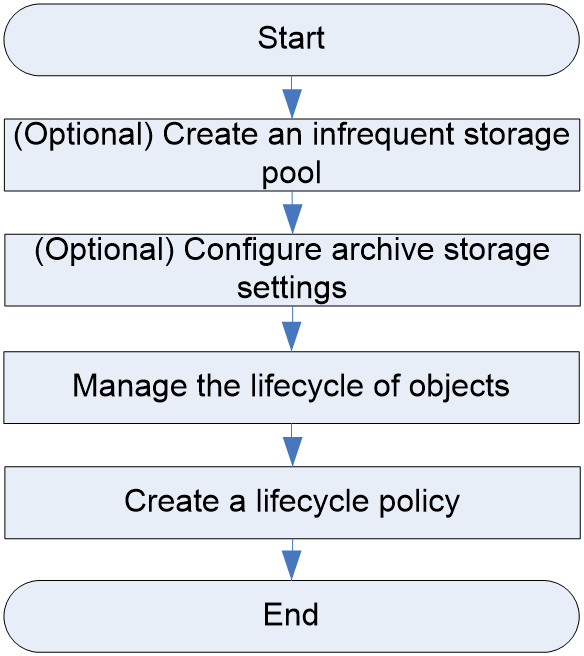

Configuring lifecycle policies

Lifecycle policy configuration workflow

(Optional) Creating an infrequent storage pool

(Optional) Configuring archive storage settings

Managing the lifecycle of objects

Deploying multiple sites for object storage

Multisite configuration workflow

(Optional) Switching site role to primary

Adding sync sites for a bucket

Adding a sync path for a bucket

(Optional) Configuring the minimum QoS limits

Configuring QoS policy-based control

ONEStor overview

About ONEStor

The product adopts the distributed architecture and unifies dispersed storage servers into a cluster to provide users with massive, undifferentiated, and unified distributed storage services.

The product helps address various challenges imposed on storage by explosive growth of cloud computing, mobile computing, social media, and big data.

Using this document

This document describes some common

features of the storage system and the configuration process and related

concepts of the storage service. For more information about features, configuration

methods, parameter description, and configuration restrictions and guidelines

not described in this document, see the online help. For quick access to online

help, click the ![]() icon on the left of a page title.

icon on the left of a page title.

The information in this document is subject to change over time.

Before using this document to configure the product, perform the following tasks:

· Install the storage software and register the required license. For more information, see the software installation guide for the product.

· Deploy the cluster based on the cluster setup wizard. For more information, see the online help.

|

|

NOTE: The information in this document is subject to change over time. The product interface is subject to change over time. |

Technical support

H3C is dedicated to providing user-friendly products with the best quality. To obtain assistance, contact H3C by email or phone or access documents at the H3C website.

· Email:

¡ China: [email protected]

¡ Hongkong, China: [email protected]

¡ Other countries or regions: [email protected]

· Technical support hotline:

To find contacts for your local area, access the H3C global contacts page at https://www.h3c.com/en/Support/Online_Help/Service_Hotlines/

· Website: http://www.h3c.com

Configuring basic cluster services

Configuring management HA

About management HA

The management HA feature ensures the connectivity of the cluster management. With management HA configured, the management HA group is accessible at a VIP. You can access that VIP to manage the cluster as long as the management HA group has one management node that is operating correctly. Typically, the primary node operates to offer management functionality. When the primary node fails, the backup node automatically takes over.

Management HA configuration workflow

Figure 1 shows the management HA configuration workflow, and Table 1 describes the configuration steps.

Figure 1 Management HA configuration workflow

Table 1 Management HA configuration workflow

|

Procedure |

Description |

|

Create a management HA group, and configure the management HA VIP and primary and backup nodes. |

|

|

N/A |

Creating a management HA group

Prerequisites

· You must install the backup node with the same version of the distributed storage software as the primary node. You can identify the version on the management page.

· Make sure the primary and backup nodes are added to the cluster management network, and the nodes are reachable to each other.

Procedure

1. From the left navigation pane, select Management HA.

2. Click Create.

3. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Logging in to the cluster at management HA VIP

1. Enter the management HA VIP in the address bar of the browser.

2. (Optional) From the left navigation pane, select Management HA.

The page displays the operating management node.

Configuring cluster users and user groups

About cluster users and user groups

The storage system provides management of administrators to improve the security of storage system access. User (group) management supports creating administrators, editing or resetting user passwords, and locking or unlocking users. You can also assign different permissions to administrators as needed.

Administrators

The storage system provides the following types of administrators:

· Admin—The admin user is the super user account that has the highest permission in the system, which can manage the storage system, and add or delete the permissions of users or user groups. The admin user is created automatically by the system and cannot be deleted.

· Common system user—A common system user is created by the admin user. The permissions of a system user are determined by the permissions of the user group to which the system user belongs.

User groups

The storage system manages users with user groups. The admin user can create user groups with different permissions as needed and add common system users to these user groups for user permission management.

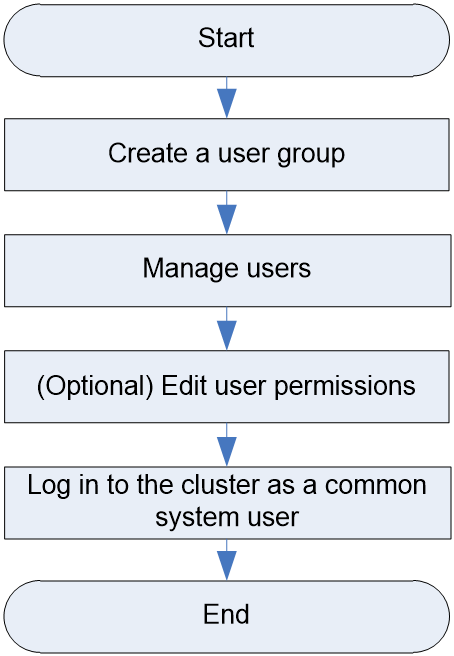

Cluster user and user group configuration workflow

Figure 2 shows the cluster user and user group configuration workflow, and Table 2 describes the configuration steps.

Figure 2 Cluster user and user group configuration workflow

Table 2 Cluster user and user group configuration workflow

|

Procedure |

Description |

|

|

Create a user group and assign permissions to the user group as needed. |

||

|

Create a common system user and add the user to a user group. The permissions of a user are the same as the permissions of the user group. |

||

|

Edit the login password of a common system user or reset the password of a common system user to the default. |

||

|

Lock or unlock a common system user. A locked user cannot log in to the cluster. |

||

|

Edit the permissions of all common system users in a user group in bulk by editing the permissions of the user group. |

||

|

Edit the permissions of a common system user by editing the permissions of the user group to which the user belongs. |

||

|

Log in to the cluster as a common system user. The user can view or configure the settings based on user permissions. |

||

Creating a user group

1. From the left navigation pane, select System.

2. Select User Groups.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Managing users

Creating and adding a user to a user group

1. From the left navigation pane, select System.

2. Select Users.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Edit or reset the password of a user

The default password of a common system user is User@123. You can reset the password of a user to the default when the password is lost.

To edit or reset the password:

1. From the left navigation pane, select System.

2. Select Users.

3. Click Change Password in the Actions column for a user.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Locking and unlocking a user

After you lock a user, the user information will not be deleted, but the user cannot log in to the cluster.

To lock or unlock a user:

1. From the left navigation pane, select System.

2. Select Users.

3. Click Lock or Unlock in the Actions column for a user.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Editing user permissions

The permissions of a common system depend on the permissions of the user group to which the user belongs. You can edit the permissions of a common system user with the following methods:

· Edit the permissions of member users in a user group by editing the permissions of the user group in bulk.

· Edit the permissions of a user by changing the user group of the user.

Editing permissions of a user group

1. From the left navigation pane, select System.

2. Select User Groups.

3. Click Edit Permissions in the Actions column for a user group.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Changing the user group of a user

1. From the left navigation pane, select System.

2. Select Users.

3. Click Change User Groups in the Actions column for a user. Select a new user group for the user.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Logging in to the cluster as a common system user

Log in to the cluster by entering the username and password of a common system user on the system login page.

After login, the system user can view or configure the settings allowed by the user permissions.

Configuring email notifications

About email notifications

The system can send alarm notifications through emails to the specified email recipients for administrators to obtain alarm information to perform troubleshooting.

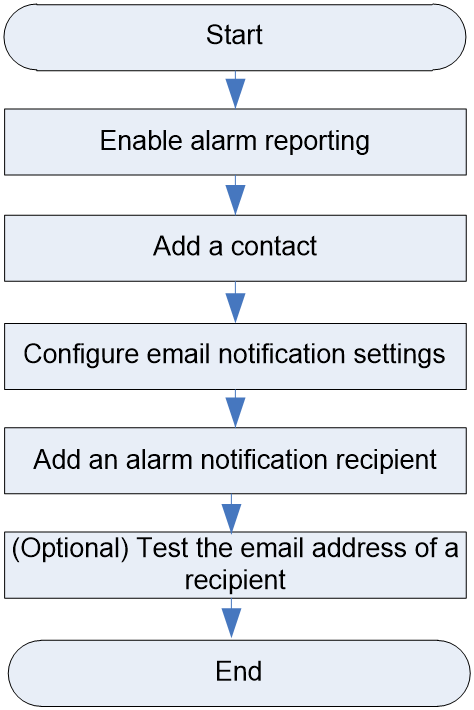

Email notification configuration workflow

Figure 3 shows the email notification configuration workflow, and Table 3 describes the configuration steps.

Figure 3 Email notification configuration workflow

Table 3 Email notification configuration workflow

|

Procedure |

Description |

|

Enable alarm reporting for the cluster to report real-time alarms. |

|

|

An added contact will be displayed in the alarm notification emails. The email recipients can contact to obtain support in case of any questions about the alarms. |

|

|

Enable email notification and configure email notification parameters. |

|

|

After an email recipient is added, the system will send alarms to the specified email address through emails. |

|

|

Test whether a recipient email address can receive alarm emails. |

Enabling alarm reporting

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Basic Settings.

3. Click Edit in the Alarm Control pane.

4. In the dialog box that opens, select On, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding a contact

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Contacts.

3. Click Add.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring email notification settings

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Basic Settings.

3. Click Edit in the Email Notification Settings pane.

4. Enable email notification and configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding an alarm notification recipient

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Email Recipients.

3. Click Add.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Testing the email address of a recipient

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Email Recipients.

3. Select an email address, and then click Test. In the dialog box that opens, click OK.

Configuring SMS notifications

About SMS notifications

The system can send alarm SMS notifications to the specified phone numbers for administrators to obtain alarm information to perform troubleshooting.

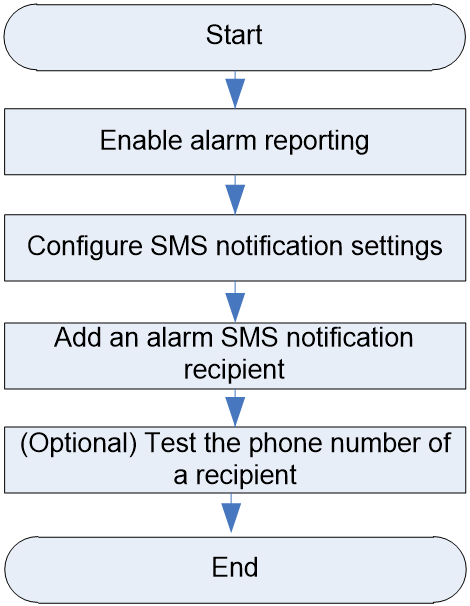

SMS notification configuration workflow

Figure 4 shows the email notifications configuration workflow, and Table 4 describes the configuration steps.

Figure 4 SMS notification configuration workflow

Table 4 SMS notification configuration workflow

|

Procedure |

Description |

|

Enable alarm reporting for the cluster to report real-time alarms. |

|

|

Enable SMS notification and configure SMS notification parameters. |

|

|

After an SMS recipient is added, the system will send alarms to the specified phone number through SMS messages. |

|

|

Test whether a phone number can receive alarm messages. |

Enabling alarm reporting

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Basic Settings.

3. Click Edit in the Alarm Control pane.

4. In the dialog box that opens, select On, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring SMS notification settings

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > Basic Settings.

3. Click Edit in the SMS Notification Settings pane.

4. Enable email notification and configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding an alarm SMS notification recipient

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > SMS Recipients.

3. Click Add.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Testing the phone number of a recipient

1. From the left navigation pane, select Alarms.

2. Select Alarm Settings > SMS Recipients.

3. Select a phone number, and then click Test.

Configuring SNMP notifications

About SNMP notifications

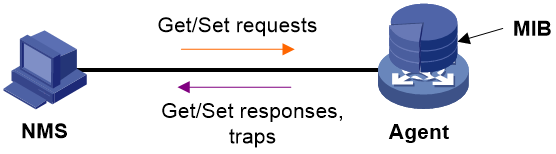

Simple Network Management Protocol (SNMP) enables network administrators to read and set the variables on managed devices for state monitoring, troubleshooting, statistics collection, and other management purposes.

SNMP framework

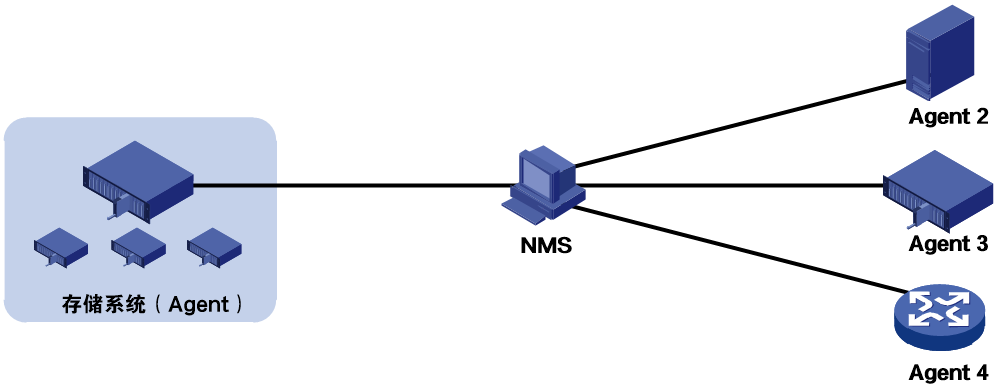

As shown in Figure 5, the SNMP framework contains the following elements:

· SNMP manager—Works on a network management system (NMS) to monitor and manage the SNMP-capable devices on the network. It can get and set values of MIB objects on the agent.

· SNMP agent—Works on a managed device to receive and handle requests from the NMS. It sends notifications to the NMS when specific events occur, such as an interface state change.

· Management Information Base (MIB)—Specifies the variables (for example, interface status and CPU usage) maintained by the SNMP agent for the SNMP manager to read and set. Each SNMP has a MIB file. The SNMP manager can generate a MIB for a SNMP agent by compiling the MIB file of that agent. The SNMP manager can then manage the agent by reading and setting the MIB objects of that agent.

Figure 5 Relationship between NMS, agent, and MIB

SNMP versions

OneStor supports SNMPv1, SNMPv2c, and SNMPv3.

· SNMPv1—Uses community names for authentication. To access an SNMP agent, an NMS must use the same community name as the SNMP agent. If the community name used by the NMS differs from the community name set on the agent, the NMS cannot establish an SNMP session to access the agent and will discard the traps from the agent.

· SNMPv2c—Uses community names for authentication. SNMPv2c is an extended version of SNMPv1. It supports more operation types, data types, and error codes.

· SNMPv3—Uses a user-based security model (USM) to secure SNMP communication. You can configure authentication and privacy mechanisms to authenticate and encrypt SNMP packets. This can block illegal users and enhance communication security.

SNMP topology

As shown in Figure 6, after you configure the storage system as an SNMP agent, the NMS can manage the storage system.

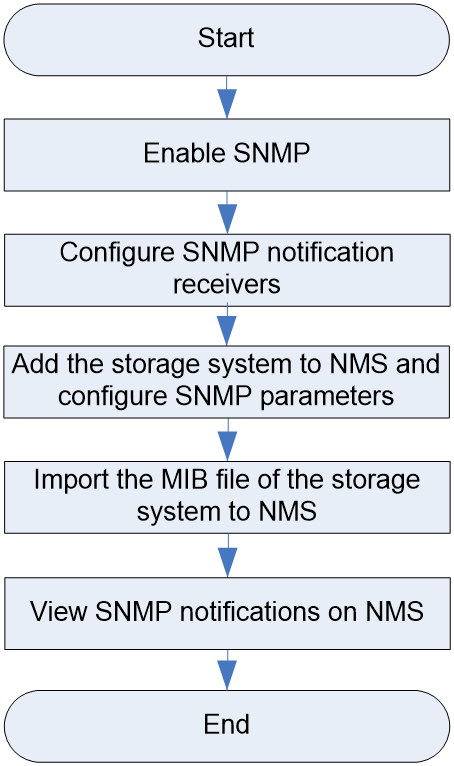

SNMP notification configuration workflow

Figure 7 shows the SNMP notification configuration workflow, and Table 5 describes the configuration steps.

Figure 7 SNMP notification configuration workflow

Table 5 SNMP notification configuration workflow

|

Procedure |

Description |

|

|

To configure SNMP settings for the storage system, enable SNMP first. |

||

|

Configure the NMS (SNMP manager) as a notification receiver. The system sends alarms to the notification receiver through SNMP notifications. The notification receiver configuration varies by SNMP version. |

||

|

Adding the storage system to NMS and configuring SNMP parameters |

Add the storage system to the NMS as an SNMP agent. The NMS can then receive SNMP notifications from the storage system. |

|

|

Import the MIB file of the storage system to the NMS. The NMS can then obtain the notification receiver settings of the storage system. |

||

|

After you configure SNMP notification settings, you can view the alarm notifications reported by the storage system to the NMS. |

||

Enabling SNMP

Prerequisites

Plan and configure the SNMP network to ensure that the storage system (SNMP agent) can correctly communicate with the NMS (SNMP manager).

Procedure

1. Log in to the management page of the storage system.

2. From the left navigation pane, select Alarms > SNMP Settings.

3. Enter the management page of a random feature, for example, the USM user management page.

4. Click the SNMP button at the top right corner of the page.

5. In the dialog box that opens, select On, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring SNMP notification receivers

Prerequisites

Complete SNMP version planning and SNMP parameter planning. An NMS and an SNMP agent must use the same SNMP version to communicate with each other.

Configuring an SNMPv1 or SNMPv2c notification receiver

1. From the left navigation pane, select Alarms > SNMP Settings > Notification Receivers.

2. Click Create.

3. In the dialog box that opens, select SNMPv1 or SNMPv2c as the SNMP version, and then configure the remaining parameters as needed.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring an SNMPv3 notification receiver

1. From the left navigation pane, select Alarms > SNMP Settings > USM Users.

2. To create a USM user, click Create, and then configure the parameters as needed.

For the parameter description and configuration restrictions and guidelines, see the online help.

You can repeat this step to create multiple USM users as needed.

3. From the left navigation pane, select Alarms > SNMP Settings > Notification Receivers.

4. Click Create.

5. In the dialog box that opens, select SNMPv3 as the SNMP version, and then configure the remaining parameters as needed.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding the storage system to NMS and configuring SNMP parameters

Add the storage system to NMS and configure related SNMP parameters.

For the detailed procedure of this task, see the NMS document or contact the technical support.

For information about the NMSs supported by the storage system, contact the technical support.

Importing the MIB file of the storage system to NMS

1. Contact the technical support to obtain the MIB file of the storage system.

2. Import the MIB file of the storage system to NMS.

For more information about this step, see the NMS document or contact the technical support.

Viewing SNMP notifications on NMS

After you configure SNMP notification settings, you can view the alarm notifications reported by the storage system to the NMS.

For the detailed procedure of this task, see the NMS document or contact the technical support.

Manage multiple clusters

About multi-cluster management

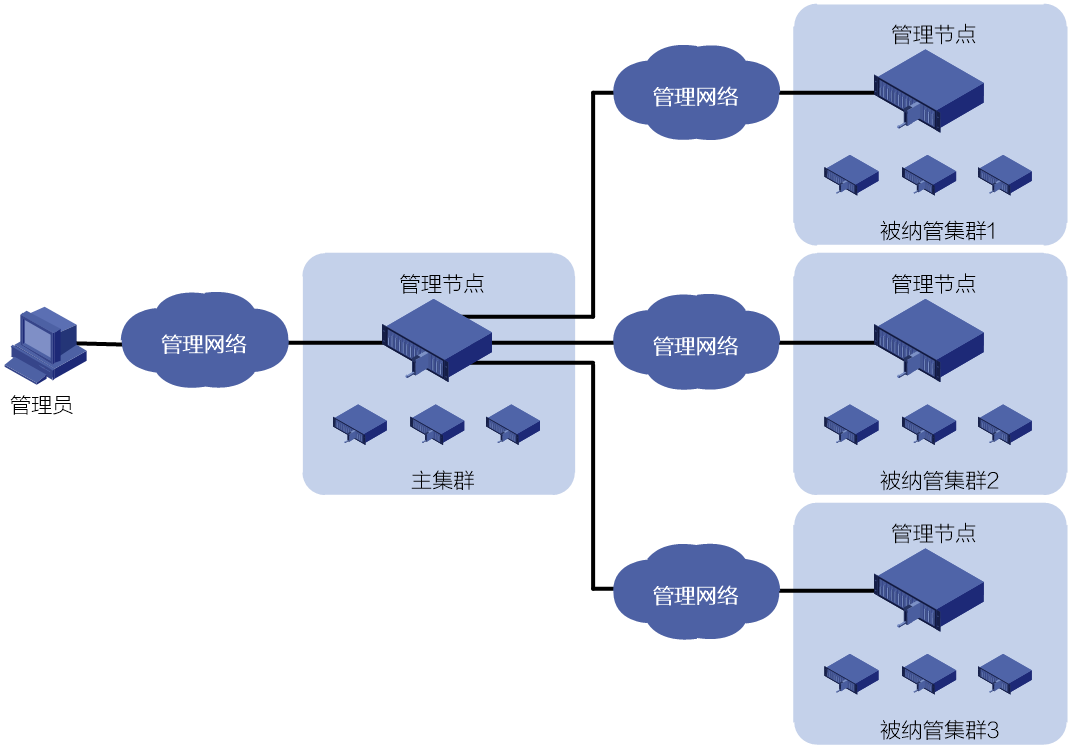

With this feature, the administrator can deploy and manage multiple clusters on one cluster in a unified manner. As shown in Figure 8, when some remote clusters are managed by the primary cluster, the management node of the primary cluster can directly access and manage those clusters. To manage one of the clusters managed by the primary cluster, you can just log in to the management page of the primary cluster.

Figure 8 Multi-cluster management

Primary cluster and managed cluster

A multi-cluster management system consists of a primary cluster and managed clusters.

· Primary cluster—The primary cluster acts as the manager in the multi-cluster management system. It provides an access to unified cluster management. After logging in to the management page of the primary cluster, you can manage both the primary cluster and the managed clusters.

· Managed cluster—After a remote cluster is managed by the primary cluster, you can directly access and manage that cluster on the management page of the primary cluster.

Cluster management account

When you create a cluster management account, follow these guidelines:

· After you create a cluster management account for a cluster, the multi-cluster management capability is enabled for that cluster and you can manage that cluster.

· If you create a cluster management account for a cluster on its management page, the cluster becomes the primary cluster in the multi-cluster management system.

· If you create a remote cluster management account for a cluster on the management page of the primary cluster, the cluster is managed by the primary cluster.

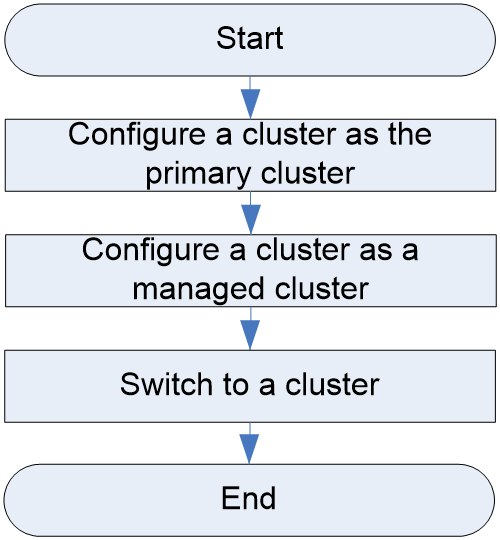

Multi-cluster management configuration workflow

Figure 9 shows the multi-cluster management configuration workflow, and Table 6 describes the configuration steps.

Figure 9 Multi-cluster management configuration workflow

Table 6 Multi-cluster management configuration workflow

|

Procedure |

Description |

|

Configure a cluster management account for the current cluster. This task enables the multi-cluster management capability of the current cluster and configures the cluster as the primary cluster in the multi-cluster management system. |

|

|

Create a cluster management account for a remote cluster on the primary cluster's management page. The cluster is then managed by the primary cluster. You can either add an existing cluster as a managed cluster, or deploy a new cluster and add it as a managed cluster. |

|

|

Switch to the management page of a managed cluster from that of the primary cluster. |

Configuring a cluster as the primary cluster

Prerequisites

Complete network planning and configuration for all clusters. Make sure the management network of the primary cluster can communicate with the management networks of the managed clusters.

Procedure

1. Log in to the management page of the target cluster.

2. From the left navigation pane, select System > Multicluster.

3. Click Create, and then configure the parameters as needed.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring a cluster as a managed cluster

Prerequisites

· When you add an existing cluster as a managed cluster for the primary cluster, make sure the target cluster meets the following requirements:

¡ The target cluster is not managed by any other cluster. To manage a cluster that is already managed by another primary cluster, release that cluster from the primary cluster first.

¡ The target cluster uses the same storage software version as the primary cluster.

· When you deploy a new cluster and add it as a managed cluster for the primary cluster, make sure the following conditions exist:

¡ You have completed the basic configuration of the hosts in the new cluster, including RAID controller configuration and operation system installation.

¡ The hosts in the new cluster meet the requirements of cluster deployment.

Procedure

1. Log in to the management page of the primary cluster.

2. From the left navigation pane, select System > Multicluster.

3. Click Create, select a cluster management option, and then perform one of the following tasks:

¡ If you select Manage Current Cluster, enter information about the target cluster.

For the parameter description and configuration restrictions and guidelines, see the online help.

¡ If you select Deploy and Manage New Cluster, deploy a new cluster under the guidance of the setup wizard.

For the parameter description and configuration restrictions and guidelines, see the online help.

Switching to a cluster

Prerequisites

The primary cluster has managed a minimum of one cluster.

Procedure

1. Log in to the management page of the primary cluster.

2. Select the target cluster management account from the cluster list at the top of the page.

Configuring cluster resources

Configuring cluster resource

About cluster resource

The storage system manages storage resources hierarchically through resource management for service data isolation and system reliability to meet the following requirements of users:

· Isolation integration—Provides block storage service, object storage service, and file storage service in a cluster.

· Data isolation—Improves flexibility and diversity of service deployment by isolating different storage services.

· Performance division—Provides different storage features for different services by combination of different disk types.

Node pool

A node pool is a storage area unit. Node pools are isolated to each other and do not affect each other. You can create multiple disk pools in a node pool.

Active-active domain

An active-active domain is a disaster recovery solution that uses the sites in that domain to ensure storage continuity. You can divide hosts in a node pool into two different sites based on their physical location, establishing a disaster recovery relationship between the two sites. During normal operations, the storage system distributes workloads to hosts in both sites according to the specified load balancing policies. When a site fails, the other site takes the place of the faulty site to continue to provide services.

Disk pool

A disk pool is a collection of disks for resource management. The disks in a disk pool might be installed on multiple hosts in a cluster.

Disk pools in a node pool do not affect each other, realizing data isolation between different service data in the node pool.

With different types of disks added, a disk pool can provide different storage performance, realizing performance division between different storage services.

Rack

Racks in the storage system are logical racks and correspond to real racks. You can add hosts to racks or divide fault domains based on racks.

Host

Hosts (nodes) in the cluster are logical hosts in the storage system. They correspond to real hosts. You can manage disks on the hosts, divide fault domains based on the hosts, and assign roles to the hosts for them to provide storage services. The following are types of nodes in the storage system:

· Management node—A host to deploy and manage storage resources in the cluster. You can access the Web management interface of the storage system by entering the IP address of the management node in a browser.

· Storage node—A host that provides services and service data storage in the cluster.

· Monitor node—A host on which the monitor process runs to monitor the cluster and maintain and distribute various information vital to cluster operations.

Protection domain

The cluster stores the replicas or fragments of data in a protection domain. Dividing a protection domain in a node pool can further improve data reliability of the node pool. A protection domain can contain multiple fault domains.

Fault domain

The cluster stores the replicas or fragments of data to different fault domains. The storage system supports the following fault domain levels:

· Rack level—Each rack is a fault domain. The system preferentially distributes replicas or fragments of data across multiple racks in a node pool. The fault domains can cooperate with redundancy policies to avoid data loss when some racks fail.

· Host level—Each host is a fault domain. The system preferentially distributes replicas or fragments of data across multiple hosts in a node pool. The fault domains can cooperate with redundancy policies to avoid data loss when some storage nodes fail.

Cluster resource configuration workflow

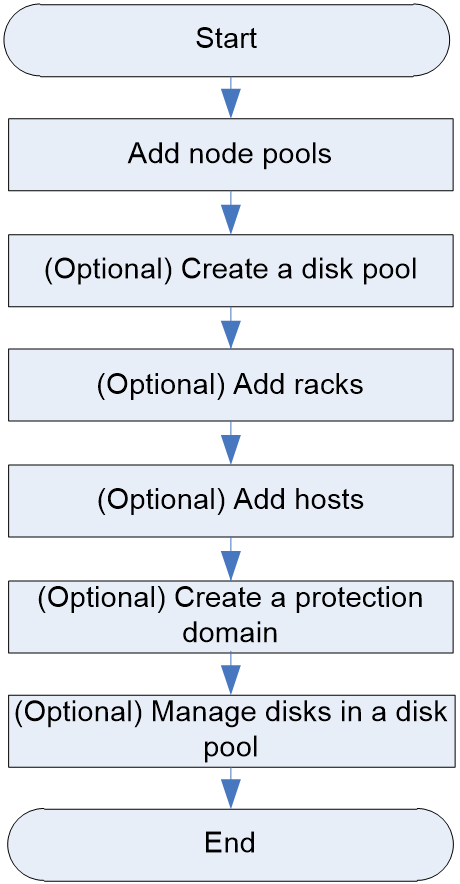

Figure 10 shows the cluster resource configuration workflow, and Table 7 describes the configuration steps.

Figure 10 Cluster resource configuration workflow

Table 7 Cluster resource configuration workflow

|

Procedure |

Description |

|

|

Add node pools and configure the resources according to the setup wizard, including disk pools, racks, hosts, and disks. |

||

|

Create disk pools for a node pool. |

||

|

Add racks in the cluster. |

||

|

Add storage nodes to a node pool. |

||

|

Add monitor nodes to a node pool. |

||

|

Add disks to or remove disks from a disk pool. The disks might belong to one or multiple storage nodes. |

||

|

Add or remove disks of a storage node to or from a disk pool. |

||

|

Create protection domains in a node pool. |

||

Adding node pools

Prerequisites

Plan services and resources in a node pool in advance, including protection domains, fault domains, disk pool number, service types, host number, disk number and disk types based on service requirements.

Procedure

1. From the left navigation pane, select Resources.

2. Select Node Pools.

3. Click Create.

4. Configure the node pool and its resources according to the setup wizard.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Creating a disk pool

1. From the left navigation pane, select Resources.

2. Select Disk Pools.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Adding racks

1. From the left navigation pane, select Resources.

2. Select Racks.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Adding hosts

Adding storage nodes

You can add storage nodes to a node pool one by one or in bulk.

· To add storage nodes one by one:

a. From the left navigation pane, select Hosts.

b. Select Storage Nodes.

c. Click Deploy One Node.

d. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

· To add storage nodes in bulk:

a. From the left navigation pane, select Hosts.

b. Select Storage Nodes.

c. Click Bulk Deploy, and scan the management network for hosts.

d. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding monitor nodes

1. From the left navigation pane, select Hosts.

2. Select Monitor Nodes.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Managing disks in a disk pool

The storage system can add disks to or remove disks from a disk pool through one of the following methods:

· Add or remove disks of one or multiple storage nodes to or from the disk pool on the disk pool management page.

· Add or remove disks of a storage node to or from the disk pool on the storage node management page.

Managing disks in a disk pool

The procedure is similar for adding or removing disks. This section adds disks as an example.

To add disks in a disk pool:

1. From the left navigation pane, select Resources.

2. Select Disk Pools.

3. Click Add Disks in the Actions column for the disk pool.

For the parameter description and configuration restrictions and guidelines, see the online help.

Managing disks on a storage node

1. From the left navigation pane, select Hosts.

2. Select Storage Nodes.

3. Click the hostname link to enter the storage node management page.

To add disks to a disk pool:

a. Click Create.

b. Add disks on the storage node to the disk pool.

For the parameter description and configuration restrictions and guidelines, see the online help.

To remove a disk from a disk pool:

c. Click Delete in the Actions column for that disk.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Creating a protection domain

Prerequisites

Make sure the protection domain feature is enabled on the target node pool. You can configure protection domains only during node pool deployment. For more information, see the online help.

Procedure

1. From the left navigation pane, select Resources.

2. Select Protection Domains.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring active-active

About active-active

You can enable the active-active mode for a node pool and divide hosts in the node pool into two different sites based on their physical location. This establishes disaster recovery between the two sites. During normal operations, the storage system distributes workloads to hosts in both sites according to the specified load balancing policies. When a site fails, the other site takes the place of the faulty site to continue to provide services.

Active-active site

An active-active site consists of hosts at the same physical location in the same node pool. A node pool supports two active-active sites, which form a disaster recovery relationship. During normal operations, the storage system distributes workloads to hosts in both sites according to the specified load balancing policies. When a site fails, the other site takes the place of the faulty site to continue to provide services.

Quorum site

The storage system automatically elects a monitor node from the active-active node pool to act as the quorum site. When a site fails, the quorum site automatically adjusts the cluster data to ensure that the other site can take the place of the faulty site.

File storage active-active configuration workflow

Table 8 describes the configuration steps.

Table 8 File storage active-active configuration workflow

|

Procedure |

Description |

|

Enable the active-active mode and configure active-active settings. You can perform this task in the cluster or node pool setup wizard when deploying a cluster for the first time or creating a node pool. The created active-active domain is automatically bound to the node pool. |

|

|

Bind hosts at different physical locations to different sites of the created active-active domain. You can perform this task when deploying a cluster for the first time or creating a node pool. |

|

|

Enable the active-active mode for a file system. |

Creating an active-active domain

Prerequisites

Determine the associated site for each host based on their physical locations and the service requirements.

Creating an active-active domain

1. Enable the active-active mode and configure active-active settings in the basic information configuration step of the cluster or node pool setup wizard. You can perform this task when deploying a cluster for the first time or creating a node pool. For the parameter description and configuration restrictions and guidelines, see the online help.

2. Enable the active-active mode and configure active-active settings in the active-active configuration step of the cluster or node pool setup wizard. For the parameter description and configuration restrictions and guidelines, see the online help.

Binding hosts to active-active sites

Prerequisites

Determine the associated site for each host in the node pool based on service requirements.

Add hosts to active-active sites

1. Select active-active sites for hosts in the host selection step of the cluster or node pool setup wizard. You can perform this task when deploying a cluster for the first time or creating a node pool. For the parameter description and configuration restrictions and guidelines, see the online help.

2. Configure other parameters as prompted in the cluster or node pool setup wizard. For the parameter description and configuration restrictions and guidelines, see the online help.

Enable the active-active mode for a file system

Prerequisites

· In the file storage node pool setup wizard, enable the active-active mode for the node pool and configure active-active settings.

· Make sure all NAS servers within the same NAS group belong to the same active-active site.

· Make sure all NAS servers in the NAS groups bound to the same load balancer belong to the same active-active site.

Enabling the active-active mode for the file system

1. From the left navigation pane, select File Storage > File System.

2. Click Enable Active-Active in the Actions column for the file system. In the dialog box that opens, click OK. For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring encryption services

About encryption services

The storage system supports encryption services to encrypt data in the cluster to improve data security.

The encryption services in the cluster are configured on a disk pool basis. You can enable or disable data encryption for a disk pool as needed. If encryption is enabled, the data will be encrypted by the encryption server before being stored in a disk in cipher text to ensure data security.

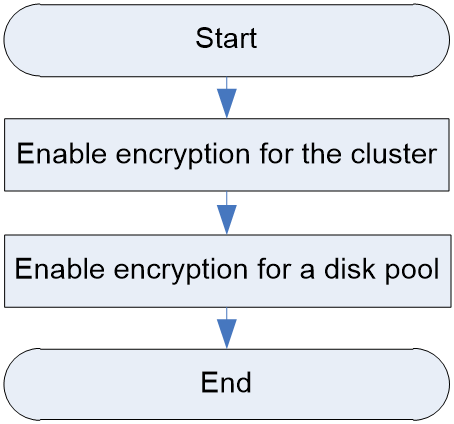

Encryption service configuration workflow

Figure 3 shows the encryption service configuration workflow, and Table 9 describes the configuration steps.

Figure 11 Encryption service configuration workflow

Table 9 Encryption service configuration workflow

|

Procedure |

Description |

|

|

Enable encryption for the cluster and configure the encryption settings in the setup wizard when you deploy the cluster for the first time. |

||

|

Enable encryption for the cluster and configure the encryption settings on the encryption service page. |

||

|

Enabling encryption in the cluster or node pool setup wizard |

Enable encryption during disk pool adding in the cluster or node pool setup wizard. |

|

|

Enable encryption when you create a disk pool. |

||

|

Enable encryption by editing the encryption configuration after a disk pool is created. |

||

Enabling encryption for the cluster

You can enable encryption for the cluster through one of the following methods:

· Enable encryption for the cluster in the setup wizard.

· Enable encryption for the cluster on the encryption service page.

Prerequisites

· Obtain the encryption server-related information in advance, such as IP address and port number.

· Make sure that the cluster and the encryption servers can reach each other.

· Obtain the client certificate file from the encryption service website.

Enable encryption for the cluster on first-time deployment

When you deploy the cluster for the first time, enable encryption for the cluster during basic information setting in the setup wizard.

For the parameter description and configuration restrictions and guidelines, see the online help.

Enable encryption on the encryption service page

1. From the left navigation pane, select Resources.

2. Select Encryption.

3. Click Edit, and then edit the encryption configuration.

For the parameter description and configuration restrictions and guidelines, see the online help.

Enabling encryption for a disk pool

You can enable encryption for a disk pool in one of the following situations:

· Enable encryption when you add disk pools in the cluster or node pool setup wizard.

· Enable encryption when you create a disk pool.

· Enable encryption when you edit the disk pool configuration after the disk pool is created.

Enabling encryption in the cluster or node pool setup wizard

When you deploy the cluster for the first time or add node pools, enable encryption during disk pool adding in the setup wizard.

For the parameter description and configuration restrictions and guidelines, see the online help.

Enabling encryption during disk pool creation

1. From the left navigation pane, select Resources.

2. Select Disk Pools.

3. Click Create.

4. Configure disk pool parameters and enable encryption for the cluster.

For the parameter description and configuration restrictions and guidelines, see the online help.

Editing the encryption configuration of the disk pool

1. From the left navigation pane, select Resources.

2. Select Disk Pools.

3. Click Edit in the Actions column for the disk pool, and then edit the encryption configuration to enable encryption.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring block storage

Configuring basic block storage services

About basic block storage services

Block storage virtualizes storage resources (RAID, LVM, or bare disks) into storage volumes and assign the volumes to clients by performing volume mapping. Clients can then identify, mount, and use the volumes as if they were using their native disks.

Storage pool

A disk pool is a logical concept used for storage resource management. To provide storage services by using the storage resources in a disk pool, you must create storage pools in the disk pool.

Storage volume

Storage volumes are virtualized storage resources in storage pools. They enable the system to dynamically adjust storage resources without concerning the performance of the underlying physical storage devices. After creating a storage volume and configuring volume mapping, you can mount the volume on a client as a logical disk.

Block storage service network

Clients can access a cluster for block storage resources via block storage service networks.

Client

For a client to access block storage resources, you must create a logical client in the storage system for it.

Client group

The storage system uses client groups to manage mappings of accessible volumes, writable volume snapshots, and access ports to clients. You can control access to storage resources by mapping volumes, writable volume snapshots, and access ports to different client groups.

iSCSI HA group

To improve block storage service availability, assign the storage nodes to an iSCSI HA group, which is accessible at a virtual IP address (VIP). Each iSCSI HA group contains one primary node and multiple backup nodes. When the primary node fails, one of the backup nodes will take over to provide block storage network access.

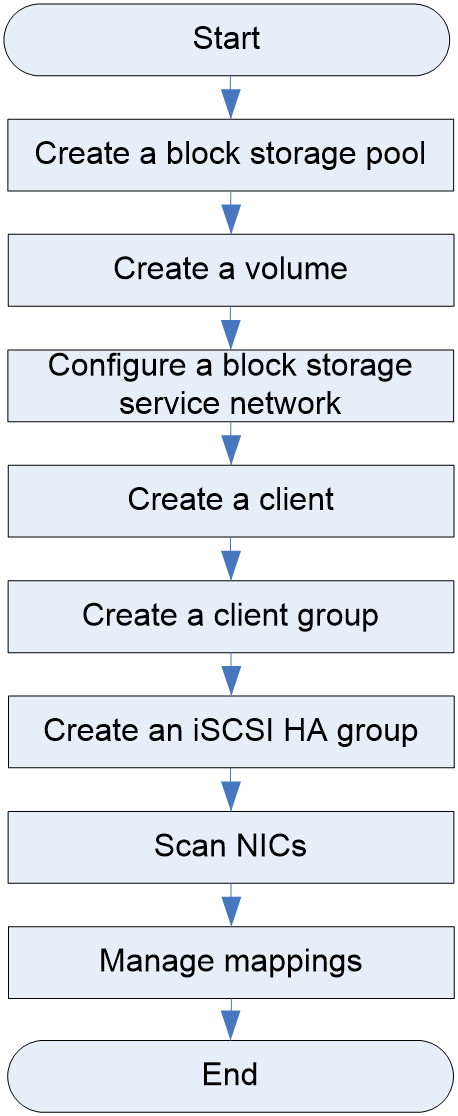

Basic block storage service configuration workflow

You can configure basic block storage services by using either of the following methods:

· Configure basic block storage services through the setup wizard. For detailed configuration and restrictions and guidelines, see the online help.

· Configure basic block storage services on a per-function basis.

Figure 4 shows the basic block storage service configuration workflow, and Table 10 describes the configuration steps.

Figure 12 Basic block storage service configuration workflow

Table 10 Basic block storage service configuration workflow

|

Procedure |

Description |

|

|

Perform this task to create a block storage pool for users to access the block storage resources in the cluster. |

||

|

Perform this task to create a volume and assign storage capacity to it. |

||

|

Perform this task before managing volume mappings. |

||

|

Perform this task to create a logical client that has the same operating system and initiator settings as the physical client. |

||

|

Perform this task to create a client group for mapping management. |

||

|

Perform this task to create an iSCSI HA group. Each iSCSI HA group contains one primary node and multiple backup nodes. When the primary node fails, one of the backup nodes will take over to provide block storage network access. |

||

|

Perform this task to scan accessible ports in a block storage service network. |

||

|

Perform this task to assign clients to client groups so they can access volumes and writable snapshots. |

||

|

Perform this task to assign storage volumes to a client group, so clients in the group can access them. |

||

|

Perform this task to assign writable snapshots to a client group, so clients in the group can access them. |

||

|

Perform this task to assign ports to a client group, so clients in the group can access volumes and writable snapshots through these ports. |

||

Creating a storage pool

Prerequisites

You must deploy a disk pool for block storage before performing this task. For more information about block storage disk pool configuration, see "Configuring cluster resources."

Procedure

1. From the left navigation pane, select Storage Pools.

2. Select Block Storage.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating a volume

1. From the left navigation pane, select Block Storage.

2. Select Volume Management > Volumes.

3. Select a node pool, a disk pool, and a storage pool.

4. Click Create.

5. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring a block storage service network

Restrictions and guidelines

Perform this task based on cluster configuration and service demands.

Procedure

1. From the left navigation pane, select Storage Pools.

2. Select Volume Mappings.

3. Configure the parameters in the dialog box that opens, and then click OK.

This dialog box opens only if volume mappings have never been configured.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating a client

Prerequisites

Before performing this task, you must perform the following operations:

· Obtain the physical client information, including IP address and the OS and initiator settings.

· Make sure the physical client can access the block storage service network correctly.

· Plan a CHAP account for client authentication to ensure data access security.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Clients.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating a client group

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Client Groups.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating an iSCSI HA group

Prerequisites

· To avoid client access failures caused by primary-backup switchover, make sure all nodes in an iSCSI HA group are configured with the same reachable routes.

· Make a service-oriented configuration plan for the iSCSI HA group. Items of the plan include primary node, backup nodes, HA VIP, and VRID.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > iSCSI HA.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Scanning NICs

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Ports.

3. Select a node pool from the Node Pool list.

4. Select a node from the Node Name list.

5. Click Scan for NICs.

For the parameter description and configuration restrictions and guidelines, see the online help.

Managing mappings

Assigning clients to a client group

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Client Groups.

3. Select a node pool, and then click the name of a client group.

4. Click Add.

5. Select one or multiple available clients.

6. Click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Assigning volumes to a client group

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Mappings.

3. Select a node pool, and then click the name of a client group.

4. Click Add.

5. Select one or multiple available volumes.

6. Click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Assigning snapshots to a client group

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Mappings.

3. Select a node pool, and then click the name of a client group.

4. Click the Snapshots tab.

5. Click Add.

6. Select one or multiple writable snapshots of the volume.

7. Click OK.

For more information about creating a writable volume snapshot, see "Configuring volume snapshots."

For more information about creating a writable consistency group snapshot, see "Configuring consistency groups."

For the parameter description and configuration restrictions and guidelines, see the online help.

Assigning ports to a client group

1. From the left navigation pane, select Block Storage.

2. Select Volume Mappings > Mappings.

3. Select a node pool, and then click the name of a client group.

4. Click the Ports tab.

5. Click Add.

6. Select one or multiple ports.

7. Click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring volume clones

About volume clones

A volume clone is an identical but independent physical replica of the source volume. You can create a volume clone from a volume or a common volume snapshot. The volume clone service is applicable to storage services, tests and analysis, or data backup.

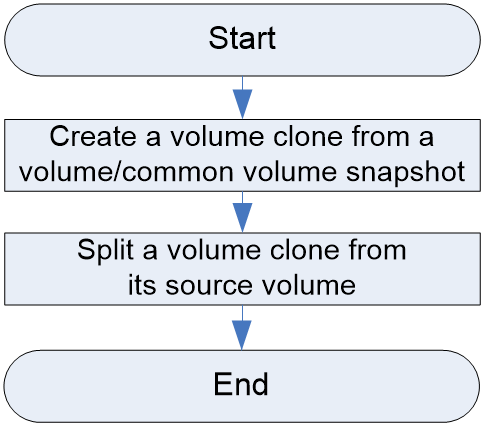

Volume clone configuration workflow

Figure 5 shows the volume clone configuration workflow, and Table 11 describes the configuration steps.

Figure 13 Volume clone configuration workflow

Table 11 Basic block storage service configuration workflow

|

Procedure |

Description |

|

|

Perform this task to create a volume clone from a volume. |

||

|

Perform this task to create a volume clone from a common volume snapshot. |

||

|

Perform this task to split a volume clone from its source volume. Then, the volume clone can operate as a normal storage volume. |

||

Creating a volume clone

Prerequisites

To avoid affecting the cluster services, plan a window time for volume clone creation (a period when the storage volume service and the volume snapshot service are idle).

Creating a volume clone from a volume

1. From the left navigation pane, select Block Storage.

2. Select Volume Management > Volumes.

3. Click More in the Actions column of a volume, and then select Create Volume Clone. In the dialog box that opens, configure parameters for the volume clone, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating a volume clone from a common volume snapshot

1. From the left navigation pane, select Block Storage > Local Backup > Snapshots.

2. Click More in the Actions column of a snapshot, and then select Create Volume Clone. In the dialog box that opens, configure parameters for the volume clone, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Splitting a volume clone from its source volume

Prerequisites

Make sure the services running on the volume clone are stopped.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Volume Management > Volumes.

3. Click the name of the parent volume.

4. Click Details for the target volume clone. In the dialog box that opens, click Split. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring volume copy pairs

About volume copy pairs

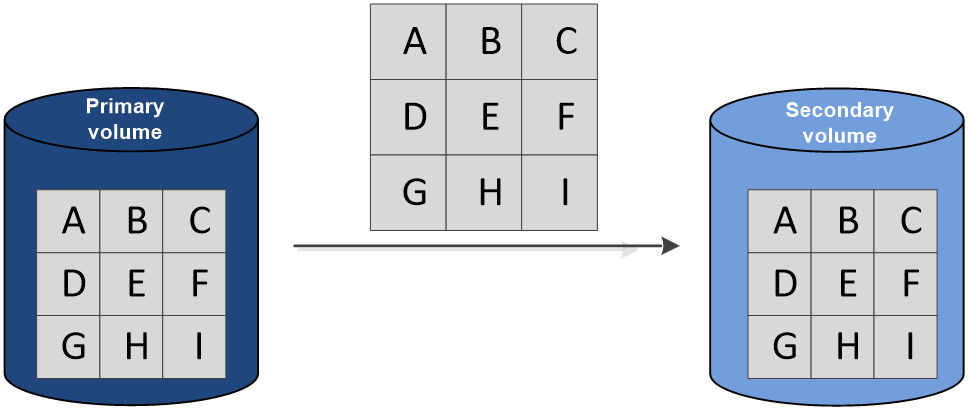

As shown in Figure 6, data is transmitted directly from the primary volume to the secondary volume in a copy pair without the participation of any application servers.

Copy pair

Each copy pair includes a primary volume and a secondary volume. Data is copied from the primary volume to the secondary volume.

Copy pair state

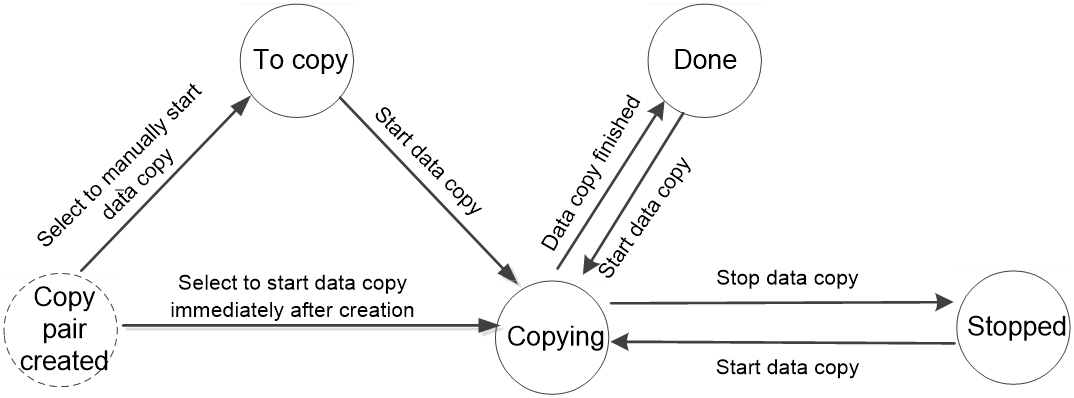

Figure 7 shows different states of a copy pair during the data copy.

Figure 15 Copy pair states

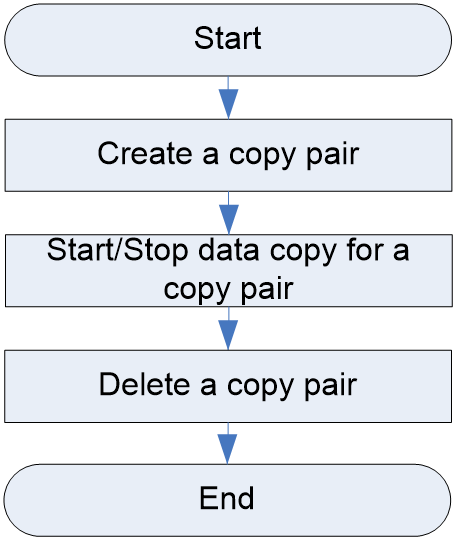

Volume copy pair configuration workflow

Figure 8 shows the volume copy pair configuration workflow, and Table 12 describes the configuration steps.

Figure 16 Volume copy pair configuration workflow

Table 12 Volume copy pair configuration workflow

|

Procedure |

Description |

|

N/A |

|

|

N/A |

|

|

Perform this task to delete a copy pair if data copy is finished. |

Creating a copy pair

Prerequisites

Before you perform this task, perform the following operations in advance:

· Plan an appropriate copy rate for the copy pair.

· Make sure data in the prepared secondary volume is overwritable.

· Make sure no storage service is running on the primary and secondary volumes if you want to start data copy immediately after pair creation.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Copy Pairs.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Starting/Stopping data copy for a copy pair

Prerequisites

Make sure no storage service is running on the primary and secondary volumes before performing this task.

Starting data copy for a copy pair

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Copy Pairs.

3. Click More in the Actions column for a copy pair.

4. Select Start.

5. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Stopping data copy for a copy pair

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Copy Pairs.

3. Click More in the Actions column for a copy pair.

4. Select Stop.

5. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Deleting a copy pair

Prerequisites

Make sure all data has been copied from the primary volume to the secondary volume.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Copy Pairs.

3. To delete copy pairs, perform one of the following tasks:

¡ To delete a copy pair, click Delete in the Actions column for the copy pair.

¡ To delete multiple copy pairs, select copy pairs to be deleted, and click Delete above the list.

4. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring volume migration pairs

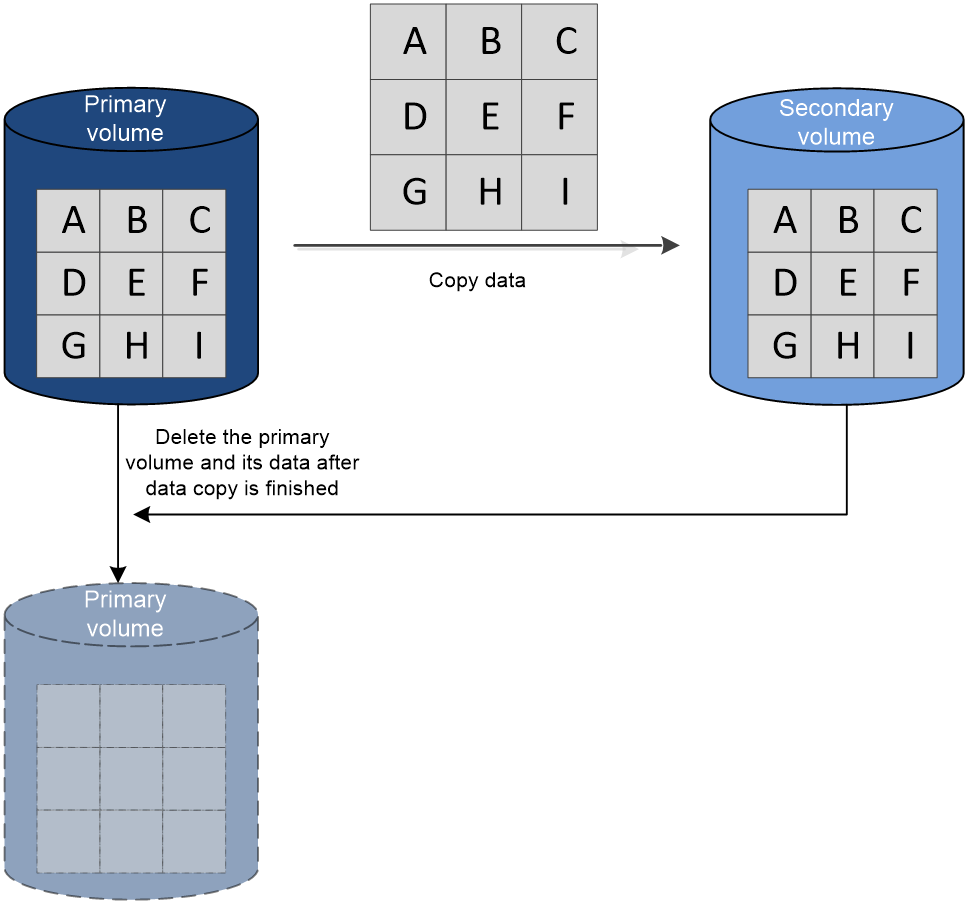

About volume migration pairs

As shown in Figure 9, the volume migration feature copies all data from the primary volume to the secondary volume, and then deletes the primary volume and its data.

Figure 17 Data migration process

Migration pair

Each migration pair includes a primary volume and a secondary volume. Data is migrated from the primary volume to the secondary volume.

Migration pair state

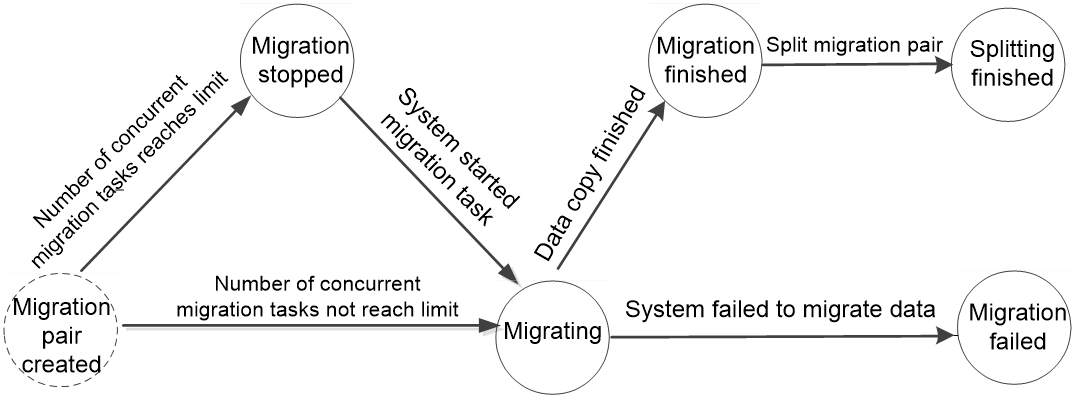

Figure 10 shows different states of a migration pair during the data migration.

Figure 18 Migration pair states

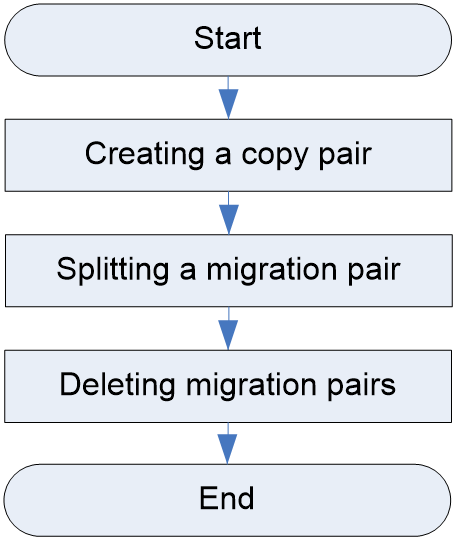

Volume migration pair configuration workflow

Figure 11 shows the volume migration pair configuration workflow, and Table 13 describes the configuration steps.

Figure 19 Volume migration pair configuration workflow

Table 13 Volume migration pair configuration workflow

|

Procedure |

Description |

|

N/A |

|

|

Perform this task after all data has been migrated from the primary volume to the secondary volume, so the secondary volume can be mapped to a client. |

|

|

Perform this task for migration pairs in split state to finish the volume migration. The primary volume and its data will be deleted. |

Creating a migration pair

Prerequisites

Before you perform this task, perform the following operations in advance:

· Plan an appropriate migration rate for the migration pair.

· Make sure data in the prepared secondary volume is overwritable.

· Make sure no storage service is running on the primary and secondary volumes.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Migration Pairs.

3. Click Create.

4. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Splitting a migration pair

Prerequisites

All data has been migrated from the primary volume to the secondary volume.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Migration Pairs.

3. Select a migration pair, and then click Split.

4. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Deleting migration pairs

Restrictions and guidelines

Perform this task only for migration pairs in split state.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Migration Pairs.

3. To delete migration pairs, perform one of the following tasks:

¡ To delete a migration pair, click Delete in the Actions column for the migration pair.

¡ To delete multiple migration pairs, select copy pairs to be deleted, and click Delete above the list.

4. In the dialog box that opens, click OK.

5. In the dialog box that opens, enter YES (case sensitive) and then click Yes.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring volume snapshots

About volume snapshots

You can take a snapshot of a volume to back up its state at a time point. When a non-physical damage such as application failure or file corruption occurs, you can revert the volume to the state when the snapshot was taken. You can also directly access a snapshot to obtain data without affecting the original data.

A volume snapshot can be one of the following types depending on its creation method:

· Common volume snapshot—Common volume snapshots are read-only snapshots and mainly used for data recovery. Each of them is manually created from a single volume.

· Writable volume snapshot—A writable volume snapshot is created from a common volume snapshot. This type of snapshot is writable to users and mainly used for testing without affecting the original data on the volume.

· Automatic snapshot—An automatic snapshot is automatically created from a volume as scheduled. Such a feature provides constant data protection.

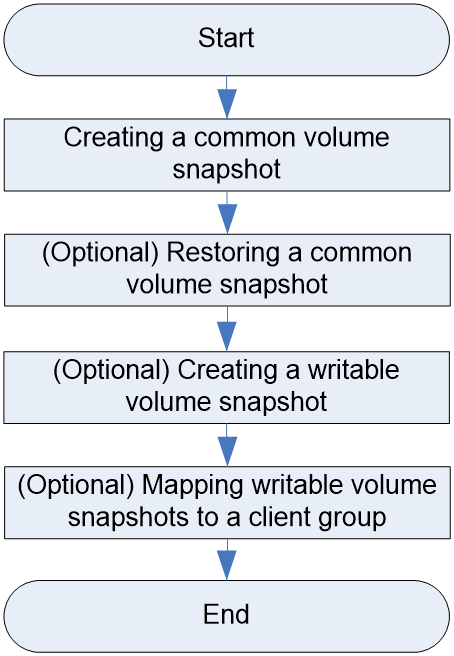

Volume snapshot configuration workflow

Figure 12 shows the volume copy pair configuration workflow, and Table 14 describes the configuration steps.

Figure 20 Volume snapshot configuration workflow

Table 14 Volume snapshot configuration workflow

|

Procedure |

Description |

|

N/A |

|

|

Perform this task to use a common snapshot of a volume to revert the volume to the state when the snapshot was taken. |

|

|

N/A |

|

|

(Optional) Mapping writable volume snapshots to a client group |

Perform this task to make a writable volume snapshot accessible to the clients in a client group. |

Creating a common volume snapshot

Restrictions and guidelines

Perform this task when services on the volume are not heavy, because the volume performance might be degraded during snapshot creation.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Snapshots.

3. Select a node pool, a disk pool, and a storage pool.

4. Click Create.

5. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Restoring a common volume snapshot

Prerequisites

Make sure you have disconnected all services from that volume and removed the volume from the client group. For more information about these operations, see the online help.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Snapshots.

3. Select a node pool, a disk pool, and a storage pool.

4. Click Restore in the Actions column of a snapshot.

5. In the warning dialog box that opens, enter YES (case sensitive), and then click Yes.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Creating a writable volume snapshot

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Snapshots.

3. Select a node pool, a disk pool, and a storage pool as needed.

4. Click the name of a snapshot.

5. Click Create.

6. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

(Optional) Mapping writable volume snapshots to a client group

1. From the left navigation pane, select Block Storage.

2. Select Local Backup > Snapshots.

3. Select a node pool, a disk pool, and a storage pool as needed.

4. Click the name of a snapshot.

5. Click Map in the Actions column of a writable volume snapshot.

6. In the dialog box that opens, select a client group.

7. Click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring basic disaster recovery services

About basic disaster recovery services

The storage system provides disaster recovery (DR) management for block storage. When the local cluster fails, users can quickly restore the business through the data backed up in the remote DR cluster. DR management includes two parts: link management and remote replication.

· Link management—Administrators create and manage the remote replication links between the local cluster and the remote cluster, which is the prerequisite for asynchronous remote replication.

· Remote replication—As the core of the DR system, remote replication enables remote data synchronization and disaster recovery between two geographically dispersed clusters. The remote cluster maintains a set of the data replicas through the links with the local cluster. Once the production cluster fails, the other cluster takes over with the backed up data (unaffected by the outage of the production cluster) to enable disaster recovery.

Replication node

Replication nodes can be used to establish links with remote clusters for data synchronization between local and remote clusters.

Remote device

A remote device is a logical device abstracted from a set of links. It is used to manage links between local and remote clusters.

Asynchronous remote replication pair

An async remote replication pair provides disaster recovery by synchronizing data from the primary volume to the secondary volume.

· Primary Volume—Storage volume that provides the source data in the async remote replication pair.

· Secondary Volume—Storage volume used for disaster recovery and backup of the source data in the async remote replication pair.

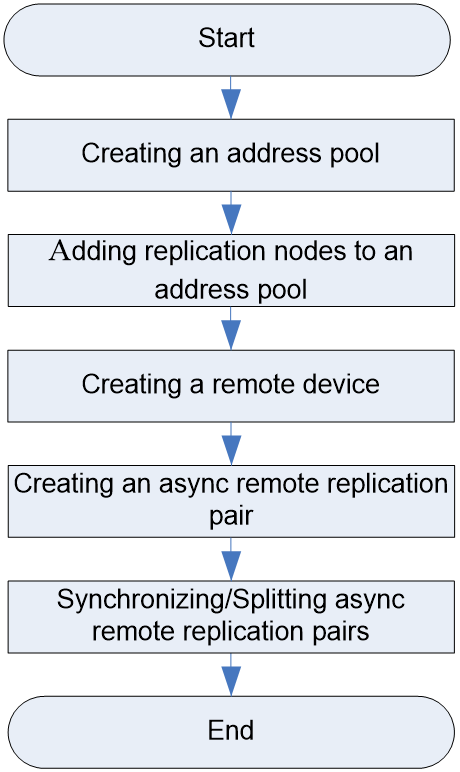

Basic disaster recovery service configuration workflow

Figure 13 shows the basic disaster recovery service configuration workflow, and Table 15 describes the configuration steps.

Figure 21 Basic disaster recovery service configuration workflow

Table 15 Basic disaster recovery service configuration workflow

|

Procedure |

Description |

|

N/A |

|

|

N/A |

|

|

N/A |

|

|

N/A |

|

|

N/A |

Creating an address pool

Restrictions and guidelines

Perform this task based on cluster configuration and service demands.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Links.

3. Select a node pool.

4. Click Create.

5. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Adding replication nodes to an address pool

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Links.

3. Select a node pool.

4. Click the name of the target address pool.

5. Click Add.

6. In the dialog box that opens, select the nodes to add to the address pool.

7. Click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating a remote device

Prerequisites

Before you perform this task, perform the following operations:

· Obtain information about the remote cluster, including remote IP pool ID, IP address of the remote replication node, remote monitoring port number, remote shared key, and remote shared key tag.

· Make sure the local replication node can communicate correctly with the remote replication node.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Links.

3. Click the Remote Devices tab.

4. Select a node pool.

5. Click Create.

6. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Creating an async remote replication pair

Prerequisites

Make sure the data on the secondary volume is overwritable.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.

4. Click Create.

5. Configure the parameters, and then click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Synchronizing/Splitting async remote replication pairs

Synchronizing async remote replication pairs

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.

4. Click More > Sync in the Actions column for the target replication pair.

5. In the dialog box that opens, click OK.

6. In the warning dialog box that opens, click Yes.

For the parameter description and configuration restrictions and guidelines, see the online help.

Splitting async remote replication pairs

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.

4. To split async remote replication pairs, perform one of the following tasks:

¡ To split an async remote replication pair, Click More > Split in the Actions column for the replication pair.

¡ To split multiple async remote replication pairs, select async remote replication pairs to be split, and click Split above the list.

5. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Configuring disaster recovery primary/secondary switchover

About disaster recovery primary/secondary switchover

This operation creates a new replication pair by switching the roles of the primary and secondary volumes in a remote replication pair.

Primary/secondary switchover configuration workflow

Figure 14 shows the primary/secondary switchover configuration workflow, and Table 16 describes the configuration steps.

Figure 22 Primary/secondary switchover configuration workflow

Table 16 Primary/secondary switchover configuration workflow

|

Procedure |

Description |

|

N/A |

|

|

Perform this task to set the secondary volume writable in an async remote replication pair. |

|

|

Perform this task to switch the roles of the primary and secondary volumes in a remote replication pair. |

|

|

Perform this task to set the secondary volume read-only in an async remote replication pair. |

|

|

N/A |

Splitting async remote replication pairs

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.

4. To split async remote replication pairs, perform one of the following tasks:

¡ To split an async remote replication pair, Click More > Split in the Actions column for the replication pair.

¡ To split multiple async remote replication pairs, select async remote replication pairs to be split, and click Split above the list.

5. In the dialog box that opens, click OK.

For the parameter description and configuration restrictions and guidelines, see the online help.

Disabling secondary volume protection

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.

4. Click More > Disable Secondary Volume Protection in the Actions column for the target replication pair.

5. In the dialog box that opens, click OK.

6. In the warning dialog box that opens, click Yes.

For the parameter description and configuration restrictions and guidelines, see the online help.

Performing a primary/secondary switchover

Prerequisites

Before you perform a primary/secondary switchover, perform the following operations:

· Disconnect all services from the primary volume.

· Make sure data in the primary volume is overwritable.

Procedure

1. From the left navigation pane, select Block Storage.

2. Select Disaster Recovery > Async Remote Replication.

3. Select a node pool.