- Table of Contents

| Title | Size | Download |

|---|---|---|

| 01-Text | 7.77 MB |

Contents

Installing the H3C NFV1000 series products on a VM

Installing the H3C NFV1000 series products on VMware ESXi

Installing vFW1000 from an ISO file

Installing vFW1000 via unattended PXE

Installing vFW1000 from an OVA file

Installing vFW1000 from an OVA file by using the auto-deploy tool

Installing the H3C NFV1000 series products on Linux KVM

Installing vFW1000 from an ISO file

Installing vFW1000 via unattended PXE

Installing the H3C NFV1000 series products on H3C CAS

Installing vFW1000 from an ISO file

Deploying the H3C NFV1000 series products

vFW1000 interface and vNIC mappings

Adding or deleting a vFW1000 interface

vSwitch interface or host physical interface mappings

Installing H3C NFV2000 on a physical server

Installing H3C NFV2000 on a bare metal server

Installing vFW2000 from an ISO image

Installing vFW2000 via unattended PXE

Upgrading vFW1000 startup images through TFTP

Upgrading vFW1000 startup images through FTP

Appendix A Installing Linux KVM

Configuring network parameters

Configuring Linux bridges on KVM

Configuring the MTU for an OVS NIC

Appendix C Loading Intel 82599 VFs

Configuration from the hypervisor

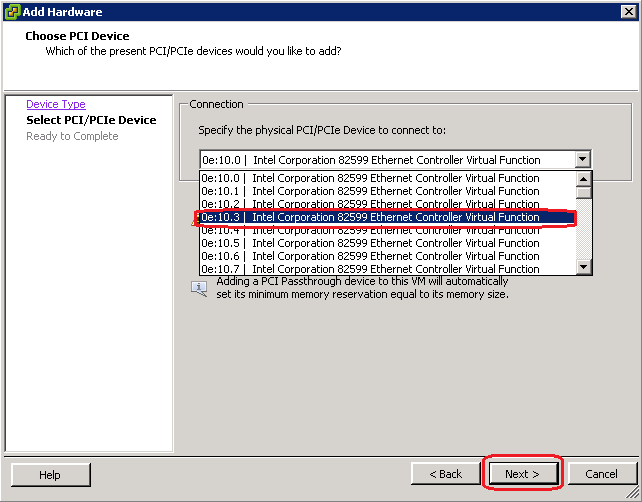

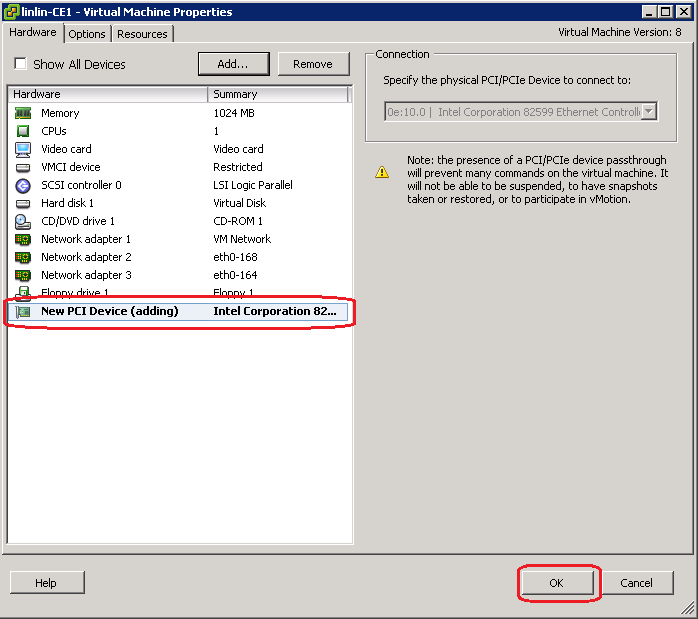

Loading Intel 82599 VFs from VMware ESXi

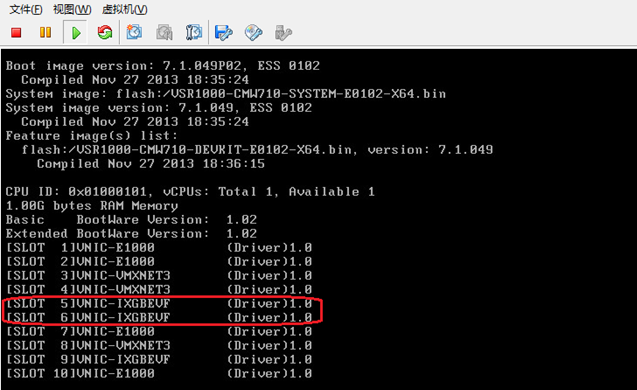

Loading Intel 82599 VFs from KVM

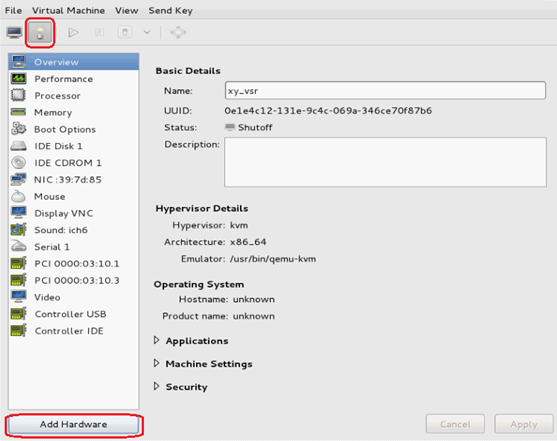

Loading Intel 82599 VFs from CAS

Appendix D Setting up a PXE server

Setting up a PXE server in CentOS

Installing and configuring DHCP

Installing and configuring TFTP

Installing and configuring HTTP

Installing and configuring NFS

Installing and configuring Syslinux

Setting up the PEX server in Ubuntu

Installing and configuring DHCP

Installing and configuring TFTP

Installing and configuring HTTP

Installing and configuring NFS

About H3C NFV products

The Network Functions Virtualization (NFV) technology separates the network service plane and forwarding plane through software virtualization and standardization of network devices. H3C NFV products are developed based on Comware 7 and deliver the same functionalities and use experience as physical devices.

Depending on the operating platforms, H3C NFV products include NFV1000 series and NFV2000 series.

· In the NFV 1000 series products, VSR1000 can be installed not only on standard server-based virtual machines (VMs), but also on Kunpeng servers that work in conjunction with the H3C CAS virtualization platform. The other products can be installed only on standard server-based VMs.

· The NFV2000 series products are directly installed on bare-metal servers.

Table 1 shows the products included in the H3C NFV1000 series and H3C NFV2000 series.

Table 1 H3C NFV products classified by operating platform

|

NFV1000 series |

NFV2000 series |

|

VSR1000 |

VSR2000 |

|

vBRAS1000 |

vBRAS2000 |

|

vLNS1000 |

vLNS2000 |

|

SecPath vFW1000 |

SecPath vFW2000 |

|

SecPath vLB1000 |

SecPath vLB2000 |

Depending on functions, H3C NFV products include the following types:

· Virtual Services Router (VSR)—The VSR1000/VSR2000 virtual routers provide the same functionality and experience as physical routers, including routing, firewall, Virtual Private Network (VPN), Quality of Service (QoS), and configuration management. They help enterprises establish secure, unified, and scalable intelligent branches while streamlining the number and investment of branch infrastructure.

· Virtual Broadband Remote Access Server (vBRAS)—The vBRAS1000/vBRAS2000 virtual BRASs provide the same functionality and experience as physical BRASs, including Point-to-Point Protocol over Ethernet (PPPoE), Internet Protocol over Ethernet (PPPoE), portal, Layer 2 Tunneling Protocol (L2TP), Multiprotocol Label Switching (MPLS), NAT444, Authentication, Authorization and Accounting (AAA), Dynamic Host Configuration Protocol (DHCP), and QoS. By using Virtual Extensible LAN (VXLAN) technology, they help service providers virtualize Points of Presence (POPs).

· Virtual L2TP Network Server (vLNS)—The vLNS1000/vLNS2000 virtual LNSs provide the same functionality and experience as physical LNSs. They terminate PPPoE sessions and complete user authentication and access through AAA.

· SecPath Virtual Load Balancer (vLB)—SecPath vLB1000/SecPath vLB2000 is a powerful software-based security product that offers comprehensive server and link load balancing functions. It enhances the reliability of enterprise applications and helps build robust data center and cloud computing network solutions.

· SecPath Virtual Fire Wall (vFW)—SecPath vFW1000/SecPath vFW2000 is a powerful software-based security product. It monitors and protects the security of virtual environments, providing comprehensive security protection for virtualized data centers and cloud computing networks. It helps enterprises build robust data center and cloud computing network security solutions.

Installing the H3C NFV1000 series products on a VM

The H3C NFV1000 series products are installed and run on a VM of a server and can be installed on multiple hypervisors.

Installation environment

Hardware environment

Table 2 describes the minimum hardware configuration requirements for a VM to host the H3C NFV1000 series products.

Table 2 Minimum hardware configuration requirements for a VM to host the H3C NFV1000 series products

|

Item |

Minimum requirement |

|

Processor |

· To install VSR1000/vLNS1000/SecPath vLB1000/SecPath vFW1000 on a VM, assign a minimum of one vCPU to the VM. · To install vBRAS1000 on a VM, assign a minimum of four vCPUs to the VM. |

|

Memory |

· 1 × vCPU (clock speed ≥ 2.0 GHz): 2 GB or above · 4 × vCPUs (clock speed ≥ 2.0 GHz): 4 GB or above (8 GB or above for vBRAS1000) · 8 × vCPUs (clock speed ≥ 2.0 GHz): 8 GB or above |

|

Hard disk |

1 × vHD, 8 GB |

|

NIC |

2 to 16 vNICs |

|

vNIC |

· E1000 (VMware ESXi, Linux KVM) · VMXNET3 (VMware ESXi) · VirtIO (Linux KVM, H3C CAS) · InteI 82599 VF (VMware ESXi, Linux KVM) |

Software environment

Table 3 describes the software environment requirements for installing the H3C NFV1000 series products.

Table 3 Software environment requirements for installing the H3C NFV1000 series products

|

Hypervisor |

Version |

|

VMware ESXi |

VMware ESXi 4.1, 5.0, 5.1, 5.5 |

|

Linux KVM |

Linux kernel 2.6.25 or higher Recommended Linux distributions: · CentOS7 · Ubuntu 12.10 · RedHat Enterprise Linux (RHEL) 6.3 · Suse Server 11SP2 |

|

H3C CAS |

H3C CAS 2.0 |

The hypervisor versions provided in Table 3 are only for your reference. For the compatible hypervisor versions, see the release notes.

Multiple Linux distributions can be used for Linux KVM installation. This document installs CentOS7 as an example to describe KVM installation in "Appendix A Installing Linux KVM."

For information about installing other hypervisors, see the document for the hypervisors.

Installing the H3C NFV1000 series products on VMware ESXi

The installation procedures on VMware ESXi are the same for H3C NFV1000 series products. The following information describes the installation procedures by using H3C vFW1000 as an example.

On VMware ESXi, you can install H3C vFW1000 by using one of the following five methods as needed:

· Installing vFW1000 from an ISO file

· Installing vFW1000 via unattended PXE

· Installing vFW1000 from an OVA file

· Installing vFW1000 from an OVA file by using the auto-deploy tool

Installing vFW1000 from an ISO file

Creating a VM

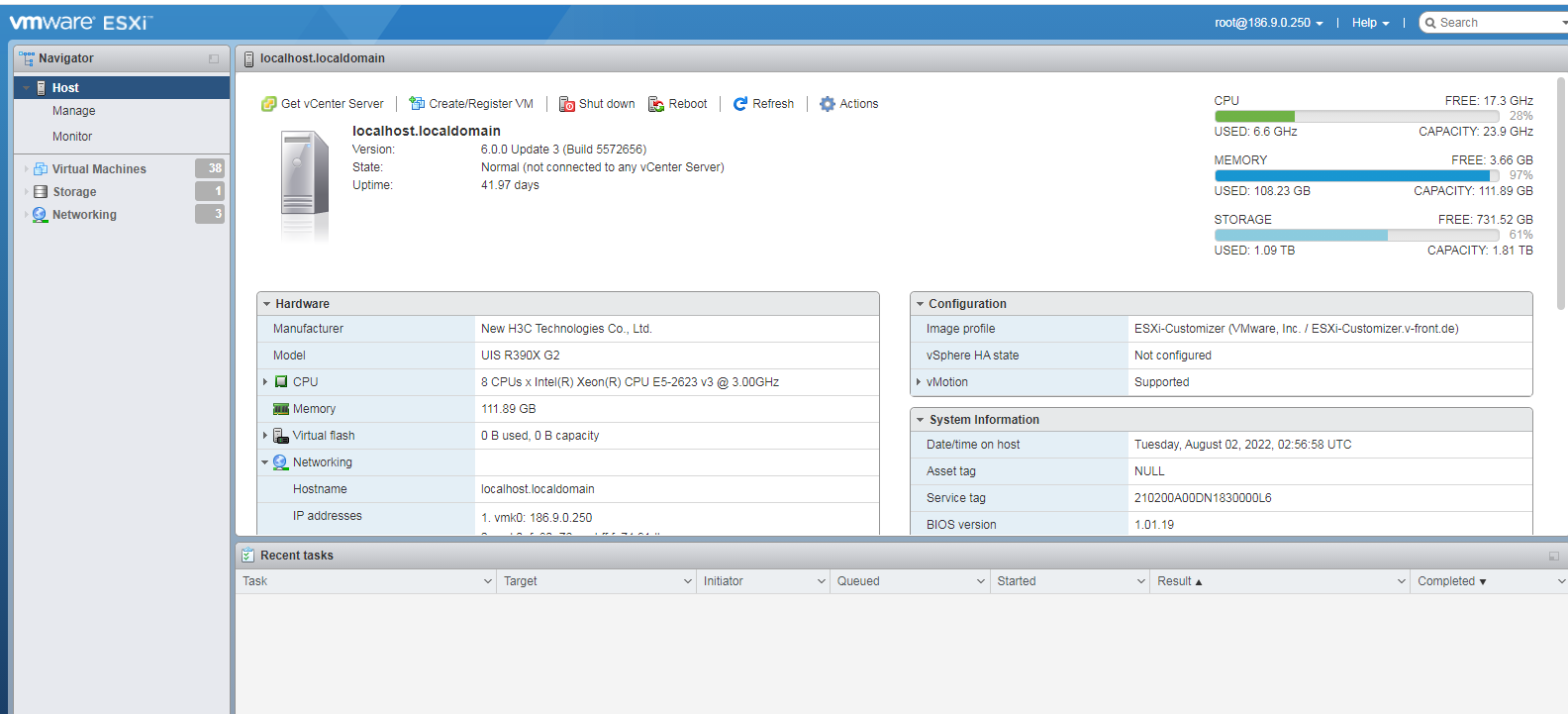

1. Open VMware vSphere Client, and enter the VMware ESXi address, username, and password, and then click Log in.

Figure 1 Logging in to VMware ESXi

To obtain the username and password for logging in to VMware ESXi, contact the server administrator.

A security certificate warning might be displayed during the login process, just ignore it.

2. The VMware ESXi page as shown in Figure 2 is displayed after a successful login.

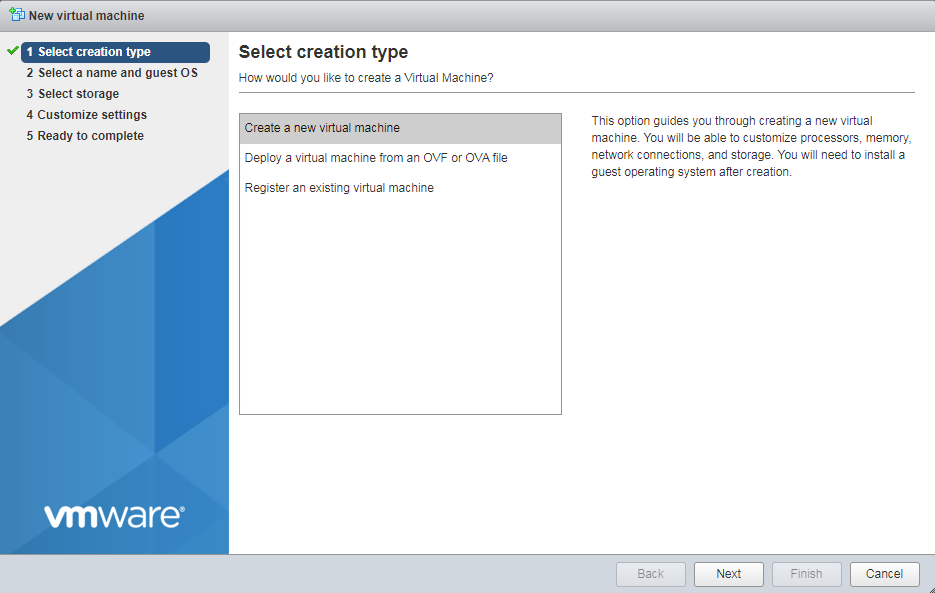

3. Click the Create/Register VM tab to start creating a new VM. On the page as shown in Figure 3, select Create a new virtual machine, and then click Next.

Figure 3 Selecting a creation type

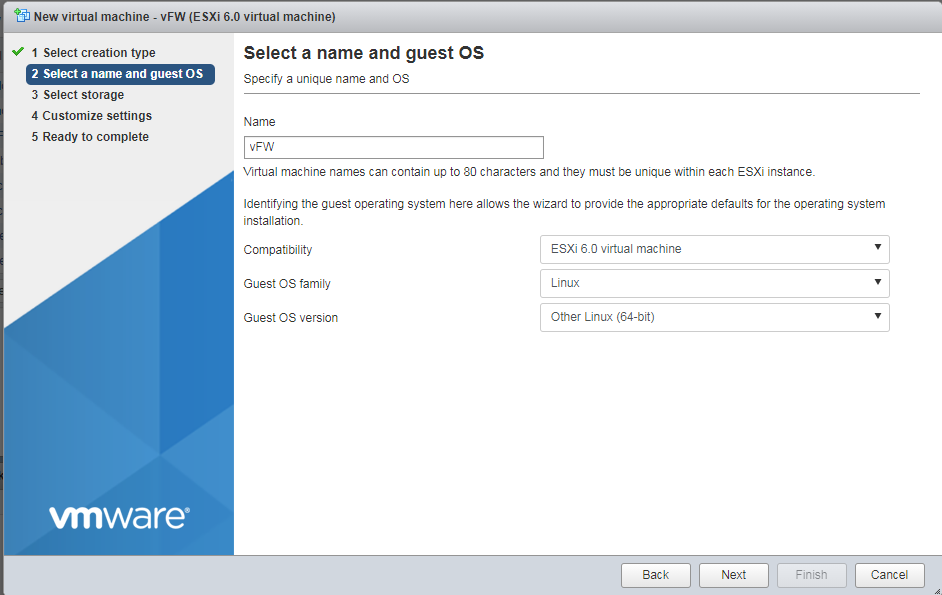

4. Specify a name for the VM, select ESXi 6.0 virtual machine for the Compatibility field, Linux for the Guest OS family field, and Other Linux (64-bit) for the Guest OS version field, and then click Next.

Figure 4 Entering a name for the VM

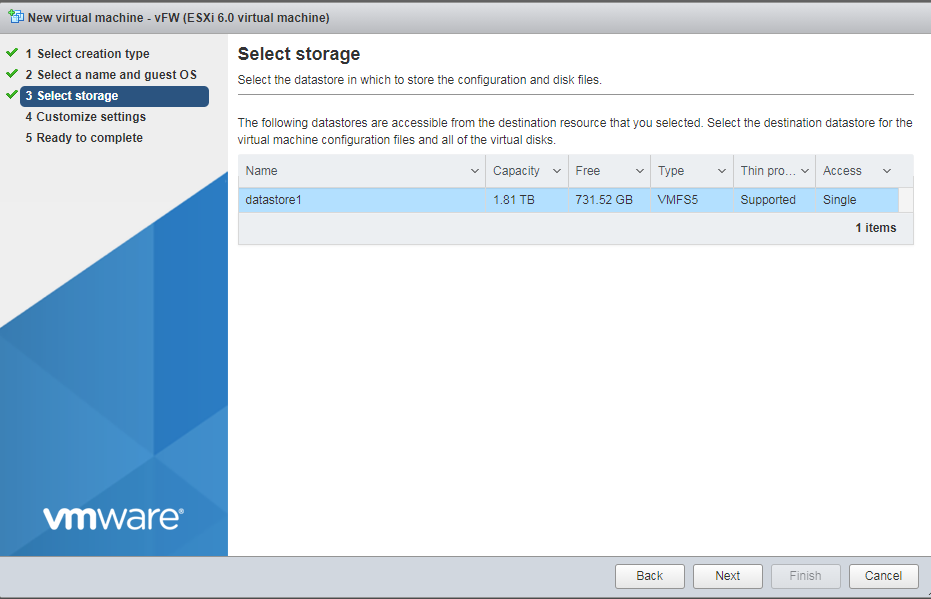

5. Select the destination storage for the VM files, and then click Next.

Figure 5 Selecting the destination storage for the VM files

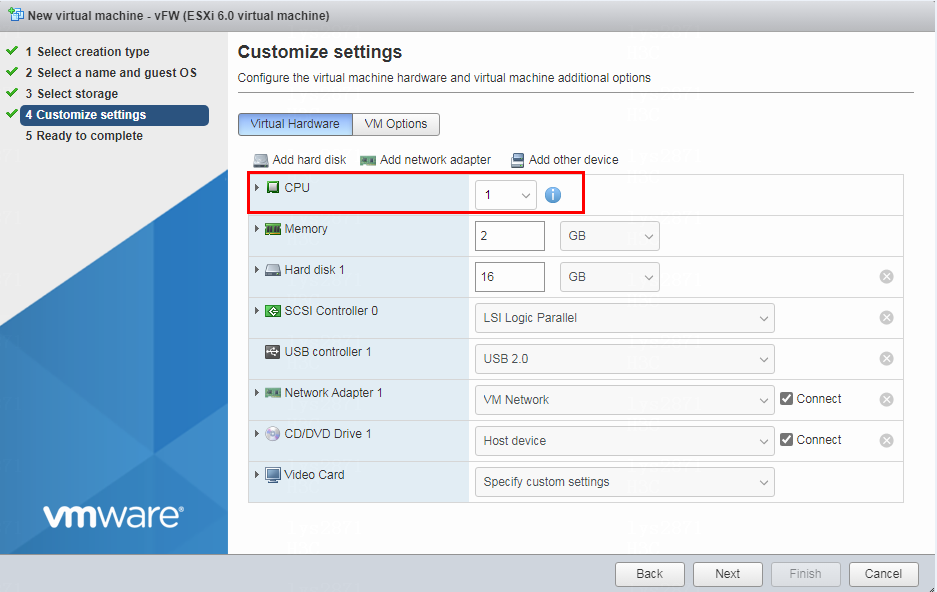

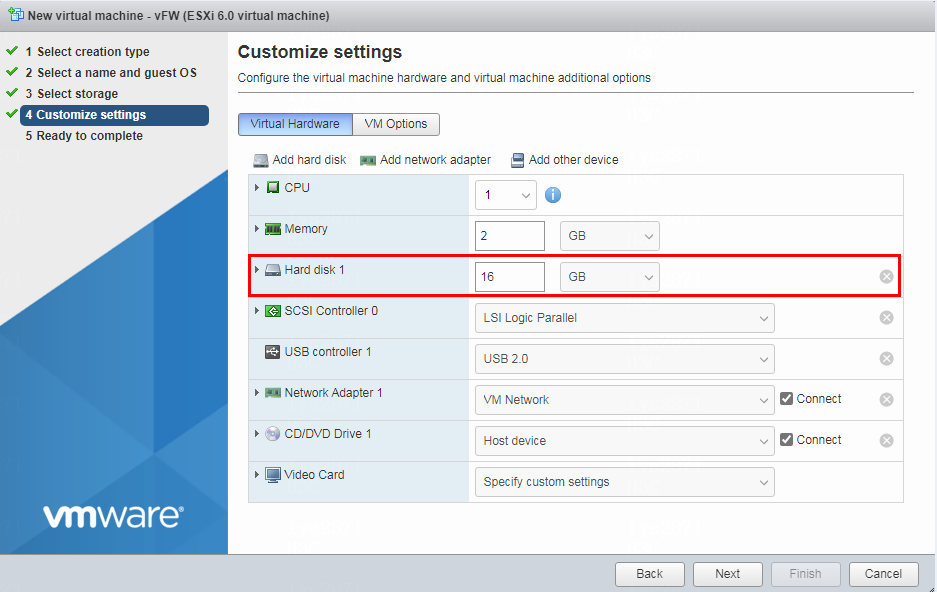

6. Specify the CPU quantity for the VM, and then click Next.

Assign a minimum of 1 vCPU (2.0 GHz or higher) to the VM. For multiple CPU cores, for example, 4 cores, the 2*2 setting equals to 1*4 setting.

Figure 6 Specifying the CPU quantity for the VM

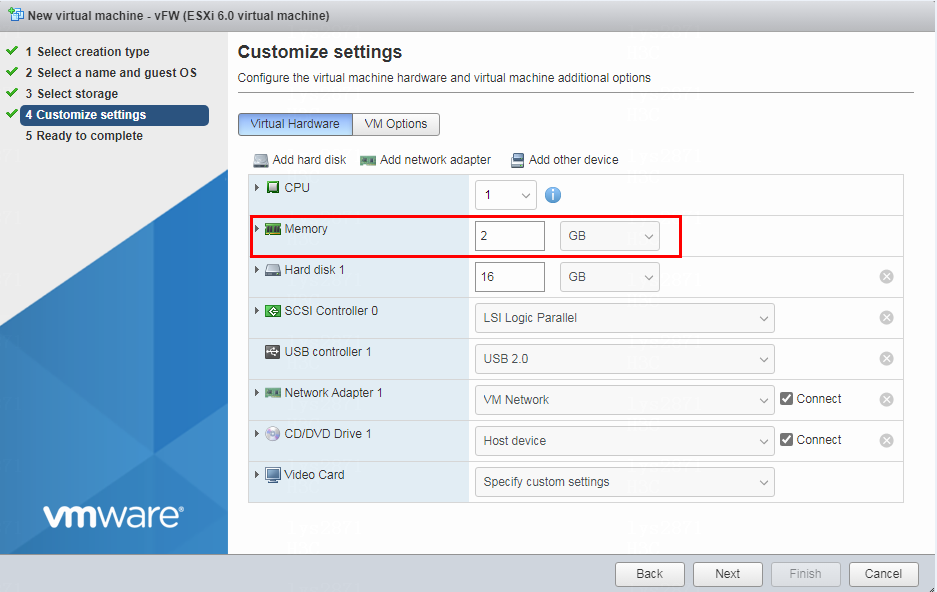

7. Set the memory capacity for the VM, and then click Next.

Assign a minimum of 1GB memory to the VM.

For the minimum memory requirements of installing vBRAS1000 on a VM, see Table 2. Assign a minimum of 2 GB of memory to the VM.

Figure 7 Setting the memory size for the VM

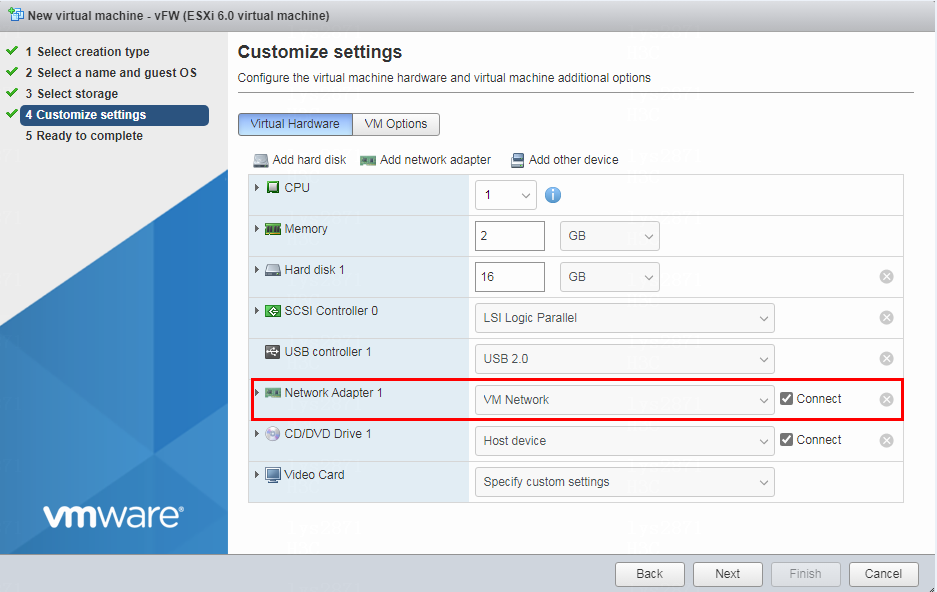

8. Specify the vNIC quantity and select the vNICs for the VM, and then click Next.

Install 2 to 16 vNICs to the VM.

Figure 8 Specifying the vNIC quantity

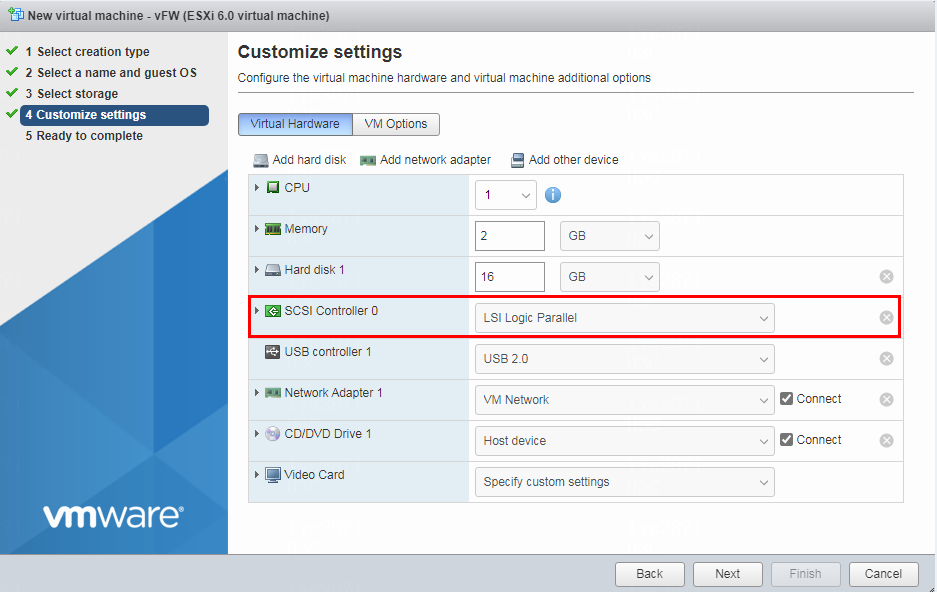

9. Select a Small Computer System Interface (SCSI) controller type and then click Next, as shown in Figure 9.

Figure 9 Specifying the SCSI controller type

10. Select the hard disk space assigned to the VM and then click Next, as shown in Figure 10. Specify a minimum of one vHD with 8 GB space.

Figure 10 Specifying the hard disk space assigned to the VM

11. As shown in Figure 11, click Finish. After the VM is created, it is displayed in the left device navigation pane.

Figure 11 Finishing VM creation

Configuring the VM to boot from CD-ROM

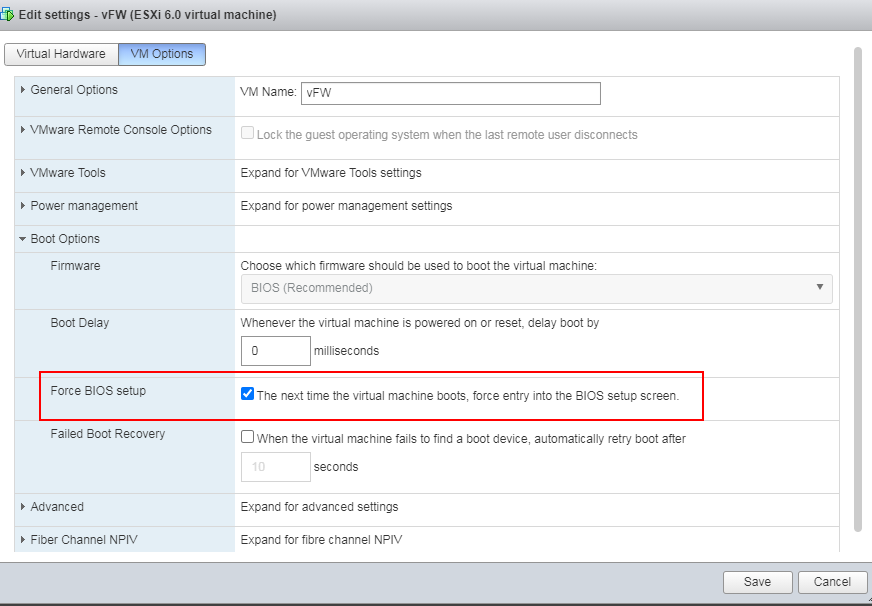

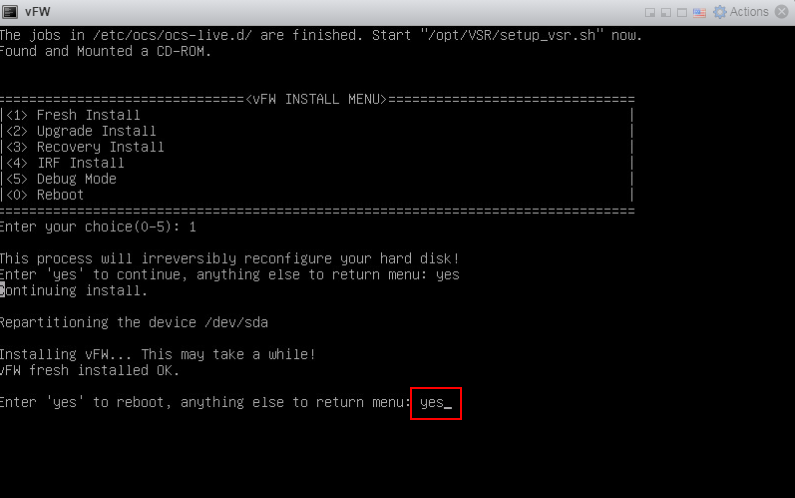

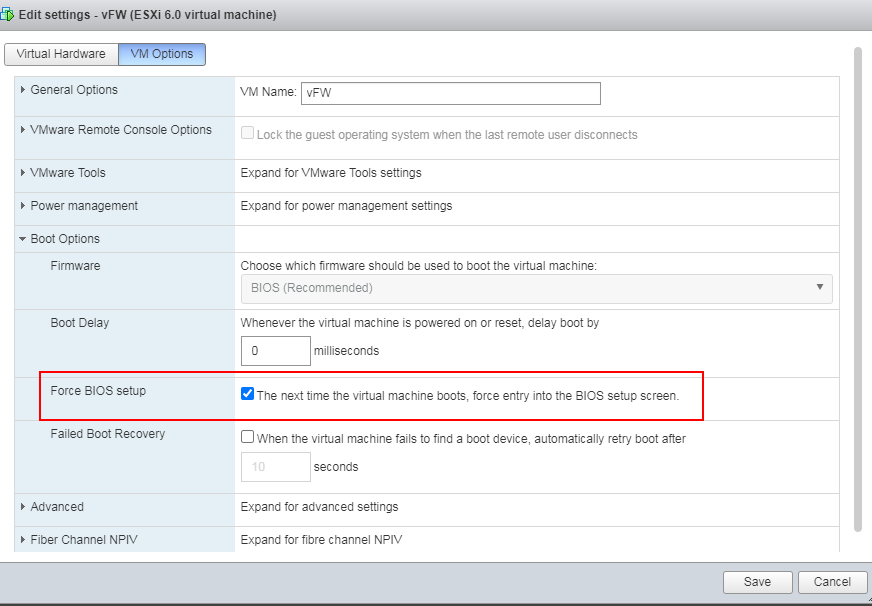

1. Right-click the newly created VM from the left device navigation pane and select Edit VM Settings from the shortcut menu. Then, click the VM Options tab, as shown in Figure 12. Select Force BIOS setup, and click OK.

Figure 12 Selecting Force BIOS setup

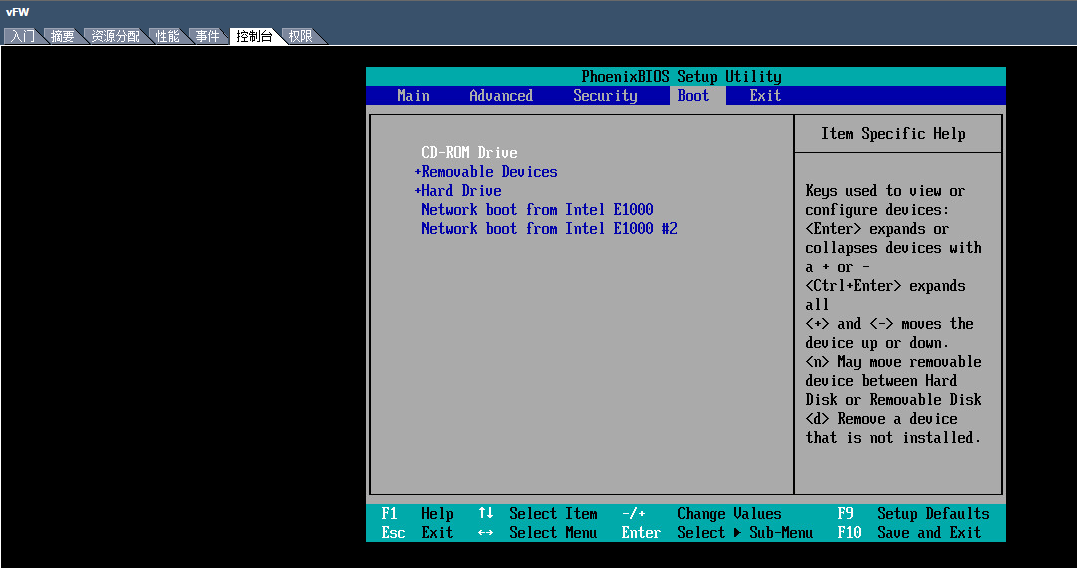

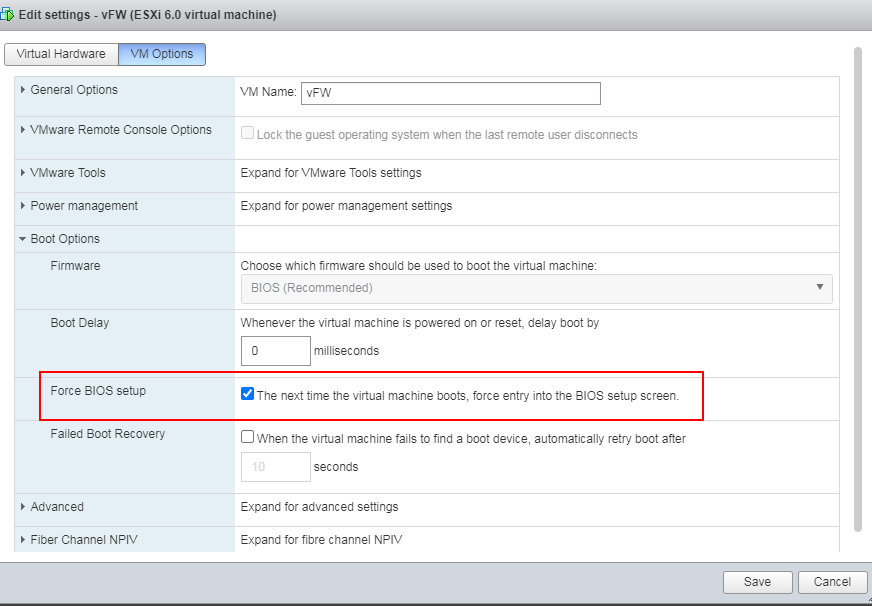

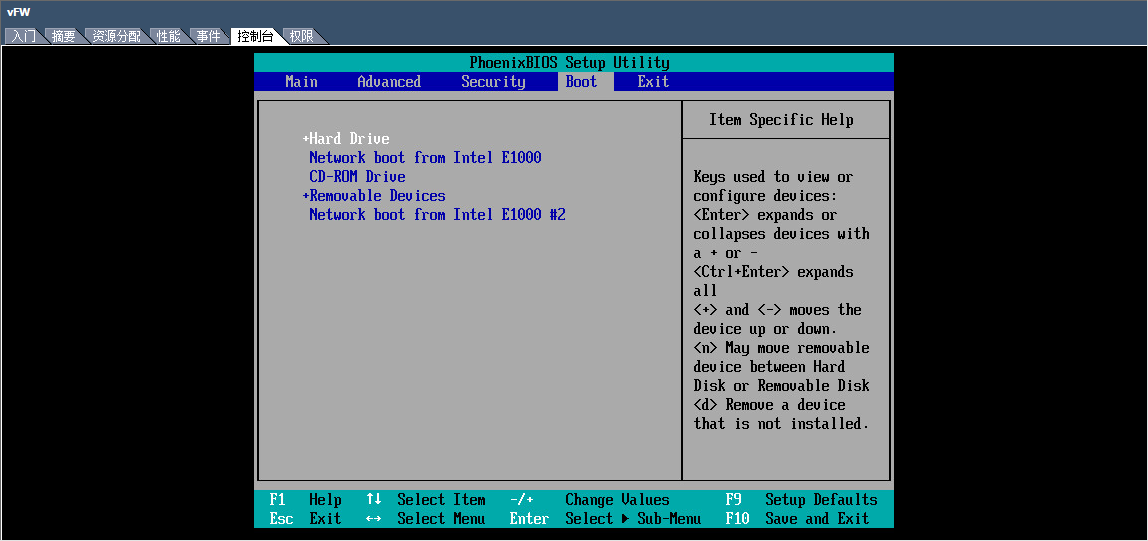

2. From the left device navigation pane, select

the newly created VM. Click ![]() to start the VM. Click the Boot tab

from the console, and select CD-ROM Drive as the

first boot option, as shown in Figure 13.

Then save the configuration and exit the console.

to start the VM. Click the Boot tab

from the console, and select CD-ROM Drive as the

first boot option, as shown in Figure 13.

Then save the configuration and exit the console.

Figure 13 Selecting CD-ROM drive as the first boot option

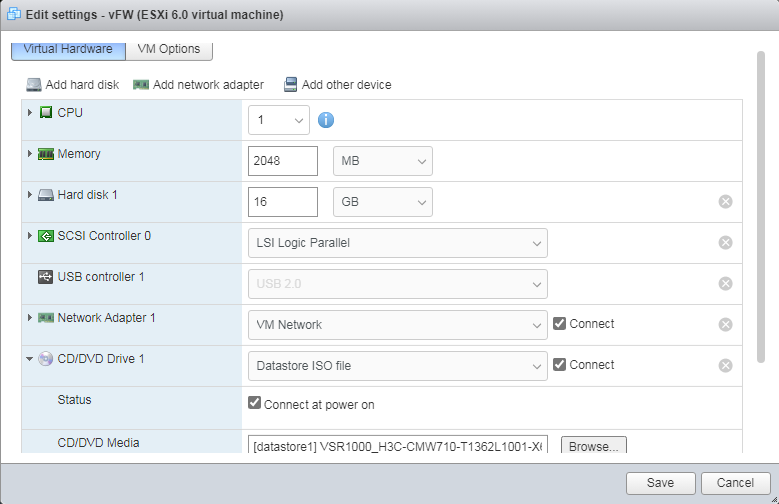

Connecting to the vFW1000 installation image

Click ![]() to

connect the CD device of the VM to the vFW1000 installation ISO file. Wait for

the VM to automatically read the installation image, as shown in Figure 14.

to

connect the CD device of the VM to the vFW1000 installation ISO file. Wait for

the VM to automatically read the installation image, as shown in Figure 14.

Figure 14 Connecting the CD device of the VM to the vFW1000 installation ISO file

Installing vFW1000

1. Select the newly created VM from the navigation pane and then click Power On. In the window that opens, select Yes.

2. Select the Console tab and then click Web to start the VM console.

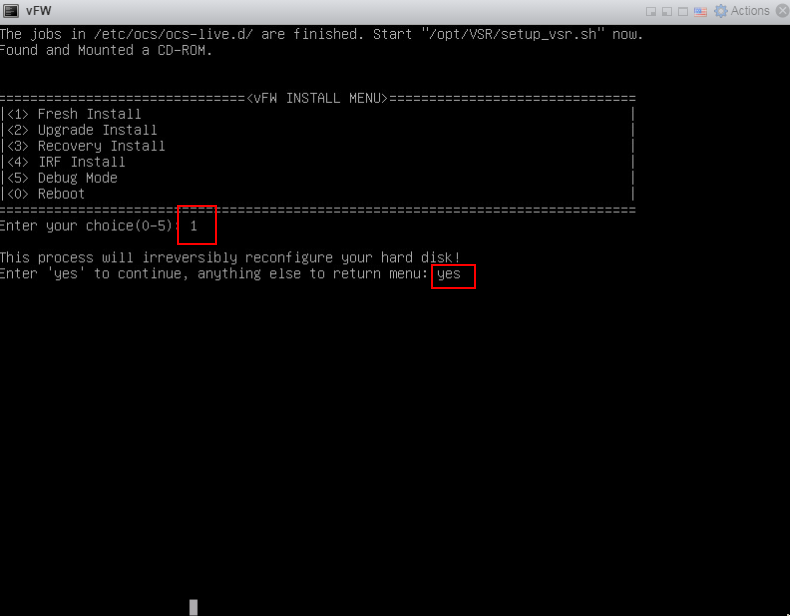

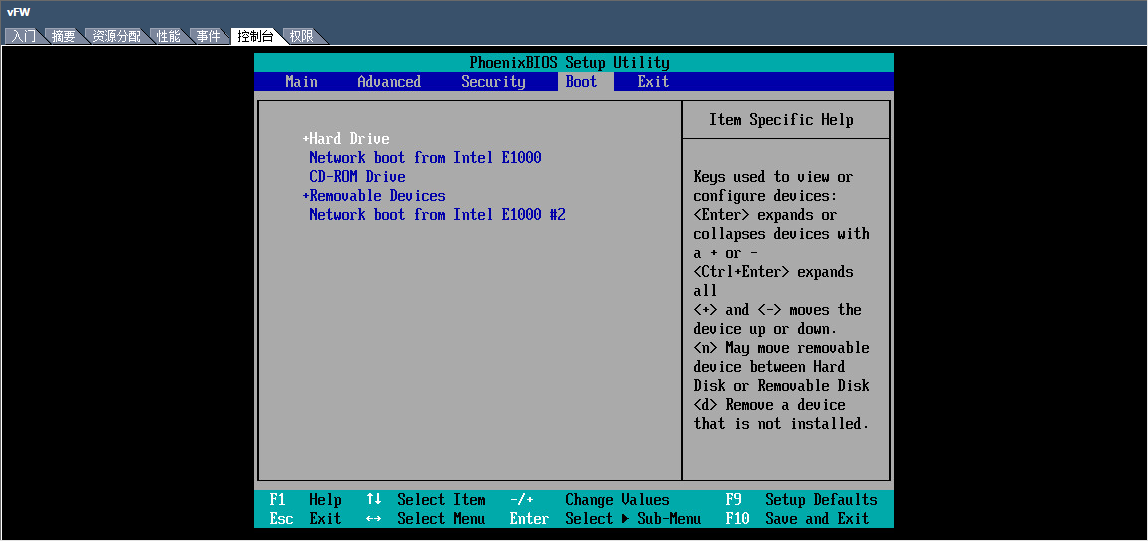

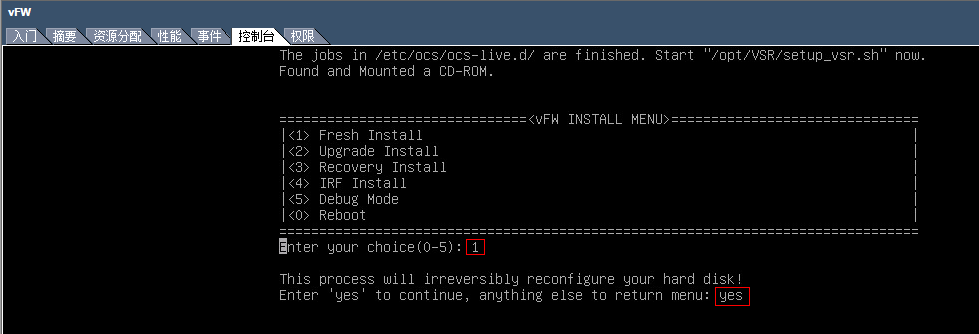

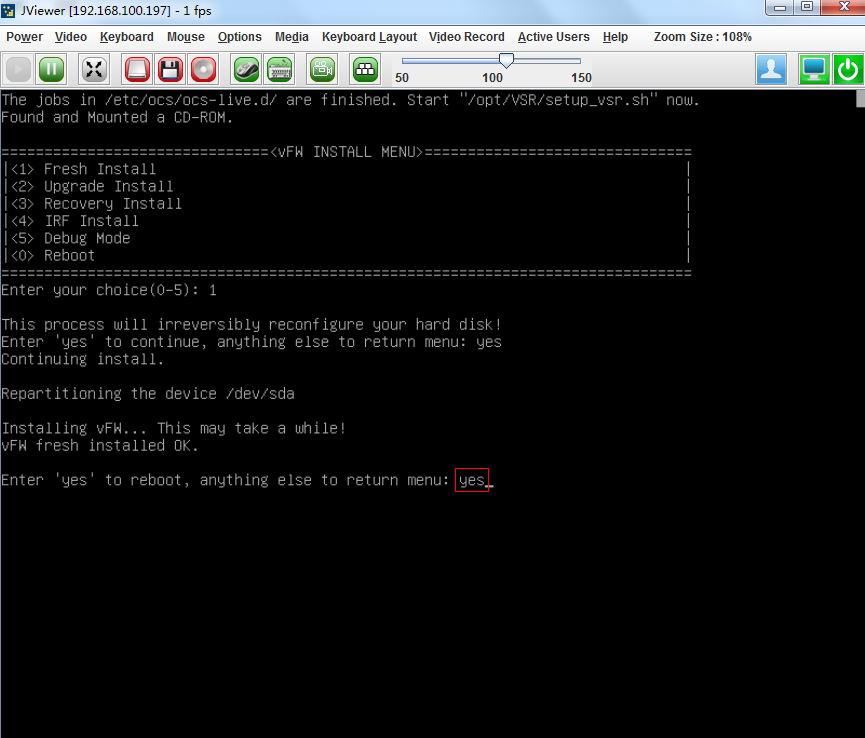

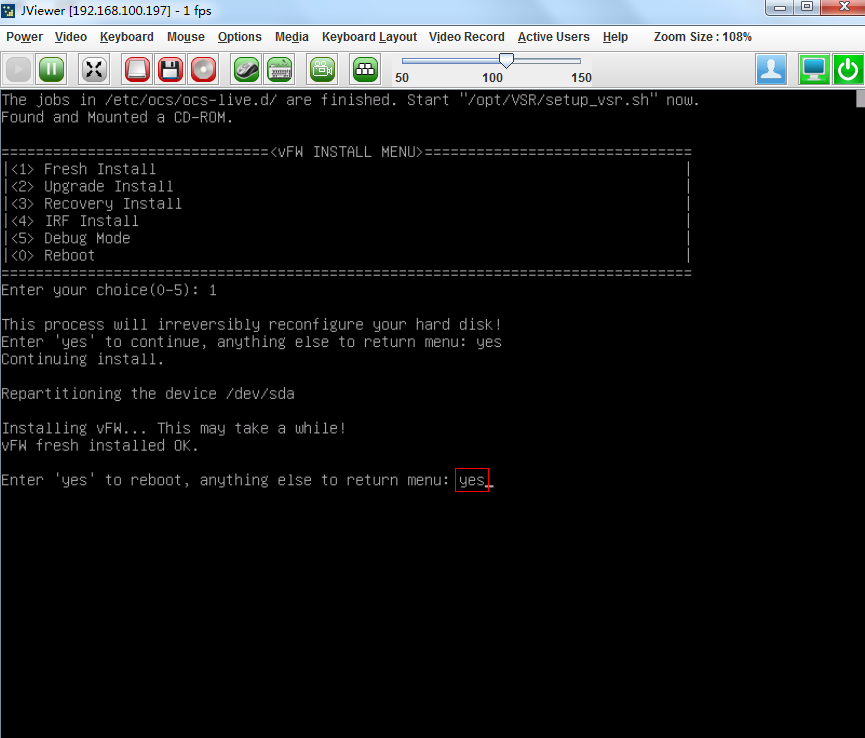

3. The VM automatically loads and installs the ISO file. On the installation screen, enter 1 to select <1> Fresh Install, and then enter yes. The system will automatically complete installation.

Figure 15 Starting installation

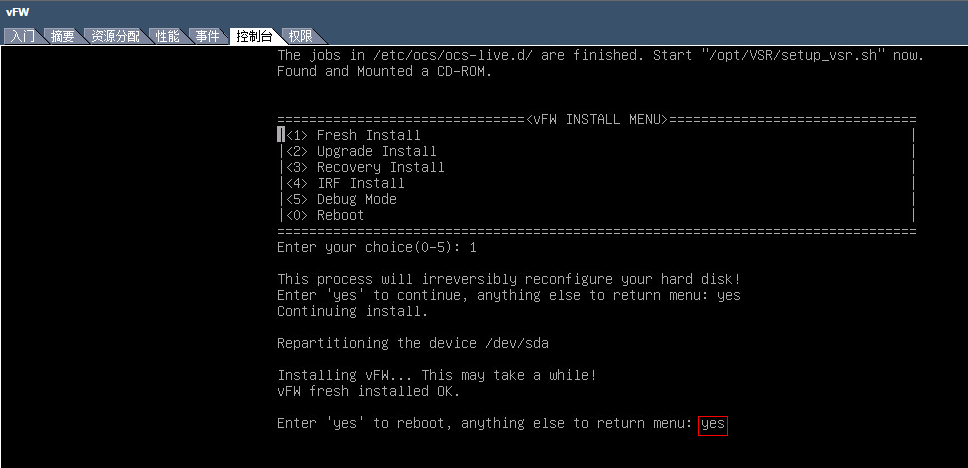

4. Enter yes to reboot the system and press enter at the subsequent screens to finish vFW1000 installation.

Figure 16 Completing vFW1000 installation

Installing vFW1000 via PXE

This section describes only the installation procedure on the PXE client side. For the PXE server setup procedure, see "Appendix D Setting up a PXE server."

Creating a VM

For information about creating a VM, see "Creating a VM."

Configuring the VM to boot from the network

1. Right click the newly created VM from the left device navigation pane and select Edit VM Settings. On the page as shown in Figure 17, click the VM Options tab, select Force BIOS setup, and click Save.

Figure 17 Selecting force BIOS setup

2. From the left device navigation pane, select

the newly created VM. Click ![]() to start the VM. Click the Boot tab

from the console, and select Network boot from xx

as the second boot option, as

shown in Figure 18.

Then save the configuration and exit the console.

to start the VM. Click the Boot tab

from the console, and select Network boot from xx

as the second boot option, as

shown in Figure 18.

Then save the configuration and exit the console.

Figure 18 Selecting boot from a network interface as the second boot option

Installing vFW1000

1. The VM automatically loads the required files from the PXE server. On the installation screen, Enter 1 to select <1> Fresh Install, and then enter yes. The system will automatically complete the installation.

Figure 19 Starting installation

2. Enter yes to reboot the system to finish the installation of vFW1000.

Figure 20 Rebooting the system

Installing vFW1000 via unattended PXE

This section describes only the unattended installation procedure on the PXE client side. For information about setting up the PXE server, see "

Appendix D Setting up a PXE server."

When setting up the PXE server, change the value of the Syslinux parameter ocs_live_run to /opt/VSR/setup_vsr_pxe.sh unmanned fresh.

Creating a VM

For information about creating a VM, see "Creating a VM."

Configuring the VM to boot from the network

1. Right click the newly created VM from the left device navigation pane and select Edit VM Settings. On the page as shown in Figure 21, click the VM Options tab, select Force BIOS setup, and click Save.

Figure 21 Selecting force BIOS setup

2. From the left device navigation pane, select

the newly created VM. Click ![]() to start the VM. Click the Boot tab

from the console, and select Network boot from xx

as the second boot option, as

shown in Figure 18.

Then save the configuration and exit the console.

to start the VM. Click the Boot tab

from the console, and select Network boot from xx

as the second boot option, as

shown in Figure 18.

Then save the configuration and exit the console.

Figure 22 Selecting boot from a network interface as the second boot option

Installing vFW1000

The VM automatically downloads the required files from the PXE server, and the system will complete the installation automatically.

Installing vFW1000 from an OVA file

The vFW1000 OVA template is created based on VMware VM version 8. This VM version is compatible with ESXi 5.0 and higher hosts. To install vFW1000 by using the OVA template, use a host running VMware ESXi 5.0 or higher.

Connecting to the VMware ESXi

Open VMware vSphere Client and connect to VMware ESXi. For information about how to connect VMware ESXi, see "Creating a VM".

Installing vFW1000

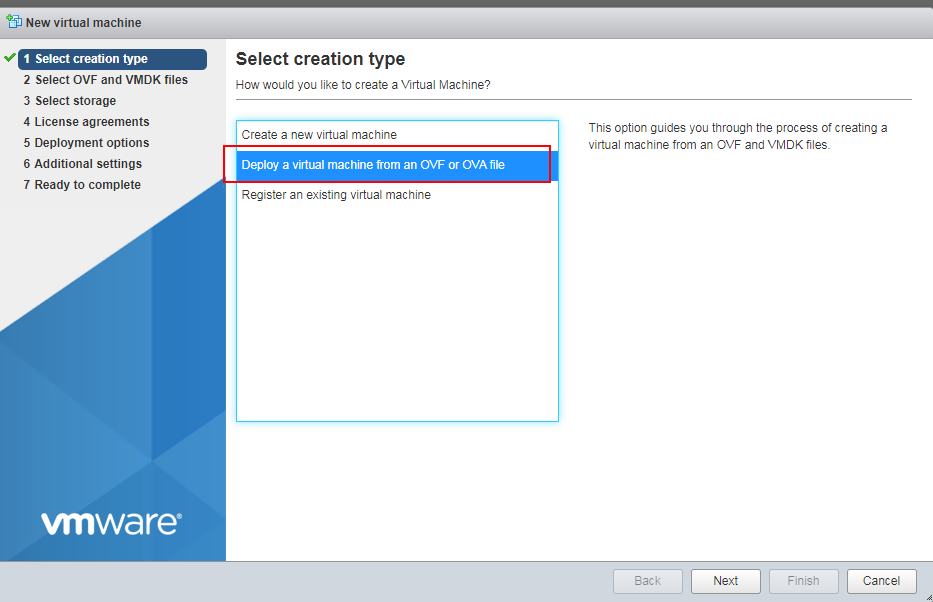

1. Click the Create/Register VM tab.

The New virtual machine wizard opens.

2. As shown in Figure 3, select Deploy a virtual machine from an OVF or OVA file, and then click Next.

Figure 23 Selecting VM deployment from an OVA file

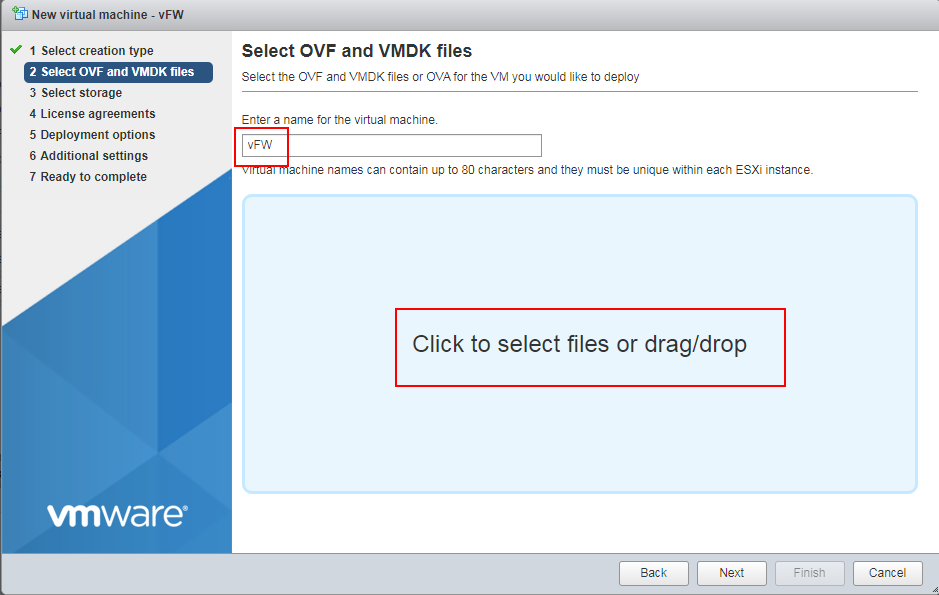

3. Enter a name for the VM, select an OVA file, and then click Next.

Figure 24 Entering a name for the VM

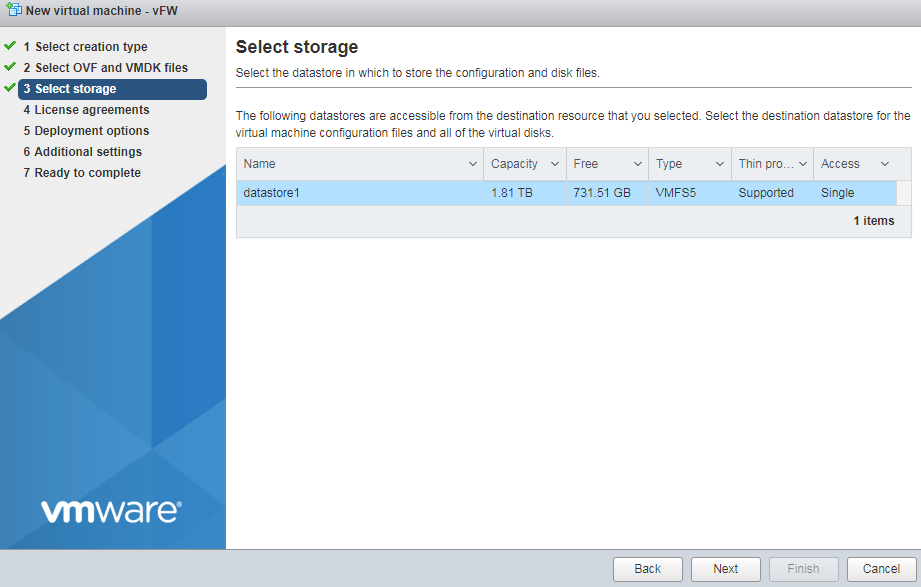

4. Configure the destination storage for the VM, and then click Next.

Figure 25 Configuring the destination storage

5. Select the VM Network mapping option, and then click Next.

Figure 26 Selecting the VM network mapping option

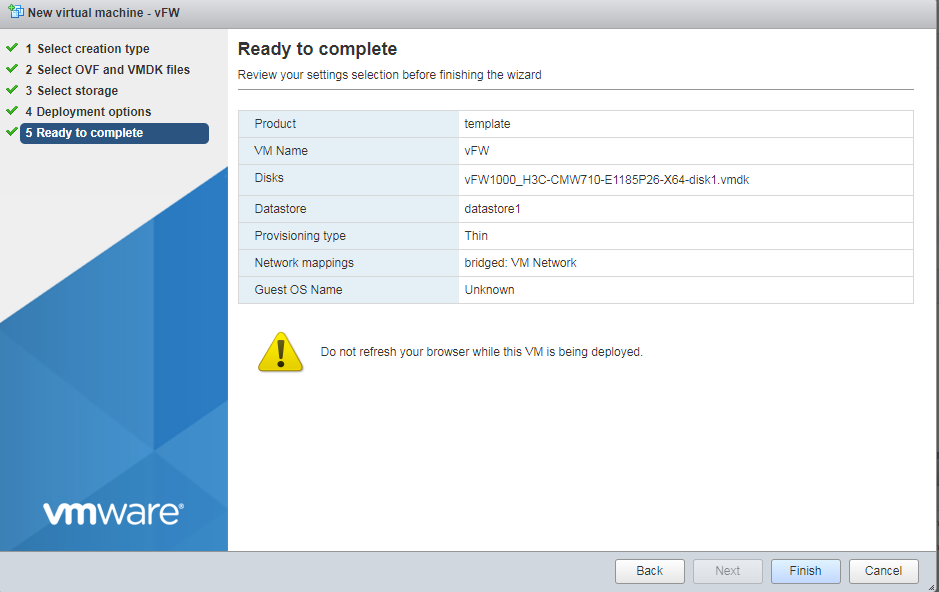

6. Click Finish to finish VM creation.

Figure 27 Finishing VM creation

7. After the VM is created, it is displayed in the left device navigation pane.

Installing vFW1000 from an OVA file by using the auto-deploy tool

Configuration procedure

Use the auto deployment tool vd_ deploy.sh to deploy the vFW1000 OVA template to the target vCenter-managed server and configure basic settings for vFW1000.

|

IMPORTANT: The auto-deploy tool vd_deploy.sh runs only in a Linux environment where OVFTOOL 3.01 or later is installed. |

|

|

NOTE: · OVFTOOL can be downloaded at www.vmware.com. · To obtain the username and password for logging in to vCenter, contact the server administrator. |

To install vFW1000 from an OVA file by using the auto-deploy tool:

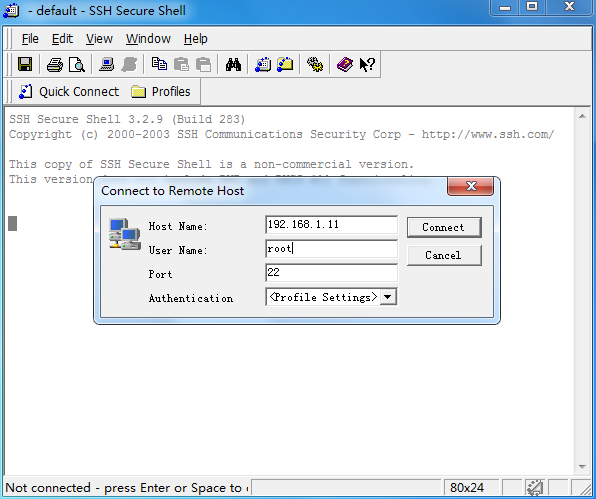

1. Log in to the Linux server. In this example, the SSH login method is used.

Figure 28 Logging in to the Linux server

# Access the /opt directory where the OAM template resides from the root directory.

Figure 29 Accessing the /opt directory

2. Use the auto-deploy tool to configure the OVA template parameters as are needed (skip this step if there is no such requirements).

# Use the OVA template named vFW1000_H3C-CMW710-E1184-X64.ova in the current directory to create a new template with 4 CPUs, 2048 MB memory, and 2 NICs, and save the template to the /opt/results directory.

[root@localhost opt]# ./vd_deploy.sh -s vFW1000_H3C-CMW710-E1184-X64.ova -o /opt/results -c 4 -m 2048 -ns 2

Generating OVF file with user params

------------------------------------

No ovftool found in your environment, please install 'ovftool' first

ovftool not available; unable to perform validity of OVF check. Continuing.

Generating Manifest

---------------------

Creating OVA package

--------------------

Copying OVA package to output directory

--------------------

'/opt/results/vFW1000_H3C-CMW710-E1184-X64.ova'

Success

3. Use the auto-deploy tool to install vFW1000 from the OVA template and configure basic settings for vFW1000 as follows.

¡ vFW1000 name—vFW.

¡ Target host—Server 192.168.1.25 managed by vCenter at 192.168.1.26.

¡ vCenter login username—root.

¡ vCenter login password—vmware.

¡ Destination database—datastore1.

¡ Connected network—VM Network.

¡ Power supply status of vFW1000—Enabled.

¡ IP address of vFW1000—172.31.2.222/24.

¡ Default gateway of vFW1000—172.31.2.254.

¡ vFW1000 login username—vfw-user.

¡ vFW1000 login password—123456.

¡ SSH status—Enabled.

[root@localhost opt]# ./vd_deploy.sh -s vFW1000_H3C-CMW710-E1184-X64.ova -n vFW -po -ov -d '192.168.1.26/Datacenter-1/host/192.168.1.25' -u root -pw vmware -ds datastore1 -nw 'VM Network' -ip '172.31.2.222/24' -gw '172.31.2.254' -lu 'vfw-user' -lpw '123456' -ssh

/usr/bin/ovftool found...

Generating OVF file with user params

------------------------------------

Validating OVF descriptor

----------------

Generating Manifest

---------------------

Creating OVA package

--------------------

Deploying OVA package to '192.168.1.26/Datacenter-1/host/192.168.1.25'

------------------------------------------

/usr/bin/ovftool --powerOffTarget --diskMode=thick --datastore=datastore1 --overwrite --powerOn --name=vFW vFW.ova vi://root:********@192.168.1.26/Datacenter-1/host/192.168.1.25

Opening OVA source: vFW.ova

The manifest validates

Accept SSL fingerprint (D1:FB:DC:C1:E0:41:89:22:6E:48:F8:D6:03:A7:8B:36:21:E1:55:CF) for host 192.168.1.26 as target type.

Fingerprint will be added to the known host file

Write 'yes' or 'no'

yes

Opening VI target: vi://root@192.168.1.26:443/Datacenter-1/host/192.168.1.25

Deploying to VI: vi://root@192.168.1.26:443/Datacenter-1/host/192.168.1.25

Transfer Completed

Powering on VM: vFW

Completed successfully

Success

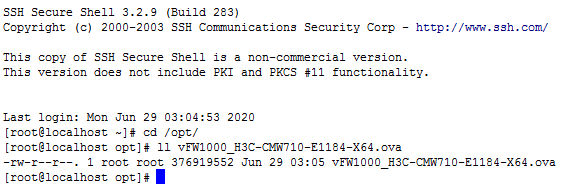

# A vFW1000 named vFW is deployed on server 192.168.1.25.

Figure 30 vFW1000 deployed

Configuration options

Table 4 describes all configuration options with the auto-deploy tool vd_deploy.sh.

|

|

NOTE: No configuration sequence exists between the options. |

|

Configuration option |

Format |

Description |

Remarks |

|

Virtual device configuration options (vFW1000 basic settings) |

|||

|

-sn | -sysname |

<string> |

Enters the sysname, which must be a string of 1 to 64 characters. |

vFW1000 system name. |

|

-ip | -ip_address |

<address/mask> |

Enters the IPv4 address/mask for the first interface, such as '10.1.1.100 255.255.255.0' or '10.1.1.100/24'. You can also specify the string 'dhcp' to use DHCP. |

vFW1000 IP address. |

|

-gw | -gateway |

<address> |

Enters the default IPv4 gateway, such as '1.1.1.1'. |

vFW1000 gateway address. |

|

-lu | -login_username |

<string> |

Enters the login username, which should be a string of 1 to 55 characters, not include \, |, /, :, *, ?, <, >, @, and must not be a, al, all. It must be paired with login_password option. |

vFW1000 login username. |

|

-lpw | -login_password |

<string> |

Enters the login password, which should be a string of 1 to 63 characters. It must be paired with login_username option. |

vFW1000 login password. |

|

-ssh |

N/A. |

If set, enables SSH. This requires that the login_username and login_password also be set. |

Enable SSH. |

|

-telnet |

N/A. |

If set, enables Telnet. This requires that the login_username and login_password also be set. |

Enable Telnet. |

|

-netconf_http |

N/A. |

If set, enables NETCONF over HTTP. This requires that the login_username and login_password also be set. |

Enable NETCONF over HTTP. |

|

-netconf_https |

N/A. |

If set, enables NETCONF over HTTPS. This requires that the login_username and login_password also be set. |

Enable NETCONF over HTTPS. |

|

-snmpv2 |

N/A. |

If set, enables SNMPv2. This requires that the ead_community and write_community also be set. |

Enable SNMPv2. |

|

-rc | -read_community |

<string> |

Enters the SNMPv2 read-only access community name, which should be a string of 1 to 32 characters. |

SNMPv2 read-only community name. |

|

-wc | -write_community |

<string> |

Enters the SNMPv2 read and write access community name, which should be a string of 1 to 32 characters. |

SNMPv2 read-write community name. |

|

Help |

|||

|

-h | -help |

- |

Display this help and exit |

Displays help information. |

|

Input/output options |

|||

|

-s | -sourcefile |

<file> |

The OVA file used to deploy the virtual device. |

Original OVA template path. |

|

-o | -output |

<directory> |

Enters the destination output directory of the customized OVA file. If you don't specify the output directory, the file will be deleted after deployed. |

Path to save the customized OVA path. |

|

-n | -name |

<string> |

Enters the VM name which will be deployed. If you don't specify the name, then the source OVA filename will be used. |

vFW1000 name or OVA template name. |

|

Virtual Machine Hardware Options |

|||

|

-c | -cpus |

<number> |

Enters the number of vCPU. |

vCPU quantity. |

|

-m | -memory |

<MB> |

Enters the amount of memory. Minimum size of memory is 1024 MB. |

Memory size. |

|

-ns | -nics |

<number> |

Enters the number of vNIC. |

vNIC quantity. |

|

-nt | -nic_type |

<string> |

Enters the vNIC type. Valid values are: E1000 VMXNET3 |

vNIC type. |

|

-nw | -network |

<string> |

Enters the network label for all vNICs, or a comma-separated list of one name per vNIC. The network label must exist on the ESXi host. |

vNIC settings. |

|

ESXi/vSphere Deploy Options |

|||

|

-d | -deploy |

<URL> |

Deploys the OVA to the specified ESXi host. |

Destination to install vFW1000. |

|

-u | -username |

<string> |

Enters the ESXi login username. |

vCenter username. |

|

-pw | -password |

<string> |

Enters the ESXi login password. |

vCenter password. |

|

-ds | -datastore |

<string> |

Enters the name of the datastore where the OVA will be deployed. The datastore should exist on the ESXi host. |

Destination datastore for vFW1000. |

|

-ov | -overwrite |

N/A |

Enters the instruction to overwrite an existing VM with the same name. |

Overwrites the vFW1000 of the same name. |

|

-po | -poweron |

N/A |

Enters the instruction to automatically power-on the VM. |

Enable startup after installation. |

Installing the H3C NFV1000 series products on Linux KVM

The installation procedure on Linux KVM is the same for H3C NFV1000 series products. This section uses vFW1000 as an example.

Installing vFW1000 from an ISO file

Prerequisites

The installation requires Virtual Machine Manager, graphic management software optional for Linux OSs. Make sure you have enabled the graphic management interface and installed Virtual Machine Manager at Linux OS installation.

Creating a VM

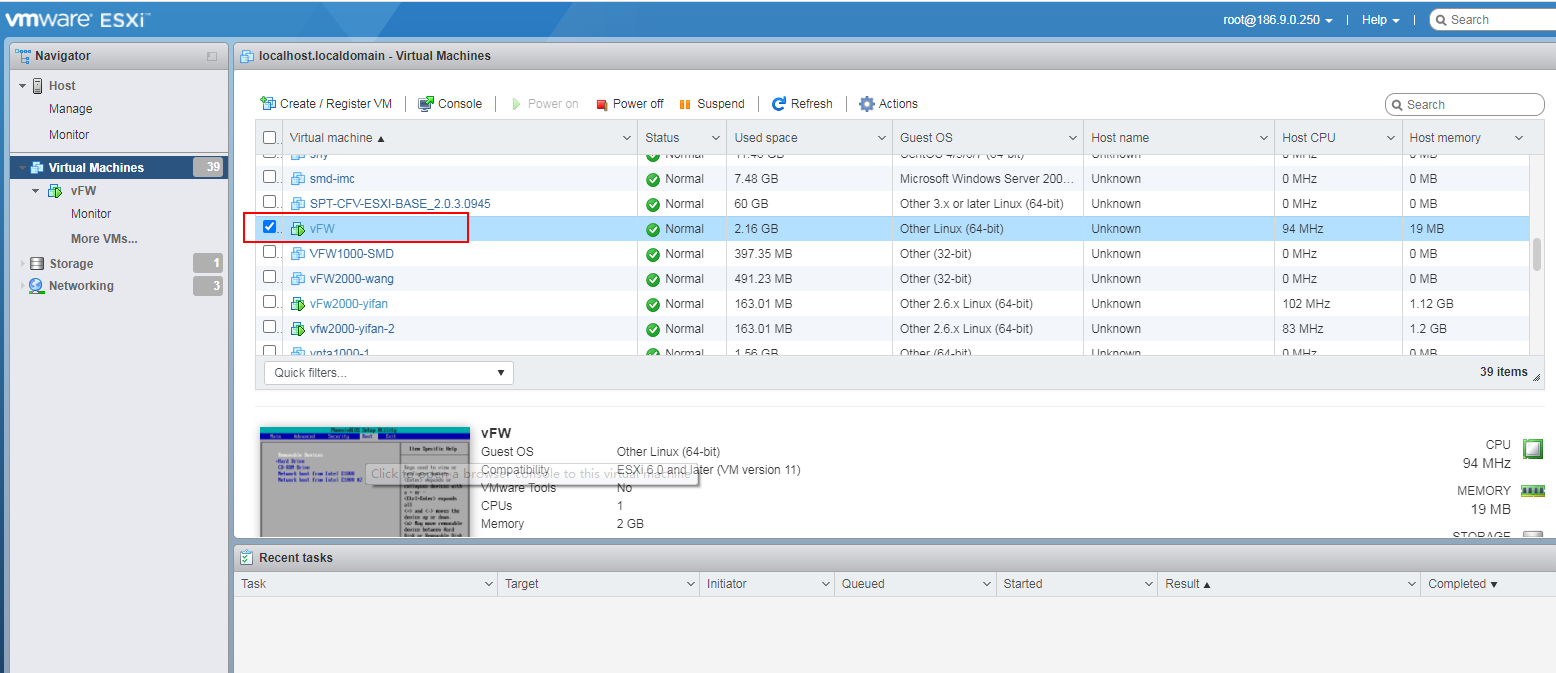

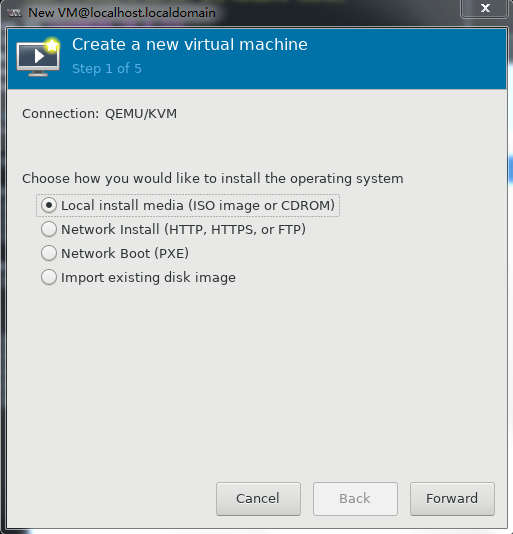

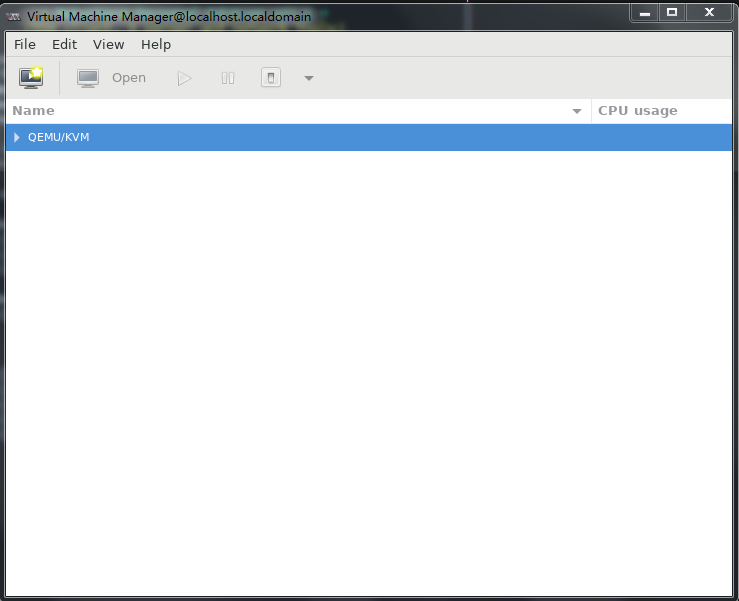

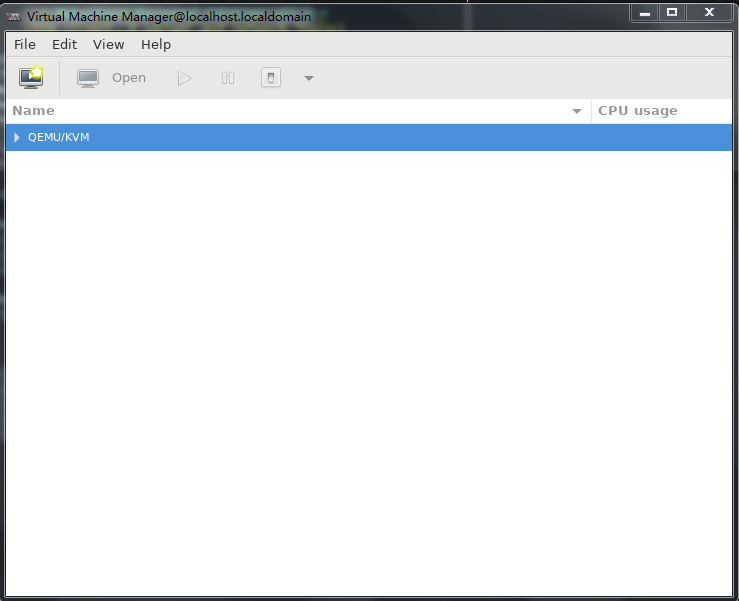

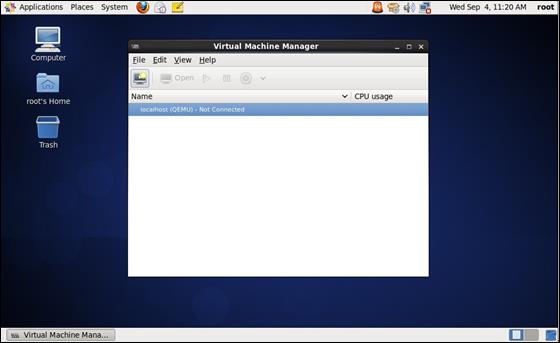

1. Run Virtual Machine Manager.

Figure 31 Virtual Machine Manager

2. Click the ![]() icon to

create a VM.

icon to

create a VM.

Specify a name for the VM and select local installation, and then click Forward.

Figure 32 Creating a VM

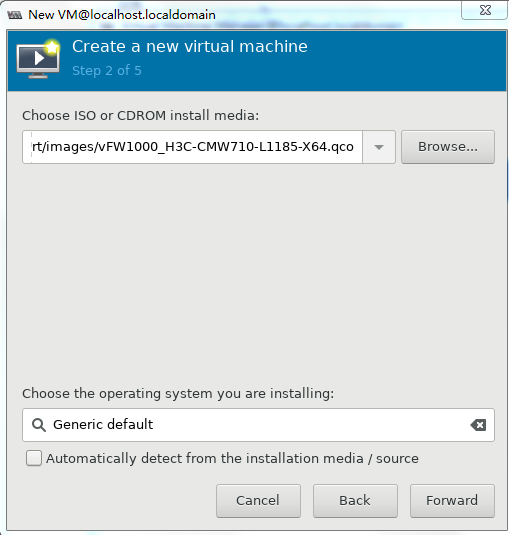

3. As shown in Figure 33, click Browse, select the ISO file for installing vFW1000, and then click Forward.

Figure 33 Selecting the ISO file for installing vFW1000

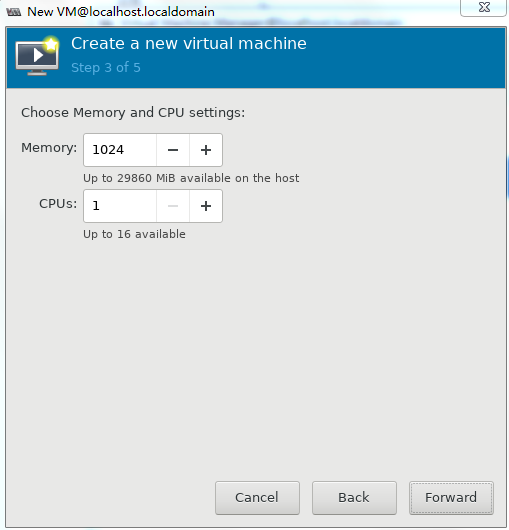

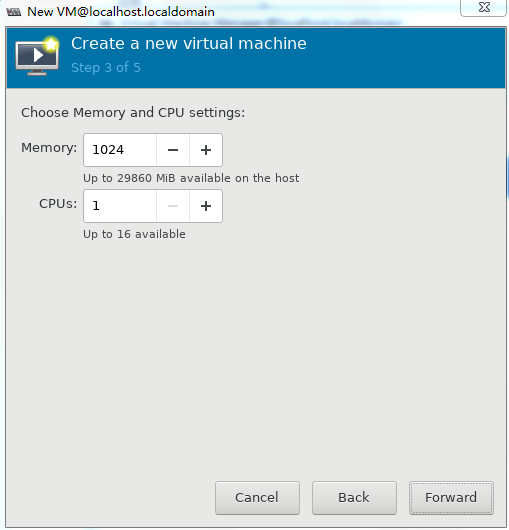

4. Set the memory capacity and CPU quantity for the VM, and then click Forward, as shown in Figure 34.

Specify a minimum of one vCPU (2.0 GHz or higher) and a minimum of 1 GB memory for the VM.

For the minimum requirements of installing vBRAS1000 on a VM, see Table 2. Specify a minimum of 2 GB memory for the VM.

Figure 34 Specifying the vCPU quantity and memory capacity

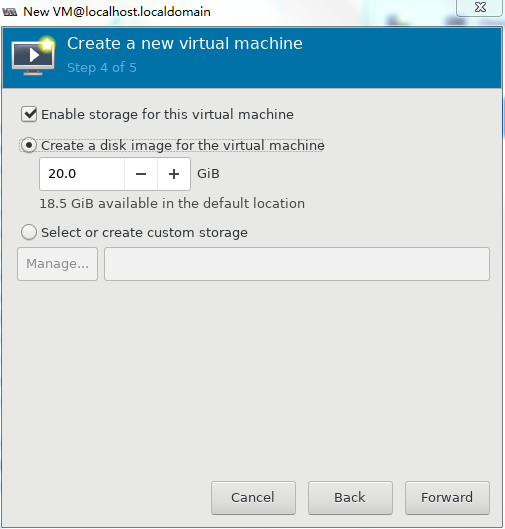

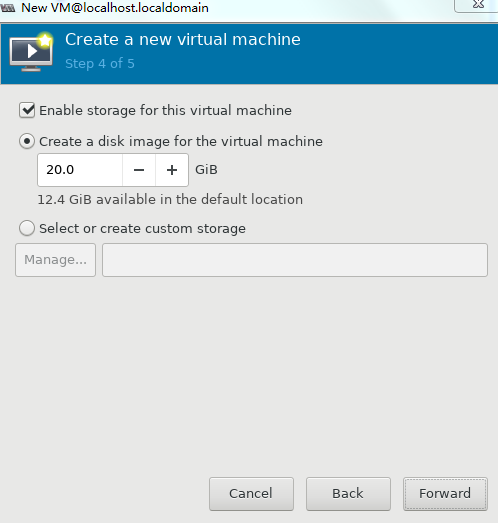

5. Set the disk quantity and capacity for the VM and then click Forward, as shown in Figure 35.

Assign a minimum of one vHD and a minimum disk capacity of 8 GB to the VM.

Figure 35 Setting the disk quantity and capacity

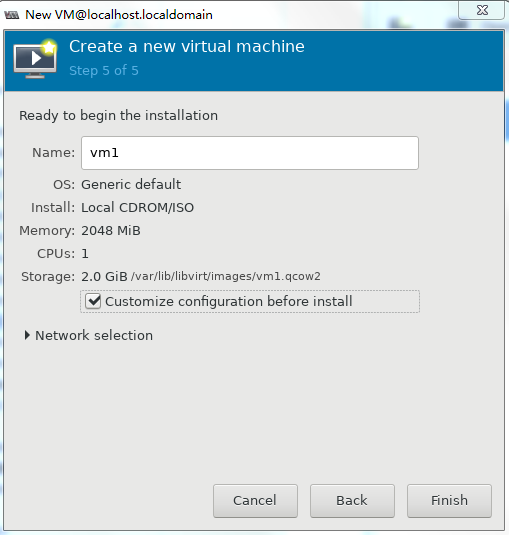

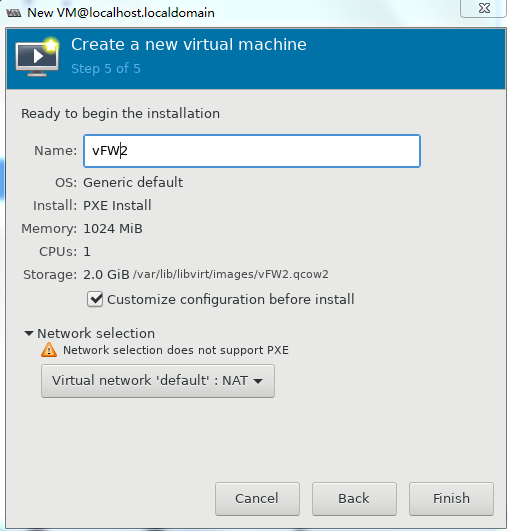

6. To configure other advanced options, select Customize configuration before install and then click Finish, as shown in Figure 36.

Figure 36 Configuring other advanced options

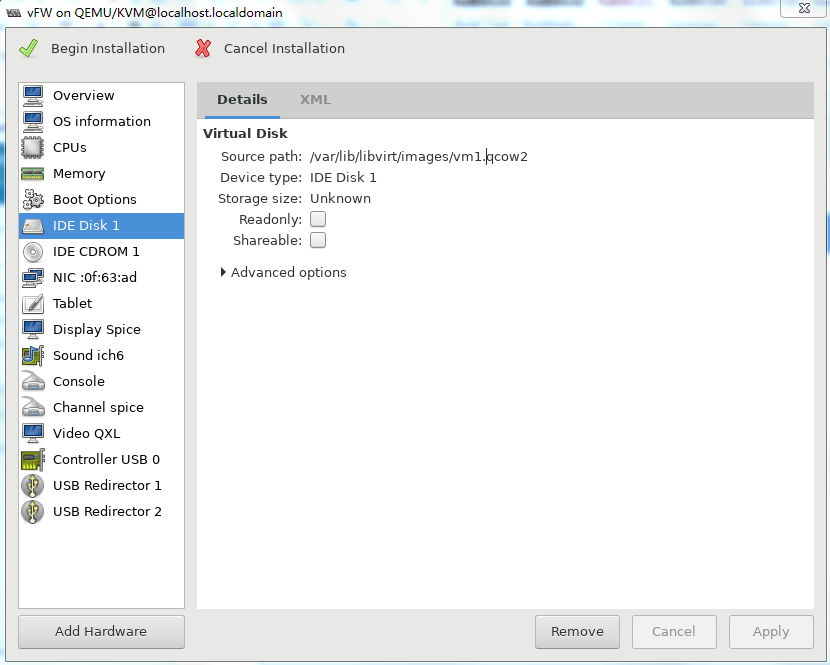

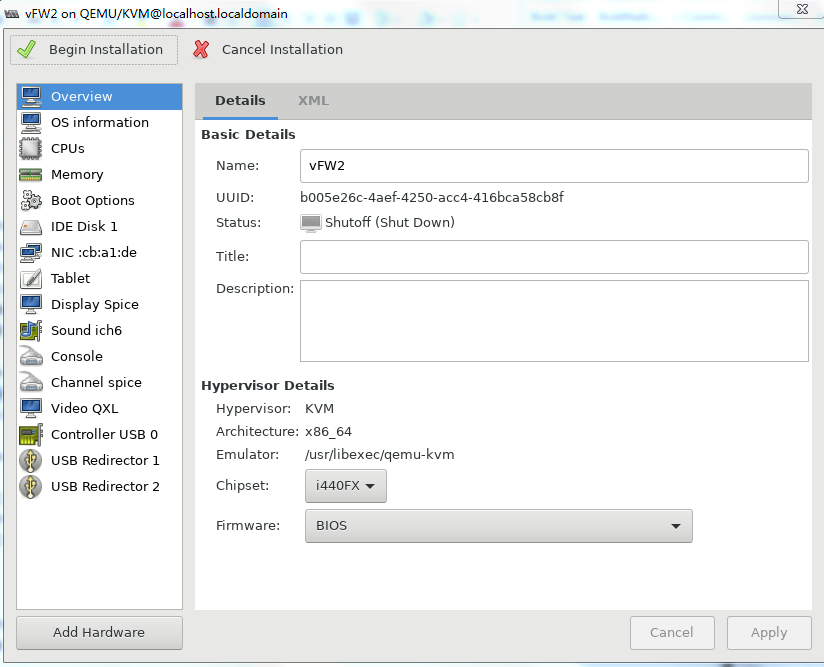

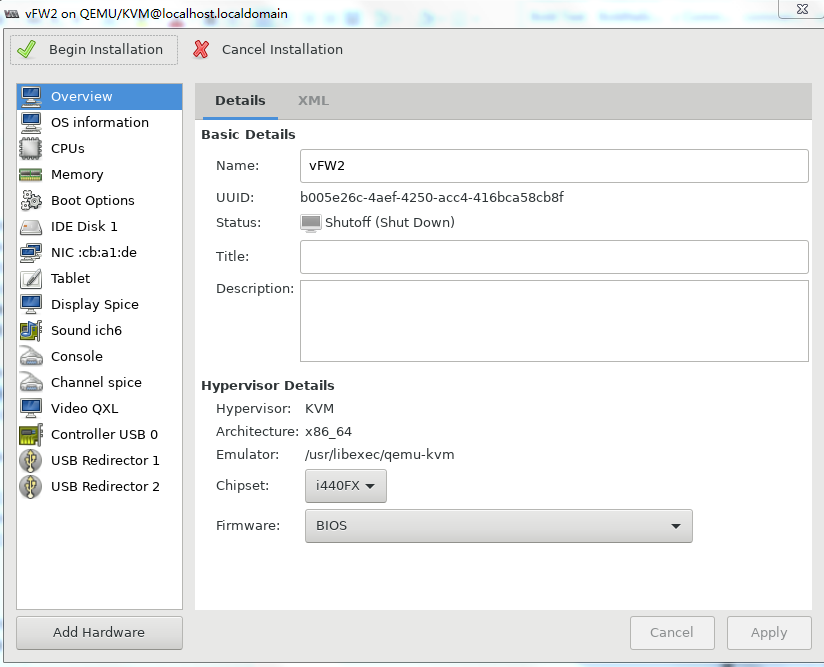

7. If you select Customize configuration before install, the configuration customization page is displayed after you complete basic VM setting.

Figure 37 Configuration customization page

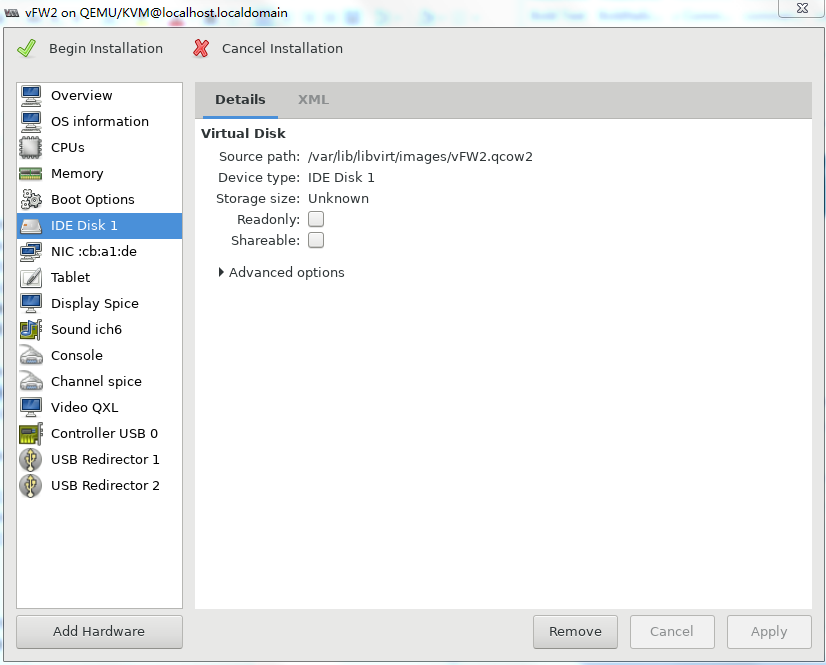

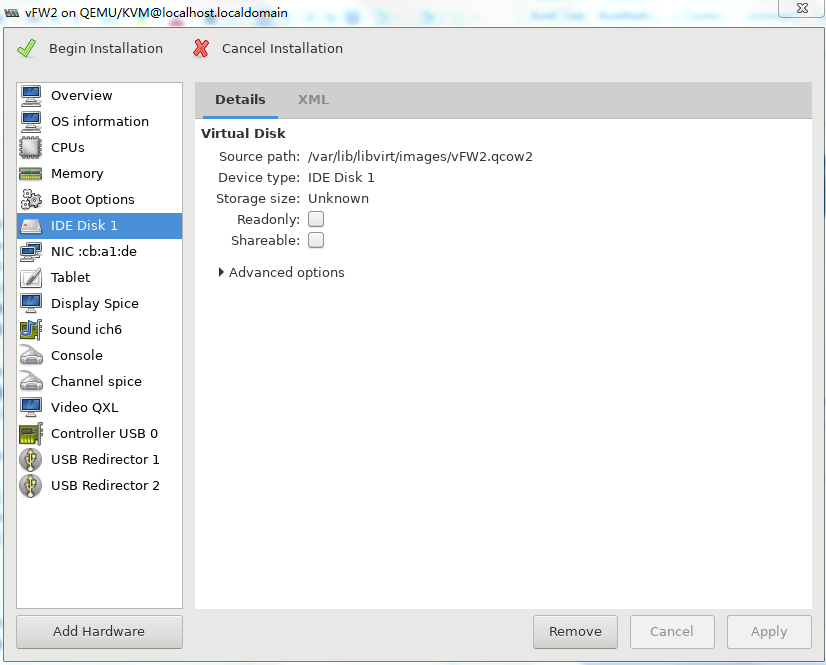

8. Select IDE Disk 1 from the left pane, select IDE from the Bus type field, and then click Apply.

Figure 38 Specifying the disk bus type

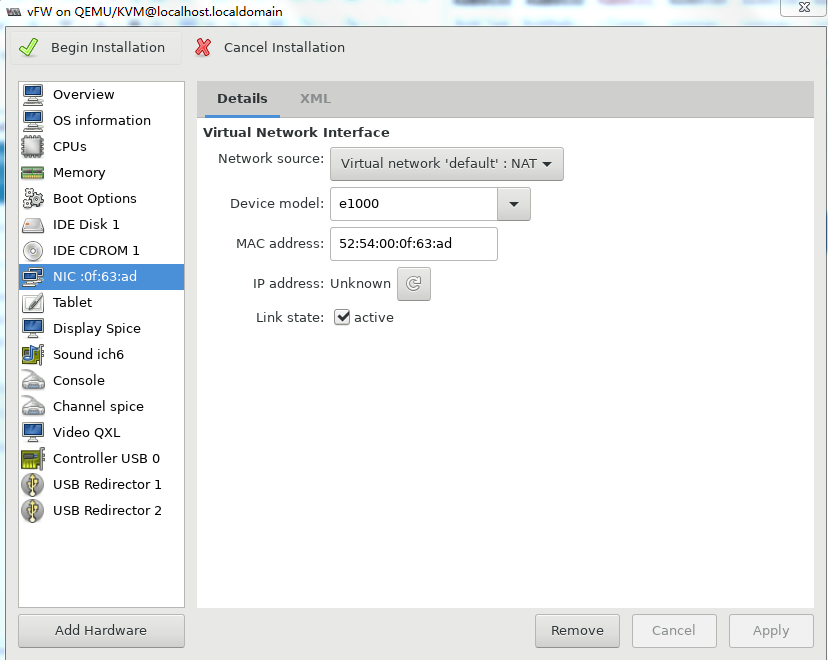

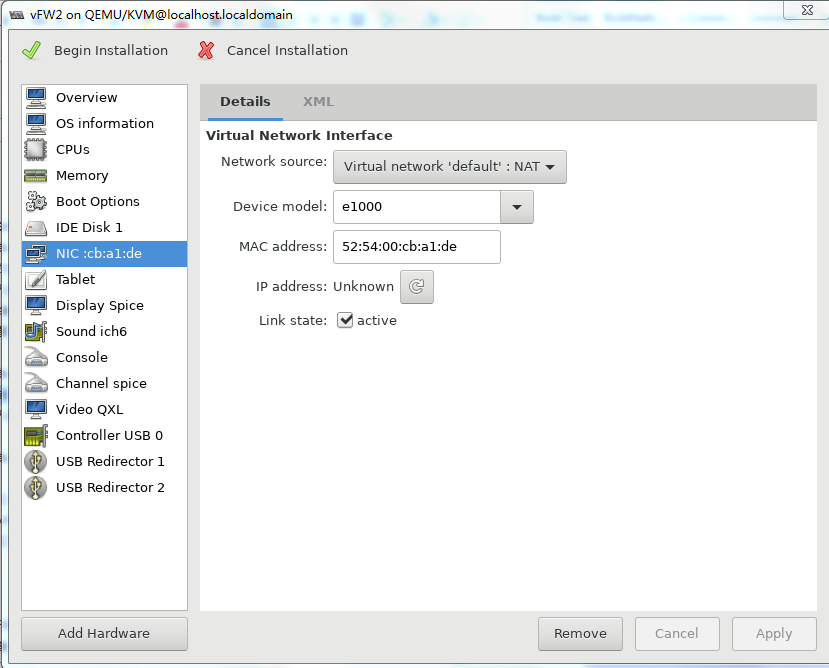

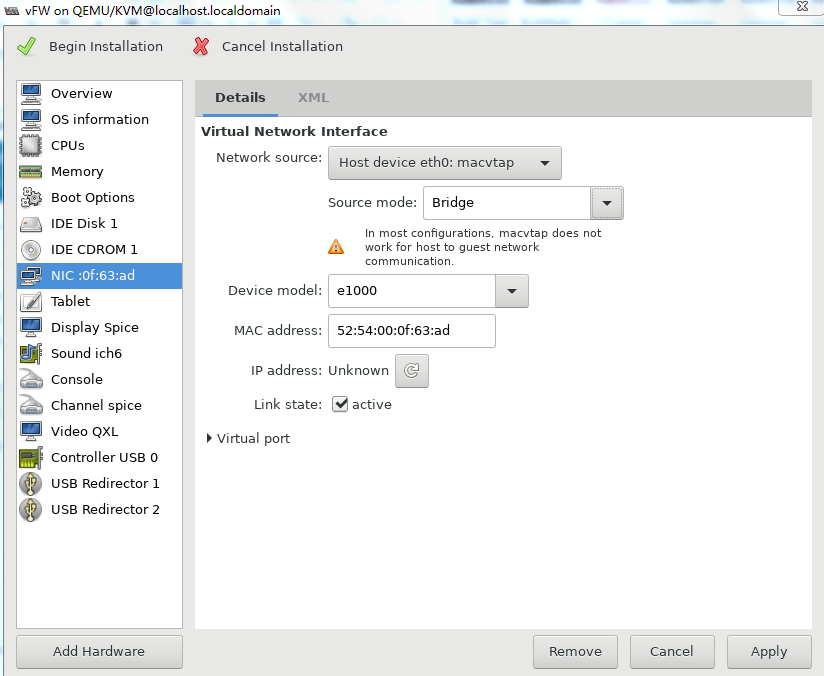

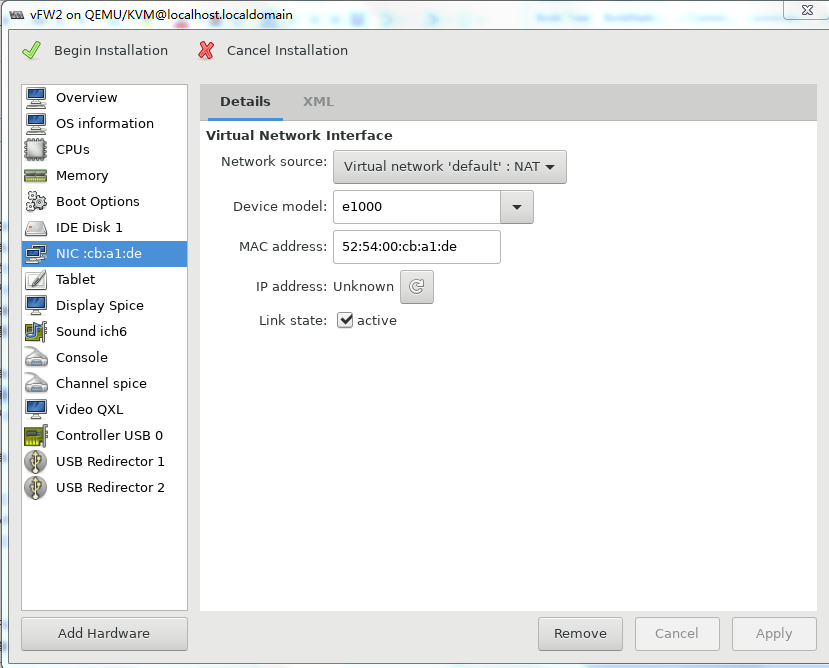

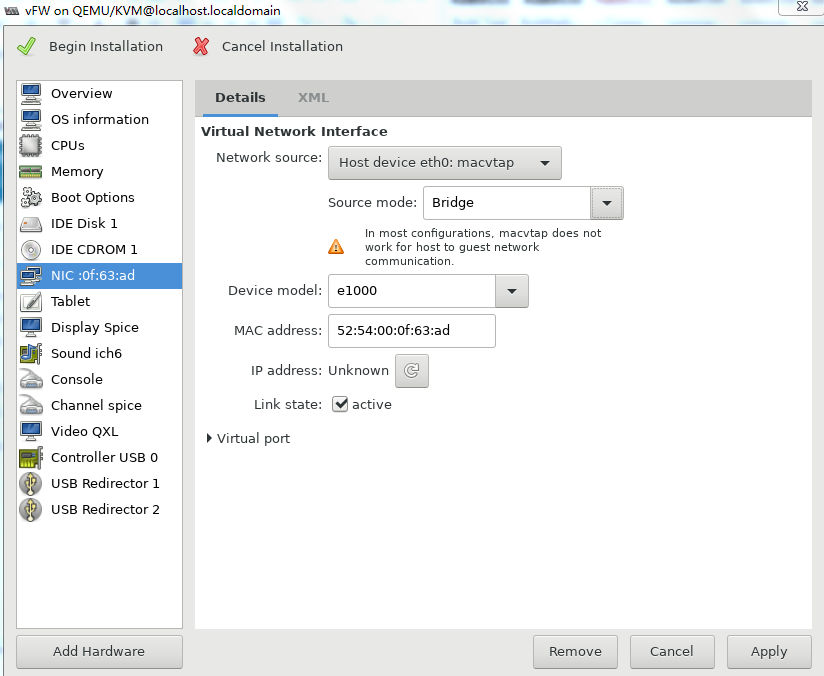

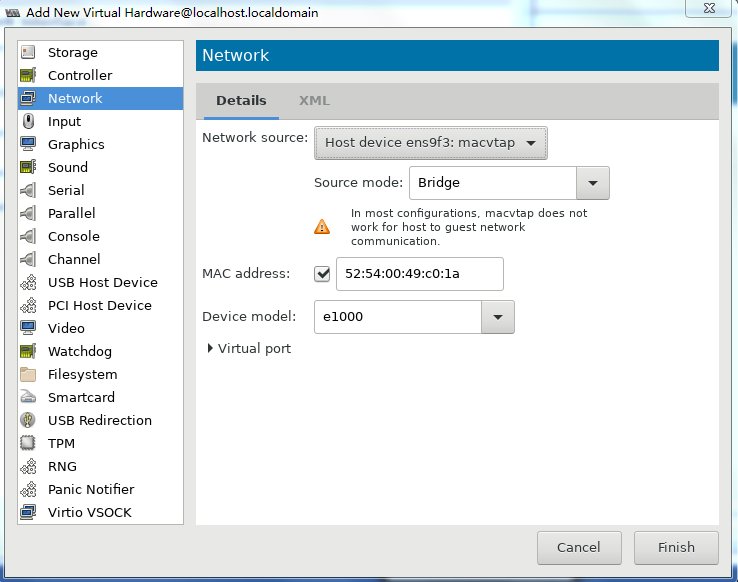

9. Select NIC from the left pane. Two NIC configuration methods as shown in Figure 39 and Figure 40 are available. As a best practice, configure the NIC by using method 2.

For information about creating a bridge, see "Configuring Linux bridges on KVM."

Figure 39 Configuring the virtual network interface (method 1)

Figure 40 Configuring the virtual network interface (method 2)

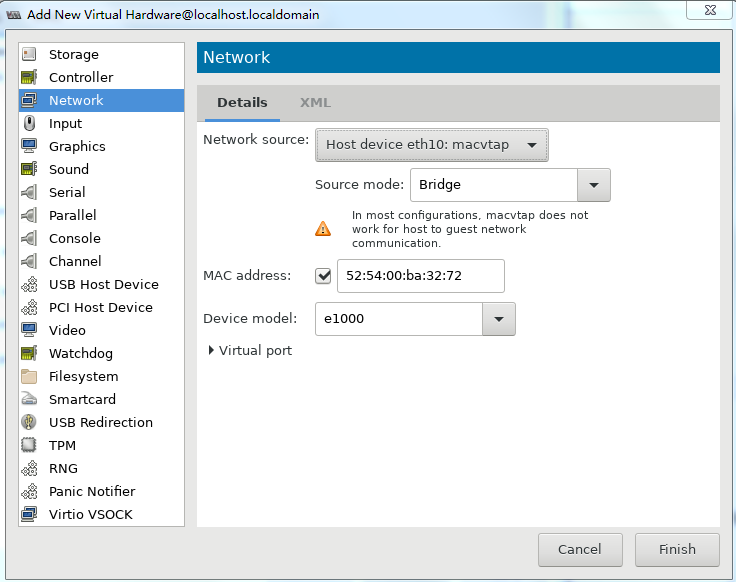

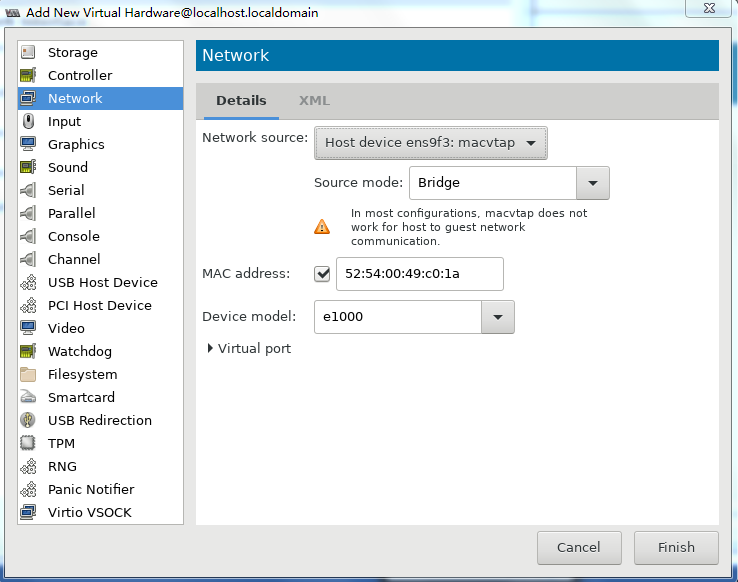

10. Two NICs are required for vFW1000 to run correctly. However, the VM has only one NIC. To add a NIC, click Add Hardware in the lower left corner of the configuration customization page, select the new NIC, and then configure the NIC properties, as shown in Figure 41.

11. Click ![]() to complete

VM creation.

to complete

VM creation.

The VM will start up automatically and then start vFW1000 installation.

Installing vFW1000

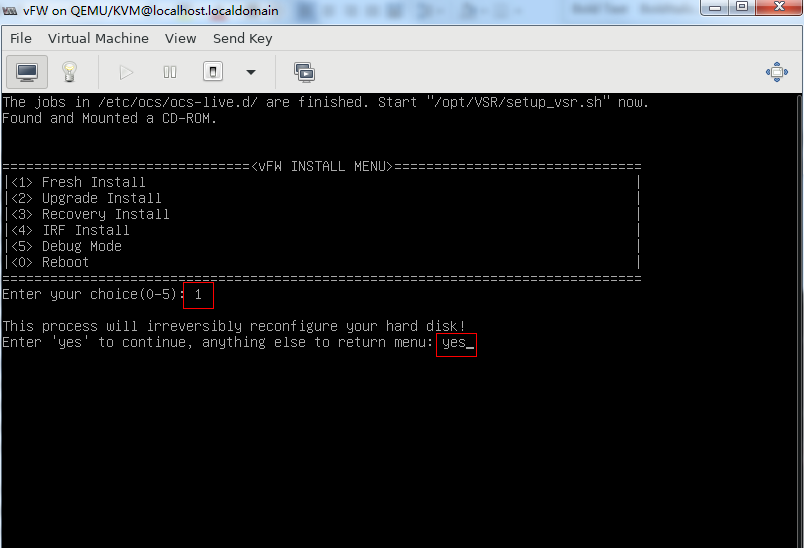

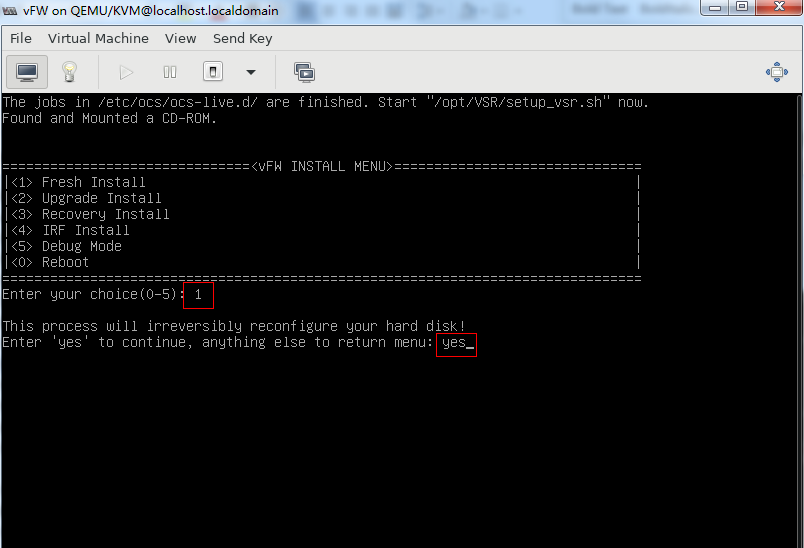

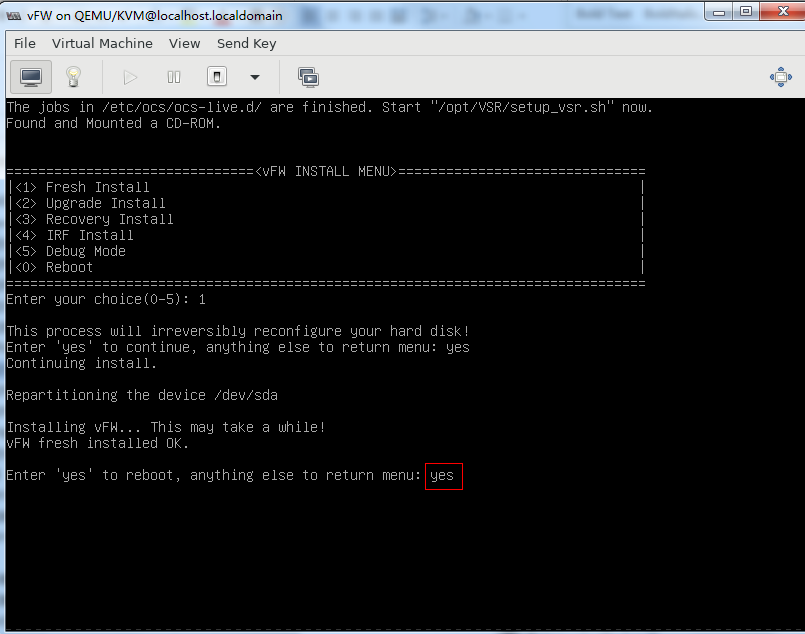

1. Enter 1 to select <1> Fresh Install, and then enter yes.

Figure 42 Selecting the installation method

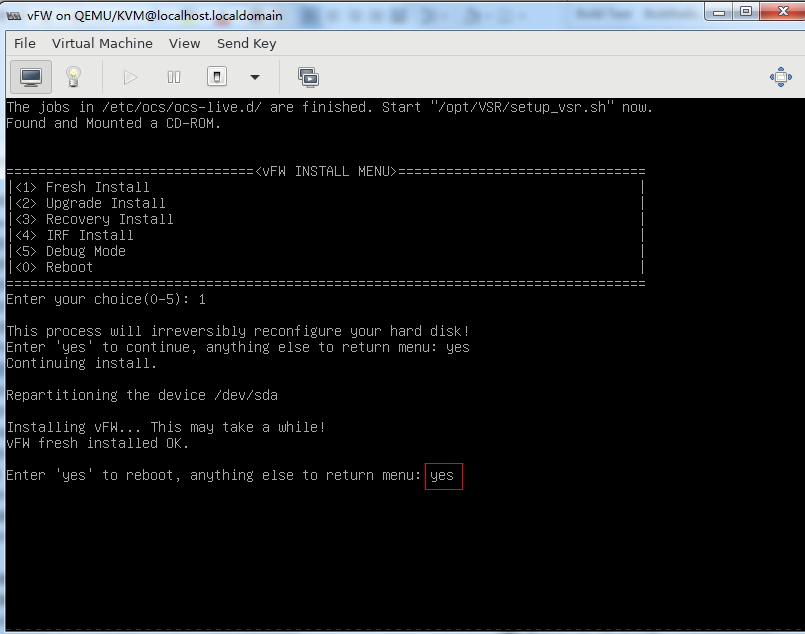

2. As shown in Figure 43, enter yes to restart the system to finish vFW1000 installation.

Figure 43 Rebooting the system

Installing vFW1000 via PXE

This section describes only the installation procedure on the PXE client side. For the PXE server setup procedure, see "Appendix D Setting up a PXE server."

Prerequisites

The installation requires Virtual Machine Manager, graphic management software optional for Linux OSs. Make sure you have enabled the graphic management interface and installed Virtual Machine Manager at Linux OS installation.

Creating a VM

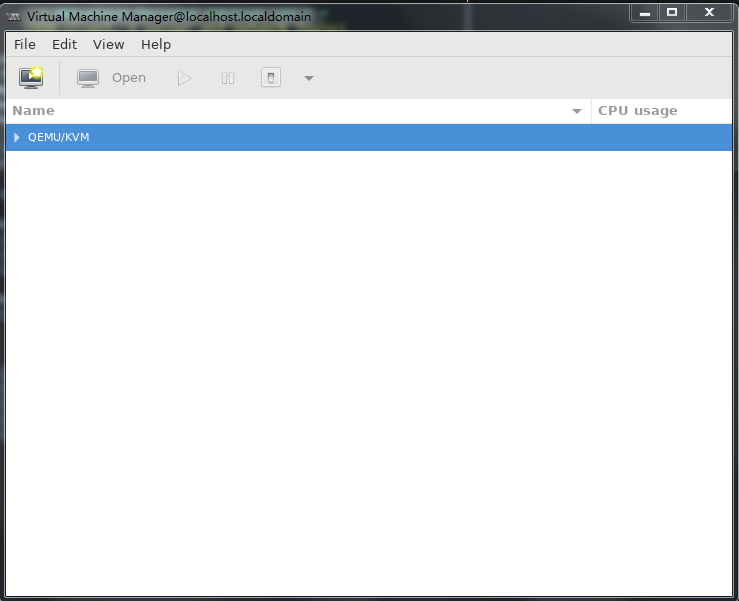

1. Run Virtual Machine Manager.

Figure 44 Virtual Machine Manager

2. Click the ![]() icon

to create a VM.

icon

to create a VM.

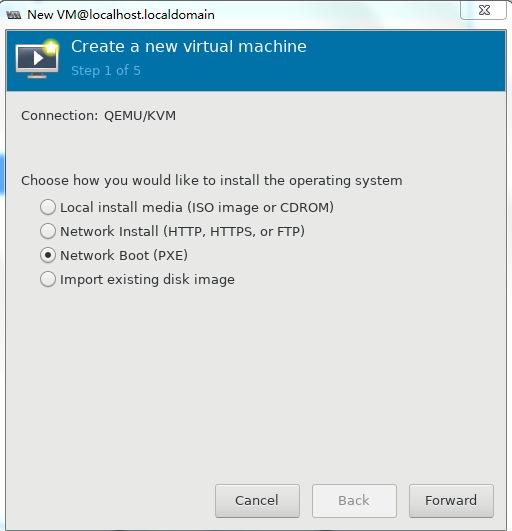

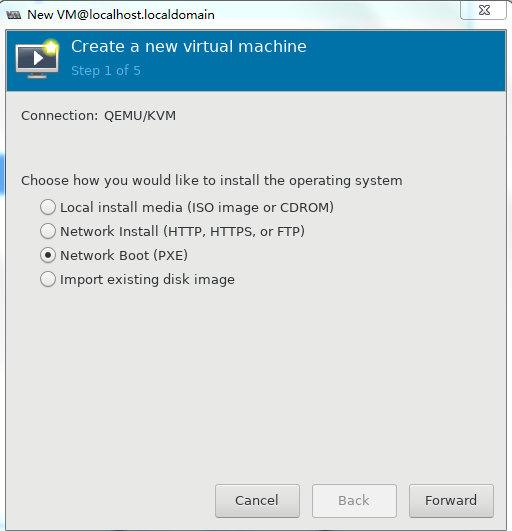

Specify a name for the VM and select Network Boot (PXE), and then click Forward.

Figure 45 Creating a VM

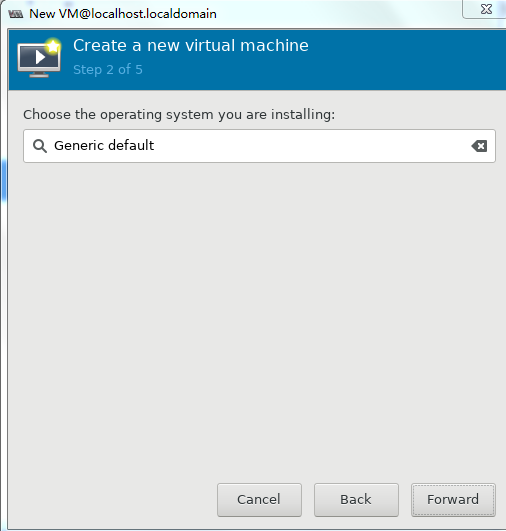

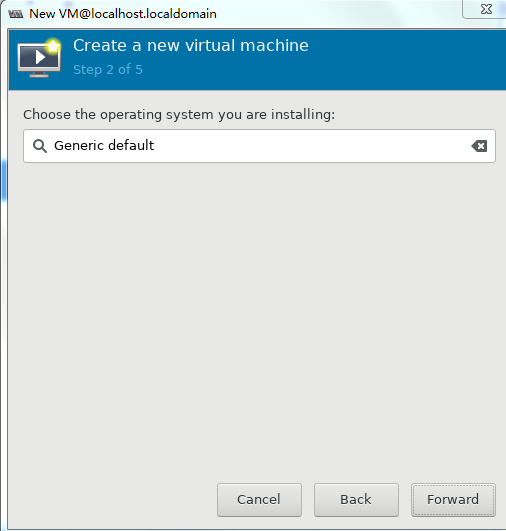

3. Select an operating system and its version and then click Forward.

Figure 46 Select an operating system and its version

4. Set the memory capacity and CPU quantity for the VM, and then click Forward, as shown in Figure 34.

Specify a minimum of one vCPU (2.0 GHz or higher) and a minimum of 1 GB memory for the VM.

|

IMPORTANT: For the minimum memory requirement for creating vBRAS1000 on a VM, see Table 2. A minimum of 2 GB memory is required. |

Figure 47 Specifying the CPU quantity and memory capacity for the VM

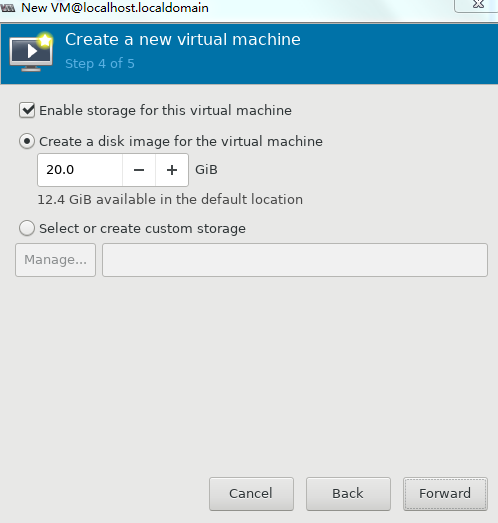

5. Set the disk quantity and capacity for the VM and then click Forward, as shown in Figure 35.

Assign a minimum of one vHD and a minimum disk capacity of 8 GB to the VM.

Figure 48 Setting the disk quantity and capacity

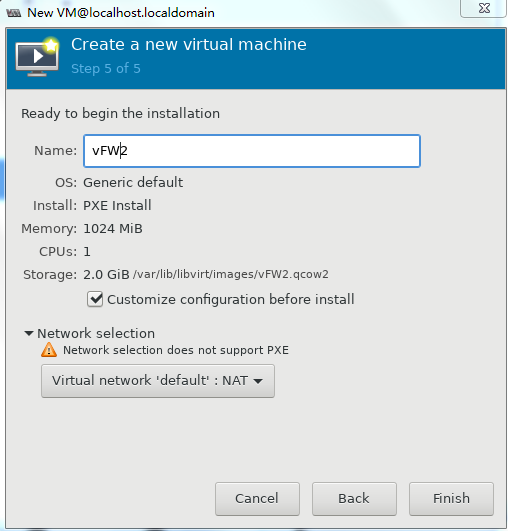

6. To configure other advanced options, select Customize configuration before install and then click Finish, as shown in Figure 36.

Figure 49 Configuring other advanced options

7. If you select Customize configuration before install, the configuration customization page is displayed after you complete basic VM setting.

Figure 50 Configuration customization page

8. Select IDE Disk 1 from the left pane, select IDE from the Bus type field, and then click Apply.

Figure 51 Specifying the disk bus type

9. Select NIC from the left pane. Two NIC configuration methods as shown in Figure 39 and Figure 40 are available. As a best practice, configure the NIC by using method 2.

For information about creating a bridge, see "Configuring Linux bridges on KVM".

Figure 52 Configuring the virtual network interface (method 1)

Figure 53 Configuring the virtual network interface (method 2)

10. Two NICs are required for vFW1000 to run correctly. However, the VM with basic settings has only one NIC. To add a NIC, click Add Hardware in the lower left corner of the configuration customization page, select the new NIC, and then configure the NIC properties, as shown in Figure 41.

Figure 54 Adding a new NIC

11. Click ![]() to

complete VM creation.

to

complete VM creation.

The VM will start up automatically and then start vFW1000 installation.

Installing vFW1000

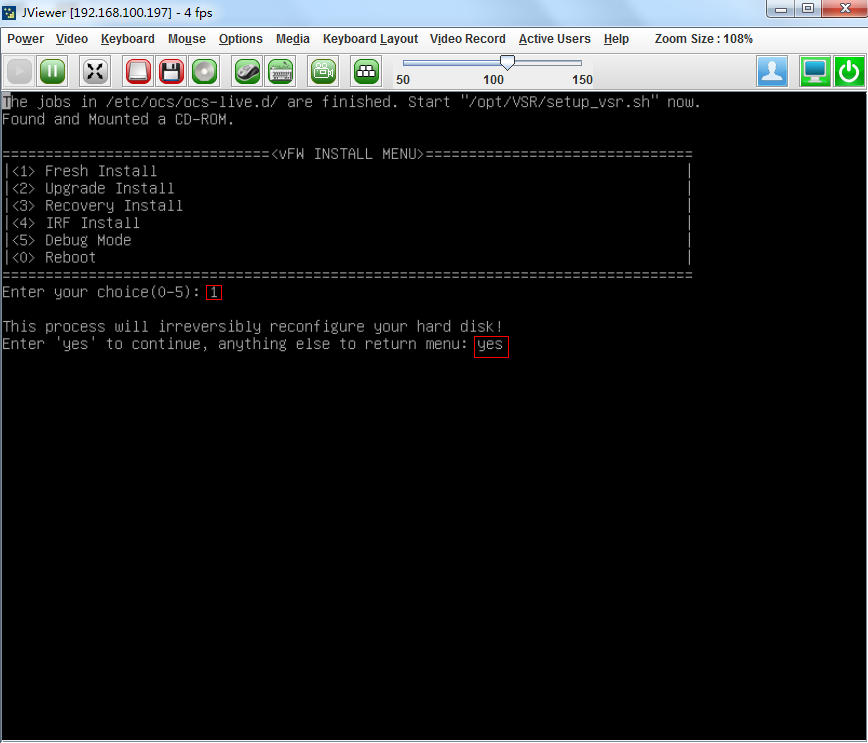

1. The VM automatically loads the required files from the PXE server and enters the installation screen. Enter 1 to select <1> Fresh Install, and then enter yes. The system will automatically complete the installation.

Figure 55 Selecting the installation method

2. Enter yes to reboot the system to complete installation of vFW1000.

Figure 56 Rebooting the system

Installing vFW1000 via unattended PXE

This section describes only the unattended installation procedure on the PXE client side. For the PXE server setup procedure, see "

Appendix D Setting up a PXE server."

When setting up the PXE server, change the value of the Syslinux parameter ocs_live_run to /opt/VSR/setup_vsr_pxe.sh unmanned fresh.

Prerequisites

The installation requires Virtual Machine Manager, graphic management software optional for Linux OSs. Make sure you have enabled the graphic management interface and installed Virtual Machine Manager at Linux OS installation.

Creating a VM

1. Run Virtual Machine Manager.

Figure 57 Virtual Machine Manager

2. Click the ![]() icon

to create a VM. On the configuration page as shown in Figure 58, enter

a name for the VM and select Network Boot (PXE),

and then click Forward.

icon

to create a VM. On the configuration page as shown in Figure 58, enter

a name for the VM and select Network Boot (PXE),

and then click Forward.

3. Select the operating system type and version, and then click Forward.

Figure 59 Selecting the operating system type and version

4. Set the memory capacity and CPU quantity for the VM, and then click Forward, as shown in Figure 60.

Specify a minimum of one vCPU (2.0 GHz or higher) and a minimum of 1 GB memory for the VM.

|

IMPORTANT: For the minimum memory requirement for creating vBRAS1000 on a VM, see Table 2. A minimum of 2 GB memory is required. |

Figure 60 Specifying the vCPU quantity and memory capacity

5. Set the disk quantity and capacity for the VM and then click Forward, as shown in Figure 61.

Assign a minimum of one vHD and a minimum disk capacity of 8 GB to the VM.

Figure 61 Setting the disk quantity and capacity

6. To configure other advanced options, select Customize configuration before install and then click Finish, as shown in Figure 62.

Figure 62 Configuring other advanced options

7. If you select Customize configuration before install, the configuration customization page is displayed after you complete basic VM setting.

Figure 63 Configuration customization page

8. Select IDE Disk 1 from the left pane, select IDE from the Bus type field, and then click Apply.

Figure 64 Specifying the disk bus type

9. Select NIC from the left pane. Two NIC configuration methods as shown in Figure 65 and Figure 66 are available. As a best practice, configure the NIC by using method 2. Click Apply after the configuration is complete.

For information about creating a bridge, see "Configuring Linux bridges on KVM".

Figure 65 Configuring the NIC (method 1)

Figure 66 Configuring the NIC (method 2)

10. Two NICs are required for vFW1000 to run correctly. However, the VM has only one NIC. To add a NIC, click Add Hardware in the lower left corner of the configuration customization page, select the new NIC, and then configure the NIC properties, as shown in Figure 67.

11. Click ![]() to

complete VM creation.

to

complete VM creation.

The VM will start up automatically and then start vFW1000 installation.

Installing vFW1000

The VM downloads required files from the PXE server and completes vFW1000 installation automatically.

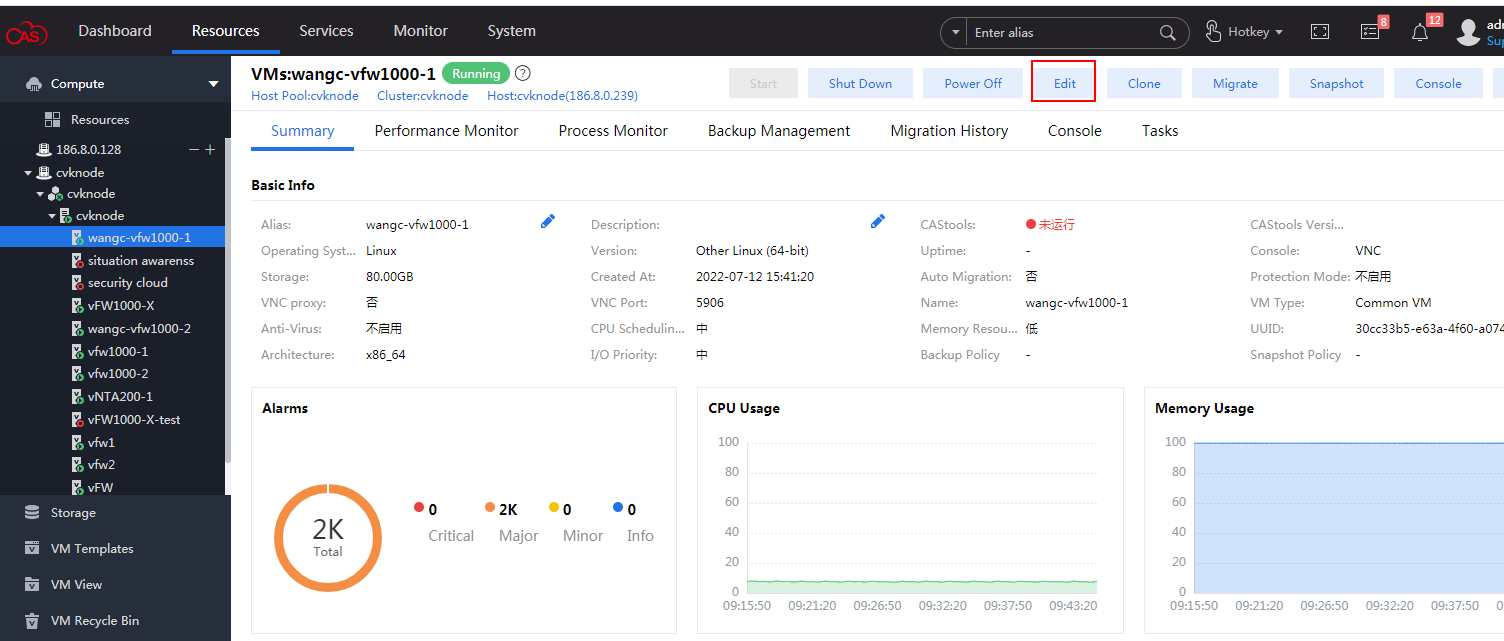

Installing the H3C NFV1000 series products on H3C CAS

The installation procedure on H3C CAS is the same for the H3C NFV1000 series products. This section uses vFW1000 as an example.

On H3C CAS, you can install H3C vFW1000 only from an ISO file.

Installing vFW1000 from an ISO file

Creating a host

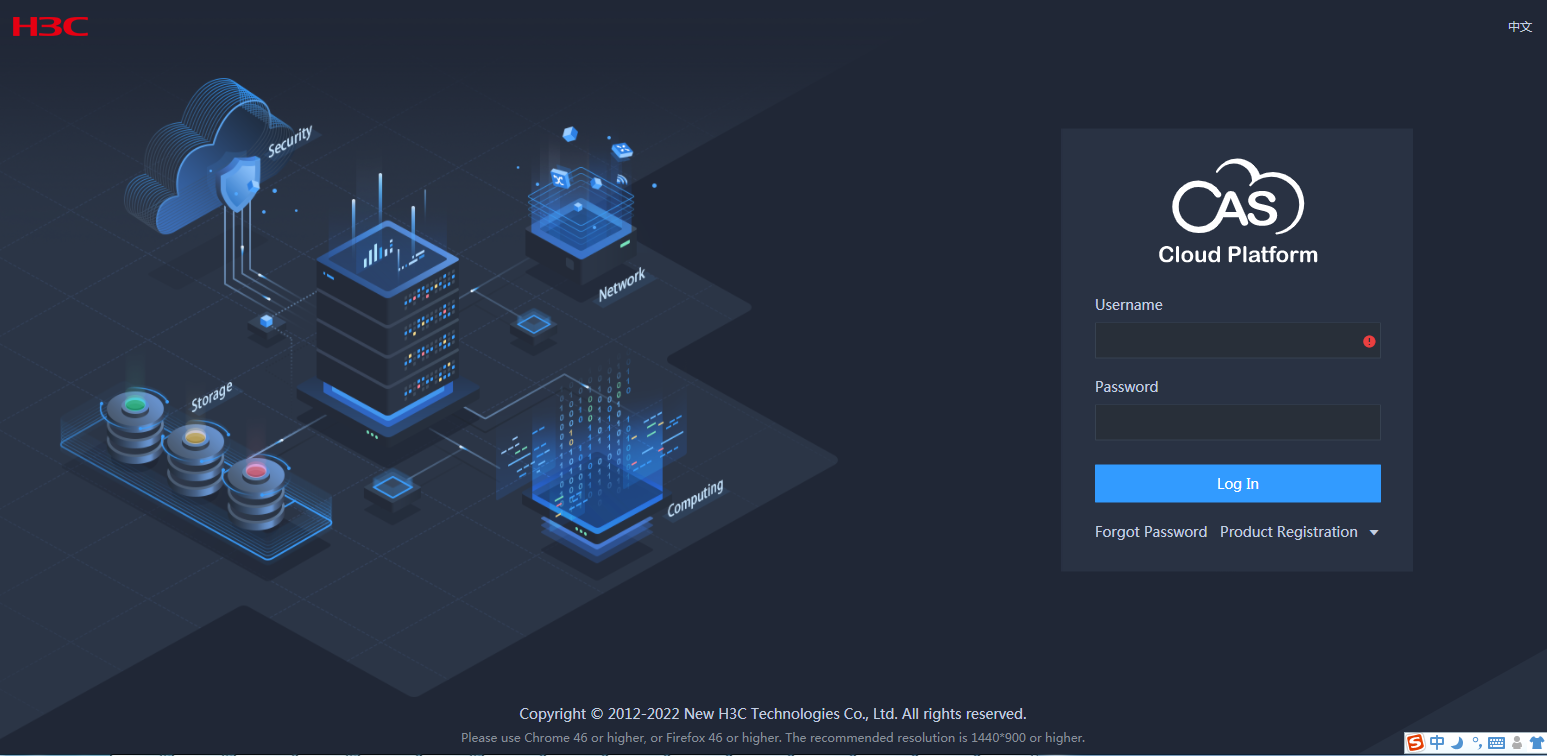

1. Log in to the H3C CAS cloud platform from your browser. As shown in Figure 68, enter the username and password, and then click Log In.

Figure 68 Logging in to the CAS cloud platform

To obtain the username and password for logging in to the CAS cloud platform, contact the administrator of the platform.

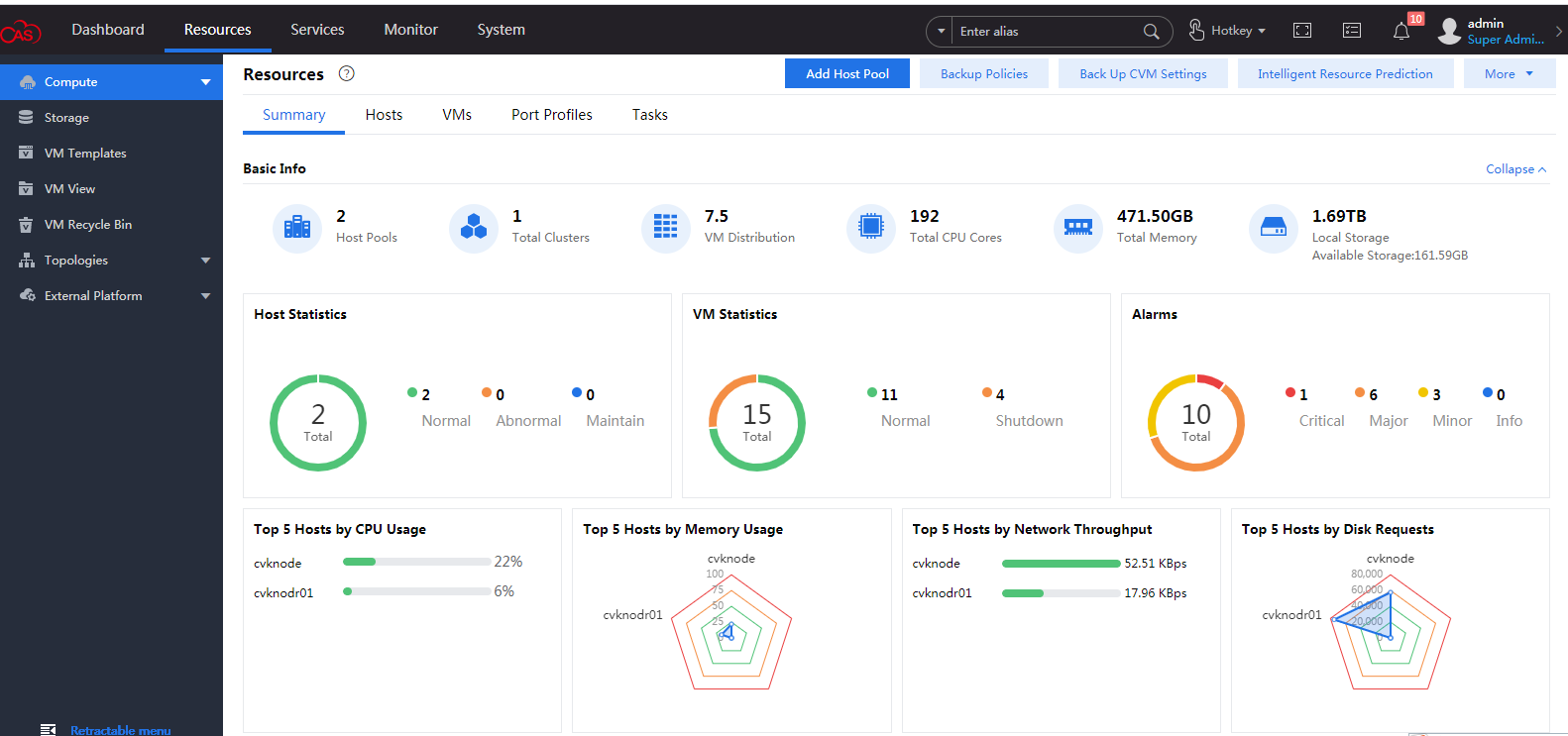

After login, the CAS cloud platform home page is displayed.

Figure 69 CAS cloud platform home page

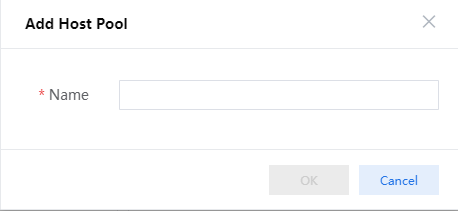

2. Select Resources from the top navigation bar, and then click Add Host Pool. In the dialog box that opens as shown in Figure 70, enter the host pool name and then click OK.

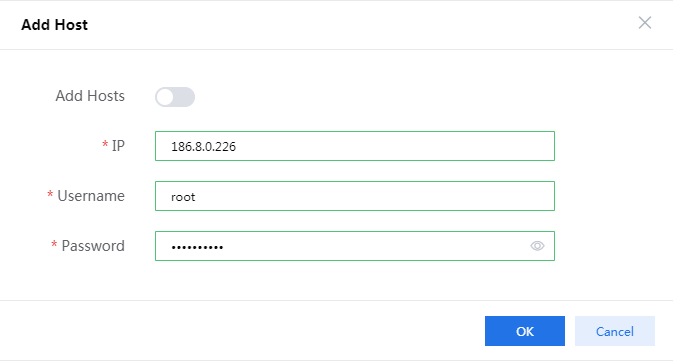

3. Select the newly created host pool and then click Add Host. In the dialog box that opens as shown in Figure 71, enter the CVK host IP, username, and password, and then click OK.

To obtain the username and password for logging in to the CVK host, contact the server administrator.

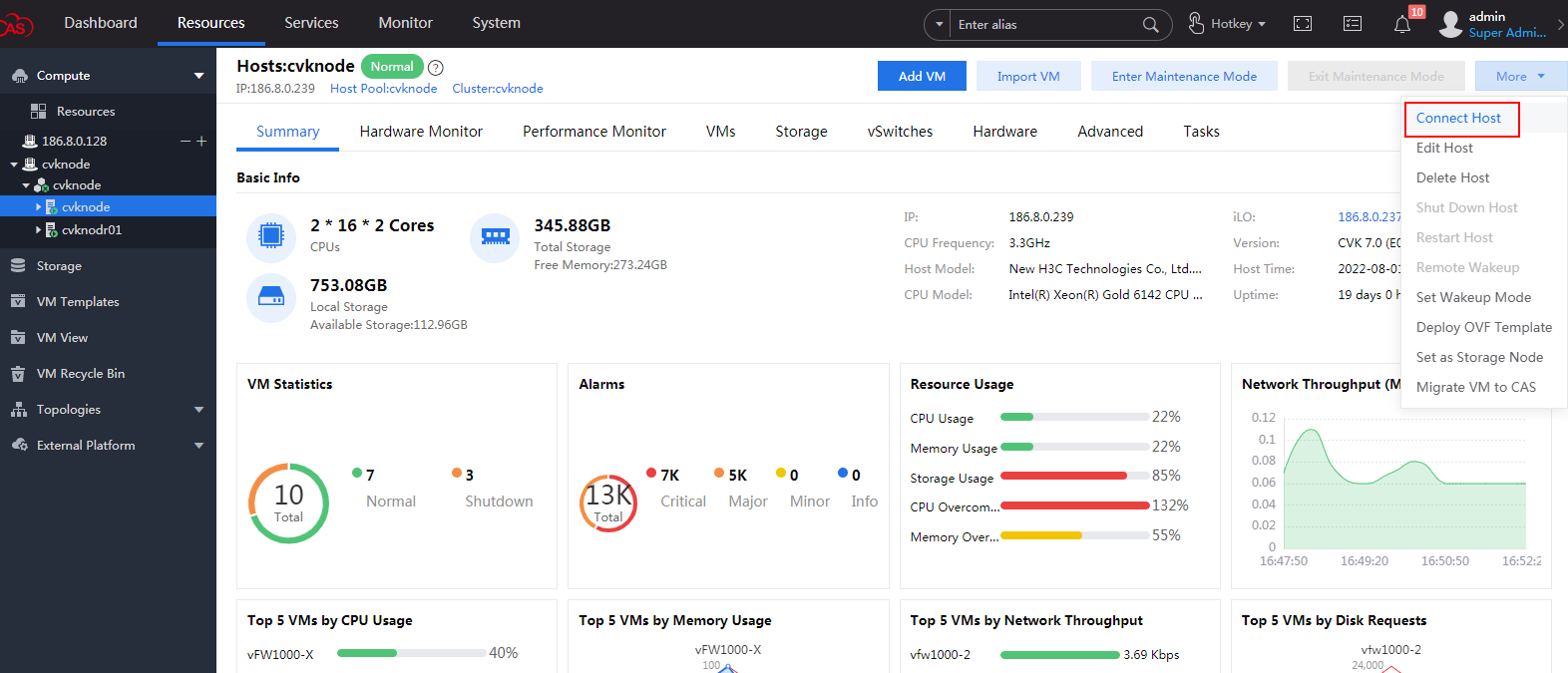

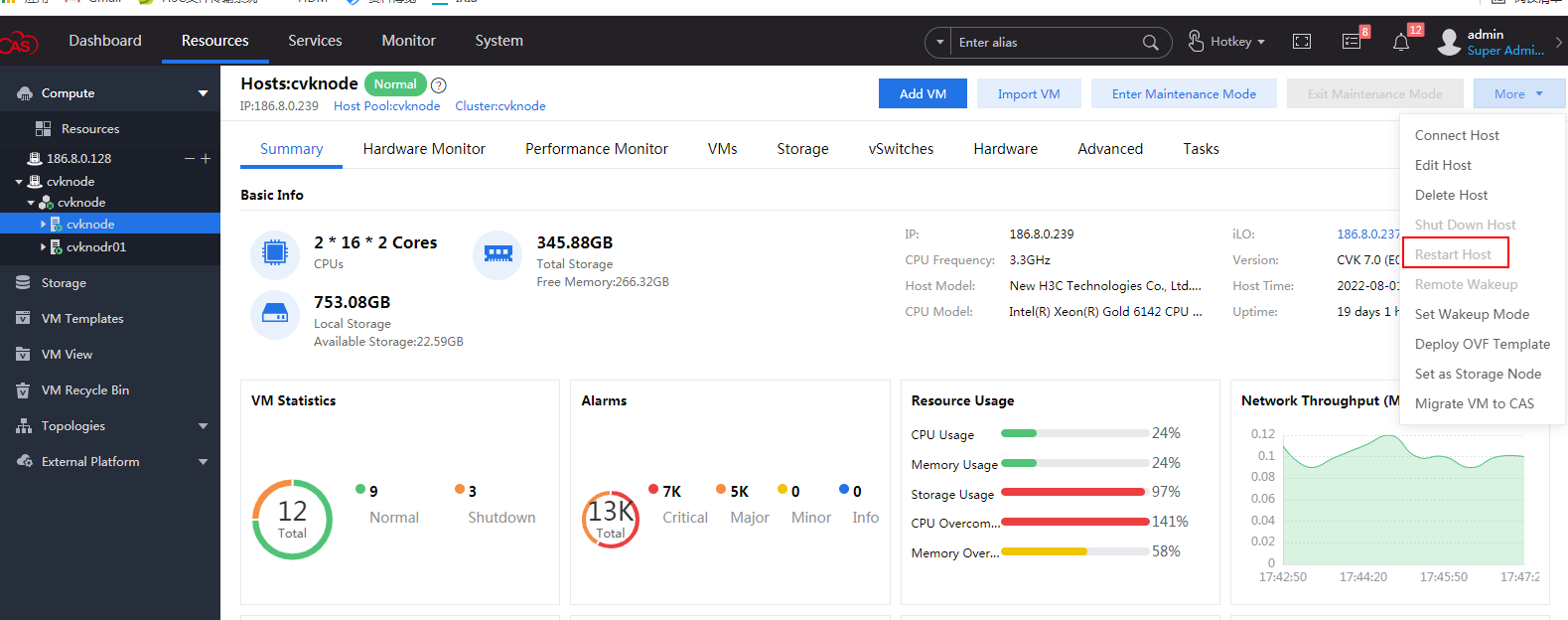

4. After the host is created, select More > Connect Host. In the dialog box that opens, click OK to connect the CVM to the host.

Figure 72 Connecting the host

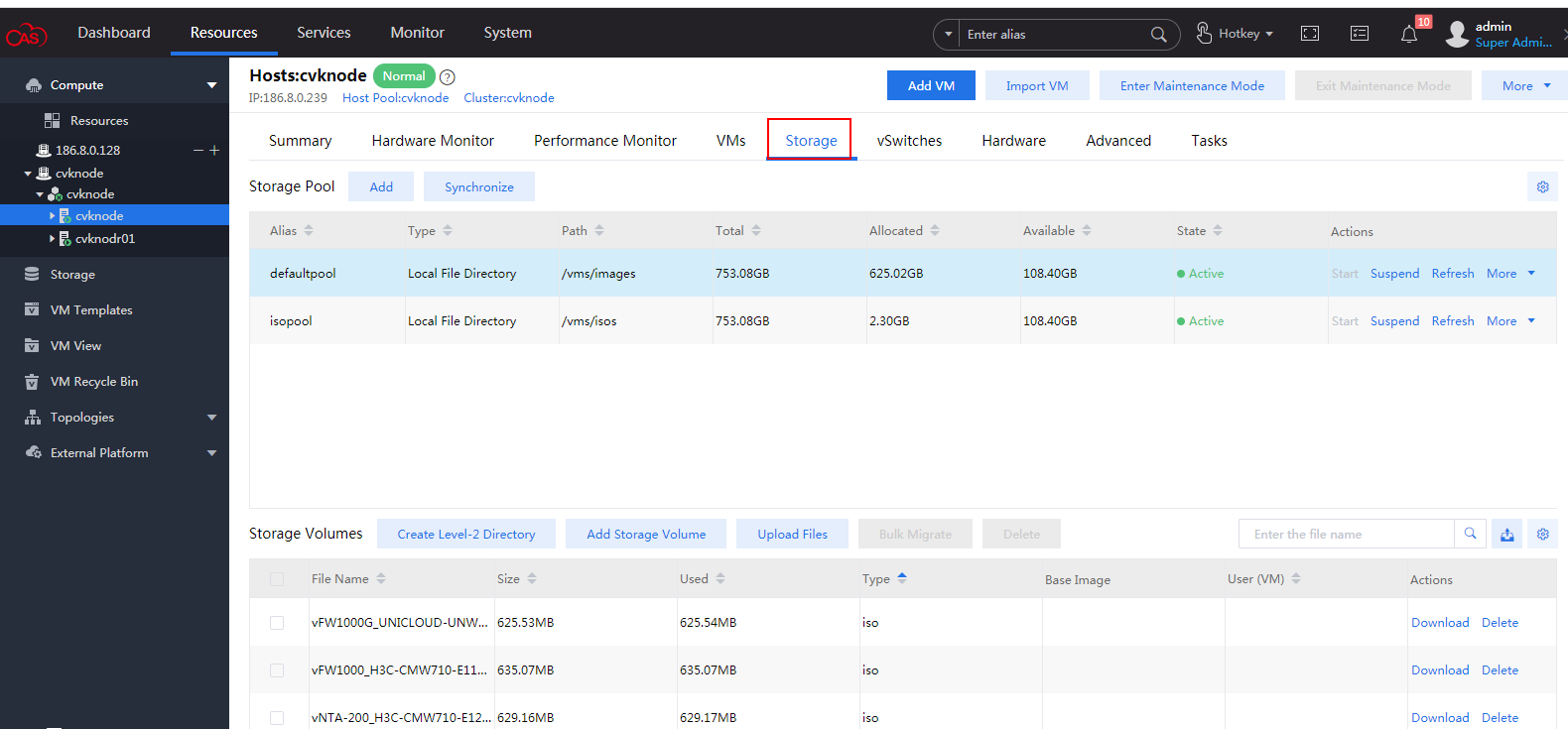

5. Select the newly created host from the left navigation pane, and then click the Storage tab. The storage management page as shown in Figure 73 is displayed.

Figure 73 Storage management page

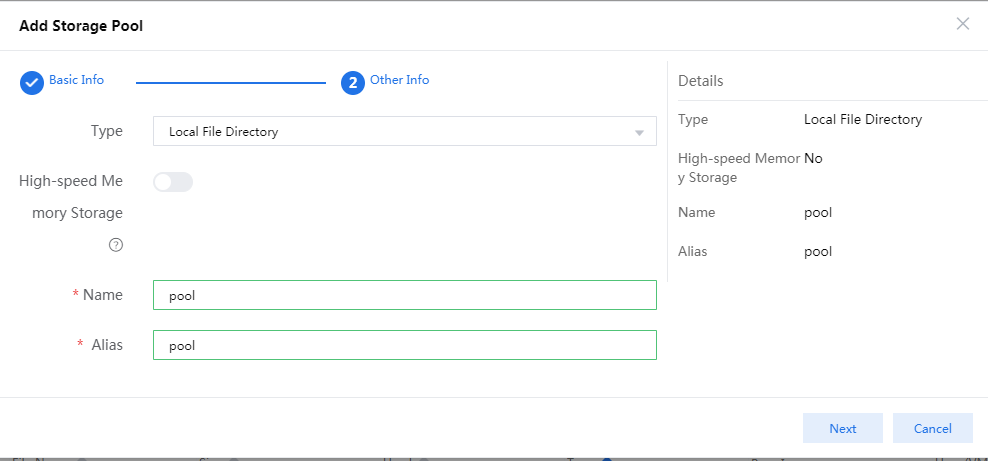

6. Click Add to create a storage pool to store vFW1000 ISO file.

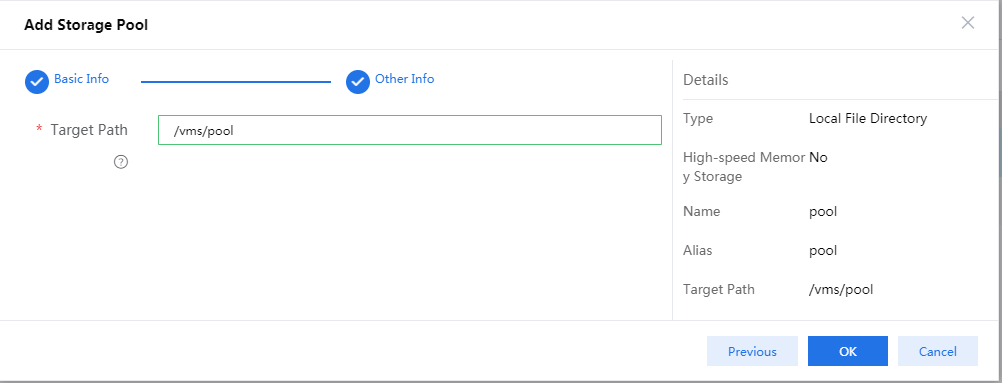

In the configuration page that opens as shown in Figure 74, enter the storage pool name and then click Next.

Figure 74 Adding a storage pool

7. Click OK and then start the storage pool.

Figure 75 Completing storage pool creation

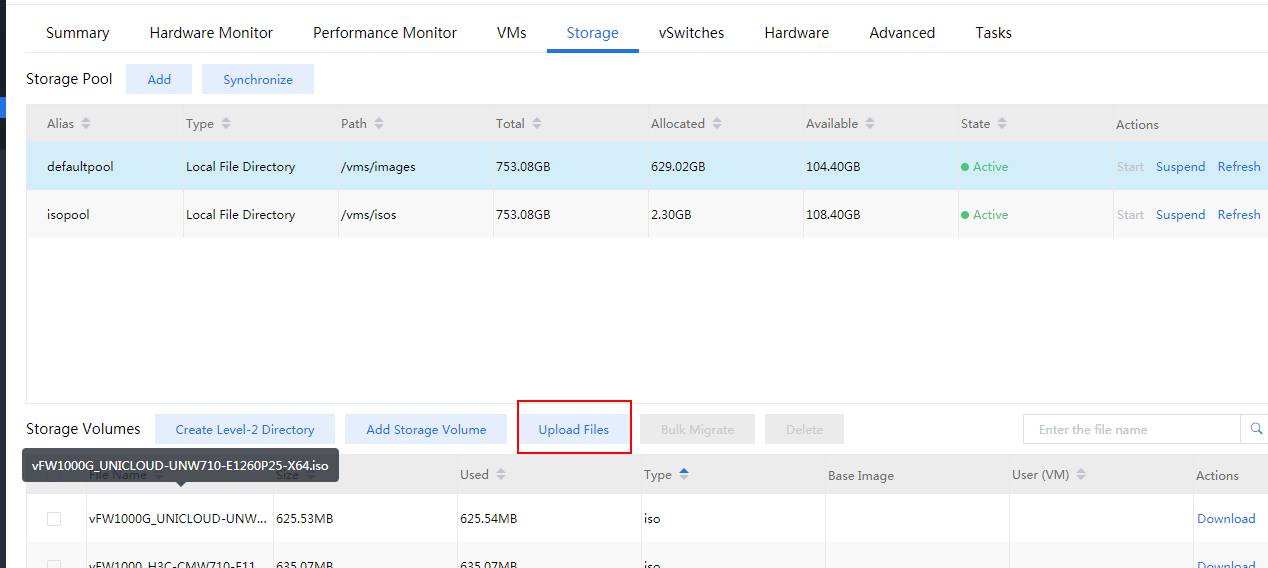

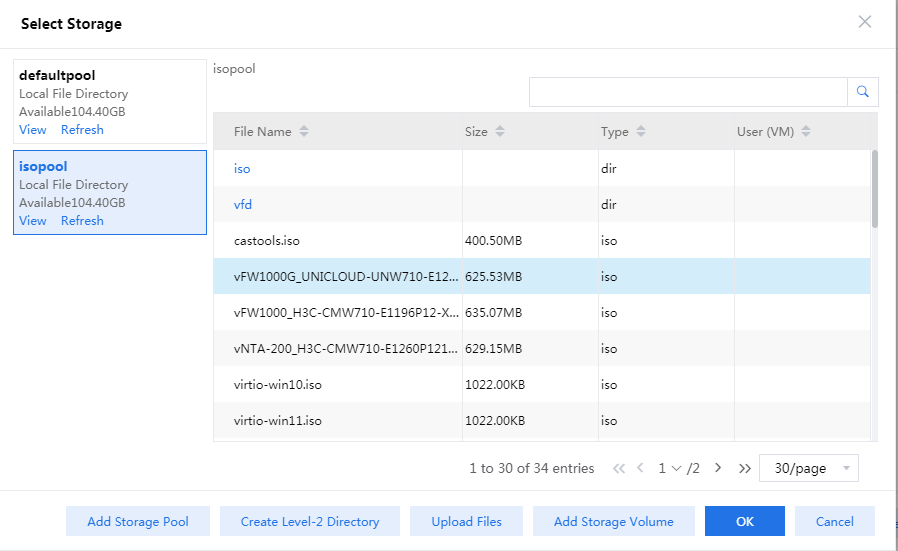

8. Select the newly created storage pool, and click Upload Files for uploading the vFW1000 ISO file to the CVK host, as shown in Figure 76

Figure 76 Uploading the vFW1000 ISO file (1)

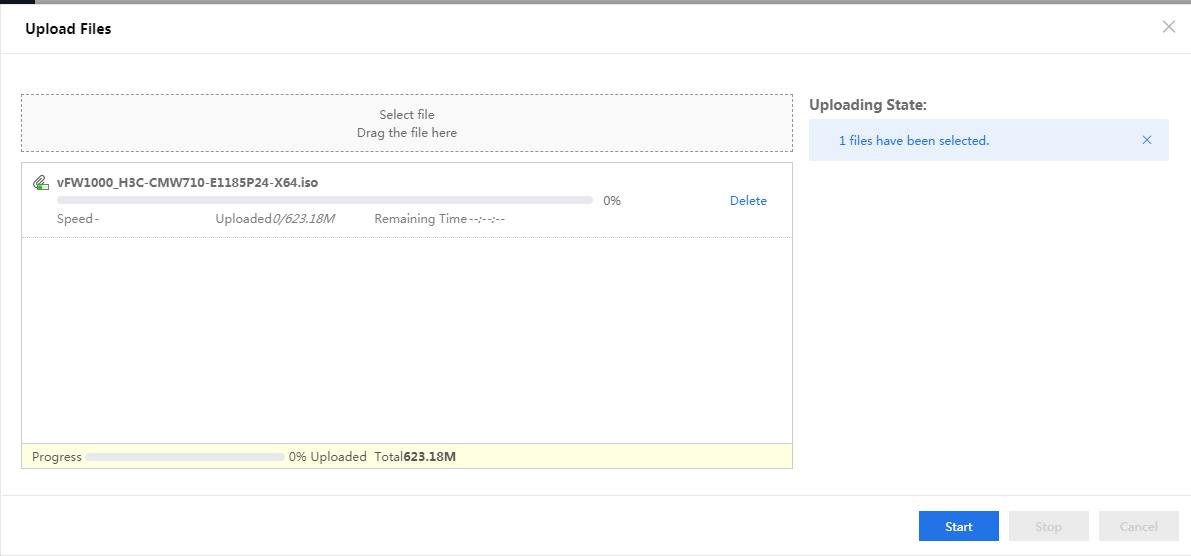

9. You can drag the file directly to the file uploading area and then click Start, as shown in Figure 77

Figure 77 Uploading the vFW1000 ISO file (2)

After the file is uploaded, close this window to return to the CAS cloud platform home page.

Creating a VM

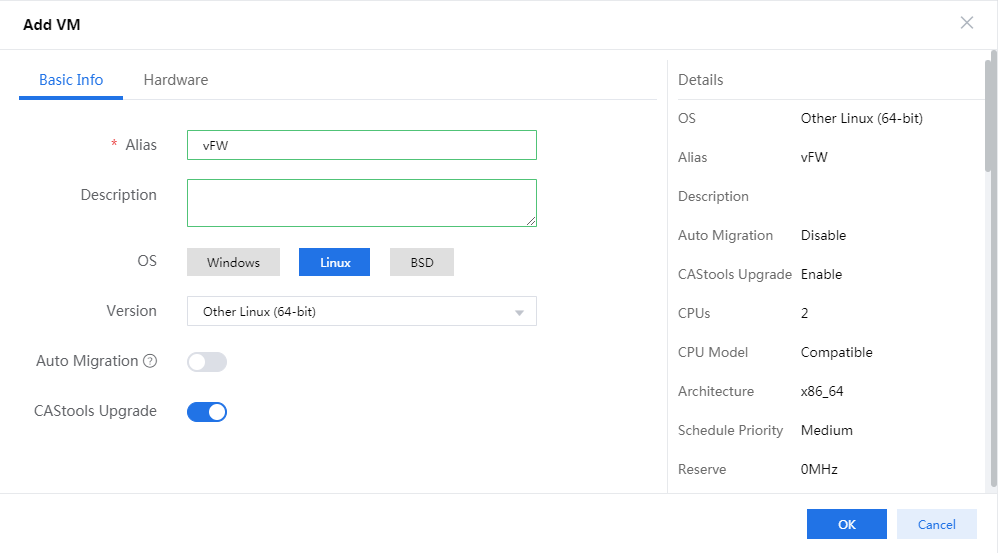

1. Select the newly created host and click Add VM. In the page as shown in Figure 78 that opens, configure basic information for the VM as follows and then click Next.

¡ Enter a name and description for the VM.

¡ Select the Linux operating system.

¡ Select the Other Linux(64bit) version.

Figure 78 Configuring basic information for the VM

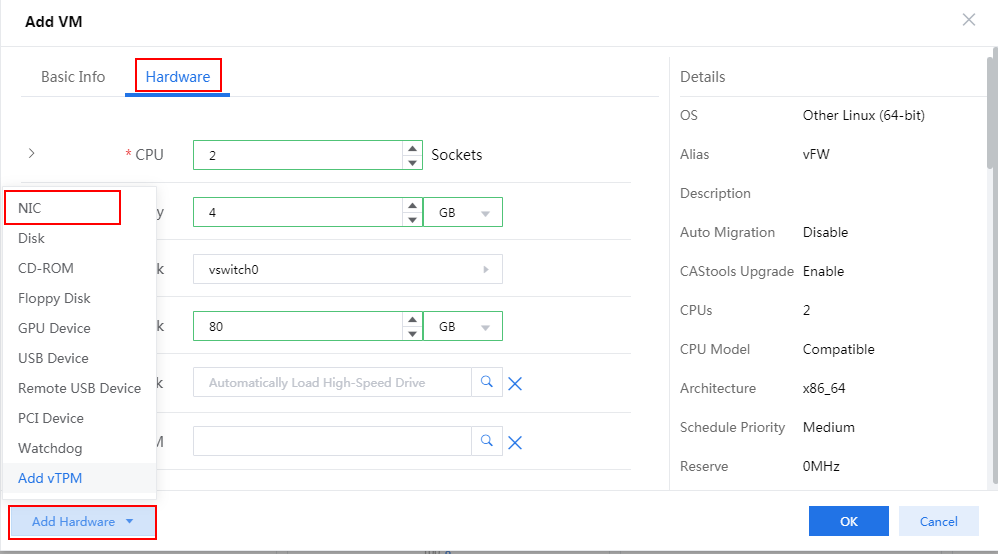

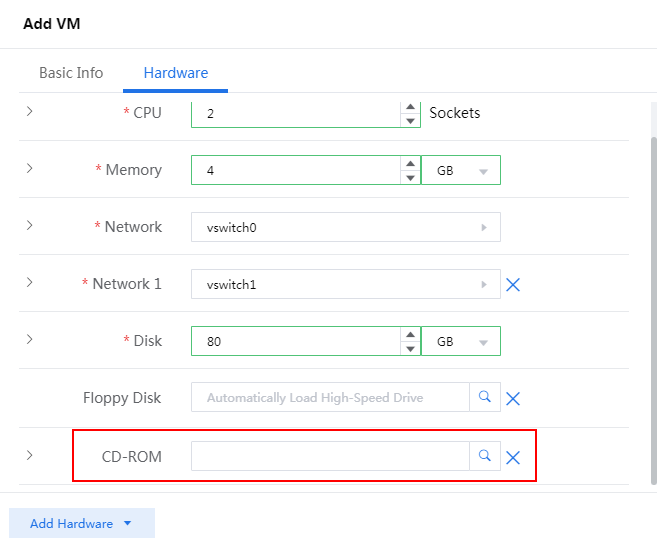

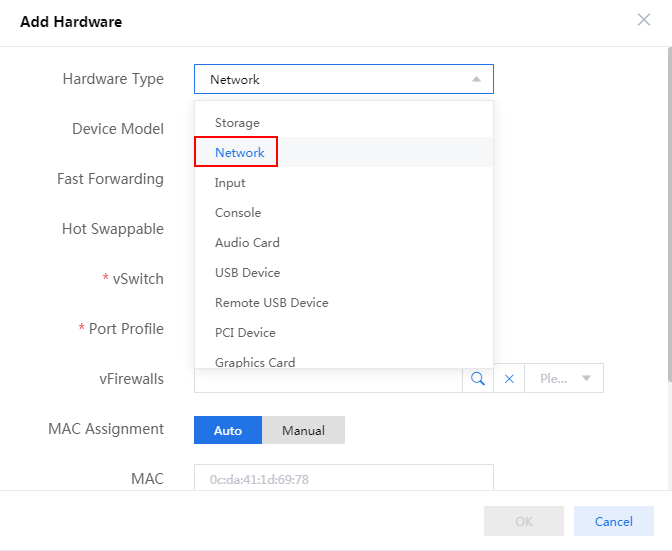

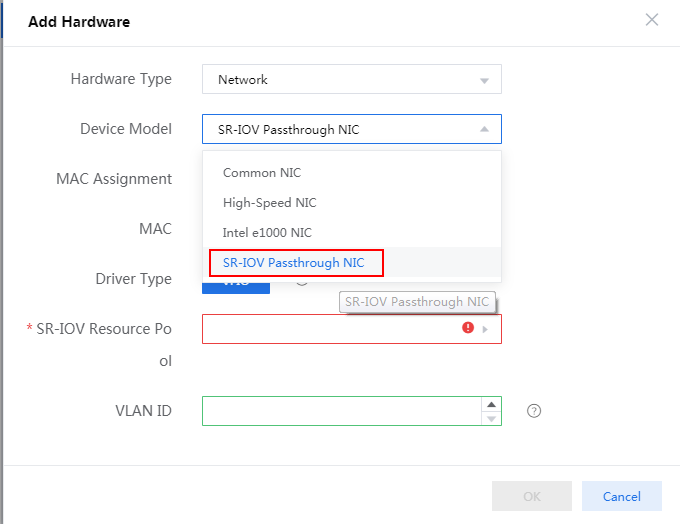

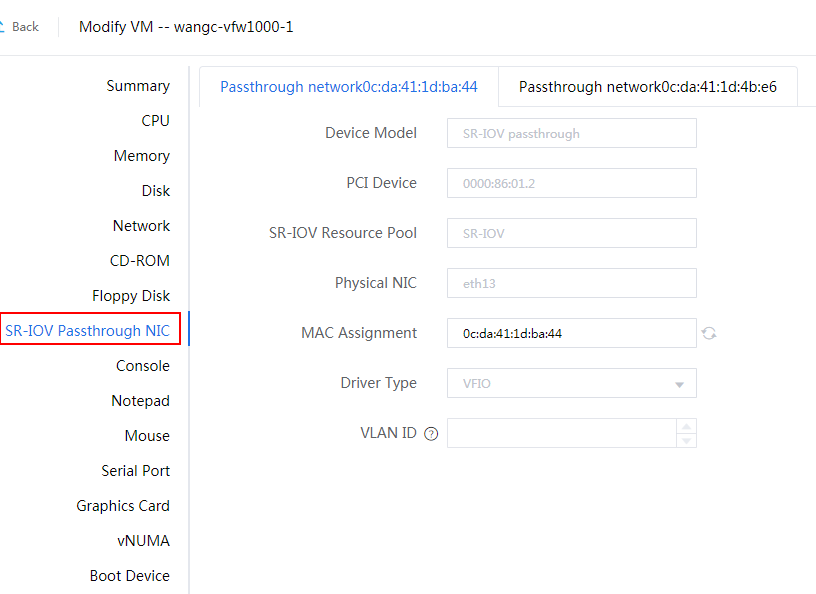

2. Configure hardware information for the VM and make sure the hardware settings meet the minimum requirements as described in Figure 79. To add a NIC, click Add Hardware and then select NIC.

For the minimum requirements of installing vBRAS1000 on a VM, see Table 2. Assign a minimum of four vCPUs (2.0 GHz or higher) and a minimum of 2 GB memory to the VM.

Figure 79 Configuring hardware information

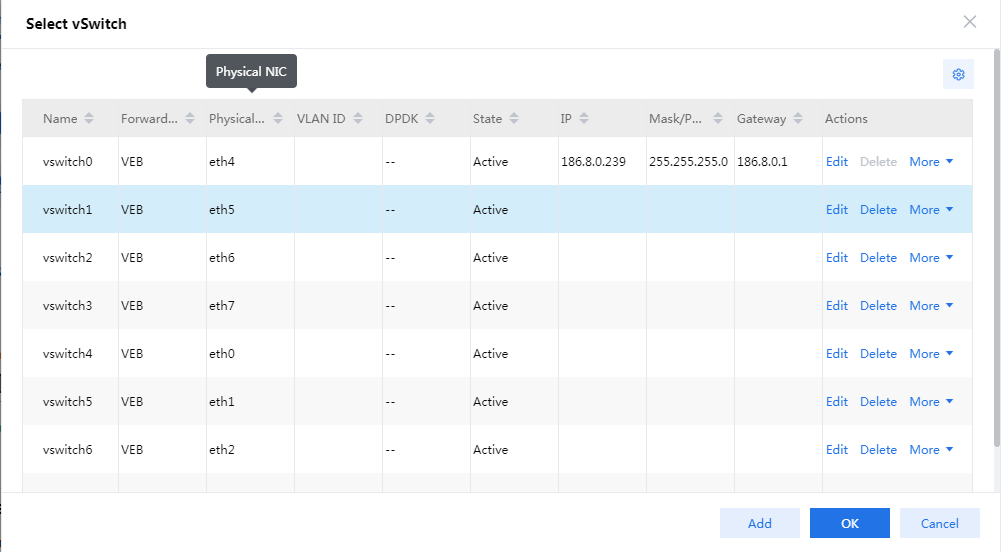

3. Click the ![]() icon in the Network filed, select a vSwitch for vFW1000 in the page than opens, and then

click OK.

icon in the Network filed, select a vSwitch for vFW1000 in the page than opens, and then

click OK.

For information about creating a vSwitch and configuring its parameters, see the CAS cloud platform online help.

Figure 80 Selecting a vSwitch

4. Click the ![]() icon for the disk to display advanced

settings for the disk, as shown in Figure 81.

icon for the disk to display advanced

settings for the disk, as shown in Figure 81.

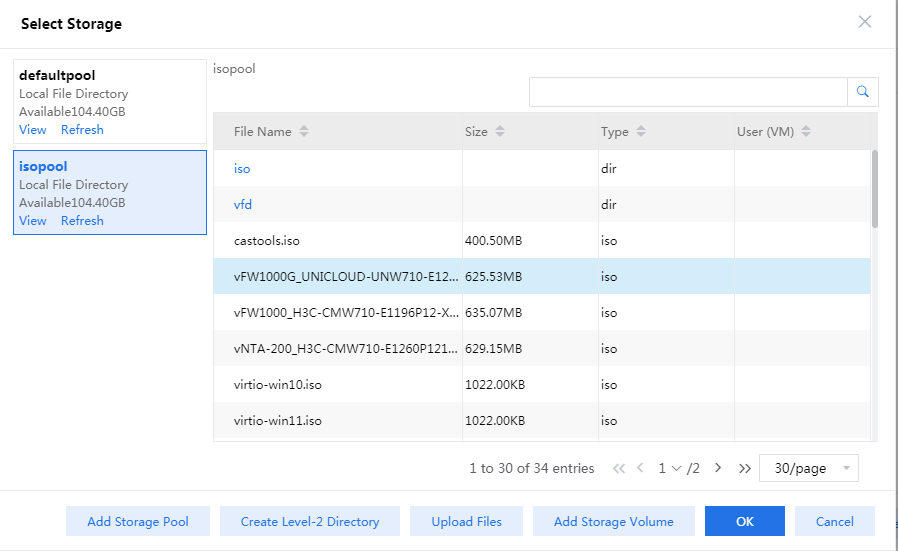

5. Click the storage pool selection icon ![]() , select the storage pool created when

creating the host, and then click OK.

, select the storage pool created when

creating the host, and then click OK.

Figure 82 Selecting the storage pool

6. Click the ![]() icon

for the CD-ROM field, select vFW1000 ISO file uploaded to the CVK host when creating the host, and then

click OK.

icon

for the CD-ROM field, select vFW1000 ISO file uploaded to the CVK host when creating the host, and then

click OK.

Figure 83 Selecting vFW1000 ISO file

7. Click Finish to complete VM creation.

The newly created VM will be listed in the navigation pane.

Figure 84 VM created successfully

Installing vFW1000

1. Select the newly created VM from the navigation pane and then click Power On. In the screen that opens, select Yes.

2. Click the Console tab and then select the Java console or Web console to start the VM console.

|

IMPORTANT: · The Java console for the VM running CAS requires the Java Runtime Environment (JRE) environment. You must first install the JRE software package before opening the console. · After vFW1000 is installed, access the VM editing page on the CAS cloud platform and disconnect IDE optical drive hdc so that vFW1000 will not start up from the optical drive. |

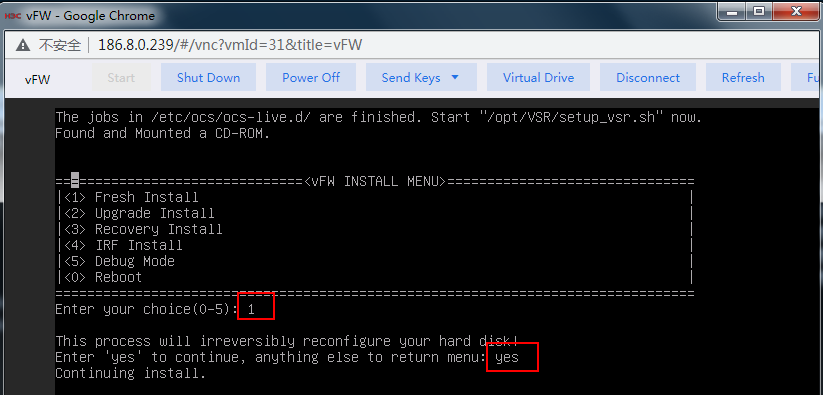

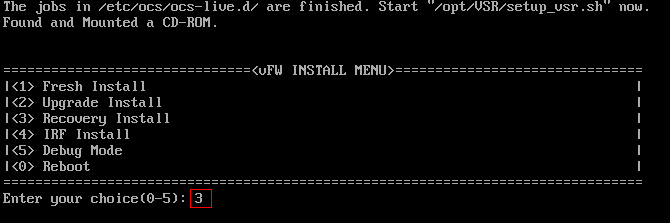

3. The VM loads the ISO file automatically and enters the installation screen. As shown in _Ref115124151, enter 1 to select <1> Fresh Install, then enter yes. The system is installed automatically.

Figure 85 Starting the installation

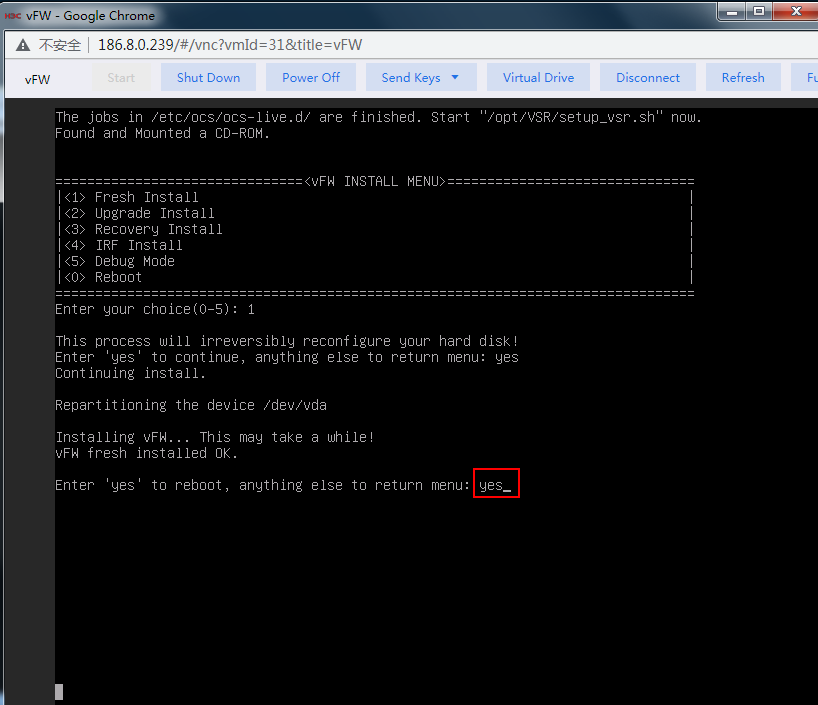

4. Enter yes to restart the system to complete vFW1000 installation, as shown in Figure 86.

Figure 86 Rebooting the system

Deploying the H3C NFV1000 series products

The deployment method is the same for H3C NFV1000 series products. This section uses vFW1000 as an example.

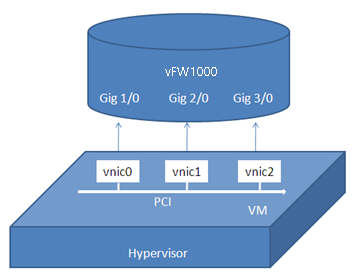

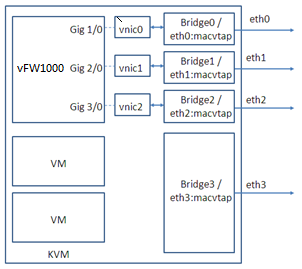

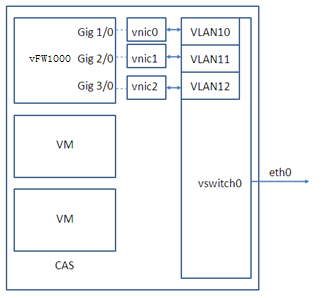

vFW1000 interface and vNIC mappings

At first startup, vFW1000 scans PCI devices, initializes detected vNICs, records vNICs' MAC addresses, and maps vNICs to empty virtual NIC slots in the order in which the MAC addresses are obtained. The vNIC and slot mappings remain unchanged unless you add or delete vNICs. Figure 87 shows the mapping relations between vFW1000 network interfaces and vNICs.

Figure 87 vFW1000 interface and vNIC mappings

After starting vFW1000, you can use the display interface gigabitethernet brief command to view the vNIC-slot mappings.

<Sysname> display interface gigabitethernet brief

Brief information on interface(s) under route mode:

Link: ADM - administratively down; Stby - standby

Protocol: (s) - spoofing

Interface Link Protocol Main IP Description

GE1/0 UP UP --

GE2/0 UP UP 172.16.0.112

GE3/0 UP UP --

|

TIP: Before configuring a network interface for vFW1000, confirm the vNIC-slot mappings to ensure that the network interface configuration of vFW1000 can be applied to the correct vNIC. |

Adding or deleting a vFW1000 interface

To add or delete an Ethernet interface from vFW1000, add or delete a vNIC from the VM. For information about adding or deleting a vNIC, see the VMware document.

|

CAUTION: · vNICs cannot be hot swapped on vFW1000. Before adding or deleting a vNIC, stop vFW1000. · If you remove a vNIC, its corresponding slot becomes empty. If you add a vNIC, the system maps the vNIC to the empty slot with the smallest slot number. The add and remove operations do not change mappings between slots and the other vNICs. |

Before adding or removing vNICs, first use the display interface gigabitethernet brief command to confirm the vNIC-slot mappings.

<Sysname> display interface gigabitethernet brief

Brief information on interface(s) under route mode:

Link: ADM - administratively down; Stby - standby

Protocol: (s) - spoofing

Interface Link Protocol Main IP Description

GE1/0 UP UP --

GE2/0 UP UP 172.16.0.112

GE3/0 UP UP --

After adding or removing a vNIC, use the display interface gigabitethernet brief command to confirm the new vNIC-slot mappings as a best practice. Then, proceed with network configuration. For example, after a vNIC is added, the new vNIC-slot mappings are as follows:

<Sysname> display interface gigabitethernet brief

Brief information on interface(s) under route mode:

Link: ADM - administratively down; Stby - standby

Protocol: (s) - spoofing

Interface Link Protocol Main IP Description

GE1/0 UP UP --

GE2/0 UP UP 172.16.0.112

GE3/0 UP UP --

GE4/0 UP UP --

The newly added vNIC is mapped to interface GigabitEthernet 4/0 of vFW1000.

vSwitch interface or host physical interface mappings

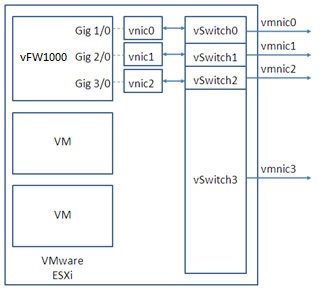

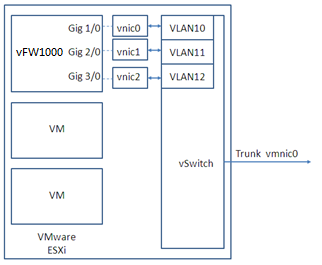

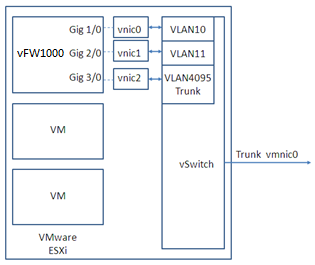

Mappings on VMware ESXi

On VMware ESXi, vFW1000 interfaces must connect to vSwitch interfaces to receive or transmit traffic. Each vSwitch provides only one interface. You can create a vSwitch for each vFW1000 interface or configure interfaces on a vFW1000 to share one vSwitch interface.

Figure 88, Figure 89, and Figure 90 describe three mapping relations.

Figure 88 One vSwitch for each vFW1000 interface

Figure 89 One vSwitch for all vFW1000 interfaces

Mappings on KVM

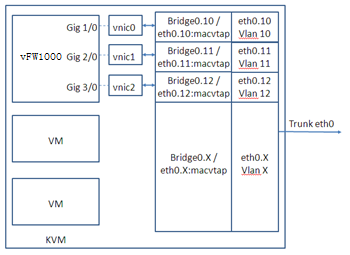

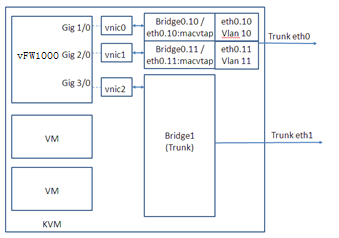

On KVM, vFW1000 interfaces must connect to physical interfaces to receive or transmit traffic. You can map vFW1000 interfaces to different physical interfaces or configure interfaces on a vFW1000 to share one physical interface.

Figure 91, Figure 92, and Figure 93 describe three mapping relations.

Figure 91 One physical interface for each vFW1000 interface

Figure 92 One physical interface for all vFW1000 interfaces

|

|

NOTE: To map a VirtIO vNIC to a trunk port, make sure the KVM platform supports vhost. |

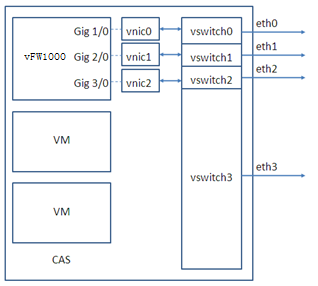

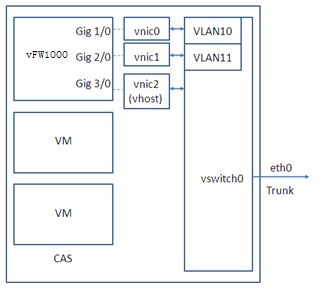

Mappings on CAS

On CAS, vFW1000 interfaces must connect to physical interfaces to receive or transmit traffic. You can map vFW1000 interfaces to different physical interfaces or configure interfaces on a vFW1000 to share one physical interface.

Figure 91, Figure 92, and Figure 93 describe three mapping relations.

Figure 94 One physical interface for each vFW1000 interface

Figure 95 One physical interface for all vFW1000 interfaces

Figure 96 One physical interface for all vFW1000 interfaces (mapping to a trunk port for vFW1000 to receive packets with VLAN tags)

|

IMPORTANT: For this configuration to take effect, you must load the vhost module on CAS and configure vhost properties for the vNIC. |

Installing H3C NFV2000 on a physical server

H3C NFV2000 can be installed on only a physical server.

Installation environment

Hardware environment

Table 5 describes the minimum hardware configuration requirements for a server to host H3C NFV2000.

Table 5 Minimum hardware configuration requirements for a VM to host H3C NFV2000

|

Item |

Minimum requirement |

|

Processor |

1 CPU (clock speed ≥ 2.0 GHz) |

|

Memory |

16 GB or above |

|

Hard disk |

1 × HD, 32 GB |

|

NIC |

2 to 16 NICs |

|

vNIC |

· InteI 82598/82599 /X540/I350 · BCM TG3 5719 |

Installing H3C NFV2000 on a bare metal server

The upgrade method is the same for NFV2000 products. This section uses vFW2000 as an example.

This section uses an H3C UIS R390X G2 server to describe the installation process of vFW2000 on a bare metal server.

Installing vFW2000 from an ISO image

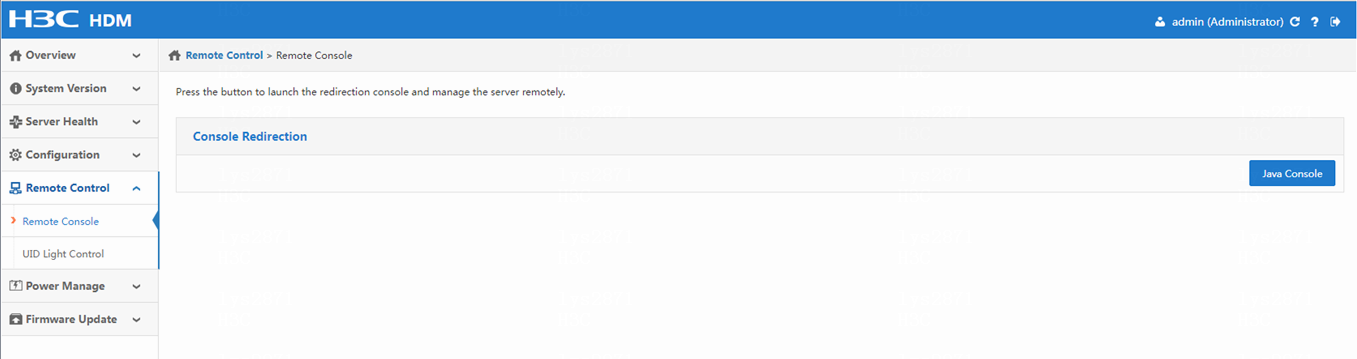

1. Access the bare metal server through iLO:

a. Enter the iLO address of the server in the IE browser, for example: https://192.168.100.179/index.html.

b. On the page that opens, select Remote Console > Remote Console.> Then, click Java Console to log in to the bare metal server through the console port.

Figure 97 Logging in to the server

|

|

NOTE: You need to enter the username and password for login. To obtain the username and password, contact the server administrator. |

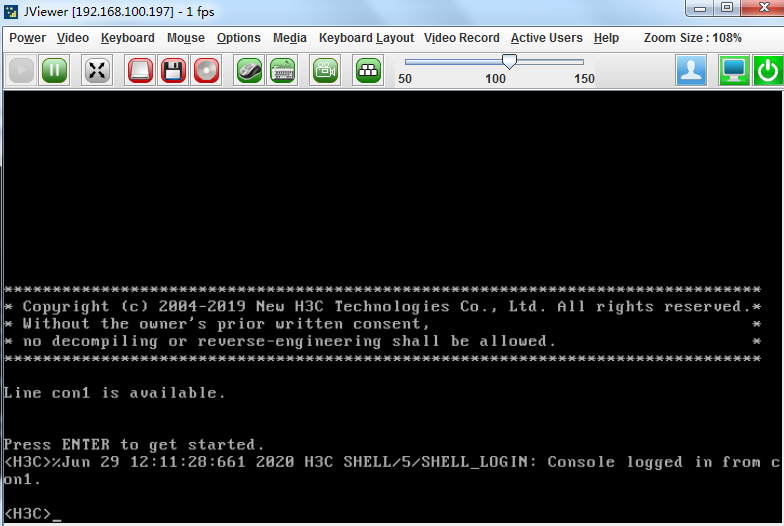

c. After successful login, the interface as shown in Figure 98 will open.

Figure 98 Successful login to the server

2. Load the ISO image of vFW2000

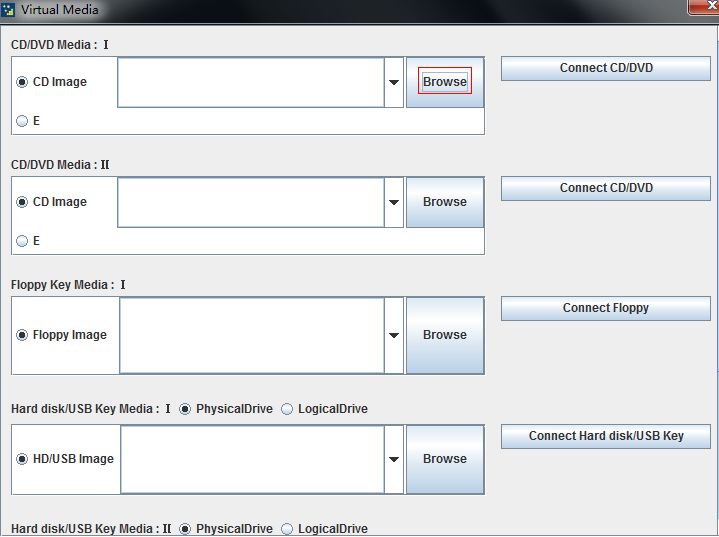

a. Select Media > Virtual Media Wizard….. In the page that opens, click Browse.

Figure 99 Selecting the ISO image (1)

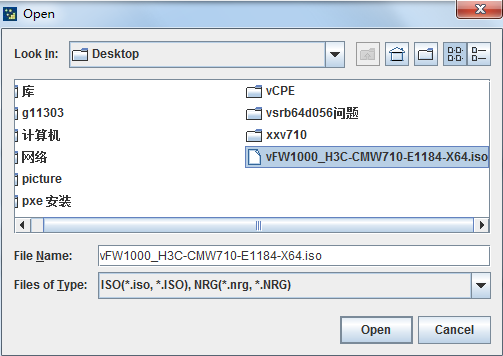

b. Selecting the target ISO image to be loaded in the file selection window, and then click Open to set the image to start from CD-ROM/DVD next time.

Figure 100 Selecting the ISO image (2)

c. Click Connect CD/DVD and then select Power > Force System Restart to restart the server.

|

|

NOTE: Some servers need to be configured as boot from the CD/DVD for the next reboot. Configure this setting as needed. |

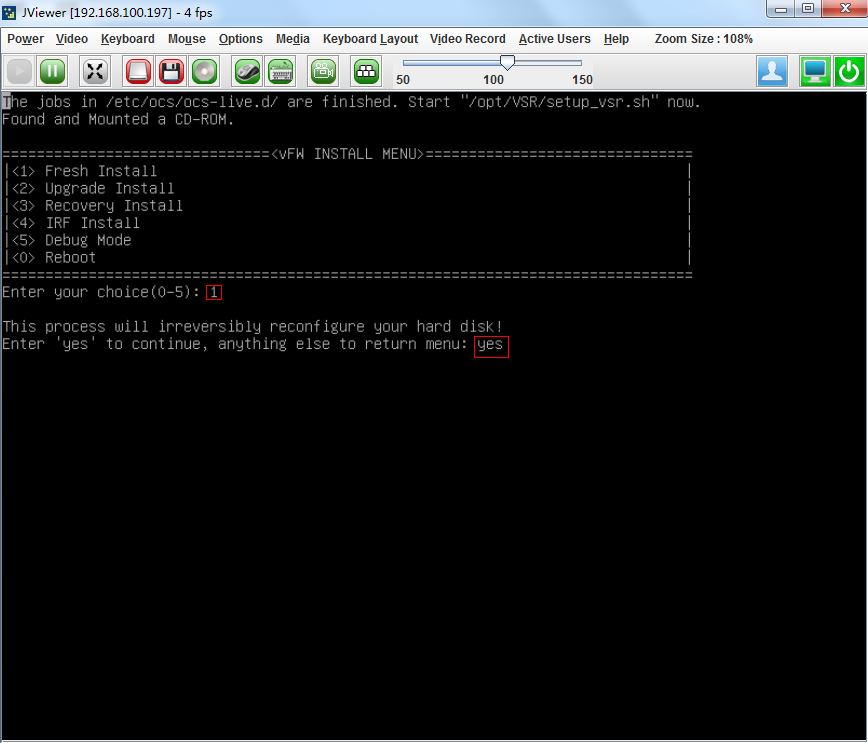

3. Install vFW2000:

a. After the server restarts, the system loads the ISO file and enters the installation interface. Select <1> to install, and then enter yes for confirmation. The system will automatically complete the installation.

Figure 101 Installation startup interface

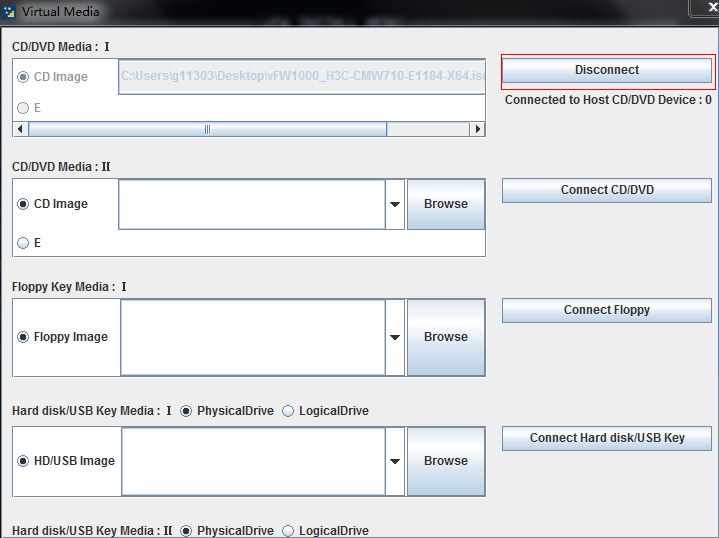

b. After the installation is complete, to avoid automatically loading the ISO file when the server is restarted next time, disconnect the CD-ROM connection. Select Media >Virtual Media Wizard… and then click Disconnect.

Figure 102 Disconnecting the CD-ROM connection

c. Enter yes to restart the system to complete the installation of vFW2000.

Figure 103 Restarting the system

|

|

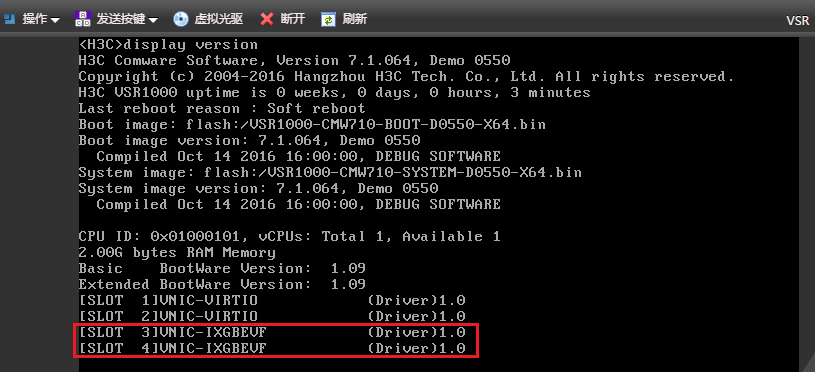

NOTE: If you install an NFV2000 with the 3.14 kernel version, after the installation is complete and you execute the display version command on the device, the number of available CPUs displayed might be 1. For correct display of the number of available CPUs, navigate to the Advanced > CPU Configuration > x2APIC page in the BIOS interface to disable x2apic. |

Installing vFW2000 via PXE

This section describes only the installation procedure on the PXE client side. For the PXE server setup procedure, see "Appendix D Setting up a PXE server."

To install vFW2000 via PXE:

1. Access the bare metal server through iLO.

For information about how to access the bare metal server through iLO, see "Installing H3C NFV2000 on a bare metal server."

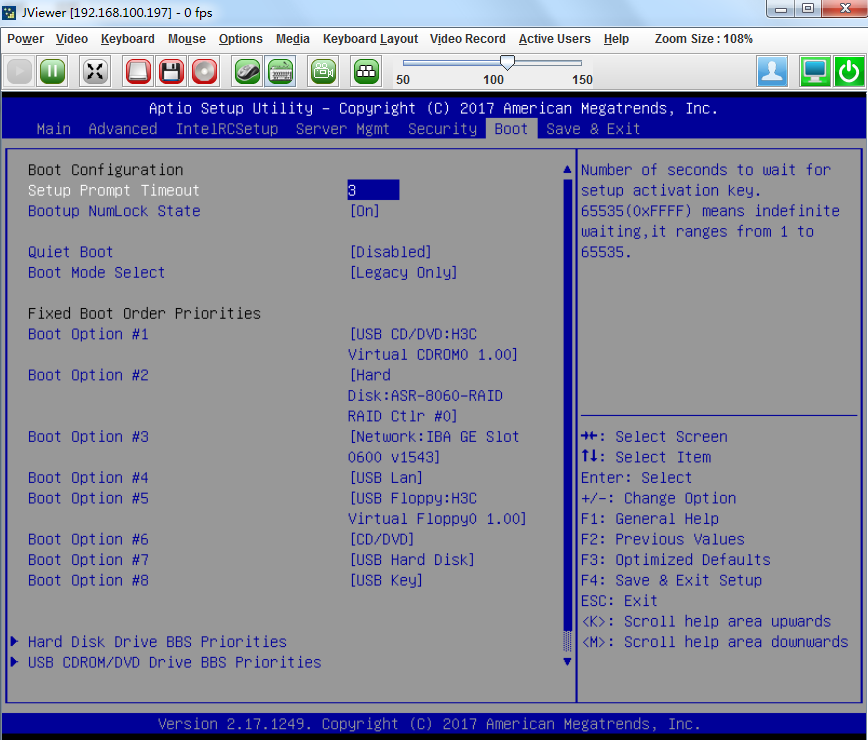

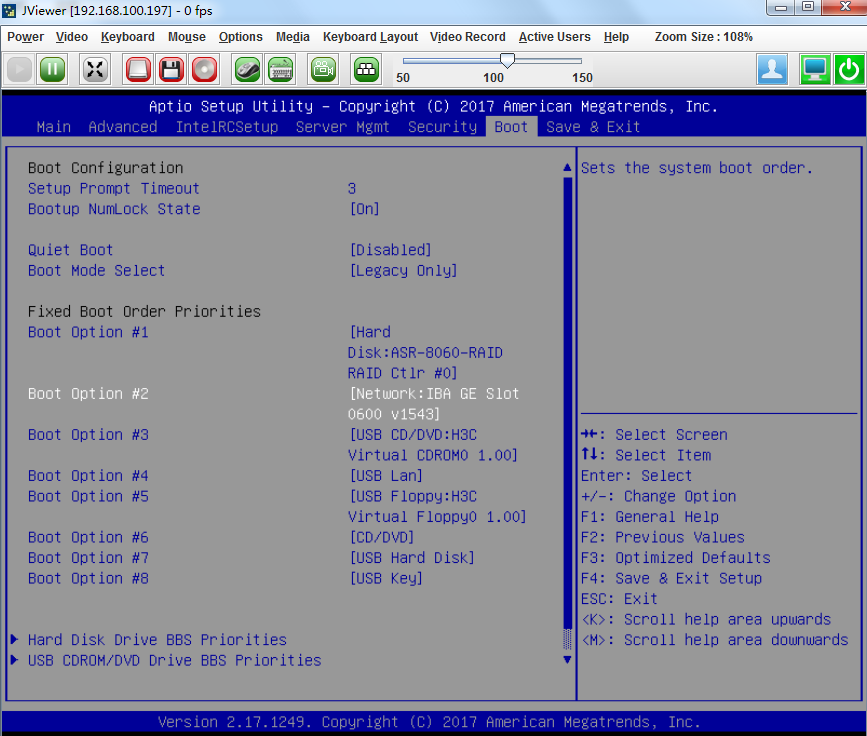

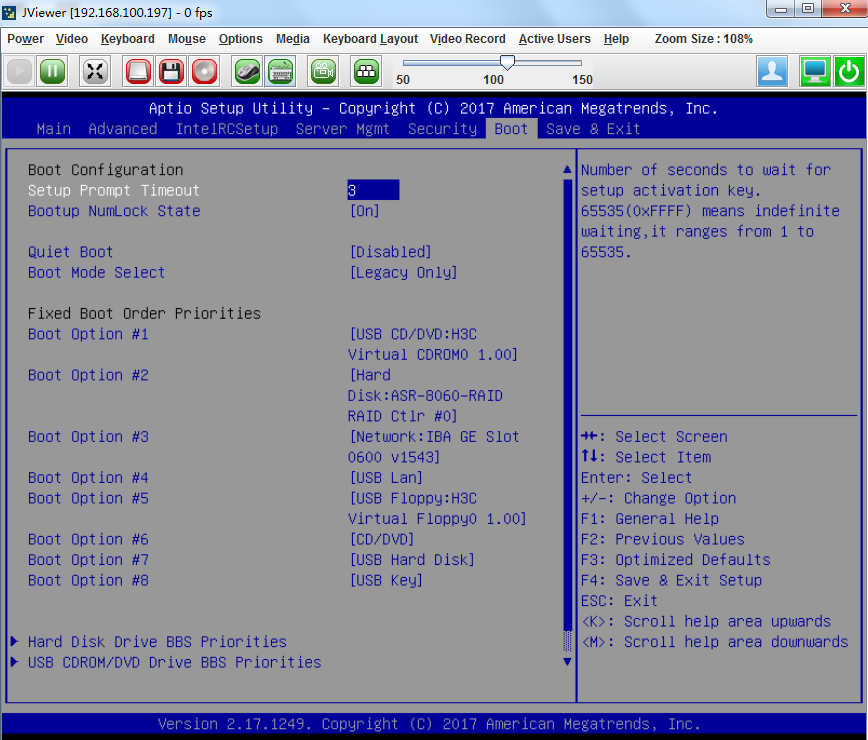

2. Set the BIOS boot order.

a. Access BIOS and select the Boot tab.

Figure 104 Boot tab

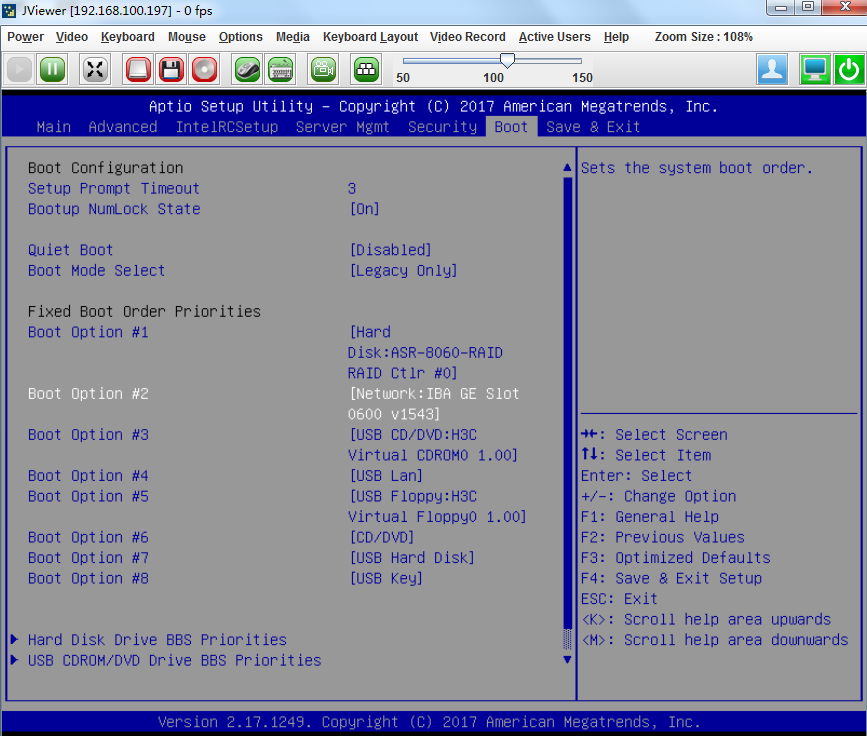

a. Set reboot via a network interface as the second boot option.

Figure 105 Setting reboot via a network interface as the second boot option

|

IMPORTANT: · BIOS screenshots vary by server. · Ensure that the selected interface can reach the PXE server over a physical link. · If the server has been installed with another system, set boot via PSE as the first boot option. After the installation is complete, change the first boot option back to hard drive. |

3. Restart the server

Installing vFW2000

1. After the server restarts, the system loads the required files from the PXE server and enters the installation interface. Select <1> to install, and then enter yes for confirmation. The system will automatically complete the installation.

Figure 106 Starting installation

2. Enter yes to reboot the system to finish vFW2000 installation.

Figure 107 Rebooting the system

|

|

NOTE: After you install an NFV2000 with the 3.14 kernel version, the number of available CPUs displayed in the display version command output might be 1. For correct display of the number of available CPUs, navigate to the Advanced > CPU Configuration > x2APIC page in the BIOS interface to disable x2apic. |

Installing vFW2000 via unattended PXE

This section describes only the installation procedure on the PXE client side. For the PXE server setup procedure, see "Appendix D Setting up a PXE server."

When setting up the PXE server, change the value of the Syslinux parameter ocs_live_run to opt/VSR/setup_vsr_pxe.sh unmanned fresh.

To install vFW2000 via unattended PXE:

1. Access the bare metal server through iLO.

For information about how to access the bare metal server through iLO, see "Installing H3C NFV2000 on a bare metal server."

2. Set the BIOS boot order.

a. Access BIOS and select the Boot tab.

Figure 108 Boot tab

b. Set reboot via a network interface as the second boot option.

Figure 109 Setting reboot via a network interface as the second boot option

|

IMPORTANT: · BIOS screenshots vary by server. · Ensure that the selected interface is physically reachable to the PXE server. · If the server has been installed with another system, set boot via PSE as the first boot option. After the installation is complete, change the first boot option back to hard drive. |

3. Restart the server

Installing vFW2000

After the server restarts, the system loads the required file from the PXE server and automatically completes the installation.

|

|

NOTE: After you install an NFV2000 with the 3.14 kernel version, the number of available CPUs displayed in the display version command output might be 1. For correct display of the number of available CPUs, navigate to the Advanced > CPU Configuration > x2APIC page in the BIOS interface to disable x2apic. |

Upgrading H3C NFV products

About startup software images

Startup software images are program files used to boot the device and are divided into four categories: Boot image, system image, feature image, and patch image. The device must have a boot image and a system image to run normally. You can select the feature image for the device as required. The patch image is installed for fixing software defects.

Table 6 Software images

|

Software image |

Description |

|

Boot image |

Contains the Linux operating system kernel and provides process management, memory management, file system management, and the emergency shell. |

|

System image |

Contains the Comware kernel and standard features, including device management, interface management, configuration management, and routing. |

|

Feature image |

Contains advanced or customized software features. Whether to support feature images and which feature images to support depend on the device model. |

|

Patch image |

Released for fixing software defects and bugs. A patch image does not add or remove features. |

The software images of NFV products can be released in one of the following forms:

· Separate .bin files. You must verify compatibility between software images.

· As a whole in one .ipe package file. The images in an .ipe package file are compatible. The system decompresses the file automatically, loads the .bin images and sets them as startup software images.

Typically, the startup file is a .ipe package file.

Upgrade methods

Table 7 Upgrade methods

|

Upgrade method |

Description |

|

This method is disruptive. You must reboot the entire device to complete the upgrade. |

|

|

Configure the vFW to boot using an ISO file and perform a software upgrade. You must reboot the entire device to complete the upgrade. |

Upgrade from the CLI

The upgrade method is the same for NFV products. This section uses vFW1000 as an example.

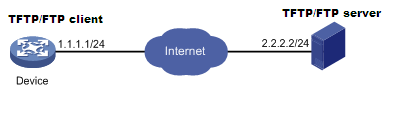

Preparing for the upgrade

Before upgrading the vFW startup images, set up the upgrade environment as shown in Figure 110.

· Make sure the file server is reachable to the vFW.

· Enable TFTP/FTP server on the file server.

· Log in to the CLI of the vFW from a configuration terminal.

· Copy the startup images to the file server and configure the TFTP/FTP server access path correctly.

Figure 110 Setting up the upgrade environment for the NFV product

Upgrading vFW1000 startup images through TFTP

The vFW accesses the specified path on the TFTP file server as a TFTP client and backs up and upgrades the startup images

Backing up the current startup image and configuration file

1. Execute the save command to save current configuration information.

<Sysname> save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

flash:/startup.cfg exists, overwrite? [Y/N]:y

Validating file. Please wait....

Configuration is saved to device successfully.

<Sysname>

2. Execute the dir command to verify that the storage space is sufficient for the new startup images.

<Sysname> dir

Directory of flash: (VFAT)

0 drw- - Jun 30 2020 05:39:20 diagfile

1 -rw- 47 Jun 30 2020 06:32:46 ifindex.dat

2 drw- - Jun 30 2020 05:47:24 license

3 drw- - Jun 30 2020 06:32:46 logfile

4 -rw- 768 Jun 30 2020 06:33:27 reboot.log

5 drw- - Jun 30 2020 05:39:20 seclog

6 -rw- 2268 Jun 30 2020 06:32:46 startup.cfg

7 -rw- 31526 Jun 30 2020 06:32:46 startup.mdb

8 -rw- 8772608 Jun 30 2020 06:32:30 vFW1000-CMW710-BOOT-E1183-X64.bin

9 -rw- 163973120 Jun 30 2020 06:32:32 vFW1000-CMW710-SYSTEM-E1183-X64.bin

10 -rw- 172752896 Jun 30 2020 06:31:14 vFW1000_H3C-CMW710-E1184-X64.ipe

11 -rw- 21016 Jun 30 2020 06:33:33 version.log

7325704 KB total (6988168 KB free)

<Sysname>

3. Execute the tftp put command to back up the startup images to the TFTP file server.

<Sysname> tftp 2.2.2.2 put vFW1000_H3C-CMW710-R0001-X64.ipe

File will be transferred in binary mode

Sending file to remote TFTP server. Please wait... \

TFTP: 31131648 bytes sent in 70 second(s).

File uploaded successfully.

<Sysname>

4. Execute the tftp put command to back up the startup.cfg file to the TFTP file server.

<Sysname> tftp 2.2.2.2 put startup.cfg

File will be transferred in binary mode

Sending file to remote TFTP server. Please wait... \

TFTP: 1694 bytes sent in 0 second(s).

File uploaded successfully.

<Sysname>

Upgrading the startup images

1. Execute the tftp get command to import the startup images to the vFW.

<Sysname> tftp 2.2.2.2 get vFW1000_H3C-CMW710-E1185-X64.ipe

File will be transferred in binary mode

Downloading file from remote TFTP server, please wait...|

TFTP: 31131648 bytes received in 70 second(s)

File downloaded successfully.

<Sysname>

2. Execute the boot-loader command to specify the main startup images for the vFW.

<Sysname> boot-loader file flash:/vFW1000_H3C-CMW710-E1185-X64.ipe main

Verifying the file flash:/vFW1000_H3C-CMW710-E1185-X64.ipe on the device...Done.

H3C SecPath vFW1000 images in IPE:

vFW1000-CMW710-BOOT-E1185-X64.bin

vFW1000-CMW710-SYSTEM-E1185-X64.bin

This command will set the main startup software images. Please do not reboot the

device during the upgrade. Continue? [Y/N]:y

Add images to the device.

Decompressing file vFW1000-CMW710-BOOT-E1185-X64.bin to flash:/vFW1000-CMW710-BO

OT-E1185-X64.bin...Done.

Decompressing file vFW1000-CMW710-SYSTEM-E1185-X64.bin to flash:/vFW1000-CMW710-

SYSTEM-E1185-X64.bin.....Done.

Verifying the file flash:/vFW1000-CMW710-BOOT-E1185-X64.bin on the device...Done

.

Verifying the file flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin on the device...Do

ne.

The images that have passed all examinations will be used as the main startup so

ftware images at the next reboot on the device.

Decompression completed.

You are recommended to delete the .ipe file after you set startup software image

s for all slots.

Do you want to delete flash:/vFW1000_H3C-CMW710-E1185-X64.ipe now? [Y/N]:n

<Sysname>

3. Execute the display boot-loader command to view information about startup software images.

<Sysname> display boot-loader

Software images on the device:

Current software images:

flash:/vFW1000-CMW710-BOOT-E1183-X64.bin

flash:/vFW1000-CMW710-SYSTEM-E1183-X64.bin

Main startup software images:

flash:/vFW1000-CMW710-BOOT-E1185-X64.bin

flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin

Backup startup software images:

None

<Sysname>

As shown in the command output, the startup software images are vFW1000-CMW710-BOOT-E1183-X64.bin and vFW1000-CMW710-SYSTEM-E1183-X64.bin files in the vFW1000_H3C-CMW710-E1185-X64.ipe package.

Upgrading vFW1000 startup images through FTP

The vFW accesses the specified path on the TFTP file server as a TFTP client and backs up and upgrades the startup images.

Backing up the current startup images and configuration file

1. Execute the save command to save current configuration information.

<Sysname> save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

flash:/startup.cfg exists, overwrite? [Y/N]:y

Validating file. Please wait....

Configuration is saved to device successfully.

<Sysname>

2. Execute the dir command to verify that the storage space is sufficient for the new startup image files.

<Sysname> dir

Directory of flash: (VFAT)

0 drw- - Jun 30 2020 05:39:20 diagfile

1 -rw- 47 Jun 30 2020 06:32:46 ifindex.dat

2 drw- - Jun 30 2020 05:47:24 license

3 drw- - Jun 30 2020 06:32:46 logfile

4 -rw- 768 Jun 30 2020 06:33:27 reboot.log

5 drw- - Jun 30 2020 05:39:20 seclog

6 -rw- 2268 Jun 30 2020 06:32:46 startup.cfg

7 -rw- 31526 Jun 30 2020 06:32:46 startup.mdb

8 -rw- 8772608 Jun 30 2020 06:32:30 vFW1000-CMW710-BOOT-E1183-X64.bin

9 -rw- 163973120 Jun 30 2020 06:32:32 vFW1000-CMW710-SYSTEM-E1183-X64.bin

10 -rw- 172752896 Jun 30 2020 06:31:14 vFW1000_H3C-CMW710-E1184-X64.ipe

11 -rw- 21016 Jun 30 2020 06:33:33 version.log

7325704 KB total (6988168 KB free)

<Sysname>

3. Execute the ftp command to log in to the FTP server and enter the login username and password as prompted.

<Sysname> ftp 2.2.2.2

Press CTRL+C to abort.

Connected to 2.2.2.2 (2.2.2.2).

220 WFTPD 2.0 service (by Texas Imperial Software) ready for new user

User (2.2.2.2:(none)): user001

331 Give me your password, please

Password:

230 Logged in successfully

Remote system type is MSDOS

ftp>

4. Execute the put command to back up the startup images to the FTP file server.

ftp> putvFW1000_H3C-CMW710-E1184-X64.ipe

227 Entering passive mode (2,2,2,2,209,112)

125 Using existing data connection

................................................................................

................................................................................

................................................................................

................................................................................

..........

226 Closing data connection; File transfer successful.

172752896 bytes sent in 3.508 seconds (46.96 Mbytes/s)

ftp>

5. Execute the put command to back up the configuration file startup.cfg to the FTP file server.

ftp> put startup.cfg

227 Entering passive mode (2,2,2,2,209,126)

125 Using existing data connection

.

226 Closing data connection; File transfer successful.

2268 bytes sent in 0.010 seconds (214.22 Kbytes/s)

ftp>

Upgrading the startup images

1. In FTP client view, execute the get command to import the startup images to the vFW.

ftp> get vFW1000_H3C-CMW710-E1185-X64.ipe

227 Entering passive mode (2,2,2,2,209,150)

125 Using existing data connection

................................................................................

................................................................................

................................................................................

................................................................................

.........................

226 Closing data connection; File transfer successful.

181030912 bytes received in 13.071 seconds (13.21 Mbytes/s)

ftp>

2. Execute the quit command to return to user view.

ftp>quit

221 Service closing control connection

<Sysname>

3. Execute the boot-loader command to set the next main startup images.

<Sysname> boot-loader file flash:/ vFW1000_H3C-CMW710-E1185-X64.ipe main

Verifying the file flash:/vFW1000_H3C-CMW710-E1185-X64.ipe on the device...Done.

H3C SecPath vFW1000 images in IPE:

vFW1000-CMW710-BOOT-E1185-X64.bin

vFW1000-CMW710-SYSTEM-E1185-X64.bin

This command will set the main startup software images. Please do not reboot the

device during the upgrade. Continue? [Y/N]:y

Add images to the device.

Decompressing file vFW1000-CMW710-BOOT-E1185-X64.bin to flash:/vFW1000-CMW710-BO

OT-E1185-X64.bin...Done.

Decompressing file vFW1000-CMW710-SYSTEM-E1185-X64.bin to flash:/vFW1000-CMW710-

SYSTEM-E1185-X64.bin.....Done.

Verifying the file flash:/vFW1000-CMW710-BOOT-E1185-X64.bin on the device...Done

.

Verifying the file flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin on the device...Do

ne.

The images that have passed all examinations will be used as the main startup so

ftware images at the next reboot on the device.

Decompression completed.

You are recommended to delete the .ipe file after you set startup software image

s for all slots.

Do you want to delete flash:/vFW1000_H3C-CMW710-E1185-X64.ipe now? [Y/N]:n

<Sysname>

4. Execute the display boot-loader command to view information about the startup software images.

<Sysname> display boot-loader

Software images on the device:

Current software images:

flash:/vFW1000-CMW710-BOOT-E1183-X64.bin

flash:/vFW1000-CMW710-SYSTEM-E1183-X64.bin

Main startup software images:

flash:/vFW1000-CMW710-BOOT-E1185-X64.bin

flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin

Backup startup software images:

None

<Sysname>

As shown in the command output, the startup software images are vFW1000-CMW710-BOOT-E1185-X64.bin and vFW1000-CMW710-SYSTEM-E1185-X64.bin files in vFW1000_H3C-CMW710-E1185-X64.ipe package.

Restarting the vFW

After the startup images are upgraded, reboot the device to complete software upgrade.

To restart the vFW:

1. Execute the reboot command to restart the vFW.

<Sysname> reboot

Start to check configuration with next startup configuration file, please wait.........DONE!

This command will reboot the device. Continue? [Y/N]:y

Now rebooting, please wait...

2. Execute the display version command to verify that the vFW starts up with correct startup software image versions.

H3C Comware Software, Version 7.1.064, ESS 1185

Copyright (c) 2004-2020 New H3C Technologies Co., Ltd. All rights reserved.

H3C SecPath vFW1000 uptime is 0 weeks, 0 days, 0 hours, 1 minute

Last reboot reason : User reboot

Boot image: flash:/vFW1000-CMW710-BOOT-E1185-X64.bin

Boot image version: 7.1.064, ESS 1185

Compiled May 27 2020 15:00:00

System image: flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin

System image version: 7.1.064, ESS 1185

Compiled May 27 2020 15:00:00

CPU ID: 0x01000101, vCPUs: Total 1, Available 1

2.00G bytes RAM Memory

Basic BootWare Version: 1.11

Extended BootWare Version: 1.11

[SLOT 1]VNIC-E1000 (Driver)1.0<Sysname>

Upgrade from an ISO file

The upgrade method is the same for NFV products. This section uses vFW1000 as an example.

The upgrade procedure is the same as installing vFW1000 from the ISO file. For more information, see "Installing the H3C NFV1000 series products on VMware ESXi", "Installing the H3C NFV1000 series products on Linux KVM", and "Installing the H3C NFV1000 series products on H3C CAS."

1. Access the INSTALL MENU, enter 2 to select <2> Upgrade Install to upgrade the vFW to the ISO version in the CD-ROM.

Figure 111 Upgrading vFW1000 from the ISO file

2. After installation, disconnect the system from the CD-ROM and the reboots the system.

3. After the vFW restarts, verify that the vFW starts up with upgraded startup software image versions.

<Sysname>display version

H3C Comware Software, Version 7.1.064, ESS 1185

Copyright (c) 2004-2020 New H3C Technologies Co., Ltd. All rights reserved.

H3C SecPath vFW1000 uptime is 0 weeks, 0 days, 0 hours, 1 minute

Last reboot reason : User reboot

Boot image: flash:/vFW1000-CMW710-BOOT-E1185-X64.bin

Boot image version: 7.1.064, ESS 1185

Compiled May 27 2020 15:00:00

System image: flash:/vFW1000-CMW710-SYSTEM-E1185-X64.bin

System image version: 7.1.064, ESS 1185

Compiled May 27 2020 15:00:00

CPU ID: 0x01000101, vCPUs: Total 1, Available 1

2.00G bytes RAM Memory

Basic BootWare Version: 1.11

Extended BootWare Version: 1.11

[SLOT 1]VNIC-E1000 (Driver)1.0

<Sysname>

Restoring NFV products

The restoration method is the same for NFV products. This section uses vFW1000 as an example.

To restore vFW1000 by using the ISO image:

1. The restoration procedure is the same as the installation procedure. For more information, see "Installing the H3C NFV1000 series products on VMware ESXi", "Installing the H3C NFV1000 series products on Linux KVM", and "Installing the H3C NFV1000 series products on H3C CAS."

2. Enter 3 to select <3> Recovery Install from the INSTALL MENU to restore vFW1000 version to the ISO version in the CD-ROM.

Figure 112 Restoring vFW1000 by using the ISO image

3. After the installation, disconnect the system from the CD-ROM and then restart the system.

Appendix A Installing Linux KVM

About Linux KVM

Kernel-based Virtual Machine (KVM) is an open-source full virtualization solution for Linux on x86 hardware. It has been merged into the Linux main releases since Linux 2.6.20, and has become a mainstream Virtual Machine Monitor (VMM).

This chapter installs CentOS7 as an example to describe KVM installation.

Restrictions and guidelines

Before installing KVM, make sure you PC or server supports hardware virtualization, such as Intel VT technology and AMD V technology.

Prerequisites

Prepare the bootable drive or the network boot environment.

· To boot the system from a CD/DVD, insert the CentOS7 optical disk into the optical drive, and configure the server to boot from CD/DVD.

· To boot the system from the network, prepare the network boot environment, and configure the server to boot from the network.

Procedure

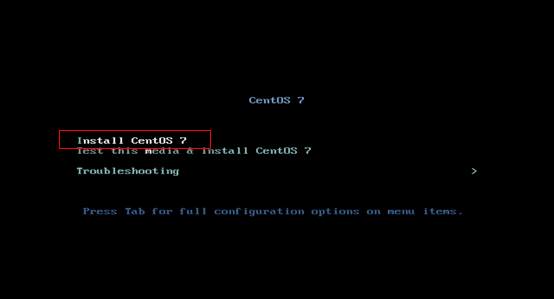

1. Access the CentOS7 installation welcome screen as shown in Figure 113, select Install CentOS7 and press Enter.

Figure 113 CentOS7 installation welcome screen

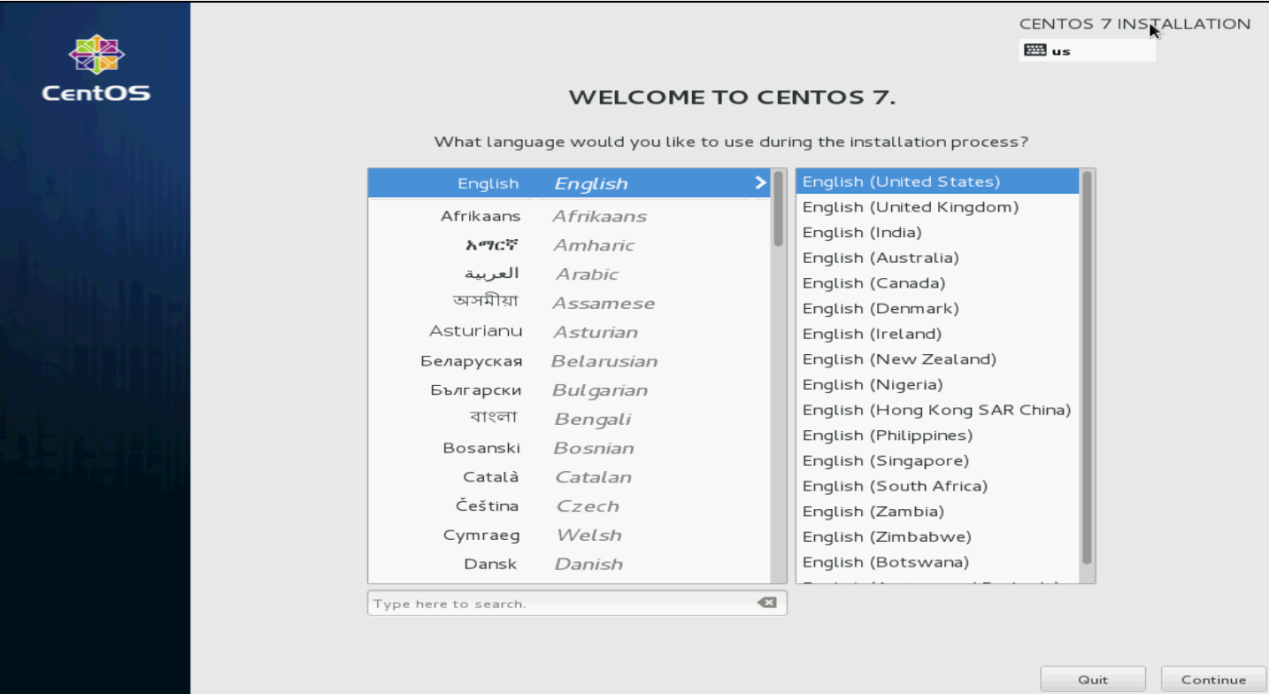

2. Select a language and then click Continue, as shown in Figure 114.

Figure 114 Selecting a language

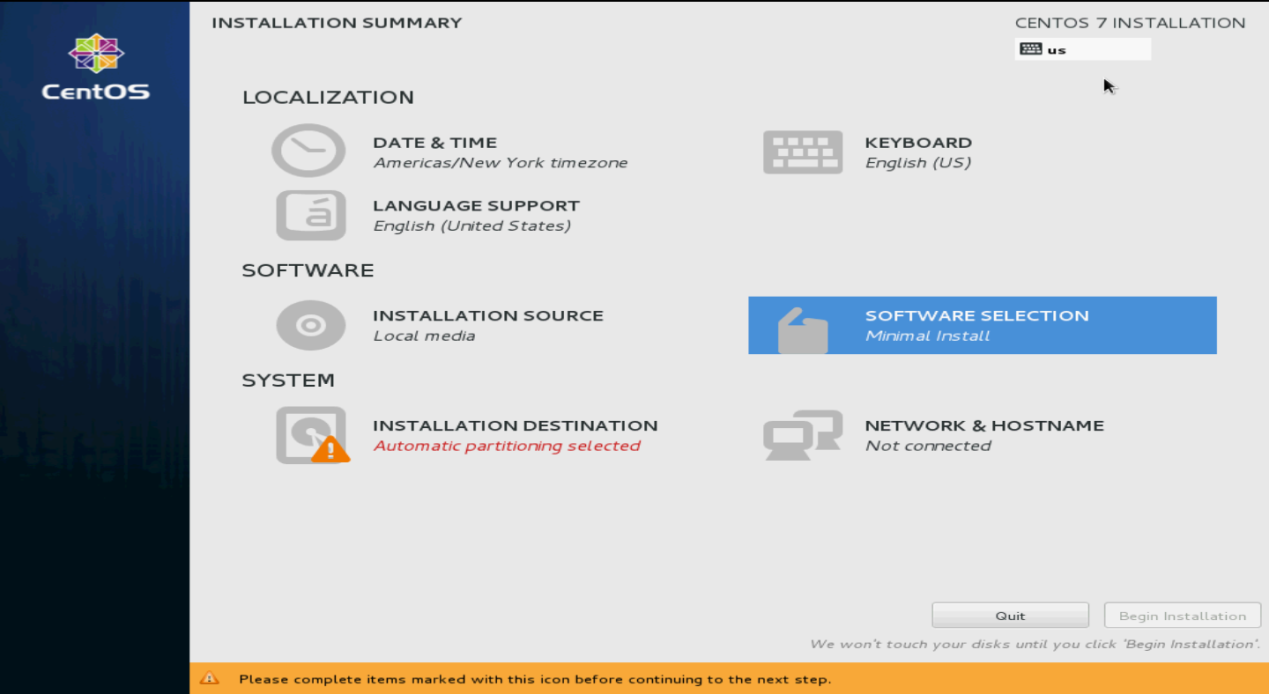

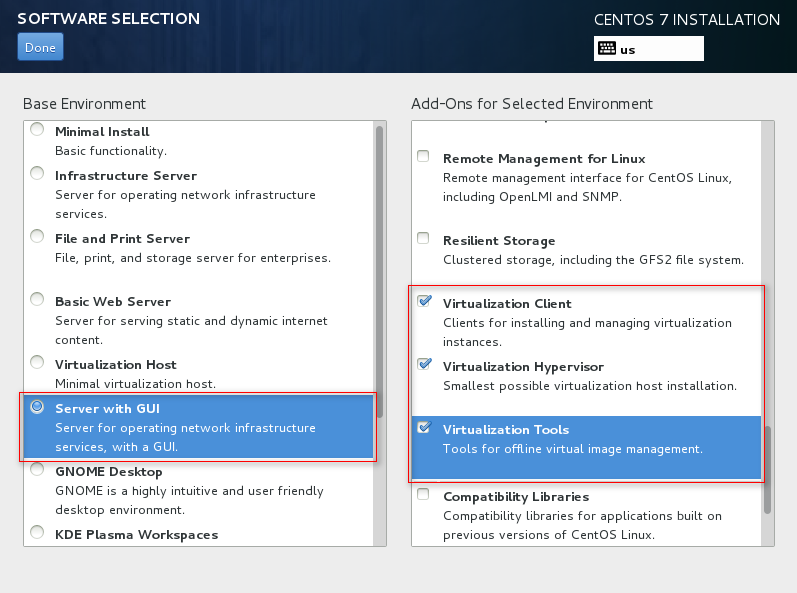

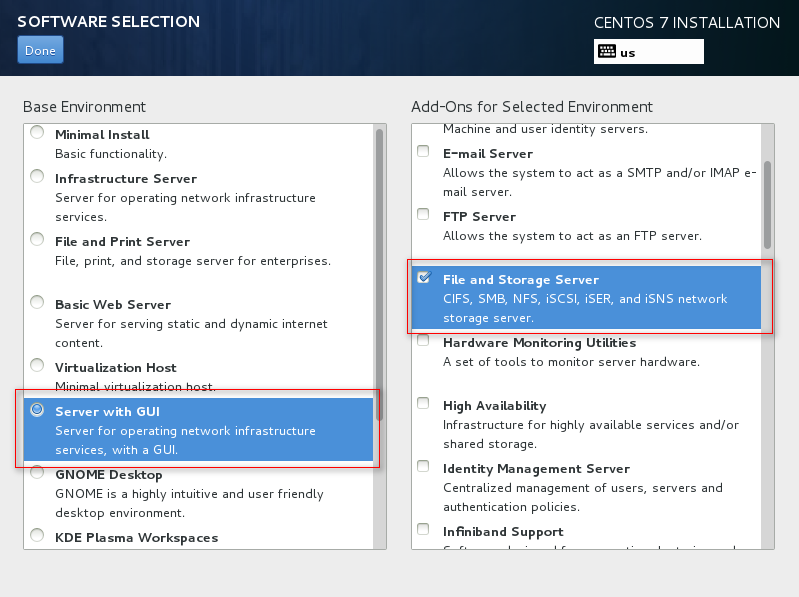

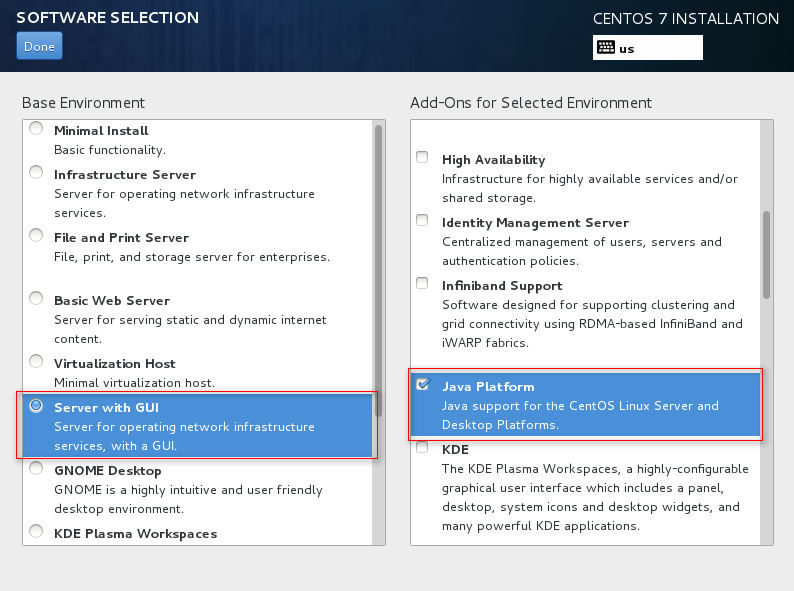

3. Select SOFTWARE SELECTION in the SOFTWARE section.

Figure 115 INSTALLATION SUMMARY screen

4. Select the virtualization components to install.

To facilitate management of VMs and ensure correct installation of the virtualization components, select the components marked in the red boxes in Figure 116 to Figure 118 and then click Done.

Figure 116 Installing virtualization components (1)

Figure 117 Installing virtualization components (2)

Figure 118 Install virtualization components (3)

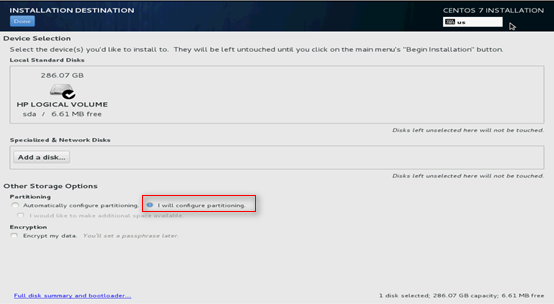

5. Click INSTALLATION DESTINATION. Select the installation destination and select I will configuration partitioning as shown in Figure 119, and then click Done.

Figure 119 Selecting the installation destination

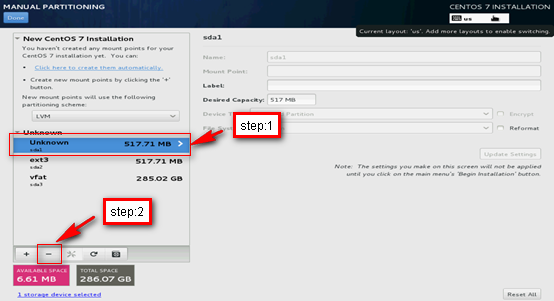

6. As shown in Figure 120, select the Unknown space and click – to remove the unknown partition. In the confirmation box that opens, select the options as shown in Figure 121 to delete it.

Figure 120 Removing the unknown partition

Figure 121 Confirming the deletion

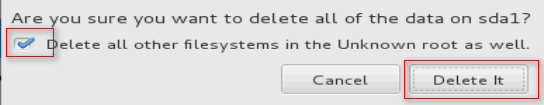

7. Select Click here to create them automatically.

Figure 122 Selecting automatic creation method for mount points

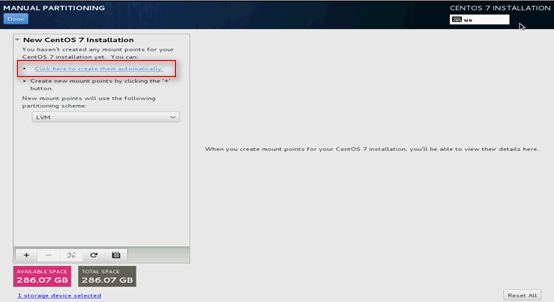

8. Because the VM image is stored in the /var or /opt subdirectory, the / partition must be large. However, the system allocates a large amount of space to the /home partition automatically. As a best practice, delete the /home partition and add its space to the / partition, and keep other partitions used to store system files unchanged.

¡ To delete the /home partition, perform the steps as shown in Figure 123.

¡ To add space to the / partition, perform the steps as shown in Figure 124.

Figure 123 Deleting the /home partition

Figure 124 Adding space to the / partition

|

IMPORTANT: The system can start up in legacy BIOS mode and UEFI mode. If the system starts up in UEFI mode, do not delete the /boot/efi partition because this partition is bootloaded by the system. |

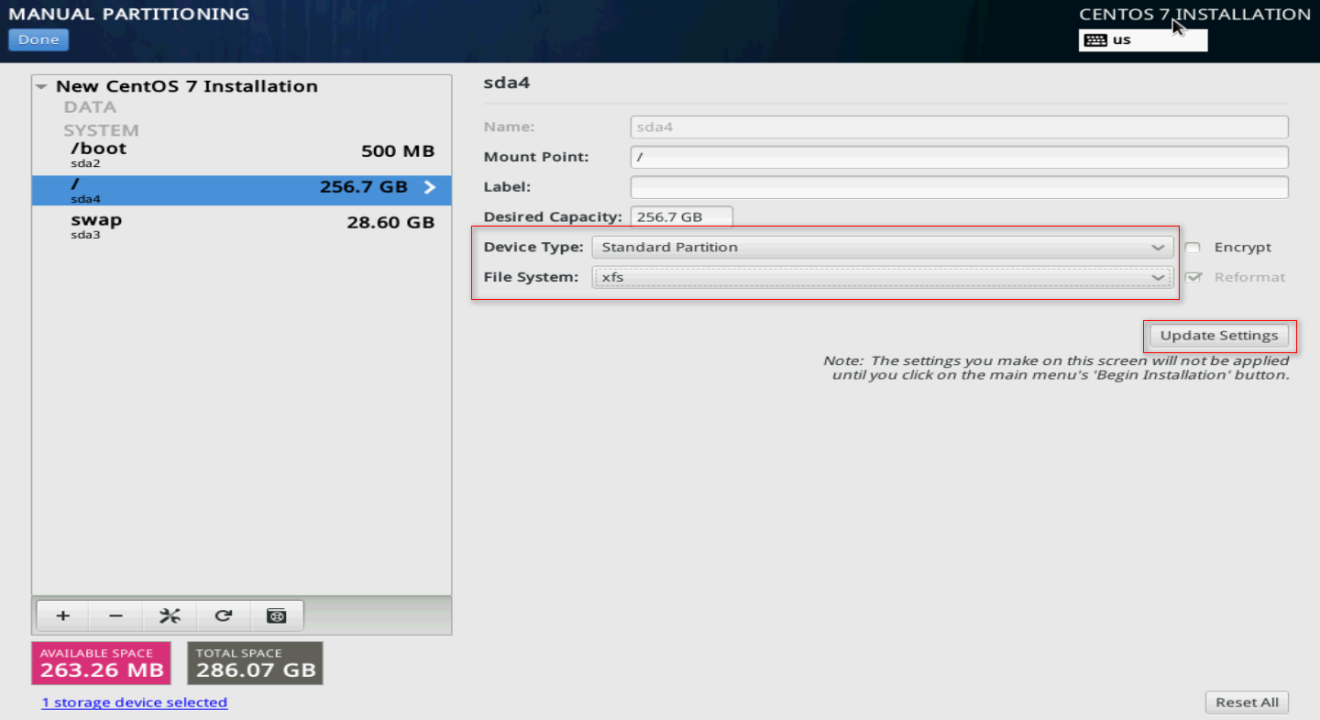

9. As a best practice to enhance the system stability and reduce the VM image corruption risk in case of a server power outage, modify the Device Type and File System settings for the partitions. As shown in Figure 125, the Device Type and File System settings are changed for the / partition. Change the Device Type and File System settings for the other partitions as shown in Table 8.

Figure 125 Modifying the / partition settings

Table 8 Changing the partition settings

|

Partition |

Device Type |

File System |

|

/boot |

Standard Partition |

xfs |

|

/boot/efi (available only in UEFI mode) |

Standard Partition |

EFI System Partition |

|

/ |

Standard Partition |

xfs |

|

swap |

Standard Partition |

swap |

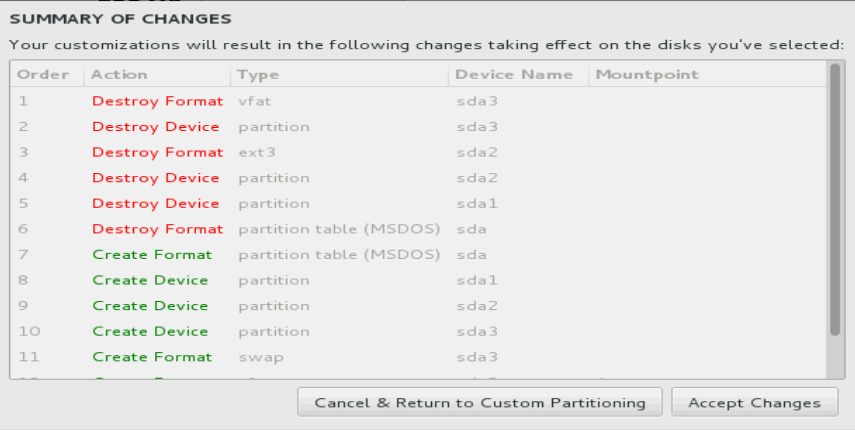

10. Click Done. In the dialog box that opens, click Accept Changes.

Figure 126 Saving the settings

|

IMPORTANT: When you add a device file, set Device Type to Standard Partition and File System to xfs to reduce the file corruption probability in case of an unexpected power down of the server. |

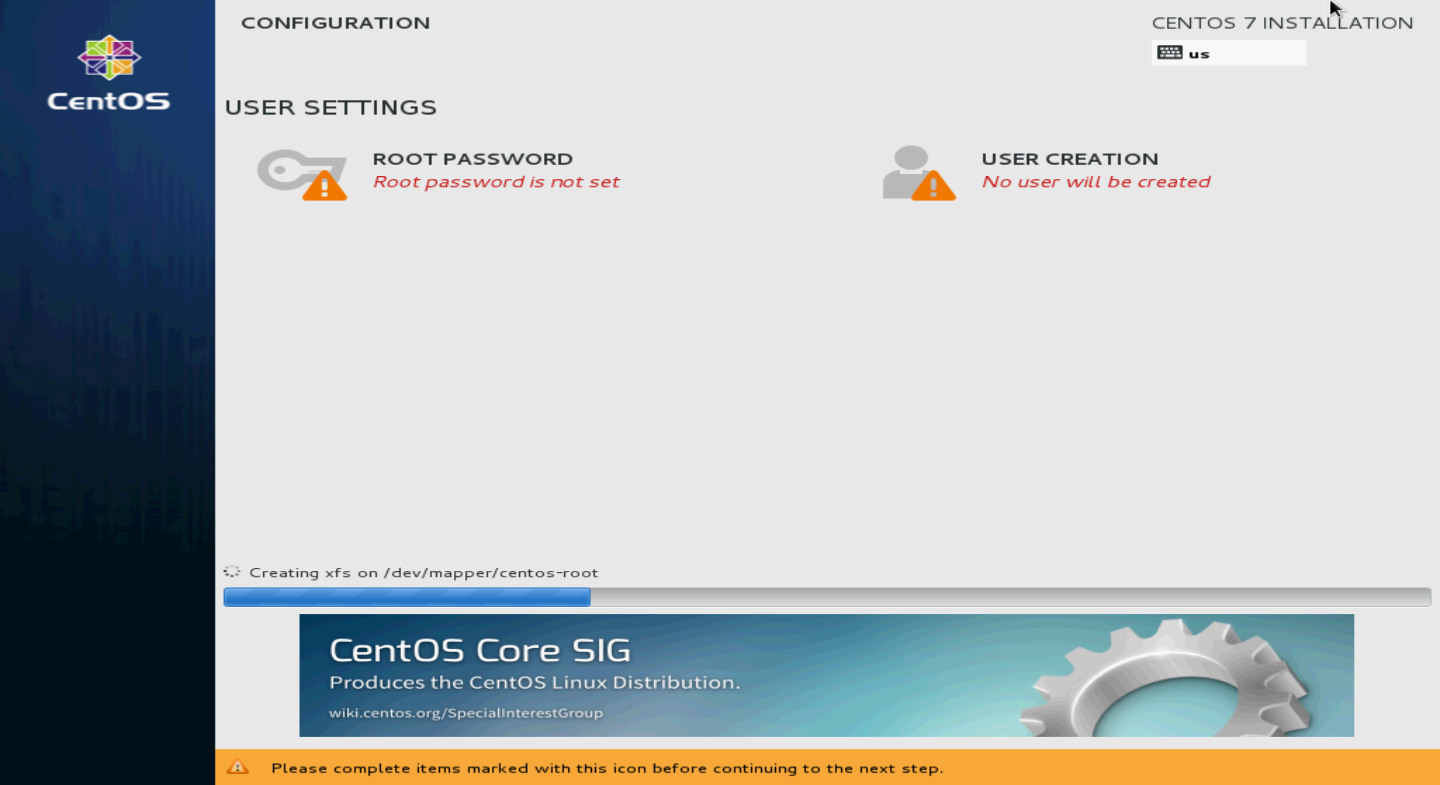

11. Click Begin Installation.

12. Click ROOT PASSWORD.

Figure 127 CONFIGURATION screen

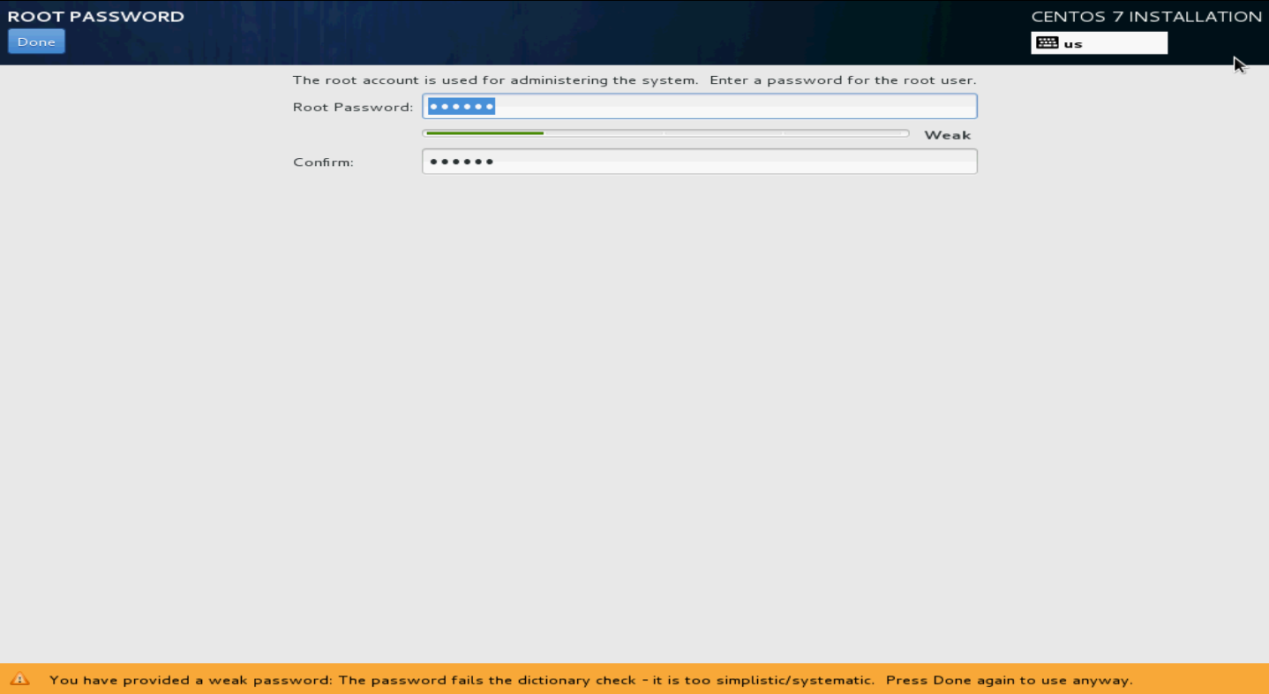

13. Specify the root password, confirm the password, and then click Done.

If the system prompts that the password is too weak, click Done to confirm the password again.

The system returns to the Configuration screen.

Figure 128 Specifying the root password

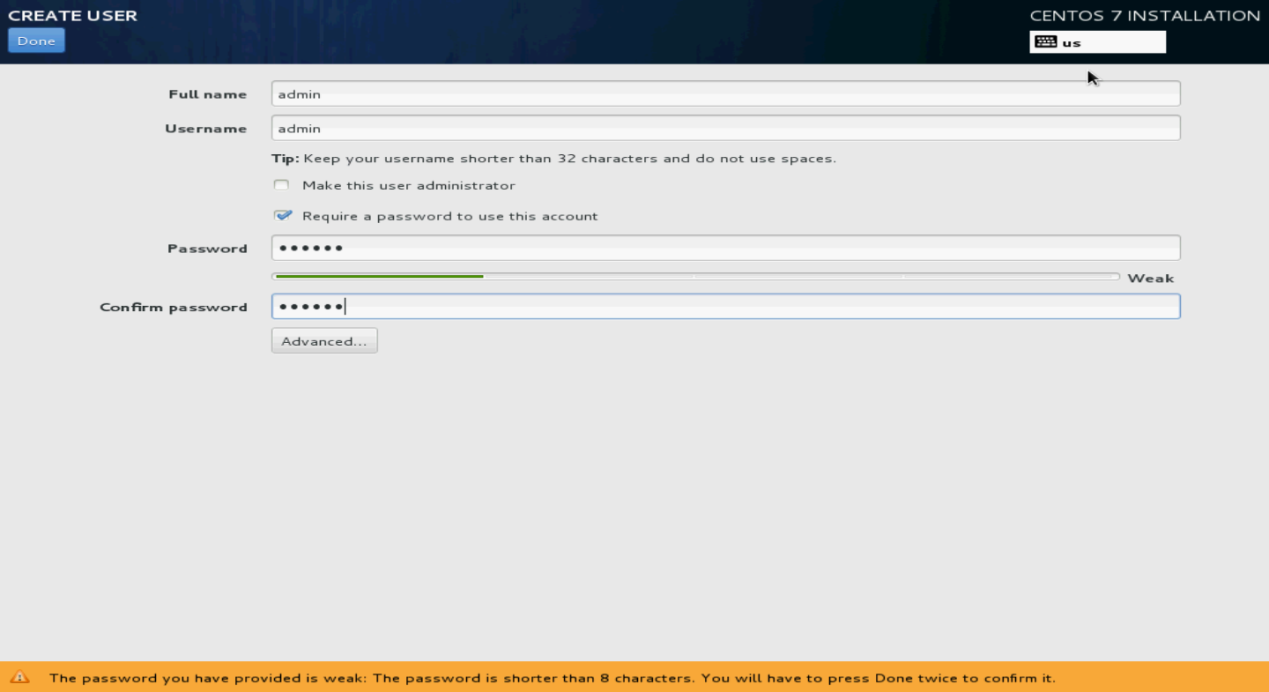

14. Click USER CREATION. Specify the username and password, and then click Done.

You can also use the non-root account created here to log in to the Linux OS.

Figure 129 Creating a user account

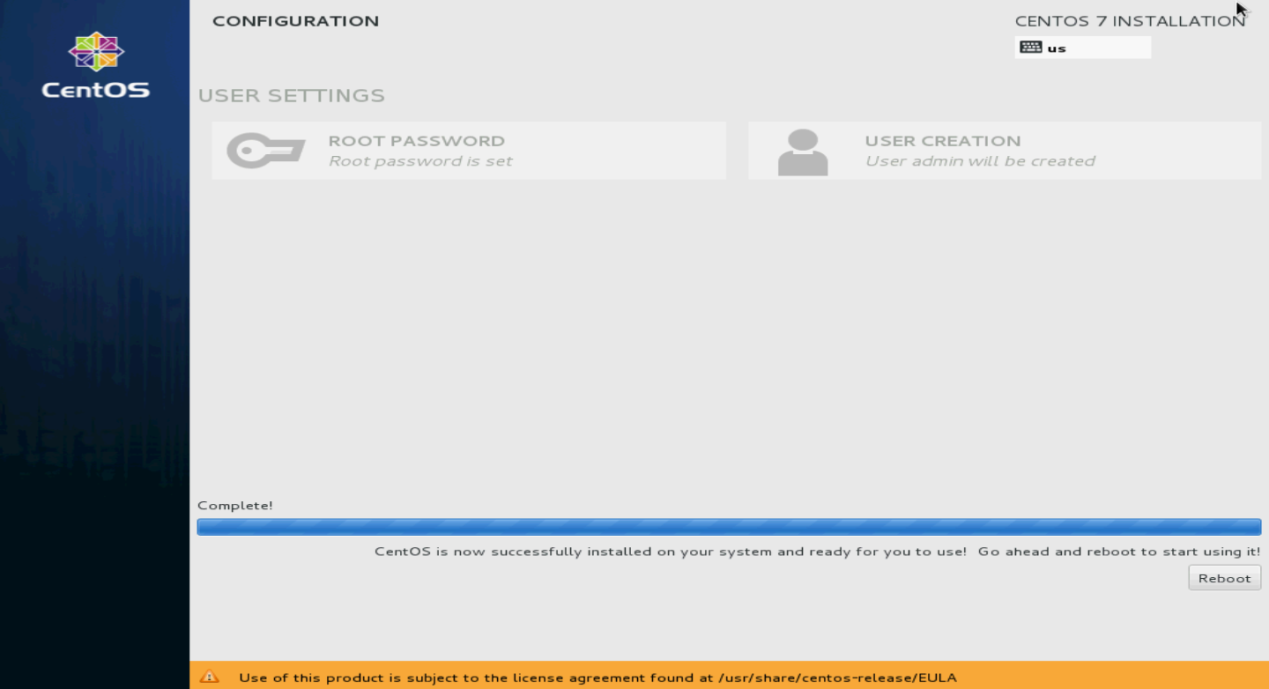

15. Click Finish configuration.

The system starts automatic installation.

16. After the installation, click Reboot to reboot the system.

If you use a CD/DVD as the bootable drive, remove the CD/DVD before rebooting the system.

The system enters the INITIAL SETUP screen.

Figure 130 Rebooting the system

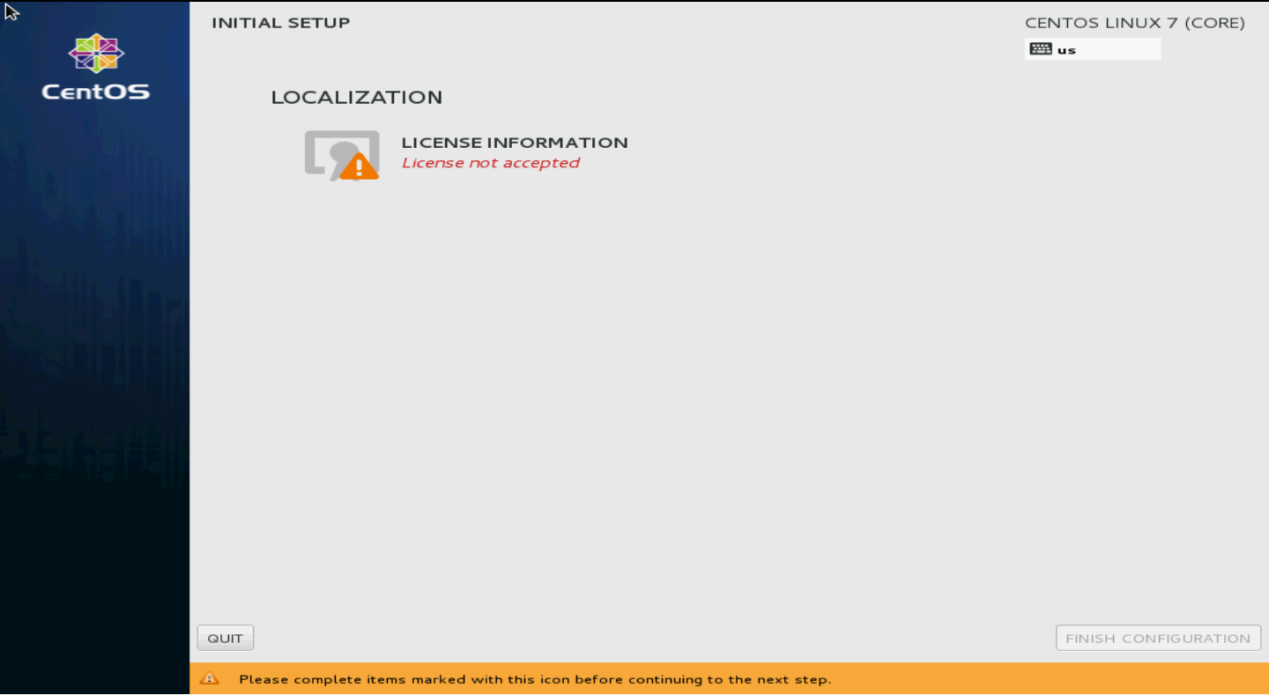

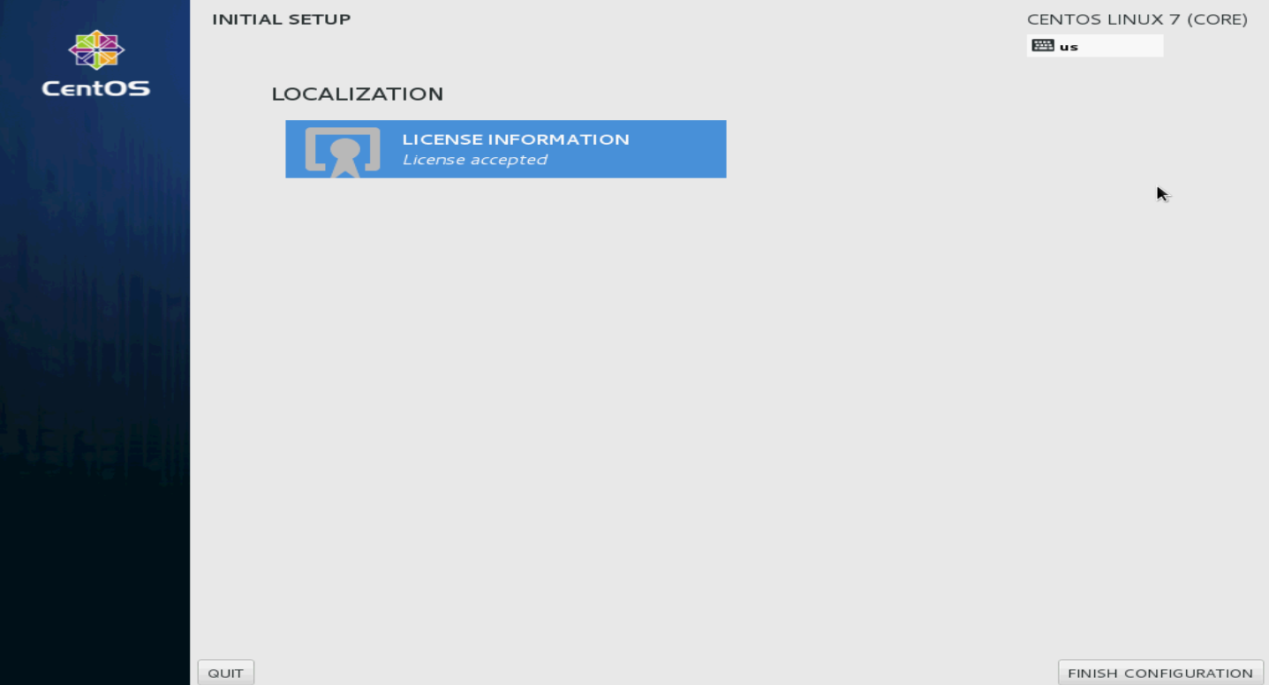

17. On the CentOS main page as shown in Figure 131, click LICENSE INFORMATION to confirm information.

Figure 131 INITIAL SETUP screen

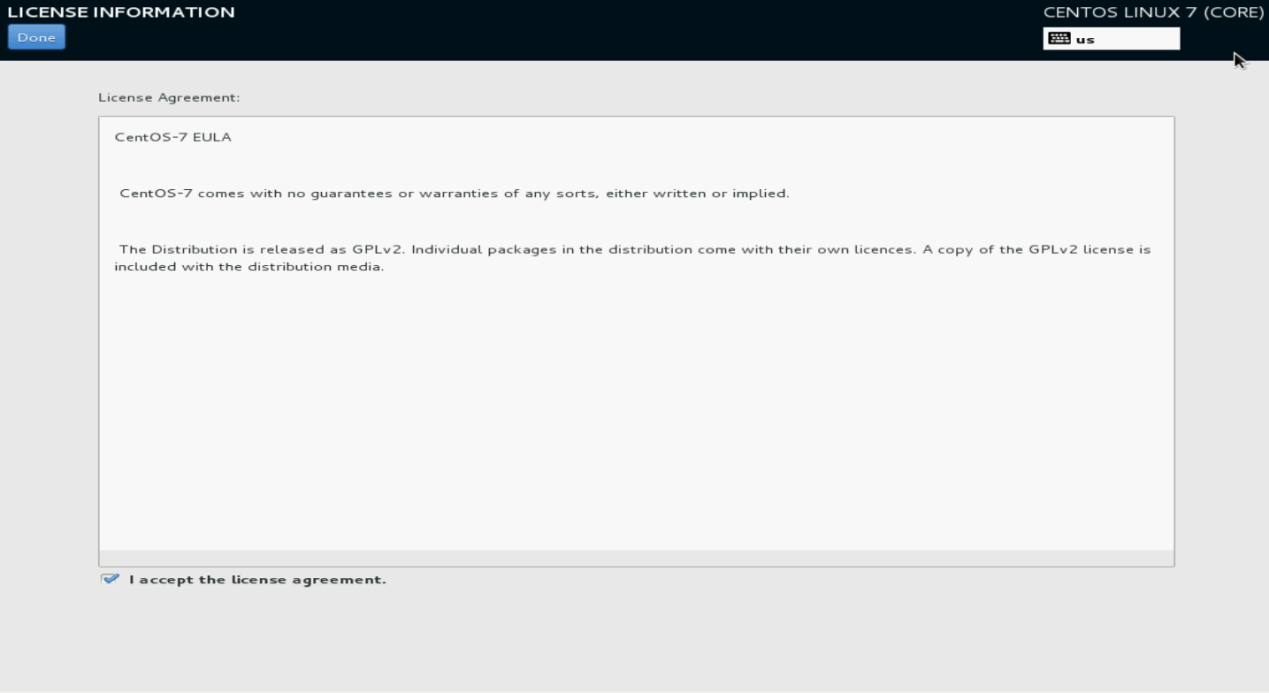

18. Select I accept the license agreement, and then click Done.

Figure 132 License agreement

19. Click FINISH CONFIGURATION.

The user login screen opens.

Figure 133 Finishing configuration

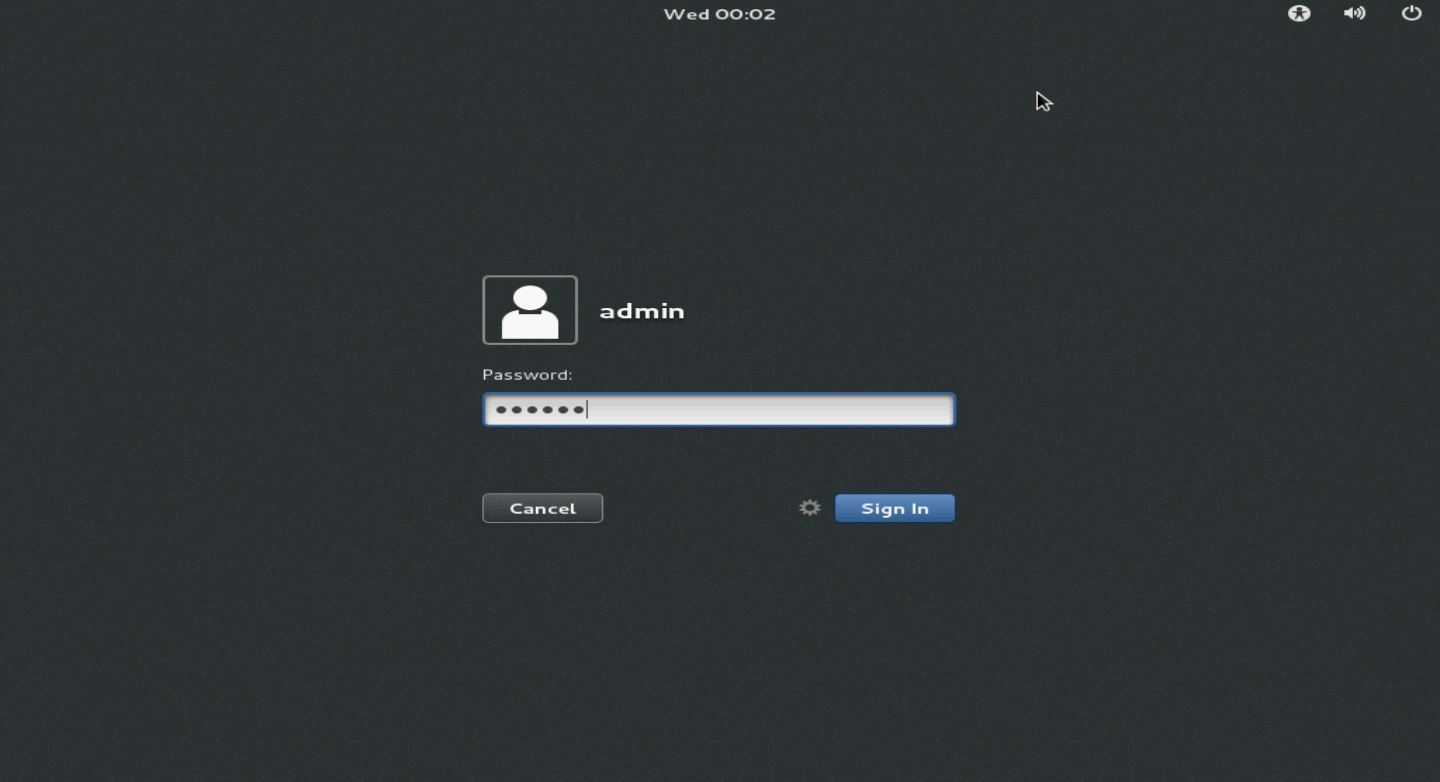

20. Enter the username and password, and then click Sign In.

Figure 134 Login screen

21. Select the language and then click Next.

Figure 135 Selecting the language

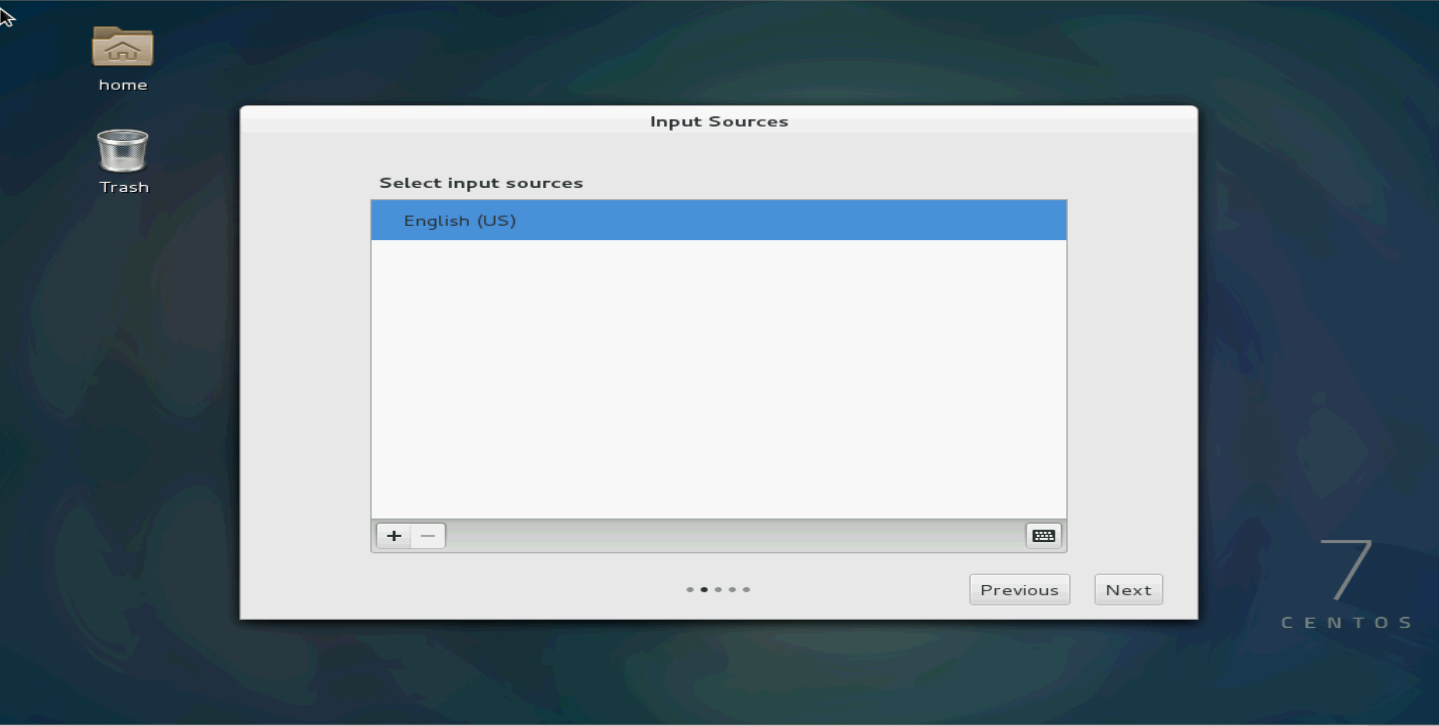

22. Select the input sources.

Figure 136 Selecting the input sources

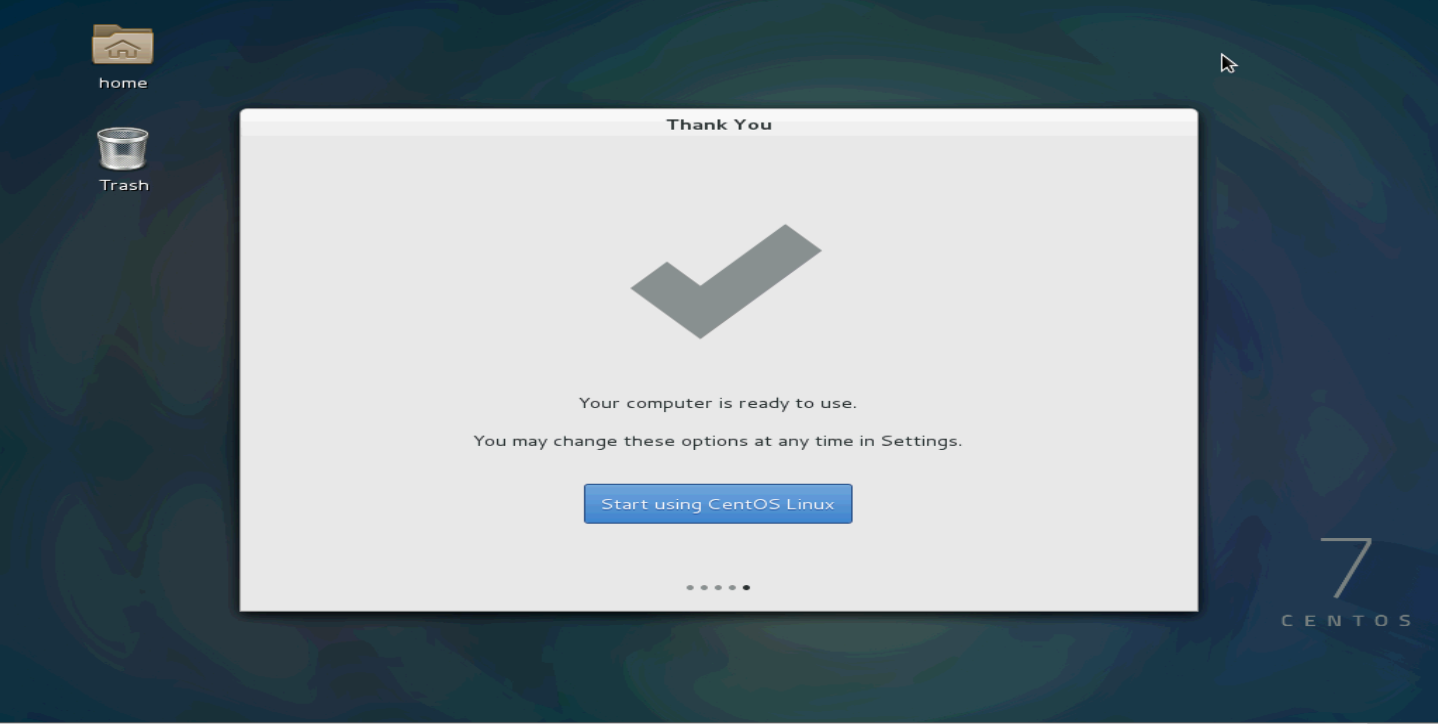

23. Click Start using CentOS Linux.

Figure 137 Starting using CentOS Linux

24. Select Applications > System Tools > Virtual Machine Manager to open the virtual machine manager (KVM).

Root permissions are required for VM-related operations. If you logged in to the Linux OS as a non-root user, the virtual machine manager will require you to enter the root password.

Figure 138 Virtual Machine Manager

Configuring network parameters

After installing CentOS7, do not configure network parameters directly by clicking the network settings icon in the upper right corner of the GUI. The network parameters configured on the GUI are managed through the NetworkManager service. However, you have to shut down the NetworkManager service when creating a bridge later, which will invalidate the previously configured network parameters. As a best practice, configure the network parameters manually.

Figure 139 Network parameter configuration from the GUI not allowed

The manual IP configuration method includes temporary configuration and permanent configuration.

Temporary IP address configuration

The IP address configured with this method will be lost after a system reboot.

# Configure IP address 192.168.16.33 with a 16-bit subnet mask for network interface eno1.

ifconfig eno1 192.168.16.33/16

Permanent IP address configuration

The /etc/sysconfig/network scripts/ directory contains a configuration file for each NIC, for example, the ifcfg-eno1 configuration file for NIC eno1. By changing the configuration file, you can modify the network port settings permanently.

To configure a permanent IP address:

1. Modify the parameters as follows. If you cannot find the parameters, add the parameter settings at the end of the file.

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# vim ifcfg-eno1

HWADDR=EC:B1:D7:80:50:54

TYPE=Ethernet

BOOTPROTO=static # Change the value from dhcp to static.

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

NAME=eno1

UUID=cbb80618-065f-4272-9fde-39ff9b06e47

ONBOOT=yes # Change the value from no to yes to activate the device when the system starts up.

IPADDR=192.168.16.33 # IP address of the NIC

NETMASK=255.255.0.0 # Subnet mask of the NIC

2. Save the configuration and restart the network service.

[root@localhost network-scripts]# systemctl restart network.service

3. Verify that the NIC configuration has been updated.

[root@localhost network-scripts]# ifconfig eno1

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.16.33 netmask 255.255.0.0 broadcast 192.168.255.255

inet6 2002:6f01:102:5:eeb1:d7ff:fe80:5054 prefixlen 64 scopeid 0x0<global>

inet6 fec0::5:eeb1:d7ff:fe80:5054 prefixlen 64 scopeid 0x40<site>

inet6 2002:8302:101:5:eeb1:d7ff:fe80:5054 prefixlen 64 scopeid 0x0<global>

inet6 fe80::eeb1:d7ff:fe80:5054 prefixlen 64 scopeid 0x20<link>

inet6 2002:aca8:284d:5:eeb1:d7ff:fe80:5054 prefixlen 64 scopeid 0x0<global>

ether ec:b1:d7:80:50:54 txqueuelen 1000 (Ethernet)

RX packets 291341 bytes 126617361 (120.7 MiB)

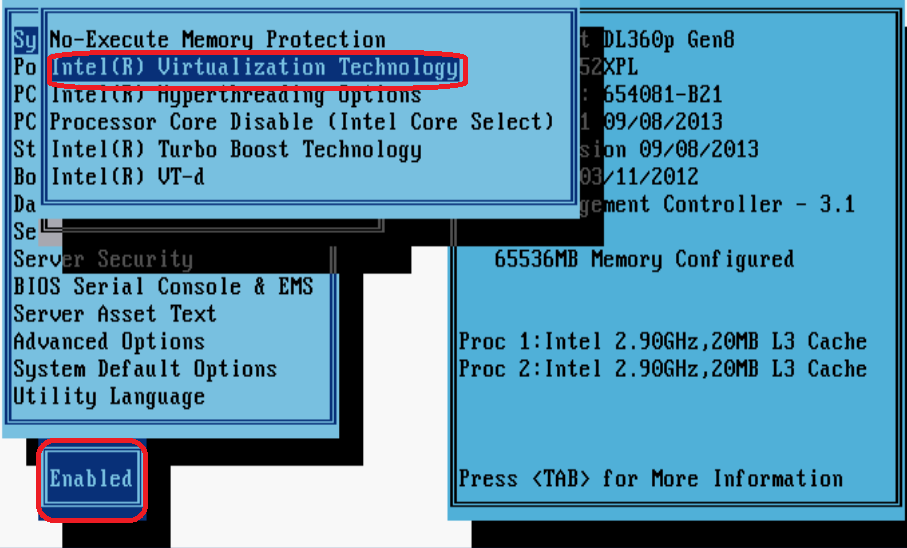

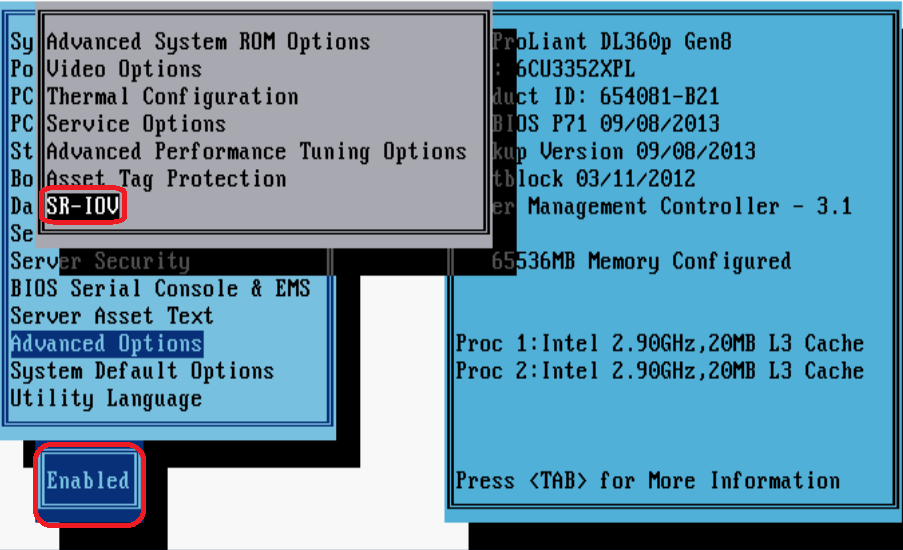

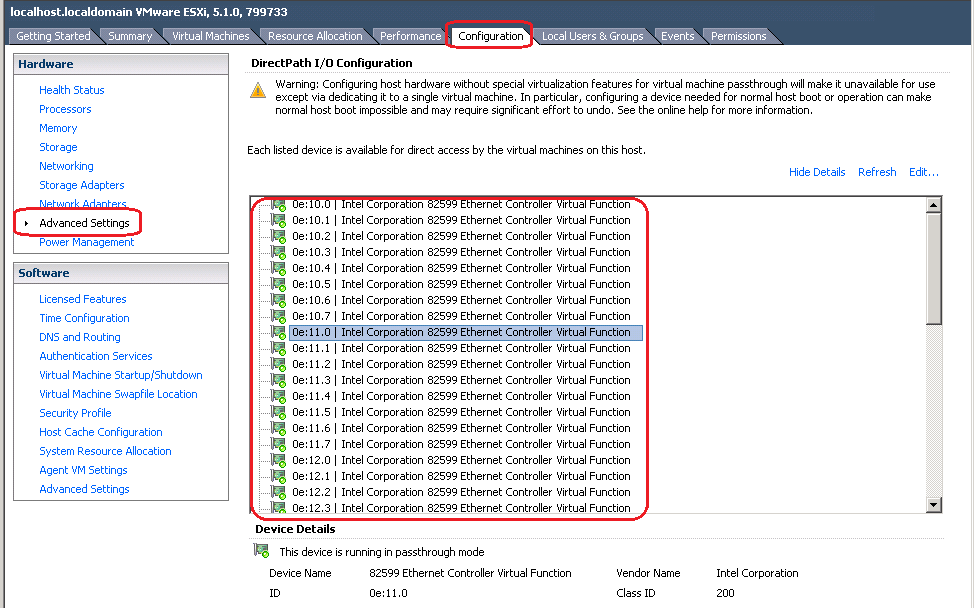

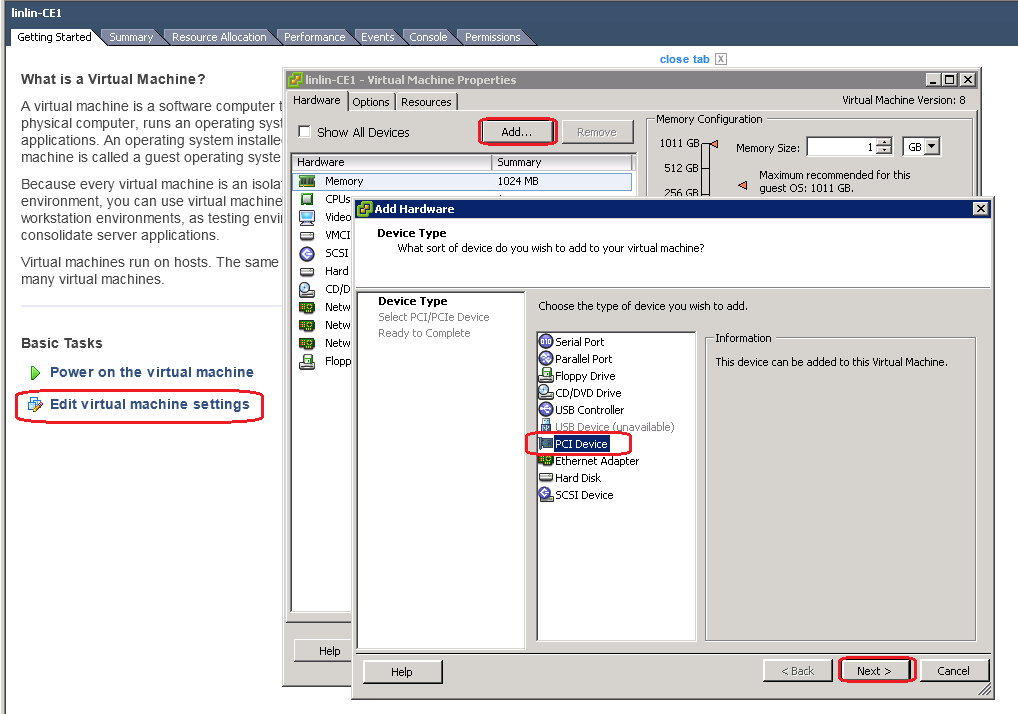

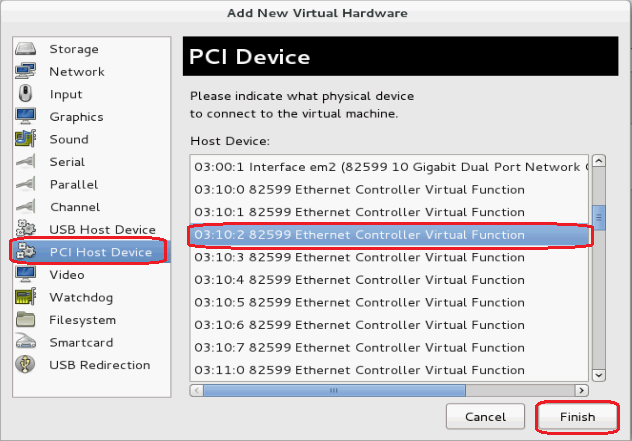

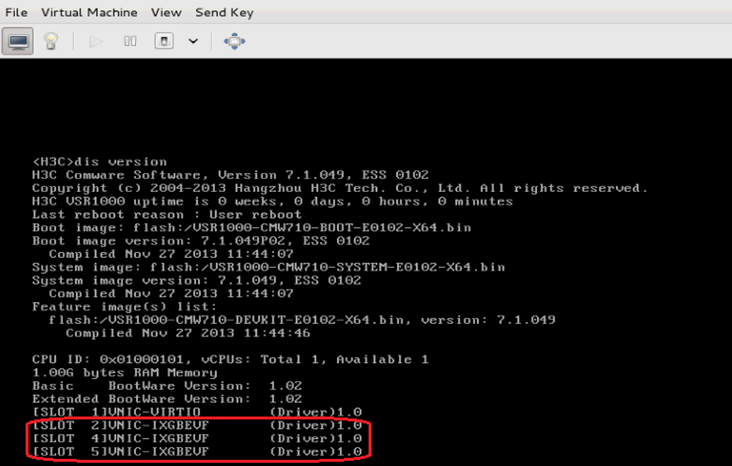

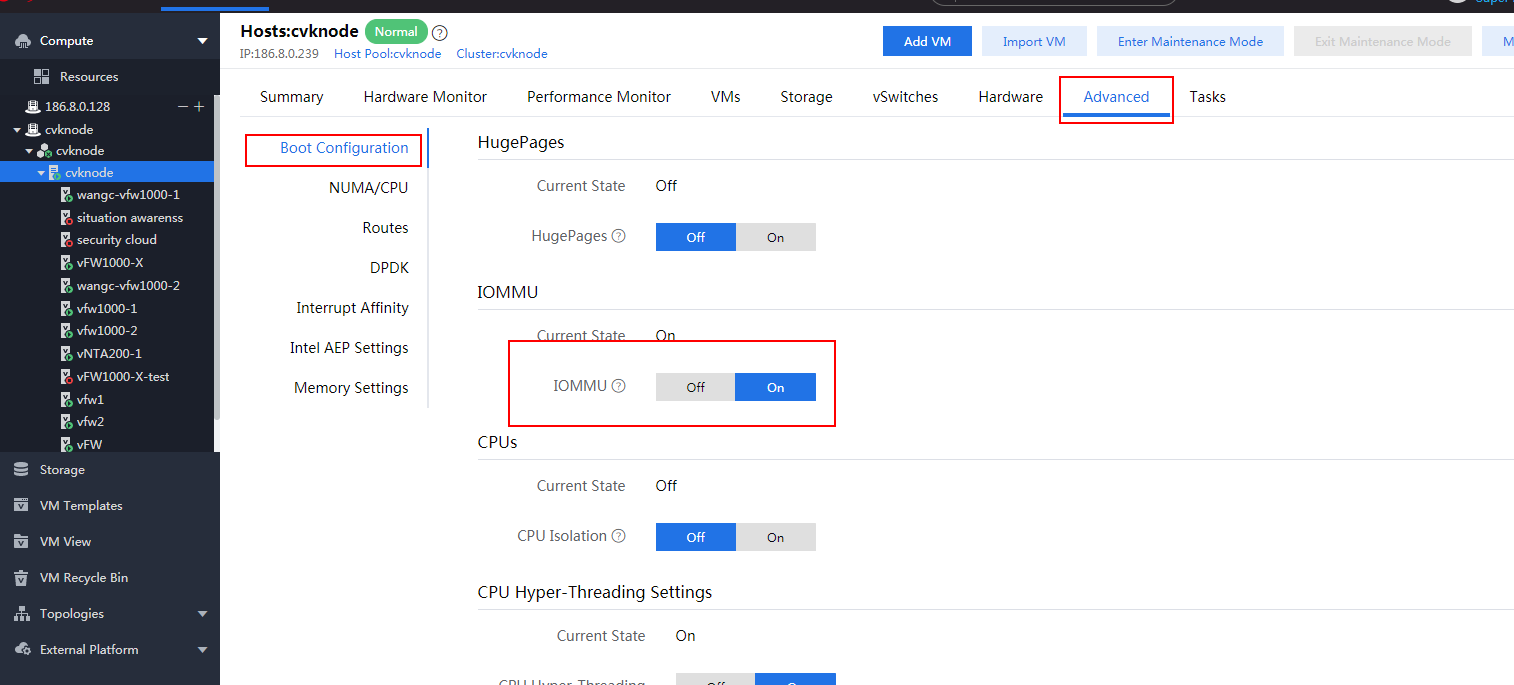

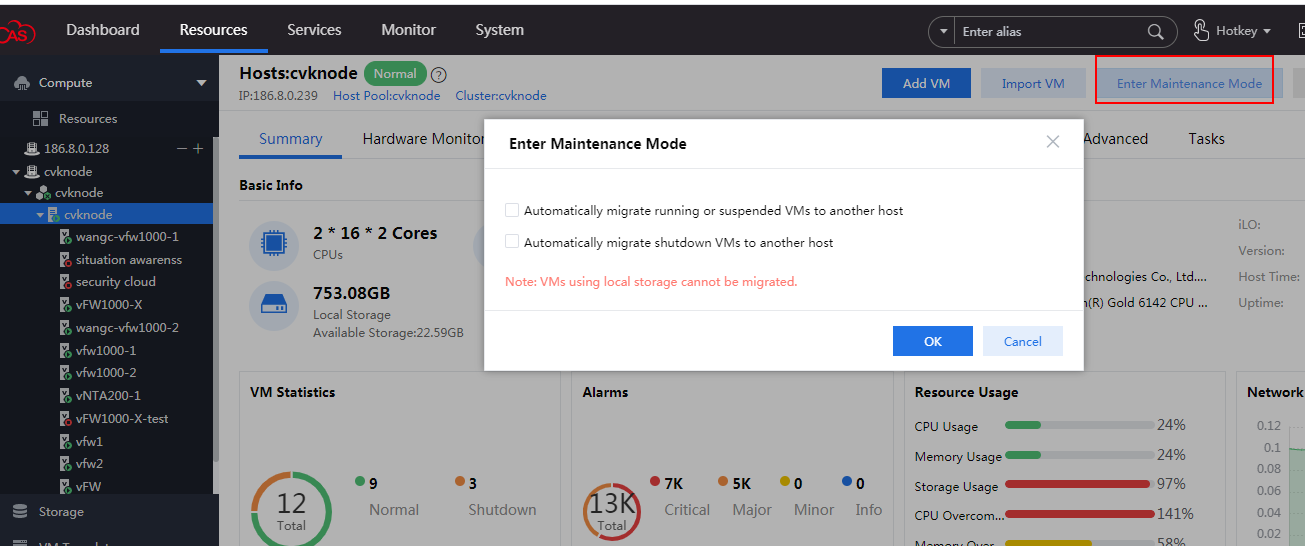

RX errors 0 dropped 178991 overruns 0 frame 0