- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 913.33 KB |

Contents

About the SeerEngine-DC controller

Deployment procedure at a glance

Installing the Unified Platform

(Optional.) Configuring HugePages

Deploying the Unified Platform

Registering and installing licenses

Registering and installing licenses for the Unified Platform

Registering and installing licenses for the controller

Installing the activation file on the license server

Backing up and restoring the controller configuration

Deploying the primary and backup sites and a third-party site

About the SeerEngine-DC controller

SeerEngine-DC (also called VCFC-DataCenter) is a data center controller. Similar to a network operating system, the SeerEngine-DC controller drives SDN application development and allows operation of various SDN applications. It can control various resources on the network and provide interfaces for applications to enable specific network forwarding.

The controller has the following features:

· It supports OpenFlow 1.3 and provides built-in services and a device driver framework.

· It is a distributed platform with high availability and scalability.

· It provides extensible REST APIs, GUI, and H3C IMC management interface.

· It can operate in standalone or cluster mode.

Preparing for installation

Server requirements

Hardware requirements

|

CAUTION: To avoid unrecoverable system failures caused by unexpected power failures, use a RAID controller that supports power fail protection on the servers and make sure a supercapacitor is in place. |

As a best practice, deploy the controller on a physical server.

Node to host the controller (x86 server)

Table 1 Hardware requirements for the node to host the controller (high-end configuration)

|

Item |

Requirements |

|

CPU |

x86-64 (Intel 64/AMD 64) 20 cores 2.2 GHz or above |

|

Memory size |

128 GB or above, or 256 GB or above for a master node on which simulation services are deployed |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces For the controller to support the remote disaster recovery system (RDRS), add a 10 Gbps or above Ethernet NIC. For the controller to support simulation services, add an NIC that meets the following requirements: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or 4): 2 × 10 Gbps Linux bonding interfaces |

Table 2 Hardware requirements for the node to host the controller (standard configuration)

|

Item |

Requirements |

|

CPU |

x86-64 (Intel 64/AMD 64) 16 cores 2.0 GHz or above |

|

Memory size |

128 GB or above, or 256 GB or above for a master node on which simulation services are deployed |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces |

Node to host the controller (Haiguang x86 server)

Table 3 Hardware requirements for the node to host the controller (high-end configuration)

|

Item |

Requirements |

|

CPU |

x86-64 (Haiguang Hygon) 2 × Hygon C86 7280s 32 cores 2.0 GHz |

|

Memory size |

128 GB or above (256 GB or above for a master node on which simulation services are deployed) |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces For the controller to support the remote disaster recovery system (RDRS), add a 10 Gbps or above Ethernet NIC. |

Table 4 Hardware requirements for the node to host the controller (standard configuration)

|

Item |

Requirements |

|

CPU |

x86-64 (Haiguang Hygon) 2 × Hygon C86 7265s 24 cores 2.2 GHz or above |

|

Memory size |

128 GB or above (256 GB or above for a master node on which simulation services are deployed) |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces |

Node to host the controller (ARM server)

An ARM server does not support similuation service deployment.

Table 5 Hardware requirements for the node to host the controller (high-end configuration)

|

Item |

Requirements |

|

CPU |

ARM (Huawei Kunpeng) 2 × Kunpeng 920s, 48 cores, 2.6 GHz |

|

Memory size |

384 GB (12*32 GB) |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces For the controller to support the remote disaster recovery system (RDRS), add a 10 Gbps or above Ethernet NIC. |

Table 6 Hardware requirements for the node to host the controller (standard configuration)

|

Item |

Requirements |

|

CPU |

ARM (Huawei Kunpeng) 2 × Kunpeng 920s, 24 cores, 2.6 GHz |

|

Memory size |

128 GB (4*32 GB) |

|

Drive |

The drives must be configured in RAID 1 or 10. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 4*960 GB SSDs or 8*480 GB SSDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 4*1200 GB or 8*600 GB 7.2K RPM or above HDDs configured in RAID 10 that provides a minimum total drive size of 1920 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 50 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

vBGP not configured: · Non-bonding mode: 1 × 10 Gbps or above Ethernet port · Bonding mode (recommended mode: mode 2 or mode 4): 2 × 10 Gbps Linux bonding interfaces vBGP configured: · Non-bonding mode: 2 × 10 Gbps or above Ethernet ports · Bonding mode (recommended mode: mode 4): 4 × 10 Gbps Linux bonding interfaces |

RDRS third-party site

You can deploy the RDRS third-party site on the same server as the primary or backup site. However, automatic RDRS switchover will fail when the server fails. As a best practice, deploy the RDRS third-party site on a separate server.

The RDRS third-party site can be deployed on a physical server . Table 7 and Table 8 describe the hardware requirements for a physical server and a VM to host the RDRS third-party site, respectively.

Table 7 Hardware requirements for a physical server to host the RDRS third-party site

|

Item |

Requirements |

|

CPU |

x86-64 architecture: Intel 64/AMD 64, 2 cores, 2.0 GHz or above |

|

Memory size |

16 GB or above |

|

Drive |

The drives must be configured in RAID 1. The storage controller must have a 1GB cache, support power fail protection, and have a supercapacitor in place. · Drive configuration option 1: ¡ System drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 256 GB. ¡ etcd drive: 2*480 GB SSDs configured in RAID 1 that provides a minimum total drive size of 20 GB. (Installation path: /var/lib/etcd.) · Drive configuration option 2: ¡ System drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 256 GB. ¡ etcd drive: 2*600 GB 7.2K RPM or above HDDs configured in RAID 1 that provides a minimum total drive size of 20 GB. (Installation path: /var/lib/etcd.) |

|

NIC |

1 × 10 Gbps or above Ethernet port |

Node to host simulation services

Table 8 Hardware requirements for a node to host simulation services (recommended configuration)

|

CPU |

Memory |

Drive space |

NIC |

|

x86-64 (Intel64/AMD64) that supports the VX-T/VX-D technology, 24 cores, 2.0 GHz or above |

256 GB or above |

2 TB or above (system partition where the root directory resides) |

2, with a bandwidth of 1 to 10 Gbps |

Software requirements

SeerEngine-DC runs on the Unified Platform as a component. Before deploying SeerEngine-DC, first install the Unified Platform.

The Unified platform supports multiple operating systems. Table 9 shows compatibility between CPUs and operating systems.

Table 9 Compatibility between CPUs and operating systems

|

CPU |

Operating system |

|

x86-64 (Intel64/AMD64) |

H3Linux |

|

x86-64 (Haiguang Hygon) |

H3Linux, Galaxy Unicorn V10 |

|

ARM (Huawei Kunpeng) |

Galaxy Unicorn V10 |

Client requirements

You can access the Unified Platform from a Web browser without installing any client. As a best practice, use Google Chrome 70 or a later version.

Pre-installation checklist

Table 10 Pre-installation checklist

|

Item |

Requirements |

|

|

Server |

Hardware |

· The CPUs, memory, drives, and NICs meet the requirements. · The server supports the Unified Platform. |

|

Software |

The system time settings are configured correctly. As a best practice, configure NTP for time synchronization and make sure the devices synchronize to the same clock source. |

|

|

Client |

You can access the Unified Platform from a Web browser without installing any client. As a best practice, use Google Chrome 70 or a later version. |

|

Deployment procedure at a glance

Use the following procedure to deploy the controller:

1. Prepare for installation.

Prepare a minimum of three physical servers. Make sure the physical servers meet the hardware and software requirements as described in "Server requirements."

2. Deploy the Unified Platform.

For the deployment procedure, see H3C Unified Platform Deployment Guide.

3. Deploy the controller.

Installing the Unified Platform

SeerEngine-DC runs on the Unified Platform as a component. Before deploying SeerEngine-DC, first install the Unified Platform. For the detailed procedure, see H3C Unified Platform Deployment Guide.

To run SeerEngine-DC on Unified Platform, you are required to partition the drive, configure HugePages, and deploy the application packages required by SeerEngine-DC.

Partitioning the system drive

Before installing the Unified Platform, partition the system drive as described in Table 11.

Table 11 Drive partition settings

|

Mount point |

2400GB RAID drive capacity |

1920GB RAID drive capacity |

|

/var/lib/docker |

500 GiB |

450 GiB |

|

/boot |

1024 MiB |

1024 MiB |

|

swap |

1024 MiB |

1024 MiB |

|

/var/lib/ssdata |

550 GiB |

500 GiB |

|

/ |

1000 GiB |

700 GiB |

|

/boot/efi |

200 MiB |

200 MiB |

|

/var/lib/etcd |

48 GiB |

48 GiB |

|

GFS |

300 GiB |

220 GiB |

(Optional.) Configuring HugePages

To use the vBGP component, you must enable and configure HugePages on the operating system. You are not required to configure HugePages if the vBGP component is not to be deployed. After you enable or disable HugePages, restart the server for the configuration to take effect. HugePages are enabled on a server by default.

H3Linux operating system

To configure HugePages on the H3Linux operating system, see H3C Unified Platform Deployment Guide.

Galaxy Kylin operating system

1. Open the grub file.

vim /etc/default/grub

2. Edit the values of the default_hugepagesz, hugepagesz, and hugepages parameters at the line starting with GRUB_CMDLINE_LINUX.

GRUB_CMDLINE_LINUX="default_hugepagesz=2M hugepagesz=2M hugepages=4096

3. Update the configuration file.

¡ If the system boots in UEFI mode, execute the following command:

grub2-mkconfig -o /boot/efi/EFI/neokylin/grub.cfg

¡ If the system boots in Legacy mode, execute the following command:

grub2-mkconfig -o /boot/grub2/grub.cfg

4. Restart the server for the configuration to take effect.

5. Execute the cat/proc/cmdline command to view the configuration result. If the result is consistent with your configuration, the configuration succeeds.

default_hugepagesz=2M hugepagesz=2M hugepages=4096

Deploying the Unified Platform

The Unified Platform can be installed on x86 or ARM servers. Select the installation packages specific to the server type and install the selected packages in sequence as described in Table 12.

For the installation procedures of the packages, see H3C Unified Platform Deployment Guide.

Table 12 Installation packages required by the controller

|

Installation package |

Description |

|

· x86: common_PLAT_GlusterFS_2.0_version.zip · ARM: common_PLAT_GlusterFS_2.0_version_arm.zip |

Provides local shared storage functionalities. |

|

· x86: general_PLAT_portal_2.0_version.zip · ARM: general_PLAT_portal_2.0_version_arm.zip |

Provides portal, unified authentication, user management, service gateway, and help center functionalities. |

|

· x86: general_PLAT_kernel_2.0_version.zip · ARM: general_PLAT_kernel_2.0_version_arm.zip |

Provides access control, resource identification, license, configuration center, resource group, and log functionalities. |

|

· x86: general_PLAT_kernel-base_2.0_version.zip · ARM: general_PLAT_kernel-base_2.0_version_arm.zip |

Provides alarm, access parameter template, monitoring template, report, email, and SMS forwarding functionalities. |

|

· x86:general_PLAT_network_2.0_version.zip · ARM:general_PLAT_network_2.0_version_arm.zip |

Provides basic management of network resouces, network performance, network topology, and iCC. |

|

· x86: general_PLAT_widget_2.0_version.zip · ARM: general_PLAT_widget_2.0_version_arm.zip |

Provides the dashboard framework. |

|

· x86: common_PLAT_GlusterFS_2.0_version.zip · ARM: common_PLAT_GlusterFS_2.0_version_arm.zip |

Provides dashboard widget management. |

Deploying the controller

|

IMPORTANT: · The controller runs on the Unified Platform. You can deploy, upgrade, and uninstall it only on the Unified Platform. · Before deploying the controller, make sure the required applications have been deployed. |

Preparing for deployment

Enabling NICs

If the server uses multiple NICs for connecting to the network, enable the NICs before deployment.

The procedure is the same for all NICs. The following procedure enables NIC ens34.

To enable a NIC:

1. Access the server that hosts the Unified Platform.

2. Access the NIC configuration file.

[root@node1 /]# vi /etc/sysconfig/network-scripts/ifcfg-ens34

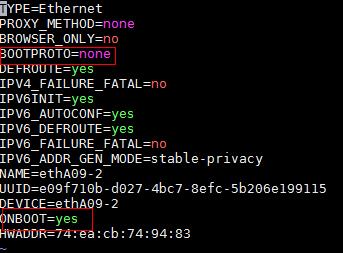

3. Set the BOOTPROTO field to none to not specify a boot-up protocol and set the ONBOOT field to yes to activate the NIC at system startup.

Figure 1 Modifying the configuration file of a NIC

4. Execute the ifdown and ifup commands in sequence to reboot the NIC.

[root@node1 /]# ifdown ens34

[root@node1 /]# ifup ens34

5. Execute the ifconfig command to verify that the NIC is in up state.

Planning the networks

Network planning

Three types of networks can be created, including Calico network, MACVLAN network, and OVS-DPDK network.

The Calico network is an internal network used for container interactions. The network segment of the Calico network is the IP address pool set for containers when the cluster is deployed. The default network segment is 177.177.0.0. You do not need to configure an address pool for the Calico network when installing and deploying the controller. The Calico network and MACVLAN network can use the same NIC.

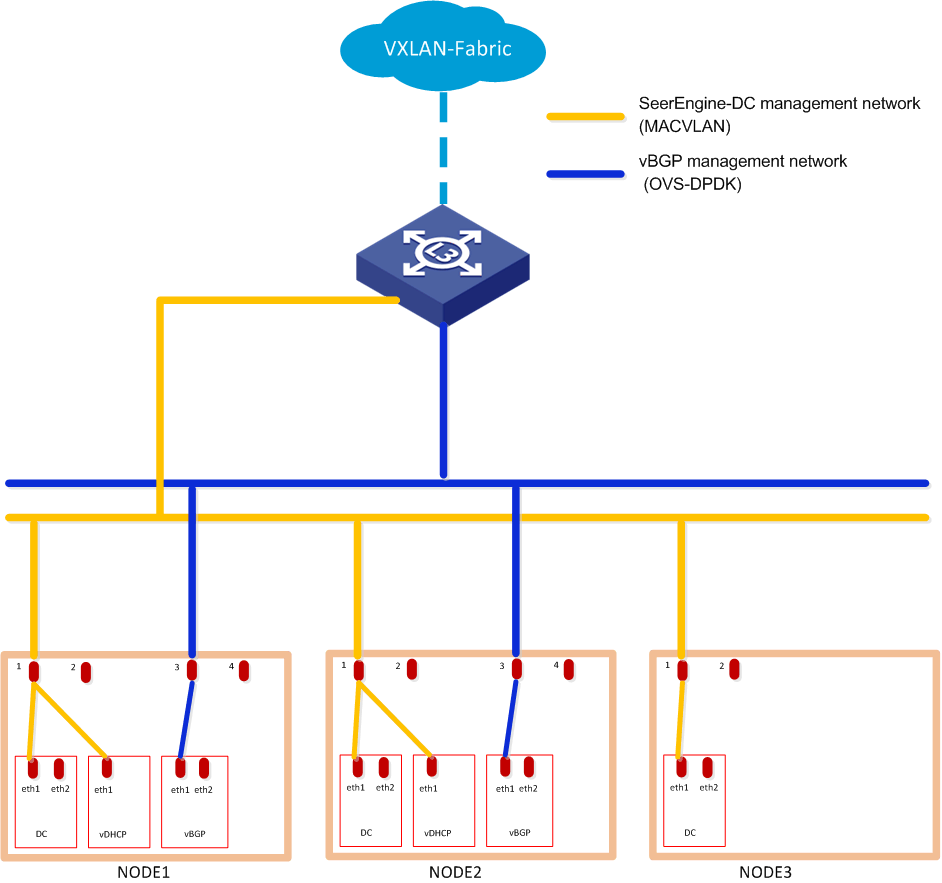

Before deploying the controller, plan the address pools for the MACVLAN and OVS-DPDK networks in advance. Both networks are used as management networks. The SeerEngine-DC and vDHCP components can use the same MACVLAN network as the management network. The vBGP component uses the OVS-DPDK network as the management network.

vBGP is an optional component. To not deploy vBGP, configure only the MACVLAN network. To deploy vBGP, configure both MACVLAN and OVS-DPDK networks as shown in Figure 2.

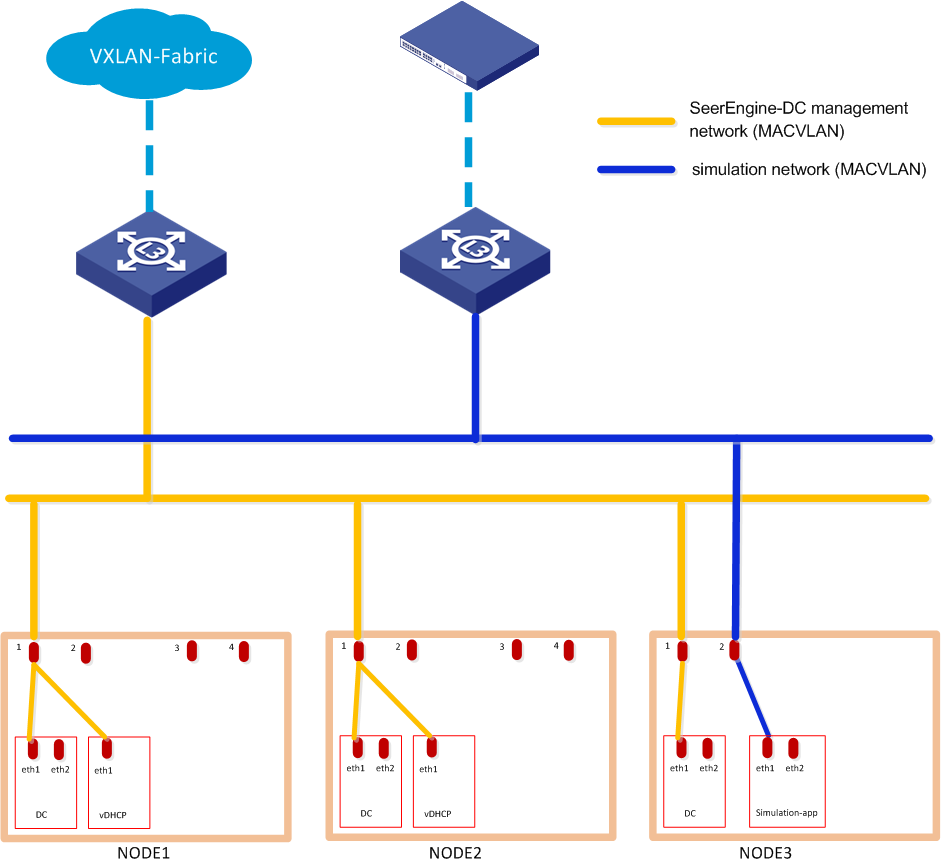

simulation-app is an optional component. To use simulation services, configure a separate MACVLAN network for this component and allow it to have an exclusive use of an NIC.

Figure 2 Network planning for the cloud data center scenario (to deploy vBGP only)

Figure 3 Network planning for the cloud data center scenario (to deploy simulation-app only)

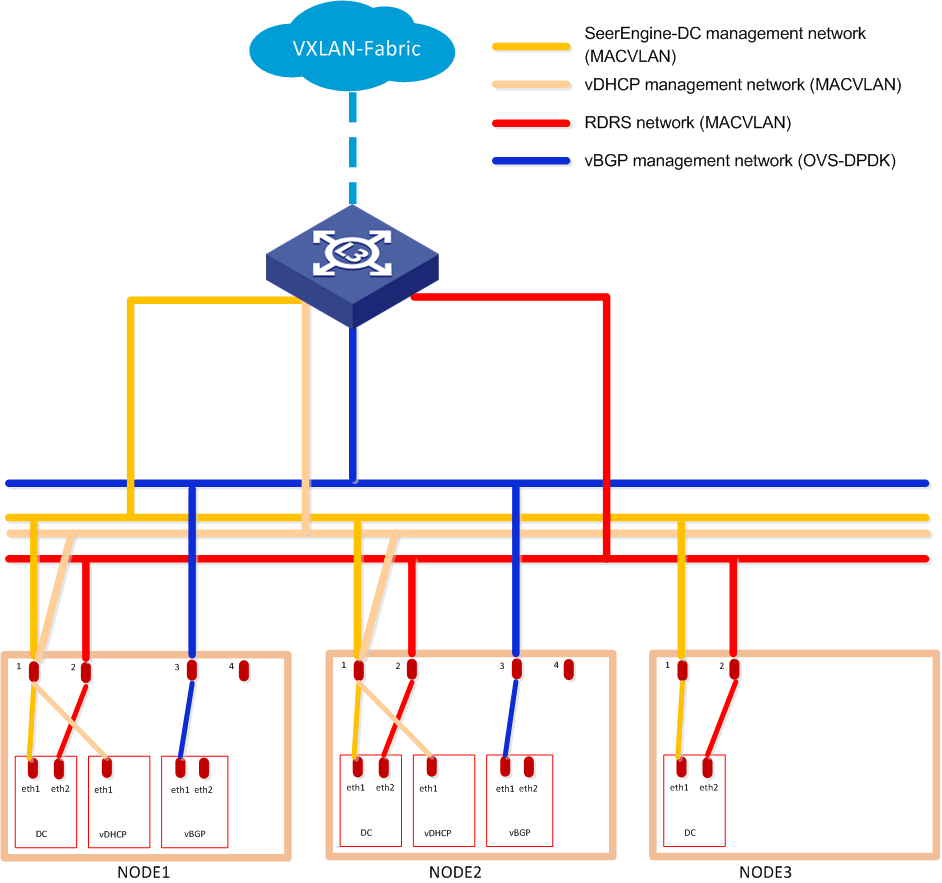

To deploy RDRS, follow these guidelines to plan the networks:

· Use the same IP address for the vDHCP components at the primary and backup sites.

· As a best practice, configure separate MACVLAN-type management networks for the SeerEngine-DC and vDHCP components. If the two MACVLAN networks share a NIC, configure VLANs to isolate the networks.

· Configure a separate MACVLAN network as the RDRS network. The RDRS network is used to synchronize data between the primary and backup sites. Ensure connectivity between the RDRS networks at the primary site and backup site. If the RDRS and management networks use the same NIC, configure VLANs to isolate the networks. As a best practice, use a separate NIC for the RDRS network as shown in Figure 4.

Figure 4 Network planning for the cloud data center scenario (to deploy vBGP and RDRS)

IP address planning

To calculate the IP addresses required for a MACVLAN or OVS-DPDK subnet.

1. Use Table 13 as a best practice to calculate the number of IP addresses required for each component team.

Table 13 Number of IP addresses required for each component team

|

Component |

Maximum team members |

Default team members |

Number of IP addresses |

|

SeerEngine-DC |

32 |

3 |

Number of team members + 1 (team IP) |

|

vDHCP |

2 |

2 |

Number of team members + 1 (team IP) |

|

vBGP |

2 |

2 |

Number of team members + 1 (team IP) |

|

simulation-app |

1 |

1 |

1 (team IP) |

2. Calculate the number of IP addresses required for the subnet. The following calculations assume that each component team has a default number of team members.

¡ MACVLAN subnet

- Without RDRS deployed: (1*3+1) + (1*2+1) + 1 = 8.

- With RDRS deployed: (2*3+1) + (1*2+1) = 10.

¡ OVS-DPDK subnet: (1*2+1) = 3.

Table 14 shows an example for IP address planning.

Table 14 IP address planning for the non-RDRS scenario

|

Item |

IP addresses |

|

MACVLAN subnet |

10.0.234.0/24 (gateway 10.0.234.254) |

|

MACVLAN network address pool |

10.0.234.6 to 10.0.234.38 |

|

MAC-VLAN subnet (simulation-app component) |

172.20.0.0/16 (gateway 172.20.1.1) |

|

MAC-VLAN network address pool (simulation-app component) |

172.20.100.100 to 172.20.100.100 |

|

OVS-DPDK subnet |

11.0.234.0/24 (gateway 11.0.234.254) |

|

OVS-DPDK network address pool |

11.0.234.1 to 11.0.234.32 |

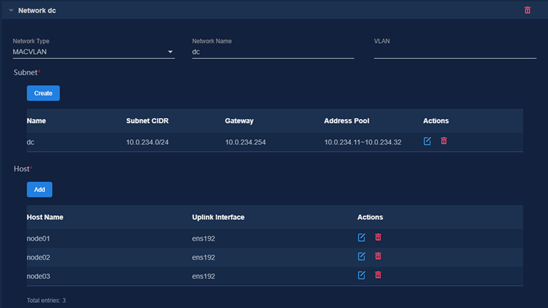

Table 15 IP address planning for the RDRS scenario

|

Item |

IP addresses |

|

MACVLAN subnet (SeerEngine-DC) |

10.0.234.0/24 (gateway 10.0.234.254) |

|

MACVLAN network address pool (SeerEngine-DC) |

10.0.234.6 to 10.0.234.38 |

|

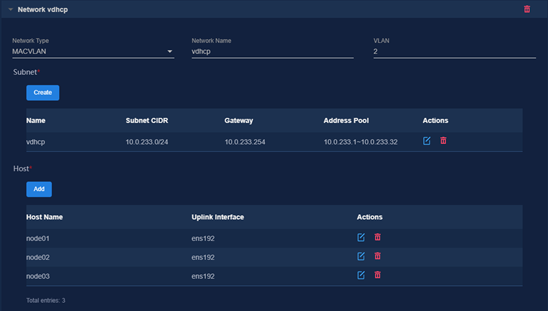

MACVLAN subnet (vDHCP) |

10.0.233.0/24 (gateway 10.0.233.254) |

|

MACVLAN network address pool (vDHCP) |

10.0.233.6 to 10.0.233.38 |

|

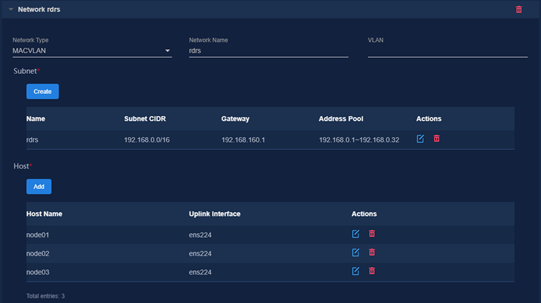

MACVLAN subnet (RDRS network) |

192.168.0.0/16 (gateway 192.168.160.1) |

|

MACVLAN network address pool (RDRS network) |

192.168.160.1 to 192.168.160.32 |

|

OVS-DPDK subnet |

11.0.234.0/24 (gateway 11.0.234.254) |

|

OVS-DPDK network address pool |

11.0.234.1 to 11.0.234.32 |

|

IMPORTANT: · The MACVLAN and OVS-DPDK subnets are on different network segments. You must configure routing entries on the connected switches to enable Layer 3 communication between the SeerEngine-DC management network and vBGP management network. · If two MACVLAN networks share a NIC, configure the port on the switch that connects to the server as a trunk port, configure the port on the server that connects to the switch to work in hybrid mode, and configure VLAN and VLAN interface settings on the switch. · For RDRS to operate correctly, make sure the IP addresses of RDRS networks at the primary and backup sites do not overlap with the IP address of the SeerEngine-DC component, and the vDHCP components at the primary and backup sites use the same IP address. · Simulation services do not support RDRS. |

Deploying the controller

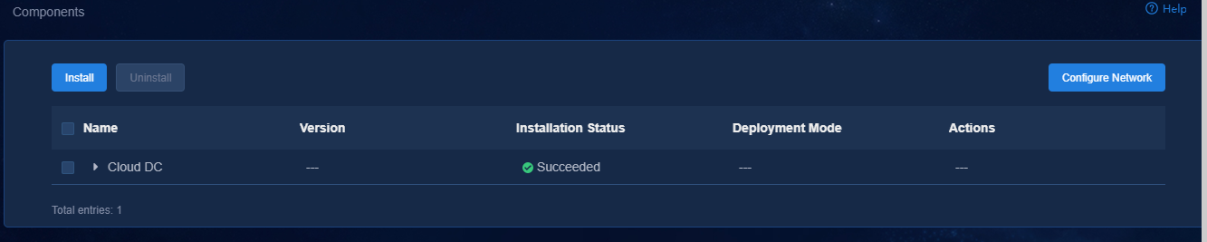

1. Log in to the Unified Platform. Click System > Deployment.

2. Obtain the SeerEngine-DC installation packages. Table 16 provides the names of the installation packages. Make sure you select installation packages specific to your server type, x86 or ARM.

Table 16 Installation packages

|

Component |

Installation package name |

|

SeerEngine-DC |

· x86: SeerEngine_DC-version-MATRIX.zip · ARM: SeerEngine_DC-version-ARM64.zip |

|

vBGP (optional) |

· x86: vBGP-version.zip · ARM: vBGP-version-ARM64.zip |

3. Click Upload to upload the installation package and then click Next.

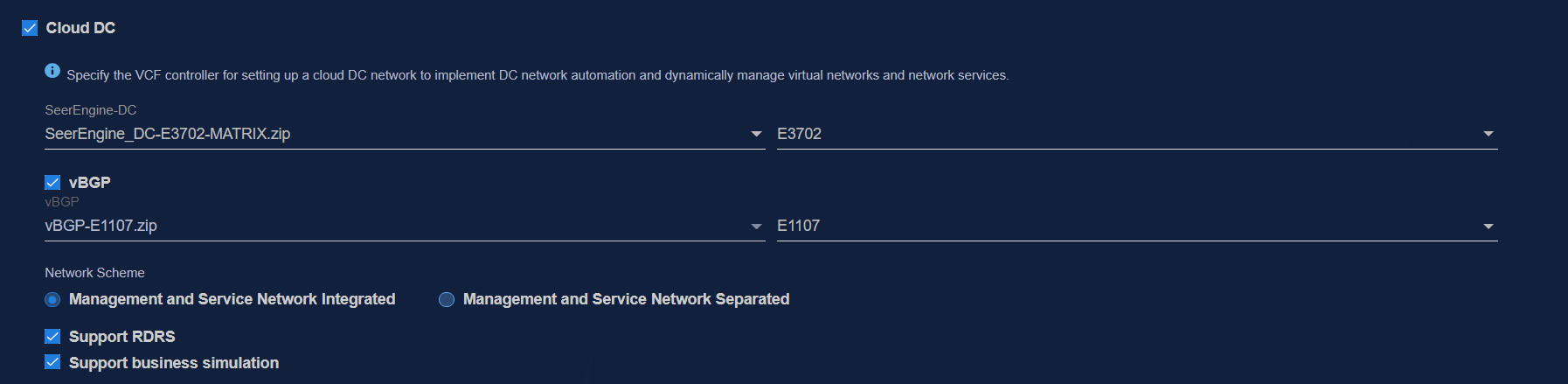

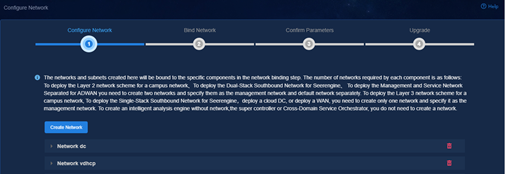

4. Select Cloud DC. To deploy the vBGP component simultaneously, select vBGP and select a network scheme for vBGP deployment. To deploy the simulation-app component simultaneously, select Service Simulation. For the controller to support RDRS, select Support RDRS. Then click Next.

Figure 5 Selecting components

|

CAUTION: To avoid malfunction of simulation services, do not delete a worker node on which the simulation-app component is deployed from the cluster deployment page on Installer. |

5. Configure the MACVLAN and OVS-DPDK networks and add the uplink interfaces according to the network plan in "Planning the network." If you are not to deploy vBGP, you only need to configure MACVLAN networks.

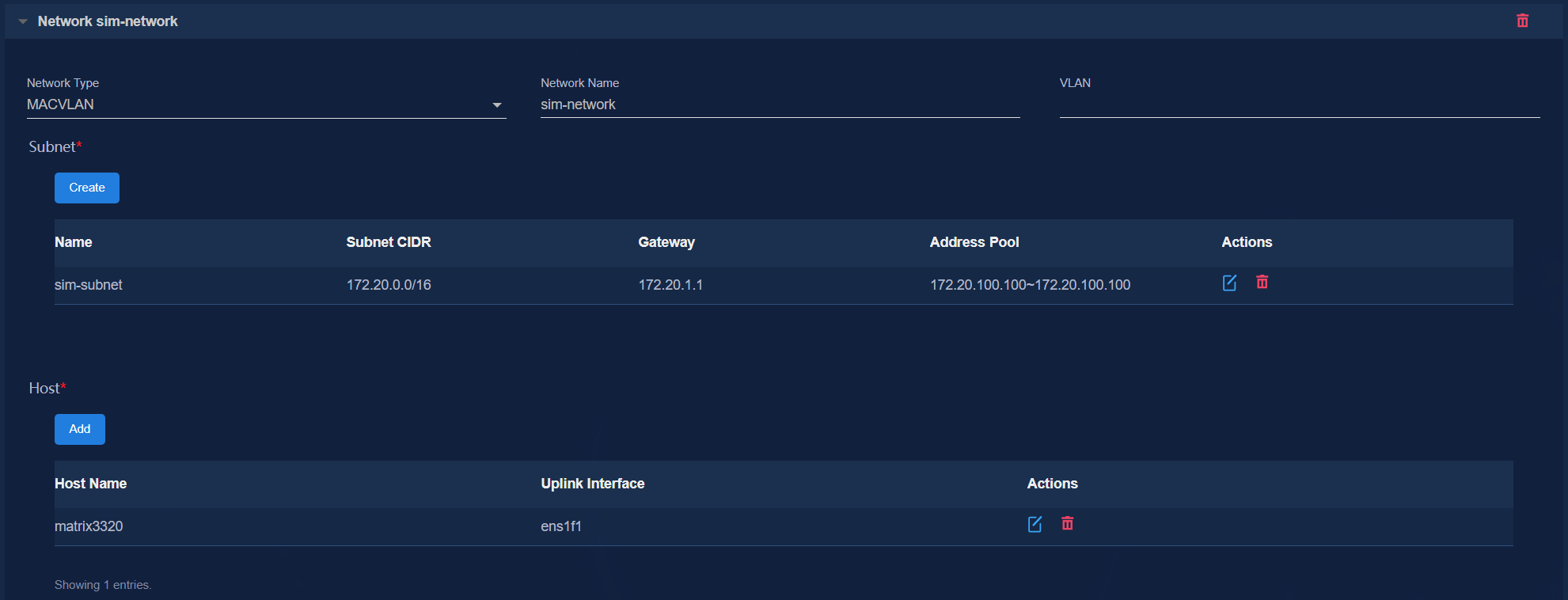

To use simulation services, configure the network settings as follows:

¡ Configure a separate MACVLAN network for the simulation-app component.

¡ Assign a minimum of one IP address to the subnet.

¡ Select a master node that has a memory size of 256 GB for the component or a worker node, specify an uplink interface, and delete other nodes.

To deploy RDRS, configure the network settings as follows:

¡ Configure a MACVLAN management network separately for the SeerEngine-DC and vDHCP components.

¡ Specify a VLAN for the MACVLAN network configured for the vDHCP component, and make sure the VLAN ID is different from the PVID.

¡ Add a same uplink interface for the two MACVLAN networks.

¡ Configure a separate MACVLAN network as the RDRS network.

Figure 6 Configuring a MACVLAN management network for the SeerEngine-DC component

Figure 7 Configuring a MACVLAN management network for the vDHCP component

Figure 8 Configuring a MACVLAN network for the simulation-app component

Figure 9 Configuring an RDRS network

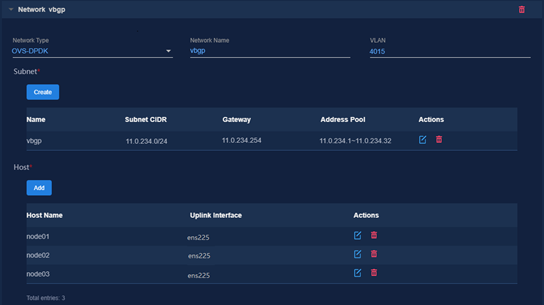

Figure 10 Configuring an OVS-DPDK network

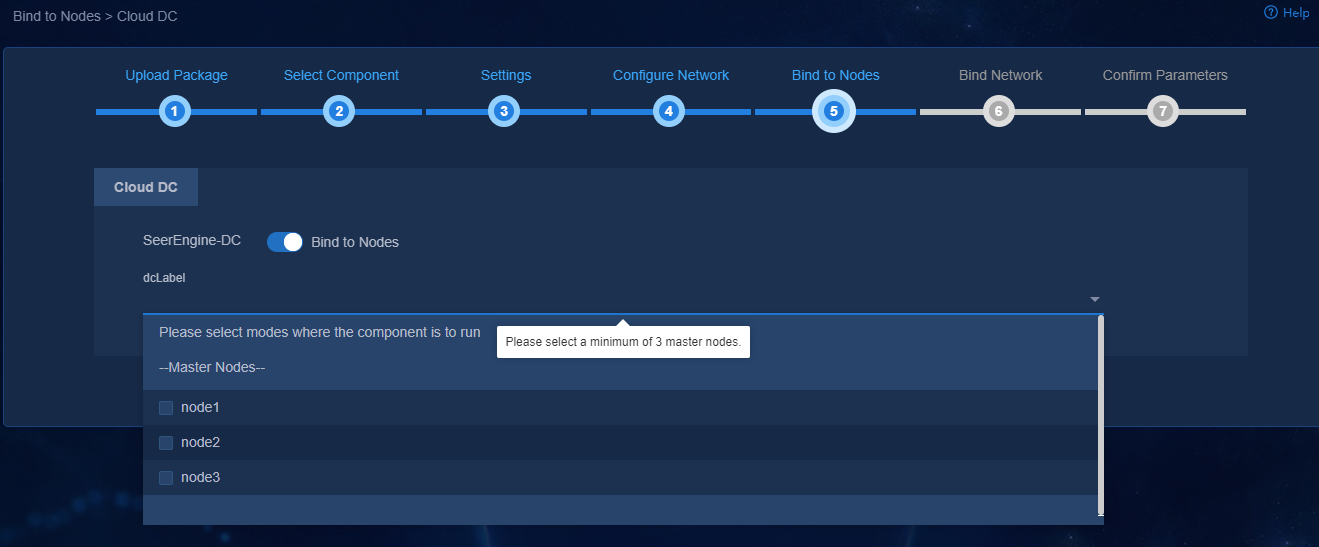

6. On the Bind to Nodes page, select whether to enable node binding. If you enable Bind to Nodes, you must bind the controller to a minimum of three master nodes on which microserivce pods can run.

Figure 11 Binding to Nodes

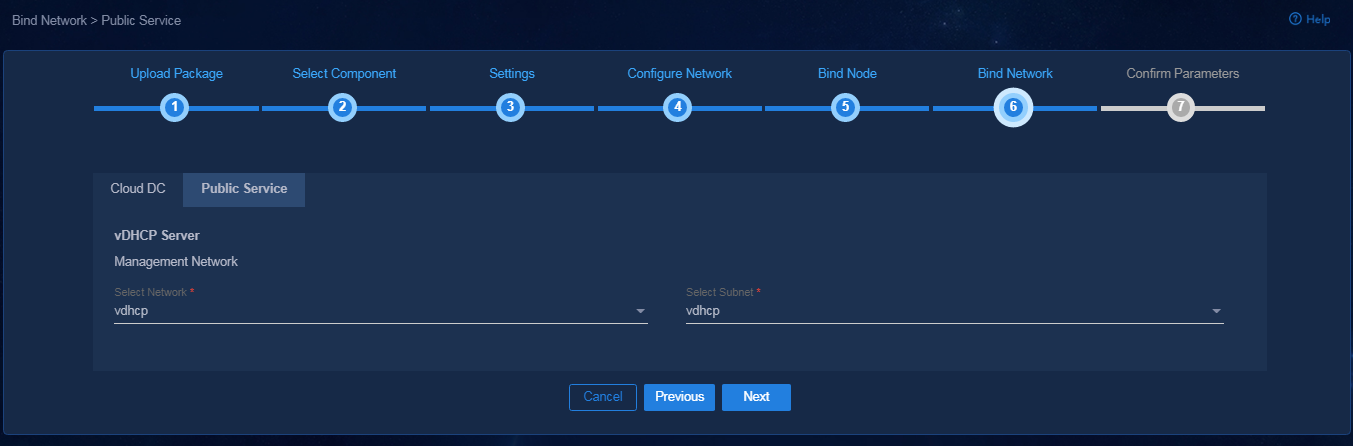

7. Bind networks to the components, assign IP address to the components, specify a network ndoe for the service simulation network, and then click Next.

Figure 12 Binding networks

8. On the Confirm Parameters tab, verify network information, configure the RDRS status, and specify a VRRP group ID for the components.

A component automatically obtains an IP address from the IP address pool of the subnet bound to it. To modify the IP address, click Modify and then specify another IP address for the component. The IP address specified must be in the IP address range of the subnet bound to the component.

You are required to configure the RDRS status for the controller if you have selected the Support RDRS option for it:

¡ Select Primary from the Status in RDRS list for a controller at the primary site.

¡ Select Backup from the Status in RDRS list for a controller at the backup site.

If vDHCP and vBGP components are to be deployed, you are required to specify a VRRP group ID in the range of 1 to 255 for the components. The VRRP group ID must be unique within the same network.

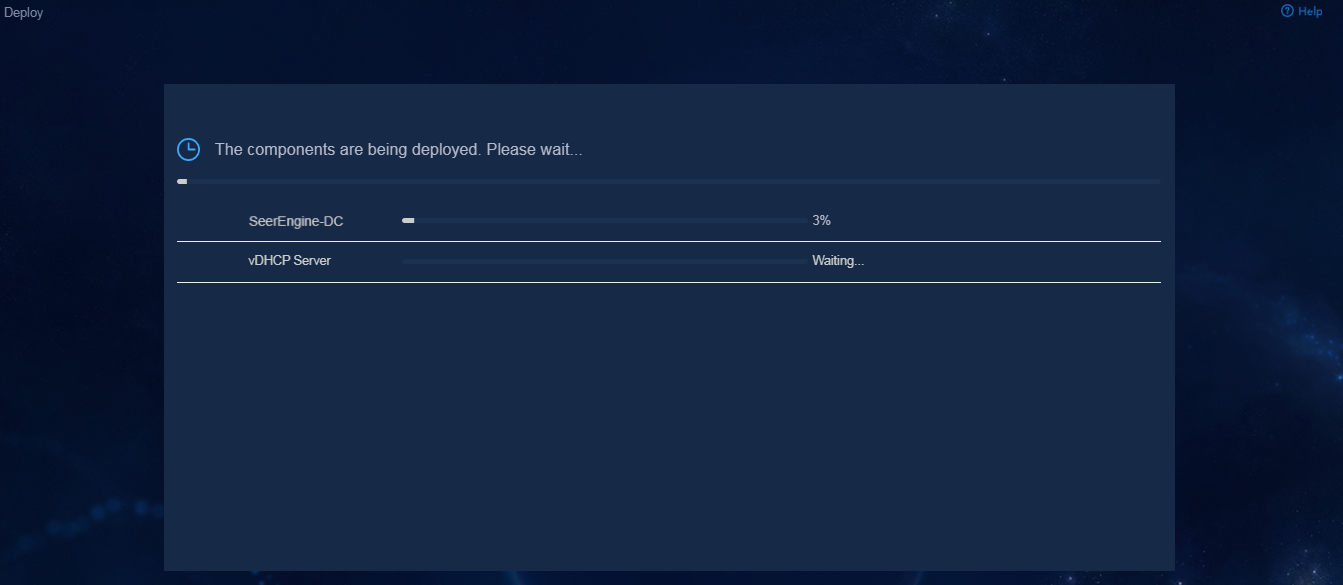

9. Click Deploy.

Figure 13 Deployment in progress

Accessing the controller

After the controller is deployed on the Unified Platform, the controller menu items will be loaded on the Unified Platform. Then you can access the Unified Platform to control and manage the controller.

To access the controller:

1. Enter the address for accessing the Unified Platform in the address bar and then press Enter.

By default, the login address is https://ucenter_ip_address:30000/central/index.html.

¡ ucenter _ip_address represents the northbound virtual IP address of the Unified Platform.

¡ 30000 is the port number.

Figure 14 Unified Platform login page

2. Enter the username and password, and then click Log in.

The default username is admin and the default password is Pwd@12345.

Table 17 Unified Platform dashboard

Registering and installing licenses

Registering and installing licenses for the Unified Platform

For the Unified Platform license registration and installation procedure, see H3C Unified Platform Deployment Guide.

Registering and installing licenses for the controller

After you install the controller, you can use its complete features and functions for a 180-day trial period. After the trial period expires, you must get the controller licensed.

Installing the activation file on the license server

For the activation file request and installation procedure, see H3C Software Products Remote Licensing Guide.

Obtaining licenses

1. Log in to the Unified Platform and then click System > License Management > DC license.

2. Configure the parameters for the license server as described in Table 18.

Table 18 License server parameters

|

Item |

Description |

|

IP address |

Specify the IP address configured on the license server used for internal communication in the cluster. |

|

Port number |

Specify the service port number of the license server. The default value is 5555. |

|

Username |

Specify the client username configured on the license server. |

|

Password |

Specify the client password configured on the license server. |

3. Click Connect to connect the controller to the license server.

The controller will automatically obtain licensing information after connecting to the license server.

Backing up and restoring the controller configuration

You can back up and restore the controller configuration on the Unified Platform. For the procedures, see H3C Unified Platform Deployment Guide.

Upgrading the controller

|

CAUTION: · The upgrade might cause service interruption. Be cautious when you perform this operation. · Before upgrading or scaling out the Unified Platform or the controller, specify the manual switchover mode for the RDRS if the RDRS has been created. · Do not upgrade the controllers on the primary and backup sites simultaneously if the RDRS has been created. Upgrade the controller on a site first, and upgrade the controller on another site after data is synchronized between the two sites. · In an RDRS system, the IP addresses of the vDHCP components at the primary and backup sites must be the same. As a best practice, remove and reinstall the vDHCP component after upgrading the controller to support RDRS in an environment where the vDHCP component has been deployed. |

This section describes the procedure for upgrading and uninstalling the controller. For the upgrading and uninstallation procedure for the Unified Platform, see H3C Unified Platform Deployment Guide.

The controller can be upgraded on the Unified Platform with the configuration retained.

To upgrade the controller:

1. Log in to the Unified Platform. Click System > Deployment.

Figure 15 Deployment page

2. Click the right chevron button ![]() for the controller to expand controller information, and then click the upgrade icon

for the controller to expand controller information, and then click the upgrade icon ![]() .

.

3. Continue the upgrade procedure as guided by the system.

¡ If the controller already supports RDRS, the upgrade page is displayed.

To upgrade the component, perform the following steps:

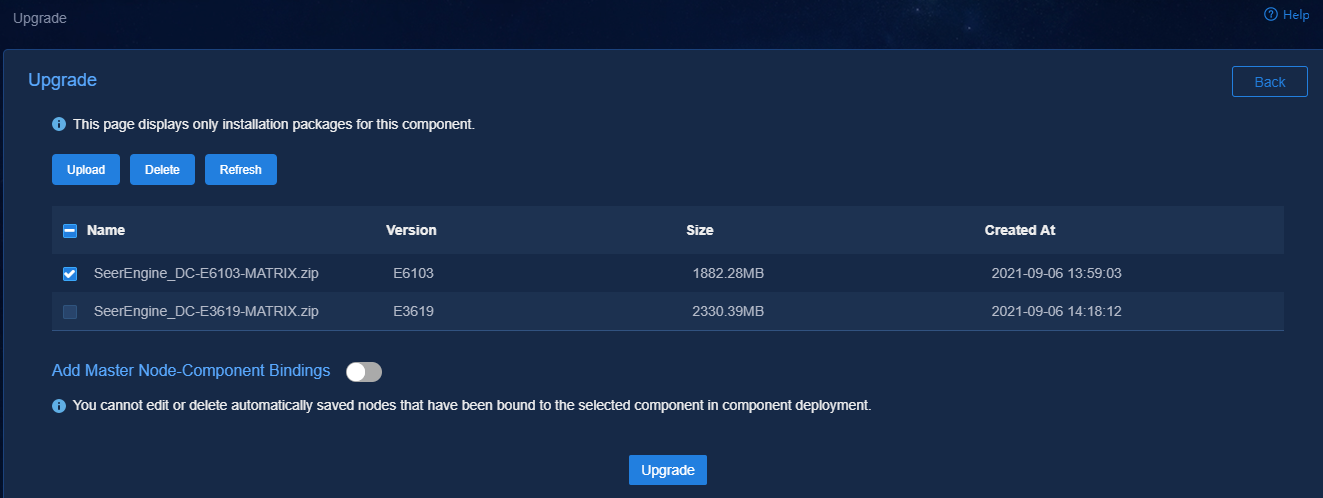

# Upload and select the installation package.

# Select whether to enable Add Master Node-Component Bindings. The nodes to which the controller has been bound during deployement cannot be modified or deleted.

# Click Deploy.

Figure 16 Adding node binding

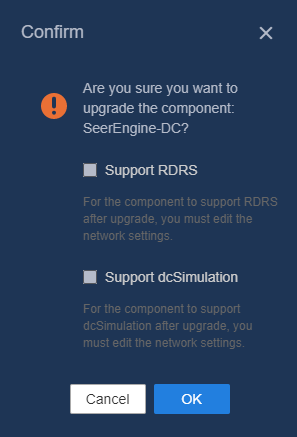

¡ If the controller does not support RDRS, the system displays a confirmation dialog box with a Support RDRS option.

Figure 17 Support RDRS option

- If you leave the Support RDRS option unselected, the upgrade page is displayed. Upload and select the installation package and then click Upgrade.

- If you select the Support RDRS option, perform the following steps:

# On the Configure Network tab, create a MACVLAN network as the RDRS network. Make sure the RDRS network and the management network are on different network segments.

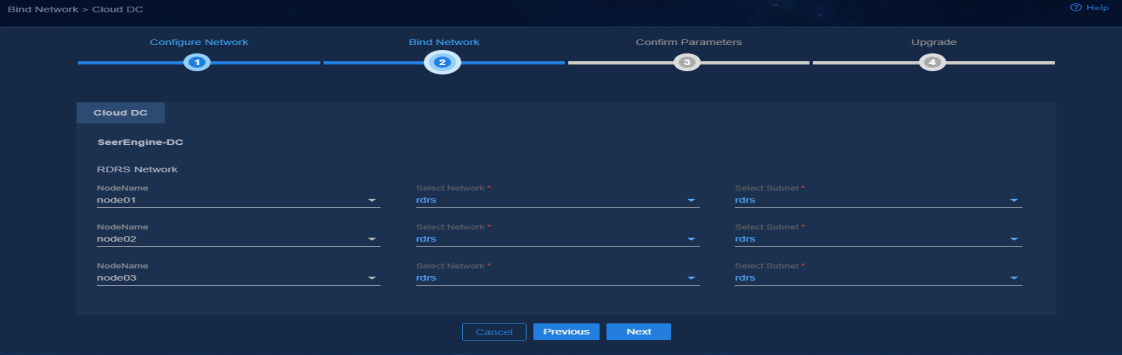

# On the Bind Network tab, bind the controller to the corresponding RDRS network and subnet, and then click Next.

# On the Confirm Parameters tab, verify that the IP addresses assigned to the RDRS network are correct, and then click Next.

# On the Upgrade tab, upload and select the installation package or patch package, and then click Upgrade.

4. If the upgrade fails, click Roll Back to roll back to the previous version.

Uninstalling the controller

1. Log in to the Unified Platform. Click System > Deployment.

2. Click the ![]() icon to the left of the controller name and then

click Uninstall.

icon to the left of the controller name and then

click Uninstall.

Figure 18 Uninstalling the controller

RDRS

About RDRS

A remote disaster recovery system (RDRS) provides disaster recovery services between the primary and backup sites. The controllers at the primary and backup sites back up each other. When the RDRS is operating correctly, data is synchronized between the site providing services and the peer site in real time. When the service-providing site becomes faulty because of power, network, or external link failure, the peer site immediately takes over to ensure service continuity.

The RDRS supports the following switchover modes:

· Manual switchover—In this mode, the RDRS does not automatically monitor state of the controllers on the primary or backup site. You must manually control the controller state on the primary and backup sites by specifying the Switch to Primary or Switch to Backup actions. This mode requires deploying the Unified Platform of the same version on the primary and backup sites.

· Auto switchover with arbitration—In this mode, the RDRS automatically monitors state of the controllers. Upon detecting a controller or Unified Platform failure (because of site power or network failure), the RDRS automatically switches controller state at both sites by using the third-party arbitration service. This mode also supports manual switchover. To use this mode, you must deploy the Unified Platform of the same version at the primary and backup sites and the third-party arbitration service.

The third-party arbitration service can be deployed on the same server as the primary or backup site. However, when the server is faulty, the third-party arbitration service might stop working. As a result, RDRS auto switchover will fail. As a best practice, configure the third-party arbitration service on a separate server.

Creating an RDRS

1. Deploy the primary and backup sites and a third-party site.

2. Deploy RDRS on the controllers.

3. Create an RDRS.

Deploying the primary and backup sites and a third-party site

Restrictions and guidelines

Follow these restrictions and guidelines when you deploy the primary and backup sites and a third-party site:

· The Unified Platform version, transfer protocol, username and password, and IP version of the primary and backup sites must be the same.

· The arbitration service package on the third-party site must match the Unified Platform version on the primary and backup sites.

· To use the auto switchover with arbitration mode, you must deploy a standalone Unified Platform as the third-party site, and deploy arbitration services on the site.

· To use the allowlist feature in an RDRS scenario, you must add the IP addresses of all nodes on the backup site to the allowlist on the primary site, and add the IP addresses of all nodes on the primary site to the allowlist on the backup site.

· To avoid service failure during a primary/backup switchover, you must configure a same IP address for the vDHCP components at the primary and backup sites.

Procedure

This procedure uses a separate server as the third-party site and deploys the Unified Platform in standalone mode on this site.

To deploy the primary and backup sites and a third-party site:

1. Deploy Installer on primary and backup sites and the third-party site. For the deployment procedure, see H3C Unified Platform Deployment Guide.

2. Deploy the Unified Platform on primary and backup sites. Specify the same NTP server for the primary and backup sites. For the deployment procedure, see H3C Unified Platform Deployment Guide.

3. Deploy arbitration services on the third-party site.

a. Log in to Installer.

b. Select Deploy from the top navigation bar and then select Application from the left navigation pane.

c. Click Upload to upload the arbitration service package SeerEngine_DC_ARBITRATOR-version.zip (for an x86 server) or SeerEngine_DC_ARBITRATOR-version-ARM64.zip (for an ARM server).

For some controllers, only one arbitration service package is available, either for an x86 server or for an ARM server. See the release notes for the service packages available for a controller.

d. Click Next and then configure the parameters.

e. Click Deploy.

Deploying RDRS on the controllers

Restrictions and guidelines

If the controller installed on the primary

site does not support RDRS, click the ![]() icon on

the deployment management page to upgrade it to support RDRS. For the upgrade

procedure, see "Upgrading the controller."

icon on

the deployment management page to upgrade it to support RDRS. For the upgrade

procedure, see "Upgrading the controller."

If the controller installed on the specified backup site does not support disaster recovery or is not in backup state, remove the controller and install it again.

The SeerEngine-DC installation package name and SeerEngine-DC version must be the same on the primary and backup sites.

Procedure

To deploy RDRS on the controller, select the Support RDRS option when deploying the controller and configure the primary and backup RDRS state for it. For the controller deployment procedure, see "Deploying the controller."

Creating an RDRS

Restrictions and guidelines

Do not create an RDRS at the primary and backup sites simultaneously.

You cannot back up or restore data on the RDRS configuration page, including the primary or backup site name, primary or backup site IP address, backup site username and password, and third-party site IP address.

After an RDRS is created, you cannot change the internal virtual IP of the cluster at the primary and backup sites and the node IPs.

Procedure

1. Click System on the top navigation bar and then select RDRS from the navigation pane.

2. In the Site Settings area, configure the primary, backup, and third-party site settings, and specify the switchover mode.

3. Click Connect.

If the heartbeat link is successfully set up, the RDRS site settings have been configured successfully.

After the sites are built successfully, the backup site will automatically synchronize its user, log, and backup and restore settings to the primary site, with the exception of the log content.

4. In the Disaster Recovery Components area, click Add to configure disaster recovery components.