- Table of Contents

-

- 11-Network Management and Monitoring Configuration Guide

- 00-Preface

- 01-System maintenance and debugging configuration

- 02-NQA configuration

- 03-NTP configuration

- 04-SNMP configuration

- 05-RMON configuration

- 06-Event MIB configuration

- 07-NETCONF configuration

- 08-SmartMC configuration

- 09-CWMP configuration

- 10-EAA configuration

- 11-Process monitoring and maintenance configuration

- 12-Mirroring configuration

- 13-sFlow configuration

- 14-Information center configuration

- 15-Packet capture configuration

- 16-VCF fabric configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 16-VCF fabric configuration | 253.83 KB |

Automated VCF fabric deployment

Process of automated VCF fabric deployment

Configuring automated VCF fabric deployment

Enabling VCF fabric topology discovery

Configuring automated underlay network deployment

Specify the template file for automated underlay network deployment

Specifying the role of the device in the VCF fabric

Configuring the device as a master spine node

Pausing automated underlay network deployment

Configuring automated overlay network deployment

Restrictions and guidelines for automated overlay network deployment

Automated overlay network deployment tasks at a glance

Prerequisites for automated overlay network deployment

Configuring parameters for the device to communicate with RabbitMQ servers

Configuring the MAC address of VSI interfaces

Display and maintenance commands for VCF fabric

Configuring VCF fabric

About VCF fabric

Based on OpenStack Networking, the Virtual Converged Framework (VCF) solution provides virtual network services from Layer 2 to Layer 7 for cloud tenants. This solution breaks the boundaries between the network, cloud management, and terminal platforms and transforms the IT infrastructure to a converged framework to accommodate all applications. It also implements automated topology discovery and automated deployment of underlay networks and overlay networks to reduce the administrators' workload and speed up network deployment and upgrade.

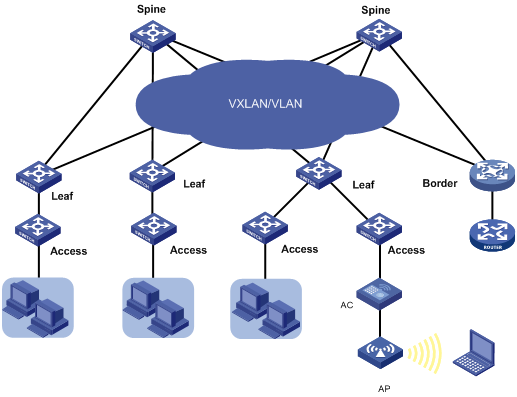

VCF fabric topology

In a campus VCF fabric, a device has one of the following roles:

· Spine node—Connects to leaf nodes.

· Leaf node—Connects to access nodes.

· Access node—Connects to an upstream leaf node and downstream terminal devices. Cascading of access nodes is supported.

· Border node—Located at the border of a VCF fabric to provide access to the external network.

Spine nodes and leaf nodes form a large Layer 2 network, which can be a VLAN, a VXLAN with a centralized IP gateway, or a VXLAN with distributed IP gateways. For more information about centralized IP gateways and distributed IP gateways, see VXLAN Configuration Guide.

Figure 1 VCF fabric topology for a campus network

Neutron overview

Neutron concepts and components

Neutron is a component in OpenStack architecture. It provides networking services for VMs, manages virtual network resources (including networks, subnets, DHCP, virtual routers), and creates an isolated virtual network for each tenant. Neutron provides a unified network resource model, based on which VCF fabric is implemented.

The following are basic concepts in Neutron:

· Network—A virtual object that can be created. It provides an independent network for each tenant in a multitenant environment. A network is equivalent to a switch with virtual ports which can be dynamically created and deleted.

· Subnet—An address pool that contains a group of IP addresses. Two different subnets communicate with each other through a router.

· Port—A connection port. A router or a VM connects to a network through a port.

· Router—A virtual router that can be created and deleted. It performs routing selection and data forwarding.

Neutron has the following components:

· Neutron server—Includes the daemon process neutron-server and multiple plug-ins (neutron-*-plugin). The Neutron server provides an API and forwards the API calls to the configured plugin. The plug-in maintains configuration data and relationships between routers, networks, subnets, and ports in the Neutron database.

· Plugin agent (neutron-*-agent)—Processes data packets on virtual networks. The choice of plug-in agents depends on Neutron plug-ins. A plug-in agent interacts with the Neutron server and the configured Neutron plug-in through a message queue.

· DHCP agent (neutron-dhcp-agent)—Provides DHCP services for tenant networks.

· L3 agent (neutron-l3-agent)—Provides Layer 3 forwarding services to enable inter-tenant communication and external network access.

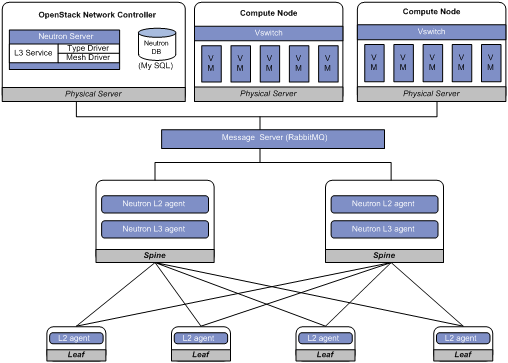

Neutron deployment

Neutron needs to be deployed on servers and network devices.

Table 1 shows Neutron deployment on a server.

Table 1 Neutron deployment on a server

|

Node |

Neutron components |

|

Controller node |

· Neutron server · Neutron DB · Message server (such as RabbitMQ server) · H3C ML2 Driver (For more information about H3C ML2 Driver, see H3C Neutron ML2 Driver Installation Guide.) |

|

Network node |

· neutron-openvswitch-agent · neutron-dhcp-agent |

|

Compute node |

· neutron-openvswitch-agent · LLDP |

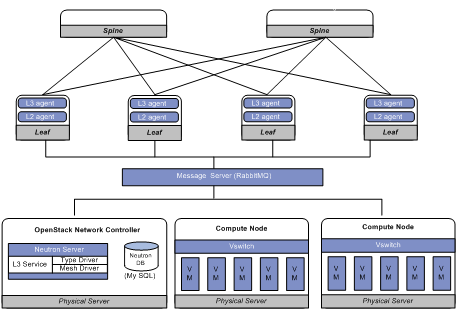

Table 2 shows Neutron deployments on a network device.

Table 2 Neutron deployments on a network device

|

Network type |

Network device |

Neutron components |

|

Centralized VXLAN IP gateway deployment |

Spine |

· neutron-l2-agent · neutron-l3-agent |

|

Leaf |

neutron-l2-agent |

|

|

Distributed VXLAN IP gateway deployment |

Spine |

N/A |

|

Leaf |

· neutron-l2-agent · neutron-l3-agent |

Figure 2 Example of Neutron deployment for centralized gateway deployment

Figure 3 Example of Neutron deployment for distributed gateway deployment

Automated VCF fabric deployment

VCF provides the following features to ease deployment:

· Automated topology discovery.

In a VCF fabric, each device uses LLDP to collect local topology information from directly-connected peer devices. The local topology information includes connection interfaces, roles, MAC addresses, and management interface addresses of the peer devices.

If multiple spine nodes exist in a VCF fabric, the master spine node collects the topology for the entire network.

· Automated underlay network deployment.

Automated underlay network deployment sets up a Layer 3 underlay network (a physical Layer 3 network) for users. It is implemented by automatically executing configurations (such as IRF configuration and Layer 3 reachability configurations) in user-defined template files.

· Automated overlay network deployment.

Automated overlay network deployment sets up an on-demand and application-oriented overlay network (a virtual network built on top of the underlay network). It is implemented by automatically obtaining the overlay network configuration (including VXLAN and EVPN configuration) from the Neutron server.

Process of automated VCF fabric deployment

The device finishes automated VCF fabric deployment as follows:

1. Starts up without loading configuration and then obtains an IP address, the IP address of the TFTP server, and a template file name from the DHCP server.

2. Determines the name of the template file to be downloaded based on the device role and the template file name obtained from the DHCP server. For example, 1_leaf.template represents a template file for leaf nodes.

3. Downloads the template file from the TFTP server.

4. Parses the template file and performs the following operations:

? Deploys static configurations that are independent from the VCF fabric topology.

? Deploys dynamic configurations according to the VCF fabric topology.

The topology process notifies the automation process of creation, deletion, and status change of neighbors. Based on the topology information, the automation process completes role discovery, automatic aggregation, and IRF fabric setup.

Template file

A template file contains the following contents:

· System-predefined variables—The variable names cannot be edited, and the variable values are set by the VCF topology discovery feature.

· User-defined variables—The variable names and values are defined by the user. These variables include the username and password used to establish a connection with the RabbitMQ server, network type, and so on. The following are examples of user-defined variables:

#USERDEF

_underlayIPRange = 10.100.0.0/16

_master_spine_mac = 1122-3344-5566

_backup_spine_mac = aabb-ccdd-eeff

_username = aaa _password = aaa _rbacUserRole = network-admin _neutron_username = openstack

_neutron_password = 12345678

_neutron_ip = 172.16.1.136

_loghost_ip = 172.16.1.136

_network_type = centralized-vxlan

…

· Static configurations—Static configurations are independent from the VCF fabric topology and can be directly executed. The following are examples of static configurations:

#STATICCFG

#

clock timezone beijing add 08:00:00

#

lldp global enable

#

stp global enable

#

…

· Dynamic configurations—Dynamic configurations are dependent on the VCF fabric topology. The device first obtains the topology information through LLDP and then executes dynamic configurations. The following are examples of dynamic configurations:

#

interface $$_underlayIntfDown

port link-mode route

ip address unnumbered interface LoopBack0

ospf 1 area 0.0.0.0

ospf network-type p2p

lldp management-address arp-learning

lldp tlv-enable basic-tlv management-address-tlv interface LoopBack0

#

VCF fabric task at a glance

To configure a VCF fabric, perform the following tasks:

· Configuring automated VCF fabric deployment

No tasks are required to be made on the device for automated VCF fabric deployment. However, you must make related configuration on the DHCP server and the TFTP server so the device can download and parse a template file to complete automated VCF fabric deployment.

· (Optional.) Adjust VCF fabric deployment

If the device cannot obtain or parse the template file to complete automated VCF fabric deployment, choose the following tasks as needed:

? Enabling VCF fabric topology discovery

? Configuring automated underlay network deployment

? Configuring automated overlay network deployment

Configuring automated VCF fabric deployment

Restrictions and guidelines

On a campus network, links between two access nodes cascaded through GigabitEthernet interfaces and links between leaf nodes and access nodes are automatically aggregated. For links between spine nodes and leaf nodes, the trunk permit vlan command is automatically executed.

Do not perform link migration when devices in the VCF fabric are in the process of coming online or powering down after the automated VCF fabric deployment finishes. A violation might cause link-related configuration fails to update.

The version format of a template file for automated VCF fabric deployment is x.y. Only the x part is examined during a version compatibility check. For successful automated deployment, make sure x in the version of the template file to be used is not greater than x in the supported version. To display the supported version of the template file for automated VCF fabric deployment, use the display vcf-fabric underlay template-version command.

If the template file does not include IRF configurations, the device does not save the configurations after executing all configurations in the template file. To save the configurations, use the save command.

Two devices with the same role can automatically set up an IRF fabric only when the IRF physical interfaces on the devices are connected.

Two IRF member devices in an IRF fabric use the following rules to elect the IRF master during automated VCF fabric deployment:

· If the uptime of both devices is shorter than two hours, the device with the higher bridge MAC address becomes the IRF master.

· If the uptime of one device is equal to or longer than two hours, that device becomes the IRF master.

· If the uptime of both devices are equal to or longer than two hours, the IRF fabric cannot be set up. You must manually reboot one of the member devices. The rebooted device will become the IRF subordinate.

If the IRF member ID of a device is not 1, the IRF master might reboot during automatic IRF fabric setup.

Procedure

1. Finish the underlay network planning (such as IP address assignment, reliability design, and routing deployment) based on user requirements.

2. Configure the DHCP server.

Configure the IP address of the device, the IP address of the TFTP server, and names of template files saved on the TFTP server. For more information, see the user manual of the DHCP server.

3. Configure the TFTP server.

Create template files and save the template files to the TFTP server.

The H3C DR1000 ADCAM network management software can automatically create template files and save the files to the TFTP server. If no H3C DR1000 ADDC or ADCAM is available on the network, you must manually create template files and save the files to the TFTP server. For more information about template files, see "Template file."

4. (Optional.) Configure the NTP server.

5. Connect the device to the VCF fabric and start the device.

After startup, the device uses a management Ethernet interface or VLAN-interface 4094 to connect to the fabric management network. Then, it downloads the template file corresponding to its device role and parses the template file to complete automated VCF fabric deployment.

6. (Optional.) Save the deployed configuration.

If the template file does not include IRF configurations, the device will not save the configurations after executing all configurations in the template file. To save the configurations, use the save command. For more information about this command, see configuration file management commands in Fundamentals Command Reference.

Enabling VCF fabric topology discovery

1. Enter system view.

system-view

2. Enable LLDP globally.

lldp global enable

By default, LLDP is globally disabled.

You must enable LLDP globally before you enable VCF fabric topology discovery, because the device needs LLDP to collect topology data of directly-connected devices.

3. Enable VCF fabric topology discovery.

vcf-fabric topology enable

By default, VCF fabric topology discovery is disabled.

Configuring automated underlay network deployment

Specify the template file for automated underlay network deployment

1. Enter system view.

system-view

2. Specify the template file for automated underlay network deployment.

vcf-fabric underlay autoconfigure template

By default, no template file is specified for automated underlay network deployment.

Specifying the role of the device in the VCF fabric

About specifying the role of the device in the VCF fabric

Perform this task to change the role of the device in the VCF fabric.

Restrictions and guidelines

If the device completes automated underlay network deployment by automatically downloading and parsing a template file, reboot the device after you change the device role. In this way, the device can obtain the template file corresponding to the new role and complete the automated underlay network deployment.

Procedure

1. Enter system view.

system-view

2. Specify the role of the device in the VCF fabric.

vcf-fabric role { access | leaf | spine }

By default, the device is a leaf node.

3. Return to system view.

quit

4. Reboot the device.

reboot

For the new role to take effect, you must reboot the device.

Configuring the device as a master spine node

About the master spine node

If multiple spine nodes exist on a VCF fabric, you must configure a device as the master spine node to collect the topology for the entire VCF fabric network.

Procedure

1. Enter system view.

system-view

2. Configure the device as a master spine node.

vcf-fabric spine-role master

By default, the device is not a master spine node.

Pausing automated underlay network deployment

About pausing automated underlay network deployment

If you pause automated underlay network deployment, the VCF fabric will save the current status of the device. It will not respond to new LLDP events, set up the IRF fabric, aggregate links, or discover uplink or downlink interfaces.

Perform this task if all devices in the VCF fabric complete automated deployment and new devices are to be added to the VCF fabric.

Procedure

1. Enter system view.

system-view

2. Pause automated underlay network deployment.

vcf-fabric underlay pause

By default, automated underlay network deployment is not paused.

Configuring automated overlay network deployment

Restrictions and guidelines for automated overlay network deployment

If the network type is VLAN or VXLAN with a centralized IP gateway, perform this task on both the spine node and the leaf nodes.

If the network type is VXLAN with distributed IP gateways, perform this task on leaf nodes.

As a best practice, do not perform any of the following tasks while the device is communicating with a RabbitMQ server:

· Change the source IPv4 address for the device to communicate with RabbitMQ servers.

· Bring up or shut down a port connected to the RabbitMQ server.

If you do so, it will take the CLI a long time to respond to the l2agent enable, undo l2agent enable, l3agent enable, or undo l3agent enable command.

Automated overlay network deployment tasks at a glance

To configure automated overlay network deployment, perform the following tasks:

1. Configuring parameters for the device to communicate with RabbitMQ servers

2. Specifying the network type

On a VLAN network or a VXLAN network with a centralized IP gateway, perform this task on both spine nodes and leaf nodes.

On a VXLAN network with distribute IP gateways, perform this task only on leaf nodes.

On a VLAN network or a VXLAN network with a centralized IP gateway, perform this task only on spine nodes.

On a VXLAN network with distribute IP gateways, perform this task only on leaf nodes.

5. Configuring the border node

Perform this task only when the device is the border node.

6. (Optional.) Enabling local proxy ARP

7. (Optional.) Configuring the MAC address of VSI interfaces

Prerequisites for automated overlay network deployment

Before you configure automated overlay network deployment, you must complete the following tasks:

1. Install OpenStack Neutron components and plugins on the controller node in the VCF fabric.

2. Install OpenStack Nova components, openvswitch, and neutron-ovs-agent on compute nodes in the VCF fabric.

3. Make sure LLDP and automated VCF fabric topology discovery are enabled.

Configuring parameters for the device to communicate with RabbitMQ servers

About parameters for the device to communicate with RabbitMQ servers

In the VCF fabric, the device communicates with the Neutron server through RabbitMQ servers. You must specify the IP address, login username, login password, and listening port for the device to communicate with RabbitMQ servers.

Restrictions and guidelines

Make sure the RabbitMQ server settings on the device are the same as those on the controller node. If the durable attribute of RabbitMQ queues is set on the Neutron server, you must enable creation of RabbitMQ durable queues on the device so that RabbitMQ queues can be correctly created.

When you set the RabbitMQ server parameters or remove the settings, make sure the routes between the device and the RabbitMQ server is reachable. Otherwise, the CLI does not respond until the TCP connection between the device and the RabbitMQ server is terminated.

Multiple virtual hosts might exist on the RabbitMQ server. Each virtual host can independently provide RabbitMQ services for the device. For the device to correctly communicate with the Neutron server, specify the same virtual host on the device and the Neutron server.

Procedure

1. Enter system view.

system-view

2. Enable Neutron and enter Neutron view.

neutron

By default, Neutron is disabled.

3. Specify the IPv4 address, port number, and MPLS L3VPN instance of a RabbitMQ server.

rabbit host ip ipv4-address [ port port-number ] [ vpn-instance vpn-instance-name ]

By default, no IPv4 address or MPLS L3VPN instance of a RabbitMQ server is specified, and the port number of a RabbitMQ server is 5672.

4. Specify the source IPv4 address for the device to communicate with RabbitMQ servers.

rabbit source-ip ipv4-address [ vpn-instance vpn-instance-name ]

By default, no source IPv4 address is specified for the device to communicate with RabbitMQ servers. The device automatically selects a source IPv4 address through the routing protocol to communicate with RabbitMQ servers.

5. (Optional.) Enable creation of RabbitMQ durable queues.

rabbit durable-queue enable

By default, RabbitMQ non-durable queues are created.

6. Configure the username for the device to establish a connection with a RabbitMQ server.

rabbit user username

By default, the device uses username guest to establish a connection with a RabbitMQ server.

7. Configure the password for the device to establish a connection with a RabbitMQ server.

rabbit password { cipher | plain } string

By default, the device uses plaintext password guest to establish a connection with a RabbitMQ server.

8. Specify a virtual host to provide RabbitMQ services.

rabbit virtual-host hostname

By default, the virtual host / provides RabbitMQ services for the device.

9. Specify the username and password for the device to deploy configurations through RESTful.

restful user username password { cipher | plain } password

By default, no username or password is configured for the device to deploy configurations through RESTful.

Specifying the network type

About network types

After you change the network type of the VCF fabric where the device resides, Neutron deploys new configuration to all devices according to the new network type.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Specify the network type.

network-type { centralized-vxlan | distributed-vxlan | vlan }

By default, the network type is VLAN.

Enabling L2 agent

About L2 agent

Layer 2 agent (L2 agent) responds to OpenStack events such as network creation, subnet creation, and port creation. It deploys Layer 2 networking to provide Layer 2 connectivity within a virtual network and Layer 2 isolation between different virtual networks

Restrictions and guidelines

On a VLAN network or a VXLAN network with a centralized IP gateway, perform this task on both spine nodes and leaf nodes.

On a VXLAN network with distribute IP gateways, perform this task only on leaf nodes.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Enable the L2 agent.

l2agent enable

By default, the L2 agent is disabled.

Enabling L3 agent

About L3 agent

Layer 3 agent (L3 agent) responds to OpenStack events such as virtual router creation, interface creation, and gateway configuration. It deploys the IP gateways to provide Layer 3 forwarding services for VMs.

Restrictions and guidelines

On a VLAN network or a VXLAN network with a centralized IP gateway, perform this task only on spine nodes.

On a VXLAN network with distribute IP gateways, perform this task only on leaf nodes.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Enable the L3 agent.

L3agent enable

By default, the L3 agent is disabled.

Configuring the border node

About the border node

On a VXLAN network with a centralized IP gateway or on a VLAN network, configure a spine node as the border node. On a VXLAN network with distributed IP gateways, configure a leaf node as the border.

You can use the following methods to configure the IP address of the border gateway:

· Manually specify the IP address of the border gateway.

· Enable the border node service on the border gateway and create the external network and routers on the OpenStack Dashboard. Then, VCF fabric automatically deploys the routing configuration to the device to implement connectivity between tenant networks and the external network.

If the manually specified IP address is different from the IP address assigned by VCF fabric, the IP address assigned by VCF fabric takes effect.

The border node connects to the external network through an interface which belongs to the global VPN instance. For the traffic from the external network to reach a tenant network, the border node needs to add the routes of the tenant VPN instance into the routing table of the global VPN instance. You must configure export route targets of the tenant VPN instance as import route targets of the global VPN instance. This setting enables the global VPN instance to import routes of the tenant VPN instance.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Enable the border node service.

border enable

By default, the device is not a border node.

4. (Optional.) Specify the IPv4 address of the border gateway.

gateway ip ipv4-address

By default, the IPv4 address of the border gateway is not specified.

5. Configure export route targets for a tenant VPN instance.

vpn-target target export-extcommunity

By default, no export route targets are configured for a tenant VPN instance.

6. (Optional.) Configure import route targets for a tenant VPN instance.

vpn-target target import-extcommunity

By default, no import route targets are configured for a tenant VPN instance

Enabling local proxy ARP

About local proxy ARP

This feature enables the device to use the MAC address of VSI interfaces to answer ARP requests for MAC addresses of VMs on a different site from the requesting VMs.

Restrictions and guidelines

Perform this task only on leaf nodes on a VXLAN network with distributed IP gateways.

This configuration takes effect on VSI interfaces that are created after the proxy-arp enable command is executed. It does not take effect on existing VSI interfaces.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Enable local proxy ARP.

proxy-arp enable

By default, local proxy ARP is disabled.

Configuring the MAC address of VSI interfaces

About configuring the MAC address of VSI interfaces

After you perform this task, VCF fabric assigns the MAC address to all VSI interfaces newly created by automated overlay network deployment on the device.

Restrictions and guidelines

Perform this task only on leaf nodes on a VXLAN network with distributed IP gateways.

This configuration takes effect only on VSI interfaces newly created after this command is executed.

Procedure

1. Enter system view.

system-view

2. Enter Neutron view.

neutron

3. Configure the MAC address of VSI interfaces.

vsi-mac mac-address

By default, no MAC address is configured for VSI interfaces.

Display and maintenance commands for VCF fabric

Execute display commands in any view.

|

Task |

Command |

|

Display the role of the device in the VCF fabric. |

display vcf-fabric role |

|

Display VCF fabric topology information. |

display vcf-fabric topology |

|

Display information about automated underlay network deployment. |

display vcf-fabric underlay autoconfigure |

|

Display the supported version and the current version of the template file for automated VCF fabric provisioning |

display vcf-fabric underlay template-version |

Automated VCF fabric deployment configuration examples

Example: Configuring automated VCF fabric deployment

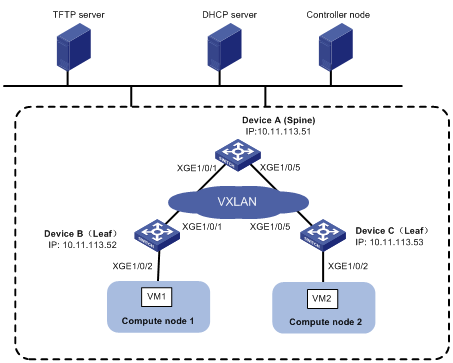

Network configuration

As shown in Figure 4, Devices A, B, and C all connect to the TFTP server and the DHCP server through management Ethernet interfaces. VM 1 resides on Compute node 1. VM 2 resides on Compute node 2. The controller node runs OpenStack Kilo version and Ubuntu14.04 LTS operating system.

Configure a VCF fabric to meet the following requirements:

· The VCF fabric is a VXLAN network deployed on spine node Device A and leaf nodes Device B and Device C to provide connectivity between VM 1 and VM 2. Device A acts as a centralized VXLAN IP gateway.

· Devices A, B, and C complete automated underlay network deployment by using template files after they start up.

· Devices A, B, and C complete automated overlay network deployment after the controller is configured.

· The DHCP server dynamically assigns IP addresses on subnet 10.11.113.0/24.

Configuring the DHCP server

Perform the following tasks on the DHCP server:

1. Configure a DHCP address pool to dynamically assign IP addresses on subnet 10.11.113.0/24 to the devices.

2. Specify 10.11.113.19/24 as the IP address of the TFTP server.

3. Specify a template file (a file with the file extension .template) as the boot file.

Creating template files

Create template files and upload them to the TFTP server.

Typically, a template file includes the following contents:

· System-predefined variables—Internally used by the system. User-defined variables cannot be the same as system-predefined variables.

· User-defined variables—Defined by the user. User-defined variables include the following:

? Basic settings: Local username and password, user role, and so on.

? Neutron server settings: IP address of the Neutron server, the username and password for establishing a connection with the Neutron server, and so on.

· Software images for upgrade and the URL to download the software images.

· Configuration commands—Include commands independent from the topology (such as LLDP, NTP, and SNMP) and commands dependent on the topology (such as interfaces and Neutron settings).

Configuring the TFTP server

Place the template files on the TFTP server. In this example, both spine node and leaf node exist on the VXLAN network, so two template files (vxlan_spine.template and vxlan_leaf.template) are required.

Powering up Device A, Device B, and Device C

After starting up without loading configuration, Device A, Device B, and Device C each automatically downloads a template file to finish automated underlay network deployment. In this example, Device A downloads the template file vxlan_spine.template, and Device B and Device C download the template file vxlan_leaf.template.

Configuring the controller node

1. Install OpenStack Neutron related components:

a. Install Neutron, Image, Dashboard, Networking, and RabbitMQ.

b. Install H3C ML2 Driver. For more information, see H3C Neutron ML2 Driver Installation Guide.

c. Configure LLDP.

2. Configure the network as a VXLAN network:

Edit the /etc/neutron/plugin/ml2/ml2_conf.ini file as follows:

a. Add the h3c_vxlan type driver to the type driver list.

type_drivers = h3c_vxlan

b. Add h3c to the mechanism driver list.

mechanism_driver = openvswitch, h3c

c. Specify h3c_vxlan as the default tenant network type.

tenant_network_types=h3c_vxlan

d. Add the [ml2_type_h3c_vxlan] section, and specify a VXLAN ID range in the format of vxlan-id1:vxlan-id2. The value range for VXLAN IDs is 0 to 16777215.

[ml2_type_h3c_vxlan]

vni_ranges = 10000:60000

3. Configure the database:

[openstack@localhost ~]$ sudo h3c_config db_sync

4. Restart the Neutron server:

[root@localhost ~]# service neutron-server restart

Configuring the compute nodes

Perform the following tasks on both Compute node 1 and Compute node 2.

1. Install the OpenStack Nova related components, openvswitch, and neutron-ovs-agent.

2. Create an OVS bridge named br-vlan and add interface Ethernet 0 (connected to the VCF fabric) to the bridge.

# ovs-vsctl add-br br-vlan

# ovs-vsctl add-port br-vlan eth0

3. Modify the bridge mapping on the OVS agent:

# Edit the /etc/neutron/plugins/ml2/openvswitch_agent.ini file as follows:

[ovs]

bridge_mappings = vlanphy:br-vlan

4. Restart the neutron-openvswitch-agent.

# service neutron-openvswitch-agent restart

Verifying the OpenStack environment

Perform the following tasks on the OpenStack dashboard.

1. Create a network named Network.

2. Create subnets:

# Create a subnet named subnet-1, and assign network address range 10.10.1.0/24 to the subnet. (Details not shown.)

# Create a subnet named subnet-2, and assign network address range 10.1.1.0/24 to the subnet. (Details not shown.)

In this example, VM 1 and VM 2 obtain IP addresses from the DHCP server. You must enable DHCP for the subnets.

3. Create a router named router. Bind a port on the router with subnet subnet-1 and then bind another port with subnet subnet-2. (Details not shown.)

4. Create VMs:

# Create VM 1 and VM 2 on Compute node 1 and Compute node 2, respectively. (Details not shown.)

In this example, VM 1 and VM 2 obtain IP addresses 10.10.1.3/24 and 10.1.1.3/24 from the DHCP server, respectively.

Verifying the configuration

1. Verifying the collected topology of the underlay network

# Display VCF fabric topology information on Device A.

[DeviceA] display vcf-fabric topology

Topology Information

----------------------------------------------------------------------------------

* indicates the master spine role among all spines

SpineIP Interface Link LeafIP Status

*10.11.113.51 Ten-GigabitEthernet1/0/1 Up 10.11.113.52 Deploying

Ten-GigabitEthernet1/0/2 Down -- --

Ten-GigabitEthernet1/0/3 Down -- --

Ten-GigabitEthernet1/0/4 Down -- --

Ten-GigabitEthernet1/0/5 Up 10.11.113.53 Deploying

2. Verifying the automated configuration for the underlay network

# Display information about automated underlay network deployment on Device A.

[DeviceA] display vcf-fabric underlay autoconfigure

success command:

#

system

clock timezone beijing add 08:00:00

#

system

lldp global enable

#

system

stp global enable

#

system

ospf 1

graceful-restart ietf

area 0.0.0.0

#

system

interface LoopBack0

#

system

ip vpn-instance global

route-distinguisher 1:1

vpn-target 1:1 import-extcommunity

#

system

l2vpn enable

#

system

vxlan tunnel mac-learning disable

vxlan tunnel arp-learning disable

#

system

ntp-service enable

ntp-service unicast-peer 10.11.113.136

#

system

netconf soap http enable

netconf soap https enable

restful http enable

restful https enable

#

system

ip http enable

ip https enable

#

system

telnet server enable

#

system

info-center loghost 10.11.113.136

#

system

local-user aaa

password ******

service-type telnet http https

service-type ssh

authorization-attribute user-role network-admin

#

system

line vty 0 63

authentication-mode scheme

user-role network-admin

#

system

bgp 100

graceful-restart

address-family l2vpn evpn

undo policy vpn-target

#

system

vcf-fabric topology enable

#

system

neutron

rabbit user openstack

rabbit password ******

rabbit host ip 10.11.113.136

restful user aaa password ******

network-type centralized-vxlan

vpn-target 1:1 export-extcommunity

l2agent enable

l3agent enable

#

system

snmp-agent

snmp-agent community read public

snmp-agent community write private

snmp-agent sys-info version all

#interface up-down:

Ten-GigabitEthernet1/0/1

Ten-GigabitEthernet1/0/5

Loopback0 IP Allocation:

DEV_MAC LOOPBACK_IP MANAGE_IP STATE

a43c-adae-0400 10.100.16.17 10.11.113.53 up

a43c-9aa7-0100 10.100.16.15 10.11.113.51 up

a43c-a469-0300 10.100.16.16 10.11.113.52 up

IRF Allocation:

Self Bridge Mac: a43c-9aa7-0100

IRF Status: No

Member List: [1]

bgp configure peer:

10.100.16.17

10.100.16.16

3. Verifying the automated deployment for the overlay network

# Display the running configuration for the current VSI on Device A.

[DeviceA] display current-configuration configuration vsi

#

vsi vxlan10071

gateway vsi-interface 8190

vxlan 10071

evpn encapsulation vxlan

route-distinguisher auto

vpn-target auto export-extcommunity

vpn-target auto import-extcommunity

#

return

[DeviceA] display current-configuration interface Vsi-interface

#

interface Vsi-interface8190

ip binding vpn-instance neutron-1024

ip address 10.1.1.1 255.255.255.0 sub

ip address 10.10.1.1 255.255.255.0 sub

#

return

[DeviceA] display ip vpn-instance

Total VPN-Instances configured : 1

VPN-Instance Name RD Create time

neutron-1024 1024:1024 2016/03/12 00:25:59

4. Verifying the connectivity between VM 1 and VM 2

# Ping VM 2 on Computer Node 2 from the VM 1 on Computer Node 1.

$ ping 10.1.1.3

Ping 10.1.1.3 (10.1.1.3): 56 data bytes, press CTRL_C to break

56 bytes from 10.1.1.3: icmp_seq=0 ttl=254 time=10.000 ms

56 bytes from 10.1.1.3: icmp_seq=1 ttl=254 time=4.000 ms

56 bytes from 10.1.1.3: icmp_seq=2 ttl=254 time=4.000 ms

56 bytes from 10.1.1.3: icmp_seq=3 ttl=254 time=3.000 ms

56 bytes from 10.1.1.3: icmp_seq=4 ttl=254 time=3.000 ms

--- Ping statistics for 10.1.1.3 ---

5 packet(s) transmitted, 5 packet(s) received, 0.0% packet loss

round-trip min/avg/max/std-dev = 3.000/4.800/10.000/2.638 ms