- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 3.39 MB |

Feature and software version compatibility

Specifying the label type advertised by the egress

Enabling sending of MPLS TTL-expired messages

Enabling SNMP notifications for MPLS

Displaying and maintaining MPLS

Feature and software version compatibility

Static LSP configuration example

Configuration restrictions and guidelines

Label distribution and control

Feature and software version compatibility

Configuring LDP session parameters

Configuring LDP MD5 authentication

Configuring an LSP generation policy

Configuring the LDP label distribution control mode

Configuring a label advertisement policy·

Configuring a label acceptance policy

Configuring LDP loop detection

Configuring LDP-IGP synchronization

Configuring LDP-OSPF synchronization

Configuring LDP-ISIS synchronization

Enabling SNMP notifications for LDP

Displaying and maintaining LDP

Label acceptance control configuration example

Label advertisement control configuration example

Automatic bandwidth adjustment

Feature and software version compatibility

MPLS TE configuration task list

Configuring a tunnel interface

Configuring an MPLS TE tunnel to use a static CRLSP

Configuring an MPLS TE tunnel to use a dynamic CRLSP

Configuring MPLS TE attributes for a link

Advertising link TE attributes by using IGP TE extension

Configuring MPLS TE tunnel constraints

Establishing an MPLS TE tunnel by using RSVP-TE

Controlling CRLSP path selection

Controlling MPLS TE tunnel setup

Configuring traffic forwarding

Configuring static routing to direct traffic to an MPLS TE tunnel

Configuring automatic route advertisement to direct traffic to an MPLS TE tunnel

Configuring a bidirectional MPLS TE tunnel

Configuring a bypass tunnel on the PLR

Configuring node fault detection

Configuring the optimal bypass tunnel selection interval

Displaying and maintaining MPLS TE·

MPLS TE configuration examples

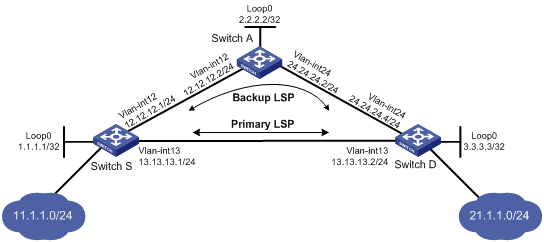

Establishing an MPLS TE tunnel over a static CRLSP

Establishing an MPLS TE tunnel with RSVP-TE

Establishing an inter-AS MPLS TE tunnel with RSVP-TE

Bidirectional MPLS TE tunnel configuration example

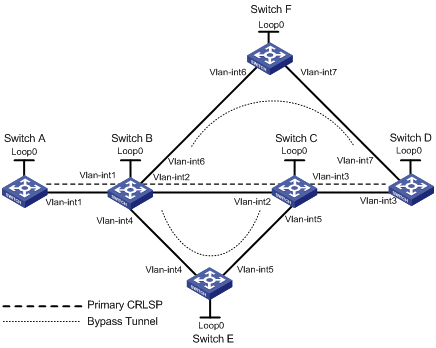

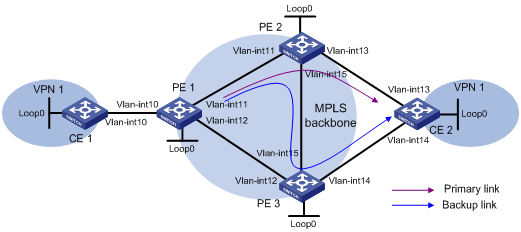

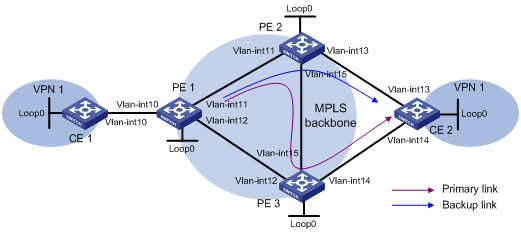

CRLSP backup configuration example

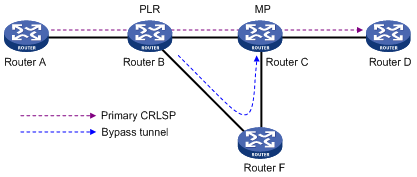

Manual bypass tunnel for FRR configuration example

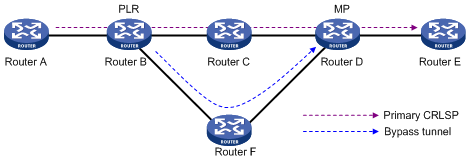

Auto FRR configuration example

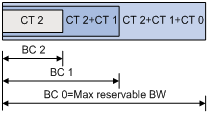

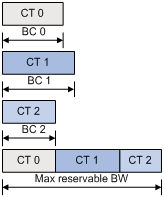

IETF DS-TE configuration example

Feature and software version compatibility

Static CRLSP configuration example

Feature and software version compatibility

Configuring RSVP Srefresh and reliable RSVP message delivery

Configuring RSVP hello extension

Configuring RSVP authentication

Specifying a DSCP value for outgoing RSVP packets

Displaying and maintaining RSVP

Establishing an MPLS TE tunnel with RSVP-TE

Feature and software version compatibility

Tunnel policy configuration examples

Preferred tunnel configuration example

Exclusive tunnel configuration example

Tunnel selection order configuration example

Preferred tunnel and tunnel selection order configuration example

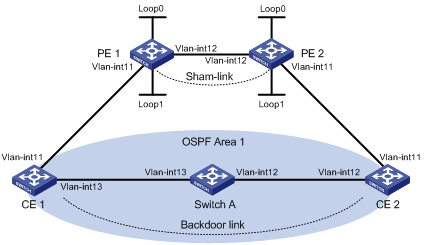

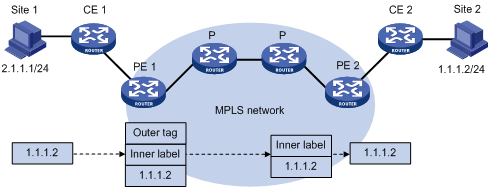

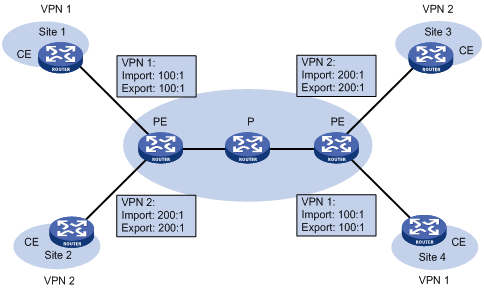

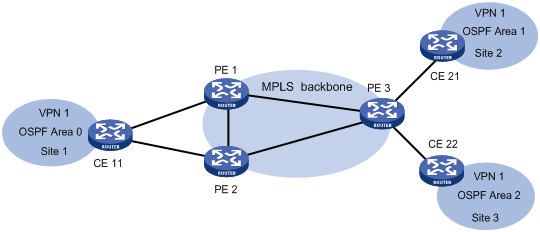

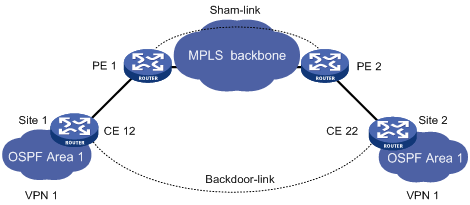

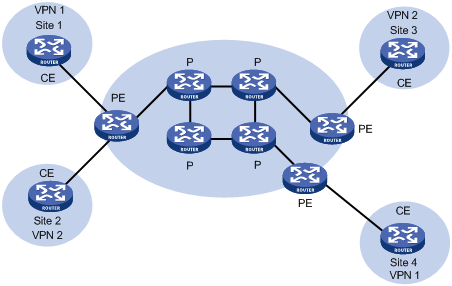

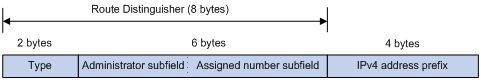

MPLS L3VPN route advertisement

Feature and software version compatibility

MPLS L3VPN configuration task list

Configuring routing between a PE and a CE

Configuring routing between PEs

Configuring BGP VPNv4 route control

Configuring a loopback interface

Redistributing the loopback interface route

Specifying the VPN label processing mode on the egress PE

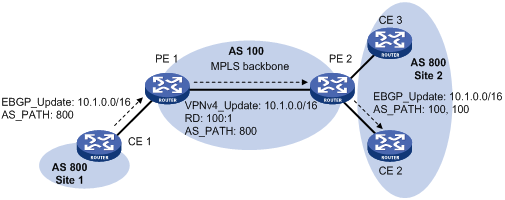

Configuring BGP AS number substitution·

Enabling SNMP notifications for MPLS L3VPN

Enabling logging for BGP route flapping

Displaying and maintaining MPLS L3VPN

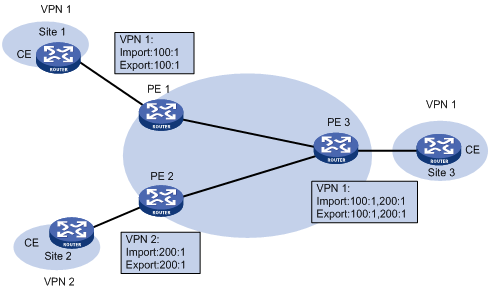

MPLS L3VPN configuration examples

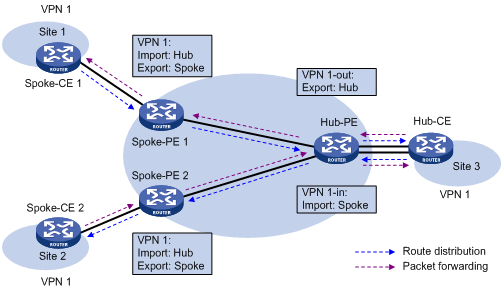

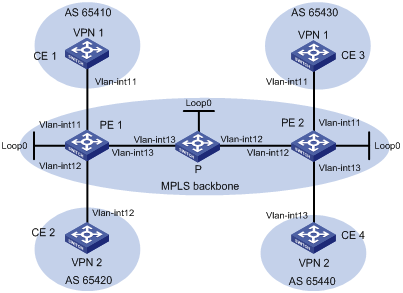

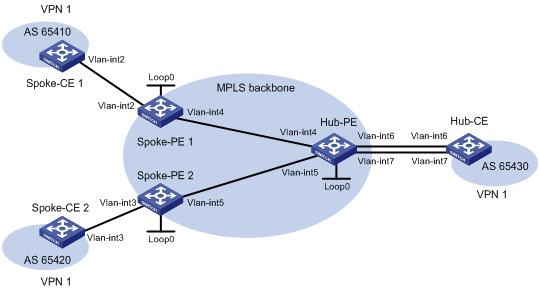

Configuring a hub-spoke network

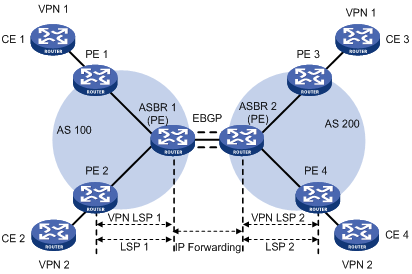

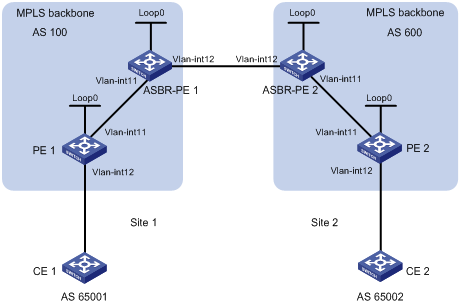

Configuring MPLS L3VPN inter-AS option A

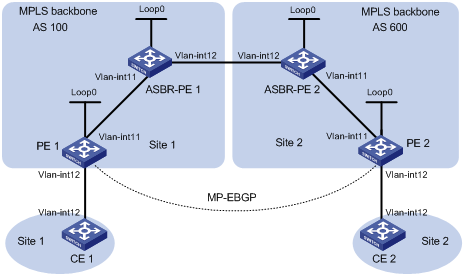

Configuring MPLS L3VPN inter-AS option B

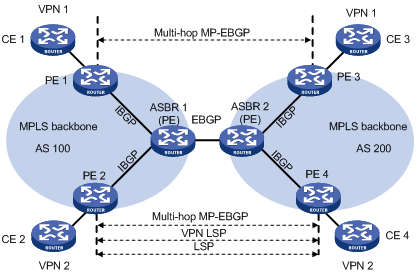

Configuring MPLS L3VPN inter-AS option C

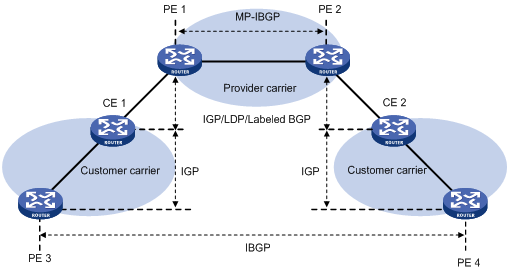

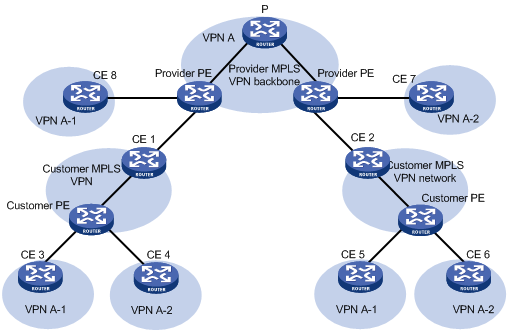

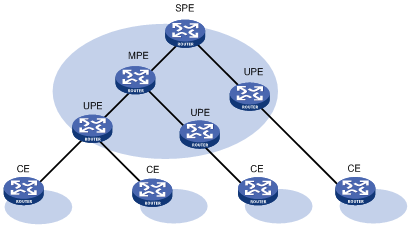

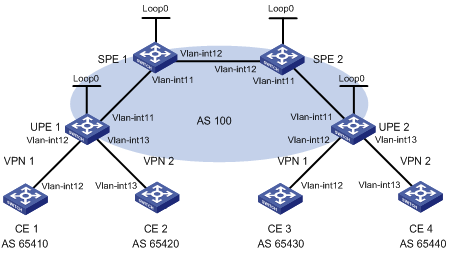

Configuring MPLS L3VPN carrier's carrier

Configuring BGP AS number substitution·

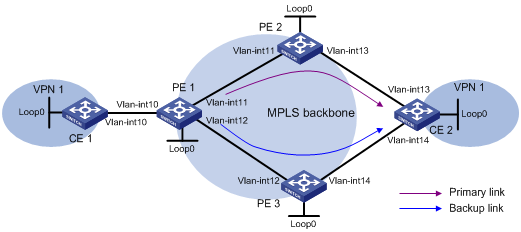

Configuring MPLS L3VPN FRR through VPNv4 route backup for a VPNv4 route

Configuring MPLS L3VPN FRR through VPNv4 route backup for an IPv4 route

Configuring MPLS L3VPN FRR through IPv4 route backup for a VPNv4 route

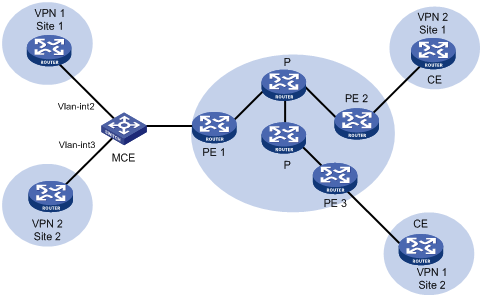

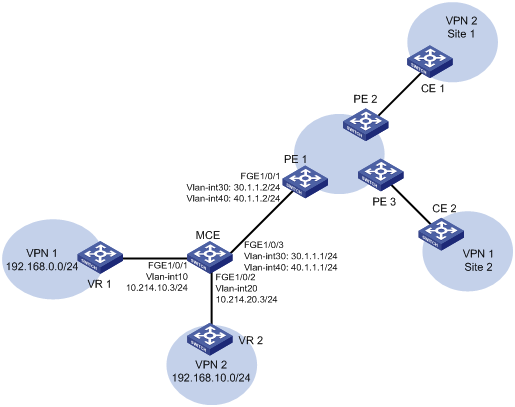

Associating a VPN instance with an interface

Configuring route related attributes for a VPN instance

Configuring routing between an MCE and a VPN site

Configuring routing between an MCE and a PE

Displaying and maintaining MCE

Configuring the MCE that uses OSPF to advertise VPN routes to the PE

Configuring the MCE that uses EBGP to advertise VPN routes to the PE

Configuring basic MPLS

Multiprotocol Label Switching (MPLS) provides connection-oriented label switching over connectionless IP backbone networks. It integrates both the flexibility of IP routing and the simplicity of Layer 2 switching.

Unless otherwise specified, the term "interface" in this chapter refers to a Layer 3 interface. It can be a VLAN interface or a Layer 3 Ethernet interface. Layer 3 Ethernet interfaces refer to the Ethernet interfaces that operate in Layer 3 mode. For information about switching the Ethernet interface operating mode, see Layer 2—LAN Switching Configuration Guide.

Overview

MPLS has the following advantages:

· High speed and efficiency—MPLS uses short- and fixed-length labels to forward packets, avoiding complicated routing table lookups.

· Multiprotocol support—MPLS resides between the link layer and the network layer. It can work over various link layer protocols (for example, PPP, ATM, frame relay, and Ethernet) to provide connection-oriented services for various network layer protocols (for example, IPv4 and IPX).

· Good scalability—The connection-oriented switching and multilayer label stack features enable MPLS to deliver various extended services, such as VPN, traffic engineering, and QoS.

Basic concepts

FEC

MPLS groups packets with the same characteristics (such as packets with the same destination or service class) into a forwarding equivalence class (FEC). Packets of the same FEC are handled in the same way on an MPLS network.

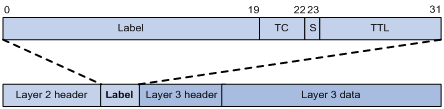

Label

A label uniquely identifies an FEC and has local significance.

A label is encapsulated between the Layer 2 header and Layer 3 header of a packet. It is four bytes long and consists of the following fields:

· Label—20-bit label value.

· TC—3-bit traffic class, used for QoS. It is also called Exp.

· S—1-bit bottom of stack flag. A label stack can have multiple labels. The label nearest to the Layer 2 header is called the top label, and the label nearest to the Layer 3 header is called the bottom label. The S field is set to 1 if the label is the bottom label and set to 0 if not.

· TTL—8-bit time to live field used for routing loop prevention.

LSR

A router that performs MPLS forwarding is a label switching router (LSR).

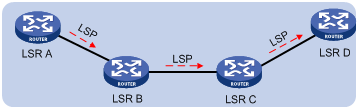

LSP

A label switched path (LSP) is the path along which packets of an FEC travel through an MPLS network.

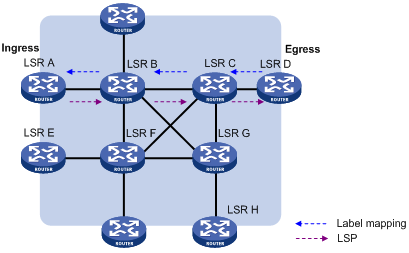

An LSP is a unidirectional packet forwarding path. Two neighboring LSRs are called the upstream LSR and downstream LSR along the direction of an LSP. As shown in Figure 2, LSR B is the downstream LSR of LSR A, and LSR A is the upstream LSR of LSR B.

LFIB

The Label Forwarding Information Base (LFIB) on an MPLS network functions like the Forwarding Information Base (FIB) on an IP network. When an LSR receives a labeled packet, it searches the LFIB to obtain information for forwarding the packet, such as the label operation type, the outgoing label value, and the next hop.

Control plane and forwarding plane

An MPLS node consists of a control plane and a forwarding plane.

· Control plane—Assigns labels, distributes FEC-label mappings to neighbor LSRs, creates the LFIB, and establishes and removes LSPs.

· Forwarding plane—Forwards packets according to the LFIB.

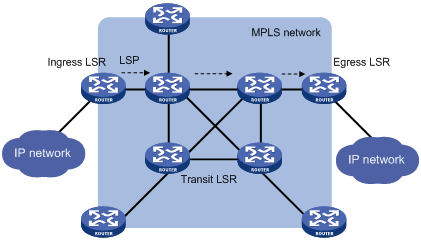

MPLS network architecture

Figure 3 MPLS network architecture

An MPLS network has the following types of LSRs:

· Ingress LSR—Ingress LSR of packets. It labels packets entering into the MPLS network.

· Transit LSR—Intermediate LSRs in the MPLS network. The transit LSRs on an LSP forward packets to the egress LSR according to labels.

· Egress LSR—Egress LSR of packets. It removes labels from packets and forwards the packets to their destination networks.

LSP establishment

LSPs include static and dynamic LSPs.

· Static LSP—To establish a static LSP, you must configure an LFIB entry on each LSR along the LSP. Establishing static LSPs consumes fewer resources than establishing dynamic LSPs, but static LSPs cannot automatically adapt to network topology changes. Therefore, static LSPs are suitable for small-scale networks with simple, stable topologies.

· Dynamic LSP—Established by a label distribution protocol (also called an MPLS signaling protocol). A label distribution protocol classifies FECs, distributes FEC-label mappings, and establishes and maintains LSPs. Label distribution protocols include protocols designed specifically for label distribution, such as the Label Distribution Protocol (LDP), and protocols extended to support label distribution, such as MP-BGP and RSVP-TE.

In this document, the term "label distribution protocols" refers to all protocols for label distribution. The term "LDP" refers to the RFC 5036 LDP.

A dynamic LSP is established in the following steps:

1. A downstream LSR classifies FECs according to destination addresses.

2. The downstream LSR assigns a label for each FEC, and distributes the FEC-label binding to its upstream LSR.

3. The upstream LSR establishes an LFIB entry for the FEC according to the binding information.

After all LSRs along the LSP establish an LFIB entry for the FEC, a dynamic LSP is established for the packets of this FEC.

Figure 4 Dynamic LSP establishment

MPLS forwarding

As shown in Figure 5, a packet is forwarded over the MPLS network in the following steps:

1. Router B (the ingress LSR) receives a packet with no label. Then, it performs the following operations:

a. Identifies the FIB entry that matches the destination address of the packet.

b. Adds the outgoing label (40, in this example) to the packet.

c. Forwards the labeled packet out of the interface VLAN-interface 20 to the next hop LSR Router C.

2. When receiving the labeled packet, Router C processes the packet as follows:

a. Identifies the LFIB entry that has an incoming label of 40.

b. Uses the outgoing label 50 of the entry to replace label 40 in the packet.

c. Forwards the labeled packet out of the outgoing interface VLAN-interface 30 to the next hop LSR Router D.

3. When receiving the labeled packet, Router D (the egress) processes the packet as follows:

a. Identifies the LFIB entry that has an incoming label of 50.

b. Removes the label from the packet.

c. Forwards the packet out of the outgoing interface VLAN-interface 40 to the next hop LSR Router E.

If the LFIB entry records no outgoing interface or next hop information, Router D performs the following operations:

a. Identifies the FIB entry by the IP header.

b. Forwards the packet according to the FIB entry.

PHP

An egress node must perform two forwarding table lookups to forward a packet:

· Two LFIB lookups (if the packet has more than one label).

· One LFIB lookup and one FIB lookup (if the packet has only one label).

The penultimate hop popping (PHP) feature can pop the label at the penultimate node, so the egress node only performs one table lookup.

A PHP-capable egress node sends the penultimate node an implicit null label of 3. This label never appears in the label stack of packets. If an incoming packet matches an LFIB entry comprising the implicit null label, the penultimate node pops the top label of the packet and forwards the packet to the egress LSR. The egress LSR directly forwards the packet.

Sometimes, the egress node must use the TC field in the label to perform QoS. To keep the TC information, you can configure the egress node to send the penultimate node an explicit null label of 0. If an incoming packet matches an LFIB entry comprising the explicit null label, the penultimate hop replaces the value of the top label with value 0, and forwards the packet to the egress node. The egress node gets the TC information, pops the label of the packet, and forwards the packet.

Protocols and standards

· RFC 3031, Multiprotocol Label Switching Architecture

· RFC 3032, MPLS Label Stack Encoding

· RFC 5462, Multiprotocol Label Switching (MPLS) Label Stack Entry: "EXP" Field Renamed to "Traffic Class" Field

Feature and software version compatibility

The basic MPLS feature is available in Release 1138P01 and later versions.

MPLS configuration task list

|

Tasks at a glance |

|

(Required.) Enabling MPLS |

|

(Optional.) Configuring MPLS MTU |

|

(Optional.) Specifying the label type advertised by the egress |

|

(Optional.) Configuring TTL propagation |

|

(Optional.) Enabling sending of MPLS TTL-expired messages |

|

(Optional.) Enabling SNMP notifications for MPLS |

Enabling MPLS

Before you enable MPLS, perform the following tasks:

· Configure link layer protocols to ensure connectivity at the link layer.

· Configure IP addresses for interfaces to ensure IP connectivity between neighboring nodes.

· Configure static routes or an IGP protocol to ensure IP connectivity among LSRs.

To enable MPLS:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Configure an LSR ID for the local node. |

mpls lsr-id lsr-id |

By default, no LSR ID is configured. An LSR ID must be unique in an MPLS network and in IP address format. As a best practice, use the IP address of a loopback interface as an LSR ID. |

|

3. Enter the view of the interface that needs to perform MPLS forwarding. |

interface interface-type interface-number |

N/A |

|

4. Enable MPLS for the interface. |

mpls enable |

By default, MPLS is disabled on an interface. |

Configuring MPLS MTU

MPLS inserts the label stack between the link layer header and network layer header of each packet. To make sure the size of MPLS labeled packets is smaller than the MTU of an interface, configure an MPLS MTU on the interface.

MPLS compares each MPLS packet against the interface MPLS MTU. When the packet exceeds the MPLS MTU:

· If fragmentation is allowed, MPLS does the following:

a. Removes the label stack from the packet.

b. Fragments the IP packet. The length of a fragment is the MPLS MTU minus the length of the label stack.

c. Adds the label stack to each fragment, and forwards the fragments.

· If fragmentation is not allowed, the LSR drops the packet.

To configure an MPLS MTU for an interface:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

N/A |

|

3. Configure an MPLS MTU for the interface. |

mpls mtu value |

By default, no MPLS MTU is configured on an interface. |

The following applies when an interface handles MPLS packets:

· If the MPLS MTU of an interface is greater than the MTU of the interface, data forwarding might fail on the interface.

· If you do not configure the MPLS MTU of an interface, fragmentation of MPLS packets is based on the MTU of the interface without considering MPLS labels. An MPLS fragment might be larger than the interface MTU and be dropped.

Specifying the label type advertised by the egress

In an MPLS network, an egress can advertise the following types of labels:

· Implicit null label with a value of 3.

· Explicit null label with a value of 0.

· Non-null label. The value range for a non-null label is 16 to 1048575.

For LSPs established by a label distribution protocol, the label advertised by the egress determines how the penultimate hop processes a labeled packet.

· If the egress advertises an implicit null label, the penultimate hop directly pops the top label of a matching packet.

· If the egress advertises an explicit null label, the penultimate hop swaps the top label value of a matching packet with the explicit null label.

· If the egress advertises a non-null label (normal label), the penultimate hop swaps the top label of a matching packet with the specific label assigned by the egress.

Configuration guidelines

As a best practice, configure the egress to advertise an implicit null label to the penultimate hop if the penultimate hop supports PHP. If you want to simplify packet forwarding on the egress but keep labels to determine QoS policies, configure the egress to advertise an explicit null label to the penultimate hop. Use non-null labels only in particular scenarios. For example, when OAM is configured on the egress, the egress can get the OAM function entity status only through non-null labels.

As a penultimate hop, the device accepts the implicit null label, explicit null label, or normal label advertised by the egress device.

For LDP LSPs, the mpls label advertise command triggers LDP to delete the LSPs established before the command is executed and re-establishes new LSPs.

For BGP LSPs, the mpls label advertise command takes effect only for the BGP LSPs established after the command is executed. To apply the new setting to BGP LSPs established before the command is executed, delete the routes corresponding to the BGP LSPs, and then redistribute the routes.

Configuration procedure

To specify the type of label that the egress node will advertise to the penultimate hop:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Specify the label type advertised by the egress to the penultimate hop. |

mpls label advertise { explicit-null | implicit-null | non-null } |

By default, an egress advertises an implicit null label to the penultimate hop. |

Configuring TTL propagation

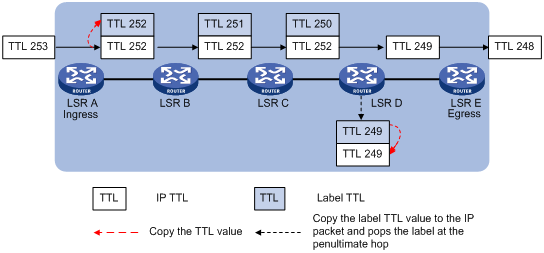

When TTL propagation is enabled, the ingress node copies the TTL value of an IP packet to the TTL field of the label. Each LSR on the LSP decreases the label TTL value by 1. The LSR that pops the label copies the remaining label TTL value back to the IP TTL of the packet, so the IP TTL value can reflect how many hops the packet has traversed in the MPLS network. The IP tracert facility can show the real path along which the packet has traveled.

Figure 6 TTL propagation

When TTL propagation is disabled, the ingress node sets the label TTL to 255. Each LSR on the LSP decreases the label TTL value by 1. The LSR that pops the label does not change the IP TTL value when popping the label. Therefore, the MPLS backbone nodes are invisible to user networks, and the IP tracert facility cannot show the real path in the MPLS network.

Figure 7 Without TTL propagation

Follow these guidelines when you configure TTL propagation:

· As a best practice, set the same TTL processing mode on all LSRs of an LSP.

· To enable TTL propagation for a VPN, you must enable it on all PE devices in the VPN, so that you can get the same traceroute result (hop count) from those PEs.

· After TTL propagation is disabled, the device cannot cannot perform correct DSCP-to-EXP mapping for IP packets entering the MPLS network.

· After TTL propagation is enabled or disabled, execute the reset mpls ldp command to make the configuration take effect. For more information about the reset mpls ldp command, see MPLS Command Reference.

To enable TTL propagation:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enable TTL propagation. |

mpls ttl propagate { public | vpn } |

By default, TTL propagation is enabled only for public-network packets. This command affects only the propagation between IP TTL and label TTL. Within an MPLS network, TTL is always copied between the labels of an MPLS packet. |

Enabling sending of MPLS TTL-expired messages

This feature enables an LSR to generate an ICMP TTL-expired message upon receiving an MPLS packet with a TTL of 1. If the MPLS packet has only one label, the LSR sends the ICMP TTL-expired message back to the source through IP routing. If the MPLS packet has multiple labels, the LSR sends it along the LSP to the egress, which then sends the message back to the source.

To enable sending of MPLS TTL-expired messages:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enable sending of MPLS TTL-expired messages. |

mpls ttl expiration enable |

By default, this feature is enabled. |

Enabling SNMP notifications for MPLS

This feature enables MPLS to generate SNMP notifications. The generated SNMP notifications are sent to the SNMP module.

For more information about SNMP notifications, see Network Management and Monitoring Configuration Guide.

To enable SNMP notifications for MPLS:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enable SNMP notifications for MPLS. |

snmp-agent trap enable mpls |

By default, SNMP notifications for MPLS are enabled. |

Displaying and maintaining MPLS

Execute display commands in any view.

|

Task |

Command |

|

Display MPLS interface information. |

display mpls interface [ interface-type interface-number ] |

|

Display usage information about MPLS labels. |

display mpls label { label-value1 [ to label-value2 ] | all } |

|

Display LSP information. |

display mpls lsp [ egress | in-label label-value | ingress | outgoing-interface interface-type interface-number | protocol { bgp | ldp | local | rsvp-te | static | static-cr } | transit ] [ vpn-instance vpn-instance-name ] [ ipv4-dest mask-length ] [ verbose ] |

|

Display MPLS Nexthop Information Base (NIB) information. |

display mpls nib [ nib-id ] |

|

Display usage information about NIDs. |

display mpls nid [ nid-value1 [ to nid-value2 ] ] |

|

Display LSP statistics. |

display mpls lsp statistics |

|

Display MPLS summary information. |

display mpls summary |

|

Display ILM entries (in standalone mode). |

display mpls forwarding ilm [ label ] [ slot slot-number ] |

|

Display ILM entries (in IRF mode). |

display mpls forwarding ilm [ label ] [ chassis chassis-number slot slot-number ] |

|

Display NHLFE entries (in standalone mode). |

display mpls forwarding nhlfe [ nid ] [ slot slot-number ] |

|

Display NHLFE entries (in IRF mode). |

display mpls forwarding nhlfe [ nid ] [ chassis chassis-number slot slot-number ] |

Configuring a static LSP

Overview

A static label switched path (LSP) is established by manually specifying the incoming label and outgoing label on each node (ingress, transit, or egress node) of the forwarding path.

Static LSPs consume fewer resources, but they cannot automatically adapt to network topology changes. Therefore, static LSPs are suitable for small and stable networks with simple topologies.

Follow these guidelines to establish a static LSP:

· The ingress node performs the following operations:

a. Determines an FEC for a packet according to the destination address.

b. Adds the label for that FEC into the packet.

c. Forwards the packet to the next hop or out of the outgoing interface.

Therefore, on the ingress node, you must specify the outgoing label for the destination address (the FEC) and the next hop or the outgoing interface.

· A transit node swaps the label carried in a received packet with a specific label, and forwards the packet to the next hop or out of the outgoing interface. Therefore, on each transit node, you must specify the incoming label, the outgoing label, and the next hop or the outgoing interface.

· If the penultimate hop popping function is not configured, an egress node pops the incoming label of a packet, and performs label forwarding according to the inner label or IP forwarding. Therefore, on the egress node, you only need to specify the incoming label.

· The outgoing label specified on an LSR must be the same as the incoming label specified on the directly connected downstream LSR.

Feature and software version compatibility

The static LSP feature is available in Release 1138P01 and later versions.

Configuration prerequisites

Before you configure a static LSP, perform the following tasks:

· Identify the ingress node, transit nodes, and egress node of the LSP.

· Enable MPLS on all interfaces that participate in MPLS forwarding. For more information, see "Configuring basic MPLS."

· Make sure the ingress node has a route to the destination address of the LSP. This is not required on transit and egress nodes.

Configuration procedure

To configure a static LSP:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Configure the ingress node of the static LSP. |

static-lsp ingress lsp-name destination dest-addr { mask | mask-length } { nexthop next-hop-addr | outgoing-interface interface-type interface-number } out-label out-label |

If you specify a next hop for the static LSP, make sure the ingress node has an active route to the specified next hop address. |

|

3. Configure the transit node of the static LSP. |

static-lsp transit lsp-name in-label in-label { nexthop next-hop-addr | outgoing-interface interface-type interface-number } out-label out-label |

If you specify a next hop for the static LSP, make sure the transit node has an active route to the specified next hop address. |

|

4. Configure the egress node of the static LSP. |

static-lsp egress lsp-name in-label in-label |

You do not need to configure this command if the outgoing label configured on the penultimate hop of the static LSP is 0 or 3. |

Displaying static LSPs

Execute display commands in any view.

|

Task |

Command |

|

Display static LSP information. |

display mpls static-lsp [ lsp-name lsp-name ] |

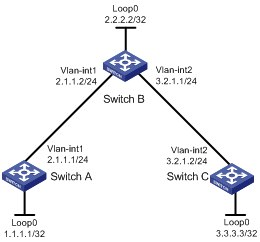

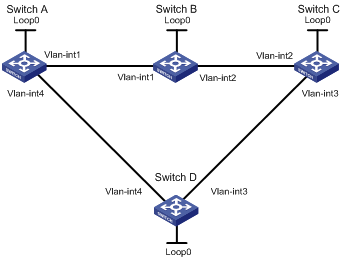

Static LSP configuration example

Network requirements

Switch A, Switch B, and Switch C all support MPLS.

Establish static LSPs between Switch A and Switch C, so that subnets 11.1.1.0/24 and 21.1.1.0/24 can access each other over MPLS.

Configuration restrictions and guidelines

· For an LSP, the outgoing label specified on an LSR must be identical with the incoming label specified on the downstream LSR.

· LSPs are unidirectional. You must configure an LSP for each direction of the data forwarding path.

· A route to the destination address of the LSP must be available on the ingress node and the egress node, but it is not needed on transit nodes. Therefore, you do not need to configure a routing protocol to ensure IP connectivity among all switches.

Configuration procedure

1. Create VLANs and configure IP addresses for all interfaces, including the loopback interfaces, as shown in Figure 8. (Details not shown.)

2. Configure a static route to the destination address of each LSP:

# On Switch A, configure a static route to network 21.1.1.0/24.

<SwitchA> system-view

[SwitchA] ip route-static 21.1.1.0 24 10.1.1.2

# On Switch C, configure a static route to network 11.1.1.0/24.

<SwitchC> system-view

[SwitchC] ip route-static 11.1.1.0 255.255.255.0 20.1.1.1

3. Configure basic MPLS on the switches:

# Configure Switch A.

[SwitchA] mpls lsr-id 1.1.1.9

[SwitchA] interface vlan-interface 2

[SwitchA-Vlan-interface2] mpls enable

[SwitchA-Vlan-interface2] quit

# Configure Switch B.

[SwitchB] mpls lsr-id 2.2.2.9

[SwitchB] interface vlan-interface 2

[SwitchB-Vlan-interface2] mpls enable

[SwitchB-Vlan-interface2] quit

[SwitchB] interface vlan-interface 3

[SwitchB-Vlan-interface3] mpls enable

[SwitchB-Vlan-interface3] quit

# Configure Switch C.

[SwitchC] mpls lsr-id 3.3.3.9

[SwitchC] interface vlan-interface 3

[SwitchC-Vlan-interface3] mpls enable

[SwitchC-Vlan-interface3] quit

4. Configure a static LSP from Switch A to Switch C:

# Configure the LSP ingress node, Switch A.

[SwitchA] static-lsp ingress AtoC destination 21.1.1.0 24 nexthop 10.1.1.2 out-label 30

# Configure the LSP transit node, Switch B.

[SwitchB] static-lsp transit AtoC in-label 30 nexthop 20.1.1.2 out-label 50

# Configure the LSP egress node, Switch C.

[SwitchC] static-lsp egress AtoC in-label 50

5. Configure a static LSP from Switch C to Switch A:

# Configure the LSP ingress node, Switch C.

[SwitchC] static-lsp ingress CtoA destination 11.1.1.0 24 nexthop 20.1.1.1 out-label 40

# Configure the LSP transit node, Switch B.

[SwitchB] static-lsp transit CtoA in-label 40 nexthop 10.1.1.1 out-label 70

# Configure the LSP egress node, Switch A.

[SwitchA] static-lsp egress CtoA in-label 70

Verifying the configuration

# Display static LSP information on switches. This example uses Switch A.

[SwitchA] display mpls static-lsp

Total: 2

Name FEC In/Out Label Nexthop/Out Interface State

AtoC 21.1.1.0/24 NULL/30 10.1.1.2 Up

CtoA -/- 70/NULL - Up

# Test the connectivity of the LSP from Switch A to Switch C.

[SwitchA] ping mpls -a 11.1.1.1 ipv4 21.1.1.0 24

MPLS Ping FEC: 21.1.1.0/24 : 100 data bytes

100 bytes from 20.1.1.2: Sequence=1 time=4 ms

100 bytes from 20.1.1.2: Sequence=2 time=1 ms

100 bytes from 20.1.1.2: Sequence=3 time=1 ms

100 bytes from 20.1.1.2: Sequence=4 time=1 ms

100 bytes from 20.1.1.2: Sequence=5 time=1 ms

--- FEC: 21.1.1.0/24 ping statistics ---

5 packets transmitted, 5 packets received, 0.0% packet loss

round-trip min/avg/max = 1/1/4 ms

# Test the connectivity of the LSP from Switch C to Switch A.

[SwitchC] ping mpls -a 21.1.1.1 ipv4 11.1.1.0 24

MPLS Ping FEC: 11.1.1.0/24 : 100 data bytes

100 bytes from 10.1.1.1: Sequence=1 time=5 ms

100 bytes from 10.1.1.1: Sequence=2 time=1 ms

100 bytes from 10.1.1.1: Sequence=3 time=1 ms

100 bytes from 10.1.1.1: Sequence=4 time=1 ms

100 bytes from 10.1.1.1: Sequence=5 time=1 ms

--- FEC: 11.1.1.0/24 ping statistics ---

5 packets transmitted, 5 packets received, 0.0% packet loss

round-trip min/avg/max = 1/1/5 ms

Configuring LDP

Overview

The Label Distribution Protocol (LDP) dynamically distributes FEC-label mapping information between LSRs to establish LSPs.

Terminology

LDP session

Two LSRs establish a TCP-based LDP session to exchange FEC-label mappings.

LDP peer

Two LSRs that use LDP to exchange FEC-label mappings are LSR peers.

Label spaces and LDP identifiers

Label spaces include the following types:

· Per-interface label space—Each interface uses a single, independent label space. Different interfaces can use the same label values.

· Per-platform label space—Each LSR uses a single label space. The device only supports the per-platform label space.

A six-byte LDP Identifier (LDP ID) identifies a label space on an LSR. It is in the format of <LSR ID>:<label space number>, where:

· The LSR ID takes four bytes to identity the LSR.

· The label space number takes two bytes to identify a label space within the LSR.

A label space number of 0 indicates that the label space is a per-platform label space. A label space number other than 0 indicates a per-interface label space.

FECs and FEC-label mappings

MPLS groups packets with the same characteristics (such as the same destination or service class) into a class, called an "FEC." The packets of the same FEC are handled in the same way on an MPLS network.

LDP can classify FECs by destination IP address.

An LSR assigns a label for a FEC and advertises the FEC-label mapping, or FEC-label binding, to its peers in a Label Mapping message.

LDP messages

LDP mainly uses the following types of messages:

· Discovery messages—Declare and maintain the presence of LSRs, such as Hello messages.

· Session messages—Establish, maintain, and terminate sessions between LDP peers, such as Initialization messages used for parameter negotiation and Keepalive messages used to maintain sessions.

· Advertisement messages—Create, alter, and remove FEC-label mappings, such as Label Mapping messages used to advertise FEC-label mappings.

· Notification messages—Provide advisory information and notify errors, such as Notification messages.

LDP uses UDP to transport discovery messages for efficiency, and uses TCP to transport session, advertisement, and notification messages for reliability.

LDP operation

LDP operates in the following phases:

Discovering and maintaining LDP peers

The device supports only the Basic Discovery mechanism in the current software release. Using Basic Discovery, an LSR enabled with LDP sends Link Hello messages to multicast address 224.0.0.2 that identifies all routers on the subnet. All directly-connected LSRs can discover the LSR and establish a hello adjacency.

LDP peers send Hello messages at the hello interval to maintain a hello adjacency. If LDP receives no Hello message from a hello adjacency before the hello hold timer expires, it removes the hello adjacency.

Establishing and maintaining LDP sessions

LDP establishes a session with a peer in the following steps:

1. Establishes a TCP connection with the neighbor.

2. Negotiates session parameters such as LDP version, label distribution method, and Keepalive timer, and establishes an LDP session with the neighbor if the negotiation succeeds.

After a session is established, LDP sends LDP PDUs (an LDP PDU carries one or more LDP messages) to maintain the session. If no information is exchanged between the LDP peers within the Keepalive interval, LDP sends Keepalive messages at the Keepalive interval to maintain the session. If LDP receives no LDP PDU from a neighbor before the keepalive hold timer expires, or the last hello adjacency with the neighbor is removed, LDP terminates the session.

LDP can also send a Shutdown message to a neighbor to terminate the LDP session.

Establishing LSPs

LDP classifies FECs according to destination IP addresses in IP routing entries, creates FEC-label mappings, and advertises the mappings to LDP peers through LDP sessions. After an LDP peer receives a FEC-label mapping, it uses the received label and the label locally assigned to that FEC to create an LFIB entry for that FEC. When all LSRs (from the Ingress to the Egress) establish an LFIB entry for the FEC, an LSP is established exclusively for the FEC.

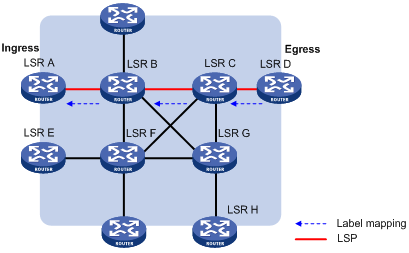

Figure 9 Dynamically establishing an LSP

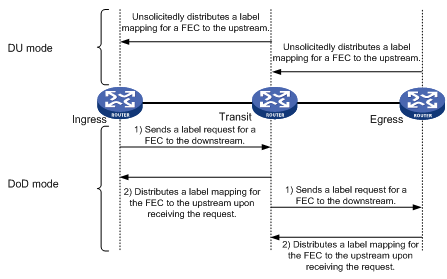

Label distribution and control

Label advertisement modes

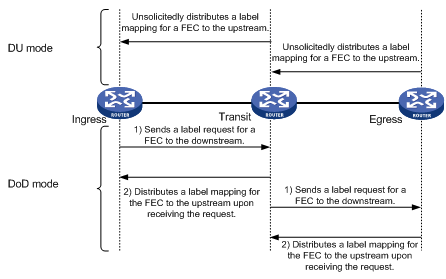

Figure 10 Label advertisement modes

LDP advertises label-FEC mappings in one of the following ways:

· Downstream Unsolicited (DU) mode—Distributes FEC-label mappings to the upstream LSR, without waiting for label requests. The device supports only the DU mode.

· Downstream on Demand (DoD) mode—Sends a label request for a FEC to the downstream LSR. After receiving the label request, the downstream LSR distributes the FEC-label mapping for that FEC to the upstream LSR.

|

|

NOTE: A pair of upstream and downstream LSRs must use the same label advertisement mode. Otherwise, the LSP cannot be established. |

Label distribution control

LDP controls label distribution in one of the following ways:

· Independent label distribution—Distributes a FEC-label mapping to an upstream LSR at any time. An LSR might distribute a mapping for a FEC to its upstream LSR before it receives a label mapping for that FEC from its downstream LSR. As shown in Figure 11, in DU mode, each LSR distributes a label mapping for a FEC to its upstream LSR whenever it is ready to label-switch the FEC, without waiting for a label mapping for the FEC from its downstream LSR. In DoD mode, an LSR distributes a label mapping for a FEC to its upstream LSR after it receives a label request for the FEC, without waiting for a label mapping for the FEC from its downstream LSR.

Figure 11 Independent label distribution control mode

· Ordered label distribution—Distributes a label mapping for a FEC to its upstream LSR only after it receives a label mapping for that FEC from its downstream LSR unless the local node is the egress node of the FEC. As shown in Figure 10, in DU mode, an LSR distributes a label mapping for a FEC to its upstream LSR only if it receives a label mapping for the FEC from its downstream LSR. In DoD mode, when an LSR (Transit) receives a label request for a FEC from its upstream LSR (Ingress), it continues to send a label request for the FEC to its downstream LSR (Egress). After the transit LSR receives a label mapping for the FEC from the egress LSR, it distributes a label mapping for the FEC to the ingress.

Label retention mode

The label retention mode specifies whether an LSR maintains a label mapping for a FEC learned from a neighbor that is not its next hop.

· Liberal label retention—Retains a received label mapping for a FEC regardless of whether the advertising LSR is the next hop of the FEC. This mechanism allows for quicker adaptation to topology changes, but it wastes system resources because LDP has to keep useless labels. The device only supports liberal label retention.

· Conservative label retention—Retains a received label mapping for a FEC only when the advertising LSR is the next hop of the FEC. This mechanism saves label resources, but it cannot quickly adapt to topology changes.

LDP GR

LDP GR overview

LDP Graceful Restart enables an LSR to retain MPLS forwarding entries during an LDP restart, ensuring continuous MPLS forwarding.

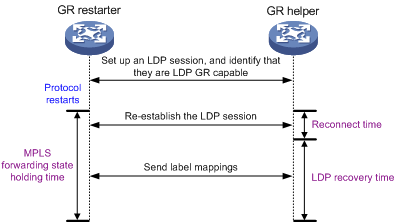

As shown in Figure 12, GR defines the following roles:

· GR restarter—An LSR that performs GR. It must be GR-capable.

· GR helper—A neighbor LSR that helps the GR restarter to complete GR.

The device can act as a GR restarter or a GR helper.

As shown in Figure 13, LDP GR works in the following steps:

1. LSRs establish an LDP session. The L flag of the Fault Tolerance TLV in their Initialization messages is set to 1 to indicate that they support LDP GR.

2. When LDP restarts, the GR restarter starts the MPLS Forwarding State Holding timer, and marks the MPLS forwarding entries as stale. When the GR helper detects that the LDP session with the GR restarter goes down, it marks the FEC-label mappings learned from the session as stale and starts the Reconnect timer received from the GR restarter.

3. After LDP completes restart, the GR restarter re-establishes an LDP session with the GR helper. If the LDP session is not set up before the Reconnect timer expires, the GR helper deletes the stale FEC-label mappings and the corresponding MPLS forwarding entries. If the LDP session is successfully set up before the Reconnect timer expires, the GR restarter sends the remaining time of the MPLS Forwarding State Holding timer as the LDP Recovery time to the GR helper.

4. After the LDP session is re-established, the GR helper starts the LDP Recovery timer.

5. The GR restarter and the GR helper exchange label mappings and update their MPLS forwarding tables.

The GR restarter compares each received label mapping against stale MPLS forwarding entries. If a match is found, the restarter deletes the stale mark for the matching entry. Otherwise, it adds a new entry for the label mapping.

The GR helper compares each received label mapping against stale FEC-label mappings. If a match is found, the helper deletes the stale mark for the matching mapping. Otherwise, it adds the received FEC-label mapping and a new MPLS forwarding entry for the mapping.

6. When the MPLS Forwarding State Holding timer expires, the GR restarter deletes all stale MPLS forwarding entries.

7. When the LDP Recovery timer expires, the GR helper deletes all stale FEC-label mappings.

LDP NSR

LDP nonstop routing (NSR) backs up protocol states and data (including LDP session and LSP information) from the active process to the standby process. When the LDP active process fails, the standby process becomes active and takes over processing seamlessly. The LDP peers are not notified of the LDP interruption. The LDP session stays in Operational state, and the forwarding is not interrupted.

The LDP active process fails when one of the following events occurs:

· The active process restarts.

· The MPU where the active process resides fails.

· The MPU where the active process resides performs an ISSU.

Choose either LDP NSR or LDP GR to ensure continuous traffic forwarding.

· Device requirements

? To use LDP NSR, the device must have two or more MPUs, and the active and standby processes for LDP reside on different MPUs.

? To use LDP GR, the device can have only one MPU on the device.

· LDP peer requirements

? With LDP NSR, LDP peers of the local device are not notified of any switchover event on the local device. The local device does not require help from a peer to restore the MPLS forwarding information.

? With LDP GR, the LDP peer must be able to identify the GR capability flag (in the Initialization message) of the GR restarter. The LDP peer acts as a GR helper to help the GR restarter to restore MPLS forwarding information.

LDP-IGP synchronization

Basic operating mechanism

LDP establishes LSPs based on the IGP optimal route. If LDP is not synchronized with IGP, MPLS traffic forwarding might be interrupted.

LDP is not synchronized with IGP when one of the following occurs:

· A link is up, and IGP advertises and uses this link. However, LDP LSPs on this link have not been established.

· An LDP session on a link is down, and LDP LSPs on the link have been removed. However, IGP still uses this link.

· The Ordered label distribution control mode is used. IGP used the link before the local device received the label mappings from the downstream LSR to establish LDP LSPs.

After LDP-IGP synchronization is enabled, IGP advertises the actual cost of a link only when LDP convergence on the link is completed. Before LDP convergence is completed, IGP advertises the maximum cost of the link. In this way, the link is visible on the IGP topology, but IGP does not select this link as the optimal route when other links are available. Therefore, the device can avoid discarding MPLS packets when there is not an LDP LSP established on the optimal route.

LDP convergence on a link is completed when all the followings occur:

· The local device establishes an LDP session to at least one peer, and the LDP session is already in Operational state.

· The local device has distributed the label mappings to at least one peer.

Notification delay for LDP convergence completion

By default, LDP immediately sends a notification to IGP that LDP convergence has completed. However, immediate notifications might cause MPLS traffic forwarding interruptions in one of the following scenarios:

· LDP peers use the Ordered label distribution control mode. The device has not received a label mapping from downstream at the time LDP notifies IGP that LDP convergence has completed.

· A large number of label mappings are distributed from downstream. Label advertisement is not completed when LDP notifies IGP that LDP convergence has completed.

To avoid traffic forwarding interruptions in these scenarios, configure the notification delay. When LDP convergence on a link is completed, LDP waits before notifying IGP.

Notification delay for LDP restart or active/standby switchover

When an LDP restart or an active/standby switchover occurs, LDP takes time to converge, and LDP notifies IGP of the LDP-IGP synchronization status as follows:

· If a notification delay is not configured, LDP immediately notifies IGP of the current synchronization states during convergence, and then updates the states after LDP convergence. This could impact IGP processing.

· If a notification delay is configured, LDP notifies IGP of the LDP-IGP synchronization states in bulk when one of the following events occurs:

? LDP recovers to the state before the restart or switchover.

? The maximum delay timer expires.

LDP FRR

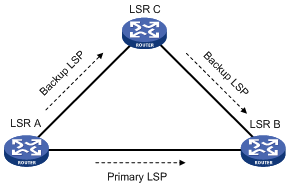

A link or router failure on a path can cause packet loss until LDP completes LSP establishment on the new path. LDP FRR enables fast rerouting to minimize the failover time. LDP FRR bases on IP FRR and is enabled automatically after IP FRR is enabled.

You can use one of the following methods to enable IP FRR:

· Configure an IGP to automatically calculate a backup next hop.

· Configure an IGP to specify a backup next hop by using a routing policy.

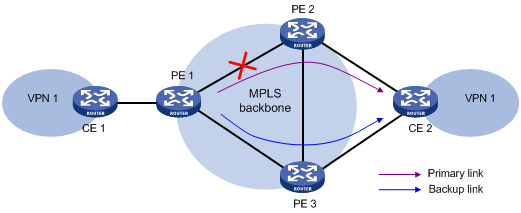

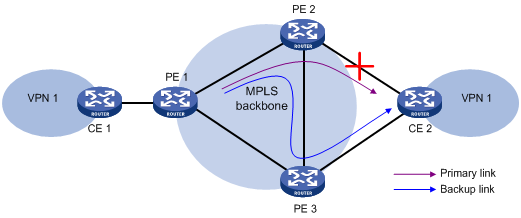

Figure 14 Network diagram for LDP FRR

As shown in Figure 14, configure IP FRR on LSR A. The IGP automatically calculates a backup next hop or it specifies a backup next hop through a routing policy. LDP creates a primary LSP and a backup LSP according to the primary route and the backup route calculated by IGP. When the primary LSP operates correctly, it forwards the MPLS packets. When the primary LSP fails, LDP directs packets to the backup LSP.

When packets are forwarded through the backup LSP, IGP calculates the optimal path based on the new network topology. When IGP route convergence occurs, LDP establishes a new LSP according to the optimal path. If a new LSP is not established after IGP route convergence, traffic forwarding might be interrupted. As a best practice, enable LDP IGP synchronization to work with LDP FRR to reduce the traffic interruption time.

Protocols

RFC 5036, LDP Specification

Feature and software version compatibility

The LDP feature is available in Release 1138P01 and later versions.

LDP configuration task list

|

Tasks at a glance |

|

Enable LDP: 1. (Required.) Enabling LDP globally 2. (Required.) Enabling LDP on an interface |

|

(Optional.) Configuring Hello parameters |

|

(Optional.) Configuring LDP session parameters |

|

(Optional.) Configuring LDP backoff |

|

(Optional.) Configuring LDP MD5 authentication |

|

(Optional.) Configuring an LSP generation policy |

|

(Optional.) Configuring the LDP label distribution control mode |

|

(Optional.) Configuring a label advertisement policy |

|

(Optional.) Configuring a label acceptance policy |

|

(Optional.) Configuring LDP loop detection |

|

(Optional.) Configuring LDP GR |

|

(Optional.) Configuring LDP NSR |

|

(Optional.) Configuring LDP-IGP synchronization |

|

(Optional.) Configuring LDP FRR |

|

(Optional.) Resetting LDP sessions |

|

(Optional.) Enabling SNMP notifications for LDP |

Enabling LDP

To enable LDP, you must first enable LDP globally. Then, enable LDP on relevant interfaces or configure IGP to automatically enable LDP on those interfaces.

Enabling LDP globally

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enable LDP for the local node or for a VPN. |

·

Enable LDP for the local node and enter LDP view: · Enable LDP for a VPN and enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

By default, LDP is disabled. |

|

3. Configure an LDP LSR ID. |

lsr-id lsr-id |

By default, the LDP LSR ID is the same as the MPLS LSR ID. |

Enabling LDP on an interface

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

If the interface is bound to a VPN instance, you must enable LDP for the VPN instance by using the vpn-instance command in LDP view. |

|

3. Enable LDP on the interface. |

mpls ldp enable |

By default, LDP is disabled on an interface. |

Configuring Hello parameters

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter the view of the interface where you want to establish an LDP session. |

interface interface-type interface-number |

N/A |

|

3. Configure the Link Hello hold time. |

mpls ldp timer hello-hold timeout |

By default, the Link Hello hold time is 15 seconds. |

|

4. Configure the Link Hello interval. |

mpls ldp timer hello-interval interval |

By default, the Link Hello interval is 5 seconds. |

Configuring LDP session parameters

This task configures the following LDP session parameters:

· Keepalive hold time and Keepalive interval.

· LDP transport address—IP address for establishing TCP connections.

When you configure LDP session parameters, follow these guidelines:

· The configured LDP transport address must be the IP address of an up interface on the device. Otherwise, no LDP session can be established.

· Make sure the LDP transport addresses of the local and peer LSRs can reach each other. Otherwise, no TCP connection can be established.

To configure LDP session parameters:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter interface view. |

interface interface-type interface-number |

N/A |

|

3. Configure the Keepalive hold time. |

mpls ldp timer keepalive-hold timeout |

By default, the Keepalive hold time is 45 seconds. |

|

4. Configure the Keepalive interval. |

mpls ldp timer keepalive-interval interval |

By default, the Keepalive interval is 15 seconds. |

|

5. Configure the LDP transport address. |

mpls ldp transport-address { ip-address | interface } |

By default, the LDP transport address is the LSR ID of the local device if the interface where you want to establish an LDP session belongs to the public network. If the interface belongs to a VPN, the LDP transport address is the primary IP address of the interface. If the interface where you want to establish an LDP session is bound to a VPN instance, the interface with the IP address specified with this command must be bound to the same VPN instance. |

Configuring LDP backoff

If LDP session parameters (for example, the label advertisement mode) are incompatible, two LDP peers cannot establish a session, and they will keep negotiating with each other.

The LDP backoff mechanism can mitigate this problem by using an initial delay timer and a maximum delay timer. After LDP fails to establish a session with a peer LSR for the first time, LDP does not start an attempt until the initial delay timer expires. If the session setup fails again, LDP waits for two times the initial delay before the next attempt, and so forth until the maximum delay time is reached. After that, the maximum delay time will always take effect.

To configure LDP backoff:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Configure the initial delay time and maximum delay time. |

backoff initial initial-time maximum maximum-time |

By default, the initial delay time is 15 seconds and the maximum delay time is 120 seconds. |

Configuring LDP MD5 authentication

To improve security for LDP sessions, you can configure MD5 authentication for the underlying TCP connections to check the integrity of LDP messages.

To configure LDP MD5 authentication:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Enable LDP MD5 authentication. |

md5-authentication peer-lsr-id { cipher | plain } password |

By default, LDP MD5 authentication is disabled. |

Configuring an LSP generation policy

An LSP generation policy controls the number of LSPs generated by LDP in one of the following ways:

· Use all routes to establish LSPs.

· Use the routes permitted by an IP prefix list to establish LSPs. For information about IP prefix list configuration, see Layer 3—IP Routing Configuration Guide.

· Use only host routes with a 32-bit mask to establish LSPs.

To configure an LSP generation policy:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Configure an LSP generation policy. |

lsp-trigger { all | prefix-list prefix-list-name } |

By default, LDP uses only host routes with a 32-bit mask to establish LSPs. |

Configuring the LDP label distribution control mode

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Configure the label distribution control mode. |

label-distribution { independent | ordered } |

By default, the Ordered label distribution mode is used. To apply the new setting to LDP sessions established before the command is configured, you must reset the LDP sessions. |

Configuring a label advertisement policy

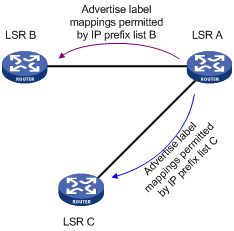

A label advertisement policy uses IP prefix lists to control the FEC-label mappings advertised to peers.

As shown in Figure 15, LSR A advertises label mappings for FECs permitted by IP prefix list B to LSR B and advertises label mappings for FECs permitted by IP prefix list C to LSR C.

Figure 15 Label advertisement control diagram

A label advertisement policy on an LSR and a label acceptance policy on its upstream LSR can achieve the same purpose. As a best practice, use label advertisement policies to reduce network load if downstream LSRs support label advertisement control.

Before you configure an LDP label advertisement policy, create an IP prefix list. For information about IP prefix list configuration, see Layer 3—IP Routing Configuration Guide.

To configure a label advertisement policy:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Configure a label advertisement policy. |

advertise-label prefix-list prefix-list-name [ peer peer-prefix-list-name ] |

By default, LDP advertises all label mappings permitted by the LSP generation policy to all peers. |

Configuring a label acceptance policy

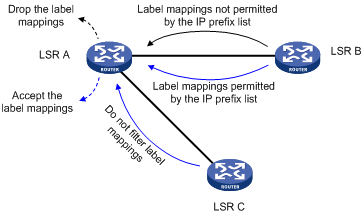

A label acceptance policy uses an IP prefix list to control the label mappings received from a peer.

As shown in Figure 16, LSR A uses an IP prefix list to filter label mappings from LSR B, and it does not filter label mappings from LSR C.

Figure 16 Label acceptance control diagram

A label advertisement policy on an LSR and a label acceptance policy on its upstream LSR can achieve the same purpose. As a best practice, use the label advertisement policy to reduce network load.

You must create an IP prefix list before you configure a label acceptance policy. For information about IP prefix list configuration, see Layer 3—IP Routing Configuration Guide.

To configure a label acceptance policy:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Configure a label acceptance policy. |

accept-label peer peer-lsr-id prefix-list prefix-list-name |

By default, LDP accepts all label mappings. |

Configuring LDP loop detection

LDP detects and terminates LSP loops in the following ways:

· Maximum hop count—LDP adds a hop count in a label request or label mapping message. The hop count value increments by 1 on each LSR. When the maximum hop count is reached, LDP considers that a loop has occurred and terminates the establishment of the LSP.

· Path vector—LDP adds LSR ID information in a label request or label mapping message. Each LSR checks whether its LSR ID is contained in the message. If it is not, the LSR adds its own LSR ID into the message. If it is, the LSR considers that a loop has occurred and terminates LSP establishment. In addition, when the number of LSR IDs in the message reaches the path vector limit, LDP also considers that a loop has occurred and terminates LSP establishment.

To configure LDP loop detection:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view or enter LDP-VPN instance view. |

·

Enter LDP view: · Enter LDP-VPN instance view: a. mpls ldp b. vpn-instance vpn-instance-name |

N/A |

|

3. Enable loop detection. |

loop-detect |

By default, loop detection is disabled. After loop detection is enabled, the device uses both the maximum hop count and the path vector methods to detect loops. |

|

4. Specify the maximum hop count. |

maxhops hop-number |

By default, the maximum hop count is 32. |

|

5. Specify the path vector limit. |

pv-limit pv-number |

By default, the path vector limit is 32. |

|

|

NOTE: The LDP loop detection feature is applicable only in networks comprised of devices that do not support TTL mechanism, such as ATM switches. Do not use LDP loop detection on other networks because it only results in extra LDP overhead. |

Configuring LDP GR

Before you configure LDP GR, enable LDP on the GR restarter and GR helpers.

To configure LDP GR:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view. |

mpls ldp |

N/A |

|

3. Enable LDP GR. |

graceful-restart |

By default, LDP GR is disabled. |

|

4. Configure the Reconnect timer for LDP GR. |

graceful-restart timer reconnect reconnect-time |

By default, the Reconnect time is 120 seconds. |

|

5. Configure the MPLS Forwarding State Holding timer for LDP GR. |

graceful-restart timer forwarding-hold hold-time |

By default, the MPLS Forwarding State Holding time is 180 seconds. |

Configuring LDP NSR

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter LDP view. |

mpls ldp |

N/A |

|

3. Enable LDP NSR. |

non-stop-routing |

By default, LDP NSR is disabled. |

Configuring LDP-IGP synchronization

After you enable LDP-IGP synchronization for an OSPF process, OSPF area, or an IS-IS process, LDP-IGP synchronization is enabled on the OSPF process interfaces or the IS-IS process interfaces.

You can execute the mpls ldp igp sync disable command to disable LDP-IGP synchronization on interfaces where LDP-IGP synchronization is not required.

Configuring LDP-OSPF synchronization

LDP-IGP synchronization is not supported for an OSPF process and its OSPF areas if the OSPF process belongs to a VPN instance.

To configure LDP-OSPF synchronization for an OSPF process:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter OSPF view. |

ospf [ process-id | router-id router-id ] * |

N/A |

|

3. Enable LDP-OSPF synchronization. |

mpls ldp sync |

By default, LDP-OSPF synchronization is disabled. |

|

4. Return to system view. |

quit |

N/A |

|

5. Enter interface view. |

interface interface-type interface-number |

N/A |

|

6. (Optional.) Disable LDP-IGP synchronization on the interface. |

mpls ldp igp sync disable |

By default, LDP-IGP synchronization is not disabled on an interface. |

|

7. Return to system view. |

quit |

N/A |

|

8. Enter LDP view. |

mpls ldp |

N/A |

|

9. (Optional.) Set the delay for LDP to notify IGP of the LDP convergence. |

igp sync delay time |

By default, LDP immediately notifies IGP of the LDP convergence completion. |

|

10. (Optional.) Set the maximum delay for LDP to notify IGP of the LDP-IGP synchronization status after an LDP restart or active/standby switchover. |

igp sync delay on-restart time |

By default, the maximum notification delay is 90 seconds. |

To configure LDP-OSPF synchronization for an OSPF area:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter OSPF view. |

ospf [ process-id | router-id router-id ] * |

N/A |

|

3. Enter area view. |

area area-id |

N/A |

|

4. Enable LDP-OSPF synchronization. |

mpls ldp sync |

By default, LDP-OSPF synchronization is disabled. |

|

5. Return to system view. |

quit |

N/A |

|

6. Enter interface view. |

interface interface-type interface-number |

N/A |

|

7. (Optional.) Disable LDP-IGP synchronization on the interface. |

mpls ldp igp sync disable |

By default, LDP-IGP synchronization is not disabled on an interface. |

|

8. Return to system view. |

quit |

N/A |

|

9. Enter LDP view. |

mpls ldp |

N/A |

|

10. (Optional.) Set the delay for LDP to notify IGP of the LDP convergence. |

igp sync delay time |

By default, LDP immediately notifies IGP of the LDP convergence completion. |

|

11. (Optional.) Set the maximum delay for LDP to notify IGP of the LDP-IGP synchronization status after an LDP restart or active/standby switchover. |

igp sync delay on-restart time |

By default, the maximum notification delay is 90 seconds. |

Configuring LDP-ISIS synchronization

LDP-IGP synchronization is not supported for an IS-IS process that belongs to a VPN instance.

To configure LDP-ISIS synchronization for an IS-IS process:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enter IS-IS view. |

isis [ process-id ] |

N/A |

|

3. Enable LDP-ISIS synchronization. |

mpls ldp sync [ level-1 | level-2 ] |

By default, LDP-ISIS synchronization is disabled. |

|

4. Return to system view. |

quit |

N/A |

|

5. Enter interface view. |

interface interface-type interface-number |

N/A |

|

6. (Optional.) Disable LDP-IGP synchronization on the interface. |

mpls ldp igp sync disable |

By default, LDP-IGP synchronization is not disabled on an interface. |

|

7. Return to system view. |

quit |

N/A |

|

8. Enter LDP view. |

mpls ldp |

N/A |

|

9. (Optional.) Set the delay for LDP to notify IGP of the LDP convergence completion. |

igp sync delay time |

By default, LDP immediately notifies IGP of the LDP convergence completion. |

|

10. (Optional.) Set the maximum delay for LDP to notify IGP of the LDP-IGP synchronization status after an LDP restart or an active/standby switchover occurs. |

igp sync delay on-restart time |

By default, the maximum notification delay is 90 seconds. |

Configuring LDP FRR

LDP FRR is based on IP FRR, and is enabled automatically after IP FRR is enabled. For information about configuring IP FRR, see Layer 3—IP Routing Configuration Guide.

Resetting LDP sessions

Changes to LDP session parameters take effect only on new LDP sessions. To apply the changes to an existing LDP session, you must reset all LDP sessions by executing the reset mpls ldp command.

Execute the reset mpls ldp command in user view.

|

Task |

Command |

Remarks |

|

Reset LDP sessions. |

reset mpls ldp [ vpn-instance vpn-instance-name ] [ peer peer-id ] |

If you specify the peer keyword, this command resets the LDP session to the specified peer without validating the session parameter changes. |

Enabling SNMP notifications for LDP

This feature enables generating SNMP notifications for LDP upon LDP session changes, as defined in RFC 3815. The generated SNMP notifications are sent to the SNMP module.

To enable SNMP notifications for LDP:

|

Step |

Command |

Remarks |

|

1. Enter system view. |

system-view |

N/A |

|

2. Enable SNMP notifications for LDP. |

snmp-agent trap enable ldp |

By default, SNMP notifications for LDP are enabled. |

For more information about SNMP notifications, see Network Management and Monitoring Configuration Guide.

Displaying and maintaining LDP

Execute display commands in any view.

|

Task |

Command |

|

Display LDP discovery information (in standalone mode). |

display mpls ldp discovery [ vpn-instance vpn-instance-name ] [ interface interface-type interface-number | peer peer-lsr-id ] [ verbose ] [ standby slot slot-number ] |

|

Display LDP discovery information (in IRF mode). |

display mpls ldp discovery [ vpn-instance vpn-instance-name ] [ interface interface-type interface-number | peer peer-lsr-id ] [ verbose ] [ standby chassis chassis-number slot slot-number ] |

|

Display LDP FEC-label mapping information (in standalone mode). |

display mpls ldp fec [ vpn-instance vpn-instance-name ] [ destination-address mask-length | summary ] [ standby slot slot-number ] |

|

Display LDP FEC-label mapping information (in IRF mode). |

display mpls ldp fec [ vpn-instance vpn-instance-name ] [ destination-address mask-length | summary ] [ standby chassis chassis-number slot slot-number ] |

|

Display LDP interface information. |

display mpls ldp interface [ interface-type interface-number ] |

|

Display LDP-IGP synchronization information. |

display mpls ldp igp sync [ interface interface-type interface-number ] |

|

Display LDP LSP information. |

display mpls ldp lsp [ vpn-instance vpn-instance-name ] [ destination-address mask-length ] |

|

Display LDP running parameters. |

display mpls ldp parameter [ vpn-instance vpn-instance-name ] |

|

Display LDP peer and session information (in standalone mode). |

display mpls ldp peer [ vpn-instance vpn-instance-name ] [ peer-lsr-id ] [ verbose ] [ standby slot slot-number ] |

|

Display LDP peer and session information (in IRF mode). |

display mpls ldp peer [ vpn-instance vpn-instance-name ] [ peer-lsr-id ] [ verbose ] [ standby chassis chassis-number slot slot-number ] |

|

Display LDP summary information (in standalone mode). |

display mpls ldp summary [ all | vpn-instance vpn-instance-name ] [ standby slot slot-number ] |

|

Display LDP summary information (in IRF mode). |

display mpls ldp summary [ all | vpn-instance vpn-instance-name ] [ standby chassis chassis-number slot slot-number ] |

LDP configuration examples

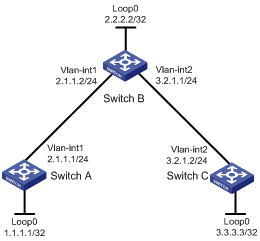

LDP LSP configuration example

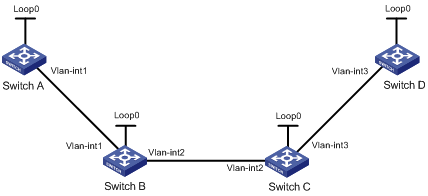

Network requirements

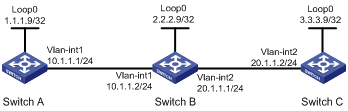

Switch A, Switch B, and Switch C all support MPLS.

Configure LDP to establish LSPs between Switch A and Switch C, so subnets 11.1.1.0/24 and 21.1.1.0/24 can reach each other over MPLS.

Configure LDP to establish LSPs only for destinations 1.1.1.9/32, 2.2.2.9/32, 3.3.3.9/32, 11.1.1.0/24, and 21.1.1.0/24 on Switch A, Switch B, and Switch C.

Requirements analysis

· To ensure that the LSRs establish LSPs automatically, enable LDP on each LSR.

· To establish LDP LSPs, configure a routing protocol to ensure IP connectivity between the LSRs. This example uses OSPF.

· To control the number of LSPs, configure an LSP generation policy on each LSR.

Configuration procedure

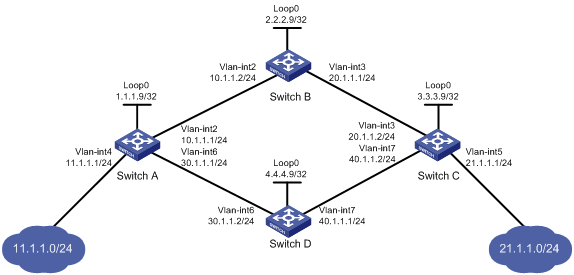

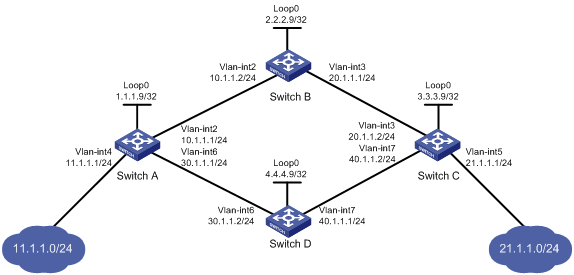

1. Configure IP addresses and masks for interfaces, including the loopback interfaces, as shown in Figure 17. (Details not shown.)

2. Configure OSPF on each switch to ensure IP connectivity between them:

# Configure Switch A.

<SwitchA> system-view

[SwitchA] ospf

[SwitchA-ospf-1] area 0

[SwitchA-ospf-1-area-0.0.0.0] network 1.1.1.9 0.0.0.0

[SwitchA-ospf-1-area-0.0.0.0] network 10.1.1.0 0.0.0.255

[SwitchA-ospf-1-area-0.0.0.0] network 11.1.1.0 0.0.0.255

[SwitchA-ospf-1-area-0.0.0.0] quit

[SwitchA-ospf-1] quit

# Configure Switch B.

<SwitchB> system-view

[SwitchB] ospf

[SwitchB-ospf-1] area 0

[SwitchB-ospf-1-area-0.0.0.0] network 2.2.2.9 0.0.0.0

[SwitchB-ospf-1-area-0.0.0.0] network 10.1.1.0 0.0.0.255

[SwitchB-ospf-1-area-0.0.0.0] network 20.1.1.0 0.0.0.255

[SwitchB-ospf-1-area-0.0.0.0] quit

[SwitchB-ospf-1] quit

# Configure Switch C.

<SwitchC> system-view

[SwitchC] ospf

[SwitchC-ospf-1] area 0

[SwitchC-ospf-1-area-0.0.0.0] network 3.3.3.9 0.0.0.0

[SwitchC-ospf-1-area-0.0.0.0] network 20.1.1.0 0.0.0.255

[SwitchC-ospf-1-area-0.0.0.0] network 21.1.1.0 0.0.0.255

[SwitchC-ospf-1-area-0.0.0.0] quit

[SwitchC-ospf-1] quit

# Display routing tables on the switches, for example, on Switch A, to verify that the switches have learned the routes to each other.

[SwitchA] display ip routing-table

Destinations : 21 Routes : 21

Destination/Mask Proto Pre Cost NextHop Interface

0.0.0.0/32 Direct 0 0 127.0.0.1 InLoop0

1.1.1.9/32 Direct 0 0 127.0.0.1 InLoop0

2.2.2.9/32 OSPF 10 1 10.1.1.2 Vlan2

3.3.3.9/32 OSPF 10 2 10.1.1.2 Vlan2

10.1.1.0/24 Direct 0 0 10.1.1.1 Vlan2

10.1.1.0/32 Direct 0 0 10.1.1.1 Vlan2

10.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

10.1.1.255/32 Direct 0 0 10.1.1.1 Vlan2

11.1.1.0/24 Direct 0 0 11.1.1.1 Vlan4

11.1.1.0/32 Direct 0 0 11.1.1.1 Vlan4

11.1.1.1/32 Direct 0 0 127.0.0.1 InLoop0

11.1.1.255/32 Direct 0 0 11.1.1.1 Vlan4

20.1.1.0/24 OSPF 10 2 10.1.1.2 Vlan2

21.1.1.0/24 OSPF 10 3 10.1.1.2 Vlan2

127.0.0.0/8 Direct 0 0 127.0.0.1 InLoop0

127.0.0.0/32 Direct 0 0 127.0.0.1 InLoop0

127.0.0.1/32 Direct 0 0 127.0.0.1 InLoop0

127.255.255.255/32 Direct 0 0 127.0.0.1 InLoop0

224.0.0.0/4 Direct 0 0 0.0.0.0 NULL0

224.0.0.0/24 Direct 0 0 0.0.0.0 NULL0

255.255.255.255/32 Direct 0 0 127.0.0.1 InLoop0

3. Enable MPLS and LDP:

# Configure Switch A.

[SwitchA] mpls lsr-id 1.1.1.9

[SwitchA] mpls ldp

[SwitchA-ldp] quit

[SwitchA] interface vlan-interface 2

[SwitchA-Vlan-interface2] mpls enable

[SwitchA-Vlan-interface2] mpls ldp enable

[SwitchA-Vlan-interface2] quit

# Configure Switch B.

[SwitchB] mpls lsr-id 2.2.2.9

[SwitchB] mpls ldp

[SwitchB-ldp] quit

[SwitchB] interface vlan-interface 2

[SwitchB-Vlan-interface2] mpls enable

[SwitchB-Vlan-interface2] mpls ldp enable

[SwitchB-Vlan-interface2] quit

[SwitchB] interface vlan-interface 3

[SwitchB-Vlan-interface3] mpls enable

[SwitchB-Vlan-interface3] mpls ldp enable

[SwitchB-Vlan-interface3] quit

# Configure Switch C.

[SwitchC] mpls lsr-id 3.3.3.9

[SwitchC] mpls ldp

[SwitchC-ldp] quit

[SwitchC] interface vlan-interface 3

[SwitchC-Vlan-interface3] mpls enable

[SwitchC-Vlan-interface3] mpls ldp enable

[SwitchC-Vlan-interface3] quit

4. Configure LSP generation policies:

# On Switch A, create IP prefix list switcha, and configure LDP to use only the routes permitted by the prefix list to establish LSPs.

[SwitchA] ip prefix-list switcha index 10 permit 1.1.1.9 32

[SwitchA] ip prefix-list switcha index 20 permit 2.2.2.9 32

[SwitchA] ip prefix-list switcha index 30 permit 3.3.3.9 32

[SwitchA] ip prefix-list switcha index 40 permit 11.1.1.0 24

[SwitchA] ip prefix-list switcha index 50 permit 21.1.1.0 24

[SwitchA] mpls ldp

[SwitchA-ldp] lsp-trigger prefix-list switcha

[SwitchA-ldp] quit

# On Switch B, create IP prefix list switchb, and configure LDP to use only the routes permitted by the prefix list to establish LSPs.

[SwitchB] ip prefix-list switchb index 10 permit 1.1.1.9 32

[SwitchB] ip prefix-list switchb index 20 permit 2.2.2.9 32

[SwitchB] ip prefix-list switchb index 30 permit 3.3.3.9 32

[SwitchB] ip prefix-list switchb index 40 permit 11.1.1.0 24

[SwitchB] ip prefix-list switchb index 50 permit 21.1.1.0 24

[SwitchB] mpls ldp

[SwitchB-ldp] lsp-trigger prefix-list switchb

[SwitchB-ldp] quit

# On Switch C, create IP prefix list switchc, and configure LDP to use only the routes permitted by the prefix list to establish LSPs.

[SwitchC] ip prefix-list switchc index 10 permit 1.1.1.9 32

[SwitchC] ip prefix-list switchc index 20 permit 2.2.2.9 32

[SwitchC] ip prefix-list switchc index 30 permit 3.3.3.9 32

[SwitchC] ip prefix-list switchc index 40 permit 11.1.1.0 24

[SwitchC] ip prefix-list switchc index 50 permit 21.1.1.0 24

[SwitchC] mpls ldp

[SwitchC-ldp] lsp-trigger prefix-list switchc

[SwitchC-ldp] quit

Verifying the configuration

# Display LDP LSP information on switches, for example, on Switch A.

[SwitchA] display mpls ldp lsp

Status Flags: * - stale, L - liberal, B - backup

Statistics:

FECs: 5 Ingress LSPs: 3 Transit LSPs: 3 Egress LSPs: 2

FEC In/Out Label Nexthop OutInterface

1.1.1.9/32 3/-

-/1279(L)

2.2.2.9/32 -/3 10.1.1.2 Vlan-int2

1279/3 10.1.1.2 Vlan-int2

3.3.3.9/32 -/1278 10.1.1.2 Vlan-int2

1278/1278 10.1.1.2 Vlan-int2

11.1.1.0/24 1277/-

-/1277(L)

21.1.1.0/24 -/1276 10.1.1.2 Vlan-int2

1276/1276 10.1.1.2 Vlan-int2

# Test the connectivity of the LDP LSP from Switch A to Switch C.

[SwitchA] ping mpls -a 11.1.1.1 ipv4 21.1.1.0 24

MPLS Ping FEC: 21.1.1.0/24 : 100 data bytes

100 bytes from 20.1.1.2: Sequence=1 time=1 ms

100 bytes from 20.1.1.2: Sequence=2 time=1 ms

100 bytes from 20.1.1.2: Sequence=3 time=8 ms

100 bytes from 20.1.1.2: Sequence=4 time=2 ms

100 bytes from 20.1.1.2: Sequence=5 time=1 ms

--- FEC: 21.1.1.0/24 ping statistics ---

5 packets transmitted, 5 packets received, 0.0% packet loss

round-trip min/avg/max = 1/2/8 ms

# Test the connectivity of the LDP LSP from Switch C to Switch A.

[SwitchC] ping mpls -a 21.1.1.1 ipv4 11.1.1.0 24

MPLS Ping FEC: 11.1.1.0/24 : 100 data bytes

100 bytes from 10.1.1.1: Sequence=1 time=1 ms

100 bytes from 10.1.1.1: Sequence=2 time=1 ms

100 bytes from 10.1.1.1: Sequence=3 time=1 ms

100 bytes from 10.1.1.1: Sequence=4 time=1 ms

100 bytes from 10.1.1.1: Sequence=5 time=1 ms

--- FEC: 11.1.1.0/24 ping statistics ---

5 packets transmitted, 5 packets received, 0.0% packet loss

round-trip min/avg/max = 1/1/1 ms

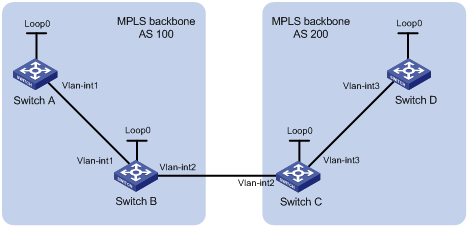

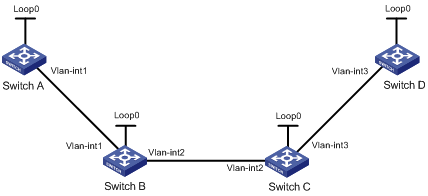

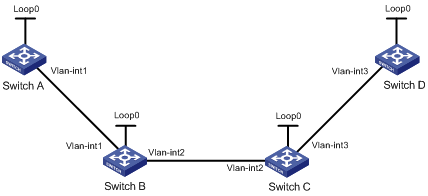

Label acceptance control configuration example

Network requirements

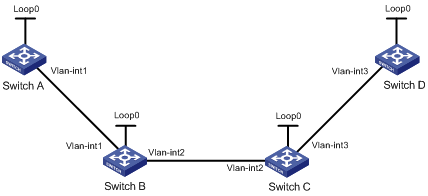

Two links, Switch A—Switch B—Switch C and Switch A—Switch D—Switch C, exist between subnets 11.1.1.0/24 and 21.1.1.0/24.

Configure LDP to establish LSPs only for routes to subnets 11.1.1.0/24 and 21.1.1.0/24.

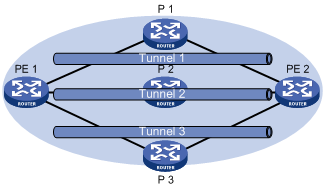

Configure LDP to establish LSPs only on the link Switch A—Switch B—Switch C to forward traffic between subnets 11.1.1.0/24 and 21.1.1.0/24.

Requirements analysis

· To ensure that the LSRs establish LSPs automatically, enable LDP on each LSR.

· To establish LDP LSPs, configure a routing protocol to ensure IP connectivity between the LSRs. This example uses OSPF.

· To ensure that LDP establishes LSPs only for the routes 11.1.1.0/24 and 21.1.1.0/24, configure LSP generation policies on each LSR.