| Title | Size | Downloads |

|---|---|---|

| IPv6 Technology White Paper-6W100-book.pdf | 2.28 MB |

- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| book | 2.28 MB |

IPv6 Technology White Paper

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

This document provides generic technical information, some of which might not be applicable to your products.

The information in this document is subject to change without notice.

Contents

Hierarchical address structure

Format of IPv6 packets with extension headers

Enhanced neighbor discovery mechanism

Configuring IPv6 global unicast addresses

Stateless address autoconfiguration

Stateful address autoconfiguration (DHCPv6)

IPv6 upgrade and transformation plan

Deploying dual-stack on specific devices

Performing address translation at network boundaries

Comparison of upgrade and transformation plans

Comprehensive IPv6 deployment plan for campus networks

IPv6 transformation plan for financial networks

E-government external network IPv6+ applications

External link access failure prevention

Similarities and differences between IPv4 and IPv6 routing protocols

IPv6-based VXLAN and EVPN VXLAN

Overview

Internet Protocol Version 6 (IPv6) is the second generation of the standard network layer protocol, also known as IP Next Generation (IPng). IPv6 not only solves the problem of insufficient IPv4 address space, but also makes some improvements based on IPv4, such as introducing extension headers to increase the protocol scalability and providing built-in security to address network security issues.

IPv6 can provide wider connectivity for the Internet and IoT to realize the Internet of Everything, create digital infrastructure, and promote innovation in new applications and fields such as IoT, industrial Internet, and artificial intelligence. With the rapid development of emerging fields such as 5G and IoT, IPv6 has gained a broader space for development.

This document describes IPv6 advantages, extension of IPv6-based application protocols, and direction of IPv6 development (IPv6+), and provides common IPv6 deployment solutions to help users understand and deploy IPv6.

Benefits

Larger address space

IPv6 increases the IP address size from 32 bits to 128 bits. It can provide 3.4 x 1038 addresses to meet the requirements of hierarchical address assignment for both public and private networks.

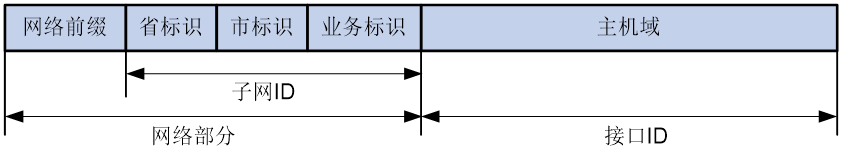

Hierarchical address structure

IPv6 uses a hierarchical address structure to speed up route lookup, simplify address management, and reduce the IPv6 routing table size through route aggregation.

IPv6 addresses use a multi-layer hierarchy. After the address registry assigns an IPv6 address range, service providers and organizations can divide the address range in a hierarchical and more granular manner to control address distribution within their scope of management. As shown in Figure 1, an IPv6 address contains the following parts:

· Network prefix—Assigned by the China Internet Network Information Center (CNNIC) and ISP.

· Subnet ID—Organizations divide address ranges in a hierarchical manner as needed. For example, address ranges are first assigned to provinces and cities respectively based on geography, and then by serivce type.

· Interface ID—Host identified in the network.

Figure 1 IPv6 address structure

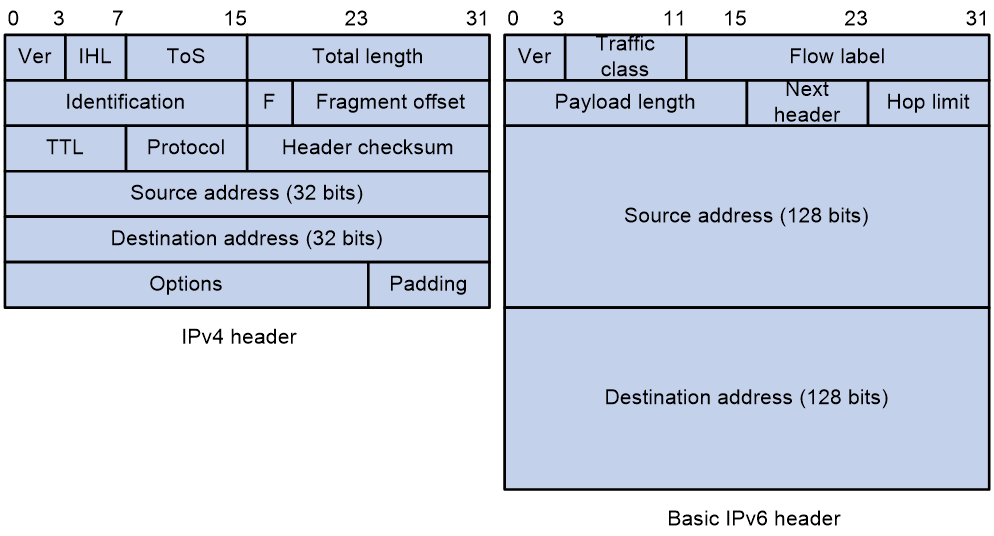

Simplified header format

IPv6 removes several IPv4 header fields or moves them to the IPv6 extension headers to reduce the length of the basic IPv6 packet header. The basic IPv6 packet header has a fixed length of 40 bytes to simplify IPv6 packet handling and improve forwarding efficiency. Although the IPv6 address size is four times the IPv4 address size, the basic IPv6 packet header size is only twice the size of the option-less IPv4 packet header.

Figure 2 IPv4 packet header format and basic IPv6 packet header format

Flexible extension headers

IPv6 eliminates the Options field in the header and introduces optional extension headers to provide scalability and improve efficiency. The Options field in the IPv4 packet header contains a maximum of 40 bytes, whereas the IPv6 extension headers are restricted to the maximum size of IPv6 packets.

IPv6 extension header types

Table 1 displays extension headers supported by IPv6. Extension headers allow IPv6 to provide good scalability. According to service needs, you can define new IPv6 extension headers, or define new sub-extension headers for existing extension headers.

Table 1 IPv6 extension headers

|

Extension header name |

Type value |

Node for header processing |

Description |

|

Hop-by-Hop Options Header |

0 |

All nodes along the packet forwarding path |

Used for jumbo load alerts, router alerts, and Resource Reservation Protocol (RSVP). |

|

Routing Header |

43 |

Destination node and intermediate nodes through which packets must pass |

Used to specify intermediate nodes through which packets must pass. |

|

Fragment Header |

44 |

Destionation node |

When the length of an IPv6 packet exceeds the Path MTU (PMTU) of the packet forwarding path, the source node fragments the IPv6 packet and fills in fragment information in the fragment header. In an IPv6 network, only the source node can fragment packets. The intermediate nodes cannot perform packet fragmentation. NOTE: The PMTU is the minimum MTU on the packet forwarding path from the source node to the destination node. |

|

Encapsulating Security Payload Header (ESP Header) |

50 |

Destionation node |

Used to provide data encryption, data source authentication, data integrity verification, and anti-replay. |

|

Authentication Header |

51 |

Destionation node |

Used to provide data source authentication, data integrity verification, and anti-replay. It can protect packets from tampering but cannot prevent packet eavesdropping. It is suitable for transmitting non-confidential data. AH provides better authentication service than ESP. |

|

Destination Options Header |

60 |

Destination node and intermediate nodes specified in the routing header |

Used to carry information sent to the destination node and intermediate nodes specified in the routing header. For example, in mobility IPv6, the destination options header can be used to exchange registration information between mobile node and home agent. |

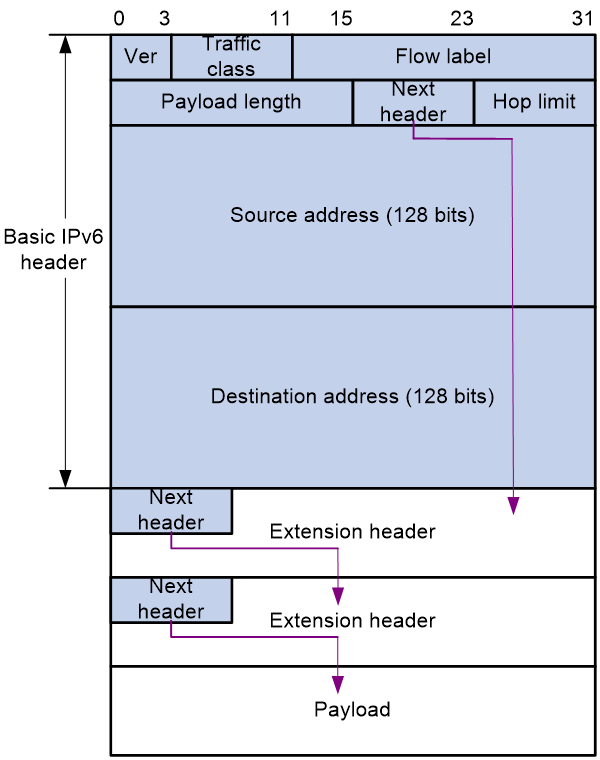

Format of IPv6 packets with extension headers

An IPv6 packet can carry zero, one or more extension headers. As shown in Figure 3, IPv6 uses the Next header field to identify the type of the next extension header. For example, a field value of 43 in the IPv6 basic packet header indicates that the next extension header is the routing header. A field value of 44 in the routing header indicates that the next extension header is the fragment header.

|

|

NOTE: The Next header field in the last extension header is used to identify the payload type. For example, a field value of 6 indicates that the payload is a TCP packet, and a field value of 17 indicates that the payload is a UDP packet. |

Figure 3 Format of IPv6 packets with extension headers

Enhanced neighbor discovery mechanism

The IPv6 neighbor discovery protocol uses a group of Internet Control Message Protocol for IPv6 (ICMPv6) messages to manage information exchange among neighboring nodes on the same link. The group of ICMPv6 messages replaces ARP messages, ICMPv4 router discovery messages, and ICMPv4 redirect messages and provides a series of other functions:

· Address resolution—Obtains the link layer addresses of neighbor nodes on the same link. It provides the same functions as IPv4 ARP.

· Neighbor reachability detection—Detects the state of a neighbor node after obtaining the neighbor's link layer address to verify if the neighbor is reachable.

· Duplicate address detection—Verifies if an IPv6 address has been used by another node after obtaining an IPv6 address. It provides similar functions to IPv4 gratutious ARP.

Built-in security

IPv4 itself does not provide security functions such as encryption and authentication, and must be used with security protocols (such as IPsec) or application protocols to provide security, which increases the complexity of application protocol design. IPv6 is designed with built-in security, which defines an ESP header and an authentication header to provide end-to-end security.

IPv6-based applications can directly inherit the security functions of the IPv6 protocol, providing a standard for solving network security issues and enhancing interoperability among different IPv6 applications.

IPv6-based protocol extension

Protocols (such as Telnet, SSH, and SNMP) used for device management, high reliability mechanisms (such as VRRP and M-LAG), and security protocols (such as 802.1X and port security) can support IPv6 without modification or with minor modifications. However, some application layer protocols, routing protocols, multicast protocols, and security protocols running in IPv4 networks require extensions to adapt to the IPv6 protocol. This section describes the extension methods of these protocols in the IPv6 network.

Configuring IPv6 global unicast addresses

Nodes can obtain global unicast IPv6 addresses through the following ways:

· Manual configuration.

· Stateless autoconfiguration: After obtaining the network prefix through the neighbor discovery protocol, nodes generate IPv6 unicast addresses automatically based on the obtained prefix.

· Stateful autoconfiguration: Nodes obtain IPv6 unicast addresses from DHCPv6 servers through the DHCPv6 protocol.

Table 2 displays scenarios suitable for different configuration methods of IPv6 global unicast addresses.

Table 2 Scenarios suitable for different configuration methods of IPv6 global unicast addresses

|

Address configuration method |

Advantages and disadvantages |

Applicable scenarios |

Prefix length requirement |

|

Manual configuration |

Advantages: Does not require protocol packet exchanges Disadvantages: Requires manual configuration and does not support dynamic adjustment |

Link local address or Loopback interface address |

No requirement (Custom supported) |

|

Stateless autoconfiguration |

Advantages: Extra server deployment is not required. Deployment is simple Disadvantages: Does not support precise control over IPv6 address allocation to nodes |

Strict control over terminal access behavior is not required, for example, IoT devices (video surveillance cameras or streetlights) |

Fixed to 64 bits |

|

Stateful autoconfiguration |

Advantages: Can perform precise control over IPv6 address allocation to nodes and record address allocation information Disadvantages: A DHCPv6 server must be deployed in the network, which is complicated |

Strict control over terminal access behavior is required, such as campus networks or office area networks |

No requirement (Custom supported) |

Stateless address autoconfiguration and stateful address autoconfiguration can be used together. For example, after a device obtains an IPv6 address through stateless autoconfiguration, it can use stateful autoconfiguration to obtain other network configuration parameters, such as DNS server address.

Stateless address autoconfiguration

Work mechanism of stateless address autoconfiguration

Stateless address autoconfiguration is achieved through the Neighbor Discovery Protocol (NDP) of IPv6. Its working process is as follows:

1. The router announces prefix information in the following ways:

¡ The router periodically sends Router Advertisement (RA) messages to the multicast address (FF02::1) of all nodes. These messages contain information such as the IPv6 prefix, prefix lifetime, and hop limit.

¡ When a node starts, it sends Router Solicitation (RS) messages to the multicast address (FF02::2) of all routers. Upon receiving the RS message, the router responds with RA messages to the multicast address (FF02::1) of all nodes.

|

|

NOTE: The prefix lifetime includes the following types: · Valid lifetime: Indicates the duration for which the prefix is considered valid. During the valid lifetime, addresses generated using this prefix can be used normally. Once the valid lifetime expires, the addresses generated using this prefix become invalid and will be removed. · Preferred lifetime: Represents the duration during which the addresses generated using this prefix are preferred for stateless address autoconfiguration. Once the preferred lifetime expires, the addresses generated using this prefix will be deprecated. Nodes cannot establish new connections using deprecated addresses but can still receive packets with deprecated addresses as the destination. The preferred lifetime must be smaller than or equal to the valid lifetime. |

2. The node combines the address prefix received from the router's RA message with its local interface ID to generate an IPv6 unicast address. The node also automatically configures itself based on the configuration information provided in the RA message. For example, the hop limit in the RA message is set as the maximum hop limit for locally sent IPv6 packets.

3. The node performs duplicate address detection (DAD) on the generated IPv6 unicast address. The DAD process involves the node sending a Neighbor Solicitation (NS) message on the local link, with the destination address being the multicast address of the requested node derived from the automatically generated IPv6 unicast address. If the node does not receive a Neighbor Advertisement (NA) message in response, it considers the address to be available and not conflicting, and hence can be used. If a NA message is received in response, the node considers the address to be conflicting and does not use it.

|

|

NOTE: The solicited-node multicast address is primarily used to obtain the link-layer addresses of neighboring nodes on the same link and to perform duplicate address detection. Each unicast or anycast IPv6 address has a corresponding solicited-node multicast address. Its format is as follows: FF02:0:0:0:0:1:FFXX:XXXX Where, · FF02:0:0:0:0:1:FF is a fixed format of 104 bits. · XX:XXXX represents the last 24 bits of the unicast or anycast IPv6 address. |

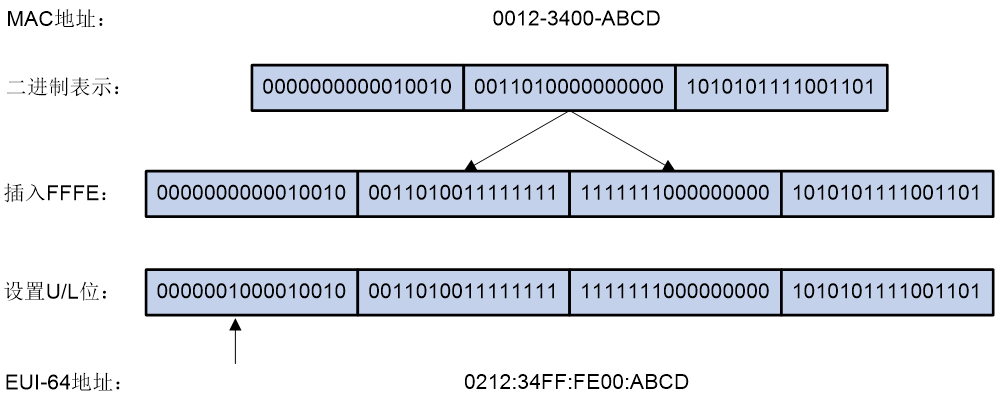

IEEE EUI-64 format interface ID

The node automatically generates an interface ID based on local information, and the method used to generate the IEEE EUI-64 format interface ID varies by interface type:

· For all IEEE 802 interface types (for example, Ethernet interfaces and VLAN interfaces): The IEEE EUI-64 format interface ID is derived from the interface's link-layer address (MAC address). The interface ID in an IPv6 address is 64-bit long, while the MAC address is 48-bit long. To create the interface ID, the hexadecimal value FFFE (1111111111111110) is inserted in the middle of the MAC address, starting from the 24th bit (counting from the highest bit). For the interface ID to have the same scope as the original MAC address, the Universal/Local (U/L) bit, which is the 7th bit counting from the highest bit, is inverted. The resulting value is then used as the EUI-64 format interface ID.

Figure 4 Process of converting a MAC address to a EUI-64 format interface ID

· Tunnel interfaces: The low 32 bits of the IEEE EUI-64 format interface ID are the source IPv4 address of the tunnel interface. For ISATAP tunnels, the high 32 bits of the interface ID are set to 0000:5EFE. For other tunnel interfaces, the high 32 bits of the interface ID are set to all zeros.

· Other interface types (such as serial interfaces): The IEEE EUI-64 format interface ID for these interfaces is randomly generated by the device.

Auto generation of IPv6 addresses with constantly changing interface IDs

When an interface of an IEEE 802 type (Ethernet or VLAN) generates an IPv6 global unicast address based on stateless address autoconfiguration, the interface ID is generated from the interface MAC address following certain rules. This interface ID is globally unique. However, the interface ID portion remains unchanged for different prefixes. Attackers can easily identify the device responsible for generating the communication traffic by analyzing the interface ID and its patterns. This can potentially create security vulnerabilities.

In stateless address autoconfiguration, to enhance the difficulty of attacks and protect the network, devices offer a temporary address feature. With this feature, devices can generate IPv6 addresses with constantly changing interface IDs. For IEEE 802 type interfaces, the following types of addresses can be simultaneously generated:

· Public address: The address prefix is obtained from the prefix carried in RA messages, and the interface ID is derived from the MAC address. The interface ID remains unchanged.

· Temporary address: The address prefix is obtained from the prefix carried in RA messages, and the interface ID is calculated by the system using the MD5 algorithm. The interface ID changes constantly.

After you specify the preference for temporary addresses, the system prioritizes selecting a temporary address as the source address for packets. When the valid lifetime of a temporary address expires, that temporary address is removed, and the system generates a new temporary address with a different interface ID using the MD5 algorithm. As a result, the interface ID of the source address for packets sent from that interface consistently changes. If a generated temporary address is unavailable due to a DAD conflict, the public address is used as the source address for the packets.

Prefix readdressing

Stateless address autoconfiguration allows users to easily and transparently switch to a new network prefix to achieve prefix readdressing. The steps for prefix readdressing are:

1. The router publishes the old IPv6 prefix on the local link through RA messages and sets the valid lifetime and preferred lifetime of the old prefix to near-zero values.

2. The router publishes the new IPv6 prefix on the local link through RA messages.

3. The node generates two IPv6 addresses based on both the old and new prefixes, and both the old and new IPv6 addresses exist simultaneously. Connections using the old IPv6 address can still be processed, while new connections use the new IPv6 address. After the valid lifetime of the old IPv6 address ends, the address is no longer used, and the node only uses the new IPv6 address to communicate.

Stateful address autoconfiguration (DHCPv6)

Dynamic Host Configuration Protocol for IPv6 (DHCPv6) is a protocol designed for IPv6 addressing scheme. It is used to allocate IPv6 prefixes, IPv6 addresses, and other network configuration parameters to hosts.

DHCPv6 advantages

Compared to other IPv6 address allocation methods such as manual configuration and stateless address autoconfiguration, DHCPv6 offers the following advantages:

· Better control over address allocation: DHCPv6 allows for recording and management of assigned addresses, including the ability to assign specific addresses to specific hosts, enhancing network management capabilities.

· Prefix delegation to DHCPv6 clients: DHCPv6 clients can be assigned a prefix, which they can then advertise to hosts, enabling stateless autoconfiguration of IPv6 addresses. This approach reduces the number of IPv6 addresses managed by the DHCPv6 server and enables automatic configuration and management across the entire network.

· In addition to IPv6 prefixes and addresses, DHCPv6 can also allocate network configuration parameters such as DNS servers and domain suffixes to hosts.

DHCPv6 address/prefix allocation process

The process of DHCPv6 server allocating addresses/prefixes to clients can be divided into the following categories:

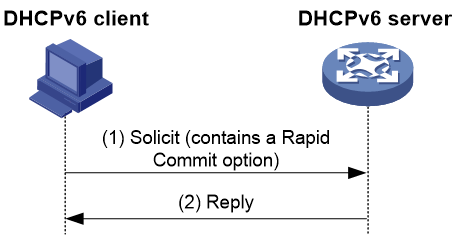

· Rapid allocation process: This process involves the exchange of two messages to quickly allocate addresses/prefixes to clients.

Figure 5 Rapid allocation of addresses/prefixes

As shown in Figure 5, the rapid allocation process for addresses/prefixes is as follows:

a. The DHCPv6 client includes the Rapid Commit option in the Solicit message sent to the DHCPv6 server, indicating that the client wants the server to quickly allocate addresses/prefixes and other network configuration parameters.

b. If the DHCPv6 server supports the rapid allocation process, it directly responds with a Reply message, allocating IPv6 addresses/prefixes and other network configuration parameters to the client. If the DHCPv6 server does not support the rapid allocation process, it uses the normal allocation process involving four message exchanges to allocate IPv6 addresses/prefixes and other network configuration parameters to the client.

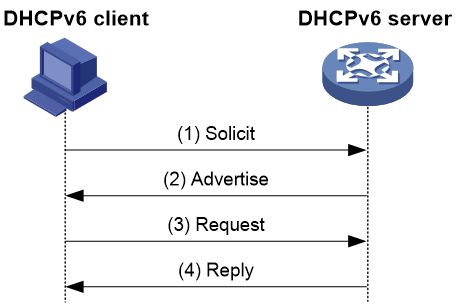

· Normal allocation process: This process involves the exchange of four messages to allocate addresses/prefixes to clients.

Figure 6 Normal allocation of addresses/prefixes

The four-message exchange process is described in Table 3.

Table 3 Normal allocation of addresses/prefixes

|

Step |

Sent message |

Description |

|

1 |

Solicit |

The DHCPv6 client sends this message to request the DHCPv6 server to assign an IPv6 address/prefix and network configuration parameters to it. |

|

2 |

Advertise |

If the Solicit message does not include the Rapid Commit option, or if the server does not support rapid allocation, the DHCPv6 server responds to notify the client of the allocable addresses/prefixes and network configuration parameters. |

|

3 |

Request |

If the DHCPv6 client receives multiple Advertise messages from servers, it selects one server based on factors such as the order of message reception and server priority. The client then sends a Request message to that server, requesting confirmation for the allocation of addresses/prefixes and network configuration parameters. |

|

4 |

Reply |

The DHCPv6 server responds to the message by confirming that it will allocate the addresses/prefixes and network configuration parameters to the client for use. |

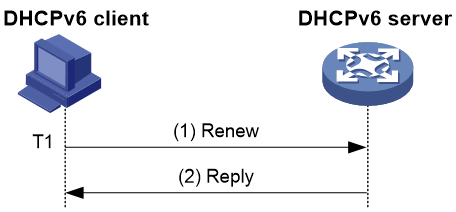

Address/prefix lease renewal process

The IPv6 address/prefix assigned to the client by the DHCPv6 server has a lease, which is a specific time period for usage. The lease term is determined by the effective service life. After the lease time of the address/prefix reaches its valid lifetime, the DHCPv6 client can no longer use that address/prefix. Before the valid lifetime expires, if the DHCPv6 client intends to continue using the address/prefix, you must request an extension of the address/prefix lease.

Figure 7 Updating the address/prefix lease through Renew

As shown in Figure 7, when the address/prefix lease time reaches time T1 (recommended value is half of the preferred lifetime), the DHCPv6 client sends a Renew message to the DHCPv6 server that assigned the address/prefix for lease renewal. If the client can continue to use the address/prefix, the DHCPv6 server responds with a Renewal Reply message to notify the client that the address/prefix lease has been successfully updated. If the address/prefix cannot be reassigned to the client, the DHCPv6 server responds with a Renewal Failure Reply message to inform the client that it cannot obtain a new lease.

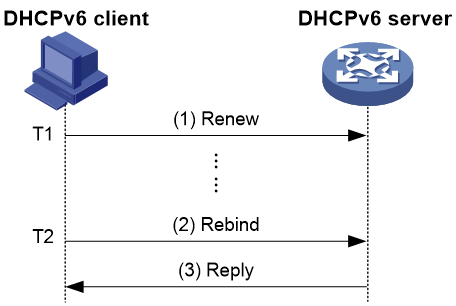

Figure 8 Updating the address/prefix lease through Rebind

As shown in Figure 8, if a Renew request to update the lease is sent at T1, but no response message is received from the DHCPv6 server, the DHCPv6 client sends a Rebind message to all DHCPv6 servers via multicast at T2 (recommended value is 0.8 times the preferred lifetime) to request updating the lease. If the client can continue to use the address/prefix, the DHCPv6 server responds with a Renew Success Reply message, notifying the DHCPv6 client that the address/prefix lease has been successfully updated. If the address/prefix cannot be reassigned to the client, the DHCPv6 server responds with a Renew Failure Reply message, notifying the client that it cannot obtain a new lease. If the DHCPv6 client does not receive a response from the server, it stops using the address/prefix after the valid lifetime expires.

DHCPv6 option introduction

Option 17 is called Vendor-specific Information, a reserved option specified in RFC.

When the device acts as a DHCPv6 server, it can use this option to carry additional network parameters (such as TFTP server name, address, or device configuration file name) and send them to DHCPv6 clients in order to provide corresponding services to the clients.

To provide scalability and allocate more information to clients through Option 17, Option 17 uses sub-options to assign different network configuration parameters to users. Currently, users can configure up to 16 sub-options under each vendor custom option.

· Option 18

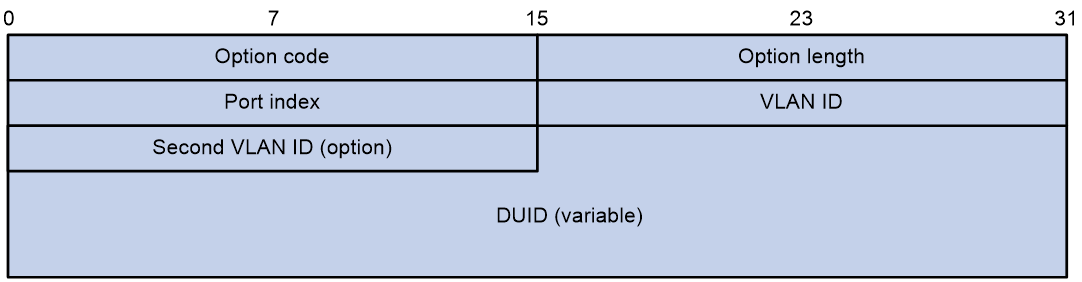

Option 18 is called Interface ID option. After receiving a DHCPv6 request message from a DHCPv6 client, the device adds Option 18 (DHCPv6 relay will add Option 18 in the Relay-forward message) in the message and forwards it to the DHCPv6 server. The server can assign IPv6 addresses to DHCPv6 clients from the appropriate address pool based on client information in Option 18. Figure 9 shows the Option 18 format.

The fields are described as follows:

¡ Option code: Option number, with a value of 18.

¡ Option length: Length of the Option field.

¡ Port index: Port index where the DHCPv6 device receives the client request message.

¡ VLAN ID: First layer VLAN information.

¡ Second VLAN ID: Layer 2 VLAN information. The Second VLAN ID field in the option format is optional. If the DHCPv6 message does not contain a Second VLAN, the Option 18 also does not include the Second VLAN ID content.

¡ DUID: By default, it is the DUID information of the device itself. It can be configured as other DUID information through the CLI.

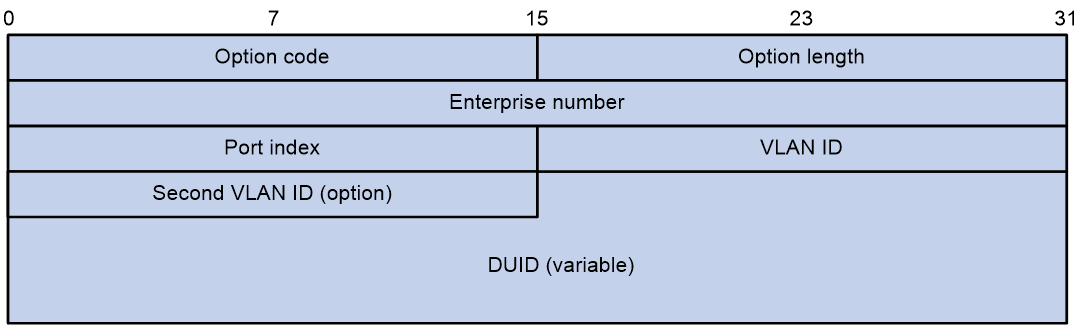

Option 37 is known as the Remote ID option. After receiving a DHCPv6 request message from a DHCPv6 client, the device adds the Option 37 option to the message (the DHCPv6 relay will add the Option 37 option to the Relay-forward message) and forwards it to the DHCPv6 server. The server can locate DHCPv6 clients and assist in assigning IPv6 addresses based on the information in Option 37. Figure 10 shows the Option 37 format.

The fields are described as follows:

¡ Option code: Option number, with a value of 37.

¡ Option length: Length of the Option field.

¡ Enterprise number: Enterprise identifier.

¡ Port index: Port index where the DHCPv6 device receives the client request message.

¡ VLAN ID: First layer VLAN information.

¡ Second VLAN ID: Layer 2 VLAN information. The Second VLAN ID field in the option format is optional. If the DHCPv6 message does not contain a Second VLAN, the Option 37 also does not include the Second VLAN ID content.

¡ DUID: By default, it is the DUID information of the device itself. It can be configured as other DUID information through the CLI.

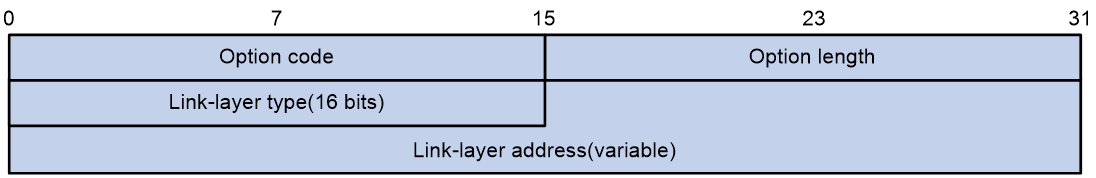

Option 79 is called the Client Link Layer Address option. When the DHCPv6 request message passes through the first DHCPv6 relay, the relay learns the source MAC address of the message, which is the MAC address of the DHCPv6 client. When the DHCPv6 relay generates a Relay-Forward message corresponding to the request, it adds the learned MAC address to Option 79 and then forwards the message to the DHCPv6 server. The DHCPv6 server can learn the MAC address of the DHCPv6 client based on the information in Option 79, which helps with IPv6 address/prefix allocation or client legitimacy authentication. Figure 11 shows the Option 79 format.

The fields are described as follows:

¡ Option code: Option number, with a value of 79.

¡ Option length: Length of the Option field.

¡ Link-layer type: Client link layer address type.

¡ Link-layer address: Client Link-layer address.

IPv6 deployment plan

IPv6 upgrade and transformation plan

IPv6 upgrade and transformation plan includes the following options:

· Create an IPv6 network.

· Deploy dual-stack for specific devices

· Perform address translation at network boundaries.

Creating an IPv6 network

IPv6 network creation refers to the formation of a completely new IPv6 network according to the existing network construction model. All devices in the network support IPv4 and IPv6 dual-stack, and this network is only used to handle IPv6 traffic. In a brand new IPv6 network, new services can be deployed to achieve service innovation.

Figure 12 IPv6 network creation

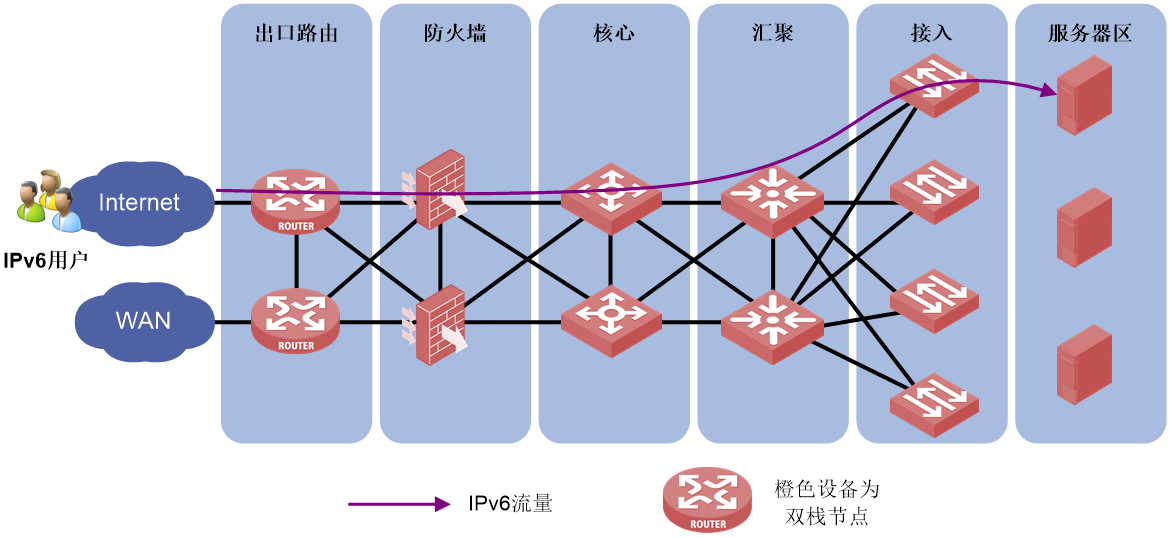

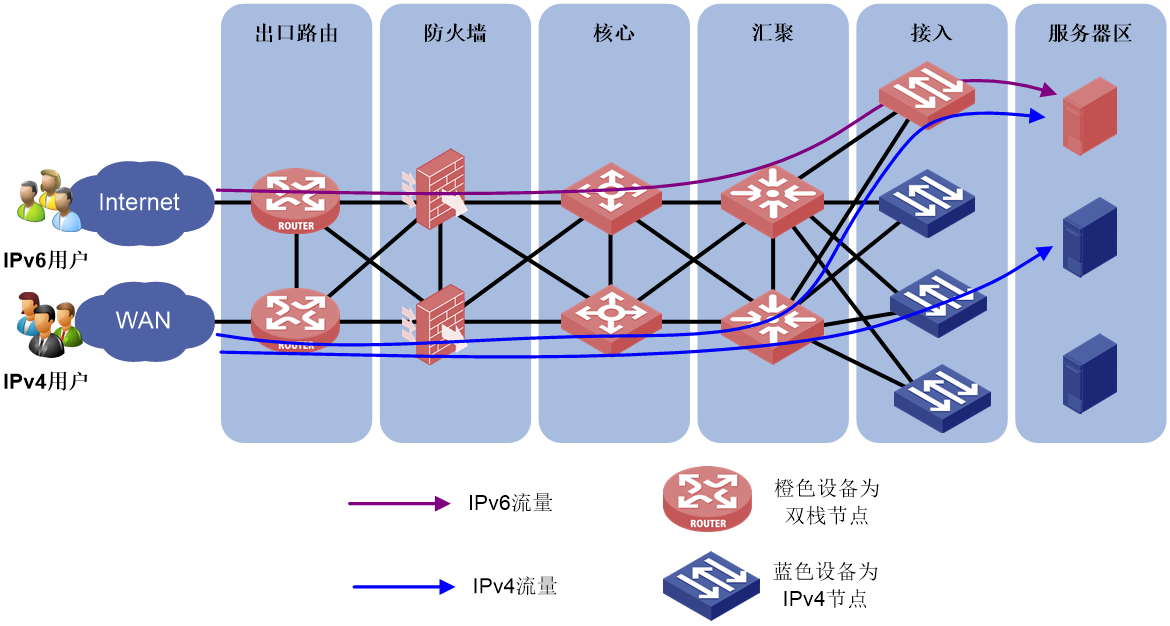

Deploying dual-stack on specific devices

Deploying dual-stack on specific devices refers to the support of dual stack in only the network egress, firewall, core devices, and some aggregation/access devices. IPv6 users communicate through these dual stack nodes.

Figure 13 Deploying dual-stack on specific devices

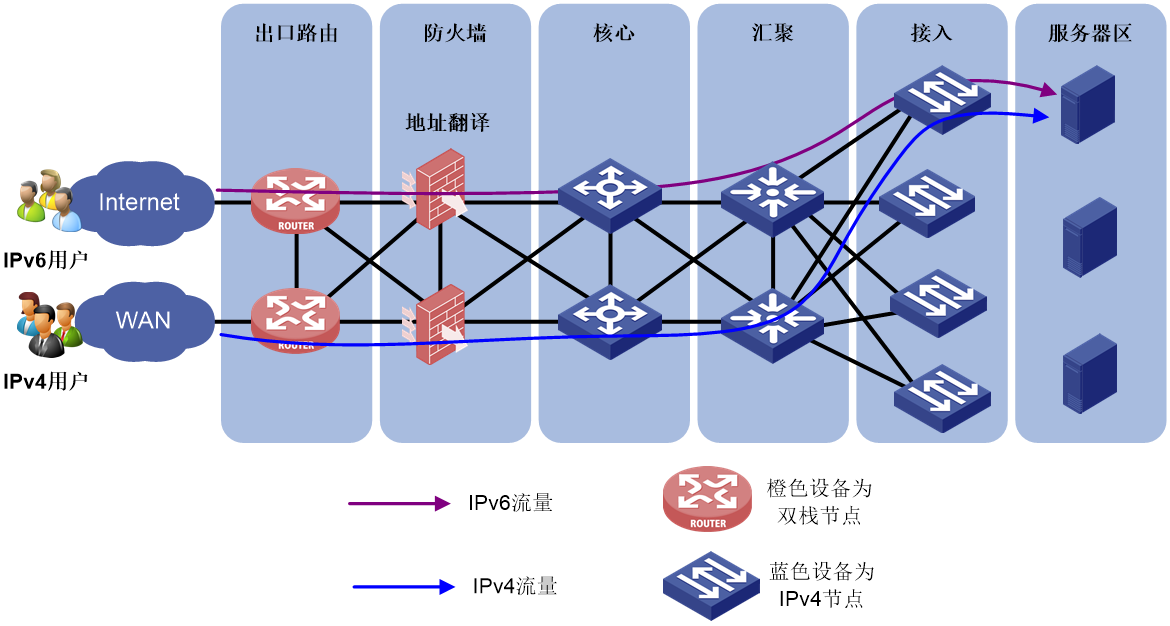

Performing address translation at network boundaries

Address translation for network boundaries refers to enabling address translation protocols such as AFT on firewalls located at the boundary between IPv4 and IPv6 networks to convert IPv4 and IPv6 addresses to each other. This enables IPv6 users to access IPv4 networks.

Figure 14 Performing address translation at network boundaries

Comparison of upgrade and transformation plans

The comparison of the three plans for upgrading and transforming IPv6 networks are as shown in the following table.

Table 4 Comparison of upgrade and transformation plans

|

Upgrade and transformation plan |

Create an IPv6 network |

Deploy dual-stack on specific devices |

Perform address translation at network boundaries |

|

Transformation cost |

High |

Medium |

Low |

|

Difficulty of transformation |

Low |

High |

Low |

|

Transformation workload |

Medium |

Medium |

Low |

|

Transformation risks |

Low |

High |

Medium |

|

Impact on existing services |

No impact. This plan redeploys IPv6 services completely. |

IPv4 and IPv6 applications reuse dual-stack networks. |

No impact. The original IPv4 services do not require address translation. |

|

Single device pressure |

Low IPv4 and IPv6 networks are handled by different devices for IPv4 and IPv6 traffic. |

Medium Network, firewall, and other nodes must be deployed with dual-stack protocols and share device resource table entries. |

High The node of address translation becomes a network bottleneck. |

|

Operation complexity |

Separated operation and maintenance interfaces for IPv4 and IPv6 to provide simplified operation and maintenance. |

Mixed operation and maintenance interface for IPv4 and Ipv6 with complex operation and maintenance. |

Continue with IPv4 operation and maintenance, which is simple to operate. |

|

Application scenarios |

Important service systems with complex architectures and high remodeling difficulties, such as financial online banking services Networks with service innovation requirements. |

Clear and simple network structure. |

Tight budget or desire to minimize the impact of IPv6 on the existing network. |

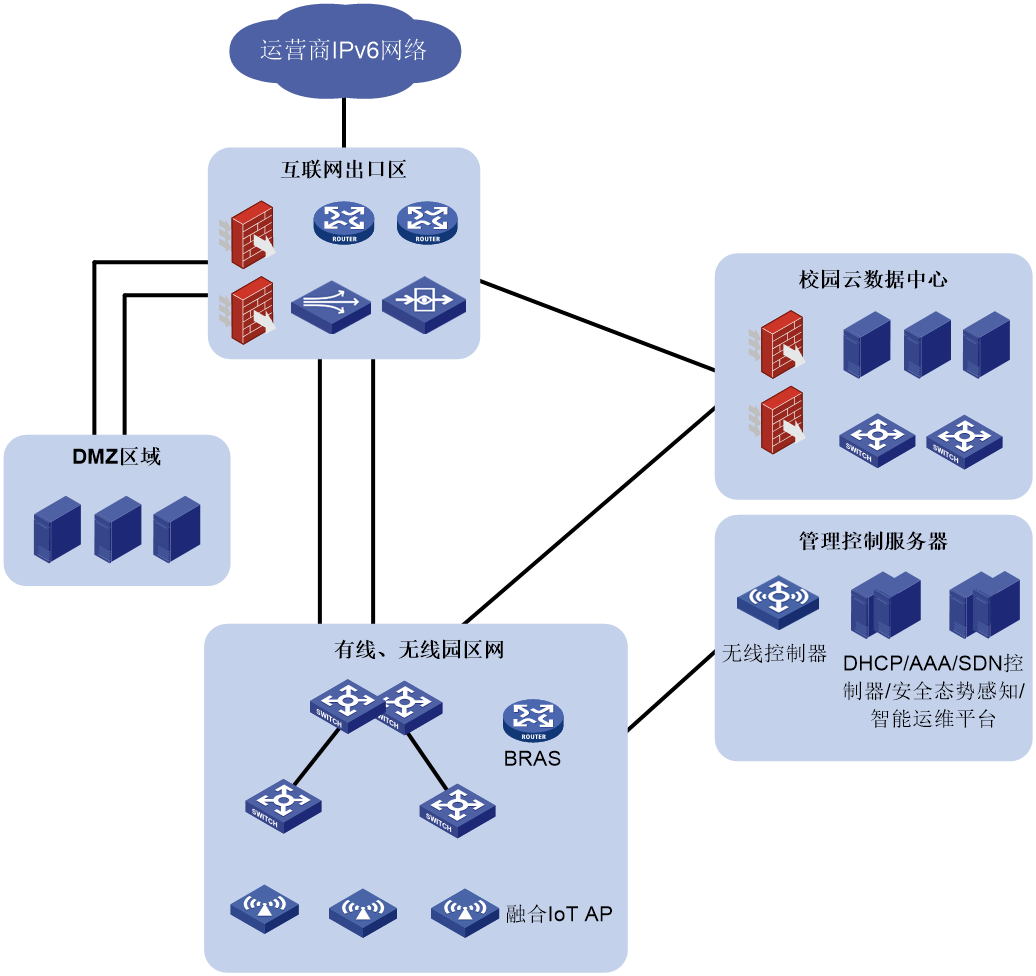

Comprehensive IPv6 deployment plan for campus networks

The typical deployment scheme for the full IPv6 transformation of the campus network is shown in Figure 15. The specific deployment method is:

· Internet egress zone:

¡ Firewalls, IPS, LB, application control, and traffic analysis devices fully support IPv6.

¡ Fully support address translation protocols such as AFT.

· Campus cloud data center:

¡ Underlay and Overlay networks support IPv6.

¡ Virtualization and cloud platform fully support IPv6.

¡ Deploy AFT and other address translation protocols.

· Management control server zone:

¡ Security situation awareness supports IPv6.

¡ Authentication, authorization, and network management servers support IPv6.

· Wired and wireless campus networks:

¡ Support IPv6 address allocation and IPv6 routing protocols.

¡ Support IPv6 access (BRAS or ADCampus).

¡ Support wired and wireless access for SAVA and intra-domain SAVA to prevent source address spoofing attacks.

· DMZ zone: Set up DNS64, and make external websites IPv6-enabled.

Figure 15 Comprehensive IPv6 deployment for campus networks

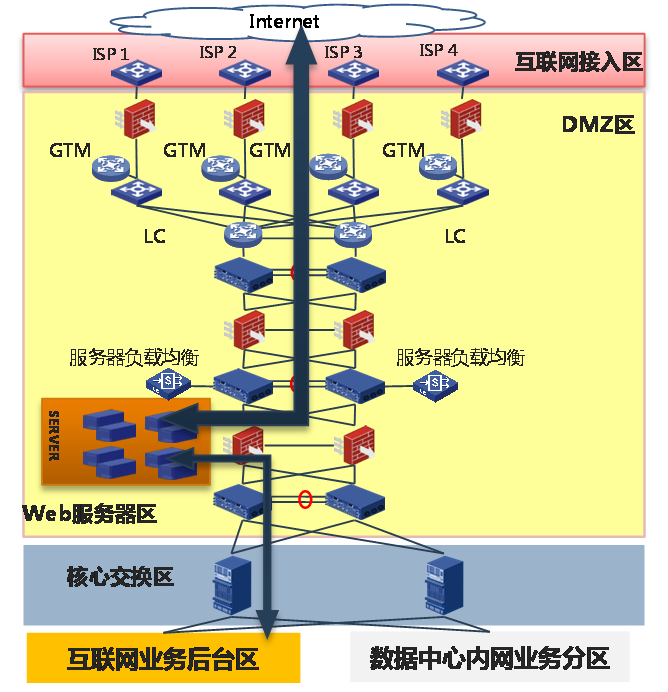

IPv6 transformation plan for financial networks

As shown in Figure 16, a financial network contains the following components:

· Internet access zone: User network access is completed through the ISP's Internet line. WAN access routers are deployed in this zone to achieve multiple ISP's access to multiple Internet lines.

· Demilitarized Zone (DMZ): The server that provides web services for users is located in the DMZ zone. Use firewalls and IPS to achieve Internet and DMZ isolation, deploy routers and switches within the DMZ to achieve interconnectivity of all devices in this zone, and use load balancing devices to optimize the service response speed and ensure high availability of web services.

· Core switch zone: Connect DMZ zone and Internet service backend zone, as well as the data center intranet server zone.

· Internet service backend zone: Mainly provides application processing for portals, online banking, and Internet financial services.

· Data center intranet server zone: Includes multiple service zones, mainly providing data services for APP servers or DB.

Figure 16 Financial network architecture

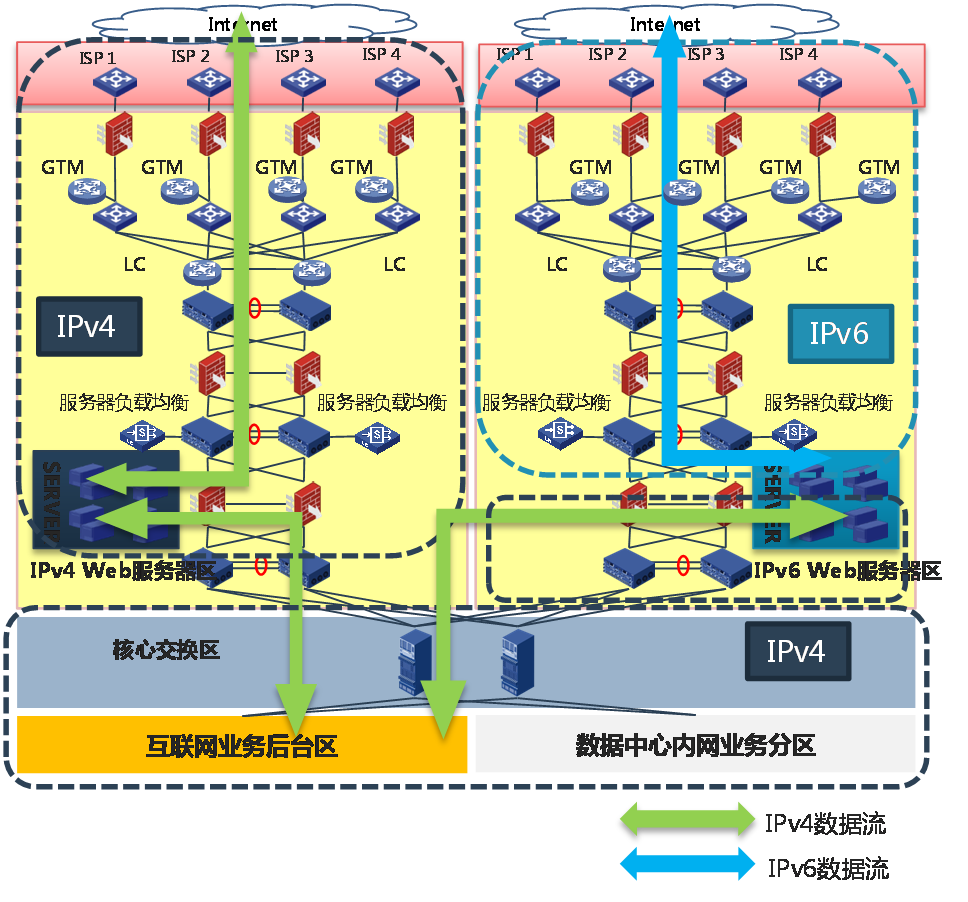

Financial networks can be upgraded from IPv4 to IPv6 using the following solutions:

· Create a new pure IPv6 zone: Establish a pure IPv6 Internet access zone and DMZ zone. The Internet access zone and DMZ zone provide Web services externally through IPv6 and connect data via IPv4 to the backend service backend zone and internal service zone.

Figure 17 Completely newly built pure IPv6 zone

· Build an IPv6 web server cluster: Upgrade the Internet access zone and DMZ zone to support dual stack. The existing IPv4 web server cluster and the newly built IPv6 web server cluster share the dual stack network.

Figure 18 Creating an IPv6 web server cluster

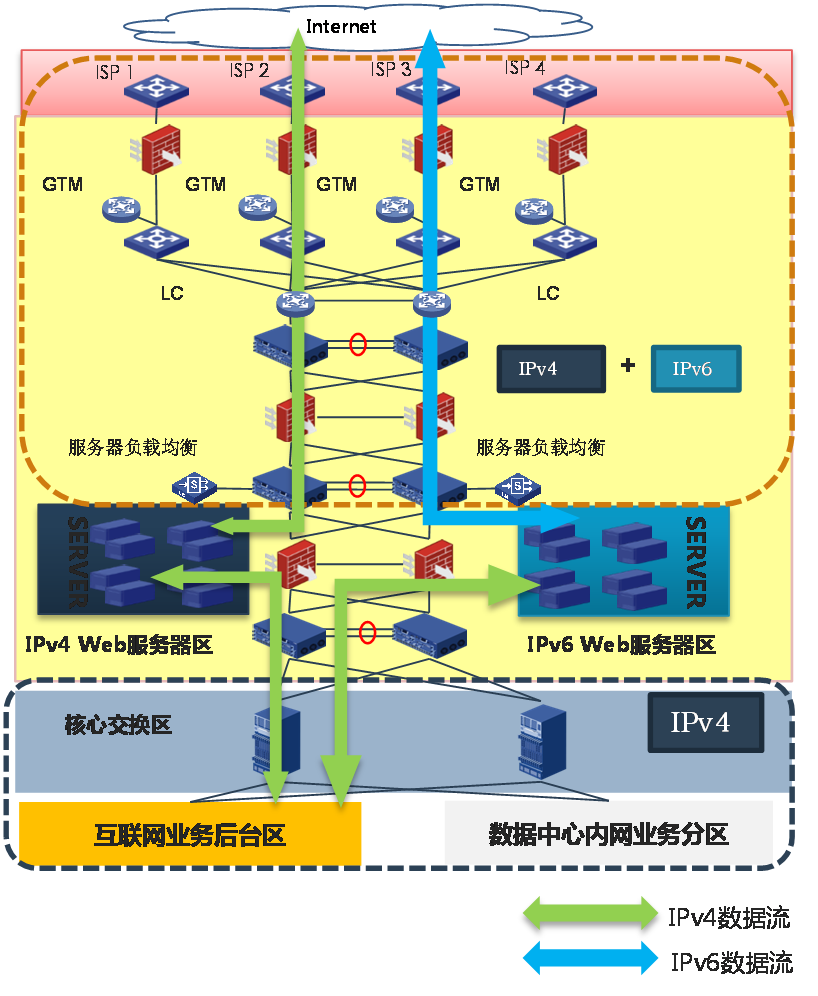

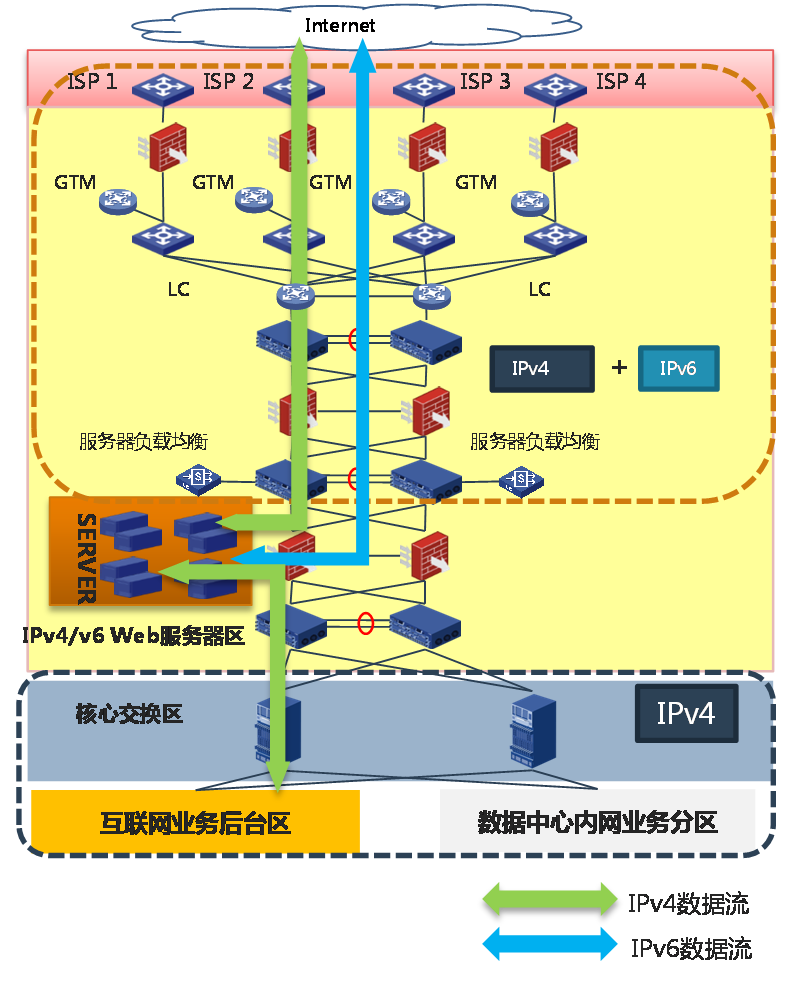

· Upgrade the original web server cluster network to a dual-stack network: Modify the Internet access zone, DMZ zone, and web server cluster network to support dual-stack.

Figure 19 Upgrading the original web server cluster network to a dual-stack network

E-government external network IPv6+ applications

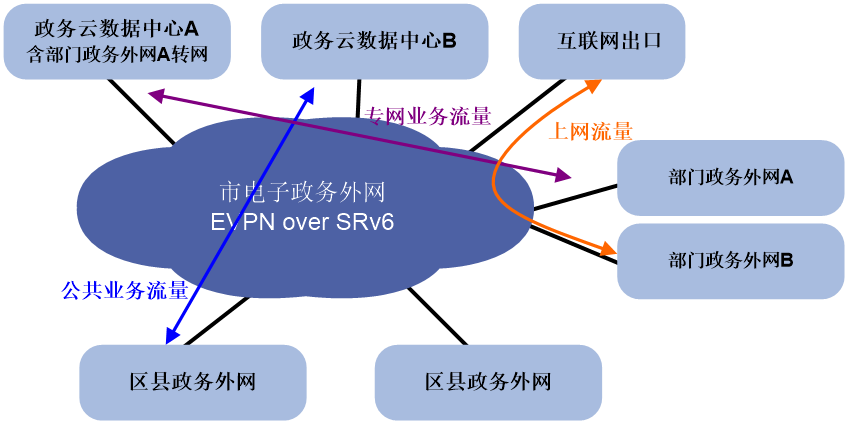

SRv6 application

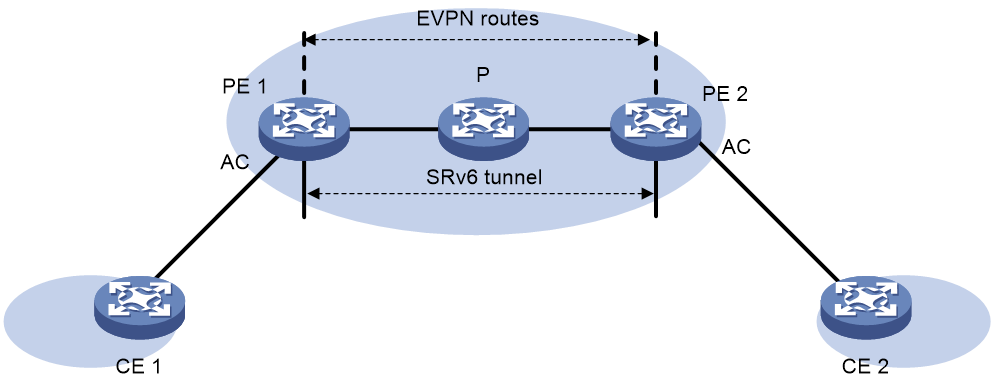

The deployment scheme for SRv6 in the external e-government network is shown as Figure 20.

· Internet access traffic is carried through EVPN over SRv6, assigning corresponding VPN permissions to Internet users, and carrying traffic through SRv6 tunnels.

· Public service traffic can use public addresses and be carried directly through SRv6.

· Special network traffic is divided into corresponding EVPN to ensure the network is dedicated and service logic is isolated.

· All paths can be dynamically adjusted by the SDN controller to ensure optimal resource usage.

Figure 20 SRv6 application in the external network of e-government

Network slicing application

The deployment scheme for network slicing in the e-government extranet is shown in Figure 21.

· By using various network slicing technologies, the backbone network bandwidth is refined to form multiple channels.

· According to different service needs (latency/jitter/packet loss), different strategies are distributed through the controller to achieve diversified utilization of channels.

· Different bandwidths can be allocated to different private networks (such as emergency command networks, video conference networks, document downloading networks) according to their requirements, ensuring that the traffic of one network will not be dropped due to congestion from another network in the same topology.

· According to customer's service requirements, the SRv6 forwarding path can be flexibly arranged to enhance network intelligence.

Figure 21 Network slicing application of the e-government external network

Visualization application

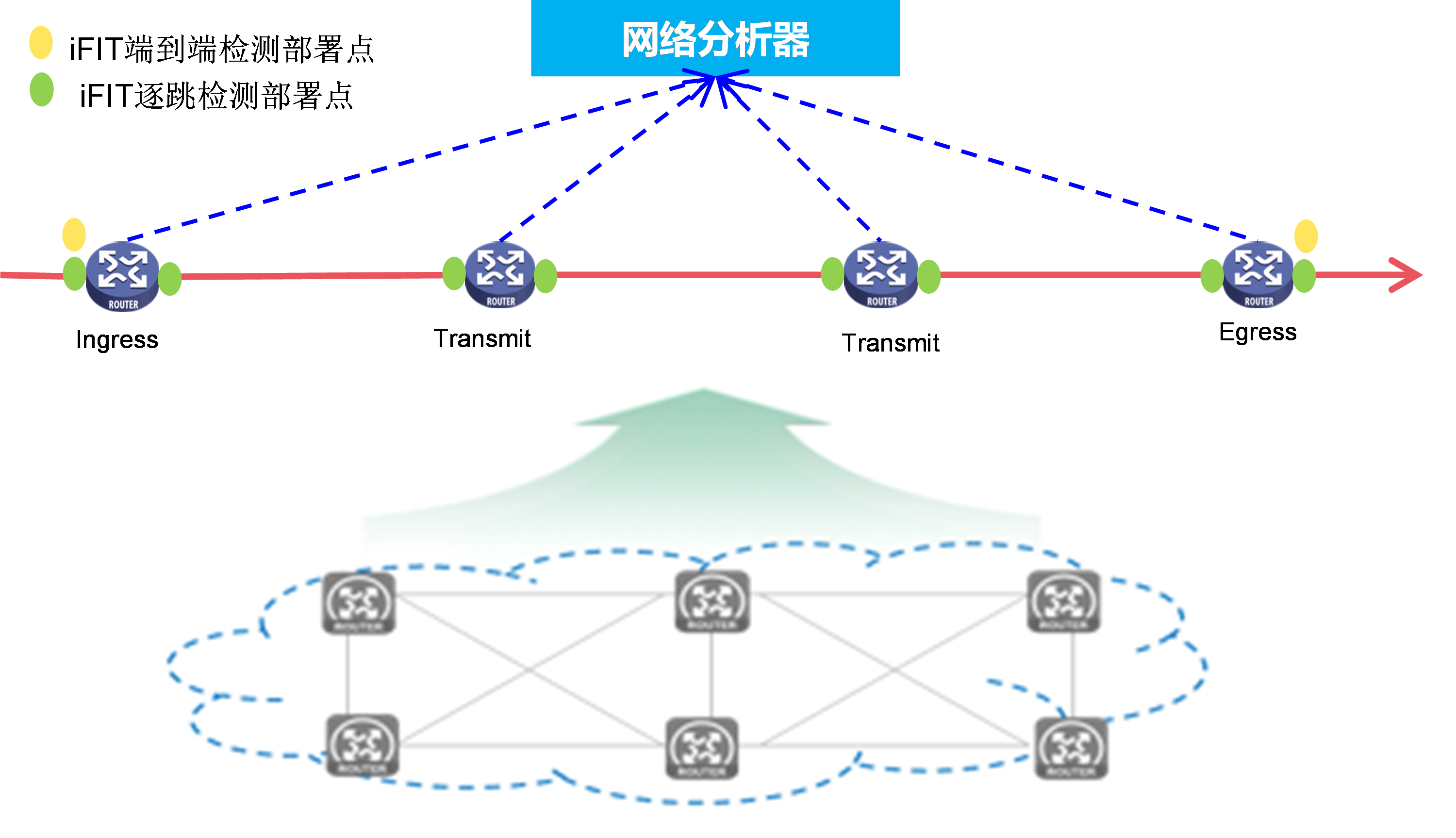

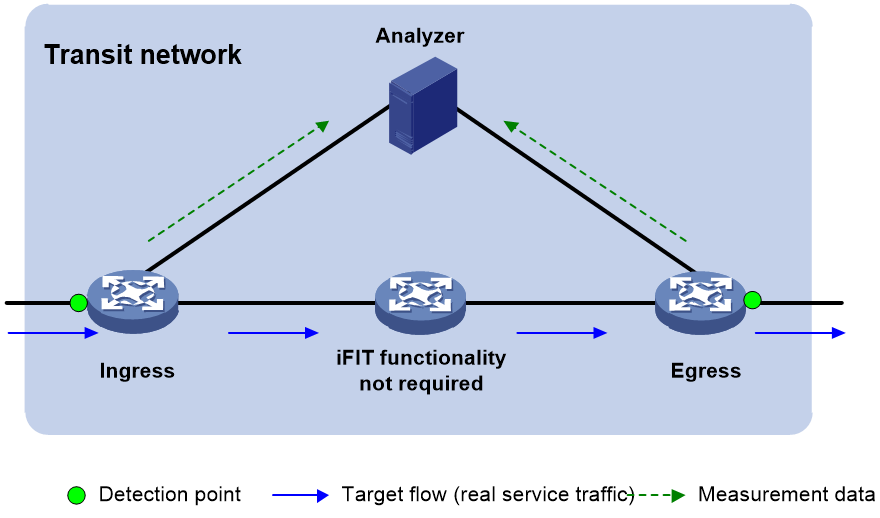

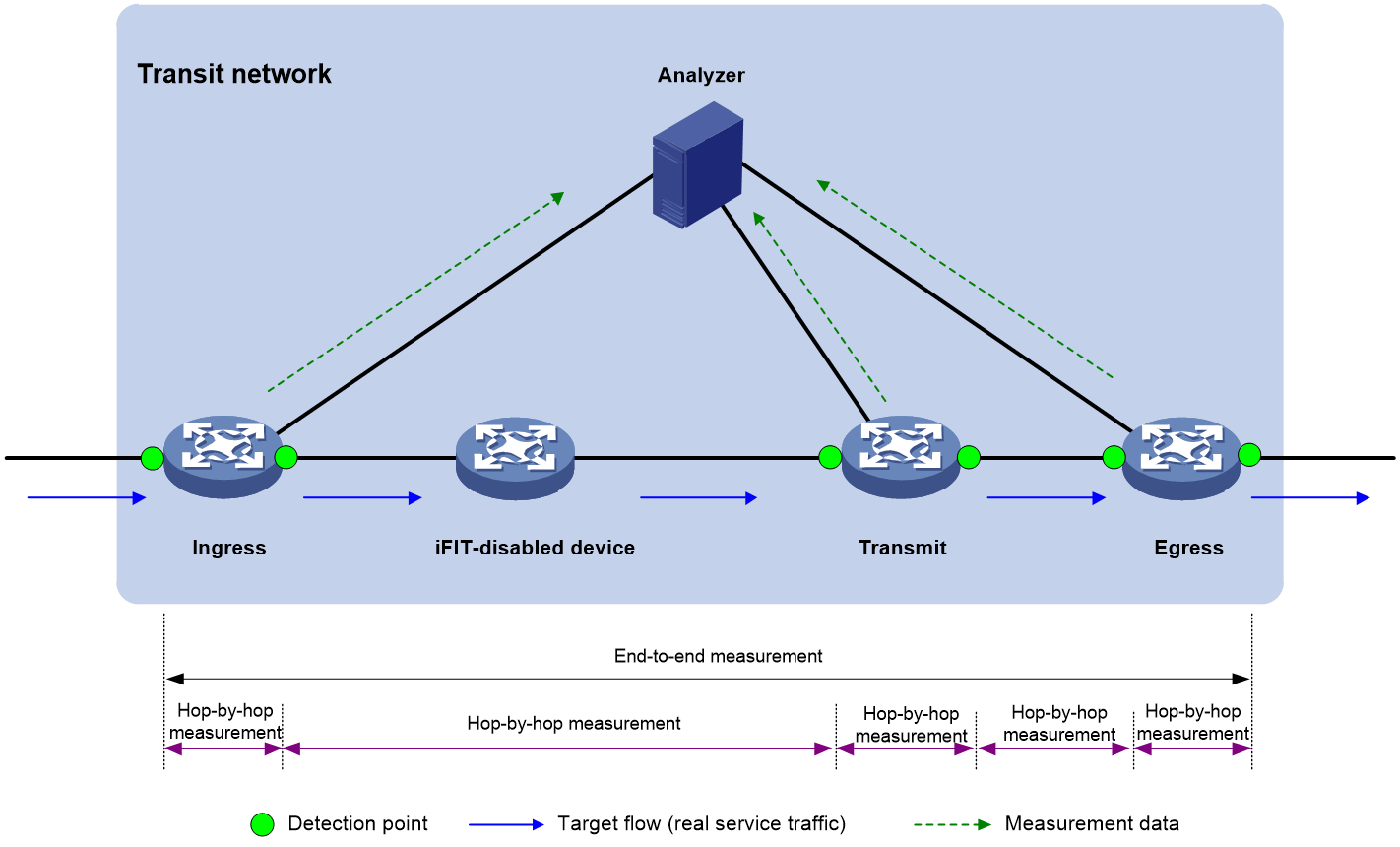

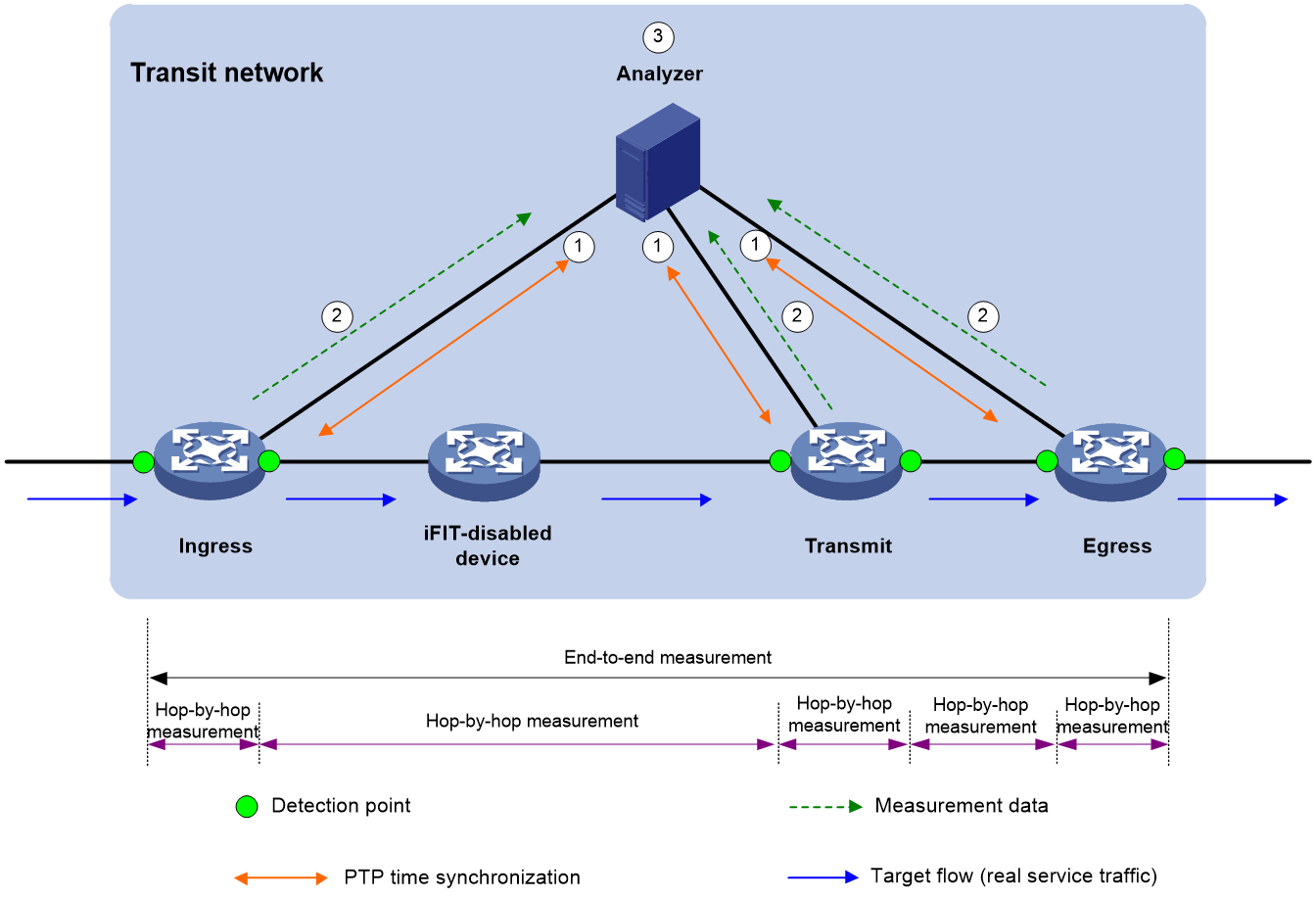

The deployment plan for the visualized e-government extranet is shown as follows in Figure 22. The visualization solution based on iFIT and Telemetry technology enables the following:

· Quality visibility and planning support: Periodic data collection generates real-time report data, providing data support for capacity expansion and subsequent planning.

· Move with demand and intelligent O&M: Achieve intelligent optimization of network paths based on current network service status, ensuring the quality of critical service operations.

· Accurate positioning and fast troubleshooting: When service issues occur, problems can be quickly identified and resolved through the graphical display on the network analyzer.

|

|

NOTE: For more information about Telemetry, see Telemetry Technology White Paper. |

Figure 22 Visualization application of e-government external network

IPv6 DNS

About IPv6 DNS

A domain name system (DNS) is a distributed database system that provides domain name-to-IP address mappings for TCP/IP applications. With DNS, users using IP applications can directly use meaningful easy-to-remember domain names, which will be resolved and mapped to corresponding IP addresses by DNS servers.

On an IPv6 network, DNS uses AAAA and PTR records to realize translation between domain names and IPv6 addresses.

· AAAA record—Maps a domain name to an IPv6 address, which is used for forward resolution.

· PTR record—Maps an IPv6 address to a domain name, which is used for reverse resolution.

External link access failure prevention

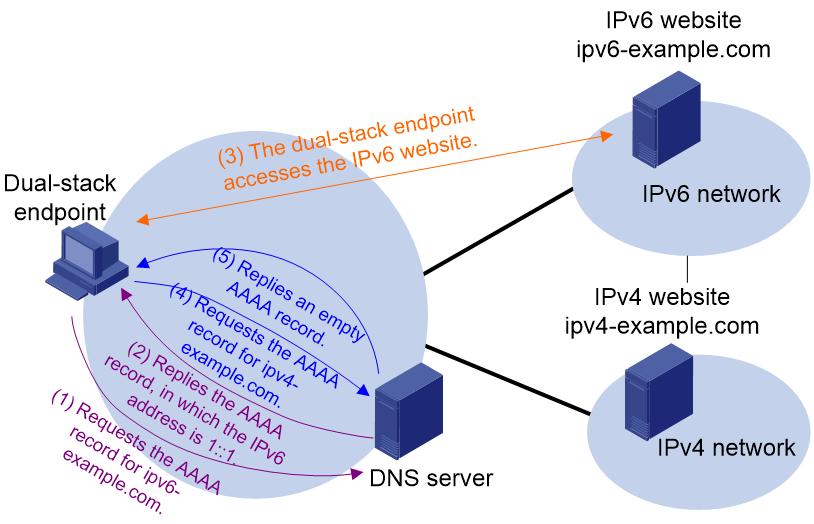

During IPv4-to-IPv6 network transition, both kinds of networks will coexist for a period of time. When a user accesses an IPv6 website containing links to other websites (external links) that do not support IPv6, the user might experience such issues as slow response, incomplete contents, and feature usage failure.

External link access failure prevention for a dual-stack endpoint

As shown in Figure 23, the dual-stack endpoint regards the IPv4 website link as an IPv6 website link when it accesses the IPv6 website. The endpoint requests the AAAA record from the DNS server, but the DNS server does not have a corresponding AAAA record for the IPv4 website. As a result, domain name resolution fails.

Figure 23 External link access failure

To solve such an issue:

1. The dual-stack endpoint sends an IPv6 DNS request to the DNS server for the AAAA record of the external link domain name.

2. The dual-stack endpoint sends an IPv4 DNS request to the DNS server for the A record of the external link domain name.

3. If the dual-stack endpoint receives the AAAA record, it sends a connection request to the IPv6 address in the AAAA record and establishes an IPv6 connection.

4. If the dual-stack endpoint fails to receive the AAAA record or establish an IPv6 connection, it sends a connection request to the IPv4 address in the A record and establishes an IPv4 connection.

External link access failure prevention for an IPv6-only client

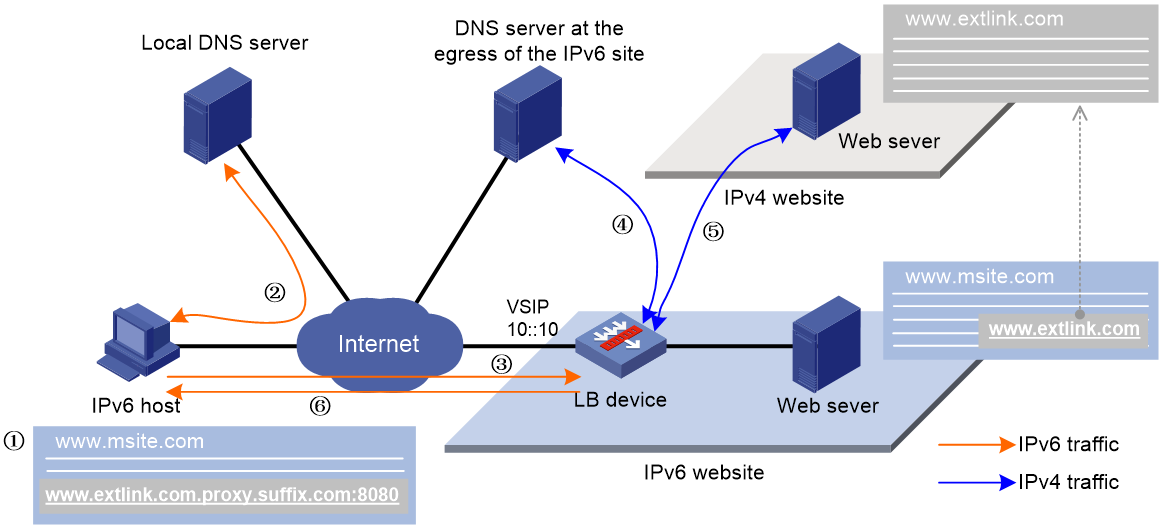

To access a website containing an IPv4 external link, an IPv6-only client sends a DNS request to the local DNS server for resolving the associated domain name. Without external link proxy configured, the domain name resolution will fail, and the IPv6 client cannot access the resource associated with the external link.

With external link proxy configured, the LB device returns an external link rewrite script file when responding to the access request from the IPv6 client. The client executes the script file, modifies the external link domain name, and then sends another DNS request containing the modified domain name. The local DNS server queries the modified domain name and redirects the request to the LB device. The LB device will request the external link resource on behalf of the IPv6 client and return the resource to the client.

Figure 24 External link proxy working mechanism

As shown in Figure 24, the working mechanism of external link proxy is as follows:

1. The IPv6 host executes the script file, and modifies the external link domain name as http://www.extlink.com.proxy.suffix.com.

2. The IPv6 host sends a DNS request to the local DNS server to query the domain name http://www.extlink.com.proxy.suffix.com. Based on the query result, the local DNS server notifies the IPv6 host of the LB device as the authoritative DNS server.

3. The IPv6 host sends a DNS request to the LB device to query domain name http://www.extlink.com.proxy.suffix.com.

4. Upon receiving the DNS request, the LB device sends a DNS request to the DNS server to query the IPv4 address associated with the original domain name http://www.extlink.com.

5. The LB device obtains the resource associated with the external link based on the IPv4 address.

6. The LB device sends the obtained resource to the IPv6 host.

7. The IPv6 host resolves the resource and displays a normal website.

IPv6 routing

RIP, OSPF, IS-IS, and BGP are important routing protocols for IPv4 networks. These routing protocols must be extended and upgraded for compatibility with IPv6 networks. Their extended versions are called RIPng, OSPFv3, IPv6 IS-IS, and IPv6 BGP, respectively. Unlike IPv4 routing protocols, IPv6 routing protocols must adjust their routing processes to accommodate to IPv6 addresses and IPv6 networks. Despite this difference, there is no essential difference between IPv4 routing protocols and IPv6 routing protocols in terms of application scenarios, routing mechanisms, pros and cons.

RIPng

RIP has two versions: RIPv1 and RIPv2.

· RIPv1 is a classful routing protocol. It advertises messages only through broadcast. Because RIPv1 messages do not carry mask information, RIPv1 can only recognize natural networks such as Classes A, B, and C, and it does not support non-contiguous subnets.

· RIPv2 is a classless routing protocol. It has the following advantages over RIPv1:

¡ Supports route tags for flexible route control through routing policies.

¡ Supports masks, route summarization, and Classless Inter-Domain Routing (CIDR).

¡ Supports designated next hops on broadcast networks.

¡ Supports multicasting route updates to reduce resource consumption.

¡ Supports plaintext authentication and MD5 authentication by adding an authentication route entry (RTE) into updates to enhance data security.

RIPng operates in the same way as RIPv2 except that RIPng contains some modifications to support IPv6. RIPng is different from RIPv2 in terms of the following aspects:

IP address length

RIPng routes use 128-bit IPv6 addresses. RIPv2 routes use 32-bit IPv4 addresses.

Packet length

A RIPv2 packet can carry a maximum of 25 RTEs. The maximum number of RTEs in a RIPng packet depends on the IPv6 MTU of the sending interface.

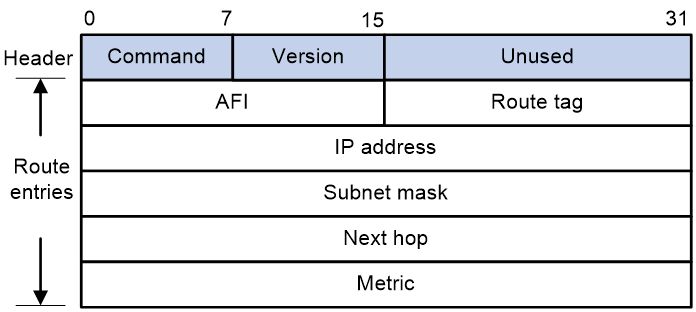

Packet format

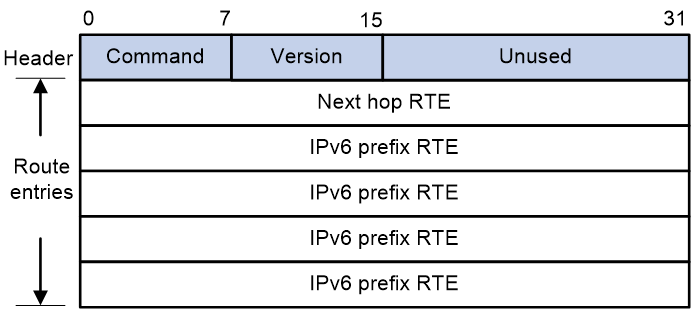

As shown in 0, a RIPv2 packet contains a header and multiple RTEs.

As shown in 0, a RIPng packet contains a header and the following types of RTEs:

· Next hop RTE—Defines the IPv6 address of a next hop.

· IPv6 prefix RTE—A next hop RTE can be followed by multiple IPv6 prefix RTEs. An IPv6 prefix RTE describes the destination IPv6 address, route tag, prefix length, and metric in a RIPng route entry.

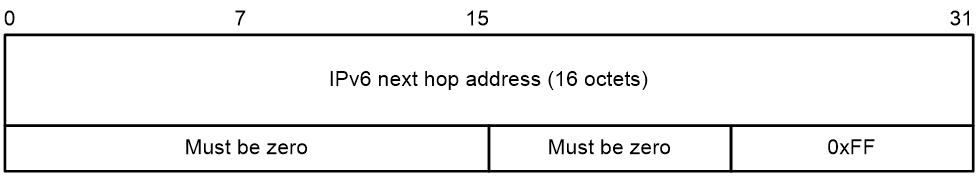

0 shows the format of a next hop RTE.

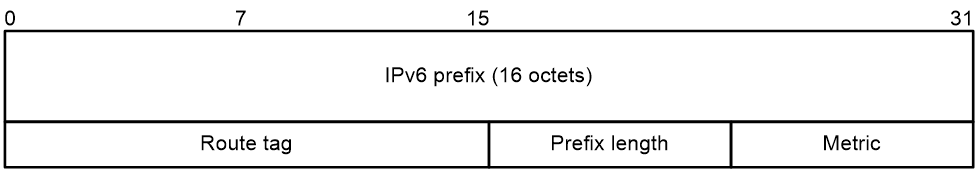

0 shows the format of an IPv6 prefix RTE:

· IPv6 prefix—Destination IPv6 address prefix.

· Route tag—Route tag.

· Prefix length—Length of the IPv6 address prefix.

· Metric—Cost of the route.

Packet sending mode

RIPv2 can periodically send routing information through broadcast or multicast as configured. RIPng periodically sends routing information only through multicast.

Packet authentication

RIPv2 uses authentication RTEs for packet authentication. RIPng uses the authentication mechanism of IPv6 for packet authentication.

Compatibility with network layer protocols

RIP can run in IP networks and IPX networks. RIPng can run in IPv6 networks only.

OSPFv3

OSPFv3 works basically in the same way as OSPFv2 but has some differences from OSPFv2 to support IPv6 address format.

Similarities between OSPFv3 and OSPFv2

OSPFv3 and OSPFv2 are similar in terms of the following aspects:

· Packet types: hello, DD, LSR, LSU, and LSAck.

· Area partition.

· LSA flooding and synchronization mechanisms: Both OSPFv3 and OSPFv2 use reliable flooding and synchronization to ensure correct LSDB contents.

· Routing calculation method: SPF algorithm

· Network types: broadcast, NBMA, P2MP, and P2P.

· Neighbor discovery and adjacency establishment mechanisms: When a router starts, it sends a hello packet via an OSPF interface, and the peer that receives the hello packet checks parameters carried in the packet. If parameters of the two routers match, they become neighbors. Not every pair of neighboring routers become adjacent, which depends on network types. Two OSPF neighbors can form an adjacency only after they successfully exchange DD packets and LSAs and complete LSDB synchronization.

· DR election: It is required to elect the DR and BDR on NBMA and broadcast networks.

Differences between OSPFv3 and OSPFv2

Some necessary modifications are made to OSPFv2 so that OSPFv3 can support IPv6. This makes OSPFv3 independent of network layer protocols and compatible with different protocols.

OSPFv3 is different from OSPFv2 as follows:

· Protocol running per-link, not per-subnet

OSPFv2 runs on a per-IP subnet basis. With OSPFv2 enabled, two routers can establish a neighbor relationship only when they run on the same subnet.

OSPFv3 runs on a per-link basis. A single link can have multiple IPv6 subnets (IPv6 prefixes). Two nodes can directly communicate with each other over a single link, even if their IPv6 prefixes are different.

· Use of link-local addresses

When an OSPFv3 router sends packets on an interface, the source address of these packets is the link-local address of that interface. The router learns the link-local addresses of other routers that are attached to the same link as the router, and uses these addresses as next hops for packet forwarding. For virtual links, the source IP addresses of OSPFv3 packets must be IPv6 global unicast addresses.

Link-local addresses are meaningful only for local links and can be flooded on the local links only. Therefore, Link-LSAs can carry link-local addresses.

· Support for multiple instances per link

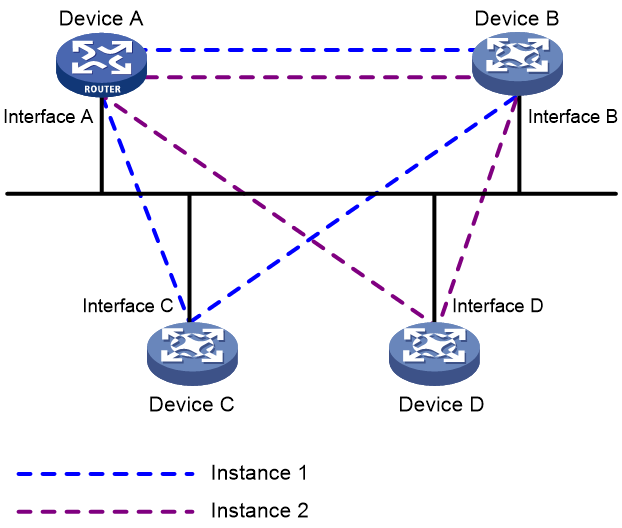

As shown in Figure 25, multiple OSPFv3 instances can run on a single link, which reduces network deployment costs.

Device A, Device B, Device C, and Device D are attached to the same broadcast network and share the same link. OSPFv3 instance 1 is enabled on Interface A, Interface B, and Interface C, so that Device A, Device B, and Device C can establish neighbor relationships with each other. OSPFv3 instance 2 is enabled on Interface A, Interface B, and Interface D, so that Device A, Device B, and Device D can establish neighbor relationships with each other.

For a link to support multiple OSPFv3 instances, OSPFv3 adds the Instance ID field into packets headers. On receipt of an OSPFv3 packet, an interface must match the instance ID carried by the packet against its own instance ID. If the two instance IDs are different, the interface discards the packet and does not establish a neighbor relationship with the source address of the packet.

Figure 25 Running of multiple instances on a link

· Identifying neighbors by router ID

Unlike OSPFv2, OSPFv3 identifies neighbors only by router ID.

· Authentication changes

OSPFv3 supports packet authentication and can use the security mechanism of IPv6 to ensure packet validity.

· Stub area support for unknown LSAs

OSPFv3 supports flooding unknown LSAs. To control the number of unknown LSAs in the stub area, OSPFv3 floods an unknown LSA to the stub area only when the LSA meets the following requirements:

¡ The LSA is flooded within a link or area.

¡ The U bit of the LSA is not set.

· Packet formats

OSPFv3 packets are encapsulated into IPv6 packets. Each OSPFv3 packet begins with a 16-byte header. The header format varies by OSPFv3 packet type.

OSPFv3 and OSPFv2 use the same LSU and LSAck packet formats, but their packet header formats and hello packet formats are different:

¡ The version number is 2 for OSPFv2 and is 3 for OSPFv3.

¡ Compared with OSPFv2 packet headers, the length of OSPFv3 packet headers is 16 bytes with the Authentication field eliminated and the Instance ID field added. The Instance ID field enables a link to support multiple OSPFv3 instances and takes effect on only local links.

¡ Compared with OSPFv2 hello packets, OSPFv3 hello packets do not carry the Network Mask field. The Interface ID field is added to OSPFv3 hello packets to identify source interfaces.

· Options field

The Options field is supported only by OSPFv2 hello packets, DD packets, and LSAs.

The Options field is supported only by OSPFv3 hello packets, DD packets, Router LSAs, Network LSAs, Inter-Area-Router LSAs, and Link LSAs.

Figure 26 shows the Options field format of OSPFv2 and Figure 27 shows the Options field format of OSPFv3.

Figure 26 OSPFv2 Options field format

Figure 27 OSPFv3 Options field format

OSPFv3 adds the R and V bits into the Options field:

¡ R bit—This bit indicates whether the originator is an active router. If the R bit is clear, the originator's routes do not participate in route calculation. Clearing the R bit would be appropriate for a device that wants to participate in routing, but does not want to forward non-locally addressed packets.

¡ V bit—If this bit is clear, the router/link should be excluded from IPv6 routing calculation.

OSPFv3 uses seven types of LSAs. The following table compares OSPFv3 LSAs and OSPFv2 LSAs.

Table 5 Comparisons between OSPFv3 and OSPFv2 LSAs

|

OSPFv2 LSA |

OSPFv3 LSA |

Comparison |

|

Router LSA |

Router LSA |

OSPFv3 Router LSAs and Network LSAs describe routing domain topology information only. |

|

Network LSA |

Network LSA |

|

|

Network Summary LSA |

Inter Area Prefix LSA |

They function similarly. |

|

ASBR Summary LSA |

Inter Area Router LSA |

|

|

AS External LSA |

AS External LSA |

Exactly the same. |

|

N/A |

Link LSA |

Available in OSPFv3 only |

|

Intra Area Prefix LSA |

Available in OSPFv3 only |

OSPFv3 has two new LSA types: Link LSA and Intra Area Prefix LSA.

¡ OSPFv3 Router LSAs does not contain address information. An OSPFv3 router originates a separate Link LSA for each attached link. A Link LSA advertises the router's link-local address and a list of IPv6 prefixes to all other routers attached to the link.

¡ Router LSAs and Network LSAs do not carry route information, which is carried by Intra-Area-Prefix LSAs. An Intra-Area-Prefix LSA can advertise one or more IPv6 address prefixes.

· Expansion of LSA flooding scopes

OSPFv3 has expanded LSA flooding scopes. The flooding scope of an LSA is defined in its LS Type field. OSPFv3 LSAs support the following flooding scopes:

¡ Link-local scope—The LSA is flooded only on the local link. It is used for new Link LSAs.

¡ Area scope—The LSA is flooded throughout the single OSPF area only. It is used for Router LSAs, Network LSAs, Inter-Area-Prefix LSAs, Inter-Area-Router LSAs, and Intra-Area-Prefix LSAs.

¡ AS scope—The LSA is flooded throughout the routing domain. It is used for AS-external LSAs.

When an OSPFv2 interface receives an unknown LSA, it directly discards the LSA.

OSPFv3 uses the U bit in the LS Type field of LSAs to indicate the mode of processing an unknown LSA:

¡ If the U bit is set to 1, OSPFv3 will flood the unknown LSA within the flooding scope defined in the LS Type field.

¡ If the U bit is set to 0, OSPFv3 will flood the unknown LSA on the link.

To accommodate to IPv6 addresses, OSPFv3 has adjusted the LSA header format and LSA formats. For more information, see OSPFv3 Technology White Paper.

IPv6 IS-IS

To route packets in IPv6 networks, IS-IS was extended to IPv6 IS-IS.

New TLVs for IPv6 IS-IS

Type-Length-Value (TLV) is a variable part of LSPs and allows LSPs to carry different information.

IPv6 IS-IS uses two new TLVs and a Network Layer Protocol Identifier (NLPID) field with a value of 0x8E for IPv6 packet forwarding.

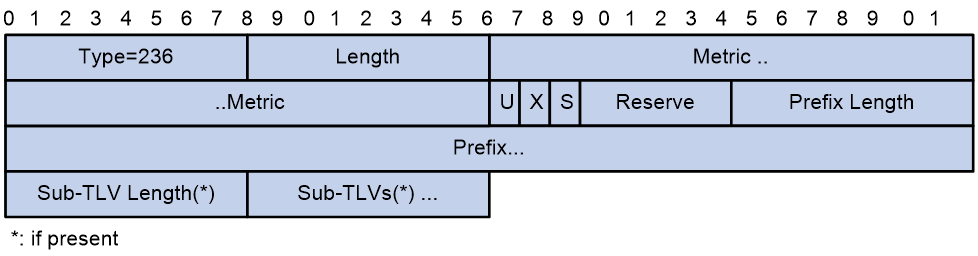

The type value for IPv6 Reachability TLV is 236 (0xEC). This TLV corresponds directly to Common Reachability TLV and Extended Reachability TLV in IPv4 IS-IS. Figure 28 shows the format of IPv6 Reachability TLV.

Figure 28 IPv6 Reachability TLV format

IPv6 Reachability TLV contains the following fields:

¡ Type—A value of 236 indicates that this TLV is an IPv6 reachability TLV.

¡ Length—TLV length.

¡ Metric—Extended metric, in the range 0 to 4261412864. If the metric value is greater than 4261412864, the IPv6 reachability information will be ignored.

¡ U—Up/Down bit. This bit prevents routing loops. When a route is advertised from a Level-2 router to a Level-1 router, this field is set to 1 to prevent the route from being looped back.

¡ X—Route redistribution bit. A value of 1 means the route is redistributed from another protocol.

¡ S—If a TLV does not carry any sub-TLVs, this field is set to 0. When the TLV carries sub-TLV information, this field is set to 1.

¡ Reserve—This field is reserved.

¡ Prefix Length—IPv6 route prefix length.

¡ Prefix—IPv6 prefix that the originator can reach.

¡ Sub-TLV/Sub-TLV Length—Sub-TLV and its length. These fields are optional.

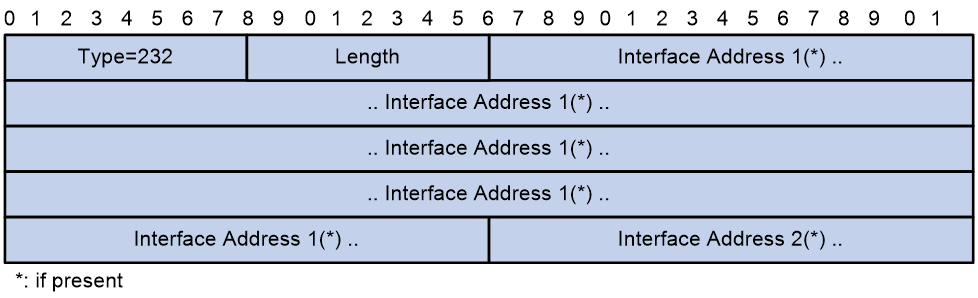

The type value for IPv6 Interface Address TLV is 232 (0xE8). This TLV is similar to IPv4 Interface Address TLV except that the IPv6 TLV uses 128-bit IPv6 addresses. Figure 29 shows the format of IPv6 Interface Address TLV:

Figure 29 Format of IPv6 Interface Address TLV

IPv6 Interface Address TLV contains the following fields:

¡ Type—A value of 236 indicates that this TLV is an IPv6 interface address TLV.

¡ Length—TLV length.

¡ Interface Address: IPv6 address of the interface enabled with IPv6 IS-IS. The interface address TLV of a hello packet carries the IPv6 link-local addresses of the interface that sent the packet. The interface address TLV of an LSP carries the IPv6 global unicast addresses assigned to the interface that sent the LSP.

If a field is asterisked, it is optional.

IS-ISv6 Adjacency

IS-IS uses hello packets to discover neighbor routers on the same link and establish adjacencies with them. After two routers become adjacent, they periodically send hello packets to each other to maintain the adjacency. To support IPv6, IPv6 IS-IS added the NLPID field and IPv6 Interface Address TLV to hello packets:

· NLPID is an 8-bit field that identifies the network protocol supported by IS-IS. For IPv6 IS-IS, the value for this field is 142 (0x8E). If a device supports IPv6 IS-IS, its neighbors can obtain this support information by reading the NLPID field in the hello packets received from the device.

· When a device receives a hello packet from a neighbor, it can obtain the IPv6 link-local addresses of the interface that sent the hello packet by reading the Interface Address field of IPv6 Interface Address TLV.

IPv6 BGP (also called BGP4+)

To support multiple network layer protocols, IETF upgraded BGP-4 to Multiprotocol BGP (MP-BGP). IPv6 BGP implements inter-AS IPv6 route transmission based on MP-BGP.

BGP-4 carries IPv4 route information in UPDATE messages, including the NLRI attribute and the NEXT_HOP attribute. To support IPv6, MP-BGP extended the NLRI and NEXT_HOP attributes:

· Two new route attributes, MP_REACH_NLRI and MP_UNREACH_NLRI, are introduced to replace the NLRI field of BGP-4 and provide IPv6 support.

¡ MP_REACH_NLRI—Carries feasible route prefixes and next hops for multiple network layer protocols.

¡ MP_UNREACH_NLRI—Carries unfeasible route prefixes for multiple network layer protocols.

· BGP next hop information supports IPv6 addresses. It can be IPv6 unicast addresses or IPv6 local link addresses. This information is carried by the MP_REACH_NLRI attribute instead of the NEXT_HOP attribute.

On a developing IPv4/IPv6 hybrid network, IPv4 peers can exchange IPv6 BGP routes over IPv4 BGP sessions to support IPv6 traffic forwarding. Likewise, IPv6 peers can exchange IPv4 BGP routes over IPv6 BGP sessions to support IPv4 traffic forwarding.

Similarities and differences between IPv4 and IPv6 routing protocols

Table 6 Comparisons between IPv4 and IPv6 routing protocols

|

Routing protocols |

Similarities |

Differences |

|

Route calculation and basic operation mechanism |

· RIP and RIPng use different packet formats in terms of multicast address, UDP port, and packet content. · RIP and RIPng process next hop information differently. · RIPng ensures data security by using IPv6 headers. |

|

|

OSPFv2/OSPFv3 |

Route calculation and basic operation mechanism |

· OSPFv3 added new LSA types and adjusted LSA formats to support IPv6 route advertisement. · OSPFv3 has greater extensibility than OSPF because it is independent of network protocols. · OSPFv3 supports processing unknown LSAs. |

|

IS-IS/IPv6 IS-IS |

· IPv6 IS-IS added the NLPID field to hello packets for IPv6 support. · IPv6 IS-IS introduced IPv6 Reachability TLV and IPv6 Interface Address TLV. |

|

|

BGP-4/IPv6 BGP |

Protocol architecture |

· IPv6 BGP upgraded the format of OPEN messages for IPv6 support. · IPv6 BGP introduced the MP_REACH_NLRI and MP_UNREACH_NLRI attributes and extended the NEXT_HOP attribute. |

Dual-stack PBR

Dual-stack policy-based routing (dual-stack PBR) uses user-defined policies to route packets. A policy can specify parameters for IPv4 or IPv6 packets that match specific criteria such as ACLs. The parameters include the next hop, output interface, default next hop, and default output interface.

Dual-stack PBR routes packets in the same way as IPv4 PBR and IPv6 PBR. However, dual-stack PBR can process both IPv4 packets and IPv6 packets.

To simplify routing configuration and save drive resources, configure dual-stack PBR on IPv4/IPv6 dual-stack nodes.

IPv6 multicast

About IPv6 multicast

Multicast enables a host to send packets to a group of hosts (a multicast group) in an IP network. The destination address in the packet is a multicast group address, and all the hosts in the multicast group can receive the packet.

As a technique that coexists with unicast and broadcast, the multicast technique effectively addresses the issue of point-to-multipoint data transmission. By enabling high-efficiency point-to-multipoint data transmission over a network, multicast greatly saves network bandwidth and reduces network load.

By using multicast technology, a network operator can easily provide bandwidth-critical and time-critical information services. These services include live webcasting, Web TV, distance learning, telemedicine, Web radio, and real-time video conferencing.

The most significant advantage of IPv6 multicast over IPv4 multicast is the greatly expanded addressing capability. Other techniques in IPv6 multicast, such as the group membership management, multicast packet forwarding, and multicast route establishment, are more or less that same as in IPv4 multicast. This document mainly describes IPv6 multicast addresses, and gives a general introduction to the differences between IPv4 multicast protocols and IPv6 multicast protocols.

IPv6 multicast address

IPv6 addresses are 128 bits long. An IPv6 address is divided into eight groups, and each 16-bit group is represented by four hexadecimal numbers, for example, FEDC:BA98:7654:3210:FEDC:BA98:7654:3210.

IPv6 multicast address format

An IPv6 multicast address identifies a group of interfaces that typically belong to different nodes. A packet sent to a multicast address will be received by all interfaces identified by the multicast address. A node can belong to one or more multicast groups.

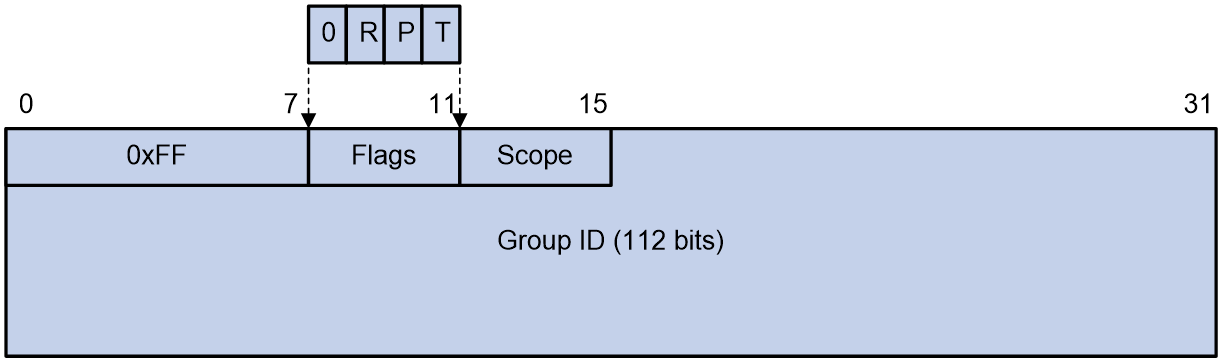

Figure 30 shows the format of an IPv6 multicast address.

Figure 30 IPv6 multicast address format

The following describes the fields of an IPv6 multicast address:

· 0xFF—Identifies an IPv6 multicast address. The most significant eight bits are 11111111.

· Flags—The Flags field contains four bits.

Table 7 Flags field description

|

Bit |

Value and meaning |

|

0 |

Reserved, must be set to 0. |

|

R |

· When set to 0, this address is an IPv6 multicast address without an embedded RP address. · When set to 1, this address is an IPv6 multicast address with an embedded RP address. (The P and T bits must also be set to 1.) |

|

P |

· When set to 0, this address is an IPv6 multicast address not based on a unicast prefix. · When set to 1, this address is an IPv6 multicast address based on a unicast prefix. (The T bit must also be set to 1.) |

|

T |

· When set to 0, this address is an IPv6 multicast address permanently-assigned by IANA. · When set to 1, this address is a transient or dynamically assigned IPv6 multicast address. |

· Scope—The Scope field contains four bits, which represent the scope of the IPv6 internetwork for which the multicast traffic is intended.

Table 8 Values of the Scope field

|

Meaning |

|

|

0, F |

Reserved. |

|

1 |

Interface-local scope. |

|

2 |

Link-local scope. |

|

3 |

Subnet-local scope. |

|

4 |

Admin-local scope. |

|

5 |

Site-local scope. |

|

6, 7, 9 through D |

Unassigned. |

|

8 |

Organization-local scope. |

|

E |

Global scope. |

· Group ID—The Group ID field contains 112 bits. It uniquely identifies an IPv6 multicast group in the scope that the Scope field defines. This group ID is permanent or dynamic, depending on the P bit setting.

Predefined IPv6 multicast addresses

_Ref112419362 shows the predefined IPv6 multicast addresses as stated in RFC 4291.

Table 9 Predefined IPv6 multicast addresses

|

Type |

Address |

Description |

|

Reserved Multicast Addresses |

FF0X:: |

These multicast addresses cannot be assigned to any multicast group. |

|

All Nodes Addresses |

FF01::1 (link-local) FF02::1 (node-local) |

N/A |

|

All Routers Addresses |

FF01::2 (node-local) FF02::2 (link-local) FF05::2 (site-local) |

N/A |

|

Solicited-Node Addresses |

FF02::1:FFXX:XXXX |

A Solicited-Node multicast address is formed by taking the low-order 24 bits of an address (unicast or anycast) and appending those bits to the prefix FF02::1:FF00::/104. For example, the Solicited-Node multicast address corresponding to the IPv6 address 4037::01:800:200E:8C6C is FF02::1:FF0E:8C6C. |

|

|

NOTE: X in _Ref112419362 represents any hexadecimal number in the range of 0 to F. |

Unicast-prefix-based IPv6 multicast addresses

RFC 3306 allows for dynamic, unicast-prefix-based allocation of IPv6 multicast addresses. Such a IPv6 multicast address contains the unicast network prefix.

Figure 31 Format of a unicast-prefix-based IPv6 multicast address

The following describes the fields of a unicast-prefix-based IPv6 multicast address:

· Flags—Identifies a unicast-prefix-based IPv6 multicast address. The R bit is set to 0, and both the P and T bits are set to 1.

· Scope—See Table 8.

· Reserved—This field is 8 bits long and must be 0.

· Plen—Indicates the actual number of bits in the network prefix field.

· Network prefix—Identifies the network prefix of the unicast subnet owning the multicast address. The valid length of the prefix is determined by the Plen field.

· Group ID—Identifies the multicast group.

For example, a network with a unicast prefix of 3FFE:FFFF:1::/48 will have a unicast prefix-based multicast prefix of FF3x:0030:3FFE:FFFF:0001::/96 (where x is any valid scope).

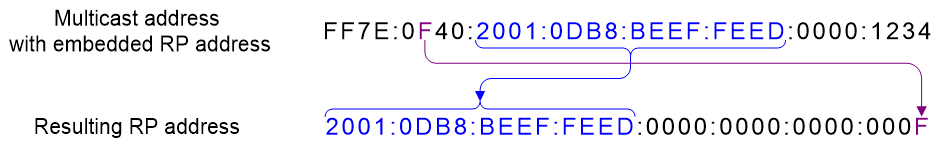

IPv6 multicast addresses embedded with an RP address

· Address format

The embedded RP mechanism enables a device to resolve the RP address from an IPv6 multicast group address to map the IPv6 multicast group to an RP.

Figure 32 shows the format of an IPv6 multicast address with an embedded RP address.

Figure 32 IPv6 multicast address with an embedded RP address

The following describes the fields of an IPv6 multicast address with an embedded RP address:

¡ Flags—The R, P, and, T bits must be set to 1.

¡ Reserved—Contains 4 bits and must be set to 0.

¡ RIID—Contains 4 bits and indicates the RP interface ID.

¡ Plen—Contains 8 bits and indicates the valid length (in bits) of the RP address prefix.

¡ Network prefix—Contains 64 bits and indicates the prefix of the RP address. The valid length of the prefix is determined by the Plen field.

¡ Group ID—Contains 32 bits and indicates the ID of the IPv6 multicast group.

· Calculating the RP address

You can obtain the RP address from a multicast address by performing the following steps:

a. Copy the first "plen" bits of the "network prefix" to a zeroed 128-bit address structure.

b. Replace the last 4 bits with the contents of "RIID".

c. Set all other bits to 0.

Figure 33 shows an example for calculating the RP address. In this example, the RP address is embedded in FF7E:F40:2001:DB8:BEEF:FEED::1234.

Figure 33 Calculating the RP address

· Example

The network administrator wants to set up an RP on subnet 2001:DB8:BEEF:FEED::/64. The group addresses would be something like FF7x:y40:2001:DB8:BEEF:FEED::/96, and then their RP address would be 2001:DB8:BEEF:FEED::y. There are still 32 bits of multicast group IDs to assign.

If the network administrator wants to have more multicast group IDs that can be assigned, he can select a shorter unicast prefix, for example, Plen = 0x20 = 32 bits. In this case, the group addresses will be FF7X:Y20:2001:DB8::/64, and there are 64 bits for group IDs to be assigned. The RP address embedded is 2001:DB8::Y/32.

|

|

NOTE: X is any valid scope, and Y is any number from 1 to F. |

IPv6 SSM addresses

IPv6 Source-Specific Multicast (SSM) addresses use the format of Unicast-prefix-based IPv6 multicast addresses. The Plen and Network prefix fields are both set to 0. The IPv6 SSM address range is FF3X::/32 (X is any valid scope value).

IPv6 multicast MAC addresses

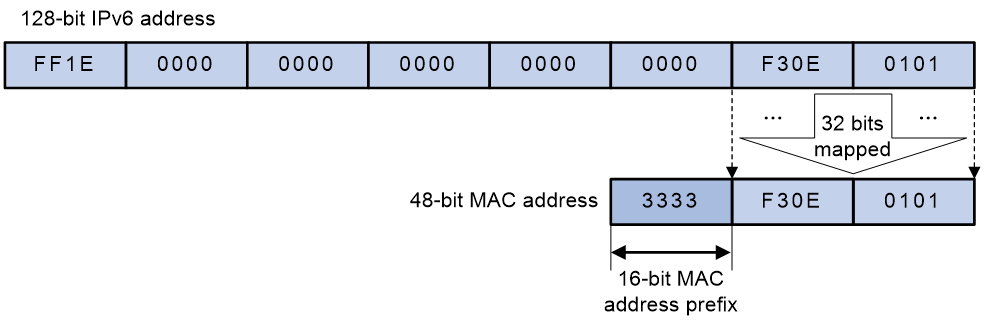

The most significant 16 bits of an IPv6 multicast MAC address are 0x3333. The least significant 32 bits are mapped from the least significant 32 bits of an IPv6 multicast address. As shown in Figure 34, FF1E::F30E:101 is mapped to 33-33-F3-0E-01-01.

Figure 34 IPv6-to-MAC address mapping

IPv6 multicast protocols

IPv6 supports the following multicast protocols:

· Multicast Listener Discovery (MLD)

· MLD snooping.

· IPv6 Protocol Independent Multicast (IPv6 PIM)

· IPv6 Multicast BGP (IPv6 MBGP)

Multicast group management protocol

MLD is based on the Internet Group Management Protocol (IGMP). MLD has two versions: MLDv1 (derived from IGMPv2) and MLDv2 (derived from IGMPv3).

IGMP uses messages with the IP protocol number as 2. MLD uses the following ICMPv6 messages with the IP protocol number as 58:

· MLD query messages (type 130)

· MLDv1 listener report messages (type 131)

· MLDv1 done messages(type 132)

· MLDv2 listener report messages (type 143)

MLD behaves exactly the same as IGMP except that it uses different message formats.

Multicast routing protocols

IPv6 PIM supports the following modes:

· IPv6 Protocol Independent Multicast-Dense Mode (IPv6 PIM-DM)

· IPv6 Protocol Independent Multicast-Sparse Mode (IPv6 PIM-SM)

· IPv6 Protocol Independent Multicast Source-Specific Multicast (IPv6 PIM-SSM)

· IPv6 Bidirectional Protocol Independent Multicast (IPv6 BIDIR-PIM)

When sending the following protocol messages, IPv6 PIM uses the link local address of the sending interface as the source IPv6 address:

· Hello messages

· Join or prune messages

· Graft-ack messages

· State refresh messages

· Assert messages

· Bootstrap messages

· Graft messages

When sending the following protocol messages, IPv6 PIM uses the global unicast address of the sending interface as the source IPv6 address:

· Register messages

· Register-stop messages

· C-RP advertisement messages

IPv6 multicast does not support MSDP. To receive multicast packets from other IPv6 PIM domains, use either of the following methods:

· Obtain multicast source addresses of other IPv6 PIM domains by using other methods (such as advertisements) and use IPv6 PIM-SSM join the related multicast groups.

· Use the embedded RP mechanism to obtain multicast source addresses of other IPv6 PIM domains and join the RPs of other IPv6 PIM domains.

To deliver IPv6 multicast information between two ASs, use MBGP.

Layer 2 multicast protocols

· MLD snooping

MLD snooping is similar to IGMP snooping.

· IPv6 PIM snooping

IPv6 PIM snooping is similar to PIM snooping.

· Multicast VLAN

Multicast VLAN for IPv4 is the same as that for IPv6.

Network security

Dual-Stack authentication

Overview

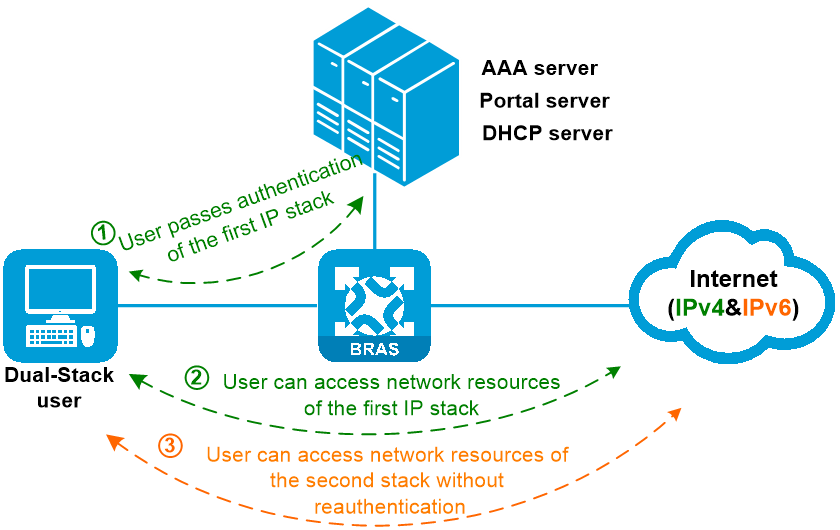

Dual-stack users exist before the network completely transits from IPv4 to IPv6. If the network is configured with IPoE Web or portal authentication only for one IP stack, dual-stack users can access network resources only of this IP stack, which fails to satisfy the needs of the users. If the network is configured with IPoE Web or portal authentication for both the IP stacks, dual-stack users must pass IPoE Web or portal authentication of both IP stacks before they can access the network resources, which complicates the login process of the users.

To resolve this issue, IPoE Web and portal dual-stack authentication are introduced. With the IPoE Web or portal dual-stack feature enabled, dual-stack portal users can access both IPv4 and IPv6 network resources after they pass IPv4 or IPv6 IPoE Web or portal authentication and come online.

Figure 35 Dual-Stack authentication

Benefits of IPoE Web or portal dual-stack authentication for IPv6 networks:

· Enhancing the user experience for dual-stack users

· Reducing the authentication pressure of the AAA server and Portal server.

· Simplifying network management and maintenance for the administrator.

Support of IPoE Web authentication for dual stack

The following describes the mechanism of IPoE Web dual-stack authentication:

1. After an IPoE Web user passes portal authentication of one IP stack (the first stack, for example, IPv4) after entering the username and password on the authentication page, the user can access network resources of this IP stack.

2. The device records the user's MAC address, username, authentication state, and other information.

3. Upon receiving a packet of the other IP stack (the second stack, for example, IPv6) from the user, the device compares the source MAC address with that for the user recorded by the device.

¡ If the MAC addresses are the same, the device allows the user to access network resources of the second stack without reauthentication.

¡ If the MAC addresses are different, the user needs to perform IPoE Web authentication of the second stack.

IPoE Web dual-stack authentication can be applied to the following scenarios:

· Dynamic dual-stack user authentication—Dual-stack users can come online after they pass authentication dynamically initiated by unclassified-IPv4 packets, unclassified-IPv6 packets, DHCPv4 packets, DHCPv6 packets, or ND RS packets. This type of authentication can be applied to mobile terminals with non-fixed IP addresses. For example, students access the campus network from mobile smart terminals.

· Static dual-stack user authentication—Dual-stack users can come online after they pass authentication statically initiated by IPv4 packets, IPv6 packets, ARP packets, NS packets, or NA packets. This type of authentication can be applied to terminals with static IP addresses. For example, students access the campus network from fixed network ports in the dormitory.

· Hybrid dual-stack user authentication—Dual-stack users can come online as both static users of one protocol stack and dynamic users of the other protocol stack. This type of authentication can be applied to the scenario when both terminals with fixed IP addresses and terminals with non-fixed IP addresses exist in the network. For example, if a campus is upgrading the campus network from IPv4 to IPv6, the static IPv4 and DHCPv6 address assignment method can be used, so that the IPv4 users can still access the network with static addresses and the IPv6 users can obtain dynamically assigned IPv6 addresses to access the network.

Support of portal authentication for dual stack

In a portal dual-stack authentication network, you can enable the portal dual-stack feature on an interface with portal authentication enabled as needed. Portal users on the interface can access both IPv4 and IPv6 network resources after passing one type (IPv4 or IPv6) of portal authentication

The following describes the mechanism of portal dual-stack authentication:

1. After a portal user passes portal authentication of one IP stack (the first stack, IPv4 or IPv6) after entering the username and password on the authentication page, the user can access network resources of this IP stack.

2. The device records the user's MAC address and IP address in the portal user entry for the user.

3. Upon receiving a packet of the other IP stack (the second stack, IPv6 or IPv4) from the user, the device compares the source MAC address with that in the portal user entry for the user.

¡ If the MAC addresses are the same, the device allows the user to access network resources of the second stack without reauthentication.

¡ If the MAC addresses are different, the user needs to perform portal authentication of the second stack.

|

IMPORTANT: The portal dual-stack feature takes effect on an interface only when both direct IPv4 portal authentication and direct IPv6 portal authentication are enabled on the interface. |

SAVI, SAVA, and SMA

Overview

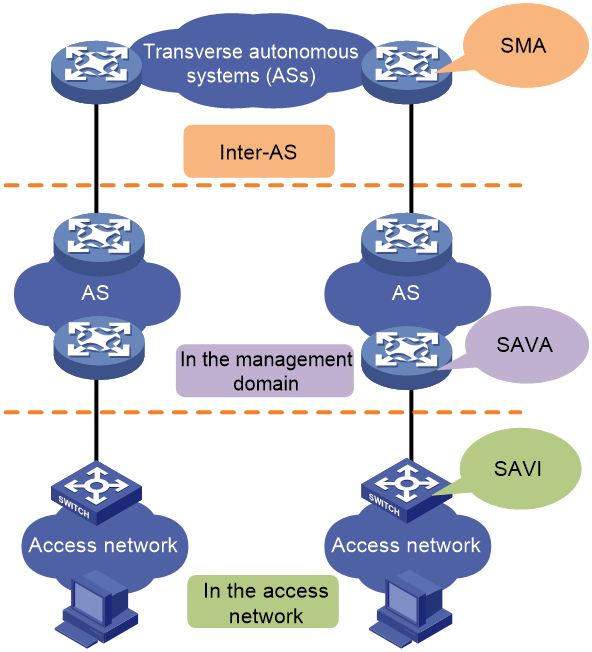

Source Address Validation checks the validity of the source IPv6 address of packets and discards packets with spoofed source IP addresses, thus improving the security of IPv6 networks. Source Address Validation Improvement (SAVI), Source Address Validation Architecture (SAVA), and State Machine Based Anti-Spoofing (SMA) are all technologies for source address validation. They can be deployed in specific network locations to meet different granularity of security needs.

· SAVI—Deployed in the access network. SAVI performs source address validation at the access level and provides host granularity source address validation, ensuring that hosts use only assigned valid IPv6 addresses.

· SAVA—Deployed on the border devices of the backbone network connecting to an access network. SAVA provides IPv6 prefix granularity protection in the management domain, so as to prevent core devices from source IPv6 address spoofing attacks initiated by illegal hosts.

· SMA—Deployed at the inter-AS level. SMA provides inter-AS granularity source address validation to prevent hosts and servers in the AS from source IPv6 address spoofing attacks initiated by illegal hosts.

Figure 36 Deployment locations of SAVI, SAVA, and SMA in networks

SAVI

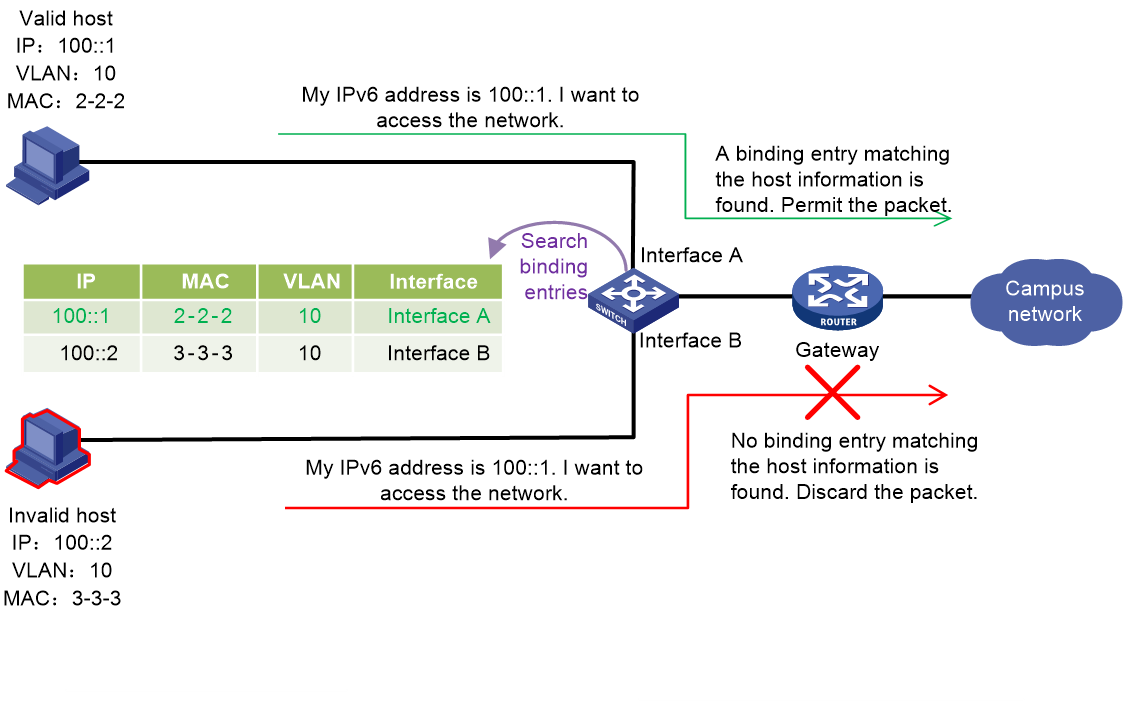

To prevent illegal attacks from DHCPv6 messages, ND messages, or IPv6 data packets with spoofed source addresses, you can enable SAVI on the device. The device creates binding entries that bind IP addresses and other information, and performs IPv6 source address validity check on packets based on the binding entries. Packets passing the validity check are forwarded. Packets sourced from an invalid address are dropped.

SAVI implements the validity check in combination with the DHCPv6 snooping, ND snooping, and IP source guard features.

Figure 37 SAVI mechanism

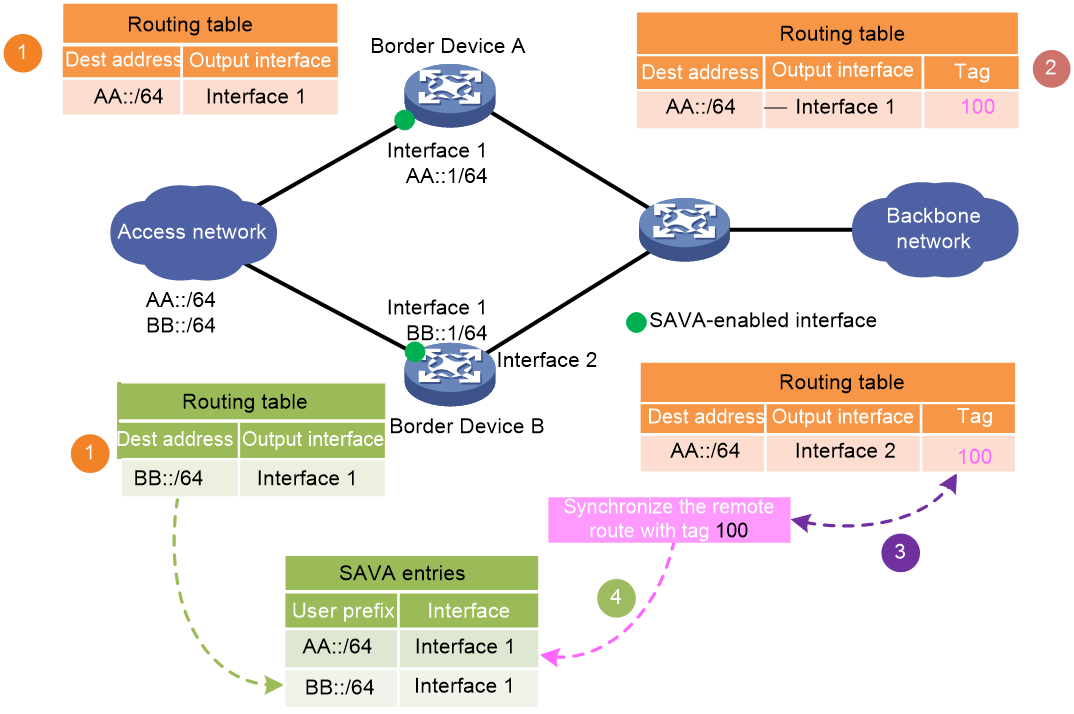

SAVA

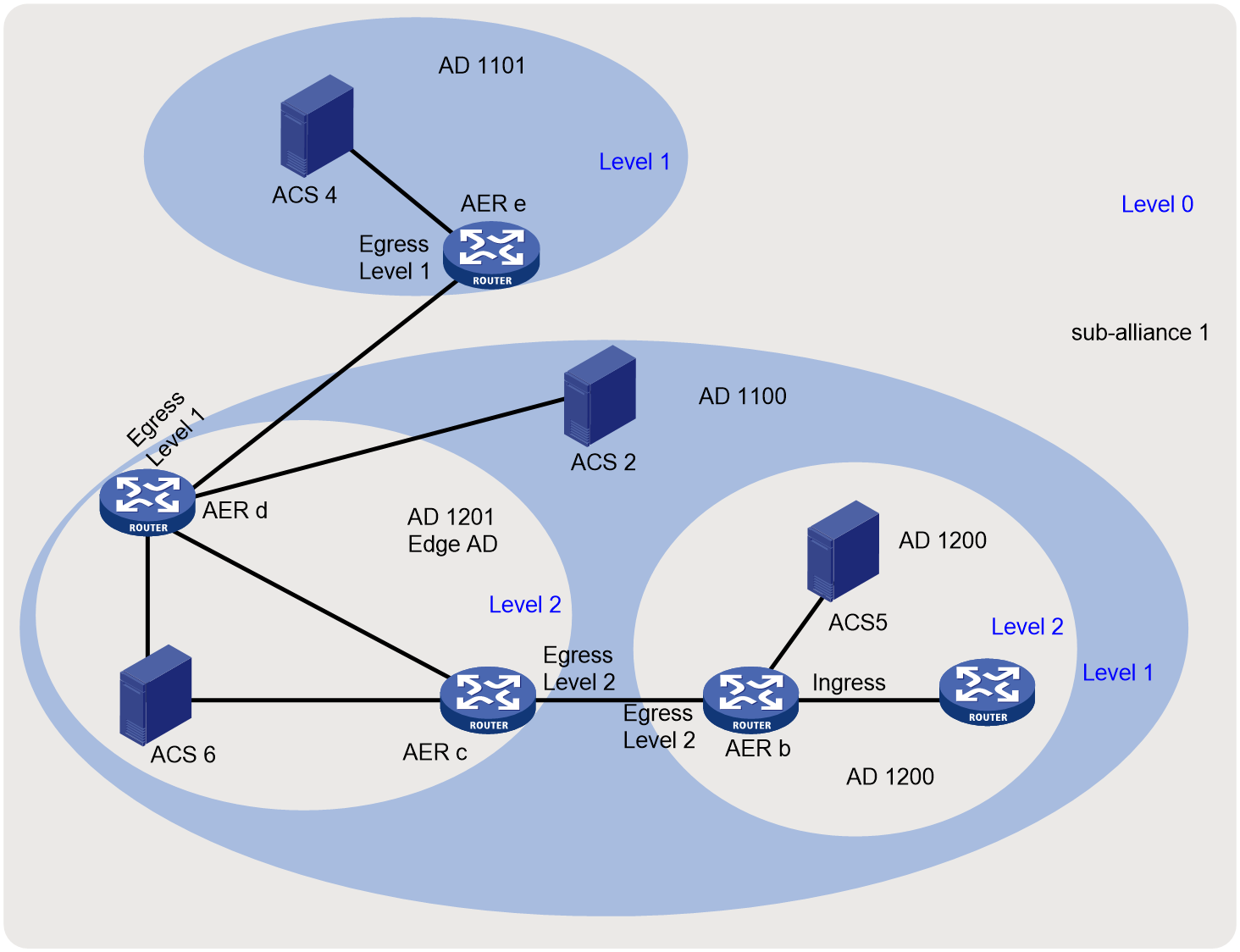

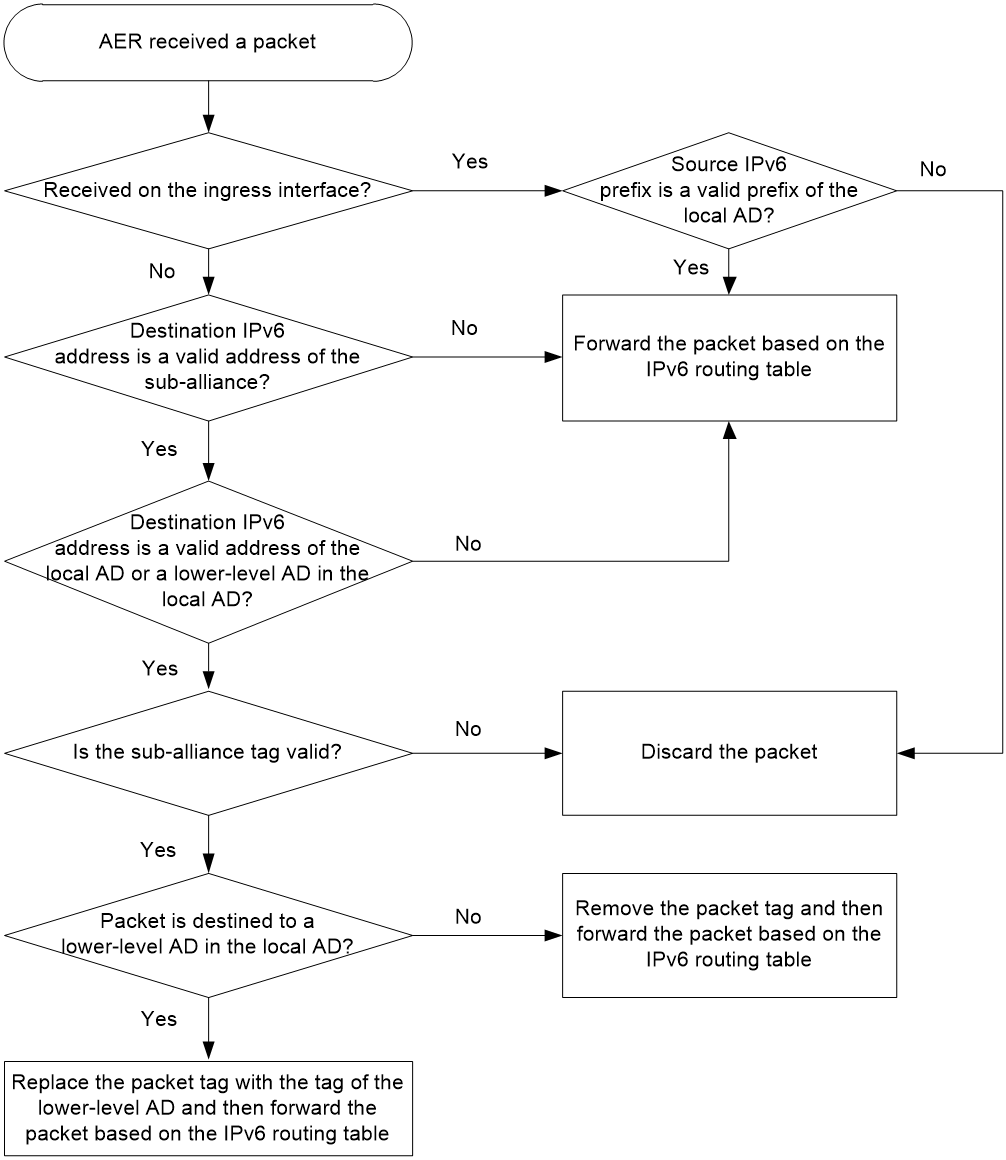

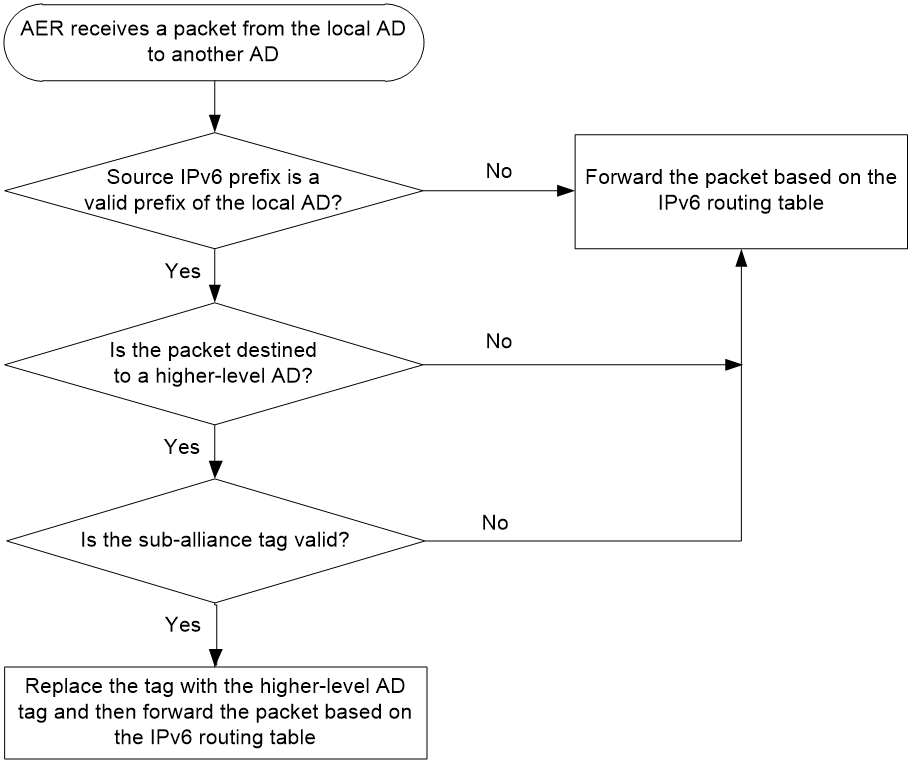

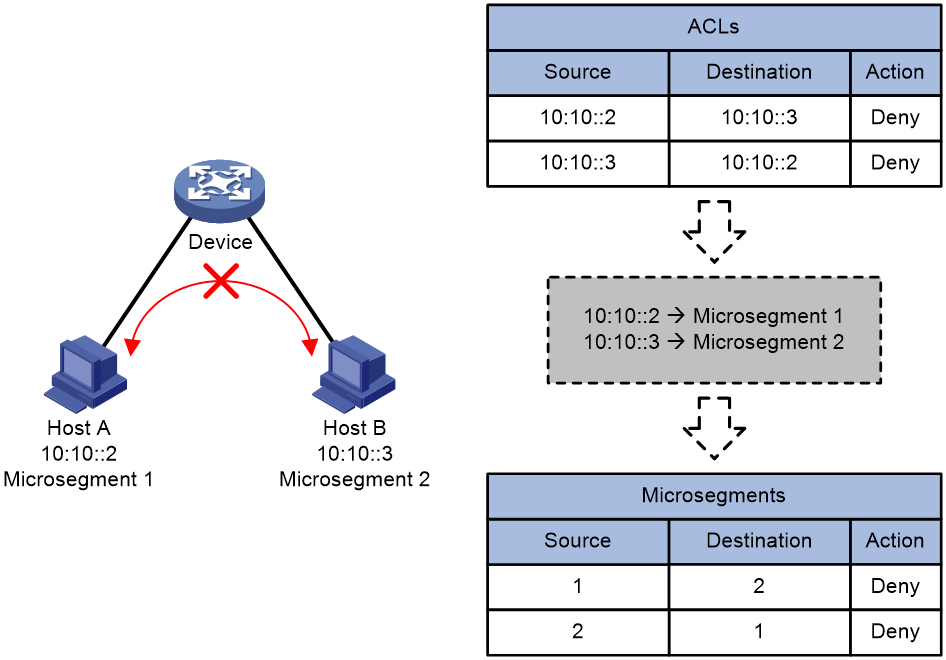

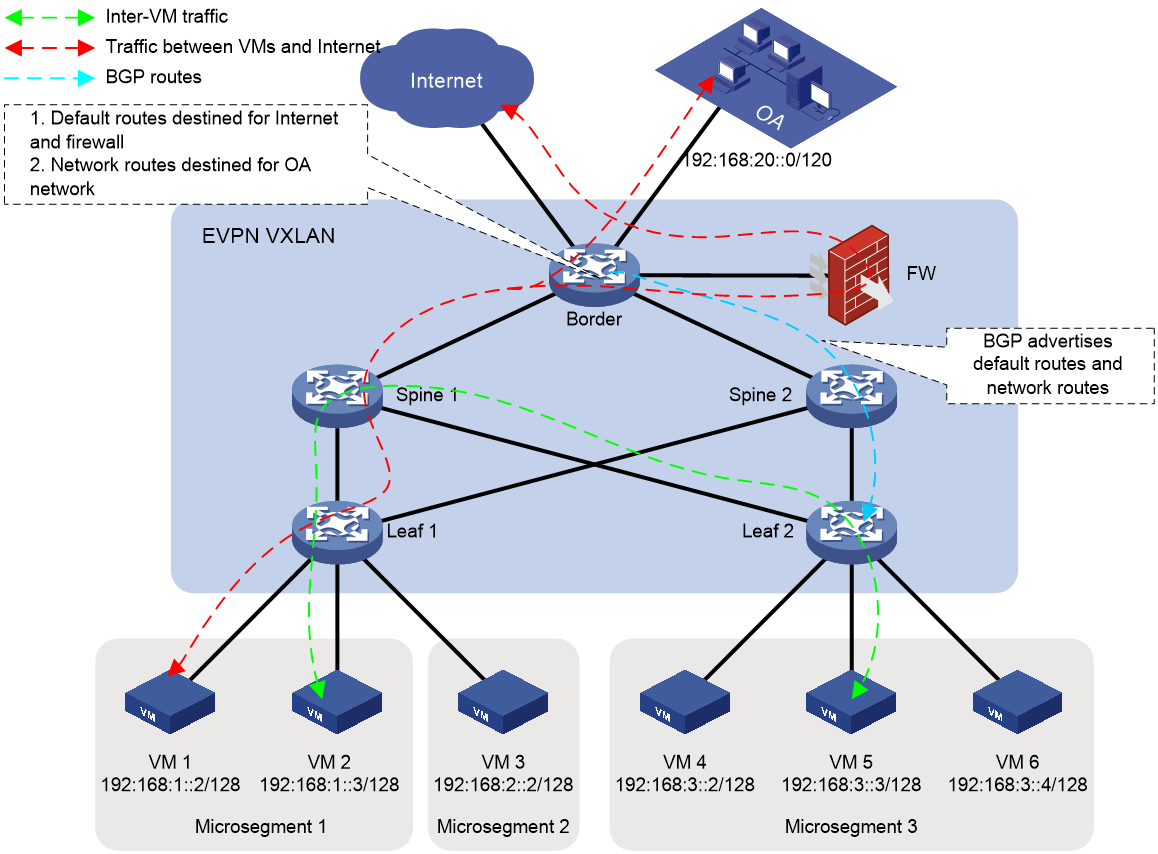

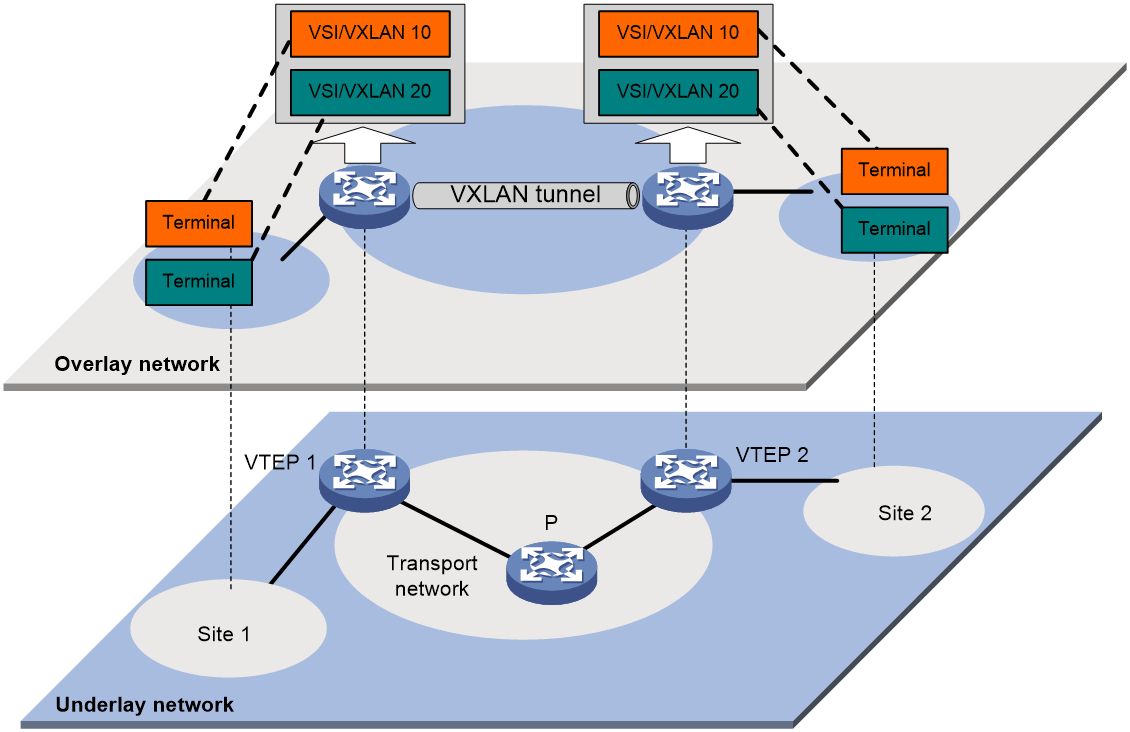

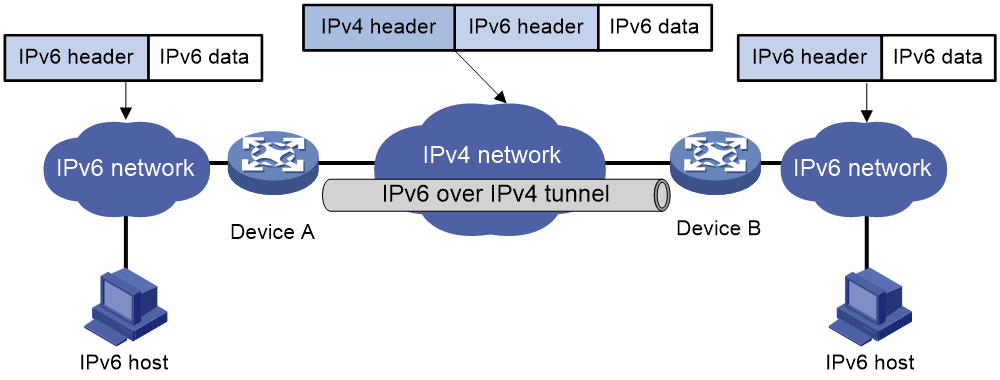

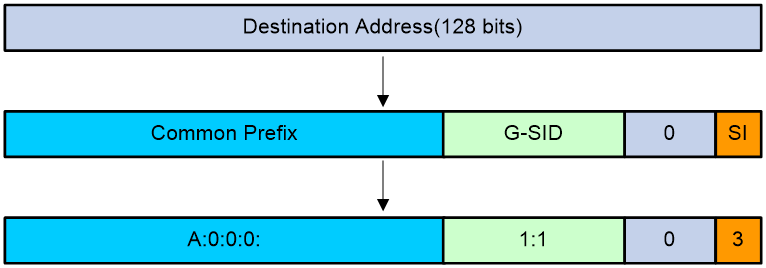

SAVA checks the validity of the source IPv6 address of packets based on routing information to prevent source IPv6 address spoofing attacks. SAVA is typically deployed on the border devices of the backbone network connecting to an access network. With SAVA enabled on an interface connecting to the access network, the device creates SAVA entries for all user prefixes in the access network. Upon receiving an IPv6 packet on the interface, the device searches for a SAVA entry that contains the source IPv6 address and the receiving interface. If a match is found, the device considers the source IPv6 address as valid and forwards the packet. If no match is found, the device considers the source IPv6 address as invalid and discards the packet.