| Title | Size | Downloads |

|---|---|---|

| Comware 9 Open Programmability Technology White Paper-6W100-book.pdf | 169.84 KB |

- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| book | 169.84 KB |

Comware 9 Open Programmability Technology White Paper

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The content in this article is general technical information, some of which may not apply to the product you purchased.

Overview

With the widespread use of networks and the emergence of new technologies, the network scale is increasingly growing, gradually elevating the complexity of deployment and maintenance. Traditional manual O&M and semi-automatic O&M methods can no longer meet user requirements, mainly reflected in the following aspects:

· Slow service provisioning. If users have new service requirements, they must go through stages such as requirement analysis, function development, testing and verification, and release to complete service provisioning. The inability to timely introduce new services might cause users to miss out on new service markets.

· When there are a large number of network devices, the workload for manual configuration is huge and prone to errors.

· The interface standards vary by device manufacturer. Typically, a manufacturer's SDN controller can only control and manage its own devices, unable to achieve end-to-end network automation deployment.

Clearly, network O&M urgently requires automation and intelligence. However, automated and intelligent O&M depend on the openness of network devices.

Comware 9 exhibits excellent openness.

· Comware 9 runs on the native Linux system which uses an unmodified kernel and provides a standard Linux environment for third-party software. User-developed Linux software and standard Linux open-source software can seamlessly run on the Comware 9 system, accelerating the new service provisioning. Comware 9 achieves full modularity and fault isolation. If third-party software running on the Comware 9 system is faulty, the built-in modules of Comware 9 can still operate normally.

· Comware 9 supports containerization and allows the deployment of containers to run third-party applications. Users can deploy the Guest Shell container and Docker containers on Comware 9 devices to run applications based on these containers. These applications can run on the devices if only they are compliant with the packaging, orchestration, and running requirements of the Docker containers.

· Comware 9 has programmability, allowing administrators to operate services on devices, manage devices, and deploy services through third-party systems such as RESTful clients and NETCONF clients. For example, users can use RESTful or NETCONF to manage devices via standard Application Programmatic Interfaces (APIs). The programmable framework of Comware 9 offers various configuration methods, adaptable to different automated configuration models, enhancing network deployment efficiency. At the same time, Comware 9 provides a publicly released programming environment and interfaces, enabling administrators to utilize the programming interfaces to develop unique functions.

· Comware 9 supports visualization, which allows for monitoring device performance and network operation state through telemetry. Telemetry is a remote data collection technology for monitoring device performance and faults. It collects rich monitoring data in time, quickly locates and resolves network issues, and realizes visual network O&M. Common telemetry technologies include gRPC and INT.

This document lists and briefly introduces the key open technologies supported by Comware 9.

Open technologies

RPM

Comware 9 supports installing software that is packaged with Red-Hat Package Manager (RPM). It provides an RPM API for you to easily install, run, and manage RPM applications, simplifying the application management and maintenance.

RPM applications run in Comware and shares the system resources and network parameters with the Comware-native applications.

Containers

About containers

Containerization is a lightweight operating-system-level virtualization technology. A container is a standard software unit that can correspond to a single application (providing a service independently) or a group of applications (providing services together). It includes everything required for an application to run -- the code, a runtime, libraries, environment variables, and configuration files. Multiple containers can be deployed on a single host, with all containers sharing the host kernel, but isolated from each other. Common container management applications include Docker and LXC.

Comware 9 adopts a containerized architecture. Users can independently develop and deploy new applications through containers, which conveniently and quickly supplement the functions provided by the Comware 9 system. This significantly enhances the openness of Comware.

Containers supported by Comware 9

Comware 9 supports three types of containers: Comware container, Guest Shell container, and third-party application containers based on Docker. Different containers run independently and are isolated from each other. A fault in one container will not affect the operation of other containers.

Comware container

The Comware system is encapsulated within the Comware container, providing fundamental functions such as switching and routing. The configuration management and core functions of the Comware system operate within the Comware container.

Guest Shell container

To facilitate users in installing and running third-party software in the Comware system without affecting the operation of the Comware container, H3C provides another official container, Guest Shell container. The Guest Shell container embeds the CentOS 7 system. Through the Guest Shell container, users can install applications developed based on the CentOS 7 system on Comware 9 devices. For example, users can install the proxy application of third-party network management or controller in the Guest Shell container. This proxy application collects information such as the state of the device and network and reports it to the third-party network management or controller. Users can also receive instructions issued by the third-party network management or controller through the proxy application to manage and control the device.

The Guest Shell container supports all commands that the official Docker image version of CentOS 7 supports.

The Guest Shell container, host, Comware container, and third-party application containers are completely isolated. Users can install and operate applications in the Guest Shell container without affecting the running of other containers.

Compared to running CentOS 7 container directly with the CentOS 7 image file, using the Guest Shell container has the following advantages:

· You can run the Guest Shell container after installing the image file provided by H3C.

· The Comware system encapsulates the commands for the Guest Shell container. After you log in to the Comware system, you can manage and maintain the Guest Shell container by executing the guestshell commands.

Third-party application containers

Some applications are released in the form of containers. To support such applications, the Comware 9 system integrates Docker container management function. By using simple command lines, users can deploy third-party application containers based on Docker on the Comware 9 system to achieve custom functions such as information collection and device state monitoring. For example, each device runs a resource collection agent container to collect real-time information such as the CPU, memory, and traffic, and reports it to the monitoring device for unified management.

Comware 9 supports Kubernetes and can serve as a worker node to accept scheduling and management from the Kubernetes Master, enabling large-scale and cluster-based deployment of third-party applications.

RESTful

About RESTful

Representational State Transfer (REST) is a resource-oriented lightweight, cross-platform, and cross-language program schema that features a clear structure and easy management, and expansion. RESTful is an application that adheres to the REST program schema. Comware devices provide the RESTful API. Users can use the RESTful function to operate the RESTful API, thereby facilitating configuration and maintenance of Comware devices.

Operating mechanism

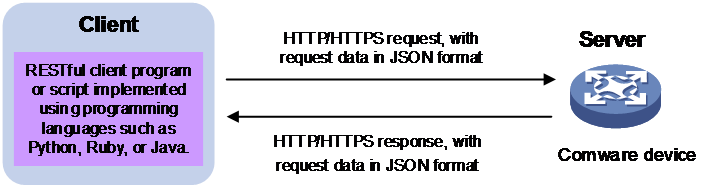

As shown in Figure 1, RESTful uses a C/S model. The RESTful client is a RESTful client program or script developed using programming languages such as Python, Ruby, or Java. The RESTful server is a network device. The process of configuring and maintaining devices through RESTful is as follows:

1. The client sends an HTTP/HTTPS request to the server to operate RESTful API using HTTP methods. The HTTP methods supported by RESTful include GET, PUT, POST, and DELETE.

2. The server complete operating the RESTful API based on the HTTP/HTTPS request. Then, it sends an HTTP/HTTPS response that contains the operation result to the client.

In the HTTP/HTTPS request and response, both the request and response data are encoded in JSON format.

Figure 1 RESTful operating mechanism

Tcl

Comware 9 provides a built-in tool command language (Tcl) interpreter. From user view, you can use the tclsh command to enter Tcl configuration view to execute the following commands:

· Tcl 8.5 commands.

· Comware commands.

The Tcl configuration view is equivalent to the user view. You can use Comware commands in Tcl configuration view in the same way they are used in user view.

Python

Python is a simple and powerful programming language that has a high-efficient high-level data structure. It provides an easy and efficient method for object-oriented programming. Its concise syntax, support for dynamic input, and interpretive nature make it an ideal script language for rapid application development across many platforms and areas.

On the Comware 9 system, you can use Python to perform the following tasks:

· Execute the python command to execute Python scripts to implement automatic device configuration.

· Enter Python shell to configure the device by using the following items:

¡ Python 2.7 commands.

¡ Python 2.7 standard API.

¡ Extended API. Extended API is Comware's extension to Python, aiming to facilitate users in system configuration.

NETCONF

About NETCONF

Network Configuration Protocol (NETCONF) is an XML-based network management protocol. It provides programmable mechanisms to manage and configure network devices. Through NETCONF, you can configure device parameters, retrieve parameter values, and collect statistics. For a network that has devices from vendors, you can develop a NETCONF-based NMS system to configure and manage devices in a simple and effective way.

NETCONF structure

NETCONF has the following layers: content layer, operations layer, RPC layer, and transport protocol layer.

Table 1 NETCONF layer and XML layer mappings

|

NETCONF layer |

XML layer |

Description |

|

Content stratum |

Configuration data, operational state data, and statistics |

Contains a set of managed objects, which can be configuration data, operational state data, and statistics. For information about permission of access to the data nodes, see the NETCONF XML API references for the device. |

|

Operations |

<get>, <get-config>, <edit-config>… |

Defines a set of base operations invoked as RPC methods with XML-encoded parameters. NETCONF comprehensively defines various basic operations for the managed devices. |

|

RPC |

<rpc> and <rpc-reply> |

A simple, transmission protocol-independent mechanism is provided for the coding of the RPC module. By using the <rpc> and <rpc-reply> elements, the NETCONF requests and response data (i.e., the content of the operation stratum and content stratum) are encapsulated. |

|

Transport protocol |

In non-FIPS mode, the following protocols are available: Console, Telnet, SSH, HTTP, HTTPS, TLS. In FIPS mode: Console, SSH, HTTPS, and TLS |

Provide a connection-oriented, reliable, and sequential data link for NETCONF. In non-FIPS mode: · NETCONF supports CLI login methods/protocols such as Telnet, SSH, and Console, specifically NETCONF over SSH, NETCONF over Telnet, and NETCONF over Console. · NETCONF supports both HTTP and HTTPS protocols, namely NETCONF over HTTP and NETCONF over HTTPS. · NETCONF supports encapsulation as Simple Object Access Protocol (SOAP) messages, and transmission through HTTP or HTTPS protocols, which are known as NETCONF over SOAP over HTTP and NETCONF over SOAP over HTTPS. In FIPS mode: · NETCONF supports CLI methods/protocols such as SSH and Console, namely NETCONF over SSH and NETCONF over Console. · NETCONF over HTTPS sessions. · NETCONF over SOAP over HTTPS sessions. |

Basic NETCONF architecture

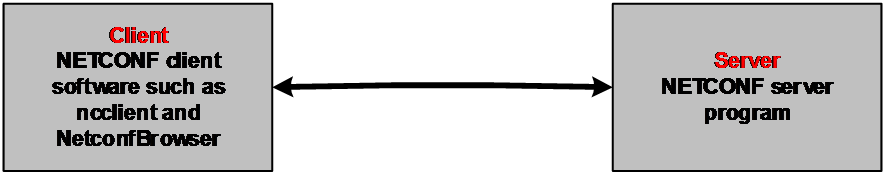

Figure 2 Basic NETCONF architecture

NETCONF architecture is based on the client-server model.

· The client needs to install NETCONF client software (such as ncclient and NetconfBrowser), or run a script or program based on SOAP requests. The main functions of the client are as follows:

¡ Configure and manage network devices through NETCONF.

¡ Actively query the state of the network device.

¡ Receive alarms and events actively transmitted by network devices to learn their current state.

· The server (the network device to be managed) needs to run the NETCONF server program, that is, support NETCONF. The server's main task is to respond to client's requests and proactively announce to the client when a fault or event occurs.

Ansible

About Ansible

Ansible is an automation operation and maintenance tool developed based on Python, integrating the advantages of many operation and maintenance tools to implement batch system configuration, program deployment, and command execution functions. This tool uses the Secure Shell protocol to establish a connection with network equipment, realizing the centralized configuration management of network devices.

Network architecture

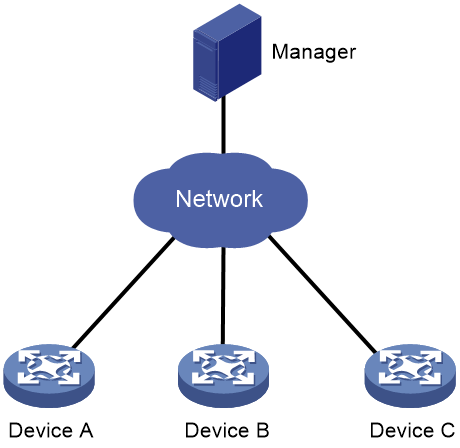

As shown in Figure 3, an Ansible system consists of the following elements:

· Manager—A host installed with the Ansible environment. For more information about the Ansible environment, see Ansible documentation.

· Managed devices—Devices to be managed. These devices do not need to install any agent software. They only need to be able to act as an SSH server. The manager communicates with managed devices through SSH to deploy configuration files.

Comware devices can act as managed devices.

Figure 3 Ansible network architecture

How Ansible works

The following the steps describe how Ansible works:

1. Edit the configuration file on the manager.

2. The manager (SSH client) initiates an SSH connection to the device (SSH server).

3. The manager deploys the configuration file to the device.

4. The device automatically executes the configuration issued by the manager.

Features

Ansible provides the following features:

· The manager establishes a connection and exchanges data with the devices based on SSH, without the need to install client software on the devices, making deployment easy.

· Based on the Python language, it is easy to learn and understand.

· Supports bulk deployment.

· In push mode, the manager deploys configuration to the devices, thus configuring and managing the device.

· Ansible focuses on simplicity and speed, making it suitable for scenarios where devices need to be quickly deployed and run.

gRPC

About gRPC

gRPC is an open source remote procedure call (RPC) system initially developed at Google. It uses HTTP 2.0 and provides network device configuration and management methods that support multiple programming languages.

Currently, the most widely used telemetry technology is gRPC-based telemetry. It is a model-driven telemetry technology that provides protobuf definition files. Third-party software can directly use gRPC to communicate with Comware, or use the H3C SDK interface encapsulated based on gRPC to communicate with Comware.

gRPC supports both Dial-in and Dial-out modes. With gRPC-based telemetry configured, a device automatically reads various statistics information (CPU, memory, and interfaces). Then, it encodes the collected data in protocol buffer code format based on the subscription requirements of the collector, and reports it to the collector through gRPC. This realizes more real-time and efficient data collection.

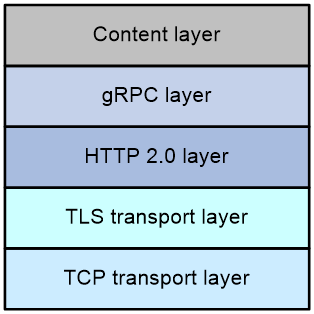

gRPC protocol stack

Table 2 describes the gRPC protocol stack layers from the bottom up.

Table 2 gRPC protocol stack layers

|

Layer |

Description |

|

TCP transport layer |

Provides connection-oriented reliable data links. |

|

TLS transport layer |

(Optional.) Provides channel encryption and mutual certificate authentication. |

|

HTTP 2.0 layer |

The transport protocol for gRPC. HTTP 2.0 provides enhanced features such as header field compression, multiplexing requests on a single connection, and flow control. |

|

gRPC layer |

Defines the interaction format for RPC calls. Public proto definition files such as the grpc_dialout.proto file define the public RPC methods. |

|

Content layer |

Carries encoded service data. This layer supports the following encoding formats: · Google Protocol Buffer (GPB)—A highly efficient binary encoding format. This format uses proto definition files to describe the structure of data to be serialized. GPB is more efficient in data transmission than protocols such as JavaScript Object Notation. · JavaScript Object Notation (JSON)—A lightweight data exchange format. It uses a text format that is language neutral to store and represent data, which is easy to read and compile. If service data is in JSON format, you can use the public proto files to decode the data without having to use the proto file specific to the service module. |

Network architecture

As shown in Figure 5, the gRPC network uses the client/server model. It uses HTTP 2.0 for packet transport.

Figure 5 gRPC network architecture

The gRPC network uses the following mechanism:

1. The gRPC server listens to connection requests from clients at the gRPC service port.

2. A user runs the gRPC client application to log in to the gRPC server, and uses methods provided in the .proto file to send requests.

3. The gRPC server responds to requests from the gRPC client.

H3C devices can act as gRPC servers or clients.

|

|

NOTE: The .proto files are written in the Protocol Buffers language. Protocol Buffers is a data description language developed by Google. It is used to develop custom data structures and generate code based on various languages. It simplifies serialization and data structuring, and resolves faster than the XML language. |

Telemetry modes

The device supports the following telemetry modes:

· Dial-in mode—The device acts as a gRPC server and the collectors act as gRPC clients. A collector initiates a gRPC connection to the device to subscribe to device data.

Dial-in mode supports the following operations:

¡ Get—Obtains device status and settings.

¡ CLI—Executes commands on the device.

¡ gNMI—gRPC Network Management Interface operations, which include the following subtypes of operations:

- gNMI Capabilities—Obtains the capacities of the device.

- gNMI Get—Obtains the status and settings of the device.

- gNMI Set—Deploys settings to the device.

- gNMI Subscribe—Subscribes to data push services provided by the device. The data might be generated by periodical data collection or event-triggered data collection.

· Dial-out mode—The device acts as a gRPC client and the collectors act as gRPC servers. The device initiates gRPC connections to the collectors and pushes device data to the collectors as configured.

INT

About INT

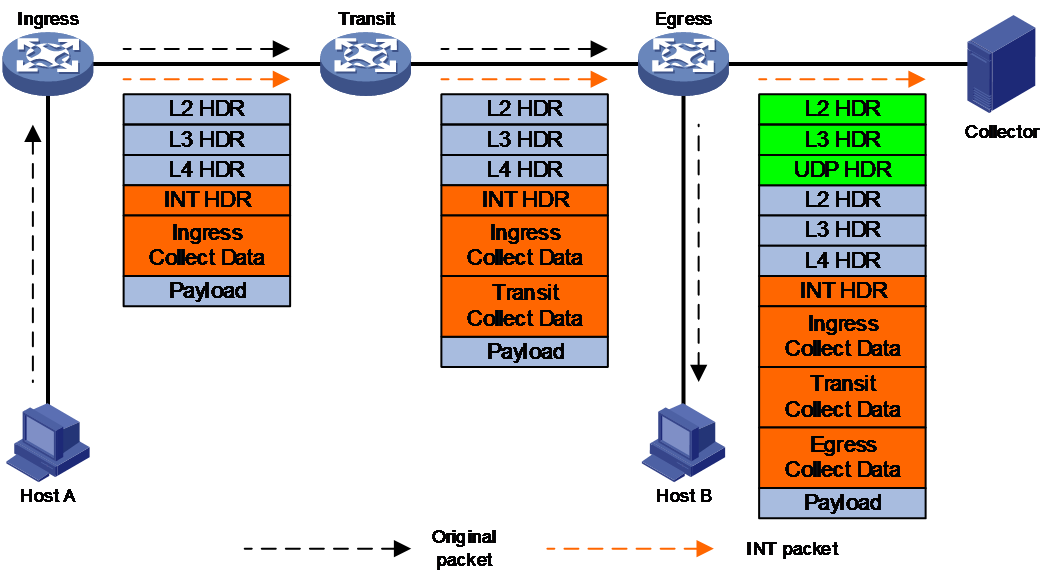

In-band Telemetry (INT) is a network monitoring technology that collects data from network devices. As shown in Figure 6, the ingress and transit nodes on the transmission path add the collected data to the packet. The egress node passes the collected data to the collector through the packet. The network management software deployed on the collector analyzes and extracts useful information from the monitoring data, aiming to monitor the network device performance and network operation.

Figure 6 INT operating mechanism

Data collected by INT

INT is mainly used to collect data plane information such as the path that packets pass through and their transmission delay. The monitoring granularity of INT is per packet, which can achieve complete real-time network state monitoring. Through INT technology, it is possible to monitor the ingress port, egress port, and queue information of each device on the packet forwarding path, the ingress timestamp and egress timestamp, and queue congestion information.

Telemetry stream

About telemetry stream

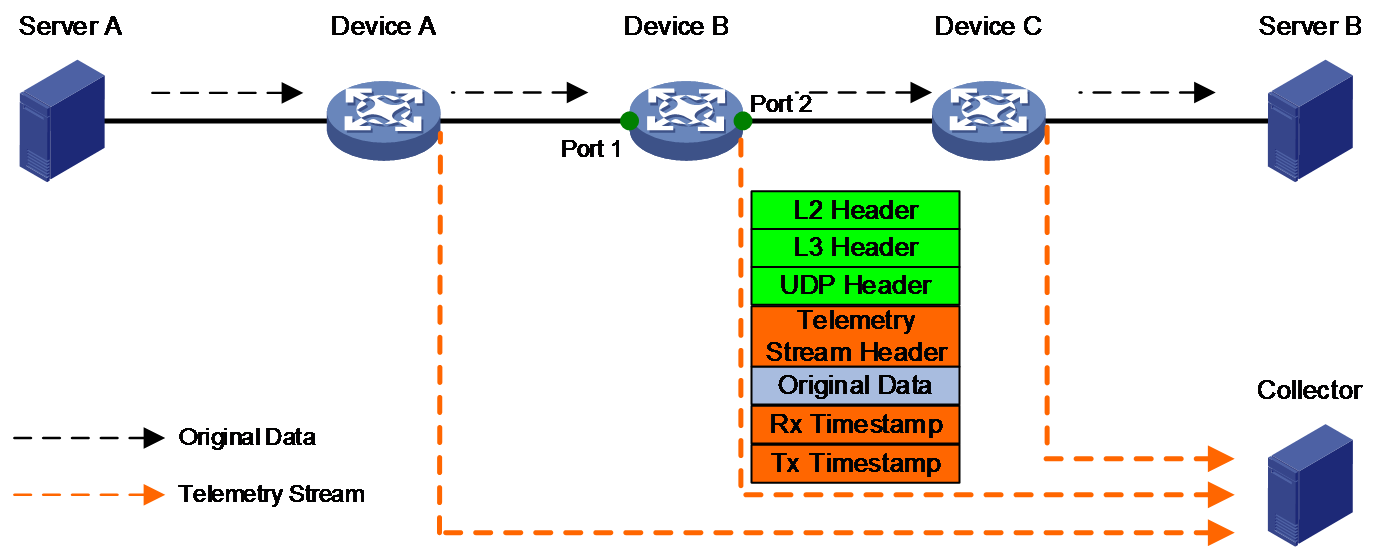

Telemetry stream is a network traffic monitoring technology based on packet sampling, mainly used for precise positioning of traffic transmission paths and transmission delay. In networks with high real-time requirements, it is necessary to accurately identify the device port that takes the longest time to forward packets. When a network device is equipped with a chip that supports telemetry stream, it can collect information about the input/output interfaces of packets and the timestamps when packets enter/exit the device, and proactively report the information to the collector. This enables network management software to monitor the transmission paths and delay of packets in the network, allowing targeted configuration deployment to optimize the network architecture and reduce network delay.

Operating mechanism

Taking Device B in Figure 7 as an example, telemetry stream works as follows:

1. All devices involved in the measurement use PTP to achieve nanosecond-level time synchronization.

2. The device samples matching packets on the input interface based on the specified sampling rate and copies the packets.

3. The device encapsulates the replicated packets with the following c:

¡ Telemetry stream padding header (records the input and output interfaces of original packets).

¡ UDP header and Layer 2 and Layer 3 headers (record the port number and MAC/IP address of the collector).

¡ Rx timestamp.

¡ Tx timestamp.

4. The device sends the sampled packets to the collector. The Rx and Tx timestamps of the sampled packets include the information of the devices they belong to (Device IDs).

Figure 7 Telemetry stream operating mechanism

Multiple nodes each send collected data to the collector. The collector can calculate the path and delay based on the collected information.

· Transmission delay of traffic passing through the specified device = Tx timestamp – Rx timestamp.

· Transmission delay of traffic passing through multiple devices = Tx timestamp for the device on which the output interface resides – Rx timestamp for the device on which the input interface resides.

Data that can be monitored by telemetry stream

The data that can be monitored by telemetry stream includes: device ID, traffic input interface and its timestamp, and traffic output interface and its timestamp. The device ID, specified when configuring telemetry stream, is used to uniquely identify a device on the transmission path.

ERSPAN

Encapsulated Remote Switch Port Analyzer (ERSPAN) is a Layer 3 remote port mirroring technology that copies packets passing through a port, VLAN, or CPU and routes the packets to the remote monitoring device for monitoring and troubleshooting.

Users can define the packets to be mirrored as required. For example, they can mirror TCP three-way handshake packets to monitor the state of TCP connection establishment or mirror RDMA signaling packets to monitor the state of RDMA sessions.

ERSPAN supports port mirroring and flow mirroring.

Flow groups and MOD

About flow groups and MOD

Mirror On Drop (MOD) is a technical method specifically used to monitor packet loss during the internal transmission process within a device. Once MOD detects a packet is dropped, it instantly collects the packet drop time, packet drop reason, and characteristics of the dropped packet, and reports the information to the remote collector. This allows administrators to timely understand the packet loss situation within the device.

MOD uses the flow entries generated based on a flow group to detect dropped packets. A flow group allows you to identify flows based on flow generation rules. The device extracts traffic characteristics (for example, 5-tuples in the packet header) and generates flow entries according to the header fields specified in a flow generation rule.

Operating mechanism

MOD uses the flow entries generated based on a flow group to detect dropped packets as follows:

1. The device generates flow entries based on a flow group.

2. Based on the packet drop reason list of MOD, the device monitors packet drops for packets matching the flow entries.

3. If a packet is dropped for a reason on the packet drop reason list, the device sends the packet drop reason and the characteristics of the dropped packet (flow entry matching the packet) to the collector.

Related documentation

· Comware 9 Architecture Technology White Paper

· Container Technology White Paper

· Telemetry Technology White Paper