- Released At: 29-10-2024

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

Zero Packet Loss Technical Topics

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

This guide describes only the most common information for lightning protection. Some information might not be applicable to your products.

Overview

Introduction

Packet loss in network communication affects the integrity and accuracy of data transmission. Common causes for packet loss include network congestion, transmission device failure, network latency, and link failure. Zero packet loss technology uses various methods to ensure network transmission quality, maintaining high availability and integrity of data within the network.

Technical background

As the Internet rapidly develops, more applications demand higher network stability and reliability, especially in fields requiring precise data transmission, such as financial transactions, medical image transfers, and remote education. In these fields, packet loss severely impacts the integrity and accuracy of data, making zero packet loss technology crucial for network stability and reliability.

To achieve zero packet loss, developers of network devices and protocols continuously innovate and improve technology. They achieve zero packet loss by enhancing device processing capabilities, and developing traffic control, congestion control, link backup, route backup, and SRv6 egress protection technologies. In scenarios that require high network transmission reliability, zero packet loss technology is becoming increasingly crucial.

Benefits

Zero packet loss delivers the following benefits:

· Reduces traffic forwarding failure to enhance network reliability.

· Supports multi-dimensional, multi-method zero packet loss to meet the zero packet loss requirements of different network environments.

Application scenarios

Using Layer 2 or Layer 3 link-aggregation traffic redirection to achieve zero packet loss for aggregate traffic

Feature overview

About this feature

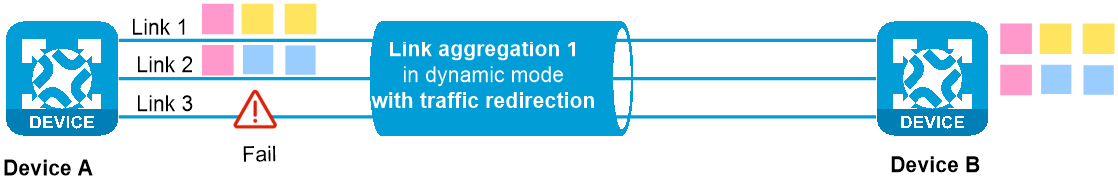

Configure a Layer 2 or Layer 3 dynamic link aggregation group on multiple links between devices, and enable traffic redirection on both ends of the aggregate link to achieve uninterrupted traffic on the aggregate link.

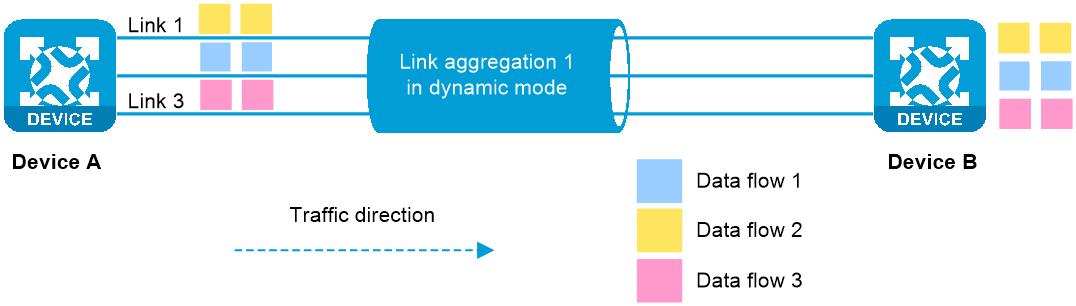

Operating mechanism

When link-aggregation traffic redirection is enabled on both ends, traffic on a Selected port will be redirected to the remaining available Selected ports of the aggregation group if one of the following events occurs:

· The port is shut down by using the shutdown command.

· The slot that hosts the port reboots, and the aggregation group spans multiple slots.

· A link in the dynamic aggregation group fails.

Zero packet loss is guaranteed for known unicast packets but not for the other packets.

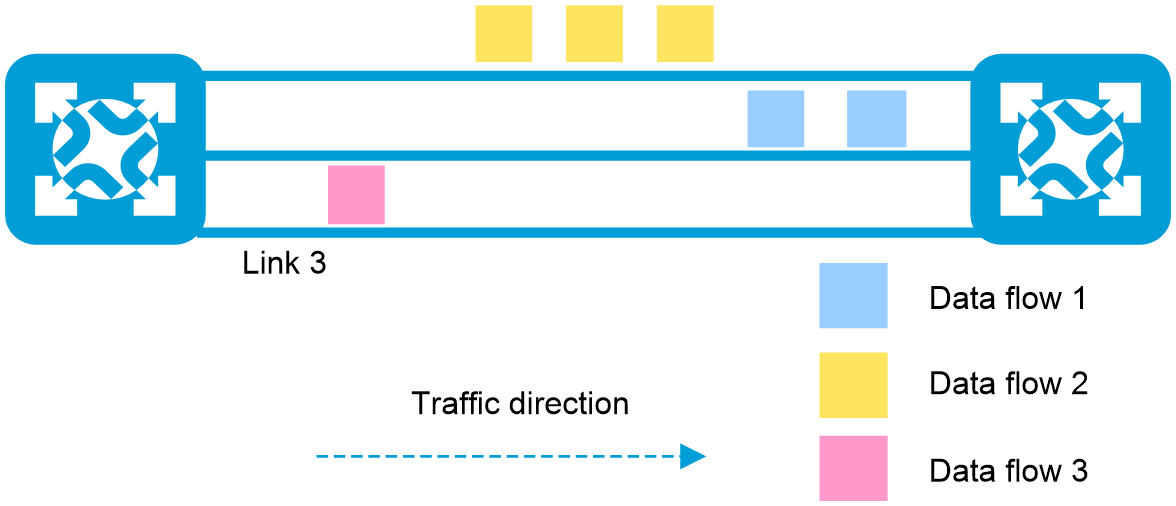

Figure 1 Traffic forwarded correctly when no link fails

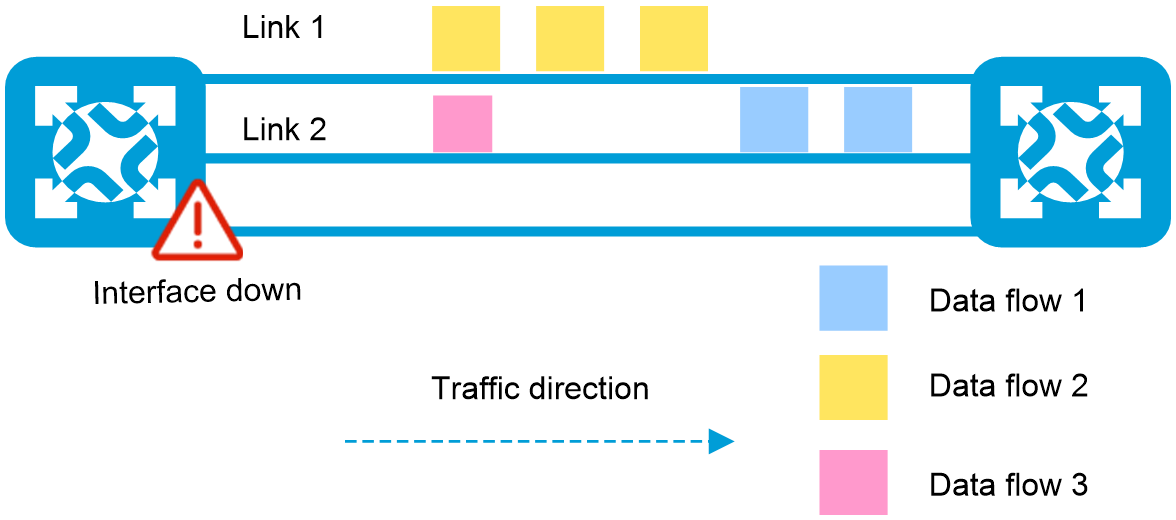

Figure 2 A link fails without link-aggregation traffic redirection configured

Figure 3 A link fails with link-aggregation traffic redirection configured

Restrictions and guidelines

Enable link-aggregation traffic redirection on both ends of an aggregate link to achieve uninterrupted traffic on the aggregate link. Whether link-aggregation traffic redirection is enabled globally and for all aggregate interfaces by default varies by device model.

If you enable both link-aggregation traffic redirection and the spanning tree feature, packet loss might occur when a slot is restarted. As a best practice, do not enable both features.

Link-aggregation traffic redirection cannot operate correctly on an edge aggregate interface.

Only dynamic aggregation groups support link-aggregation traffic redirection.

As a best practice, preferentially enable link-aggregation traffic redirection on the aggregate interfaces. When you enable link-aggregation traffic redirection globally, it might affect the normal communication of aggregation groups if some aggregate interfaces are connected to third-party devices.

After the link-aggregation lacp traffic-redirect-notification enable command is executed, the device will add a proprietary field to the end of the LACP packets. Because the peer device cannot verify this proprietary field, LACP packets might fail to be verified and exchanged. As a result, the aggregation member ports cannot become Selected. To prevent this issue, you must disable link-aggregation traffic redirection on the H3C device when the H3C device connects to a third-party device.

Feature control commands

The following table shows the control commands for link-aggregation traffic redirection. A command executed in the system view takes effect on all aggregation groups on the device. A command executed in aggregate interface view takes effect only on the current aggregation group.

|

Task |

Command |

|

Enable link-aggregation traffic redirection globally. |

link-aggregation lacp traffic-redirect-notification enable (system view) |

|

Enable link-aggregation traffic redirection on an aggregate interface |

link-aggregation lacp traffic-redirect-notification enable (Layer 2/Layer 3 aggregate interface view) |

Application scenarios

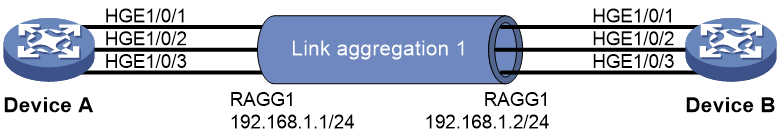

Example: Configuring zero packet loss for a Layer 2 dynamic aggregation group

Network configuration

Configure a Layer 2 dynamic aggregation group on multiple links between devices and enable link-aggregation traffic redirection on both ends of the aggregate link. When a link in the aggregation group fails, the system can redirect the traffic from the corresponding port to the other Selected ports, ensuring uninterrupted traffic on the aggregate link.

Figure 4 Network diagram

Major configuration steps

1. Configure VLAN interfaces. (Details not shown.)

2. Configure link-aggregation traffic redirection for a Layer 2 dynamic aggregation group on Device A:

# Create Layer 2 aggregate interface Bridge-Aggregation 1 and configure the interface to operate in dynamic mode. Enable link-aggregation traffic redirection on the aggregate interface.

[DeviceA] interface bridge-aggregation 1

[DeviceA-Bridge-Aggregation1] link-aggregation mode dynamic

[DeviceA-Bridge-Aggregation1] link-aggregation lacp traffic-redirect-notification enable

[DeviceA-Bridge-Aggregation1] quit

# Assign HundredGigE 1/0/1 through HundredGigE 1/0/3 to aggregation group 1.

[DeviceA] interface hundredgige 1/0/1

[DeviceA-HundredGigE1/0/1] port link-aggregation group 1

[DeviceA-HundredGigE1/0/1] quit

[DeviceA] interface hundredgige 1/0/2

[DeviceA-HundredGigE1/0/2] port link-aggregation group 1

[DeviceA-HundredGigE1/0/2] quit

[DeviceA] interface hundredgige 1/0/3

[DeviceA-HundredGigE1/0/3] port link-aggregation group 1

[DeviceA-HundredGigE1/0/3] quit

# Set the link type of Bridge-Aggregation 1 to trunk, and assign it to VLANs 10 and 20.

[DeviceA] interface bridge-aggregation 1

[DeviceA-Bridge-Aggregation1] port link-type trunk

[DeviceA-Bridge-Aggregation1] port trunk permit vlan 10 20

[DeviceA-Bridge-Aggregation1] quit

3. Configuring Device B.

Configure Device B in the same way Device A is configured. (Details not shown.)

4. Configure VLAN interfaces and IP addresses.

If only Layer 2 devices exist in the network, skip this step.

If Layer 3 devices exist in the network and a Layer 2 aggregate interface needs to forward traffic, modify the PVID for the Layer 2 aggregate interface and configure the IP address for the VLAN interface of the PVID. When Layer 3 packets are forwarded through an aggregate link, they are load-shared on that link.

# Configure a VLAN interface and assign the Layer 2 aggregate interface to the corresponding VLAN.

[DeviceA] interface bridge-aggregation 1

[DeviceA-Bridge-Aggregation1] port trunk pvid vlan 100

[DeviceA-Bridge-Aggregation1] quit

[DeviceA] vlan 100

[DeviceA-vlan100] quit

[DeviceA] interface vlan-interface 100

[DeviceA-Vlan-interface200] ip-address 2.2.2.2 24

# Configure routes.

Configure routes on Device A and Device B. (Details not shown.)

¡ If you configure a static route, specify the outgoing interface of the route as the VLAN interface where the Layer 2 aggregate interface resides.

¡ If you configure a dynamic route, configure the VLAN interface where the Layer 2 aggregate interface resides to establish a neighbor.

After configuration, Layer 2 and Layer 3 packets between Device A and Device B will be forwarded through the aggregate interface, and the packets will be load-shared across multiple member links of the aggregate link.

Displaying the configuration

1. Display the configuration.

# On Device A, display detailed information about the aggregation groups.

[DeviceA] display link-aggregation verbose

Loadsharing Type: Shar -- Loadsharing, NonS -- Non-Loadsharing

Port Status: S -- Selected, U -- Unselected, I -- Individual

Port: A -- Auto port, M -- Management port, R -- Reference port

Flags: A -- LACP_Activity, B -- LACP_Timeout, C -- Aggregation,

D -- Synchronization, E -- Collecting, F -- Distributing,

G -- Defaulted, H -- Expired

Aggregate Interface: Bridge-Aggregation1

Creation Mode: Manual

Aggregation Mode: Dynamic

Loadsharing Type: Shar

Management VLANs: None

System ID: 0x8000, 000f-e267-6c6a

Local:

Port Status Priority Index Oper-Key Flag

HGE1/0/1(R) S 32768 11 1 {ACDEF}

HGE1/0/2 S 32768 12 1 {ACDEF}

HGE1/0/3 S 32768 13 1 {ACDEF}

Remote:

Actor Priority Index Oper-Key SystemID Flag

HGE1/0/1 32768 81 1 0x8000, 000f-e267-57ad {ACDEF}

HGE1/0/2 32768 82 1 0x8000, 000f-e267-57ad {ACDEF}

HGE1/0/3 32768 83 1 0x8000, 000f-e267-57ad {ACDEF}

The output shows that aggregation group 1 is a load-sharing Layer 2 dynamic aggregation group containing three Selected ports.

Verifying the configuration (zero packet loss during traffic switchover upon aggregation member port failure)

1. When a Selected member port in the aggregation group fails (three Selected member ports change to two), verify that traffic switchover occurs with zero packet loss.

# After interface HGE 1/0/1 fails, display the packet receiving rate statistics of the interfaces.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

BAGG1 1 8445651 -- --

HGE1/0/1 0 0 -- --

HGE1/0/2 1 4222825 -- --

HGE1/0/3 1 4222826 -- --

Overflow: More than 14 digits.

--: Not supported.

# After interface HGE 1/0/1 recovers, display the packet receiving rate statistics of the interfaces and verify that the traffic has switched back to HGE 1/0/1.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

BAGG1 1 84445651 -- --

HGE1/0/1 1 28148550 -- --

HGE1/0/2 1 28148551 -- --

HGE1/0/3 1 28148550 -- --

Overflow: More than 14 digits.

--: Not supported.

2. When only one Selected member port in the aggregation group is operating correctly and the others fail (three Selected member ports change to one), verify that traffic switchover occurs with zero packet loss.

# After interfaces HGE 1/0/1 and HGE 1/0/2 fail, display the packet receiving rate statistics of the interfaces. Verify that traffic has switched to HGE 1/0/3 with zero packet loss.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

BAGG1 1 1327644 -- --

HGE1/0/1 0 0 -- --

HGE1/0/2 0 0 -- --

HGE1/0/3 1 1327644 -- --

Overflow: More than 14 digits.

--: Not supported.

# After interfaces HGE 1/0/1 and HGE 1/0/2 recover, display the packet receiving rate statistics of the interfaces. Verify that traffic has switched back to HGE 1/0/1 and HGE 1/0/2 with zero packet loss.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

BAGG1 1 1327644 -- --

HGE1/0/1 1 442548 -- --

HGE1/0/2 1 442546 -- --

HGE1/0/3 1 442550 -- --

Overflow: More than 14 digits.

--: Not supported.

Test result

Use this scenario to achieve Layer 2 aggregation with zero packet loss. Three interfaces form a Layer 2 aggregate interface. If one or two interfaces fail, traffic will switch to the remaining interfaces with zero packet loss.

Example: Configuring zero packet loss for Layer 3 dynamic aggregation groups

Network configuration

Configure a Layer 3 dynamic aggregation group on multiple links between devices and enable link-aggregation traffic redirection on both ends of the aggregate link. When a link in the aggregation group fails, the system can redirect the traffic from the corresponding port to the other Selected ports, ensuring uninterrupted traffic on the aggregate link.

Figure 5 Network diagram

Major configuration steps

1. Configure link-aggregation traffic redirection for a Layer 3 dynamic aggregation group on Device A:

# Create Layer 3 aggregate interface Route-Aggregation 1, set the link aggregation mode to dynamic, and then assign an IP address and subnet mask to the interface.

<DeviceA> system-view

[DeviceA] interface route-aggregation 1

[DeviceA-Route-Aggregation1] link-aggregation mode dynamic

[DeviceA-Route-Aggregation1] link-aggregation lacp traffic-redirect-notification enable

[DeviceA-Route-Aggregation1] ip address 192.168.1.1 24

[DeviceA-Route-Aggregation1] quit

# Assign HundredGigE 1/0/1 through HundredGigE 1/0/3 to aggregation group 1.

[DeviceA] interface hundredgige 1/0/1

[DeviceA-HundredGigE1/0/1] port link-aggregation group 1

[DeviceA-HundredGigE1/0/1] quit

[DeviceA] interface hundredgige 1/0/2

[DeviceA-HundredGigE1/0/2] port link-aggregation group 1

[DeviceA-HundredGigE1/0/2] quit

[DeviceA] interface hundredgige 1/0/3

[DeviceA-HundredGigE1/0/3] port link-aggregation group 1

[DeviceA-HundredGigE1/0/3] quit

2. Configuring Device B.

Configure Device B in the same way Device A is configured. (Details not shown.)

3. Assign an IP address and subnet mask to the Layer 3 aggregate interface. (Details not shown.)

4. If other devices exist in the network, you must configure the interfaces, IP addresses, and routing protocols on each device to achieve Layer 3 communication.

¡ When configuring a static route on Device A, specify the Layer 3 aggregate interface on Device B as the outgoing interface of the route.

¡ For dynamic routing protocols, establish a neighbor by using the Layer 3 aggregate interface address.

Then, Layer 3 packets between Device A and Device B will be forwarded through the aggregate interface, and the packets will be load-shared across multiple member links of the Layer 3 aggregate link.

Displaying the configuration

# On Device A, display detailed information about the aggregation groups.

[DeviceA] display link-aggregation verbose

Loadsharing Type: Shar -- Loadsharing, NonS -- Non-Loadsharing

Port Status: S -- Selected, U -- Unselected, I -- Individual

Port: A -- Auto port, M -- Management port, R -- Reference port

Flags: A -- LACP_Activity, B -- LACP_Timeout, C -- Aggregation,

D -- Synchronization, E -- Collecting, F -- Distributing,

G -- Defaulted, H -- Expired

Aggregate Interface: Route-Aggregation1

Creation Mode: Manual

Aggregation Mode: Dynamic

Loadsharing Type: Shar

Management VLANs: None

System ID: 0x8000, 000f-e267-6c6a

Local:

Port Status Priority Index Oper-Key Flag

HGE1/0/1(R) S 32768 11 1 {ACDEF}

HGE1/0/2 S 32768 12 1 {ACDEF}

HGE1/0/3 S 32768 13 1 {ACDEF}

Remote:

Actor Priority Index Oper-Key SystemID Flag

HGE1/0/1 32768 81 1 0x8000, 000f-e267-57ad {ACDEF}

HGE1/0/2 32768 82 1 0x8000, 000f-e267-57ad {ACDEF}

HGE1/0/3 32768 83 1 0x8000, 000f-e267-57ad {ACDEF}

The output shows that aggregation group 1 is a load-sharing Layer 3 dynamic aggregation group containing three Selected ports.

Scenario 1 (three Selected member ports change to two): Traffic switchover with zero packet loss upon the failure of a Selected member port in the aggregation group

# After interface HGE 1/0/1 fails, display the packet receiving rate statistics of the interfaces.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 0 0 -- --

HGE1/0/2 1 1206646 -- --

HGE1/0/3 1 1206978 -- --

RAGG1 1 2413624 -- --

Overflow: More than 14 digits.

--: Not supported.

# After HGE 1/0/1 recovers, display the packet receiving rate statistics of the interfaces and verify that the traffic has switched back to HGE 1/0/1.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 1 804541 -- --

HGE1/0/2 1 804542 -- --

HGE1/0/3 1 804541 -- --

RAGG1 1 2413624 -- --

Overflow: More than 14 digits.

--: Not supported.

Scenario 2 (three Selected member ports change to one): Traffic switchover with zero packet loss when only one interface in the aggregation group is operating correctly and the others fail

# After interfaces HGE 1/0/1 and HGE 1/0/2 fail, display the packet receiving rate statistics of the interfaces. Verify that traffic has switched to HGE 1/0/3 with zero packet loss.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 0 0 -- --

HGE1/0/2 1 0 -- --

HGE1/0/3 1 2413624 -- --

RAGG1 1 2413624 -- --

Overflow: More than 14 digits.

--: Not supported.

# After interfaces HGE 1/0/1 and HGE 1/0/2 recover, display the packet receiving rate statistics of the interfaces. Verify that traffic has switched back to HGE 1/0/1 and HGE 1/0/2 with zero packet loss.

<DeviceA> display counters rate inbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 1 804541 -- --

HGE1/0/2 1 804542 -- --

HGE1/0/3 1 804541 -- --

RAGG1 1 2413624 -- --

Overflow: More than 14 digits.

--: Not supported.

Test result

Use this scenario to achieve Layer 3 aggregation with zero packet loss. Three interfaces form a Layer 3 aggregate interface. If one or two interfaces fail, traffic will switch to the remaining interfaces with zero packet loss.

Example: Configuring zero packet loss during card restart (aggregation group members located on multiple cards)

Network configuration

Multiple links exist between two devices and these links are located on several cards of Device B. To prevent link failure on Device B caused by card restart or card failure, aggregate multiple links between Device A and Device B into a single dynamic aggregation group, and enable traffic redirection for this group.

If you restart a card on Device B, Device A will switch the traffic destined for that card to other cards, with zero packet loss during the switchover process.

Figure 6 Network diagram

Major configuration steps

Configure a Layer 2 or Layer 3 dynamic aggregation group based on actual conditions. Add interfaces from different interface cards to the same dynamic aggregation group, and configure traffic redirection. For the specific configuration procedures, see the zero packet loss configuration examples for Layer 2 and Layer 3 dynamic aggregation groups.

Zero packet loss technology in IGP/BGP routing

Graceful restart

Feature overview

About this feature

Graceful Restart (GR) ensures forwarding continuity when a protocol restarts or an active/standby switchover occurs. Currently, it is supported by multiple protocols such as RIP, RIPng, OSPF, OSPFv3, IS-IS, BGP, and LDP.

Take OSPF as an example. Without the GR feature enabled, if the OSPF protocol restarts or an active/standby switchover occurs, the device will re-establish OSPF neighbor relationships with neighboring routers, synchronize all route data, and recalculate routes. In this situation, network flapping will occur and traffic forwarding will be interrupted. After the GR feature is enabled, during an OSPF restart or active/standby switchover, surrounding devices can retain their neighbor relationships with the device, keeping the routes and the FIB unchanged. After the device restarts, surrounding devices can help the device complete route synchronization for fast route restoration. With this mechanism, GR ensures network topology stability and achieves zero packet loss.

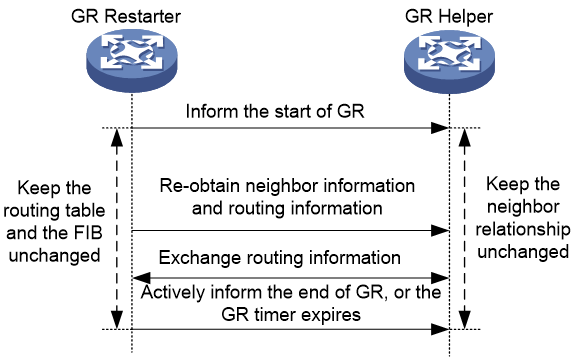

Operating mechanism

The following roles are required to complete a GR process:

· GR restarter—Graceful restarting router. It must have GR capability.

· GR helper—A neighbor of the GR restarter. It helps the GR restarter to complete the GR process. The GR helper must also be GR-capable.

A device can act as either a GR restarter or a GR helper. The GR role of a device is determined by its function during the GR process.

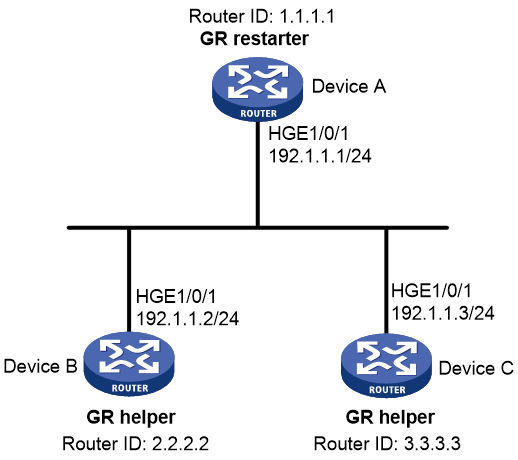

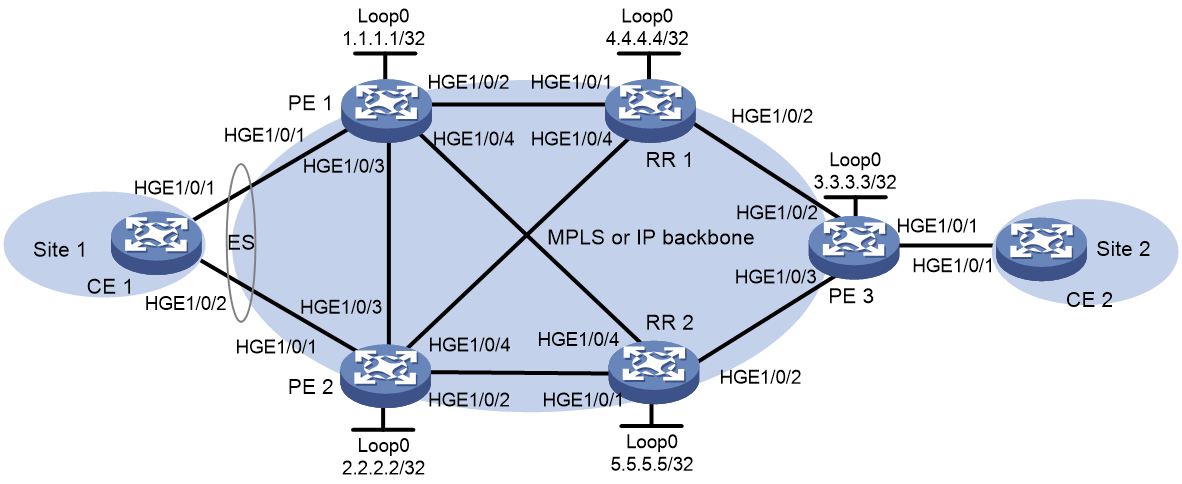

Figure 7 BGP operating mechanism

The detailed workflow is as follows:

1. GR is enabled on both the local device and the neighboring device.

2. When an active/standby switchover or protocol restart occurs, the GR restarter informs the GR helper of this event.

3. During the GR process, the GR restarter keeps its Routing Information Base (RIB) and Forwarding Information Base (FIB) unchanged. It still uses original routes for packet forwarding, retaining its neighbor relationship with the GR helper.

4. After the active/standby switchover or protocol restart completes, the GR restarter re-establishes a neighbor relationship with the GR helper. The GR restarter then exchanges routing information with the GR helper for routing information restoration.

5. After completing the GR process, the GR restarter actively notifies the GR helper to exit the GR process. When the GR timer expires, both the GR restarter and the GR helper exit the GR process.

6. After the local device restores to the expected state, it continues to learn routing information and maintain its routing table.

Feature control commands

The following table shows the control commands for GR. To enable GR for a routing protocol, execute the related command in the view of that routing protocol.

|

Task |

Command |

|

Enable GR for RIP. |

graceful-restart (RIP view) |

|

Enable GR for RIPng. |

graceful-restart (RIPng view) |

|

Enable GR for OSPF. |

graceful-restart (OSPF view) |

|

Enable GR for OSPFv3. |

graceful-restart (OSPFv3 view) |

|

Enable GR for IS-IS. |

graceful-restart (IS-IS view) |

|

Enable GR for BGP. |

graceful-restart (BGP instance view) |

Example: Configuring GR for OSPF

Network configuration

Configure the GR restarter and GR helpers, ensuring zero packet loss when a protocol restart or active/standby switchover occurs on the GR restarter.

· Device A, Device B, and Device C belong to the same autonomous system and the same OSPF routing domain. All of them are GR capable and are connected through OSPF.

· Device A acts as the non-IETF GR restarter. Device B and Device C are the GR helpers, and synchronize their LSDBs with Device A through the out-of-band re-synchronization capability of GR.

Figure 8 Network diagram

Major configuration steps

1. Configure IP addresses for interfaces and configure basic OSPF settings. (Details not shown.)

2. Configure OSPF GR.

# Configure Device A as the non-IETF OSPF GR restarter.

Enable the link-local signaling capability, the out-of-band re-synchronization capability, and non-IETF GR for OSPF process 100.

<DeviceA> system-view

[DeviceA] ospf 100

[DeviceA-ospf-100] enable link-local-signaling

[DeviceA-ospf-100] enable out-of-band-resynchronization

[DeviceA-ospf-100] graceful-restart

[DeviceA-ospf-100] quit

# Configure Device B as the GR helper.

Enable the link-local signaling capability and the out-of-band re-synchronization capability for OSPF process 100.

<DeviceB> system-view

[DeviceB] ospf 100

[DeviceB-ospf-100] graceful-restart helper enable

[DeviceB-ospf-100] enable link-local-signaling

[DeviceB-ospf-100] enable out-of-band-resynchronization

# Configure Device C as the GR helper.

Enable the link-local signaling capability and the out-of-band re-synchronization capability for OSPF process 100.

<DeviceC> system-view

[DeviceC] ospf 100

[DeviceC-ospf-100] graceful-restart helper enable

[DeviceC-ospf-100] enable link-local-signaling

[DeviceC-ospf-100] enable out-of-band-resynchronization

Verifying the configuration

# Enable OSPF GR event debugging and restart the OSPF process by using GR on Device A.

<DeviceA> debugging ospf event graceful-restart

<DeviceA> terminal monitor

<DeviceA> terminal logging level 7

<DeviceA> reset ospf 100 process graceful-restart

Reset OSPF process? [Y/N]:y

%Oct 21 15:29:28:727 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.2(HundredGigE1/0/1) from Full to Down.

%Oct 21 15:29:28:729 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.3(HundredGigE1/0/1) from Full to Down.

*Oct 21 15:29:28:735 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 nonstandard GR Started for OSPF Router

*Oct 21 15:29:28:735 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 created GR wait timer,timeout interval is 40(s).

*Oct 21 15:29:28:735 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 created GR Interval timer,timeout interval is 120(s).

*Oct 21 15:29:28:758 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 created OOB Progress timer for neighbor 192.1.1.3.

*Oct 21 15:29:28:766 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 created OOB Progress timer for neighbor 192.1.1.2.

%Oct 21 15:29:29:902 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.2(HundredGigE1/0/1) from Loading to Full.

*Oct 21 15:29:29:902 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 deleted OOB Progress timer for neighbor 192.1.1.2.

%Oct 21 15:29:30:897 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.3(HundredGigE1/0/1) from Loading to Full.

*Oct 21 15:29:30:897 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 deleted OOB Progress timer for neighbor 192.1.1.3.

*Oct 21 15:29:30:911 2019 DeviceA OSPF/7/DEBUG:

OSPF GR: Process 100 Exit Restart,Reason : DR or BDR change,for neighbor : 192.1.1.3.

*Oct 21 15:29:30:911 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 deleted GR Interval timer.

*Oct 21 15:29:30:912 2019 DeviceA OSPF/7/DEBUG:

OSPF 100 deleted GR wait timer.

%Oct 21 15:29:30:920 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.2(HundredGigE1/0/1) from Full to Down.

%Oct 21 15:29:30:921 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.3(HundredGigE1/0/1) from Full to Down.

%Oct 21 15:29:33:815 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.3(HundredGigE1/0/1) from Loading to Full.

%Oct 21 15:29:35:578 2019 DeviceA OSPF/5/OSPF_NBR_CHG: OSPF 100 Neighbor 192.1.1.2(HundredGigE1/0/1) from Loading to Full.

The output shows that Device A completes GR.

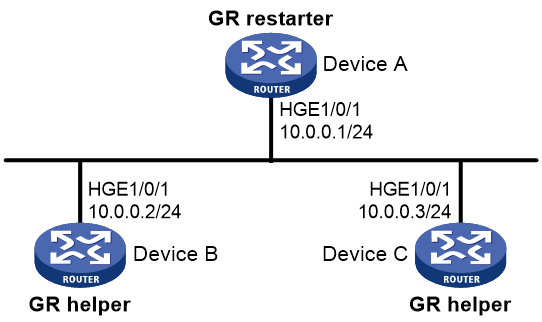

Example: Configuring GR for IS-IS

Networking configuration

Configure the GR restarter and GR helpers, ensuring zero packet loss when a protocol restart or active/standby switchover occurs on the GR restarter.

Figure 9 Network diagram

Major configuration steps

1. Configure IP addresses for interfaces and configure basic IS-IS settings. (Details not shown.)

2. Configure IS-IS GR.

Enable IS-IS GR on Device A.

<DeviceA> system-view

[DeviceA] isis 1

[DeviceA-isis-1] graceful-restart

[DeviceA-isis-1] quit

[DeviceA] quit

Verifying the configuration

# Restart the IS-IS process on Device A.

<DeviceA> reset isis all 1 graceful-restart

Reset IS-IS process? [Y/N]:y

# Check the GR state of the IS-IS process on Device A.

<DeviceA> display isis graceful-restart status

Restart information for IS-IS(1)

--------------------------------

Restart status: COMPLETE

Restart phase: Finish

Restart t1: 3, count 10; Restart t2: 60; Restart t3: 300

SA Bit: supported

Level-1 restart information

---------------------------

Total number of interfaces: 1

Number of waiting LSPs: 0

Level-2 restart information

---------------------------

Total number of interfaces: 1

Number of waiting LSPs: 0

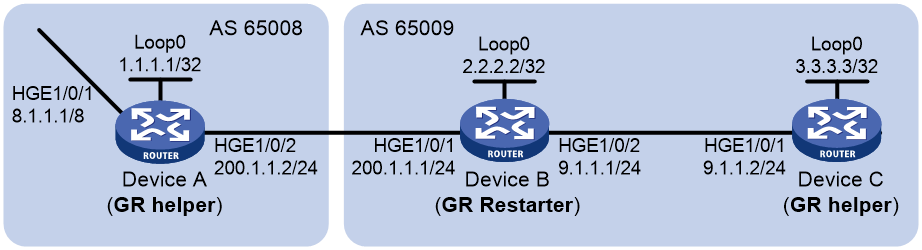

Example: Configuring GR for BGP

Network configuration

As shown in Figure 10, all devices run BGP. Device A and Device B has an EBGP peer session. Device B and Device C has an IBGP peer session. Configure BGP GR so that the data transmission between Device A and Device C is not affected when an active/standby switchover occurs on Device B.

Major configuration steps

1. Configure Device A.

# Configure IP addresses for interfaces. (Details not shown.)

# Configure an EBGP connection between Device A and Device B.

<DeviceA> system-view

[DeviceA] bgp 65008

[DeviceA-bgp-default] router-id 1.1.1.1

[DeviceA-bgp-default] peer 200.1.1.1 as-number 65009

# Enable BGP GR.

[DeviceA-bgp-default] graceful-restart

# Inject network 8.0.0.0/8 to the IPv4 BGP routing table.

[DeviceA-bgp-default] address-family ipv4

[DeviceA-bgp-default-ipv4] network 8.0.0.0

# Enable Device A to exchange IPv4 unicast routing information with Device B.

[DeviceA-bgp-default-ipv4] peer 200.1.1.1 enable

2. Configure Device B.

# Configure IP addresses for interfaces. (Details not shown.)

# Configure an EBGP connection between Device B and Device A.

<DeviceB> system-view

[DeviceB] bgp 65009

[DeviceB-bgp-default] router-id 2.2.2.2

[DeviceB-bgp-default] peer 200.1.1.2 as-number 65008

# Configure an IBGP connection between Device B and Device C.

[DeviceB-bgp-default] peer 9.1.1.2 as-number 65009

# Enable BGP GR.

[DeviceB-bgp-default] graceful-restart

# Inject networks 200.1.1.0/24 and 9.1.1.0/24 to the IPv4 BGP routing table.

[DeviceB-bgp-default] address-family ipv4

[DeviceB-bgp-default-ipv4] network 200.1.1.0 24

[DeviceB-bgp-default-ipv4] network 9.1.1.0 24

# Enable Device B to exchange IPv4 unicast routing information with Device A and Device C.

[DeviceB-bgp-default-ipv4] peer 200.1.1.2 enable

[DeviceB-bgp-default-ipv4] peer 9.1.1.2 enable

3. Configure Device C.

# Configure IP addresses for interfaces. (Details not shown.)

# Configure an IBGP connection between Device C and Device B.

<DeviceC> system-view

[DeviceC] bgp 65009

[DeviceC-bgp-default] router-id 3.3.3.3

[DeviceC-bgp-default] peer 9.1.1.1 as-number 65009

# Enable BGP GR.

[DeviceC-bgp-default] graceful-restart

# Enable Device C to exchange IPv4 unicast routing information with Device B.

[DeviceC-bgp-default] address-family ipv4

[DeviceC-bgp-default-ipv4] peer 9.1.1.1 enable

Verifying the configuration

Ping Device C on Device A. Meanwhile, trigger an active/standby switchover on Device B. The ping operation is successful throughout the switchover. (Details not shown.)

Nonstop routing

Feature overview

About this feature

Nonstop Routing (NSR) ensures nonstop forwarding services by backing up routing-related information from the active routing protocol process to the standby process. This allows the standby process to seamlessly take over the work of the active process in case of a process failure or active/standby switchover. During NSR configuration, make sure the active and standby processes run on different MPUs or IRF member devices.

Currently, NSR is supported by the RIB (also called routing management) module and multiple protocols such as RIP, RIPng, OSPF, OSPFv3, IS-IS, BGP, and LDP.

Table 1 Differences between NSR and GR

|

Feature |

Advantages |

Disadvantages |

|

Graceful Restart (GR) |

When the system is running correctly, GR consumes fewer system resources than NSR. |

· Neighboring devices require GR configuration. · Data restoration is time consuming upon device recovery. · When multiple nodes fail simultaneously, GR cannot function. |

|

Nonstop Routing (NSR) |

· Loose requirements on neighboring devices: Neighbor nodes do not need to support NSR or be aware of routing information changes. When a protocol process restarts unexpectedly or an active/standby switchover occurs on the local device, the local device does not require support from its neighbors. · Limited impact upon node failure: When the local device fails, the standby process can seamlessly take over the work of the active process without affecting other devices. When multiple nodes fail simultaneously, the system can still operate within control. |

· When the system is running correctly, NSR backs up routing information between the active and standby processes, burdening the system. · To configure NSR on a device, make sure one of the following requirements is met: ¡ The active and standby processes of the related routing protocol must run on different MPUs. To run NSR, make sure the device has a minimum of two MPUs. (Distributed devices.) ¡ The active and standby processes of the related routing protocol must run on different IRF member devices. To run NSR, you must set up an IRF fabric that contains a minimum of two member devices. (Centralized IRF devices.) |

When both GR and NSR are configured for the same routing protocol on a device, the following rules apply:

· NSR has a higher priority than GR. When the active process goes down, the device does not act as the GR restarter and initiates an NSR process to ensure nonstop forwarding.

· The device can act as the GR restarter to initiate a GR process for the routing protocol. If the GR helper performs an active/standby switchover before the GR process finishes, the GR process might fail even if NSR is enabled on the GR helper.

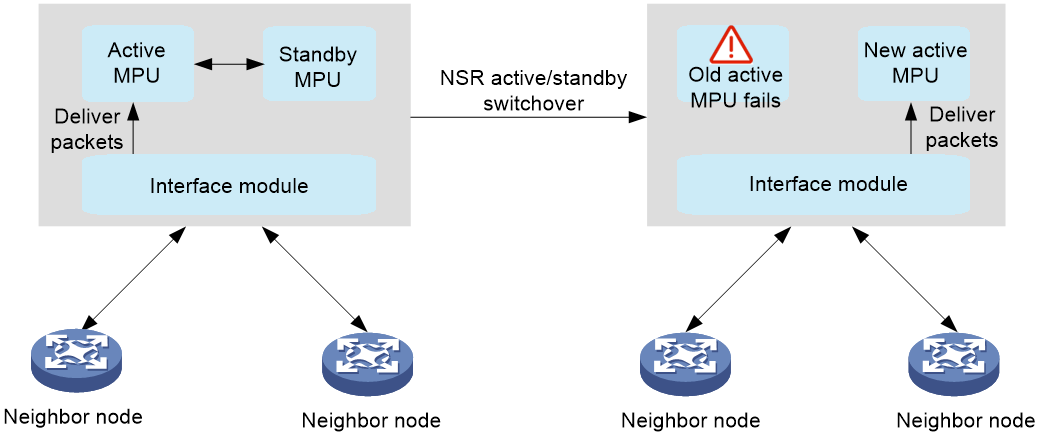

Operating mechanism

Take a distributed device as an example. NSR includes the following phases:

1. Bulk backup: After NSR is enabled, the active MPU backs up routing information and forwarding information in bulk to the standby MPU.

2. Real-time backup: After the bulk backup finishes, the system transitions to the real-time backup phase. Whenever the routing protocol has a change, the change will be backed up from the active MPU to the standby MPU in real time. During this phase, the standby MPU can take the place of the active MPU at any time if needed.

3. Active/standby switchover: When the active MPU fails in NSR real-time backup state, the standby MPU immediately detects the failure through hardware status and becomes active. The interface modules then delivers packets to the new active MPU. The routing protocol does not terminate its neighbor connections, because the whole active/standby switchover finishes within a very short time. Consequently, no traffic loss occurs throughout the switchover.

Figure 11 NSR active/standby switchover

Feature control commands

The following table shows the control commands for NSR. To enable NSR for a routing protocol, execute the related command in the view of that routing protocol.

|

Task |

Command |

|

Enable NSR for RIP. |

non-stop-routing (RIP view) |

|

Enable NSR for RIPng. |

non-stop-routing (RIPng view) |

|

Enable NSR for OSPF. |

non-stop-routing (OSPF view) |

|

Enable NSR for OSPFv3. |

non-stop-routing (OSPFv3 view) |

|

Enable NSR for IS-IS. |

non-stop-routing (IS-IS view) |

|

Enable NSR for BGP. |

non-stop-routing (BGP instance view) |

Example: Configuring NSR for OSPF

Network configuration

NSR is enabled for Device S to ensure forwarding continuity between Device A and Device B when an active/standby switchover occurs on Device S.

Before enabling NSR for Device S, make sure it meets one of the following requirements:

· If Device S is a distributed device, it must have a minimum of two MPUs.

· If Device S is a centralized IRF device, it must be in an IRF fabric containing a minimum of two member devices.

Figure 12 Network diagram

Major configuration steps

1. Configure IP addresses and OSPF settings for interfaces on the devices.

¡ Configure IP addresses and subnet masks for interfaces on the devices according to the above network diagram. The details are not shown.

¡ Configure OSPF on the devices to ensure the following: (Details not shown.)

- Device S, Device A, and Device B can communicate with each other at Layer 3.

- Dynamic route update can be implemented among those devices with OSPF.

2. Configure OSPF NSR.

# Enable OSPF NSR for Device S.

<DeviceS> system-view

[DeviceS] ospf 100

[DeviceS-ospf-100] non-stop-routing

[DeviceS-ospf-100] quit

Verifying the configuration

# Trigger an active/standby switchover on Device S.

[DeviceS] placement reoptimize

Predicted changes to the placement

Predicted changes to the placement

Program Current location New location

---------------------------------------------------------------------

staticroute 0/0 0/0

rib 0/0 0/0

rib6 0/0 0/0

staticroute6 0/0 0/0

ospf 0/0 1/0

Continue? [y/n]:y

Re-optimization of the placement start. You will be notified on completion.

Re-optimization of the placement complete. Use 'display placement' to view the new placement.

# View OSPF neighbors and routes on Device A.

<DeviceA> display ospf peer

OSPF Process 1 with Router ID 2.2.2.1

Neighbor Brief Information

Area: 0.0.0.0

Router ID Address Pri Dead-Time State Interface

3.3.3.1 12.12.12.2 1 37 Full/BDR Vlan100

<DeviceA> display ospf routing

OSPF Process 1 with Router ID 2.2.2.1

Routing Table

Topology base (MTID 0)

Routing for network

Destination Cost Type NextHop AdvRouter Area

44.44.44.44/32 2 Stub 12.12.12.2 4.4.4.1 0.0.0.0

14.14.14.0/24 2 Transit 12.12.12.2 4.4.4.1 0.0.0.0

22.22.22.22/32 0 Stub 22.22.22.22 2.2.2.1 0.0.0.0

12.12.12.0/24 1 Transit 12.12.12.1 2.2.2.1 0.0.0.0

Total nets: 4

Intra area: 4 Inter area: 0 ASE: 0 NSSA: 0

# View OSPF neighbors and routes on Device B.

<DeviceB> display ospf peer

OSPF Process 1 with Router ID 4.4.4.1

Neighbor Brief Information

Area: 0.0.0.0

Router ID Address Pri Dead-Time State Interface

3.3.3.1 14.14.14.2 1 39 Full/BDR Vlan200

<DeviceB> display ospf routing

OSPF Process 1 with Router ID 4.4.4.1

Routing Table

Topology base (MTID 0)

Routing for network

Destination Cost Type NextHop AdvRouter Area

44.44.44.44/32 0 Stub 44.44.44.44 4.4.4.1 0.0.0.0

14.14.14.0/24 1 Transit 14.14.14.1 4.4.4.1 0.0.0.0

22.22.22.22/32 2 Stub 14.14.14.2 2.2.2.1 0.0.0.0

12.12.12.0/24 2 Transit 14.14.14.2 2.2.2.1 0.0.0.0

Total nets: 4

Intra area: 4 Inter area: 0 ASE: 0 NSSA: 0

According to the command outputs, when an active/standby switchover occurs on Device S:

· The neighbor relationships and routing information on Device A and Device B do not change.

· The traffic from Device A to Device B is not impacted.

Test results

In scenarios where an active/standby switchover occurs or the active MPU fails, NSR can retain OSPF neighbors and routing information unchanged, achieving zero packet loss.

Example: Configuring NSR for IS-IS

Networking configuration

NSR is enabled for Device S to ensure forwarding continuity between Device A and Device B when an active/standby switchover occurs on Device S.

Before enabling NSR for Device S, make sure it meets one of the following requirements:

· If Device S is a distributed device, it must have a minimum of two MPUs.

· If Device S is a centralized IRF device, it must be in an IRF fabric containing a minimum of two member devices.

Figure 13 Network diagram

Major configuration steps

1. Configure IP addresses and IS-IS settings for interfaces on the devices.

¡ Configure IP addresses and subnet masks for interfaces on the devices according to the above network diagram. The details are not shown.

¡ Configure IS-IS on the devices to ensure the following: (Details not shown.)

- Device S, Device A, and Device B can communicate with each other at Layer 3.

- Dynamic route update can be implemented among those devices with IS-IS.

2. Configure IS-IS NSR.

# Enable IS-IS NSR for Device S.

<DeviceS> system-view

[DeviceS] isis 1

[DeviceS-isis-1] non-stop-routing

[DeviceS-isis-1] quit

Verifying the configuration

# Trigger an active/standby switchover on Device S.

[DeviceS] placement reoptimize

Predicted changes to the placement

Program Current location New location

---------------------------------------------------------------------

staticroute 0/0 0/0

rib 0/0 0/0

rib6 0/0 0/0

staticroute6 0/0 0/0

isis 0/0 1/0

Continue? [y/n]:y

Re-optimization of the placement start. You will be notified on completion.

Re-optimization of the placement complete. Use 'display placement' to view the new placement.

# View IS-IS neighbors and routes on Device A.

<DeviceA> display isis peer

Peer information for IS-IS(1)

----------------------------

System ID: 0000.0000.0001

Interface: HGE1/0/1 Circuit Id: 0000.0000.0001.01

State: Up HoldTime: 23s Type: L1(L1L2) PRI: 64

System ID: 0000.0000.0001

Interface: HGE1/0/1 Circuit Id: 0000.0000.0001.01

State: Up HoldTime: 28s Type: L2(L1L2) PRI: 64

<DeviceA> display isis route

Route information for IS-IS(1)

-----------------------------

Level-1 IPv4 Forwarding Table

-----------------------------

IPv4 Destination IntCost ExtCost ExitInterface NextHop Flags

-------------------------------------------------------------------------------

12.12.12.0/24 10 NULL HGE1/0/1 Direct D/L/-

22.22.22.22/32 10 NULL Loop0 Direct D/-/-

14.14.14.0/32 10 NULL HGE1/0/1 12.12.12.2 R/L/-

44.44.44.44/32 10 NULL HGE1/0/1 12.12.12.2 R/L/-

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

Level-2 IPv4 Forwarding Table

-----------------------------

IPv4 Destination IntCost ExtCost ExitInterface NextHop Flags

-------------------------------------------------------------------------------

12.12.12.0/24 10 NULL HGE1/0/1 Direct D/L/-

22.22.22.22/32 10 NULL Loop0 Direct D/-/-

14.14.14.0/32 10 NULL

44.44.44.44/32 10 NULL

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

# View IS-IS neighbors and routes on Device B.

<DeviceB> display isis peer

Peer information for IS-IS(1)

----------------------------

System ID: 0000.0000.0001

Interface: HGE1/0/1 Circuit Id: 0000.0000.0001.01

State: Up HoldTime: 23s Type: L1(L1L2) PRI: 64

System ID: 0000.0000.0001

Interface: HGE1/0/1 Circuit Id: 0000.0000.0001.01

State: Up HoldTime: 28s Type: L2(L1L2) PRI: 64

<DeviceB> display isis route

Route information for IS-IS(1)

-----------------------------

Level-1 IPv4 Forwarding Table

-----------------------------

IPv4 Destination IntCost ExtCost ExitInterface NextHop Flags

-------------------------------------------------------------------------------

14.14.14.0/24 10 NULL HGE1/0/1 Direct D/L/-

44.44.44.44/32 10 NULL Loop0 Direct D/-/-

12.12.12.0/32 10 NULL HGE1/0/1 14.14.14.4 R/L/-

22.22.22.22/32 10 NULL HGE1/0/1 14.14.14.4 R/L/-

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

Level-2 IPv4 Forwarding Table

-----------------------------

IPv4 Destination IntCost ExtCost ExitInterface NextHop Flags

-------------------------------------------------------------------------------

14.14.14.0/24 10 NULL HGE1/0/1 Direct D/L/-

44.44.44.44/32 10 NULL Loop0 Direct D/-/-

12.12.12.0/32 10 NULL

22.22.22.22/32 10 NULL

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

The output shows that the neighbor information and routing information on Device A and Device B do not change during the active/standby switchover on Device S. The neighbors (Device A and Device B) are unaware of the switchover.

Test results

In scenarios where an active/standby switchover occurs or the active MPU fails, NSR can retain IS-IS neighbors and routing information unchanged, achieving zero packet loss.

ECMP routes

Feature overview

About ECMP routes

When the next hop of a route becomes unreachable due to a network failure, the routing management and routing protocol of the device will select the optimal route again for packet forwarding, which might interrupt packet forwarding.

By configuring equal-cost multi-path (ECMP) routes, you can enable multiple next hop links to back up each other, minimizing the impact of next hop failures. If the output interface of packets fails, you can achieve zero packet loss. Packets are shared across multiple next hops of ECMP routes. In the current software version, the routing protocols that support load sharing include static routing, IPv6 static routing, RIP, RIPng, OSPF, OSPFv3, IS-IS, IPv6 IS-IS, BGP, and IPv6 BGP.

Operating mechanism

For routes of the same protocol, if multiple optimal routes have the same destination address and cost, these routes form ECMP routes. Packets are shared across multiple next hops in the ECMP routes. Multiple next hops that perform load sharing back up the paths of each other. If one or multiple paths fail, traffic is reassigned among the remaining paths.

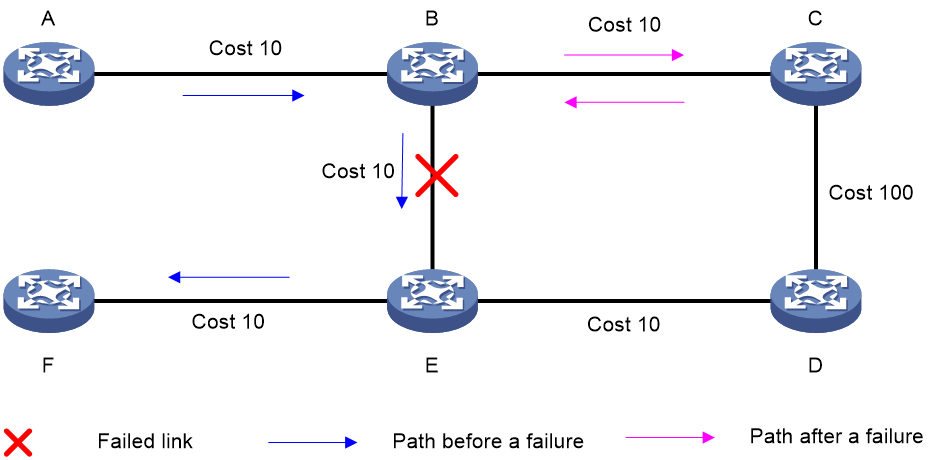

· If the path fails because the output interface goes down, the packets will be forwarded through another output interface, achieving zero packet loss. As shown in the figure below, after the local interface goes down, the packets quickly switchover to another path, achieving zero packet loss.

· If the path fails because an intermediate link or the next hop interface fails, few packets might be lost. In this case, you can configure BFD to monitor the next hop link for fast failure detection, achieving zero packet loss.

Figure 14 Packet load sharing

Figure 15 Packet switchover to another path upon local interface failure for zero packet loss

Feature control commands

This feature does not provide control commands. It only requires multiple next hops to the same destination address in the network.

Example: Implementing zero packet loss upon local interface failure of ECMP routes

Network configuration

In an ECMP network, packets are forwarded through load sharing based on a hash value generated from the source IP, destination IP, and protocol number. When the local interface fails or shuts down, the associated next hop in the ECMP path becomes unreachable. At this time, the device does not need to obtain unreachability information from the peer. Instead, it directly notifies the routing table and forwarding table to switch the traffic of the failed next hop to another next hop. This ensures the integrity of network failure processing, enhancing the device stability and reliability.

For example, two equal-cost static routes are available between Device A and Device B. ECMP load sharing is performed for packets destined for IP address 1.2.3.4/24 through Device B. When a link fails, traffic can immediately switch over to another link.

Figure 16 Network diagram

Major configuration steps

1. Configure IP addresses for the interfaces.

2. Configure equal-cost static routes.

# Configure static routes on Device A.

<DeviceA> system-view

[DeviceA] ip route-static 1.2.3.4 24 10.1.1.2 bfd echo-packet

[DeviceA] ip route-static 1.2.3.4 24 20.1.1.2 bfd echo-packet

[DeviceA] quit

Displaying the configuration

1. After configuration, execute the following command to view traffic load sharing across two links.

# Display the packet receiving rate statistics of the interfaces.

<DeviceA> display counters rate outbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 2 1174779 -- --

HGE1/0/2 2 1174779 -- --

Overflow: More than 14 digits.

--: Not supported.

Scenario 1: Zero packet loss upon traffic switchover between two physical links

1. After interface HGE 1/0/2 fails, traffic switches over to interface HGE 1/0/1 for forwarding without any packet loss.

# Display the packet receiving rate statistics of the interfaces.

<DeviceA> display counters rate outbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 4 2349621 -- --

HGE1/0/2 0 0 -- --

Overflow: More than 14 digits.

--: Not supported.

2. After interface HGE 1/0/2 recovers, traffic is load shared between interfaces HGE 1/0/1 and HGE 1/0/2 without any packet loss.

# Display the packet receiving rate statistics of the interfaces.

<DeviceA> display counters rate outbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 2 1174642 -- --

HGE1/0/2 2 1174642 -- --

Overflow: More than 14 digits.

--: Not supported.

Scenario 2: Zero packet loss upon traffic switchover if all subinterfaces of a main Ethernet interface are shut down

1. Create 128 subinterfaces and different subnet IP addresses for HGE 1/0/1 and HGE 1/0/2. Configure a dynamic routing protocol between Device A and Device B to enable load sharing on the subinterfaces.

2. Shut down all subinterfaces of one physical interface. Traffic will switch over to all subinterfaces of another physical interface for load sharing without packet loss. After the shutdown subinterfaces recover, traffic switches to these interfaces for load sharing without any packet loss.

Scenario 3: Zero packet loss upon traffic switchover if half of the subinterfaces of the main Ethernet interface are shut down

1. Create 128 subinterfaces and different subnet IP addresses for HGE 1/0/1 and HGE 1/0/2. Configure a dynamic routing protocol between Device A and Device B to enable load sharing on the subinterfaces.

2. Shut down half of the subinterfaces on each of the two physical interfaces. Traffic will switch over to the remaining available subinterfaces for load sharing without packet loss. After the shutdown subinterfaces recover, traffic switches to these interfaces for load sharing without any packet loss.

Scenario 4: Zero packet loss upon traffic switchback and temporary packet loss upon traffic switchover if a main Ethernet interface is shut down

1. Create 128 subinterfaces and different subnet IP addresses for HGE 1/0/1 and HGE 1/0/2. Configure a dynamic routing protocol between Device A and Device B to enable load sharing on the subinterfaces.

2. Shutting down one main interface will cause traffic on its subinterfaces to switch over to another physical interface and subinterfaces for load sharing. During this process, traffic experiences millisecond-level packet loss. After the main interface recovers, traffic switches to the subinterfaces of the main interface for load sharing without packet loss.

Test result

Shutting down a main Ethernet interface causes a millisecond-level packet loss on the subinterfaces. In the other scenarios, zero packet loss can be achieved upon local interface shutdown of ECMP routes. During a network failure, if one or multiple forwarding paths are available in the ECMP route group, traffic on the failed link will switch over to the remaining available paths.

FRR

Feature overview

About FRR

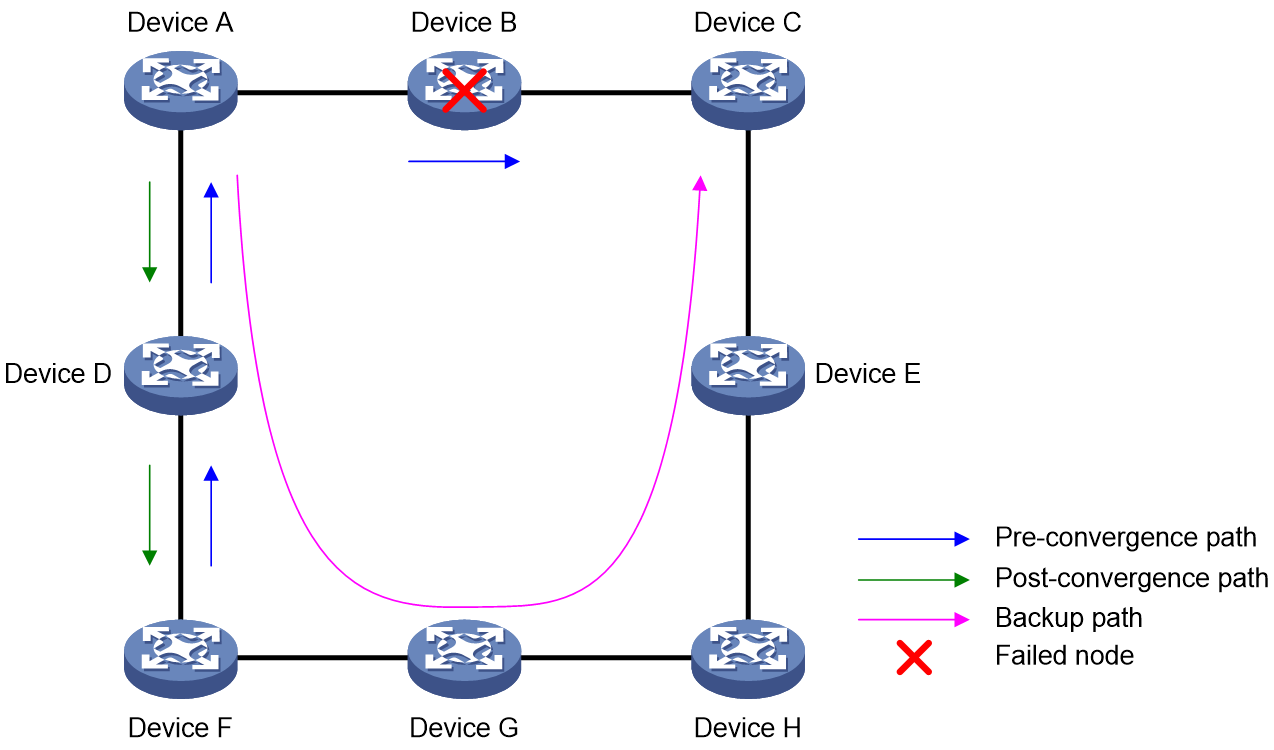

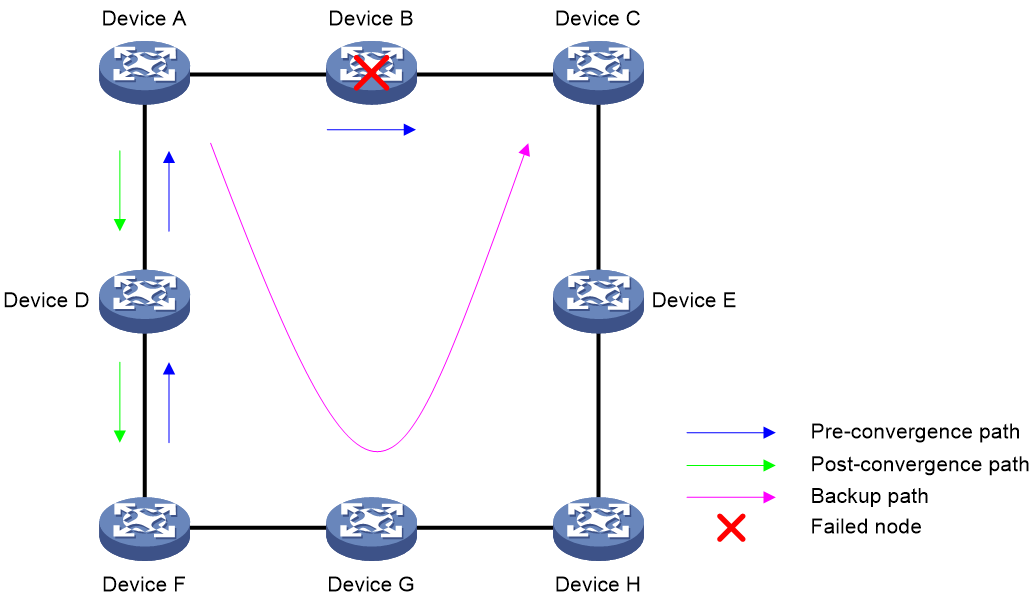

When a link or network node fails, packets passing through the faulty link or node will be discarded, and data traffic will be disrupted. Upon detecting the failure, the routing protocol must perform the optimal route selection again. For example, failure detection and recovery for IS-IS involves failure detection, LSP update, LSP flooding, route calculation, and forwarding information base (FIB) entry deployment before traffic can switch over to a new link. For services that require high real-time performance, configure the fast reroute (FRR) feature to issue low-priority routes as backup routes to the forwarding table. When a device detects a primary route failure, it immediately uses the backup route to guide packet forwarding, avoiding traffic disruption. If the device can detect the failure promptly enough, it can achieve zero packet loss. As a best practice, use BFD to detect the next hop of the primary route for faster link failure detection and higher network reliability.

Figure 17 FRR

You can configure protocol-specific FRR or inter-protocol FRR as needed. If you configure both features, protocol-specific FRR takes effect. The working mechanism of FRR is as follows:

1. Allow the protocol to automatically select or manually configure the primary and backup next hops based on the actual protocol status.

¡ If only one optimal route is available, you can proceed to the next step.

¡ If multiple equal-cost routes have the same preference and cost, FRR cannot set backup routes for them.

2. Optimal route selection. Inter-protocol FRR preferentially selects routes from the routing information base (RIB). Protocol-specific FRR first selects routes from the protocol, and then issues the optimal routes to the RIB for further selection.

3. RIB issues routes to the FIB. If the RIB determines that a route is the optimal route and the route has a backup next hop, it issues both the primary and backup next hops to the FIB. The primary next hop guides packet forwarding, while the backup next hop is not active.

4. Primary/Backup next hop switchover. When the device detects a failure in the primary next hop, it immediately uses the backup next hop to guide packet forwarding.

5. Route reconvergence. The device performs optimal route selection based on the changed network topology, and uses the new optimal route to guide packet forwarding.

The primary route in FRR requires a backup route with the same destination address. FRR protection cannot take effect if the following conditions exist:

· Inter-protocol FRR cannot find a backup next hop in the RIB.

· Protocol-specific FRR cannot find a backup next hop in the routing table of the protocol.

· Multiple ECMP routes with equal-cost next hops exist.

Inter-protocol FRR

Inter-protocol FRR can specify backup next hops for routes in the RIB. For multiple routes with the same destination address, the next hop of the optimal route is used as the primary next hop, and the next hop of the suboptimal route is used as the backup next hop. The protocols for the optimal and suboptimal routes are different.

Static route FRR

You can specify a backup output interface and backup next hop for static routes, or configure the device to automatically use a suboptimal next hop as a backup next hop.

RIP/RIPng FRR

RIP/RIPng FRR automatically calculates a suboptimal next hop from RIP/RIPng as a backup next hop.

OSPFv3/IPv6 IS-IS FRR

OSPFv3/IPv6 IS-IS FRR can set the backup next hop in the following ways:

· Automatically calculate the backup next hop through the Loop Free Alternate (LFA) algorithm. LFA uses the existing SPF algorithm to complete all calculations and backups locally. The calculation process is relatively simple and does not require expansion of the OSPF/IS-IS protocol.

· You can manually specify a backup next hop for matching routes by using a routing policy.

The LFA algorithm uses a neighbor that can provide a backup link as the root node, and calculates the shortest distance to the destination node by using the Shortest Path First (SPF) algorithm. Then, it calculates a set of backup links with the lowest cost and no loops by using the following LFA inequality: Distance_opt(N,D) < Distance_opt(N,S) + Distance_opt(S,D)

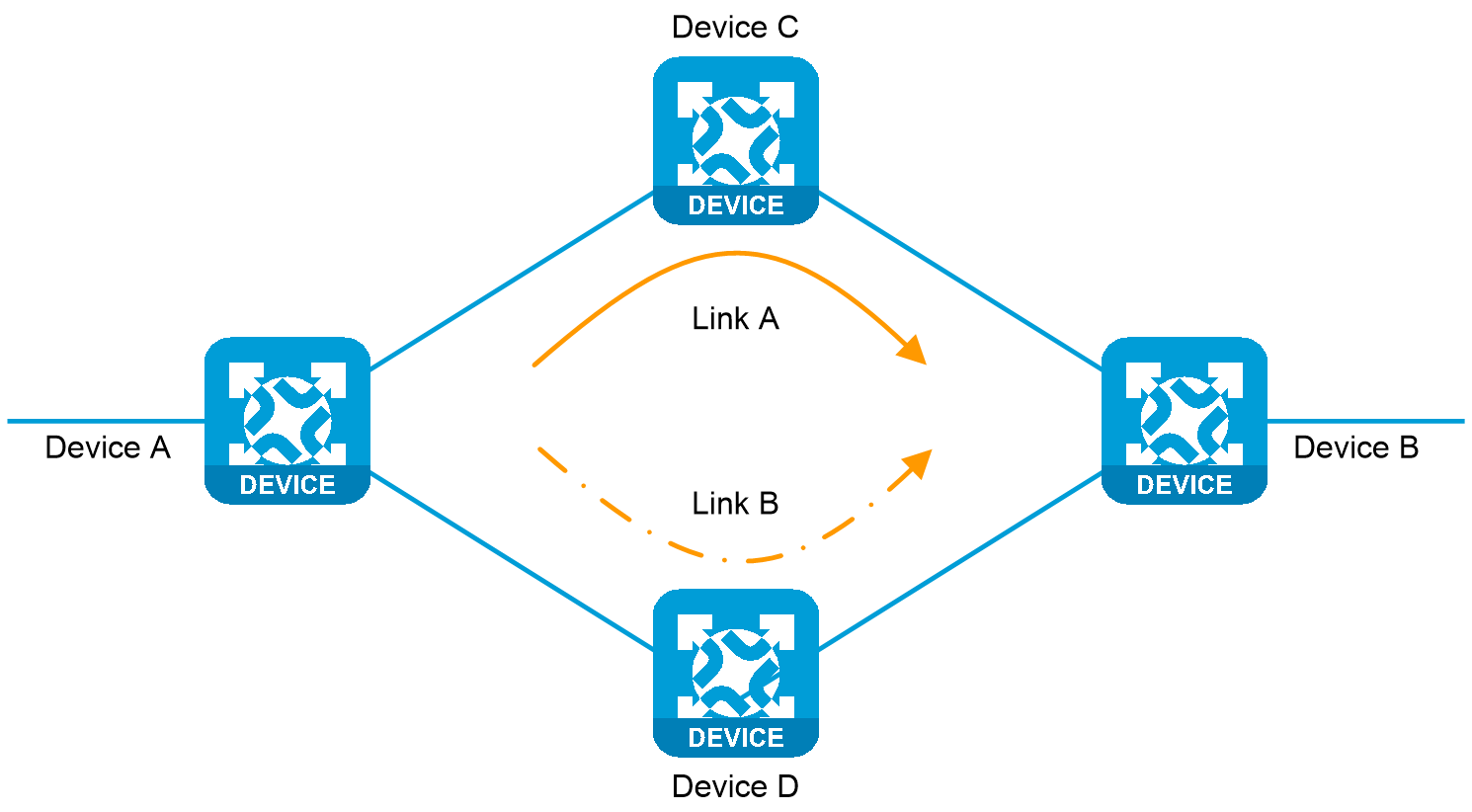

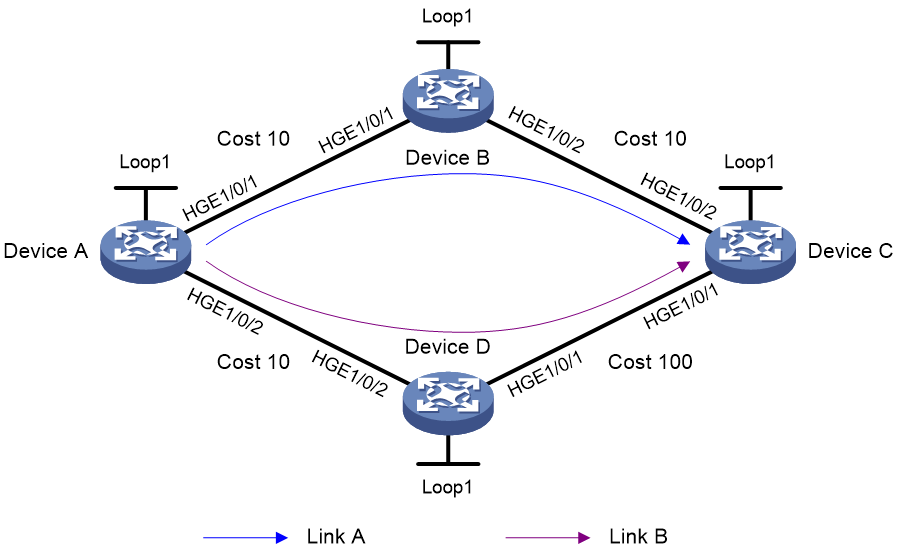

For example, as shown in the figure below, Device A is the source node for traffic forwarding, Device B is the destination node, and Device D is a neighbor that can provide a backup link. The LFA algorithm performs calculation as follows:

1. Selects neighbor node Device D that can provide a backup link, that is, the node of the backup next hop.

2. Uses the SPF algorithm to calculate the shortest path distance d from Device D to the traffic destination node Device B.

3. Calculates the shortest path distance c between backup link node Device D and the source node Device A.

4. Calculates the shortest path distance a + b from traffic source node Device A to traffic destination node Device B.

5. Uses this link as a backup link for FRR if d < a + b + c.

6. Selects the link with the lowest cost if multiple backup links exist.

Figure 18 Backup link calculation with the LFA algorithm

IS-IS/OSPF FRR

IS-IS/OSPF FRR can set the backup next hop in the following ways:

· Use the LFA algorithm to automatically calculate the backup next hop.

· You can manually specify the backup next hop through a routing policy.

· Use remote LFA to automatically calculate the backup next hop.

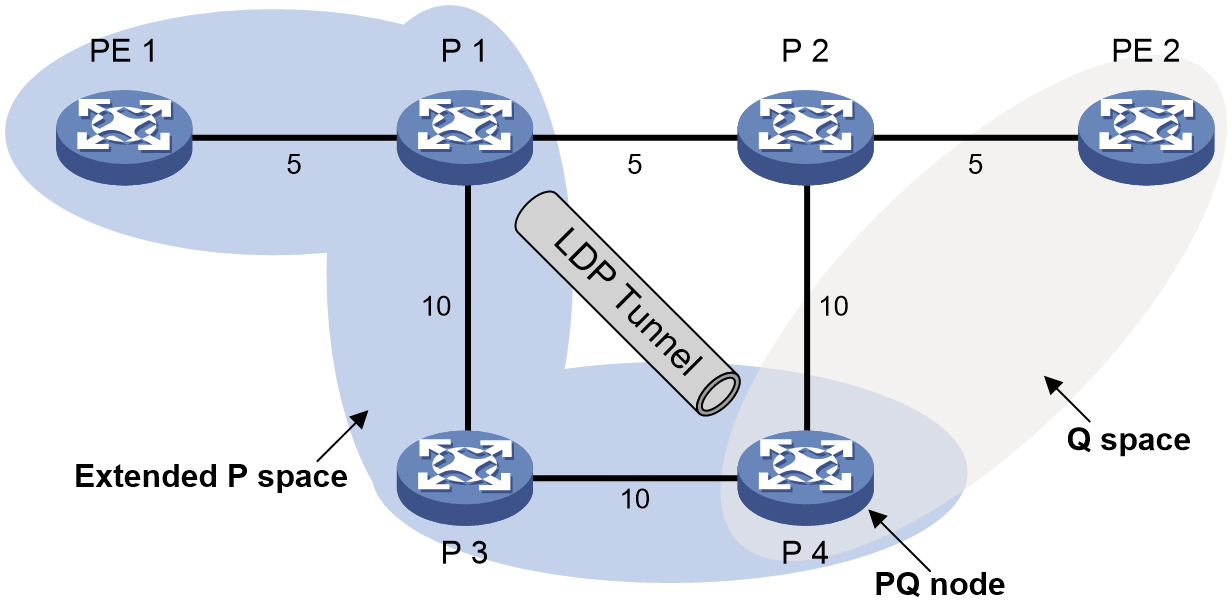

IS-IS/OSPF supports using remote LFA to automatically calculate the backup next hop. The LFA algorithm selects a backup path only on the local node. For some large-scale networks, especially ring networks, it cannot calculate a backup path and does not meet reliability requirements. The remote LFA algorithm calculates the PQ node across the entire network based on the protected link, and establishes an LDP tunnel between the source node and PQ node for backup next hop protection. When the protected link fails, traffic automatically switches to the backup tunnel path, enhancing network reliability.

Remote LFA typically uses the following concepts:

· P space—Use the source node of the protected link as the root to establish a shortest path tree. All nodes that are reachable from the source node without passing the protected link form the P space. Nodes in the P space are called P nodes.

· Extended P space—Use the source node of the protected link and its neighbors as the roots to establish shortest path trees. All nodes that are reachable from the source node or one of its neighbors without passing the protected link form the extended P space.

· Q space—Use the destination node of the protected link as the root to establish a reverse shortest path tree. All nodes that are reachable from the root node without passing the protected link form the Q space. Nodes in the Q space are called Q nodes.

· PQ node—A PQ node refers to a node that resides in both the extended P space and the Q space. Remote LFA uses a PQ node as the destination node of a protected link.

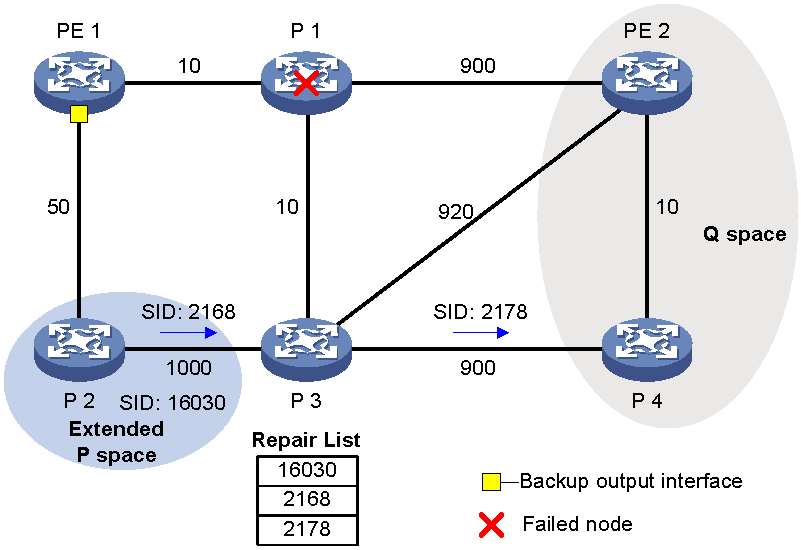

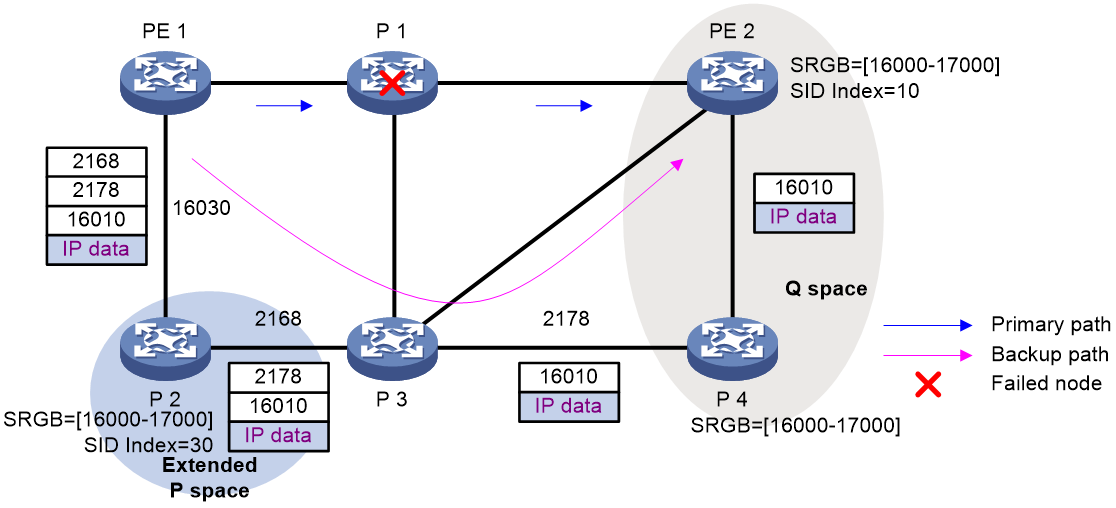

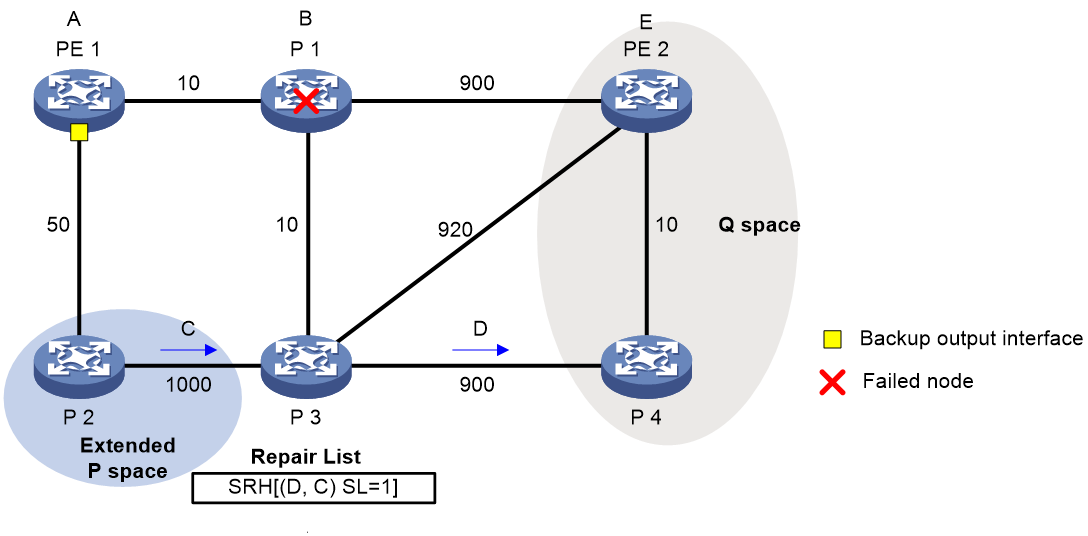

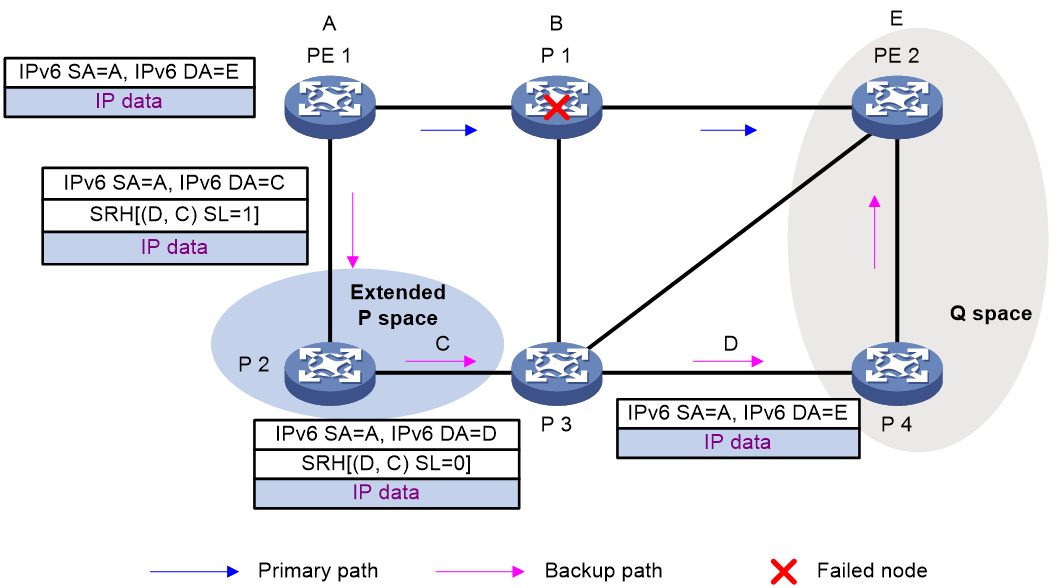

As shown in Figure 19, the traffic forwarding path is PE 1—P 1—P 2—PE 2. To avoid traffic loss caused by link failures between P 1 and P 2, the system establishes an LDP tunnel between P 1 and P 4, which is the PQ node. When the link between P 1 and P 2 fails, P 1 encapsulates IP packets in MPLS packets and sends the MPLS packets to P 4 through the LDP tunnel. After receiving the MPLS packets, P 4 removes the MPLS labels of the packets and then forwards the packets to the next hop based on the IP routing table. This ensures rapid protection and prevents traffic loss.

In Figure 19, the system calculates the PQ node as follows:

1. Uses P 1 (source node of the protected link) and its neighbors except P 2 (which passes the protected link) as the roots to establish shortest path trees.

2. Finds out all nodes that are reachable from P 1 or one of its neighbors without passing the protected link, which are PE 1, P 1, P 3, and P 4.

These nodes form the extended P space.

3. Uses P 2 (destination node of the protected link) as the root to establish a reverse shortest path tree.

4. Finds out all nodes that are reachable from P 2 without passing the protected link, which are PE 2 and P 4.

These nodes form the Q space.

5. Finds out all nodes that reside in both the extended P space and the Q space.

Only P 4 resides in both the extended P space and the Q space, so P 4 is the PQ node of the protected link.

Figure 19 Network diagram for remote LFA

BGP FRR

BGP FRR can set the backup next hop as follows:

· BGP automatically selects a backup route. If the routes to the same destination network are learned from different BGP peers and they have different costs, a primary route and a backup route are generated.

· You can manually specify a backup next hop for matching routes by using a routing policy.

IGP/BGP FRR support for primary and backup next hops in a many-to-one, many-to-many, and one-to-many relationship

In the current software version, IGP/BGP FRR does not support primary and backup next hops in a many-to-many or one-to-many relationship. That is, FRR does not support setting multiple backup next hops for a route. Only one backup next hop is issued to the FIB along with the primary next hop. If multiple low-priority links exist in the network, only the next hop of the highest-priority backup link becomes the backup next hop for FRR.

Except OSPF and IS-IS, other protocols do not support primary and backup next hops in a many-to-one relationship

For OSPF and IS-IS, some devices support multiple primary next hops with a single backup next hop. The devices support selecting shared backup next hop information for ECMP routes through the LFA algorithm. The specific configuration methods are as follows:

· Specify the ecmp-shared keyword when you execute the fast-reroute command in IS-IS IPv4 unicast address family view or IS-IS IPv4 unicast topology view to configure IS-IS FRR.

· Specify the ecmp-shared keyword when you execute the fast-reroute command in OSPF view or OSPF IPv4 unicast topology view to configure OSPF FRR.

After configuration, if a device has ECMP routes and a suboptimal route, the LFA algorithm will specify the suboptimal route as the backup route.

Feature control commands

The following table shows the control commands for FRR.

|

Command |

|

|

Enable IPv4 or IPv6 RIB inter-protocol FRR. |

inter-protocol fast-reroute (RIB IPv4 address family view or RIB IPv6 address family view) |

|

Configure static route FRR to automatically select a backup next hop. |

ip route-static fast-reroute auto (system view) |

|

Configure RIP FRR. |

fast-reroute (RIP view) |

|

Configure RIPng FRR. |

fast-reroute (RIPng view) |

|

Configure OSPF FRR. |

fast-reroute (OSPF view or OSPF IPv4 unicast topology view) |

|

Configure OSPFv3 FRR |

fast-reroute (OSPFv3 view) |

|

Configure IS-IS FRR. |

Fast-reroute (IS-IS IPv4 unicast address family view, IS-IS IPv4 unicast topology view, or IS-IS IPv6 unicast address family view) |

|

Enable BGP FRR for a BGP address family. |

pic (BGP IPv4 unicast address family view, BGP-VPN IPv4 unicast address family view, BGP IPv6 unicast address family view, or BGP-VPN IPv6 unicast address family view) |

|

Apply a routing policy to FRR for a BGP address family. |

· fast-reroute route-policy (BGP IPv4 unicast address family view, BGP-VPN IPv4 unicast address family view, BGP IPv6 unicast address family view, or BGP-VPN IPv6 unicast address family view) · apply [ ipv6 ] fast-reroute backup-nexthop (routing policy node view) |

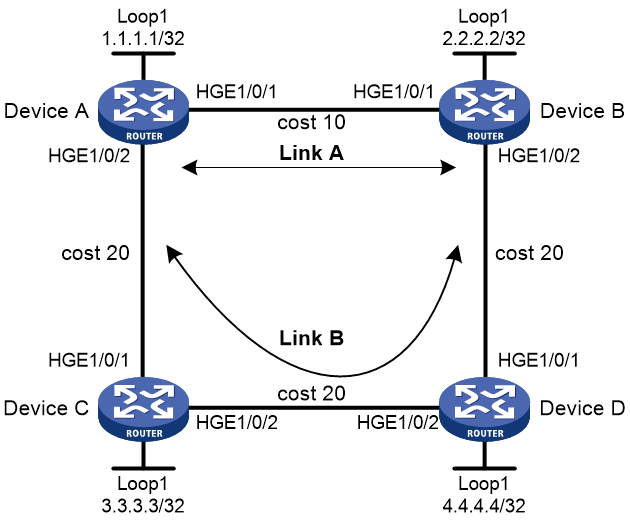

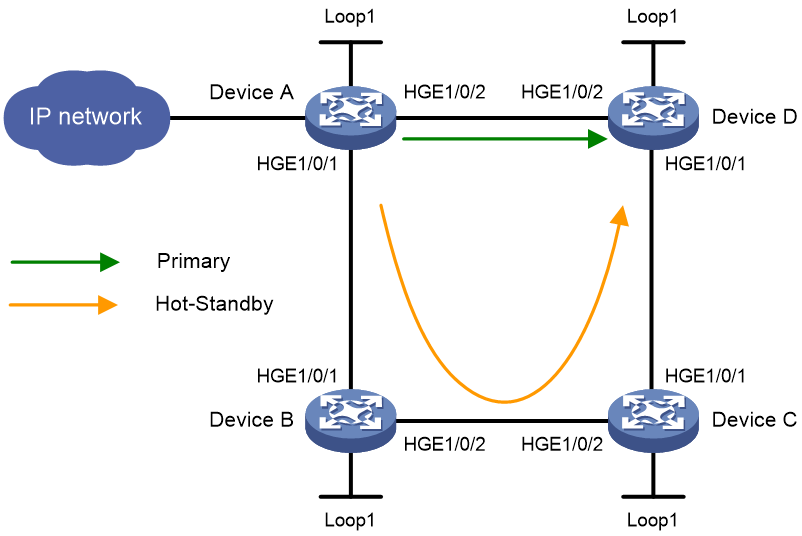

Example: Configuring IS-IS remote LFA FRR

Network configuration

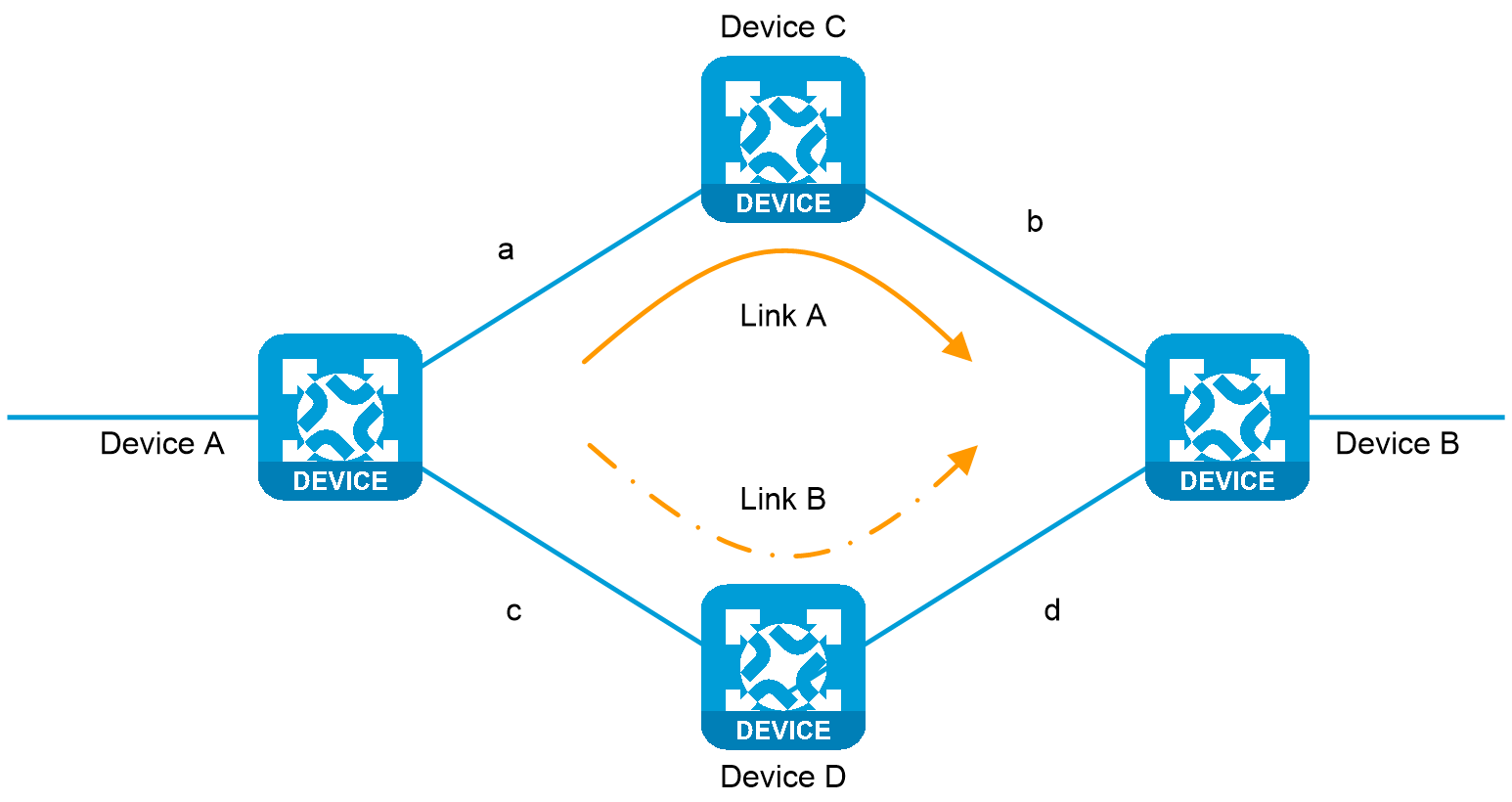

As shown in Figure 20, Device A, Device B, Device C, and Device D reside in the same IS-IS routing domain.

· Run IS-IS on all the routers to interconnect them with each other.

· Configure MPLS LDP on all the devices.

· Configure IS-IS remote LFA FRR so that when Link A fails, traffic can be switched to Link B immediately.

|

Device |

Interface |

IP address |

Device |

Interface |

IP address |

|

Device A |

HGE1/0/1 |

12.12.12.1/24 |

Device B |

HGE1/0/1 |

12.12.12.2/24 |

|

|

HGE1/0/2 |

13.13.13.1/24 |

|

HGE1/0/2 |

15.15.15.1/24 |

|

|

Loop1 |

1.1.1.1/32 |

|

Loop1 |

2.2.2.2/32 |

|

Device C |

HGE1/0/1 |

13.13.13.2/24 |

Device D |

HGE1/0/1 |

15.15.15.2/24 |

|

|

HGE1/0/2 |

14.14.14.1/24 |

|

HGE1/0/2 |

14.14.14.2/24 |

|

|

Loop1 |

3.3.3.3/32 |

|

Loop1 |

4.4.4.4/32 |

Prerequisites

Configure IP addresses for the interfaces on the devices according to the network diagram.

Procedure

1. Configure IS-IS and MPLS LDP on all the devices:

# Configure Device A.

<DeviceA> system-view

[DeviceA] mpls lsr-id 1.1.1.1

[DeviceA] mpls ldp

[DeviceA-ldp] accept target-hello all

[DeviceA-ldp] quit

[DeviceA] isis 1

[DeviceA-isis-1] network-entity 00.0000.0000.0001.00

[DeviceA-isis-1] quit

[DeviceA] interface hundredgige 1/0/1

[DeviceA-HundredGigE1/0/1] isis enable 1

[DeviceA-HundredGigE1/0/1] isis cost 10

[DeviceA-HundredGigE1/0/1] mpls enable

[DeviceA-HundredGigE1/0/1] mpls ldp enable

[DeviceA-HundredGigE1/0/1] quit

[DeviceA] interface hundredgige 1/0/2

[DeviceA-HundredGigE1/0/2] isis enable 1

[DeviceA-HundredGigE1/0/2] isis cost 20

[DeviceA-HundredGigE1/0/2] mpls enable

[DeviceA-HundredGigE1/0/2] mpls ldp enable

[DeviceA-HundredGigE1/0/2] quit

[DeviceA] interface loopback 1

[DeviceA-LoopBack1] isis enable 1

[DeviceA-LoopBack1] quit

# Configure Device B.

<DeviceB> system-view

[DeviceB] mpls lsr-id 2.2.2.2

[DeviceB] mpls ldp

[DeviceB-ldp] accept target-hello all

[DeviceB-ldp] quit

[DeviceB] isis 1

[DeviceB-isis-1] network-entity 00.0000.0000.0002.00

[DeviceB-isis-1] quit

[DeviceB] interface hundredgige 1/0/1

[DeviceB-HundredGigE1/0/1] isis enable 1

[DeviceB-HundredGigE1/0/1] isis cost 10

[DeviceB-HundredGigE1/0/1] mpls enable

[DeviceB-HundredGigE1/0/1] mpls ldp enable

[DeviceB-HundredGigE1/0/1] quit

[DeviceB] interface hundredgige 1/0/2

[DeviceB-HundredGigE1/0/2] isis enable 1

[DeviceB-HundredGigE1/0/2] isis cost 20

[DeviceB-HundredGigE1/0/2] mpls enable

[DeviceB-HundredGigE1/0/2] mpls ldp enable

[DeviceB-HundredGigE1/0/2] quit

[DeviceB] interface loopback 1

[DeviceB-LoopBack1] isis enable 1

[DeviceB-LoopBack1] quit

# Configure Device C.

<DeviceC> system-view

[DeviceC] mpls lsr-id 3.3.3.3

[DeviceC] mpls ldp

[DeviceC-ldp] accept target-hello all

[DeviceC-ldp] quit

[DeviceC] isis 1

[DeviceC-isis-1] network-entity 00.0000.0000.0003.00

[DeviceC-isis-1] quit

[DeviceC] interface hundredgige 1/0/1

[DeviceC-HundredGigE1/0/1] isis enable 1

[DeviceC-HundredGigE1/0/1] isis cost 20

[DeviceC-HundredGigE1/0/1] mpls enable

[DeviceC-HundredGigE1/0/1] mpls ldp enable

[DeviceC-HundredGigE1/0/1] quit

[DeviceC] interface hundredgige 1/0/2

[DeviceC-HundredGigE1/0/2] isis enable 1

[DeviceC-HundredGigE1/0/2] isis cost 20

[DeviceC-HundredGigE1/0/2] mpls enable

[DeviceC-HundredGigE1/0/2] mpls ldp enable

[DeviceC-HundredGigE1/0/2] quit

[DeviceC] interface loopback 1

[DeviceC-LoopBack1] isis enable 1

[DeviceC-LoopBack1] quit

# Configure Device D.

<DeviceD> system-view

[DeviceD] mpls lsr-id 4.4.4.4

[DeviceD] mpls ldp

[DeviceD-ldp] accept target-hello all

[DeviceD-ldp] quit

[DeviceD] isis 1

[DeviceD-isis-1] network-entity 00.0000.0000.0004.00

[DeviceD-isis-1] quit

[DeviceD] interface hundredgige 1/0/1

[DeviceD-HundredGigE1/0/1] isis enable 1

[DeviceD-HundredGigE1/0/1] isis cost 20

[DeviceD-HundredGigE1/0/1] mpls enable

[DeviceD-HundredGigE1/0/1] mpls ldp enable

[DeviceD-HundredGigE1/0/1] quit

[DeviceD] interface hundredgige 1/0/2

[DeviceD-HundredGigE1/0/2] isis enable 1

[DeviceD-HundredGigE1/0/2] isis cost 20

[DeviceD-HundredGigE1/0/2] mpls enable

[DeviceD-HundredGigE1/0/2] mpls ldp enable

[DeviceD-HundredGigE1/0/2] quit

[DeviceD] interface loopback 1

[DeviceD-LoopBack1] isis enable 1

[DeviceD-LoopBack1] quit

2. Configure IS-IS remote LFA FRR:

# Configure Device A.

[DeviceA] isis 1

[DeviceA-isis-1] address-family ipv4

[DeviceA-isis-1-ipv4] fast-reroute lfa

[DeviceA-isis-1-ipv4] fast-reroute remote-lfa tunnel ldp

[DeviceA-isis-1-ipv4] quit

[DeviceA-isis-1] quit

Verifying the configuration

1. Display the configuration:

# Display route 2.2.2.2/32 on Device A to view the backup next hop information.

[DeviceA] display isis route ipv4 2.2.2.2 32 verbose

Route information for IS-IS(1)

------------------------------

Level-1 IPv4 Forwarding Table

-----------------------------

IPv4 Dest : 2.2.2.2/32 Int. Cost : 10 Ext. Cost : NULL

Admin Tag : - Src Count : 1 Flag : R/L/-

InLabel : 4294967295 InLabel Flag: -/-/-/-/-/-

NextHop : Interface : ExitIndex :

12.12.12.2 HGE1/0/1 0x00000002

Nib ID : 0x14000008 OutLabel : 4294967295 OutLabelFlag: -

LabelSrc : N/A Delay Flag : N/A

Remote-LFA:

Interface : HGE1/0/2

BkNextHop : 13.13.13.2 LsIndex : 0x01000002

Tunnel destination address: 4.4.4.4

Backup label: {1149}

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

InLabel flags: R-Readvertisement, N-Node SID, P-no PHP

E-Explicit null, V-Value, L-Local

OutLabelFlags: E-Explicit null, I-Implicit null, N-Nomal, P-SR label prefer

Level-2 IPv4 Forwarding Table

-----------------------------

IPv4 Dest : 2.2.2.2/32 Int. Cost : 10 Ext. Cost : NULL

Admin Tag : - Src Count : 3 Flag : -/-/-

InLabel : 4294967295 InLabel Flag: -/-/-/-/-/-

Flags: D-Direct, R-Added to Rib, L-Advertised in LSPs, U-Up/Down Bit Set

InLabel flags: R-Readvertisement, N-Node SID, P-no PHP

E-Explicit null, V-Value, L-Local

OutLabelFlags: E-Explicit null, I-Implicit null, N-Nomal, P-SR label prefer

2. Verify the configuration after traffic switchover:

# Display traffic for the primary next hop.

[DeviceA] display counters rate outbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 6 3905728 -- --

HGE1/0/2 0 0 -- --

Overflow: More than 14 digits.

--: Not supported.

# When the primary output interface HGE 1/0/1 fails, traffic switches over to the backup output interface HGE 1/0/2 with zero packet loss during the process.

[DeviceA] display counters rate outbound interface

Usage: Bandwidth utilization in percentage

Interface Usage (%) Total (pps) Broadcast (pps) Multicast (pps)

HGE1/0/1 0 0 -- --

HGE1/0/2 6 3905728 -- --

Overflow: More than 14 digits.

--: Not supported.

# After the primary output interface HGE 1/0/1 recovers, traffic switches back to HGE 1/0/1 with zero packet loss during the process.

Test result

In this scenario, no packet loss occurs during traffic forwarding through the primary next hop of IS-IS. When the primary next hop fails, traffic can immediately switch to the backup next hop.

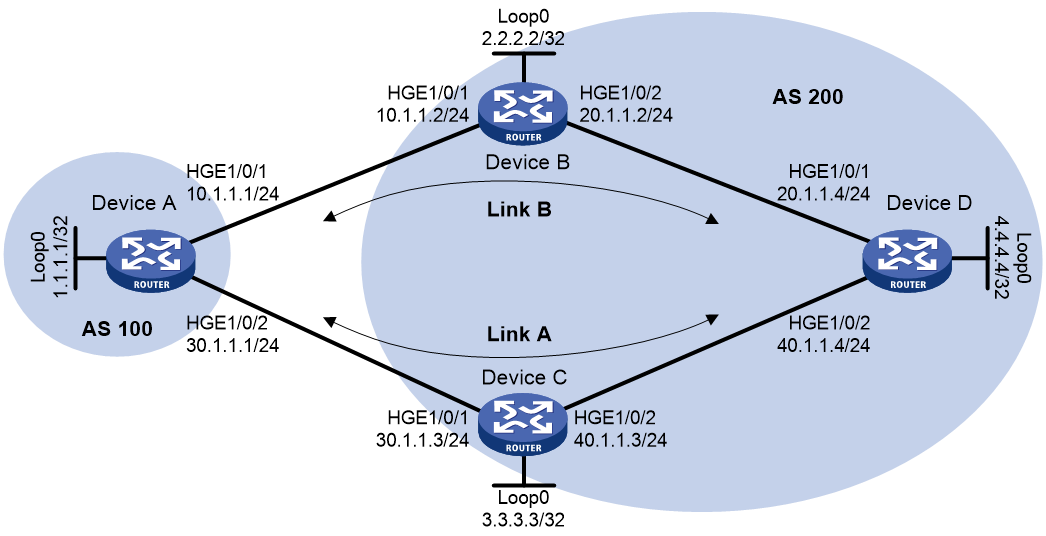

Example: Configuring BGP FRR

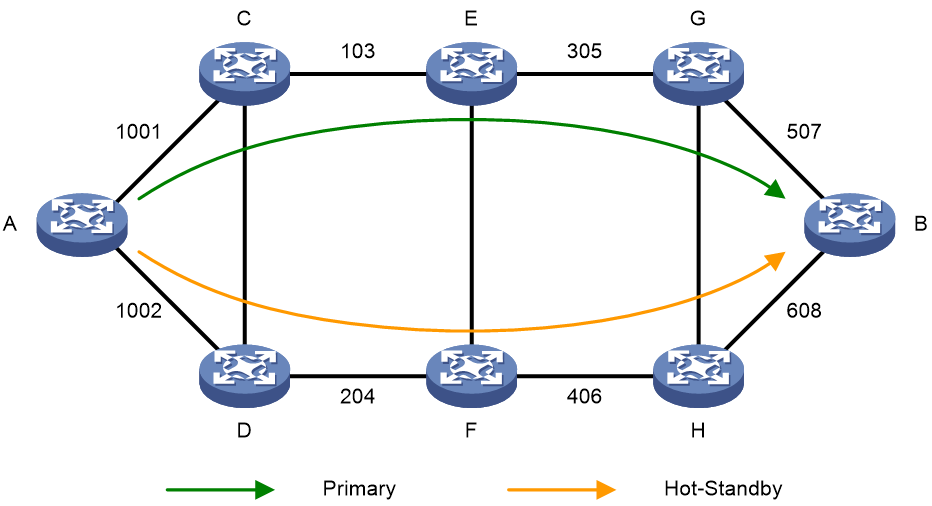

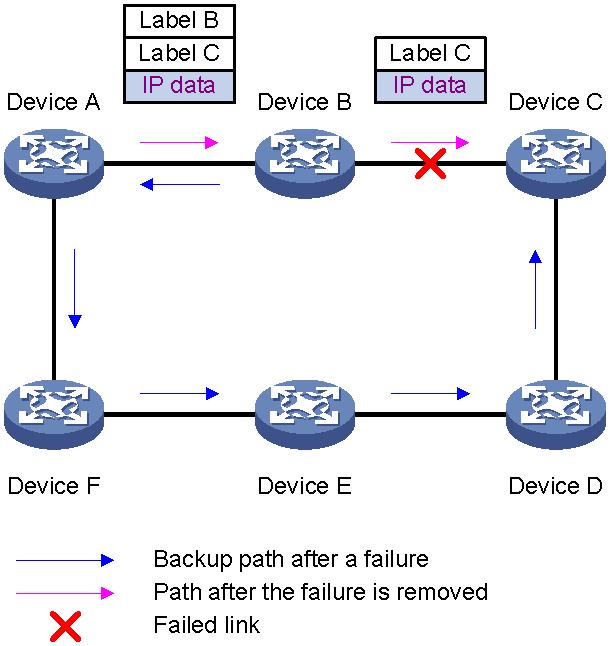

Network configuration

As shown in Figure 21, two links exist between Device A and Device D. To achieve zero packet loss when a link failure occurs, configure BGP FRR and specify link A with lower priority as the backup link. Typically, traffic between Device A and Device D is forwarded through link B. If link B fails, traffic immediately switches over to link A.

Major configuration steps

1. Configure IP addresses for interfaces and configure OSPF settings. (Details not shown.)

2. Configure OSPF in AS 200 to advertise subnet routes associated with the interfaces (including loopback interfaces) and ensure connectivity among Device B, Device C, and Device D. (Details not shown.)

3. Configure BGP connections.

# Configure Device A to establish EBGP sessions to Device B and Device C, and advertise network 1.1.1.1/32.

<DeviceA> system-view

[DeviceA] bgp 100

[DeviceA-bgp-default] router-id 1.1.1.1

[DeviceA-bgp-default] peer 10.1.1.2 as-number 200

[DeviceA-bgp-default] peer 30.1.1.3 as-number 200

[DeviceA-bgp-default] address-family ipv4 unicast

[DeviceA-bgp-default-ipv4] peer 10.1.1.2 enable

[DeviceA-bgp-default-ipv4] peer 30.1.1.3 enable

[DeviceA-bgp-default-ipv4] network 1.1.1.1 32

# Configure Device B to establish an EBGP session to Device A, and an IBGP session to Device D.

<DeviceB> system-view

[DeviceB] bgp 200

[DeviceB-bgp-default] router-id 2.2.2.2

[DeviceB-bgp-default] peer 10.1.1.1 as-number 100

[DeviceB-bgp-default] peer 4.4.4.4 as-number 200

[DeviceB-bgp-default] peer 4.4.4.4 connect-interface loopback 0

[DeviceB-bgp-default] address-family ipv4 unicast

[DeviceB-bgp-default-ipv4] peer 10.1.1.1 enable

[DeviceB-bgp-default-ipv4] peer 4.4.4.4 enable

[DeviceB-bgp-default-ipv4] peer 4.4.4.4 next-hop-local

[DeviceB-bgp-default-ipv4] quit

[DeviceB-bgp-default] quit

# Configure Device C to establish an EBGP session to Device A, and an IBGP session to Device D.

<DeviceC> system-view

[DeviceC] bgp 200

[DeviceC-bgp-default] router-id 3.3.3.3

[DeviceC-bgp-default] peer 30.1.1.1 as-number 100

[DeviceC-bgp-default] peer 4.4.4.4 as-number 200

[DeviceC-bgp-default] peer 4.4.4.4 connect-interface loopback 0

[DeviceC-bgp-default] address-family ipv4 unicast

[DeviceC-bgp-default-ipv4] peer 30.1.1.1 enable

[DeviceC-bgp-default-ipv4] peer 4.4.4.4 enable

[DeviceC-bgp-default-ipv4] peer 4.4.4.4 next-hop-local

[DeviceC-bgp-default-ipv4] quit

[DeviceC-bgp-default] quit

# Configure Device D to establish IBGP sessions to Device B and Device C, and advertise network 4.4.4.4/32.

<DeviceD> system-view

[DeviceD] bgp 200

[DeviceD-bgp-default] router-id 4.4.4.4

[DeviceD-bgp-default] peer 2.2.2.2 as-number 200

[DeviceD-bgp-default] peer 2.2.2.2 connect-interface loopback 0

[DeviceD-bgp-default] peer 3.3.3.3 as-number 200

[DeviceD-bgp-default] peer 3.3.3.3 connect-interface loopback 0

[DeviceD-bgp-default] address-family ipv4 unicast

[DeviceD-bgp-default-ipv4] peer 2.2.2.2 enable

[DeviceD-bgp-default-ipv4] peer 3.3.3.3 enable

[DeviceD-bgp-default-ipv4] network 4.4.4.4 32

4. Configure preferred values so Link B is used to forward traffic between Device A and Device D:

# Configure Device A to set the preferred value to 100 for routes received from Device B.

[DeviceA-bgp-default-ipv4] peer 10.1.1.2 preferred-value 100

[DeviceA-bgp-default-ipv4] quit

[DeviceA-bgp-default] quit

# Configure Device D to set the preferred value to 100 for routes received from Device B.

[DeviceD-bgp-default-ipv4] peer 2.2.2.2 preferred-value 100

[DeviceD-bgp-default-ipv4] quit

[DeviceD-bgp-default] quit

5. Configure BGP FRR:

# On Device A, configure the source address of BFD echo packets as 11.1.1.1.

[DeviceA] bfd echo-source-ip 11.1.1.1

# Create routing policy frr to set a backup next hop 30.1.1.3 (Device C) for the route destined for 4.4.4.4/32.

[DeviceA] ip prefix-list abc index 10 permit 4.4.4.4 32

[DeviceA] route-policy frr permit node 10

[DeviceA-route-policy] if-match ip address prefix-list abc

[DeviceA-route-policy] apply fast-reroute backup-nexthop 30.1.1.3

[DeviceA-route-policy] quit

# Use BFD echo packet mode to detect the connectivity to Device D.

[DeviceA] bgp 100

[DeviceA-bgp-default] primary-path-detect bfd echo

# Apply the routing policy to BGP FRR for BGP IPv4 unicast address family.

[DeviceA-bgp-default] address-family ipv4 unicast

[DeviceA-bgp-default-ipv4] fast-reroute route-policy frr

[DeviceA-bgp-default-ipv4] quit

[witchA-bgp-default] quit

# On Device D, set the source address of BFD echo packets to 44.1.1.1.

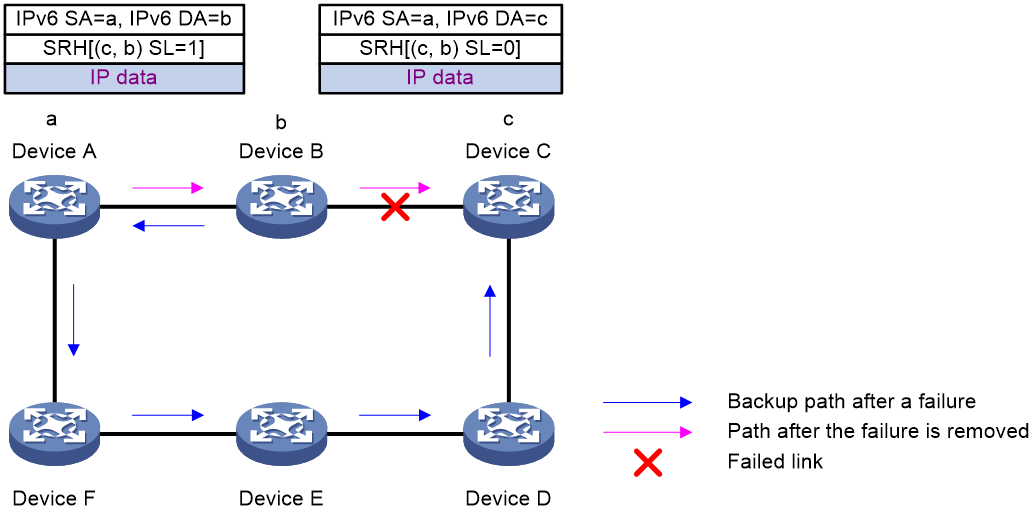

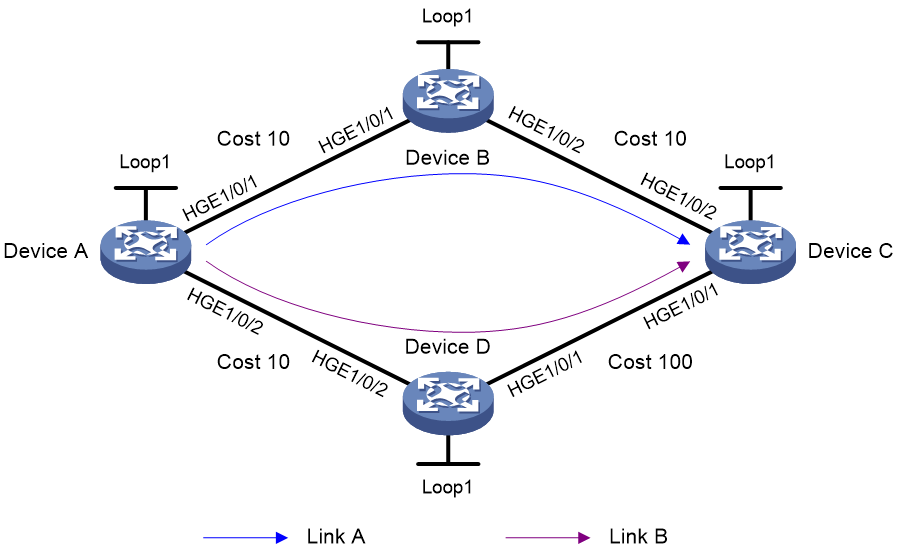

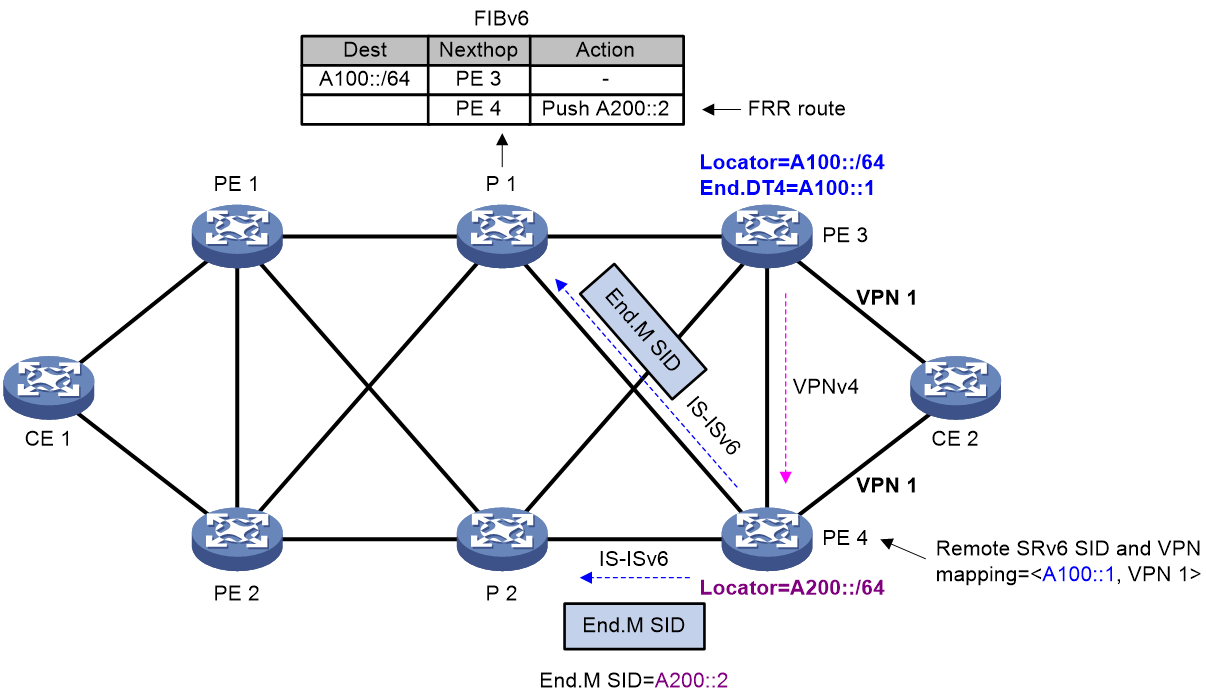

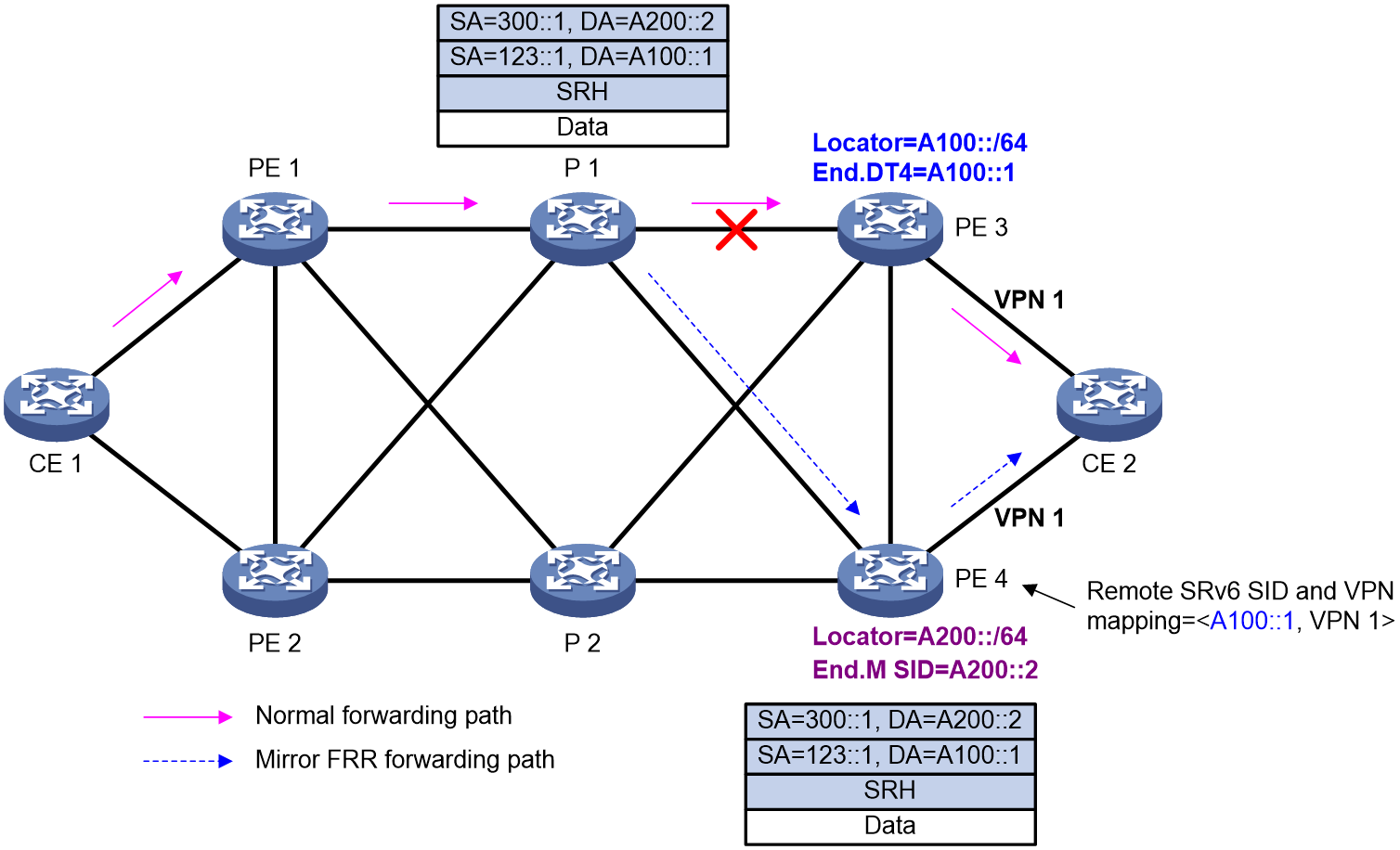

[DeviceD] bfd echo-source-ip 44.1.1.1