- Released At: 17-07-2024

- Page Views:

- Downloads:

- Related Documents

-

Best Practices for Deploying VMware on an H3C UniServer B16000 Chassis

Document version: 6W100-20240712

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice.

Installing an operating system

Optimizing operating system settings

Troubleshooting and information collection

Collecting server hardware logs

Collecting interconnect module logs

Overview

Product overview

The H3C UniServer B16000 blade chassis (hereafter referred to as B16000 chassis) is a new generation of converged IT infrastructure platform independently developed by H3C. It is integrated with computing, storage, switching, management, and multi-service extension capabilities, and is specifically designed for data centers, cloud computing, virtualization, and high-performance computing (HPC) scenarios.

Figure 1 H3C UniServer B16000 chassis view

The combination of the H3C B16000 consolidated architecture and VMware® vSphere delivers the optimal virtualization experience.

· Converged architecture

The H3C B16000 is integrated with various management capabilities such as computing, network, storage, power supply, and fan resources management, providing simplified management, powerful processing, high network bandwidth, and efficient power supply and heat dissipation capabilities.

· Industry-leading specifications

The H3C B16000 supports pooling of multiple resources, multiple switching planes, various switching modules, and abundant service applications.

· Abundant management features

The H3C B16000 supports centralized device management. The management module can manage and monitor all system components in a unified manner. Compared to traditional rack servers, the H3C B16000 greatly reduces the management complexity and costs. It also supports centralized management of multiple cascaded chassis, simplifying operations and maintenance.

· High reliability

¡ All core modules support N+1 or N+N backup and seamless failure switchover.

¡ The passive backplane design reduces wiring density and enhances backplane reliability and signal quality, improving overall backplane performance.

¡ The H3C B16000 uses highly efficient power modules and supports intelligent power supply.

¡ The H3C B16000 supports link aggregation and system-level device stacking, providing multiple reliability guarantee modes and application mode options.

· Fast deployment and AIOps

The H3C B16000 supports various fast deployment features and AIOps.

Compatible hardware

The H3C UniServer B16000 chassis supports components of various functions, shapes, and sizes, offering a range of options to meet diverse workload requirements from general-purpose to task-critical ones. The following section introduces the core components in the H3C UniServer B16000 chassis:

Blade servers

Table 1 Blade server specifications

|

Model |

Maximum allowed number |

Server type |

Description |

|

H3C UniServer B5700 G5 |

16 |

2-U half-width single-height |

· An application-oriented blade server suitable for general enterprise application workloads such as high density, cloud computing, and virtualization. · Supports two Intel Whitley platform Ice Lake series CPUs or Montage Jintide C3 series CPUs · Supports a maximum of thirty-two 3200 MT/s DDR4 or PMem 200 DIMMs · Provides three drive slots at the front. Supports a maximum of four storage devices, supports SATA/SAS/NVMe drives and M.2 adapter boxes. · Provides one x16 PCIe3.0 Riser expansion slot · Supports a maximum of three MEZZ (PCIe3.0 x16) expansion slots · Provides one storage controller expansion slot, supporting RAID controllers, HBAs, and 4-port SATA pass-through modules |

|

H3C UniServer B5700 G3 |

16 |

2-U half-width single-height |

· An application-oriented blade server suitable for general enterprise application workloads such as high density, cloud computing, and virtualization. · Supports two Intel Xeon processors from the Skylake or Cascade Lake series, supports single-CPU configuration · Supports 24 DDR4 2666MT/s, 2933 MT/s, or 3200 MT/s DIMMs · Provides three drive slots at the front. Supports a maximum of four storage devices, supports SATA/SAS/NVMe drives and M.2 adapter boxes. · Provides one x16 PCIe3.0 Riser expansion slot · Supports a maximum of three MEZZ (PCIe3.0 x16) expansion slots · Provides one storage controller expansion slot, supporting RAID controllers, HBAs, and 4-port SATA pass-through modules · Provides two embedded Micro SD card slots |

|

H3C UniServer B5800 G3 |

8 |

2-U full-width single-height |

· An application-oriented blade server suitable for general enterprise application workloads such as high density, storage optimization, cloud computing, and virtualization. · Supports two Intel Xeon processors from the Skylake or Cascade Lake series, supports dual-CPU configuration · Supports 24 DDR4 2666MT/s, 2933 MT/s, or 3200 MT/s DIMMs · Provides 14 drive slots, supporting a maximum of 15 storage devices (slot 0 supports dual M.2 adapter boxes) · Supports a maximum of three MEZZ (PCIe3.0 x16) expansion slots · Provides one storage controller expansion connector · Provides two embedded SATA M.2 slots |

|

H3C UniServer B7800 G3 |

8 |

4-U full-width single-height |

· A high-performance blade server suitable for enterprise application workloads such as high-density computing, critical services, and OLTP. · Supports a maximum of four Intel Xeon processors from the Skylake or Cascade Lake series (Series 5 and above), supports dual-CPU configuration · Supports 48 DDR4 2666MT/s, 2933 MT/s, or 3200 MT/s DIMMs · Provides five drive slots at the front, supporting a maximum of six storage devices Supports SATA/SAS/NVMe/M.2 · Provides two x16 PCIe3.0 Riser expansion slots · Supports a maximum of six MEZZ (PCIe3.0 x16) expansion slots · Provides one storage controller expansion connector · Provides two embedded SATA M.2 slots (supporting pass-through modules, RAID controllers, and HBAs) · Provides two embedded Micro SD card slots |

Management modules

The B16000 chassis supports a maximum of two H3C UniSever OM100 management modules for redundancy. A management module mainly provides the following features:

· Centralized device management (management plane)

The management module provides two GE management ports for centralized management and monitoring of all devices in the chassis, including blade servers, interconnect modules, AE engine modules, LCD modules, power supply modules, and fan modules. It also supports intelligent power management and system-level smart speed control for the entire chassis. A maximum of one management module is required. You can configure two management modules to provide redundancy and improve reliability.

· Integrated GE switch (service plane)

The management module is integrated with a GE switch to provide switching services, and is integrated with two GE interconnect modules. A blade server provides an embedded dual-port GE adapter. Therefore, the chassis provides 3+1=4 switching planes and 6+2=8 interconnect modules.

The service ports (2 × 10GE + 2 × GE) and management ports (2 × GE) on the management module panel are isolated.

Interconnect modules

Interconnect module specifications

Table 2 Interconnect module specifications

|

Model |

Maximum allowed number |

Description |

|

H3C UniServer BX720E |

6 |

Ethernet switch: 16 × 10GE fiber ports + 4 × 40GE fiber ports |

|

H3C UniServer BX720EF |

6 |

Converged network switch: 8 × 10GE FC ports + 8 × 10GE fiber ports + 4 × 40G fiber ports |

|

H3C UniServer BX608FE |

6 |

FC network switch: 8 × 16G FC ports |

|

H3C UniServer BX1010E |

6 |

Ethernet switch: 8 × 25GE fiber ports + 2 × 100GE fiber ports. A 100G fiber port can be split into four 25GE or 10GE ports. |

|

H3C UniServer BX1020EF |

6 |

8 × converged ports + 8 × 25GE fiber ports + 4 × 100GE fiber ports |

|

H3C UniServer BT616E |

6 |

16 × 10GE/25GE fiber ports |

|

H3C UniServer BT1004E |

6 |

4 × (4 × 25GE) fiber ports |

|

H3C UniServer BT716F |

6 |

16 × 16G/32G FC fiber ports |

|

H3C UniServer BX1020B |

6 |

20 × 100G EDR IB ports |

· H3C UniServer BX720E and H3C UniServer BX1010E

A BX720E or BX1010E switching module can be installed in interconnect module slots 1 through 6 at the chassis rear. It offers data exchange services for various modules within chassis, and provides ports for connecting external devices. BX1010E provides higher port speeds than BX720E.

· H3C UniServer BX720EF

A BX720EF converged switching module can be installed in interconnect module slots 1 through 6 at the chassis rear. It offers data exchange services for various modules within chassis, and provides interfaces for connecting external devices. A converged port can switch between Ethernet and FC modes. The module provides sixteen 10G ports and four 40G ports. Eight out of the sixteen 10G ports support FC.

· H3C UniServer BX608FE

A BX608FE FC switching module can be installed in interconnect module slots 1 through 6 at the chassis rear. It offers data exchange services for various modules within chassis, and provides interfaces for connecting external devices.

· H3C UniServer BX1020EF

A BX1020EF switching module acts as the converged switching control unit within the chassis, offering data exchange services for blade servers in the chassis and providing ports for connecting blade servers to the external network. A converged port can switch between Ethernet and FC modes. The module provides sixteen 25G ports and four 100G ports. Eight out of the sixteen 25G ports support FC.

· H3C UniServer BT616E

As a 10GE/25GE Ethernet pass-through module of the H3C UniServer B16000 chassis, the BT616E pass-through module provides external data ports for the blade servers at the front, passing through the network adapter ports on the servers.

· H3C UniServer BT1004E

As a 4 × (4 × 25GE) Ethernet cascading module of the H3C UniServer B16000 chassis, the BT1004E Ethernet cascading module provides external data ports for the blade servers, passing through the Ethernet Mezz adapter ports on the servers.

· H3C UniServer BT716F

As a 16G/32G FC pass-through module of the H3C UniServer B16000 chassis, the BT716F pass-through module provides external data ports for the blade servers at the front, passing through the FC Mezz network adapter ports on the servers.

· H3C UniServer BX1020B

The BX1020B IB switching module provides Infiniband (IB) ports for the blade servers at the front. The BX1020B provides 16 internal 100G HDR IB ports to connect the IB Mezz network adapters on blade servers, and provides 20 external 100G HDR IB ports to connect external devices.

Interconnect module high availability

The chassis can be fully configured with six interconnect modules. Interconnect modules 1 and 4, 2 and 5, as well as 3 and 6 are interconnected through internal ports. Each of the three interconnect module pairs can act as a switching plane. You can configure the interconnect module pairs to operate in active/standby mode as needed.

Figure 2 Interconnect module slots

Interconnect module connections

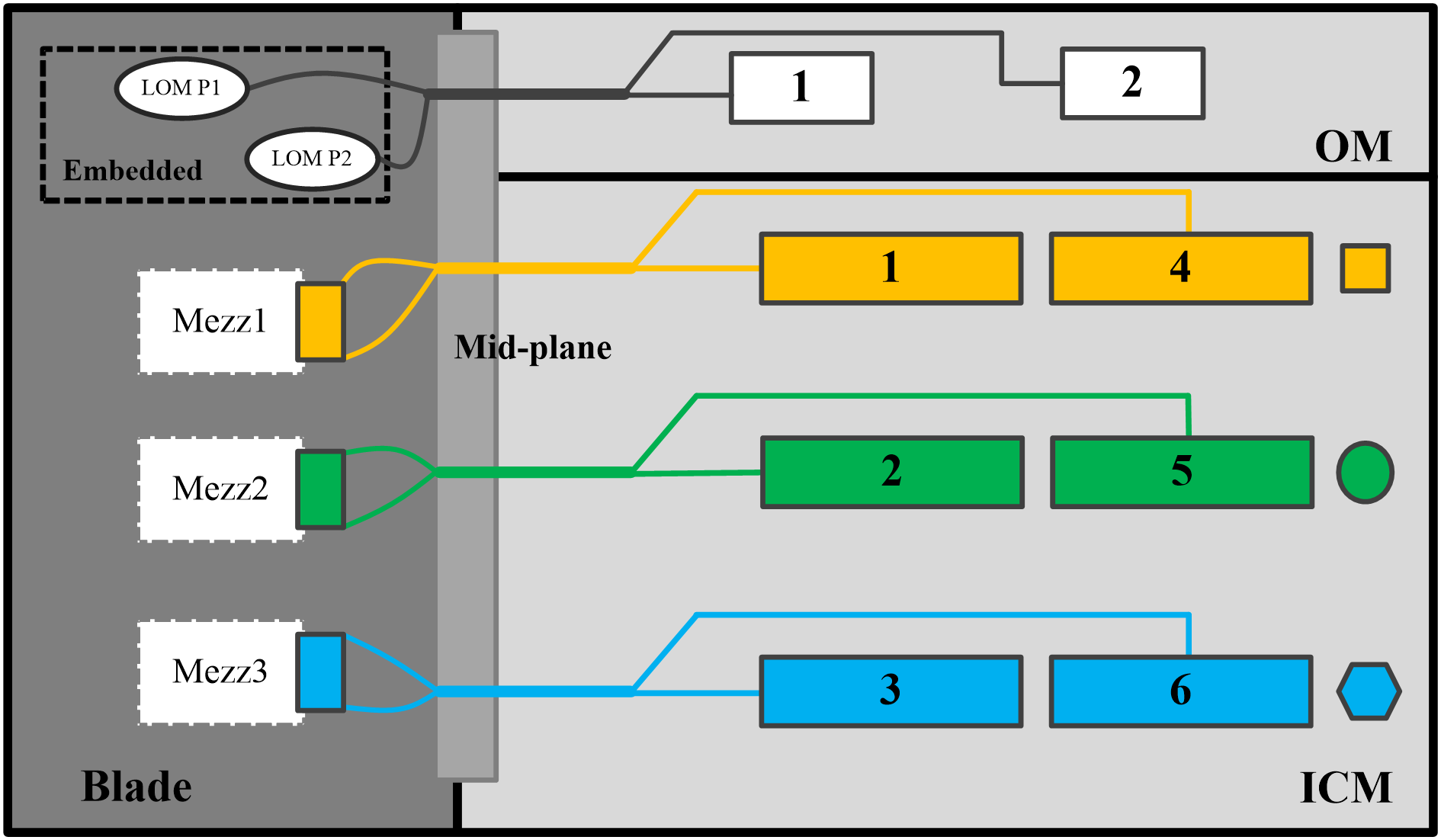

As shown in Figure 3, the interconnect modules and Mezz adapters can be connected as follows:

· The embedded network adapter connects to the primary and backup OM modules.

· Mezz adapter 1 connects to interconnect modules 1 and 4.

· Mezz adapter 2 connects to interconnect modules 2 and 5.

· Mezz adapter 3 connects to interconnect modules 3 and 6.

Figure 3 Connection mode 1 for interconnect modules and Mezz adapters

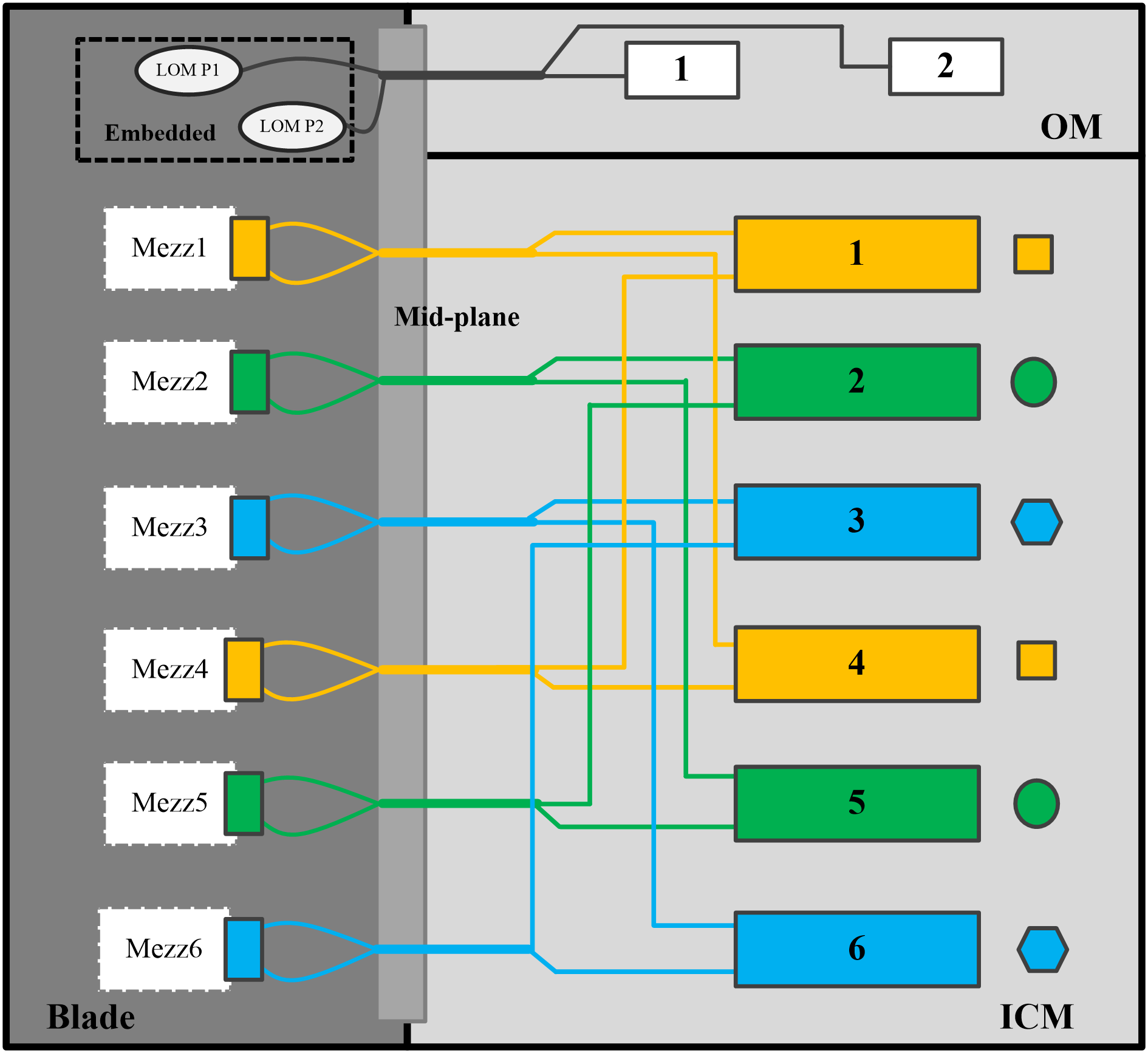

As shown in Figure 4, the interconnect modules and Mezz adapters can be connected as follows:

· The embedded network adapter connects to the primary and backup OM modules.

· Mezz adapters 1 and 4 connect to interconnect modules 1 and 4.

· Mezz adapters 2 and 5 connect to interconnect modules 2 and 5.

· Mezz adapters 3 and 6 connect to interconnect modules 3 and 6.

Figure 4 Connection mode 2 for interconnect modules and Mezz adapters

Recommended VMware solution

VMware vSphere

VMware vSphere is a software suite composed of components such as ESXi and vCenter. ESXi is a management program based on VMkernel and independent of the operating system. It is the exclusive management program of VMware vSphere 8.X. VMware® vCenter Server™ is a centralized management application that allows you to perform centralized management of VMs and ESXi hosts.

You can configure FC-based storage connection or FCoE-based storage connection to implement the following configuration:

· Configure a storage controller to set up RAID 1 by using two drives on each blade server, and install the VMware ESXi 8.X operating system.

· Create several VMs on each blade server. Install the VMware vCenter Server Application management software on one blade server to manage the VMs on all blade servers in a unified manner.

· Configure redundancy for the management, service, and storage networks, and make sure the networks are accessible.

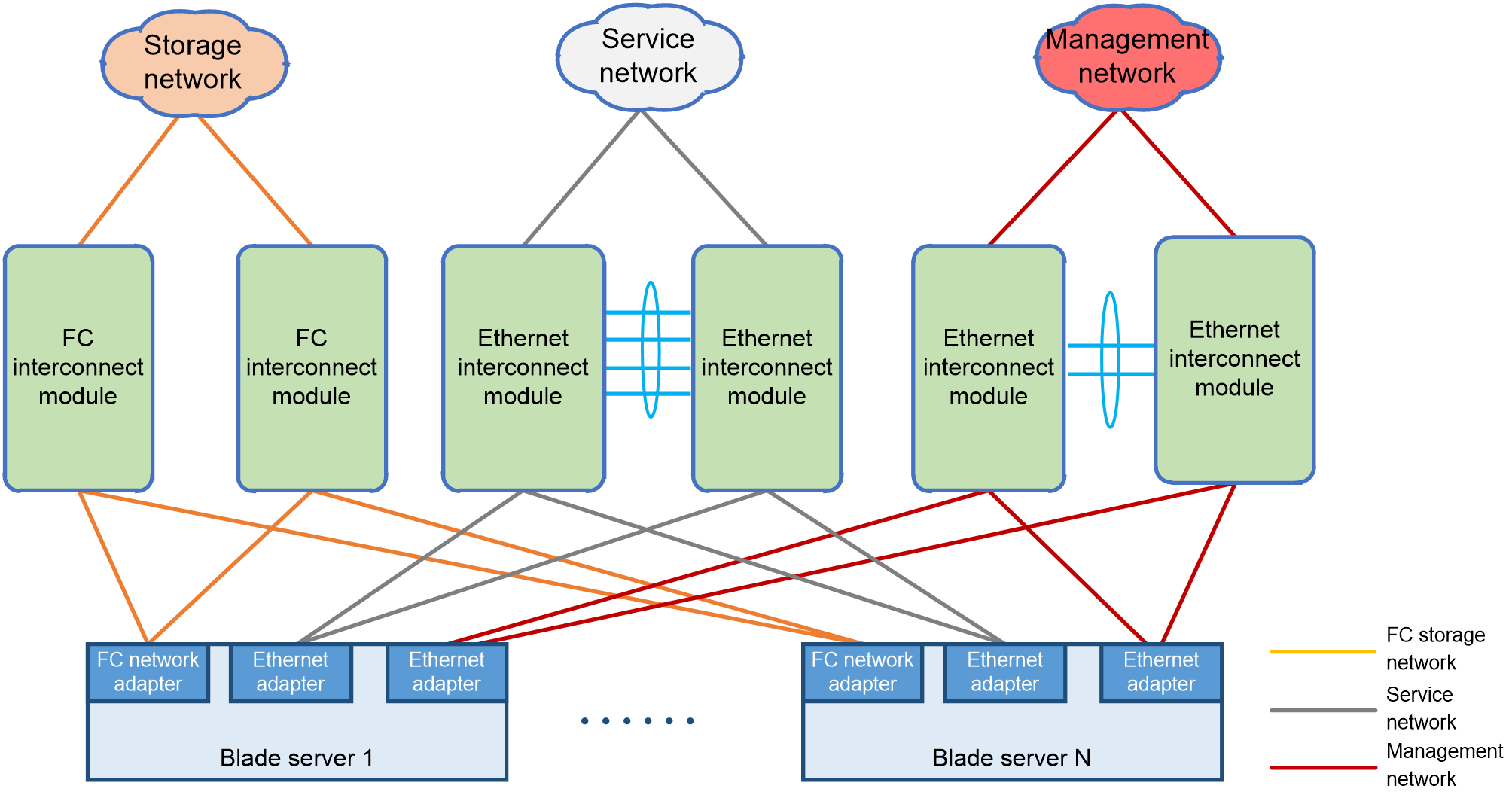

FC-based storage connection

As shown in Figure 5, install multiple blade servers in the H3C B16000 chassis to act as compute nodes. Install multiple drives and one storage controller on each blade server. Use two drives to set up a RAID, acting as the system drive, and use the other drives as data drives.

A compute node connects to the two Ethernet interconnect modules through the two ports on one Ethernet adapter. The multiple links on the compute node ensure high availability and connect the compute node to the management network.

A compute node connects to the two DC interconnect modules through the two ports on the FC network adapter. The multiple links on the compute node ensure high availability and connect the compute node to the storage network.

A compute node connects to the two Ethernet interconnect modules through the two ports on the other Ethernet adapter. The multiple links on the compute node ensure high availability and connect the compute node to the service network.

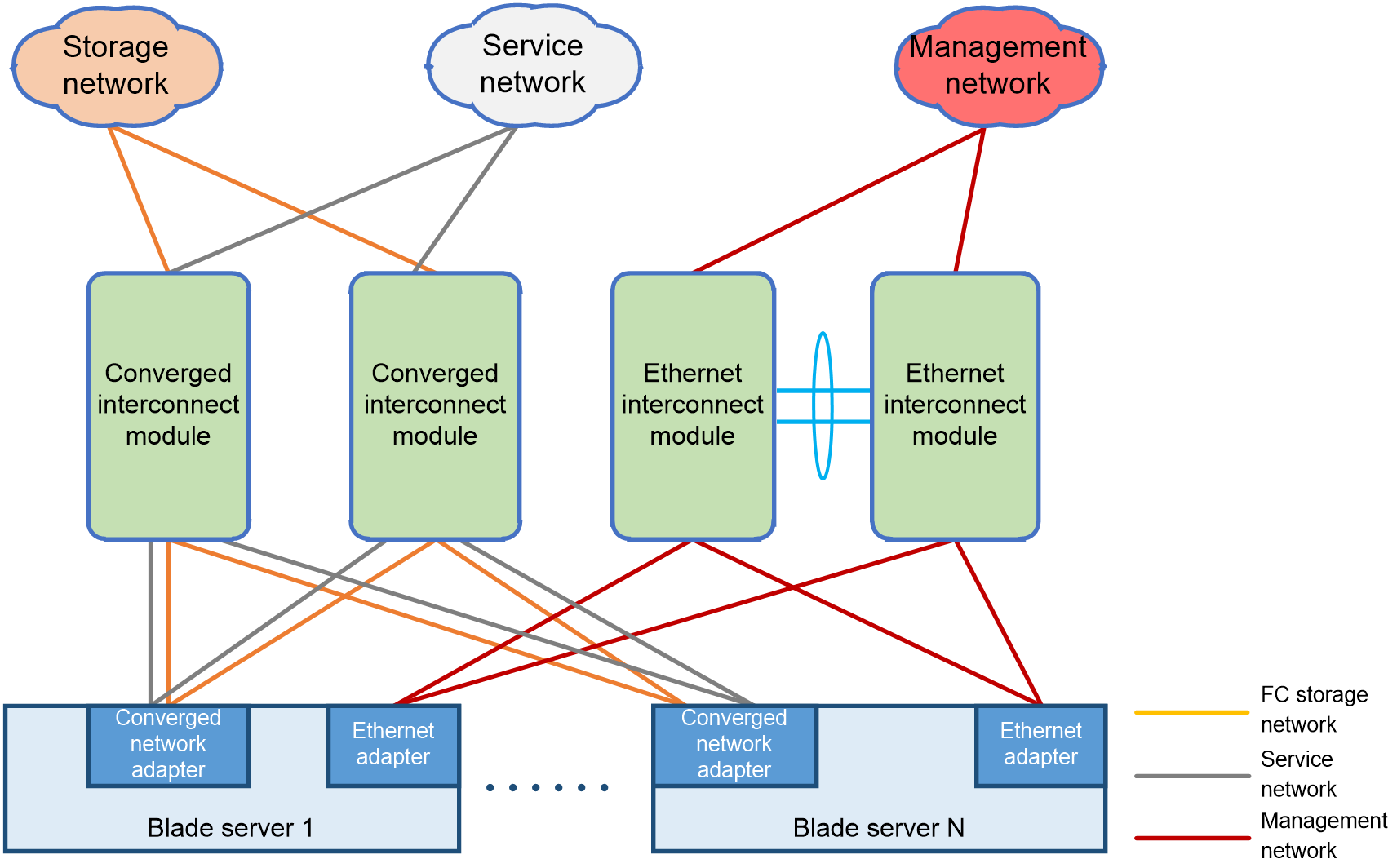

FCoE-based storage connection

As shown in Figure 6, install multiple blade servers in the H3C B16000 chassis to act as compute nodes. Install multiple drives and one storage controller on each blade server. Use two drives to set up a RAID, acting as the system drive, and use the other drives as data drives.

A compute node connects to the two Ethernet interconnect modules through the two ports on the Ethernet adapter. The multiple links on the compute node ensure high availability and connect the compute node to the management network.

A compute node connects to the two converged interconnect modules through two ports on the converged network adapter. The multiple links on the compute node ensure high availability through FCoE and connect the compute node to the storage network.

A compute node connects to the two converged interconnect modules through the other two ports on the converged network adapter. The multiple links on the compute node ensure high availability and connect the compute node to the service network.

Virtualization deployment

Optional hardware

Table 3 Optional hardware

|

Item |

Model |

|

Blade server |

· H3C UniServer B5700 G5 · H3C UniServer B5700 G3 · H3C UniServer B5800 G3 · H3C UniServer B7800 G3 |

|

OM module |

OM100 |

|

Interconnect module |

Ethernet/converged interconnect module: · H3C UniServer BX720E · H3C UniServer BX720EF · H3C UniServer BX1010E · H3C UniServer BX1020EF |

|

FC interconnect module: · H3C UniServer BX608FE |

|

|

Mezz network adapter |

CNA/Ethernet (converged) adapter: · NIC-ETH521i-Mb-4 × 10G (10GE converged network adapter) · NIC-ETH522i-Mb-2 × 10G (10GE converged network adapter) · NIC-ETH561i-Mb-4 × 10G (10GE Ethernet adapter) · NIC-ETH640i-Mb-2 × 25G (25GE Ethernet adapter) · NIC-ETH641i-Mb-2 × 25G (25GE Ethernet adapter) · NIC-ETH681i-Mb-2 × 25G (25GE Ethernet adapter) · NIC-ETH682i-Mb-2 × 25G (25GE converged network adapter) |

|

FC network adapter: · NIC-FC680i-Mb-2*16G · NIC-FC730i-Mb-2P |

|

|

Storage controller |

· RAID-P5408-Mf-8i-4GB · RAID-P2404-Mf-4i (applicable to only B5700 G5 and B5700 G3) · RAID-P4408-Mf-8i (applicable to only B5800 G3 and B7800 G3) |

|

Drives |

Any drives compatible with the product. |

Configuring BIOS

As a best practice, use the factory default BIOS settings (optimal settings) of an H3C server.

If you have edited the BIOS settings, or if you are not sure whether the BIOS is in its default settings, you can press ESC/DEL during the server BIOS POST process to enter the BIOS SETUP menu, and then press F3 to load the default configuration.

Table 4 shows the recommended BIOS settings for VMware. You can check the settings on the BIOS SETUP menu.

Table 4 Recommended values for BIOS options

|

BIOS option |

Recommended value |

|

Hyper-Threading[ALL] |

Enabled |

|

Monitor Mwait |

Enabled |

|

VMX |

Enabled |

|

Intel VT for Direct I/O(VT-d) |

Enabled |

|

Interrupt Remapping |

Enabled |

|

Hardware Prefetcher |

Enabled |

|

Ajacent Cache Prefetch |

Enabled |

|

DCU Stramer Prefetcher |

Enabled |

|

DCU IP Prefetcher |

Enabled |

Installing an operating system

See H3C Servers Operating System Installation Guide.

Optimizing operating system settings

As a best practice to obtain the optimal performance and stability in an ESXi operating system, perform the following tasks:

Configuring the power management policy

To improve system performance and stability, configure the high-performance power management policy as follows:

1. Log in to the ESXi web management interface.

2. Select Management > Hardware > Power Supply Management, and then click Edit Policy.

3. Select High Performance, and then click OK.

|

|

NOTE: You can edit the power management policy only when the Power Performance Tuning option is set to OS Control in the BIOS. |

Disabling C state

To improve system performance, disable the CPU C state in ESXi as follows:

1. Log in to the ESXi web management interface.

2. Select Management > System > Advanced Settings, and then enter Power in the search box to find the Power.CStateMaxLatency option.

3. Right-click Power.CStateMaxLatency, and then select Edit Option.

4. Set the new value to 0, and then click Save.

Troubleshooting and information collection

If you encounter any issues while using the VMware system, collect the VMware system logs and server hardware logs.

Collecting VMware system logs

You can collect VMware system logs through one of the following methods:

· Using the vSphere web client

For more information, see Collecting diagnostic information for ESX/ESXi hosts and vCenter Server using the vSphere Web Client (2032892) at VMware official website.

· Using the vm-support command

For more information, see “vm-support” command in ESX/ESXi to collect diagnostic information (1010705) at VMware official website.

· Using the PowerCLI

For more information, see Collecting diagnostic information for VMware vCenter Server and ESX/ESXi using the vSphere PowerCLI (1027932) at VMware official website.

Collecting server hardware logs

You can troubleshoot the blade server hardware based on event logs, HDM logs, and SDS logs. For detailed information about log collection, see the HDM online help.

Collecting interconnect module logs

You can troubleshoot the interconnect modules based on system logs, diagnostic logs, and security logs. You can collect interconnect module logs through FTP.

Summary

As an industry-leading VM hypervisor, VMware vSphere ESXi supports server virtualization on the B16000 for workload consolidation to provide the optimal performance, availability, and security. The B16000 can combine physical and virtual computing, storage, and structural pools into any configuration suitable for any application.

The B16000 can reduce the licensing cost, because it supports a high VM density and a large memory size. It is suitable for the deployment of various workloads ranging from general service applications to task-critical ones.