- Released At: 24-12-2024

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

|

|

|

H3C Intelligent Management Center |

|

Deployment Guide |

|

|

New H3C Technologies Co., Ltd.

http://www.h3c.com

Document version: 5W100-20241218

Software version: IMC PLAT 7.3 (E0710)

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice.

Preface

This deployment guide primarily covers the installation and deployment of H3C Intelligent Management Center (IMC), including its installation, upgrade, access, and uninstallation.

This preface includes the following topics about the documentation:

· Audience.

· Conventions.

Audience

This documentation is intended for:

· Network planners.

· Field technical support and servicing engineers.

· Network administrators working with H3C IMC.

Conventions

The following information describes the conventions used in the documentation.

GUI conventions

|

Convention |

Description |

|

Boldface |

Window names, button names, field names, and menu items are in Boldface. For example, the New User window opens; click OK. |

|

> |

Multi-level menus are separated by angle brackets. For example, File > Create > Folder. |

Symbols

|

Convention |

Description |

|

An alert that calls attention to important information that if not understood or followed can result in data loss, data corruption, or damage to hardware or software. |

|

|

An alert that calls attention to essential information. |

|

|

NOTE: |

An alert that contains additional or supplementary information. |

Documentation feedback

You can e-mail your comments about product documentation to [email protected].

We appreciate your comments.

Contents

Restrictions for using the embedded database

Deployment restrictions and guidelines

Obtaining IMC installation and deployment methods

Hardware requirements of the IMC platform

Hardware requirements of the EIA component

Hardware requirements of the WSM component

Installation requirements of the embedded database

Preparing the installation environment

Uninstalling previous versions of IMC

Checking the database configuration

(Optional.) Checking the installation environment

Installing and deploying the IMC platform

Selecting the installation type

Installing the IMC platform in typical mode

Installing the IMC platform in custom mode

Deploying the IMC platform component

Deploying IMC on a member server (distributed deployment)

Starting the remote installation wizard

Installing the Intelligent Deployment Monitoring Agent

Deploying the IMC platform subcomponents

Managing IMC by using the Intelligent Deployment Monitoring Agent

Starting the Intelligent Deployment Monitoring Agent

Installing and deploying IMC service components

Installing and deploying IMC BIMS

Deploying IMC BIMS on the conductor server

Deploying BIMS subcomponents on a member server

Installing and deploying IMC UAM

Deploying UAM subcomponents on the conductor server

Deploying UAM subcomponents on a member server

Installing and deploying IMC MVM

Installing a DHCP plug-in on an MS DHCP server

Installing a DHCP plug-in on a Linux DHCP server

Installing an LLDP Windows agent

Installing an LLDP Linux agent

Hardware, software, and browser requirements

Accessing the UAM self-service center

Accessing IMC from a mobile device

Uninstalling all IMC components at one time

Uninstalling IMC components from each member server

Uninstalling IMC components from the conductor server

Backing up and restoring the database

Starting DBMan on the database server (for remote databases only)

Installing DBMan on the database server

Backing up and restoring databases for a single IMC system

Backing up and restoring databases in stateless failover scenarios

Backing up and restoring databases

Configuration restrictions and guidelines

Overview

The following information describes the IMC deployment schemes.

· Centralized deployment

¡ Local database—This deployment scheme scales to networks from 50 to 500 devices.

¡ Remote database—This deployment scheme scales to networks from 200 to 10000 devices.

¡ Embedded database—This deployment scheme scales to small networks.

· Distributed deployment

¡ Local database—This deployment scheme scales to networks from 200 to 15000 devices.

¡ Remote database—This deployment scheme scales to networks of 500 to 150000 devices.

IMC components

IMC includes the IMC platform and service components.

IMC platform

The IMC platform is the base component to provide IMC services and includes the following subcomponents:

· Resource Management

· Alarm Management

· User Self Service Management

· Guest Access Management

· Intelligent Configuration Center

· Report Management

· Network Element (NE) Management

· Performance Management

· ACL Management

· Network Asset Management

· Security Control Center

· General Search Service Management

· Syslog Management

· VLAN Management

· WeChat Server

Service components

Service components are optional and purchased separately from the IMC platform. The IMC platform is the basis for implementing various services and must be installed before service component deployment.

IMC includes the following service components:

· Endpoint Intelligent Access (EIA)—Includes User Access Manager (UAM) and TACACS+ Authentication Manager (TAM).

¡ User Access Manager (UAM)—Provides policy-based Authentication, Authorization and Accounting (AAA) services. UAM software extends management to wired, wireless and remote network users and enables the integration of network device, user, guest and terminal management on a single unified platform.

¡ TACACS+ Authentication Manager (TAM)—Provides basic AAA functions for network devices or IT users for network device management security. TAM can assign users with different privileges, monitor login and command execution operations, and simplify user management.

· Endpoint Admission Defense (EAD) Security Policy—Endpoint Admission Defense integrates security policy management and endpoint posture assessment to identify and isolate risks at the network edge. The security policy component allows administrators to control endpoint admission based on an endpoint's identity and posture.

· MPLS VPN Manager (MVM)—Provides functions such as VPN autodiscovery, topology, monitoring, fault location, auditing, and performance evaluation, as well as VPN and service deployment. MVM also contains a traffic engineering component that helps operators monitor an entire network and deliver service quality by distributing suitable network resources as needed.

· IPsec VPN Manager (IVM)—Provides features for all aspects of IPsec VPN management. IVM allows administrators to construct an IPsec VPN network, effectively monitor the operation and performance of the VPN network, and quickly locate device faults for full IPsec VPN lifecycle management.

· Wireless Service Manager (WSM)—Provides unified management of wired and wireless networks, adding network management functions into existing wired network management systems. WSM software offers wireless LAN (WLAN) device configuration, topology, performance monitoring, RF heat mapping, and WLAN service reports.

· User Behavior Auditor (UBA)—Provides comprehensive log collection and audit functions supporting log formats such as NAT, flow, NetStreamV5, and DIG. UBA provides DIG logs to audit security-sensitive operations and digest information from HTTP, FTP, and SMTP packets.

· QoS Manager (QoSM)—Enhances visibility and control over QoS configurations and helps administrators focus on QoS service planning by providing a robust set of QoS device and configuration management functions. It allows administrators to organize traffic into different classes based on the configured matching criteria to provide differentiated services, committed access rate (CAR), generic traffic shaping (GTS), priority marking, queue scheduling, and congestion avoidance.

· Branch Intelligent Management System (BIMS)—Provides support for service operations, delivering high reliability, scalability, flexibility, and IP investment returns. Based on the TR-069 protocol, IMC BIMS offers resource, configuration, service, alarm, group, and privilege management. It allows the remote management of customer premise equipment (CPE) in WANs.

· VAN Fabric Manager (VFM)—Provides an integrated solution for managing both the LANs and SANs in data centers by working with HP devices. VFM depends on VRM to obtain virtual machine (VM) migration information.

· Intelligent Analysis Reporter (iAR)—Extends the reporting capabilities within IMC to include customized reporting. iAR includes a report designer, which can save designs into report templates. Report formats include charts. Reports can be automatically generated at specified intervals and distributed to key stakeholders.

· Endpoint Mobile Office (EMO)—Provides mobile office services based on virtualization technologies and the cloud service platform. EMO allows remote access to Windows applications and desktops, provides local resources in the apps store, and manages mobile devices.

· Security Service Manager (SSM)—Contains SSM and LBM. SSM provides centralized network security management on security devices. LBM deploys configurations to LB devices to implement load balancing through virtual services, real servers, and server farms.

· Intelligent Portal Management (IPM)—Management platform that provides Wi-Fi marketing for enterprises and organizations. IPM supports site-based authentication policy customization, monitors and analyzes customer flow data, and flexibly pushes advertisements to customers. IPM meets the management and marketing requirements of portal sites, upgrades service quality, and improves customers' online experiences.

· Endpoints Profiling System (EPS)—IMC service component developed for endpoint identification and monitoring. EPS can immediately identify new or abnormal endpoints by executing periodical or one-time tasks to scan endpoints in areas of the network.

· U-Center O&M Platform—As a new-generation intelligent O&M management platform, U-Center O&M Platform provides powerful Infrastructure Operations Management (IOM), including Application Manager (APM) and Server & Storage Automation (SSA), Service Health Manager (SHM), Configuration Management Database (CMDB), Business Service Manager (BSM), and IT Service Manager (ITSM).

IMC editions

The following editions of IMC are available:

· Professional

· Standard

· SNS

Table 1 Differences between IMC editions

|

Item |

SNS |

Standard |

Professional |

|

Number of nodes |

40 |

Extensible |

Extensible |

|

Hierarchical Network Management |

Not supported |

Lower-level NMS only |

Supported |

|

Distributed deployment |

Not supported |

Supported |

Supported |

|

Operating system |

Windows |

Windows and Linux |

Windows and Linux |

|

Embedded database |

Supported |

Supported |

Linux |

|

Separate database |

Supported |

Supported |

Supported |

For information about installing a separate database for IMC on Windows, see the following documents:

· SQL Server 2016 Installation and Configuration Guide

· SQL Server 2017 Installation and Configuration Guide

· SQL Server 2019 Installation and Configuration Guide

· SQL Server 2022 Installation and Configuration Guide

· MySQL 8.0.xx Installation and Configuration Guide (for Windows)

For information about installing a separate database for IMC on Linux, see the following documents:

· Oracle 11g Installation and Configuration Guide

· Oracle 11g R2 Installation and Configuration Guide

· Oracle 12c Installation and Configuration Guide

· Oracle 12c R2 Installation and Configuration Guide

· Oracle 18c Installation and Configuration Guide

· MySQL 8.0.xx Installation and Configuration Guide (for Linux)

Installation and deployment

IMC uses the install + deploy model:

· Install—The installation package of the IMC component is copied to the server and loaded to the Intelligent Deployment Monitoring Agent.

· Deploy—The installation package is decompressed on the server and database scripts are created for the component.

The IMC components are operational only after they are deployed. In centralized deployment, all IMC components are installed and deployed on the same server.

IMC automatically creates a database user for each component when the component is deployed. As a best practice, do not modify the database user configuration, including the database user password and password policy.

If the deployment or upgrade process is interrupted, IMC automatically stores logs as a compressed file in the \tmp directory of the IMC installation path. You can use the logs to quickly locate the issue or error.

Restrictions for using the embedded database

The IMC Standard edition and SNS edition installation packages contain embedded database software packages. The Windows OS-specific IMC is embedded with the SQL Server 2017 Express database and the Linux OS-specific IMC is embedded with the MariaDB 10.5.12 database. In a Windows environment, if the server where IMC is to be installed does not have database software installed, deploy IMC by using its embedded database software to store IMC business data. The password for the embedded database is IMC-Install2008 on Windows operating systems (iMC123 on Linux operating systems).

To use the embedded database on these IMC editions, follow these restrictions:

· IMC must run on a Windows server on which no IMC-supported SQL server database is installed.

· The number of nodes to be managed by IMC cannot exceed 1000. If the number exceeds 1000, install a separate database.

During the database installation, IMC selects an embedded database version that is compatible with the Windows operating system version.

|

IMPORTANT: For restrictions about using the embedded database, see the release notes for the specific IMC version. For example, in the E0710 release notes, the restrictions are as follows: · Use the default data retention period setting for IMC. · Make sure the total number of collectors for the platform component is less than 20000. · Make sure the total number of alarms stored in the database is less than 100000. · Make sure the number of managed device nodes is less than 1000. |

Deployment restrictions and guidelines

In the distributed deployment scheme, IMC servers include conductor and member servers. The conductor server is the management center of the IMC system, responsible for coordinating with all member servers to collectively complete management tasks. A member server is responsible for specific management tasks, such as traffic analysis services for NTA and portal services for UAM.

In the distributed deployment scheme, install all components on the conductor server, and then deploy the components on both the conductor and member servers as needed. The conductor server provides a unified web portal, allowing users to access all IMC management functions by simply accessing the conductor server.

To deploy IMC in distributed mode, follow these restrictions and guidelines:

· The conductor and member servers must use the same operating system.

· Make sure the operating systems and databases are compatible.

· You can use SQL Server and MySQL databases for Windows. You can use Oracle and MySQL databases for Linux.

· When deploying components with reports in MySQL and MariaDB database environments, use the database that the conductor server uses.

· When you use Oracle, make sure all databases used by the conductor and member servers have different network service names.

· The following subcomponents must be deployed on the conductor server:

¡ Resource Management

¡ NE Management

¡ Report Management

¡ Network Asset Management

¡ Security Control Center

¡ Intelligent Configuration Center

For more information about the deployment for other subcomponents, see Table 13. For more information about the deployment for other service components, see Table 15.

· If the IMC Intelligent Deployment Monitoring Agent is already installed on member servers, uninstall it before you deploy IMC components in distributed mode. For more information about how to uninstall the Intelligent Deployment Monitoring Agent, see "Uninstalling IMC."

Obtaining IMC installation and deployment methods

You can use the following methods to obtain the IMC installation and deployment procedure:

· View the video case on H3C website at https://www.h3c.com/en/Support/Resource_Center/EN/Network_Management/Catalog/H3C_IMC/IMC/.

You can also perform the following tasks to view the video case:

a. Access https://www.h3c.com/en/.

b. Select Support > Technical Documents > Network Operations & Management > Intelligent Management Center 7.

c. Select Videos > Installation Videos, download the video to your computer, and decompress it.

· Read this document.

This document describes information about installing and deploying IMC on Windows Server 2012 R2. Installing and deploying IMC on Linux is the same as that on Windows.

The IMC software is included in the DVD delivered with the product.

Preparing for installation

Hardware requirements

If service components are added to the IMC platform, be sure to read the release notes of each component. When multiple components are deployed, the resources must be combined. Suppose the required CPU resource, memory resource, and disk resource of a component are A(num), B(num), and C(num), respectively. When multiple components are deployed, the required hardware resources are as follows:

· CPU=A0+A1+A2+A3

· Memory=B0+B1+B2+B3

· Disk=C0+C1+C2+C3

Hardware requirements of the IMC platform

Table 2 Hardware requirements for a 64-bit Windows/Linux operating system

|

Management scale |

Minimum hardware requirements |

|||||||

|

Node quantity |

Collectors (when the number of collectors is 0 to 5k, no or few performance monitors are enabled) |

Online operators |

CPU (frequency≥ 2.5GHz) |

Memory used by IMC |

Memory used by database |

Java heap size |

Disk space for software installation (imcInstallDir) |

Disk size for running IMC (imcDataDir) |

|

0 to 200 |

0 to 5K |

20 |

2-core CPU |

12GB |

6GB |

4GB |

3GB |

100GB |

|

5K to 50K |

10 |

200GB |

||||||

|

200 to 1K |

0 to 10K |

30 |

4-core CPU |

16GB |

8GB |

6GB |

3GB |

100GB |

|

10K to 100K |

10 |

200GB |

||||||

|

1K to 2K |

0 to 20K |

30 |

6-core CPU |

24GB |

12GB |

8GB |

4GB |

100GB |

|

20K to 200K |

10 |

200GB |

||||||

|

2K to 5K |

0 to 30K |

40 |

8-core CPU |

32GB |

16GB |

12GB |

5GB |

120GB |

|

30K to 300K |

20 |

250GB |

||||||

|

5K to 10K |

0 to 40K |

50 |

16-core CPU |

64GB |

32GB |

16GB |

7GB |

150GB |

|

40K to 400K |

20 |

300GB |

||||||

|

10K to 15K |

0 to 40K |

50 |

24-core CPU |

80GB |

40GB |

24GB |

10GB |

200GB |

|

40K to 400K |

20 |

600GB |

||||||

|

|

NOTE: · If the database is deployed on the same server as IMC, the IMC server memory is the sum of the memory used by IMC, the memory used by the database, and the memory used by the operating system. · If the database is deployed on a different server than IMC, the IMC server memory is the sum of the memory used by IMC and the memory used by the operating system. The database server memory is the sum of the memory used by the database and the memory used by the operating system. · Prepare the operating system memory based on different operation requirements. Without specific requirements, allocate at least 4G of memory to the operating system. |

The tables in this section use the following terminology:

· Node—IMC servers, database servers, and devices managed by IMC are called nodes.

· Collector—The number of collectors equals the total number of performance instances collected at 5-minute intervals. If the collection interval is greater than 5 minutes, the number of collectors decreases. If the collection interval is smaller than 5 minutes, the number of collectors increases.

For example, if performance instances listed in Table 1 are collected every 5 minutes, the total collectors are the same as the number of performance instances, which is 24. If the collector is twice as the 5-minute interval (10 minutes), the number of collectors is half the total number of performance instances, which is 12.

|

Monitored item |

Number |

Performance index |

Performance instance |

|

CPU |

1 |

CPU usage |

1 |

|

Memory |

1 |

Memory usage |

1 |

|

Interface |

10 |

Receiving rate |

10 |

|

Sending rate |

10 |

||

|

Device |

1 |

Unreachability rate |

1 |

|

Response time |

1 |

||

|

|

|

Total |

24 |

· Java heap size—Java heap size that can be used by the IMC Web server.

To set the Java heap size for IMC:

¡ On Windows, run the setmem.bat heap size script in the \client\bin directory of the IMC installation path.

¡ On Linux, run the setmem.sh heap size script in the /client/bin directory of the IMC installation path.

Set heap size to a value in the range of 256 to 32768 for a 64-bit OS. The java heap size cannot exceed the physical memory size.

To improve the I/O performance, follow these guidelines:

· When the number of the collectors is from 100 K to 200 K, install two or more disks and a RAID card with a cache of at least 256 MB.

· When the number of collectors is from 200 K to 300 K, install two or more disks and a RAID card with a cache of at least 512 MB.

· When the number of collectors is 300 K to 400 K, install four or more disks and a RAID card with a cache of at least 1 GB.

· Install three disks in RAID 5, and four or more disks in RAID 0+1.

Optimal hardware requirements vary with scale, other management factors, and are specific to each installation. Consult H3C Support, or your local account teams, for exact requirements.

Hardware requirements of the EIA component

UAM

You can deploy the portal component on multiple servers in distributed mode. When there are high requirements for portal access, as a best practice, deploy the portal component in distributed mode. When you deploy the portal component in distributed mode, as a best practice, support more users on a dedicated portal server. A dedicated portal server must have at least a configuration that is one level lower than the current configuration.

If the number of managed access users is above 5k and self-service center is needed, you must deploy self-service center in distributed mode. A dedicated self-service center must have at least a configuration that is one level lower than the current configuration.

The following deployment scheme is given based on some reasonable assumptions. More specifically:

· In the following tables, the 802.1X access method represents any access method that does not need the collaboration of UAM, except portal access.

· The CPU requirements of EIA specified here are requirements for Intel CPUs. The requirements for Kunpeng and Feiteng ARM CPUs must be twice the requirements for Intel CPUs.

|

Management scale |

System minimum requirements |

||||||||||

|

Managed access users |

Online operators |

Access method |

Authentication method |

Online users |

Concurrent online users |

CPU (2.0GHz or above) |

Memory |

Java heap size |

Disk size for installing IMC (imcInstallDir) |

Disk size for running IMC (imcDataDir) |

Maximum IOPS for running disks |

|

<=20K |

5 |

802.1X |

PAP/CHAP/EAP-MD5 |

10000 |

100 |

4-core CPU |

16G |

4G |

150GB |

100GB |

300 (as a best practice, configure a RAID controller with the cache higher than 192M) |

|

EAP-PEAP/TLS/TTLS |

3000 |

10 |

|||||||||

|

Portal |

PAP/CHAP |

6000 |

50 |

||||||||

|

EAP-PEAP/TLS/TTLS |

3000 |

10 |

|||||||||

|

<=100K |

10 |

802.1X |

PAP/CHAP/EAP-MD5 |

50000 |

200 |

8-core CPU |

32G |

8G |

300GB |

150GB |

600 (as a best practice, configure a RAID controller with the cache higher than 256M) |

|

EAP-PEAP/TLS/TTLS |

15000 |

20 |

|||||||||

|

Portal |

PAP/CHAP |

20000 |

150 |

||||||||

|

EAP-PEAP/TLS/TTLS |

15000 |

20 |

|||||||||

|

<=500K |

15 |

802.1X |

PAP/CHAP/EAP-MD5 |

100000 |

500 |

16-core CPU |

64G |

12G |

600GB |

300GB |

1000 (as a best practice, configure a RAID controller with the cache higher than 1G) |

|

EAP-PEAP/TLS/TTLS |

30000 |

50 |

|||||||||

|

Portal |

PAP/CHAP |

40000 |

300 |

||||||||

|

EAP-PEAP/TLS/TTLS |

20000 |

40 |

|||||||||

Table 5 64-bit Linux

|

Management scale |

System minimum requirements |

||||||||||

|

Managed access users |

Online operators |

Access method |

Authentication method |

Online users |

Concurrent online users |

CPU (2.0GHz or above) |

Memory |

Java heap size |

Disk size for installing IMC (imcInstallDir) |

Disk size for running IMC (imcDataDir) |

Maximum IOPS for running disks |

|

<=20K |

5 |

802.1X |

PAP/CHAP/EAP-MD5 |

10000 |

100 |

4-core CPU |

16G |

4G |

150GB |

100GB |

800 (as a best practice, configure a RAID controller with the cache higher than 192M) |

|

EAP-PEAP/TLS/TTLS |

3000 |

10 |

|||||||||

|

Portal |

PAP/CHAP |

6000 |

50 |

||||||||

|

EAP-PEAP/TLS/TTLS |

3000 |

10 |

|||||||||

|

<=100K |

10 |

802.1X |

PAP/CHAP/EAP-MD5 |

50000 |

200 |

8-core CPU |

32G |

8G |

300GB |

150GB |

1800 (as a best practice, configure a RAID controller with the cache higher than 256M) |

|

EAP-PEAP/TLS/TTLS |

15000 |

20 |

|||||||||

|

Portal |

PAP/CHAP |

20000 |

150 |

||||||||

|

EAP-PEAP/TLS/TTLS |

15000 |

20 |

|||||||||

|

<=500K |

15 |

802.1X |

PAP/CHAP/EAP-MD5 |

100000 |

500 |

16-core CPU |

64G |

12G |

600GB |

300GB |

2400 (as a best practice, configure a RAID controller with the cache higher than 1G) |

|

EAP-PEAP/TLS/TTLS |

30000 |

50 |

|||||||||

|

Portal |

PAP/CHAP |

40000 |

300 |

||||||||

|

EAP-PEAP/TLS/TTLS |

20000 |

40 |

|||||||||

TAM

The managed devices refer to the devices added to the device list for the device authentication service.

Table 6 64-bit Windows/Linux

|

Management scale |

System minimum requirements |

||||

|

Managed devices |

CPU (2.5GHz or above) |

Memory |

Java heap size |

Disk size for installing IMC (imcInstallDir) |

Disk size for running IMC (imcDataDir) |

|

<=5000 |

4-core CPU |

8G |

2G |

3GB |

160GB |

|

<=20K |

8-core CPU |

16G |

4G |

3GB |

320GB |

Hardware requirements of the WSM component

Table 7 Hardware requirements for 64-bit Windows/Linux operating systems

|

Management scale |

System minimum requirements |

|||||||

|

Nodes |

Collectors |

Online operators |

CPU (2.0GHz or above) |

Memory |

Java heap size |

Disk size for installing IMC (imcInstallDir) |

Disk size for running IMC (imcDataDir) |

Maximum IOPS for running disks |

|

Fit APs: 0 to 500 Fat APs: 0 to 300 |

0 to 50K |

10 |

2-core CPU |

4G |

2G |

3GB |

60GB |

Windows: 120 Linux: 990 |

|

Fit APs: 500 to 1000 Fat APs: 300 to 700 |

16K to 90K |

10 |

4-core CPU |

8G |

4G |

3GB |

100GB |

Windows:160 Linux: 1210 |

|

Fit APs: 1000 to 3000 Fat APs: 700 to 2000 |

32K to 150K |

10 |

6-core CPU |

16G |

6G |

4GB |

200GB |

Windows: 300 Linux: 2530 |

|

Fit APs: 3000 to 5000 Fat APs: 2000 to 3000 |

100K to 500K |

10 |

8-core CPU |

24G |

8G |

5GB |

250GB |

Windows: 330 Linux: 3910 |

|

Enterprise network: fit APs: 5000 to 10000 Fat APs: 3000 to 5000 |

320K to 800K |

10 |

12-core CPU |

32G |

12G |

7GB |

300GB |

Windows: 360 Linux: 4760 |

|

Service provider: Fit APs: 5000 to 8000 Fat APs: 3000 to 5000 |

||||||||

To improve the I/O performance, follow these guidelines:

· When the number of the collectors is from 100 K to 200 K, install two or more disks and a RAID card with a cache of at least 256 MB.

· When the number of collectors is from 200 K to 300 K, install two or more disks and a RAID card with a cache of at least 512 MB.

· When the number of collectors is 300 K to 400 K, install four or more disks and a RAID card with a cache of at least 1 GB.

· As a best practice, install three disks in RAID 5, and four or more disks in RAID 0+1.

Software requirements

The software requirements of the centralized scheme are as shown in Table 8 and Table 9. As a best practice, install the latest patches for the corresponding software.

The software requirements of the distributed scheme are as shown in Table 10. As a best practice, install the latest patches for the corresponding software.

Table 8 Software requirements (centralized deployment with local/remote database)

|

Item |

Requirement |

Remarks |

|

|

Windows |

Operating system |

Windows Server 2016 (64bit) |

N/A |

|

Windows Server 2019 (64bit) |

KB5005112, KB5022840, and KB5026362 |

||

|

Windows Server 2022 (64bit) |

KB5026370 |

||

|

Database |

SQL Server 2016 Enterprise SP2 (64bit) |

N/A |

|

|

SQL Server 2017 Enterprise (64bit) |

N/A |

||

|

SQL Server 2019 Enterprise (64bit) |

N/A |

||

|

SQL Server 2022 Enterprise (64bit) |

N/A |

||

|

MySQL Enterprise Server 8.0 (64bit) |

A maximum of 2000 devices are supported. |

||

|

MySQL Community Server 8.0 (64bit) |

|||

|

MariaDB 10.3.x (64bit) |

|||

|

MariaDB 10.5.x (64bit) |

|||

|

MariaDB 10.6.9 (64bit) and later minor versions |

|||

|

Linux |

Operating system |

Red Hat Enterprise Linux Server 8.x (64-bit) |

N/A |

|

Kylin Advanced Server Operating System V10 (AMD64 Edition) |

N/A |

||

|

NingOS V3 1.0.2403 |

N/A |

||

|

Database |

Oracle 11g Release 1 (64bit) |

N/A |

|

|

Oracle 11g Release 2 (64bit) |

N/A |

||

|

Oracle 12c Release 1 (64bit) |

N/A |

||

|

Oracle 12c Release 2 (64bit) |

N/A |

||

|

Oracle 18c (64bit) |

N/A |

||

|

SQL Server 2016 Enterprise SP2 (64bit) |

N/A |

||

|

SQL Server 2017 Enterprise (64bit) |

N/A |

||

|

SQL Server 2019 Enterprise (64bit) |

N/A |

||

|

SQL Server 2022 Enterprise (64bit) |

N/A |

||

|

MySQL Enterprise Server 8.0 |

A maximum of 2000 devices are supported. |

||

|

MySQL Community Server 8.0 |

|||

|

MariaDB 10.3.x |

|||

|

MariaDB 10.5.x |

|||

|

MariaDB 10.6.9 and later minor versions |

|||

|

DM Database Management System V7.6.1.112 |

Available only on Kylin V10 |

||

|

DM Database Management System V8.1.1.126 |

|||

|

DM Database Management System V8.1.2.114 |

|||

Table 9 Software requirements (centralized deployment with embedded database)

|

Item |

Requirements |

Remarks |

|

|

Windows |

Operating system |

Windows Server 2016 (64bit) |

N/A |

|

Windows Server 2019 (64bit) |

KB5005112, KB5022840, and KB5026362 |

||

|

Windows Server 2022 (64bit) |

KB5026370 |

||

|

Database |

SQL Server 2017 Express |

Used as the embedded database for SNS and standard editions only. |

|

|

Linux |

Operating system |

Red Hat Enterprise Linux Server 8.x (64-bit) |

N/A |

|

Kylin Advanced Server Operating System V10 (AMD64 Edition) |

N/A |

||

|

NingOS V3 1.0.2403 |

N/A |

||

|

Database |

MariaDB 10.5.12 |

N/A |

|

Table 10 Software requirements (distributed deployment)

|

Item |

Requirements |

Remarks |

|

|

Windows |

Operating system |

Windows Server 2016 (64bit) |

N/A |

|

Windows Server 2019 (64bit) |

KB5005112, KB5022840, KB5026362 |

||

|

Windows Server 2022 (64bit) |

KB5026370 |

||

|

Database |

SQL Server 2016 Enterprise SP2 (64bit) |

N/A |

|

|

SQL Server 2017 Enterprise (64bit) |

N/A |

||

|

SQL Server 2019 Enterprise (64bit) |

N/A |

||

|

SQL Server 2022 Enterprise (64bit) |

N/A |

||

|

Linux |

Operating system |

Red Hat Enterprise Linux Server 8.x (64bit) |

N/A |

|

Kylin Advanced Server Operating System V10 (AMD64 Edition) |

N/A |

||

|

NingOS V3 1.0.2403 |

N/A |

||

|

Database |

Oracle 11g Release 1 (64bit) |

N/A |

|

|

Oracle 11g Release 2 (64bit) |

N/A |

||

|

Oracle 12c Release 1 (64bit) |

N/A |

||

|

Oracle 12c Release 2 (64bit) |

N/A |

||

|

Oracle 18c (64bit) |

N/A |

||

|

SQL Server 2016 Enterprise SP2 (64bit) |

N/A |

||

|

SQL Server 2017 Enterprise (64bit) |

N/A |

||

|

SQL Server 2019 Enterprise (64bit) |

N/A |

||

|

SQL Server 2022 Enterprise (64bit) |

N/A |

||

|

Dameng database management system V7.6.1.112 |

Available only on Kylin V10 |

||

|

Dameng database management system V8.1.1.126 |

|||

|

Dameng database management system V8.1.2.114 |

|||

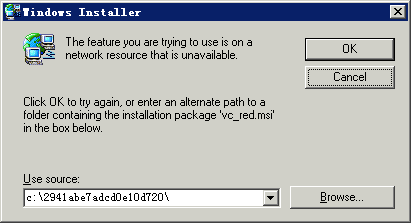

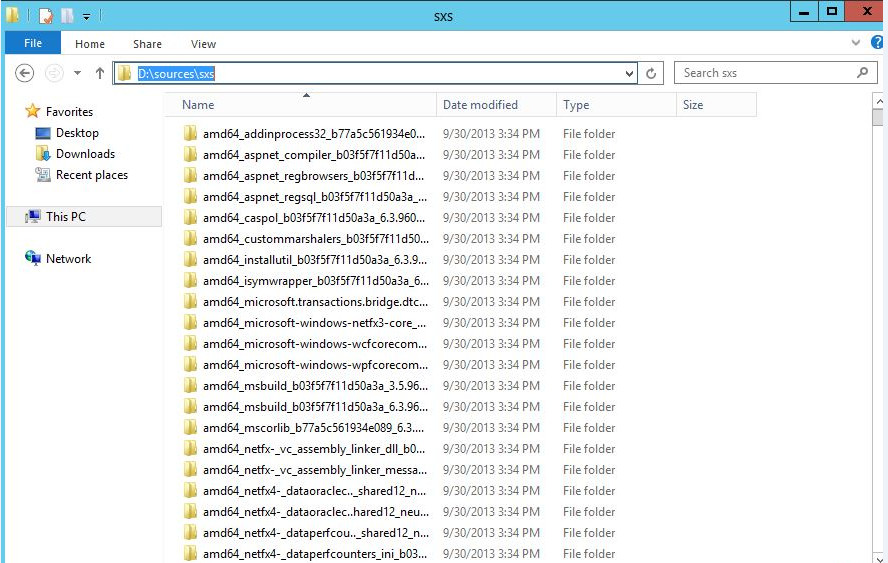

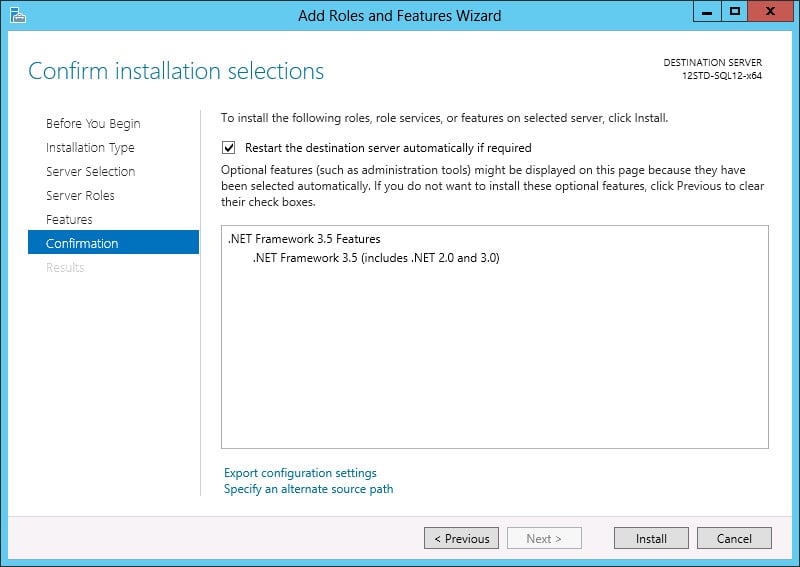

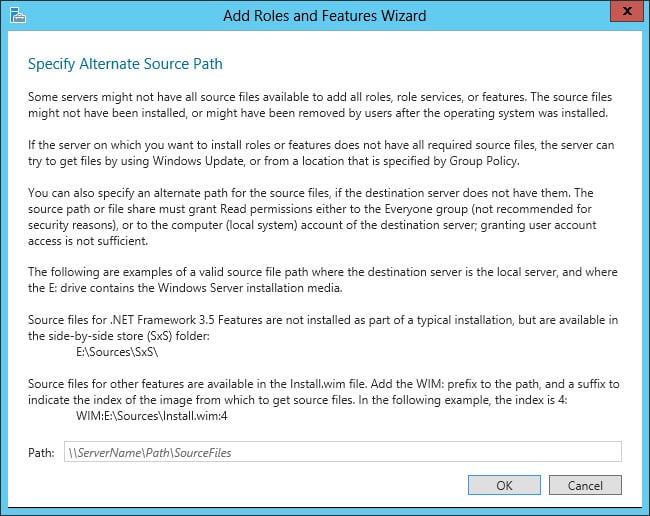

Installation requirements of the embedded database

In a Windows environment, to accommodate different versions of Window operating systems used by customers, IMC integrates SQL Server 2017 Express as its embedded database. To install SQL Server 2017 Express as an embedded database, you must first install the .Net Framework 4.6 or .Net Framework 4.7 software. The embedded database installer does not automatically install the preceding software products, so you need to install them manually.

Download the software products from the following website:

http://www.microsoft.com/downloads

|

IMPORTANT: The embedded database in the Linux system environment does not have these requirements. |

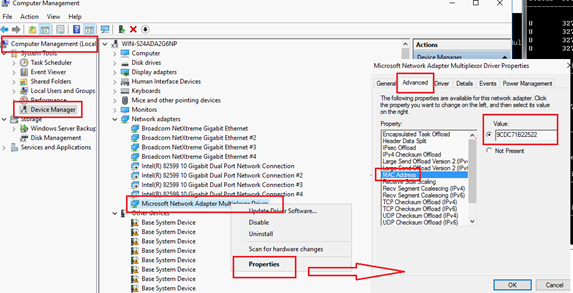

VM requirements

As a best practice, install IMC on a physical server.

If IMC is installed on a VM, do not change the following VM configuration settings:

· CPU cores

· Number, model, and MAC addresses of network adapters

· Number of disk drives

· Storage paths

· Assignment of storage

If the settings are changed, IMC might not operate correctly.

Preparing the installation environment

To ensure the correct installation and operation of IMC, do not install IMC with other network management products on the same server.

Do not install IMC in an IPv6 environment. However, IMC allows users to manage IPv6 devices.

When you install or upgrade IMC, restart the IMC server if a socket issue exists in the IMC installation environment. If no socket issue exists, you do not need to restart the IMC server.

Before installing IMC on a Linux operating system, make sure the mapping from 127.0.0.1 to localhost in the hosts file in the /etc/ directory is not deleted or commented out with a number sign (#). If you cannot do that, IMC will not start properly after installation.

Uninstalling previous versions of IMC

If IMC was previously installed on the system, then thoroughly uninstall it first. For information about uninstalling IMC, see "Uninstalling IMC."

After you uninstall IMC:

· On Windows, delete the iMC-Reserved folder from the WINDOWS folder of the system disk.

· On Linux, delete the iMC-Reserved folder from the /etc directory.

Checking ports and firewalls

Make sure the IMC Web service ports and database listening ports are open in the firewall. Table 11 lists the default IMC Web service ports and database listening ports.

Table 11 IMC port requirements

|

Server |

Usage: protocol/default port |

Direction |

|

Web |

HTTP: TCP/8080 HTTPS: TCP/8443 |

Browser to IMC |

|

Database |

SQL Server database: TCP/1433 Oracle database: TCP/1521 MySQL database: TCP/3306 |

IMC and components to the database |

|

|

NOTE: Other IMC components might have additional port requirements. For more information, see "Security settings." |

Make sure the javaw.exe and java.exe programs are not blocked by the firewall. In Windows, these programs are located in the \common\jre\bin directory of the IMC installation path. In Linux, these programs are located in the /common/jre/bin/java directory of the IMC installation path.

Use tools such as netstat -a and telnet hostname port to verify access between systems.

Checking the database configuration

Before installing non-SNS editions of IMC, first install the database server and configure the database services to automatically start with the operating system.

For example, to use a SQL Server database for IMC, install the database before IMC installation and set the startup type of the SQL Server and SQL Server Agent services to Automatic. To view the startup type of the database services, click Start, and then select Administrative Tools > Services.

Before you install IMC, make sure the database server and client are correctly installed and configured.

IMC uses a local database client to communicate with a remote database server. The client version must match the version of the database server.

On the remote database server, you must create a data file folder for storing IMC data. You will need to specify the path to the folder during IMC installation.

Additional database requirements vary by the database type: SQL Server or Oracle.

For a SQL Server database, the following requirements must be met:

· Set the startup type of the SQL Server and SQL Server Agent services to Automatic.

To view the service startup type, click Start, and then select Administrative Tools > Services.

· The startup account of the SQL Server service must have write permissions to all disks on the database server. As a best practice, use the Local System account.

For an Oracle database, the following requirements must be met:

· Configure the Oracle database service to start automatically with the operating system.

· The database server and client use the same network service name, which contains the IP address of the database server as the host name.

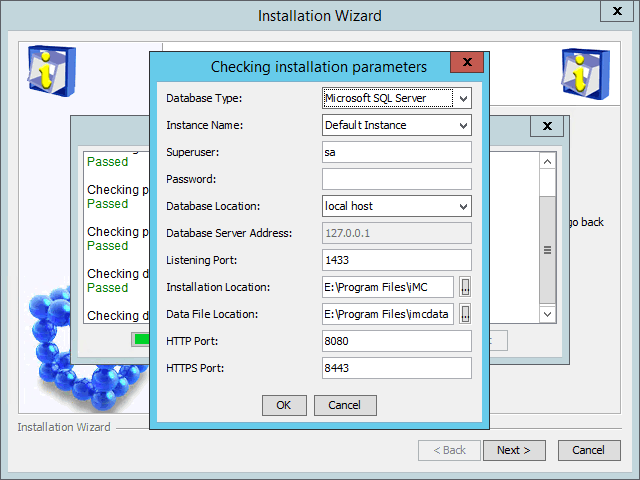

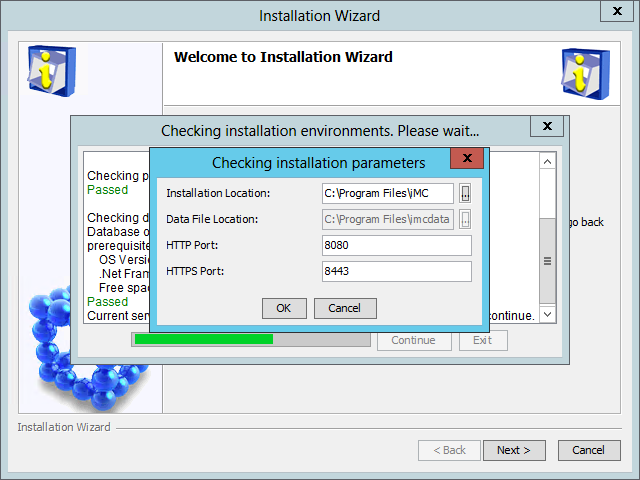

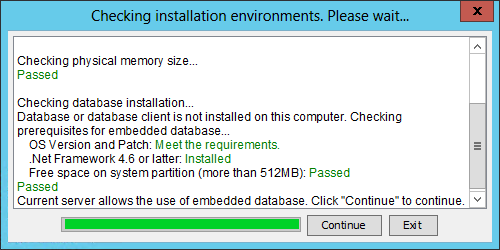

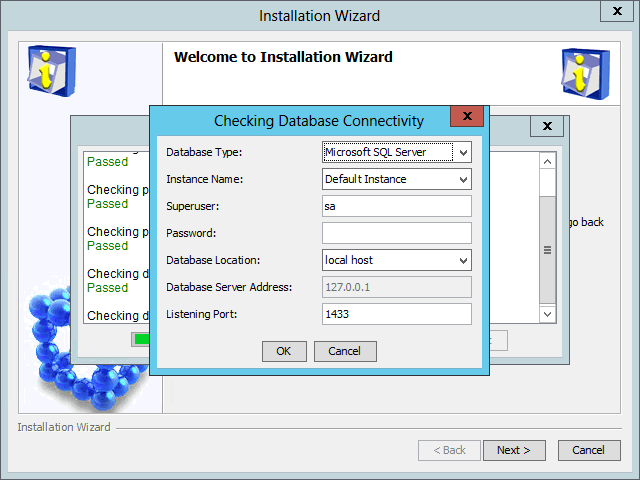

(Optional.) Checking the installation environment

The IMC installation package provides a tool (envcheck) to check the system environment and database connectivity.

To use the envcheck tool:

1. Copy the envcheck tool (envcheck.bat for Windows or envcheck.sh for Linux) from the tools folder to the install folder of the IMC installation package.

2. Run the tool.

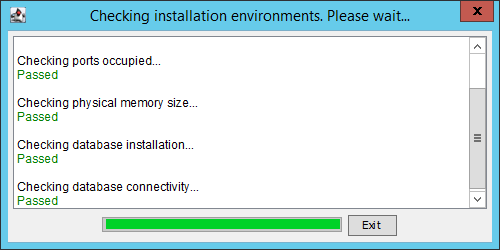

The Checking installation environments dialog box opens.

The system checks the port availability, free physical memory, and legacy database server or client.

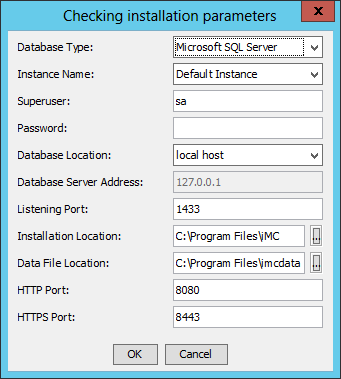

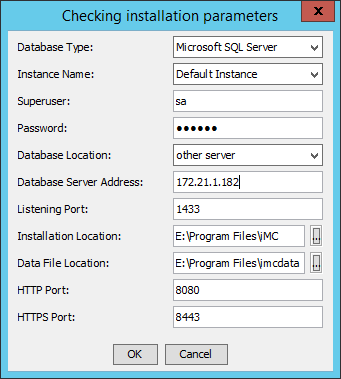

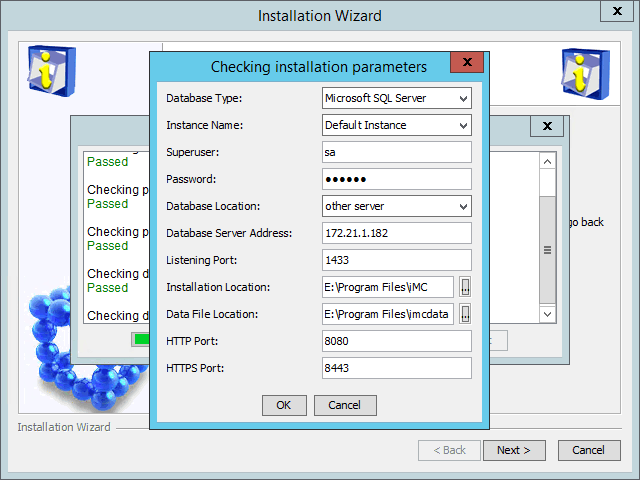

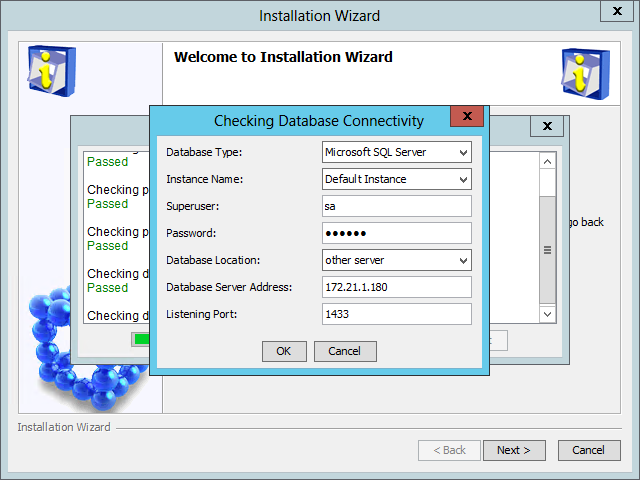

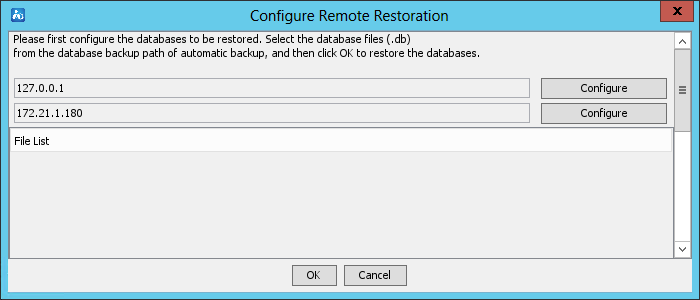

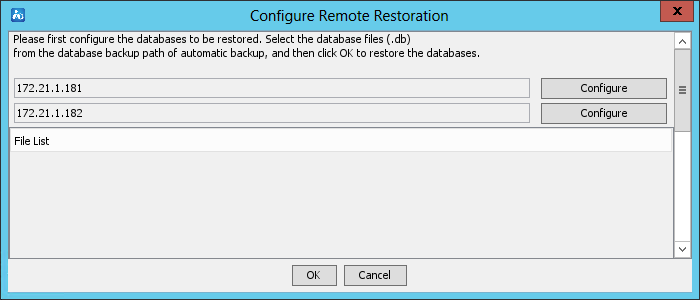

After the checks are complete, the Checking installation parameters dialog box opens, as shown in Figure 1 and Figure 2. The following information uses Windows and Microsoft SQL Server as an example.

Figure 1 Checking installation parameters (local database)

Figure 2 Checking installation parameters (remote database)

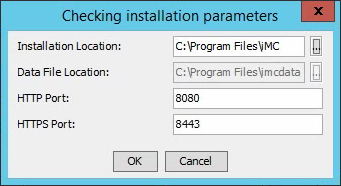

Figure 3 Checking installation parameters (embedded database)

3. Configure the parameters for checking database connectivity:

|

IMPORTANT: For centralized deployment with embedded database, you only need to configure the installation location, data file location, and HTTP/HTTPS port. |

¡ Database Type—Select the database type. Options are Microsoft SQL Server, MySQL, and Oracle. The default is Microsoft SQL Server.

¡ Instance Name—To connect to the default instance of the database, select Default Instance. To connect to a named instance, select Other Instance, and then enter the instance name.

If you install IMC on Linux and use an Oracle database, configure the network service name and the tablespace name.

- You can select a network service name or click the Add Network Service Name icon ![]() to add a network

service name. For more information about configuring the network service name,

see Oracle 11g Installation and Configuration Guide

or Oracle 11g R2 Installation and Configuration Guide.

to add a network

service name. For more information about configuring the network service name,

see Oracle 11g Installation and Configuration Guide

or Oracle 11g R2 Installation and Configuration Guide.

- To connect to the default tablespace of the database, select Default Tablespace. To connect to a named tablespace, select Other Tablespace, and then enter the tablespace name.

¡ Superuser—Enter the database superuser name. The default is sa.

¡ Password—Enter the password of the superuser.

¡ Database Location—Select local host from the list when you use a local database, and select other server from the list when you use a remote database.

¡ Database Server Address—You do not need to configure this field when you use a local database. Enter the database server IP address when you use a remote database.

¡ Listening Port—Enter the listening port of the database server. The default is 1433.

¡ Installation Location—Specify the local directory for storing the IMC installation package.

¡ Data File Location—Specify the local directory for storing the data files.

¡ HTTP Port—Enter the HTTP port number for the IMC Web server. The default is 8080.

¡ HTTPS Port—Enter the HTTPS port number for the IMC Web server. The default is 8443.

4. Click OK.

The Checking installation environments dialog box displays the check results, as shown in Figure 4.

5. Click Exit.

Fix any failed check items according to the check results.

Superuser account

During the IMC platform installation, IMC uses the superuser account and password for database access, and then creates database files and user accounts for each deployed component. The deployed IMC platform subcomponents and service components use their own user accounts for database access.

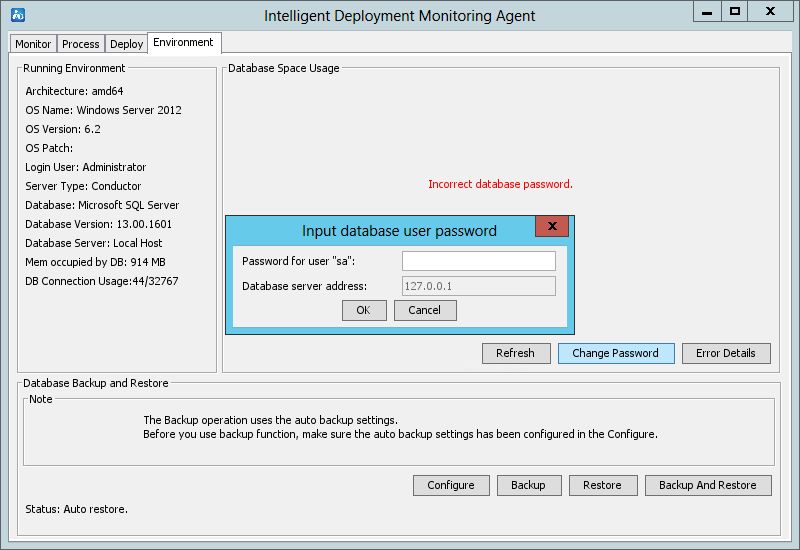

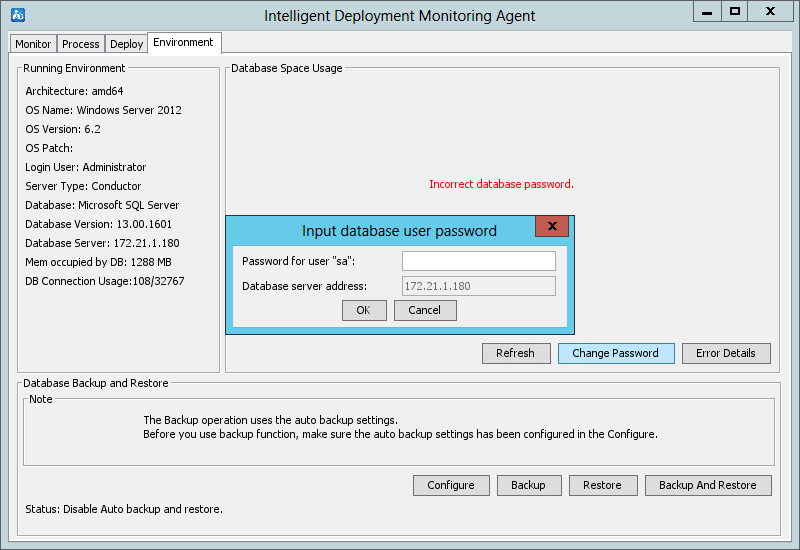

If the password of the superuser account is changed after IMC deployment, be sure to update the password in IMC. If the password is not promptly updated, you cannot view database information on the Environment tab, deploy new components, or update existing components for IMC.

To update the database user password in IMC:

1. Start the Intelligent Deployment Monitoring Agent, and then click the Environment tab.

2. Click Change Password.

The Change Password button is displayed only when the Intelligent Deployment Monitoring Agent detects the incorrect database user password.

3. Enter the new database password, and then click OK, as shown in Figure 5 and Figure 6.

Figure 5 Changing the superuser password (local database)

Figure 6 Changing the superuser password (remote database)

Table 12 lists the default superuser accounts of SQL Server, MySQL, and Oracle databases.

Table 12 Database superuser accounts

|

Database |

Superuser |

|

SQL Server |

sa |

|

Oracle |

· system · sys |

|

MySQL |

root |

Setting the system time

As a best practice, configure the following settings:

· Do not enable seasonal time adjustments such as daylight savings time.

· Before installing IMC, verify that the system time, date, and time zone settings on the server are correct.

Do not modify the system time on the server after IMC is started. If you modify the system time, the following issues might occur:

· When jumping to a future time, the system might get so occupied in processing the sudden burst of expired data that real-time data sampling will be delayed. The delay is automatically recovered after the processing of expired data is complete.

· When you modify the system time to a past time, data with overlapping time occurs, and data processing might become abnormal. After the overlapping time is past, data processing becomes normal again.

Installing and deploying the IMC platform

The following information describes how to install and deploy the IMC platform on a Windows host that is already installed with a SQL Server 2012 database.

In the distributed deployment scheme, once the database client is installed, you can install and deploy the IMC platform on the conductor server. Only some subcomponents of the IMC platform can be deployed on a member server. For more information, see Table 13.

Table 13 IMC platform subcomponents and deployment requirements

|

Component |

Subcomponents |

Deployment server |

|

IMC platform |

Resource Management |

Conductor |

|

Alarm Management |

Conductor or member |

|

|

User Selfservice Management |

Conductor or member |

|

|

Guest Access Management |

Conductor or member |

|

|

Intelligent Configuration Center |

Conductor |

|

|

Report Management |

Conductor |

|

|

NE Management |

Conductor |

|

|

Performance Management |

Conductor or member |

|

|

ACL Management |

Conductor or member |

|

|

Network Asset Management |

Conductor |

|

|

Security Control Center |

Conductor |

|

|

General Search Service Management |

Conductor or member |

|

|

Syslog Management |

Conductor or member |

|

|

VLAN Management |

Conductor or member |

|

|

WeChat Server |

Conductor or member |

|

|

NOTE: The IMC platform supports deploying multiple member servers, but each subcomponent can only be deployed on one server. |

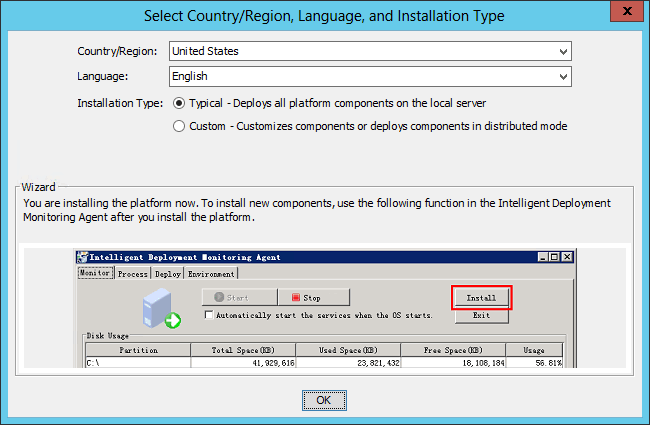

Selecting the installation type

1. Log on to Windows as an administrator.

2. Run the install.bat script in the install directory of the IMC installation package.

The Select Country/Region, Language, and Installation Type dialog box appears, as shown in Figure 7.

Figure 7 Select Country/Region, Language, and Installation Type dialog box

3. Select the country/region, language, and installation type.

IMC supports typical and custom installations.

¡ Typical—All platform subcomponents are automatically installed and deployed on the local host without manual intervention.

¡ Custom—You can select desired platform subcomponents to install on the local host. After installation is complete, manually deploy the platform subcomponents.

¡ In the distributed deployment mode, you must select the custom installation mode to install platform subcomponents as needed.

4. Click OK.

The IMC installation file does not have any special requirements for the transfer directory. Copy the installation file to the local server and then decompress it. You can decompress the file by using decompression software on Windows systems and the unzip command on Linux systems.

To install the IMC platform on a Linux host, use the following guidelines:

· Run the install.sh script in the install directory of the IMC installation package as a root user.

· If Linux is used, copy the IMC installation package to a local directory before you run the install.sh script.

· If the IMC installation package is transferred through FTP, grant read access to the install.sh script by executing chmod –R 775 install.sh in the directory of the script.

When you install or upgrade IMC, restart the IMC server if a socket issue exists in the IMC installation environment. If no socket issue exists, you do not need to restart the IMC server.

The installation packages of the following components are located in the tools\components directory: ACL, EUPLAT, GAM, RestPlugin, VLAN, and WeChat. Before you install and deploy the IMC platform, copy the installation packages of the components you want to install to the install\components directory.

Installing the IMC platform in typical mode

1. In the Select Country/Region, Language, and Installation Type dialog box, select the Typical installation type, and then click OK.

The Checking installation parameters dialog box opens, as shown in Figure 8 for local database and Figure 9 for remote database.

Figure 8 Checking installation environment (local database)

Figure 9 Checking installation environment (remote database)

Figure 10 Checking installation parameters (embedded database)

2. Configure the parameters as needed, as shown in "(Optional.) Checking the installation environment."

3. Click OK.

The system checks the installation environment and database connectivity, and then displays the check results.

Fix any failed check items according to the check results.

After the checks are passed, the system installs and deploys all IMC platform subcomponents.

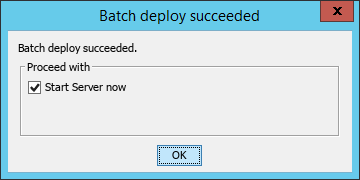

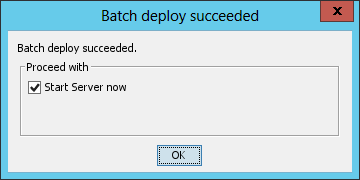

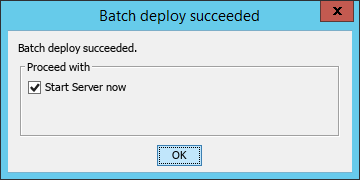

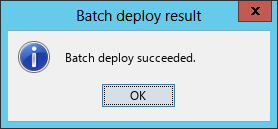

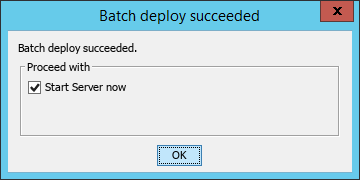

4. After IMC installation and deployment is complete, the Batch deploy succeeded dialog box opens, as shown in Figure 11.

Figure 11 Batch deploy succeeded

5. Click OK.

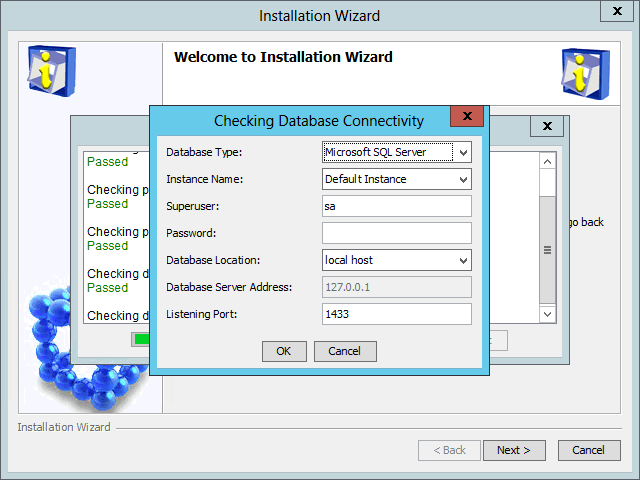

Installing the IMC platform in custom mode

Installing the IMC platform

1. In the Select Country/Region, Language, and Installation Type dialog box, select the Custom installation type, and then click OK.

¡ For the local database scheme and remote database scheme, the Checking Database Connectivity dialog box opens, as shown in Figure 12 and Figure 13.

Figure 12 Checking Database Connectivity dialog box (local database)

Figure 13 Checking Database Connectivity dialog box (remote database)

¡ For the embedded database scheme, the Checking installation environments dialog box opens, as shown in Figure 14.

Figure 14 Checking installation environments (embedded database)

2. Configure the parameters as needed, as shown in "(Optional.) Checking the installation environment."

3. Click OK.

The system checks the installation environment and database connectivity, and then displays the check results.

Fix any failed check items according to the check results.

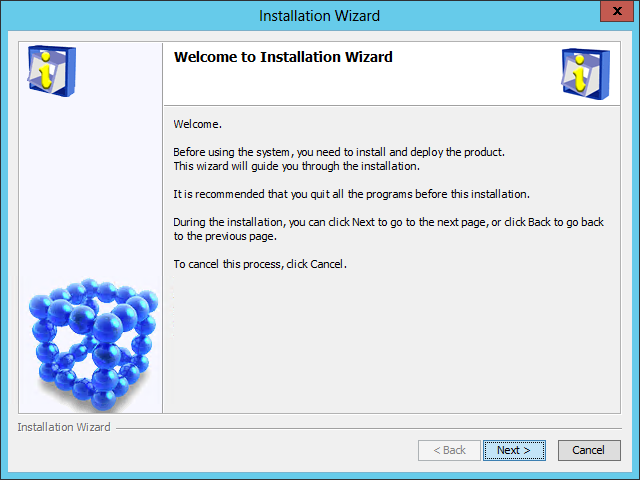

After the checks are passed, the IMC installation wizard opens, as shown in Figure 15.

Figure 15 IMC installation wizard

4. Click Next.

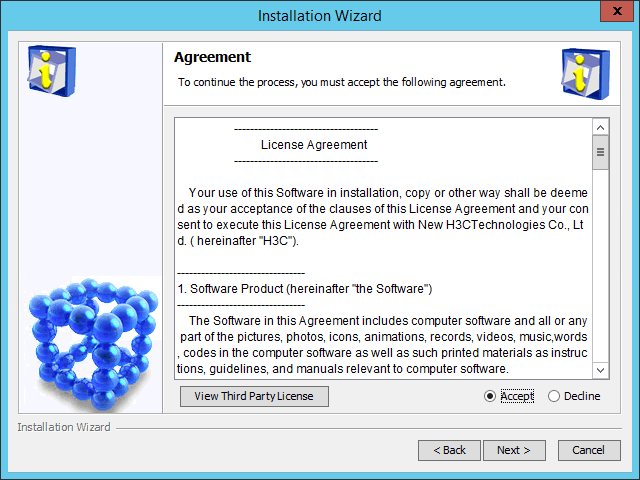

The Agreement page opens, as shown in Figure 16.

Figure 16 Agreement page

5. Read the license agreement, select Accept, and then click Next.

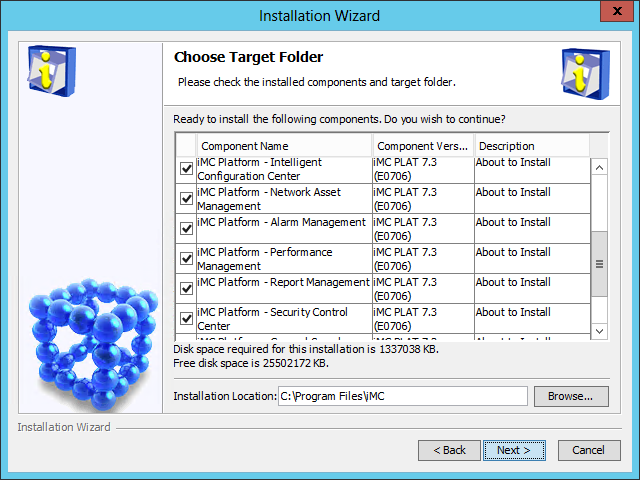

The Choose Target Folder page opens, as shown in Figure 17.

Figure 17 Choose Target Folder page

6. Select the components you want to install and specify a local path as the installation location.

The installation program checks whether the specified installation path contains any files. If the path contains files, a message is displayed. Click OK to delete the files.

The default installation location is X:\Program Files\iMC, where X is the drive letter of the disk with the largest amount of free space.

|

|

NOTE: · If you install the IMC platform on a Linux host, do not use a symlink path as the installation location. · In Linux, the default installation location is /opt/iMC. |

7. Click Next.

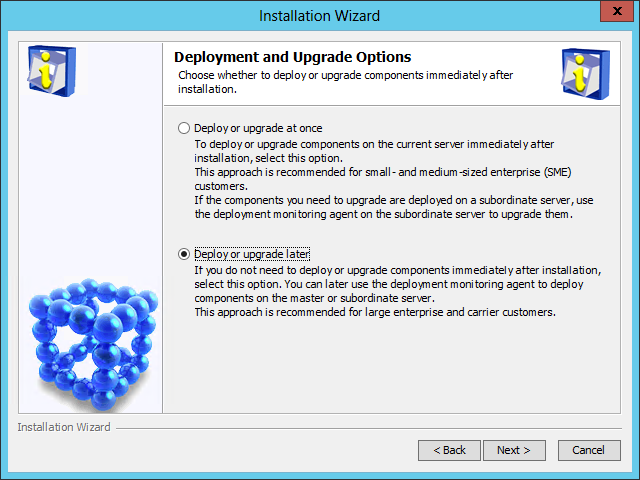

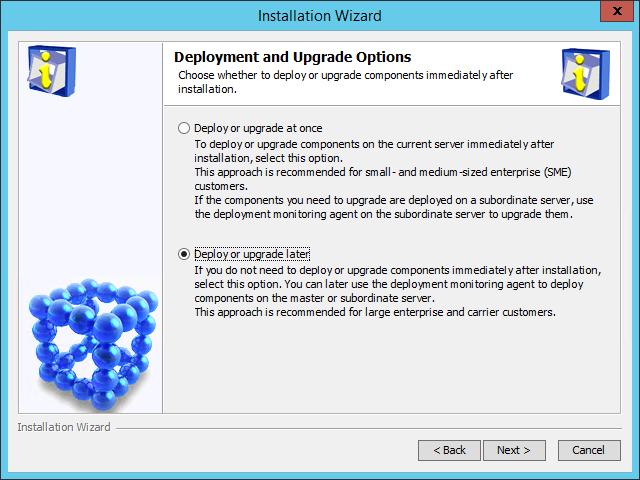

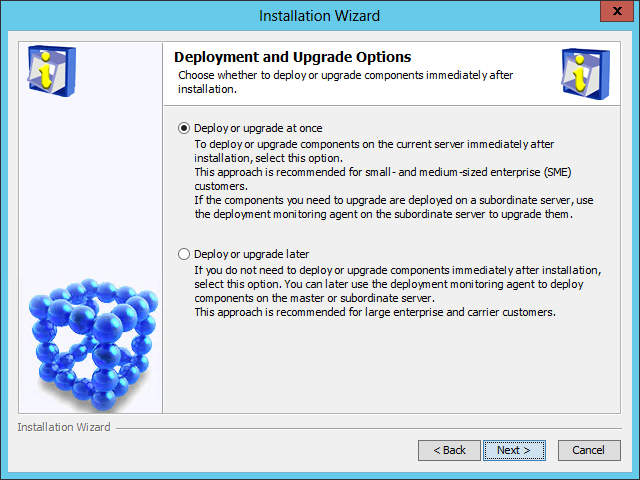

The Deployment and Upgrade Options page opens, as shown in Figure 18.

Figure 18 Deployment and Upgrade Options page

8. Select Deploy or upgrade later.

9. Click Next.

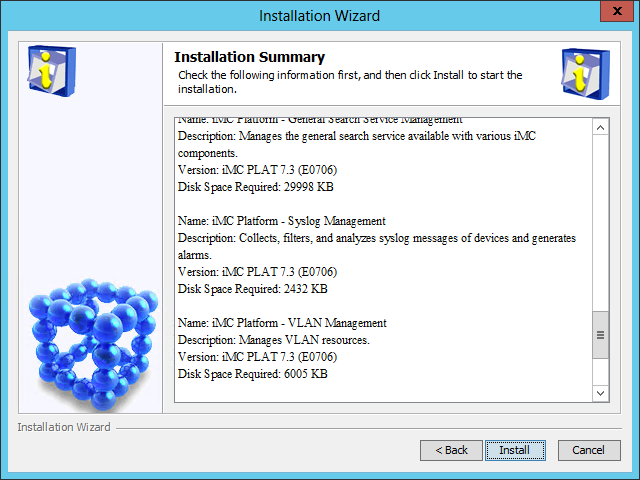

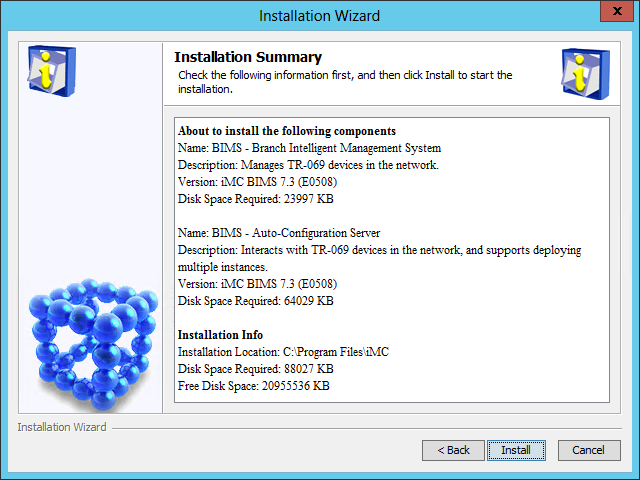

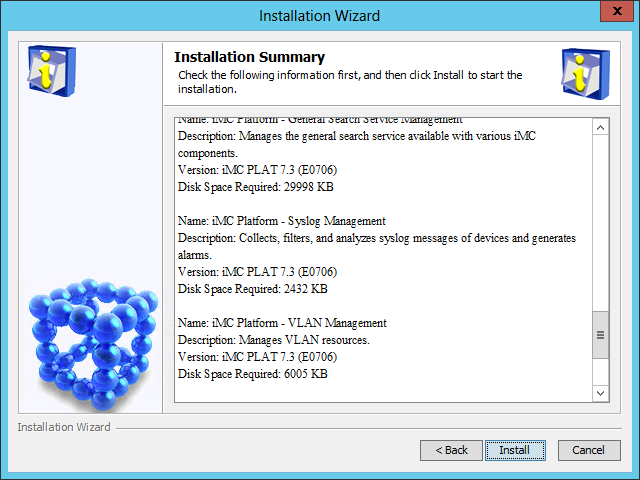

The Installation Summary page opens, as shown in Figure 19.

Figure 19 Installation Summary page

10. Verify the installation summary, and then click Install.

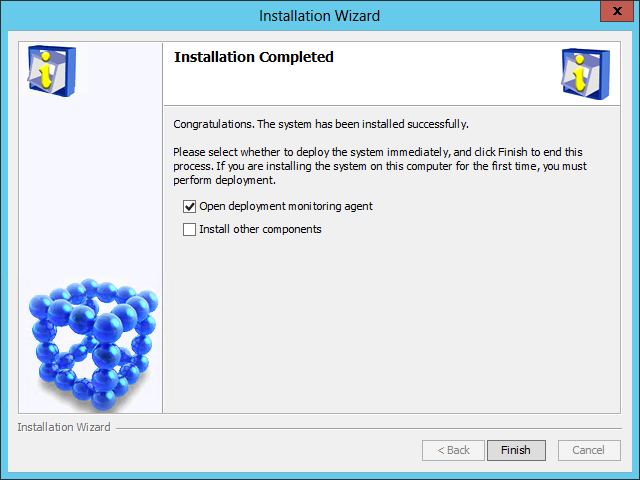

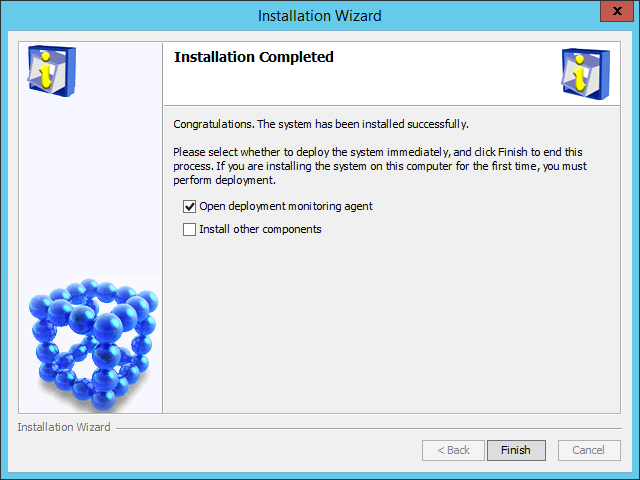

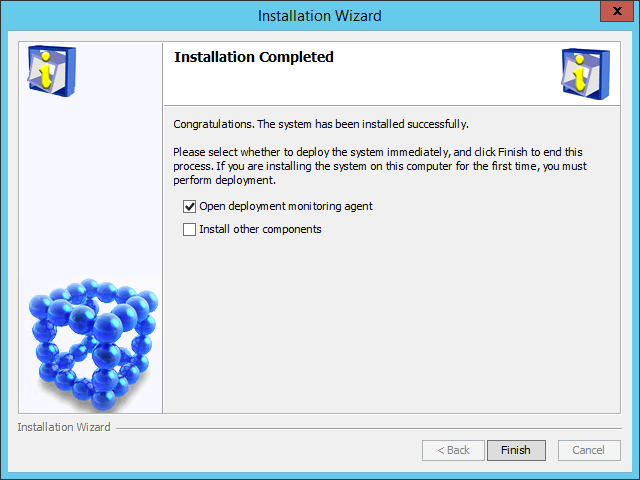

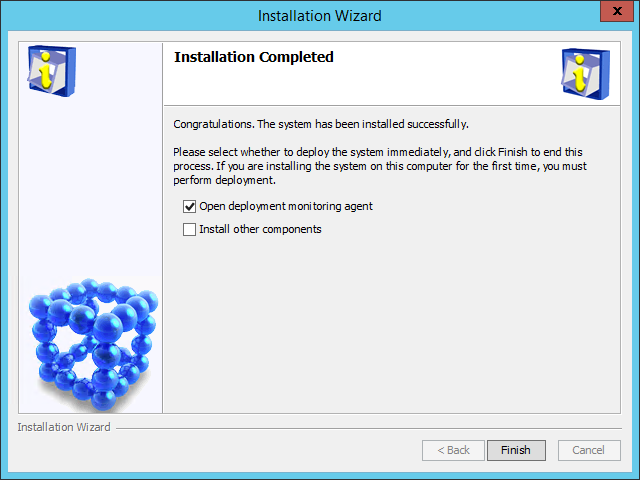

After the installation is complete, the Installation Completed page opens, as shown in Figure 20.

Figure 20 Installation Completed page

11. Select Open deployment monitoring agent, and then click Finish.

Deploying the IMC platform component

|

IMPORTANT: When IMC uses the distributed deployment scheme, you must deploy the IMC platform component on the conductor server. |

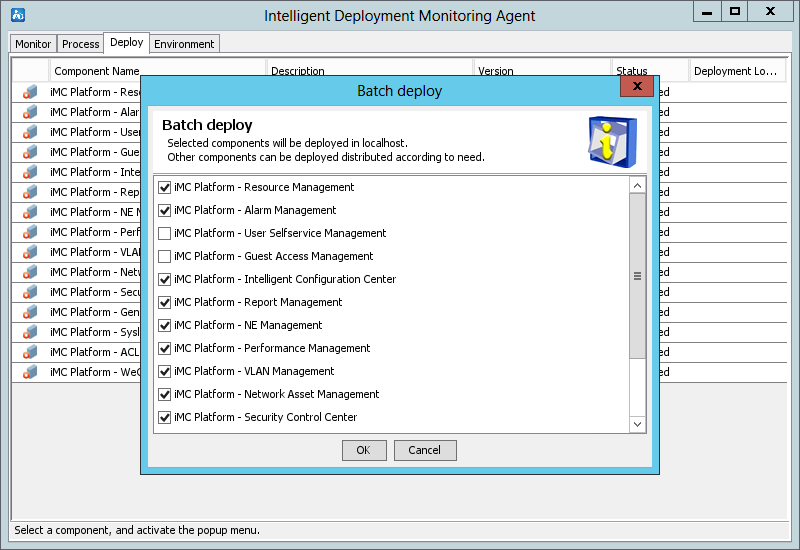

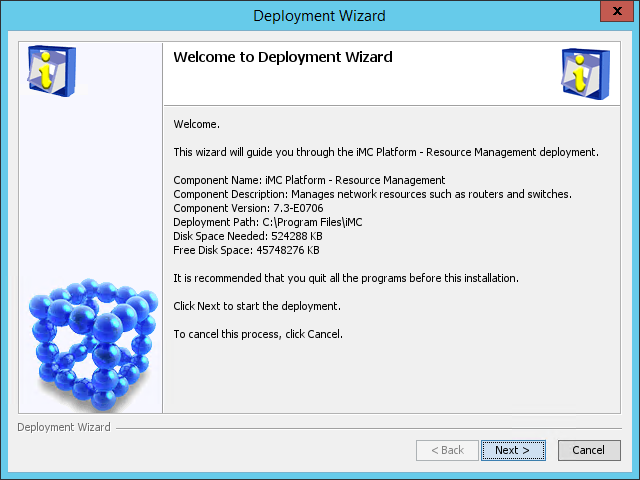

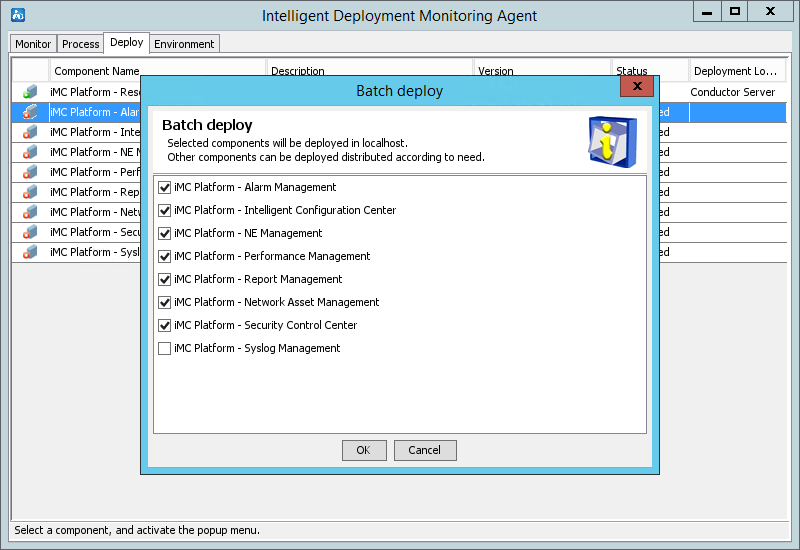

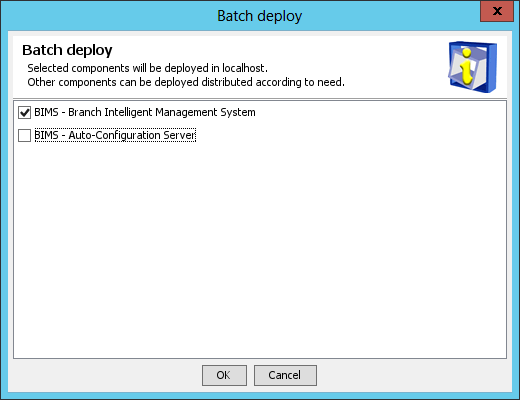

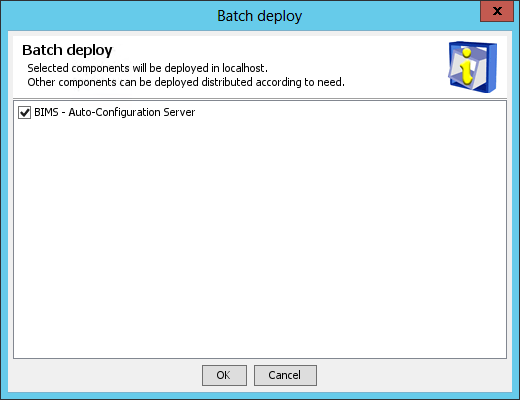

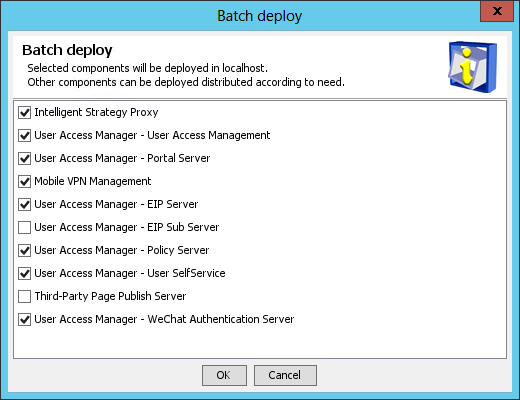

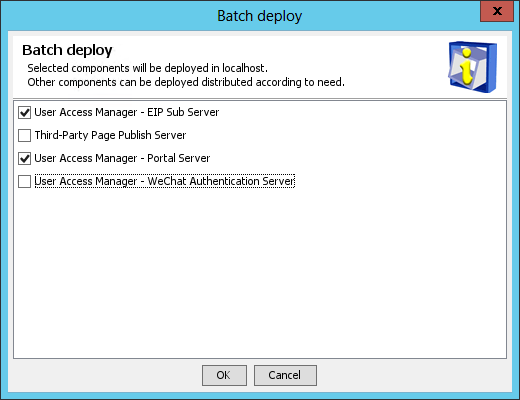

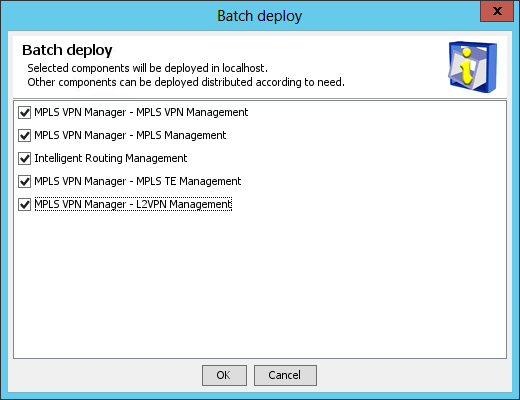

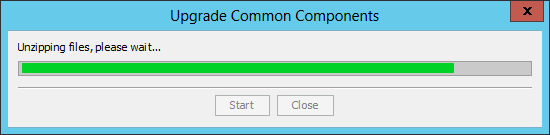

1. After the IMC platform is installed, the system automatically starts the Intelligent Deployment Monitoring Agent and displays the Batch deploy dialog box, as shown in Figure 21.

Figure 21 Batch deploy dialog box

2. Select the components to be deployed (select the default components in this example), and then click OK.

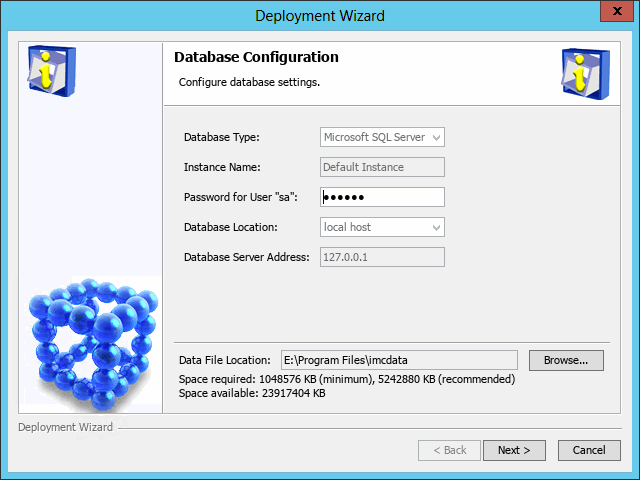

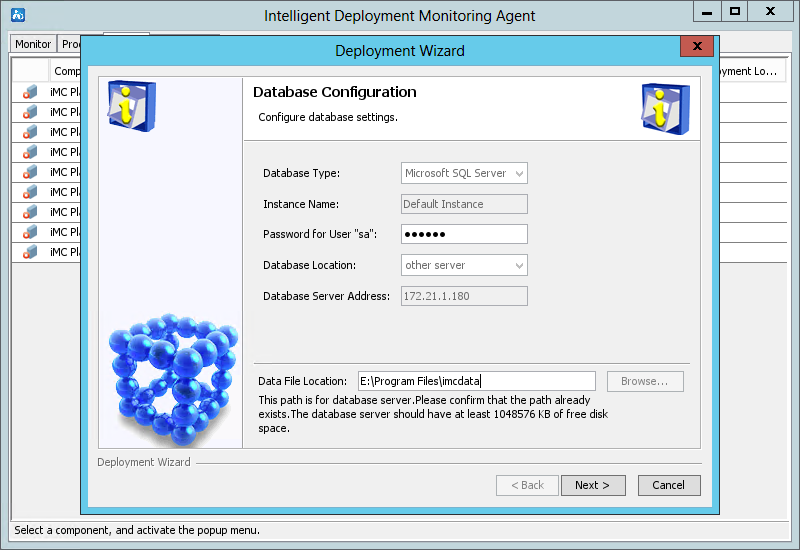

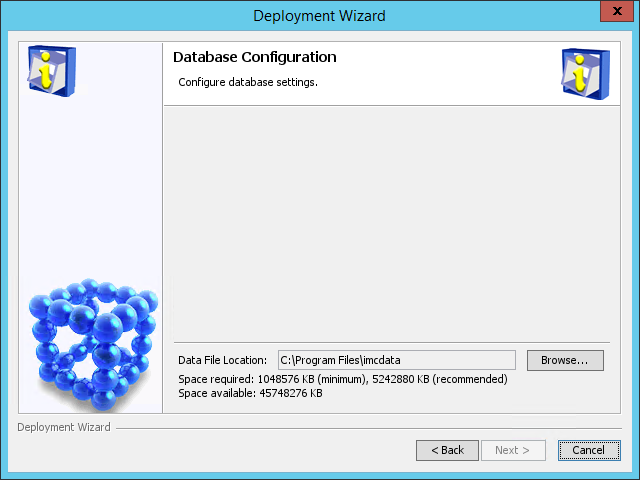

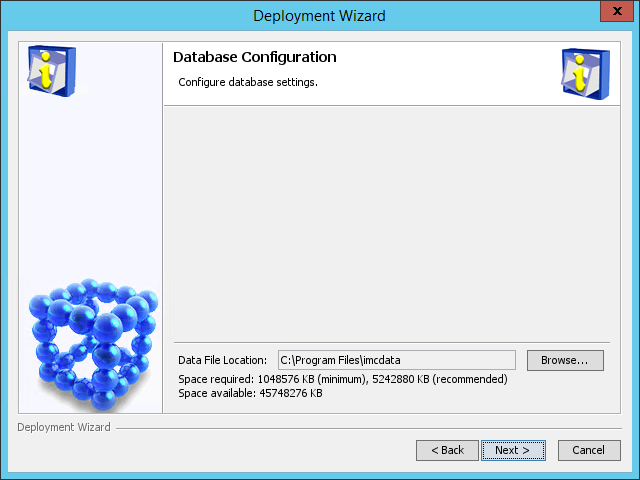

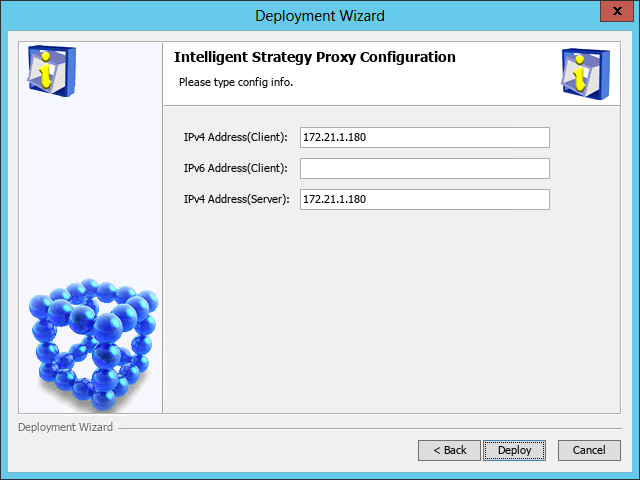

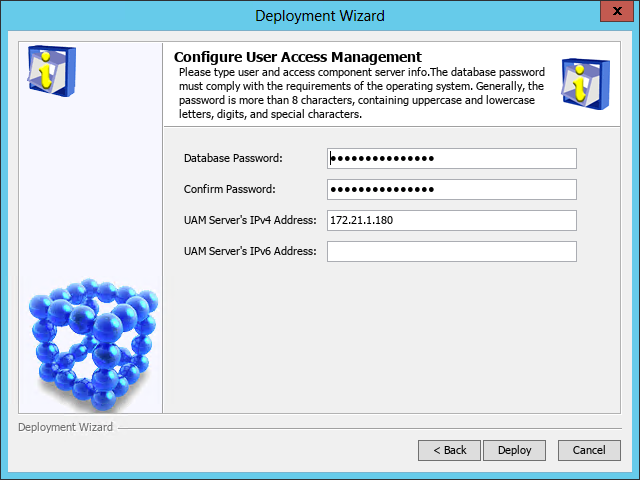

The Database Configuration page opens, as shown in Figure 22 and Figure 23.

Figure 22 Database Configuration page (local database)

Figure 23 Database Configuration page (remote database)

3. Enter the password of the superuser.

4. (Applicable to only IMC centralized deployment with embedded database.) Perform the following tasks:

a. Click Cancel to exit the deployment wizard, and then click OK in the window that opens.

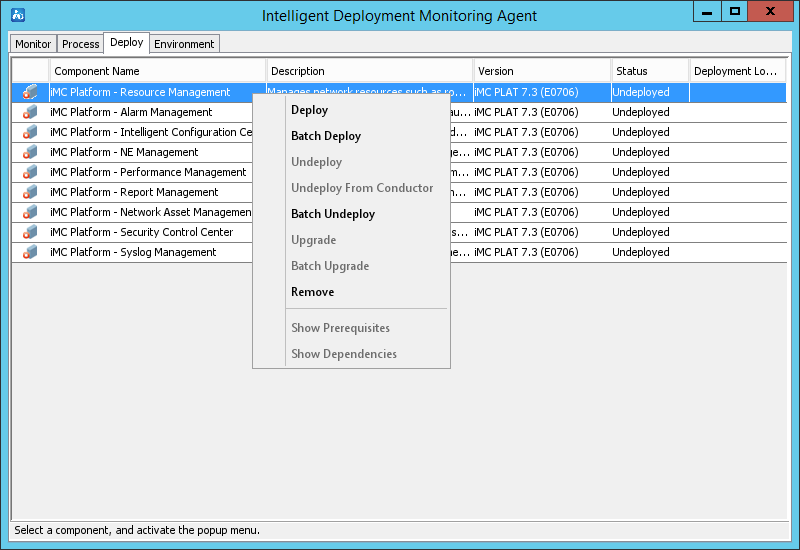

b. On the Deploy tab of the Intelligent Deployment Monitoring Agent window, right-click the resource management component, and then select Deploy, as shown in Figure 24.

Figure 24 Deploying the resource management component

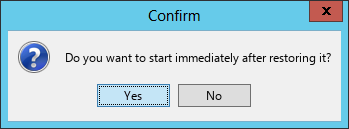

c. In the confirmation dialog box that opens, click Yes.

The deployment wizard opens, as shown in Figure 25.

d. Click Next. The Database Configuration page opens, as shown in Figure 26.

Figure 26 Database Configuration page

e. Click Back to return to the Deployment Wizard page, as shown in Figure 25, and then click Next to enter the Database Configuration page, as shown in Figure 27.

Figure 27 Database Configuration page

5. Set the data file location.

¡ Local database:

Make sure the specified data file location is on a readable, writable, and uncompressed disk drive and does not include any files.

The default data file location is X:\Program Files\imcdata, where X is the drive letter of the disk that has the largest amount of free space.

¡ Remote database:

Specify the directory on the database server for storing IMC data files. Make sure the specified data file location exists on the database server and does not include any files.

|

|

NOTE: On Linux, the default data file location is /opt/imcdata. |

6. Click Next. In the confirmation dialog box that opens, click OK.

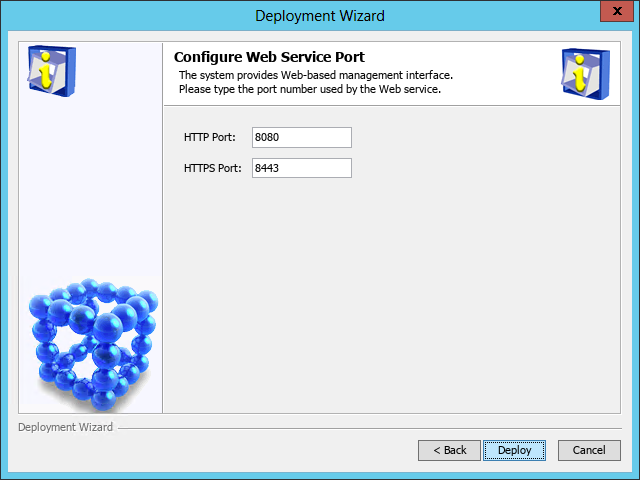

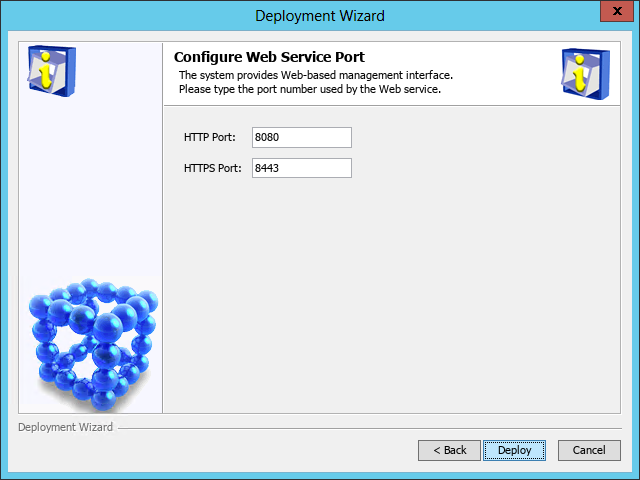

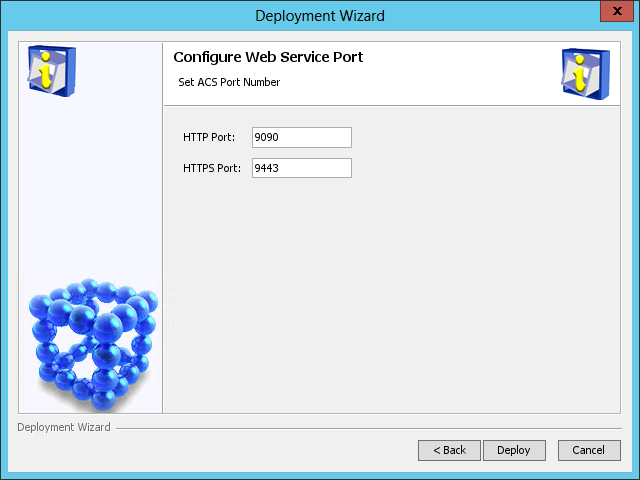

The Configure Web Service Port page opens, as shown in Figure 28.

Figure 28 Configure Web Service Port page

7. Enter the HTTP and HTTPS port numbers. This example uses the default port numbers 8080 and 8443.

If you specify other port numbers, make sure the specified ports are not used by other services.

8. (Applicable to only IMC centralized deployment with embedded database.) Perform the following tasks:

a. After the resource management component deployment is complete, click Finish to close the deployment wizard.

b. On the Deploy tab of the Intelligent Deployment Monitoring Agent window, right-click the component you want to deploy, and then select Batch Deploy. In the Batch deploy window that opens, select the target components, as shown in Figure 29.

9. Click Deploy. After the deployment is complete, the Batch deploy succeeded dialog box opens, as shown in Figure 30.

Figure 30 Batch deploy succeeded dialog box

10. Click OK.

Deploying IMC on a member server (distributed deployment)

Before you deploy IMC subcomponents on a member server for the first time, install the Intelligent Deployment Monitoring Agent on the member server.

Make sure you have started IMC on the conductor server.

Starting the remote installation wizard

To start the remote installation wizard:

1. On the member server, right-click the installslave.bat script in the install directory of the installation package and select Run as Administrator.

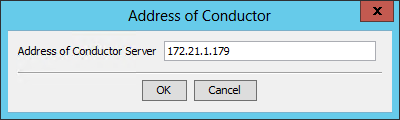

The Address of Conductor page opens, as shown in Figure 31.

To start the remote installation wizard on Linux, run the installslave.sh script in the install directory of the installation package as a root user. If the installation file is obtained by using FTP, you must first authorize the installslave.sh script by executing the chmod –R 775 installslave.sh command in the directory of the script.

Figure 31 Address of Conductor

2. Enter the IP address of the conductor server, and then click OK.

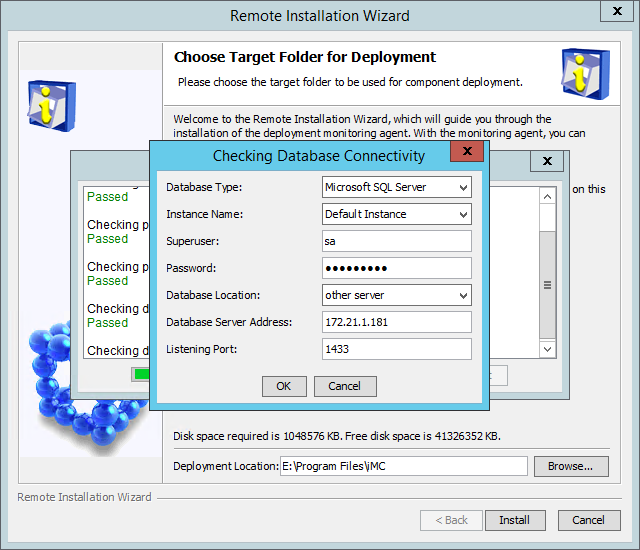

The Checking Database Connectivity dialog box opens, as shown in Figure 32and Figure 33.

Figure 32 Checking installation environment (local database)

Figure 33 Checking Database Connectivity (remote database)

3. Configure the parameters as needed. For descriptions about the parameters, see "(Optional.) Checking the installation environment."

4. Click OK to start checking the database connectivity.

After the installation environment check is passed, the Remote Installation Wizard opens, which means that you have successfully started the remote installation wizard.

Installing the Intelligent Deployment Monitoring Agent

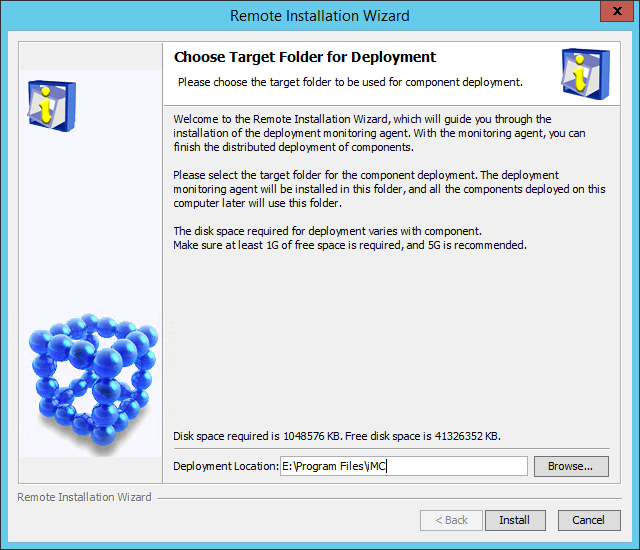

1. On the Choose Target Folder for Deployment dialog box shown in Figure 34, specify the deployment location for the Intelligent Deployment Monitoring Agent.

The default deployment location is the \Program Files\iMC directory of the disk with the maximum free space on Windows or is /opt/iMC on Linux. This example uses E:\Program Files\iMC.

The installation program examines whether the specified installation path contains files. If the path contains files, a message is displayed. Click OK to delete the files.

Figure 34 Choose Target Folder for Deployment

2. Click Install.

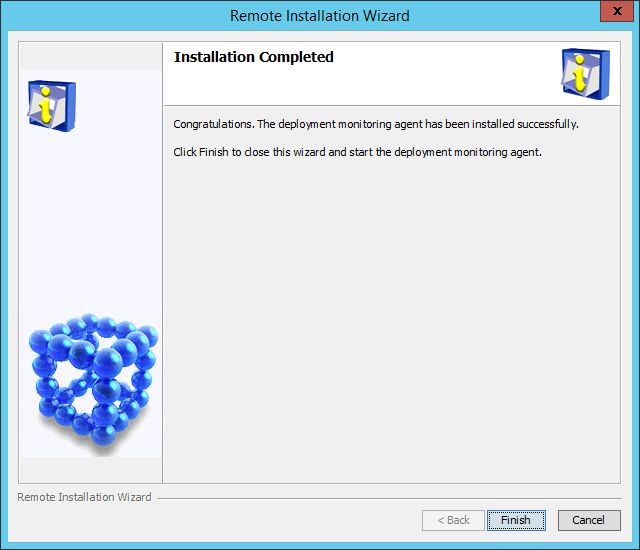

The system starts to download files. After the download, the Installation Completed dialog box opens, as shown in Figure 35.

Figure 35 Installation Completed

3. Click Finish.

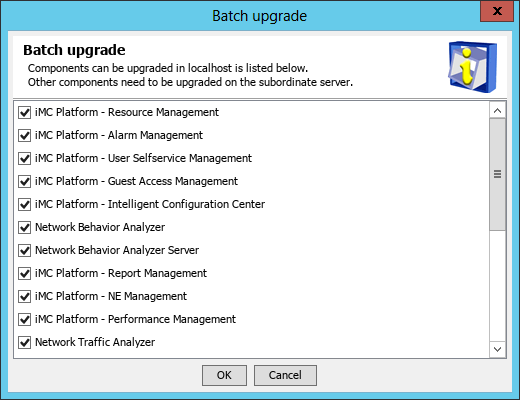

Deploying the IMC platform subcomponents

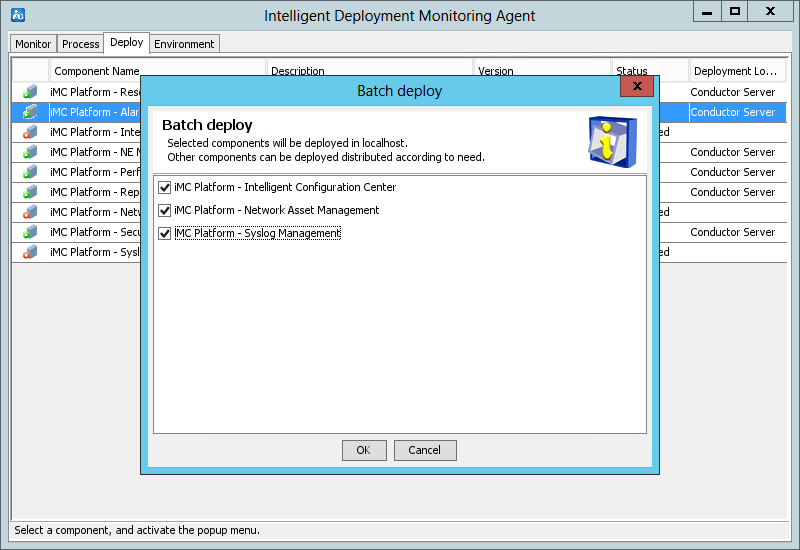

1. Click the Deploy tab.

The Deploy tab displays information about all IMC components that have been installed.

2. Right-click a platform subcomponent that has not been deployed, and then select Batch Deploy from the shortcut menu.

The Batch deploy dialog box opens.

Figure 36 Batch deploy

3. Select the subcomponents you want to deploy, and then click OK.

The system starts downloading the files.

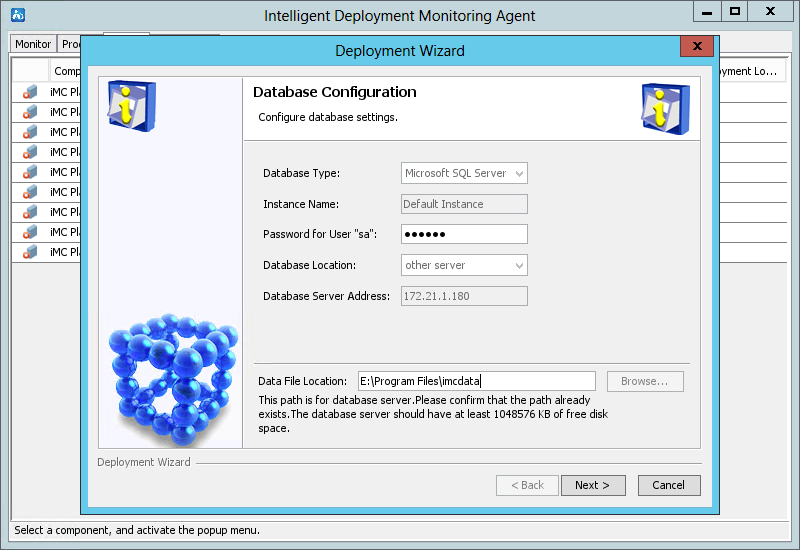

4. After the download is complete, perform the following tasks on the Database Configuration page:

a. Enter the password for the user sa for the current database, which is the superuser name specified during IMC installation.

b. Set the data file location.

- Local database:

Make sure the specified data file location is on a

readable, writable, and uncompressed disk drive and does not include any files.

The default data file location is X:\Program Files\imcdata,

where X is the drive letter of the disk that has

the largest amount of free space.

- Remote database:

Specify the directory on the database server for

storing IMC data files. Make sure the specified data file location exists on

the database server and does not include any files.

|

|

NOTE: On Linux, the default data file location is /opt/imcdata. |

Figure 37 Database Configuration (local database)

Figure 38 Database Configuration page (remote database)

5. Click Next. On the Configure Web Service Port page that opens, set HTTP Port (8080 by default) and HTTPS Port (8443 by default) as needed.

Figure 39 Configure Web Service Port

6. Click Deploy to start the deployment.

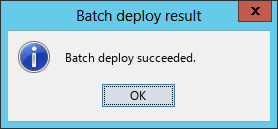

After the deployment is finished, the Batch deploy result dialog box opens.

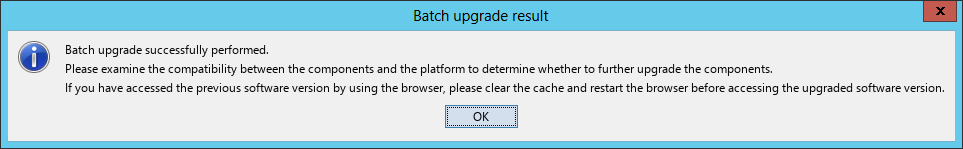

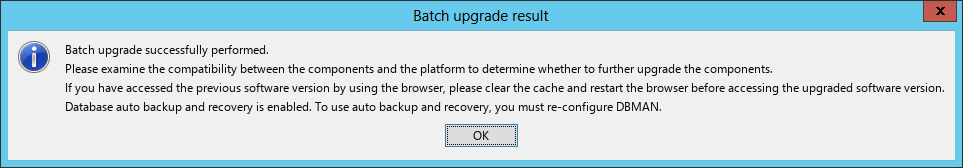

Figure 40 Batch deploy result

7. Click OK.

Managing IMC by using the Intelligent Deployment Monitoring Agent

The Intelligent Deployment Monitoring Agent is automatically installed after the IMC platform is installed.

As the IMC management and maintenance tool, the Intelligent Deployment Monitoring Agent provides IMC operation information as well as a variety of management options, such as:

· Starting and stopping IMC.

· Installing new components.

· Upgrading IMC components.

· Deploying and removing components.

Starting the Intelligent Deployment Monitoring Agent

To start the Intelligent Deployment Monitoring Agent, click Start, access the all applications page, and then select iMC > Deployment Monitoring Agent.

To start the Intelligent Deployment Monitoring Agent on Linux, run the dma.sh script in the /deploy directory of the IMC installation path.

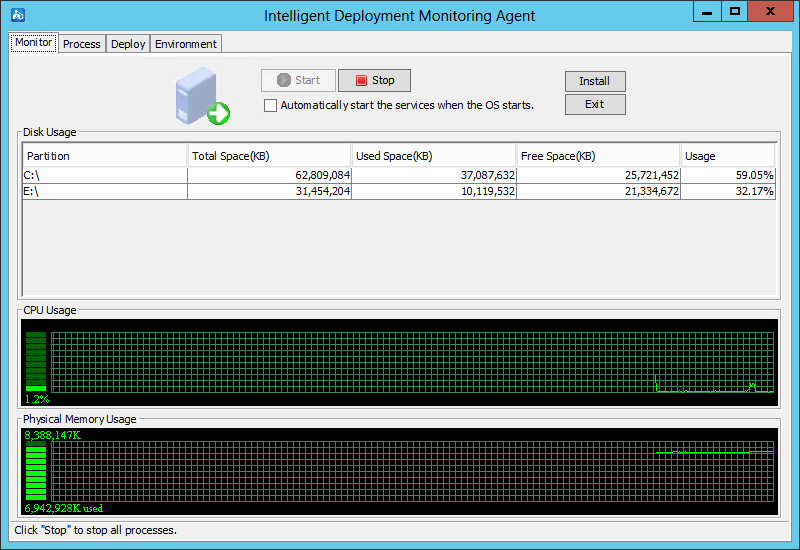

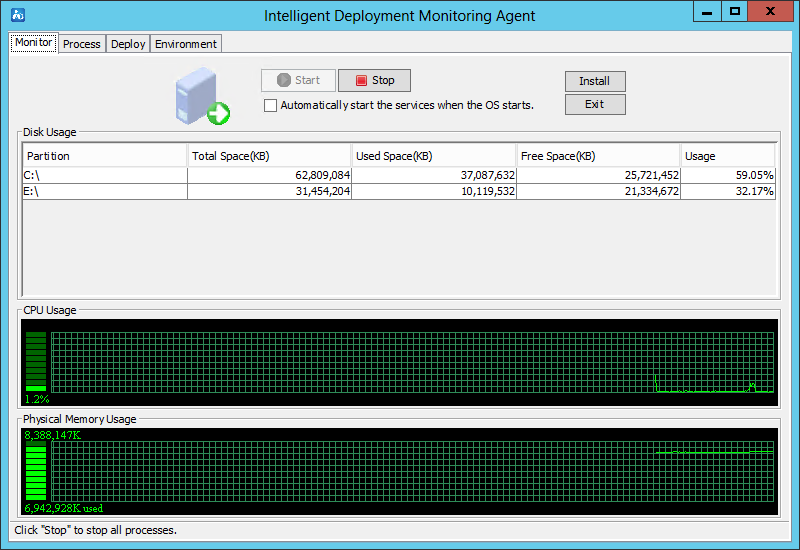

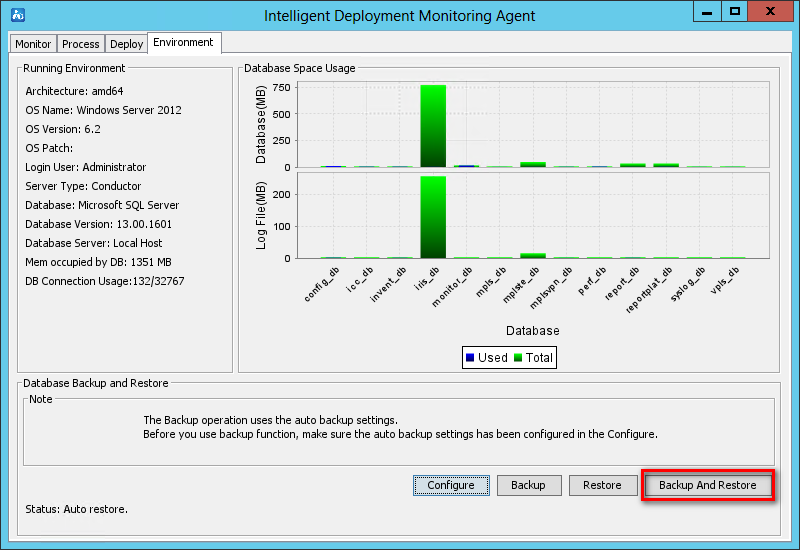

As shown in Figure 41, the agent contains the following tabs: Monitor, Process, Deploy, and Environment. By default, the Monitor tab is displayed.

The following information describes the functionality of each tab.

Figure 41 Intelligent Deployment Monitoring Agent

|

|

NOTE: To start the Intelligent Deployment Monitoring Agent on Linux, run the dma.sh script in the /deploy directory of the IMC installation path. |

Monitor tab

As shown in Figure 42, the Monitor tab displays the performance information of the IMC server, including the disk, CPU, and physical memory usage information.

The tab also provides the following options:

· Start—Click this button to start IMC. This button is available when IMC is stopped.

|

IMPORTANT: For correct operation, start the Intelligent Management Server service with an account that has read/write permissions on the IMC installation folder. By default, the Intelligent Management Server service starts with the Local System account. |

· Stop—Click this button to stop IMC. This button is available when IMC is already started.

· Automatically start the services when the OS starts—Select this option to automatically start IMC when the operating system starts.

· Install—Click this button to install new components or upgrade existing components.

· Exit—Click this button to exit the Intelligent Deployment Monitoring Agent.

Figure 42 Monitor tab of the Intelligent Deployment Monitoring Agent

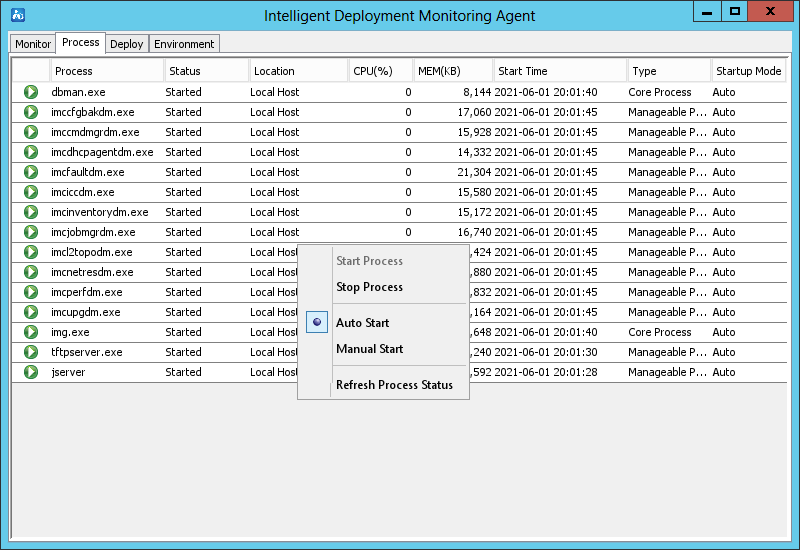

Process tab

As shown in Figure 43, the Process tab displays IMC process information.

Figure 43 Process tab of the Intelligent Deployment Monitoring Agent

The right-click menu of a manageable process provides the following options:

· Start Process—Select this option to start the process. This option is available when the process is stopped.

· Stop Process—Select this option to stop the process. This option is available when the process is started.

· Auto Start—Select this option to enable automatic startup of the process when IMC is started.

· Manual Start—Select this option to require manual startup of the process.

· Refresh Process Status—Select this option to refresh the status of the process.

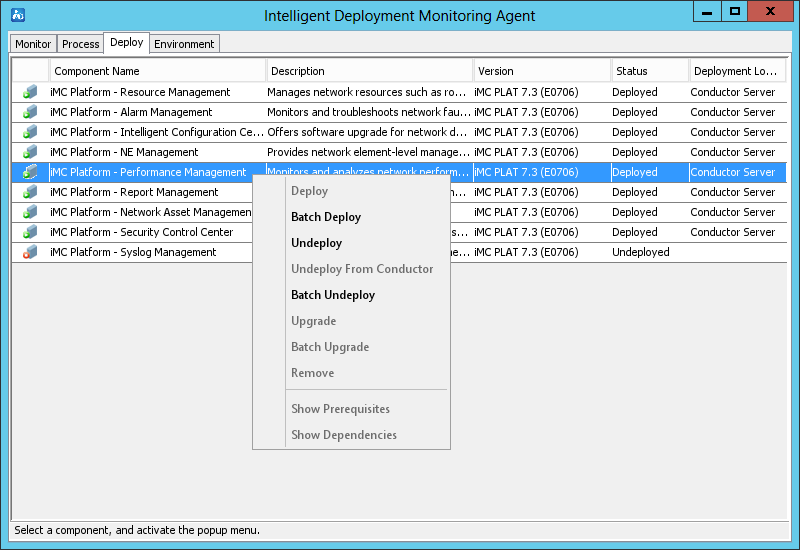

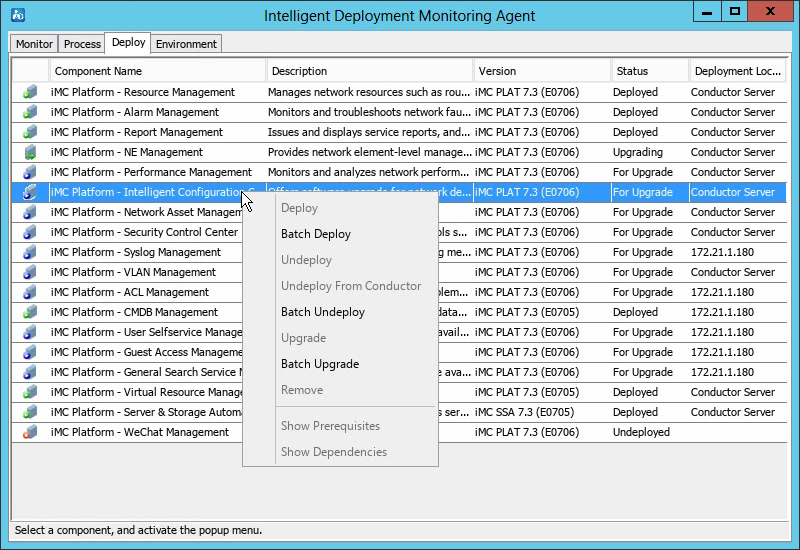

Deploy tab

As shown in Figure 44, the Deploy tab displays information about all deployed components.

Figure 44 Deploy tab of the Intelligent Deployment Monitoring Agent

The right-click menu of a component provides the following options:

· Deploy—Select this option to deploy the component on the local host.

This option is available only when the selected component is in Undeployed state.

· Batch Deploy—Select this option to batch deploy components on the local host.

Components can be deployed only when they have been installed but in Undeployed state.

· Undeploy—Select this option to undeploy the component.

This option is available only when the selected component is in Deployed state.

· Undeploy From Conductor—Select this option to delete component deployment information from the conductor server.

This option is available only when the member server where the component is deployed cannot operate correctly.

· Batch Undeploy—Select this option to undeploy multiple components.

· Upgrade—Select this option to upgrade the component.

· Batch Upgrade—Select this option to upgrade components in batches.

· Remove—Select this option to remove the component from the host.

This option is available only when the selected component is in Undeployed state.

· Show Prerequisites—Select this option to view all components that the selected component depends on. The component can be deployed only after the dependent components have been deployed.

This option is unavailable if the component does not depend on any other components.

· Show Dependencies—Select this option to view all components that depend on the selected component.

This option is unavailable if no other components depend on the selected component.

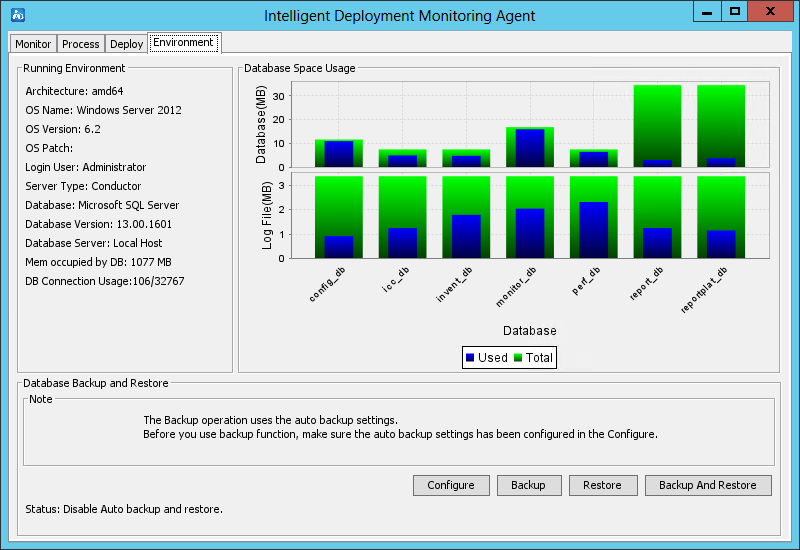

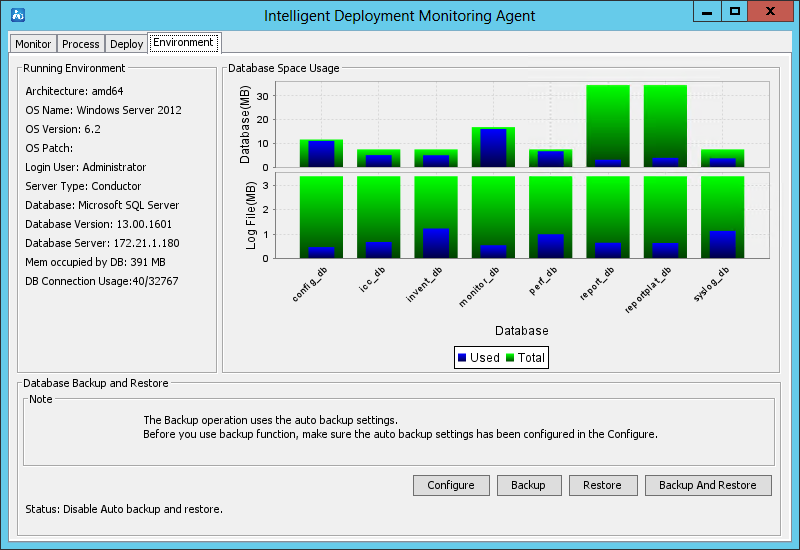

Environment tab

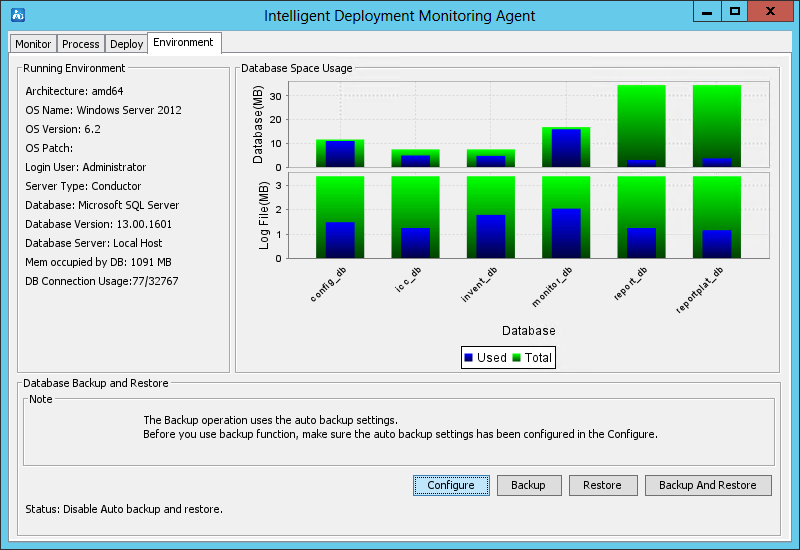

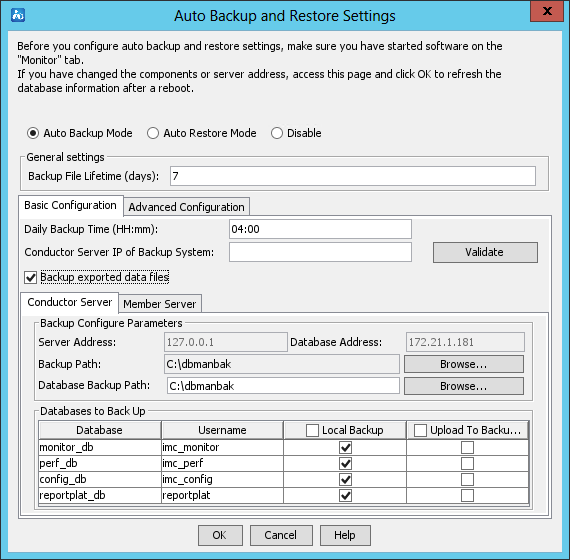

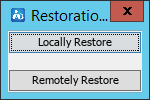

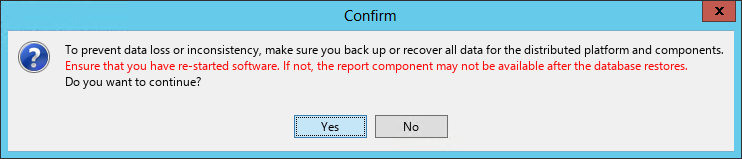

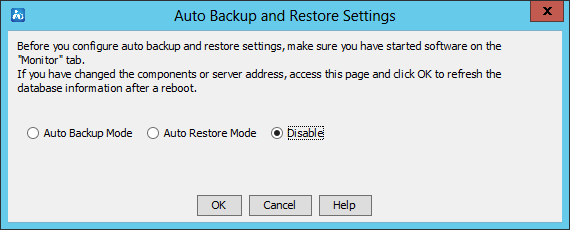

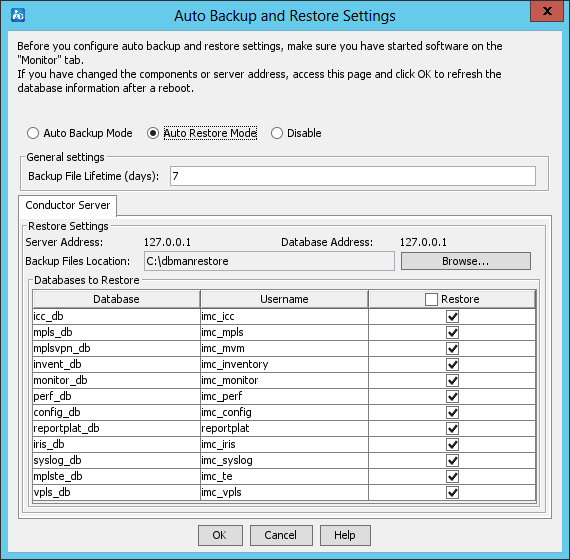

As shown in Figure 45 and Figure 46, the Environment tab displays the software, hardware, and database information for the current IMC server.

The tab also provides database backup and restoration options in the Database Backup and Restore area.

For more information about the Environment tab, see "Backing up and restoring the database."

Figure 45 Environment tab of the Intelligent Deployment Monitoring Agent (local database)

Figure 46 Environment tab of the Intelligent Deployment Monitoring Agent (remote database)

Installing and deploying IMC service components

The following information describes how to install and deploy the service components.

Deployment guidelines

Table 14 lists all service components and subcomponents in IMC.

|

|

NOTE: The subcomponents included vary by component version. |

Table 14 Service components and subcomponents (centralized deployment)

|

Component |

Subcomponent |

|

Endpoint Intelligent Access |

· User Access Manager: ¡ Intelligent Strategy Proxy ¡ User Access Management ¡ User Access Management Sub Server ¡ Portal Server ¡ EIP Server ¡ EIP Sub Server ¡ Policy Server ¡ User SelfService ¡ Third-Party Page Publish Server · TACACS+ Authentication Manager |

|

EAD Security Policy |

· Security Policy Configuration · Desktop Asset Manager · Desktop Asset Manager Proxy Server |

|

MPLS VPN Manager |

· MPLS Management · MPLS VPN Management · Intelligent Routing Management · MPLS TE Management · L2VPN Management |

|

IPsec VPN Manager |

IPsec VPN Manager |

|

Wireless Service Manager |

· Wireless Service Manager · Wireless Intrusion Prevention System · Wireless Location Manager · Wireless Location Engine |

|

User Behavior Auditor |

· User Behavior Auditor · User Behavior Auditor Server · Network Behavior Analyzer · Network Behavior Analyzer Server |

|

Application Manager |

· Application Management · Application Management Service |

|

Server & Storage Automation |

Server & Storage Automation |

|

Resource Configuration Management |

Configuration Management Database (CMDB) |

|

QoS Manager |

QoS Management |

|

Service Health Manager |

· Service Health Manager · NQA Collector Manager |

|

Branch Intelligent Management System |

· Branch Intelligent Management System · Auto-Configuration Server · Mobile Branch Manager |

|

VAN Fabric Manager |

VAN Fabric Manager |

|

Endpoint Mobile Office |

· Mobile Office Manager · Mobile Office MDM Proxy · Intelligent Strategy Proxy |

|

Security Service Manager |

· Security Service Manager · Load Balancing Manager |

|

Business Service Manager |

Business Service Manager |

|

IT Service Manager |

Self-Service Desk |

|

Intelligent Portal Manager |

· Intelligent Portal Manager · Intelligent Portal Authentication Manager · Intelligent Portal Authentication Backend |

|

Endpoints Profiling System |

· Endpoint Management · Scanner Engine |

Table 15 Service components and subcomponents (distributed deployment)

|

Component |

Subcomponent |

Optional server |

Quantity |

|||

|

Endpoint Intelligent Access |

User Access Manager |

Intelligent Strategy Proxy |

Conductor or member |

1 |

||

|

User Access Management |

Conductor or member |

1 |

||||

|

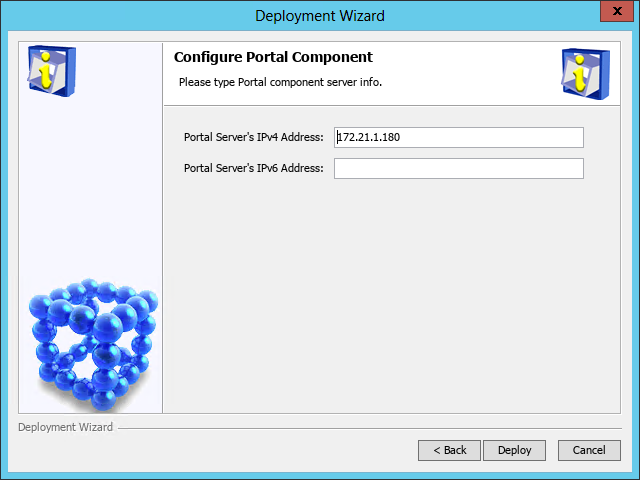

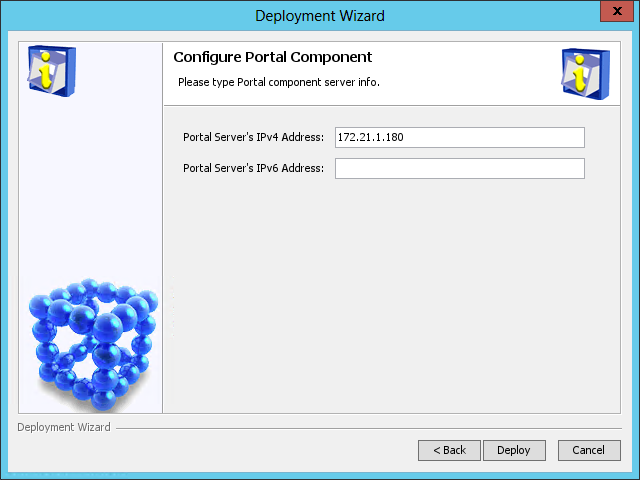

Portal Server |

Conductor or member |

10 |

||||

|

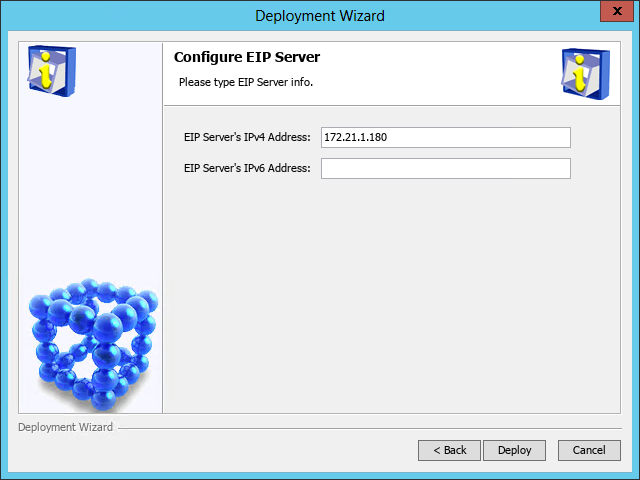

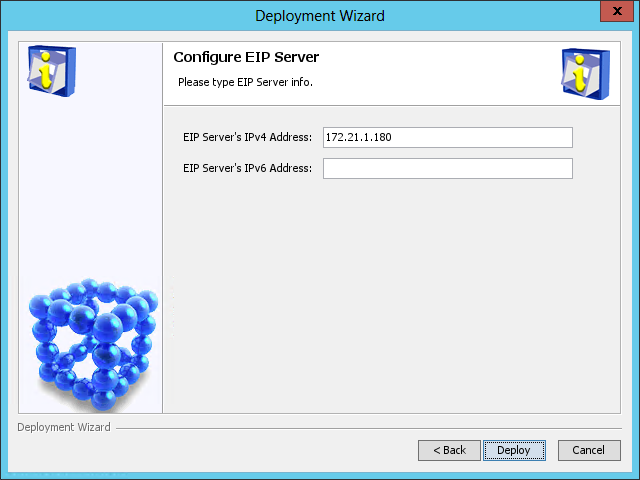

EIP Server |

Conductor or member |

1 |

||||

|

EIP Sub Server |

Member |

5 |

||||

|

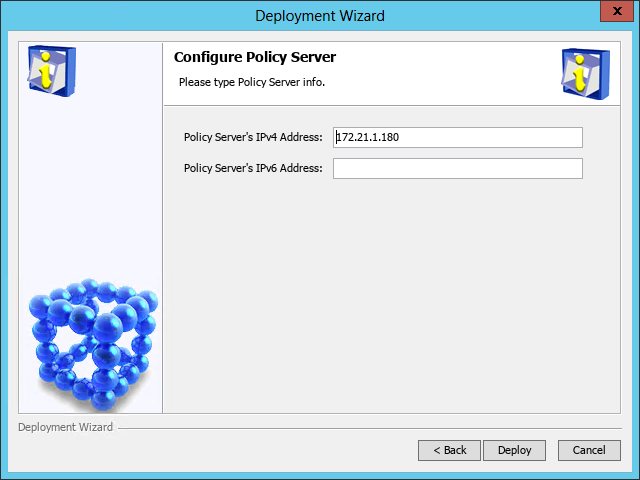

Policy Server |

Conductor or member |

1 |

||||

|

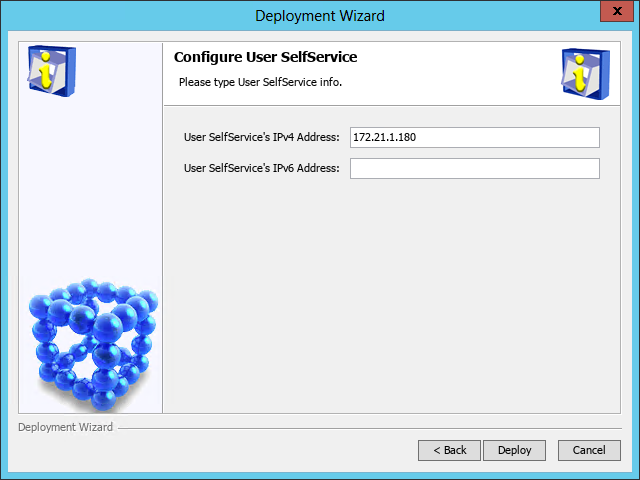

User SelfService |

Conductor or member |

1 |

||||

|

Third-Party Page Publish Server |

Conductor or member |

10 |

||||

|

TACACS+ Authentication Manager |

TACACS+ Authentication Manager |

Conductor or member |

1 |

|||

|

EAD Security Policy |

Security Policy Configuration |

Conductor or member |

1 |

|||

|

Desktop Asset Manager |

Conductor or member |

1 |

||||

|

Desktop Asset Manager Proxy Server |

Conductor or member |

N |

||||

|

MPLS VPN Manager |

MPLS VPN Management |

Conductor or member |

1 |

|||

|

MPLS TE management |

Conductor or member |

1 |

||||

|

L2VPN Management |

Conductor or member |

1 |

||||

|

IPsec VPN Manager |

IPsec VPN Manager |

Conductor or member |

1 |

|||

|

Wireless Service Manager |

Wireless Service Manager |

Conductor or member |

1 |

|||

|

Wireless Intrusion Prevention System |

Conductor or member |

1 |

||||

|

Wireless Location Manager |

Conductor or member |

1 |

||||

|

Wireless Location Engine |

Conductor or member |

20 |

||||

|

Network Traffic Analyzer |

Network Traffic Analyzer |

Conductor |

1 |

|||

|

Network Traffic Analyzer Server |

Conductor or member |

10 |

||||

|

Network Behavior Analyzer |

Conductor |

1 |

||||

|

Network Behavior Analyzer Server |

Conductor or member |

10 |

||||

|

User Behavior Auditor |

User Behavior Auditor |

Conductor |

1 |

|||

|

User Behavior Auditor Server |

Conductor or member |

10 |

||||

|

Network Behavior Analyzer |

Conductor |

1 |

||||

|

Network Behavior Analyzer Server |

Conductor or member |

10 |

||||

|

Application Manager |

Application Management |

Conductor |

1 |

|||

|

Application Management Service |

Conductor or member |

500 |

||||

|

Server & Storage Automation |

Server & Storage Automation |

Conductor |

1 |

|||

|

Resource Configuration Management |

Configuration Management Database (CMDB) |

Conductor or member |

1 |

|||

|

QoS Manager |

QoS Management |

Conductor or member |

1 |

|||

|

Branch Intelligent Management System |

Branch Intelligent Management System |

Conductor or member |

1 |

|||

|

Auto-Configuration Server |

Conductor or member |

15 |

||||

|

Mobile Branch Manager |

Conductor or member |

1 |

||||

|

VAN Fabric Manager |

VAN Fabric Manager |

Conductor or member |

1 |

|||

|

Endpoint Mobile Office |

Mobile Office Manager |

Conductor or member |

5 |

|||

|

Mobile Office MDM Proxy |

Conductor or member |

5 |

||||

|

Intelligent Strategy Proxy |

Conductor or member |

1 |

||||

|

Security Service Manager |

Security Service Manager |

Conductor or member |

1 |

|||

|

Load Balancing Manager |

Conductor or member |

1 |

||||

|

Business Service Manager |

Business Service Manager |

Conductor |

1 |

|||

|

IT Service Manager |

Self-Service Desk |

Conductor or member |

1 |

|||

|

Intelligent Portal Manager |

Intelligent Portal Manager |

Conductor |

1 |

|||

|

Intelligent Portal Authentication Manager |

Conductor or member |

1 |

||||

|

Intelligent Portal Authentication Backend |

Conductor or member |

10 |

||||

|

Endpoints Profiling System |

Endpoint Management |

Conductor or member |

1 |

|||

|

Scanner Engine |

Conductor or member |

1 |

||||

All service components can be installed in the same way, but their deployment procedure might differ. Based on the deployment procedure, the service components can be classified into several categories, as listed in Table 16.

Table 16 Service components classified by deployment procedure

|

Example component |

Similar components |

|

BIMS |

IVM, WSM, EPON, QoSM, VFM, SSM, BSM, ITSM, U-Center |

|

UAM |

EMO, EAD, TAM, IPM, EPS |

|

MVM |

N/A |

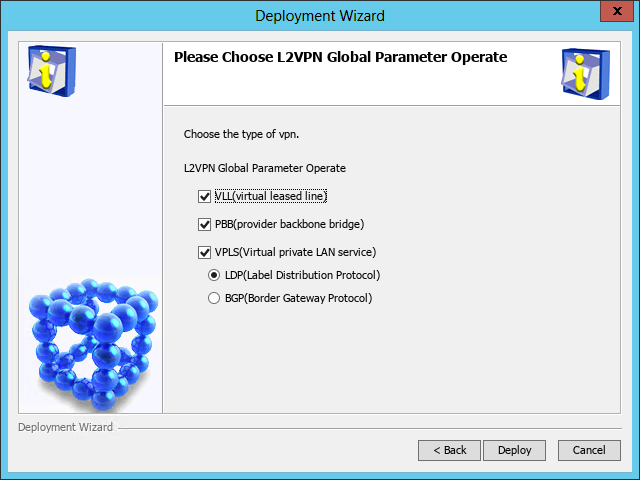

The following information describes how to install and deploy BIMS, UAM, and MVM.

|

IMPORTANT: U-Center must be deployed on IMC PLAT 7.3 (E0706P09). Before deploying U-Center, upgrade the platform to this version. |

Installing and deploying IMC BIMS

Installing IMC BIMS

1. Start the Intelligent Deployment Monitoring Agent, and then click Install on the Monitor tab.

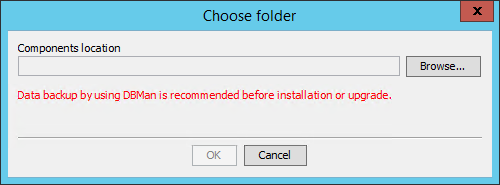

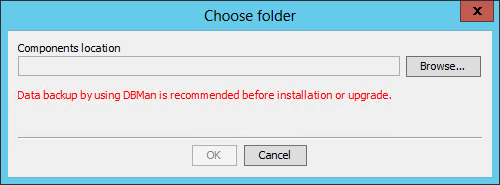

The Choose folder dialog box opens, as shown in Figure 47.

Figure 47 Choose folder dialog box

2. Click Browse, and then select the install\components folder in the BIMS installation package.

3. Click OK.

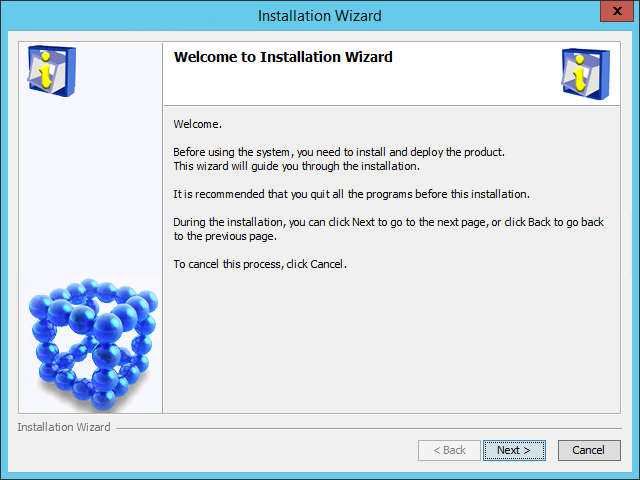

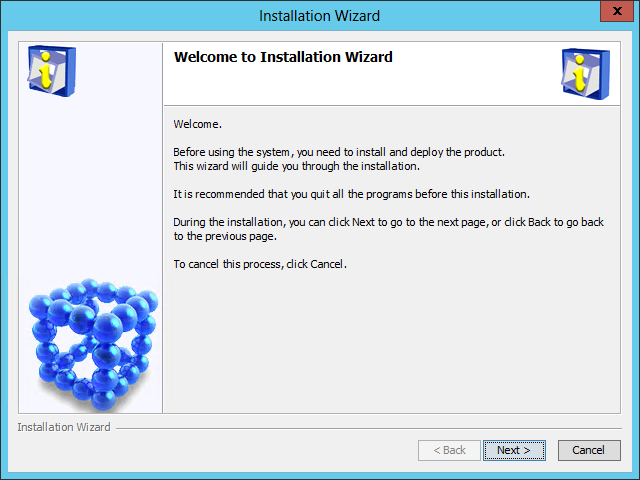

The IMC installation wizard opens, as shown in Figure 48.

Figure 48 IMC installation wizard

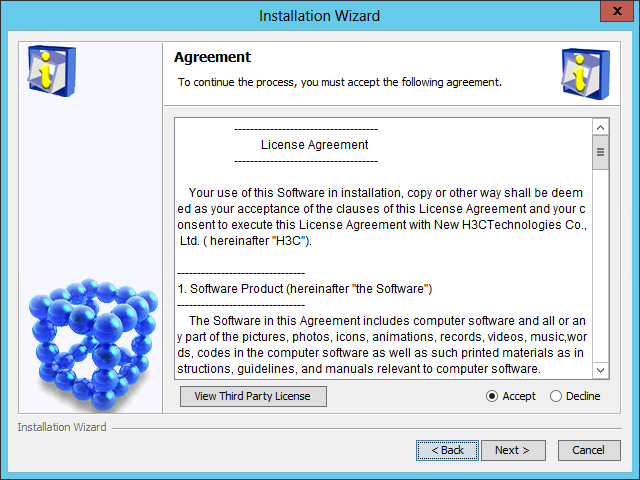

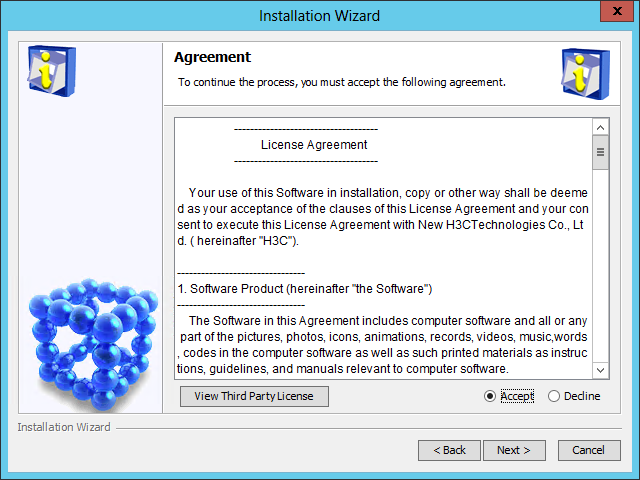

4. Click Next.

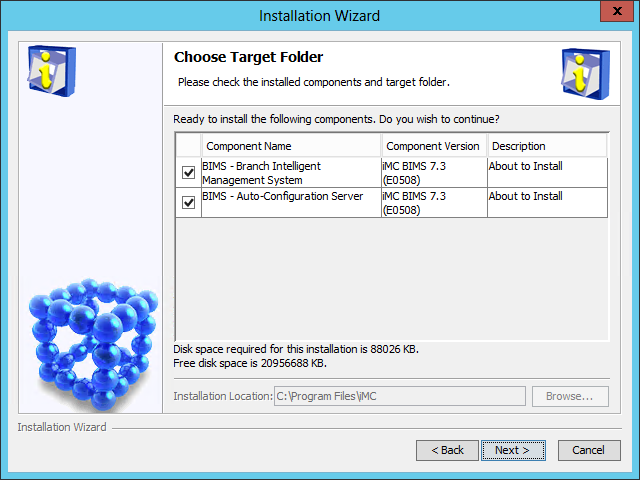

The Agreement page opens, as shown in Figure 49.