- Released At: 18-04-2024

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

Comware 9 Container Technology White Paper

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The content in this article is general technical information, some of which may not be applicable to the product you purchased.

Contents

Resource isolation (namespace) and grouping (cgroup)

Comware 9 container implementation

Containers supported by Comware 9

Third-party application containers

Saving and restoring a container

Container network implementation

Network communication of third-party containers that do not share the network namespace of Comware

Network communication of third-party containers that do not share the network namespace of Comware

Network communication of hosts that do not share the network namespace of Comware

Managing containers through K8s

Containerized deployment of third-party applications that share the network namespace

Containerized deployment of third-party applications (separate network namespace and no NAT)

Containerized deployment of third-party applications (separate network namespace and NAT)

Overview

Technical background

With the proliferation of the internet, a wide variety of Internet services have emerged to meet people's production and life requirements. Consequently, the scale of data centers operated by service providers has been expanding continuously, and the demand and requirements for servers are increasing. For an individual service provider, deploying a large number of servers has led to a surge in service deployment costs and increased the network maintenance difficulty. For the whole society, the repeated investments by service providers result in low resource usage and significant resource wastage. Server virtualization can help users rapidly and cost-effectively deploy new services, which has won favor in the market.

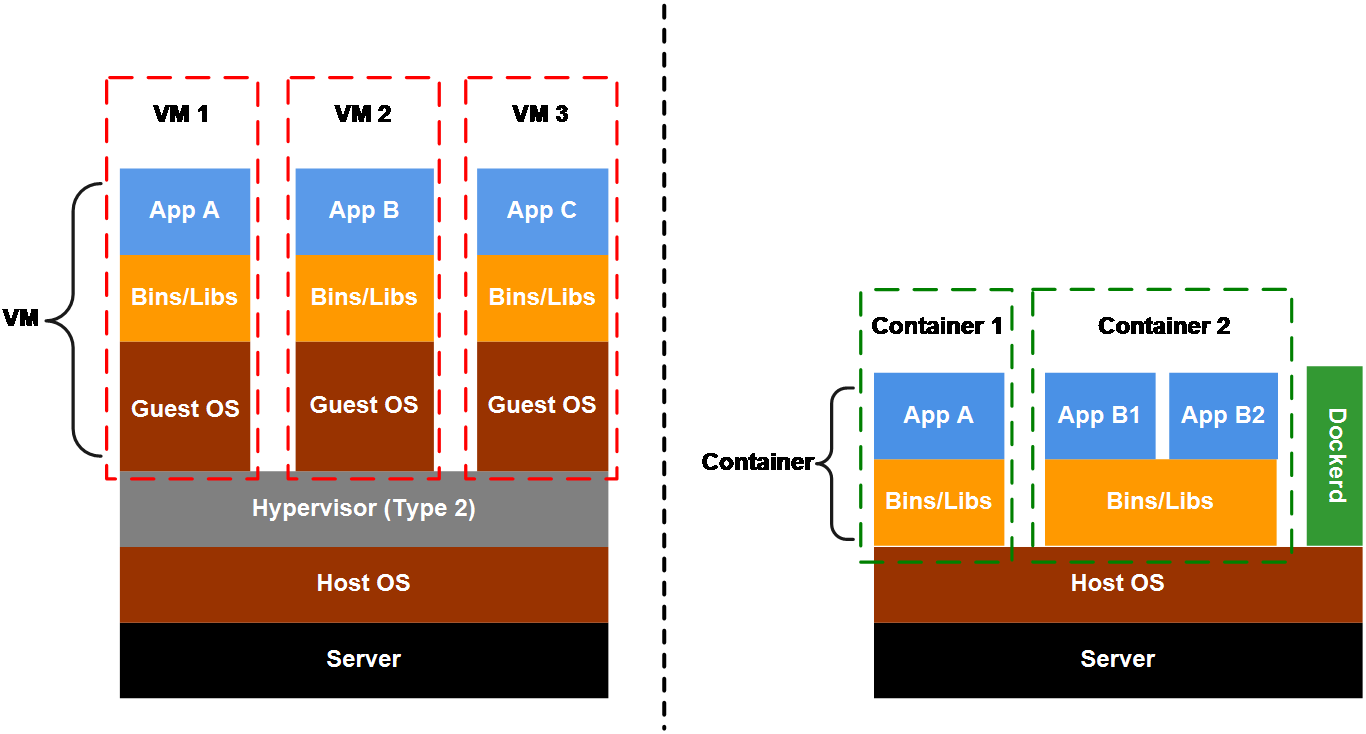

Server virtualization branches into two main categories: traditional virtual machine (VM) technologies and container technology.

As shown in Figure 1, traditional VM technologies, such as KVM and Xen, abstract underlying physical resources through the virtualization layer, provide isolated VM environments, and require independent client operating systems to be installed and run in the VMs. Changes in the clients do not affect the hosts. The differences in underlying hardware can be ignored, providing a consistent operating environment for applications. However, the need for an additional virtualization layer and independent client operating systems inevitably leads to performance losses in CPU, disk, network, and other aspects.

The container technology is a lightweight virtualization technology, which shares the kernel of the hosts and does not require independent client operating systems. It occupies less disk space and boots up faster, thereby becoming increasingly popular in the industry.

Figure 1 Comparison between the VM and container architecture

About containers

Containerization is a lightweight operating-system-level virtualization technology. A container is a standard software unit that can correspond to a single application (providing a service independently) or a group of applications (providing services together). It includes everything required for an application to run -- the code, a runtime, libraries, environment variables, and configuration files. Multiple containers can be deployed on a single host, with all containers sharing the host kernel, but isolated from each other. Containerization is a virtualization technology at the operating system level.

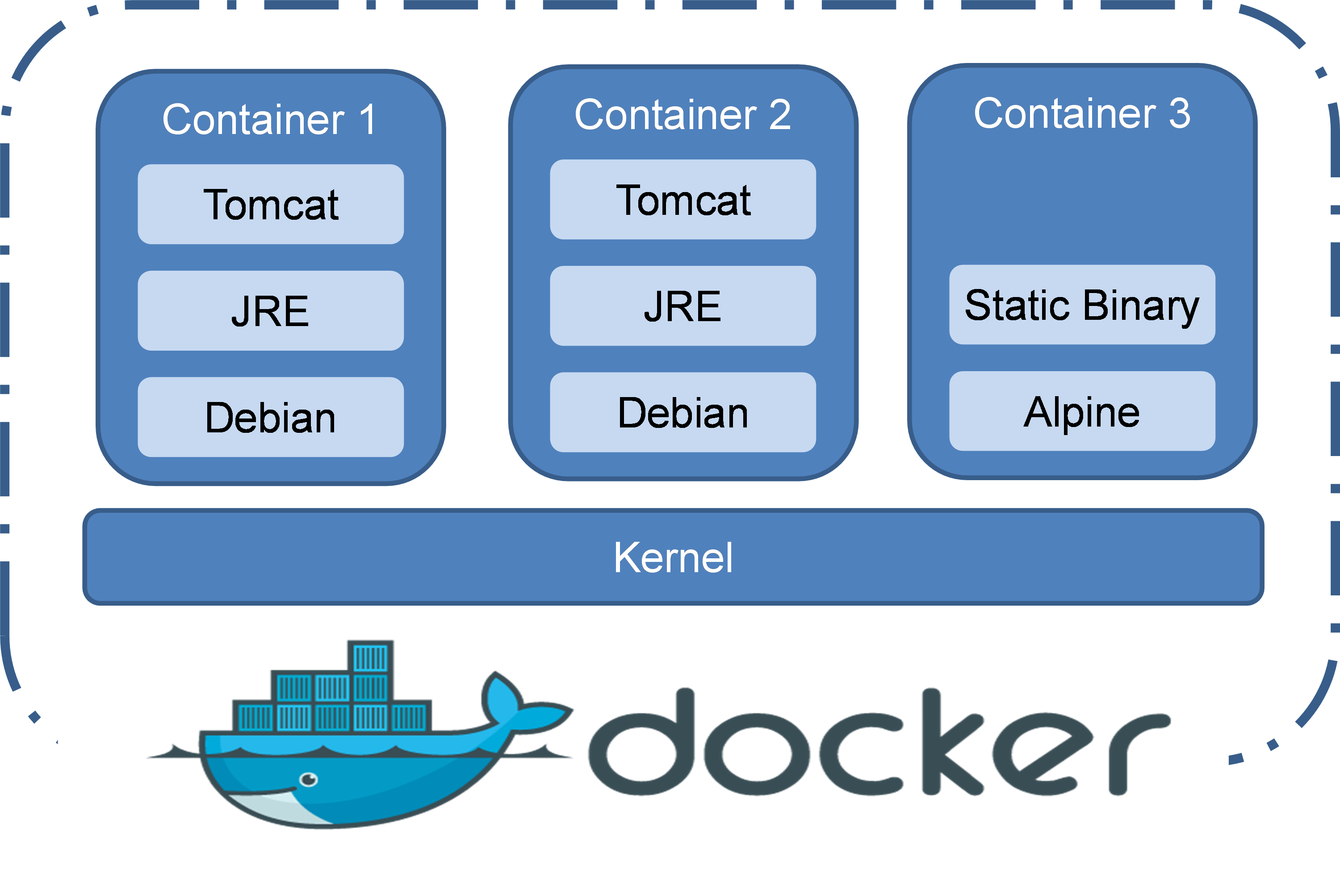

Typical container management tools include Docker and LXC. Docker is built on top of facilities such as namespaces and cgroups and uses the mirroring technology to package container deliverables. This greatly simplifies container application and promotes wide use of containers deliverables.

Figure 2 Docker containers

Comware 9 integrates Docker container management. Users can run containerized third-party applications on the Comware 9 system. The containerized third-party applications can be independently developed and deployed to supplement the functions provided by the Comware 9 system conveniently and quickly. Moreover, the third-party applications and the Comware 9 system operate independently. Thus, faults or restarts of third-party applications will not affect the Comware 9 system.

Technical benefits

Containers leverage and share the host kernel. Compared with traditional virtual machines, containers use fewer resources and are more lightweight and quicker to start, allowing the host to support more applications. Linux namespaces are used in combination with cgroups to isolate containers and control resource allocation and usage.

Docker packages application software and its dependent components into container images in the manner of a container for release and management. This allows containers to be deployed in various environments such as physical machines, virtual machines, public clouds, and private clouds, ignoring the differences in operating environments. It is characterized by high flexibility and rapid deployment.

Container images have a unified packaging and release standard, providing strong consistency and replicability. Once a container image is packaged and set up with a standard operating environment, it can run on any machine, making operations and maintenance more efficient and reliable.

Containers eliminate non-conformity in the development, testing, and production environments, allowing each role to focus more on the services.

Core container technologies

Resource isolation (namespace) and grouping (cgroup)

The underlying core technologies of containers are Linux's namespace and cgroup technologies.

About namespaces

Linux namespaces provide a method for kernel-level resource isolation. Different types of namespaces can isolate different types of resources. By default, when an administrator creates a container, the Linux kernel creates a corresponding namespace instance for that container. Each container uses its own namespace instance to create and modify resources. These Namespace instances do not affect each other, thus achieving resource isolation. If two containers need to share a namespace, you can specify the container running parameters. For example, execute the docker run --name tftpd --network container:comware tftpd command to run a TFTP server container and allow the container to share a namespace with Comware.

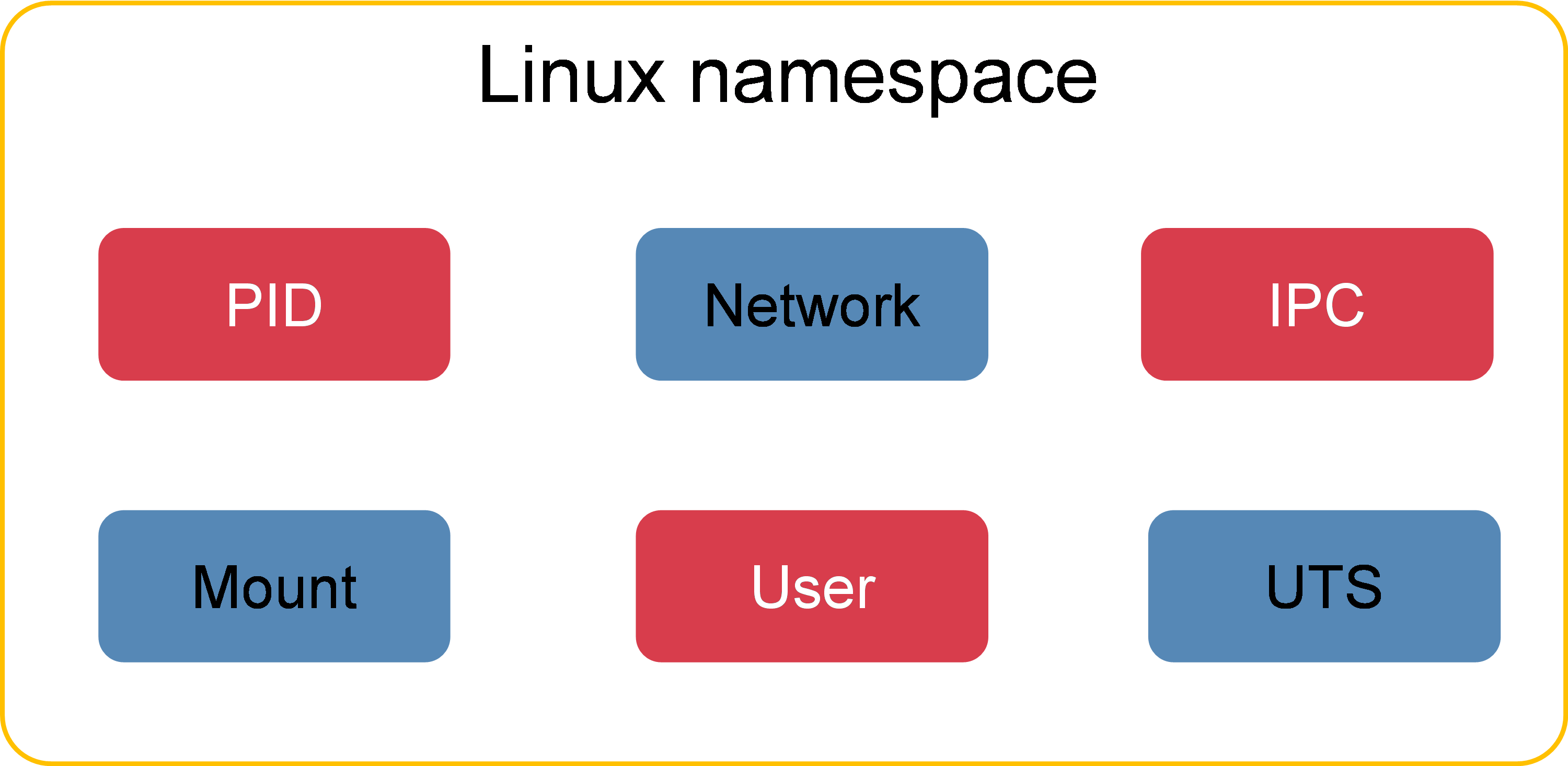

Figure 3 Linux namespaces supported by the Comware 9 system

As shown in Figure 3, the Comware 9 system achieves resource isolation for the following resources through namespaces:

· PID (Process ID)—The PID namespace is used to isolate the process IDs. This allows processes on different containers to use the same ID, with each process being independently numbered on each container. Inside a container, you can see the processes and their child processes of the container, but you cannot see the processes of the host and other containers. On the host, you can see all processes and their child processes for all containers.

· Network—The network namespace is used to isolate network resources such as network devices, ARP tables, routing tables, firewall rules, and IP addresses.

· IPC (Inter-Process Communication)—System IPC communication mechanism and POSIX message queue. The IPC namespace isolates semaphores, message queues, and shared memory, making the processes in different IPC namespaces unable to communicate through the IPC mechanism.

· Mount—Mount point of a file system. The mount namespace isolates file systems of different containers by isolating the file system mount points. File systems in different mount namespaces do not affect each other.

· User—Username and user group name. The user namespace isolates users and user groups of containers.

· UTS (UNIX Time-sharing System)—The UTS namespace isolates host names and domain names, enabling each container to have an independent host name and domain name.

About cgroups

Linux cgroups provide a mechanism for organizing and distributing system resources for applications (processes), which controls the use of different resources through the Cgroupfs file system. Cgroups also support the measurement of resources and restrict the number of resources used by each application to prevent a single application from consuming excessive system resources.

Cgroups mainly offers the following key functions:

· Resource limitation—Restricts the usage of system resources by containers, such as CPU nodes, CPU time, memory nodes, memory size, and I/O devices.

· Resource statistics—Collects data about system resource usage.

· Task grouping—Categorizes processes with different resource requirements into groups, and assigns processes with the same resource requirements to the same group. This allows the processes within a group to share the same set of resources, simplifying configuration and management.

In a cgroup, each resource is grouped and controlled by a separate subsystem. Common subsystems include:

· cpu subsystem—Sets a CPU weight value for each task group. The operating system limits the use of CPU by each task group based on the CPU weight.

· cpuset subsystem—For multi-core CPUs, this subsystem allows setting a process group to run only on specified cores.

· cpuacct subsystem—Used to generate reports on the CPU usage of processes within a process group.

· memory subsystem—Used to limit the process's use of memory on a per-page basis, such as setting the upper limit of memory usage for a process group. It can also generate reports on memory usage.

· blkio subsystem—Used to restrict the reading and writing of each block device.

· device subsystem—Restricts the access of process groups to devices. It permits or prohibits access to the device.

· freezer subsystem—Suspends all processes in a process group.

· net-cls subsystem—Provides access limitations for network bandwidth, such as restricting the transmit (Tx) bandwidth and receive (Rx) bandwidth of processes.

For example, execute the docker run –it –name test --cpu-period=100000 --cpu-quota=20000 ubuntu /bin/bash command to create a test container through Ubuntu mirroring. With the usage of the cpu subsystem, this container can consume up to 20% of the CPU resources.

Container images

About container images

Containers are described through images, which are the core technology of Docker containers. An image is a lightweight, executable, and independent software package that not only includes the programs, libraries, resources, configuration, and other files required for container operation, but also contains configuration parameters required for operation (such as anonymous volumes, environment variables, and users). Running an image allows for the creation and operation of a container.

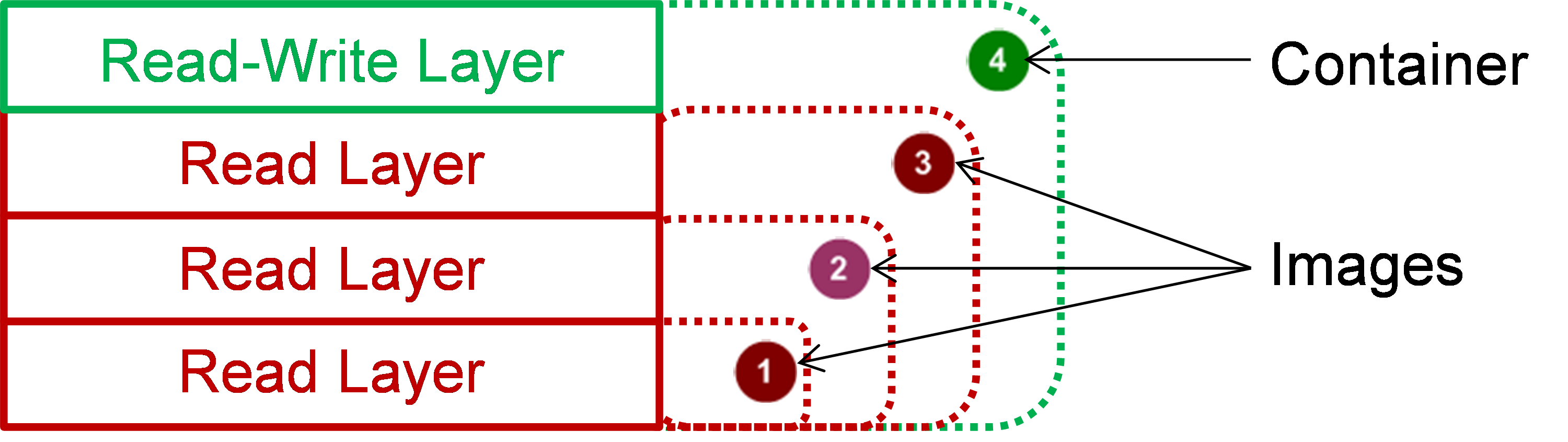

Docker uses a layered file system (aufs/unionfs/overlay2) to organize and manage the root file system of the containers. As shown in Figure 4, a Docker image is composed of multiple read-only image layers. A running container adds a readable and writable layer to the Docker image. Any modifications made to the files during container operation are saved in the container layer only. All image layers and container layers are united to form a unified file system. What users ultimately see is a superimposed file system.

If you reboot the container, the container layer data will be automatically deleted. Any operation on the container during its operation does not affect the images. If you save the container, the container layer is solidified into a read-only image layer, allowing you to save the data for the current runtime. After the container reboots, it can inherit the configuration before the reboot.

Common file operations in a layered file system include:

· Add a file.

When a new file is created in a container, it is added to the container layer.

· Read a file.

When you read a specific file in a container, Docker searches for the file in each layer from top to bottom.

· Edit a file.

When you modify an existing file in a container, Docker sequentially searches for the file in each image layers from top to bottom. Once found, it copies the file to the container layer and then modifies it.

· Delete a file.

When you delete a file from a container, Docker searches for the file in the image layers from top to bottom. Once found, it marks the file as deleted in the container layer. However, the file hasn't been truly deleted from the image layer.

Container image building

The Dockerfile is a collection of instructions for building Docker images, containing all the commands needed to build an image. You can execute the docker build command to obtain the Dockerfile. The instructions in the Dockerfile help compile, package, and generate images.

In the Dockerfile, you can superpose new deliverables based on existing images and modify the container's entry point. This allows for expanding images based on existing images for meeting new service requirements, facilitating reuse and reducing duplicated efforts.

Comware 9 container implementation

Containerized architecture

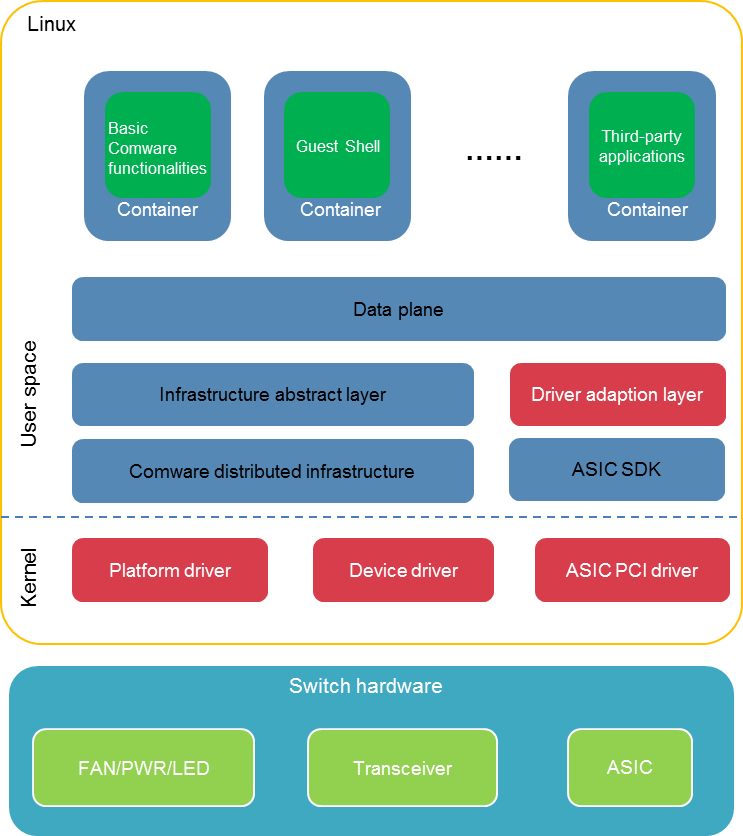

Figure 5 Containerized architecture

As shown in Figure 5, Comware 9 adopts a containerized architecture, providing independent operating environments for Comware as well as third-party applications.

· The system configuration management and core services run in the Comware container.

· The Guest Shell container integrates the CentOS 7 system. Users can install applications based on the CentOS 7 system in the Guest Shell container.

· Comware 9 supports third-party containers. Users can execute simple commands to install third-party containers on H3C devices, conveniently expanding the functionality of Comware through containerization. When you deploy third-party software on Comware 7, it is necessary to compile the third-party software's source code in the cross-compile environment of Comware 7. Once the compilation passes, the third-party software can run on Comware. Compared to the integration method of Comware 7, Comware 9 deploys third-party applications in a more flexible and convenient way through containers, significantly enhancing the openness of Comware.

· The Comware container and the containers created by the user run independently and do not affect each other. They all run on the Linux kernel and directly use the hardware resources of the physical device. The Linux namespaces and cgroups are used to isolate containers and control resource allocation.

· Comware 9 encapsulates Docker's container management function and can customize the maximum container resources available for third-party applications according to product requirements. This ensures resource usage for the system's forwarding plane and control plane. Comware 9 encapsulates command interfaces for Docker and kubelet, making sure Docker and kubelet commands are also under the management of Comware user authentication system and RBAC. Only authorized users can use them.

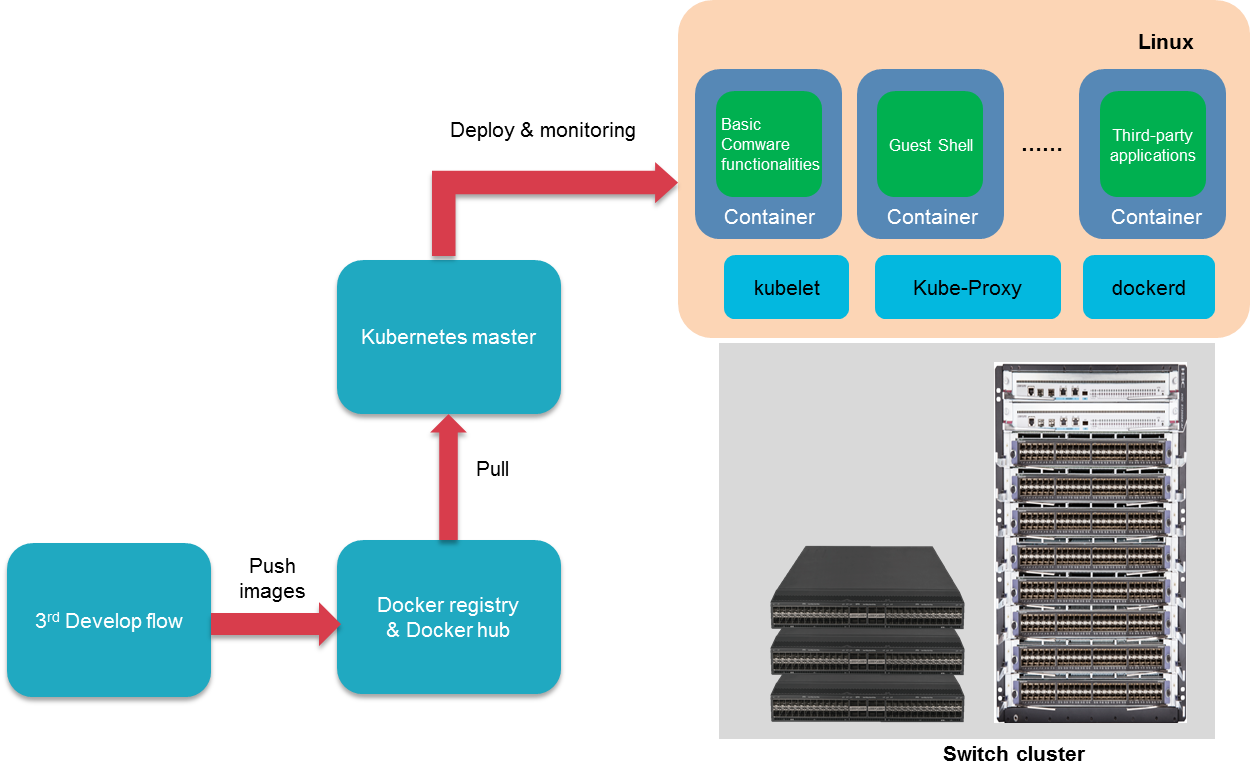

· Comware 9 supports Kubernetes and can serve as a worker node to accept scheduling and management from the Kubernetes Master, enabling large-scale and cluster-based deployment of third-party applications.

Containers supported by Comware 9

Comware 9 supports the following types of containers:

· Comware container—Encapsulates the Comware system and provides basic functions such as switching and routing.

· Guest Shell container—An official container provided by H3C, which has CentOS 7 system embedded. Users can install third-party applications based on CentOS 7 system on this container.

· Third-party application containers—Docker-based containers developed and released by third parties, used for the expansion of Comware system functions.

Comware container

The Comware system deliverables and the basic runtime environment are packaged into a container image, and the Comware system is operated in the form of a container. This container is referred to as the Comware container.

When a device is powered on and starts up, the system automatically creates and runs the Comware container. The Comware container is the system's management container, responsible for managing all the hardware resources on the device. Users can access the CLI of the Comware container from the console and use the Comware container commands to create, run, stop, or destroy the Guest Shell container and third-party application containers. Users are prohibited from operating the Comware container by using docker related commands.

Guest Shell container

To facilitate users to install and run third-party software in the Comware system without affecting the operation of the Comware container, H3C offers the Guest Shell container. The Guest Shell container has an embedded CentOS 7 system, and through this container, users can install applications developed on the CentOS 7 system on H3C Comware 9 devices. For example, users can install network server software, databases, and Web development tools such as Apache, Sendmail, VSFtp, SSH, MySQL, PHP, and JSP in the Guest Shell container to deploy email and Web services. Users can also meet their requirements for secondary development of Comware applications in the Guest Shell container using programming languages and development tools such as gcc, cc, C++, Tcl/Tk, and PIP (Python's package management tool).

The Guest Shell container supports all commands supported by the official Docker image version of CentOS 7.

The Guest Shell container is completely isolated from the host, the Comware container, and third-party application containers. Installing and operating applications in the Guest Shell container do not affect the running of other containers.

Compared to running CentOS 7 container directly with the CentOS 7 image file, using the Guest Shell container has the following advantages:

· You can run the Guest Shell container after installing the image file provided by H3C.

· The Comware system encapsulates the commands for the Guest Shell container. After you log in to the Comware system, you can manage and maintain the Guest Shell container by executing the guestshell commands.

Third-party application containers

Some applications are released in the form of containers. To support these types of applications, the Comware system supports the deployment of Docker-based third-party application containers. Users can create and use Docker-based applications on devices to implement custom functions such as information collection and device state monitoring. For example, each device runs a resource collection agent container that collects real-time information of the device CPU, memory, and traffic, and reports to the monitoring device for unified management.

Third-party application containers are isolated from the Comware container and Guest Shell container. Containerization technology confines the impact of third-party applications within the container.

Starting a container

To start a container in the Comware 9 system:

1. Power on the device to start the basic system service management process.

2. Start the Docker service.

3. Docker service startup triggers building a Comware container image, creating the Comware container, and establishing the directory mapping required in the Comware root file system.

4. After the basic system finishes startup, it automatically calls the docker command to start the Comware container and transfers control to it. The service management process of Comware then continues with initialization in the container.

5. After the Comware container has fully started, it automatically logs in to the CLI within the container, and transfers control to the user.

6. The user logs in to the Comware system and use the guestshell start and docker commands to start the Guest Shell container and third-party application containers, respectively.

Comware 9 creates the following Docker instances for the Docker service.

· Comware Docker instance—Used for managing the Comware container.

· User Docker instance—Used for managing the Guest Shell container and third-party application containers.

These two Docker instances separate the management of the Comware container, Guest Shell container, and third-party application containers. They also distribute Docker instructions to different instances via specific communication channels. The issued Docker commands are transmitted to only the user Docker instance, which prevents the user from mistakenly operating the Comware container.

|

|

NOTE: To make sure the IP address used by the container for providing services remains unchanged, use the --ip or --ip6 parameter to specify the container IP address when the container starts up. If you do not specify the container IP address, the container must re-announce its service address after startup to make sure the services can be discovered. This is because the IP address assigned to the container by the system might be different for each startup. |

Saving and restoring a container

Due to Docker's layered file system, any modifications to container running parameters made during container operation are only saved in the temporary cache (the container layer of the container layered file system). When a container restarts, it uses the image file and restores to the state defined in the image file when the container is released.

To have the container inherit its state, Comware V9 provides the container saving feature which is integrated in the save command. After you execute the save command and select to save the container files, the device will automatically package and compress all running data of all currently running containers in the permanent storage media into a file named like flash:/third-party/dockerfs.cpio.gz. When the container restarts, this file will be decompressed to /var/lib/docker from the storage media, and then the container starts. After the container has started, it restores to the state recorded in the container file.

|

|

NOTE: If you want a container to automatically run when the device restarts, specify the --restart=always parameter when creating the container. |

Container network implementation

Comware provides network communication services for open applications. Open applications running on the device can communicate with both applications running on Comware and entities on networks. The traffic between applications in third-party containers and Comware is called east-west traffic, and the traffic from applications in third-party containers communicating with entities on networks through Comware is called south-north traffic.

Docker uses namespaces to implement isolation between containers. The network namespaces are used to isolate network resources, for example, IP addresses, IP routing tables, /proc/net directories, and port numbers. When you create a container on the device, you can configure the container to share or not to share the network namespace for Comware. The communication mode of the container with entities on networks and the required networking configuration depend on the choice.

|

|

NOTE: · To create the Guest Shell container, you must use the Guest Shell image. The Guest Shell container created by using the guestshell start command does not share the network namespace with the Comware container. If you use the docker run command to create the Guest Shell container, you can configure the Guest Shell container to share or not to share the network namespace with the Comware container. · The communication process between the Guest Shell container and the destination node is the same as that between a third-party container and the destination node. The following uses third-party containers as an example to describe the container network implementation. |

Network communication of third-party containers that do not share the network namespace of Comware

Fundamentals

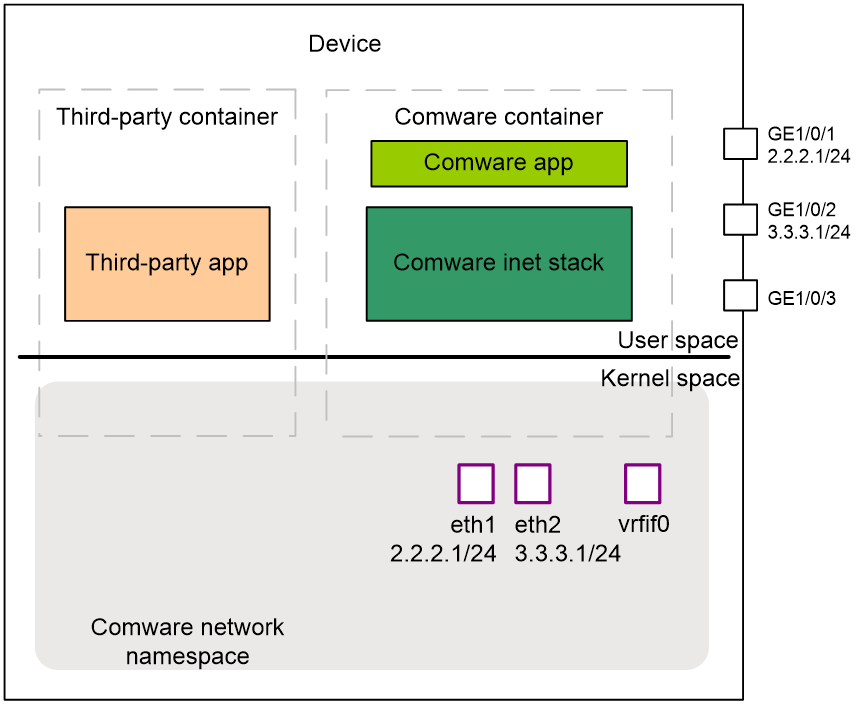

An application in a third-party container that shares the network namespace of Comware is similar to a Comware application which uses the network parameters of Comware, including the interfaces, IP addresses, IP routing table, and port numbers.

· For the application to communicate with a host that resides on the same network as Comware, the administrator does not need to configure additional network parameters. The application uses the Comware IP address to communicate with the host. Comware sends the packet to the corresponding application based on the protocol number or destination port number. If the protocol number or destination port number listened by the Comware application is the same as that listened by the third-party application, Comware first sends the packet to the Comware application.

After the administrator configures an IP address for the Comware Layer 3 interface, Comware creates the corresponding eth interface in the Linux kernel to realize communication between the Linux kernel and Comware. It also specifies the IP address of the Layer 3 interface for the eth interface, triggering the kernel to generate a direct route for the network segment where the IP address resides. When the Linux kernel forwards the packet, it performs a route lookup and sends the packet to Comware via the eth interface. Comware uses the eth interface to send the packet to the application in the third-party container.

· For the application to communicate with a host that resides on a different network than Comware, the administrator must configure a source IP address for the application. The administrator must also make sure that a connection is available between the source IP address and the remote host. The application uses the source IP address to communicate with the remote host.

Comware creates virtual interface vrfif0 in the kernel to act as the default output interface for the container, and deploys the default route within the container. The output interface for the default route is vrfif0, and the source address of the default route is the address of a Layer 3 interface in Comware. The address is the IP address of interface LoopBack 0 by default, which can be edited by commands. The kernel's forwarding module uses this default route and sends packets to Comware via interface vrfif0. Then, Comware sends the packets to the host. The host sends packets to Comware, and Comware sends them to the application in the third-party container via interface vrfif0.

As shown in Figure 6, specify IP address 2.2.2.1/24 for GE1/0/1, 3.3.3.1/24 for GE1/0/2, 10.10.3.3/24 for interface Loopback 0. You can view the following routes on the third-party container's routing table.

· A default route: Packets destined for non-directly connected subnets with source address 10.10.3.3 are transmitted to Comware for processing via interface vrfif0.

· A direct route corresponding to interface Loopback 0: The output interface of packets destined for 10.10.0.0/24 is Loop0.

· A direct route corresponding to GE1/0/1: Packets destined for 2.2.2.0/24 are transmitted to Comware for processing via eth1.

· A direct route corresponding to GE1/0/2: Packets destined for 3.3.3.0/24 are transmitted to Comware for processing via eth2.

· A local route: Packets destined for 127.0.0.0/8 are transmitted to the local host via InLoop0.

Figure 6 Network diagram for third-party containers that share the network namespace of Comware

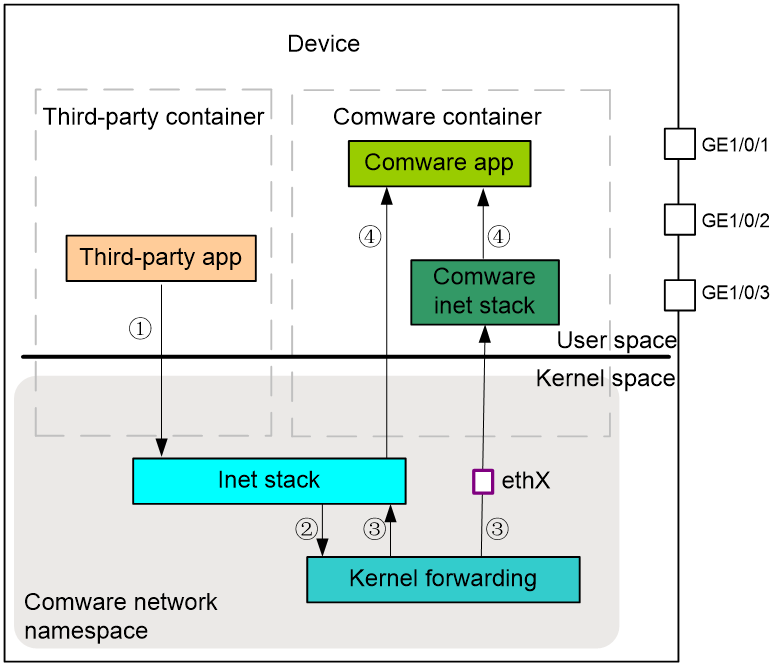

East-west traffic

The communication traffic between applications in third-party containers and Comware is referred to as east-west traffic.

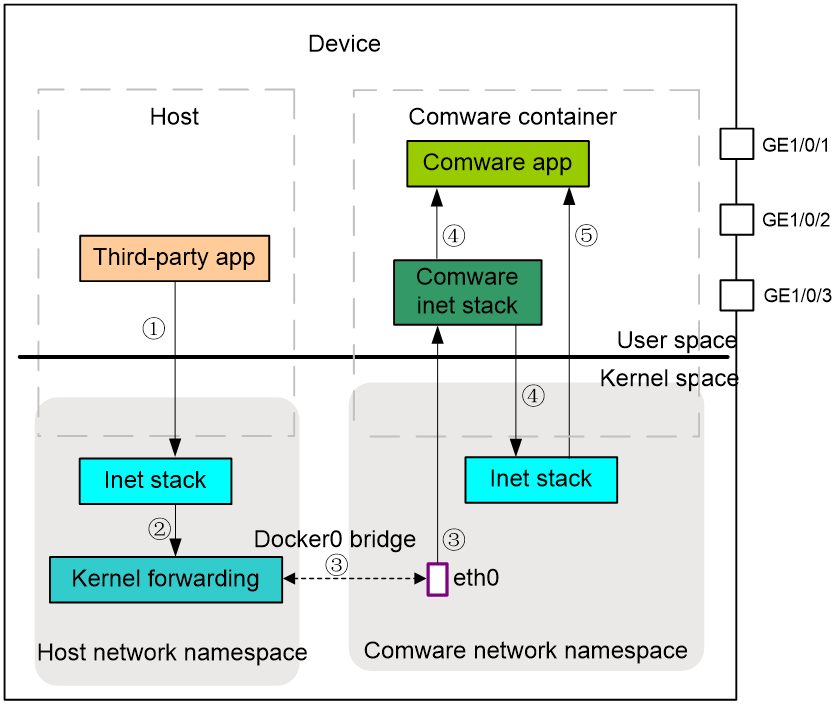

As shown in Figure 7, an application in a third-party container sends a packet to a Comware application as follows:

1. The third-party container transmits the packet to the kernel protocol stack (Inet stack).

2. The kernel protocol stack transmits the packet to the kernel forwarding module (Kernel forwarding) for processing.

3. Because the destination IP address is the local address, the kernel forwarding module must forwards the packet to the Comware application. Based on the forwarding rules issued by Comware, the kernel forwarding module transmits the packet to the kernel protocol stack or Comware protocol stack via interface ethX.

4. Based on the application layer port number, the kernel protocol stack or Comware protocol stack transmits the packet to the Comware application.

The process of handling packets sent from Comware applications to applications in third-party containers is similar.

|

|

NOTE: The process of communication between different third-party container applications within the same device is similar to that between third-party container applications and Comware applications. The third-party container applications can directly interact through the kernel protocol stack and the kernel forwarding module. |

Figure 7 Flowchart for handling packets sent from a third-party application to a Comware application

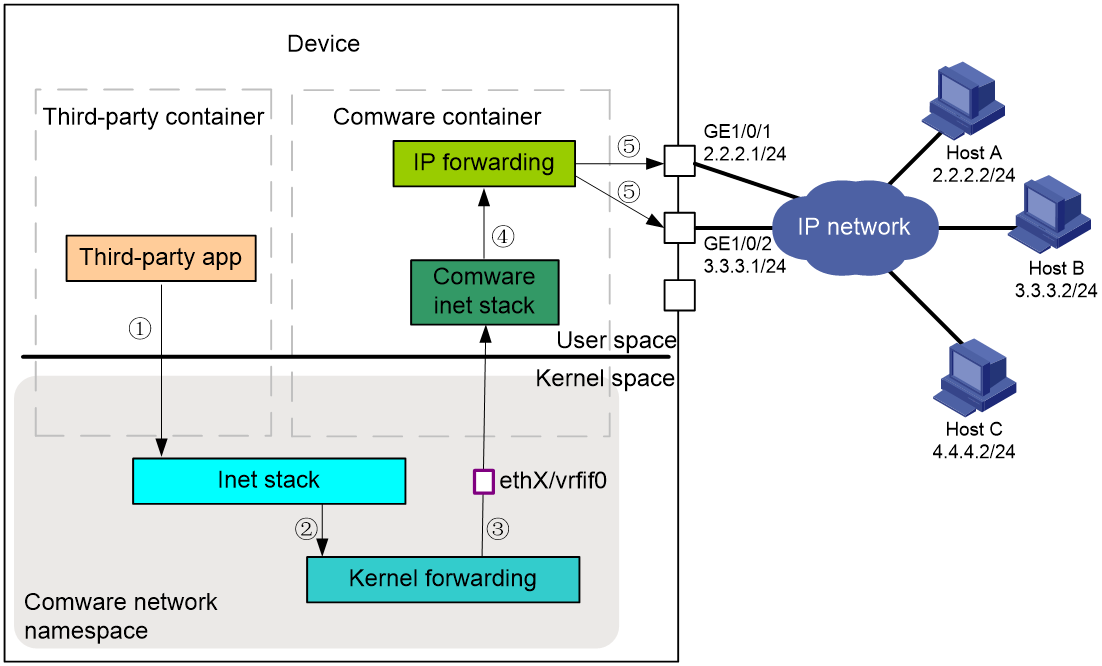

South-north traffic

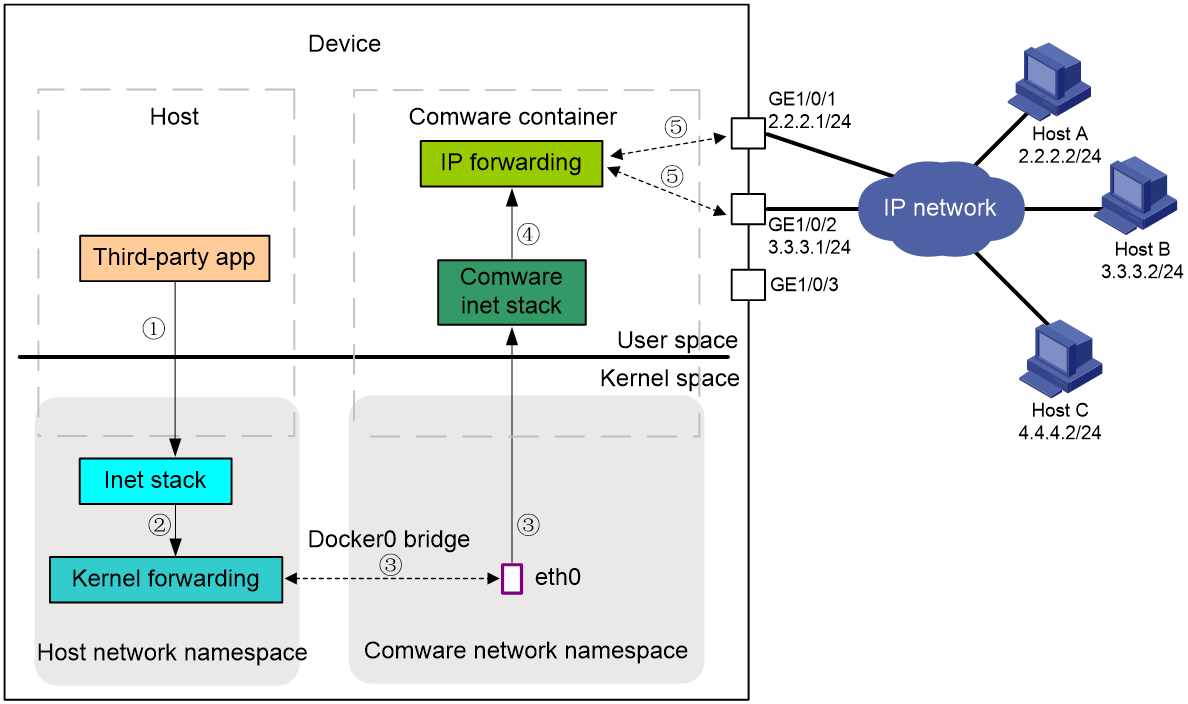

As shown in Figure 8, the process of an application in a third-party container transmitting a packet to a remote host is as follows:

1. The third-party application transmits the packet to the Linux kernel protocol stack (Inet stack) for processing.

2. The Linux kernel protocol stack forwards the packet to the kernel forwarding module for processing.

3. The kernel forwarding module looks up for a matching router, and transmits the packet to the Comware protocol stack via interface ethX or vrfif0.

4. The Comware protocol stack transmits the packet to the Comware forwarding module.

5. The Comware forwarding module performs a table lookup and forwards the packet from the physical interface.

Packets sent from a remote host are also sequentially forwarded by Comware protocol stack, kernel forwarding module, and kernel protocol stack to an application in a third-party container.

Figure 8 Flowchart for handling packets sent from a third-party application to a remote host

Network communication of third-party containers that do not share the network namespace of Comware

Fundamentals

If you want a third-party container to use an IP address independent of Comware for communication with other containers or devices, or you do not want third-party applications to see Comware's address and interface, you can select not to share the network namespace of Comware when creating a third-party container.

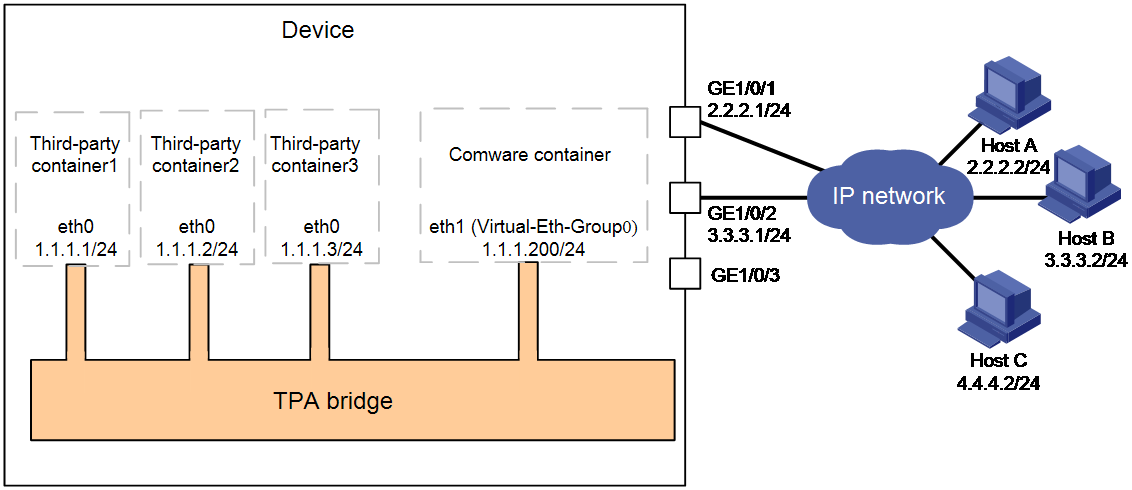

For third-party applications in containers that use a different network namespace than Comware to communicate with each other or hosts on indirectly connected networks, you must create interface Virtual-Eth-Group 0. When creating interface Virtual-Eth-Group 0, Comware also creates a Third-party Application (TPA) bridge. The third-party applications use the bridge to communicate with each other or hosts on indirectly connected networks.

As shown in Figure 9, after you create interface Virtual-Eth-Group 0 and specify IP address 1.1.1.200/24 for it, a TPA bridge and virtual network interface eth1 are created. When you create a Docker container that uses a different network namespace than Comware, Comware performs the following operations:

· Creates virtual interface eth0 for the container.

· Assigns the interface a free IP address that is on the subnet where Virtual-Eth-Group 0 resides, for example, 1.1.1.1/24, 1.1.1.2/24, and 1.1.1.3/24.

· Set the default gateway IP address to the IP address of interface Virtual-Eth-Group 0.

You can view the following routes on the routing table of Third-party container3.

· A default route: Packets not destined for 1.1.1.0/24 with next hop 1.1.1.200 are transmitted to Comware for processing via interface eth0.

· A direct route: The output interface of packets destined for 1.1.1.0/24 is eth0.

Figure 9 Network diagram for third-party containers that do not share the network namespace of Comware

East-west traffic

On the same device, third-party applications in different containers are in the same network segment. They can communicate directly with each other through TPA bridge and eth0.

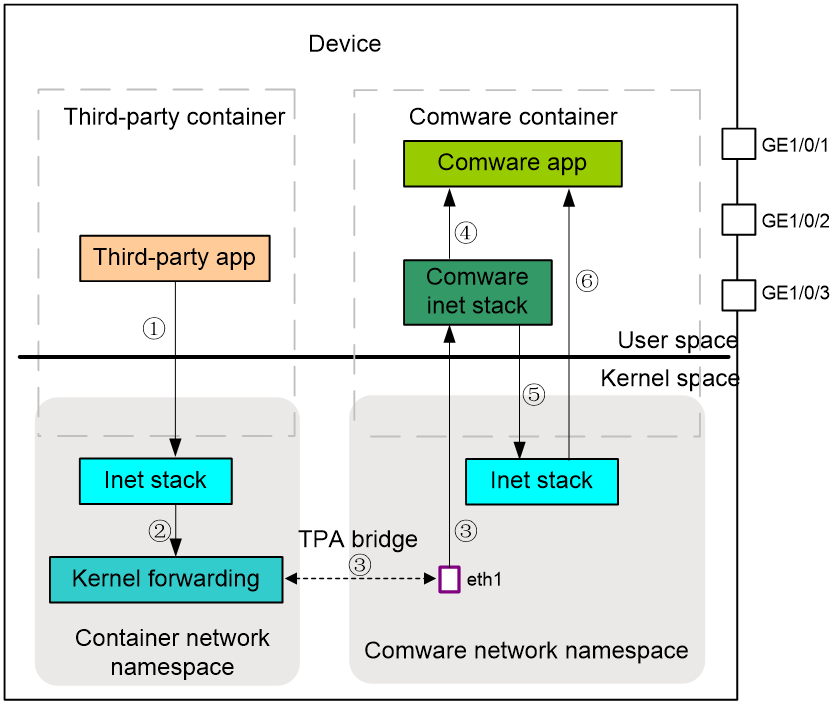

Third-party container applications transmit packets to Comware applications through the internal interface, as shown in Figure 10.

1. The application in the third-party container transmits a packet to the Linux kernel protocol stack.

2. The kernel protocol stack transmits the packet to the kernel forwarding module for processing.

3. The kernel forwarding module transmits the packet from the network namespace of the third-party container to the Comware container via eth0, TPA bridge, and eth1 in sequence. The Comware container matches the kernel route and sends the packet via interface vrfif0 to the Comware protocol stack.

4. If the Comware protocol stack supports processing the packet of the application, it transmits the packet to the Comware application. If the Comware protocol stack does not support processing the packet of the application, it transmits the packet to the Linux kernel protocol stack.

5. The kernel protocol stack transmits the packet to the Comware application.

The process for handling packets sent from Comware applications to applications in third-party containers is similar.

South-north traffic

South-north traffic needs to pass through Comware from the physical interface to the destination host. The destination host needs to communicate with an application in a third-party container using the IP address of the third-party container.

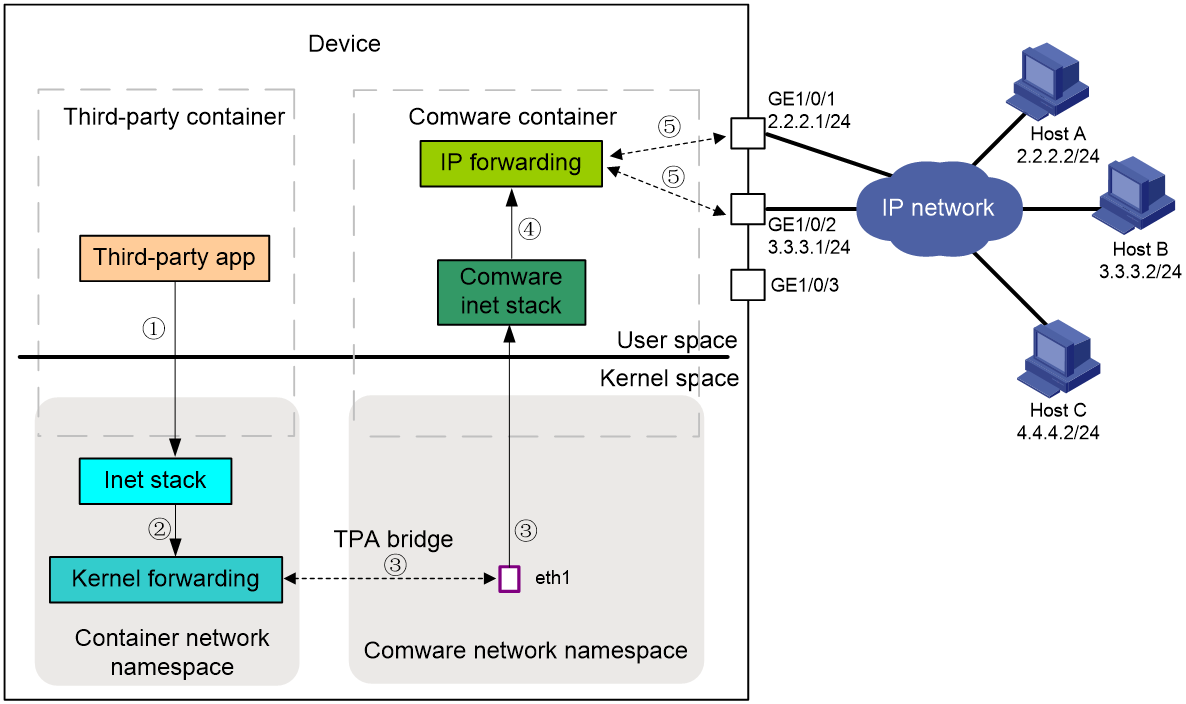

As shown in Figure 11, an application in a third-party container transmits packets to a destination host as follows:

1. The third-party application transmits the packet to the Linux kernel protocol stack for processing.

2. The Linux kernel protocol stack forwards the packet to the kernel forwarding module for processing.

3. The kernel forwarding module transmits the packet from the network namespace of the third party container to the Comware protocol stack via eth0, TPA bridge, and eth1 in sequence.

4. The Comware protocol stack transmits the packet to the Comware forwarding module.

|

|

NOTE: The administrator can select whether to perform NAT on the third party application traffic before Comware transmits packets. If the IP address of the third-party container can be directly declared to the external, NAT is not required. Otherwise, communication with the external can be achieved by converting the IP address of the third-parties' container into the CMW's address via NAT, hiding the existence of the third parties' container from the outside world. |

5. The Comware forwarding module performs table lookup forwarding, transmitting the packet from the physical interface.

The process of handling packets sent to applications in third-party containers from external destination nodes is similar to this.

Figure 11 Flowchart for handling packets sent from a third-party application to a remote host

Network communication of hosts that do not share the network namespace of Comware

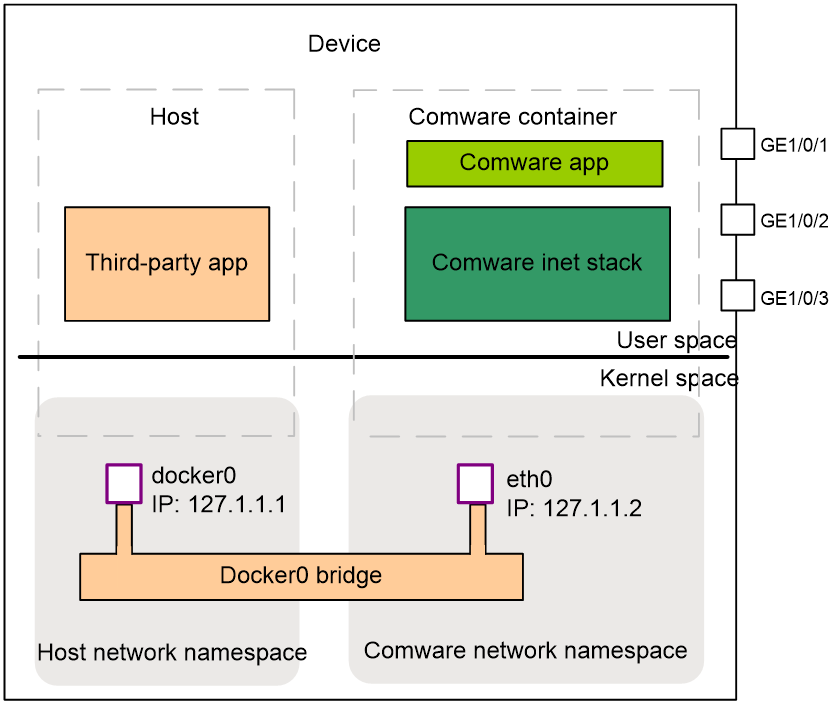

Fundamentals

The host and Comware do not share network space. Interfaces on the host are driven and managed by Comware, and applications running directly on the host need to communicate with the outside world through Comware's interfaces. For example, although Docker and Kubelet are integrated functions in Comware, they run directly on the host and do not share network space with Comware. When a Docker application pulls an image from Docker Hub or uploads an image to Docker Hub, it requires Comware to provide network support. When Kubelet needs to accept the scheduling and management from Kubernetes Master, Comware must also provide network support.

As shown in Figure 12, to enable Comware to provide network support for applications on the host, the device will automatically execute the following operations upon startup.

1. Create a default Docker0 bridge in the host's Linux kernel, which is used to connect the network namespace of the host and the network namespace of Comware.

2. On the network namespace side of the Docker0 bridge host, create a Docker0 interface and set its IP address to 127.1.1.1.

3. Create the eth0 interface and set its IP address to 127.1.1.2 on the Docker0 bridge Comware network namespace side.

4. Two routes are generated in the host's network space.

¡ A default route rule stipulates that packets destined for a node other than Comware should have a next hop of 127.1.1.2 and need to be passed to Comware via eth0 for processing.

¡ A directly connected route, the rule is for packets sent to the Comware application, with docker0 as the egress interface.

Figure 12 Network communication between Comware and a host

East-west traffic

As shown in Figure 13, the process of an application in the host machine transmitting a packet to the Comware application through the internal interface is as follows:

1. The application within the host machine transmits a packet to the Linux kernel protocol stack.

2. The kernel protocol stack transmits the packet to the kernel forwarding module.

3. The kernel forwarding module transmits the packet from the host's network namespace to the Comware protocol stack, through docker0, Docker0 bridge, and eth0.

4. If the Comware protocol stack supports processing the application's packet, then the packet is transmitted to the Comware application. If the Comware protocol stack does not support processing the application's packet, then the packet is sent to the Linux kernel protocol stack.

5. The kernel protocol stack transmits the packet to the Comware application.

The process for handling messages sent by Comware applications to applications in third-party containers is similar in the reverse direction, no further explanation is needed.

Figure 13 Flowchart for handling packets sent from a host to a Comware application

South-north traffic

North-South traffic needs to pass through Comware from the physical interface to the destination node. The destination node needs to use the IP address of the Comware Loopback0 interface to communicate with the host.

As shown in Figure 14, the process of the application within the host transmitting a packet to the destination node is as follows:

1. The application in the host transmits the packet to the Linux kernel protocol stack for processing.

2. The Linux kernel protocol stack forwards the packet to the kernel forwarding module for processing.

3. The kernel forwarding module performs NAT on the packets, converting the source address of the packet to the IP address of Comware's Loopback0 interface. The packet passes through docker0->Docker0 bridge->eth0, and is transmitted from the host's network namespace to the Comware protocol stack.

4. The Comware protocol stack transmits the packet to the Comware forwarding module.

5. The Comware forwarding module carries out table lookup transmission, ultimately transmitting the packet from the physical interface.

The process of handling packets sent from an external destination node to an application within a third-party container in the opposite direction is similar.

Figure 14 Flowchart for handling packets sent from a hot to a destination node

Managing containers through K8s

Figure 15 Comware 9 support for K8s management

As shown in Figure 15, the Comware 9 system has integrated Kubelet internally, enabling the device to serve as a working node in the K8S cluster. It accepts scheduling and management (Mgmt) from the Kubernetes Master, thereby achieving large-scale, clustered deployment and centralized management of third-party containers running on the device through K8S. For administrators, the method of deploying and managing containers on Comware 9 devices is basically the same as on servers, not affecting the administrators' configuration habits.

Container usage guidelines

If there's a conflict between a third-party application and a built-in Comware application, the device gives priority to the Comware application. For instance, if a user activates the built-in FTP server in Comware and another FTP server in a Docker container, and if both applications share the same IP address and port number; when the FTP client accesses the device via FTP protocol, the device uses the Comware's built-in FTP server to respond to the echo request. Only when the Comware's built-in FTP server is turned off, will it use the FTP server application in the Docker container to respond to the request.

Do not perform container-related operations on Comware containers, including Docker command line instructions and commands issued by Kubelet.

For Comware devices without dedicated hard disk (HD) or Flash, it is impossible to save the running state of containers. Therefore, it is recommended to use stateless containers.

To prevent third-party containers from excessively consuming resources and negatively affecting key components of Comware containers and other devices, a limit for CPU and memory resources that each third-party container can use has been set at the factory. When using the 'docker run' command to start a third-party container, and surpassing the factory device limit by specifying available CPU and memory resources through the '--cpuset-cpus', '--cpuset-shared', and '--memory' parameters, the factory device limit takes precedence.

Typical network applications

Containerized deployment of third-party applications that share the network namespace

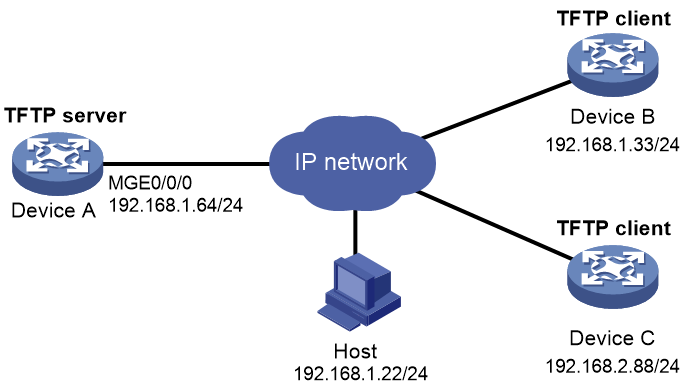

The Comware system supports the TFTP client function, but not the server function. By employing the container function, there's no need to modify the Comware system software or even access the Comware programming interface. Simply install the TFTP server container on Device A, and it can deploy the TFTP server directly, enabling the device to offer TFTP server services.

If the IP address is scarce, the TFTP server can share the IP address 192.168.1.64 with the devices. In this way, Device A, Device B, and Device C can all access the TFTP server via the IP address 192.168.1.64, and upload their respective configuration files to Device A.

Figure 16 Network diagram

Containerized deployment of third-party applications (separate network namespace and no NAT)

The administrator requires a management software that monitors the current resource usage of the device, including CPU, memory, network, and file system. Incremental development based on the Comware system requires time and considerable manpower and resources. By using the container function, you can immediately pull the cAdvisor image file from the public image repository. By running the cAdvisor container on the device with this image file, you can meet the monitoring requirements.

If the administrator only wants to grant access to cAdvisor for some lower-level users. In order not to expose the device's IP address (10.10.10.10), cAdvisor uses a fixed public network IP address 135.1.1.6. When creating a container, the administrator customizes it to not share the network namespace and not perform NAT address translation.

Figure 17 Network diagram

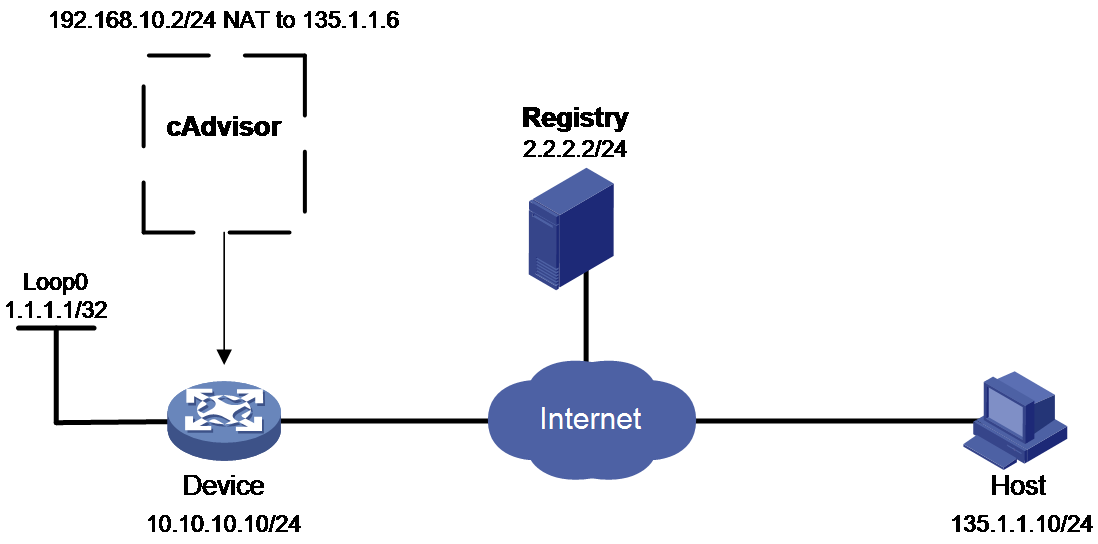

Containerized deployment of third-party applications (separate network namespace and NAT)

Pull the image file from the mirroring library with an IP address of 2.2.2.2, and install third-party applications on the device for monitoring the current device's resource usage (including CPU, memory, network, file system, etc.). The device's IP address is 10.10.10.10, and cAdvisor uses a private network address of 192.168.10.2/24. By customizing it to not share the network namespace and deploying NAT address translation function, it is possible to access cAdvisor from the Host via a fixed IP address of 135.1.1.6, and monitor device resource usage from the web page provided by cAdvisor.

Figure 18 Network diagram

Related documentation

· OCI official website: https://opencontainers.org/

· OCI runtime specifications: https://github.com/opencontainers/runtime-spec

· OCI image specifications: https://github.com/opencontainers/image-spec

· Kubernetes documentation: https://kubernetes.io/docs

· Docker documentation: https://docs.docker.com