- Released At: 27-01-2025

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

H3C UIS Manager Maintenance Guide

Document version: 5W100-20250126

Copyright © 2025 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice

Contents

Identifying the cluster HA feature· 4

Identifying the shared storage in the cluster 4

Identifying host information· 4

Identifying the uptime of a host 5

Identifying host performance monitoring information· 5

Identifying vSwitch information· 9

Identifying physical NIC status· 9

Identifying the running status of CAStools· 10

Verifying disk and NIC types· 10

Identifying VM performance monitoring statistics· 11

Identifying VM backup information· 14

Identifying license information· 15

Configuration cautions and guidelines· 17

Starting or shutting down a UIS host 18

IP address and host name change· 18

Managing physical interfaces bound to a vSwitch· 19

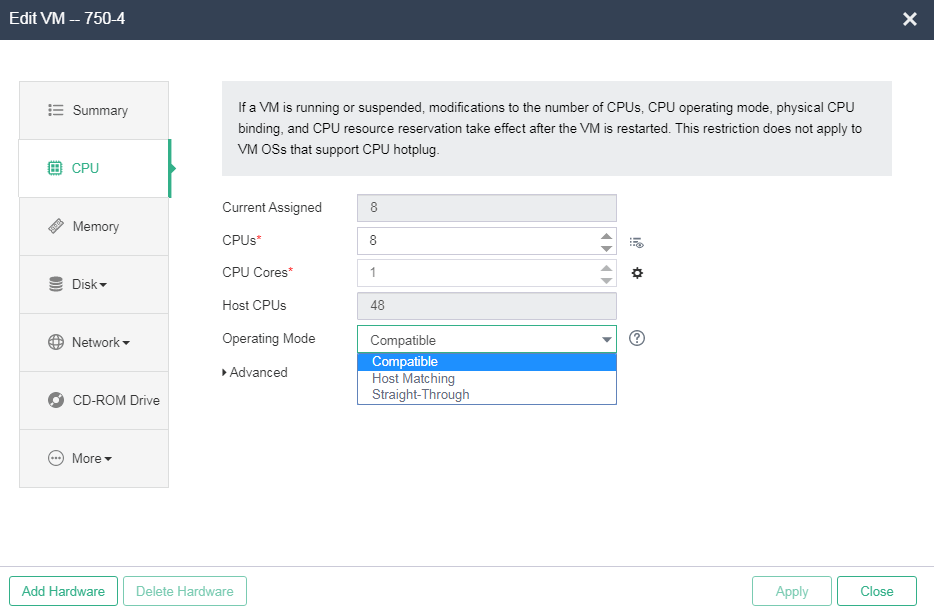

Replacing a disk on a CVK host 27

Changing the password for accessing UIS Manager 27

Changing the root password of a host from the Web interface· 28

Changing the admin password· 28

Scaling out and scaling in a cluster 29

Performing a heterogeneous or homogeneous migration· 29

Obtaining the XML file of the VM·· 29

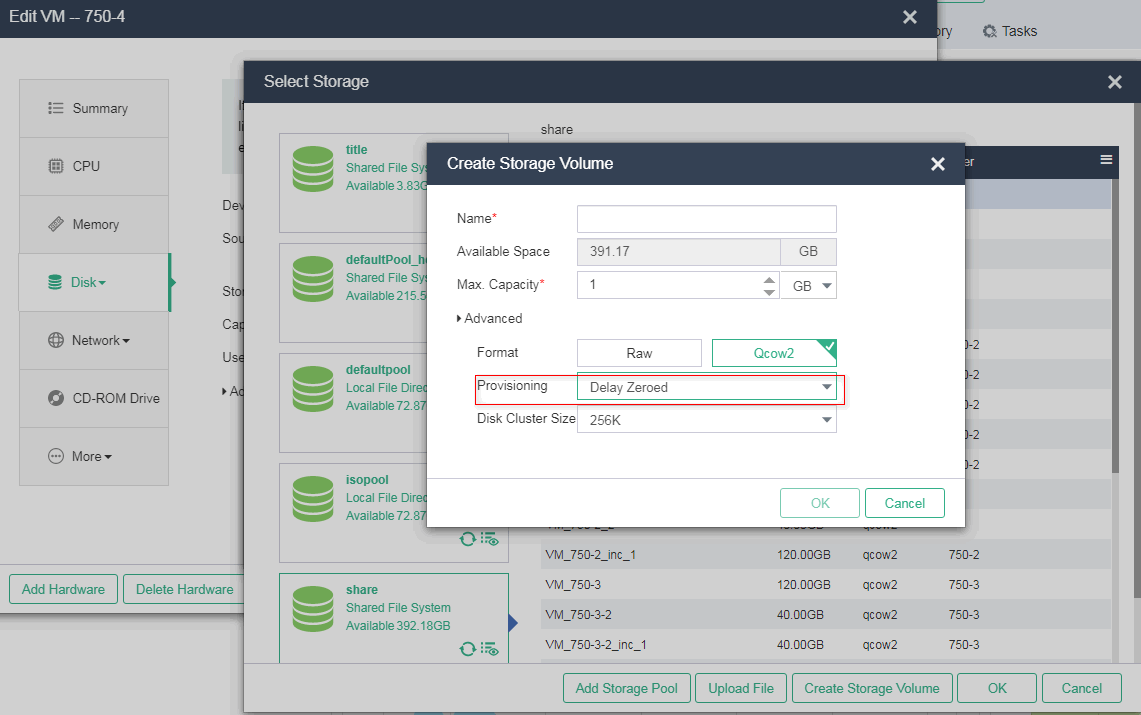

Identifying the storage volume for VM disk files· 32

Copying the XML file of the VM to the target host 32

Defining the VM through XML· 32

Clearing VM data on the original host 33

Configuring stateful failover 33

Replacing SSDs with NVMe drives· 34

Configuring storage disaster recovery· 34

Collecting logs of the UIS Manager 35

Collecting logs from the Web interface· 35

Collecting logs at the CLI of a CVK host 35

Collecting logs of CAStools· 40

Collecting logs of a VM operating system·· 40

Collecting logs of a Windows operating system·· 40

Viewing logs of a Windows operating system·· 42

Collecting logs of a Linux operating system·· 43

Troubleshooting tools and utilities· 44

Analysis with the Kdump file· 44

/var/log/ceph/ceph-osd.*.log· 49

/var/log/ceph/ceph-disk.log· 49

/var/log/ceph/ceph-mon.*.log· 50

/var/log/calamari/calamari.log· 50

/var/log/onestor_cli/ onestor_cli.log· 50

Distributed storage maintenance· 52

Rebalancing data placement when data imbalance occurs· 52

Resolving host issues caused by a full system disk· 53

Issues caused by network failure· 54

Handling failures to add or delete hosts· 54

Deleting a monitor node offline and restoring the node· 55

Deleting a storage node offline and restoring the node· 55

Missing or changing sdX device names due to host restart 55

Failure to display O&M and monitoring data· 58

Failure to display O&M and monitoring data (1) 58

Failure to display O&M and monitoring data (2) 59

Cluster initialization issues· 61

Compute cluster creation failure· 61

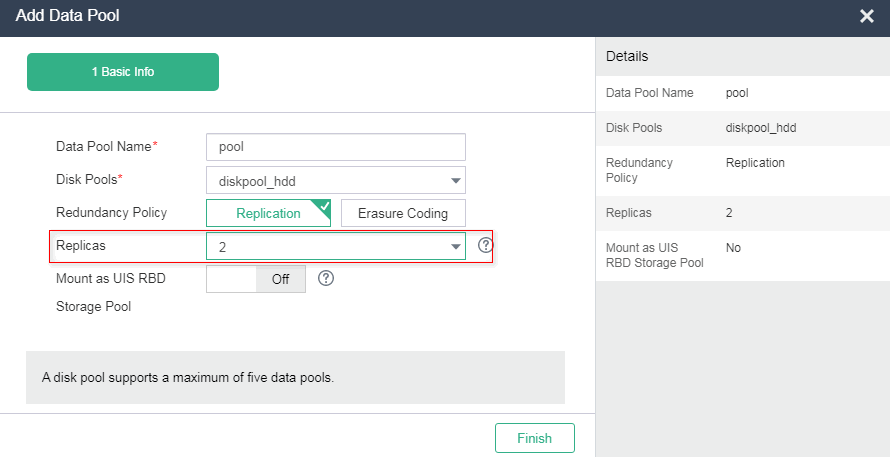

Storage configuration failure· 61

Health index lower than 100%·· 62

Deletion failure prompt for successful host deletion· 63

OSD process terminated unexpectedly· 67

UIS management node failure· 71

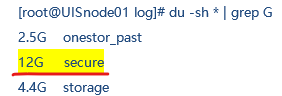

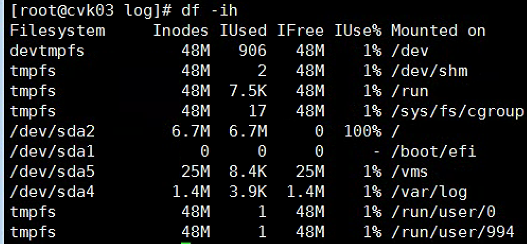

Down monitoring node due to high system disk usage· 73

Down monitoring node due to network error 74

Extent backup file decompression· 75

Script for data restoration· 75

Shared storage space reclamation· 76

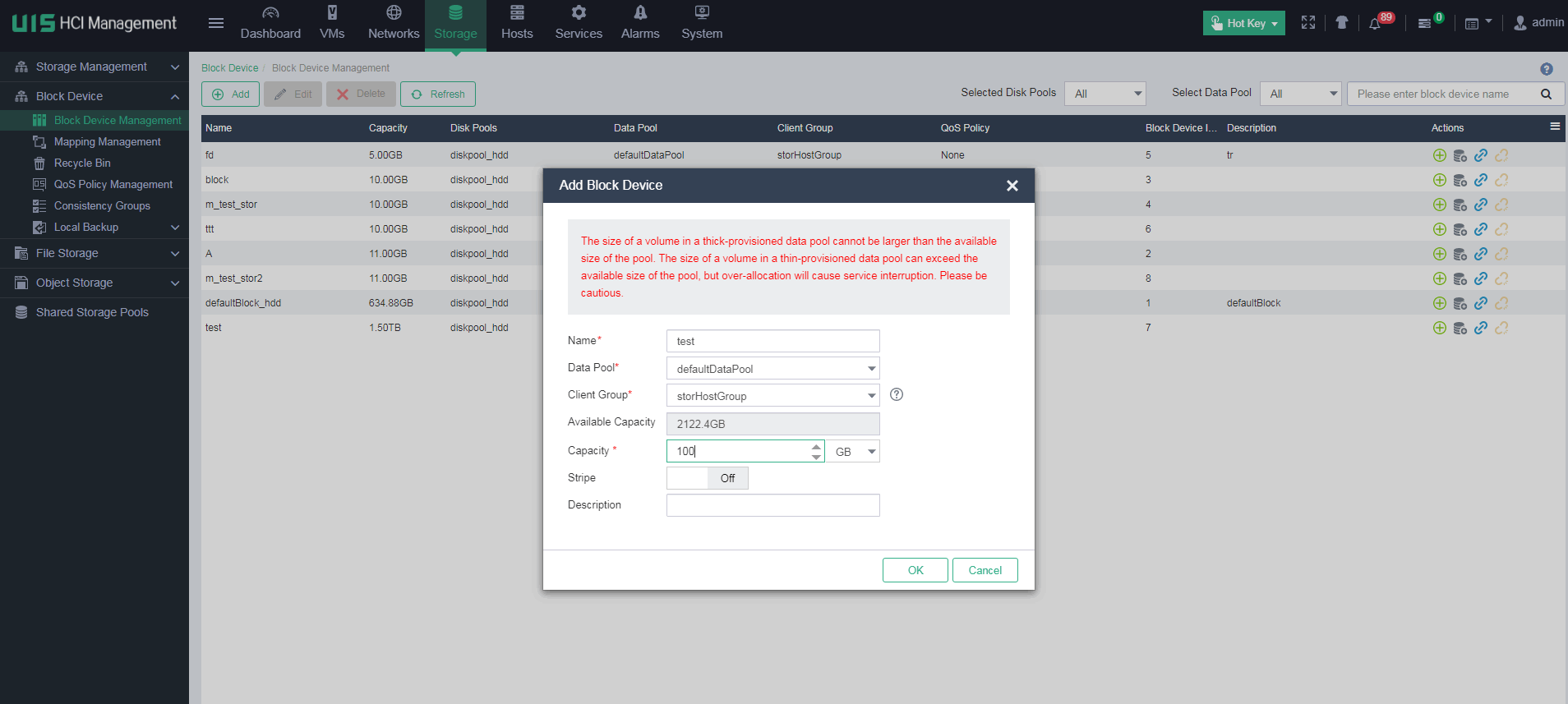

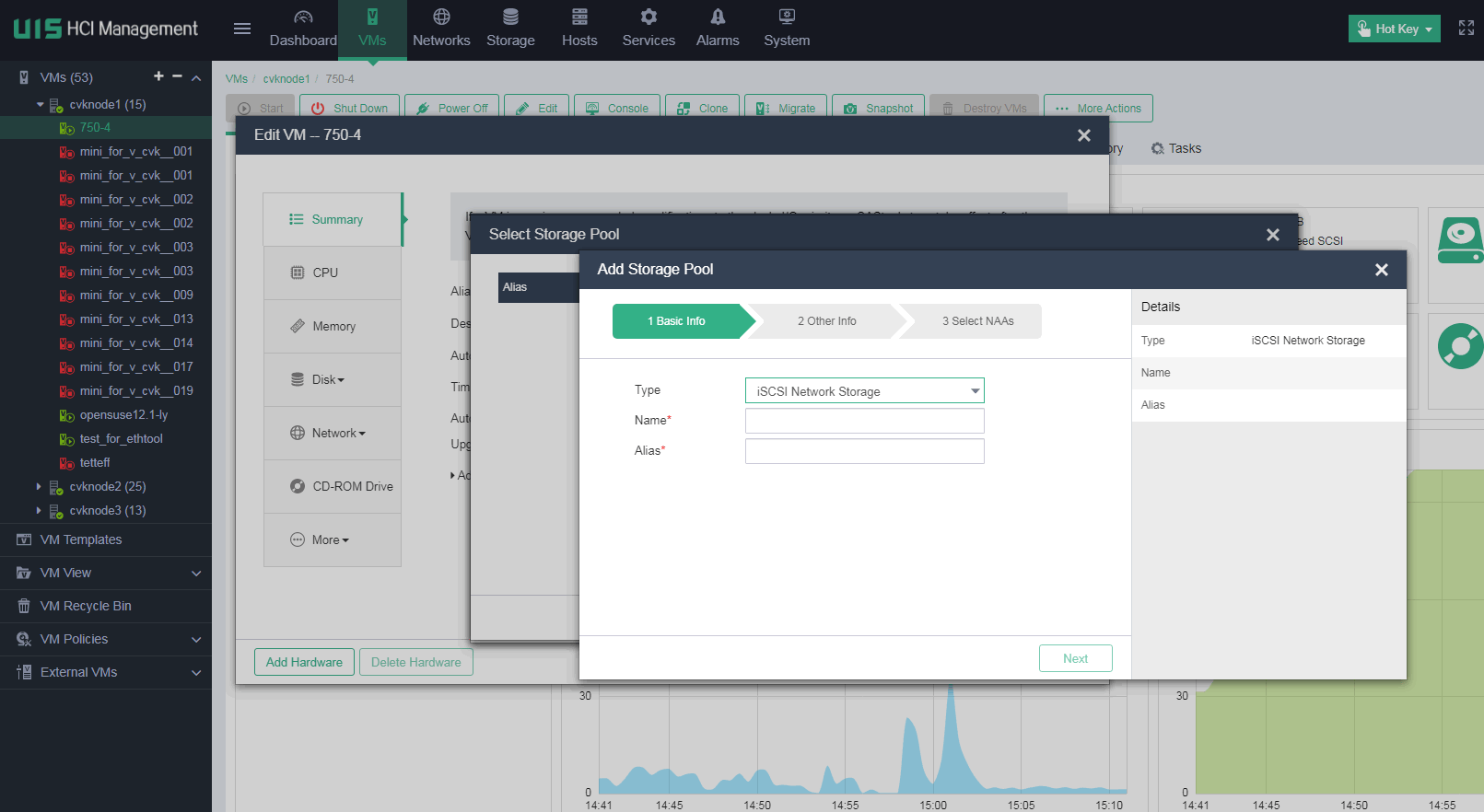

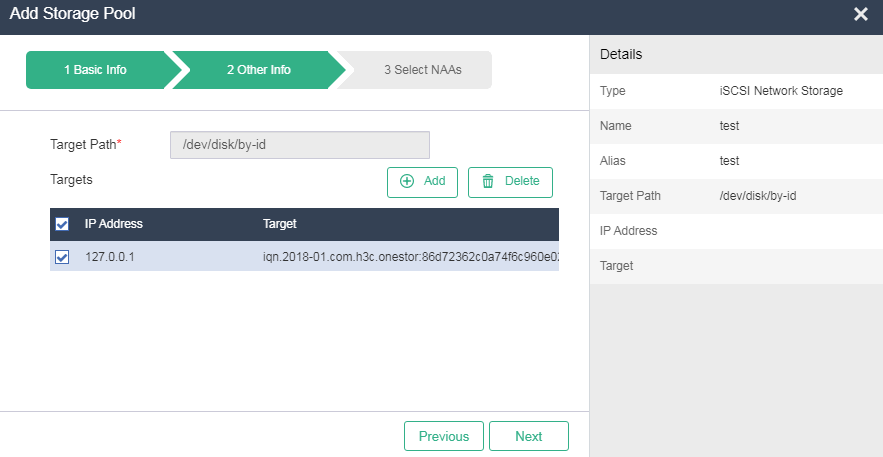

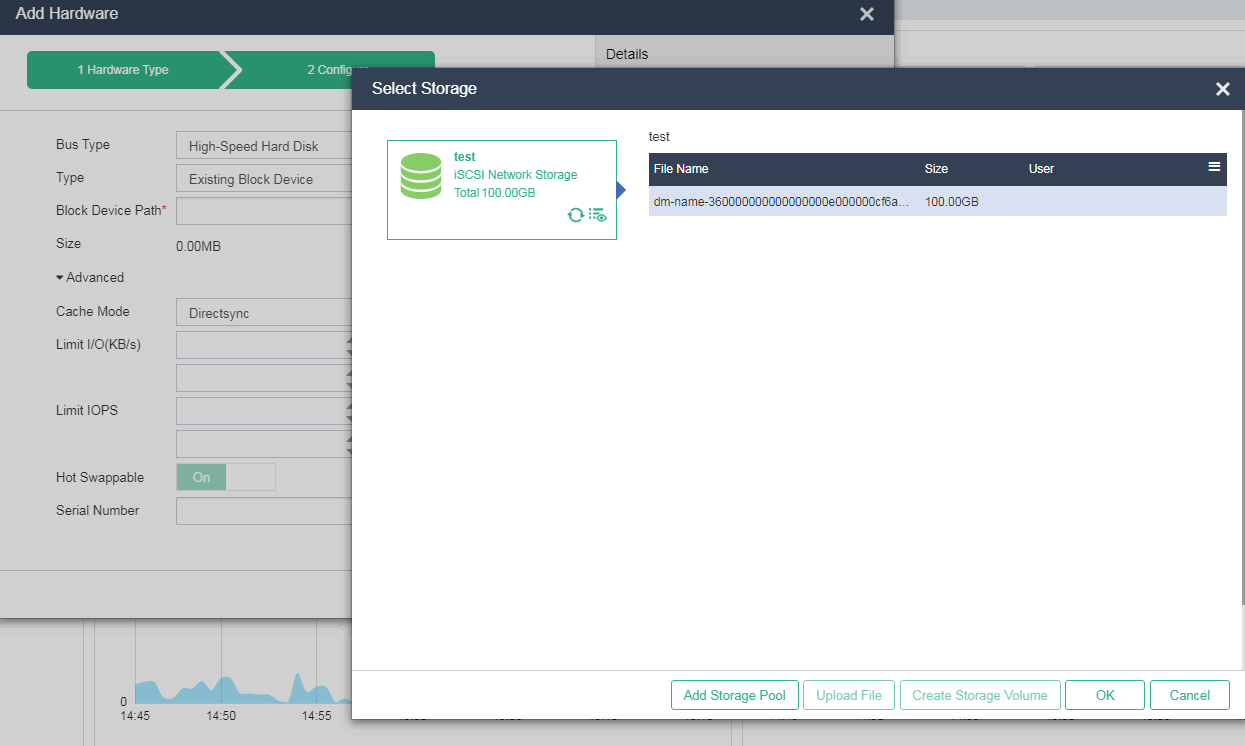

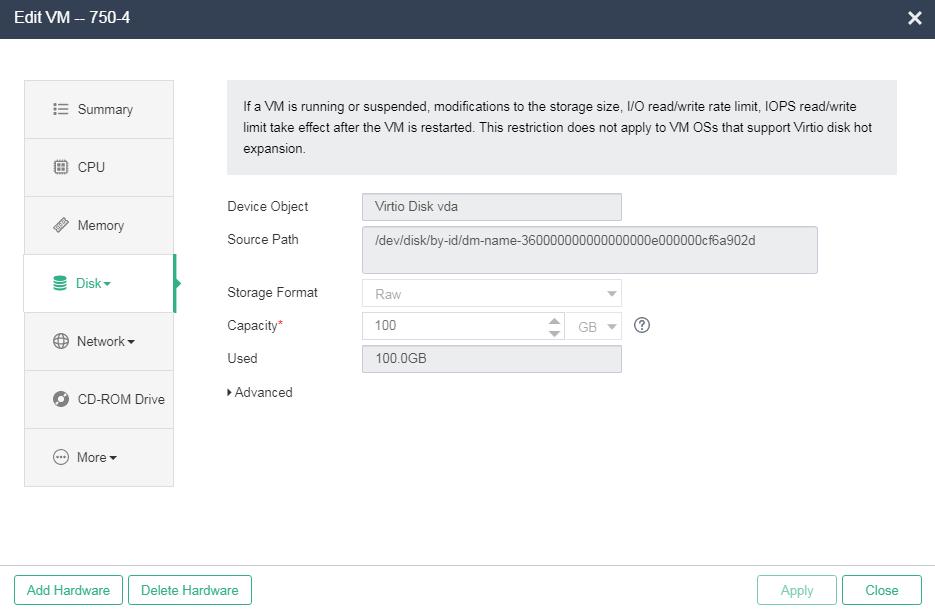

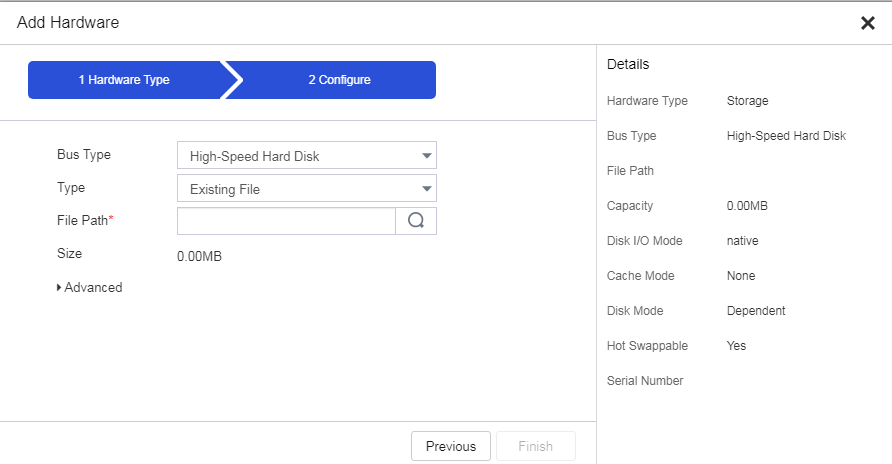

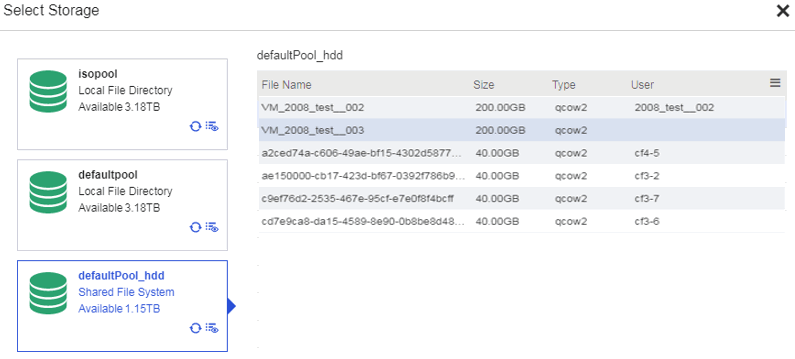

Releasing space of a shared volume by editing the VM bus type· 76

Releasing space of a shared volume by deleting files· 78

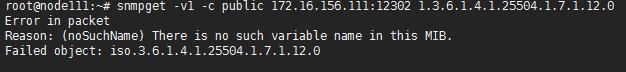

Get responses not received by an NMS· 78

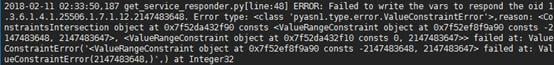

Data of a value-added service in the memory is different from that in the database· 80

Data inconsistency occurs if you mount a volume on a Windows client and create a snapshot online 80

The state of a snapshot is Creating, Deleting, or Restoring· 82

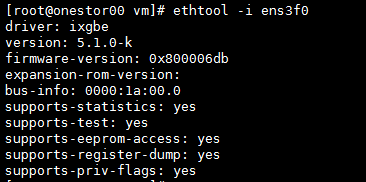

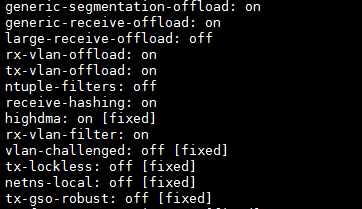

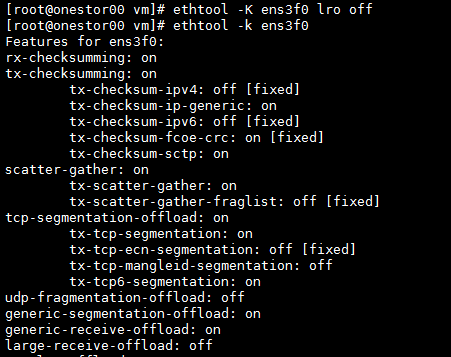

When the intel ixgbe network adapter is enabled with load balancing, storage access gets slow· 82

Using a QoS policy with low bandwidth and IOPS limits makes the storage disks of a client slow· 83

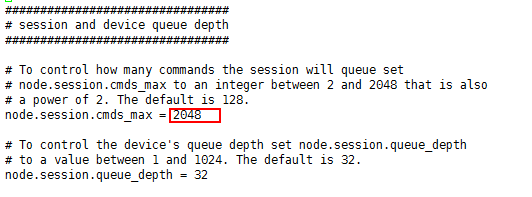

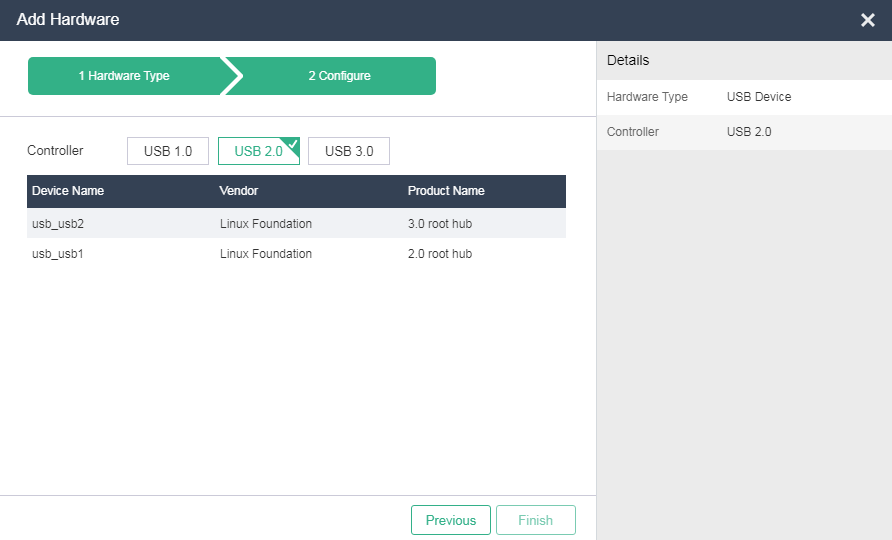

Failure to recognize an encryption dongle by VMs· 84

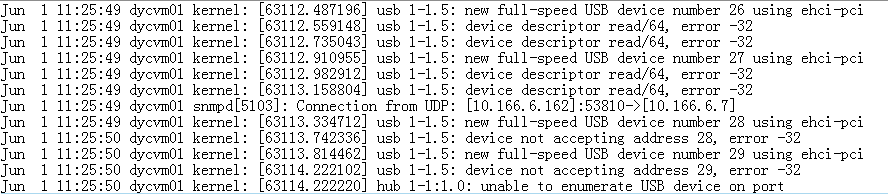

After a USB device is plugged into a CVK host, the host cannot recognize the USB device· 84

Use of USB-to-serial devices· 89

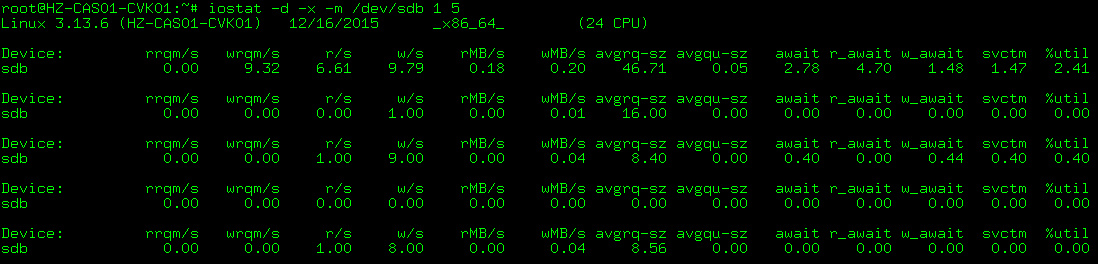

Disk performance optimization· 90

Guest OS and VM restoration· 96

Restrictions and guidelines· 96

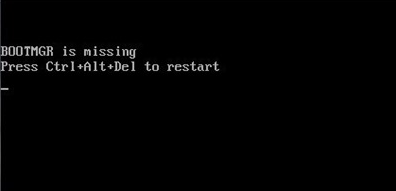

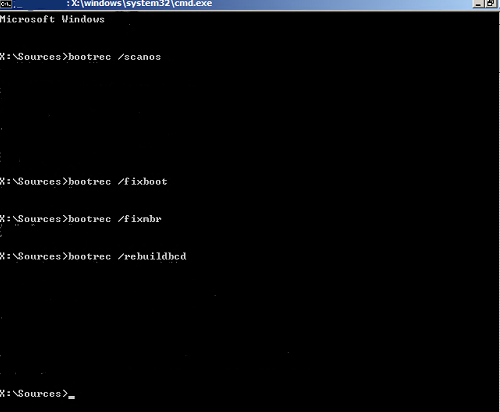

Windows repair operations and steps· 101

Space occupation issue due to manual operations· 106

Space occupation issue due to software issues· 107

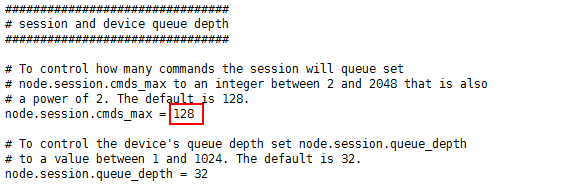

Message The maximum number of pending replies per connection has been reached generated· 108

Unified authentication issue· 109

CAS authentication service exception· 109

Cloud-native engine container service commands· 134

Process management commands· 151

Routine maintenance

Stable operation of the UIS system requires maintenance works that typically include reviewing alarms, identifying cluster status, host information, virtual machine (VM) status, license information, and reviewing logs.

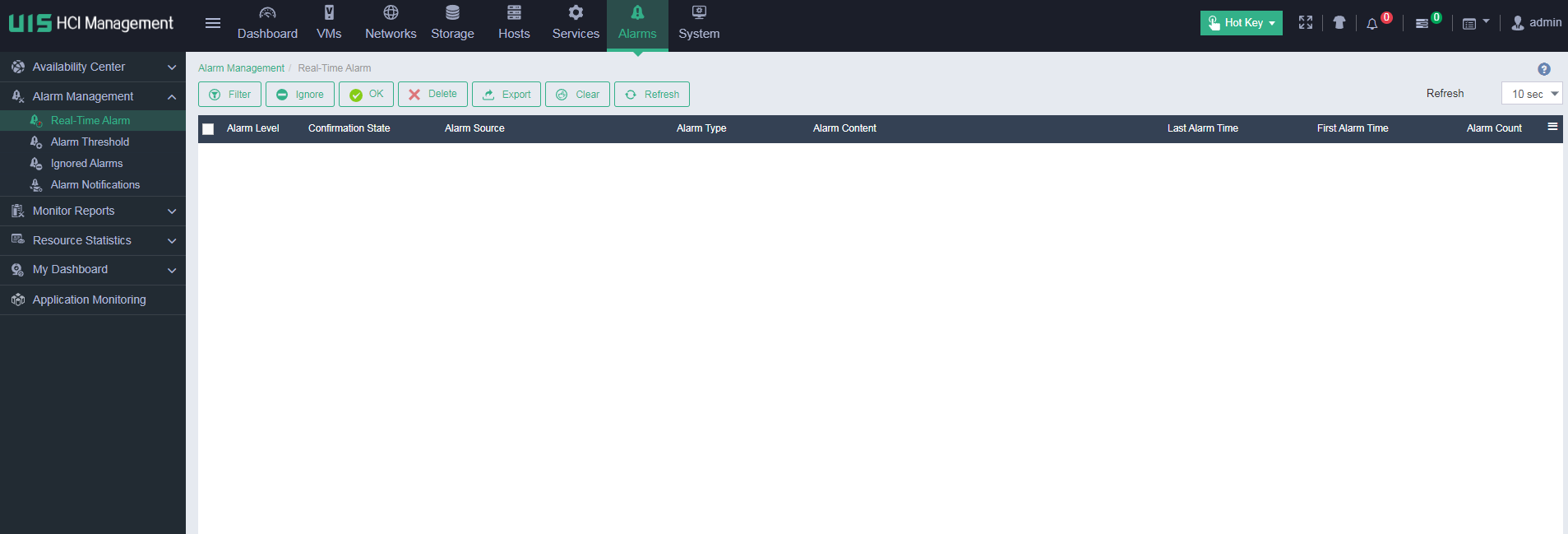

Reviewing alarms

The UIS platform main page displays indicators for critical alarms, major alarms, minor alarms, and information alarms generated during UIS system operation in the top right corner.

If critical or major alarms are displayed, the UIS system operation might contain anomalies that require immediate troubleshooting.

By clicking the corresponding alarm indicator, you can access the associated real-time alarm page. Alternatively, you can navigate to the Alarm Management > Real-Time Alarm page.

You can perform troubleshooting based on the alarm source, type, content, and the last alarm time on the real-time alarm page.

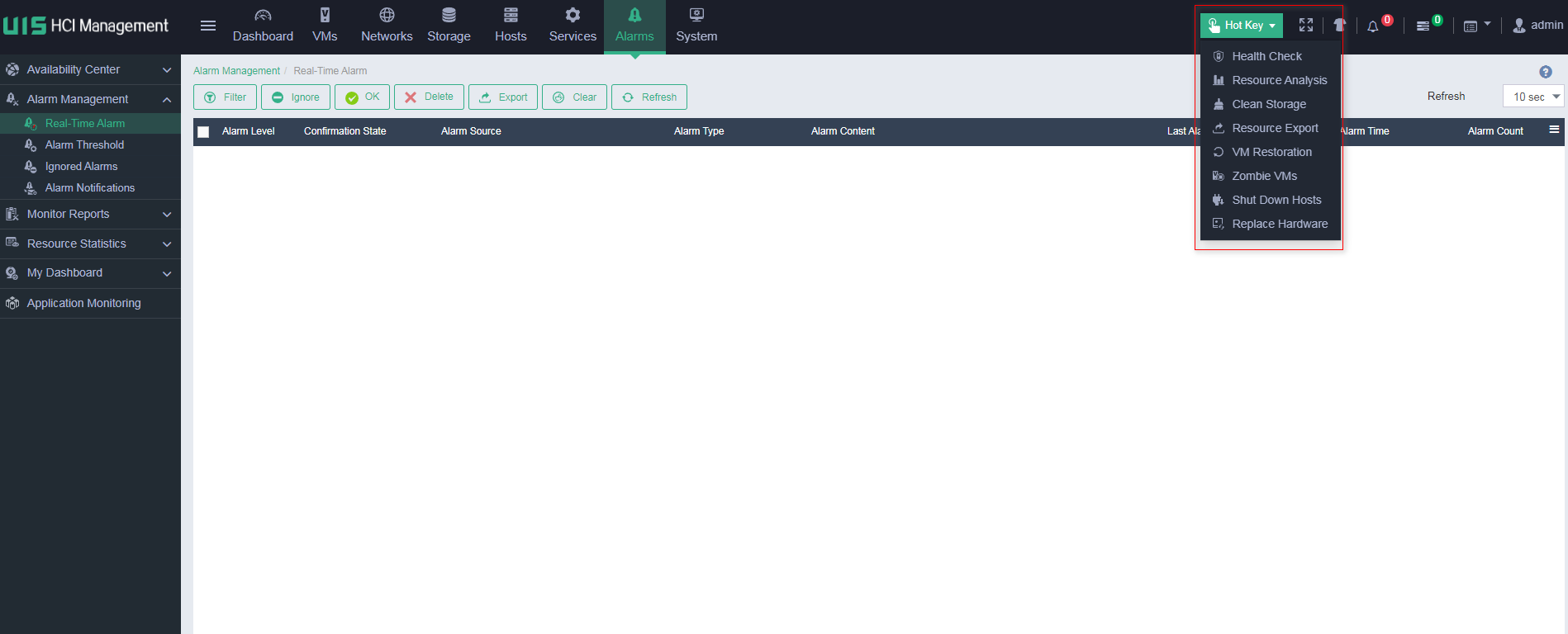

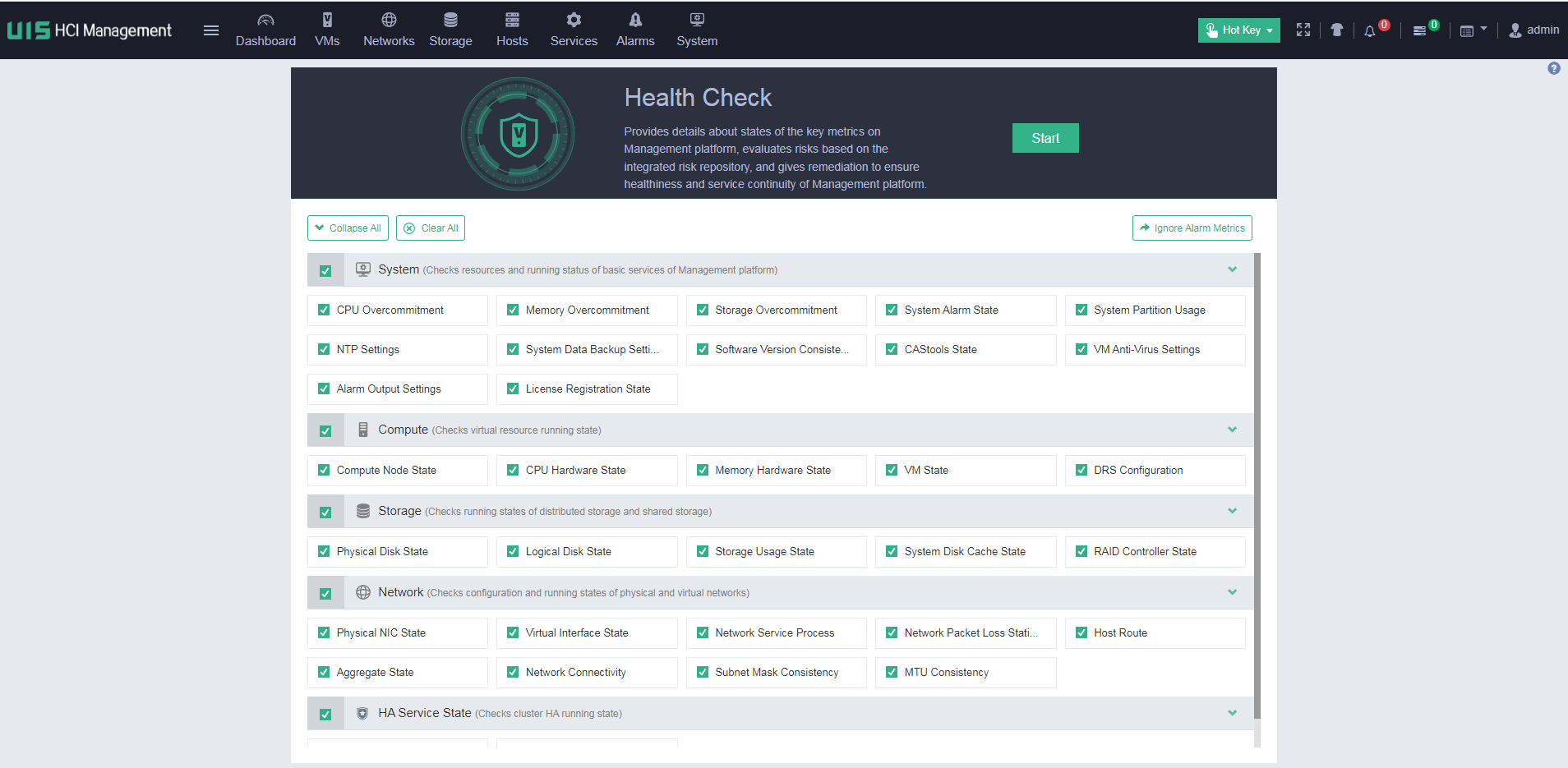

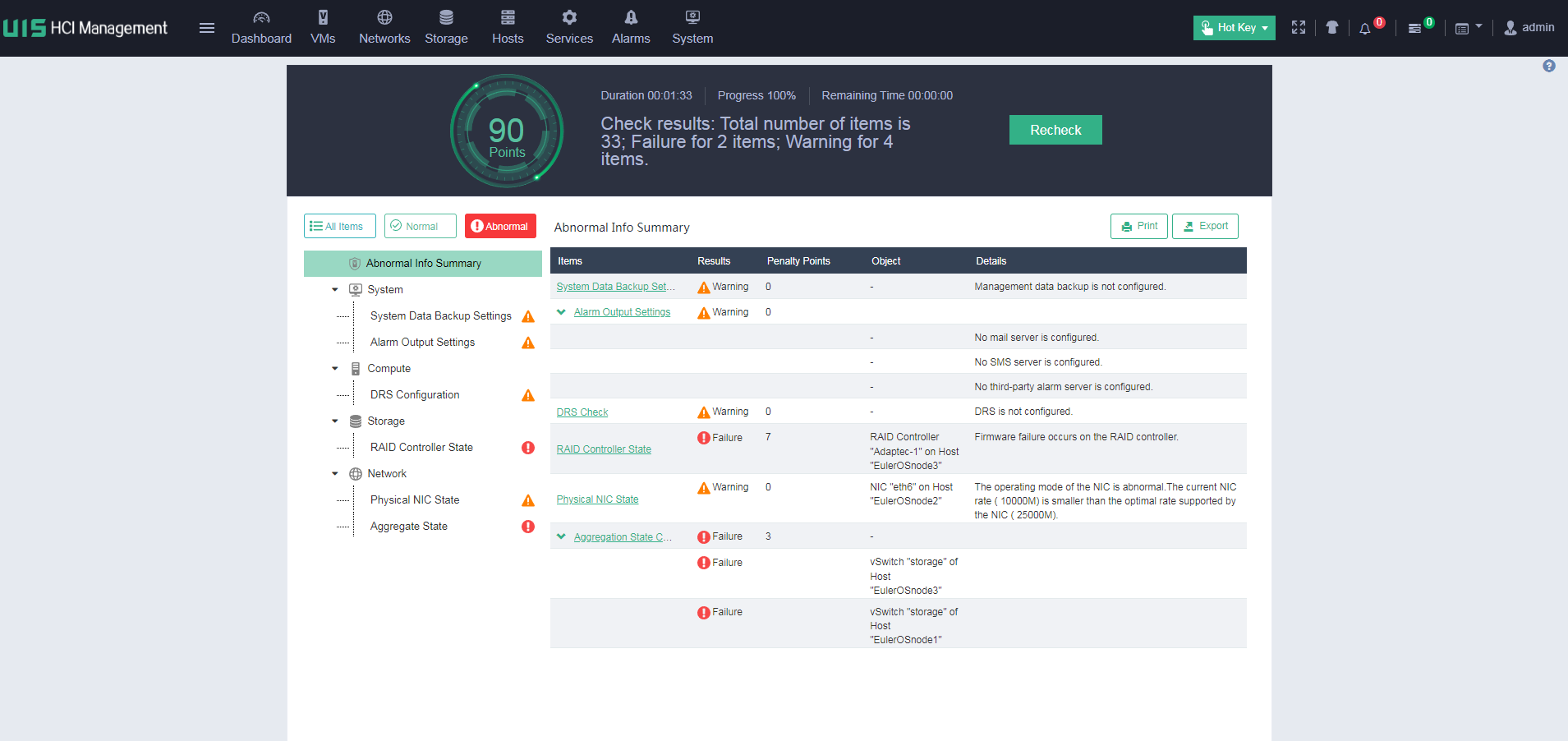

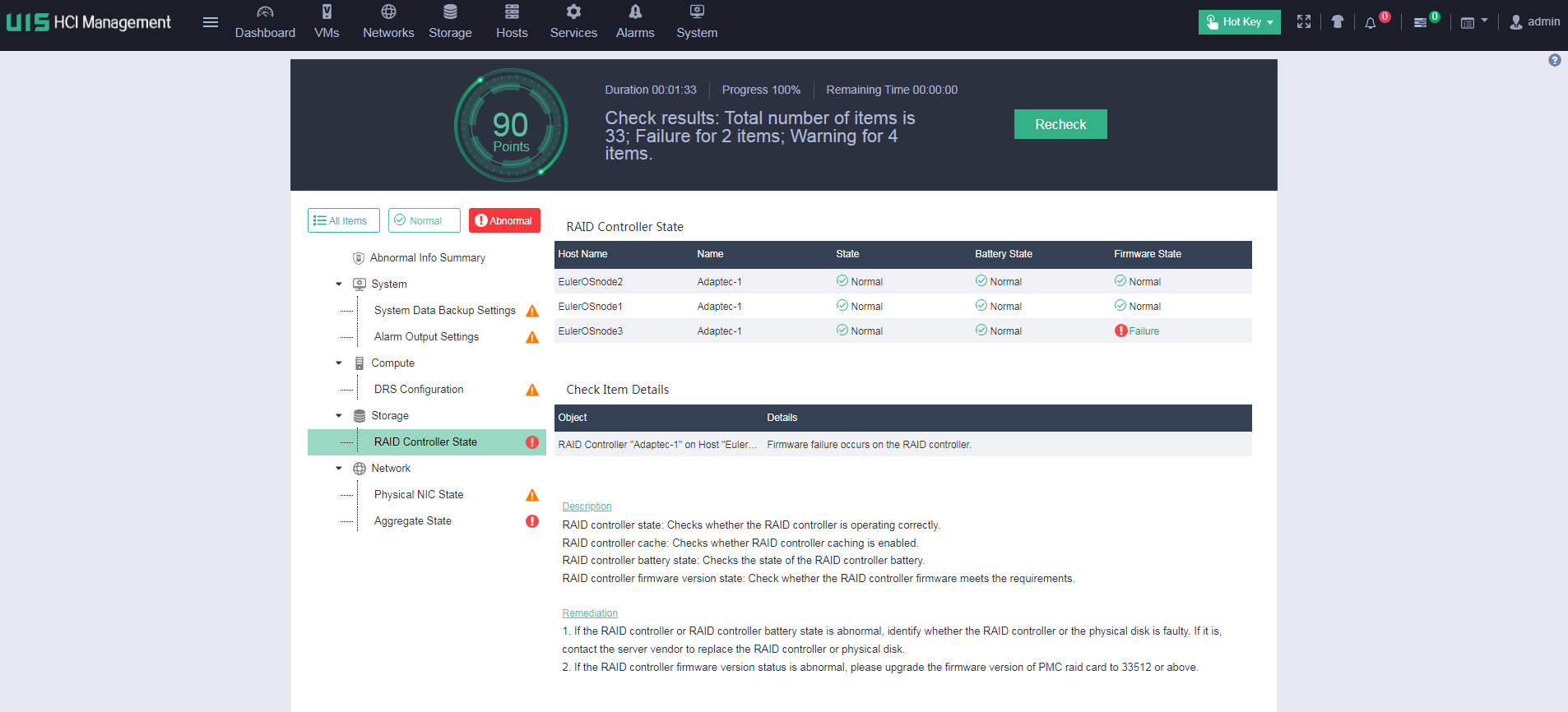

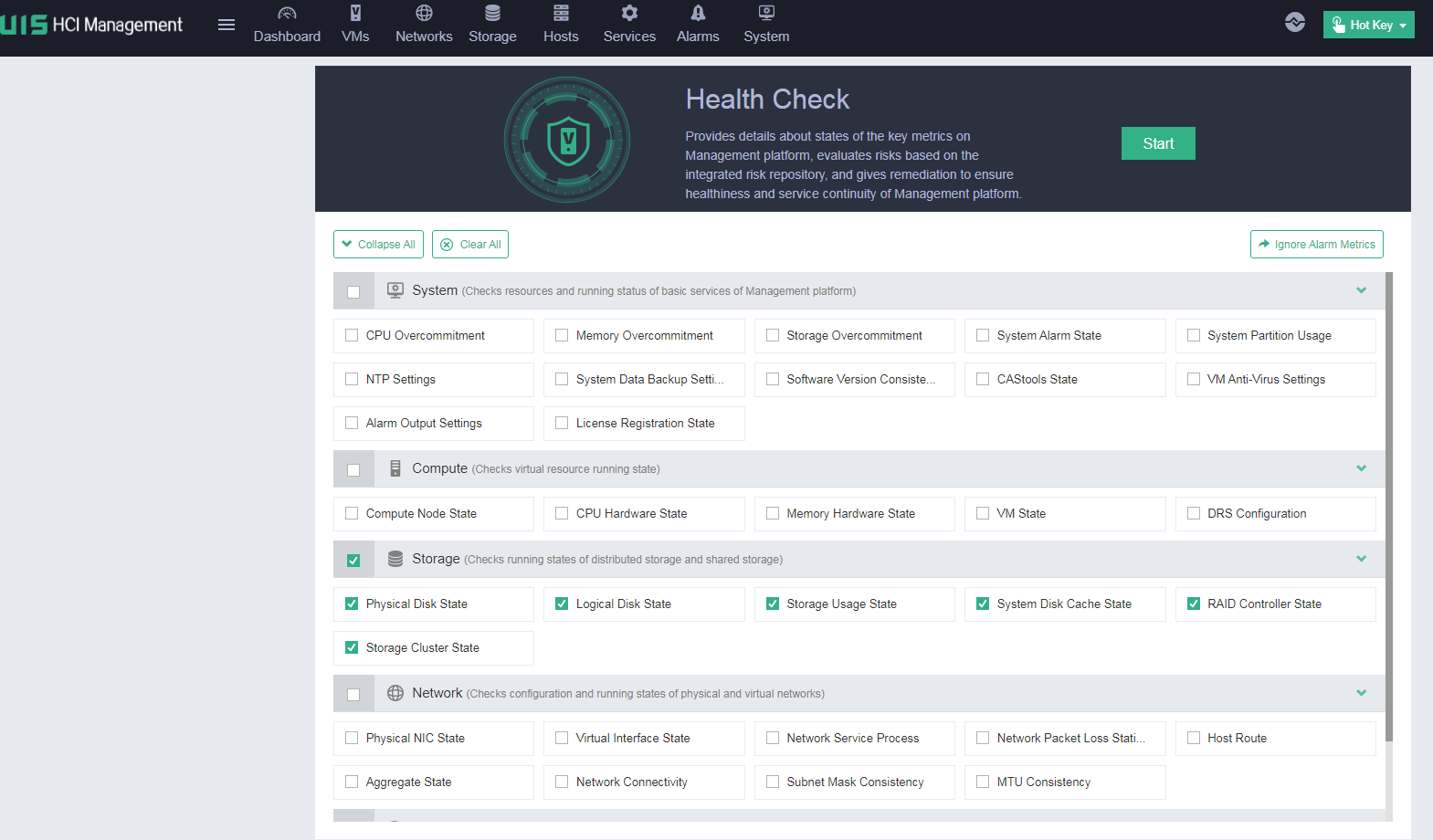

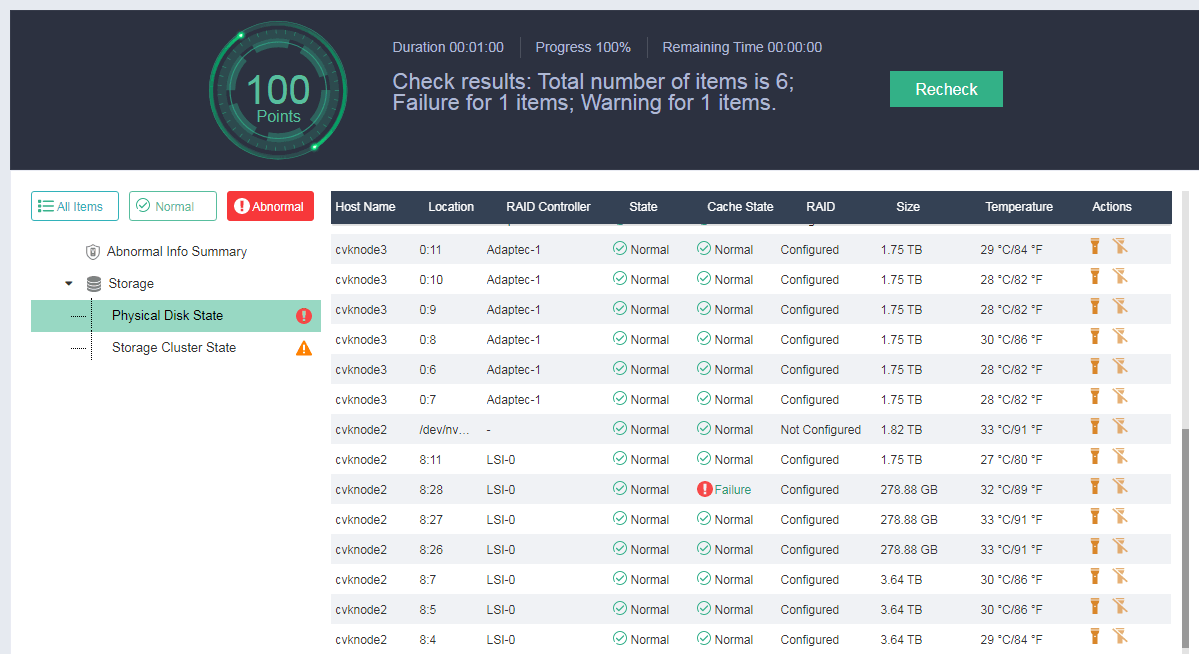

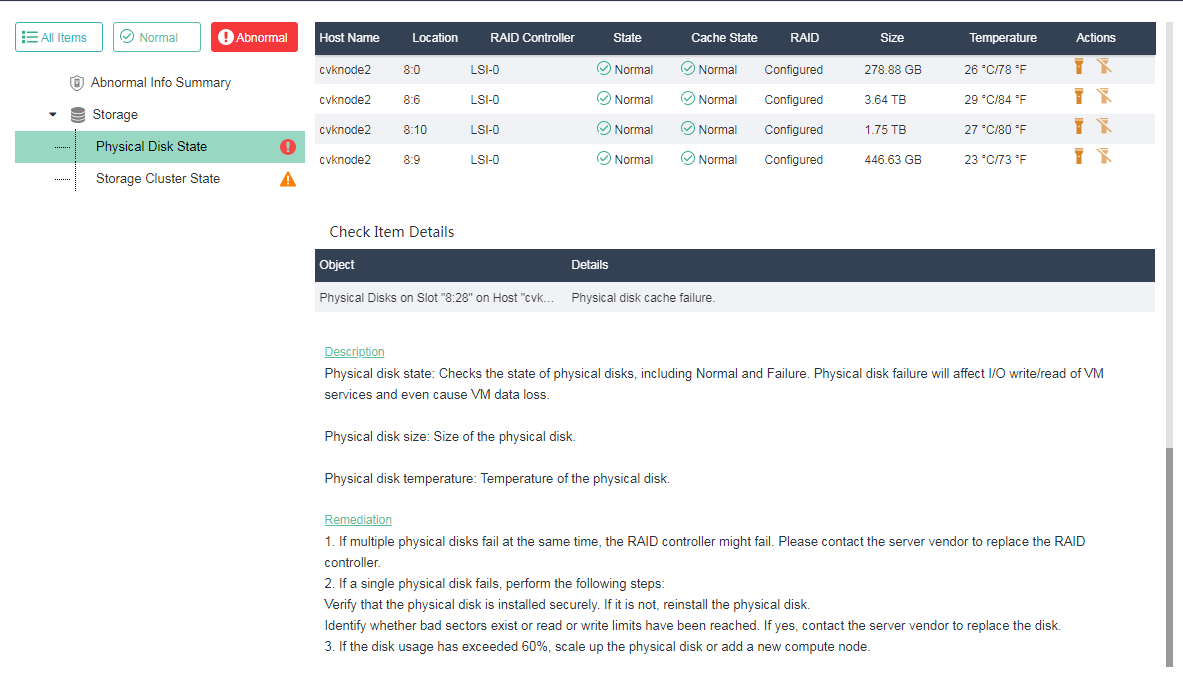

Performing health check

The UIS platform provides a hot key in the top right corner that allows you to perform health check, resource analysis, storage cleanup, resource export, VM restoration, and zombie VM operations.

Select Health Check to enter the health check page. You can perform health check for the specified modules.

You can print and export the health check results.

If a failure is detected in the health check, for example, a RAID controller or hard drive cache failure, you can click Remediation to resolve the issue.

Reviewing operation logs

The Operation Logs page records history operations in the UIS system, including front-end manual user operations and back-end automatic system operations.

The system provides important information about operation logs including` the operator name, finish time, login address, operation description, and failure result reason.

If an operation log message result is failed, you need to troubleshoot the failure based on the failure reason. If a large number of operation logs exist, you can download them for troubleshooting and analysis.

The following figure shows the UIS Manager operation logs.

Identifying cluster status

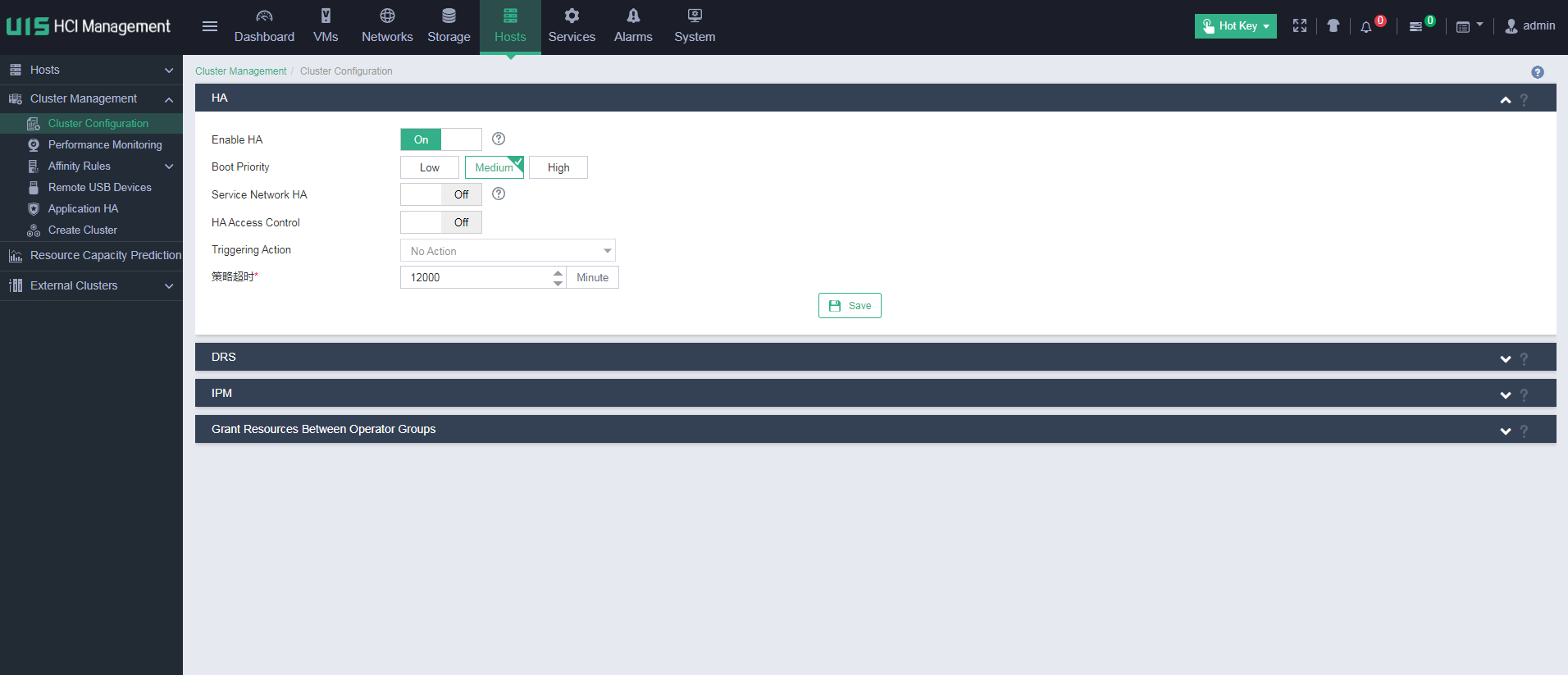

Identifying the cluster HA feature

Verify that the HA feature is enabled for the cluster. If HA is not enabled, and the next CVK host anomaly occurs in the cluster, the VMs on the CVK host cannot correctly migrate to other CVK hosts in the cluster.

After enabling HA for the cluster, you can enable service area HA. When the service area HA becomes faulty or a connectivity issue occurs for a VM, the VM can migrate to another host.

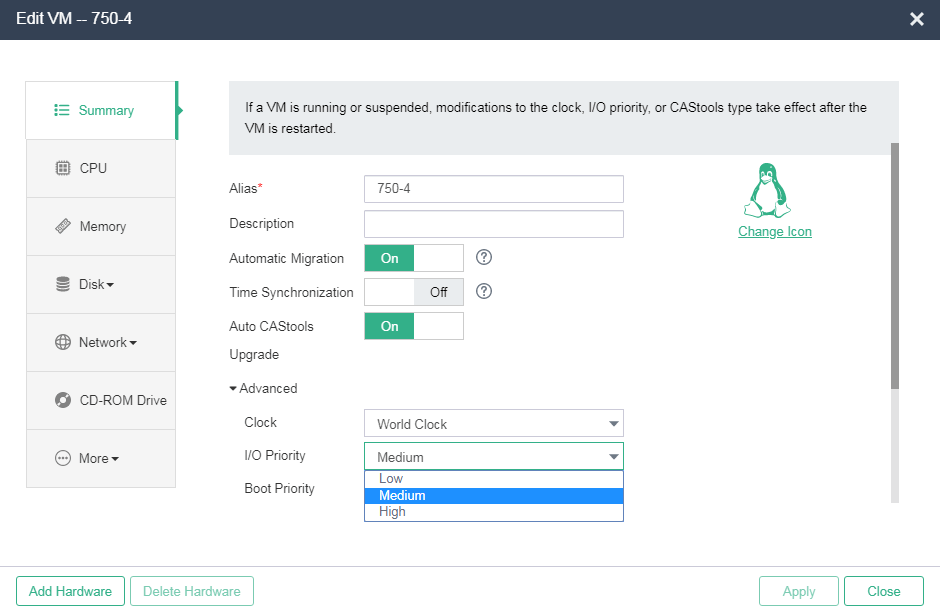

You can specify the boot priority for the VMs in the cluster. Options include Low, Medium, and High. The default boot priority is Medium. The VM boot priority is set upon adding or editing VMs. The boot priority specifies the startup order of VMs after a host failure occurs. The VMs restart on the new host according to the specified boot priorities. The VMs with the high, medium, and low boot priorities start up in descending order until all VMs restart or no more cluster resources are available.

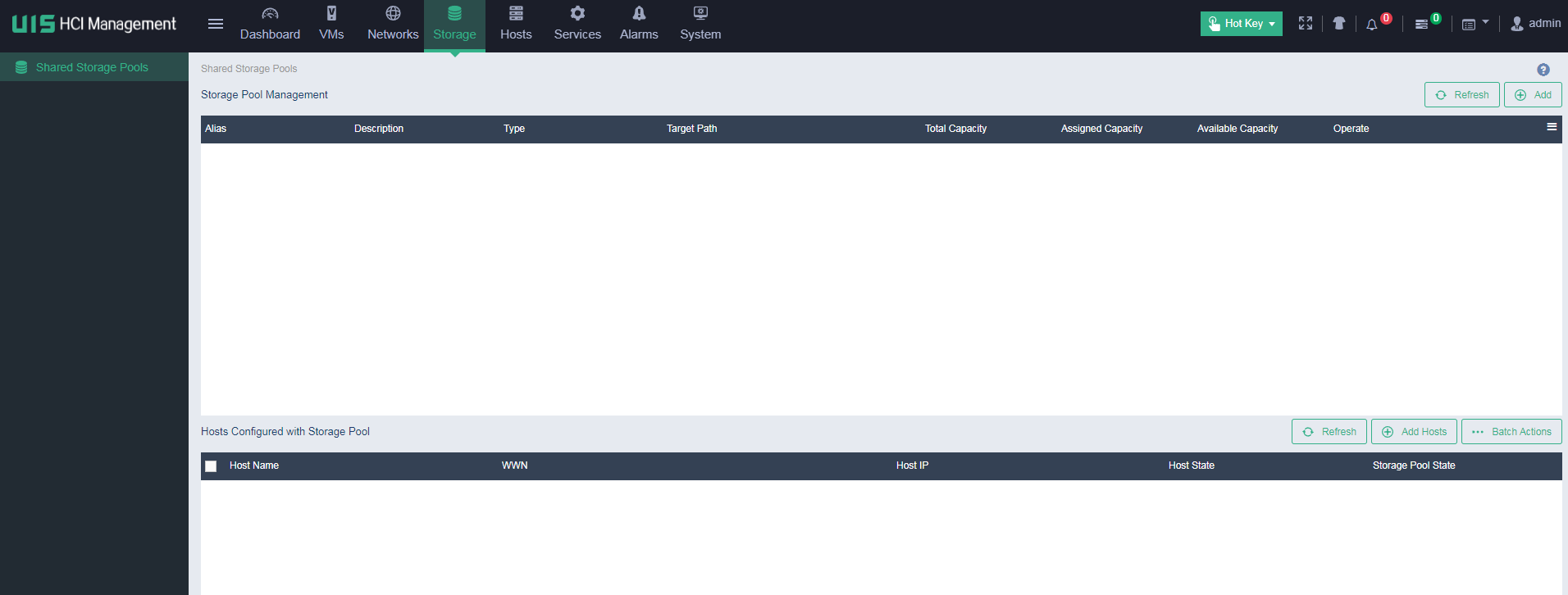

Identifying the shared storage in the cluster

During VM migration, if the target host has no shared storage mounted for VMs, the migration will fail.

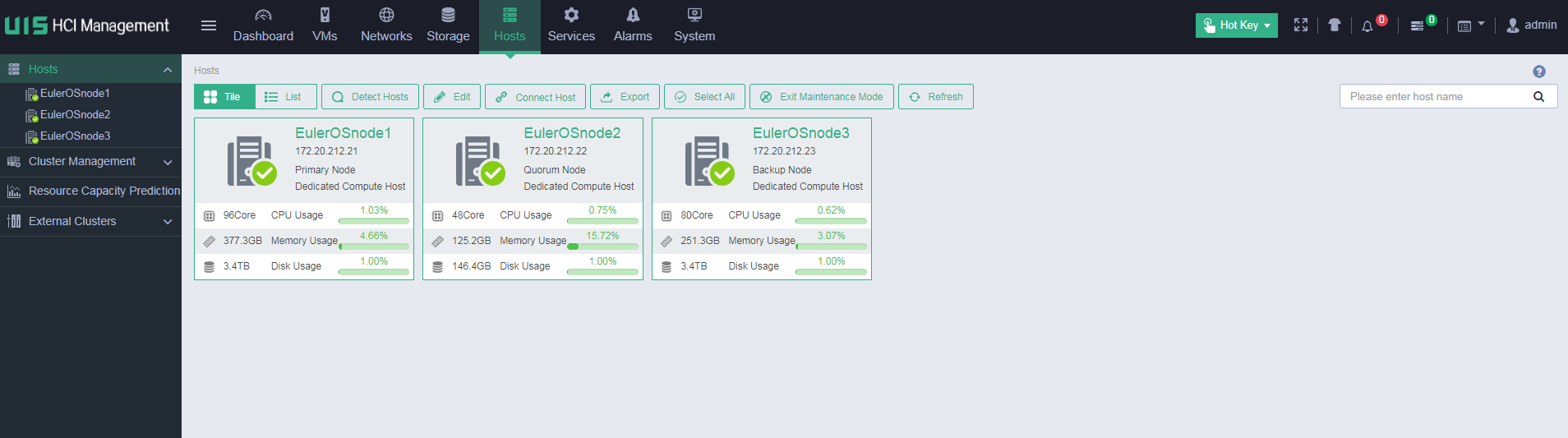

Identifying host information

Identifying host status

View host status on the Hosts page to identify whether abnormal hosts exist.

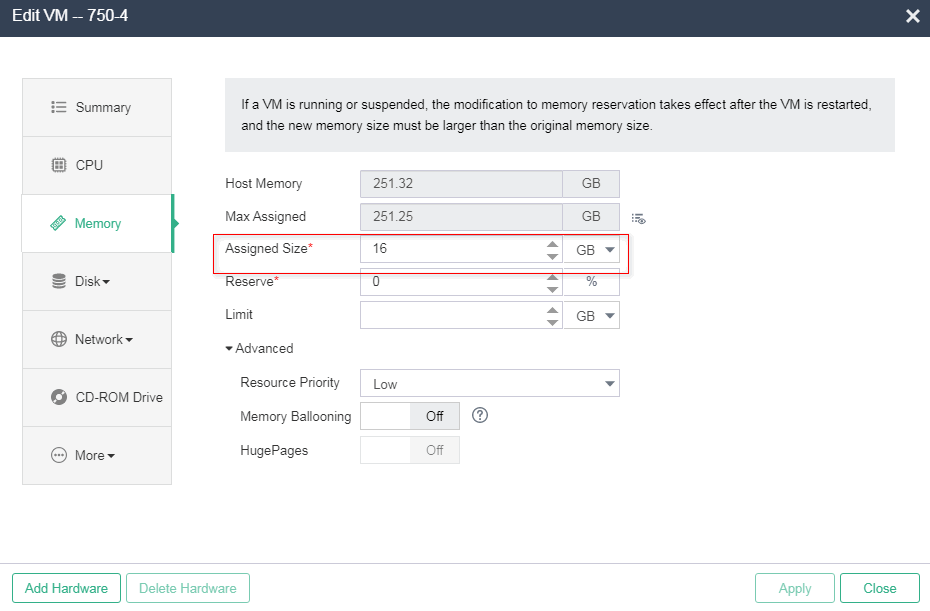

Check the CPU and memory usage of each host, and pay special attention to the hosts with usage exceeding 80%.

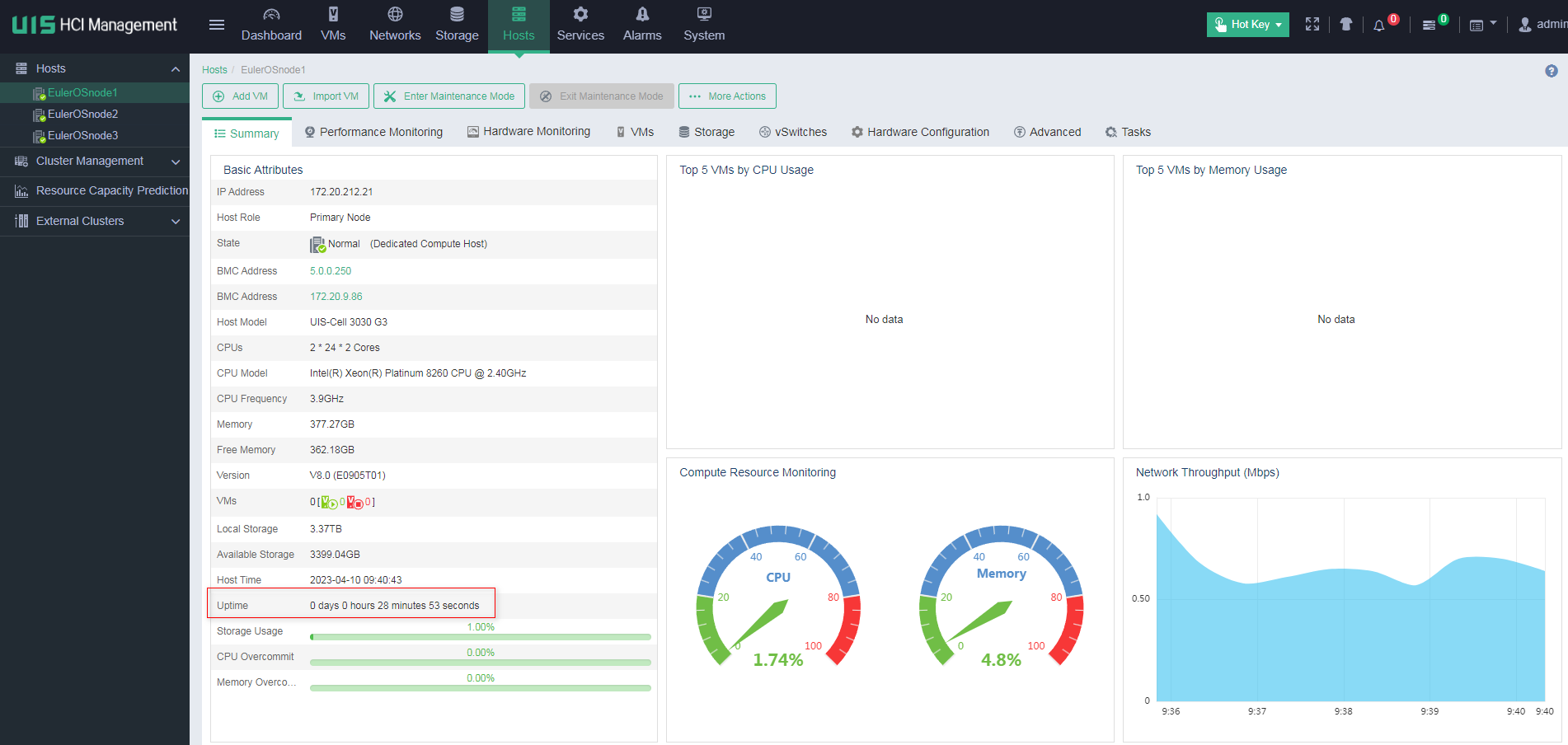

Identifying the uptime of a host

On the Summary page of a CVK host, you can see the detailed host configuration information. From the Uptime field, you can identify whether the host has been rebooted recently.

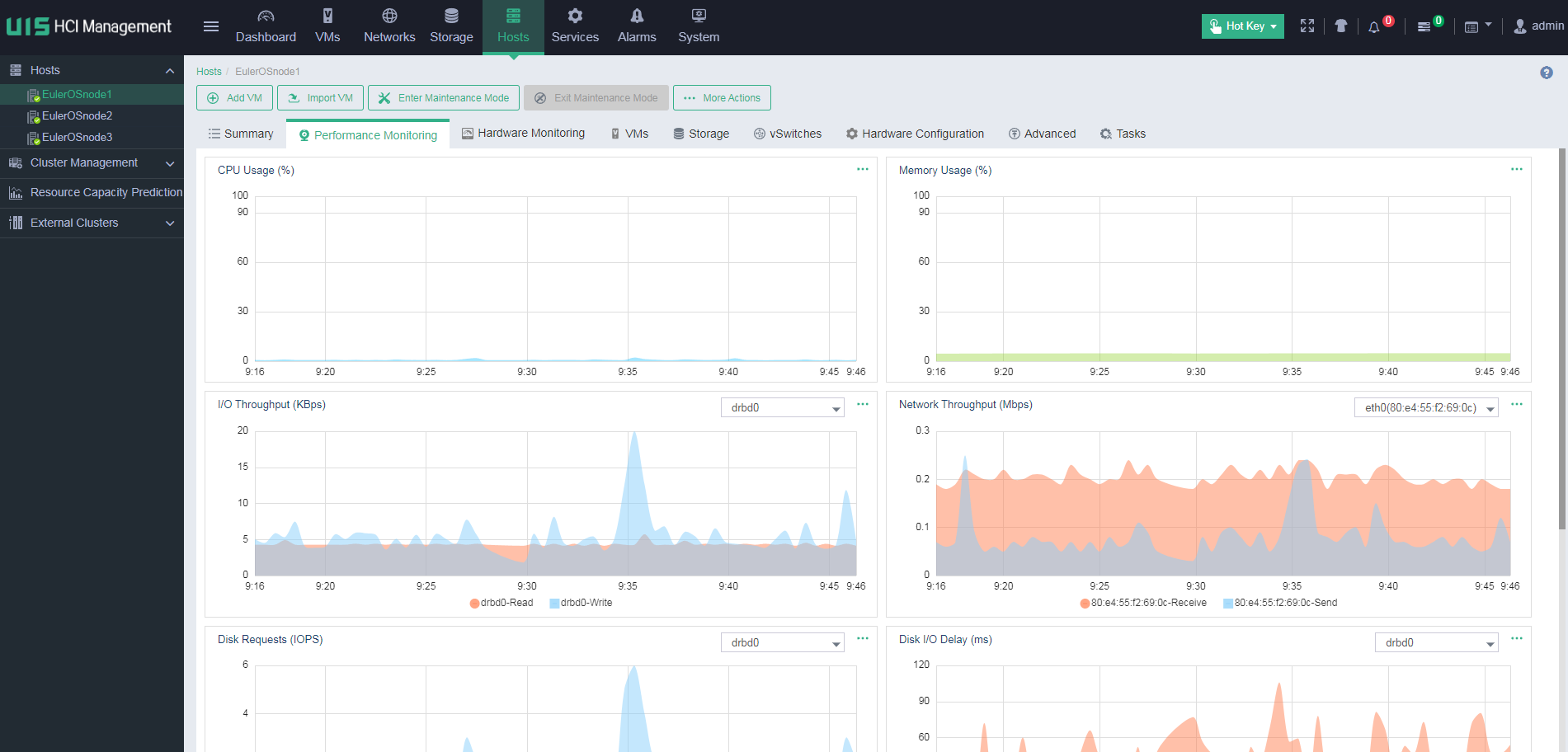

Identifying host performance monitoring information

On the Performance Monitoring page of the CVK host, you can see the CPU usage, memory usage, I/O throughput, network throughput, disk usage, and partition usage of the host.

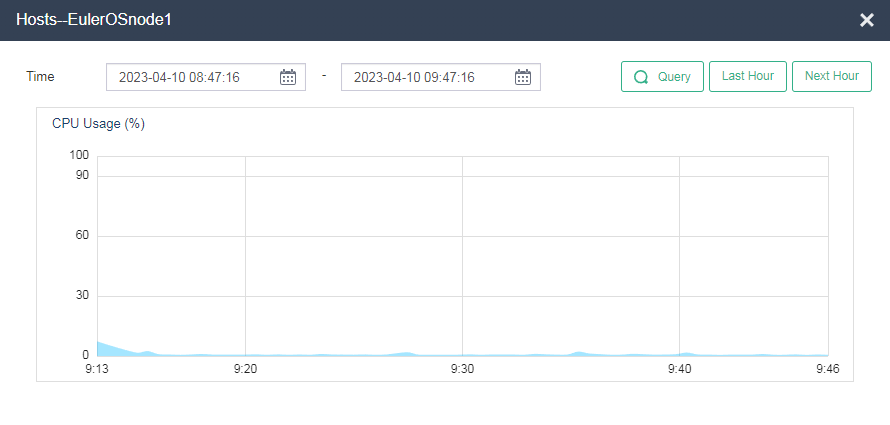

Identifying host CPU usage

On the Performance Monitoring > CPU Usage (%) page, click … to view CPU usage in a longer time range.

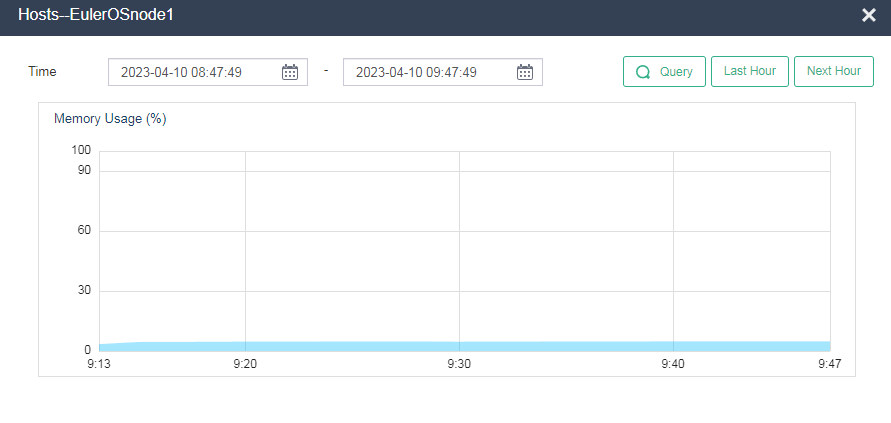

Identifying host memory usage

On the Performance Monitoring > Memory Usage (%) page, click … to view memory usage in a longer time range.

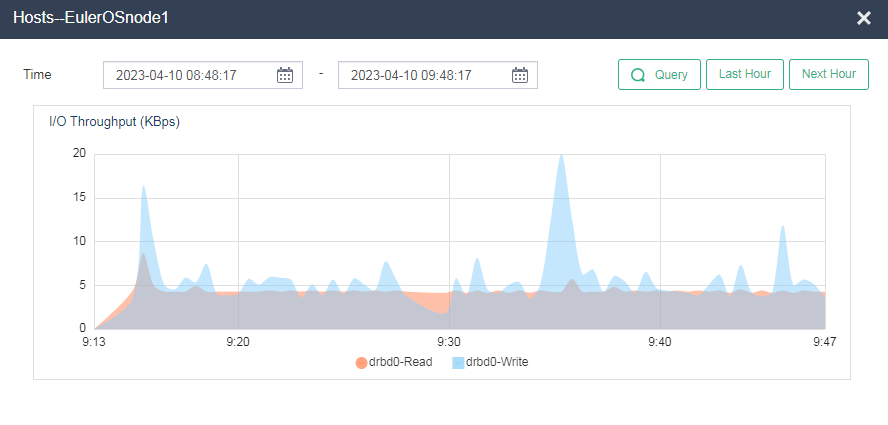

Identifying host I/O throughput

On the Performance Monitoring > I/O Throughput (KBps) page, click … to view I/O throughput in a longer time range.

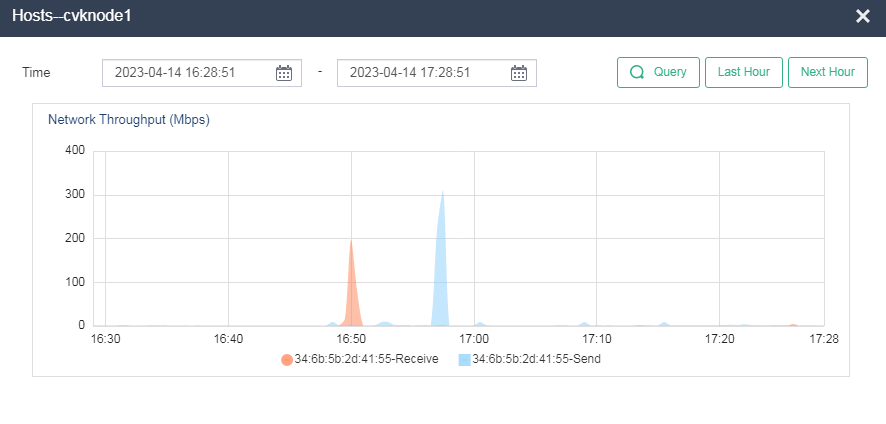

Identifying host network throughput

On the Performance Monitoring > Network Throughput (Mbps) page, click ... to view the network throughput of each physical NIC in a longer time range.

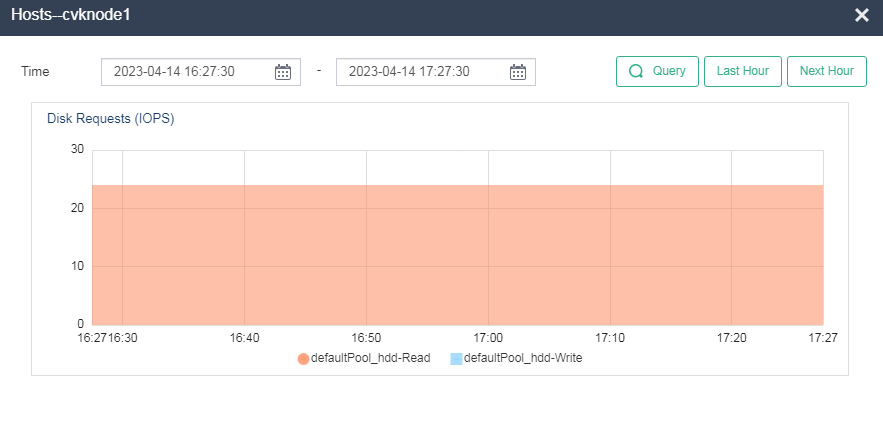

Identifying host disk usage

On the Performance Monitoring > Disk Requests (IOPS) page, you can see the host disk usage information.

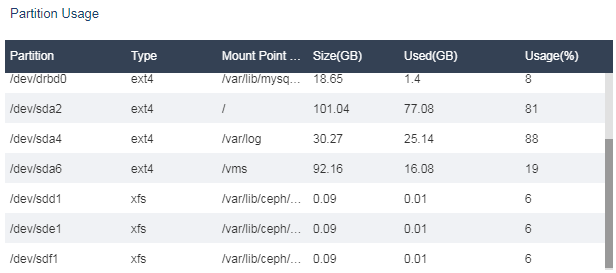

Identifying host partition usage

On the Performance Monitoring > Partition Usage page, you can see the host disk usage information.

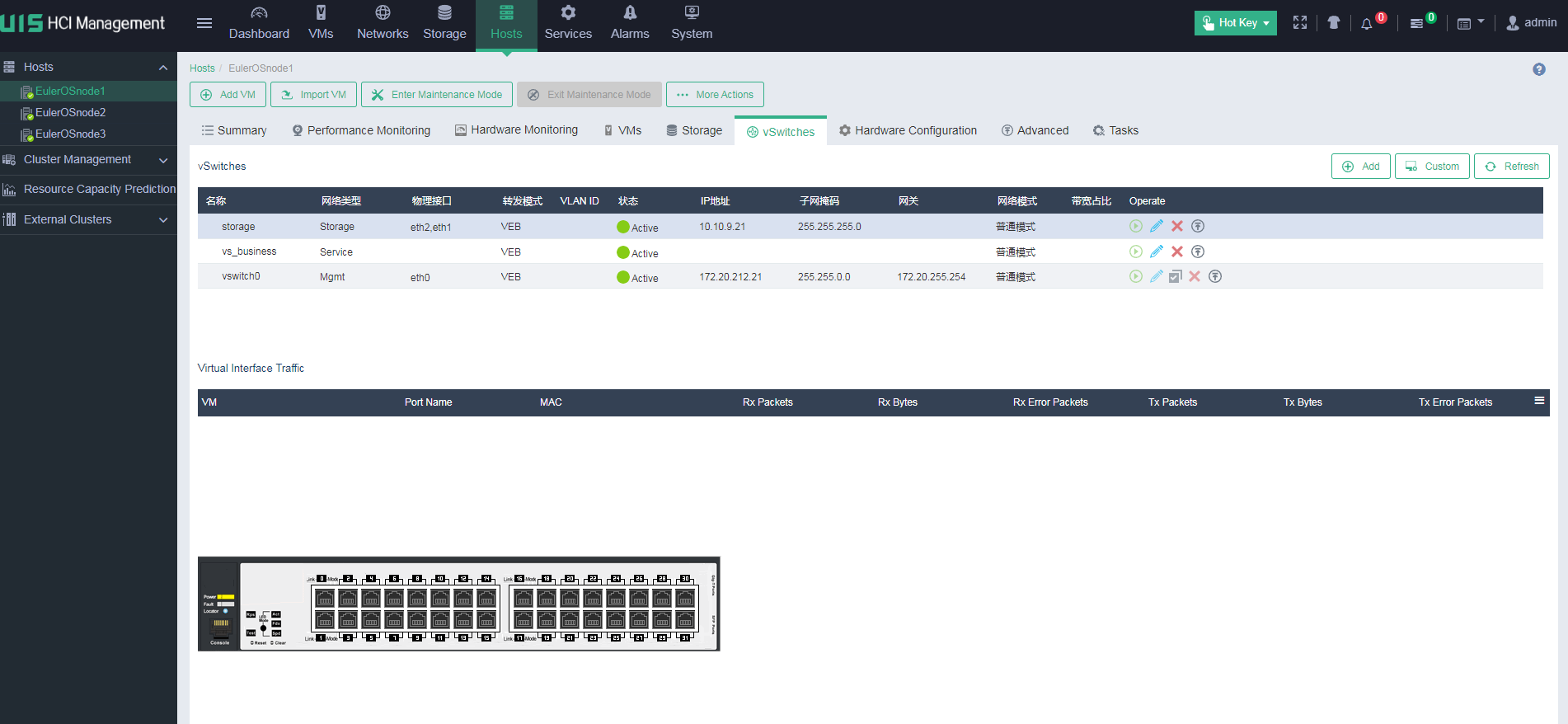

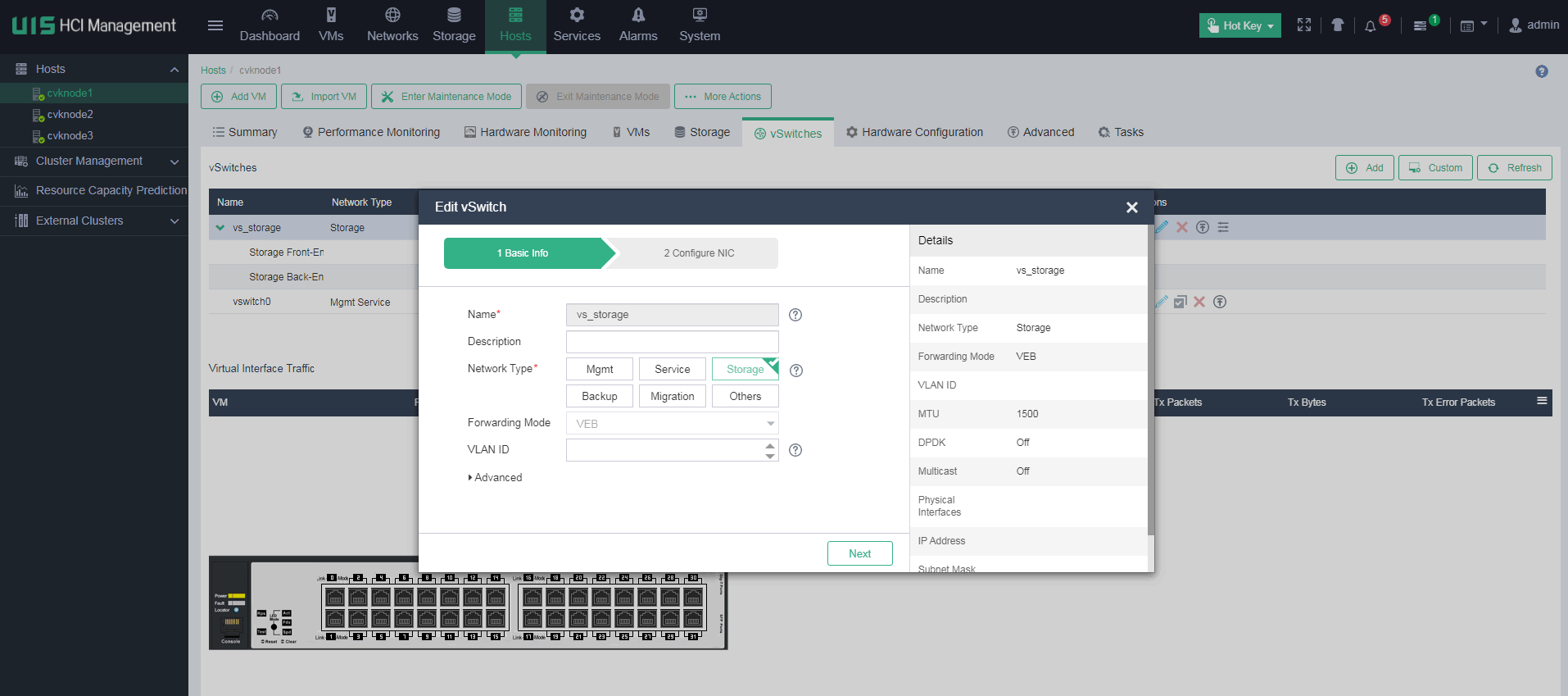

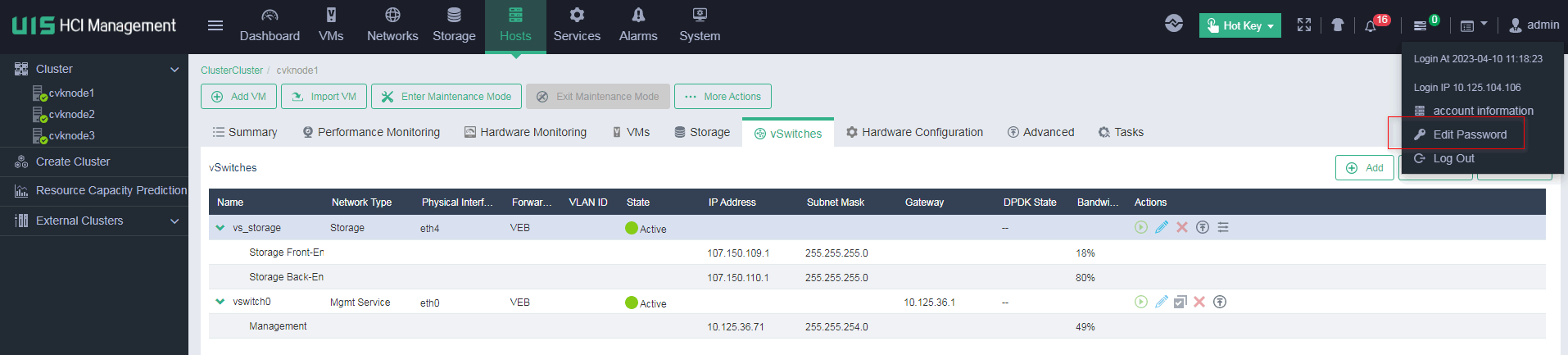

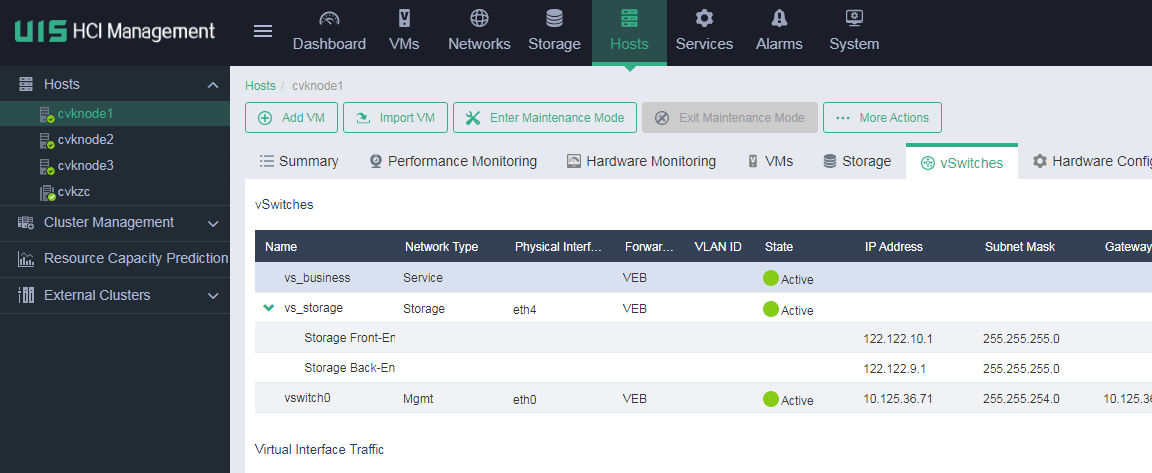

Identifying vSwitch information

Identify whether the names of vSwitches between hosts in the cluster are consistent.

On the vSwitches page of a host, identify whether the vSwitches are active. If a vSwitch is in abnormal state, identify whether the physical NIC is normal.

Make sure only one gateway is configured for all vSwitches of the host.

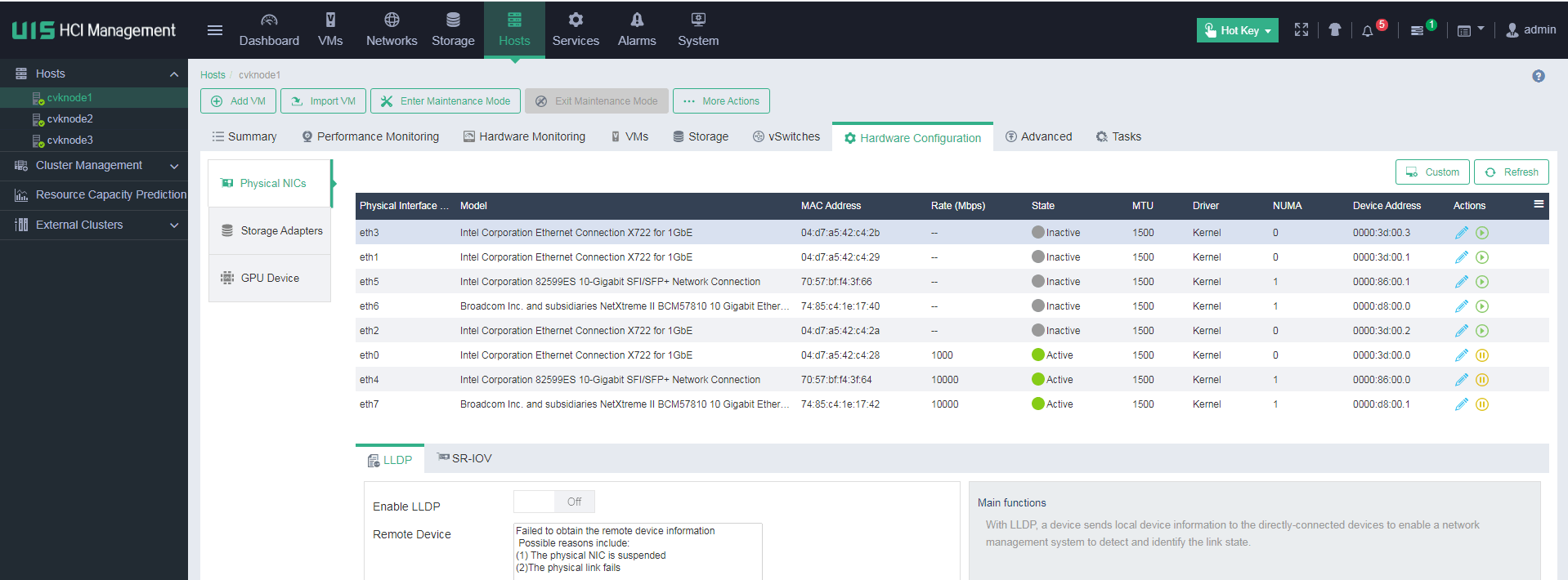

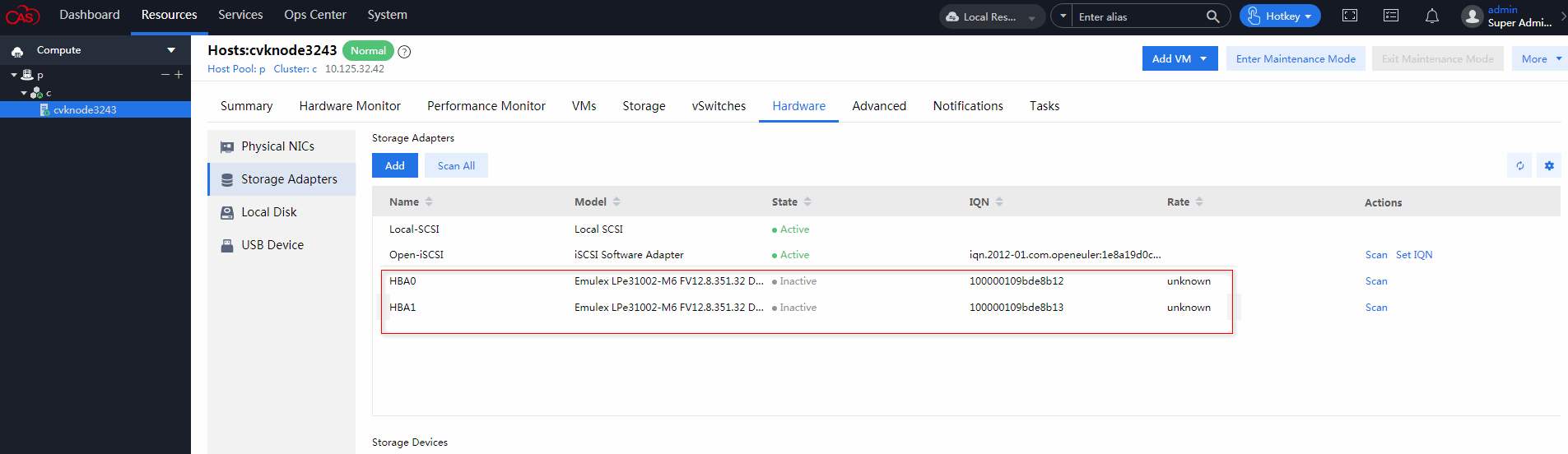

Identifying physical NIC status

On the Physical NICs page, identify whether the physical NICs of the host, such as the rate and state, are normal.

Abnormal physical NICs will affect vSwitch performance.

Identifying VM status

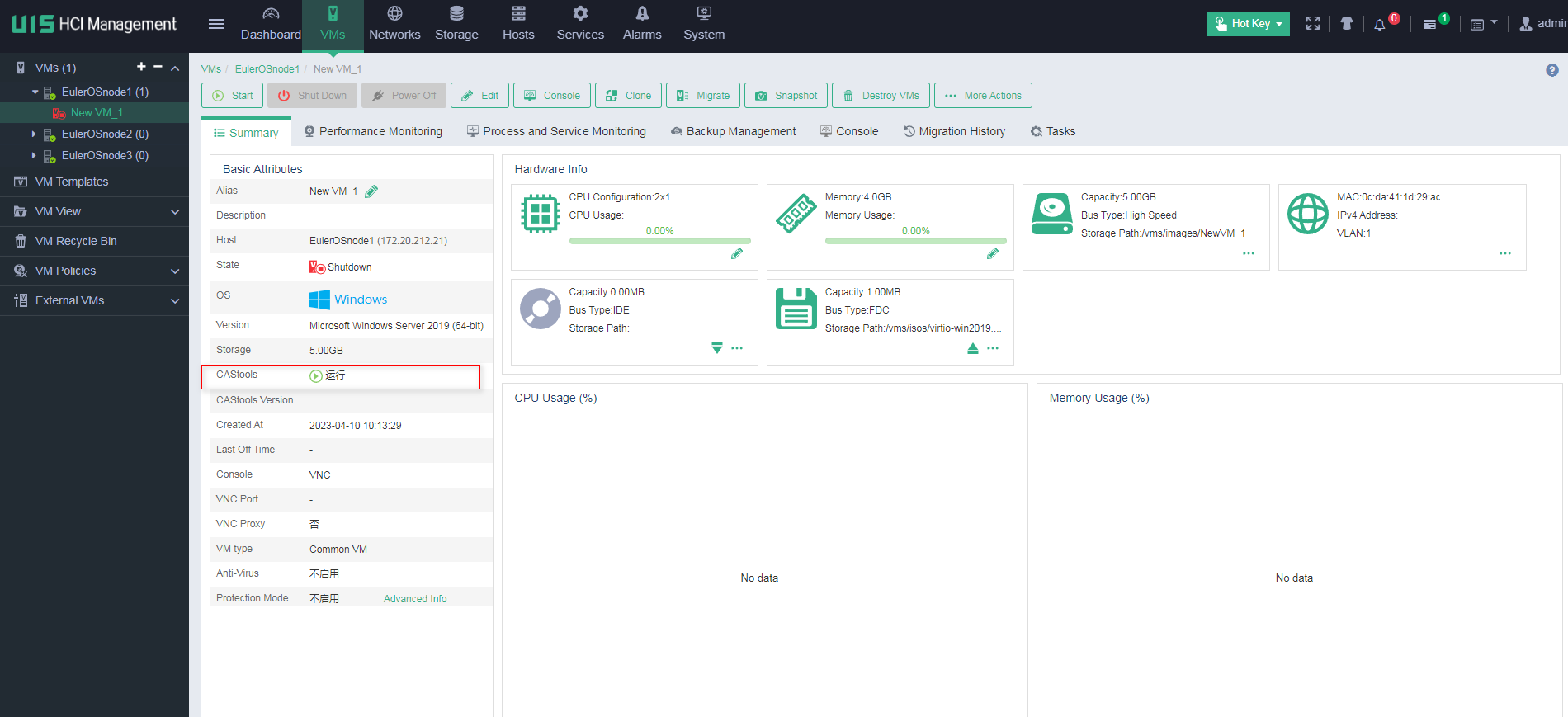

Identifying the running status of CAStools

On the Summary page of the VM, identify whether CAStools are installed to the VM and running correctly.

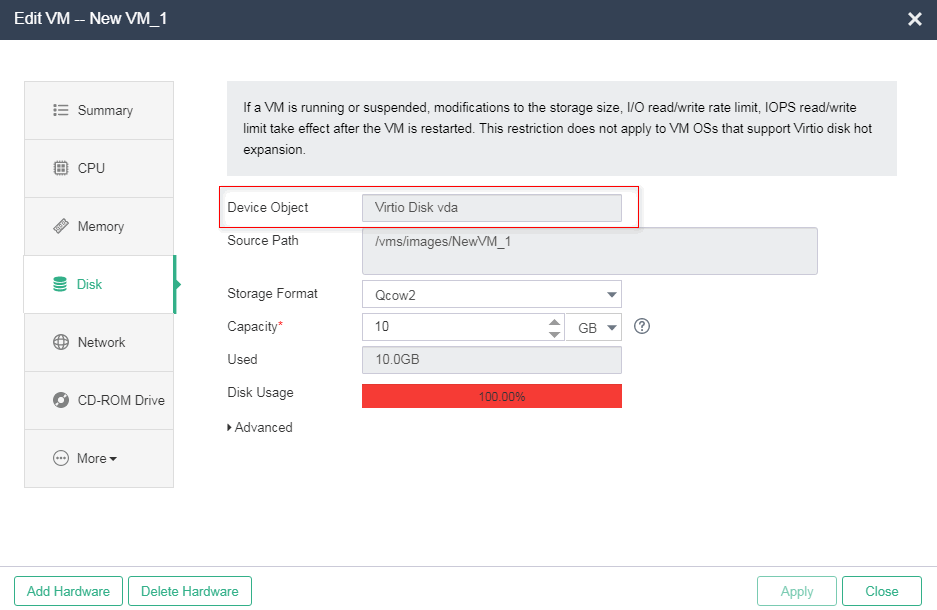

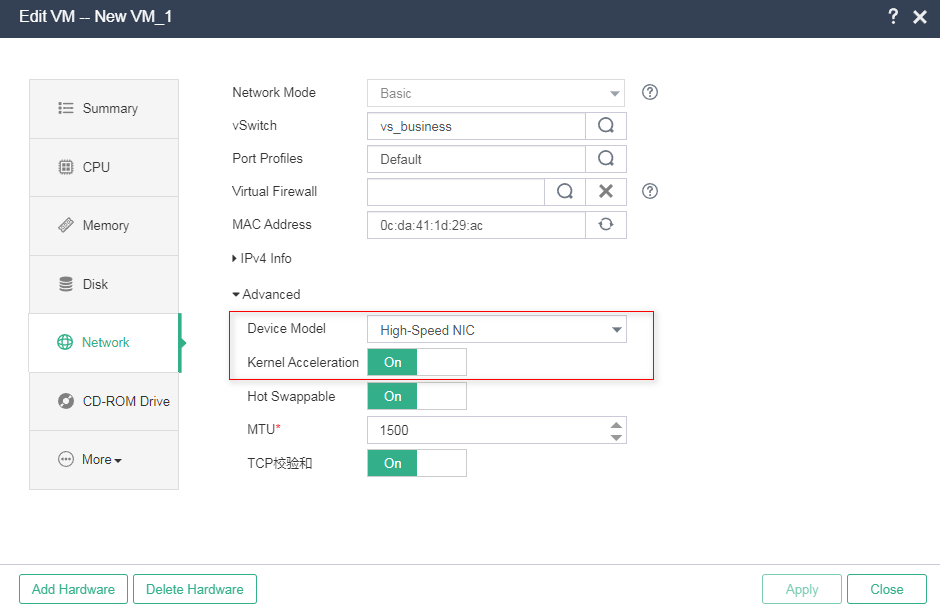

Verifying disk and NIC types

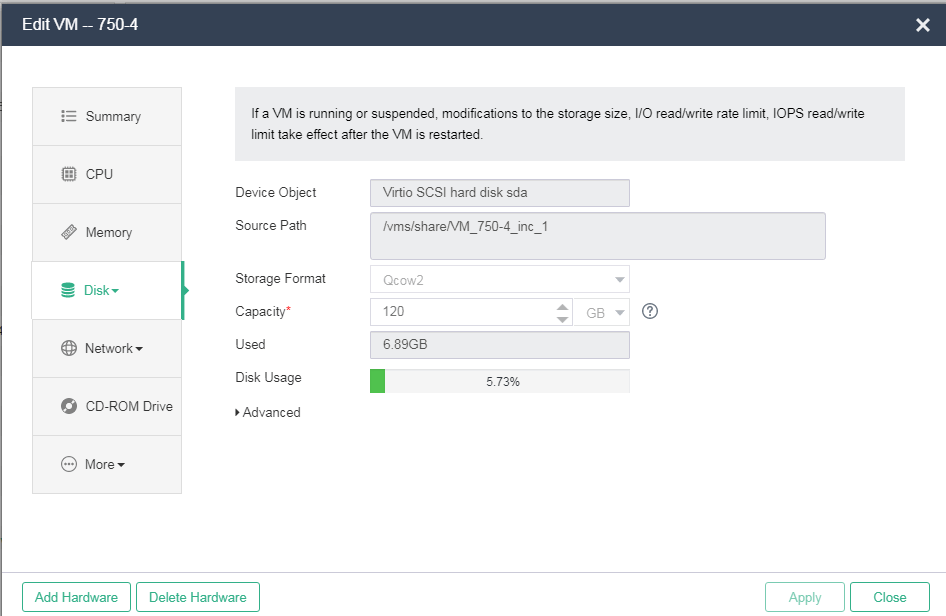

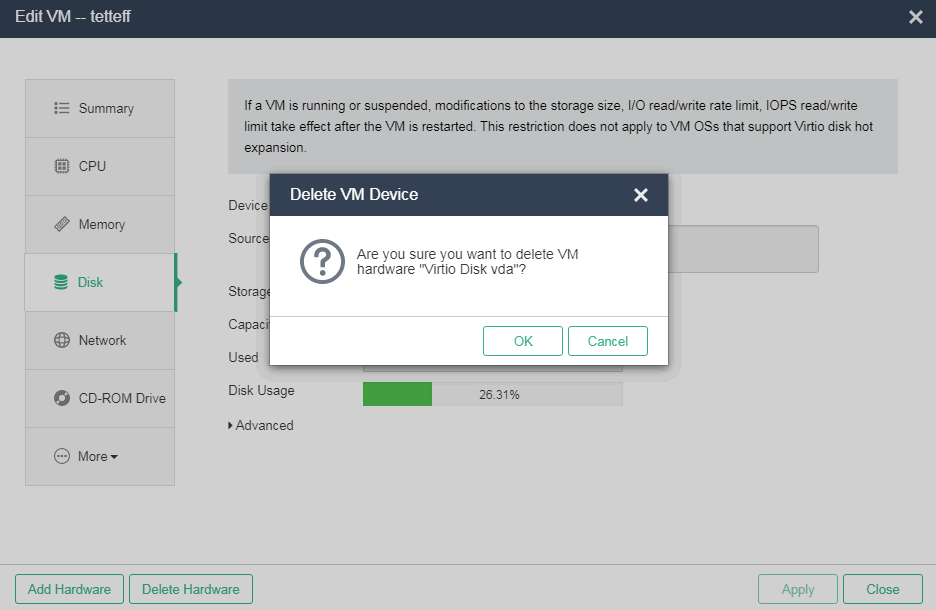

Verifying the disk type

On the Disk tab of the VM modification page, verify that the device object is Virtio disk (that significantly improves disk performance), the source path is a shared storage path, and the cache mode is directsync (recommended setting).

Verifying the NIC type

On the Network tab of the VM modification page, verify that the device model is high-speed NIC and kernel acceleration is enabled (that significantly improves NIC performance).

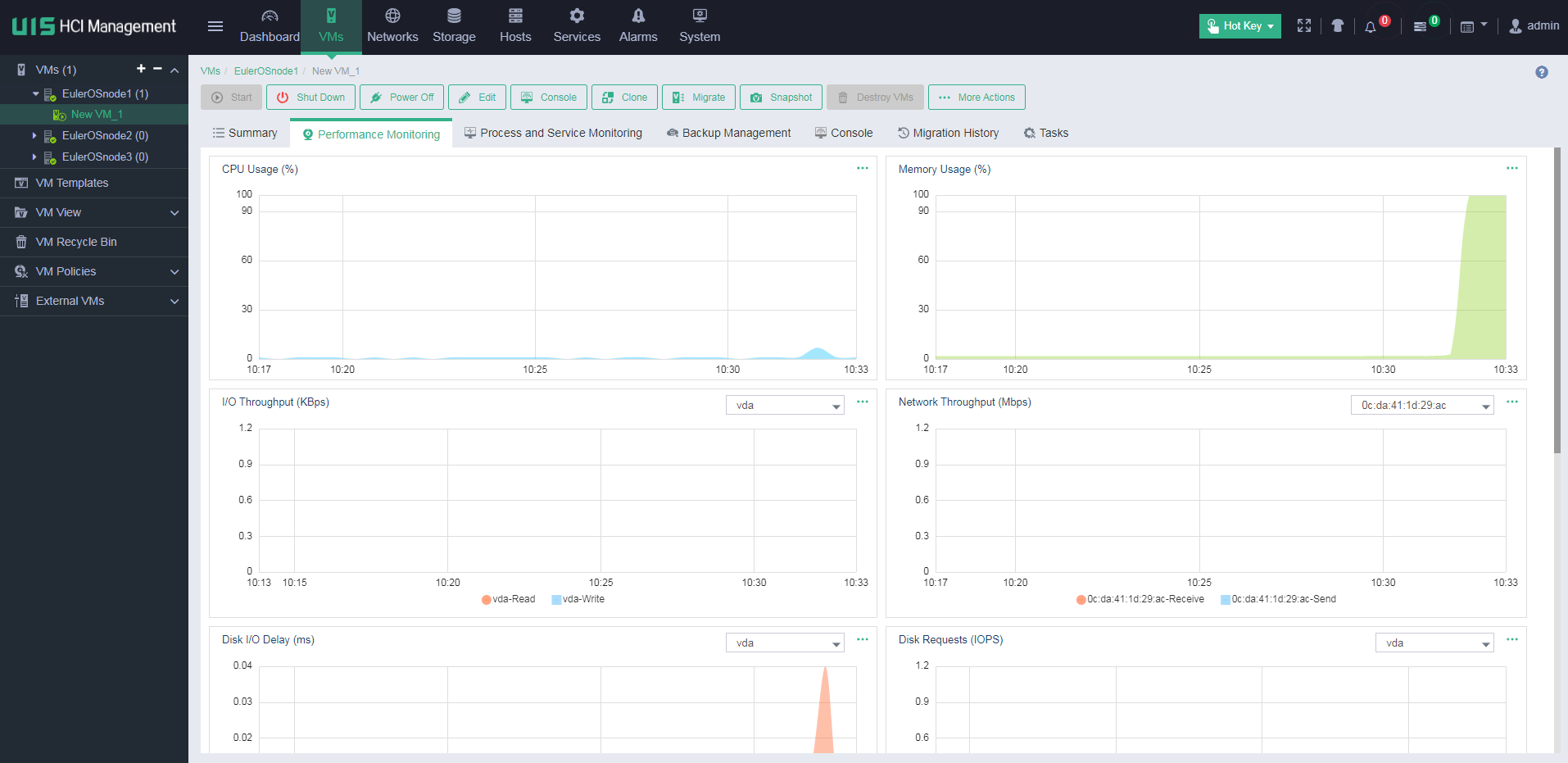

Identifying VM performance monitoring statistics

On the Performance Monitoring page of the VM, you can see the CPU usage, memory usage, I/O throughput, network throughput, disk usage, and partition usage of the VM.

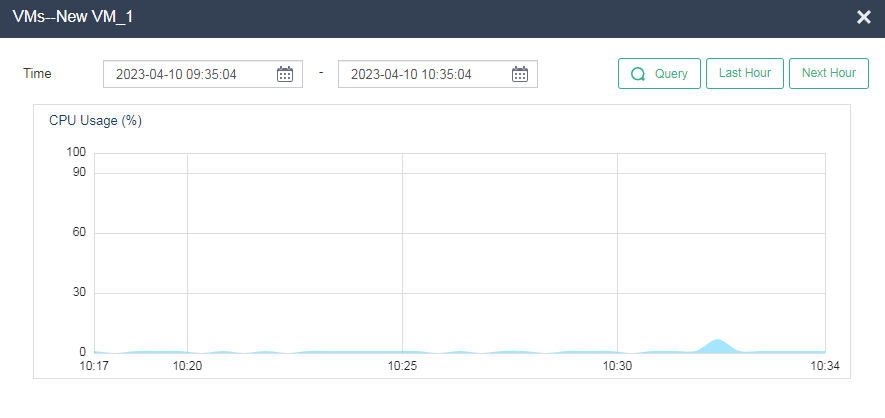

Identifying VM CPU usage

On the Performance Monitoring > CPU Usage (%) page, click … to view CPU usage in a longer time range.

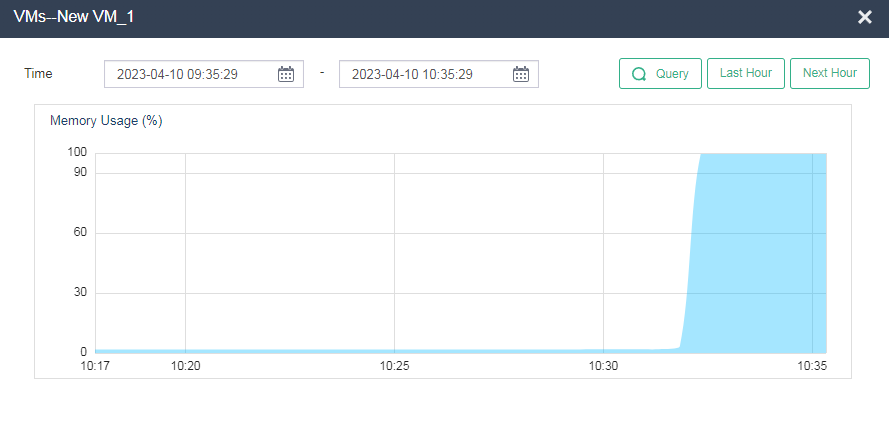

Identifying VM memory usage

On the Performance Monitoring > Memory Usage (%) page, click … to view memory usage in a longer time range.

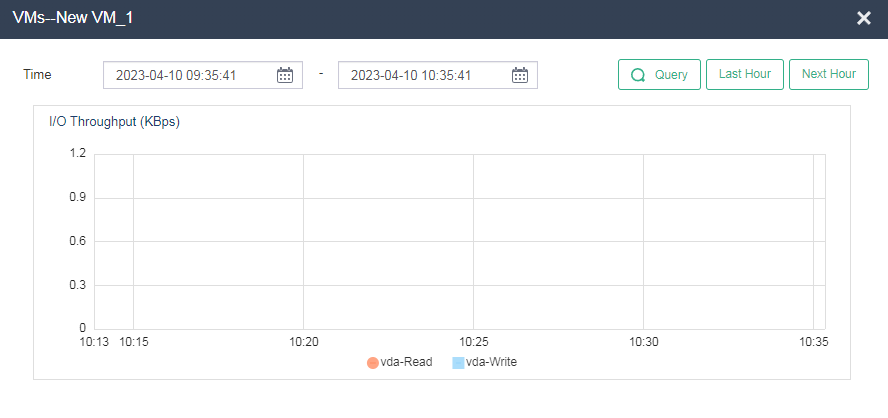

Identifying VM I/O throughput

On the Performance Monitoring > I/O Throughput (KBps) page, click … to view I/O throughput in a longer time range.

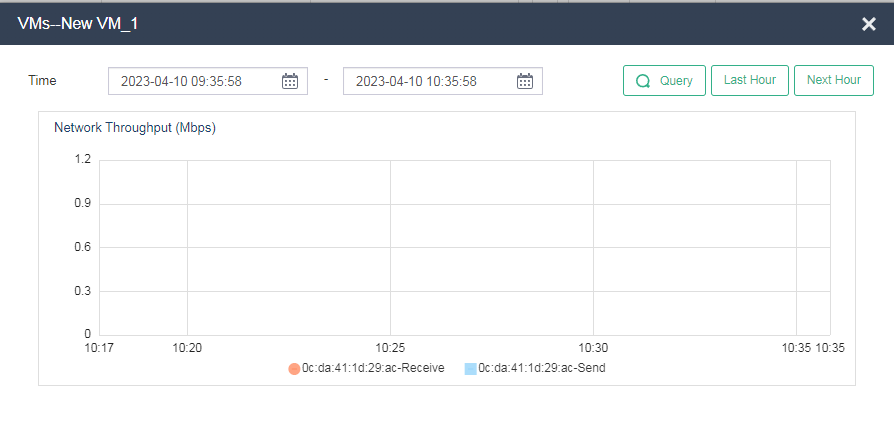

Identifying VM network throughput

On the Performance Monitoring > Network Throughput (Mbps) page, click … to view the network throughput of each physical NIC in a longer time range.

Identifying VM disk usage

On the Performance Monitoring > Disk Requests (IOPS) page, you can see the VM disk usage information.

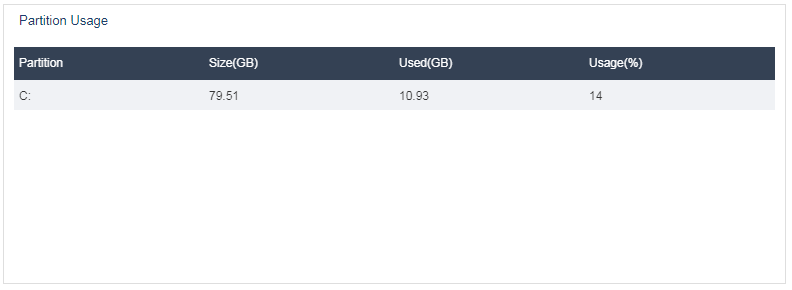

Identifying VM partition usage

On the Performance Monitoring > Partition Usage page, you can see VM partition usage information.

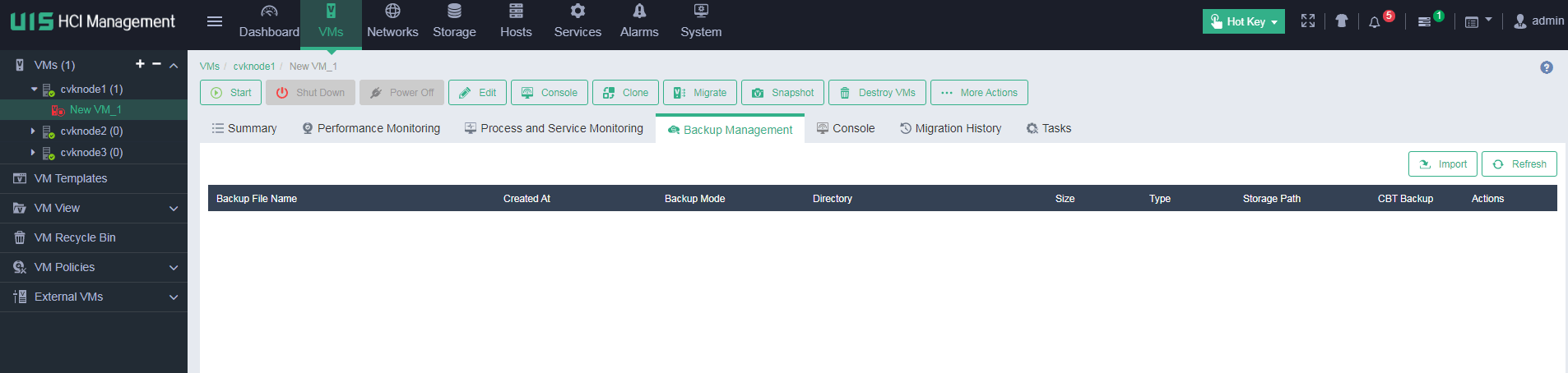

Identifying VM backup information

On the Backup Management page of a VM, you can see the backup history of the VM. As a best practice, back up all core VMs on the UIS platform.

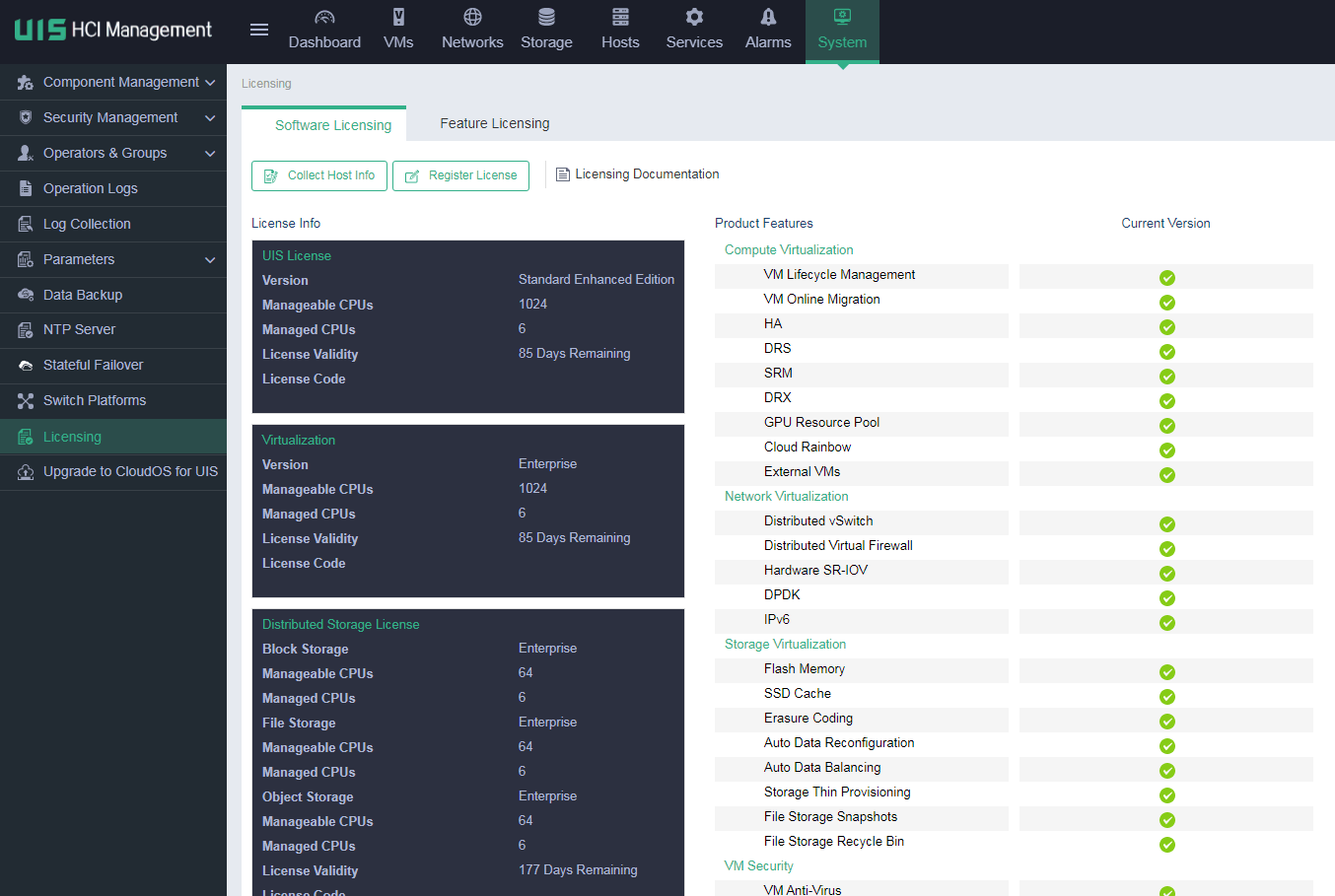

Identifying license information

The UIS system typically contains UIS Manager license, CAS license, and distributed storage license. You need to use official licenses at official deployment sites. You can use temporary licenses at test or temporary deployment sites. To avoid impacts on correct UIS system usage upon expiration of the temporary licenses, you need to update the temporary licenses in advance.

The following figure shows the licensing page of the UIS Manager component.

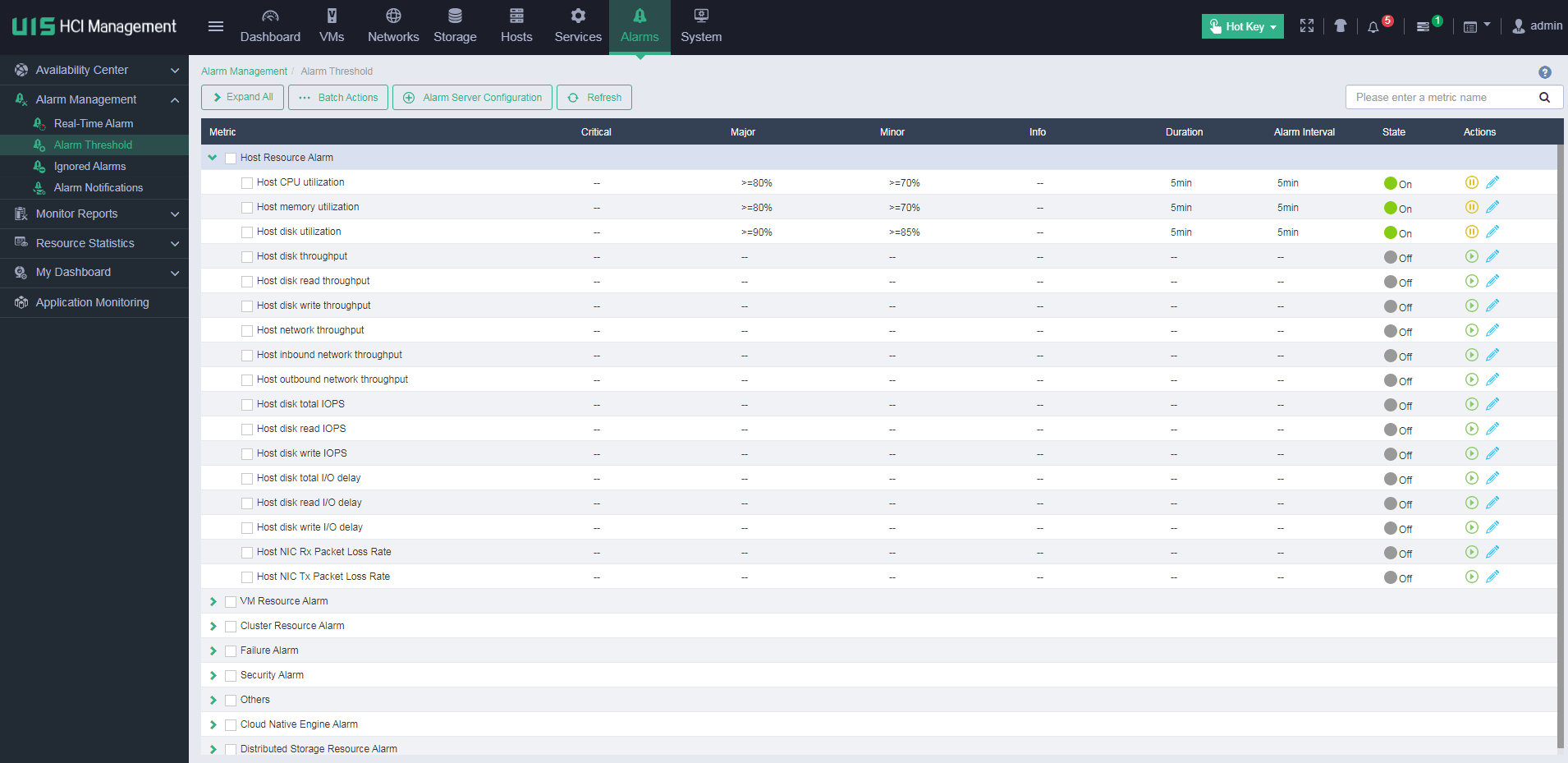

Managing alarms

The alarm management feature collects and displays statistics of concerned alarms for operators. In the current software version, UIS collects statistics of host resource alarms, VM resource alarms, cluster resource alarms, failure alarms, security alarms, other alarms, and distributed storage resource alarms.

Users can configure alarm threshold settings for the indexes such as CPU usage and memory usage of hosts or VMs. When an index value reaches the alarm threshold, an alarm is generated and reported. Users can view the reported alarms in the real-time alarm list. The alarm filtering configuration allows users to filter the alarms that are not concerned. Such alarms will not be reported. In addition, the system supports sending alarms to users through Emails or SMS messages.

Configuration cautions and guidelines

See H3C UIS Manager Configuration Cautions and Guidelines.

See H3C UIS Manager Data Loss Prevention Best Practices.

Change operations

If issues occur during the UIS system running process, you must follow certain rules to resolve the issues. If you cannot do that, normal operation of services on the live network will be affected.

Upgrading UIS software

See H3C UIS Upgrade Guide.

Handling hardware failure

See H3C UIS Hyper-Converged Infrastructure Component Replacement Configuration Guide.

Starting or shutting down a UIS host

When you perform comprehensive maintenance for the UIS system, you must follow a certain order to power on or power off the device. If you cannot do that, the service system will be destroyed. Before powering on the device, make sure the health is 100%.

For more information, see H3C UIS Hyper-Converged Infrastructure Node Shutdown Configuration Guide.

IP address and host name change

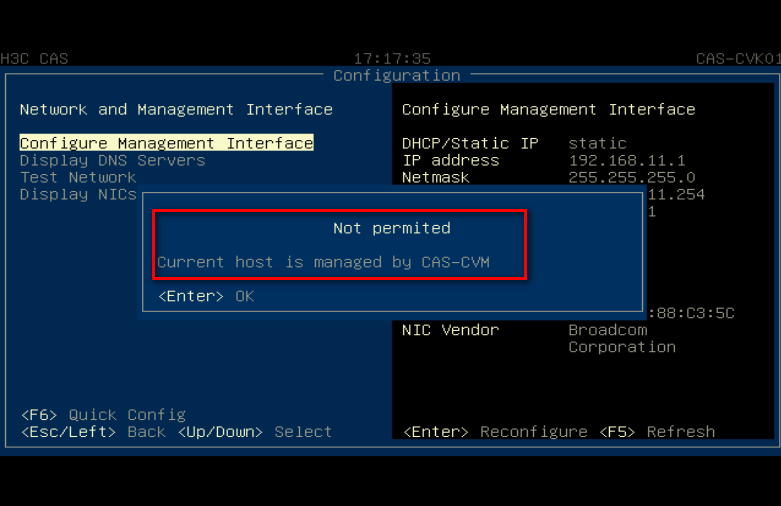

CAUTION: · To change the root password for a CVK the system, access the Web interface of UIS Manager. You cannot change the root password for a CVK host from its command shell. · If you delete a CVK host when the shared storage of the CVK host is suspended, the shared storage will be automatically deleted. Therefore, you must mount the shared storage to the CVK host again after the CVK host is added again. · When the number of nodes is equal to or less than four hosts, primary nodes, backup nodes, and quorum nodes, you cannot modify IP addresses through directly deleting hosts. For more information, contact Technical Support. |

After the UIS system is deployed, you might need to modify the UIS system IP address or hosts.

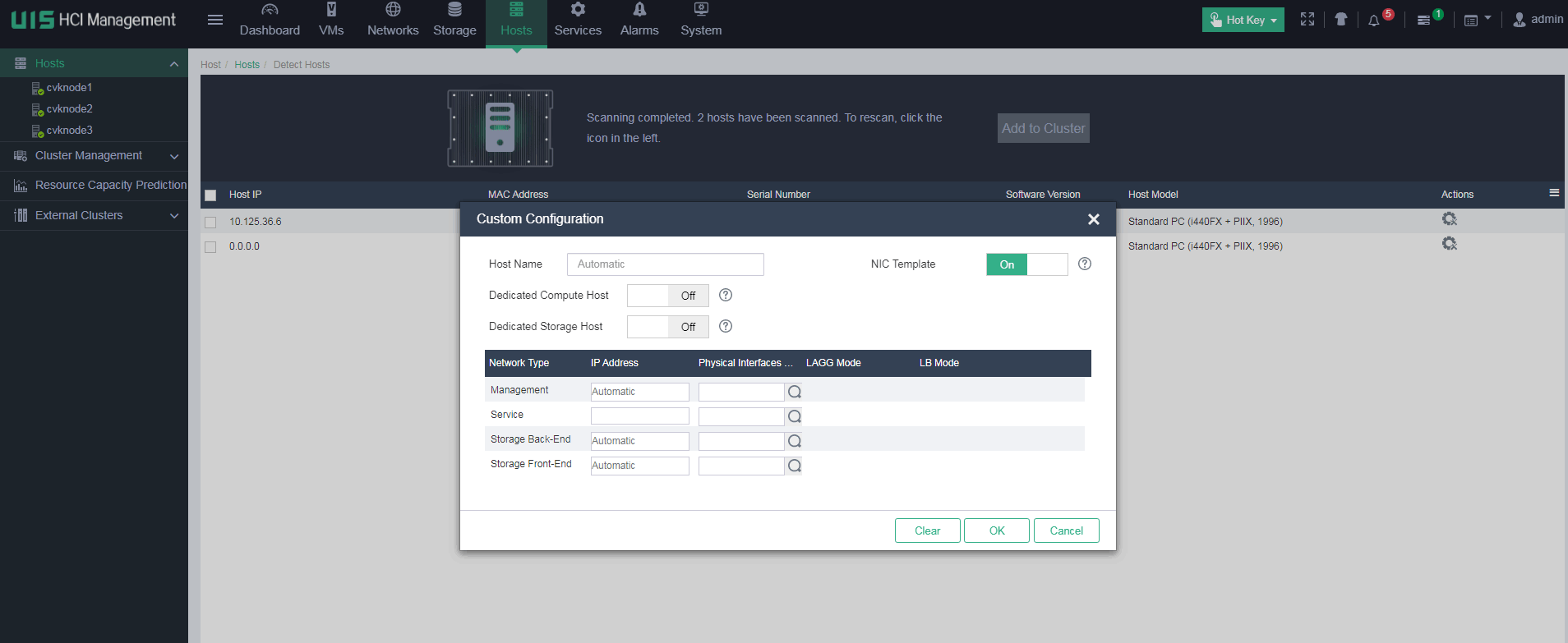

After a CVK host is added to the UIS cluster, you can modify the IP address or host name through the method provided by the Xconsole interface, as shown in the figure below. To do that, you must first delete the CVK host from the UIS system.

If the CVK host has shared storage enabled or runs VMs, it cannot be deleted. To delete the host in this case, you must first stop or migrate VMs and pause or delete the shared file system.

After the host is deleted, you can add the host through host expansion. During the host expansion process, you can manually configure an IP address for the host and select the corresponding NIC interface, and then add the host back to the cluster. Then, you can migrate the VMs back to the host.

CAUTION: · Make sure the IP address you enter can communicate with the management network and internal/external storage networks of the original cluster. If you cannot do that, you will fail to add the host. · The IP address settings are planned in the deployment phase. You must determine the IP address settings at the beginning, because you cannot modify the IP address settings later. |

Managing physical interfaces bound to a vSwitch

When the live plan is improper, you must adjust the physical interfaces bound to the vSwitch. If you want to change the network settings after the deployment is finished, you must do that with caution and make sure you are familiar with the network topology and change requirements.

In version E0750P06 and later, you can do that in the Web interface as follows. First, configure the host to operate in maintenance mode. Then, access the Hosts > vSwitches page and edit the network settings. At last, confirm the connectivity and exit maintenance mode.

In versions earlier than E0750P06, you cannot modify the physical interfaces bound to a vSwitch and modify the mode in the Web interface. Instead, you must do that in the backend. By assigning multiple interfaces to an aggregation group, you can load-share the traffic among the member ports and provide higher connection availability for traffic.

Link aggregation delivers the following benefits:

· Increases the network bandwidth—Link aggregation binds multiple links into a logical link, whose bandwidth is the sum of the bandwidth of each single link.

· Improves the network connection availability—Multiple links in a link aggregation back up each other. When a link is disconnected, the traffic will be automatically load-shared again among the remaining links.

Based on whether LACP is enabled on the bond interfaces, link aggregation includes static aggregation and dynamic aggregation.

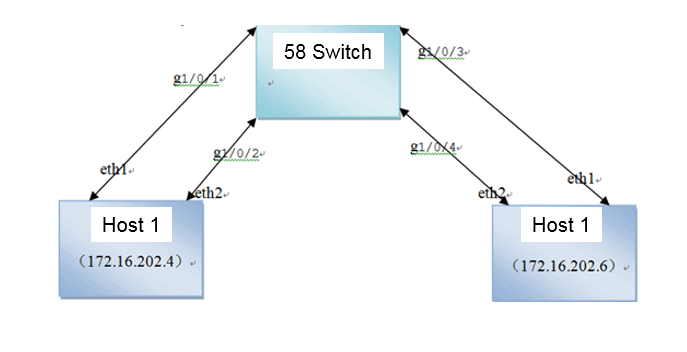

Dynamic aggregation on an OVS

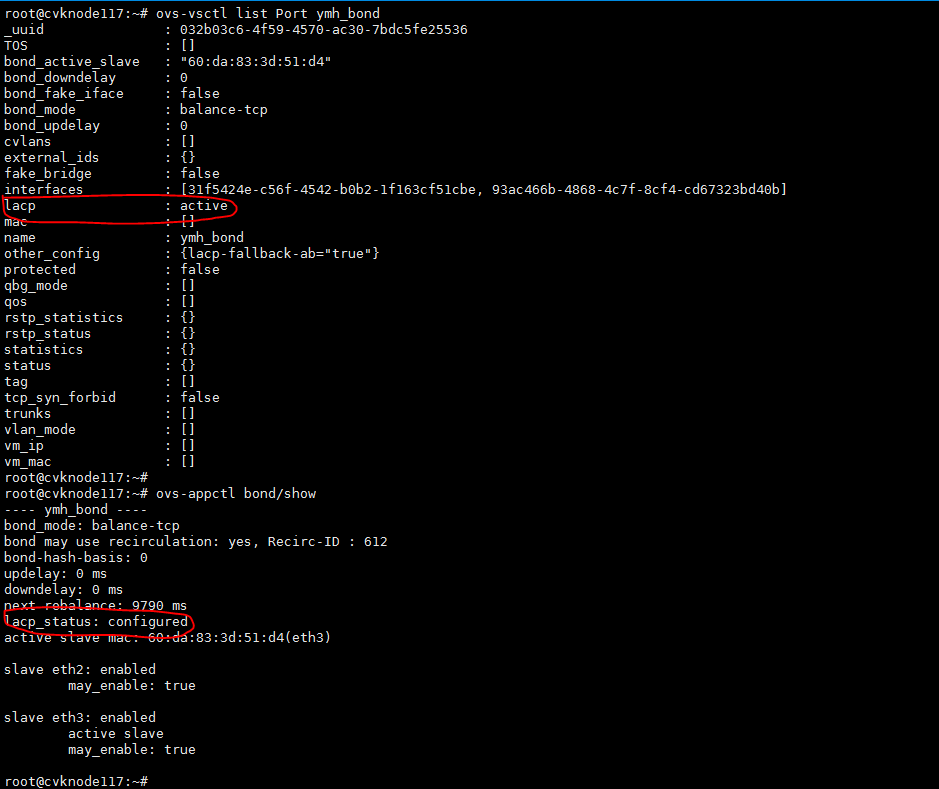

LACP is enabled on both the OVS side and switch side. On the bond interfaces of an OVS, the value for the lacp parameter can be active (enable LACP) or off (disable LACP).

The lacp_status parameter represents dynamic aggregation status. Options include negotiated (LACP negotiation succeeds), configured (LACP is enabled on the OVS side but LACP negotiation fails), and disabled (LACP is not enabled on the OVS side).

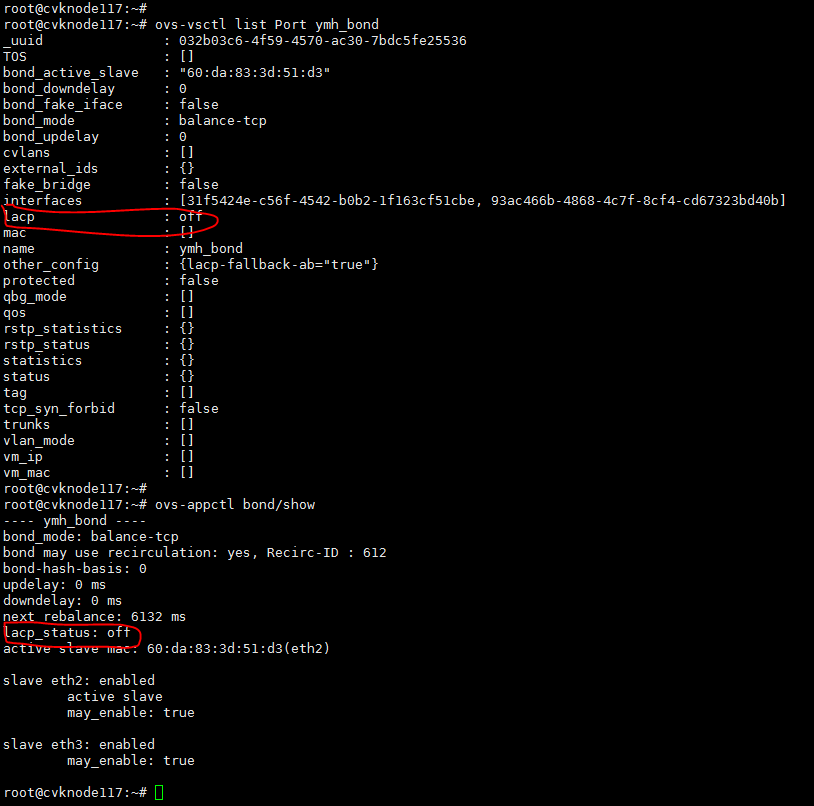

As shown in Figure 1, the lacp parameter is set to active on a bond interface to enable LACP on the bond interface of the OVS. However, the lacp_status parameter is configured on the bond interface. A possible reason is that LACP is not enabled on the peer device.

Figure 1 Dynamic aggregation autonegotiation fails

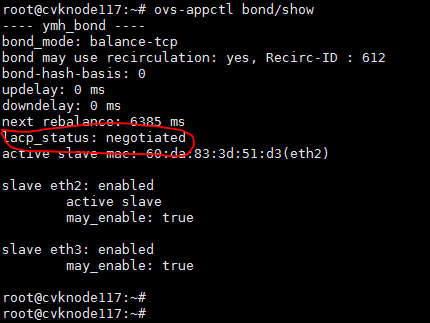

In normal conditions, LACP negotiation succeeds. In this case, the bond interface status is as shown in Figure 2.

Figure 2 Dynamic aggregation autonegotiation succeeds

On the OVS, dynamic aggregation supports advanced (balance-tcp mode) load sharing and basic (balance-slb mode) load sharing. The difference lies in the dimensions during the link entry hashing process.

· balance-tcp mode—Obtains the packet forwarding interface through hashing the Ethernet type, source/destination MAC address, VLAN ID, IP packet protocol, source/destination IP/IPv6 address, and source/destination Layer 4 port number fields of packets.

· balance-slb mode—Obtains the packet forwarding interface through hashing the source MAC and VLAN fields of packets. This bond_mode is deployed on the current Web interface.

Static aggregation on an OVS

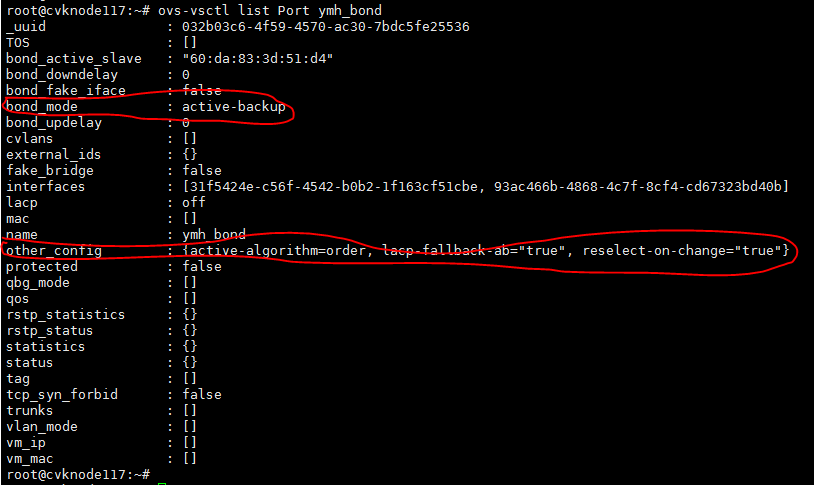

LACP is disabled on both the OVS side and switch side. When the configuration succeeds, the state is as follows:

Figure 3 Static aggregation configuration state

In the bond interface configuration, the lacp parameter is set to off, and the lacp_status parameter is off for aggregation.

On the OVS, static aggregation supports advanced load sharing, basic load sharing, and active/backup load sharing. The difference between advanced load sharing and basic load sharing is the same as that in dynamic aggregation. The following information describes basic load sharing.

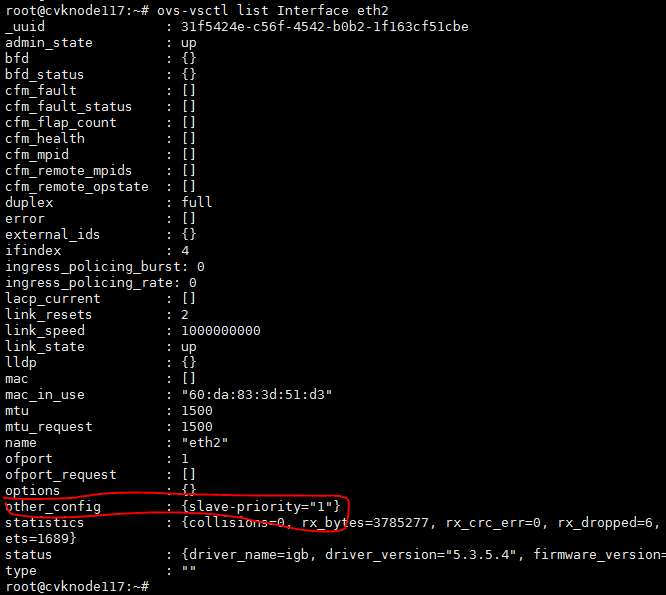

In the OVSDB, the bond interface configuration saves the active link selection method, and the interface configuration saves the physical NIC priority. Configure the following settings:

1. ovs-vsctl set Port bond-name other_config: active-algorithm=”speed|order”

The speed option means to select the active link by NIC speed. The order option means to select the active link in the NIC configuration order. If this command is not executed, the active link is selected by NIC speed by default.

2. ovs-vsctl set Port bond-name other_config:active-algorithm=”true|false”

The true option means the traffic will be switched back to the selected active link NIC when the NIC goes down and then comes up. The false option means the traffic will not be switched back. If this command is not executed, the traffic will not be switched back by default.

3. ovs-vsctl set Interface ethx other_config:slave-priority=”n”,

The n argument represents the ID assigned by the back end according to the configuration order, for example, 1, 2, 3... A smaller ID means a higher priority.

Figure 4 Active/backup aggregation group configuration

Figure 5 Member interface configuration for an active/backup aggregation group on an OVS

Changing single NIC interfaces to a dynamic aggregation group on an OVS

The following information describes how to change single NIC interface eth7 into a dynamic aggregation group with member interfaces eth5 and eth7 for advanced/basic load sharing on vswitch0 on the management network.

· If the peer switch of eth5 and eth7 has been configured with a dynamic aggregation group and the two interfaces have been assigned to the aggregation group, you only need to configure the dynamic aggregation group with advanced (bond_mode=balance-tcp) or basic (bond_mode= balance-slb) load sharing on the OVS.

ovs-vsctl del-port vswitch0 eth7; ovs-vsctl -- add-bond vswitch0 vswitch0_bond eth5 eth7 bond_mode=[balance-tcp | balance-slb] -- set port vswitch0_bond lacp=active

CAUTION: You must enter the commands before and after the semicolon (;) at the same time. In this way, when the management interface is disconnected (eth7 is removed from vswitch0), vswitch0 is immediately configured with the dynamic aggregation group containing eth5+eth7. |

· If the peer switch of eth5 and eth7 is not configured with a dynamic aggregation group, you can configure a static active/backup aggregation and then switch the aggregation mode.

a. Create a static active/backup aggregation group with members eth5 and eth7 on the OVS:

ovs-vsctl del-port vswitch0 eth7;ovs-vsctl add-bond vswitch0 vswitch0_bond eth5 eth7 bond_mode=active-backup

b. Configure a dynamic aggregation group on the peer switch of eth5 and eth7, and assign the two interfaces to the aggregation group.

Without loss of generality, suppose eth5 is connected to GigabitEthernet 1/0/5 on the peer switch and eth7 is connected to GigabitEthernet1/0/7 on the peer switch.

[H3C]interface Bridge-Aggregation 8 //Create aggregation group 8

[H3C-Bridge-Aggregation8]link-aggregation mode dynamic //Specify the aggregation group as a dynamic aggregation group

[H3C]interface GigabitEthernet 1/0/5

[H3C-GigabitEthernet1/0/5]port link-aggregation group 8 //Assign GigabitEthernet 1/0/5 to aggregation group 8

[H3C]interface GigabitEthernet 1/0/7

[H3C-GigabitEthernet1/0/7]port link-aggregation group 8 //Assign GigabitEthernet 1/0/7 to aggregation group 8

CAUTION: Make sure the configuration (especially the VLAN configuration) of aggregation group Bridge-Aggregation 8 is the same as the configuration of member interfaces (GigabitEthernet 1/0/5 and GigabitEthernet 1/0/7 in this example). If you cannot do that, dynamic aggregation and static advanced/basic load sharing will fail. |

c. Execute the following command to configure the static active/backup aggregation group to operate in dynamic advanced (bond_mode=balance-tcp) or basic (bond_mode= balance-slb) load sharing mode:

ovs-vsctl set port vswitch0_bond bond_mode=[balance-tcp | balance-slb] lacp=active

Changing single NIC interfaces to a static aggregation group on an OVS

The following information describes how to change single NIC interface eth7 into a dynamic advanced/basic load sharing aggregation group with member interfaces eth5 and eth7 on vswitch0 on the management network.

· If the peer switch of eth5 and eth7 has been configured with a dynamic aggregation group and the two interfaces have been assigned to the aggregation group, you only need to configure the dynamic aggregation group with advanced (bond_mode=balance-tcp) or basic (bond_mode= balance-slb) load sharing on the OVS.

ovs-vsctl del-port vswitch0 eth7; ovs-vsctl -- add-bond vswitch0 vswitch0_bond eth5 eth7 bond_mode=[balance-tcp | balance-slb] -- set port vswitch0_bond lacp=active

CAUTION: You must enter the commands before and after the semicolon (;) at the same time. In this way, when the management interface is disconnected (eth7 is removed from vswitch0), vswitch0 is immediately configured with the dynamic aggregation group containing eth5+eth7. |

· If the peer switch of eth5 and eth7 is not configured with a dynamic aggregation group, you can configure a static active/backup aggregation and then switch the aggregation mode.

a. Create a static active/backup aggregation group with members eth5 and eth7 on the OVS:

ovs-vsctl del-port vswitch0 eth7;ovs-vsctl add-bond vswitch0 vswitch0_bond eth5 eth7 bond_mode=active-backup

b. Configure a dynamic aggregation group on the peer switch of eth5 and eth7, and assign the two interfaces to the aggregation group.

Without loss of generality, suppose eth5 is connected to GigabitEthernet 1/0/5 on the peer switch and eth7 is connected to GigabitEthernet1/0/7 on the peer switch.

[H3C]interface Bridge-Aggregation 8 //Create aggregation group 8

[H3C]interface GigabitEthernet 1/0/5

[H3C-GigabitEthernet1/0/5]port link-aggregation group 8 //Assign GigabitEthernet 1/0/5 to aggregation group 8

[H3C]interface GigabitEthernet 1/0/7

[H3C-GigabitEthernet1/0/7]port link-aggregation group 8 //Assign GigabitEthernet 1/0/7 to aggregation group 8

CAUTION: Make sure the configuration (especially the VLAN configuration) of aggregation group Bridge-Aggregation 8 is the same as the configuration of member interfaces (GigabitEthernet 1/0/5 and GigabitEthernet 1/0/7 in this example). If you cannot do that, dynamic aggregation and static advanced/basic load sharing will fail. |

c. Execute the following command to configure the static active/backup aggregation group to operate in static advanced (bond_mode=balance-tcp) or basic (bond_mode= balance-slb) load sharing mode:

ovs-vsctl set port vswitch0_bond bond_mode=[balance-tcp | balance-slb]

Changing a dynamic aggregation group to a static aggregation group on an OVS

The following information changes the dynamic aggregation group with eth5 and eth7 to a static aggregation group on vswitch0.

To smoothly change a dynamic aggregation group to a static aggregation group (try to avoid packet loss as possible), you must configure a static active/backup aggregation group in between.

1. Change a dynamic aggregation group to a static active/backup aggregation group on the OVS.

ovs-vsctl set port vswitch0_bond bond_mode=active-backup lacp=off

2. Disable LACP for the aggregation group (Bridge-Aggregation 8 in this example) on the peer switch of eth5 and eth7.

[H3C]interface Bridge-Aggregation 8

[H3C-Bridge-Aggregation8]undo link-aggregation mode dynamic

3. Change the static active/backup aggregation group to a static aggregation group with advanced/basic load sharing on the OVS.

ovs-vsctl set port vswitch0_bond bond_mode=[balance-tcp | balance-slb]

Changing a static aggregation group to a dynamic aggregation group on an OVS

The following information switches the static aggregation group with eth5 and eth7 to a dynamic aggregation group on vswitch0.

1. Change a static aggregation group to a static active/backup aggregation group on the OVS.

Skip this step if the aggregation group on the OVS is a static active/backup aggregation group.

ovs-vsctl set port vswitch0_bond bond_mode=active-backup

2. Enable LACP for the aggregation group (Bridge-Aggregation 8 in this example) on the peer switch of eth5 and eth7.

[H3C]interface Bridge-Aggregation 8

[H3C-Bridge-Aggregation8]link-aggregation mode dynamic

3. Change the static active/backup aggregation group to a dynamic aggregation group with advanced/basic load sharing on the OVS.

ovs-vsctl set port vswitch0_bond bond_mode=[balance-tcp | balance-slb] lacp=active

Deleting an aggregation group on an OVS

The following information describes how to change a dynamic advanced load-sharing aggregation group with member interfaces eth5 and eth7 to single interface eth7 on vswitch0.

1. Change the aggregation mode to static active/backup aggregation on vswitch0.

ovs-vsctl set port vswitch0_bond bond_mode=active-backup lacp=off

2. Remove eth5 and eth7 from the aggregation group on vswitch0.

Suppose eth5 is connected to GigabitEthernet 1/0/5 on the peer switch and eth7 is connected to GigabitEthernet 1/0/7 on the peer switch.

[H3C]interface GigabitEthernet 1/0/5

[H3C-GigabitEthernet1/0/5]undo port link-aggregation group

[H3C]interface GigabitEthernet 1/0/7

[H3C-GigabitEthernet1/0/7]undo port link-aggregation group

3. Delete the static active/backup aggregation group on vswitch0, and assign eth7 to switch0.

ovs-vsctl del-port vswitch0_bond;ovs-vsctl add-port vswitch0 eth7

The way of switching a static advanced/basic load-sharing aggregation group to a single link is similar to the way of switching a dynamic advanced/basic load-sharing aggregation group to a single link. The difference is that the following command is executed in the first step:

ovs-vsctl set port vswitch0_bond bond_mode=active-backup

CAUTION: Because of various objective restrictions (for example, restrictions on the peer physical switch), the CAS OVS cannot absolutely perform the aggregation mode switchover smoothly, and few packets will be dropped. As a best practice, perform aggregation mode switchover when the traffic is light. |

Replacing a disk on a CVK host

When a disk in the cluster fails, it cannot be directly replaced. Software operations and configurations are required for a successful disk replacement on UIS Manager. For more information, see H3C UIS Hyper-Converged Infrastructure Component Replacement Configuration Guide.

Changing the password for accessing UIS Manager

CAUTION: · To change the root password for a CVK, access the Web interface of UIS Manager. You cannot change the root password for a CVK host from its command shell. · As a best practice, configure the same password for all hosts in the cluster. · Regularly change your password and avoid using simple or common passwords. |

To meet security requirements, user passwords need to be changed periodically. The following changes the password of the UIS root user as an example.

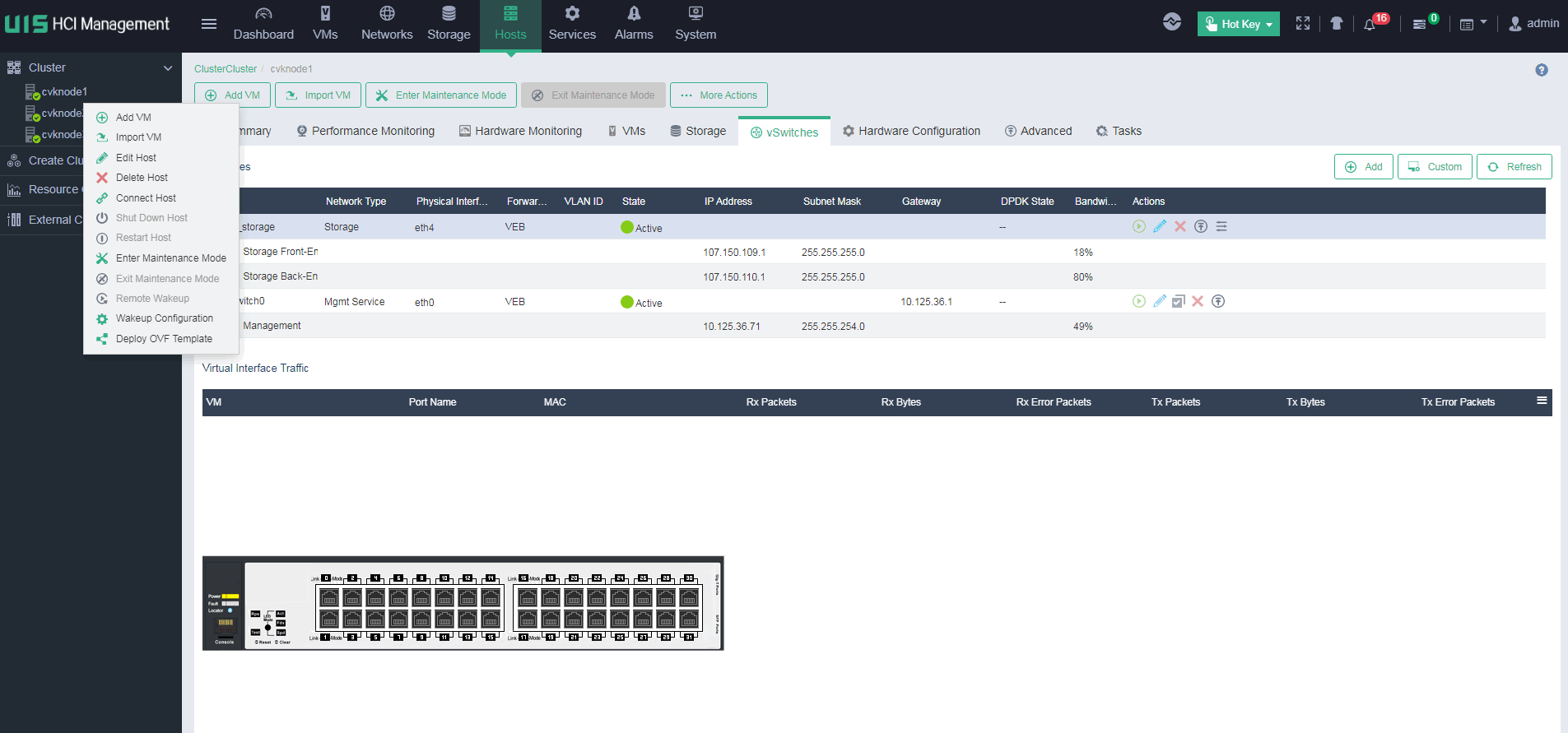

Changing the root password of a host from the Web interface

1. Right-click a host, and then select Edit Host.

2. In the dialog box that opens, enter a new password, and then click OK.

If you forget the root password, see H3C UIS&CAS Host Password Retrieval Configuration Guide.

Changing the admin password

UIS Manager has a default password. To change this password, access UIS Manager and click admin in the upper-right corner, and then change the password as needed.

As a best practice, change the root password and admin password in time at the first login to UIS Manager.

Scaling out and scaling in a cluster

See H3C UIS Manager Resource Scale-Out and Scale-In Configuration Guide.

Changing the system time

See H3C UIS Manager System Time Modification Configuration Guide.

Performing a heterogeneous or homogeneous migration

See H3C UIS HCI Cloud Migration Guide.

Redefining a VM

In some cases, such as when a VM fails to start up due to host operation issues, it might be necessary to redefine and restore a VM on a different host from the original location. However, VMs that use raw blocks and encrypted disks and have multi-level images do not support VM redefinition.

Obtaining the XML file of the VM

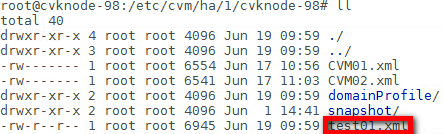

Obtaining the XML file of the VM when HA is enabled and the CVM node is normal

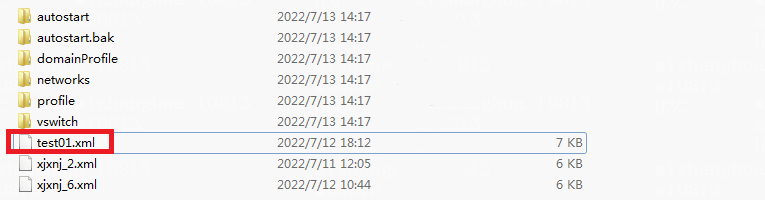

When HA is enabled and the CVM node is normal, the XML file of a VM is saved in the HA directory on the CVM node by default. Typically, the HA directory is /etc/cvm/ha/clust_id/cvk_name, for example, /etc/cvm/ha/2/cvknode191. In the corresponding HA directory, enter the CVK directory for the VM to find the XML file of the VM, for example, test01.

Obtaining the XML file of the VM when HA is disabled and the CVM node is normal

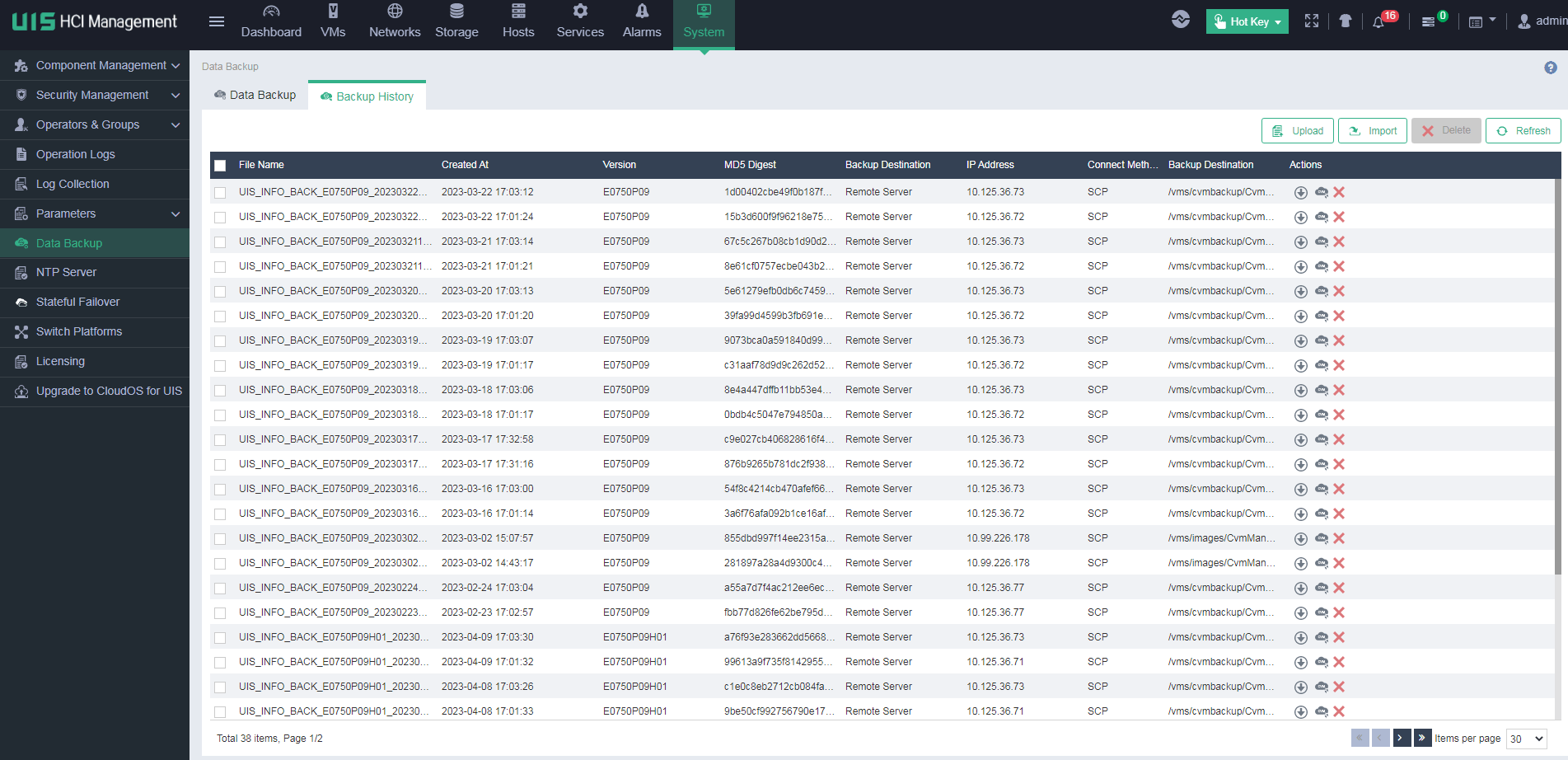

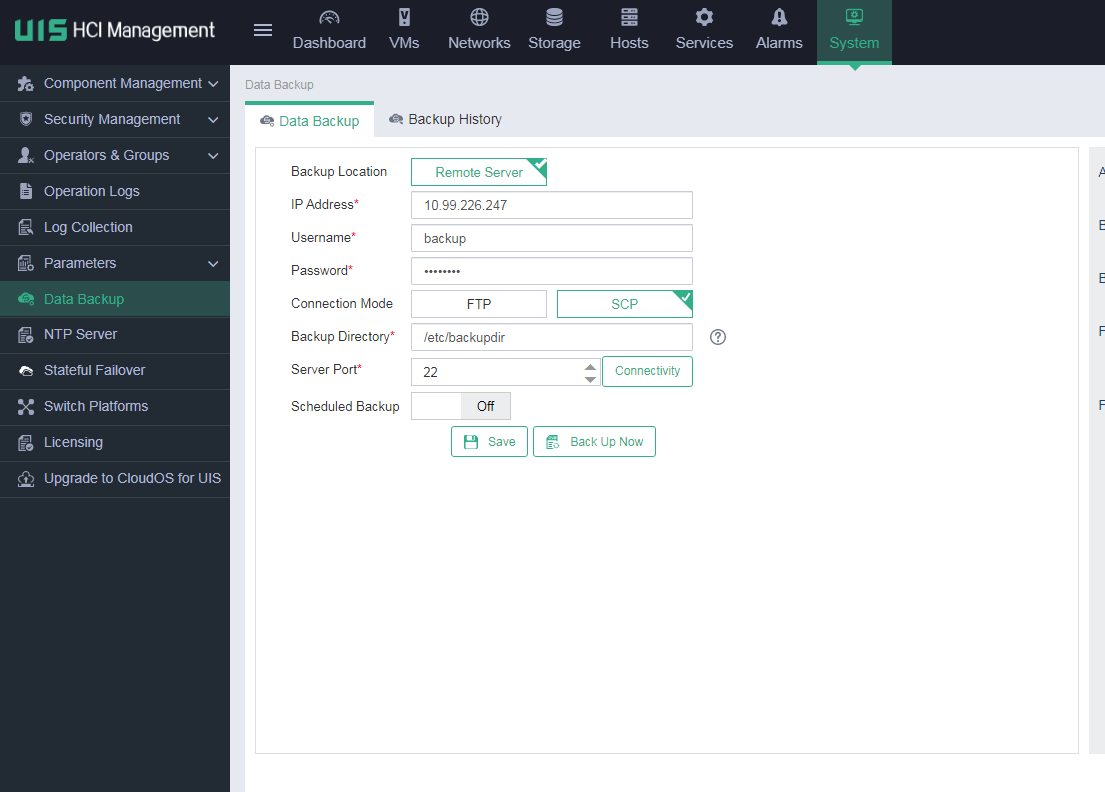

1. On the top navigation bar, click System, and then select Data Backup > Backup History from the left navigation pane. Then, download the most recent backup file.

This example downloads backup file UIS_INFO_BACK_E0750P07_20220713123106.tar.gz.

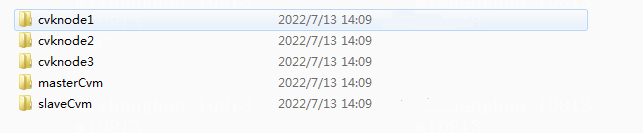

2. Decompress the downloaded backup file and enter directory UIS_INFO_BACK_E0750P07_20220713123106\cvknode1_crm_cvknode2\CVM_INFO_BACK_E0710P21_20220713123125\front\cvks.

3. Select the directory for the host where the VM is located, and then decompress the libvirt.tar.gz file in the directory. Then, enter the qemu subdirectory to obtain the XML file of the VM.

| NOTE: Directory cvknode1_crm_cvknode2 is named in the format of primary CVM node name_crm_secondary CVM node name. In a single host environment, this directory is named in the format of CVM node name. |

Obtaining the XML file of the VM when HA is disabled and the CVM node is faulty

If HA is disabled and the CVM node is faulty, you cannot access UIS Manager. To obtain the XML file of a VM in this case, perform the following steps:

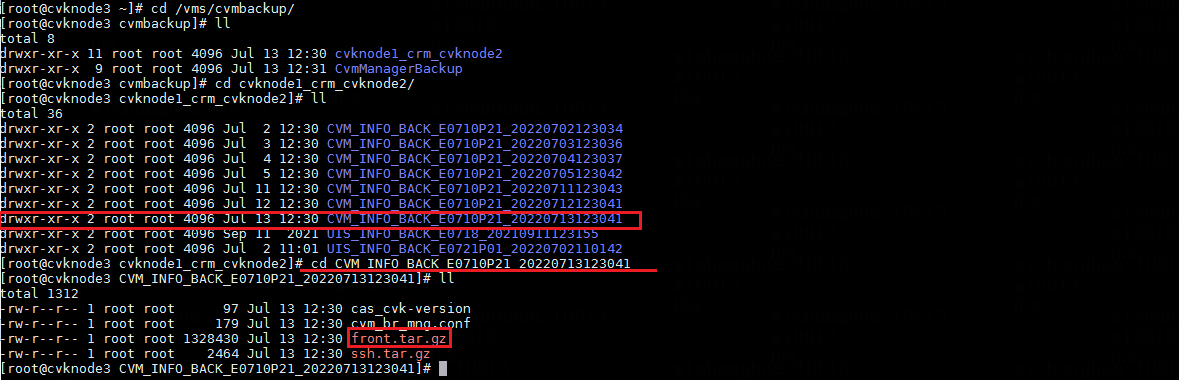

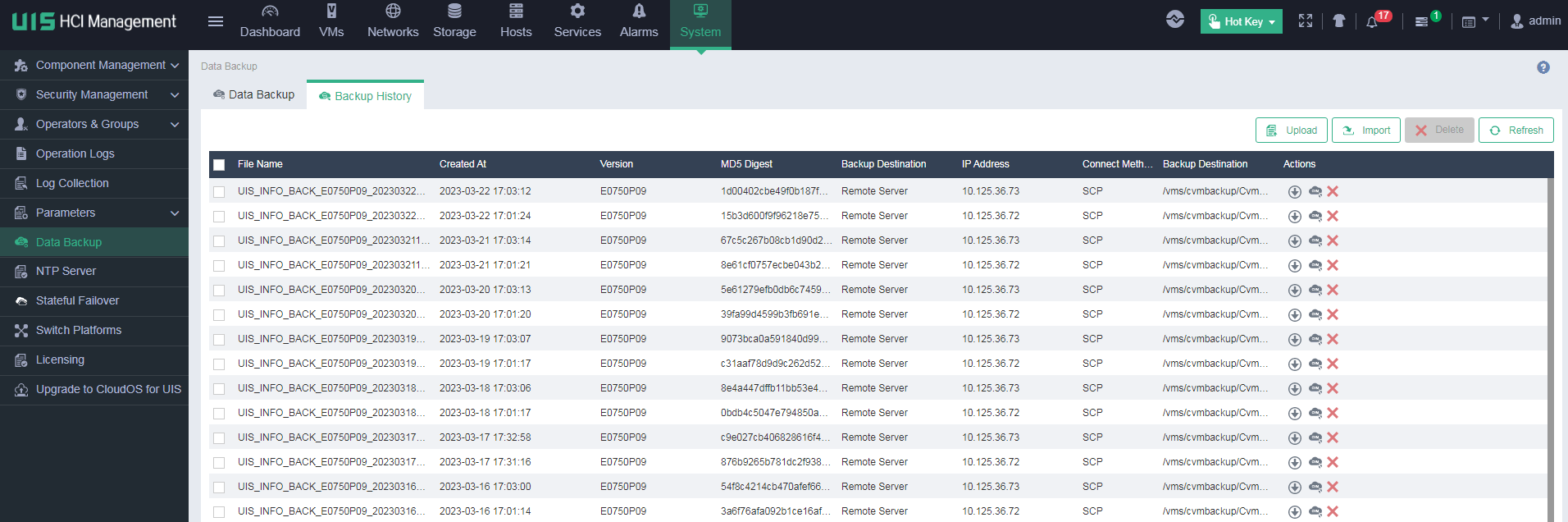

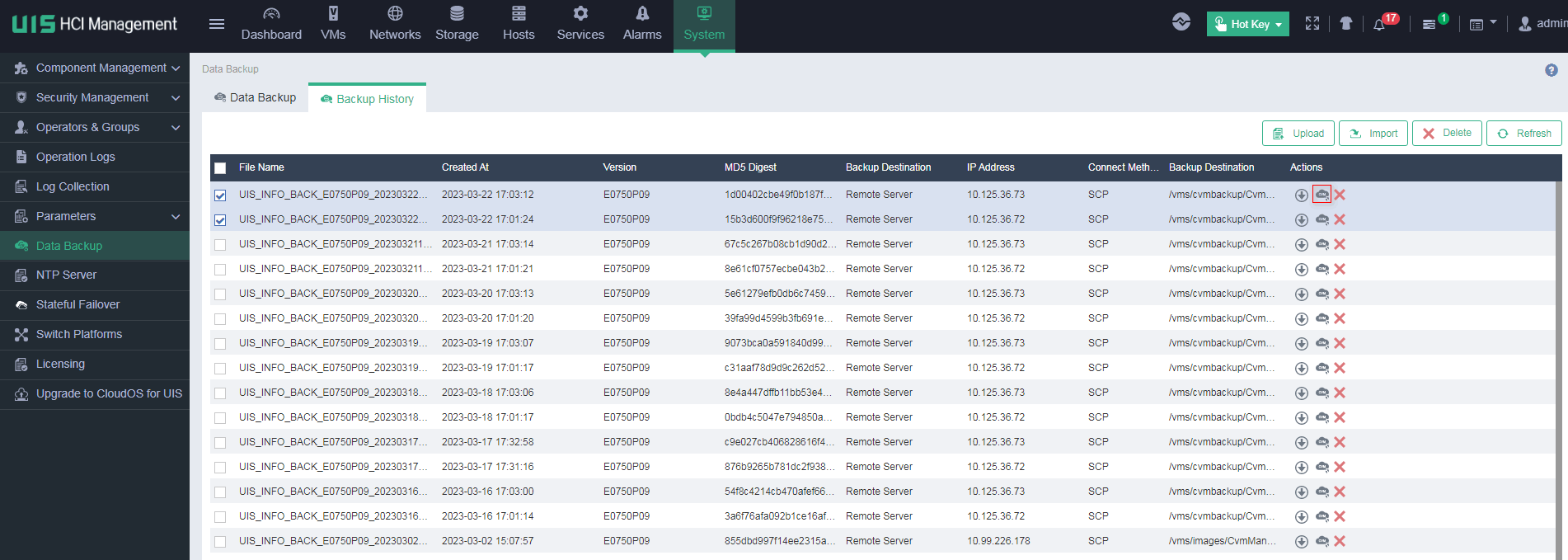

1. Use an SSH client to access each node in the cluster to find a node that has the /vms/cvmbackup directory.

The backup data is saved on three random hosts managed by the system.

2. Enter the /vms/cvmbackup directory on the node, and then enter the cvknode1_crm_cvknode2 directory to identify the most recent backup record. Then, enter the corresponding directory to locate the front.tar.gz file.

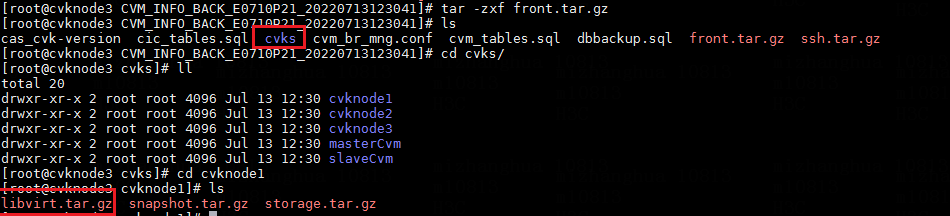

3. Decompress the front.tar.gz file, and then enter the cvks directory. Then, enter the directory for the host where the VM is located, and then decompress the libvirt.tar.gz file in the directory.

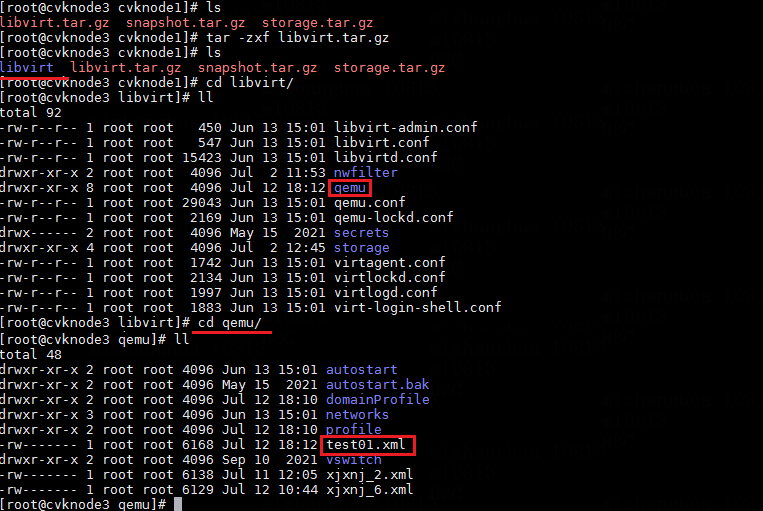

4. Enter the libvirt/qemu directory after decompression to find the XML file of the VM.

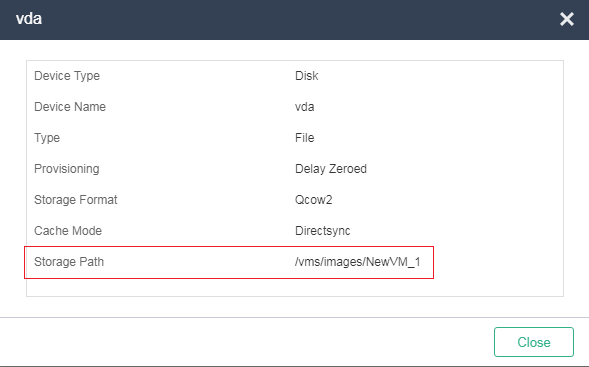

Identifying the storage volume for VM disk files

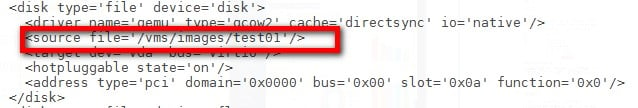

If you already know the storage volume for VM disk files, verify that the corresponding storage volume on another host that has mounted it is normal from the CLI of the host. If you do not know the storage volume for VM disk files, execute the vim or cat command to obtain the disk file location of the VM from the XML file obtained in "Obtaining the XML file of the VM." For example:

The source file field displays the location of the VM disk files.

Copying the XML file of the VM to the target host

Use SCP to copy the XML file of the VM to the /etc/libvirt/qemu directory on the host where the storage volume location has been identified in "Identifying the storage volume for VM disk files."

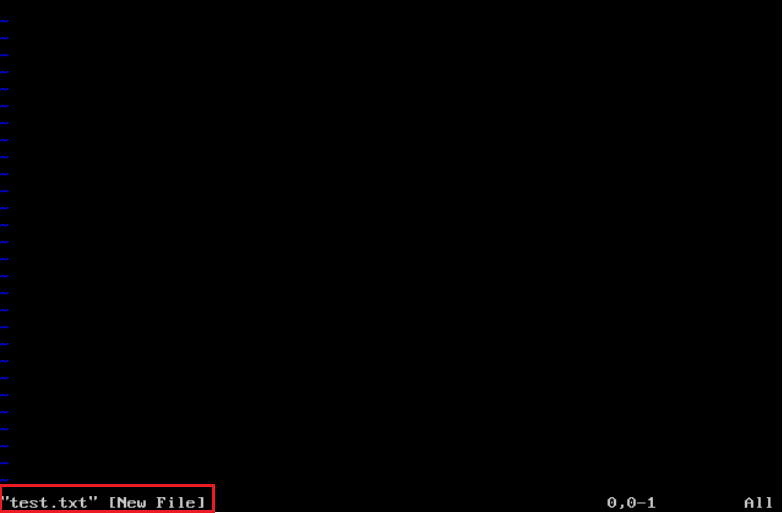

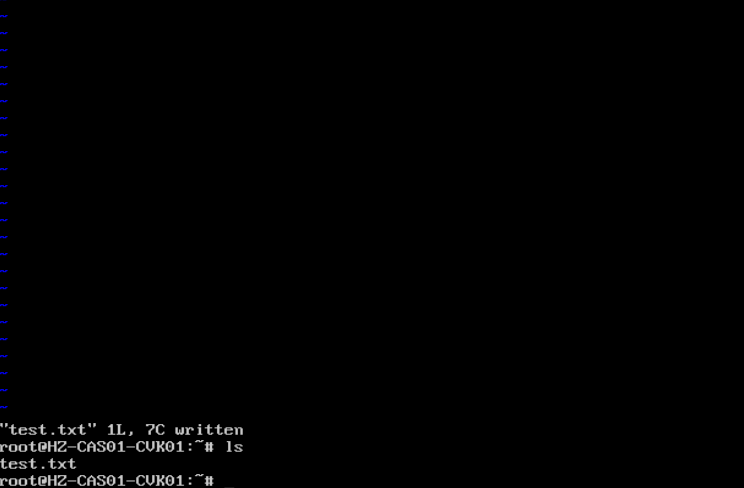

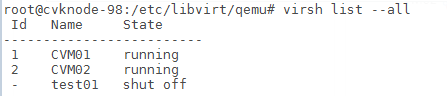

Defining the VM through XML

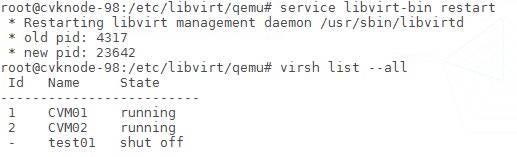

1. Execute the virsh define vm.xml command in the /etc/libvirt/qemu directory.

![]()

The VM is defined through XML.

2. Verify that the VM is also displayed in the output from the virsh list –all command at the CLI of the new host.

3. Connect the host from the Web interface. Then, you can view and start up the VM on from the Web interface.

To define many VMs, you can also reboot libvirt to automatically define these VMs if the system does not have any VMs with their names in Chinese characters. Then, start up these VMs after successful definition, as shown in the following figure:

Clearing VM data on the original host

If the original host has been completely damaged due to some hardware issues, resolve the hardware issues, and then re-install the same UIS version as the original system.

If the original host does not have hardware issues, perform the following steps to clear VM data on the host:

1. Disconnect the network cable from the original host before the host starts up.

2. Log in to the CLI of the original host to remove the XML file of the VM to avoid dual writes that occur when HA brings up the VM on the original host after the server restarts.

Configuring stateful failover

See H3C UIS Manager Stateful Failover Configuration Guide.

Configure a stateful failover system before a version upgrade.

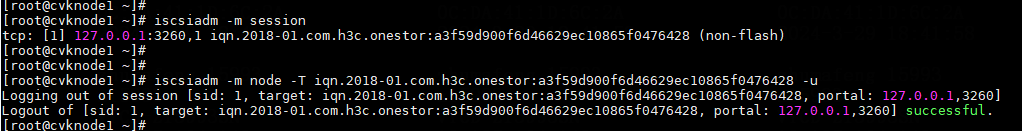

If you cannot access the ONEStor Web interface, access the CVM node to execute the following commands:

Then, execute the following command:

Replacing SSDs with NVMe drives

See H3C UIS Manager Configuration Guide for Replacing SSDs with NVMe Disks.

Migrating VMware VMs

See H3C UIS HCI Cloud Migration Guide.

Configuring GPUs

See H3C UIS Manager GPU Passthrough Configuration Guide.

Configuring vGPUs

See H3C UIS Manager vGPU Configuration Guide.

Configuring anti-virus

Contact Technical Support.

Configuring AISHU backup

See H3C UIS AISHU Solution Configuration Guide.

Configuring storage disaster recovery

See H3C UIS Manager Site Recovery Management Configuration Guide.

Collecting logs

Collecting logs of the UIS Manager

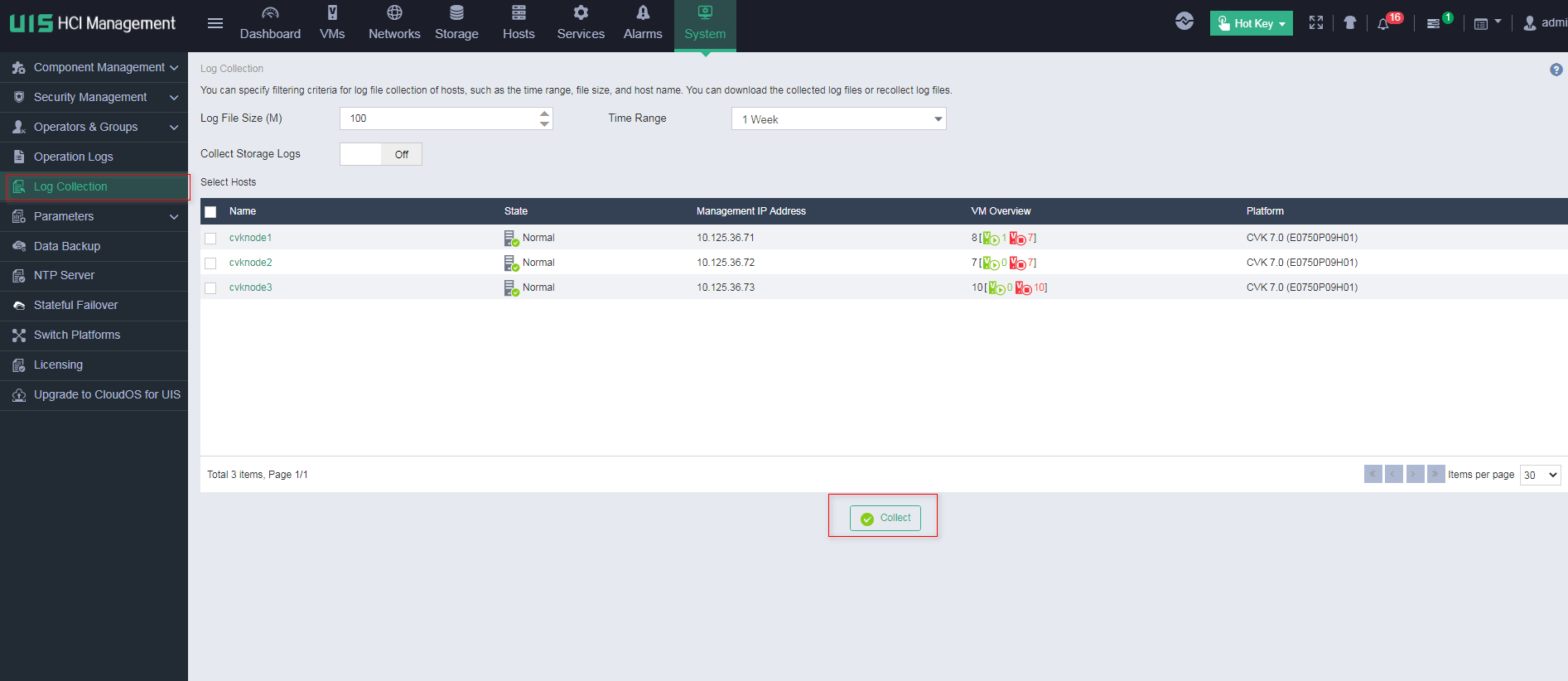

Collecting logs from the Web interface

1. On the top navigation bar, click System, and then select Log Collection from the left navigation pane.

2. Select the CVK hosts for which the system collects logs, and then click Collect to save the log files locally.

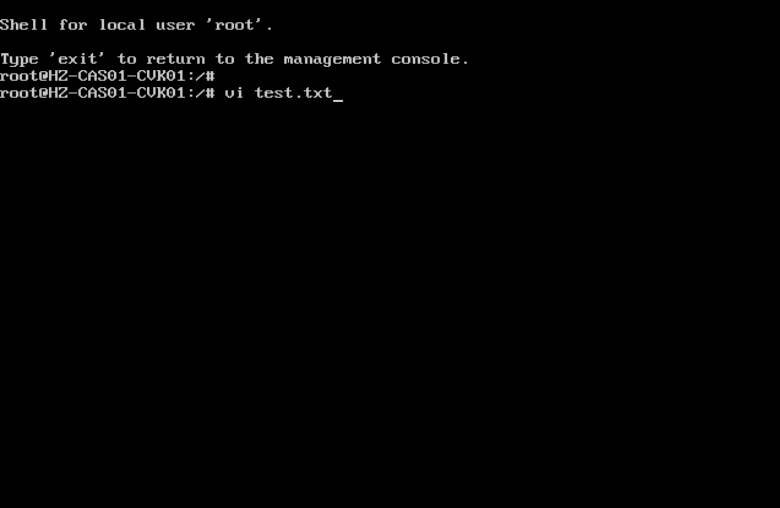

Collecting logs at the CLI of a CVK host

If you cannot collect logs from the Web interface of the UIS Manager due to CVK failure, access the CLI of the CVK host to collect logs manually.

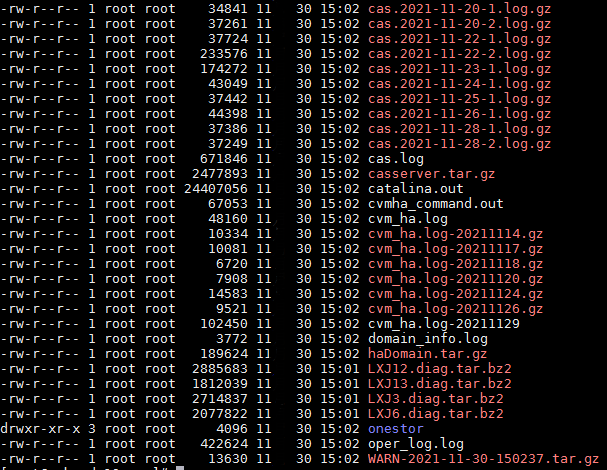

To collect logs at the CLI of a CVK host, access the CLI of the CVK host, and then execute the cas_collect_log.sh command. A compressed file is generated in the /vms directory as shown in the figure.

To analyze the logs, download the file to your local computer by using SSH client software.

For ONEStor-related hosts, you cannot collect logs for them by executing the script. To collect logs for a ONEStor-related host, manually copy the logs in the /var/log/storage and /var/log/ceph directories. If the time range for log collection is short or the log size is too large, you can collect part of the logs archived in the /var/log/storage/backup directory.

Introduction to logs

Logs collected from the Web interface

UIS log files downloaded from the Web interface are named in the UIS_×××_×××.tar.gz format. A decompressed log file includes the following types of files:

· catalina.out—Contains logs of Web functions on the UIS Manager.

· oper_log.log—Contains user operation logs.

· *.diag.tar.bz2—Contains logs of each CVK host.

· onestor—Contains operation logs and system logs of ONEStor.

· WARN*.tar.gz—Contains alarm messages.

Logs collected at the CLI

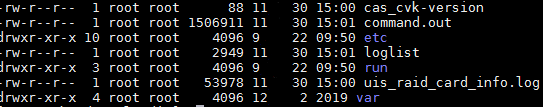

CVK host log files obtained at the CLI are named in the XXX.tar.bz2 format. A decompressed CVK host log file includes the following types of directory files:

· etc—Contains UIS configuration files, which are mainly VM configuration files. The VM configuration files are in the libvirt/qemu/VM.xml directory.

· var—Contains logs of each UIS feature module.

· command.out—Contains output information about frequently used commands at the CLI.

· cas _cvk-version—Contains UIS version information.

· loglist—Contains UIS log file names.

· uis_raid_card_info.log—Contains basic information about RAID controllers on the host.

The var directory mainly contains the following logs:

· messages—Host system logs, which record the system running information.

· fsm—Shared file system logs.

· cas_ha—HA logs.

· Ha_shell_XX.log—HA logs.

· libvirt—VM logs.

· openvswitch—Logs generated by the OVS running process.

· Ovs_shell_XX.log—Logs generated by calling the ovs_bridge.sh script.

· tomcat8—UIS Web logs.

· operation—Logs for manual operations at the CLI of UIS Manager.

The following provides descriptions for CVK host logs:

· Messages logs

Messages logs record critical information during operating system operation. The following introduces the records for an abnormal reboot of a CVK host.

Feb 3 13:58:01 XJYZ-CVK01 CRON【64458】: (root) CMD (ump-node-sync )

Feb 3 13:58:01 XJYZ-CVK01 CRON【64459】: (root) CMD (ump-sync -p ALL)

Feb 3 13:58:01 XJYZ-CVK01 CRON【64460】: (root) CMD ( /opt/bin/ocfs2_iscsi_conf_chg_timer.sh)

Feb 3 13:58:01 XJYZ-CVK01 CRON【64443】: (CRON) info (No MTA installed, discarding output)

Feb 3 14:06:35 XJYZ-CVK01 kernel: imklog 5.8.6, log source = /proc/kmsg started.

Feb 3 14:06:35 XJYZ-CVK01 rsyslogd: 【origin software="rsyslogd" swVersion="5.8.6" x-pid="2747" x-info="http://www.rsyslog.com"】 start

Feb 3 14:06:35 XJYZ-CVK01 rsyslogd: rsyslogd's groupid changed to 103

Feb 3 14:06:35 XJYZ-CVK01 rsyslogd: rsyslogd's userid changed to 101

Feb 3 14:06:35 XJYZ-CVK01 rsyslogd-2039: Could not open output pipe '/dev/xconsole' 【try http://www.rsyslog.com/e/2039 】

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Initializing cgroup subsys cpuset

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Initializing cgroup subsys cpu

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Initializing cgroup subsys cpuacct

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Linux version 3.13.6 (root@cvknode22) (gcc version 4.6.3 (Ubuntu/Linaro 4.6.3-1ubuntu5) ) #5 SMP Mon Jul 21 10:07:26 CST 2014

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Command line: BOOT_IMAGE=/boot/vmlinuz-3.13.6 root=UUID=4beeb503-6e10-4836-93a4-0836a9a1571e ro nomodeset elevator=deadline transparent_hugepage=always crashkernel=256M quiet

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 KERNEL supported cpus:

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Intel GenuineIntel

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 AMD AuthenticAMD

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 Centaur CentaurHauls

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 e820: BIOS-provided physical RAM map:

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 BIOS-e820: 【mem 0x0000000000000000-0x000000000009cbff】 usable

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 BIOS-e820: 【mem 0x000000000009cc00-0x000000000009ffff】 reserved

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 BIOS-e820: 【mem 0x00000000000f0000-0x00000000000fffff】 reserved

Feb 3 14:06:35 XJYZ-CVK01 kernel: 【0.000000】 BIOS-e820: 【mem 0x0000000000100000-0x00000000bf60ffff】 usable

As shown in the example, the messages log file does not have any records from 13:58:01 to 14:06:35, indicating that the CVK host failed in the time range.

The kernel-level logs record information about the CVK host after it restarted.

· Libvirt logs

In the /var/log/libvirt/libvirtd.log log file, an alarm that the CVK host lacks memory resources exists and the current memory usage has reached 97%. (The alarm message prompted when the CPU resources are insufficient is similar to that in the example.)

2014-10-24 09:15:52.792+0000: 2994: warning : virIsLackOfResource:1106 : Lack of Memory resource! only 374164 free 64068 cached and vm locked memory(4194304*0%) of 16129760 total, max:85; now:97

2014-10-24 09:15:52.792+0000: 2994: error : qemuProcessStart:3419 : Lack of system resources, out of memory or cpu is too busy, please check it.

The /var/log/libvirt/qemu directory saves the log files of VMs running on the CVK host.

root@UIS-CVK01:/var/log/libvirt/qemu# ls -l

total 44

-rw------- 1 root root 7067 Jan 9 19:08 RedHat5.9.log

-rw------- 1 root root 1969 Jan 18 15:41 win7.log

-rw------- 1 root root 26574 Feb 11 16:15 windows2008.log

VM logs files record VM running information, including the time when the VM started up and was closed and disk files of the VM.

2015-02-11 15:50:18.349+0000: starting up

LC_ALL=C PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin QEMU_AUDIO_DRV=none /usr/bin/kvm -name windows2008 -S -machine pc-i440fx-1.5,accel=kvm,usb=off,system=windows -cpu qemu64,hv_relaxed,hv_spinlocks=0x2000 -m 1024 -smp 1,maxcpus=12,sockets=12,cores=1,threads=1 -uuid 43741f06-166d-4155-b47e-4137df68e91c -no-user-config -nodefaults -chardev file=/vms/sharefile/windows2008,if=none,id=drive-virtio-disk0,format=qcow2,cache=directsync –device

…

char device redirected to /dev/pts/0 (label charserial0)

qemu: terminating on signal 15 from pid 4530

2015-02-11 16:15:28.825+0000: shutting down

· OCFS2 logs

The /var/log/fsm/fsm_core*.log log file records information about processing triggered by OCFS2 Fence of the CVK host.

2021-11-04 06:40:35,882 manager:233 INFO Received an event: {'index': 7, 'type': 'fence_umount', 'uuid': u'851D36905AB74AFD93E1ABA8259DA3A2', 'seq': 11538, 'dev_name': u'dm-7'}

2021-11-04 06:40:35,923 manager:204 INFO Remain 0 events to be handling

2021-11-04 06:40:35,923 manager:131 INFO Manager received an event: Pool sharefile06 was fence_umount

2021-11-04 06:40:35,923 fspool:141 INFO Pool sharefile06 received a event fence_umount

· Operation logs

Operation logs record information about the commands executed at the CLI of the CVK host. The following contains commands executed from Apr 19th to Apr 21st.

root@cvknode1:~/cas# ll /var/log/operation/

total 32

drwxrwxrwx 2 root root 4096 Apr 21 10:06 ./

drwxr-xr-x 40 root root 4096 Apr 21 11:01 ../

-rwxrwxrwx 1 root root 5162 Apr 19 17:49 18-04-19.log*

-rwxrwxrwx 1 root root 829 Apr 20 19:11 18-04-20.log*

-rwxrwxrwx 1 root root 8505 Apr 21 11:00 18-04-21.log*

The following example shows the content of an operation log file, including the following information:

¡ Time when a command was executed.

¡ Login user.

¡ Login address.

¡ Login method.

¡ Executed commands.

¡ Directory where a command was executed.

2018/04/19 16:56:50##root pts/6 (172.16.130.3)##/root## vi /var/log/tomcat8/cas.log

2018/04/19 16:57:05##root pts/6 (172.16.130.3)##/root## service tomcat8 restart

2018/04/19 17:02:21##root pts/5 (172.16.130.3)##/root## cat /etc/cvk/system_alarm.xml

2018/04/19 17:02:23##root pts/5 (172.16.130.3)##/root## lsblk

2018/04/19 17:49:04##root pts/6 (172.16.130.3)##/root## ceph osd tree

2018/04/19 17:49:19##root pts/6 (172.16.130.3)##/root## stop ceph-osd id=3

Collecting logs of CAStools

The UIS system and VMs are separated. To monitor and manage VMs on the UIS Manager, you must install CAStools in the operating system of the VMs.

The log collection method for CAStools varies by the operating system installed on the VM:

· Windows operating system—Obtain the qemu-ga.log file in the C:\Program Files\castools\ directory of the VM.

· Linux operating system—Obtain the qemu-ga.log and set-ip.log files in the /var/log/ directory of the VM.

Collecting logs of a VM operating system

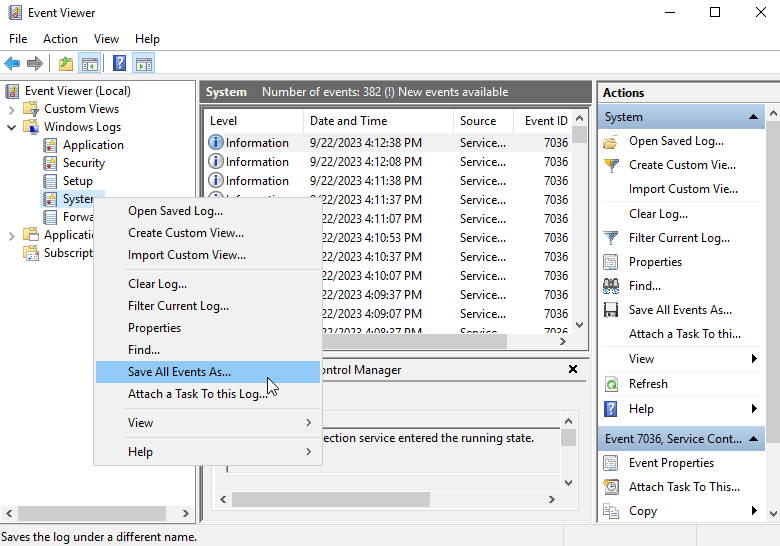

Collecting logs of a Windows operating system

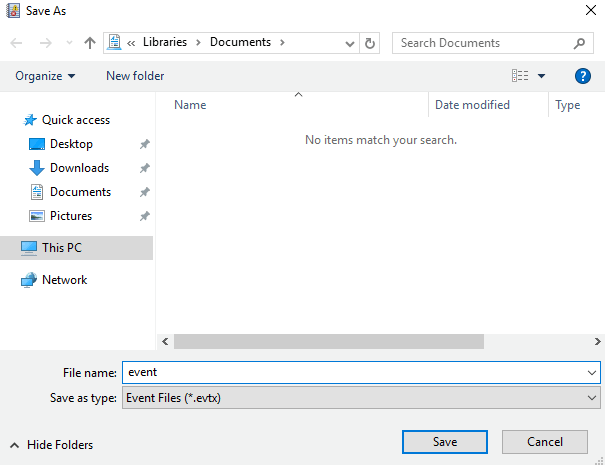

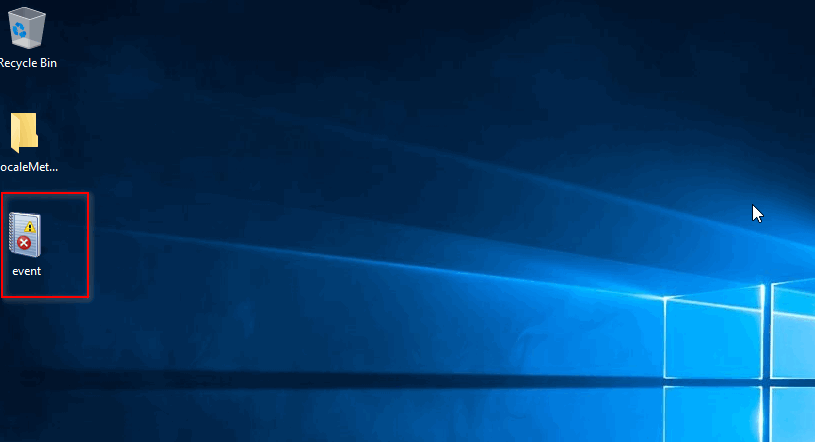

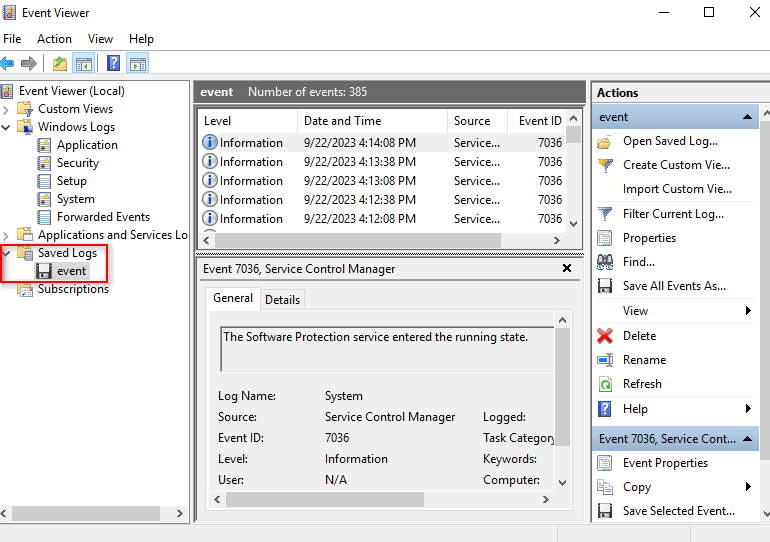

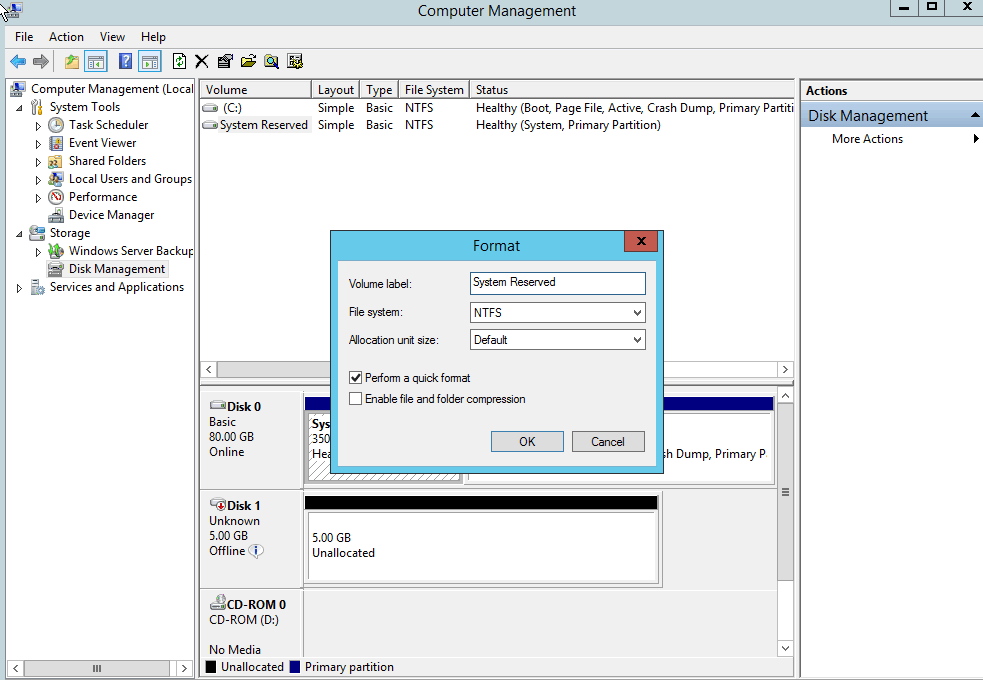

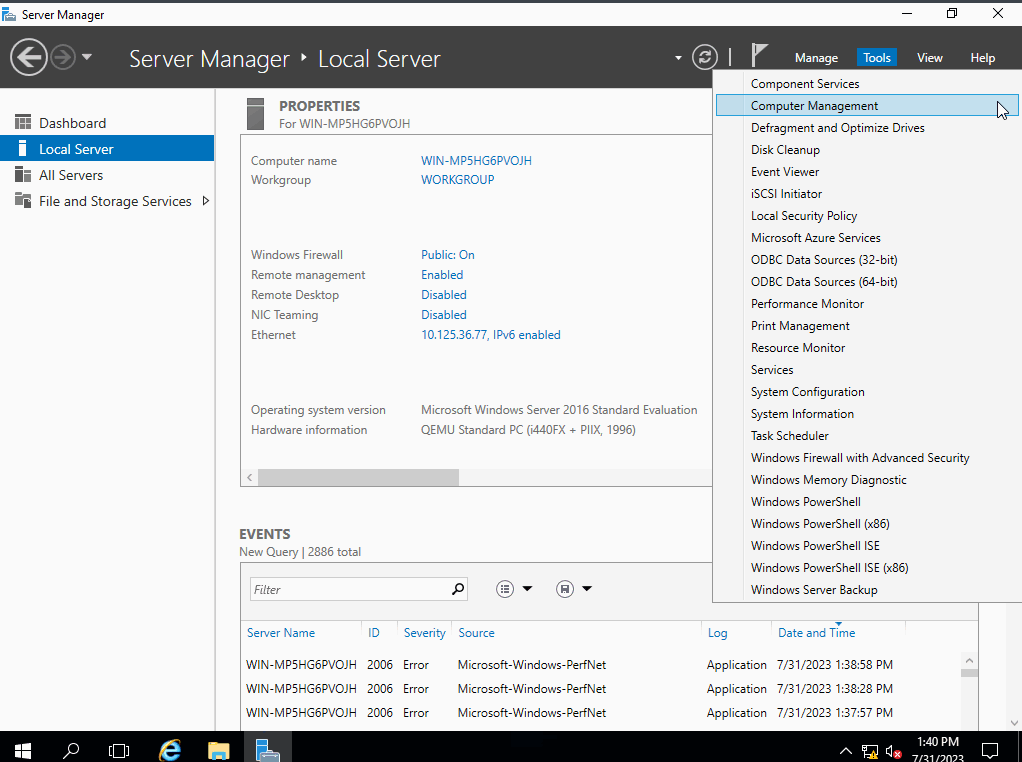

1. Open the Event Viewer window, and then select Windows Logs from the left navigation pane. Right click System, and then select Save All Events As.

2. Save the logs.

3. The downloaded log file is as shown in the figure.

Viewing logs of a Windows operating system

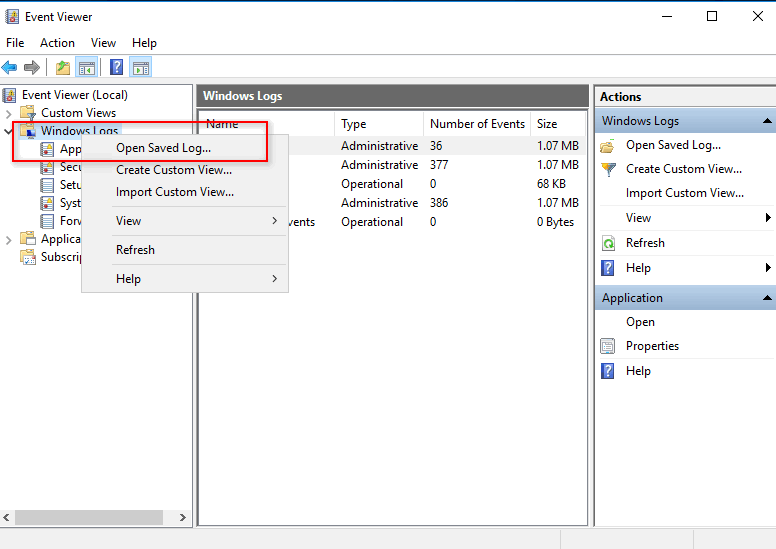

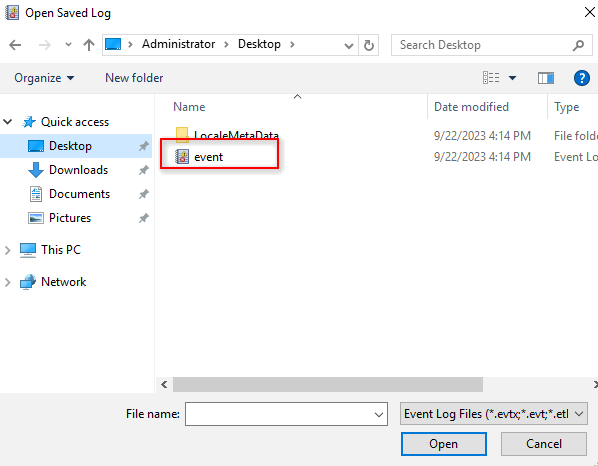

1. On the local computer (installed with the Windows 7 operating system), open the Event Viewer window. From the left navigation pane, right click Windows Logs, and then select Open Saved Log.

2. On the dialog box that opens, select the saved log file.

3. The logs are displayed on the Saved Logs > event page.

Collecting logs of a Linux operating system

To collect logs for a VM installed with a Linux operating system, collect logs in the /var/log directory. If the log size is large, first compress the logs and then copy the compressed file and save it locally.

For example, to collect logs generated on Sep 17th, 2019 for VM vm_test, execute the tar -cvf vm_test_20190917.tar.gz /var/log command.

Troubleshooting tools and utilities

Introduction to kdump

Kdump is a dump tool of the Linux kernel. It saves part of the memory to store the capture kernel. Once the current kernel crashes, kdump uses kexec to run the capture kernel. The capture kernel dumps complete information of the crashed kernel (for example, CPU register and stack statistics) to a file in a local disk or on the network.

By default, the UIS system supports kdump. When the kernel of a CVK host fails, the system generates a crash file in the /vms/crash directory for troubleshooting as shown in the example.

root@cvk29:/vms/crash# ls -lt

drwxr-sr-x 2 root whoopsie 4096 Jul 22 17:34 2014-07-22-09:34

The file named in the dump-*** format in the 2014-07-22-09:34 directory contains the output of kdump.

Analysis with the Kdump file

You can use the crash tool to analyze the Kdump file. The vmlinux file for the kernel version is needed for the analysis. You can find that file at /usr/src/linux-4.1.0-generic/vmlinux-kernelversion (the kernel version name might vary).

The following information describes how to use the Kdump file to locate typical online issues.

CPU error

Node cvknode1 at a site reboots repeatedly. After all virtual machines (VMs) are migrated and the shared storage settings are deleted from the node, the node still reboots repeatedly. The syslogs at reboots do not show occurrence of any anomalies before the reboot, while a vmcore file is present in the /vms/crash directory.

1. View abnormal call stack information in the vmcore file:

root@cvk21:/vms/tmp# crach vmlinux vmcore

No command 'crach' found, did you mean:

Command 'crash' from package 'crash' (main)

crach: command not found

root@cvk21:/vms/tmp# crash vmlinux vmcore

crash 7.0.5

Copyright (C) 2002-2014 Red Hat, Inc.

Copyright (C) 2004, 2005, 2006, 2010 IBM Corporation

Copyright (C) 1999-2006 Hewlett-Packard Co

Copyright (C) 2005, 2006, 2011, 2012 Fujitsu Limited

Copyright (C) 2006, 2007 VA Linux Systems Japan K.K.

Copyright (C) 2005, 2011 NEC Corporation

Copyright (C) 1999, 2002, 2007 Silicon Graphics, Inc.

Copyright (C) 1999, 2000, 2001, 2002 Mission Critical Linux, Inc.

This program is free software, covered by the GNU General Public License,

and you are welcome to change it and/or distribute copies of it under

certain conditions. Enter "help copying" to see the conditions.

This program has absolutely no warranty. Enter "help warranty" for details.

GNU gdb (GDB) 7.6

Copyright (C) 2013 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later [http://gnu.org/licenses/gpl.html]

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law. Type "show copying"

and "show warranty" for details.

This GDB was configured as "x86_64-unknown-linux-gnu"...

KERNEL: vmlinux

DUMPFILE: vmcore [PARTIAL DUMP]

CPUS: 8

DATE: Wed Nov 5 12:25:19 2014

UPTIME: 00:02:19

LOAD AVERAGE: 0.06, 0.05, 0.02

TASKS: 324

NODENAME: cvknode-1

RELEASE: 3.13.6

VERSION: #5 SMP Mon Jul 21 10:07:26 CST 2014

MACHINE: x86_64 (2132 Mhz)

MEMORY: 64 GB

PANIC: "Kernel panic - not syncing: Fatal Machine check"

PID: 0

COMMAND: "swapper/6"

TASK: ffff8807f4618000 (1 of 8) [THREAD_INFO: ffff8807f4620000]

CPU: 6

STATE: TASK_RUNNING (PANIC)

crash] bt

PID: 0 TASK: ffff8807f4618000 CPU: 6 COMMAND: "swapper/6"

#0 [ffff8807ffc6ac50] machine_kexec at ffffffff8104c991

#1 [ffff8807ffc6acc0] crash_kexec at ffffffff810e97e8

#2 [ffff8807ffc6ad90] panic at ffffffff8174ac9d

#3 [ffff8807ffc6ae10] mce_panic at ffffffff81038b2f

#4 [ffff8807ffc6ae60] do_machine_check at ffffffff810399d8

#5 [ffff8807ffc6af50] machine_check at ffffffff817589df

[exception RIP: intel_idle+204]

RIP: ffffffff8141006c RSP: ffff8807f4621db8 RFLAGS: 00000046

RAX: 0000000000000010 RBX: 0000000000000004 RCX: 0000000000000001

RDX: 0000000000000000 RSI: ffff8807f4621fd8 RDI: 0000000001c0d000

RBP: ffff8807f4621de8 R8: 0000000000000009 R9: 0000000000000004

R10: 0000000000000001 R11: 0000000000000001 R12: 0000000000000003

R13: 0000000000000010 R14: 0000000000000002 R15: 0000000000000003

ORIG_RAX: ffffffffffffffff CS: 0010 SS: 0018

--- [MCE exception stack] ---

#6 [ffff8807f4621db8] intel_idle at ffffffff8141006c

#7 [ffff8807f4621df0] cpuidle_enter_state at ffffffff81602a8f

#8 [ffff8807f4621e50] cpuidle_idle_call at ffffffff81602be0

#9 [ffff8807f4621ea0] arch_cpu_idle at ffffffff8101e2ce

#10 [ffff8807f4621eb0] cpu_startup_entry at ffffffff810c1818

#11 [ffff8807f4621f20] start_secondary at ffffffff8104306b

crash]

Abnormal call stack information shows that a machine check error (MCE) exception occurs. This exception is typically caused by hardware issues.

2. Execute the crash-dmesg command to view information printed before the unexpected reboots:

[ 15.707981] 8021q: 802.1Q VLAN Support v1.8

[ 16.416569] drbd: initialized. Version: 8.4.3 (api:1/proto:86-101)

[ 16.416573] drbd: srcversion: F97798065516C94BE0F27DC

[ 16.416575] drbd: registered as block device major 147

[ 17.142281] Ebtables v2.0 registered

[ 17.203400] ip_tables: (C) 2000-2006 Netfilter Core Team

[ 17.247387] ip6_tables: (C) 2000-2006 Netfilter Core Team

[ 139.114172] Disabling lock debugging due to kernel taint

[ 139.114185] mce: [Hardware Error]: CPU 2: Machine Check Exception: 4 Bank 5: be00000000800400

[ 139.114192] mce: [Hardware Error]: TSC 10ba0482e78 ADDR 3fff81760d32 MISC 7fff

[ 139.114199] mce: [Hardware Error]: PROCESSOR 0:206c2 TIME 1415161519 SOCKET 0 APIC 14 microcode 13

[ 139.114203] mce: [Hardware Error]: Run the above through 'mcelog --ascii'

[ 139.114208] mce: [Hardware Error]: Machine check: Processor context corrupt

[ 139.114211] Kernel panic - not syncing: Fatal Machine check

crash]

It can be determined from preceding information that an error has occurred on CPU 2.

Memory error

A CVK node at a site reboots unexpectedly. No abnormal records are found in the syslogs before and after the reboot. Kdump records are generated at the reboots.

1. View call stack information from the Kdump records.

If information as follows is output, a hardware error might occur.

crash] bt

PID: 0 TASK: ffffffff81c144a0 CPU: 0 COMMAND: "swapper/0"

#0 [ffff880c0fa07c60] machine_kexec at ffffffff8104c991

#1 [ffff880c0fa07cd0] crash_kexec at ffffffff810e97e8

#2 [ffff880c0fa07da0] panic at ffffffff8174ac9d

#3 [ffff880c0fa07e20] asminline_call at ffffffffa014c895 [hpwdt]

#4 [ffff880c0fa07e40] nmi_handle at ffffffff817598da

#5 [ffff880c0fa07ec0] do_nmi at ffffffff81759b7d

#6 [ffff880c0fa07ef0] end_repeat_nmi at ffffffff81758cf1

[exception RIP: intel_idle+204]

RIP: ffffffff8141006c RSP: ffffffff81c01da8 RFLAGS: 00000046

RAX: 0000000000000010 RBX: 0000000000000010 RCX: 0000000000000046

RDX: ffffffff81c01da8 RSI: 0000000000000018 RDI: 0000000000000001

RBP: ffffffff8141006c R8: ffffffff8141006c R9: 0000000000000018

R10: ffffffff81c01da8 R11: 0000000000000046 R12: ffffffffffffffff

R13: 0000000000000000 R14: ffffffff81c01fd8 R15: 0000000000000000

ORIG_RAX: 0000000000000000 CS: 0010 SS: 0018

--- [NMI exception stack] ---

#7 [ffffffff81c01da8] intel_idle at ffffffff8141006c

#8 [ffffffff81c01de0] cpuidle_enter_state at ffffffff81602a8f

#9 [ffffffff81c01e40] cpuidle_idle_call at ffffffff81602be0

#10 [ffffffff81c01e90] arch_cpu_idle at ffffffff8101e2ce

#11 [ffffffff81c01ea0] cpu_startup_entry at ffffffff810c1818

#12 [ffffffff81c01f10] rest_init at ffffffff8173fc97

#13 [ffffffff81c01f20] start_kernel at ffffffff81d37f7b

#14 [ffffffff81c01f70] x86_64_start_reservations at ffffffff81d375f8

#15 [ffffffff81c01f80] x86_64_start_kernel at ffffffff81d3773e

crash]

2. Execute the dmesg command to view information before the anomaly.

crash]dmesg

…

[10753.155822] sd 3:0:0:1: [sdd] Very big device. Trying to use READ CAPACITY(16).

[10804.115376] sbridge: HANDLING MCE MEMORY ERROR

[10804.115386] CPU 23: Machine Check Exception: 0 Bank 9: cc1bc010000800c0

[10804.115387] TSC 0 ADDR 12422f7000 MISC 90868002800208c PROCESSOR 0:306e4 TIME 1417366012 SOCKET 1 APIC 2b

…

[10804.283467] sbridge: HANDLING MCE MEMORY ERROR

[10804.283473] CPU 9: Machine Check Exception: 0 Bank 9: cc003010000800c0

[10804.283475] TSC 0 ADDR 1242ef7000 MISC 90868000800208c PROCESSOR 0:306e4 TIME 1417366012 SOCKET 1 APIC 26

[10804.303482] EDAC MC1: 28416 CE memory scrubbing error on CPU_SrcID#1_Channel#0_DIMM#0 (channel:0 slot:0 page:0x12422f7 offset:0x0 grain:32 syndrome:0x0 - OVERFLOW area:DRAM err_code:0008:00c0 socket:1 channel_mask:1 rank:0)

[10804.303489] EDAC MC1: 192 CE memory scrubbing error on CPU_SrcID#1_Channel#0_DIMM#0 (channel:0 slot:0 page:0x12424a7 offset:0x0 grain:32

…

[10804.319474] sbridge: HANDLING MCE MEMORY ERROR

[10804.319481] CPU 6: Machine Check Exception: 0 Bank 9: cc001010000800c0

[10804.319482] TSC 0 ADDR 1243087000 MISC 90868002800208c PROCESSOR 0:306e4 TIME 1417366012 SOCKET 1 APIC 20

[10805.303772] EDAC MC1: 64 CE memory scrubbing error on CPU_SrcID#1_Channel#0_DIMM#0 (channel:0 slot:0 page:0x1243087 offset:0x0 grain:32 syndrome:0x0 - OVERFLOW area:DRAM err_code:0008:00c0 socket:1 channel_mask:1 rank:0)

[10813.602696] sd 3:0:0:0: [sdc] Very big device. Trying to use READ CAPACITY(16).

[10813.603219] sd 3:0:0:1: [sdd] Very big device. Trying to use READ CAPACITY(16).

[10840.833238] Kernel panic - not syncing: An NMI occurred, please see the Integrated Management Log for details.

crash]

3. View information in the kern.log file.

Nov 30 07:05:01 HBND-UIS-E-CVK09 kernel: [229821.496666] sd 11:0:0:1: [sdd] Very big device. Trying to use READ CAPACITY(16).

Nov 30 07:05:55 HBND-UIS-E-CVK09 kernel: [229875.188854] sbridge: HANDLING MCE MEMORY ERROR

Nov 30 07:05:55 HBND-UIS-E-CVK09 kernel: [229875.188873] CPU 23: Machine Check Exception: 0 Bank 9: cc1e0010000800c0

Nov 30 07:05:55 HBND-UIS-E-CVK09 kernel: [229875.188874] TSC 0 ADDR 10638f7000 MISC 90868002800208c PROCESSOR 0:306e4 TIME 1417302355 SOCKET 1 APIC 2b

…

Nov 30 07:05:55 HBND-UIS-E-CVK09 kernel: [229875.244902] EDAC MC1: 30720 CE memory scrubbing error on CPU_SrcID#1_Channel#0_DIMM#0 (channel:0 slot:0 page:0x10638f7 offset:0x0 grain:32 syndrome:0x0 - OVERFLOW area:DRAM err_code:0008:00c0 socket:1 channel_mask:1 rank:0)

…

root@gzh-139:/vms/issue_logs/hebeinongda/20141201/HBND-UIS-E-CVK09/logdir/var/log# grep OVERFLOW kern* | wc

225 6341 60264

root@gzh-139:/vms/issue_logs/hebeinongda/20141201/HBND-UIS-E-CVK09/logdir/var/log#

It can be determined from preceding information that the issue is caused by a memory error. The issue is resolved after the memory is replaced.

Storage cluster logs

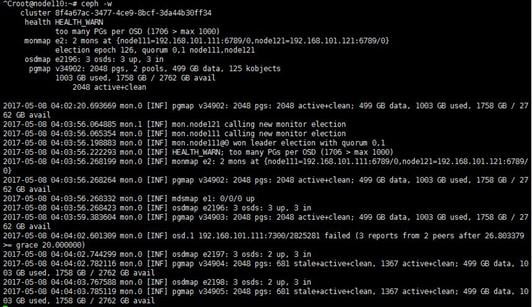

/var/log/ceph/ceph.log

The ceph.log file mainly records the health status and traffic of the cluster. It is available only on monitor nodes and has the same content as that output from the ceph –w command.

· If logs as follows are in the ceph.log file, the service network of the primary monitor node of the cluster has been disconnected.

2017-05-09 19:44:03.400143 mon.2 172.16.105.84:6789/0 2009 : cluster [INF] mon.cvknode84 calling new monitor election

2017-05-09 19:44:03.404362 mon.1 172.16.105.83:6789/0 2023 : cluster [INF] mon.cvknode83 calling new monitor election

2017-05-09 19:44:05.419510 mon.1 172.16.105.83:6789/0 2024 : cluster [INF] mon.cvknode83@1 won leader election with quorum 1,2

2017-05-09 19:44:05.428131 mon.1 172.16.105.83:6789/0 2025 : cluster [INF] HEALTH_WARN; 1 mons down, quorum 1,2 cvknode83,cvknode84

2017-05-09 19:44:14.383590 mon.1 172.16.105.83:6789/0 2057 : cluster [INF] osdmap e1397: 18 osds: 12 up, 18 in

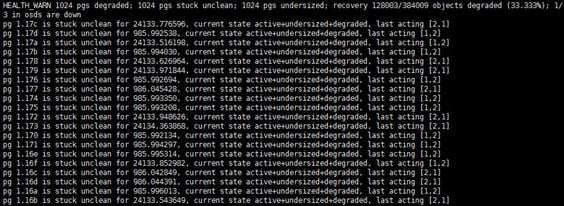

· If logs as follows are in the ceph.log file, the health of the cluster is not 100%, and the cluster is in the process of recovery.

2017-06-06 19:31:41.319993 mon.0 192.168.93.21:6789/0 86387 : cluster [INF] pgmap v73931: 4096 pgs: 2561 active+clean, 1532 active+remapped+wait_backfill, 3 active+remapped+backfilling; 3362 GB data, 6730 GB used, 21941 GB / 28672 GB avail; 0 B/s rd, 127 kB/s wr, 256 op/s rd, 63 op/s wr; 5/2608637 objects degraded (0.000%); 1765938/2608637 objects misplaced (67.696%); 62992 kB/s, 15 objects/s recovering

· If logs as follows are in the ceph.log file, the storage network of a non-Handy or non-primary monitor node in the cluster has been disconnected.

2017-05-12 16:05:14.585496 mon.0 172.31.1.31:6789/0 106035 : cluster [INF] osd.31 marked itself down

2017-05-12 16:05:15.095824 mon.0 172.31.1.31:6789/0 106038 : cluster [INF] osd.33 marked itself down

2017-05-12 16:05:15.195542 mon.0 172.31.1.31:6789/0 106040 : cluster [INF] osdmap e286: 36 osds: 25 up, 36 in

2017-05-12 16:05:15.287350 mon.0 172.31.1.31:6789/0 106042 : cluster [INF] osd.27 marked itself down

2017-05-12 16:05:16.186527 mon.0 172.31.1.31:6789/0 106043 : cluster [INF] osdmap e287: 36 osds: 24 up, 36 in

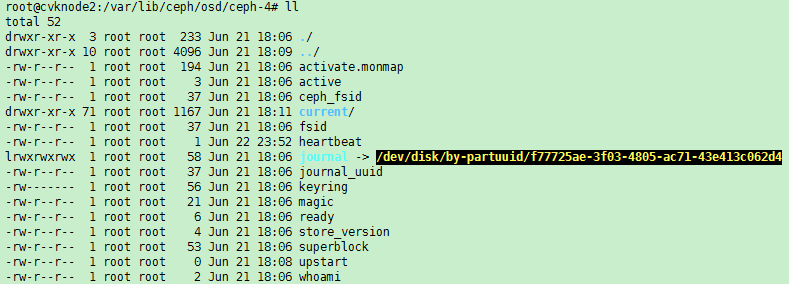

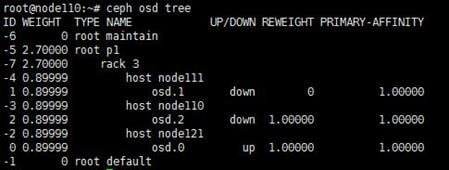

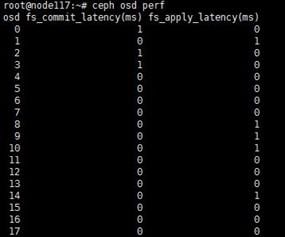

/var/log/ceph/ceph-osd.*.log

The ceph-osd.*.log file mainly records information about an OSD in the cluster. If an error occurs on a cluster OSD, the error reasons will be recorded in the ceph-osd.*.log file for that OSD, which can be used for troubleshooting.

The following is an example about how to troubleshoot by using a ceph-osd.*.log file when an OSD is abnormal (the UI reports an OSD error):

1. Use the ceph osd tree command in the CLI to identify the identifier of the abnormal OSD.

2. Access the /var/log/ceph/ceph-osd.*.log file for the OSD and identify the reason for the OSD exception.

¡ If a log as follows is in the ceph-osd log file, the storage controller is damaged, causing the journal to be interrupted.

2017-04-25 14:34:08.807146 7f5bf690a780 -1 journal Unable to read past sequence 301115833 but header indicates the journal has committed up through 301115842, journal is corrupt

¡ If logs as follows are in the ceph-osd log file, the OSD has committed suicide because of is excessive pressure.

2017-03-09 11:46:01.576034 7f0878364700 1 heartbeat_map is_healthy 'FileStore::op_tp thread 0x7f086fa6c700' had suicide timed out after 180

2017-03-09 11:46:01.576049 common/HeartbeatMap.cc: 81: FAILED assert(0 == "hit suicide timeout")

¡ If a log as follows is in the ceph-osd log file, the OSD has not been mounted.

2017-04-27 19:46:18.280510 7fcfb954c700 5 filestore(/var/lib/ceph/osd/ceph-85) umount /var/lib/ceph/osd/ceph-85

¡ If logs as follows are in the ceph-osd log file, the data copies are inconsistent.

2016-10-22 06:49:23.854201 7fd2e860f700- 1 log_channel(cluster)log [ERR]:1.ad shard 1:soid 819850ad/rbd_date.3b7055757a07.0000000000000ab1/7//1 date_digest 0xd7ac1812 != best guess date_digest 0x43d61c5d from auth shard 0

2016-10-22 06:49:23.854253 osd/osd_types.cc:4148:FAILED assert(clone_size.count(clone))

/var/log/ceph/ceph-disk.log

The ceph-disk.log file mainly records information about OSD deployment and startup and is typically used in conjunction with the ceph-osd.*.log file to locate OSD related issues.

· If logs as follows are in the ceph-disk log file, the system stops OSD mounting and exits the OSD mounting process because files exist in the /var/lib/ceph/osd/ceph-* directory. This issue typically occurs at the restart of the host. When the host restarts, all OSDs must be reactivated and mounted and the mounting process will check whether other files than the heartbeat, osd_disk_info.ini, and osd_should_be_restart_flag files exist in the OSD directory. If other files exist in the directory, the OSD mounting process stops.

ceph-disk: Error: another ceph osd.71 already mounted in position(old/different cluster instance?);unmounting ours.

· If logs as follows are in the ceph-disk log file, the OSD has not been activated and cannot be mounted.

Fri. 07 Apr 2017 10:24:48 ceph-disk[line:2438] ERROR Failed to activate

Fri. 07 Apr 2017 10:24:48 ceph-disk[line:976] DEBUG Unmounting /var/lib/ceph/tmp/mnt.hD_6nh

/var/log/ceph/ceph-mon.*.log

The ceph-mon.*.log file mainly records information of a monitor node in the Ceph cluster. Monitor nodes are responsible for monitoring the cluster. If an error occurs on a monitor node, the error reason will be recorded in the ceph-mon.*.log file for that node, which can be used for troubleshooting.

To troubleshoot for a monitor node exception (the UI reports a monitor node anomaly):

1. Check the hostname of the abnormal monitor node on the host management page.

2. Access the /var/log/ceph/ceph-mon.*.log file for the host to check for the cause of the monitor node exception. If the following logs are found in the ceph-mon log file, the primary monitor node is abnormal (possible reason is an exception occurs on the service network of the primary monitor node or the ceph-mon process on the primary master node is stopped), and the backup monitor nodes trigger the election mechanism.

2017-05-08 19:24:58.017935 7fb173765700 1 mon.cvknode84@2(peon).paxos(paxos active c 24348..24883) lease_timeout -- calling new election

2017-05-08 19:24:58.024456 7fb172f64700 0 log_channel(cluster) log [INF] : mon.cvknode84 calling new monitor election

/var/log/calamari/calamari.log

The calamari.log file mainly records the operations on Handy.

If logs as follows are in the calamari.log file, the Handy node does not have network connectivity with the other nodes.

2017-05-08 15:08:29,060 - ERROR - onestor_common.py[network_check][line:494] - django.request <network_check> Host "172.16.105.84" is unreachable, retry again...

2017-05-08 15:08:29,060 - ERROR - onestor_common.py[execute][line:622] - django.request [ONEStor] onestor_request_all_node cvknode84:Host is unreachable

/var/log/onestor_cli/ onestor_cli.log

The onestor_cli.log file records information about the process of collecting real-time logs on a node. It can be used to diagnose and troubleshoot any issues related to log collection.

· If a log as follows is in the onestor_cli.log file, the size of the collected logs has exceeded 5 GB.

[2017-05-10 10:47:01,980][WARNING][monitor.py][line:157] We detect the current collecting log size is up to 5GB, ending collecting automatically!

· If the onestor_cli.log file disappears from a node, the log disk space on the node might be full.

Bimodal HCI logs

Bimodal HCI provides VMware VM lifecycle management and VMware VM agentless migration features.

1. The vmware-api-server service on the CVM host provides VMware VM lifecycle management. It stores related logs in the /var/log/vmware-api-server directory. If an exception occurs when you operate VMware VMs on the UIS, a log is generated in that directory to record the causes for the exception, which can be used for issue diagnosis.

For example, if a log as follows is generated, you can determine that the reason for failure to generate a snapshot is that the snapshot directory is too deep (which is limited by VMware):

[Vmware VM Request Processor Manager1] Trace[] UID[] c.h.h.u.s.v.handler.VmwareHandler – vmware vm “hdm2-snapshot” to generate a snapshot fail, cause:Snapshot hierarchy is too deep.

2. The vmware-agent service on the CVK host is responsible for migrating data from VMware. It stores related logs in the /var/log/vmware-agent directory. If a migration task fails or is interrupted unexpectedly on the UIS, you can view the logs in that directory.

¡ vmware-agent.log—Migration process logs. When an exception occurs during the migration process, the vmware-agent.log file will record the causes for the exception, which can be used for future issue diagnosis.

If a log as follows is output, a known VMware issue https://kb.vmware.com/s/article/2035976 has been triggered

2022-01-19 16:03:06 [ERROR] service.go:149 migrate failed, vcenter key: 172.20.67.6:443 vmref: vm-64 task 1955534340610146293 reason: {"code": 12002, "message": "Get QueryChangedDiskAreas failed. ", "error": "ServerFaultCode: Error caused by file /vmfs/volumes/61dd4ded-84b7a178-07ce-98f181b81b1c/ubuntu18041desktop/ubuntu18041desktop.vmdk"}

¡ vmware_vddk.log—VDDK operation logs. These logs record the operations related to connecting to vSphere and can assist in locating data transmission interruption during migration.

3. If an error of failed driver injection is reported on the UI during the VM migration process, you can check the relevant error logs to preliminarily locate the cause of the failure. The relevant error logs are saved in the /var/log/caslog/cas_xc_virtio_driver.log file.

4. If the VM still reports that castools is not running on the UI a period of time after the injection is completed, remount the ISO and install castools again.

5. If no errors are reported on the UI after the VM is migrated but you cannot access the desktop after the VM is powered on, a VM driver injection compatibility issue might exist. If this VM is in the compatible migrated VM list, contact Technical Support to locate the issue on site.

Distributed storage maintenance

Cluster issues

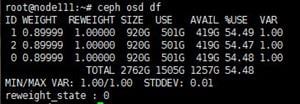

Rebalancing data placement when data imbalance occurs

ONEStor uses the CRUSH algorithm to automatically balance data across the object-based storage daemons (OSDs) in the cluster. Each OSD maps to a disk.

To rebalance data when occasional data imbalance occurs:

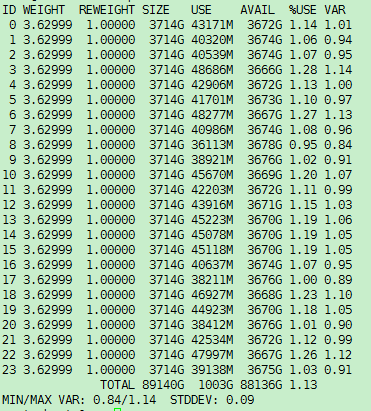

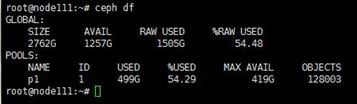

1. Execute the ceph osd df command and then identify the disk utilization of each OSD in the %USE field.

Figure 6 Identifying the disk utilization of each OSD

2. If the disk utilization of some OSDs is unusually higher than other OSDs, execute the ceph osd reweight-by-utilization command to rebalance data.

IMPORTANT: Data rebalancing is read and write intensive and might cause cluster performance to degrade. To minimize its impact on storage services, perform this operation at off-peak hours. |

3. Verify that the system has finished the rebalancing operation successfully.

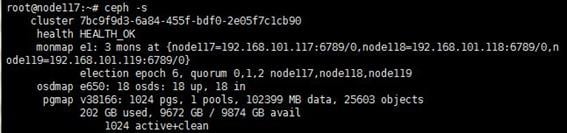

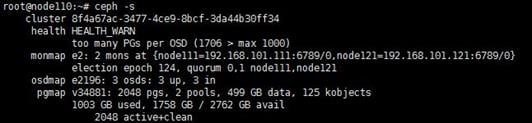

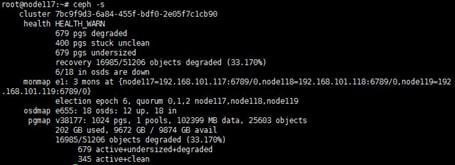

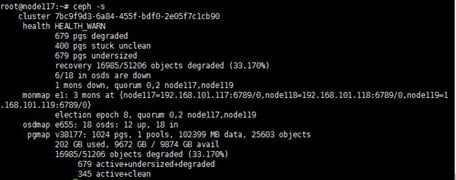

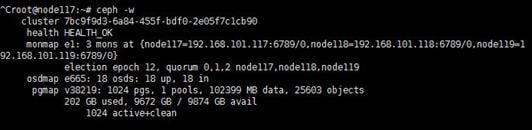

Execute the ceph -s command to monitor the cluster health state. When the cluster state changes to HEALTH_OK, you can determine that the system has finished the rebalancing operation.

Method to accelerate data rebalancing when the cluster is in an idle state

When the cluster is in an idle state, you can accelerate data rebalancing, as follows:

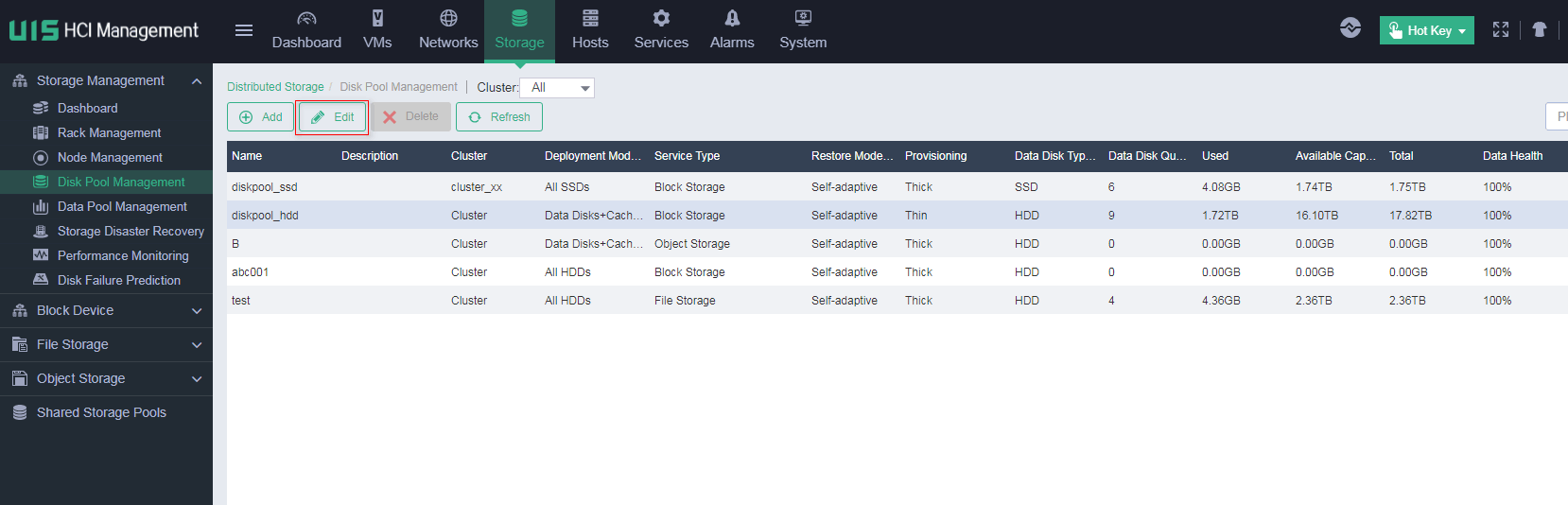

1. Log in to UIS Manager.

2. On the top navigation bar, click Storage, and then select Disk Pool Management from the left navigation pane.

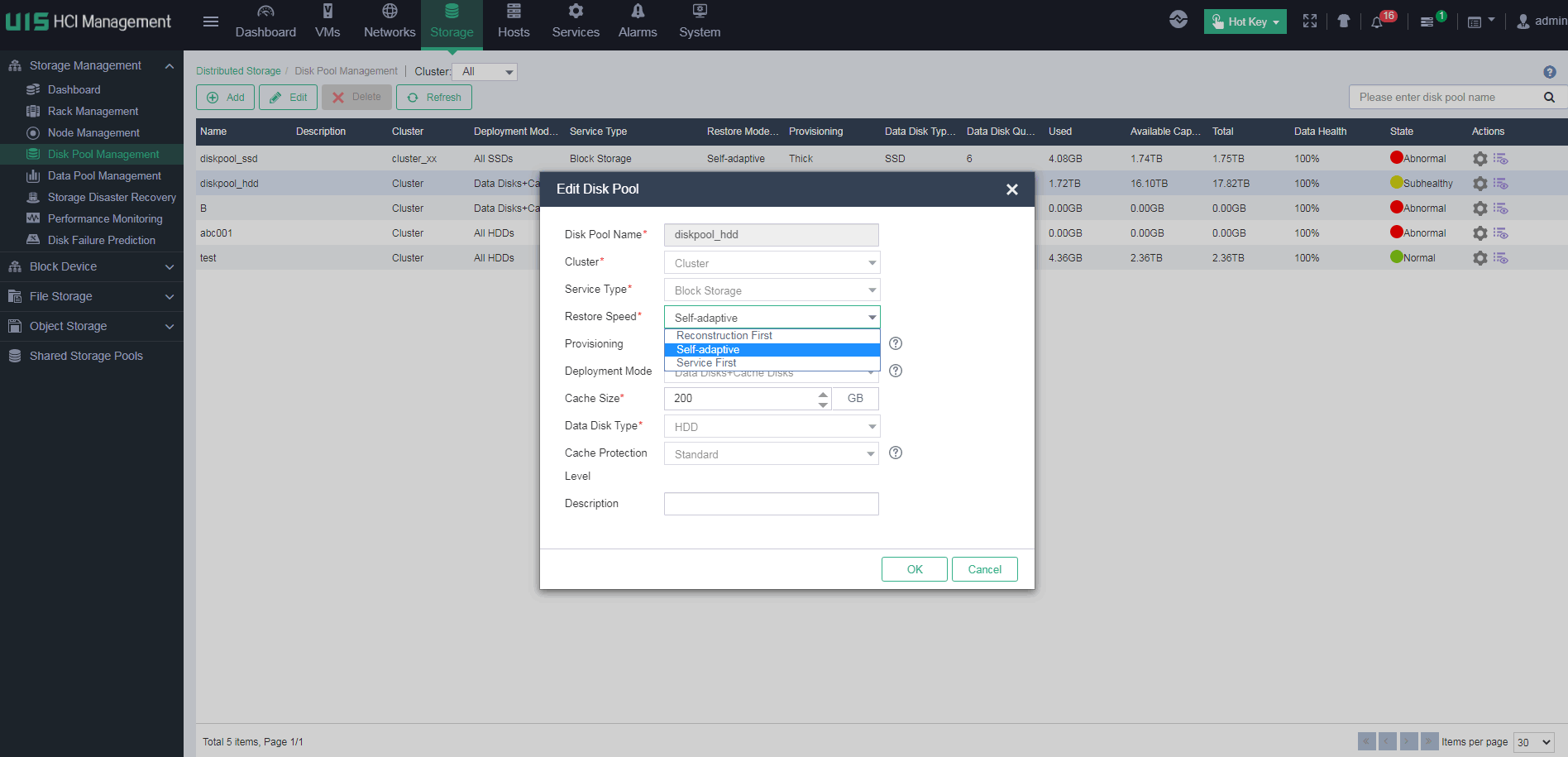

3. Select the disk pool on which data rebalancing is to be performed, and then click Edit.

4. In the dialog box that opens, change the restore speed from self-adaptive to reconstruction first.

Node issues

Resolving host issues caused by a full system disk

A host might malfunction when the usage of its system disk reaches 100%. For example, Apache processes and the ceph-mon daemon might fail to start, resulting in issues such as the mon down error and inability to log in to the management node.

System disk might get full for the following reasons:

· Too many large files and log files are present.

· The fio tester stores a large test0.0 file on the system disk. This issue occurs if you run fio without specifying the --filename option.

To free up disk space:

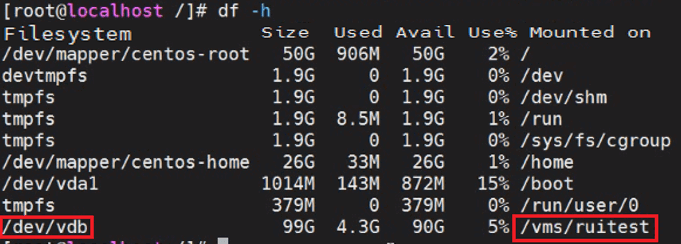

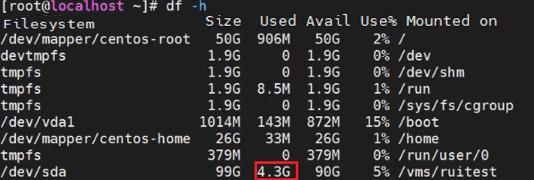

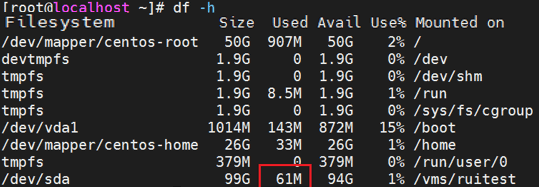

1. Execute the df –h command on the host to identify its system disk usage. The following is sample output:

root@cvknode86:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 28G 4.0G 23G 16% /

If the Use field displays that the disk usage has reached 100%, proceed to remove unused files.

2. Remove unused large files or log files:

a. Access the /var/log directory and other directories that might contain large files or unused files.

b. Execute the du –h --max-depth=1 command to view the size of each folder in the directory.

c. Delete unused files.

3. Remove the test data file generated by fio:

a. Execute the echo ""> filename command.

b. Execute the rm –rf filename command to delete the test data file.

Issues caused by network failure

Handling failures to add or delete hosts

You will fail to add or delete a host or disks on the host if network failure occurs before the system finishes the operation. The system will then display a failure message indicating that the system failed to delete a host because of management network failure.

The solution to these issues differs depending on the timing of the network failure.

Network failure occurs before the system starts deleting disks

If network failure occurs before the system starts deleting disks, you only need to select the target host from the webpage and perform the operation again after the system regains network connectivity to the host.

If the connectivity to the host cannot be restored in extreme cases, for example, because the host's operating system is damaged, select the host from the webpage to delete it offline. However, data on the host's disks will remain. You must take action to handle residual data.

Network failure occurs before the system deleting all disks

See "Network failure occurs before the system starts deleting disks."

Network failure occurs during disk formatting after all the disks are deleted from the cluster

The host will be invisible on the management webpages after the system deletes all its disks from the cluster and proceeds to disk formatting. If network failure occurs before the system finishes formatting all the disks, the data and Ceph partitions on the unformatted disks will remain. After the host restarts, the unformatted disks will be automatically mounted to the operating system. UIS Manager will be unable to discover these disks when the host is re-added to the cluster.

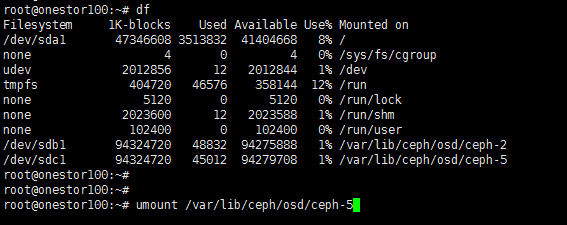

To resolve these issues, execute the umount command to manually unmount the residual disks before you add the host back to the cluster.

Deleting a monitor node offline and restoring the node

You delete a monitor node offline from the cluster on the webpage only if the network connectivity to its host cannot be restored. This operation directly removes the node from the cluster.

To minimize the impact of the operation on the cluster:

1. Remove all roles of the host in the cluster.

2. Destroy the cluster data on the host.

CAUTION: Destroying the cluster data on a host will result in loss of all cluster data on that host. Be sure that the node is no longer in use when you perform the operation. |

These operations ensure that you can add the host back to the cluster as a storage, monitor, or backup management node for management high availability.

Deleting a storage node offline and restoring the node

You delete a storage node offline from the cluster on the webpage only if the network connectivity to its host cannot be restored. This operation directly removes the node from the cluster.

Before you delete a storage node offline, verify the following items:

1. No abnormal placement groups (PGs) are present for the disk pool that contains the storage node.

CAUTION: If abnormal PGs are present, data rebalancing might be in progress. To avoid loss of data, do not delete the node at this time. |

2. Verify that the disk pool is in healthy state.

Then, you can safely delete the node.

To minimize the impact of the operation on the cluster:

3. Remove all roles of the host in the cluster.

4. Destroy the cluster data on the host.

CAUTION: Destroying the cluster data on a host will result in loss of all cluster data on that host. Be sure that the node is no longer in use when you perform the operation. |

These operations ensure that you can add the host back to the cluster as a storage, monitor, or backup management node for management high availability.

Disk issues

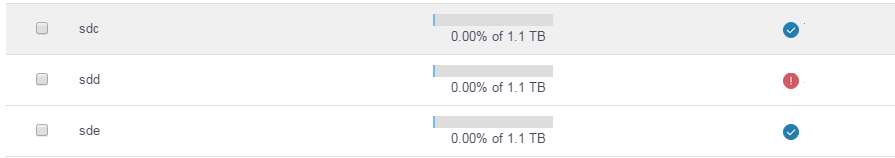

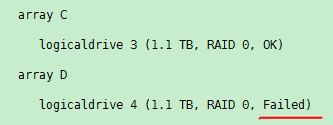

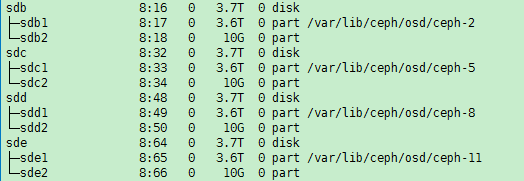

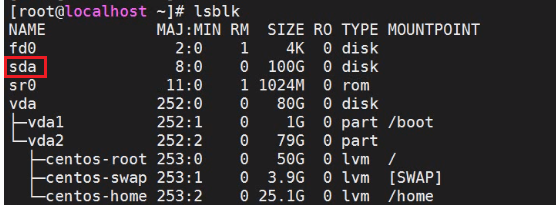

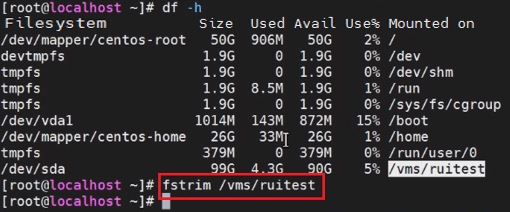

Missing or changing sdX device names due to host restart

When you remove a disk, the state of its corresponding logical drive on the RAID controller changes from OK to Failed. Normally, the sdX drive letters will stay unchanged after you re-insert or replace the disk and restore its logical drive back to the OK state. However, if the host restarts while the logical drive is in FAIL state, the disk will not be visible in the operating system. If you execute the lsblk or fdisk command to view disks on the host, you will notice that the disk is missing.

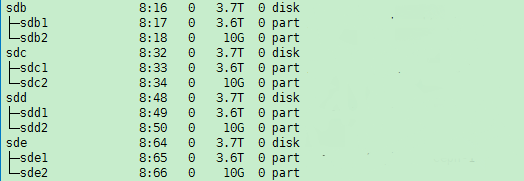

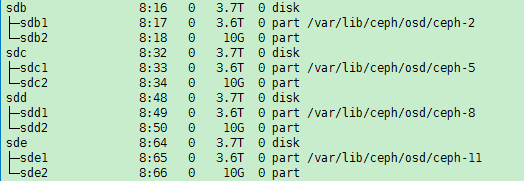

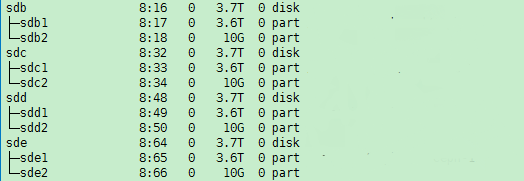

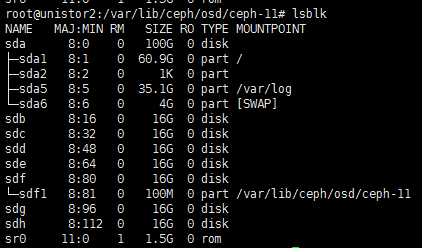

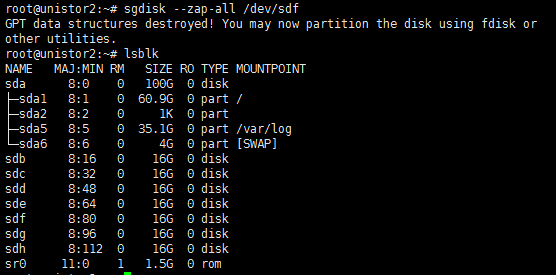

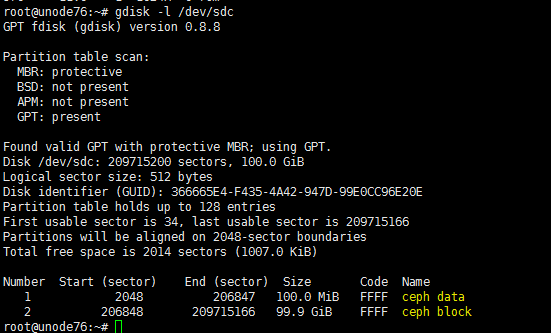

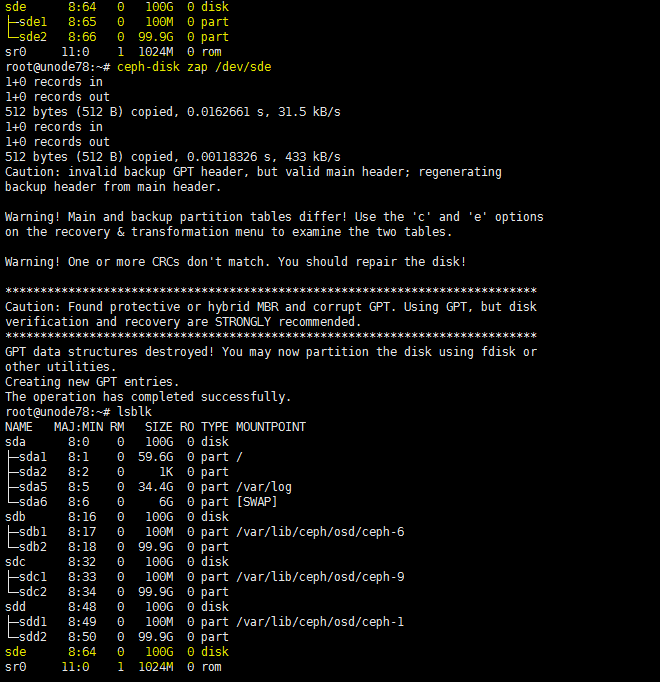

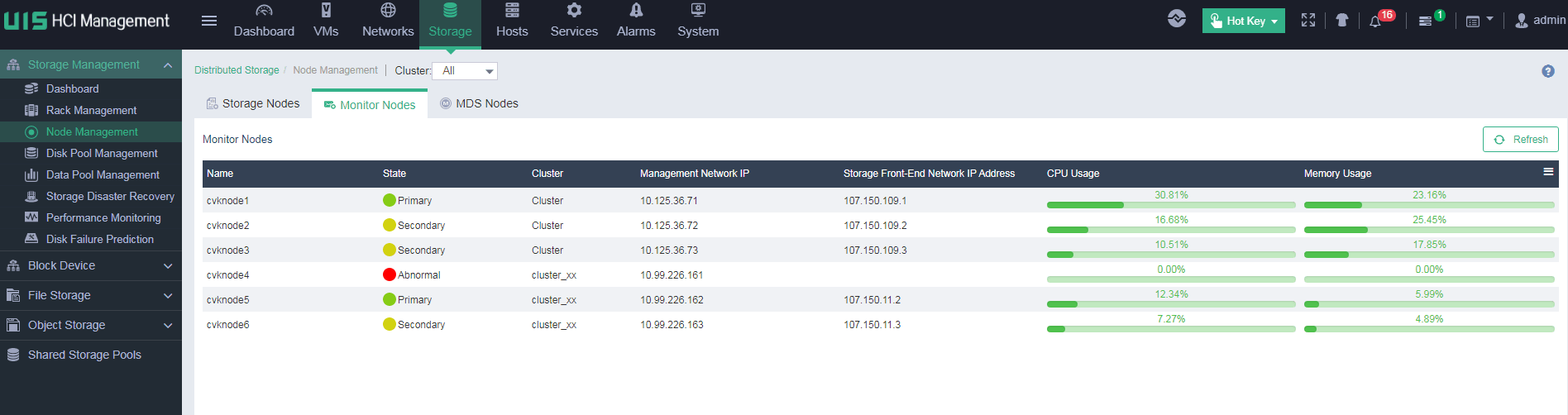

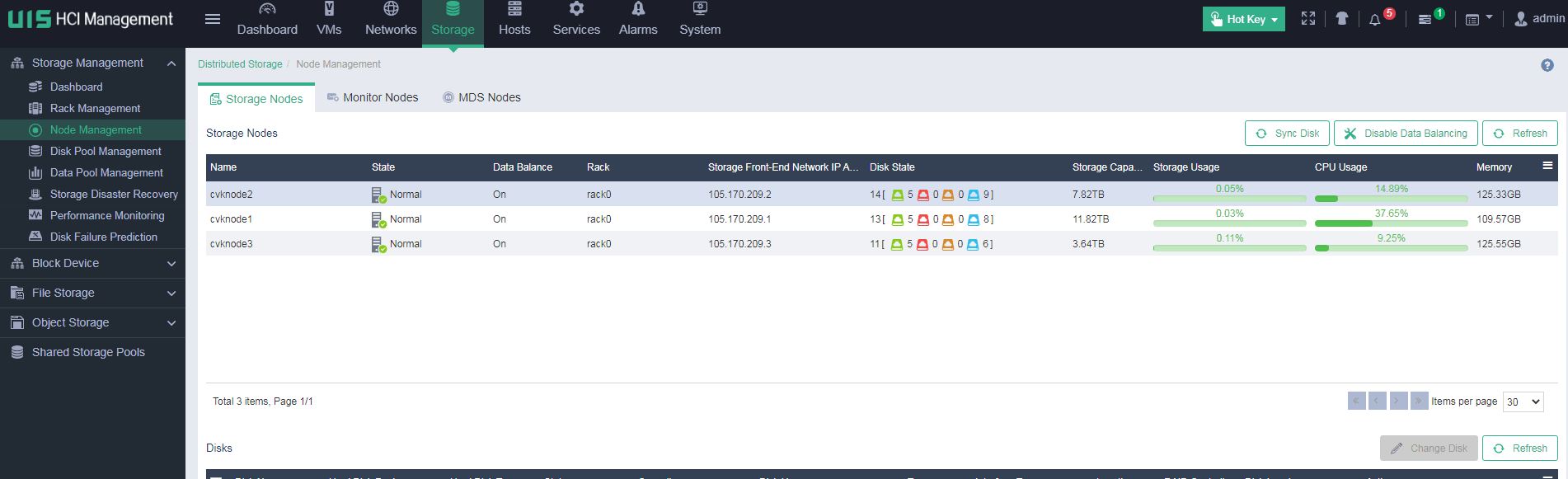

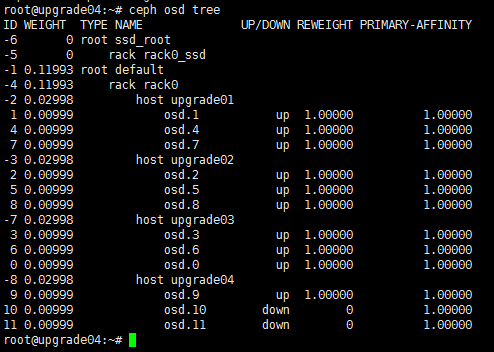

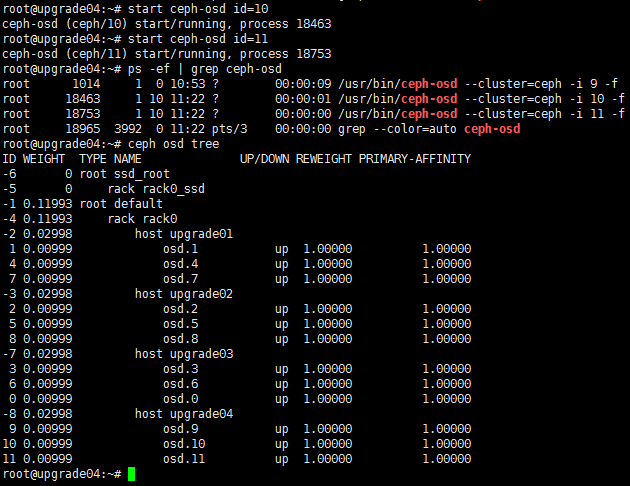

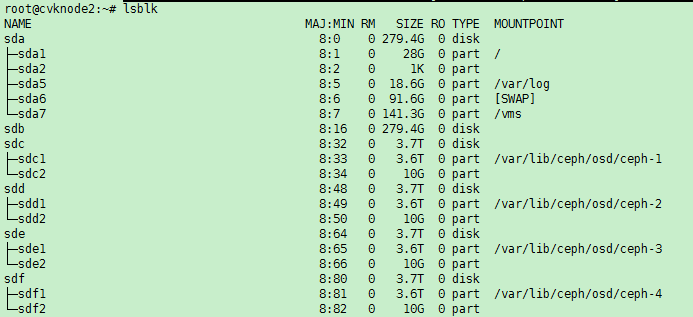

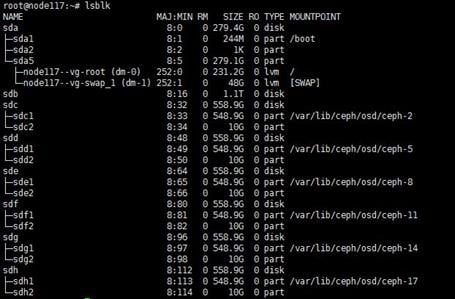

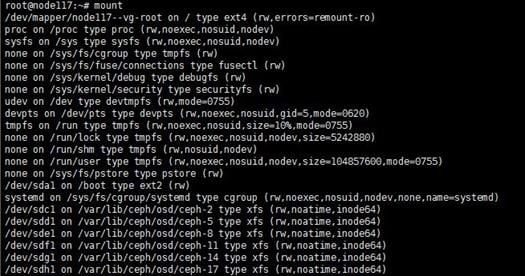

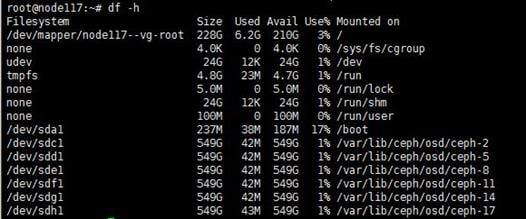

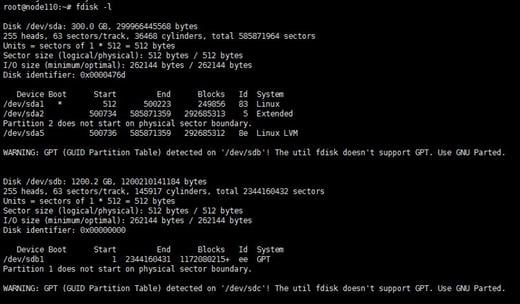

For example, the lsblk command displays that the host has disks sda, sdb, sdc, sdd, and sde when it is executed before a disk removal operation. ONEStor shows that the sdd disk is abnormal. The output from the hpssacli controller all show config command shows that the logical drive for sdd is in Failed state, as shown in the following figures: