- Released At: 09-09-2024

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

|

|

|

H3C Workspace Cloud Desktop |

|

Maintenance Guide |

|

|

|

|

Document version: 5W101-20240905

Copyright © 2024 New H3C Technologies Co., Ltd. All rights reserved.

No part of this manual may be reproduced or transmitted in any form or by any means without prior written consent of New H3C Technologies Co., Ltd.

Except for the trademarks of New H3C Technologies Co., Ltd., any trademarks that may be mentioned in this document are the property of their respective owners.

The information in this document is subject to change without notice.

Restoring and rebuilding the system disk

Powering on and powering off Workspace

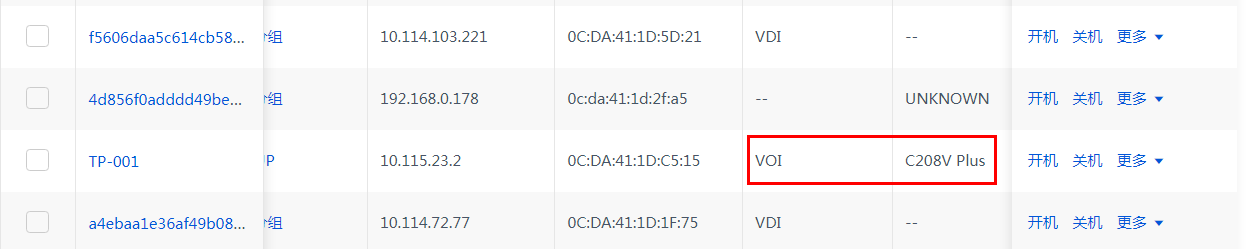

Using UPM policies in desktop pools for IDV/VOI client anonymous users

Enabling HugePages memory for the UCC endpoint in IDV mode

Multimedia, vGPU, protocol, peripheral, and agent logs

Storage locations for course VMs

Important components and maintenance methods

Versions earlier than E1012P10

Configuration and debugging methods

Component running status check

Troubleshooting common display issues

Troubleshooting peripheral issues

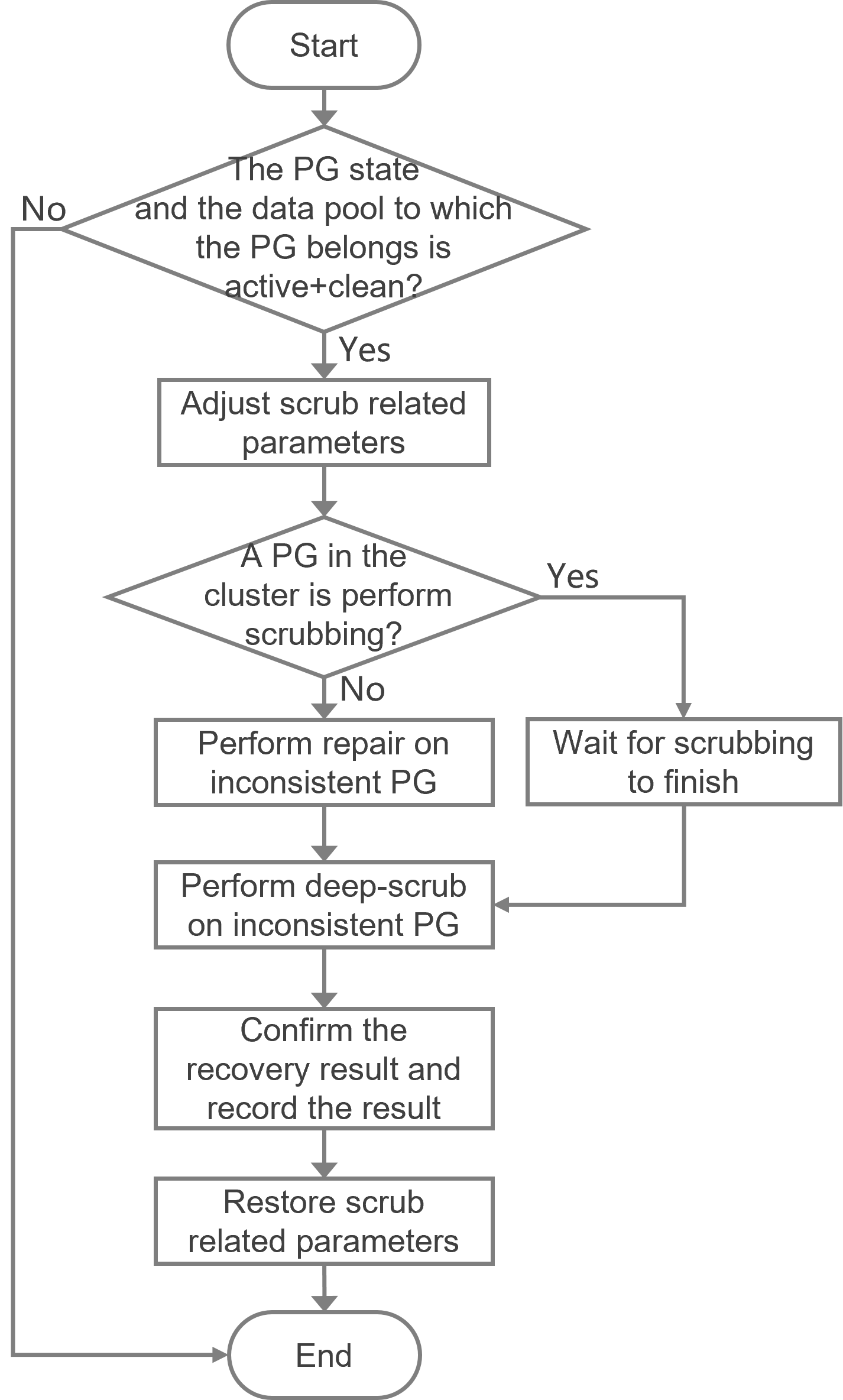

ONEStor PG inconsistent recovery method

Restrictions and guidelines for PG inconsistent recovery

GlusterFS split-brain clearance method

Detecting GlusterFS split-brain issues

Using GlusterFS built-in commands to resolve split-brain issues

Resolving split-brain through mounting

Guidelines for data backup before system re-installation

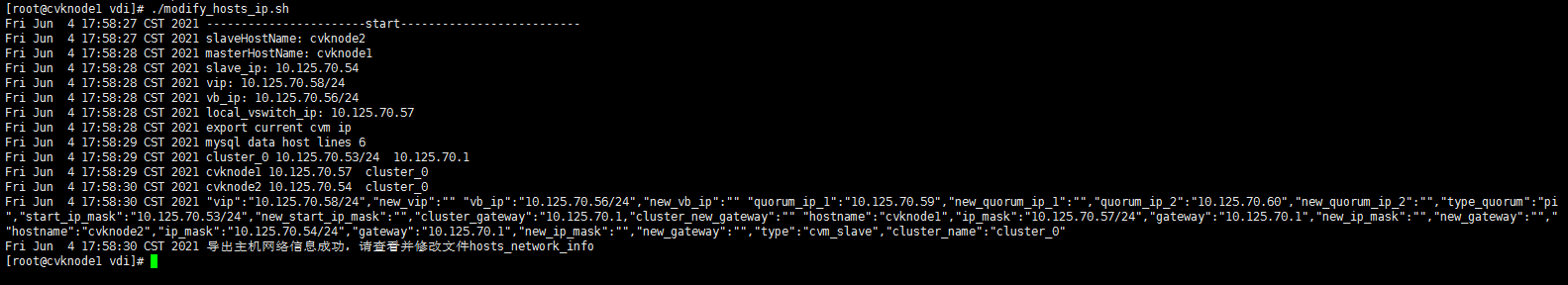

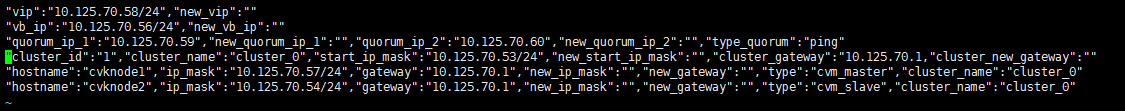

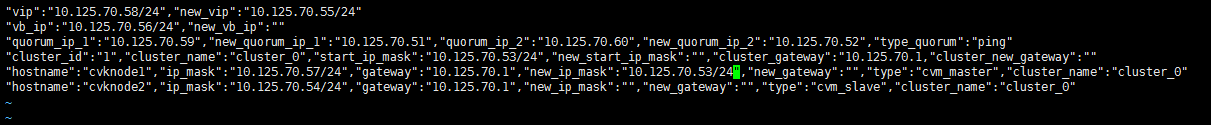

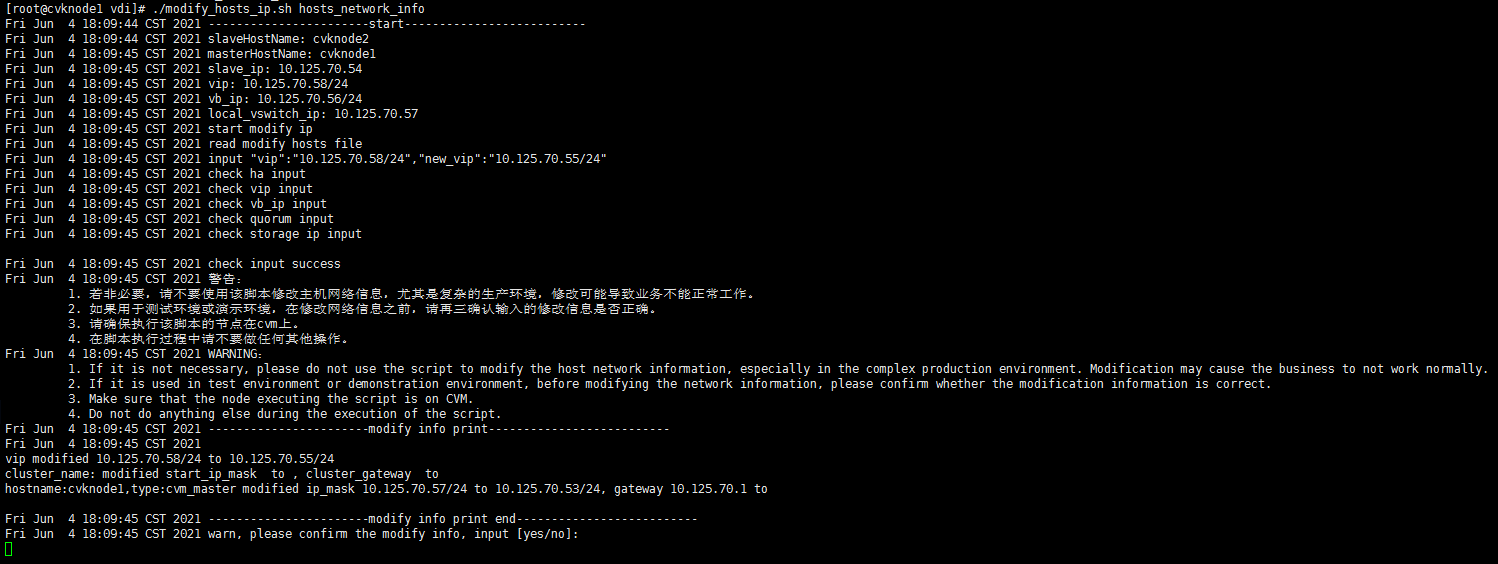

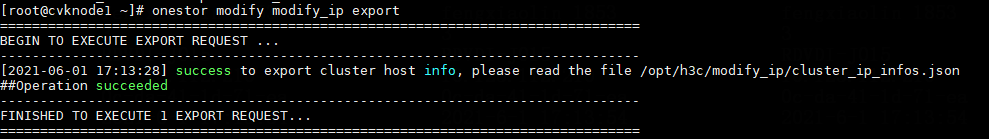

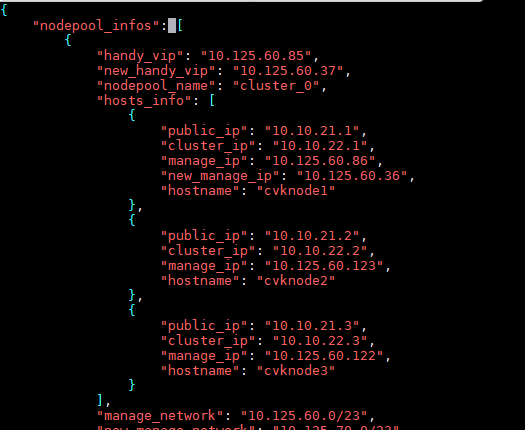

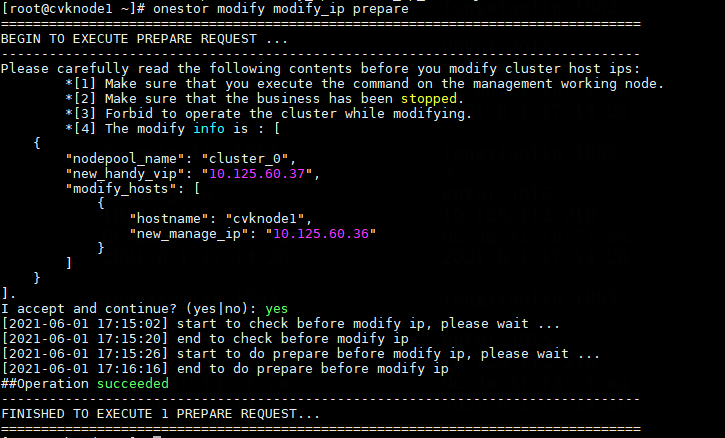

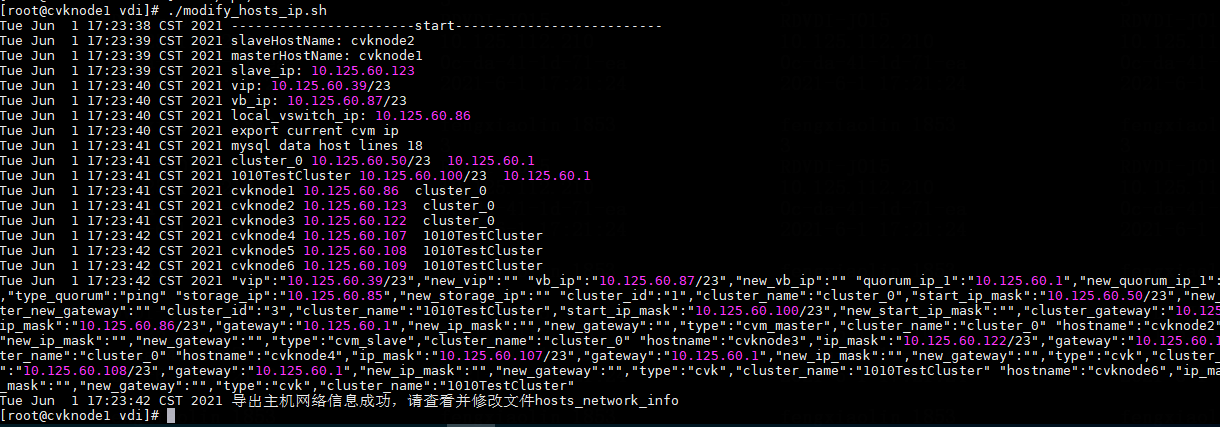

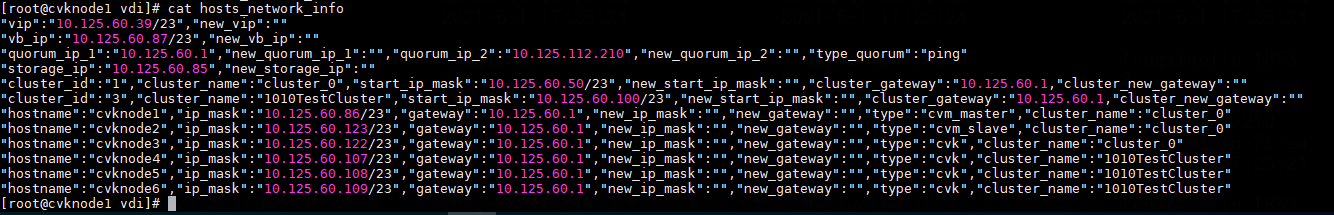

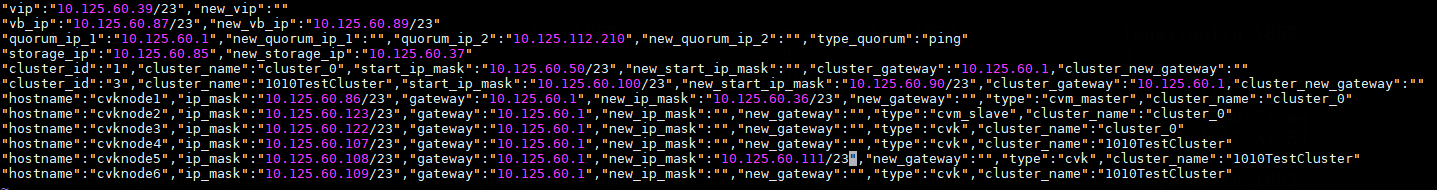

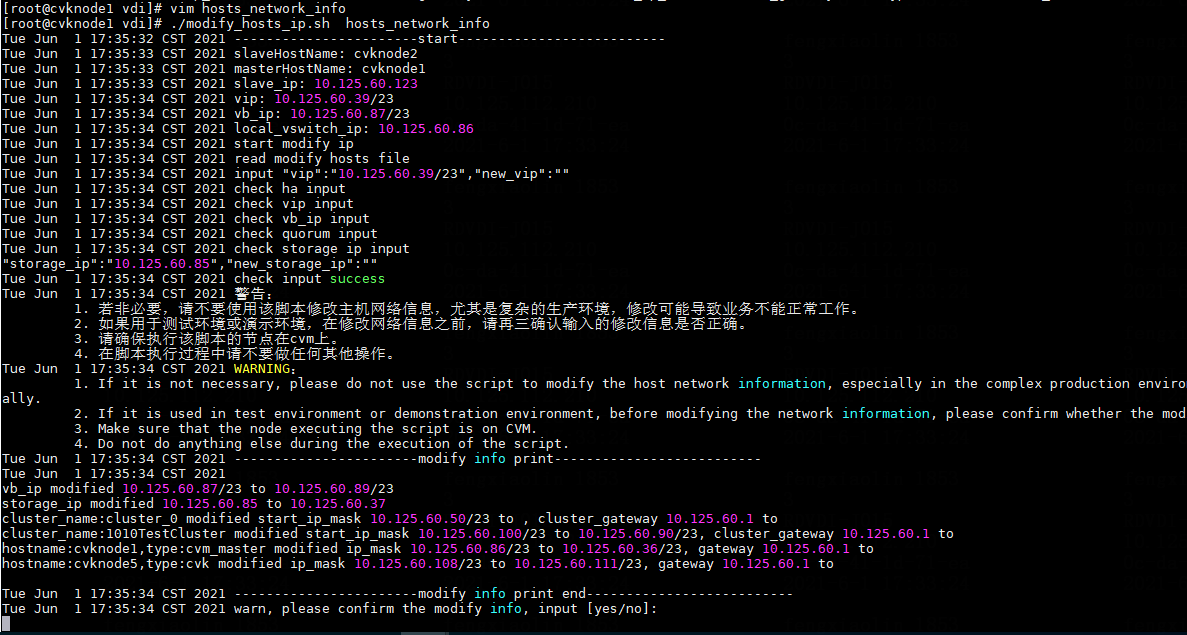

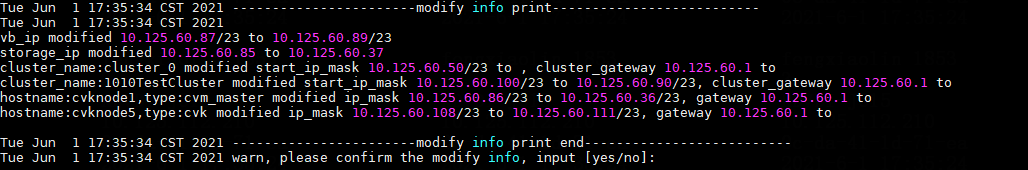

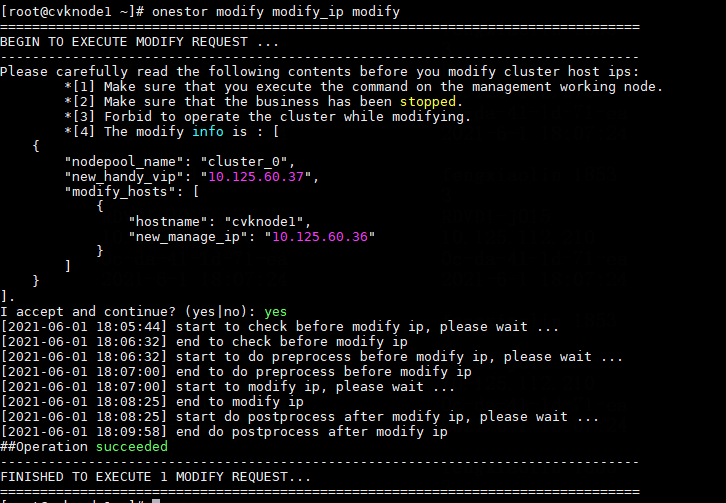

Modifying host network configuration (after initial deployment)

Changing the Space Console IP address (before initial deployment)

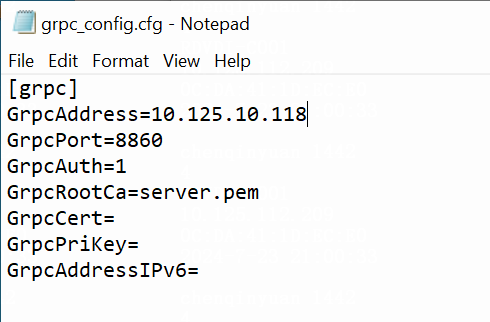

Changing the Controller IP for client connection

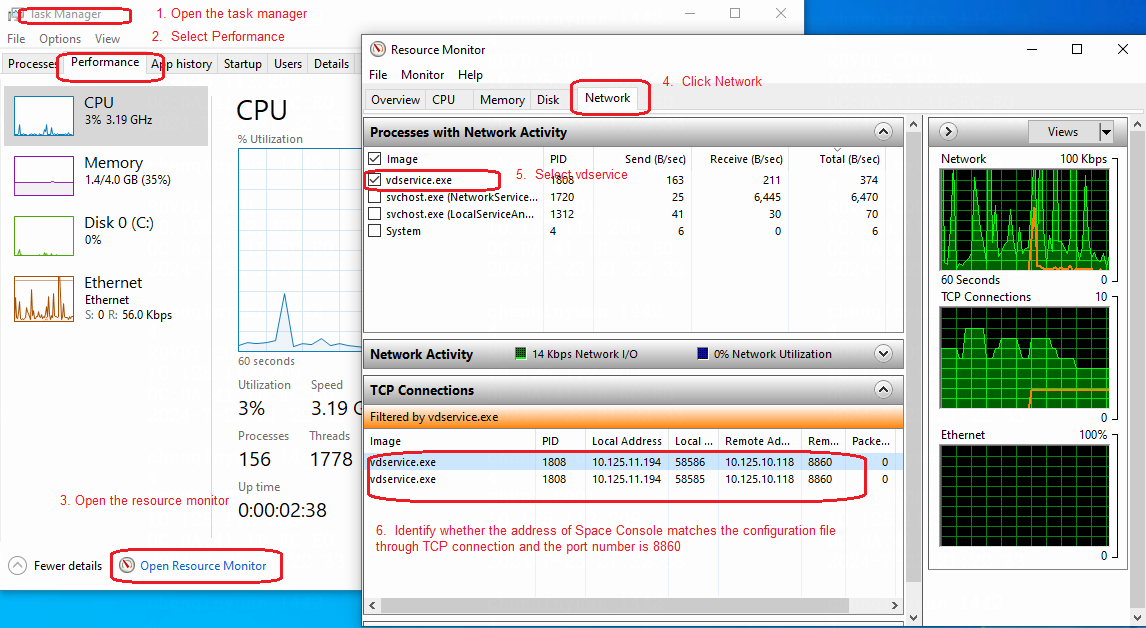

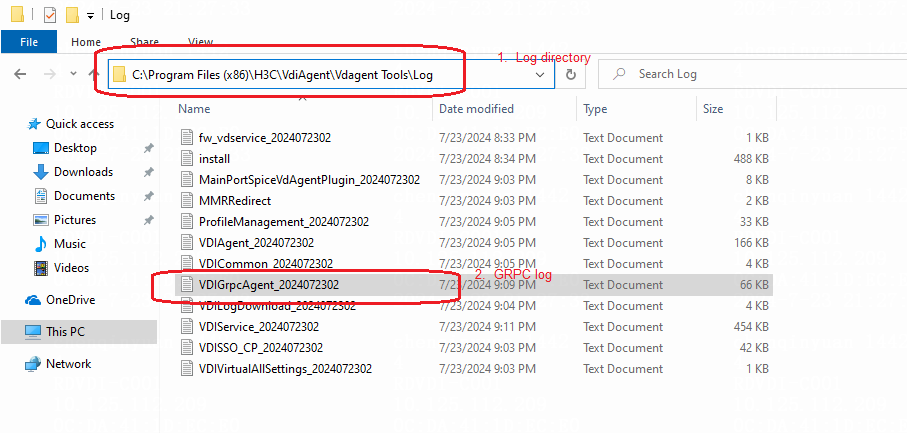

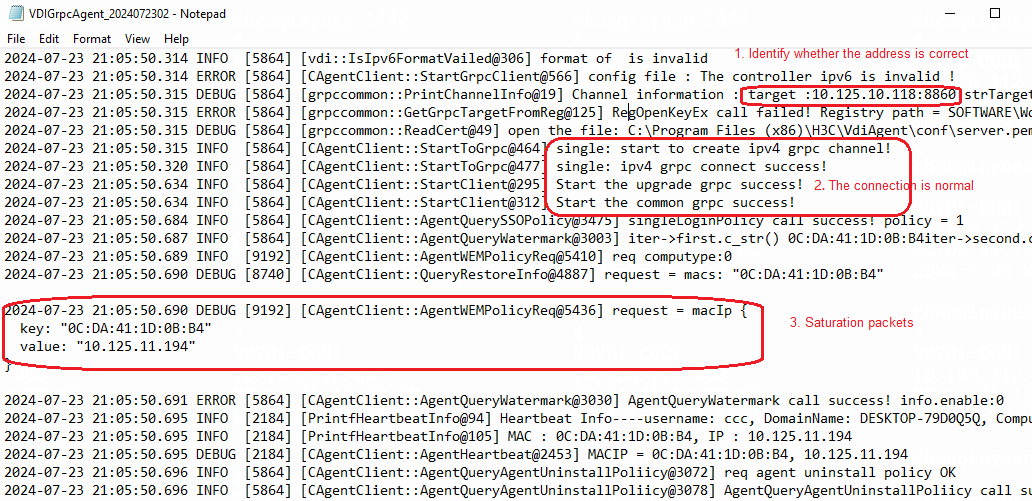

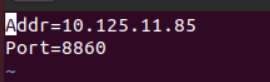

Changing the Controller IP address on the Agent

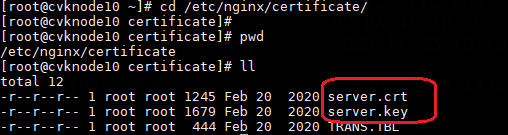

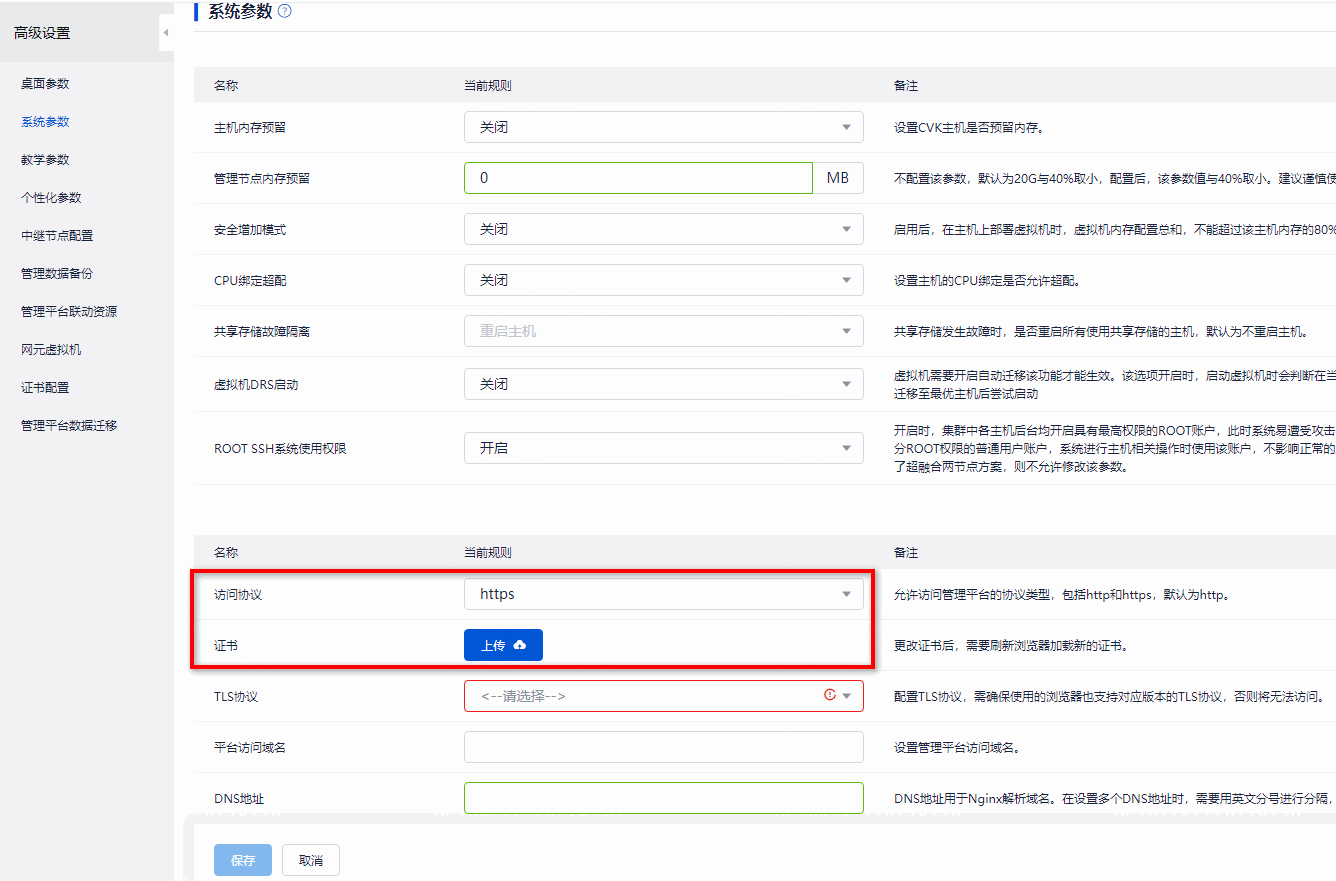

Replacing the Space Console certificate

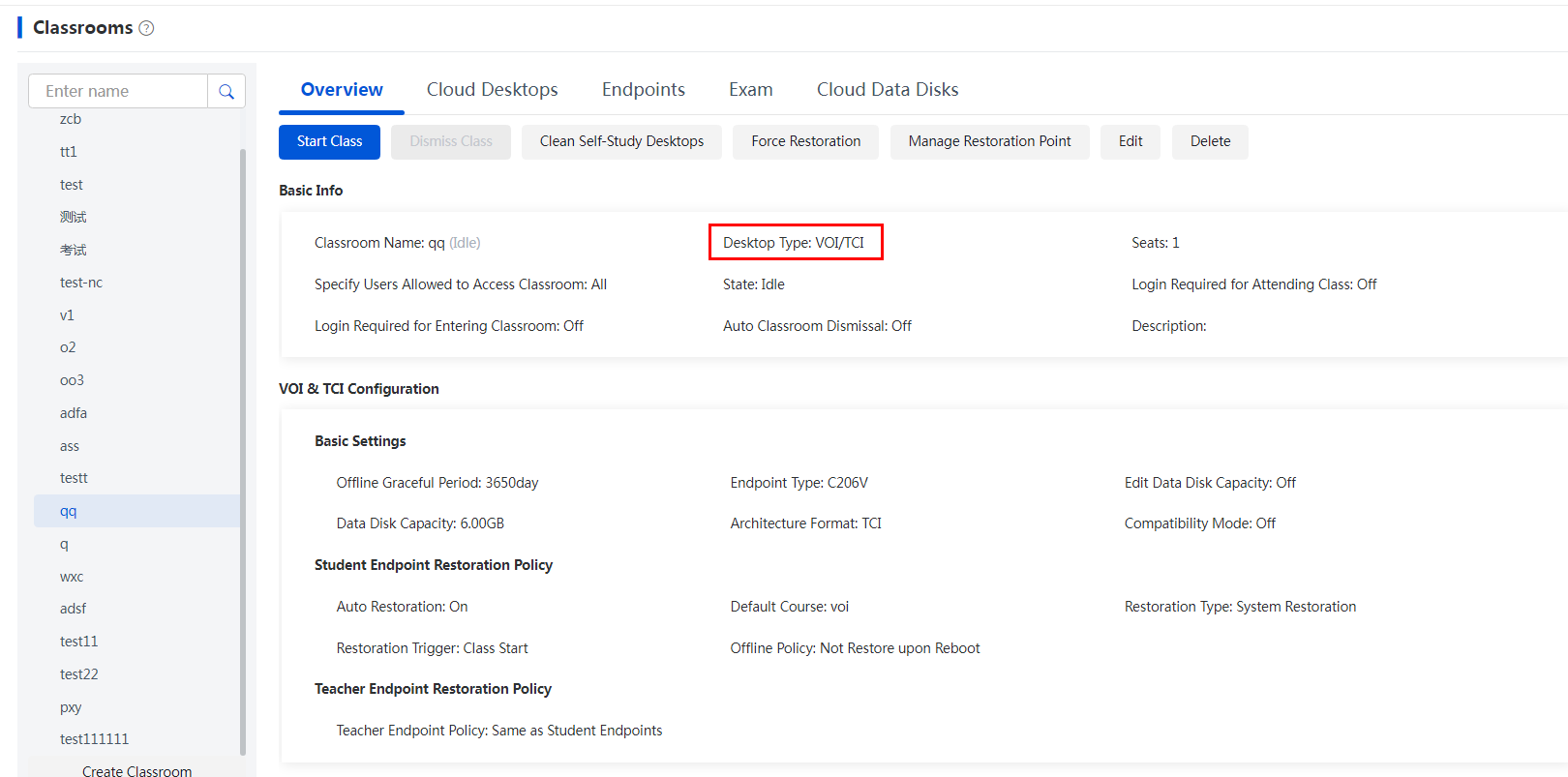

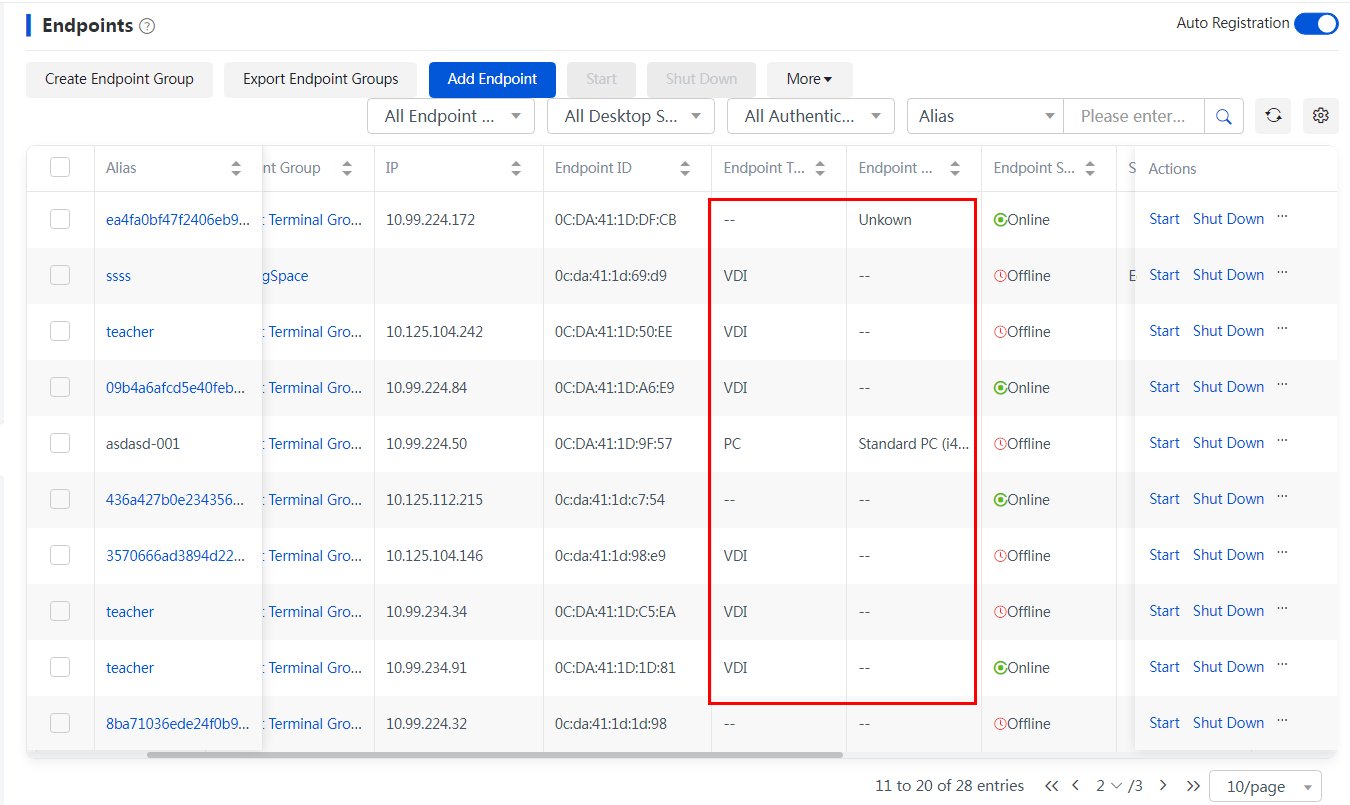

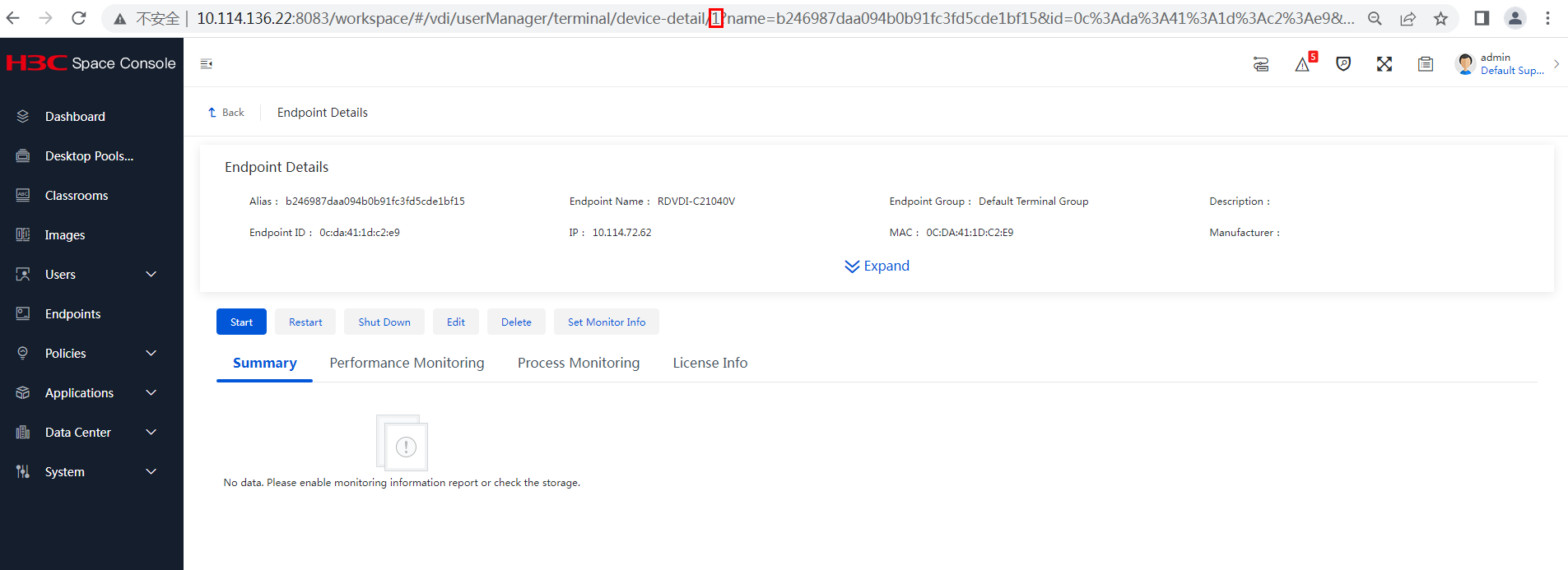

Replacing the teacher endpoint and changing an endpoint classroom (in education scenario)

Changing an endpoint classroom

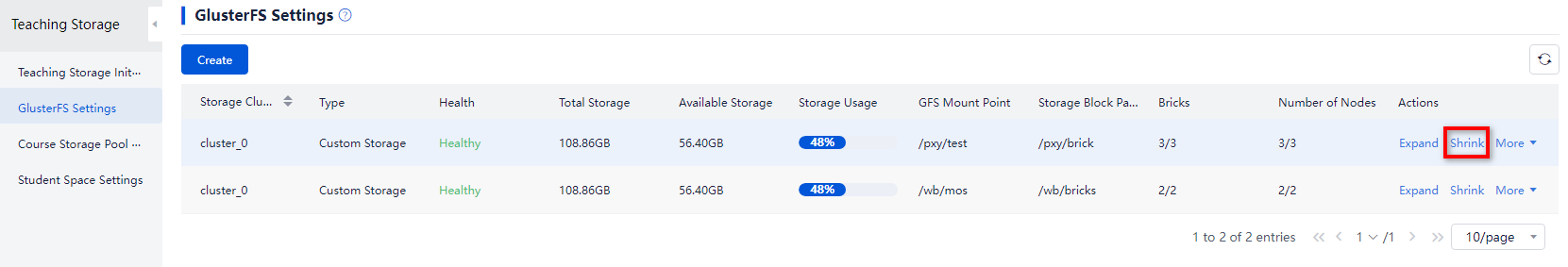

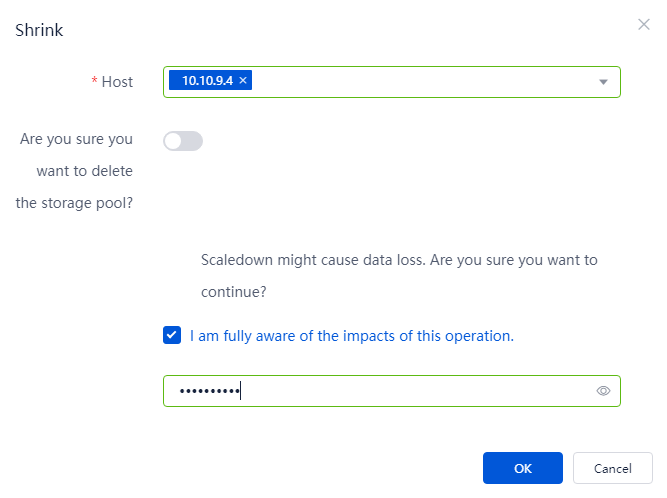

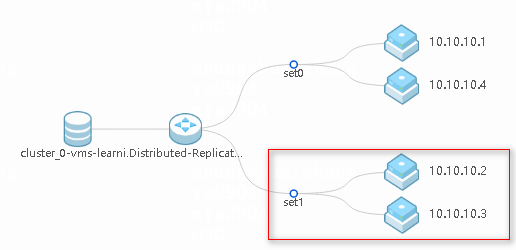

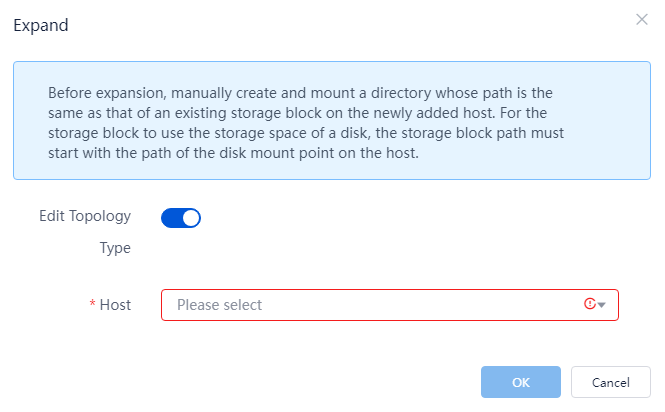

Replacing a GlusterFS drive (in education scenario)

Changing the topology type for GlusterFS storage

Changing the topology type by deleting the existing GlusterFS storage and creating a new one

Changing the topology type by scaling down and then scaling up the existing GlusterFS storage

Overview

H3C Space Console provides basic and advanced O&M tasks to reduce workload and cost, ease routine maintenance, and simplify the IT environment.

· Basic O&M tasks include dashboard, health check, virtualization information, report, and ONEStor monitoring report.

· Advanced O&M tasks include log management, alarm management, and routine maintenance functions such as host discovery, file distribution, message delivery, command issuing, and task configuration.

For detailed information about O&M procedures, see H3C Workspace Space Console User Manual.

O&M best practices

· Monitor the health status and resource usage of Space Console and perform health check regularly to detect software and hardware issues in the virtualization environment.

· Monitor compute, network, and storage resource distribution and host, VM, IP, VLAN, and storage resource utilization.

· Enable alarm notifications and follow the recommendations to resolve the reported issues. Add maintenance experiences for alarms to ease future maintenance work.

· Check and analyze logs regularly to detect potential risks. Download the log files and send them to the technical support team for troubleshooting.

Basic O&M

Dashboard

The dashboard displays the system health, service status, online desktops, desktop state, resource usage, and alarms. In addition, the dashboard provides quick access to common operations.

For more information, see the dashboard section in H3C Workspace Space Console User Manual.

Health check

Perform health check to check the resources in the system. This feature helps the administrator to learn the operating state and performance issues and any other risks that might negatively impact VM services. On detection of any exceptions, the system automatically provides recommended actions to help you maintain or restore system health. The system provides fast health check configuration in different scenarios, and allows you to customize health check items.

For more information, see the health check section in H3C Workspace Space Console User Manual.

Virtualization information

The system displays detailed virtualization information, including various parameters for clusters, hosts, and VMs in the system.

For more information, see the virtualization section in H3C Workspace Space Console User Manual.

Cluster information

You can view the following cluster information:

· Cluster summary information: Detailed cluster configuration (number of hosts, total CPU cores, total memory, local storage, VMs and VM density, and state of high availability, compute DRS, and storage DRS), resource usage (CPU, memory, and shared storage usage and allocation rate), top 10 hosts by CPU or memory usage, and top 10 VMs by CPU or memory usage.

· Cluster performance monitoring information: Average CPU usage, average memory usage, I/O throughput, and disk requests of hosts in the cluster.

· Information about hosts in the cluster: State, management IP, number of running VMs, total CPU cores, memory size, disk size, and CPU and memory usage of hosts in the cluster.

· Information about VMs in the cluster: Information about all VMs in the cluster, including the corresponding host, VM state, total CPU cores, memory space, CPU and memory usage, IP address, and operating system information.

For more information, see the cluster management section in H3C Workspace Space Console User Manual.

Host information

You can view the following host information:

· Host summary information: Detailed configuration of the host (host IP address, host state, host iLO interface address, host model, version, memory), resource usage (CPU allocation ratio, memory allocation ratio, memory usage), compute resource monitoring information, network throughput, Top 5 VMs by CPU or memory usage.

· Host hardware monitoring information: Host hardware monitoring information, including host summary information, processor information, memory information, temperature information, power supply information, and fan information.

· Host performance monitoring information: CPU usage, memory usage, I/O throughput, network throughput, disk requests, disk I/O latency, disk usage, and partition usage for a host.

· Information about VMs running on a host: Information about all VMs on a host, including VM state, total CPU cores, memory space, CPU usage, memory address, IP address, and operating system.

For more information, see the host management section in H3C Workspace Space Console User Manual.

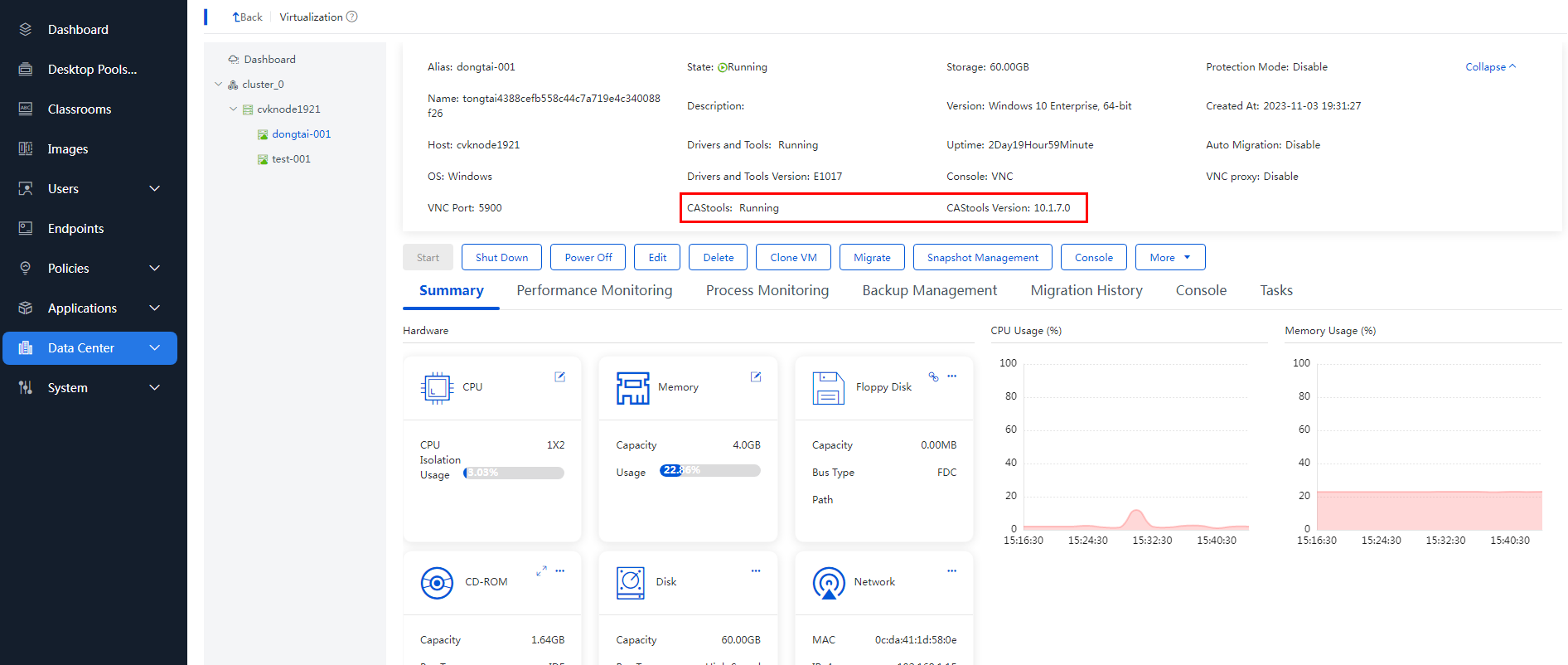

VM information

You can view the following VM information:

· VM summary information: Basic VM information (operating system, version, disk capacity, host, VM state, VNC port number for remote VM access, creation time, most recent shutdown time), hardware information (CPU, memory, disk, network, optical drive, and floppy information), and CPU usage and memory usage.

· VM performance monitoring information: CPU usage, memory usage, I/O throughput, network throughput, disk I/O latency, disk requests, CPU usage, disk usage, connection quantity, and partition usage for a VM.

|

IMPORTANT: · As a best practice to accurately monitor the CPU and memory usage of a VM, install CAStools on VMs. Space Console can use the tool to obtain the accurate CPU usage and memory usage of VMs. · If CAStools is not installed, the system cannot obtain information about a VM in shutdown or suspended state. The disk usage and partition usage fields will be empty on the page. · During VM online migration, the CPU usage, memory usage, I/O throughput statistics, and network throughput statistics might be inaccurate. |

· VM process monitoring information: Information about running programs, processes, and services on a VM, including the process username, PID, process name, CPU usage, memory usage, and used memory size.

|

IMPORTANT: During VM online migration, the CPU usage and memory usage statistics of the VM process might be inaccurate. |

· VM backup history: Information about VM backup, including the creation time, backup mode, backup location, file size, and backup type of a VM backup file as well as the storage path of backup files.

· VM migration history: Detailed information about VM migration, including the source and destination hosts and storage pools, migration method, administrator, migration start time, and time consumption.

· VM console information: VM console information, including VM UUID, VM state, VNC port, MAC address, and IP address.

For more information, see the VM management section in H3C Workspace Space Console User Manual.

Reports

Monitoring statistics

This feature is available on the System > Reports > Monitor page. It typically includes desktop monitoring, process monitoring, host reports, VM reports, and TopN reports.

· Desktop monitoring—Display the statistics such as desktop usage, number of online desktops, desktop online duration, and desktop idle time.

· Process monitoring—Display the system process state, CPU usage, memory usage, and start/stop time.

· Host reports—Display the statistics such as CPU usage and memory usage statistics of host for a specified time period. You can set criteria to filter the statistics and save the results to the local disk.

· VM reports—Display the statistics such as CPU usage and memory usage statistics of a VM for a specified time period. You can set criteria to filter the statistics and save the results to the local disk.

· Top N reports—Display hosts or VMs with the highest resource usage such as CPU or memory usage for a specified time period. You can set criteria to filter the statistics and save the results to the local disk.

For more information, see the monitoring section of report management in H3C Workspace Space Console User Manual.

Connection statistics

This feature is available on the System > Reports > Users page. It typically includes connection statistics, login statistics, online statistics, and static desktop statistics.

· Connection statistics—Display the statistics about user connection records and desktop connection duration.

· Login statistics—Display the statistics about login records and authentication duration.

· Online statistics—Display the statistics about the number of online users and the online durations of users. For the online users, you can view the number of online users and online gateway users during each time period of the day. For the online durations, you can view online durations of users (including gateway users) matching certain criteria within the specified time span.

· Static desktop statistics—Display information about all static desktops assigned to users, including desktop information statistics and desktop resource statistics.

For more information, see the users section of report management in H3C Workspace Space Console User Manual.

Usage statistics

This feature is available on the System > Reports > Statistics page. It typically collects statistics for host resource usage, VM resource usage, storage resource usage, IP address assignment, VLAN assignment, peripheral accesses, virtual app usage, and software download from the app store. You can customize the report information, print the report data, and export the reports as a .pdf file or .excel file.

· Host resource usage statistics: Host information and resource usage information, including uptime, host model, CPU model, CPU usage, memory usage, and local disk size.

· VM resource usage statistics: VM host information and resource usage information, including host name, VM state, CPU usage, memory usage, total CPU cores, VM disk, and OS.

· Storage resource usage statistics: Storage resource statistics for host pools, clusters, and hosts, including the VM disk, network storage, and shared file system.

· IP address assignment statistics: IP addresses assigned to host pools, clusters, and hosts.

· VLAN assignment statistics: VLANs assigned to VMs.

· Peripheral monitoring statistics: Such as the desktop name, desktop IP address, endpoint type, endpoint IP address, mapping state, and running state. You can also configure the retention period for peripheral monitoring statistics.

· Virtual app statistics: Such as the login name, endpoint type, application name, endpoint IP address, and endpoint MAC address. You can also configure the retention period for virtual app statistics.

· App upload statistics: App installation and usage statistics in the app store, such as the number of download times and desktop information for each application.

For more information, see the statistics section of report management in H3C Workspace Space Console User Manual.

ONEStor monitoring reports

This feature is available on the ONEStor page. It typically includes cluster summary information, cluster monitoring report, host monitoring report, and disk monitoring report.

· Cluster summary information: Summary information about the cluster, including cluster capacity, health state, and cluster IOPS/OPS.

· Cluster monitoring report: Displays running information about the cluster, including IOPS, OPS, bandwidth, traffic, capacity, CPU usage, memory usage, disk latency, and load.

· Disk pool monitoring report: Displays running information about the disk pool, including IOPS, bandwidth, and, capacity

· Storage pool monitoring report: Displays running information about the storage pool, including IOPS and bandwidth.

· Storage volume monitoring report: Displays running information about the volume, including IOPS, bandwidth, latency, and average I/O size.

· Host monitoring report: Displays running information about a host, including data disk IOPS, read/write bandwidth, capacity, read/write latency, file OPS, read/write bandwidth, CPU usage, memory usage, and Tx/Rx traffic.

· Disk monitoring report: Displays disk rank by usage, and disk usage statistics and charts.

For more information, see the cluster summary and monitoring charts sections of ONEStor management in H3C Workspace Space Console User Manual.

Advanced O&M

Managing logs

This feature is available on the Monitor Reports > Logs page. It typically includes system logs, administrator operation logs, user operation logs, and screen monitoring logs.

· System logs: Include host logs, desktop logs, and endpoint logs.

¡ Host logs: Allow you to collect and download log files of the management and service nodes (such as cloud desktop, visualization, and storage) on Space Console. By default, logs of management nodes are collected. To collect logs of service nodes, select hosts in the service node list. You can download the collected log files to a local file.

¡ Cloud desktop logs: Record logs related to VMs and collected by the agent. You can view, regularly clear, delete, or download cloud desktop logs.

¡ Endpoint logs: Record the operations performed by the user on the endpoint. You can view, regularly clear, delete, or download endpoint logs

· Administrator operation logs: Include host logs, desktop logs, and endpoint logs.

¡ Cloud desktop logs: Record the operations performed by the administrator on the cloud desktops, including desktop authorization, user management, system settings, and course management. You can filter, clear, and collect cloud desktop logs.

¡ Virtualization logs: Record the operations performed by the administrator on the VMs, including VM actions, host actions, and storage actions. You can filter, clear, and download virtualization logs.

¡ Cluster host logs: Record the operation performed by the administrator on the cluster hosts, including host action, storage action, platform action, and system settings. You can filter, clear, and download cluster host logs.

¡ Storage logs: Record the actions performed by the administrator on the ONEStor in the hyper-converged deployment scenario.

· User operation logs: Record operations performed by the user on the cloud desktop, including logging in to the client, disconnecting, rebooting, shutting down, snapshotting, and cloud desktop renaming in the office scenario and class taking and dismissing, self-study, endpoint deployment in the education scenario. You can filter, clear, and export user operation logs.

· Screen monitoring logs: If you enable screen monitoring policies, the recording operation by a cloud desktop user will be monitored and recorded. You can configure the screen recording server, and view and download screen monitoring log entries.

For more information, see the log management section in H3C Workspace Space Console User Manual.

Managing alarms

This feature is available on the Monitor Reports > Alarms page. It typically collects statistics of real-time alarms, VIP desktop alarms, gateway alarms, and endpoint alarms. In addition, it allows you to configure alarm threshold, ignored alarm, and alarm notification settings. To ensure the stable operation of the system, you can use alarms to detect and resolve issues that affect the system running state.

· Real-time alarms: View, acknowledge, or delete the real-time alarms. The real-time alarms page displays real-time alarms in chronological order and refreshes every 10 seconds by default.

· Real-time alarms: View or delete VIP desktop alarms, or configure VIP desktop policies.

· Gateway alarms: View and delete gateway alarms, and configure gateway alarm policies. Gateway alarms only support detecting connectivity between Space Console and internal ports of a gateway.

· Endpoint alarms: Configure the indexes that can trigger endpoint alarms. When the indexes of endpoints exceed the specified thresholds, the system generates corresponding endpoint alarms. You can view the endpoint alarms in the alarm list.

· Alarm threshold configuration: An alarm threshold defines the minimum value of the specified indicator that triggers an alarm. You can set CPU usage, memory usage, and disk usage alarm thresholds for hosts, VMs, and clusters, and set disk throughput and network throughput alarm thresholds for hosts and VMs. Alarm threshold management enables you to view the predefined alarm thresholds and configure the alarm server, alarm thresholds, and alarming state.

· Alarm notification configuration: Enable the system to send email notifications or SMS notifications for alarms to the users.

For more information, see the alarm management section in H3C Workspace Space Console User Manual.

Common operations

Discovering hosts

This feature allows you to discover the hosts installed with Space Console and add them to the system. This function is applicable to scenarios for system capacity expansion. Space Console automatically scans hosts that are reachable at Layer 2 network, and any extra network settings and management settings are not required.

For more information, see the host discovery section in H3C Workspace Space Console User Manual.

File distribution

This feature allows you to bulk distribute files to cloud desktops. If the user is online on a cloud host that is operating correctly, the cloud host receives files after a file distribution task is created. If the user is offline on a cloud host that is operating correctly or a cloud host is shut down, the cloud host receives the files after the user comes online and the operating system is loaded. The recipient cloud desktops automatically create directories to save the received files.

For more information, see the file distribution section (office scenario) in H3C Workspace Space Console User Manual.

Troubleshooting file distribution

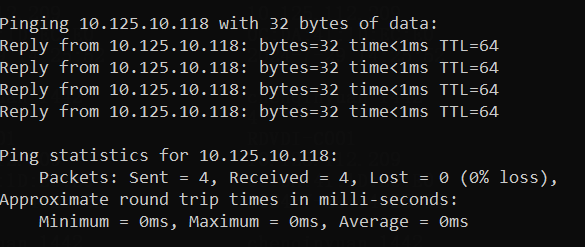

1. Perform ping operations to verify the network connectivity between the VMs and Space Console.

2. Check the Space Console service state by executing the systemctl status smb.service command at the management node backend.

If the system prompts that the Space Console service runs correctly, the network connectivity might be lost.

3. Check the connectivity to port 445 on Space Console by using the tcping program.

If port 445 is disabled, enable that port.

Software deployment

This feature allows you to bulk install and upgrade software on VDI desktops without deleting desktop data.

For more information, see H3C Workspace Cloud Desktop Software Deployment Configuration Guide.

Message delivery

This feature allows you to send messages to cloud desktops. Administrators can use this feature to notify cloud desktops of user O&M information.

For more information, see the message delivery (office scenario) section in H3C Workspace Space Console User Manual.

Issuing commands

This feature allows you to issue a command or command attachment to desktops or endpoints for remote management and maintenance.

For more information, see the command issuing section in H3C Workspace Space Console User Manual.

Configuring tasks

This feature allows you to configure scheduled tasks for desktop pools VMs, endpoints, and classrooms, which is suitable for services that do not need to run all the time. This feature not only ensures the availability of services in a specified time period, but also timely releases resources to improve the system resource usage. The following types of tasks are supported:

· Desktop pool tasks—Include shutting down VMs, starting VMs, restarting VMs, restoring VMs in a manual desktop pool, creating snapshots, restoring snapshots, and deploying desktops.

· VM tasks—Include shutting down, starting, and restarting VMs, creating snapshots, and restoring snapshots.

· Endpoint tasks—Starting and shutting down endpoints, and clearing user data disks.

· Classroom tasks—Restoring classrooms, clearing user data disks, and clearing self-study VMs.

For more information, see the scheduled task section in H3C Workspace Space Console User Manual.

Restoring and rebuilding the system disk

System disk restoration and rebuilding mechanism

System disk rebuilding is to update or restore the system disk of a cloud desktop. The main process of rebuilding a desktop system disk is as follows:

1. Deploy a temporary desktop based on the selected image.

2. After the deployment is completed, uninstall the system disk of the temporary desktop.

3. Uninstall the system disk of the original desktop.

4. Mount the uninstalled system disk of the temporary desktop onto the original desktop.

5. Restore the desktop network configuration.

System disk restoration and rebuilding have no distinctions in essence. System disk restoration can be understood as a special type of system disk update. The difference between system disk restoration and rebuilding lies in the selection of the desktop image, which is associated with the desktop pool where the desktop is currently located.

Troubleshooting operation failures

1. After understanding the process of system disk rebuilding, if the task fails, you can identify the failure reason by checking the operation logs. You can identify which specific process has failed based on the rebuilding process.

2. The most common failure reason is operating system version inconsistency, which leads to the failure of system disk update.

3. The selected desktop image for update is incorrectly created, resulting in various abnormalities after the update.

4. During the system disk update process, the desktop will be set to maintenance mode. At this time, do not disable the maintenance mode on the management platform.

Powering on and powering off Workspace

For information about powering on and powering off Workspace, see H3C Workspace Cloud Desktop Shutdown and Startup Configuration Guide.

Using UPM policies in desktop pools for IDV/VOI client anonymous users

When you configure data management policies for IDV and VOI anonymous user desktop pools, the following restrictions apply:

· If you do not set a fixed account and password, you must create a local user Anonymous on Space Console and authorize the data management policy group to that user.

· If you set a non-special fixed account and password, you must create a local user with the same name on Space Console and authorize the data management policy group to that local user.

· If you set a special fixed account and password (such as Administrator), contact Technical Support to create local user Administrator in the database and authorize the data management policy group to that user. Modifying the database is a high-risk operation, so perform it under the guidance of a professional technician. The following describes how to create local user Administrator:

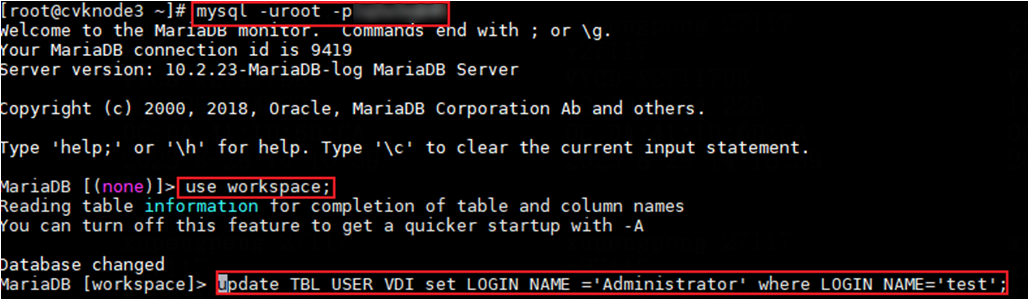

E1xxx versions

1. On Space Console, create a local user. This example creates user test.

2. Connect to the database server and use the root user to perform user authentication by using the following command where XXXX represents the database password and can be edited as needed:

mysql -uroot -pXXXX

3. Change the login name of user test to Administrator. Make sure the username of user test is the same as that in step 1.

update TBL_USER_VDI set LOGIN_NAME ='Administrator' where LOGIN_NAME='test';

Figure 1 Changing the login name of user test to Administrator

E2xxx versions

1. On Space Console, create a local user. This example creates user test.

2. Connect to the database server by using the following command where XXXX represents the database password and can be edited as needed:

su - omm -c "gsql -d workspace -p 15400 –r -W XXXX"

3. Change the login name of user test to Administrator. Make sure the username of user test is the same as that in step 1.

update TBL_USER_VDI set LOGIN_NAME ='Administrator' where LOGIN_NAME='test';

Figure 2 Changing the login name of user test to Administrator

Enabling HugePages memory for the UCC endpoint in IDV mode

1. Keep the endpoint in management machine state and use the SSH tool to access the CLI of the endpoint.

2. Set the number of pages: idvctl set-hugepages HP_TOTAL.

|

CAUTION: The HP_TOTAL argument specifies the number pages to be applied for. Make sure the number of pages multiplied by 2M is less than the current free memory. If the free memory space is insufficient, use sync & echo 3 > /proc/sys/vm/drop_caches to free page table caches. |

3. Enable HugePages on the VM: idvctl create-vm VM name System image full path --hp_flag on.

4. Stop the user interface service on the management machine: service ui stop.

5. Start the VM: idvctl start-vm VM name.

6. Start VdSession: service Workspace start.

7. View HugePages usage information: idvctl get-hugepages.

Logs

Endpoint logs

This chapter introduces the basic ideas of troubleshooting, log collection methods, and searching logs by issue. When sharing logs, provide the specific deployment location to facilitate analysis by R & D engineers.

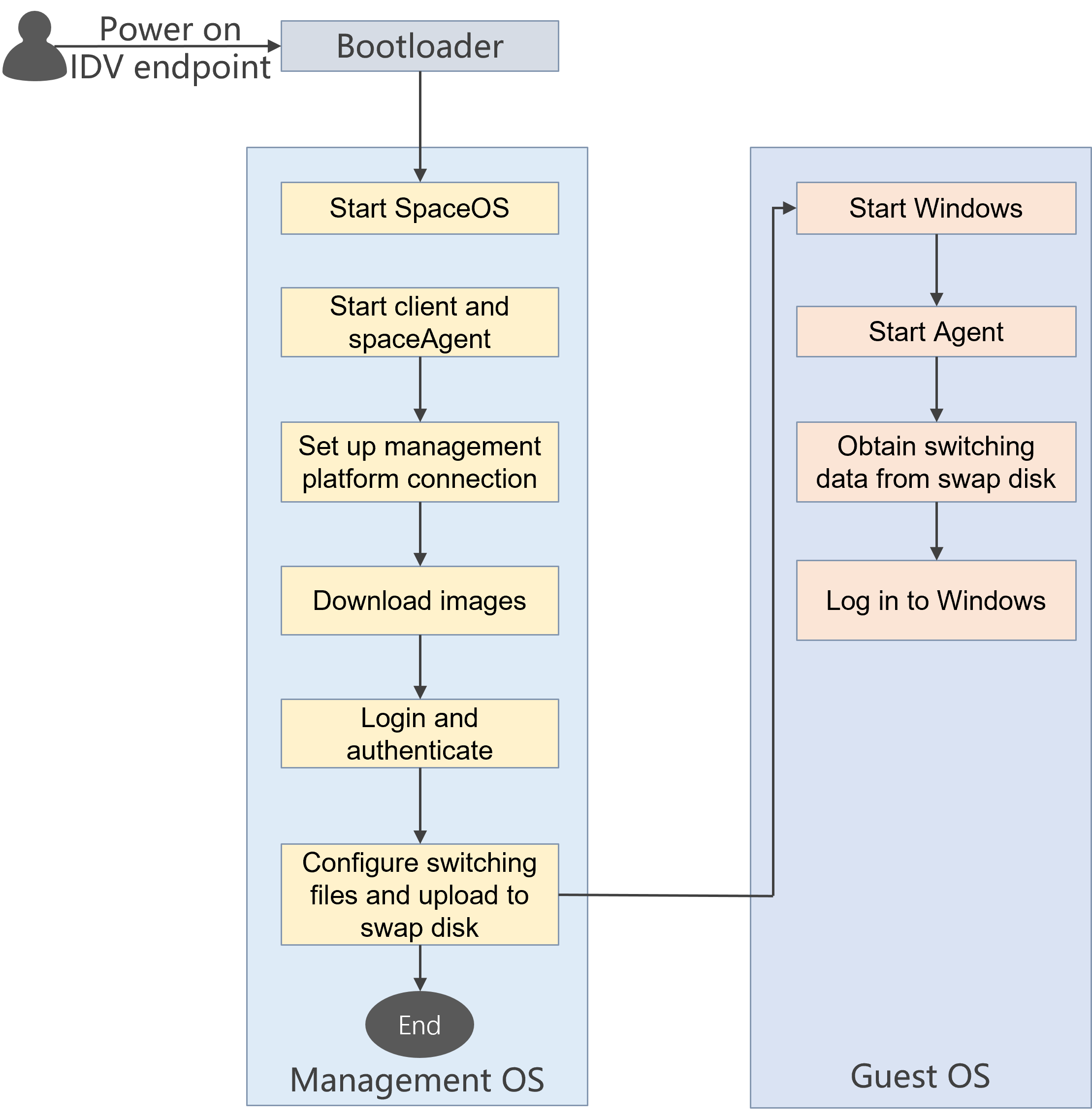

An endpoint can be in management machine state or client machine state. During log collection, the endpoint must be in the corresponding state. When you collect endpoint management machine logs, the endpoint must be in the management machine state. When you collect endpoint client machine logs, the endpoint must be in the client machine state.

· Management machine state: Only the Workspace or Learningspace login programs are running on the endpoint, and the user's actual desktop system has not been accessed.

· Client machine state: The endpoint has entered the user's actual desktop system and can use the desktop correctly.

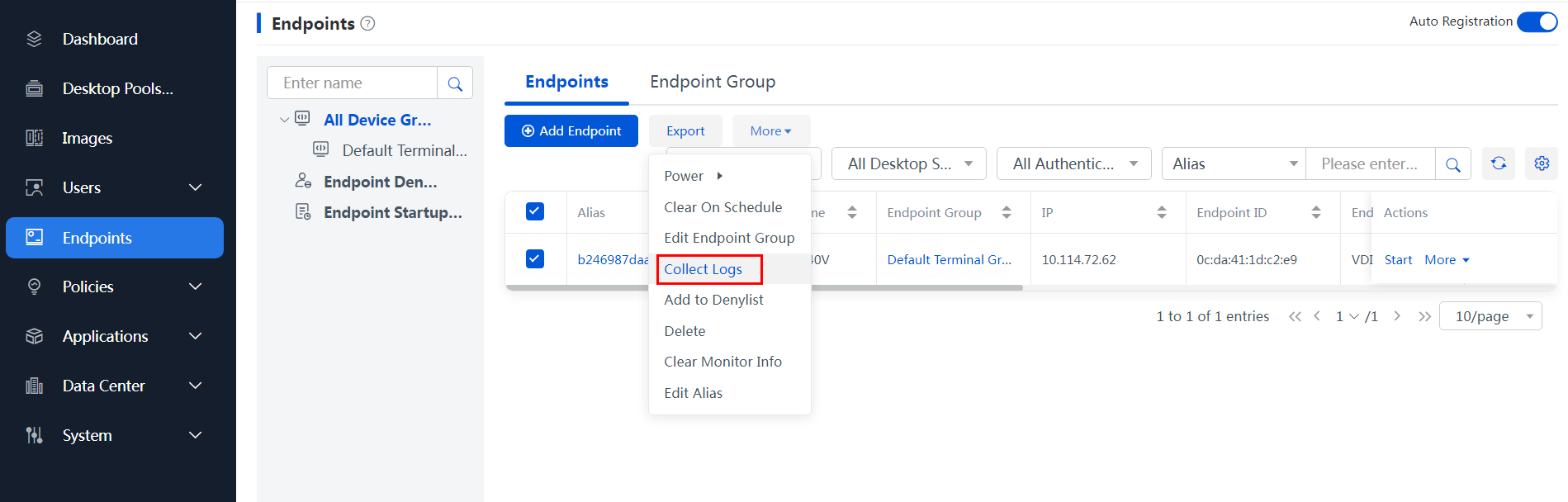

To collect endpoint logs:

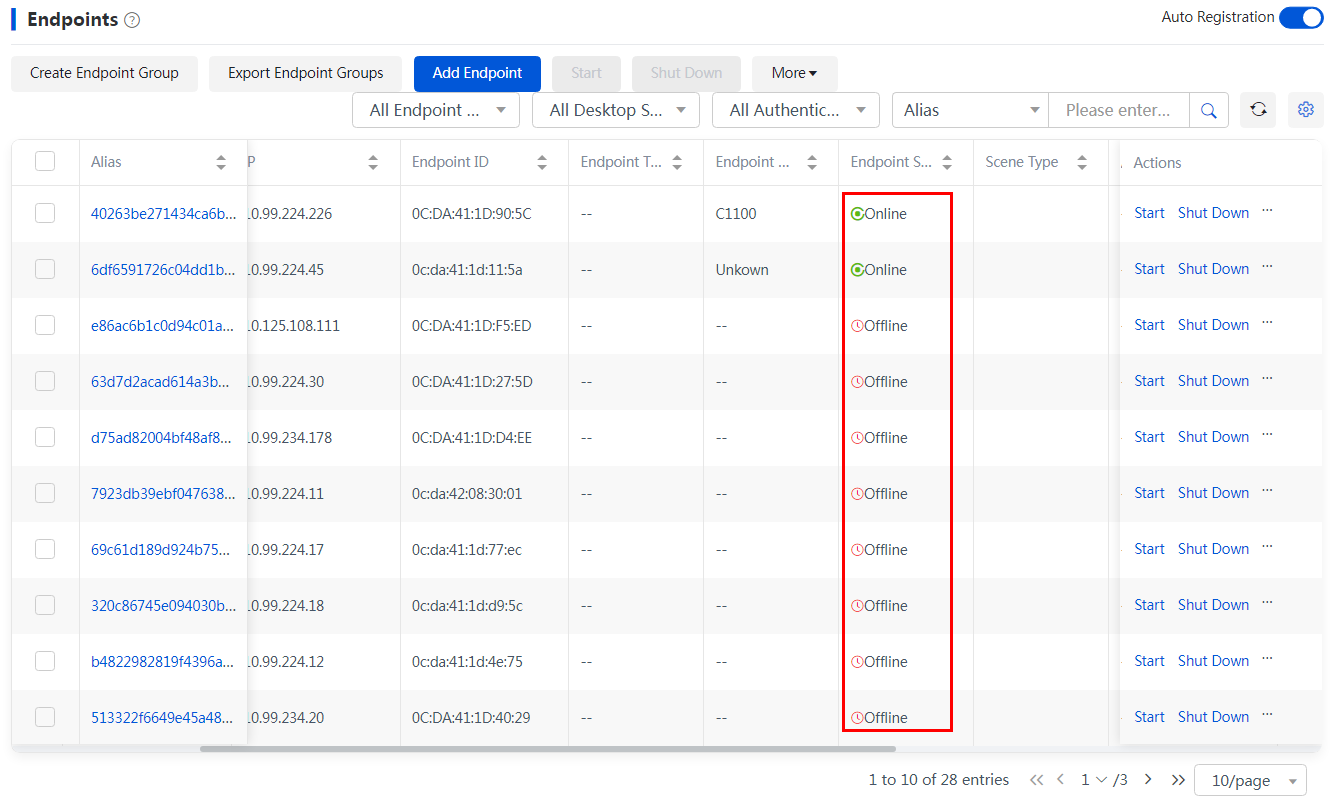

1. Navigate to the Endpoints page, and use the log collection function to collect endpoint logs with one key.

Figure 3 Collecting endpoint logs

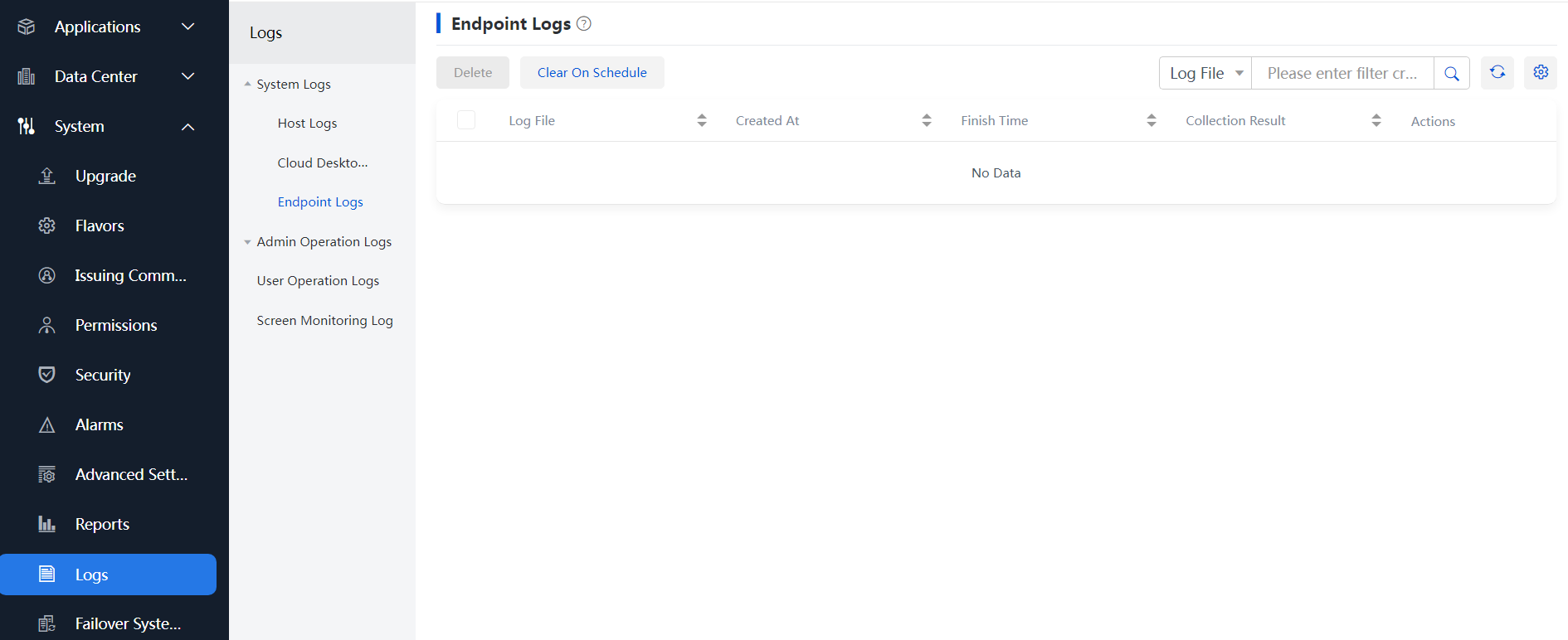

2. To download and view collected endpoint logs, navigate to the Monitor Reports > Logs > System Logs > Endpoint Logs page.

Figure 4 Viewing collected endpoint logs

VDI

Table 1 VDI endpoint logs

|

System |

Component |

Log directory |

Log file name |

Log contents |

|

Windows |

Workspace |

User file directory\Workspace\log |

Workspace.date.log |

Workspace software logs |

|

VdSession |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

Logs for remote desktop connection program |

|

|

U2ec |

Workspace installation directory\log |

u2ec_date.log |

Logs for peripheral redirection program |

|

|

Installation logs |

Workspace installation directory\log |

Workspace_NSIS_log.txt |

Logs for Workspace client installation |

|

|

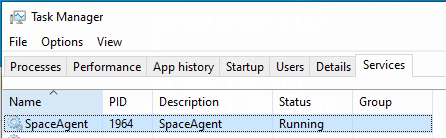

SpaceAgent |

SpaceAgent installation directory\log |

SpaceAgent.date.log |

Logs for SpaceAgent endpoint service proxy program |

|

|

SpaceConfig |

SpaceAgent installation directory\log |

SpaceConfig.date.log |

Logs for operation of client endpoint configuration program |

|

|

upgrader |

Liveupdate installation directory\log |

Upgrader.date.log |

Logs for client online upgrade |

|

|

popview.date.log |

||||

|

SpaceOS |

Workspace |

/home/spaceos/Documents/Workspace/log |

Workspace.date.log |

Workspace software logs |

|

VdSession |

/home/spaceos/Documents/VdSession/log |

VdSession-server address-desktop pool-desktop ID-date.log |

Logs for remote desktop connection program |

|

|

SpaceAgent |

/userdata/H3C/SpaceAgent/log |

SpaceAgent.date.log |

Logs for SpaceAgent endpoint service proxy program |

|

|

SpaceConfig |

/userdata/H3C/SpaceAgent/log |

SpaceConfig.date.log |

Logs for client endpoint configuration program |

|

|

upgrader |

/userdata/H3C/Liveupdate/log |

Upgrader.date.log |

Logs for client online upgrade |

|

|

popview.date.log |

IDV/VOI/UCC

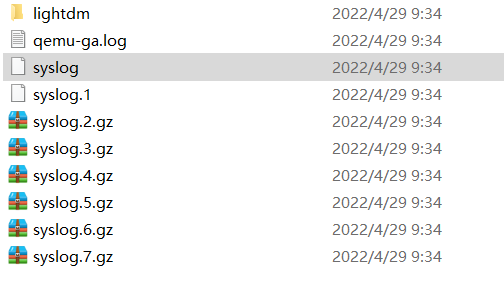

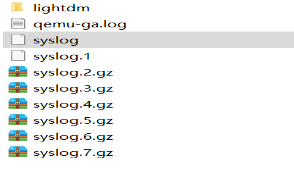

Figure 5 IDV/VOI/UCC endpoint logs

|

Component |

Log directory |

Remarks |

|

VOI management machine client |

· E1004P04 and earlier versions: /var/log/voiclient/* · E1004P05 and later versions: /voisys/var/log/voiclient/* |

· The client of E1004P04 and earlier versions only records the current startup log. · The logs for the client of E1004P05 and later versions can retained for three days. |

|

VOI management machine Agent |

/home/spaceos/idvclient/log/* |

Only records the current startup log. |

|

Management machine system log |

/var/log/* |

Mainly syslogs. Only the current startup log is recorded. |

|

Client machine critical log |

· C:\Users\Administrator\Documents\Workspace\log · VOIAgent: C:\Program Files (x86)\H3C\VoiAgent\Vdagent Tools\Log · Peripheral control: C:\Program Files (x86)\H3C\VoiAgent\Device Redirect\log |

N/A |

|

IDV |

/var/log/idvclient/* |

N/A |

|

UCC |

/voisys/var/log/voiclient/* or /var/log/voi/* or /var/log/u3client/ |

N/A |

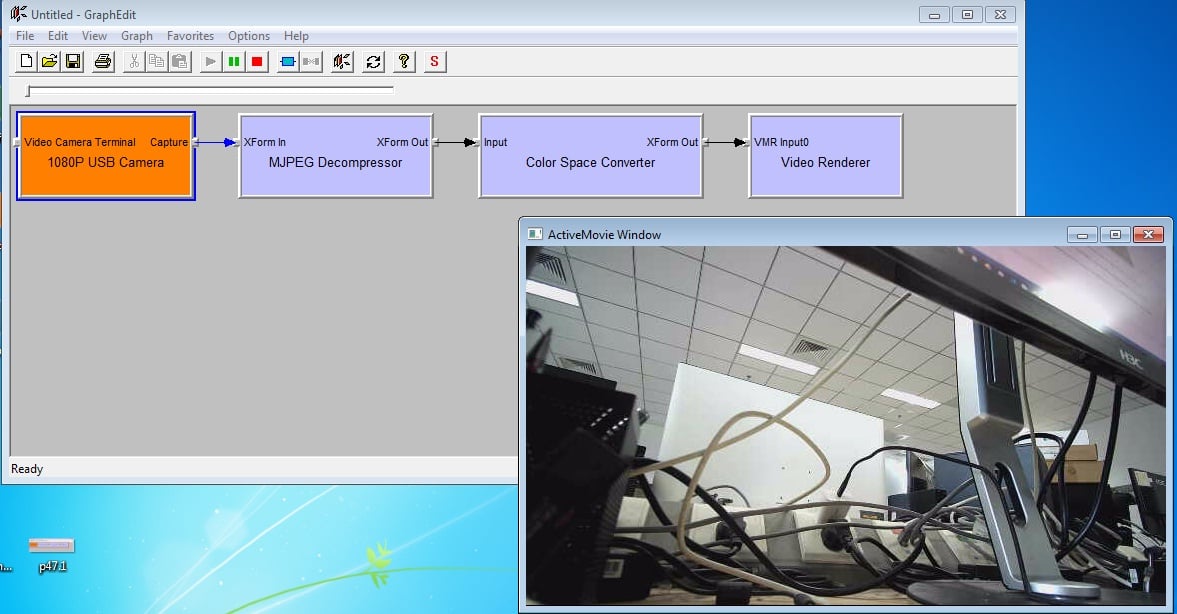

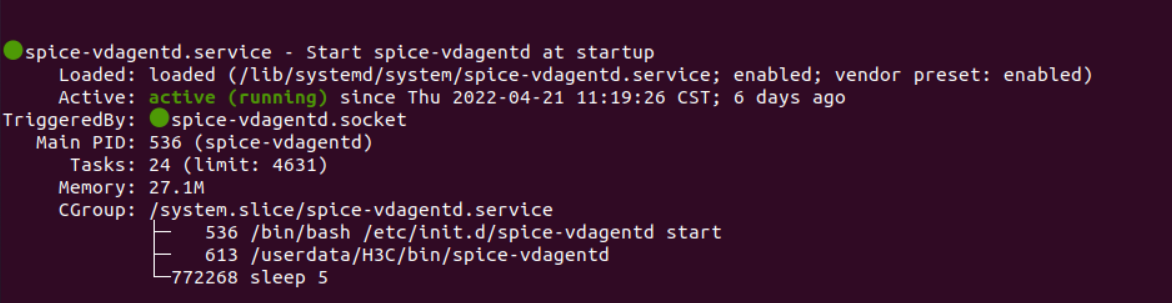

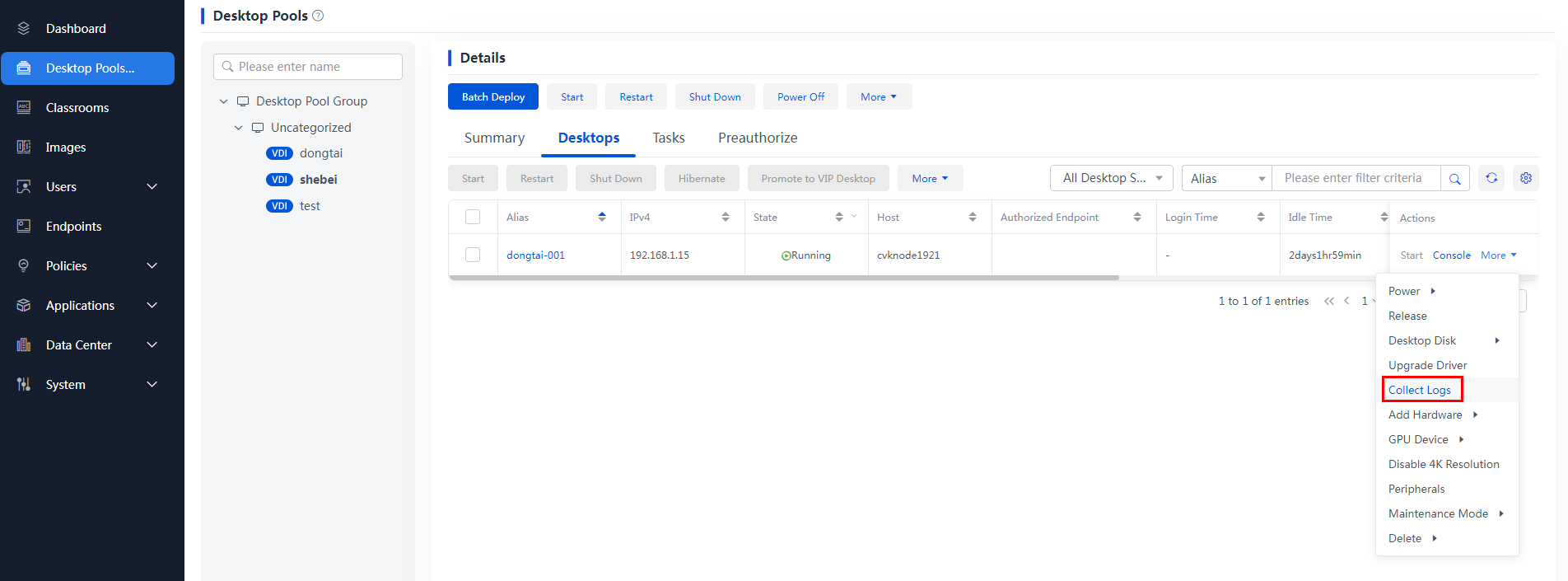

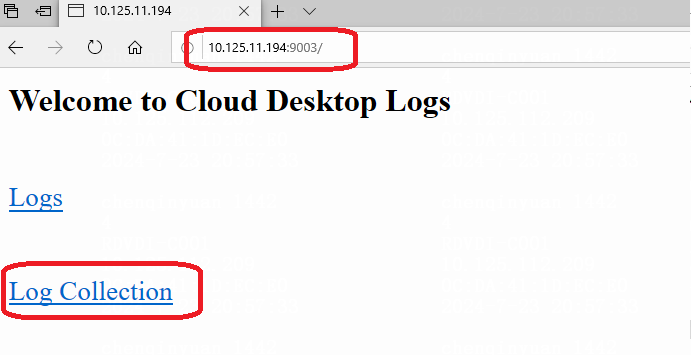

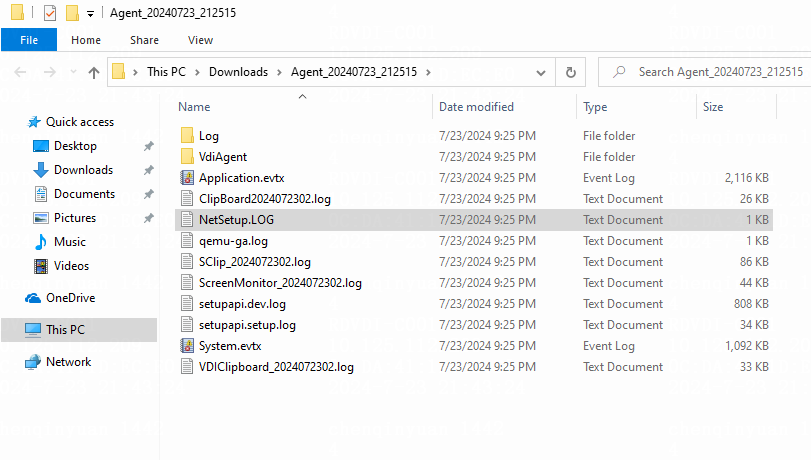

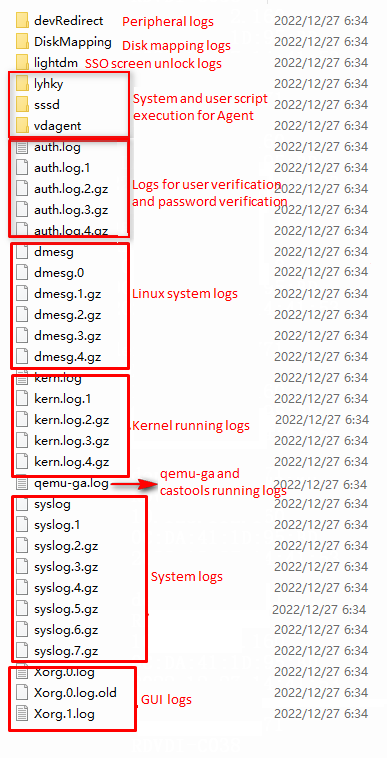

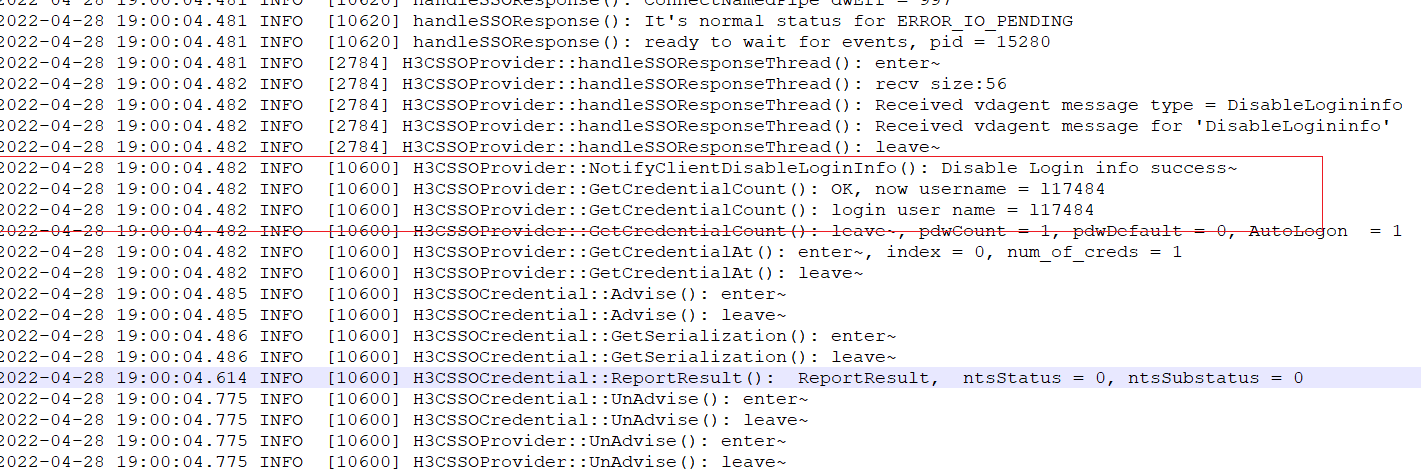

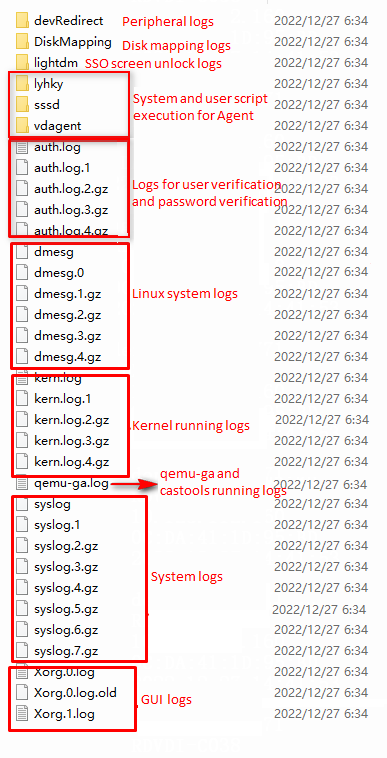

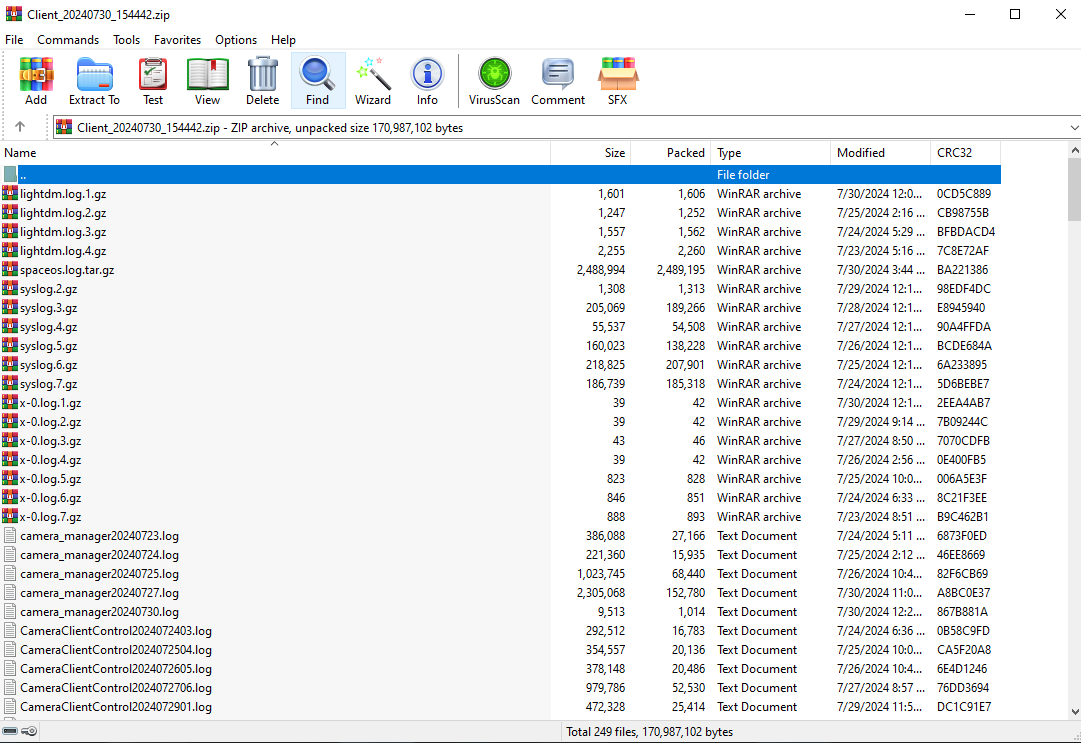

Multimedia, vGPU, protocol, peripheral, and agent logs

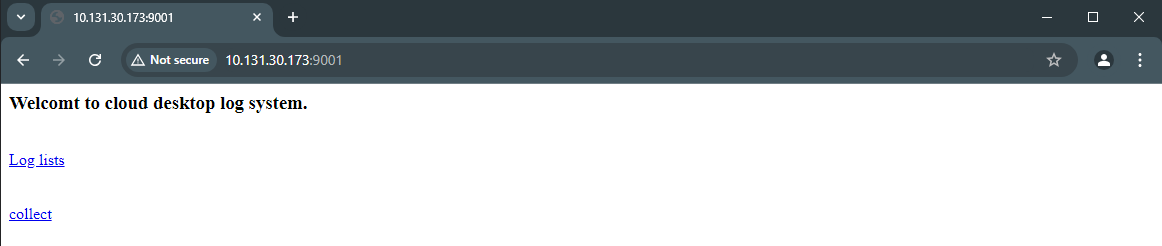

VM logs can be downloaded from a browser to a client by entering VM IP:9003 in the browser of the client.

Table 2 Multimedia, vGPU, protocol, peripheral, and agent-related logs

|

VM/Endpoint |

System |

Component |

Log directory |

Log file name |

Log contents |

|

Endpoint |

Windows |

Peripheral |

H3C installation path\workspace\Device Redirect\log\ |

VDIUsbServicedateday.log |

Port redirection logs |

|

UsbSetupdateday.log |

Driver installation logs for port redirection |

||||

|

VDIComServicedateday.log |

Serial redirection logs |

||||

|

ComSetupdateday.log |

Driver installation logs for serial redirection |

||||

|

devredirectdate.log |

Camera redirection logs |

||||

|

virtualCameradateday.log (dedicated for VM side) |

Virtual camera redirection logs (dedicated for VMs) |

||||

|

C:\Users\Currrent login user\H3CDClient\ |

CDriverMappdateday.log (dedicated for physical device side) |

Disk mapping logs |

|||

|

SDriverMappdateday.log (dedicated for VM side) |

Disk mapping logs |

||||

|

Video redirection |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

Video redirection logs |

||

|

vGPU |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

GPU logs |

||

|

HTML5 |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

H5 redirection logs |

||

|

Spiceclient |

User file directory\VdSession\log |

protocol-server address-desktop pool-desktop ID-date.log |

Logs for operation of remote desktop connection protocol operation |

||

|

SpaceOS |

Spiceclient |

/home/spaceos/Documents/VdSession/log |

protocol-server address-desktop pool-desktop ID-date.log |

Logs for operation of remote desktop connection protocol |

|

|

Video redirection |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

Video redirection logs |

||

|

H5 |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

H5 redirection logs |

||

|

vGPU |

User file directory\VdSession\log |

VdSession-server address-desktop pool-desktop ID-date.log |

vGPU logs |

||

|

Peripheral |

/var/log/ comredirect/ |

Comredirdateday.log |

Serial redirection logs |

||

|

/var/log/camera_redirect/ |

devredirectdate.log |

Camera redirection logs |

|||

|

/var/log/eveusb/ |

Eveusbdateday.log |

Port redirection logs |

|||

|

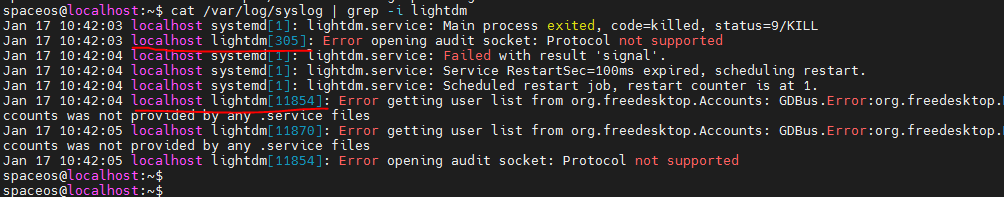

/var/log/ |

syslog |

System logs and port redirection logs |

|||

|

/home/Current login user/document/DiskMapping/ |

CDriverMappdateday.log |

Disk mapping logs |

|||

|

/home/Current login user/Documents/DiskMapping/ |

|||||

|

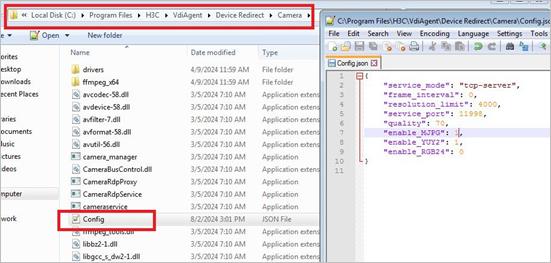

VM |

Windows |

Peripheral |

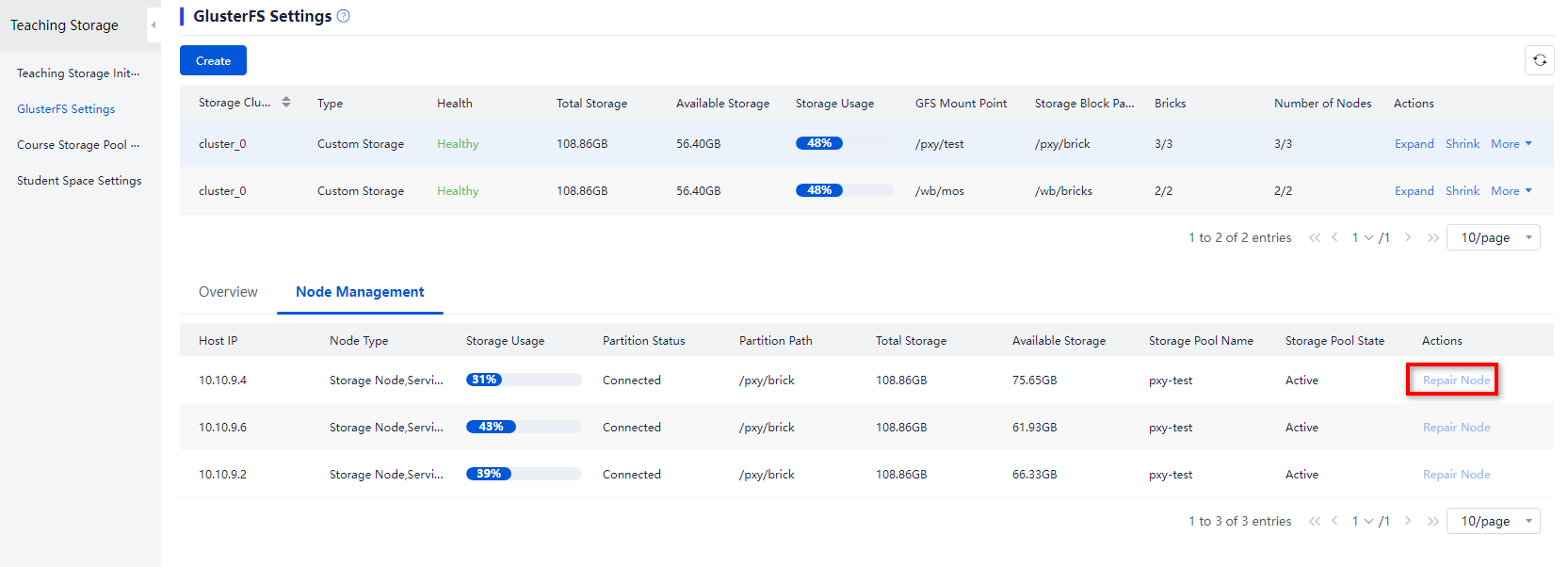

Agent installation path\ VdiAgent \Device Redirect\log\ |

VDIUsbServicedateday.log |

Port redirection logs |

|

UsbSetupdateday.log |

Driver installation logs for port redirection |

||||

|

VDIComServicedateday.log |

Serial redirection logs |

||||

|

ComSetupdateday.log |

Driver installation logs for serial redirection |

||||

|

devredirectdate.log |

Camera redirection logs |

||||

|

virtualCameradateday.log (dedicated for VM side) |

Virtual camera redirection logs (dedicated for VMs) |

||||

|

H3C installation path\VdiAgent\Vdagent Tools\log\ |

CDriverMappdateday.log (dedicated for physical device side) |

Disk mapping logs |

|||

|

SDriverMappdateday.log (dedicated for VM side) |

Disk mapping logs |

||||

|

vGPU |

H3C installation path\VdiAgent\Vgpu\log |

VGPUCapturer_dateday.log (dedicated for VM side) |

Capturer logs |

||

|

Video redirection |

Agent installation path\VdiAgent\Vdagent Tools\log\ |

MMRRedirect+SN.log |

Video redirection logs |

||

|

Agent installation path\ VdiAgent \Device Redirect\log\ |

MMRPlayerHookExe+SN.log |

Video redirection logs |

|||

|

MMRPlayerHookDll+SN.log |

|||||

|

MMRVChannel+SN.log |

|||||

|

MMRTransfer+SN.log |

|||||

|

MMRDShowHook+SN.log |

|||||

|

MMRVRender+SN.log |

|||||

|

H5 |

H3C installation path\VdiAgent\Device Redirect\log |

H3CcivetwebServerdateday.log |

H5 redirection logs |

||

|

Optimization tool |

C:\ |

optToolsCopyProfileSevdateday.log (dedicated for VM side) |

Optimization logs |

||

|

agent |

C:\Users\Userxx\VDtray\ |

VDTray_date.log |

VDTray logs |

||

|

Notificition_date.log |

Notification component logs |

||||

|

C:\Users\Userxx\clipboard |

SClip_date.log/VDIClipboard_date.log |

Clipboard logs |

|||

|

Agent installation path\ VdiAgent\Vdagent Tools\log\ |

Vdagent_date.log |

Agent-related logs |

|||

|

ProfileManagement_date.log |

UPM logs |

||||

|

VDIGrpcAgent_date.log |

GRPC logs |

||||

|

VDILogDownload_date.log |

Log download module logs |

||||

|

VDIService_date.log |

Vdservice service logs |

||||

|

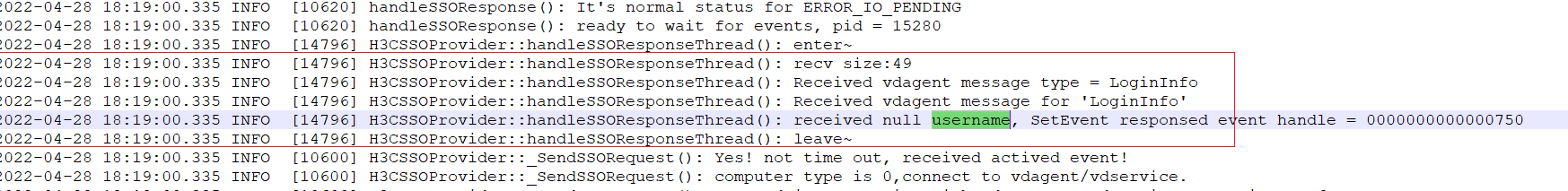

VDISSO_CP_date.log |

SSO component logs |

||||

|

VDIWaterMark_date.log |

Blind watermarking logs |

||||

|

VDICommon_date.log |

Common component logs |

||||

|

fw_vdservice_date.log |

Firewall exception component logs |

||||

|

MainPortSpiceVdAgentPlugin_date.log |

Student communication interface logs |

||||

|

Server |

Linux |

hostagent |

/var/log/vdi/hostagent/ |

hostagent+date.log |

Recording hostagent logs |

|

Linux |

Spiceserver |

/var/log/libvirt/qemu/ |

VM storage name.log |

Logs for spiceserver and kvm/qemu-based VM process |

Space Console logs

Space Console logs are saved under the /var/log/vdi/ directory, mainly including the following modules.

Table 3 Space Console component log directories

|

Component |

Log directory |

Remarks |

|

Workspace-server |

/var/log/vdi/workspace-sever/workspace.log |

Space Console backend log |

|

Controller |

· /var/log/vdi/controller/controller.log (platform, client, CAS, UIS communication services) · /var/log/vdi/controller/grpc.log (GRPC communication logs between the controller and agent) · /var/log/vdi/controller/grpc-client.log (GRPC communication logs between the controller and client. Detailed request/response packets are printed for both ends) |

All controller logs, including GRPC communication logs |

|

ssv |

/var/log/vdi/ssv |

Log of the ssv user log module |

|

hostagent |

/var/log/vdi/hostagent |

Log of hostagent |

|

vdi-install.log |

/var/log/vdi |

VDI installation log |

|

vdi-upgrade.log |

/var/log/vdi |

VDI update log |

Data storage locations

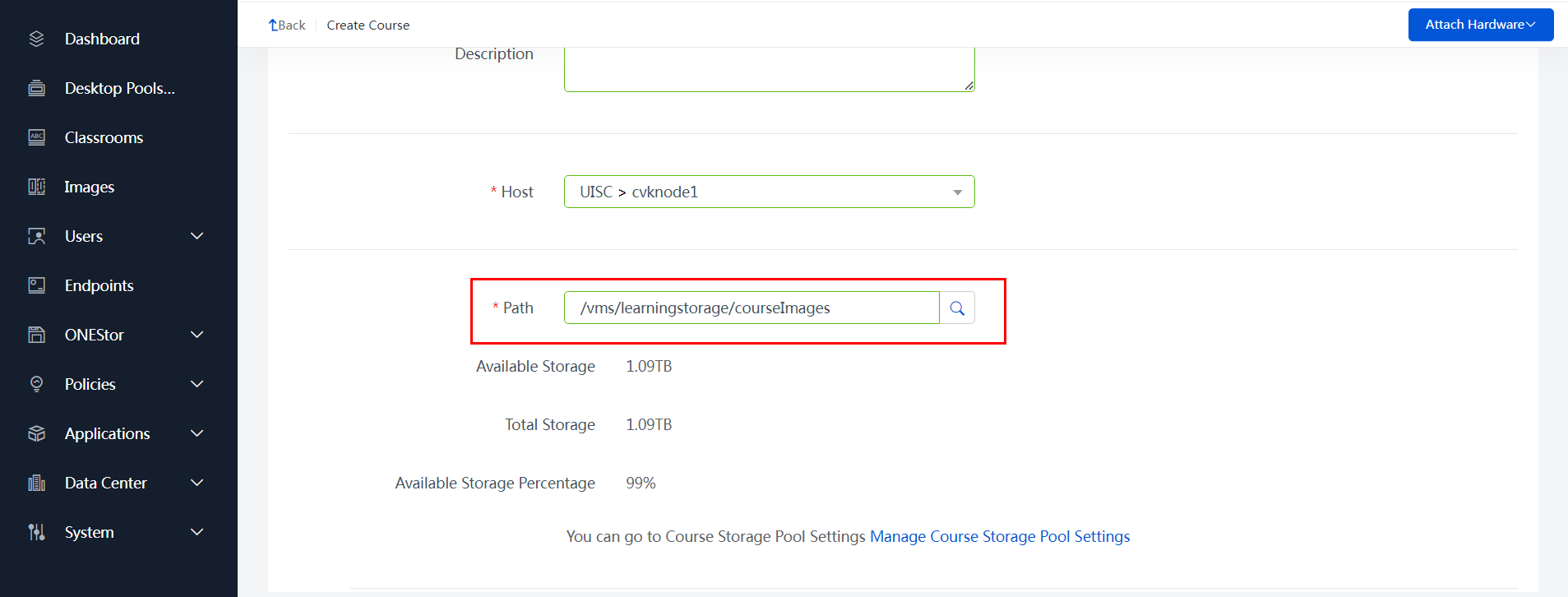

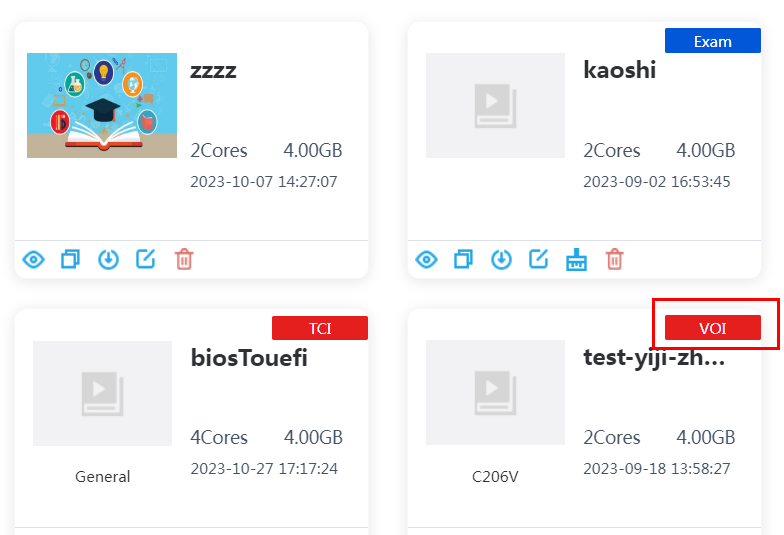

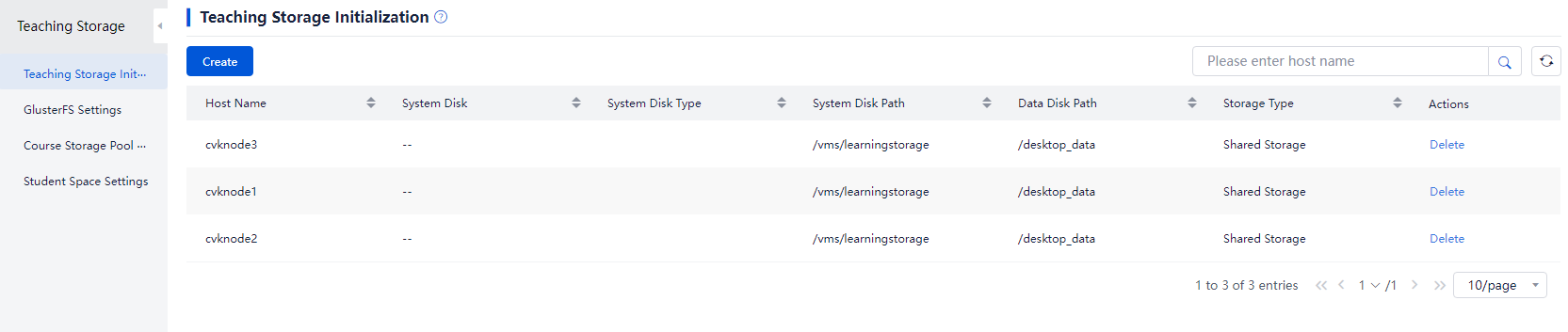

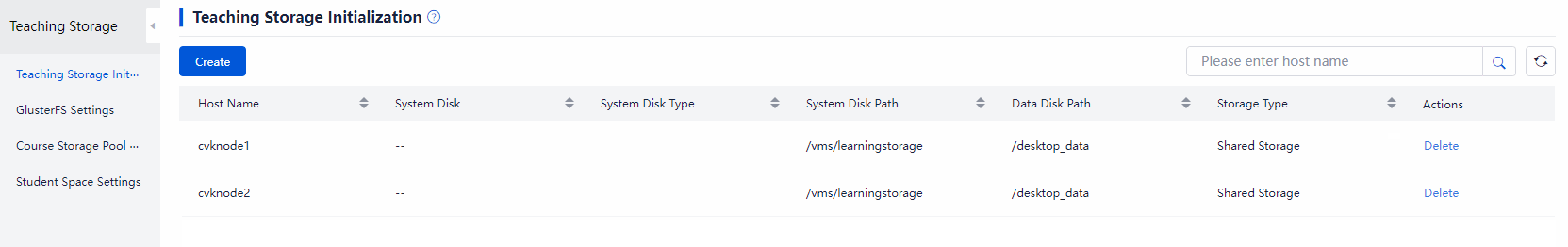

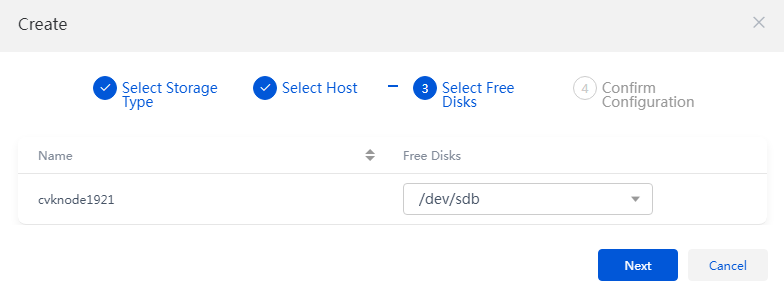

Storage locations for course VMs

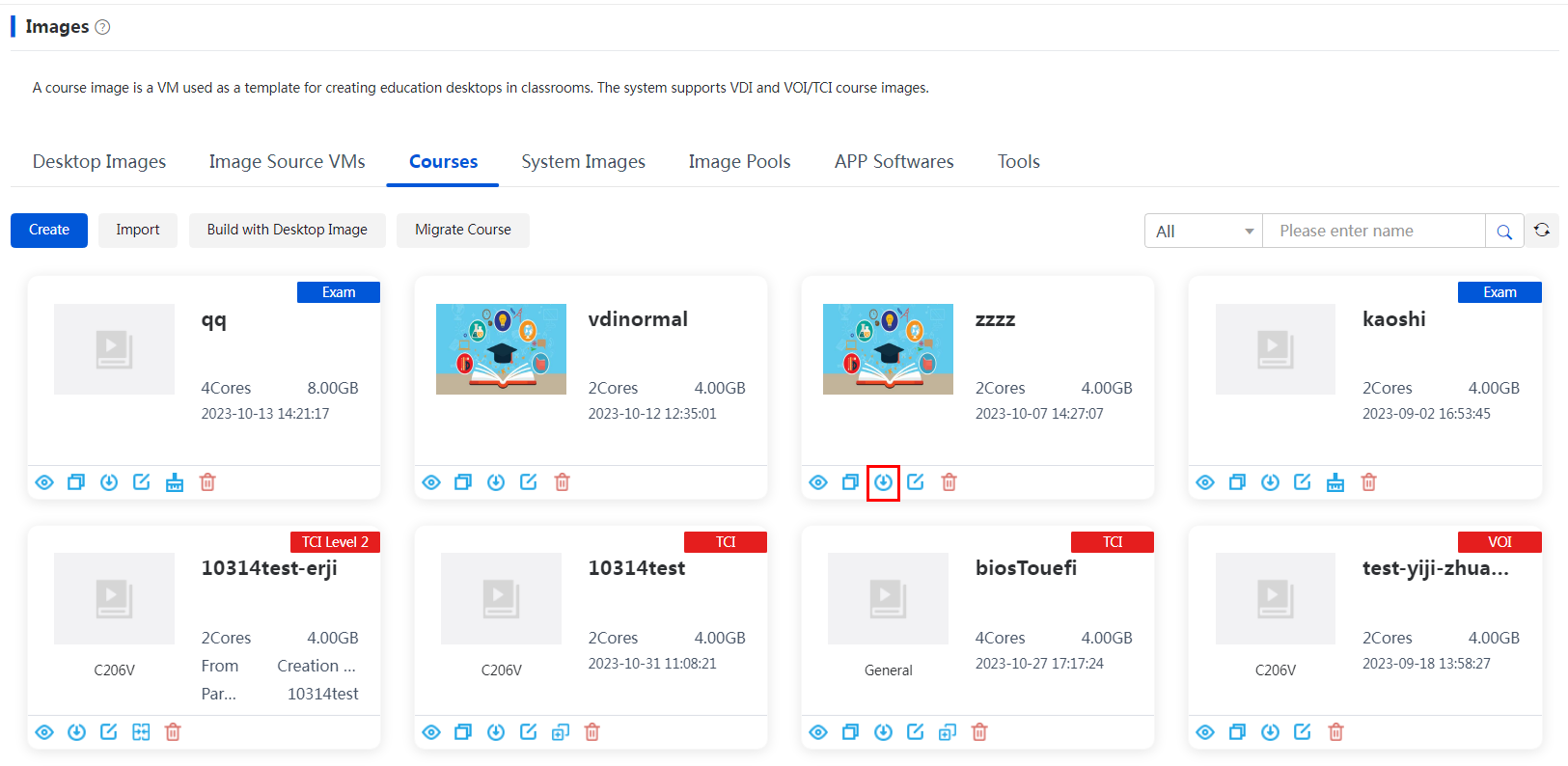

The system and data disk files of each course VM are placed in the same path, which depends on the selected path upon course creation:

Figure 6 Course storage path

The available storage paths for creating a new course include the following:

· /vms/learningspace/courseImages (earlier than E1015) or /vms/learningstorage/courseImages (E1015 and later): Local storage, added on the Data Center > Teaching Storage page of Space Console.

· Local or shared storage that is newly added in the course storage pool.

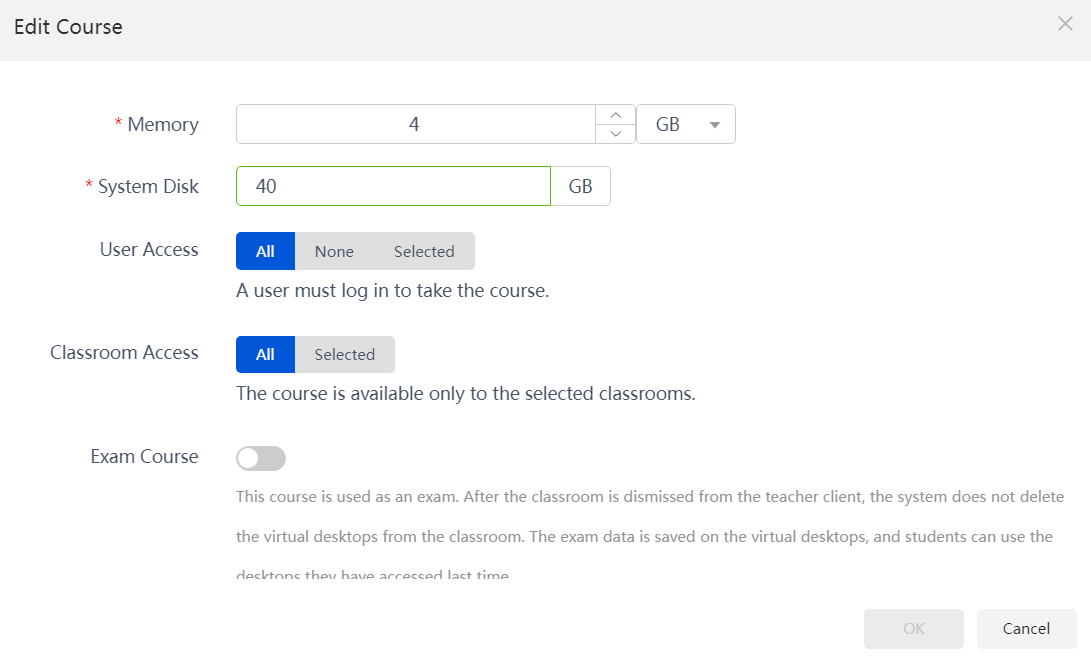

Course VMs

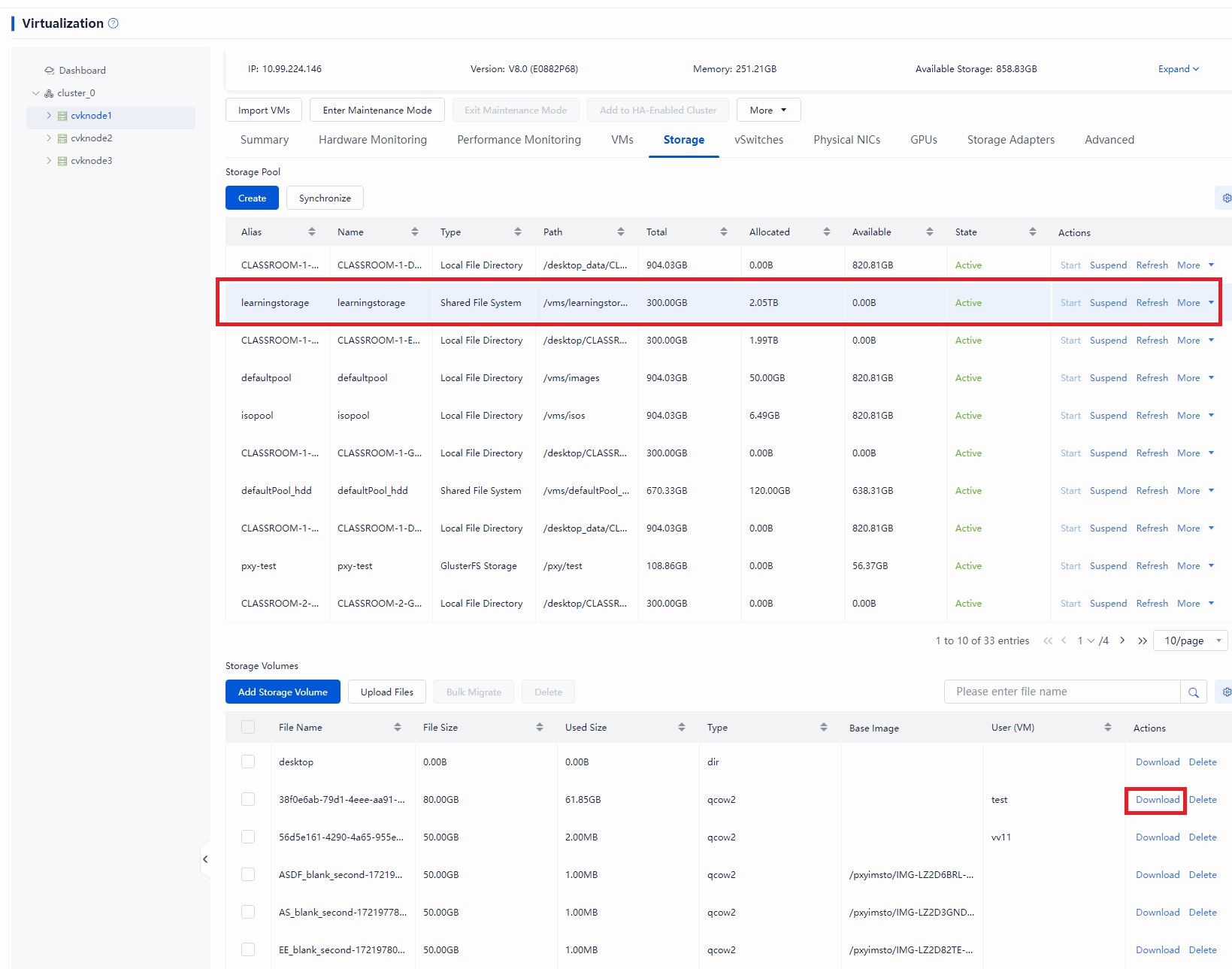

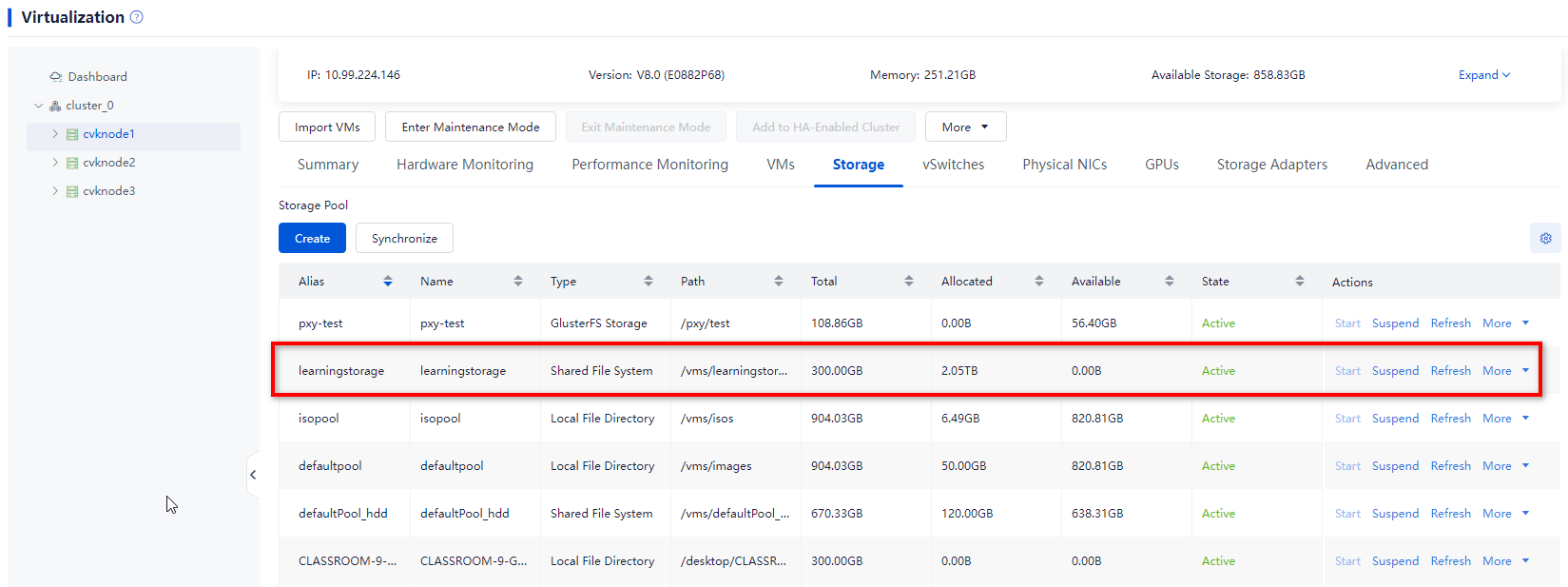

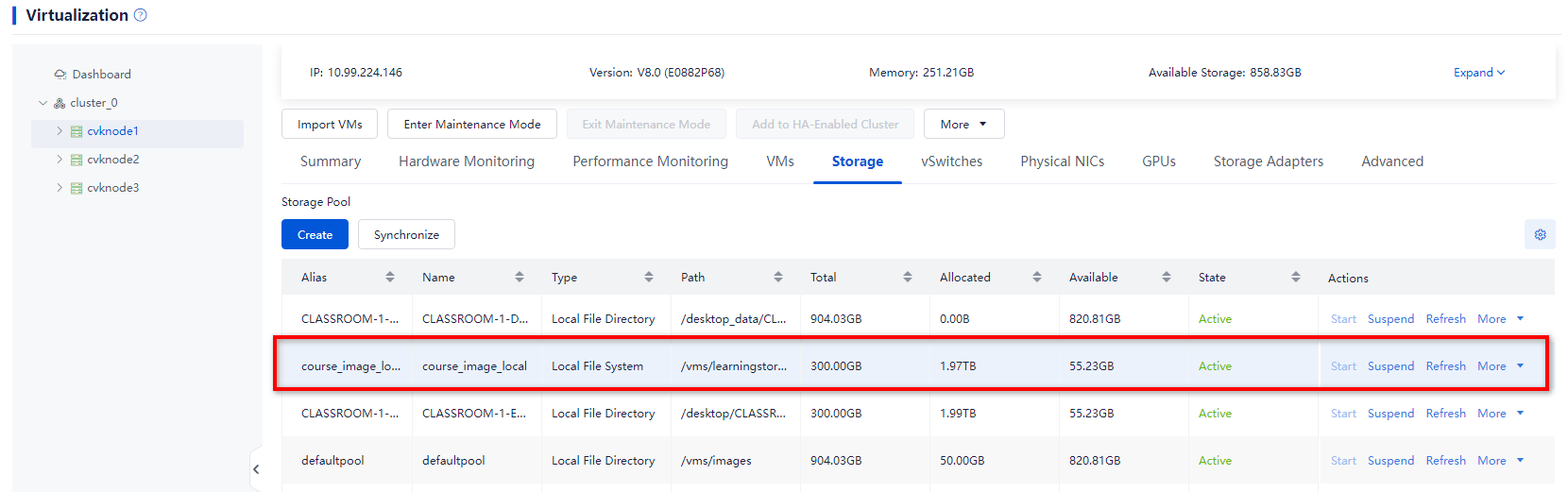

The system and data disk files for course VMs are stored separately:

· System disk: Each classroom has its own subdirectory in the main directory, and a separate subdirectory is available for regular and exam courses in each classroom. The main directory is located at /vms/learningspace/desktop/ in versions earlier than E1015 and /vms/learningstorage/desktop/ in E1015 and later.

· Data disk: Each classroom has its own subdirectory in the main directory, and a separate subdirectory is available for regular and exam courses in each classroom. The main directory is located at /vms/desktop_data/ in versions earlier than E1015 and /desktop_data/ in E1015 and later.

Each classroom has four paths (storage pools) for storing the files of course VMs. These paths (storage pools) are automatically created when the classroom is created:

· In versions earlier than E1015

¡ /vms/learningspace/desktop/CLASSROOM-ID-GENERAL: System disk path for regular course VMs.

¡ /vms/learningspace/desktop/CLASSROOM-ID-EXAM: System disk path for exam course VMs.

¡ /vms/desktop_data/CLASSROOM-ID-DATA-GENERAL: Data disk path for regular course VMs.

¡ /vms/desktop_data/CLASSROOM-ID-DATA-EXAM: Data disk path for exam course VMs.

· In E1015 and later

¡ /vms/learningstorage/desktop/CLASSROOM-ID-GENERAL: System disk path for regular course VMs.

¡ /vms/learningstorage/desktop/CLASSROOM-ID-EXAM: System disk path for exam course VMs.

¡ /desktop_data/CLASSROOM-ID-DATA-GENERAL: Data disk path for regular course VMs.

¡ /desktop_data/CLASSROOM-ID-DATA-EXAM: Data disk path for exam course VMs.

Important components and maintenance methods

Space Console

System advanced command mode

Some commands at the CLI of the host in an E2xxx version can be executed only in advanced mode. To enter the advanced mode:

/opt/bin/command_config

Default password: Cloud@4321. The advanced mode is available after you enter the password.

workspace-server

About workspace-server

The workspace-server component is the core management component of the cloud desktop, providing backend service support for Space Console functions.

Service management

· To restart the workspace-server service, execute the service workspace-server restart command.

· To start the workspace-server service, executing the service workspace-server start command.

· To check the status of the workspace-server service, execute the service workspace-server status command.

· To stop the workspace-server service, execute the service workspace-server stop command.

Commonly used commands

· jps: Used to obtain the process ID of workspace-server.jar. Command syntax: jps -l.

· jstack: Used to obtain information about the Java stack and native stack for running Java programs. Command syntax: jstack -l <pid> <dstFileName>.

· jmap: Used to obtain detailed information about memory allocation for running Java programs, such as instance count and size. Command syntax: jmap -dump:format=b,file=<destFileName> <pid>. For example, the value for the destFileName parameter can be heap.bin.

· jstat: Used to obtain information related to class loader, compiler, and garbage collection. You can use this command to monitor resources and performance in real time. Command syntax: jstat –gcutil <pid> <interval>. The unit for the interval parameter is milliseconds.

|

CAUTION: As a best practice, do not execute the jmap command during busy service periods or under high pressure of the environment. Execute other commands when necessary to collect information. |

Commonly used logs

The workspace-server logs are stored in the /var/log/vdi/workspace-server/ log directory.

· workspace.log—This log file stores the internal running logs of workspace-server. If errors are prompted during interface operations, you can view this log file.

· ws-gc.log.0.current—You can view this log file when the memory usage of workspace-server is too high.

· jstack-generated logs—You can view specific stuck or blocked stacks from the logs when an interface task takes a long time to complete.

· jmap-generated logs—You can analyze and view jmap logs with the Eclipse MemoryAnalyzer tool. These logs are typically provided directly to developers after collection.

|

|

NOTE: When viewing the workspace.log file and jstack-generated logs, you can use fuzzy search based on the service operations performed on the interface. For example, you can use deploy as a keyword to search for VM deployment operation logs. |

Perform the following tasks to view specific information in the workspace.log file:

1. To filter and view exception information, execute the cat workspace.log | grep -i Exception command.

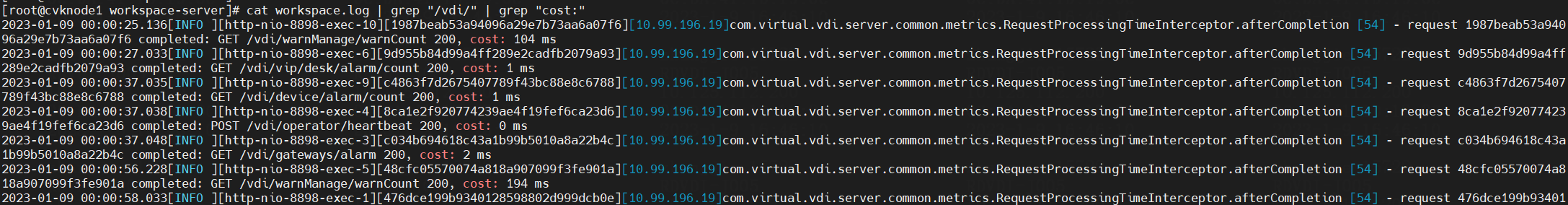

2. To view the return time of the VDI API, execute the cat workspace.log | grep "/vdi/" | grep "cost:" command. If the return time is long (for example, 20 s), it indicates an exception might have occurred on the API.

Figure 7 Viewing the return time of the VDI API

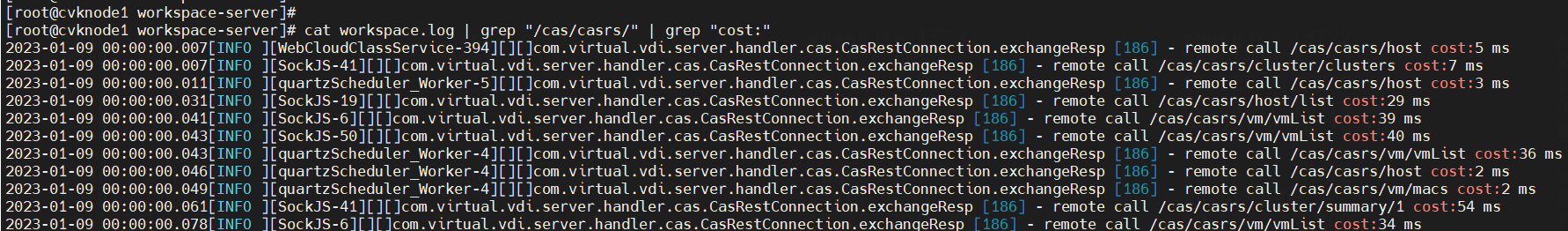

3. To view the return time of the CAS API, execute the cat workspace.log | grep "/cas/casrs/" | grep "cost:" command. If the return time is long (for example, 20 s), it indicates an exception might have occurred on the API.

Figure 8 Viewing the return time of the CAS API

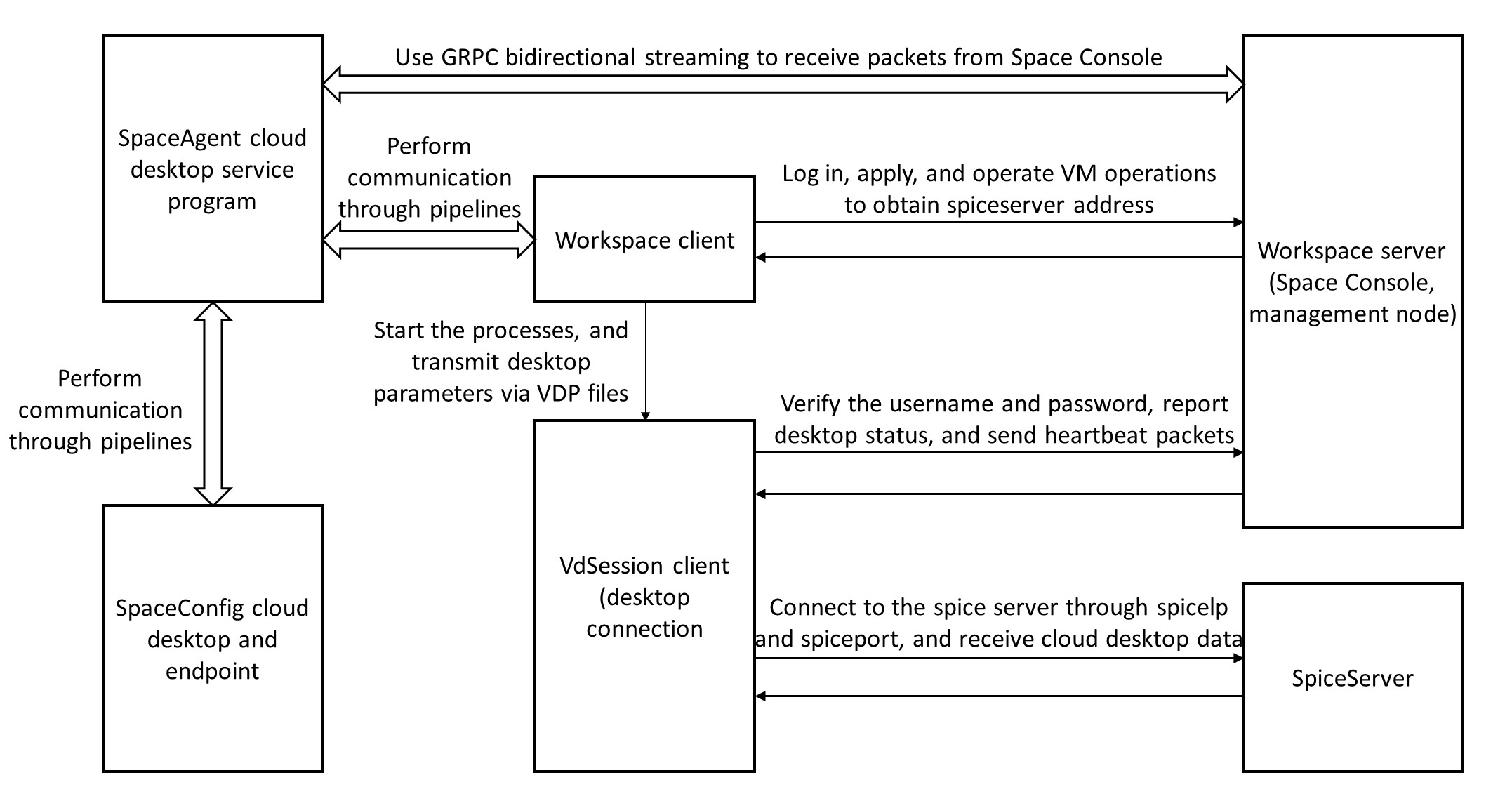

Controller

About Controller

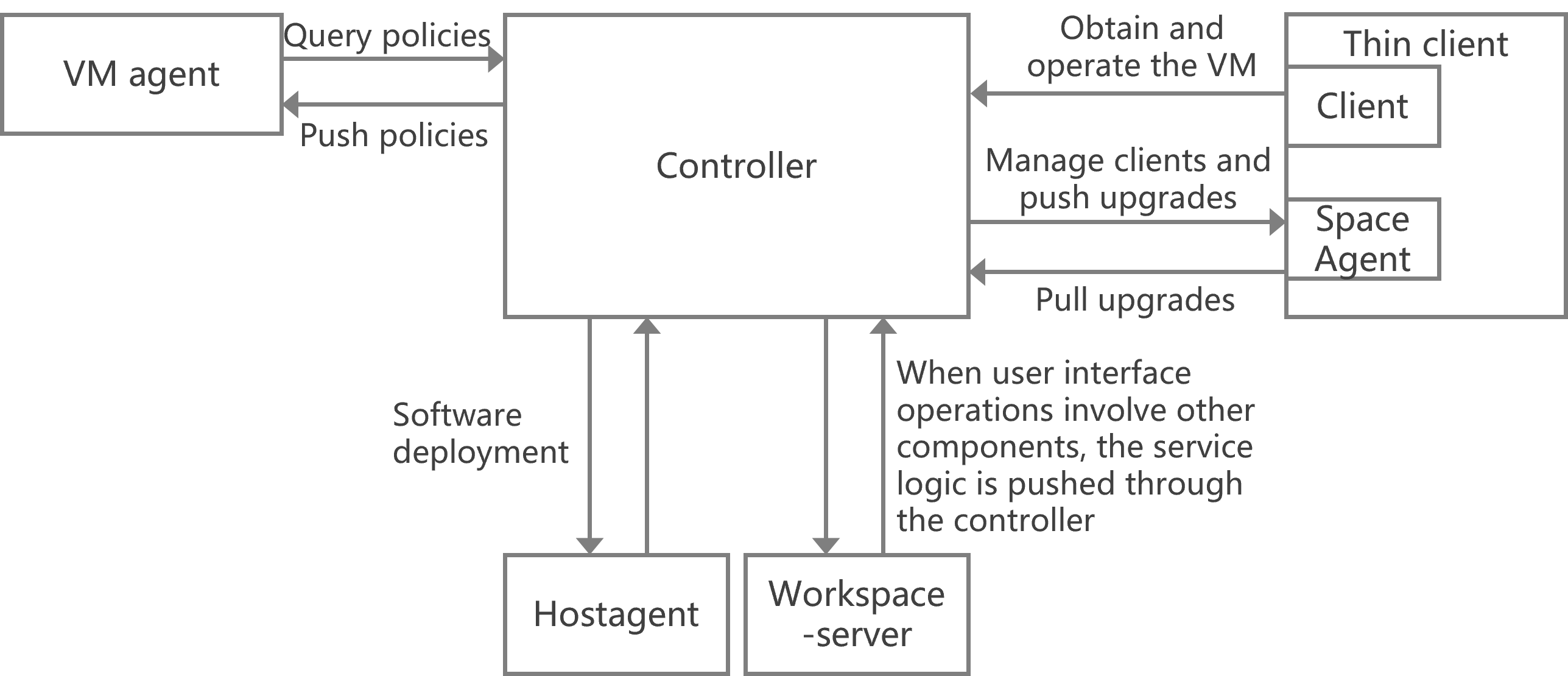

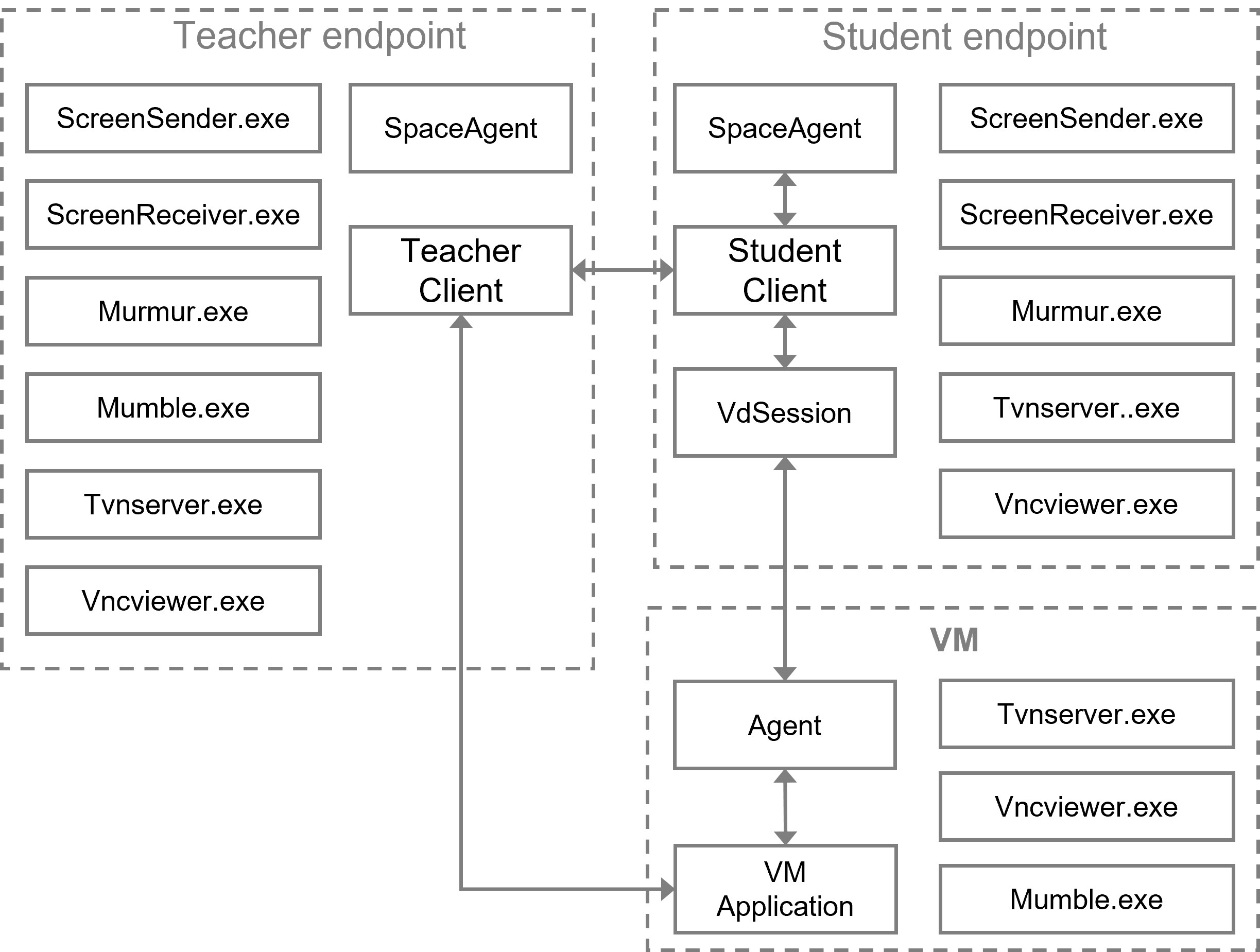

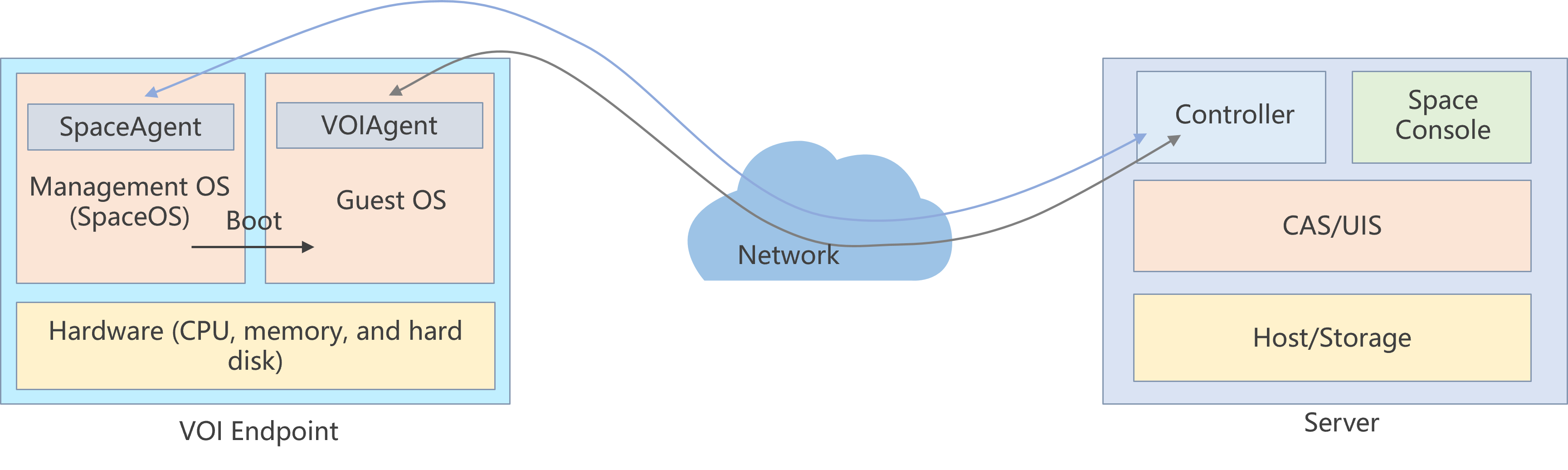

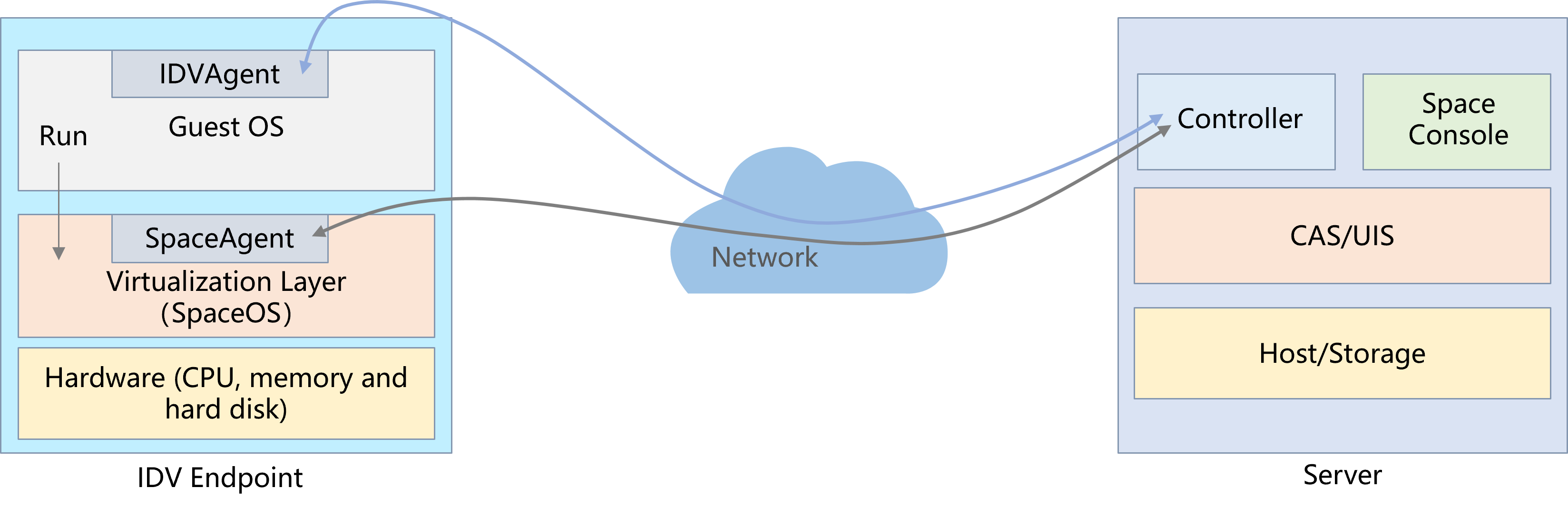

The Controller component, as the basic messaging component of the cloud desktop, acts as a message hub in cloud desktop services. It interacts with multiple other components and uses the gRPC and Protobuf protocols to carry messages. The Controller component interacts with other objects as follows:

· Communicates with the client on the thin client (for example, when the user logs in to the VM).

· Communicates with SpaceAgent on the thin client and maintains a long connection state (for realizing functions such as client management and service upgrades on the thin client).

· Communicates with the VM's internal agent and maintains a long connection state (for pulling and pushing policies to the agent).

· Communicates with HostAgent (which is used in only the software deployment function) on the host and maintains a long connection state.

· Communicates with workspace-server.java and maintains a long connection state. For example, user operations on the interface trigger the Controller component to push messages to other components.

Figure 9 Controller's interactions with other components

Service management

To restart, start, check the status of, and stop the service controller, execute the service controller restart, service controller start, service controller status, and service controller stop commands, respectively.

Commonly used commands

· jps: Used to obtain the process ID of workspace-server.jar. Command syntax: jps -l.

· jstack: Used to obtain information about the Java stack and native stack for running Java programs. Command syntax: jstack -l <pid> > <dstFileName>.

· jmap: Used to obtain detailed information about memory allocation for running Java programs, such as instance count and size. Command syntax: jmap -dump:format=b,file=<destFileName> <pid>. For example, the value for the destFileName parameter can be heap.bin.

· jstat: Used to obtain information related to class loader, compiler, and garbage collection. You can use this command to monitor resources and performance in real time. Command syntax: jstat –gcutil <pid> <interval>. The unit for the interval parameter is milliseconds.

|

CAUTION: As a best practice, do not execute the jmap command during busy service periods or under high pressure of the environment. Execute other commands when necessary to collect information. |

Commonly used logs

The following log files are stored in the /var/log/vdi/controller/ log directory:

· controller.log—This log file stores the internal running logs of the Controller. All calls (such as URLs) to the CAS API are logged. You can view this log file when service logic exceptions occur.

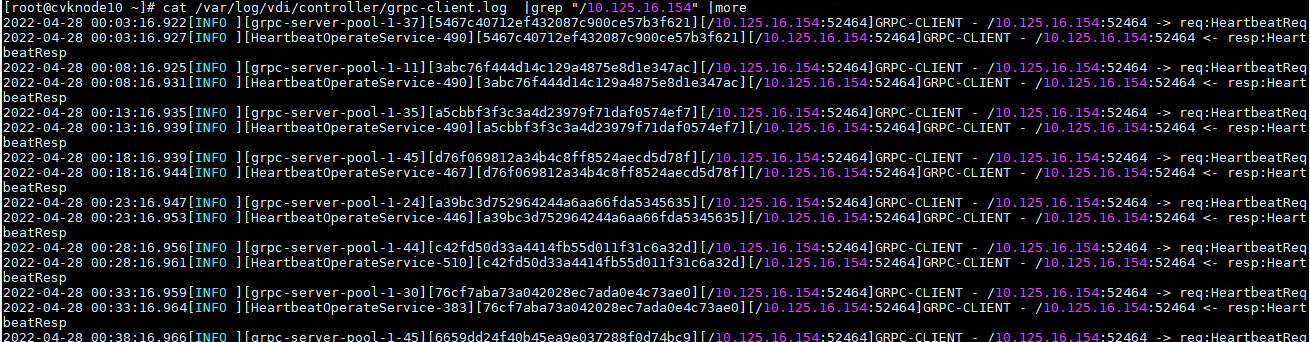

· grpc-client.log—This log file stores the logs for the communication between the Controller component and the client.

· grpc.log—This log file stores the logs for communication between the Controller component and non-client components, such as Agent, HostAgent, workspace-server, and SpaceAgent.

· jstack-generated logs—You can view specific stuck or blocked stacks from the logs when an interface task takes a long time to complete.

· jmap-generated logs—You can analyze and view jmap logs with the Eclipse MemoryAnalyzer tool. These logs are typically provided directly to developers after collection.

|

|

NOTE: When you view the grpc-client.log and grpc.log log files, you can filter the logs by the following information: · The communication peer's IP (such as the client's IP and the Agent's IP) in a non-gateway access environment. · The VM's IP or MAC address in a gateway access environment. You can determine whether the VM and client are connected to Space Console based on whether related logs exist. |

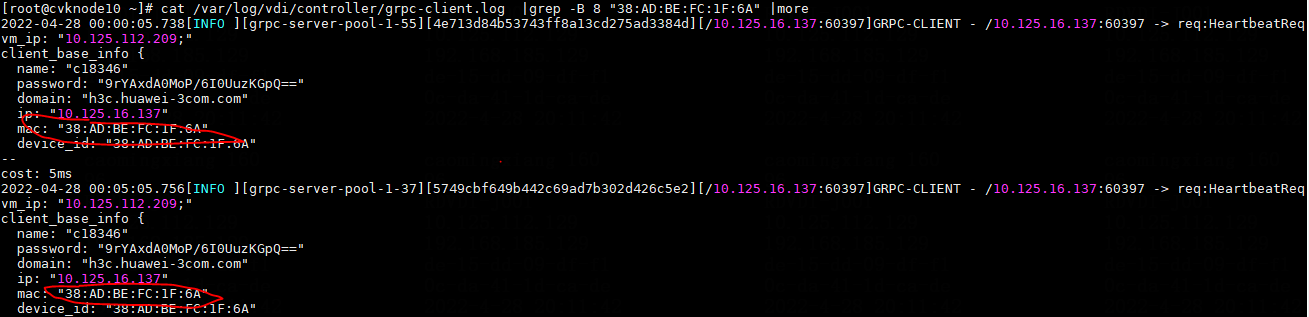

Figure 10 Viewing grpc-client.log in a non-gateway access environment

Figure 11 Viewing grpc-client.log in a gateway access environment

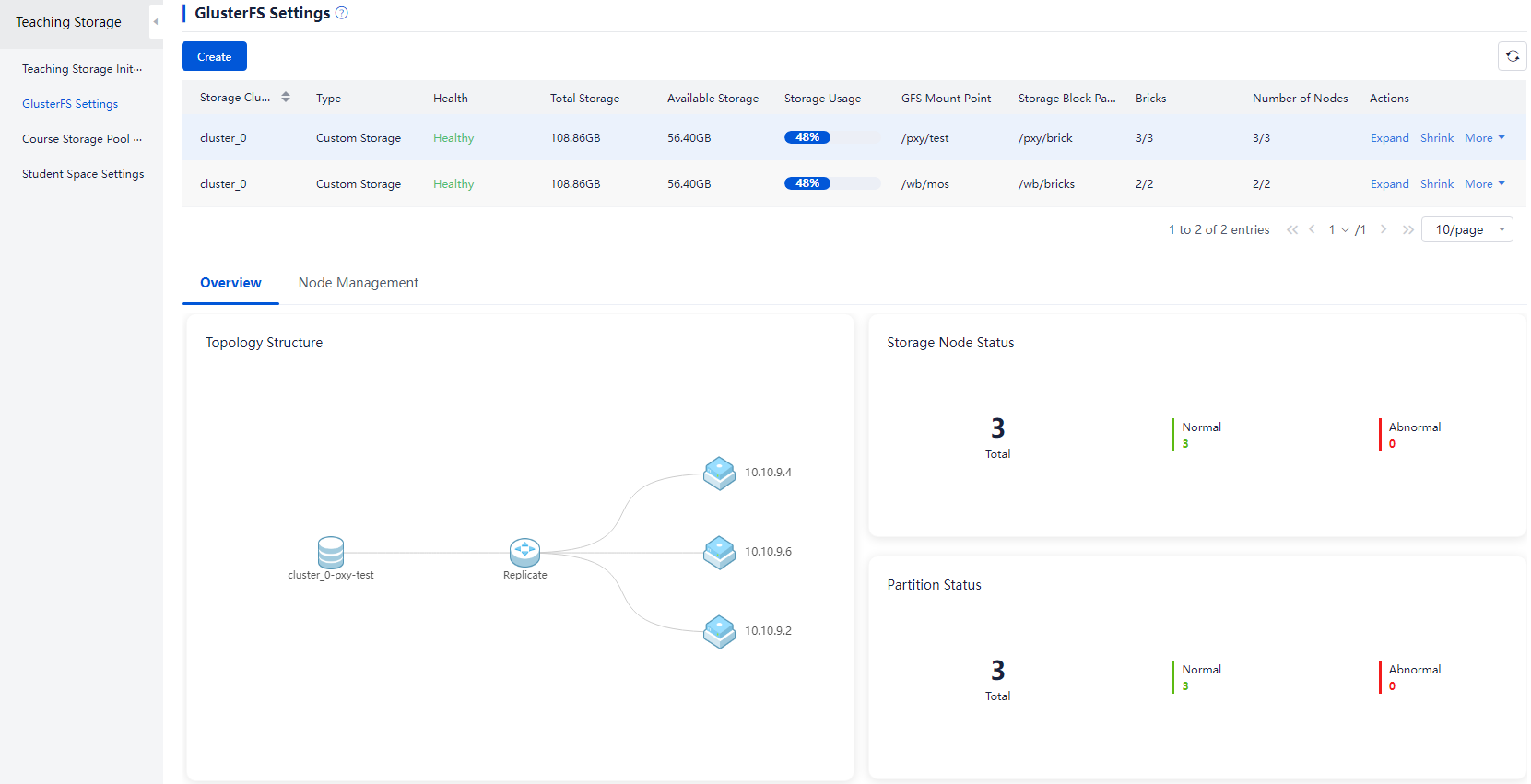

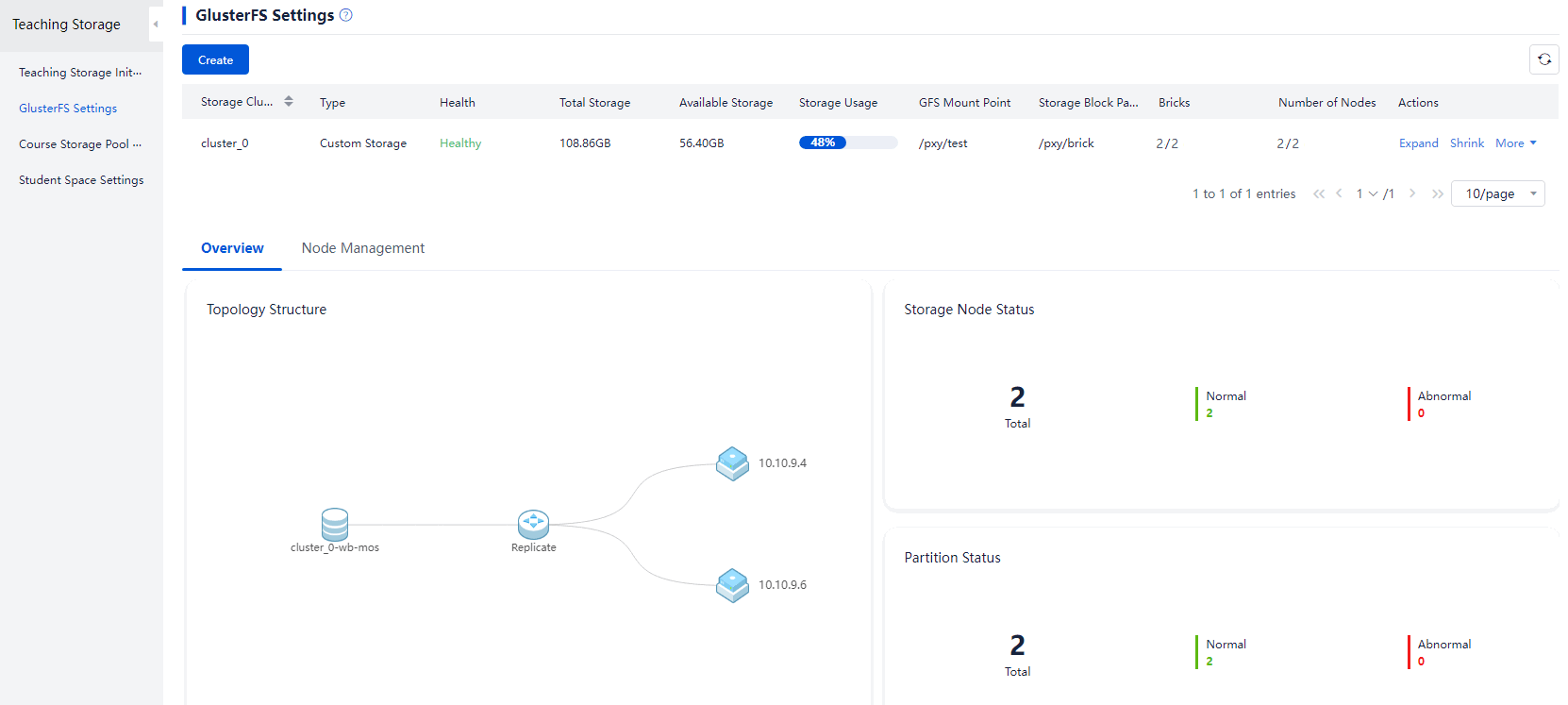

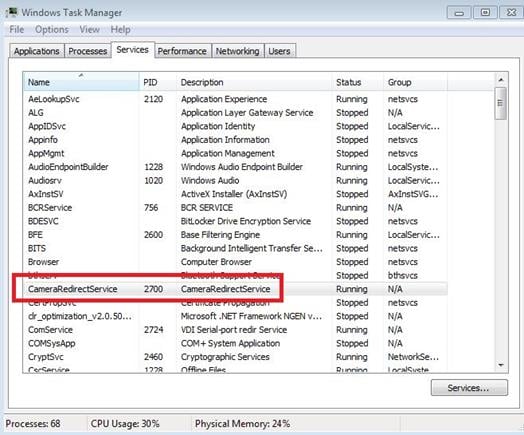

GlusterFS

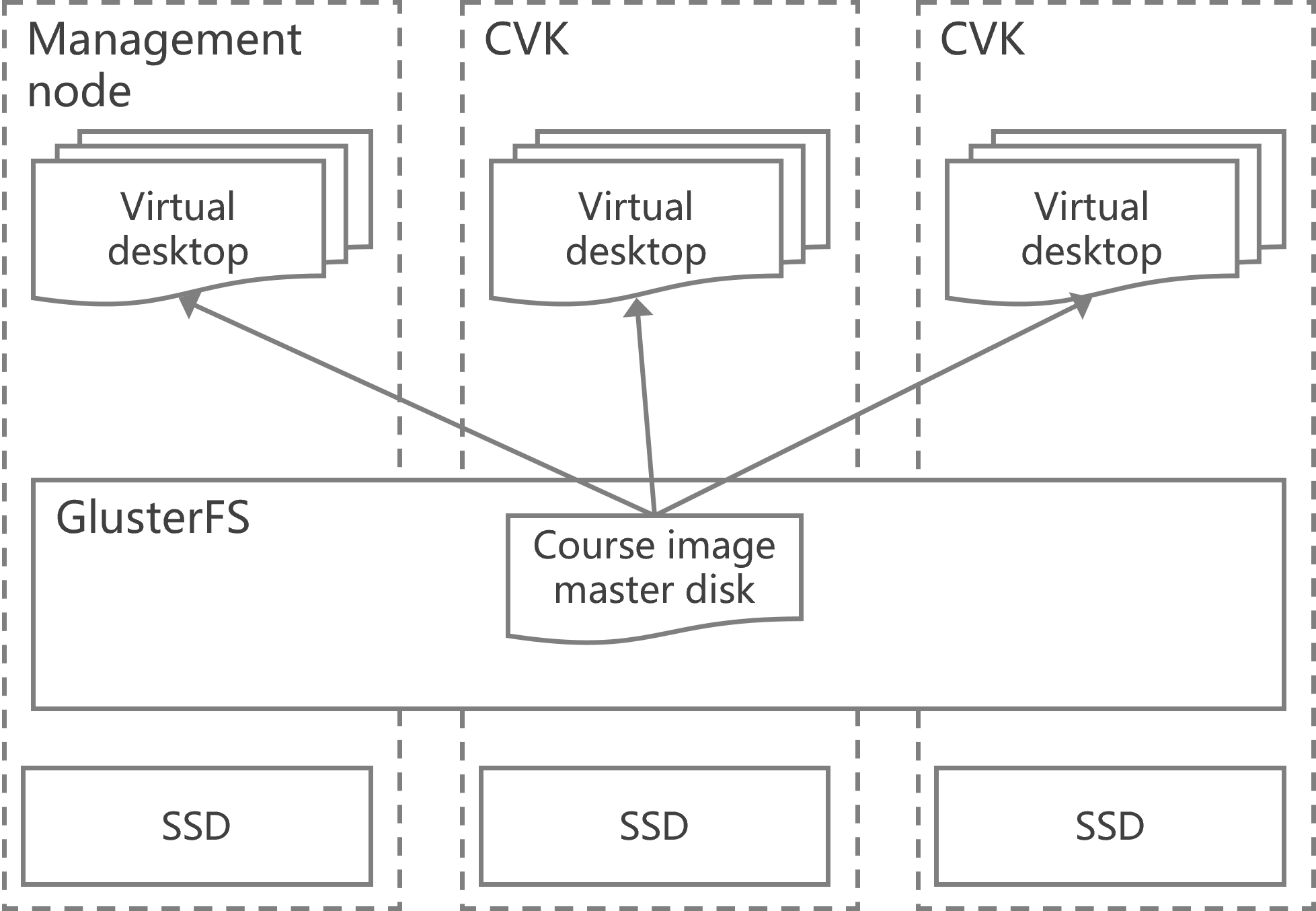

About GlusterFS

The essential principle of GlusterFS is to logically integrate the local disks on servers into a shared file system through the GlusterFS open source software. This allows the shared course image master disk to be hosted, realizing rapid distribution of student VMs during a class.

Figure 12 GlusterFS shared network topology

Service management

· To restart, stop, check the status for, and stop the uisfs-http service, execute the service uisfs-http restart, service uisfs-http start, service uisfs-http status, and service uisfs-http stop commands, respectively.

· To restart, start, check the status of, and stop the uisfs-cannikin service, execute the service uisfs-cannikin restart, service uisfs-cannikin start, service uisfs-cannikin status, and service uisfs-cannikin stop commands, respectively.

· To restart, start, check the status of, and stop the glusterd service, execute the service glusterd restart, service glusterd start, service glusterd status, and service glusterd stop commands, respectively.

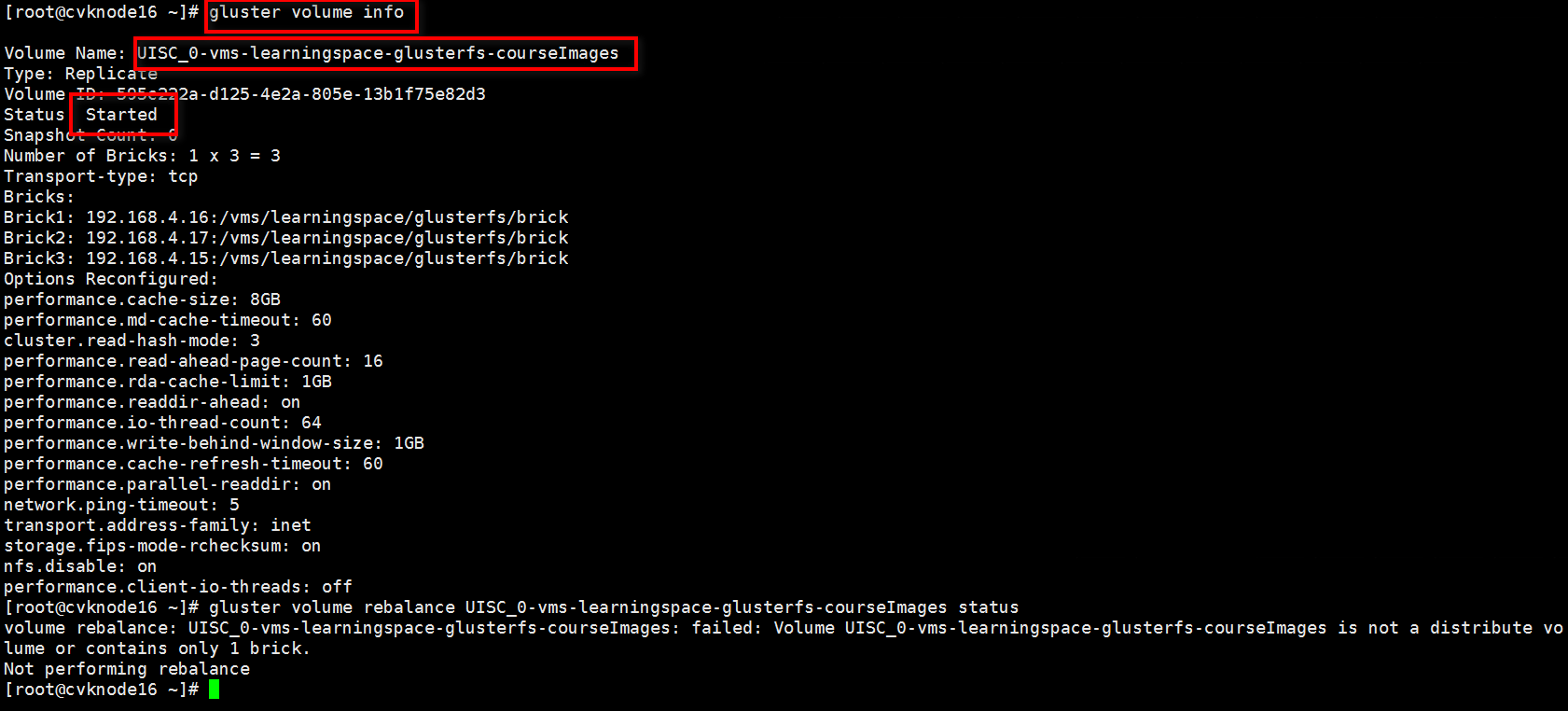

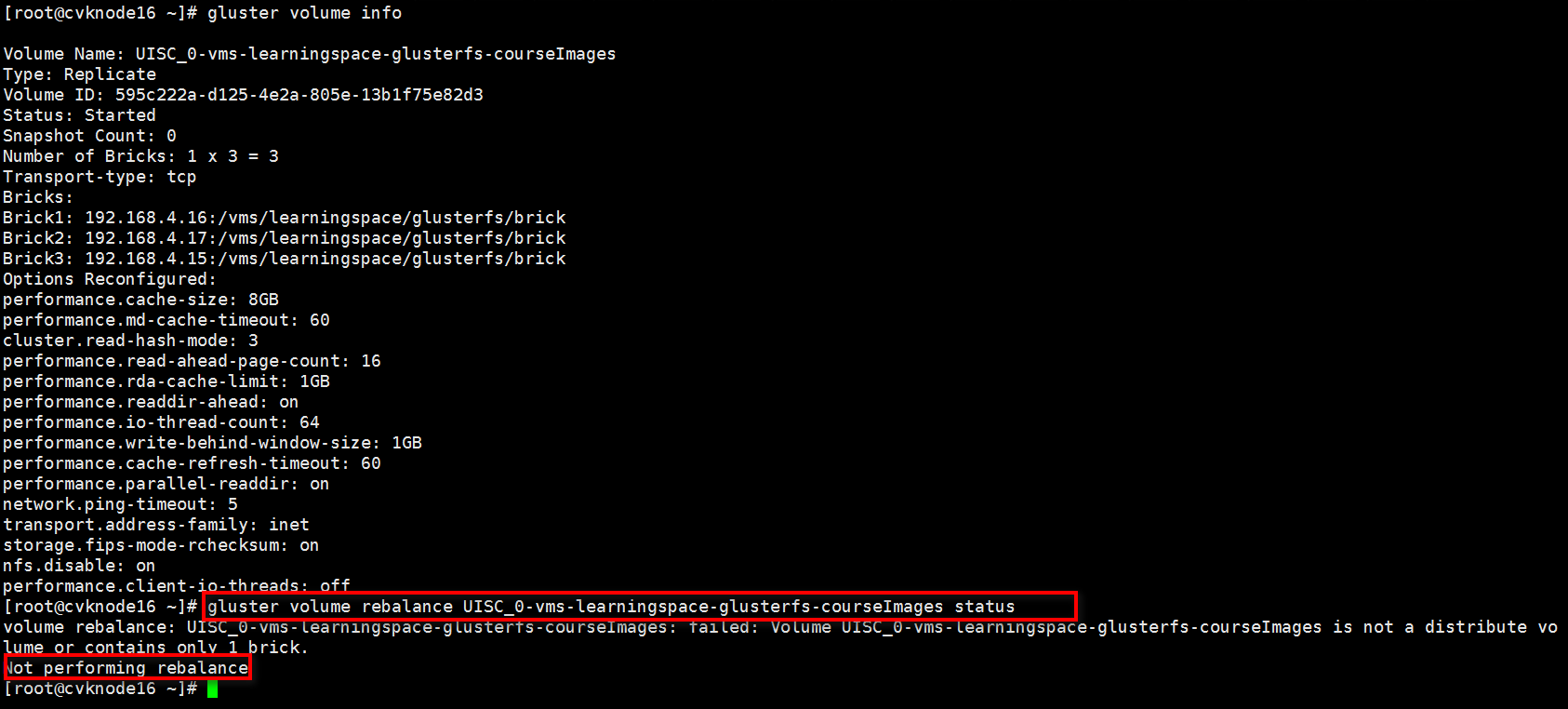

Commonly used commands

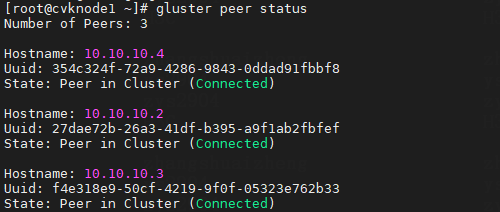

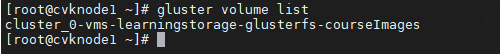

· peer status: Used to obtain the node status information. Command syntax: gluster peer status.

· volume list: Used to obtain information about storage volumes. Command syntax: gluster volume list.

· volume info: Used to obtain information about a specified storage volume. Command syntax: gluster volume info <name>.

· volume status: Used to obtain status information about a specified storage volume. Command syntax: gluster volume status <name>.

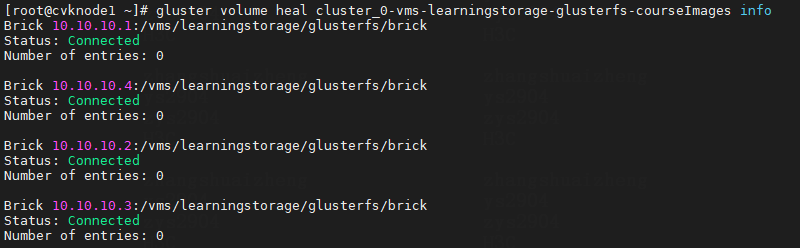

· volume heal: Used to identify whether split-brain files exist in a specified storage volume. Command syntax: gluster volume heal <name> info.

· mount -t glsuterfs: Used to mount a GlusterFS storage volume. Command syntax: mount -t glusterfs ip:/name /vms/learningspace/glusterfs/courseImages.

Commonly used logs

GlusterFS logs are stored in the /var/log/uisfs/ and /var/log/glusterfs/ directories.

· cistern.log—This log file stores the logs for the communication between Space Console and the uisfs-http service.

· cannikin.log—This log file stores the logs for the communication between the uisfs-cannikin service and the GlusterFS component.

· glusterd.log—This log file stores the running logs for the management process of the GlusterFS open source component.

Troubleshooting common issues

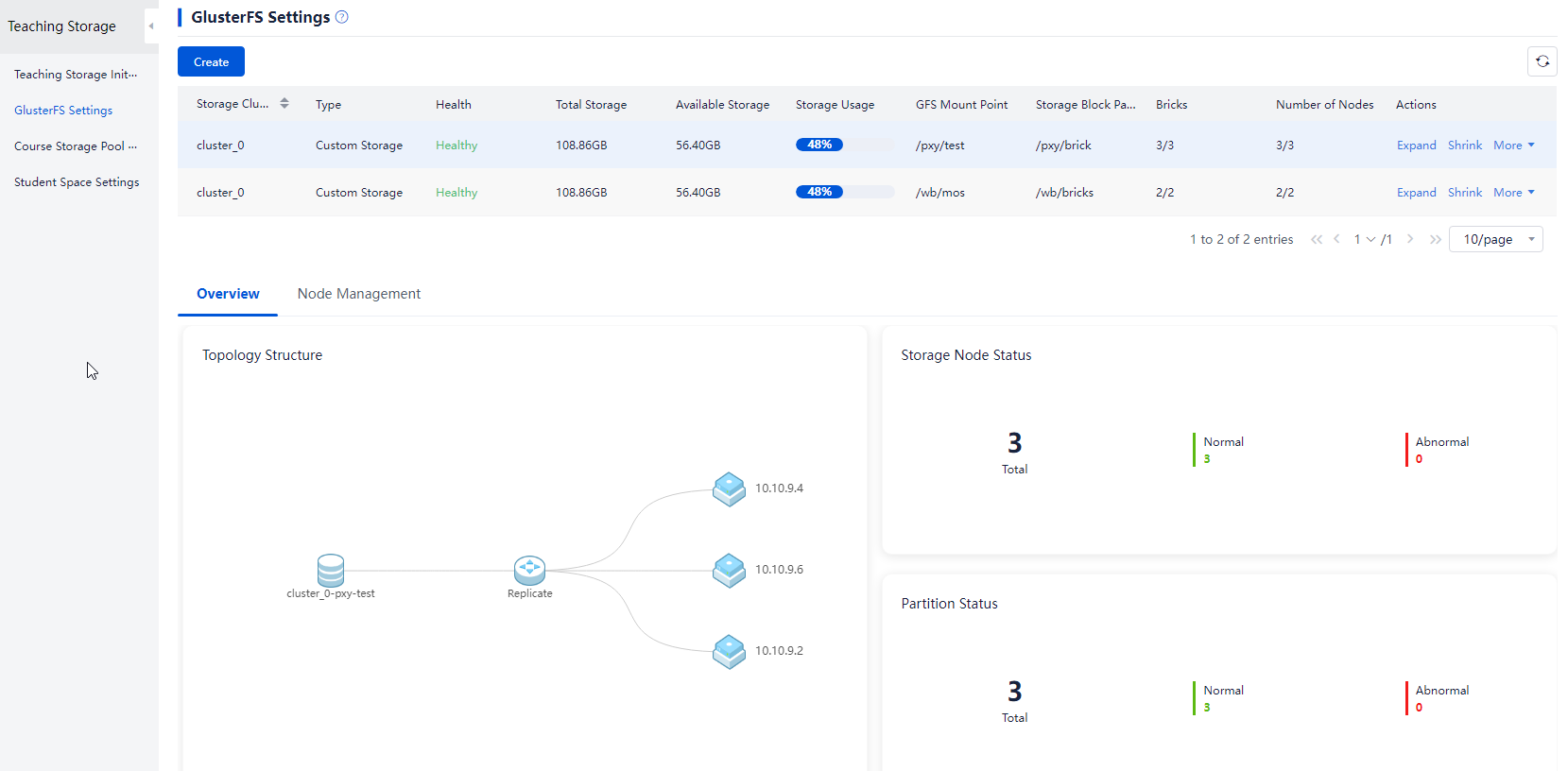

Poor health statuses of GlusterFS storage nodes

1. On the GlusterFS configuration node management page, identify whether the statuses of the storage node partitions, storage pool, and service node storage pool are all normal.

2. If any nodes are abnormal, repair the nodes.

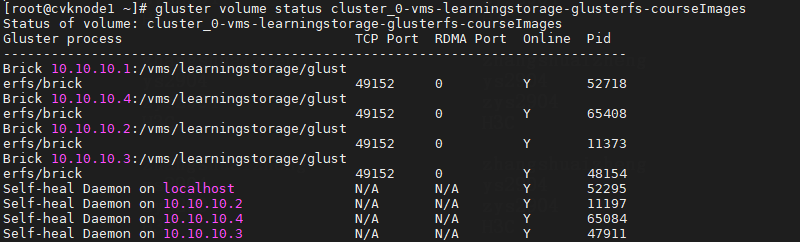

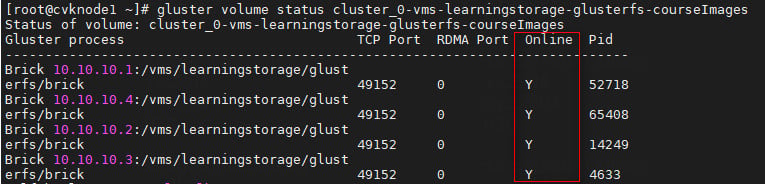

3. To check the online status of the GlusterFS storage node partitions, execute the gluster volume status <name> command (which is used to obtain status information about a specified storage volume).

Inactive GlusterFS storage pool and update status failure in manual start

1. Check the connectivity of the storage network.

2. Mount a GlusterFS storage volume to a temporary directory, and identify whether duplicate files exist in the GlusterFS storage volume. If yes, delete the duplicate files. The specific method is as follows:

a. Execute the mkdir -p /vms/temp command to create temporary directory /vms/temp.

b. Execute the mount -t glusterfs IP:/VolumeName /vms/temp command to mount the GlusterFS storage volume. The IP parameter indicates the IP address of a normal node of the GlusterFS storage volume, and the VolumeName parameter indicates the volume name.

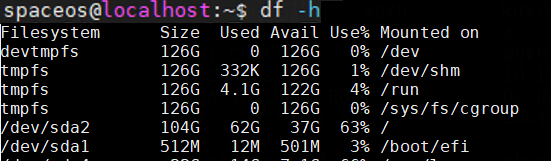

c. Execute the df -h command to identify whether the mounting is successful.

d. Enter the /vms/temp directory, execute the ls -a command to view all files, and delete any duplicate files if found.

3. Unmount /vms/temp.

4. Start the storage pool

Inactive storage pool caused by Split-brain GlusterFS storage files

1. Execute the gluster volume heal VolumeName info (VolumeName is the name of the storage volume) command to identify whether split-brain files displayed as ls in split brain exist. If yes, delete the split-brain files.

If you want to keep the split-brain files, copy them from the block storage directory and copy them back to the original directory after the storage pool has been repaired. Backup the split-brain files in the corresponding nodes' brick directories.

2. Execute the mkdir -p /vms/temp command to create temporary directory /vms/temp.

3. Execute the mount -t glusterfs IP:/VolumeName /vms/temp command to mount the GlusterFS storage volume. The IP parameter indicates the IP address of a normal node of the GlusterFS storage volume, and the VolumeName parameter indicates the volume name.

4. Enter the /vms/temp directory and delete the split-brain files.

5. Unmount /vms/temp.

6. Start the storage pool.

7. Copy the backup files to the corresponding mount point directory.

Inactive GlusterFS storage pool and invalid mount point in manual start

1. Execute the df -h command to identify whether the corresponding GlusterFS storage mount point exists on the corresponding host.

2. If the mount point does not exist, delete the data in the GlusterFS mount point directory on the host. If the mount point directory is not mounted to GlusterFS storage, the mount point directory cannot have any data.

3. Start the storage pool.

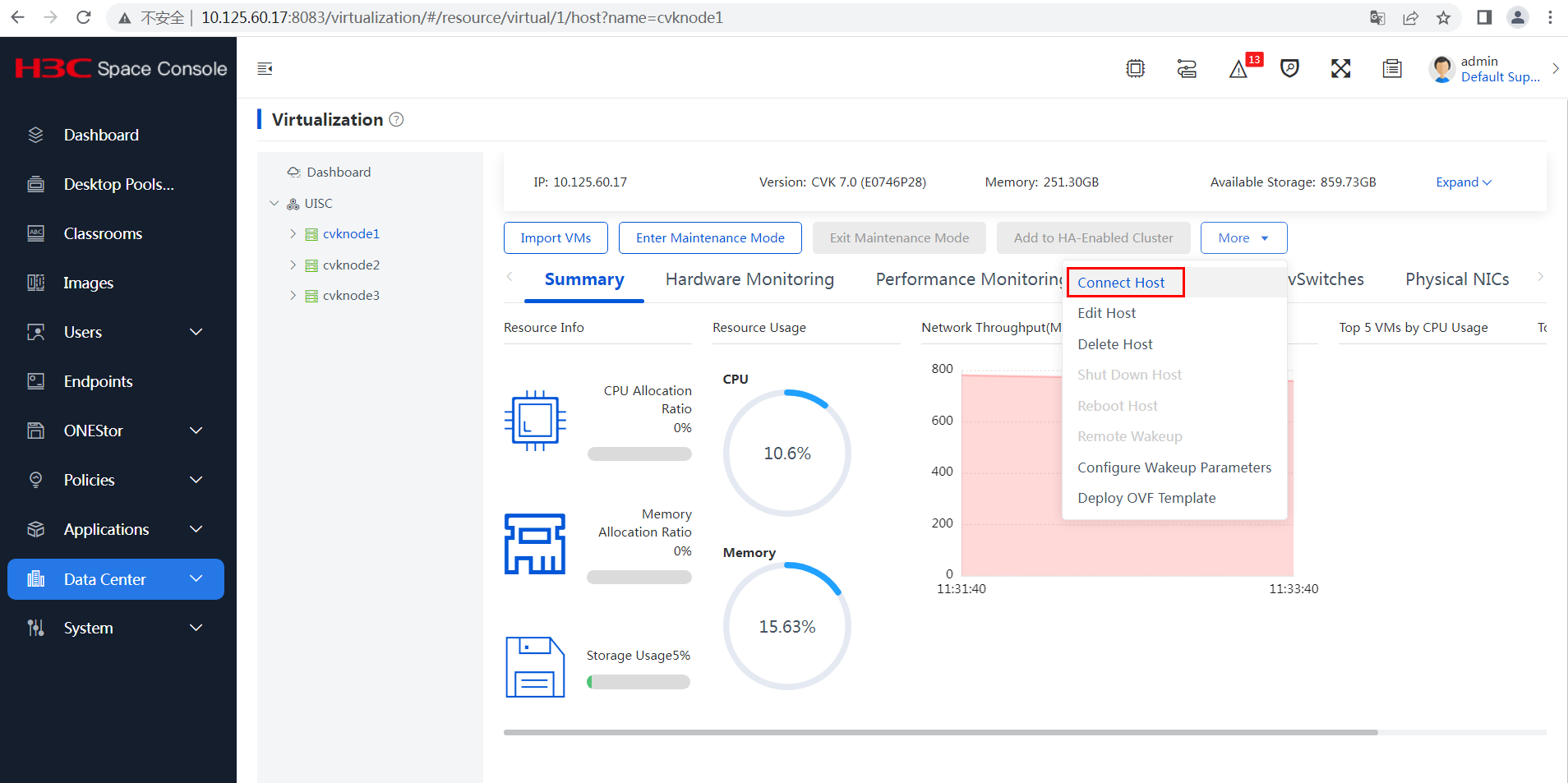

Domain not found in creation of a GlusterFS teaching storage

For example, if the message Domain not found: no domain with matching name 'IMG_LCVEPXDE_1673668009778_1 appears when you create a GlusterFS teaching storage, troubleshoot the issue as follows:

1. Identify whether the vservice table TBL_DOMAIN in the CAS database contains residual data of the domain named IMG_LCVEPXDE_1673668009778_1. If a residual data entry exists, record the corresponding HOST_ID and delete the data entry.

Figure 13 Troubleshooting the TBL_DOMAIN table

2. Locate the corresponding host on Space Console based on the host ID obtained in the previous step, and then connect to the host to perform data synchronization.

Figure 14 Connecting the host ID

3. Recreate the GlusterFS teaching storage.

No data available when you query GlusterFS configuration after switching to the backup device in the stateful failover scenario

1. Identify whether the backup device has a storage virtual switch with the same name required by GlusterFS.

2. If no such storage virtual switch exists, add the storage virtual switch with the same name.

3. Execute the following command on the primary node in the stateful failover system: gluster peer probe storage IP of the backup node. For example, gluster peer probe 10.10.10.1.

4. Execute the following command on the backup node in the stateful failover system: gluster peer probe storage IP of the primary node. For example, gluster peer probe 10.10.10.2.

5. Query GlusterFS configuration again.

openGauss

About openGauss

As from E2001, openGauss has been the database system used by the workspace-server service.

Service management

· To restart the openGauss service, execute su - omm -c "gs_om -t restart".

· To start the openGauss service, execute su - omm -c "gs_om -t start".

· To view the openGauss service status, execute su - omm -c "gs_om -t status --detail".

· To stop the openGauss service, execute su - omm -c "gs_om -t stop".

Commonly used commands

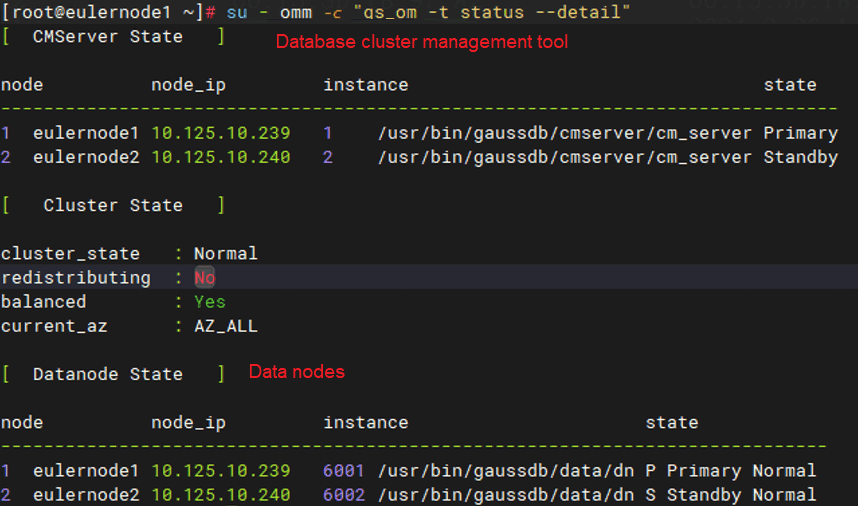

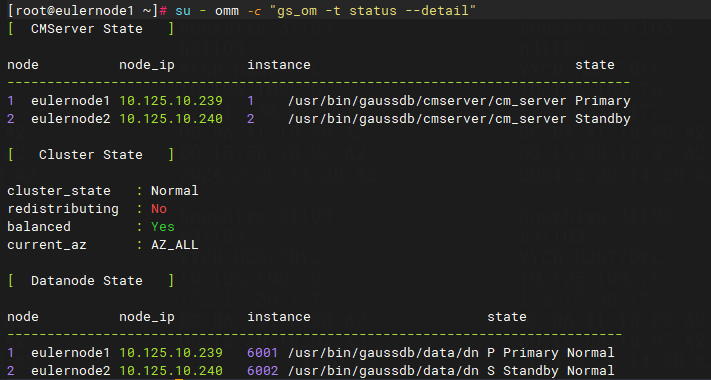

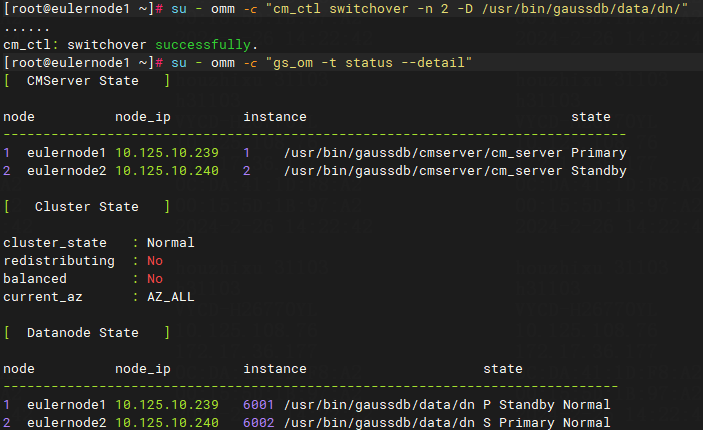

· To view the openGauss service status, execute su - omm -c "gs_om -t status --detail". In a dual-node environment, this command displays information about both primary and backup nodes. The information under [Datanode State] represents the states of data nodes. The information under [CMServer State] represents the states of nodes in the openGauss cluster management tool. The primary node under [Datanode State] represents the actual primary node of the database. The Cluster_state field displays the cluster status, which can be Unavailable, Normal, and Degraded. The Degraded option indicates that the database can still provide services but has some anomalies.

Figure 15 Viewing the openGauss service status

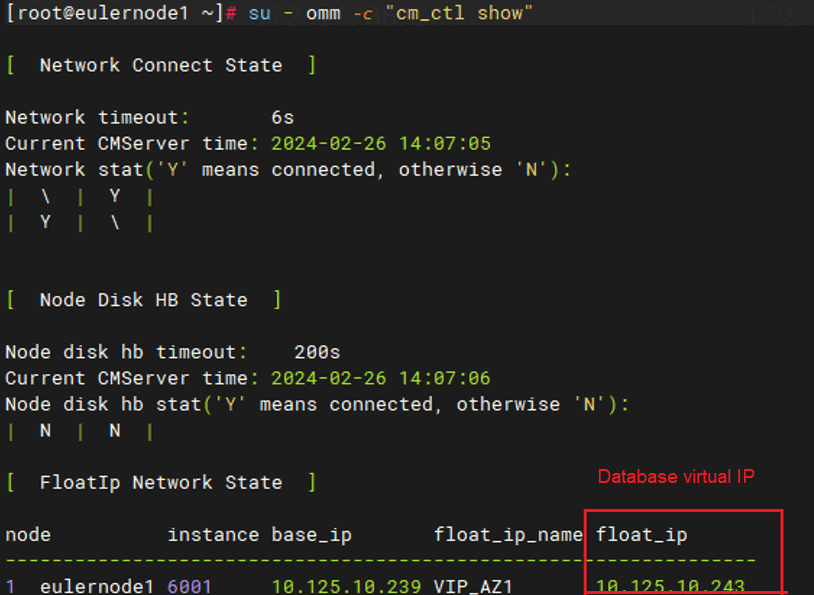

· To view the virtual IP of openGauss, execute su - omm -c "cm_ctl show". In the stateful failover scenario, the value for the float_ip field is the virtual IP of the database system.

Figure 16 Viewing the virtual IP of openGauss

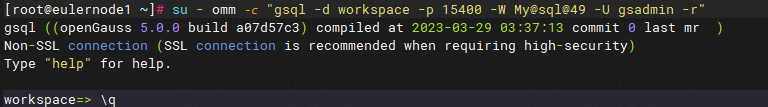

· To log in a database, execute su - omm -c "gsql -d database name -p 15400 -W database password -U database user -r". For example, execute su - omm -c "gsql -d workspace -p 15400 -W My@sql@49 -U gsadmin -r” to log in to the workspace database. After login, enter \q to exit the database and enter \c database name to switch to a new database. The other commands for adding, deleting, modifying, and querying databases are the same as those in MySQL.

Figure 17 Logging in to a database

· To perform a primary/backup database switchover, execute su - omm -c "cm_ctl switchover -n node ID -D service data path". The node ID and service data path are obtained from the command for querying the openGauss service status. For example:

Execute su - omm -c "cm_ctl switchover -n 2 -D /usr/bin/gaussdb/data/dn/" to promote the original backup node to the primary node. This command changes only information displayed in the [Datanode State] section.

Figure 18 Before the switchover

Figure 19 After the switchover

Common logs

The log messages are saved to the /usr/bin/gaussdb/log/omm directory, including the following subdirectories:

· /usr/bin/gaussdb/log/omm/om—In standalone mode, record log messages about common commands such as status checks and startups and log messages about installation and scaling.

· /usr/bin/gaussdb/log/omm/cm—In stateful failover mode, record common commands depending on components and logic log messages, such as switchover log messages.

· /usr/bin/gaussdb/log/omm/pg_log—Record data node log messages, including internal logic log messages containing actual data services.

· The remaining folders contain openGauss built-in component logs, which do not require attention.

BT component

HTTP and BT are two commonly used methods for downloading files. Their main differences are as follows:

· HTTP uses a client-server model where files are stored on the server, and all clients request files from the server. If a file is too large, each client might take a long time to download it, and will maintain a connection with the server. If many clients need to download the file simultaneously, the connections significantly burden the server.

Figure 20 HTTP download model

· BT uses a P2P model. When a client attempts to download a file, it first locates a host on the network that has the file, and then establishes a connection with the host to obtain the file. Each host acts as both a server and a client. As a server, a host can provide the files for other clients to download, and as a client, it can download files from other hosts. For BT, compared with HTTP, the more clients downloading, the faster the download speed.

Figure 21 BT download model

Versions earlier than E1012P10

Workspace BT is a file download service based on the open source BitTorrent component, providing download services for large file such as images, client installation packages, and agents in Workspace and Learningspace scenarios.

Workspace BT includes the following components:

· BT management service named bttorrent. For more information, see "About bttorrent."

· BitTorrent service, an open source component that provides the actual file download backend service. It is an application developed in Python based on the Bit Torrent protocol.

About bttorrent

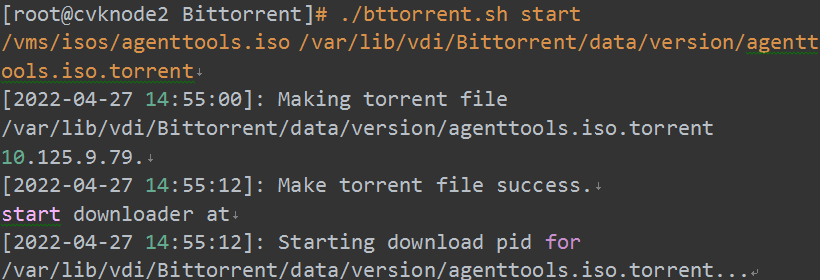

The bttorrent service is a secondary development of the BitTorrent service, making it easier and more convenient to call BitTorrent during the development process. All core codes are in the bttorrent.sh script, located in the /var/lib/vdi/Bittorrent/bttorrent.sh directory of the main management node. The script supports using the following command parameters:

Figure 22 bttorrent.sh script parameters

· start: Start the BT download process. This script is mainly used by developers. Command syntax: ./bttorrent.sh start original_file_path torrent_file_path. The main process includes two steps: generating a torrent file and using the torrent file to start the download process.

Figure 23 Output for the start parameter

· stop: Stop the BT download process.

Figure 24 Stop parameter

· restart: Restart the BT download process.

Figure 25 Output for the restart parameter

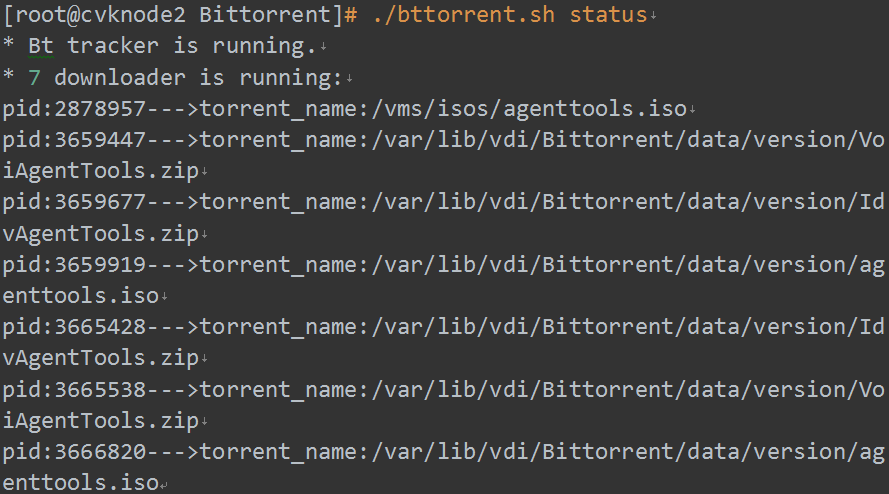

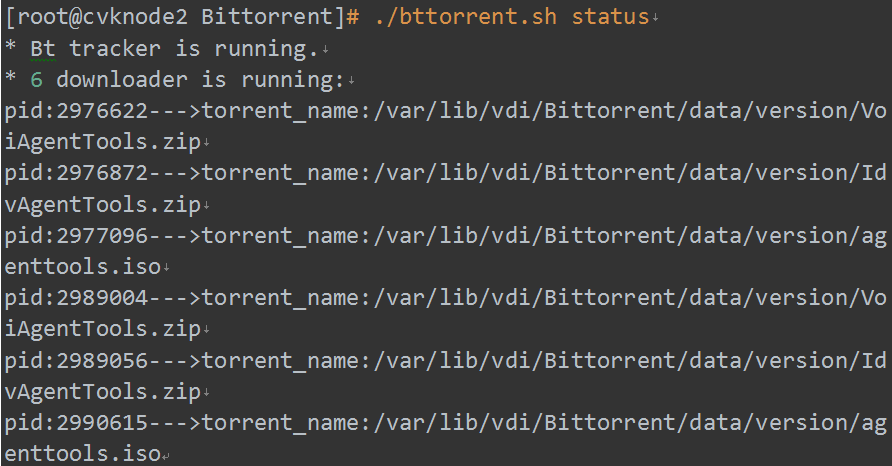

· status: Check the BT running status. You can view the running status of the BT tracker service and the current running download processes.

Figure 26 Output for the status parameter (running status)

Figure 27 Output for the status parameter (not running status)

· refresh: This parameter is currently deprecated. Please do not use.

Troubleshooting common issues

1. Identify whether the status of the bttorrent service is normal.

Figure 28 Checking the status of bttorrent

2. Identify whether the BT downloader process is normal and whether the downloader list contains files to be downloaded.

Figure 29 Checking the BT downloader process

3. Identify whether the source file and image file exist.

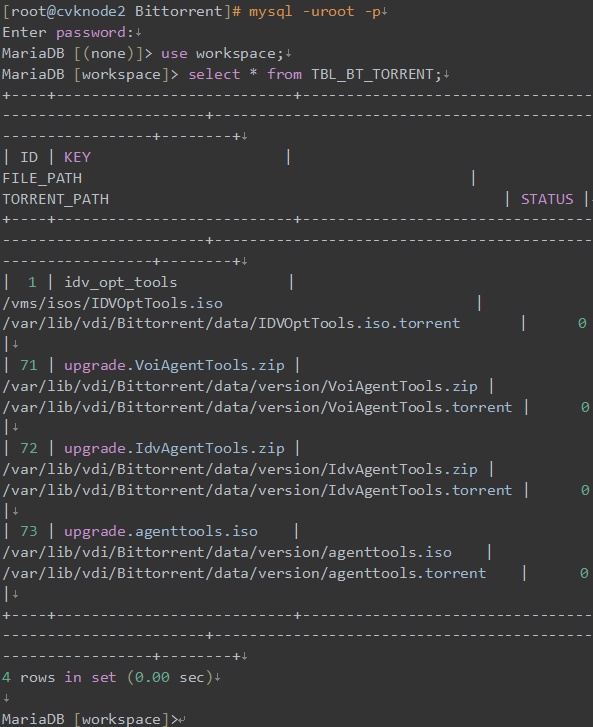

4. If the server has been restarted, the BT service will read the BT list from the database and start the downloader download process one by one. If you cannot find a file in the downloader list, check the database table for corresponding record. The FILE_PATH and TORRENT_PATH columns in the command output table displays the original file and the torrent file of each entry, respectively. You can identify whether the files exist based on the file directories.

Figure 30 Viewing the database table

5. Identify whether the communication from the client to the server on port 6969 is normal.

Figure 31 Viewing port 6969

|

|

NOTE: You can use the telnet command or access http://Space_Console_IP:6969/ on a browser to identify whether the communication from the client to the server on port 6969 is normal. |

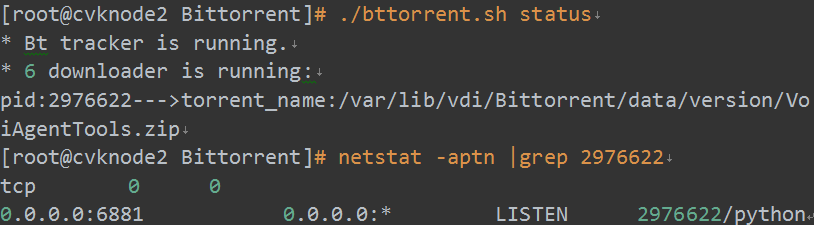

6. Each downloader occupies a port exclusively. When starting the port service, a downloader will find an available port, starting from 6881 and increasing. Figure 32 shows the command to check a specific downloader port. After querying the port, you can use related commands (such as telnet) on the client to identify whether the port communication is normal.

Figure 32 Querying a downloader port

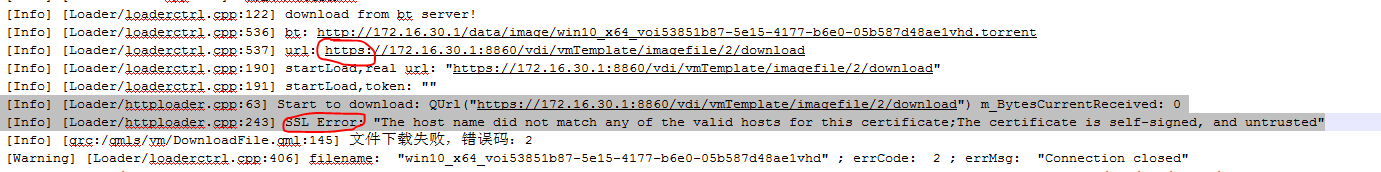

E1012P10 and later versions

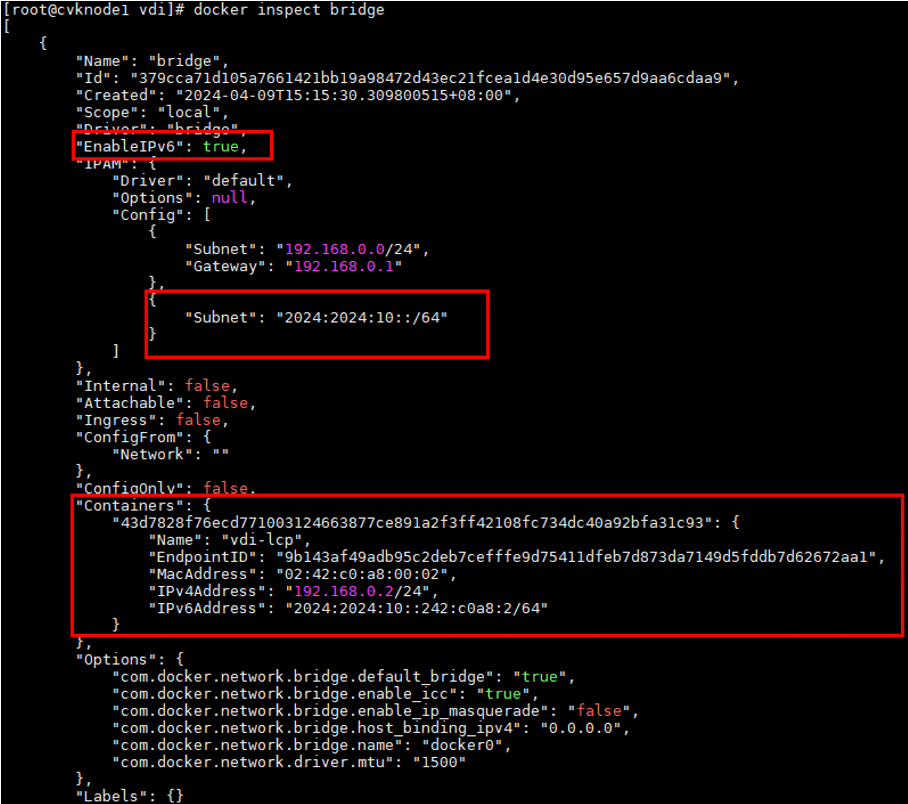

The BT component mainly includes the bittorrent-tracker and webtorrent-cli modules. The tracker module supports both IPv4 and IPv6 addresses and supports using multiple addresses. You do not need to configure the BT server address on Space Console because its address is the BT server address. If Space Console has multiple addresses, all can be used for BT download.

About the component script

The loopstart.sh script is used for periodic calls in scheduled tasks. It checks whether the torrent process exists and whether the server's address changes. A change in the server's address means the tracker address in the torrent file needs to be updated.

Collecting logs

Logs are stored on the management node, and located at the /var/lib/vdi/nodejs/logs directory. The structures of the log files are as follows:

· -rw-r--r-- 1 root root 8062 Aug 9 19:01 bt_log.log—Stores the bttorrent.sh script execution logs.

· -rw-r--r-- 1 root root 7297 Aug 9 19:01 loopstart.log—Stores the loopstart.sh script execution logs.

· -rw-r--r-- 1 root root 328 Aug 9 19:01 torrent_port_pid—Stores the torrent process records, including process ID, port, image or installation package path, and the path of the generated torrent.

· -rw-r--r-- 1 root root 47 Aug 11 13:47 tracker_ip—The tracker IP is the tracker address used to create torrent files.

Viewing processes

1. The tracker process uses port 6969, corresponding to the node.js process. To view the tracker process, execute the following command:

[root@cvknode1 logs]# ps -ef |grep 6969

root 4962 1 0 Jun14 ? 00:00:51 node /var/lib/vdi/nodejs/node-linux-x64/bin/bittorrent-tracker -p 6969 --http

2. Execute the following command to view the port number and process ID used by the WebTorrent process, as well as the process ID and port number of each torrent file's torrent process. The starting port number for a torrent process is 6881, which remains consistent with the versions earlier than E1012P10. Seeding one torrent file occupies two port numbers, a torrent port and a DHT port.

[root@cvknode1 logs]# netstat -anp |grep WebTorrent

tcp6 0 0 :::6881 :::* LISTEN 46610/WebTorrent

tcp6 0 0 :::6883 :::* LISTEN 42602/WebTorrent

udp 0 0 0.0.0.0:6771 0.0.0.0:* 42602/WebTorrent

udp 0 0 0.0.0.0:6771 0.0.0.0:* 46610/WebTorrent

udp 0 0 0.0.0.0:6882 0.0.0.0:* 46610/WebTorrent

udp 0 0 0.0.0.0:6884 0.0.0.0:* 42602/WebTorrent

|

|

NOTE: Identify whether the client can access the torrent port and DHT port. You can use the telnet command on the client for check. |

Accessing the tracker statistics page

The tracker statistics page displays the usage of the torrents. To access the statistics page, type http://Console_Space_IP:6969/stats on a browser.

Troubleshooting common issues

1. Identify whether the corresponding source file record exists in a torrent_port_pid log file. If the record does not exist, the torrent process will not exist either, and BT downloads cannot be performed. If the record exists, verify whether the process is running correctly.

[root@cvknode1 logs]# cat /var/lib/vdi/nodejs/logs/torrent_port_pid |grep Workspace_App //Check the source file records

46610 6881 /var/lib/vdi/Bittorrent/data/version/H3C_Workspace_App-E1013-win32.zip /var/lib/vdi/Bittorrent/data/version/H3C_Workspace_App-E1013-win32.torrent

[root@cvknode1 logs]#

[root@cvknode1 logs]# ps -ef |grep 46610 //Identify whether the process is running normally

root 46610 1 0 Jun17 ? 00:41:43 WebTorrent

root 67292 64960 0 14:22 pts/10 00:00:00 grep --color=auto 46610

2. If the process exists, identify whether the torrent file information is correct. The red text below containing the announce word is the tracker addresses. As long as the client can connect to any of these addresses, it can perform a BT download.

[root@cvknode1 logs]# webtorrent info /var/lib/vdi/Bittorrent/data/version/H3C_Workspace_App-E1013-win32.torrent //View torrent file information

WebTorrent: uTP not supported

{

"name": "H3C_Workspace_App-E1013-win32.zip",

"announce": [

"http://10.114.103.174:6969/announce",

"http://192.168.3.174:6969/announce",

"http://[2022:2022::3:174]:6969/announce"

],

"infoHash": "3d48aac756cfa080595e9dbac2e05dbbc3902b40",

"created": "2022-06-17T07:28:03.000Z",

"createdBy": "WebTorrent <https://webtorrent.io>",

"urlList": [],

"files": [

{

"path": "H3C_Workspace_App-E1013-win32.zip",

"name": "H3C_Workspace_App-E1013-win32.zip",

"length": 260873190,

"offset": 0

}

],

"length": 260873190,

"pieceLength": 262144,

"lastPieceLength": 39910,

"pieces": [

3. If all the above steps are completed, you can use a BT client to download the torrent file for test. If the test is successful, identify whether the issue is on the client side.

4. Collect upgrade download logs from the C:\Program Files (x86)\H3C\SpaceAgent\log directory on the client to check for relevant information.

VDP protocol

About the VDP protocol

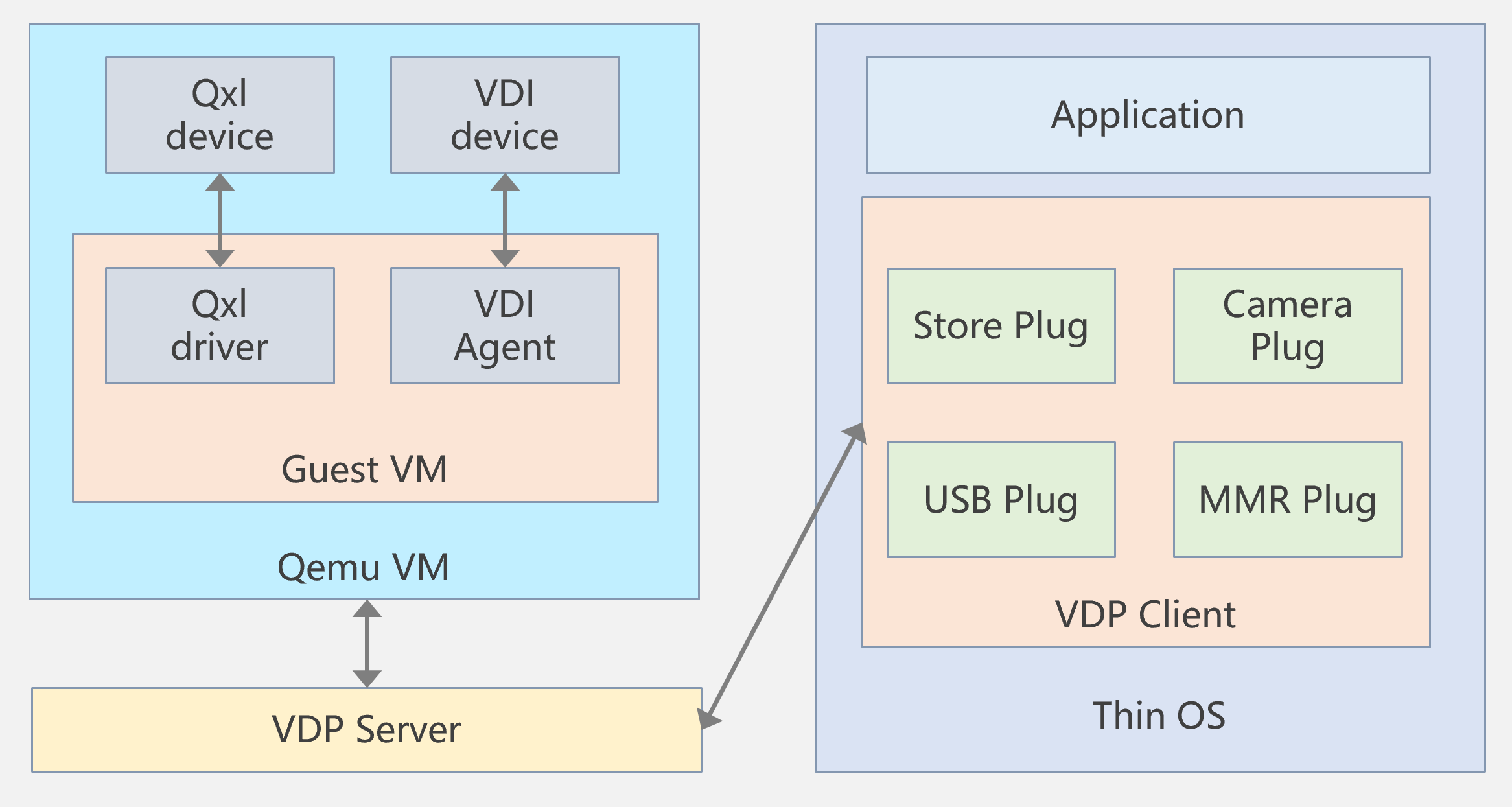

Virtual Desktop Protocol (VDP) is the fundamental protocol that connects the VDP server and the VDP client.

The VDP server obtains rendering commands and cursor commands from the VM through the Qxl device, and communicates internally with the VM through the VDI device.

The VDP client runs on devices such as a thin client or PC. The VDP client communicates with the VDP server and processes the commands sent from the VDP server. The VDP client provides basic functions of displaying the VM desktop and interacting with the VM. The VDP client uses plugins to load extended services, providing a data transfer channel. The mechanism of the virtual channel service is similar to that of the RDP protocol virtual channel. Plugins are added as dynamic libraries, and the VDP protocol automatically loads the corresponding plugins to enable the respective services. Service data communicates with programs in the VM through the VDP protocol channel. The service data communicates with programs in the VM through the VDP protocol channel.

Figure 33 VDP protocol module

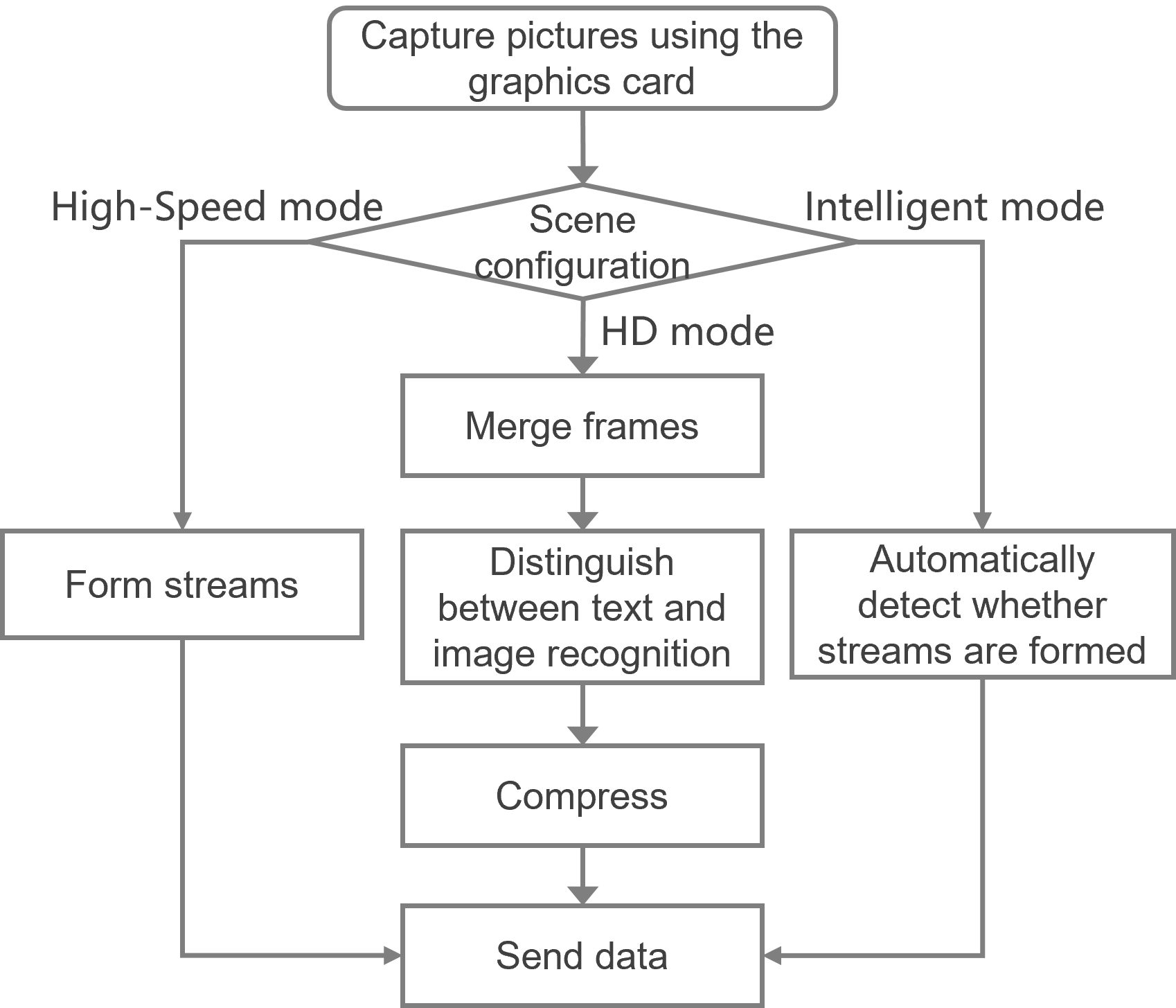

About the VDP mode

The VDP protocol supports using the following modes in different scenarios:

· HD mode—Mainly used in common office scenarios, and supports HD lossy and HD lossless.

· High-speed mode—Mainly used for video scenarios where the bandwidth is relatively low.

· Intelligent mode—Automatically detects the current scenario and switches the image compression algorithm accordingly.

Figure 34 Processing flows for different modes

Troubleshooting common issues

Client failed to connect the VM

1. Identify whether the client can access Space Console and the IP used for client access on the host normally.

2. Use the telnet or SSH command, or tcping tool to troubleshoot whether the host has opened the SPICE console port for the VM.

3. For the gateway access scenario, identify whether the client is connected to the gateway IP and whether the gateway's external port is open.

4. If the client cannot connect to the VM after login, try clearing the files in the following directory and restarting the client to connect to the VM.

¡ Windows client: C:\Users\current_user\Documents\Workspace\vdps.

¡ SpaceOS client: /home/spaceos/Documents/Workspace/vdps.

5. If the client system is Windows 10, identify whether the controlled folder access feature is disabled on the Windows Security > Virus & threat protection > Ransomware protection > Controlled folder access page. If the feature is enabled, disable it and then try again.

|

|

NOTE: The SPICE console port number on the VM is not fixed. To obtain the SPICE console port number, navigate to the Edit page of the VM on Space Console and then click the Console > Console SPICE tab. |

Sudden disconnection during VM use

The client pops up a message indicating that an exception occurred in the communication between the client and the server where the VM is located, or that the client is disconnected from the cloud desktop.

1. Identify whether the network between the client and the host is normal, and whether the TCP connection between the client and the VM SPICE console port on the host is normal. You can continuously ping the host IP from the client and check the ping results when the issue reoccurs. If the client is a Windows client, use the tcping tool as a best practice.

2. For a gateway access scenario, identify whether the connection from the client to the gateway IP and gateway port is stable.

3. Identify whether the TCP aging time of network devices (such as firewalls, routers, and Layer 3 switches) between the client and the host is too short.

VM lags

1. Identify whether the network bandwidth between the client and the host is too small, and whether the latency is too high. If so, optimize the network.

2. On Space Console, identify whether the VM's disk IO latency and disk request IOPS have peaks during the lags. If so, troubleshoot the storage or CAS virtualization component.

3. Identify whether the CPU usage and memory usage are too high inside the VM. If the usages are high, identify the processes consuming the most resources.

4. For VMs without vGPU cards, try changing the advanced settings of the client: set the image compression to lossy, or set the scene to the high-speed mode and set the decoding mode to hardware decoding. For VMs with vGPU cards, try changing the decoding mode to hardware decoding in the client's advanced settings. Disconnect and reconnect the VM for the changes to take effect.

5. Identify whether software that consumes a lot of GPU (such as AutoCAD) is running on the VM. If so, use vGPU cards as a best practice.

6. Try shutting down the VM normally and then restarting it (not rebooting).

7. Try connecting the VM to a client with higher specifications.

No response from the mouse and keyboard

1. Disconnect the client from the VM and identify whether the mouse and keyboard work normally on the local end. If not, try replacing them.

2. If you use a USB keyboard or mouse, troubleshoot the USB redirection policy to identify whether the device has been redirected to the VM.

3. If the mouse is unresponsive but the keyboard works normally, identify whether the vdservice.exe and vdagent.exe processes are running normally inside the VM. In addition, identify whether the Spice Agent service is running normally and try restarting it.

4. If the keyboard is unresponsive but the mouse works normally, connect the client to a different VM and identify whether the same issue occurs.

5. Try shutting down the VM normally and then restarting it (not rebooting).

VM cannot play sound

1. Identify whether the client can play sound normally.

2. Identify whether the VM and client system are muted and whether the playing volume is set to 0.

3. Identify whether the VM has a sound card device and whether the sound card driver is working normally, and whether the VM has been restarted after installation of the sound card and driver.

4. If you use a USB speaker or headphone, troubleshoot the USB redirection policy to identify whether the device has been redirected to the VM.

5. If choppy or noisy sounds exist during playing, identify whether identify whether the same issue occurs during normal operation of the VM. Try connecting the VM to a different client or connecting the client to a different VM to identify whether the same issue occurs.

6. Try shutting down the VM normally and then restarting it (not rebooting).

VM cannot record sound

1. Identify whether the client can record sound normally.

2. Identify whether the microphone on the VM or client system is muted and whether the microphone volume is set to 0.

3. Identify whether the VM has a sound card device and whether the sound card driver is working normally, and whether the VM has been restarted after installation of the sound card and driver.

4. If you use a USB microphone, troubleshoot the USB redirection policy to identify whether the device has been redirected to the VM.

5. If choppy or noisy sounds exist during playback of the recorded sounds, identify whether identify whether the same issue occurs during normal operation of the VM. Try connecting the VM to a different client or connecting the client to a different VM to identify whether the same issue occurs.

6. Try shutting down the VM normally and then restarting it (not rebooting).

Video playing lags on VM

1. First identify whether the video source itself is choppy or has a low frame rate. Then troubleshoot according to "VM lags."

2. Identify whether there is any lag when you play the video directly on a physical device.

3. Check the video player and related advanced settings on the client:

¡ For VMs without vGPU, as a best practice, use PureCode or SPlayer rather than the built-in Windows Media Player for video playing. Identify whether you have set the mode to the intelligent mode or high-speed mode in the client's advanced settings. The HD mode is currently not suitable for video playing.

¡ For VMs with vGPU cards, try changing the decoding mode to hardware decoding in the client's advanced settings.

4. Try shutting down the VM normally and then restarting it (not rebooting).

5. Use a client with higher specifications

VM gets stuck and becomes unresponsive during use

1. Identify whether the client tool bar is displayed normally and can be clicked, and whether it allows returning to the client system. If not, exceptions might occur on the client or client system. Try disconnecting and reconnecting the client or restarting the client system.

2. Identify whether the mouse or keyboard is unresponsive by viewing the VM screen for any updates (such as changes in system time or the interface activities when you operating the VM connected to the client on the console). If the mouse or keyboard is unresponsive, see "No response from the mouse and keyboard" for troubleshooting.

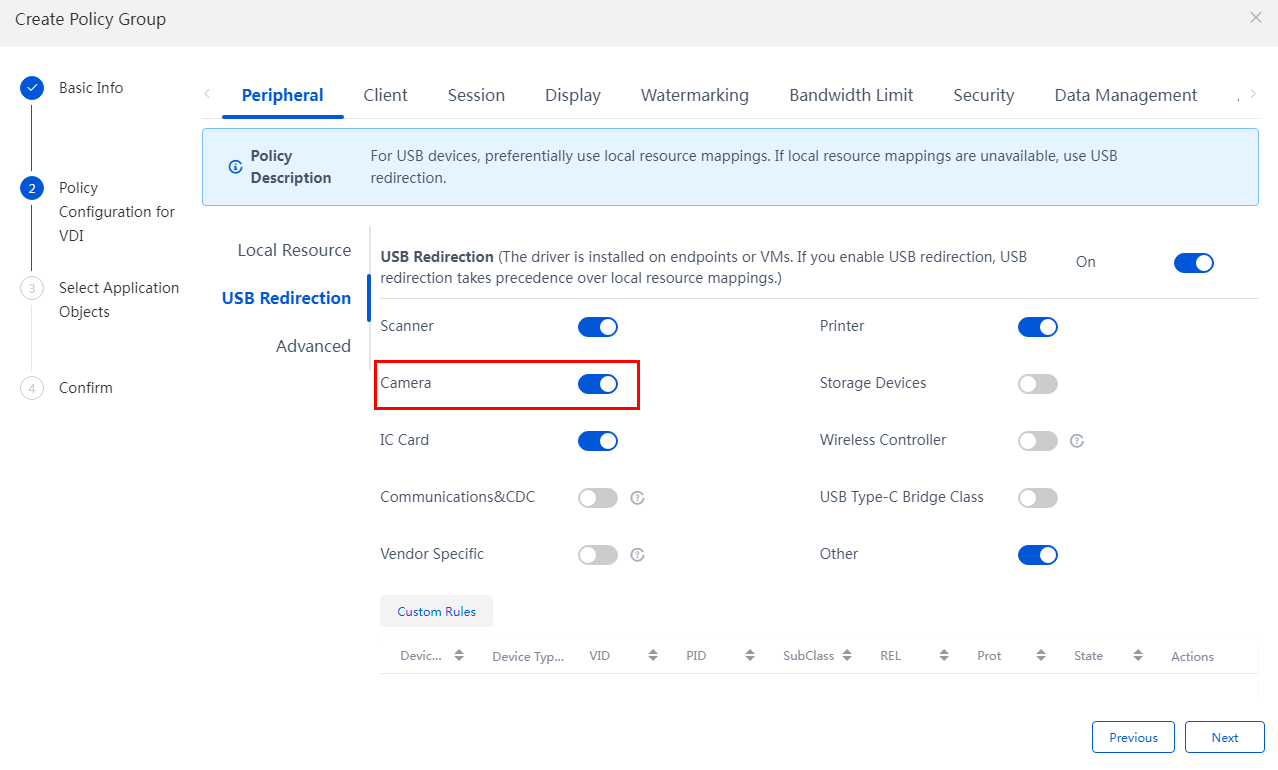

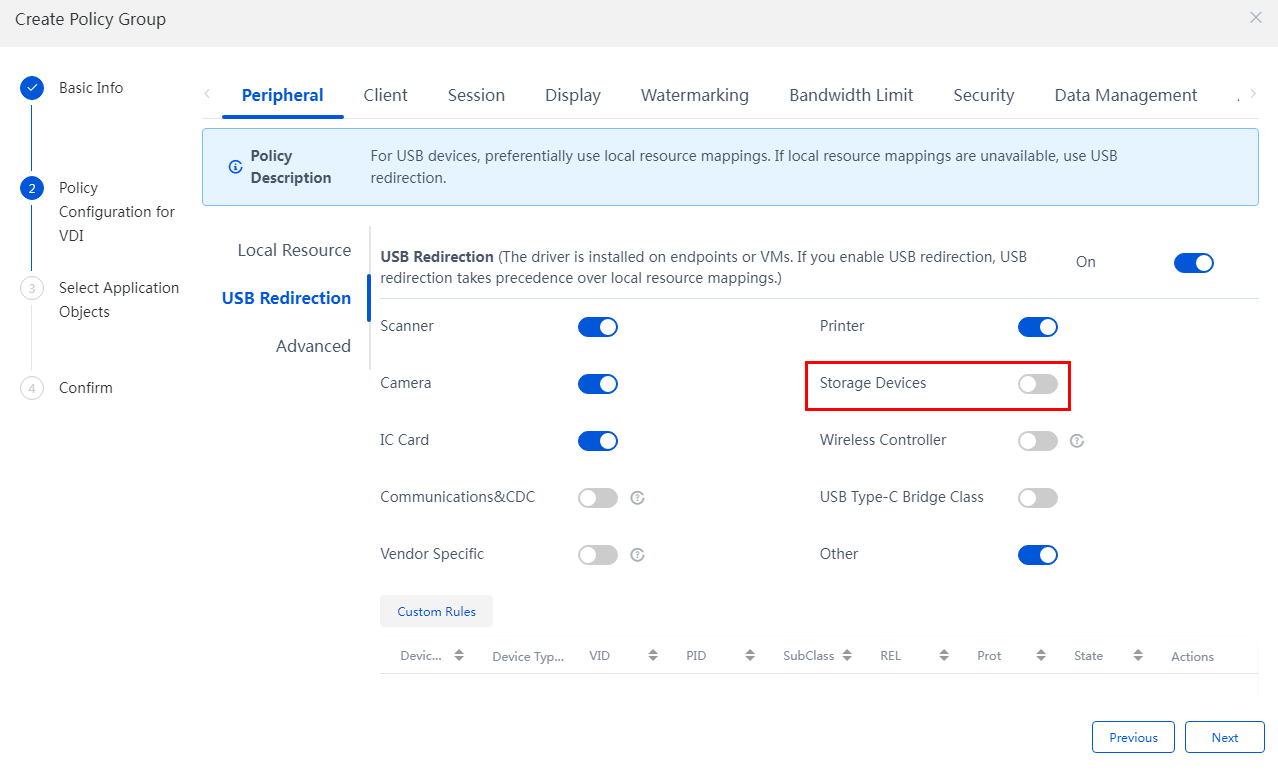

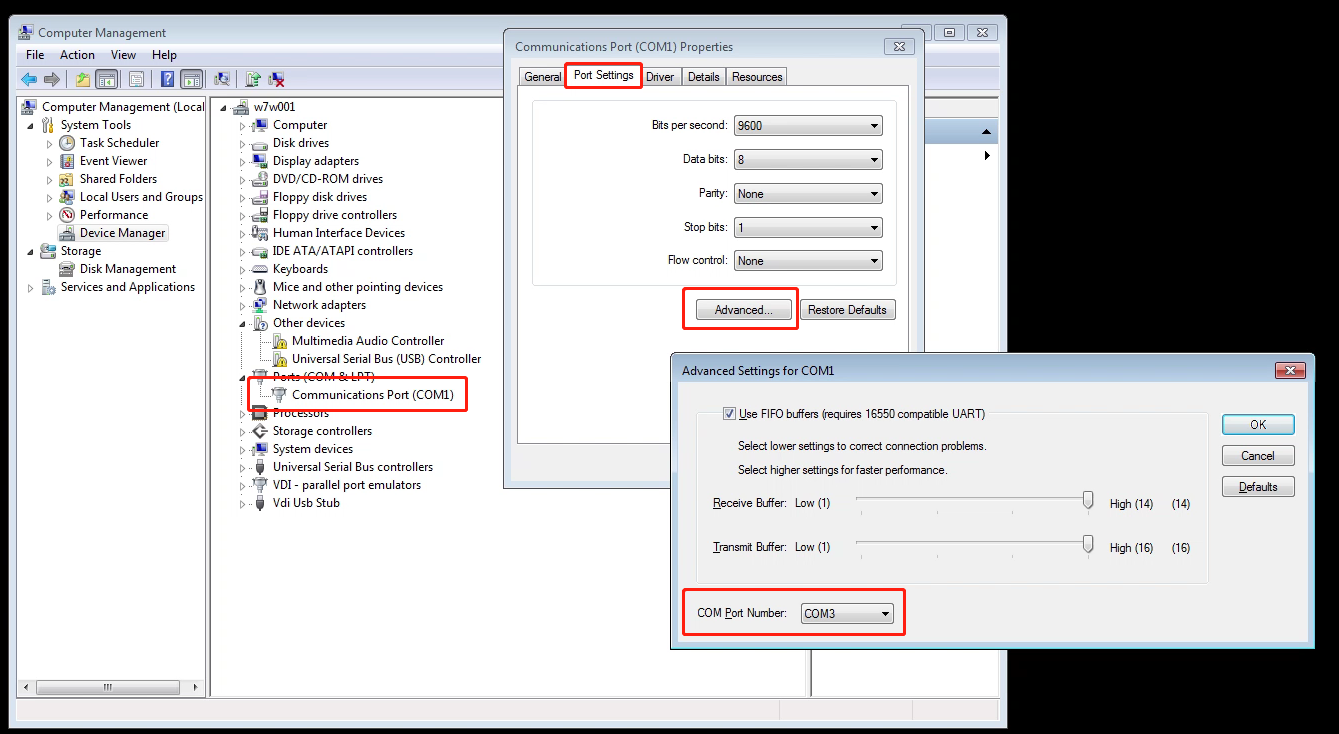

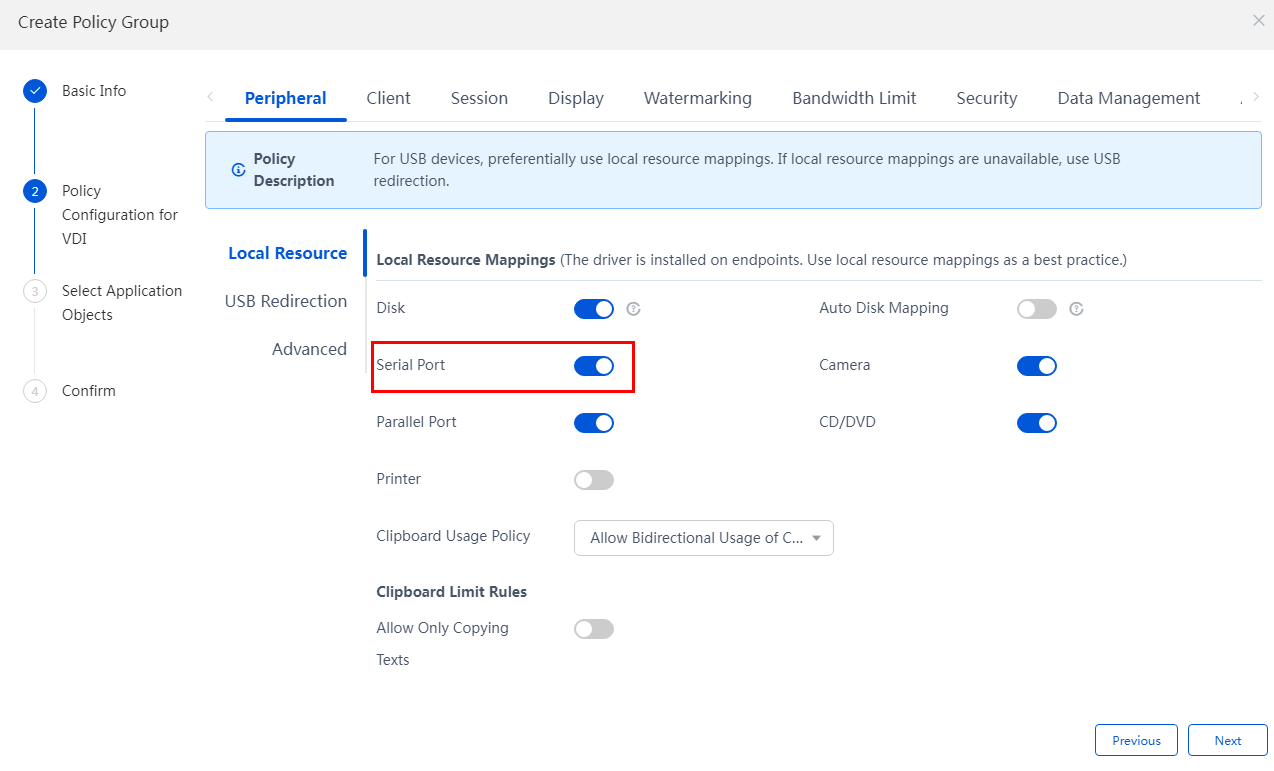

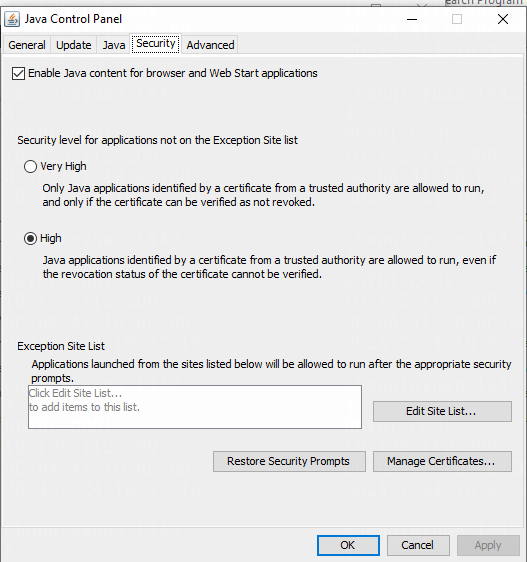

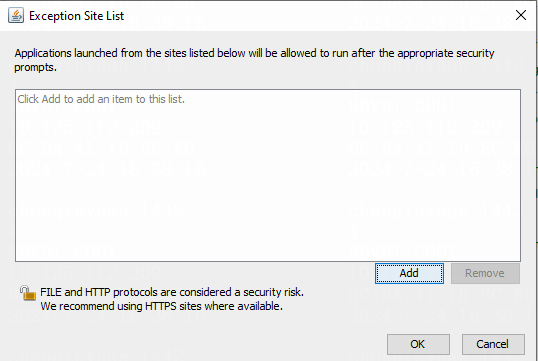

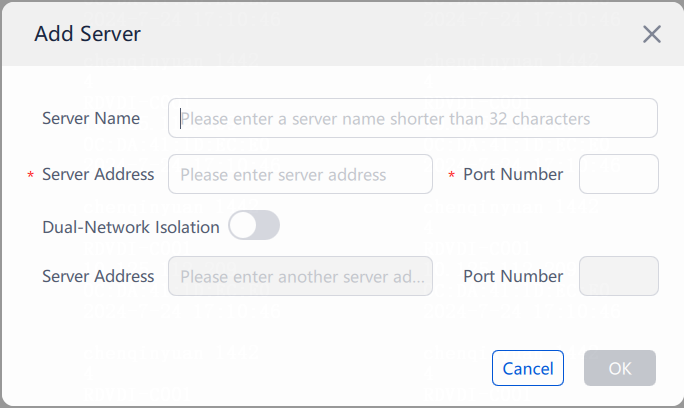

3. If the VM console on Space Console operates normally or can send keystrokes normally, try disconnecting and reconnecting the client or restarting the client system.