- Table of Contents

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-Text | 6.77 MB |

Installation safety recommendations

Installation site requirements

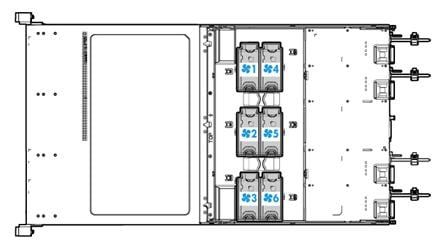

Space and airflow requirements

Temperature, humidity, and altitude requirements

Installing or removing the server

Installing the chassis rails and slide rails

Connecting a mouse, keyboard, and monitor

Removing the server from a rack

Powering on and powering off the server

Deploying and registering UIS Manger

Installing the low mid air baffle or GPU module air baffle to a compute module

Installing the GPU module air baffle to a rear riser card

Installing riser cards and PCIe modules

Installing a riser card and a PCIe module in a compute module

Installing riser cards and PCIe modules at the server rear

Installing storage controllers and power fail safeguard modules

Installing a GPU module in a compute module

Installing a GPU module to a rear riser card

Installing an mLOM Ethernet adapter

Installing a PCIe Ethernet adapter

Installing a PCIe M.2 SSD in a compute module

Installing a PCIe M.2 SSD at the server rear

Installing an NVMe SSD expander module

Installing the NVMe VROC module

Installing and setting up a TCM or TPM

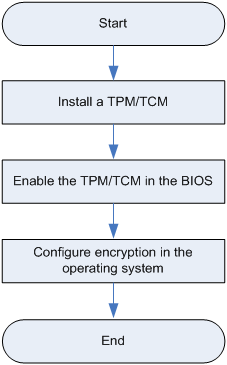

Installation and setup flowchart

Enabling the TCM or TPM in the BIOS

Configuring encryption in the operating system

Replacing a compute module and its main board

Removing the main board of a compute module

Installing a compute module and its main board

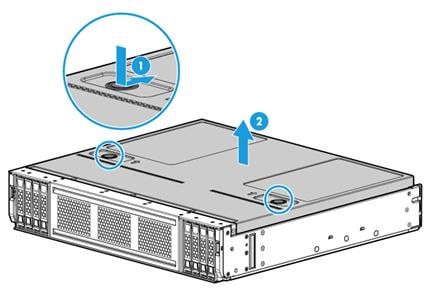

Replacing a compute module access panel

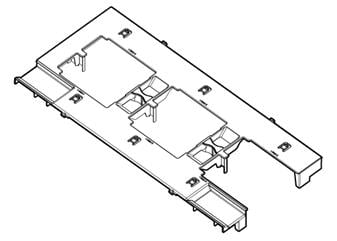

Replacing the chassis access panel

Replacing air baffles in a compute module

Replacing the power supply air baffle

Replacing a riser card air baffle

Replacing a riser card and a PCIe module

Replacing the riser card and PCIe module in a compute module

Replacing a riser card and PCIe module at the server rear

Replacing a storage controller

Replacing the power fail safeguard module for a storage controller

Replacing the GPU module in a compute module

Replacing a GPU module at the server rear

Replacing an mLOM Ethernet adapter

Replacing a PCIe Ethernet adapter

Replacing an M.2 transfer module and a PCIe M.2 SSD

Replacing the M.2 transfer module and a PCIe M.2 SSD in a compute module

Replacing an M.2 transfer module and a PCIe M.2 SSD at the server rear

Replacing the dual SD card extended module

Replacing an NVMe SSD expander module

Replacing the NVMe VROC module

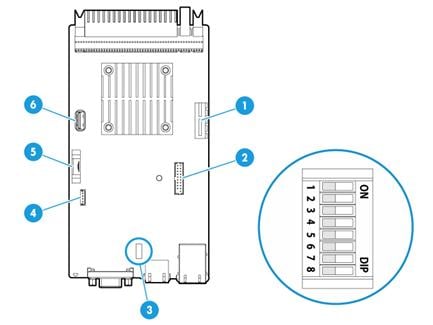

Replacing the management module

Removing the management module

Installing the management module

Replacing the diagnostic panel

Connecting drive cables in compute modules

Storage controller cabling in riser cards at the server rear

Connecting the flash card on a storage controller

Connecting the NCSI cable for a PCIe Ethernet adapter

Connecting the front I/O component cable from the right chassis ear

Connecting the cable for the front VGA and USB 2.0 connectors on the left chassis ear

Monitoring the temperature and humidity in the equipment room

Appendix A Server specifications

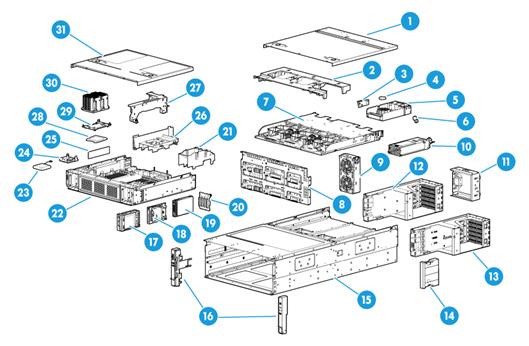

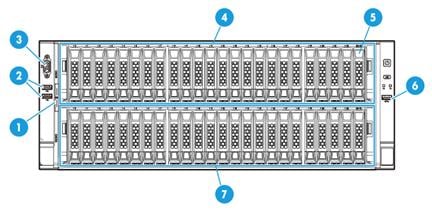

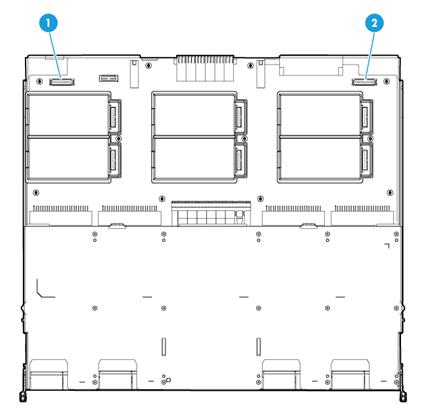

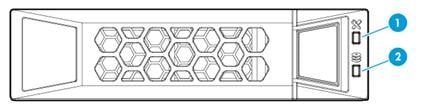

Front panel view of the server

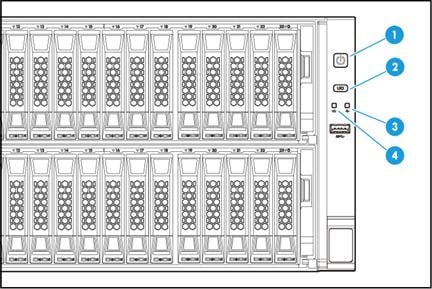

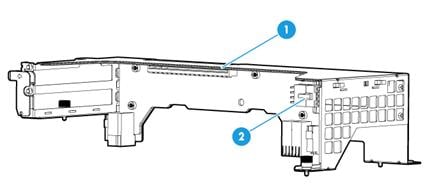

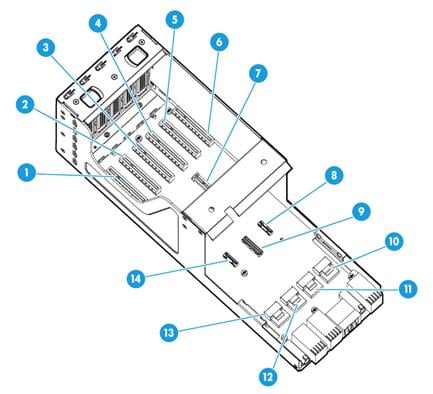

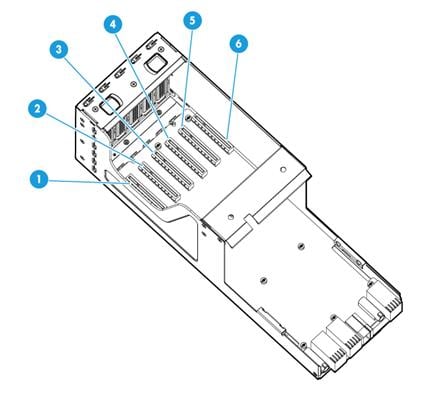

Front panel view of a compute module

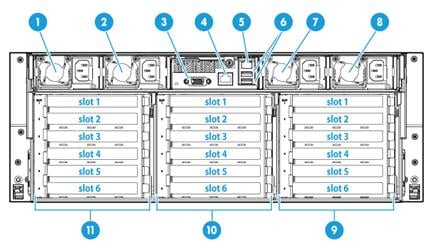

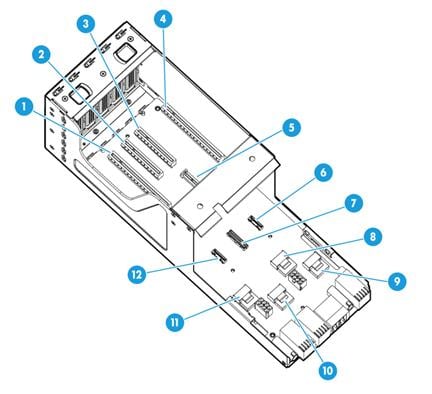

Main board of a compute module

Appendix B Component specifications

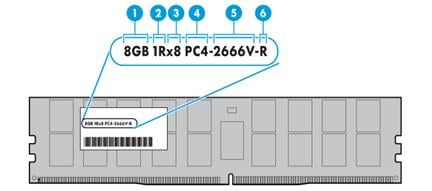

DRAM DIMM rank classification label

Drive configurations and numbering

Riser card and system board port mapping relationship

800 W high-voltage power supply

850 W high-efficiency Platinum power supply

Diagnostic panel specifications

Storage options other than HDDs and SDDs

Security bezels, slide rail kits, and CMA

Appendix C Managed hot removal of NVMe drives

Performing a managed hot removal in Windows

Performing a managed hot removal in Linux

Performing a managed hot removal from the CLI

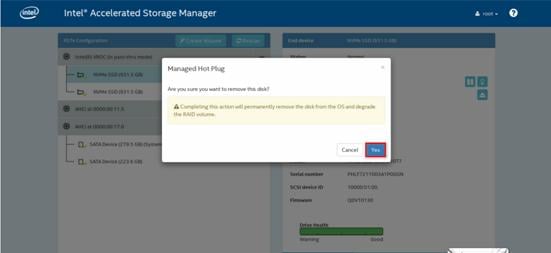

Performing a managed hot removal from the Intel® ASM Web interface

Appendix D Environment requirements

About environment requirements

General environment requirements

Operating temperature requirements

Safety information

Safety sign conventions

To avoid bodily injury or damage to the server or its components, make sure you are familiar with the safety signs on the server chassis or its components.

Table 1 Safety signs

|

Sign |

Description |

|

|

Circuit or electricity hazards are present. Only H3C authorized or professional server engineers are allowed to service, repair, or upgrade the server.

To avoid bodily injury or damage to circuits, do not open any components marked with the electrical hazard sign unless you have authorization to do so. |

|

|

Electrical hazards are present. Field servicing or repair is not allowed.

To avoid bodily injury, do not open any components with the field-servicing forbidden sign in any circumstances. |

|

|

The surface or component might be hot and present burn hazards.

To avoid being burnt, allow hot surfaces or components to cool before touching them. |

|

|

The server or component is heavy and requires more than one people to carry or move.

To avoid bodily injury or damage to hardware, do not move a heavy component alone. In addition, observe local occupational health and safety requirements and guidelines for manual material handling. |

|

|

The server is powered by multiple power supplies.

To avoid bodily injury from electrical shocks, make sure you disconnect all power supplies if you are performing offline servicing. |

Power source recommendations

Power instability or outage might cause data loss, service disruption, or damage to the server in the worst case.

To protect the server from unstable power or power outage, use uninterrupted power supplies (UPSs) to provide power for the server.

Installation safety recommendations

To avoid bodily injury or damage to the server, read the following information carefully before you operate the server.

General operating safety

To avoid bodily injury or damage to the server, follow these guidelines when you operate the server:

· Only H3C authorized or professional server engineers are allowed to install, service, repair, operate, or upgrade the server.

· Make sure all cables are correctly connected before you power on the server.

· Place the server on a clean, stable table or floor for servicing.

· To avoid being burnt, allow the server and its internal modules to cool before touching them.

Electrical safety

|

|

WARNING! If you put the server in standby mode (system LED in amber) with the power on/standby button on the front panel, the power supplies continue to supply power to some circuits in the server. To remove all power for servicing safety, you must first press the button until the system LED turns into steady amber, and then remove all power cords from the server. |

To avoid bodily injury or damage to the server, follow these guidelines:

· Always use the power cords that came with the server.

· Do not use the power cords that came with the server for any other devices.

· Power off the server when installing or removing any components that are not hot swappable.

Rack mounting recommendations

To avoid bodily injury or damage to the equipment, follow these guidelines when you rack mount a server:

· Mount the server in a standard 19-inch rack.

· Make sure the leveling jacks are extended to the floor and the full weight of the rack rests on the leveling jacks.

· Couple the racks together in multi-rack installations.

· Load the rack from the bottom to the top, with the heaviest hardware unit at the bottom of the rack.

· Get help to lift and stabilize the server during installation or removal, especially when the server is not fastened to the rails. As a best practice, a minimum of four people are required to safely load or unload a rack. A fifth person might be required to help align the server if the server is installed higher than check level.

· For rack stability, make sure only one unit is extended at a time. A rack might get unstable if more than one server unit is extended.

· Make sure the rack is stable when you operate a server in the rack.

· To maintain correct airflow and avoid thermal damage to the server, use blanks to fill empty rack units.

ESD prevention

Preventing electrostatic discharge

To prevent electrostatic damage, follow these guidelines:

· Transport or store the server with the components in antistatic bags.

· Keep the electrostatic-sensitive components in the antistatic bags until they arrive at an ESD-protected area.

· Place the components on a grounded surface before removing them from their antistatic bags.

· Avoid touching pins, leads, or circuitry.

· Make sure you are reliably grounded when touching an electrostatic-sensitive component or assembly.

Grounding methods to prevent electrostatic discharge

The following are grounding methods that you can use to prevent electrostatic discharge:

· Wear an ESD wrist strap and make sure it makes good skin contact and is reliably grounded.

· Take adequate personal grounding measures, including wearing antistatic clothing, static dissipative shoes, and antistatic gloves.

· Use conductive field service tools.

· Use a portable field service kit with a folding static-dissipating work mat.

Cooling performance

Poor cooling performance might result from improper airflow and poor ventilation and might cause damage to the server.

To ensure good ventilation and proper airflow, follow these guidelines:

· Install blanks if the following module slots are empty:

¡ Compute module slots.

¡ Drive bays.

¡ Rear riser card bays.

¡ PCIe slots.

¡ Power supply slots.

· Do not block the ventilation openings in the server chassis.

· To avoid thermal damage to the server, do not operate the server for long periods in any of the following conditions:

¡ Access panel open or uninstalled.

¡ Air baffles uninstalled.

¡ PCIe slots, drive bays, or power supply slots empty.

· If the server is stacked in a rack with other devices, make sure there is a minimum clearance of 2 mm (0.08 in) below and above the server.

Battery safety

The server's management module contains a system battery, which is designed with a lifespan of 5 to 10 years.

If the server no longer automatically displays the correct date and time, you might need to replace the battery. When you replace the battery, follow these safety guidelines:

· Do not attempt to recharge the battery.

· Do not expose the battery to a temperature higher than 60°C (140°F).

· Do not disassemble, crush, puncture, short external contacts, or dispose of the battery in fire or water.

· Dispose of the battery at a designated facility. Do not throw the battery away together with other wastes.

Preparing for installation

Prepare a rack that meets the rack requirements and plan an installation site that meets the requirements of space and airflow, temperature, humidity, equipment room height, cleanliness, and grounding.

Rack requirements

|

|

IMPORTANT: As a best practice to avoid affecting the server chassis, install power distribution units (PDUs) with the outputs facing backwards. If you install PDUs with the outputs facing the inside of the server, please perform onsite survey to make sure the cables won't affect the server rear. |

The server is 4U high. The rack for installing the server must meet the following requirements:

· A standard 19-inch rack.

· A clearance of more than 50 mm (1.97 in) between the rack front posts and the front rack door.

· A minimum of 1200 mm (47.24 in) in depth as a best practice. For installation limits for different rack depth, see Table 2.

Table 2 Installation limits for different rack depths

|

Rack depth |

Installation limits |

|

1000 mm (39.37 in) |

· H3C cable management arm (CMA) is not supported. · A clearance of 60 mm (2.36 in) is reserved from the server rear to the rear rack door for cabling. · The slide rails and PDUs might hinder each other. Perform onsite survey to determine the PDU installation location and the proper PDUs. If the PDUs hinder the installation and movement of the slide rails anyway, use other methods to support the server, a tray for example. |

|

1100 mm (43.31 in) |

Make sure the CMA does not hinder PDU installation at the server rear before installing the CMA. If the CMA hinders PDU installation, use a deeper rack or change the installation locations of PDUs. |

|

1200 mm (47.24 in) |

Make sure the CMA does not hinder PDU installation or cabling. If the CMA hinders PDU installation or cabling, change the installation locations of PDUs. For detailed installation suggestions, see Figure 1. |

Figure 1 Installation suggestions for a 1200 mm deep rack (top view)

|

(1) 1200 mm (47.24 in) rack depth |

|

(2) A minimum of 50 mm (1.97 in) between the rack front posts and the front rack door |

|

(3) 830 mm (32.68 in) between the rack front posts and the rear of the chassis, including power supply handles at the server rear (not shown in the figure) |

|

(4) 830 mm (32.68 in) server depth, including chassis ears |

|

(5) 950 mm (37.40 in) between the front rack posts and the CMA |

|

(6) 860 mm (33.86 in) between the front rack posts and the rear ends of the slide rails |

Installation site requirements

Space and airflow requirements

For convenient maintenance and heat dissipation, make sure the following requirements are met:

· The passage for server transport is a minimum of 1500 mm (59.06 in) wide.

· A minimum clearance of 1200 mm (47.24 in) is reserved from the front of the rack to the front of another rack or row of racks.

· A minimum clearance of 800 mm (31.50 in) is reserved from the back of the rack to the rear of another rack or row of racks.

· A minimum clearance of 1000 mm (39.37 in) is reserved from the rack to any wall.

· The air intake and outlet vents of the server are not blocked.

· The front and rear rack doors are adequately ventilated to allow ambient room air to enter the cabinet and allow the warm air to escape from the cabinet.

· The air conditioner in the equipment room provides sufficient air flow for heat dissipation of devices in the room.

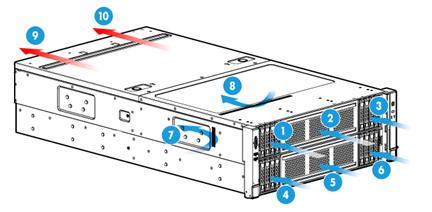

Figure 2 Airflow through the server

|

(1) to (8) Directions of the airflow into the chassis and power supplies |

|

(9) to (10) Directions of the airflow out of the chassis |

Temperature, humidity, and altitude requirements

To ensure correct operation of the server, make sure the room temperature, humidity, and altitude meet the requirements as described in "Appendix C Environment requirements."

Cleanliness requirements

Mechanically active substances buildup on the chassis might result in electrostatic adsorption, which causes poor contact of metal components and contact points. In the worst case, electrostatic adsorption can cause communication failure.

Table 3 Mechanically active substance concentration limit in the equipment room

|

Substance |

Particle diameter |

Concentration limit |

|

Dust particles |

≥ 5 µm |

≤ 3 x 104 particles/m3 (No visible dust on desk in three days) |

|

Dust (suspension) |

≤ 75 µm |

≤ 0.2 mg/m3 |

|

Dust (sedimentation) |

75 µm to 150 µm |

≤ 1.5 mg/(m2h) |

|

Sand |

≥ 150 µm |

≤ 30 mg/m3 |

The equipment room must also meet limits on salts, acids, and sulfides to eliminate corrosion and premature aging of components, as shown in Table 4.

Table 4 Harmful gas limits in an equipment room

|

Gas |

Maximum concentration (mg/m3) |

|

SO2 |

0.2 |

|

H2S |

0.006 |

|

NO2 |

0.04 |

|

NH3 |

0.05 |

|

Cl2 |

0.01 |

Grounding requirements

Correctly connecting the server grounding cable is crucial to lightning protection, anti-interference, and ESD prevention. The server can be grounded through the grounding wire of the power supply system and no external grounding cable is required.

Installation tools

Table 5 lists the tools that you might use during installation.

|

Picture |

Name |

Description |

|

|

T25 Torx screwdriver |

For captive screws inside chassis ears. |

|

T30 Torx screwdriver (Electric screwdriver) |

For captive screws on processor heatsinks. |

|

|

T15 Torx screwdriver (shipped with the server) |

For replacing the management module. |

|

|

T10 Torx screwdriver (shipped with the server) |

For screws on PCIe modules. |

|

|

Flat-head screwdriver |

For replacing processors or the management module. |

|

|

Phillips screwdriver |

For screws on PCIe M.2 SSDs. |

|

|

|

Cage nut insertion/extraction tool |

For insertion and extraction of cage nuts in rack posts. |

|

|

Diagonal pliers |

For clipping insulating sleeves. |

|

|

Tape measure |

For distance measurement. |

|

|

Multimeter |

For resistance and voltage measurement. |

|

|

ESD wrist strap |

For ESD prevention when you operate the server. |

|

|

Antistatic gloves |

For ESD prevention when you operate the server. |

|

|

Antistatic clothing |

For ESD prevention when you operate the server. |

|

|

Ladder |

For high-place operations. |

|

|

Interface cable (such as an Ethernet cable or optical fiber) |

For connecting the server to an external network. |

|

|

Monitor (such as a PC) |

For displaying the output from the server. |

Installing or removing the server

Installing the server

As a best practice, install hardware options to the server (if needed) before installing the server in the rack. For more information about how to install hardware options, see "Installing hardware options."

Installing the chassis rails and slide rails

Install the chassis rails to the server and the slide rails to the rack. For information about installing the rails, see the installation guide shipped with the rails.

Rack-mounting the server

|

|

WARNING! To avoid bodily injury, slide the server into the rack with caution for the sliding rails might squeeze your fingers. |

1. Remove the screws from both sides of the server, as shown in Figure 3.

Figure 3 Removing the screws from both sides of the server

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. (Optional.) Remove the compute modules if the server is too heavy for mounting. For more information, see "Removing a compute module."

4. Install the chassis handles. For more information, see the labels on the handles.

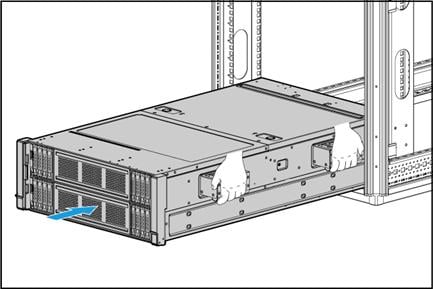

5. Lift the server and slide the server into the rack along the slide rails, as shown in Figure 4.

Remove the chassis handles as you slide the server into the rack. For more information, see the labels on the handles.

Figure 4 Rack-mounting the server

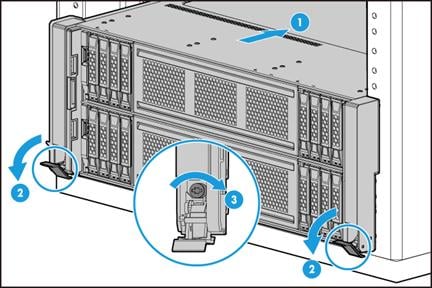

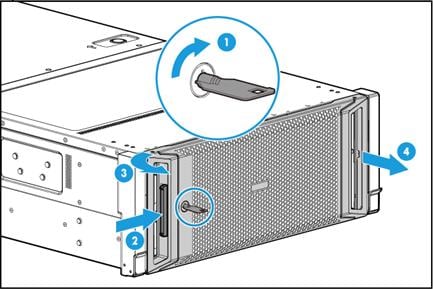

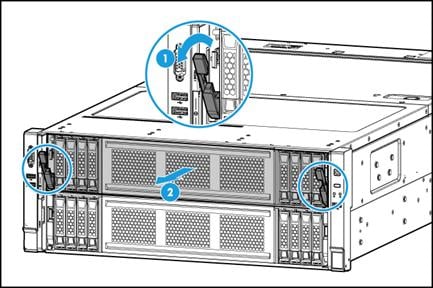

6. Secure the server, as shown in Figure 5:

a. Push the server until the chassis ears are flush against the rack front posts, as shown by callout 1.

b. Unlock the latches of the chassis ears, as shown by callout 2.

c. Fasten the captive screws inside the chassis ears and lock the latches, as shown by callout 3.

7. Install the removed compute modules. For more information, see "Installing a compute module."

|

|

CAUTION: To avoid errors such as drive identification failure, make sure each compute module is installed into the correct slot. You can view the compute module label pasted on the module's package to determine the correct slot. |

8. Install the removed security bezel. For more information, see "Installing the security bezel."

(Optional) Installing the CMA

Install the CMA if the server is shipped with the CMA. For information about how to install the CMA, see the installation guide shipped with the CMA.

Connecting external cables

Cabling guidelines

|

|

WARNING! To avoid electric shock, fire, or damage to the equipment, do not connect communication equipment to RJ-45 Ethernet ports on the server. |

· For heat dissipation, make sure no cables block the inlet or outlet air vents of the server.

· To easily identify ports and connect/disconnect cables, make sure the cables do not cross.

· Label the cables for easy identification of the cables.

· Wrap unused cables onto an appropriate position on the rack.

· To avoid damage to cables when extending the server out of the rack, do not route the cables too tight if you use the CMA.

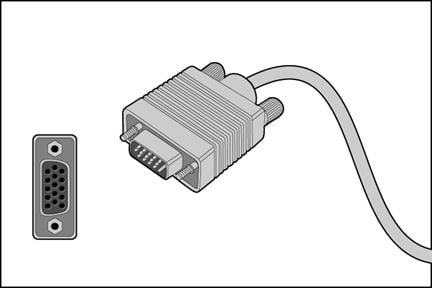

Connecting a mouse, keyboard, and monitor

About this task

The server provides a maximum of two VGA connectors for connecting a monitor.

· One on the front panel provided by the left chassis ear.

· One on the rear panel.

|

|

IMPORTANT! The two VGA connectors on the server cannot be used at the same time. |

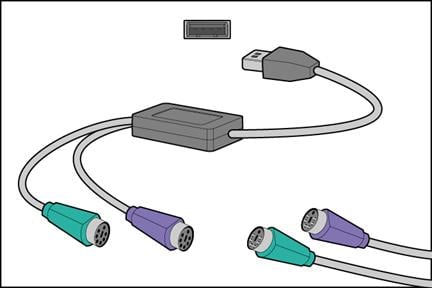

The server does not provide ports for standard PS2 mouse and keyboard. To connect a PS2 mouse and keyboard, you must prepare a USB-to-PS2 adapter.

Procedure

1. Connect one plug of a VGA cable to a VGA connector on the server, and fasten the screws on the plug.

Figure 6 Connecting a VGA cable

2. Connect the other plug of the VGA cable to the VGA connector on the monitor, and fasten the screws on the plug.

3. Connect the mouse and keyboard.

¡ For a USB mouse and keyboard, directly connect the USB connectors of the mouse and keyboard to the USB connectors on the server.

¡ For a PS2 mouse and keyboard, insert the USB connector of the USB-to-PS2 adapter to a USB connector on the server. Then, insert the PS2 connectors of the mouse and keyboard into the PS2 receptacles of the adapter.

Figure 7 Connecting a PS2 mouse and keyboard by using a USB-to-PS2 adapter

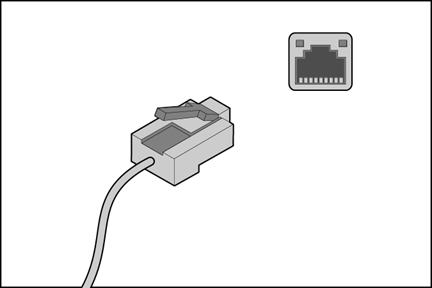

Connecting an Ethernet cable

About this task

Perform this task before you set up a network environment or log in to the HDM management interface through the HDM network port to manage the server.

Prerequisites

Install an mLOM or PCIe Ethernet adapter. For more information, see "Installing Ethernet adapters."

Procedure

1. Determine the network port on the server.

¡ To connect the server to the external network, use an Ethernet port on an Ethernet adapter.

¡ To log in to the HDM management interface, use the HDM dedicated network port or shared network port. For the position of the HDM network port, see "Rear panel view."

2. Determine the type of the Ethernet cable.

Verify the connectivity of the cable by using a link tester.

If you are replacing the Ethernet cable, make sure the new cable is of the same type with the old cable or compatible with the old cable.

3. Label the Ethernet cable by filling in the names and numbers of the server and the peer device on the label.

As a best practice, use labels of the same kind for all cables.

If you are replacing the Ethernet cable, label the new cable with the same number as the number of the old cable.

4. Connect one end of the Ethernet cable to the network port on the server and the other end to the peer device.

Figure 8 Connecting an Ethernet cable

5. Verify network connectivity.

After powering on the server, use the ping command to test the network connectivity. If the connection between the server and the peer device fails, make sure the Ethernet cable is correctly connected.

6. Secure the Ethernet cable. For information about how to secure cables, see "Securing cables."

Connecting a USB device

About this task

Perform this task before you install the operating system of the server or transmit data through a USB device.

The server provides six USB connectors.

· Five external USB connectors on the front and rear panels for connecting USB devices that are designed to be installed and removed very often:

¡ Two USB 2.0 connectors provided by the left chassis ear on the front panel.

¡ One USB 3.0 connector provided by the right chassis ear on the front panel.

¡ Two USB 3.0 connectors on the rear panel.

· One internal USB 3.0 connector for connecting USB devices that are not designed to be installed and removed very often.

Guidelines

Before connecting a USB device, make sure the USB device can operate correctly and then copy data to the USB device.

USB devices are hot swappable. However, to connect a USB device to the internal USB connector or remove a USB device from the internal USB connector, power off the server first.

As a best practice for compatibility, purchase H3C certified USB devices.

Connecting a USB device to the internal USB connector

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect all the cables from the management module.

3. Remove the management module. For more information, see "Removing the management module."

4. Connect the USB device. For the location of the internal USB connector, see "Management module components."

5. Install the management module. For more information, see "Installing the management module."

6. Reconnect the removed cables to the management module.

7. Power on the server. For more information, see "Powering on the server."

8. Verify that the server can identify the USB device.

If the server fails to identify the USB device, download and install the driver of the USB device. If the server still fails to identify the USB device after the driver is installed, replace the USB device.

Connecting a USB device to an external USB connector

1. Connect the USB device.

2. Verify that the server can identify the USB device.

If the server fails to identify the USB device, download and install the driver of the USB device. If the server still fails to identify the USB device after the driver is installed, replace the USB device.

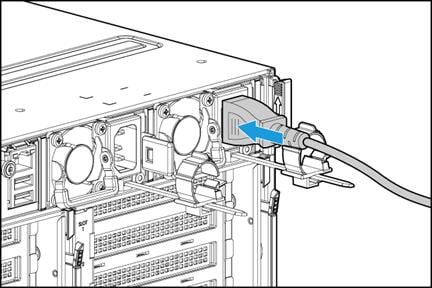

Connecting the power cord

Guidelines

|

|

WARNING! To avoid damage to the equipment or even bodily injury, use the power cord that ships with the server. |

Before connecting the power cord, make sure the server and components are installed correctly.

Procedure

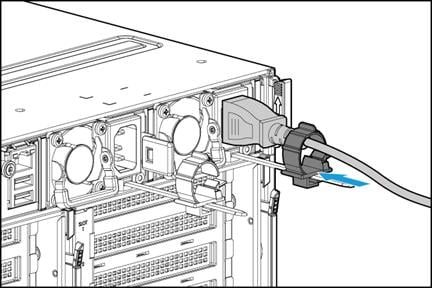

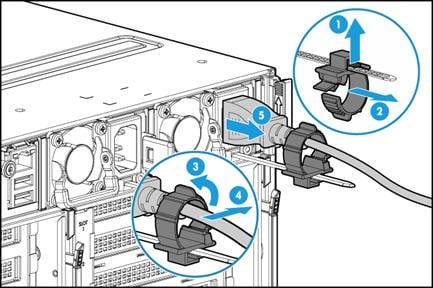

1. Insert the power cord plug into the power receptacle of a power supply at the rear panel, as shown in Figure 9.

Figure 9 Connecting the power cord

2. Connect the other end of the power cord to the power source, for example, the power strip on the rack.

3. Secure the power cord to avoid unexpected disconnection of the power cord.

a. (Optional.) If the cable clamp is positioned too near the power cord and blocks the power cord plug connection, press down the tab on the cable mount and slide the clamp backward.

Figure 10 Sliding the cable clamp backward

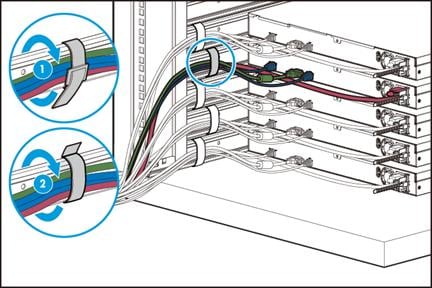

b. Open the cable clamp, place the power cord through the opening in the cable clamp, and then close the cable clamp, as shown by callouts 1, 2, 3, and 4 in Figure 11.

Figure 11 Securing the power cord

c. Slide the cable clamp forward until it is flush against the edge of the power cord plug, as shown in Figure 12.

Figure 12 Sliding the cable clamp forward

Securing cables

Securing cables to the CMA

For information about how to secure cables to the CMA, see the installation guide shipped with the CMA.

Securing cables to slide rails by using cable straps

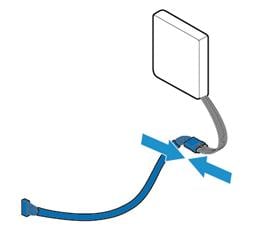

You can secure cables to either left slide rails or right slide rails by using the cable straps provided with the server. As a best practice for cable management, secure cables to left slide rails.

When multiple cable straps are used in the same rack, stagger the strap location, so that the straps are adjacent to each other when viewed from top to bottom. This positioning will enable the slide rails to slide easily in and out of the rack.

To secure cables to slide rails by using cable straps:

1. Hold the cables against a slide rail.

2. Wrap the strap around the slide rail and loop the end of the cable strap through the buckle.

3. Dress the cable strap to ensure that the extra length and buckle part of the strap are facing outside of the slide rail.

Figure 13 Securing cables to a slide rail

Removing the server from a rack

1. Power down the server. For more information, see "Powering off the server."

2. Disconnect all peripheral cables from the server.

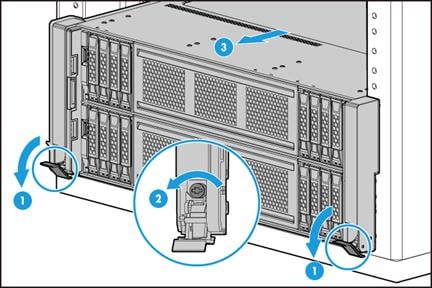

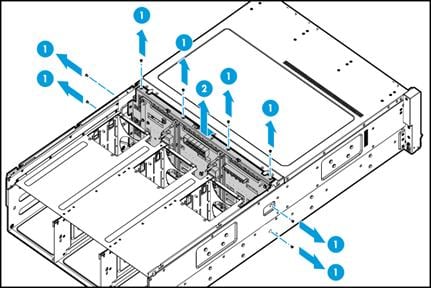

3. Extend the server from the rack, as shown in Figure 14.

a. Open the latches of the chassis ears.

b. Loosen the captive screws.

c. Slide the server out of the rack, during which install the chassis handles in sequence. For information about installing the chassis handles, see the labels on the handles.

Figure 14 Extending the server from the rack

4. Place the server on a clean, stable surface.

Powering on and powering off the server

Important information

If the server is connected to external storage devices, make sure the server is the first device to power off and then the last device to power on. This restriction prevents the server from mistakenly identifying the external storage devices as faulty devices.

Powering on the server

Prerequisites

Before you power on the server, you must complete the following tasks:

· Install the server and internal components correctly.

· Connect the server to a power source.

Procedure

Powering on the server by pressing the power on/standby button

Press the power on/standby button to power on the server.

The server exits standby mode and supplies power to the system. The system power LED changes from steady amber to flashing green and then to steady green. For information about the position of the system power LED, see "LEDs and buttons."

Powering on the server from the HDM Web interface

1. Log in to the HDM.

For information about how to log in to HDM, see the firmware update guide for the server.

2. In the navigation pane, select Power Manager > Power Control, and then power on the server.

For more information, see HDM online help.

Powering on the server from the remote console interface

1. Log in to HDM.

For information about how to log in to HDM, see the firmware update guide for the server.

2. Log in to a remote console and then power on the server.

For information about how to log in to a remote console, see HDM online help.

Configuring automatic power-on

You can configure automatic power-on from HDM or the BIOS.

To configure automatic power-on from HDM:

1. Log in to HDM.

For information about how to log in to HDM, see the firmware update guide for the server.

2. In the navigation pane, select Power Manager > Meter Power.

The meter power configuration page opens.

3. Click the Automatic power-on tab and then select Always power on.

4. Click Save.

To configure automatic power-on from the BIOS, set AC Restore Settings to Always Power On. For more information, see the BIOS user guide for the server.

Powering off the server

Guidelines

Before powering off the server, you must complete the following tasks:

· Install the server and internal components correctly.

· Back up all critical data.

· Make sure all services have stopped or have been migrated to other servers.

Procedure

Powering off the server from its operating system

1. Connect a monitor, mouse, and keyboard to the server.

2. Shut down the operating system of the server.

3. Disconnect all power cords from the server.

Powering off the server by pressing the power on/standby button

|

|

IMPORTANT: This method forces the server to enter standby mode without properly exiting applications and the operating system. If an application stops responding, you can use this method to force a shutdown. As a best practice, do not use this method when the applications and the operating system are operating correctly. |

1. Press and hold the power on/standby button until the system power LED turns into steady amber.

2. Disconnect all power cords from the server.

Powering off the server from the HDM Web interface

1. Log in to HDM.

For information about how to log in to HDM, see the firmware update guide for the server.

2. In the navigation pane, select Power Manager > Power Control, and then power off the server.

For more information, see HDM online help.

3. Disconnect all power cords from the server.

Powering off the server from the remote console interface

1. Log in to HDM.

For information about how to log in to HDM, see the firmware update guide for the server.

2. Log in to a remote console and then power off the server.

For information about how to log in to a remote console, see HDM online help.

Configuring the server

The following information describes the procedures to configure the server after the server installation is complete.

Powering on the server

1. Power on the server. For information about the procedures, see "Powering on the server."

2. Verify that the health LED on the front panel is steady green, which indicates that the system is operating correctly. For more information about the health LED status, see "LEDs and buttons."

Updating firmware

|

|

IMPORTANT: Verify the hardware and software compatibility before firmware update. For information about the hardware and software compatibility, see the software release notes. |

You can update the following firmware from FIST or HDM:

· HDM.

· BIOS.

· CPLD.

· PDBCPLD.

· NDCPLD.

For information about the update procedures, see the firmware update guide for the server.

Deploying and registering UIS Manger

For information about deploying UIS Manager, see H3C UIS Manager Installation Guide.

For information about registering the licenses of UIS Manager, see H3C UIS Manager 6.5 License Registration Guide.

Installing hardware options

If you are installing multiple hardware options, read their installation procedures and identify similar steps to streamline the entire installation procedure.

Installing the security bezel

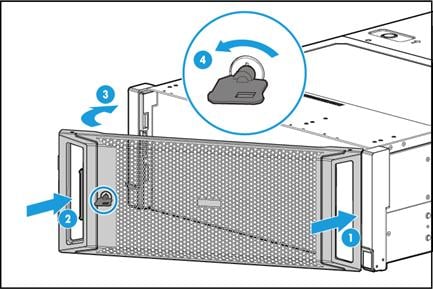

1. Press the right edge of the security bezel into the groove in the right chassis ear on the server, as shown by callout 1 in Figure 15.

2. Press the latch at the other end, close the security bezel, and then release the latch to secure the security bezel into place. See callouts 2 and 3 in Figure 15.

3. Insert the key provided with the bezel into the lock on the bezel and lock the security bezel, as shown by callout 4 in Figure 15. Then, pull out the key and keep it safe.

|

|

CAUTION: To avoid damage to the lock, hold down the key while you are turning the key. |

Figure 15 Installing the security bezel

Installing SAS/SATA drives

Guidelines

The drives are hot swappable.

If you are using the drives to create a RAID, follow these restrictions and guidelines:

· To build a RAID (or logical drive) successfully, make sure all drives in the RAID are the same type (HDDs or SSDs) and have the same connector type (SAS or SATA).

· For efficient use of storage, use drives that have the same capacity to build a RAID. If the drives have different capacities, the lowest capacity is used across all drives in the RAID. Whether a drive with extra capacity can be used to build other RAIDs depends on the storage controller model. The following storage controllers do not allow the use of a drive for multiple RAIDs:

¡ RAID-LSI-9361-8i(1G)-A1-X.

¡ RAID-LSI-9361-8i(2G)-1-X.

¡ RAID-LSI-9460-8i(2G).

¡ RAID-LSI-9460-8i(4G).

¡ RAID-P460-B4.

¡ HBA-H460-B1.

· If the installed drive contains RAID information, you must clear the information before configuring RAIDs. For more information, see the storage controller user guide for the server.

Procedure

1. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

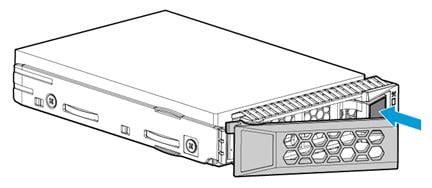

2. Press the latch on the drive blank inward with one hand, and pull the drive blank out of the slot, as shown in Figure 16.

Figure 16 Removing the drive blank

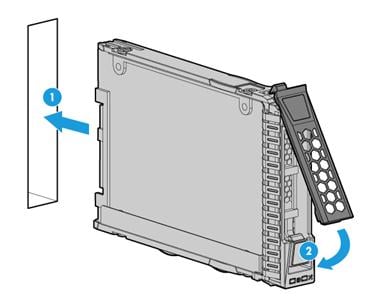

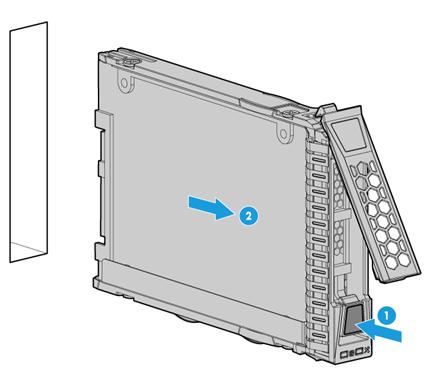

3. Install the drive:

a. Press the button on the drive panel to release the locking lever.

Figure 17 Releasing the locking lever

b. Insert the drive into the slot and push it gently until you cannot push it further.

c. Close the locking lever until it snaps into place.

Figure 18 Installing a drive

4. Install the security bezel. For more information, see "Installing the security bezel."

Verifying the installation

Use the following methods to verify that the drive is installed correctly:

· Verify the drive properties (including capacity) and state by using one of the following methods:

¡ Log in to HDM. For more information, see HDM online help.

¡ Access the BIOS. For more information, see the storage controller user guide for the server.

¡ Access the CLI or GUI of the server.

· Observe the drive LEDs to verify that the drive is operating correctly. For more information, see "Drive LEDs."

Installing NVMe drives

Guidelines

NVMe drives support hot insertion and managed hot removal.

Only one drive can be hot inserted at a time. To hot insert multiple NVMe drives, wait a minimum of 60 seconds for the previously installed NVMe drive to be identified before hot inserting another NVMe drive.

If you are using the drives to create a RAID, follow these restrictions and guidelines:

· Make sure the NVMe VROC module is installed before you create a RAID.

· For efficient use of storage, use drives that have the same capacity to build a RAID. If the drives have different capacities, the lowest capacity is used across all drives in the RAID. A drive with extra capacity cannot be used to build other RAIDs.

· If the installed drive contains RAID information, you must clear the information before configuring RAIDs. For more information, see the storage controller user guide for the server.

Procedure

1. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

2. Remove the drive blank. For more information, see "Installing SAS/SATA drives."

3. Install the drive. For more information, see "Installing SAS/SATA drives."

4. Install the removed security bezel. For more information, see "Installing the security bezel."

Verifying the installation

Use the following methods to verify that the drive is installed correctly:

· Observe the drive LEDs to verify that the drive is operating correctly. For more information, see "Drive LEDs."

· Access the CLI or GUI of the server to verify the drive properties (including capacity) and state.

Installing power supplies

Guidelines

|

|

CAUTION: To avoid hardware damage, do not use third-party power supplies. |

· The power supplies are hot swappable.

· The server provides four power supply slots. Before powering on the server, install two to achieve 1+1 redundancy, or install four power modules to achieve N+N redundancy.

· Make sure the installed power supplies are the same model. HDM will perform power supply consistency check and generate an alarm if the power supply models are different.

· Install power supplies in power supply slots 1 through 4 in sequence.

Procedure

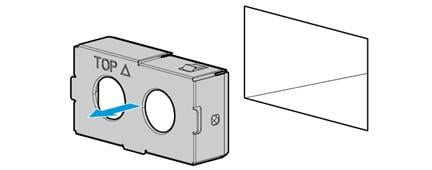

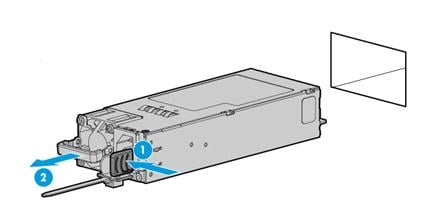

1. As shown in Figure 19, remove the power supply blank from the target power supply slot.

Figure 19 Removing the power supply blank

2. Align the power supply with the slot, making sure its fan is on the left.

3. Push the power supply into the slot until it snaps into place.

Figure 20 Installing a power supply

4. Connect the power cord. For more information, see "Connecting the power cord."

Verifying the installation

Use one of the following methods to verify that the power supply is installed correctly:

· Observe the power supply LED to verify that the power supply is operating correctly. For more information about the power supply LED, see LEDs in "Rear panel."

· Log in to HDM to verify that the power supply is operating correctly. For more information, see HDM online help.

Installing a compute module

Guidelines

· The server supports two compute modules: compute module 1 and compute module 2. For more information, see "Front panel."

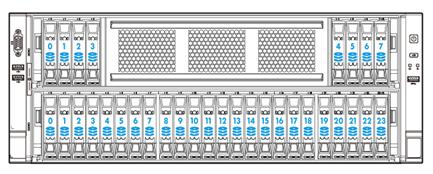

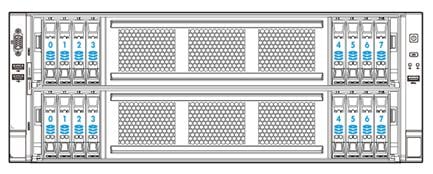

· The server supports 24SFF and 8SFF compute modules. For more information, see "Front panel of a compute module."

Procedure

The installation procedure is the same for 24SFF and 8SFF compute modules. This section uses an 8SFF compute module as an example.

To install a compute module:

1. Identify the installation location of the compute module. For more information about the installation location, see "Drive configurations and numbering."

2. Power off the server. For more information, see "Powering off the server."

3. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

4. Press the latches at both ends of the compute module blank, and pull outward the blank, as shown in Figure 21.

Figure 21 Removing the compute module blank

5. Install the compute module:

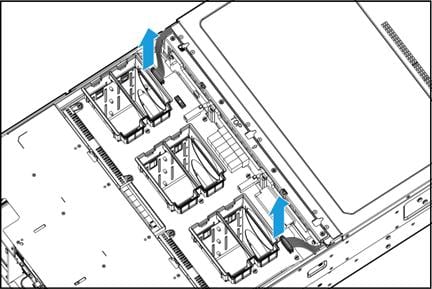

a. Press the clips at both ends of the compute module inward to release the locking levers, as shown in Figure 22.

Figure 22 Releasing the locking levers

b. Push the module gently into the slot until you cannot push it further. Then, close the locking levers at both ends to secure the module in place, as shown in Figure 23.

|

|

CAUTION: To avoid module connector damage, do not use excessive force when pushing the module into the slot. |

Figure 23 Installing the compute module

6. Install the removed security bezel. For more information, see "Installing the security bezel."

7. Connect the power cord. For more information, see "Connecting the power cord."

8. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the compute module is operating correctly. For more information, see HDM online help.

Installing air baffles

For more information about air baffles available for the server, see "Air baffles."

Installing the low mid air baffle or GPU module air baffle to a compute module

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

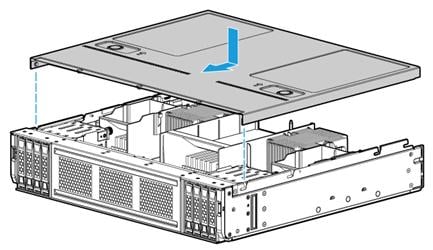

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

5. Remove the high mid air baffle. For more information, see "Replacing air baffles in a compute module."

6. Install the low mid air baffle or GPU module air baffle.

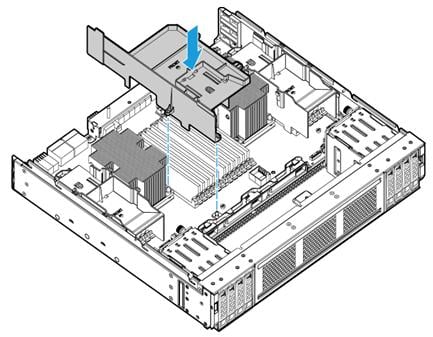

¡ To install the low mid air baffle, align the two pin holes with the guide pins on the processor socket and the cable clamp. Then, gently press down the air baffle onto the main board of the compute module, as shown in Figure 24.

Figure 24 Installing the low mid air baffle

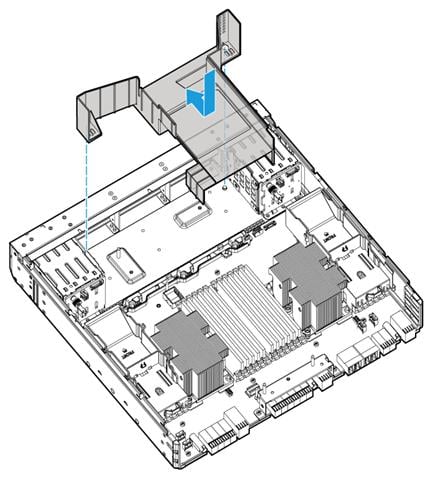

¡ To install the GPU module air baffle, align the two pin holes with the guide pins (near the compute module front panel) in the compute module. Then, gently press down the air baffle onto the main board and push it forward until you cannot push it any further, as shown in Figure 25.

Figure 25 Installing the GPU module air baffle

7. Install a riser card and a PCIe module in the compute module. For more information, see "Installing a riser card and a PCIe module in a compute module."

8. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

9. Install the compute module. For more information, see "Installing a compute module."

10. Install the removed security bezel. For more information, see "Installing the security bezel."

11. Connect the power cord. For more information, see "Connecting the power cord."

12. Power on the server. For more information, see "Powering on the server."

Installing the GPU module air baffle to a rear riser card

The GPU module air baffle hinders GPU module installation. Make sure GPU modules have been installed to the rear riser card before you install the GPU module air baffle.

To install the GPU module air baffle to a rear riser card:

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from the riser card, if the cables hinder air baffle installation.

3. Remove the riser card. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. Remove the riser card air baffle.

5. Install PCIe modules to the riser card. For more information, see "Installing riser cards and PCIe modules at the server rear."

6. If an installed PCIe module requires external cables, remove the air baffle panel closer to the PCIe module installation slot for the cables to pass through.

Figure 26 Removing an air baffle panel from the GPU module air baffle

7. Install the GPU module air baffle to the rear riser card. Tilt and insert the air baffle into the riser card, and then push the riser card until it snaps into place, as shown in Figure 27.

Figure 27 Installing the GPU module air baffle to the rear riser card

8. Install the rear riser card. For more information, see "Installing riser cards and PCIe modules at the server rear."

9. (Optional.) Connect external cables to the riser card.

10. Connect the power cord. For more information, see "Connecting the power cord."

11. Power on the server. For more information, see "Powering on the server."

Installing riser cards and PCIe modules

The server provides two PCIe riser connectors and three PCIe riser bays. The three riser bays are at the server rear and each compute module provides one riser connector. For more information about the locations of the bays and connectors, see "Rear panel view" and "Main board components", respectively.

Guidelines

· You can install a small-sized PCIe module in a large-sized PCIe slot. For example, an LP PCIe module can be installed in an FHFL PCIe slot.

· A PCIe slot can supply power to the installed PCIe module if the maximum power consumption of the module does not exceed 75 W. If the maximum power consumption exceeds 75 W, a power cord is required.

· Make sure the number of installed PCIe modules requiring PCIe I/O resources does not exceed eleven. For more information about PCIe modules requiring PCIe I/O resources, see "PCIe modules."

· If a processor is faulty or absent, the corresponding PCIe slots are unavailable. For more information about processor and PCIe slot mapping relationship, see "Riser cards."

· For more information about riser card installation location, see Table 6.

Table 6 Riser card installation location

|

PCIe riser connector or bay |

Riser card name |

Available riser cards |

|

PCIe riser connector 0 (in a compute module) |

Riser card 0 |

· RS-FHHL-G3 · RS-GPU-R6900-G3 (available only for 8SFF compute modules) |

|

PCIe riser bay 1 (at the server rear) |

Riser card 1 |

· RS-4*FHHL-G3 · RS-6*FHHL-G3-1 |

|

PCIe riser bay 2 (at the server rear) |

Riser card 2 |

RS-6*FHHL-G3-2 |

|

PCIe riser bay 3 (at the server rear) |

Riser card 3 |

· RS-4*FHHL-G3 · RS-6*FHHL-G3-1 |

Installing a riser card and a PCIe module in a compute module

You can install an RS-GPU-R6900-G3 riser card only in an 8SFF compute module.

The installation method is the same for the RS-FHHL-G3 and RS-GPU-R6900-G3. This section uses the RS-FHHL-G3 as an example.

Procedure

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

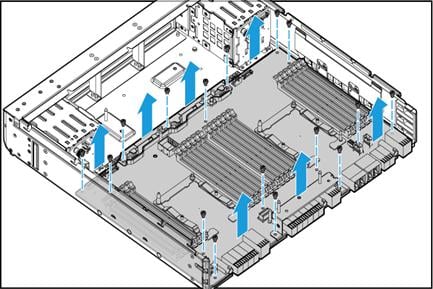

5. Remove the high mid air baffle. For more information, see "Replacing air baffles in a compute module."

6. Install the low mid air baffle. For more information, see "Installing the low mid air baffle or GPU module air baffle to a compute module."

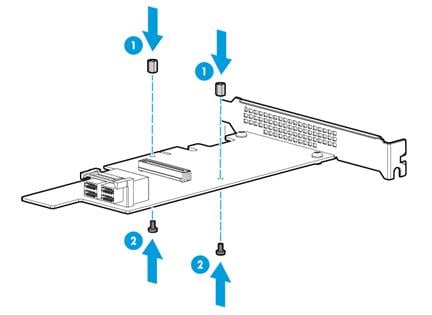

7. Install a PCIe module to the riser card:

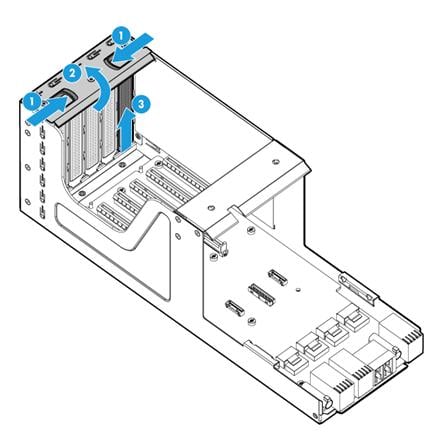

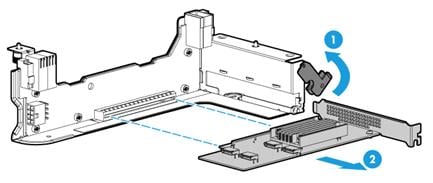

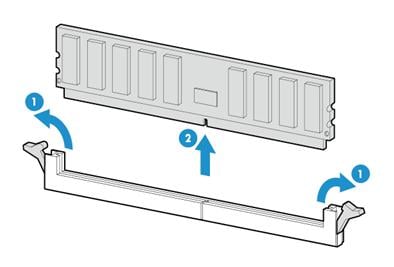

a. Hold and rotate the latch upward to open it, as shown in Figure 28.

Figure 28 Opening the latch on the riser card

b. Insert the PCIe module into the slot along the guide rails and close the latch on the riser card, as shown in Figure 29.

Figure 29 Installing the PCIe module

c. Connect PCIe module cables, if any, to the PCIe module.

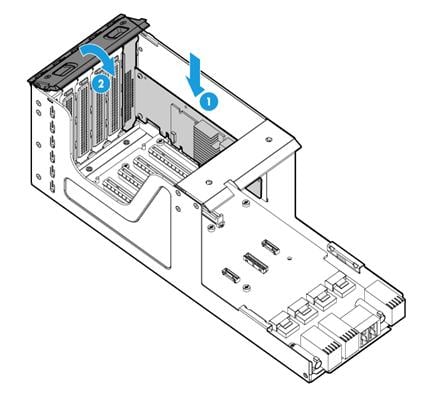

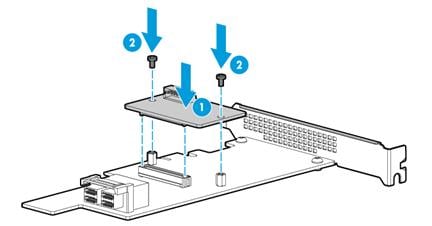

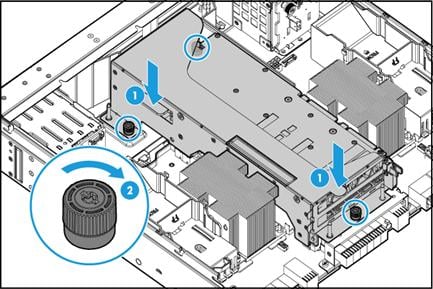

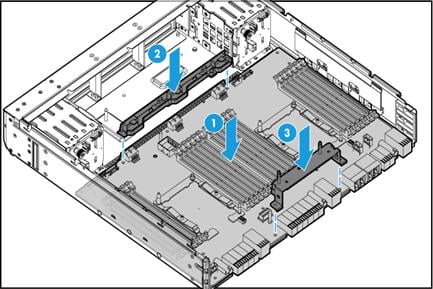

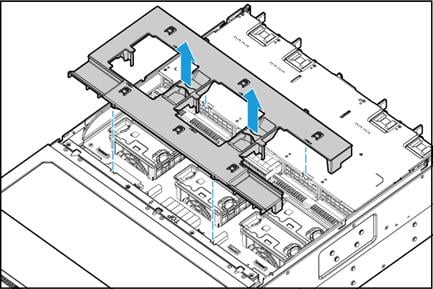

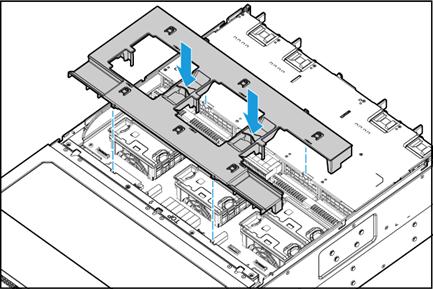

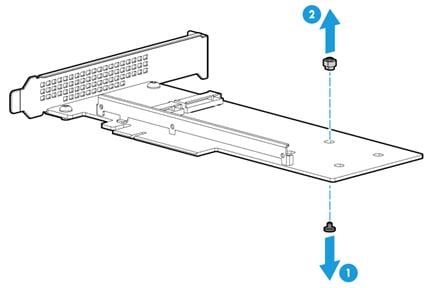

8. Install the riser card to the compute module, as shown in Figure 30:

a. Align the two pin holes on the riser card with the guide pins on the main board, and then insert the riser card in the PCIe riser connector.

b. Fasten the captive screws to secure the riser card to the main board.

Figure 30 Installing the riser card to the compute module

c. Connect PCIe module cables, if any, to the drive backplane.

9. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

10. Install the compute module. For more information, see "Installing a compute module."

11. Install the removed security bezel. For more information, see "Installing the security bezel."

12. Connect the power cord. For more information, see "Connecting the power cord."

13. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the PCIe module on the riser card is operating correctly. For more information, see HDM online help.

Installing riser cards and PCIe modules at the server rear

The procedure is the same for installing RS-6*FHHL-G3-1 and RS-6*FHHL-G3-2 riser cards. This section uses the RS-6*FHHL-G3-1 riser card as an example.

Procedure

1. Power off the server. For more information, see "Powering off the server."

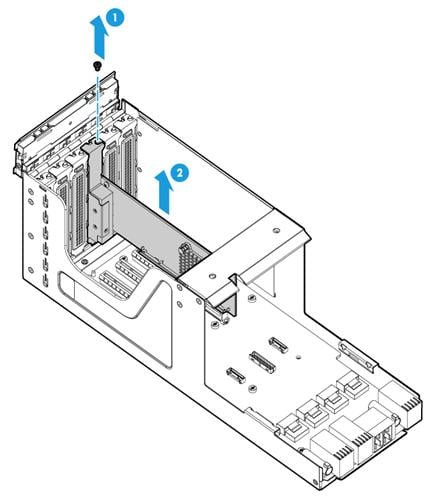

2. Remove the riser card blank. As shown in Figure 31, hold the riser card blank with your fingers reaching into the holes on the blank and pull the blank out.

Figure 31 Removing the rear riser card blank

3. Install the PCIe module to the riser card:

a. Remove the riser card air baffle, if the PCIe module to be installed needs to connect cables. For more information, see "Replacing a riser card air baffle."

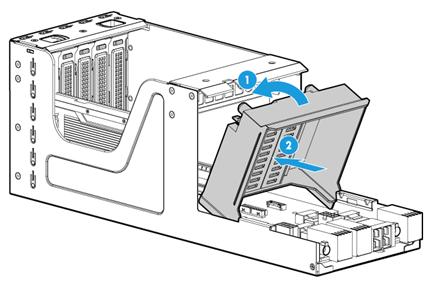

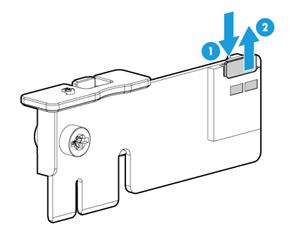

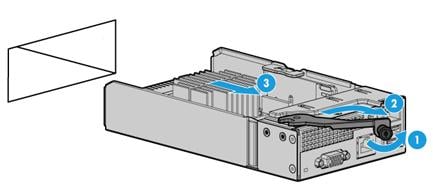

b. Open the riser card cover. As shown by callouts 1 and 2 in Figure 32, pressing the two locking tabs on the riser card cover, rotate the cover outward.

c. Pull the PCIe module blank out of the slot, as shown by callout 3.

Figure 32 Removing the PCIe module blank

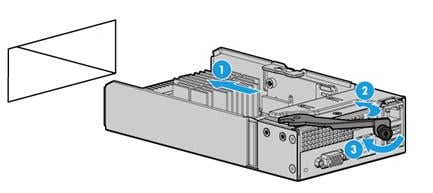

d. Insert the PCIe module into the PCIe slot along the guide rails, and then close the riser card cover, as shown in Figure 33.

Figure 33 Installing a PCIe module to the riser card

e. Connect PCIe module cables, if any, to the PCIe module.

f. Install the removed riser card air baffle. For more information, see "Replacing a riser card air baffle."

4. Install the riser card to the server.

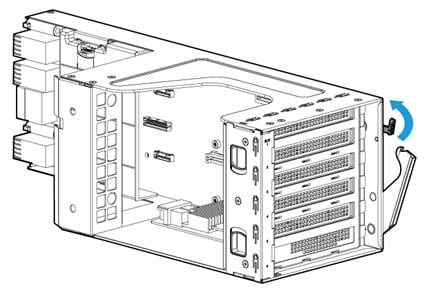

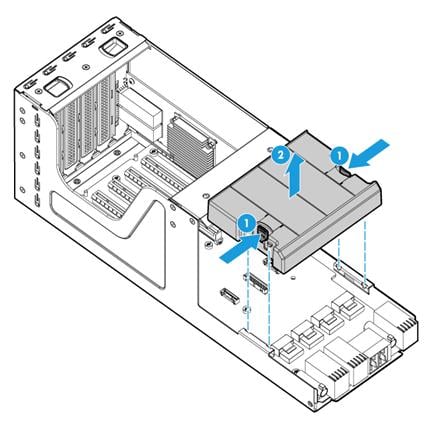

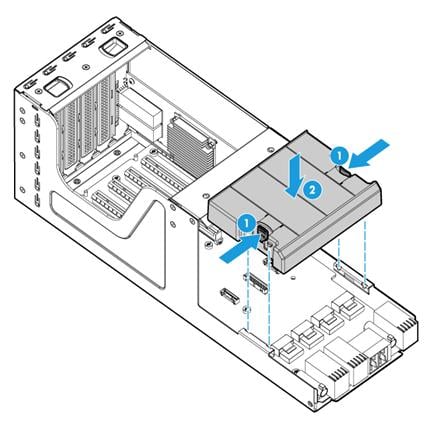

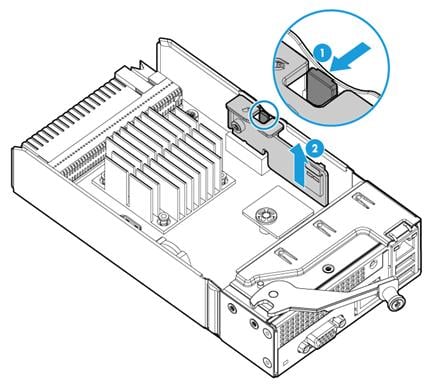

a. Unlock the riser card. As shown in Figure 34, rotate the latch on the riser card upward to release the ejector lever.

Figure 34 Unlocking the riser card

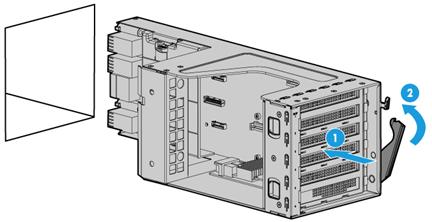

b. Install the riser card to the server. As shown in Figure 35, gently push the riser card into the bay until you cannot push it further, and then close the ejector lever to secure the riser card.

Figure 35 Installing the riser card to the server

5. Connect PCIe module cables, if any.

6. Connect the power cord. For more information, see "Connecting the power cord."

7. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the PCIe module on the riser card is operating correctly. For more information, see HDM online help.

Installing storage controllers and power fail safeguard modules

A power fail safeguard module provides a flash card and a supercapacitor. When a system power failure occurs, this supercapacitor can provide power for a minimum of 20 seconds. During this interval, the storage controller transfers data from DDR memory to the flash card, where the data remains indefinitely or until the controller retrieves the data.

Guidelines

Make sure the power fail safeguard module is compatible with its connected storage controller. For the compatibility matrix, see "Storage controllers."

The supercapacitor might have a low charge after the power fail safeguard module is installed or after the server is powered on. If the system displays that the supercapacitor has low charge, no action is required. The system will charge the supercapacitor automatically.

Install storage controllers only in PCIe slots on riser card 1.

You cannot install the HBA-H460-B1 or RAID-P460-B4 storage controller on a server installed with one of the following storage controllers:

· HBA-LSI-9300-8i-A1-X.

· HBA-LSI-9311-8i.

· HBA-LSI-9440-8i.

· RAID-LSI-9361-8i(1G)-A1-X.

· RAID-LSI-9361-8i(2G)-1-X.

· RAID-LSI-9460-8i(2G).

· RAID-LSI-9460-8i(4G).

· RAID-LSI-9460-16i(4G).

Procedure

The procedure is the same for installing storage controllers of different models. This section uses the RAID-LSI-9361-8i(1G)-A1-X storage controller as an example.

To install a storage controller:

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from the rear riser card if the cables hinder storage controller installation.

3. Remove riser card 1. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. (Optional.) Install the flash card of the power fail safeguard module to the storage controller:

|

|

IMPORTANT: Skip this step if no power fail safeguard module is required or the storage controller has a built-in flash card. For information about storage controllers with a built-in flash card, see "Storage controllers." |

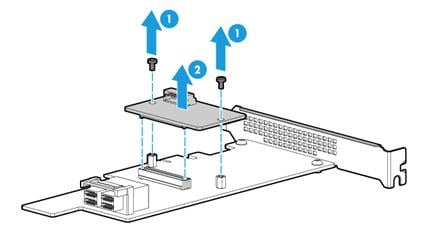

a. Install the two internal threaded studs supplied with the power fail safeguard module on the storage controller.

Figure 36 Installing the internal threaded studs

b. Use screws to secure the flash card on the storage controller.

Figure 37 Installing the flash card

5. Install the storage controller to the riser card:

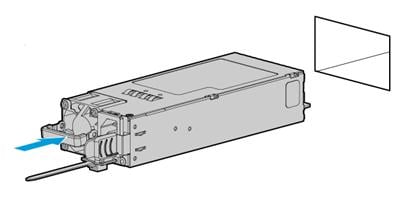

a. (Optional.) Connect the flash card cable (P/N 0404A0VU) to the flash card.

Figure 38 Connecting the flash card cable to the flash card

b. Install the storage controller to the riser card. For more information, see "Installing riser cards and PCIe modules at the server rear."

c. (Optional.) Connect the flash card cable to the riser card. For more information, see "Connecting the flash card on a storage controller."

d. Connect the storage controller cable to the riser card. For more information see "Storage controller cabling in riser cards at the server rear."

e. Install the riser card air baffle. For more information, see "Replacing a riser card air baffle."

6. Install the riser card to the server. For more information, see "Installing riser cards and PCIe modules at the server rear."

7. (Optional.) Install the supercapacitor:

a. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

b. Remove the corresponding compute module. For more information, see "Removing a compute module." For more information about the mapping relationship between PCIe slot and compute module, see "Riser cards."

c. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

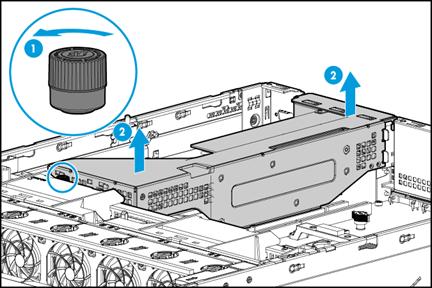

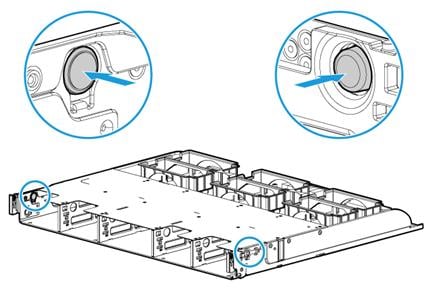

d. Install the supercapacitor holder to the right air baffle. As shown in Figure 39, slide the holder gently until it snaps into place.

Figure 39 Installing the supercapacitor holder

e. Connect one end of the supercapacitor cable (P/N 0404A0VT) provided with the flash card to the supercapacitor cable, as shown in Figure 40.

Figure 40 Connecting the supercapacitor cable

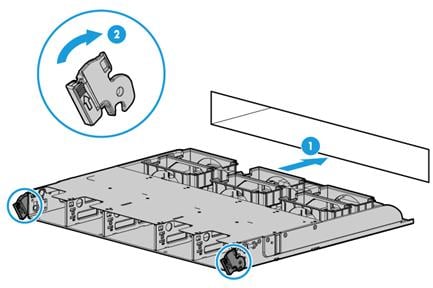

f. Insert the cableless end of the supercapacitor into the holder. Pull a clip on the holder, insert the other end of the supercapacitor into the holder, and then release the clip, as shown in by callouts 1, 2, and 3 in Figure 41.

g. Connect the other end of the supercapacitor cable to supercapacitor connector 1 on the compute module main board, as shown by callout 4 in Figure 41.

Figure 41 Installing the supercapacitor and connecting the supercapacitor cable

h. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

i. Install the compute module. For more information, see "Installing a compute module."

j. Install the removed security bezel. For more information, see "Installing the security bezel."

8. Connect the power cord. For more information, see "Connecting the power cord."

9. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the storage controller, flash card, and supercapacitor are operating correctly. For more information, see HDM online help.

Installing GPU modules

Guidelines

· A riser card is required when you install a GPU module.

· Make sure the number of installed GPU modules requiring PCIe I/O resources does not exceed eleven. For more information about GPU modules requiring PCIe I/O resources, see "GPU modules."

· The available GPU modules and installation positions vary by riser card model and position. For more information, see "GPU module and riser card compatibility."

· For heat dissipation purposes, if a 24SFF compute module and a GPU-P4-X or GPU-T4 GPU module are installed, do not install processors whose power exceeds 165 W. For more information about processor power, see "Processors."

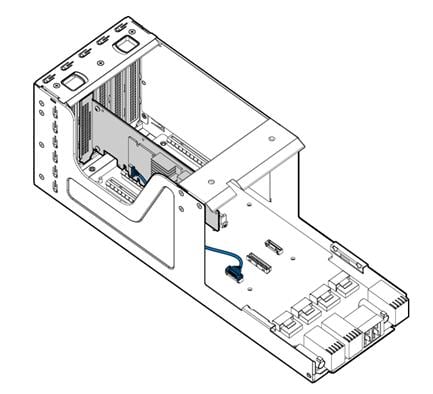

Installing a GPU module in a compute module

Guidelines

You can install a GPU module only in an 8SFF compute module and an RS-GPU-R6900-G3 riser card is required for the installation.

For the GPU module to take effect, make sure processor 2 of the compute module is in position.

Procedure

The procedure is the same for installing GPU modules GPU-P4-X, GPU-P40-X, GPU-T4, GPU-P100, GPU-V100, and GPU-V100-32G. This section uses the GPU-P100 as an example.

To install a GPU module in a compute module:

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

5. Remove the high mid air baffle. For more information, see "Replacing air baffles in a compute module."

6. Install the GPU module air baffle to the compute module. For more information, see "Installing the low mid air baffle or GPU module air baffle to a compute module."

7. If the GPU module is dual-slot wide, attach the support bracket provided with the GPU module to the GPU module. As shown in Figure 42, align screw holes in the support bracket with the installation holes in the GPU module. Then, use screws to attach the support bracket to the GPU module.

Figure 42 Installing the GPU module support bracket

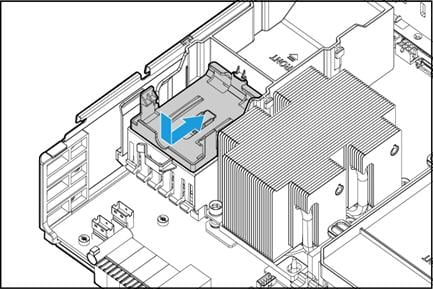

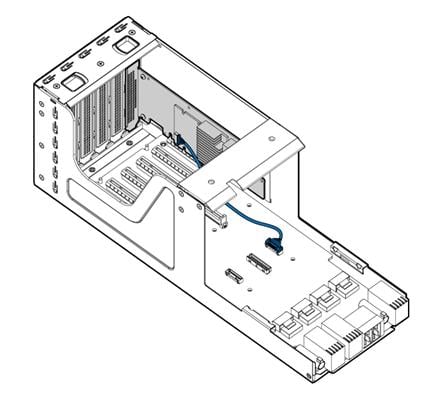

8. Install the GPU module and connect the GPU power cord:

a. Hold and rotate the latch upward to open it. For more information, see "Installing a riser card and a PCIe module in a compute module."

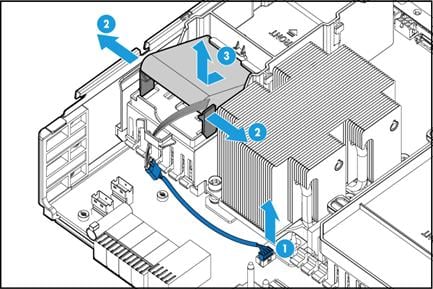

b. Connect the riser power end of the power cord (P/N 0404A0UC) to the riser card, as shown by callout 1 in Figure 43.

c. Insert the GPU module into PCIe slot 1 along the guide rails, as shown by callout 2 in Figure 43.

d. Connect the other end of the power cord to the GPU module and close the latch on the riser card, as shown by callouts 3 and 4 in Figure 43.

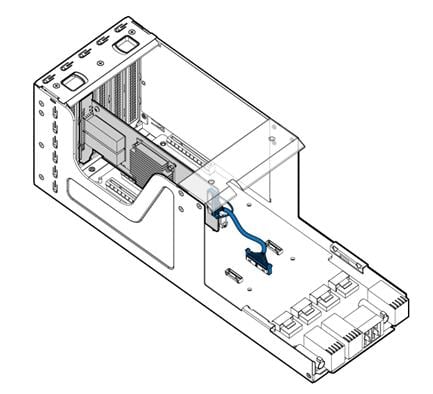

Figure 43 Installing a GPU module

9. Install the riser card on PCIe riser connector 0. Align the pin holes on the riser card with the guide pins on the main board, and place the riser card on the main board. Then, fasten the captive screws to secure the riser card into place, as shown in Figure 44.

Figure 44 Installing the riser card

10. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

11. Install the compute module. For more information, see "Installing a compute module."

12. Install the removed security bezel. For more information, see "Installing the security bezel."

13. Connect the power cord. For more information, see "Connecting the power cord."

14. Power on the server. For more information, see "Powering on the server."

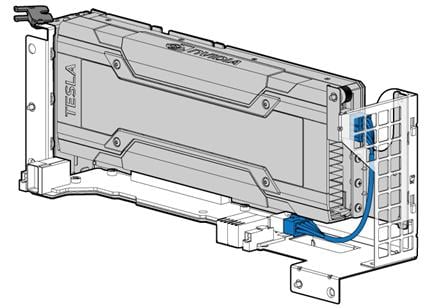

Installing a GPU module to a rear riser card

Guidelines

You can install GPU modules only to the riser card in riser bay 1 or 3.

To install only one GPU module to a rear riser card, install the GPU module in PCIe slot 2. To install two GPU modules to a rear riser card, install the GPU modules in PCIe slots 2 and 6.

The procedure is the same for GPU modules GPU-P4-X and GPU-T4. This section uses GPU module GPU-P4-X as an example.

Procedure

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from the riser card, if the cables hinder GPU module installation.

3. Remove the riser card. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. Install the GPU module to the riser card. For more information, see "Installing riser cards and PCIe modules at the server rear."

5. Install the GPU module air baffle to the riser card. For more information, see "Installing the GPU module air baffle to a rear riser card."

6. Install the riser card to the server. For more information, see "Installing riser cards and PCIe modules at the server rear."

7. Connect the power cord. For more information, see "Connecting the power cord."

8. Power on the server. For more information, see "Powering on the server."

Installing Ethernet adapters

Guidelines

You can install an mLOM Ethernet adapter only in the mLOM Ethernet adapter connector on riser card 1. For more information about the connector location, see "Riser cards." When the mLOM Ethernet adapter is installed, PCIe slot 4 on riser card 1 becomes unavailable.

To install a PCIe Ethernet adapter that supports NCSI, install it in PCIe slot 3 on riser card 1. If you install the Ethernet adapter in another slot, NCSI does not take effect.

By default, port 1 on the mLOM Ethernet adapter acts as the HDM shared network port. If only a PCIe Ethernet adapter exists and the PCIe Ethernet adapter supports NCSI, port 1 on the PCIe Ethernet adapter acts as the HDM shared network port. You can configure another port on the PCIe Ethernet adapter as the HDM shared network port from the HDM Web interface. For more information, see HDM online help.

Installing an mLOM Ethernet adapter

The procedure is the same for all mLOM Ethernet adapters. This section uses the NIC-GE-4P-360T-L3-M mLOM Ethernet adapter as an example.

Procedure

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from riser card 1, if the cables hinder mLOM Ethernet adapter installation.

3. Remove riser card 1. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. Install the mLOM Ethernet adapter to the riser card:

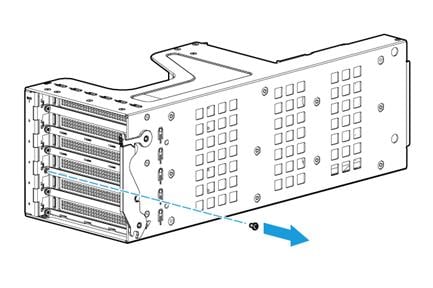

a. Remove the screw from the mLOM Ethernet adapter slot and then remove the blank, as shown in Figure 45.

b. Open the riser card cover. For more information, see "Installing riser cards and PCIe modules at the server rear."

c. Remove the PCIe module in PCIe slot 4 on the riser card, if a PCIe module is installed. For more information, see "Replacing a riser card and PCIe module at the server rear."

Remove the PCIe module blank, if no PCIe module is installed. For more information, see "Installing riser cards and PCIe modules at the server rear."

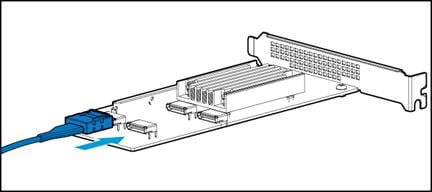

d. Insert the mLOM Ethernet adapter into the slot along the guide rails, and fasten the screw to secure the Ethernet adapter into place, as shown in Figure 46. Then, close the riser card cover.

Figure 46 Installing an mLOM Ethernet adapter to the riser card

5. If you have removed the riser card air baffle, install the removed riser card air baffle. For more information, see "Replacing a riser card air baffle."

6. Install the riser card to the server. For more information, see "Installing riser cards and PCIe modules at the server rear."

7. Connect network cables to the mLOM Ethernet adapter.

8. Connect the power cord. For more information, see "Connecting the power cord."

9. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the mLOM Ethernet adapter is operating correctly. For more information, see HDM online help.

Installing a PCIe Ethernet adapter

Procedure

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from the target riser card if the cables hinder PCIe Ethernet adapter installation.

3. Remove the riser card. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. Install the PCIe Ethernet adapter to the riser card. For more information, see "Installing riser cards and PCIe modules."

5. (Optional.) If the PCIe Ethernet adapter supports NCSI, connect the NCSI cable from the PCIe Ethernet adapter to the NCSI connector on the riser card. For more information about the NCSI connector location, see "Riser cards." For more information about the cable connection method, see "Connecting the NCSI cable for a PCIe Ethernet adapter."

6. If you have removed the riser card air baffle, install the removed riser card air baffle. For more information, see "Replacing a riser card air baffle."

7. Install the riser card to the server. For more information, see "Installing riser cards and PCIe modules at the server rear."

8. Connect network cables to the PCIe Ethernet adapter.

9. Connect the power cord. For more information, see "Connecting the power cord."

10. Power on the server. For more information, see "Powering on the server."

Verifying the installation

Log in to HDM to verify that the PCIe Ethernet adapter is operating correctly. For more information, see HDM online help.

Installing PCIe M.2 SSDs

Guidelines

|

|

CAUTION: To avoid thermal damage to processors, do not install PCIe M.2 SSDs to a 24SFF compute module if an 8180, 8180M, 8168, 6154, 6146, 6144, or 6244 processor is installed in the compute module. |

An M.2 transfer module and a riser card are required to install PCIe M.2 SSDs.

To ensure high availability, install two PCIe M.2 SSDs of the same model.

You can install a maximum of two PCIe M.2 SSDs on an M.2 transfer module. The installation procedure is the same for the two SSDs.

Installing a PCIe M.2 SSD in a compute module

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

5. Remove the high mid air baffle. For more information, see "Replacing air baffles in a compute module."

6. Install the low mid air baffle. For more information, see "Installing the low mid air baffle or GPU module air baffle to a compute module."

7. Install the PCIe M.2 SSD to the M.2 transfer module:

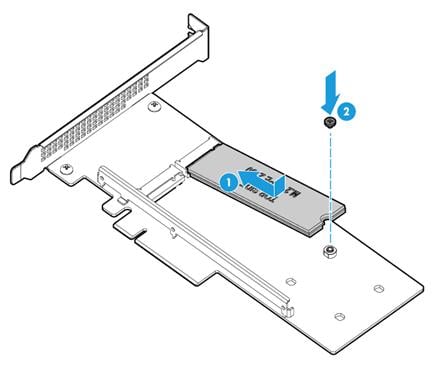

a. Install the internal threaded stud supplied with the transfer module onto the transfer module, as shown in Figure 47.

Figure 47 Installing the internal threaded stud

b. Insert the connector of the SSD into the socket, and push down the other end of the SSD. Then, fasten the screw provided with the transfer module to secure the SSD into place, as shown in Figure 48.

Figure 48 Installing a PCIe M.2 SSD to the M.2 transfer module

8. Install the M.2 transfer module to the riser card. For more information, see "Installing a riser card and a PCIe module in a compute module."

9. Install the riser card to the compute module. For more information, see "Installing a riser card and a PCIe module in a compute module."

10. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

11. Install the compute module. For more information, see "Installing a compute module."

12. Install the removed security bezel. For more information, see "Installing the security bezel."

13. Connect the power cord. For more information, see "Connecting the power cord."

14. Power on the server. For more information, see "Powering on the server."

Installing a PCIe M.2 SSD at the server rear

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect external cables from the riser card, if any.

3. Remove the riser card. For more information, see "Replacing a riser card and PCIe module at the server rear."

4. Install the PCIe M.2 SSD to the M.2 transfer module. For more information, see "Installing a PCIe M.2 SSD in a compute module."

5. Install the M.2 transfer module to the riser card. For more information, see "Installing riser cards and PCIe modules at the server rear."

6. Install the riser card to the server. For more information, see "Installing riser cards and PCIe modules at the server rear."

7. Reconnect the external cables to the riser card.

8. Connect the power cord. For more information, see "Connecting the power cord."

9. Power on the server. For more information, see "Powering on the server."

Installing SD cards

Guidelines

To achieve 1+1 redundancy and avoid storage space waste, install two SD cards with the same capacity as a best practice.

Procedure

1. Power off the server. For more information, see "Powering off the server."

2. Disconnect all the cables from the management module.

3. Remove the management module. For more information, see "Removing the management module."

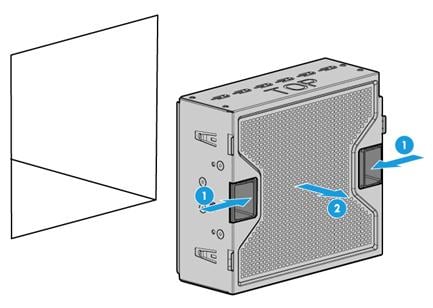

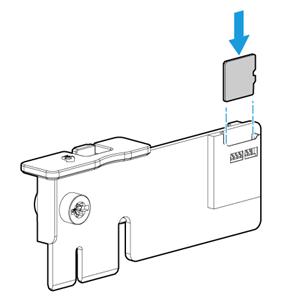

4. Orient the SD card with its golden plating facing the dual SD card extended module and insert the SD card into the slot, as shown in Figure 49.

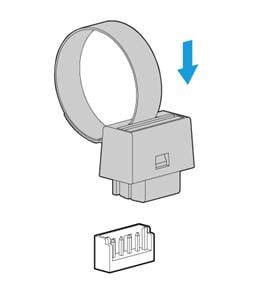

Figure 49 Installing an SD card

5. Installing the extended module to the management module. Align the two blue clips on the extended module with the bracket on the management module, and slowly insert the extended module downwards until it snaps into space, as shown in Figure 50.

Figure 50 Installing the dual SD card extended module

6. Install the management module. For more information, see "Installing the management module."

7. Reconnect the removed cables to the management module.

8. Connect the power cord. For more information, see "Connecting the power cord."

9. Power on the server. For more information, see "Powering on the server."

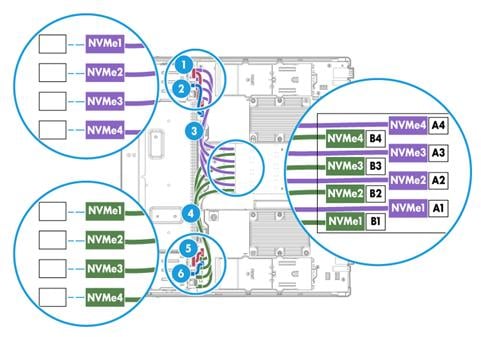

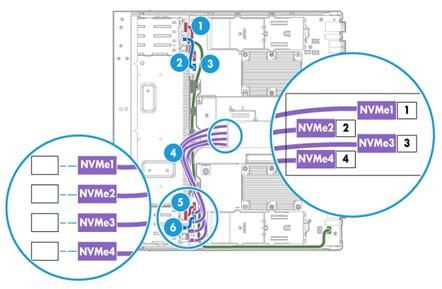

Installing an NVMe SSD expander module

Guidelines

A riser card in a compute module is required when you install an NVMe SSD expander module.

An NVMe SSD expander module is required only when NVMe drives are installed. For configurations that require an NVMe expander module, see "Drive configurations and numbering."

Procedure

The procedure is the same for installing a 4-port NVMe SSD expander module and an 8-port NVMe SSD expander module. This section uses a 4-port NVMe SSD expander module as an example.

To install an NVMe SSD expander module:

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

5. Remove the high mid air baffle. For more information, see "Replacing air baffles in a compute module."

6. Install the low mid air baffle. For more information, see "Installing the low mid air baffle or GPU module air baffle to a compute module."

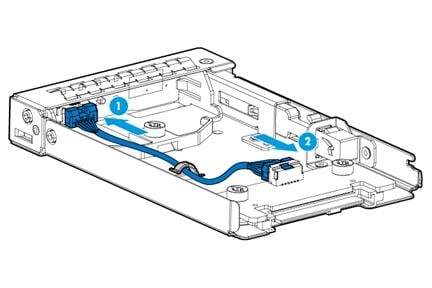

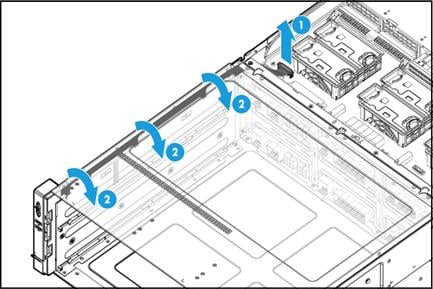

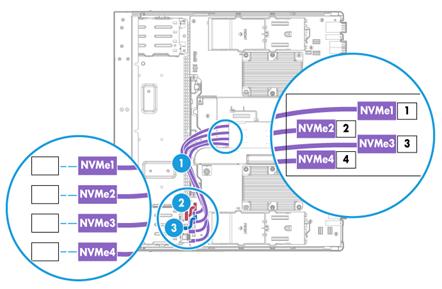

7. Connect the four NVMe data cables to the NVMe SSD expander module, as shown in Figure 51.

Make sure you connect the ports on the module with the correct NVMe data cable. For more information, see "Connecting drive cables."

Figure 51 Connecting an NVMe data cable to the NVMe SSD expander module

8. Install the NVMe SSD expander module to the compute module by using a riser card. For more information, see "Installing riser cards and PCIe modules."

9. Connect the NVMe data cables to the drive backplane. For more information, see "Connecting drive cables in compute modules."

Make sure you connect the ports on the drive backplane with the correct NVMe data cable. For more information, see "Connecting drive cables."

10. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

11. Install the compute module. For more information, see "Installing a compute module."

12. Install the removed security bezel. For more information, see "Installing the security bezel."

13. Connect the power cord. For more information, see "Connecting the power cord."

14. Power on the server. For more information, see "Powering on the server."

Installing the NVMe VROC module

1. Identify the NVMe VROC module connector on the management module. For more information, see "Management module components."

2. Power off the server. For more information, see "Powering off the server."

3. Disconnect all the cables from the management module.

4. Remove the management module. For more information, see "Removing the management module."

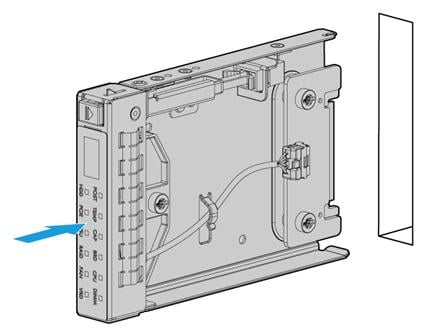

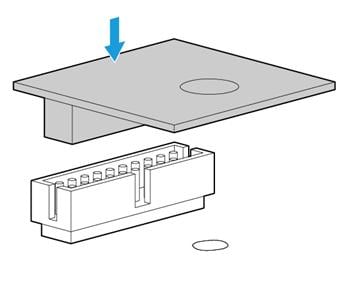

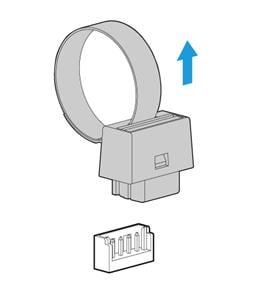

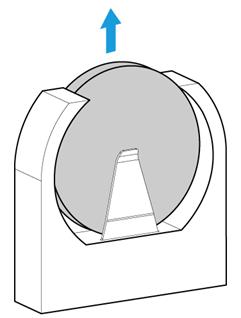

5. Insert the NVMe VROC module onto the NVMe VROC module connector on the management module, as shown in Figure 52.

Figure 52 Installing the NVMe VROC module

6. Install the management module. For more information, see "Installing the management module."

7. Connect the power cord. For more information, see "Connecting the power cord."

8. Power on the server. For more information, see "Powering on the server."

Installing a drive backplane

Guidelines

The installation locations of drive backplanes vary by drive configuration. For more information, see "Drive configurations and numbering."

When installing a 4SFF NVMe drive backplane, paste the stickers provided with the backplane over the drive number marks on the front panel of the corresponding compute module. This helps users identify NVMe drive bays. Make sure the numbers on the stickers correspond to the numbers on the front panel of the compute module. For more information about drive numbers, see "Drive configurations and numbering."

Procedure

The procedure is the same for installing a 4SFF drive backplane and a 4SFF NVMe drive backplane. This section uses a 4SFF drive backplane as an example.

To install a 4SFF drive backplane:

1. Power off the server. For more information, see "Powering off the server."

2. Remove the security bezel, if any. For more information, see "Replacing the security bezel."

3. Remove the compute module. For more information, see "Removing a compute module."

4. Remove the compute module access panel. For more information, see "Replacing a compute module access panel."

5. Remove the air baffles that might hinder the installation in the compute module. For more information, see "Replacing air baffles in a compute module."

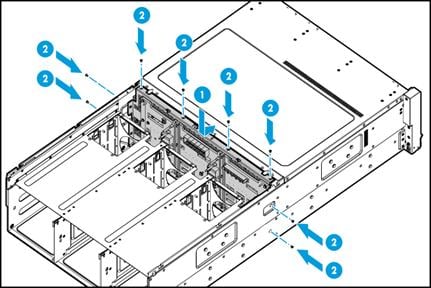

6. Install the drive backplane. Place the backplane against the slot and fasten the captive screw on the backplane, as shown in Figure 53.

Figure 53 Installing the 4SFF drive backplane

7. Connect cables to the drive backplane. For more information, see "Connecting drive cables in compute modules."

8. Install the removed air baffles. For more information, see "Replacing air baffles in a compute module."

9. Install the compute module access panel. For more information, see "Replacing a compute module access panel."

10. Install drives. For more information, see "Installing SAS/SATA drives."

11. Install the compute module. For more information, see "Installing a compute module."

12. Install the removed security bezel. For more information, see "Installing the security bezel."

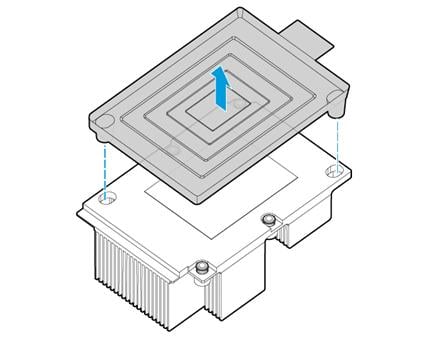

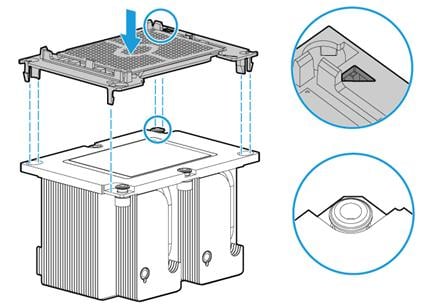

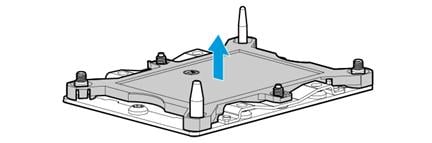

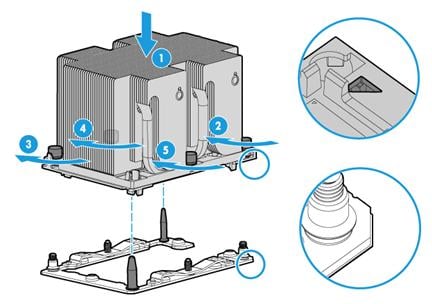

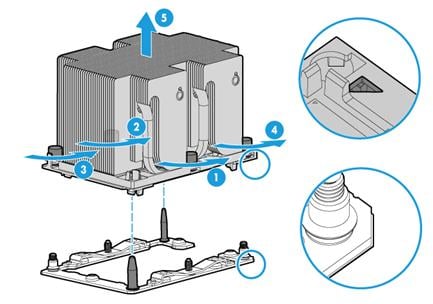

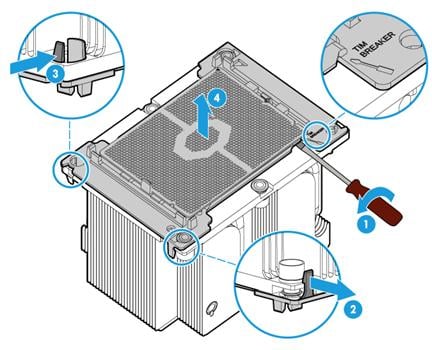

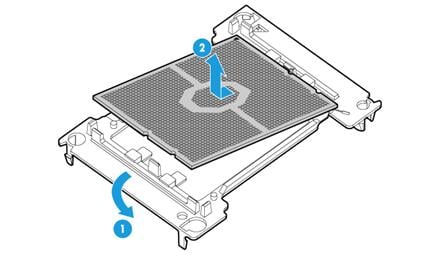

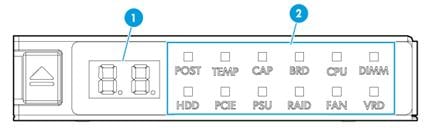

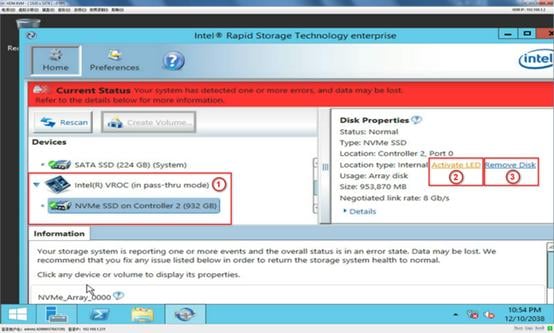

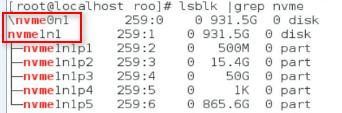

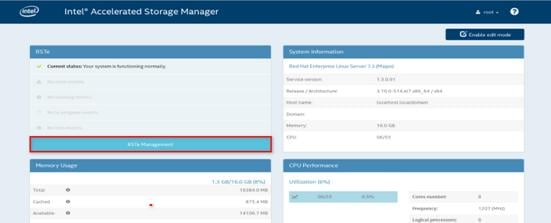

13. Connect the power cord. For more information, see "Connecting the power cord."