- Table of Contents

-

- 03-Layer 2—LAN Switching Configuration Guide

- 00-Preface

- 01-MAC address table configuration

- 02-Bulk interface configuration

- 03-Ethernet interface configuration

- 04-Ethernet link aggregation configuration

- 05-DRNI configuration

- 06-Port isolation configuration

- 07-VLAN configuration

- 08-MVRP configuration

- 09-Loopback, null, and inloopback interface configuration

- 10-QinQ configuration

- 11-VLAN mapping configuration

- 12-Loop detection configuration

- 13-Spanning tree configuration

- 14-LLDP configuration

- 15-L2PT configuration

- 16-Service loopback group configuration

- 17-Cut-through Layer 2 forwarding configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 05-DRNI configuration | 550.45 KB |

Keepalive and failover mechanism

DRNI MAD DOWN state persistence

Configuration consistency check

DRNI failure handling mechanisms

Mechanisms to handle concurrent IPL and keepalive link failures

Restrictions and guidelines: DRNI configuration

Compatibility with other features

Configuring DR system settings

Configuring the DR system MAC address

Setting the DR system priority

Setting the DR role priority of the device

Enabling DRNI standalone mode on a DR member device

Configuring DR keepalive settings

Restrictions and guidelines for configuring DR keepalive settings

Configuring DR keepalive packet parameters

Setting the DR keepalive interval and timeout timer

Configuring the default DRNI MAD action on network interfaces

Excluding an interface from the shutdown action by DRNI MAD

Excluding all logical interfaces from the shutdown action by DRNI MAD

Specifying interfaces to be shut down by DRNI MAD when the DR system splits

Enabling DRNI MAD DOWN state persistence

Specifying a Layer 2 aggregate interface as the IPP

Enabling the IPP to retain MAC address entries for down single-homed devices

Assigning a DRNI virtual IP address to an interface

Setting the mode of configuration consistency check

Disabling configuration consistency check

Enabling the short DRCP timeout timer on the IPP or a DR interface

Setting the keepalive hold timer for identifying the cause of IPL down events

Configuring DR system auto-recovery

Setting the data restoration interval

Enabling DRNI sequence number check

Enabling DRNI packet authentication

Displaying and maintaining DRNI

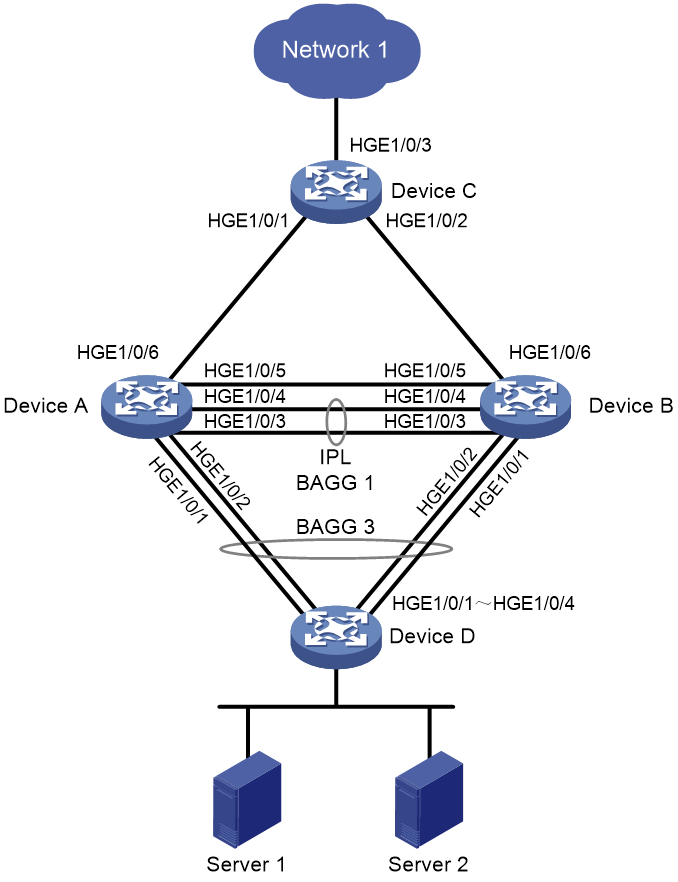

Example: Configuring basic DRNI functions

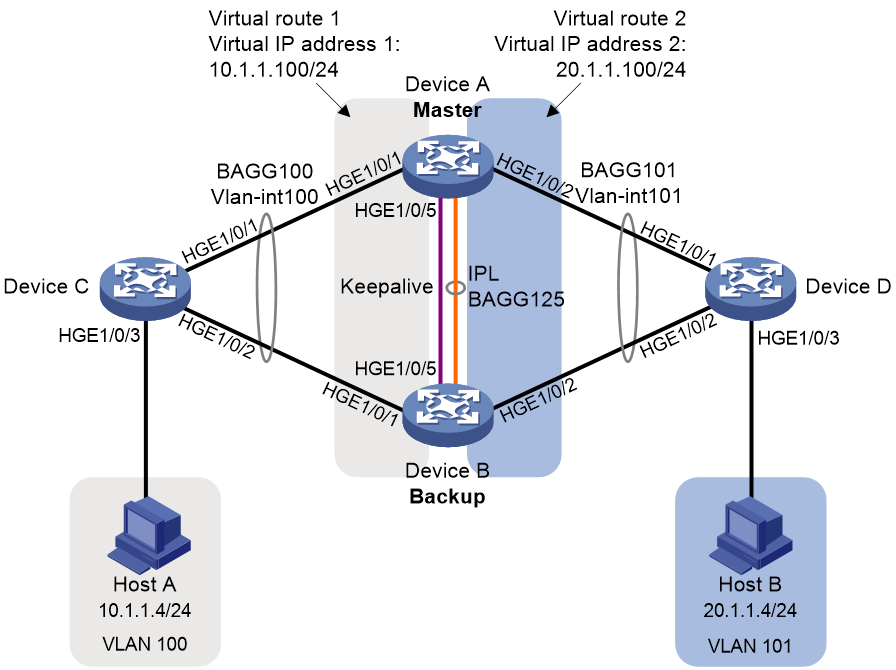

Example: Configuring Layer 3 gateways on a DR system

Example: Configuring IPv4 and IPv6 VLAN gateways on a DR system

Configuring DRNI

About DRNI

Distributed Resilient Network Interconnect (DRNI) virtualizes two physical devices into one system through multichassis link aggregation.

DRNI network model

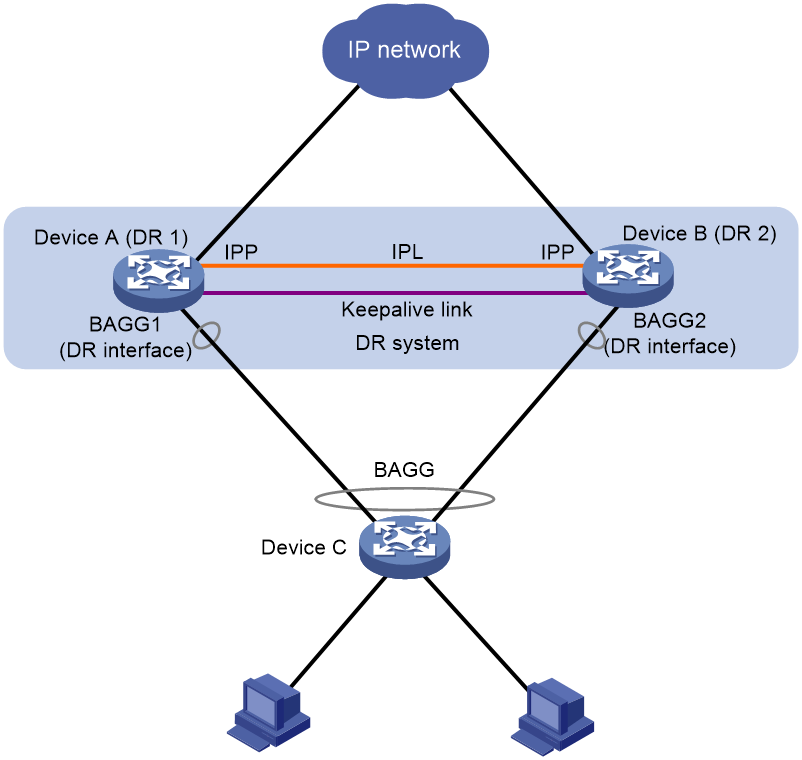

As shown in Figure 1, DRNI virtualizes two devices into a distributed-relay (DR) system, which connects to the remote aggregation system through a multichassis aggregate link. To the remote aggregation system, the DR system is one device.

Figure 1 DRNI network model

The DR member devices are DR peers to each other. For features that require centralized traffic processing (for example, spanning tree), a DR member device is assigned the primary or secondary role based on its DR role priority. The secondary DR member device passes the traffic of those features to the primary DR member device for processing. If the DR member devices in a DR system have the same DR role priority, the device with the lower bridge MAC address is assigned the primary role.

DRNI defines the following interface roles for each DR member device:

· DR interface—Layer 2 aggregate interface connected to the remote aggregation system. DR interfaces connected to the same remote aggregation system belong to one DR group. In Figure 1, Bridge-Aggregation 1 on Device A and Bridge-Aggregation 2 on Device B belong to the same DR group. DR interfaces in a DR group form a multichassis aggregate link.

· Intra-portal port (IPP)—Interface connected to the DR peer for internal control. Each DR member device has only one IPP. The IPPs of the DR member devices transmit DRNI protocol packets and data packets through the intra-portal link (IPL) established between them. A DR system has only one IPL.

DR member devices use a keepalive link to monitor each other's state. For more information about the keepalive mechanism, see "Keepalive and failover mechanism."

If a device is attached to only one of the DR member devices in a DR system, that device is a single-homed device.

DRCP

DRNI uses IEEE P802.1AX Distributed Relay Control Protocol (DRCP) for multichassis link aggregation. DRCP runs on the IPL and uses distributed relay control protocol data units (DRCPDUs) to advertise the DRNI configuration out of IPPs and DR interfaces.

DRCP operating mechanism

DRNI-enabled devices use DRCPDUs for the following purposes:

· Exchange DRCPDUs through DR interfaces to determine whether they can form a DR system.

· Exchange DRCPDUs through IPPs to negotiate the IPL state.

DRCP timeout timers

DRCP uses a timeout mechanism to specify the amount of time that an IPP or DR interface must wait to receive DRCPDUs before it determines that the peer interface is down. This timeout mechanism provides the following timer options:

· Short DRCP timeout timer, which is fixed at 3 seconds. If this timer is used, the peer interface sends one DRCPDU every second.

· Long DRCP timeout timer, which is fixed at 90 seconds. If this timer is used, the peer interface sends one DRCPDU every 30 seconds.

Short DRCP timeout timer enables the DR member devices to detect a peer interface down event more quickly than the long DRCP timeout timer. However this benefit is at the expense of bandwidth and system resources.

Keepalive and failover mechanism

For the secondary DR member device to monitor the state of the primary device, you must establish a Layer 3 keepalive link between the DR member devices.

The DR member devices periodically send keepalive packets over the keepalive link. If a DR member device has not received keepalive packets from the peer when the keepalive timeout timer expires, it determines that the keepalive link is down. When both the keepalive link and the IPL are down, a DR member device acts depending on its role.

· If its role is primary, the device retains its role as long as it has up DR interfaces. If all its DR interfaces are down, its role becomes None.

· If its role is secondary, the device takes over the primary role and retains the role as long as it has up DR interfaces. If all its DR interfaces are down, its role becomes None.

A device with the None role cannot send or receive keepalive packets. Its keepalive link stays in the down state.

If the keepalive link is down while the IPL is up, the DR member devices prompt you to check for keepalive link issues.

If the keepalive link is up while the IPL is down, the DR member devices elect a primary device based on the information in the keepalive packets.

MAD mechanism

A multi-active collision occurs if the IPL goes down while the keepalive link is up. To avoid network issues, DRNI MAD shuts down all network interfaces on the secondary DR member device except those manually or automatically excluded.

When the IPL comes up, the secondary DR member device starts a delay timer and begins to restore table entries (including MAC address entries and ARP entries) from the primary DR member device. When the delay timer expires, the secondary DR member device brings up all network interfaces placed in DRNI MAD DOWN state.

Device role calculation

The role of a DR member device can be primary, secondary, or none.

DRNI uses the following process to determine the role of each DR member device:

1. Initially, each DR member device is assigned the none role when it joins a DR system or reboots with DRNI configuration.

2. If the IPL is up, the DR member devices exchange DRCPDUs over the IPL to determine which of them takes the primary role.

a. Device roles before calculation. If one device already has the primary role, the primary device retains its role.

b. DRNI MAD DOWN state. If one device has not placed any network interfaces in DRNI MAD DOWN state, it becomes the primary device.

c. Health state. The healthier device takes the primary role.

d. DR role priority. The device with higher DR role priority takes the primary role.

e. Bridge MAC address. The device with a lower bridge MAC address takes the primary role.

The device that has failed the election takes the secondary role if it has DR interfaces in up state. If the device does not have DR interfaces in up state, its role is none.

3. If the IPL is down, each DR member device examines the availability of their local DR interfaces.

¡ A DR member device changes its role to none if all its local DR interfaces are down.

¡ A DR member device does not change its role if it has a minimum of one DR interface in up state.

4. If the keepalive link is up, the DR member devices exchange keepalive packets over the link to determine their roles.

¡ If the role of one DR member device is none, the other DR member device retains its primary role or changes its role from secondary to primary.

¡ If neither of them has the none role, the DR member devices negotiate their roles as they do on the IPL.

5. If both the IPL and the keepalive link are down, a DR member device takes the primary role if it has available DR interfaces.

DRNI MAD DOWN state persistence

Both of the DR member devices might take the primary role if both of them have DR interfaces in up state after the following series of events occur:

1. The IPL goes down while the keepalive link is up. Then, DRNI MAD shuts down all network interfaces on the secondary DR member device except those excluded from the shutdown action by DRNI MAD.

2. The keepalive link also goes down. Then, the secondary DR member device brings up the network interfaces in DRNI MAD DOWN state and sets its role to primary.

DRNI MAD DOWN state persistence helps avoid the forwarding issues that might occur in the multi-active situation that occurs because the keepalive link goes down while the IPL is down.

DR system setup process

As shown in Figure 2, two devices perform the following operations to form a DR system:

1. Send DRCPDUs over the IPL to each other and compare the DRCPDUs to determine the DR system stackability and device roles:

a. Compare the DR system settings. The devices can form a DR system if they have consistent DR system settings.

b. Determine the device roles as described in "Device role calculation."

c. Perform configuration consistency check. For more information, see "Configuration consistency check."

2. Send keepalive packets over the keepalive link after primary DR member election to verify that the peer system is operating correctly.

3. Synchronize configuration data by sending DRCPDUs over the IPL. The configuration data includes MAC address entries and ARP entries.

Figure 2 DR system setup process

DRNI standalone mode

The DR member devices might both operate with the primary role to forward traffic if they have DR interfaces in up state after the DR system splits. DRNI standalone mode helps avoid traffic forwarding issues in this multi-active situation by allowing only the member ports in the DR interfaces on one member device to forward traffic.

The following information describes the operating mechanism of this feature.

The DR member devices change to DRNI standalone mode when they detect that both the IPL and the keepalive link are down. In addition, the secondary DR member device changes its role to primary.

In DRNI standalone mode, the LACPDUs sent out of a DR interface by each DR member device contain the interface-specific LACP system MAC address and LACP system priority.

The Selected state of the member ports in the DR interfaces in a DR group depends on their LACP system MAC address and LACP system priority. If a DR interface has a lower LACP system priority value or LACP system MAC address, the member ports in that DR interface become Selected to forward traffic. If those Selected ports fail, the member ports in the DR interface on the other DR member device become Selected to forward traffic.

|

|

NOTE: A DR member device changes to DRNI standalone mode only when it detects that both the IPL and the keepalive link are down. It does not change to DRNI standalone mode when the peer DR member device reboots. |

Configuration consistency check

During DR system setup, DR member devices exchange the configuration and perform configuration consistency check to verify their consistency in the following configurations:

· Type 1 configuration—Settings that affect traffic forwarding of the DR system. If an inconsistency in type 1 configuration is detected, the secondary DR member device shuts down its DR interfaces.

· Type 2 configuration—Settings that affect only service features. If an inconsistency in type 2 configuration is detected, the secondary DR member device disables the affected service features, but it does not shut down its DR interfaces.

To prevent interface flapping, the DR system performs configuration consistency check when half the data restoration internal elapses.

|

|

NOTE: The data restoration interval specifies the maximum amount of time for the secondary DR member device to synchronize data with the primary DR member device during DR system setup. For more information, see "Setting the data restoration interval." |

Type 1 configuration

Type 1 configuration consistency check is performed both globally and on DR interfaces. Table 1 and Table 2 show settings that type 1 configuration contains.

Table 1 Global type 1 configuration

|

Setting |

Details |

|

IPP link type |

IPP link type, including access, hybrid, and trunk. |

|

PVID on the IPP |

PVID on the IPP. |

|

Spanning tree state |

· Global spanning tree state. · VLAN-specific spanning tree state. DRNI checks the VLAN-specific spanning tree state only when PVST is enabled. |

|

Spanning tree mode |

Spanning tree mode, including STP, RSTP, PVST, and MSTP. |

|

MST region settings |

· MST region name. · MST region revision level. · VLAN-to-MSTI mappings. |

Table 2 DR interface type 1 configuration

|

Setting |

Details |

|

Aggregation mode |

Aggregation mode, including static and dynamic. |

|

Spanning tree state |

Interface-specific spanning tree state. |

|

Link type |

Interface link type, including access, hybrid, and trunk. |

|

PVID |

Interface PVID. |

Type 2 configuration

Type 2 configuration consistency check is performed both globally and on DR interfaces. Table 3 and Table 4 show settings that type 2 configuration contains.

Table 3 Global type 2 configuration

|

Setting |

Details |

|

VLANs permitted by the IPP |

VLANs permitted by the IPP. The DR system compares tagged VLANs prior to untagged VLANs. |

|

VLAN interfaces |

Up VLAN interfaces of which the VLANs contain the IPP. |

|

VLAN interface status |

Whether a VLAN interface is in administratively down state. |

|

IPv4 address of a VLAN interface |

IPv4 address assigned to a VLAN interface. |

|

IPv6 address of a VLAN interface |

IPv6 address assigned to a VLAN interface. |

|

Virtual IPv4 address of the VRRP group on a VLAN interface |

Virtual IPv4 address of the VRRP group configured on a VLAN interface. |

|

Global BPDU guard |

Global status of BPDU guard. |

|

MAC aging timer |

Aging timer for dynamic MAC address entries. |

|

VSI name |

Name of a VSI that has ACs on a DR interface. |

|

VXLAN ID |

VXLAN ID of a VSI. |

|

Gateway interface |

VSI interface associated with a VSI. |

|

VSI interface number |

Number of a VSI interface. |

|

MAC address of a VSI interface |

MAC address assigned to a VSI interface. |

|

IPv4 address of a VSI interface |

IPv4 address assigned to a VSI interface. |

|

IPv6 address of a VSI interface |

IPv6 address assigned to a VSI interface. |

|

Physical state of a VSI interface |

Physical link state of a VSI interface. |

|

Protocol state of a VSI interface |

Data link layer state of a VSI interface. |

|

Mode of RoCEv2 traffic analysis |

Mode used by NetAnalysis to analyze RoCEv2 traffic. |

|

RoCEv2 traffic statistics collection |

State of RoCEv2 traffic statistics collection. |

|

ACL used for RoCEv2 traffic statistics collection |

ACL used to match RoCEv2 traffic for RoCEv2 traffic statistics collection. |

|

Global RoCEv2 packet loss analysis |

State of global RoCEv2 packet loss analysis. |

|

Interval for reporting RoCEv2 traffic statistics to the NDA |

Whether the interval is set for reporting RoCEv2 traffic statistics to the NDA. |

|

Aging timer for inactive RoCEv2 flows |

Whether the aging timer is set for inactive RoCEv2 flows. |

The device displays the following global type 2 settings only when VLAN or VLAN interface configuration inconsistency exists:

· VLAN interface status.

· IPv4 address of a VLAN interface.

· IPv6 address of a VLAN interface.

· Virtual IPv4 address of the VRRP group on a VLAN interface.

Table 4 DR interface type 2 configuration

|

Setting |

Details |

|

VLANs permitted by a DR interface |

VLANs permitted by a DR interface. The DR system compares tagged VLANs prior to untagged VLANs. |

|

Using port speed as the prioritized criterion for reference port selection |

Whether a DR interface uses port speed as the prioritized criterion for reference port selection. |

|

Ignoring port speed in setting the aggregation states of member ports |

Whether a DR interface ignores port speed in setting the aggregation states of member ports. |

|

Root guard status |

Status of root guard. |

DRNI sequence number check

DRNI sequence number check protects DR member devices from replay attacks.

With this feature enabled, the DR member devices insert a sequence number into each outgoing DRCPDU or keepalive packet and the sequence number increases by 1 for each sent packet. When receiving a DRCPDU or keepalive packet, the DR member devices check its sequence number and drop the packet if the check result is either of the following:

· The sequence number of the packet is the same as that of a previously received packet.

· The sequence number of the packet is smaller than that of the most recently received packet.

DRNI packet authentication

DRNI packet authentication prevents DRCPDU and keepalive packet tampering from causing link flapping.

With this feature enabled, the DR member devices compute a message digest by using an authentication key for each outgoing DRCPDU or keepalive packet and insert the message digest into the packet. When receiving a DRCPDU or keepalive packet, a DR member device computes a message digest and compares it with the message digest in the packet. If the message digests match, the packet passes authentication. If the message digests do not match, the device drops the packet.

DRNI failure handling mechanisms

DR interface failure handling mechanism

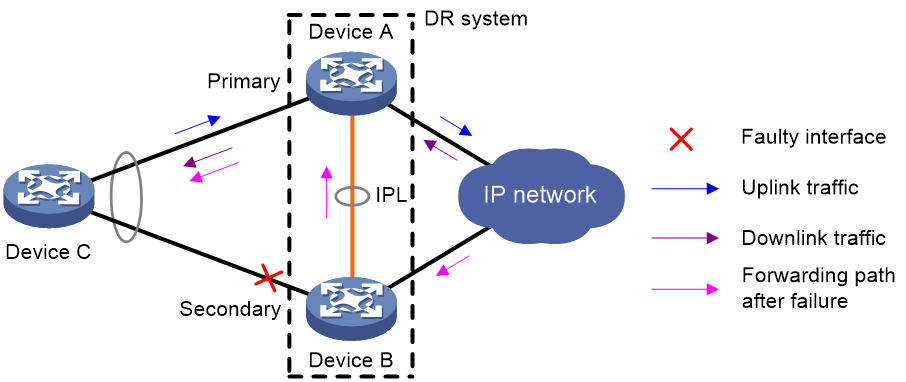

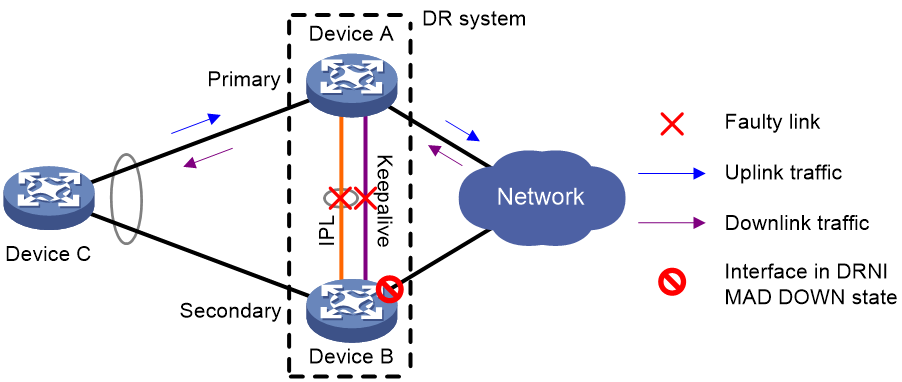

As shown in Figure 3, Device A and Device B form a DR system, to which Device C is attached through a multichassis aggregation. If traffic to Device C arrives at Device B after the DR interface connected Device B to Device C has failed, the DR system forwards the traffic as follows:

1. Device B sends the traffic to Device A over the IPL.

2. Device A forwards the downlink traffic received from the IPL to Device C.

After the faulty DR interface comes up, Device B forwards traffic to Device C through the DR interface.

Figure 3 DR interface failure handling mechanism

IPL failure handling mechanism

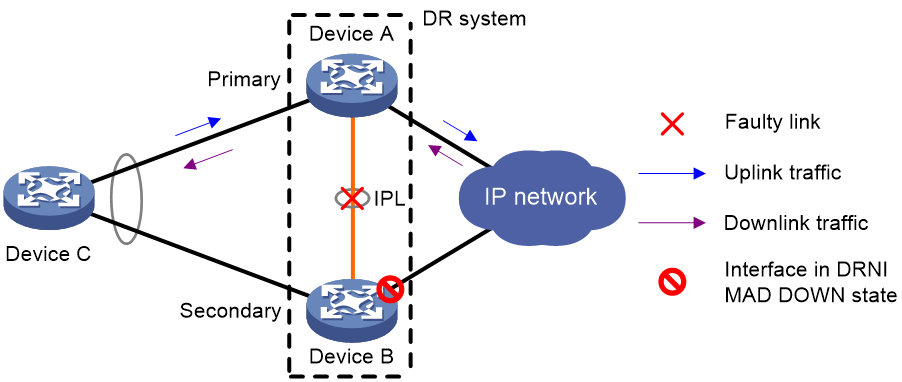

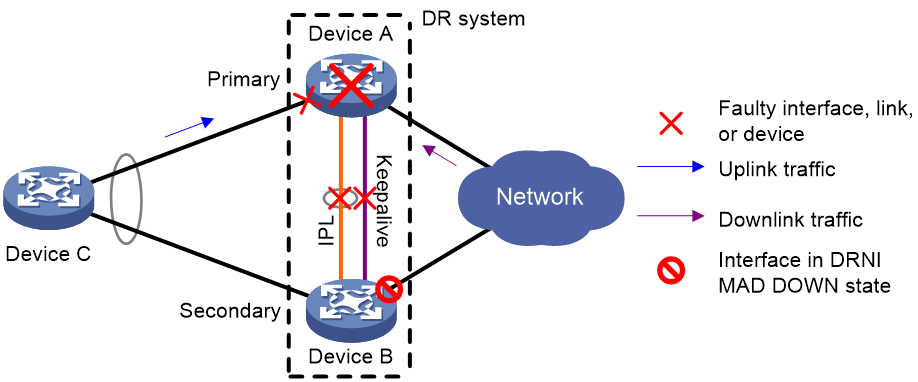

As shown in Figure 4, multi-active collision occurs if the IPL goes down while the keepalive link is up. To avoid network issues, the secondary DR member device sets all network interfaces to DRNI MAD DOWN state, except for interfaces excluded from the shutdown action by DRNI MAD.

In this situation, the primary DR member device forwards all traffic for the DR system.

When the IPP comes up, the secondary DR member device does not bring up the network interfaces immediately. Instead, it starts a delay timer and begins to recover data from the primary DR member device. When the delay timer expires, the secondary DR member device brings up all network interfaces.

Figure 4 IPL failure handling mechanism

Device failure handling mechanism

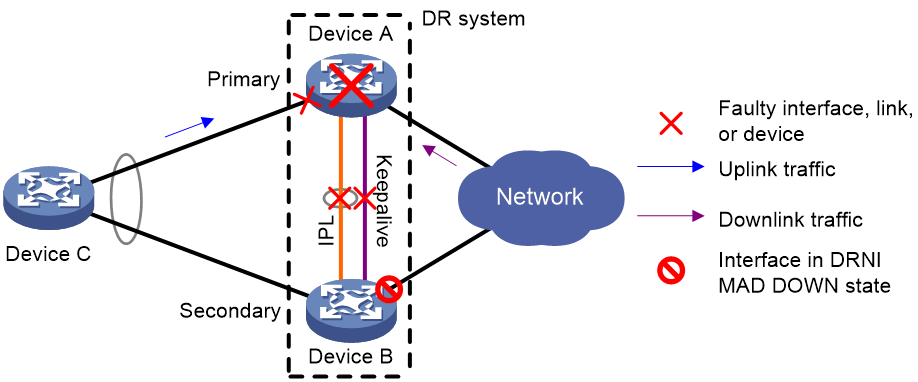

As shown in Figure 5, when the primary DR member device fails, the secondary DR member device takes over the primary role to forward all traffic for the DR system. When the faulty device recovers, it becomes the secondary DR member device.

When the secondary DR member device fails, the primary DR member device forwards all traffic for the DR system.

Figure 5 Device failure handling mechanism

Uplink failure handling mechanism

Uplink failure does not interrupt traffic forwarding of the DR system. As shown in Figure 6, when the uplink of Device A fails, Device A passes traffic destined for the IP network to Device B for forwarding.

To enable faster traffic switchover in response to an uplink failure and minimize traffic losses, configure Monitor Link to associate the DR interfaces with the uplink interfaces. When the uplink interface of a DR member device fails, that device shuts down its DR interface for the other DR member device to forward all traffic of Device C. For more information about Monitor Link, see High Availability Configuration Guide.

Figure 6 Uplink failure handling mechanism

Mechanisms to handle concurrent IPL and keepalive link failures

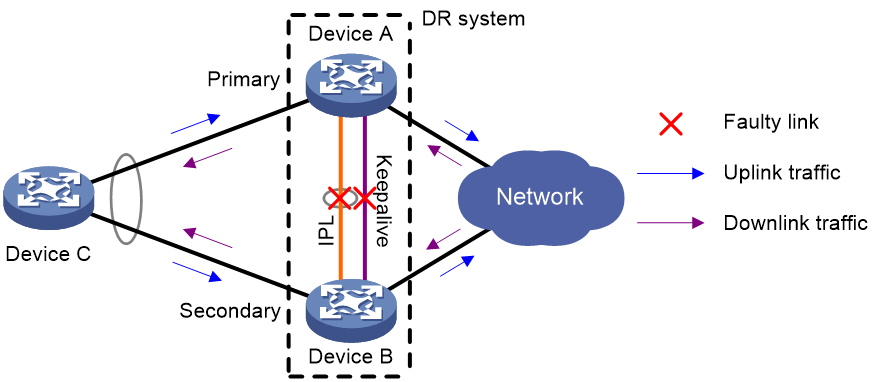

When both the IPL and the keepalive link are down, the DR member devices handle this situation depending on your configuration.

Default failure handling mechanism

Figure 7 shows the default mechanism to handle IPL and keepalive link failures when the DRNI standalone mode and DRNI MAD DOWN state persistency features are not configured.

· If the IPL goes down while the keepalive link is up, the DR member devices negotiate their roles over the keepalive link. DRNI MAD shuts down all network interfaces on the secondary DR member device except those excluded from the shutdown action by DRNI MAD.

· If the keepalive link goes down while the IPL is down, the secondary DR member device sets its role to primary and brings up the network interfaces in DRNI MAD DOWN state to forward traffic. In this situation, both of the DR member devices might operate with the primary role to forward traffic. Forwarding errors might occur because the DR member devices cannot synchronize MAC address entries over the IPL.

· If the keepalive link is down before the IPL goes down, DRNI MAD will not place network interfaces in DRNI MAD DOWN state. Both DR member devices can operate with the primary role to forward traffic.

Figure 7 Default failure handling mechanism

Failure handling mechanism with DRNI MAD DOWN state persistence

Figure 8 shows the mechanism to handle IPL and keepalive link failures when the DRNI MAD DOWN state persistence feature is configured.

· If the IPL goes down while the keepalive link is up, the DR member devices negotiate their roles over the keepalive link. DRNI MAD shuts down all network interfaces on the secondary DR member device except those excluded from the shutdown action by DRNI MAD.

· If the keepalive link goes down while the IPL is down, the secondary DR member device sets its role to primary, but it does not bring up the network interfaces in DRNI MAD DOWN state. Only the original primary member device can forward traffic.

· If the keepalive link is down before the IPL goes down, DRNI MAD will not place network interfaces in DRNI MAD DOWN state. Both DR member devices can operate with the primary role to forward traffic.

Figure 8 Failure handling mechanism with DRNI MAD DOWN state persistence

As shown in Figure 9, you can bring up the interfaces in DRNI MAD DOWN state on the secondary DR member device for it to forward traffic if the following conditions exist:

· Both the IPL and the keepalive link are down.

· The primary DR member device fails or its DR interface fails.

Figure 9 Bringing up the interfaces in DRNI MAD DOWN state

Failure handling mechanism with DRNI standalone mode

Figure 10 shows the mechanism to handle IPL and keepalive link failures when the DRNI standalone mode feature is configured.

· If the IPL goes down while the keepalive link is up, the DR member devices negotiate their roles over the keepalive link. DRNI MAD shuts down all network interfaces on the secondary DR member device except those excluded from the shutdown action by DRNI MAD.

· If the keepalive link goes down while the IPL is down, both DR member devices change to DRNI standalone mode. The secondary DR member device sets its role to primary and brings up its network interfaces in DRNI MAD DOWN state. In DRNI standalone mode, only the aggregation member ports on one DR member device can become Selected to forward traffic. For more information about how DRNI standalone mode operates, see "DRNI standalone mode."

· If the keepalive link is down before the IPL goes down, both DR member devices change to DRNI standalone mode.

Figure 10 Failure handling mechanism with DRNI standalone mode

Protocols and standards

IEEE P802.1AX-REV™/D4.4c, Draft Standard for Local and Metropolitan Area Networks

Restrictions and guidelines: DRNI configuration

Software version requirements

The DR member devices in a DR system must use the same software version.

DRNI configuration

DR system configuration

DRNI is an H3C proprietary protocol. You cannot use DR interfaces for communicating with third-party devices.

You can assign two member devices to a DR system. For the DR member devices to be identified as one DR system by the upstream or downstream devices, you must configure the same DR system MAC address and DR system priority on the DR member devices. You must assign different DR system numbers to the DR member devices.

Make sure each DR system uses a unique DR system MAC address.

To ensure correct forwarding, delete DRNI configuration from a DR member device if it leaves its DR system.

When you bulk shut down physical interfaces on a DR member device for service changes or hardware replacement, shut down the physical interfaces used for keepalive detection prior to the physical member ports of the IPP. If you fail to do so, link flapping will occur on the member ports of DR interfaces.

IPL

In addition to protocol packets, the IPL also transmits data packets between the DR member devices when an uplink fails.

If a DR member device is a fixed-port device, assign at least two physical interfaces to the aggregation group of the IPP.

Make sure the member ports in the aggregation group of the IPP have the same speed.

If a leaf-tier DR system is attached to a large number of servers whose NICs operate in active/standby mode, take the size of the traffic sent among those servers into account when you determine the bandwidth of the IPL.

As a best practice to reduce the impact of interface flapping on upper-layer services, use the link-delay command to configure the same link delay settings on the IPPs. Do not set the link delay to 0.

In a DR system , two IPPs must have the same configuration for the maximum jumbo frame length.

For the DR system to correctly forward traffic for single-homed devices, set the link type to trunk for the IPPs and the interfaces attached to the single-homed devices. If you fail to do so, the ARP and ND protocol packets sent to or from the single-homed devices cannot be forwarded over the IPL.

Keepalive link

The DR member devices exchange keepalive packets over the keepalive link to detect multi-active collisions when the IPL is down.

As a best practice, establish a dedicated direct link between two DR member devices as a keepalive link. Do not use the keepalive link for any other purposes. Make sure the DR member devices have Layer 2 and Layer 3 connectivity to each other over the keepalive link.

You can use management Ethernet interfaces, Layer 3 Ethernet interfaces, Layer 3 aggregate interfaces, or interfaces with a bound VPN instance to set up the keepalive link. As a best practice, do not use VLAN interfaces for keepalive link setup. If you have to use VLAN interfaces, remove the IPPs from the related VLANs to avoid loops.

For correct keepalive detection, you must exclude the physical and logical interfaces used for keepalive detection from the shutdown action by DRNI MAD.

DR interface

DR interfaces in the same DR group must use the different LACP system MAC addresses.

As a best practice, use the undo lacp period command to enable the long LACP timeout timer (90 seconds) on a DR system.

You must execute the lacp edge-port command on the DR interfaces attached to bare metal servers.

Interfaces excluded from the shutdown action by DRNI MAD

When you configure DRNI on the underlay networks, follow these restrictions and guidelines:

· Set the default DRNI MAD action to DRNI MAD DOWN by using the drni mad default-action down command. By default, the default DRNI MAD action is DRNI MAD DOWN.

· Exclude the VLAN interfaces of the VLANs to which the DR interfaces and IPPs belong from the shutdown action by DRNI MAD. These interfaces will not be shut down by DRNI MAD.

· Exclude the interfaces used for keepalive detection. These interfaces will not be shut down by DRNI MAD.

· Do not exclude the uplink Layer 3 interfaces, VLAN interfaces, or physical interfaces. These interfaces will be shut down by DRNI MAD.

DRNI standalone mode

The DR member devices might both operate with the primary role to forward traffic if they have DR interfaces in up state after the DR system splits. DRNI standalone mode helps avoid traffic forwarding issues in this multi-active situation by allowing only the member ports in the DR interfaces on one member device to forward traffic.

The following information describes the operating mechanism of this feature.

The DR member devices change to DRNI standalone mode when they detect that both the IPL and the keepalive link are down. In addition, the secondary DR member device changes its role to primary.

In DRNI standalone mode, the LACPDUs sent out of a DR interface by each DR member device contain the interface-specific LACP system MAC address and LACP system priority.

The Selected state of the member ports in the DR interfaces in a DR group depends on their LACP system MAC address and LACP system priority. If a DR interface has a lower LACP system priority value or LACP system MAC address, the member ports in that DR interface become Selected to forward traffic. If those Selected ports fail, the member ports in the DR interface on the other DR member device become Selected to forward traffic.

To configure the DR system priority, use the drni system-priority command in system view. To configure the LACP system priority, use one of the following methods:

· Execute the lacp system-mac and lacp system-priority commands in system view.

· Execute the port lacp system-mac and port lacp system-priority commands in DR interface view.

The DR interface-specific configuration takes precedence over the global configuration.

When you configure the DR system priority and LACP system priority, follow these guidelines:

· For a single tier of DR system at the leaf layer, set the DR system priority value to be larger than the LACP system priority value for DR interfaces. The smaller the value, the higher the priority. For a DR group, configure different LACP system priority values for the member DR interfaces.

· For two or more tiers of DR systems, configure the same LACP system priority for the devices with the same DR role. This ensures traffic is forwarded along the correct path when a DR system splits.

Compatibility with other features

GIR

Before you change a DR system back to normal mode by using the undo gir system-mode maintenance command, execute the display drni mad verbose command to verify that no network interfaces are in DRNI MAD DOWN state. For information about GIR, see Fundamentals Configuration Guide.

IRF

DRNI cannot work correctly on an IRF fabric. Do not configure DRNI on an IRF fabric. For more information about IRF, see Virtual Technologies Configuration Guide.

MAC address table

If the DR system has a large number of MAC address entries, set the MAC aging timer to a higher value than 20 minutes as a best practice. To set the MAC aging timer, use the mac-address timer command.

The MAC address learning feature is not configurable on the IPP.

For more information about the MAC address table, see "Configuring the MAC address table."

Ethernet link aggregation

Do not configure automatic link aggregation on a DR system.

The aggregate interfaces in an S-MLAG group cannot be used as DR interfaces or IPPs.

You cannot configure link aggregation management subnets on a DR system.

When you configure a DR interface, follow these restrictions and guidelines:

· The link-aggregation selected-port maximum and link-aggregation selected-port minimum commands do not take effect on a DR interface.

· If you execute the display link-aggregation verbose command for a DR interface, the displayed system ID contains the DR system MAC address and the DR system priority.

· If the reference port is a member port of a DR interface, the display link-aggregation verbose command displays the reference port on both DR member devices.

For more information about Ethernet link aggregation, see "Configuring Ethernet link aggregation."

Port isolation

Do not assign DR interfaces or IPPs to a port isolation group. For more information about port isolation, see "Configuring port isolation."

Loop detection

Member devices in a DR system must have the same loop detection configuration. For information about loop detection, see "Configuring loop detection."

Spanning tree

When the spanning tree protocol is enabled for a DR system, follow these restrictions and guidelines:

· Make sure the DR member devices have the same spanning tree configuration. Violation of this rule might cause network flapping. The configuration includes:

¡ Global spanning tree configuration.

¡ Spanning tree configuration on the IPP.

¡ Spanning tree configuration on DR interfaces.

· IPPs of the DR system do not participate in spanning tree calculation.

· The DR member devices still use the DR system MAC address after the DR system splits, which will cause spanning tree calculation issues. To avoid the issues, enable DRNI standalone mode on the DR member devices before the DR system splits.

For more information about spanning tree, see "Configuring spanning tree."

Multicast

You can configure multicast on a DR system only with Release 6635.

Multicast VPN is not supported on a DR system. For more information about multicast VPN, see IP Multicast Configuration Guide.

CFD

Do not use the MAC address of a remote MEP for CFD tests on IPPs. These tests cannot work on IPPs. For more information about CFD, see High Availability Configuration Guide.

Smart Link

The DR member devices in a DR system must have the same Smart Link configuration.

For Smart Link to operate correctly on a DR interface, do not assign the DR interface and non-DR interfaces to the same smart link group.

Do not assign an IPP to a smart link group.

For more information about Smart Link configuration, see High Availability Configuration Guide.

VRRP

If you use DRNI and VRRP together, make sure the keepalive hold timer is shorter than the interval at which the VRRP master sends VRRP advertisements. Violation of this restriction might cause a VRRP master/backup switchover to occur before IPL failure is confirmed. To set the interval at which the VRRP master sends VRRP advertisements, use the vrrp vrid timer advertise or vrrp ipv6 vrid timer advertise command. For more information about the commands, see High Availability Command Reference.

Mirroring

For a mirroring group, do not assign the source port to an aggregation group other than the one that accommodates the destination port, egress port, or reflector port. If the source port is in a different aggregation group than the other ports, mirrored LACPDUs will be transmitted between the aggregation groups and cause aggregate interface flapping.

DRNI tasks at a glance

To configure DRNI, perform the following tasks:

1. Configuring DR system settings

¡ Configuring the DR system MAC address

¡ Setting the DR system number

¡ Setting the DR system priority

2. Setting the DR role priority of the device

3. (Optional.) Enabling DRNI standalone mode on a DR member device

4. Configuring DR keepalive settings

¡ Configuring DR keepalive packet parameters

¡ Setting the DR keepalive interval and timeout timer

¡ Configuring the default DRNI MAD action on network interfaces

¡ Excluding an interface from the shutdown action by DRNI MAD

¡ Excluding all logical interfaces from the shutdown action by DRNI MAD

¡ Specifying interfaces to be shut down by DRNI MAD when the DR system splits

¡ Enabling DRNI MAD DOWN state persistence

7. Specifying a Layer 2 aggregate interface as the IPP

8. (Optional.) Enabling the IPP to retain MAC address entries for down single-homed devices

9. (Optional.) Assigning a DRNI virtual IP address to an interface

Configure DRNI virtual IP addresses for devices to communicate with the DR system by using dynamic routing protocols.

10. (Optional.) Configuring configuration consistency check

¡ Setting the mode of configuration consistency check

¡ (Optional.) Disabling configuration consistency check

Configuration consistency check might fail when you upgrade the DR member devices in a DR system. To prevent the DR system from falsely shutting down DR interfaces, temporarily disable configuration consistency check.

11. (Optional.) Enabling the short DRCP timeout timer on the IPP or a DR interface

12. Configuring DRNI timers

¡ (Optional.) Setting the keepalive hold timer for identifying the cause of IPL down events

¡ Configuring DR system auto-recovery

¡ (Optional.) Setting the data restoration interval

13. (Optional.) Configuring DRNI security features

¡ Enabling DRNI sequence number check

¡ Enabling DRNI packet authentication

Configuring DR system settings

Configuring the DR system MAC address

Restrictions and guidelines

On a DR system, DR interfaces in the same DR group must use the same LACP system MAC address. As a best practice, use the bridge MAC address of one DR member device as the DR system MAC address.

Changing the DR system MAC address causes DR system split. When you perform this task on a live network, make sure you are fully aware of its impact.

You can configure the DR system MAC address on an aggregate interface only after it is configured as a DR interface.

You can configure the DR system MAC address globally and in aggregate interface view. The global DR system MAC address takes effect on all aggregation groups. On an aggregate interface, the interface-specific DR system MAC address takes precedence over the global DR system MAC address.

Procedure

1. Enter system view.

system-view

2. Configure the DR system MAC address.

drni system-mac mac-address

By default, the DR system MAC address is not configured.

3. Enter Layer 2 aggregate interface view.

interface bridge-aggregation interface-number

4. Set the DR system MAC address on the aggregate interface.

port drni system-mac mac-address

By default, the DR system MAC address is not configured.

This command is supported only in Release 6616 and later.

Setting the DR system number

Restrictions and guidelines

Changing the DR system number causes DR system split. When you perform this task on a live network, make sure you are fully aware of its impact.

You must assign different DR system numbers to the DR member devices in a DR system.

Procedure

1. Enter system view.

system-view

2. Set the DR system number.

drni system-number system-number

By default, the DR system number is not set.

Setting the DR system priority

About this task

A DR system uses its DR system priority as the system LACP priority to communicate with the remote aggregation system.

Restrictions and guidelines

Changing the DR system priority in system view causes DR system split. When you perform this task on a live network, make sure you are fully aware of its impact.

You must configure the same DR system priority for the DR interfaces in the same DR group.

You can configure the DR system priority on an aggregate interface only after it is configured as a DR interface.

You can configure the DR system priority globally and in aggregate interface view. The global DR system priority takes effect on all aggregation groups. On an aggregate interface, the interface-specific DR system priority takes precedence over the global DR system priority.

Procedure

1. Enter system view.

system-view

2. Set the DR system priority.

drni system-priority system-priority

By default, the DR system priority is 32768.

3. Enter Layer 2 aggregate interface view.

interface bridge-aggregation interface-number

4. Set the DR system priority on the aggregate interface.

port drni system-priority priority

By default, the DR system priority is 32768.

This command is supported only in Release 6616 and later.

Setting the DR role priority of the device

About this task

DRNI assigns the primary or secondary role to a DR member device based on its DR role priority. The smaller the priority value, the higher the priority. If the DR member devices in a DR system use the same DR role priority, the device with the lower bridge MAC address is assigned the primary role.

Restrictions and guidelines

To prevent a primary/secondary role switchover from causing network flapping, avoid changing the DR priority assignment after the DR system is established.

Procedure

1. Enter system view.

system-view

2. Set the DR role priority of the device.

drni role priority priority-value

By default, the DR role priority of the device is 32768.

Enabling DRNI standalone mode on a DR member device

About this task

Perform this task to avoid forwarding issues in the multi-active situation that might occur after both the IPL and the keepalive link are down.

DRNI standalone mode helps avoid traffic forwarding issues in this multi-active situation by allowing only the member ports in the DR interfaces on one member device to forward traffic. For more information about this mode, see "DRNI standalone mode."

When you configure this feature, you can configure a delay to prevent an unnecessary mode change because of transient link down issues.

If the keepalive link fails before the IPL fails, DRNI MAD will not shut down the interfaces on the DR member devices. After the DR member devices enter DRNI standalone mode, they use different LACP system IDs for link aggregation. As a result, the aggregation member ports on one of the DR member devices are selected to forward traffic.

If the IPL fails, DRNI MAD shuts down the interfaces on the secondary DR member device. When the keepalive link also fails, DRNI MAD brings up the interfaces in DRNI MAD DOWN state, and then the secondary DR member device enters DRNI standalone mode.

Software version and feature compatibility

This feature is supported only in Release 6616 and later.

Restrictions and guidelines

A DR member device changes to DRNI standalone mode only when it detects that both the IPL and the keepalive link are down. It does not change to DRNI standalone mode when the peer DR member device reboots, because the peer notifies the DR member device of the reboot event.

As a best practice, enable DRNI standalone mode on both DR member devices.

Before you enable DRNI standalone mode on a DR member device, make sure its LACP system priority is higher than that of the remote aggregation system. This restriction ensures that the reference port is on the remote aggregation system and prevents the interfaces attached to the DR system from flapping. For more information about the LACP system priority, see "Configuring Ethernet link aggregation."

Procedure

1. Enter system view.

system-view

2. Enable DRNI standalone mode.

drni standalone enable [ delay delay-time ]

By default, DRNI standalone mode is disabled.

Configuring DR keepalive settings

Restrictions and guidelines for configuring DR keepalive settings

As a best practice, establish a dedicated direct link between DR member devices as a keepalive link. Do not use the keepalive link for any other purposes. Make sure the DR member devices have Layer 2 and Layer 3 connectivity to each other over the keepalive link.

Configuring DR keepalive packet parameters

About this task

Perform this task to specify the parameters for sending DR keepalive packets, such as its source and destination IP addresses.

The device accepts only keepalive packets that are sourced from the specified destination IP address. The keepalive link goes down if the device receives keepalive packets sourced from any other IP address.

Restrictions and guidelines

Make sure the keepalive source and destination IP addresses have Layer 3 reachability.

Make sure the DR member devices in a DR system use the same keepalive destination UDP port.

Procedure

1. Enter system view.

system-view

2. Configure DR keepalive packet parameters.

drni keepalive { ip | ipv6 } destination { ipv4-address | ipv6-address } [ source { ipv4-address | ipv6-address } | udp-port udp-number | vpn-instance vpn-instance-name ] *

By default, the DR keepalive packet parameters are not configured. If you do not specify a source IP address or destination UDP port when you execute this command, the IP address of the outgoing interface and UDP port 6400 are used, respectively.

Setting the DR keepalive interval and timeout timer

About this task

The device sends keepalive packets at the specified interval to its DR peer. If the device has not received a keepalive packet from the DR peer before the keepalive timeout timer expires, the device determines that the keepalive link is down.

Restrictions and guidelines

The local DR keepalive timeout timer must be two times the DR keepalive interval of the peer at minimum.

Configure the same DR keepalive interval on the DR member devices in the DR system.

Procedure

1. Enter system view.

system-view

2. Set the DR keepalive interval and timeout timer.

drni keepalive interval interval [ timeout timeout ]

By default, the DR keepalive interval is 1000 milliseconds, and the DR keepalive timeout timer is 5 seconds.

Configuring DRNI MAD

About this task

DRNI MAD configuration methods

When you configure DRNI MAD, use either of the following methods:

· To shut down all network interfaces on the secondary DR member device except a few special-purpose interfaces that must be retained in up state:

¡ Set the default DRNI MAD action to DRNI MAD DOWN. For more information, see "Configuring the default DRNI MAD action on network interfaces."

¡ Exclude interfaces from being shut down by DRNI MAD. For more information, see "Excluding an interface from the shutdown action by DRNI MAD."

This method is applicable to most network environments.

· To have the secondary DR member device retain a large number of interfaces in up state and shut down the remaining interfaces:

¡ Set the default DRNI MAD action to NONE. For more information, see "Configuring the default DRNI MAD action on network interfaces."

¡ Specify network interfaces that must be shut down by DRNI MAD. For more information, see "Specifying interfaces to be shut down by DRNI MAD when the DR system splits."

For a DR member device to operate correctly after the DR system splits, you must retain a large number of logical interfaces (for example, VLAN, aggregate,and loopback interfaces) in up state.

List of automatically included interfaces

DRNI MAD will always shut down the ports in the system-configured included port list if the device acts as the secondary DR member device when the DR system splits.

This list contains aggregation member ports of DR interfaces. To identify system-configured included ports, execute the display drni mad verbose command.

List of automatically excluded interfaces

DRNI MAD will not shut down the ports in the following list when the DR system splits:

· System-configured excluded port list in DRNI MAD:

¡ IPP.

¡ Aggregation member interfaces if a Layer 2 aggregate interface is used as the IPP.

¡ DR interfaces.

¡ Management interfaces.

To identify these interfaces, execute the display drni mad verbose command.

· Network interfaces used for special purposes, including:

¡ Interfaces placed in a loopback test by using the loopback command.

¡ Interfaces assigned to a service loopback group by using the port service-loopback group command.

¡ Mirroring reflector ports configured by using the mirroring-group reflector-port command.

¡ Interfaces forced to stay up by using the port up-mode command.

Configuring the default DRNI MAD action on network interfaces

About this task

You can configure DRNI MAD to take either of the following default actions on network interfaces if the device acts as the secondary DR member device when the DR system splits:

· DRNI MAD DOWN—DRNI MAD will shut down all network interfaces on the secondary DR member device when the DR system splits, except the interfaces excluded manually or by the system.

· NONE—DRNI MAD will not shut down any network interfaces when the DR system splits, except the interfaces configured manually or by the system to be shut down by DRNI MAD.

Restrictions and guidelines

The DRNI MAD DOWN action will not take effect on the interfaces listed in "List of automatically excluded interfaces."

The DRNI MAD DOWN action will always take on the interfaces listed in "List of automatically included interfaces," even if the default DRNI MAD action is NONE.

Procedure

1. Enter system view.

system-view

2. Configure the default DRNI MAD action to take on network interfaces on the secondary DR member device when the DR system splits.

drni mad default-action { down | none }

By default, DRNI MAD shuts down network interfaces on the secondary DR member device.

Excluding an interface from the shutdown action by DRNI MAD

About this task

By default, DRNI MAD automatically excludes the interfaces listed in "List of automatically excluded interfaces" when it shuts down network interfaces on the secondary DR member device.

To specify additional interfaces that cannot be shut down, perform this task.

You typically perform this task when the default DRNI MAD action is set to DRNI MAD DOWN.

Software version and feature compatibility

This feature is supported only in Release 6616 and later.

Restrictions and guidelines

You must always exclude the following interfaces from being shut down by DRNI MAD:

· For correct keepalive detection, you must exclude the interfaces used for keepalive detection.

· For DR member devices to synchronize ARP entries, you must exclude the VLAN interfaces of the VLANs to which the DR interfaces and IPPs belong.

The DRNI MAD DOWN action is always taken on interfaces listed in "List of automatically included interfaces." You cannot disable the action by excluding those interfaces.

To view interfaces excluded from the MAD shutdown action, see the Excluded ports (user-configured) field in the output from the display drni mad verbose command.

If you exclude an interface that is already in DRNI MAD DOWN state from the MAD shutdown action, the interface stays in that state. It will not come up automatically.

Procedure

1. Enter system view.

system-view

2. Exclude an interface from the shutdown action by DRNI MAD.

drni mad exclude interface interface-type interface-number

By default, DRNI MAD shuts down all network interfaces when detecting a multi-active collision, except for the network interfaces set by the system to not shut down.

Excluding all logical interfaces from the shutdown action by DRNI MAD

About this task

For a DR member device to operate correctly after the DR system splits, you must retain a large number of logical interfaces (for example, VLAN, aggregate,and loopback interfaces) in up state. To simplify configuration, you can exclude all logical interfaces from the shutdown action by DRNI MAD.

Restrictions and guidelines

The drni mad exclude interface and drni mad include interface commands take precedence over the drni mad exclude logical-interfaces command.

Procedure

1. Enter system view.

system-view

2. Exclude all logical interfaces from the shutdown action by DRNI MAD.

drni mad exclude logical-interfaces

By default, DRNI MAD shuts down all network interfaces when it detects a multi-active collision, except for the network interfaces set by the system to not shut down.

Specifying interfaces to be shut down by DRNI MAD when the DR system splits

About this task

By default, DRNI MAD automatically shuts down the interfaces listed in "List of automatically included interfaces" if the device is the secondary DR member device when the DR system splits.

To specify additional interfaces to be shut down by DRNI MAD, perform this task.

You typically perform this task when the default DRNI MAD action is set to NONE.

Restrictions and guidelines

The DRNI MAD DOWN action will not take effect on the interfaces listed in "List of automatically excluded interfaces."

Procedure

1. Enter system view.

system-view

2. Specify interfaces to be shut down by DRNI MAD when the DR system splits.

drni mad include interface interface-type interface-number

By default, the user-configured included port list does not contain any ports.

Enabling DRNI MAD DOWN state persistence

About this task

DRNI MAD DOWN state persistence helps avoid the multi-active situation by preventing the secondary DR member device from bringing up the network interfaces in DRNI MAD DOWN state. For more information about this feature, see "DRNI MAD DOWN state persistence" and "Failure handling mechanism with DRNI MAD DOWN state persistence."

You can bring up the interfaces in DRNI MAD DOWN state on the secondary DR member device for it to forward traffic if the following conditions exist:

· The primary DR member device fails while the IPL is down.

· The DRNI MAD DOWN state persists on the secondary DR member device.

Software version and feature compatibility

This feature is supported only in Release 6616 and later.

Procedure

1. Enter system view.

system-view

2. Enable DRNI MAD DOWN state persistence.

drni mad persistent

By default, the secondary DR member device brings up interfaces in DRNI MAD DOWN state when its role changes to primary.

3. (Optional.) Bring up the interfaces in DRNI MAD DOWN state.

drni mad restore

Execute this command only when both the IPL and the keepalive link are down.

Configuring a DR interface

About this task

If a DR group contains only one DR interface, that interface is called a single-homed DR interface. By default, DRNI does not allow access through single-homed DR interfaces, which means DRNI MAD shuts down a DR interface if it is the only member in its DR group.

To ensure traffic forwarding for a device single-homed to a DR interface, allow the DR interface to be the single member in its DR group. DRNI MAD will not shut down the single-homed DR interface, and the device will not perform configuration consistency check on the interface.

Restrictions and guidelines

The device can have multiple DR interfaces. However, you can assign a Layer 2 aggregate interface to only one DR group.

A Layer 2 aggregate interface cannot operate as both IPP and DR interface.

To improve forwarding efficiency, exclude the DR interface on the secondary DR member device from the shutdown action by DRNI MAD. This action enables the DR interface to forward traffic immediately after a multi-active collision is removed without having to wait for the secondary DR member device to complete entry restoration.

To use resilient load sharing on a DR interface, you must configure the resilient load sharing mode by using the link-aggregation load-sharing mode command before you assign member ports to the DR interface.

If a DR interface or its peer DR interface already has member ports, use the following procedure to configure the resilient load sharing mode on that DR interface:

1. Delete the DR interface.

2. Recreate the DR interface.

3. Configure the resilient load sharing mode.

4. Assign member ports to the DR interface.

For more information about the resilient load sharing mode, see "Configuring Ethernet link aggregation."

To change the allow-single-member setting for a single-homed DR interface, first execute the undo port drni group command to remove it from its DR group.

To prevent loops when you assign a single-homed aggregate interface to a DR group, use the following procedure:

1. Assign the aggregate interface to the DR group.

2. Assign ports to the aggregation group of the aggregate interface.

When you remove a single-homed DR interface from its DR group, use the following procedure:

1. Remove the member ports from the aggregation group of the DR interface.

2. Remove the DR interface from the DR group.

Procedure

1. Enter system view.

system-view

2. Enter Layer 2 aggregate interface view.

interface bridge-aggregation interface-number

3. Assign the aggregate interface to a DR group.

port drni group group-id [ allow-single-member ]

As a best practice, specify the allow-single-member keyword for a dynamic aggregate interface.

The allow-single-member keyword is supported in Release 6635 and later.

Specifying a Layer 2 aggregate interface as the IPP

Restrictions and guidelines

A DR member device can have only one IPP. A Layer 2 aggregate interface cannot operate as both IPP and DR interface.

If you specify an aggregate interface as an IPP, the device assigns the aggregate interface as a trunk port to all VLANs when the interface uses the default VLAN settings. If not, the device does not change the VLAN settings of the interface.

To ensure correct Layer 3 forwarding over the IPL, you must use the undo mac-address static source-check enable command to disable static source check on the Layer 2 aggregate interface assigned the IPP role.

The device does not change the VLAN settings of an aggregate interface when you remove its IPP role.

Do not use the MAC address of a remote MEP for CFD tests on IPPs. These tests cannot work on IPPs. For more information about CFD, see High Availability Configuration Guide.

Procedure

1. Enter system view.

system-view

2. Enter Layer 2 aggregate interface view.

interface bridge-aggregation interface-number

3. Specify the interface as the IPP.

port drni intra-portal-port port-id

Enabling the IPP to retain MAC address entries for down single-homed devices

About this task

When a DR member device detects that the link to a single-homed device goes down, the IPP takes the following actions:

· Deletes the MAC address entries for the single-homed device.

· Sends a message to the peer IPP for it to delete the affected MAC address entries.

If the link to a single-homed device flaps constantly, the IPP repeatedly deletes and adds MAC address entries for the device. This situation increases floods of unicast traffic destined for the single-homed device.

To reduce flood traffic, enable the IPP to retain MAC address entries for single-homed devices. After the links to single-homed devices go down, the affected MAC address entries age out on expiration of the MAC aging timer instead of being deleted immediately. The timer is set by using the mac-address timer command. For more information about this command, see MAC address table commands in Layer 2—LAN Switching Command Reference.

Software version and feature compatibility

This feature is supported only in Release 6616 and later.

Procedure

1. Enter system view.

system-view

2. Enable the IPP to retain MAC address entries for single-homed devices.

drni ipp mac-address hold

By default, the IPP does not retain MAC address entries for single-homed devices when the devices go down.

Assigning a DRNI virtual IP address to an interface

About this task

DRNI virtual IP addresses allow devices to communicate with the DR system by using dynamic routing protocols.

To ensure correct traffic forwarding, assign DRNI virtual IP addresses to the following interfaces on the DR system:

· VLAN interfaces that act as dual-active gateways for the same VLAN.

· Loopback interfaces that offer AAA and 802.1X authentication services. For more information, see AAA configuration in Security Configuration Guide.

When both DR member devices act as gateways for dualhomed user-side devices, the gateway interfaces (VLAN interfaces) on the DR member devices use the same IP address and MAC address. In this scenario, the DR member devices cannot set up neighbor relationships with the user-side devices. To resolve this issue, assign virtual IP addresses to the gateway interfaces and configure routing protocols such as BGP, OSPF, and OSPFv3 to use the virtual IP addresses for neighbor relationship setup.

When dual-active gateways exist on the DR system, you must assign unique virtual IP addresses to the gateway interfaces on the DR member devices and configure both virtual IP addresses to be active. When you assign a virtual MAC address to a VLAN interface, make sure the virtual MAC address is identical to the MAC address assigned to the VLAN interface by using the mac-address command.

Restrictions and guidelines

The feature is supported only in Release 6635 and later.

When you assign multiple DRNI virtual IP addresses to an interface, follow these restrictions and guidelines:

· You can assign a maximum of two virtual IPv4 or IPv6 addresses to an interface.

· If you configure different virtual MAC addresses for a virtual IPv4 or IPv6 address, the most recent configuration takes effect.

· You cannot configure the same virtual MAC address for multiple virtual IPv4 or IPv6 addresses.

If you assign both virtual IPv4 and IPv6 addresses to VLAN interfaces, make sure the virtual IPv4 and IPv6 addresses that use the same virtual MAC address are in the same state on the DR member devices.

Assigning DRNI virtual IP addresses to a VLAN interface

1. Enter system view.

system-view

2. Enter VLAN interface view.

interface vlan-interface interface-number

3. Assign a virtual IPv4 address to the VLAN interface.

port drni virtual-ip ipv4-address { mask-length | mask } [ active | standby ] virtual-mac mac-address

By default, no virtual IPv4 addresses are assigned to interfaces.

4. Assign a virtual IPv6 address to the VLAN interface.

port drni ipv6 virtual-ip ipv6-address { prefix-length [ active | standby ] [ virtual-mac mac-address ] | link-local }

By default, no virtual IPv6 addresses are assigned to interfaces.

Assigning DRNI virtual IP addresses to a loopback interface

1. Enter system view.

system-view

2. Enter loopback interface view.

interface loopback interface-number

3. Assign a virtual IPv4 address to the loopback interface.

port drni virtual-ip ipv4-address { mask-length | mask } [ active | standby ]

By default, no virtual IPv4 addresses are assigned to interfaces.

4. Assign a virtual IPv6 address to the loopback interface.

port drni ipv6 virtual-ip ipv6-address { prefix-length [ active | standby ] | link-local }

By default, no virtual IPv6 addresses are assigned to interfaces.

Setting the mode of configuration consistency check

About this task

The device handles configuration inconsistency depending on the mode of configuration consistency check.

· For type 1 configuration inconsistency:

¡ The device generates log messages if loose mode is enabled.

¡ The device shuts down DR interfaces and generates log messages if strict mode is enabled.

· For type 2 configuration inconsistency, the device only generates log messages, whether strict or loose mode is enabled.

Procedure

1. Enter system view.

system-view

2. Set the mode of configuration consistency check.

drni consistency-check mode { loose | strict }

By default, configuration consistency check uses strict mode.

Disabling configuration consistency check

About this task

To ensure that the DR system can operate correctly, DRNI by default performs configuration consistency check when the DR system is set up.

Configuration consistency check might fail when you upgrade the DR member devices in a DR system. To prevent the DR system from falsely shutting down DR interfaces, you can temporarily disable configuration consistency check.

Restrictions and guidelines

Make sure the DR member devices use the same setting for configuration consistency check.

Procedure

1. Enter system view.

system-view

2. Disable configuration consistency check.

drni consistency-check disable

By default, configuration consistency check is enabled.

Enabling the short DRCP timeout timer on the IPP or a DR interface

About this task

By default, the IPP or a DR interface uses the 90-second long DRCP timeout timer. To detect peer interface down events more quickly, enable the 3-second short DRCP timeout timer on the interface.

Restrictions and guidelines

To avoid traffic interruption during an ISSU or DRNI process restart, disable the short DRCP timeout timer before you perform an ISSU or DRNI process restart. For more information about ISSU, see Fundamentals Configuration Guide.

Procedure

1. Enter system view.

system-view

2. Enter Layer 2 aggregate interface view.

interface bridge-aggregation interface-number

3. Enable the short DRCP timeout timer.

drni drcp period short

By default, an interface uses the long DRCP timeout timer (90 seconds).

Setting the keepalive hold timer for identifying the cause of IPL down events

About this task

The keepalive hold timer starts when the IPL goes down. The keepalive hold timer specifies the amount of time that the device uses to identify the cause of an IPL down event.

· If the device receives keepalive packets from the DR peer before the timer expires, the IPL is down because the IPL fails.

· If the device does not receive keepalive packets from the DR peer before the timer expires, the IPL is down because the peer DR member device fails.

Procedure

1. Enter system view.

system-view

2. Set the keepalive hold timer.

drni keepalive hold-time value

By default, the keepalive hold timer is 3 seconds.

Configuring DR system auto-recovery

About this task

If only one DR member device recovers after the entire DR system reboots, auto-recovery enables that member device to remove its DR interfaces from the DRNI DOWN interface list.

· If that member device has up DR interfaces, it takes over the primary role when the reload delay timer expires and forwards traffic.

· If that member device does not have up DR interfaces, it is stuck in the None role and does not forward traffic.

If auto-recovery is disabled, that DR member device will be stuck in the None role with all its DR interfaces being DRNI DOWN after it recovers.

Restrictions and guidelines

If both DR member devices recover and have up DR interfaces after the entire DR system reboots, active-active situation might occur if both IPL and keepalive links were down when the reload delay timer expires. If this rare situation occurs, examine the IPL and keepalive links and restore them.

To avoid incorrect role preemption, make sure the reload delay timer is longer than the amount of time required for the device to restart.

Procedure

1. Enter system view.

system-view

2. Configure DR system auto-recovery.

drni auto-recovery reload-delay delay-value

By default, DR system auto-recovery is not configured. The reload delay timer is not set.

Setting the data restoration interval

About this task

The data restoration interval specifies the maximum amount of time for the secondary DR member device to synchronize data with the primary DR member device during DR system setup. Within the data restoration interval, the secondary DR member device sets all network interfaces to DRNI MAD DOWN state, except for interfaces excluded from the shutdown action by DRNI MAD.

When the data restoration interval expires, the secondary DR member device brings up all network interfaces.

Restrictions and guidelines

Make sure the data restoration interval is long enough for the device to reboot and restore forwarding entries after failure occurs.

Adjust the data restoration interval based on the size of forwarding tables. If the DR member devices have small forwarding tables, reduce this interval. If the forwarding tables are large, increase this interval. Typically, set the data restoration interval to 300 seconds.

Increase the data restoration interval as needed for the following purposes:

· Avoid packet loss and forwarding failure that might occur when the amount of data is large or when you perform an ISSU between the DR member devices.

· Avoid DR interface flapping that might occur if type 1 configuration consistency check fails after the DR interfaces come up upon expiration of the data restoration interval.

Procedure

1. Enter system view.

system-view

2. Set the data restoration interval.

drni restore-delay value

By default, the data restoration interval is 30 seconds.

Enabling DRNI sequence number check

Restrictions and guidelines

As a best practice to improve security, use DRNI sequence number check together with DRNI packet authentication.

After one DR member device reboots, the other DR member device might receive and accept the packets that were intercepted by an attacker before the reboot. As a best practice, change the authentication key after a DR member device reboots.

Procedure

1. Enter system view.

system-view

2. Enable DRNI sequence number check.

drni sequence enable

By default, DRNI sequence number check is disabled.

Enabling DRNI packet authentication

Restrictions and guidelines

For successful authentication, configure the same authentication key for the DR member devices.

Procedure

1. Enter system view.

system-view

2. Enable DRNI packet authentication and configure an authentication key.

drni authentication key { simple | cipher } string

By default, DRNI packet authentication is disabled.

Displaying and maintaining DRNI

Execute display commands in any view and reset commands in user view.

|

Task |

Command |

|

Display information about the configuration consistency check done by DRNI. |

display drni consistency { type1 | type2 } { global | interface interface-type interface-number } |

|

Display the configuration consistency check status. |

display drni consistency-check status |

|

Display DRCPDU statistics. |

display drni drcp statistics [ interface interface-type interface-number ] |

|

Display DR keepalive packet statistics. |

display drni keepalive |

|

Display detailed DRNI MAD information. |

display drni mad verbose |

|

Display DR role information. |

display drni role |

|

Display brief information about the IPP and DR interfaces. |

display drni summary |

|

Display the DR system settings. |

display drni system |

|

Display DRNI troubleshooting information. (Release 6616 and later.) |

display drni troubleshooting [ dr | ipp | keepalive ] [ history ] [ count ] |

|

Display detailed information about the IPP and DR interfaces. |

display drni verbose [ interface bridge-aggregation interface-number ] |

|

Display DRNI virtual IP addresses. |

display drni virtual-ip [ interface interface-type interface-number ] |

|

Clear DRCPDU statistics. |

reset drni drcp statistics [ interface interface-list ] |

|

Clear DRNI troubleshooting records. (Release 6616 and later.) |

reset drni troubleshooting history |

DRNI configuration examples

Example: Configuring basic DRNI functions

Network configuration

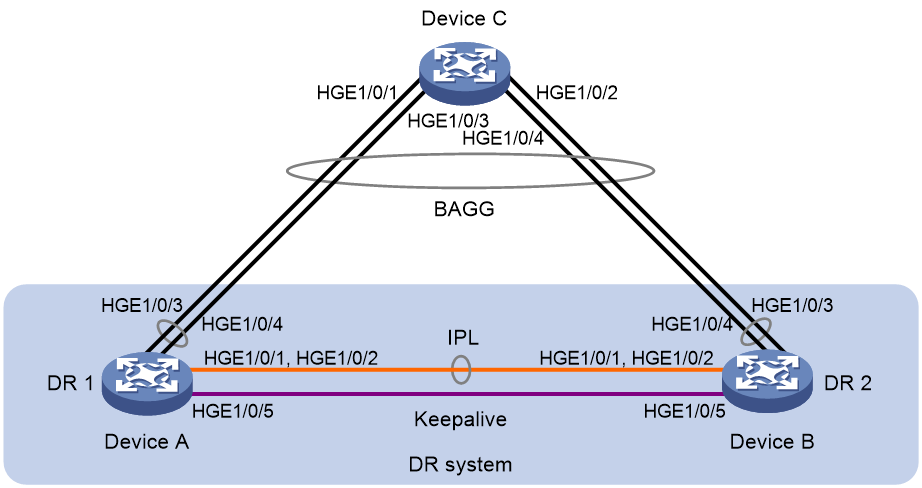

As shown in Figure 11, configure DRNI on Device A and Device B to establish a multichassis aggregate link with Device C.

Procedure

1. Configure Device A:

# Configure DR system settings.

<DeviceA> system-view

[DeviceA] drni system-mac 1-1-1

[DeviceA] drni system-number 1

[DeviceA] drni system-priority 123

# Configure DR keepalive packet parameters.

[DeviceA] drni keepalive ip destination 1.1.1.1 source 1.1.1.2

# Set the link mode of HundredGigE 1/0/5 to Layer 3, and assign the interface an IP address. The IP address will be used as the source IP address of keepalive packets.

[DeviceA] interface hundredgige 1/0/5

[DeviceA-HundredGigE1/0/5] port link-mode route

[DeviceA-HundredGigE1/0/5] ip address 1.1.1.2 24

[DeviceA-HundredGigE1/0/5] quit

# Exclude the interface used for DR keepalive detection (HundredGigE 1/0/5) from the shutdown action by DRNI MAD.

[DeviceA] drni mad exclude interface hundredgige 1/0/5

# Create Layer 2 dynamic aggregate interface Bridge-Aggregation 3.

[DeviceA] interface bridge-aggregation 3

[DeviceA-Bridge-Aggregation3] link-aggregation mode dynamic

[DeviceA-Bridge-Aggregation3] quit

# Assign HundredGigE 1/0/1 and HundredGigE 1/0/2 to aggregation group 3.

[DeviceA] interface hundredgige 1/0/1

[DeviceA-HundredGigE1/0/1] port link-aggregation group 3

[DeviceA-HundredGigE1/0/1] quit

[DeviceA] interface hundredgige 1/0/2

[DeviceA-HundredGigE1/0/2] port link-aggregation group 3

[DeviceA-HundredGigE1/0/2] quit

# Specify Bridge-Aggregation 3 as the IPP.

[DeviceA] interface bridge-aggregation 3

[DeviceA-Bridge-Aggregation3] port drni intra-portal-port 1

[DeviceA-Bridge-Aggregation3] undo mac-address static source-check enable

[DeviceA-Bridge-Aggregation3] quit

# Create Layer 2 dynamic aggregate interface Bridge-Aggregation 4.

[DeviceA] interface bridge-aggregation 4

[DeviceA-Bridge-Aggregation4] link-aggregation mode dynamic

[DeviceA-Bridge-Aggregation4] quit

# Assign HundredGigE 1/0/3 and HundredGigE 1/0/4 to aggregation group 4.

[DeviceA] interface hundredgige 1/0/3

[DeviceA-HundredGigE1/0/3] port link-aggregation group 4

[DeviceA-HundredGigE1/0/3] quit

[DeviceA] interface hundredgige 1/0/4

[DeviceA-HundredGigE1/0/4] port link-aggregation group 4

[DeviceA-HundredGigE1/0/4] quit

# Assign Bridge-Aggregation 4 to DR group 4.

[DeviceA] interface bridge-aggregation 4

[DeviceA-Bridge-Aggregation4] port drni group 4

[DeviceA-Bridge-Aggregation4] quit

2. Configure Device B:

# Configure DR system settings.

<DeviceB> system-view

[DeviceB] drni system-mac 1-1-1

[DeviceB] drni system-number 2

[DeviceB] drni system-priority 123

# Configure DR keepalive packet parameters.

[DeviceB] drni keepalive ip destination 1.1.1.2 source 1.1.1.1

# Set the link mode of HundredGigE 1/0/5 to Layer 3, and assign the interface an IP address. The IP address will be used as the source IP address of keepalive packets.

[DeviceB] interface hundredgige 1/0/5

[DeviceB-HundredGigE1/0/5] port link-mode route

[DeviceB-HundredGigE1/0/5] ip address 1.1.1.1 24

[DeviceB-HundredGigE1/0/5] quit

# Exclude the interface used for DR keepalive detection (HundredGigE 1/0/5) from the shutdown action by DRNI MAD.