- Released At: 12-10-2023

- Page Views:

- Downloads:

- Table of Contents

- Related Documents

-

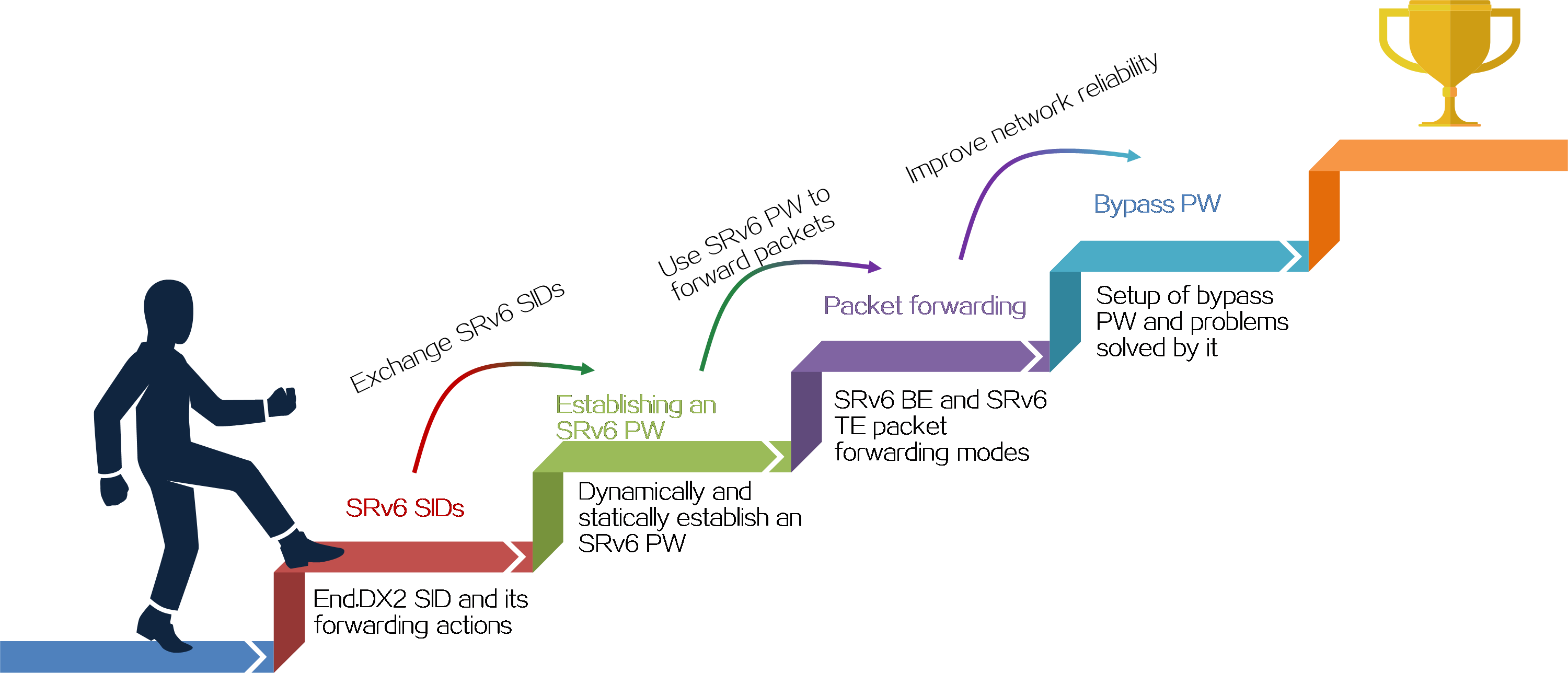

3 Technical advancement roadmap

4.8 SRv6 and MPLS interworking

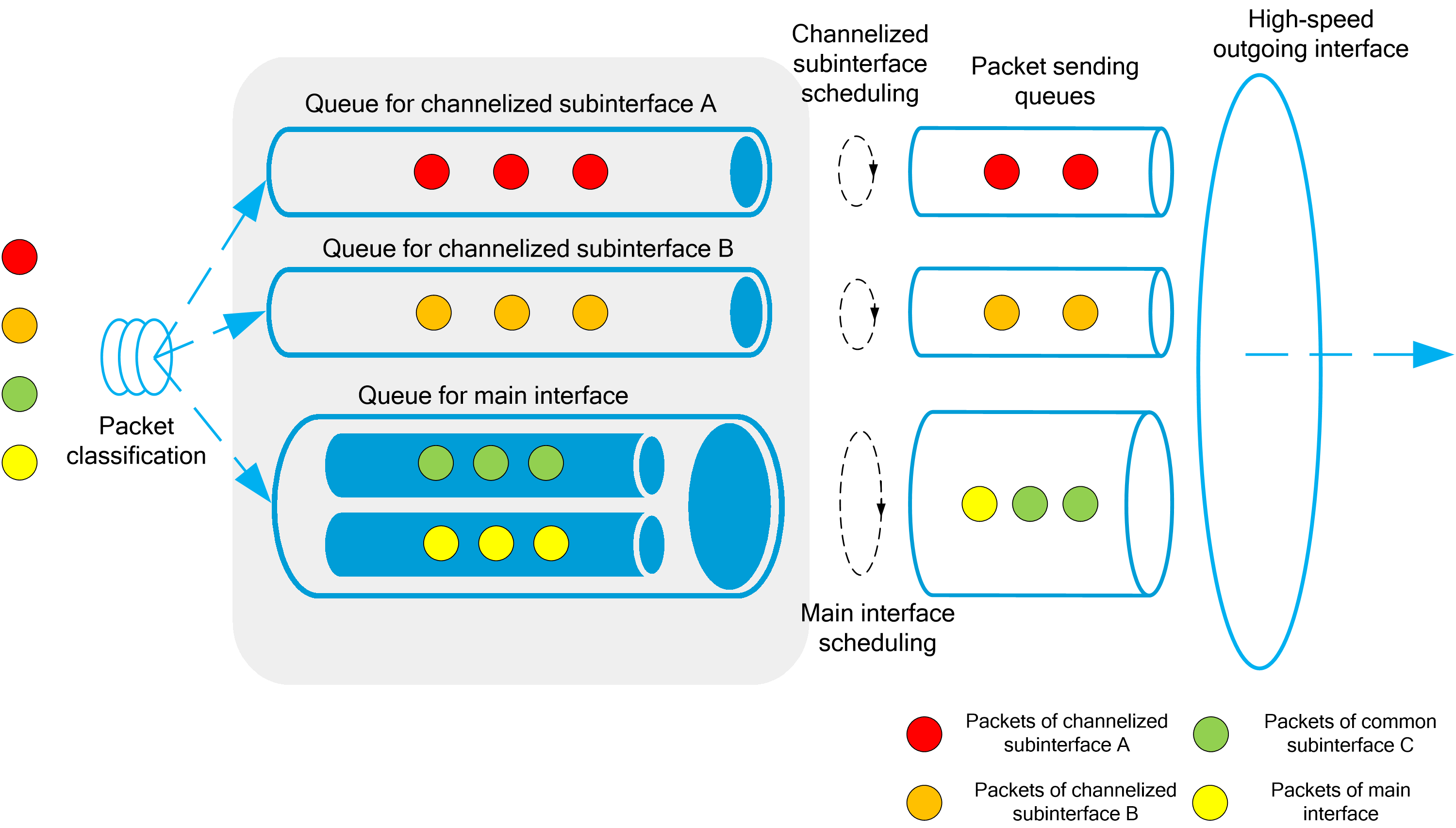

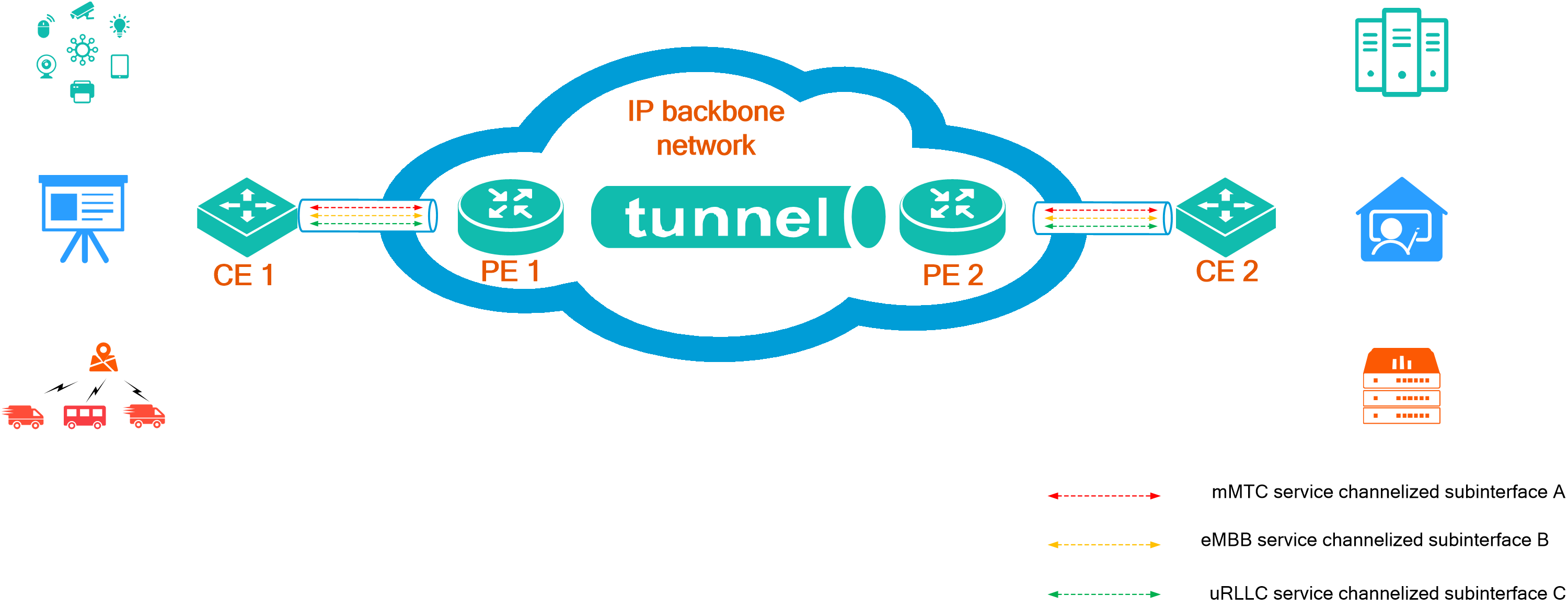

4.12 Subinterface channelization

4.15 Slice ID-based network slicing

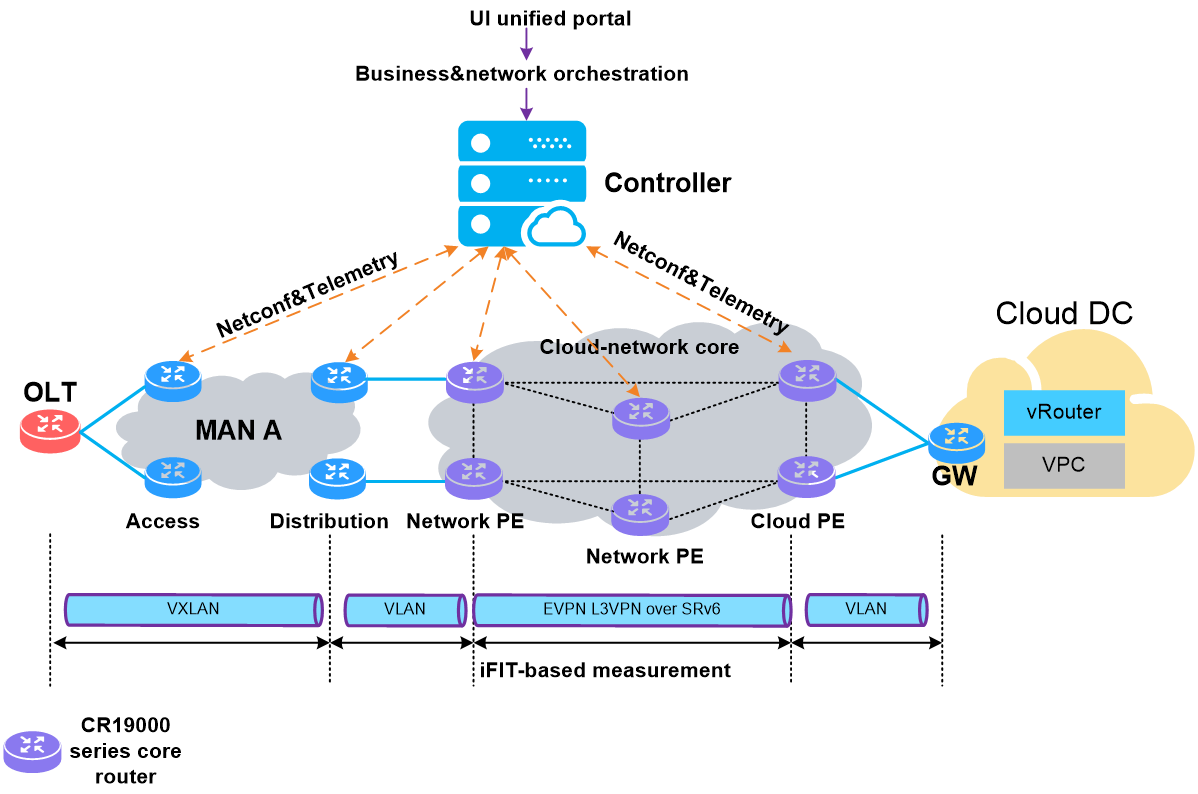

5.1 IPv6+ carrier cloud-network convergence solution

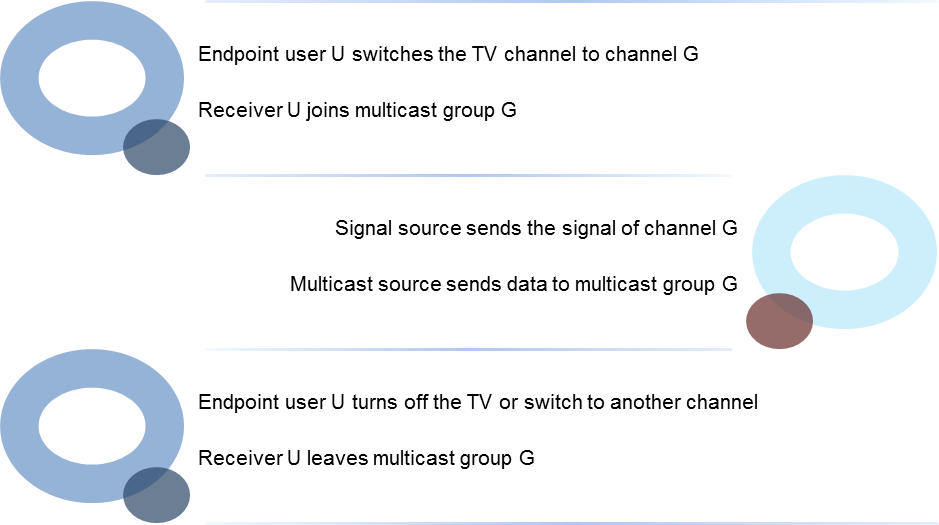

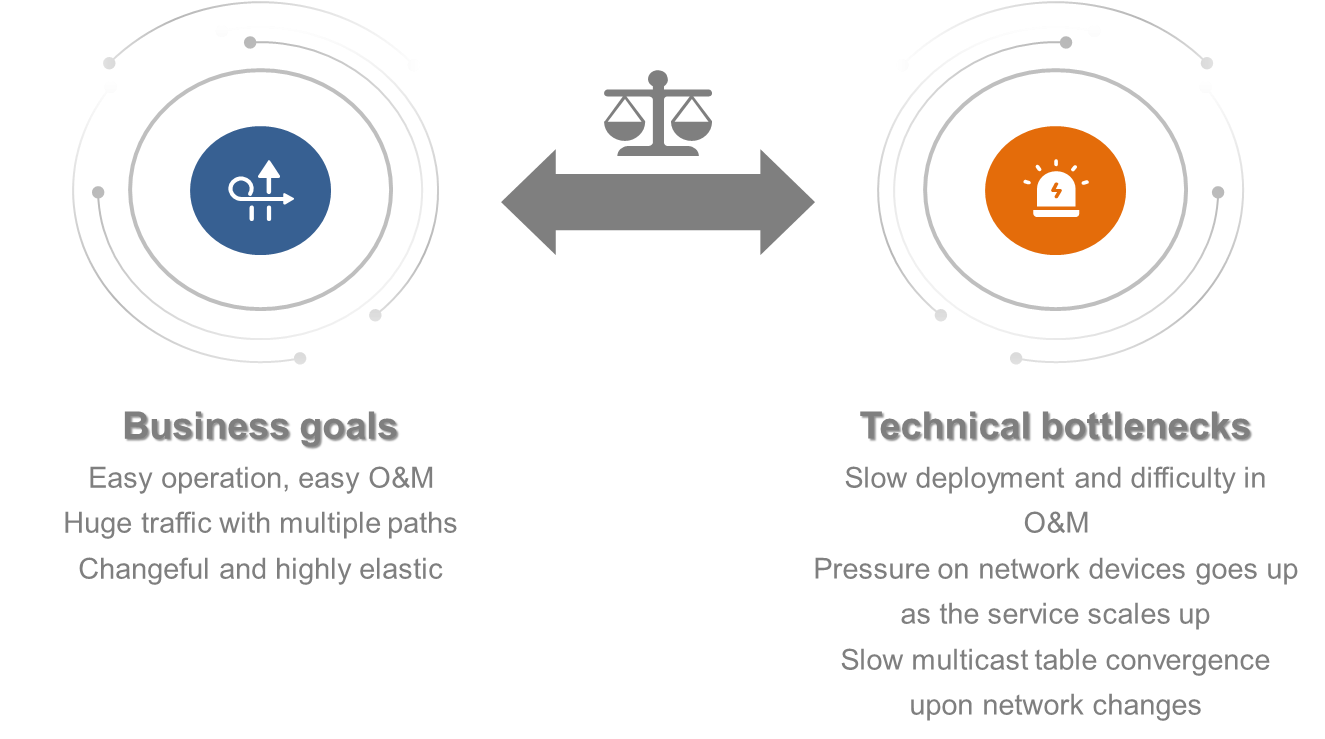

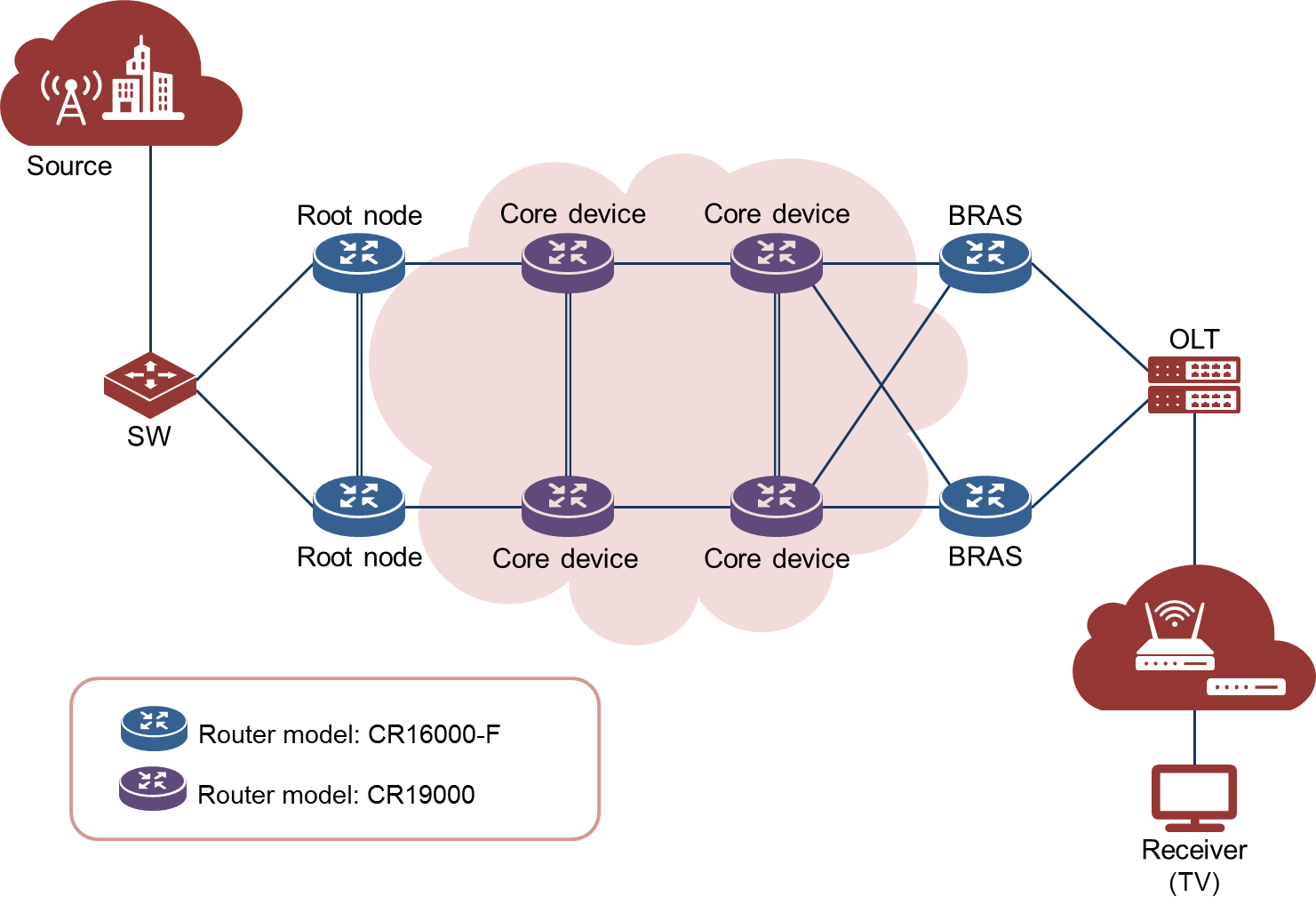

5.2 IPv6+ carrier cloud IPTV solution

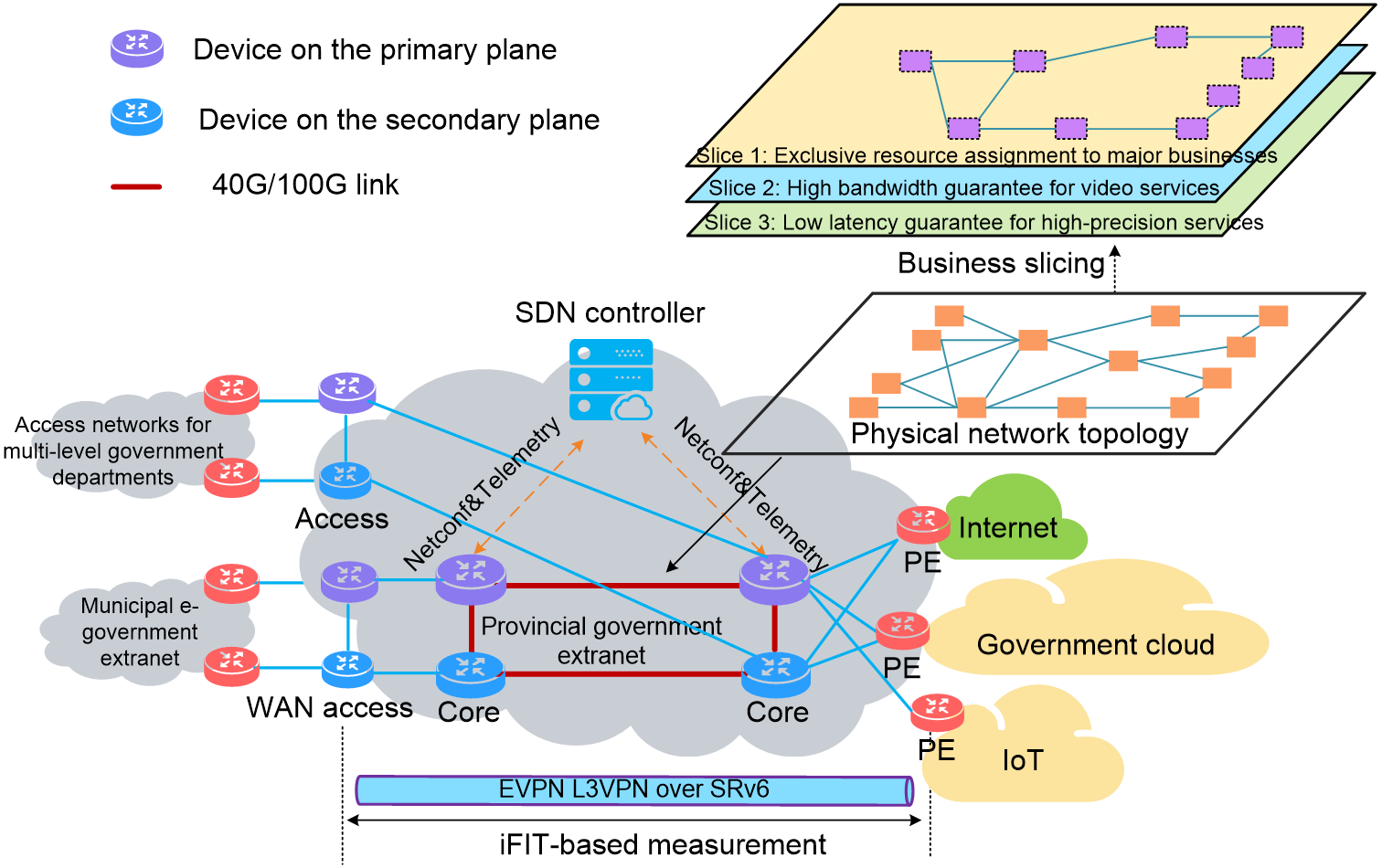

5.3 IPv6+ e-government extranet solution

1 Technical background

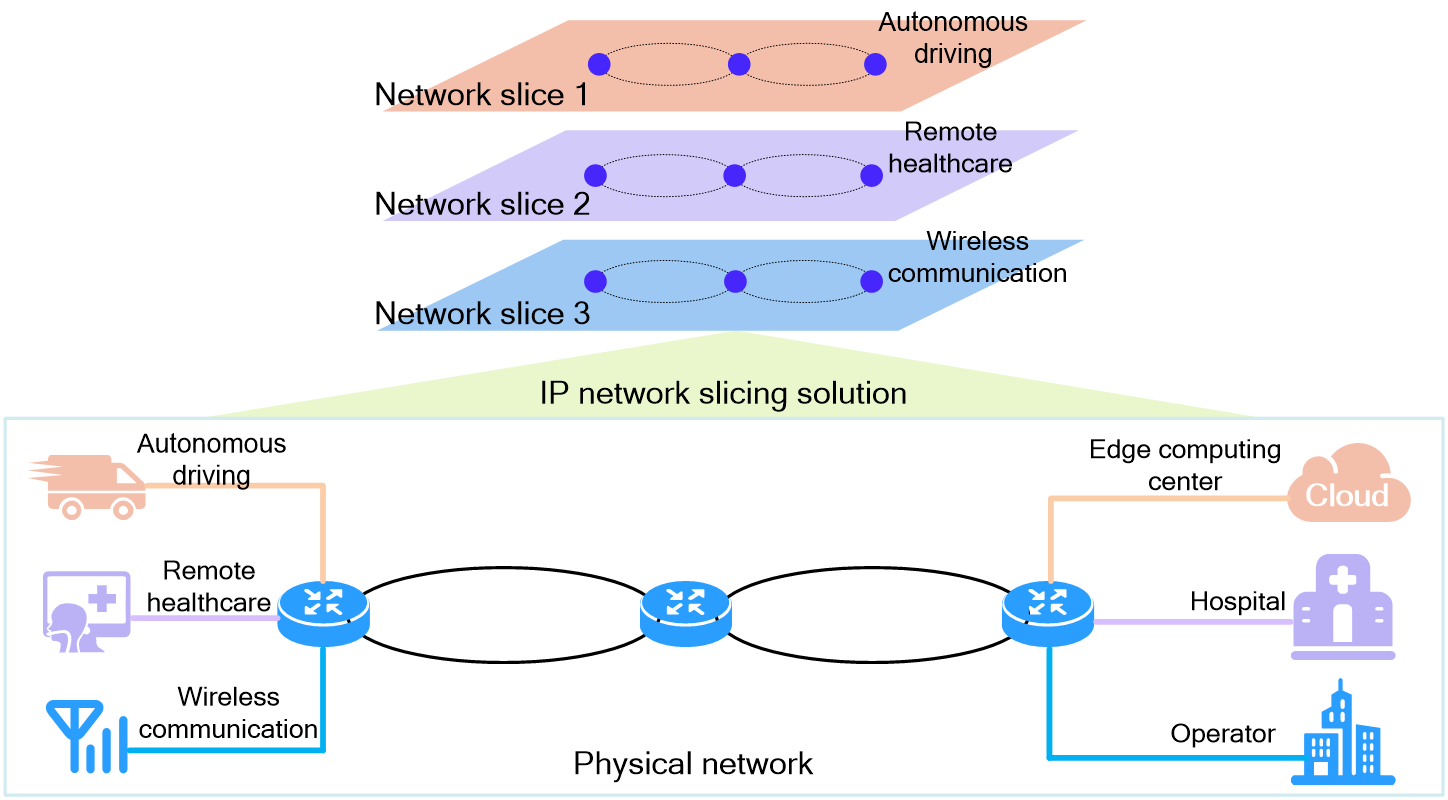

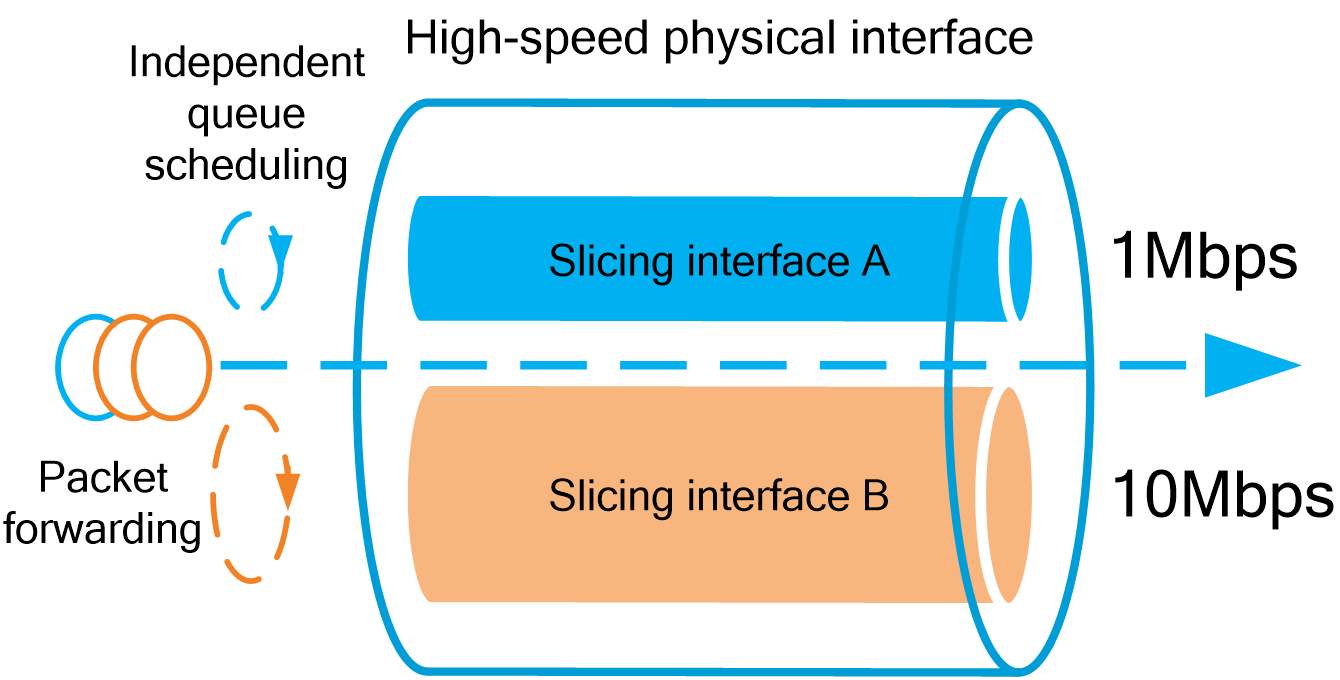

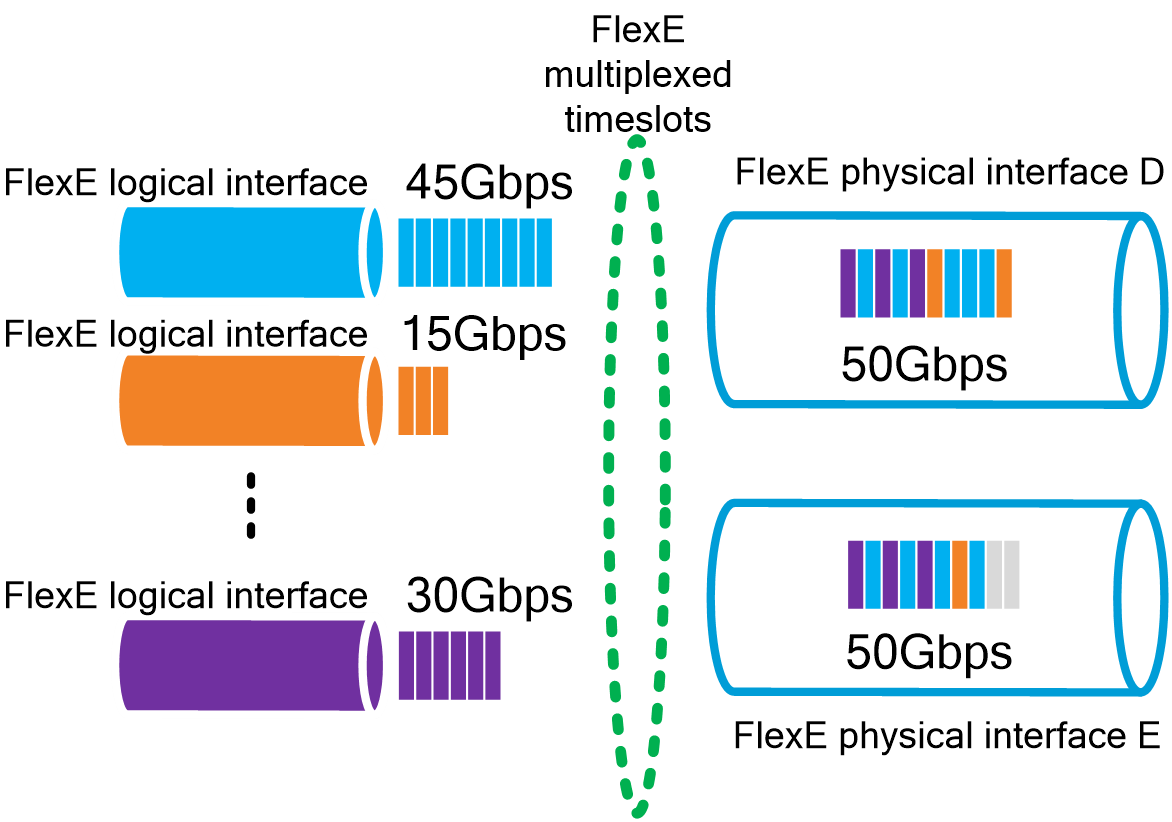

The rapid development of emerging fields such as 5G, Internet of Things (IoT), and cloud computing has led to a significant increase in network scale, complexity, business diversity, and demand for intelligence, posing new challenges to network technologies.

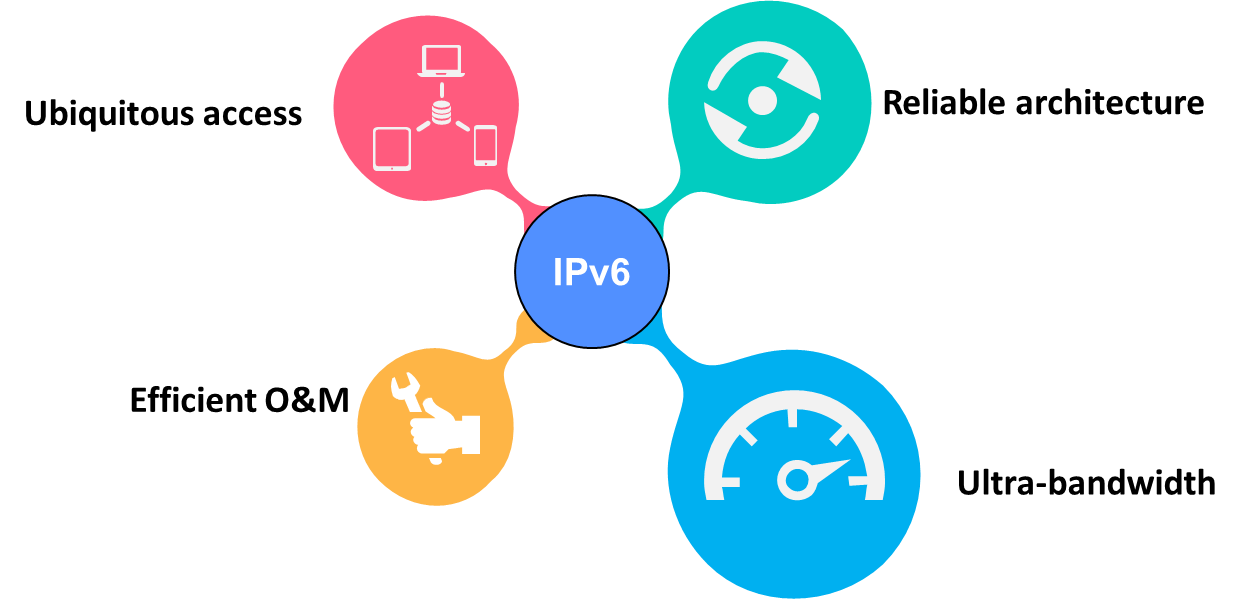

The first-generation network layer protocol, IPv4, has a limited address space, which cannot meet the requirements of IoT. The second-generation network layer protocol, IPv6, uses 128-bit addresses and can provide 3.4×10^38 addresses, enabling broader connections for the Internet and IoT, serving as the foundation for the Internet of Everything (IoE). However, IoE is far from enough in the context of booming new businesses. Business differentiation and AIOps must also be considered.

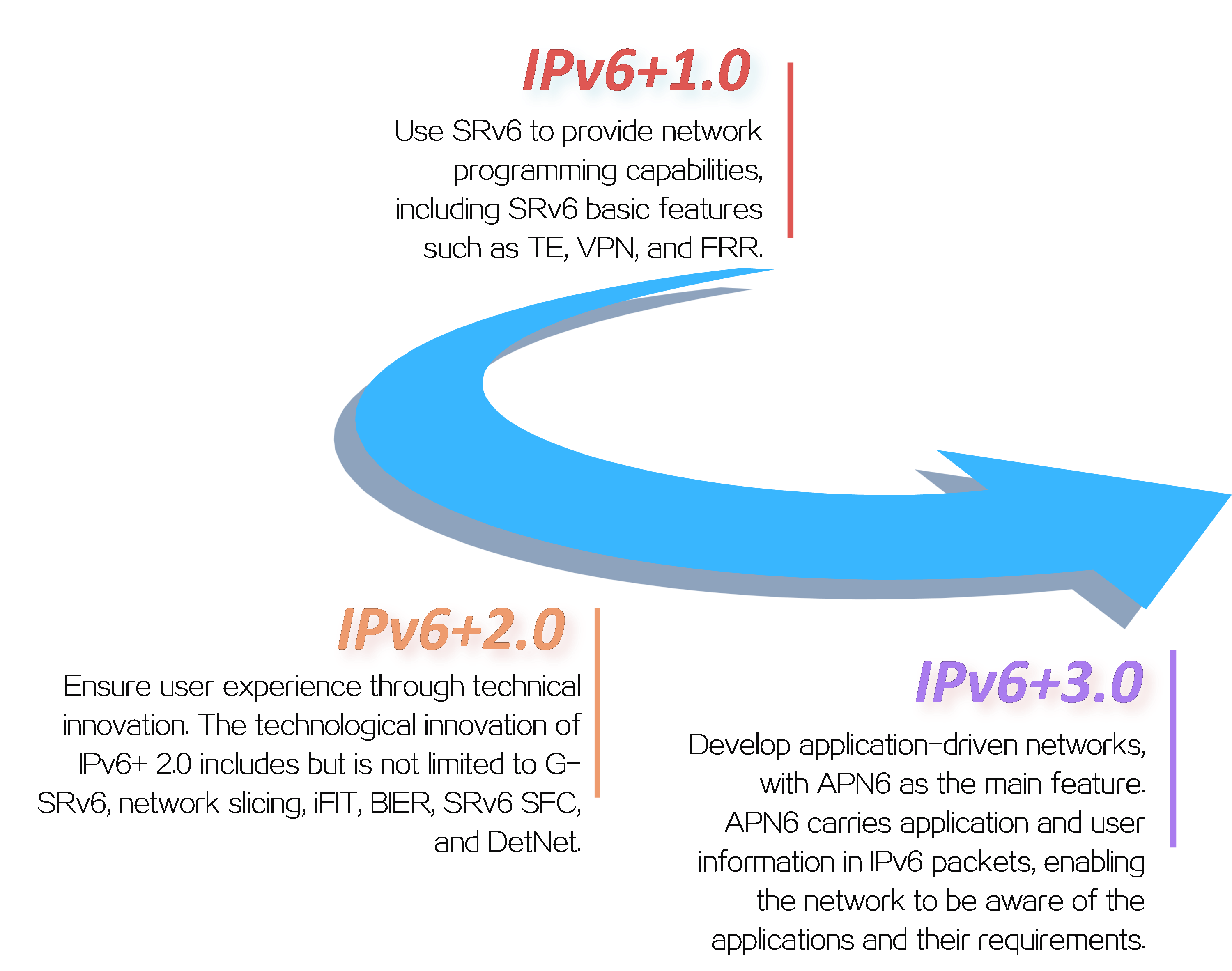

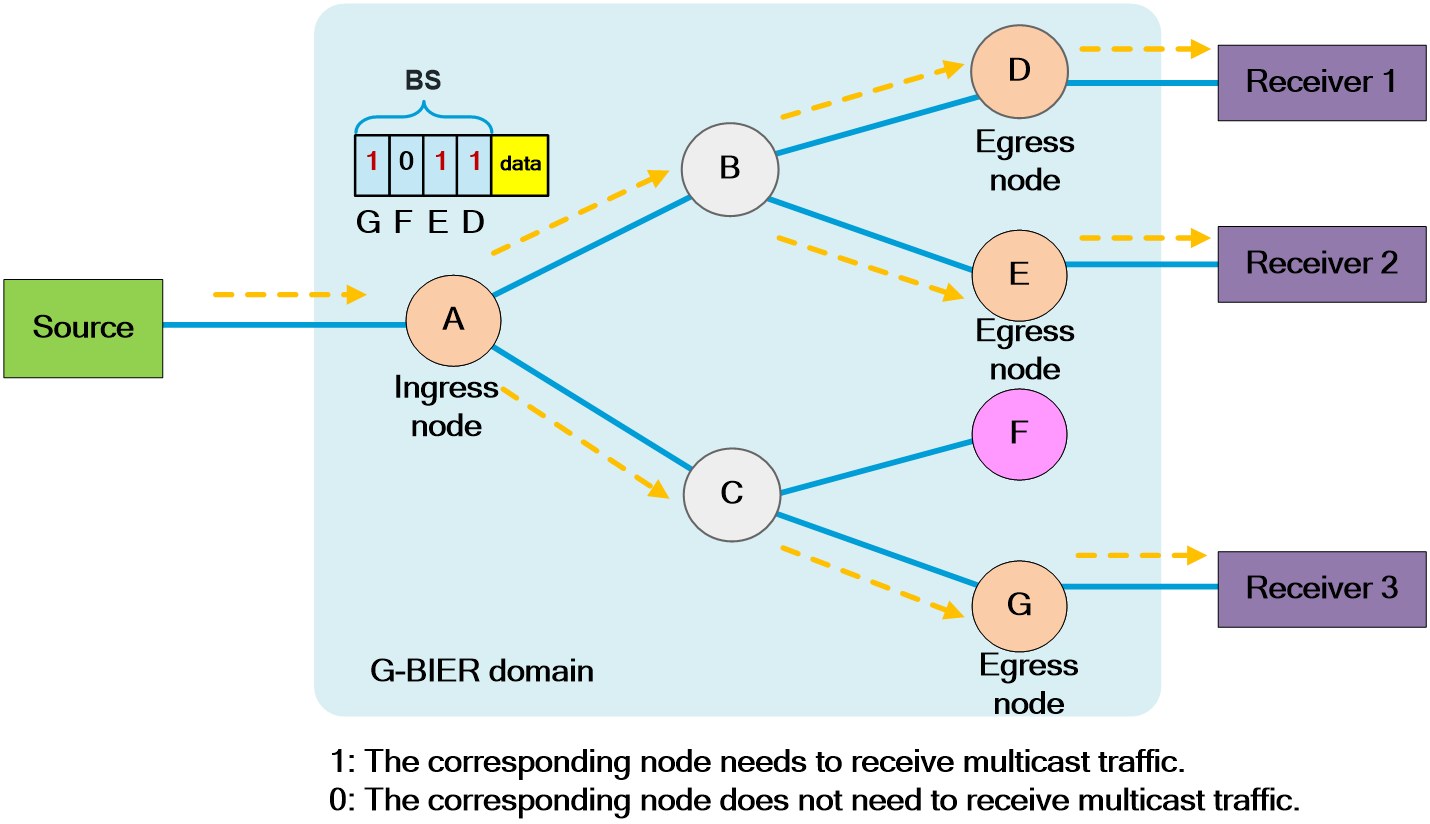

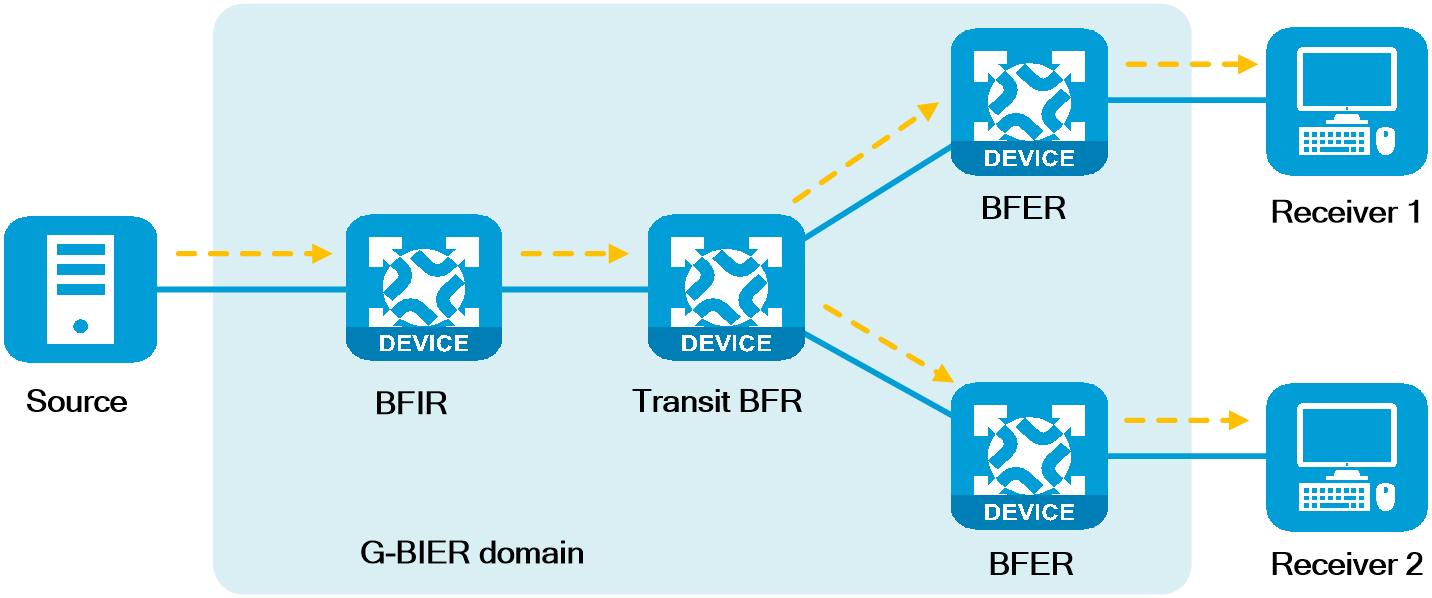

IPv6+ has made significant innovations based on the IPv6 protocol, such as SRv6, network slicing, in-band network telemetry (iFIT), new multicast (BIER), service chaining (SRv6 SFC), deterministic networking (DetNet), and application-aware networking (APN6). It has also added new features such as intelligent identification and control.

IPv6+ is an intelligent IP technology for the 5G and cloud era, featuring programmable paths, rapid service provisioning, automated Ops, quality visualization, SLA guarantee, and application awareness. IPv6+ enables transformation from IoE to the Internet of Intelligent Things (IIoT), driving digital transformation across industries.

Figure1 IPv6+ development

2 Technical benefits

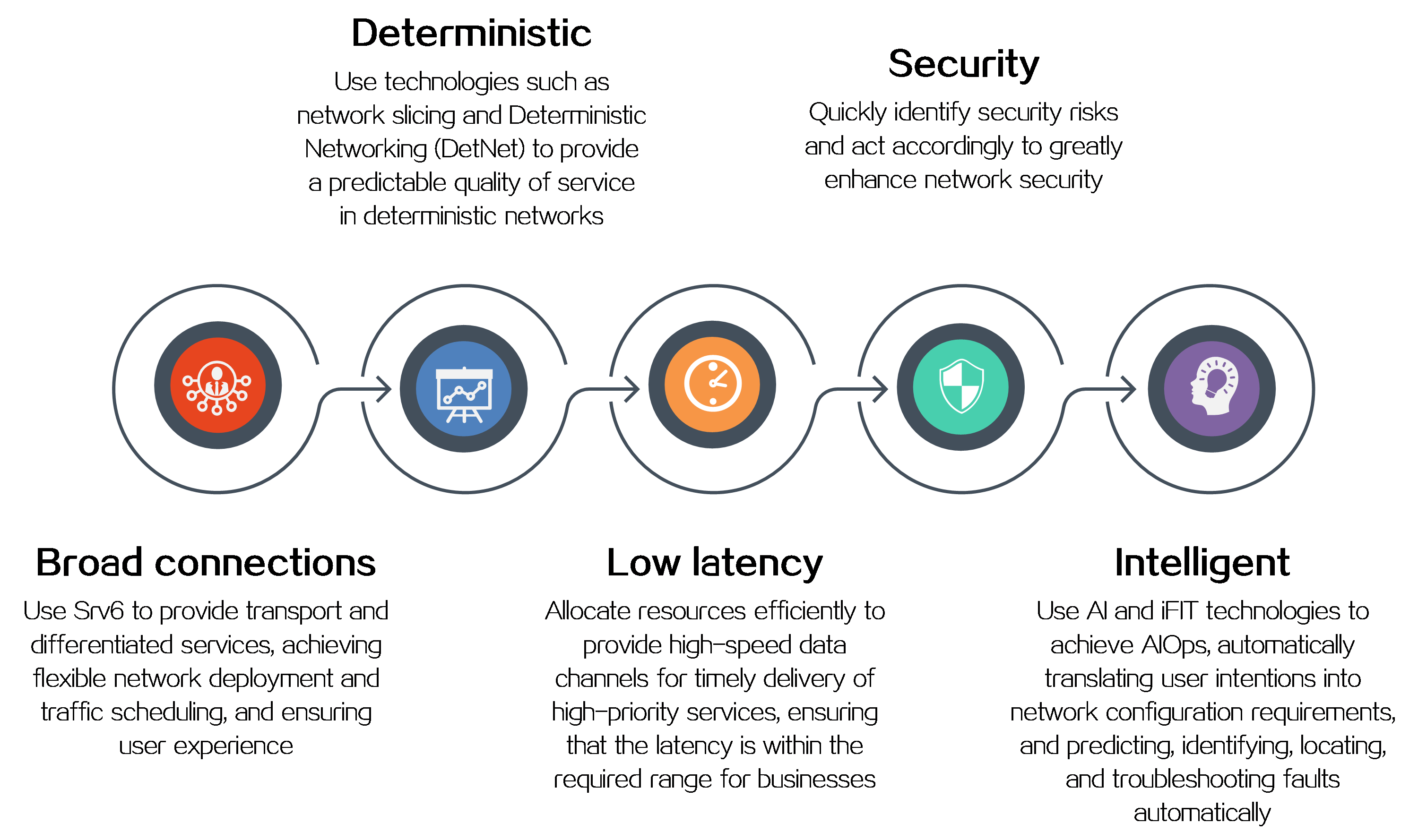

IPv6+ not only provides a large address space and flexible expansion but also enhances the capabilities of IP networks in the following aspects as shown in Figure2 .

Figure2 IPv6+ technical benefits

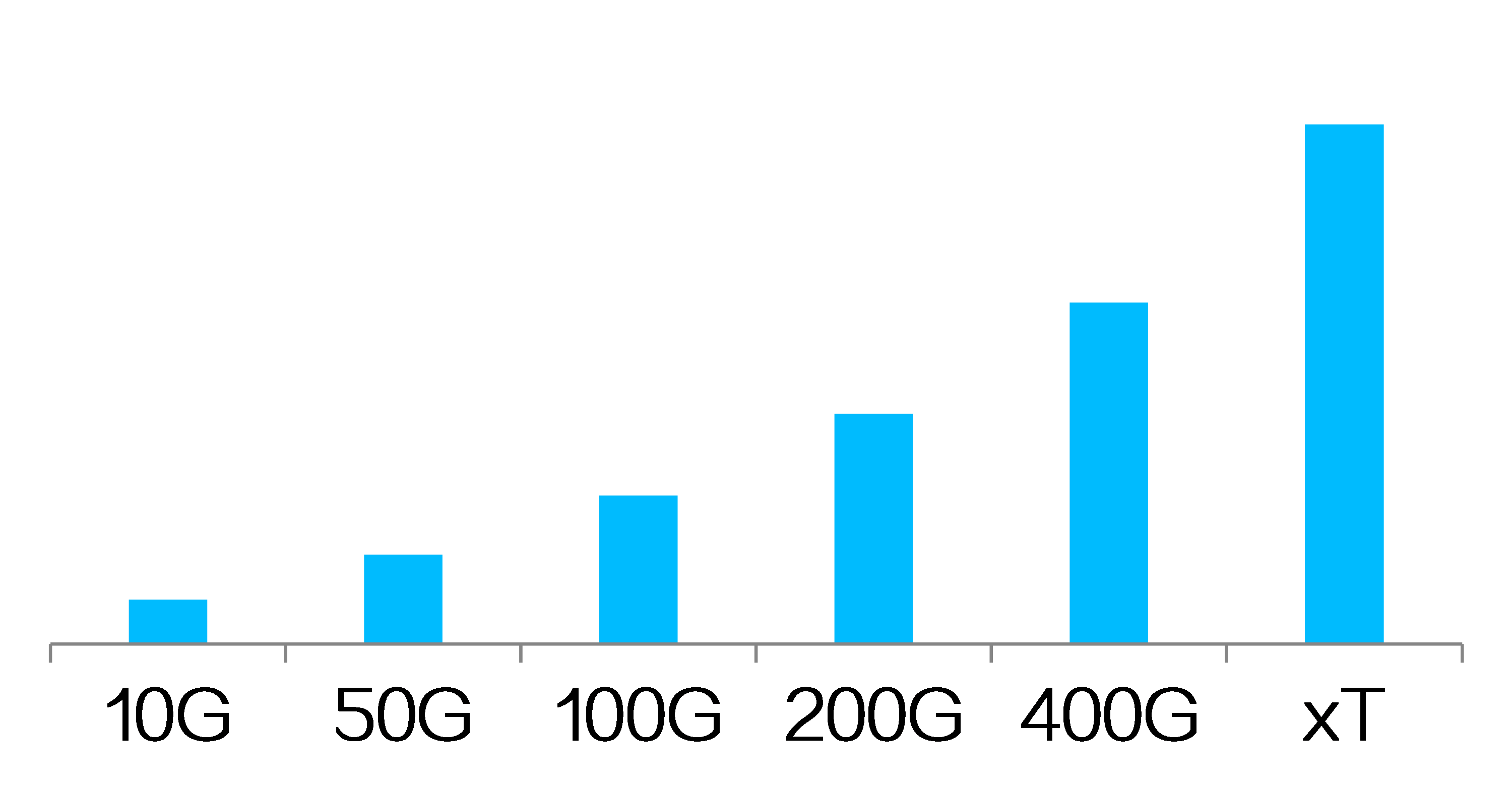

3 Technical advancement roadmap

Figure3 IPv6+ technical advancement roadmap

H3C has basically implemented IPv6+ 1.0, IPv6+ 2.0, and IPv6+ 3.0, and is closely following the development trend of IPv6+ technology for continuous evolution. With IPv6+ technology, H3C can help customers address the challenges of future network technologies, ensuring efficient, secure, and smooth network communication. At the same time, H3C will continue to be committed to the development and popularization of IPv6+ technology to meet the customers’ changing needs and help drive digital transformation of enterprises.

4 Key technologies

|

|

4.1 SRv6 overview

About SRv6

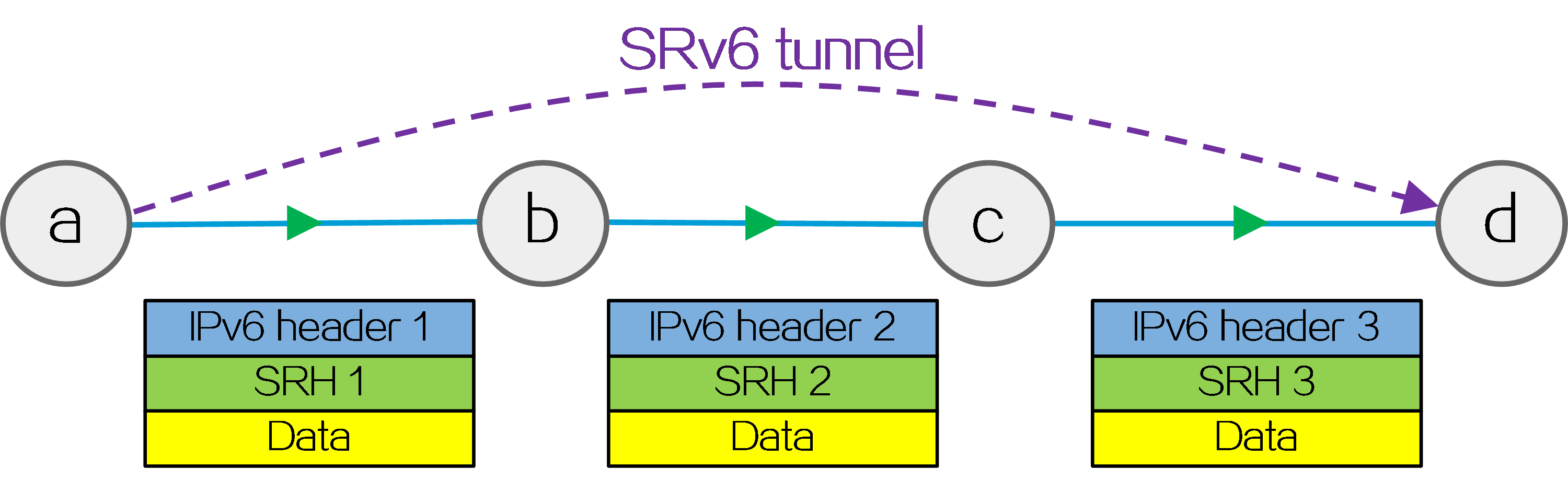

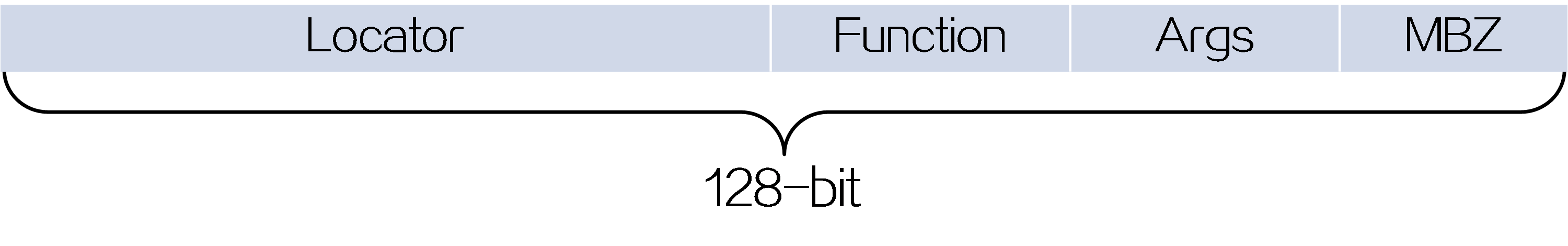

Segment Routing (SR) is a source routing technology. The source node selects a path for packet forwarding, and then encodes the path in the packet header as an ordered list of segments. Each segment is identified by the Segment Identifier (SID). The SR nodes along the path forward the packets based on the SIDs in the packets. Only the source node needs to maintain the path status.

IPv6 SR (SRv6) uses IPv6 addresses as SIDs to forward packets.

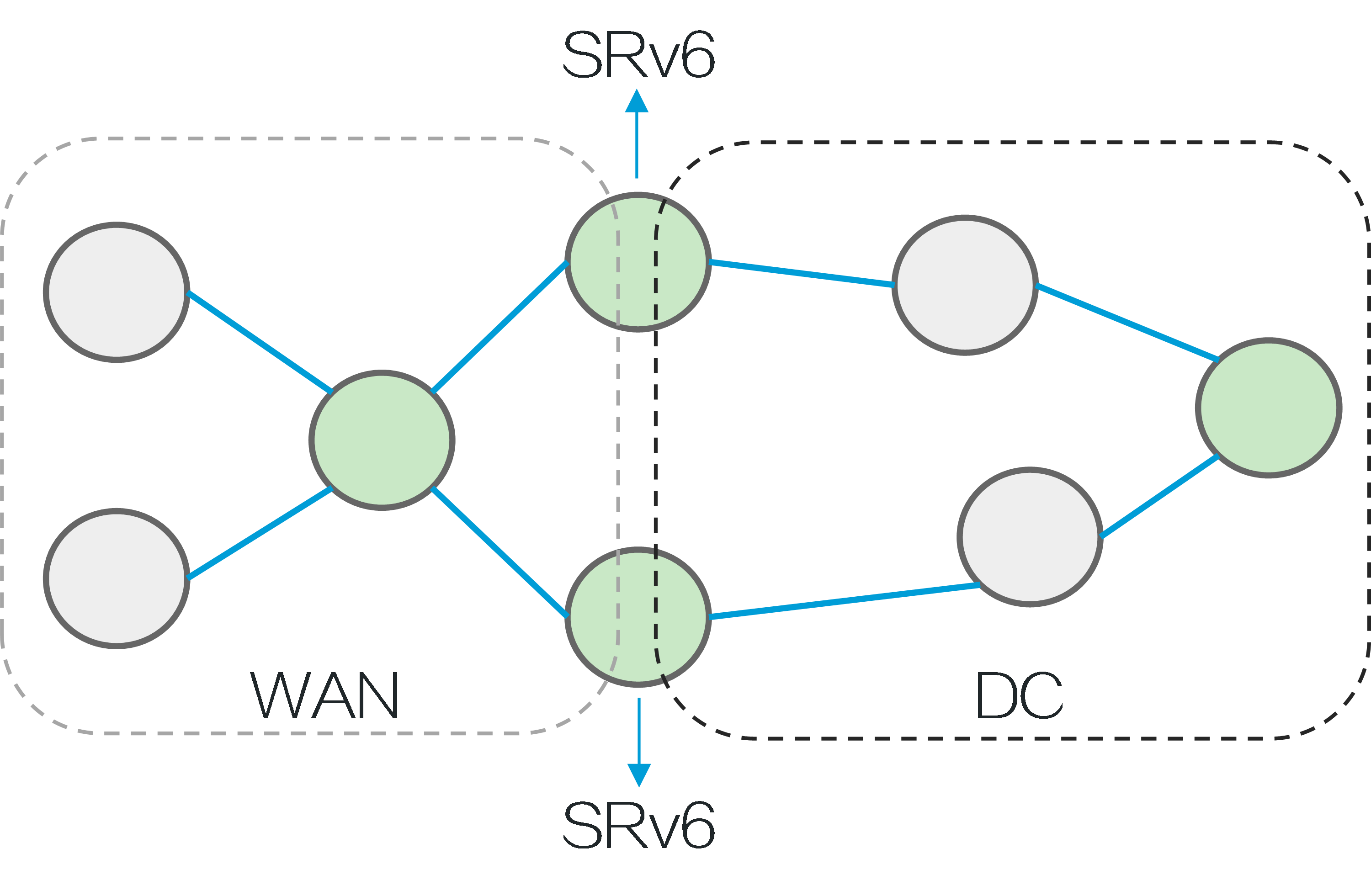

Figure4 SRv6 tunnel

Benefits

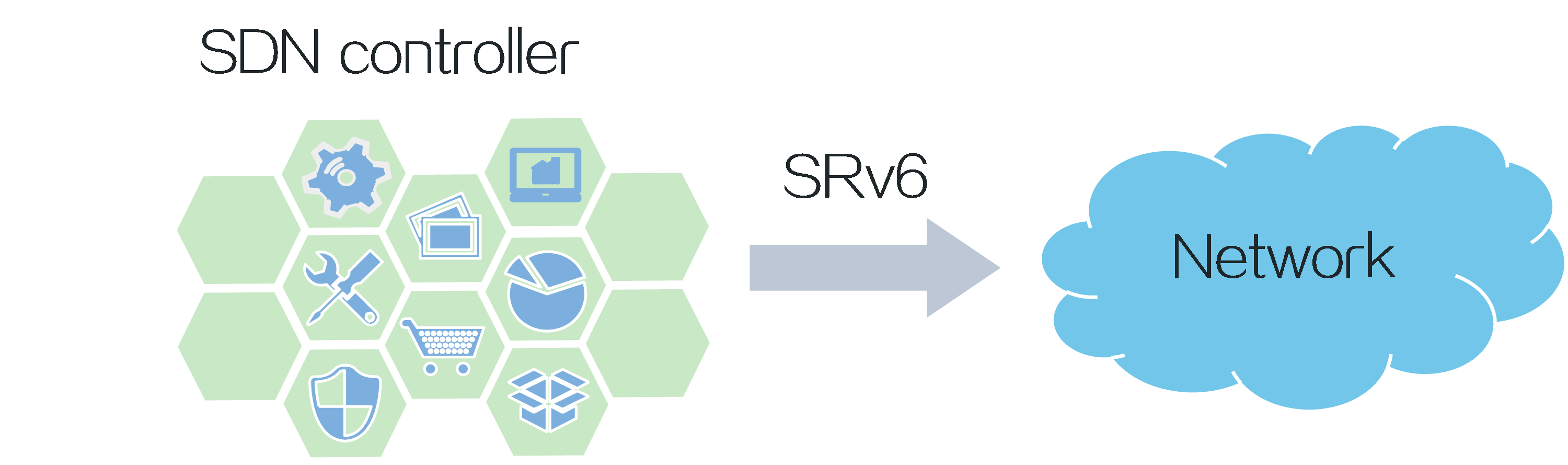

Smart control

SRv6, designed based on the SDN architecture, bridges the gap between applications and networks, enabling application-driven networking. In SRv6, forwarding paths, forwarding behaviors, and service types are all controllable.

Figure5 SRv6 architecture

Easy deployment

SRv6 is implemented based on IGP and BGP extensions, eliminating the need for MPLS labels and label distribution protocols, simplifying configuration.

In an SRv6 network, new services can be deployed without the need to upgrade a large number of network devices. For example, in data centers and WANs, new services can be deployed as long as the edge devices and specific network nodes support SRv6 and the other devices support IPv6.

Figure6 SRv6 deployment

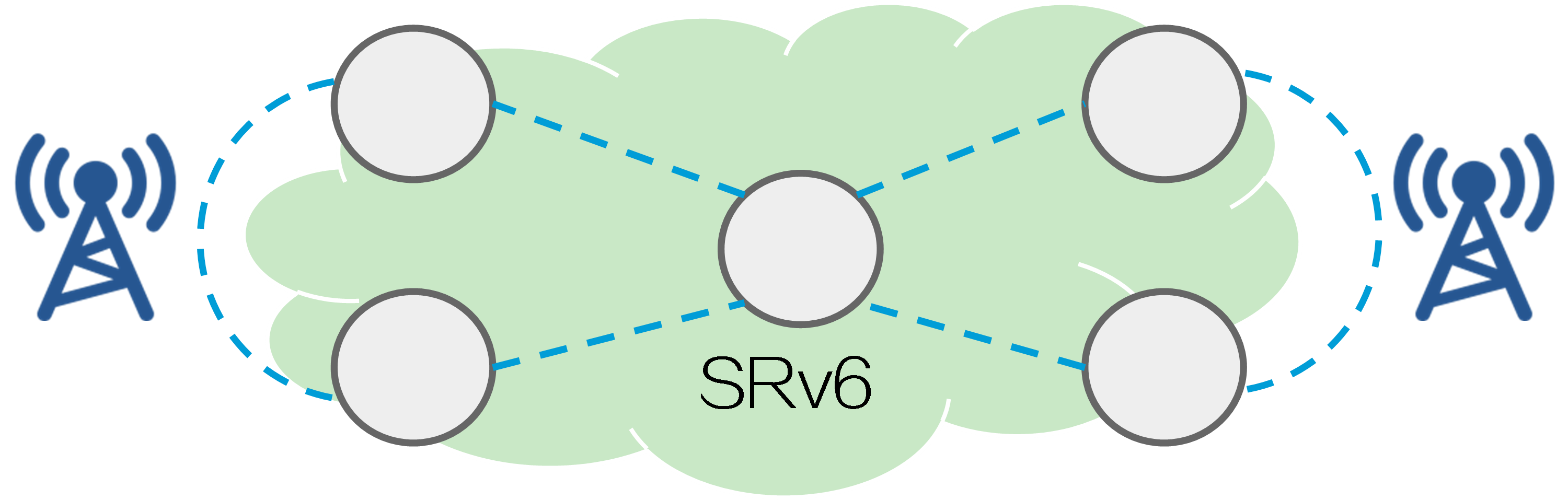

Adaptive to 5G services

With the development of 5G services, IPv4 addresses are no longer sufficient to meet the network requirements of service providers. You can deploy SRv6 in service provider networks to enable all devices to forward traffic through IPv6 addresses, addressing the requirements of 5G services.

Figure7 Deploying SRv6 on service provider networks

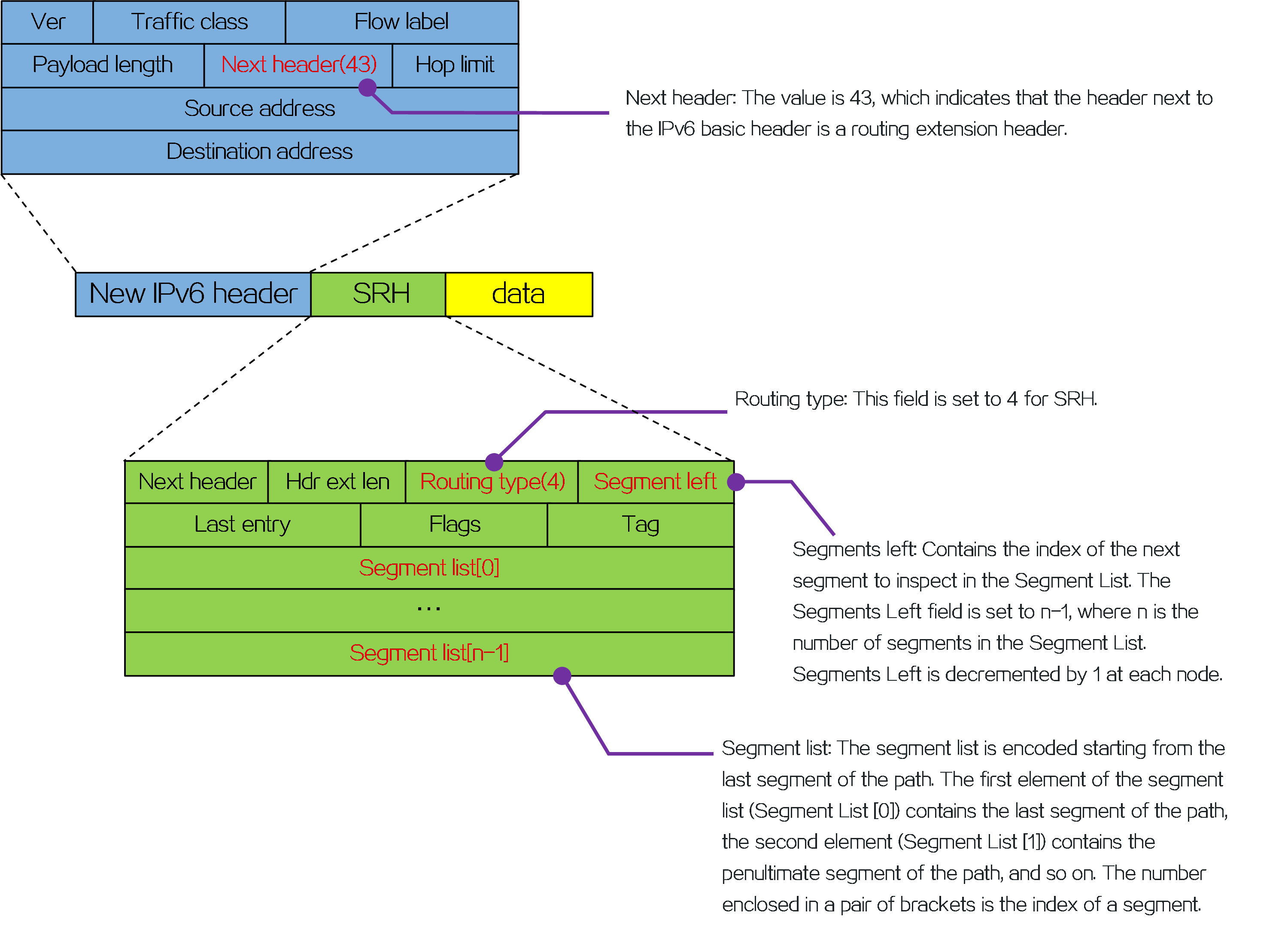

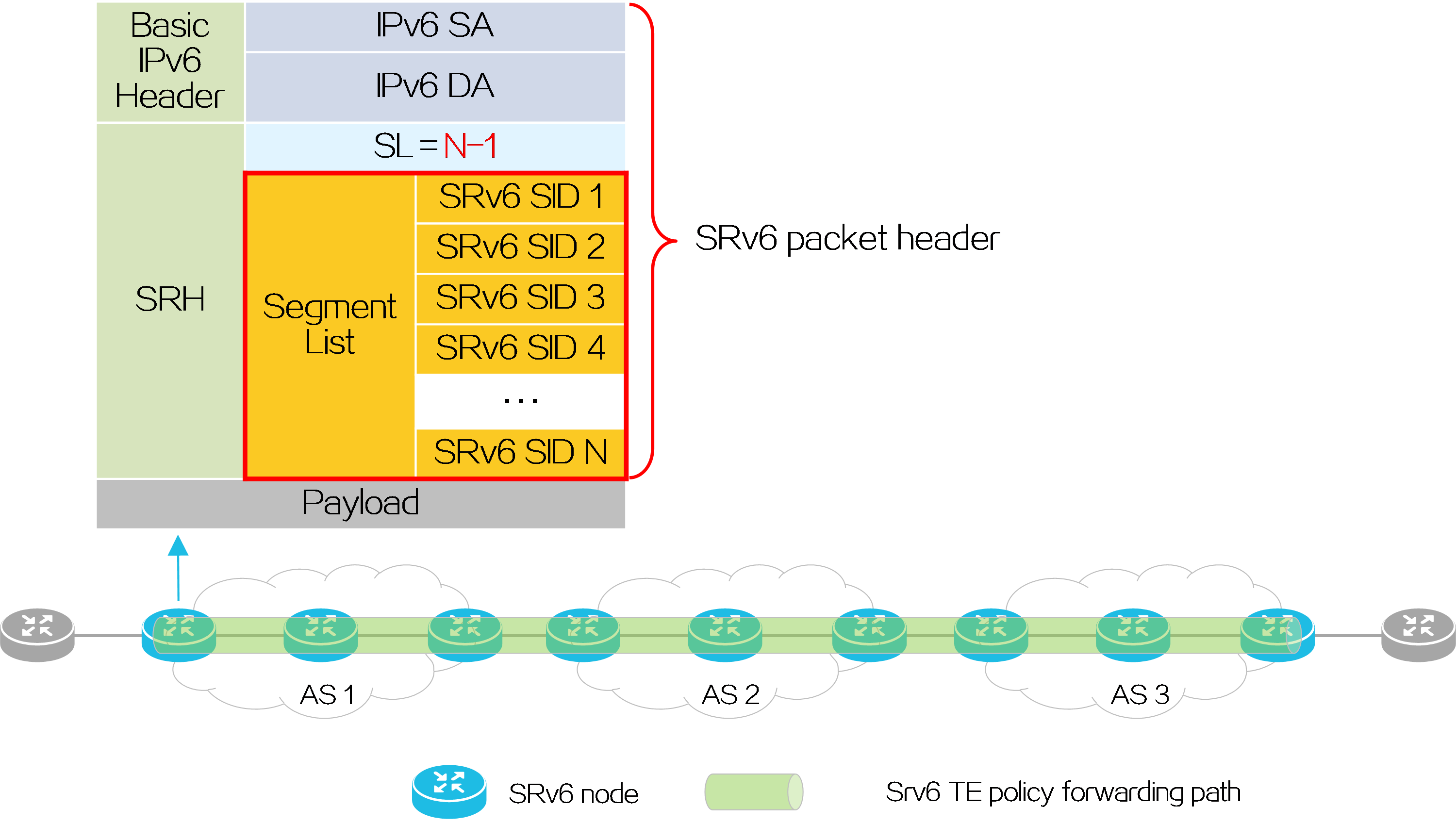

Packet encapsulation

An outer IPv6 header and a Segment Routing Header (SRH) are added to the original data packet to form an SRv6 packet.

Figure8 SRv6 packet encapsulation

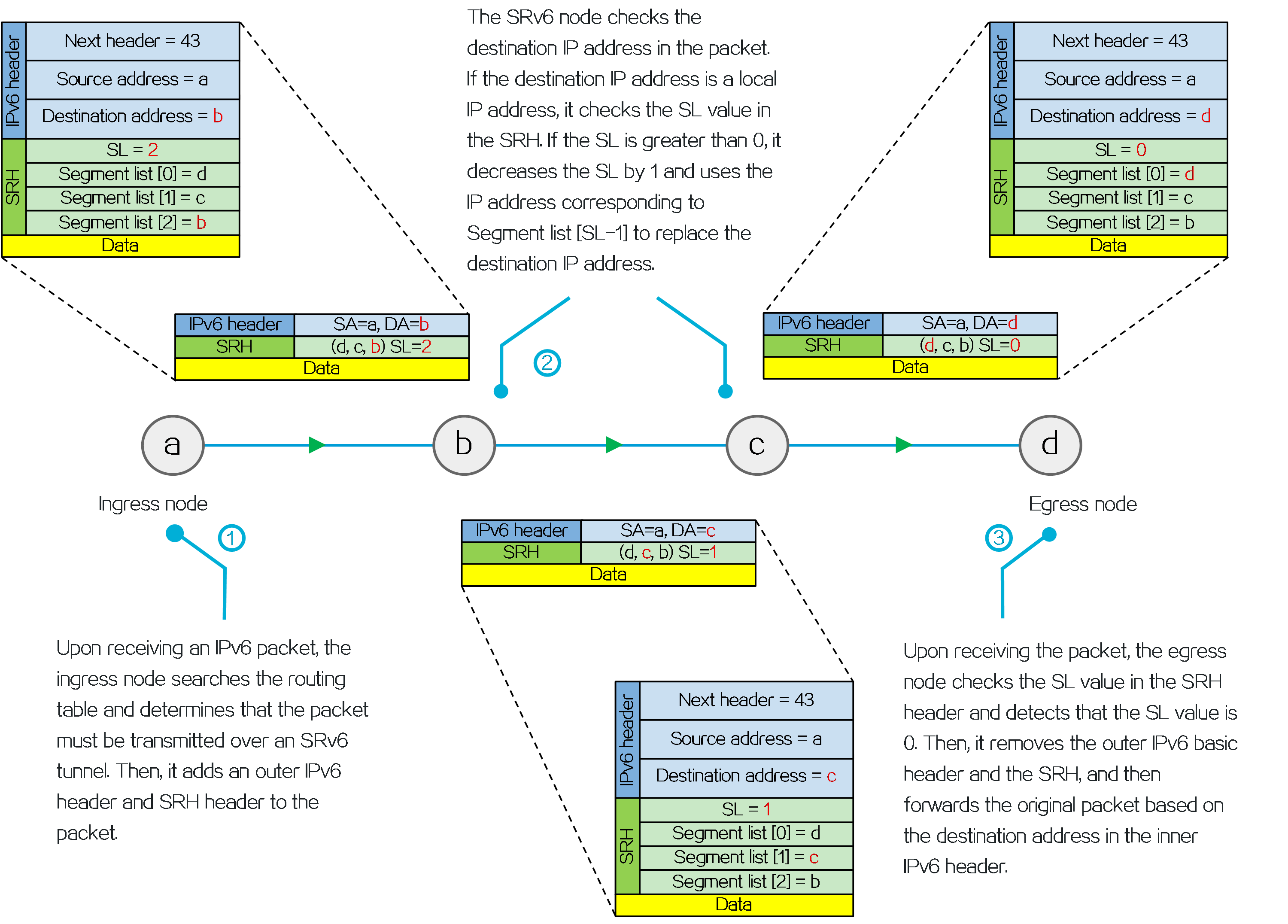

Operating mechanism

Figure9 SRv6 operating mechanism

4.2 SRv6 TE Policy

About SRv6 TE policy

An SRv6 TE policy is a flexible forwarding policy that allows for the selection of appropriate forwarding paths based on service requirements. An SRv6 TE policy consists of multiple forwarding paths, which can be used for load balancing and backup between the paths.

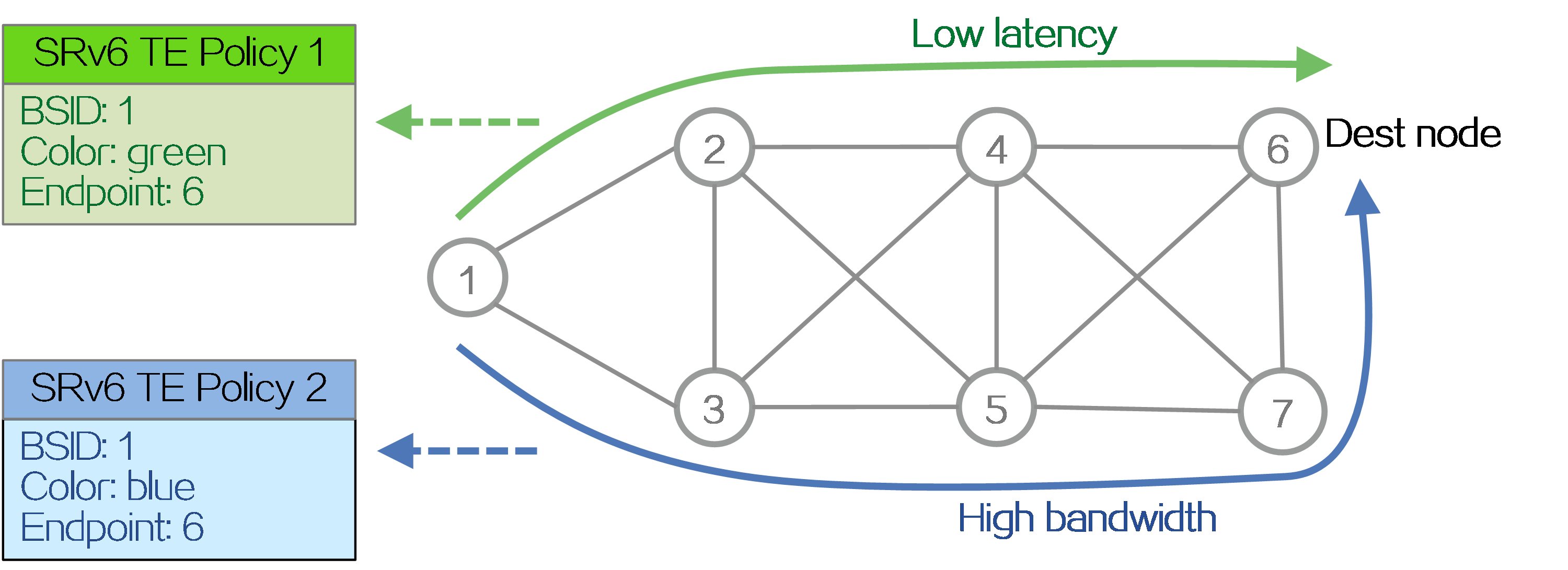

An SRv6 TE policy can be identified through the following sections:

· BSID—SID of the ingress node (source node), used to steer the traffic to the forwarding path in the SRv6 TE policy.

· Color—Color attribute for the SRv6 TE policy, used to distinguish an SRv6 TE policy from other SRv6 TE policies that are configured for the same source and destination nodes. Color attributes can represent different quality of service requirements, such as low latency, high bandwidth, etc. Administrators can assign different color attributes to different SRv6 TE policies based on service types.

· Endpoint—IPv6 address of the destination node.

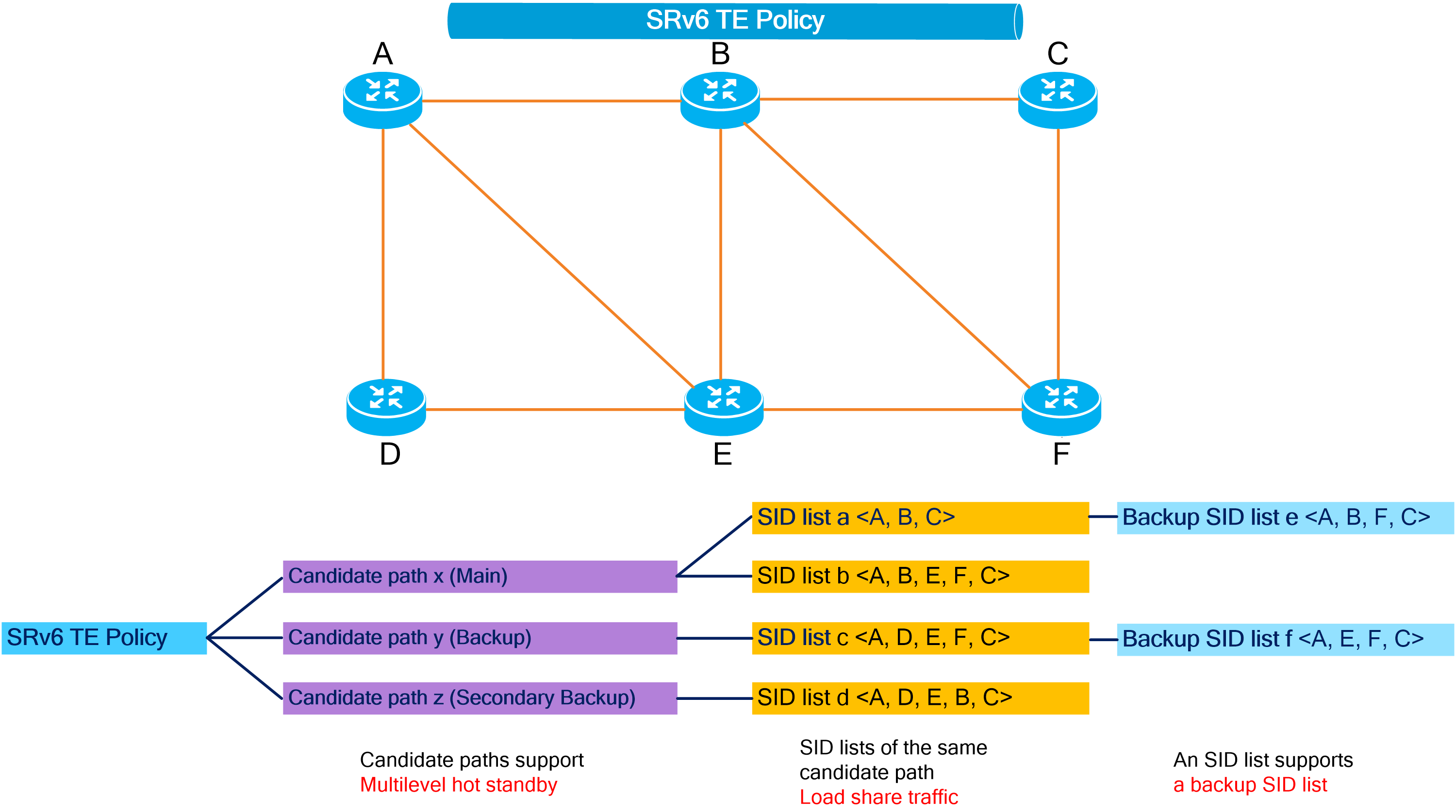

Figure10 SRv6 TE policy forwarding paths

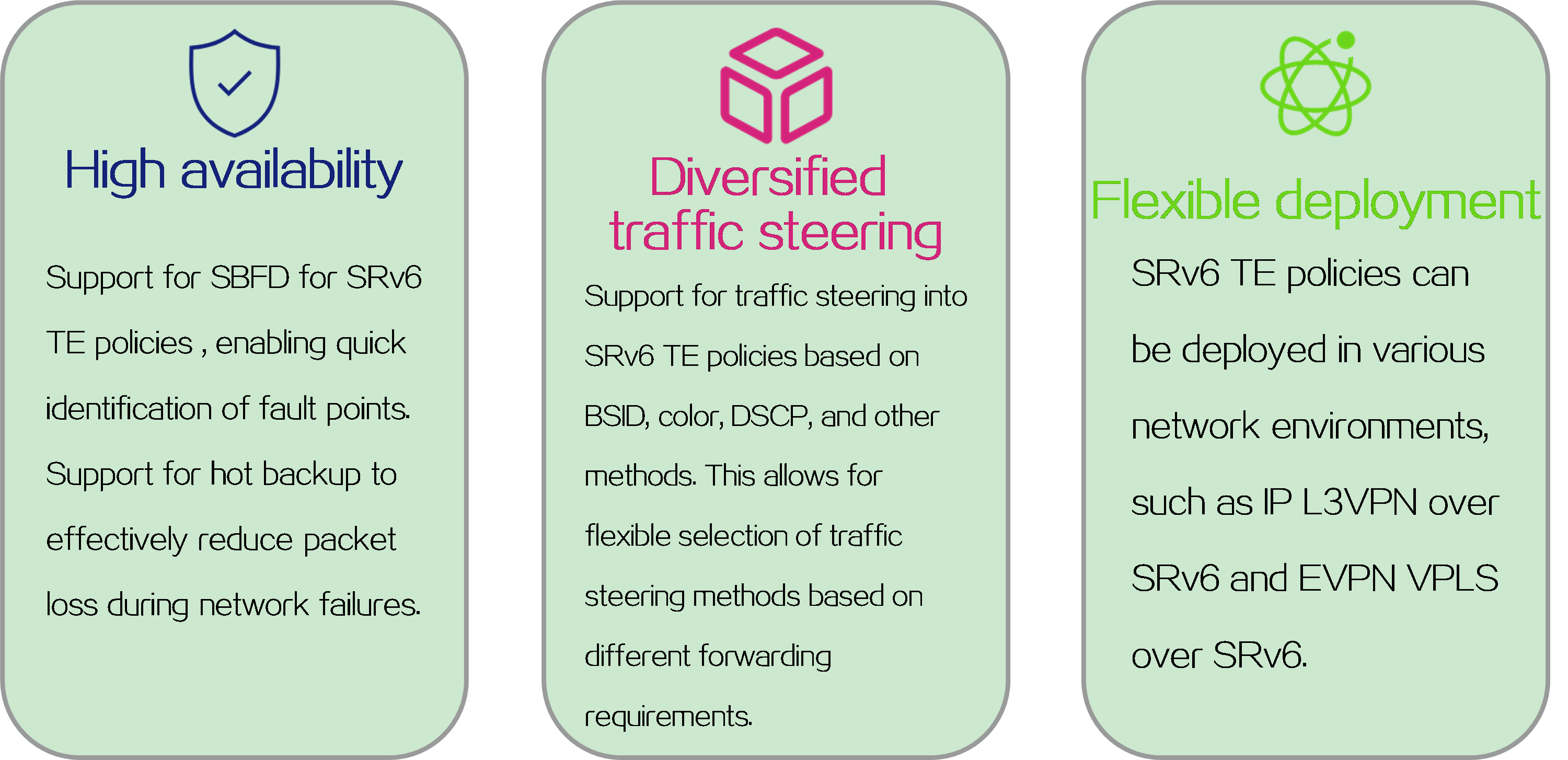

Technical benefits

Figure11 Technical benefits of SRv6 TE policy

Network model

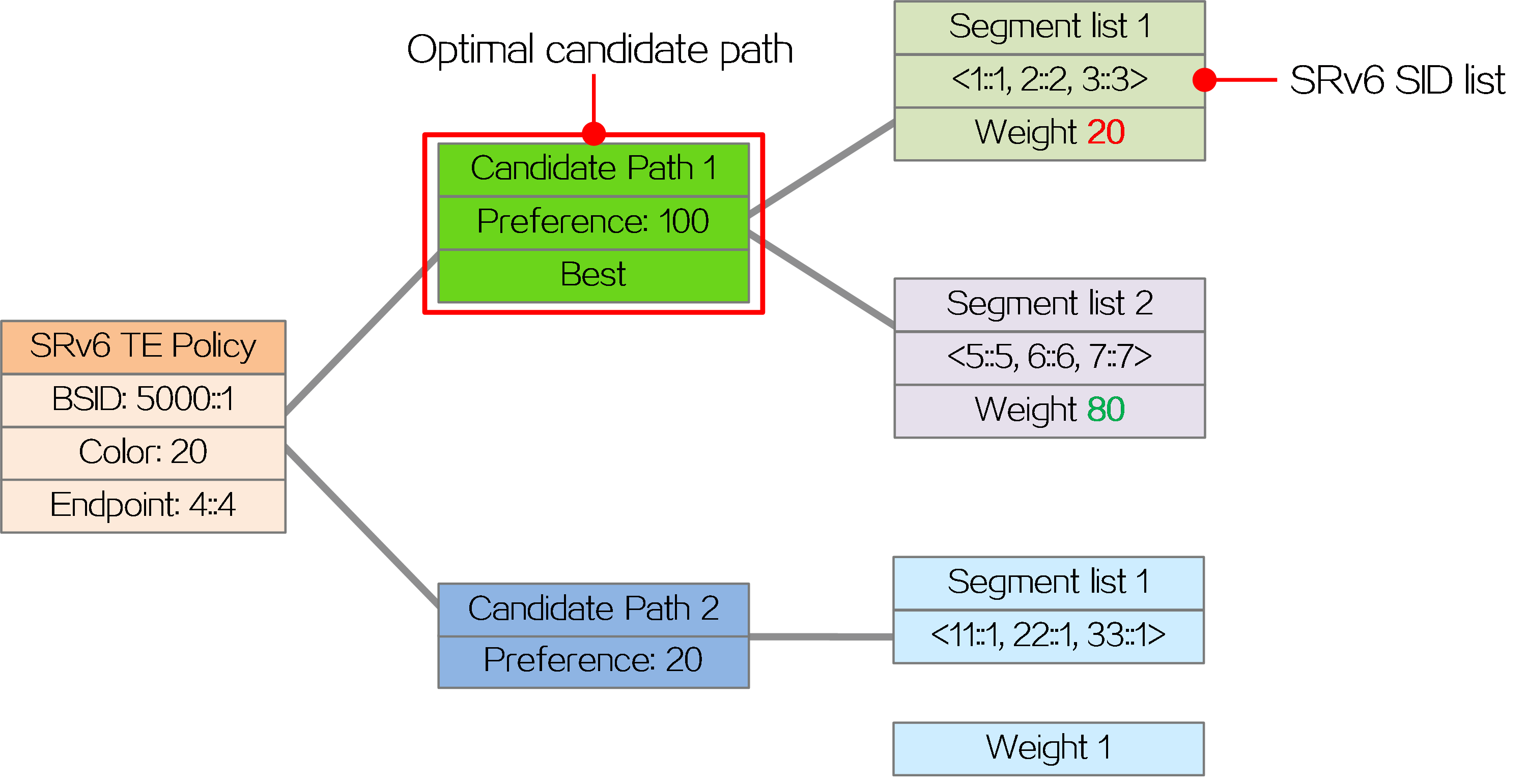

An SRv6 TE policy can contain multiple candidate paths. A candidate path can contain multiple segment lists (SID lists), and each segment list carries a weight attribute.

· Candidate paths

Candidate paths carry preference attributes, and the preferences of different candidate paths are different. When forwarding traffic through an SRv6 TE policy, the device selects the candidate path with the highest preference (called the optimal candidate path) from multiple valid candidate paths for packet forwarding.

· SID lists

A SID list is a list of SIDs that indicates a packet forwarding path.

As shown in the figure below, the SRv6 TE policy includes two candidate paths, Candidate Path 1 and Candidate Path 2. Candidate Path 1 has the highest preference, so the SRv6 TE policy uses candidate path 1 to forward packets. Candidate Path 1 contains two SID lists, Segment List 1 and Segment List 2. Segment List 1 contains SRv6 SIDs <1::1, 2::2, 3::3> with a weight of 20. Segment List 2 contains SRv6 SIDs <5::5, 6::6, 7::7> with a weight of 80.

Figure12 Network model of SRv6 TE policy

Steering methods

SRv6 TE policy steering refers to the process of matching certain packet characteristics or routing rules to direct packets to an SRv6 TE policy for forwarding.

Table1 SRv6 TE policy steering methods and rules

|

Steering method |

Steering rule |

|

BSID-based traffic steering |

If the destination IPv6 address of a received packet is the BSID of an SRv6 TE policy, the device uses the SRv6 TE policy to forward the packet. |

|

Color-based traffic steering |

If the matching BGP route of a packet carries a color extended community attribute and nexthop address that match the color attribute and endpoint address of an SRv6 TE policy, the device forwards the packet through that SRv6 TE policy. |

|

DSCP-based traffic steering |

The device identifies the color value mapped to the DSCP value of a packet, and searches for the SRv6 TE policy containing that color value. If a matching SRv6 TE policy is found, the device forwards the packet through that SRv6 TE policy. |

|

Tunnel policy-based traffic steering |

In L2VPN and L3VPN networks, tunnel policies are deployed to specify SRv6 TE policies as the public network tunnels to forward private network packets. |

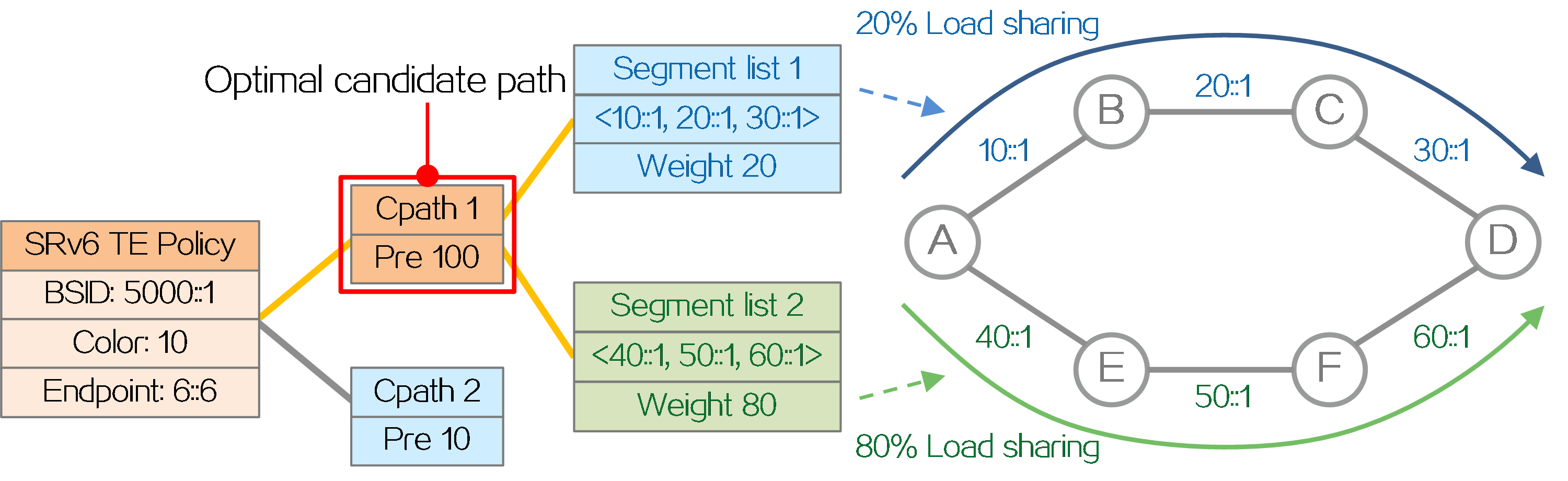

Path selection

The path selection principles for different steering methods are the same. After data packets are steered into an SRv6 TE policy, the SRv6 TE policy selects a forwarding path for the packets as follows:

(1) Selects the candidate path that has the highest preference among all valid candidate paths.

(2) Performs Weighted ECMP (WECMP) load sharing among the SID lists of the selected candidate path. Assume there are n valid SID lists in the candidate path, and the weight of SID list x is Wight x. The load of SID list x is equal to Weight x/(Weight 1 + Weight 2 + … + Weight n).

As shown in the figure below, the SRv6 TE policy selects the candidate path with the highest preference, Candidate Path 1, to forward traffic. Candidate Path 1 contains two SID lists, Segment List 1 and Segment List 2. Segment List 1 contains SRv6 SIDs <10::1, 20::1, 30::1> with a weight of 20. Segment List 2 contains SRv6 SIDs <40::1, 50::1, 60::1> with a weight of 80. The traffic is load balanced between Segment List 1 and Segment List 2 based on weight. The total weight of Segment List 1 and Segment List 2 is 100, so the traffic proportion shared by Segment List 1 is 20÷100=20%, and the traffic proportion shared by Segment List 2 is 80÷100=80%.

Figure13 SRv6 TE policy path selection

BSID stitching

SRv6 over SRv6

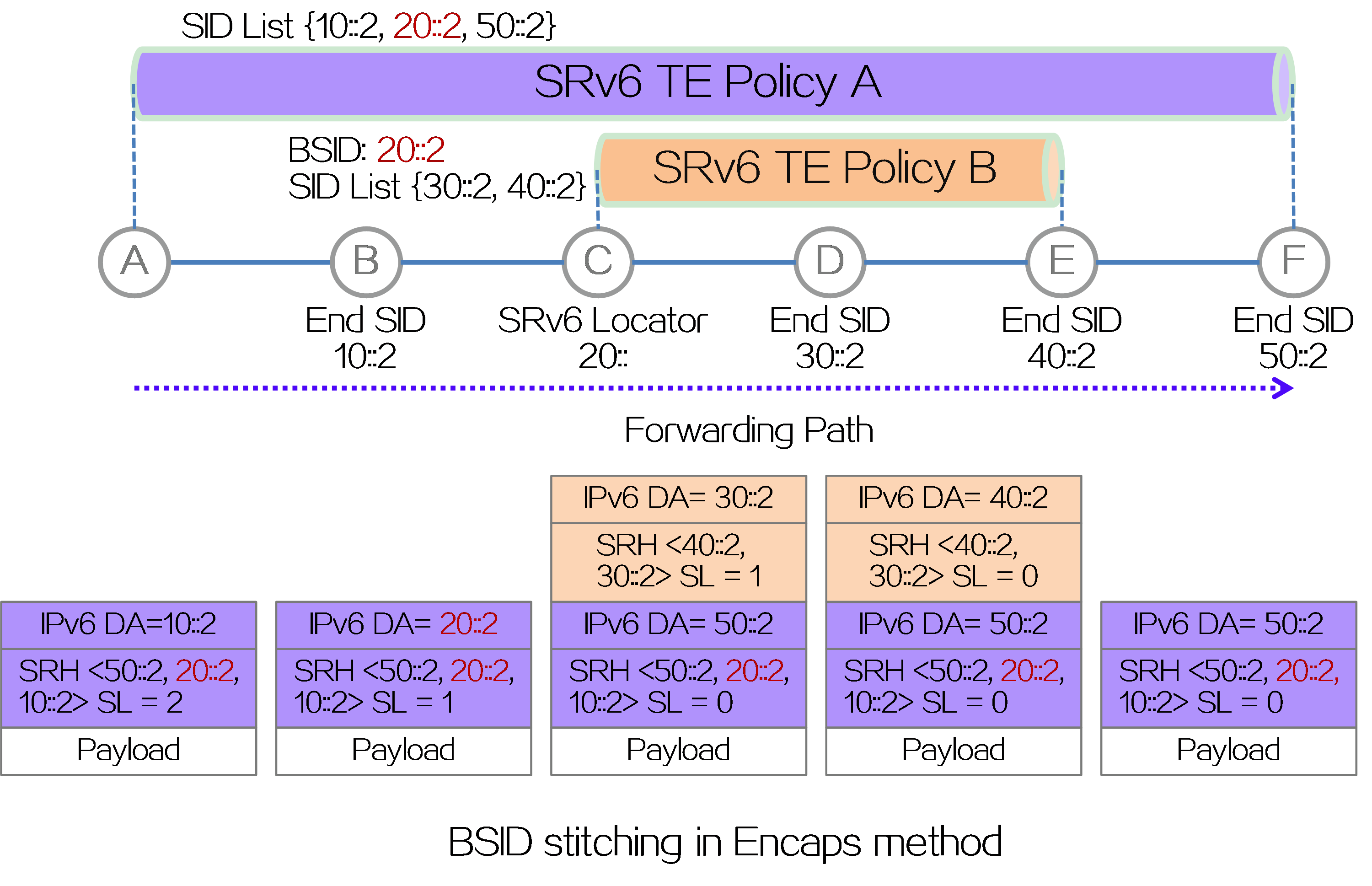

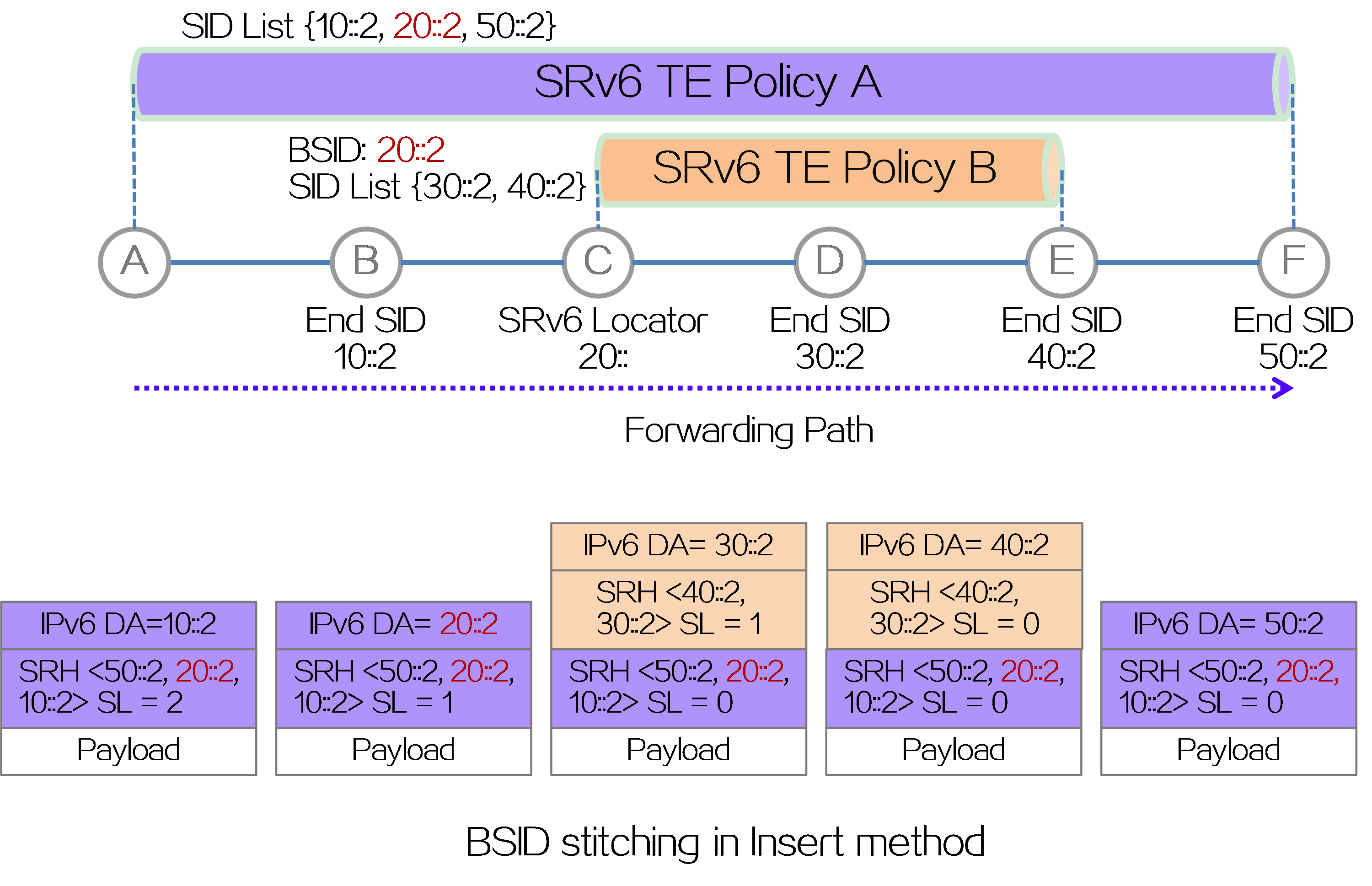

The supported SID depth of the device is limited. When the source node of an SRv6 TE policy encapsulates the packet with SRH, the number of SIDs cannot exceed the limit supported by the device. In order to reduce the number of SIDs in the SID list of an SRv6 TE policy, the SID list supports inserting a BSID, which represents the SID list of a candidate path for another SRv6 TE policy. Based on the BSID, the traffic is redirected to another candidate path of an SRv6 TE policy. This capability is called BSID stitching.

As shown in the figure below, BSID stitching supports the Encaps method of encapsulating an IPv6 basic header and SRH extension header outside the original packet, and also supports the Insert method of inserting a new SRH extension header after the IPv6 basic header of the original packet.

· Encaps method: The BSID of SRv6 TE Policy B is inserted into the SID list of SRv6 TE Policy A. When a packet is forwarded to node C through SRv6 TE Policy A, the source node C of SRv6 TE Policy B discovers that the BSID is a local SID of End.B6ENCAPS type. Therefore, it executes the forwarding instruction of the BSID, which is to add an IPv6 header and SRH outside the packet, with SRH containing the SID list of SRv6 TE Policy B.

Figure14 BSID stitching in Encaps method

· Insert method: The BSID of SRv6 TE policy B is inserted into the SID list of SRv6 TE Policy A. When the packet is forwarded to node C through SRv6 TE Policy A, the source node C of SRv6 TE Policy B discovers that the BSID is a local SID of End.B6INSERT type. Therefore, it executes the forwarding instruction of the BSID, which is to insert the SRH after the original IPv6 packet header, with SRH containing the SID list of SRv6 TE Policy B.

Figure15 BSID splicing in Insert method

SRv6 over SR-MPLS

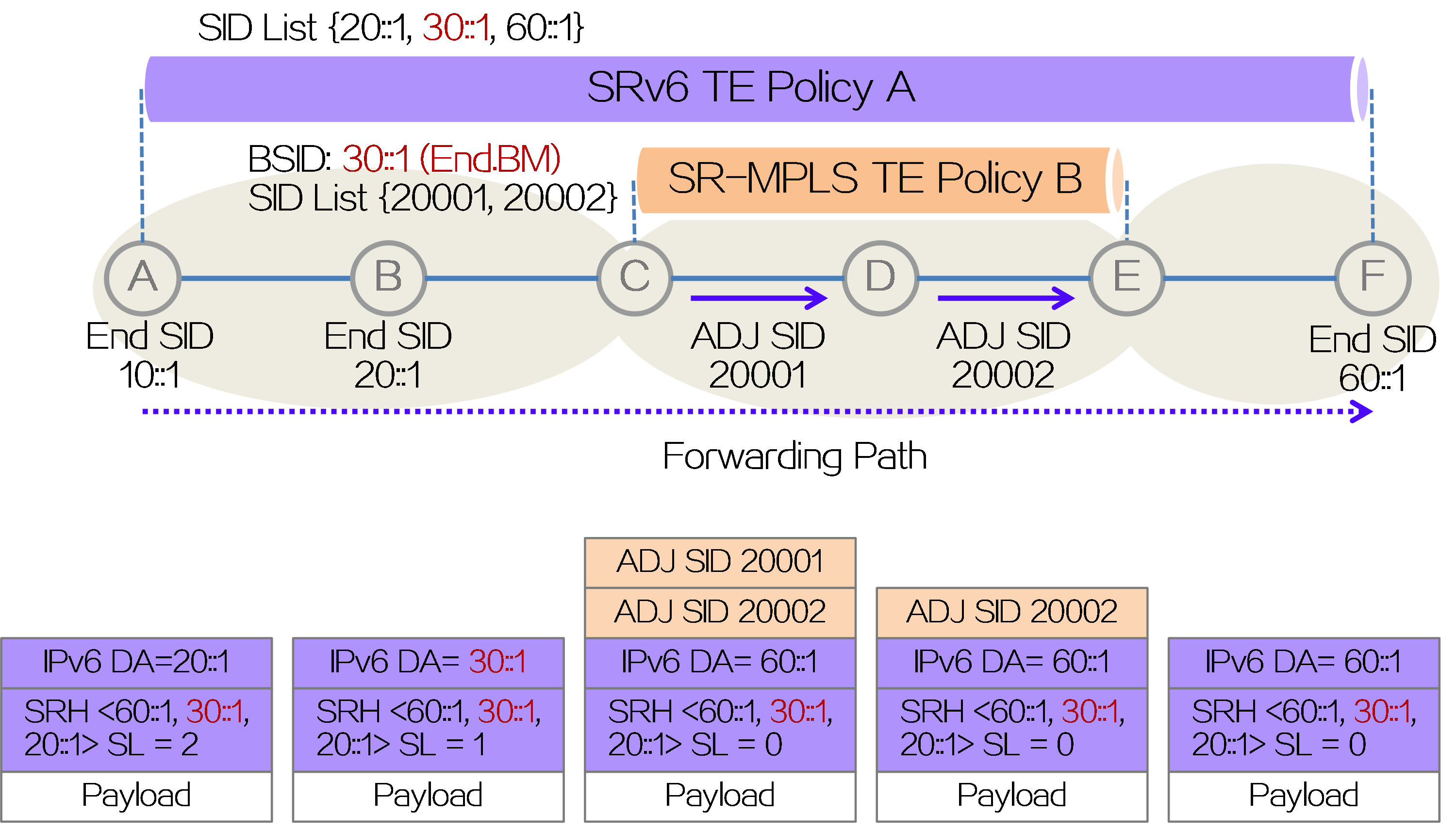

The BSID stitching feature can not only reduce the number of SIDs in the SID list, but also achieve SRv6 and SR-MPLS interworking. In the SRv6 and SR-MPLS interworking scenario, packets traverse multiple SRv6 and SR-MPLS domains via SRv6 TE policy tunnels to achieve end-to-end service interoperability. This scenario is referred to as SRv6 over SR-MPLS.

As shown in the figure below, a new type of SRv6 SID called End.BM is defined in the SRv6 over SR-MPLS scenario as the BSID for SR-MPLS TE Policy B. When configuring SRv6 TE Policy A, insert BSID 30::1 of the End.BM type into the SID list of SRv6 TE Policy A. BSID 30::1 represents the SID list of the optimal candidate path for SR-MPLS TE Policy B, which is the MPLS label stack {20001, 20002}. When an IPv6 packet is forwarded to the source node C of SR-MPLS TE Policy B, the node performs the function of End.BM SID, that is, encapsulating the MPLS label stack {20001, 20002} of SR-MPLS TE Policy B outside the IPv6 packet. Node C forwards the packet in the SR-MPLS domain based on the MPLS label stack encapsulated in the packet. After popping all MPLS labels at the tail node E of SR-MPLS TE Policy B, it continues to forward the packet as an SRv6 packet. By stitching the BSID of type End.BM into the SID list of the SRv6 TE policy, the interworking between SRv6 and SR-MPLS is achieved.

Figure16 BSID stitching in SRv6 over SR-MPLS scenario

Configuration examples

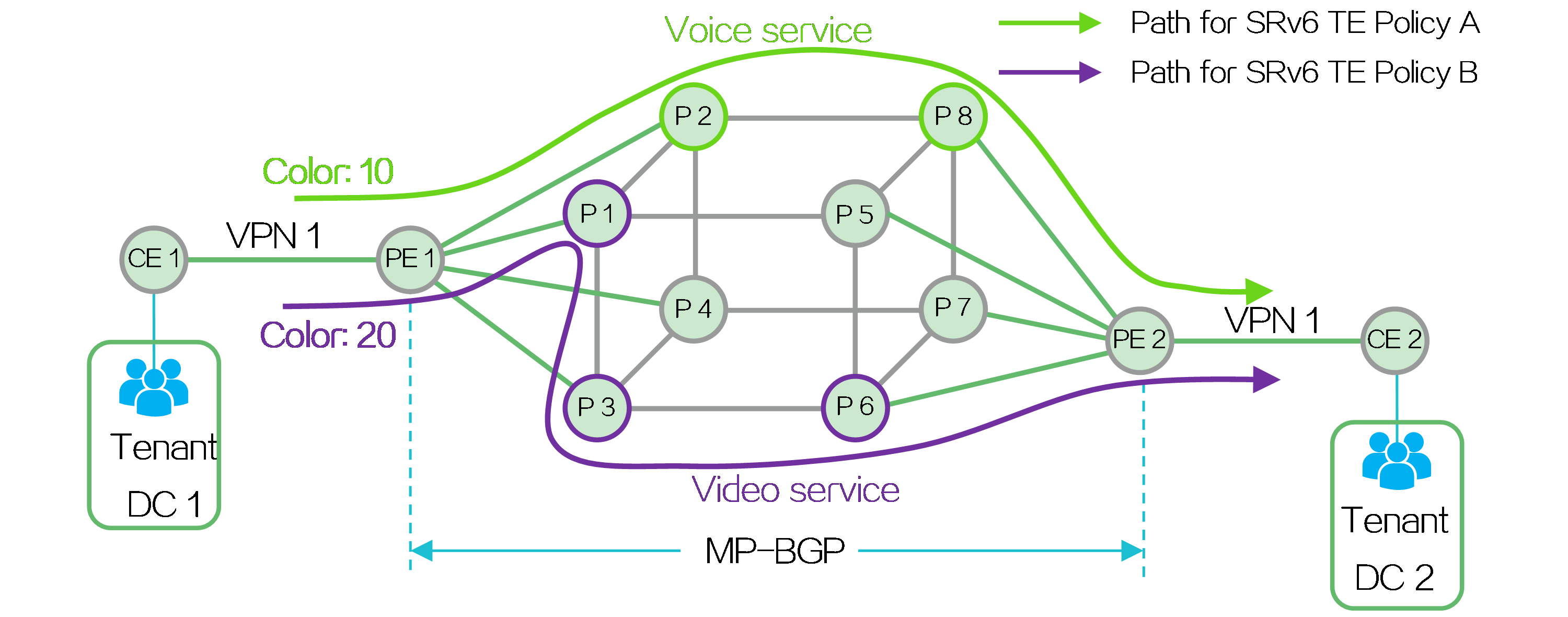

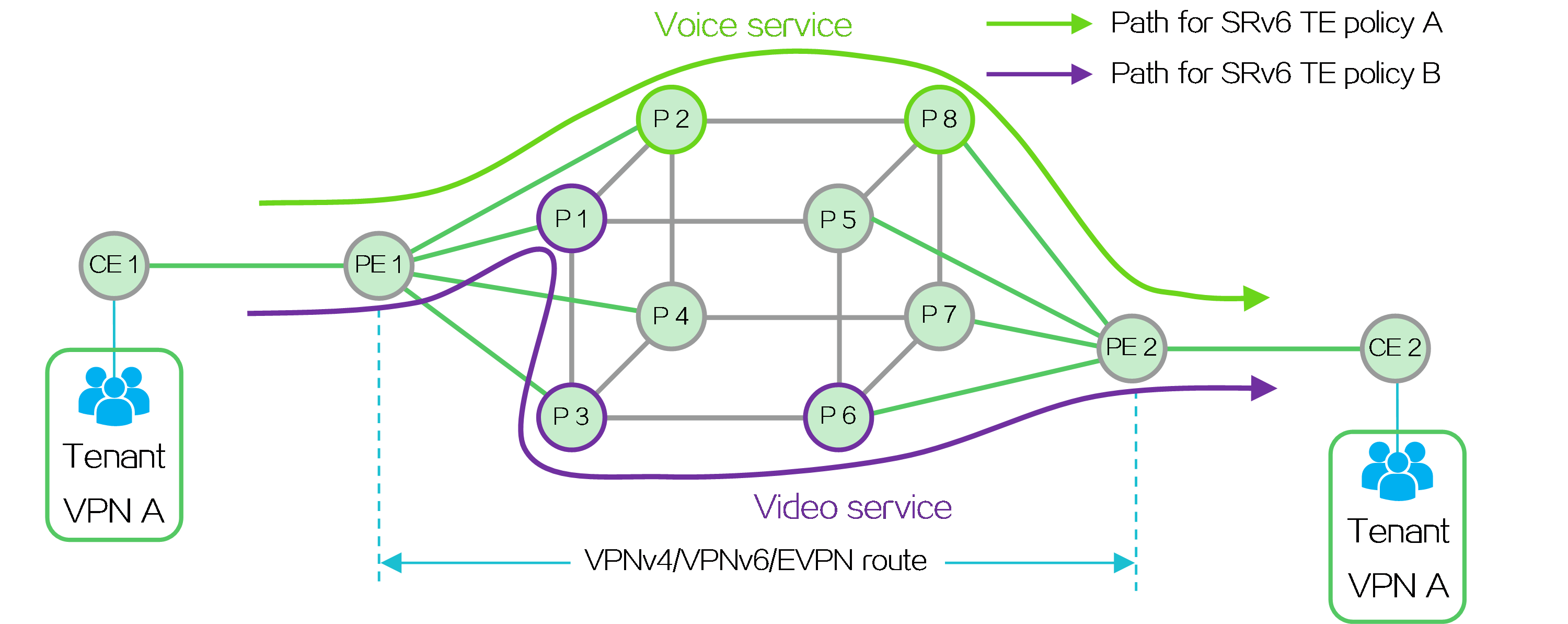

By deploying L2VPN/L3VPN over SRv6 TE policy, tenants within the same VPN located in different data centers (DCs) can communicate with each other. The source node can flexibly plan and allocate the forwarding paths for different service traffic, so as to meet the various service requirements of the tenants. Taking IP L3VPN over SRv6 TE policy as an example, the deployment mechanism of this solution is implemented as follows:

· Create VPN 1 on PE 1 and PE 2 to allow the users of the same tenant located in different data centers to join the same VPN. Deploy MP-BGP between PEs to transmit private network routing information for achieving Layer 3 connectivity.

· Deploy SRv6 TE policies with different color attributes between PE 1 and PE 2, select forwarding paths based on the tenant's service requirements, and steer the traffic of a service into the corresponding SRv6 TE policy.

Figure17 Typical application of SRv6 TE policies

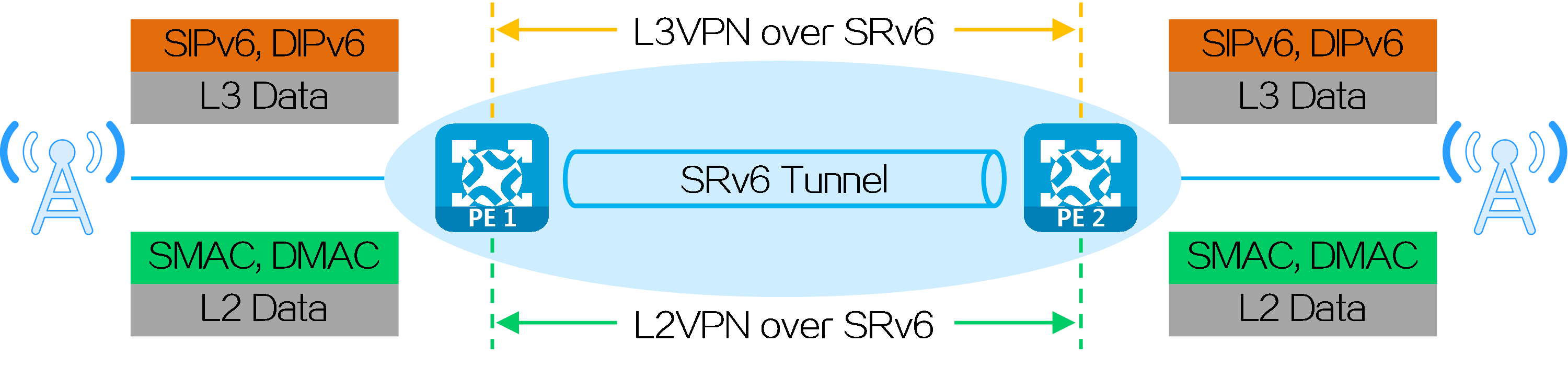

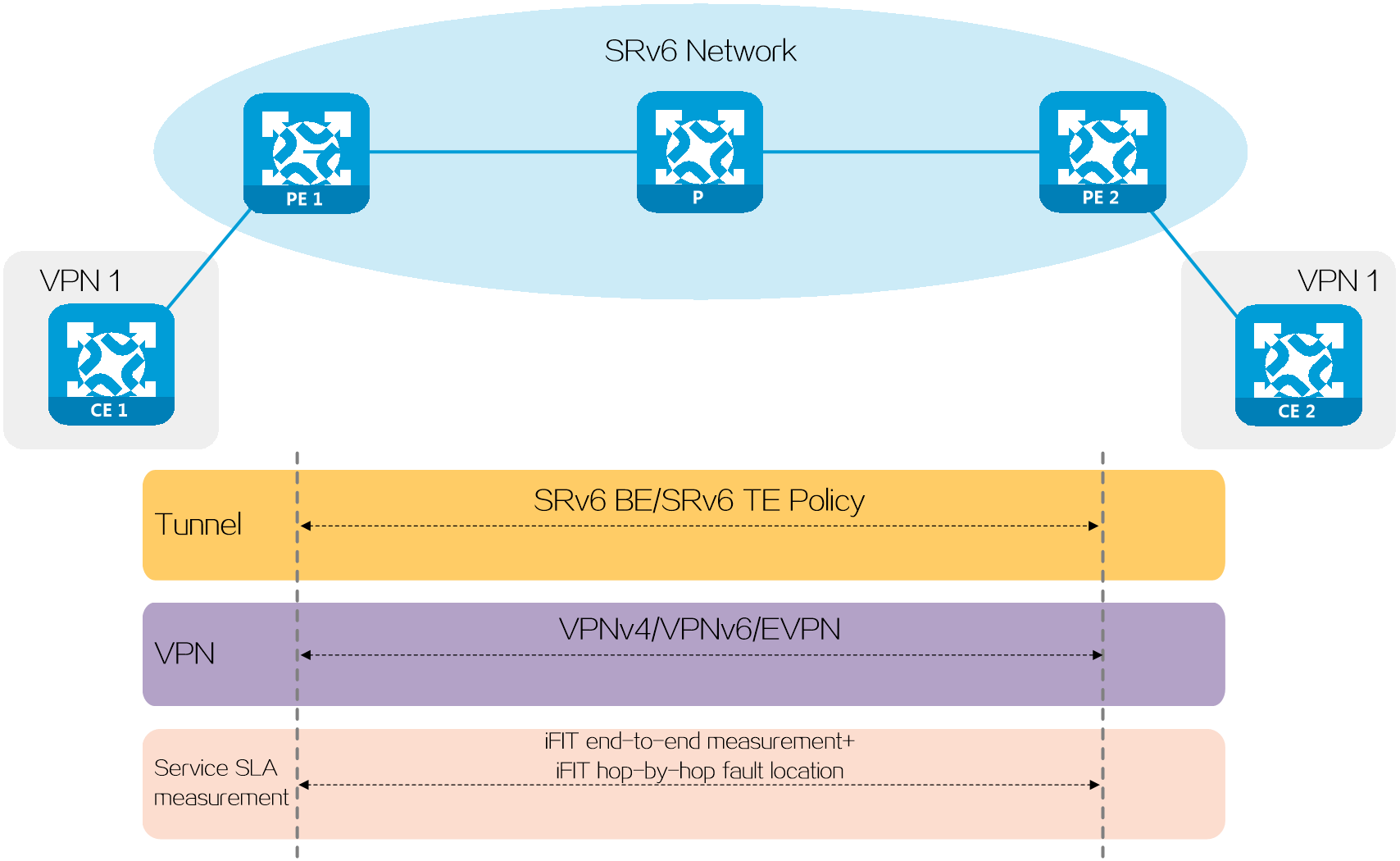

4.3 SRv6 VPN overview

About SRv6 VPN

With the development of 5G services, IPv4 addresses can no longer meet the network needs of operators, and the construction of IPv6 networks has been put on the agenda by operators. Deploying SRv6 VPN in the operator's IPv6 network and using SRv6 to support VPN services in the network enable better fulfillment of 5G service requirements.

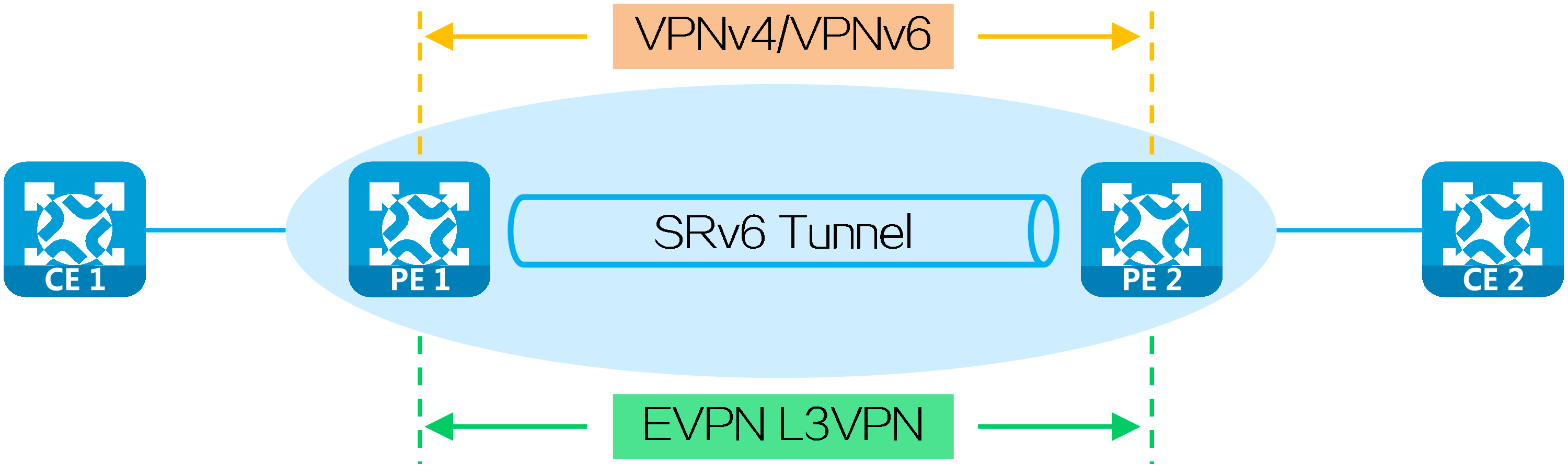

According to the types of VPN services, SRv6 VPN services can be divided into the following types:

· L3VPN services—Transparently transmit user Layer 3 service packets through the IPv6 backbone network. L3VPN services include IP L3VPN over SRv6 and EVPN L3VPN over SRv6.

· L2VPN services—Transparently transmit user Layer 2 service packets through the IPv6 backbone network. L2VPN services include EVPN VPWS over SRv6 and EVPN VPLS over SRv6.

Figure18 SRv6 VPN service classification

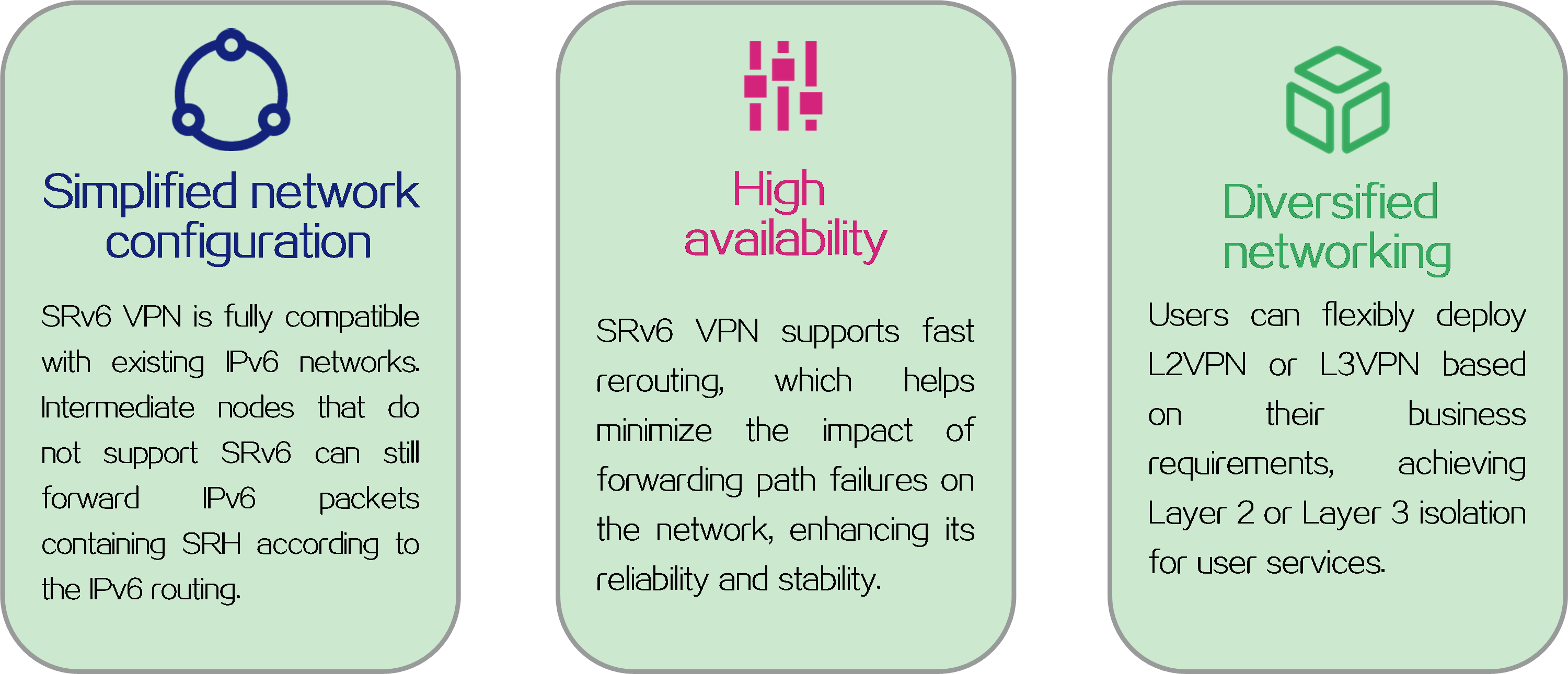

SRv6 VPN advantages

Figure19 SRv6 VPN advantages

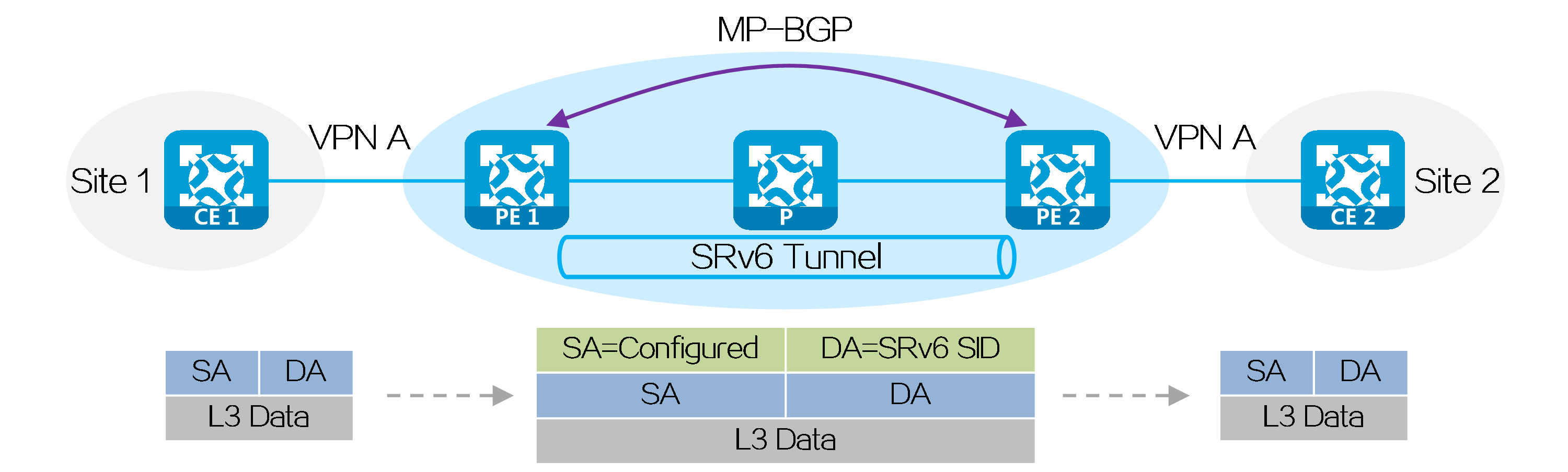

L3VPN over SRv6 operating mechanism

Networking model

In IP L3VPN over SRv6 or EVPN L3VPN over SRv6 networking environment, private network route information is advertised between PEs through MP-BGP, and packets are forwarded through SRv6 encapsulation. When the physical sites of users are dispersed in different locations, the existing service providers or enterprise IPv6 networks can be used to provide Layer 3 interconnection for users of the same VPN in different sites, while ensuring isolation between users of different VPNs.

Figure20 L3VPN over SRv6 networking model

Route advertisement

Taking CE 1 as an example, a private network route of CE 1 is advertised to CE 2 through MP-BGP as follows:

(1) CE 1 uses IGP or BGP to advertise the private network route of the local site to PE 1.

(2) After learning private network routing information from CE 1, PE 1 assigns an SRv6 SID to the private network route and forms a VPN route. PE 1 uses MP-BGP to advertise the VPN route with the SRv6 SID to PE 2.

(3) After PE 2 receives the VPN route, it adds the VPN route to the VPN routing table, converts the VPN route into a private network route, and then advertises the private network route to CE 2.

(4) After CE 2 receives the route, it learns the route into the routing table. In this way, CE 2 has learned the route of CE 1.

Packet forwarding

After route advertisement is completed, a packet can be sent from CE 2 to CE 1 as follows:

(1) CE 2 sends the packet to PE 2.

(2) After receiving the private network packet, PE 2 looks up the matching route for the destination address in the VPN routing table. It finds the SRv6 SID assigned by PE 1 to the private network route.

(3) PE 2 encapsulates an outer IPv6 header for the packet, with the destination IPv6 address being the identified SRv6 SID, and the source IPv6 address can be configured as needed.

(4) According to the SRv6 SID, PE 2 looks up the IPv6 routing table and forwards the packet to P via the optimal IGP route.

(5) After receiving the packet, P looks up the IPv6 routing table according to the SRv6 SID and forwards the packet to PE 1 via the optimal IGP route.

(6) After receiving the packet, PE 1 performs the function of the SRv6 SID, that is, it decapsulates the packet to remove the outer IPv6 header and looks up the private network route in the routing table of the specified VPN based on the SRv6 SID, and then sends the packet to CE 1.

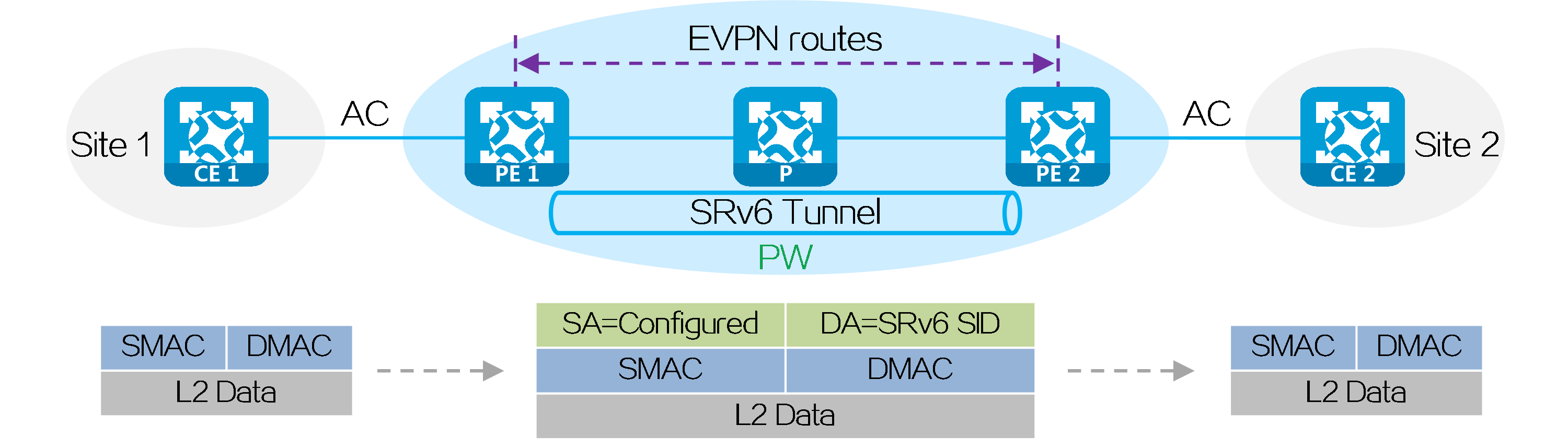

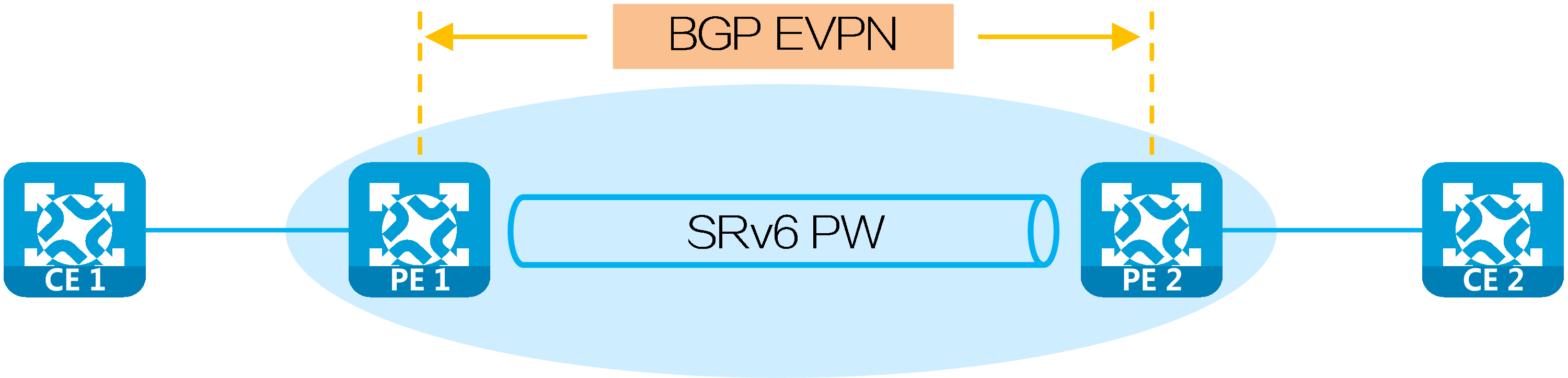

L2VPN over SRv6 operating mechanism

Networking model

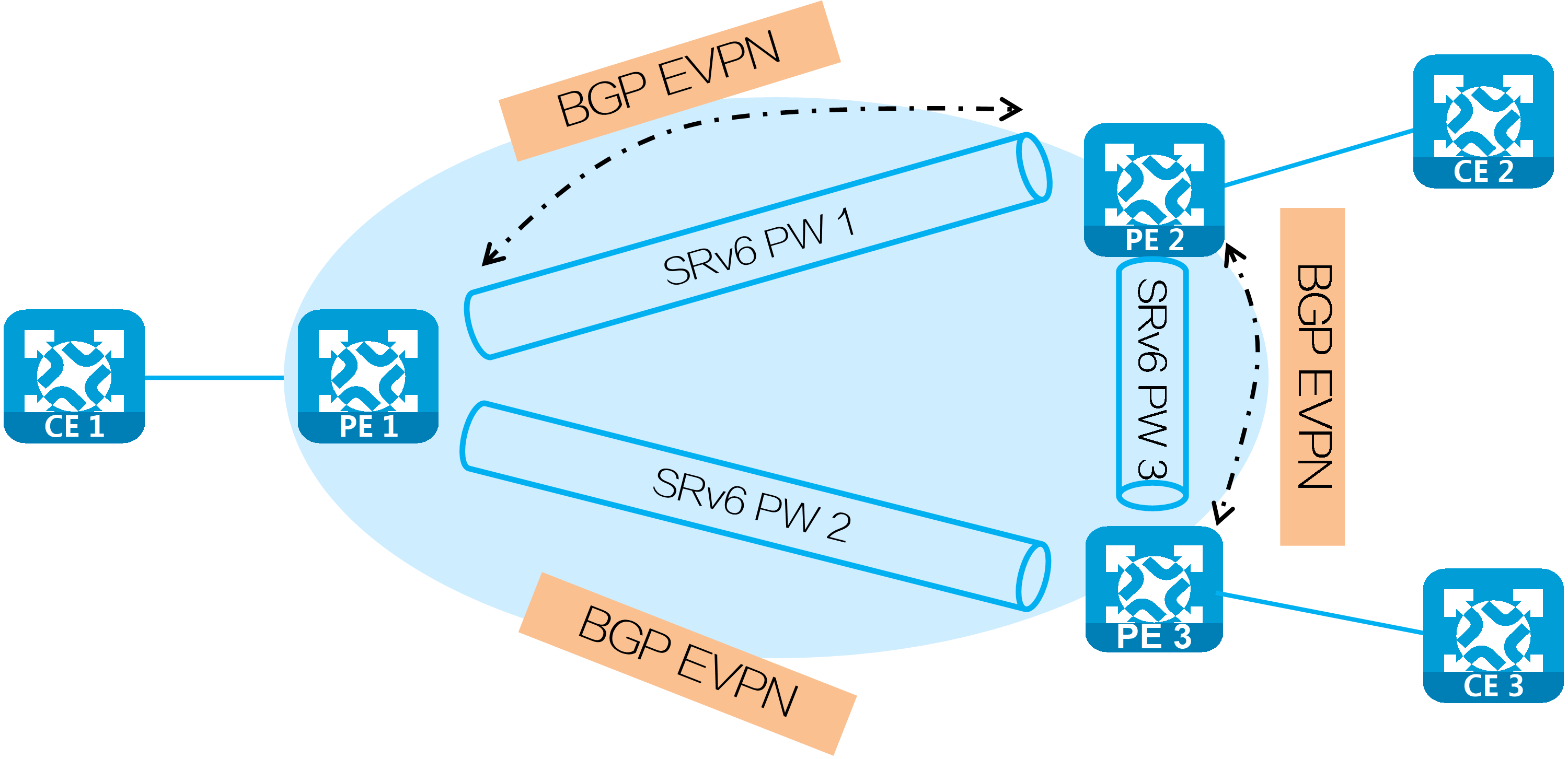

In the networking environment of EVPN VPWS over SRv6 and EVPN VPLS over SRv6, PEs establish SRv6 tunnels by advertising SRv6 SIDs in BGP EVPN routes. Two SRv6 tunnels in opposite directions between PEs form a PW, which encapsulates and forwards Layer 2 data packets between site networks, enabling transparent transmission of Layer 2 data for users over the IPv6 backbone network.

Figure21 L2VPN over SRv6 networking model

An Attachment Circuit (AC) is a physical or virtual circuit that connects CE and PE.

A pseudowire (PW) is a virtual bidirectional connection between two PE devices.

Establishing a PW

The process of establishing a PW between PEs through BGP EVPN routing is as follows:

(1) PE 1 and PE 2 mutually advertise BGP EVPN routes, carrying the SRv6 SID assigned to the local end as a cross-connect or VSI in the BGP EVPN route.

(2) PE 1 and PE 2 establish a single-hop SRv6 tunnel from the local PE to the remote PE after receiving the BGP EVPN route. The SID identifier of this tunnel is the SRv6 SID in the route.

(3) After establishing a single-hop SRv6 tunnel between PE 1 and PE 2 in both directions, the two SRv6 tunnels form a PW to carry user Layer 2 data. The PW is called an SRv6 PW.

Packet forwarding

After the BGP EVPN route advertisement is completed, a Layer 2 packet is sent from CE 1 to CE 2 as follows:

(1) CE 1 sends a Layer 2 packet to PE 1.

(2) After receiving the Layer 2 packet from the AC connected to CE 1, PE 1 performs the following:

· In EVPN VPWS over SRv6 networking, PE 1 identifies the SRv6 SID corresponding to the cross-connection associated with this AC.

· In the EVPN VPLS over SRv6 networking, PE 1 looks up the MAC address table within the VSI associated with this AC to find the corresponding SRv6 SID.

(3) PE 1 encapsulates an outer IPv6 header for the packet, with the destination IPv6 address being the identified SRv6 SID and the source IPv6 address being user-configured.

(4) After receiving the packet, PE 1 searches for the IPv6 routing table according to the SRv6 SID and forwards the packet to P through the optimal IGP route.

(5) After receiving the packet, P looks up the IPv6 routing table according to the SRv6 SID and forwards the packet to PE 2 via the optimal IGP route.

(6) PE 2 looks up the local SID table based on the SRv6 SID and performs the function of the SRv6 SID, which is to decapsulate the packet by removing the outer IPv6 header and forward it based on the SRv6 SID.

· In EVPN VPWS over SRv6 networking, PE 2 identifies the AC associated with the SRv6 SID and then forwards the packet through that AC to CE 2.

· In the EVPN VPLS over SRv6 networking, PE 2 identifies the VSI associated with the SRv6 SID, and then searches the MAC address table within that VSI to forward the packet to CE 2.

4.4 L3VPN over SRv6 BE

Introduction

L3VPN over segment routing IPv6 best effort (SRv6 BE) carries IP L3VPN data in the public network over optimal SRv6 paths calculated with IGP, providing Layer 3 interconnections between users in the same VPN at different sites and implementing user isolation among different VPNs.

Based on the carried services in the network, L3VPN over SRv6 BE is classified into the following types:

· IP L3VPN over SRv6 BE—Transmits private network routes through VPNv4/VPNv6 routes between PEs to carry Layer 3 VPN services.

· EVPN L3VPN over SRv6 BE—Transmits private network routes through EVPN IP prefix routes between PEs to carry both Layer 2 and Layer 3 VPN services.

Figure22 L3VPN over SRv6 BE types

SRv6 SIDs

An L3VPN over SRv6 network uses the following types of SRv6 SIDs to identify private network packets.

VPN instance-based SRv6 SID allocation

With VPN instance-based SRv6 SID allocation, a PE assigns an SRv6 SID to each VPN instance, and uses the SRv6 SID to identify the IPv4/IPv6 VPN in the network. Such SRv6 SIDs include End.DT4 SID/End.DT6 SID/End.DT46 SID:

· End.DT4 SIDs apply to the scenarios accessed by only IPv4 private network users.

· End.DT6 SIDs apply to the scenarios accessed by only IPv6 private network users.

· End.DT46 SIDs apply to the scenarios accessed by both IPv4 and IPv6 private network users.

Upon receiving a packet with an End.DT4 SID/End.DT6 SID/End.DT46 SID as the destination IPv6 address, a PE decapsulates the packet by removing its IPv6 packet header, obtains the VPN instance associated with the SID, and forwards the packet according to routing table lookup in the VPN instance.

Figure23 VPN instance-based SRv6 SID allocation

Next hop-based SRv6 SID allocation

With next hop-based SRv6 SID allocation, a PE assigns an SRv6 SID to each next hop, and uses the SRv6 SID to identify the IPv4/IPv6 next hop in the network. Such SRv6 SIDs include End.DX4 SID/End.DX6 SID:

· End.DX4 SIDs apply to the scenarios accessed by only IPv4 private network users.

· End.DX6 SIDs apply to the scenarios accessed by only IPv6 private network users.

Upon receiving a packet with an End.DX4 SID/End.DX6 SID as the destination IPv6 address, the device decapsulates the packet by removing its IPv6 packet header, and forwards the packet based on the SID-associated next hop and output interface to the specified device in the private network.

Figure24 Next hop-based SRv6 SID allocation

Operating mechanism

The route advertisement and packet forwarding processes of IP L3VPN over SRv6 is similar to those of EVPN L3VPN over SRv6. This section uses IPv4 L3VPN over SRv6 BE to illustrate the process.

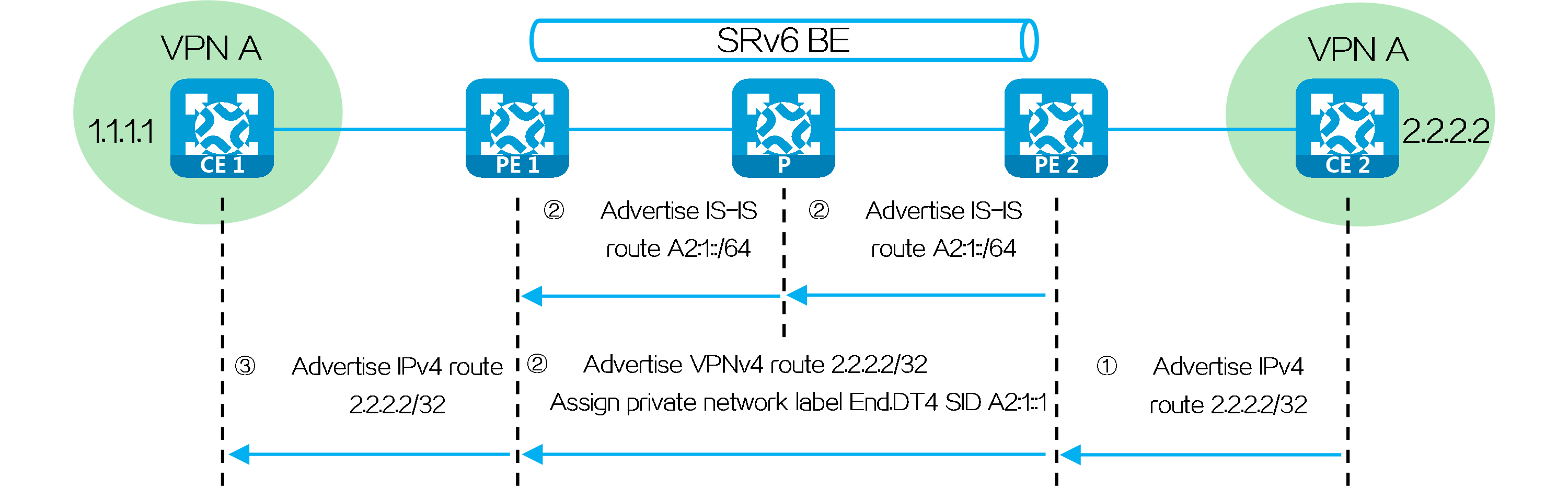

Route advertisement

The route advertisement process based on End.DT4 SID/End.DT6 SID/End.DT46 SID is similar to that based on End.DX4 SID/End.DX6 SID. This section uses End.DT4 SID to illustrate the process.

PE 2 advertises locator route A2:1::/64 associated with the End.DT4 SID to P and PE 1 through IGP (IS-IS for example). Upon receiving the IS-IS route, PE 1 and P learn it to their routing tables.

For example, CE 2 advertises a private network route to CE 1 through MP-BGP as follows:

(1) CE 2 advertises private network route 2.2.2.2/32 from the local site to PE 2 through IGP or BGP.

(2) Upon learning the route, PE 2 adds the route to the routing table of VPN instance A. PE 2 adds the RD and RT attributes to the private network route, and assigns End.DT4 SID A2:1::1 to it to form a VPNv4 route. PE 2 advertises the VPNv4 route to PE 1 through MP-BGP.

(3) Upon receiving the VPNv4 route, PE 1 adds it to the routing table of VPN instance A, converts the VPNv4 route to an IPv4 route, and advertises the route to CE 1.

(4) Upon receiving the route, CE 1 learns it to its routing table.

Figure25 Route advertisement for IPv4 L3VPN over SRv6 BE

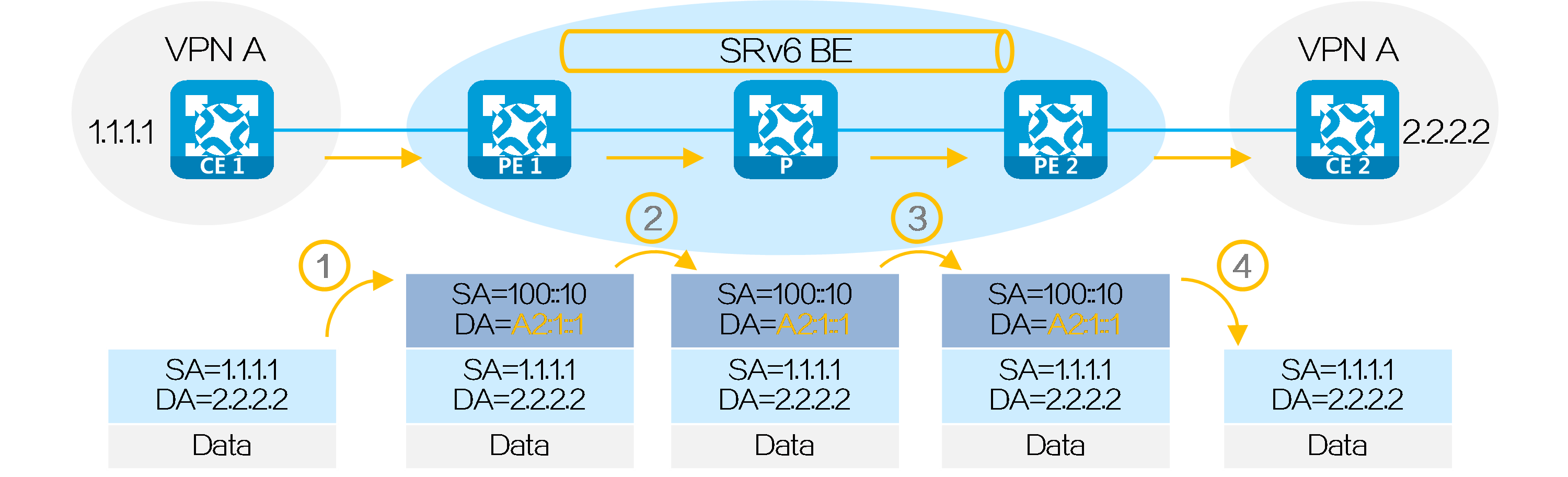

Packet forwarding

Packet forwarding based on End.DT4 SID/End.DT6 SID/End.DT46 SID

After route advertisement is completed, CE 1 forwards a packet with destination address 2.2.2.2 to CE 2 as follows:

(1) CE 1 sends an IPv4 packet with destination address 2.2.2.2 to PE 1.

(2) Upon receiving the packet from the interface bound to VPN instance A, PE 1 searches the route matching 2.2.2.2 in VPN instance A’s routing table. It obtains the route-associated End.DT4 SID A2:1::1, and encapsulates an IPv6 packet header for the packet. The source address in the IPv6 packet header is configured by the administrator, and the destination address is End.DT4 SID A2:1::1.

(3) PE 1 searches the IPv6 routing table based on End.DT4 SID A2:1::1, and forwards the packet to P through the optimal IGP route.

(4) P searches the IPv6 routing table based on End.DT4 SID A2:1::1, and forwards the packet to PE 2 through the optimal IGP route.

(5) Upon receiving the packet with End.DT4 SID A2:1::1 as the destination IPv6 address, PE 2 decapsulates the packet by removing is IPv6 packet header, searches the routing table of VPN instance A matching the End.DT4 SID, and forwards the packet to CE 2.

Figure26 Packet forwarding based on End.DT4 SID/End.DT6 SID/End.DT46 SID

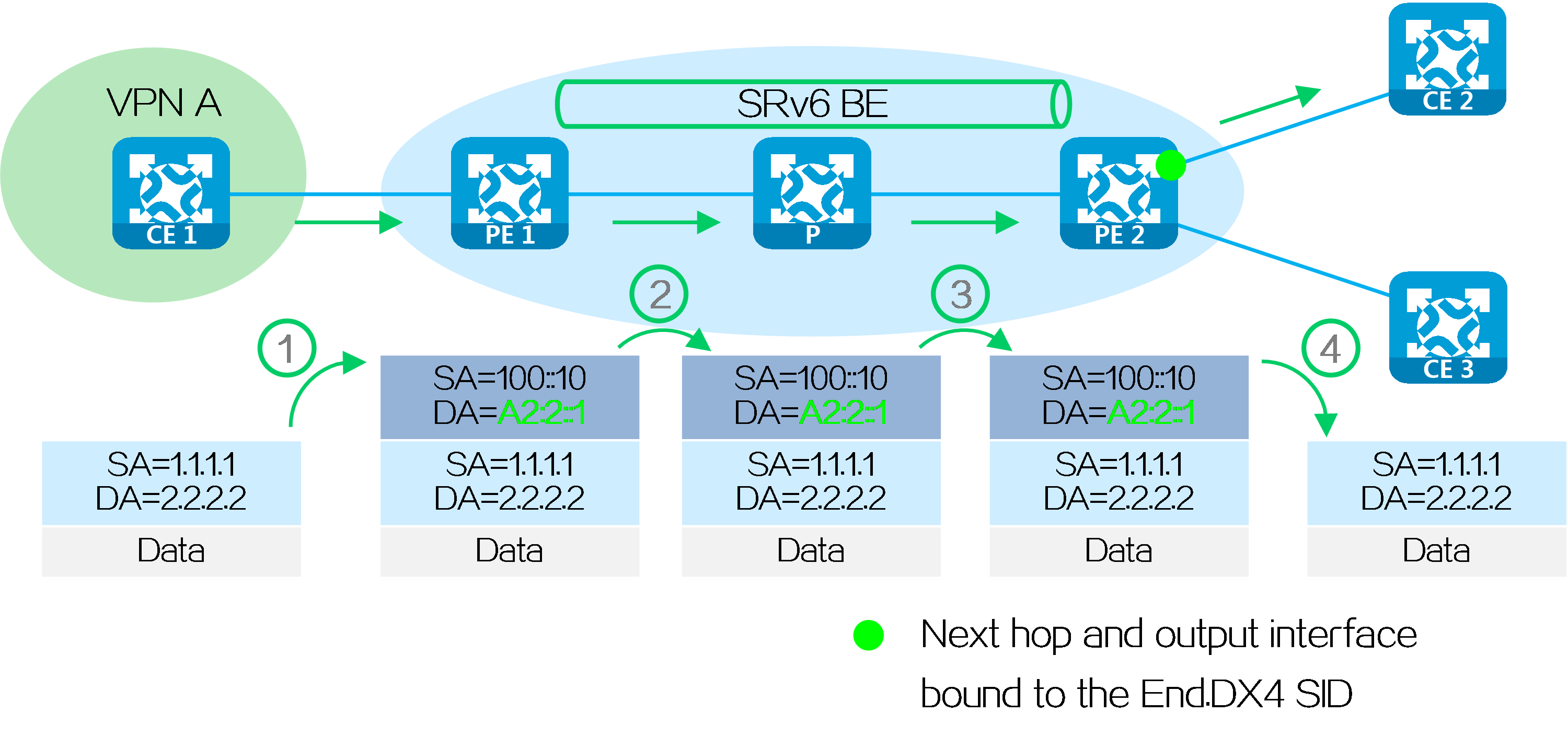

Packet forwarding based on End.DX4 SID/End.DX6 SID

After route advertisement is completed, CE 1 forwards a packet with destination address 2.2.2.2 to CE 2 as follows:

(1) CE 1 sends an IPv4 packet with destination address 2.2.2.2 to PE 1.

(2) Upon receiving the private network packet from the interface bound to VPN instance A, PE 1 searches the route matching 2.2.2.2 in VPN instance A’s routing table. It obtains the route-associated End.DX4 SID A2:2::1, and encapsulates an IPv6 packet header for the packet. The source address in the IPv6 packet header is configured by the administrator, and the destination address is End.DX4 SID A2:2::1.

(3) PE 1 searches the IPv6 routing table based on End.DX4 SID A2:2::1, and forwards the packet to P through the optimal IGP route.

(4) P searches the IPv6 routing table based on End.DX4 SID A2:2::1, and forwards the packet to PE 2 through the optimal IGP route.

(5) Upon receiving the packet with End.DX4 SID A2:2::1 as the destination IPv6 address, PE 2 decapsulates the packet by removing its IPv6 packet header, and forwards the packet based on the SID-associated next hop and output interface to CE 2.

Figure27 Packet forwarding based on End.DX4 SID/End.DX6 SID

4.5 L3VPN over SRv6 TE

Introduction

L3VPN over SRv6 TE adopts the forwarding paths corresponding to SRv6 TE policies (SRv6 TE policy tunnels) as public network tunnels to VPN service traffic, providing Layer 3 interconnections between users in the same VPN at different sites and implementing user isolation among different VPNs.

An L3VPN over SRv6 TE network can use various traffic steering methods to direct different service traffic in the VPN into different SRv6 TE tunnels to meet user demands. For example, it can forward low-delay voice service traffic based on the path of SRv6 TE policy A, and high-bandwidth video service traffic based on the path of SRv6 TE policy B.

Figure28 L3VPN over SRv6 TE network forwarding

Based on the carried VPN services, L3VPN over SRv6 TE is classified into the following types:

· IP L3VPN over SRv6 TE policy—Transmits private network routes through VPNv4/VPNv6 routes between PEs to carry Layer 3 VPN services.

· EVPN L3VPN over SRv6 TE policy—Transmits private network routes through EVPN routes between PEs to carry Layer 3 VPN services.

Technical benefits

Compared with L3VPN over SRv6 BE, L3VPN over SRv6 TE is more flexible and reliable for deployment.

Table2 Comparison between L3VPN over SRv6 TE and L3VPN over SRv6 BE

|

Technology |

L3VPN over SRv6 TE |

L3VPN over SRv6 BE |

|

Public network route advertisement |

All SRv6 nodes advertise their own locator routes in the public network. |

Only PEs advertise their own locator routes in the public network. |

|

Packet encapsulation at the source node |

SRH: Encapsulates the SID list of the SRv6 TE policy and the SRv6 SID to be assigned to the private network route. IPv6 basic header: The first SID in the SID list of the SRv6 TE policy is used as the destination address. |

The SRH is not encapsulated. IPv6 basic header: The SRv6 SID to be assigned to the private network route is used as the destination address. |

|

Packet forwarding on intermediate nodes |

The SRv6 nodes corresponding to the SID list forward packets based on the SRH. Other nodes forward packets according to routing table lookup. |

All nodes forward packets according to routing table lookup. |

Operating mechanism

In an L3VPN over SRv6 TE network, public network traffic is forwarded along the SID list of the SRv6 TE policy. The SID list contains the SRv6 SIDs corresponding to the SRv6 nodes through which the forwarding path must pass. Therefore, the intermediate nodes must support SRv6. To ensure reachability of the devices associated with the SRv6 SIDs in the SID list, each device must advertise the route of the locator network where the SRv6 SID resides, that is, the locator route. In an L3VPN over SRv6 BE network, public network traffic is forwarded according to routing table lookup based on IGP routes. Therefore, the intermediate nodes do not need to support SRv6.

The SRv6 SIDs used and private network route advertisement method are the same for L3VPN over SRv6 TE and L3VPN over SRv6 BE. The operating mechanism differences are as shown in the following table.

Table3 Operating mechanism comparison between L3VPN over SRv6 TE and L3VPN over SRv6 BE

|

Technology |

L3VPN over SRv6 TE |

L3VPN over SRv6 BE |

|

Public network route advertisement |

All SRv6 nodes advertise their own locator routes in the public network. |

Only PEs advertise their own locator routes in the public network. |

|

Packet encapsulation at the source node |

SRH: Encapsulates the SID list of the SRv6 TE policy and the SRv6 SID to be assigned to the private network route. IPv6 basic header: The first SID in the SID list of the SRv6 TE policy is used as the destination address. |

The SRH is not encapsulated. IPv6 basic header: The SRv6 SID to be assigned to the private network route is used as the destination address. |

|

Packet forwarding on intermediate nodes |

The SRv6 nodes corresponding to the SID list forward packets based on the SRH. Other nodes forward packets according to routing table lookup. |

All nodes forward packets according to routing table lookup. |

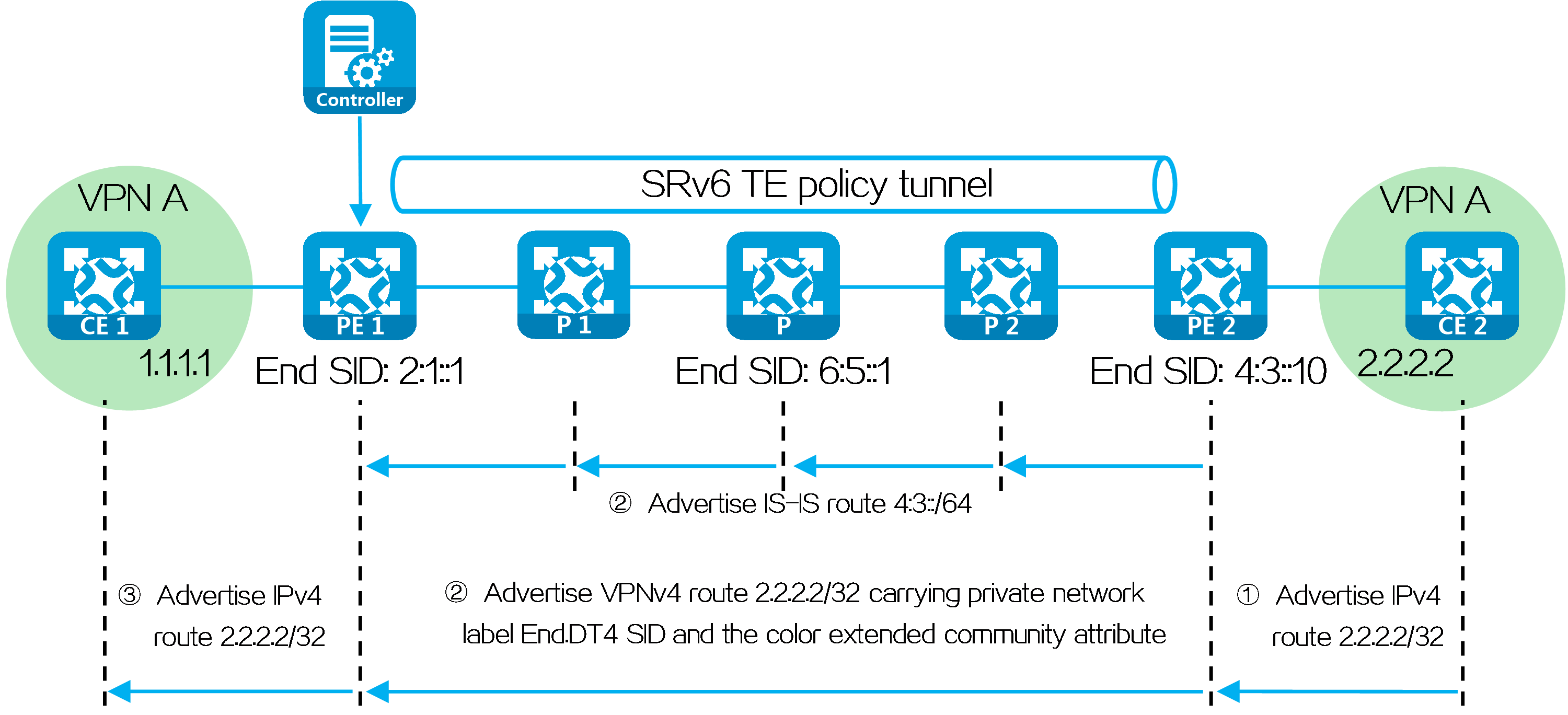

Route advertisement

In an L3VPN over SRv6 TE network, SRv6 TE policy settings are typically deployed by the controller to PE 1. In the following figure, the SID list of the deployed SRv6 TE policy is <6:5::1, 4:3::10>, corresponding to SRv6 nodes P and PE 2. PE 1, P, and PE 2 use IGP to advertise locator routes in the public network.

PE 2 advertises network route 4:3::/64 associated with the End.DT4 SID to P 2, P, P 1, and PE 1 through IGP (IS-IS for example). Upon receiving the IGP route from PE 2, P 2, P, P 1, and PE 1 learn it to their routing tables. Similarly, P 2, P, P 1, and PE 1 also advertise their own locator routes.

Taking color-based traffic steering as an example, CE 2 advertises a private network route to CE 1 as follows:

(1) CE 2 advertises private network route 2.2.2.2/32 from the local site to PE 2 through IGP or BGP.

(2) Upon learning the route, PE 2 adds the route to the routing table of VPN instance A. PE 2 adds the RD and RT attributes to the private network route, and assigns End.DT4 SID 4:3::1 to it to form a VPNv4 route. PE 2 advertises the VPNv4 route carrying the End.DT4 SID and color extended community attribute to PE 1 through MP-BGP.

(3) Upon receiving the VPNv4 route, PE 1 adds it to the routing table of VPN instance A, and steers the VPNv4 route to the SRv6 TE policy by using the color-based traffic steering method. PE 1 converts the VPNv4 route to an IPv4 route, and advertises the route to CE 1.

(4) Upon receiving the private network route, CE 1 learns it to its routing table.

Figure29 Route advertisement for L3VPN over SRv6 TE

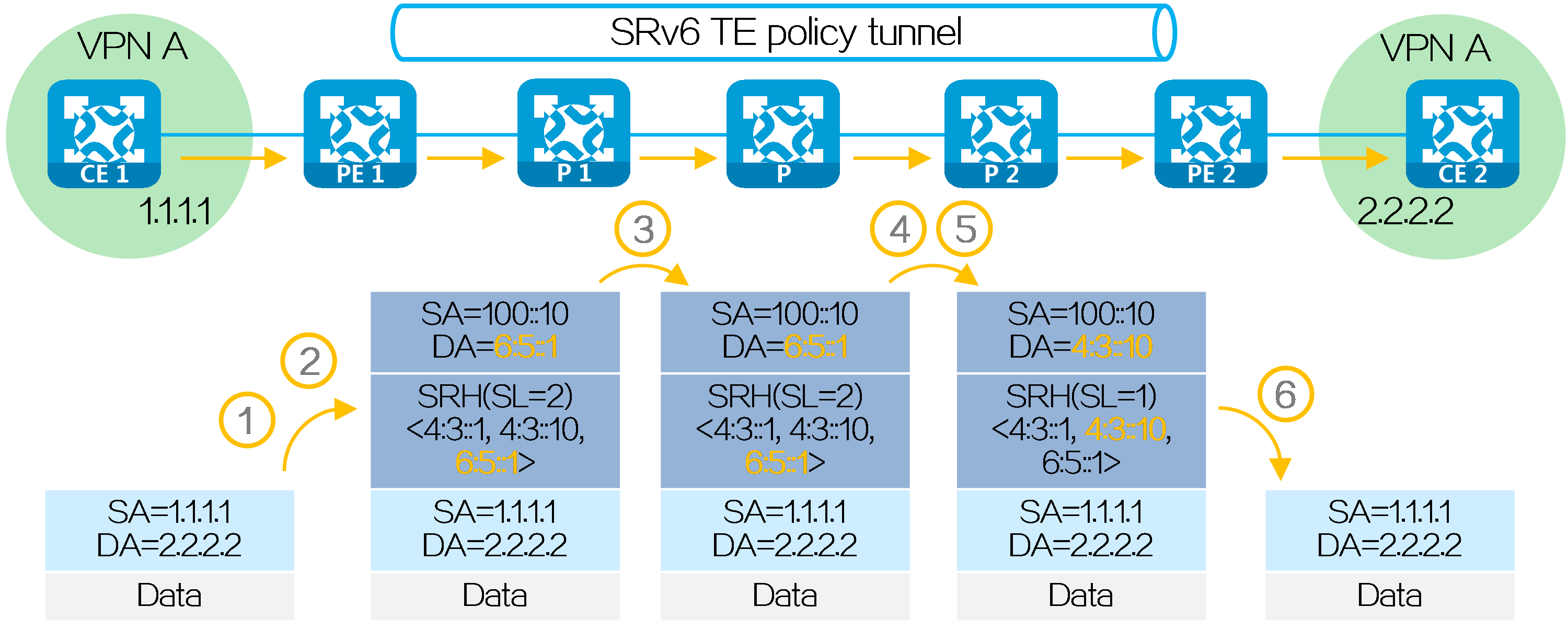

Packet forwarding

After route advertisement is completed, CE 1 forwards a packet with destination address 2.2.2.2 to CE 2 as follows:

(1) CE 1 sends an IPv4 packet with destination address 2.2.2.2 to PE 1.

(2) Upon receiving the private network packet from the interface bound to VPN instance A, PE 1 searches the route matching 2.2.2.2 in VPN instance A’s routing table. It obtains the route-associated End.DT4 SID 4:3::1. The next hop of the route is the SRv6 TE policy. Then PE 1 performs the following operations:

· Encapsulates SID list <6:5::1, 4:3::10> of the SRv6 TE policy and End.DT4 SID 4:3::1 in the SRH.

· Encapsulates the user-configured source address and destination address 6:5::1 (the first SID in the SID list of the SRv6 TE policy) to the IPv6 basic header.

· Searches the routing table based on the encapsulated destination address in the IPv6 header, and encapsulates and forwards the packet to P 1.

(3) P 1 searches the IPv6 routing table based on the destination address, and forwards the packet to P through the optimal IGP route.

(4) Upon receiving the packet, P performs the following operations:

· Checks the SL value in the SRH header. If the SL is greater than 0, it decreases the value by 1. The destination address is pointed by SL. Because the SL is 1, the destination address is IPv6 address 4:3::10 as pointed by Segment List [1].

· Searches the routing table based on the encapsulated destination address in the IPv6 header, and forwards the packet to P 2.

(5) P 2 searches the IPv6 routing table based on the destination address, and forwards the packet to PE 2 through the optimal IGP route.

(6) Upon receiving the packet, PE 2 uses the destination IPv6 address to search the local SID table, obtains the End SID, decreases the SL by 1, and updates the destination IPv6 address to End.DT4 SID 4:3::1. Then, PE 2 uses destination IPv6 address 4:3::1 to search the local SID table, obtains the End.DT4 SID, and executes the action associated with the End.DT4 SID. That is, it removes the IPv6 packet header, obtains VPN instance A matching the End.DT4 SID, searches the routing table of VPN instance A, and forwards the packet to CE 2.

Figure30 Packet forwarding for L3VPN over SRv6 TE

4.6 EVPN VPLS over SRv6

Introduction

EVPN VPLS over SRv6 uses SRv6 PW tunnels to carry EVPN VPLS services. PEs advertise SRv6 SIDs through BGP EVPN routes, and establish an SRv6 tunnel. The SRv6 tunnel is used as an SRv6 PW that encapsulates and forwards Layer 2 data packets between different sites, implementing point-to-multipoint connections for customer sites over the IPv6 backbone network.

Figure31 EVPN VPLS over SRv6 architecture

Operating mechanism

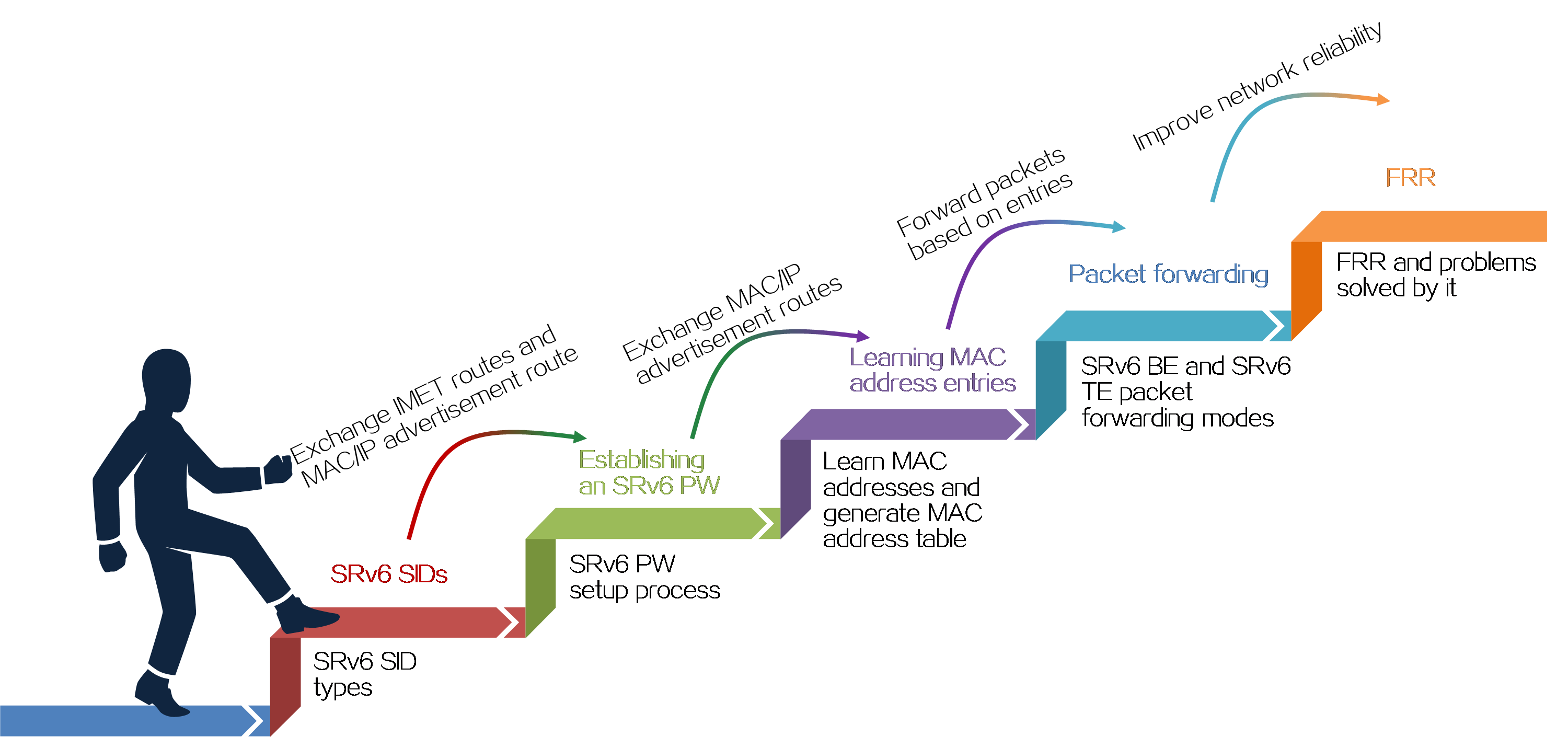

Figure32 Operating mechanism of EVPN VPLS over SRv6

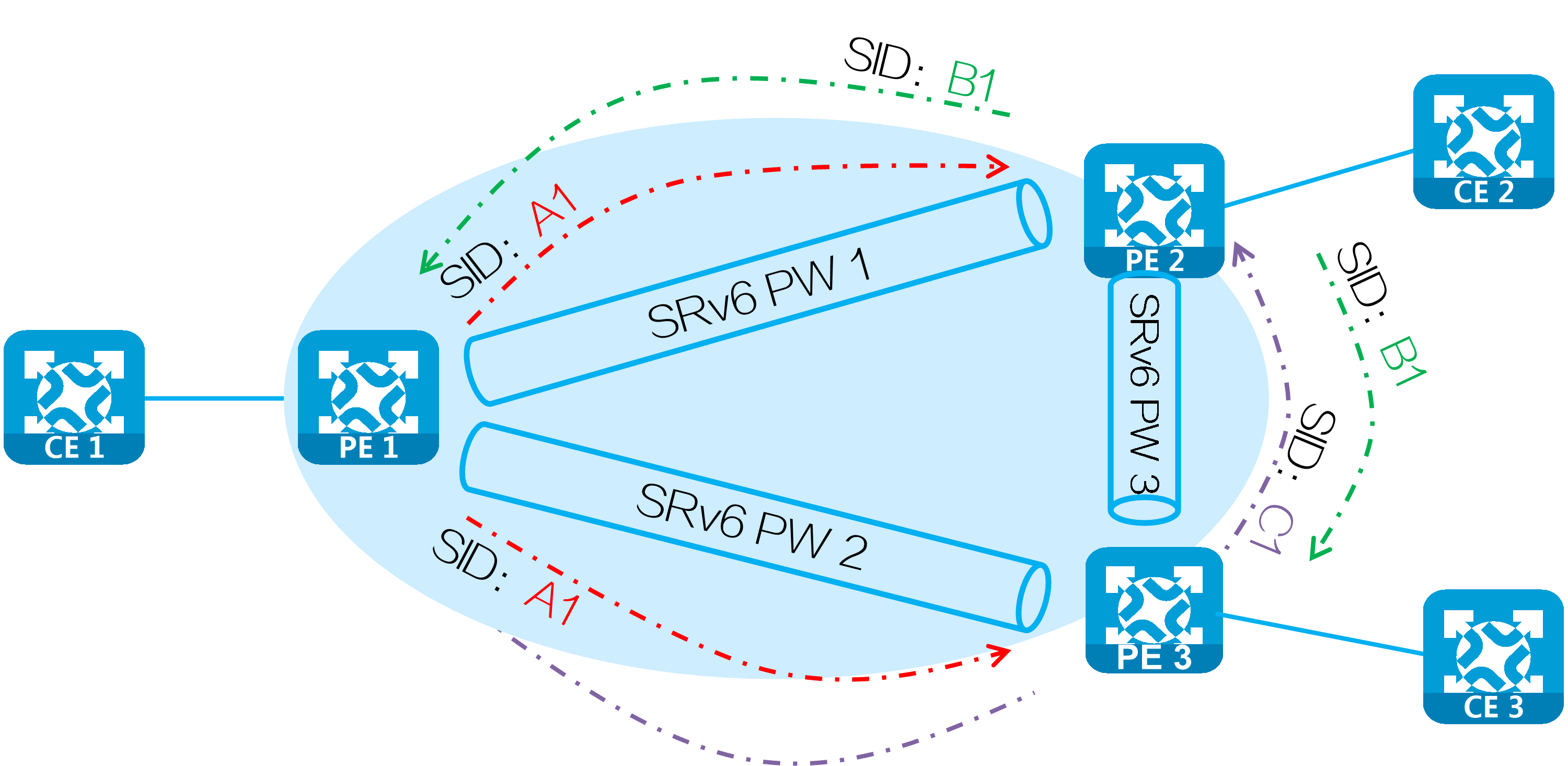

SRv6 SIDs

In an EVPN VPLS over SRv6 network, PEs exchange SRv6 SIDs through BGP EVPN routes. Typically the SRv6 SIDs include the following types:

· End.DX2 SIDs and End.DT2U SIDs used for forwarding unicast traffic (whether to use End.DX2 SIDs or End.DT2U SIDs to forward unicast traffic depends on the configuration).

· End.DT2M SIDs used for forwarding EVPN VPLS broadcast, unknown unicast, and multicast (BUM) traffic.

The following table shows the relationship between SRv6 SID types and BGP EVPN routes.

Table4 Relationship between SRv6 SIDs and BGP EVPN routes

|

SRv6 SID type |

BGP EVPN routes carrying the SIDs |

SID functions |

|

End.DX2 SID |

AD per EVI route MAC/IP advertisement route |

Identifies an AC. The associated forwarding actions are removing the IPv6 packet header and extension header, and then forwarding the packet through the specified AC. |

|

End.DT2U SID |

AD per EVI route MAC/IP advertisement route |

Identifies a VSI. The associated forwarding actions are removing the IPv6 packet header and extension header, and then searching the MAC address table to forward the packet to the specified output interface. |

|

End.DT2M SID |

IMET route |

Identifies an VSI. The associated forwarding actions are removing the IPv6 packet header and extension header, and then broadcasting the packet within the VSI. |

Establishing an SRv6 PW

PEs exchange SRv6 SIDs through BGP EVPN routes (IMET routes, AD per EVI routes, and MAC/IP advertisement routes) to establish an SRv6 PW. As shown in the following figure, PE 1 and PE 2 exchange IMET routes to establish an SRv6 PW as follows:

(1) PE 1 and PE 2 advertise IMET routes to each other, carrying the End.DT2M SID assigned to their VSIs.

(2) Upon receiving the IMET route, PE 1 and PE 2 each establishes a single-hop SRv6 tunnel to the remote PE. The SIDs of the tunnel are the End.DT2M SIDs in the routes.

(3) PE 1 establishes a single-hop SRv6 tunnel to the remote PE (PE 2). PE 2 establishes a single-hop SRv6 tunnel to the PE 1 in the same way. The two SRv6 tunnels form an SRv6 PW to carry Layer 2 data of users.

Figure33 Establishing an SRv6 PW

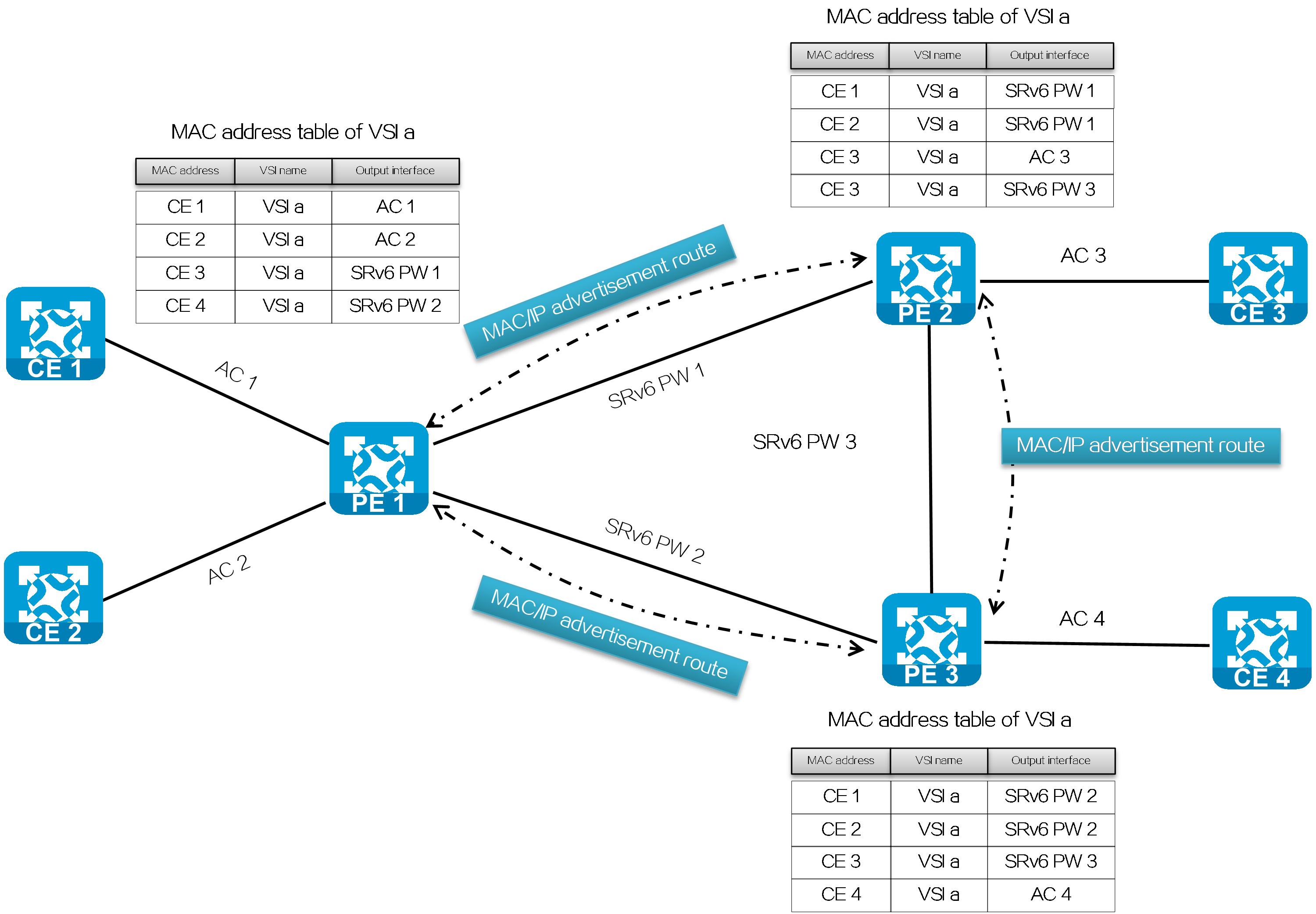

Learning MAC address entries

In the EVPN VPLS over SRv6 network, the PEs forward Layer 2 packets based on learned MAC address entries. The MAC address learning on the PEs includes the following parts:

· Local MAC address learning: Upon receiving a packet sent by the local CE, PE determines VSI to which the packet belongs, and adds the source MAC address (MAC address of the local CE) in the packet to the MAC address table of the VSI. The output interface of the MAC address entry is the AC that receives the packet.

· Remote MAC address learning: The PE advertises the MAC address learned through the MAC/IP advertisement route to the remote PE. Upon receiving the information, the remote PE adds the MAC address to the MAC address table of the associated VSI. The output interface of the MAC address entry is the SRv6 PW between the two PEs.

Figure34 MAC address learning for EVPN VPLS over SRv6

Packet forwarding

The packet forwarding modes supported by EVPN VPLS over SRv6 are SRv6-BE, SRv6-TE, and SRv6-TE and SRv6-BE hybrid modes. The packet forwarding modes have the following differences.

Table5 Differences between packet forwarding modes for EVPN VPLS over SRv6

|

Forwarding mode |

SRv6 TE mode |

SRv6 BE mode |

|

Forwarding principle |

Searches for a matching SRv6 TE policy based on packet attributes, adds the SRH containing the End.DX2 SID (or End.DT2M SID or End.DT2U SID) and SRv6 TE policy SID list to the packet, and forwards the packet through the SRv6 TE policy. |

Forwards the packet according to IPv6 routing table lookup based on the encapsulated End.DX2 SID, End.DT2M SID, or End.DT2U SID. |

|

Forwarding path |

Supports traffic steering methods based on color and tunnel policy. You can flexibly select traffic steering methods according to forwarding requirements. Implements forwarding path control by planning SID lists in SRv6 TE policies. You can select appropriate forwarding paths according to service requirements. |

Uses IGP to calculate forwarding paths. The forwarding paths cannot be planned. |

|

Reliability |

An SRv6 TE policy contains multiple candidate paths, and supports backup between primary and backup paths. |

The speed of forwarding path switchover upon network failures depends on the route convergence speed. |

|

Load sharing |

A candidate path contains multiple SID lists, and can implement load sharing based on the SID list weights. |

Implements load sharing based on locator routes. |

In SRv6 TE and SRv6 BE hybrid mode, the SRv6 TE mode is preferentially used to select forwarding paths. If no SRv6 TE policy is available, the SRv6 BE mode is used to select forwarding paths.

SRv6 BE

Known unicast packets

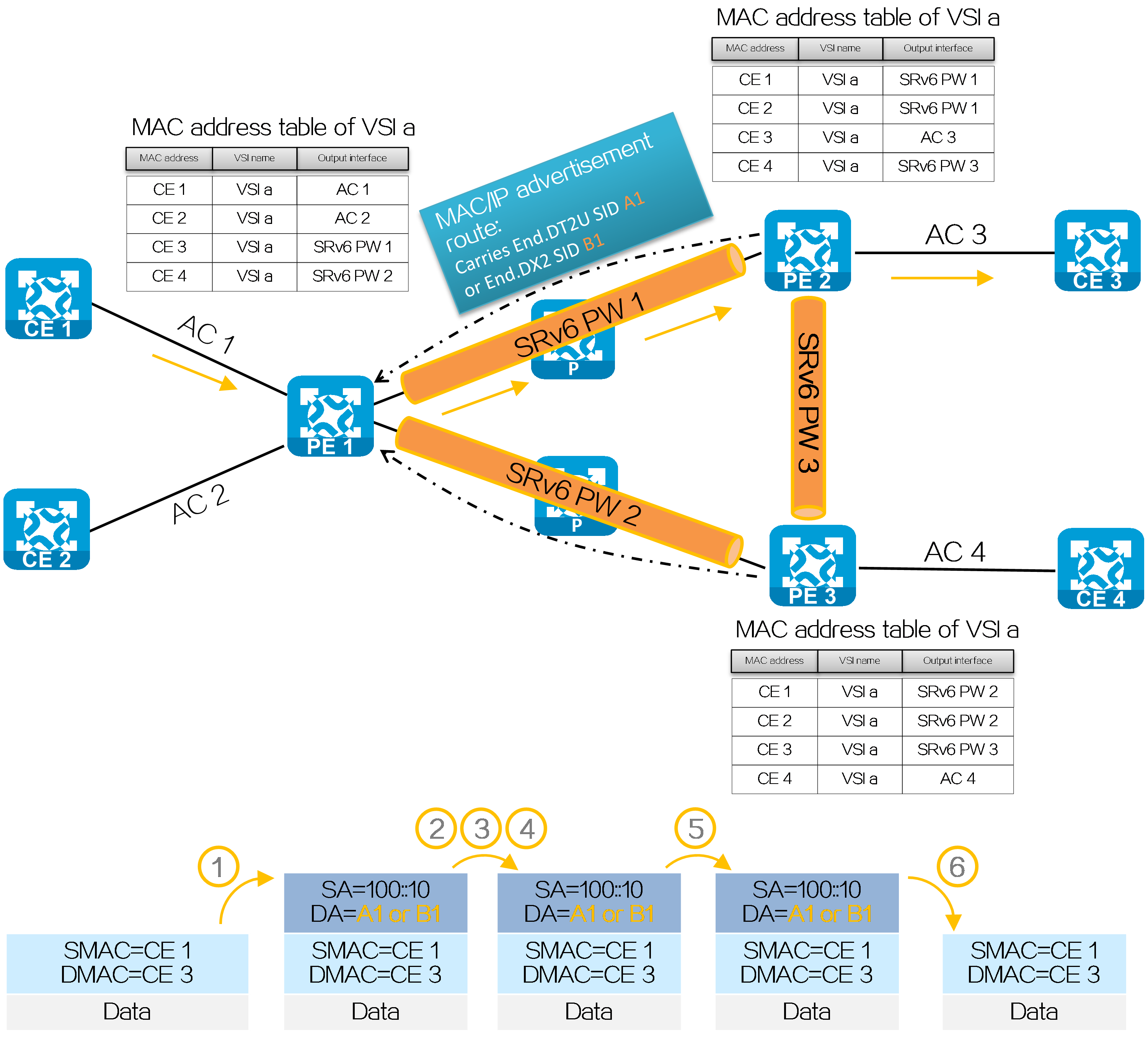

Figure35 Unicast packet forwarding process in the EVPN VPLS over SRv6 BE network

As shown in the figure above, after SRv6 PW establishment is completed, CE 1 forwards a known unicast packet to CE 3 process in the EVPN VPLS over SRv6 BE network as follows:

(1) CE 1 sends a Layer 2 packet with destination MAC address of CE 2 to PE 1.

(2) Upon receiving the Layer 2 packet on the AC connected to CE 1, PE 1 searches the MAC address table in the AC-associated VSI, finds the associated output interface SRv6 PW 1, and obtains the End.DT2U SID or End.DX2 SID of the tunnel (that is, End.DT2U SID A1 or End.DX2 SID B1 assigned by PE 2).

(3) PE 1 encapsulates the outer IPv6 packet header for the packet, with End.DT2U SID A1 or End.DX2 SID B1 as the destination IPv6 address and 100::10 (configured source address in the IPv6 packet header encapsulated for EVPN VPLS over SRv6) as the source IPv6 address.

(4) PE 1 searches the IPv6 routing table based on End.DT2U SID A1 or End.DX2 SID B1, and forwards the packet to P through the optimal IGP route.

(5) P searches the IPv6 routing table based on End.DT2U SID A1 or End.DX2 SID B1, and forwards the packet to PE 2 through the optimal IGP route.

(6) PE 2 searches the local SID table based on End.DT2U SID A1 or End.DX2 SID B1, and executes the SID-associated forwarding action.

· For End.DT2U SID, PE 2 decapsulates the packet by removing its IPv6 packet header, searches the MAC address table in the VSI to which the End.DT2U SID belongs, and forwards the packet to CE 2 according to the search result.

· For End.DX2 SID, PE 2 decapsulates the packet by removing its IPv6 packet header, and forwards the packet to the AC associated with the End.DX2 SID.

Broadcast, multicast, and unknown unicast packets

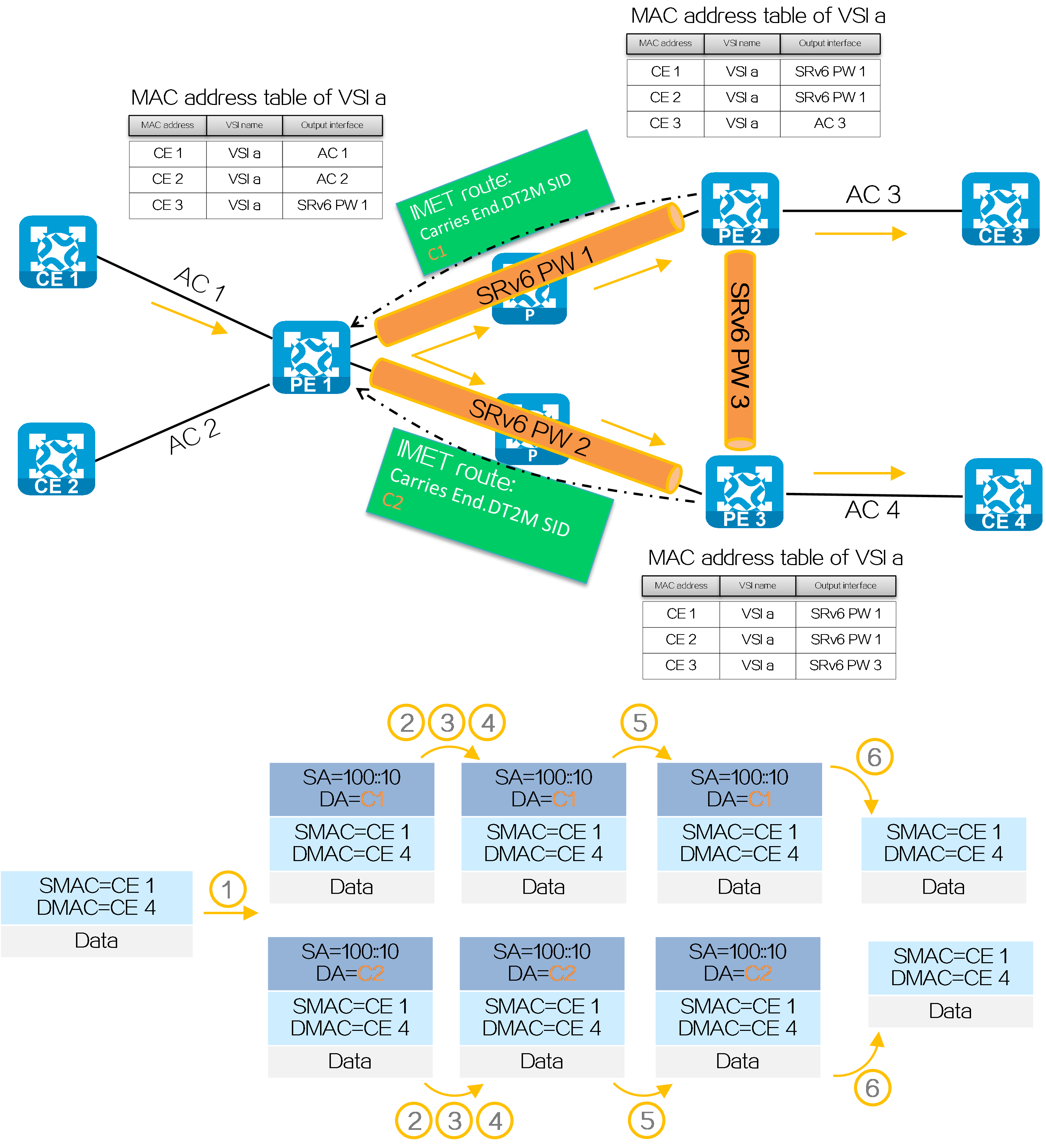

Figure36 Unknown unicast packet forwarding process in the EVPN VPLS over SRv6 BE network

As shown in the figure above, if SRv6 PW establishment is completed but PE 1 has not learned the MAC address of CE 4, CE 1 forwards an unknown unicast packet to CE 4 process in the EVPN VPLS over SRv6 BE network as follows:

(1) CE 1 sends a Layer 2 packet with destination MAC address of CE 4 to PE 1.

(2) After receiving the Layer 2 packet on the AC connected to CE 1, PE 1 fails to obtain a matching MAC address entry in the MAC address table of the AC-associated VSI, and then searches for the End.DT2M SID assigned by PE 2 and PE 3 in the VSI.

(3) PE 1 encapsulates the outer IPv6 packet header for the packet. The destination IPv6 address for the packet sent to PE 2 is End.DT2M SID C1. The destination IPv6 address for the packet sent to PE 3 is End.DT2M SID C2. The source IPv6 address for both packets is 100::10 (configured source address in the IPv6 packet header encapsulated for EVPN VPLS over SRv6).

(4) PE 1 searches the IPv6 routing table based on the End.DT2M SID, and forwards the packet to P through the optimal IGP route.

(5) P searches the IPv6 routing table based on the End.DT2M SID, and forwards the packet to PE 2 and PE 3 through the optimal IGP routes.

(6) PE 2 and PE 3 search the local SID tables based on the End.DT2M SID, and execute the SID-associated action, that is, removing the IPv6 packet headers and broadcasting the packets within the VSI to which the End.DT2M SID belongs.

|

|

SRv6 TE mode

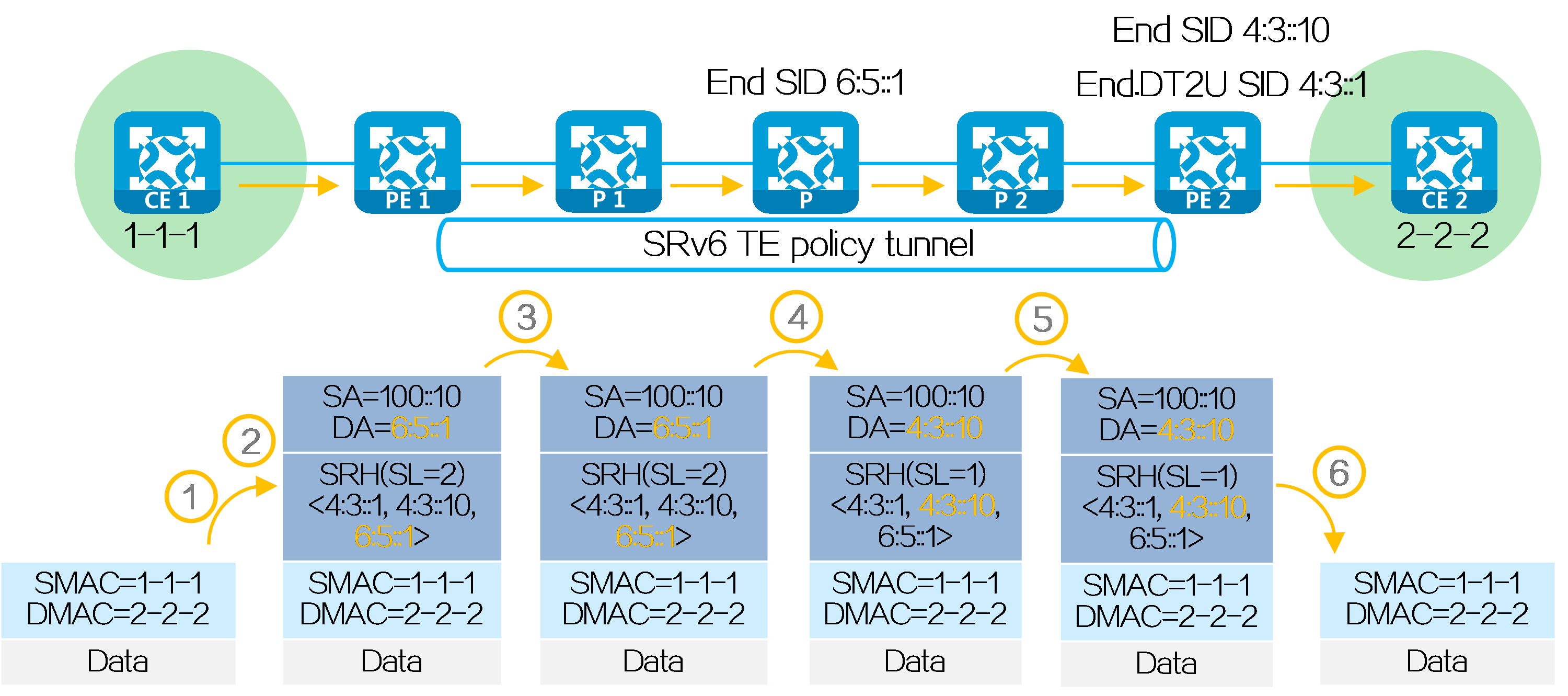

Figure37 Packet forwarding process for EVPN VPLS over SRv6 TE

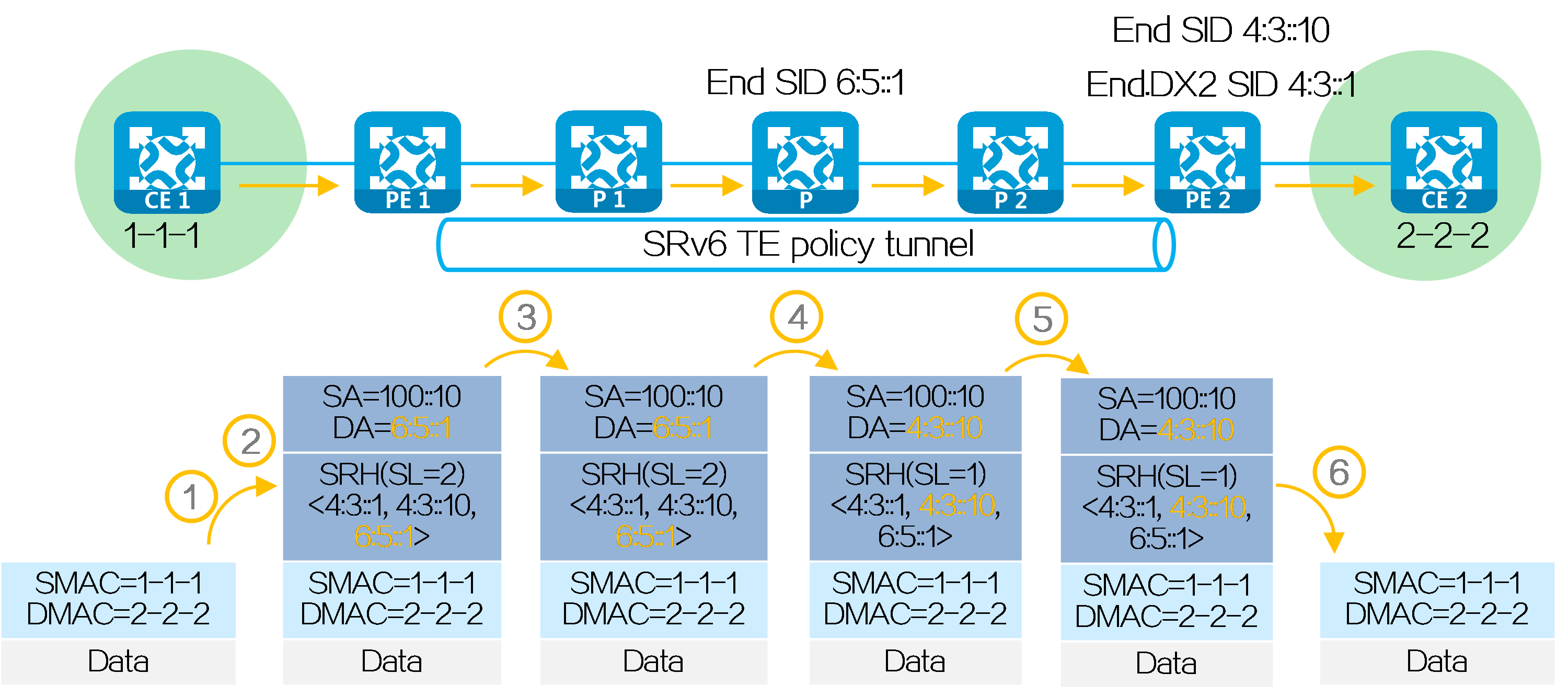

As shown in the figure above, after SRv6 PW establishment is completed, PE 1, P, and PE 2 are SRv6 nodes, and P 1 and P 2 are non-SRv6 nodes. CE 1 forwards a packet to CE 2 in the EVPN VPLS over SRv6 TE network as follows:

(1) CE 1 sends a Layer 2 packet with the destination MAC address of CE 2 to PE 1.

(2) After receiving the Layer 2 packet on the AC connected to CE 1, PE 1 searches the MAC address table in the AC-associated VSI.

· If a matching MAC address is found, PE 1 obtains the End.DT2U SID or End.DX2 SID of the SRv6 PW (output interface of the matching entry).

· If not matching MAC address is found, it obtains the End.DT2M SID assigned by PE 2. If PE 1 has End.DT2M SIDs received from multiple remote PEs, it encapsulates an End.DT2M SID for each Layer 2 packet, and forwards the packets to all the remote PEs.

¡ Upon obtaining the End.DT2U SID or End.DX2 SID, PE 1 obtains the SRv6 TE policy based on the next hop of the MAC/IP advertisement route carrying the SID. Upon obtaining the End.DT2M SID, PE 1 obtains the SRv6 TE policy based on the next hop of the IMET route carrying the SID.

¡ Encapsulates SID list <6:5::1, 4:3::10> of the SRv6 TE policy and SID 4:3::1 obtained in step 2 in the SRH. (Use the obtained End.DT2U SID 4:3::1 as an example.)

¡ Encapsulates the user-configured source address and destination address 6:5::1 (the first SID in the SID list of the SRv6 TE policy) to the IPv6 basic header.

¡ Searches the routing table based on the encapsulated destination address in the IPv6 header, and encapsulates and forwards the packet to P 1.

(3) P 1 searches the IPv6 routing table based on the destination address, and forwards the packet to P through the optimal IGP route.

(4) Upon receiving the packet, P performs the following operations:

· Checks the SL value in the SRH header. If the SL is greater than 0, it decreases the value by 1. The destination address is as pointed by SL. Because the SL is 1, the destination address is IPv6 address 4:3::10 as pointed by Segment List [1].

· Searches the routing table based on the encapsulated destination address in the IPv6 header, and forwards the packet to P 2.

(5) P 2 searches the IPv6 routing table based on the destination address, and forwards the packet to PE 2 through the optimal IGP route.

(6) PE 2 searches the local SID table based on the SIDs carried in the packet. It first removes the End SID, and then searches the local SID table based on the next SID. PE 2 processes SIDs differently by SID type as follows:

· For End.DT2U SID, PE 2 decapsulates the packet by removing its IPv6 packet header, searches the MAC address table in the VSI to which the End.DT2U SID belongs, and forwards the packet to CE 2 according to the search result.

· For End.DX2 SID, PE 2 decapsulates the packet by removing its IPv6 packet header, and forwards the packet to the AC associated with the End.DX2 SID.

· For End.DT2M SID, PE 2 decapsulates the packet by removing its IPv6 packet header, and then broadcasts the packet within the VSI to which the End.DT2M SID belongs.

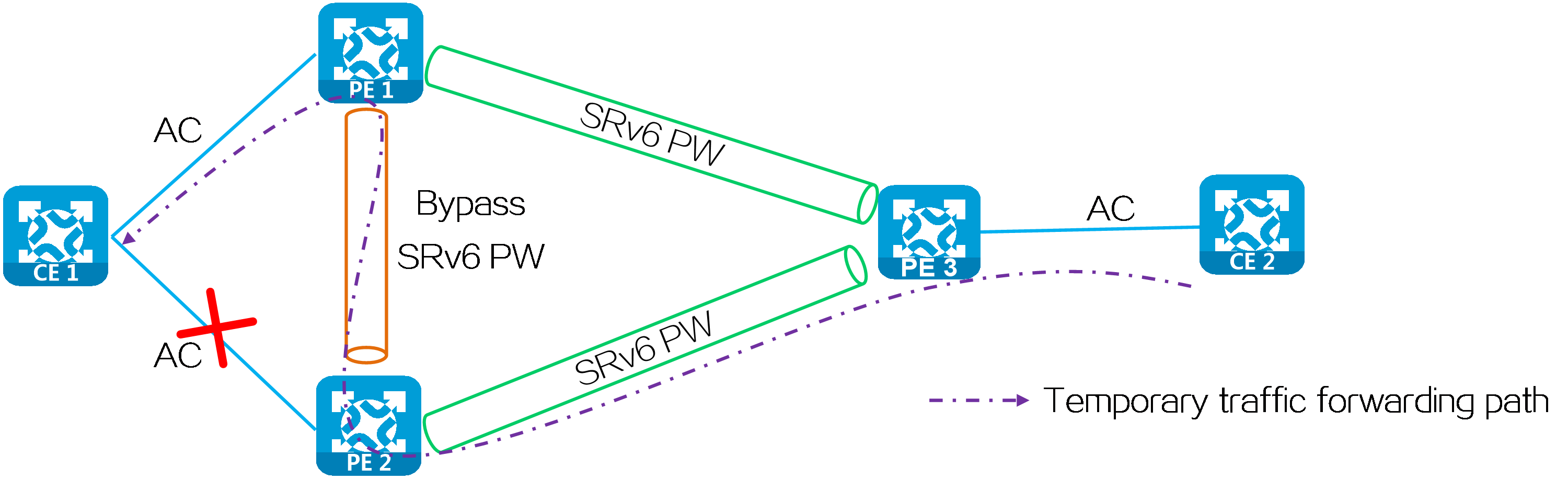

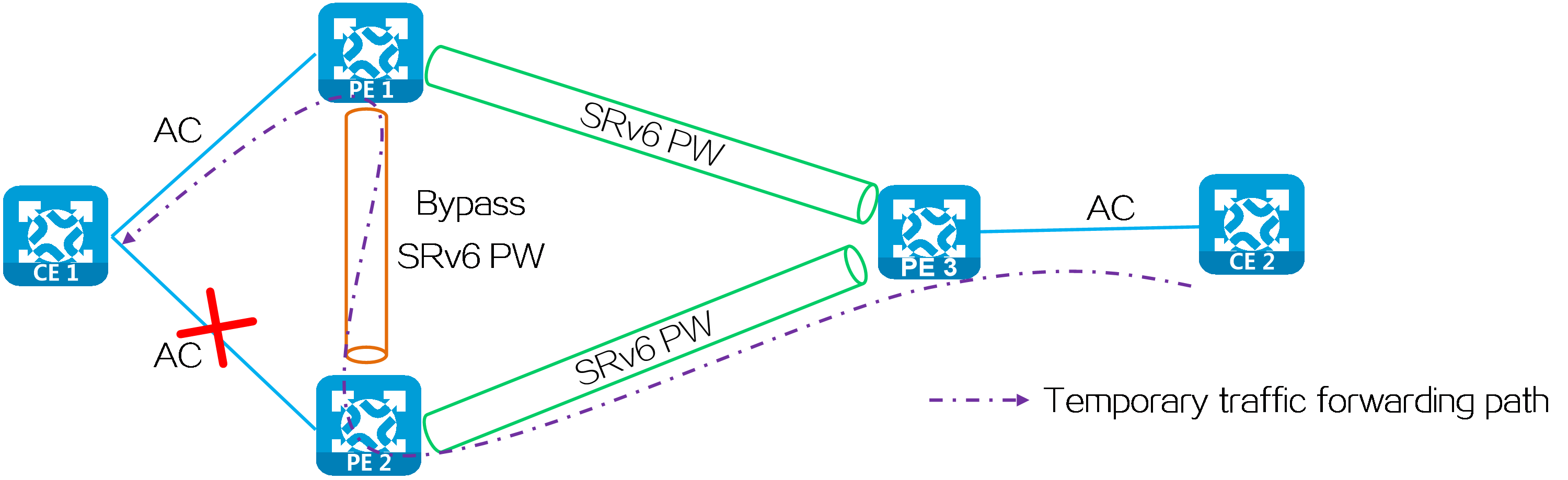

FRR

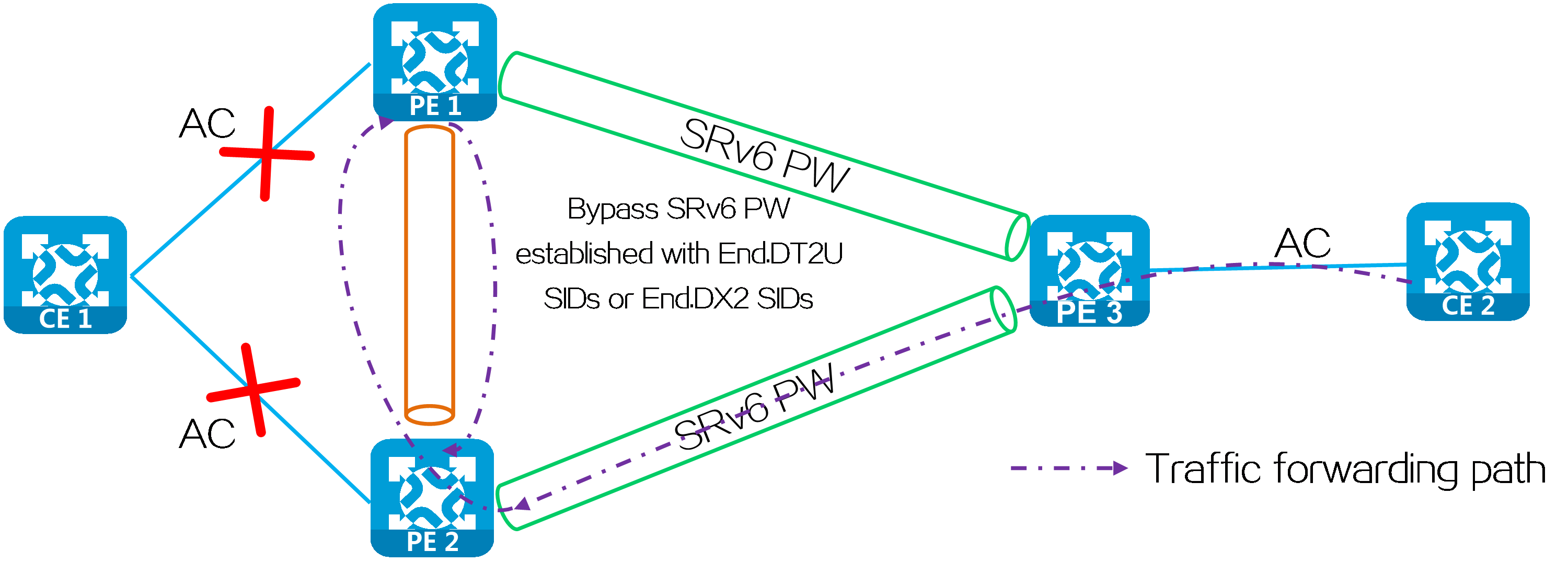

In a multi-homed network, when the AC attached to PE 2 fails, PE 2 deletes the corresponding MAC address entry and advertises the local unreachable event to PE 1 and remote PEs (PE 3 in this example). During this period of time, the packets sent by PE 3 to PE 2 will be discarded due to failure to find an output interface. To address this issue, you can configure FRR for EVPN VPLS over SRv6. With FRR enabled, PE 2 does not delete the corresponding MAC address entry when the attached AC fails. Instead, it forwards the packet matching the MAC address entry to PE 1 through the bypass SRv6 PW between PE 2 and PE 1. Upon receiving the packet, PE 1 forwards the packet to CE 1 to prevent packet loss due to the AC failure.

Figure38 FRR network

As shown in the following figure, a loop might exist if PE 1 and PE 2 establish a bypass SRv6 PW between them through End.DT2U or End.DX2 SIDs. When the ACs on PE 1 and PE 2 both fail, PE 1 or PE 2 forwards the packets received from its peer back to its peer through the bypass SRv6 PW.

Figure39 Bypass SRv6 PW established through End.DT2U or End.DX2 SIDs

To resolve this issue, use End.DT2UL or End.DX2L SIDs to establish a bypass SRv6 PW between PE 1 and PE 2. The packets from a bypass SRv6 PW carry an End.DT2UL or End.DX2L SID. A PE does not forward the packets back to the bypass SRv6 PW.

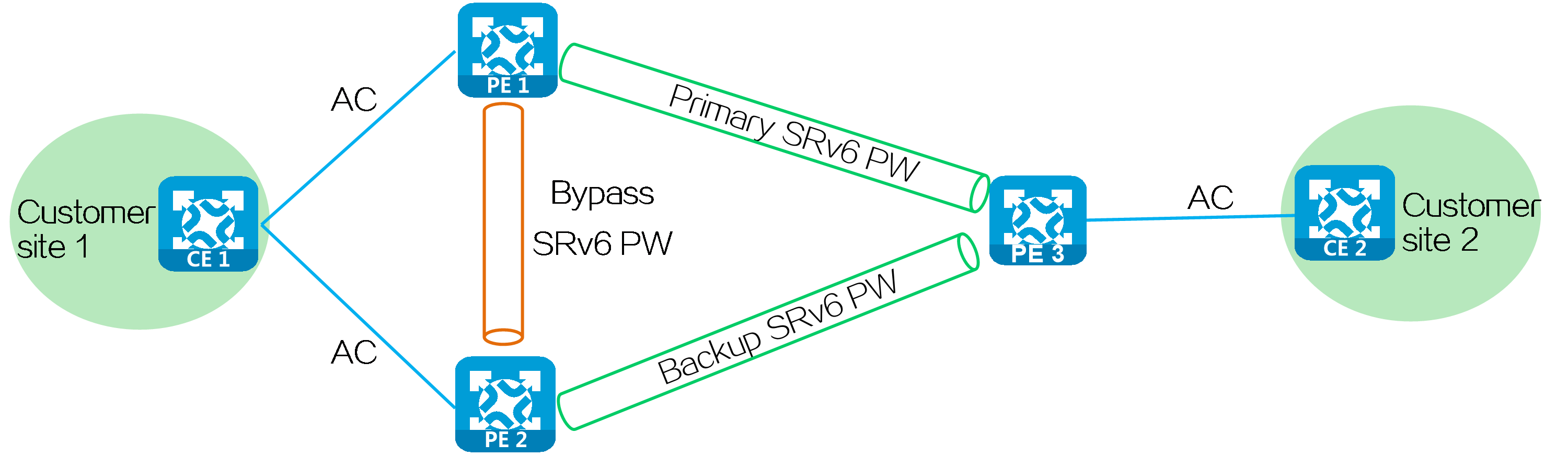

Typical networking

Multi-homed + FRR networking

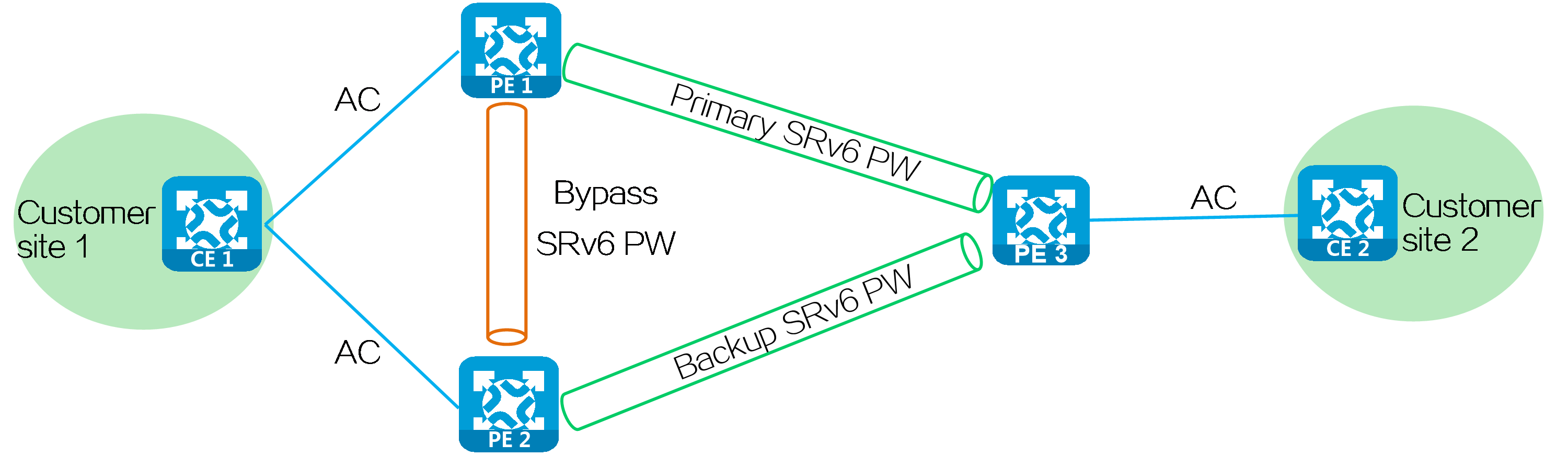

CE 1 is dual-homed to PE 1 and PE 2 through link aggregation or S-Trunk. CE 2 is single-homed to PE 3. You can establish SRv6 PWs in the network to implement interconnection between customer sites. You can deploy FRR to minimize impact on the network caused by AC or SRv6 PW failures, improving network reliability and stability.

Figure40 Multi-homed + FRR networking

4.7 EVPN VPWS over SRv6

Introduction

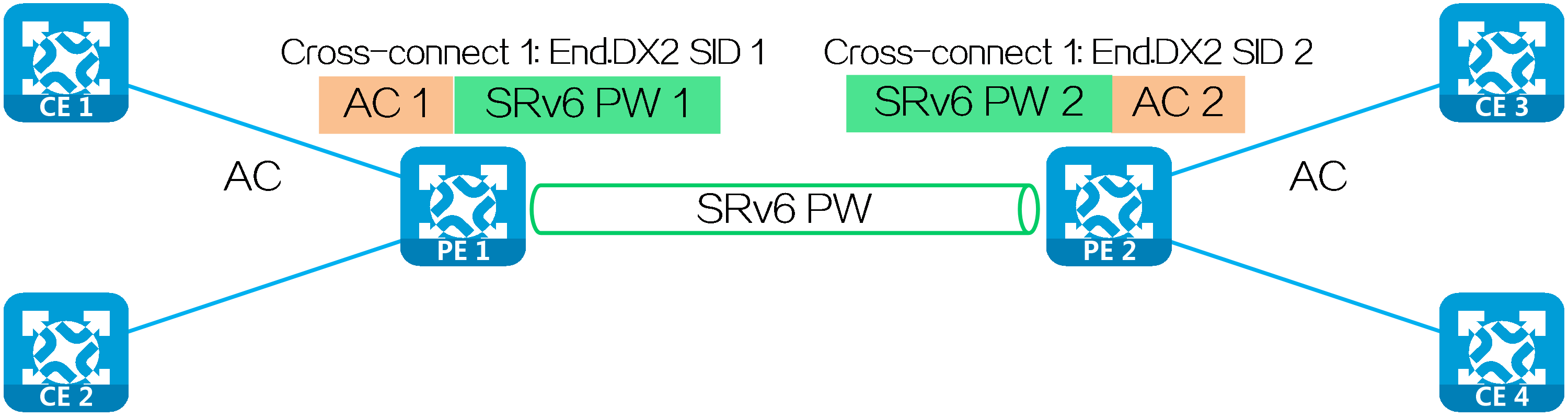

EVPN VPWS over SRv6 uses SRv6 PW tunnels to carry EVPN VPWS services. PEs advertise SRv6 SIDs through BGP EVPN routes, and establish an SRv6 tunnel. The SRv6 tunnel is used as an SRv6 PW that encapsulates and forwards Layer 2 data packets between different sites, implementing transparent forwarding of Layer 2 customer traffic over the IPv6 backbone network and establishment of point-to-point connections between customer sites over the IPv6 backbone network.

Figure41 EVPN VPWS over SRv6 architecture

Operating mechanism

Figure42 Operating mechanism of EVPN VPWS over SRv6

SRv6 SIDs

The SRv6 SIDs used in an EVPN VPWS over SRv6 network are typically End.DX2 SIDs.

End.DX2 SIDs

A PE assigns an End.DX2 SID to each cross-connect. PEs exchange End.DX2 SIDs through AD per EVI routes to establish an SRv6 PWs.

Upon receiving a packet carrying an End.DX2 SID, the PE decapsulates the packet, obtains the AC based on the cross-connect identified by the End.DX2 SID, and forwards the packet to the CE through the AC.

|

|

Figure43 EVPN VPWS over SRv6 networking

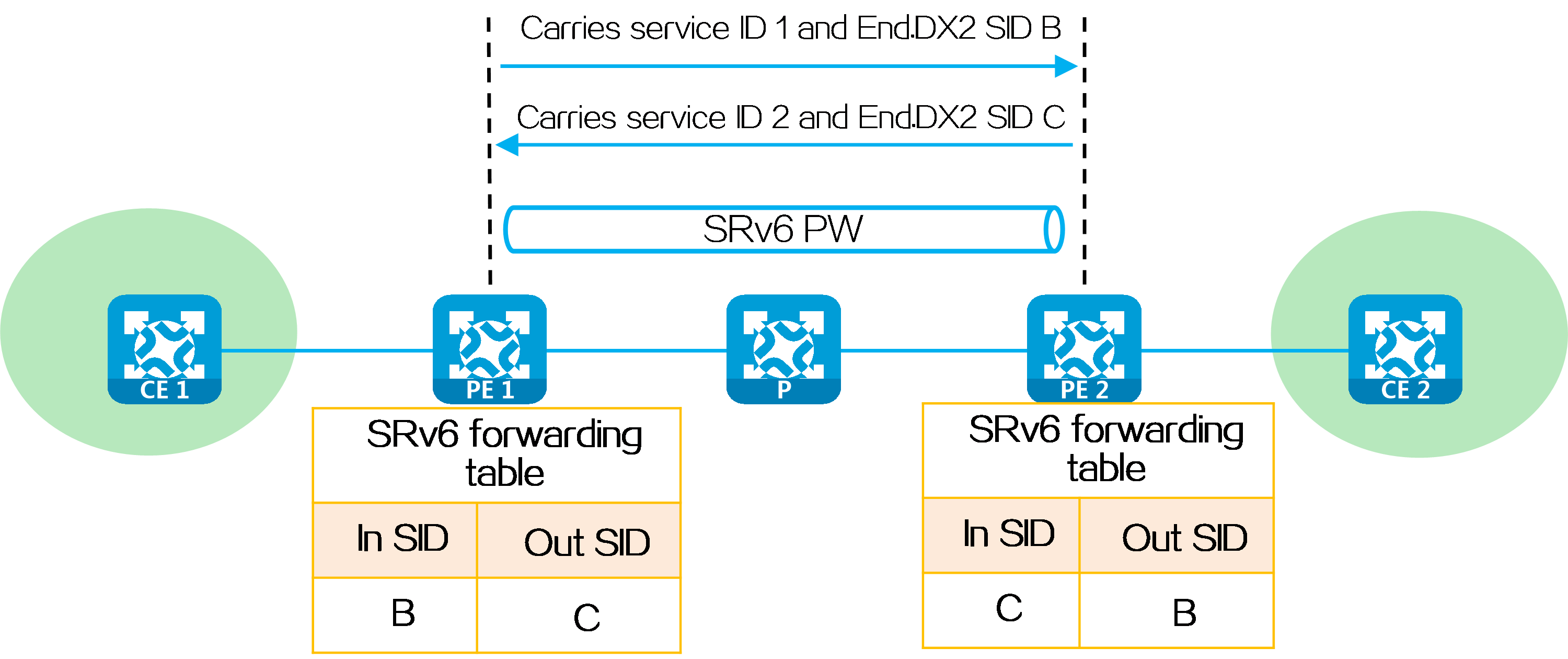

Establishing an SRv6 PW

Dynamically establishing an SRv6 PW

PEs exchange End.DX2 SIDs through BGP EVPN routes to dynamically establish an SRv6 PW. The process for dynamically establishing an SRv6 PW is as follows:

(1) When advertising an EVPN Ethernet auto-discovery route to PE 2, PE 1 carries the local service ID and the End.DX2 SID assigned to the cross-connect in the route.

(2) Upon receiving the EVPN route carrying the same service ID as the locally configured remote service ID, PE 2 establishes a single-hop SRv6 tunnel to the PE 1. The SID of the tunnel is the End.DX2 SID in the route.

(3) After PE 1 and PE 2 both advertise End.DX2 SIDs and establish unidirectional single-hop SRv6 tunnels to each other, the SRv6 tunnels form a PW to carry Layer 2 user data. The PW is called an SRv6 PW.

Figure44 Dynamically establishing an SRv6 PW

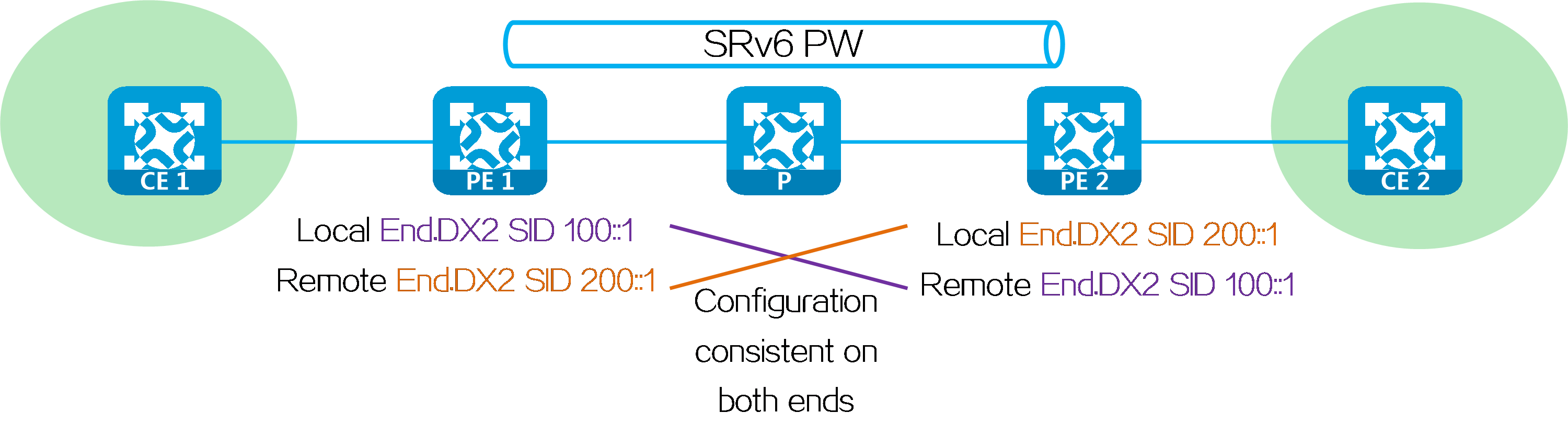

Statically establishing an SRv6 PW

To statically establish an SRv6 PW between two PEs, configure the SRv6 SIDs assigned by both the local and remote ends to the cross-connects on the PEs.

Figure45 Statically establishing an SRv6 PW

Packet forwarding

The packet forwarding modes supported by EVPN VPWS over SRv6 are SRv6-BE, SRv6-TE, and SRv6-TE and SRv6-BE hybrid modes. The packet forwarding modes have the following differences.

Table6 Differences between packet forwarding modes for EVPN VPWS over SRv6

|

Forwarding mode |

SRv6 TE mode |

SRv6 BE mode |

|

Forwarding principle |

Searches for a matching SRv6 TE policy based on packet attributes, adds the SRH containing the End.DX2 SID and SRv6 TE policy SID list to the packet, and forwards the packet through the SRv6 TE policy. |

Forwards the packet according to IPv6 routing table lookup based on the encapsulated End.DX2 SID. |

|

Forwarding path |

Supports traffic steering methods based on color and tunnel policy. You can flexibly select traffic steering methods according to forwarding requirements. Implements forwarding path control by planning SID lists in SRv6 TE policies. You can select appropriate forwarding paths according to service requirements. |

Uses IGP to calculate forwarding paths. The forwarding paths cannot be planned. |

|

Reliability |

An SRv6 TE policy contains multiple candidate paths, and supports backup between primary and backup paths. |

The speed of forwarding path switchover upon network failures depends on the route convergence speed. |

|

Load sharing |

A candidate path contains multiple SID lists, and can implement load sharing based on the SID list weights. |

Implements load sharing based on locator routes. |

In SRv6 TE and SRv6 BE hybrid mode, the SRv6 TE mode is preferentially used to select forwarding paths. If no SRv6 TE policy is available, the SRv6 BE mode is used to select forwarding paths.

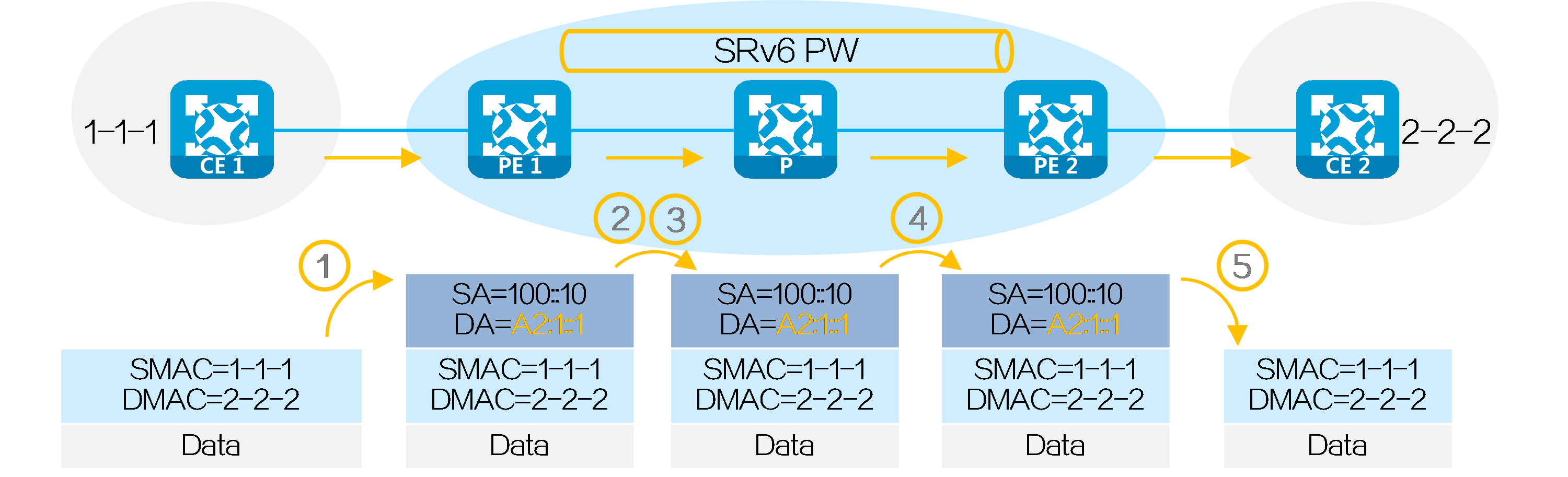

SRv6 BE mode

After SRv6 PW establishment is completed, CE 1 forwards a packet to CE 2 in the EVPN VPWS over SRv6 BE network as follows:

(1) CE 1 sends a Layer 2 packet with destination MAC address 2-2-2 to PE 1.

(2) After receiving the Layer 2 packet on the AC connected to CE 1, PE 1 searches for the SRv6 PW associated with the AC, and obtains End.DX2 SID A2:1::1 (End.DX2 SID assigned by PE 2 to the cross-connect). Then, PE 1 encapsulates the outer IPv6 packet header for the packet, with End.DX2 SID A2:1::1 as the destination IPv6 address and 100::10 (configured source address in the IPv6 packet header encapsulated for EVPN VPWS over SRv6) as the source IPv6 address.

(3) PE 1 searches the IPv6 routing table based on End.DX2 SID A2:1::1, and forwards the packet to P through the optimal IGP route.

(4) P searches the IPv6 routing table based on End.DX2 SID A2:1::1, and forwards the packet to PE 2 through the optimal IGP route.

(5) PE 2 searches the local SID table based on the End.DX2 SID, and executes the SID-associated action, that is, removing the outer IPv6 packet header, obtaining the AC matching the End.DX2 SID, and forwarding the packet to CE 2 through the AC.

Figure46 Packet forwarding process for EVPN VPWS over SRv6 BE

SRv6 TE mode

After SRv6 PW establishment is completed, PE 1, P, and PE 2 are SRv6 nodes, and P 1 and P 2 are non-SRv6 nodes. CE 1 forwards a packet to CE 2 in the EVPN VPWS over SRv6 TE network as follows:

(1) CE 1 sends a Layer 2 packet with destination MAC address 2-2-2 to PE 1.

(2) After receiving the Layer 2 packet on the AC connected to CE 1, PE 1 searches for the SRv6 PW associated with the AC, and obtains End.DX2 SID 4:3::1. The next hop of the route is an SRv6 TE policy. Then PE 1 performs the following operations:

· Encapsulates SID list <6:5::1, 4:3::10> of the SRv6 TE policy and End.DX2 SID 4:3::1 in the SRH.

· Encapsulates the user-configured source address and destination address 6:5::1 (the first SID in the SID list of the SRv6 TE policy) to the IPv6 basic header.

· Searches the routing table based on the encapsulated destination address in the IPv6 header, and encapsulates and forwards the packet to P 1.

(3) P 1 searches the IPv6 routing table based on the destination address, and forwards the packet to P through the optimal IGP route.

(4) Upon receiving the packet, P performs the following operations:

· Checks the SL value in the SRH header. If the SL is greater than 0, it decreases the value by 1. The destination address is as pointed by SL. Because the SL is 1, the destination address is IPv6 address 4:3::10 as pointed by Segment List [1].

· Searches the routing table based on the encapsulated destination address in the IPv6 header, and forwards the packet to P 2.

(5) P 2 searches the IPv6 routing table based on the destination address, and forwards the packet to PE 2 through the optimal IGP route.

(6) PE 2 searches the local SID table based on the End.DX2 SID, and executes the SID-associated action, that is, removing the outer IPv6 packet header, obtaining the AC matching the End.DX2 SID, and forwarding the packet to CE 2 through the AC.

Figure47 Packet forwarding process for EVPN VPWS over SRv6 TE

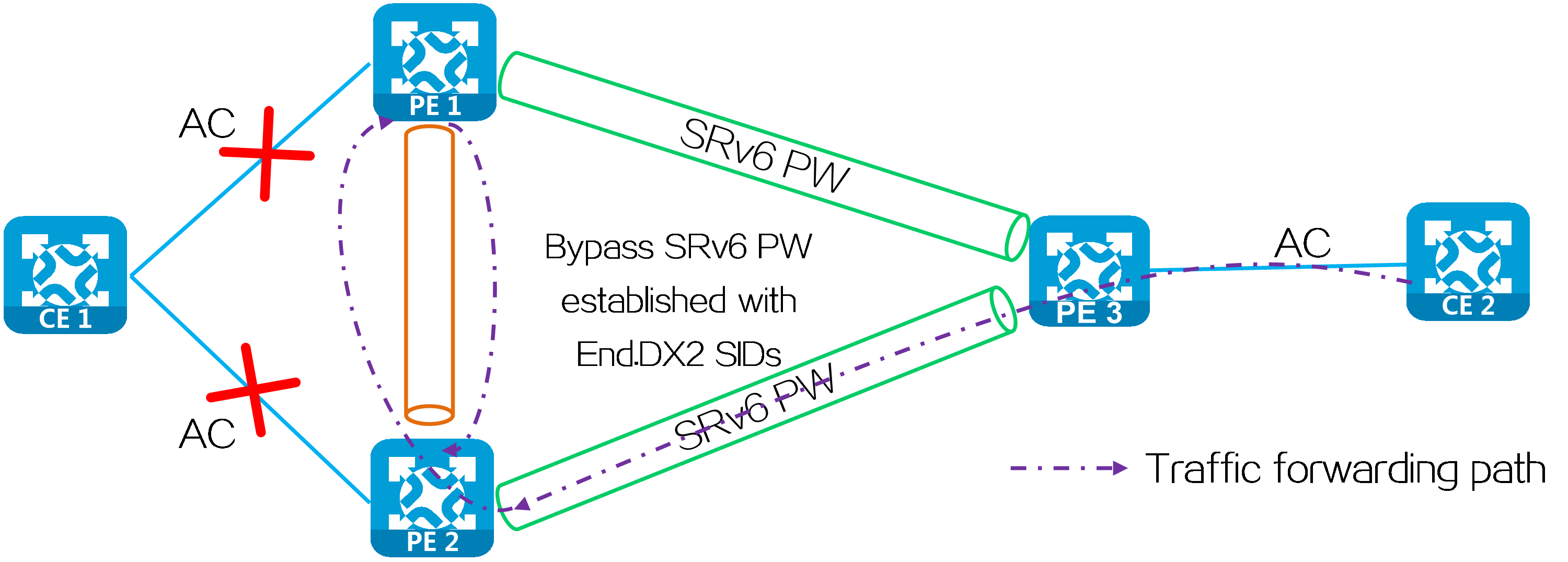

Bypass PW

In multi-homed site or primary/backup SRv6 PW network, when the AC on PE 2 fails, PE 2 notifies PE 1 and PE 3 of the local unreachable event. Then traffic is no longer forwarded through the SRv6 PW between PE 3 and PE 2. During this period of time, data packets sent from PE 3 to PE 2 cannot be forwarded to CE 1 and will be discarded. To address this issue, you can configure bypass PW for EVPN VPWS over SRv6 by establishing a bypass SRv6 PW between redundant PEs. When an AC failure occurs, PE 2 temporarily forwards traffic to PE 1 through the bypass SRv6 PW. PE 1 then forwards traffic to CE 1 to prevent traffic loss.

Figure48 Bypass PW networking

A loop might exist if PE 1 and PE 2 establish a bypass SRv6 PW between them through End.DX2 SID. When the ACs on PE 1 and PE 2 both fail, PE 1 or PE 2 forwards the packets received from its peer back to its peer through the bypass SRv6 PW. To resolve this issue, use End.DX2L SIDs to establish a bypass SRv6 PW between PE 1 and PE 2. The packets from a bypass SRv6 PW carry an End.DX2L SID. A PE does not forward the packets back to the bypass SRv6 PW.

Figure49 Bypass SRv6 PW established through End.DX2 SIDs

Typical networking

Multi-homed + FRR networking

CE 1 is dual-homed to PE 1 and PE 2 through link aggregation or S-Trunk. CE 2 is single-homed to PE 3. You can establish SRv6 PWs in the network to implement interconnection between customer sites. You can deploy bypass SRv6 PW and primary and backup SRv6 PWs to minimize impact on the network caused by AC or SRv6 PW failures, improving network reliability and stability.

Figure50 Multi-homed + FRR networking

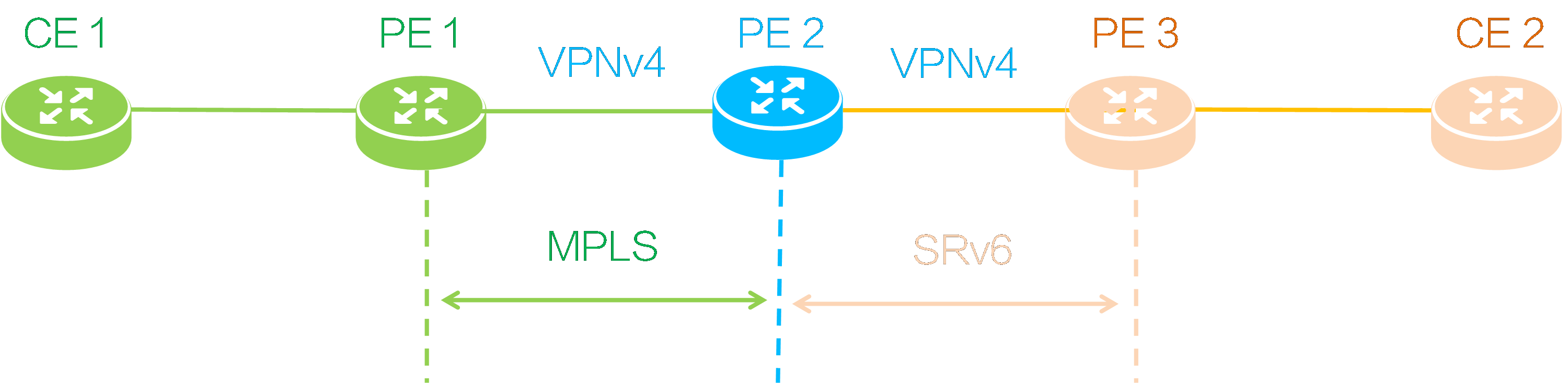

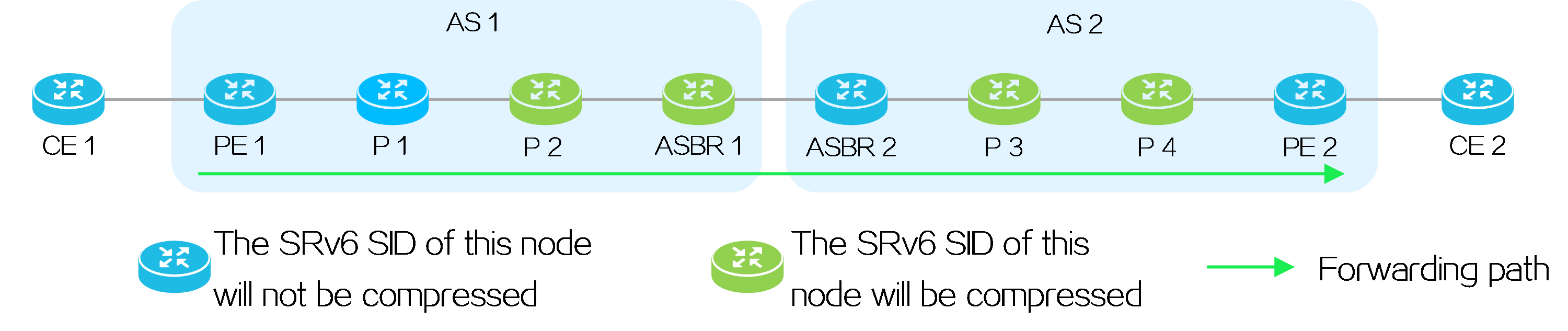

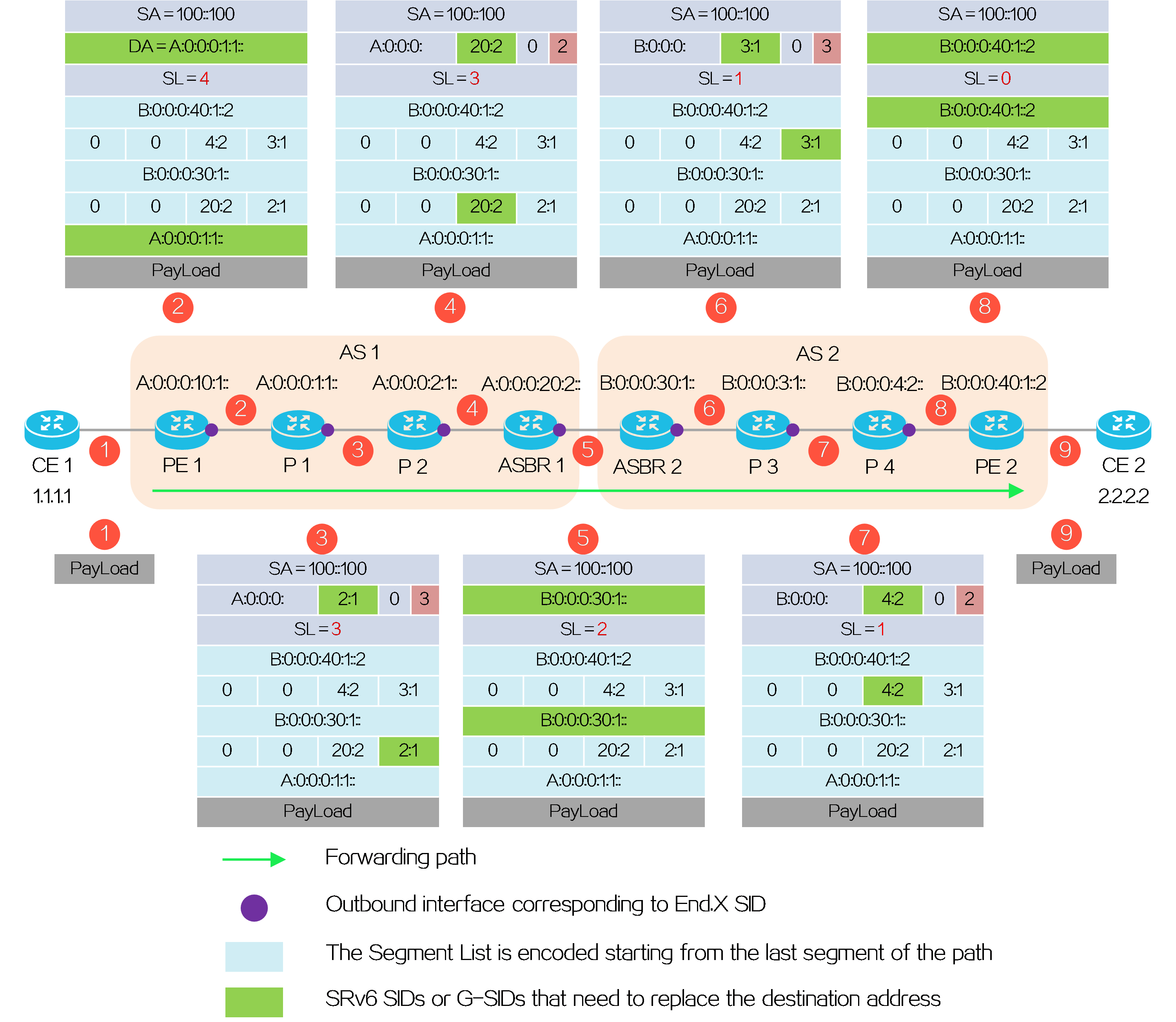

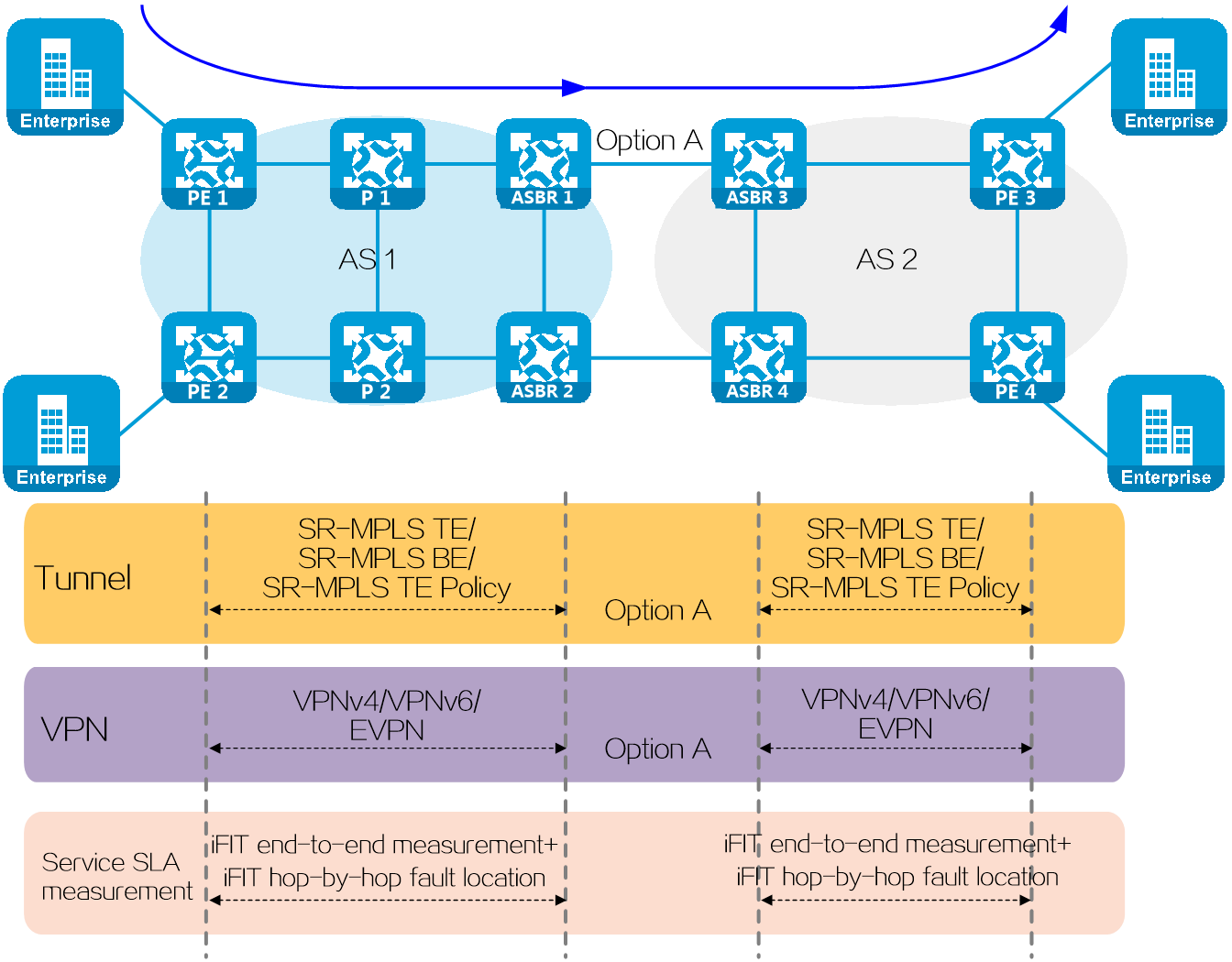

4.8 SRv6 and MPLS interworking

About SRv6 and MPLS interworking

With mature development of Internet, SRv6 and MPLS networks are gradually becoming representatives of efficient, reliable, and secure network solutions. However, in practical applications, due to the differences in routing information and packet encapsulation between the two networks, interoperability between SRv6 and MPLS networks has become an important issue to resolve.

Currently, there are two method to implement SRv6 and MPLS interworking: one is to deploy VPN instances on the network border device, and the other is to adopt the inter-AS Option B method without deploying VPN instances. The implementation principles of these two methods are different.

According to the different backbone network structures, SRv6 and MPLS interworking can be divided into the following types:

· Interworking between IP L3VPN SRv6 network and MPLS L3VPN network.

· Interworking between IP L3VPN SRv6 network and EVPN L3VPN network.

· Interworking between EVPN L3VPN SRv6 network and MPLS L3VPN network.

· Interworking between EVPN L3VPN SRv6 network and EVPN L3VPN network.

In summary, there are two interworking methods and four interworking types, totaling eight scenarios. When using the same interworking method, the implementations for the four types of network interworking are similar. The only difference lies in the BGP route types used to carry private network routing information. This document takes IP L3VPN over SRv6 interworking with MPLS L3VPN as an example to describe in details for two scenarios.

Comparison of interworking methods

Choose the interworking method based on a comprehensive consideration of the scenario conditions. The characteristics and advantages of the two methods are listed in the table below for your reference.

Table7 Comparison of SRv6 and MPLS interworking methods

|

VPN instance deployment on the border device |

Inter-AS Option B |

|

|

Characteristics |

Inter-AS is not required (inter-AS is also allowed). The network border device must reoriginate the received private network routes. |

Inter-AS is required. When the types of the BGP routes that carry private network routes on both sides of the networks are the same, the network border device does not need to reoriginate private network routes. |

|

Applicable scenarios |

The network border device has VPN instance deployment requirements. |

The network border device does not allow the deployment of VPN instances. |

|

Benefits |

· The network border device can collect private network routes from different sites and VPN instances into one VPN instance. It then assigns SRv6 SIDs to these private network routes within the same VPN instance before forwarding them to save SRv6 SID resources. · It is compatible with the HoVPN architecture to achieve SPE and UPE interconnection across different types of networks in the HoVPN architecture. |

· The network border device does not need to deploy VPN instances and can simply act as "transit stations" for private network routes, with low deployment difficulty. · Since there is no need to regenerate private network routes, the integrity of private network routing information can be maximally ensured when transmitting the private network routes. |

Interworking method: VPN instance deployment on the border device

Networking model

Figure51 Network diagram for deploying VPN instances on border devices

In this network, an MPLS L3VPN network is deployed between PE 1 and PE 2, establishing a BGP VPNv4 session. An IP L3VPN over SRv6 network is deployed between PE 2 and PE 3, establishing a BGP VPNv4 session. After deploying SRv6 and MPLS interworking, private network users connected to CE 1 and CE 2 can communicate with each other.

The SRv6 with MPLS interworking is mainly achieved at the border device (PE 2), and the interworking operating mechanisms include private network route advertisement and packet forwarding.

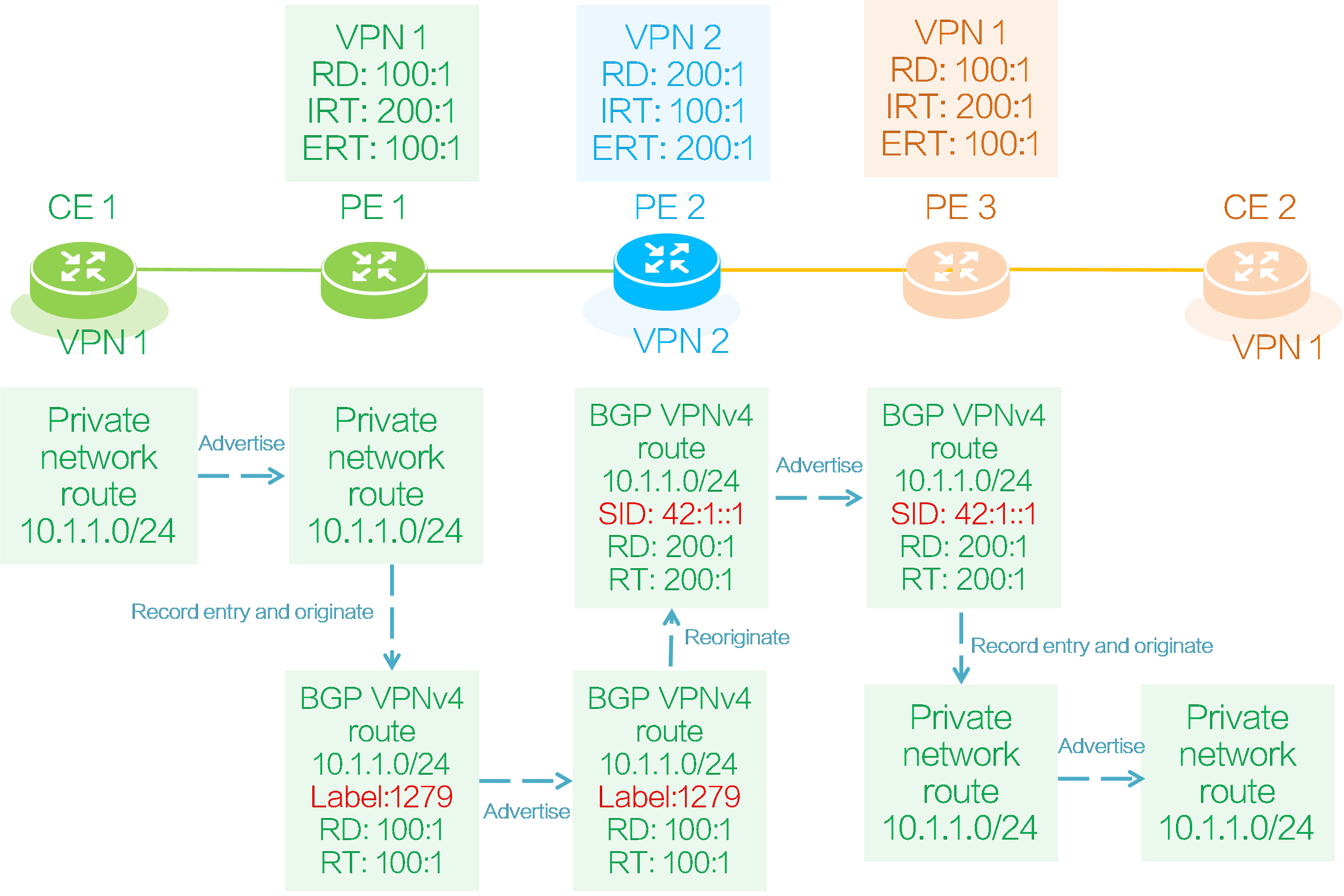

Private network route advertisement

CE 1 to CE 2 route advertisement

Figure52 Private network route advertisement

As shown in the figure above, both PE 1 and PE 2 deploy VPN instance 1 to connect CE 1 and CE 2. PE 2 deploys VPN instance 2. CE 1 sends a private network route to CE 2 as follows:

(1) CE 1 advertises its local private network route 10.1.1.0/24 to PE 1 through IGP, BGP, or static routing.

(2) PE 1 adds the received private network route to the IP routing table of VPN instance 1, and adds RD 100:1 and RT 100:1 attributes to the route to generate a BGP VPNv4 route.

(3) PE 1 assigns a private network label of 1279 to the BGP VPNv4 route, and then advertises the route to PE 2 through the BGP VPNv4 session. The VPN instance bound to private network label 1279 is VPN instance 1.

(4) The RT attribute carried in the BGP VPNv4 route 10.1.1.0/24 matches the locally configured IRT 100:1 of VPN instance 2 on PE 2, so PE 2 receives the route and adds it to the IP routing table of VPN instance 2.

(5) PE 2 reoriginates the BGP VPNv4 route 10.1.1.0/24 in VPN instance 2. The reoriginated BGP VPNv4 route 10.1.1.0/24 carries RD 200:1 and ERT 200:1 of VPN instance 2. Additionally, PE 2 assigns the route an SRv6 SID of 42:1::1 in VPN instance 2. (The SRv6 SID can be End.DT4 SID, End.DT6 SID, End.DT46 SID, End.DX4 SID, or End.EX6 SID. In this example, it is an End.DT4 SID.)

(6) PE 2 advertises the reoriginated BGP VPNv4 route 10.1.1.0/24 to PE 3.

(7) The RT attribute carried in the BGP VPNv4 route 10.1.1.0/24 matches the locally configured IRT attribute 200:1 of VPN instance 1 on PE 3, so PE 3 receives the route and adds it to the IP routing table of VPN instance 1.

(8) PE 3 advertises the private network route 10.1.1.0/24 of VPN instance 1 to CE 2 through IGP, BGP, or static routing.

The IP routing table entries formed on the devices in the backbone network during the private network route advertisement process are shown in the following table:

Table8 IP routes on backbone devices

|

Device Name |

Destination Address |

VPN instance |

Next Hop |

|

PE 1 |

10.1.1.0/24 |

VPN 1 |

CE 1 |

|

PE 2 |

10.1.1.0/24 |

VPN 2 |

Label forwarding path pointing to PE 1 |

|

PE 3 |

10.1.1.0/24 |

VPN 1 |

SRv6 tunnel pointing to PE 2 |

|

|

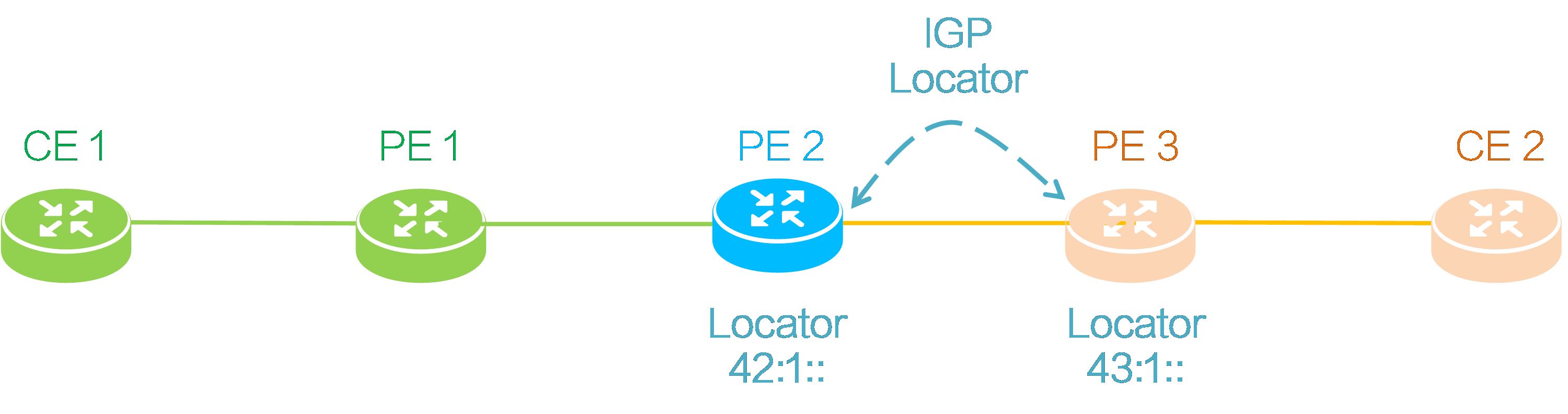

Locator subnet route advertisement

As shown in the figure below, PE 2 and PE 3 need to advertise their respective locator subnet route to each other through an IGP. The locator subnet route prefix advertised by PE 2 is 42:1::, and that advertised by PE 3 is 43:1::.

Figure53 Advertising locator subnet routes

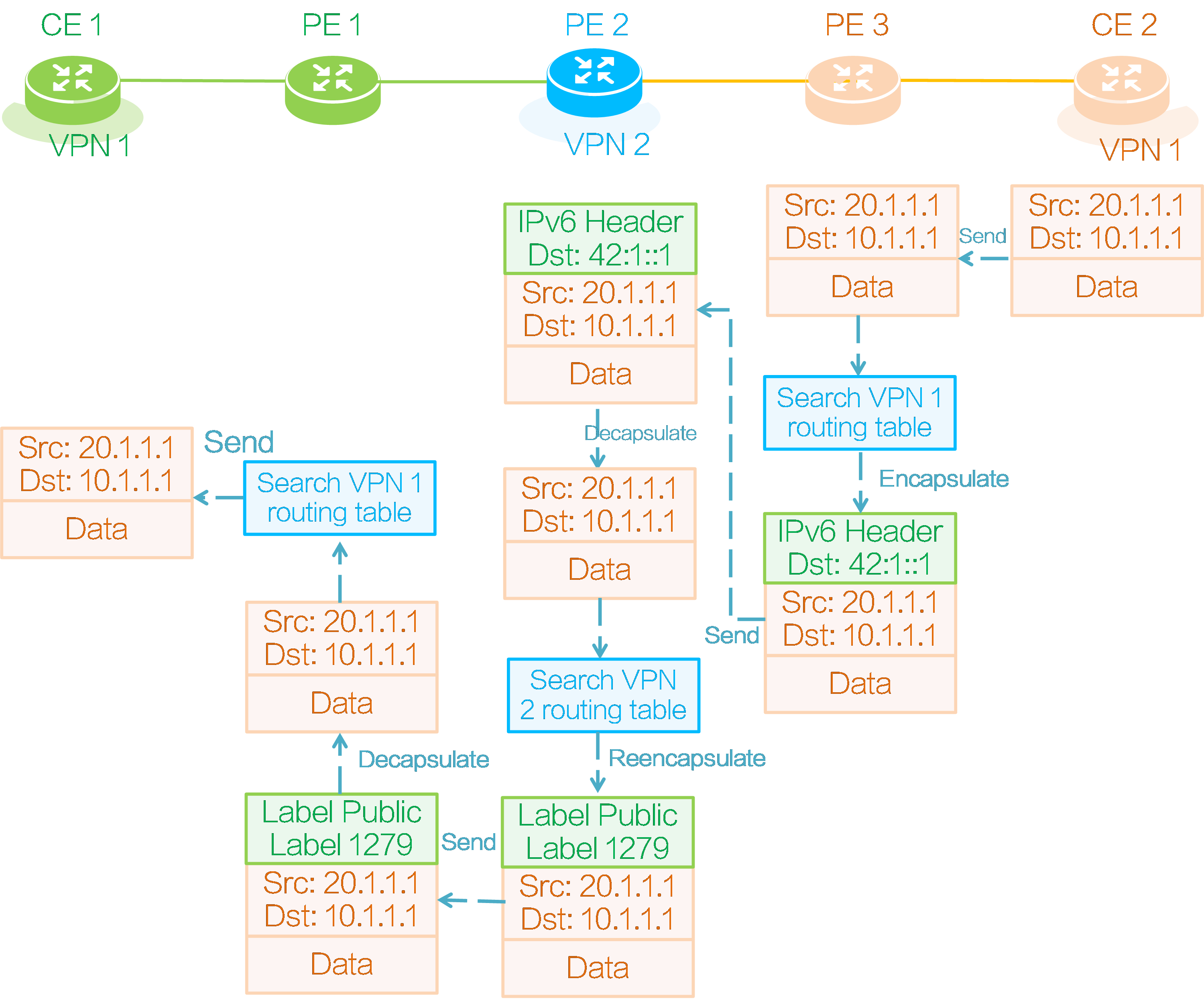

Packet forwarding

CE 2 to CE 1 packet forwarding

Figure54 Packet forwarding

As shown in the above figure, taking the SRv6 BE forwarding mode as an example, CE 2 forwards a packet to CE 1 as follows:

(1) CE 2 encapsulates an IPv4 packet header for the private network data, with the source address as 20.1.1.1 and the destination address as 10.1.1.1, forming a private network packet. Then, it sends the packet to PE 3.

(2) PE 3 looks up the IP routing table of VPN instance 1 after receiving the private network packet and finds the route with the prefix 10.1.1.0/24. The SRv6 SID corresponding to this route is 42:1::1. PE 3 encapsulates an outer IPv6 header for the private network packet, with the destination IPv6 address as the SRv6 SID.

(3) According to the outer destination IPv6 address of the encapsulated packet, PE 3 searches the public IP routing table and finds the locator subnet route advertised by PE 2, and then sends the packet to PE 2 based on that route.

(4) PE 2 receives the packet and finds that the outer destination IPv6 address is the local End.DT4 SID. It decapsulates the outer IPv6 header of the packet and looks up the IP routing table in VPN instance 2 corresponding to End.DT4 SID 42:1::1. PE 2 finds the route with the prefix 10.1.1.0/24 from PE 1, and the corresponding private network label for this route is 1279. Therefore, PE 2 encapsulates the inner private network label 1279 and the outer public network label, and then forwards the packet along the label forwarding path to PE 1.

(5) After receiving the packet, PE 1 pops out the outer public network label, identifies that the packet belongs to VPN instance 1 based on the inner private network label 1279, and then searches the routing table in VPN instance 1. PE 1 finds the route from CE 1 with the prefix 10.1.1.0/24, and then pops all the labels and forwards the packet to CE 1 based on that route.

|

|

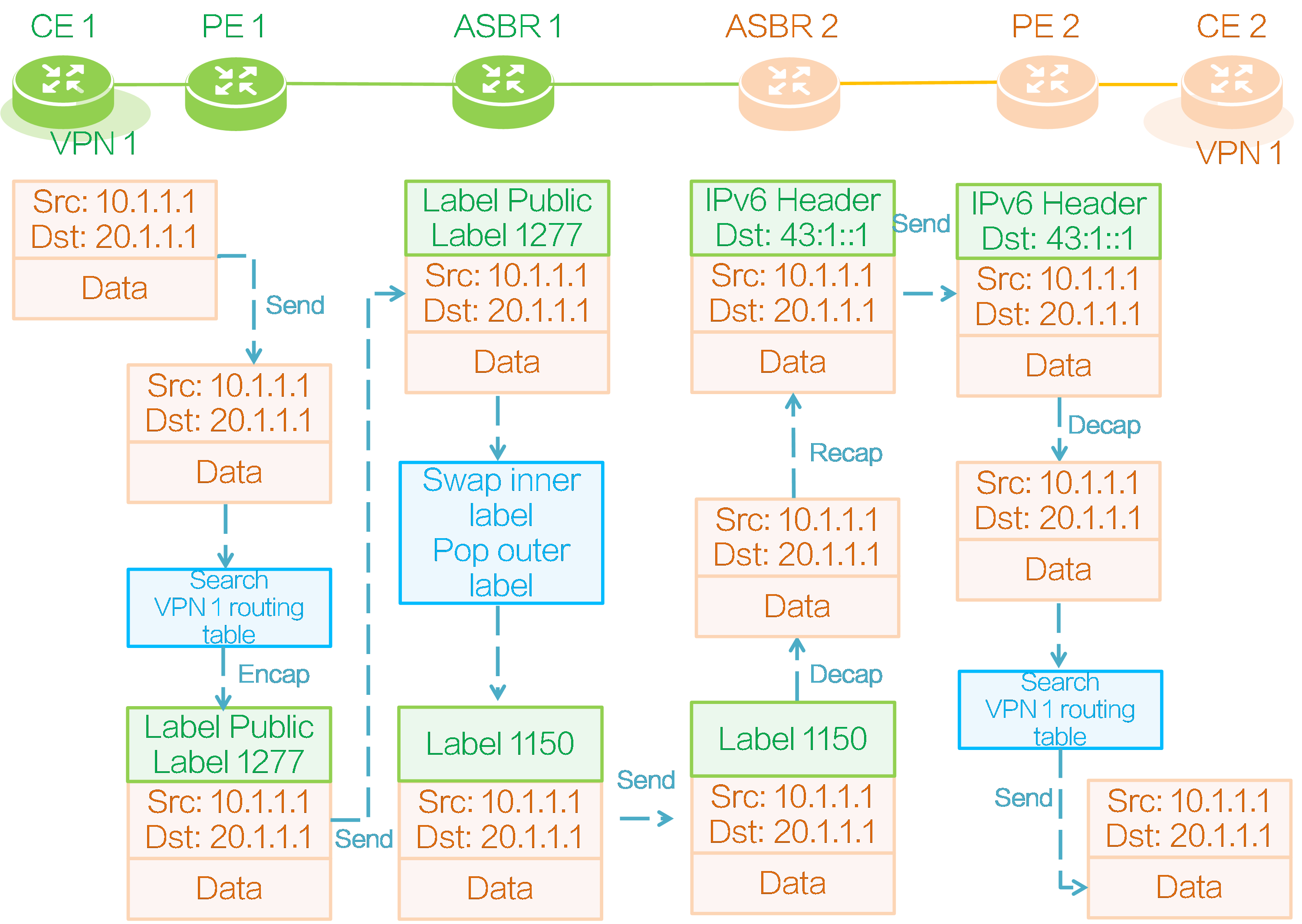

Interworking method: inter-AS option B

Networking model

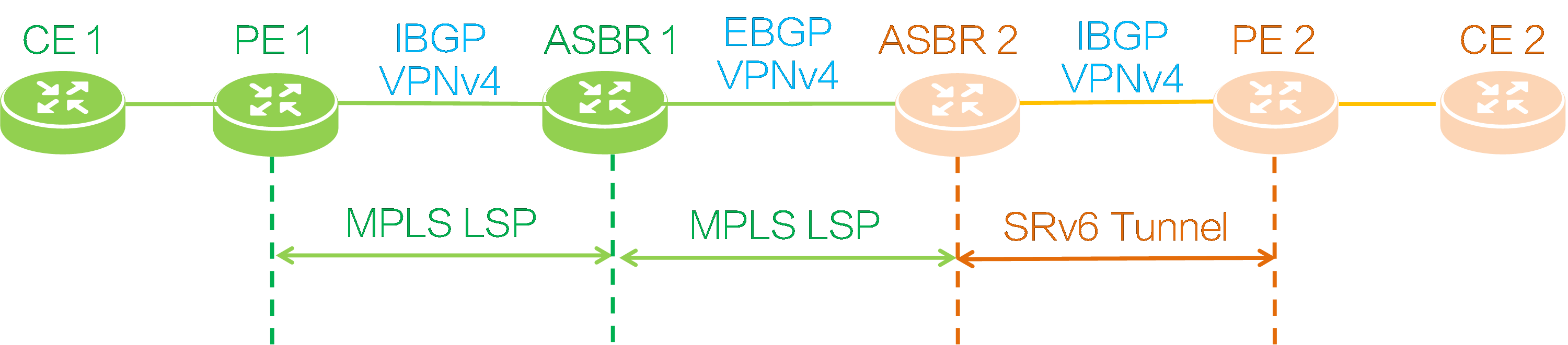

Figure55 Inter-AS option B networking

As shown in the figure above, deploy an MPLS L3VPN between PE 1 and ASBR 1, and establish IBGP VPNv4 session between them; deploy an MPLS L3VPN between ASBR 1 and ASBR 2, and establish EBGP VPNv4 session between them; deploy an IP L3VPN over SRv6 network between ASBR 2 and PE 2, and establish IBGP VPNv4 session between them. After SRv6 and MPLS interworking is deployed, private network users connected to CE 1 and CE 2 can communicate with each other.

End.T SID

End.T SIDs are required to achieve SRv6 and MPLS interworking by using inter-AS option B. The ASBR in the SRv6 network assigns and adds End.T SIDs to the private network routes from the MPLS network. The function of an End.T SID is removing the outer IPv6 header and looking up the IPv6 FIB table based on the End.T SID to forward packets.

Private network route advertisement

CE 1 to CE 2 route advertisement

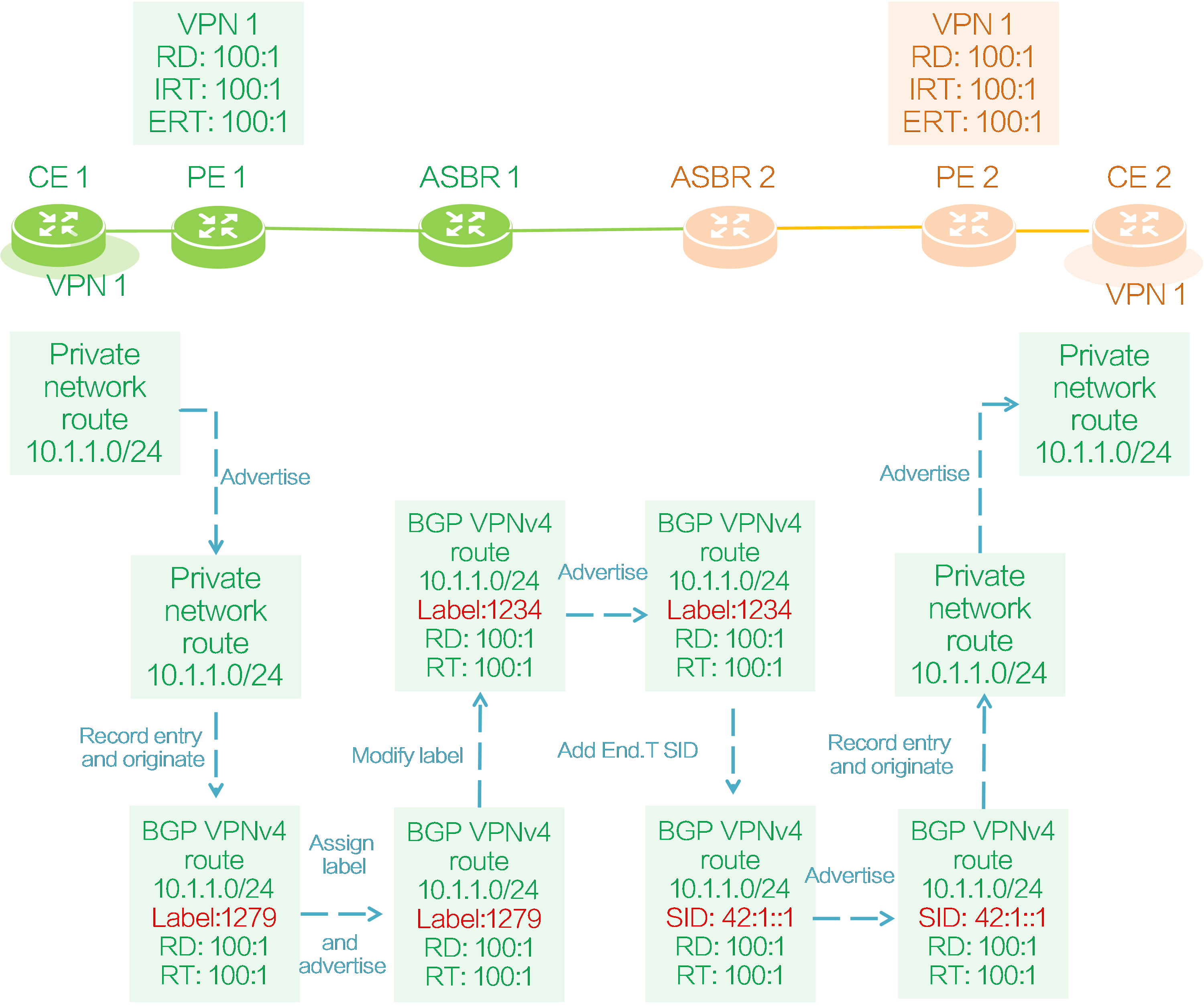

Figure56 CE 1 to CE 2 route advertisement

As shown in the figure above, both PE 1 and PE 2 deploy VPN instance 1 to connect CE 1 and CE 2. CE 1 advertises a private network route to CE 2 as follows:

(1) CE 1 advertises its local private network route 10.1.1.0/24 to PE 1 through IGP, BGP, or static routing.

(2) PE 1 adds the received private network route to the IP routing table of VPN instance 1, and adds RD 100:1 and RT 100:1 attributes to the route to generate a BGP VPNv4 route.

(3) PE 1 assigns a private network label 1279 to the route, and then advertises the route to ASBR 1 through the IBGP VPNv4 session. The VPN instance bound to private network label 1279 is VPN instance 1.

(4) ASBR 1 receives BGP VPNv4 route 10.1.1.0/24 advertised by PE 1, modifies the next hop of the route to its own IP address, and assigns a new private network label 1234 to the route to replace the original private network label 1279.

(5) ASBR 1 adds a mapping entry of BGP VPNv4 route 10.1.1.0/24 for the new and old labels in the local ILM table. If the incoming label is the new label 1234, it replaces the label with the old label 1279 and sends it to PE 1, as shown in the following diagram.

Table9 ASBR 1 label mapping

|

InLabel |

Operation |

SwapInfo |

Forwarding Info |

|

1234 |

SWAP |

1279 |

To PE 1 |

(6) ASBR 1 advertises the BGP VPNv4 route 10.1.1.0/24 with private network label 1234 to ASBR 2 through the EBGP VPNv4 session.

(7) After receiving the BGP VPNv4 route from ASBR 1, ASBR 2 modifies the next hop of the route to its own IP address, assigns the End.T SID 42:1::1 to the route, and generates an IPv6 FIB entry as shown in the following diagram. End.T SID 42:1::1 carried by the route is associated with the private network label 1234 carried by the route in the IPv6 FIB entry. According to this label and the NHLFE entry corresponding to private network label 1234, the packet with destination address End.T SID 42:1::1 will be encapsulated with the private network label 1234 on ASBR 2 and sent to ASBR 1.

Table10 ASBR IPv6 FIB entry

|

Destination |

Label |

|

42:1::1 |

1234 |

(8) ASBR 2 advertises BGP VPNv4 route 10.1.1.0/24 with End.T SID 42:1::1 to PE 2 through the IBGP VPNv4 session.

(9) The RT attribute carried in the BGP VPNv4 route 10.1.1.0/24 matches the IRT attribute 100:1 configured for local VPN instance 1 on PE 2, so PE 2 receives the route and adds it to the IP routing table of VPN instance 1.

(10) PE 2 advertises the private network route 10.1.1.0/24 of VPN instance 1 to CE 2 through IGP, BGP, or static routing.

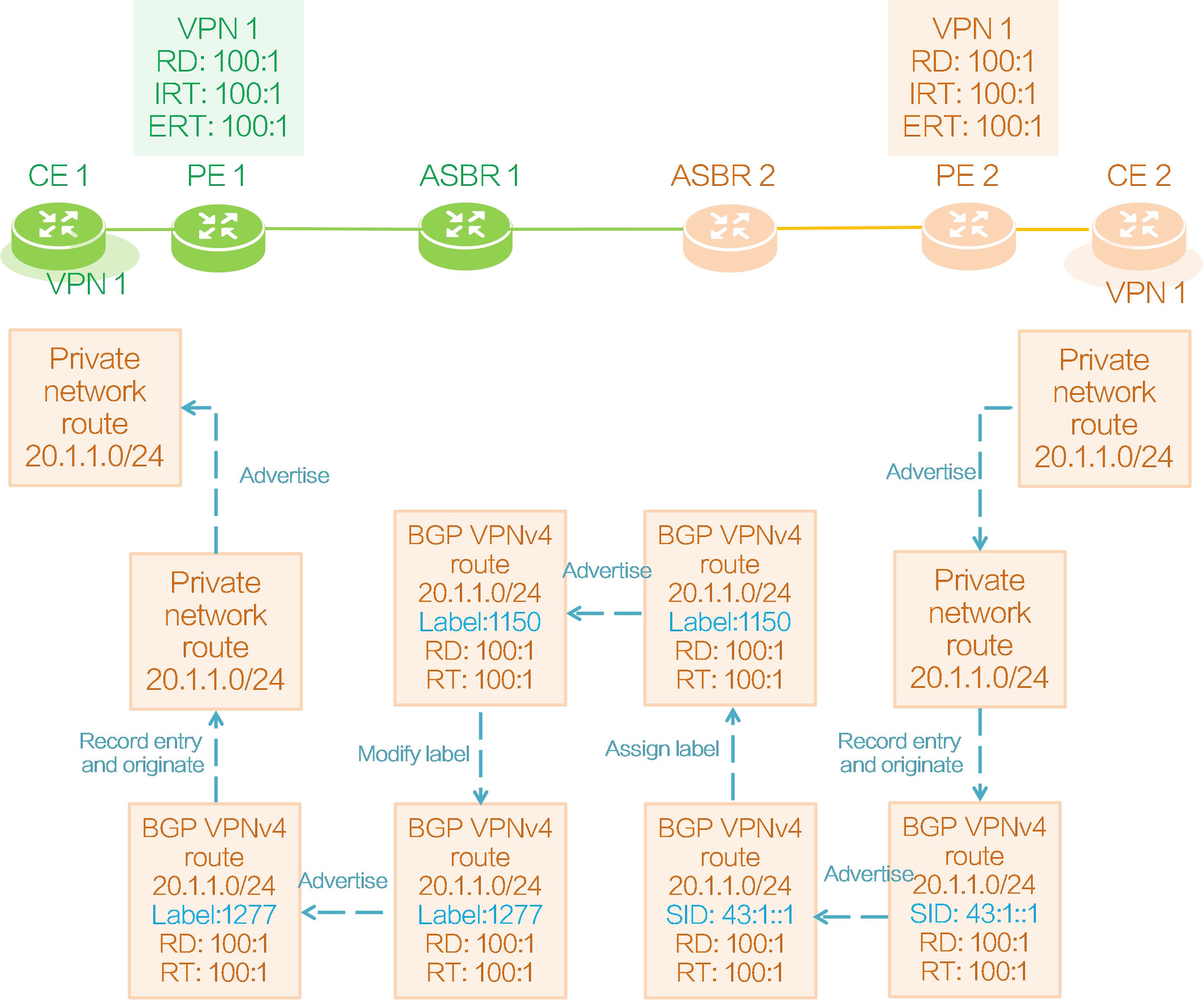

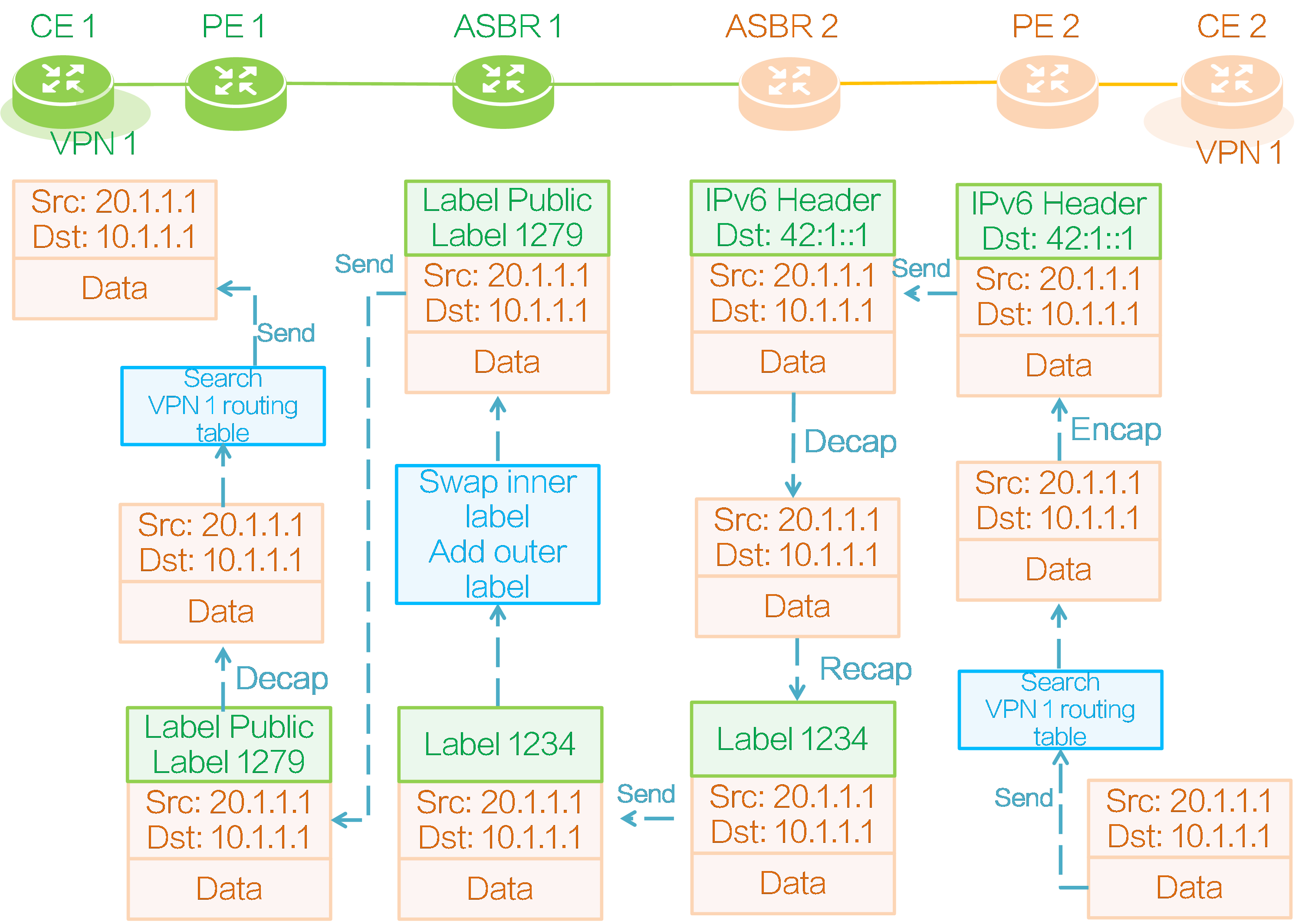

CE 2 to CE 1 route advertisement

Figure57 CE 2 to CE 1 route advertisement

As shown in the above figure, the route of CE 2 is advertised to CE 1 in the following process:

(1) CE 2 advertises its local private network route 20.1.1.0/24 to PE 2 through IGP, BGP, or static routing.

(2) Upon receiving the private network route, PE 2 adds it to the IP routing table of VPN instance 1, adds RD 100:1 and RT 100:1 to it, and reoriginates a BGP VPNv4 route.

(3) PE 2 requests SRv6 SID 43:1::1 for the reoriginated route in VPN instance 1, and then advertises the route to ASBR 2 through the IBGP VPNv4 session. (The SRv6 SID can be End.DT4 SID, End.DT6 SID, End.DT46 SID, End.DX4 SID, or End.EX6 SID. In this example, the SRv6 SID is End.DT4 SID.)

(4) ASBR 2 receives BGP VPNv4 route 20.1.1.0/24 advertised by PE 2, modifies the next hop of the route to its own IP address, assigns private network label 1150 to the route, and adds the following mapping entry for the private network label and SRv6 SID in the local ILM table. Through the ILM entry, ASBR 2 associates the End.DT4 SID 43:1::1 carried by the route with the private network label 1150 assigned to the route. The packet carrying the private network label 1150 will be encapsulated with the destination IPv6 address End.DT4 SID 43:1::1 on ASBR 2 and sent to PE 2.

Table11 ASBR 2 label mapping

|

InLabel |

Operation |

SwapInfo |

Forwarding Info |

|

1150 |

SWAP |

43:1:1 |

Sent to PE 2 |

(5) ASBR 2 advertises BGP VPNv4 route 20.1.1.0/24 with private network label 1150 to ASBR 1 through the EBGP VPNv4 session.

(6) ASBR 1 modifies the next hop of the BGP VPNv4 route received from ASBR 2 to its own IP address and assigns a new private network label 1277 to the route instead of the original label 1150.

(7) ASBR 1 adds a mapping entry for BGP VPNv4 route 20.1.1.0/24 with the new and old labels in the local ILM table. If the incoming label is the new label 1277, it replaces it with the old label 1150 and sends it to ASBR 2.

Table12 ASBR 1 label mapping

|

InLabel |

Operation |

SwapInfo |

Forwarding Info |

|

1277 |

SWAP |

1150 |

Sent to ASBR 2 |

(8) ASBR 1 advertises the BGP VPNv4 route 20.1.1.0/24 with the private network label 1277 to PE 1 through the IBGP VPNv4 session.

(9) The RT attribute carried in BGP VPNv4 route 20.1.1.0/24 matches IRT 100:1 configured for local VPN instance 1 on PE 1, so PE 1 receives the route, redistributes it into VPN instance 1, and adds it to the IP routing table of VPN instance 1.

(10) PE 2 advertises the private network route 20.1.1.0/24 of VPN instance 1 to CE 1 through IGP, BGP, or static routing.

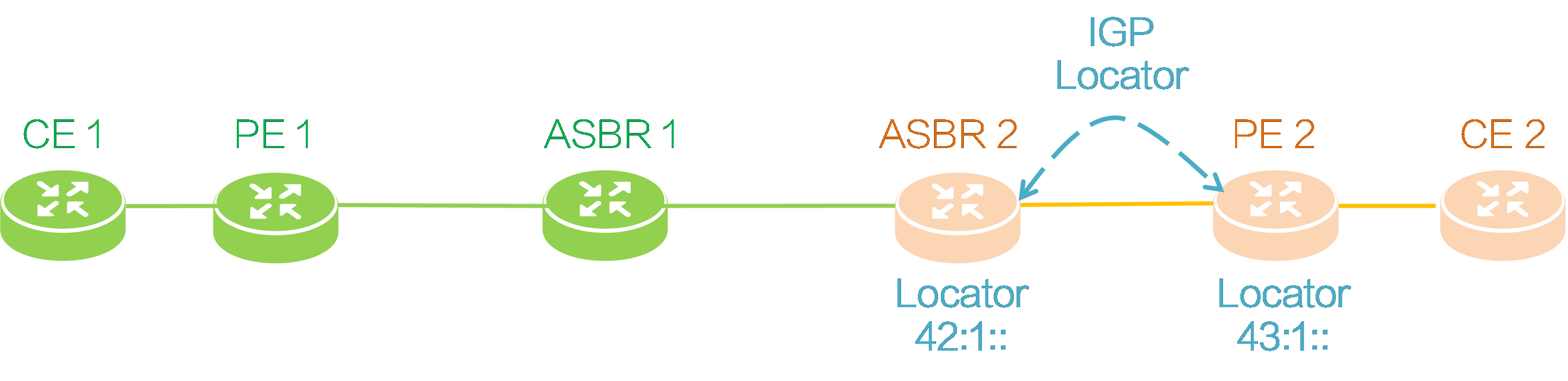

Locator subnet route advertisement

As shown in the figure below, ASBR 2 and PE 2 need to advertise their respective locator subnet route information to each other through IGP. The locator route prefix advertised by ASBR 2 is 42:1::, and that advertised by PE 2 is 43:1::.

Figure58 Locator subnet route advertisement

Packet forwarding

CE 1 to CE 2 packet forwarding

Figure59 Packet forwarding

As shown in the above figure, taking SRv6 BE forwarding mode as an example, a private network packet is forwarded from CE 1 to CE 2 is as follows:

(1) CE 1 encapsulates an IPv4 header for the private network data, with source address 10.1.1.1 and destination address 20.1.1.1, and sends the private network packet to PE 1.

(2) PE 1 looks up the IP routing table of VPN instance 1 after receiving the private network packet and finds a route with prefix 20.1.1.0/24. The private network label associated with this route is 1277. Therefore, PE 1 encapsulates the inner private network label 1277 and the outer public network label for the packet, and then forwards the packet along the label forwarding path to ASBR 1.

(3) After receiving the private network packet, ASBR 1 pops the outer public network label and replaces private network label 1277 with private network label 1150 according to the ILM entry corresponding to private network label 1277. Because ASBR 1 and ASBR 2 only have a single-hop label LSP, ASBR 1 directly forwards the private network packet with private network label 1150 to ASBR 2.

(4) After receiving the private network packet, based on the ILM entry corresponding to private network label 1150, ASBR 2 pops the private network label 1150 and re-encapsulates it with an outer IPv6 header. The destination IPv6 address of the encapsulated outer IPv6 header is End.DT4 SID 43:1::1 mapped to private network label 1150.

(5) ASBR 2 looks up the public IP routing table based on the outer destination IPv6 address of the encapsulated packet, finds the locator subnet route advertised by PE 2, and then sends the packet to PE 2 based on that route.

(6) PE 2 receives the packet and finds that the outer destination IPv6 address is the local End.DT4 SID. It then decapsulates the outer IPv6 header of the packet and looks up the IP routing table in VPN instance 1 corresponding to End.DT4 SID 43:1::1. PE 2 finds the route with prefix 10.1.1.0/24 from CE 2, and then forwards the packet to CE 2 based on that route.

CE 2 to CE 1 packet forwarding

Figure60 Packet forwarding

As shown in the above figure, taking the SRv6 BE forwarding mode as an example, CE 2 forwards a packet to CE 1 as follows:

(1) CE 2 encapsulates an IPv4 packet header for the private network data, with the source address as 20.1.1.1 and the destination address as 10.1.1.1, and then sends the private network packet to PE 2.

(2) Upon receiving the private network packet, PE 2 looks up the IP routing table of VPN instance 1, locates the route with prefix 10.1.1.0/24, identifies SRv6 SID 42:1::1 for that route, and encapsulates an outer IPv6 header for the packet, with the SRv6 SID as the destination IPv6 address in the encapsulated outer IPv6 packet header.

(3) PE 2 looks up the public IP routing table based on the outer destination IPv6 address of the encapsulated packet, finds the locator route advertised by ASBR 2, and then sends the packet to ASBR 2 based on the route.

(4) ASBR 2 receives the packet and finds that the outer destination IPv6 address is the local End.T SID. Then it decapsulates the outer IPv6 header of the packet and looks up the IPv6 FIB table locally. According to the found IPv6 FIB entry, ASBR 2 encapsulates the packet with inner private network label 1234. Because ASBR 1 and ASBR 2 only have a single-hop LSP, ASBR 2 directly forwards the private network packet with private network label 1234 to ASBR 1.

(5) After receiving the packet, ASBR 1 replaces the private network label corresponding to ILM table entry 1234 with private network label 1279, and adds an outer public network label to the packet. Then, it forwards the packet along the LSP to PE 1.

(6) After receiving the packet, PE 1 pops out the outer public network label, identifies that the packet belongs to VPN instance 1 based on the inner private network label 1279, and then searches the routing table in VPN instance 1. PE 1 finds the route with prefix 10.1.1.0/24 from CE 1, and then pops all the labels and forwards the packet to CE 1 based on that route.

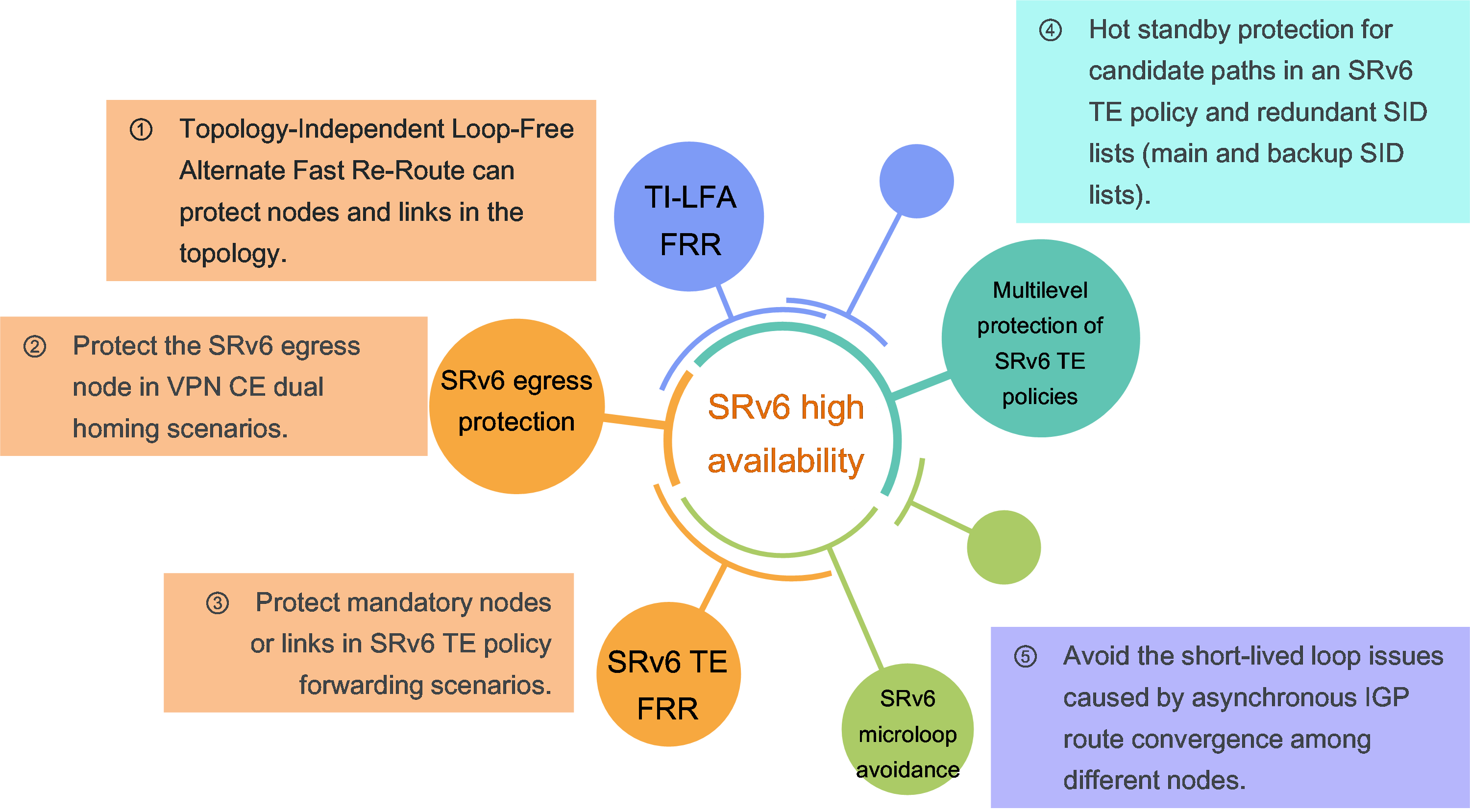

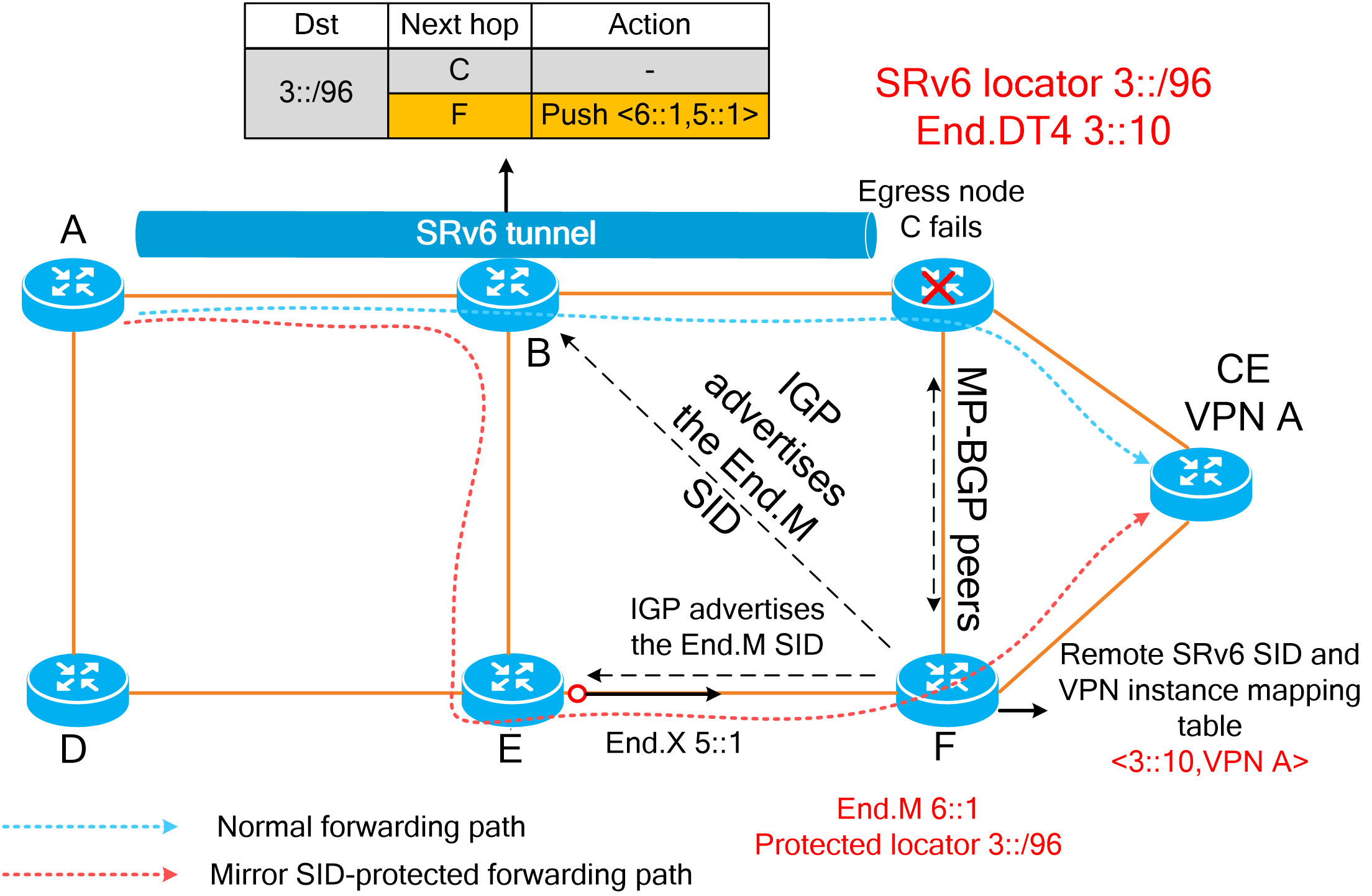

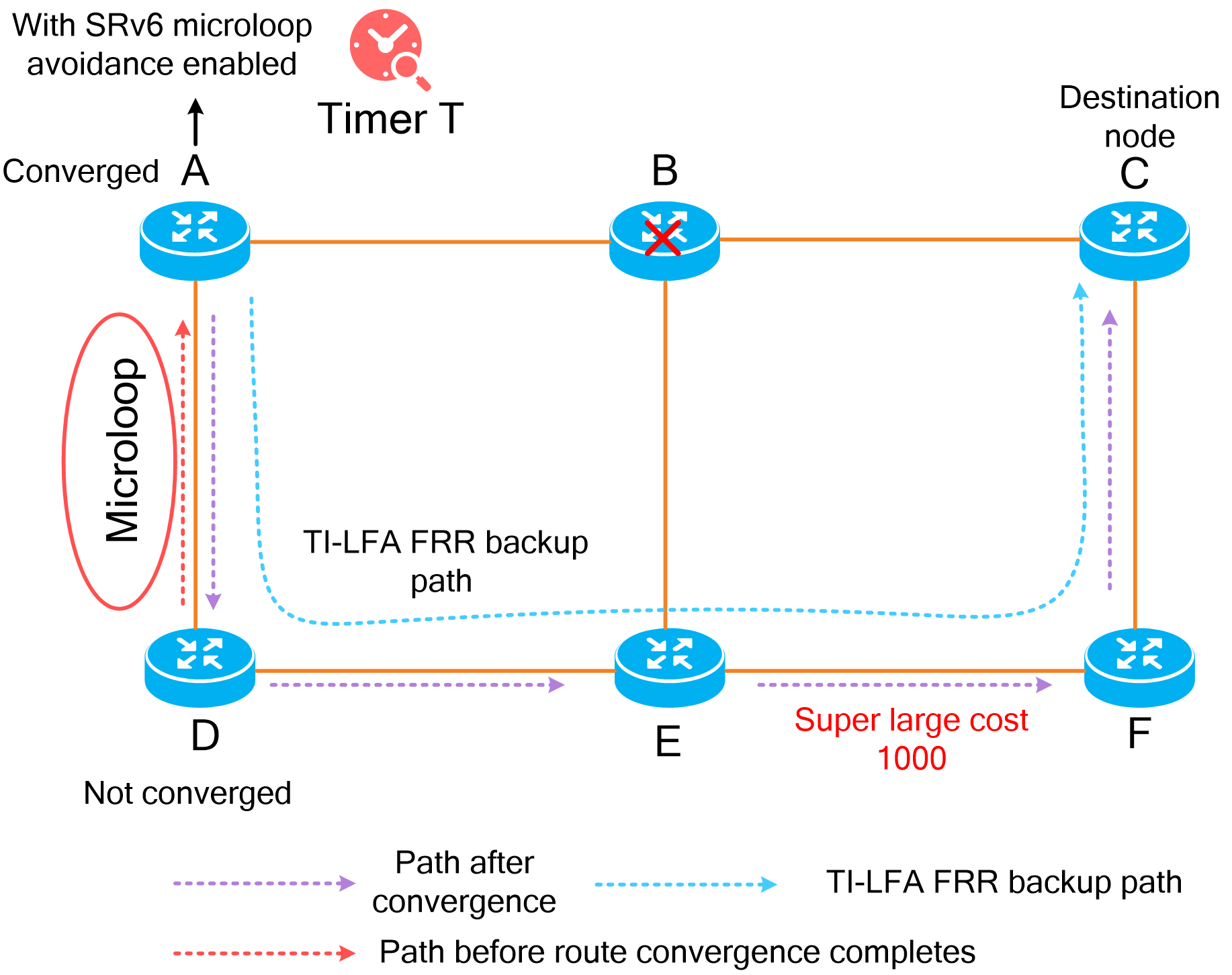

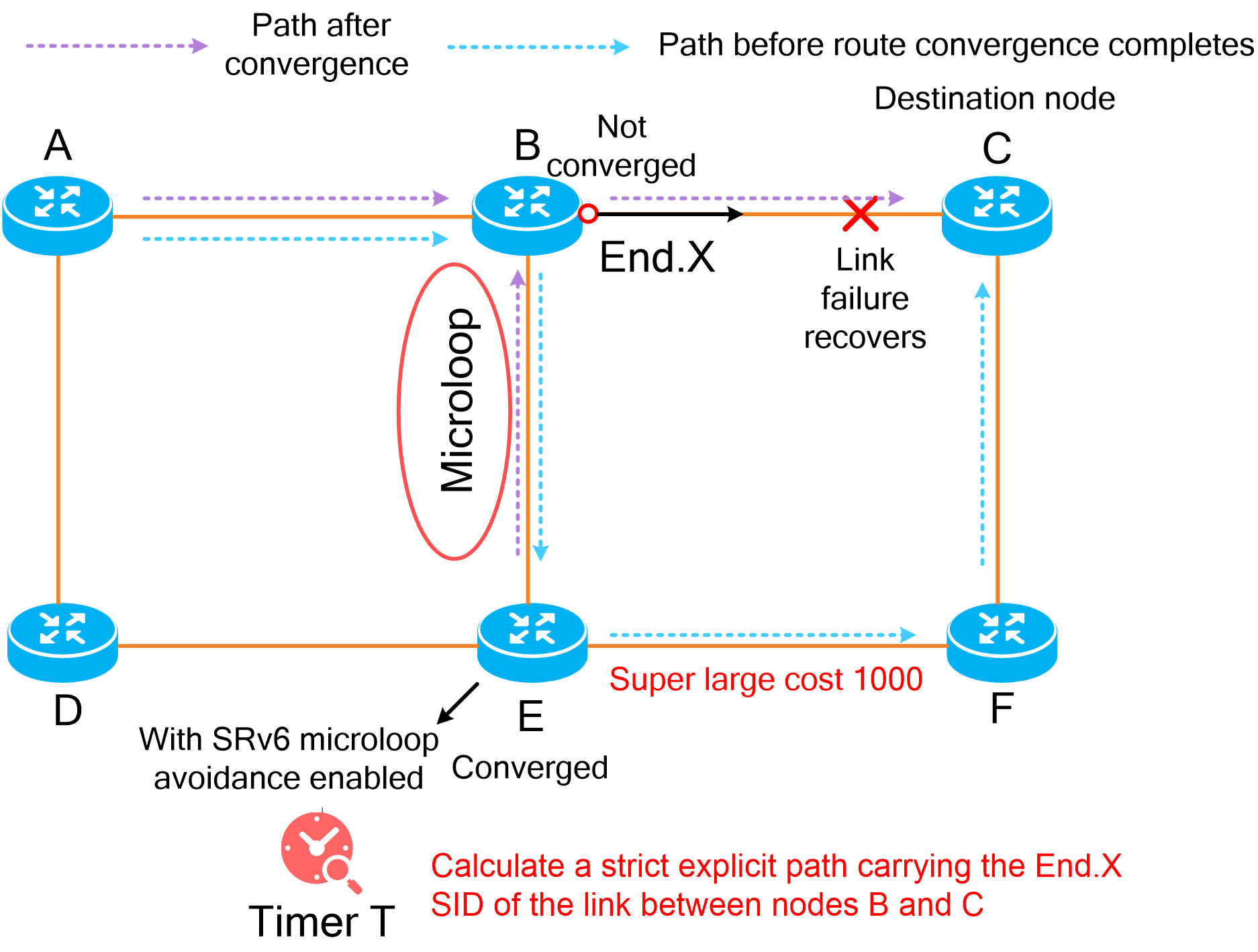

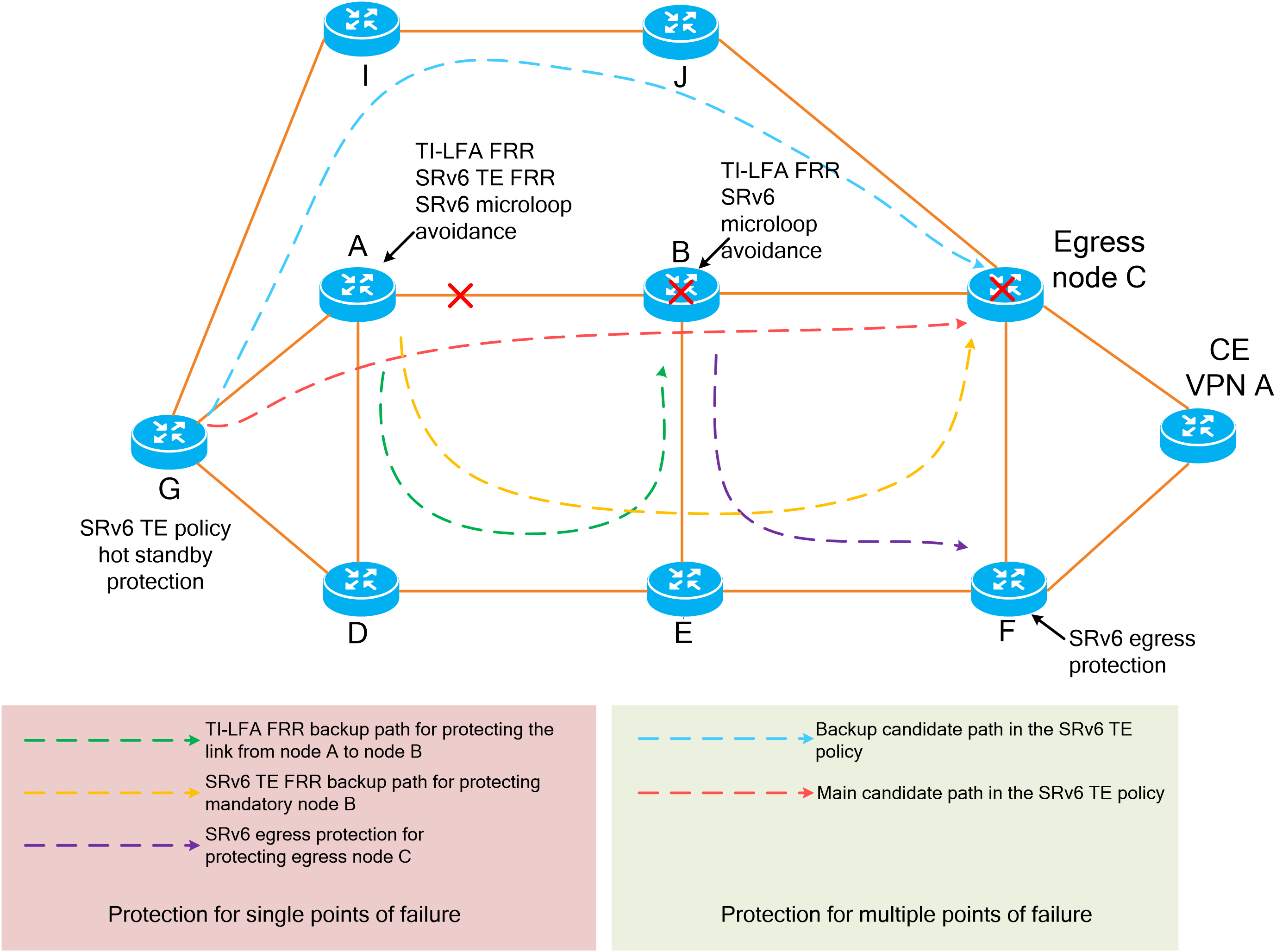

4.9 SRv6 high availability

Introduction

In an SRv6 network, packet loss might occur when a link or node fails or recovers from a failure. To ensure stable forwarding of service traffic in the SRv6 network, SRv6 provides high availability measures to avoid long interruptions of service traffic and improve network quality.

Figure61 SRv6 high availability

An SRv6 network provides the following high availability mechanisms:

· Node link-level protection—Includes TI-LFA FRR, SRv6 TE FRR, and SRv6 egress protection.

· Instantaneous loop protection—Includes SRv6 microloop avoidance.

· Path-level protection—Includes multilevel protection of SRv6 TE policies.

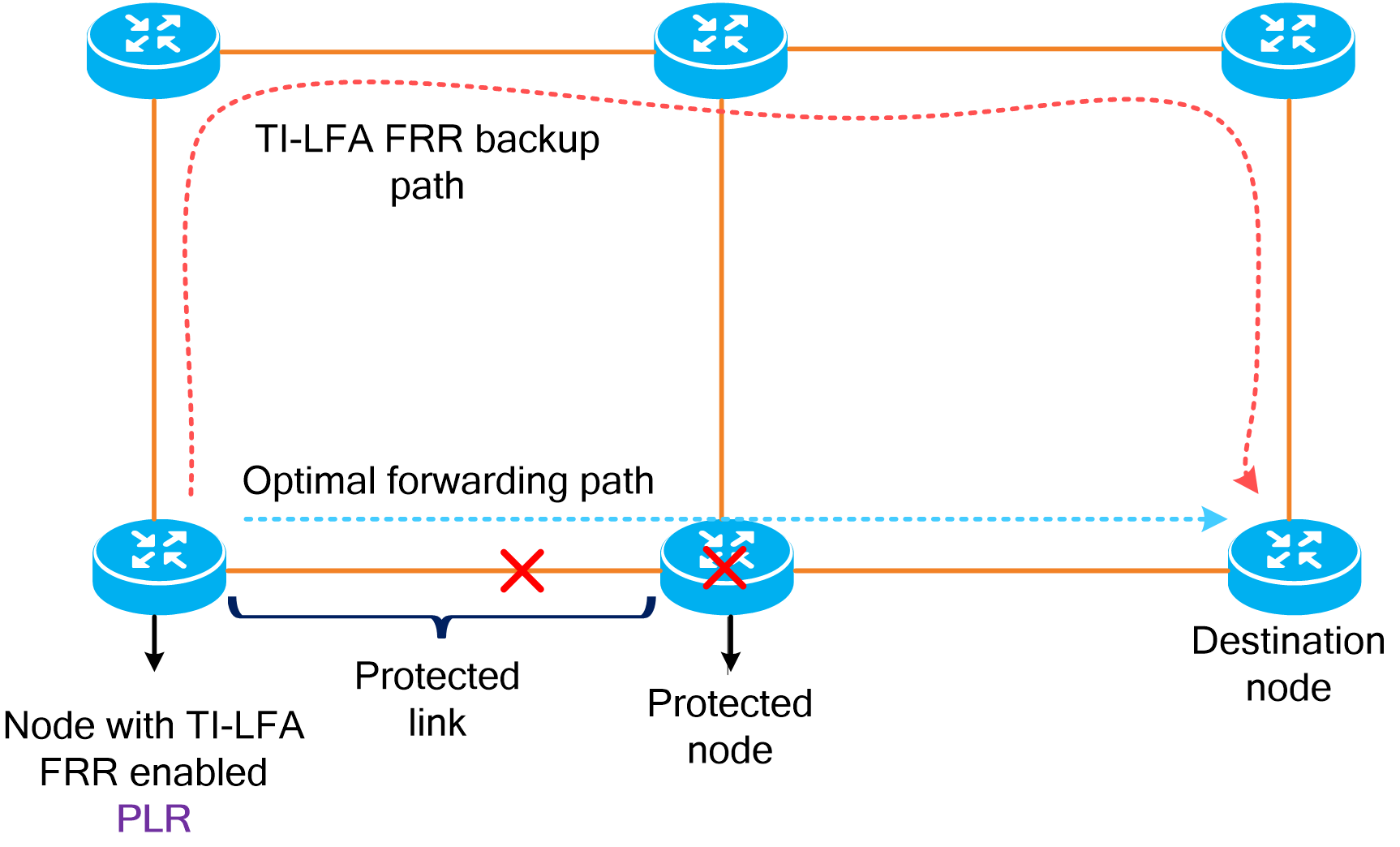

Node link-level protection

TI-LFA FRR

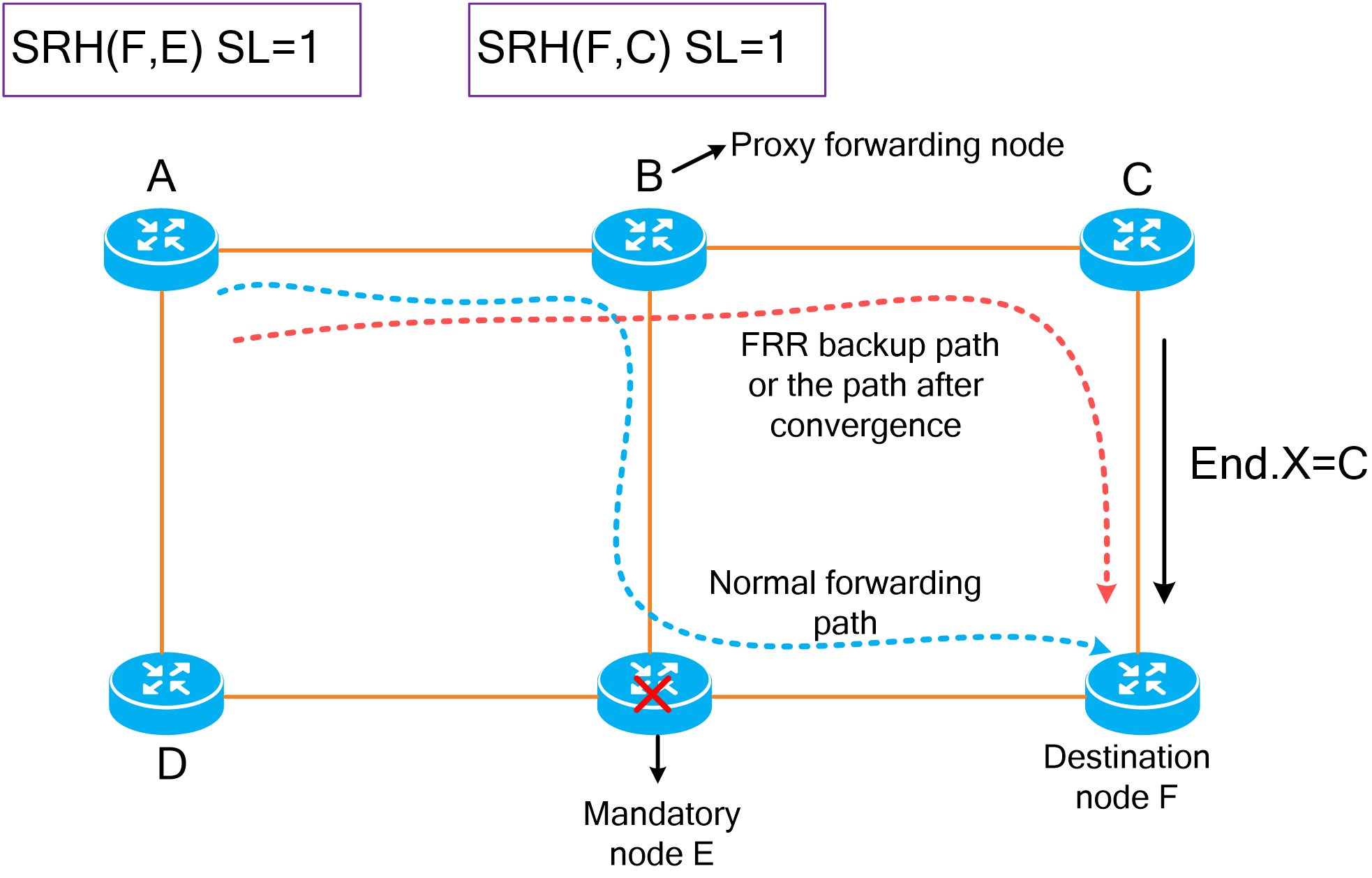

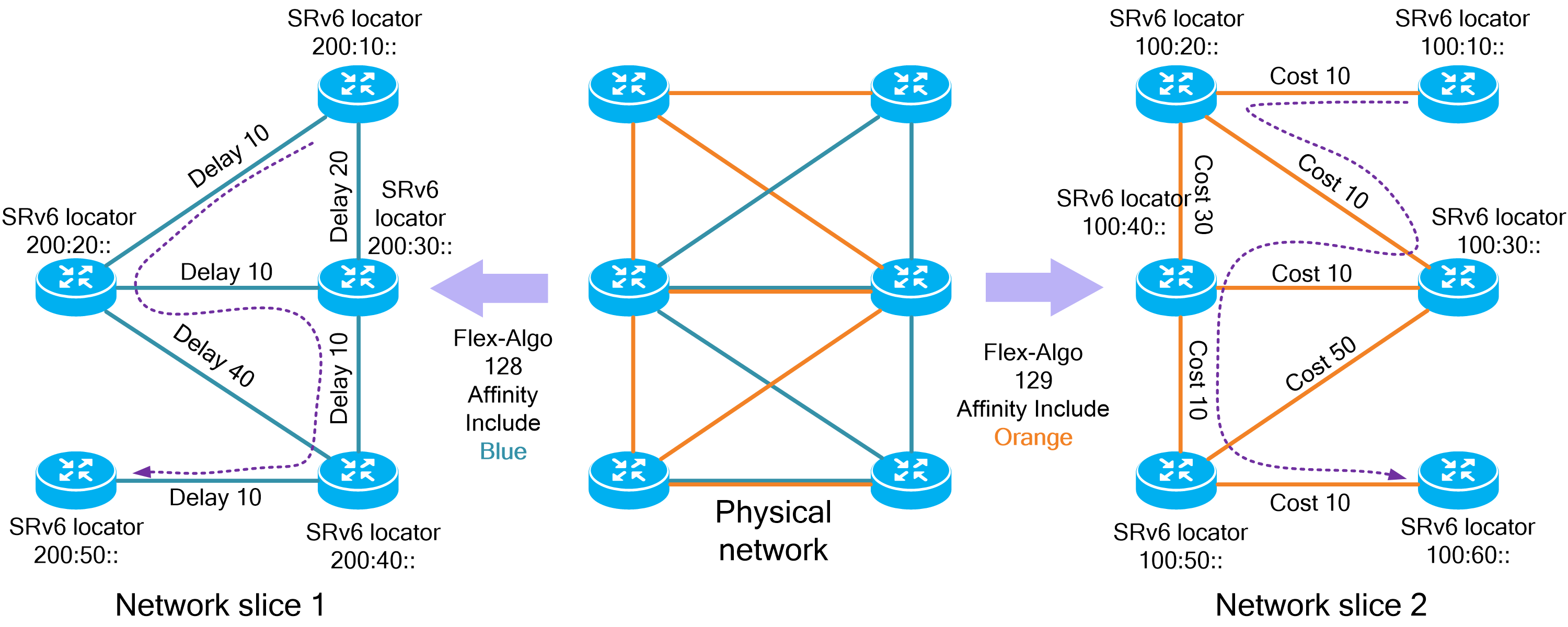

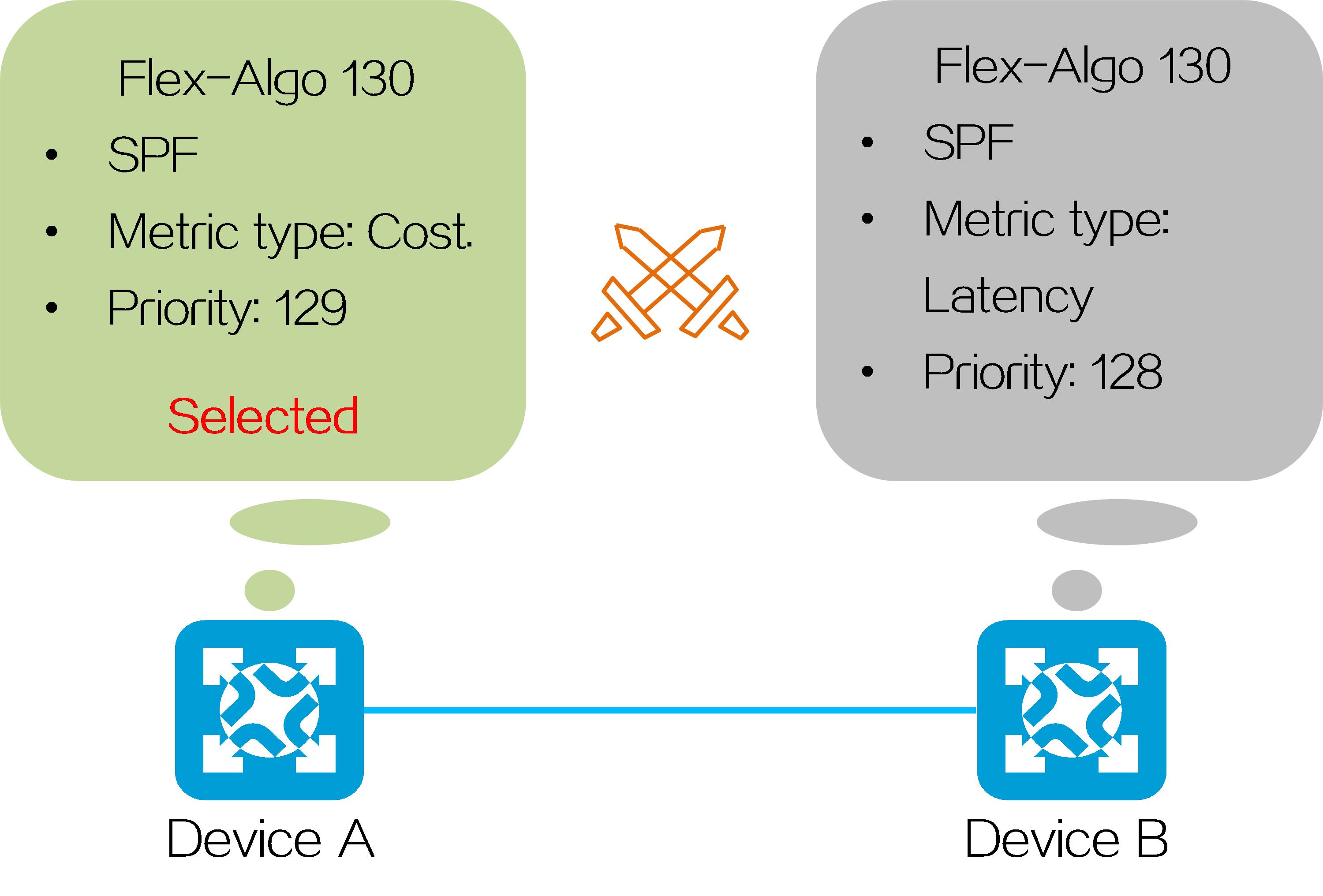

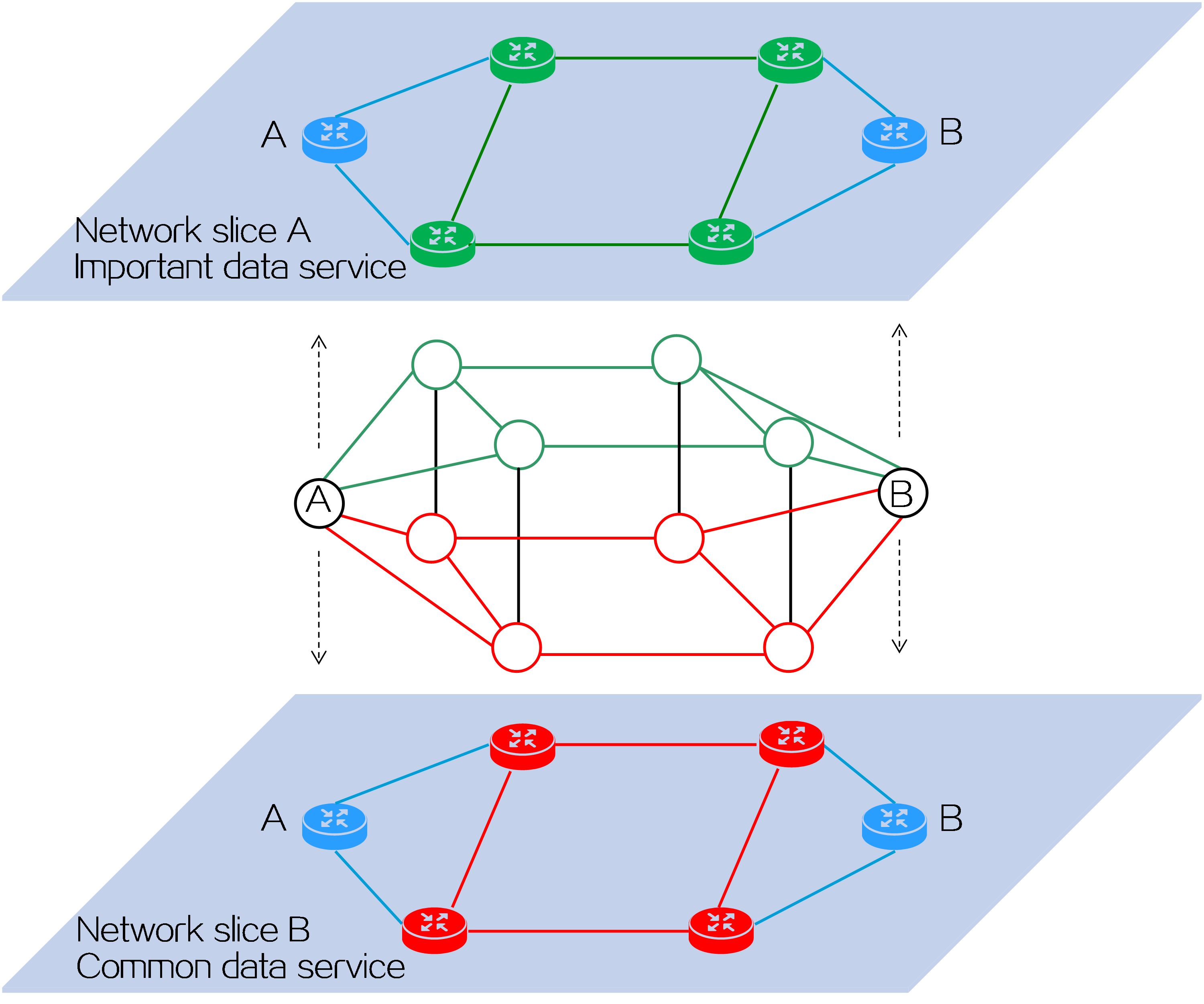

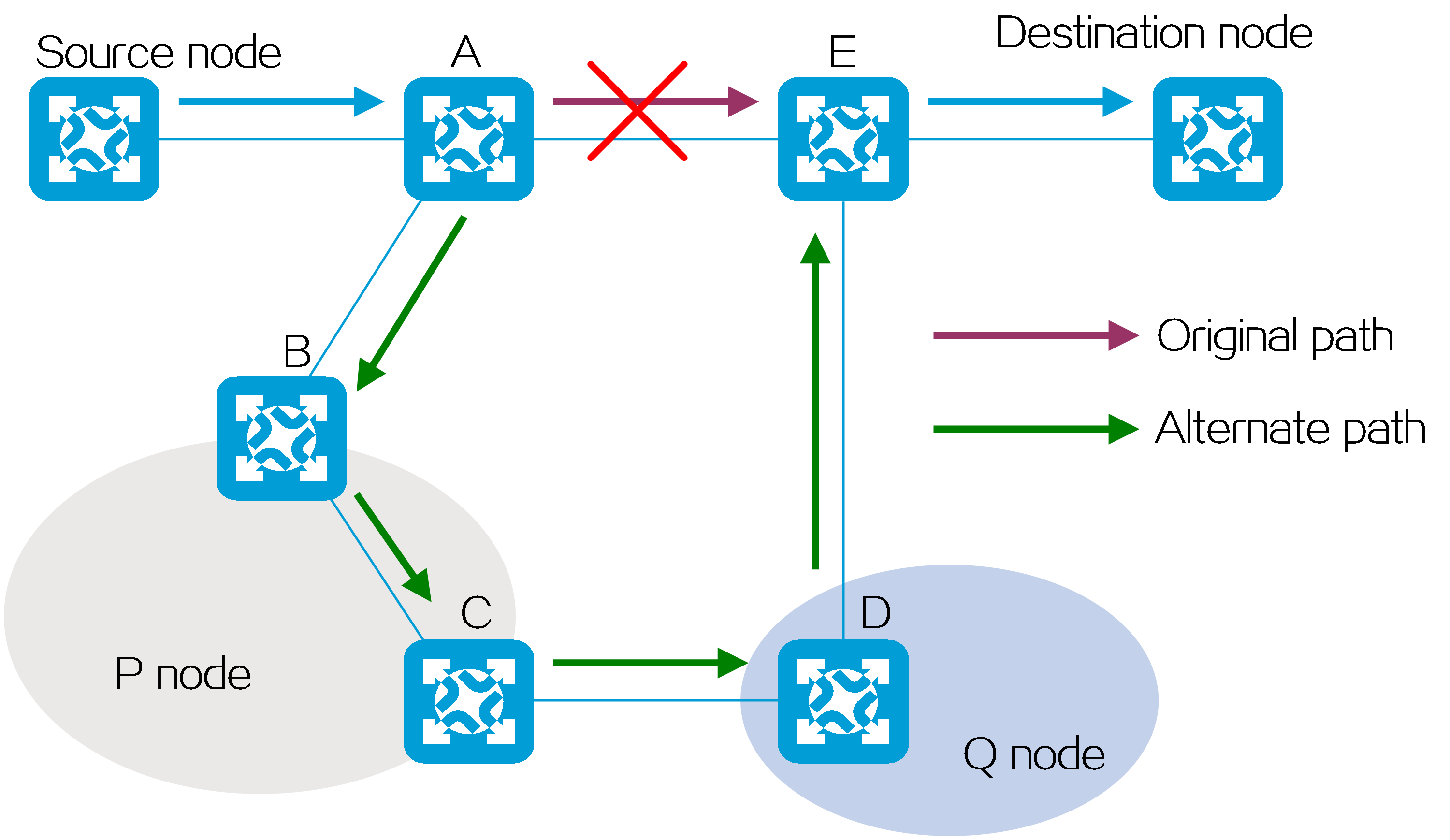

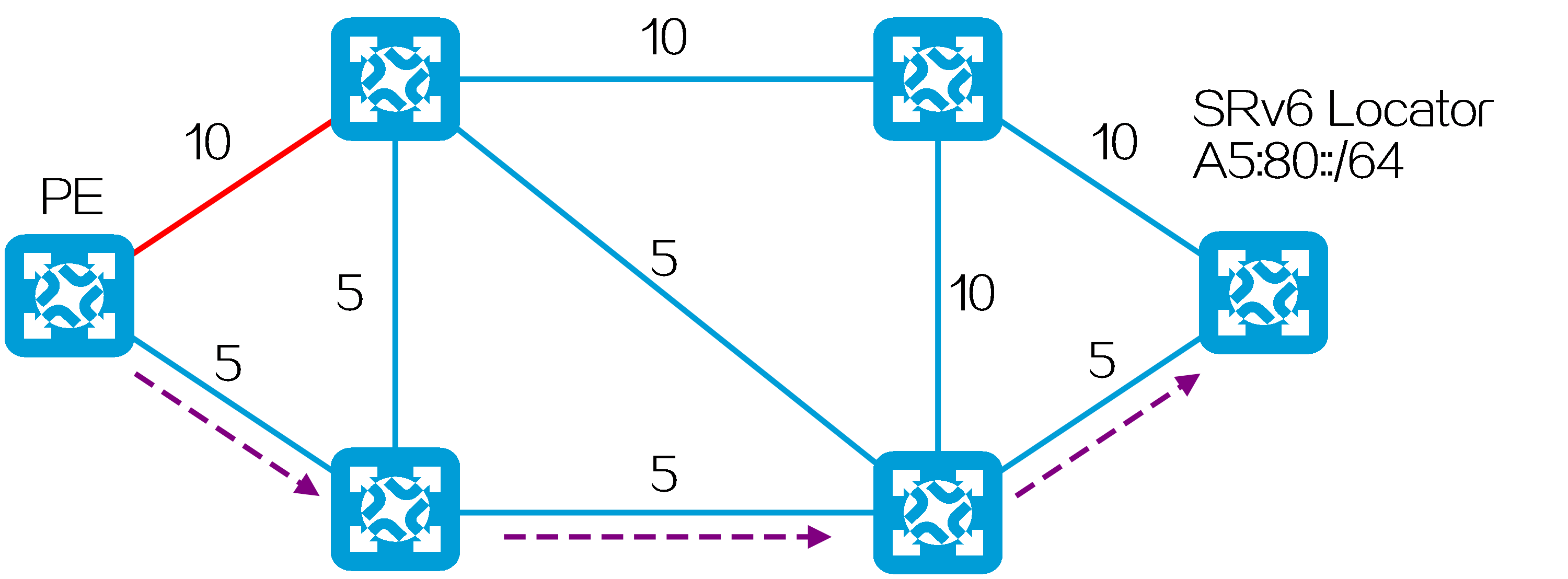

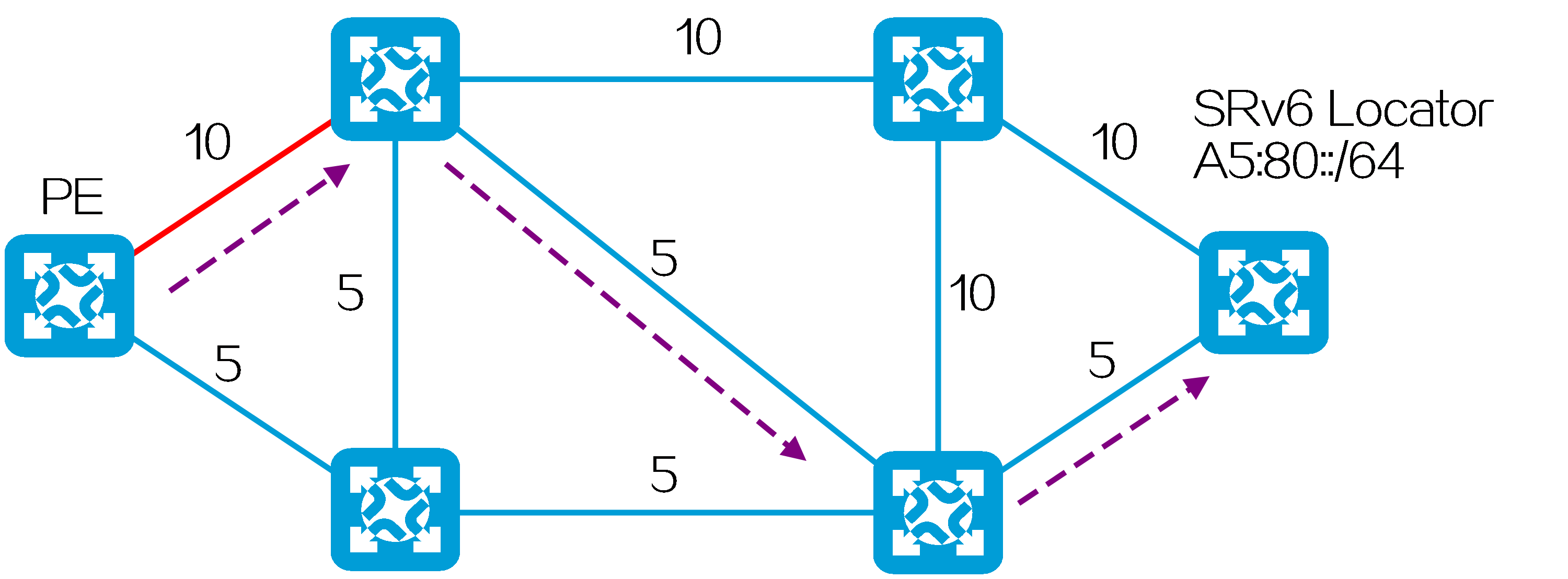

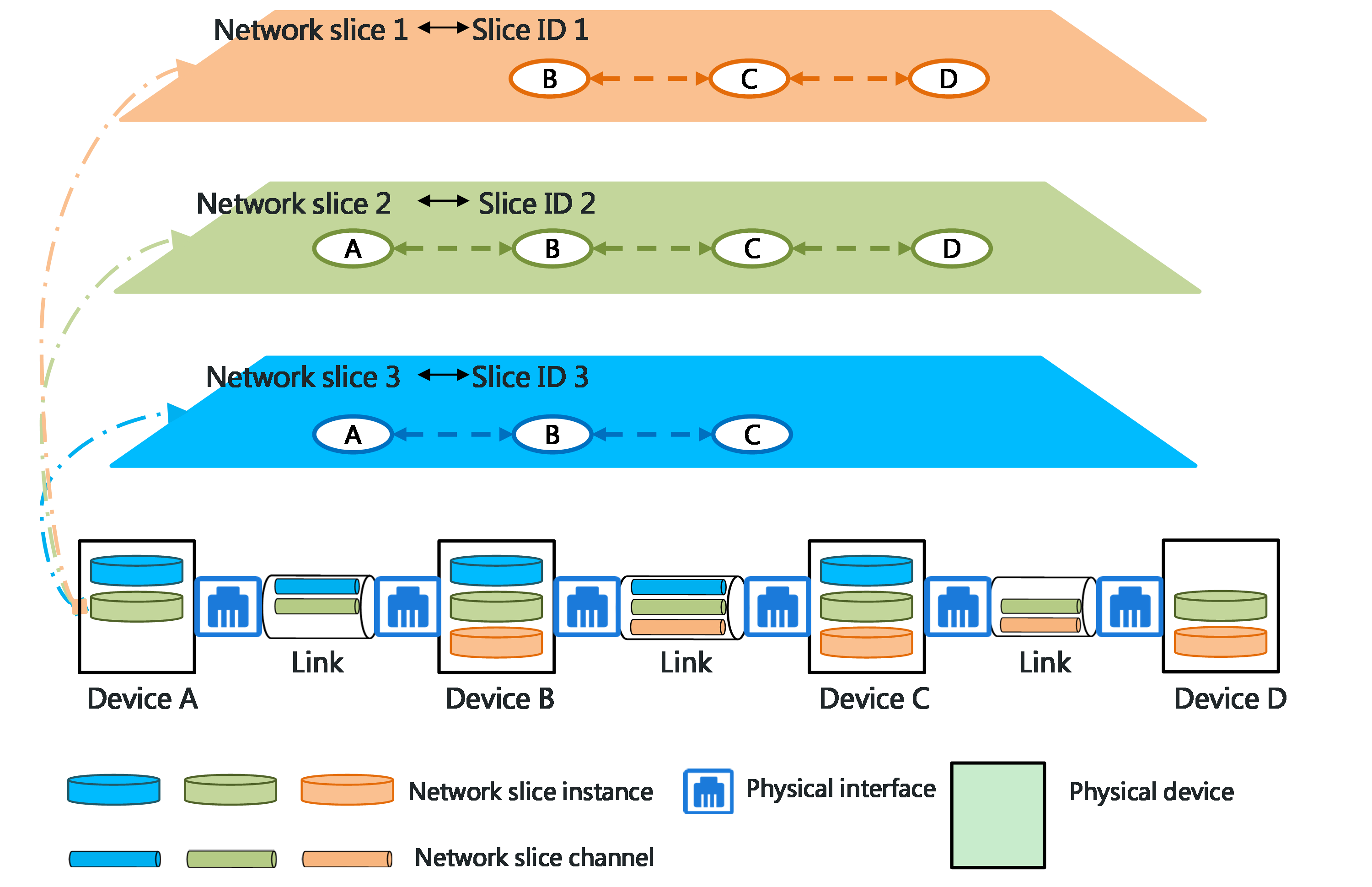

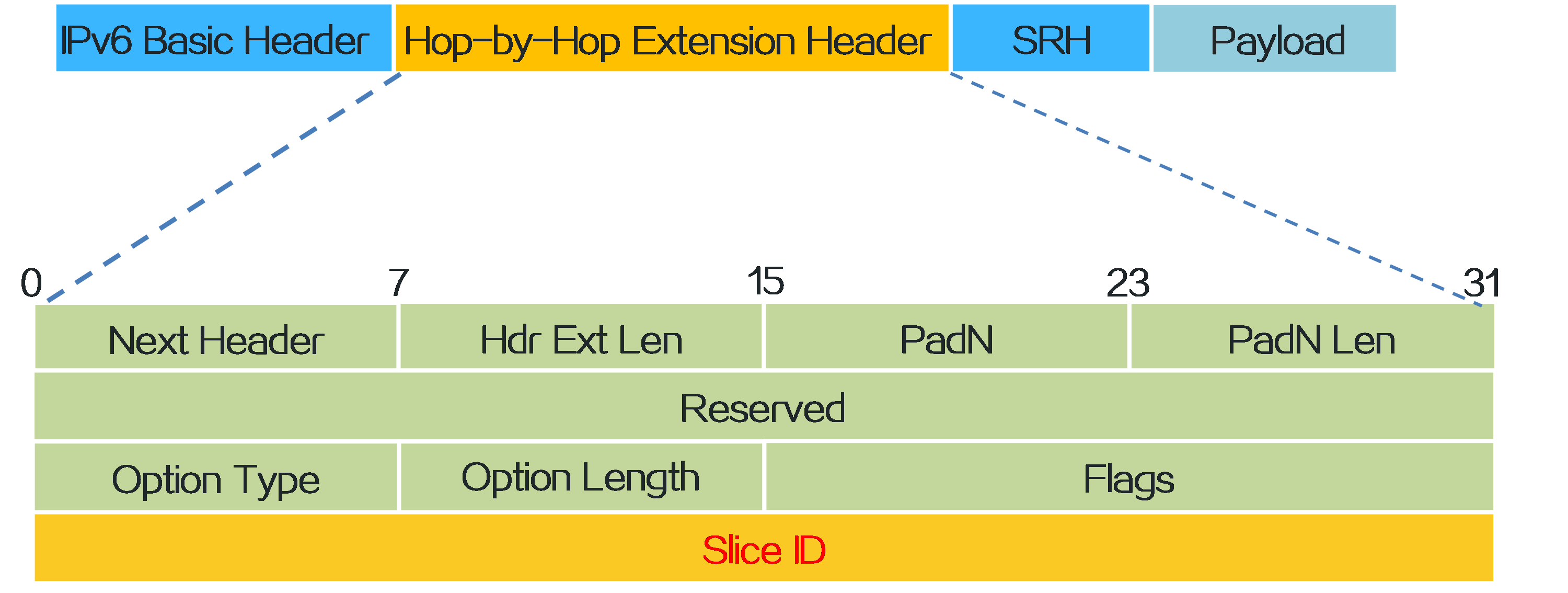

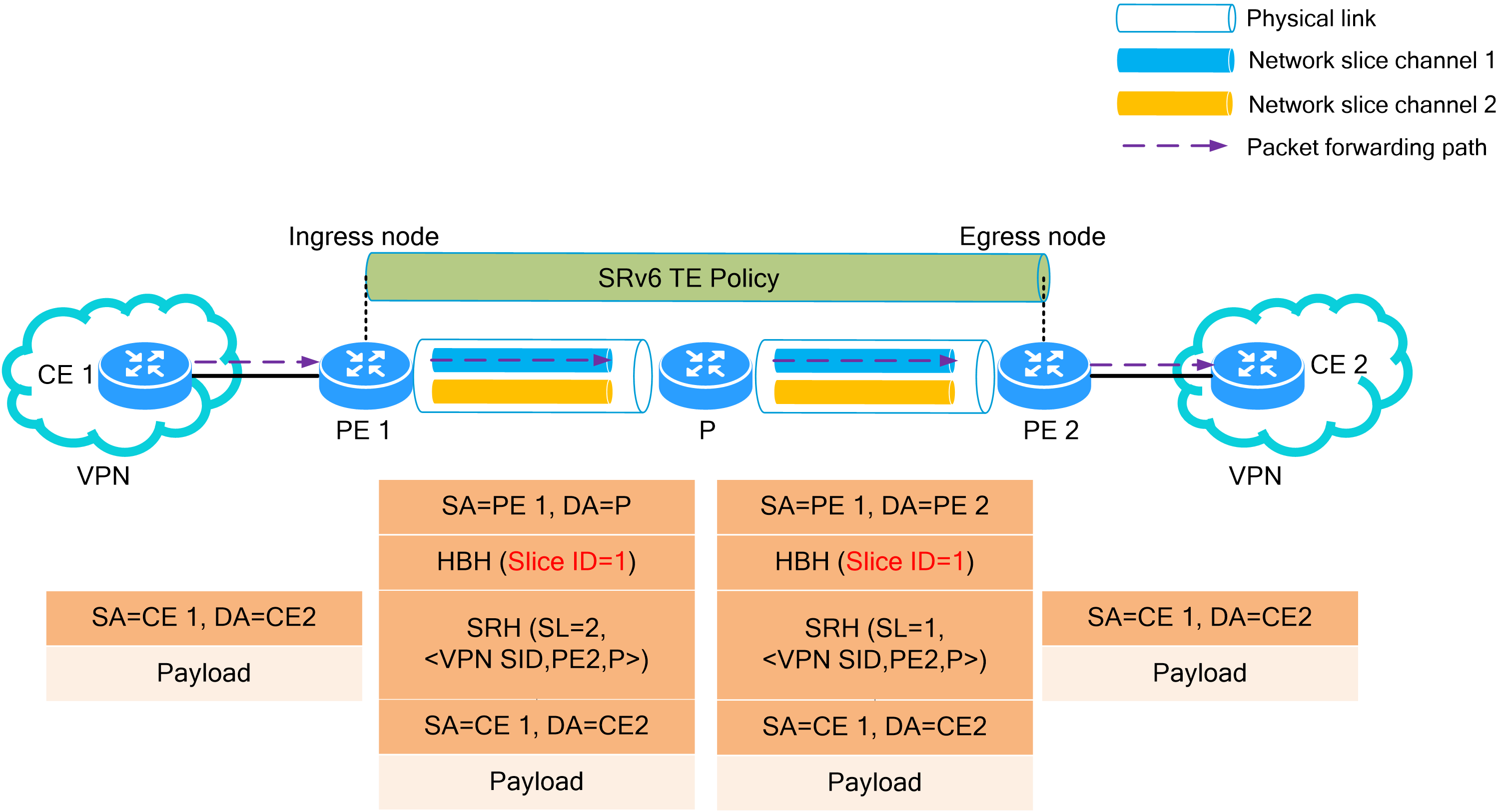

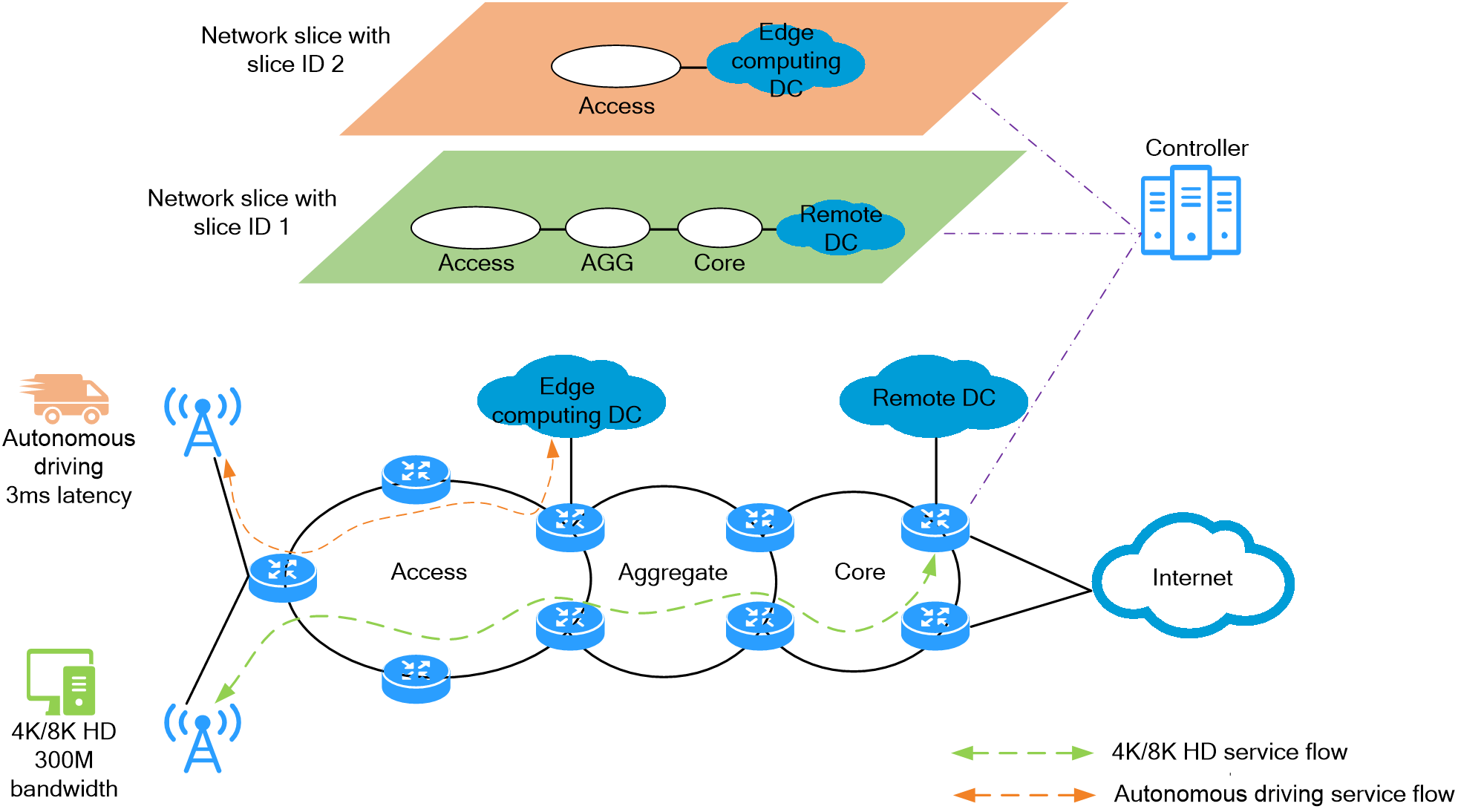

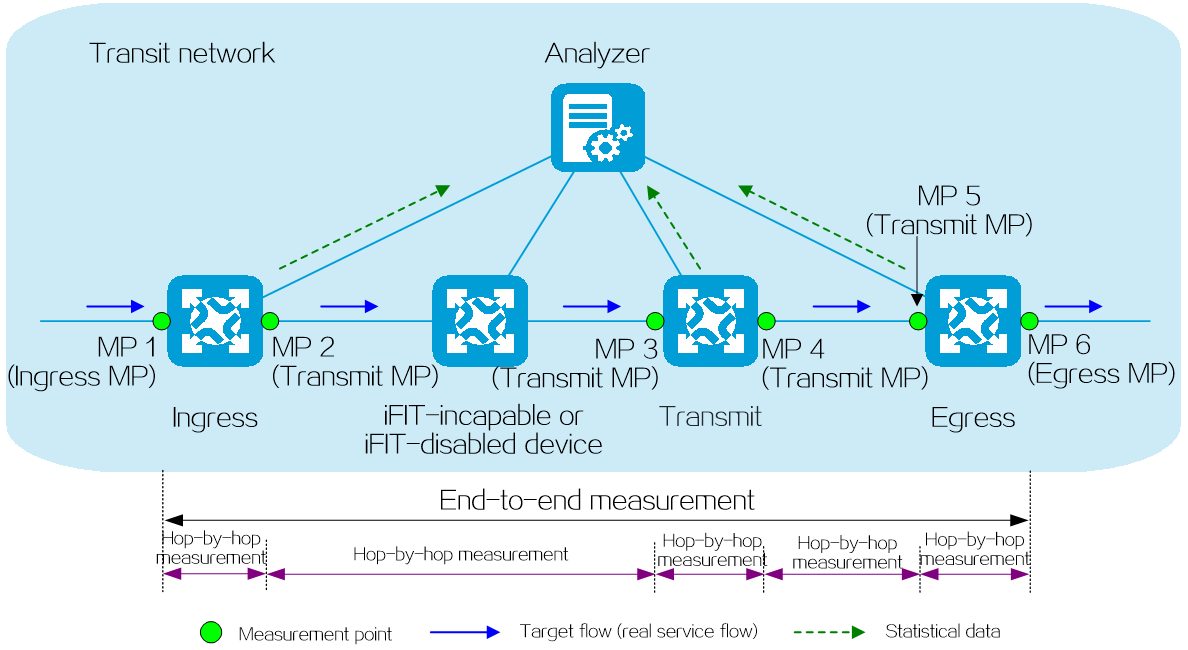

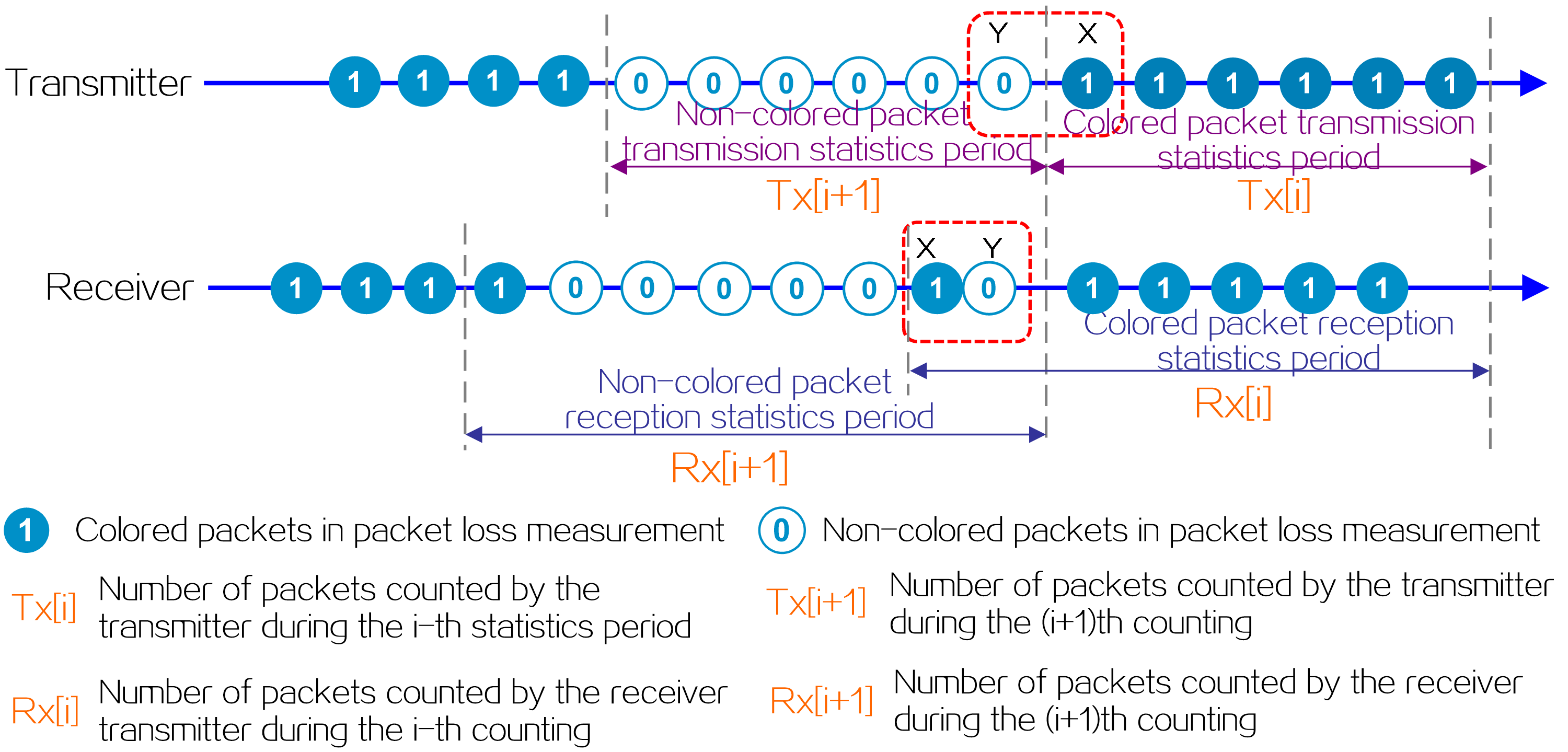

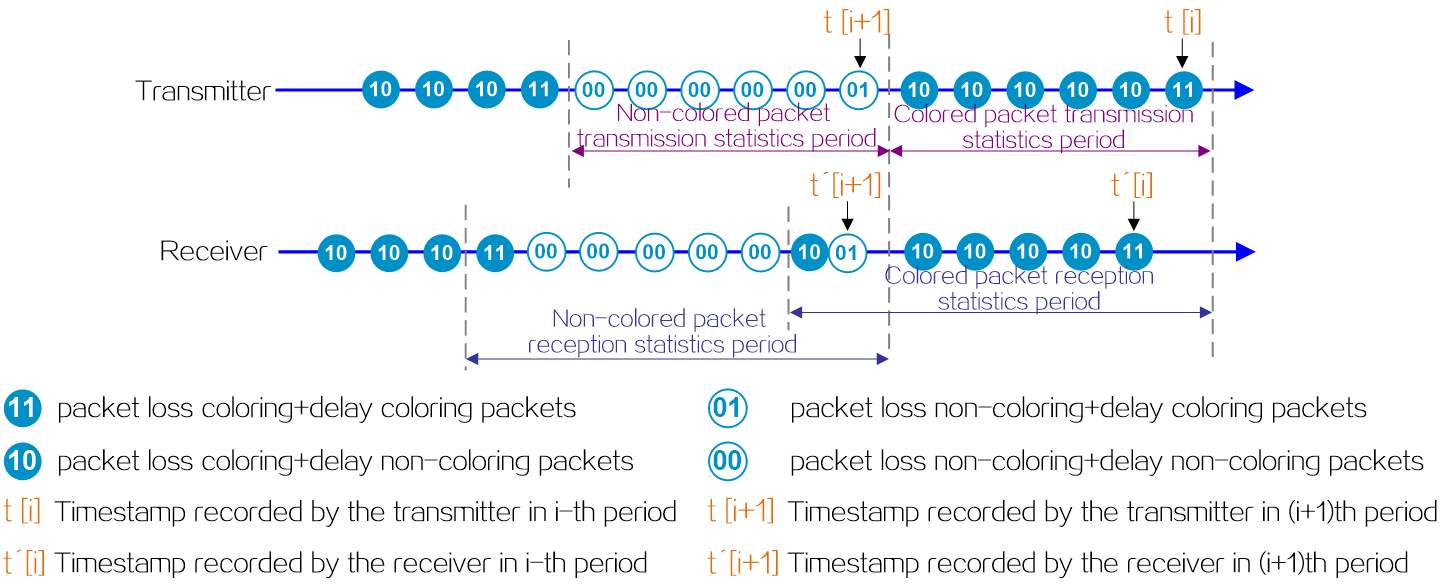

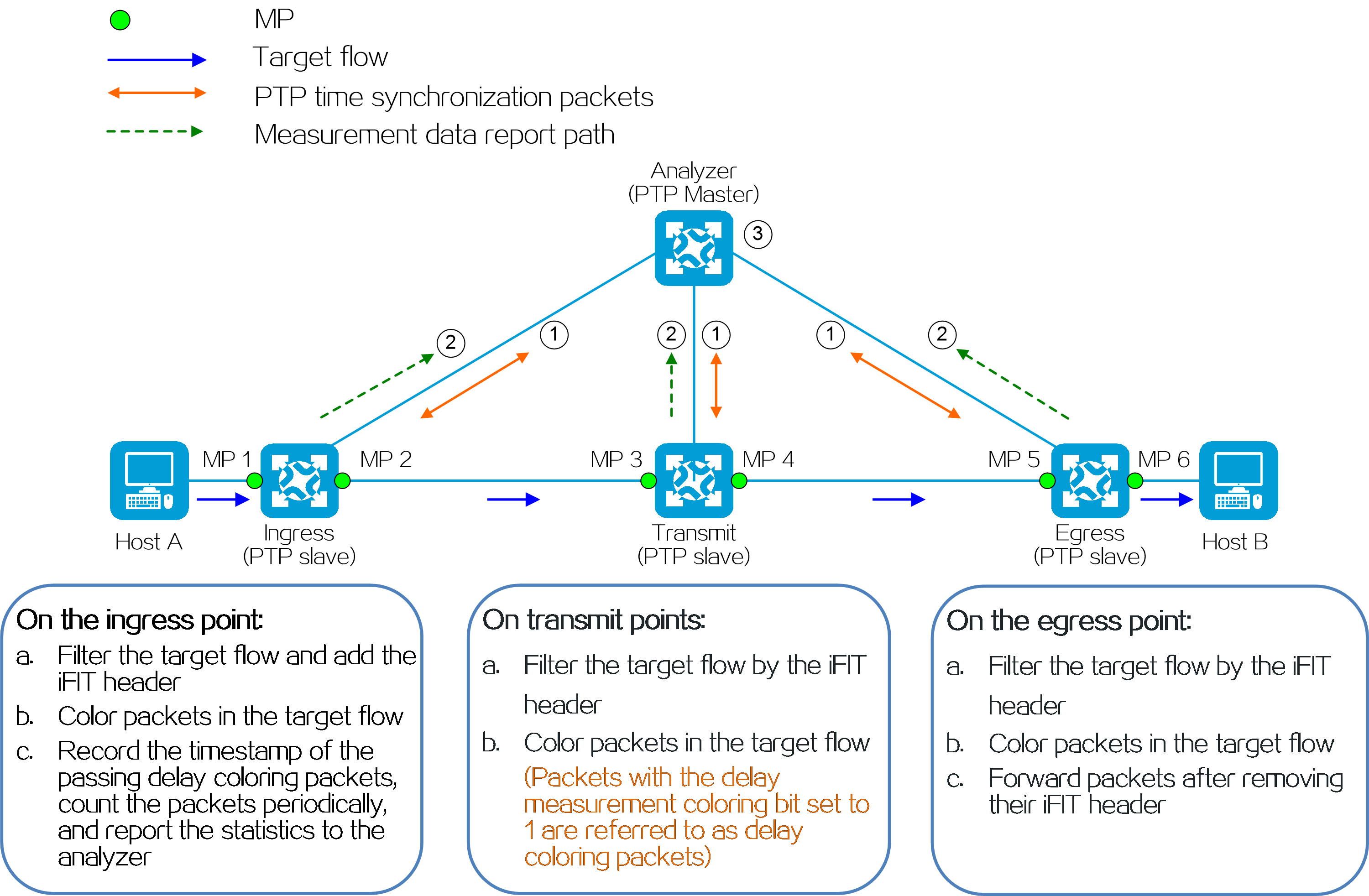

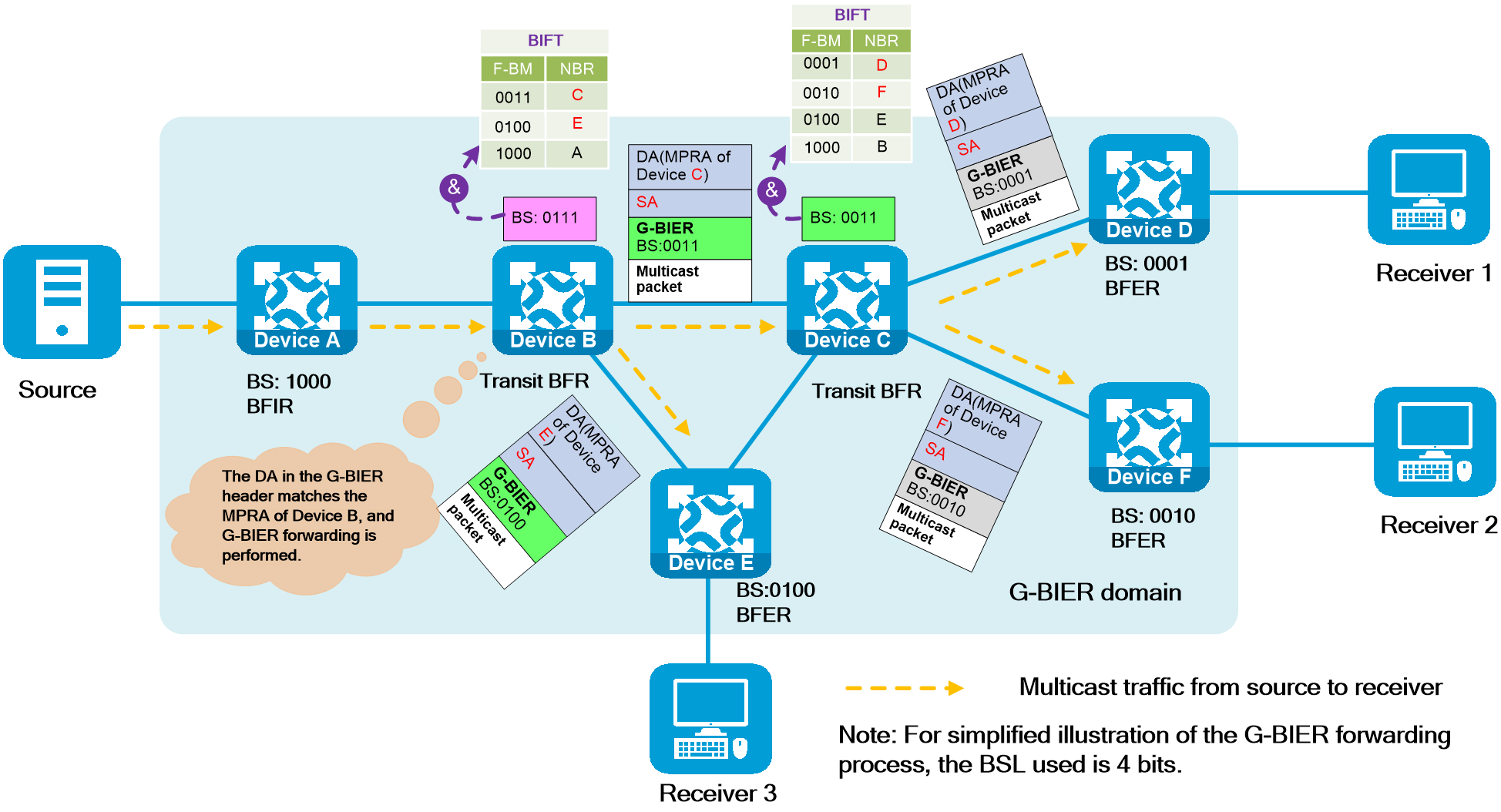

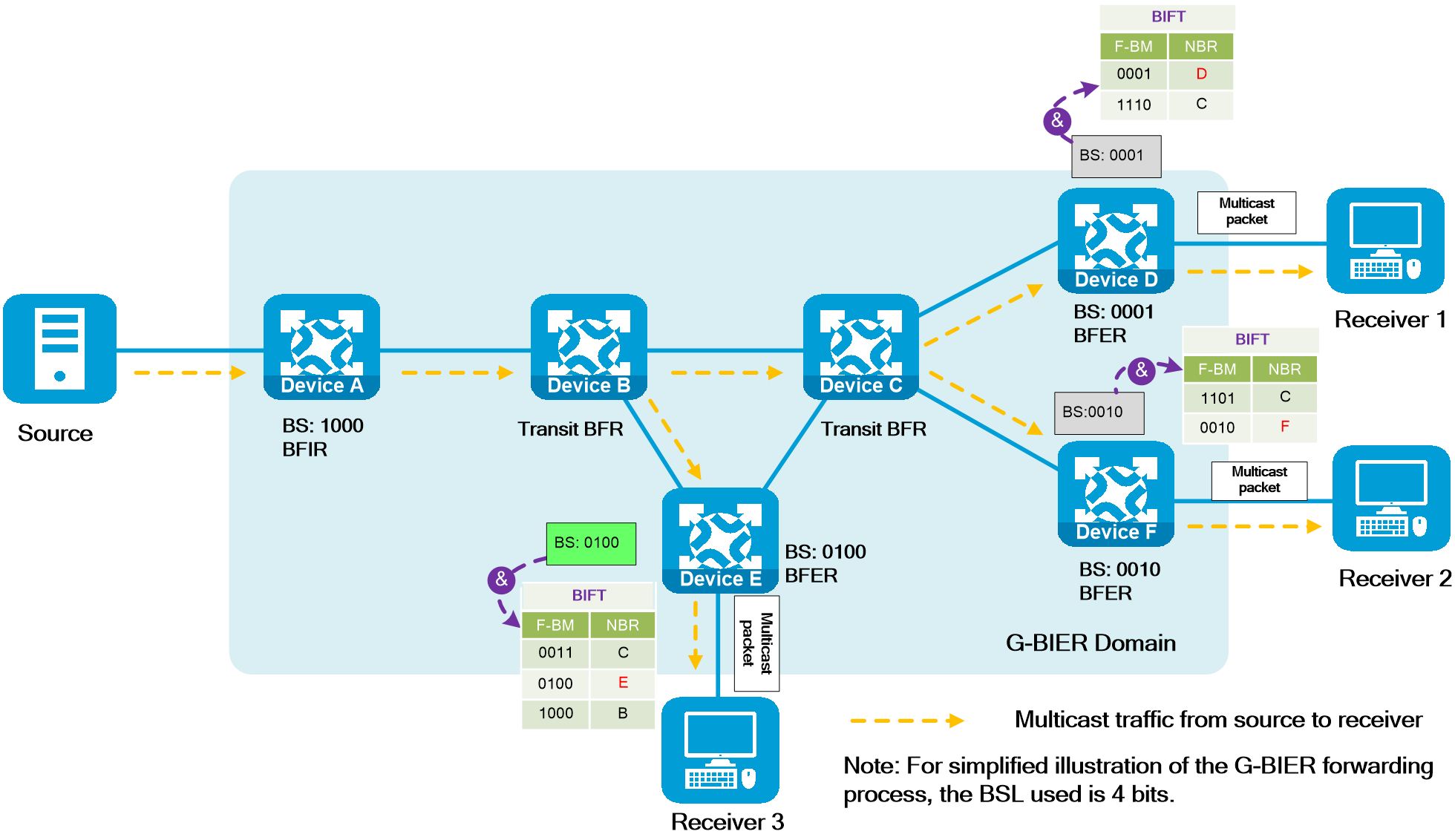

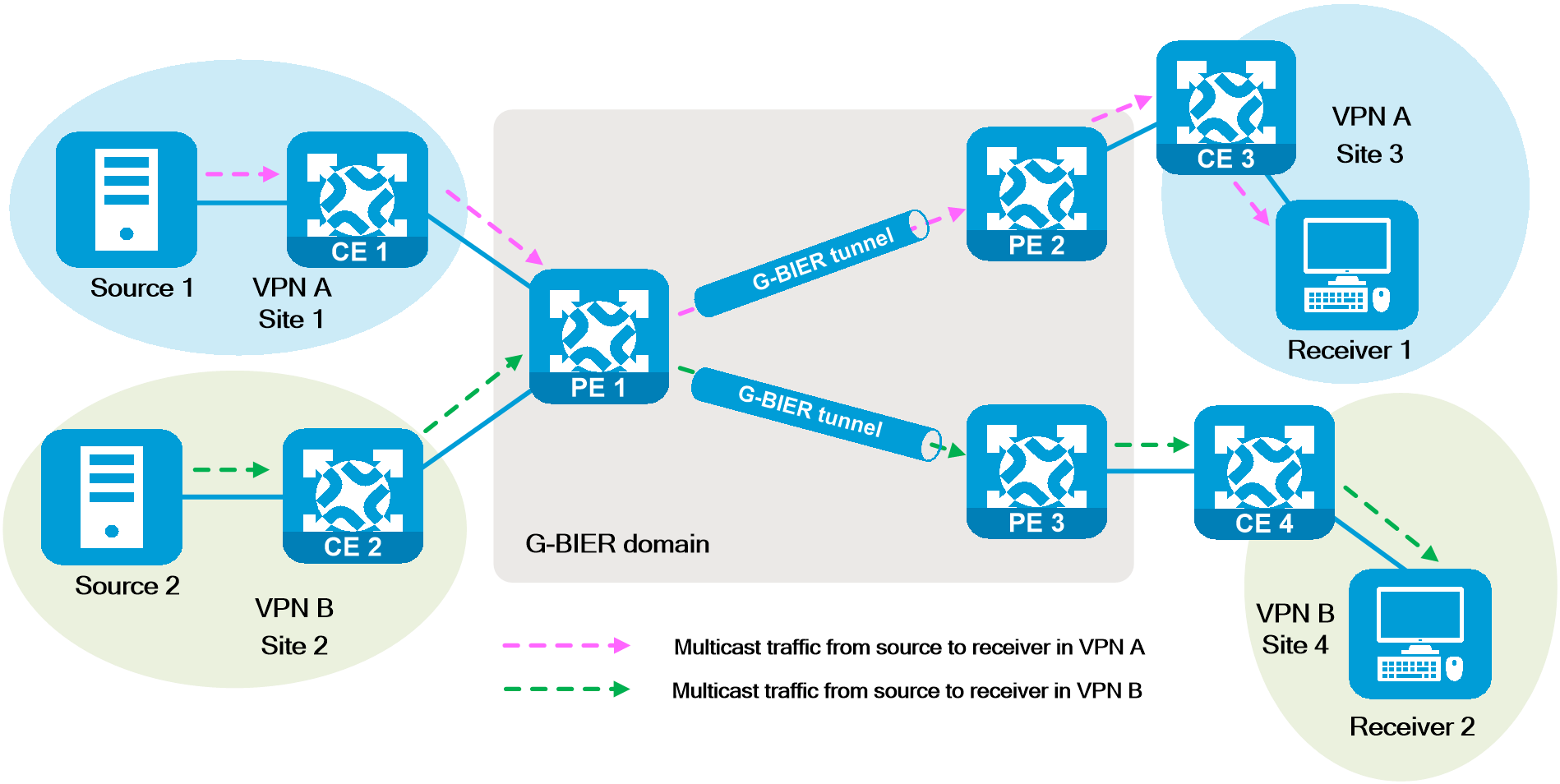

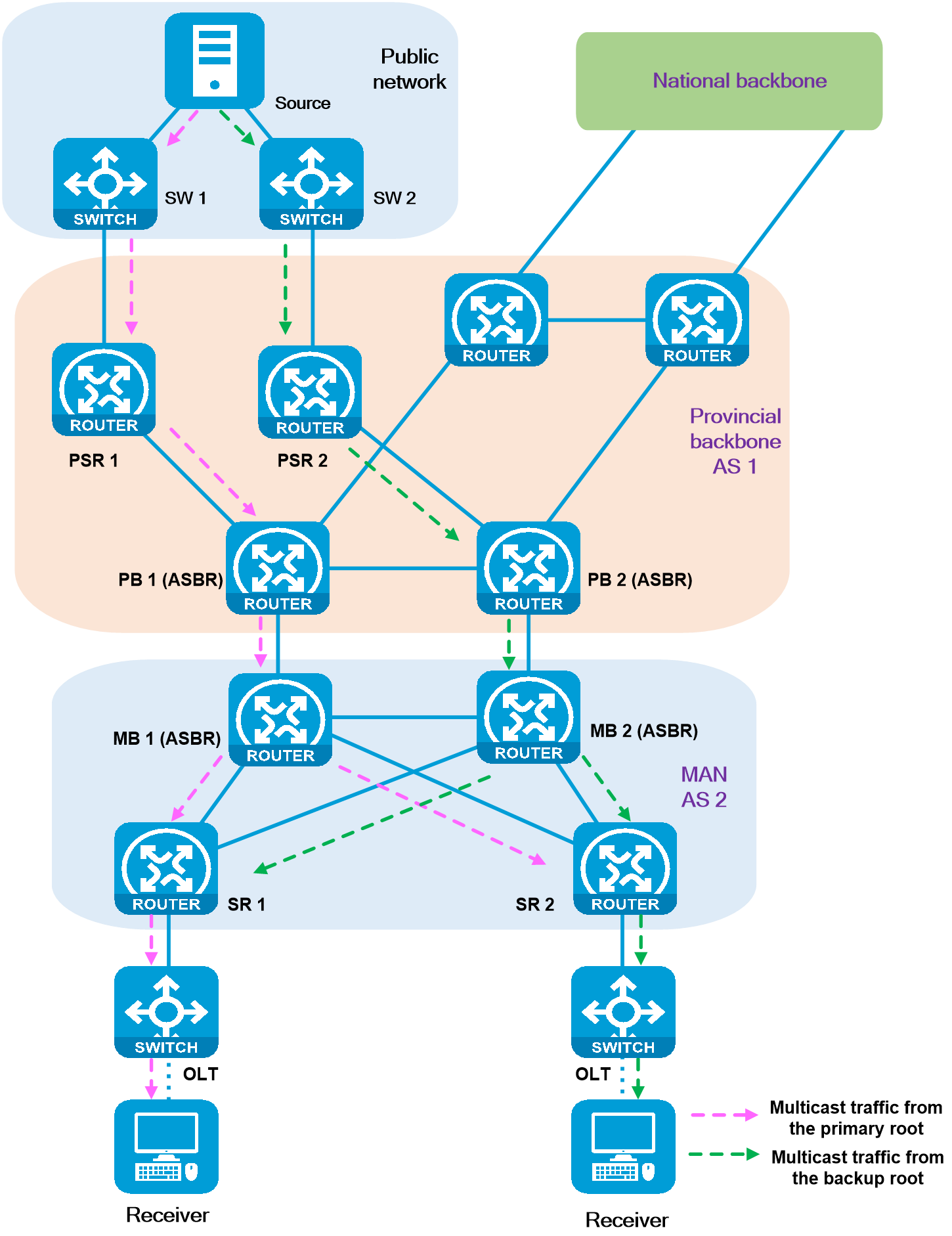

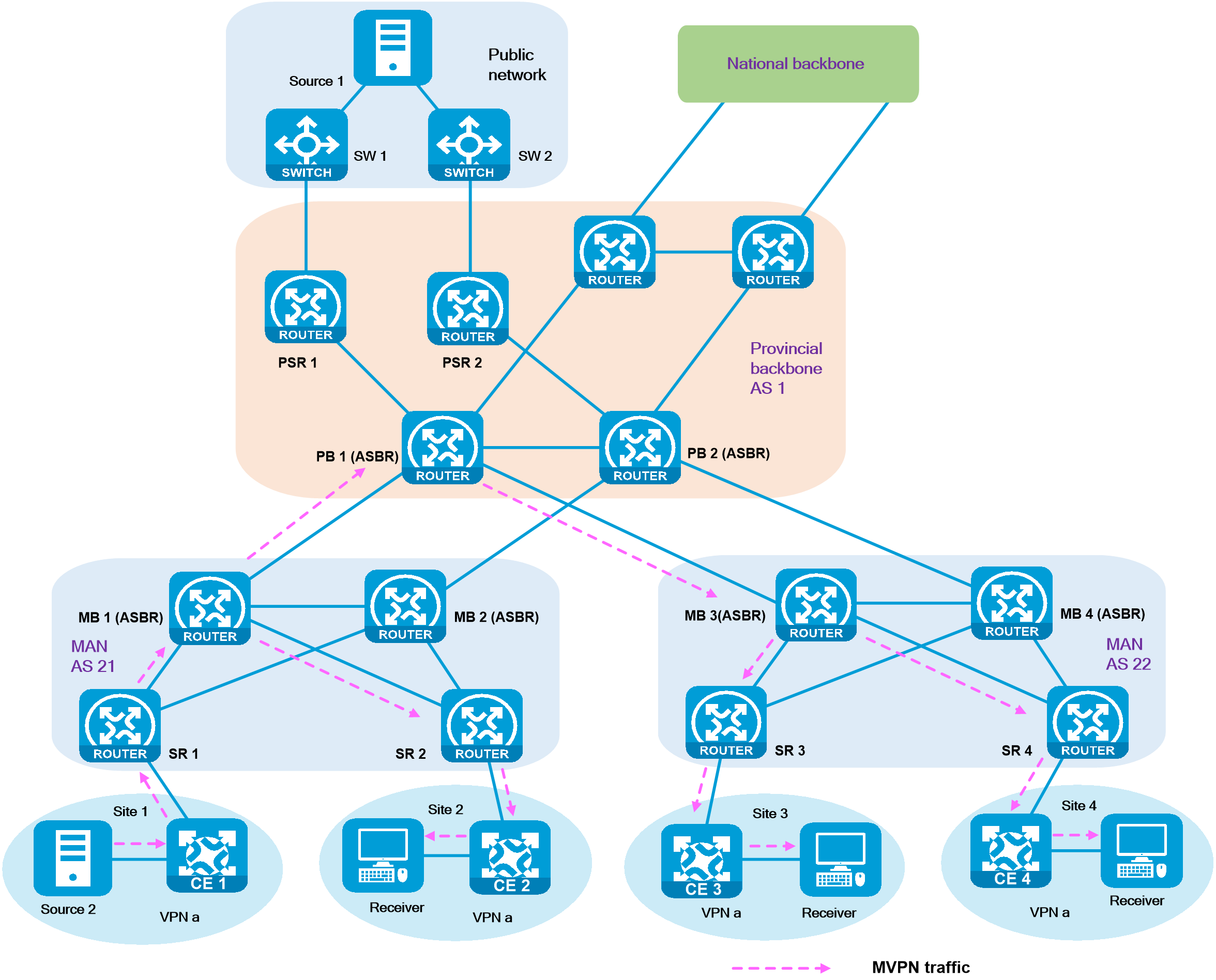

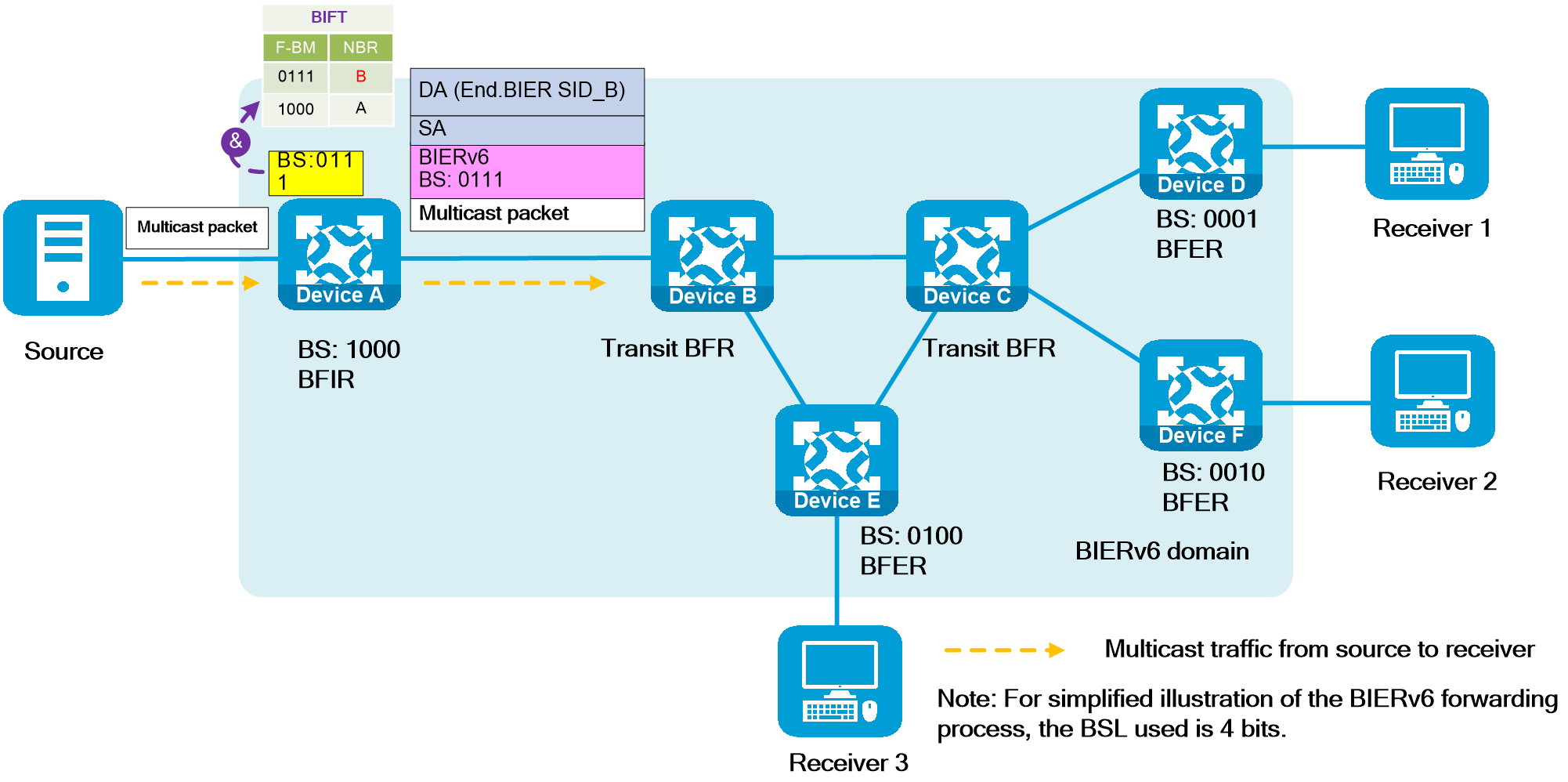

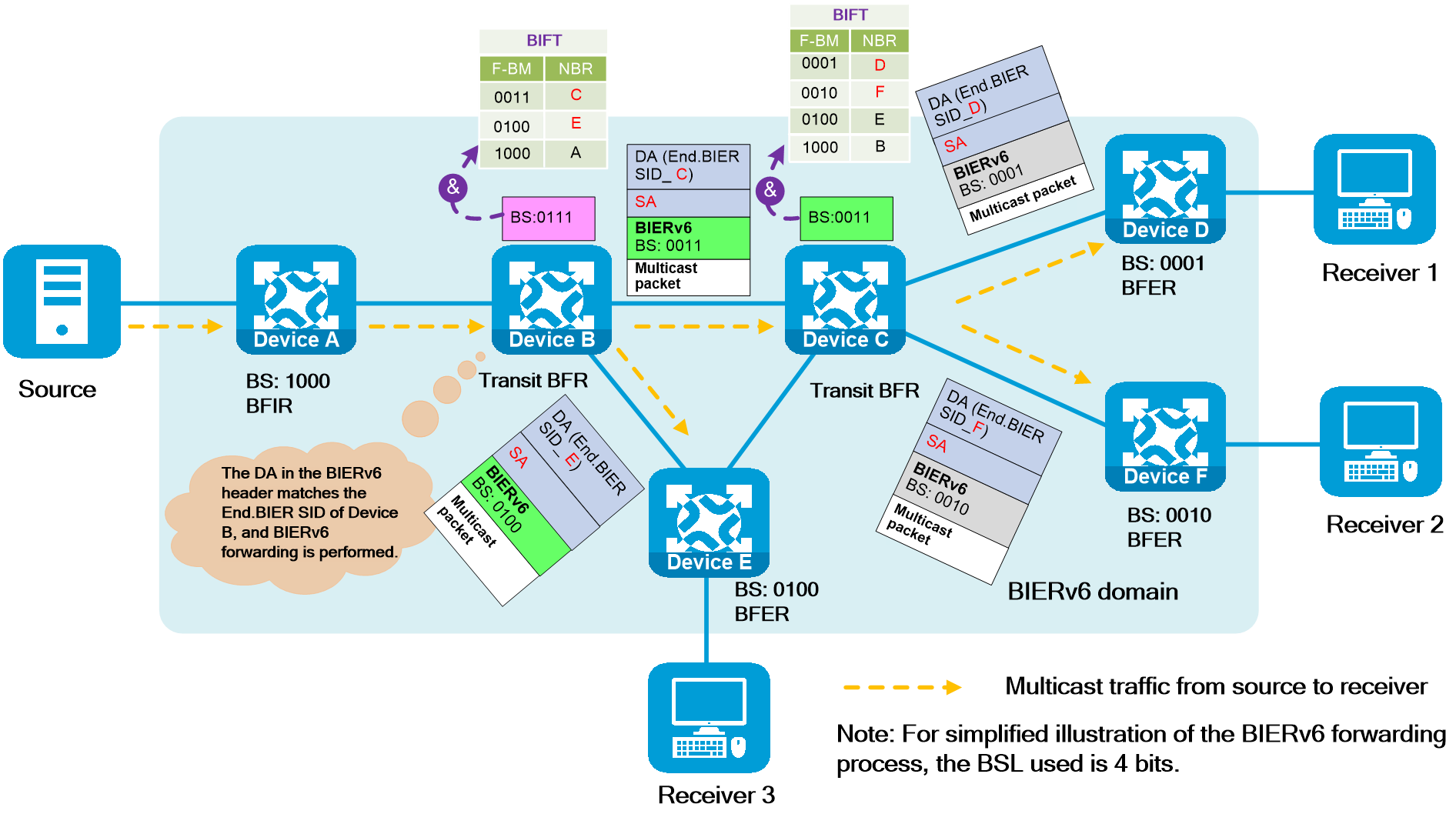

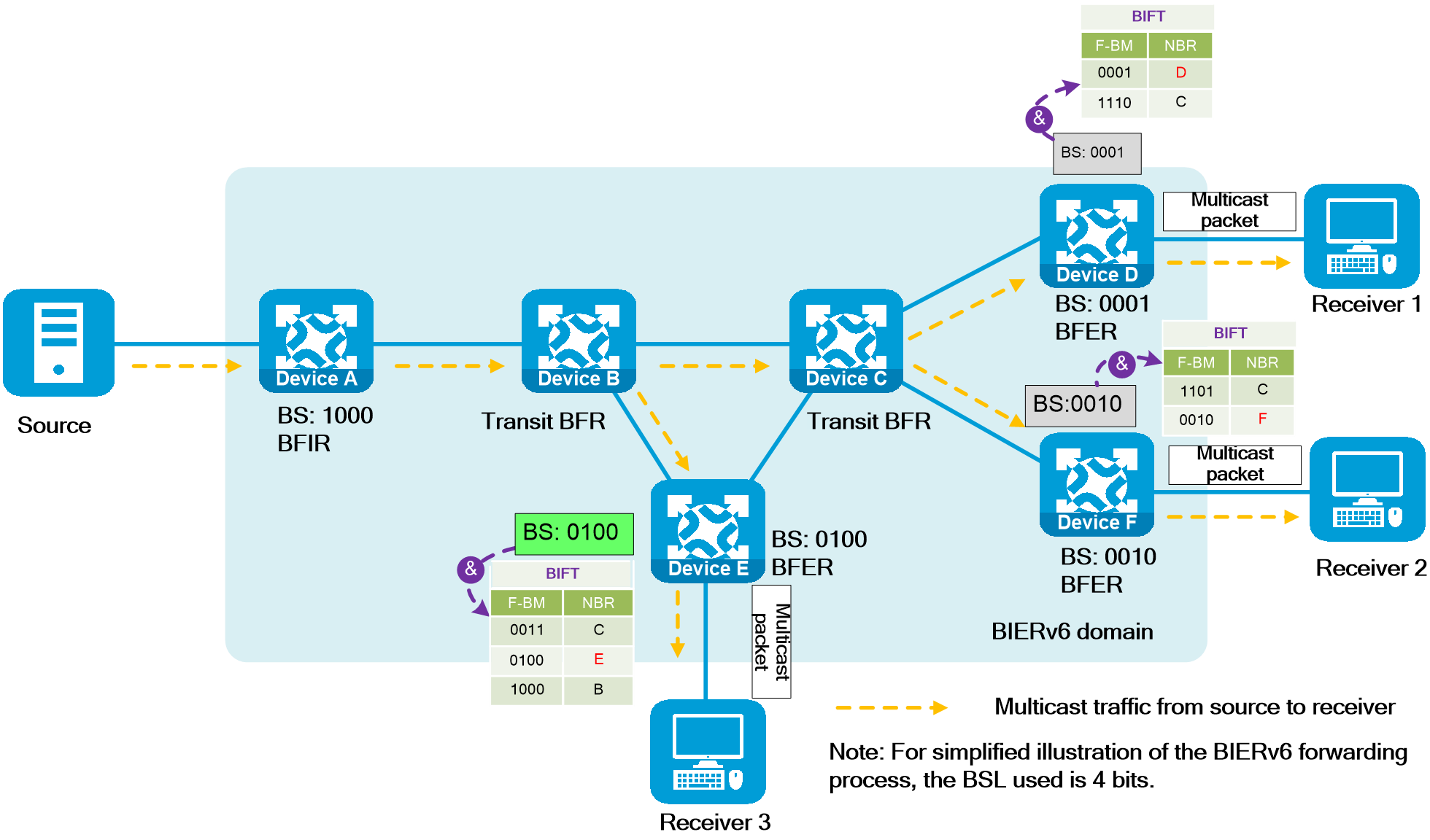

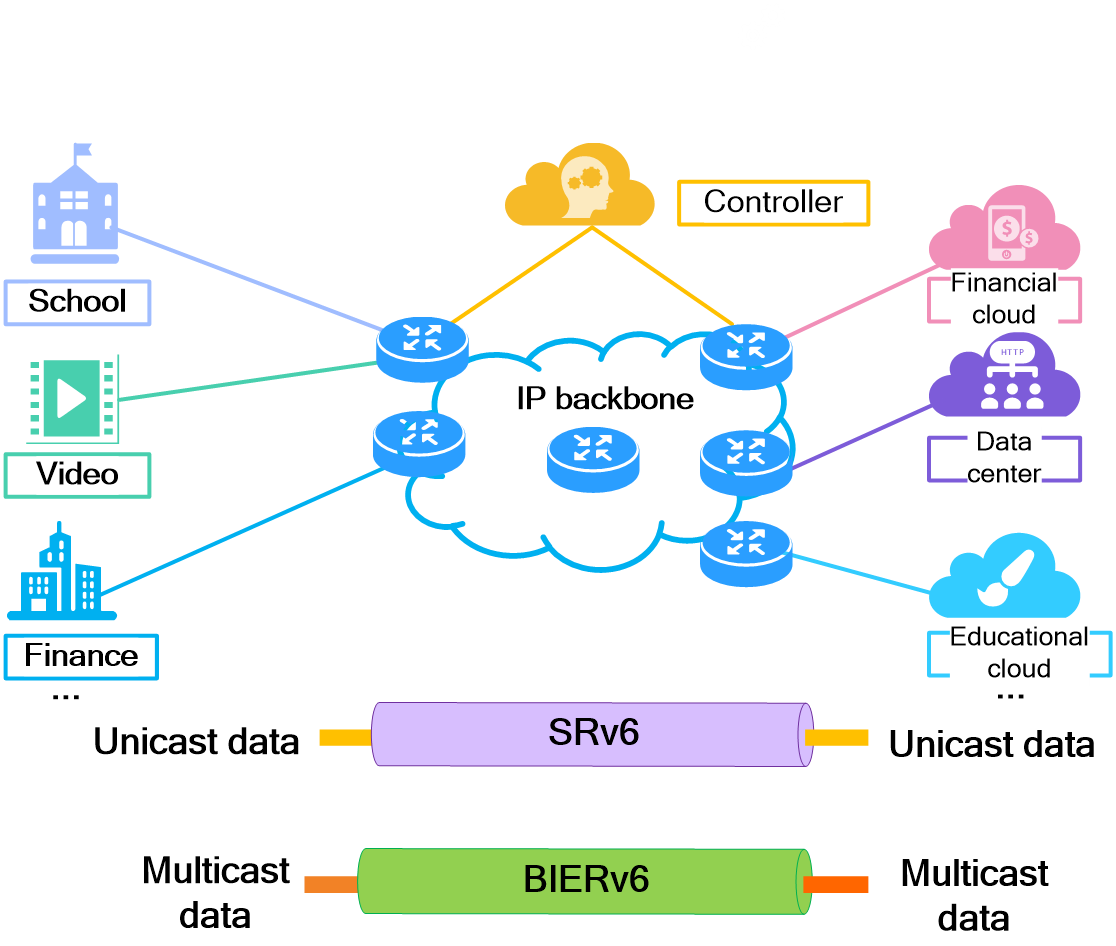

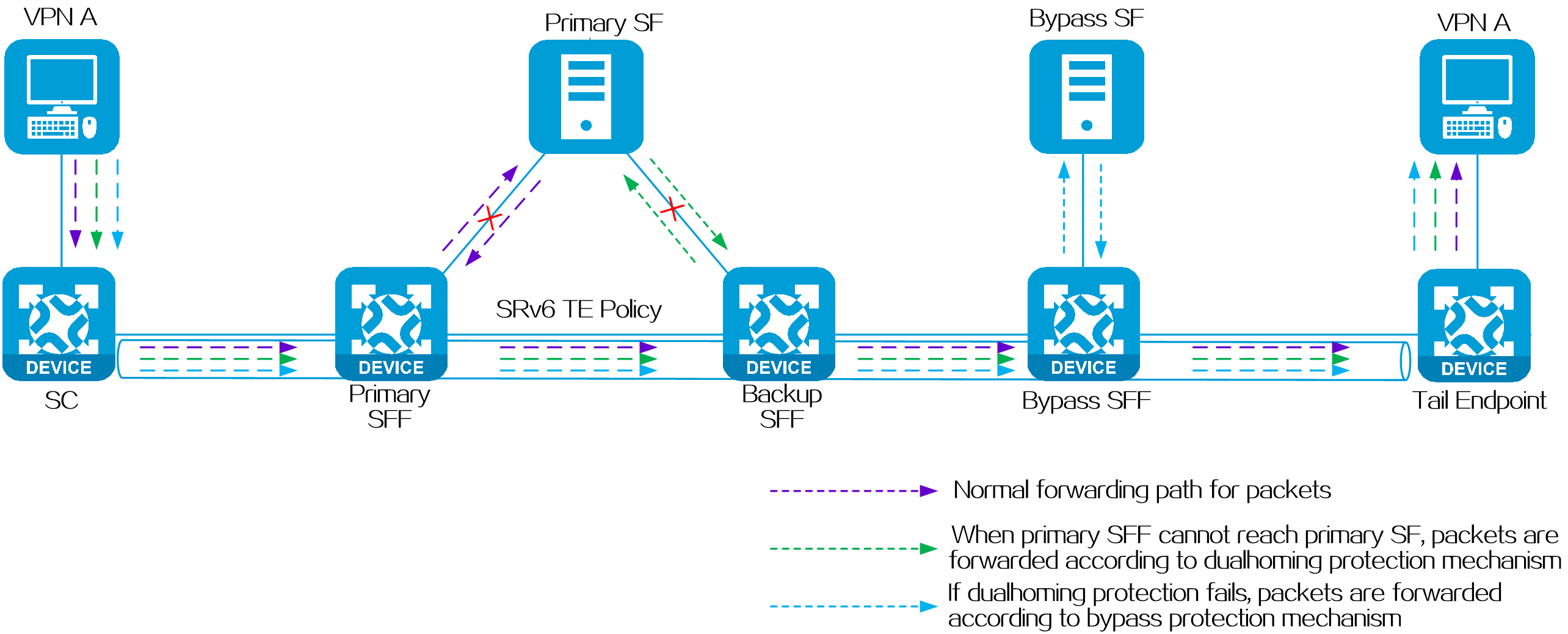

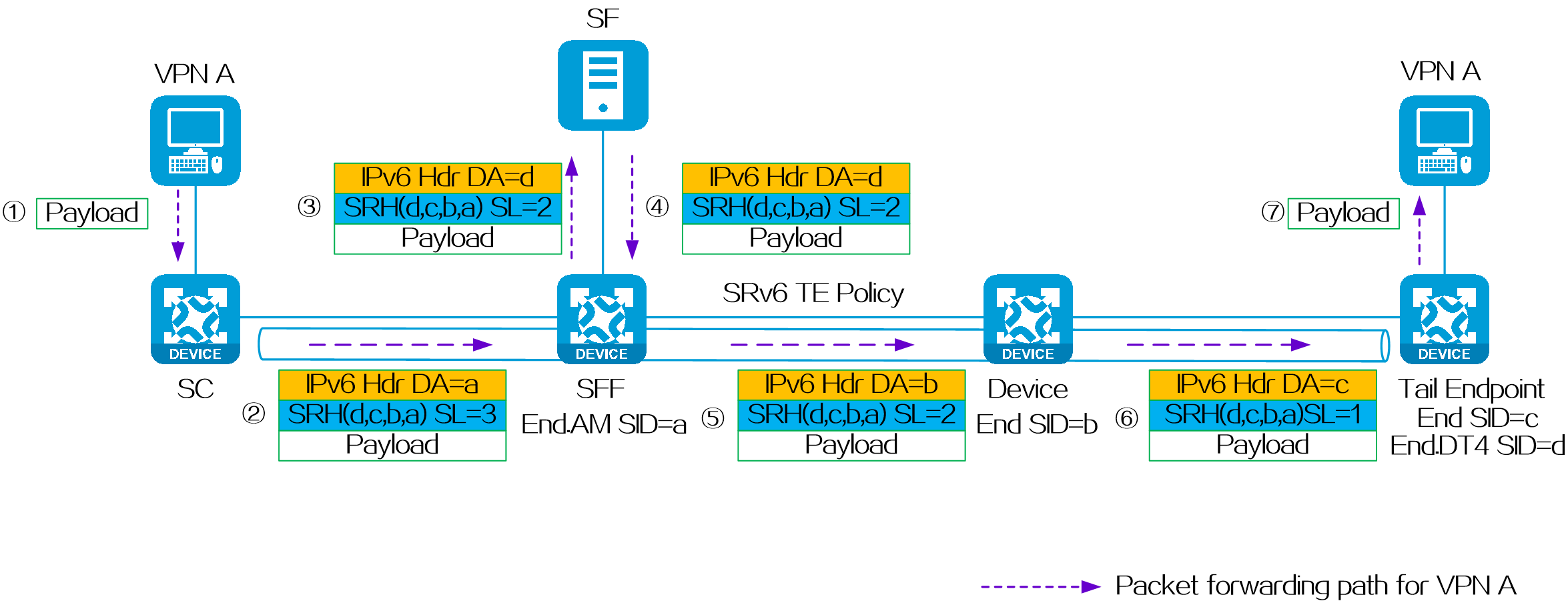

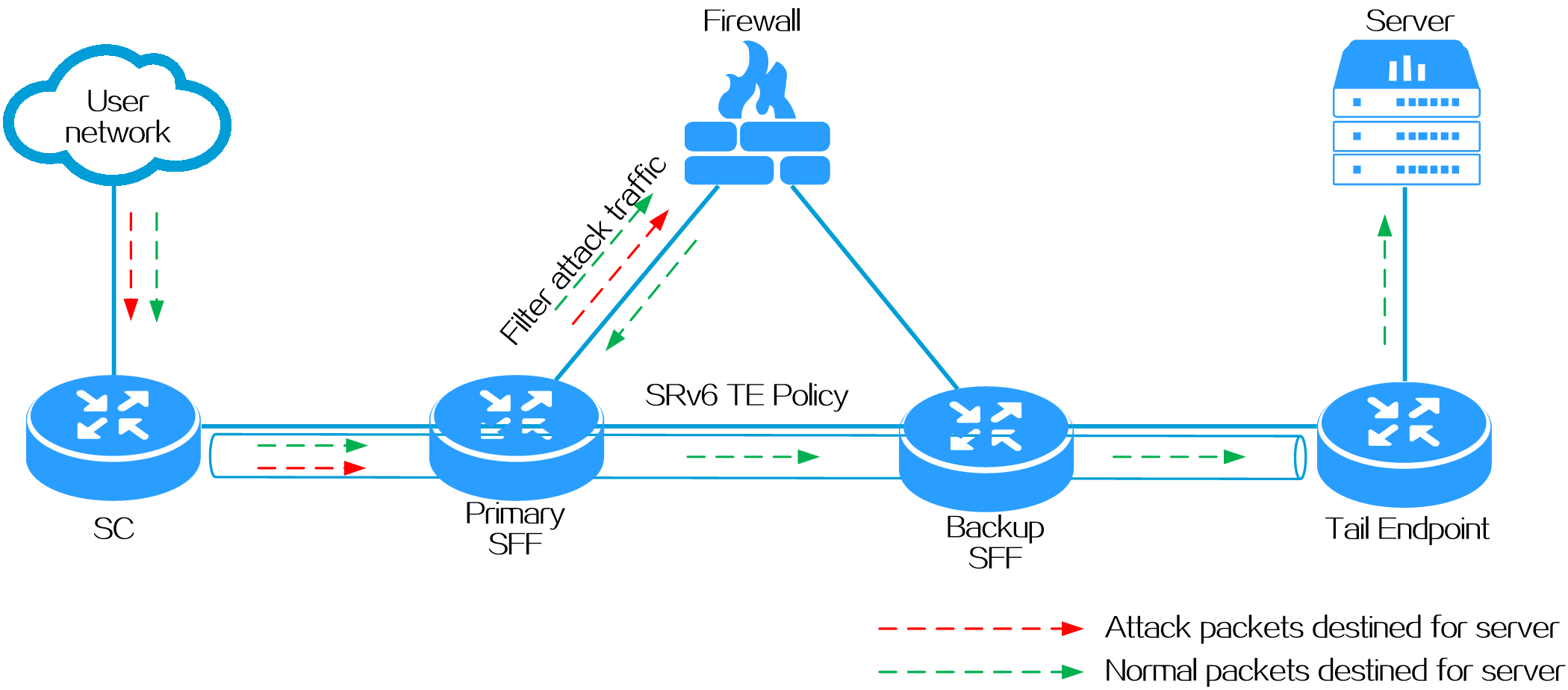

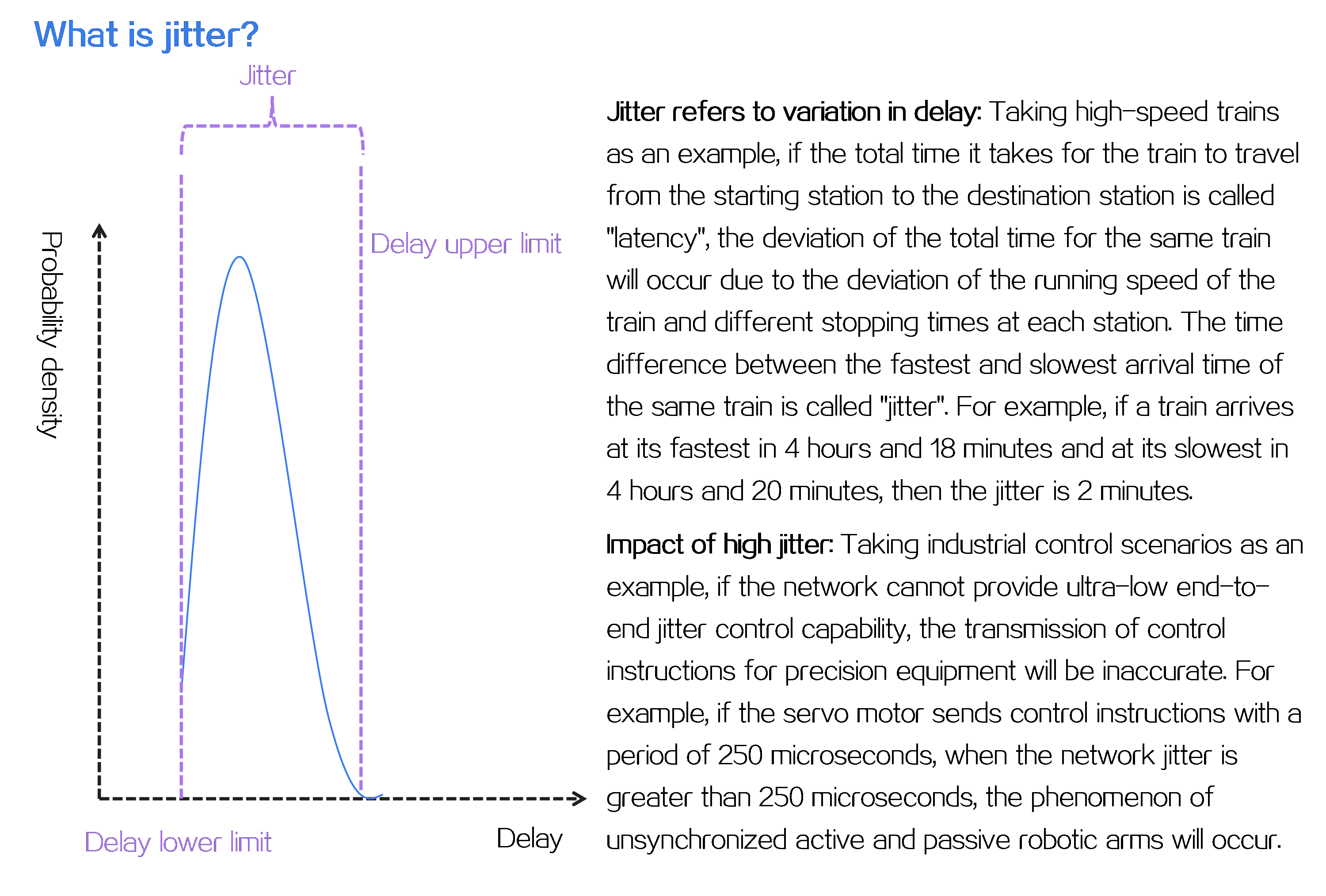

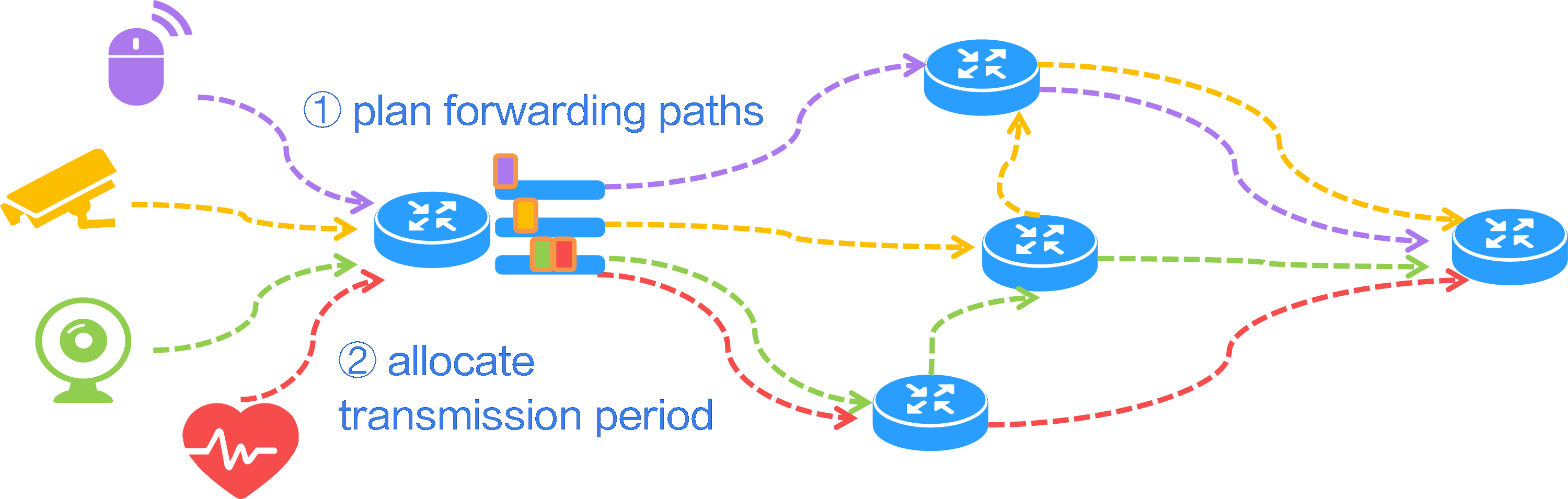

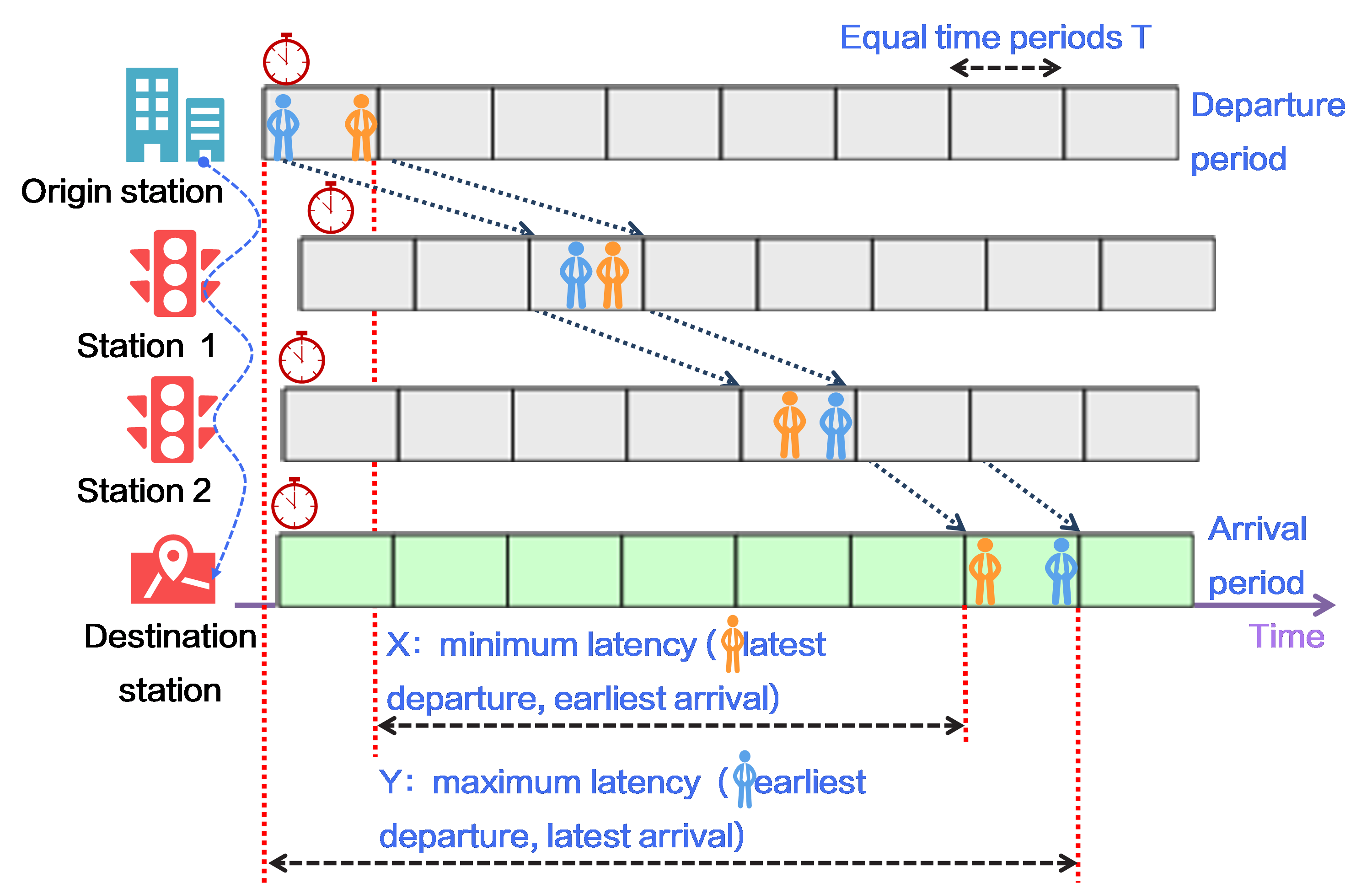

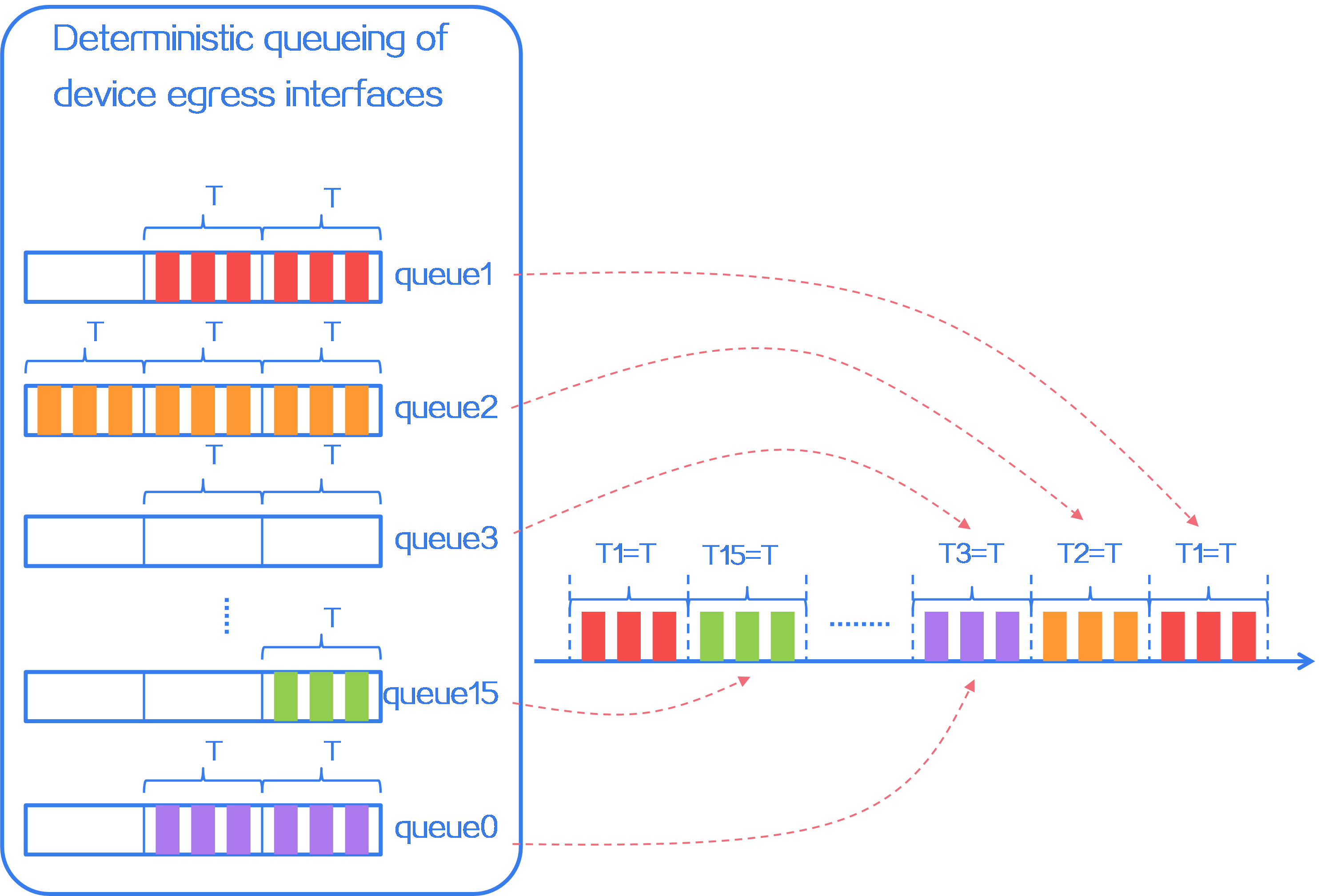

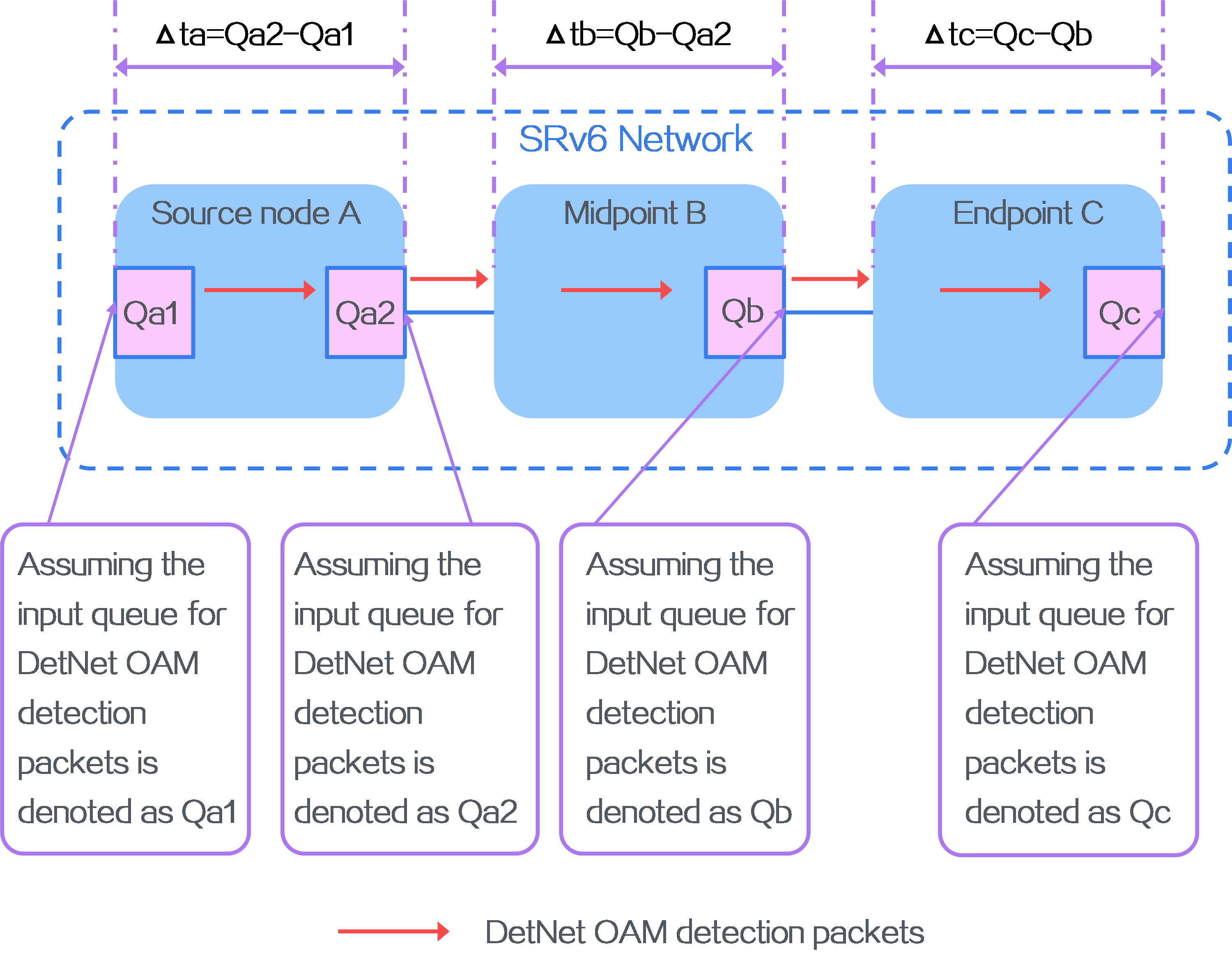

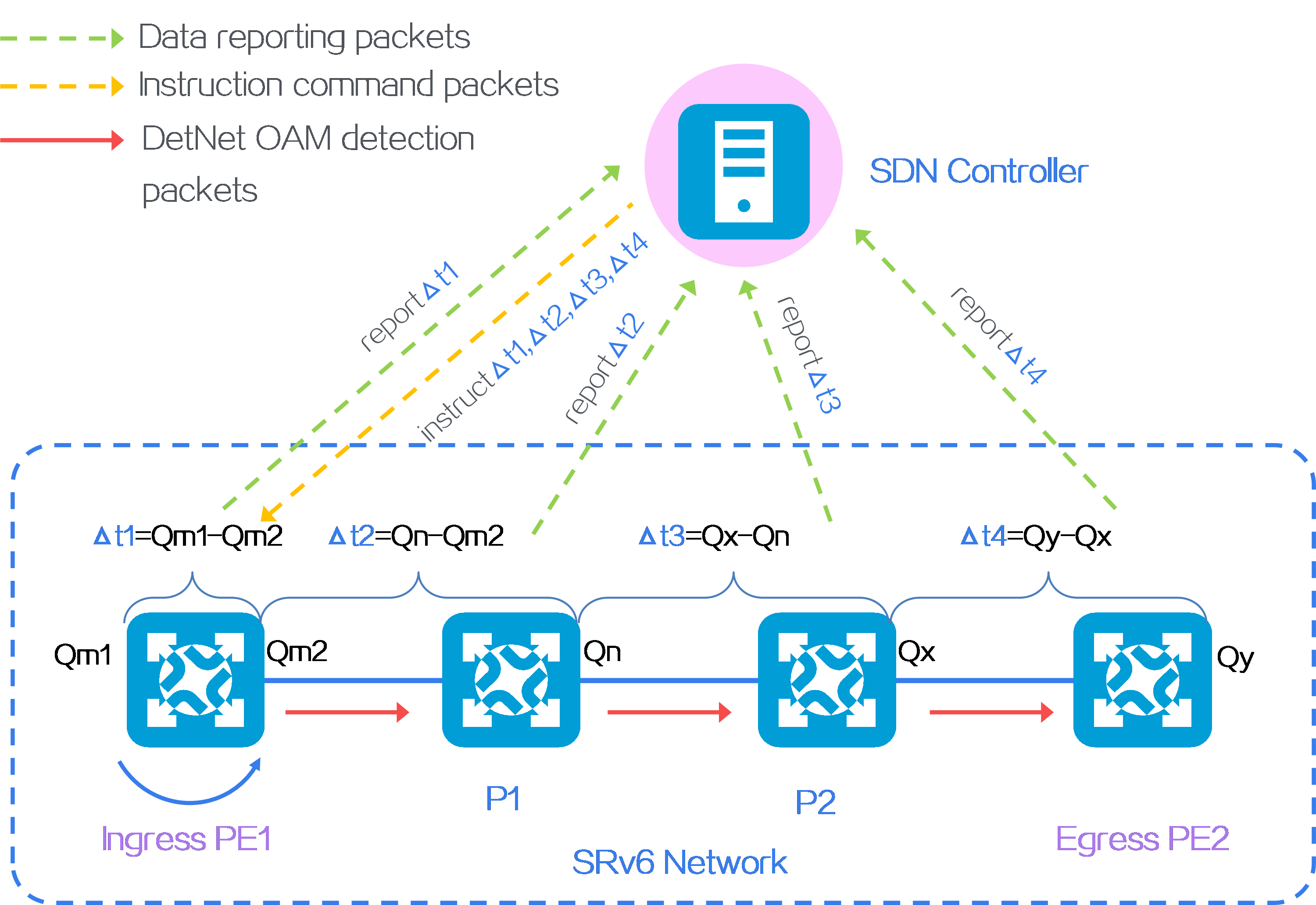

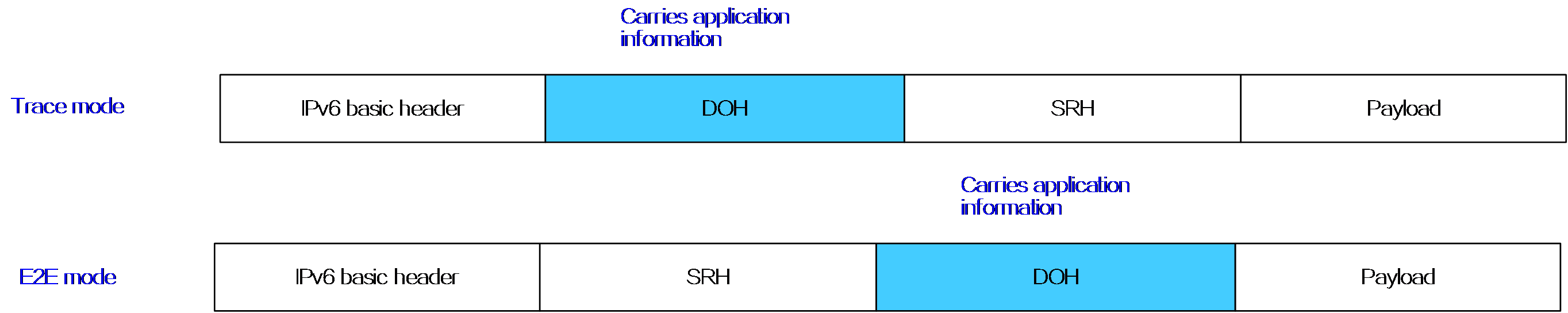

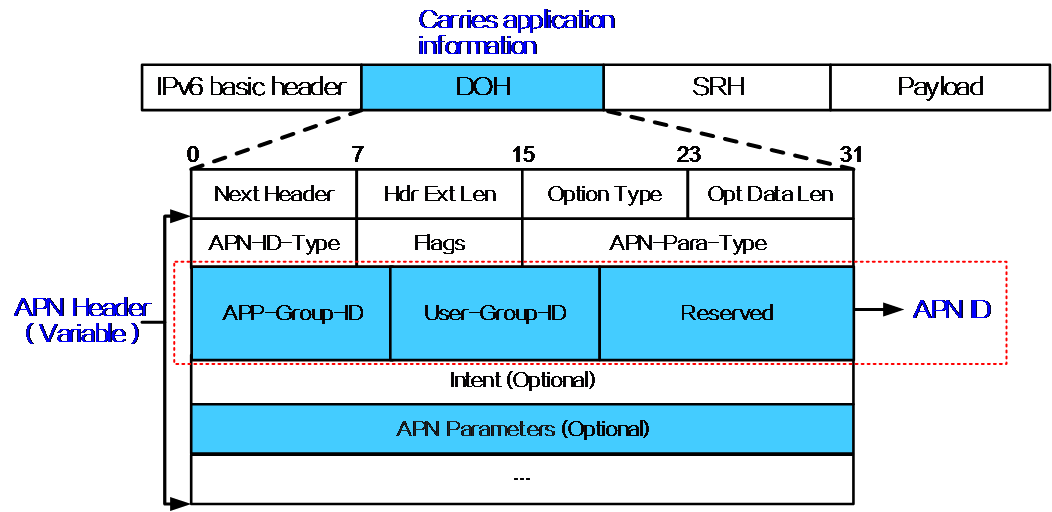

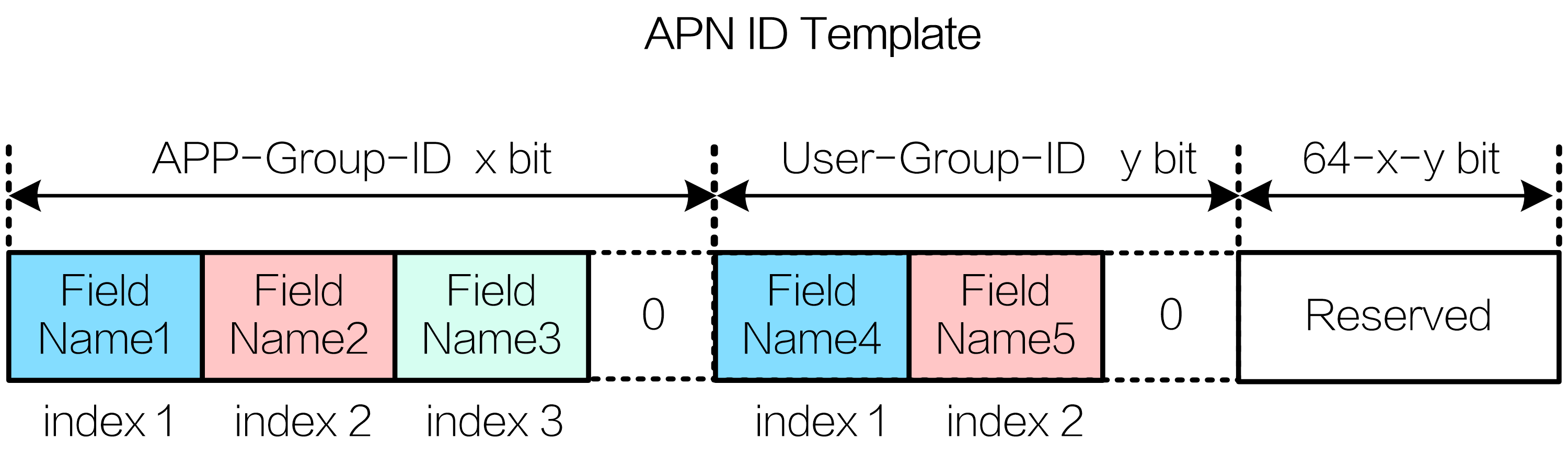

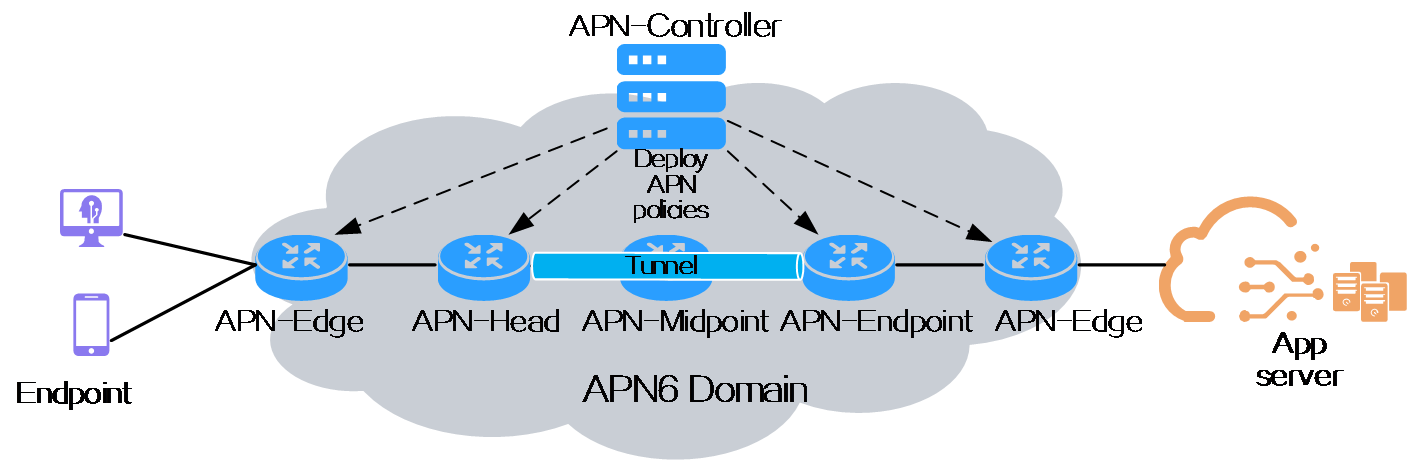

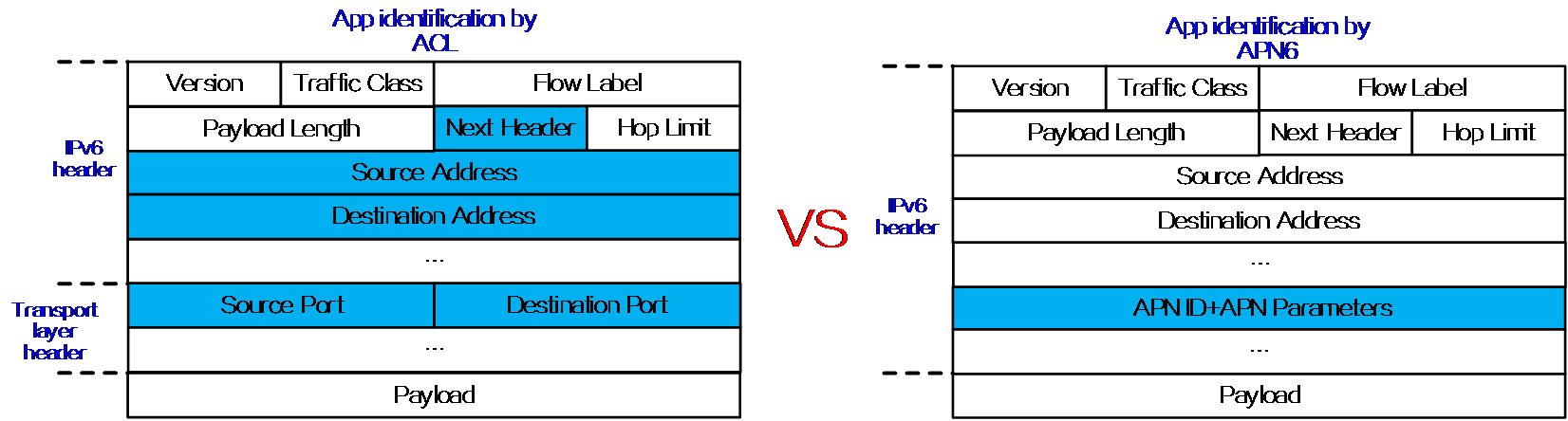

As shown in the figure below, the target flow reaches the destination node along the optimal forwarding path. You can select a link or node on the optimal forwarding path as a protected link or node and enable TI-LFA FRR on the upstream node directly connected to the protected link or the upstream neighbor node of the protected node. This upstream node is called the Point of Local Repair (PLR). TI-LFA FRR uses the following process to protect links and nodes: