About load balancers

As a cluster-based technology, the load balancing service distributes access traffic to multiple back-end real servers to improve the service processing performance and increase service reliability.

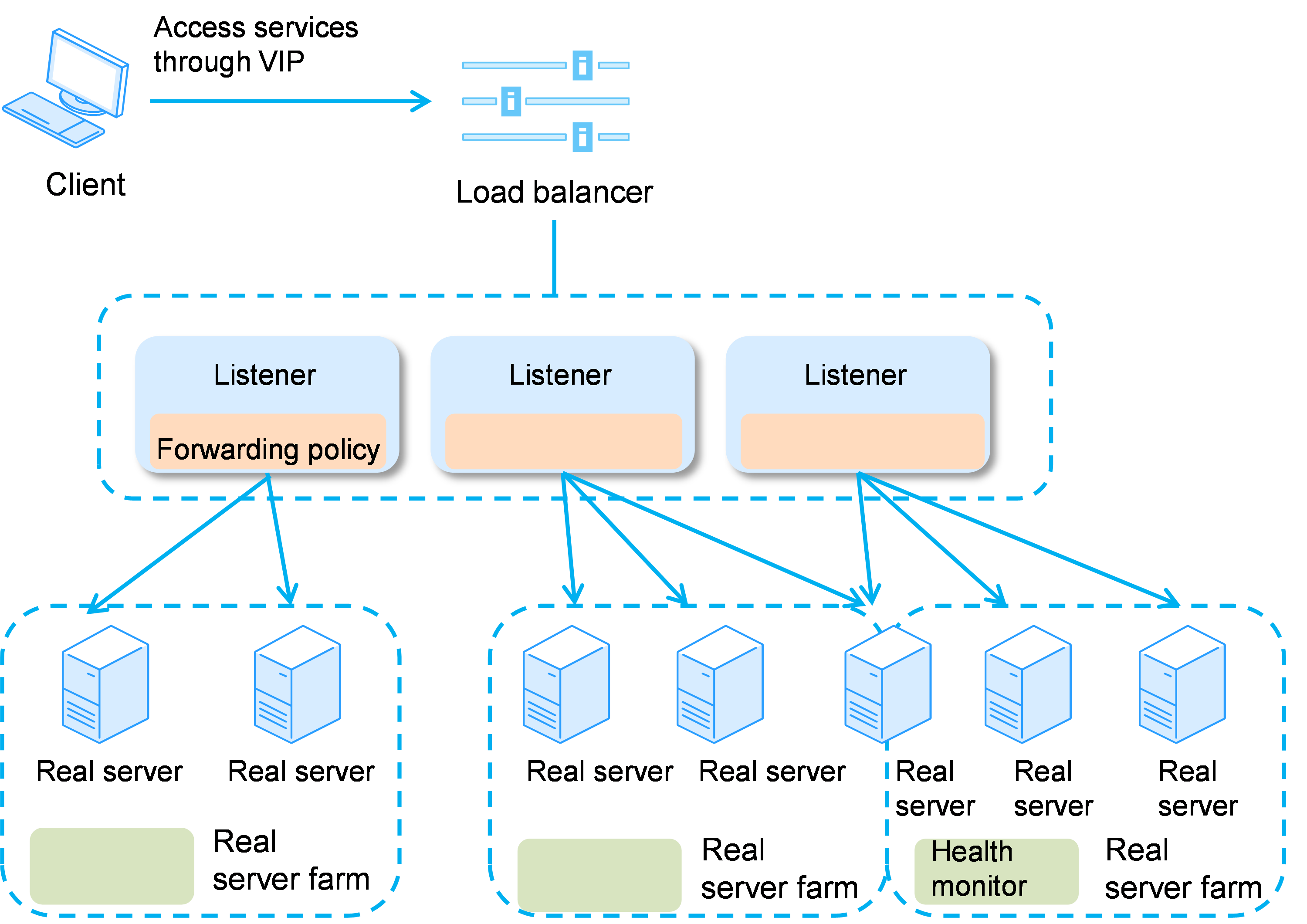

A load balancer can receive access requests after you assign it a virtual IP address. You can create one or multiple listeners for a load balancer. The listeners listen to access requests of clients on the configured protocol and port and forward the requests to the real servers based on the forwarding policies. You can create a health monitor to monitor the running state of each real server. When the health monitor detects anomalies on a real server, the load balancer stops distributing access requests to the real server.

Figure-1 Load balancing components

Concepts

Listener

Configure a listener to listen on the protocol and port number of the connection from clients to the load balancer. You can also configure a forwarding policy to specify the load balancing algorithm and session persistence state. After you enable session persistence, requests from the same client will be forwarded to the same real server within the session lifetime. A load balancer requires a minimum of one listener.

Real server farm

A real server farm contains multiple real servers that process the same class of services.

Real server

A real server is a VM that processes services.

Health monitor

A health monitor monitors the running state of each real server. When the health monitor detects anomalies on a real server, the load balancer stops distributing access requests to the real server.

Benefits

Ensure service continuity—Supports multiple listening protocols include Ping, TCP, HTTP, and HTTPS to avoid service interruption caused by single point of failure.

Improve service fluency—Balances traffic across multiple cloud hosts to avoid service response delay caused by overloaded cloud hosts and improve service fluency and fault tolerance capability.

Support elastic scaling—Supports flexible real server scaling policies based on various monitoring metrics, so that users only need to care the services without concerning the service bottleneck.

Application scenarios

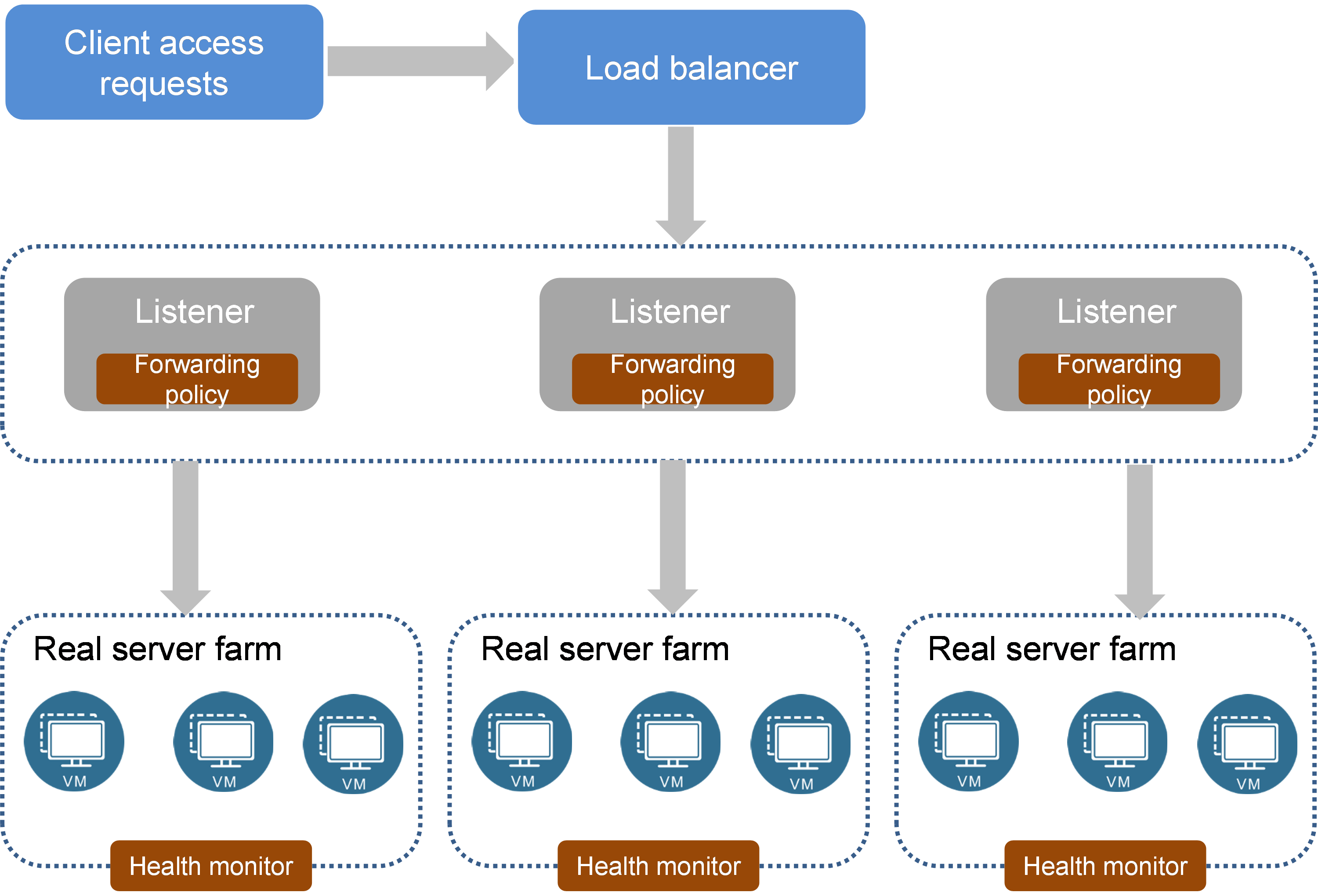

Service distribution

In scenarios with a large amount of traffic, configure a load balancing algorithm to distribute access requests to multiple real server to balance the load. Enable session persistence to distribute access requests from the same client to the same real server to improve the access efficiency. When a real server fails, the load balancer stops distributing requests to the real server to ensure the high availability of the service.

Figure-2 Service distribution scenario

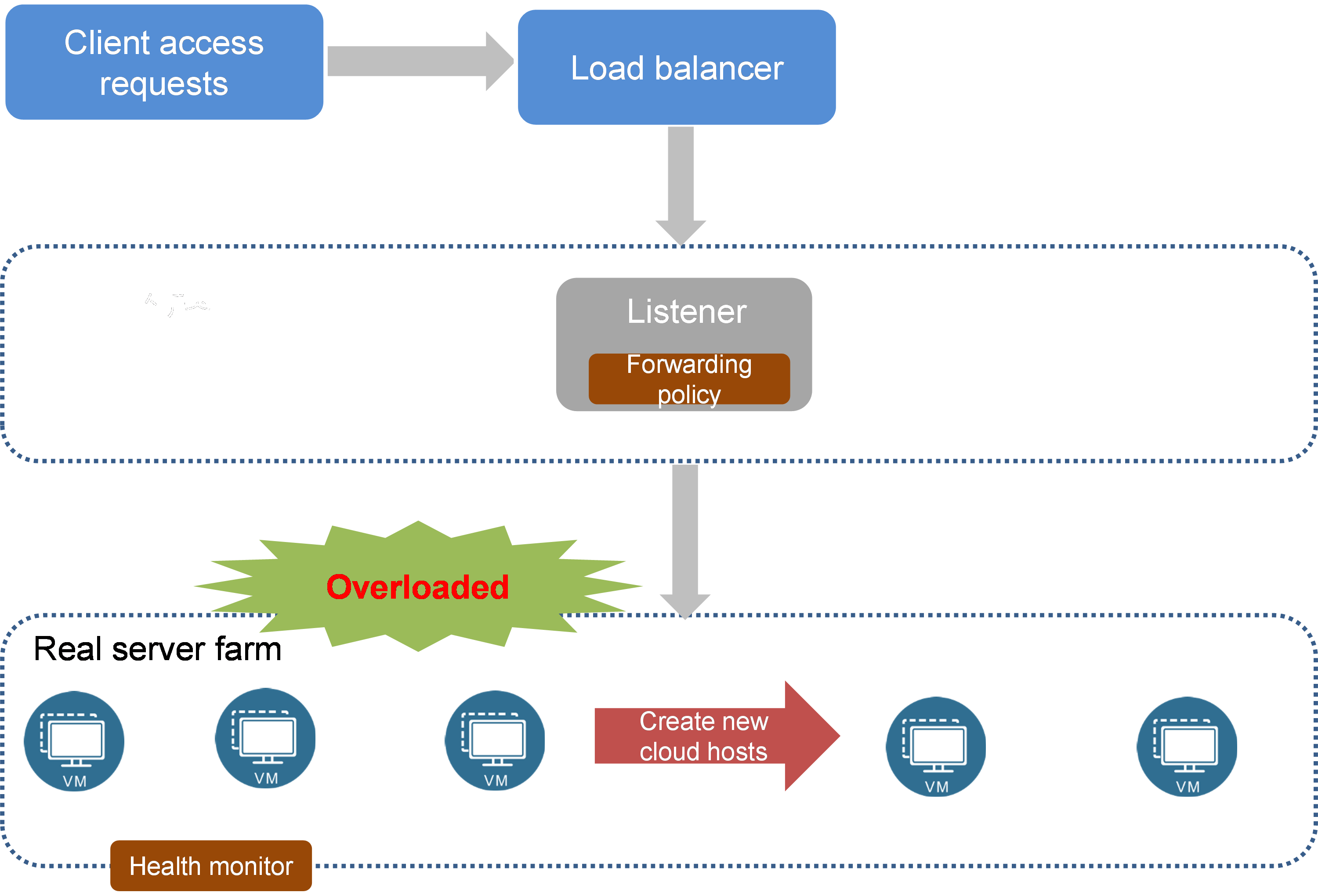

Dynamic resource extension

In scenarios where the service systems' demands for IT resources fluctuate greatly, configure dynamic resource extension to enable the system to quickly create the specified number of cloud hosts and add them to the real server group when traffic burst occurs. After the peak access period, the system automatically deletes or shuts down unnecessary cloud hosts to release resources.

Figure-3 Dynamic resource extension scenario

Relationship with other cloud services

Table-1 Relationship with other cloud services

|

Service name |

Relationship with load balancer |

|

Networks |

Load balancers obtain virtual IP addresses from subnets in networks. |

|

Cloud hosts |

Cloud hosts act as real servers to receive and process service requests distributed by load balancers. |

|

Public IP addresses |

The external network accesses load balancers through public IP addresses. |